Cray XT 3 Programming Introduction Sarah Anderson Cray

- Slides: 14

Cray XT 3 Programming Introduction Sarah Anderson Cray, Inc saraha@cray. com Oak Ridge National Laboratory XT 3 Workshop June 14 -17 2005

XT 3 Programming Environment Compiler, basic libraries and communication libraries PGI C/C++/Fortran compiler 6. 0. 1 MPI-2 message passing library Cray SHMEM library Execution environment (”yod” launcher and batch scheduler). Other talks will address the following. . . Math librariies ACML 2. 5 - BLAS, LAPACK, FFT and other numerical routines. Cray Sci. Lib Scalapack, BLACS, Super. LU subroutine libraries Debugger: Etnus Totalview 6. 5. 0 Performance tools: Craypat and Apprentice² 06/11/2005 2

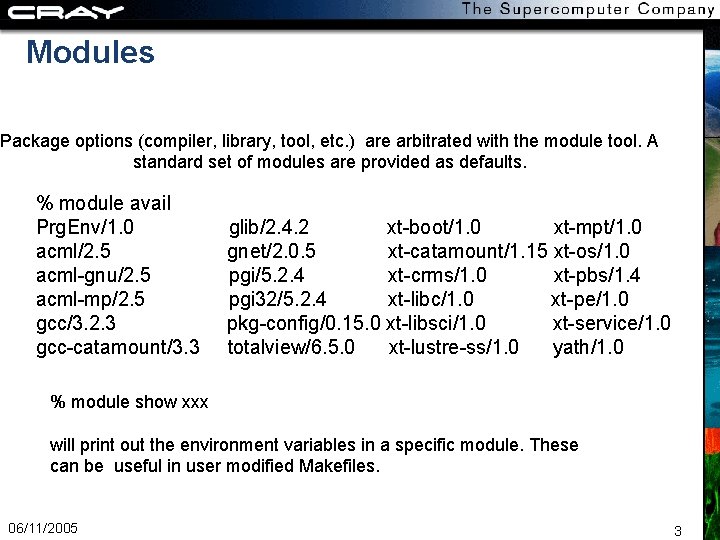

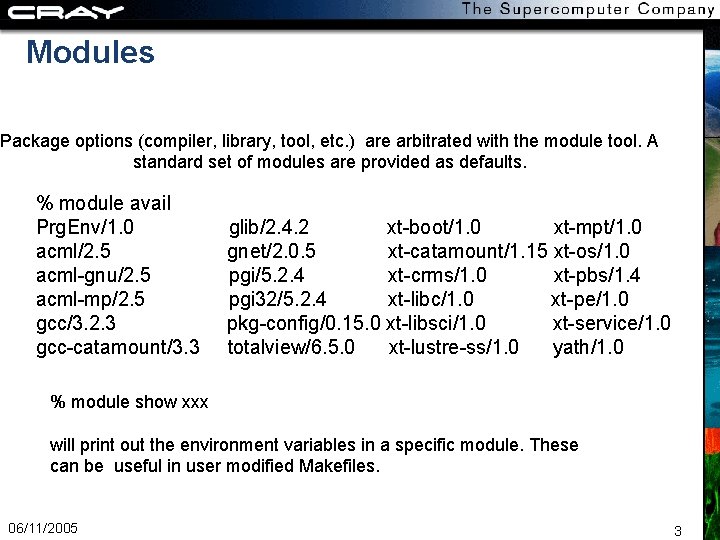

Modules Package options (compiler, library, tool, etc. ) are arbitrated with the module tool. A standard set of modules are provided as defaults. % module avail Prg. Env/1. 0 acml/2. 5 acml-gnu/2. 5 acml-mp/2. 5 gcc/3. 2. 3 gcc-catamount/3. 3 glib/2. 4. 2 xt-boot/1. 0 xt-mpt/1. 0 gnet/2. 0. 5 xt-catamount/1. 15 xt-os/1. 0 pgi/5. 2. 4 xt-crms/1. 0 xt-pbs/1. 4 pgi 32/5. 2. 4 xt-libc/1. 0 xt-pe/1. 0 pkg-config/0. 15. 0 xt-libsci/1. 0 xt-service/1. 0 totalview/6. 5. 0 xt-lustre-ss/1. 0 yath/1. 0 % module show xxx will print out the environment variables in a specific module. These can be useful in user modified Makefiles. 06/11/2005 3

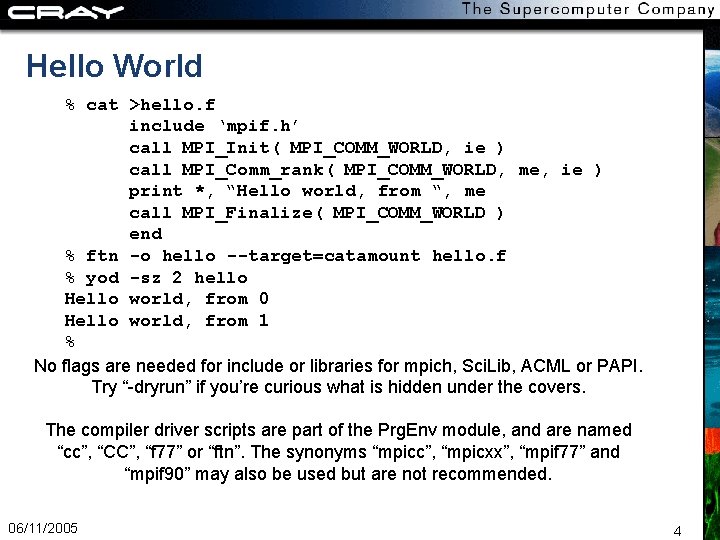

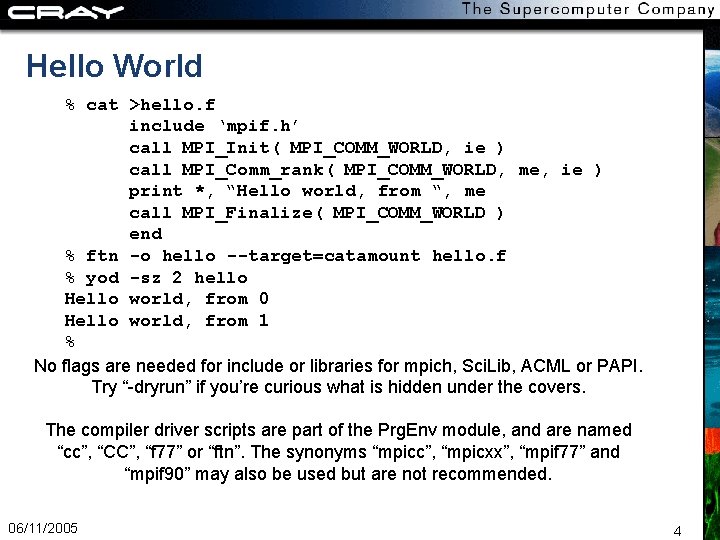

Hello World % cat >hello. f include ‘mpif. h’ call MPI_Init( MPI_COMM_WORLD, ie ) call MPI_Comm_rank( MPI_COMM_WORLD, me, ie ) print *, “Hello world, from “, me call MPI_Finalize( MPI_COMM_WORLD ) end % ftn -o hello --target=catamount hello. f % yod -sz 2 hello Hello world, from 0 Hello world, from 1 % No flags are needed for include or libraries for mpich, Sci. Lib, ACML or PAPI. Try “-dryrun” if you’re curious what is hidden under the covers. The compiler driver scripts are part of the Prg. Env module, and are named “cc”, “CC”, “f 77” or “ftn”. The synonyms “mpicc”, “mpicxx”, “mpif 77” and “mpif 90” may also be used but are not recommended. 06/11/2005 4

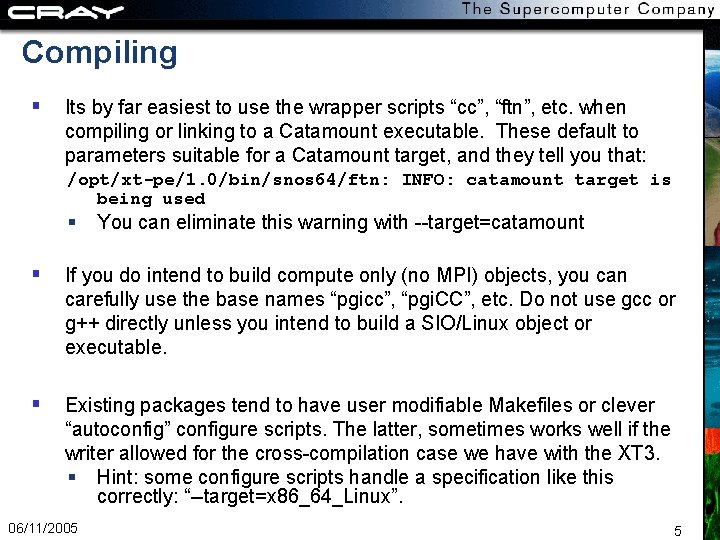

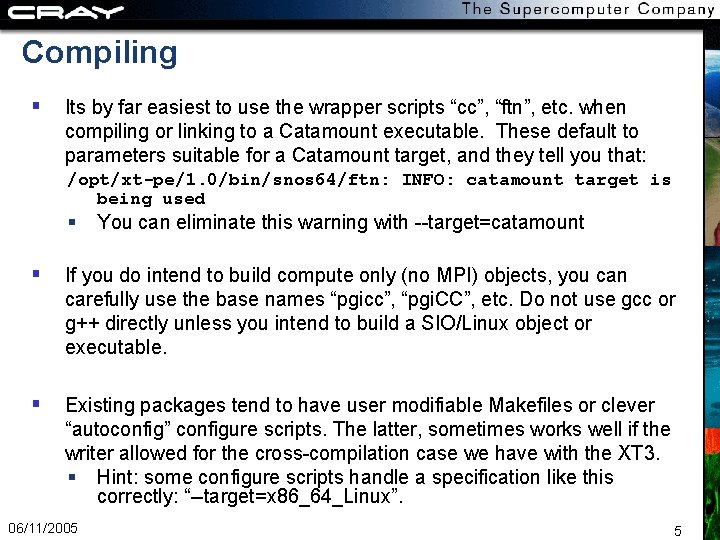

Compiling Its by far easiest to use the wrapper scripts “cc”, “ftn”, etc. when compiling or linking to a Catamount executable. These default to parameters suitable for a Catamount target, and they tell you that: /opt/xt-pe/1. 0/bin/snos 64/ftn: INFO: catamount target is being used You can eliminate this warning with --target=catamount If you do intend to build compute only (no MPI) objects, you can carefully use the base names “pgicc”, “pgi. CC”, etc. Do not use gcc or g++ directly unless you intend to build a SIO/Linux object or executable. Existing packages tend to have user modifiable Makefiles or clever “autoconfig” configure scripts. The latter, sometimes works well if the writer allowed for the cross-compilation case we have with the XT 3. Hint: some configure scripts handle a specification like this correctly: “--target=x 86_64_Linux”. 06/11/2005 5

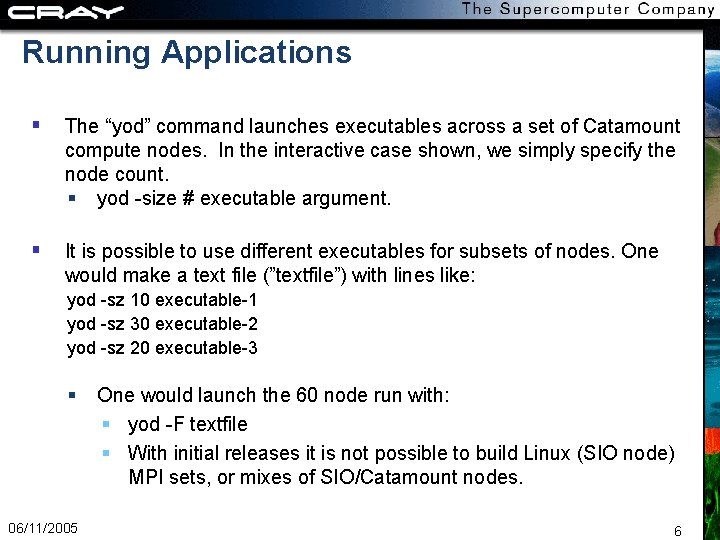

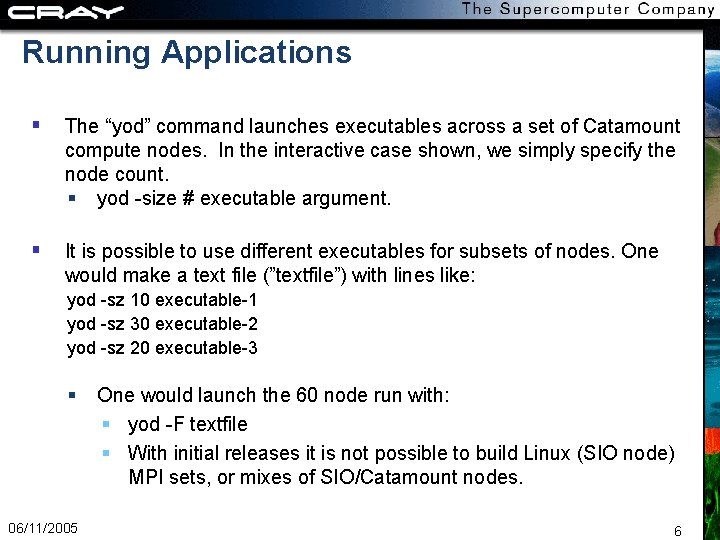

Running Applications The “yod” command launches executables across a set of Catamount compute nodes. In the interactive case shown, we simply specify the node count. yod -size # executable argument. It is possible to use different executables for subsets of nodes. One would make a text file (”textfile”) with lines like: yod -sz 10 executable-1 yod -sz 30 executable-2 yod -sz 20 executable-3 06/11/2005 One would launch the 60 node run with: yod -F textfile With initial releases it is not possible to build Linux (SIO node) MPI sets, or mixes of SIO/Catamount nodes. 6

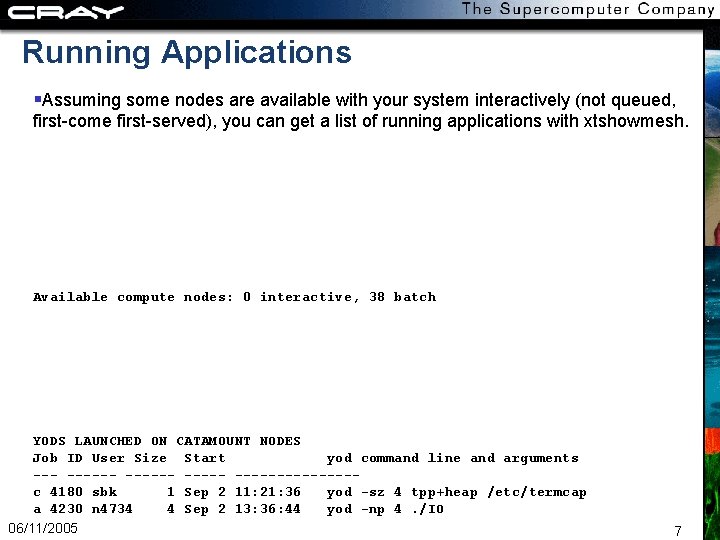

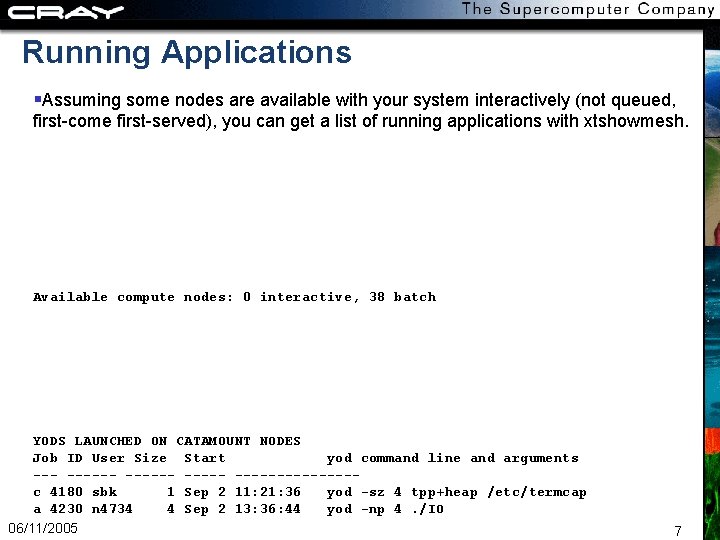

Running Applications Assuming some nodes are available with your system interactively (not queued, first-come first-served), you can get a list of running applications with xtshowmesh. Available compute nodes: 0 interactive, 38 batch YODS LAUNCHED ON CATAMOUNT NODES Job ID User Size Start yod command line and arguments ------ -------c 4180 sbk 1 Sep 2 11: 21: 36 yod -sz 4 tpp+heap /etc/termcap a 4230 n 4734 4 Sep 2 13: 36: 44 yod -np 4. /IO 06/11/2005 7

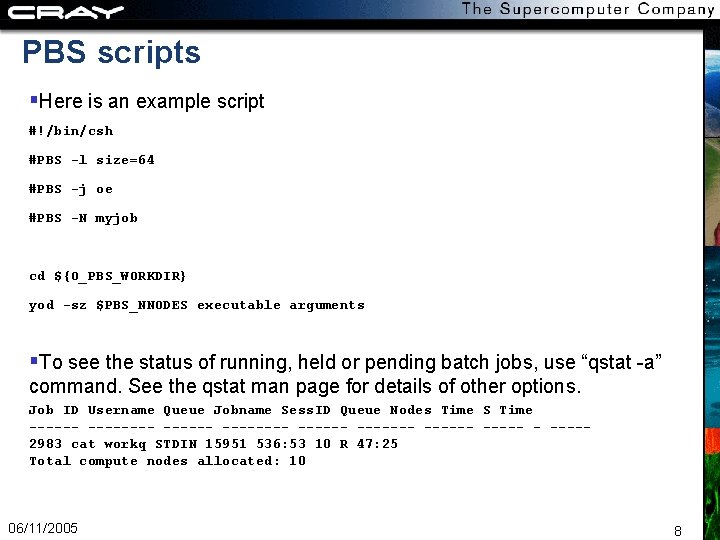

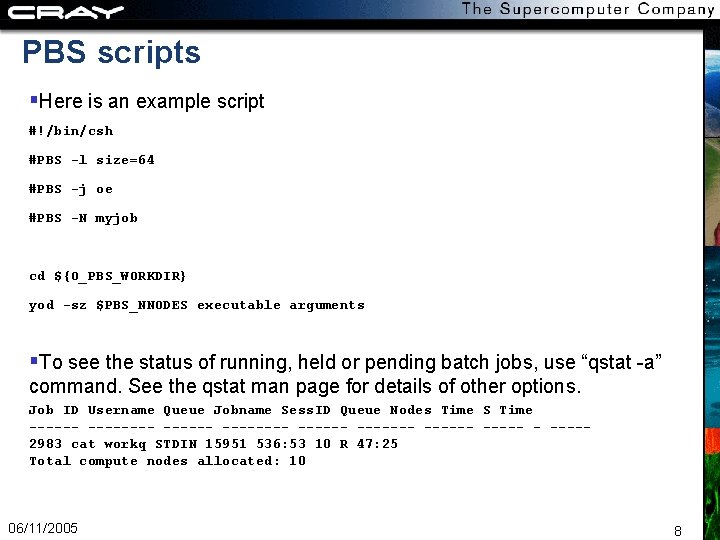

PBS scripts Here is an example script #!/bin/csh #PBS -l size=64 #PBS -j oe #PBS -N myjob cd ${O_PBS_WORKDIR} yod -sz $PBS_NNODES executable arguments To see the status of running, held or pending batch jobs, use “qstat -a” command. See the qstat man page for details of other options. Job ID Username Queue Jobname Sess. ID Queue Nodes Time S Time -------- ------- - ----2983 cat workq STDIN 15951 536: 53 10 R 47: 25 Total compute nodes allocated: 10 06/11/2005 8

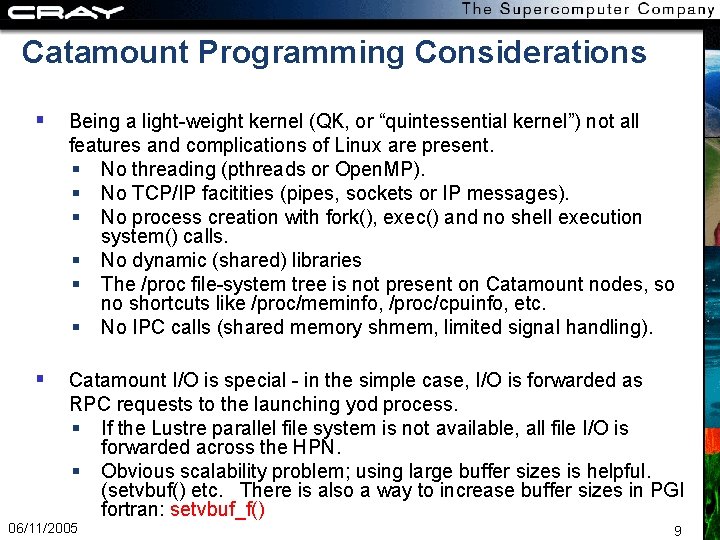

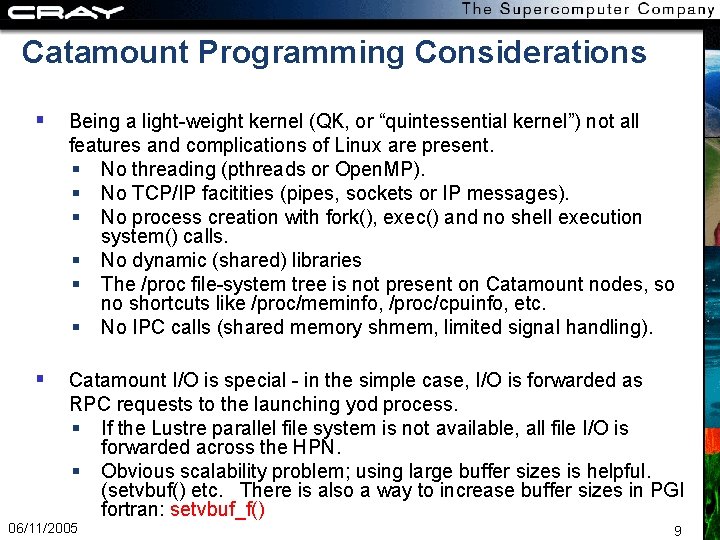

Catamount Programming Considerations Being a light-weight kernel (QK, or “quintessential kernel”) not all features and complications of Linux are present. No threading (pthreads or Open. MP). No TCP/IP facitities (pipes, sockets or IP messages). No process creation with fork(), exec() and no shell execution system() calls. No dynamic (shared) libraries The /proc file-system tree is not present on Catamount nodes, so no shortcuts like /proc/meminfo, /proc/cpuinfo, etc. No IPC calls (shared memory shmem, limited signal handling). Catamount I/O is special - in the simple case, I/O is forwarded as RPC requests to the launching yod process. If the Lustre parallel file system is not available, all file I/O is forwarded across the HPN. Obvious scalability problem; using large buffer sizes is helpful. (setvbuf() etc. There is also a way to increase buffer sizes in PGI fortran: setvbuf_f() 06/11/2005 9

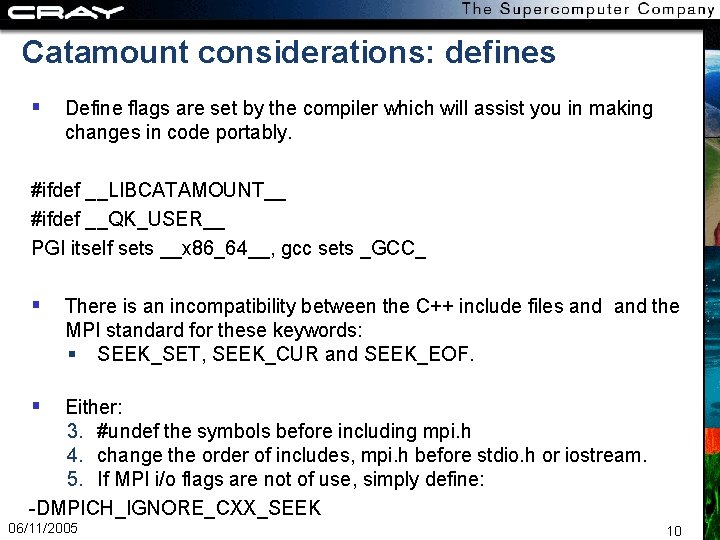

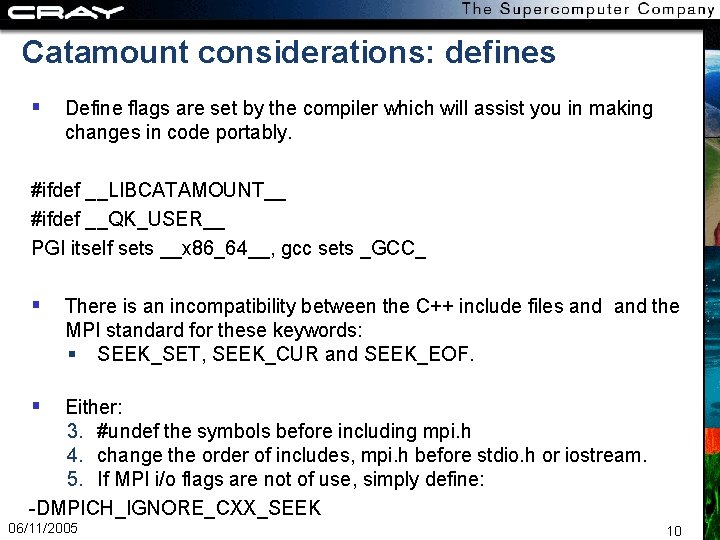

Catamount considerations: defines Define flags are set by the compiler which will assist you in making changes in code portably. #ifdef __LIBCATAMOUNT__ #ifdef __QK_USER__ PGI itself sets __x 86_64__, gcc sets _GCC_ There is an incompatibility between the C++ include files and the MPI standard for these keywords: SEEK_SET, SEEK_CUR and SEEK_EOF. Either: 3. #undef the symbols before including mpi. h 4. change the order of includes, mpi. h before stdio. h or iostream. 5. If MPI i/o flags are not of use, simply define: -DMPICH_IGNORE_CXX_SEEK 06/11/2005 10

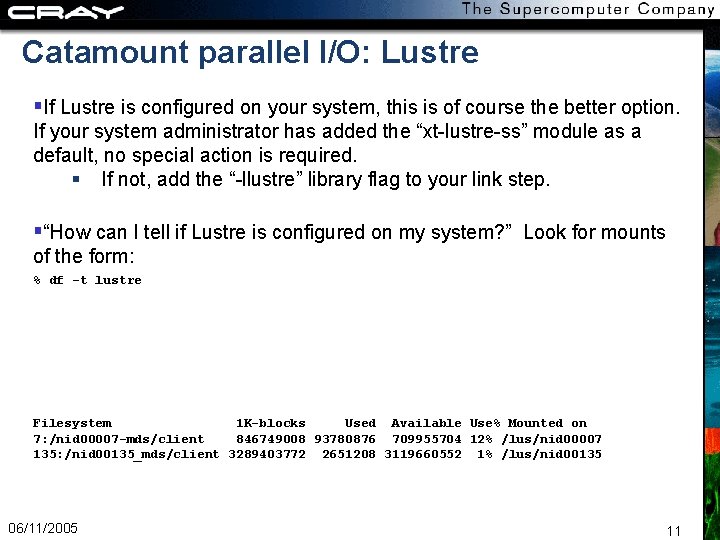

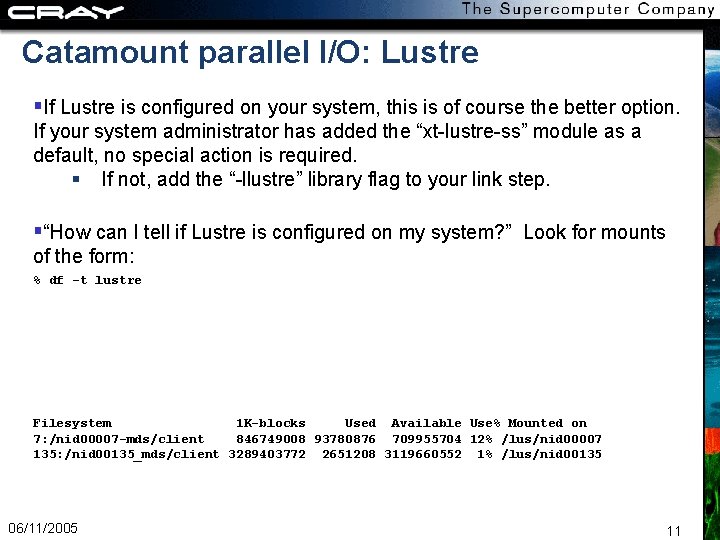

Catamount parallel I/O: Lustre If Lustre is configured on your system, this is of course the better option. If your system administrator has added the “xt-lustre-ss” module as a default, no special action is required. If not, add the “-llustre” library flag to your link step. “How can I tell if Lustre is configured on my system? ” Look for mounts of the form: % df -t lustre Filesystem 1 K-blocks Used Available Use% Mounted on 7: /nid 00007 -mds/client 846749008 93780876 709955704 12% /lus/nid 00007 135: /nid 00135_mds/client 3289403772 2651208 3119660552 1% /lus/nid 00135 06/11/2005 11

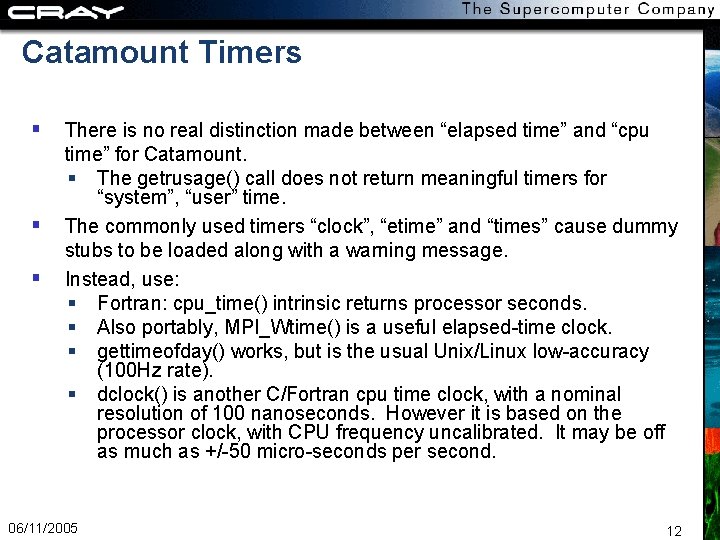

Catamount Timers There is no real distinction made between “elapsed time” and “cpu time” for Catamount. The getrusage() call does not return meaningful timers for “system”, “user” time. The commonly used timers “clock”, “etime” and “times” cause dummy stubs to be loaded along with a warning message. Instead, use: Fortran: cpu_time() intrinsic returns processor seconds. Also portably, MPI_Wtime() is a useful elapsed-time clock. gettimeofday() works, but is the usual Unix/Linux low-accuracy (100 Hz rate). dclock() is another C/Fortran cpu time clock, with a nominal resolution of 100 nanoseconds. However it is based on the processor clock, with CPU frequency uncalibrated. It may be off as much as +/-50 micro-seconds per second. 06/11/2005 12

Catamount Error Handling “User error”, that is, can generate SIGSEGV and related signals. Generally these will produce a call trace-back for the faulting process. Other options are covered in the “man CORE” man page; it is possible to produce a core file for the first faulting process or all of them (be cautious of this option!). man page 06/11/2005 13

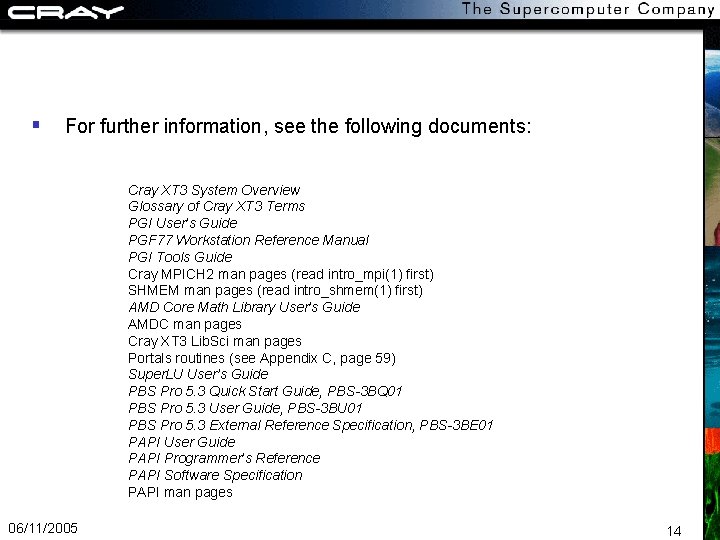

For further information, see the following documents: Cray XT 3 System Overview Glossary of Cray XT 3 Terms PGI User’s Guide PGF 77 Workstation Reference Manual PGI Tools Guide Cray MPICH 2 man pages (read intro_mpi(1) first) SHMEM man pages (read intro_shmem(1) first) AMD Core Math Library User’s Guide AMDC man pages Cray XT 3 Lib. Sci man pages Portals routines (see Appendix C, page 59) Super. LU User’s Guide PBS Pro 5. 3 Quick Start Guide, PBS-3 BQ 01 PBS Pro 5. 3 User Guide, PBS-3 BU 01 PBS Pro 5. 3 External Reference Specification, PBS-3 BE 01 PAPI User Guide PAPI Programmer’s Reference PAPI Software Specification PAPI man pages 06/11/2005 14