STMIK ERESHA DR Yeni Herdiyeni M Kom Alternative

![Comparing a Query and a Document [pq 1. . . pqj. . . pqt] Comparing a Query and a Document [pq 1. . . pqj. . . pqt]](https://slidetodoc.com/presentation_image/1a81d1c718105c061cb5f200af987f7e/image-32.jpg)

- Slides: 32

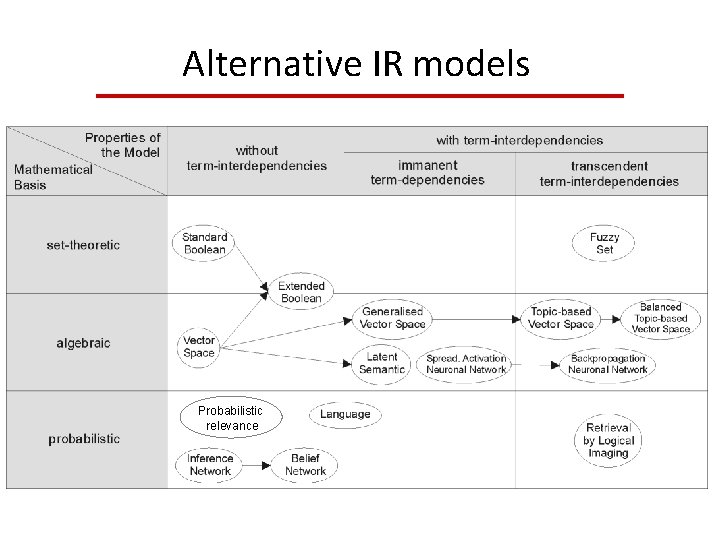

STMIK ERESHA DR. Yeni Herdiyeni, M. Kom Alternative IR models

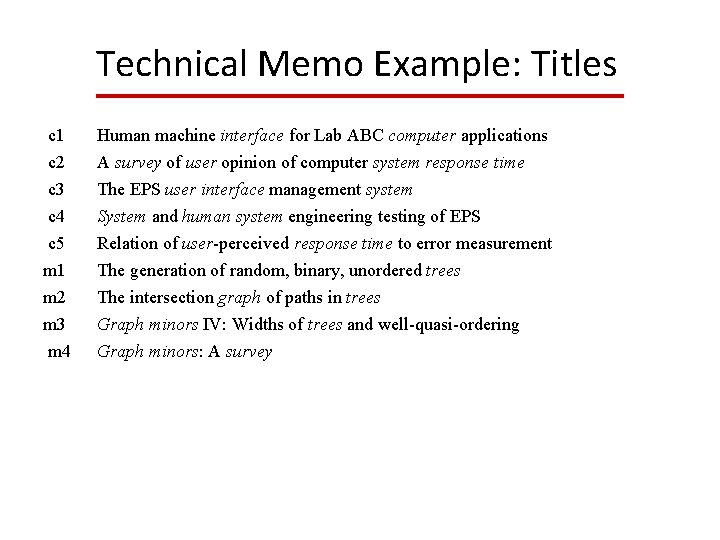

Technical Memo Example: Titles c 1 Human machine interface for Lab ABC computer applications c 2 A survey of user opinion of computer system response time c 3 c 4 c 5 m 1 m 2 m 3 m 4 The EPS user interface management system System and human system engineering testing of EPS Relation of user-perceived response time to error measurement The generation of random, binary, unordered trees The intersection graph of paths in trees Graph minors IV: Widths of trees and well-quasi-ordering Graph minors: A survey

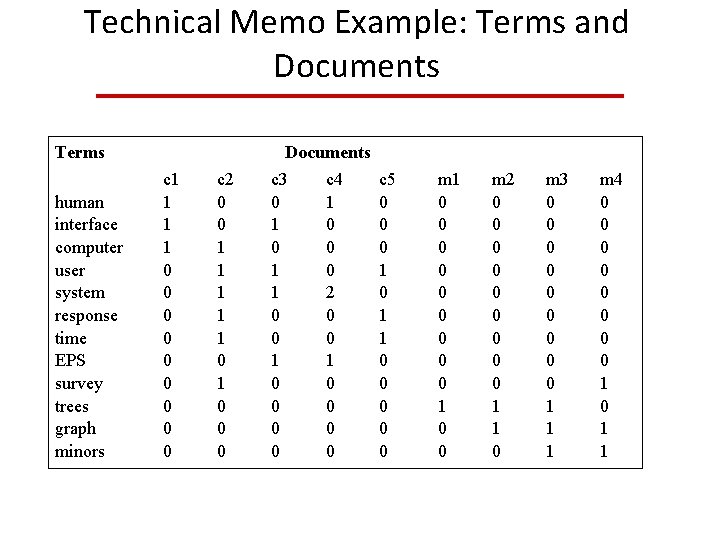

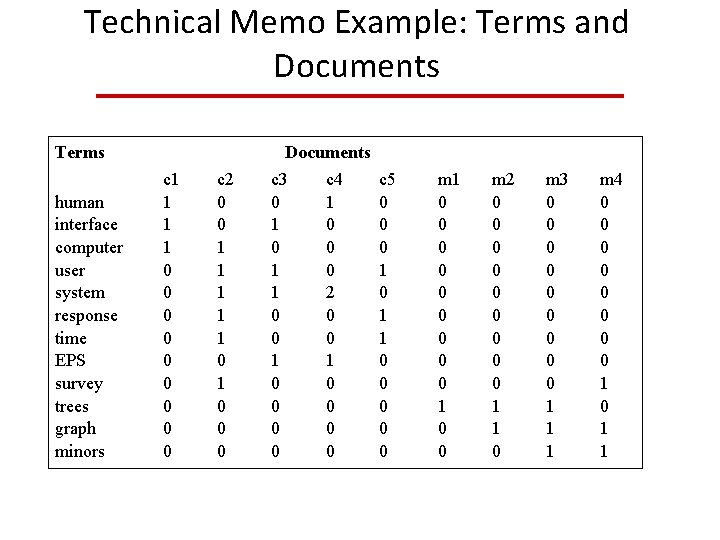

Technical Memo Example: Terms and Documents Terms Documents human interface computer user system response time EPS survey trees graph minors c 1 1 0 0 0 0 0 c 2 0 0 1 1 1 0 0 0 c 3 0 1 1 0 0 0 0 c 4 1 0 0 0 2 0 0 1 0 0 c 5 0 0 0 1 1 0 0 0 m 1 0 0 0 0 0 1 0 0 m 2 0 0 0 0 0 1 1 0 m 3 0 0 0 0 0 1 1 1 m 4 0 0 0 0 1 0 1 1

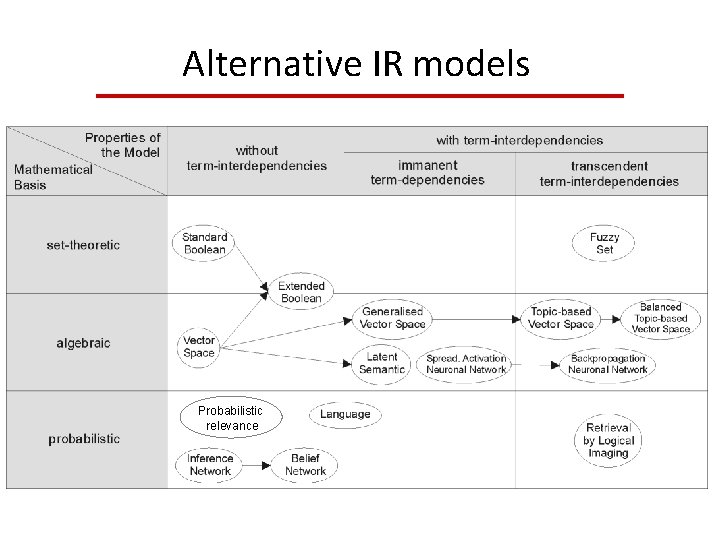

Alternative IR models Probabilistic relevance

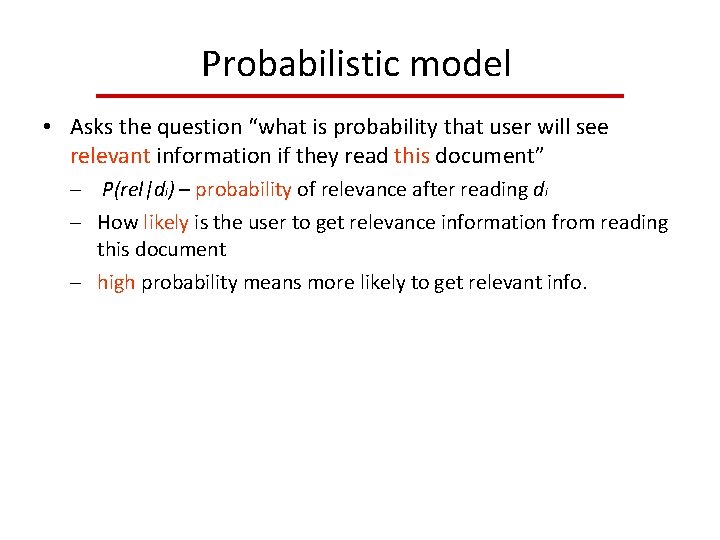

Probabilistic model • Asks the question “what is probability that user will see relevant information if they read this document” P(rel|di) – probability of relevance after reading di How likely is the user to get relevance information from reading this document high probability means more likely to get relevant info.

Probabilistic model • “if a reference retrieval system's response to each request is a ranking of the documents in the collection in order of decreasing probability of relevance. . . the overall effectiveness of the system to its user will be the best that is obtainable…”, Probability ranking principle Rank documents based on decreasing probability of relevance to user Calculate P(rel|di) for each document and rank • Suggests mathematical approach to IR and matching Can predict (somewhat) good retrieval models based on their estimates of P(rel|di)

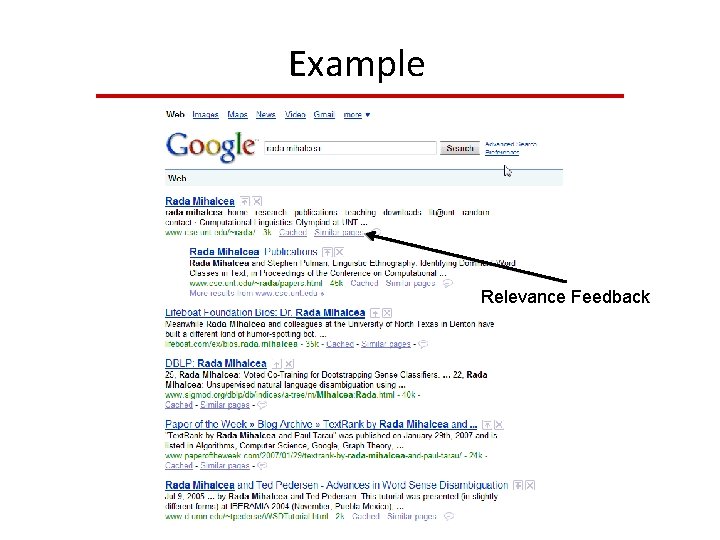

Example Relevance Feedback

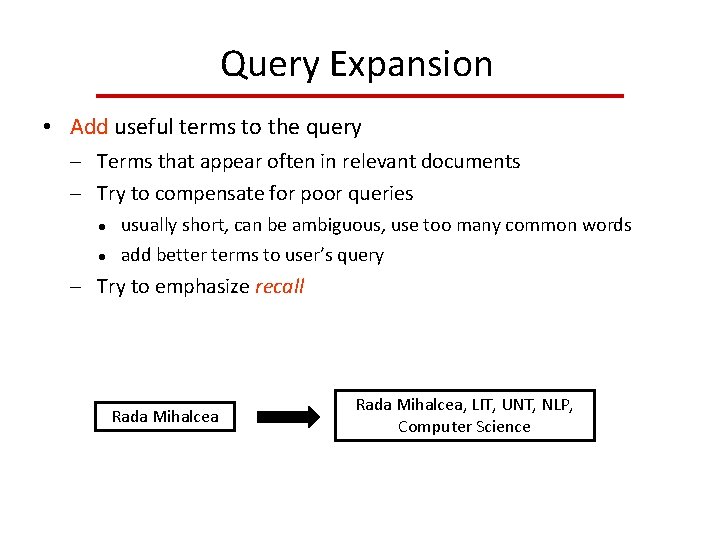

Relevance Feedback • What these systems are doing is Using examples of documents the user likes To detect which words are useful new query words query expansion To detect how useful these words are change weights of query words term reweighting Use new query for retrieval

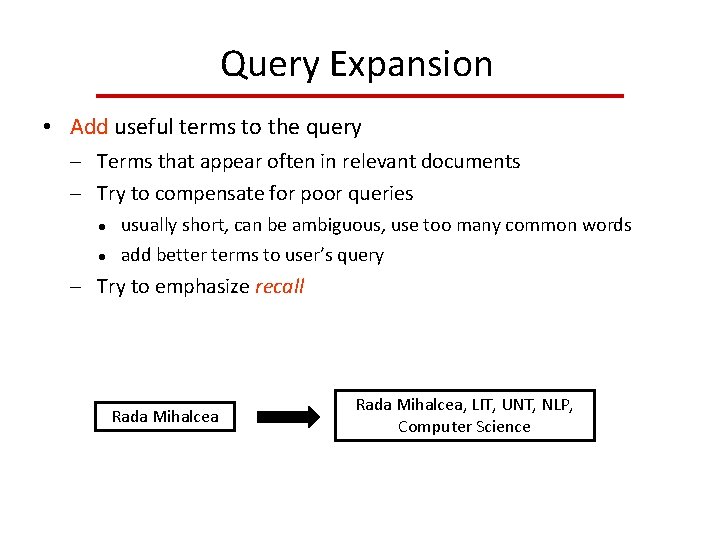

Query Expansion • Add useful terms to the query Terms that appear often in relevant documents Try to compensate for poor queries usually short, can be ambiguous, use too many common words add better terms to user’s query Try to emphasize recall Rada Mihalcea, LIT, UNT, NLP, Computer Science

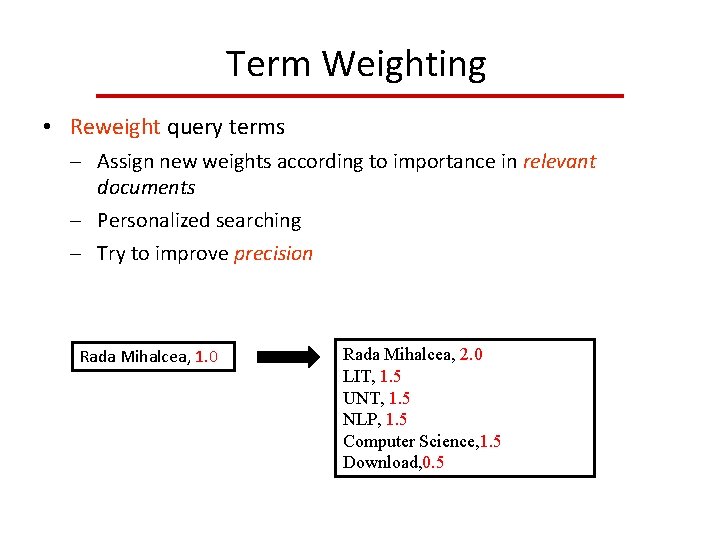

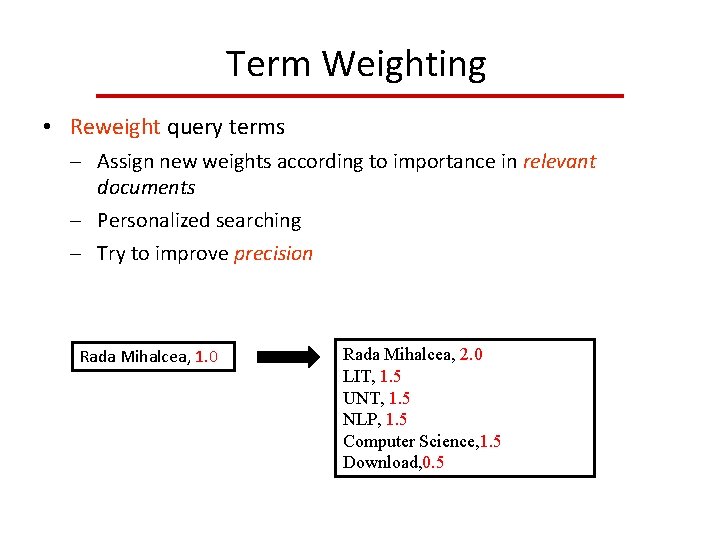

Term Weighting • Reweight query terms Assign new weights according to importance in relevant documents Personalized searching Try to improve precision Rada Mihalcea, 1. 0 Rada Mihalcea, 2. 0 LIT, 1. 5 UNT, 1. 5 NLP, 1. 5 Computer Science, 1. 5 Download, 0. 5

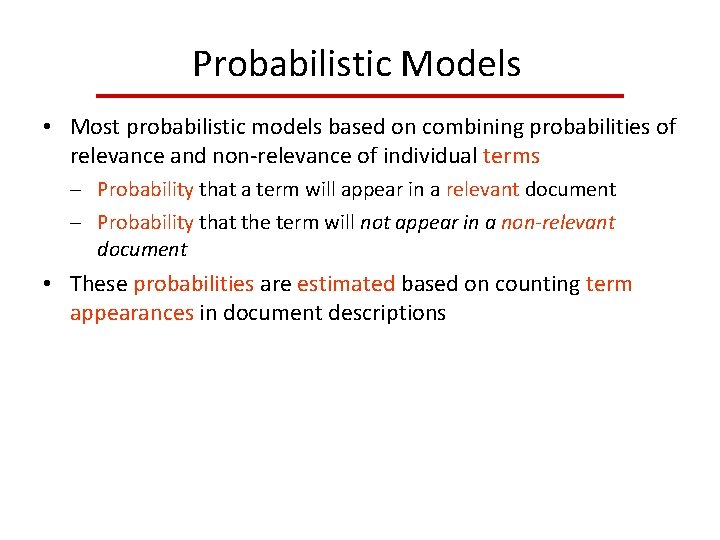

Probabilistic Models • Most probabilistic models based on combining probabilities of relevance and non‐relevance of individual terms Probability that a term will appear in a relevant document Probability that the term will not appear in a non‐relevant document • These probabilities are estimated based on counting term appearances in document descriptions

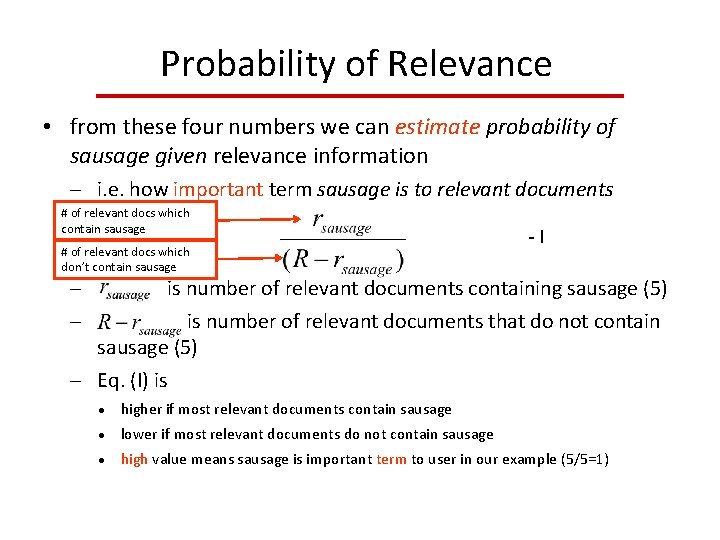

Example • Assume we have a collection of 100 documents N=100 • 20 of the documents contain the term sausage • Searcher has read and marked 10 documents as relevant R=10 • Of these relevant documents 5 contain the term sausage • How important is the word sausage to the searcher?

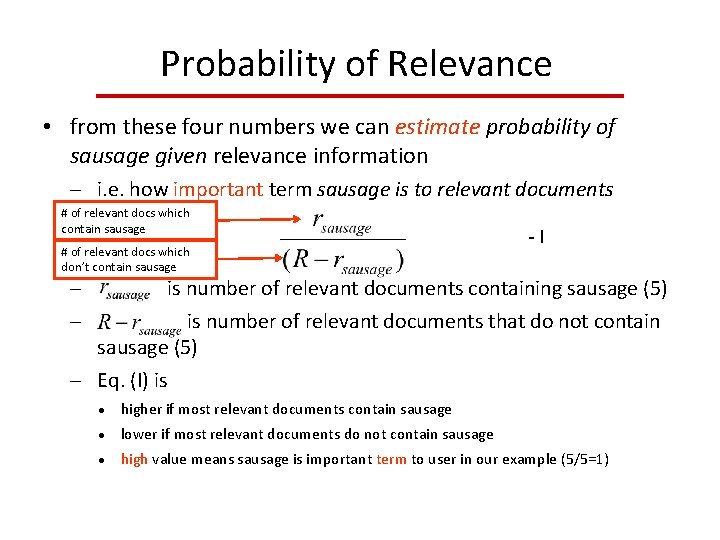

Probability of Relevance • from these four numbers we can estimate probability of sausage given relevance information i. e. how important term sausage is to relevant documents # of relevant docs which contain sausage # of relevant docs which don’t contain sausage -I is number of relevant documents containing sausage (5) is number of relevant documents that do not contain sausage (5) Eq. (I) is higher if most relevant documents contain sausage lower if most relevant documents do not contain sausage high value means sausage is important term to user in our example (5/5=1)

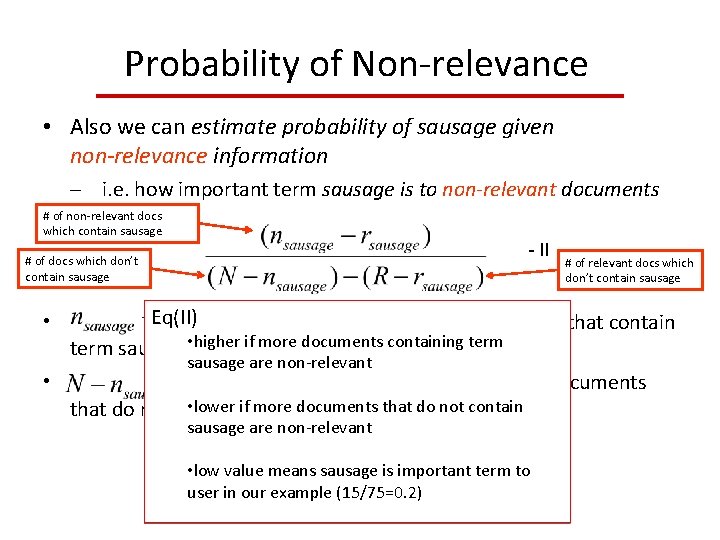

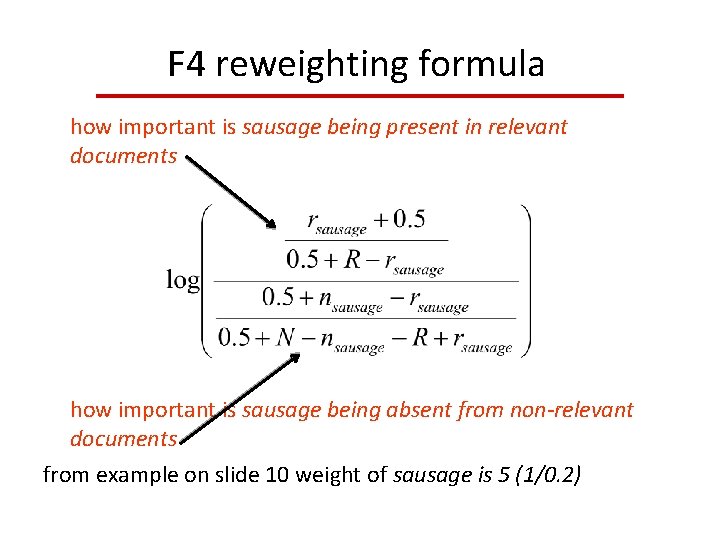

Probability of Non‐relevance • Also we can estimate probability of sausage given non‐relevance information i. e. how important term sausage is to non‐relevant documents # of non‐relevant docs which contain sausage - II # of docs which don’t contain sausage • • # of relevant docs which don’t contain sausage Eq(II) is number of non‐relevant documents that contain if more documents containing term sausage • higher (20‐ 5)=15 sausage are non‐relevant is number of non‐relevant documents • lower ifsausage more documents that do not contain (100‐ 20‐ 10+5)=75 sausage are non‐relevant • low value means sausage is important term to user in our example (15/75=0. 2)

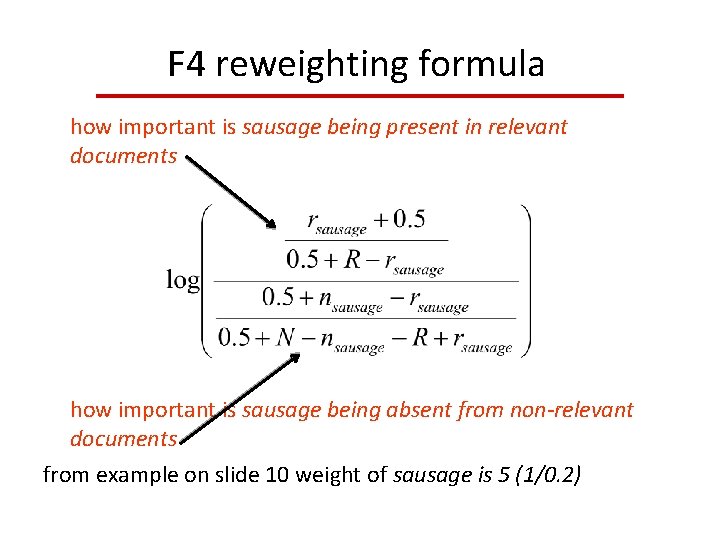

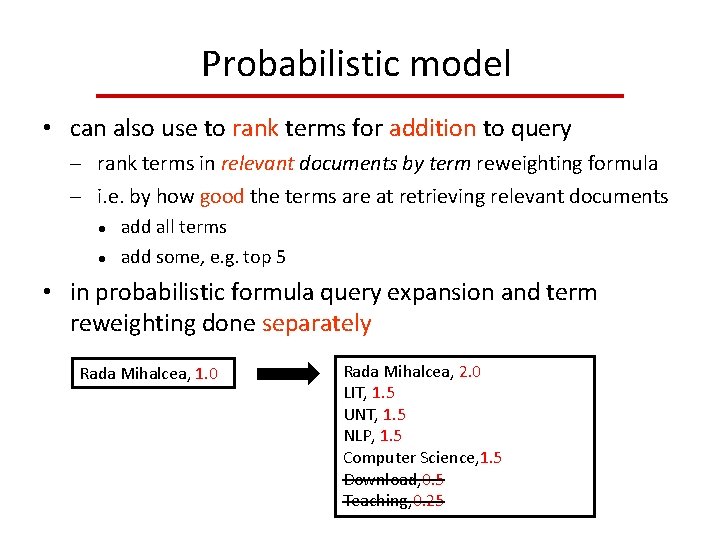

F 4 reweighting formula how important is sausage being present in relevant documents how important is sausage being absent from non‐relevant documents from example on slide 10 weight of sausage is 5 (1/0. 2)

F 4 reweighting formula • F 4 gives new weights to all terms in collection (or just query) high weights to important terms Low weights to unimportant terms replaces idf, tf, or any other weights document score is based on sum of query terms in documents

Probabilistic model • can also use to rank terms for addition to query rank terms in relevant documents by term reweighting formula i. e. by how good the terms are at retrieving relevant documents add all terms add some, e. g. top 5 • in probabilistic formula query expansion and term reweighting done separately Rada Mihalcea, 1. 0 Rada Mihalcea, 2. 0 LIT, 1. 5 UNT, 1. 5 NLP, 1. 5 Computer Science, 1. 5 Download, 0. 5 Teaching, 0. 25

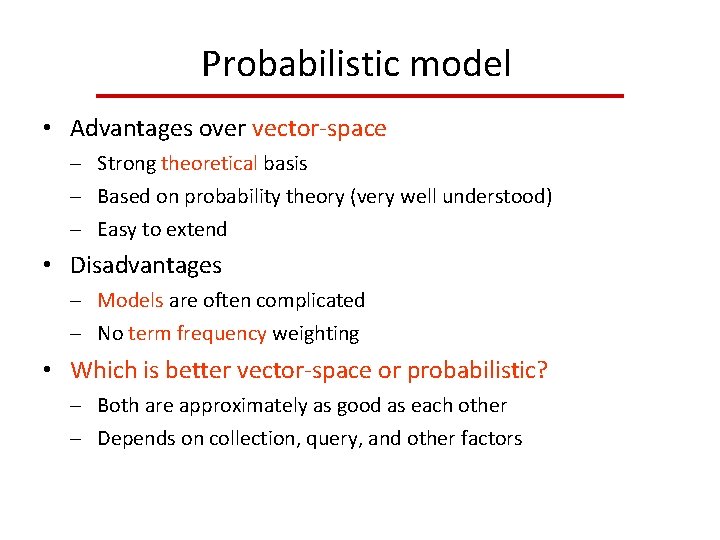

Probabilistic model • Advantages over vector‐space Strong theoretical basis Based on probability theory (very well understood) Easy to extend • Disadvantages Models are often complicated No term frequency weighting • Which is better vector‐space or probabilistic? Both are approximately as good as each other Depends on collection, query, and other factors

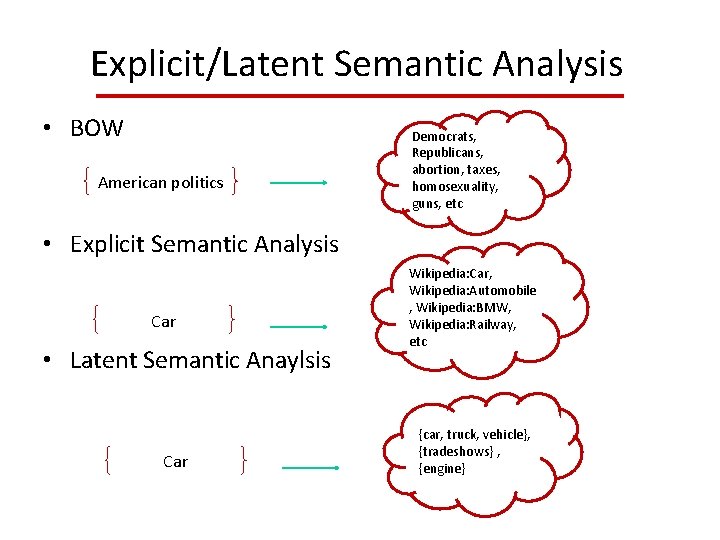

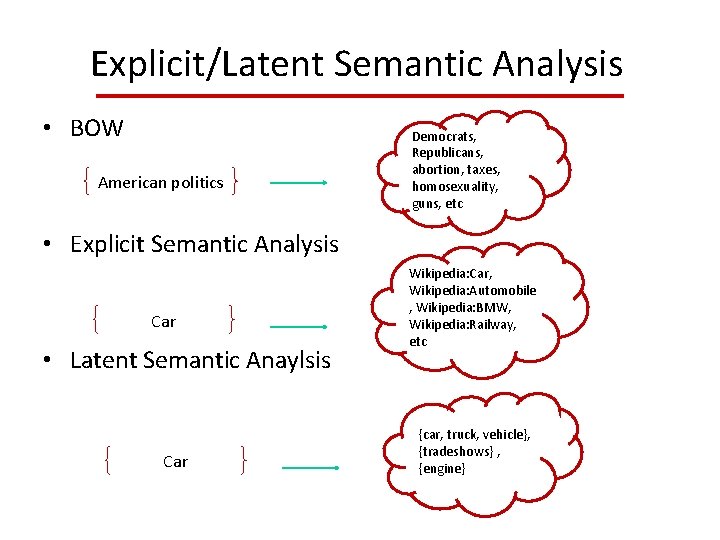

Explicit/Latent Semantic Analysis • BOW American politics Democrats, Republicans, abortion, taxes, homosexuality, guns, etc • Explicit Semantic Analysis Car • Latent Semantic Anaylsis Car Wikipedia: Car, Wikipedia: Automobile , Wikipedia: BMW, Wikipedia: Railway, etc {car, truck, vehicle}, {tradeshows} , {engine}

Explicit/Latent Semantic Analysis • Objective Replace indexes that use sets of index terms/docs by indexes that use concepts. • Approach Map the term vector space into a lower dimensional space, usingular value decomposition. Each dimension in the new space corresponds to a explicit/latent concept in the original data.

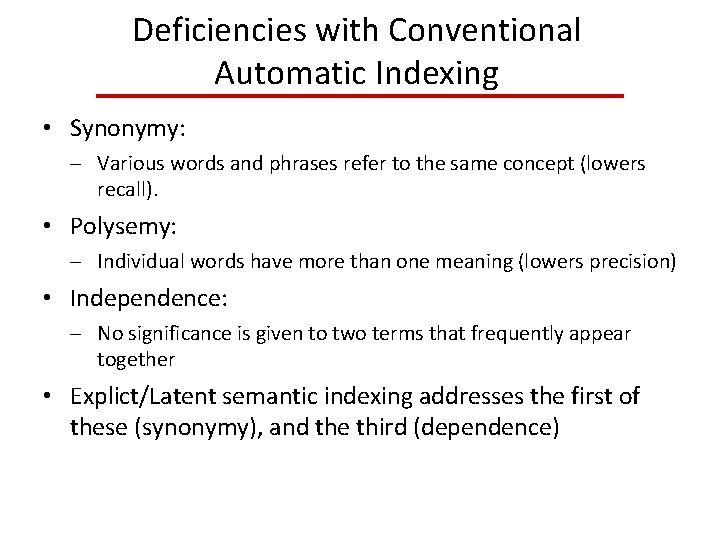

Deficiencies with Conventional Automatic Indexing • Synonymy: Various words and phrases refer to the same concept (lowers recall). • Polysemy: Individual words have more than one meaning (lowers precision) • Independence: No significance is given to two terms that frequently appear together • Explict/Latent semantic indexing addresses the first of these (synonymy), and the third (dependence)

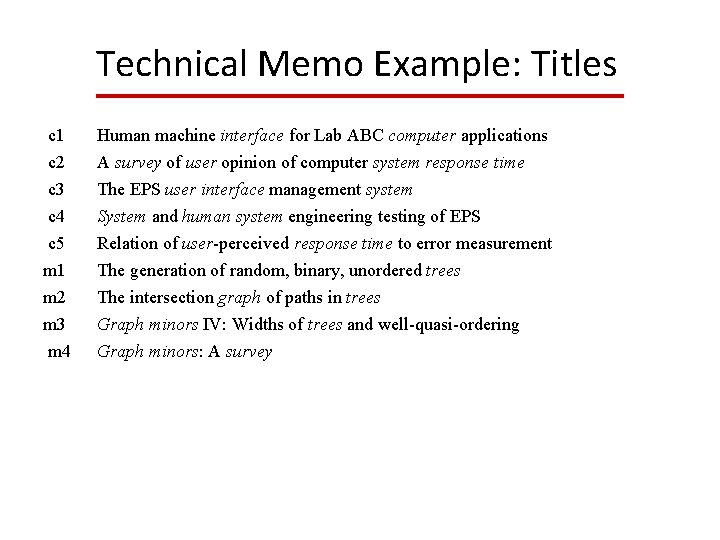

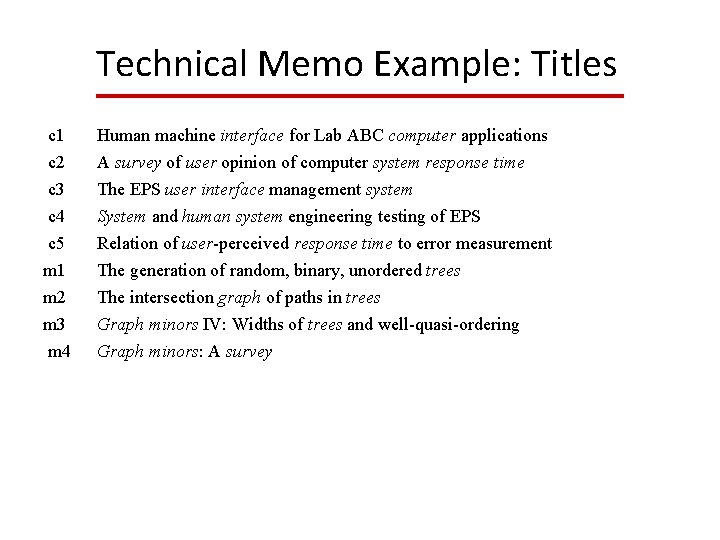

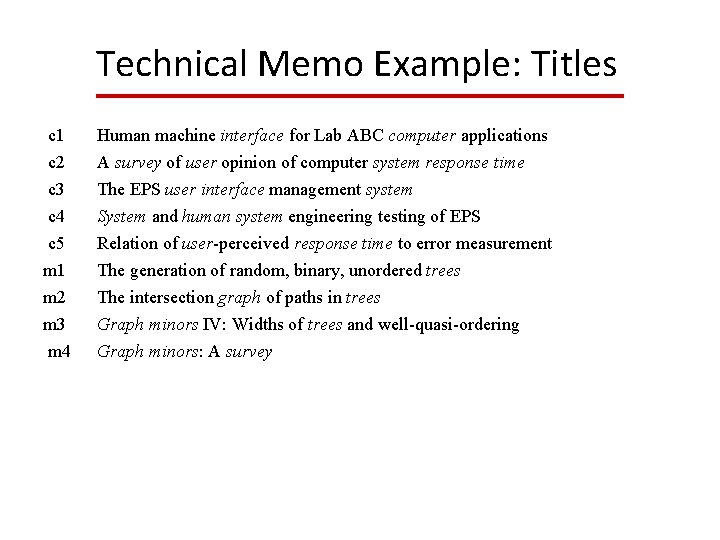

Technical Memo Example: Titles c 1 Human machine interface for Lab ABC computer applications c 2 A survey of user opinion of computer system response time c 3 c 4 c 5 m 1 m 2 m 3 m 4 The EPS user interface management system System and human system engineering testing of EPS Relation of user-perceived response time to error measurement The generation of random, binary, unordered trees The intersection graph of paths in trees Graph minors IV: Widths of trees and well-quasi-ordering Graph minors: A survey

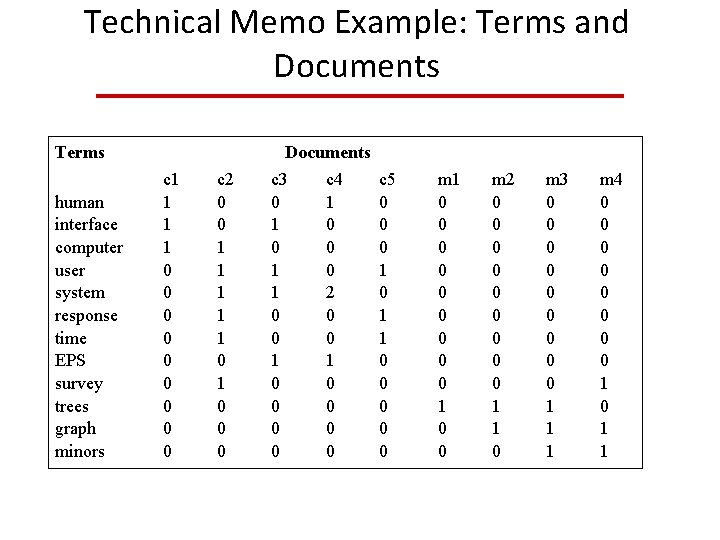

Technical Memo Example: Terms and Documents Terms Documents human interface computer user system response time EPS survey trees graph minors c 1 1 0 0 0 0 0 c 2 0 0 1 1 1 0 0 0 c 3 0 1 1 0 0 0 0 c 4 1 0 0 0 2 0 0 1 0 0 c 5 0 0 0 1 1 0 0 0 m 1 0 0 0 0 0 1 0 0 m 2 0 0 0 0 0 1 1 0 m 3 0 0 0 0 0 1 1 1 m 4 0 0 0 0 1 0 1 1

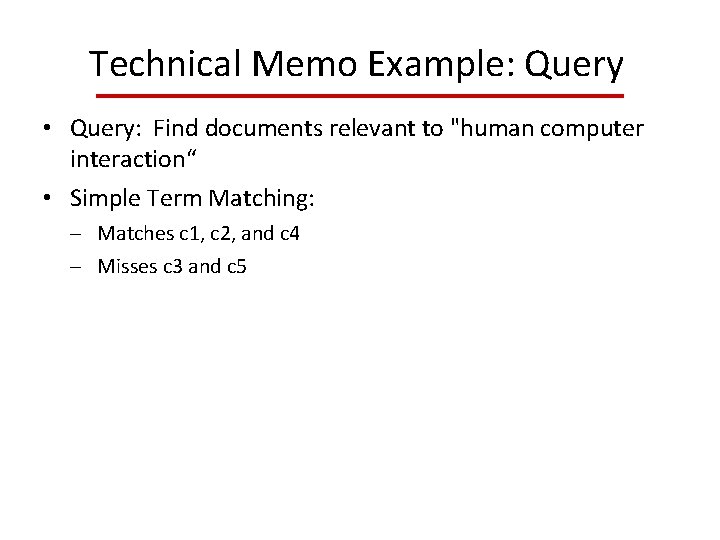

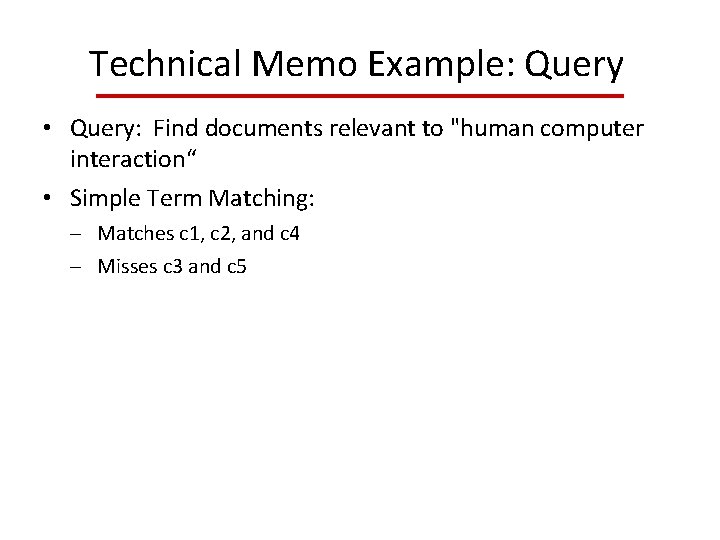

Technical Memo Example: Query • Query: Find documents relevant to "human computer interaction“ • Simple Term Matching: Matches c 1, c 2, and c 4 Misses c 3 and c 5

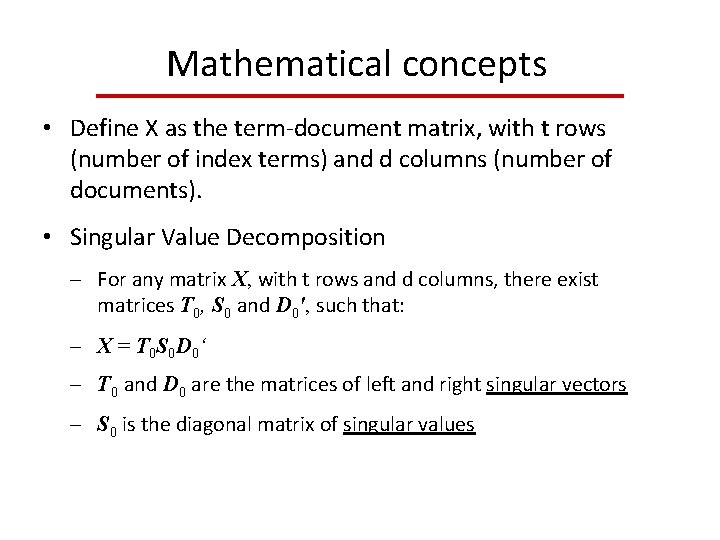

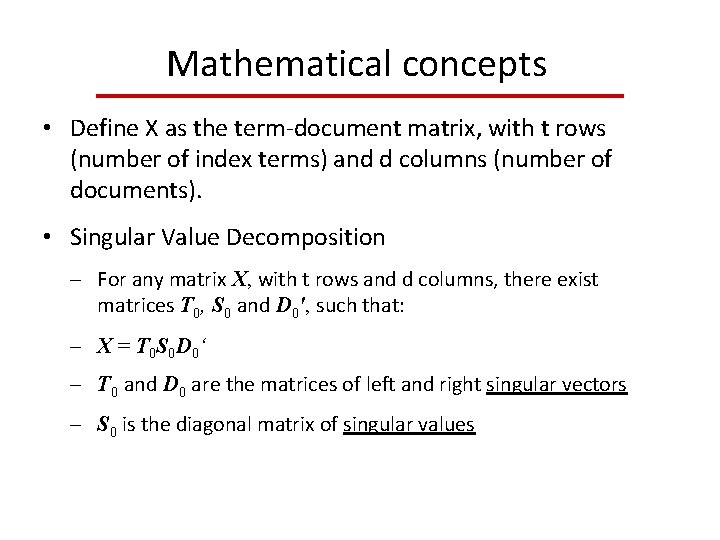

Mathematical concepts • Define X as the term‐document matrix, with t rows (number of index terms) and d columns (number of documents). • Singular Value Decomposition For any matrix X, with t rows and d columns, there exist matrices T 0, S 0 and D 0', such that: X = T 0 S 0 D 0‘ T 0 and D 0 are the matrices of left and right singular vectors S 0 is the diagonal matrix of singular values

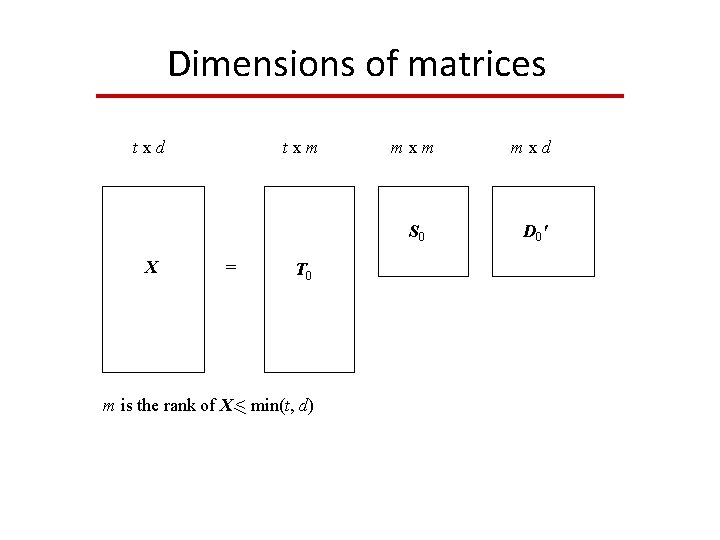

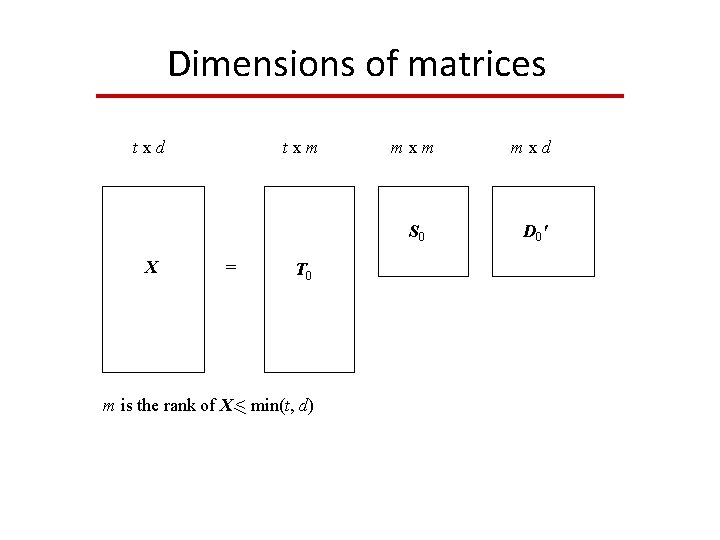

Dimensions of matrices t x d X txm = T 0 m is the rank of X < min(t, d) mxm mxd S 0 D 0 '

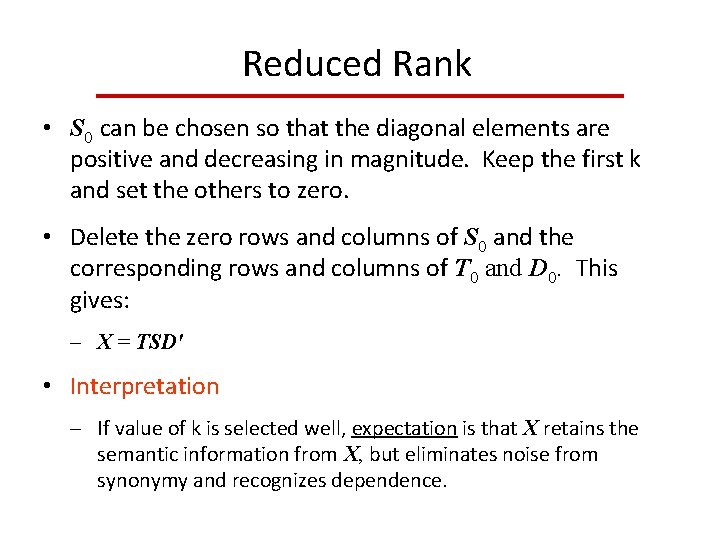

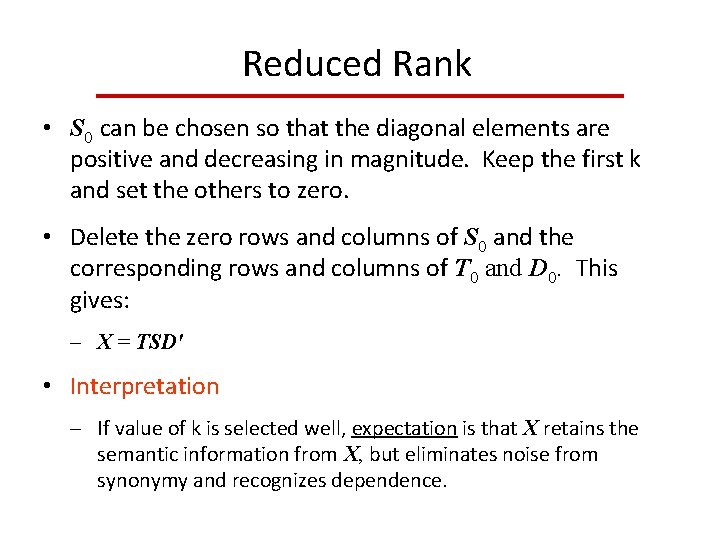

Reduced Rank • S 0 can be chosen so that the diagonal elements are positive and decreasing in magnitude. Keep the first k and set the others to zero. • Delete the zero rows and columns of S 0 and the corresponding rows and columns of T 0 and D 0. This gives: X = TSD' • Interpretation If value of k is selected well, expectation is that X retains the semantic information from X, but eliminates noise from synonymy and recognizes dependence.

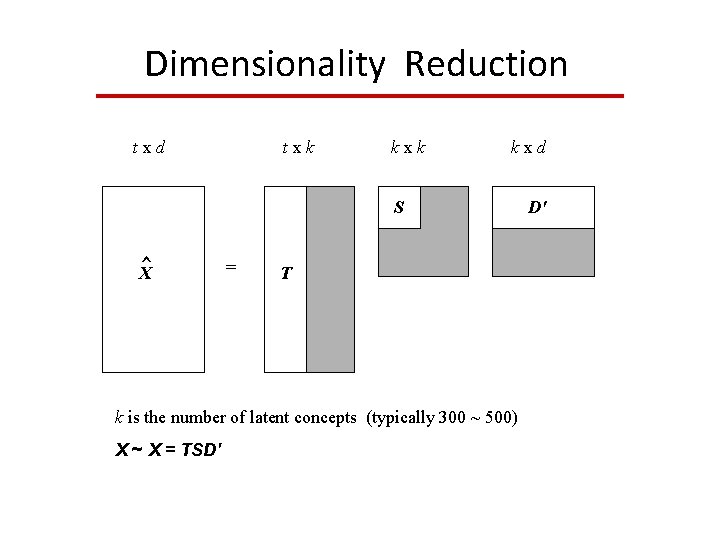

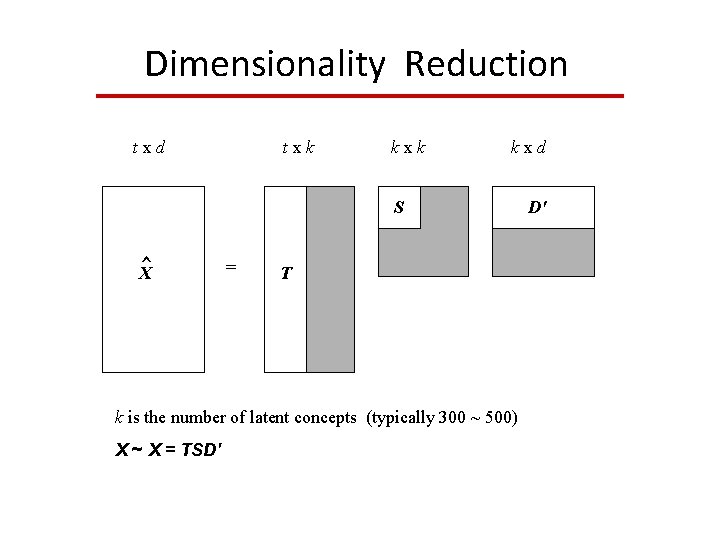

Dimensionality Reduction t x d txk kxd S ^ X = T k is the number of latent concepts (typically 300 ~ 500) X ~ X = TSD' D'

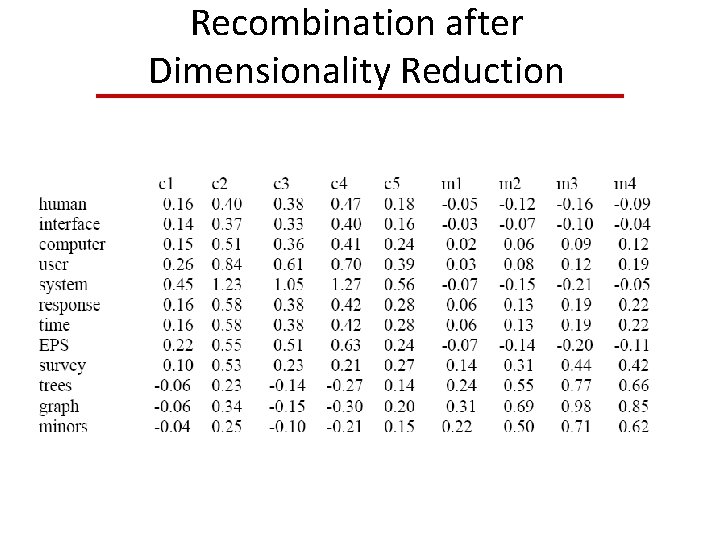

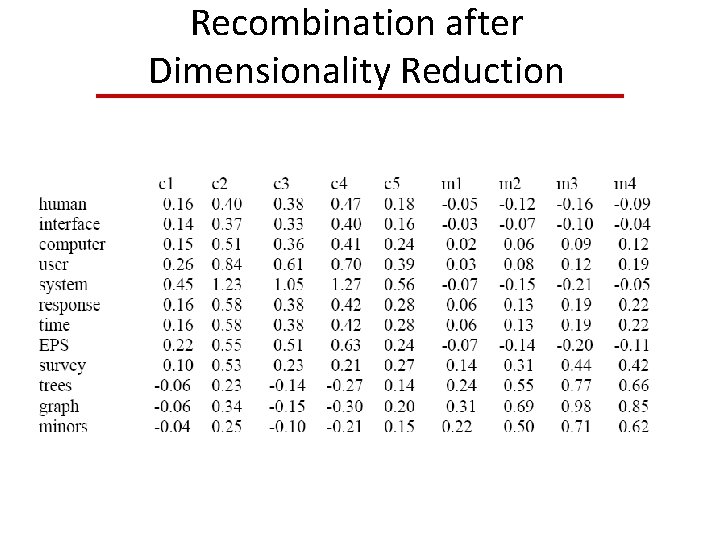

Recombination after Dimensionality Reduction

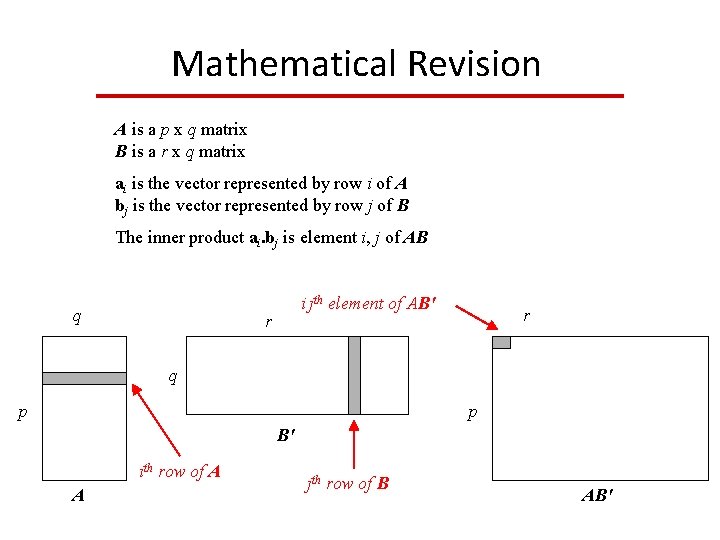

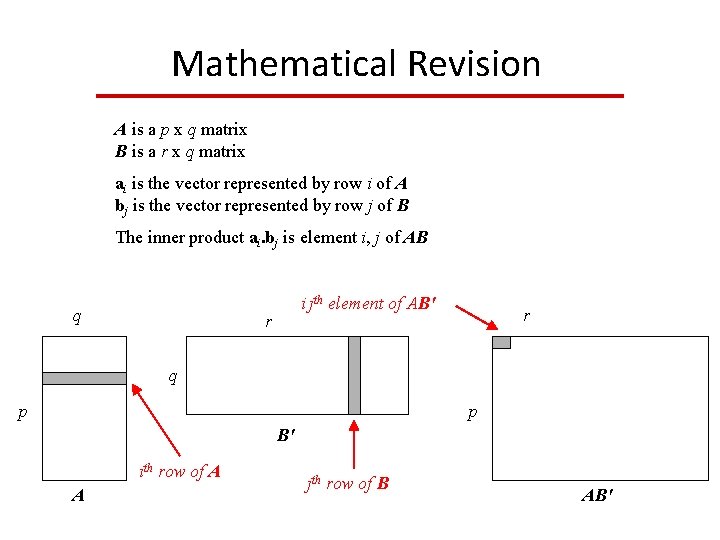

Mathematical Revision A is a p x q matrix B is a r x q matrix ai is the vector represented by row i of A bj is the vector represented by row j of B The inner product ai. bj is element i, j of AB q i jth element of AB' r r q p p B' ith row of A A jth row of B AB'

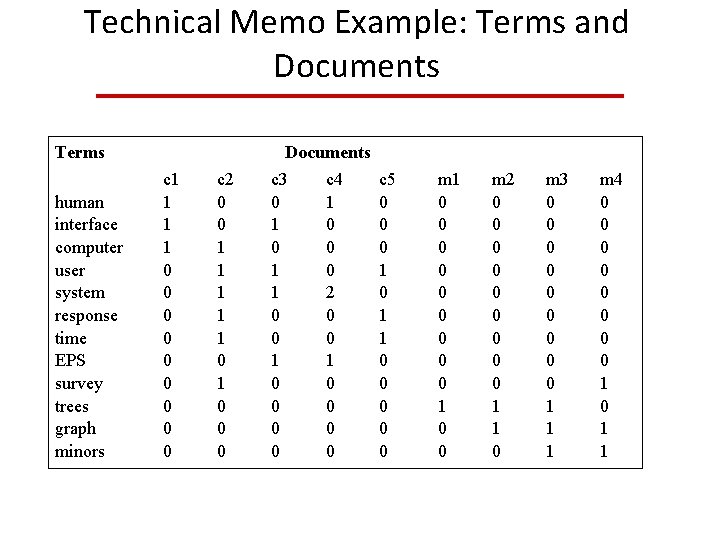

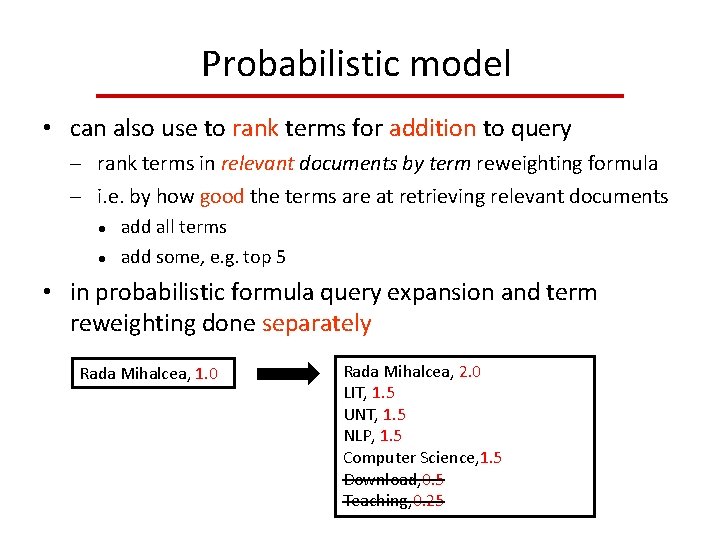

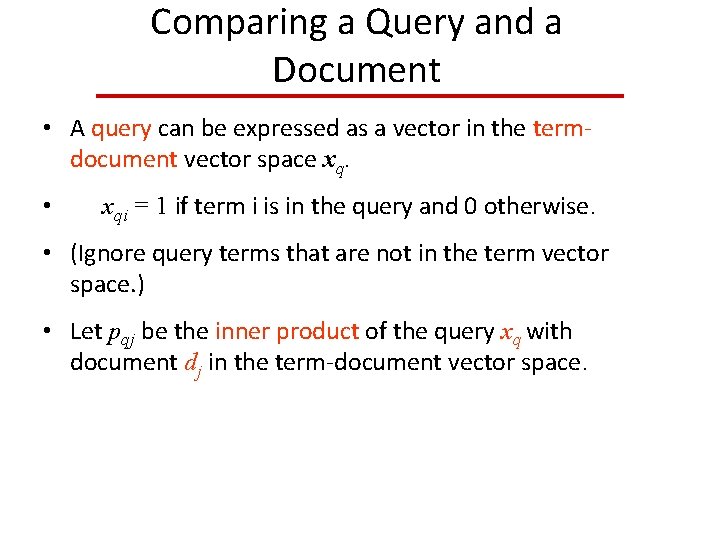

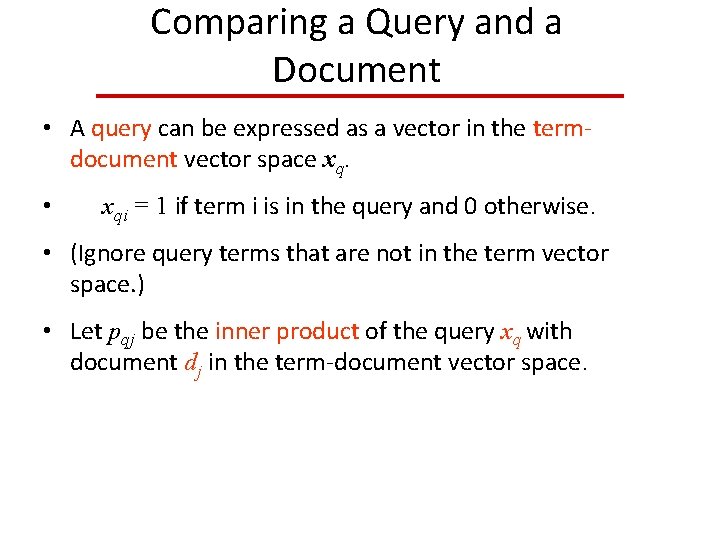

Comparing a Query and a Document • A query can be expressed as a vector in the term‐ document vector space xq. • xqi = 1 if term i is in the query and 0 otherwise. • (Ignore query terms that are not in the term vector space. ) • Let pqj be the inner product of the query xq with document dj in the term‐document vector space.

![Comparing a Query and a Document pq 1 pqj pqt Comparing a Query and a Document [pq 1. . . pqj. . . pqt]](https://slidetodoc.com/presentation_image/1a81d1c718105c061cb5f200af987f7e/image-32.jpg)

Comparing a Query and a Document [pq 1. . . pqj. . . pqt] = [xq 1 xq 2. . . xqt] inner product of query q with document dj query document dj is ^ column j of X ^ X cosine of angle is inner product divided by lengths of vectors

Stmik eresha

Stmik eresha Kom heer jezus kom

Kom heer jezus kom Stmik pranata indonesia kampus pengasinan

Stmik pranata indonesia kampus pengasinan Stmik mercusuar

Stmik mercusuar Stmik ganesha

Stmik ganesha Webpunt posthof

Webpunt posthof Hoe kom je op het dark web

Hoe kom je op het dark web Alek i basia

Alek i basia Www,xxx,kom

Www,xxx,kom Kumar kom jet

Kumar kom jet Diketahui sudut aec = 500, maka besar sudut boc adalah ....

Diketahui sudut aec = 500, maka besar sudut boc adalah .... Komponen kunci sig

Komponen kunci sig Menti dot kom

Menti dot kom Kom tot rus

Kom tot rus Sinstipes

Sinstipes Menti.com.

Menti.com. Kom hitt

Kom hitt Lief vrouwke ik kom niet om te bidden

Lief vrouwke ik kom niet om te bidden Kom til mig alle i som slider jer trætte

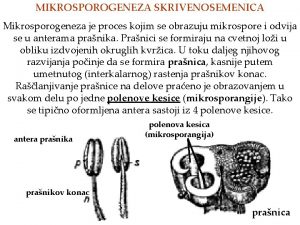

Kom til mig alle i som slider jer trætte Makrosporogeneza

Makrosporogeneza Kom syllabus

Kom syllabus Kom o skepper gees

Kom o skepper gees Sinta swastikawara, m.i.kom

Sinta swastikawara, m.i.kom Dubai koji je kontinent

Dubai koji je kontinent Na kojim kontinentima se nalazi egipat

Na kojim kontinentima se nalazi egipat Menti.doc

Menti.doc Go to www menti com and use

Go to www menti com and use Menti.com

Menti.com Stichting kom leren

Stichting kom leren Po kom je pojmenována amerika

Po kom je pojmenována amerika Yeni salma barlinti

Yeni salma barlinti Dseases

Dseases Yeni stargate projesi

Yeni stargate projesi