Semantic Hacking and Information Infrastructure Protection Paul Thompson

- Slides: 32

Semantic Hacking and Information Infrastructure Protection Paul Thompson 27 August 2009

Outline • • • ISTS and the I 3 P Cognitive or Semantic Hacking Intelligence and Security Informatics Detecting Deception in Language Socio-Cultural Content in Language

Security and Critical Infrastructure Protection at Dartmouth • Institute for Security Technology Studies, Dartmouth College (ISTS), January 2000 – Funded by National Infrastructure Protection Center (FBI) and National Institute of Justice – Computer Security Focus • Institute for Information Infrastructure Protection (I 3 P), January 2002 – Consortium of about 28 non-profit organizations based at Dartmouth

Semantic Hacking Project • • ISTS project Funded from 2001 through 2003 Cybenko, Giani, and Thompson Do. D Critical Infrastructure Protection Fellowship

Types of Attacks on Computer Systems • Physical • Syntactic • Semantic (Cognitive) - Martin Libicki: The mesh and the net: Speculations on armed conflict in an age of free silicon. 1994.

Background • Perception Management • Computer Security Taxonomies • Definitions of Semantic and Cognitive Hacking

Perception Management • “. . . Information operations that aim to affect the perceptions of others in order to influence their emotions, reasoning, decisions, and ultimately actions. ” – Denning, p. 101 • Continuum – Propaganda -> Psychological Operations -> -> Advertising -> Education • Has existed long before computers

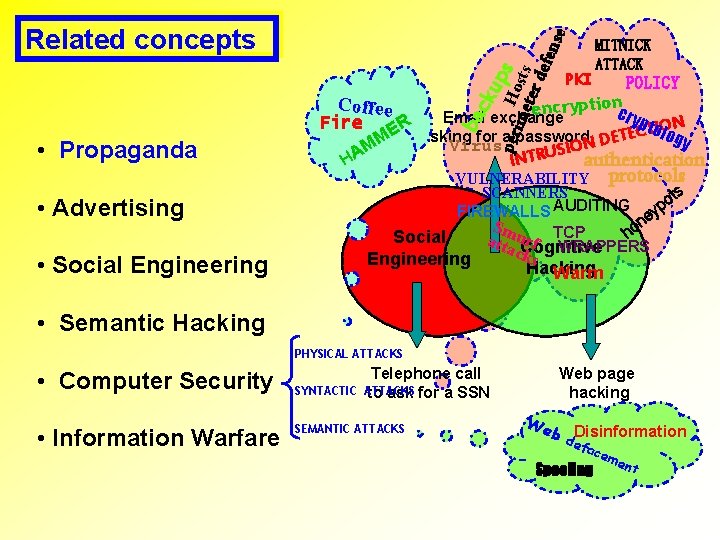

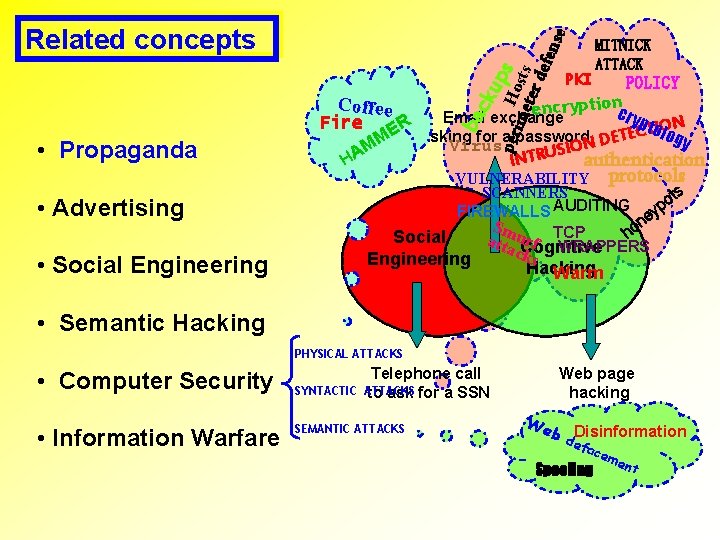

• Propaganda • Advertising • Social Engineering ba cku Coffee per Ho ps ime sts ter def e nse Related concepts PKI MITNICK ATTACK POLICY ncryption e cry Email exchange pt. To. ION Fire R E C log E asking for a password. N DET M y Virus O M I S U A H authentication INTR VULNERABILITY protocols SCANNERS s ot AUDITING p FIREWALLS ey n Sm ho Social rf TCP atta u. Cognitive WRAPPERS c Engineering ks Hacking Warm • Semantic Hacking PHYSICAL ATTACKS • Computer Security • Information Warfare SYNTACTIC Telephone call ATTACKS to ask for a SSN SEMANTIC ATTACKS Web page hacking We b d Disinformation efa c em ent Spoofing

Computer Security Taxonomies “Information contained in an automated system must be protected from three kinds of threats: (1) the unauthorized disclosure of information, (2) the unauthorized modification of information, and (3) the unauthorized withholding of information (usually called denial of service). ” - Landwehr, 1981

COGNITIVE HACKING Definition A networked information system attack that relies on changing human users' perceptions and corresponding behaviors in order to be successful. q Requires the use of an information system - not true for all social engineering q Requires a user to change some behaviornot true for all hacking Exploits our growing reliance on networked information sources

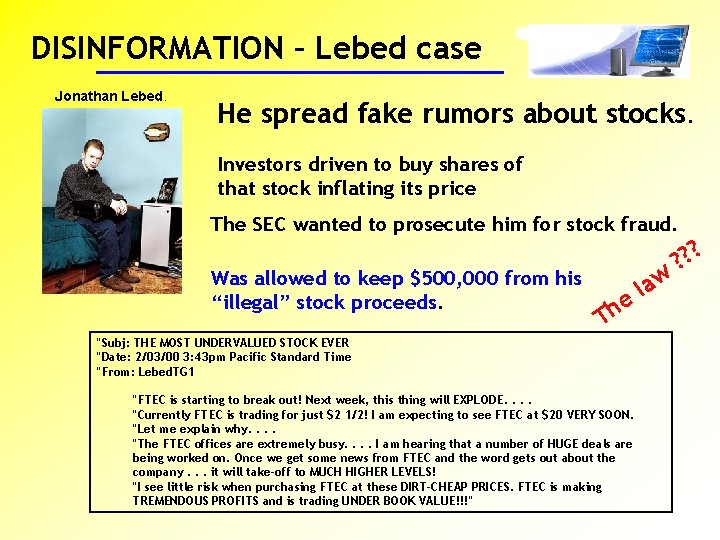

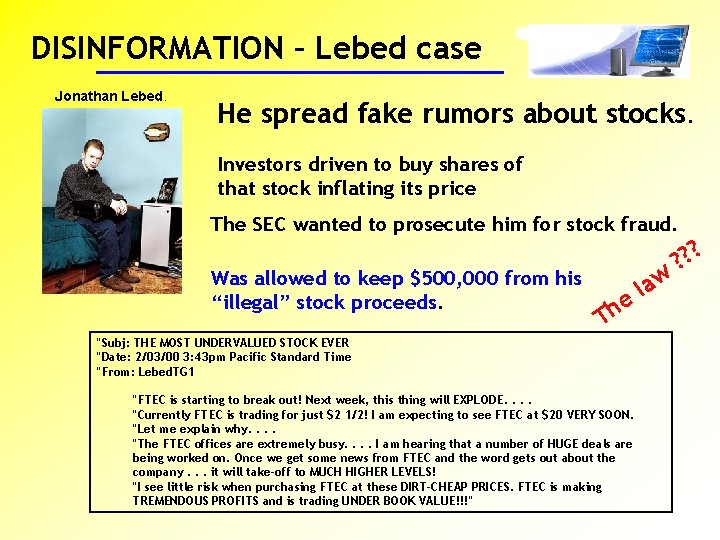

DISINFORMATION – Lebed case Jonathan Lebed. He spread fake rumors about stocks. Investors driven to buy shares of that stock inflating its price The SEC wanted to prosecute him for stock fraud. Was allowed to keep $500, 000 from his “illegal” stock proceeds. e h T "Subj: THE MOST UNDERVALUED STOCK EVER "Date: 2/03/00 3: 43 pm Pacific Standard Time "From: Lebed. TG 1 "FTEC is starting to break out! Next week, this thing will EXPLODE. . "Currently FTEC is trading for just $2 1/2! I am expecting to see FTEC at $20 VERY SOON. "Let me explain why. . "The FTEC offices are extremely busy. . I am hearing that a number of HUGE deals are being worked on. Once we get some news from FTEC and the word gets out about the company. . . it will take-off to MUCH HIGHER LEVELS! "I see little risk when purchasing FTEC at these DIRT-CHEAP PRICES. FTEC is making TREMENDOUS PROFITS and is trading UNDER BOOK VALUE!!!" law ? ? ?

Emulex Exploit • Mark Jakob, shorted 3, 000 shares of Emulex stock for $72 and $92 • Price rose to $100 • Jakob lost almost $100, 000 • Sent false press release to Internet Wire Inc. • Claimed Emulex Corporation being investigated by the SEC • Claimed company forced to restate 1998 1999 earnings. • Jakob earned $236, 000

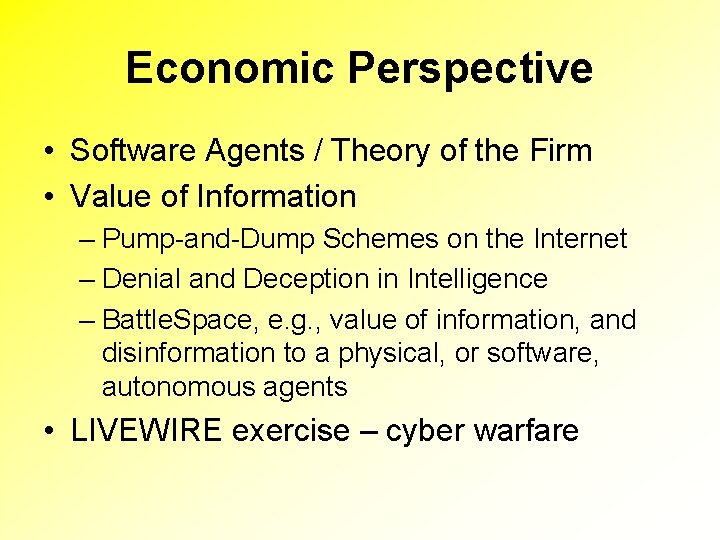

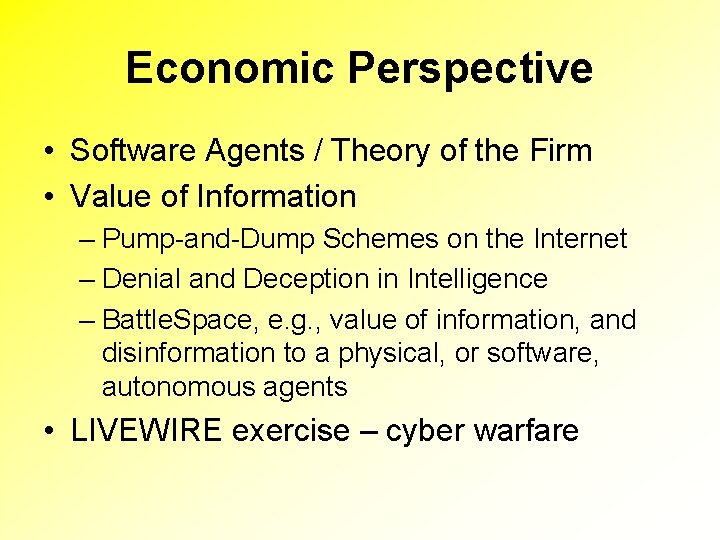

Economic Perspective • Software Agents / Theory of the Firm • Value of Information – Pump-and-Dump Schemes on the Internet – Denial and Deception in Intelligence – Battle. Space, e. g. , value of information, and disinformation to a physical, or software, autonomous agents • LIVEWIRE exercise – cyber warfare

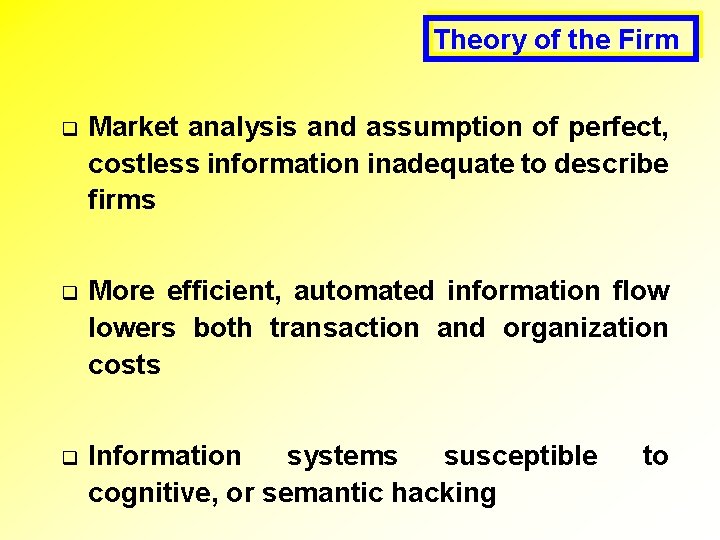

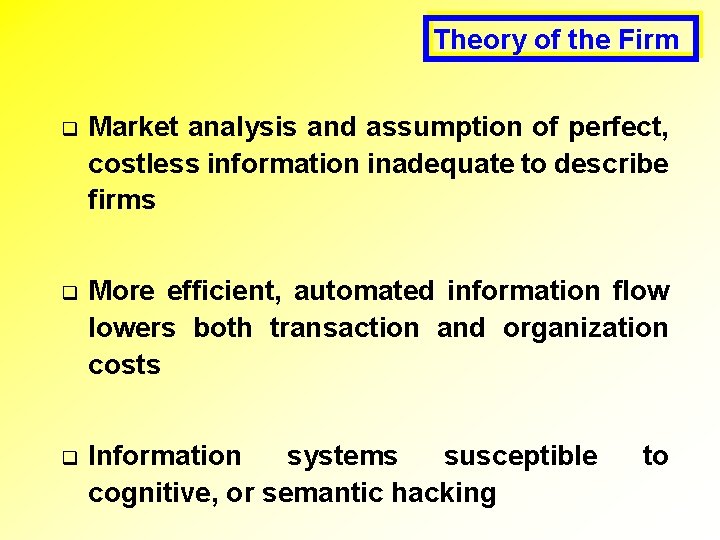

Theory of the Firm q Market analysis and assumption of perfect, costless information inadequate to describe firms q More efficient, automated information flow lowers both transaction and organization costs q Information systems susceptible cognitive, or semantic hacking to

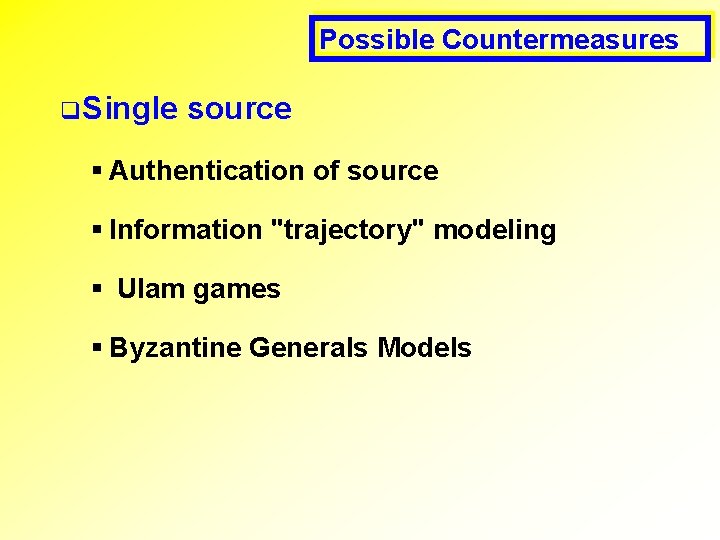

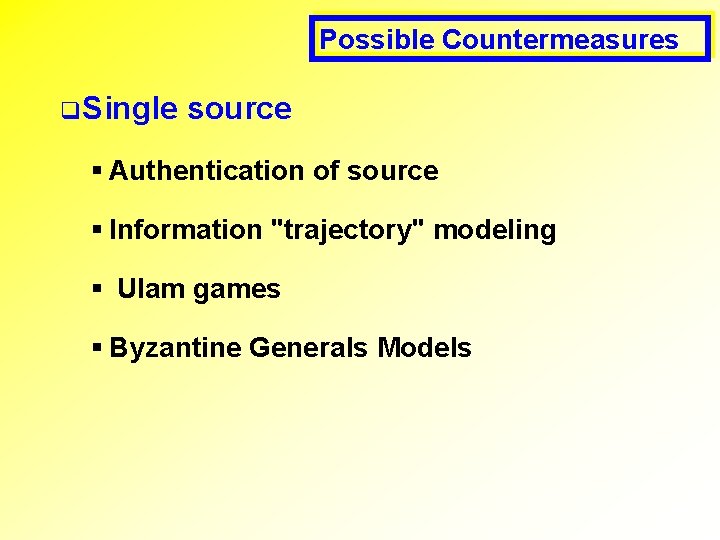

Possible Countermeasures q Single source § Authentication of source § Information "trajectory" modeling § Ulam games § Byzantine Generals Models

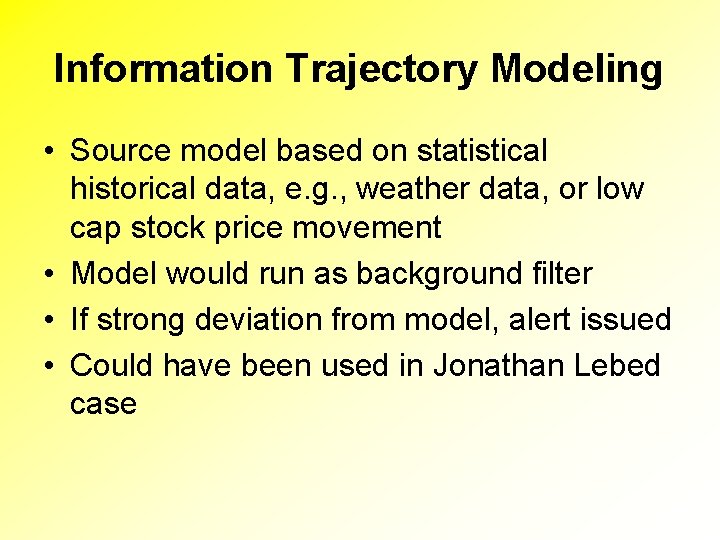

Information Trajectory Modeling • Source model based on statistical historical data, e. g. , weather data, or low cap stock price movement • Model would run as background filter • If strong deviation from model, alert issued • Could have been used in Jonathan Lebed case

Possible Countermeasures • Multiple Sources – Source Reliability via Collaborative Filtering and Reliability reporting – Detection of Collusion by Information Sources – Linguistic Analysis , e. g. Determination of common authorship

Linguistic Analysis • Determination of common authorship – Jonathan Lebed case – Mosteller and Wallace – Federalist papers – IBM researchers – Recent NSF KDD program – Madigan et al. at DIMACS • Automatic genre detection – Information v. disinformation – Schuetze and Nunberg

Countering Disinforming News : Information Trajectory Modeling • AEnalyst – An Electronic Analyst of Financial Markets – Used for market prediction – Dataset of news stories, stock prices, and automatically generated trends for 121 publicly traded stocks – Center for Intelligent Information Retrieval, U. of Massachusetts, Amherst http: //ciir. cs. umass. edu/~lavrenko/aenalyst

Disinforming News Countermeasure • Mining of concurrent text and time series – Time stamps of news articles – Stock prices • Based on Activity Monitoring – Fawcett and Provost – Monitoring behavior of large population for interesting events requiring action – Automated adaptive fraud detection, e. g. , cell phone fraud

Countermeasures for Disinforming News • User finds possibly disinforming news item • User wants to act quickly to make huge profit, but does not want to be victim of pump-and-dump scheme • Query automatically generated and submitted to Google News to create collection of related news items

Countermeasure 1: News Verifier • Stories optimally re-ranked and presented to user • User scans top two or three stories and decides whether or not original news item is reliable • Countermeasure fails if user cannot determine reliability based on top two or three ranked stories • News Verifier – prototype implementation

Countermeasure 2 • Collection assembled by search of Google News is analyzed automatically, e. g. , via information trajectory modeling • Countermeasure system extracts monetary amounts or price movement references from text and compares to database of stock prices • If movement is out of range, user is alerted • Can process 2 work better than 1?

Cognitve Hacking and a New Science of Intelligence and Security Informatics • 1 st NSF / NIJ Symposium on Intelligence and Security Informatics, Tucson, Arizona, 1 -3 June 2003 (annual IEEE conference) • Traditional Information Retrieval – Developed to serve needs of scientific researchers and attorneys • Utility-Theoretic Retrieval taking into account disinformation – Cognitive hacking, e. g. , Internet stock trading pumpand-dump schemes – Deception and denial in open source intelligence – Semantic attacks on software agents in warfare

Deception Detection • Reflections on Intelligence, R. V. Jones – WW 2 electronic deception • Whaley’s taxonomy – Bell and Whaley, Cheating and Deception • Libicki - Semantic Attacks and Information Warfare • Rattray - Strategic Information Warfare Defense • Kott - Reading the Opponent’s Mind

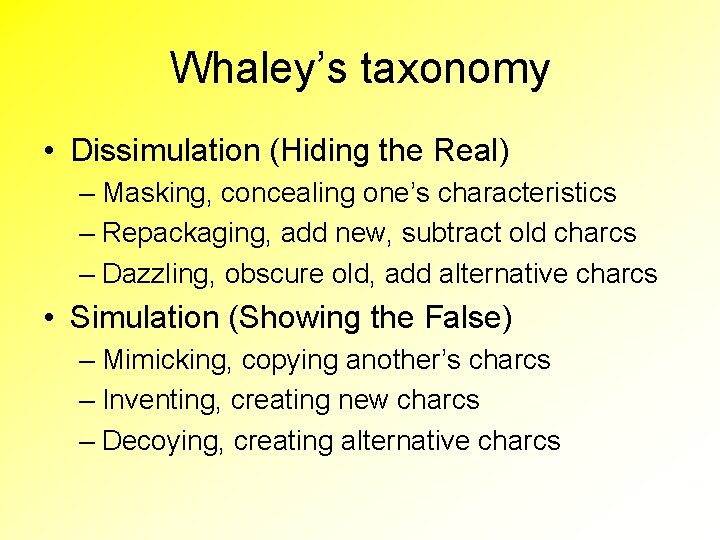

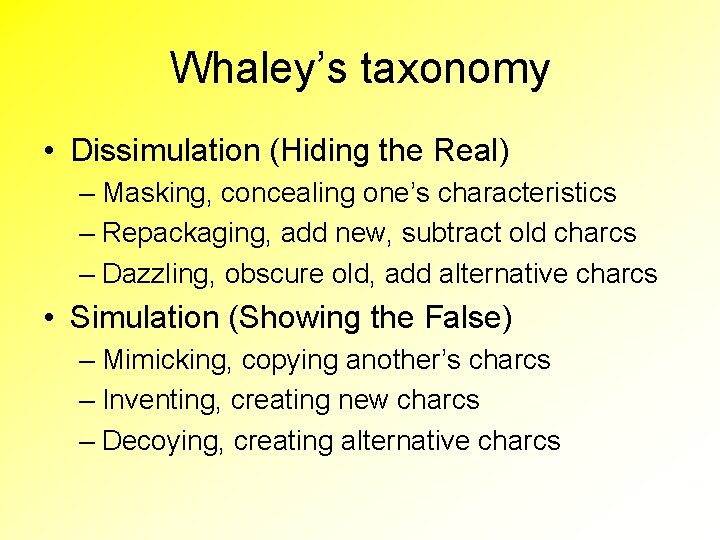

Whaley’s taxonomy • Dissimulation (Hiding the Real) – Masking, concealing one’s characteristics – Repackaging, add new, subtract old charcs – Dazzling, obscure old, add alternative charcs • Simulation (Showing the False) – Mimicking, copying another’s charcs – Inventing, creating new charcs – Decoying, creating alternative charcs

Detecting Deception in Language • First of Seven NSF OSTP Workshops on Security Evaluations – summer 2005 • Most participants psychologists • Funding was anticipated, but did not materialize, in FY ‘ 07

WORKSHOP RESEARCH AGENDA RECOMMENDATIONS • Language Measurement Development within a Deception Context • Corpus Development • Theoretical Development in Language and Deception • Embedded Scholar Project • Rapid Funding Mechanism

Deception: Methods, Motives, Contexts & Consequences • Santa Fe Institute workshop held on 1 -3 March 2007 • Earlier related workshop held in 2005 • Organized by Brooke Harrington • Stanford University Press book based on workshops published in 2009

Deception Workshop: Some Participants • Brooke Harrington - Max Planck Institute for the Study of Societies, economic sociologist, workshop organizer, studies stock brokers • Mark Frank - social psychology, SUNY Buffalo, thoughts, feelings, and deception • Jeffrey Hancock, Cornell, communication, digital deception

Socio-Cultural Content in Language • IARPA program began this year • Goals – to use existing social science theories to identify the social goals of a group and its members – to correlate these social goals with the language used by the members – to automate this correlation

Conclusions • Semantic hacking is widespread • We are only at the beginning stages of developing effective countermeasures for semantic hacking • Semantic hacking countermeasures will play an important role in cyber security and in intelligence and security informatics