SelfImproving Computer Chips Warp Processing Frank Vahid Dept

- Slides: 45

Self-Improving Computer Chips – Warp Processing Frank Vahid Dept. of CS&E University of California, Riverside Associate Director, Center for Embedded Computer Systems, UC Irvine Contributing Ph. D. Students Roman Lysecky (Ph. D. 2005, now Asst. Prof. at Univ. of Arizona Greg Stitt (Ph. D. 2007, now Asst. Prof. at Univ. of Florida, Gainesville Scotty Sirowy (current) David Sheldon (current) ______? ? ? _____ This research was supported in part by the National Science Foundation and the Semiconductor Research Corporation, Intel, Freescale, and IBM

Self-Improving Cars? Frank Vahid, UC Riverside 2

Self-Improving Chips? n Moore’s Law n 2 x capacity growth / 18 months Frank Vahid, UC Riverside 3

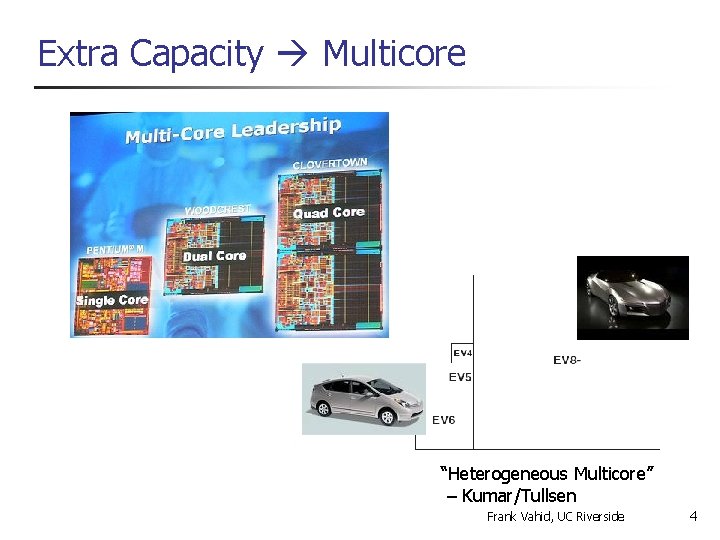

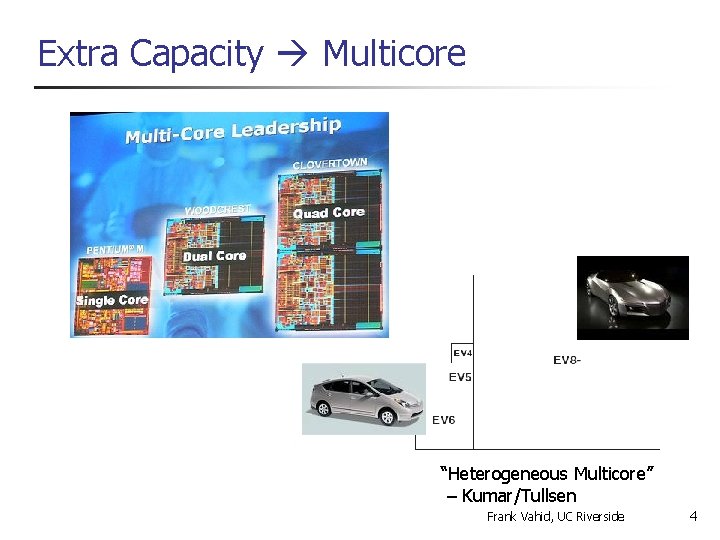

Extra Capacity Multicore “Heterogeneous Multicore” – Kumar/Tullsen Frank Vahid, UC Riverside 4

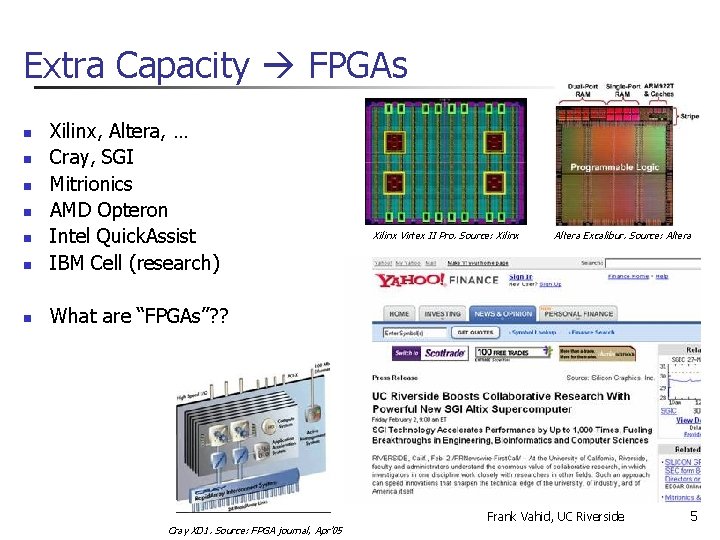

Extra Capacity FPGAs n Xilinx, Altera, … Cray, SGI Mitrionics AMD Opteron Intel Quick. Assist IBM Cell (research) n What are “FPGAs”? ? n n n Cray XD 1. Source: FPGA journal, Apr’ 05 Xilinx Virtex II Pro. Source: Xilinx Altera Excalibur. Source: Altera Frank Vahid, UC Riverside 5

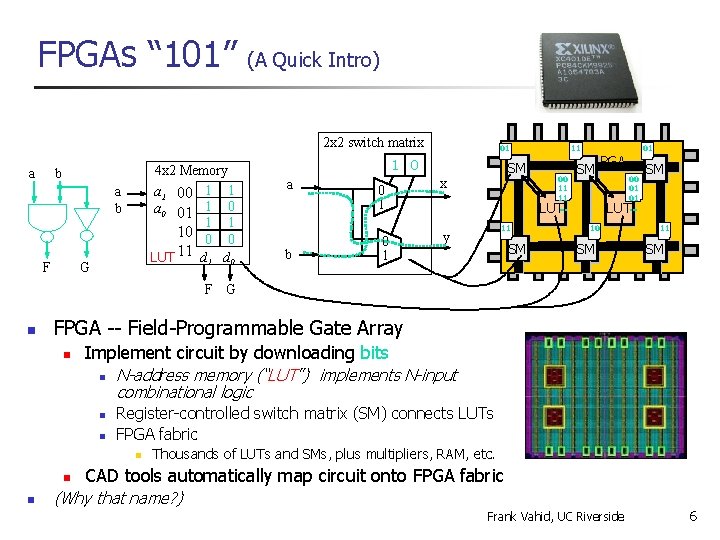

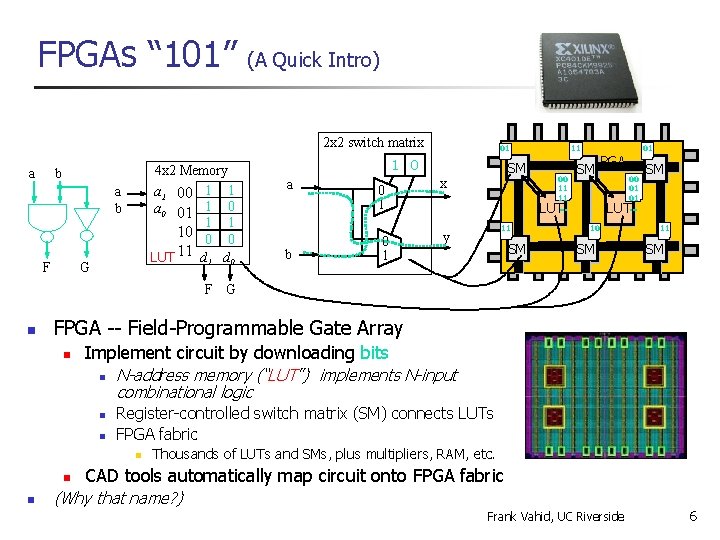

FPGAs “ 101” (A Quick Intro) 2 x 2 switch matrix a 4 x 2 Memory a 1 00 1 1 a 0 01 1 0 1 1 10 0 0 LUT 11 d 0 b a b F G F n b 0 1 SM x 00 11 11. . . 01 FPGA SM SM 00 01 01. . . LUT 11 y SM LUT 10 SM 11 SM G FPGA -- Field-Programmable Gate Array n Implement circuit by downloading bits n n n N-address memory (“LUT”) implements N-input combinational logic Register-controlled switch matrix (SM) connects LUTs FPGA fabric n n n 1 0 a 11 01 Thousands of LUTs and SMs, plus multipliers, RAM, etc. CAD tools automatically map circuit onto FPGA fabric (Why that name? ) Frank Vahid, UC Riverside 6

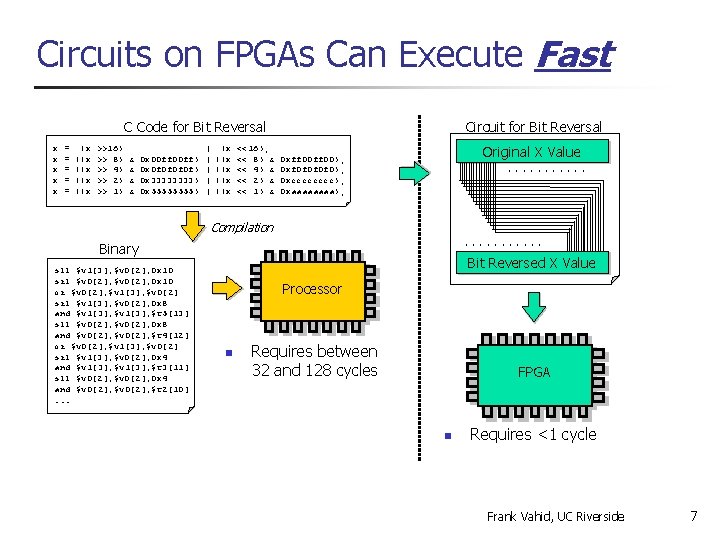

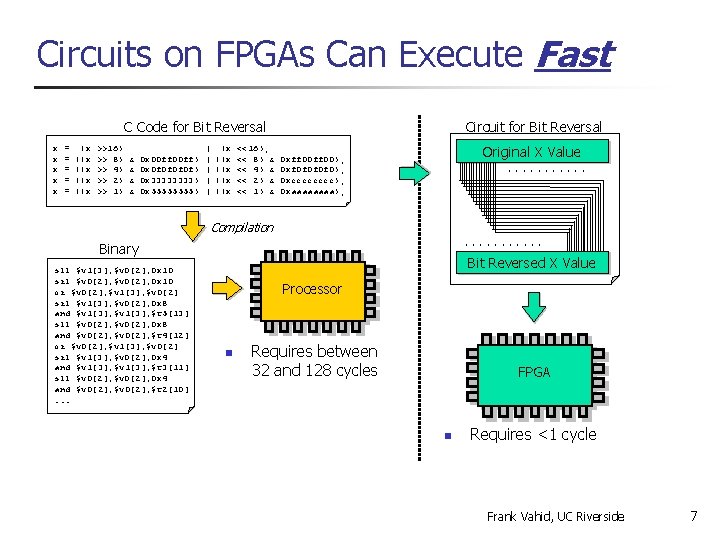

Circuits on FPGAs Can Execute Fast C Code for Bit Reversal x x x = = = (x ((x ((x >>16) >> 8) >> 4) >> 2) >> 1) & & 0 x 00 ff) 0 x 0 f 0 f) 0 x 3333) 0 x 5555) | | | (x ((x ((x <<16); << 8) & << 4) & << 2) & << 1) & Circuit for Bit Reversal X Value Bit. Original Reversed X Value 0 xff 00); 0 xf 0 f 0); 0 xcccc); 0 xaaaa); . . . Compilation. . . Binary sll $v 1[3], $v 0[2], 0 x 10 srl $v 0[2], 0 x 10 or $v 0[2], $v 1[3], $v 0[2] srl $v 1[3], $v 0[2], 0 x 8 and $v 1[3], $t 5[13] sll $v 0[2], 0 x 8 and $v 0[2], $t 4[12] or $v 0[2], $v 1[3], $v 0[2] srl $v 1[3], $v 0[2], 0 x 4 and $v 1[3], $t 3[11] sll $v 0[2], 0 x 4 and $v 0[2], $t 2[10]. . . Bit Reversed XX Value Processor n Requires between 32 and 128 cycles Processor FPGA n Requires <1 cycle Frank Vahid, UC Riverside 7

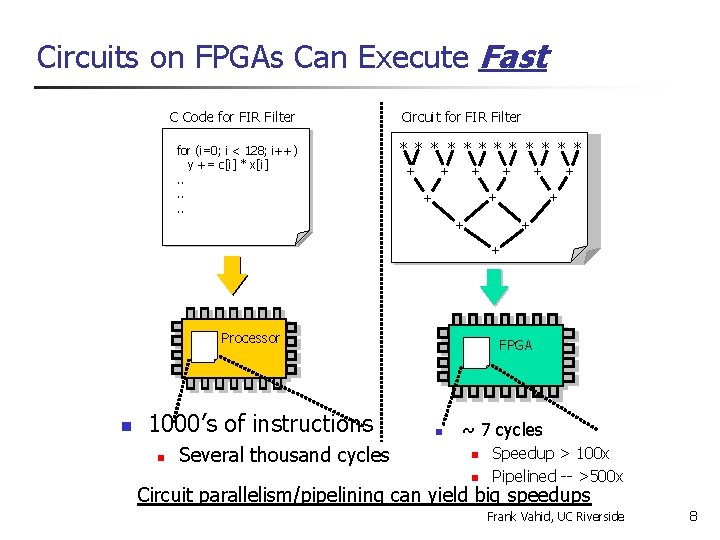

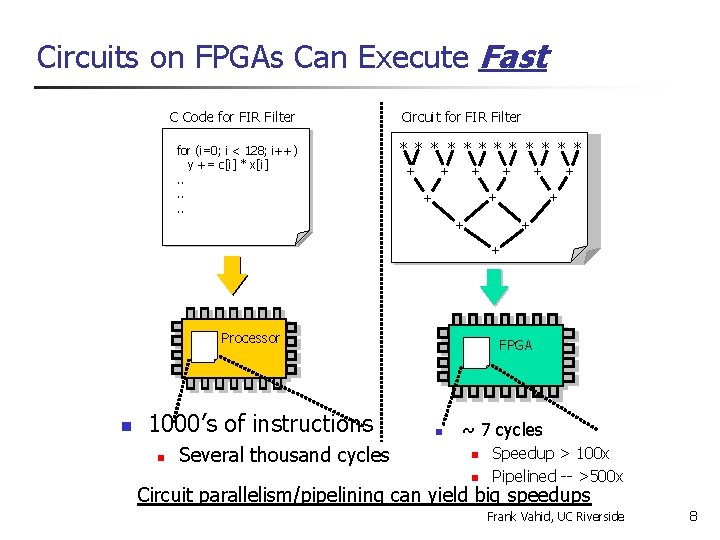

Circuits on FPGAs Can Execute Fast C Code for FIR Filter for (i=0; ii << 128; i++) yy[i] +=+= c[i] * x[i]. . . Circuit for FIR Filter * * * + + + Processor n 1000’s of instructions n Several thousand cycles FPGA Processor n ~ 7 cycles n n Speedup > 100 x Pipelined -- >500 x Circuit parallelism/pipelining can yield big speedups Frank Vahid, UC Riverside 8

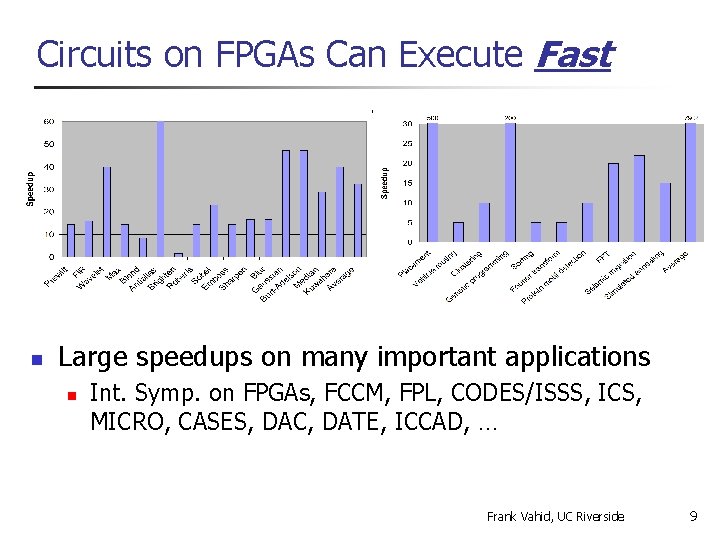

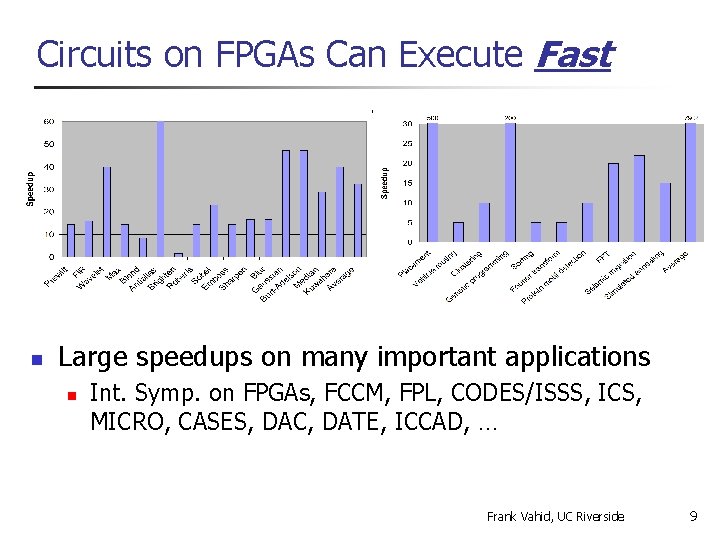

Circuits on FPGAs Can Execute Fast n Large speedups on many important applications n Int. Symp. on FPGAs, FCCM, FPL, CODES/ISSS, ICS, MICRO, CASES, DAC, DATE, ICCAD, … Frank Vahid, UC Riverside 9

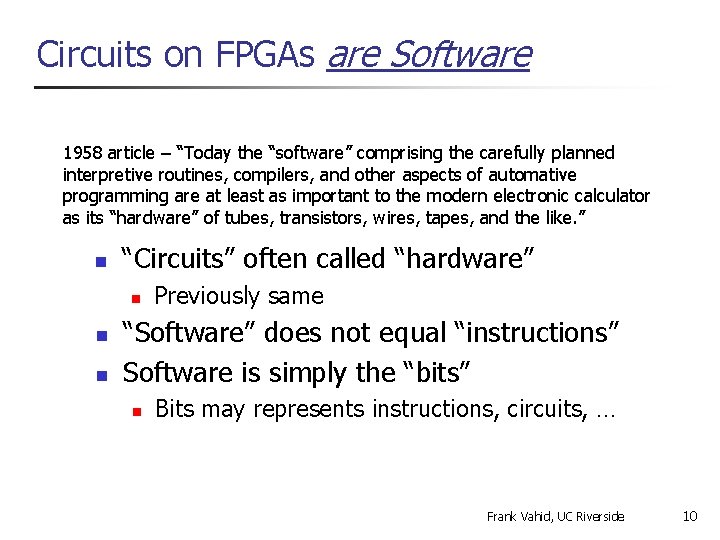

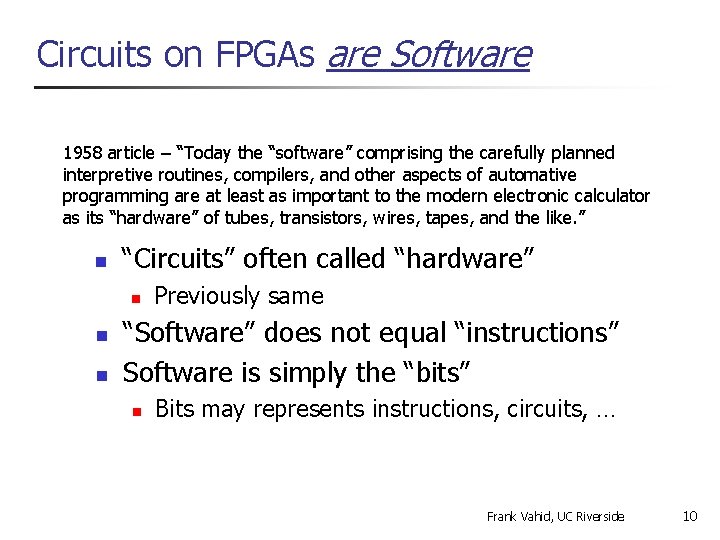

Circuits on FPGAs are Software 1958 article – “Today the “software” comprising the carefully planned interpretive routines, compilers, and other aspects of automative programming are at least as important to the modern electronic calculator as its “hardware” of tubes, transistors, wires, tapes, and the like. ” n “Circuits” often called “hardware” n n n Previously same “Software” does not equal “instructions” Software is simply the “bits” n Bits may represents instructions, circuits, … Frank Vahid, UC Riverside 10

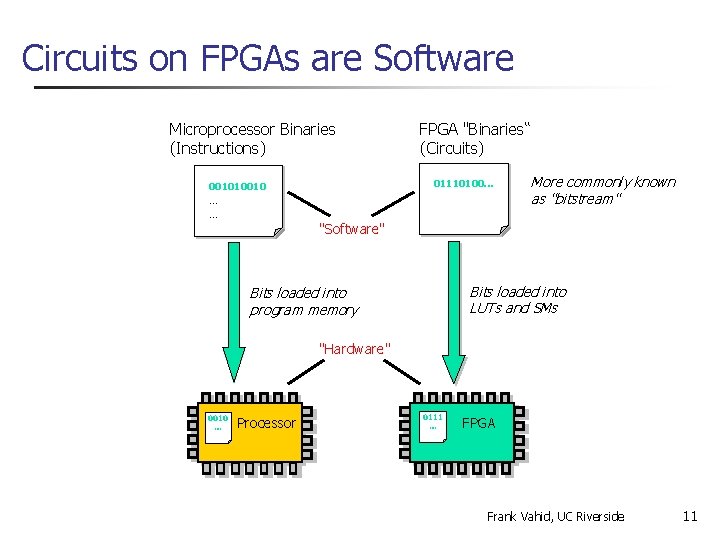

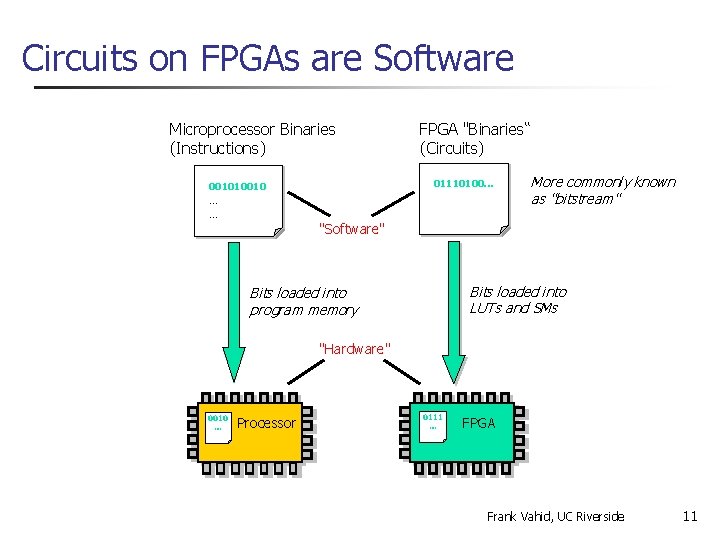

Circuits on FPGAs are Software Microprocessor Binaries (Instructions) 01110100. . . 001010010 … … FPGA "Binaries“ (Circuits) "Software" … … More commonly known as "bitstream" Bits loaded into LUTs and SMs Bits loaded into program memory "Hardware" 0010 … Processor 0111 … FPGA Processor Frank Vahid, UC Riverside 11

Circuits on FPGAs are Software Sep 2007 IEEE Computer Frank Vahid, UC Riverside 12

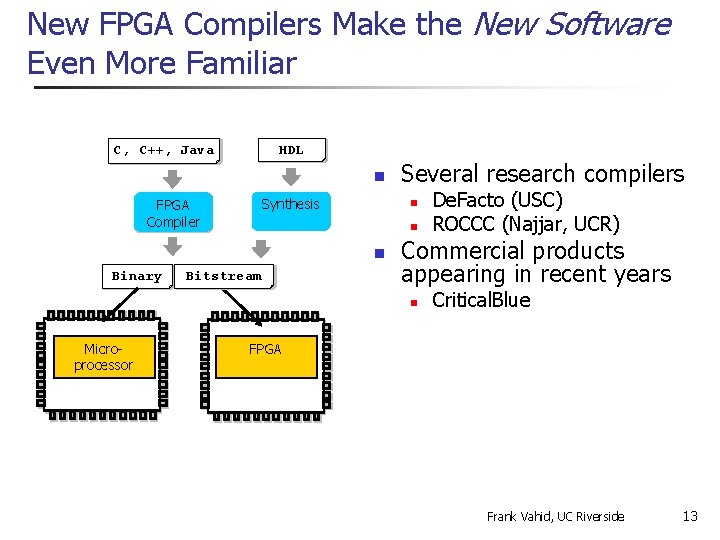

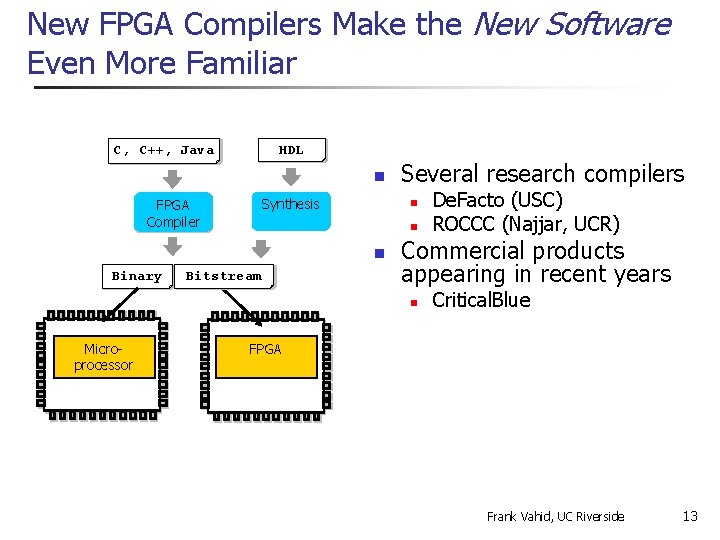

New FPGA Compilers Make the New Software Even More Familiar C, C++, Java Binary HDL Binary n Synthesis FPGA Profiling Compiler n n n Binary Several research compilers Bitstream Binary Commercial products appearing in recent years n Microprocessor De. Facto (USC) ROCCC (Najjar, UCR) Critical. Blue FPGA Frank Vahid, UC Riverside 13

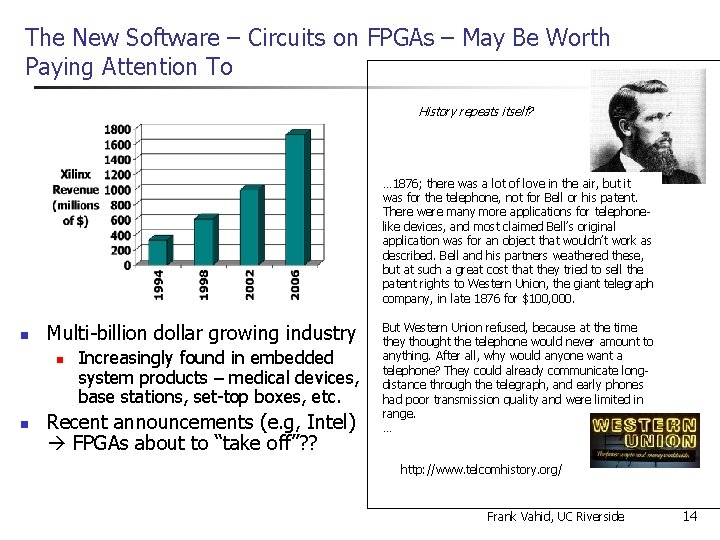

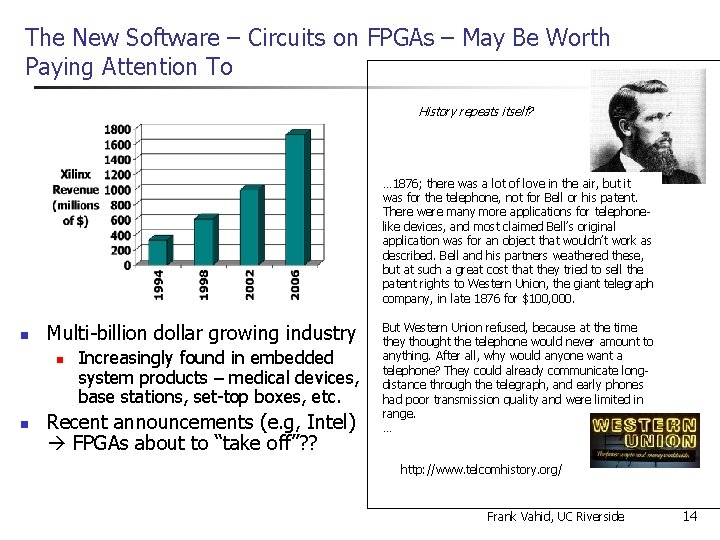

The New Software – Circuits on FPGAs – May Be Worth Paying Attention To History repeats itself? … 1876; there was a lot of love in the air, but it was for the telephone, not for Bell or his patent. There were many more applications for telephonelike devices, and most claimed Bell’s original application was for an object that wouldn’t work as described. Bell and his partners weathered these, but at such a great cost that they tried to sell the patent rights to Western Union, the giant telegraph company, in late 1876 for $100, 000. n Multi-billion dollar growing industry n n Increasingly found in embedded system products – medical devices, base stations, set-top boxes, etc. Recent announcements (e. g, Intel) FPGAs about to “take off”? ? But Western Union refused, because at the time they thought the telephone would never amount to anything. After all, why would anyone want a telephone? They could already communicate longdistance through the telegraph, and early phones had poor transmission quality and were limited in range. … http: //www. telcomhistory. org/ Frank Vahid, UC Riverside 14

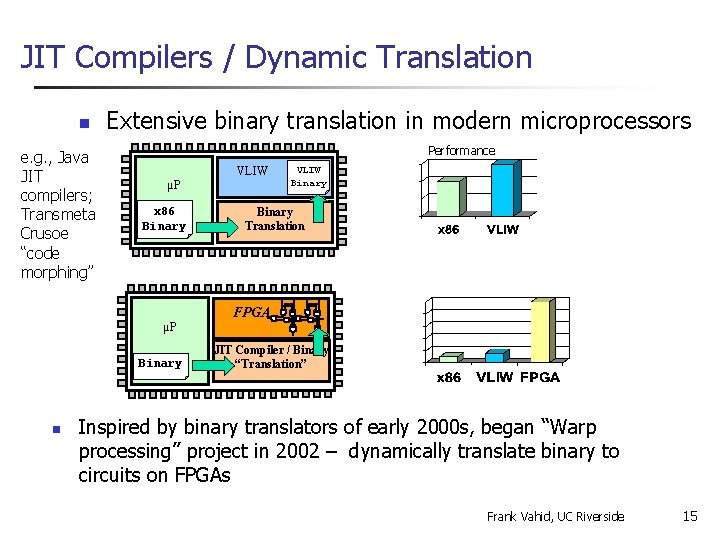

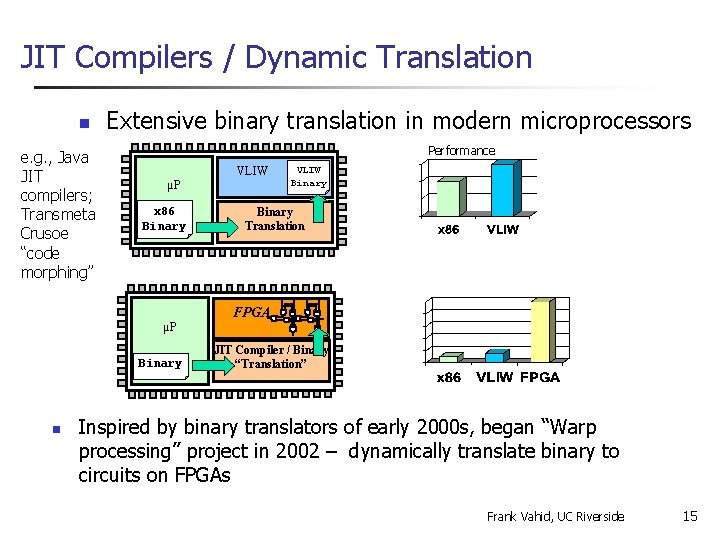

JIT Compilers / Dynamic Translation n e. g. , Java JIT compilers; Transmeta Crusoe “code morphing” Extensive binary translation in modern microprocessors Performance VLIW µP x 86 Binary µP Binary n VLIW Binary Translation FPGA JIT Compiler / Binary “Translation” Inspired by binary translators of early 2000 s, began “Warp processing” project in 2002 – dynamically translate binary to circuits on FPGAs Frank Vahid, UC Riverside 15

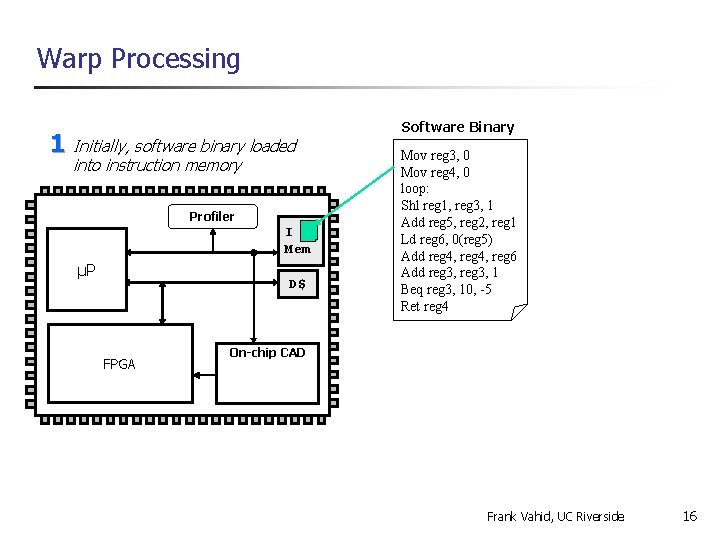

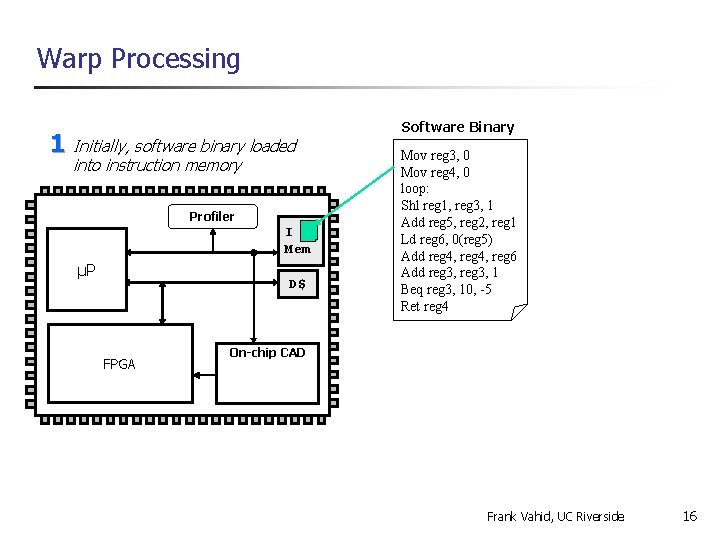

Warp Processing 1 Initially, software binary loaded into instruction memory Profiler I Mem µP D$ FPGA Software Binary Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 On-chip CAD Frank Vahid, UC Riverside 16

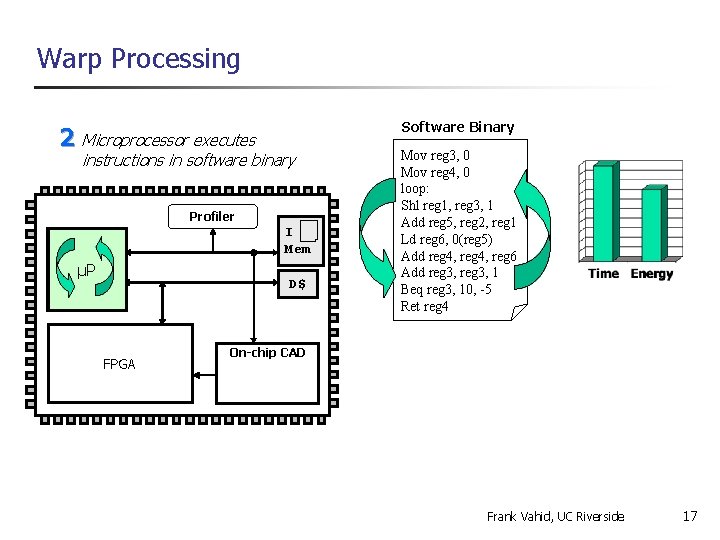

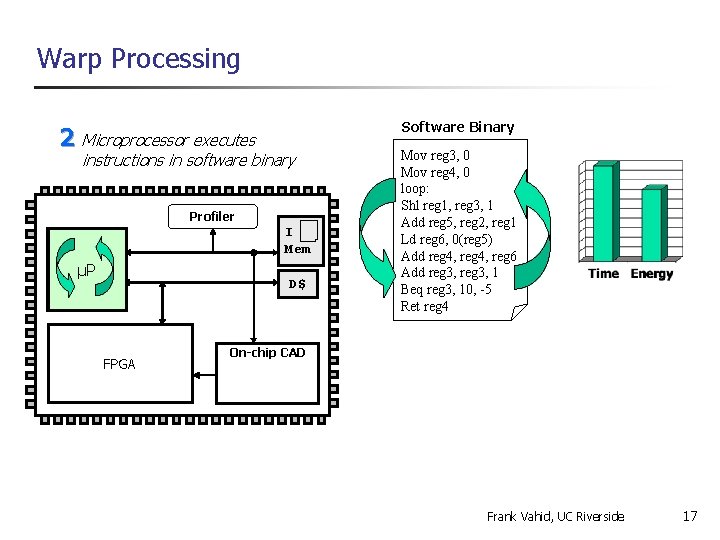

Warp Processing 2 Microprocessor executes instructions in software binary Profiler I Mem µP D$ FPGA Software Binary Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 On-chip CAD Frank Vahid, UC Riverside 17

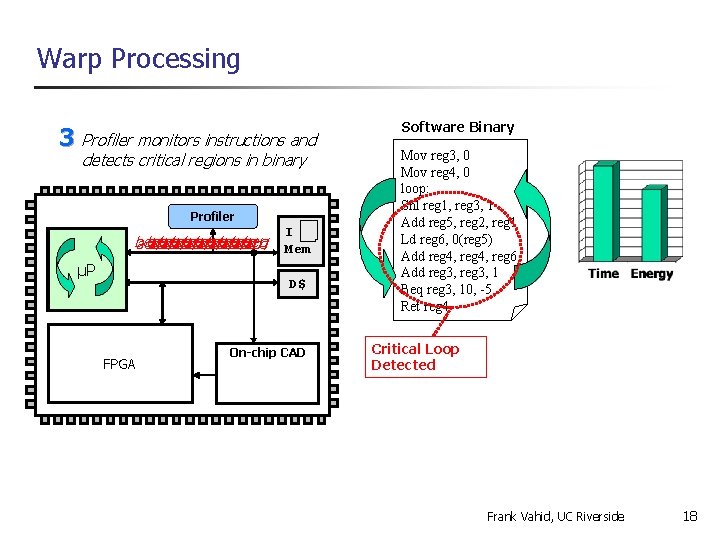

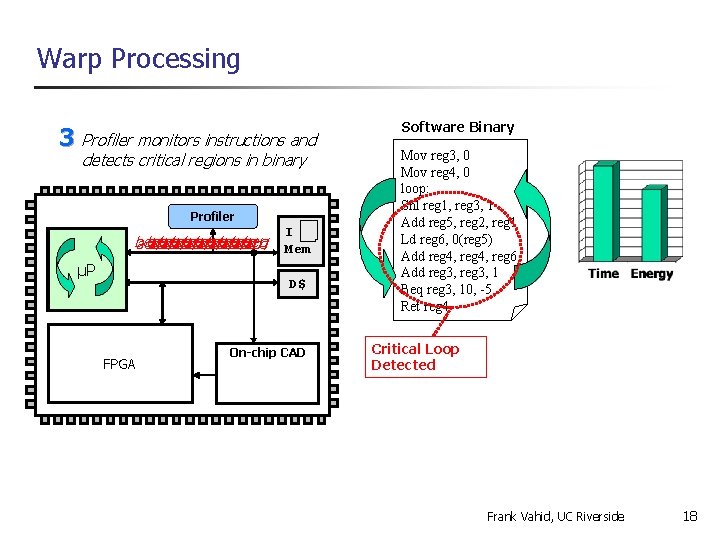

Warp Processing 3 Profiler monitors instructions and detects critical regions in binary Profiler beq beq beq add add add µP I Mem D$ FPGA On-chip CAD Software Binary Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 Critical Loop Detected Frank Vahid, UC Riverside 18

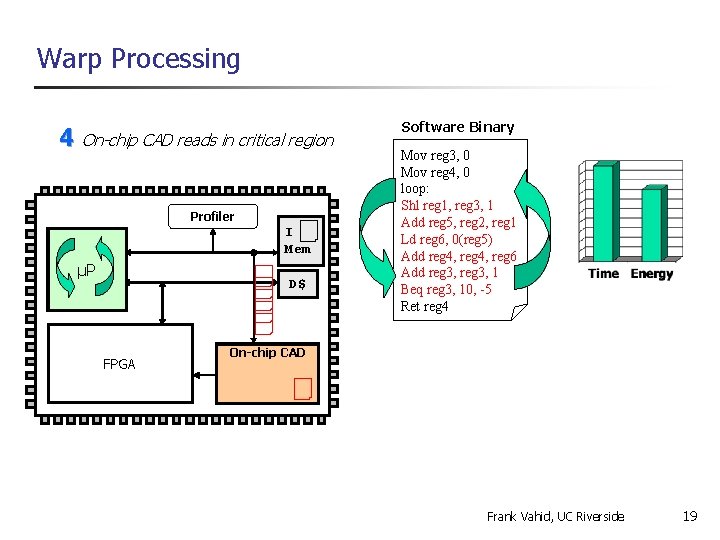

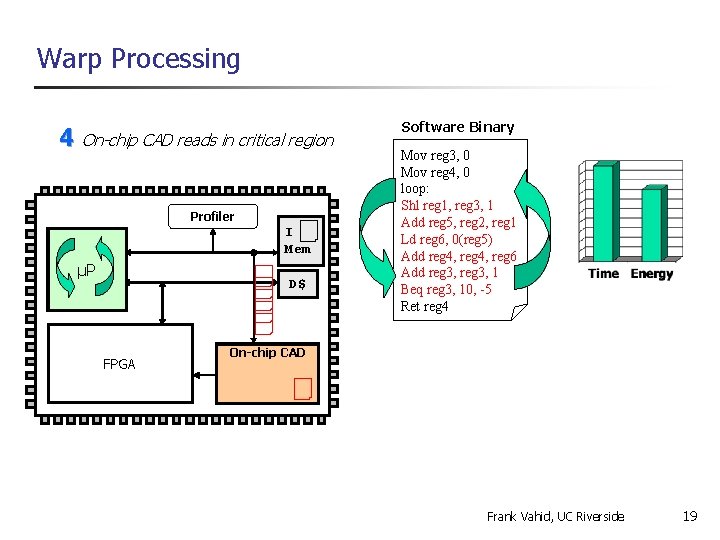

Warp Processing 4 On-chip CAD reads in critical region Profiler I Mem µP D$ FPGA Software Binary Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 On-chip CAD Frank Vahid, UC Riverside 19

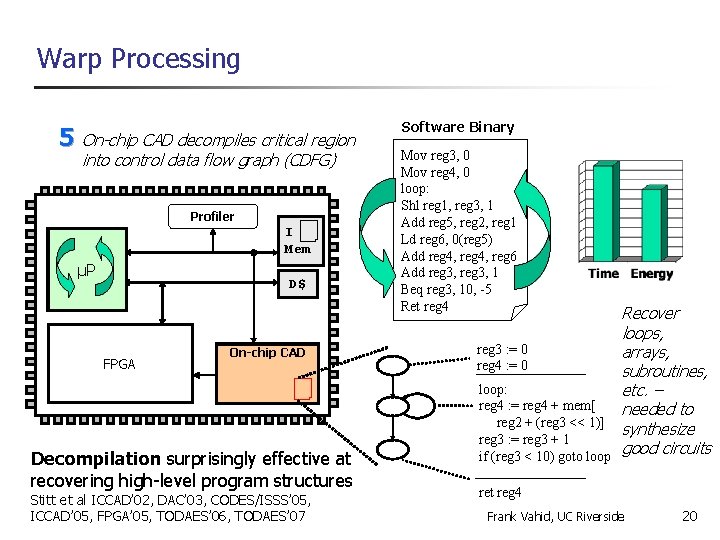

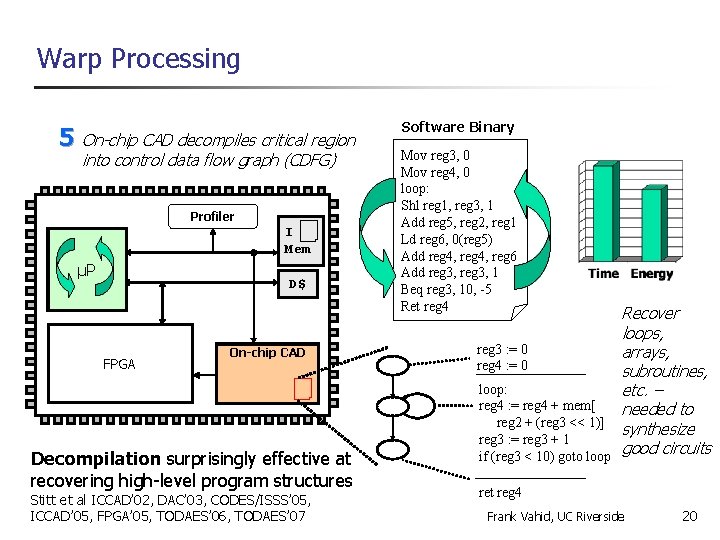

Warp Processing 5 On-chip CAD decompiles critical region into control data flow graph (CDFG) Profiler I Mem µP D$ FPGA Dynamic Part. On-chip CAD Module (DPM) Decompilation surprisingly effective at recovering high-level program structures Stitt et al ICCAD’ 02, DAC’ 03, CODES/ISSS’ 05, ICCAD’ 05, FPGA’ 05, TODAES’ 06, TODAES’ 07 Software Binary Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 Recover loops, reg 3 : = 0 arrays, reg 4 : = 0 subroutines, loop: etc. – reg 4 : = reg 4 + mem[ needed to reg 2 + (reg 3 << 1)] synthesize reg 3 : = reg 3 + 1 good circuits if (reg 3 < 10) goto loop ret reg 4 Frank Vahid, UC Riverside 20

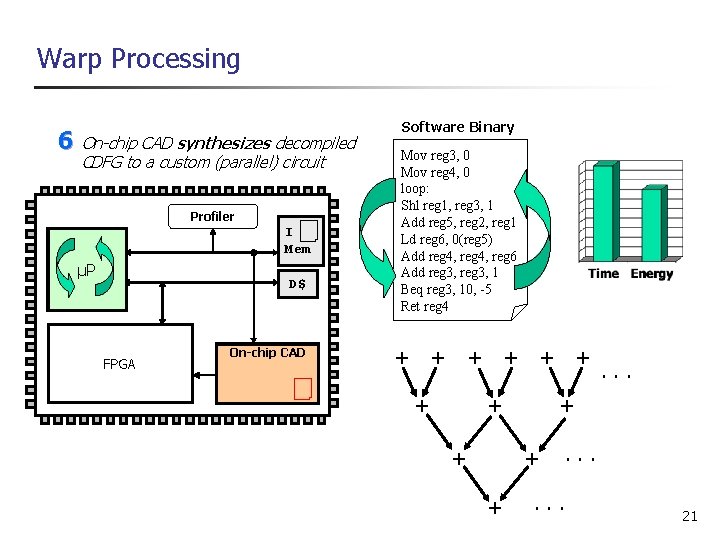

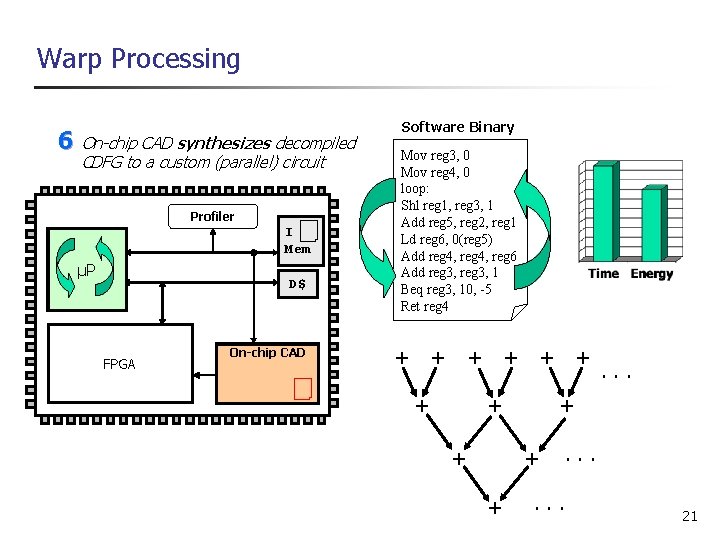

Warp Processing 6 On-chip CAD synthesizes decompiled CDFG to a custom (parallel) circuit Profiler I Mem µP D$ FPGA Dynamic Part. On-chip CAD Module (DPM) Software Binary Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 + + + reg 3 : = 0 + : = 0+ reg 4 + . . . loop: +reg 4 : = reg 4++ mem[ reg 2 + (reg 3 << 1)] reg 3 : = reg 3 + 1 if (reg 3. . loop +< 10). goto ret reg 4 + . . . Frank Vahid, UC Riverside 21

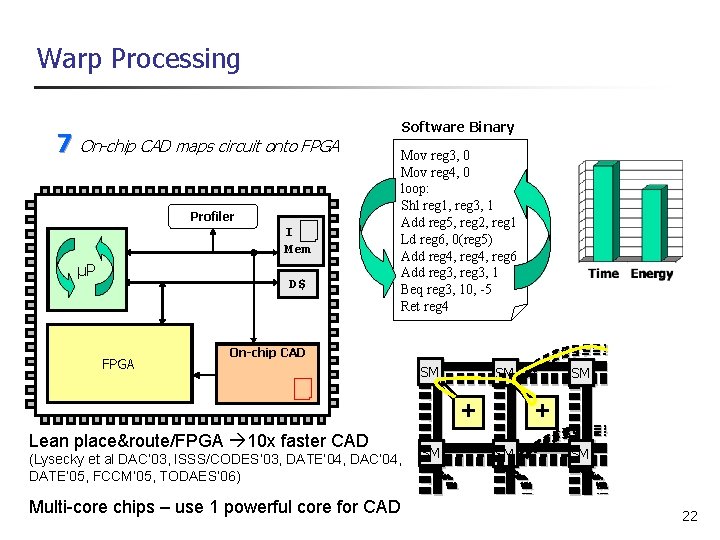

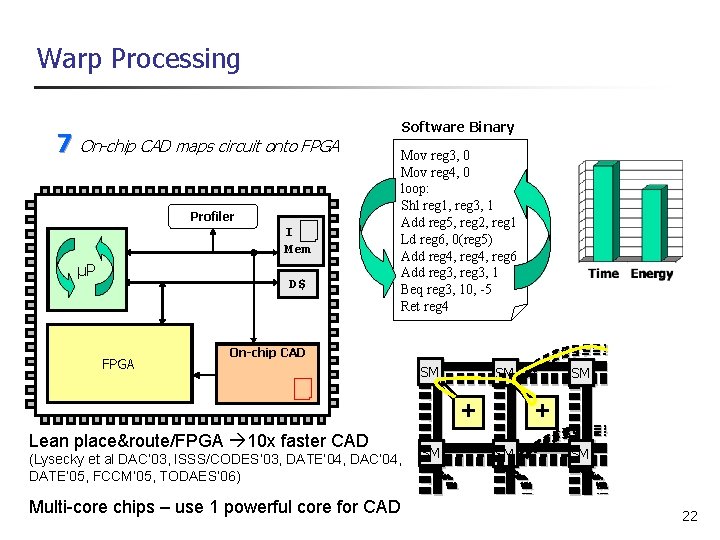

Warp Processing 7 Software Binary On-chip CAD maps circuit onto FPGA Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 Profiler I Mem µP D$ reg 3 : = 0 + : = 0+ + FPGA reg 4 SM SM SM. . . loop: reg 4+ + mem[ + CLB + +reg 4 : =CLB + reg 2 + (reg 3 << 1)] reg 3 : = reg 3 + 1 Lean place&route/FPGA 10 x faster CAD SM goto. . loop (Lysecky et al DAC’ 03, ISSS/CODES’ 03, DATE’ 04, DAC’ 04, + if. SM(reg 3 +< 10). SM DATE’ 05, FCCM’ 05, TODAES’ 06) ret reg 4 Dynamic Part. On-chip CAD Module (DPM) + Multi-core chips – use 1 powerful core for CAD + + + . . . Frank Vahid, UC Riverside 22

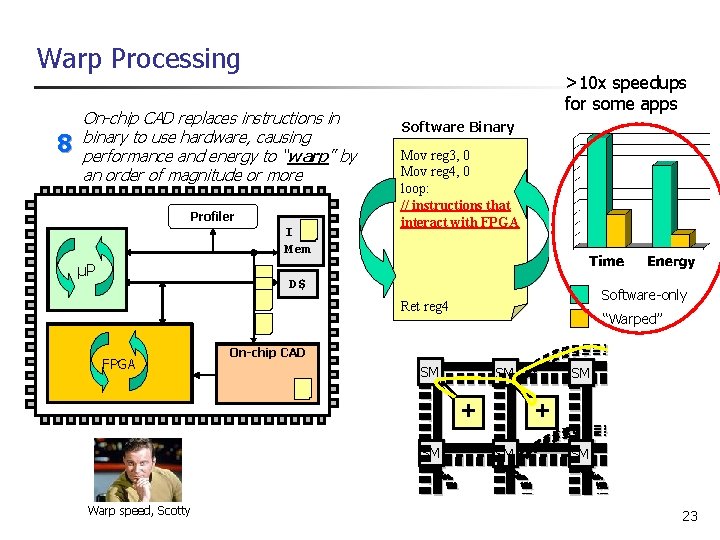

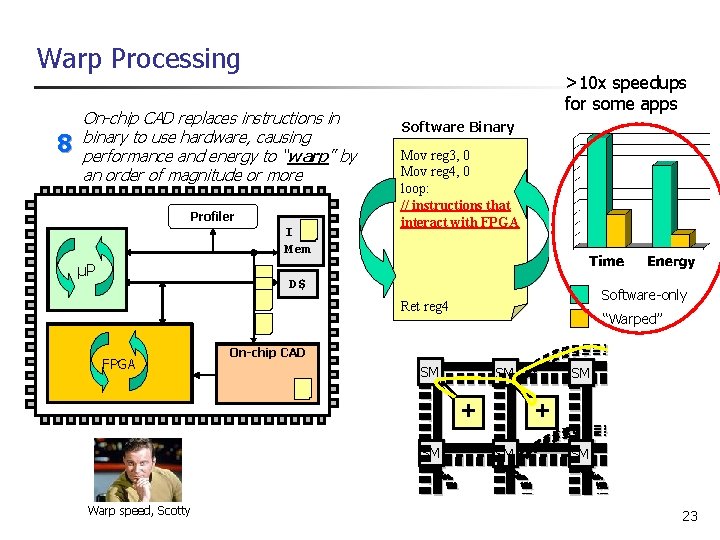

Warp Processing 8 On-chip CAD replaces instructions in binary to use hardware, causing performance and energy to “warp” by an order of magnitude or more Profiler I Mem µP D$ FPGA Dynamic Part. On-chip CAD Module (DPM) >10 x speedups for some apps Software Binary Mov reg 3, 0 Mov reg 4, 0 loop: // instructions Shl reg 1, reg 3, that 1 interact FPGA Add reg 5, with reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 + Software-only “Warped” reg 3 : = 0 + : = 0+ + reg 4 SM SM SM. . . loop: reg 4+ + mem[ + CLB + +reg 4 : =CLB + reg 2 + (reg 3 << 1)] reg 3 : = reg 3 + 1 SM + if. SM (reg 3 goto. . loop +< 10). SM + + ret reg 4 Warp speed, Scotty + . . . Frank Vahid, UC Riverside 23

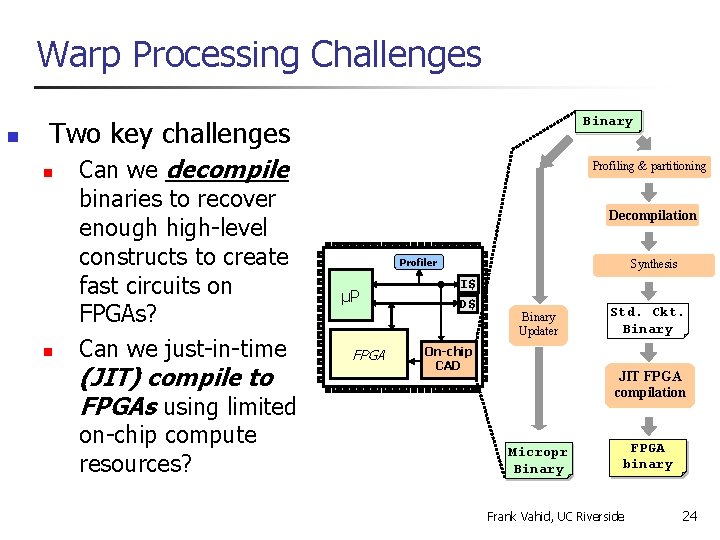

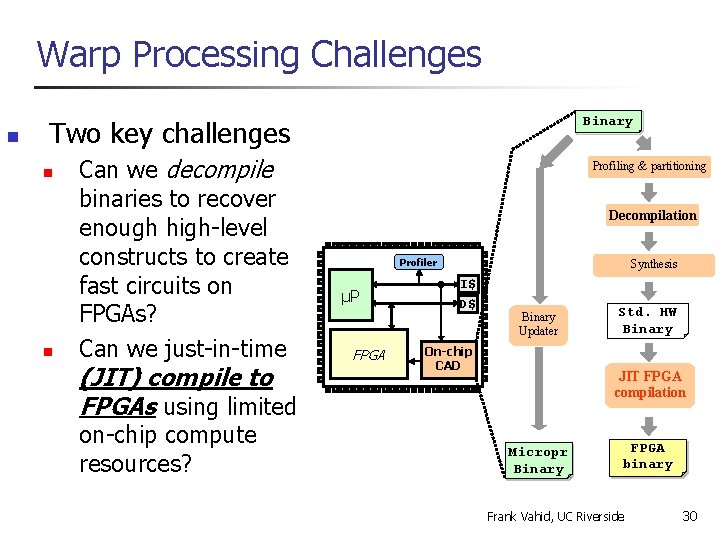

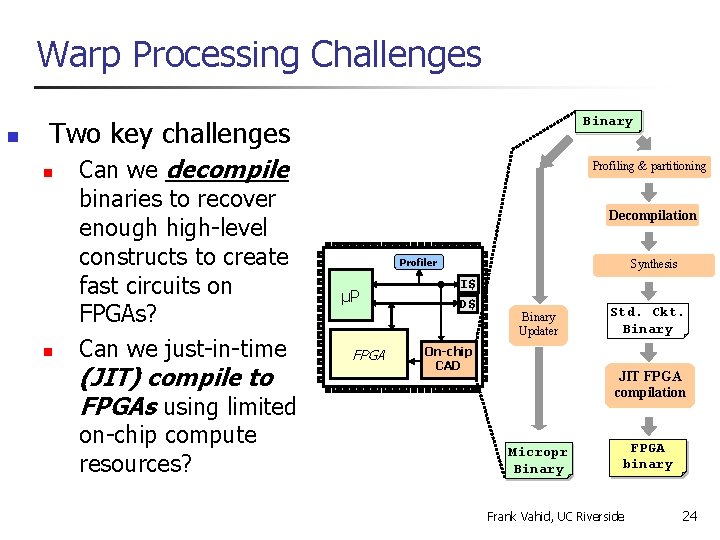

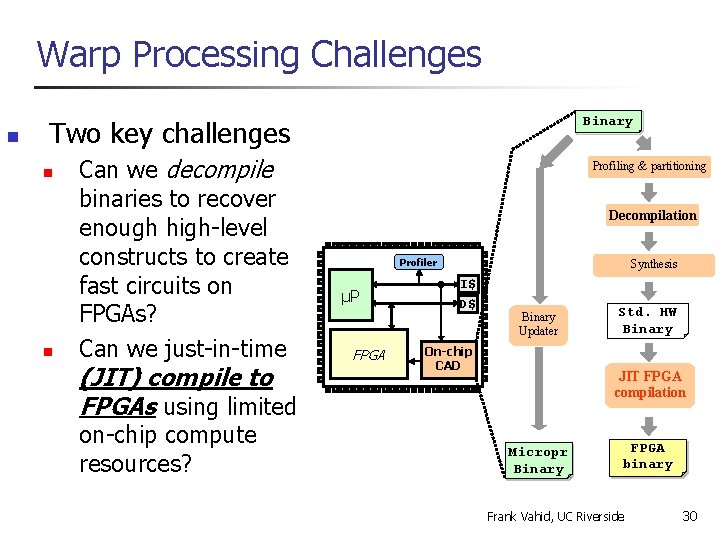

Warp Processing Challenges n Binary Two key challenges n n Can we decompile binaries to recover enough high-level constructs to create fast circuits on FPGAs? Can we just-in-time (JIT) compile to FPGAs using limited on-chip compute resources? Profiling & partitioning Decompilation Profiler µP FPGA Synthesis I$ D$ Binary Updater On-chip CAD Std. Ckt. Binary JIT FPGA compilation Micropr Binary FPGA Binary binary Frank Vahid, UC Riverside 24

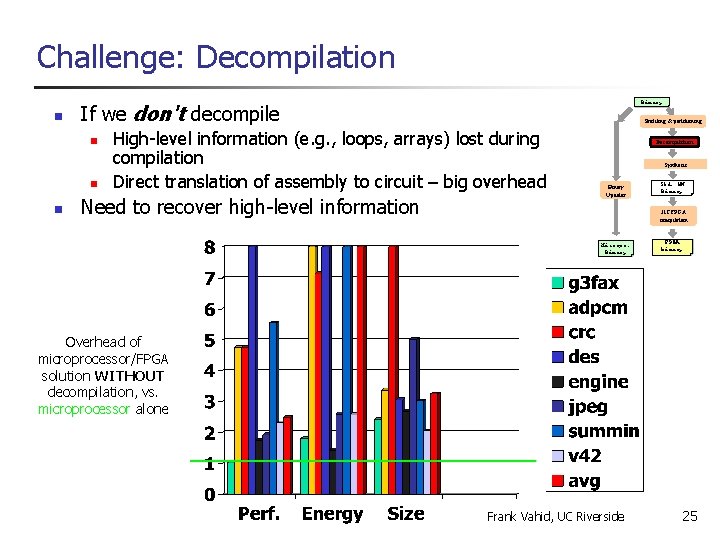

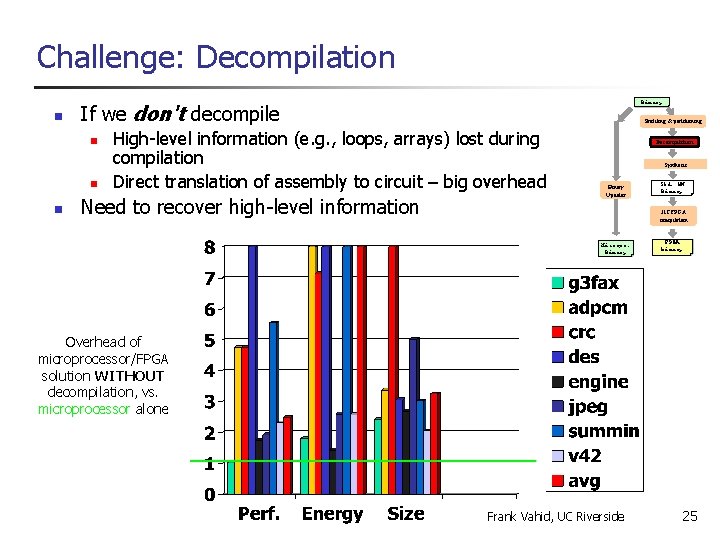

Challenge: Decompilation n If we don't decompile n n n Binary Profiling & partitioning High-level information (e. g. , loops, arrays) lost during compilation Direct translation of assembly to circuit – big overhead Need to recover high-level information Decompilation Synthesis Binary Updater Std. HW Binary JIT FPGA compilation Micropr. Binary FPGA Binary binary Overhead of microprocessor/FPGA solution WITHOUT decompilation, vs. microprocessor alone Frank Vahid, UC Riverside 25

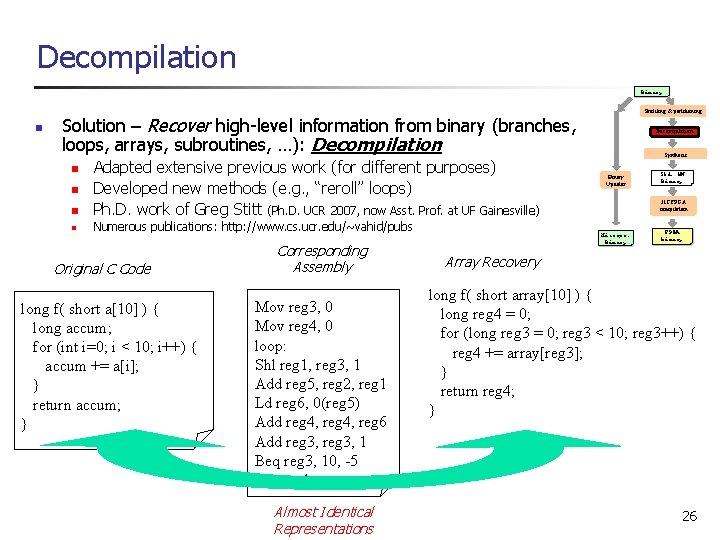

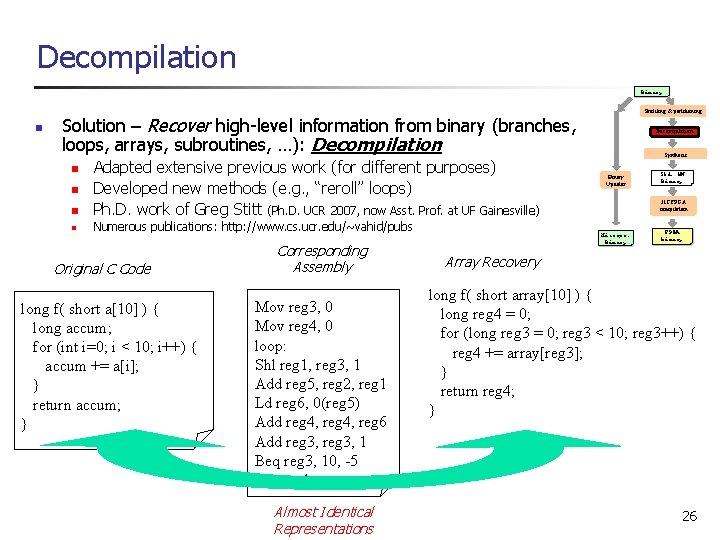

Decompilation Binary n Profiling & partitioning Solution – Recover high-level information from binary (branches, loops, arrays, subroutines, …): Decompilation n Adapted extensive previous work (for different purposes) Developed new methods (e. g. , “reroll” loops) Ph. D. work of Greg Stitt (Ph. D. UCR 2007, now Asst. Prof. at UF Gainesville) n Numerous publications: http: //www. cs. ucr. edu/~vahid/pubs n n Original C Code long f( short a[10] ) { long accum; for (int i=0; i < 10; i++) { accum += a[i]; } return accum; } Corresponding Assembly Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 Almost Identical Representations Decompilation Synthesis Binary Updater Std. HW Binary JIT FPGA compilation Micropr. Binary FPGA Binary binary Data Flow Analysis Control/Data Flow. Recovery Graph Creation Control Structure Function Recovery Array Recovery long f( long reg 2 ) { : = array[10] reg 3 long f( short long reg 2 ) {0 long reg 4= =0; 0; reg 4 : = 0 int reg 3 for (long=reg 3 int reg 4 0; = 0; reg 3 < 10; reg 3++) { reg 4 += mem[reg 2 array[reg 3]; loop: + (reg 3 << 1)]; }reg 4 = reg 4 + mem[reg 2 + << reg 4 : = reg 3 reg 4 + mem[ reg 1 1 reg 3 << 1)]; return reg 4; reg 2 + (reg 3 << 1)] reg 5 : = reg 2 + reg 1 } reg 3 = reg 3 + 1; reg 6 reg 3 : = mem[reg 5 reg 3 + 1 + 0] if (reg 3 < 10) goto loop; if (reg 3 < 10)+goto reg 4 : = reg 4 reg 6 loop return reg 4; reg 3 : = reg 3 + 1 } if (reg 3 < 10) goto loop ret reg 4 Frank Vahid, UC Riverside 26

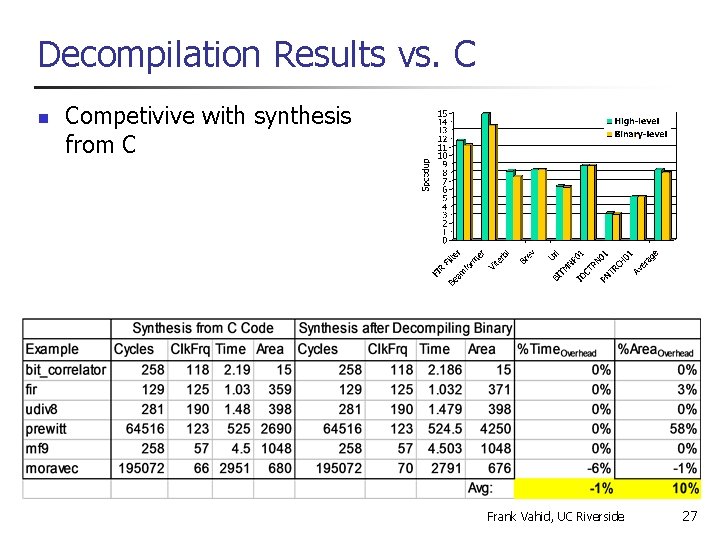

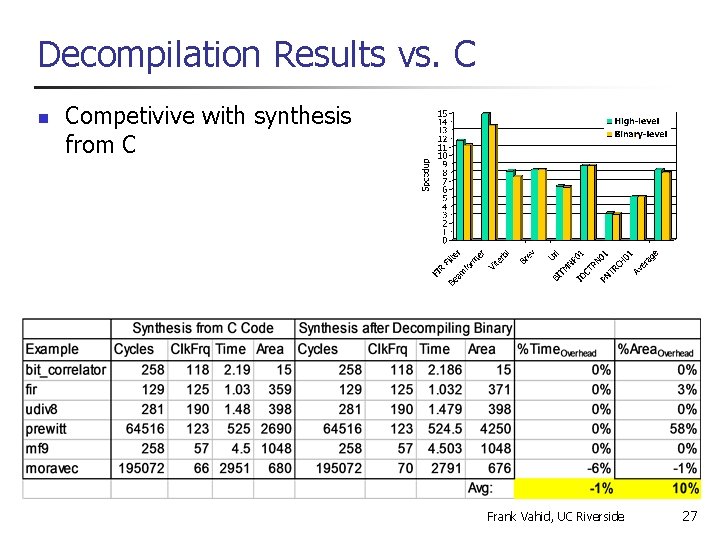

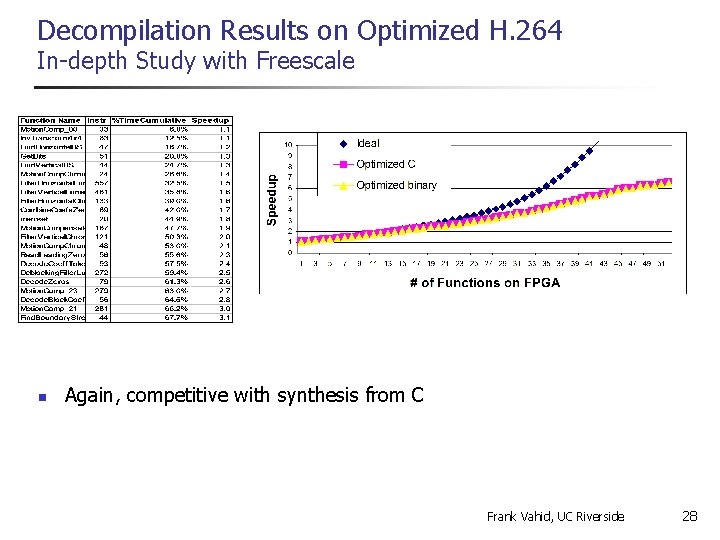

Decompilation Results vs. C n Competivive with synthesis from C Frank Vahid, UC Riverside 27

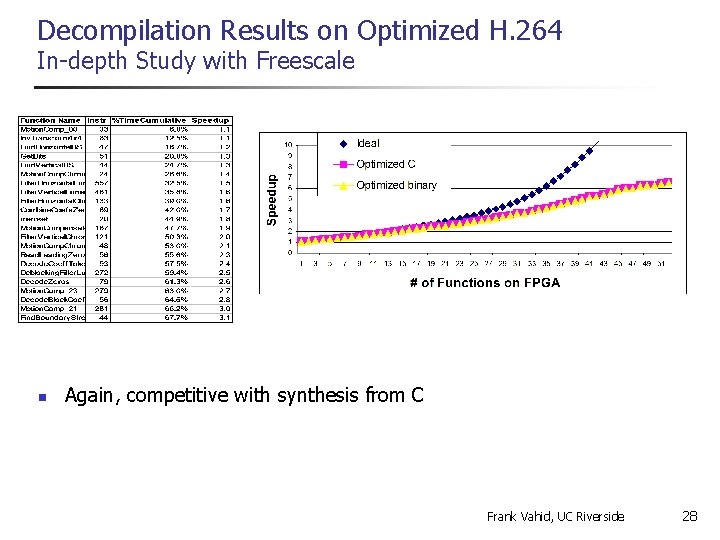

Decompilation Results on Optimized H. 264 In-depth Study with Freescale n Again, competitive with synthesis from C Frank Vahid, UC Riverside 28

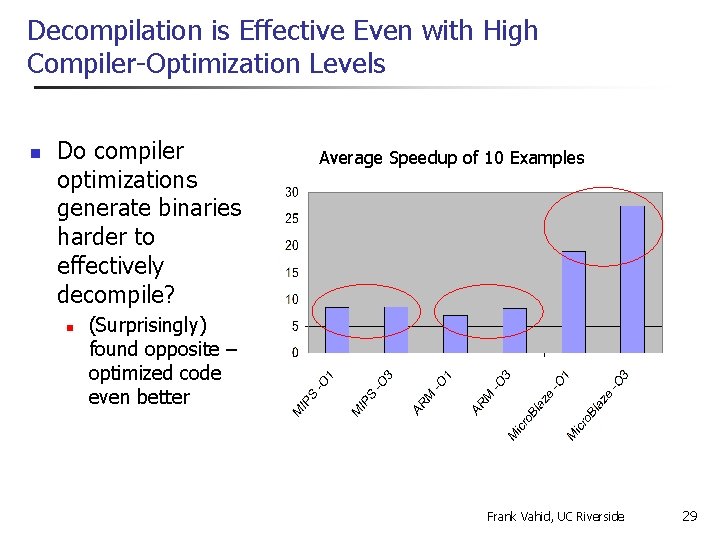

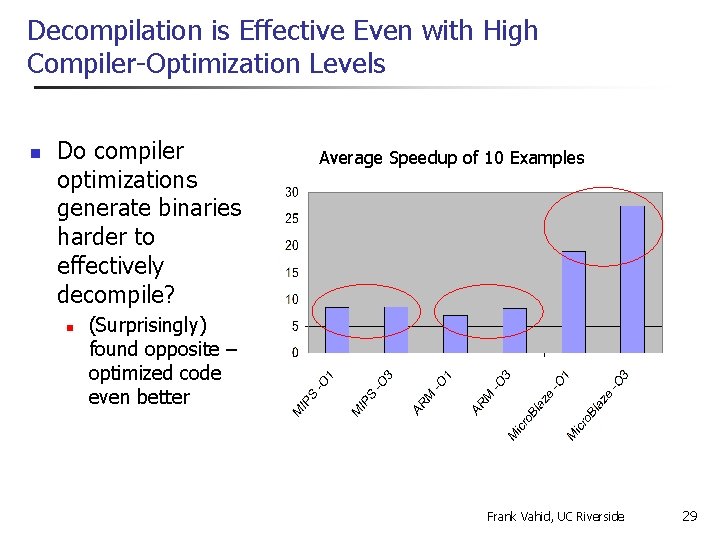

Decompilation is Effective Even with High Compiler-Optimization Levels n Do compiler optimizations generate binaries harder to effectively decompile? n Average Speedup of 10 Examples (Surprisingly) found opposite – optimized code even better Frank Vahid, UC Riverside 29

Warp Processing Challenges n Binary Two key challenges n n Can we decompile binaries to recover enough high-level constructs to create fast circuits on FPGAs? Can we just-in-time (JIT) compile to FPGAs using limited on-chip compute resources? Profiling & partitioning Decompilation Profiler µP FPGA Synthesis I$ D$ Binary Updater On-chip CAD Std. HW Binary JIT FPGA compilation Micropr Binary FPGA Binary binary Frank Vahid, UC Riverside 30

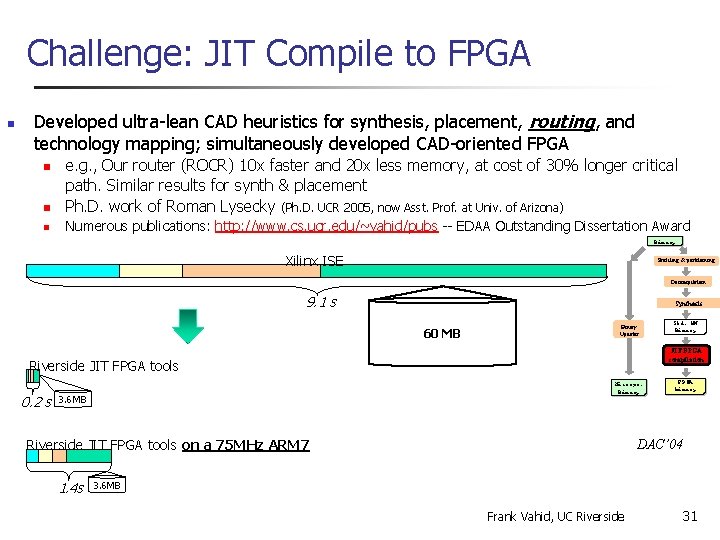

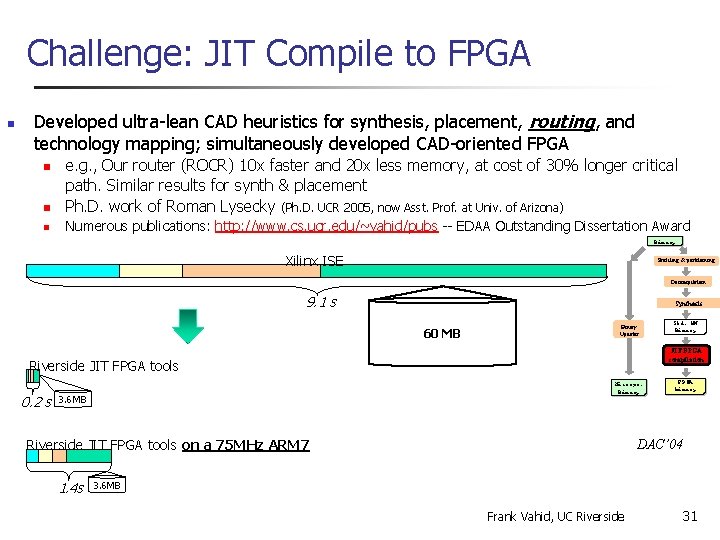

Challenge: JIT Compile to FPGA n Developed ultra-lean CAD heuristics for synthesis, placement, routing, and technology mapping; simultaneously developed CAD-oriented FPGA n e. g. , Our router (ROCR) 10 x faster and 20 x less memory, at cost of 30% longer critical path. Similar results for synth & placement Ph. D. work of Roman Lysecky (Ph. D. UCR 2005, now Asst. Prof. at Univ. of Arizona) n Numerous publications: http: //www. cs. ucr. edu/~vahid/pubs -- EDAA Outstanding Dissertation Award n Binary Xilinx ISE Profiling & partitioning Decompilation 9. 1 s Synthesis 60 MB Binary Updater JIT FPGA compilation Riverside JIT FPGA tools 0. 2 s Micropr. Binary 3. 6 MB Riverside JIT FPGA tools on a 75 MHz ARM 7 1. 4 s Std. HW Binary FPGA Binary binary DAC’ 04 3. 6 MB Frank Vahid, UC Riverside 31

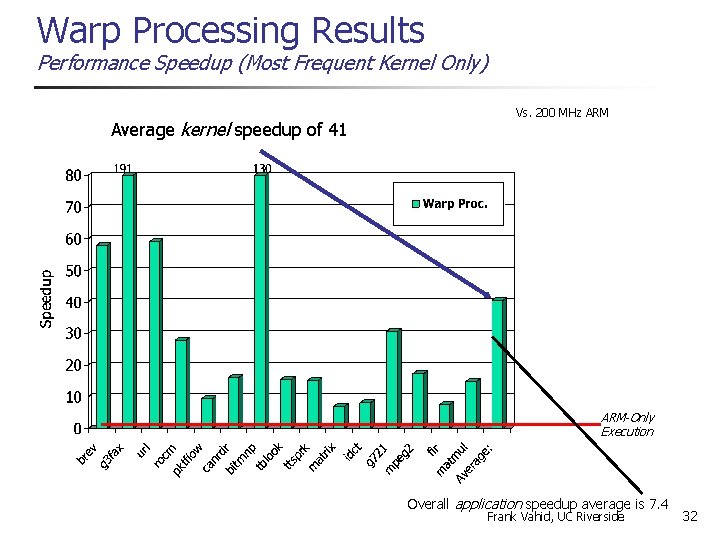

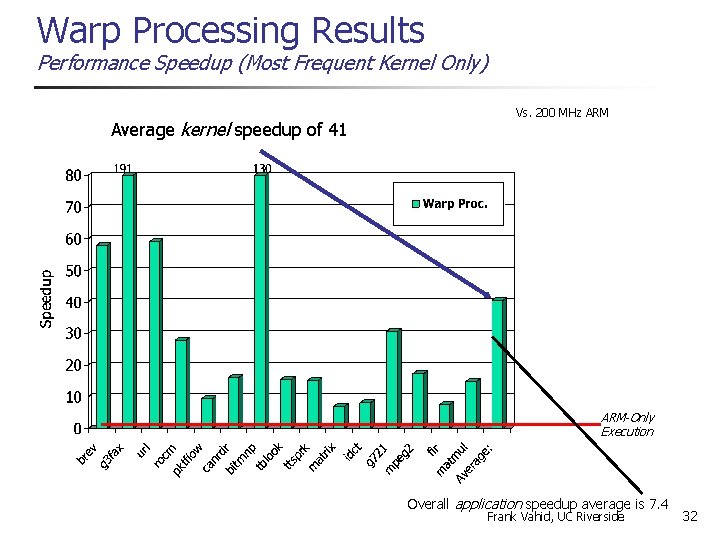

Warp Processing Results Performance Speedup (Most Frequent Kernel Only) Average kernel speedup of 41 Vs. 200 MHz ARM-Only Execution Overall application speedup average is 7. 4 Frank Vahid, UC Riverside 32

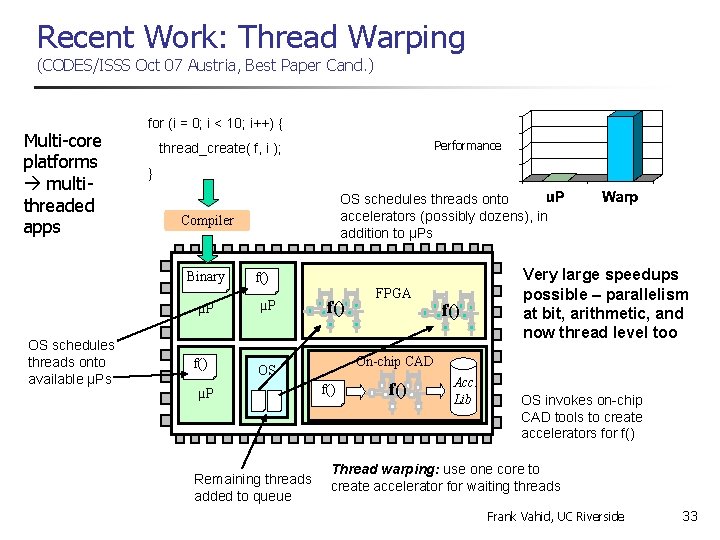

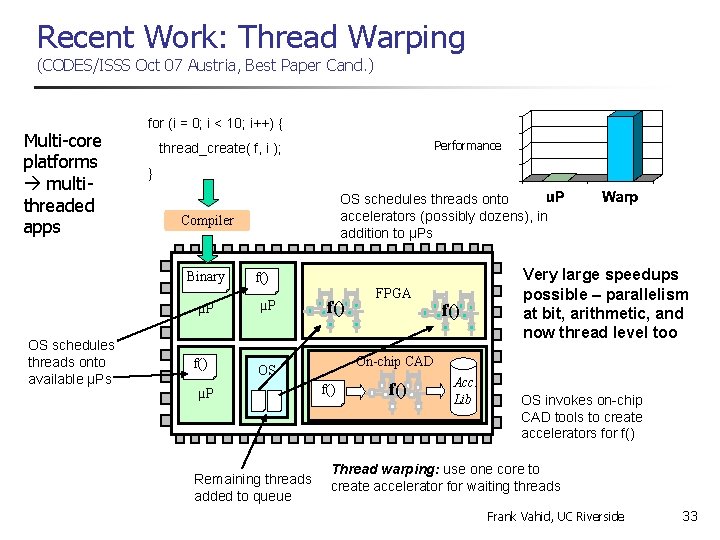

Recent Work: Thread Warping (CODES/ISSS Oct 07 Austria, Best Paper Cand. ) Multi-core platforms multithreaded apps for (i = 0; i < 10; i++) { } OS schedules threads onto accelerators (possibly dozens), in addition to µPs Compiler Binary µP OS schedules threads onto available µPs Performance thread_create( f, i ); f() µP f() Remaining threads added to queue f() On-chip CAD OS µP FPGA Very large speedups possible – parallelism at bit, arithmetic, and now thread level too µP f() Acc. Lib OS invokes on-chip CAD tools to create accelerators for f() Thread warping: use one core to create accelerator for waiting threads Frank Vahid, UC Riverside 33

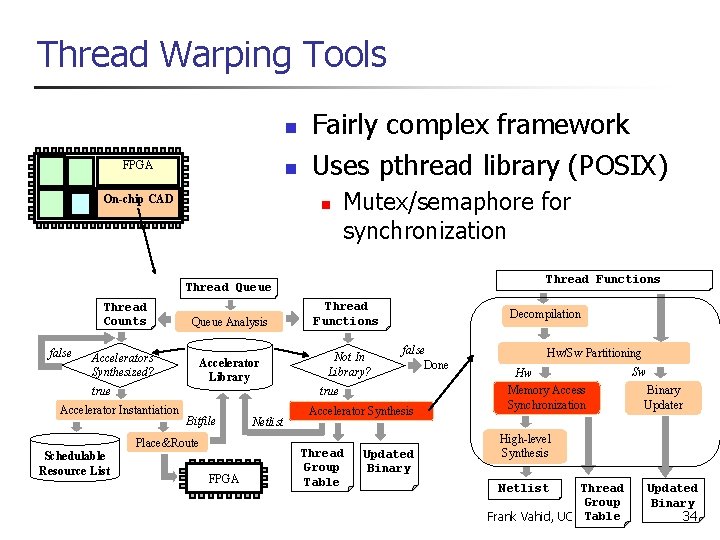

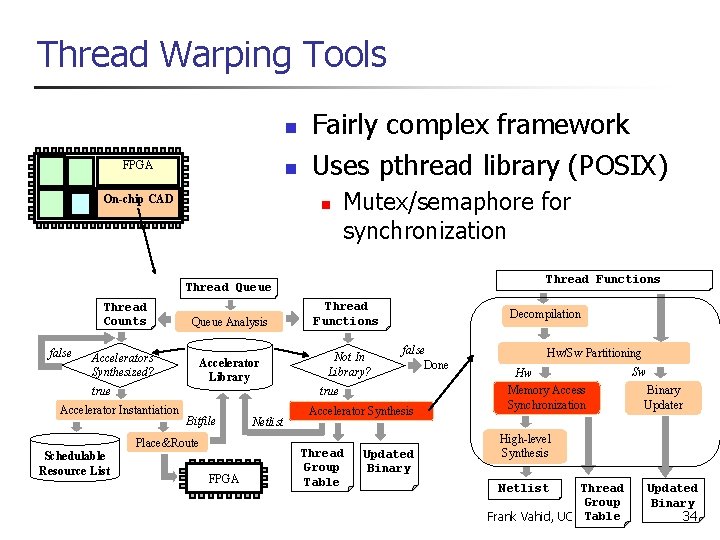

Thread Warping Tools n FPGA µ P n On-chip CAD Fairly complex framework Uses pthread library (POSIX) n Mutex/semaphore for synchronization Thread Functions Thread Queue false Thread Counts Queue Analysis Thread Functions Accelerators Synthesized? Accelerator Library Not In Library? true false Done true Accelerator Instantiation Bitfile Place&Route Schedulable Resource List Decompilation FPGA Netlist Accelerator Synthesis Thread Group Table Updated Binary Hw/Sw Partitioning Hw Memory Access Synchronization Sw Binary Updater High-level Synthesis Netlist Thread Group Table Frank Vahid, UC Riverside Updated Binary 34

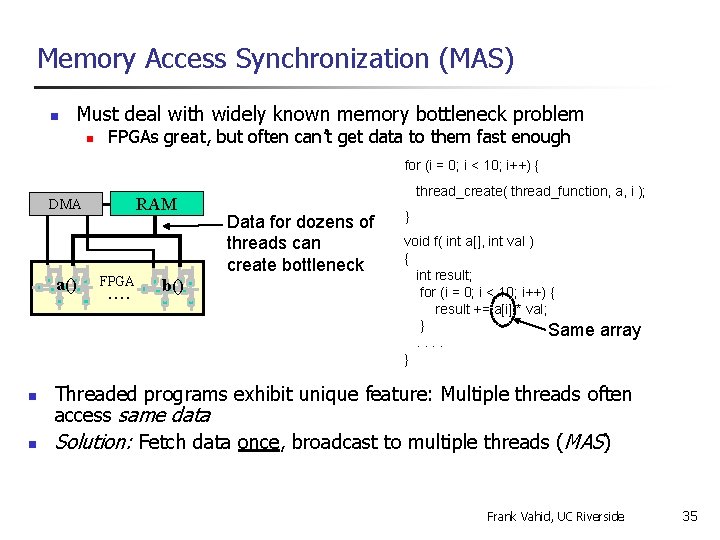

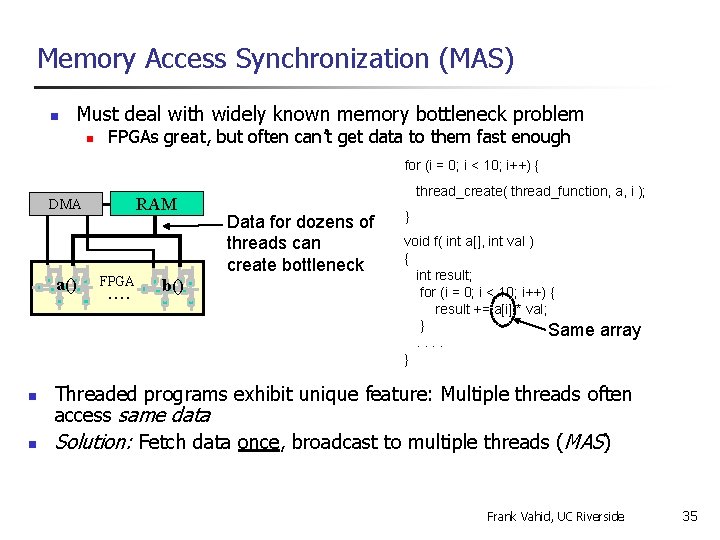

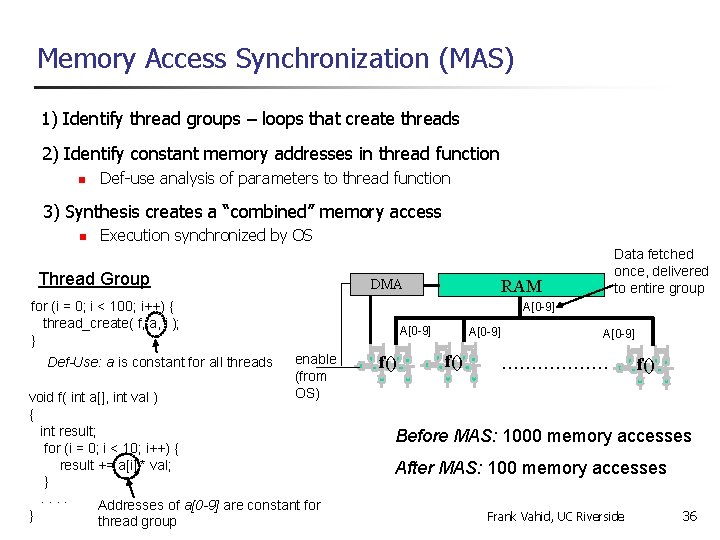

Memory Access Synchronization (MAS) n Must deal with widely known memory bottleneck problem n FPGAs great, but often can’t get data to them fast enough for (i = 0; i < 10; i++) { RAM DMA a() n n FPGA …. b() thread_create( thread_function, a, i ); Data for dozens of threads can create bottleneck } void f( int a[], int val ) { int result; for (i = 0; i < 10; i++) { result += a[i] * val; } Same. . } array Threaded programs exhibit unique feature: Multiple threads often access same data Solution: Fetch data once, broadcast to multiple threads (MAS) Frank Vahid, UC Riverside 35

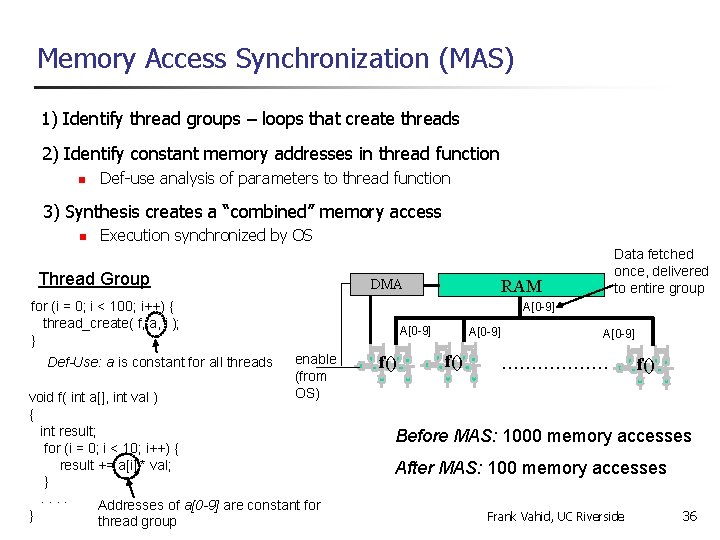

Memory Access Synchronization (MAS) 1) Identify thread groups – loops that create threads 2) Identify constant memory addresses in thread function n Def-use analysis of parameters to thread function 3) Synthesis creates a “combined” memory access n Execution synchronized by OS Thread Group Def-Use: a is constant for all threads RAM DMA for (i = 0; i < 100; i++) { thread_create( f, a, i ); } Data fetched once, delivered to entire group A[0 -9] enable (from OS) void f( int a[], int val ) { int result; for (i = 0; i < 10; i++) { result += a[i] * val; }. . Addresses of a[0 -9] are constant for } thread group f() A[0 -9] ……………… f() Before MAS: 1000 memory accesses After MAS: 100 memory accesses Frank Vahid, UC Riverside 36

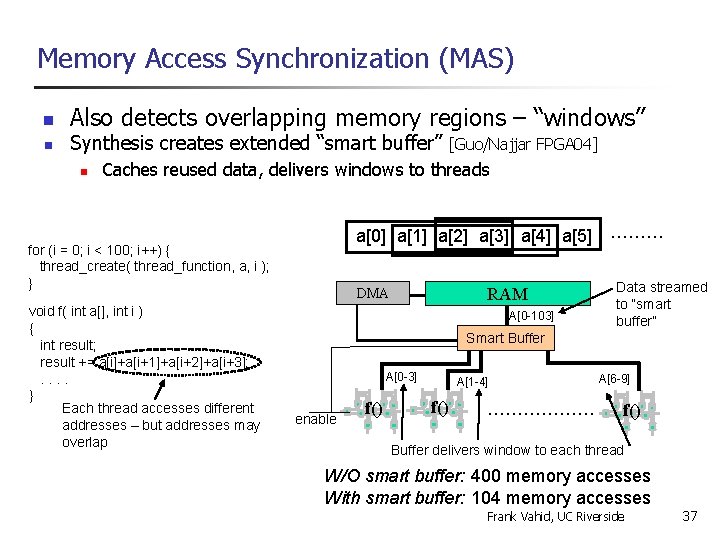

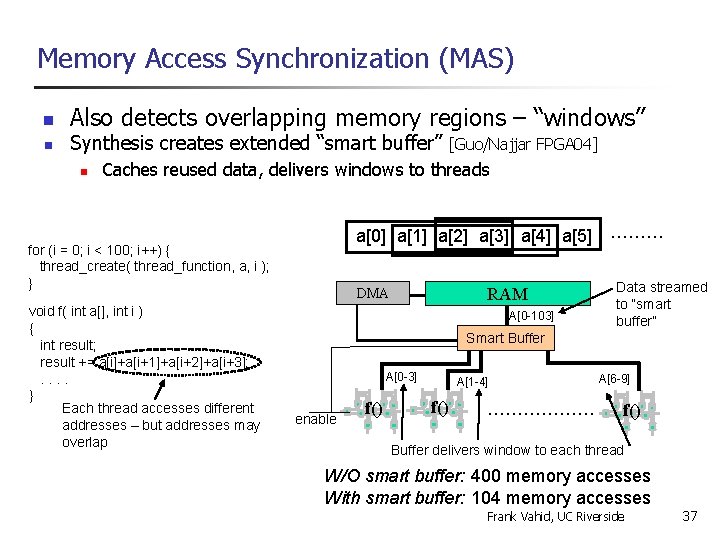

Memory Access Synchronization (MAS) n n Also detects overlapping memory regions – “windows” Synthesis creates extended “smart buffer” n [Guo/Najjar FPGA 04] Caches reused data, delivers windows to threads a[0] a[1] a[2] a[3] a[4] a[5] for (i = 0; i < 100; i++) { thread_create( thread_function, a, i ); } void f( int a[], int i ) { int result; result += a[i]+a[i+1]+a[i+2]+a[i+3]; . . } Each thread accesses different addresses – but addresses may overlap RAM DMA A[0 -103] ……… Data streamed to “smart buffer” Smart Buffer A[0 -3] enable f() A[1 -4] f() ……………… A[6 -9] f() Buffer delivers window to each thread W/O smart buffer: 400 memory accesses With smart buffer: 104 memory accesses Frank Vahid, UC Riverside 37

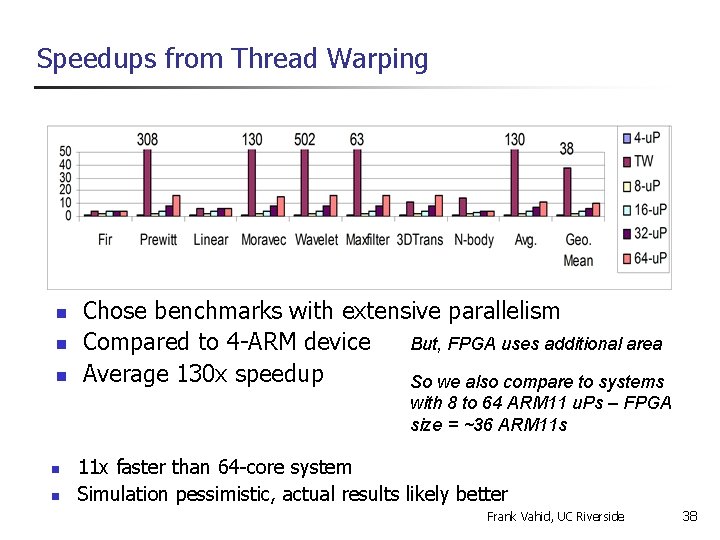

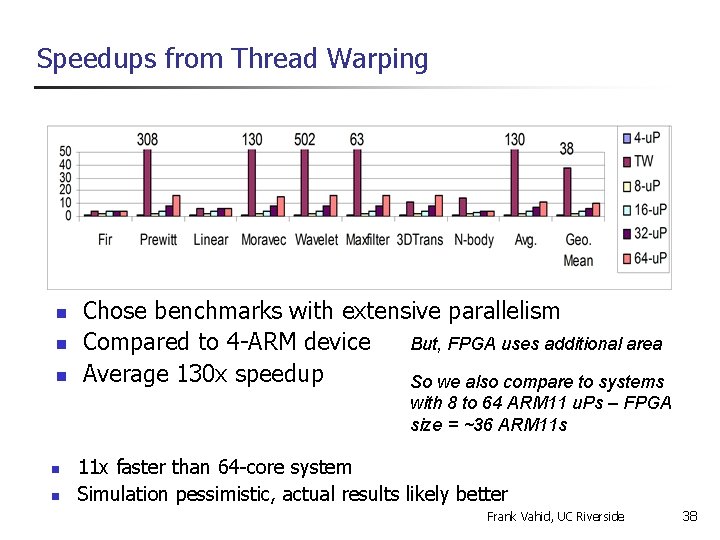

Speedups from Thread Warping n n n Chose benchmarks with extensive parallelism But, FPGA uses additional area Compared to 4 -ARM device Average 130 x speedup So we also compare to systems with 8 to 64 ARM 11 u. Ps – FPGA size = ~36 ARM 11 s n n 11 x faster than 64 -core system Simulation pessimistic, actual results likely better Frank Vahid, UC Riverside 38

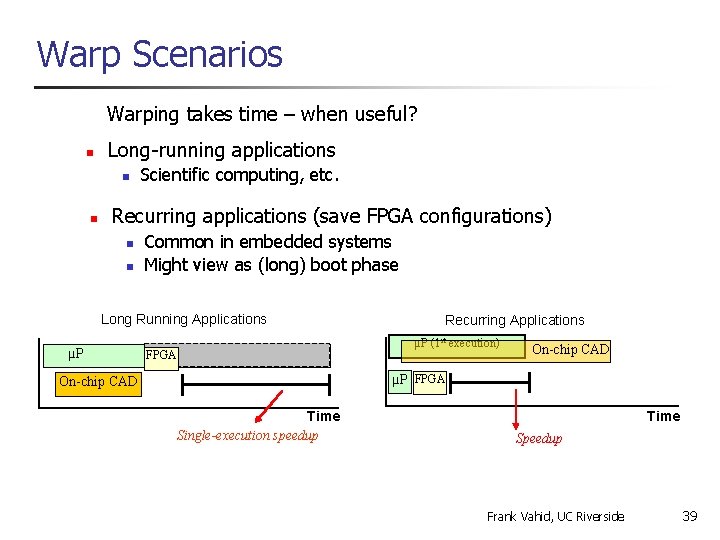

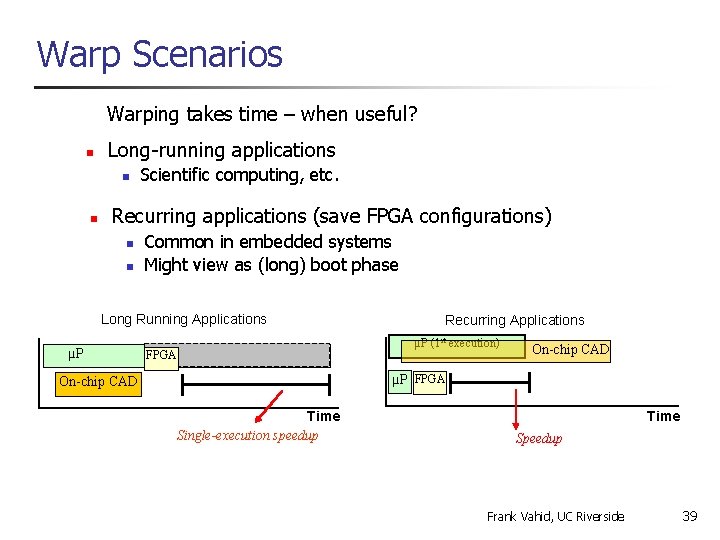

Warp Scenarios Warping takes time – when useful? n Long-running applications n n Scientific computing, etc. Recurring applications (save FPGA configurations) n n Common in embedded systems Might view as (long) boot phase Long Running Applications µP FPGA Recurring Applications µP (1 st execution) On-chip CAD µP FPGA On-chip CAD Time Single-execution speedup Time Speedup Frank Vahid, UC Riverside 39

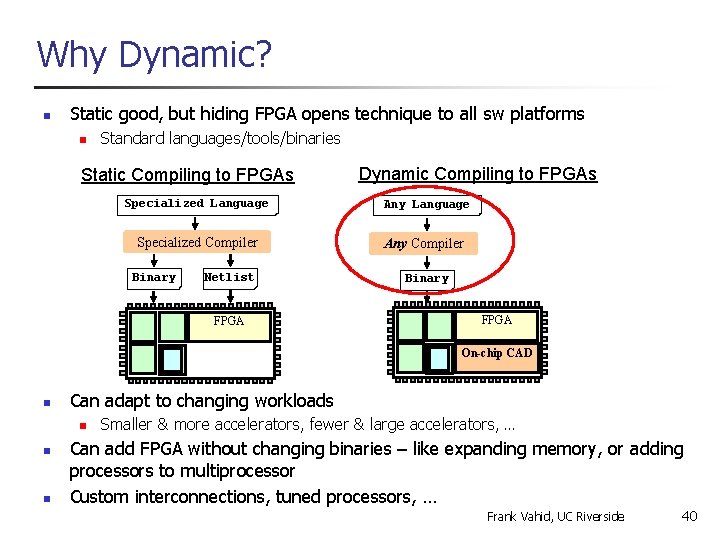

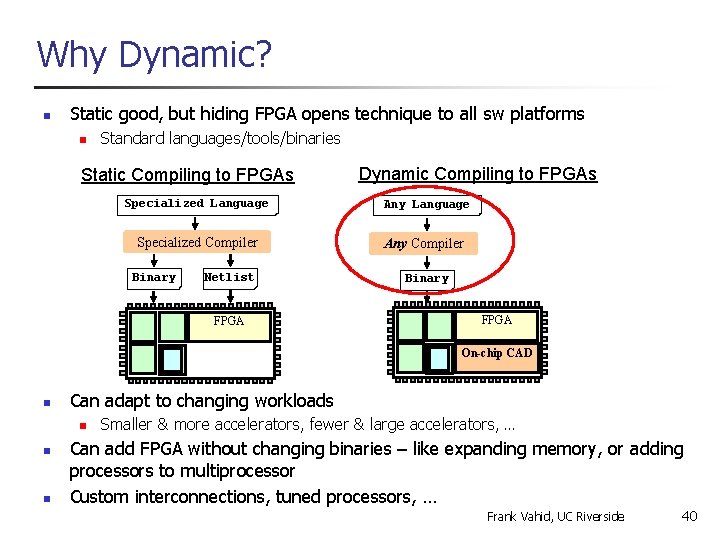

Why Dynamic? n Static good, but hiding FPGA opens technique to all sw platforms n Standard languages/tools/binaries Static Compiling to FPGAs Dynamic Compiling to FPGAs Specialized Language Any Language Specialized Compiler Any Compiler Binary Netlist Binary FPGA µ P n n On-chip CAD Can adapt to changing workloads n n µ P Smaller & more accelerators, fewer & large accelerators, … Can add FPGA without changing binaries – like expanding memory, or adding processors to multiprocessor Custom interconnections, tuned processors, … Frank Vahid, UC Riverside 40

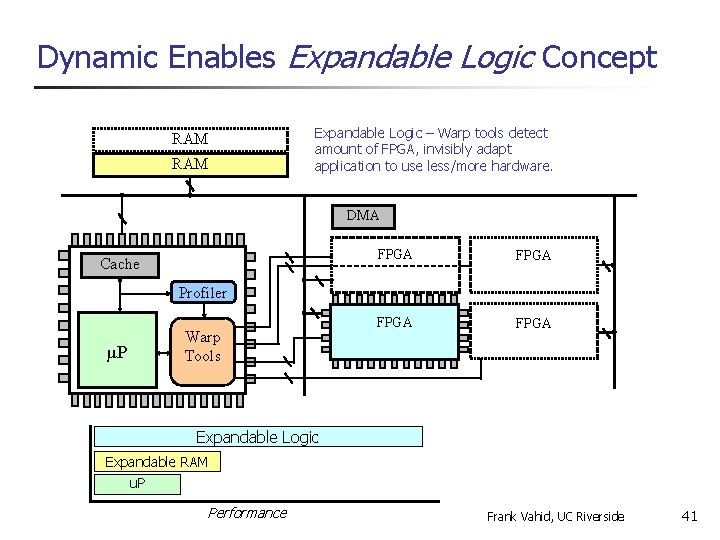

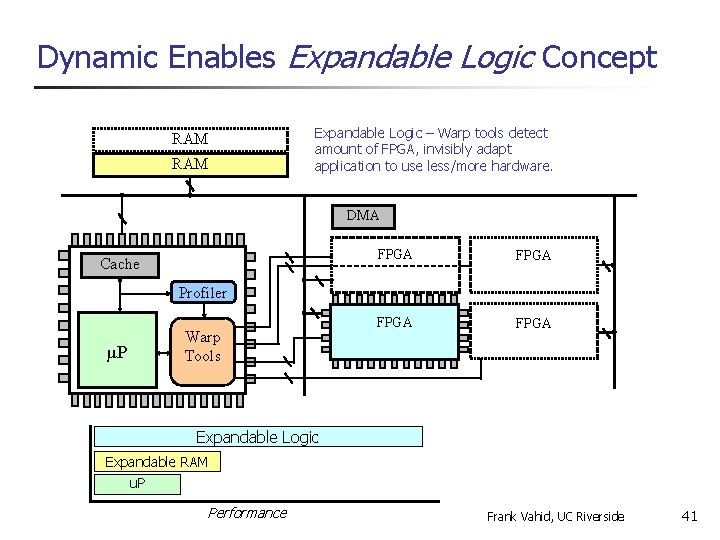

Dynamic Enables Expandable Logic Concept RAM Expandable. RAM Logic– –System Warp tools detects duringinvisibly start, adapt amount. RAM of FPGA, improves performance invisiblyhardware. application to use less/more DMA Cache FPGA Profiler µP µP Warp Tools Expandable Logic Expandable RAM u. P Performance Frank Vahid, UC Riverside 41

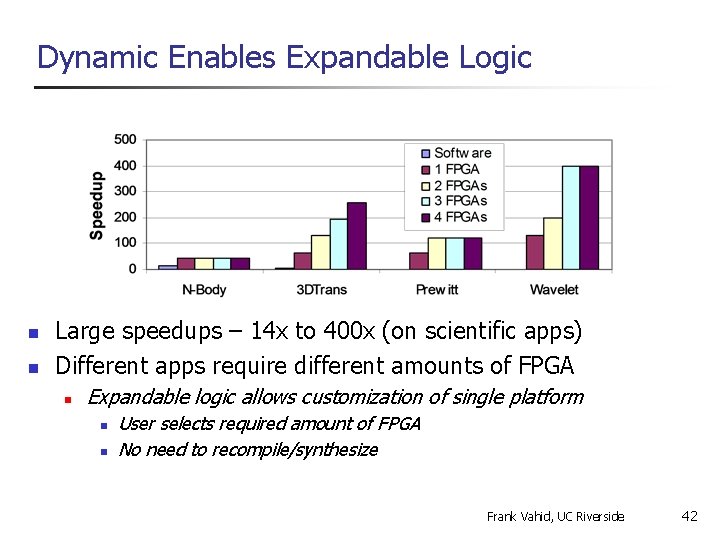

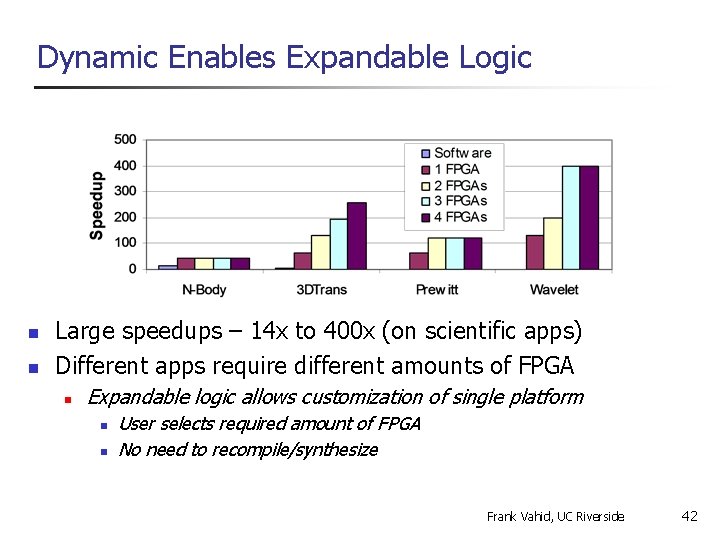

Dynamic Enables Expandable Logic n n Large speedups – 14 x to 400 x (on scientific apps) Different apps require different amounts of FPGA n Expandable logic allows customization of single platform n n User selects required amount of FPGA No need to recompile/synthesize Frank Vahid, UC Riverside 42

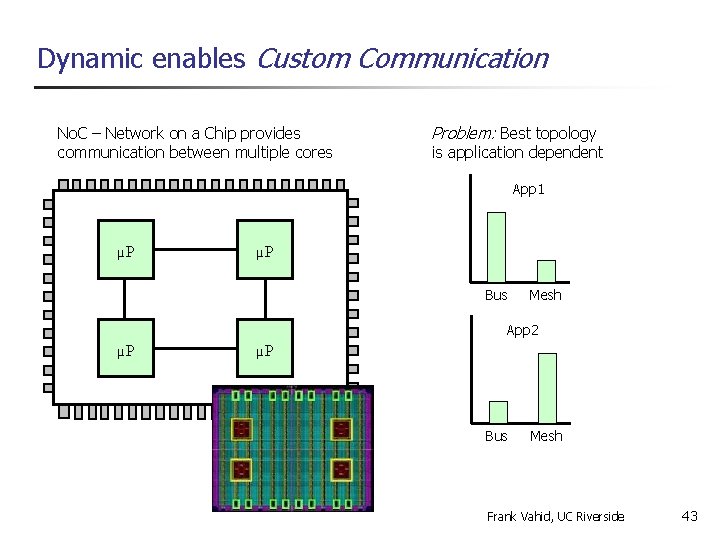

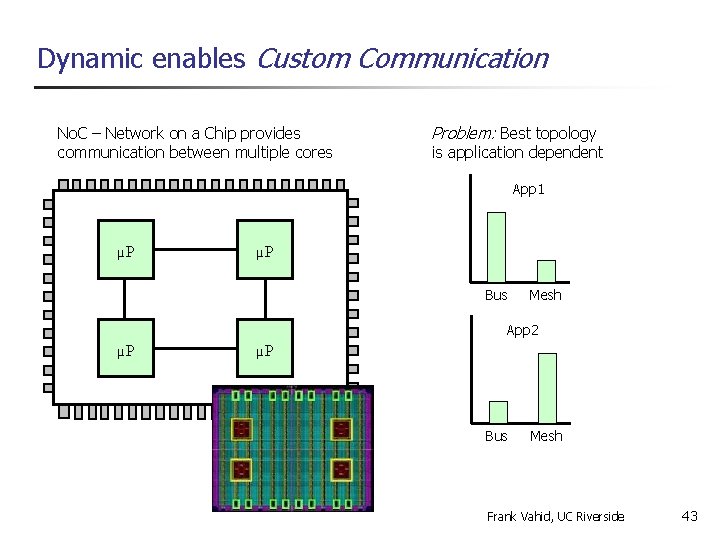

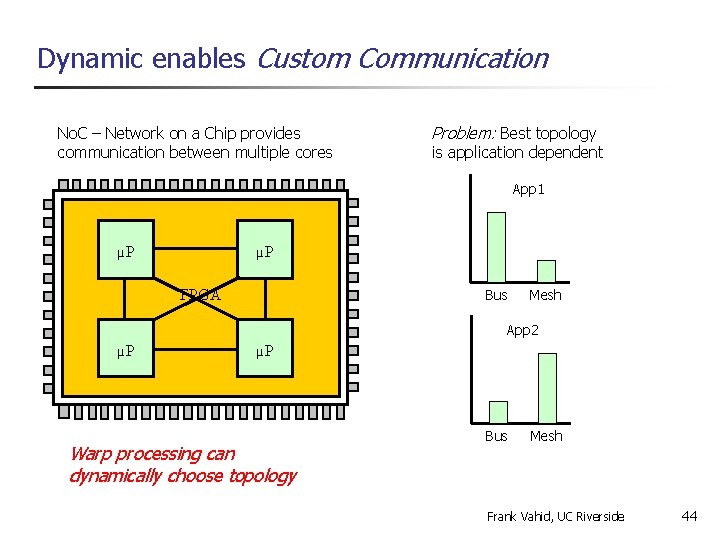

Dynamic enables Custom Communication No. C – Network on a Chip provides communication between multiple cores Problem: Best topology is application dependent App 1 µP µP Bus Mesh App 2 µP µP Bus Mesh Frank Vahid, UC Riverside 43

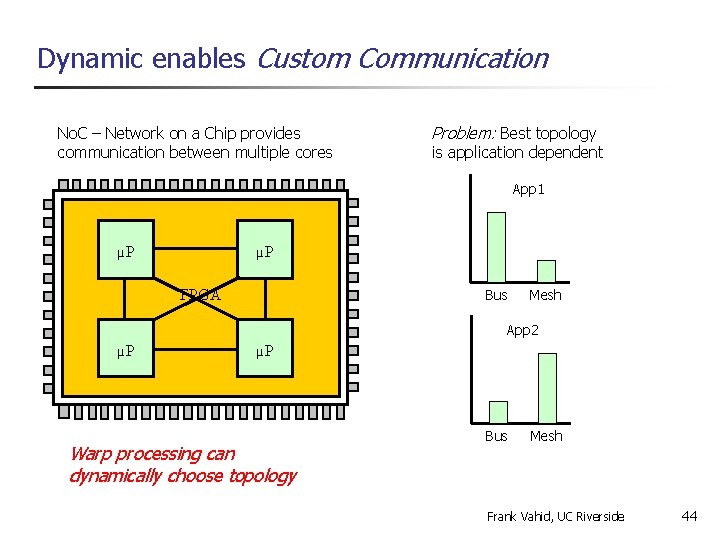

Dynamic enables Custom Communication No. C – Network on a Chip provides communication between multiple cores Problem: Best topology is application dependent App 1 µP µP FPGA Bus Mesh App 2 µP µP Warp processing can dynamically choose topology Bus Mesh Frank Vahid, UC Riverside 44

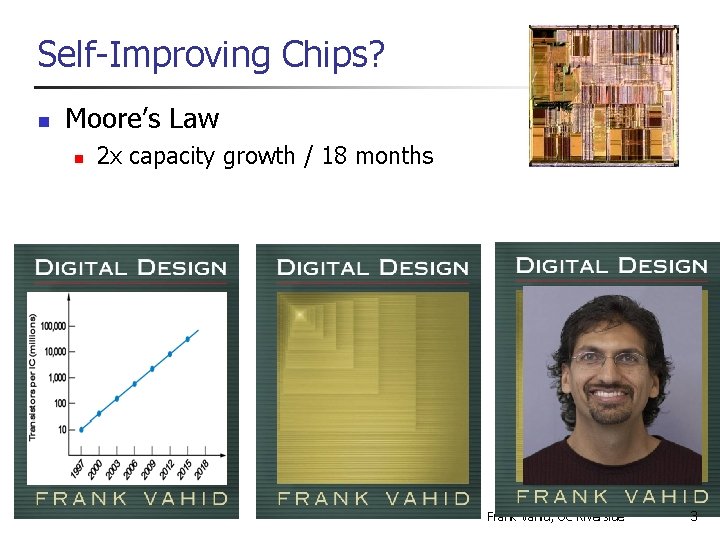

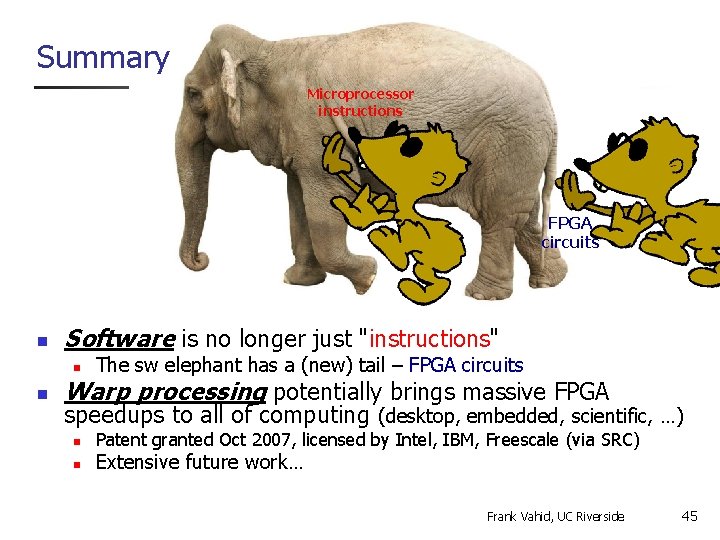

Summary Microprocessor instructions FPGA circuits n Software is no longer just "instructions" n n The sw elephant has a (new) tail – FPGA circuits Warp processing potentially brings massive FPGA speedups to all of computing (desktop, embedded, scientific, …) n n Patent granted Oct 2007, licensed by Intel, IBM, Freescale (via SRC) Extensive future work… Frank Vahid, UC Riverside 45