Seeking Serendipity with Summon Jennifer De Jonghe Michelle

- Slides: 54

Seeking Serendipity with Summon Jennifer De. Jonghe, Michelle Filkins and Alec Sonsteby Metropolitan State University

Agenda • Our implementation and branding of Summon • Usability studies of discovery tools • Getting buy-in/demonstrating value • Break • Hands-on activity • Educational opportunities • Ways to change reference and teaching • Q&A

Discovery • A discovery tool is a unified index. It includes scholarly content that you subscribe to and local content like a catalog. • A discovery tool is not federated search. It does not “search databases”. It is an index of content that may or align with your database subscriptions. Focus on full text. • Faster, more relevant, higher maintenance • Summon, EBSCO Discovery Service, World. Cat Local, Primo. Vu. Find (catalog layer). • Questions?

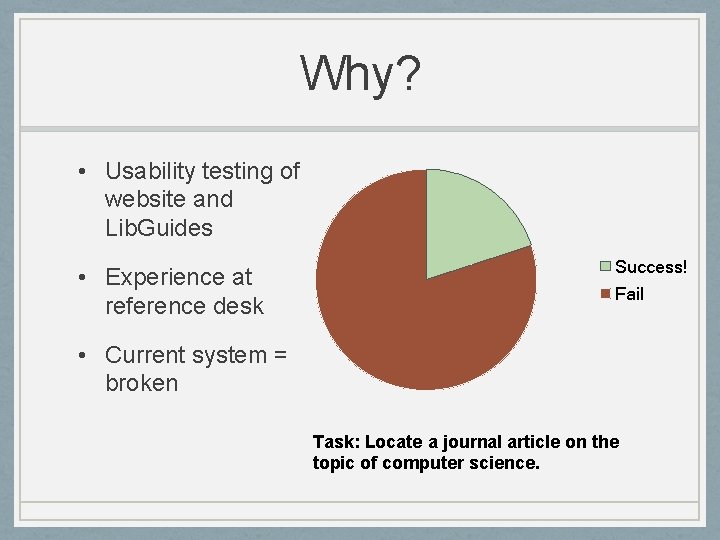

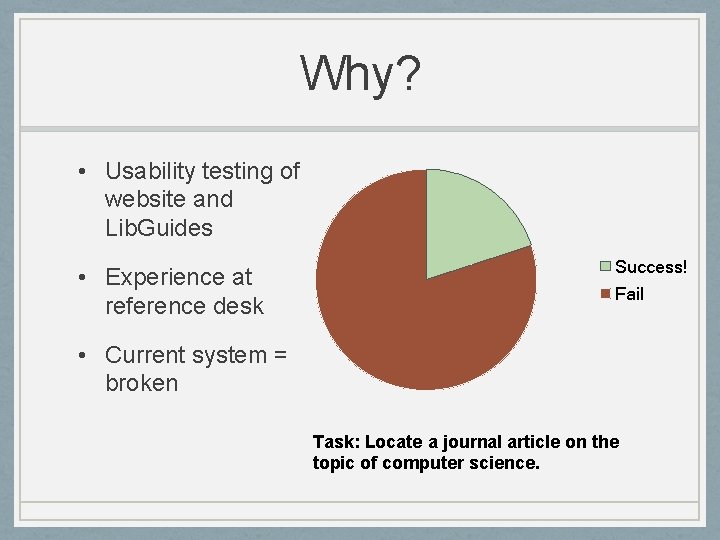

Why? • Usability testing of website and Lib. Guides • Experience at reference desk Success! Fail • Current system = broken Task: Locate a journal article on the topic of computer science.

Implementation: Metro State Timeline • Metro State’s implementation team: Two reference & instruction librarians and the assistant director of technical services. Web and eresource expertise. • Started meeting in late August 2012. • Meet once weekly during fall semester. • Sharing and transparency: Reported progress at biweekly librarians’ meetings. • Soft launch of Summon/One Search: October 31, 2012. • Staff training: Third week of February 2013 • Official launch: Last week of February 2013

Implementation: The Index • “A discovery tool is supposed to make all of the resources available to the library ‘discoverable’. What are all of the resources? This question is at the heart of the planning process” (Brennan, 2012, p. 47). • Time-consuming process: Determining what resources, i. e. , databases, to include in index. “Nonbibliographic” and primary source databases problematic. • Also time-consuming: Verifying subscriptions and holdings of individual e-journal subscriptions and ereference sources.

Implementation: The Index • Two other considerations (Welch, 2012) • Patron-driven acquisitions placeholder records • Con: May increase price of discovery service. • Con: Requires two-step process of adding and deleting records in the catalog and in the index. • Open access publications • Con: OA records may “dilute” the records for the library’s paid resources. • Metro State: Selectively turned some “on, ” e. g. National Academies publications.

Implementation: The Index • Items not in the index risk becoming orphans, “particularly if the library promotes the discovery service as the primary method of accessing its collections” (Welch, 2012, p. 326). • Solutions • • • Talk to vendors/publishers Subject guides A-Z list of resources User education Database recommender system

Implementation: Data Integrity (Brennan, 2012) • Quality of metadata harvested by discovery tool? • Proper mapping between discovery tool’s facets and corresponding fields and terms in harvested records. • Metro State: Spreadsheet/worksheet. • How often is data refreshed, e. g. , adds and deletes? • Metro State: Catalog records four times daily. • Local availability data: Real-time or delay?

Implementation: Interoperability • How well can your authentication system, e. g. , proxy server, and link resolver interoperate with your discovery tool? (Brennan, 2012) • Metro State: EZproxy and 360 Link (Ser. Sol product)

Implementation: Customization • Customizing and branding options (Brennan, 2012) • Metro State: Main logo, link at top, inclusion of Library. H 3 lp chat widget. Few other options in Summon. • Labels and terminology (Welch, 2012) • Metro State: Some options available, but nothing has been changed yet. • Authentication (Welch, 2012) • Metro State: Pre-authenticate or not? Art. Stor issue. Alumni and ILL service.

Implementation: Branding • What to call it? • Library One Search • Clear meaning • But not quite true • Very common name in the literature

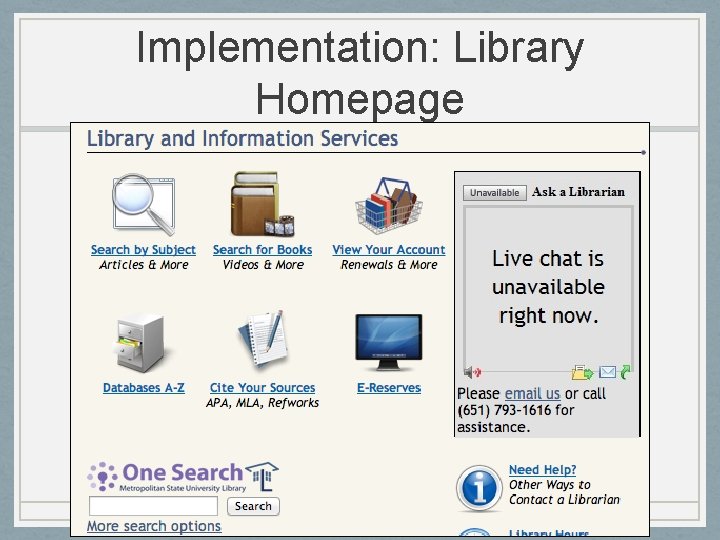

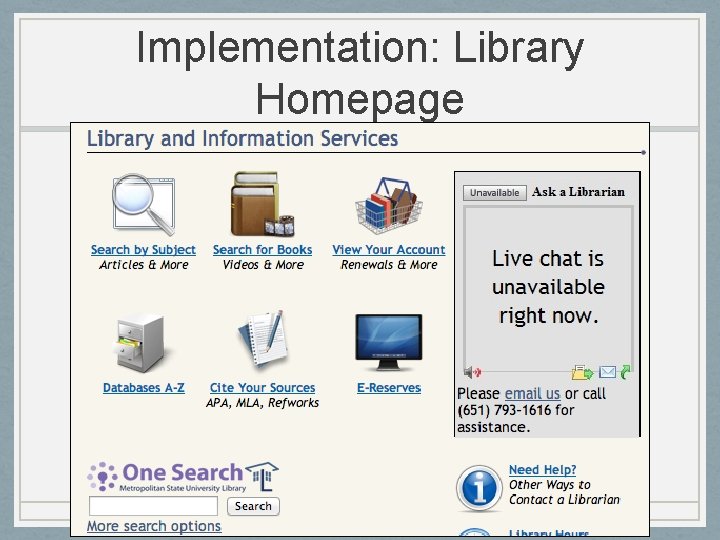

Implementation: Library Homepage

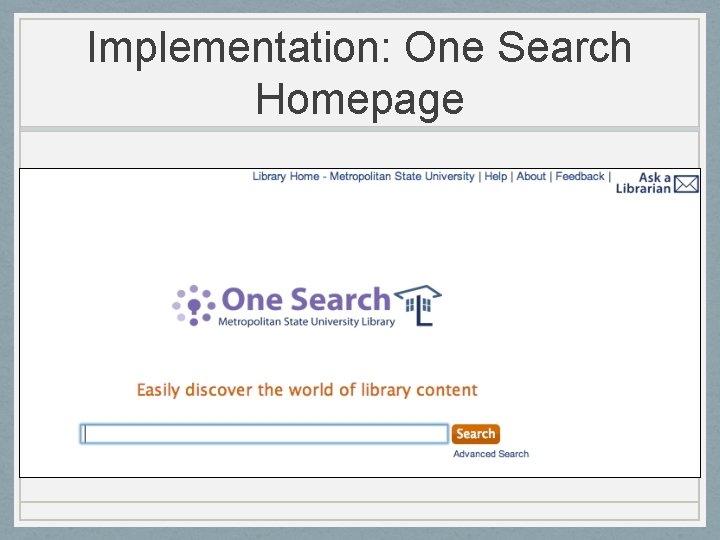

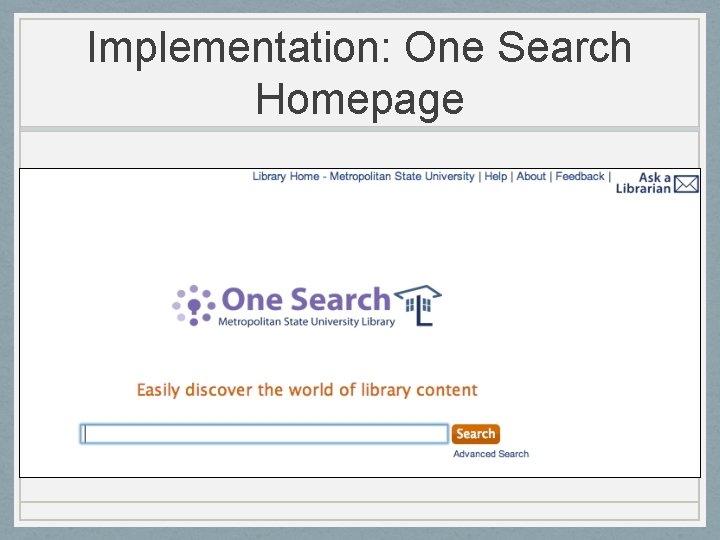

Implementation: One Search Homepage

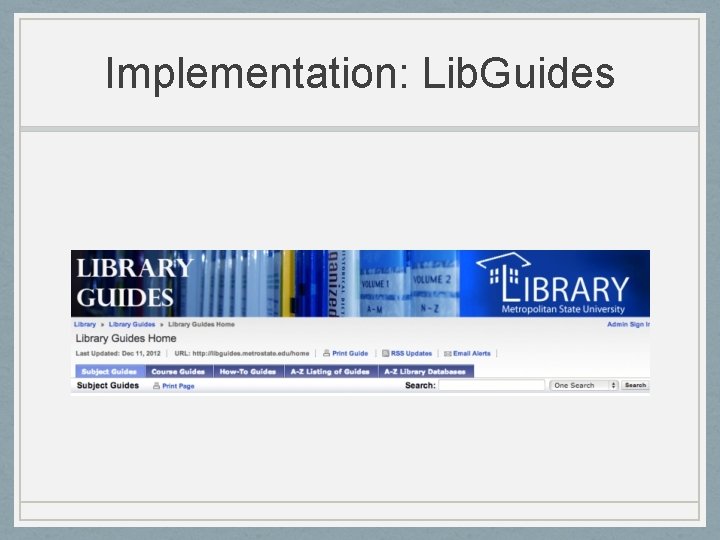

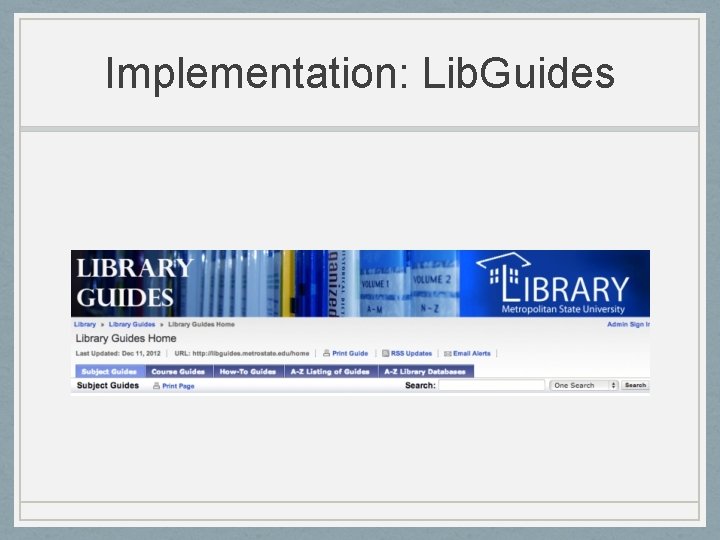

Implementation: Lib. Guides

Marketing: Soft Launch • Notes to liaisons • Facebook announcement (promoted post)

Marketing: Social Media

Marketing: Official Launch • Facebook announcement • LMS announcement • Student and faculty/staff newsletters • Library newsletter

Marketing: Social Media

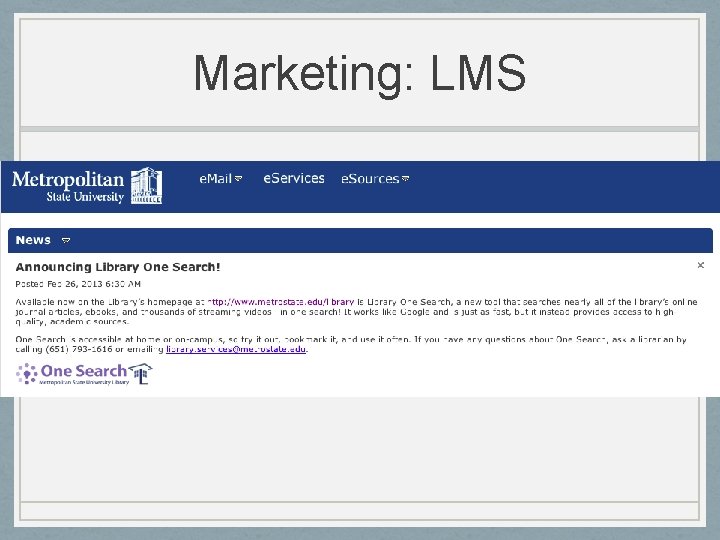

Marketing: LMS

Marketing: University Newsletters

Marketing: University Newsletters

Marketing: Library Newsletter

Usability • Metro State: No studies conducted—yet. • Univ. System of MD (USMAI) (Holman et al. , 2012) • Interface: Users failed to recognize “visual cues such as format icons or full-text availability” (p. 260). • Search: Irrelevant results. Users did not see suggested spellings. • Facets: Half of users “did not engage with facets” (p. 260). (But half did!) • Save Features: Few used at all.

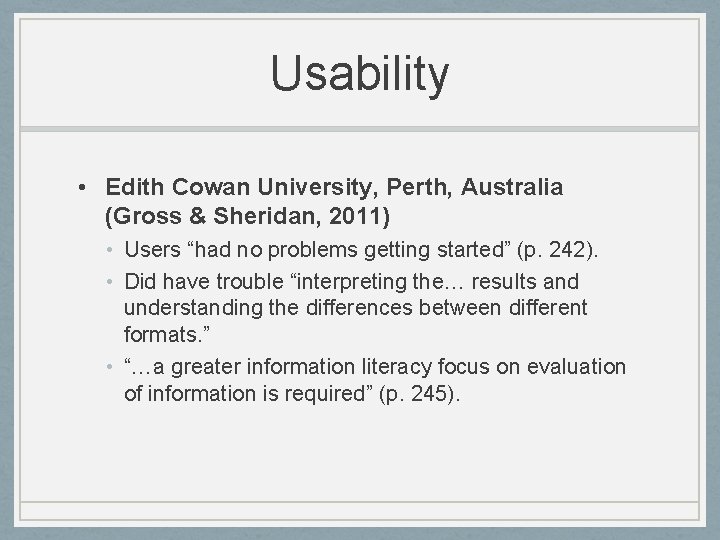

Usability • Edith Cowan University, Perth, Australia (Gross & Sheridan, 2011) • Users “had no problems getting started” (p. 242). • Did have trouble “interpreting the… results and understanding the differences between different formats. ” • “…a greater information literacy focus on evaluation of information is required” (p. 245).

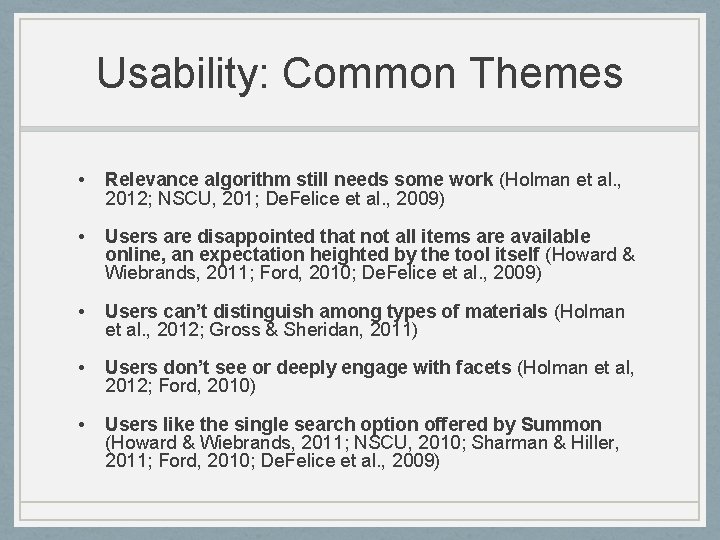

Usability: Common Themes • Relevance algorithm still needs some work (Holman et al. , 2012; NSCU, 201; De. Felice et al. , 2009) • Users are disappointed that not all items are available online, an expectation heighted by the tool itself (Howard & Wiebrands, 2011; Ford, 2010; De. Felice et al. , 2009) • Users can’t distinguish among types of materials (Holman et al. , 2012; Gross & Sheridan, 2011) • Users don’t see or deeply engage with facets (Holman et al, 2012; Ford, 2010) • Users like the single search option offered by Summon (Howard & Wiebrands, 2011; NSCU, 2010; Sharman & Hiller, 2011; Ford, 2010; De. Felice et al. , 2009)

Usability: Some Instruments • Gross & Sheridan, 2011 • Fagan et al. , 2012

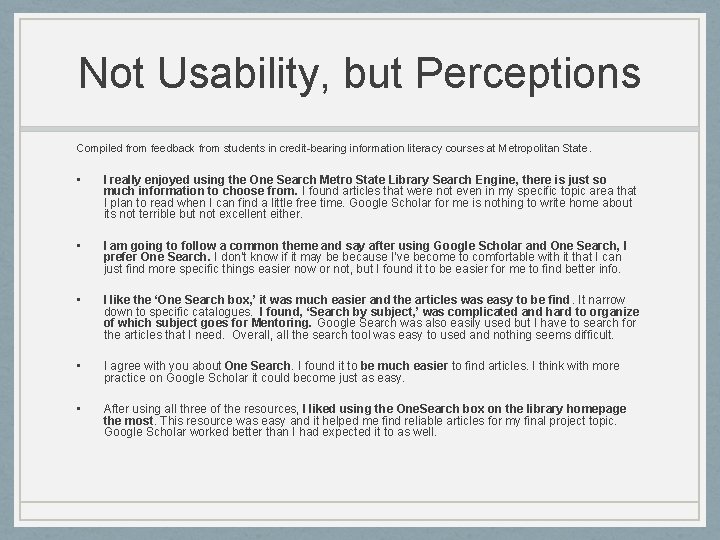

Not Usability, but Perceptions Compiled from feedback from students in credit-bearing information literacy courses at Metropolitan State. • I really enjoyed using the One Search Metro State Library Search Engine, there is just so much information to choose from. I found articles that were not even in my specific topic area that I plan to read when I can find a little free time. Google Scholar for me is nothing to write home about its not terrible but not excellent either. • I am going to follow a common theme and say after using Google Scholar and One Search, I prefer One Search. I don't know if it may be because I've become to comfortable with it that I can just find more specific things easier now or not, but I found it to be easier for me to find better info. • I like the ‘One Search box, ’ it was much easier and the articles was easy to be find. It narrow down to specific catalogues. I found, ‘Search by subject, ’ was complicated and hard to organize of which subject goes for Mentoring. Google Search was also easily used but I have to search for the articles that I need. Overall, all the search tool was easy to used and nothing seems difficult. • I agree with you about One Search. I found it to be much easier to find articles. I think with more practice on Google Scholar it could become just as easy. • After using all three of the resources, I liked using the One. Search box on the library homepage the most. This resource was easy and it helped me find reliable articles for my final project topic. Google Scholar worked better than I had expected it to as well.

Return on Investment • Pro • Materials that are easily discovered will get greater use (Vaughn, 2011) • Increased usage makes the investment in library resources more justifiable to administrators • Con (? ) • Decreased use of subject databases could mean that specialized resources become underutilized • Some librarians fear Summon could encourage the use of “good enough” sources, undermining the value of library collections (Buck & Mellinger, 2011)

Impact on Collection Usage Significant increase in full text downloads and link resolver clickthroughs: • Grand Valley State University (Way, 2012) • 66% increase in downloads from top 5, 000 journals totaling 81, 371 click-throughs in first semester of implementation • University of Texas at San Antonio (Kemp, 2012) • Link resolver use increased 84%, full text downloads increased 23% in first year

Impact on Collection Usage Unclear impact on circulation: • Grand Valley State University (Way, 2012) • Users found and selected monographs via Summon • University of Texas at San Antonio (Kemp, 2012) • -13. 7% direct catalog usage first year after implementation, -3% circulation

Impact on Collection Usage Significant increase in newspaper access and downloads: • Grand Valley State University (Way, 2012) • NY Times use increased 202%, local newspaper Grand Rapids Press use increased 400%. Increased access of other newspaper titles.

Impact on Collection Usage Decrease in use of indexing and abstracting databases: • Grand Valley State University (Way, 2012) • Large drop in use regardless of platform, subject, or discipline • University of Texas at San Antonio (Kemp, 2012) • -5% first year after implementation

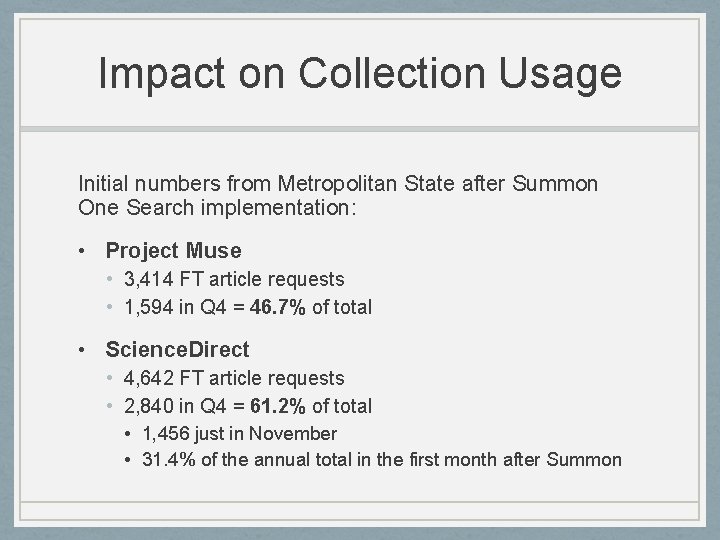

Impact on Collection Usage Initial numbers from Metropolitan State after Summon One Search implementation: • Project Muse • 3, 414 FT article requests • 1, 594 in Q 4 = 46. 7% of total • Science. Direct • 4, 642 FT article requests • 2, 840 in Q 4 = 61. 2% of total • 1, 456 just in November • 31. 4% of the annual total in the first month after Summon

Planning and Implementation Dahl and Mac. Donald (2012) suggest that discovery tools are a disruptive technology: • Expect some resistance from staff • May receive resistance from users • Catalogs and indexes were designed for librarians and scholars; discovery tools are designed for library users

Planning and Implementation • Strategies that can positively impact staff buy-in: • Build trust through transparency and shared information (Boyer & Besaw, 2012; Dahl & Mac. Donald, 2012) • Clarify how tool fits the mission and vision of the library (Mandernach & Fagan, 2012) • Involve staff in all areas of the library in the initial planning and implementation process (Dahl & Mac. Donald, 2012) • Develop a strategy for implementation (Boyer & Besaw, 2012; Dahl and Mac. Donald, 2012) • Training in initial stages of implementation (Boyer & Besaw, 2012; Dahl & Mac. Donald, 2012)

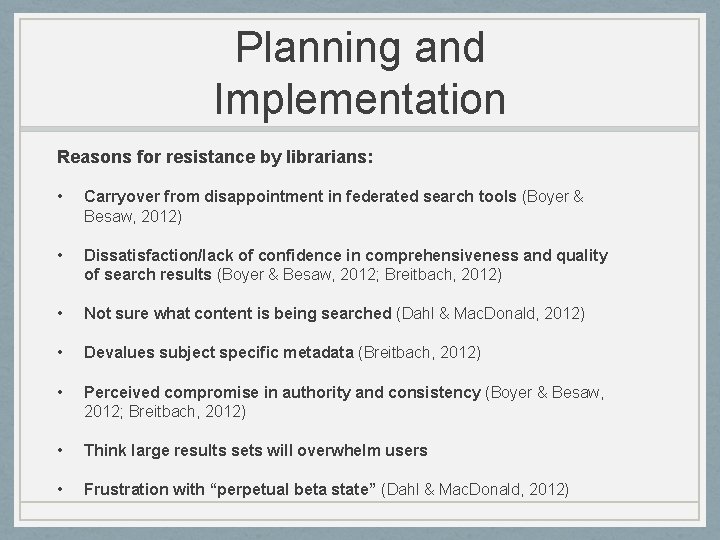

Planning and Implementation Reasons for resistance by librarians: • Carryover from disappointment in federated search tools (Boyer & Besaw, 2012) • Dissatisfaction/lack of confidence in comprehensiveness and quality of search results (Boyer & Besaw, 2012; Breitbach, 2012) • Not sure what content is being searched (Dahl & Mac. Donald, 2012) • Devalues subject specific metadata (Breitbach, 2012) • Perceived compromise in authority and consistency (Boyer & Besaw, 2012; Breitbach, 2012) • Think large results sets will overwhelm users • Frustration with “perpetual beta state” (Dahl & Mac. Donald, 2012)

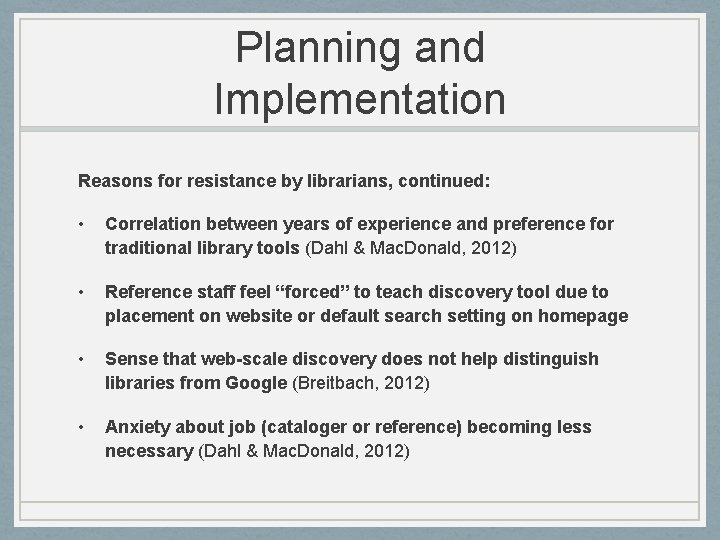

Planning and Implementation Reasons for resistance by librarians, continued: • Correlation between years of experience and preference for traditional library tools (Dahl & Mac. Donald, 2012) • Reference staff feel “forced” to teach discovery tool due to placement on website or default search setting on homepage • Sense that web-scale discovery does not help distinguish libraries from Google (Breitbach, 2012) • Anxiety about job (cataloger or reference) becoming less necessary (Dahl & Mac. Donald, 2012)

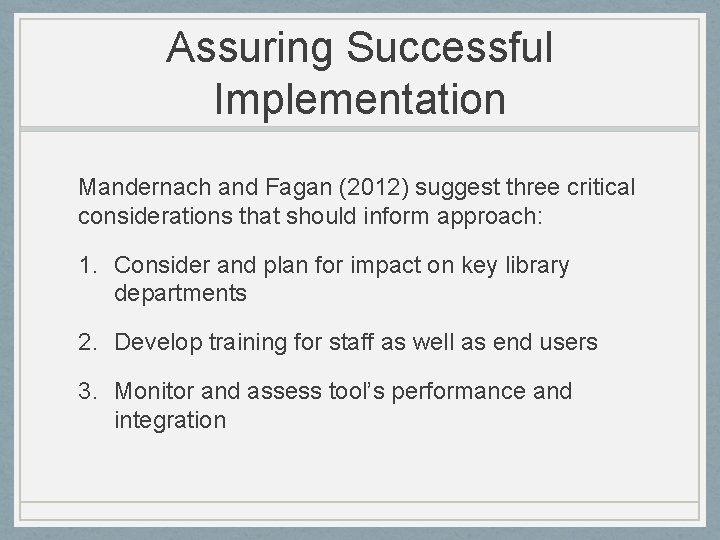

Assuring Successful Implementation Mandernach and Fagan (2012) suggest three critical considerations that should inform approach: 1. Consider and plan for impact on key library departments 2. Develop training for staff as well as end users 3. Monitor and assess tool’s performance and integration

Questions?

Break

Activity: Comparing Search Results www. metrostate. edu/library

Advantages for Users and Implications for Info. Lit. • Discovery is cross disciplinary/reduces silos • Searches across content types, locations, and publishers • Better access to full text, more intuitive, saves time (4 th Law) • Serendipity/“gateway to creative exploration” (Race, 2012) • “Zone of curiosity, ” juxtaposition, play, meaning (Borowske, 2005) • End users only need to learn one interface • Better transition to life after school • Good enough/satisficing. Users know value of library but still choose Google for ease of use (Cmor et al. , 2010) • Less need for mediation. Empowering

Advantages for Librarians • Less teaching to the tool. . . less “click here, do that” (Cmor, 2012) • Less time on Boolean and specialized database “tricks” • Ability to showcase valuable “hidden” content and collections • More time to focus on “higher level” skills: • Research as an iterative process • Search refinement • Content and source selection, scholarly vs. popular • Source evaluation • Choosing keywords, broadening and narrowing • Synthesis

How Does Discovery Foster Serendipity? • Serendipity means a "happy accident" or "pleasant surprise"; specifically, the accident of finding something good or useful while not specifically searching for it. (Wikipedia) • Old: Browsing books • Stimulates creativity, helps create novel connections and frameworks. Messy, unpredictable, personal (Race 2012). User brings themselves to the search • Discovery Tools and Serendipity • “Large result sets stimulate accidental information discovery” (Race, 2012). Challenge your assumptions! • Facets aid exploration, recursive searching (Race, 2012) • Serendipity more likely to occur in informal environments than in highly controlled systems (Mc. Birnie, 2008)

Strategies: BIs • Stop teaching the catalog(? ) Teach discovery first • Think about needs of class – what level in school/subject of study? Type of assignment? Are specialized databases necessary? Can you scaffold? • Use broad keyword searches, teach refining with facets, “pearling” or “following the tracings” • Emphasize critical thinking and evaluation • Use subject guides in conjunction for advanced users(? ) • The hope is that you don’t need to just add it on to everything else you do… use your imagination!

Beyond the Basics (Credit. Bearing Courses) • Use facets as a way to teach information cycle and different formats (Fawley & Krysak, 2012) • Use option to “search beyond collection” to teach Interlibrary Loan • Stop teaching mechanics of citations (? ) • Talk about the important ways Summon is different than Google • Use hands on, comparative exercises. When you see failure, don’t fix the lesson, fix the tool

Reference Desk • Document for yourself – what works well? For which subjects? Keep a record at the desk. Watch the student. Test your assumptions re: their level of expertise • Try discovery first: you might be surprised at the databases you find results in. Try known item searching, it works better than you might expect, and is perhaps an improvement to what you taught before • What message do you send when you show complex search “tricks”? Can your users repeat them? • Reference interview – what has the user tried already? Did they use disovery? • e-reference books often near the top of results, giving them more exposure • Break the bad library assignment (but facets can help when you get them anyway) • Reduce anxiety = foster creativity

Final Thoughts • Focus on the end user, not the discovery tool “innards”. How did your old way work? Did students know where they were before? What is your end goal? • Be careful what you wish for: your “fixes” could break the tool • Have an open, flexible mind • Be curious • Have fun! CC by James. Jen

References Borowske, K. (2005). Curiosity and motivation-to-learn. Paper presented at the ACRL Twelfth National Conference. Minneapolis, MN. Retrieved from http: //three. umfglobal. org/resources/1976/curiosity_article. pdf Brennan, D. P. (2012). Details, details: Issues in planning for, implementing, and using resource discovery tools. In M. P. Popp & D. Dallis (Eds. ). Planning and implementing resource discovery tools in academic libraries (pp. 44 -56). Hershey PA: IGI Global. doi: 10. 4018/978 -1 -4666 -1821 -3. ch 003 Buck, S. & Mellinger, M. (2011): The impact of Serial Solutions’ Summon on information literacy instruction: Librarian perceptions. Internet Reference Services Quarterly, 16(4), 159 -181. doi: 10. 1080/10875301. 2011. 621864 Cmor, D. , & Li, X. (2012). Beyond Boolean, towards thinking: Discovery systems and information literacy. Library Management, 33(8) 450 -457. doi: 10. 1108/01435121211279812 Cmor, D. , Chan, A. , & Kong, T. (2010). Course-integrated learning outcomes for library database searching: Three assessment points on the path of evidence. Evidence Based Library and Information Practice, 5(1), 64 -68.

References Dahl, D. , & Mac. Donald, P. (2012). Implementation and acceptance of a discovery tool: Lessons learned. In M. P. Popp & D. Dallis (Eds. ). Planning and implementing resource discovery tools in academic libraries (pp. 366 -387). Hershey PA: IGI Global. doi: 10. 4018/978 -1 -4666 -1821 -3. ch 021 De. Felice, B. , et al. (2009). An evaluation of Serials Solutions Summon as a discovery tool for the Dartmouth College Library. Retrieved from http: //www. dartmouth. edu/~library/admin/docs/Summon_Report. pdf Fagan, J. C. , Mandernach, M. , Nelson, C. S. , Paulo, J. R. , & Saunders, G. (2012). Usability test results for a discovery tool in an academic library. Information Technology and Libraries, 31(1), 83 -112. doi: 10. 6017/ital. v 31 i 1. 1855 Fawley, N. , & Krysak, N. (2012). Information literacy opportunities within the discovery tool environment. College & Undergraduate Libraries, 19(2 -4), 207 -214. doi: 10. 1080/10691316. 2012. 693439 Ford, L. (2010). Better than Google Scholar? Presented at Internet Librarian 2010. Retrieved from http: //conferences. infotoday. com/documents/111/a 105_ford. pdf

References Gross, J. , & Sheridan, L. (2011). Web scale discovery: The user experience. New Library World, 112(5/6), 236 -247. doi: 10. 1108/03074801111136275 Holman, L. , et al. (2012). How users approach discovery tools. In M. P. Popp & D. Dallis (Eds. ). Planning and implementing resource discovery tools in academic libraries (pp. 252265). Hershey PA: IGI Global. doi: 10. 4018/978 -1 -4666 -1821 -3. ch 014 Howard, D. , & Wiebrands, C. (2011). Culture shock: Librarians’ response to web scale search. Retrieved from http: //ro. ecu. edu. au/cgi/viewcontent. cgi? article=7208? context=ecuworks Kemp, J. (2012). Does web scale discovery make a difference? Changes in collections use after implementing Summon. In M. P. Popp & D. Dallis (Eds. ). Planning and implementing resource discovery tools in academic libraries (pp. 456 -468). Hershey PA: IGI Global. doi: 10. 4018/978 -1 -4666 -1821 -3. ch 026 Mandernach, M. & Fagan, J. C. (2012). Creating organizational buy-in: Overcoming challenges to a library-wide discovery tool implementation. In M. P. Popp & D. Dallis (Eds. ). Planning and implementing resource discovery tools in academic libraries (pp. 419 -437). Hershey PA: IGI Global. doi: 10. 4018/978 -1 -4666 -1821 -3. ch 024 Mc. Birnie, A. (2008). Seeking serendipity: The paradox of control. Aslib Proceedings: New Information Perspectives, 60(6), 600 -618. doi: 10. 1108/0001253081/0924294

References North Carolina State University. (2010). Summon usability testing. Retrieved from http: //www. lib. ncsu. edu/userstudies/2010_summon/ Race, T. (2012). Resource discovery tools: Supporting serendipity. In M. P. Popp & D. Dallis (Eds. ). Planning and implementing resource discovery tools in academic libraries (pp. 139 -152). Hershey PA: IGI Global. doi: 10. 4018/978 -1 -4666 -1821 -3. ch 009 Sharman, A. , & Hiller, E. (2011). Implementation of Summon at the University of Huddersfield. SCONUL Focus, 51, 50 -52. Thomsett-Scott B. , & Reese, P. (2012). Academic libraries and discovery tools: A survey of the literature. College & Undergraduate Libraries, 19, 123 -143. doi: 10. 1080/10691316. 2012. 697009 Way, D. (2010). The impact of web-scale discovery on the use of a library collection. Retrieved from http: //scholarworks. gvsu. edu/library_sp/9 Welch, A. J. (2012). Implementing library discovery: A balancing act. In M. P. Popp & D. Dallis (Eds. ). Planning and implementing resource discovery tools in academic libraries (pp. 322 -337). Hershey PA: IGI Global. doi: 10. 4018/978 -1 -4666 -1821 -3. ch 018

Thank You! • Questions or comments? Please write us! • Jennifer De. Jonghe <jennifer. dejonghe@metrostate. edu> • Michelle Filkins <michelle. filkins@metrostate. edu> • Alec Sonsteby <alexander. sonsteby@metrostate. edu>

Michelle de jonghe

Michelle de jonghe Pien wijn

Pien wijn Jennifer de jonghe

Jennifer de jonghe Serendipity testo argomentativo

Serendipity testo argomentativo Serendipity lll

Serendipity lll Neer classification proximal humerus

Neer classification proximal humerus Summon lydia

Summon lydia Wilfried de jonghe partner

Wilfried de jonghe partner Just seeking peace 0921

Just seeking peace 0921 How to make a range card

How to make a range card Grounding seeking safety

Grounding seeking safety Letter of enquiry

Letter of enquiry Expression of asking and giving permission

Expression of asking and giving permission How does lorenzo describe the gentle nature of jessica

How does lorenzo describe the gentle nature of jessica Novelty seeking

Novelty seeking Seek the old paths

Seek the old paths Power-seeking behavior examples

Power-seeking behavior examples Gravitational force definition

Gravitational force definition Actual diagnosis

Actual diagnosis Habit 5

Habit 5 Keep seeking the things above

Keep seeking the things above Sensation seeking

Sensation seeking Wives want nsa kiowa

Wives want nsa kiowa Manufacturers seeking distributors

Manufacturers seeking distributors Highly aligned loosely coupled

Highly aligned loosely coupled Consumer personality

Consumer personality Information seeking in business

Information seeking in business Information seeking strategies

Information seeking strategies Center seeking force

Center seeking force Seeking safety coping with triggers

Seeking safety coping with triggers Information seeking behaviour definition

Information seeking behaviour definition Seeking more knowledge

Seeking more knowledge Sensation seeking significato

Sensation seeking significato Novelty seeking betekenis

Novelty seeking betekenis Replacement behaviors for attention seeking

Replacement behaviors for attention seeking Goal seeking analysis in dss

Goal seeking analysis in dss Chapter 30 preparing for licensure and employment answers

Chapter 30 preparing for licensure and employment answers Michelle pitot

Michelle pitot Michelle brailsford

Michelle brailsford Michelle yao md

Michelle yao md Michelle colodzin

Michelle colodzin Michelle jackson oxford

Michelle jackson oxford Michelle tat

Michelle tat Michelle keiper

Michelle keiper Ao summer internship

Ao summer internship Michelle harkins

Michelle harkins Michelle tubbs

Michelle tubbs Michelle morel

Michelle morel Nathan nau dhcs

Nathan nau dhcs Michelle chronister

Michelle chronister Dr michelle reid

Dr michelle reid Michelle harkins

Michelle harkins Michelle heim

Michelle heim Web analytics wednesday

Web analytics wednesday Michelle turpin

Michelle turpin