Schedule Wednesday 911 and Monday 916 Lecture Wednesday

- Slides: 71

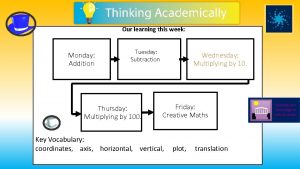

Schedule: Wednesday, 9/11 and Monday, 9/16: Lecture Wednesday, 9/18, Exam Unit 2: PSY 6450 Traditional Performance Appraisal Performance Measurement Performance Assessment Task Clarification 1

Unit 2 l l l Aamodt: traditional performance appraisal Daniels chapter: measurement & performance matrix Pampino et al. article: great example of performance assessment, multiple component intervention, and social validity assessment. Only problem is the short-term nature of the study. Anderson et al. article: great example of task clarification and importance of feedback Komaki: Tool to assess effective supervisors and managers from an operant perspective 2

Additional Readings l l I have included seven references for additional readings that I highly recommend if you are interested in pursuing any of the topics l Alyce’s hit list I have also included “Major Take-Home Points” in the study objectives, or the World According to Alyce l l Hopefully this will help explain why I chose the articles I did and tie the material together for you There a lot of different topics covered 3

SO 1 NFE: Why it is important to determine the reason for evaluation l l Performance is assessed for a number of reasons (primarily, administrative reasons and performance improvement) Various performance appraisal methods are appropriate for some purposes, not for others. l l Employee comparison methods such as forced choice and ranking: good for administrative purposes, not good for performance improvement 360 -degree evaluation: good for performance improvement, not good for administrative purposes (Start with traditional performance appraisal: two very good articles in the 2011 JOBM IO/OBM special issue: De. Nisi and Gravina & Siers administrative – pay increases, promotions, termination) 4

Traditional Performance Appraisal: Who does it? l l 90% of appraisals are done by supervisors Others, however, may also participate in the appraisal l l Peers Subordinates/Direct reports (upward feedback) Customers Employee himself or herself (rather rare) 28% of large companies use some form of multisource evaluations (start with some data re the source of the appraisal; that is who actually does the appraisal) 5

SO 2: Different results l There is often little agreement when different sources evaluate the same employee l l l Supervisors and peers: . 34 correlation Supervisors and subordinates: . 22 correlation Supervisors and the employee: . 22 correlation Peers and subordinates: . 22 correlation Peers and the employee: . 19 (start with some data re the source of the appraisal; note only. 22 correlation between the supervisor and employee – that often leads to problems, ) 6

SO 2: Two reasons why results may differ l Those groups see different aspects of the person’s performance l l The supervisor may see the results, but peers may not The employee may interact very differently with the supervisor than with peers and subordinates This is why we have our current graduate students evaluate our applicants to our graduate programs (why are the evaluations so different? , to get an accurate evaluation, tap into different sources) 7

SO 3: Peer assessments l 3 A. In general, when peers are similar and know the person well peer ratings have good reliability and validity l l l Reliability - different peers rate the employee the same way; Inter-observer agreement Validity - predict future success of employees 3 B. However, employees react worse to negative feedback from peers than from supervisors and experts 8

SO 4: Main obstacle to peer assessments l Acceptance by employees - we don’t like to do this l WMU faculty merit system: Union vs. admin. l Not enough money to go around; many just split $$ How would you like to be given the responsibility for evaluating your peers - the other students advised by your faculty advisor - and have rewards (i. e. , assistantships, grades in classes, opportunities for practica and projects) based on that? 9

SO 5: Ratings by subordinates l l 5 A. Not surprisingly, accurate subordinate ratings can be hard to obtain. Why? Employees fear a backlash, particularly if there are only a few of them and the supervisor is likely to be able to identify them. 5 B. Data also indicate that feedback/ratings from subordinates brings about the greatest performance change when compared to feedback/ratings by supervisors and peers (quite interesting!) (used in 360 degree feedback programs; quite popular right now; however, subordinate rating systems are not common or well-regarded by managers. ; same problem with getting evaluations of faculty advisors) 10

360 degree feedback (NFE) l A manager/supervisor l l l Rates himself Is rated by his manager/supervisor Is rated by his peers Is rated by his subordinates What percentage of managers saw themselves as others saw them? Only 10%! l Overrating was most noteworthy on what scale? People 11 (NFE, devastating, but why is this good news for us? ; use for administrative purposes is increasing, but there are some problems with this – Aamodt advised, it is best used for development purposes; coaching with a coach who is not the manager’s supervisor)

SO 6 Self-assessment l 6 A: Main problem? l l 6 B: Self-ratings correlate l l Inflation: we think we are better than we are only moderately with actual performance poorly with subordinate ratings poorly with management ratings NFE, but interesting cross-cultural data l l Ratings of Japanese, Korean, & Taiwanese workers suffer from strictness (modesty) Ratings from workers in the US, mainland China, India, Singapore, and Hong Kong suffer from leniency 12

SO 6 Self-assessments, cont. l 6 C: Little agreement between supervisory and selfassessments. What are the important implications of this from a behavioral perspective? If employees believe they are performing better than their supervisor believes, then they will not get the rewards they feel they deserve from the supervisor. Thus, employees will not believe that rewards are contingent upon their performance. Because of that, it is likely to hurt performance and cause strained relations with the supervisor. 13

NFE: Common appraisal methods l Employee comparison methods l l l Evaluate employees by comparing them against each other rather than against a standard Objective performance Ratings l l l Graphic rating scales, most common Behavioral checklists Less common in Appendix (due to complexity): (a) behaviorally anchored rating scales, (b) forced choice rating scales, (c) mixed standard scales, (d) behavioral observation scales 14 (Aamodt does a superb job of presenting the different types and discussing strengths and weaknesses of each)

SO 8: Why use employee comparisons rather than rating scales? l To reduce leniency l l Forces variability into the ratings With graphic scales, not uncommon for a supervisor to rate employees pretty much the same: everyone is a 5 or a 6 on a 7 point scale, making it difficult to make personnel decisions. (rank order, paired comparison, and forced distribution; I want to look at ranking and forced distribution in detail)) 15

SO 9: Forced distribution l l A predetermined percentage of employees are placed in each rating category More than 20% of Fortune 1000 companies use this type of system l General Electric, former CEO Jack Welch l Rank and yank: required that the bottom 10% be fired (unfortunately, you have probably experienced this in some of your classes: 10% get As, 20% get Bs, 40% get Cs, 20% get Ds and 10% get Es; how do you feel about that system; quote from article next slide? ) 16

SO 9: The problem with forced distribution l l Assumes that the performance of employees is normally distributed Probably not the case (if it is, the organization is doing something very wrong) if the selection system is good, poor performers have been fired, and if there is a good management system (include material in italics for exam) Example: My undergraduate class distribution l l Oops, only the top 10% in fall get an A, when in reality typically 20% of students earn 92% of the points or better? Also, my fall grade distribution tends to be better than my spring grade distribution: those who take the class in fall could do just as well as students (or better) in spring, but get a lower grade. 17 (assumes 10% are performing great, 20% good, 40% are average, etc. )

SO 9: Forced distribution l A legal caution about forced distribution systems! l l Two major lawsuits have been brought against companies because members of minority groups were disproportionately ranked in the low category Ford and Goodyear dropped them 18

SO 10: What’s the problem with employee comparisons? l May create a false impression that differences between individuals are actually larger than they are because you do not get actual performance data Six employees, rank ordered 1. Jan 2. Shakira 3. Paul 4. Mike 5. Emilio 6. Susan Actual performance difference between Jan and Mike or even Susan may be very small, yet look big. Can affect salary increases, promotions, etc. (employee comparisons in general: ranking, forced distribution are types: limited $ for merit increases, promotions - across depts – Is #3 in one dept. comparable in performance to #3 in another? Also, competitive systems; only one person can be #1; sabotage or at least decrease willingness to cooperate/help ) 19

SO 11: Main problem with graphic rating systems l Leniency and halo l l Leniency: supervisors rate employees better than they should Halo: a rating in one area of performance affects ratings in other areas l Person has good social skills, supervisor rates him/her higher on performance than the supervisor should (in general, rating errors, but the two most common: pressures on raters: how would you like to be the supervisor who gives one of your direct reports a poor evaluation, placing the employee in jeopardy of being fired, given MI’s unemployment 20 rate right now? )

SO 12: Behavioral checklists l l Behavioral checklists and scales are assumed to be more accurate because they are less vague, and target “objective” behaviors that are readily observable but they are not more accurate Why? l l l Still are based on subjective judgments Completed once a year That is also why we should not consider them adequate from a behavioral perspective l l Objective measures of behavior/performance Over time as behavior/performance occurs on the job (only 5% of variance between individuals when rated is due to the type of performance appraisal – That means, the type of performance appraisal format really does not matter; one form is not better than another, people just think they are) 21

SO 13: Which method is better? Research suggests that none is really better than another Methods that are complicated and time consuming to develop have only occasionally been found to be better than uncomplicated, inexpensive graphic rating scales. Known this for years: Landy & Farr, 1980. 22

Dissatisfaction with performance appraisal (NFE) l Companies tend to switch frequently from one type of form to another l l l Recall performance appraisal was the third-ranked topic addressed in JAP IBM abandoned employee ranking in favor of graphic scales, then returned to ranking a few years ago l Made the front page of the Wall Street Journal: ranking was THE way to go Why do you think IBM may have made the switch? (nothing to do with reliability or validity) 23

NFE: Main problem with performance appraisals l l Inflation of ratings Main assumption about the cause of errors l l l Design of the form (hence why so much time and money is spent redesigning forms - “design out” rating errors Lack of knowledge on the part of supervisors/managers - training Rarely are the consequences for ratings examined or considered 24 (Director of Personnel, WMU, ranks among the worst problems she had - fire/good ratings)

NFE: Consequences for inflated ratings l No rewards for accuracy; no sanctions for inaccurate ratings (how does the organization know if the ratings are accurate or inaccurate - a problem) l Most common reason High ratings are necessary to get salary increases, promotions and other rewards for employees and now even more importantly, to prevent employees from losing their jobs l l Avoids aversive interactions with subordinates Ratings of subordinates reflect the competence of the supervisors as a supervisor/manager (high unemployment, like now; servers, etc. 25

SO 14 NFE: Legal issues l l Performance appraisals are subject to federal and state EEO laws, and more cases are being filed. Protected classes under Title VII of the Civil Rights Act of 1964? African Americans, Hispanics, Asians, Native Americans, and females (sex) Note: Obama extended this to sexual preference, and sexual identification as well – “sex”; Trump rescinded this extension. These are in the courts now. (on click; Aamodt gives a very nice list of the factors that will increase the likelihood that you will “win” a legal challenge – note those well – but too many for you to memorize; but, next slide) 26

For your entertainment only: Actual statements l l l His men would follow him anywhere, but of morbid curiosity I would not allow this employee to breed Works well when under constant supervision and cornered like a rat in a trap He would be out of his depth in a parking lot puddle This young lady has delusions of adequacy This employee is depriving a village somewhere of an idiot (see next slide as well) 27

What some others say* l l l “By only infrequently rating personnel we only infrequently demotivate them” (Anonymous blogger on ADI web page) A 1994 survey by the Society for Human Resource Management found that 90% of appraisal systems were not successful (Shellhardt, 1994) “The best way to double the effectiveness of the typical annual performance appraisal is to do it once every two years” (Daniels, 2009) *All taken from Daniels, A. (2009). Oops! 13 management practices that waste time and money (and what to do about them). Atlanta: Performance Management Publications. 28

Unit 2: Part 2 Performance Matrix Functional Assessment Task Clarification 29

SO 18: Performance Matrix Daniels 1 l Based on Riggs’ Objectives Matrix (1986) l l Abernathy l l Morphed into Felix & Riggs’ Balanced Scorecard Performance Scorecard Unique feature: performance indexing (weighting/prioritizing of multiple measures and inclusion of sub-goals for each measure) Skip to SO 18 Performance Matrix power point slides: © AUBREY DANIELS INTERNATIONAL, INC. FOR USE EXCLUSIVELY BY EDUCATORS IN ACADEMIC INSTITUTIONS. 1 30

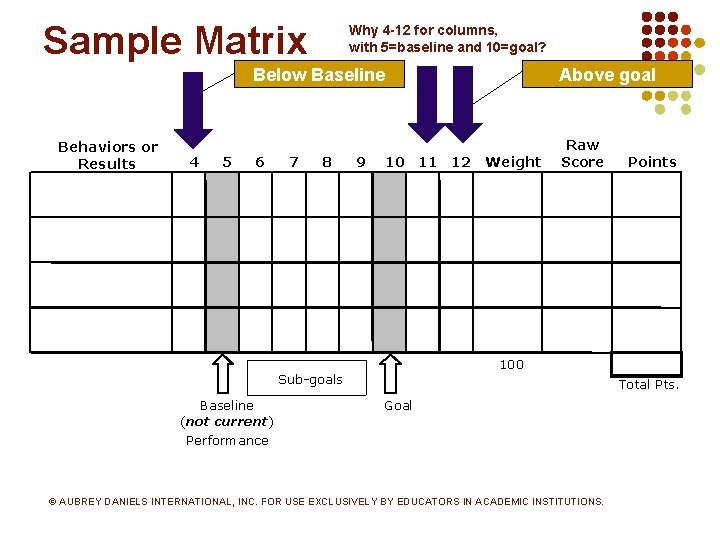

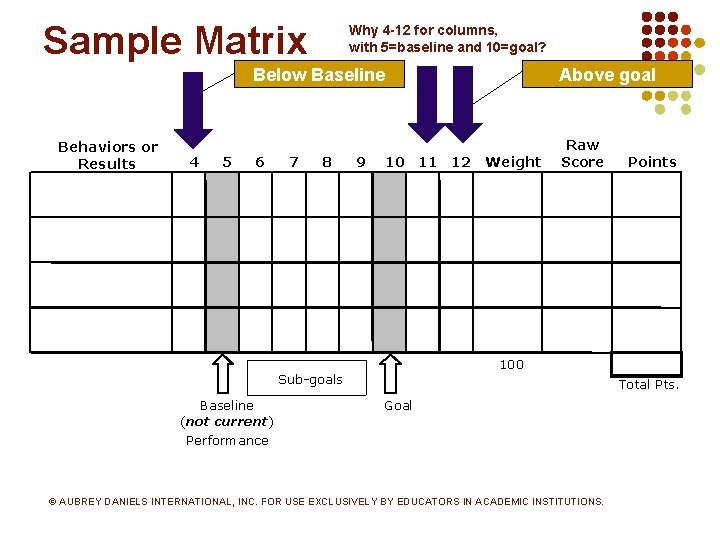

Sample Matrix Behaviors or Results 4 5 Why 4 -12 for columns, with 5=baseline and 10=goal? Below Baseline Above goal 6 Raw Score 7 8 9 10 11 12 Points 100 Sub-goals Baseline (not current) Performance Weight Total Pts. Goal © AUBREY DANIELS INTERNATIONAL, INC. FOR USE EXCLUSIVELY BY EDUCATORS IN ACADEMIC INSTITUTIONS. 31

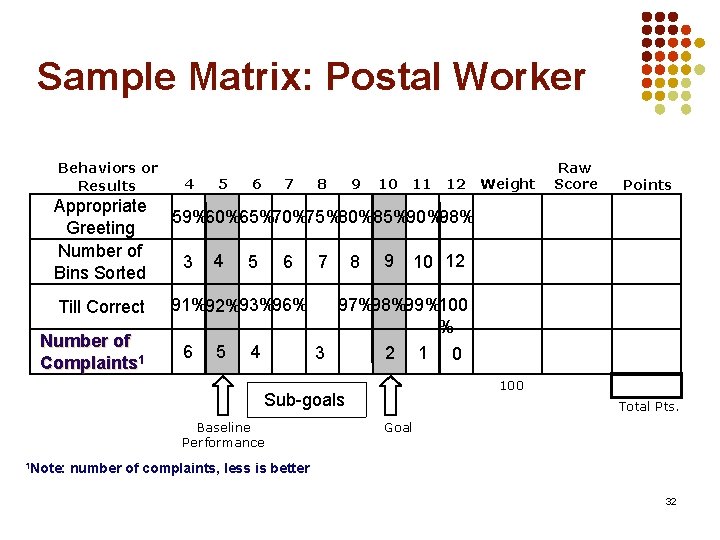

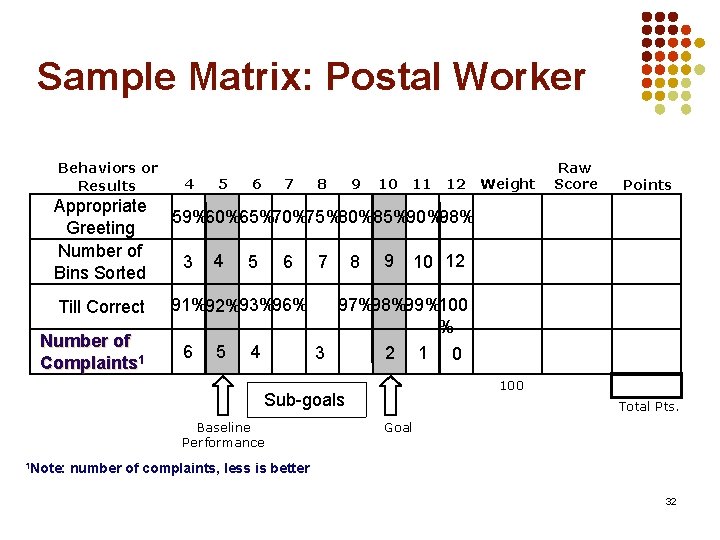

Sample Matrix: Postal Worker Behaviors or Results Appropriate Greeting Number of Bins Sorted Till Correct Number of Complaints 1 4 5 6 7 8 9 10 11 12 Points 59%60%65%70%75%80%85%90%98% 3 4 5 6 91%92%93%96% 6 5 4 7 8 9 10 12 97%98%99%100 % 3 2 1 0 100 Sub-goals Baseline Performance 1 Note: Weight Raw Score Total Pts. Goal number of complaints, less is better 32

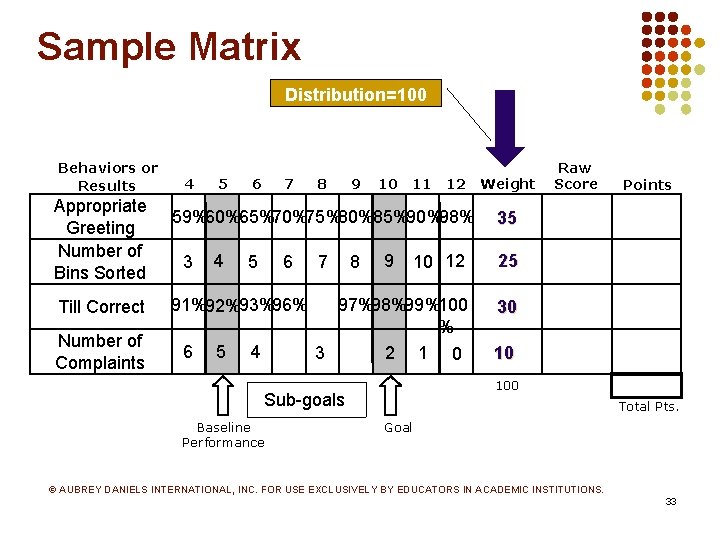

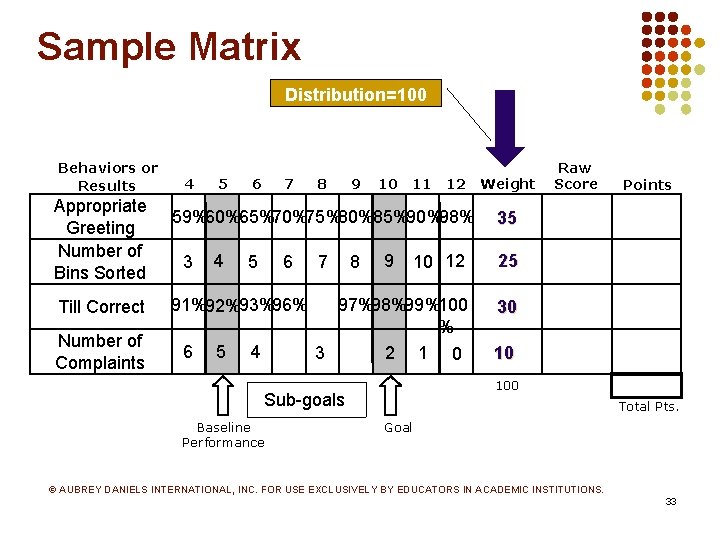

Sample Matrix Distribution=100 Behaviors or Results Appropriate Greeting Number of Bins Sorted Till Correct Number of Complaints 4 5 6 7 8 9 10 11 12 59%60%65%70%75%80%85%90%98% 3 4 5 6 91%92%93%96% 6 5 4 25 97%98%99%100 % 3 2 1 0 30 8 9 Points 35 10 12 7 10 100 Sub-goals Baseline Performance Weight Raw Score Total Pts. Goal © AUBREY DANIELS INTERNATIONAL, INC. FOR USE EXCLUSIVELY BY EDUCATORS IN ACADEMIC INSTITUTIONS. 33

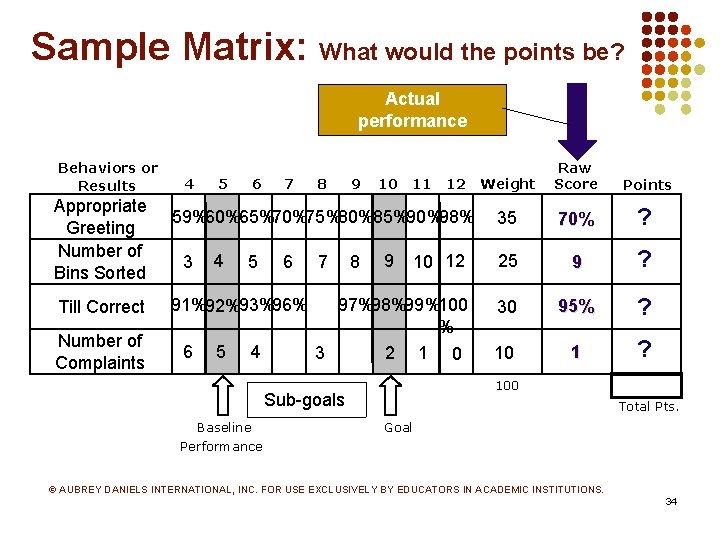

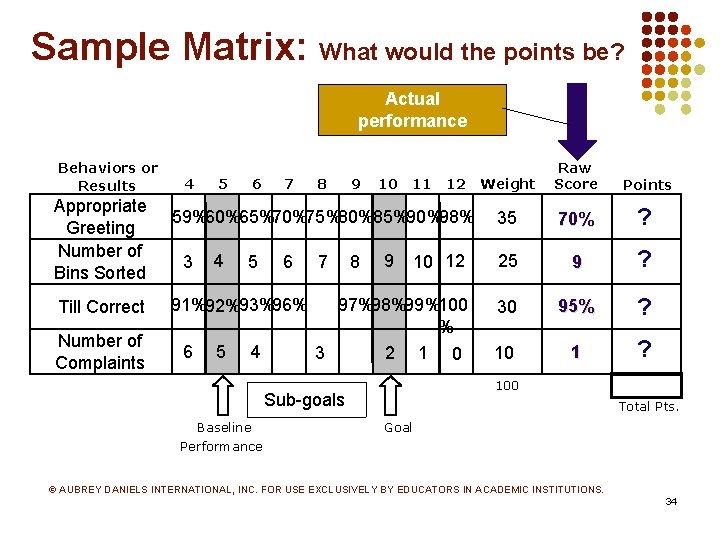

Sample Matrix: What would the points be? Actual performance Behaviors or Results Appropriate Greeting Number of Bins Sorted Till Correct Number of Complaints 4 5 6 7 Weight Raw Score Points 35 70% ? 10 12 25 9 ? 97%98%99%100 % 3 2 1 0 30 95% ? 10 1 ? 8 9 10 11 12 59%60%65%70%75%80%85%90%98% 3 4 5 6 91%92%93%96% 6 5 4 7 8 9 100 Sub-goals Baseline Performance Total Pts. Goal © AUBREY DANIELS INTERNATIONAL, INC. FOR USE EXCLUSIVELY BY EDUCATORS IN ACADEMIC INSTITUTIONS. 34

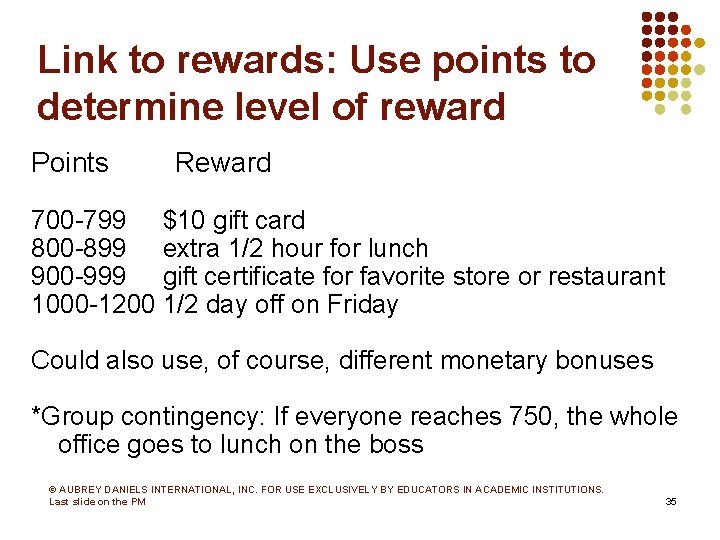

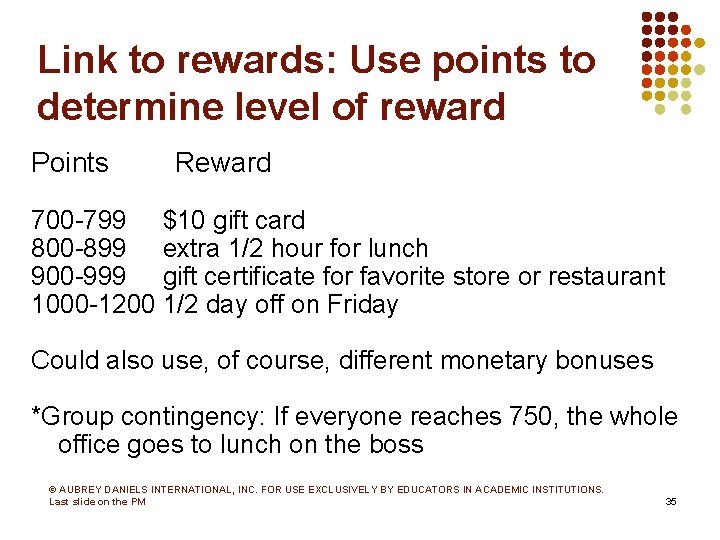

Link to rewards: Use points to determine level of reward Points Reward 700 -799 $10 gift card 800 -899 extra 1/2 hour for lunch 900 -999 gift certificate for favorite store or restaurant 1000 -1200 1/2 day off on Friday Could also use, of course, different monetary bonuses *Group contingency: If everyone reaches 750, the whole office goes to lunch on the boss © AUBREY DANIELS INTERNATIONAL, INC. FOR USE EXCLUSIVELY BY EDUCATORS IN ACADEMIC INSTITUTIONS. Last slide on the PM 35

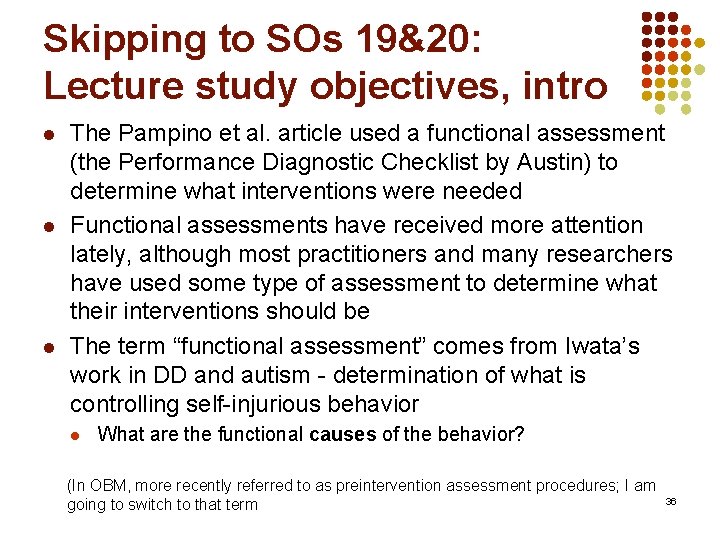

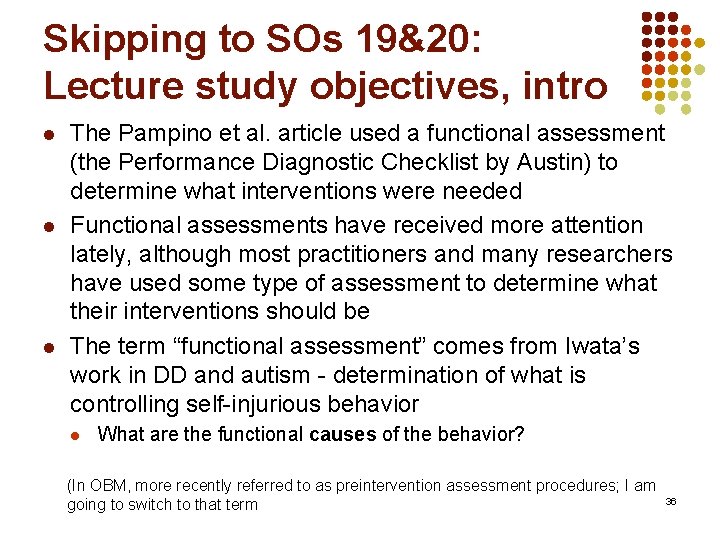

Skipping to SOs 19&20: Lecture study objectives, intro l l l The Pampino et al. article used a functional assessment (the Performance Diagnostic Checklist by Austin) to determine what interventions were needed Functional assessments have received more attention lately, although most practitioners and many researchers have used some type of assessment to determine what their interventions should be The term “functional assessment” comes from Iwata’s work in DD and autism - determination of what is controlling self-injurious behavior l What are the functional causes of the behavior? (In OBM, more recently referred to as preintervention assessment procedures; I am going to switch to that term 36

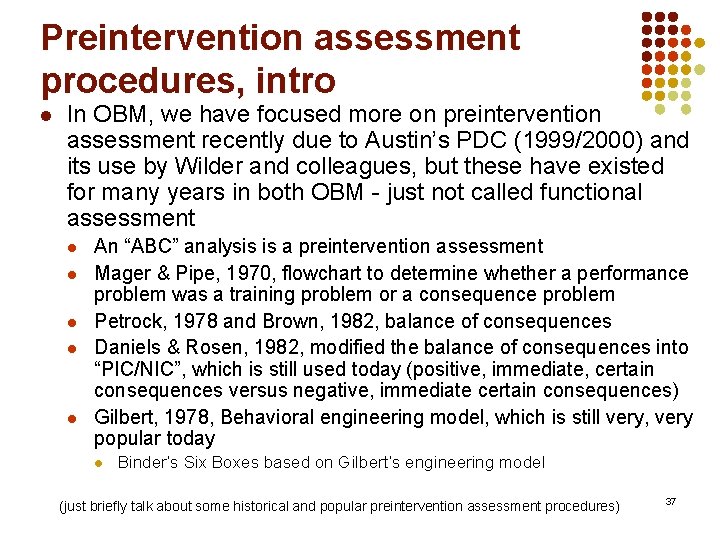

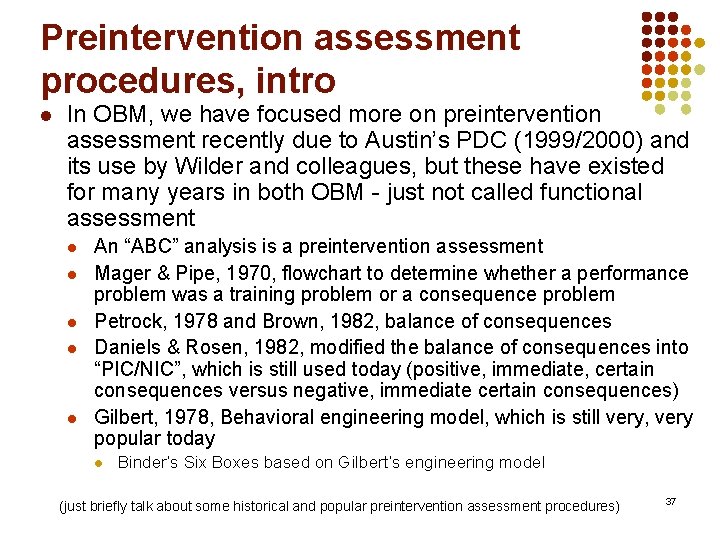

Preintervention assessment procedures, intro l In OBM, we have focused more on preintervention assessment recently due to Austin’s PDC (1999/2000) and its use by Wilder and colleagues, but these have existed for many years in both OBM - just not called functional assessment l l l An “ABC” analysis is a preintervention assessment Mager & Pipe, 1970, flowchart to determine whether a performance problem was a training problem or a consequence problem Petrock, 1978 and Brown, 1982, balance of consequences Daniels & Rosen, 1982, modified the balance of consequences into “PIC/NIC”, which is still used today (positive, immediate, certain consequences versus negative, immediate certain consequences) Gilbert, 1978, Behavioral engineering model, which is still very, very popular today l Binder’s Six Boxes based on Gilbert’s engineering model (just briefly talk about some historical and popular preintervention assessment procedures) 37

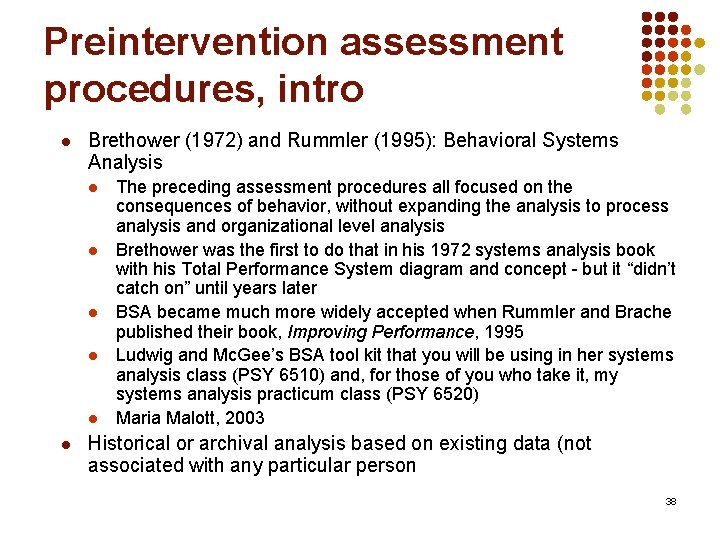

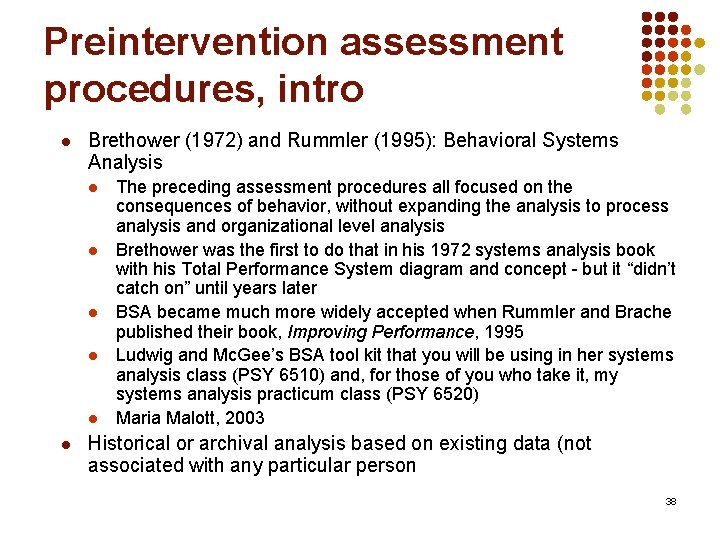

Preintervention assessment procedures, intro l Brethower (1972) and Rummler (1995): Behavioral Systems Analysis l l l The preceding assessment procedures all focused on the consequences of behavior, without expanding the analysis to process analysis and organizational level analysis Brethower was the first to do that in his 1972 systems analysis book with his Total Performance System diagram and concept - but it “didn’t catch on” until years later BSA became much more widely accepted when Rummler and Brache published their book, Improving Performance, 1995 Ludwig and Mc. Gee’s BSA tool kit that you will be using in her systems analysis class (PSY 6510) and, for those of you who take it, my systems analysis practicum class (PSY 6520) Maria Malott, 2003 Historical or archival analysis based on existing data (not associated with any particular person 38

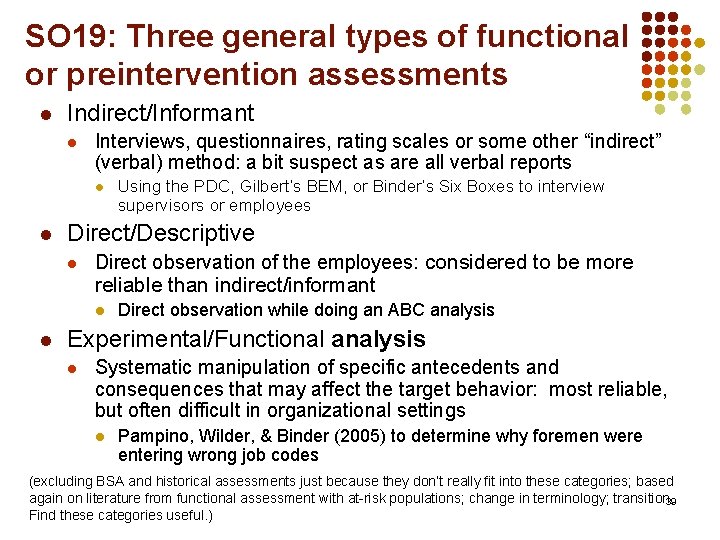

SO 19: Three general types of functional or preintervention assessments l Indirect/Informant l Interviews, questionnaires, rating scales or some other “indirect” (verbal) method: a bit suspect as are all verbal reports l l Using the PDC, Gilbert’s BEM, or Binder’s Six Boxes to interview supervisors or employees Direct/Descriptive l Direct observation of the employees: considered to be more reliable than indirect/informant l l Direct observation while doing an ABC analysis Experimental/Functional analysis l Systematic manipulation of specific antecedents and consequences that may affect the target behavior: most reliable, but often difficult in organizational settings l Pampino, Wilder, & Binder (2005) to determine why foremen were entering wrong job codes (excluding BSA and historical assessments just because they don’t really fit into these categories; based again on literature from functional assessment with at-risk populations; change in terminology; transition, 39 Find these categories useful. )

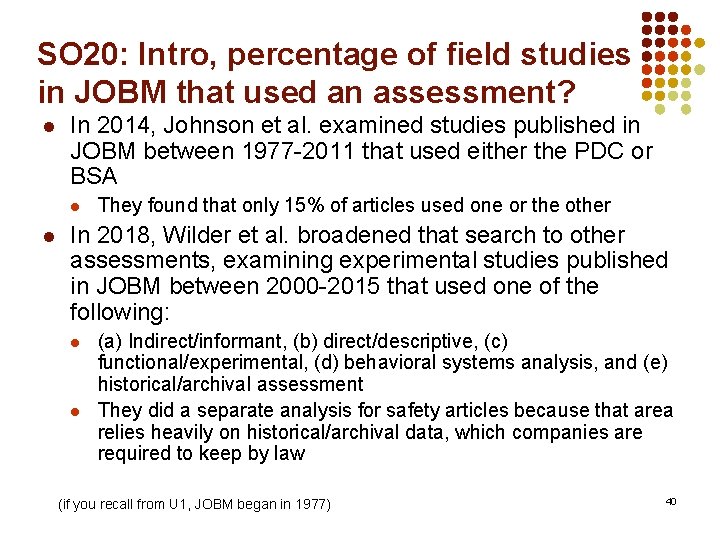

SO 20: Intro, percentage of field studies in JOBM that used an assessment? l In 2014, Johnson et al. examined studies published in JOBM between 1977 -2011 that used either the PDC or BSA l l They found that only 15% of articles used one or the other In 2018, Wilder et al. broadened that search to other assessments, examining experimental studies published in JOBM between 2000 -2015 that used one of the following: l l (a) Indirect/informant, (b) direct/descriptive, (c) functional/experimental, (d) behavioral systems analysis, and (e) historical/archival assessment They did a separate analysis for safety articles because that area relies heavily on historical/archival data, which companies are required to keep by law (if you recall from U 1, JOBM began in 1977) 40

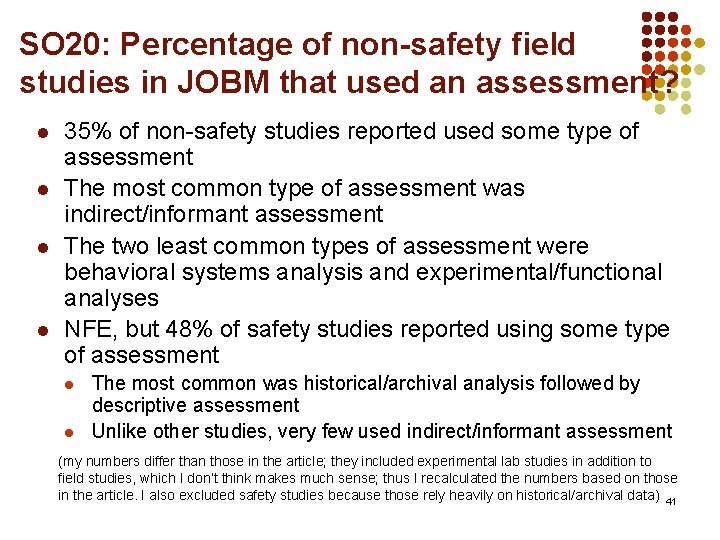

SO 20: Percentage of non-safety field studies in JOBM that used an assessment? l l 35% of non-safety studies reported used some type of assessment The most common type of assessment was indirect/informant assessment The two least common types of assessment were behavioral systems analysis and experimental/functional analyses NFE, but 48% of safety studies reported using some type of assessment l l The most common was historical/archival analysis followed by descriptive assessment Unlike other studies, very few used indirect/informant assessment (my numbers differ than those in the article; they included experimental lab studies in addition to field studies, which I don’t think makes much sense; thus I recalculated the numbers based on those in the article. I also excluded safety studies because those rely heavily on historical/archival data) 41

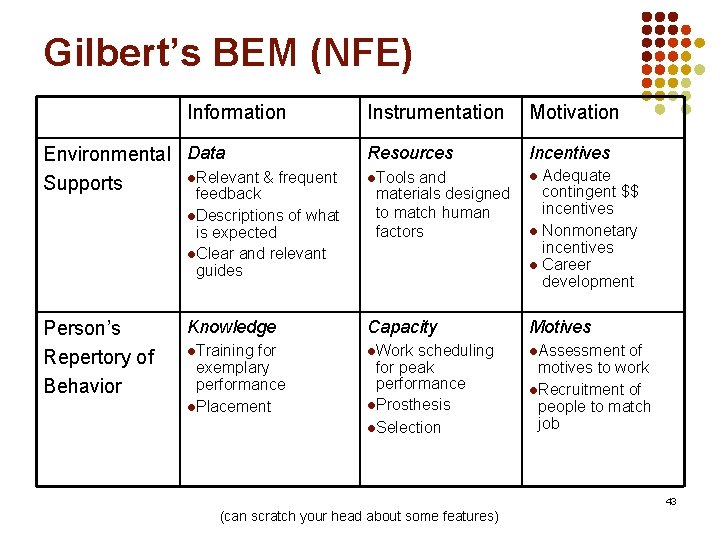

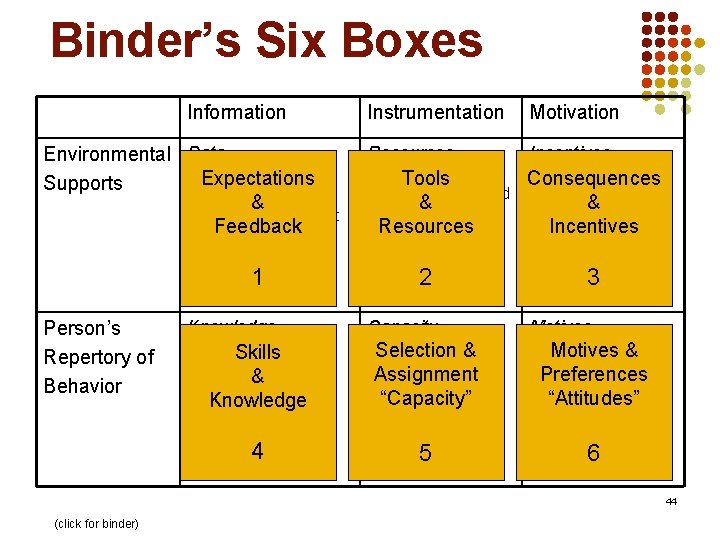

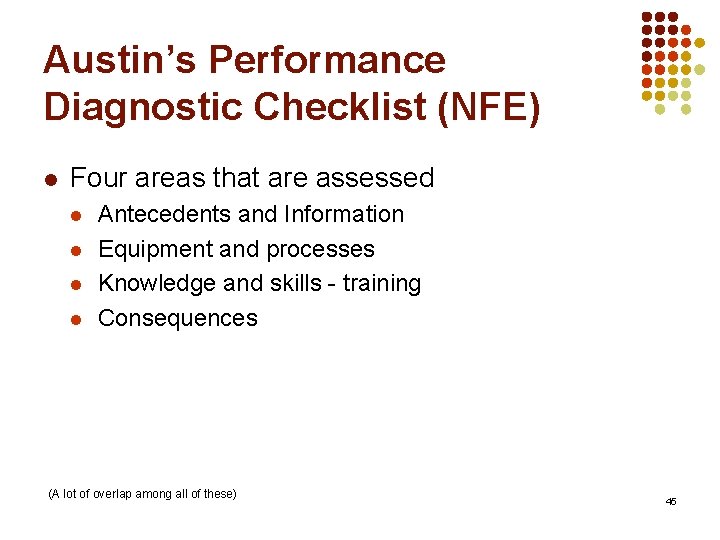

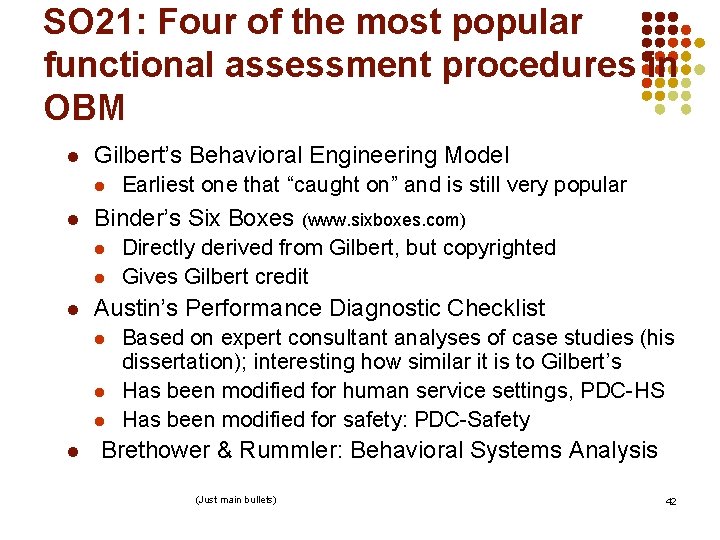

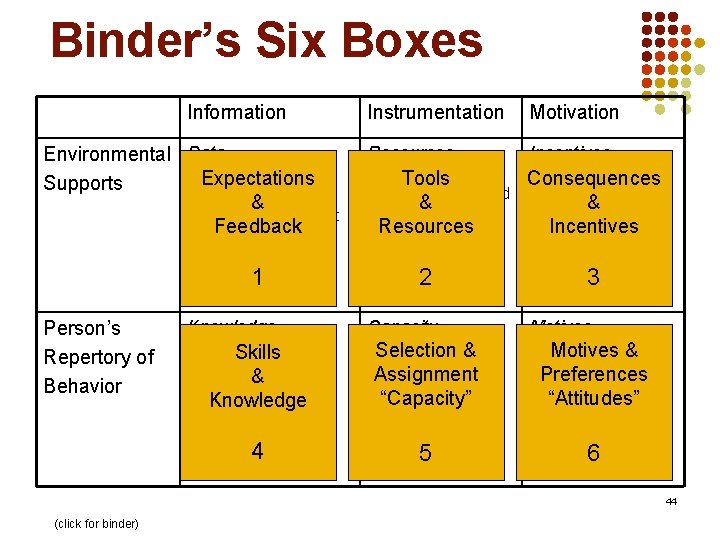

SO 21: Four of the most popular functional assessment procedures in OBM l Gilbert’s Behavioral Engineering Model l l Binder’s Six Boxes l l l (www. sixboxes. com) Directly derived from Gilbert, but copyrighted Gives Gilbert credit Austin’s Performance Diagnostic Checklist l l Earliest one that “caught on” and is still very popular Based on expert consultant analyses of case studies (his dissertation); interesting how similar it is to Gilbert’s Has been modified for human service settings, PDC-HS Has been modified for safety: PDC-Safety Brethower & Rummler: Behavioral Systems Analysis (Just main bullets) 42

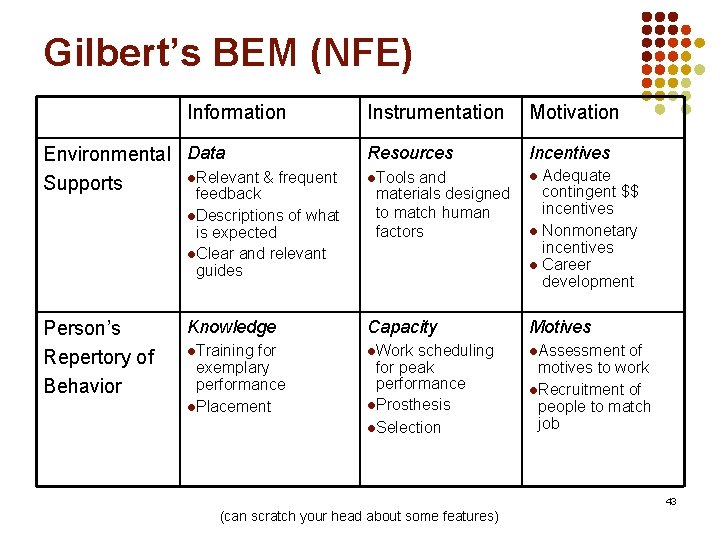

Gilbert’s BEM (NFE) Information Instrumentation Motivation Resources Incentives l. Tools l Knowledge Capacity Motives l. Training l. Work l. Assessment Environmental Data l. Relevant & frequent Supports feedback l. Descriptions of what is expected l. Clear and relevant guides Person’s Repertory of Behavior for exemplary performance l. Placement and materials designed to match human factors scheduling for peak performance l. Prosthesis l. Selection Adequate contingent $$ incentives l Nonmonetary incentives l Career development of motives to work l. Recruitment of people to match job 43 (can scratch your head about some features)

Binder’s Six(NFE)Boxes Gilbert’s BEM Information Environmental Data l. Relevant & frequent Expectations Supports feedback & l. Descriptions of what Feedback is expected Instrumentation Motivation Resources Incentives l Adequate and Tools Consequences contingent $$ materials designed & & incentives to match human Resources Incentives l Nonmonetary factors l. Tools l. Clear and relevant guides 1 Person’s Repertory of Behavior 2 incentives l Career 3 development Knowledge Capacity Motives for Skills exemplary & performance Knowledge l. Placement l. Work scheduling Selection & for peak Assignment performance “Capacity” l. Prosthesis l. Assessment l. Training l. Selection 4 5 Motives &of motives to work Preferences l. Recruitment of “Attitudes” people to match job 6 44 (click for binder)

Austin’s Performance Diagnostic Checklist (NFE) l Four areas that are assessed l l Antecedents and Information Equipment and processes Knowledge and skills - training Consequences (A lot of overlap among all of these) 45

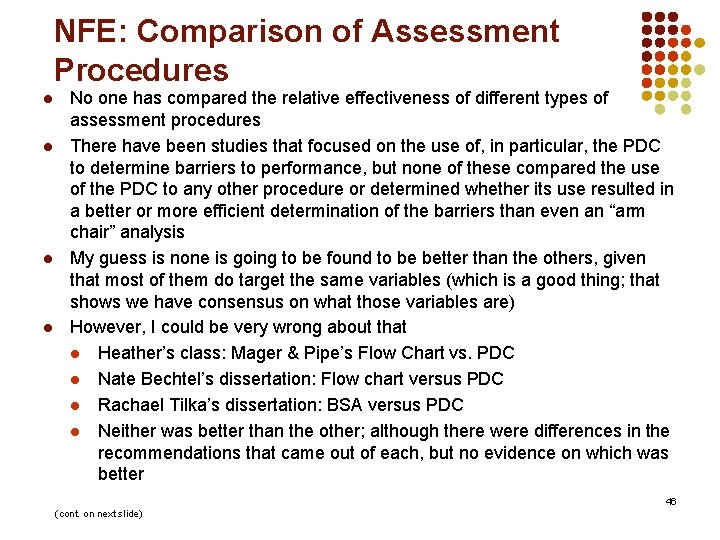

NFE: Comparison of Assessment Procedures l l No one has compared the relative effectiveness of different types of assessment procedures There have been studies that focused on the use of, in particular, the PDC to determine barriers to performance, but none of these compared the use of the PDC to any other procedure or determined whether its use resulted in a better or more efficient determination of the barriers than even an “arm chair” analysis My guess is none is going to be found to be better than the others, given that most of them do target the same variables (which is a good thing; that shows we have consensus on what those variables are) However, I could be very wrong about that l Heather’s class: Mager & Pipe’s Flow Chart vs. PDC l Nate Bechtel’s dissertation: Flow chart versus PDC l Rachael Tilka’s dissertation: BSA versus PDC l Neither was better than the other; although there were differences in the recommendations that came out of each, but no evidence on which was better 46 (cont. on next slide)

NFE: Comparison of Assessment Procedures l l That said, I would suggest, that from an organizational/systems perspective, the assessment tools that include an analysis of systems and processes, would be better than those that don’t include such an analysis In other words, assessment tools that focus on organizational improvement rather than performance improvement should produce better results for the entire organization, but both types of assessment tools may be equally effective if only looking at particular/targeted performance issues within a unit/department l The latter will no doubt be more efficient/cost effective if you are only looking at very particular/targeted performance issues 47

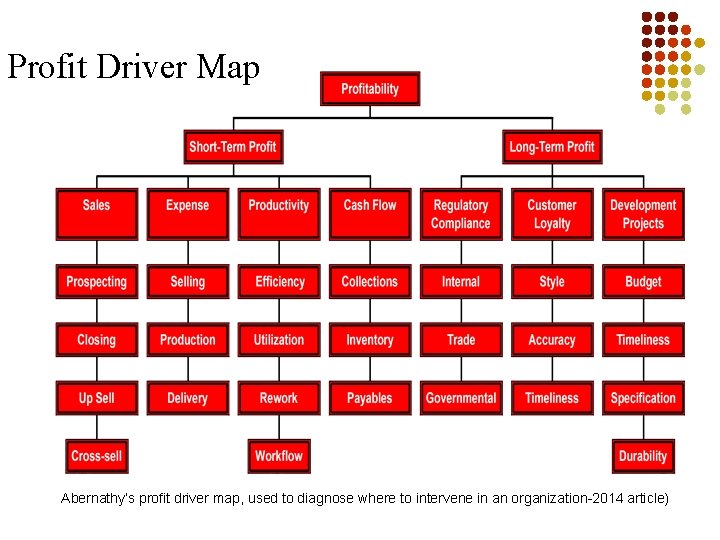

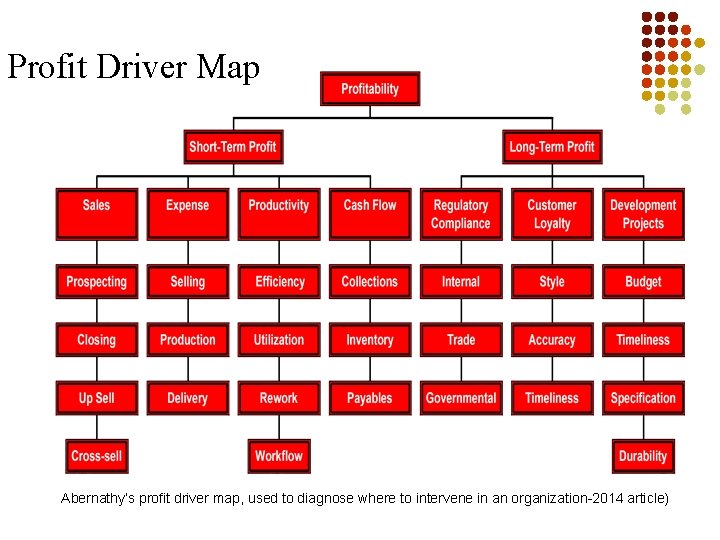

Profit Driver Map Abernathy’s profit driver map, used to diagnose where to intervene in an organization-2014 article)

Pampino et al. SOs 22, 23, & 25 on your own In other words, I am only going to cover 24! 49

SO 24: Intro to Pampino et al. l Pampino et al. is a very nice demonstration of the use of a functional assessment procedure (PDC) and an effective multi-component package to improve closing tasks in a coffee shop l l l PDC to select the intervention Task clarification through the use of a task checklist Public posting of performance Monthly lottery They also assessed social validity The weakness is the short-term nature of the study 50

SO 24: Intro to Pampino et al. l They used a monthly lottery where each of 5 employees had an opportunity to win $20. 00 based on the number of lottery tickets they earned for each shift they worked l l l Employees earned 1 lottery ticket for completing 90%-99% of the 95 tasks and 2 tickets for completing 100% of the tasks (U 5) Gaetani et al. used state lottery tickets to decrease cash shortages in a retail beverage chain (U 8) Green et al. used a lottery to increase extent to which human service staff conducted scheduled training with consumers in residential facility for individuals with DD and profound handicaps (lotteries have been used in a number of studies; appear effective; benefit? Cost effective -Which certainly has advantages in human service settings – Few articles in this class demonstrating effectiveness of lotteries; in fact one of the very first articles in OBM, Pedalino & Gamboa used a lottery system 51 to increase attendance – based on the best poker hand – really cool study! 1974, JAP )

SO 24 A: Describe the method to post the number of tickets earned l l l They publicly posted a data sheet with The name of each employee and Placed stickers corresponding to each lottery ticket the employee earned next to his/her name (in the past, students have had trouble with this; perhaps because the SO says “be sure to include all Relevant components – but some may not realize what those are; emphasize public posting; each factor, Public posting, name, not a code; # stickers = # tickets; not just lottery, but public accountability)) 52

SO 24 B What influences the effectiveness of lotteries? What factors could influence how effective a lottery is? 1. Amount of money/strength of reward 2. Frequency of lottery: the more frequent the better 3. Number of eligible employees (affects chances) (how/why? clearly, however, be careful – “gambling” issue”; bible belt) 53

NFE: Recent study examining odds of winning a lottery l Wine, Edgerton, Inzana, & Newcomb (JOBM, 2017) l l Participants: Two teaching assistants at a school for individuals diagnosed with autism DV: Number of scenarios evaluated in a 30 -min session l l IV: Probability of winning a $10. 00 lottery after each session l l l Ps read work-related scenarios consisting of client caregiver notes and checked “yes” or “no” to indicate if the notes contained concerns that needed to be passed on to the teacher the same day (change in medication, illness, leaving early, etc. ) 25%, 12%, 6%, or 3% Multi-element design for 14 or 15 sessions (different probability each session, semi-randomly determined Results: 6%, 12%, and 25% probabilities all increased performance; performance was similar under all three (this is a cool study; “rich schedule” with lotteries held after each 30 -minute session, with $10 winnings for 30 minutes of work; only 2 participants – but we need this type of research; next slide Anderson et al. ) 54

Anderson et al. Task Clarification Task clarification improves performance moderately When combined with feedback, much better! 55 (really should come as no surprise; task clarification is an antecedent intervention)

Anderson et al. article, one of Hantula’s first studies l Fun study, because it was done in a student-run university bar “Cleaning was an ancillary requirement and also preempted time that could be spent with peers or studying. The result was a conspicuous, pervasive accumulation of grease and various sticky materials on virtually every surface. Garbage areas were strewn with debris. ” University officials said the bar had been a nearimpossible management situation for years, and the State Board of Health threatened closure. 56 (again, I am just going to go over a few selected SOs)

Anderson et al. overview l l l 30 student employees 11 work areas, each with a separate task list Each task list had 5 -10 Yes/No items on it Assistant managers scored the cleanliness of each area after line employees finished work Task clarification: checklists were posted in relevant areas Feedback: Individual line graphs, coded by number, were publicly posted 57

SOs 27 and 29: Effects of task clarification and task clarification plus feedback l l Task clarification increased task completion 13% over baseline Task clarification plus feedback increased task completion 62% over baseline (last slide on this study, not covering SO 29 in lecture; moving onto Komaki) 58

Komaki The OSTI: Operant Supervisory Taxonomy & Index 59

Intro: Komaki’s Operant Supervisory Taxonomy & Index (OSTI) l l This is the only supervisory/managerial assessment tool based on behavioral principles that is available in the literature Komaki and colleagues published a series of three articles and a book containing research results from field studies l First article – development and psychometric soundness of the OSTI l Second article – the one I have assigned l Third article – the one I mention in the SOs as one of my all time favorite articles; sailboat regatta (companies, consulting firms, have own assessment questionnaires, but proprietary; research; Judi is now retired, but was one of the best field researchers in OBM) 60

Intro: Komaki’s OSTI l l A an article in JOBM 2011, summarizes all of the research, discusses an in-basket assessment procedure, and then the results of a training program for managers/supervisors Full reference is in the study objectives In my personal opinion, this should be required reading for all students who study OBM Finally, also the book: Komaki, J. L. (1998). Leadership from an operant perspective. London: Routledge. I chose to use one of Komaki’s earlier studies that provides more detail about the actual assessment tool for this class 61

Intro: Komaki’s OSTI l l Leadership consists of more than supervisory behavior but clearly one function of a leader is to be an effective supervisor Many non-behavioral theories exist l l l Trait theories: general traits that make a person an effective supervisor Situational theories: factors that interact with the traits/characteristics of the person Transformational leadership: leaders work to enhance the motivation and engagement of followers by directing behavior toward a shared vision (See Aamodt, chapter 12 for more!; OBMN conference and j. OBM articles on leadership; but We still don’t have a coherent or agreed upon definition of even the term “leadership”) 62

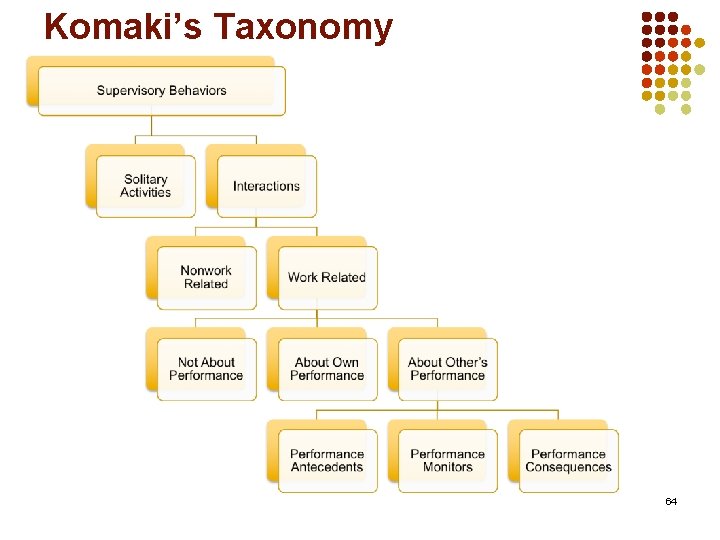

Intro: Komaki’s OSTI l Komaki’s analysis is based on the extent to which supervisors: l l l Provide antecedents (task clarification and clear expectations for performance) Monitor performance (measure and collect information about the performance of direct reports) Provide consequences for performance AMC as an analogy to ABC 63

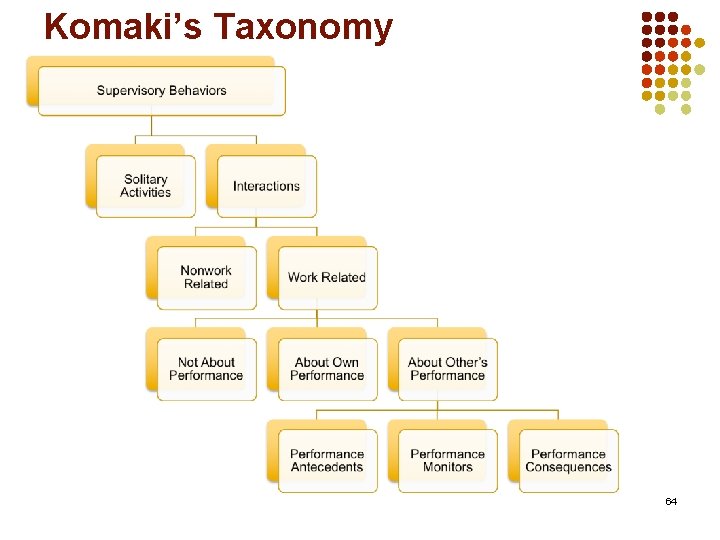

Komaki’s Taxonomy 64

Summary of Komaki article l Will the following three categories of supervisory behavior distinguish between effective and marginally effective supervisors with respect to motivating others? l l l Providing antecedents Monitoring performance Providing consequences 65

Summary of Komaki article l Hypotheses l Providing antecedents: No l l l Monitoring performance: Yes l l Antecedents do not reliably affect performance There won’t be any difference because both good and bad managers provide a lot - the same number -of antecedents, regardless of any differences in monitoring and providing consequences Monitoring is a prerequisite to providing contingent consequences Providing consequences: Yes l l Behavior is a function of its consequences Effective managers will indicate knowledge of subordinate performance, whether positive, negative, or neutral 66

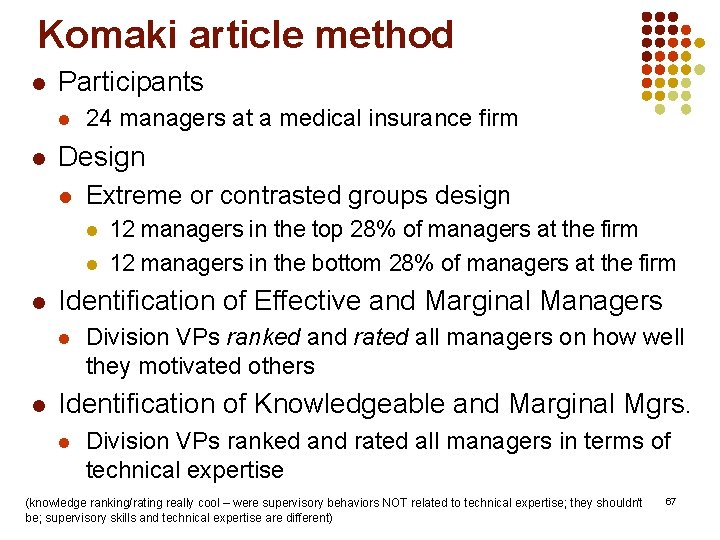

Komaki article method l Participants l l 24 managers at a medical insurance firm Design l Extreme or contrasted groups design l l l Identification of Effective and Marginal Managers l l 12 managers in the top 28% of managers at the firm 12 managers in the bottom 28% of managers at the firm Division VPs ranked and rated all managers on how well they motivated others Identification of Knowledgeable and Marginal Mgrs. l Division VPs ranked and rated all managers in terms of technical expertise (knowledge ranking/rating really cool – were supervisory behaviors NOT related to technical expertise; they shouldn’t be; supervisory skills and technical expertise are different) 67

Komaki results l Managers spent only ~13% of their time dealing with the performance of others (SO 33)* l l This is important to remember when we intervene – managers have many other responsibilities, thus time management and the labor-intensiveness of our interventions becomes an important issue Effective managers monitored performance significantly more than marginal managers l Effective managers used work sampling rather than self-report or a secondary source (i. e. , “is Mary doing the accounts well? ”) when monitoring performance *In other studies, bank managers spent ~15% of their time dealing with the performance of others; newspaper managers spent ~14% 68

Komaki results l Performance monitoring was not related to technical expertise (as predicted) (SO 34) l l Implication: Just because people are experts in their field doesn’t mean they are a good manager Performance consequences did not distinguish between effective and marginal supervisors l l Results may mean that it is not the number of consequences provided, but whether contingent consequences are provided, which performance monitoring makes possible Performance monitoring may be an establishing operation for employees that makes consequences more reinforcing or punishing (more on this next unit) (if a supervisor monitors your performance, it makes his/her consequences more effective) 69

Results, subsequent studies, NFE Performance l l l When performance monitoring was sufficient, consequences did distinguish between effective and marginal managers In a sailboat regatta, both performance monitoring and consequences by skippers were significantly related to race results In an Australian police force, both performance monitoring and consequences by patrol sergeants were significantly related to higherperforming teams Attitudes and perceptions l l In a national movie theater management company, managers who monitored work were perceived as more fair Workers rated managers as more positive and less negative when they monitored work and provided consequences than when managers only monitored work or only provided consequences (simulated study in a post office) 70

THE END Questions? 71

What does id card mld mean

What does id card mld mean Monday tuesday wednesday thursday friday calendar

Monday tuesday wednesday thursday friday calendar Monday tuesday wednesday thursday friday saturday sunday

Monday tuesday wednesday thursday friday saturday sunday Marvelous monday terrific tuesday wonderful wednesday

Marvelous monday terrific tuesday wonderful wednesday Enum days sunday monday=5 tuesday=6 wednesday=7

Enum days sunday monday=5 tuesday=6 wednesday=7 Sunday monday tuesday thursday friday saturday

Sunday monday tuesday thursday friday saturday Tuesday-sunday

Tuesday-sunday Monday=621 tuesday=732 wednesday=933

Monday=621 tuesday=732 wednesday=933 Monday=621 tuesday=732 wednesday=933

Monday=621 tuesday=732 wednesday=933 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Bmfp lecture

Bmfp lecture Contoh kertas kerja pemeriksaan perusahaan

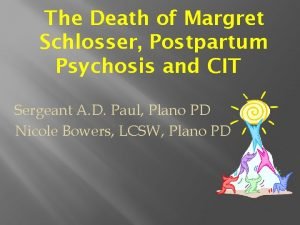

Contoh kertas kerja pemeriksaan perusahaan Margaret schlosser death

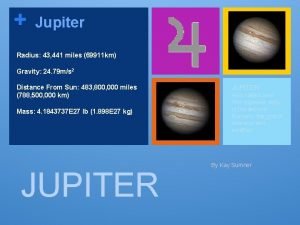

Margaret schlosser death Jupiter radius km

Jupiter radius km Jref 911

Jref 911 734-147-911

734-147-911 Mark petitt 911

Mark petitt 911 Yitzhak rabin

Yitzhak rabin Exotic car rental new orleans

Exotic car rental new orleans Ley 911 enfermeria

Ley 911 enfermeria 911 ip

911 ip 911 lbs

911 lbs Plastix 911

Plastix 911 Nep 911

Nep 911 Alarm notification software

Alarm notification software 911 in asl

911 in asl 911 call routing diagram

911 call routing diagram 01 8000 911 119

01 8000 911 119 Nep 911 et 912

Nep 911 et 912 Please enter the missing figure: 13, 57, 911, 1315, 1719

Please enter the missing figure: 13, 57, 911, 1315, 1719 Van jones facebook

Van jones facebook Gamess error code 911

Gamess error code 911 Telephone 911

Telephone 911 Telephone 911

Telephone 911 øyou

øyou Neci 911

Neci 911 Art and music are my favorite subject

Art and music are my favorite subject Dua monday

Dua monday On monday and tuesday

On monday and tuesday Wednesday evening prayer

Wednesday evening prayer Wednesday seminar

Wednesday seminar Ib history ia grade boundaries

Ib history ia grade boundaries Web analytics wednesday

Web analytics wednesday How to write wednesday

How to write wednesday Good morning happy february

Good morning happy february Wednesday is my favorite day

Wednesday is my favorite day Skinny wednesday

Skinny wednesday Flmsg download

Flmsg download Wise wednesday

Wise wednesday Happy wednesday march

Happy wednesday march English class is wednesday

English class is wednesday Wednesday writing prompts

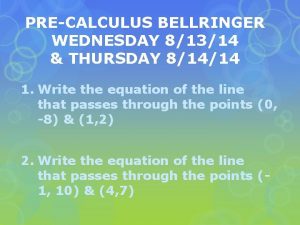

Wednesday writing prompts Wednesday bell work

Wednesday bell work Thursday bellringer

Thursday bellringer Wednesday bellwork

Wednesday bellwork Wednesday good morning

Wednesday good morning Thursday prayer images

Thursday prayer images Wednesday bell ringer

Wednesday bell ringer Wednesday syllables

Wednesday syllables Wednesday lunch

Wednesday lunch Happy monday answer

Happy monday answer Happy wednesday

Happy wednesday Thursday prayer images

Thursday prayer images Fe exam results wednesday

Fe exam results wednesday Wednesday phonics

Wednesday phonics Wednesday lo

Wednesday lo Wednesday at 6

Wednesday at 6 Function of lipids

Function of lipids Wednesday

Wednesday Monday welcome back

Monday welcome back What did you do last week?

What did you do last week? Monday evening prayers

Monday evening prayers