Safe and Efficient Cluster Communication in Java using

- Slides: 46

Safe and Efficient Cluster Communication in Java using Explicit Memory Management Chi-Chao Chang Dept. of Computer Science Cornell University

Goal High-performance cluster computing with safe languages l parallel and distributed applications Use off-the-shelf technologies l Java l l safe: “better C++” “write once run everywhere” growing interest for high-performance applications (Java Grande) User-level network interfaces (UNIs) l l direct, protected access to network devices prototypes: U-Net (Cornell), Shrimp (Princeton), FM (UIUC) industry standard: Virtual Interface Architecture (VIA) cost-effective clusters: new 256 -processor cluster @ Cornell TC 22

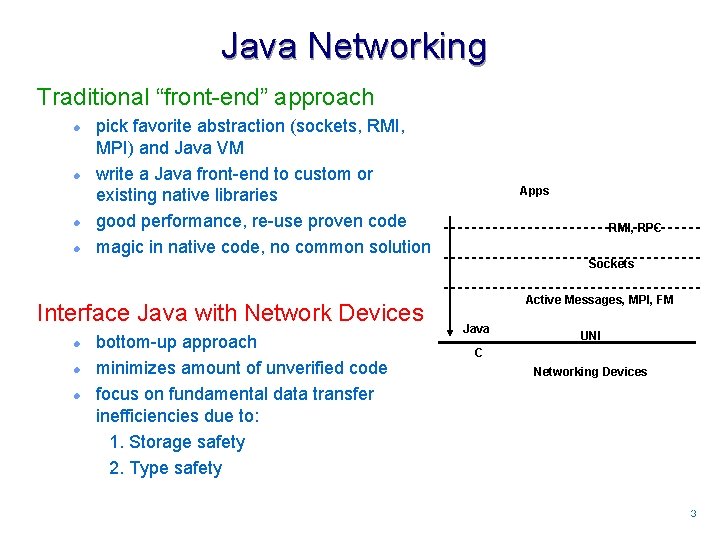

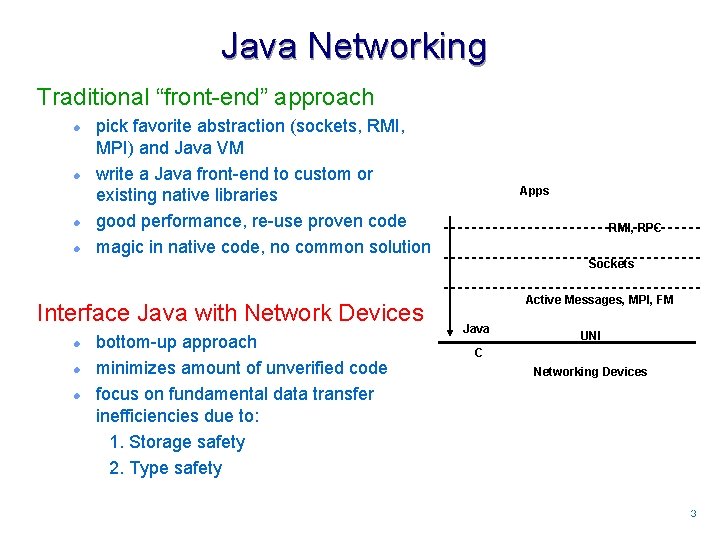

Java Networking Traditional “front-end” approach l l pick favorite abstraction (sockets, RMI, MPI) and Java VM write a Java front-end to custom or existing native libraries good performance, re-use proven code magic in native code, no common solution Apps RMI, RPC Sockets Interface Java with Network Devices l l l bottom-up approach minimizes amount of unverified code focus on fundamental data transfer inefficiencies due to: 1. Storage safety 2. Type safety Active Messages, MPI, FM Java UNI C Networking Devices 33

Outline Thesis Overview l GC/Native heap separation, object serialization Experimental Setup: VI Architecture and Marmot Part I: Array Transfers (1) Javia-I: Java Interface to VI Architecture l respects heap separation (2) Jbufs: Safe and Explicit Management of Buffers l Javia-II, matrix multiplication, Active Messages Part II: Object Transfers (3) A Case For Specialization l micro-benchmarks, RMI using Javia-I/II, impact on application suite (4) Jstreams: in-place de-serialization l micro-benchmarks, RMI using Javia-III, impact on application suite Conclusions 44

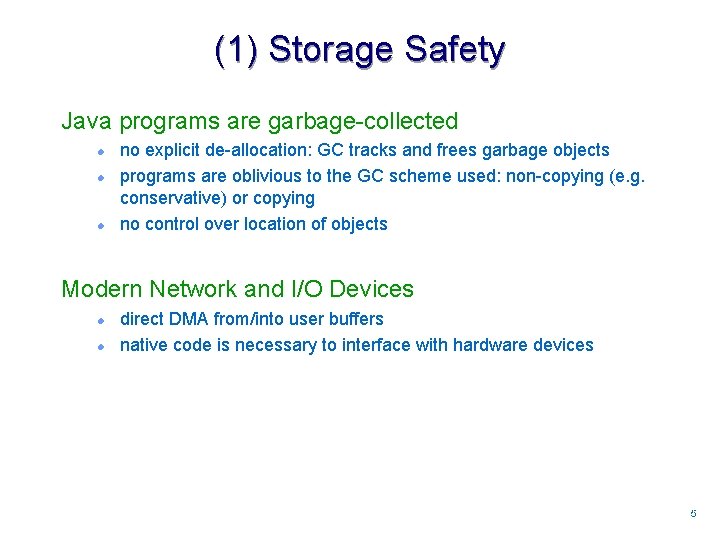

(1) Storage Safety Java programs are garbage-collected l l l no explicit de-allocation: GC tracks and frees garbage objects programs are oblivious to the GC scheme used: non-copying (e. g. conservative) or copying no control over location of objects Modern Network and I/O Devices l l direct DMA from/into user buffers native code is necessary to interface with hardware devices 55

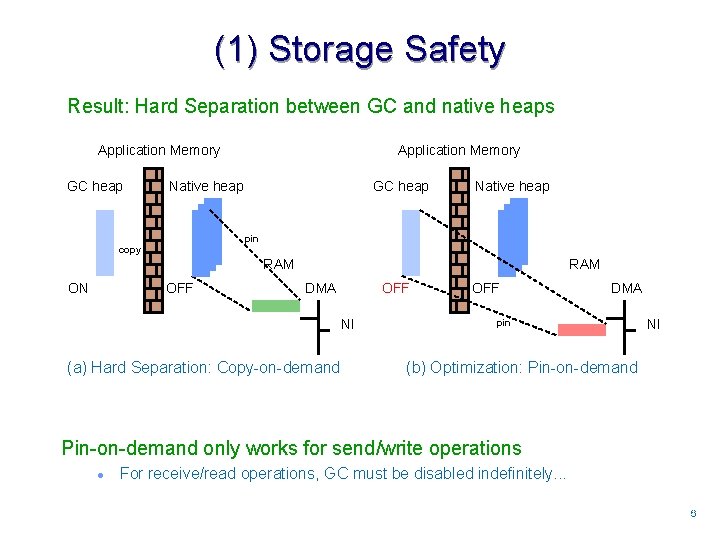

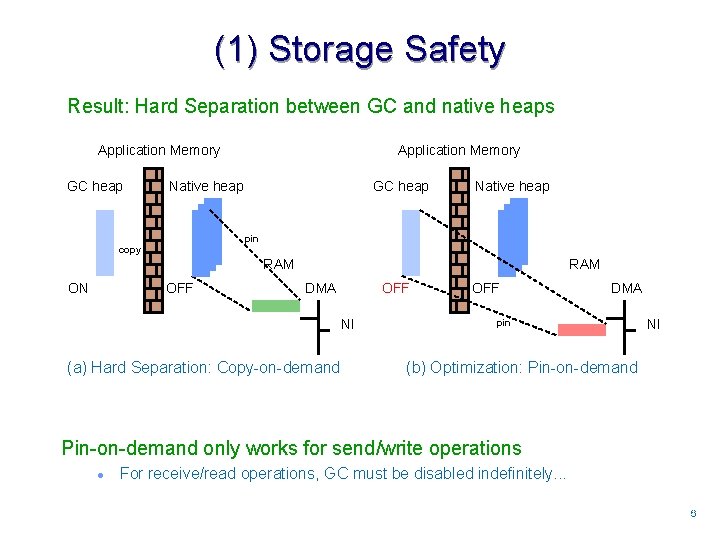

(1) Storage Safety Result: Hard Separation between GC and native heaps Application Memory GC heap Application Memory Native heap GC heap Native heap pin copy RAM ON OFF RAM DMA OFF NI (a) Hard Separation: Copy-on-demand OFF DMA pin NI (b) Optimization: Pin-on-demand only works for send/write operations l For receive/read operations, GC must be disabled indefinitely. . . 66

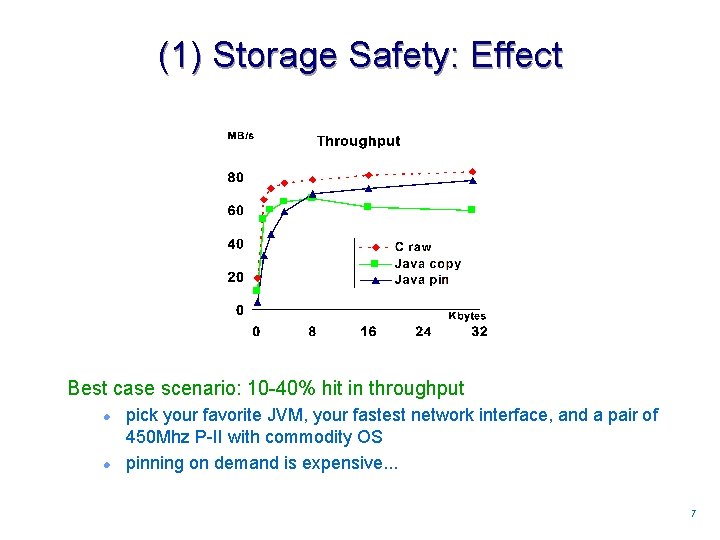

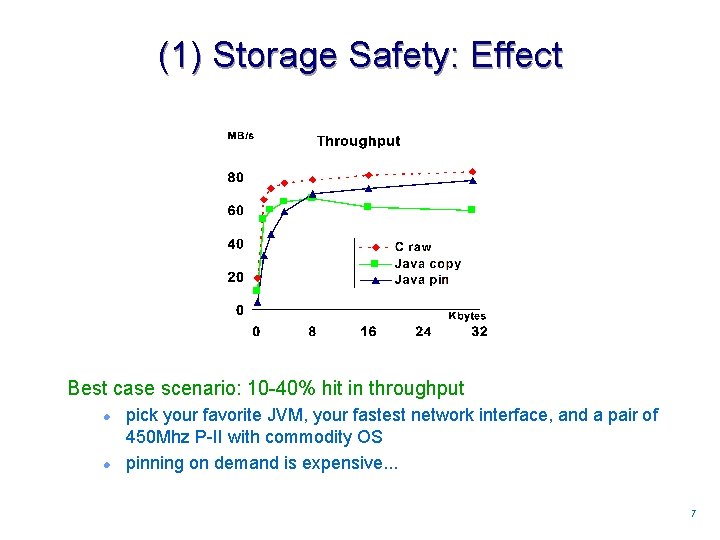

(1) Storage Safety: Effect Best case scenario: 10 -40% hit in throughput l l pick your favorite JVM, your fastest network interface, and a pair of 450 Mhz P-II with commodity OS pinning on demand is expensive. . . 77

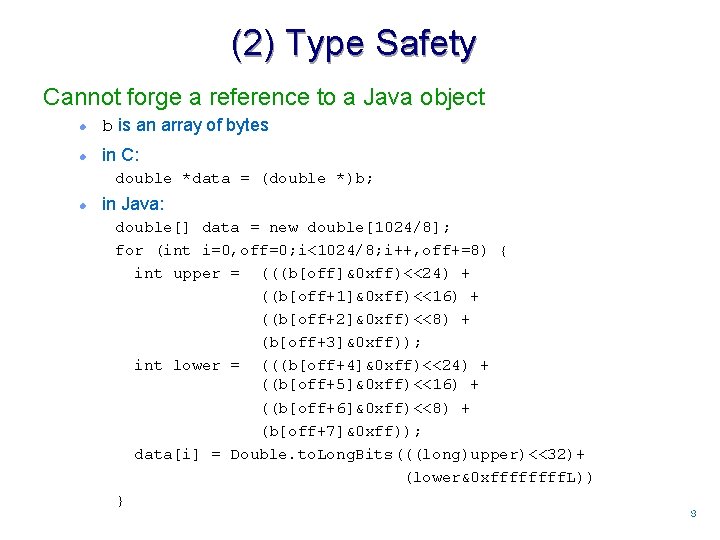

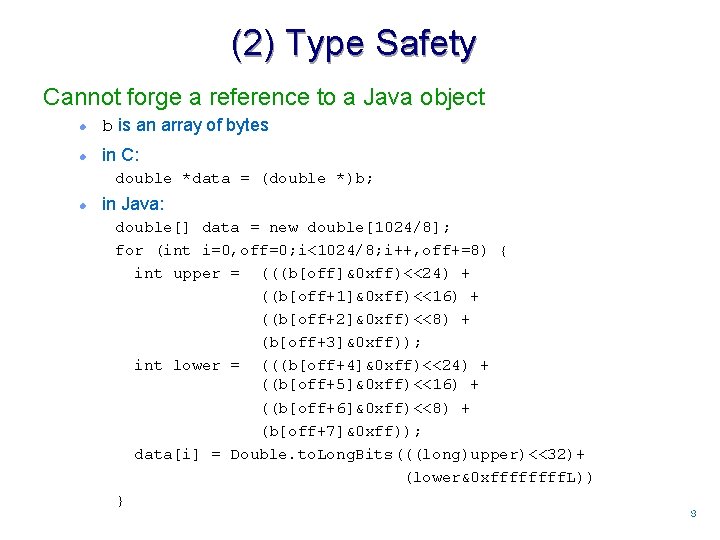

(2) Type Safety Cannot forge a reference to a Java object l b is an array of bytes l in C: double *data = (double *)b; l in Java: double[] data = new double[1024/8]; for (int i=0, off=0; i<1024/8; i++, off+=8) { int upper = (((b[off]&0 xff)<<24) + ((b[off+1]&0 xff)<<16) + ((b[off+2]&0 xff)<<8) + (b[off+3]&0 xff)); int lower = (((b[off+4]&0 xff)<<24) + ((b[off+5]&0 xff)<<16) + ((b[off+6]&0 xff)<<8) + (b[off+7]&0 xff)); data[i] = Double. to. Long. Bits(((long)upper)<<32)+ (lower&0 xffff. L)) } 88

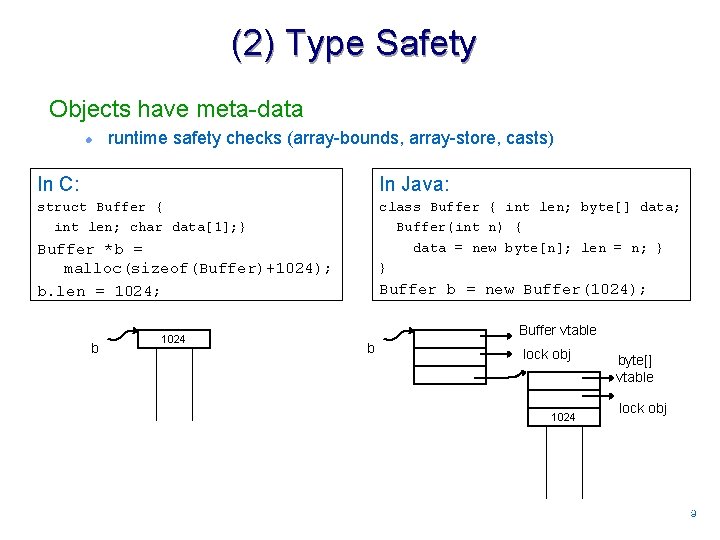

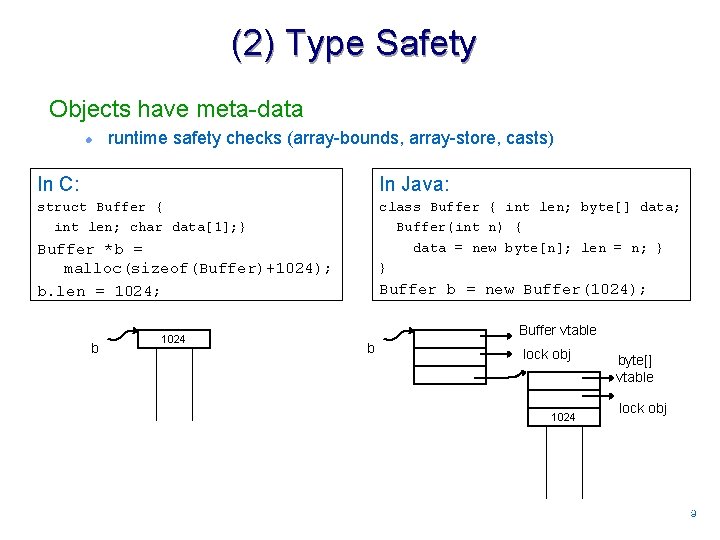

(2) Type Safety Objects have meta-data l runtime safety checks (array-bounds, array-store, casts) In C: In Java: struct Buffer { int len; char data[1]; } class Buffer { int len; byte[] data; Buffer(int n) { data = new byte[n]; len = n; } } Buffer *b = malloc(sizeof(Buffer)+1024); b. len = 1024; b 1024 Buffer b = new Buffer(1024); Buffer vtable b lock obj 1024 byte[] vtable lock obj 99

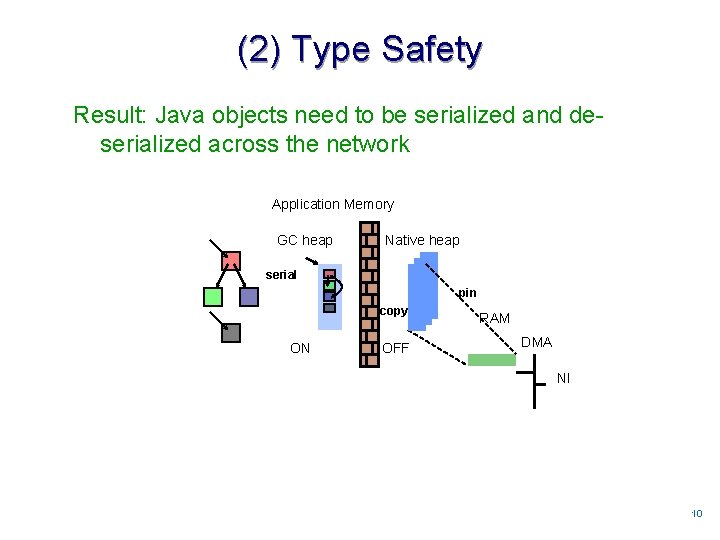

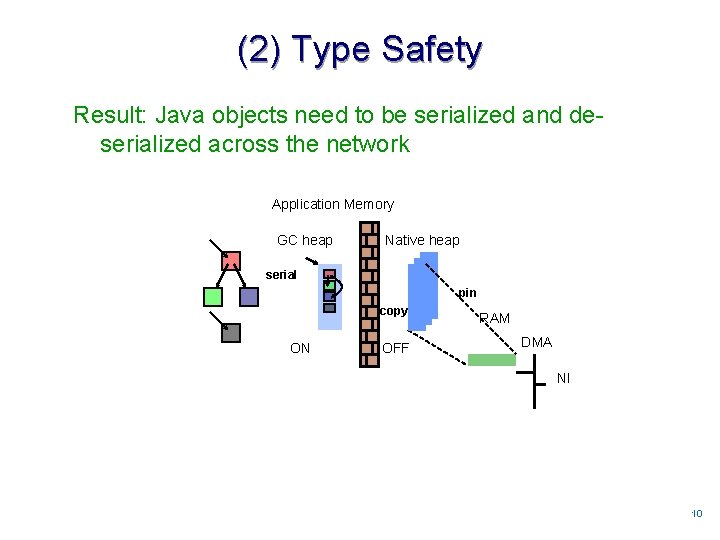

(2) Type Safety Result: Java objects need to be serialized and deserialized across the network Application Memory GC heap Native heap serial pin copy ON OFF RAM DMA NI 1010

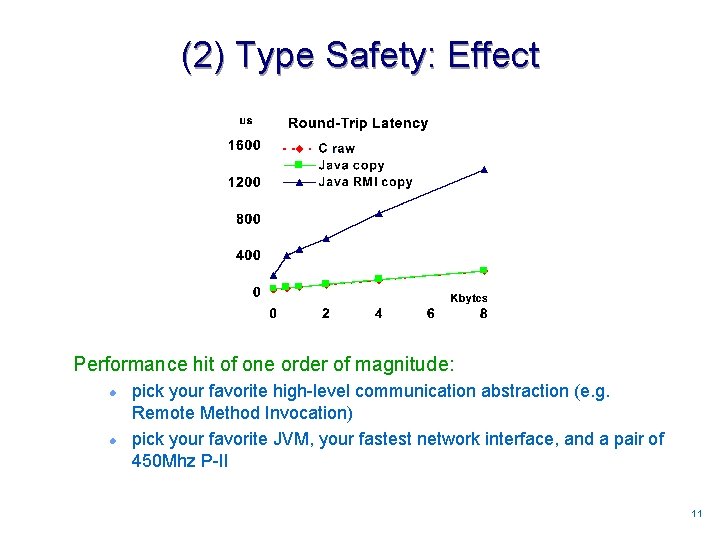

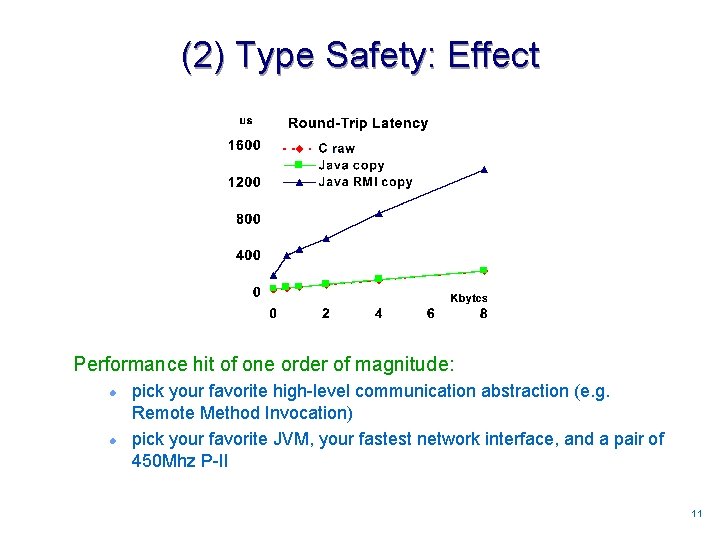

(2) Type Safety: Effect Performance hit of one order of magnitude: l l pick your favorite high-level communication abstraction (e. g. Remote Method Invocation) pick your favorite JVM, your fastest network interface, and a pair of 450 Mhz P-II 1111

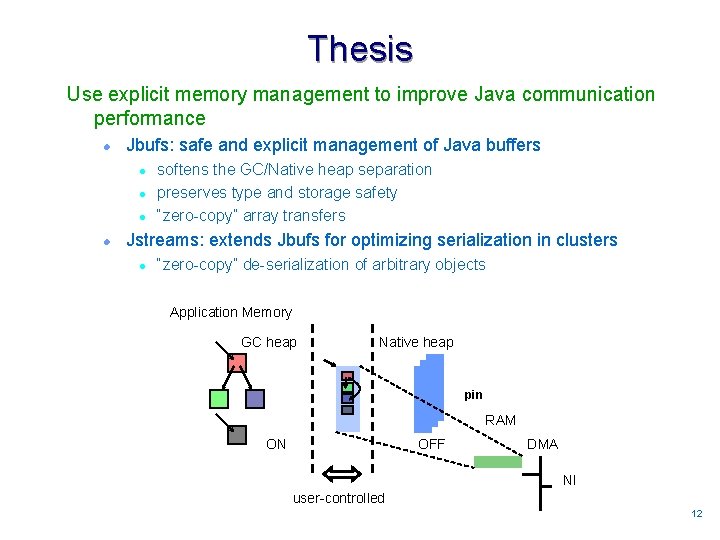

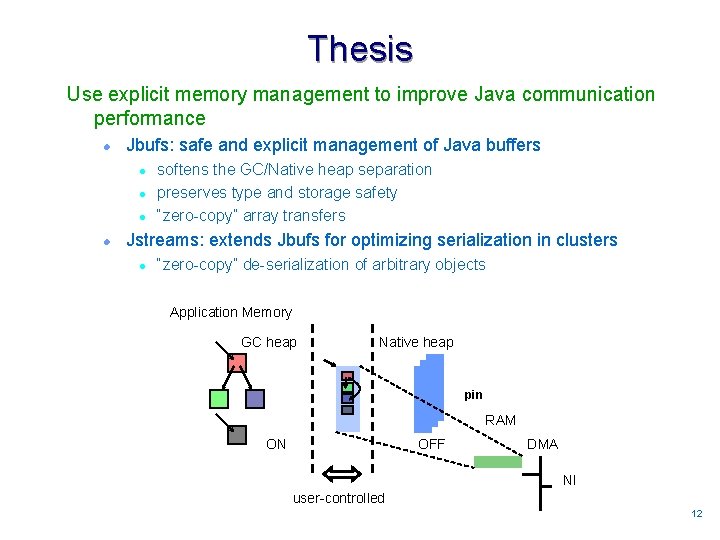

Thesis Use explicit memory management to improve Java communication performance l Jbufs: safe and explicit management of Java buffers l l softens the GC/Native heap separation preserves type and storage safety “zero-copy” array transfers Jstreams: extends Jbufs for optimizing serialization in clusters l “zero-copy” de-serialization of arbitrary objects Application Memory GC heap Native heap pin RAM ON OFF DMA NI user-controlled 1212

Outline Thesis Overview l GC/Native heap separation, object serialization Experimental Setup: Giganet cluster and Marmot Part I: Array Transfers (1) Javia-I: Java Interface to VI Architecture l respects heap separation (2) Jbufs: Safe and Explicit Management of Buffers l Javia-II, matrix multiplication, Active Messages Part II: Object Transfers (3) A Case For Specialization l micro-benchmarks, RMI using Javia-I/II, impact on application suite (4) Jstreams: in-place de-serialization l micro-benchmarks, RMI using Javia-III, impact on application suite Conclusions 1313

Giganet Cluster Configuration l l l 8 P-II 450 MHz, 128 MB RAM 8 1. 25 Gbps Giganet GNN-1000 adapter one Giganet switch GNN 1000 Adapter: User-Level Network Interface l Virtual Interface Architecture implemented as a library (Win 32 dll) Base-line pt-2 -pt Performance l l 14 s r/t latency, 16 s with switch over 100 MBytes/s peak, 85 MBytes/s with switch 1414

Marmot Java System from Microsoft Research l l l not a VM static compiler: bytecode (. class) to x 86 (. asm) linker: asm files + runtime libraries -> executable (. exe) no dynamic loading of classes most Dragon book opts, some OO and Java-specific opts Advantages l source code good performance two types of non-concurrent GC (copying, conservative) l native interface “close enough” to JNI l l 1515

Outline Thesis Overview l GC/Native heap separation, object serialization Experimental Setup: Giganet cluster and Marmot Part I: Array Transfers (1) Javia-I: Java Interface to VI Architecture l respects heap separation (2) Jbufs: Safe and Explicit Management of Buffers l Javia-II, matrix multiplication, Active Messages Part II: Object Transfers (3) A Case For Specialization l micro-benchmarks, RMI using Javia-I/II, impact on application suite (4) Jstreams: in-place de-serialization l micro-benchmarks, RMI using Javia-III, impact on application suite Conclusions 1616

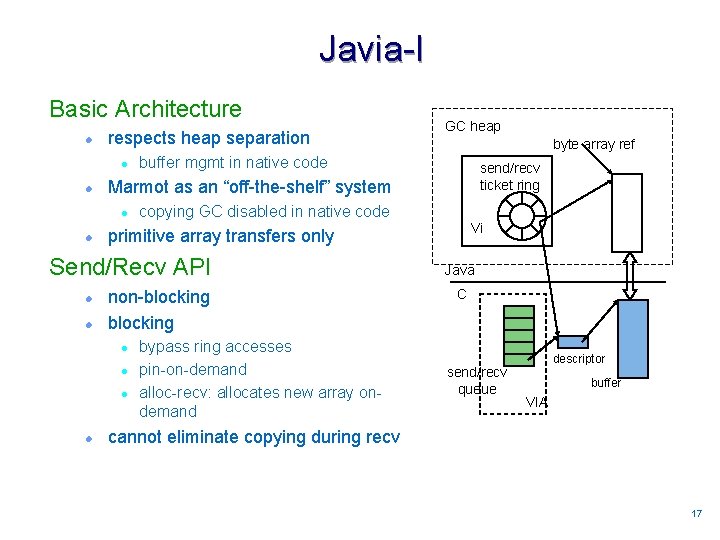

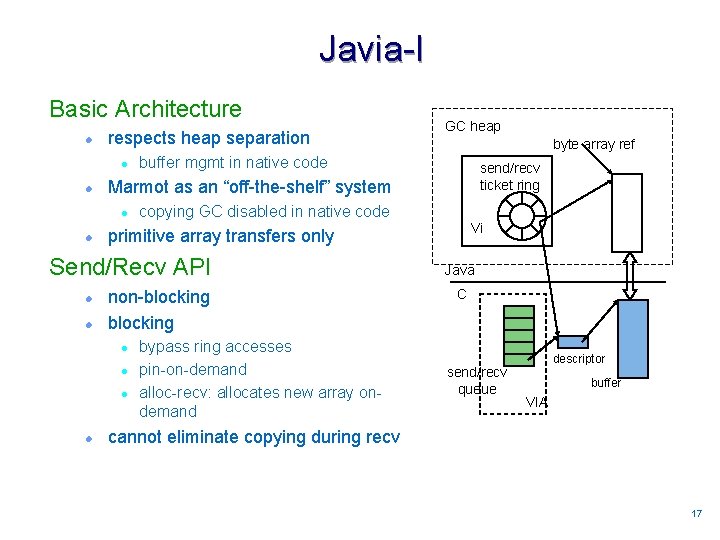

Javia-I Basic Architecture l respects heap separation l l buffer mgmt in native code l Vi primitive array transfers only non-blocking l l send/recv ticket ring copying GC disabled in native code Send/Recv API l byte array ref Marmot as an “off-the-shelf” system l l GC heap bypass ring accesses pin-on-demand alloc-recv: allocates new array ondemand Java C send/recv queue descriptor buffer VIA cannot eliminate copying during recv 1717

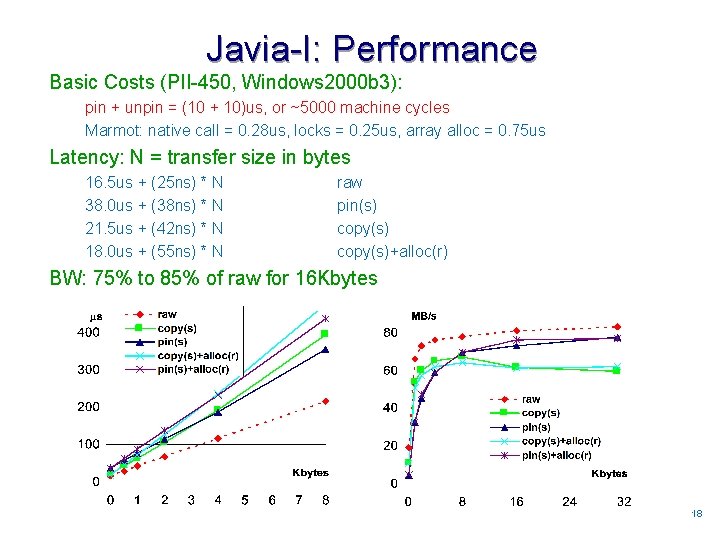

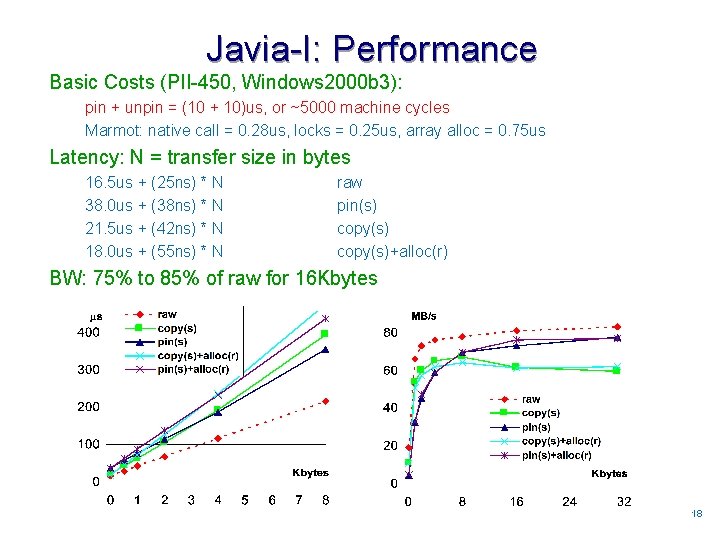

Javia-I: Performance Basic Costs (PII-450, Windows 2000 b 3): pin + unpin = (10 + 10)us, or ~5000 machine cycles Marmot: native call = 0. 28 us, locks = 0. 25 us, array alloc = 0. 75 us Latency: N = transfer size in bytes 16. 5 us + (25 ns) * N 38. 0 us + (38 ns) * N 21. 5 us + (42 ns) * N 18. 0 us + (55 ns) * N raw pin(s) copy(s)+alloc(r) BW: 75% to 85% of raw for 16 Kbytes 1818

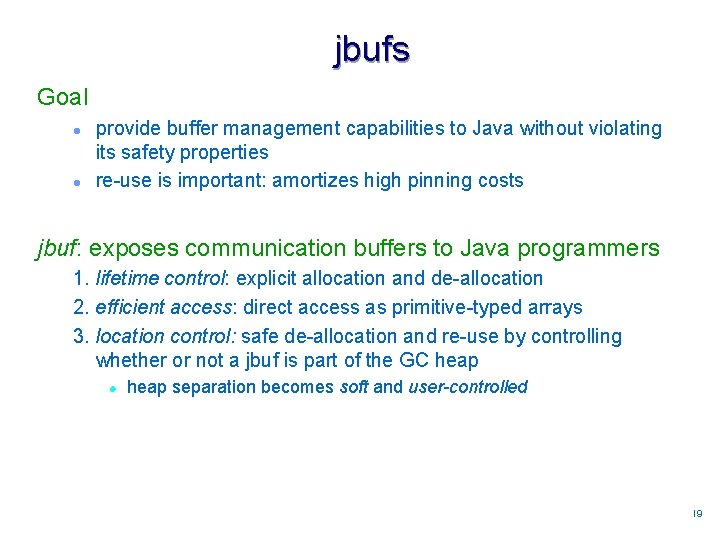

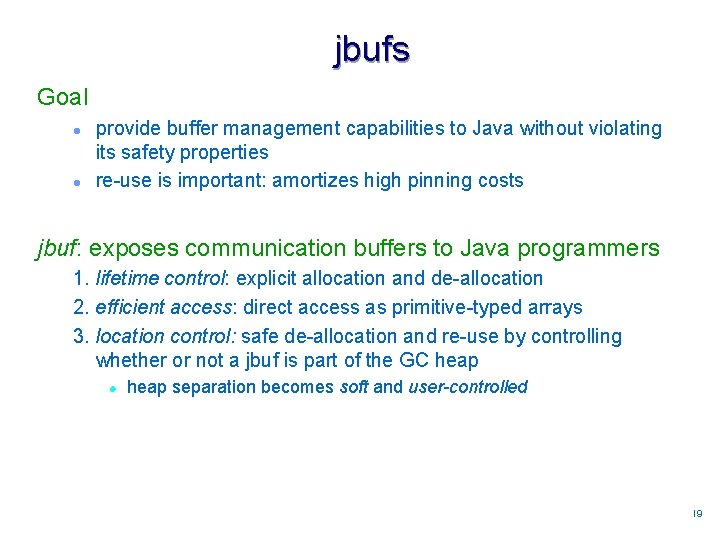

jbufs Goal l l provide buffer management capabilities to Java without violating its safety properties re-use is important: amortizes high pinning costs jbuf: exposes communication buffers to Java programmers 1. lifetime control: explicit allocation and de-allocation 2. efficient access: direct access as primitive-typed arrays 3. location control: safe de-allocation and re-use by controlling whether or not a jbuf is part of the GC heap l heap separation becomes soft and user-controlled 1919

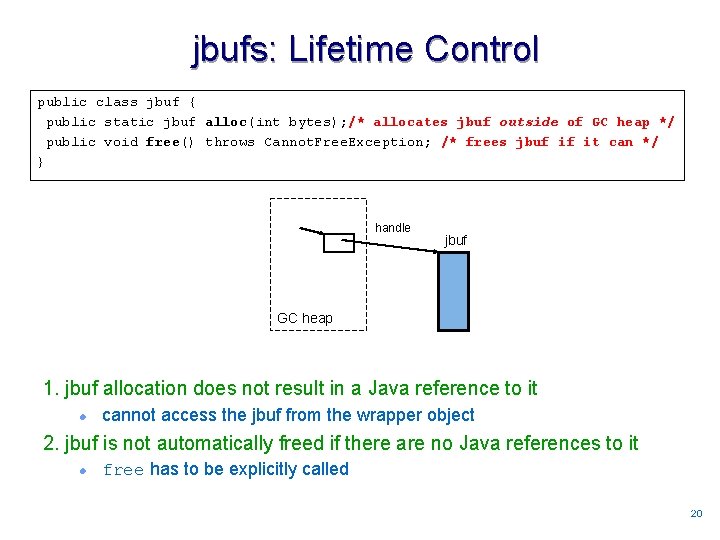

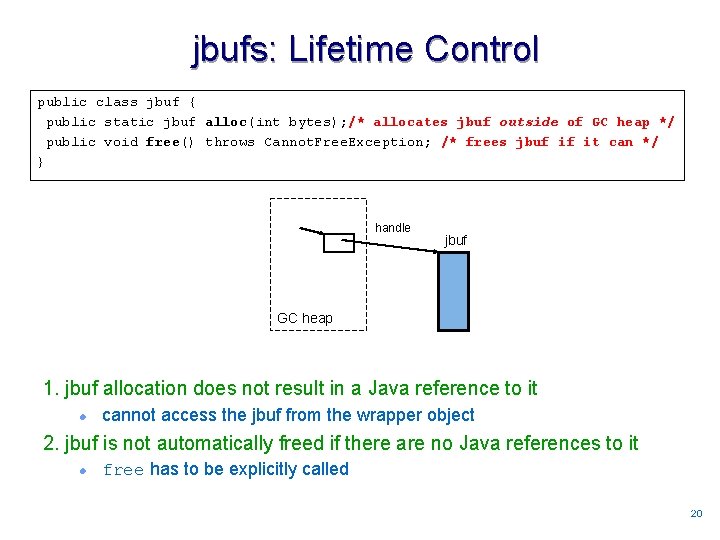

jbufs: Lifetime Control public class jbuf { public static jbuf alloc(int bytes); /* allocates jbuf outside of GC heap */ public void free() throws Cannot. Free. Exception; /* frees jbuf if it can */ } handle jbuf GC heap 1. jbuf allocation does not result in a Java reference to it l cannot access the jbuf from the wrapper object 2. jbuf is not automatically freed if there are no Java references to it l free has to be explicitly called 2020

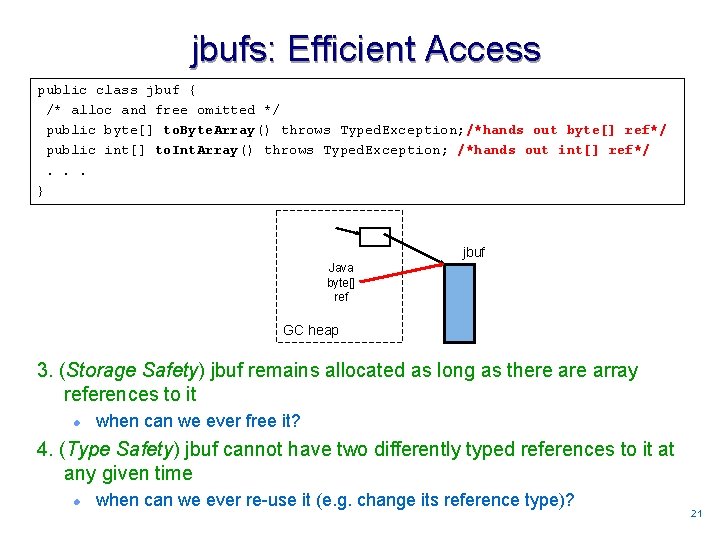

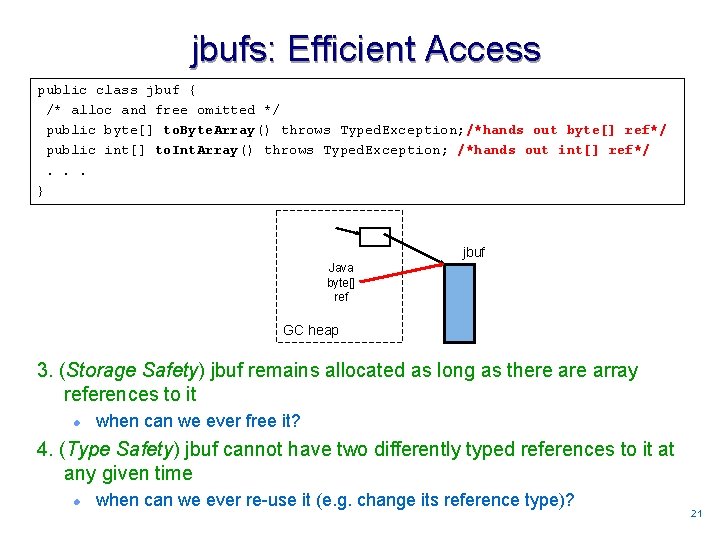

jbufs: Efficient Access public class jbuf { /* alloc and free omitted */ public byte[] to. Byte. Array() throws Typed. Exception; /*hands out byte[] ref*/ public int[] to. Int. Array() throws Typed. Exception; /*hands out int[] ref*/. . . } jbuf Java byte[] ref GC heap 3. (Storage Safety) jbuf remains allocated as long as there array references to it l when can we ever free it? 4. (Type Safety) jbuf cannot have two differently typed references to it at any given time l when can we ever re-use it (e. g. change its reference type)? 2121

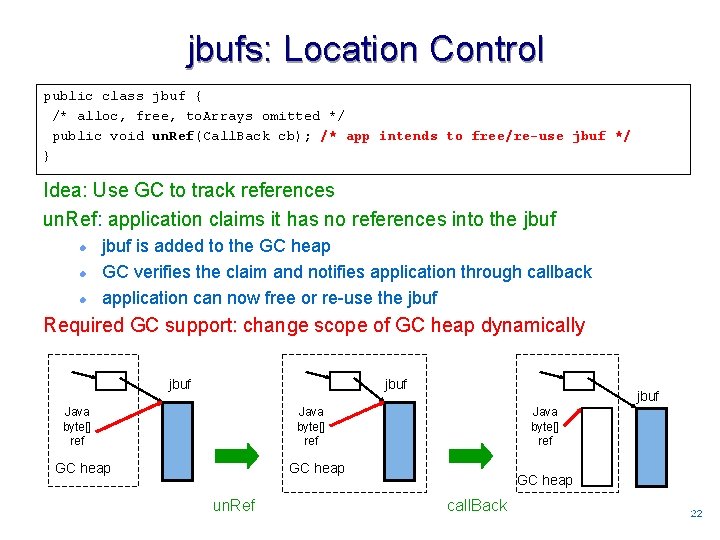

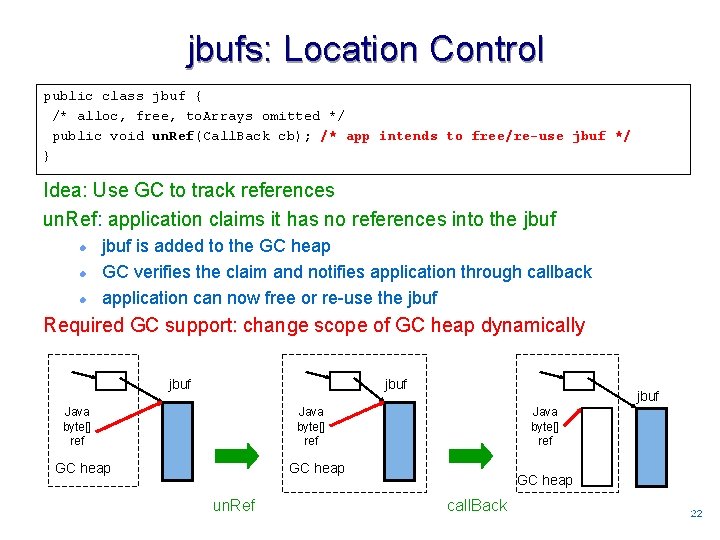

jbufs: Location Control public class jbuf { /* alloc, free, to. Arrays omitted */ public void un. Ref(Call. Back cb); /* app intends to free/re-use jbuf */ } Idea: Use GC to track references un. Ref: application claims it has no references into the jbuf l l l jbuf is added to the GC heap GC verifies the claim and notifies application through callback application can now free or re-use the jbuf Required GC support: change scope of GC heap dynamically jbuf Java byte[] ref GC heap un. Ref GC heap call. Back 2222

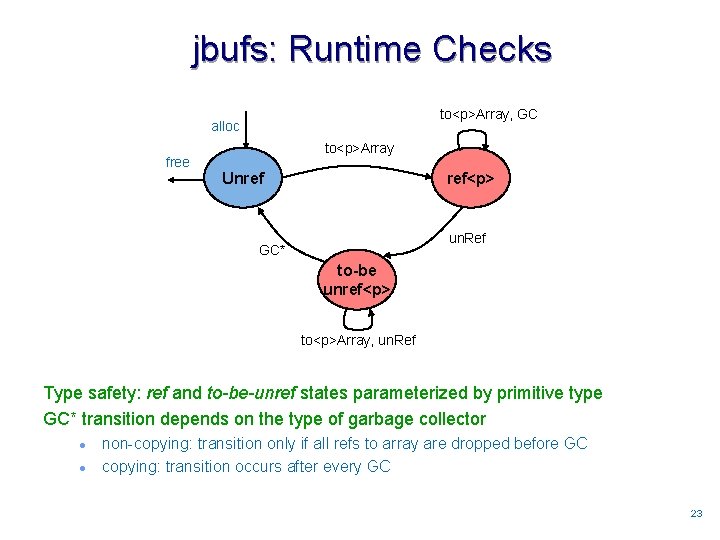

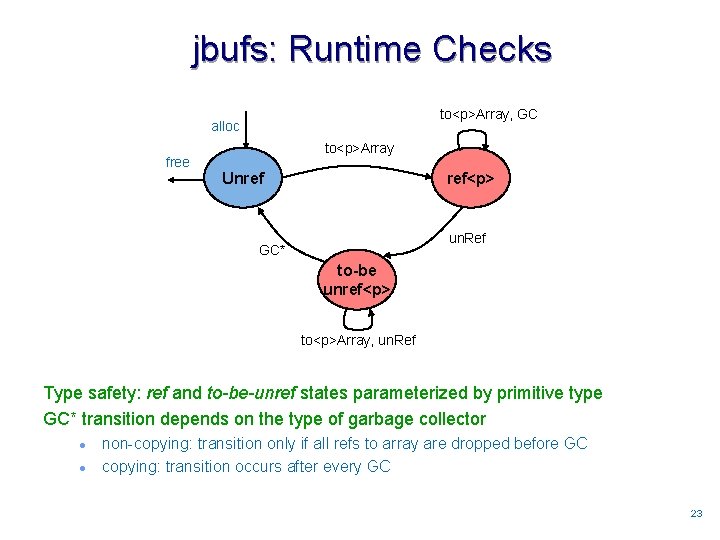

jbufs: Runtime Checks to<p>Array, GC alloc free to<p>Array Unref ref<p> un. Ref GC* to-be unref<p> to<p>Array, un. Ref Type safety: ref and to-be-unref states parameterized by primitive type GC* transition depends on the type of garbage collector l l non-copying: transition only if all refs to array are dropped before GC copying: transition occurs after every GC 2323

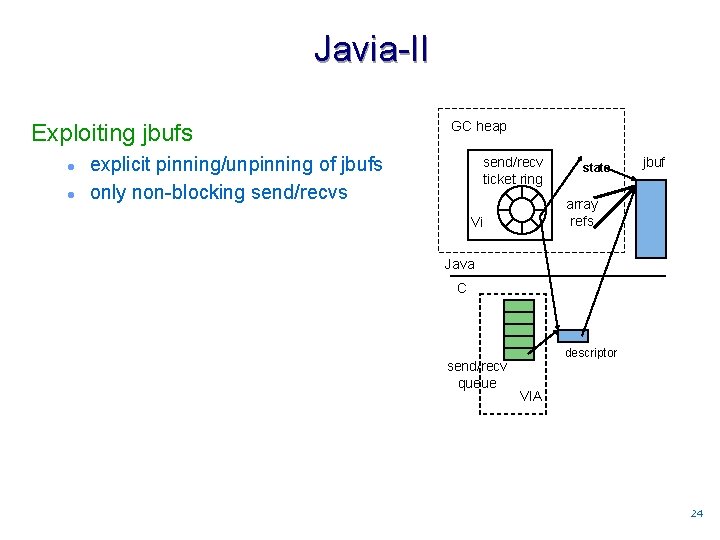

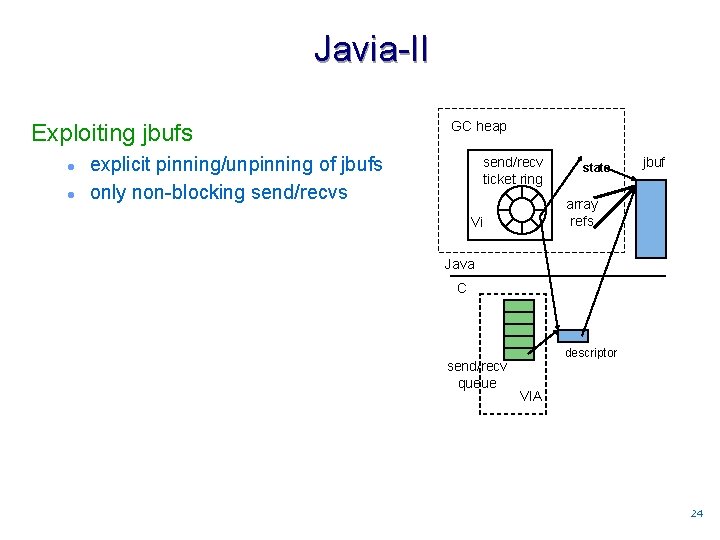

Javia-II Exploiting jbufs l l GC heap explicit pinning/unpinning of jbufs only non-blocking send/recvs send/recv ticket ring state jbuf array refs Vi Java C send/recv queue descriptor VIA 2424

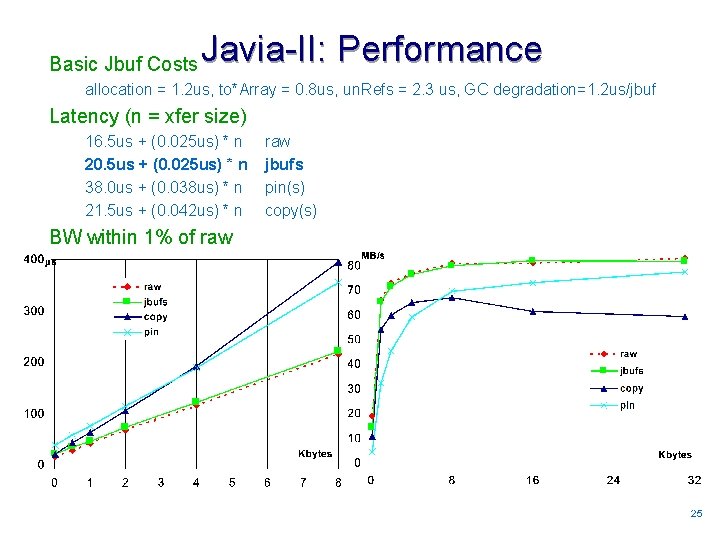

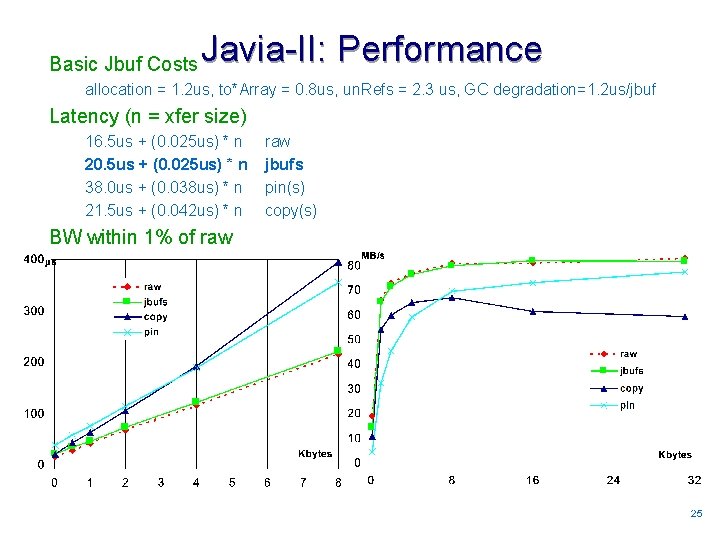

Basic Jbuf Costs Javia-II: Performance allocation = 1. 2 us, to*Array = 0. 8 us, un. Refs = 2. 3 us, GC degradation=1. 2 us/jbuf Latency (n = xfer size) 16. 5 us + (0. 025 us) * n 20. 5 us + (0. 025 us) * n 38. 0 us + (0. 038 us) * n 21. 5 us + (0. 042 us) * n raw jbufs pin(s) copy(s) BW within 1% of raw 2525

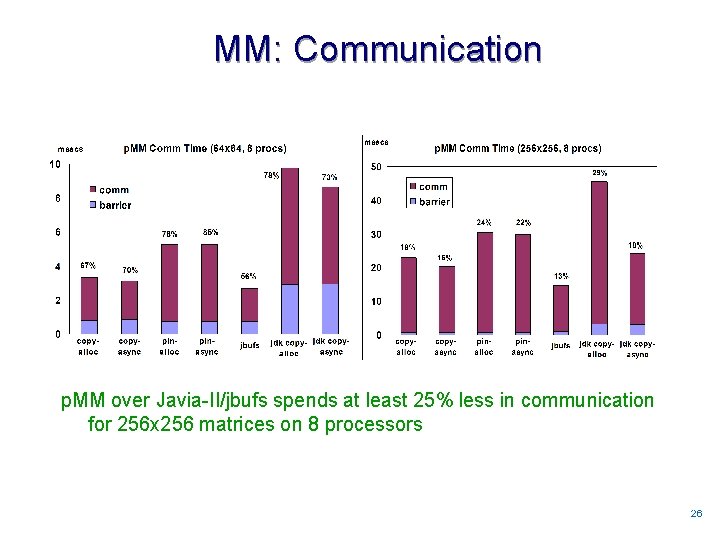

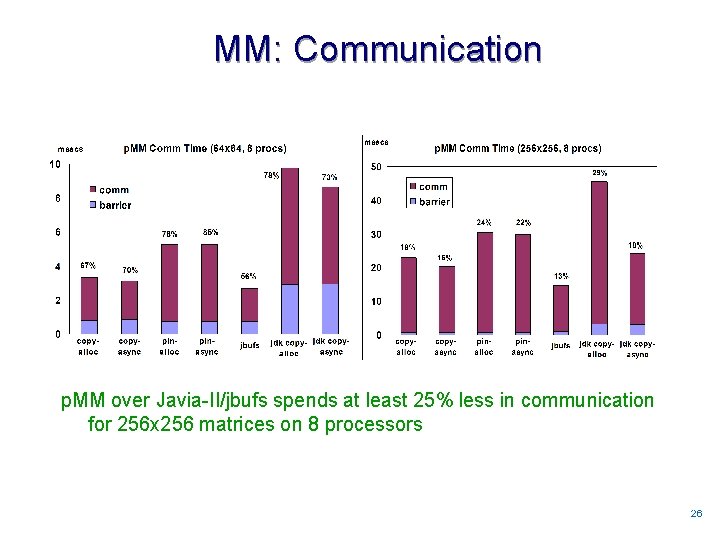

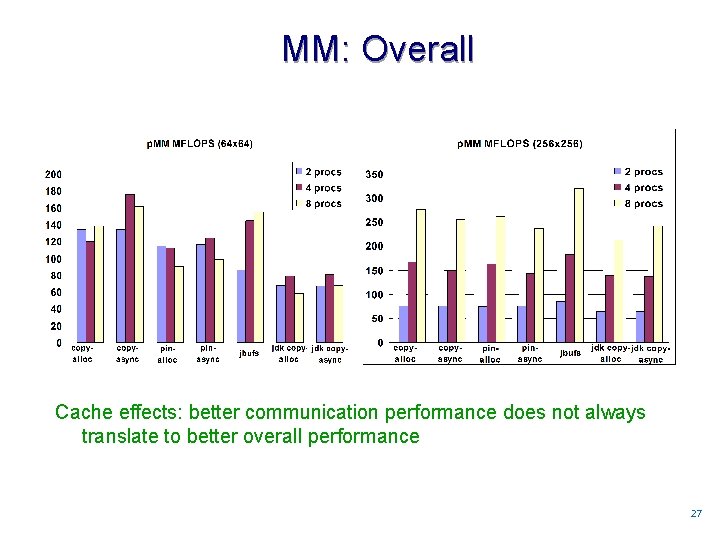

MM: Communication p. MM over Javia-II/jbufs spends at least 25% less in communication for 256 x 256 matrices on 8 processors 2626

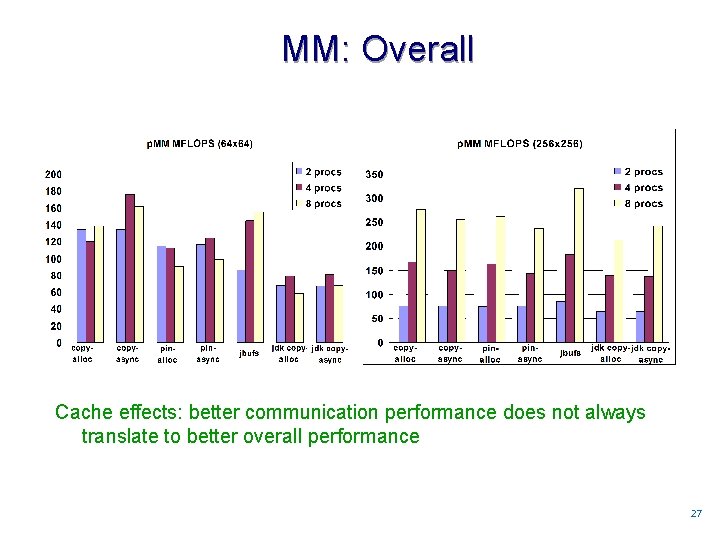

MM: Overall Cache effects: better communication performance does not always translate to better overall performance 2727

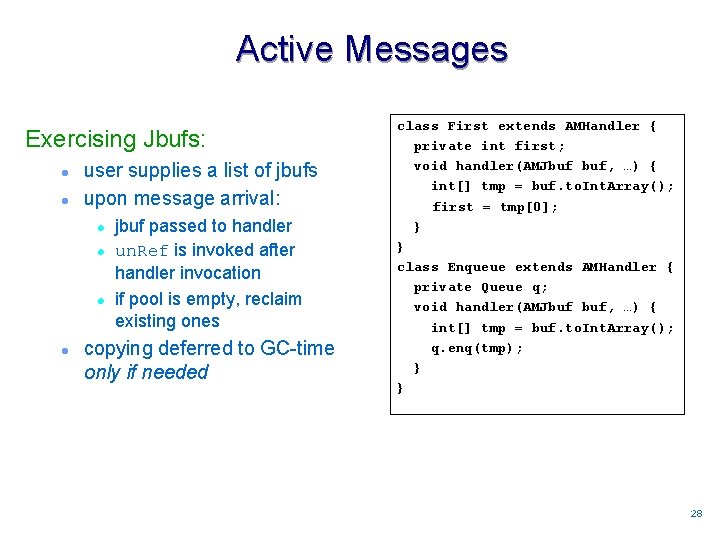

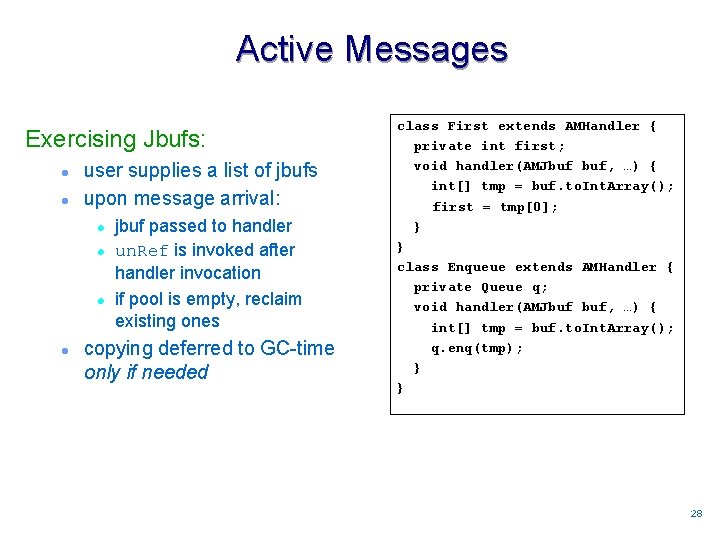

Active Messages Exercising Jbufs: l l user supplies a list of jbufs upon message arrival: l l jbuf passed to handler un. Ref is invoked after handler invocation if pool is empty, reclaim existing ones copying deferred to GC-time only if needed class First extends AMHandler { private int first; void handler(AMJbuf buf, …) { int[] tmp = buf. to. Int. Array(); first = tmp[0]; } } class Enqueue extends AMHandler { private Queue q; void handler(AMJbuf buf, …) { int[] tmp = buf. to. Int. Array(); q. enq(tmp); } } 2828

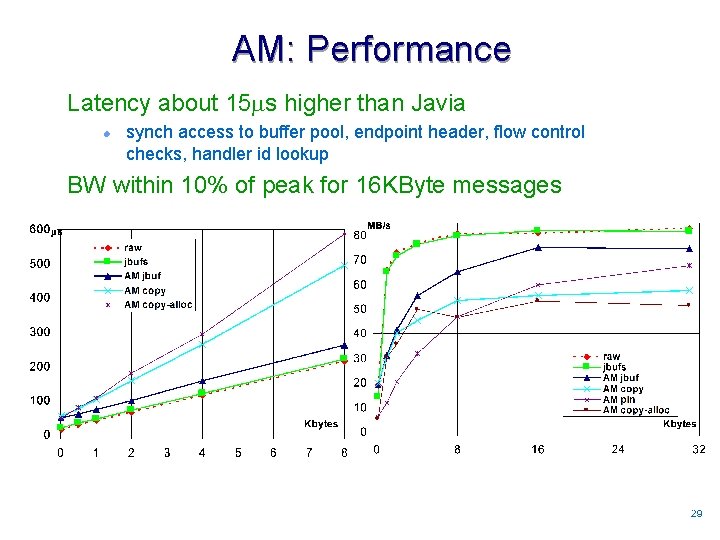

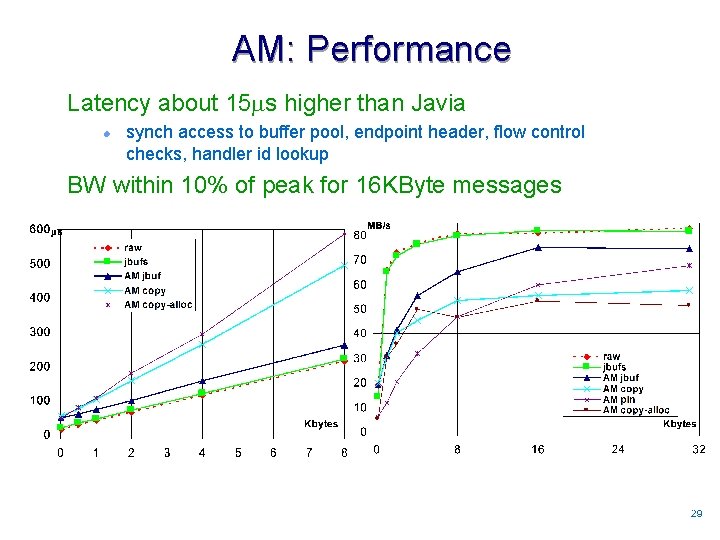

AM: Performance Latency about 15 s higher than Javia l synch access to buffer pool, endpoint header, flow control checks, handler id lookup BW within 10% of peak for 16 KByte messages 2929

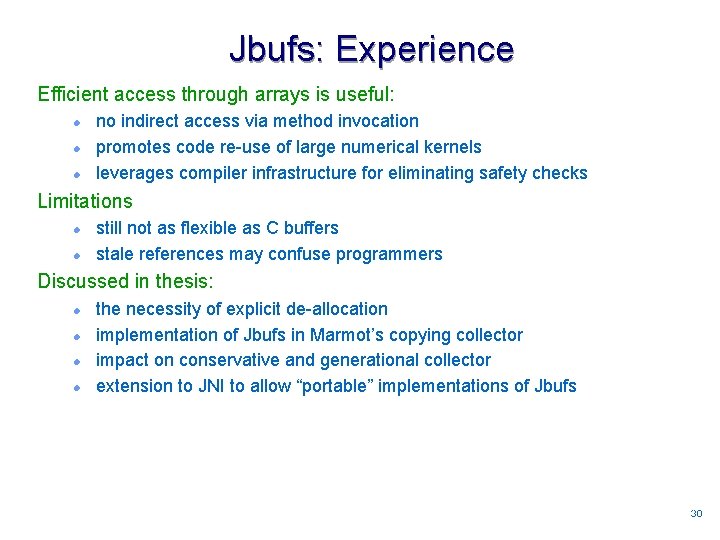

Jbufs: Experience Efficient access through arrays is useful: l l l no indirect access via method invocation promotes code re-use of large numerical kernels leverages compiler infrastructure for eliminating safety checks Limitations l l still not as flexible as C buffers stale references may confuse programmers Discussed in thesis: l l the necessity of explicit de-allocation implementation of Jbufs in Marmot’s copying collector impact on conservative and generational collector extension to JNI to allow “portable” implementations of Jbufs 3030

Outline Thesis Overview l GC/Native heap separation, object serialization Experimental Setup: VI Architecture and Marmot Part I: Array Transfers (1) Javia-I: Java Interface to VI Architecture l respects heap separation (2) Jbufs: Safe and Explicit Management of Buffers l Javia-II, matrix multiplication, Active Messages Part II: Object Transfers (3) A Case For Specialization on Homogeneous Clusters l micro-benchmarks, RMI using Javia-I/II, impact on application suite (4) Jstreams: in-place de-serialization l micro-benchmarks, RMI using Javia-III, impact on application suite Conclusions 3131

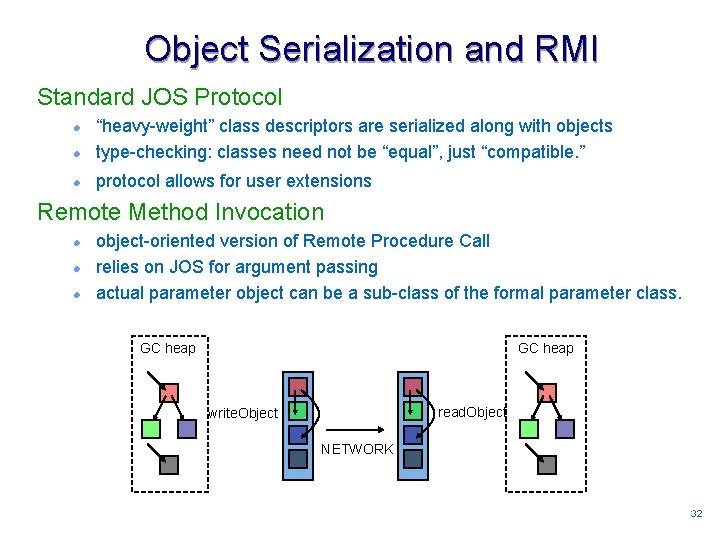

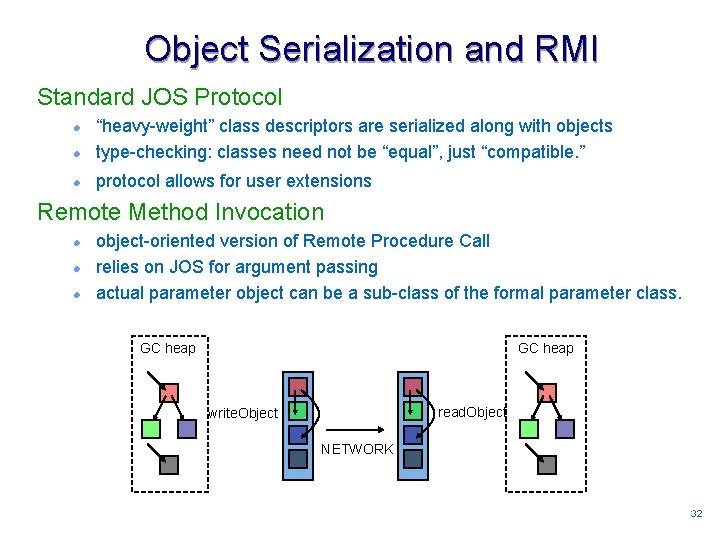

Object Serialization and RMI Standard JOS Protocol l “heavy-weight” class descriptors are serialized along with objects type-checking: classes need not be “equal”, just “compatible. ” l protocol allows for user extensions l Remote Method Invocation l l l object-oriented version of Remote Procedure Call relies on JOS for argument passing actual parameter object can be a sub-class of the formal parameter class. GC heap read. Object write. Object NETWORK 3232

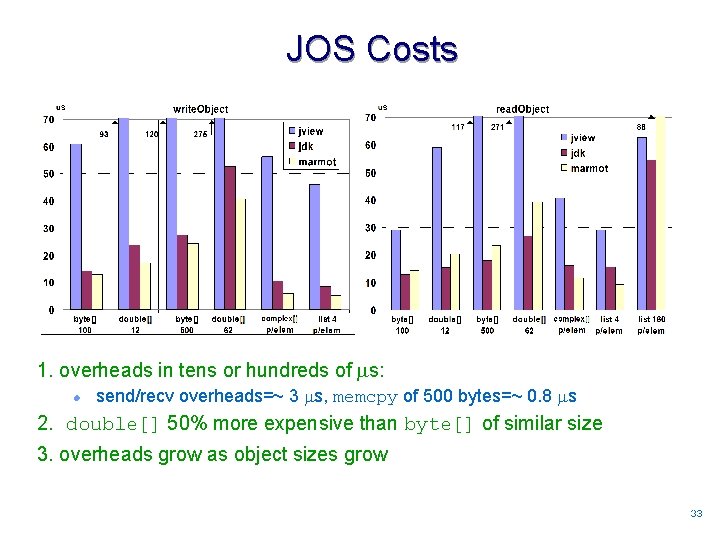

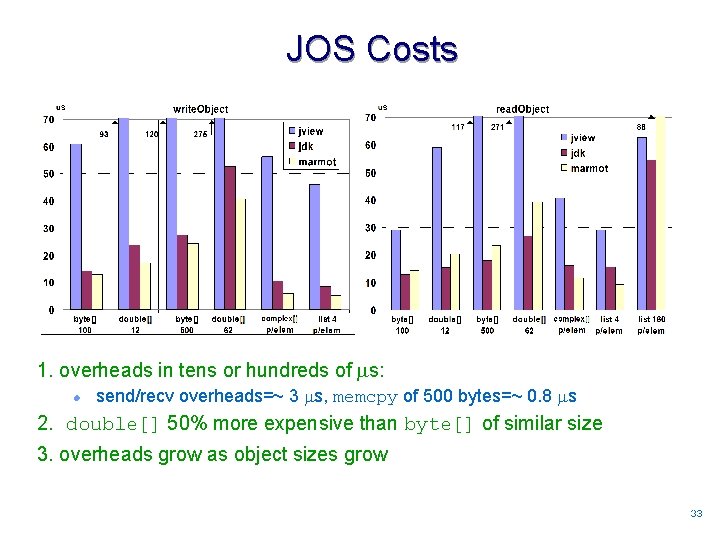

JOS Costs 1. overheads in tens or hundreds of s: l send/recv overheads=~ 3 s, memcpy of 500 bytes=~ 0. 8 s 2. double[] 50% more expensive than byte[] of similar size 3. overheads grow as object sizes grow 3333

Impact of Marmot’s optimizations: l l l Method inlining: up to 66% improvement (already deployed) No synchronization whatsoever: up to 21% improvement No safety checks whatsoever: up to 15% combined Better compilation technology unlikely to reduce overheads substantially 3434

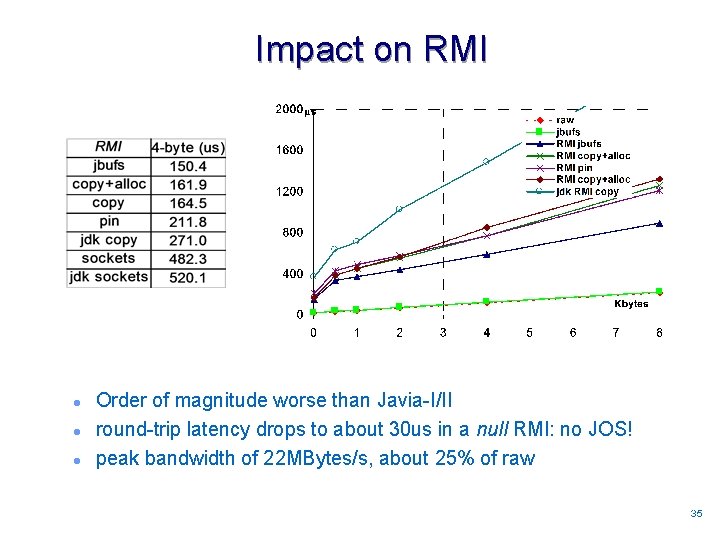

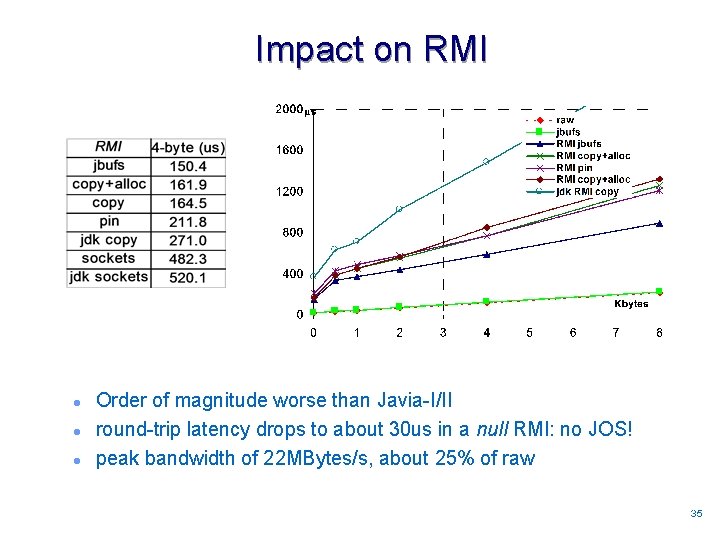

Impact on RMI l l l Order of magnitude worse than Javia-I/II round-trip latency drops to about 30 us in a null RMI: no JOS! peak bandwidth of 22 MBytes/s, about 25% of raw 3535

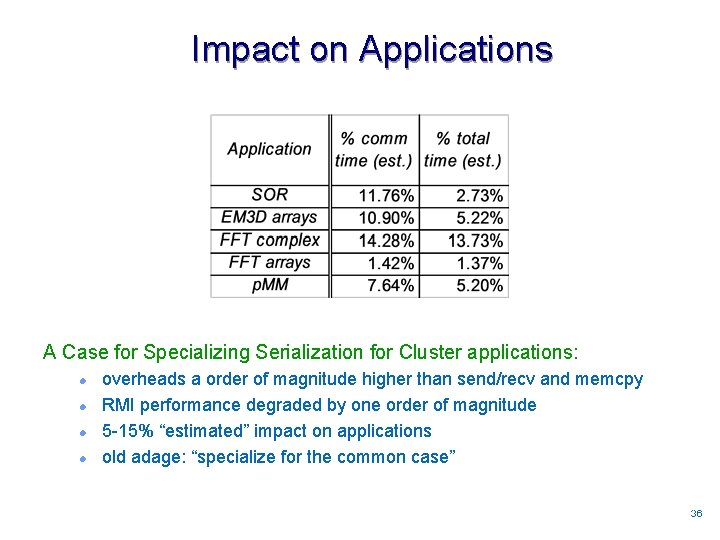

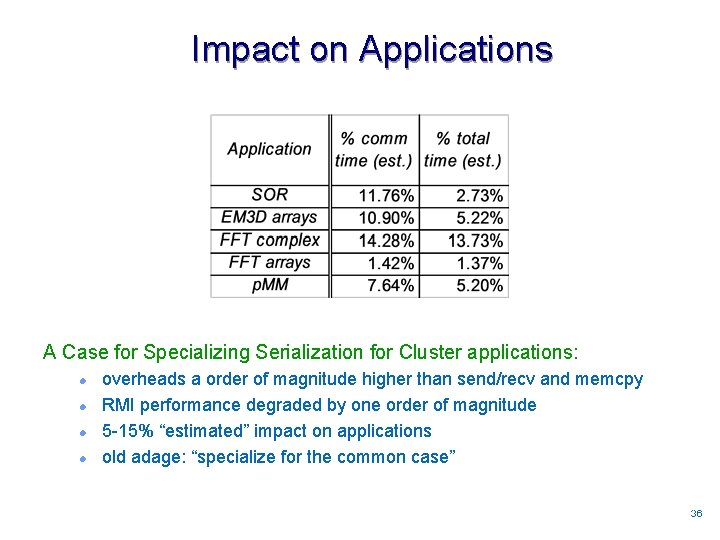

Impact on Applications A Case for Specializing Serialization for Cluster applications: l l overheads a order of magnitude higher than send/recv and memcpy RMI performance degraded by one order of magnitude 5 -15% “estimated” impact on applications old adage: “specialize for the common case” 3636

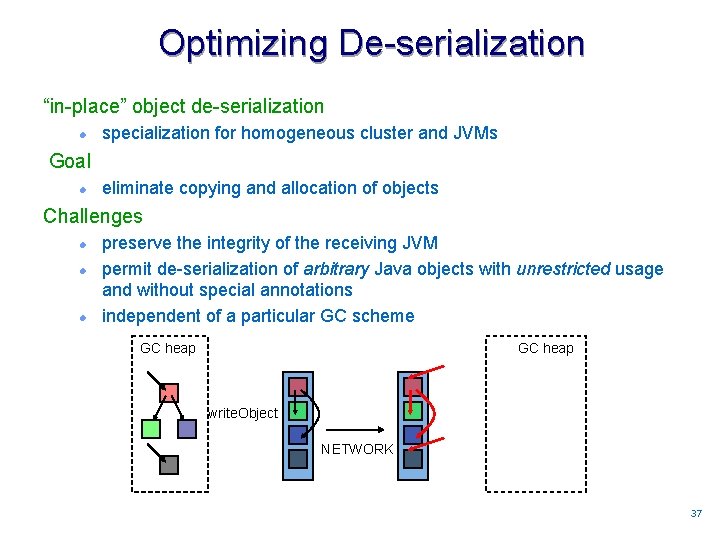

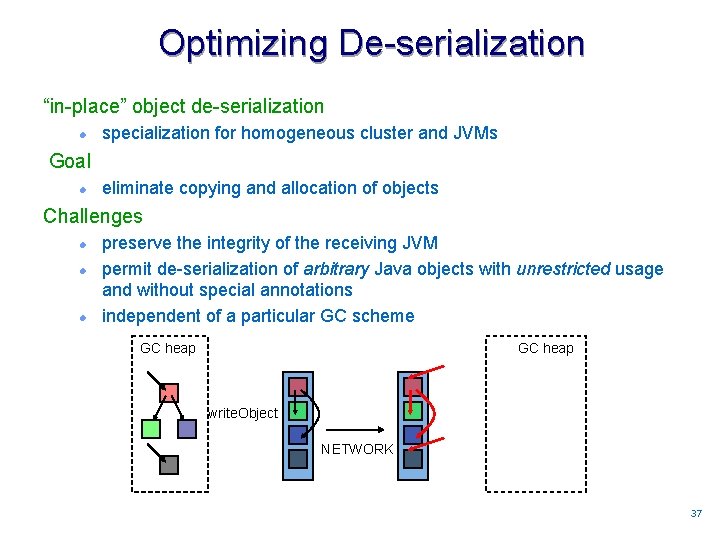

Optimizing De-serialization “in-place” object de-serialization l specialization for homogeneous cluster and JVMs Goal l eliminate copying and allocation of objects Challenges l l l preserve the integrity of the receiving JVM permit de-serialization of arbitrary Java objects with unrestricted usage and without special annotations independent of a particular GC scheme GC heap write. Object NETWORK 3737

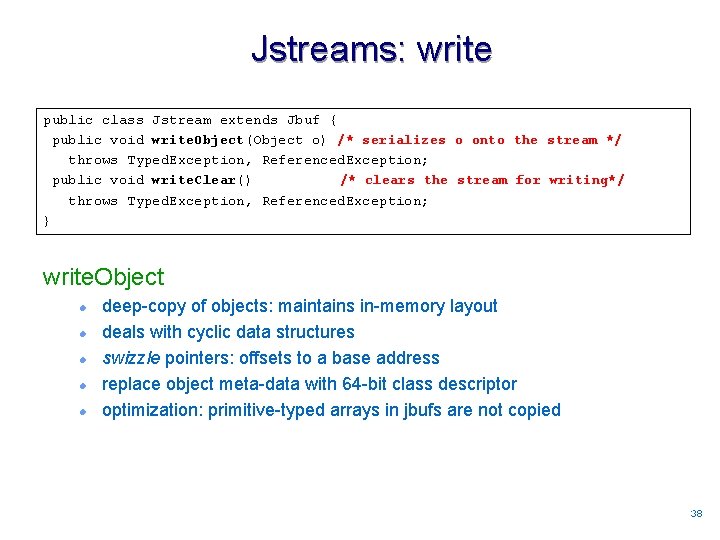

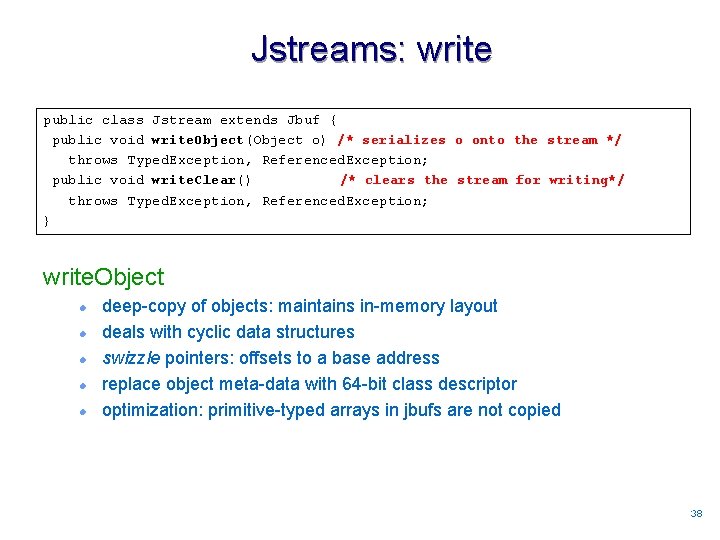

Jstreams: write public class Jstream extends Jbuf { public void write. Object(Object o) /* serializes o onto the stream */ throws Typed. Exception, Referenced. Exception; public void write. Clear() /* clears the stream for writing*/ throws Typed. Exception, Referenced. Exception; } write. Object l l l deep-copy of objects: maintains in-memory layout deals with cyclic data structures swizzle pointers: offsets to a base address replace object meta-data with 64 -bit class descriptor optimization: primitive-typed arrays in jbufs are not copied 3838

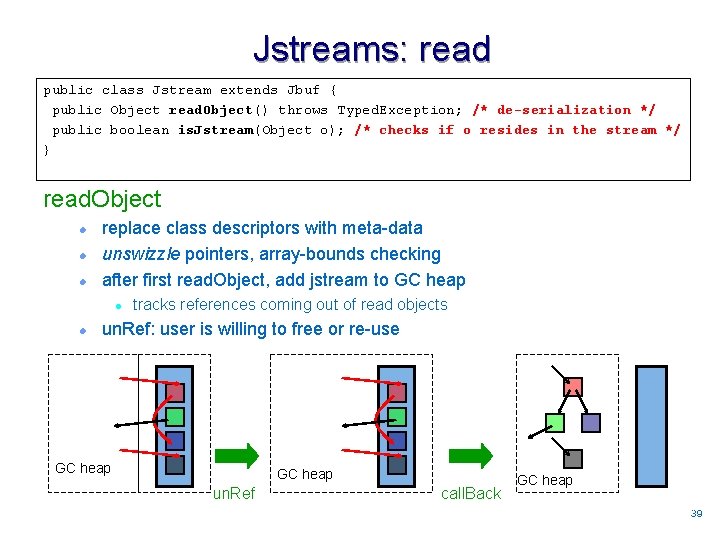

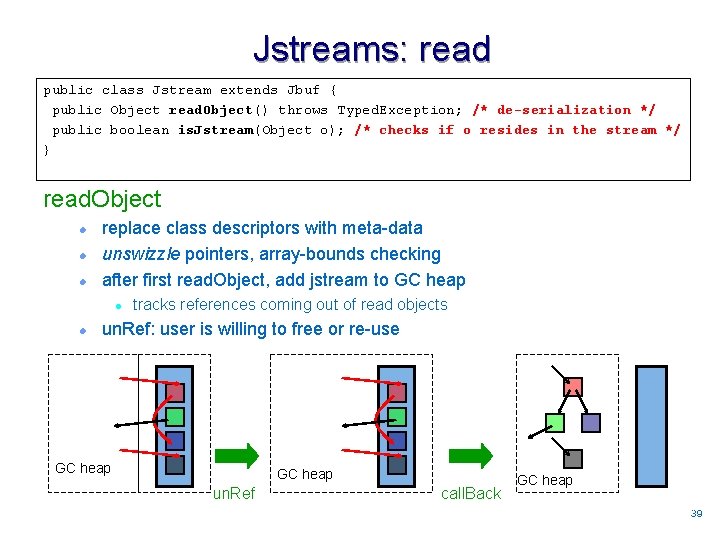

Jstreams: read public class Jstream extends Jbuf { public Object read. Object() throws Typed. Exception; /* de-serialization */ public boolean is. Jstream(Object o); /* checks if o resides in the stream */ } read. Object l l l replace class descriptors with meta-data unswizzle pointers, array-bounds checking after first read. Object, add jstream to GC heap l l tracks references coming out of read objects un. Ref: user is willing to free or re-use GC heap un. Ref call. Back GC heap 3939

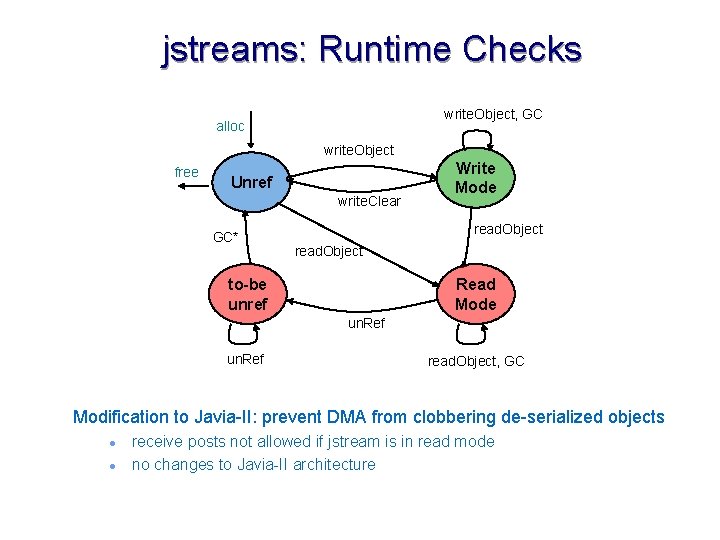

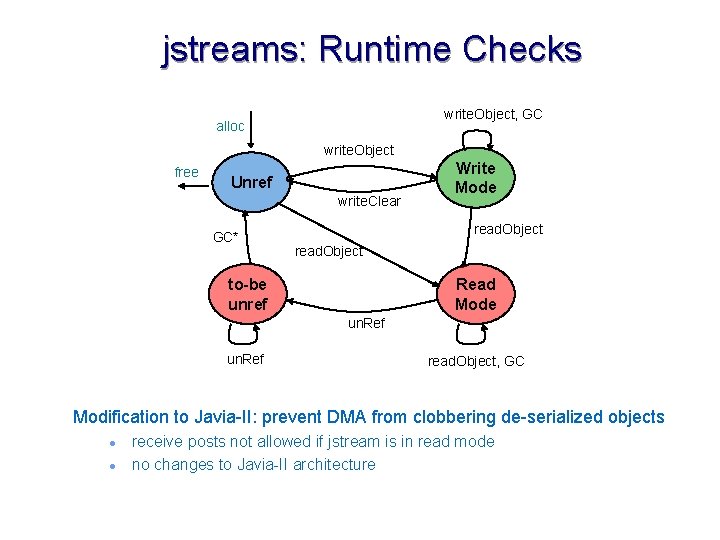

jstreams: Runtime Checks write. Object, GC alloc write. Object free Unref write. Clear GC* Write Mode read. Object to-be unref Read Mode un. Ref read. Object, GC Modification to Javia-II: prevent DMA from clobbering de-serialized objects l l receive posts not allowed if jstream is in read mode no changes to Javia-II architecture 40

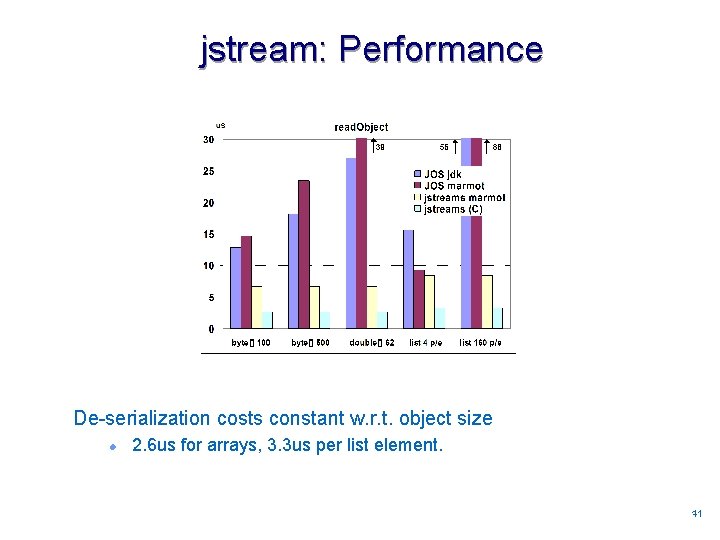

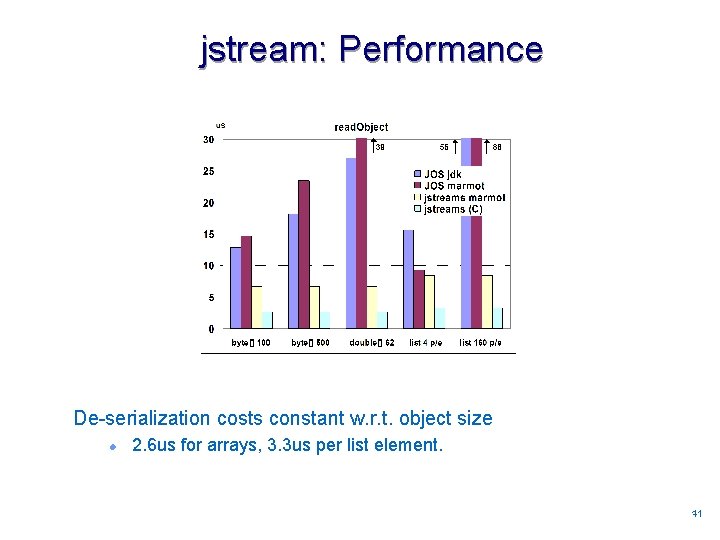

jstream: Performance De-serialization costs constant w. r. t. object size l 2. 6 us for arrays, 3. 3 us per list element. 4141

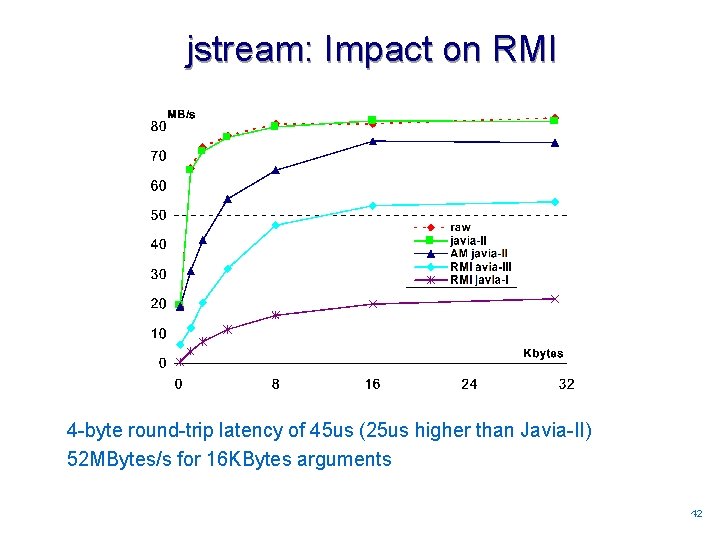

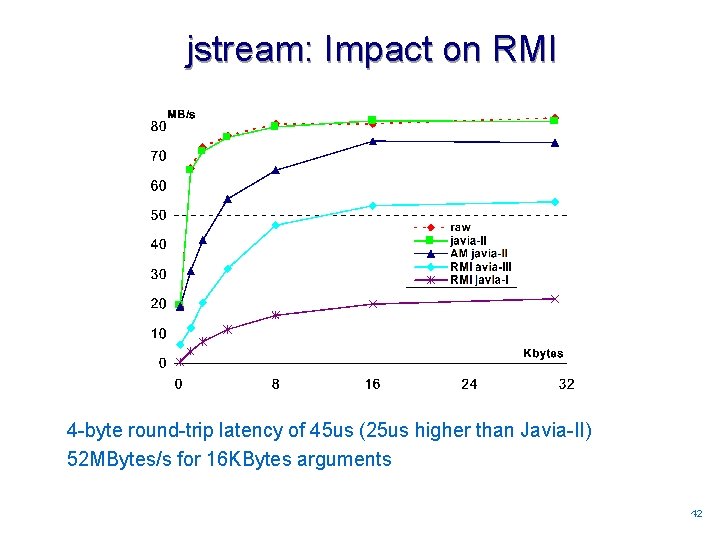

jstream: Impact on RMI 4 -byte round-trip latency of 45 us (25 us higher than Javia-II) 52 MBytes/s for 16 KBytes arguments 4242

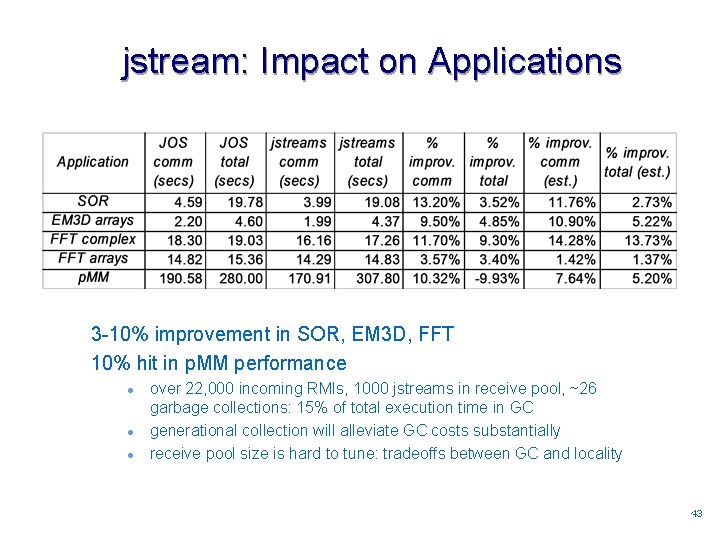

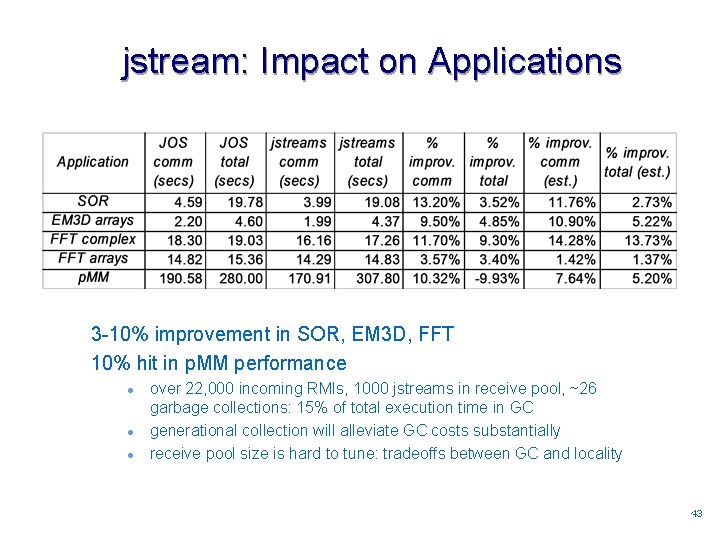

jstream: Impact on Applications 3 -10% improvement in SOR, EM 3 D, FFT 10% hit in p. MM performance l l l over 22, 000 incoming RMIs, 1000 jstreams in receive pool, ~26 garbage collections: 15% of total execution time in GC generational collection will alleviate GC costs substantially receive pool size is hard to tune: tradeoffs between GC and locality 4343

Jstreams: Experience Implementation of read. Object and write. Object integrated into JVM l l protocol is JVM-specific native implementation is faster Limitations l l not as flexible as Java streams: cannot read and write at the same time no “extensible” wire protocols Discussed in thesis: l l l implementation of Jstreams in Marmot’s copying collector support for polymorphic RMI: minor changes to the stub compiler JNI extensions to allow “portable” implementations of Jstreams 4444

Related Work Microsoft J-Direct l l “pinned” arrays defined using source-level annotations JIT produces code to “redirect” array access: expensive Berkeley’s Jaguar: efficient code generation with JIT extensions security concern: JIT “hacks” may break Java or byte-code Custom JVMs l l many “tricks” are possible (e. g. pinned array factories, pinned and non-pinned heaps, etc): depend on a particular GC scheme Jbufs: isolates minimal support needed from GC Memory Management l Safe Regions (Gay and Aiken): reference counting, no GC Fast Serialization and RMI l l Ka. RMI (Karlsruhe): fixed JOS, ground-up RMI implementation Manta (Vrije U): fast RMI but a Java dialect 4545

Summary Use of explicit memory management to improve Java communication performance in clusters l l l softens the GC/Native heap separation preserves type and storage safety independent of GC scheme jbufs: zero-copy array transfers jstreams: zero-copy de-serialization of arbitrary objects Framework for building communication software and applications in Java l l l Javia-I/II parallel matrix multiplication Jam: active messages Java RMI cluster applications: TSP, IDA, SOR, EM 3 D, FFT, and MM 4646