Quality of Service in California K20 Networking Dave

- Slides: 30

Quality of Service in California K-20 Networking Dave Reese A Gathering of State Networks April 30, 2001

Quietly on the Sidelines ® What traffic is most important? ® Video (of course) ® Voice (is this really coming? ) ® Research, Business, Admissions transactions? (depends on who decides) ® Can’t just create one queue, everyone will demand special treatment ® How many queues are needed (practical)? ® How to prioritize multiple queues? ® Will there really be a National Qo. S (and what will be the cost)?

What are we waiting for? ® Bandwidth guarantees - like ATM CBR ® Stable router software (does this exist? ) ® Reservations, limits/controls on usage ® Method to decide who gets to use ® Who enforces/patrols usage? ® New planning/forecasting tools for network design

What California is doing now ® Building shared Statewide “Intranet” to serve research, education, and business applications for K-20 ® Keeping intra-state bandwidth ahead of demand ® Using ATM to guarantee quality for video conferences/distance education ® Bringing critical applications to the Intranet and off of the Internet

How is this working? ® Only buying time - we want to move from ATM to IP ® Bottleneck is between campus and backbone, backbone and Internet ® Pilot project for “e. Content” management to push multimedia servers closer to the user

Quality of Service

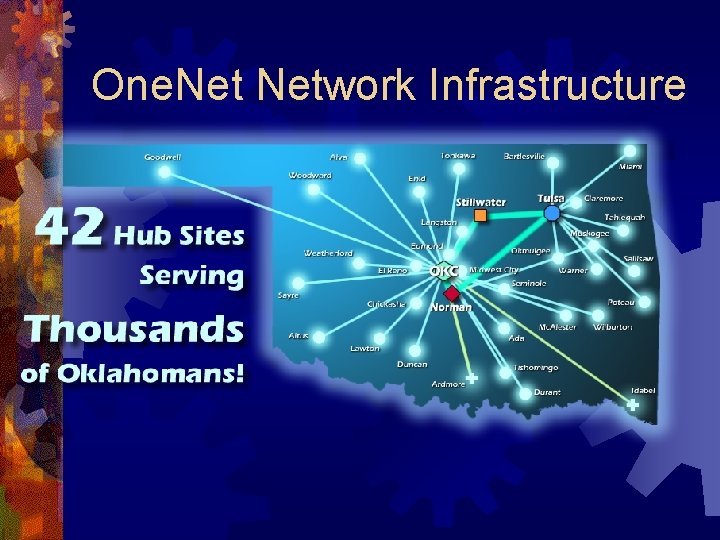

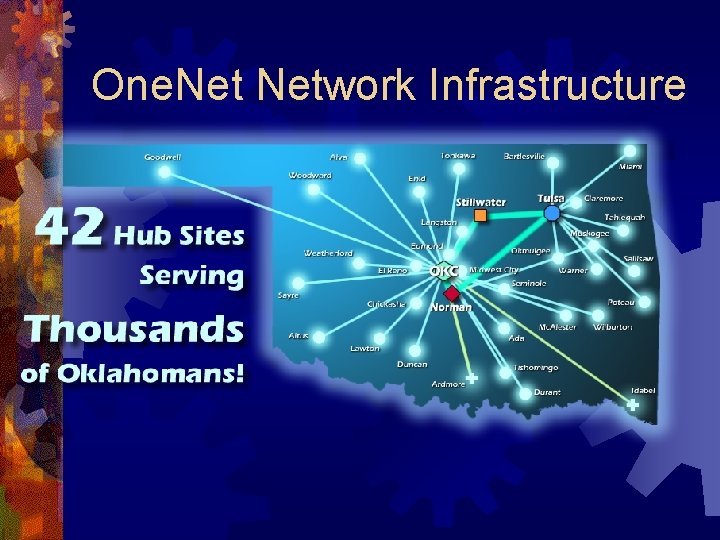

One. Network Infrastructure

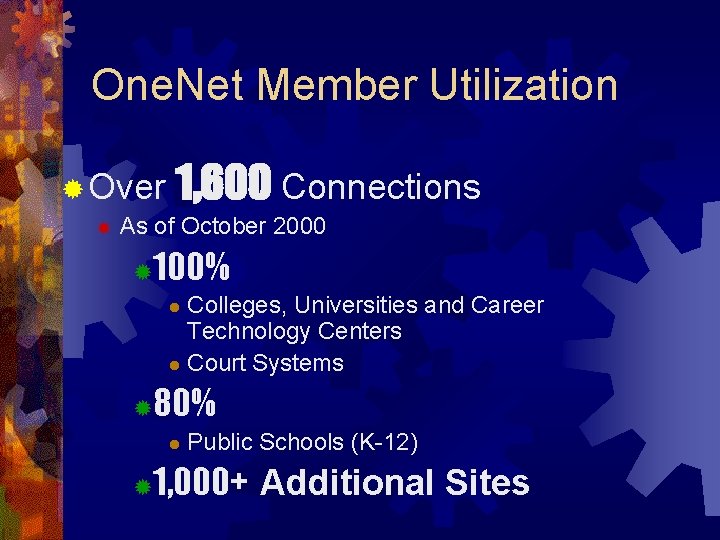

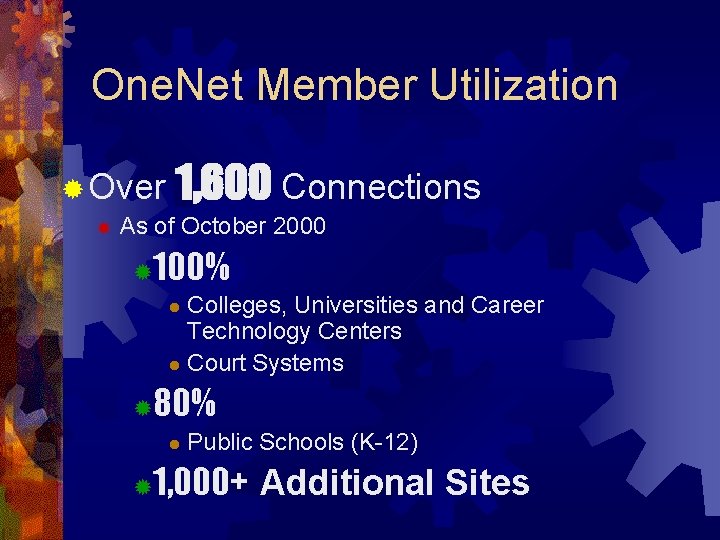

One. Net Member Utilization ® Over ® 1, 600 Connections As of October 2000 ® 100% Colleges, Universities and Career Technology Centers l Court Systems l ® 80% l ® Public Schools (K-12) 1, 000+ Additional Sites

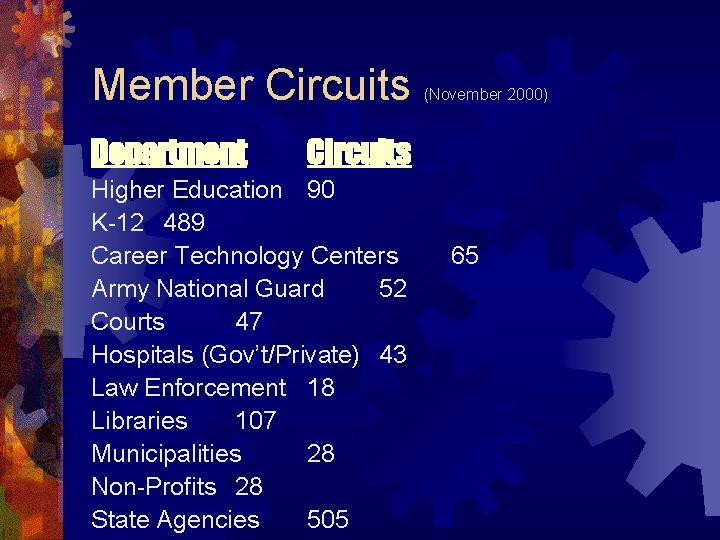

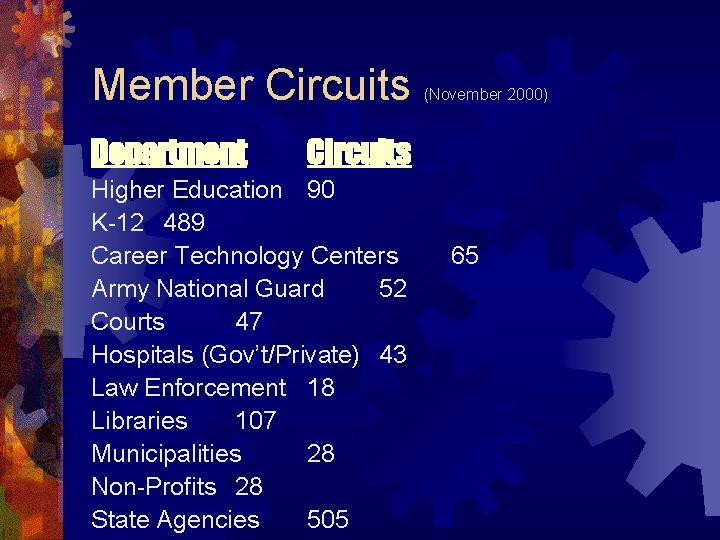

Member Circuits Department (November 2000) Circuits Higher Education 90 K-12 489 Career Technology Centers Army National Guard 52 Courts 47 Hospitals (Gov’t/Private) 43 Law Enforcement 18 Libraries 107 Municipalities 28 Non-Profits 28 State Agencies 505 65

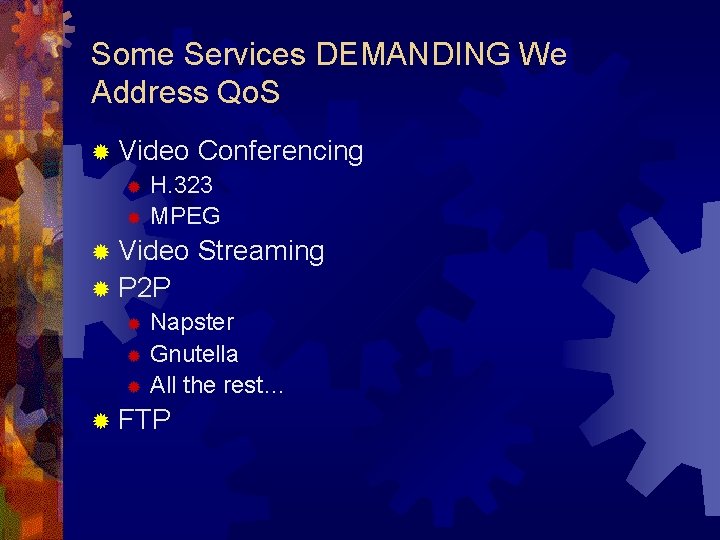

Some Services DEMANDING We Address Qo. S ® Video Conferencing ® H. 323 ® MPEG ® Video Streaming ® P 2 P ® Napster ® Gnutella ® All the rest… ® FTP

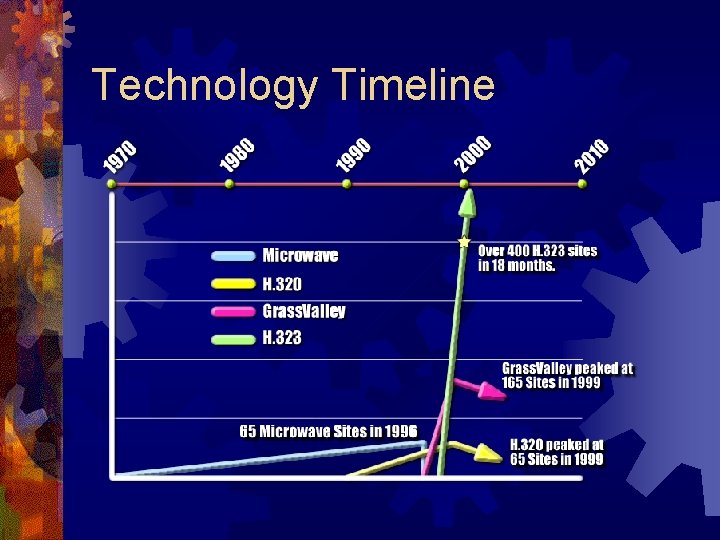

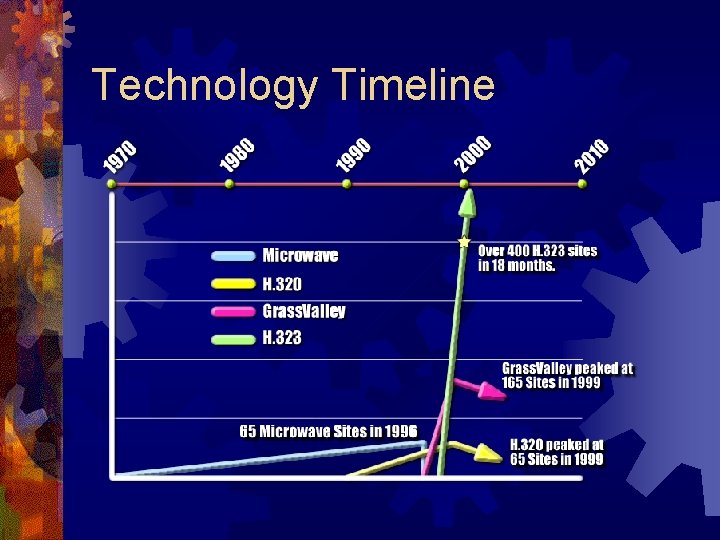

Technology Timeline

It All Adds Up Quickly Examples • We now have over 800 H. 323 endpoints registered as distance learning classrooms • Every higher education institution is wiring their dorms or building new dorms to be wired. • Local expertise in many of our members’ networks regarding traffic management is somewhat limited, new hip applications can quickly congest links.

Identifying The Causes • SNMP • Falls short in classification • Sniffers • Deployment is costly/difficult in the wider area • Net. Flow • Can be utilized anywhere you have the capability to export flow information and have the time to wait for results

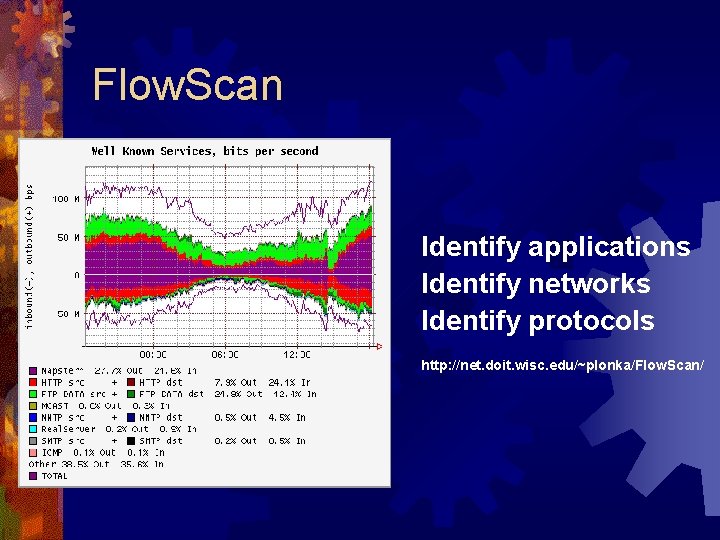

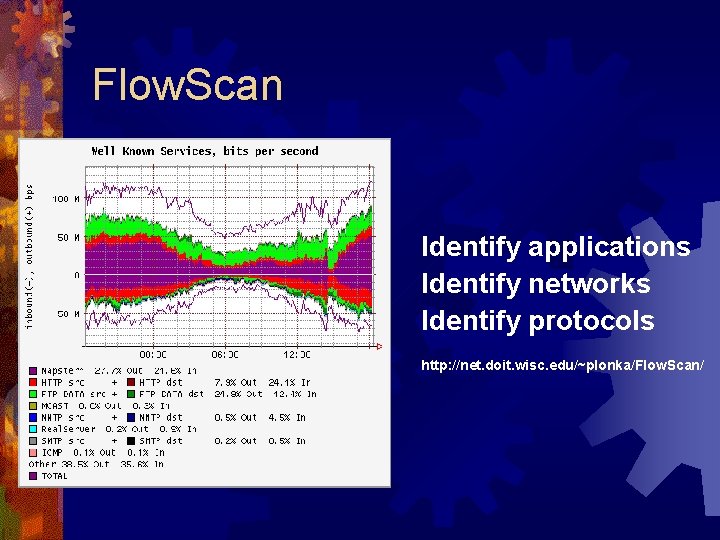

Flow. Scan Identify applications Identify networks Identify protocols http: //net. doit. wisc. edu/~plonka/Flow. Scan/

Recent Specific Issue • Congestion at T 1 level has been handled very well until recently with just WFQ. • Load-balanced per-packet overhead T 1 s at some hubsites are becoming congested • Distance-learning is our primary concern at these locations

Current Solution • Congested T 1 s moved to a PQ-WFQ scenario via ‘ip rtp priority’ • Not ideal, RTP traffic of any sort can starve out other activities. Fortunately not an issue in the troubled locations • Load-balanced T 1 s moved to per-destination PQWFQ scenario • Adding in queuing with per-packet balancing introduced greater out-of-sequence issues than many endpoints could handle • Max bandwidth available to a flow is now constrained to a single T 1 • MOVE to greater bandwidth! • WRED used on DS 3 s and greater

Current Work In the lab… • CAR • Start policing some applications to provide more assurance • NBAR • Anything we can do to help automate identification of what is going on in order to make classification simpler. • Diff. Serv, RSVP • Watching the Qbone and other I 2 initiatives • MPLS • Traffic engineering not Qo. S but integral in many of the decisions we have to make

Issues • Quality of Service is Managed Unfairness • Many decisions to be made about what is rate limited, what is dropped, what gets prioritized • How do we check our trusts on pre-marked traffic?

QUALITY OF SERVICE & TRAFFIC MANAGEMENT Ben Colley http: //www. more. net ben@more. net (800) 509 -6673

The Problem ® Like elsewhere, Napster started it all. ® Expanded from a traffic limiting need to a traffic prioritization goal. ® Excess recreational traffic was impacting production services ® Growth in bandwidth requirements still exceeds available funding

The Project ® What solutions exist: At the backbone level? , ® At the customer edge ® ® ® via the router? via other devices? ® Goals Qo. S - Ensure delivery of mission critical traffic ® TM - Provide tools enabling local traffic management policies ®

Qo. S Direction ® Implement “Differentiated Services” in core and edge routers ® Mark “state level” applications as top priority in edge router H. 323 traffic to/from MOREnet MCU farm ® Library Automation traffic to/from server farm ® Other future applications, eg Vo. IP ® ® MOREnet will not mark or remark any other traffic ® Campuses can mark other traffic as desired at the source device, or elsewhere in their network

Qo. S Alphabet Soup ® At the network core (current best thinking) ® Modified Deficit Round Robin (MDRR) ® ® At ® But still to determine queue mapping and forwarding strategy! the customer premise (current best thinking) CAR and WFQ ® ® CAR to ensure marking of “state level” application traffic WFQ to forward appropriately ® Technical meeting in May ® Establish a common Diff. Serv Code Point (DSCP) strategy and, queue mapping and forwarding plan

Traffic Management ® Mark packets for Qo. S (and unmark!) ® Policy administration by: physical network interface ® server or workstation network address ® application signature ® ® Multiple network interfaces permit ability to: ® isolate critical servers; load-balance servers, caches and/or intrusion detection devices ® aggregate like kinds of traffic ® (Future) API available for Time of Day policies

Traffic Management Research ® Several ® products reviewed Many good, focused products available ® Recommendation for the Top. Layer App. Switch Multiple interfaces support broader range of network design and architecture opportunities ® Excellent H. 323 “flow” management ® Commitment to enhancing application recognition ® Commitment to expanding usability ®

TM Implementation Strategy ® Focus on sites that will experience congestion soon ® Acquire & install in 1 -2 lead sites and learn ® Deploy to remaining sites throughout year ® Vendor training and support ® MOREnet supported product ® Campus determines local policy and manages the platform ® MOREnet only interested in “state level” services

Deployment Plan ® Implement Qo. S prior to beginning of summer school for lead sites. ® Test through summer to be ready for fall. ® Implement 2 nd round of Qo. S in August prior to fall semester. ® Traffic Management deployment will move as needed on customer-by-customer basis starting this summer.

Lessons Learned ® Still an emerging technology -- its not cookie cutter yet ® And here we go with a state-wide deployment (again) ® There ® Who gets to decide whose packets are important? ® Build ® will be bumps along the way, like: a “Community of Interest” How one organization prioritizes traffic can have impact on another

Lessons Learned (continued) ® We believe future funding increases will be linked to ‘good stewardship’ of current funding ®Ask us in six months what the real lessons were!

Peroxidos formulacion

Peroxidos formulacion Dicloruro de hierro

Dicloruro de hierro Inverse z-transform solved examples

Inverse z-transform solved examples K20 education

K20 education Sdn vs traditional network

Sdn vs traditional network Class of service vs quality of service

Class of service vs quality of service California central service association

California central service association California service center laguna niguel

California service center laguna niguel Perform quality assurance

Perform quality assurance Plan quality management pmp

Plan quality management pmp Pmbok quality assurance vs quality control

Pmbok quality assurance vs quality control Define seminar in nursing management

Define seminar in nursing management Quality improvement vs quality assurance

Quality improvement vs quality assurance Quality control basics

Quality control basics Quality definition by quality gurus

Quality definition by quality gurus Crosby quality is free

Crosby quality is free Old quality vs new quality

Old quality vs new quality Quality weld service

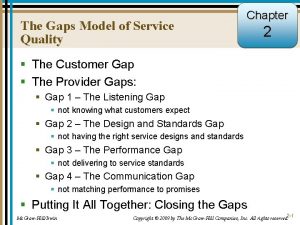

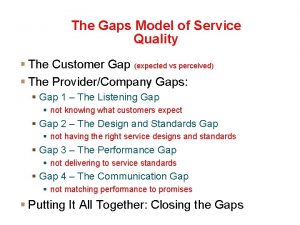

Quality weld service Listening gap definition

Listening gap definition Listening gap definition

Listening gap definition Irates complainers

Irates complainers Internet quality of service

Internet quality of service Rater service quality

Rater service quality Service encounter cascade

Service encounter cascade Improving service quality and productivity

Improving service quality and productivity Service quality and productivity

Service quality and productivity Dimension of service quality

Dimension of service quality 5 service quality dimensions

5 service quality dimensions Transit capacity and quality of service manual

Transit capacity and quality of service manual Provider gap 4

Provider gap 4 Servqual

Servqual