PIPELINNING TO SUPERSCALAR PIPELINING What makes it easy

- Slides: 27

PIPELINNING TO SUPERSCALAR

PIPELINING • What makes it easy • all instructions are of the same length • just a few instruction formats • memory operands appear only in loads and stores • What makes it hard? • structural hazards: suppose we had only one memory • control hazards: need to worry about branch instructions • data hazards: an instruction depends on a previous instruction

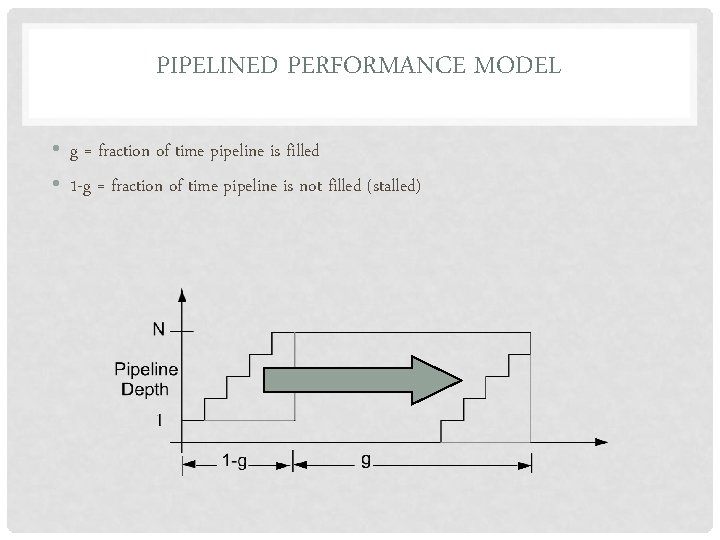

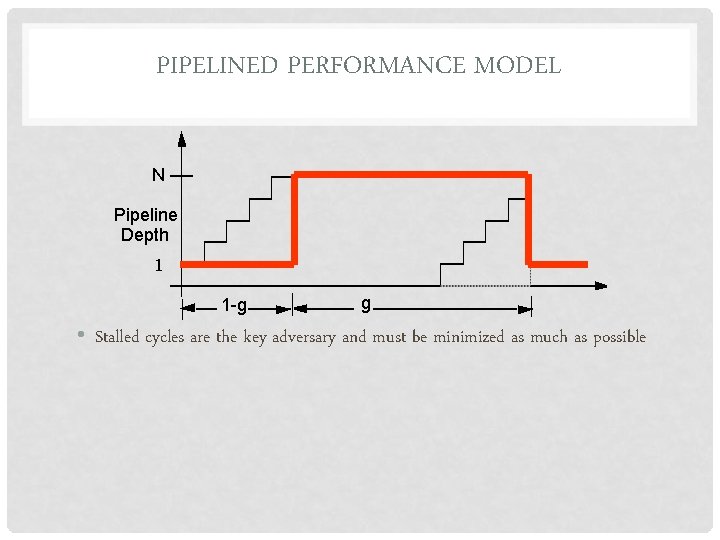

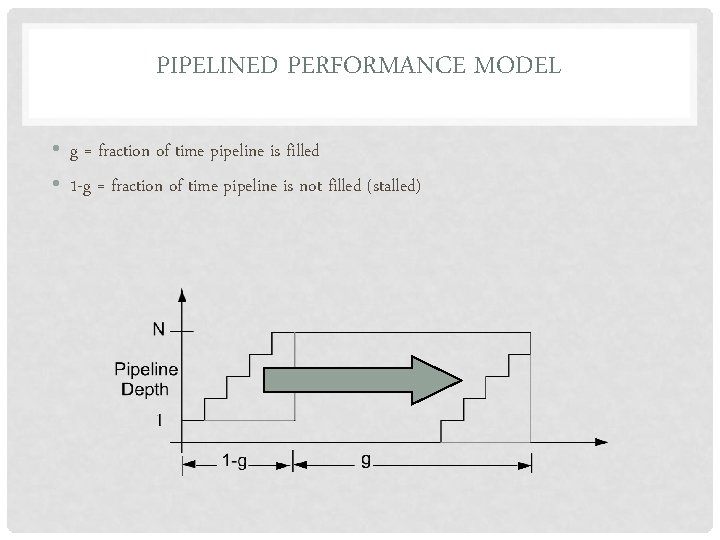

PIPELINED PERFORMANCE MODEL • g = fraction of time pipeline is filled • 1 -g = fraction of time pipeline is not filled (stalled)

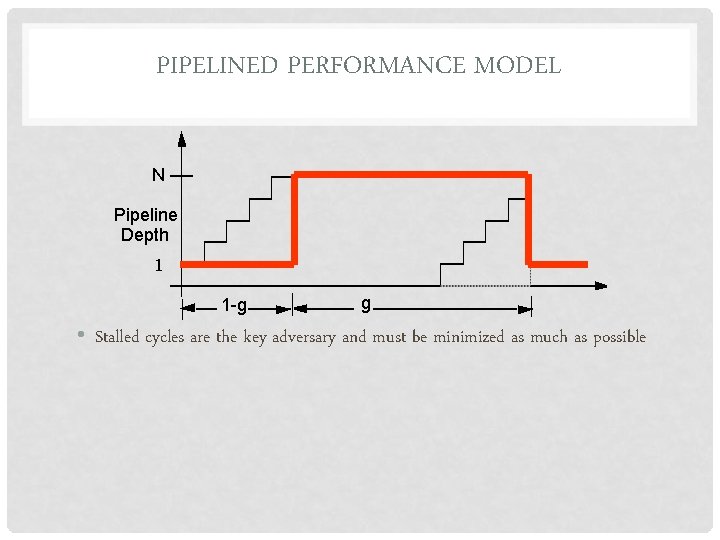

PIPELINED PERFORMANCE MODEL N Pipeline Depth 1 1 -g g • Stalled cycles are the key adversary and must be minimized as much as possible

LIMITS OF PIPELINING • IBM RISC Experience • Control and data dependences add 15% • Best case CPI of 1. 15, IPC of 0. 87 • Deeper pipelines (higher frequency) magnify dependence penalties • This analysis assumes 100% cache hit rates • Hit rates approach 100% for some programs • Many important programs have much worse hit rates

SUPERSCALAR PROPOSAL • • • Go beyond single instruction pipeline, achieve IPC > 1 Dispatch multiple instructions per cycle Provide more generally applicable form of concurrency Geared for sequential code that is hard to parallelize otherwise Exploit fine-grained or instruction-level parallelism (ILP)

SCALAR PIPELINES • • A single k stage pipeline capable of executing at most one instruction per clock cycle. All instructions, regardless of type, traverse through the same set of pipeline stages. Instructions advance through the pipeline stages in lockstep fashion. Except when stalled an instructions remains in a stage for only one clock cycle and then advances to the next stage.

SUPERPIPELINING • Many pipeline stages need less than half a clock cycle • Double internal clock speed gets two tasks per external clock cycle • Superpipelining is based on dividing the stages of a pipeline into substages and thus increasing the number of instructions which are supported by the pipeline at a given moment.

SUPERPIPELINING • By dividing each stage into two, the clock cycle period t will be reduced to the half, t/2; hence, at the maximum capacity, the pipeline produces a result every t/2 s • For a given architecture and the corresponding instruction set there is an optimal number of pipeline stages; increasing the number of stages over this limit reduces the performance • A solution to further improve speed is the superscalar architecture

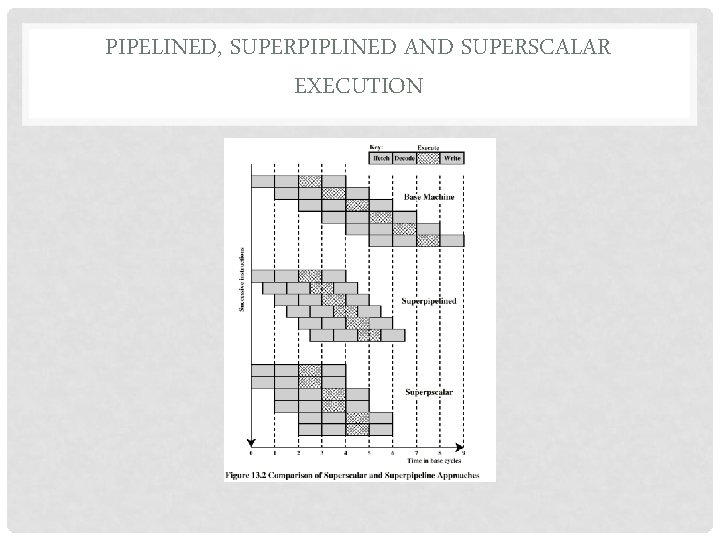

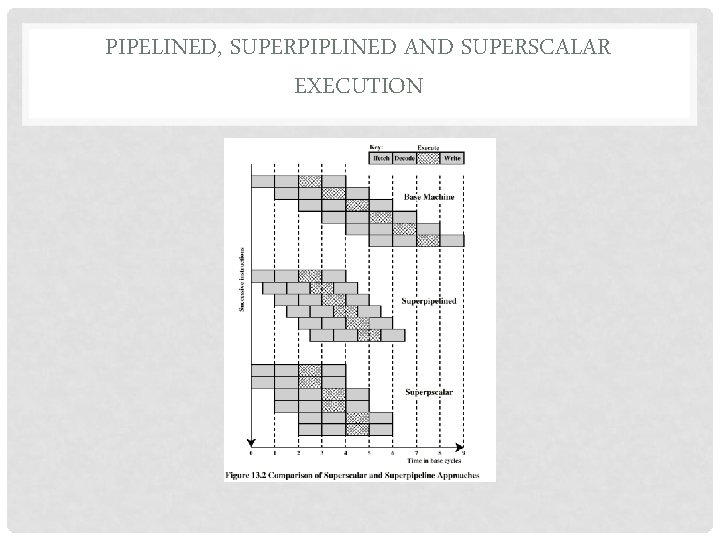

PIPELINED, SUPERPIPLINED AND SUPERSCALAR EXECUTION

SUPERSCALAR PIPELINES • The natural descendants of scalar pipelines. • They consist of: • Parallel pipelines that are able to initiate the processing of multiple instructions in every machine cycle. • Diversified pipelines which consist of execution stage with different types of functional units. • They may be implemented as dynamic pipelines which change the execution order of execution of instructions without the reordering of instructions by the compiler.

PARALLEL PIPELINES • Degree of parallelism of a machine can be measured by the maximum number of instructions that can be concurrently in progress at any one time. • • A k-stage pipeline can have k instructions concurrently resident in the machine. The potential speedup is k. Same as using k non-pipelined processors. The pipeline requires much less hardware.

DIVERSIFIED PIPELINES • Hardware required to support different instructions types can vary significantly (Particularly in a CISC). • Scalar pipeline requires all diverse requirements be unified into a single pipeline resulting in inefficiencies. • Each instruction type has different requirements in the execution stages. • In parallel pipelines instead of using s identical pipes in an s-wide pipeline diversified pipes can be employed for different instruction types.

DYNAMIC PIPELINES • Stalled instructions can be bypassed. • Eliminating the stall. • Causes instructions to be executed out of order. • After execution instructions are reordered into the proper completion sequence. • Much more complicated process than scalar pipelines.

DYNAMIC PIPELINES • In any pipeline buffers (registers) are required between stages. • In the rigid scalar pipelines a single entry buffer is placed between each stage as shown in Figure 4. 8 a. • Except when stalled, a new instruction enters the buffer on each clock. • All instructions enter and leave each buffer in the same order as the original code. • In a parallel pipeline multientry buffers are placed between each stage as shown in Figure 4. 8 b. • If all instruction are required to advanced simultaneously a stall of one instruction stalls the entire buffer. • Dynamic pipelines help eliminate unnecessary stalling.

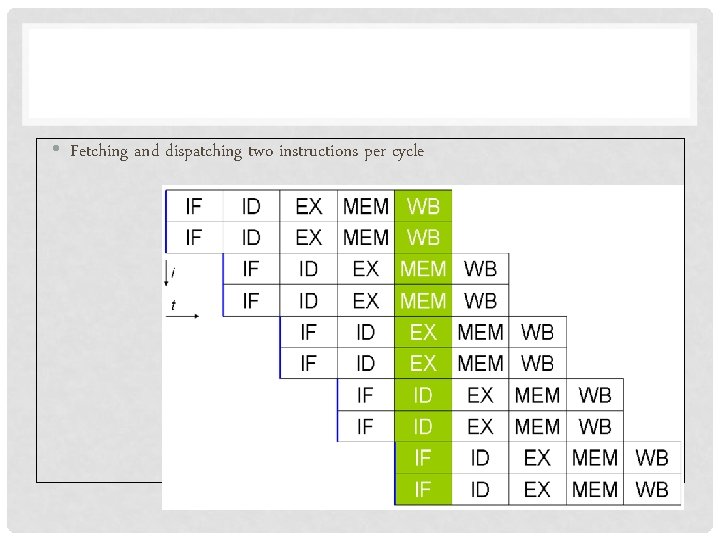

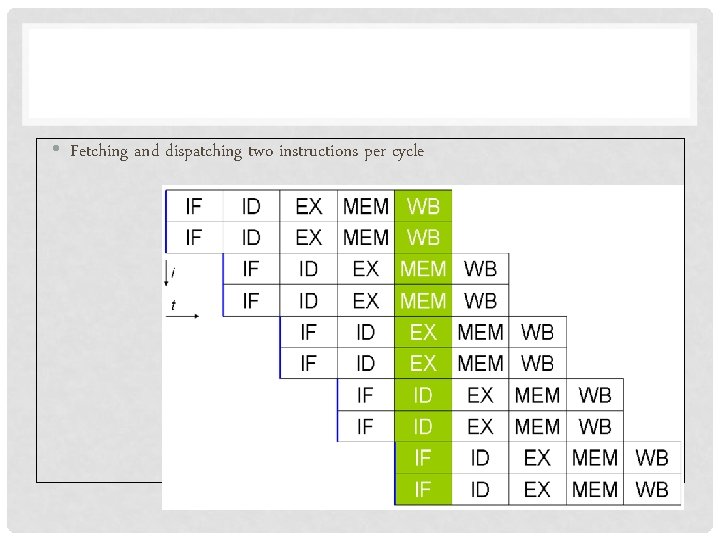

• Fetching and dispatching two instructions per cycle

WHAT IS A SUPERSCALAR ARCHITECTURE? • A superscalar architecture is one in which several instructions can be initiated simultaneously and executed independently. • Pipelining allows several instructions to be executed at the same time, but have to be in different pipeline stages at a given moment. • Superscalar architectures include all features of pipelining but, in addition, can be several instructions executing simultaneously in the same pipeline stage.

SUPERSCALAR ARCHITECTURES • Superscalar architectures allow several instructions to be issued and completed per clock cycle • A superscalar architecture consists of a number of pipelines that are working in parallel • Depending on the number and kind of parallel units available, a certain number of instructions can be executed in parallel.

LIMITATIONS ON PARALLEL EXECUTION • The situations which prevent instructions to be executed in parallel by a superscalar architecture are very similar to those which prevent an efficient execution on any pipelined architecture • The consequences of these situations on superscalar architectures are more severe than those on simple pipelines, because the potential of parallelism is greater and, thus, a greater opportunity is lost • Limited by: • Resource conflicts • Procedural dependency (control dependency) • Data dependency

RESOURCE CONFLICTS • They occur if two or more instructions compete for the same resource (register, memory, functional unit) at the same time; they are similar to structural hazards discussed with pipelines. • Introducing several parallel pipelined units, superscalar architectures try to reduce a part of possible resource conflicts.

CONTROL DEPENDENCY • The presence of branches creates major problems in assuring an optimal parallelism • If instructions are of variable length, they cannot be fetched and issued in parallel; an instruction has to be decoded in order to identify the following one and to fetch it • Superscalar techniques are efficiently applicable to RISCs, with fixed instruction length and format

DATA CONFLICTS • Data conflicts are produced by dependencies • Because superscalar architectures provide a great liberty in the order in which instructions can be issued and completed, data dependencies have to be considered with much attention. • Three types of data dependencies • True data dependency • Output dependency • Antidependency

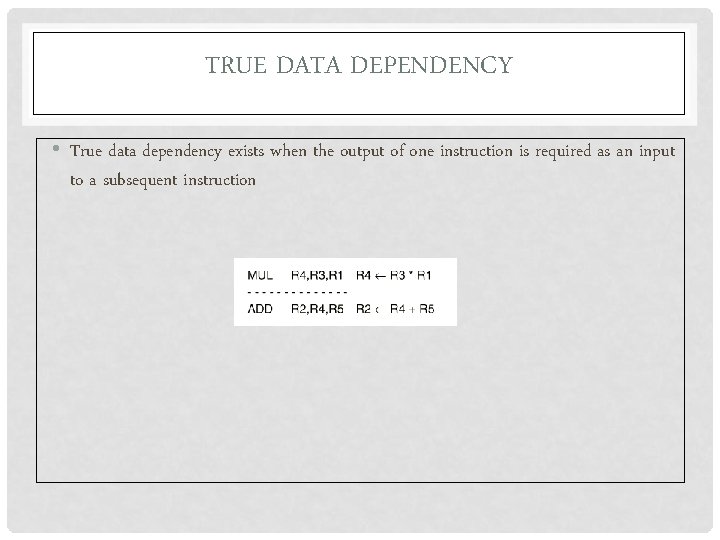

TRUE DATA DEPENDENCY • True data dependency exists when the output of one instruction is required as an input to a subsequent instruction

TRUE DATA DEPENDENCY • True data dependencies are intrinsic features of the user program. They cannot be eliminated by compiler or hardware techniques. • The simplest solution is to stall the adder until the multiplier has finished. • In order to avoid the adder to be stalled, the compiler or hardware can find other instructions which can be executed by the adder until result of the multiplication is available.

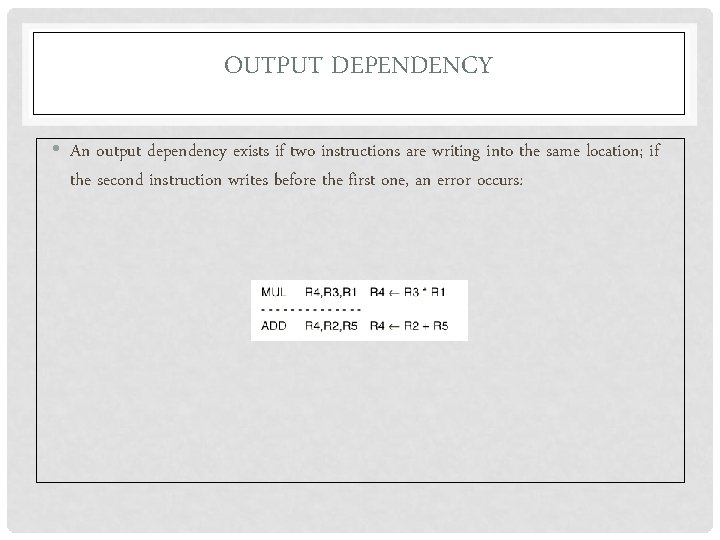

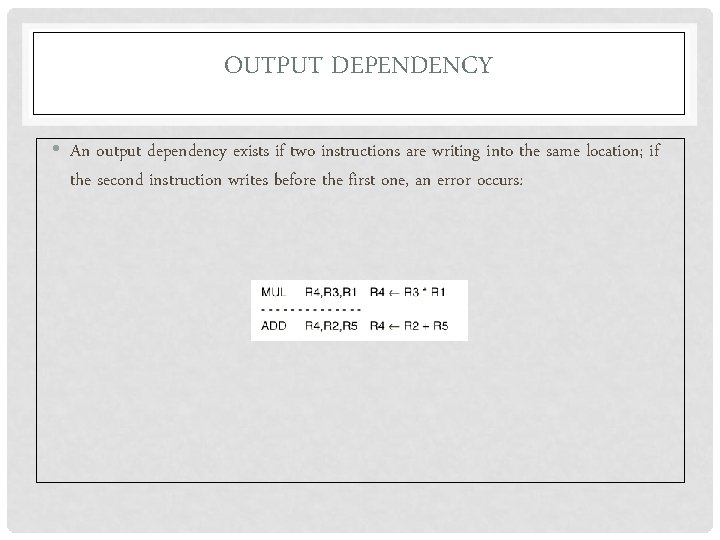

OUTPUT DEPENDENCY • An output dependency exists if two instructions are writing into the same location; if the second instruction writes before the first one, an error occurs:

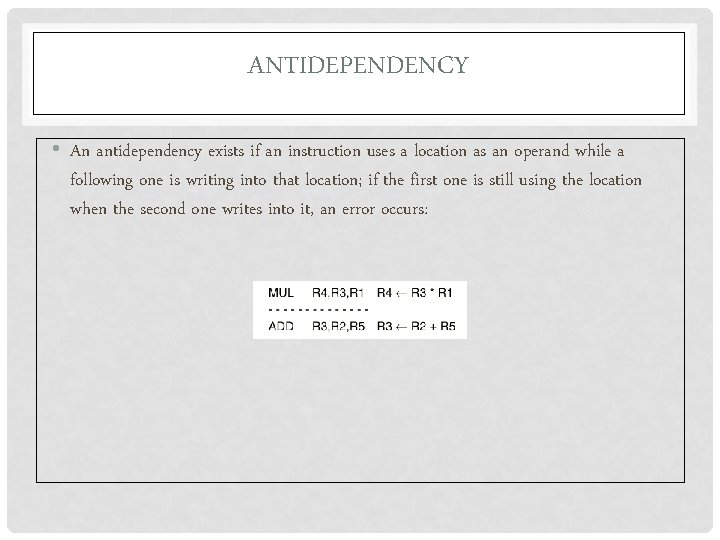

ANTIDEPENDENCY • An antidependency exists if an instruction uses a location as an operand while a following one is writing into that location; if the first one is still using the location when the second one writes into it, an error occurs:

EXECUTION POLICIES • In-order issue with in-order completion. • In-order issue with out-of-order completion. • Out-of-order issue with out-of-order completion.