Superscalar Processors Superscalar Execution How it can help

- Slides: 61

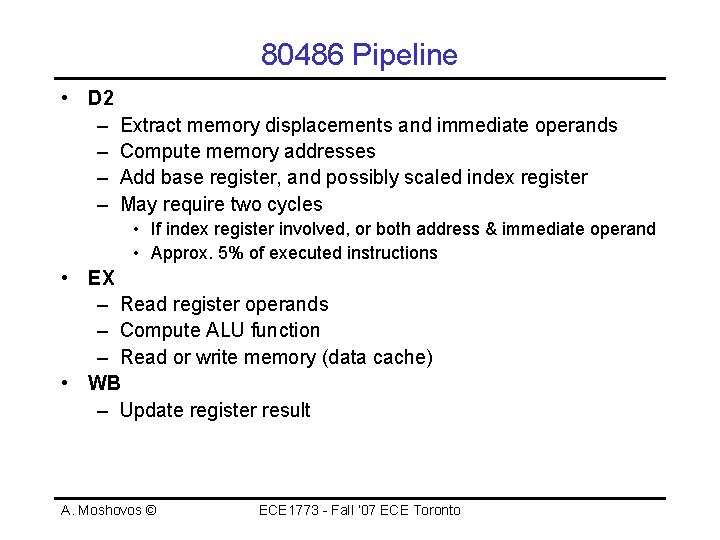

Superscalar Processors • Superscalar Execution – How it can help – Issues: • Maintaining Sequential Semantics • Scheduling – Scoreboard – Superscalar vs. Pipelining • Example: Alpha 21164 and 21064 A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

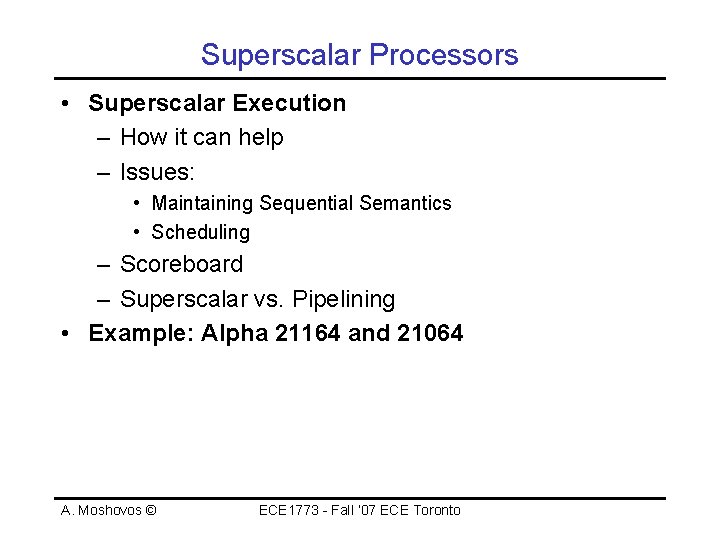

Sequential Semantics - Review • Instructions appear as if they executed: – In the order they appear in the program – One after the other • Pipelining: Partial Overlap of Instructions – Initiate one instruction per cycle – Subsequent instructions overlap partially – Commit one instruction per cycle A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

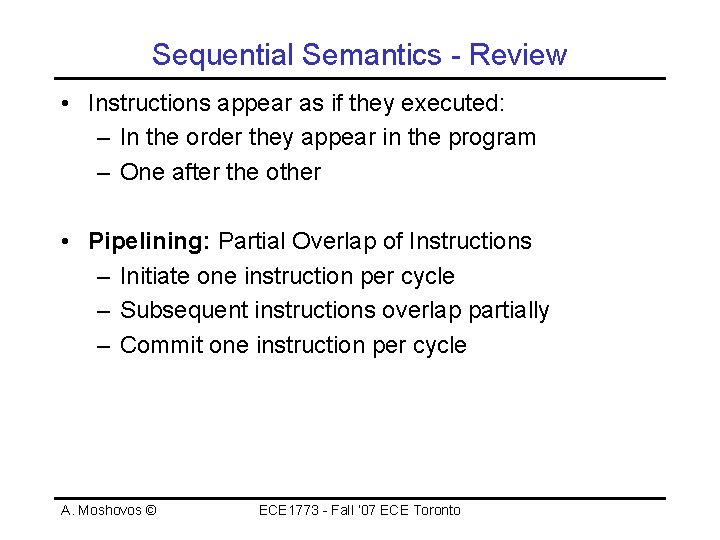

Superscalar - In-order • Two or more consecutive instructions in the original program order can execute in parallel – This is the dynamic execution order • N-way Superscalar – Can issue up to N instructions per cycle – 2 -way, 3 -way, … A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

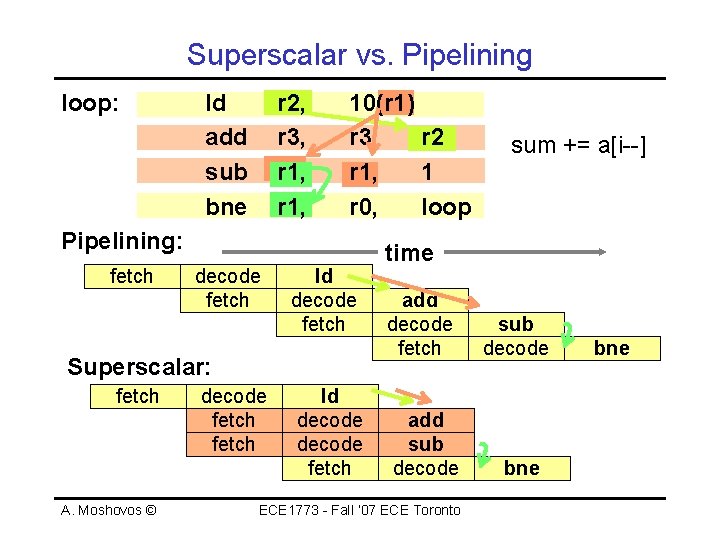

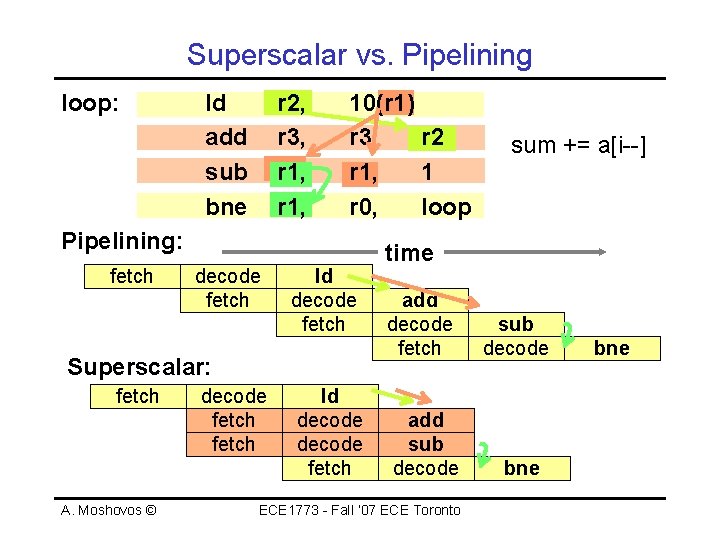

Superscalar vs. Pipelining loop: ld add sub bne r 2, r 3, r 1, 10(r 1) r 3, r 2 r 1, 1 r 0, loop Pipelining: fetch decode fetch ld decode fetch Superscalar: fetch A. Moshovos © decode fetch ld decode fetch sum += a[i--] time add decode fetch sub decode add sub decode bne ECE 1773 - Fall ‘ 07 ECE Toronto bne

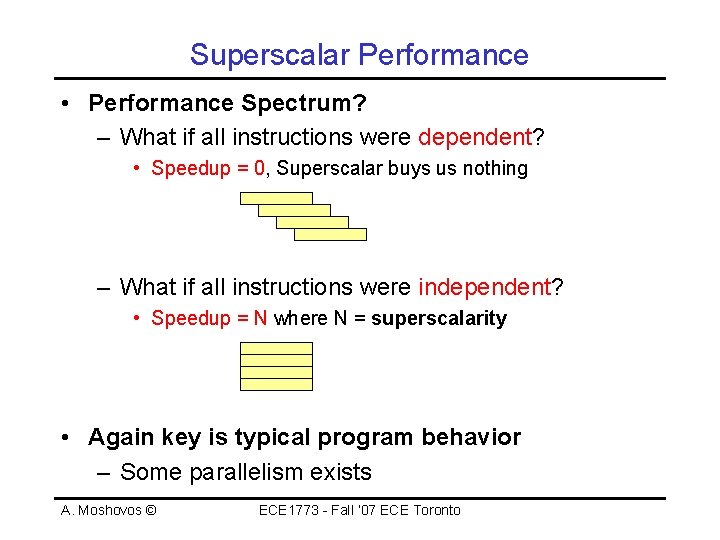

Superscalar Performance • Performance Spectrum? – What if all instructions were dependent? • Speedup = 0, Superscalar buys us nothing – What if all instructions were independent? • Speedup = N where N = superscalarity • Again key is typical program behavior – Some parallelism exists A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

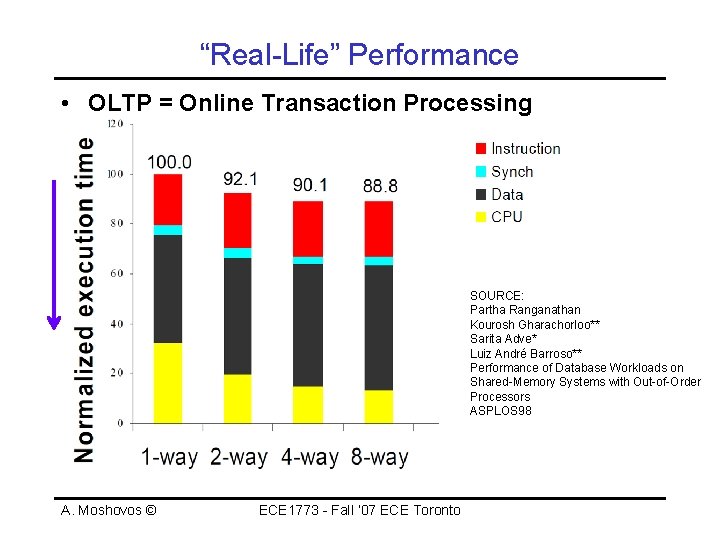

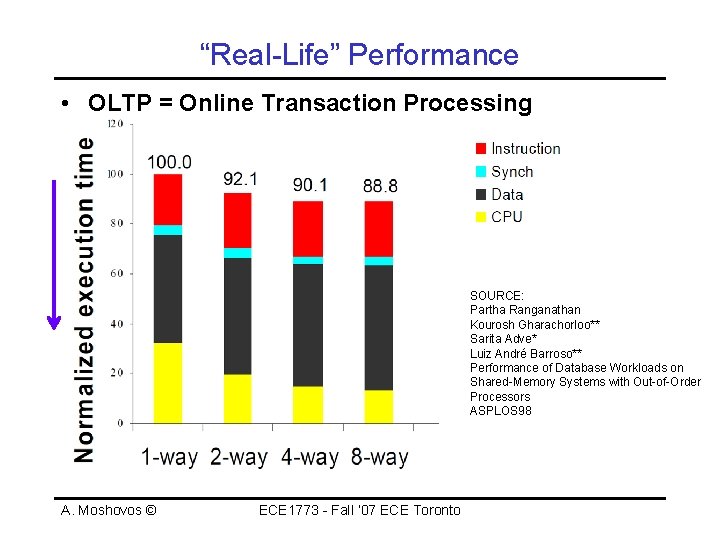

“Real-Life” Performance • OLTP = Online Transaction Processing SOURCE: Partha Ranganathan Kourosh Gharachorloo** Sarita Adve* Luiz André Barroso** Performance of Database Workloads on Shared-Memory Systems with Out-of-Order Processors ASPLOS 98 A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

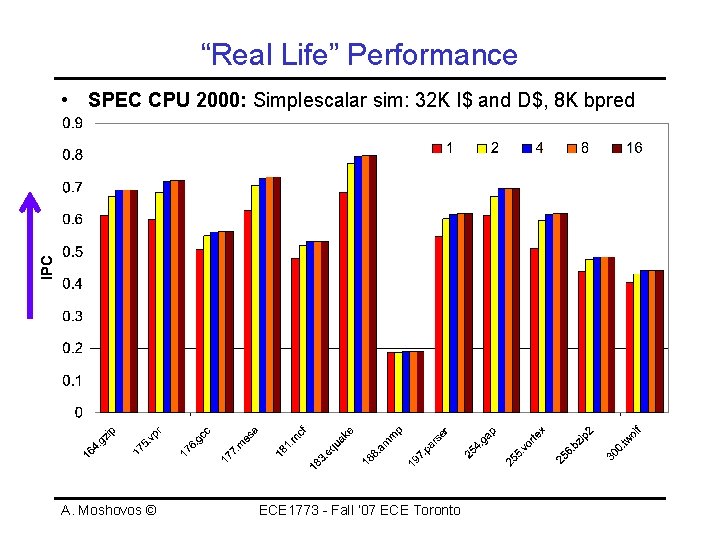

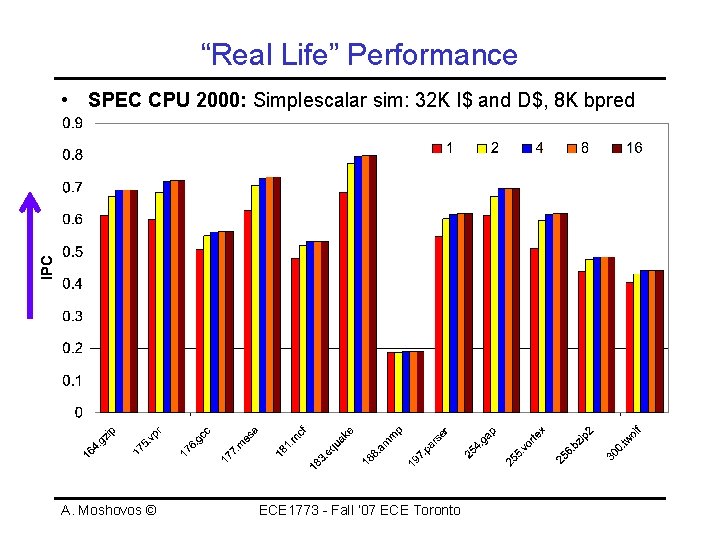

“Real Life” Performance • SPEC CPU 2000: Simplescalar sim: 32 K I$ and D$, 8 K bpred A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

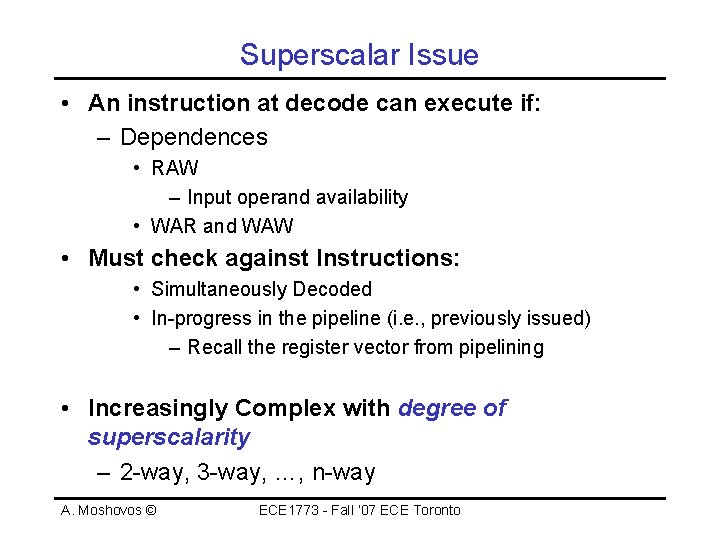

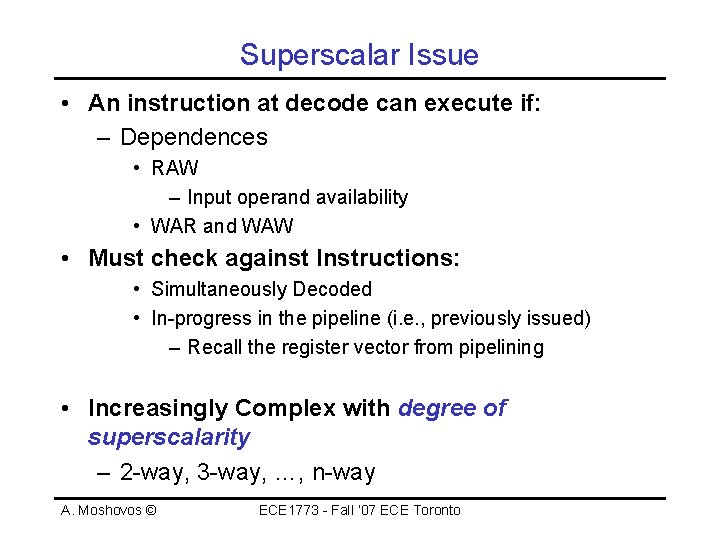

Superscalar Issue • An instruction at decode can execute if: – Dependences • RAW – Input operand availability • WAR and WAW • Must check against Instructions: • Simultaneously Decoded • In-progress in the pipeline (i. e. , previously issued) – Recall the register vector from pipelining • Increasingly Complex with degree of superscalarity – 2 -way, 3 -way, …, n-way A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

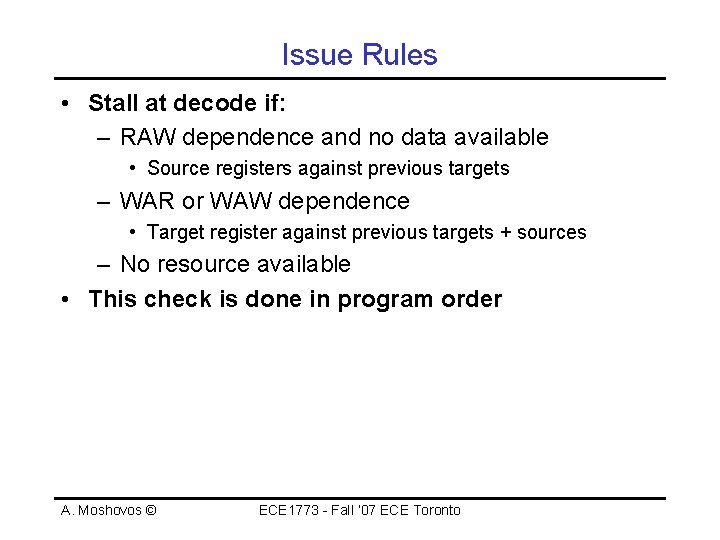

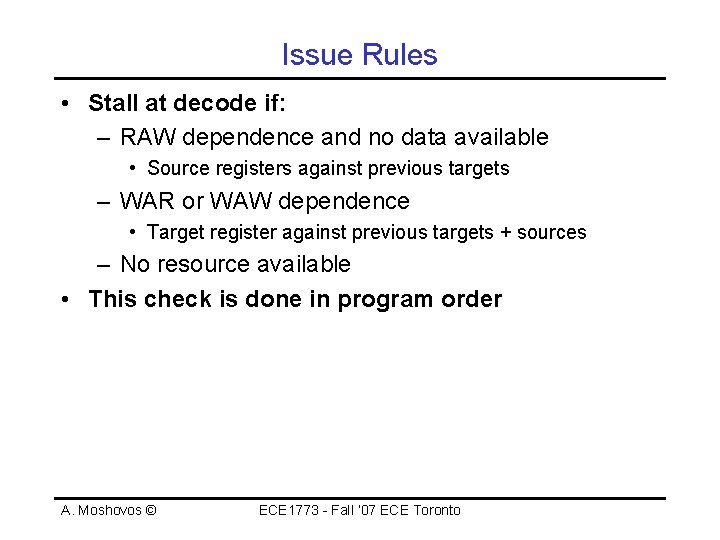

Issue Rules • Stall at decode if: – RAW dependence and no data available • Source registers against previous targets – WAR or WAW dependence • Target register against previous targets + sources – No resource available • This check is done in program order A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

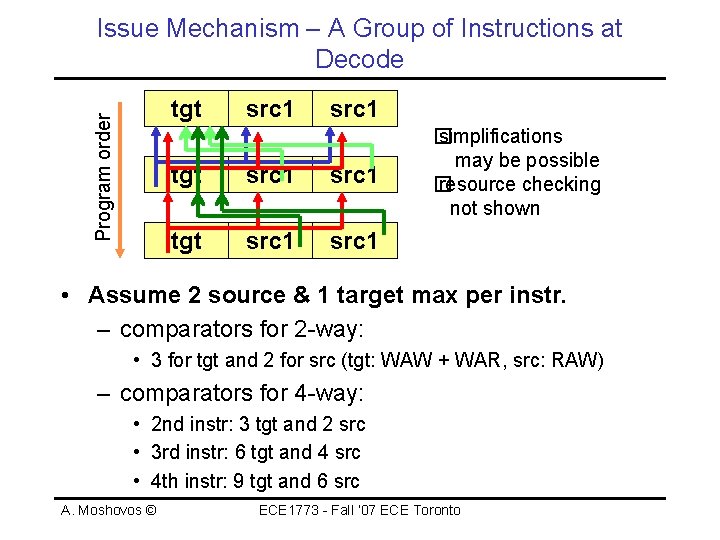

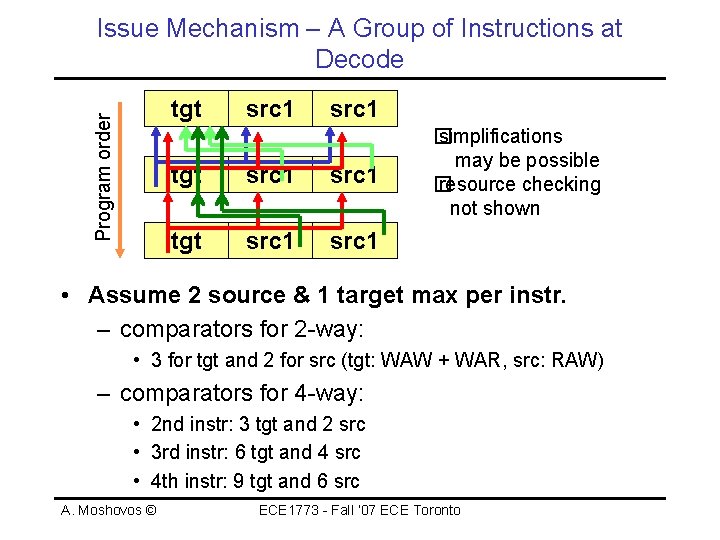

Issue Mechanism – A Group of Instructions at Decode Program order tgt src 1 tgt src 1 � simplifications may be possible � resource checking not shown • Assume 2 source & 1 target max per instr. – comparators for 2 -way: • 3 for tgt and 2 for src (tgt: WAW + WAR, src: RAW) – comparators for 4 -way: • 2 nd instr: 3 tgt and 2 src • 3 rd instr: 6 tgt and 4 src • 4 th instr: 9 tgt and 6 src A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

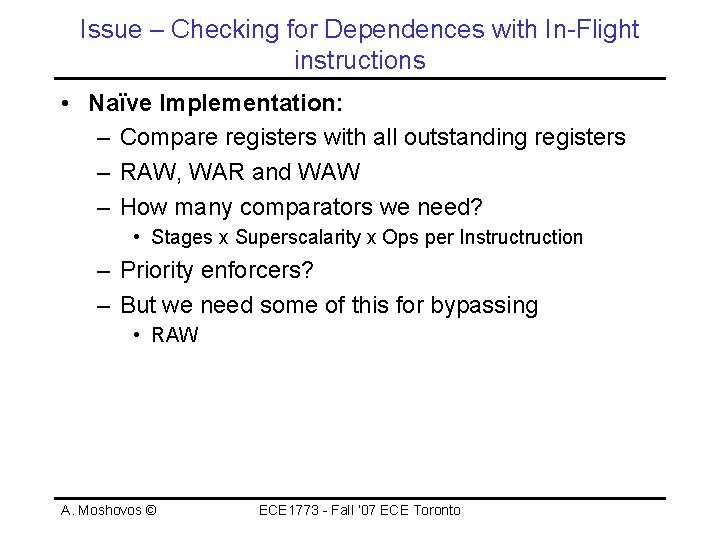

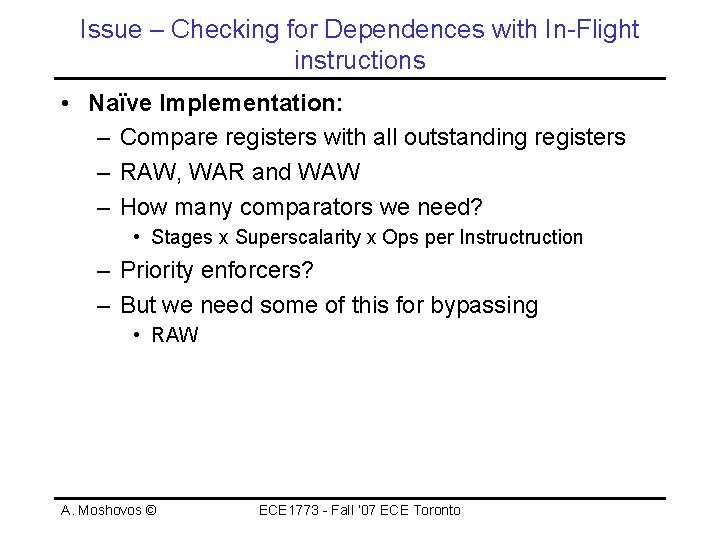

Issue – Checking for Dependences with In-Flight instructions • Naïve Implementation: – Compare registers with all outstanding registers – RAW, WAR and WAW – How many comparators we need? • Stages x Superscalarity x Ops per Instruction – Priority enforcers? – But we need some of this for bypassing • RAW A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

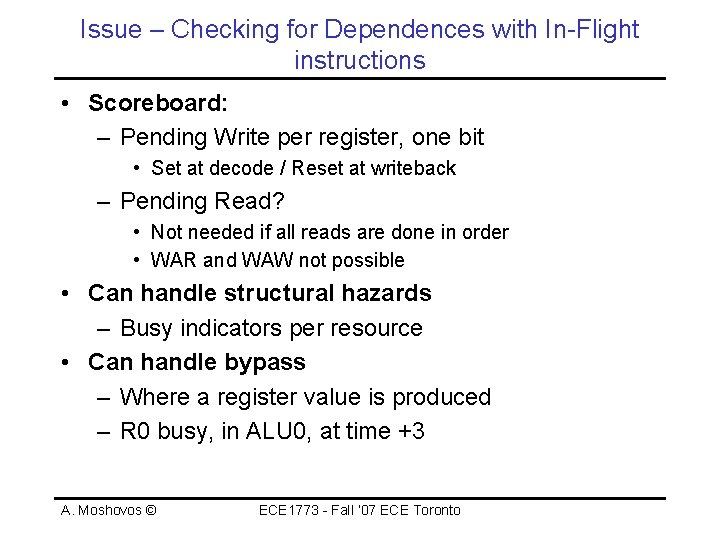

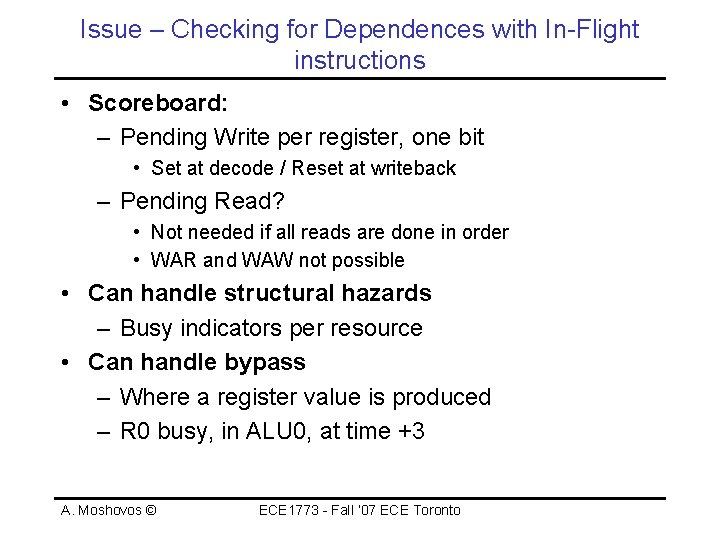

Issue – Checking for Dependences with In-Flight instructions • Scoreboard: – Pending Write per register, one bit • Set at decode / Reset at writeback – Pending Read? • Not needed if all reads are done in order • WAR and WAW not possible • Can handle structural hazards – Busy indicators per resource • Can handle bypass – Where a register value is produced – R 0 busy, in ALU 0, at time +3 A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

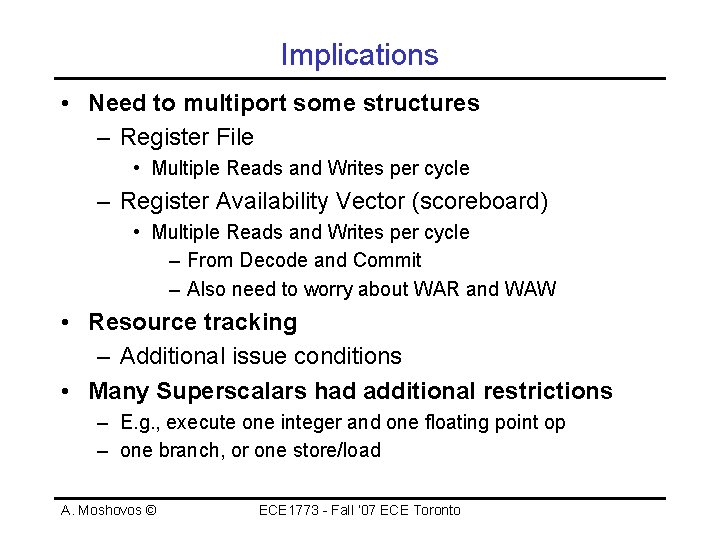

Implications • Need to multiport some structures – Register File • Multiple Reads and Writes per cycle – Register Availability Vector (scoreboard) • Multiple Reads and Writes per cycle – From Decode and Commit – Also need to worry about WAR and WAW • Resource tracking – Additional issue conditions • Many Superscalars had additional restrictions – E. g. , execute one integer and one floating point op – one branch, or one store/load A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

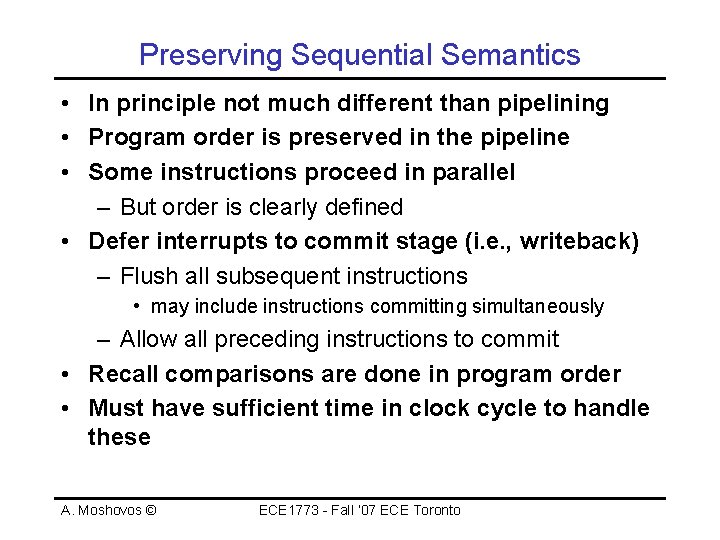

Preserving Sequential Semantics • In principle not much different than pipelining • Program order is preserved in the pipeline • Some instructions proceed in parallel – But order is clearly defined • Defer interrupts to commit stage (i. e. , writeback) – Flush all subsequent instructions • may include instructions committing simultaneously – Allow all preceding instructions to commit • Recall comparisons are done in program order • Must have sufficient time in clock cycle to handle these A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

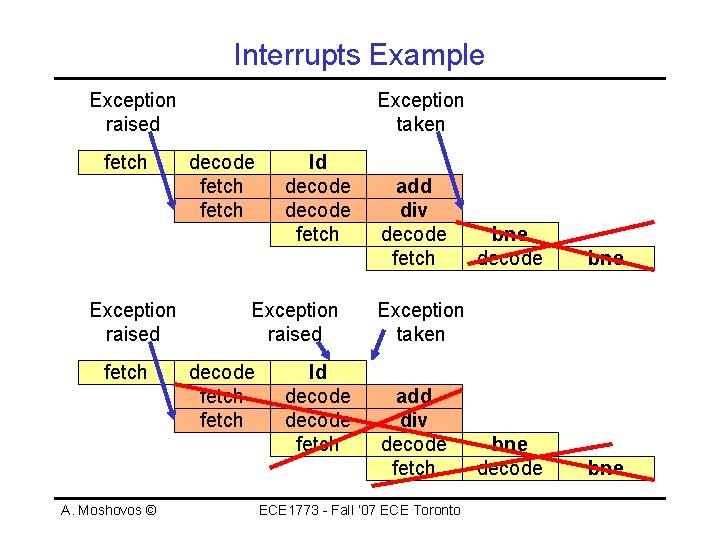

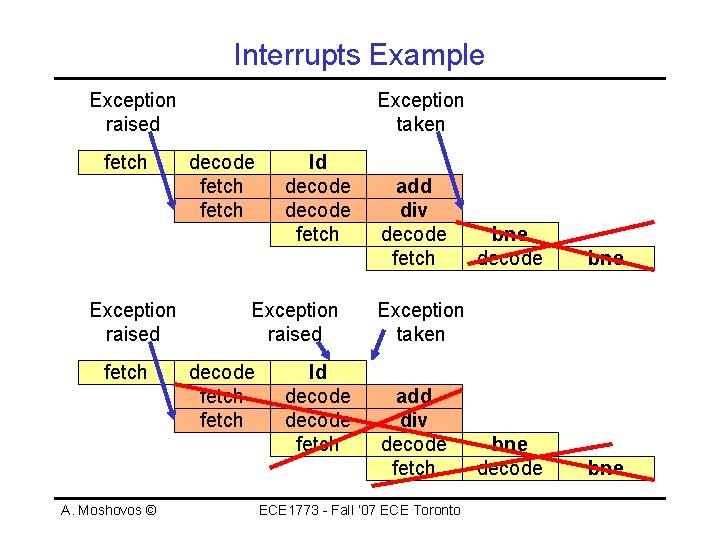

Interrupts Example Exception raised fetch A. Moshovos © Exception taken decode fetch ld decode fetch Exception raised decode fetch ld decode fetch add div decode fetch bne decode bne Exception taken add div decode fetch ECE 1773 - Fall ‘ 07 ECE Toronto

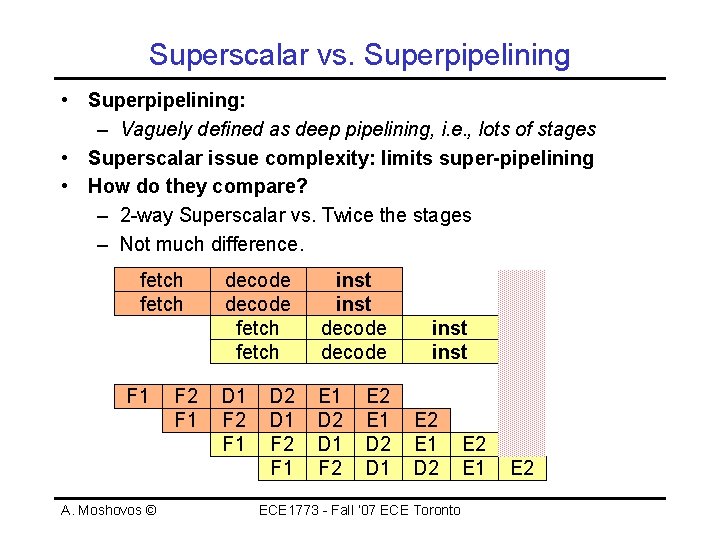

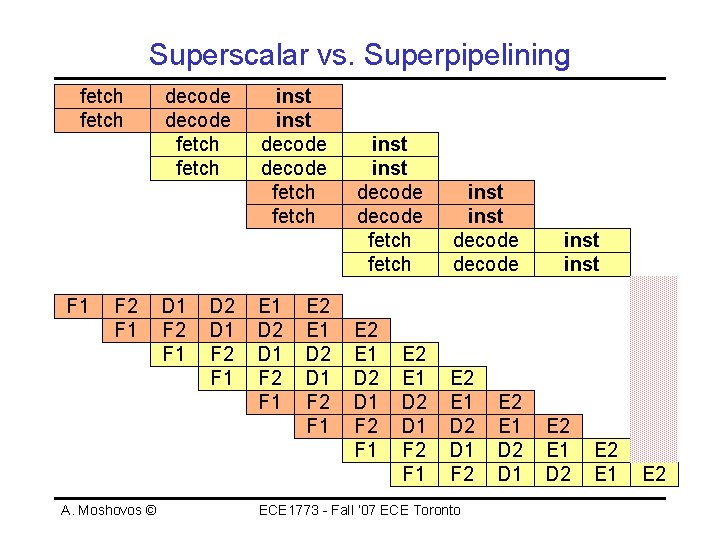

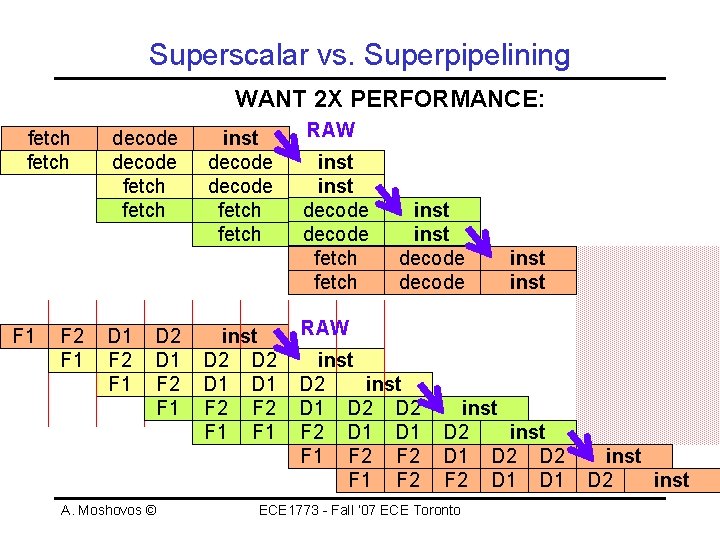

Superscalar and Pipelining • In principle they are orthogonal – Superscalar non-pipelined machine – Pipelined non-superscalar – Superscalar and Pipelined (common) • Additional functionality needed by Superscalar: – Another bound on clock cycle – At some point it limits the number of pipeline stages A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

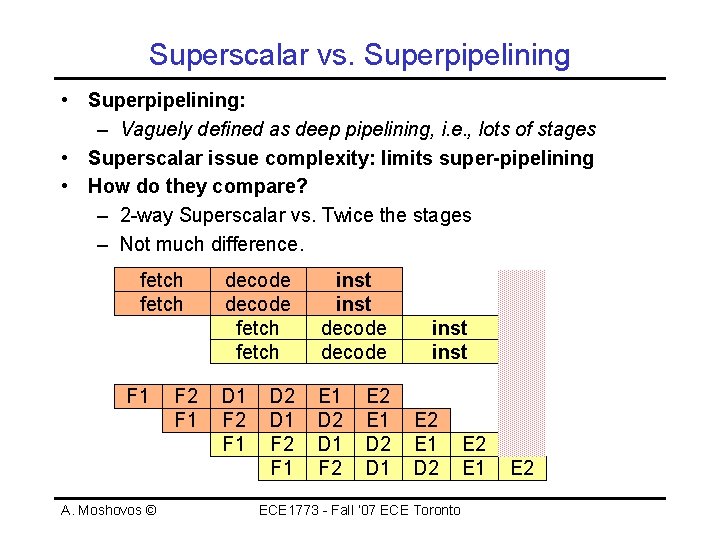

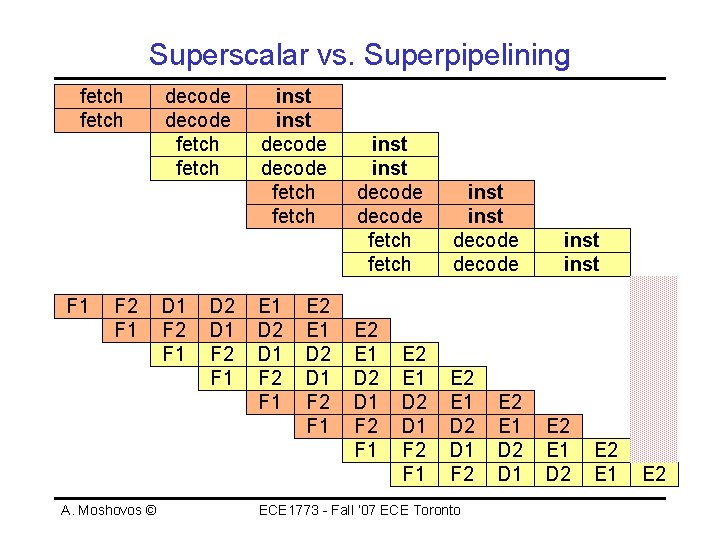

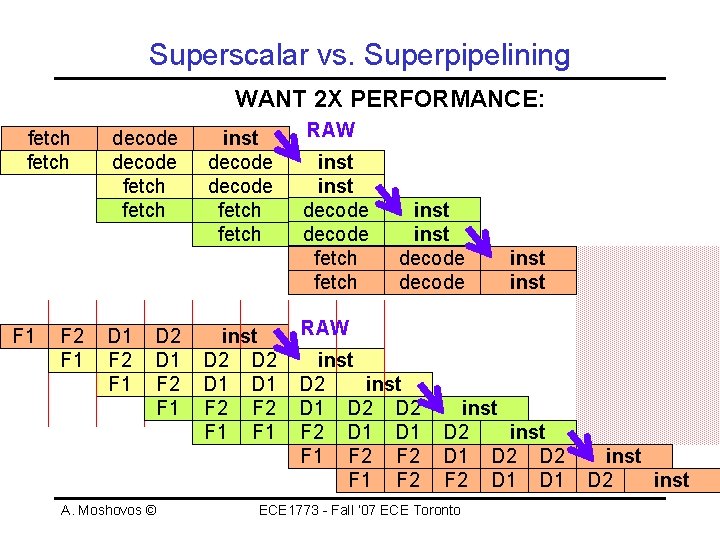

Superscalar vs. Superpipelining • Superpipelining: – Vaguely defined as deep pipelining, i. e. , lots of stages • Superscalar issue complexity: limits super-pipelining • How do they compare? – 2 -way Superscalar vs. Twice the stages – Not much difference. fetch F 1 A. Moshovos © F 2 F 1 decode fetch inst decode D 1 F 2 F 1 E 1 D 2 D 1 F 2 F 1 E 2 E 1 D 2 D 1 inst E 2 E 1 D 2 ECE 1773 - Fall ‘ 07 ECE Toronto E 2 E 1 E 2

Superscalar vs. Superpipelining fetch F 1 F 2 F 1 A. Moshovos © decode fetch D 1 F 2 F 1 D 2 D 1 F 2 F 1 inst decode fetch E 1 D 2 D 1 F 2 F 1 E 2 E 1 D 2 D 1 F 2 F 1 inst decode fetch E 2 E 1 D 2 D 1 F 2 F 1 inst decode E 2 E 1 D 2 D 1 F 2 ECE 1773 - Fall ‘ 07 ECE Toronto E 2 E 1 D 2 D 1 inst E 2 E 1 D 2 E 1 E 2

Superscalar vs. Superpipelining WANT 2 X PERFORMANCE: fetch F 1 F 2 F 1 decode fetch D 1 F 2 F 1 D 2 D 1 F 2 F 1 A. Moshovos © inst decode fetch inst D 2 D 1 F 2 F 1 RAW inst decode fetch inst decode inst RAW inst D 2 inst D 1 D 2 F 2 D 1 F 1 F 2 inst D 1 D 2 F 2 D 1 ECE 1773 - Fall ‘ 07 ECE Toronto inst D 2 inst

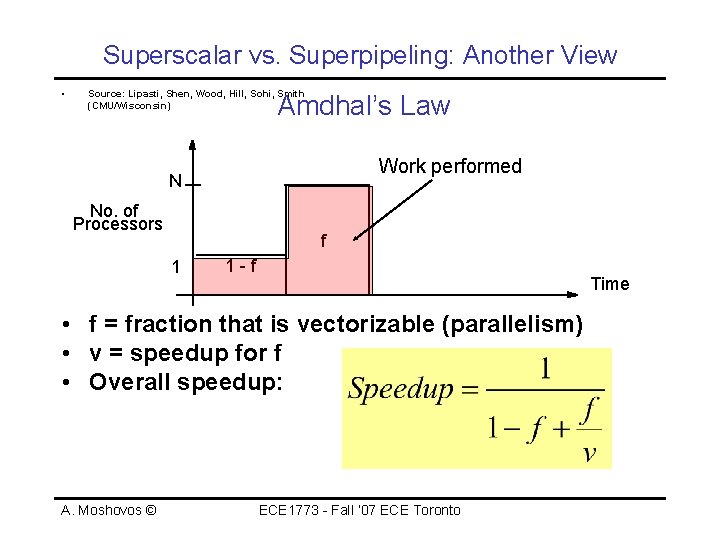

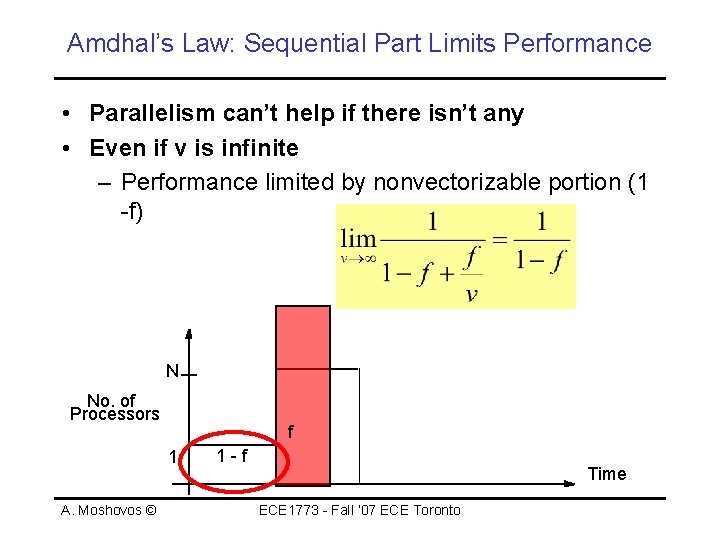

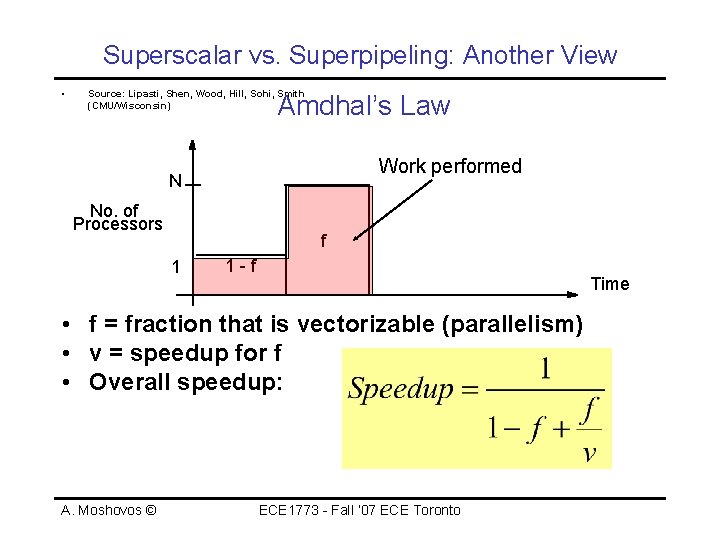

Superscalar vs. Superpipeling: Another View • Source: Lipasti, Shen, Wood, Hill, Sohi, Smith (CMU/Wisconsin) Amdhal’s Law Work performed N No. of Processors f 1 1 -f Time • f = fraction that is vectorizable (parallelism) • v = speedup for f • Overall speedup: A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

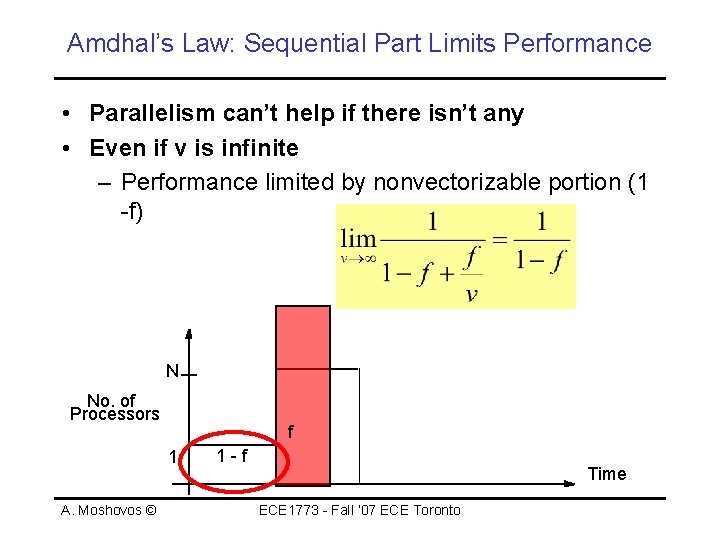

Amdhal’s Law: Sequential Part Limits Performance • Parallelism can’t help if there isn’t any • Even if v is infinite – Performance limited by nonvectorizable portion (1 -f) N No. of Processors f 1 A. Moshovos © 1 -f Time ECE 1773 - Fall ‘ 07 ECE Toronto

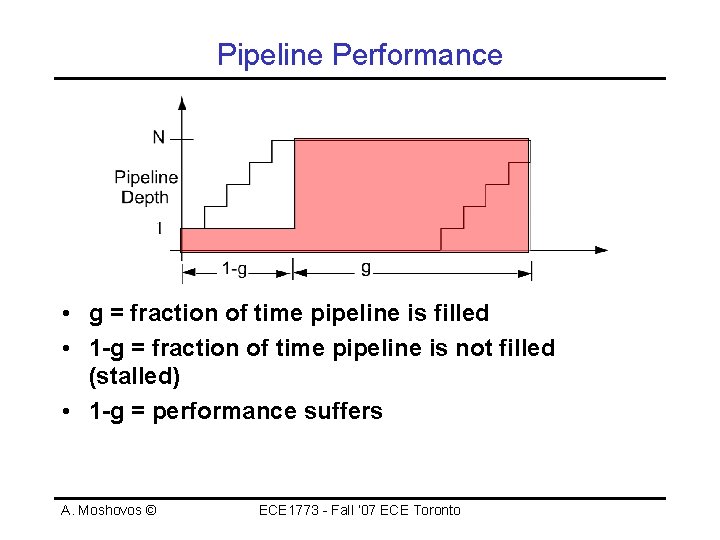

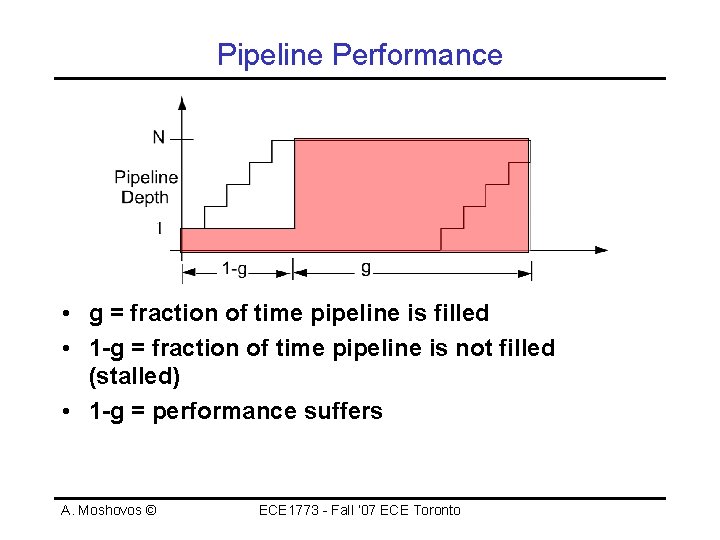

Pipeline Performance • g = fraction of time pipeline is filled • 1 -g = fraction of time pipeline is not filled (stalled) • 1 -g = performance suffers A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

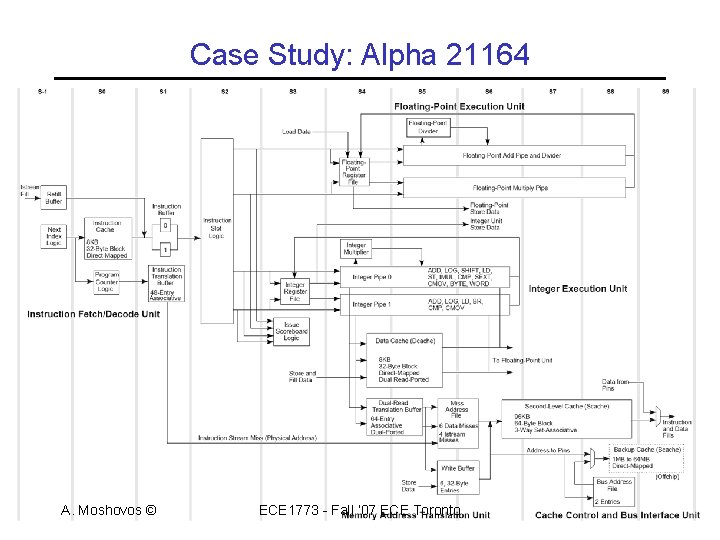

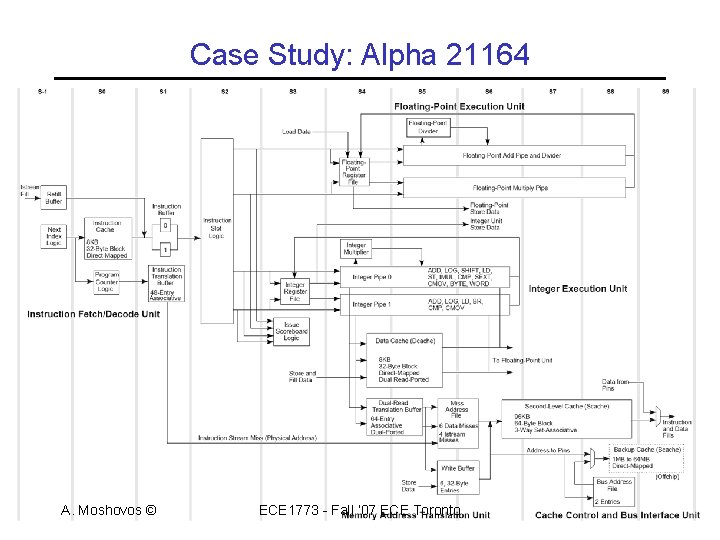

Case Study: Alpha 21164 A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

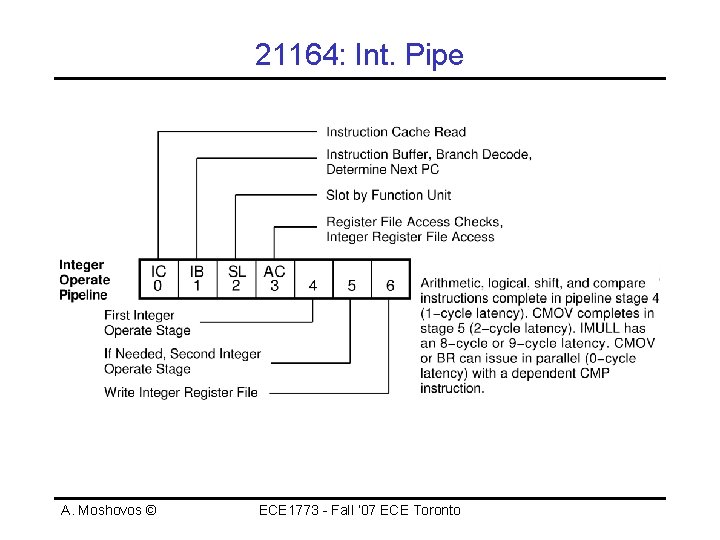

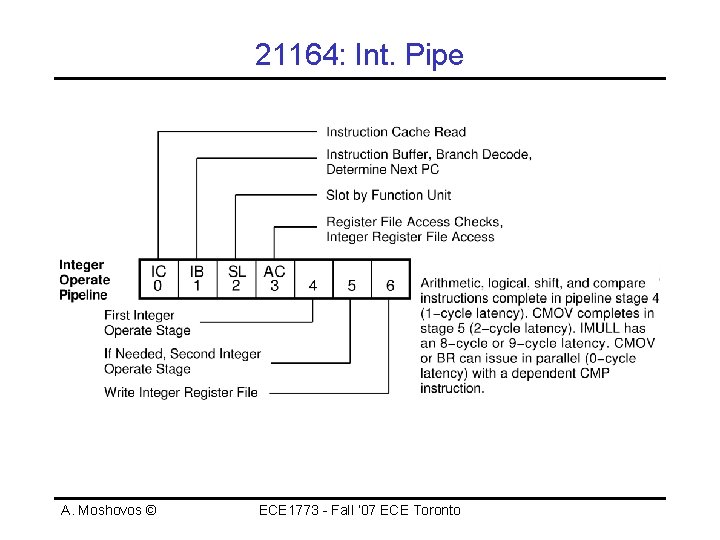

21164: Int. Pipe A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

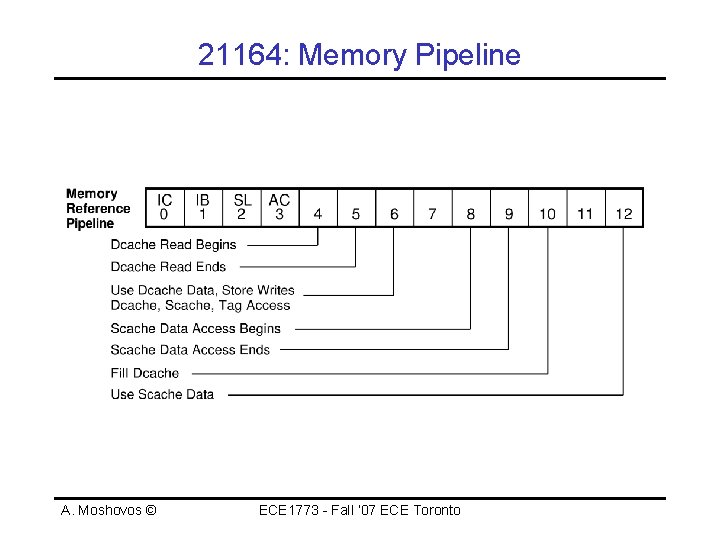

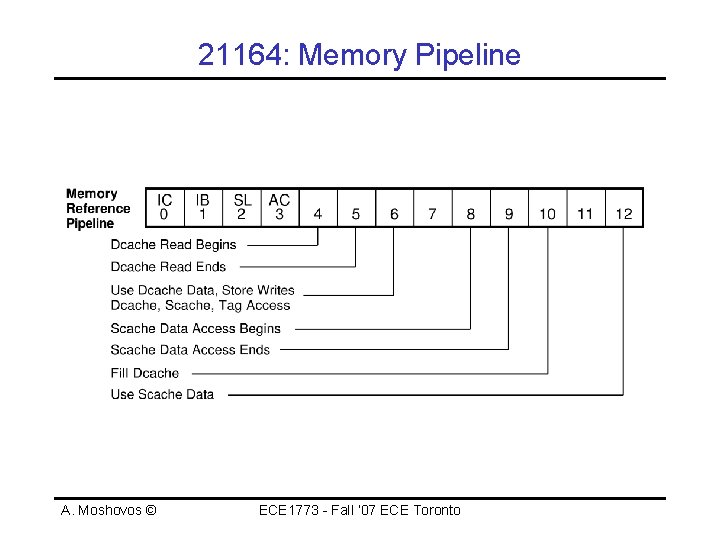

21164: Memory Pipeline A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

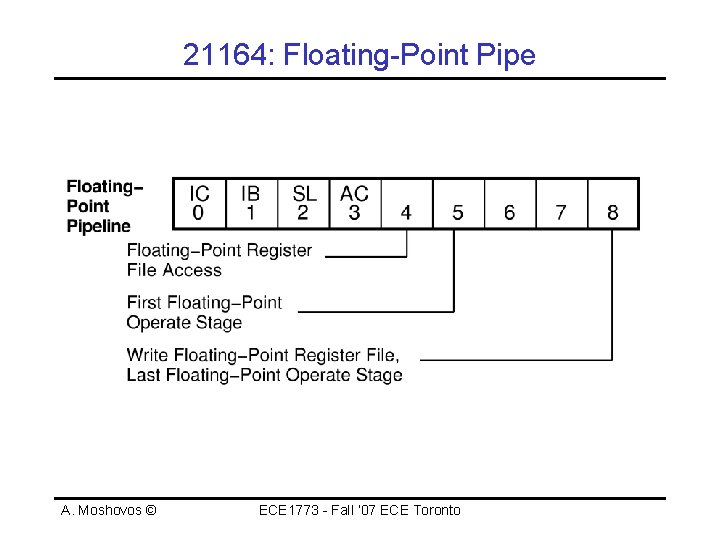

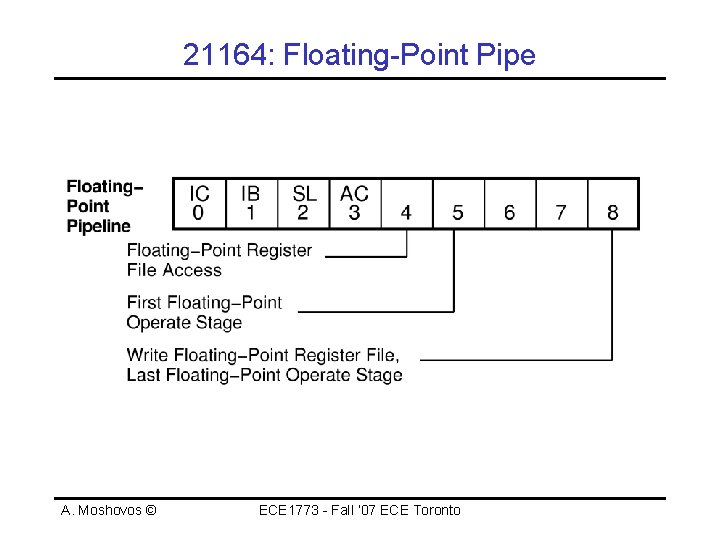

21164: Floating-Point Pipe A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

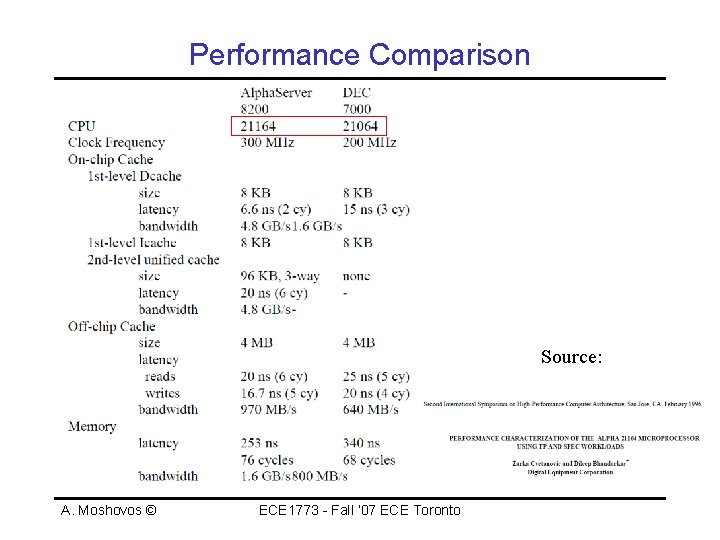

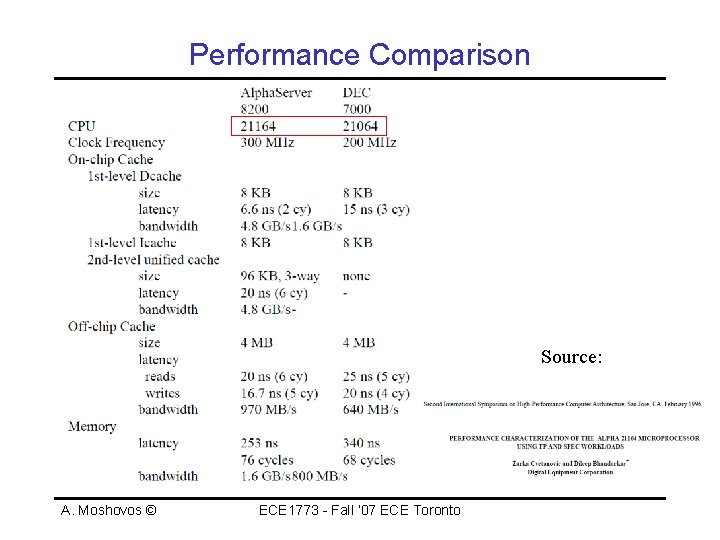

Performance Comparison Source: A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

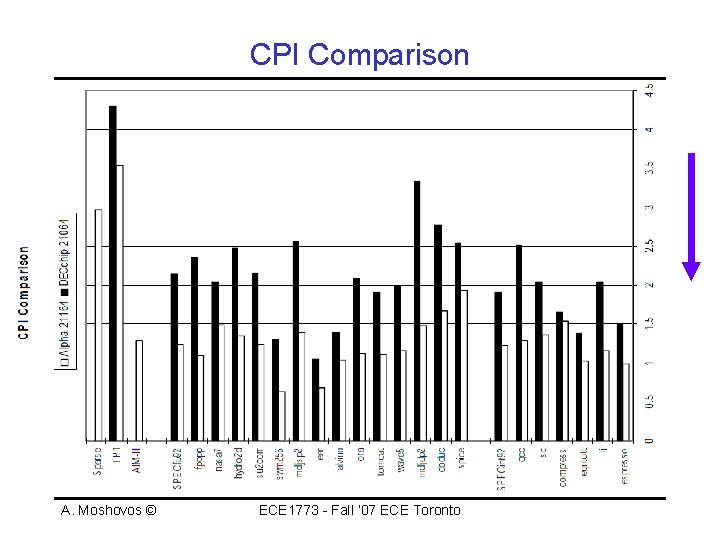

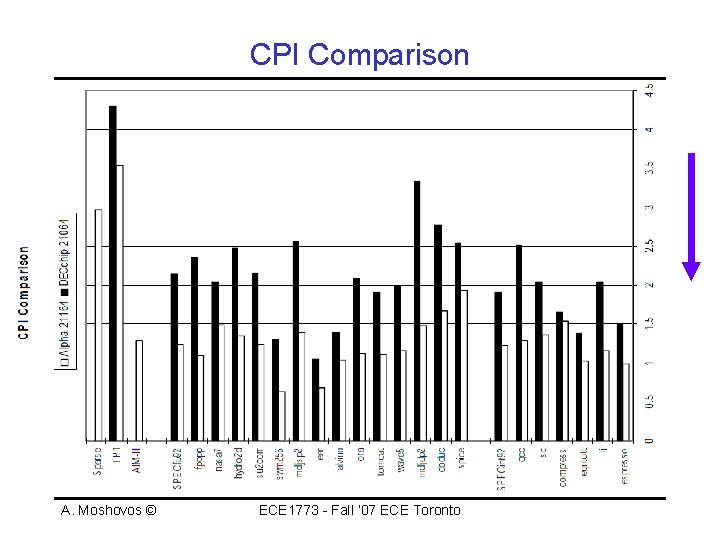

CPI Comparison A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

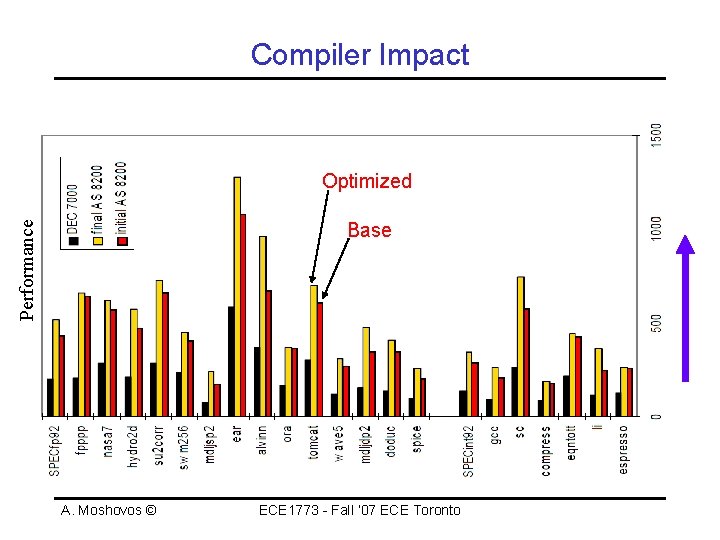

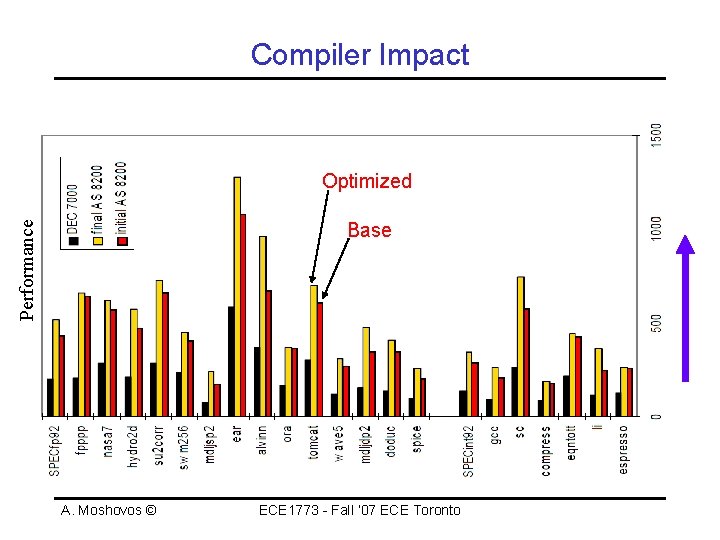

Compiler Impact Optimized Performance Base A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

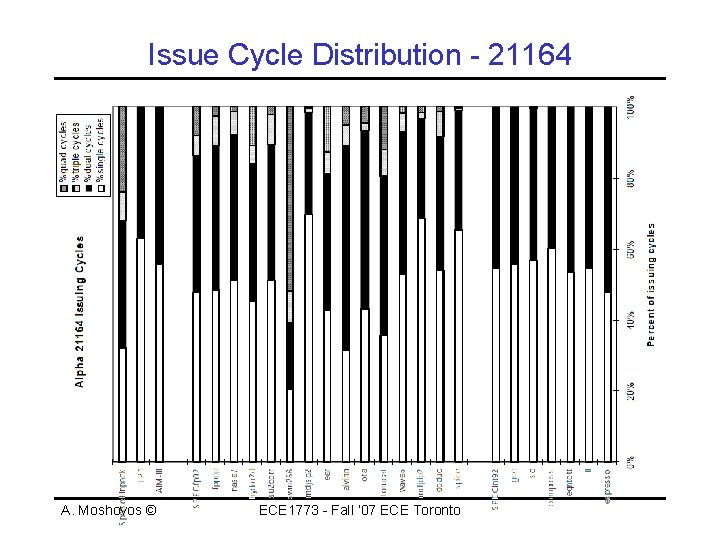

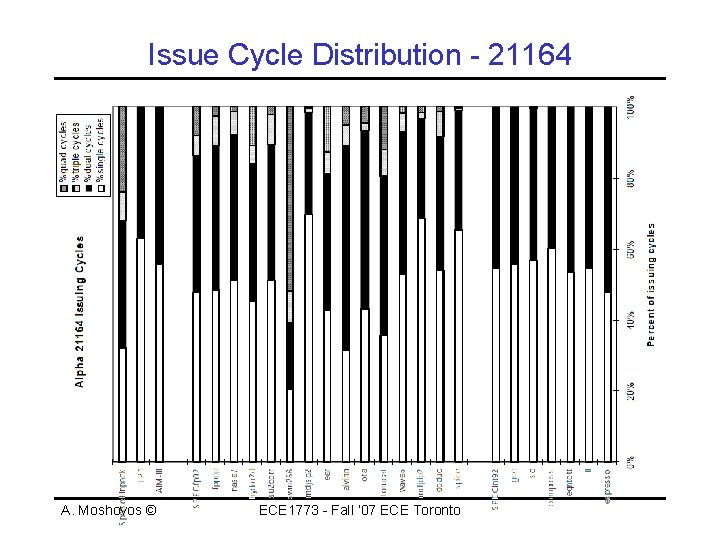

Issue Cycle Distribution - 21164 A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

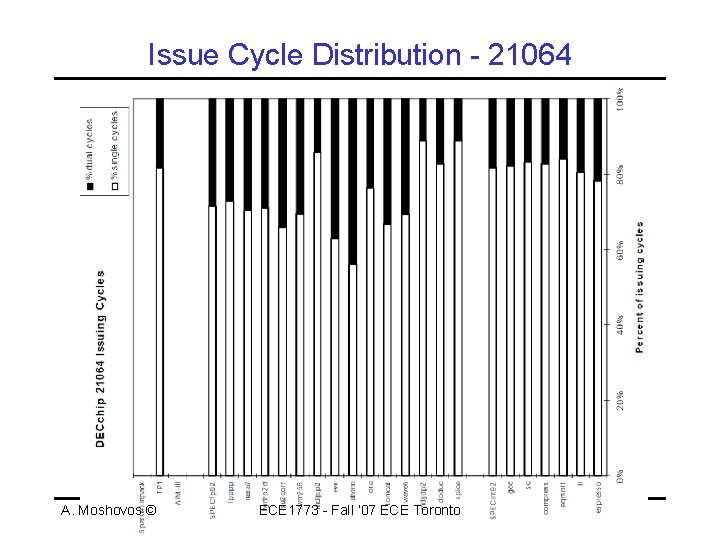

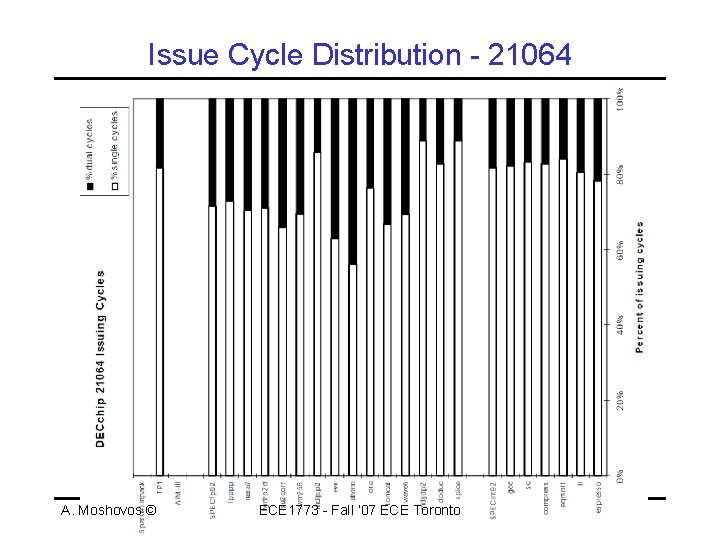

Issue Cycle Distribution - 21064 A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

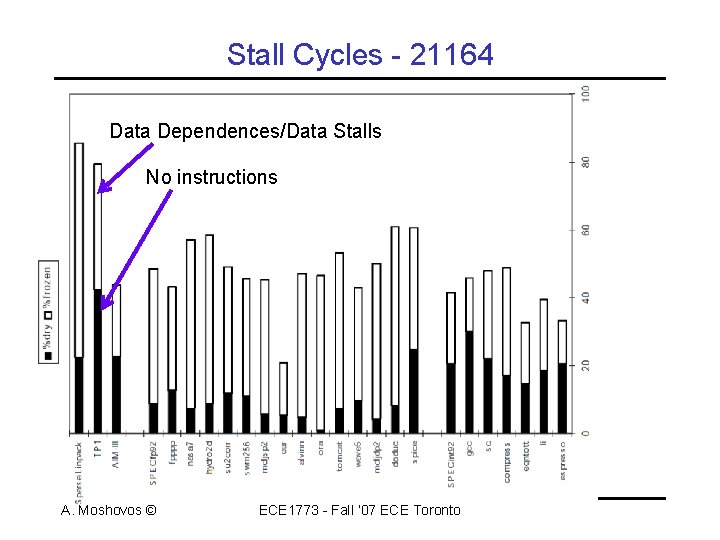

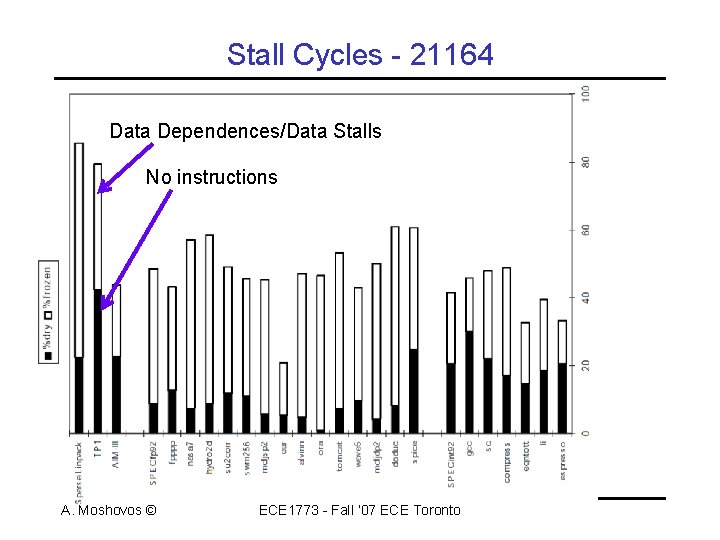

Stall Cycles - 21164 Data Dependences/Data Stalls No instructions A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

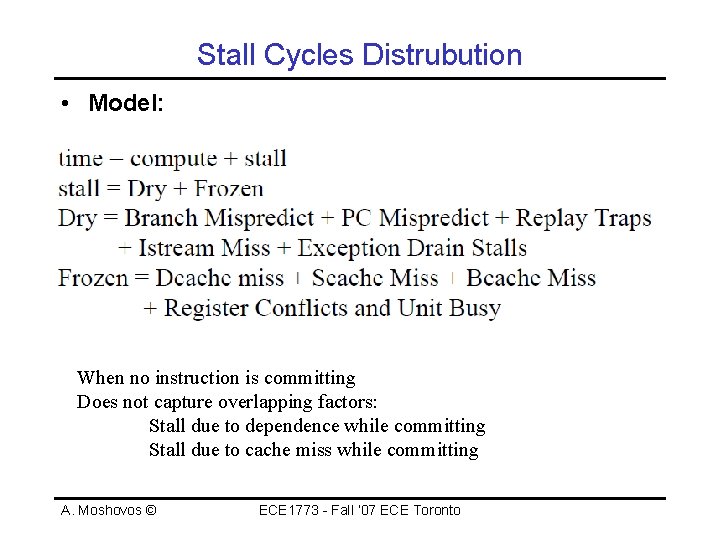

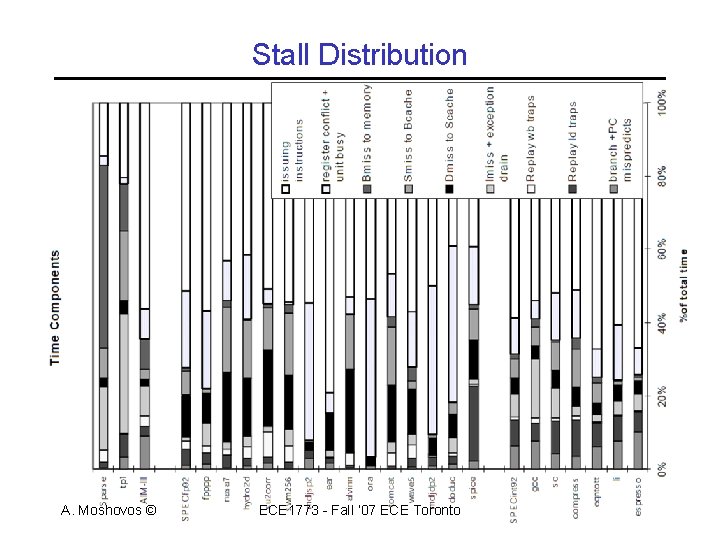

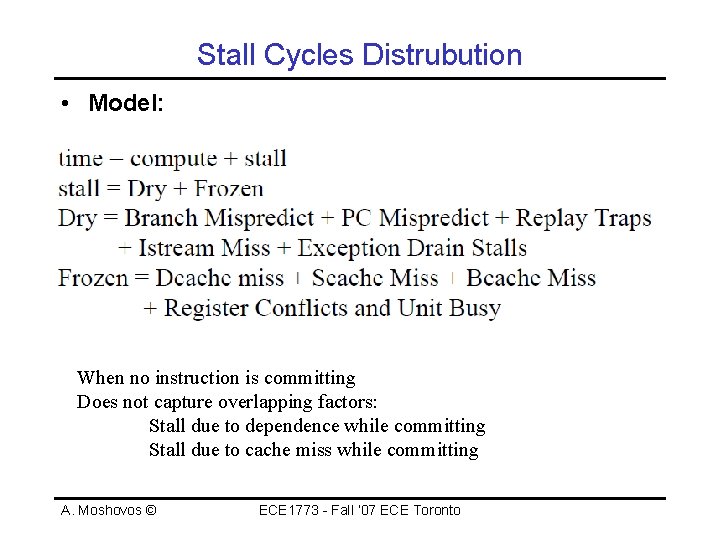

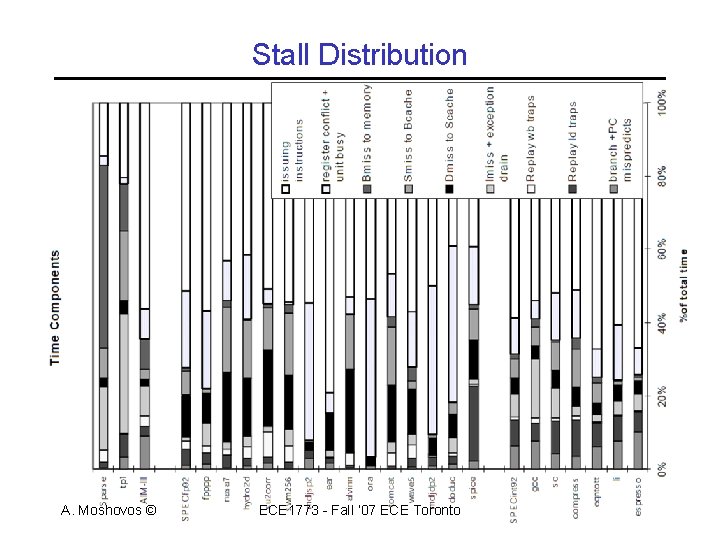

Stall Cycles Distrubution • Model: When no instruction is committing Does not capture overlapping factors: Stall due to dependence while committing Stall due to cache miss while committing A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

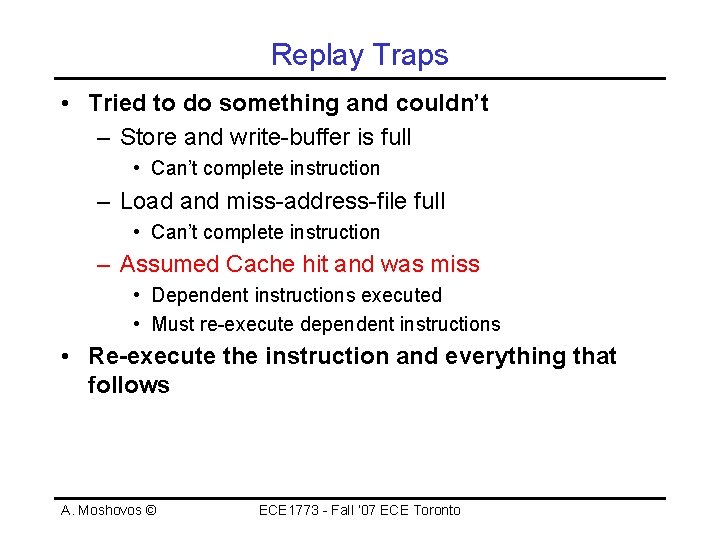

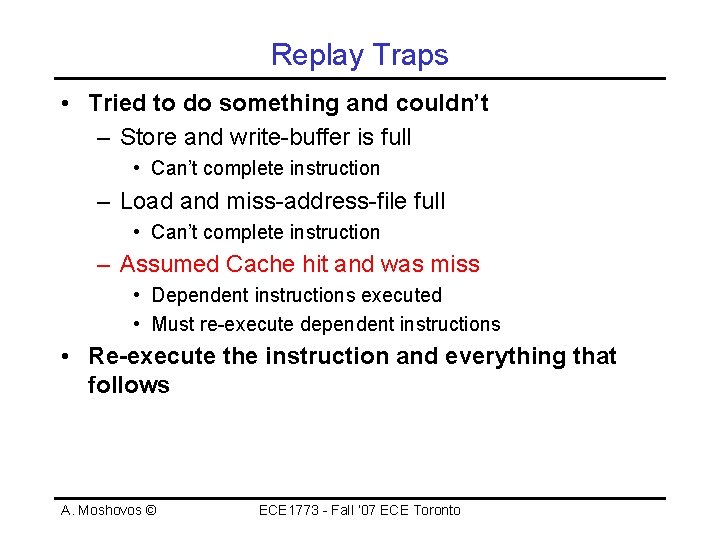

Replay Traps • Tried to do something and couldn’t – Store and write-buffer is full • Can’t complete instruction – Load and miss-address-file full • Can’t complete instruction – Assumed Cache hit and was miss • Dependent instructions executed • Must re-execute dependent instructions • Re-execute the instruction and everything that follows A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

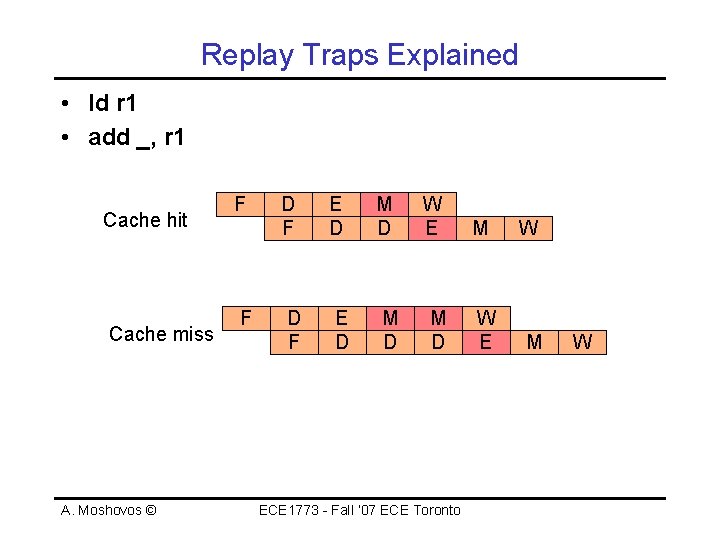

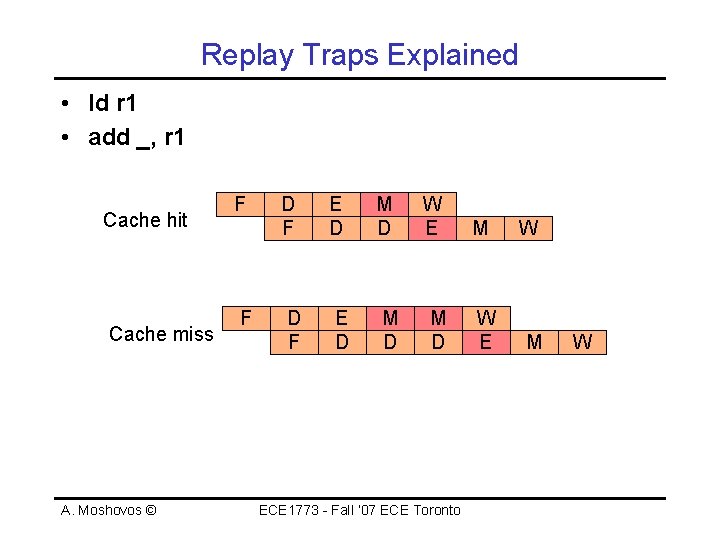

Replay Traps Explained • ld r 1 • add _, r 1 Cache hit Cache miss A. Moshovos © F F D F E D M D W E D F E D M D ECE 1773 - Fall ‘ 07 ECE Toronto M W E W M W

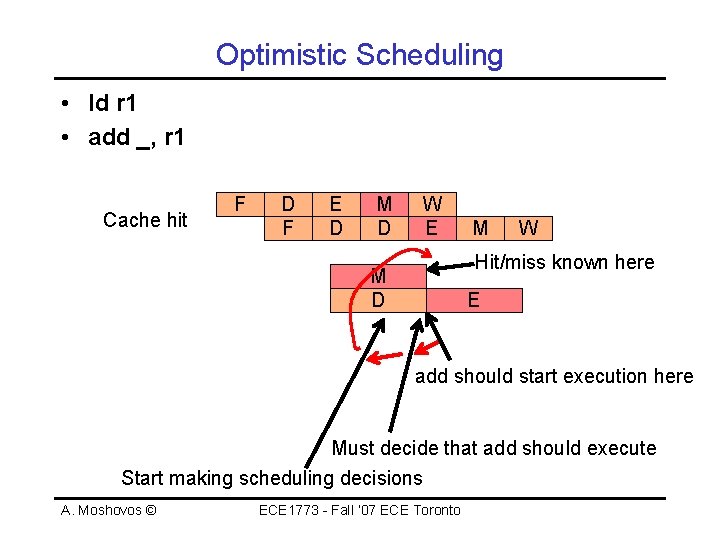

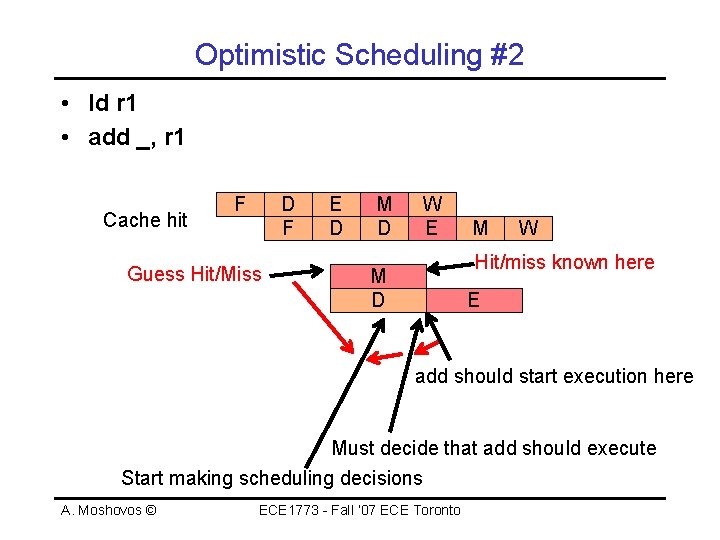

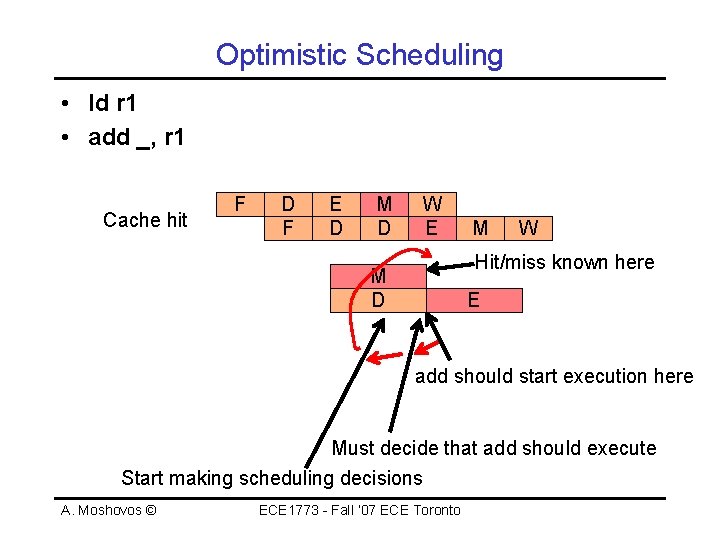

Optimistic Scheduling • ld r 1 • add _, r 1 Cache hit F D F E D M D W E M W Hit/miss known here M D E add should start execution here Must decide that add should execute Start making scheduling decisions A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

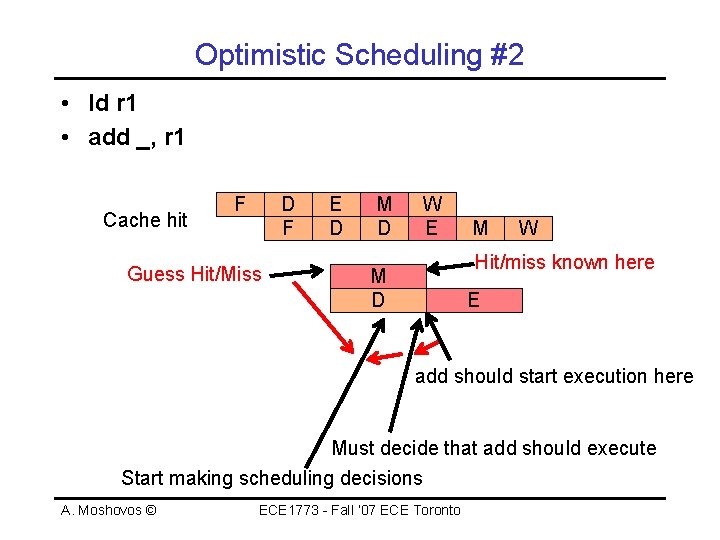

Optimistic Scheduling #2 • ld r 1 • add _, r 1 Cache hit F D F Guess Hit/Miss E D M D W E M W Hit/miss known here M D E add should start execution here Must decide that add should execute Start making scheduling decisions A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

Stall Distribution A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

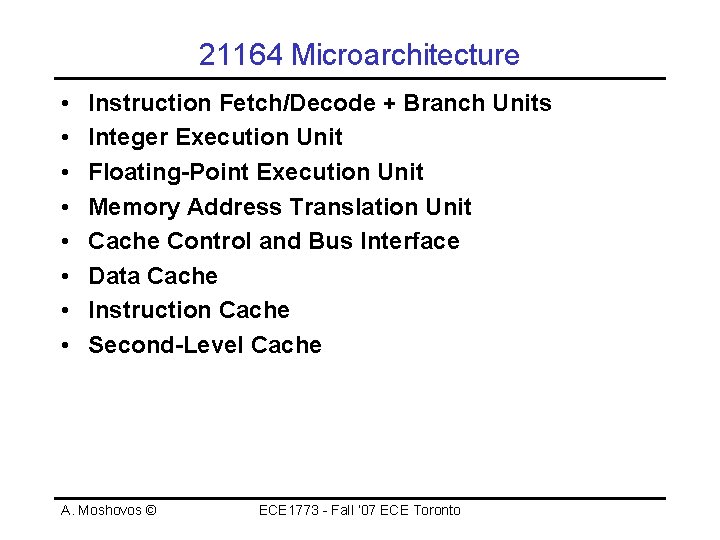

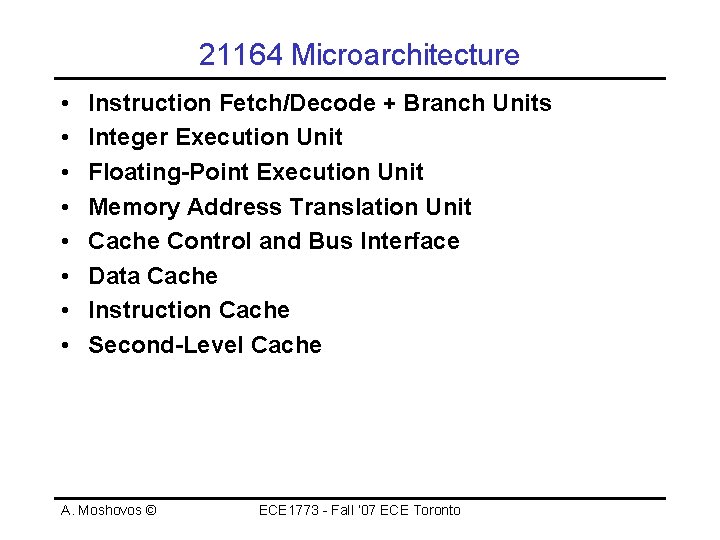

21164 Microarchitecture • • Instruction Fetch/Decode + Branch Units Integer Execution Unit Floating-Point Execution Unit Memory Address Translation Unit Cache Control and Bus Interface Data Cache Instruction Cache Second-Level Cache A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

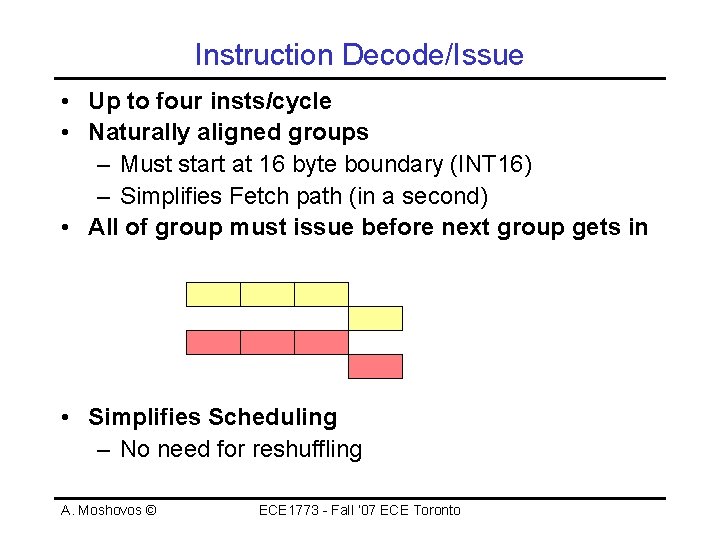

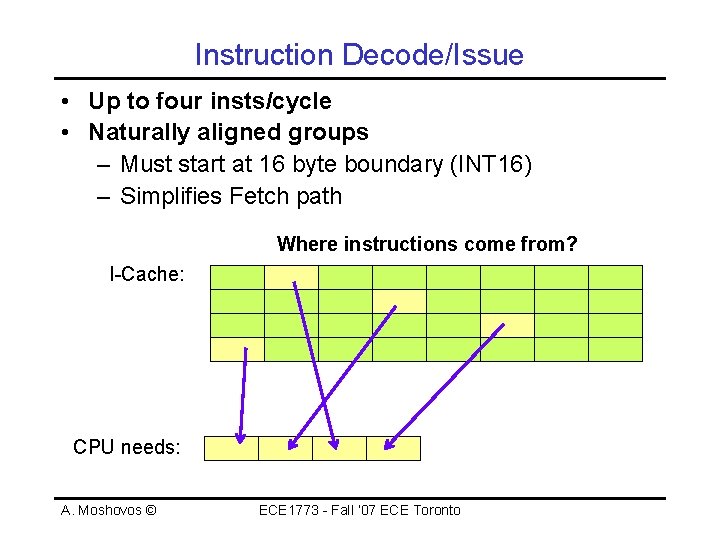

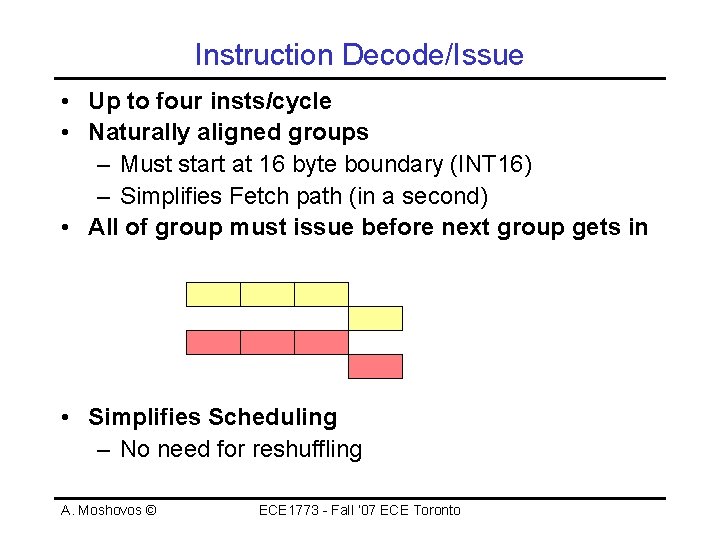

Instruction Decode/Issue • Up to four insts/cycle • Naturally aligned groups – Must start at 16 byte boundary (INT 16) – Simplifies Fetch path (in a second) • All of group must issue before next group gets in • Simplifies Scheduling – No need for reshuffling A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

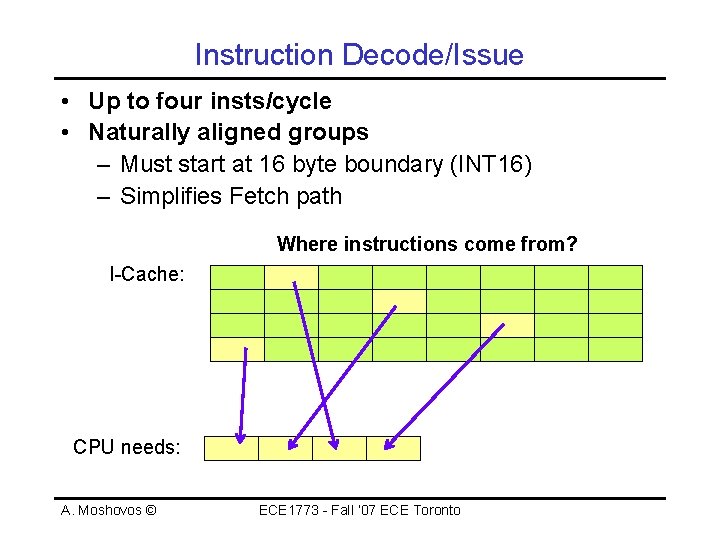

Instruction Decode/Issue • Up to four insts/cycle • Naturally aligned groups – Must start at 16 byte boundary (INT 16) – Simplifies Fetch path Where instructions come from? I-Cache: CPU needs: A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

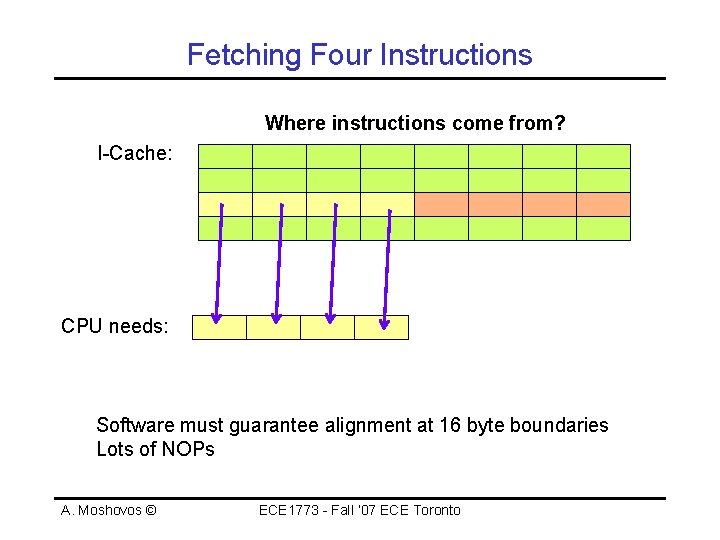

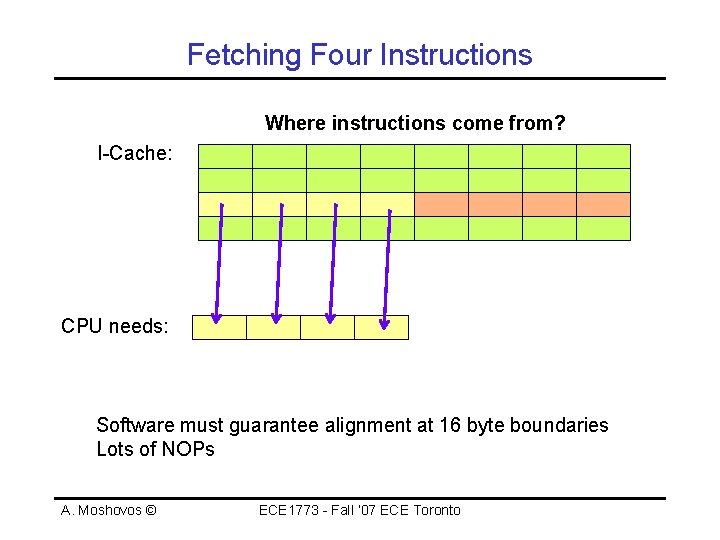

Fetching Four Instructions Where instructions come from? I-Cache: CPU needs: Software must guarantee alignment at 16 byte boundaries Lots of NOPs A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

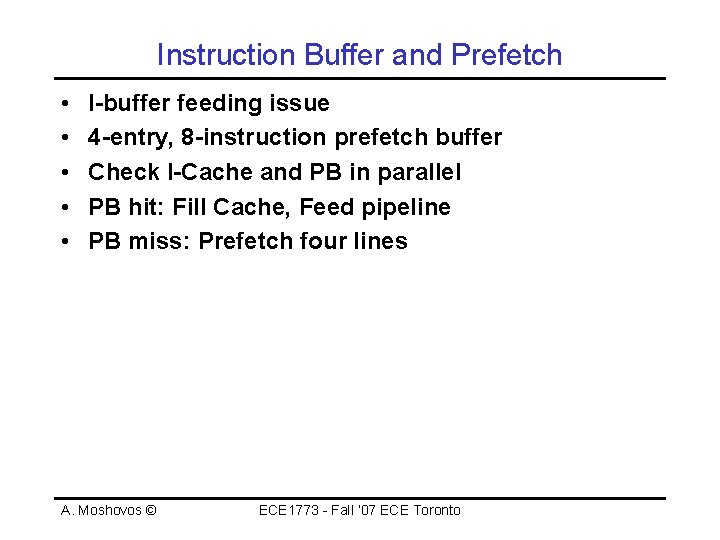

Instruction Buffer and Prefetch • • • I-buffer feeding issue 4 -entry, 8 -instruction prefetch buffer Check I-Cache and PB in parallel PB hit: Fill Cache, Feed pipeline PB miss: Prefetch four lines A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

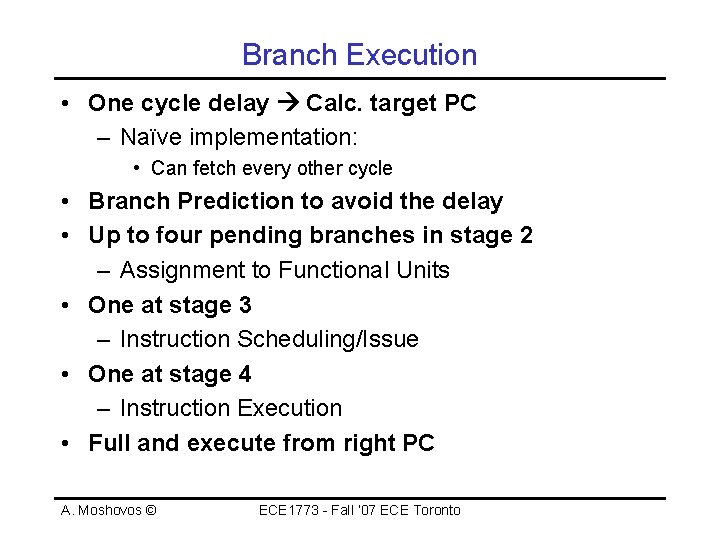

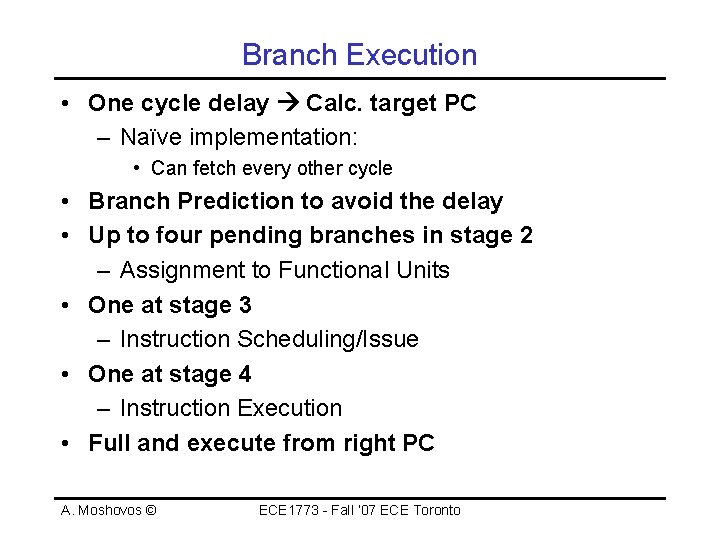

Branch Execution • One cycle delay Calc. target PC – Naïve implementation: • Can fetch every other cycle • Branch Prediction to avoid the delay • Up to four pending branches in stage 2 – Assignment to Functional Units • One at stage 3 – Instruction Scheduling/Issue • One at stage 4 – Instruction Execution • Full and execute from right PC A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

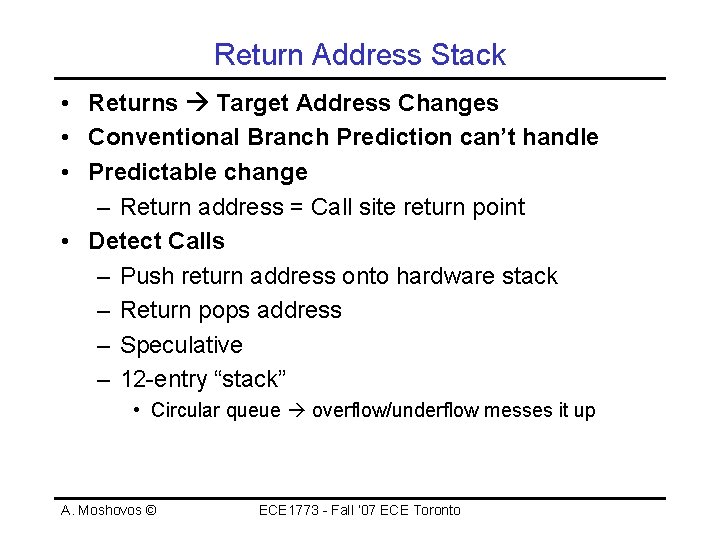

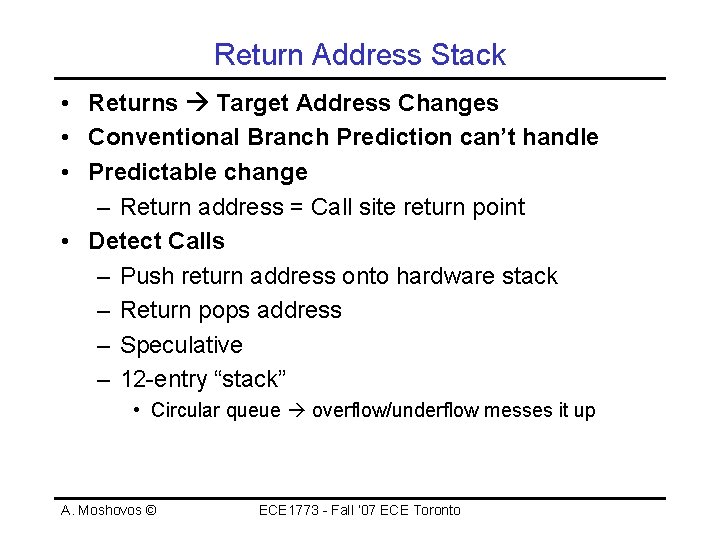

Return Address Stack • Returns Target Address Changes • Conventional Branch Prediction can’t handle • Predictable change – Return address = Call site return point • Detect Calls – Push return address onto hardware stack – Return pops address – Speculative – 12 -entry “stack” • Circular queue overflow/underflow messes it up A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

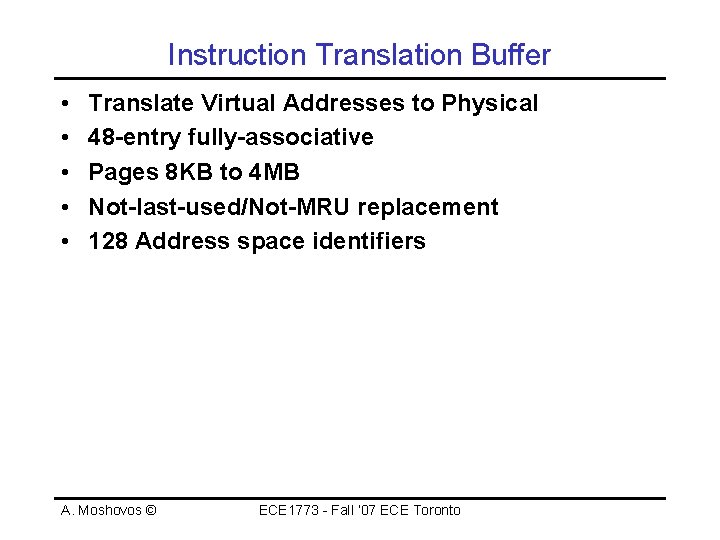

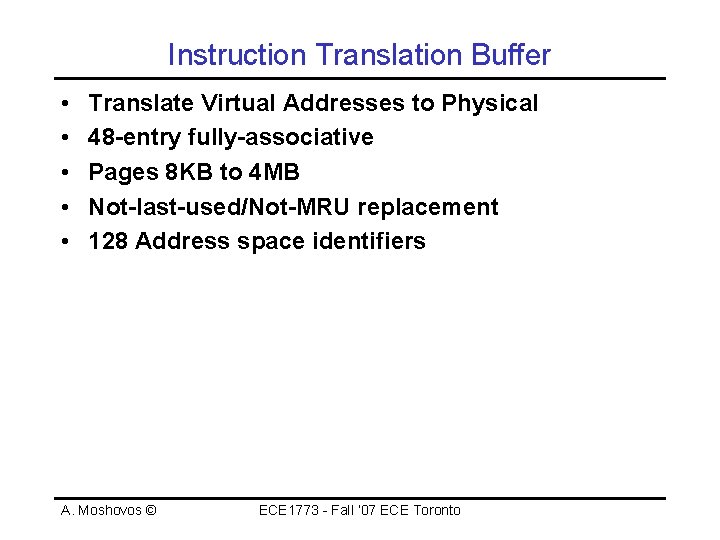

Instruction Translation Buffer • • • Translate Virtual Addresses to Physical 48 -entry fully-associative Pages 8 KB to 4 MB Not-last-used/Not-MRU replacement 128 Address space identifiers A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

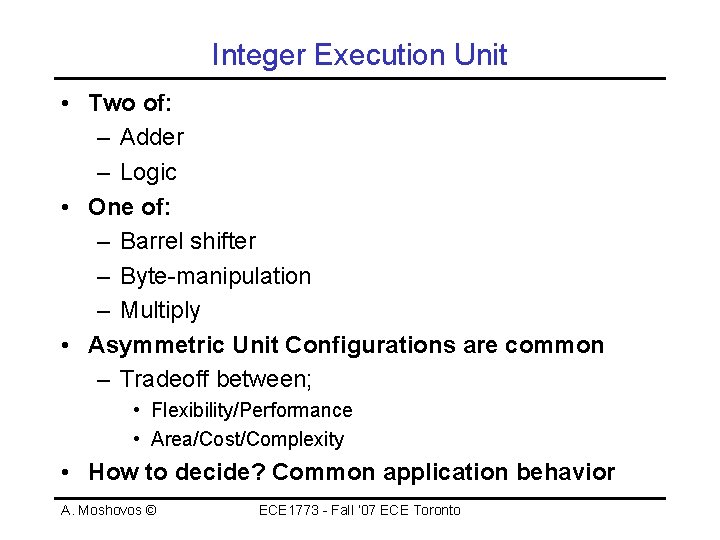

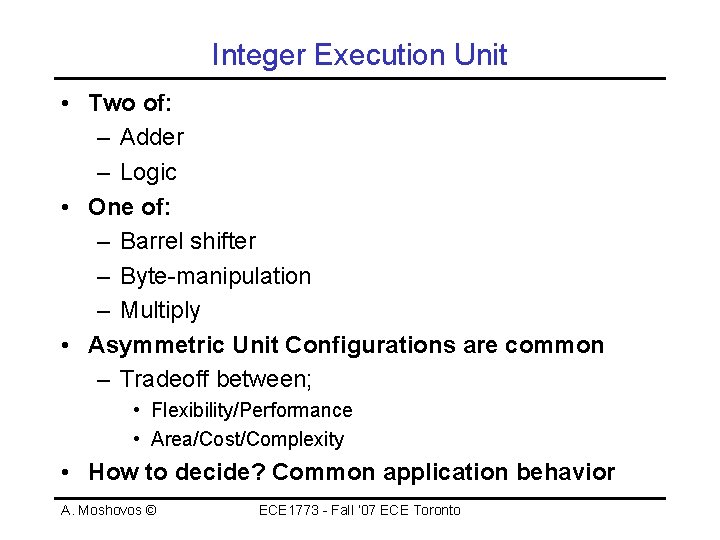

Integer Execution Unit • Two of: – Adder – Logic • One of: – Barrel shifter – Byte-manipulation – Multiply • Asymmetric Unit Configurations are common – Tradeoff between; • Flexibility/Performance • Area/Cost/Complexity • How to decide? Common application behavior A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

Integer Register File • 32+8 registers – 8 are legacy DEC • Four read ports, two write ports – Support for up to two integer ops A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

Floating-Point Unit • • • FPU ADD FPU Multiply 2 ops per cycle Divides take multiple cycles 32 registers, five reads, four writes – Four reads and two writes for FP pipe – One read for stores (handled by integer pipe) – One write for loads (handled by integer pipe) A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

Memory Unit • Up to two accesses • Data translation buffer – 512 -entries, not-MRU – Loads access in parallel with D-cache • Miss Address File – Pending misses – Six data loads – Four instruction reads – Merges loads to same block A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

Store/Load Conflicts • Load immediately after a store – Can’t see the data – Detect and replay • Flush pipe and re-execute • Compiler can help – Schedule load three cycles after store – Two cycles stalls the load at issue/address generation A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

Write Buffer • Six 32 -byte entries • Defer stores until there is a port available • Loads can read from Writebuffer A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

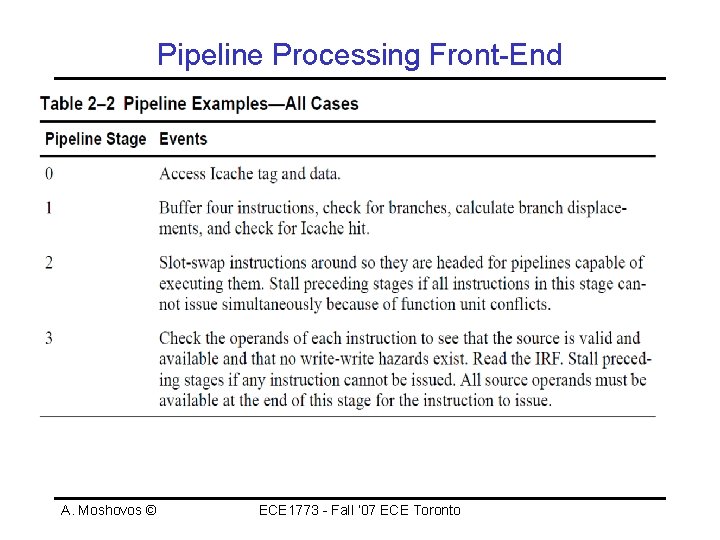

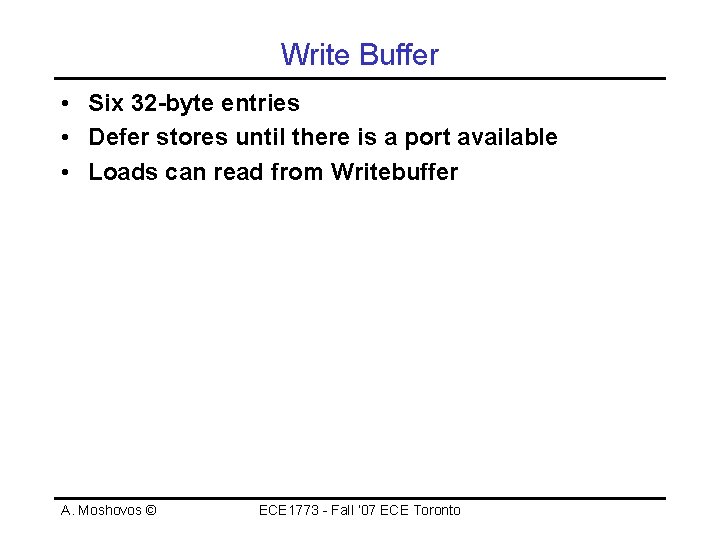

Pipeline Processing Front-End A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

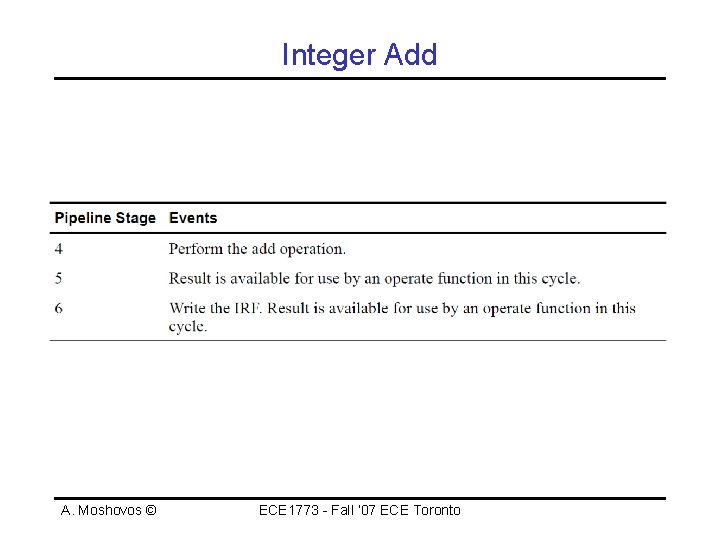

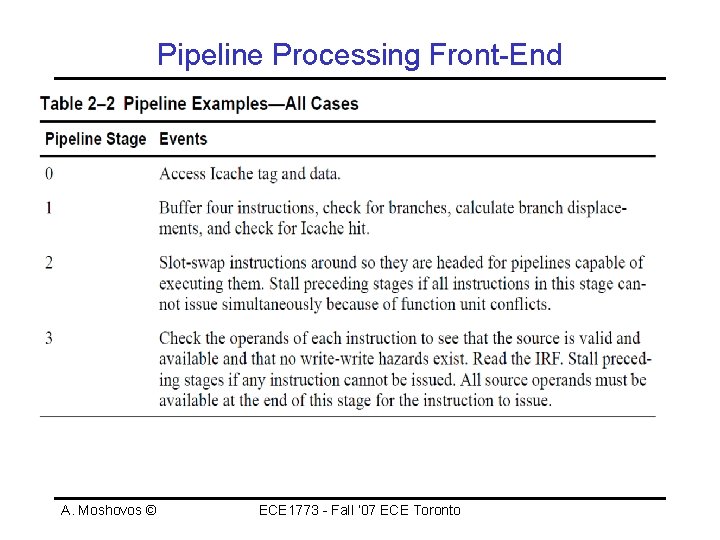

Integer Add A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

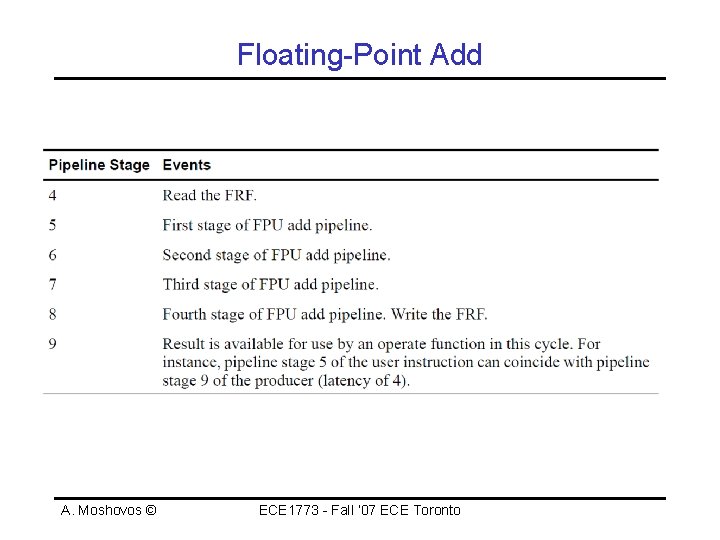

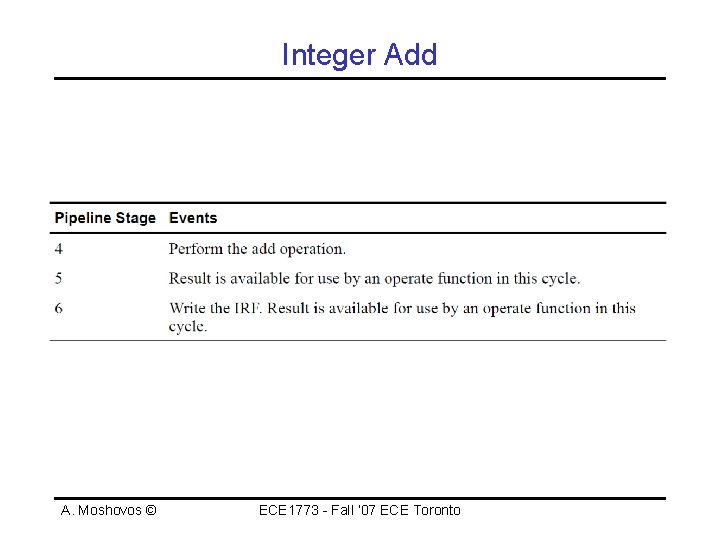

Floating-Point Add A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

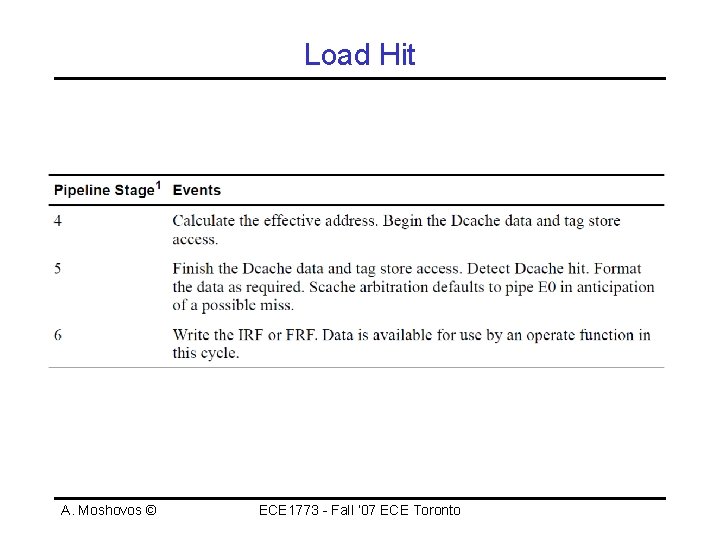

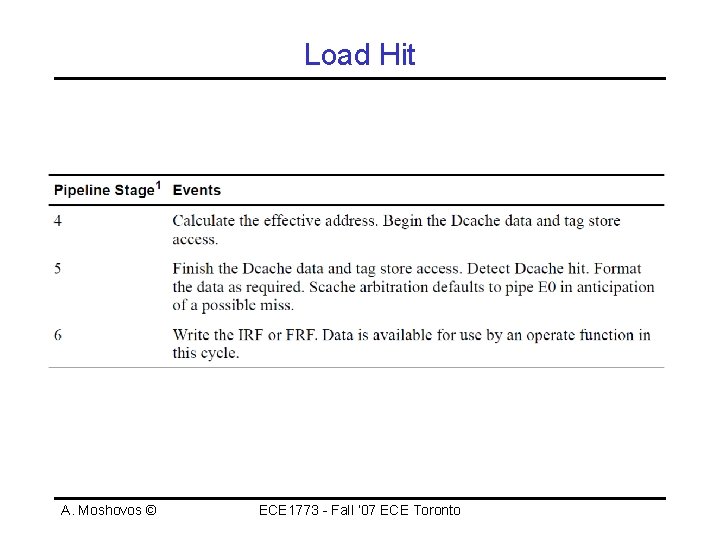

Load Hit A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

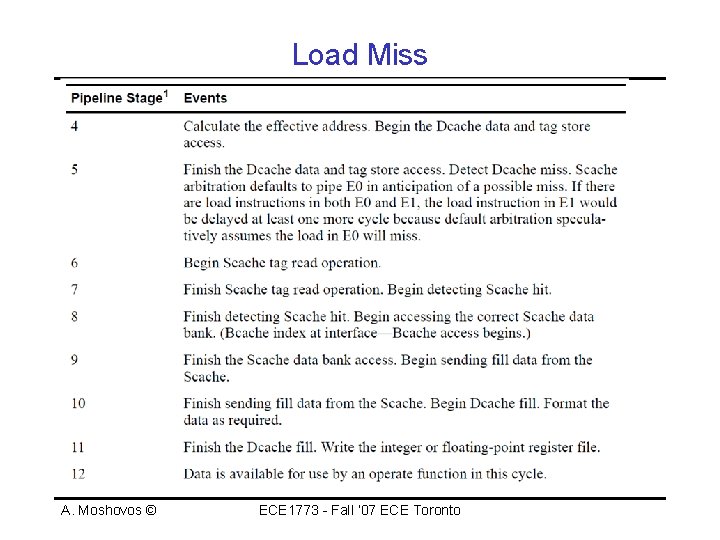

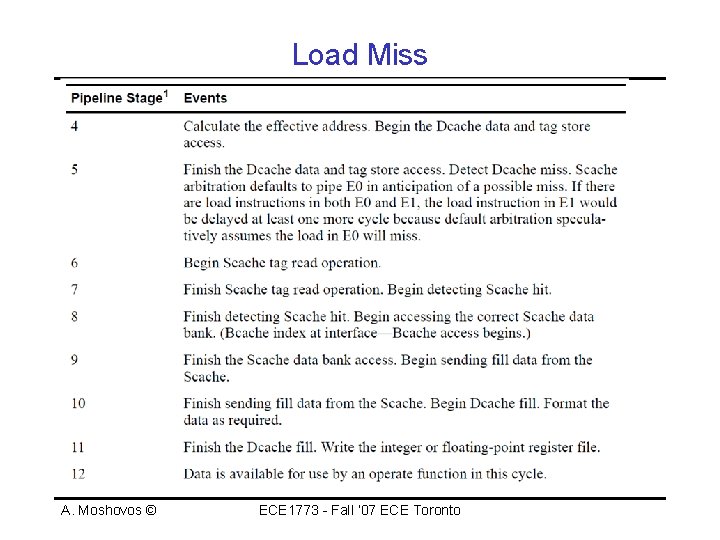

Load Miss A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

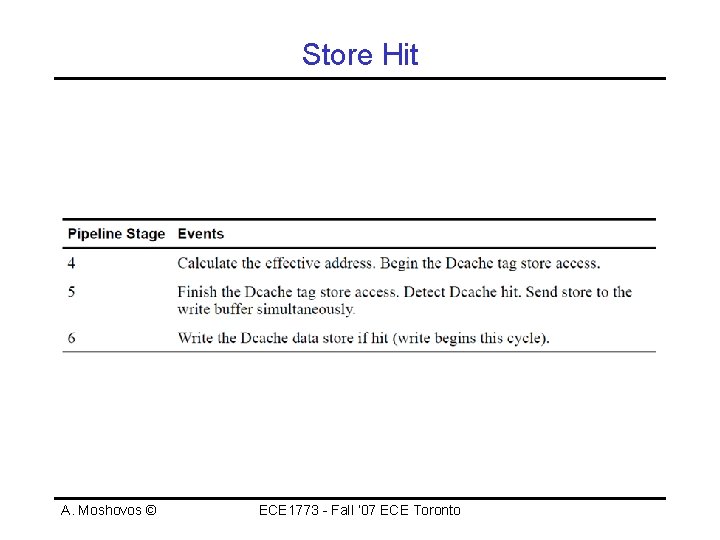

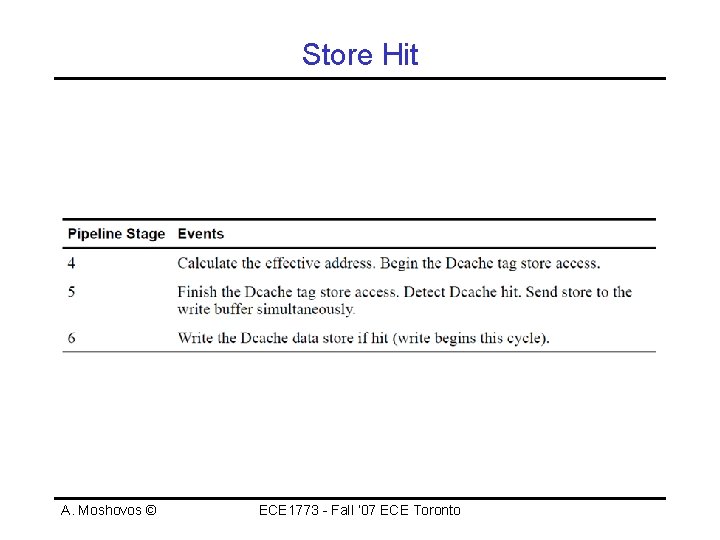

Store Hit A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

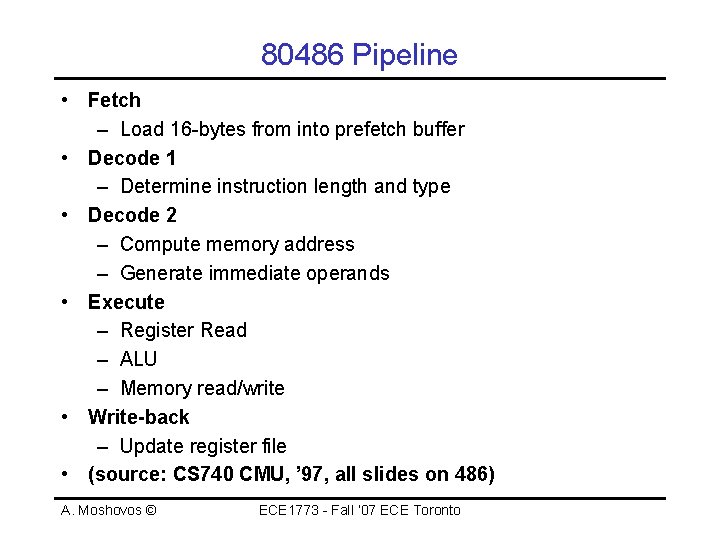

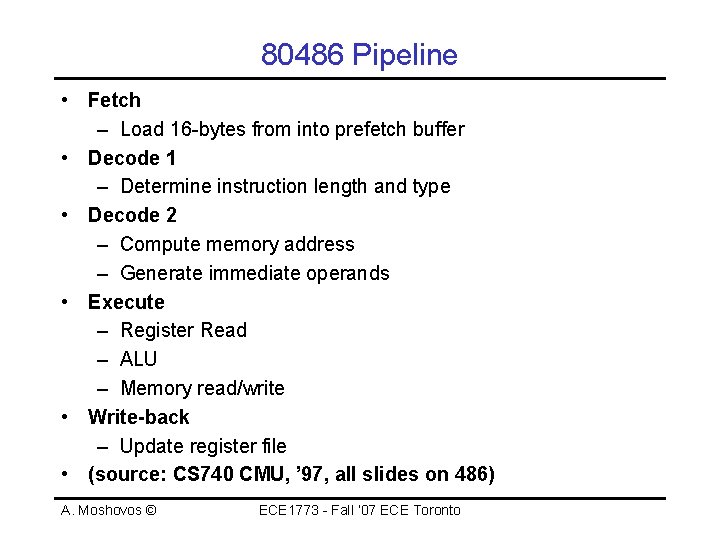

80486 Pipeline • Fetch – Load 16 -bytes from into prefetch buffer • Decode 1 – Determine instruction length and type • Decode 2 – Compute memory address – Generate immediate operands • Execute – Register Read – ALU – Memory read/write • Write-back – Update register file • (source: CS 740 CMU, ’ 97, all slides on 486) A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

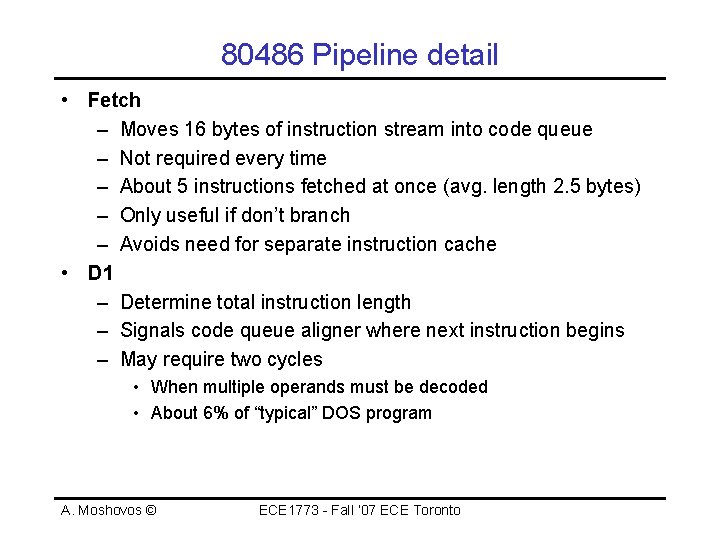

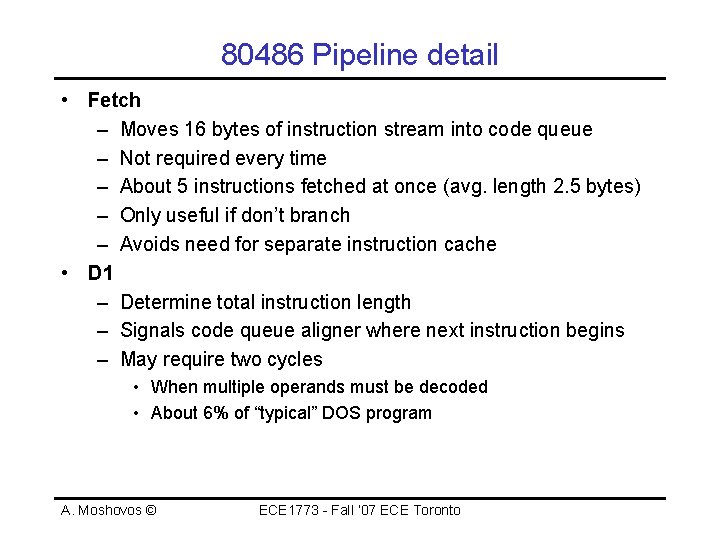

80486 Pipeline detail • Fetch – Moves 16 bytes of instruction stream into code queue – Not required every time – About 5 instructions fetched at once (avg. length 2. 5 bytes) – Only useful if don’t branch – Avoids need for separate instruction cache • D 1 – Determine total instruction length – Signals code queue aligner where next instruction begins – May require two cycles • When multiple operands must be decoded • About 6% of “typical” DOS program A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto

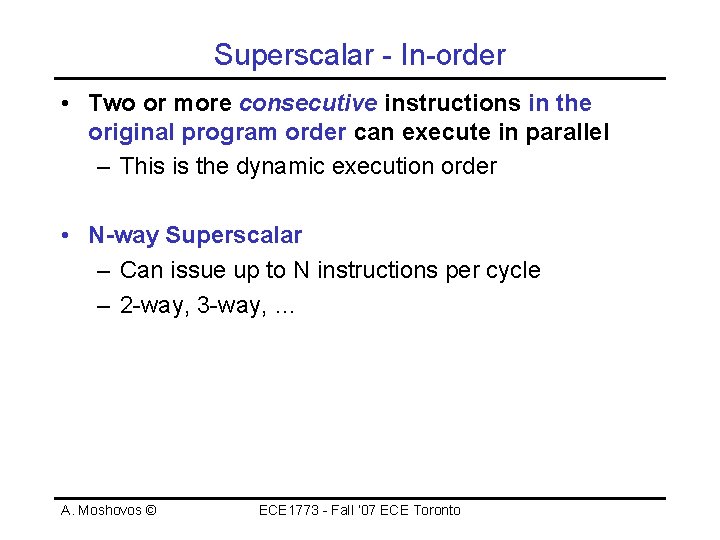

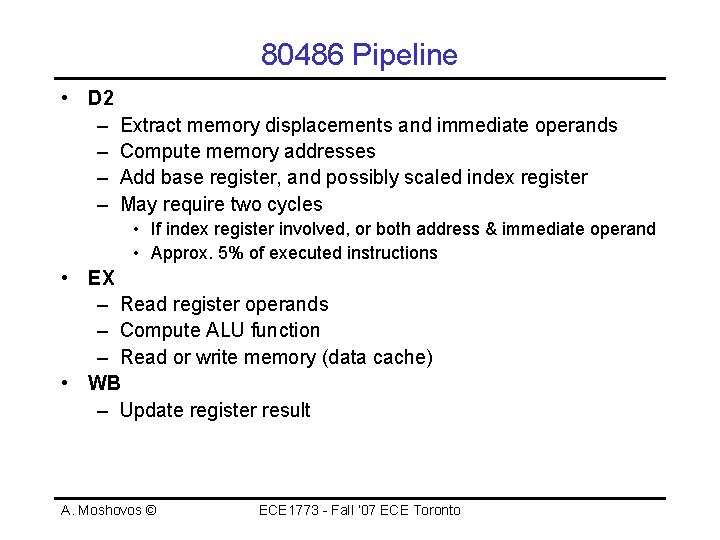

80486 Pipeline • D 2 – Extract memory displacements and immediate operands – Compute memory addresses – Add base register, and possibly scaled index register – May require two cycles • If index register involved, or both address & immediate operand • Approx. 5% of executed instructions • EX – Read register operands – Compute ALU function – Read or write memory (data cache) • WB – Update register result A. Moshovos © ECE 1773 - Fall ‘ 07 ECE Toronto