Paraguin Compiler Introduction July 24 2012 copyright 2012

![Hello World #include <stdio. h> int __guin_pid; int main(int argc, char *argv[]) { printf("Master Hello World #include <stdio. h> int __guin_pid; int main(int argc, char *argv[]) { printf("Master](https://slidetodoc.com/presentation_image/84349a8dcdfa67ab9ab2d46d4c736a77/image-6.jpg)

- Slides: 45

Paraguin Compiler Introduction July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Introduction The Paraguin Compiler is a compiler that I am developing at UNCW (by myself basically) It is based on the SUIF Compiler infrastruction Using pragmas the user can direct the compiler (compiler directives) to produce and MPI program July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

SUIF Compiler System Created by the SUIF Compiler Group at Stanford (suif. stanford. edu) SUIF is an open source compiler intended to promote research in compiler technology Paraguin is built using the SUIF compiler July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Compiler Directives The Paraguin compiler is a source to source compiler It transforms a sequential program into a parallel program suitable for execution on a distributed-memory system The result is a parallel program with calls to MPI routines Parallelization is not automatic; but rather directed via pragmas July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Compiler Directives The advantage to using pragmas is that other compilers will ignore them You can provide information to Paraguin that is ignored by other compilers, say gcc July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

![Hello World include stdio h int guinpid int mainint argc char argv printfMaster Hello World #include <stdio. h> int __guin_pid; int main(int argc, char *argv[]) { printf("Master](https://slidetodoc.com/presentation_image/84349a8dcdfa67ab9ab2d46d4c736a77/image-6.jpg)

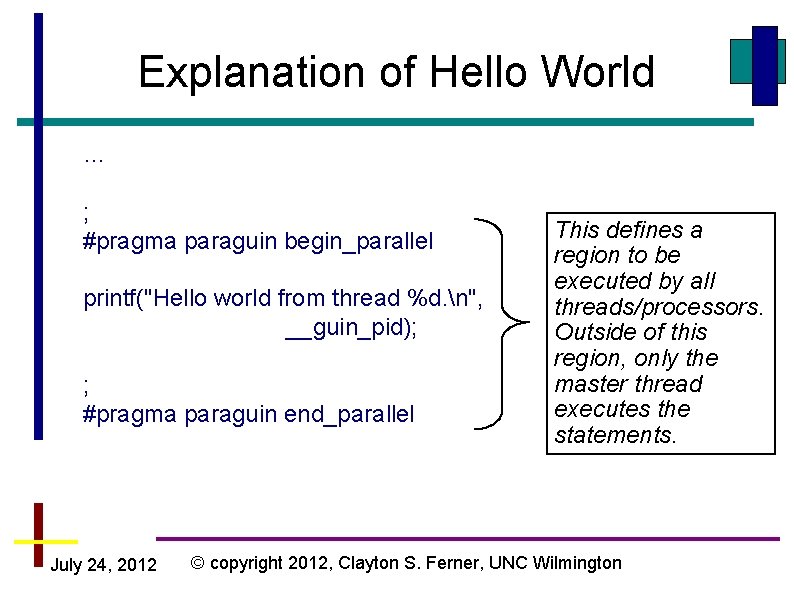

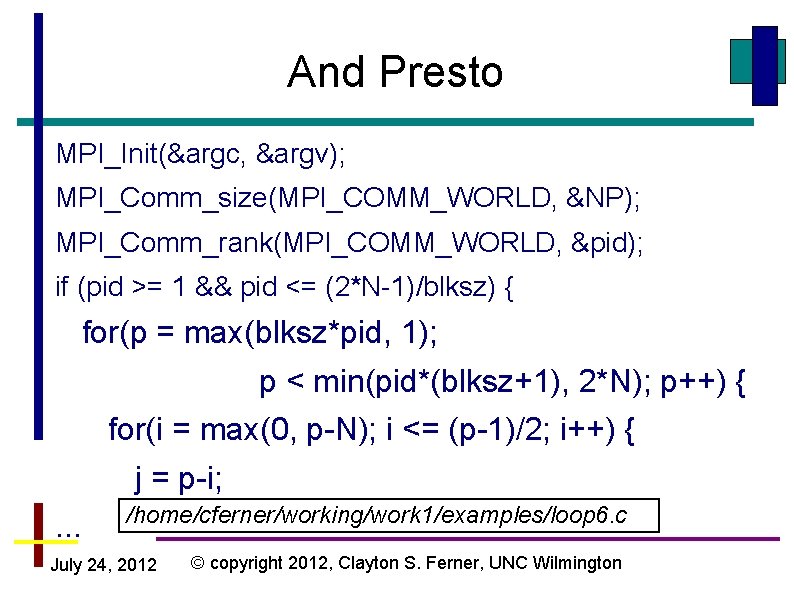

Hello World #include <stdio. h> int __guin_pid; int main(int argc, char *argv[]) { printf("Master thread %d starting. n", __guin_pid); ; #pragma paraguin begin_parallel printf("Hello world from thread %d. n", __guin_pid); ; #pragma paraguin end_parallel printf("Goodbye world from thread %d. n", __guin_pid); return 0; }

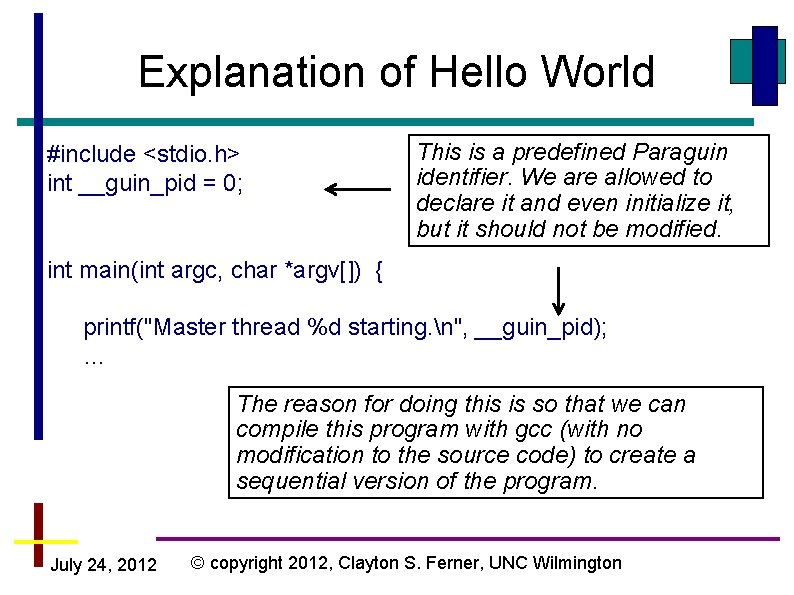

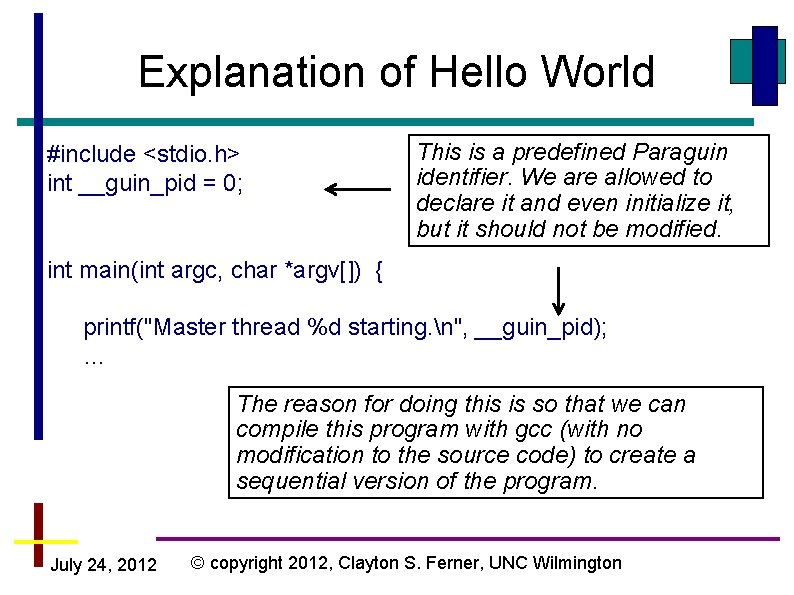

Explanation of Hello World #include <stdio. h> int __guin_pid = 0; This is a predefined Paraguin identifier. We are allowed to declare it and even initialize it, but it should not be modified. int main(int argc, char *argv[]) { printf("Master thread %d starting. n", __guin_pid); … The reason for doing this is so that we can compile this program with gcc (with no modification to the source code) to create a sequential version of the program. July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

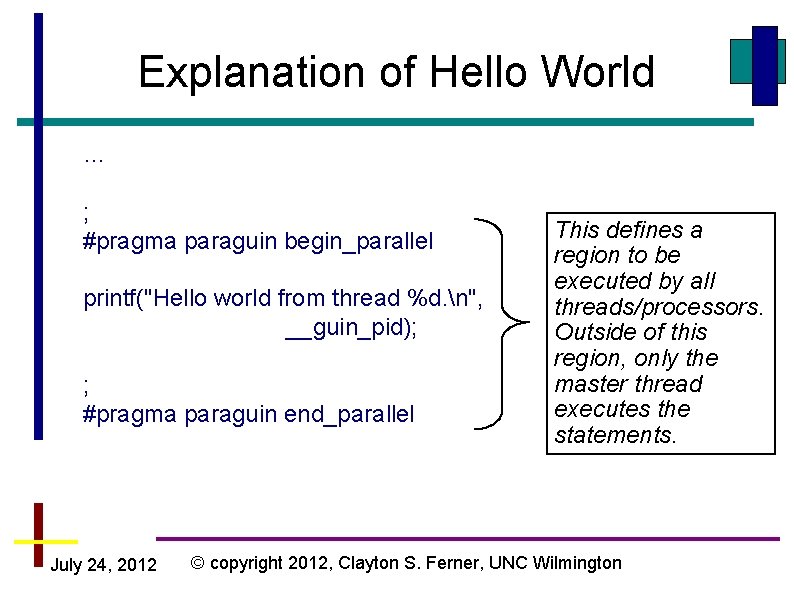

Explanation of Hello World … ; #pragma paraguin begin_parallel printf("Hello world from thread %d. n", __guin_pid); ; #pragma paraguin end_parallel July 24, 2012 This defines a region to be executed by all threads/processors. Outside of this region, only the master thread executes the statements. © copyright 2012, Clayton S. Ferner, UNC Wilmington

Result of Hello World Compiling Running $ runparaguin hello. c $ mpirun –nolocal –np 8 hello. out Hello world from thread 3. Hello world from thread 1. Hello world from thread 7. Hello world from thread 5. Hello world from thread 4. Hello world from thread 2. Hello world from thread 6. Master thread 0 starting. Hello world from thread 0. Goodbye world from thread 0. $ Processing file hello. spd Parallelizing procedure: "main" $ July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Notes on pragmas Notice the extra semicolon (; ) in front of the pragma statements. The reason is to insert a NOOP instruction into the code to which the pragmas are attached SUIF attaches the pragmas to the last instruction, which may be deeply nested. This makes it difficult for Paraguin to find the pragmas Solution: insert a semicolon on a line by itself before a block of pragma statements July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

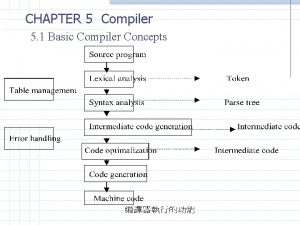

Compiler Internals Loop Transformations July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

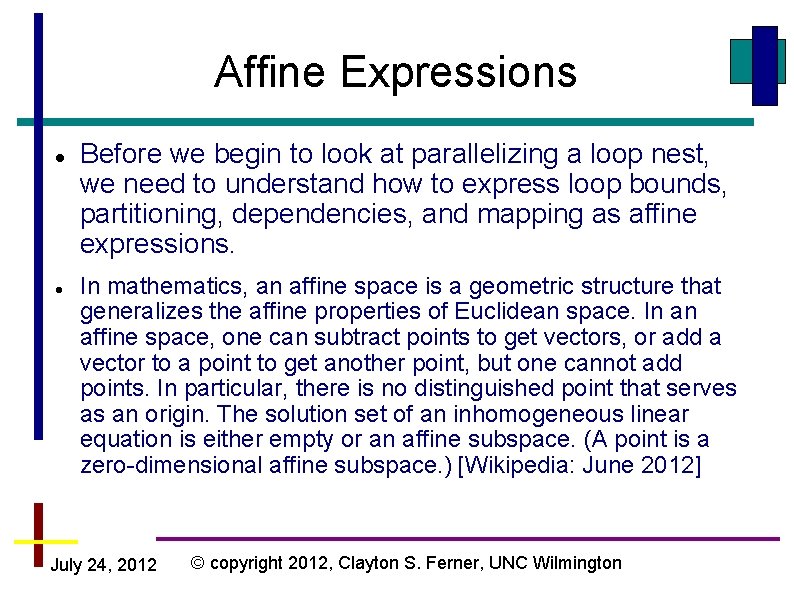

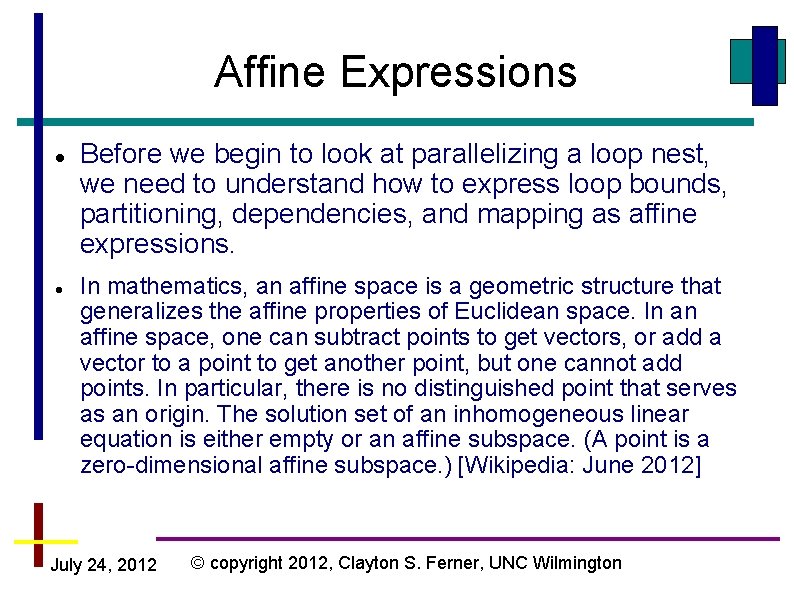

Affine Expressions Before we begin to look at parallelizing a loop nest, we need to understand how to express loop bounds, partitioning, dependencies, and mapping as affine expressions. In mathematics, an affine space is a geometric structure that generalizes the affine properties of Euclidean space. In an affine space, one can subtract points to get vectors, or add a vector to a point to get another point, but one cannot add points. In particular, there is no distinguished point that serves as an origin. The solution set of an inhomogeneous linear equation is either empty or an affine subspace. (A point is a zero-dimensional affine subspace. ) [Wikipedia: June 2012] July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

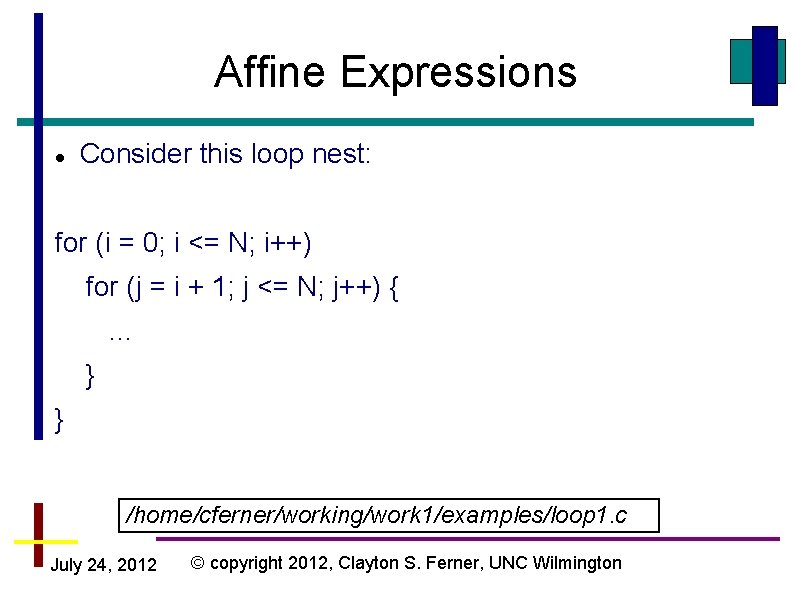

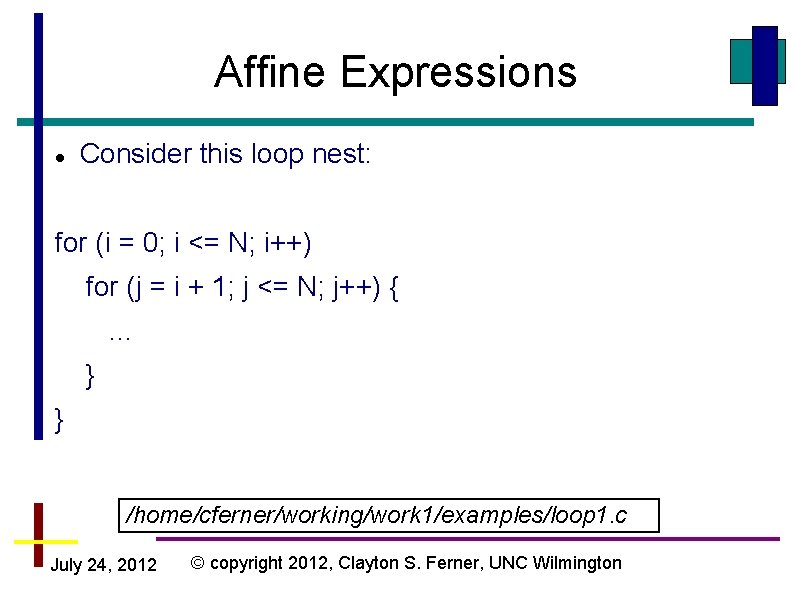

Affine Expressions Consider this loop nest: for (i = 0; i <= N; i++) for (j = i + 1; j <= N; j++) {. . . } } /home/cferner/working/work 1/examples/loop 1. c July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

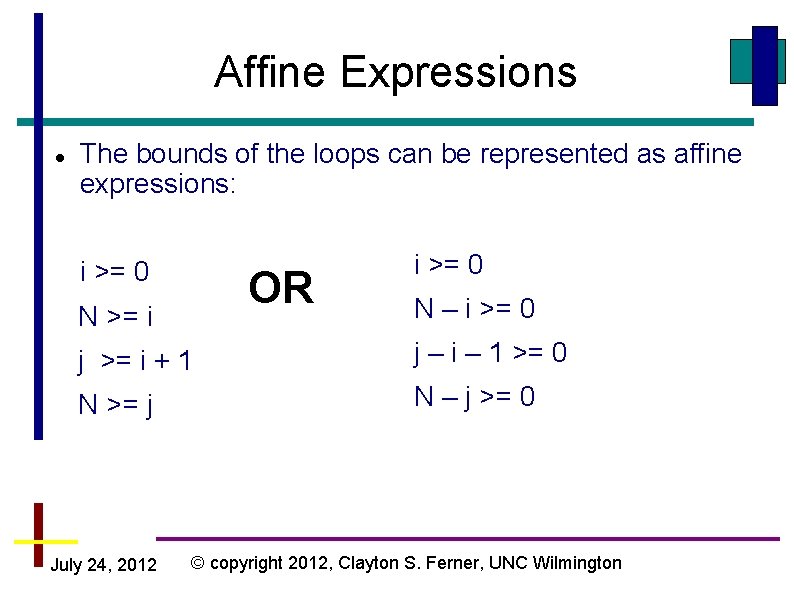

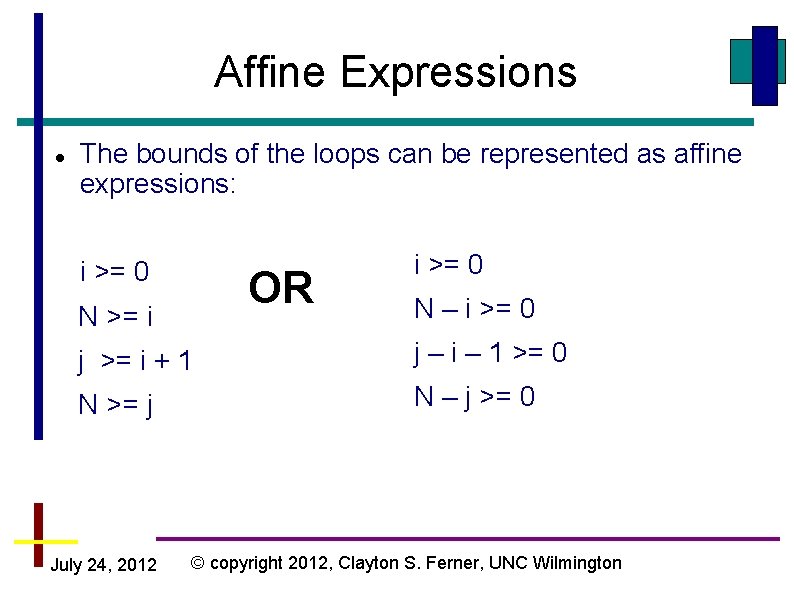

Affine Expressions The bounds of the loops can be represented as affine expressions: i >= 0 OR N >= i i >= 0 N – i >= 0 j >= i + 1 j – i – 1 >= 0 N >= j N – j >= 0 July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

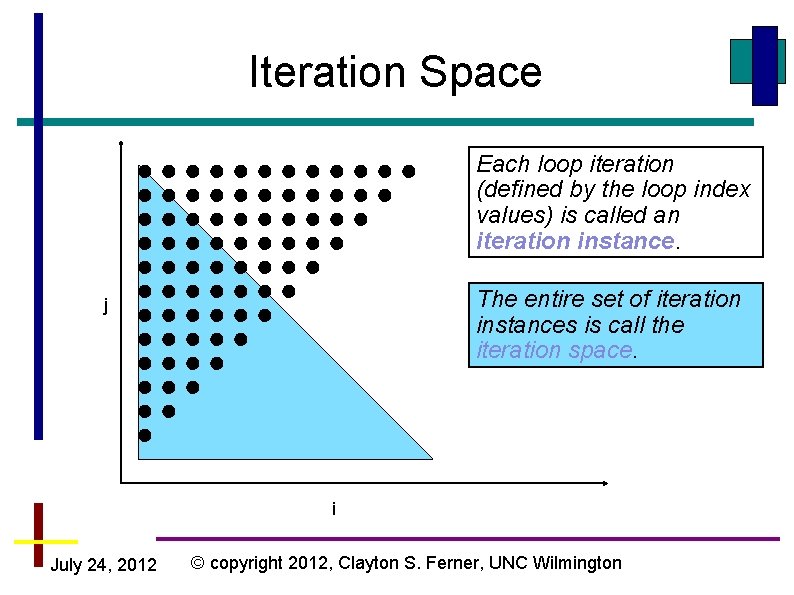

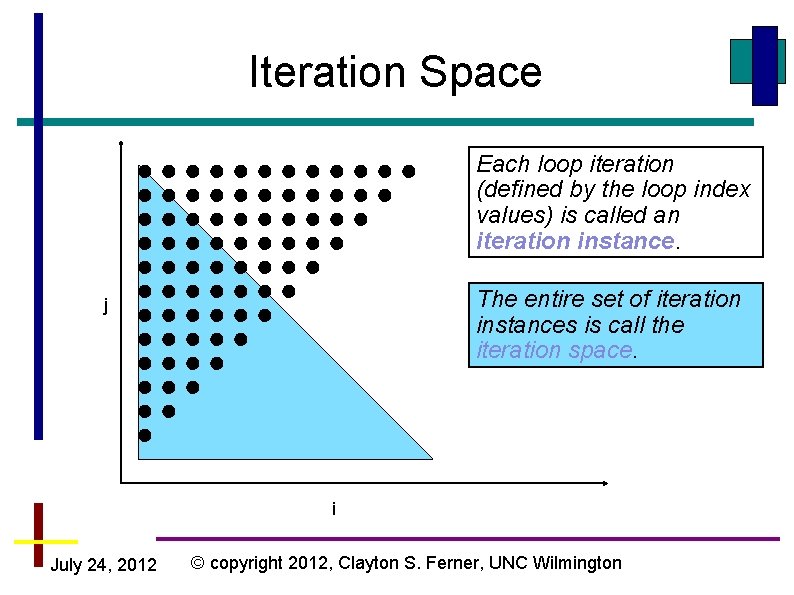

Iteration Space Each loop iteration (defined by the loop index values) is called an iteration instance. The entire set of iteration instances is call the iteration space. j i July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

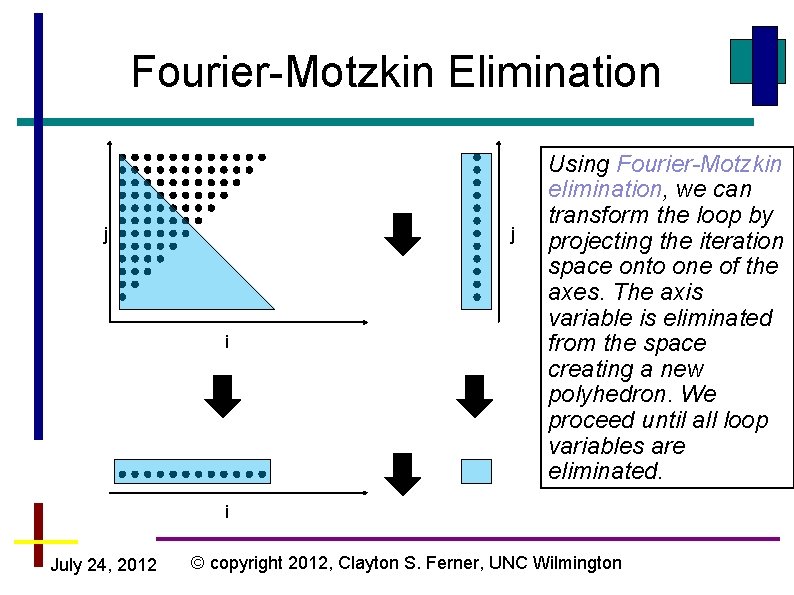

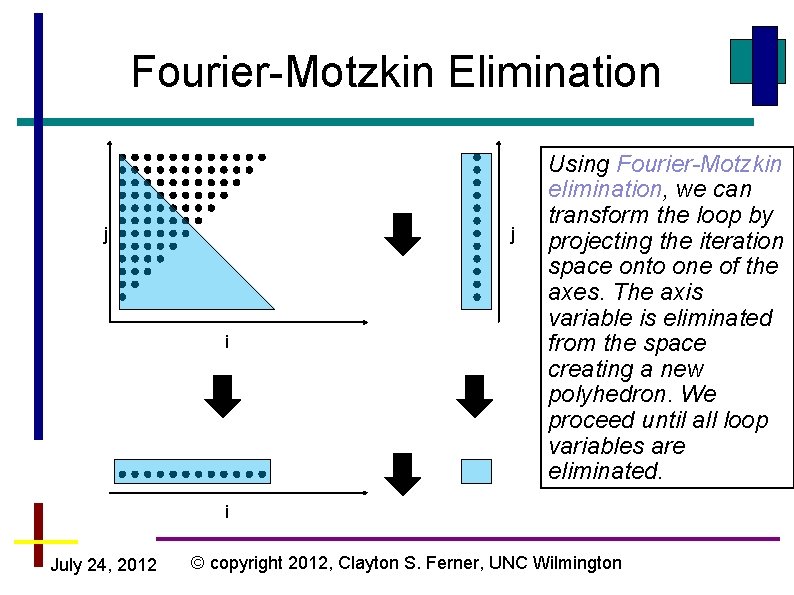

Fourier-Motzkin Elimination j j i Using Fourier-Motzkin elimination, we can transform the loop by projecting the iteration space onto one of the axes. The axis variable is eliminated from the space creating a new polyhedron. We proceed until all loop variables are eliminated. i July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Affine Expressions Loops order i then j for (i = 0; i <= N-1; i++) { Loops ordered j then i for (j = 1; j <= N; j++) { for (j = i + 1; j <= N; j++) { for (i = 0; i <= -1+j; i++) { . . . … } } loop 2. c and loop 3. c These two loops iterate over the same iteration space July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

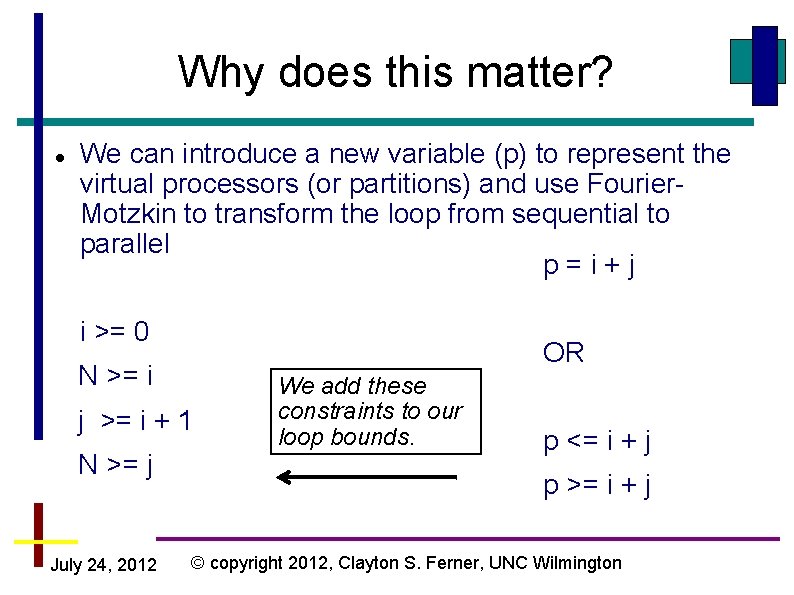

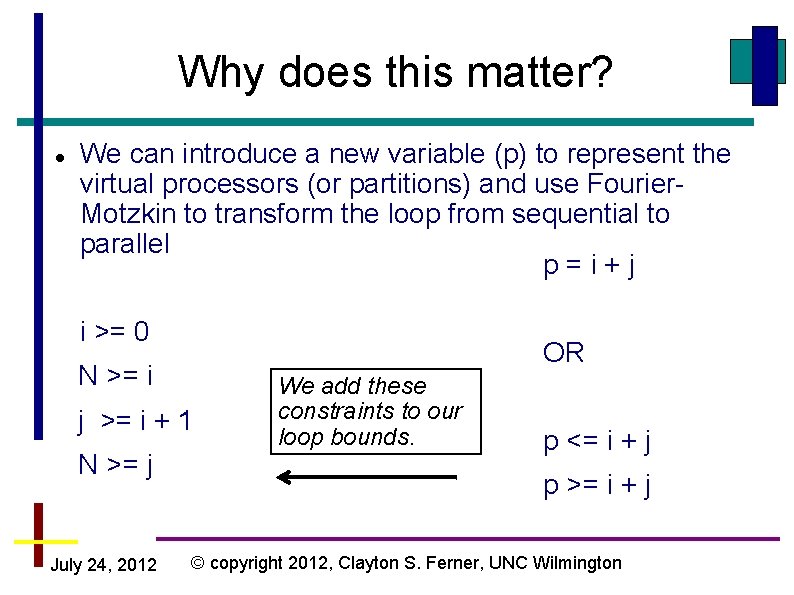

Why does this matter? We can introduce a new variable (p) to represent the virtual processors (or partitions) and use Fourier. Motzkin to transform the loop from sequential to parallel p=i+j i >= 0 OR N >= i j >= i + 1 N >= j July 24, 2012 We add these constraints to our loop bounds. p <= i + j p >= i + j © copyright 2012, Clayton S. Ferner, UNC Wilmington

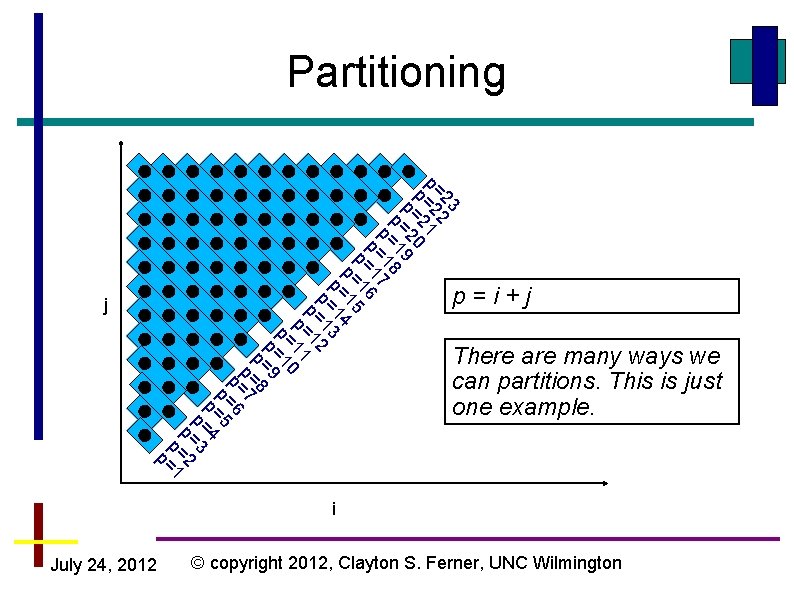

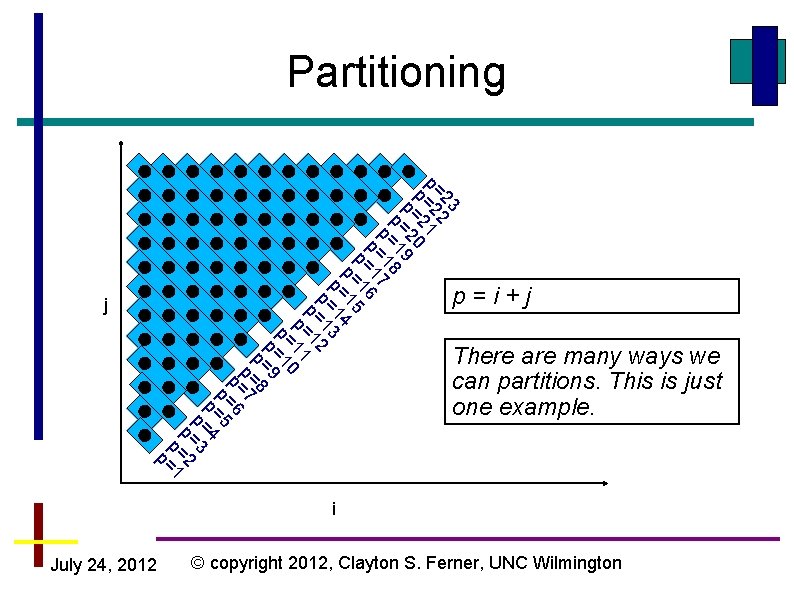

Partitioning When we use Fourier-Motzkin elimination to rebuild the loop we get: for(p = 1; p <= 2*N - 1; p++) { for(i = max(p-N, 0); i <= (p-1)/2; i++) { j = p-i; } } /home/cferner/working/work 1/examples/loop 4. c July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Partitioning 23 2 p= 2 1 p= 2 0 p= 2 9 p= 1 8 p= 1 7 p= =1 6 p 1 p= 15 4 p= 1 3 p= =1 2 p 1 p= 11 0 p= 1 p= =9 p 8 p= =7 p 6 p= =5 p 4 p= =3 p 2 p= =1 p j p=i+j There are many ways we can partitions. This is just one example. i July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Partition ~ Virtual Processors p is the virtual processor (or partition). But let’s pretend that p is the physical processor We can replace the for loop on p with an if statement, because p is the processor number and pre-determined if(p >= 1 && p <= 1) { { for(p = 1; p <= 2*N - 1; -p++) for(i = max(p-N, 0); i <= (p-1)/2; i++) { j = p-i; } } July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

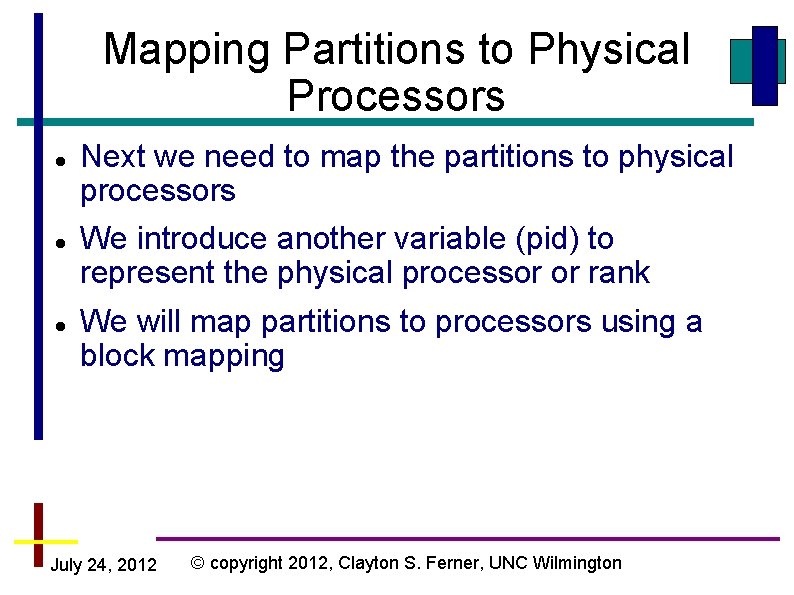

Mapping Partitions to Physical Processors Next we need to map the partitions to physical processors We introduce another variable (pid) to represent the physical processor or rank We will map partitions to processors using a block mapping July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

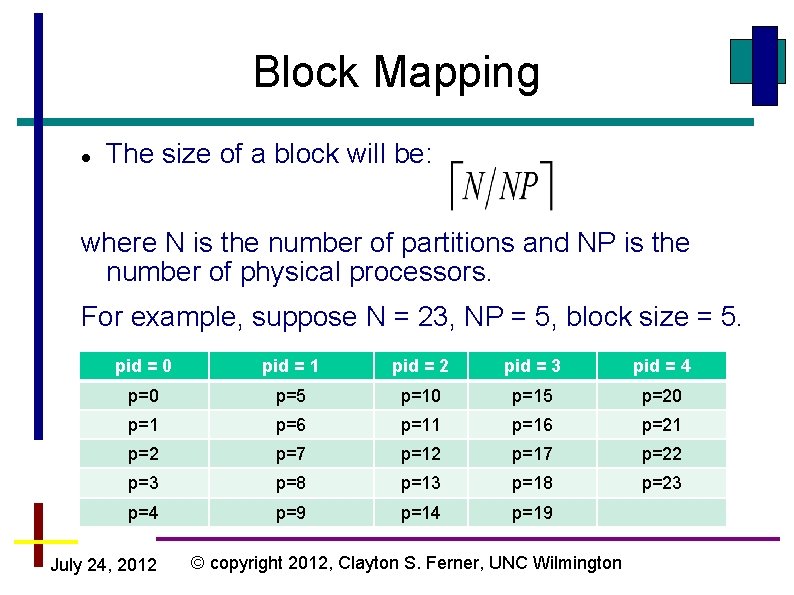

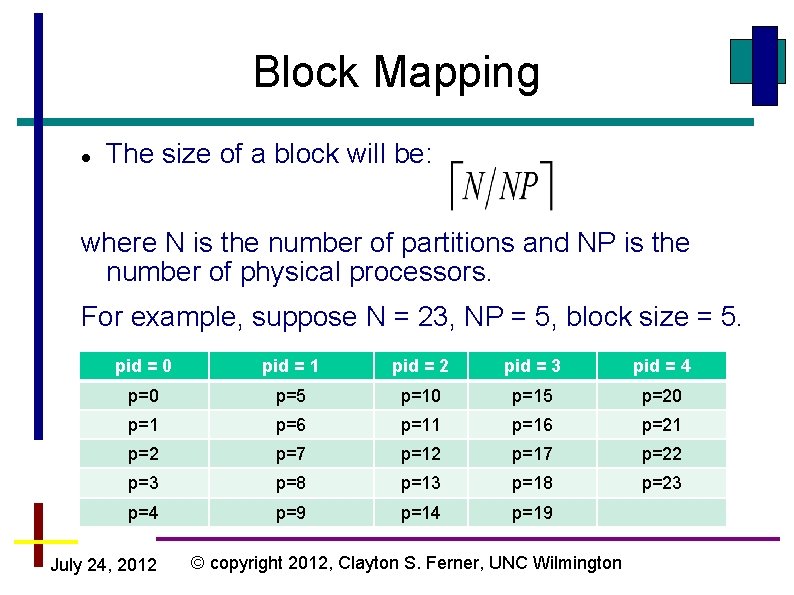

Block Mapping The size of a block will be: where N is the number of partitions and NP is the number of physical processors. For example, suppose N = 23, NP = 5, block size = 5. pid = 0 pid = 1 pid = 2 pid = 3 pid = 4 p=0 p=5 p=10 p=15 p=20 p=1 p=6 p=11 p=16 p=21 p=2 p=7 p=12 p=17 p=22 p=3 p=8 p=13 p=18 p=23 p=4 p=9 p=14 p=19 July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Block Mapping Notice that we need to make sure processors don’t go beyond the number of iterations. The load may not be perfectly balanced. To represent the mapping, we add the constraint: blksz*pid <= p < blksz*(pid+1) OR p >= blksz*pid p <= blksz*pid + blksz - 1 July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

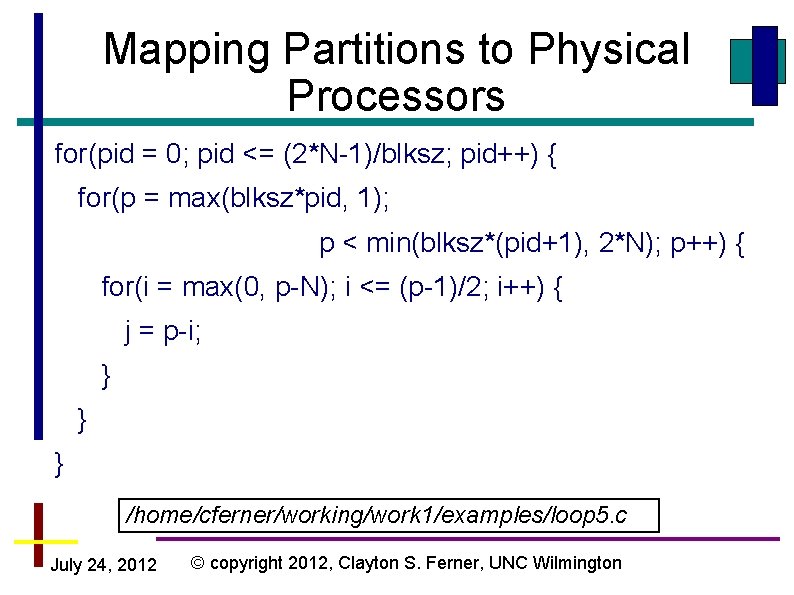

Mapping Partitions to Physical Processors All of the constraints put together are: i >= 0 p <= i + j N >= i p >= i + j j >= i + 1 p >= blksz*pid N >= j p <= blksz*pid + blksz - 1 July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

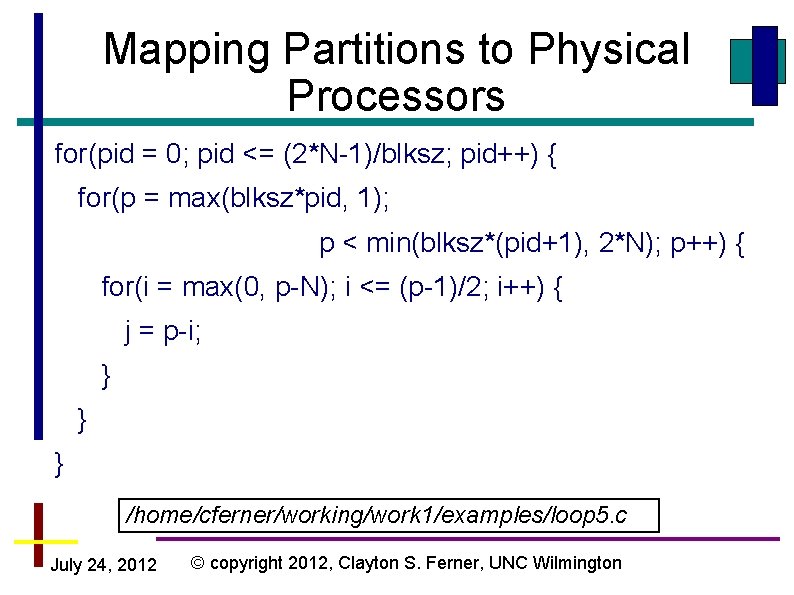

Mapping Partitions to Physical Processors for(pid = 0; pid <= (2*N-1)/blksz; pid++) { for(p = max(blksz*pid, 1); p < min(blksz*(pid+1), 2*N); p++) { for(i = max(0, p-N); i <= (p-1)/2; i++) { j = p-i; } } } /home/cferner/working/work 1/examples/loop 5. c July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

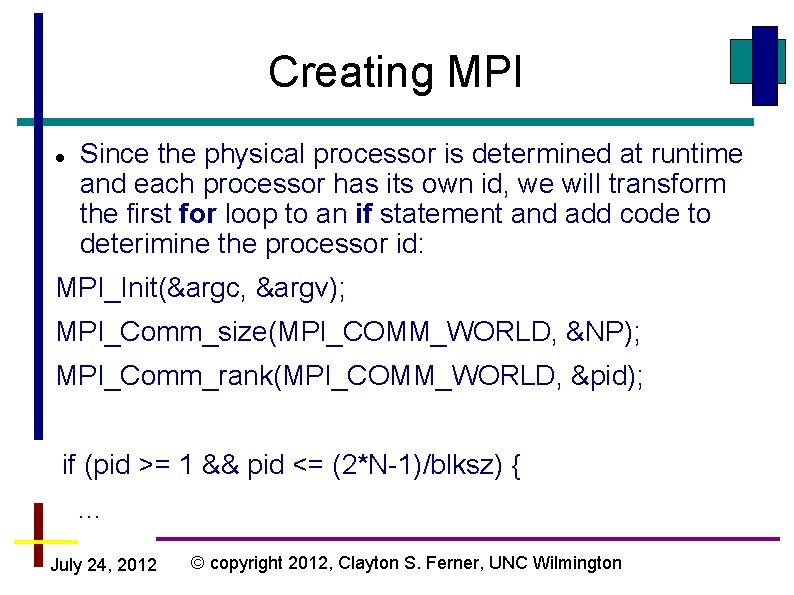

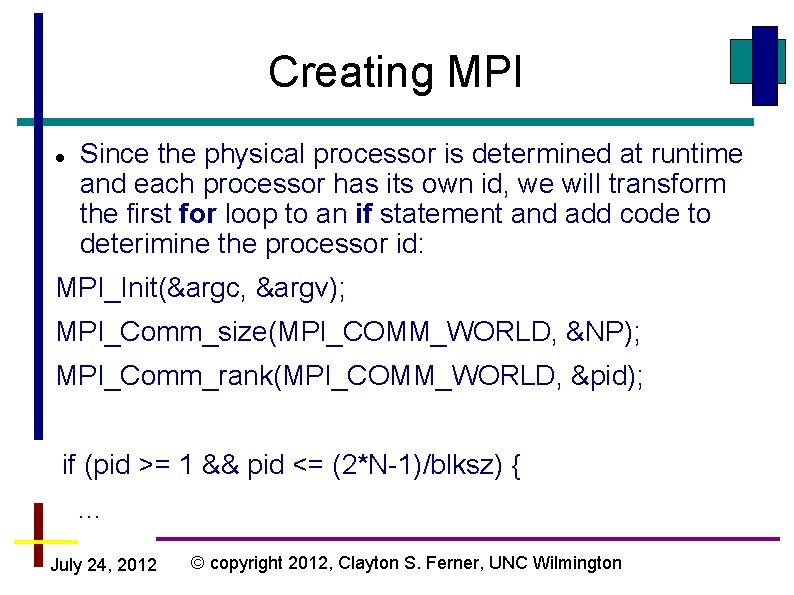

Creating MPI Since the physical processor is determined at runtime and each processor has its own id, we will transform the first for loop to an if statement and add code to deterimine the processor id: MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &NP); MPI_Comm_rank(MPI_COMM_WORLD, &pid); if (pid >= && <= pid(2*N-1)/blksz; <= (2*N-1)/blksz) { { for(pid = 1; 1 pid++). . . July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

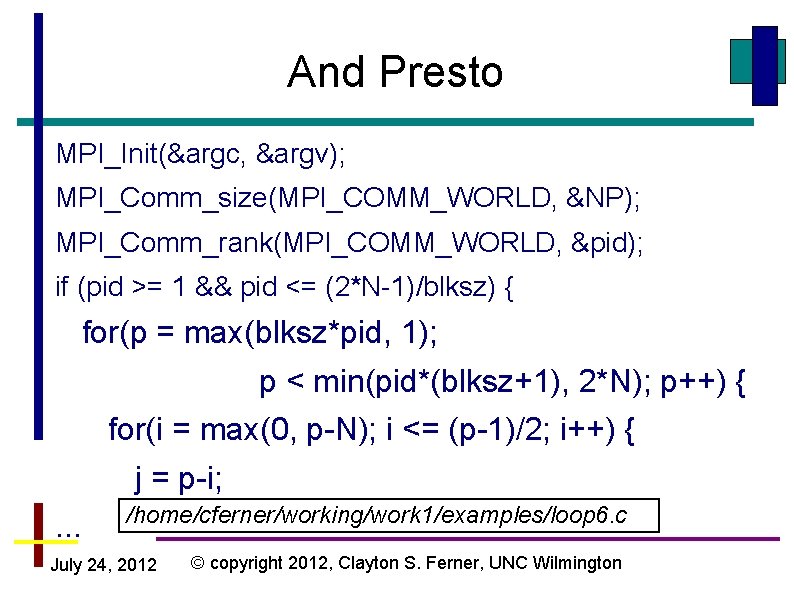

And Presto MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &NP); MPI_Comm_rank(MPI_COMM_WORLD, &pid); if (pid >= 1 && pid <= (2*N-1)/blksz) { for(p = max(blksz*pid, 1); p < min(pid*(blksz+1), 2*N); p++) { for(i = max(0, p-N); i <= (p-1)/2; i++) { j = p-i; . . . /home/cferner/working/work 1/examples/loop 6. c July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Using the Compiler What does the compiler need from us? July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

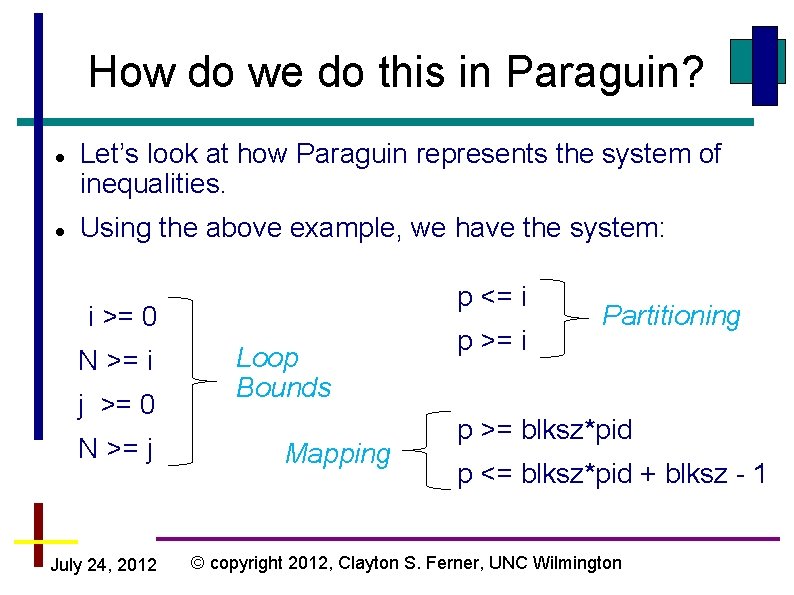

How do we do this in Paraguin? Paraguin will create the system of inequalities to represent the bounds of a loop nest. We need to tell Paraguin that we want a for loop to be parallelized and what the partitioning should be. Paraguin will assume block mapping of partitions to physical processors. July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

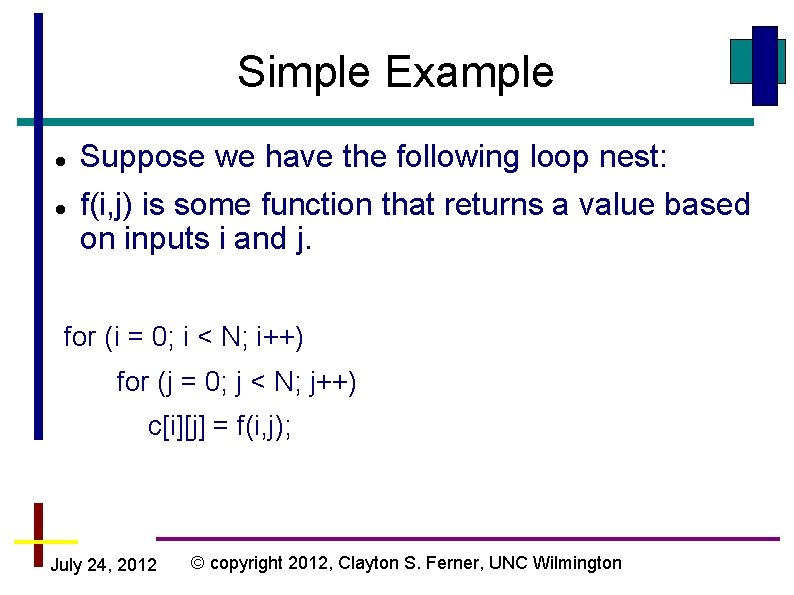

Simple Example Suppose we have the following loop nest: f(i, j) is some function that returns a value based on inputs i and j. for (i = 0; i < N; i++) for (j = 0; j < N; j++) c[i][j] = f(i, j); July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

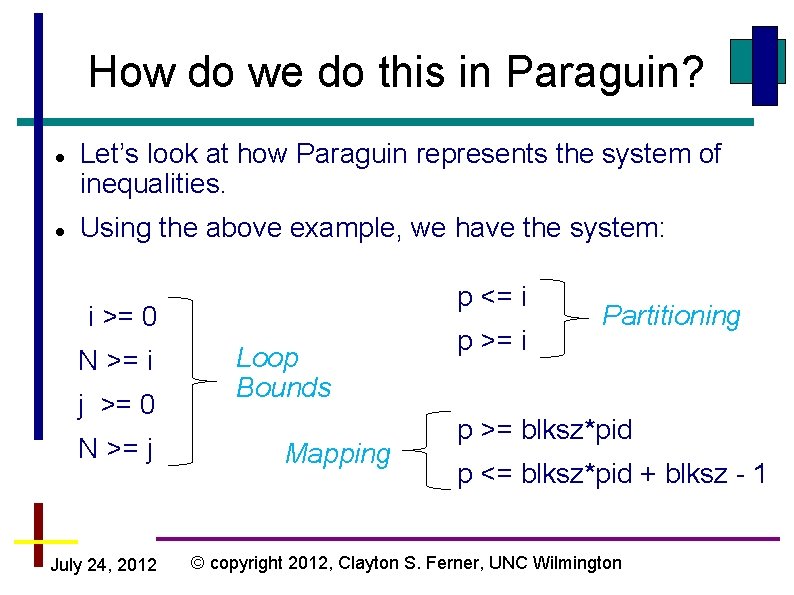

How do we do this in Paraguin? Let’s look at how Paraguin represents the system of inequalities. Using the above example, we have the system: p <= i i >= 0 N >= i j >= 0 N >= j July 24, 2012 Loop Bounds Mapping p >= i Partitioning p >= blksz*pid p <= blksz*pid + blksz - 1 © copyright 2012, Clayton S. Ferner, UNC Wilmington

How do we do this in Paraguin? First we rewrite the constraints such that 0 is on the rhs of >=: p – i >= 0 N - i >= 0 j >= 0 N – j >= 0 July 24, 2012 - p + i >= 0 p – blksz*pid >= 0 – p + blksz*pid + blksz – 1 >= 0 © copyright 2012, Clayton S. Ferner, UNC Wilmington

How do we do this in Paraguin? Next we write this in matrix/vector form: where July 24, 2012 is a matrix, and are vectors. © copyright 2012, Clayton S. Ferner, UNC Wilmington

How do we do this in Paraguin? July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

How do we do this in Paraguin? Paraguin does not need to store the vector because it is always zero. It simply needs to matrix, and it derives the vector passed on the loop variables. In order to get Paraguin to parallelize this loop, we need to use a “forall” pragma and give the partitioning. The loop bounds are determined from the loops and the mapping is assumed to be block mapping. So we only need to give the partitioning July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

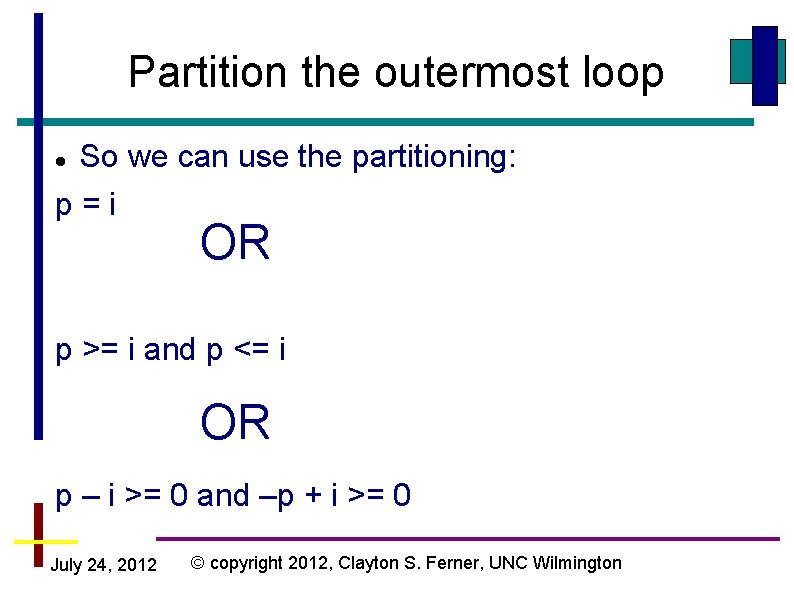

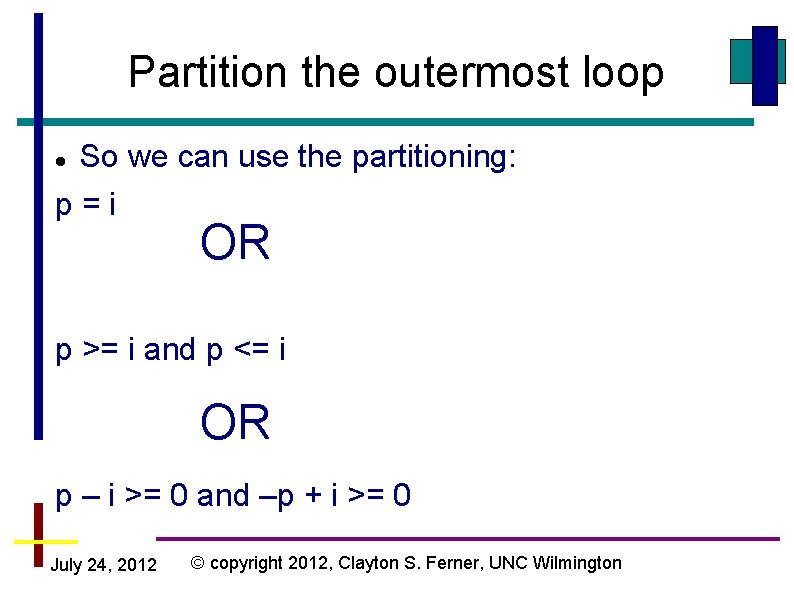

Partition the outermost loop So we can use the partitioning: p=i OR p >= i and p <= i OR p – i >= 0 and –p + i >= 0 July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

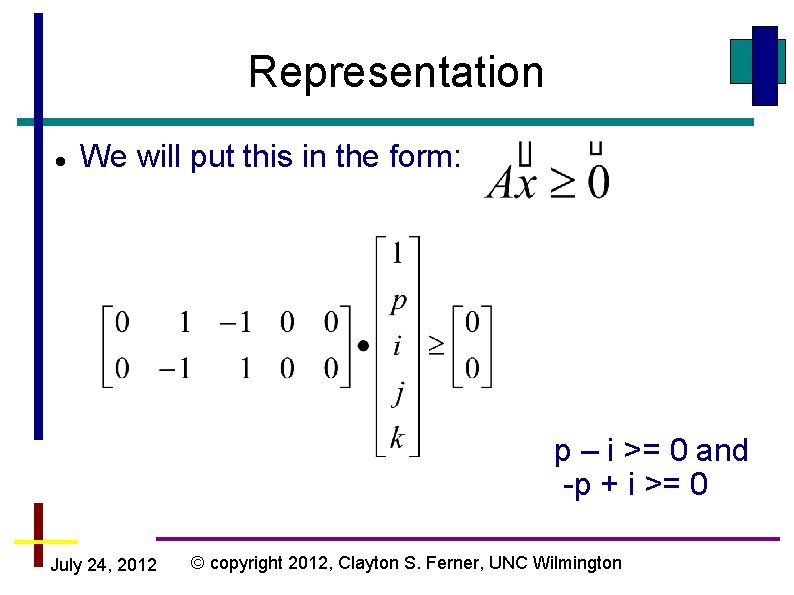

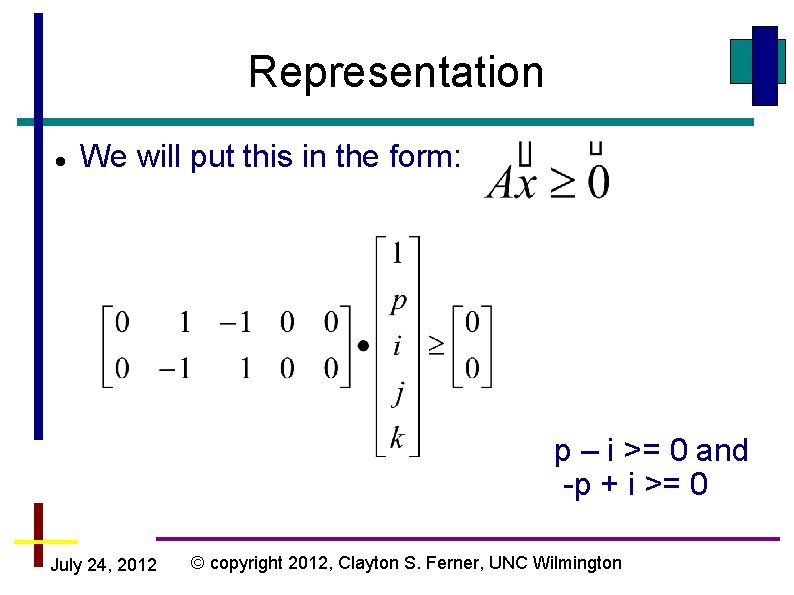

Representation We will put this in the form: p – i >= 0 and -p + i >= 0 July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

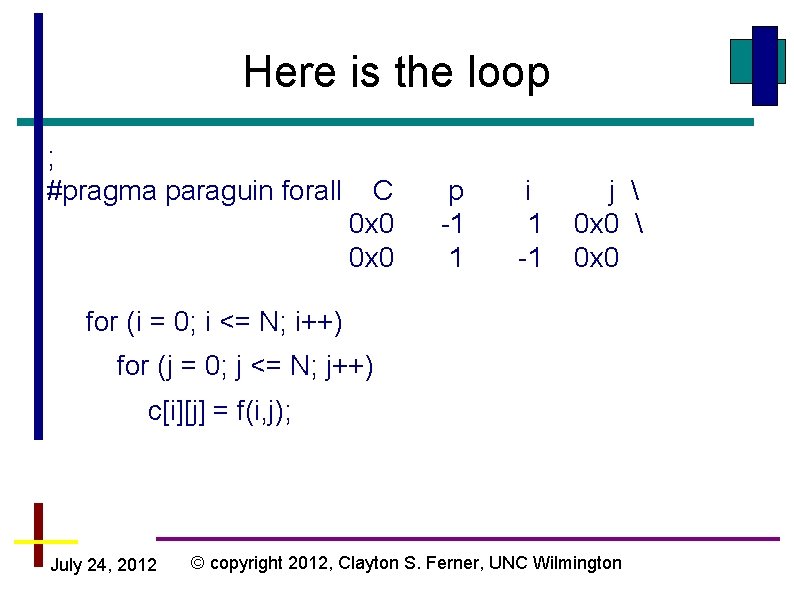

Forall Syntax The syntax for the forall pragma is as follows: #pragma paraguin forall <X> <A> Where <X> is the vector of variables and <A> is the matrix of coefficients July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Forall Example For example: #pragma paraguin forall C p i j k 0 x 0 -1 1 0 x 0 0 x 0 1 -1 0 x 0 We must use a symbol (C) for the constant column instead of 1 because Paraguin assume a number is the beginning of the matrix 0 x 0 (hex) must be used instead of 0, because the SUIF compiler will process a 0 as a string July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

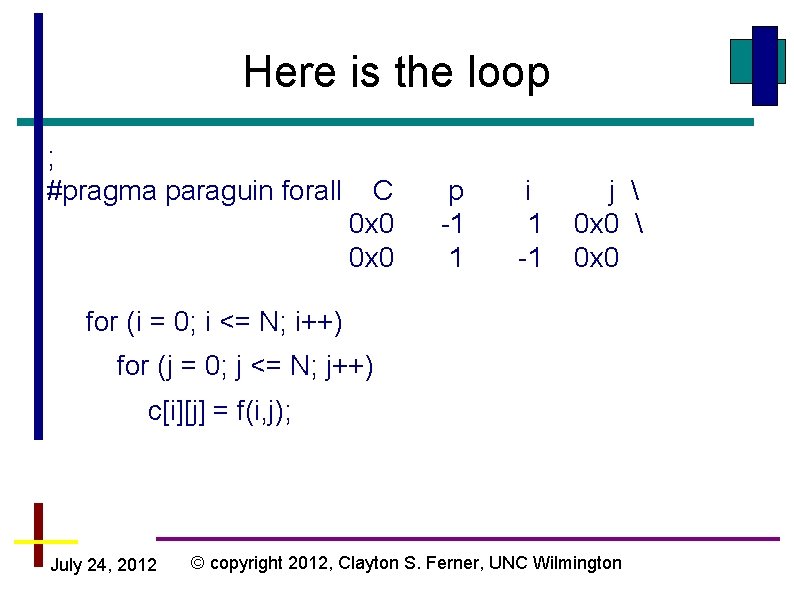

Here is the loop ; #pragma paraguin forall C 0 x 0 p -1 1 i 1 -1 j 0 x 0 for (i = 0; i <= N; i++) for (j = 0; j <= N; j++) c[i][j] = f(i, j); July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

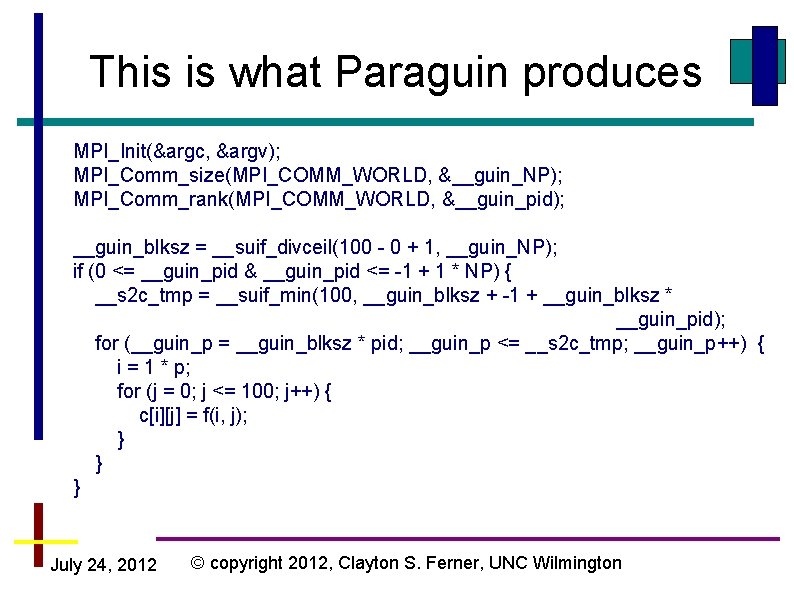

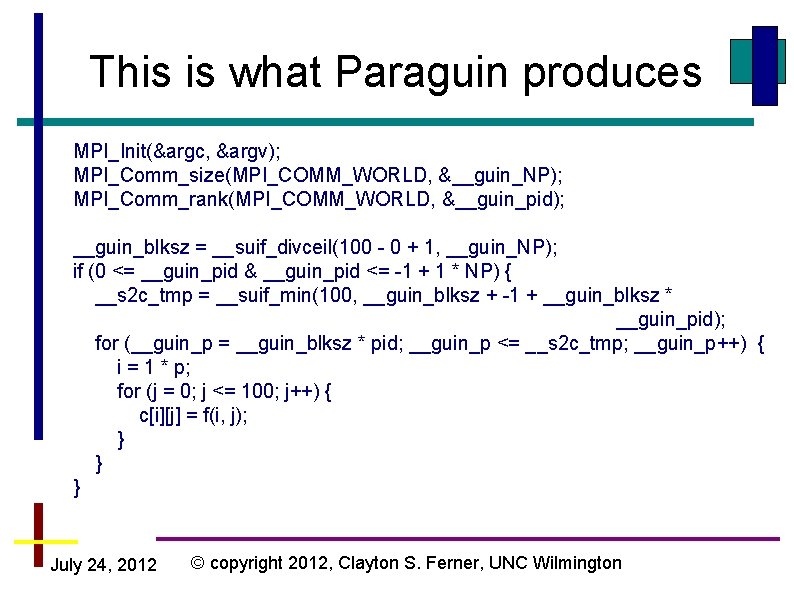

This is what Paraguin produces MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &__guin_NP); MPI_Comm_rank(MPI_COMM_WORLD, &__guin_pid); __guin_blksz = __suif_divceil(100 - 0 + 1, __guin_NP); if (0 <= __guin_pid & __guin_pid <= -1 + 1 * NP) { __s 2 c_tmp = __suif_min(100, __guin_blksz + -1 + __guin_blksz * __guin_pid); for (__guin_p = __guin_blksz * pid; __guin_p <= __s 2 c_tmp; __guin_p++) { i = 1 * p; for (j = 0; j <= 100; j++) { c[i][j] = f(i, j); } } } July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Paraguin Pre-defined Names In order to make sure the variables Paraguin creates are unique, they are named as: __guin_pid __guin_NP __guin_blksz Also notice that 100 has been substitute for N because N is a literal July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

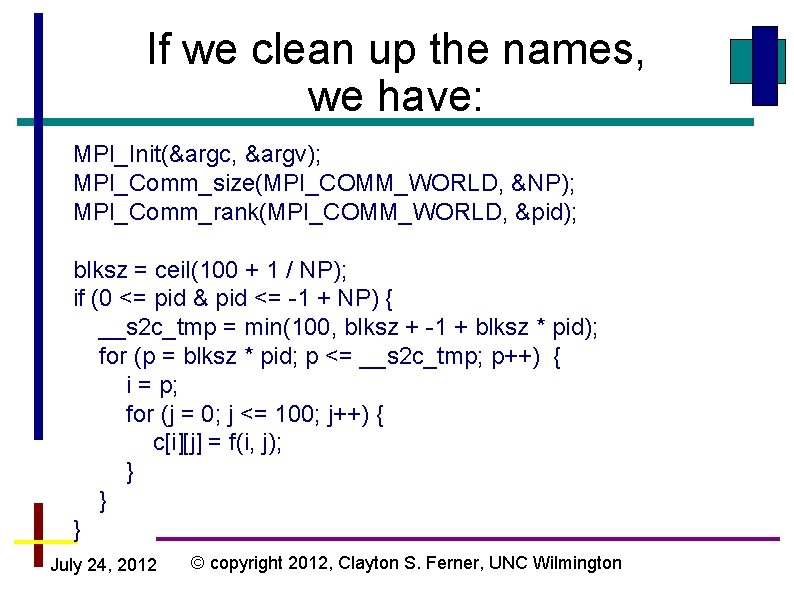

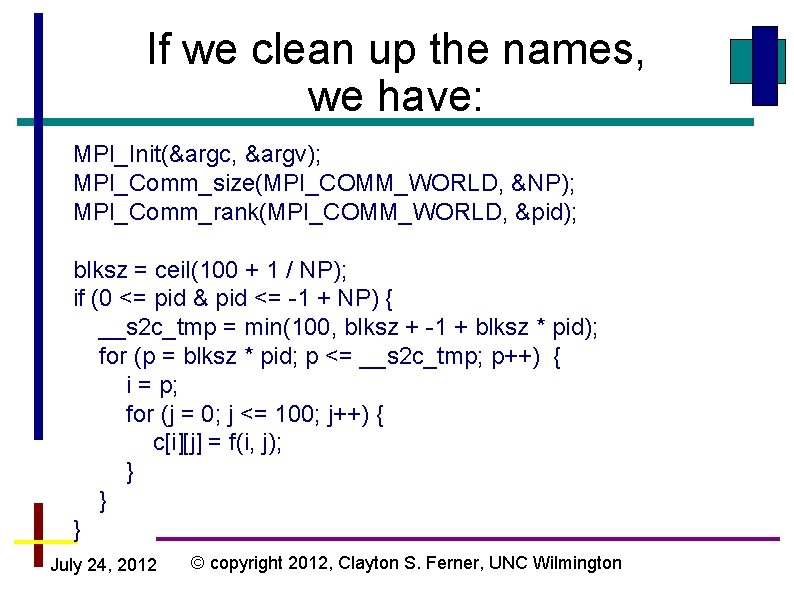

If we clean up the names, we have: MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &NP); MPI_Comm_rank(MPI_COMM_WORLD, &pid); blksz = ceil(100 + 1 / NP); if (0 <= pid & pid <= -1 + NP) { __s 2 c_tmp = min(100, blksz + -1 + blksz * pid); for (p = blksz * pid; p <= __s 2 c_tmp; p++) { i = p; for (j = 0; j <= 100; j++) { c[i][j] = f(i, j); } } } July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Questions? July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Yet another compiler compiler

Yet another compiler compiler Cross compiler in compiler design

Cross compiler in compiler design Paraguin

Paraguin Paraguin

Paraguin Paraguin

Paraguin Paraguin

Paraguin Paraguin

Paraguin Dentist copyright 2012

Dentist copyright 2012 Copyright 2012

Copyright 2012 Clean leader

Clean leader Copyright 2012

Copyright 2012 Introduction to interpreter

Introduction to interpreter Harris burdick another place another time

Harris burdick another place another time Harris burdick pictures uninvited guest

Harris burdick pictures uninvited guest July 1-4 1863

July 1-4 1863 Tender definition

Tender definition Astronomy picture of the day 11 july 2001

Astronomy picture of the day 11 july 2001 2001 july 15

2001 july 15 2003 july 17

2003 july 17 July 30 2009 nasa

July 30 2009 nasa Sources nso july frenchhowell neill technology...

Sources nso july frenchhowell neill technology... May 1775

May 1775 I am silver and exact i have no preconceptions

I am silver and exact i have no preconceptions The cuban melodrama

The cuban melodrama Poppies in july

Poppies in july Gdje se rodio nikola tesla

Gdje se rodio nikola tesla Ctdssmap payment schedule july 2021

Ctdssmap payment schedule july 2021 July 1969

July 1969 6th july 1988

6th july 1988 Monday 13th july

Monday 13th july On july 18 2001 a train carrying hazardous chemicals

On july 18 2001 a train carrying hazardous chemicals July 4 sermon

July 4 sermon June too soon july stand by

June too soon july stand by July 2 1937 amelia earhart

July 2 1937 amelia earhart June 22 to july 22

June 22 to july 22 July 12 1776

July 12 1776 The hot july sun beat relentlessly down

The hot july sun beat relentlessly down Catawba indian nation bingo

Catawba indian nation bingo January february march april may

January february march april may July 14 1789

July 14 1789 Malaga in july

Malaga in july Leaf yeast experiment

Leaf yeast experiment July 26 1953

July 26 1953 July 16 1776

July 16 1776 Dsl definition wikipedia

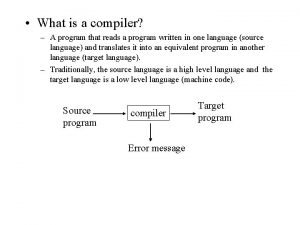

Dsl definition wikipedia What is compiler

What is compiler