Paraguin Compiler Version 2 1 August 30 2013

![Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) + Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) +](https://slidetodoc.com/presentation_image_h2/ac4370b5813e0445f3db6b6705fdf012/image-31.jpg)

![Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) + Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) +](https://slidetodoc.com/presentation_image_h2/ac4370b5813e0445f3db6b6705fdf012/image-32.jpg)

![Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) + Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) +](https://slidetodoc.com/presentation_image_h2/ac4370b5813e0445f3db6b6705fdf012/image-33.jpg)

![Scatter void f(int *A, int n) { int B[N]; … // Initialize B somehow Scatter void f(int *A, int n) { int B[N]; … // Initialize B somehow](https://slidetodoc.com/presentation_image_h2/ac4370b5813e0445f3db6b6705fdf012/image-35.jpg)

- Slides: 47

Paraguin Compiler Version 2. 1 August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 1

Introduction The Paraguin Compiler is a compiler that I am developing at UNCW (by myself basically) It is based on the SUIF Compiler infrastruction Using pragmas the user can direct the compiler (compiler directives) to produce and MPI program User Manual can be accessed at: http: //people. uncw. edu/cferner/Paraguin/userman. pdf August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 2

SUIF Compiler System Created by the SUIF Compiler Group at Stanford (suif. stanford. edu) SUIF is an open source compiler intended to promote research in compiler technology Paraguin is built using the SUIF compiler August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 3

Compiler Directives The Paraguin compiler is a source to source compiler It transforms a sequential program into a parallel program suitable for execution on a distributed-memory system The result is a parallel program with calls to MPI routines Parallelization is not automatic; but rather directed via pragmas August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 4

Compiler Directives The advantage to using pragmas is that other compilers will ignore them You can provide information to Paraguin that is ignored by other compilers, say gcc You can create a hybrid program using pragmas for different compilers Syntax: #pragma paraguin <type> [<parameters>] August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 5

Running a Parallel Program When your parallel program is run, you specify how many processors you want on the command line (or in a job submission file) Processes (1 per processor) will be given a rank, which is unique, in the range [0. . NP-1], where NP is the number of processors. Process 0 is considered to be the master. August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 6

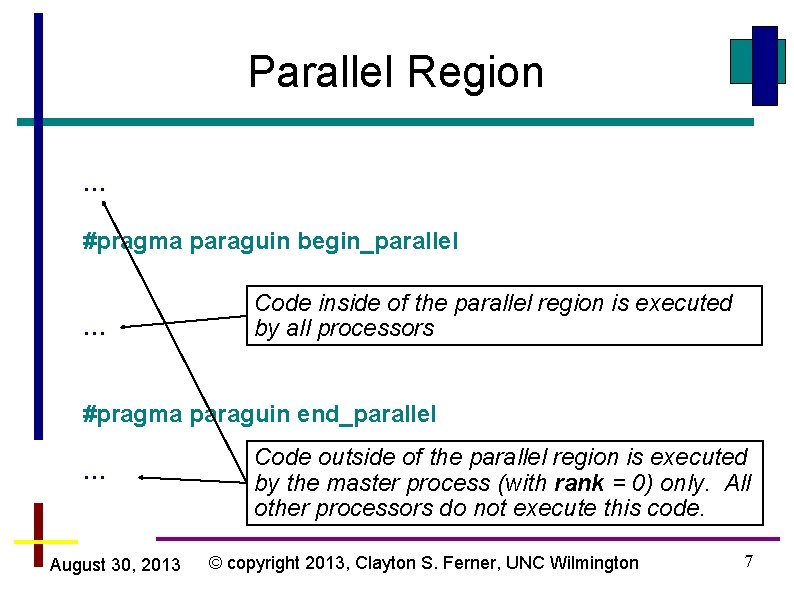

Parallel Region … #pragma paraguin begin_parallel … Code inside of the parallel region is executed by all processors #pragma paraguin end_parallel … August 30, 2013 Code outside of the parallel region is executed by the master process (with rank = 0) only. All other processors do not execute this code. © copyright 2013, Clayton S. Ferner, UNC Wilmington 7

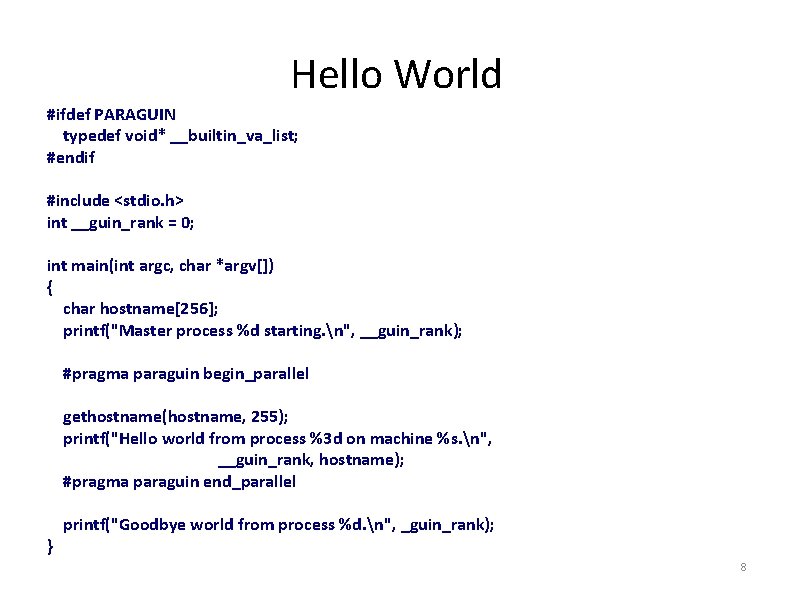

Hello World #ifdef PARAGUIN typedef void* __builtin_va_list; #endif #include <stdio. h> int __guin_rank = 0; int main(int argc, char *argv[]) { char hostname[256]; printf("Master process %d starting. n", __guin_rank); #pragma paraguin begin_parallel gethostname(hostname, 255); printf("Hello world from process %3 d on machine %s. n", __guin_rank, hostname); #pragma paraguin end_parallel } printf("Goodbye world from process %d. n", _guin_rank); 8

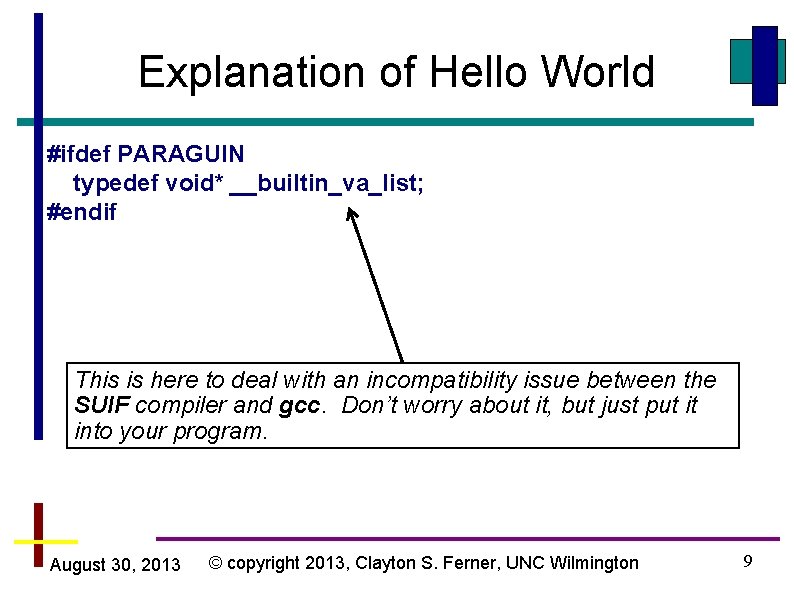

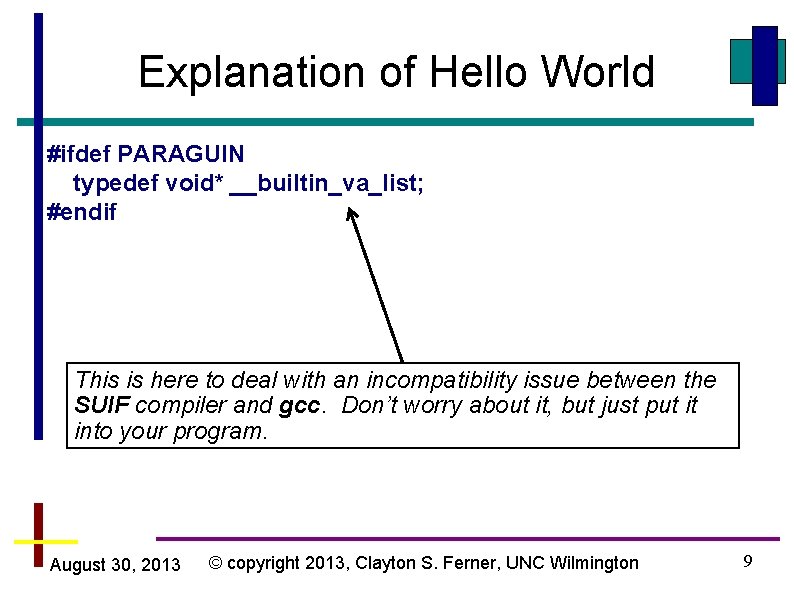

Explanation of Hello World #ifdef PARAGUIN typedef void* __builtin_va_list; #endif This is here to deal with an incompatibility issue between the SUIF compiler and gcc. Don’t worry about it, but just put it into your program. August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 9

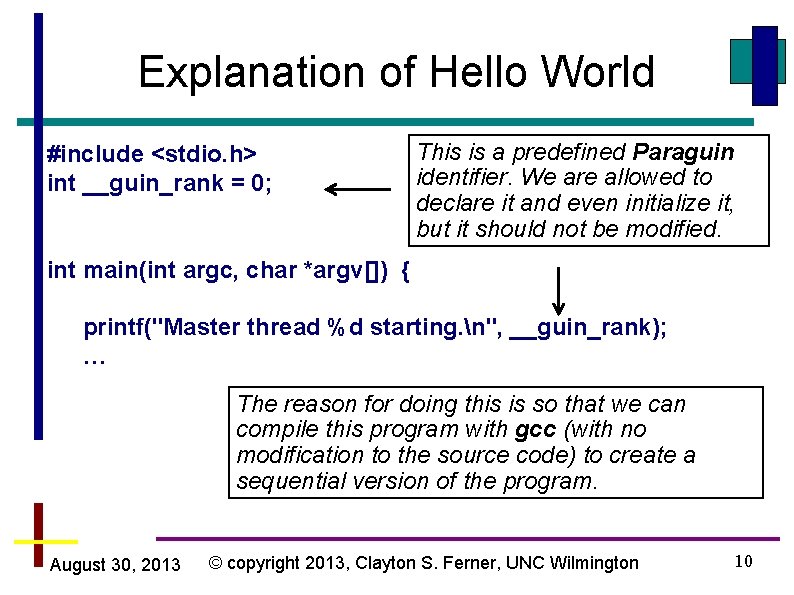

Explanation of Hello World #include <stdio. h> int __guin_rank = 0; This is a predefined Paraguin identifier. We are allowed to declare it and even initialize it, but it should not be modified. int main(int argc, char *argv[]) { printf("Master thread %d starting. n", __guin_rank); … The reason for doing this is so that we can compile this program with gcc (with no modification to the source code) to create a sequential version of the program. August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 10

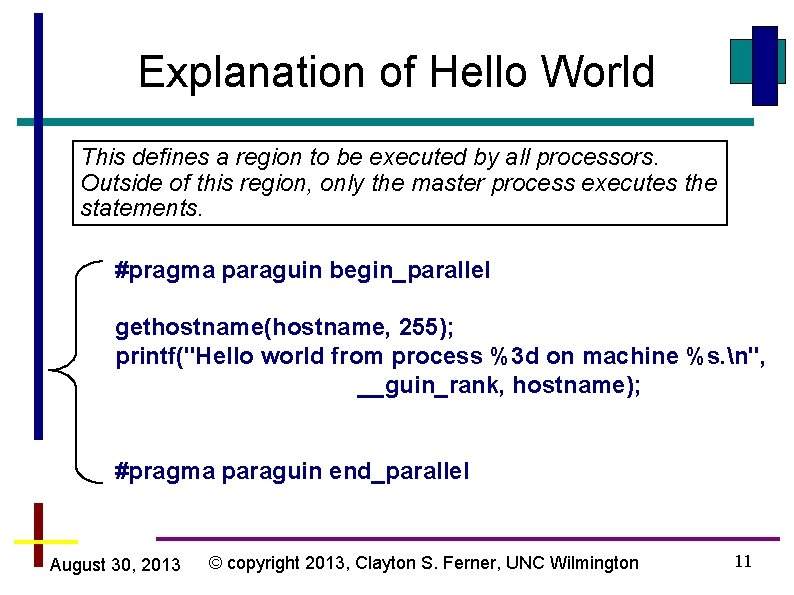

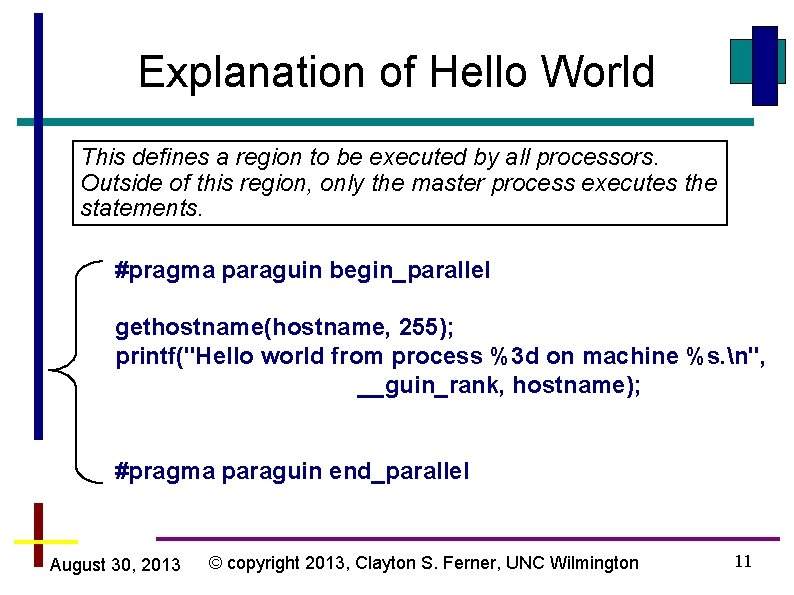

Explanation of Hello World This defines a region to be executed by all processors. Outside of this region, only the master process executes the statements. #pragma paraguin begin_parallel gethostname(hostname, 255); printf("Hello world from process %3 d on machine %s. n", __guin_rank, hostname); #pragma paraguin end_parallel August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 11

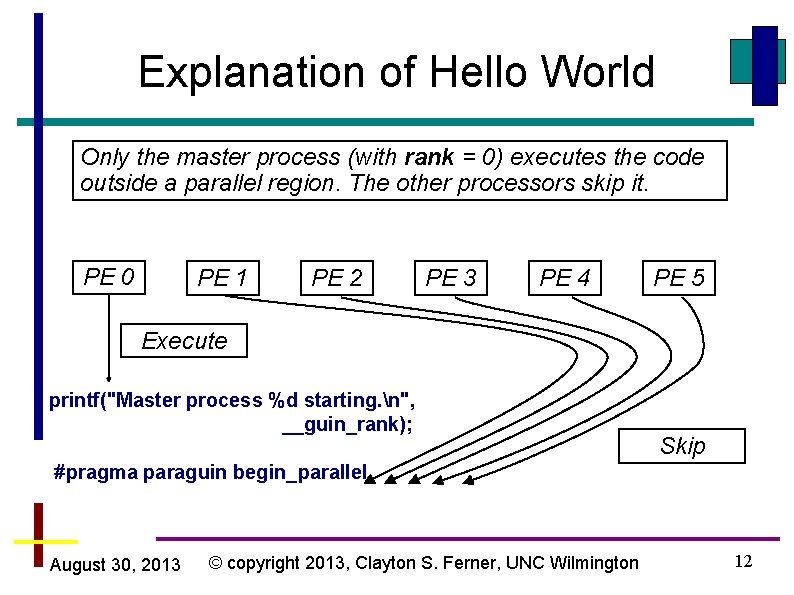

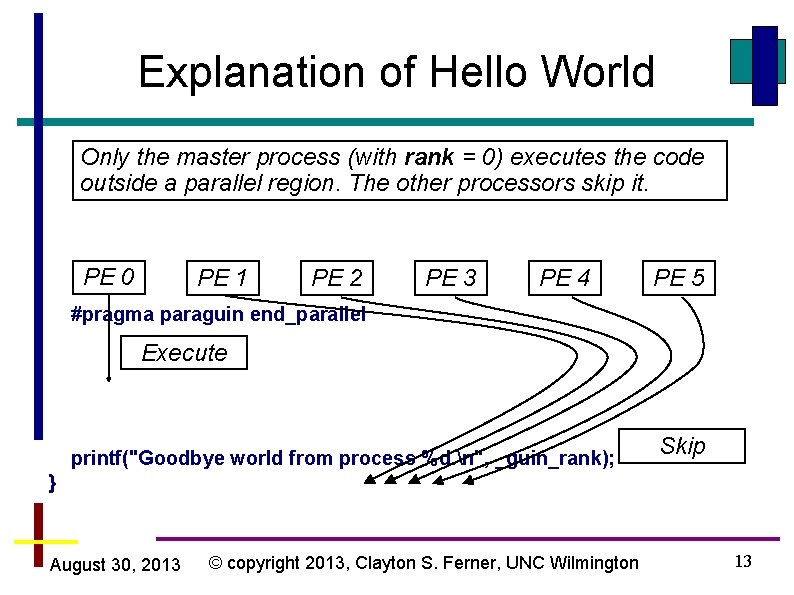

Explanation of Hello World Only the master process (with rank = 0) executes the code outside a parallel region. The other processors skip it. PE 0 PE 1 PE 2 PE 3 PE 4 PE 5 Execute printf("Master process %d starting. n", __guin_rank); Skip #pragma paraguin begin_parallel August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 12

Explanation of Hello World Only the master process (with rank = 0) executes the code outside a parallel region. The other processors skip it. PE 0 PE 1 PE 2 PE 3 PE 4 PE 5 #pragma paraguin end_parallel Execute printf("Goodbye world from process %d. n", _guin_rank); Skip } August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 13

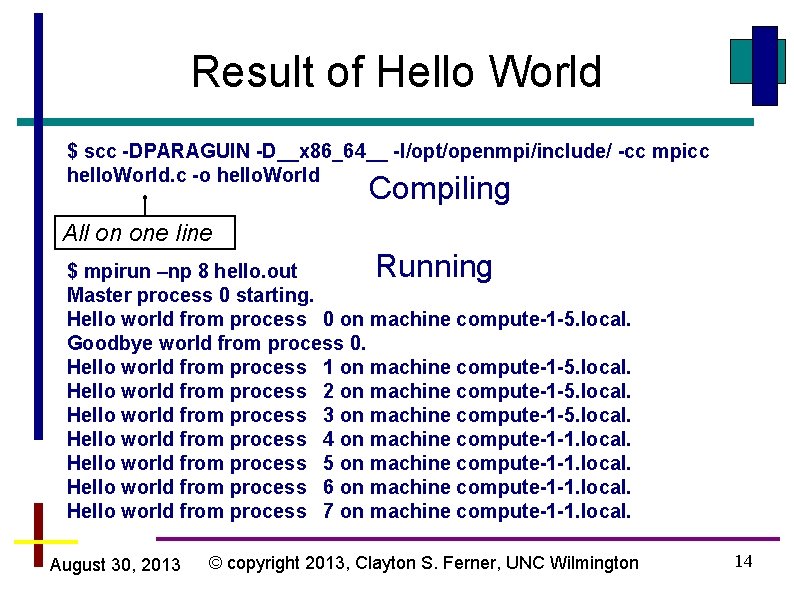

Result of Hello World $ scc -DPARAGUIN -D__x 86_64__ -I/opt/openmpi/include/ -cc mpicc hello. World. c -o hello. World Compiling All on one line Running $ mpirun –np 8 hello. out Master process 0 starting. Hello world from process 0 on machine compute-1 -5. local. Goodbye world from process 0. Hello world from process 1 on machine compute-1 -5. local. Hello world from process 2 on machine compute-1 -5. local. Hello world from process 3 on machine compute-1 -5. local. Hello world from process 4 on machine compute-1 -1. local. Hello world from process 5 on machine compute-1 -1. local. Hello world from process 6 on machine compute-1 -1. local. Hello world from process 7 on machine compute-1 -1. local. August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 14

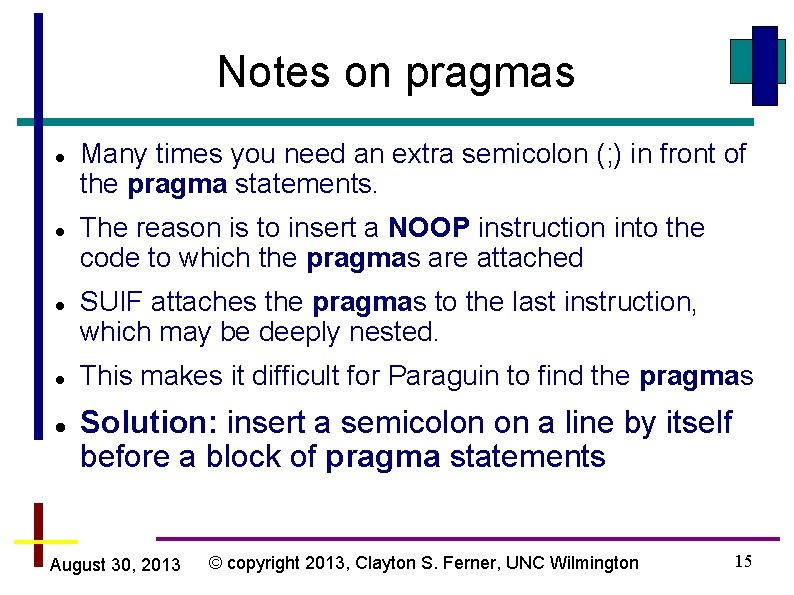

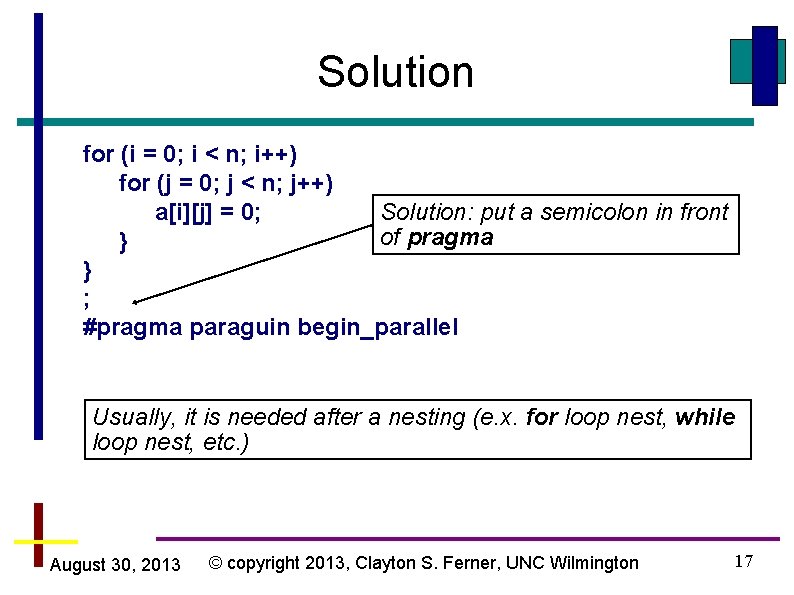

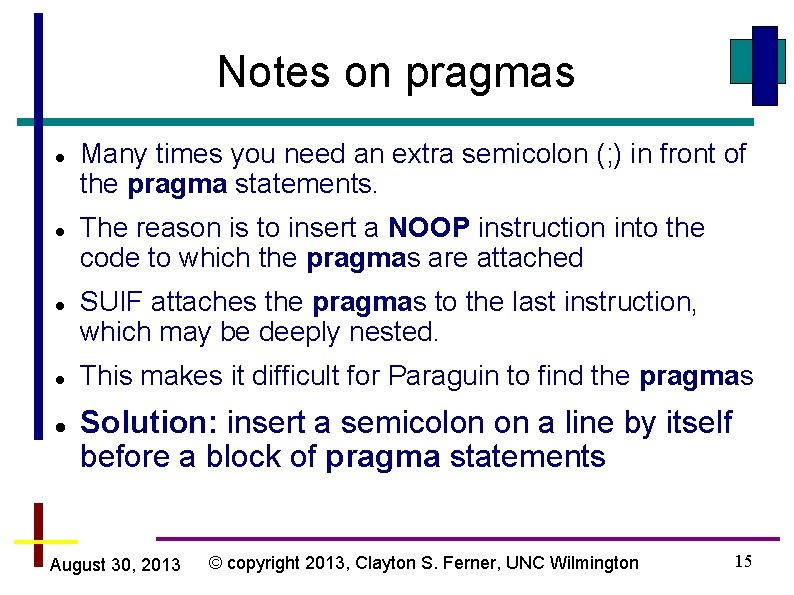

Notes on pragmas Many times you need an extra semicolon (; ) in front of the pragma statements. The reason is to insert a NOOP instruction into the code to which the pragmas are attached SUIF attaches the pragmas to the last instruction, which may be deeply nested. This makes it difficult for Paraguin to find the pragmas Solution: insert a semicolon on a line by itself before a block of pragma statements August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 15

Incorrect Location of Pragma for (i = 0; i < n; i++) for (j = 0; j < n; j++) a[i][j] = 0; } } #pragma paraguin begin_parallel This code Actually appears like this: for (i = 0; i < n; i++) for (j = 0; j < n; j++) a[i][j] = 0; #pragma paraguin begin_parallel } } August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 16

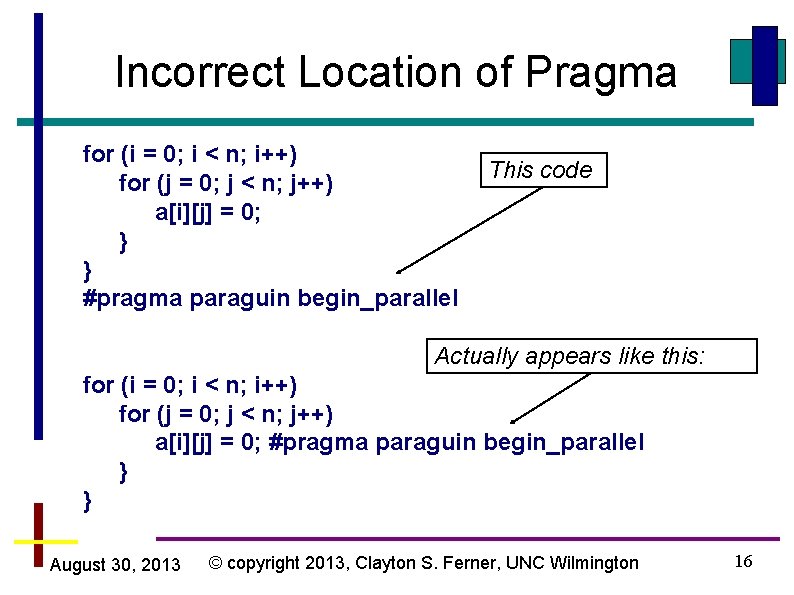

Solution for (i = 0; i < n; i++) for (j = 0; j < n; j++) Solution: put a semicolon in front a[i][j] = 0; of pragma } } ; #pragma paraguin begin_parallel Usually, it is needed after a nesting (e. x. for loop nest, while loop nest, etc. ) August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 17

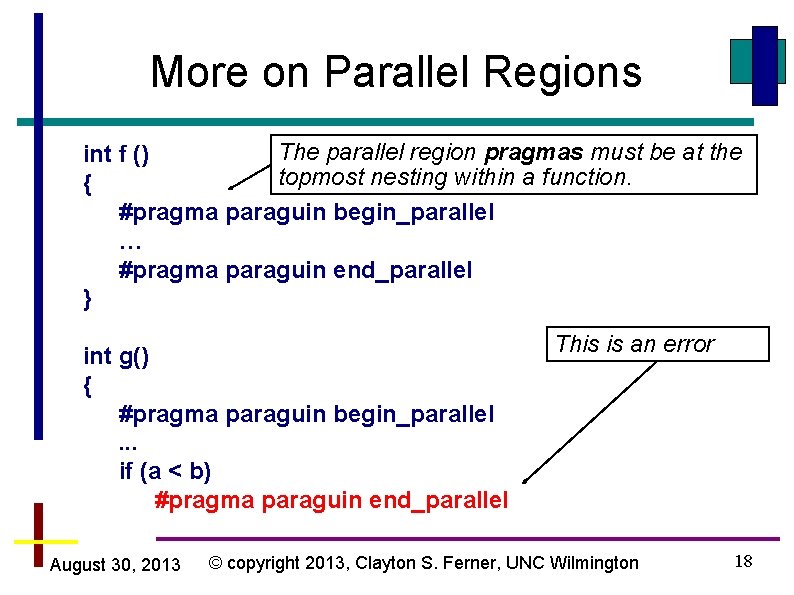

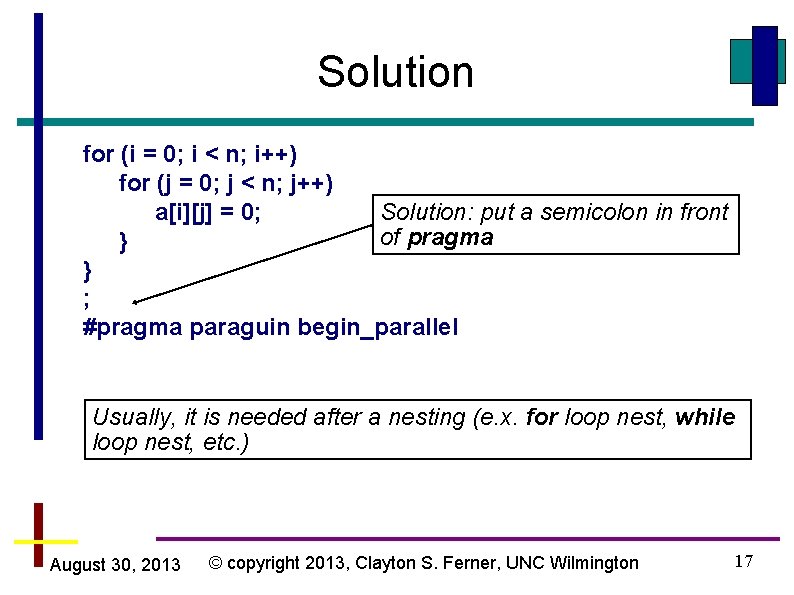

More on Parallel Regions The parallel region pragmas must be at the int f () topmost nesting within a function. { #pragma paraguin begin_parallel … #pragma paraguin end_parallel } int g() { #pragma paraguin begin_parallel. . . if (a < b) #pragma paraguin end_parallel August 30, 2013 This is an error © copyright 2013, Clayton S. Ferner, UNC Wilmington 18

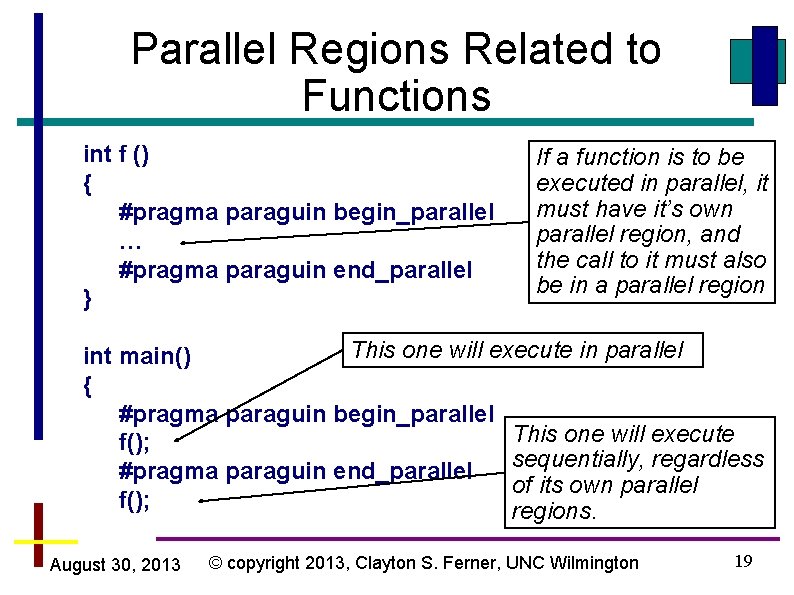

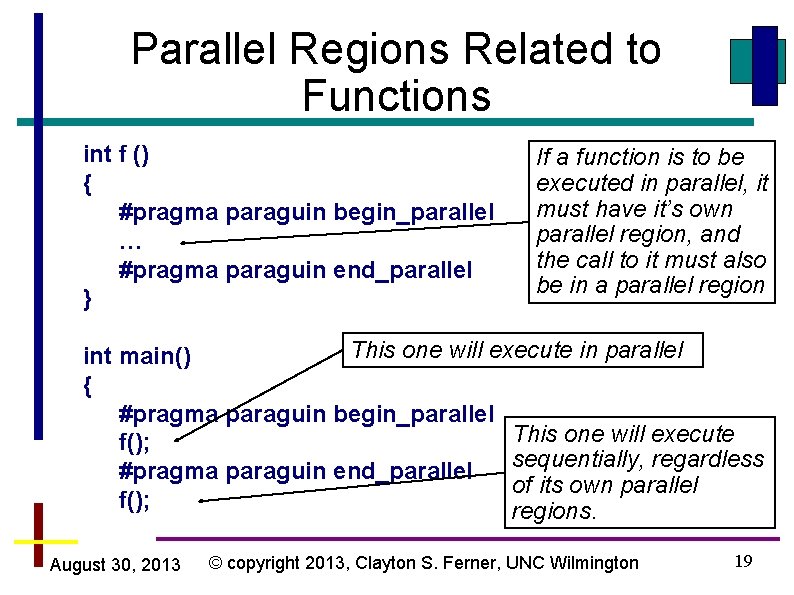

Parallel Regions Related to Functions int f () { #pragma paraguin begin_parallel … #pragma paraguin end_parallel } If a function is to be executed in parallel, it must have it’s own parallel region, and the call to it must also be in a parallel region This one will execute in parallel int main() { #pragma paraguin begin_parallel This one will execute f(); sequentially, regardless #pragma paraguin end_parallel of its own parallel f(); regions. August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 19

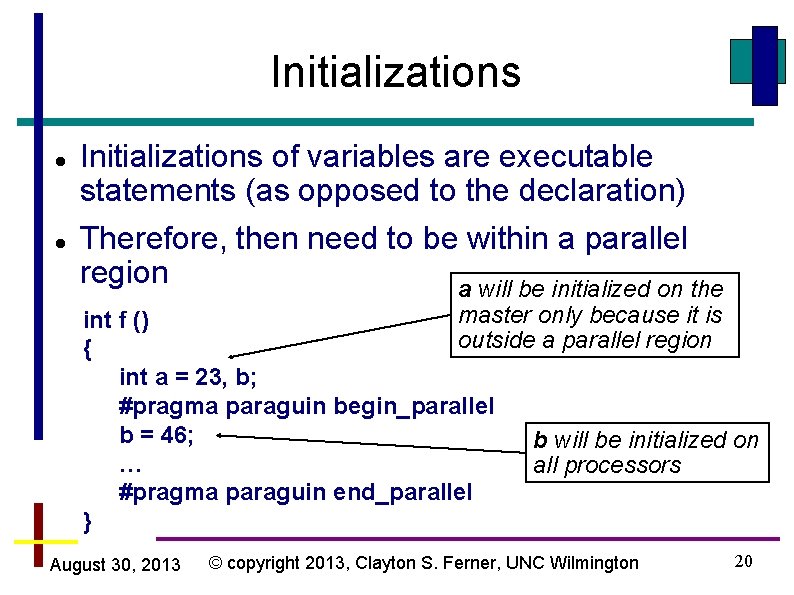

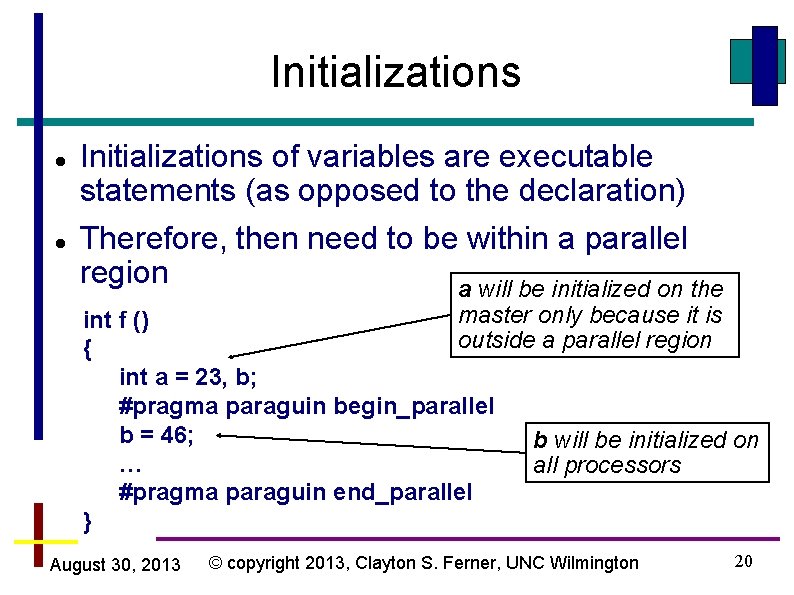

Initializations of variables are executable statements (as opposed to the declaration) Therefore, then need to be within a parallel region a will be initialized on the master only because it is int f () outside a parallel region { int a = 23, b; #pragma paraguin begin_parallel b = 46; b will be initialized on … all processors #pragma paraguin end_parallel } August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 20

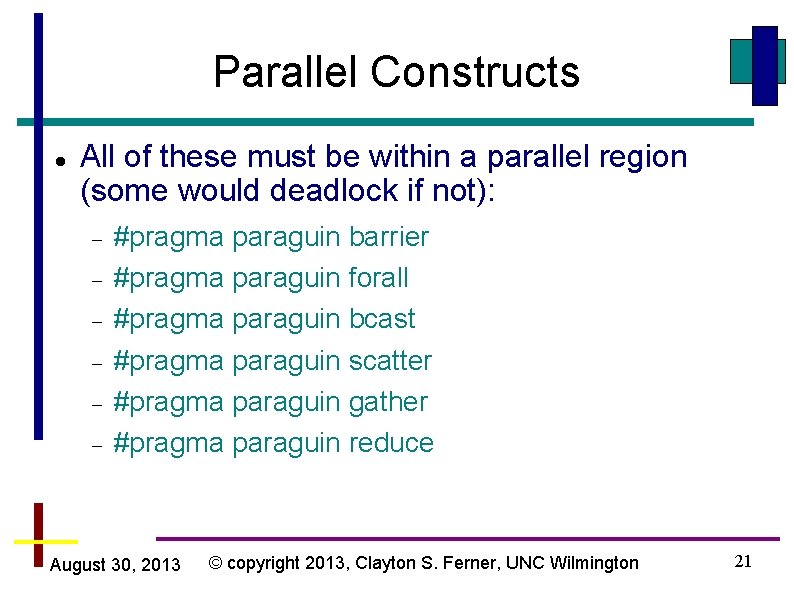

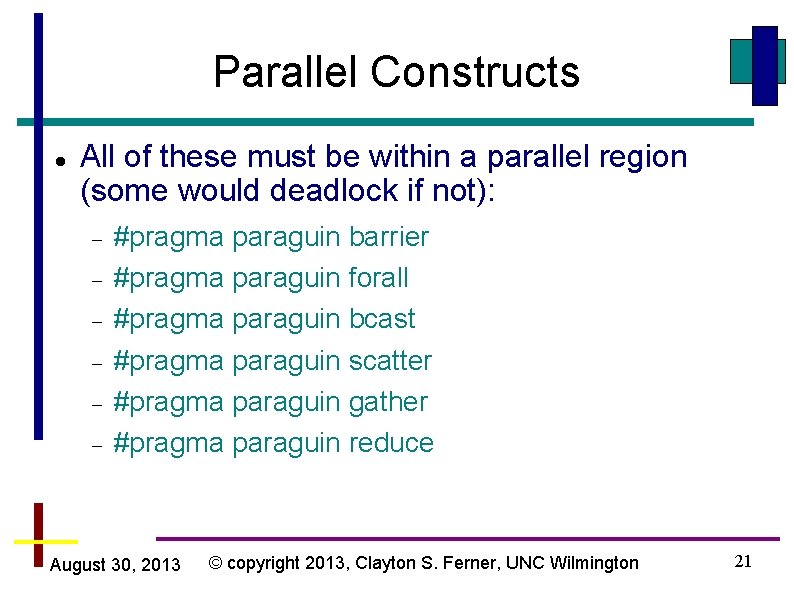

Parallel Constructs All of these must be within a parallel region (some would deadlock if not): #pragma paraguin barrier #pragma paraguin forall #pragma paraguin bcast #pragma paraguin scatter #pragma paraguin gather #pragma paraguin reduce August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 21

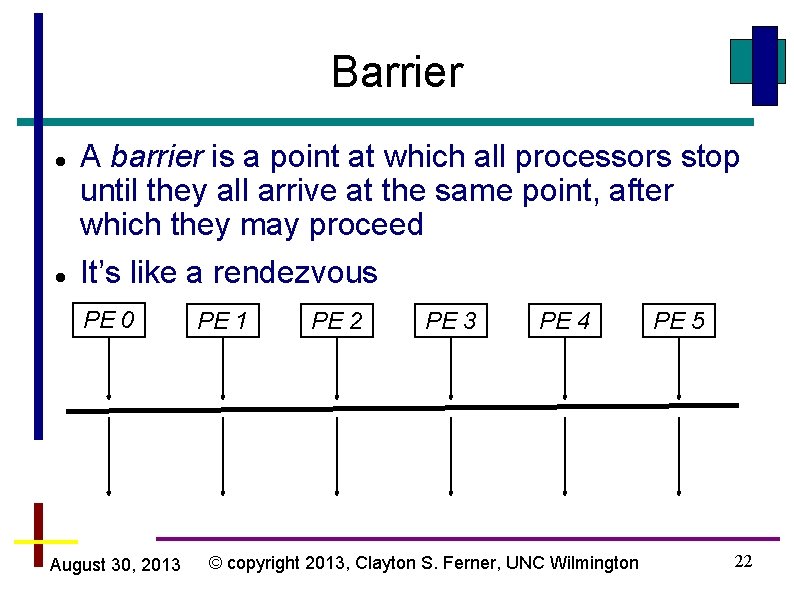

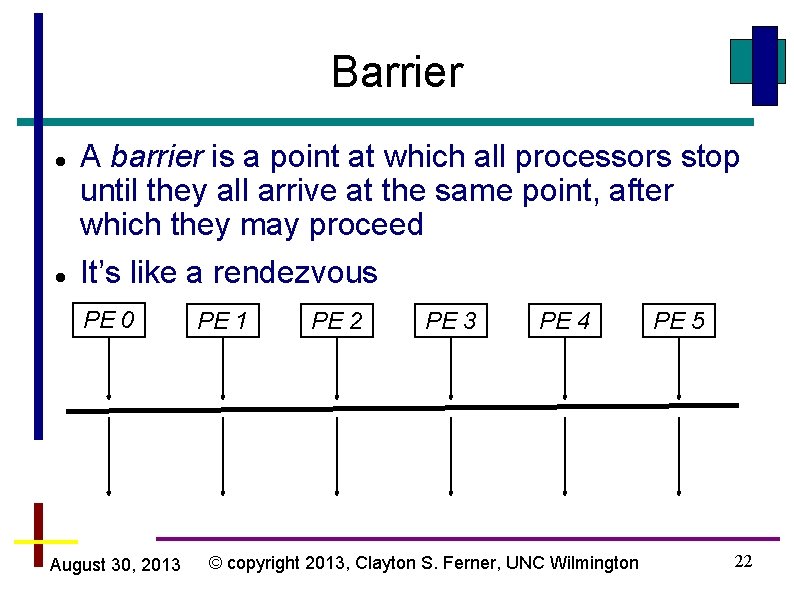

Barrier A barrier is a point at which all processors stop until they all arrive at the same point, after which they may proceed It’s like a rendezvous PE 0 August 30, 2013 PE 1 PE 2 PE 3 PE 4 © copyright 2013, Clayton S. Ferner, UNC Wilmington PE 5 22

Barrier … #pragma paraguin barrier … August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 23

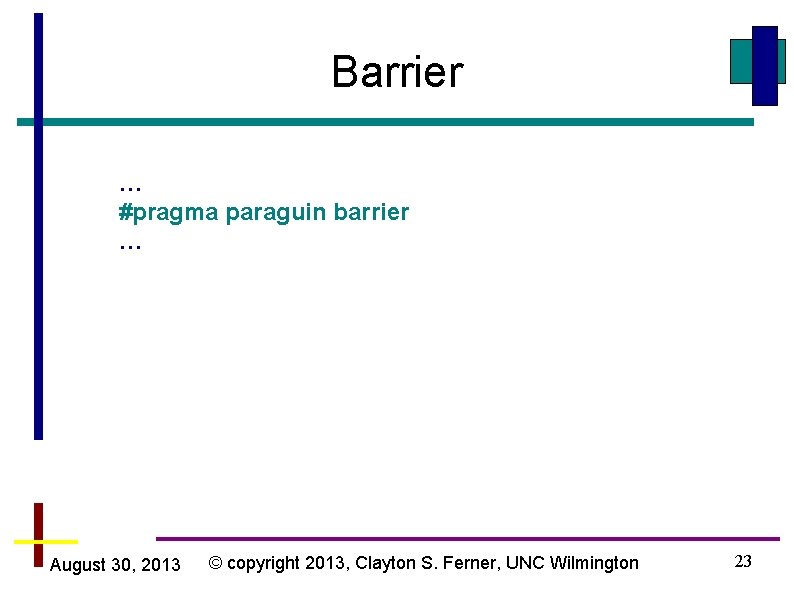

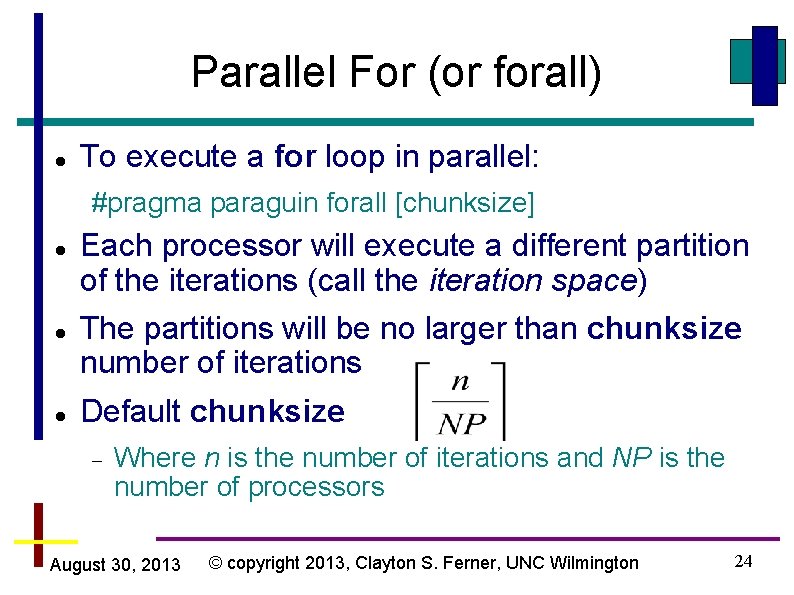

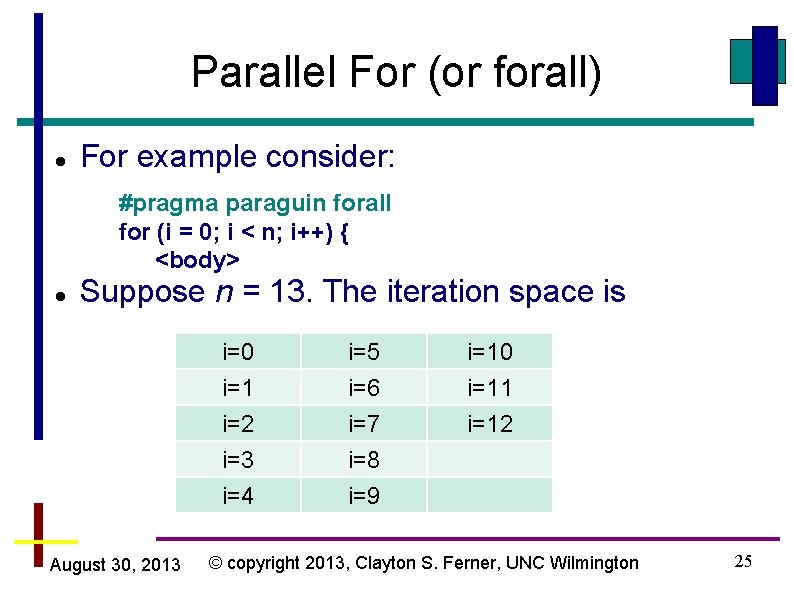

Parallel For (or forall) To execute a for loop in parallel: #pragma paraguin forall [chunksize] Each processor will execute a different partition of the iterations (call the iteration space) The partitions will be no larger than chunksize number of iterations Default chunksize Where n is the number of iterations and NP is the number of processors August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 24

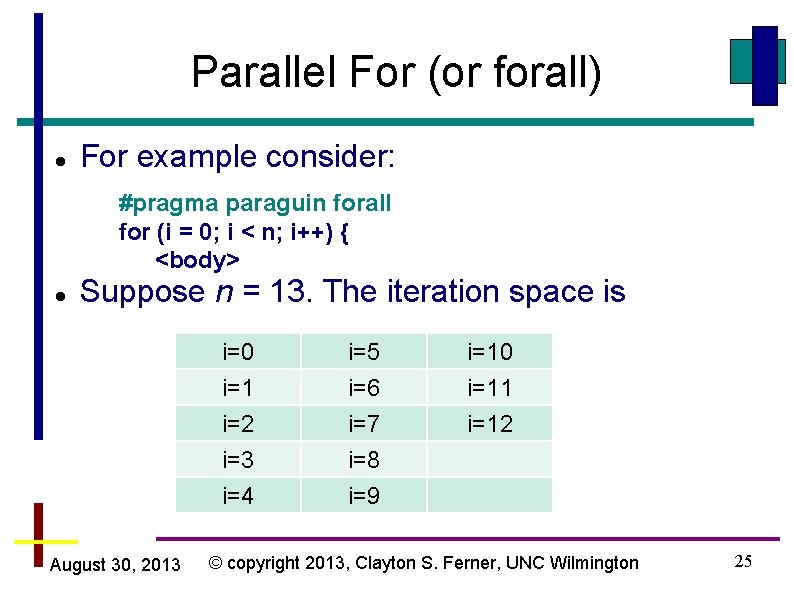

Parallel For (or forall) For example consider: #pragma paraguin forall for (i = 0; i < n; i++) { <body> Suppose n = 13. The iteration space is August 30, 2013 i=0 i=1 i=2 i=3 i=5 i=6 i=7 i=8 i=4 i=9 i=10 i=11 i=12 © copyright 2013, Clayton S. Ferner, UNC Wilmington 25

Parallel For (or forall) Also suppose we have 4 processors. Default chunksize is The iteration space will be executed by the 4 processors as: PE 0 i=1 i=2 PE 1 i=4 i=5 i=6 PE 2 i=8 i=9 i=10 PE 3 i=12 - i=3 i=7 i=11 - August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 26

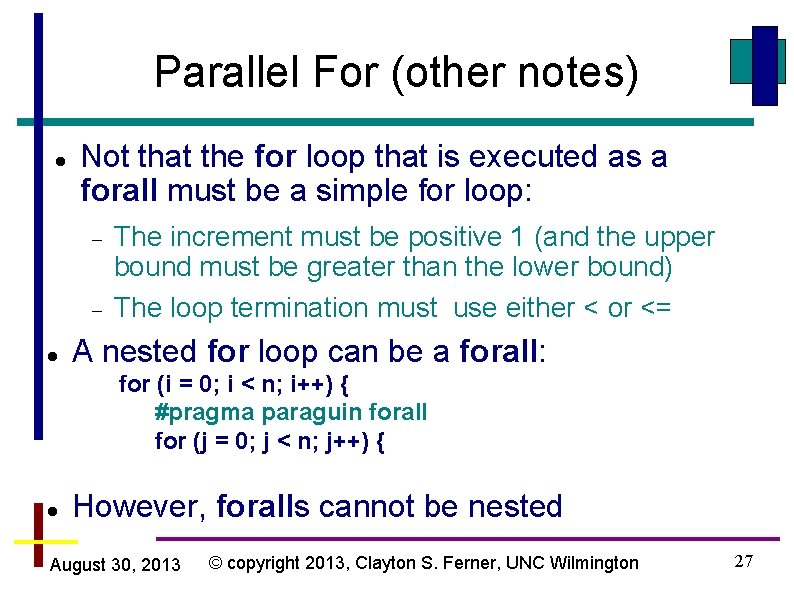

Parallel For (other notes) Not that the for loop that is executed as a forall must be a simple for loop: The increment must be positive 1 (and the upper bound must be greater than the lower bound) The loop termination must use either < or <= A nested for loop can be a forall: for (i = 0; i < n; i++) { #pragma paraguin forall for (j = 0; j < n; j++) { However, foralls cannot be nested August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 27

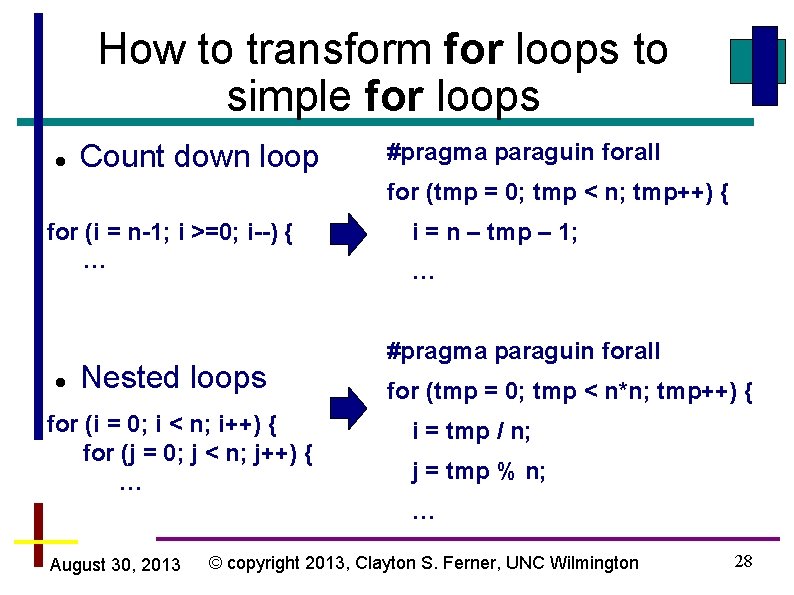

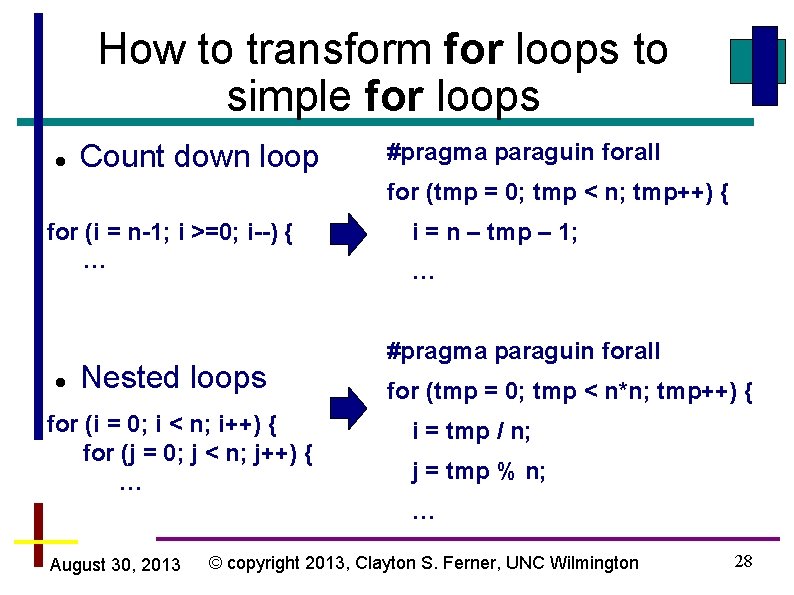

How to transform for loops to simple for loops Count down loop #pragma paraguin forall for (tmp = 0; tmp < n; tmp++) { for (i = n-1; i >=0; i--) { … Nested loops for (i = 0; i < n; i++) { for (j = 0; j < n; j++) { … i = n – tmp – 1; … #pragma paraguin forall for (tmp = 0; tmp < n*n; tmp++) { i = tmp / n; j = tmp % n; … August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 28

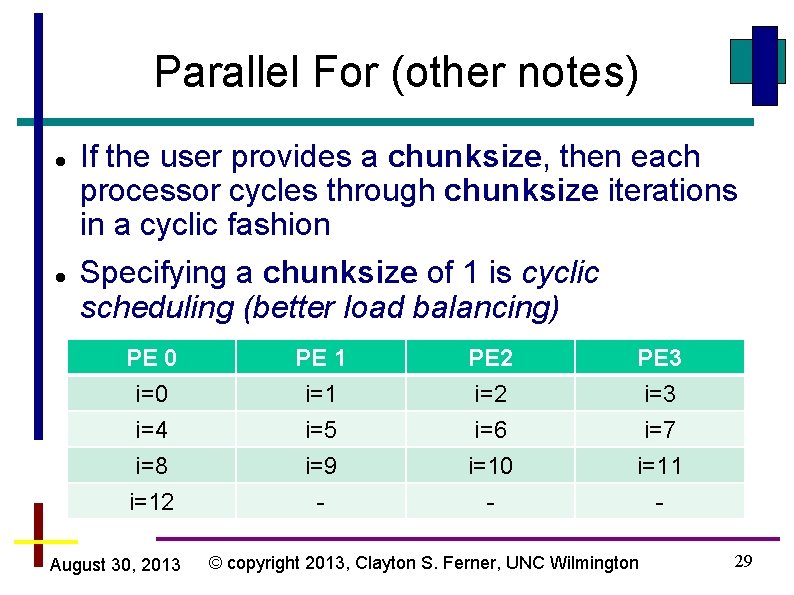

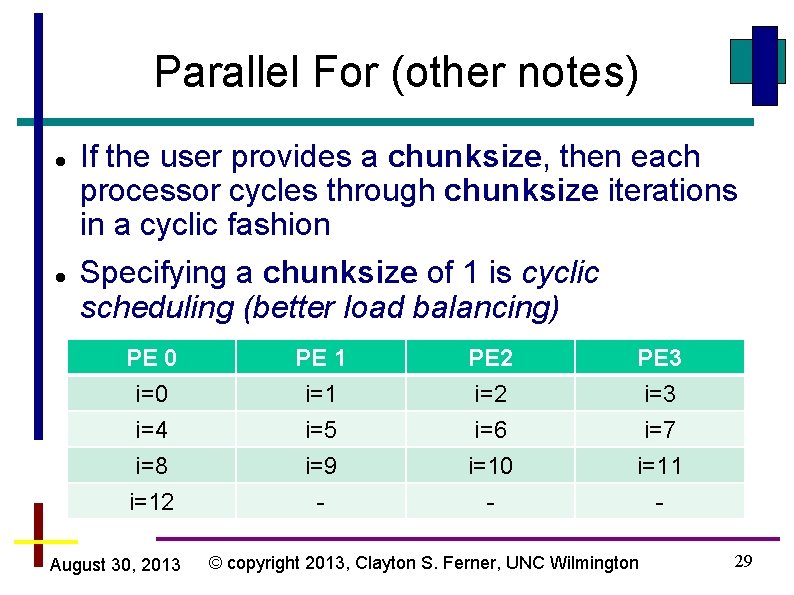

Parallel For (other notes) If the user provides a chunksize, then each processor cycles through chunksize iterations in a cyclic fashion Specifying a chunksize of 1 is cyclic scheduling (better load balancing) PE 0 i=4 i=8 PE 1 i=5 i=9 PE 2 i=6 i=10 PE 3 i=7 i=11 i=12 - - - August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 29

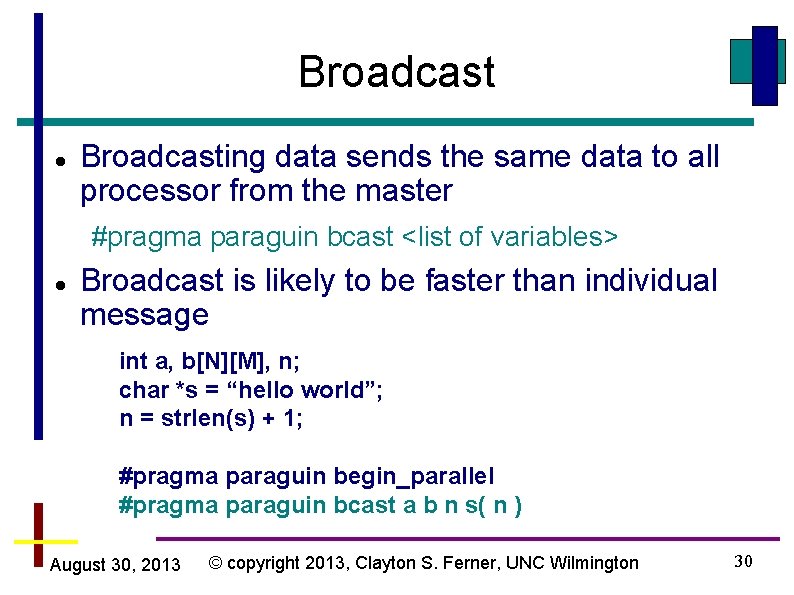

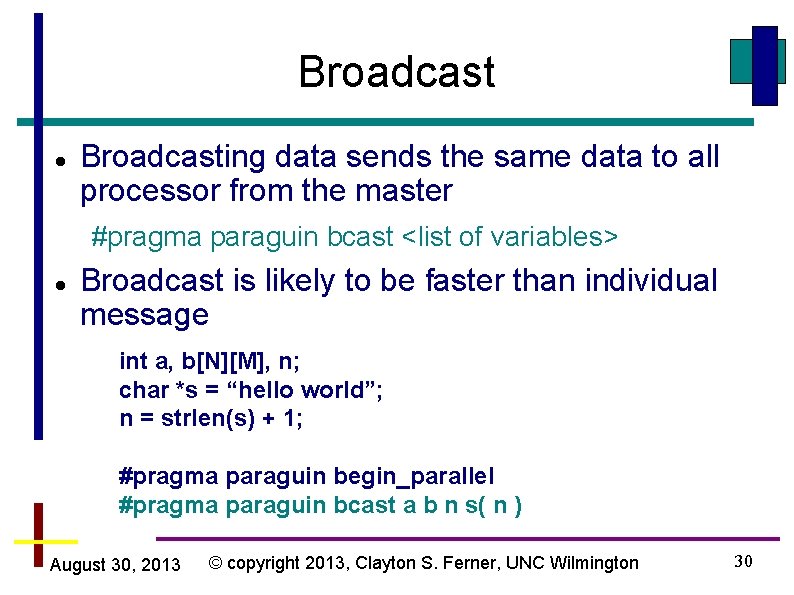

Broadcast Broadcasting data sends the same data to all processor from the master #pragma paraguin bcast <list of variables> Broadcast is likely to be faster than individual message int a, b[N][M], n; char *s = “hello world”; n = strlen(s) + 1; #pragma paraguin begin_parallel #pragma paraguin bcast a b n s( n ) August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 30

![Broadcast int a bNM n char s hello world n strlens Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) +](https://slidetodoc.com/presentation_image_h2/ac4370b5813e0445f3db6b6705fdf012/image-31.jpg)

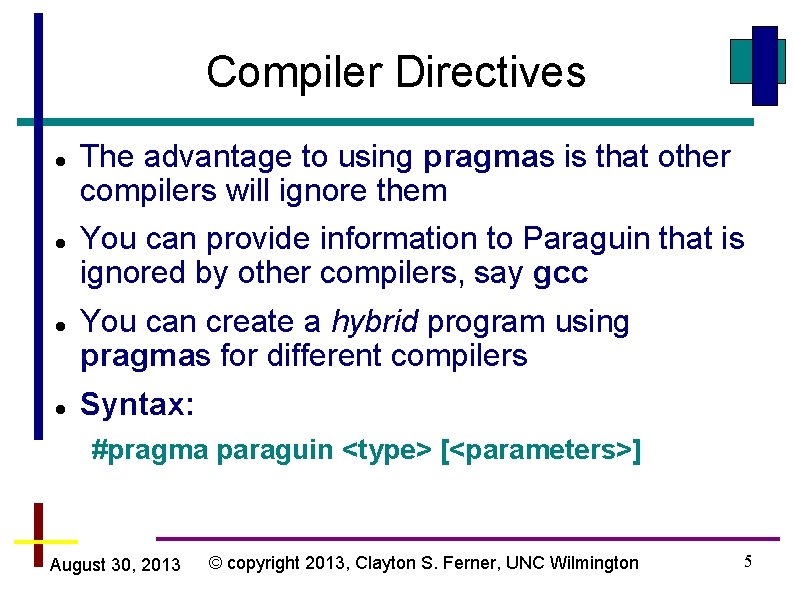

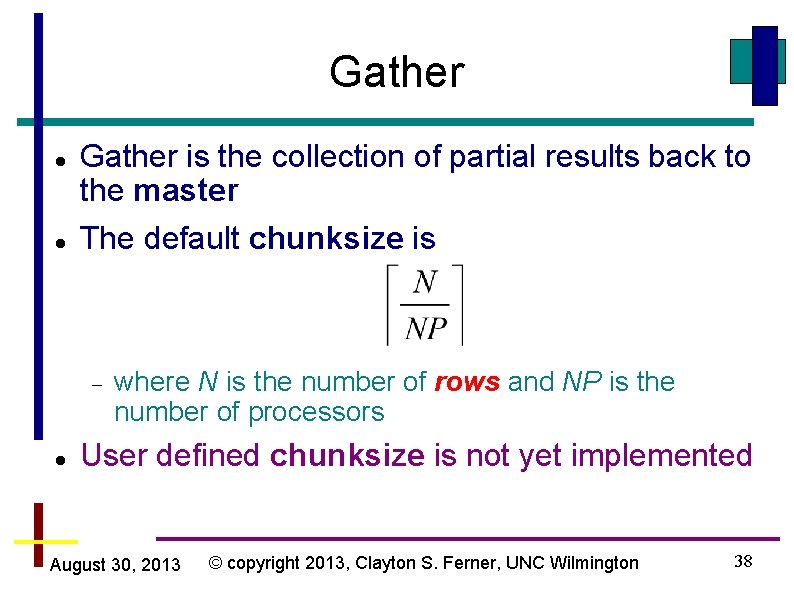

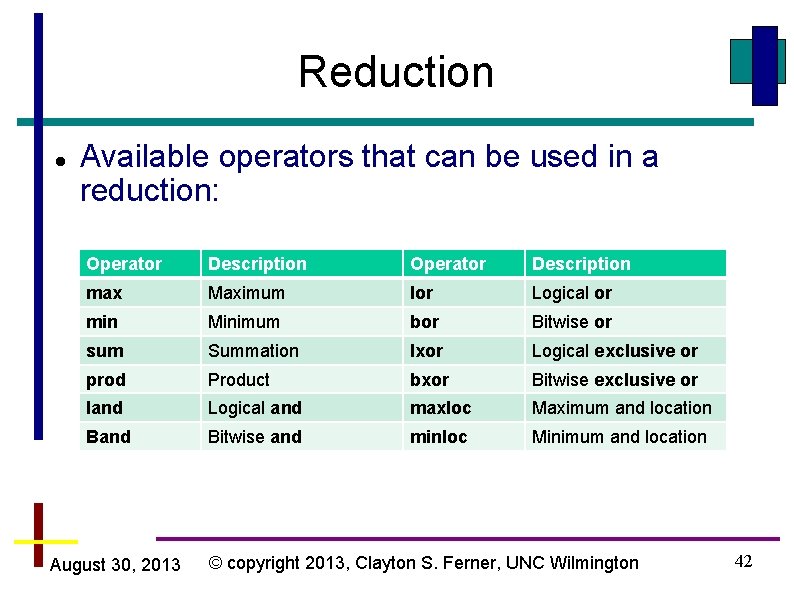

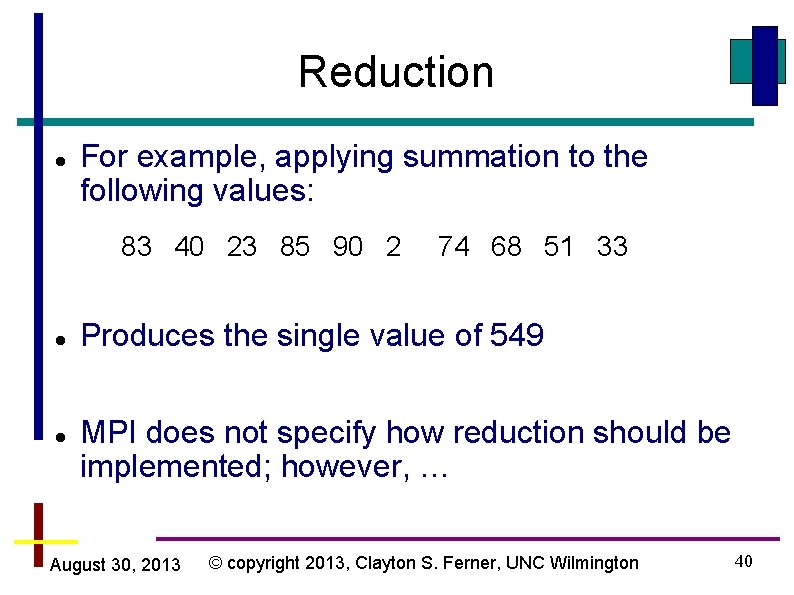

Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) + 1; #pragma paraguin begin_parallel #pragma paraguin bcast a b n s( n ) Variable a is a scalar and b is an array, but the correct number of bytes are broadcast N*M*sizeof(int) bytes are broadcast for variable b. August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 31

![Broadcast int a bNM n char s hello world n strlens Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) +](https://slidetodoc.com/presentation_image_h2/ac4370b5813e0445f3db6b6705fdf012/image-32.jpg)

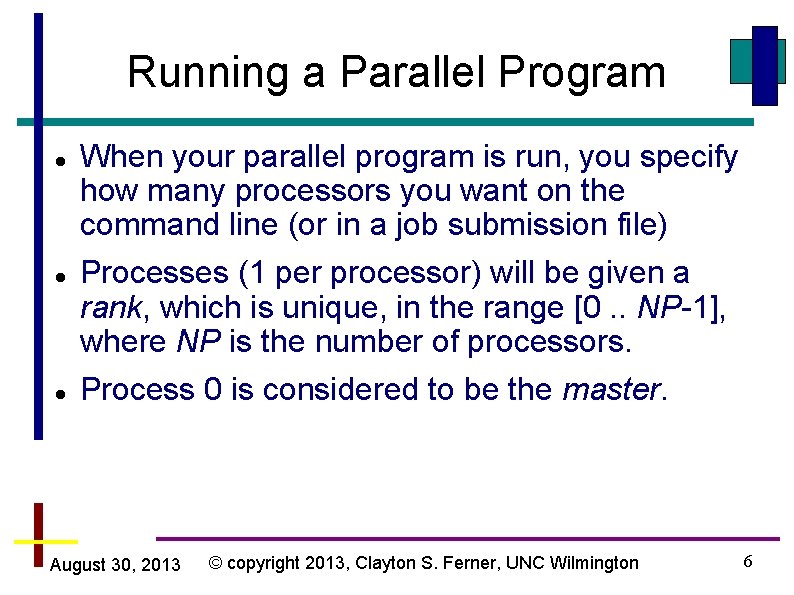

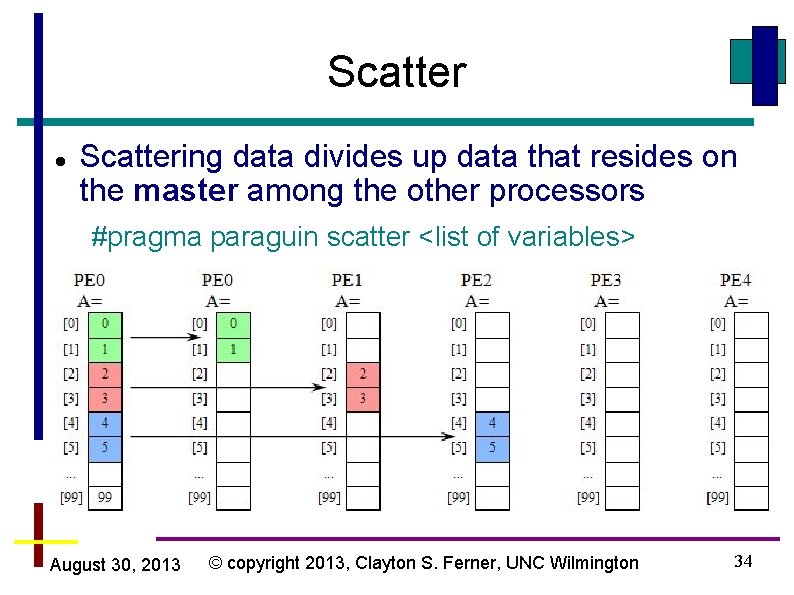

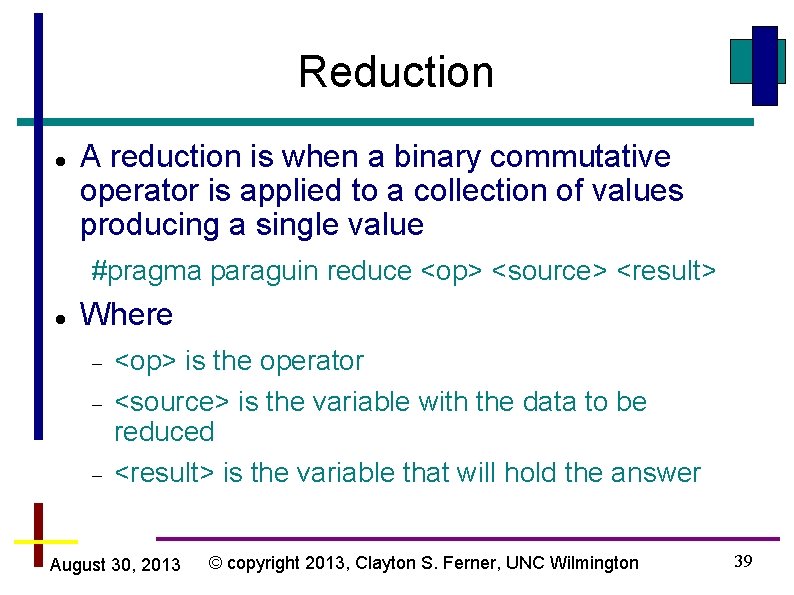

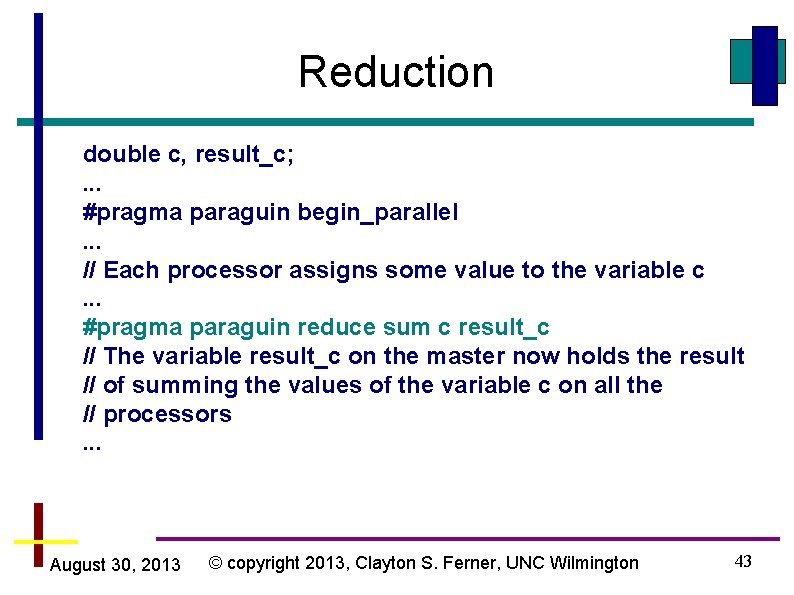

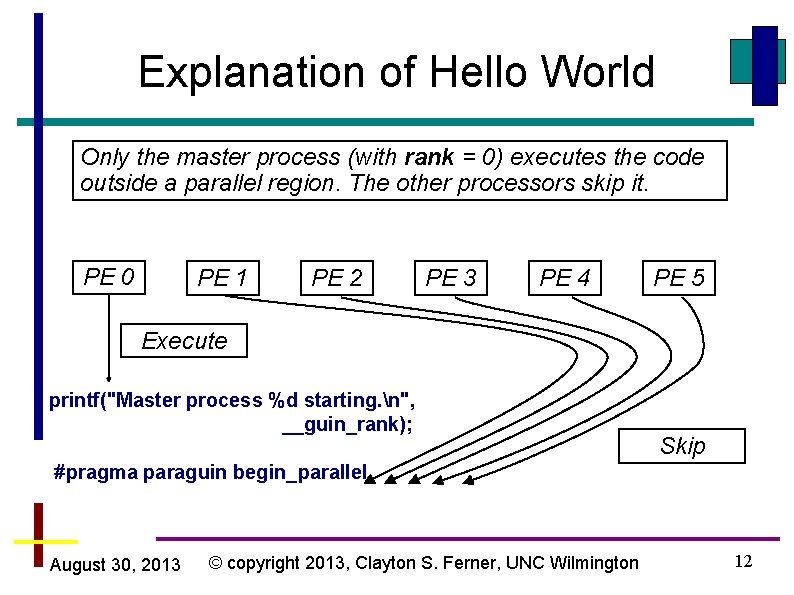

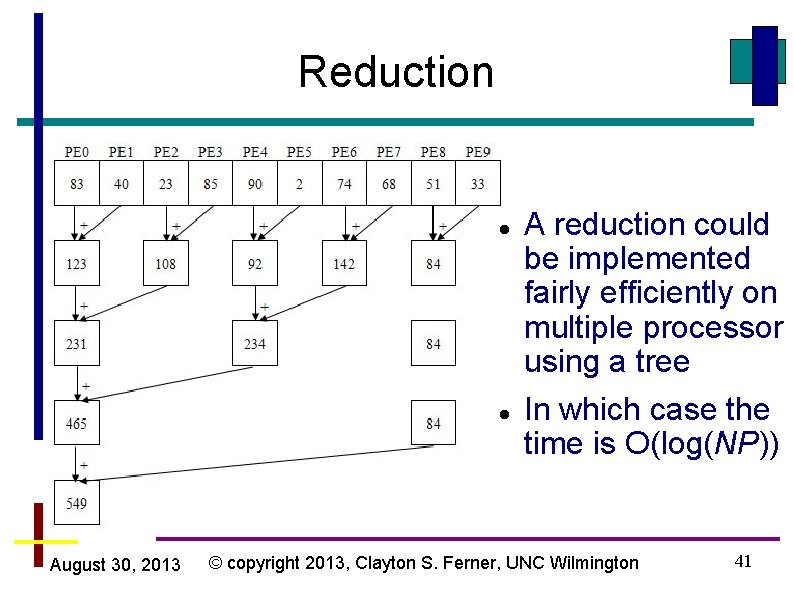

Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) + 1; #pragma paraguin begin_parallel #pragma paraguin bcast a b n s( n ) Variable s is a string or a pointer. There is no way to know how big the data actually is Pointers require a size (such as s( n )) If the size is not given then only one character will be broadcast August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 32

![Broadcast int a bNM n char s hello world n strlens Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) +](https://slidetodoc.com/presentation_image_h2/ac4370b5813e0445f3db6b6705fdf012/image-33.jpg)

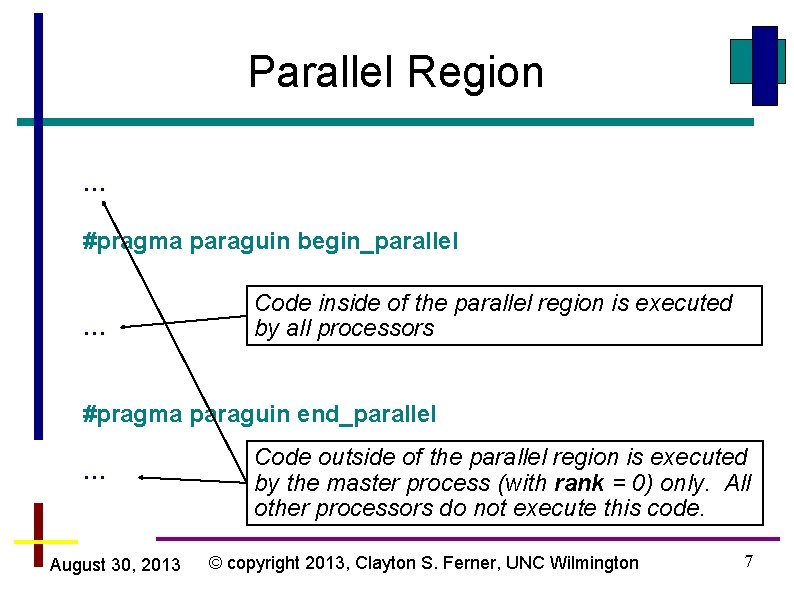

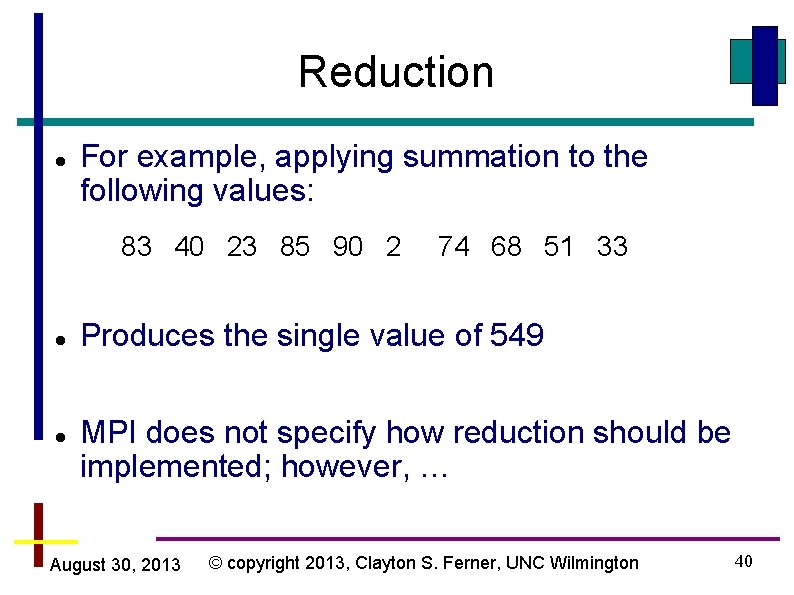

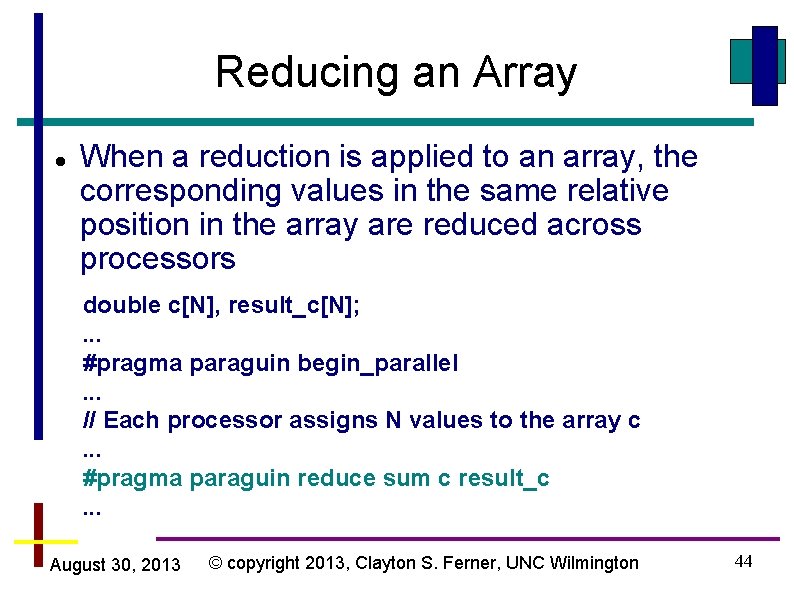

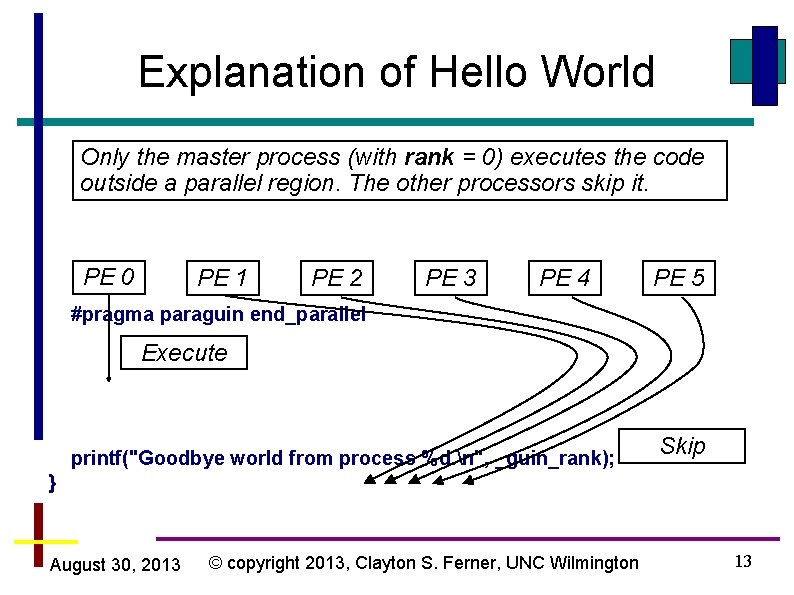

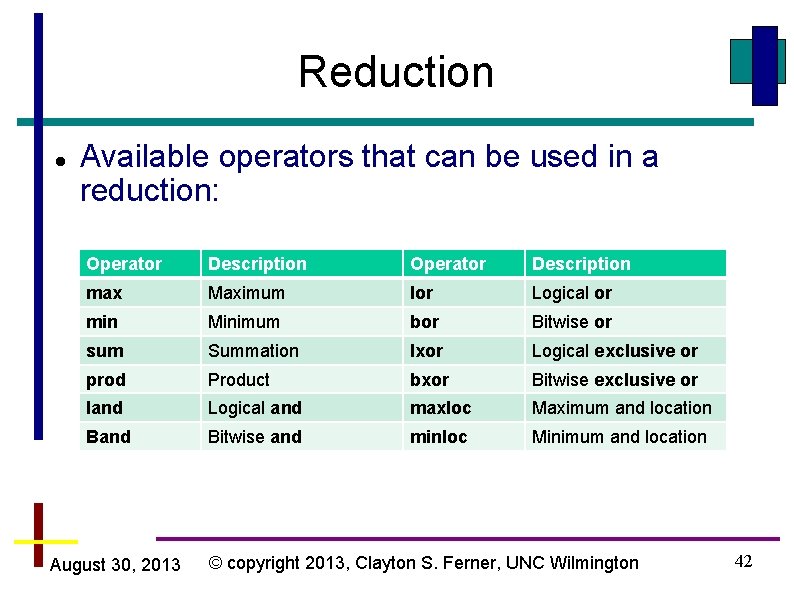

Broadcast int a, b[N][M], n; char *s = “hello world”; n = strlen(s) + 1; #pragma paraguin begin_parallel #pragma paraguin bcast a b n s( n ) Notice that s and n are initialize on the master only 1 is added to strlen(s) to include null character Variable n must be broadcast BEFORE variable s Put spaces between parentheses and size (e. g. ( n )) August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 33

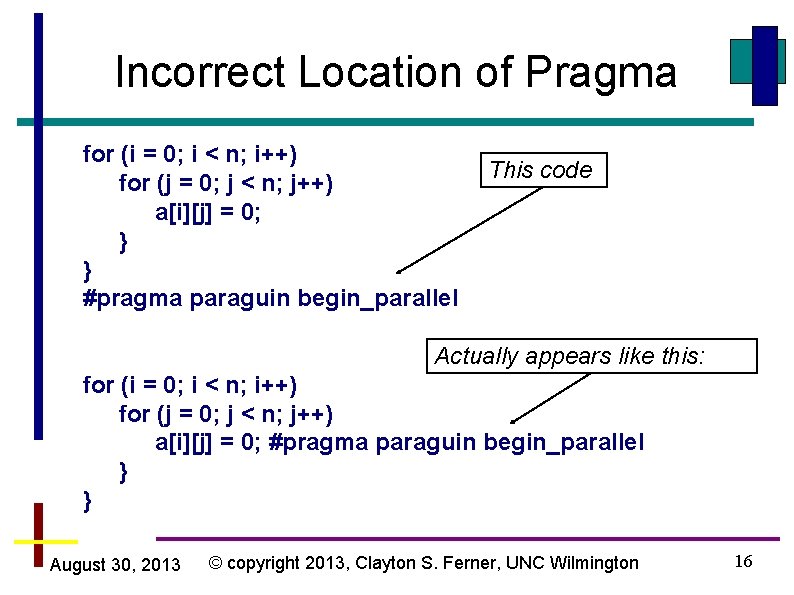

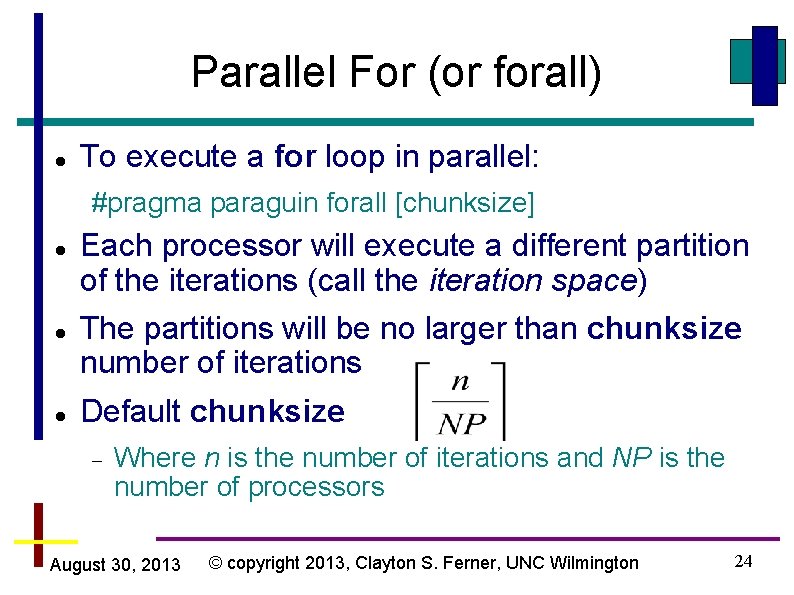

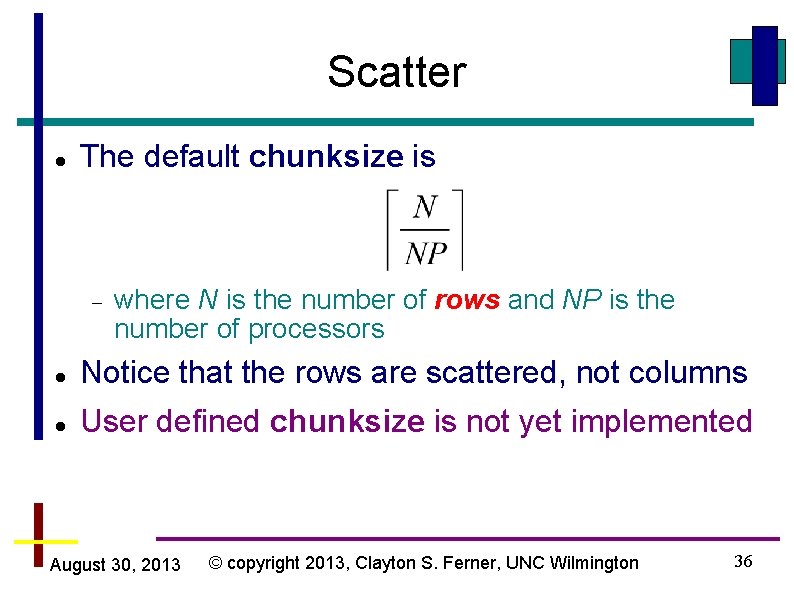

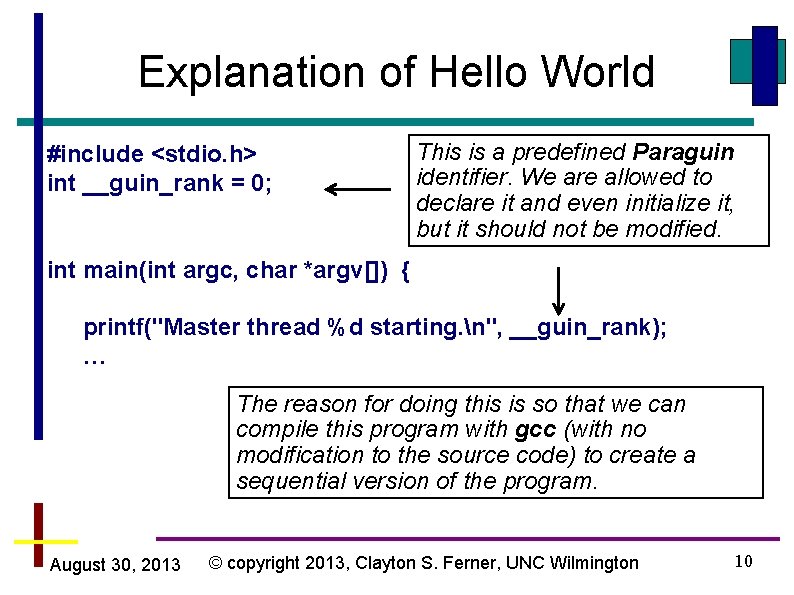

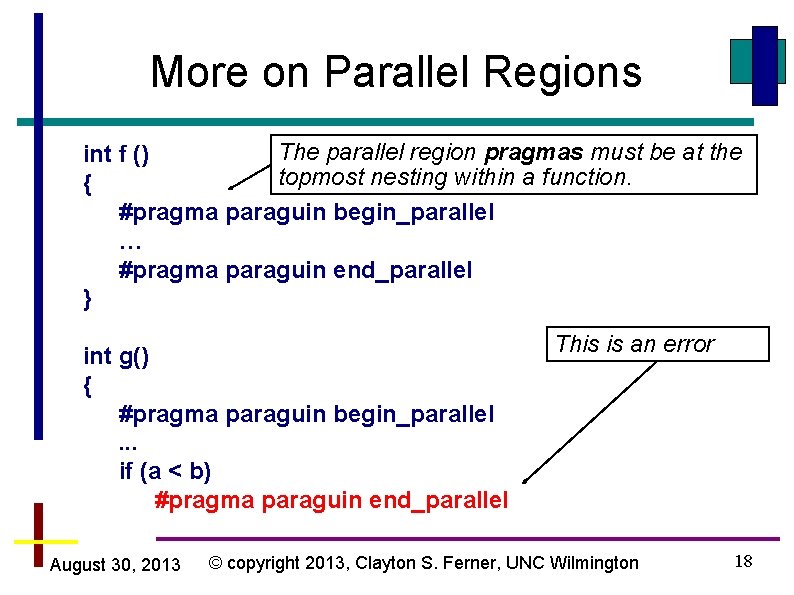

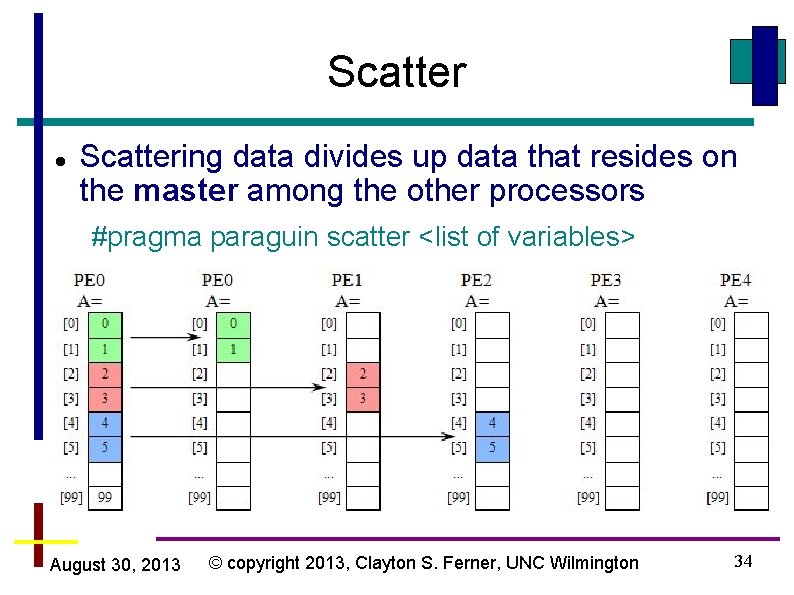

Scatter Scattering data divides up data that resides on the master among the other processors #pragma paraguin scatter <list of variables> August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 34

![Scatter void fint A int n int BN Initialize B somehow Scatter void f(int *A, int n) { int B[N]; … // Initialize B somehow](https://slidetodoc.com/presentation_image_h2/ac4370b5813e0445f3db6b6705fdf012/image-35.jpg)

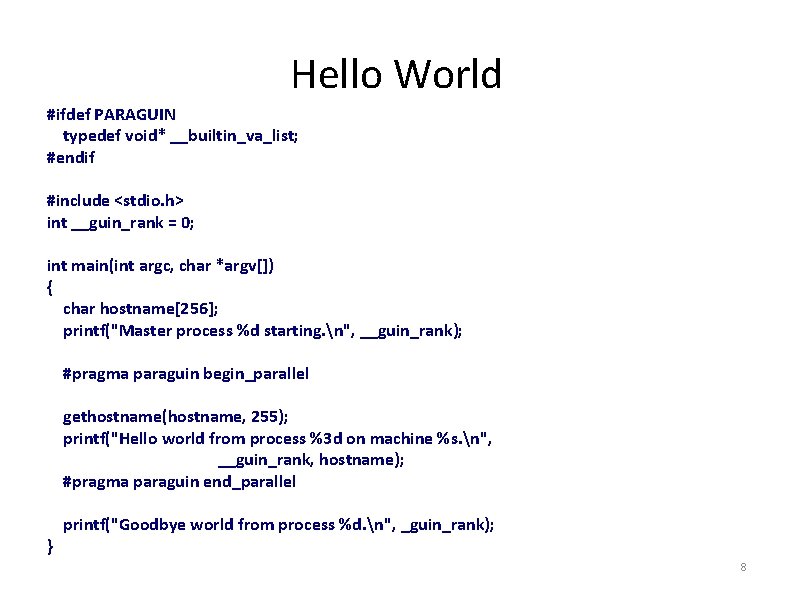

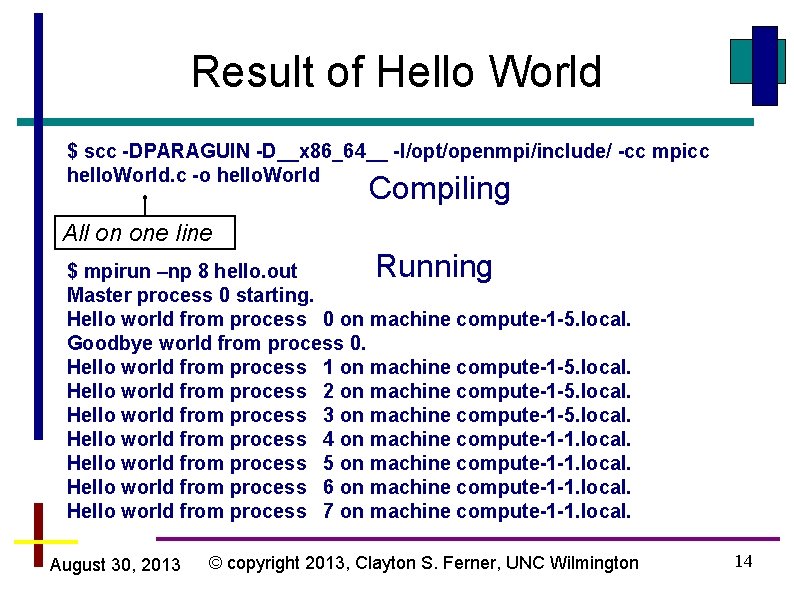

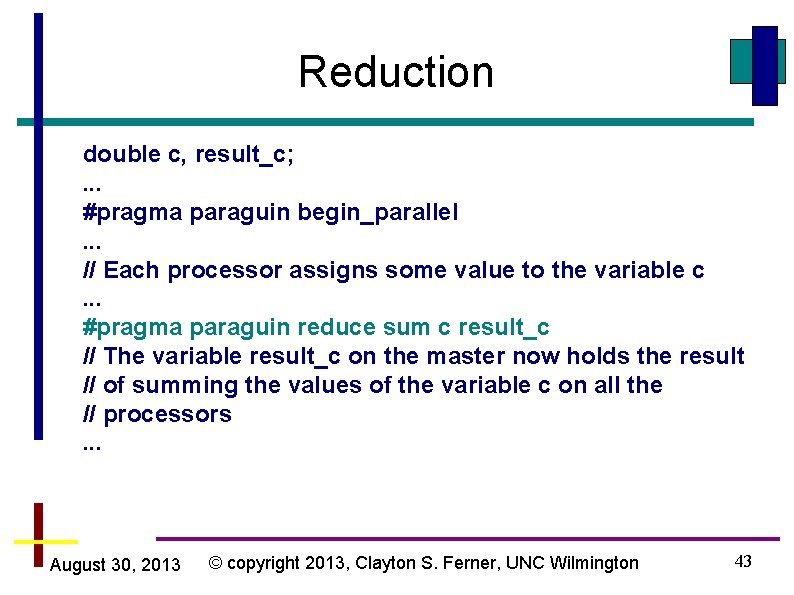

Scatter void f(int *A, int n) { int B[N]; … // Initialize B somehow #pragma paraguin begin_parallel #pragma paraguin scatter A( n ) B. . . Same thing applies for pointers with scatter as with broadcast. The size must be given. Only arrays should be scatter (it makes no sense to scatter a scalar). August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 35

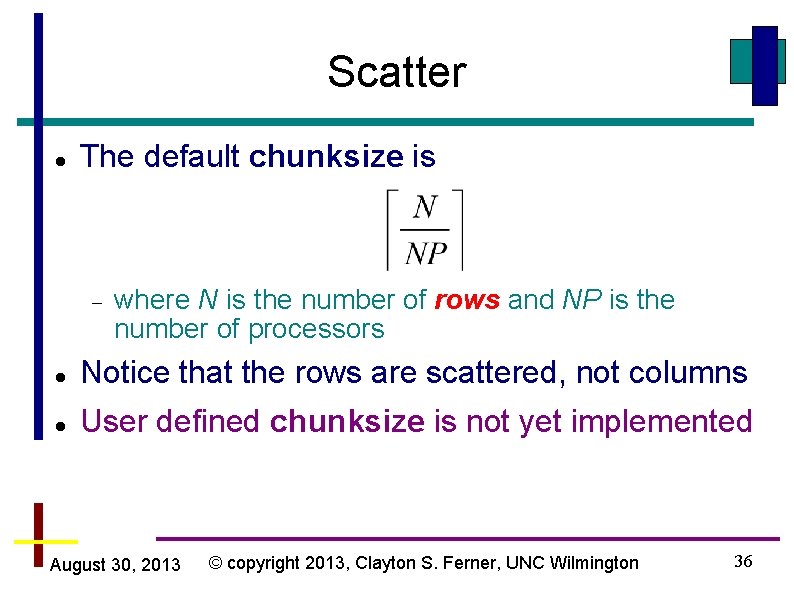

Scatter The default chunksize is where N is the number of rows and NP is the number of processors Notice that the rows are scattered, not columns User defined chunksize is not yet implemented August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 36

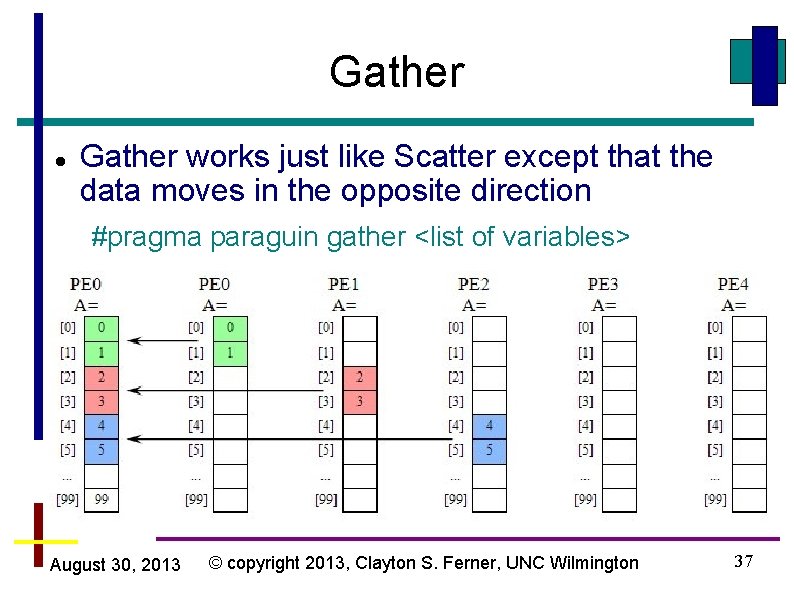

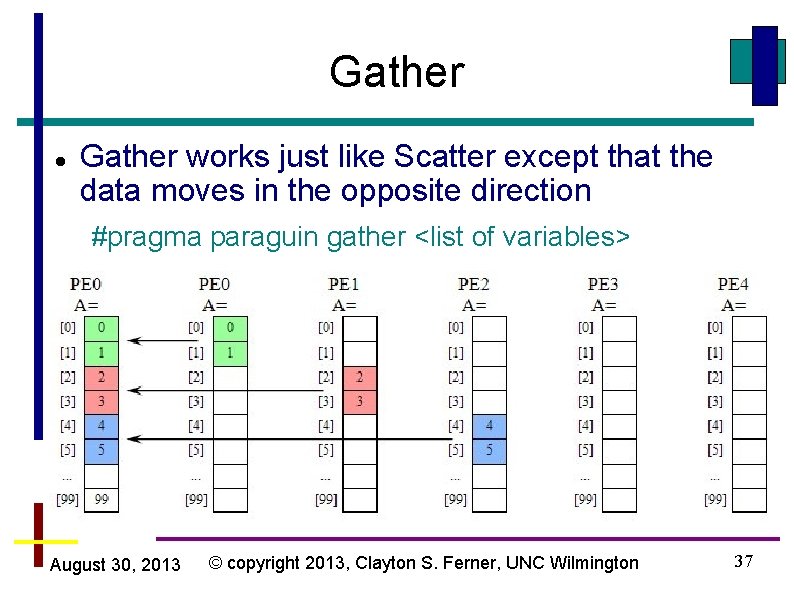

Gather works just like Scatter except that the data moves in the opposite direction #pragma paraguin gather <list of variables> August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 37

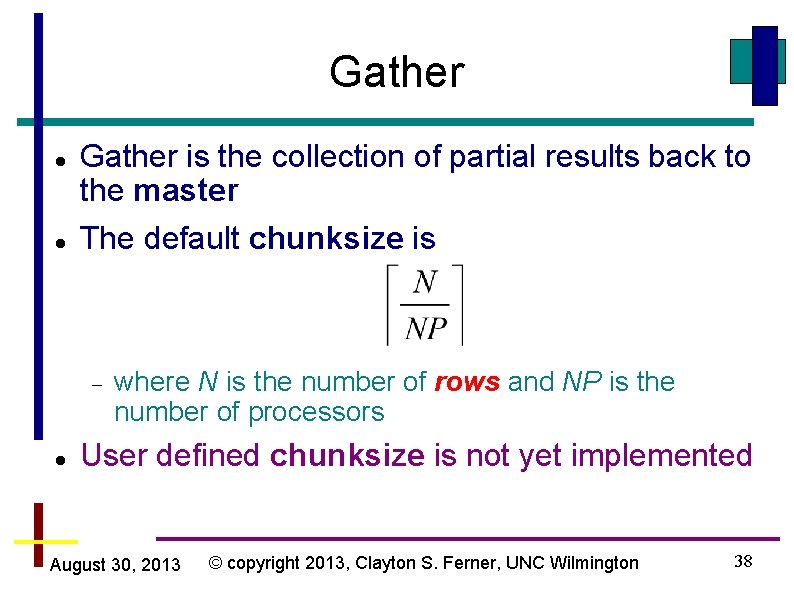

Gather is the collection of partial results back to the master The default chunksize is where N is the number of rows and NP is the number of processors User defined chunksize is not yet implemented August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 38

Reduction A reduction is when a binary commutative operator is applied to a collection of values producing a single value #pragma paraguin reduce <op> <source> <result> Where <op> is the operator <source> is the variable with the data to be reduced <result> is the variable that will hold the answer August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 39

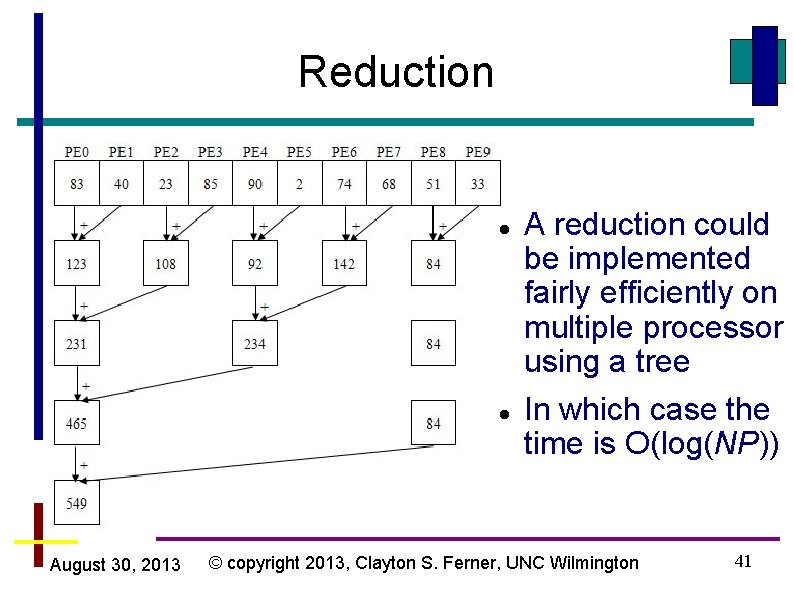

Reduction For example, applying summation to the following values: 83 40 23 85 90 2 74 68 51 33 Produces the single value of 549 MPI does not specify how reduction should be implemented; however, … August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 40

Reduction August 30, 2013 A reduction could be implemented fairly efficiently on multiple processor using a tree In which case the time is O(log(NP)) © copyright 2013, Clayton S. Ferner, UNC Wilmington 41

Reduction Available operators that can be used in a reduction: Operator Description max Maximum lor Logical or min Minimum bor Bitwise or sum Summation lxor Logical exclusive or prod Product bxor Bitwise exclusive or land Logical and maxloc Maximum and location Band Bitwise and minloc Minimum and location August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 42

Reduction double c, result_c; . . . #pragma paraguin begin_parallel. . . // Each processor assigns some value to the variable c. . . #pragma paraguin reduce sum c result_c // The variable result_c on the master now holds the result // of summing the values of the variable c on all the // processors. . . August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 43

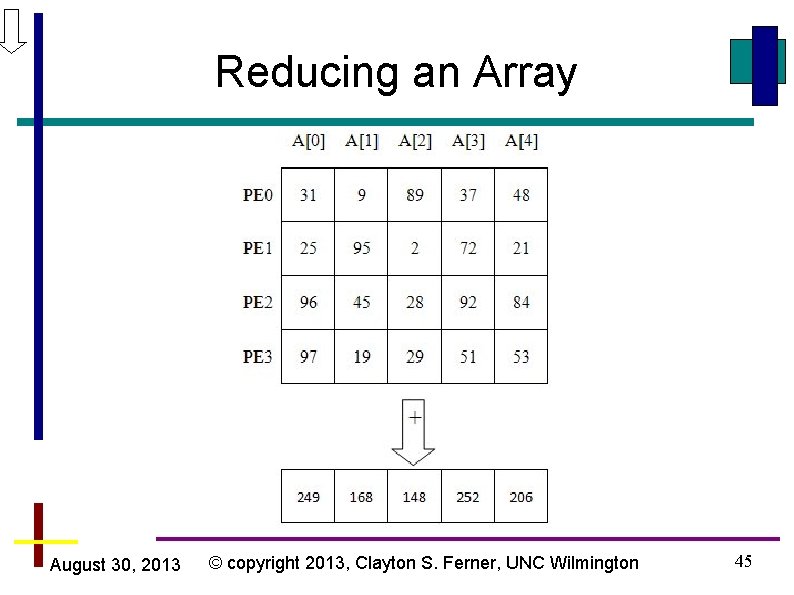

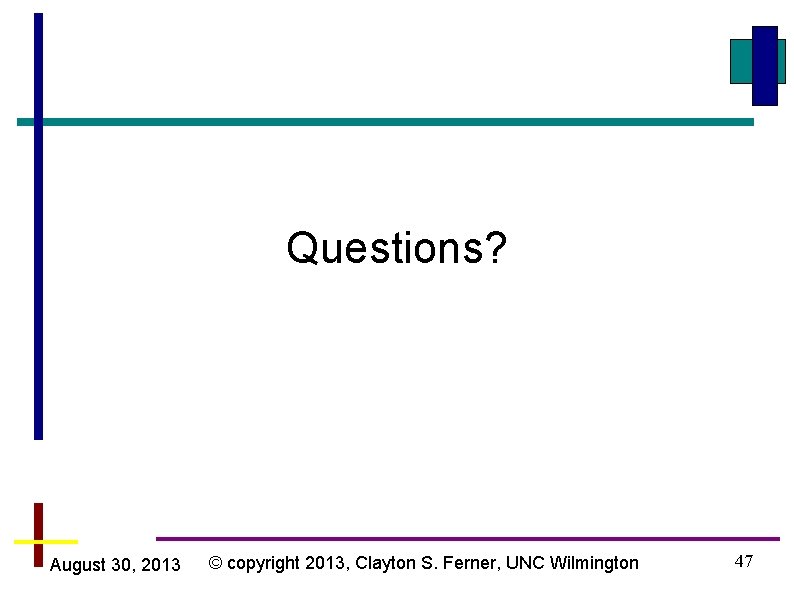

Reducing an Array When a reduction is applied to an array, the corresponding values in the same relative position in the array are reduced across processors double c[N], result_c[N]; . . . #pragma paraguin begin_parallel. . . // Each processor assigns N values to the array c. . . #pragma paraguin reduce sum c result_c. . . August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 44

Reducing an Array August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 45

Next Topic Patterns: Scatter/Gather Stencil August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 46

Questions? August 30, 2013 © copyright 2013, Clayton S. Ferner, UNC Wilmington 47