Using compilerdirected approach to create MPI code automatically

![Scatter/Gather Example - Matrix Addition int main(int argc, char *argv[]) { int i, j, Scatter/Gather Example - Matrix Addition int main(int argc, char *argv[]) { int i, j,](https://slidetodoc.com/presentation_image/e8f3d8dc7fbfd7f5cbdfe1e29ba3c321/image-6.jpg)

![Basic Jacobi Iteration int main() { int i, j; double A[N][M], B[N][M]; // A Basic Jacobi Iteration int main() { int i, j; double A[N][M], B[N][M]; // A](https://slidetodoc.com/presentation_image/e8f3d8dc7fbfd7f5cbdfe1e29ba3c321/image-14.jpg)

- Slides: 28

Using compiler-directed approach to create MPI code automatically Paraguin Compiler Patterns ITCS 4145/5145, Parallel Programming Clayton Ferner/B. Wilkinson March 11, 2014. Paragion. Slides 2 abw. ppt 1

The Paraguin compiler is being developed by Dr. C Ferner, UNCWilmington Following based upon his slides 2

Patterns • As of right now, there are only two patterns implemented in Paraguin: – Scatter/Gather – Stencil 3

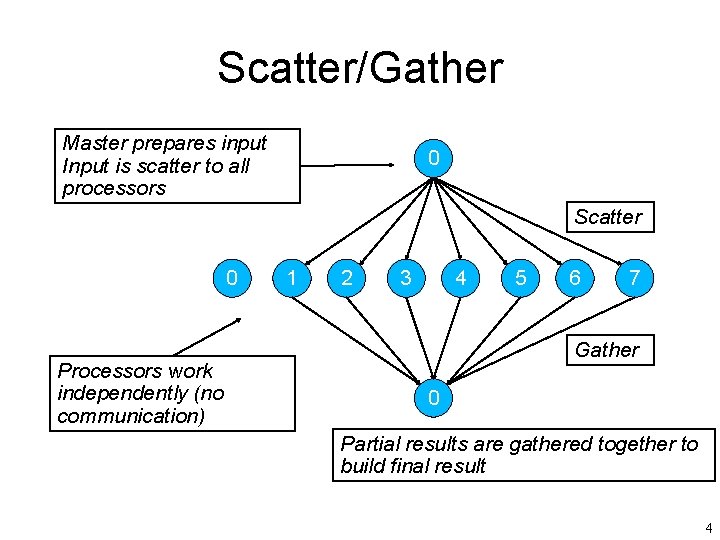

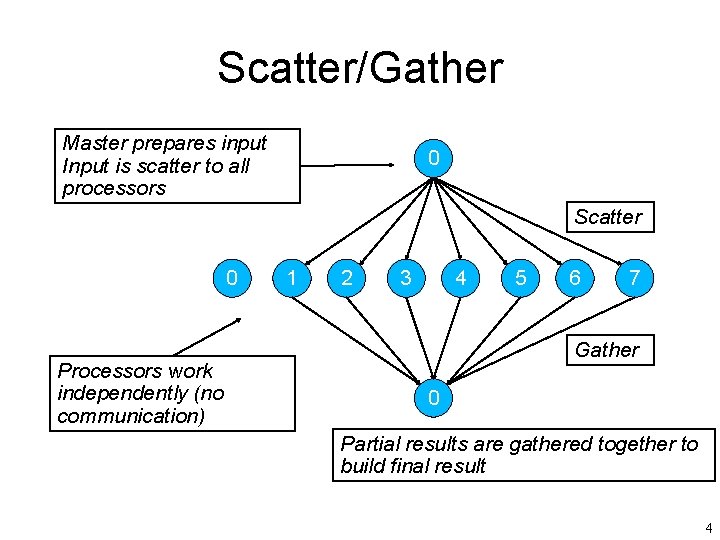

Scatter/Gather Master prepares input Input is scatter to all processors 0 Scatter 0 Processors work independently (no communication) 1 2 3 4 5 6 7 Gather 0 Partial results are gathered together to build final result 4

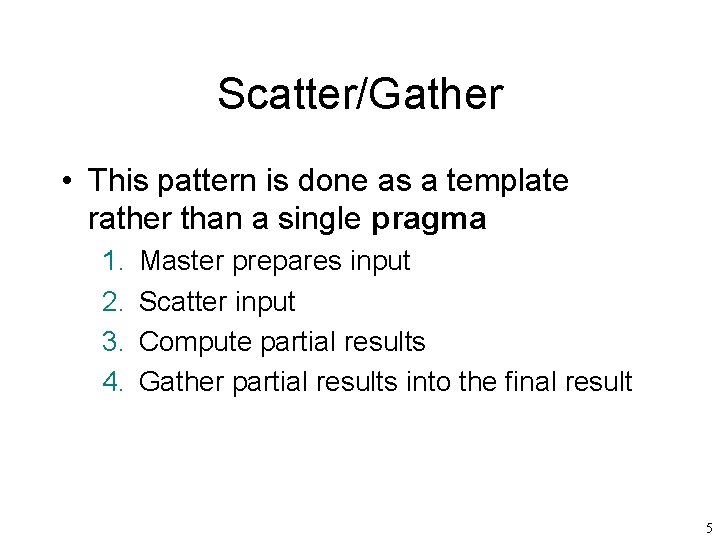

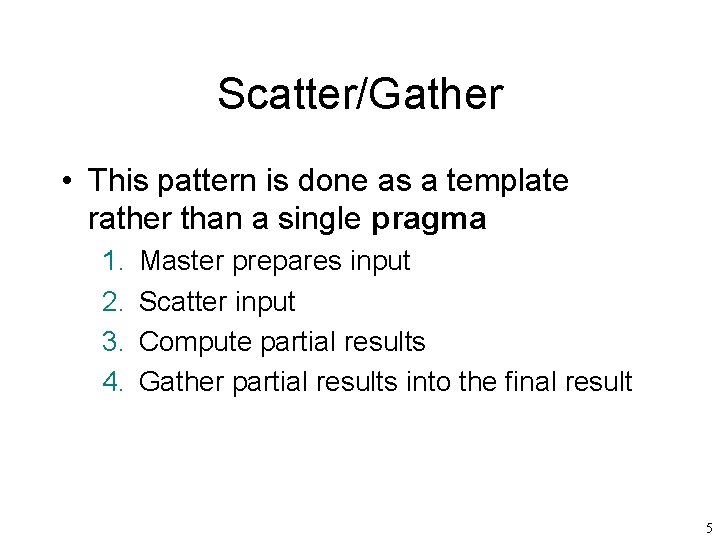

Scatter/Gather • This pattern is done as a template rather than a single pragma 1. 2. 3. 4. Master prepares input Scatter input Compute partial results Gather partial results into the final result 5

![ScatterGather Example Matrix Addition int mainint argc char argv int i j Scatter/Gather Example - Matrix Addition int main(int argc, char *argv[]) { int i, j,](https://slidetodoc.com/presentation_image/e8f3d8dc7fbfd7f5cbdfe1e29ba3c321/image-6.jpg)

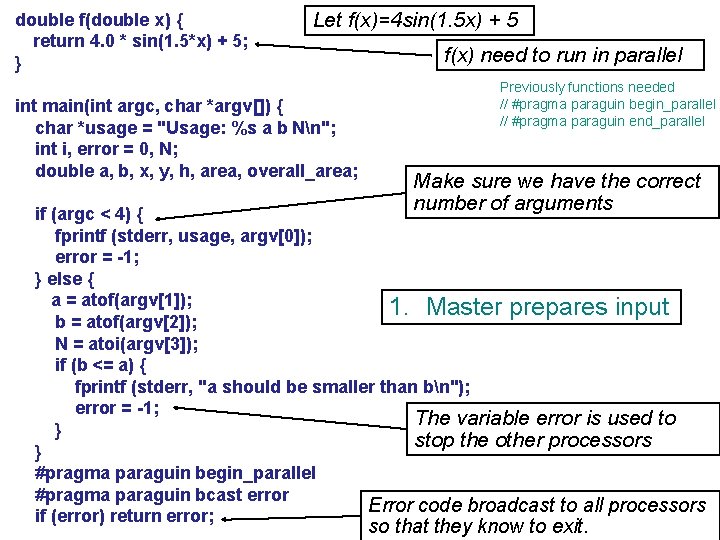

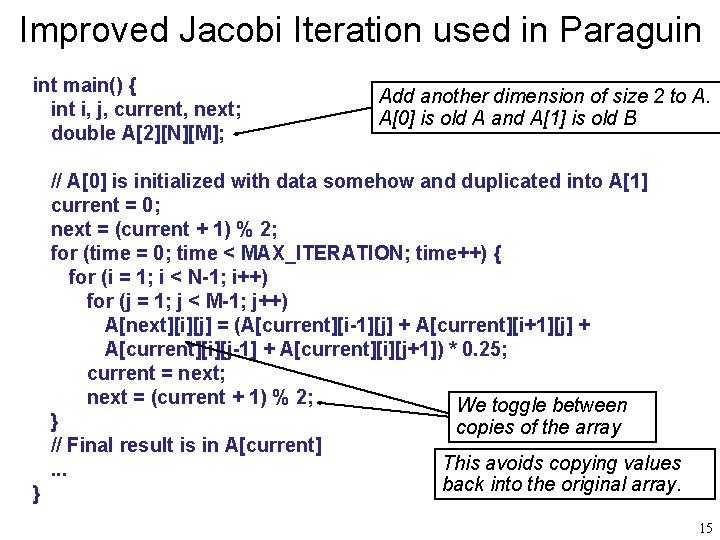

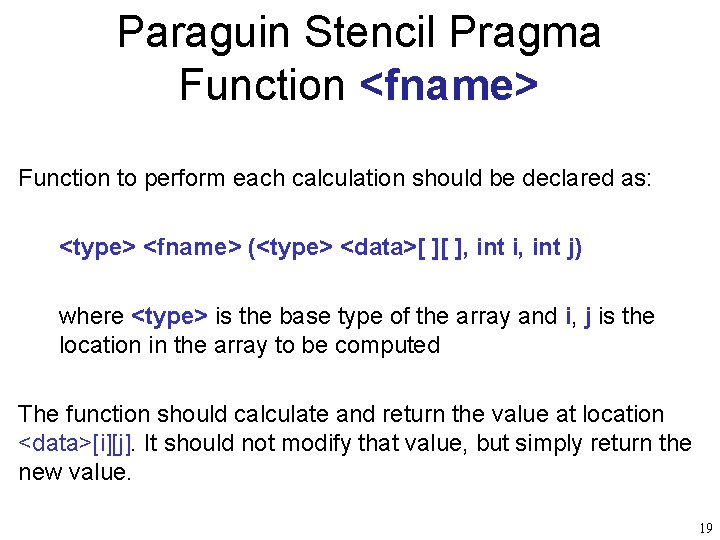

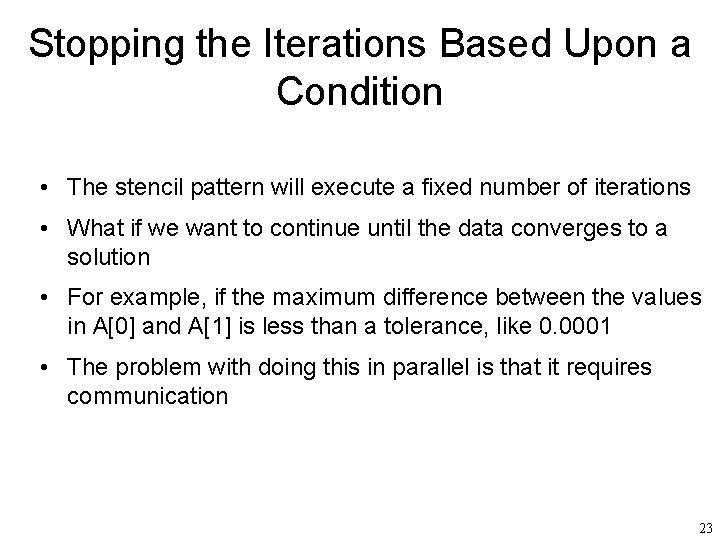

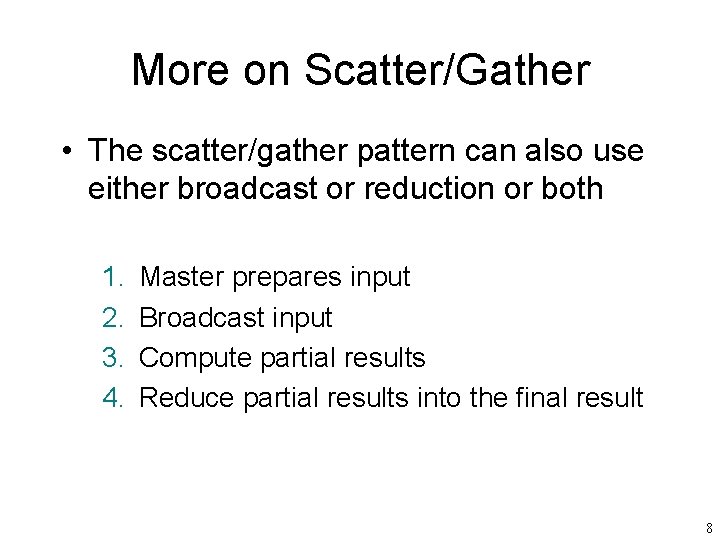

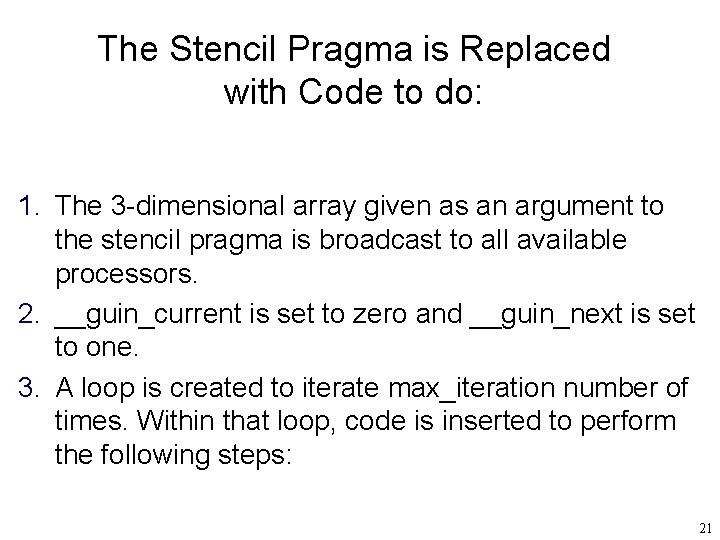

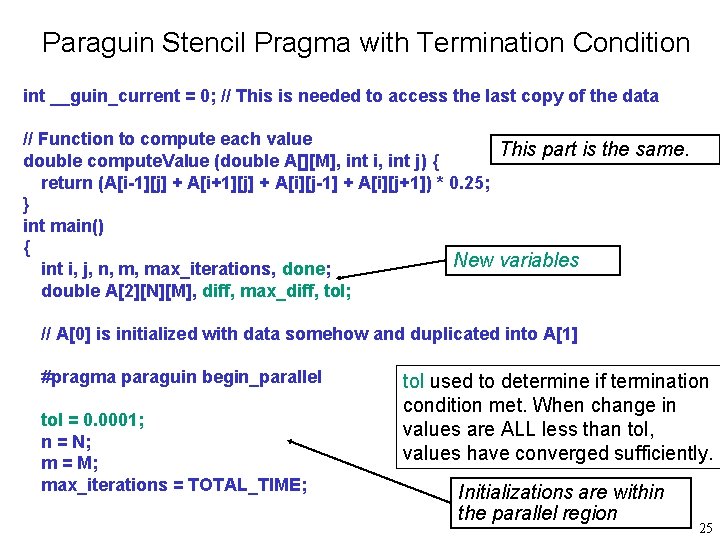

Scatter/Gather Example - Matrix Addition int main(int argc, char *argv[]) { int i, j, error = 0; double a[N][N], b[N][N], c[N][N]; char *usage = "Usage: %s filen"; FILE *fd; Make sure we have the correct number of arguments if (argc < 2) { fprintf (stderr, usage, argv[0]); Make sure we can open the error = -1; input file } if (!error && (fd = fopen (argv[1], "r")) == NULL) { fprintf (stderr, "%s: Cannot open file %s for reading. n", argv[0], argv[1]); The variable error is used to stop fprintf (stderr, usage, argv[0]); the other processors error = -1; } error code broadcast to all processors so that they know to exit. If we just had a #pragma paraguin begin_parallel “return -1” in the above two if statements #pragma paraguin bcast error then the master only would exit and if (error) return error; workers would not, causing a deadlock. 6

1. Master prepares input 2. Scatter input for (i = 0; i < N; i++) for (j = 0; j < N; j++) fscanf (fd, "%lf", &a[i][j]); for (i = 0; i < N; i++) for (j = 0; j < N; j++) fscanf (fd, "%lf", &b[i][j]); fclose(fd); #pragma paraguin begin_parallel #pragma paraguin scatter a b // Parallelize loop nest assigning iterations // of outermost loop (i) to different partitions. 3. Compute partial results 4. Gather partial results into the final result #pragma paraguin forall for (i = 0; i < N; i++) { for (j = 0; j < N; j++) { c[i][j] = a[i][j] + b[i][j]; } } Semicolon to prevent gather pragma from ; being placed INSIDE above for loop nest. #pragma paraguin gather c #pragma paraguin end_parallel 7

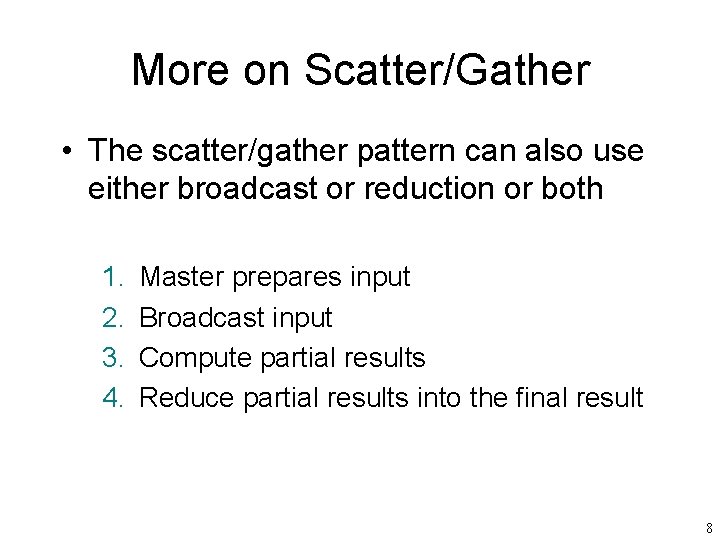

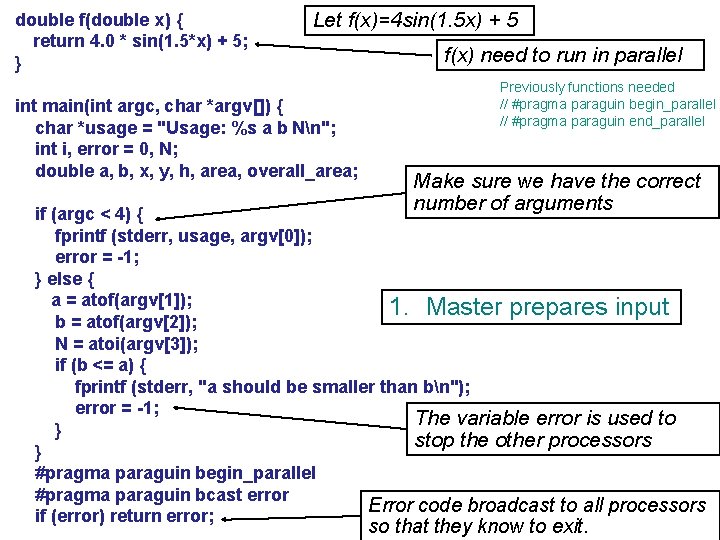

More on Scatter/Gather • The scatter/gather pattern can also use either broadcast or reduction or both 1. 2. 3. 4. Master prepares input Broadcast input Compute partial results Reduce partial results into the final result 8

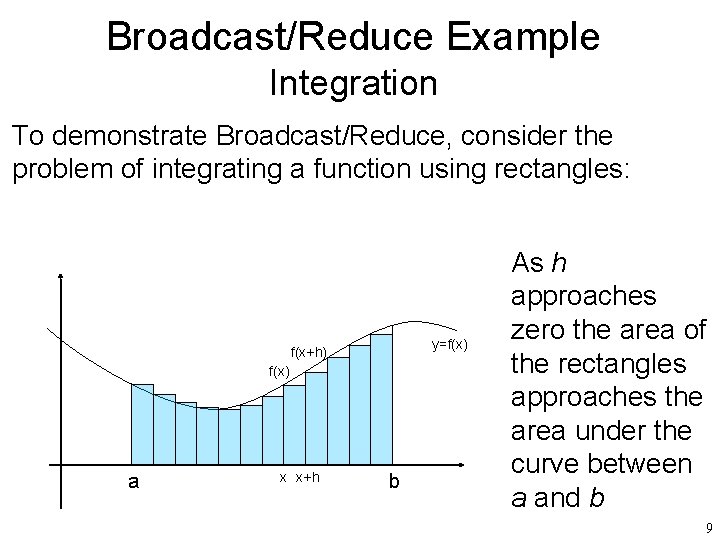

Broadcast/Reduce Example Integration To demonstrate Broadcast/Reduce, consider the problem of integrating a function using rectangles: y=f(x) f(x+h) f(x) a x x+h b As h approaches zero the area of the rectangles approaches the area under the curve between a and b 9

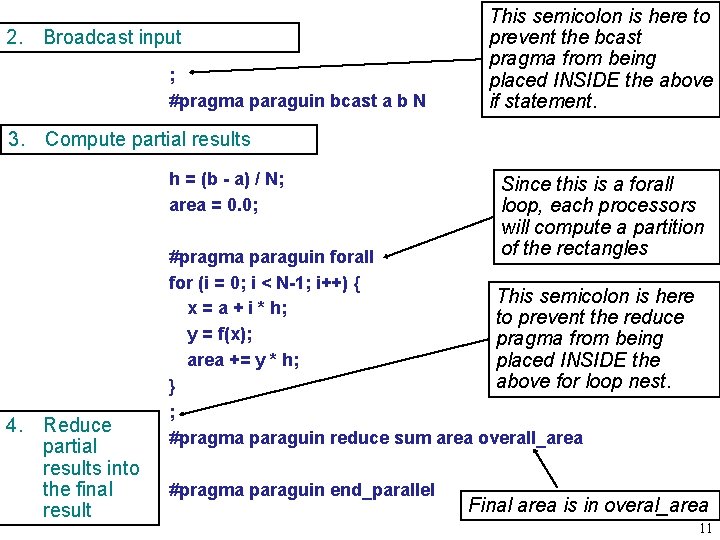

double f(double x) { return 4. 0 * sin(1. 5*x) + 5; } Let f(x)=4 sin(1. 5 x) + 5 int main(int argc, char *argv[]) { char *usage = "Usage: %s a b Nn"; int i, error = 0, N; double a, b, x, y, h, area, overall_area; f(x) need to run in parallel Previously functions needed // #pragma paraguin begin_parallel // #pragma paraguin end_parallel Make sure we have the correct number of arguments if (argc < 4) { fprintf (stderr, usage, argv[0]); error = -1; } else { a = atof(argv[1]); 1. Master prepares input b = atof(argv[2]); N = atoi(argv[3]); if (b <= a) { fprintf (stderr, "a should be smaller than bn"); error = -1; The variable error is used to } stop the other processors } #pragma paraguin begin_parallel #pragma paraguin bcast error Error code broadcast to all processors if (error) return error; so that they know to exit. 10

2. Broadcast input ; #pragma paraguin bcast a b N This semicolon is here to prevent the bcast pragma from being placed INSIDE the above if statement. 3. Compute partial results h = (b - a) / N; area = 0. 0; 4. Reduce partial results into the final result Since this is a forall loop, each processors will compute a partition of the rectangles #pragma paraguin forall for (i = 0; i < N-1; i++) { This semicolon is here x = a + i * h; to prevent the reduce y = f(x); pragma from being area += y * h; placed INSIDE the above for loop nest. } ; #pragma paraguin reduce sum area overall_area #pragma paraguin end_parallel Final area is in overal_area 11

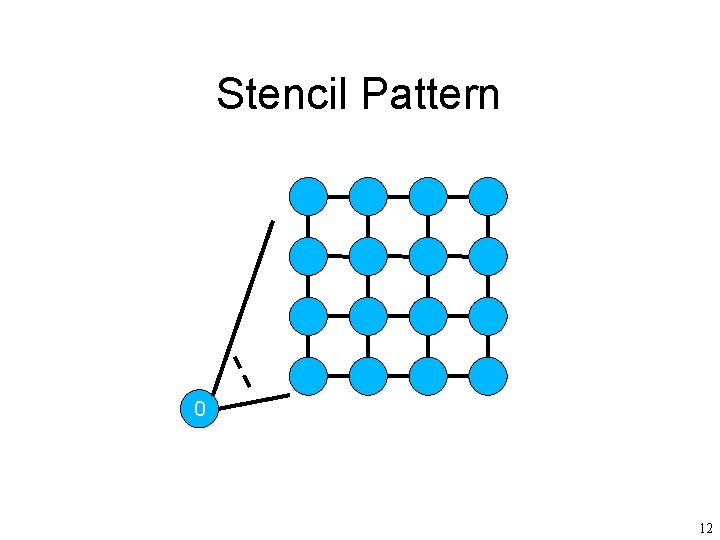

Stencil Pattern 0 12

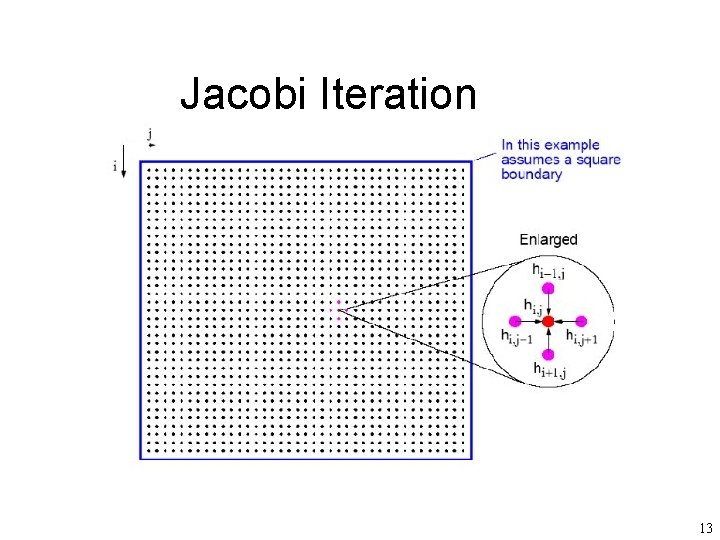

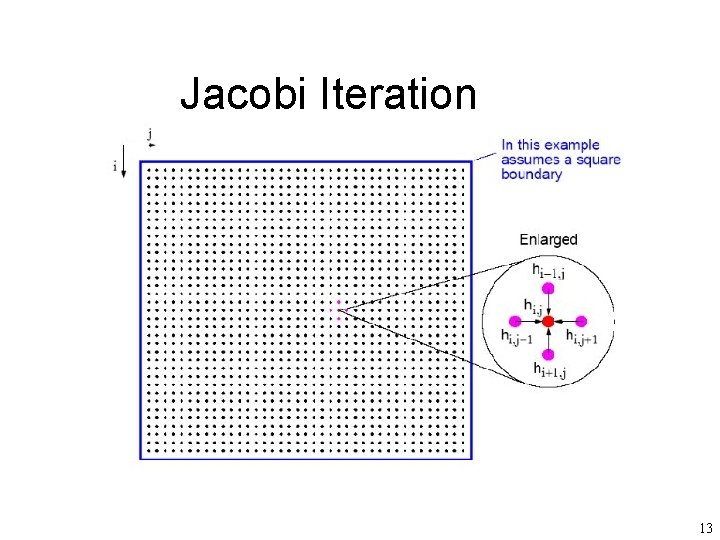

Jacobi Iteration 13

![Basic Jacobi Iteration int main int i j double ANM BNM A Basic Jacobi Iteration int main() { int i, j; double A[N][M], B[N][M]; // A](https://slidetodoc.com/presentation_image/e8f3d8dc7fbfd7f5cbdfe1e29ba3c321/image-14.jpg)

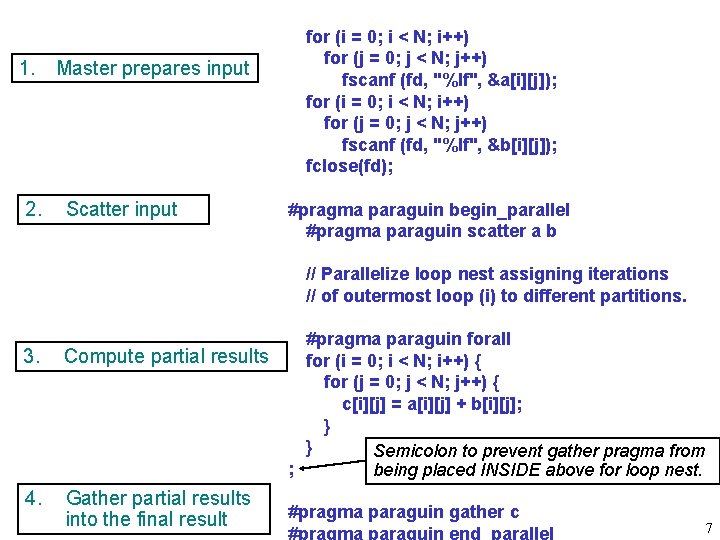

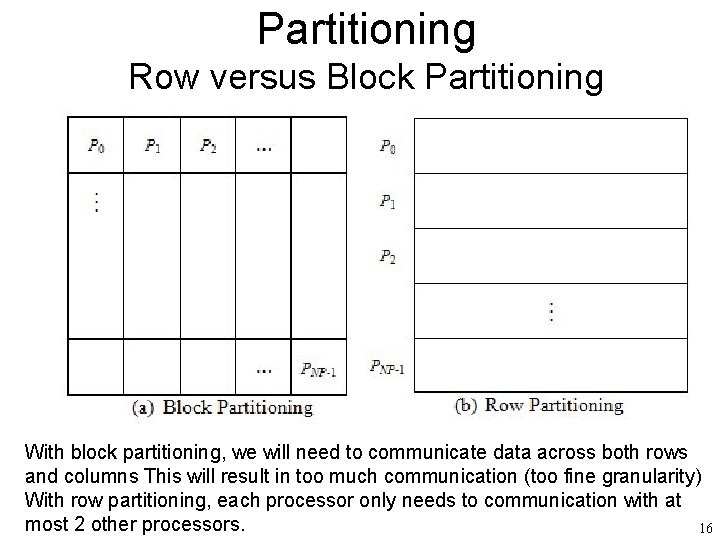

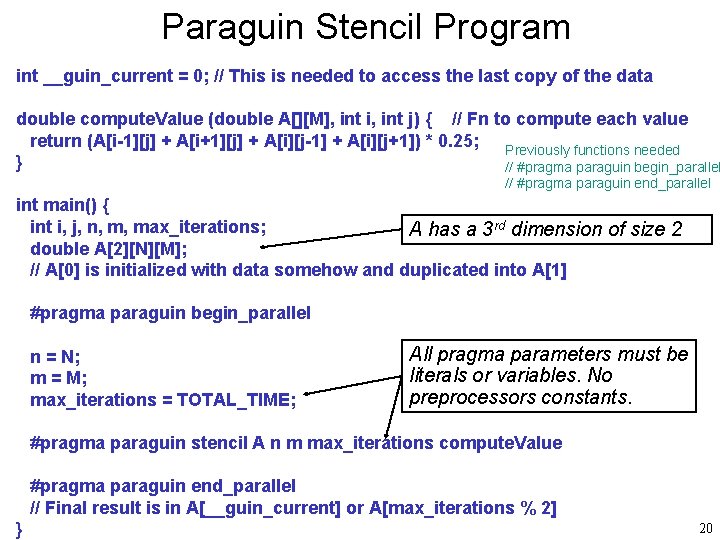

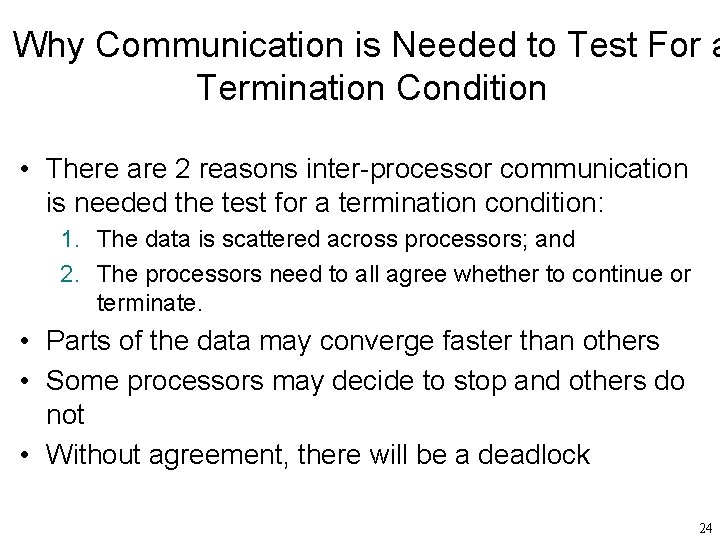

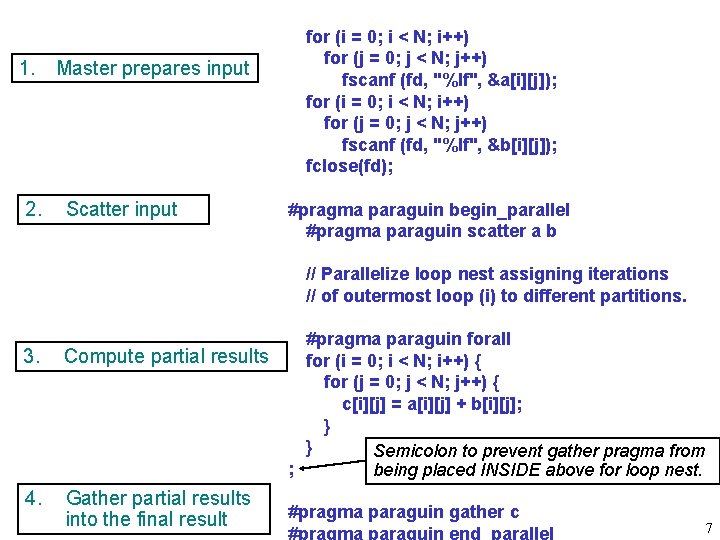

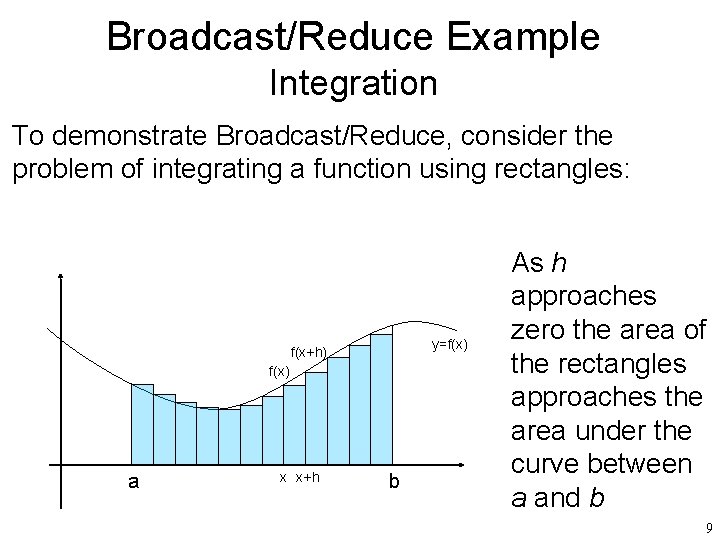

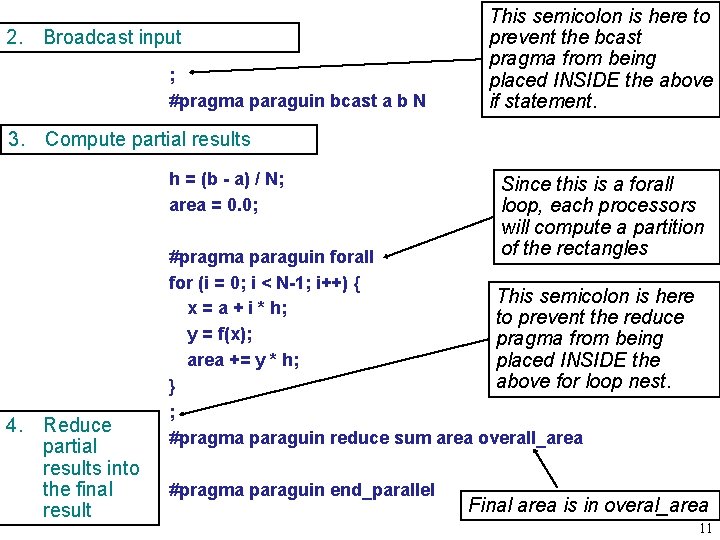

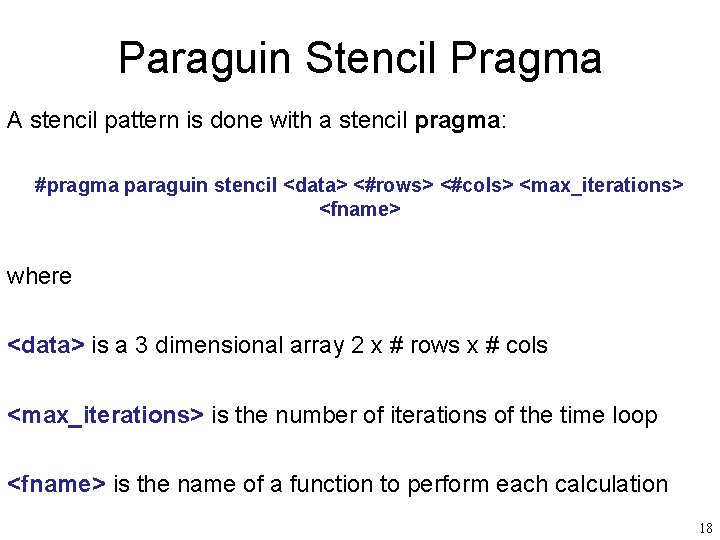

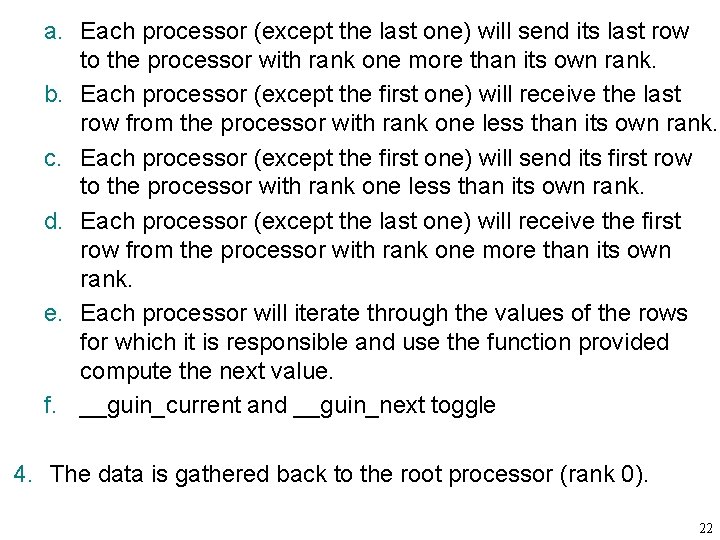

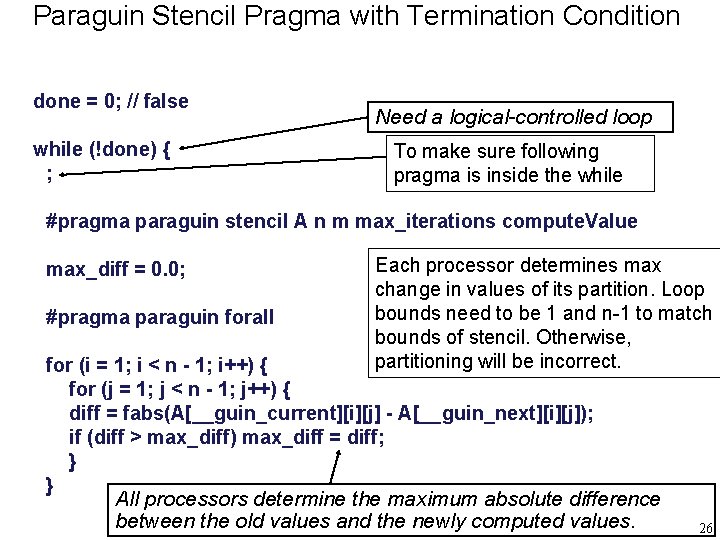

Basic Jacobi Iteration int main() { int i, j; double A[N][M], B[N][M]; // A is initialized with data somehow for (time = 0; time < MAX_ITERATION; time++) { for (i = 1; i < N-1; i++) for (j = 1; j < M-1; j++) Skip the boundary values Multiplying by 0. 25 is faster than dividing by 4. 0 B[i][j] = (A[i-1][j] + A[i+1][j] + A[i][j-1] + A[i][j+1]) * 0. 25; for (i = 1; i < N-1; i++) for (j = 1; j < M-1; j++) A[i][j] = B[i][j]; }. . . Then copied back to the original. Newly computed values are placed in a new array. } 14

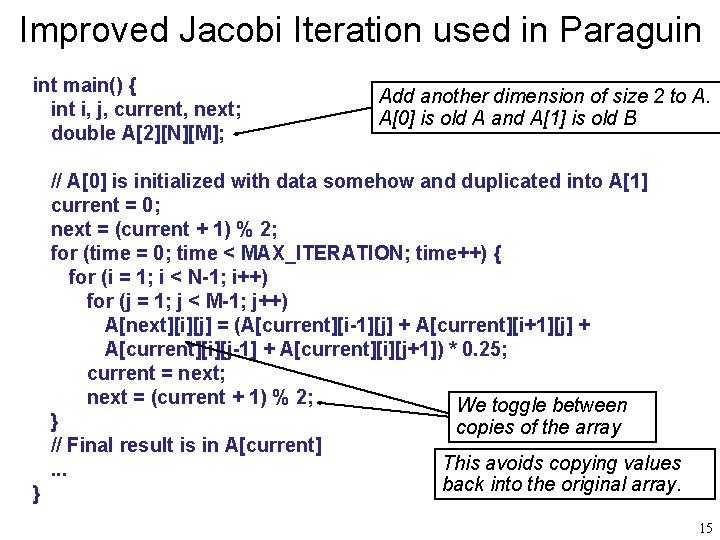

Improved Jacobi Iteration used in Paraguin int main() { int i, j, current, next; double A[2][N][M]; Add another dimension of size 2 to A. A[0] is old A and A[1] is old B // A[0] is initialized with data somehow and duplicated into A[1] current = 0; next = (current + 1) % 2; for (time = 0; time < MAX_ITERATION; time++) { for (i = 1; i < N-1; i++) for (j = 1; j < M-1; j++) A[next][i][j] = (A[current][i-1][j] + A[current][i+1][j] + A[current][i][j-1] + A[current][i][j+1]) * 0. 25; current = next; next = (current + 1) % 2; We toggle between } copies of the array // Final result is in A[current] This avoids copying values. . . back into the original array. } 15

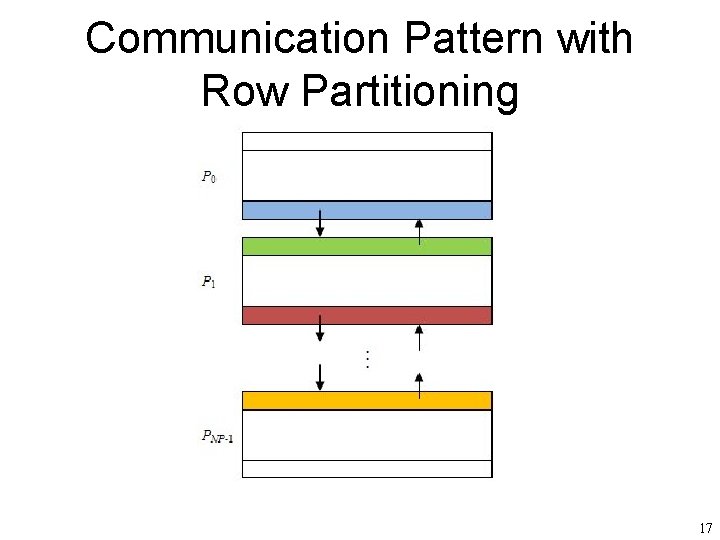

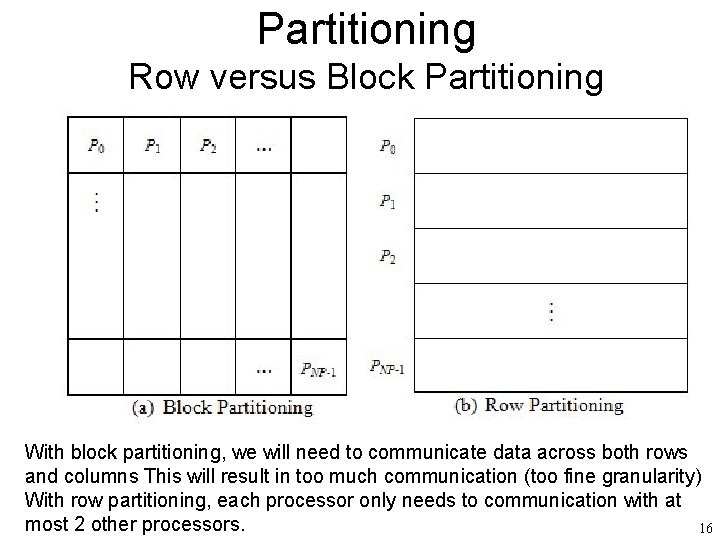

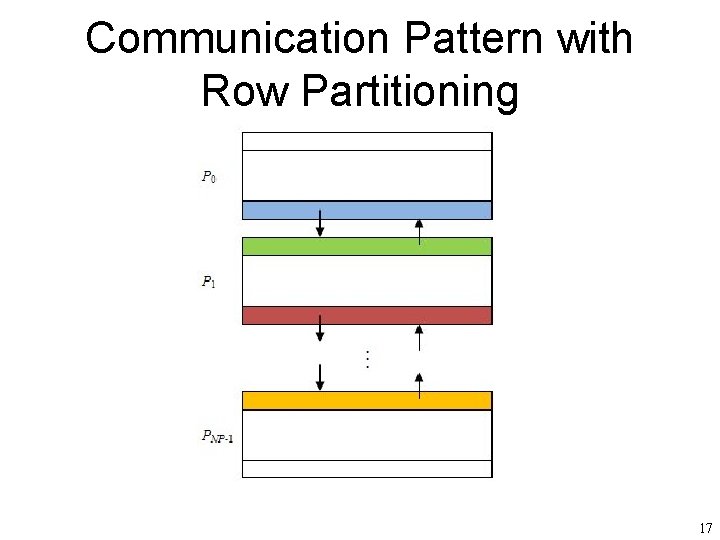

Partitioning Row versus Block Partitioning With block partitioning, we will need to communicate data across both rows and columns This will result in too much communication (too fine granularity) With row partitioning, each processor only needs to communication with at most 2 other processors. 16

Communication Pattern with Row Partitioning 17

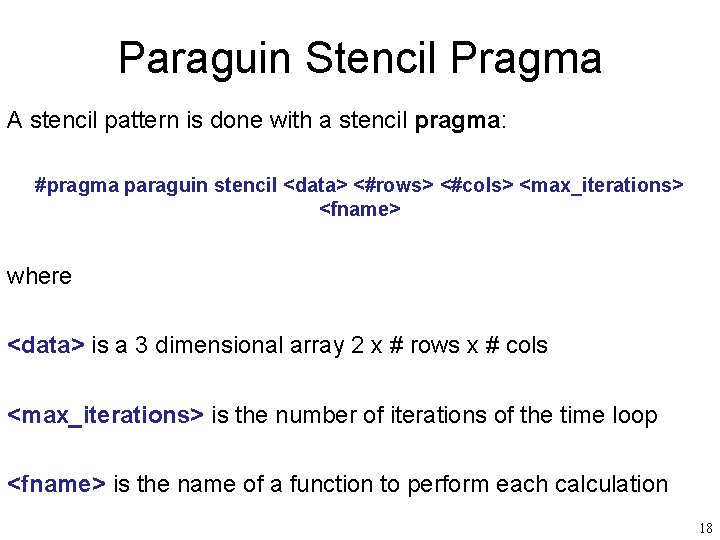

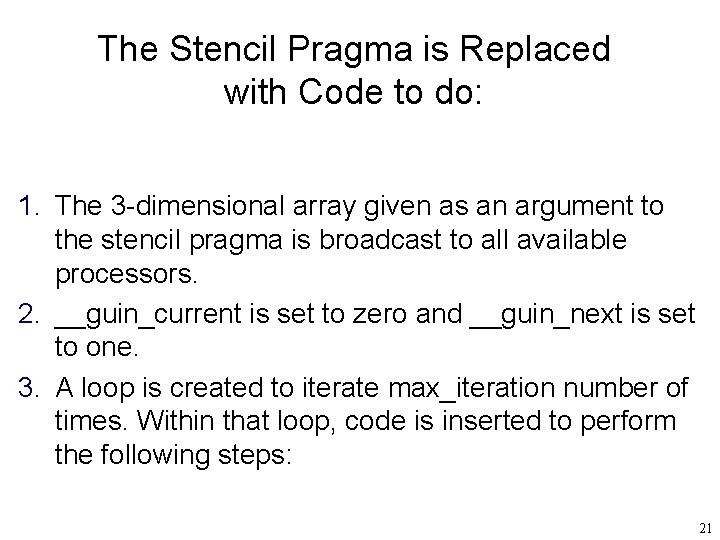

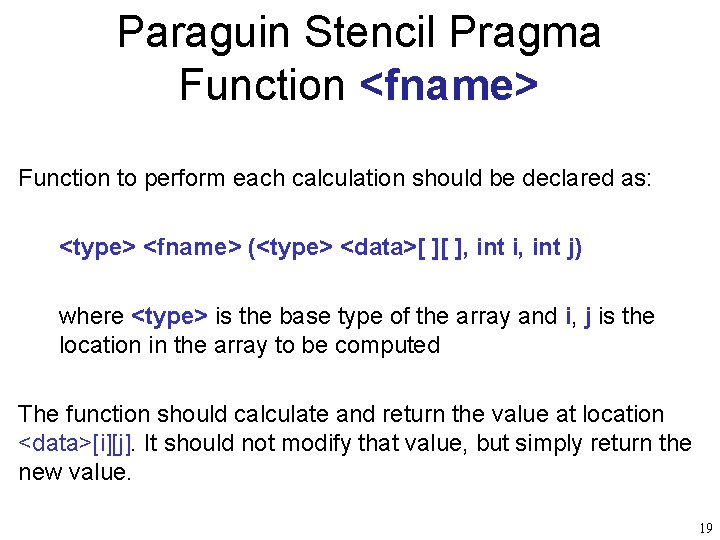

Paraguin Stencil Pragma A stencil pattern is done with a stencil pragma: #pragma paraguin stencil <data> <#rows> <#cols> <max_iterations> <fname> where <data> is a 3 dimensional array 2 x # rows x # cols <max_iterations> is the number of iterations of the time loop <fname> is the name of a function to perform each calculation 18

Paraguin Stencil Pragma Function <fname> Function to perform each calculation should be declared as: <type> <fname> (<type> <data>[ ][ ], int i, int j) where <type> is the base type of the array and i, j is the location in the array to be computed The function should calculate and return the value at location <data>[i][j]. It should not modify that value, but simply return the new value. 19

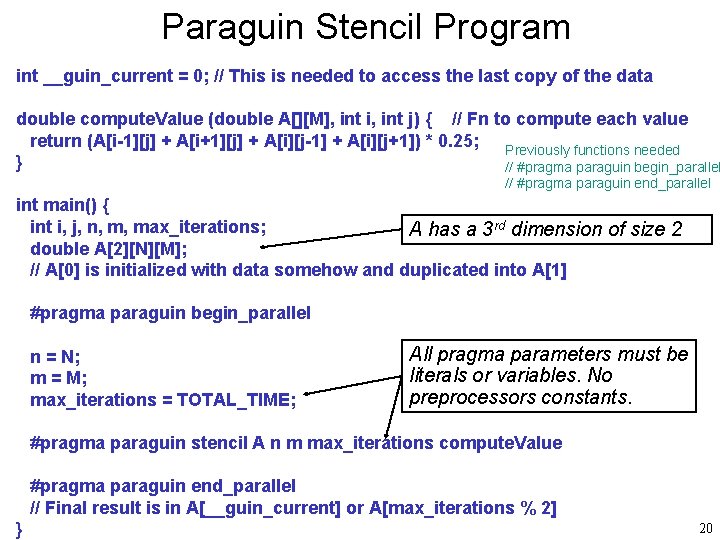

Paraguin Stencil Program int __guin_current = 0; // This is needed to access the last copy of the data double compute. Value (double A[][M], int i, int j) { // Fn to compute each value return (A[i-1][j] + A[i+1][j] + A[i][j-1] + A[i][j+1]) * 0. 25; Previously functions needed } // #pragma paraguin begin_parallel // #pragma paraguin end_parallel int main() { int i, j, n, m, max_iterations; A has a 3 rd dimension of size 2 double A[2][N][M]; // A[0] is initialized with data somehow and duplicated into A[1] #pragma paraguin begin_parallel n = N; m = M; max_iterations = TOTAL_TIME; All pragma parameters must be literals or variables. No preprocessors constants. #pragma paraguin stencil A n m max_iterations compute. Value #pragma paraguin end_parallel // Final result is in A[__guin_current] or A[max_iterations % 2] } 20

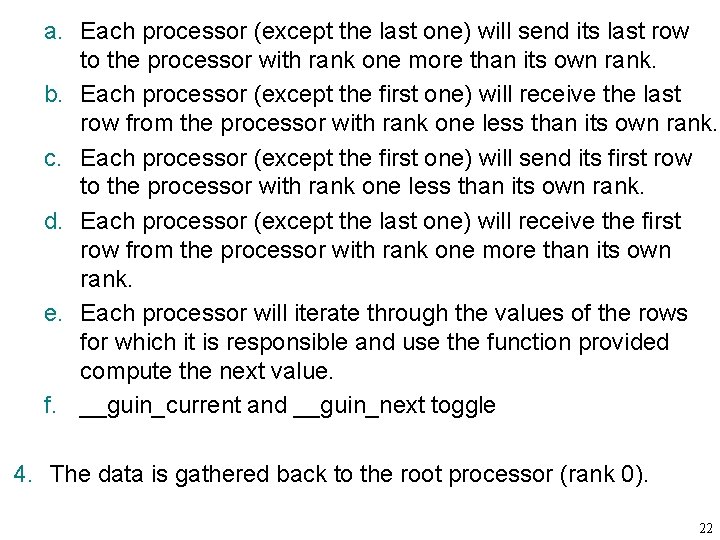

The Stencil Pragma is Replaced with Code to do: 1. The 3 -dimensional array given as an argument to the stencil pragma is broadcast to all available processors. 2. __guin_current is set to zero and __guin_next is set to one. 3. A loop is created to iterate max_iteration number of times. Within that loop, code is inserted to perform the following steps: 21

a. Each processor (except the last one) will send its last row to the processor with rank one more than its own rank. b. Each processor (except the first one) will receive the last row from the processor with rank one less than its own rank. c. Each processor (except the first one) will send its first row to the processor with rank one less than its own rank. d. Each processor (except the last one) will receive the first row from the processor with rank one more than its own rank. e. Each processor will iterate through the values of the rows for which it is responsible and use the function provided compute the next value. f. __guin_current and __guin_next toggle 4. The data is gathered back to the root processor (rank 0). 22

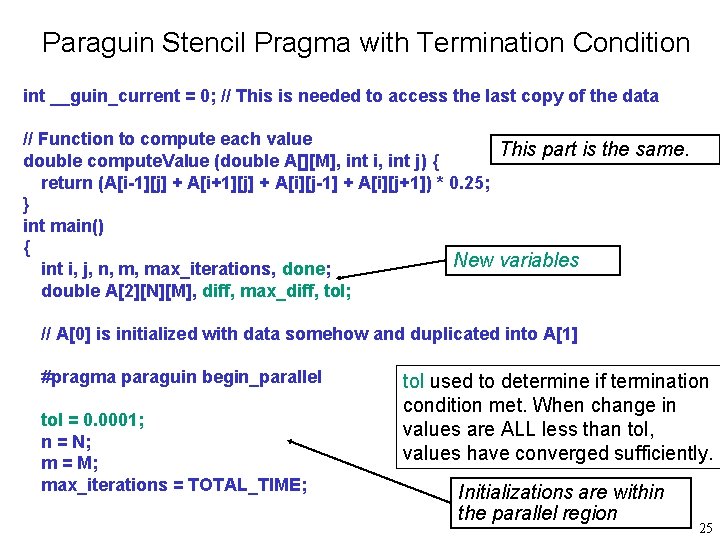

Stopping the Iterations Based Upon a Condition • The stencil pattern will execute a fixed number of iterations • What if we want to continue until the data converges to a solution • For example, if the maximum difference between the values in A[0] and A[1] is less than a tolerance, like 0. 0001 • The problem with doing this in parallel is that it requires communication 23

Why Communication is Needed to Test For a Termination Condition • There are 2 reasons inter-processor communication is needed the test for a termination condition: 1. The data is scattered across processors; and 2. The processors need to all agree whether to continue or terminate. • Parts of the data may converge faster than others • Some processors may decide to stop and others do not • Without agreement, there will be a deadlock 24

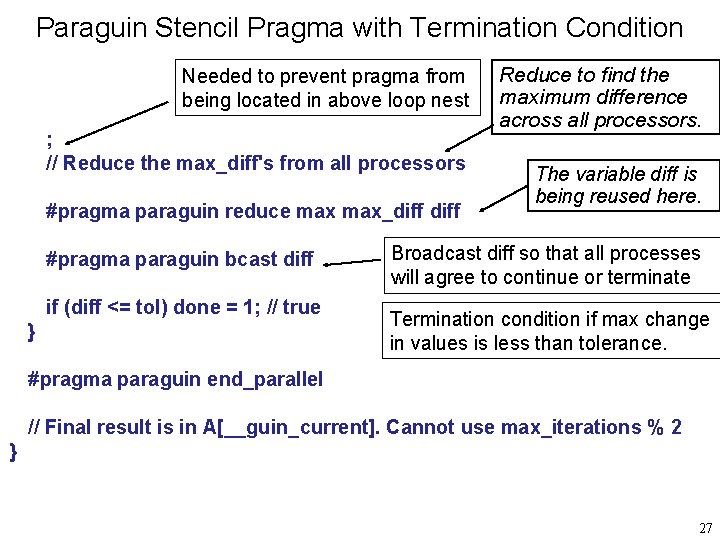

Paraguin Stencil Pragma with Termination Condition int __guin_current = 0; // This is needed to access the last copy of the data // Function to compute each value This part is the same. double compute. Value (double A[][M], int i, int j) { return (A[i-1][j] + A[i+1][j] + A[i][j-1] + A[i][j+1]) * 0. 25; } int main() { New variables int i, j, n, m, max_iterations, done; double A[2][N][M], diff, max_diff, tol; // A[0] is initialized with data somehow and duplicated into A[1] #pragma paraguin begin_parallel tol = 0. 0001; n = N; m = M; max_iterations = TOTAL_TIME; tol used to determine if termination condition met. When change in values are ALL less than tol, values have converged sufficiently. Initializations are within the parallel region 25

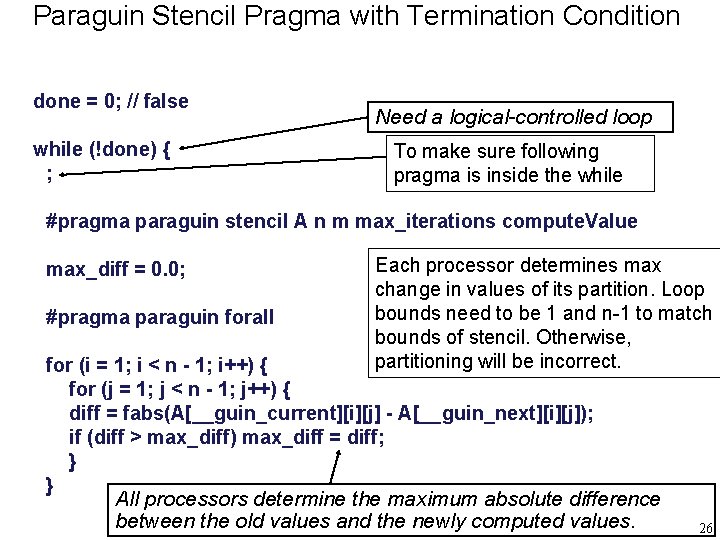

Paraguin Stencil Pragma with Termination Condition done = 0; // false while (!done) { ; Need a logical-controlled loop To make sure following pragma is inside the while #pragma paraguin stencil A n m max_iterations compute. Value max_diff = 0. 0; #pragma paraguin forall Each processor determines max change in values of its partition. Loop bounds need to be 1 and n-1 to match bounds of stencil. Otherwise, partitioning will be incorrect. for (i = 1; i < n - 1; i++) { for (j = 1; j < n - 1; j++) { diff = fabs(A[__guin_current][i][j] - A[__guin_next][i][j]); if (diff > max_diff) max_diff = diff; } } All processors determine the maximum absolute difference between the old values and the newly computed values. 26

Paraguin Stencil Pragma with Termination Condition Needed to prevent pragma from being located in above loop nest ; // Reduce the max_diff's from all processors #pragma paraguin reduce max_diff #pragma paraguin bcast diff if (diff <= tol) done = 1; // true } Reduce to find the maximum difference across all processors. The variable diff is being reused here. Broadcast diff so that all processes will agree to continue or terminate Termination condition if max change in values is less than tolerance. #pragma paraguin end_parallel // Final result is in A[__guin_current]. Cannot use max_iterations % 2 } 27

Questions? 28