NEURAL NETWORKS David Kauchak CS 158 Fall 2019

- Slides: 65

NEURAL NETWORKS David Kauchak CS 158 – Fall 2019

Admin Assignment 7 A solutions available on sakai in resources Assignment 7 B Assignment grading

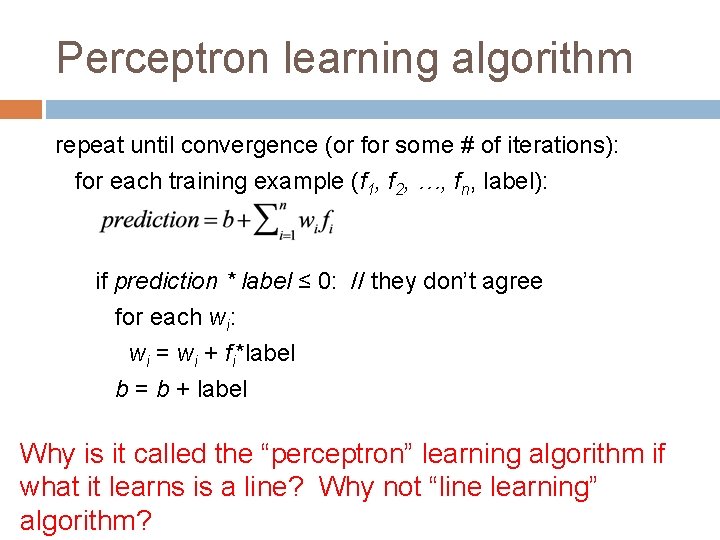

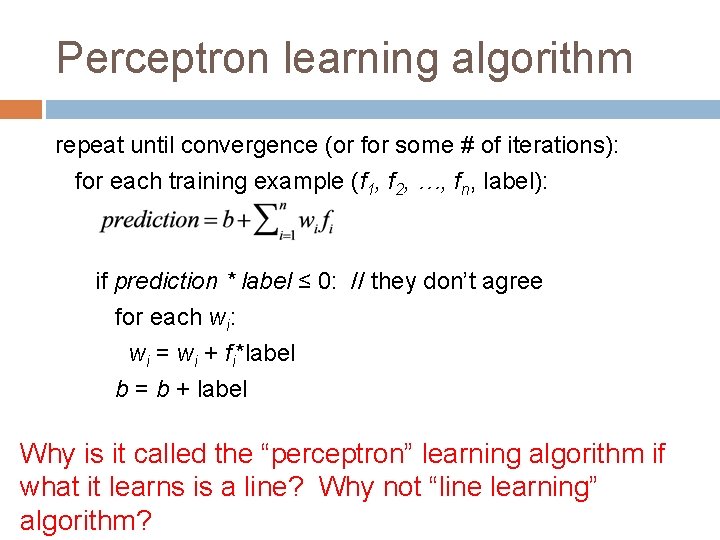

Perceptron learning algorithm repeat until convergence (or for some # of iterations): for each training example (f 1, f 2, …, fn, label): if prediction * label ≤ 0: // they don’t agree for each wi: wi = wi + fi*label b = b + label Why is it called the “perceptron” learning algorithm if what it learns is a line? Why not “line learning” algorithm?

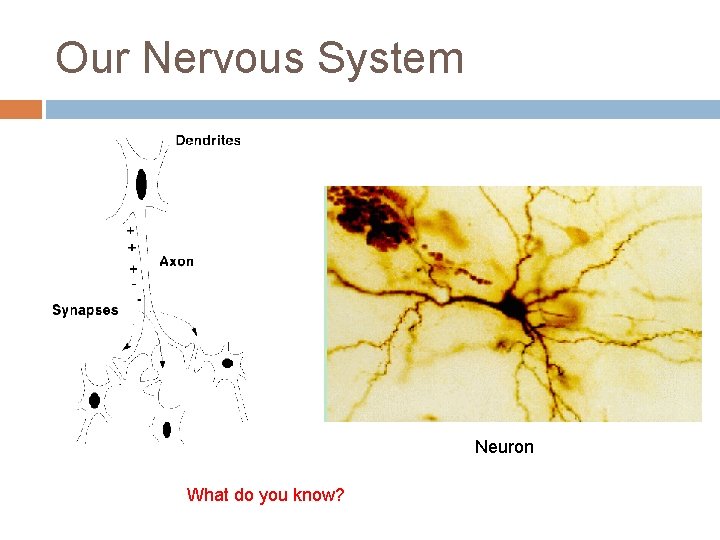

Our Nervous System Neuron What do you know?

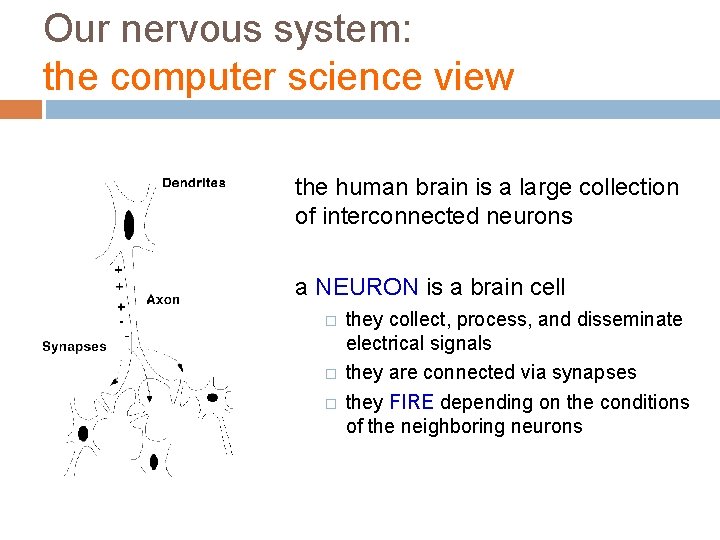

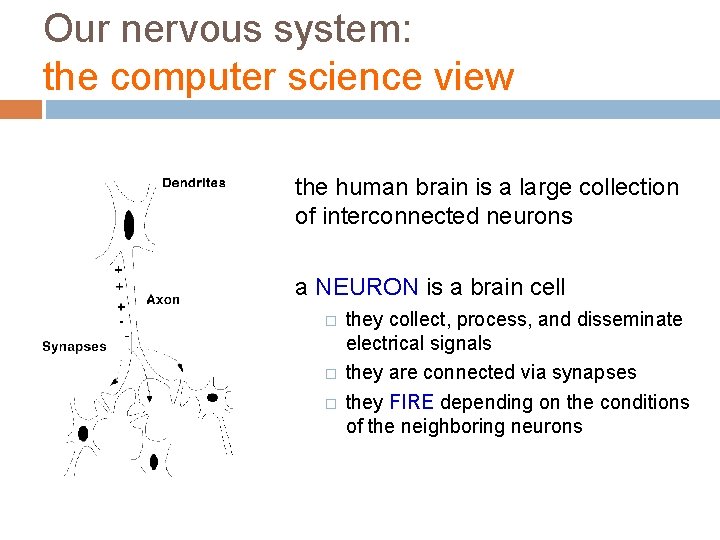

Our nervous system: the computer science view the human brain is a large collection of interconnected neurons a NEURON is a brain cell � � � they collect, process, and disseminate electrical signals they are connected via synapses they FIRE depending on the conditions of the neighboring neurons

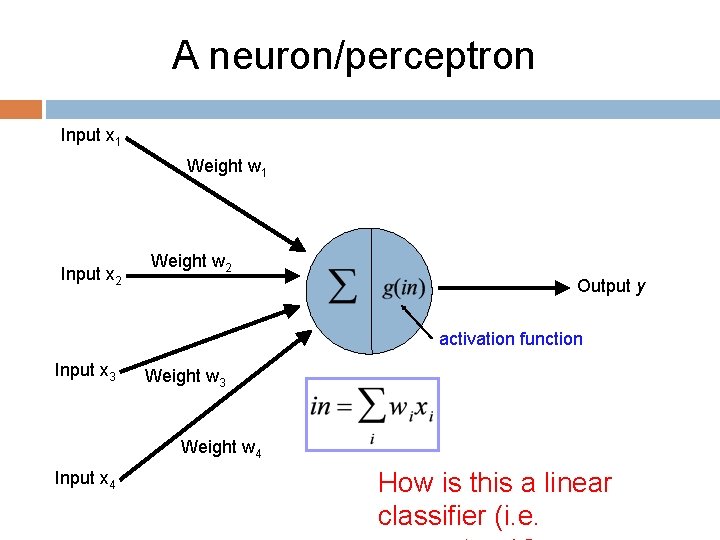

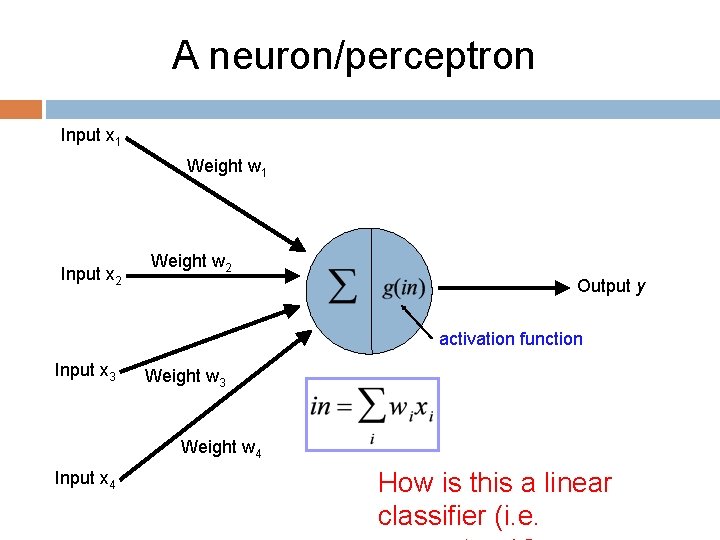

A neuron/perceptron Input x 1 Weight w 1 Input x 2 Weight w 2 Output y activation function Input x 3 Weight w 4 Input x 4 How is this a linear classifier (i. e.

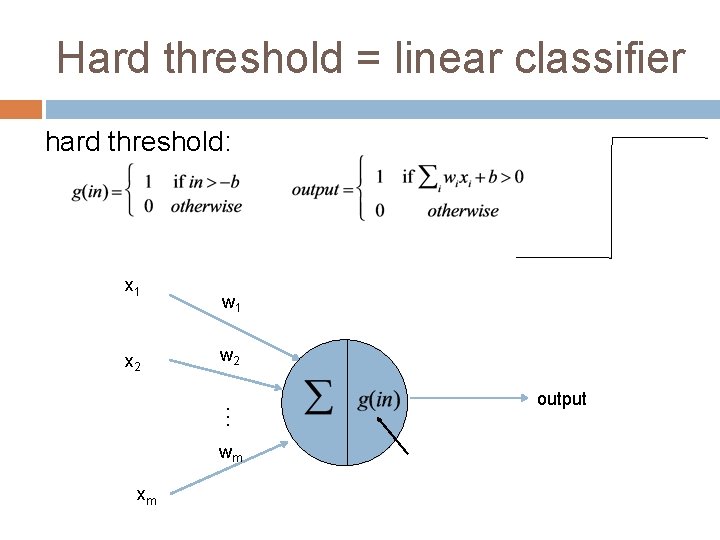

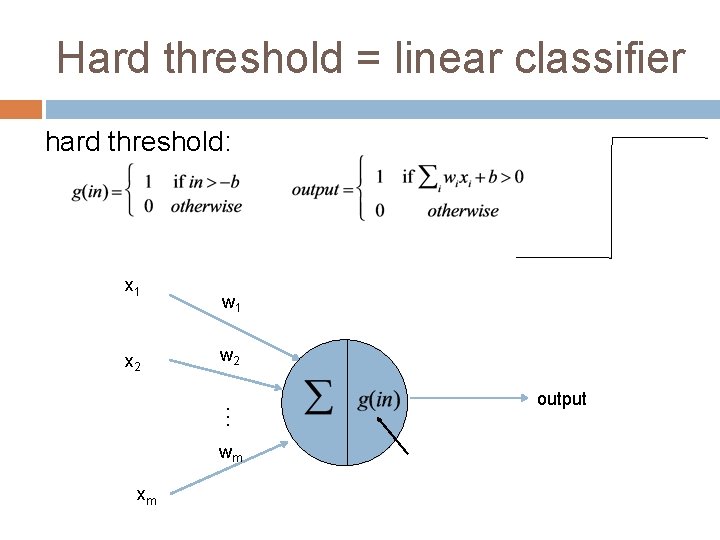

Hard threshold = linear classifier hard threshold: x 1 x 2 w 1 w 2 … wm xm output

Neural Networks try to mimic the structure and function of our nervous system People like biologically motivated approaches

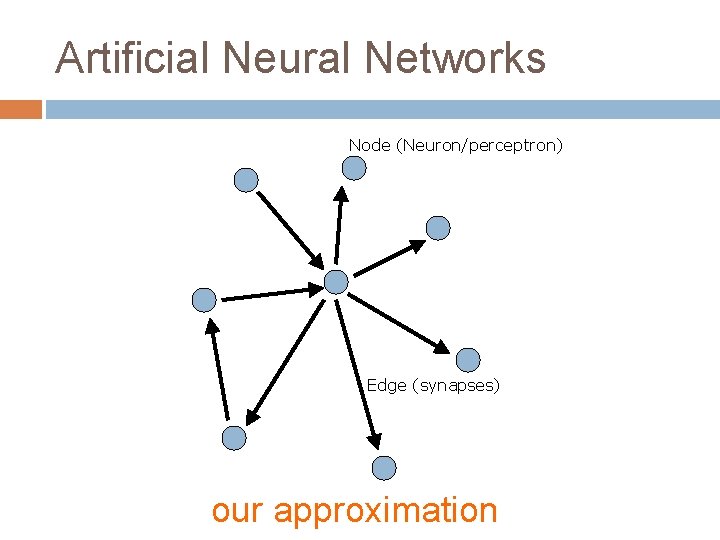

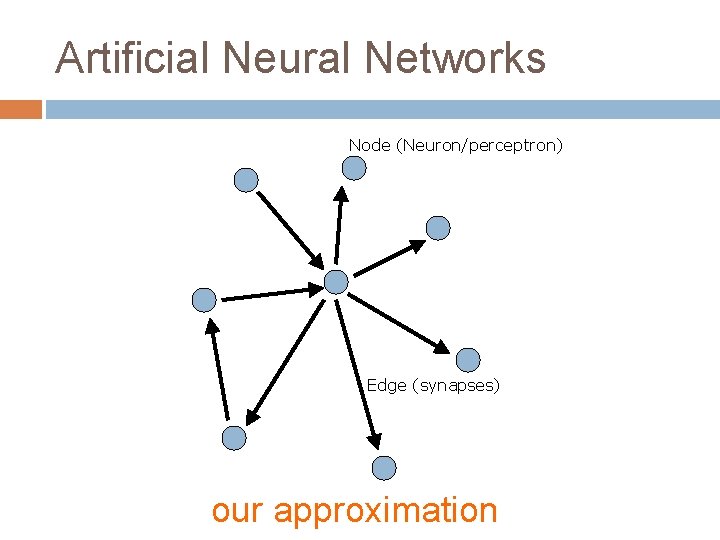

Artificial Neural Networks Node (Neuron/perceptron) Edge (synapses) our approximation

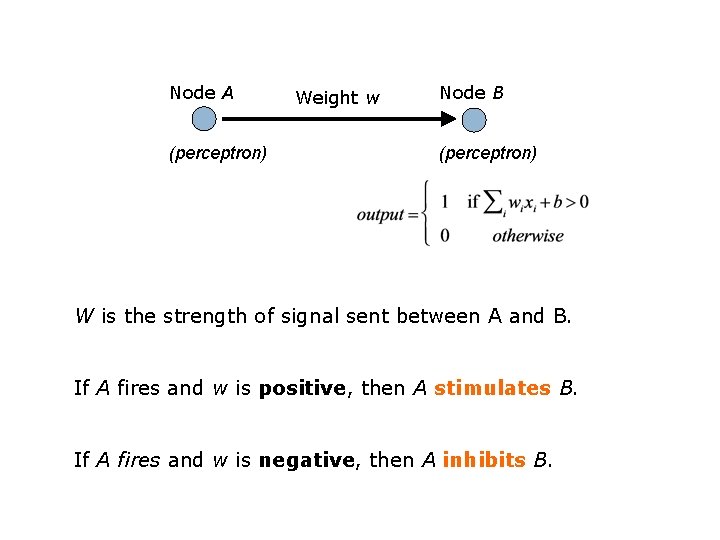

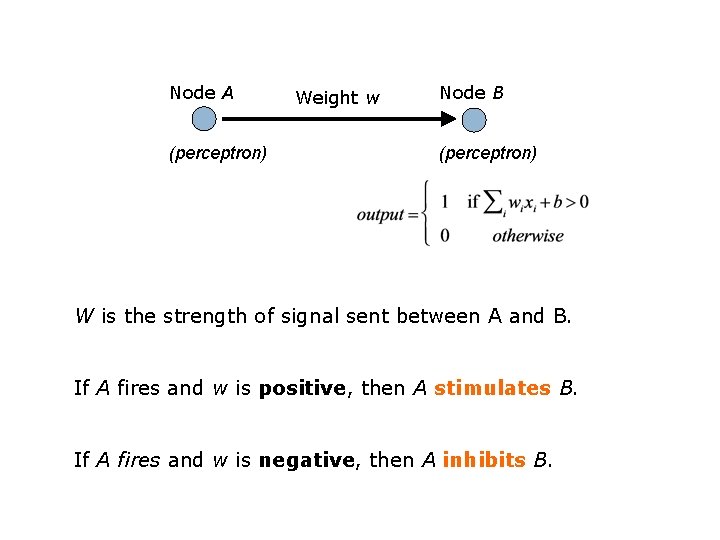

Node A (perceptron) Weight w Node B (perceptron) W is the strength of signal sent between A and B. If A fires and w is positive, then A stimulates B. If A fires and w is negative, then A inhibits B.

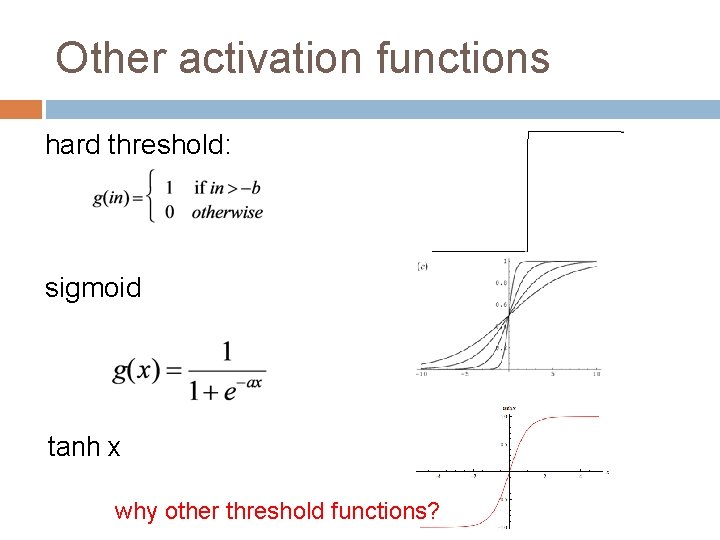

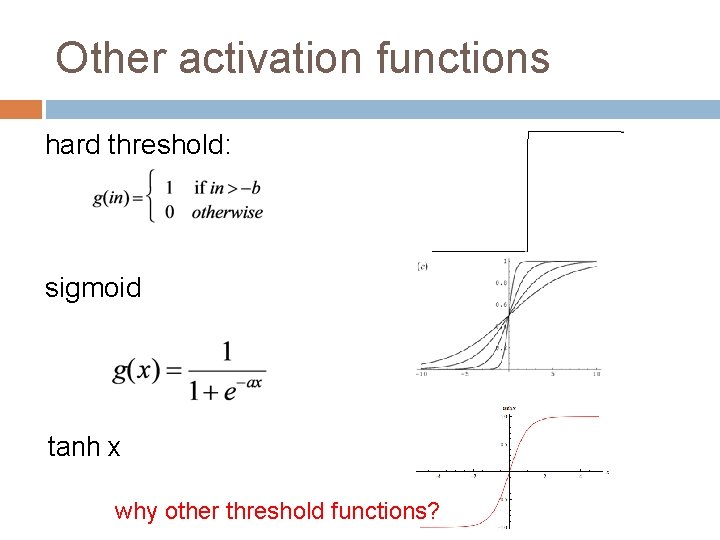

Other activation functions hard threshold: sigmoid tanh x why other threshold functions?

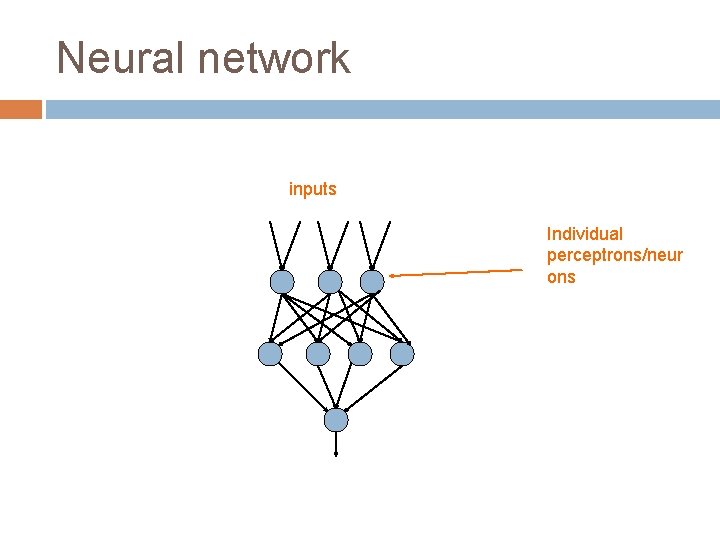

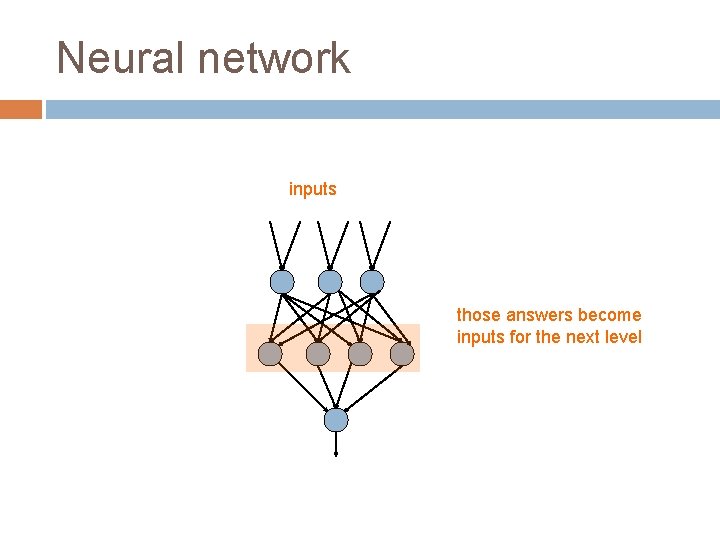

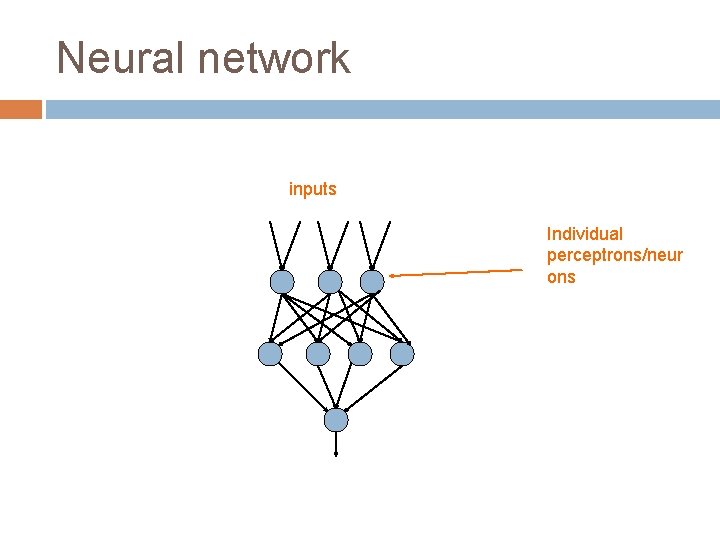

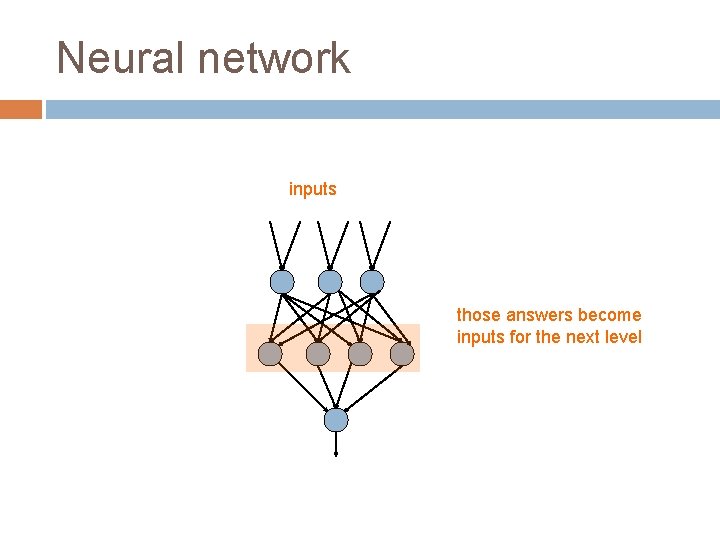

Neural network inputs Individual perceptrons/neur ons

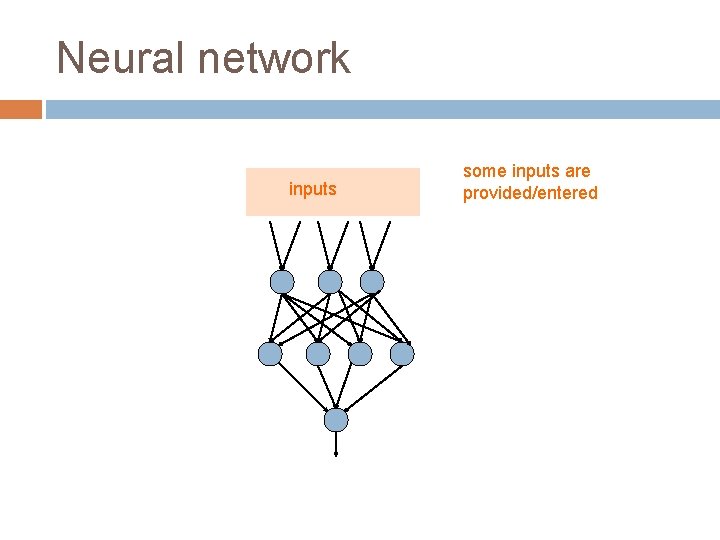

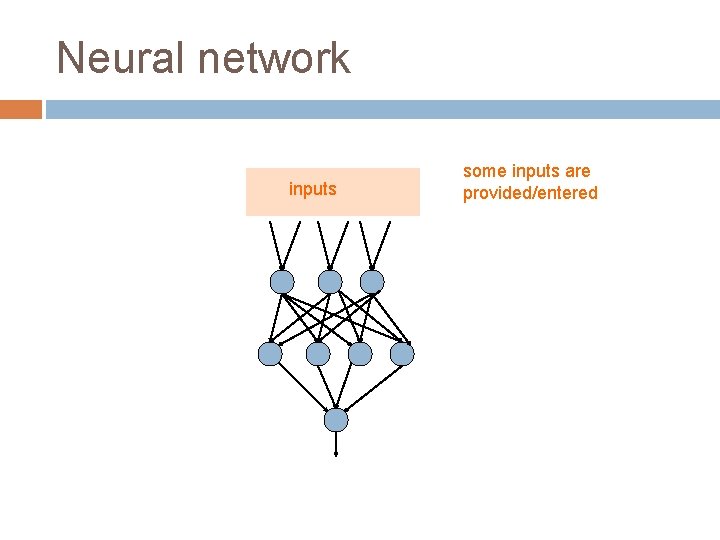

Neural network inputs some inputs are provided/entered

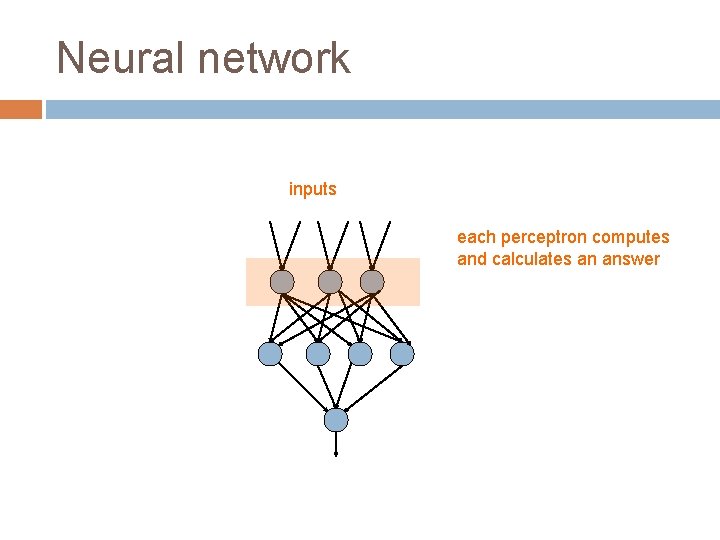

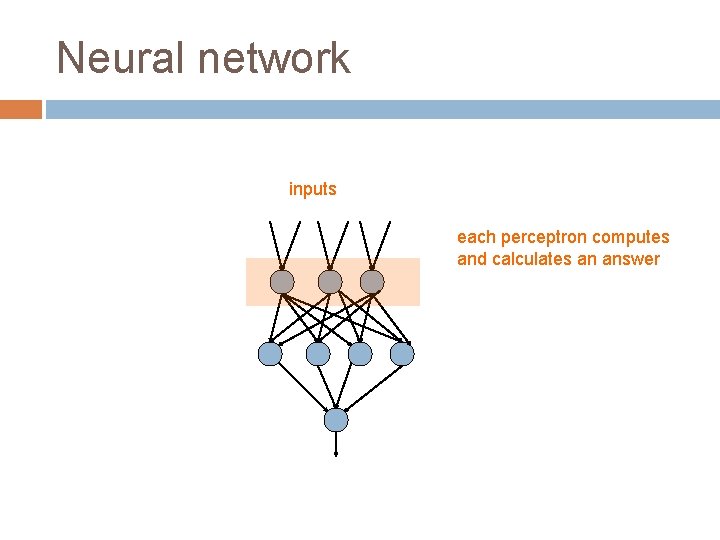

Neural network inputs each perceptron computes and calculates an answer

Neural network inputs those answers become inputs for the next level

Neural network inputs finally get the answer after all levels compute

Activation spread http: //www. youtube. com/watch? v=Yq 7 d 4 ROv. Z 6 I

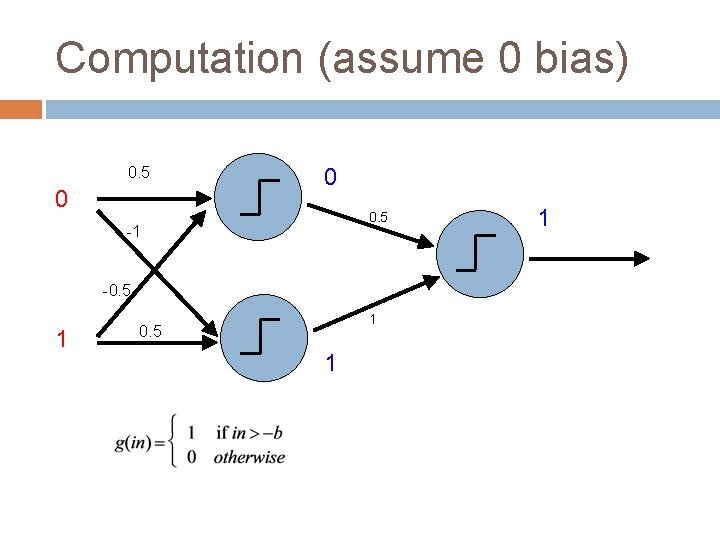

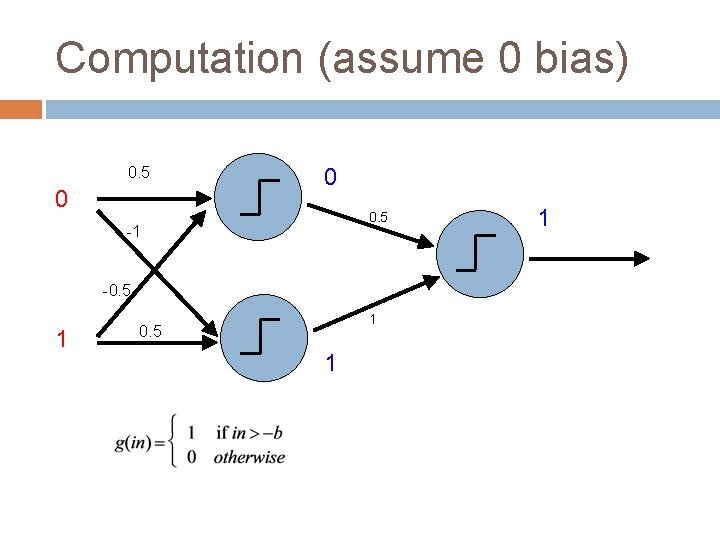

Computation (assume 0 bias) 0. 5 0 0 0. 5 -1 -0. 5 1 1

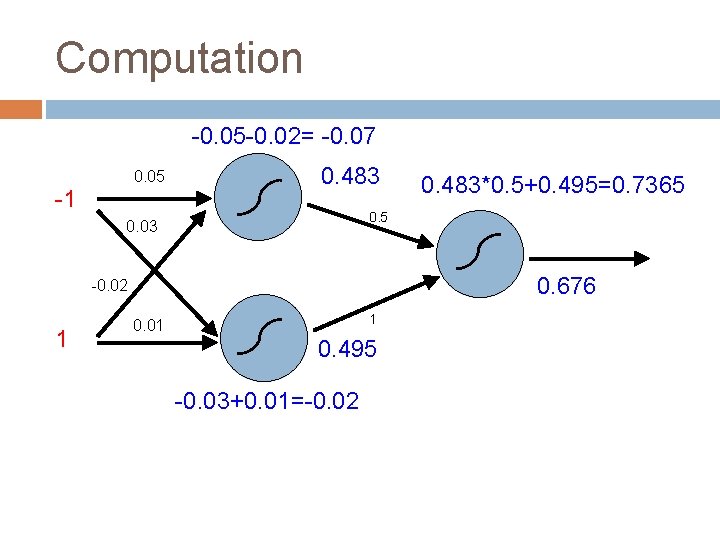

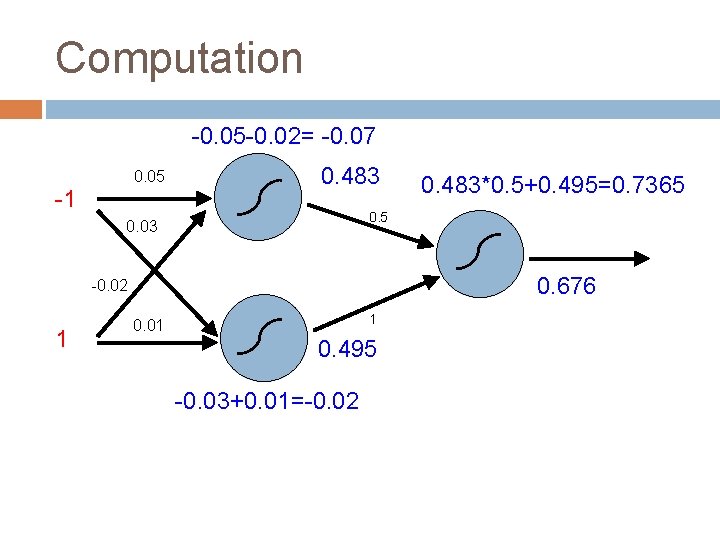

Computation -0. 05 -0. 02= -0. 07 0. 05 -1 0. 483 0. 5 0. 03 0. 676 -0. 02 1 0. 483*0. 5+0. 495=0. 7365 1 0. 01 0. 495 -0. 03+0. 01=-0. 02

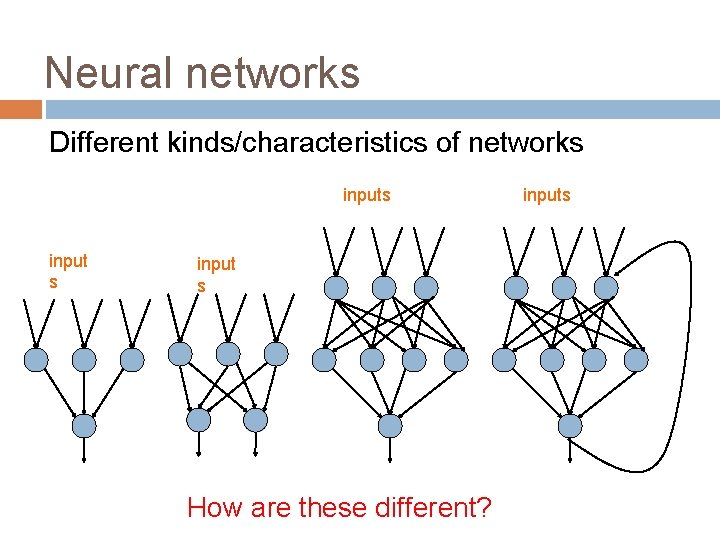

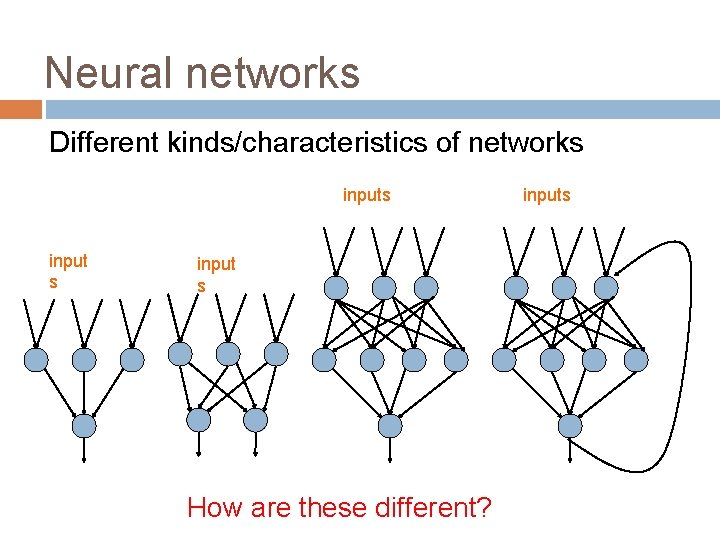

Neural networks Different kinds/characteristics of networks input s How are these different? inputs

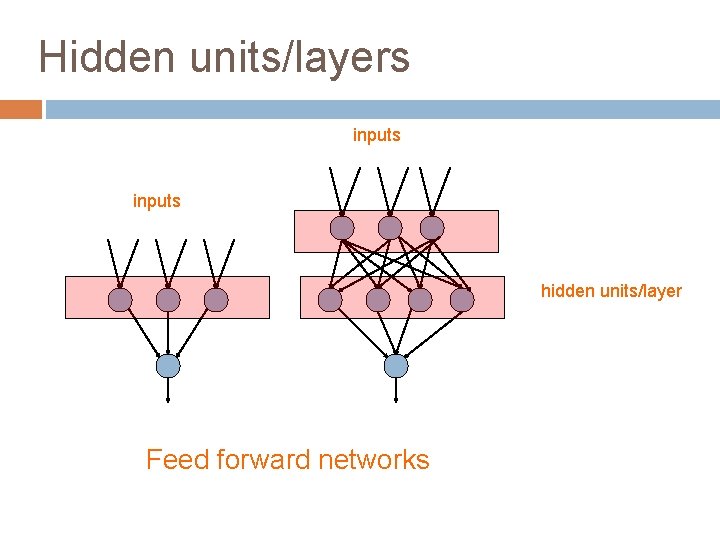

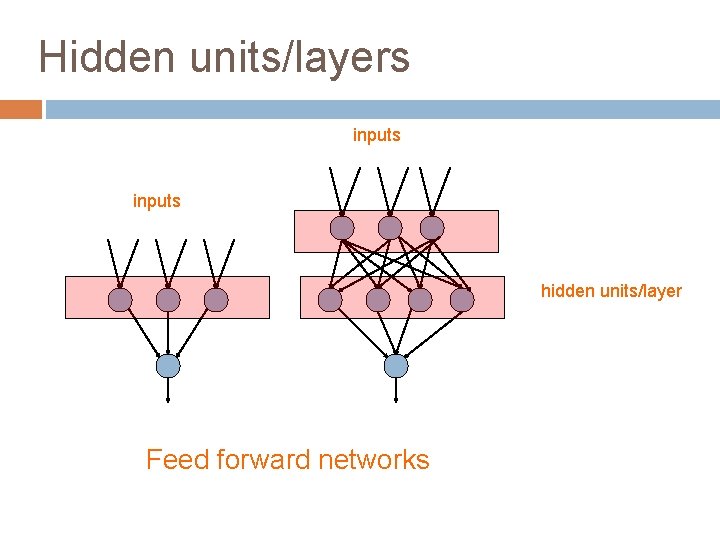

Hidden units/layers inputs hidden units/layer Feed forward networks

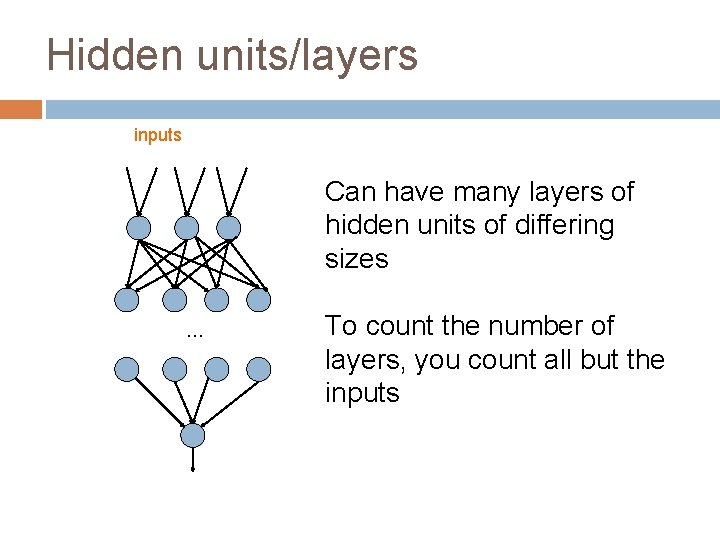

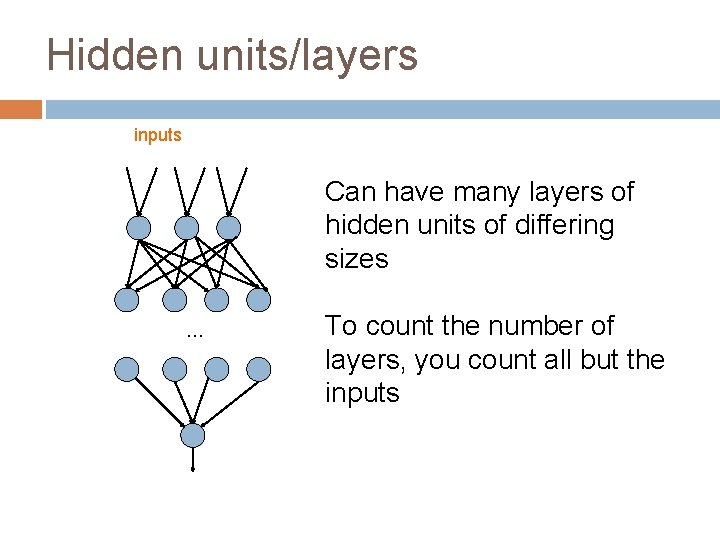

Hidden units/layers inputs Can have many layers of hidden units of differing sizes … To count the number of layers, you count all but the inputs

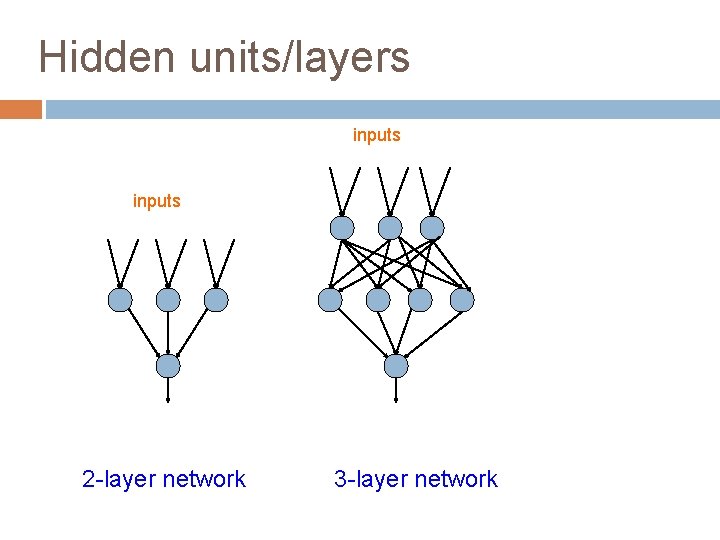

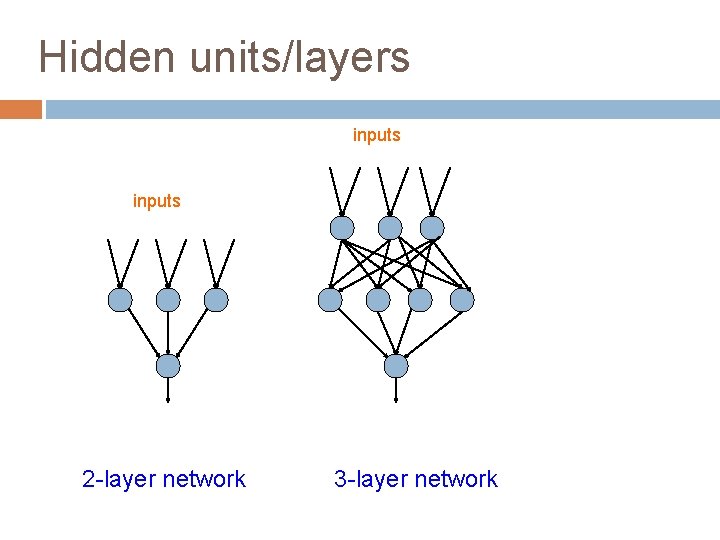

Hidden units/layers inputs 2 -layer network 3 -layer network

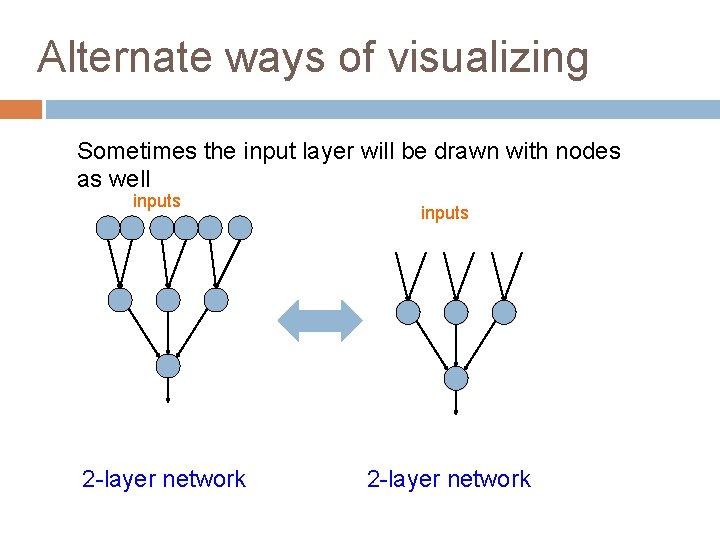

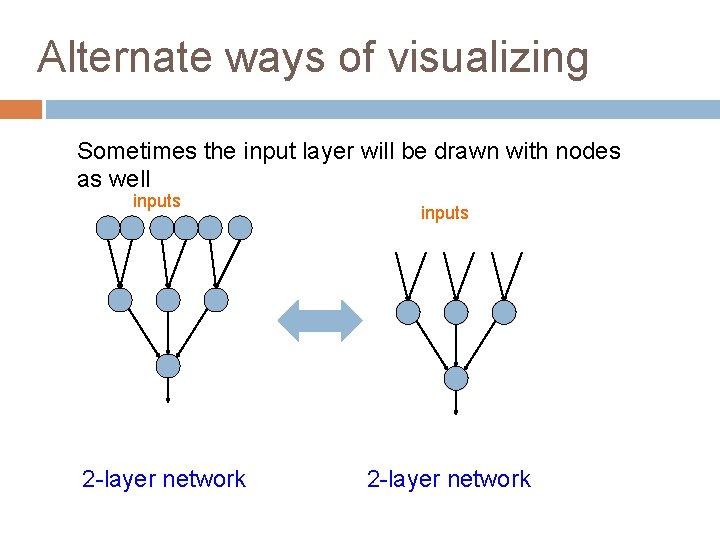

Alternate ways of visualizing Sometimes the input layer will be drawn with nodes as well inputs 2 -layer network

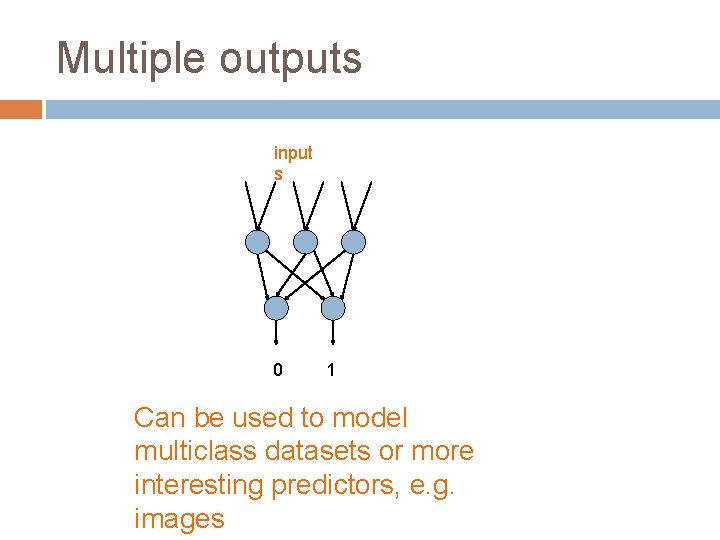

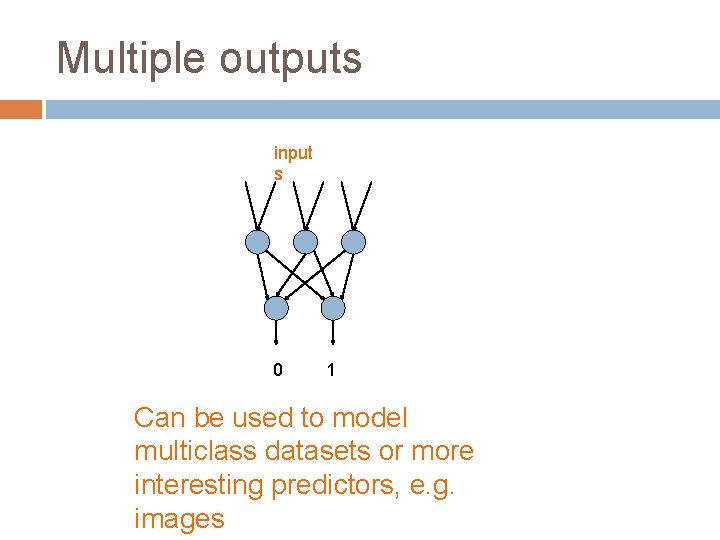

Multiple outputs input s 0 1 Can be used to model multiclass datasets or more interesting predictors, e. g. images

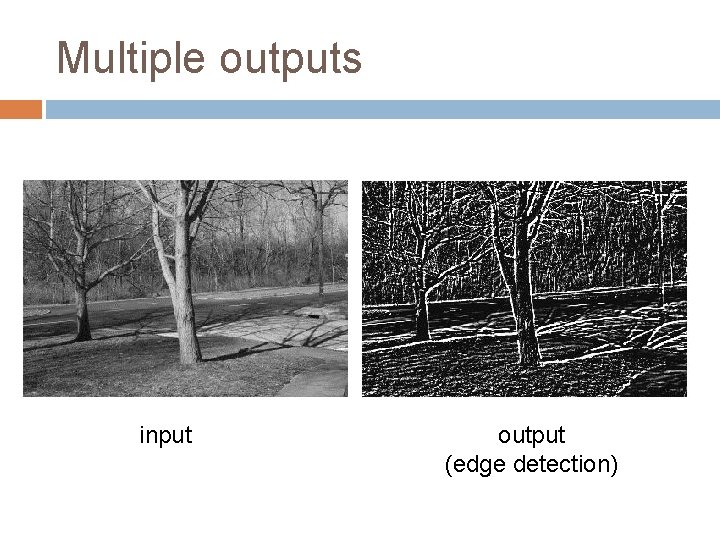

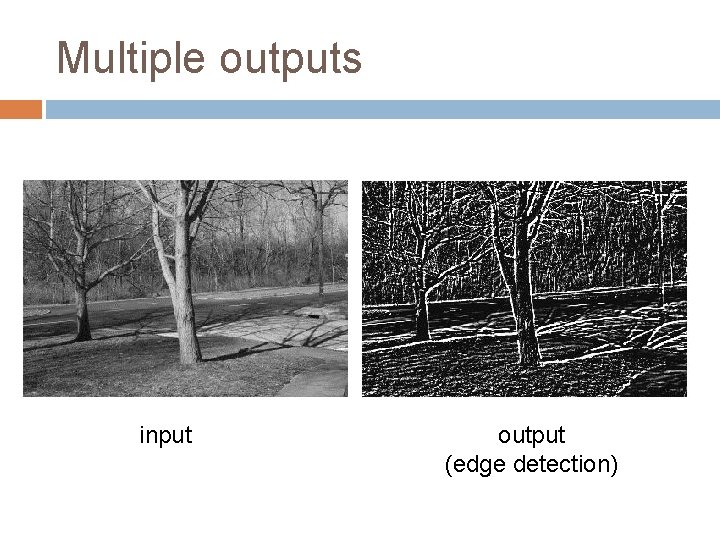

Multiple outputs input output (edge detection)

Neural networks inputs Recurrent network Output is fed back to input Can support memory! Good for temporal/sequential data

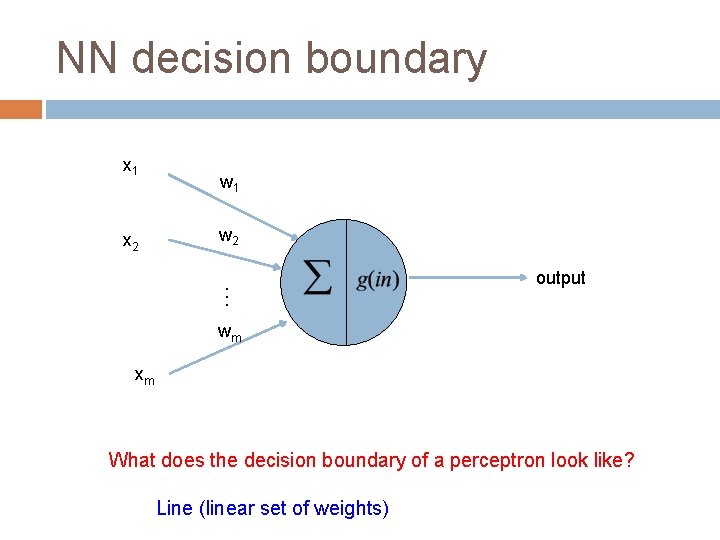

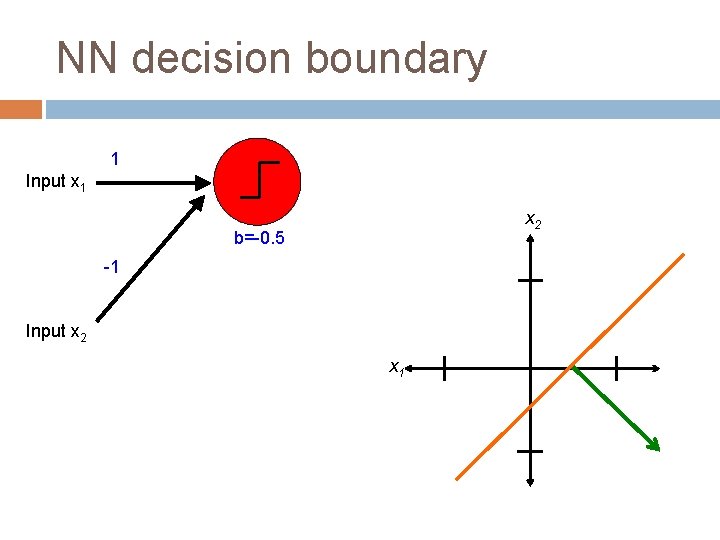

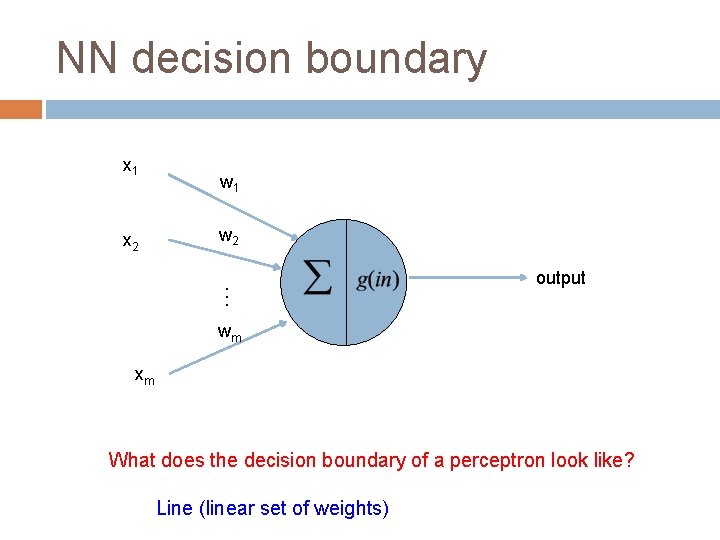

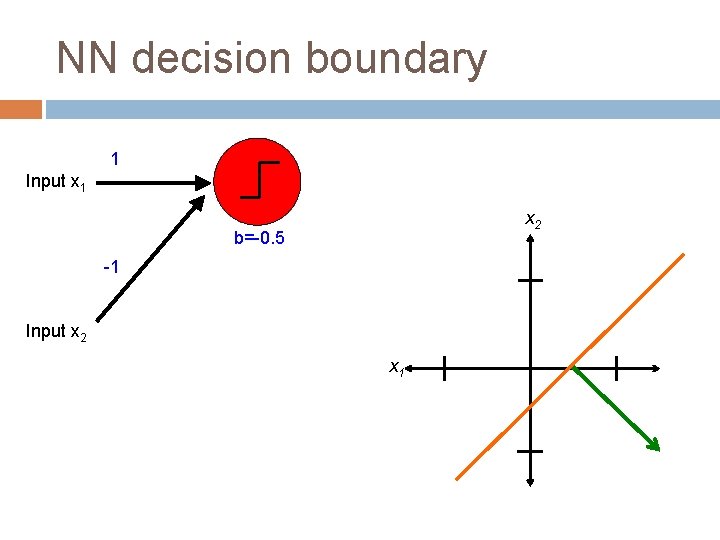

NN decision boundary x 1 x 2 w 1 w 2 … output wm xm What does the decision boundary of a perceptron look like? Line (linear set of weights)

NN decision boundary What does the decision boundary of a 2 -layer network look like Is it linear? What types of things can and can’t it model?

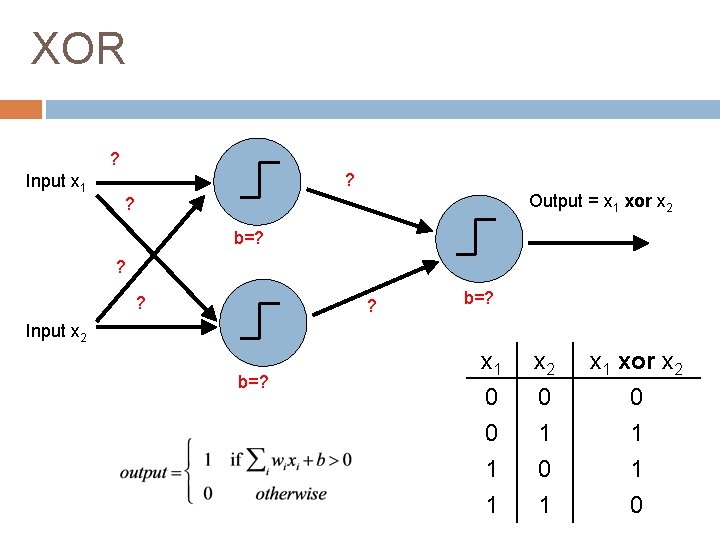

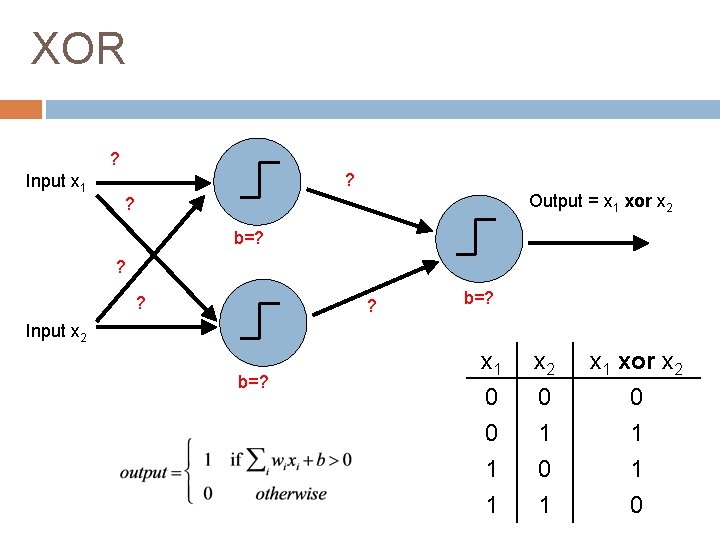

XOR ? Input x 1 ? Output = x 1 xor x 2 ? b=? ? ? ? b=? Input x 2 b=? x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0

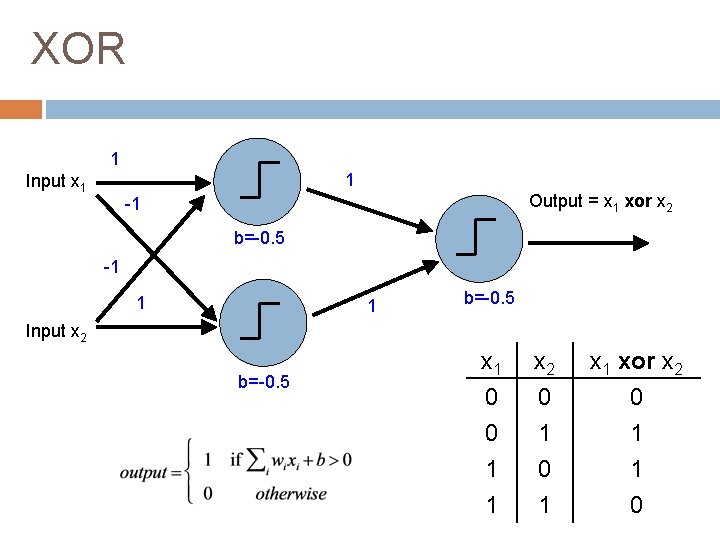

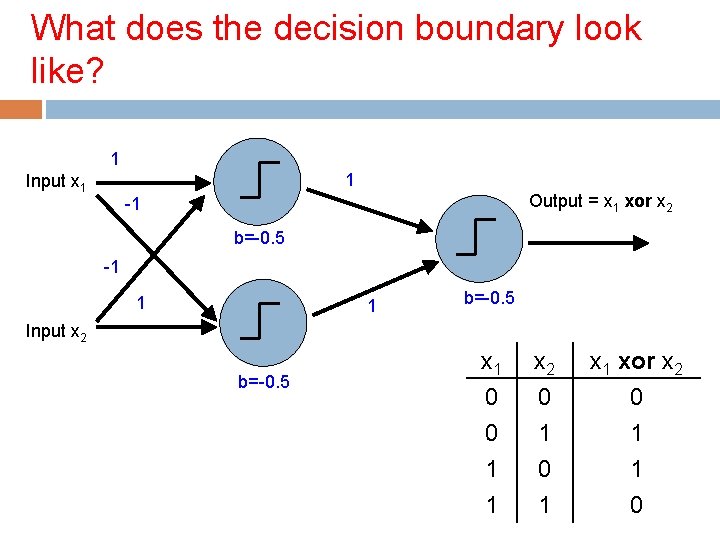

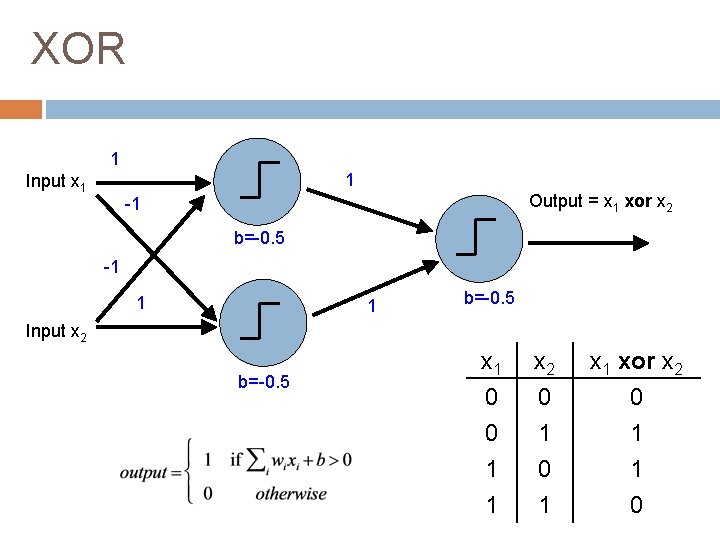

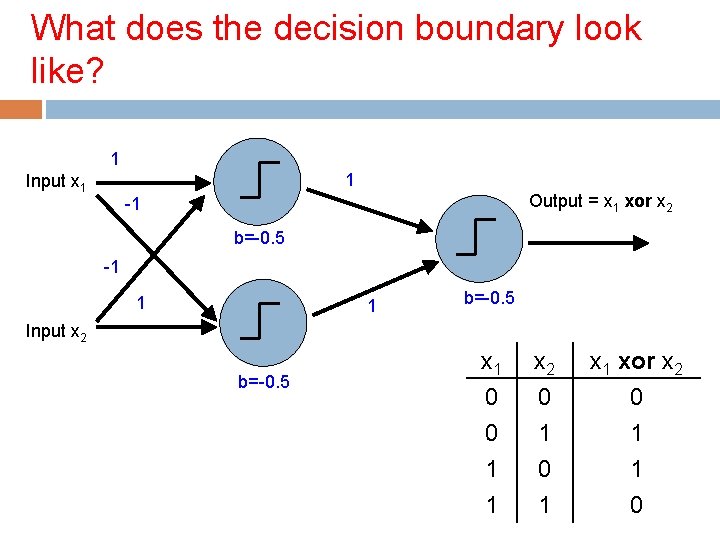

XOR 1 1 Input x 1 Output = x 1 xor x 2 -1 b=-0. 5 -1 1 1 b=-0. 5 Input x 2 b=-0. 5 x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0

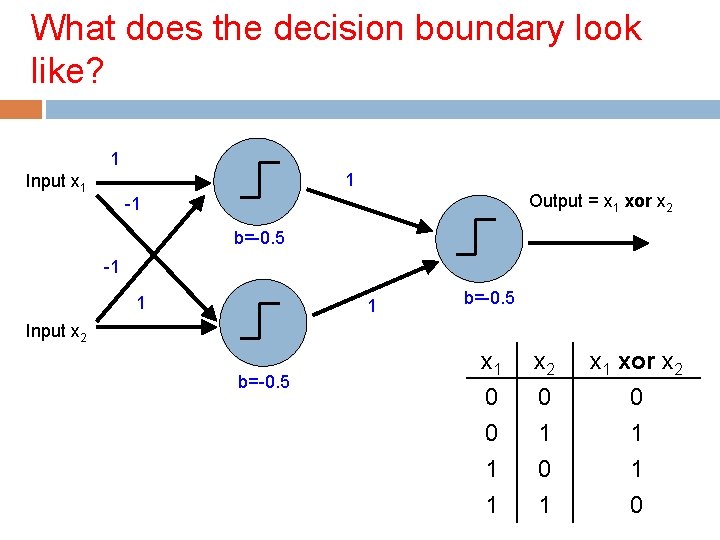

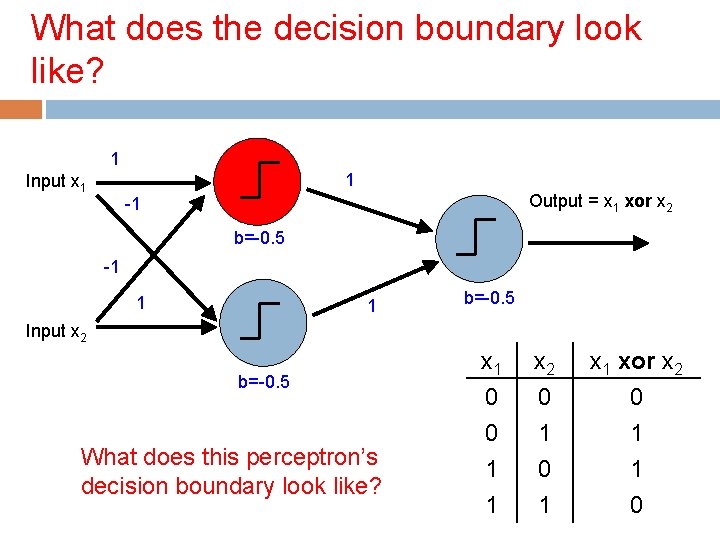

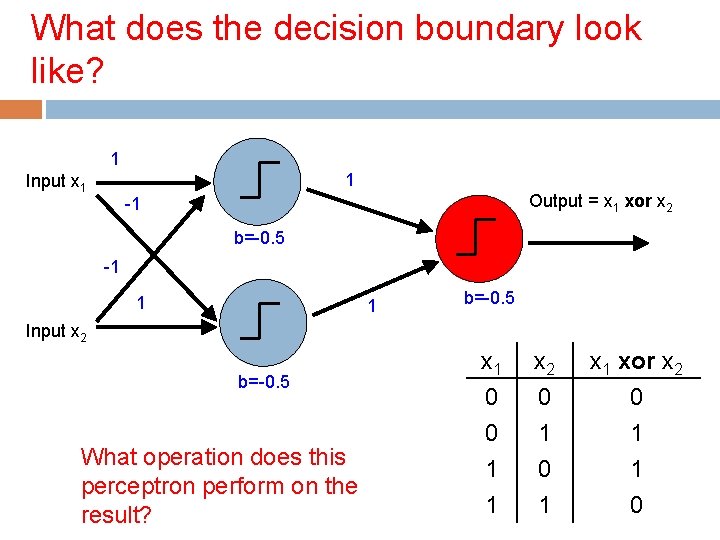

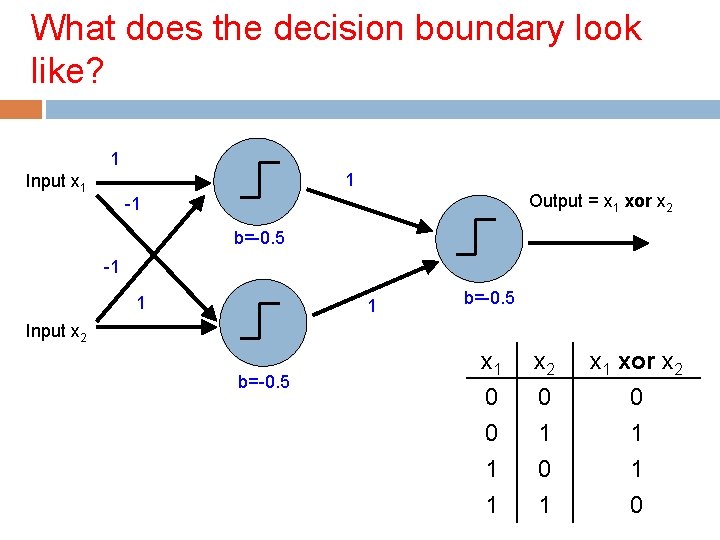

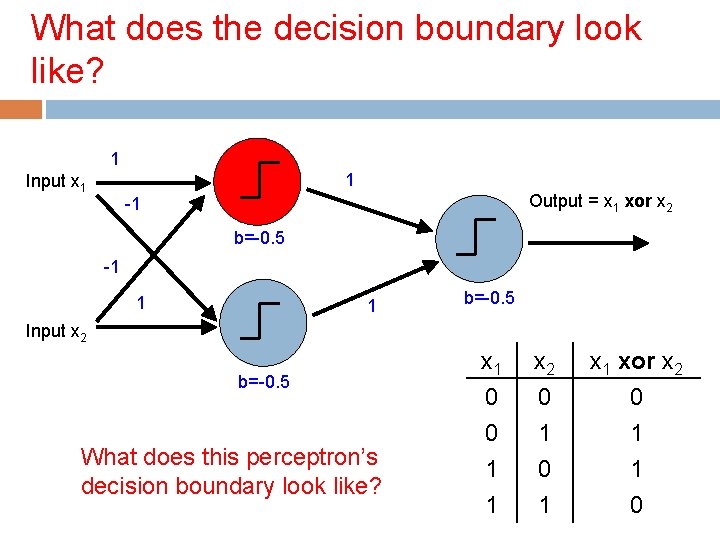

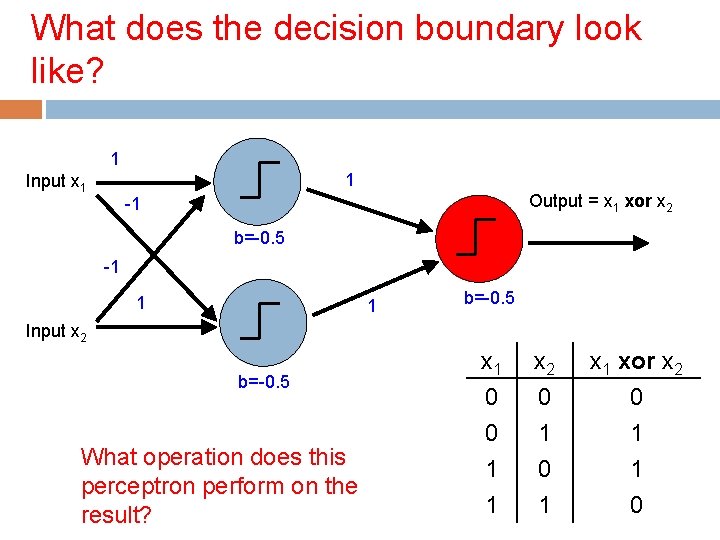

What does the decision boundary look like? 1 1 Input x 1 Output = x 1 xor x 2 -1 b=-0. 5 -1 1 1 b=-0. 5 Input x 2 b=-0. 5 x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0

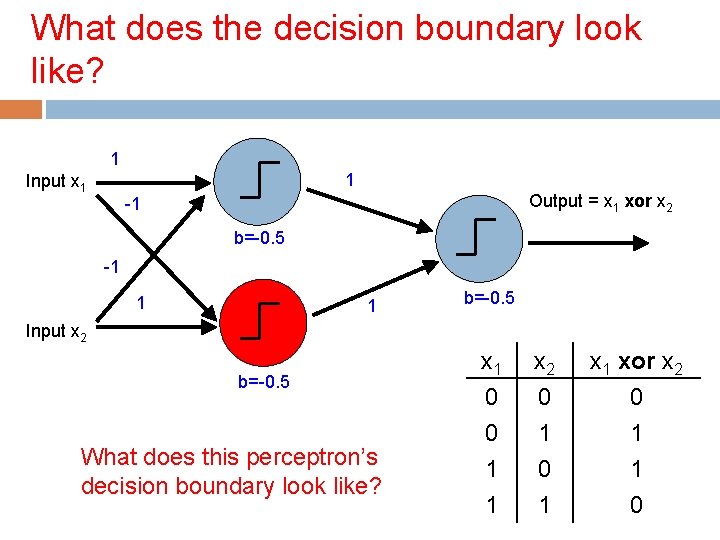

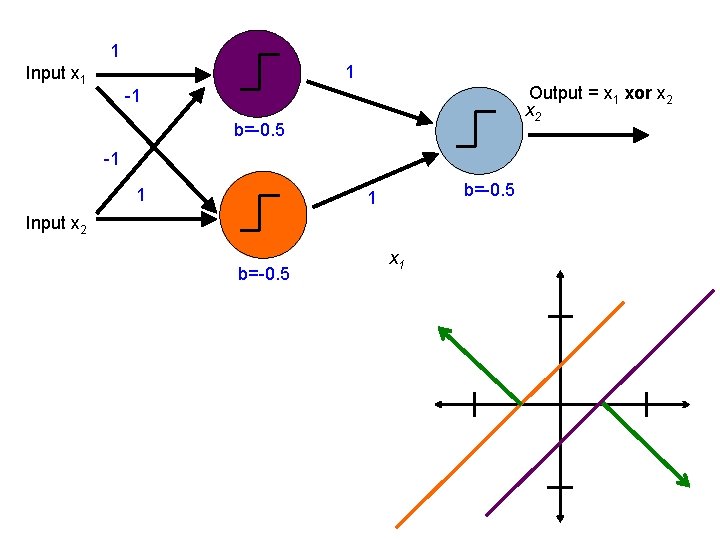

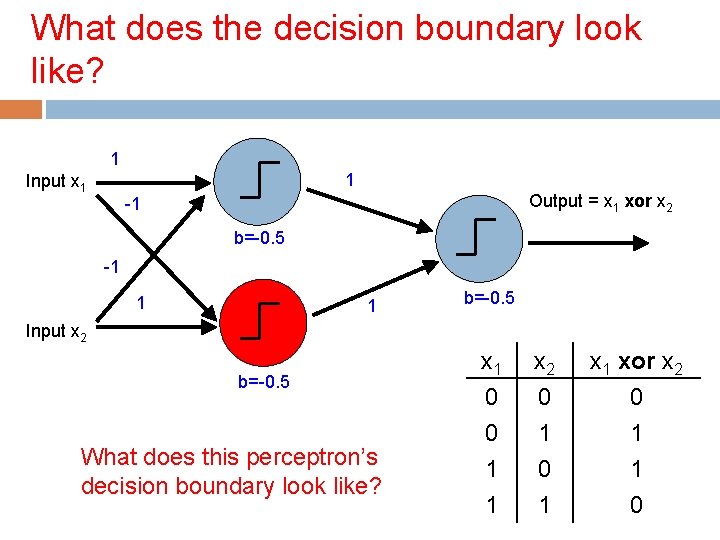

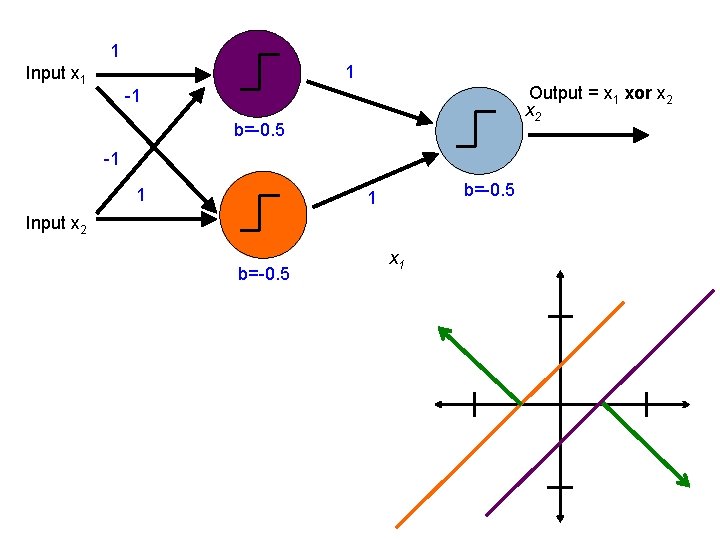

What does the decision boundary look like? 1 1 Input x 1 Output = x 1 xor x 2 -1 b=-0. 5 -1 1 1 b=-0. 5 Input x 2 b=-0. 5 What does this perceptron’s decision boundary look like? x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0

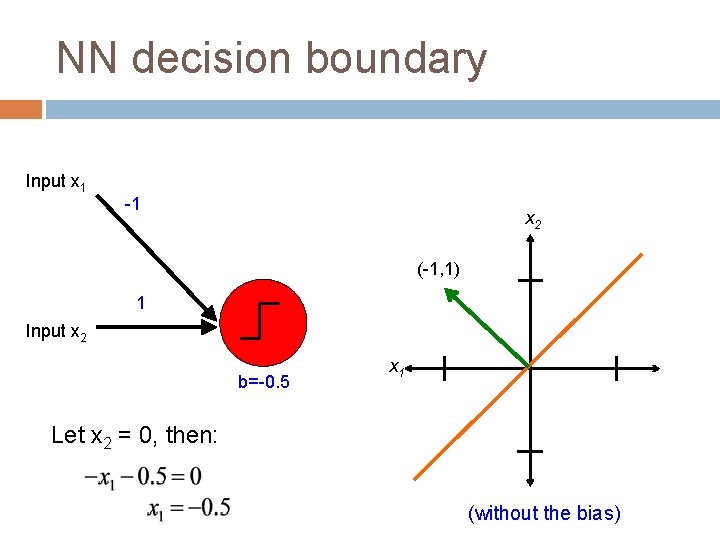

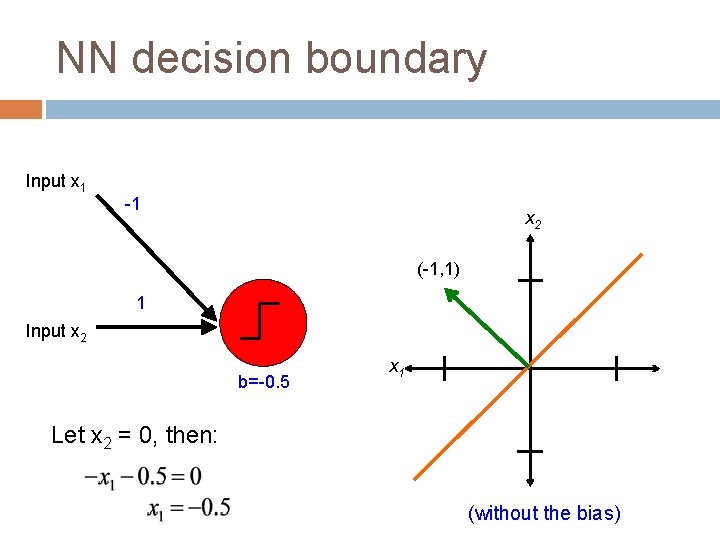

NN decision boundary Input x 1 -1 x 2 (-1, 1) 1 Input x 2 b=-0. 5 x 1 Let x 2 = 0, then: (without the bias)

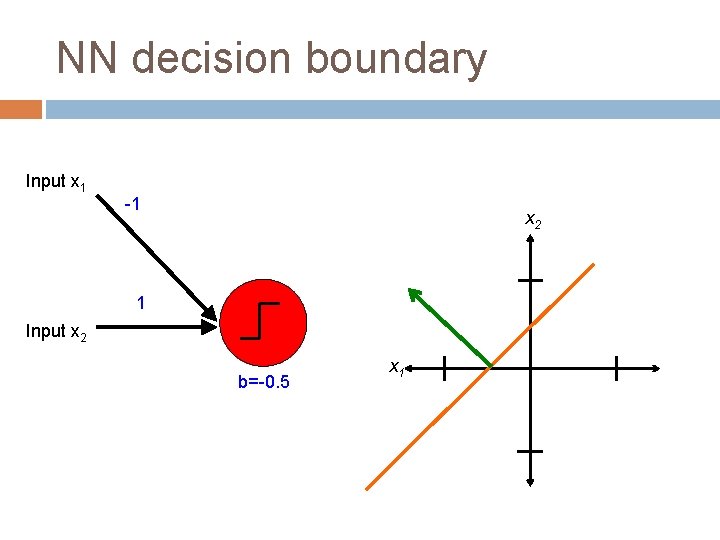

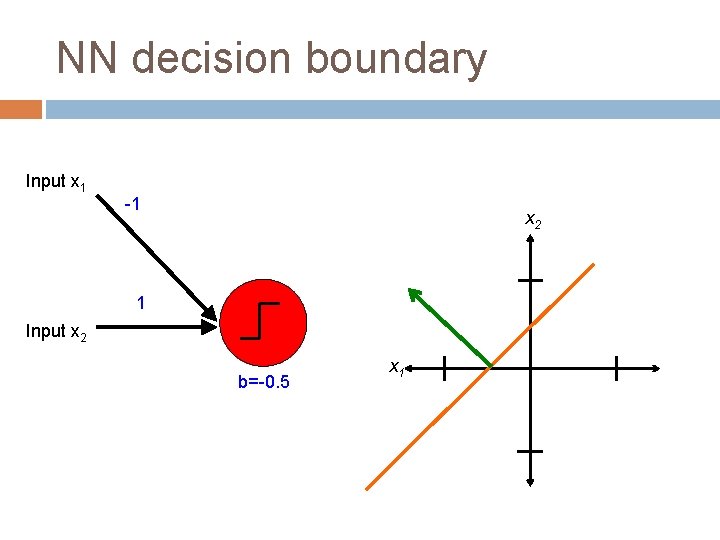

NN decision boundary Input x 1 -1 x 2 1 Input x 2 b=-0. 5 x 1

What does the decision boundary look like? 1 1 Input x 1 Output = x 1 xor x 2 -1 b=-0. 5 -1 1 1 b=-0. 5 Input x 2 b=-0. 5 What does this perceptron’s decision boundary look like? x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0

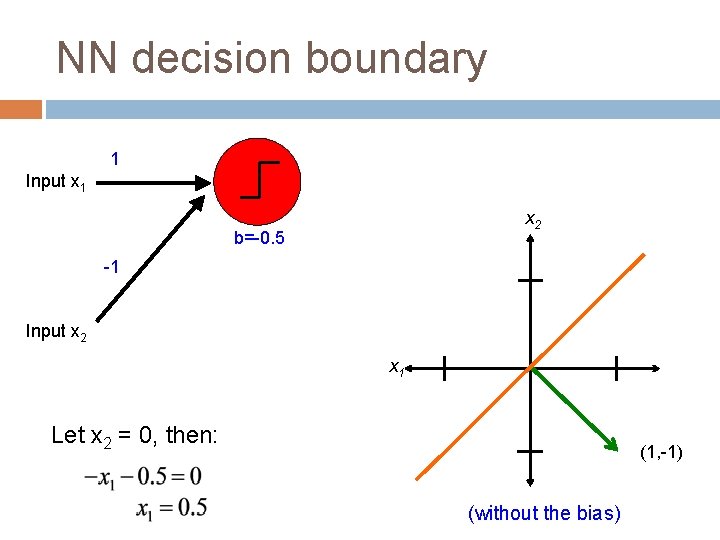

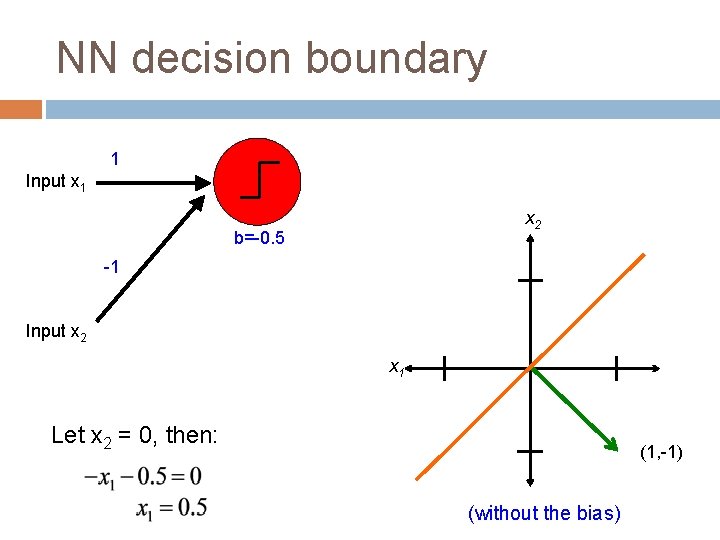

NN decision boundary 1 Input x 1 x 2 b=-0. 5 -1 Input x 2 x 1 Let x 2 = 0, then: (1, -1) (without the bias)

NN decision boundary 1 Input x 1 x 2 b=-0. 5 -1 Input x 2 x 1

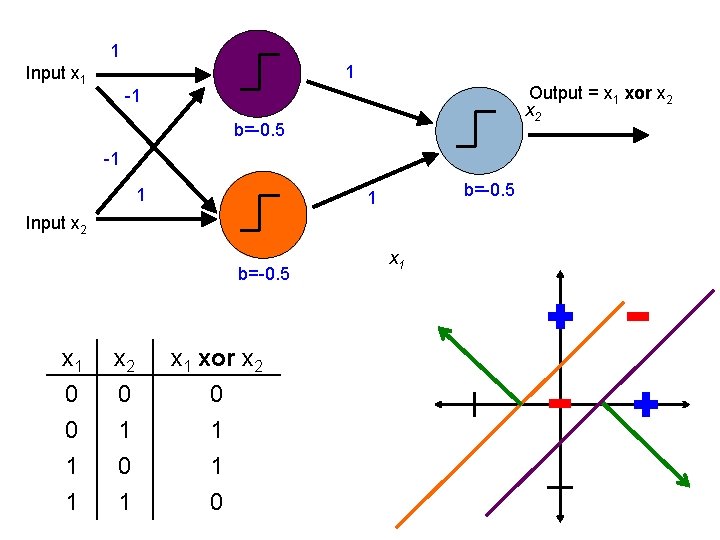

What does the decision boundary look like? 1 1 Input x 1 Output = x 1 xor x 2 -1 b=-0. 5 -1 1 1 b=-0. 5 Input x 2 b=-0. 5 What operation does this perceptron perform on the result? x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0

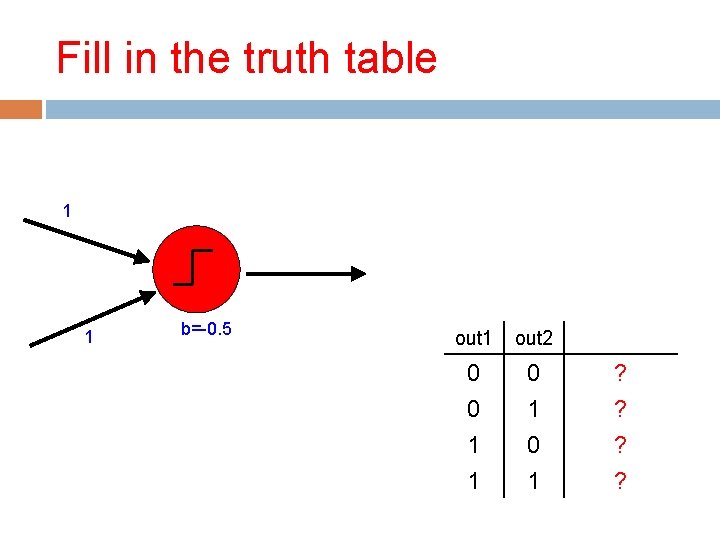

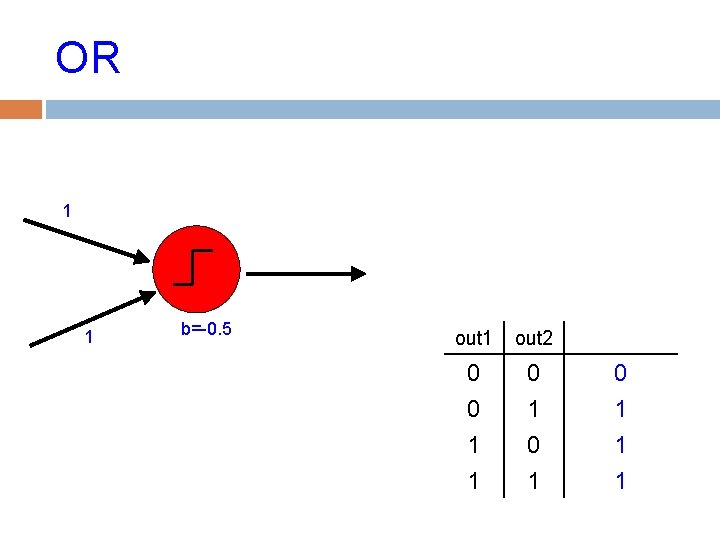

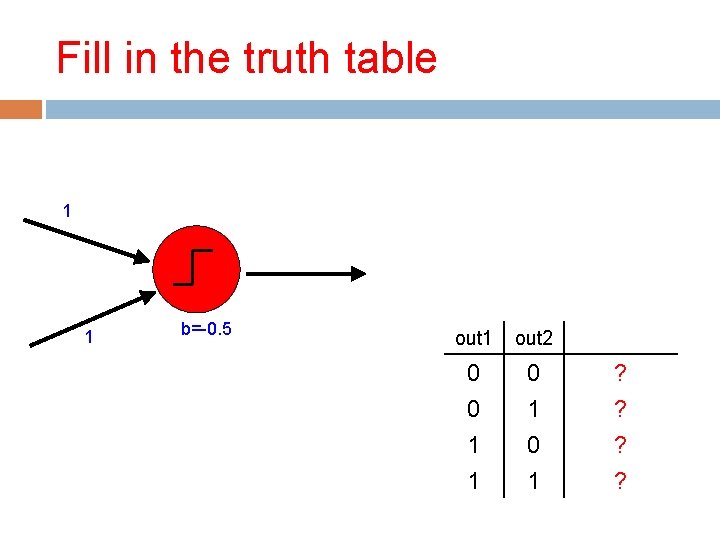

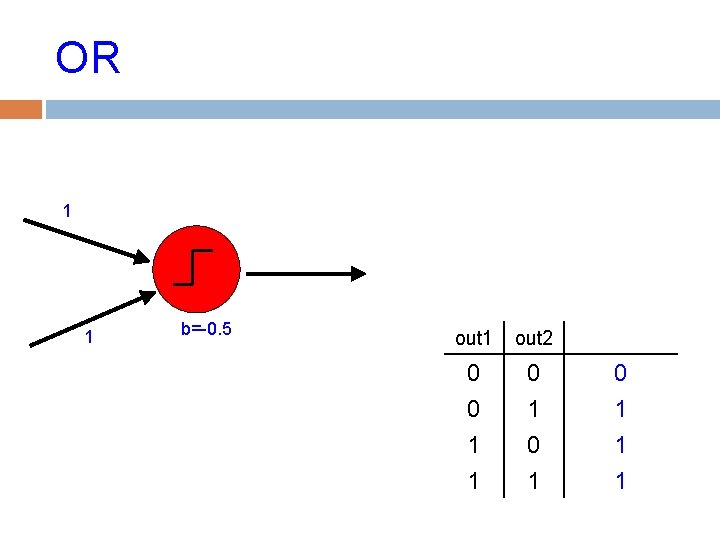

Fill in the truth table 1 1 b=-0. 5 out 1 out 2 0 0 1 1 0 1 ? ?

OR 1 1 b=-0. 5 out 1 out 2 0 0 1 1 0 1 0 1 1 1

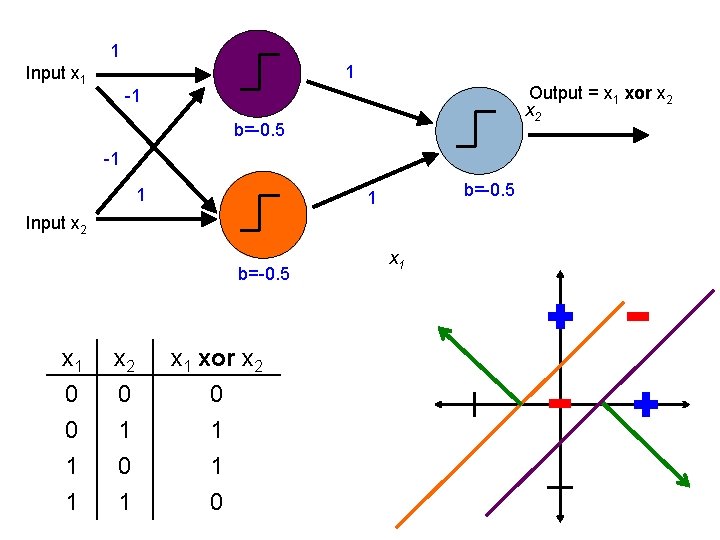

What does the decision boundary look like? 1 1 Input x 1 Output = x 1 xor x 2 -1 b=-0. 5 -1 1 1 b=-0. 5 Input x 2 b=-0. 5 x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0

1 1 Input x 1 Output = x 1 xor x 2 -1 b=-0. 5 -1 1 b=-0. 5 1 Input x 2 b=-0. 5 x 1

1 1 Input x 1 Output = x 1 xor x 2 -1 b=-0. 5 -1 1 b=-0. 5 1 Input x 2 b=-0. 5 x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0 x 1

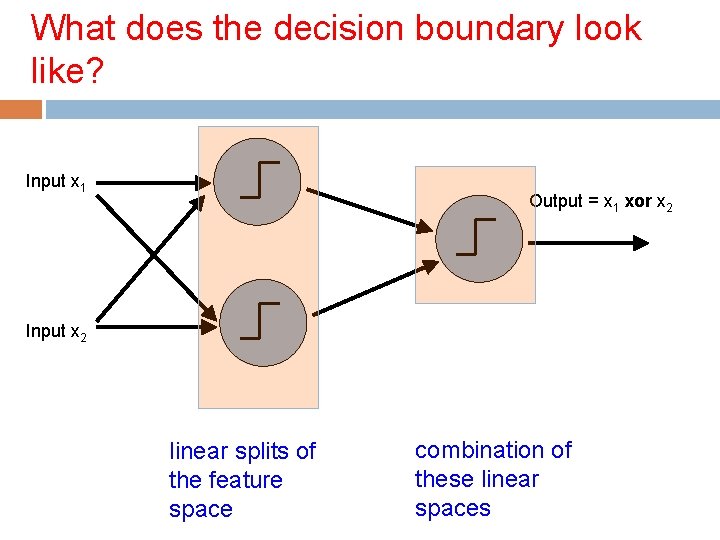

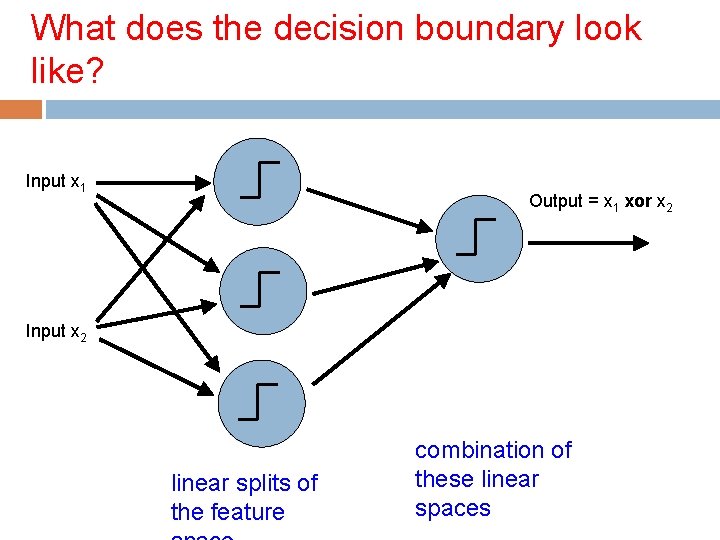

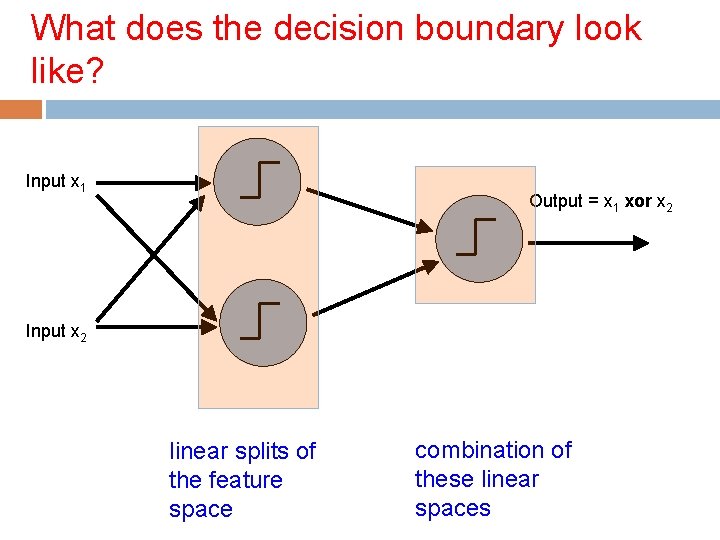

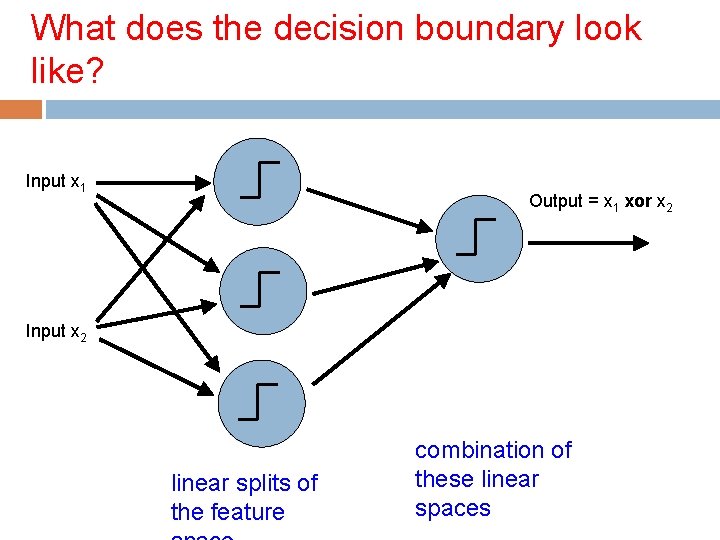

What does the decision boundary look like? Input x 1 Output = x 1 xor x 2 Input x 2 linear splits of the feature space combination of these linear spaces

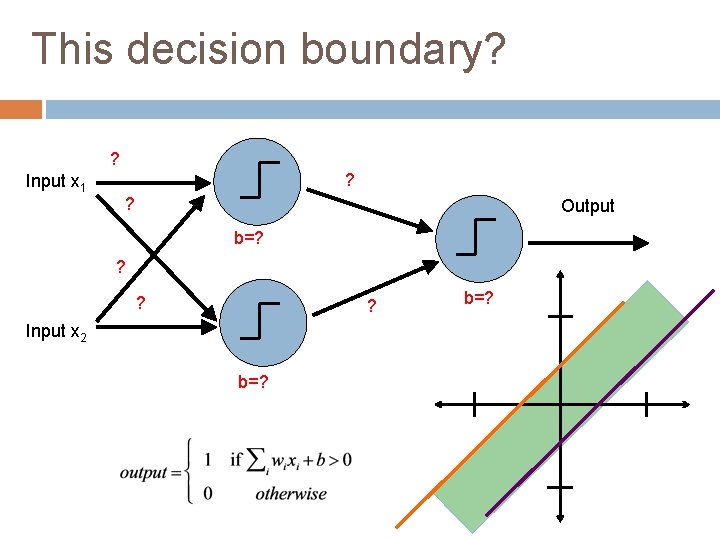

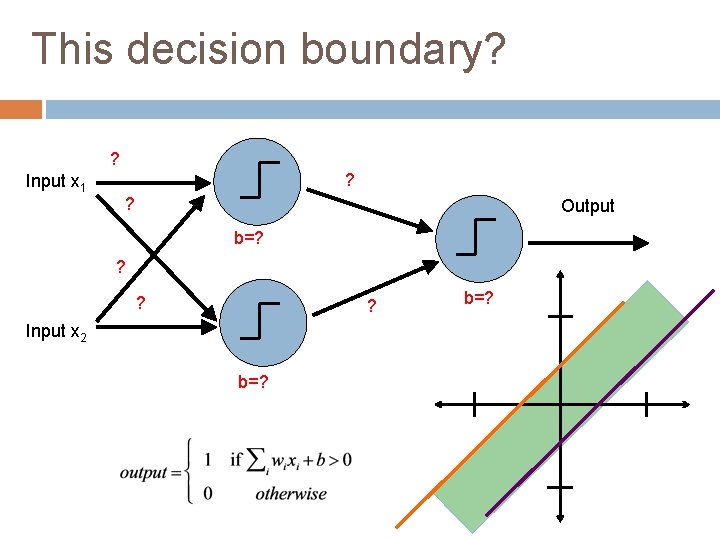

This decision boundary? ? Input x 1 ? ? Output b=? ? Input x 2 b=?

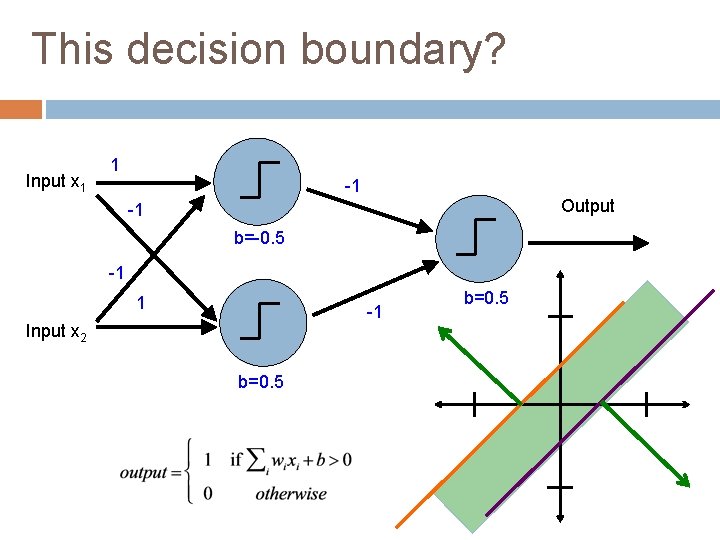

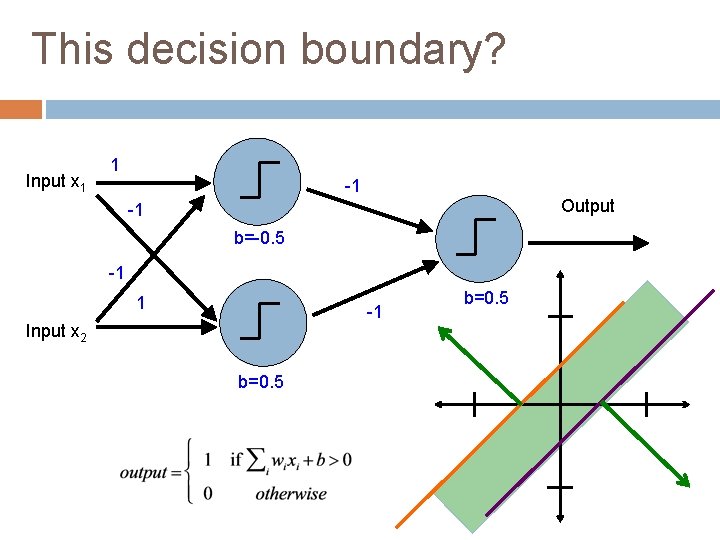

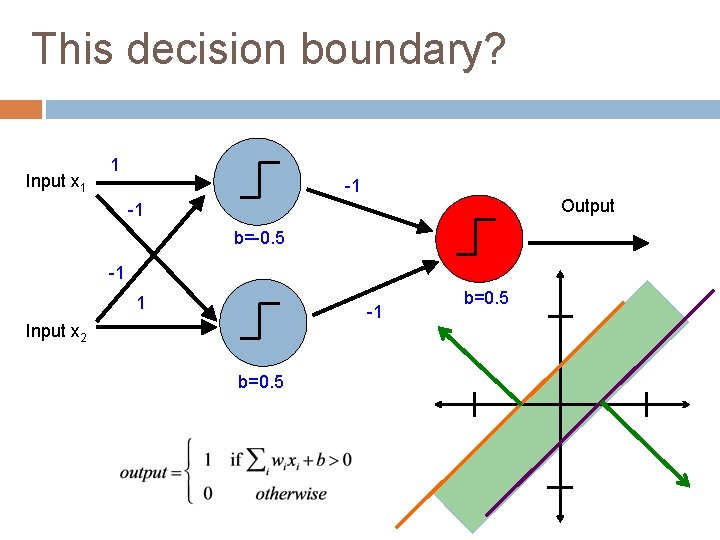

This decision boundary? Input x 1 1 -1 Output -1 b=-0. 5 -1 1 -1 Input x 2 b=0. 5

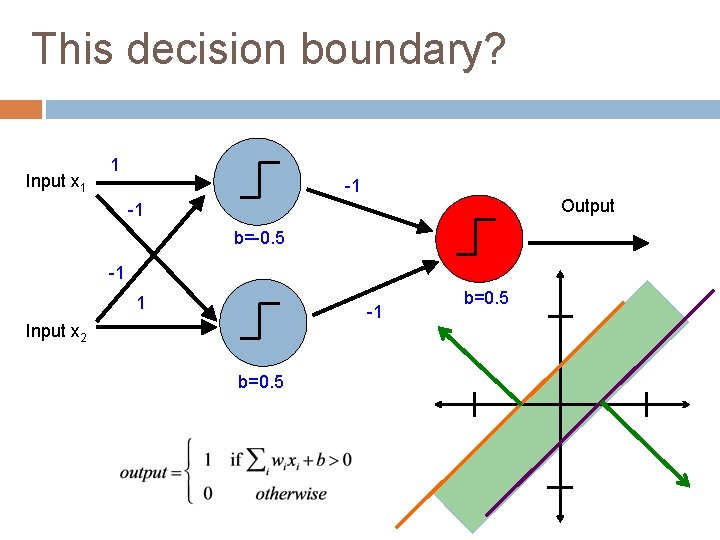

This decision boundary? Input x 1 1 -1 Output -1 b=-0. 5 -1 1 -1 Input x 2 b=0. 5

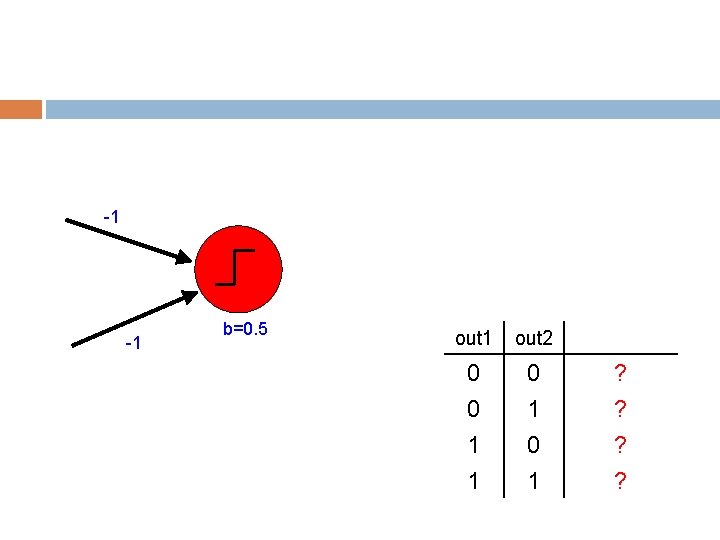

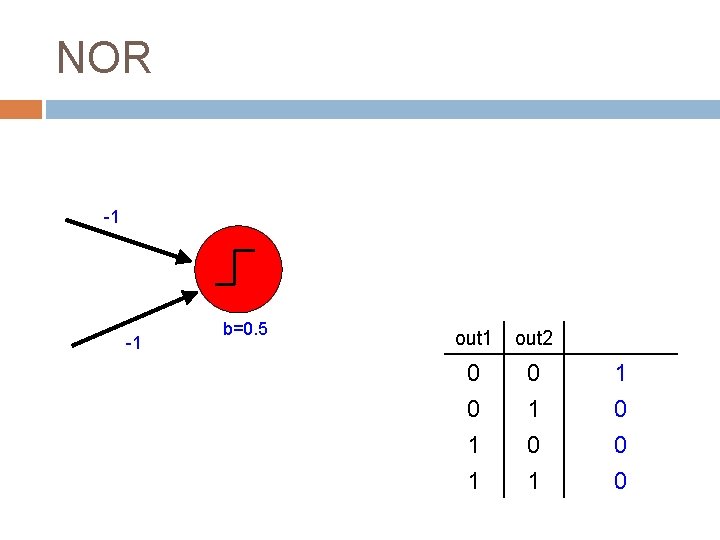

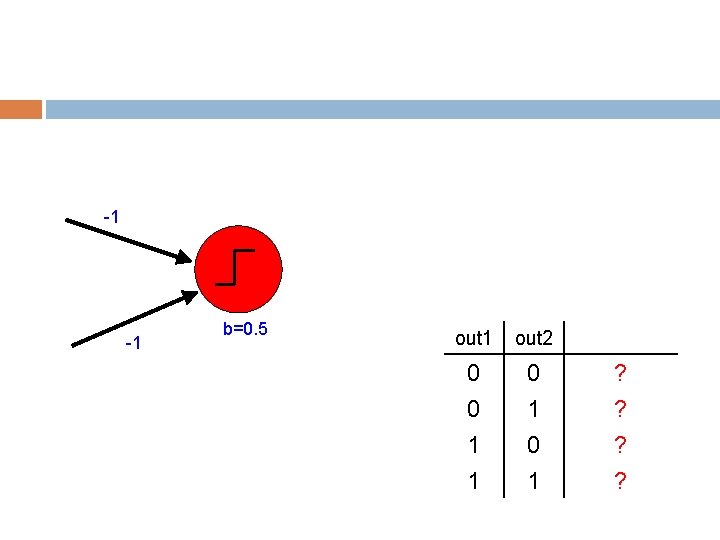

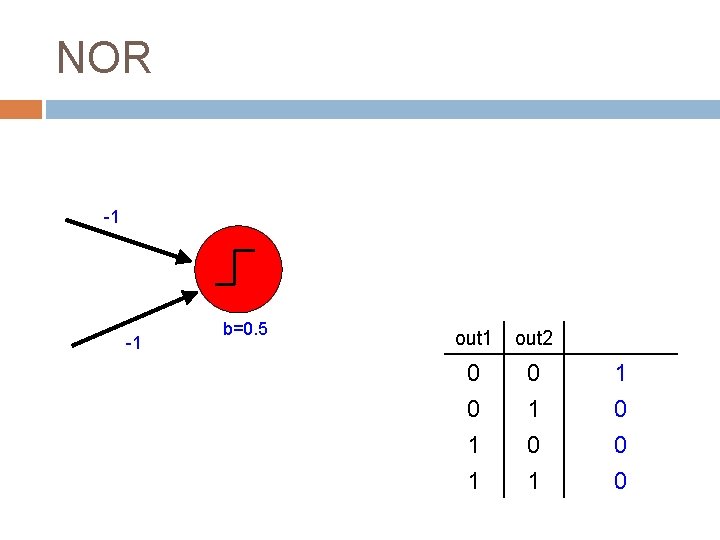

-1 -1 b=0. 5 out 1 out 2 0 0 1 1 0 1 ? ?

NOR -1 -1 b=0. 5 out 1 out 2 0 0 1 1 0 0 0

What does the decision boundary look like? Input x 1 Output = x 1 xor x 2 Input x 2 linear splits of the feature combination of these linear spaces

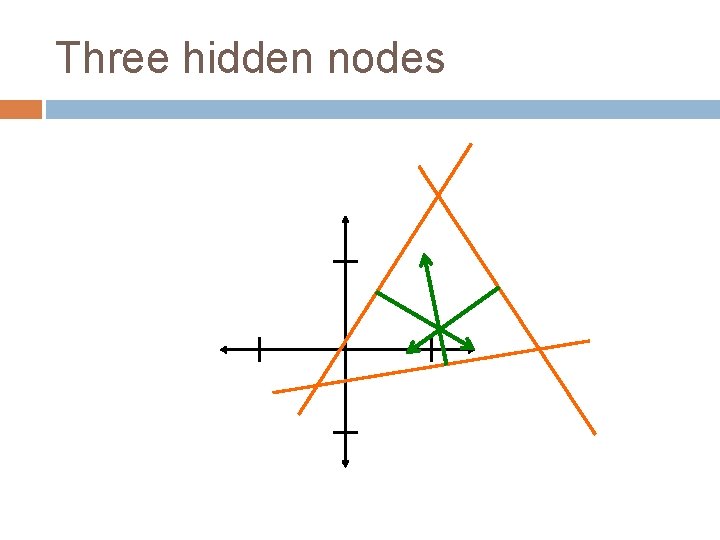

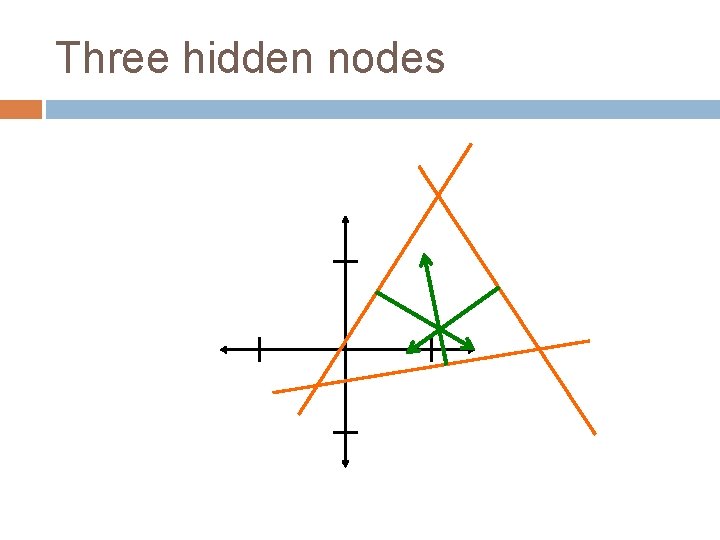

Three hidden nodes

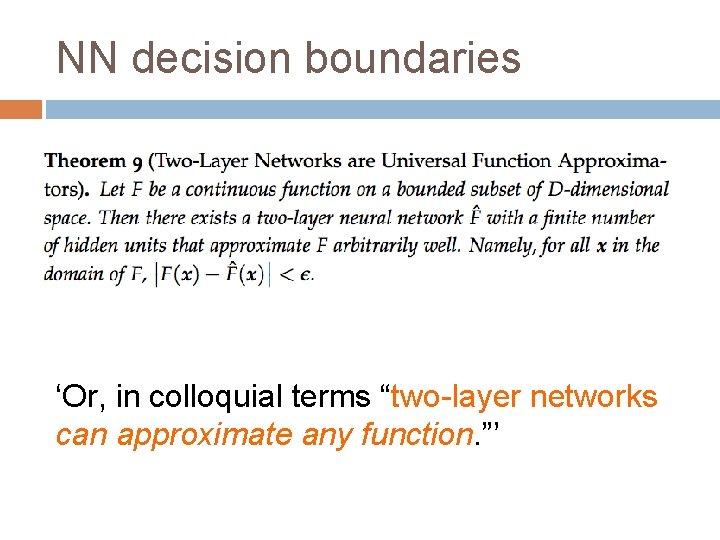

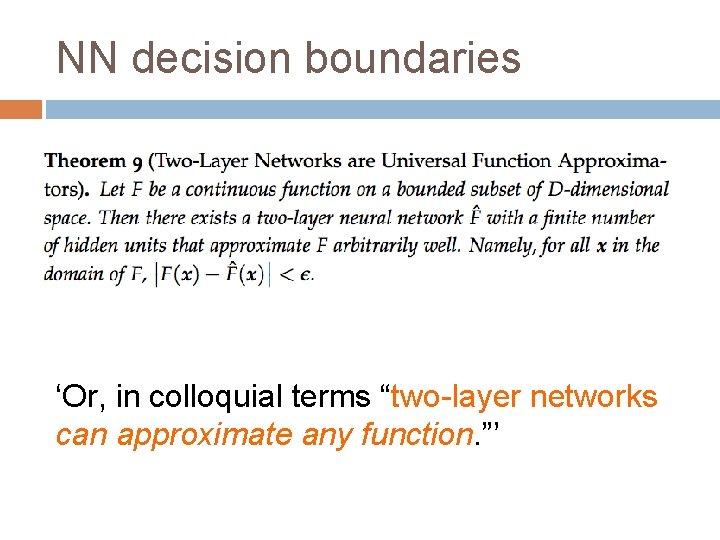

NN decision boundaries ‘Or, in colloquial terms “two-layer networks can approximate any function. ”’

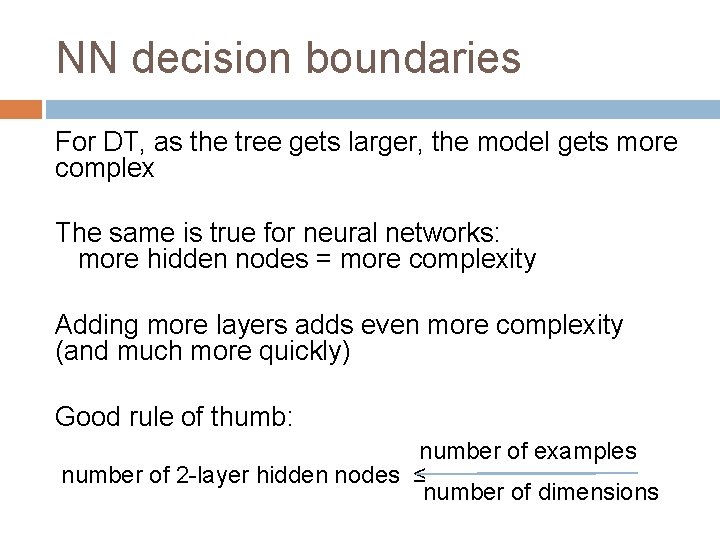

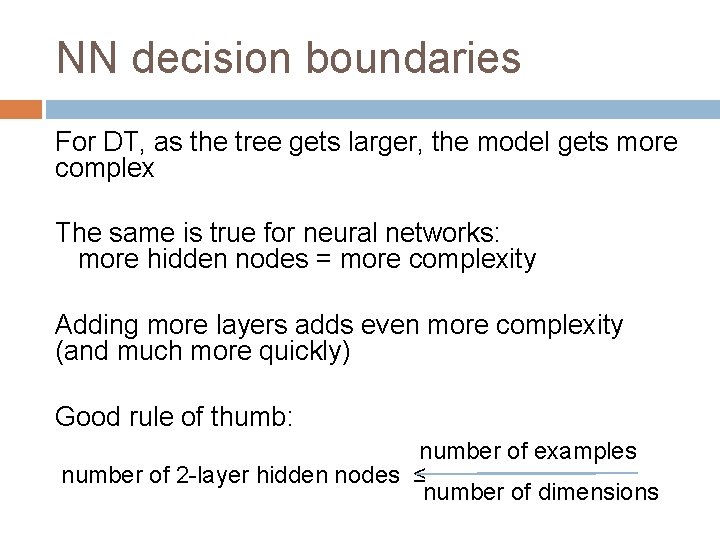

NN decision boundaries For DT, as the tree gets larger, the model gets more complex The same is true for neural networks: more hidden nodes = more complexity Adding more layers adds even more complexity (and much more quickly) Good rule of thumb: number of examples number of 2 -layer hidden nodes ≤ number of dimensions

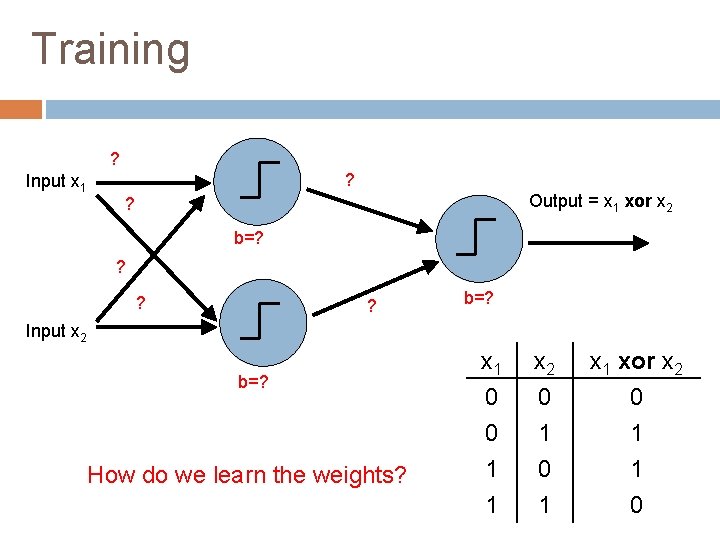

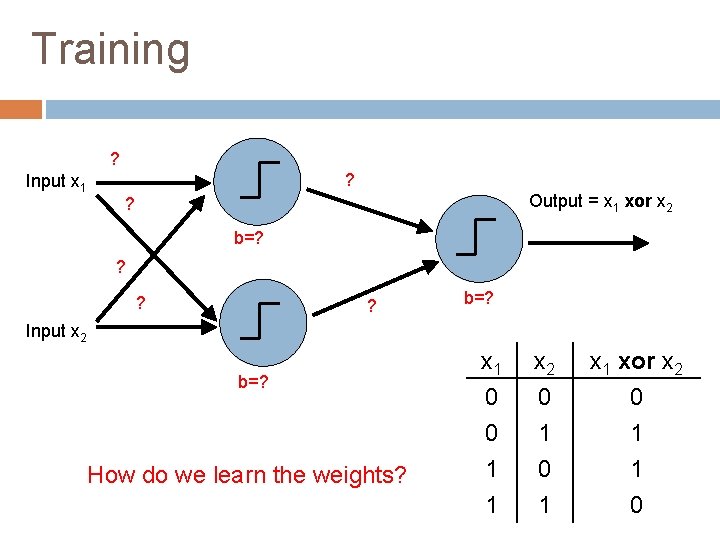

Training ? Input x 1 ? Output = x 1 xor x 2 ? b=? ? ? ? b=? Input x 2 b=? How do we learn the weights? x 1 0 0 1 x 2 0 1 0 x 1 xor x 2 0 1 1 0

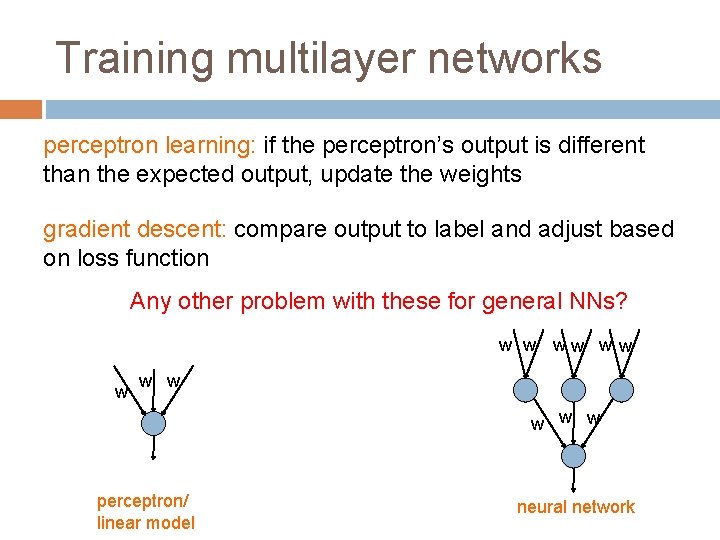

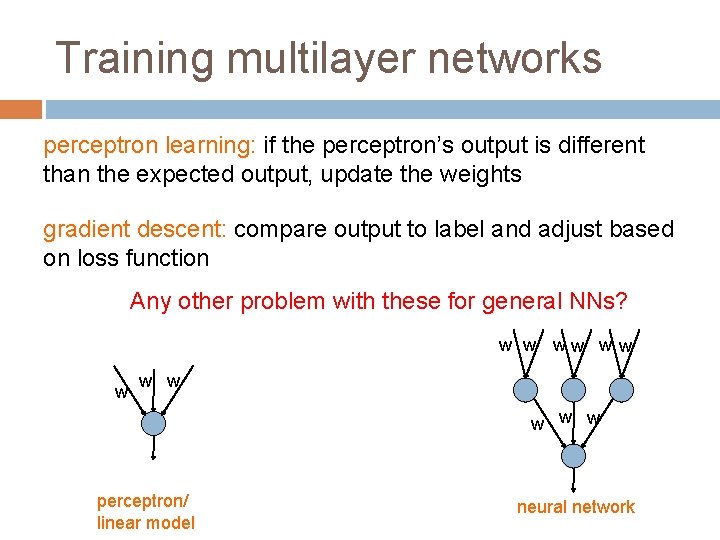

Training multilayer networks perceptron learning: if the perceptron’s output is different than the expected output, update the weights gradient descent: compare output to label and adjust based on loss function Any other problem with these for general NNs? w w w perceptron/ linear model neural network

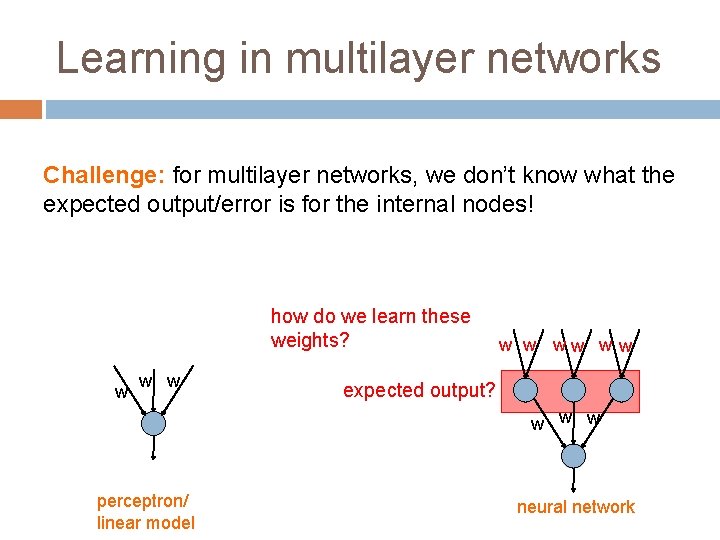

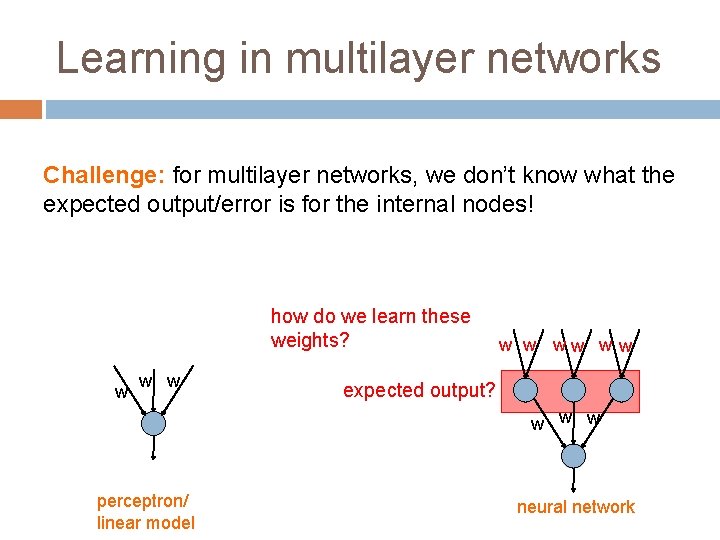

Learning in multilayer networks Challenge: for multilayer networks, we don’t know what the expected output/error is for the internal nodes! how do we learn these weights? w w ww w w expected output? w w w perceptron/ linear model neural network

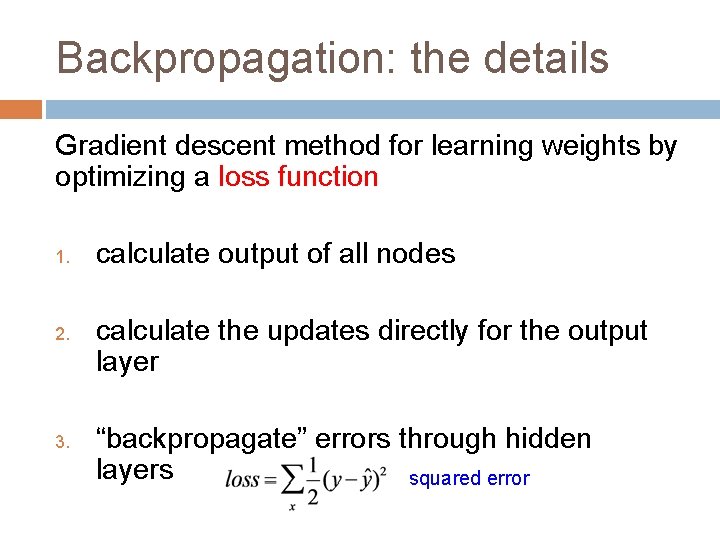

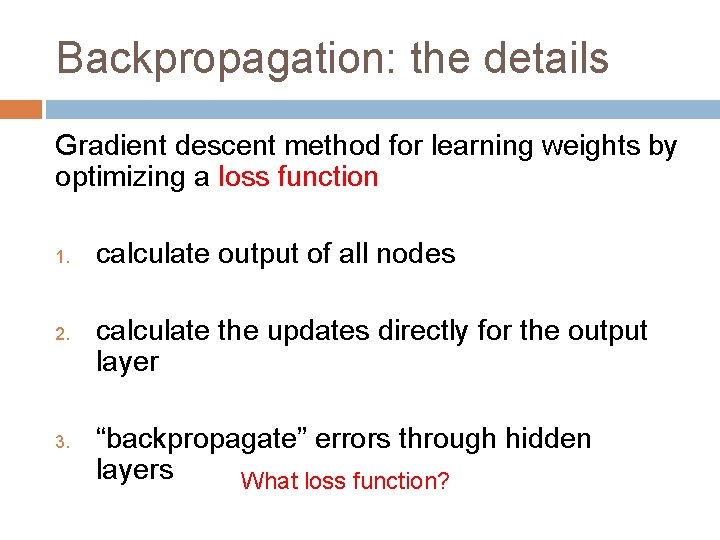

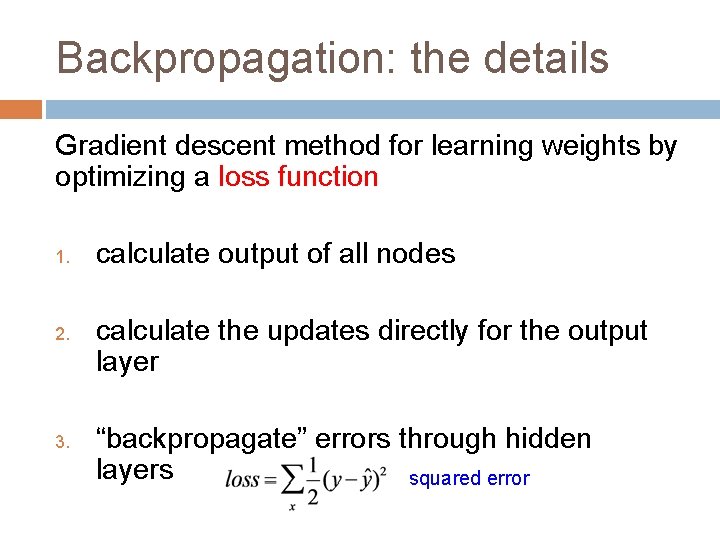

Backpropagation: intuition Gradient descent method for learning weights by optimizing a loss function 1. 2. 3. calculate output of all nodes calculate the weights for the output layer based on the error “backpropagate” errors through hidden layers

Backpropagation: intuition We can calculate the actual error here

Backpropagation: intuition Key idea: propagate the error back to this layer

Backpropagation: intuition “backpropagate” the error: Assume all of these nodes were responsible for some of the error How can we figure out how much they were responsible for?

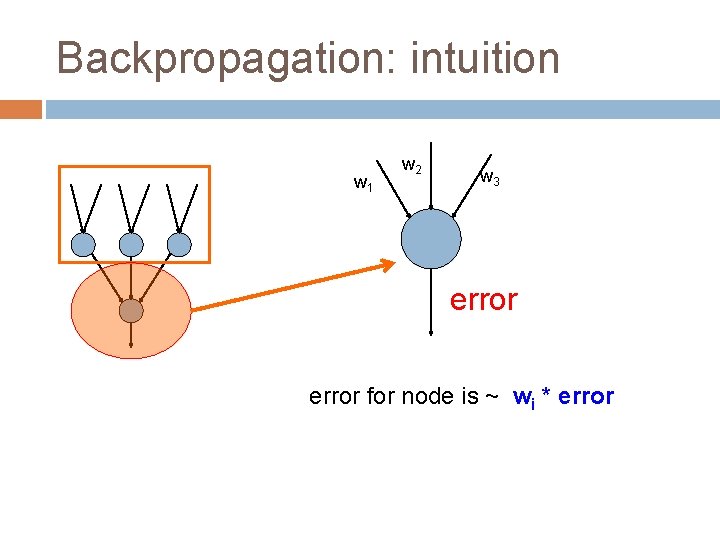

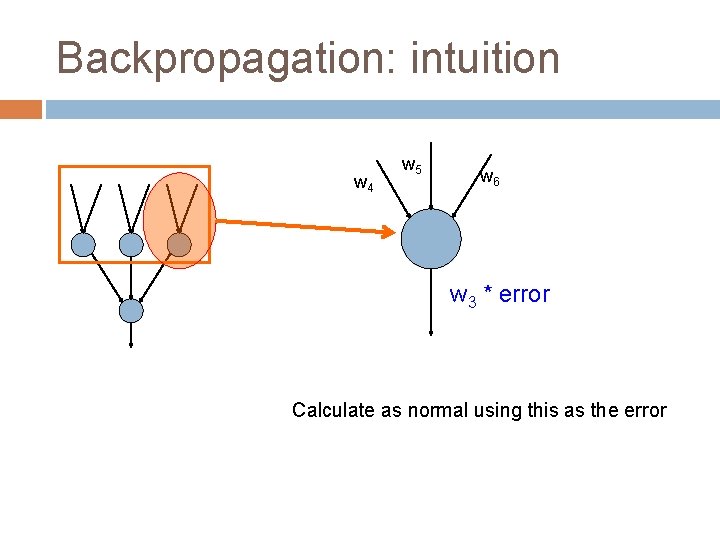

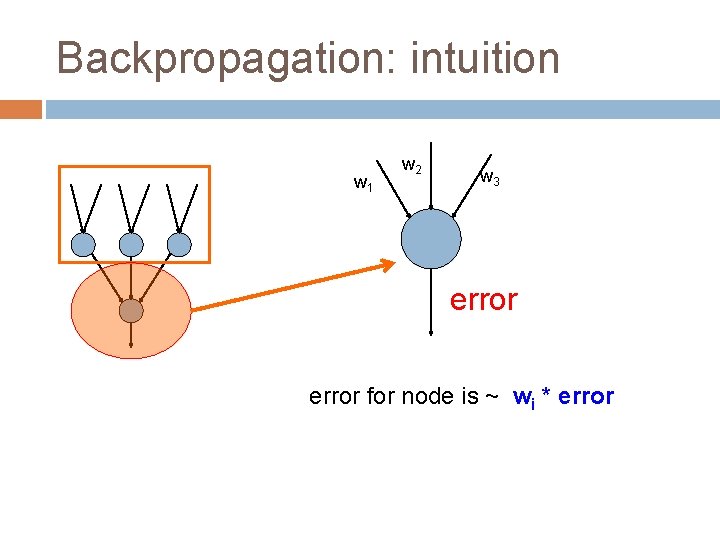

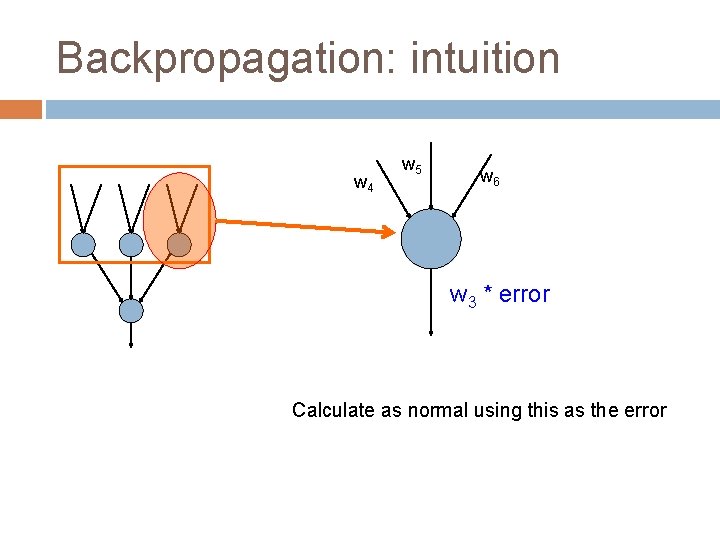

Backpropagation: intuition w 1 w 2 w 3 error for node is ~ wi * error

Backpropagation: intuition w 4 w 5 w 6 w 3 * error Calculate as normal using this as the error

Backpropagation: the details Gradient descent method for learning weights by optimizing a loss function 1. 2. 3. calculate output of all nodes calculate the updates directly for the output layer “backpropagate” errors through hidden layers What loss function?

Backpropagation: the details Gradient descent method for learning weights by optimizing a loss function 1. 2. 3. calculate output of all nodes calculate the updates directly for the output layer “backpropagate” errors through hidden layers squared error

David kauchak

David kauchak Lebensversicherungsgesellschaftsangestellter

Lebensversicherungsgesellschaftsangestellter David kauchak

David kauchak Translation process

Translation process David kauchak

David kauchak David kauchak

David kauchak David kauchak

David kauchak Introduction to teaching: becoming a professional

Introduction to teaching: becoming a professional The verb ser (p. 158) answers

The verb ser (p. 158) answers Krs 158

Krs 158 Me gustan me encantan (p. 135)

Me gustan me encantan (p. 135) Concurrent validity example

Concurrent validity example Scp 158

Scp 158 Cs 158

Cs 158 Visualizing and understanding convolutional neural networks

Visualizing and understanding convolutional neural networks Liran szlak

Liran szlak Ib psychology

Ib psychology Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition Leon gatys

Leon gatys Efficient processing of deep neural networks pdf

Efficient processing of deep neural networks pdf Deep neural networks and mixed integer linear optimization

Deep neural networks and mixed integer linear optimization Convolutional neural network presentation

Convolutional neural network presentation Neural networks and learning machines 3rd edition

Neural networks and learning machines 3rd edition Pixelrnn

Pixelrnn Matlab neural network toolbox pdf

Matlab neural network toolbox pdf Neural networks for rf and microwave design

Neural networks for rf and microwave design 11-747 neural networks for nlp

11-747 neural networks for nlp Neural networks simon haykin

Neural networks simon haykin Csrmm

Csrmm On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Tlu in neural network

Tlu in neural network Neural networks and fuzzy logic

Neural networks and fuzzy logic Introduction to convolutional neural networks

Introduction to convolutional neural networks Convolutional neural networks

Convolutional neural networks Few shot learning with graph neural networks

Few shot learning with graph neural networks Deep forest towards an alternative to deep neural networks

Deep forest towards an alternative to deep neural networks Convolutional neural networks

Convolutional neural networks Neuraltools neural networks

Neuraltools neural networks Gated recurrent unit in deep learning

Gated recurrent unit in deep learning Predicting nba games using neural networks

Predicting nba games using neural networks Neural networks and learning machines

Neural networks and learning machines The wake-sleep algorithm for unsupervised neural networks

The wake-sleep algorithm for unsupervised neural networks Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural network alternatives

Convolutional neural network alternatives Datagram network vs virtual circuit network

Datagram network vs virtual circuit network Backbone networks in computer networks

Backbone networks in computer networks A neural probabilistic language model

A neural probabilistic language model улмфи

улмфи Cervical and brachial plexus

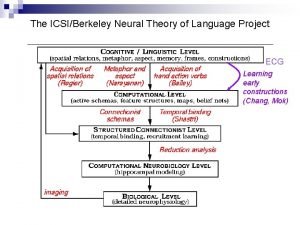

Cervical and brachial plexus Neural theory

Neural theory Textured neural avatars

Textured neural avatars Prosencéfalo mesencéfalo e rombencéfalo

Prosencéfalo mesencéfalo e rombencéfalo Show and tell: a neural image caption generator

Show and tell: a neural image caption generator Student teacher deep learning

Student teacher deep learning Splanchnic mesoderm

Splanchnic mesoderm Neural circuits the organization of neuronal pools

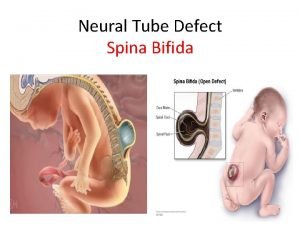

Neural circuits the organization of neuronal pools What is neural tube

What is neural tube Neural strategies pdhpe

Neural strategies pdhpe Neural packet classification

Neural packet classification Cost function neural network

Cost function neural network Mink ph reviews

Mink ph reviews Meshnet: mesh neural network for 3d shape representation

Meshnet: mesh neural network for 3d shape representation Pengertian artificial neural network

Pengertian artificial neural network Neural network in r

Neural network in r Spss neural network

Spss neural network