Neural Packet Classification Eric Liang 1 Hang Zhu

![Intuition N 3 action sampled; update tree; repeat until done FCNet([256, 256]) Action Distribution Intuition N 3 action sampled; update tree; repeat until done FCNet([256, 256]) Action Distribution](https://slidetodoc.com/presentation_image_h/0d5111aa03a90612c178e2a142e1183b/image-28.jpg)

- Slides: 31

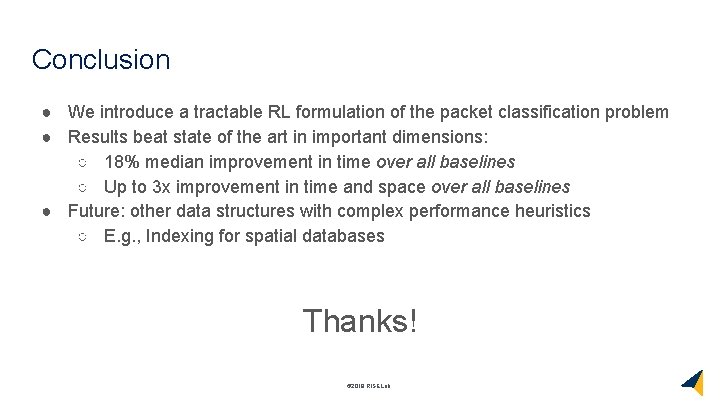

Neural Packet Classification Eric Liang 1, Hang Zhu 2, Xin Jin 2, Ion Stoica 1 1 UC Berkeley, 2 JHU

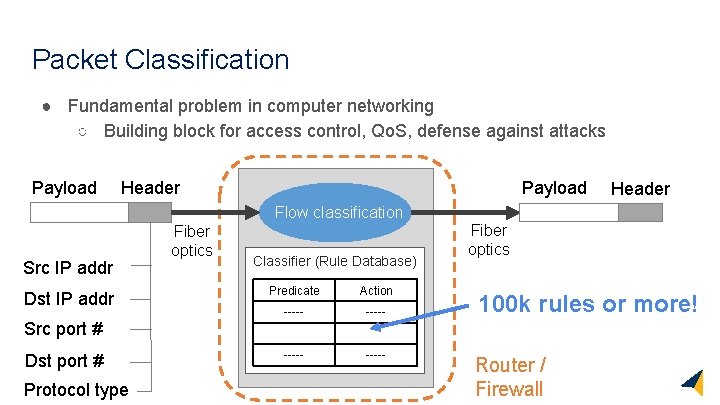

Packet Classification ● Fundamental problem in computer networking ○ Building block for access control, Qo. S, defense against attacks Payload Header Payload Flow classification Src IP addr Dst IP addr Fiber optics Classifier (Rule Database) Predicate Action ----- Fiber optics 100 k rules or more! Src port # Dst port # Protocol type © 2018 RISELab Header Router / Firewall

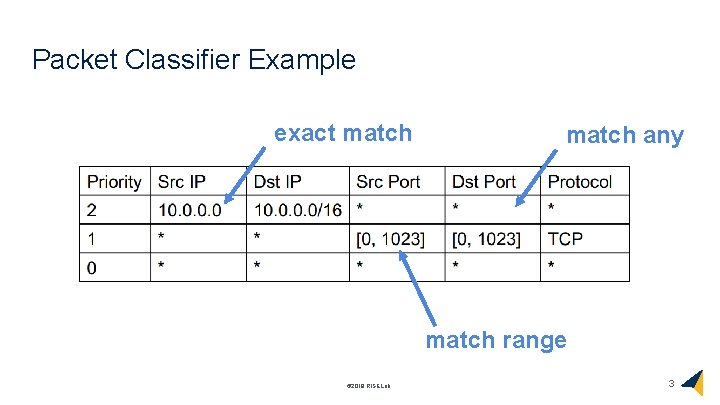

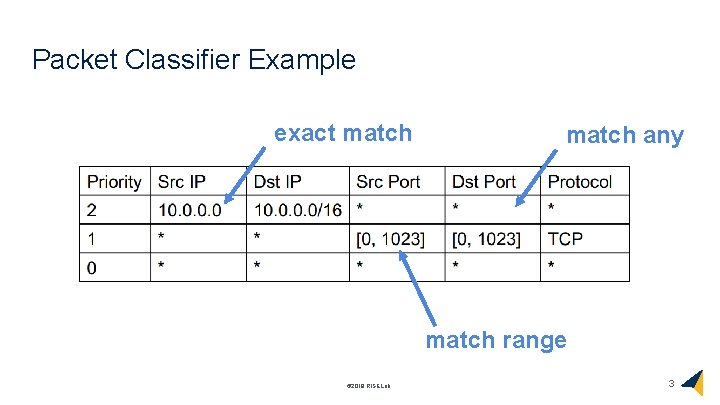

Packet Classifier Example exact match any match range © 2018 RISELab 3

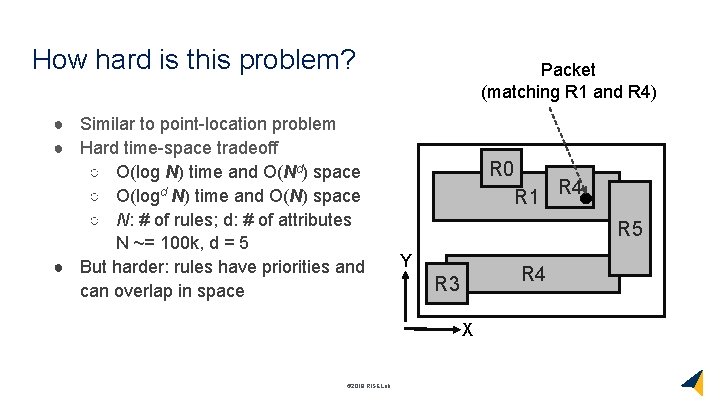

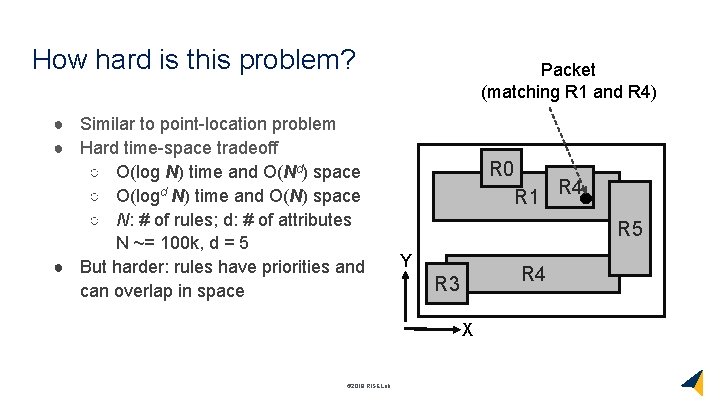

How hard is this problem? ● Similar to point-location problem ● Hard time-space tradeoff ○ O(log N) time and O(Nd) space ○ O(logd N) time and O(N) space ○ N: # of rules; d: # of attributes N ~= 100 k, d = 5 ● But harder: rules have priorities and can overlap in space Packet (matching R 1 and R 4) R 0 R 4 R 1 R 5 Y R 4 R 3 X © 2018 RISELab

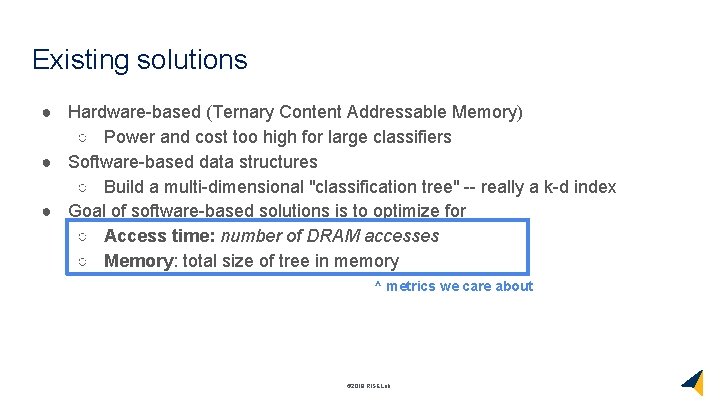

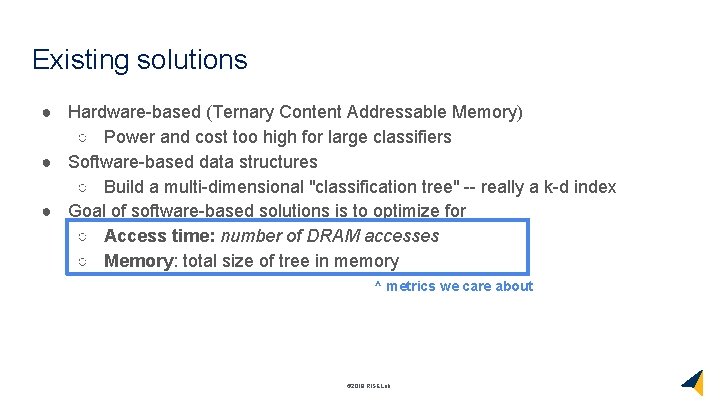

Existing solutions ● Hardware-based (Ternary Content Addressable Memory) ○ Power and cost too high for large classifiers ● Software-based data structures ○ Build a multi-dimensional "classification tree" -- really a k-d index ● Goal of software-based solutions is to optimize for ○ Access time: number of DRAM accesses ○ Memory: total size of tree in memory ^ metrics we care about © 2018 RISELab

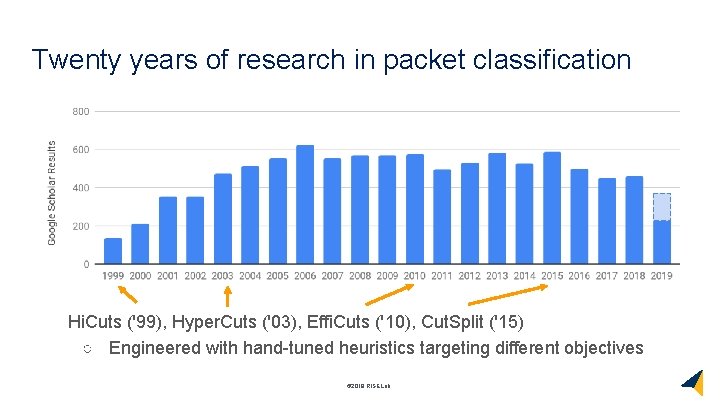

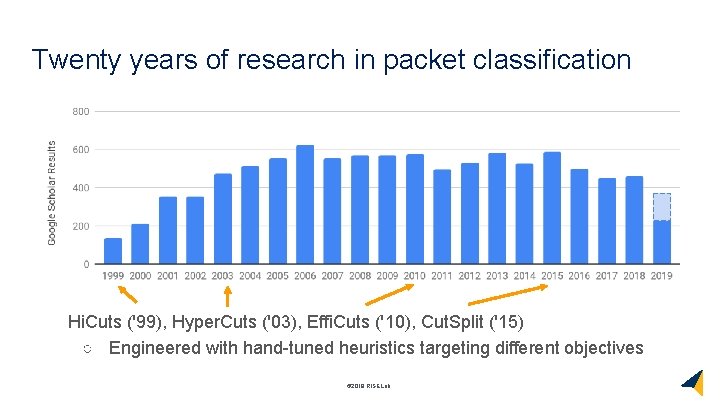

Twenty years of research in packet classification Hi. Cuts ('99), Hyper. Cuts ('03), Effi. Cuts ('10), Cut. Split ('15) ○ Engineered with hand-tuned heuristics targeting different objectives © 2018 RISELab

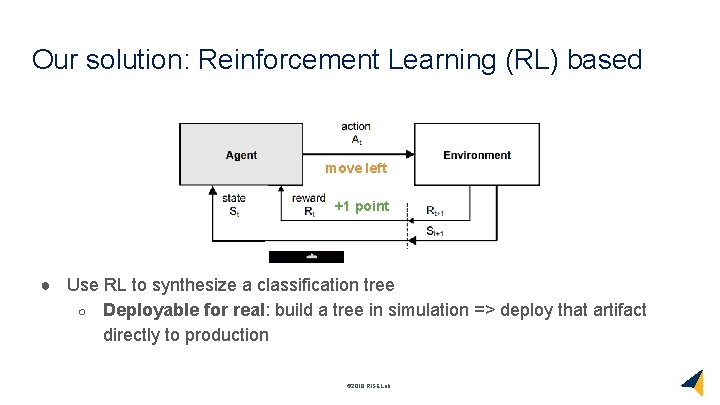

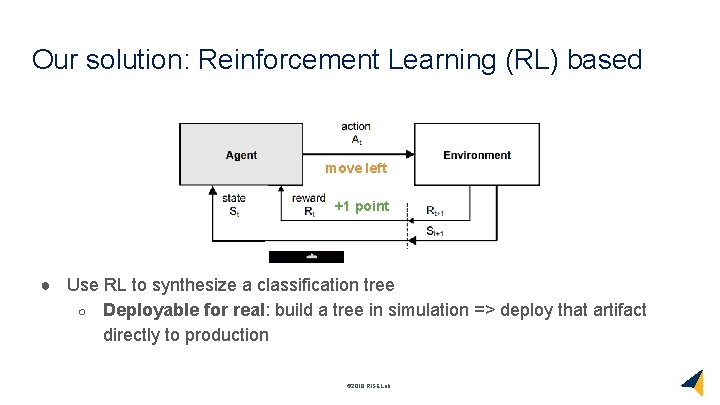

Our solution: Reinforcement Learning (RL) based move left +1 point ● Use RL to synthesize a classification tree ○ Deployable for real: build a tree in simulation => deploy that artifact directly to production © 2018 RISELab

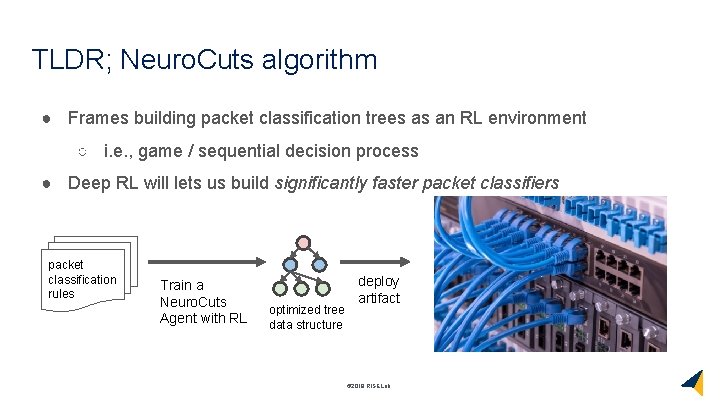

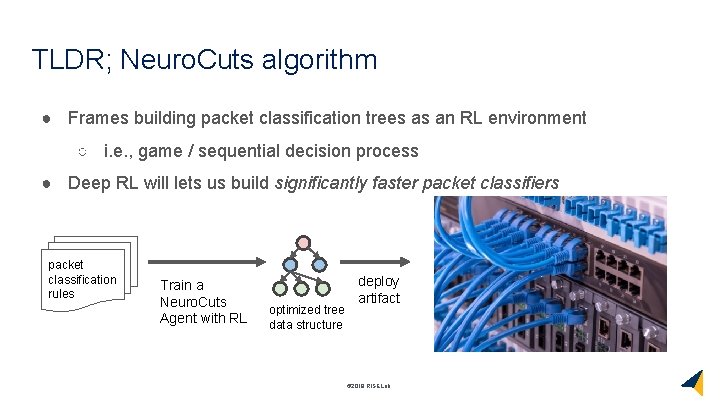

TLDR; Neuro. Cuts algorithm ● Frames building packet classification trees as an RL environment ○ i. e. , game / sequential decision process ● Deep RL will lets us build significantly faster packet classifiers packet classification rules Train a Neuro. Cuts Agent with RL optimized tree data structure deploy artifact © 2018 RISELab

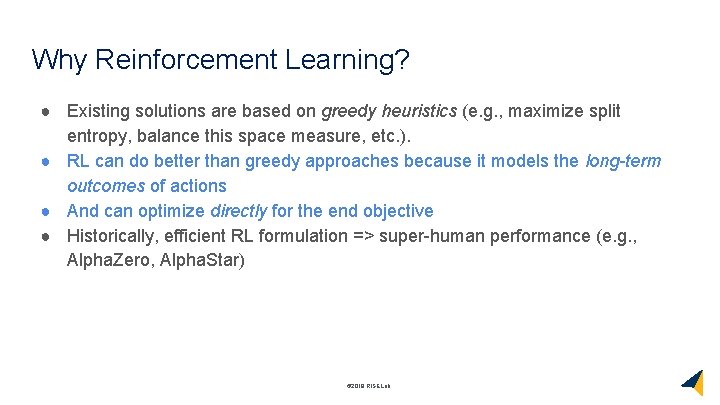

Why Reinforcement Learning? ● Existing solutions are based on greedy heuristics (e. g. , maximize split entropy, balance this space measure, etc. ). ● RL can do better than greedy approaches because it models the long-term outcomes of actions ● And can optimize directly for the end objective ● Historically, efficient RL formulation => super-human performance (e. g. , Alpha. Zero, Alpha. Star) © 2018 RISELab

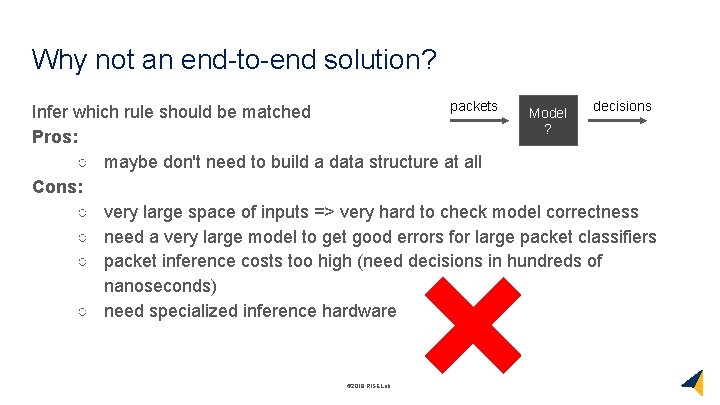

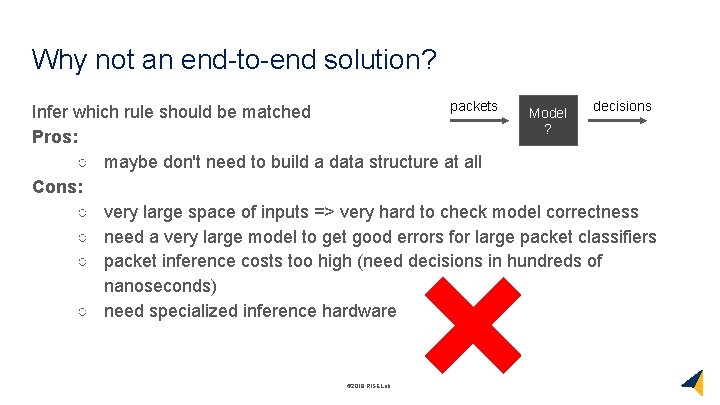

Why not an end-to-end solution? packets decisions Model Infer which rule should be matched ? Pros: ○ maybe don't need to build a data structure at all Cons: ○ very large space of inputs => very hard to check model correctness ○ need a very large model to get good errors for large packet classifiers ○ packet inference costs too high (need decisions in hundreds of nanoseconds) ○ need specialized inference hardware © 2018 RISELab

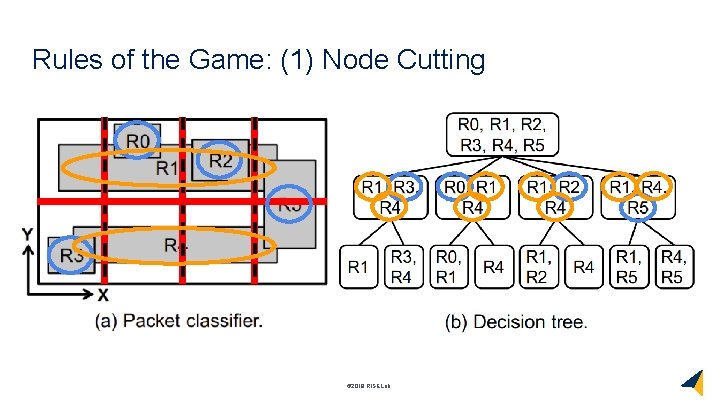

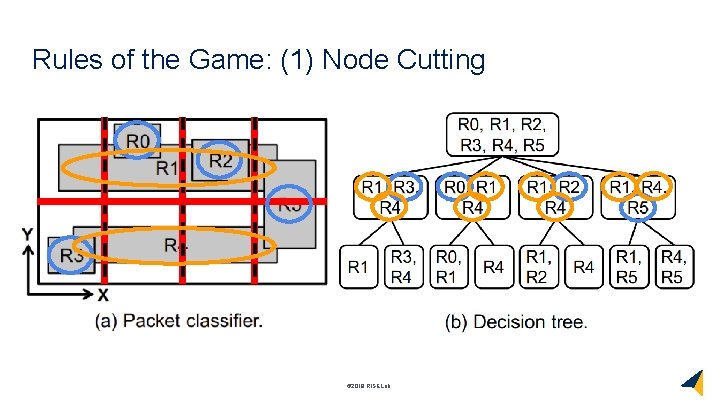

Rules of the Game: (1) Node Cutting © 2018 RISELab

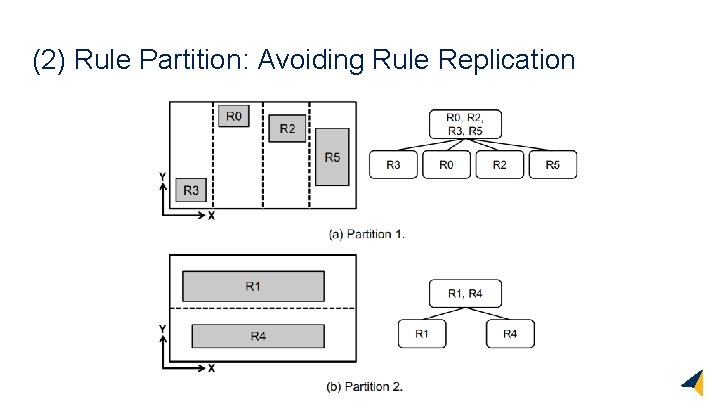

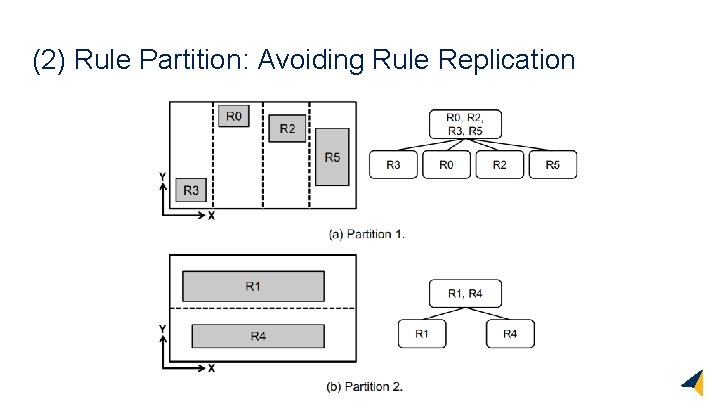

(2) Rule Partition: Avoiding Rule Replication © 2018 RISELab

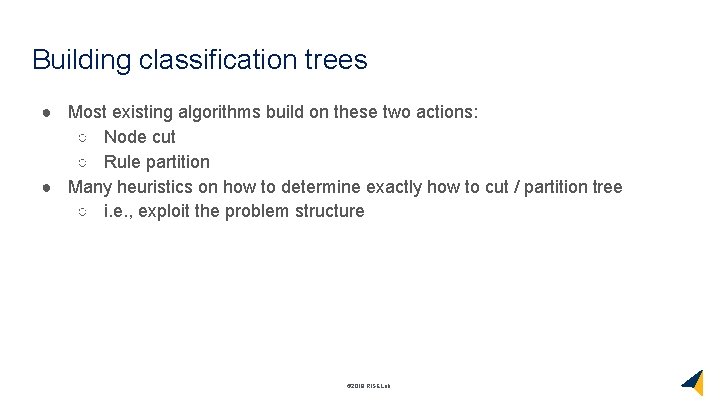

Building classification trees ● Most existing algorithms build on these two actions: ○ Node cut ○ Rule partition ● Many heuristics on how to determine exactly how to cut / partition tree ○ i. e. , exploit the problem structure © 2018 RISELab

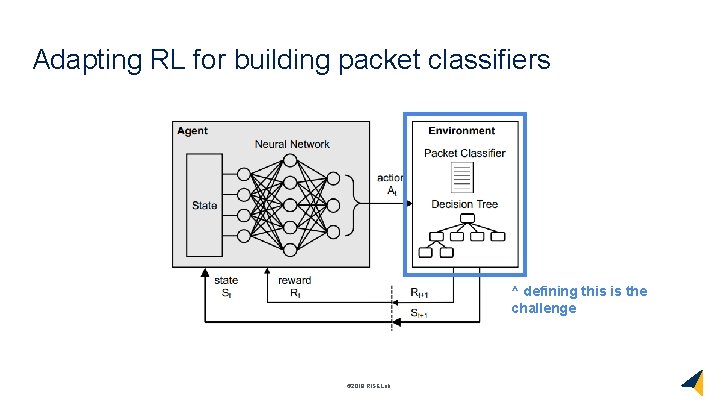

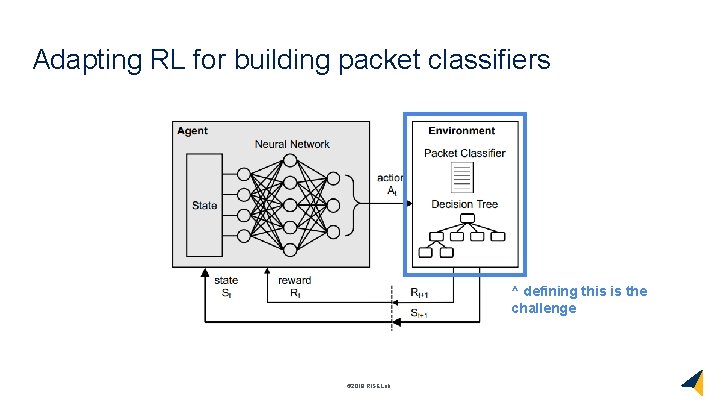

Adapting RL for building packet classifiers ^ defining this is the challenge © 2018 RISELab

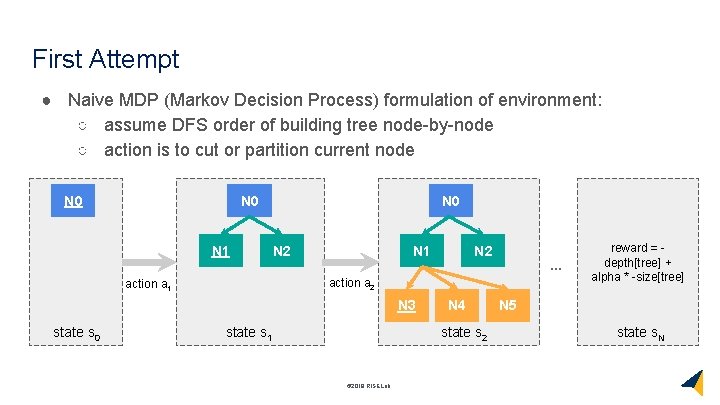

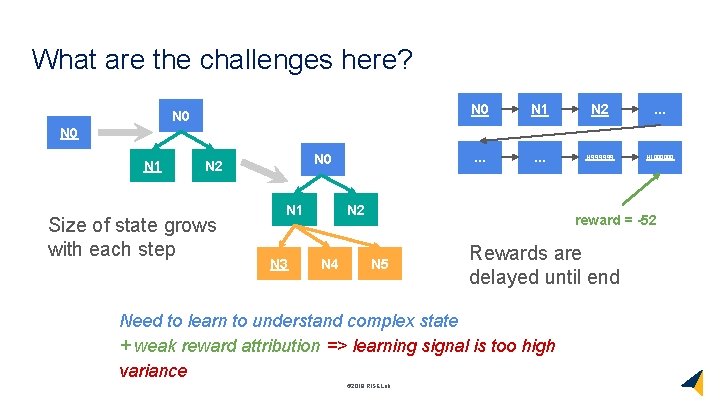

First Attempt ● Naive MDP (Markov Decision Process) formulation of environment: ○ assume DFS order of building tree node-by-node ○ action is to cut or partition current node N 0 N 1 N 0 N 2 N 1 . . . action a 2 action a 1 N 3 state s 0 N 2 state s 1 N 4 state s 2 © 2018 RISELab reward = depth[tree] + alpha * -size[tree] N 5 state s. N

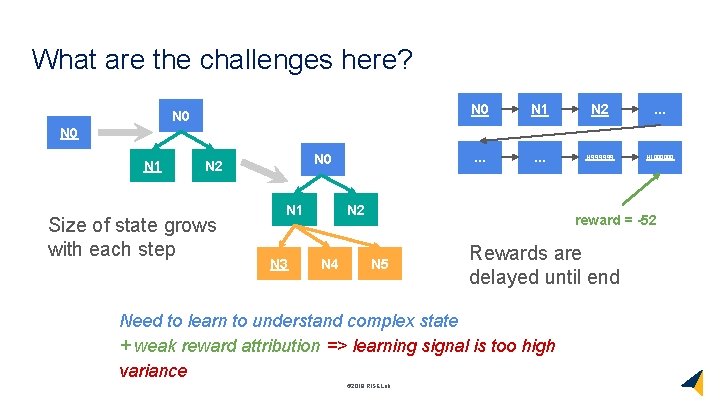

What are the challenges here? N 0 N 1 N 2 . . N 999999 N 1000000 N 1 N 0 N 2 Size of state grows with each step N 1 N 3 N 2 N 4 reward = -52 N 5 Rewards are delayed until end Need to learn to understand complex state + weak reward attribution => learning signal is too high variance © 2018 RISELab

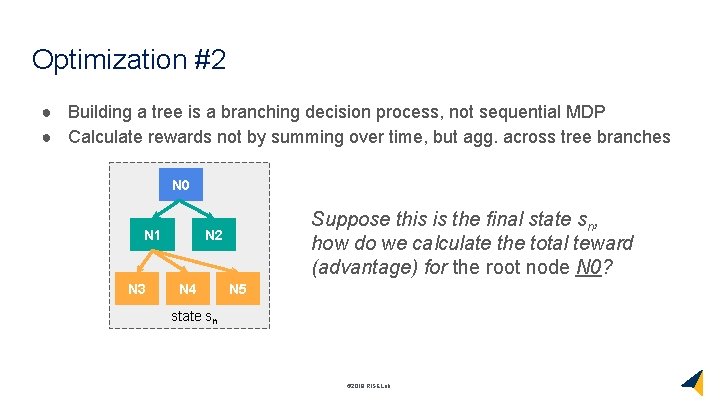

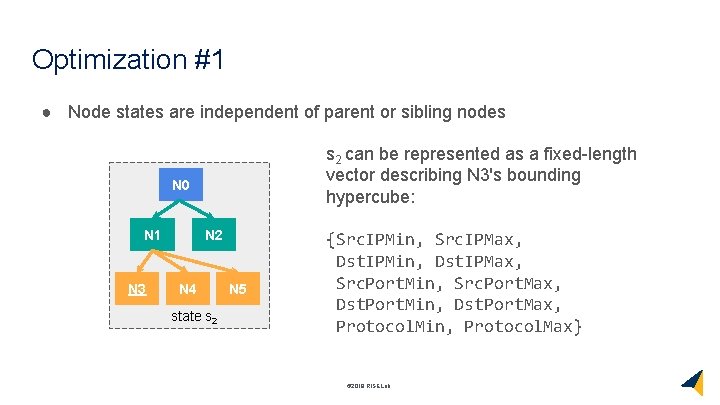

Optimization #1 ● Node states are independent of parent or sibling nodes s 2 can be represented as a fixed-length vector describing N 3's bounding hypercube: N 0 N 1 N 3 N 2 N 4 state s 2 N 5 {Src. IPMin, Src. IPMax, Dst. IPMin, Dst. IPMax, Src. Port. Min, Src. Port. Max, Dst. Port. Min, Dst. Port. Max, Protocol. Min, Protocol. Max} © 2018 RISELab

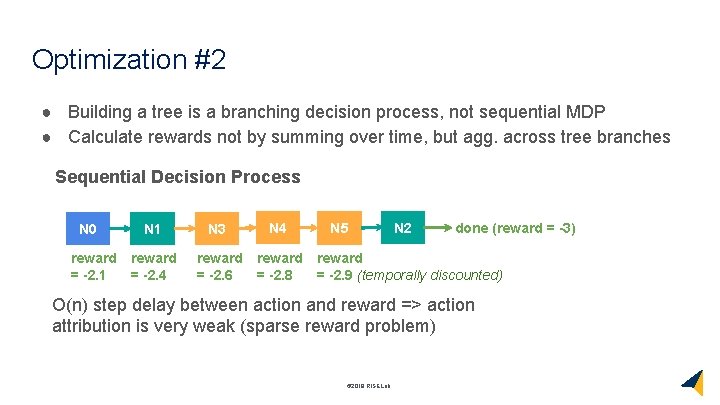

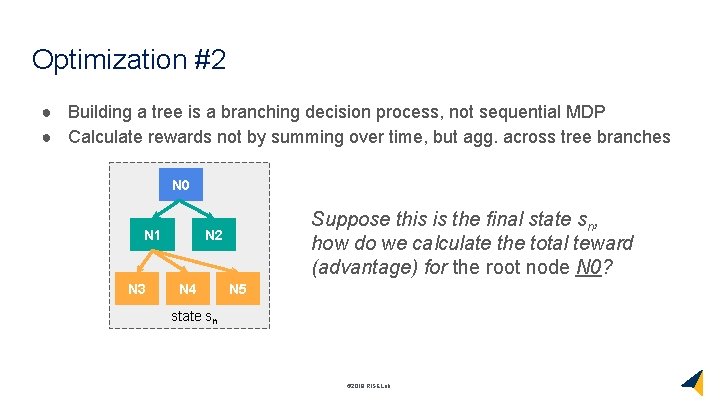

Optimization #2 ● Building a tree is a branching decision process, not sequential MDP ● Calculate rewards not by summing over time, but agg. across tree branches N 0 N 1 N 3 Suppose this is the final state sn, how do we calculate the total teward (advantage) for the root node N 0? N 2 N 4 N 5 state sn © 2018 RISELab

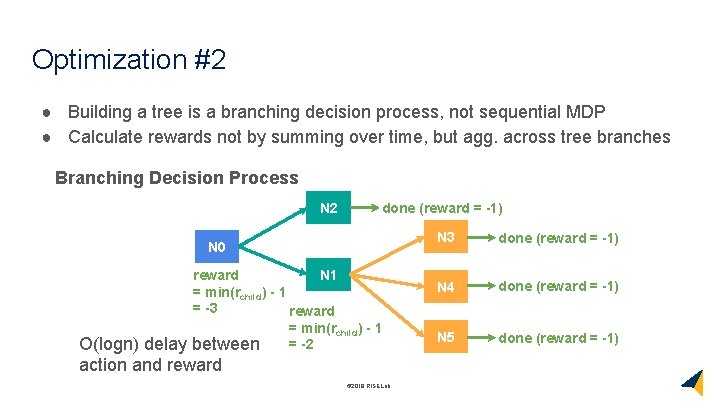

Optimization #2 ● Building a tree is a branching decision process, not sequential MDP ● Calculate rewards not by summing over time, but agg. across tree branches Sequential Decision Process N 0 reward = -2. 1 N 3 N 4 reward = -2. 6 reward = -2. 8 N 5 N 2 done (reward = -3) reward = -2. 9 (temporally discounted) O(n) step delay between action and reward => action attribution is very weak (sparse reward problem) © 2018 RISELab

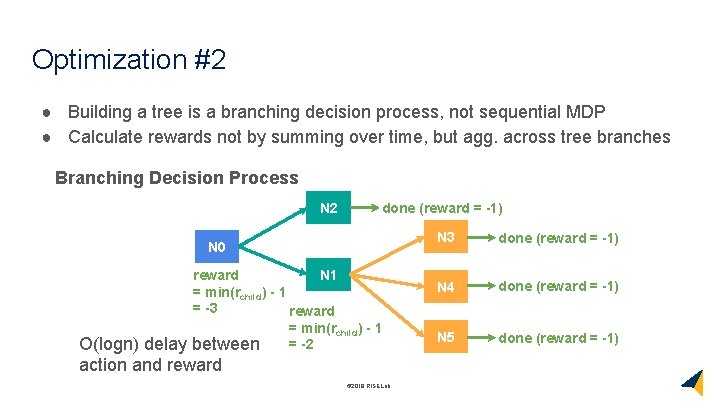

Optimization #2 ● Building a tree is a branching decision process, not sequential MDP ● Calculate rewards not by summing over time, but agg. across tree branches Branching Decision Process N 2 done (reward = -1) N 0 reward N 1 = min(rchild) - 1 = -3 reward = min(rchild) - 1 = -2 between O(logn) delay action and reward © 2018 RISELab N 3 done (reward = -1) N 4 done (reward = -1) N 5 done (reward = -1)

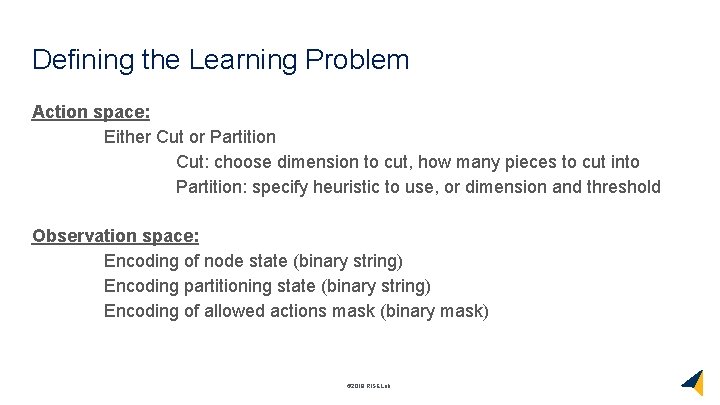

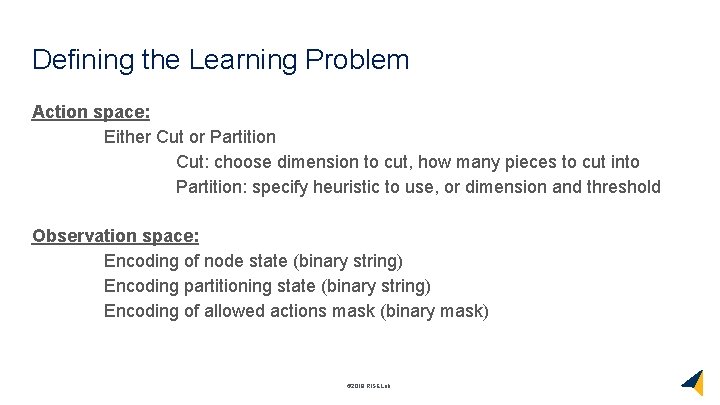

Defining the Learning Problem Action space: Either Cut or Partition Cut: choose dimension to cut, how many pieces to cut into Partition: specify heuristic to use, or dimension and threshold Observation space: Encoding of node state (binary string) Encoding partitioning state (binary string) Encoding of allowed actions mask (binary mask) © 2018 RISELab

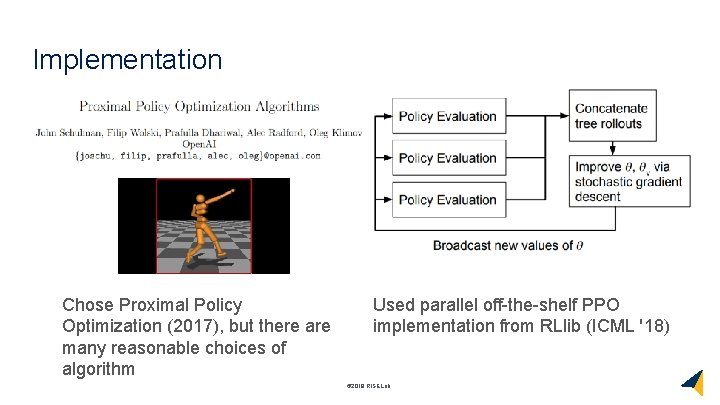

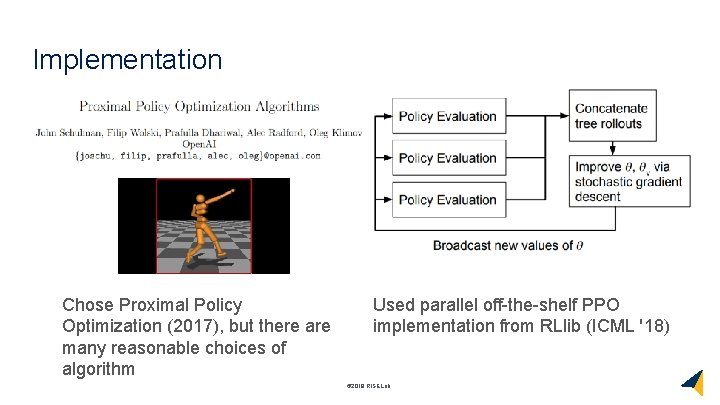

Implementation Chose Proximal Policy Optimization (2017), but there are many reasonable choices of algorithm Used parallel off-the-shelf PPO implementation from RLlib (ICML '18) © 2018 RISELab

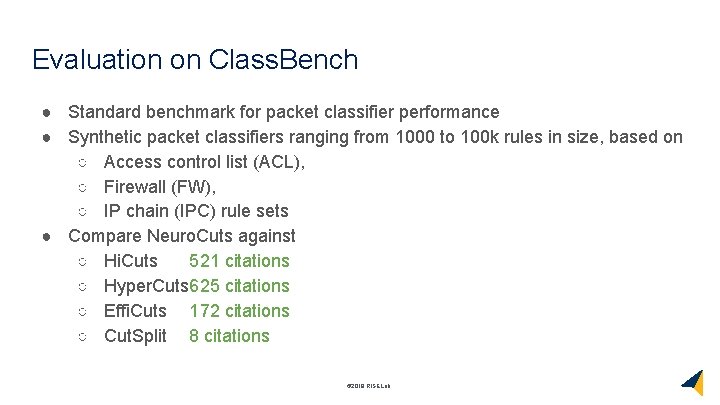

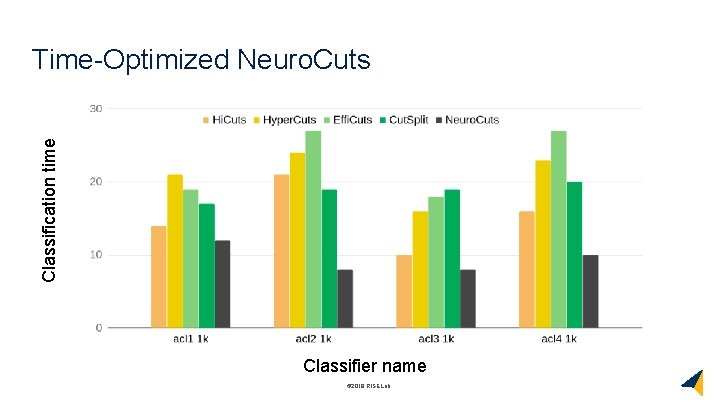

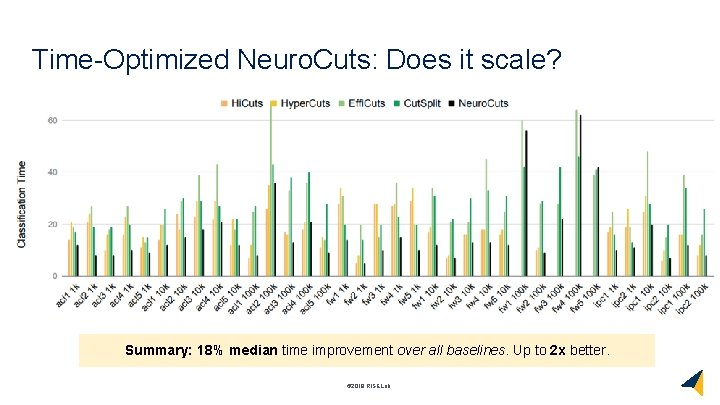

Evaluation on Class. Bench ● Standard benchmark for packet classifier performance ● Synthetic packet classifiers ranging from 1000 to 100 k rules in size, based on ○ Access control list (ACL), ○ Firewall (FW), ○ IP chain (IPC) rule sets ● Compare Neuro. Cuts against 521 citations ○ Hi. Cuts ○ Hyper. Cuts 625 citations ○ Effi. Cuts 172 citations ○ Cut. Split 8 citations © 2018 RISELab

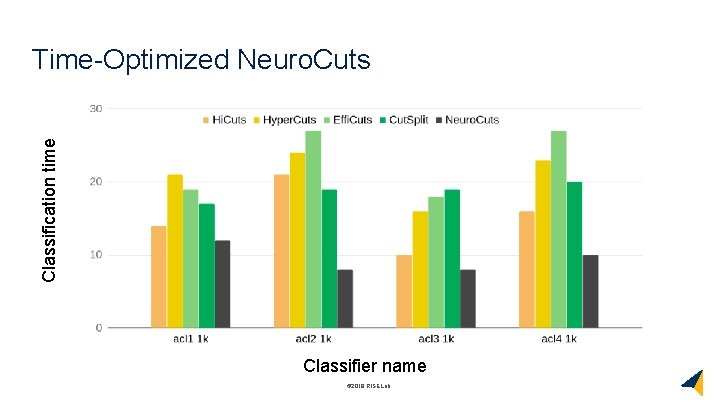

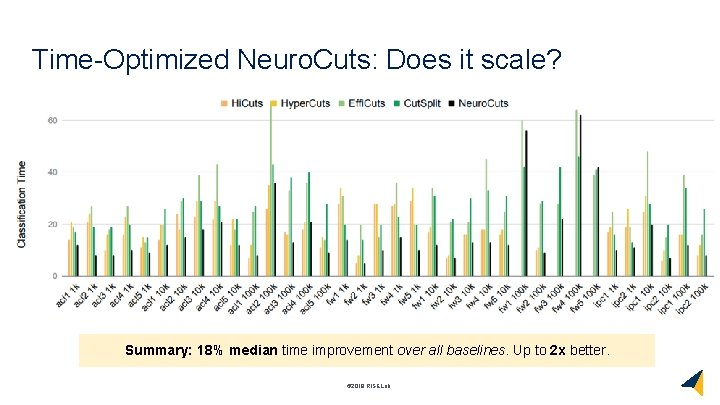

Classification time Time-Optimized Neuro. Cuts Classifier name © 2018 RISELab

Time-Optimized Neuro. Cuts: Does it scale? Summary: 18% median time improvement over all baselines. Up to 2 x better. © 2018 RISELab

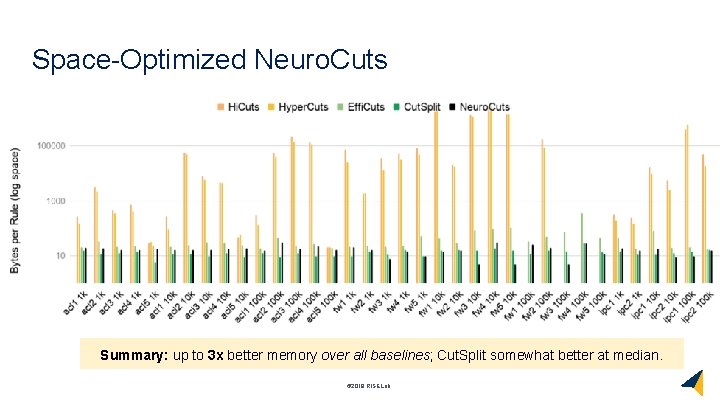

Space-Optimized Neuro. Cuts Summary: up to 3 x better memory over all baselines; Cut. Split somewhat better at median. © 2018 RISELab

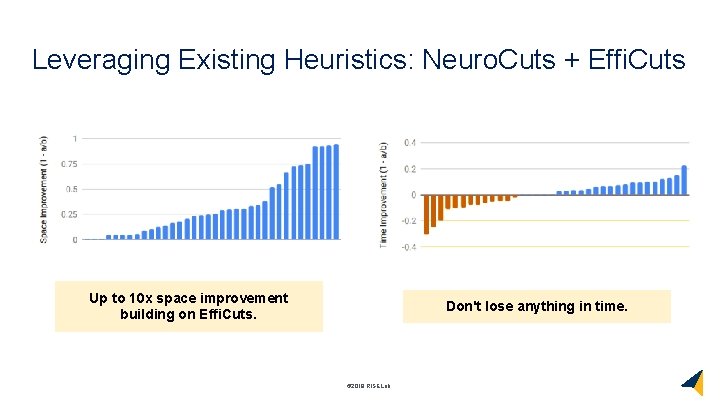

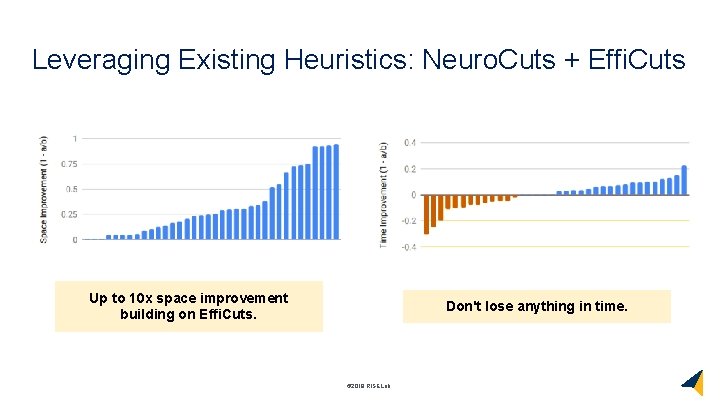

Leveraging Existing Heuristics: Neuro. Cuts + Effi. Cuts Up to 10 x space improvement building on Effi. Cuts. Don't lose anything in time. © 2018 RISELab

![Intuition N 3 action sampled update tree repeat until done FCNet256 256 Action Distribution Intuition N 3 action sampled; update tree; repeat until done FCNet([256, 256]) Action Distribution](https://slidetodoc.com/presentation_image_h/0d5111aa03a90612c178e2a142e1183b/image-28.jpg)

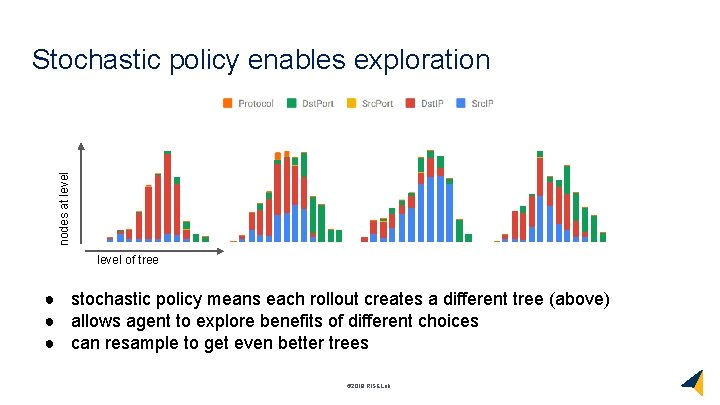

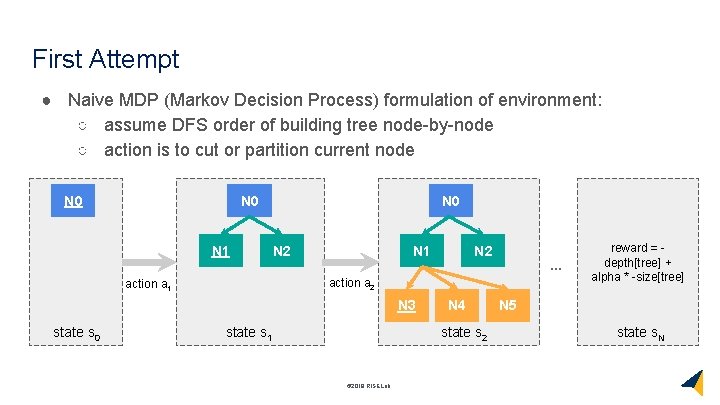

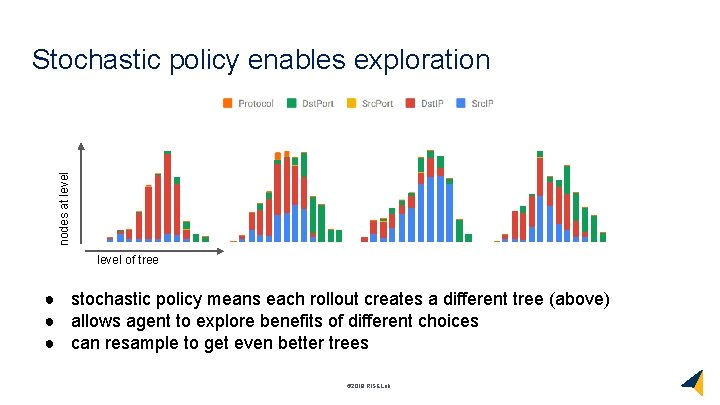

Intuition N 3 action sampled; update tree; repeat until done FCNet([256, 256]) Action Distribution π(a|s) nodes at level Encoded current node: s = 010001. . . 11000101 dim=[0. 3, 0. 1, 0. 2, . . . ] num=[0. 05, 0. 7, 0. 1, . . . ] type=[0. 1, 0. 9] color = dimension of node split level of tree © 2018 RISELab

nodes at level Stochastic policy enables exploration level of tree ● stochastic policy means each rollout creates a different tree (above) ● allows agent to explore benefits of different choices ● can resample to get even better trees © 2018 RISELab

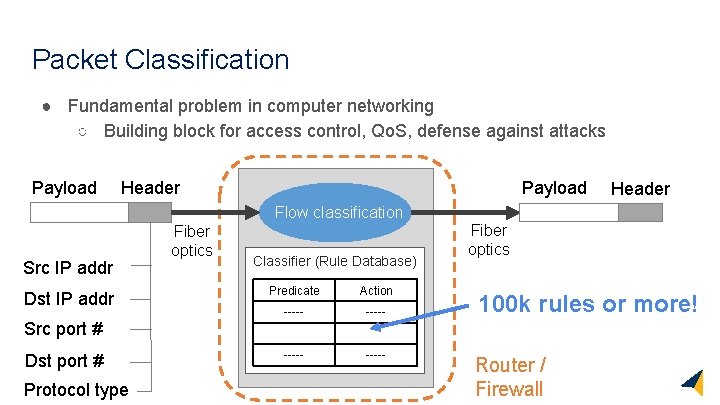

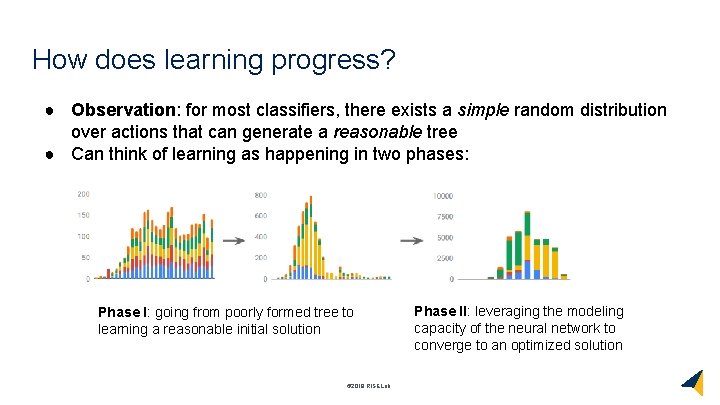

How does learning progress? ● Observation: for most classifiers, there exists a simple random distribution over actions that can generate a reasonable tree ● Can think of learning as happening in two phases: Phase I: going from poorly formed tree to learning a reasonable initial solution © 2018 RISELab Phase II: leveraging the modeling capacity of the neural network to converge to an optimized solution

Conclusion ● We introduce a tractable RL formulation of the packet classification problem ● Results beat state of the art in important dimensions: ○ 18% median improvement in time over all baselines ○ Up to 3 x improvement in time and space over all baselines ● Future: other data structures with complex performance heuristics ○ E. g. , Indexing for spatial databases Thanks! © 2018 RISELab