Machine Translation Factored Models Stephan Vogel Spring Semester

- Slides: 33

Machine Translation Factored Models Stephan Vogel Spring Semester 2011 Stephan Vogel - Machine Translation 1

Overview l Factored Language Models l Multi-Stream Word Alignment l Factored Translation Models Stephan Vogel - Machine Translation 2

Motivation l Vocabulary grows dramatically for morphology rich languages l Looking at surface word form does not take connections (morphological derivations) between words into account l Example: ‘book’ and ‘books’ as unrelated as ‘book’ and ‘sky’ l Dependencies within sentences between words are not well detected l Example: number or gender agreement Singular: der alte Tisch (the old table) Plural: die alten Tische (the old tables) l Consider word as a bundle of factors l Surface word form, stem, root, prefix, suffix, POS, gender marker, case marker, number marker, … Stephan Vogel - Machine Translation 3

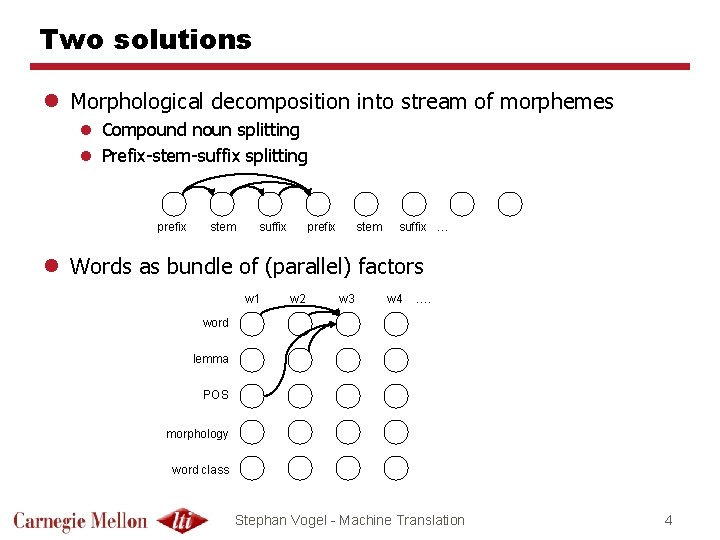

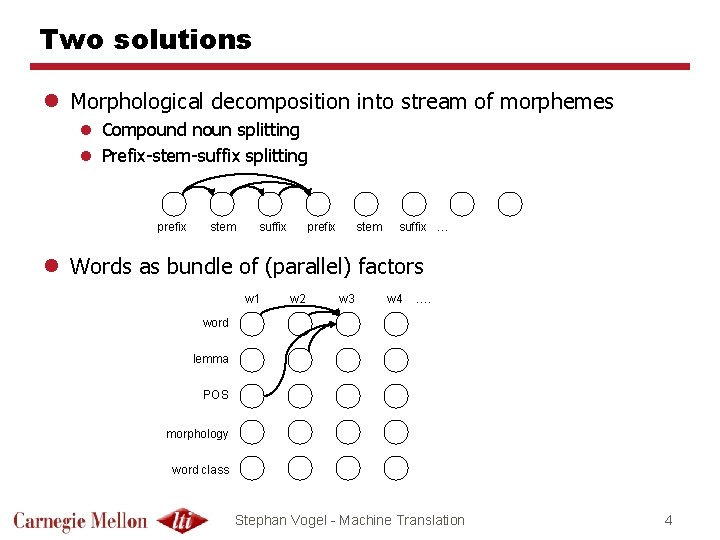

Two solutions l Morphological decomposition into stream of morphemes l Compound noun splitting l Prefix-stem-suffix splitting prefix stem suffix … l Words as bundle of (parallel) factors w 1 w 2 w 3 w 4 …. word lemma POS morphology word class Stephan Vogel - Machine Translation 4

Questions l Which information is the most useful l How to use this information? l In the language model l In the translation model l How to use it at training time l How to use it at decoding time Stephan Vogel - Machine Translation 5

Factored Models l Morphological preprocessing l A significant body of work l Factored language models l Kirchhoff et al l Hierarchical lexicon l Niessen at al l Bi-Stream alignment l Zhao et al l Factored translation models l Koehn et al Stephan Vogel - Machine Translation 6

Factored Language Model Some papers: Bilmes and Kirchhoff, 2003 Factored Language Models and Generalized Parallel Backoff Duh and Kirchhoff, 2004 Automatic learning of language model structure Kirchhoff and Yang, 2005 Improved Language Modeling for Statistical Machine Translation Stephan Vogel - Machine Translation 7

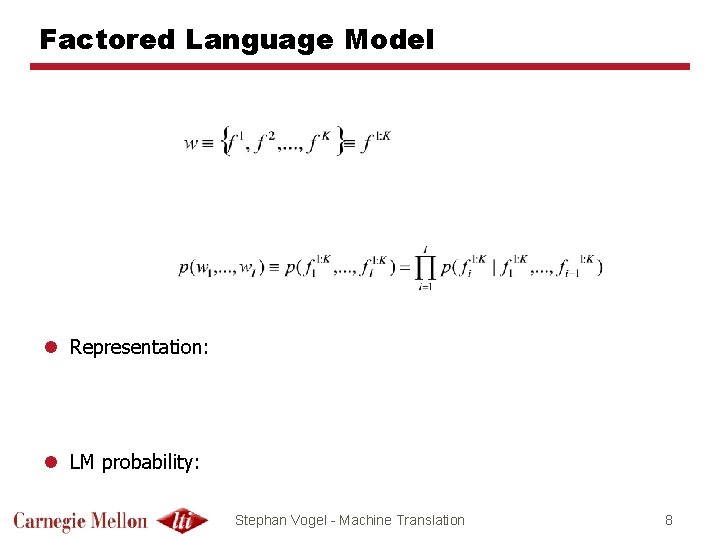

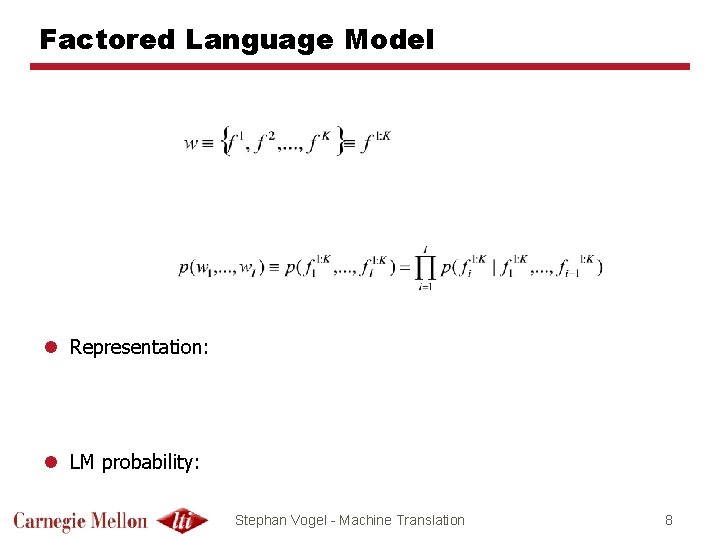

Factored Language Model l Representation: l LM probability: Stephan Vogel - Machine Translation 8

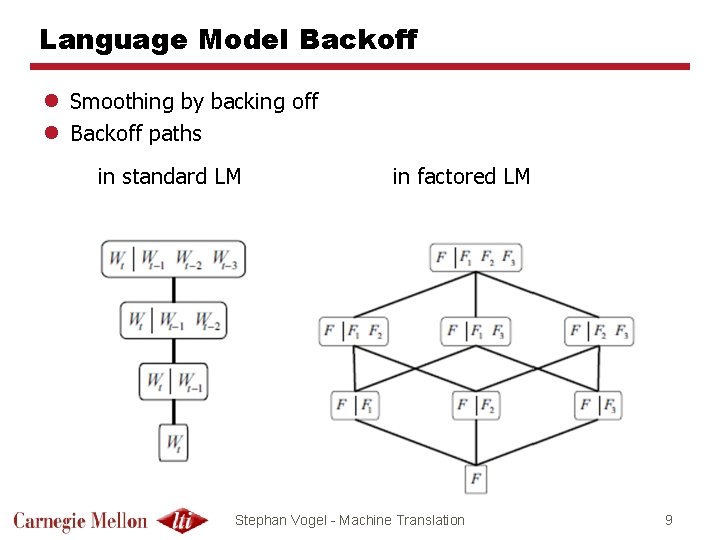

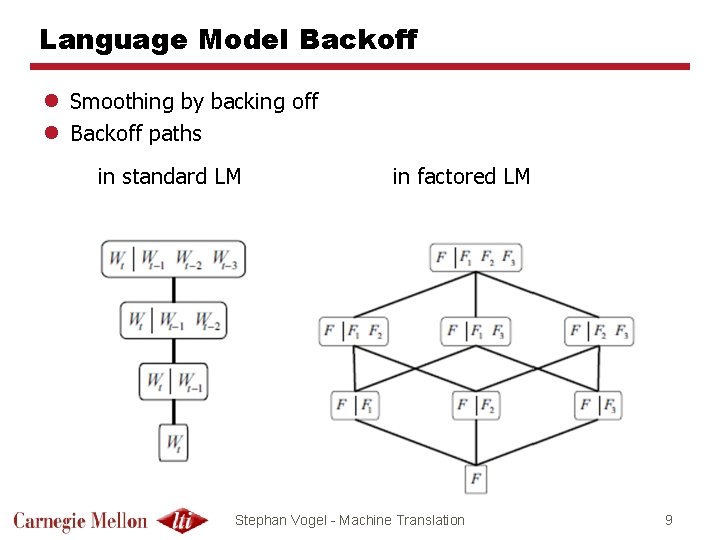

Language Model Backoff l Smoothing by backing off l Backoff paths in standard LM in factored LM Stephan Vogel - Machine Translation 9

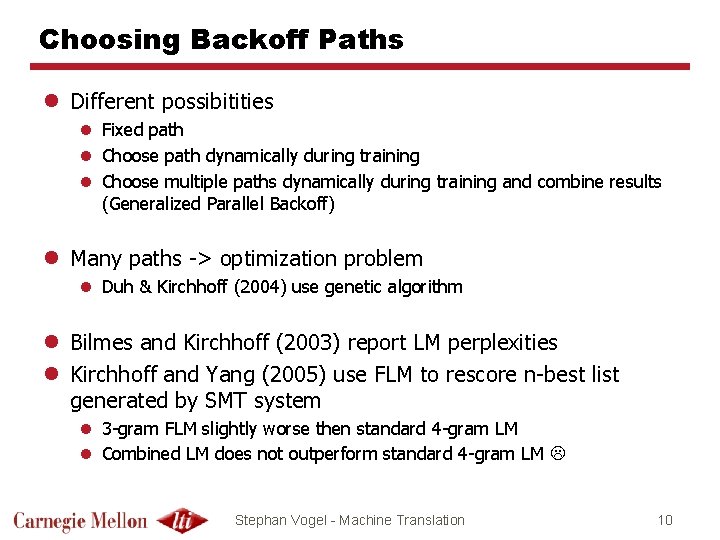

Choosing Backoff Paths l Different possibitities l Fixed path l Choose path dynamically during training l Choose multiple paths dynamically during training and combine results (Generalized Parallel Backoff) l Many paths -> optimization problem l Duh & Kirchhoff (2004) use genetic algorithm l Bilmes and Kirchhoff (2003) report LM perplexities l Kirchhoff and Yang (2005) use FLM to rescore n-best list generated by SMT system l 3 -gram FLM slightly worse then standard 4 -gram LM l Combined LM does not outperform standard 4 -gram LM Stephan Vogel - Machine Translation 10

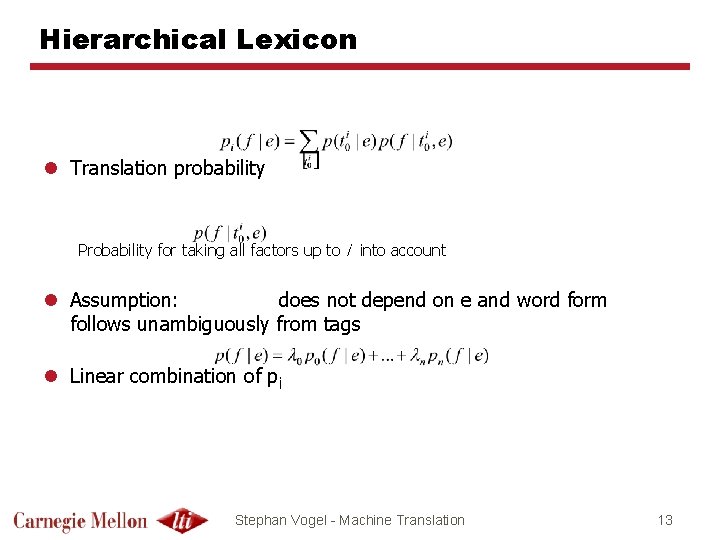

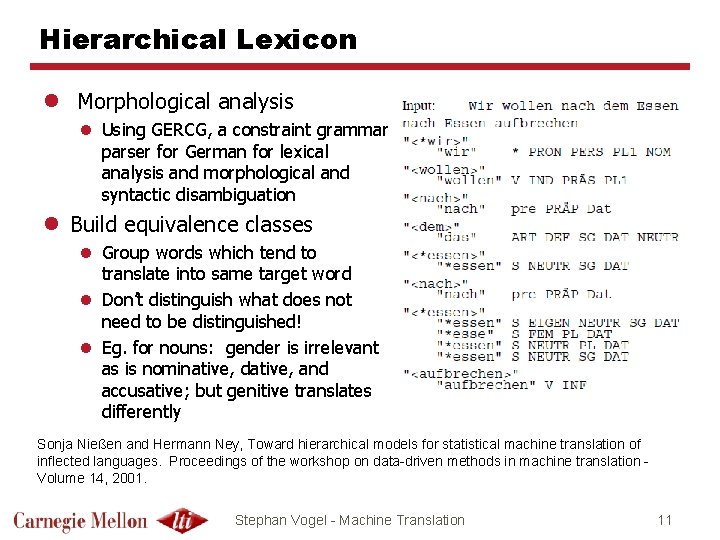

Hierarchical Lexicon l Morphological analysis l Using GERCG, a constraint grammar parser for German for lexical analysis and morphological and syntactic disambiguation l Build equivalence classes l Group words which tend to translate into same target word l Don’t distinguish what does not need to be distinguished! l Eg. for nouns: gender is irrelevant as is nominative, dative, and accusative; but genitive translates differently Sonja Nießen and Hermann Ney, Toward hierarchical models for statistical machine translation of inflected languages. Proceedings of the workshop on data-driven methods in machine translation Volume 14, 2001. Stephan Vogel - Machine Translation 11

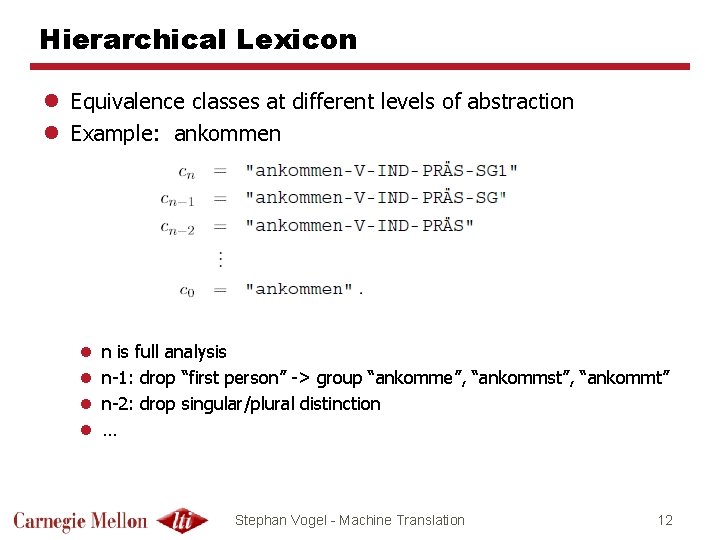

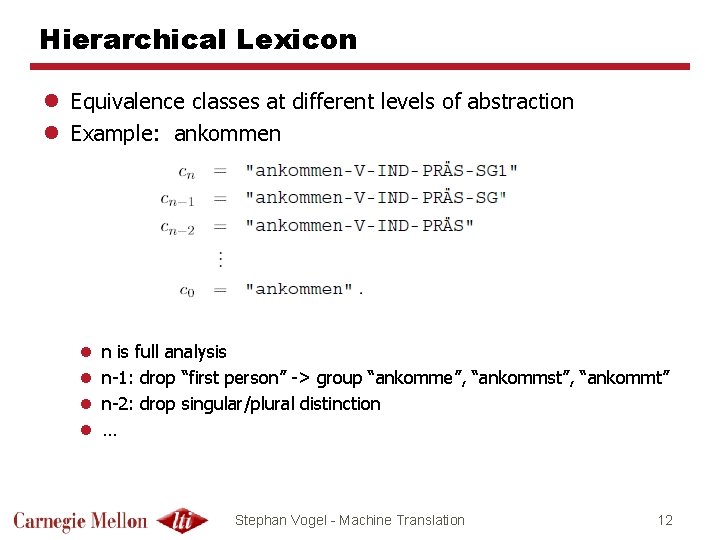

Hierarchical Lexicon l Equivalence classes at different levels of abstraction l Example: ankommen l l n is full analysis n-1: drop “first person” -> group “ankomme”, “ankommst”, “ankommt” n-2: drop singular/plural distinction … Stephan Vogel - Machine Translation 12

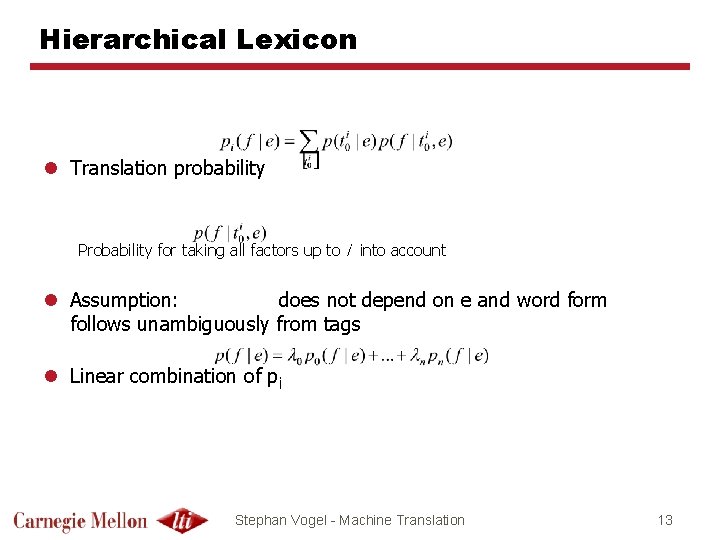

Hierarchical Lexicon l Translation probability Probability for taking all factors up to i into account l Assumption: does not depend on e and word form follows unambiguously from tags l Linear combination of pi Stephan Vogel - Machine Translation 13

Multi-Stream Word Alignment l Use multiple annotations: stem, POS, … l Consider each annotation as additional stream or tier l Use generative alignment models l Model each stream l But tie streams through alignment l Example: Bi-Stream HMM word alignment (Zhao et al 2005) Stephan Vogel - Machine Translation 14

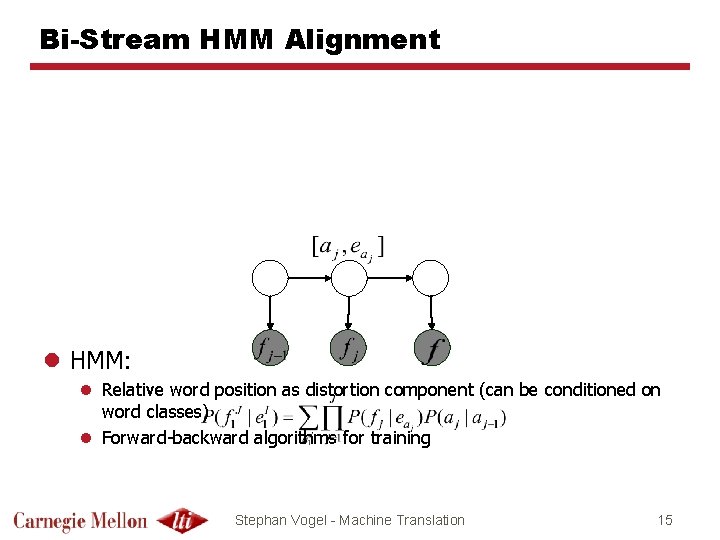

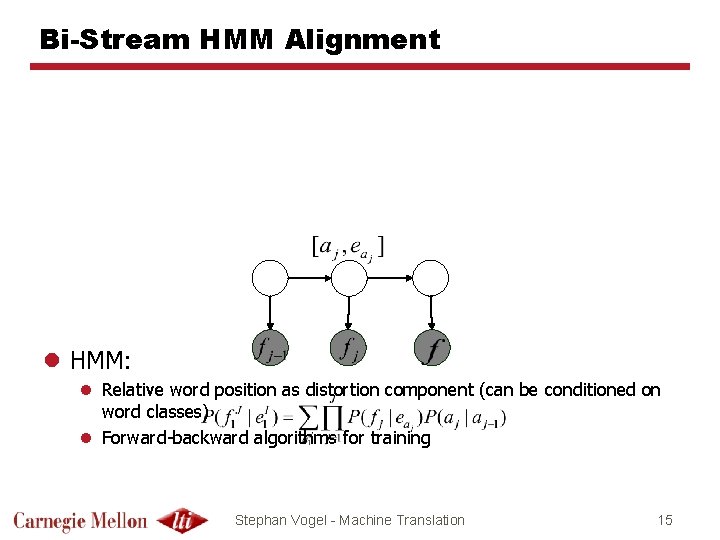

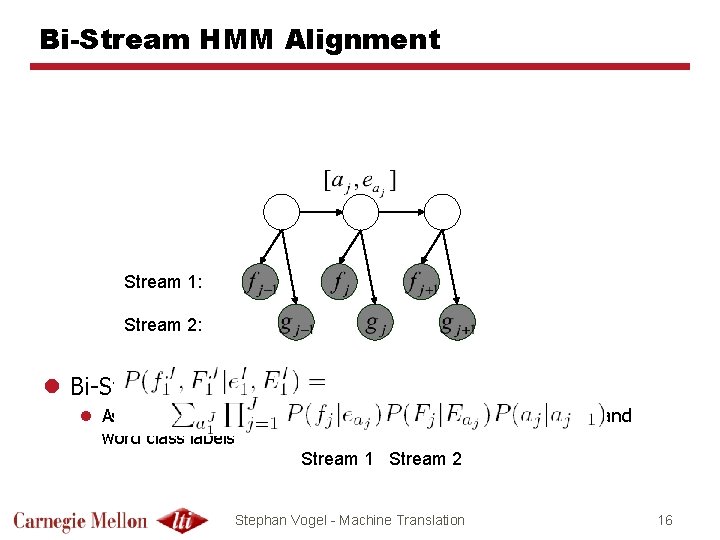

Bi-Stream HMM Alignment l HMM: l Relative word position as distortion component (can be conditioned on word classes) l Forward-backward algorithms for training Stephan Vogel - Machine Translation 15

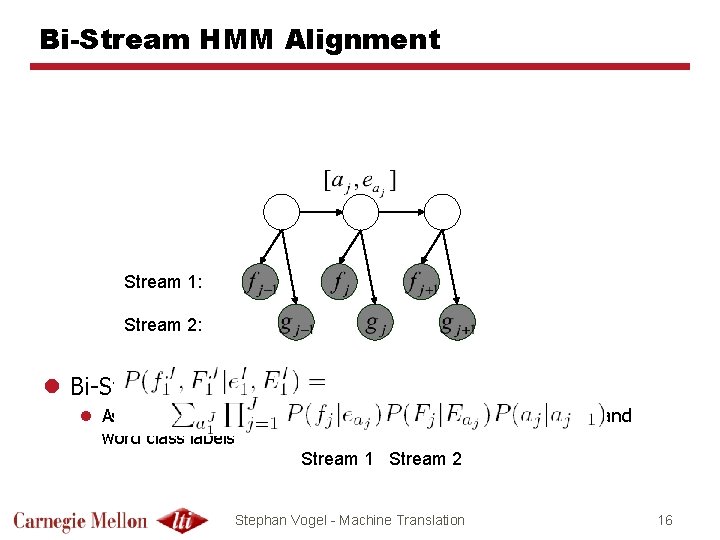

Bi-Stream HMM Alignment Stream 1: Stream 2: l Bi-Stream HMM: l Assume the hidden alignment generates 2 data stream: words and word class labels Stream 1 Stream 2 Stephan Vogel - Machine Translation 16

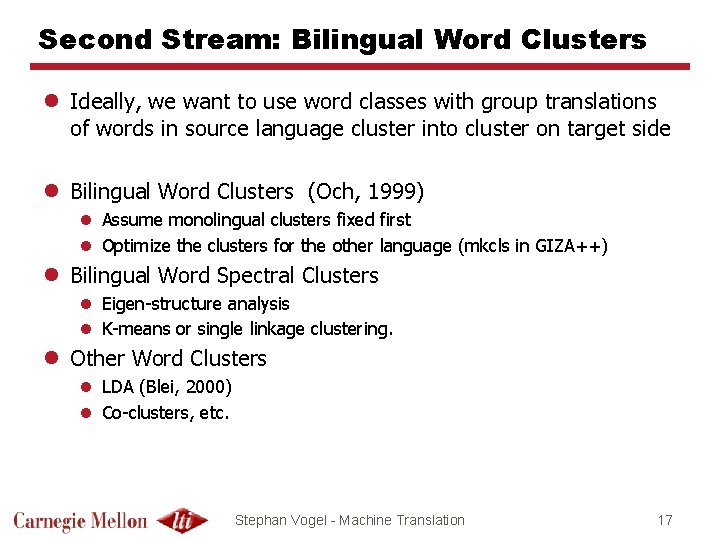

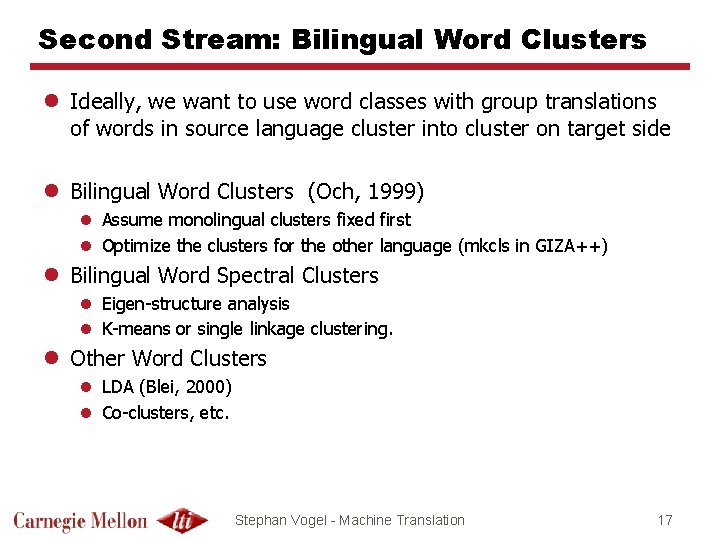

Second Stream: Bilingual Word Clusters l Ideally, we want to use word classes with group translations of words in source language cluster into cluster on target side l Bilingual Word Clusters (Och, 1999) l Assume monolingual clusters fixed first l Optimize the clusters for the other language (mkcls in GIZA++) l Bilingual Word Spectral Clusters l Eigen-structure analysis l K-means or single linkage clustering. l Other Word Clusters l LDA (Blei, 2000) l Co-clusters, etc. Stephan Vogel - Machine Translation 17

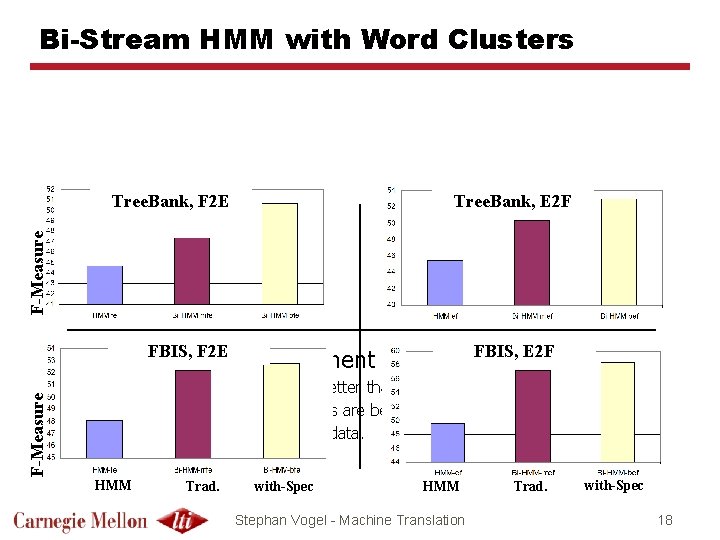

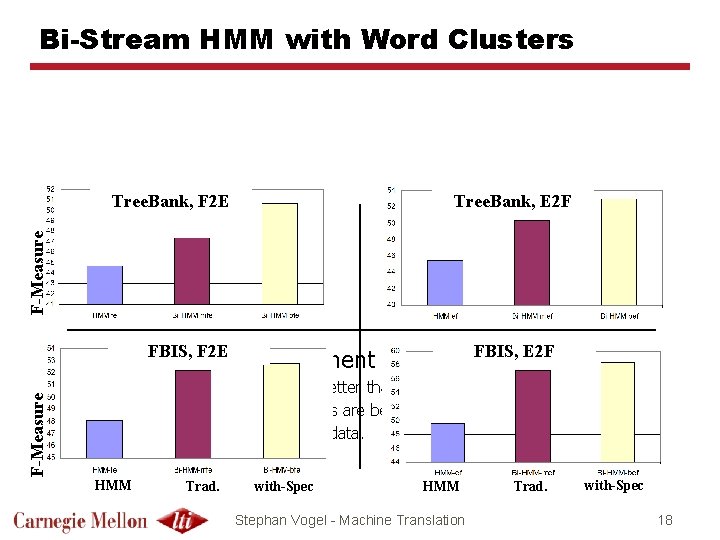

Bi-Stream HMM with Word Clusters Tree. Bank, E 2 F F-Measure Tree. Bank, F 2 E F-Measure FBIS, F 2 E E 2 F l Evaluating Word Alignment Accuracy: FBIS, F-measure l Bi-stream HMM (Bi-HMM) is better than HMM; l Bilingual word-spectral clusters are better than traditional ones; l Helps more for small training data. HMM Trad. with-Spec HMM Stephan Vogel - Machine Translation Trad. with-Spec 18

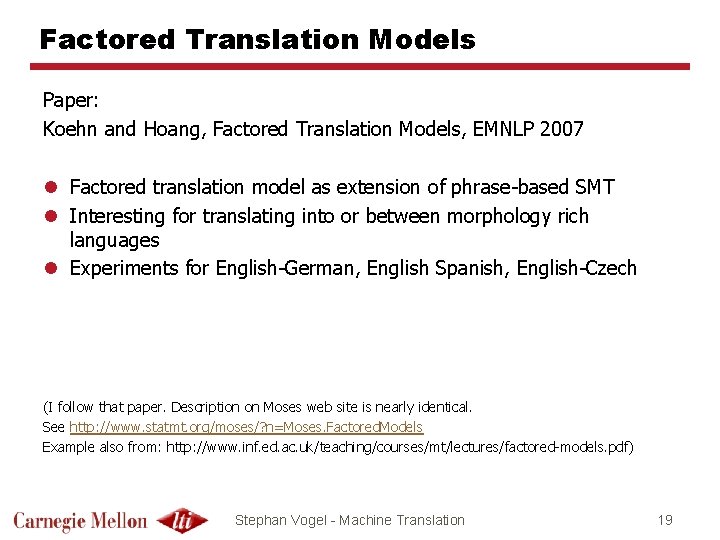

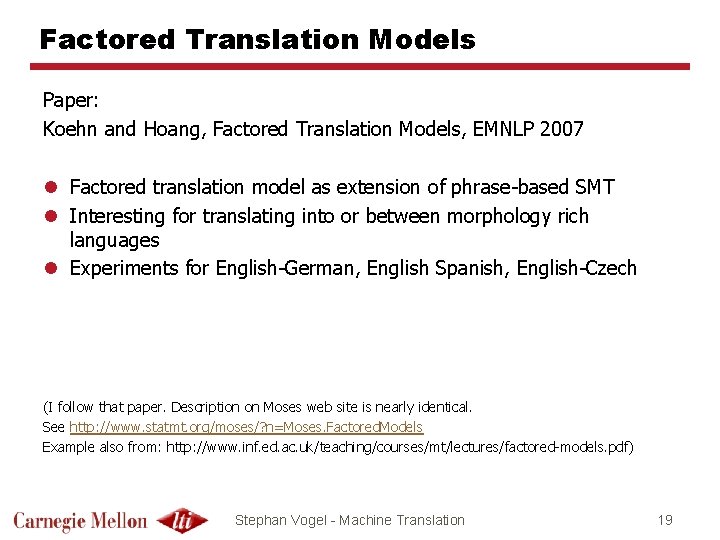

Factored Translation Models Paper: Koehn and Hoang, Factored Translation Models, EMNLP 2007 l Factored translation model as extension of phrase-based SMT l Interesting for translating into or between morphology rich languages l Experiments for English-German, English Spanish, English-Czech (I follow that paper. Description on Moses web site is nearly identical. See http: //www. statmt. org/moses/? n=Moses. Factored. Models Example also from: http: //www. inf. ed. ac. uk/teaching/courses/mt/lectures/factored-models. pdf) Stephan Vogel - Machine Translation 19

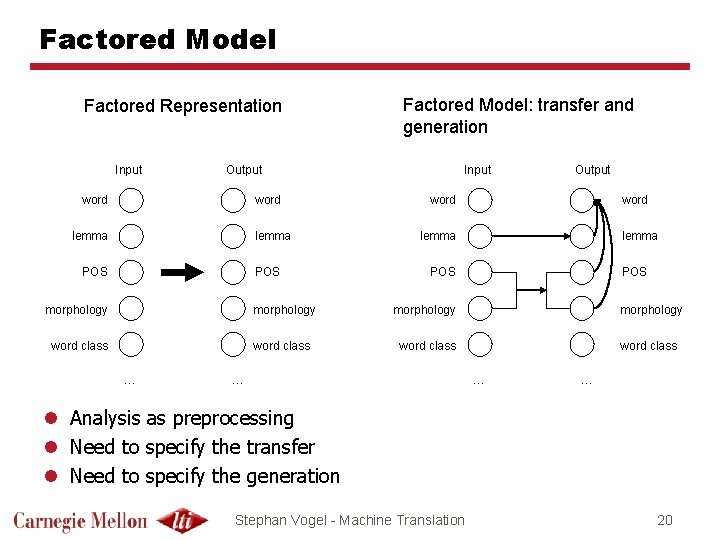

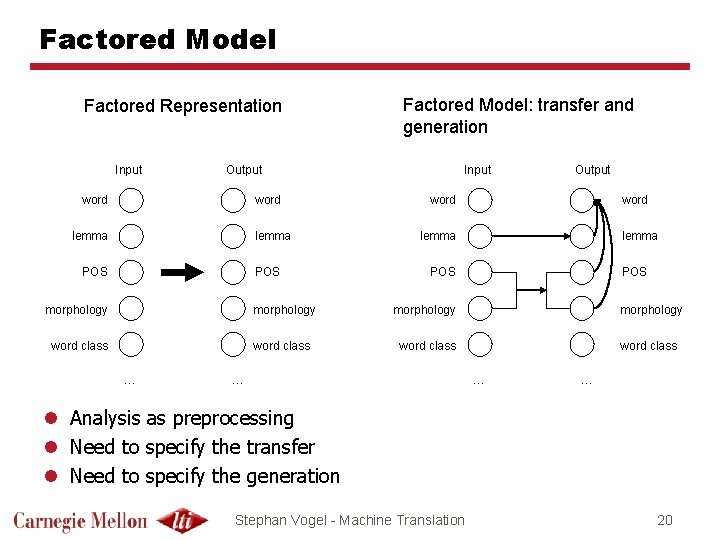

Factored Model Factored Representation Input Factored Model: transfer and generation Output word lemma POS Input Output word lemma POS morphology word class … … l Analysis as preprocessing l Need to specify the transfer l Need to specify the generation Stephan Vogel - Machine Translation 20

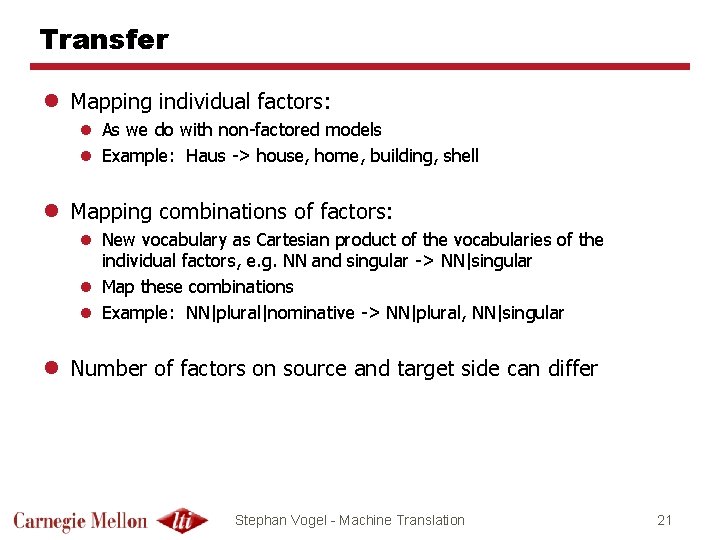

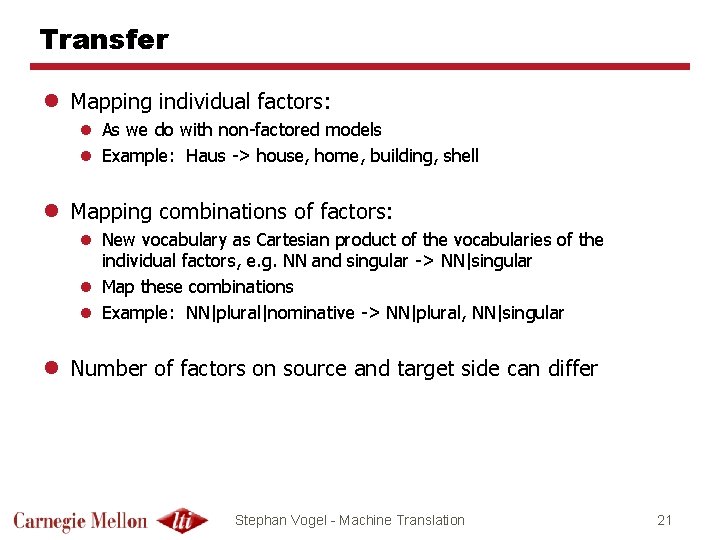

Transfer l Mapping individual factors: l As we do with non-factored models l Example: Haus -> house, home, building, shell l Mapping combinations of factors: l New vocabulary as Cartesian product of the vocabularies of the individual factors, e. g. NN and singular -> NN|singular l Map these combinations l Example: NN|plural|nominative -> NN|plural, NN|singular l Number of factors on source and target side can differ Stephan Vogel - Machine Translation 21

Generation l Generate surface form from factors l Examples: house|NN|plural -> houses house|NN|singular -> house|VB|present|3 rd-person -> houses Stephan Vogel - Machine Translation 22

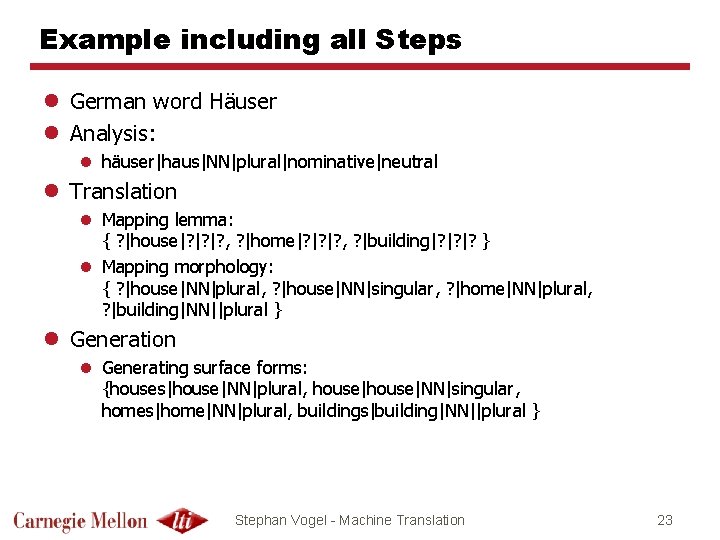

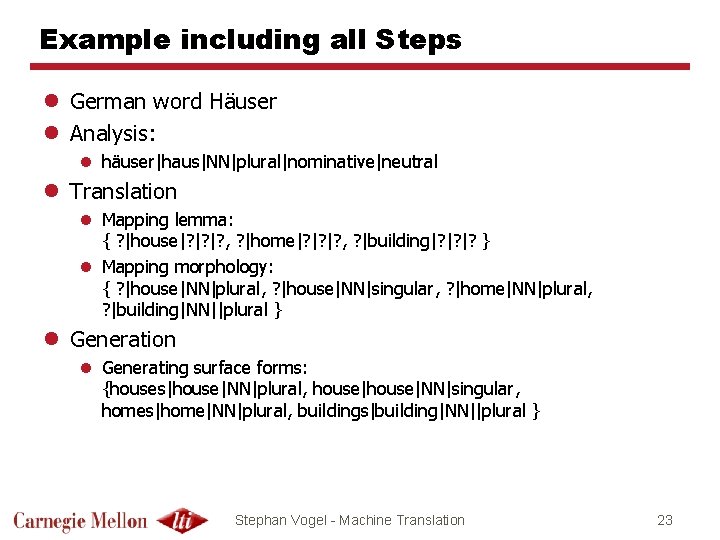

Example including all Steps l German word Häuser l Analysis: l häuser|haus|NN|plural|nominative|neutral l Translation l Mapping lemma: { ? |house|? |? |? , ? |home|? |? |? , ? |building|? |? |? } l Mapping morphology: { ? |house|NN|plural, ? |house|NN|singular, ? |home|NN|plural, ? |building|NN||plural } l Generation l Generating surface forms: {houses|house|NN|plural, house|NN|singular, homes|home|NN|plural, buildings|building|NN||plural } Stephan Vogel - Machine Translation 23

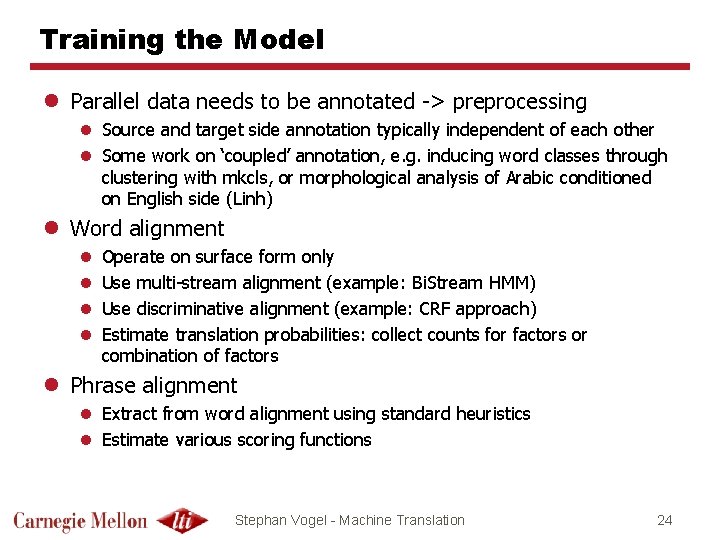

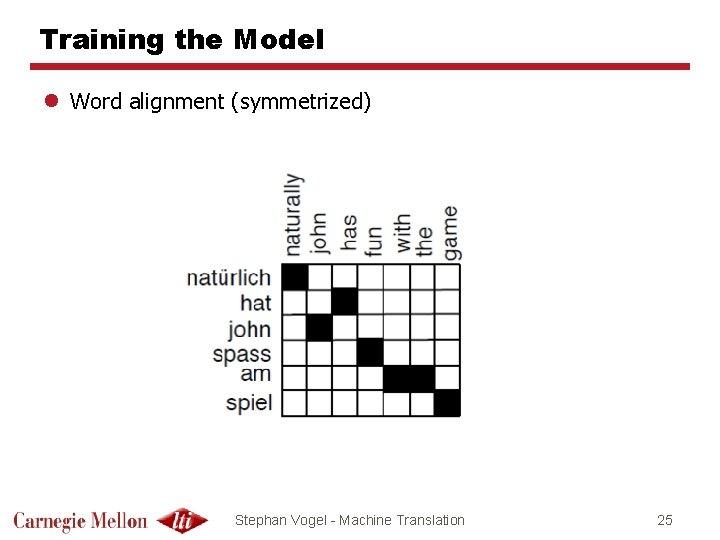

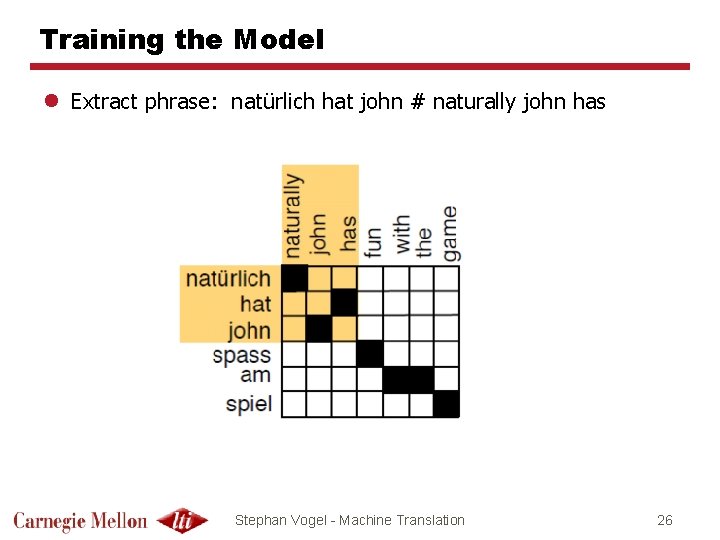

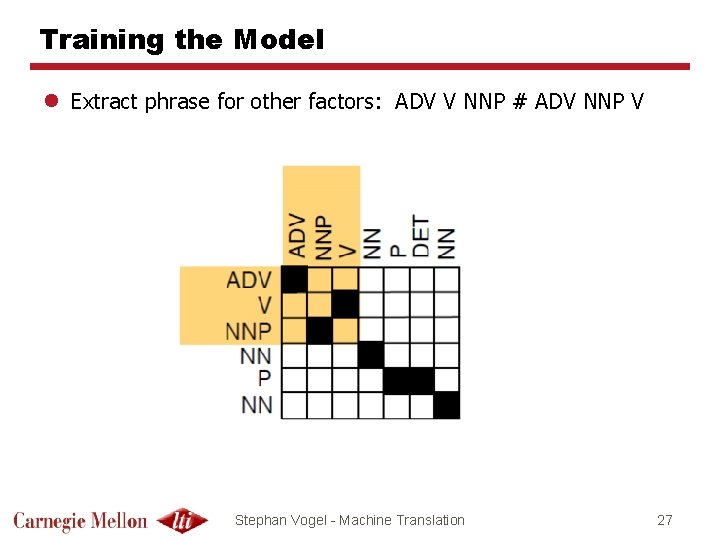

Training the Model l Parallel data needs to be annotated -> preprocessing l Source and target side annotation typically independent of each other l Some work on ‘coupled’ annotation, e. g. inducing word classes through clustering with mkcls, or morphological analysis of Arabic conditioned on English side (Linh) l Word alignment l l Operate on surface form only Use multi-stream alignment (example: Bi. Stream HMM) Use discriminative alignment (example: CRF approach) Estimate translation probabilities: collect counts for factors or combination of factors l Phrase alignment l Extract from word alignment using standard heuristics l Estimate various scoring functions Stephan Vogel - Machine Translation 24

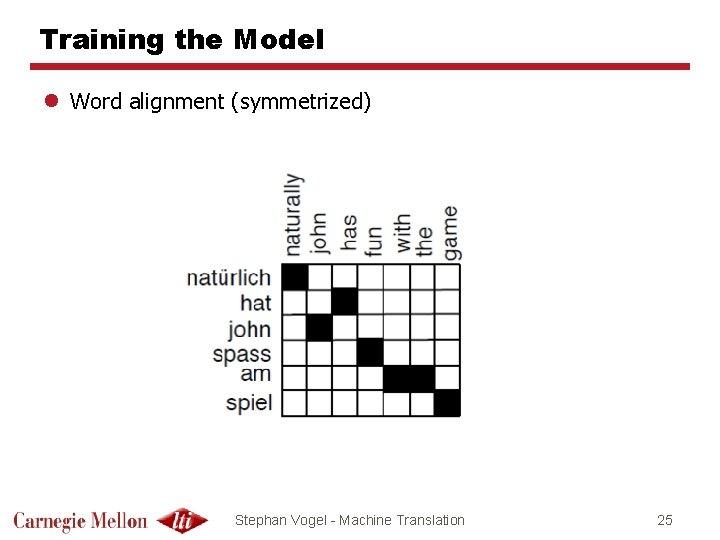

Training the Model l Word alignment (symmetrized) Stephan Vogel - Machine Translation 25

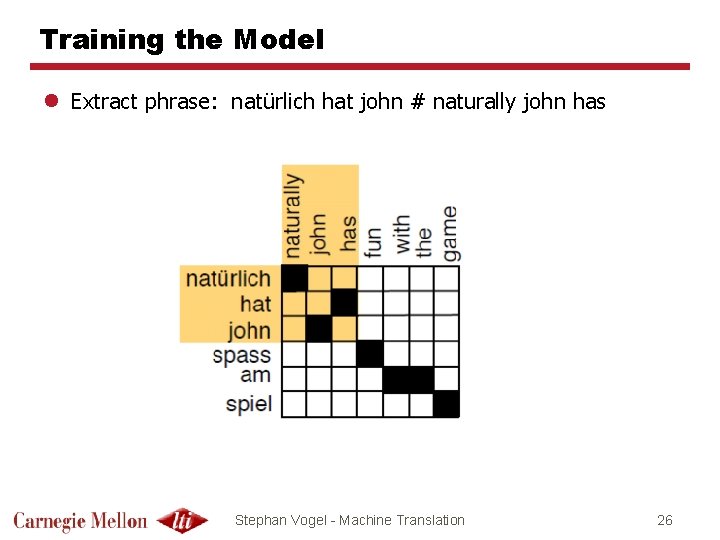

Training the Model l Extract phrase: natürlich hat john # naturally john has Stephan Vogel - Machine Translation 26

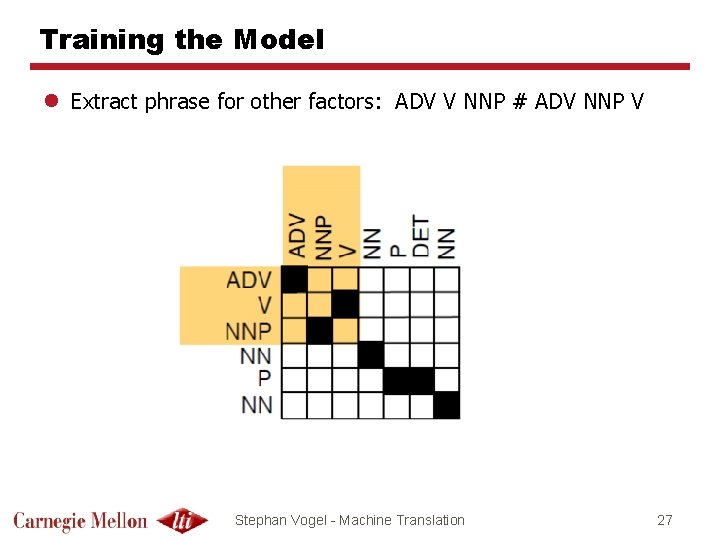

Training the Model l Extract phrase for other factors: ADV V NNP # ADV NNP V Stephan Vogel - Machine Translation 27

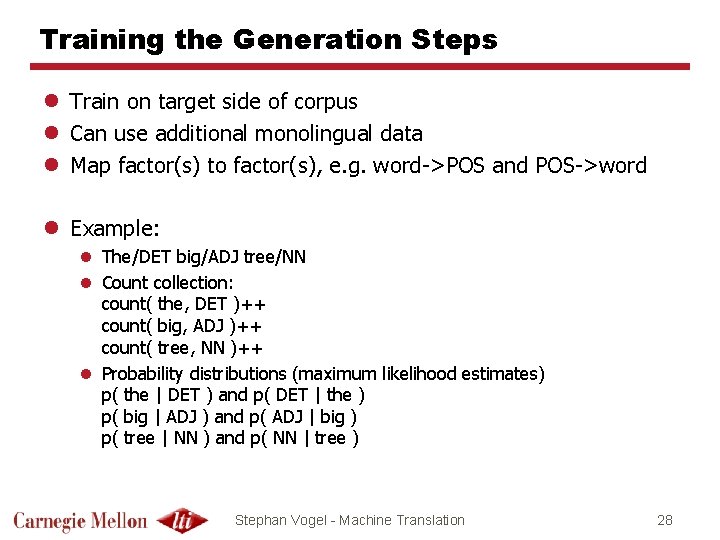

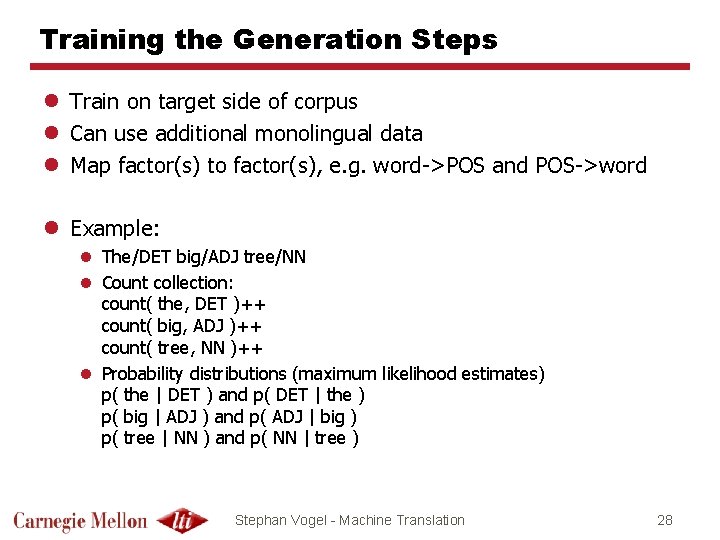

Training the Generation Steps l Train on target side of corpus l Can use additional monolingual data l Map factor(s) to factor(s), e. g. word->POS and POS->word l Example: l The/DET big/ADJ tree/NN l Count collection: count( the, DET )++ count( big, ADJ )++ count( tree, NN )++ l Probability distributions (maximum likelihood estimates) p( the | DET ) and p( DET | the ) p( big | ADJ ) and p( ADJ | big ) p( tree | NN ) and p( NN | tree ) Stephan Vogel - Machine Translation 28

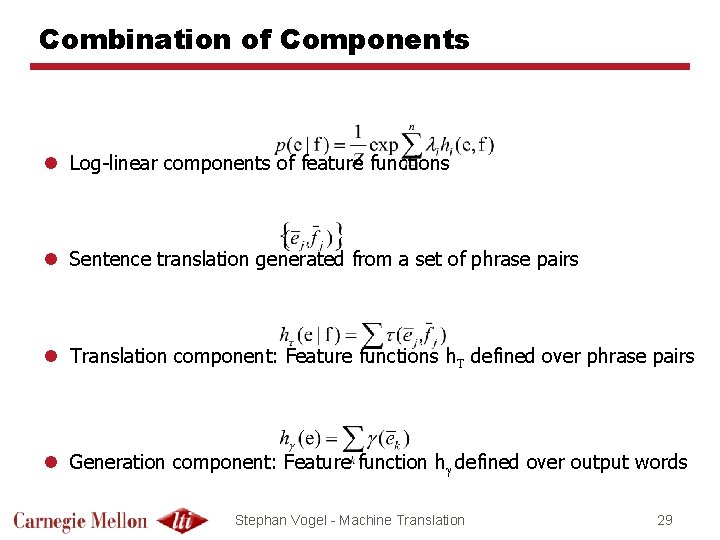

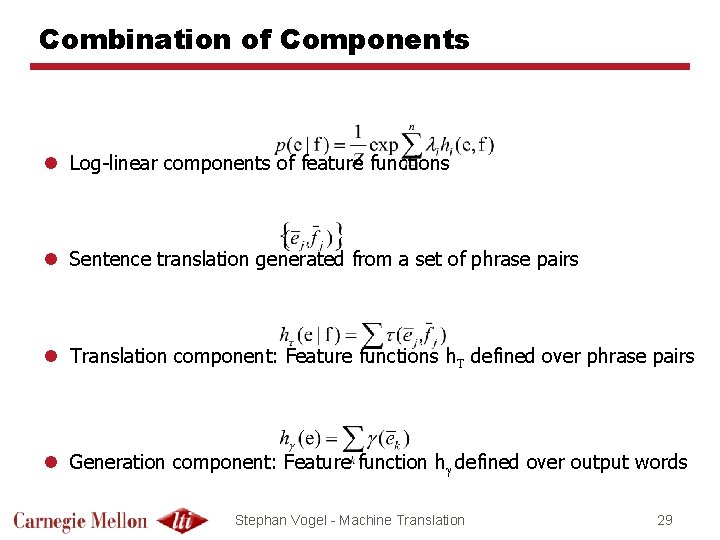

Combination of Components l Log-linear components of feature functions l Sentence translation generated from a set of phrase pairs l Translation component: Feature functions h. T defined over phrase pairs l Generation component: Feature function hg defined over output words Stephan Vogel - Machine Translation 29

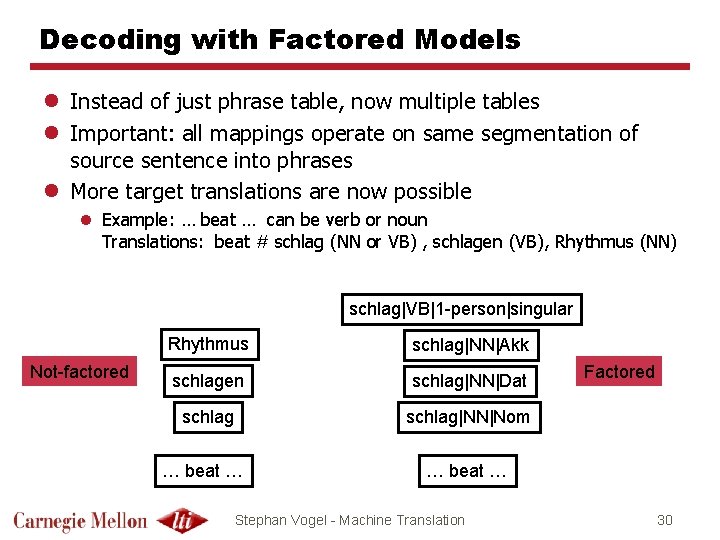

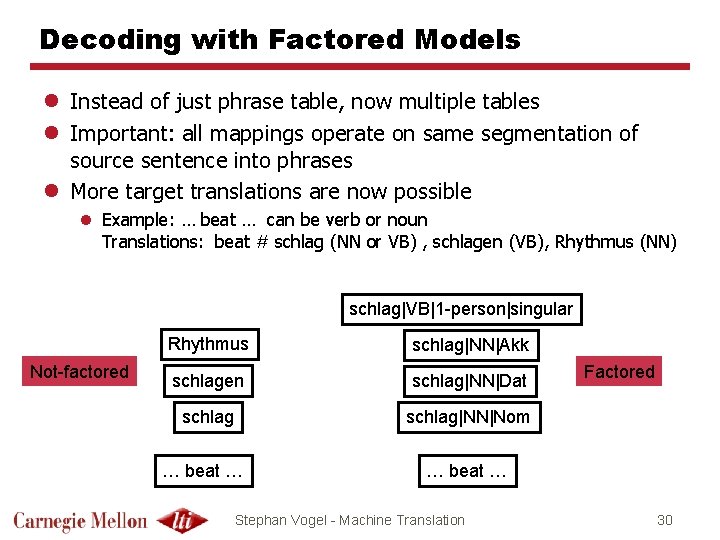

Decoding with Factored Models l Instead of just phrase table, now multiple tables l Important: all mappings operate on same segmentation of source sentence into phrases l More target translations are now possible l Example: … beat … can be verb or noun Translations: beat # schlag (NN or VB) , schlagen (VB), Rhythmus (NN) schlag|VB|1 -person|singular Not-factored Rhythmus schlag|NN|Akk schlagen schlag|NN|Dat schlag|NN|Nom … beat … Stephan Vogel - Machine Translation Factored 30

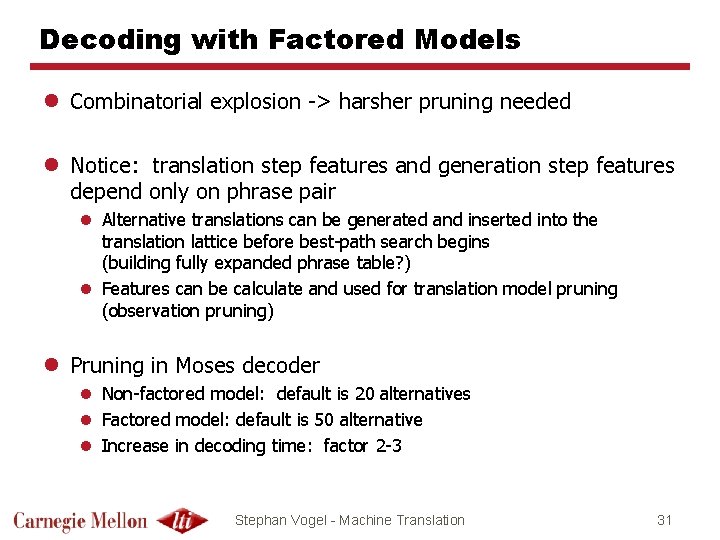

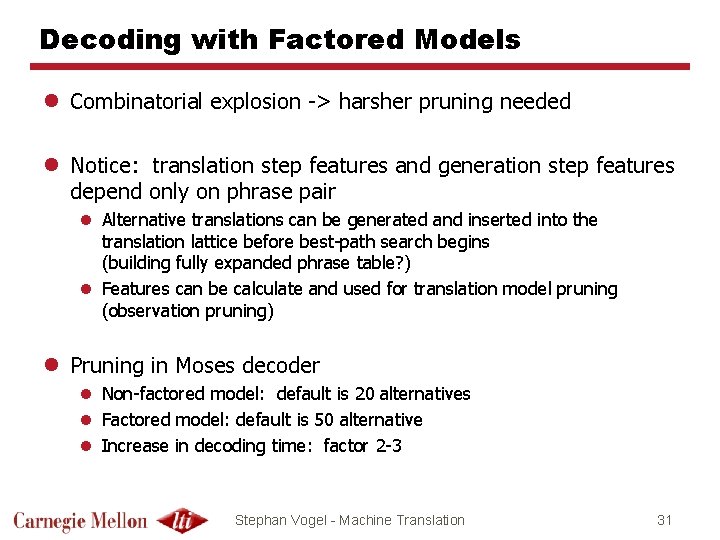

Decoding with Factored Models l Combinatorial explosion -> harsher pruning needed l Notice: translation step features and generation step features depend only on phrase pair l Alternative translations can be generated and inserted into the translation lattice before best-path search begins (building fully expanded phrase table? ) l Features can be calculate and used for translation model pruning (observation pruning) l Pruning in Moses decoder l Non-factored model: default is 20 alternatives l Factored model: default is 50 alternative l Increase in decoding time: factor 2 -3 Stephan Vogel - Machine Translation 31

Factored LMs in Moses l The training script allows to specify multiple LMs on different factors, with individual orders (history length) l Example: --lm 0: 3: factored-corpus/surface. lm // surface form 3 -gram LM --lm 2: 3: factored-corpus/pos. lm // POS 3 -gram LM l This generates different LMs on the different factors, not a factored LM l Different LMs are used as independent features in decoder l No backing-off between different factors Stephan Vogel - Machine Translation 32

Summary l Factored models to l Deal with large vocabulary in morphology rich LMs l ‘Connect’ words, thereby getting better model estimates l Explicitly model morphological dependencies within sentences l Factored models are not always called factored models l Hierarchical model (lexicon) l Multi-stream model (alignment) l Factored LMs introduced for ASR l Many backoff paths l Moses decoder l Allows factored TMs and factored LMs l But no backing-off between factors, only log-linear combination Stephan Vogel - Machine Translation 33