Statistical Machine Translation Word Alignment Stephan Vogel MT

- Slides: 29

Statistical Machine Translation Word Alignment Stephan Vogel MT Class Spring Semester 2011 Stephan Vogel - Machine Translation 1

Overview l l l Word alignment – some observations Models IBM 2 and IBM 1: 0 th-order position model HMM alignment model: 1 st-order position model IBM 3: fertility IBM 4: plus relative distortion Stephan Vogel - Machine Translation 2

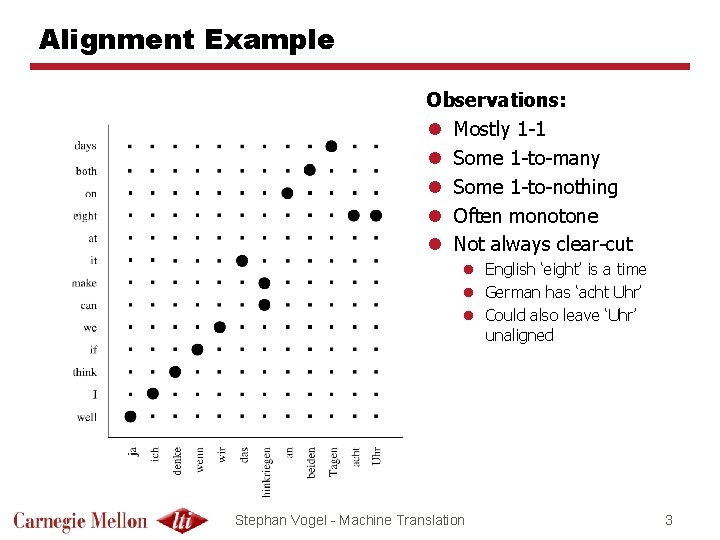

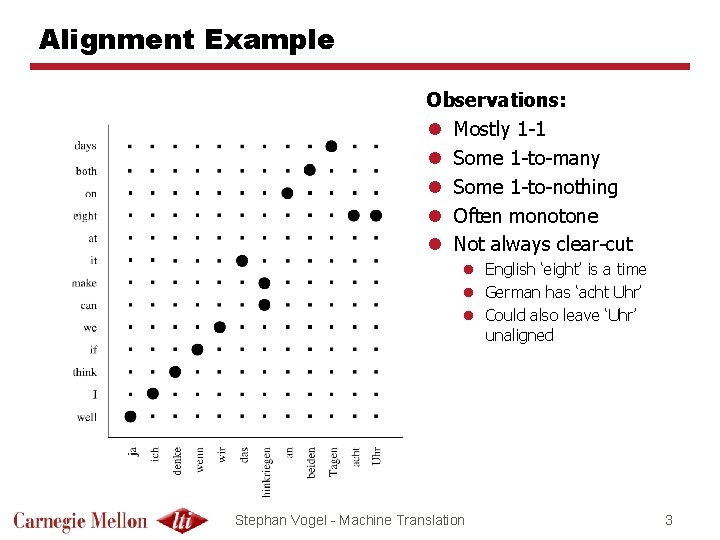

Alignment Example Observations: l Mostly 1 -1 l Some 1 -to-many l Some 1 -to-nothing l Often monotone l Not always clear-cut l English ‘eight’ is a time l German has ‘acht Uhr’ l Could also leave ‘Uhr’ unaligned Stephan Vogel - Machine Translation 3

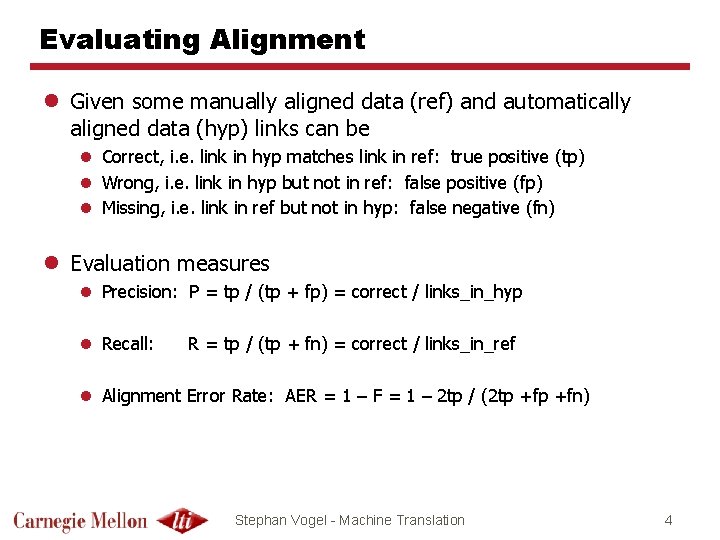

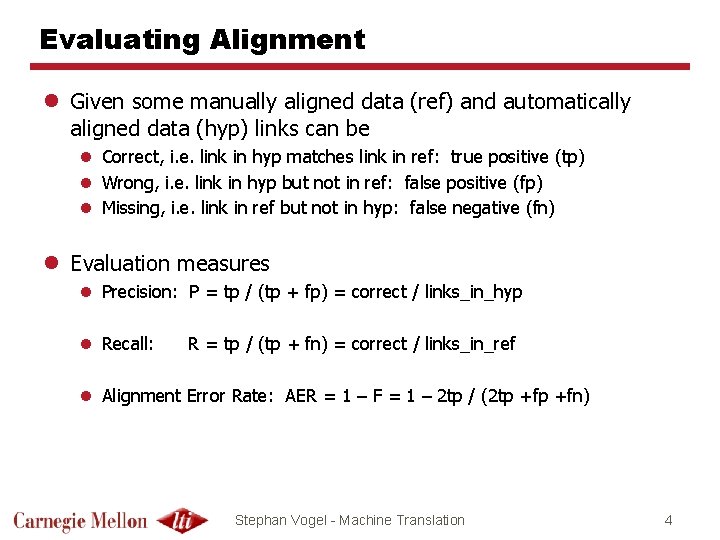

Evaluating Alignment l Given some manually aligned data (ref) and automatically aligned data (hyp) links can be l Correct, i. e. link in hyp matches link in ref: true positive (tp) l Wrong, i. e. link in hyp but not in ref: false positive (fp) l Missing, i. e. link in ref but not in hyp: false negative (fn) l Evaluation measures l Precision: P = tp / (tp + fp) = correct / links_in_hyp l Recall: R = tp / (tp + fn) = correct / links_in_ref l Alignment Error Rate: AER = 1 – F = 1 – 2 tp / (2 tp +fn) Stephan Vogel - Machine Translation 4

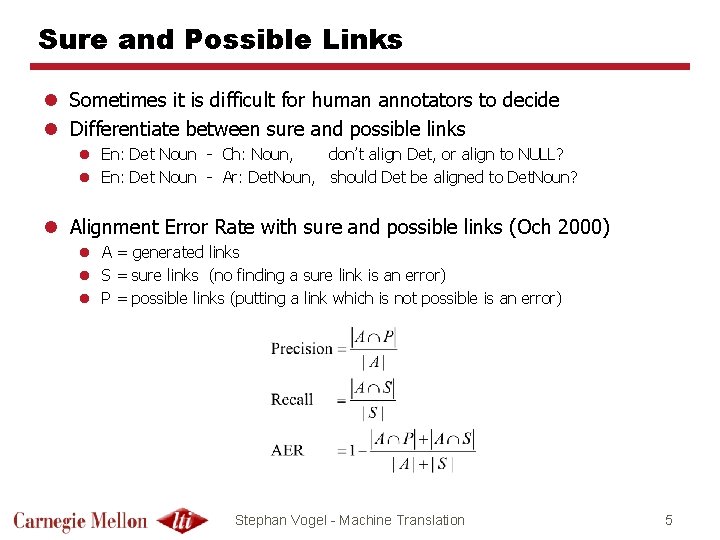

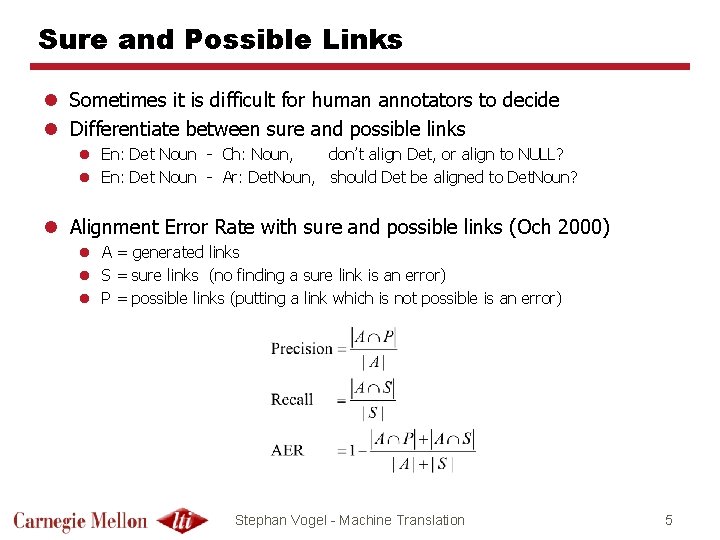

Sure and Possible Links l Sometimes it is difficult for human annotators to decide l Differentiate between sure and possible links l En: Det Noun - Ch: Noun, don’t align Det, or align to NULL? l En: Det Noun - Ar: Det. Noun, should Det be aligned to Det. Noun? l Alignment Error Rate with sure and possible links (Och 2000) l A = generated links l S = sure links (no finding a sure link is an error) l P = possible links (putting a link which is not possible is an error) Stephan Vogel - Machine Translation 5

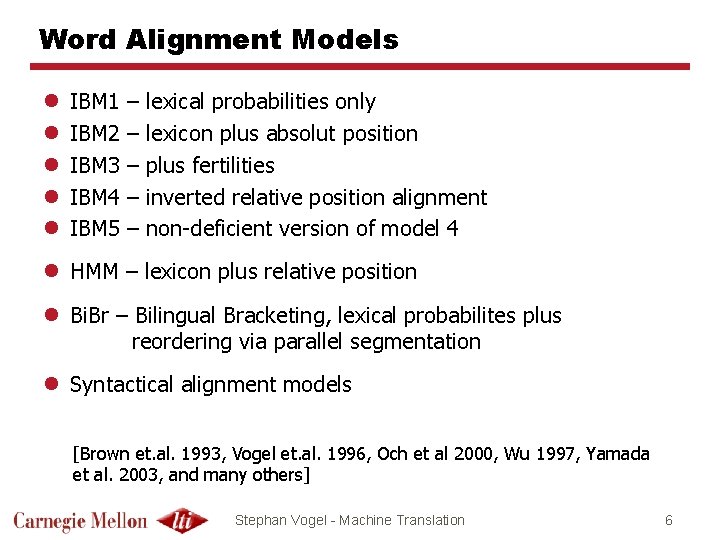

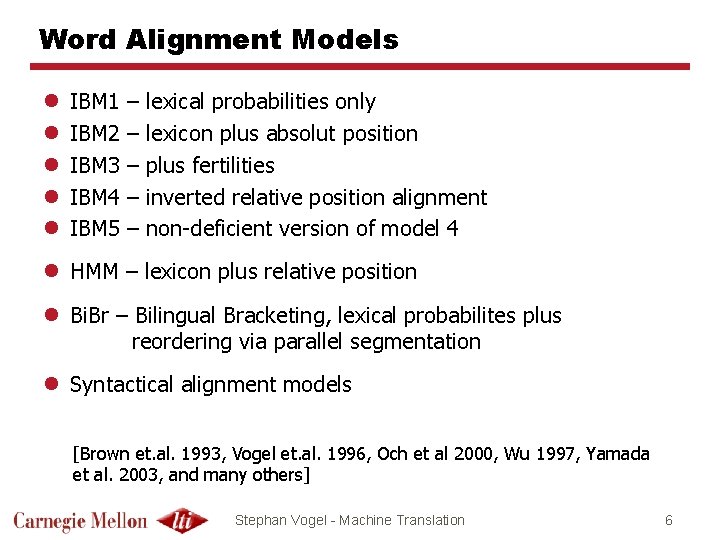

Word Alignment Models l l l IBM 1 IBM 2 IBM 3 IBM 4 IBM 5 – – – lexical probabilities only lexicon plus absolut position plus fertilities inverted relative position alignment non-deficient version of model 4 l HMM – lexicon plus relative position l Bi. Br – Bilingual Bracketing, lexical probabilites plus reordering via parallel segmentation l Syntactical alignment models [Brown et. al. 1993, Vogel et. al. 1996, Och et al 2000, Wu 1997, Yamada et al. 2003, and many others] Stephan Vogel - Machine Translation 6

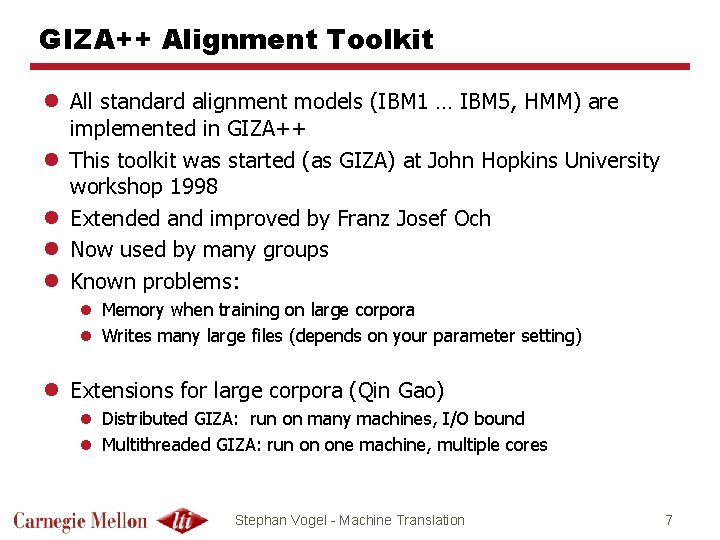

GIZA++ Alignment Toolkit l All standard alignment models (IBM 1 … IBM 5, HMM) are implemented in GIZA++ l This toolkit was started (as GIZA) at John Hopkins University workshop 1998 l Extended and improved by Franz Josef Och l Now used by many groups l Known problems: l Memory when training on large corpora l Writes many large files (depends on your parameter setting) l Extensions for large corpora (Qin Gao) l Distributed GIZA: run on many machines, I/O bound l Multithreaded GIZA: run on one machine, multiple cores Stephan Vogel - Machine Translation 7

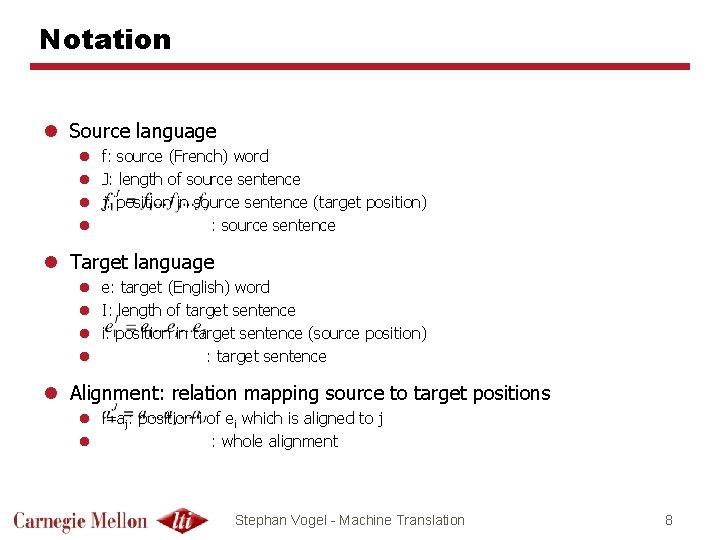

Notation l Source language l f: source (French) word l J: length of source sentence l j: position in source sentence (target position) l : source sentence l Target language l e: target (English) word l I: length of target sentence l i: position in target sentence (source position) l : target sentence l Alignment: relation mapping source to target positions l i=aj: position i of ei which is aligned to j l : whole alignment Stephan Vogel - Machine Translation 8

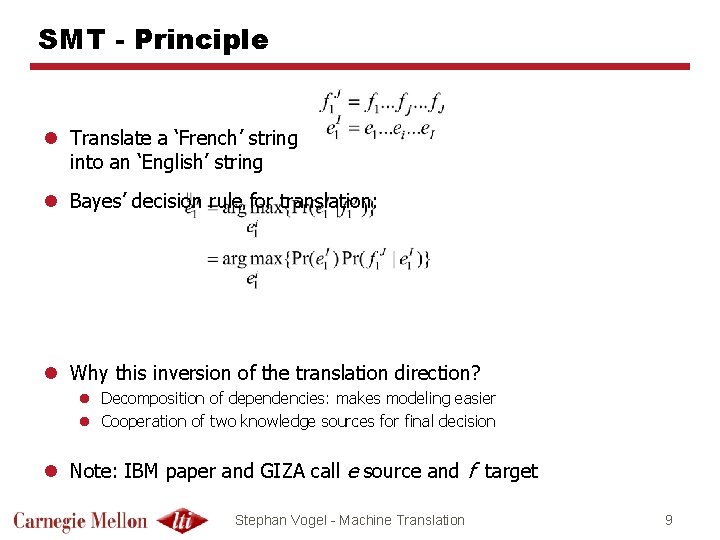

SMT - Principle l Translate a ‘French’ string into an ‘English’ string l Bayes’ decision rule for translation: l Why this inversion of the translation direction? l Decomposition of dependencies: makes modeling easier l Cooperation of two knowledge sources for final decision l Note: IBM paper and GIZA call e source and f target Stephan Vogel - Machine Translation 9

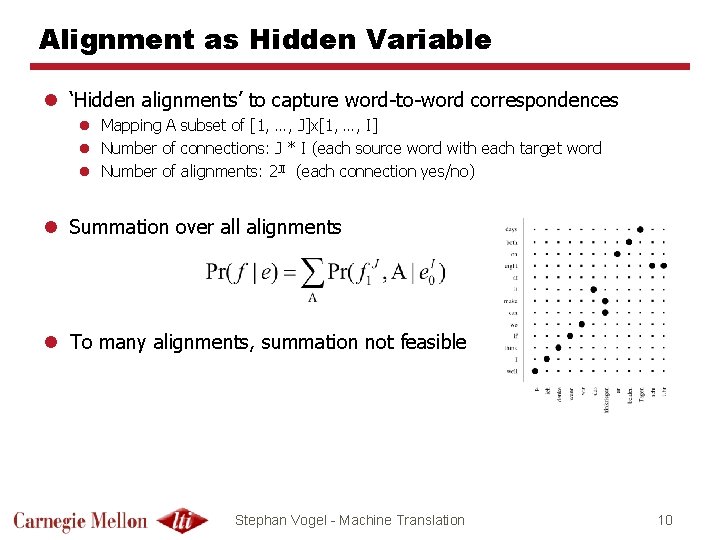

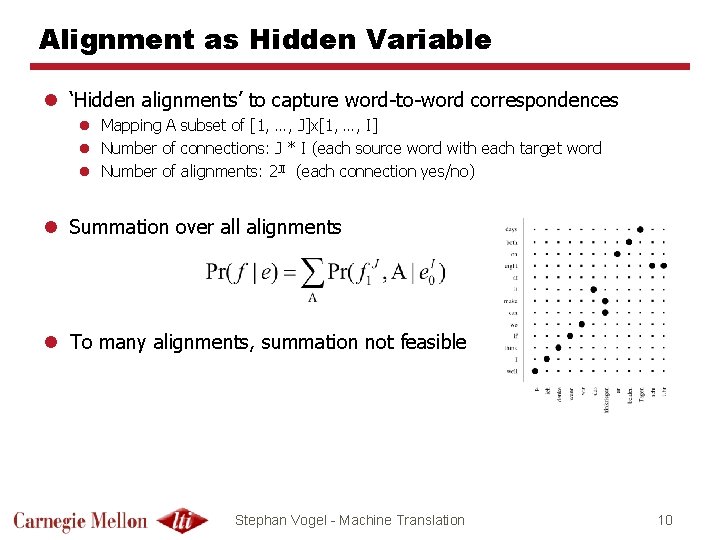

Alignment as Hidden Variable l ‘Hidden alignments’ to capture word-to-word correspondences l Mapping A subset of [1, …, J]x[1, …, I] l Number of connections: J * I (each source word with each target word l Number of alignments: 2 JI (each connection yes/no) l Summation over all alignments l To many alignments, summation not feasible Stephan Vogel - Machine Translation 10

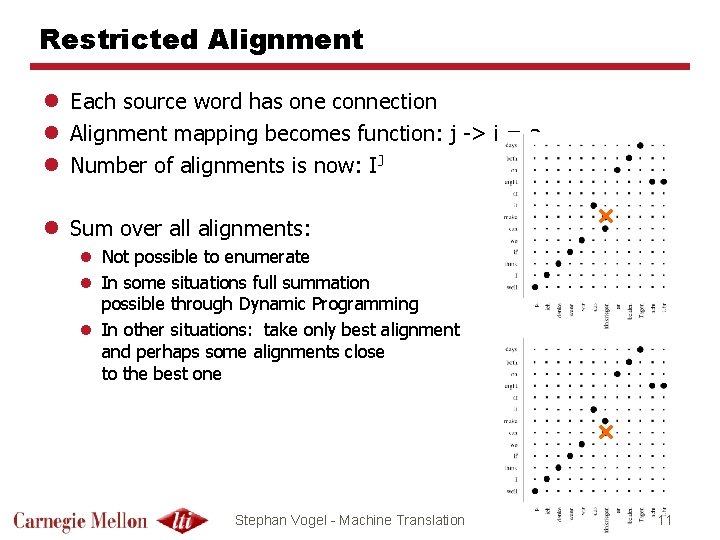

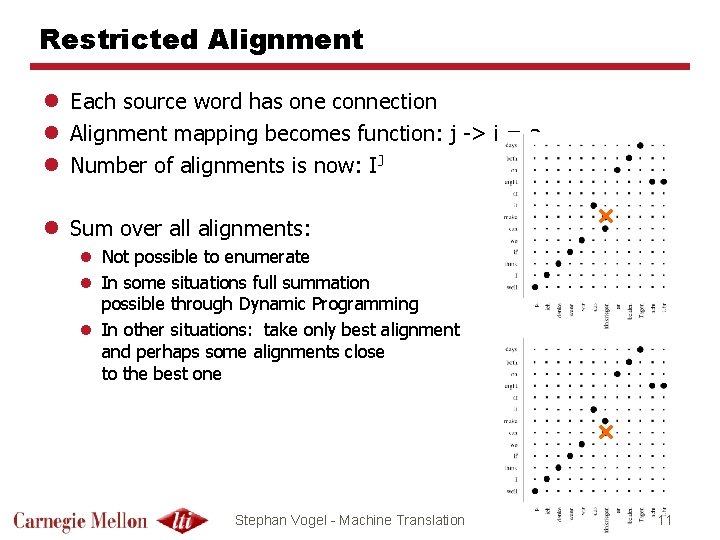

Restricted Alignment l Each source word has one connection l Alignment mapping becomes function: j -> i = aj l Number of alignments is now: IJ l Sum over all alignments: l Not possible to enumerate l In some situations full summation possible through Dynamic Programming l In other situations: take only best alignment and perhaps some alignments close to the best one Stephan Vogel - Machine Translation 11

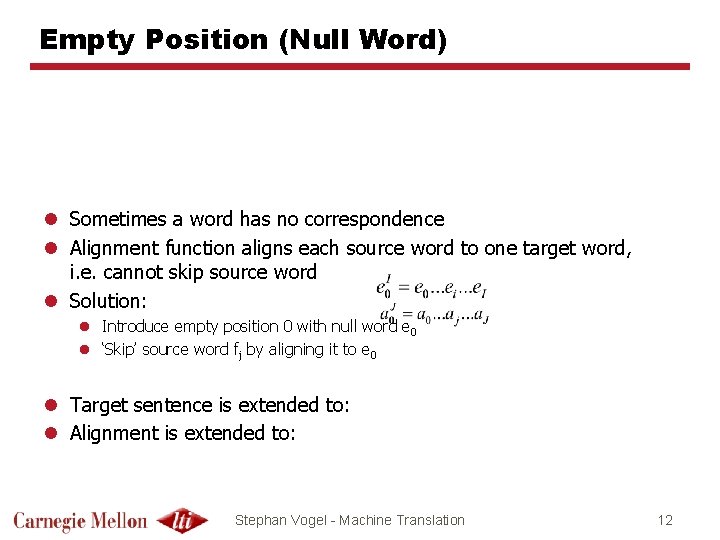

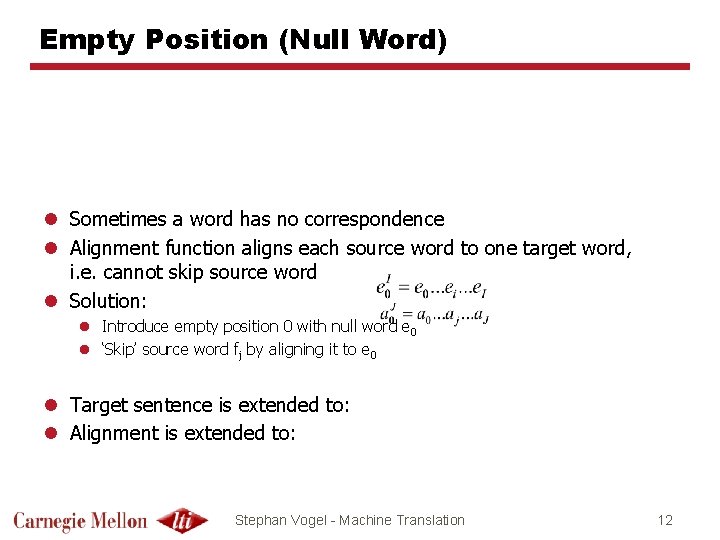

Empty Position (Null Word) l Sometimes a word has no correspondence l Alignment function aligns each source word to one target word, i. e. cannot skip source word l Solution: l Introduce empty position 0 with null word e 0 l ‘Skip’ source word fj by aligning it to e 0 l Target sentence is extended to: l Alignment is extended to: Stephan Vogel - Machine Translation 12

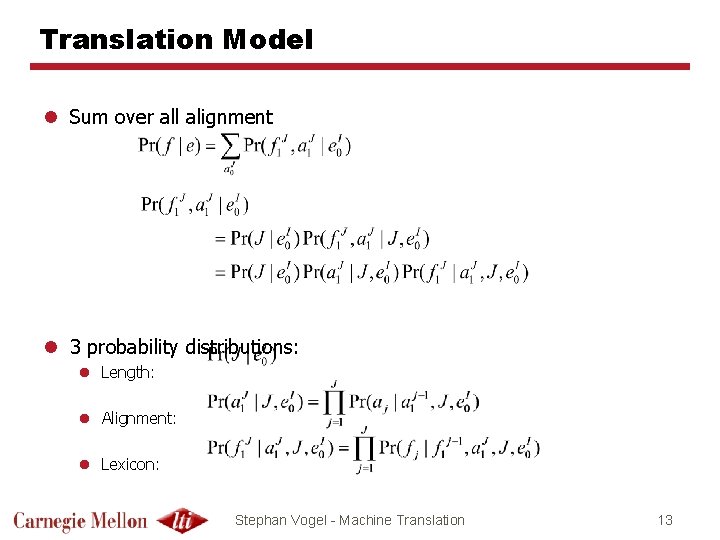

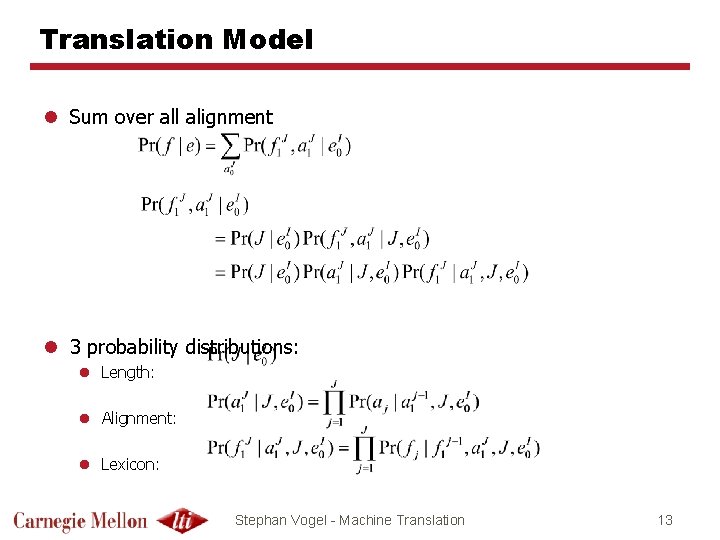

Translation Model l Sum over all alignment l 3 probability distributions: l Length: l Alignment: l Lexicon: Stephan Vogel - Machine Translation 13

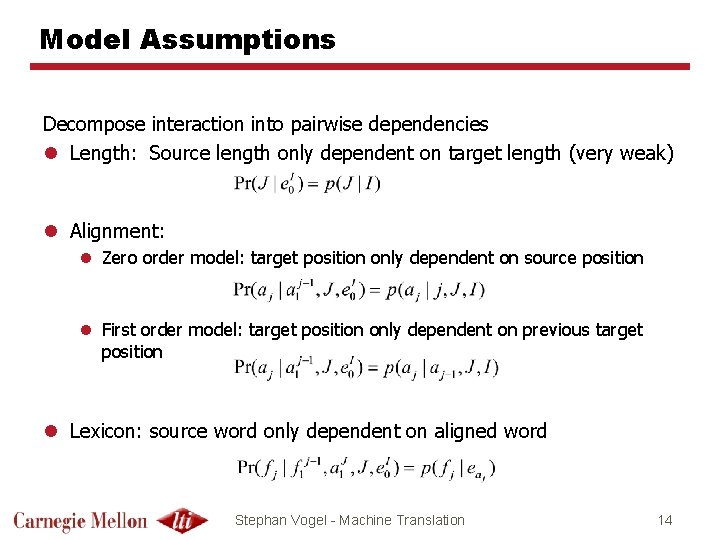

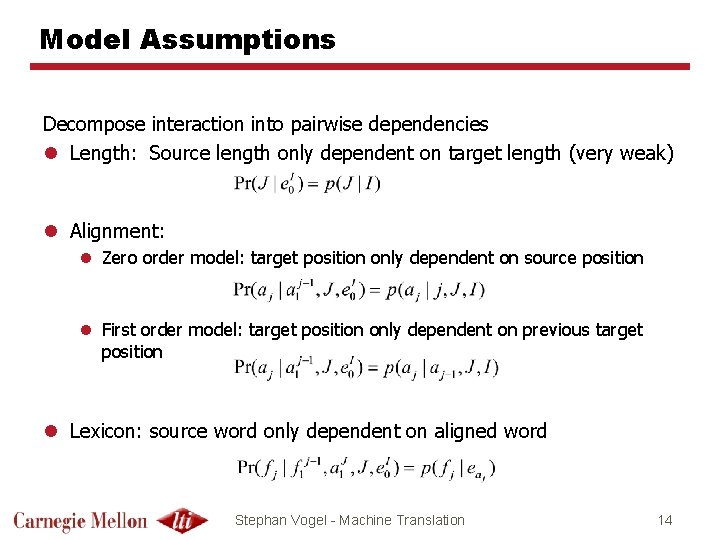

Model Assumptions Decompose interaction into pairwise dependencies l Length: Source length only dependent on target length (very weak) l Alignment: l Zero order model: target position only dependent on source position l First order model: target position only dependent on previous target position l Lexicon: source word only dependent on aligned word Stephan Vogel - Machine Translation 14

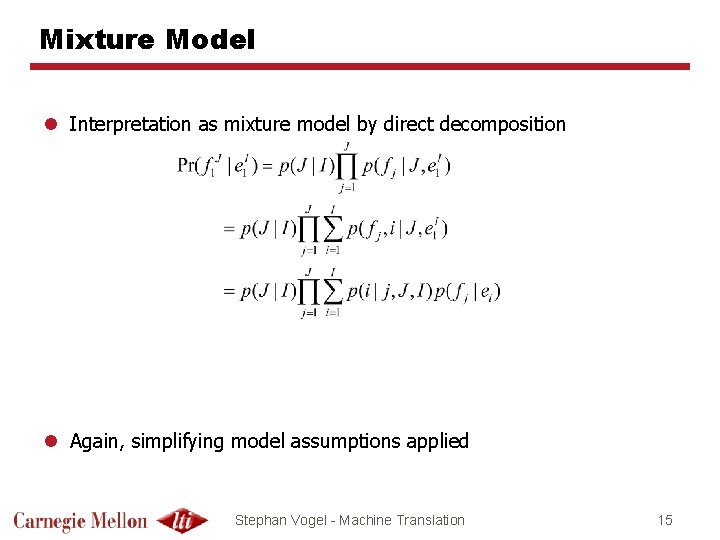

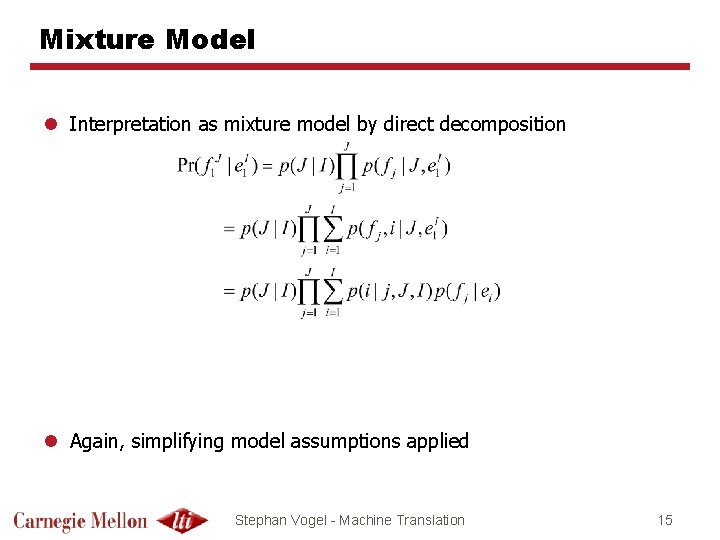

Mixture Model l Interpretation as mixture model by direct decomposition l Again, simplifying model assumptions applied Stephan Vogel - Machine Translation 15

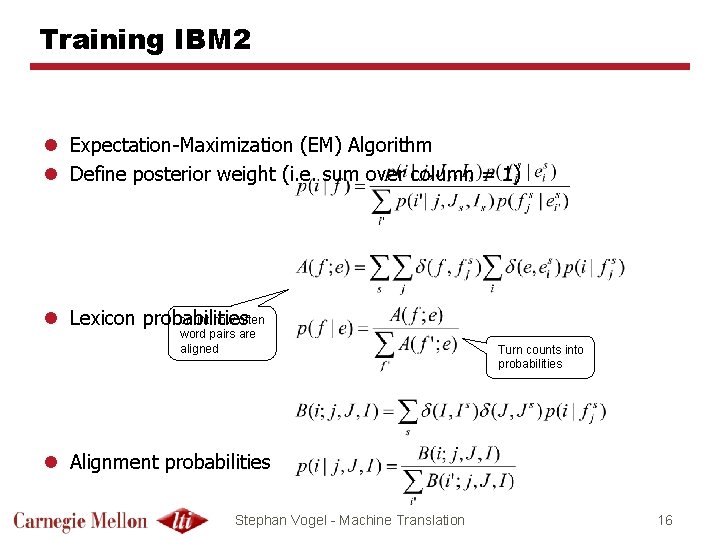

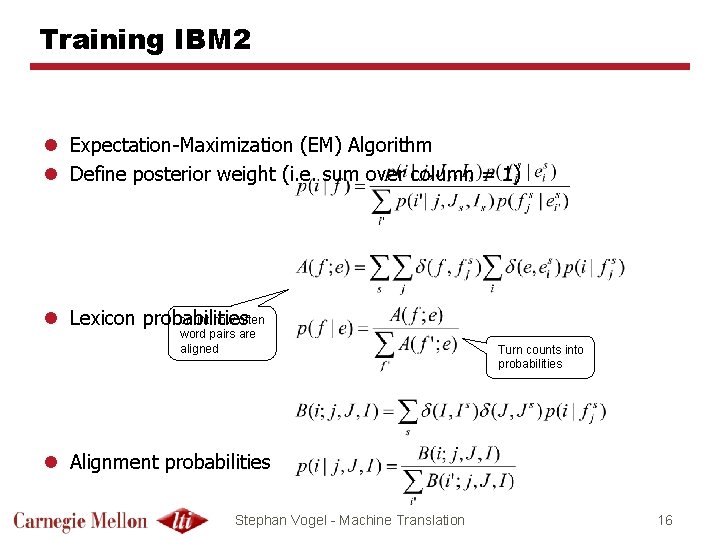

Training IBM 2 l Expectation-Maximization (EM) Algorithm l Define posterior weight (i. e. sum over column = 1) count how often l Lexicon probabilities word pairs are aligned Turn counts into probabilities l Alignment probabilities Stephan Vogel - Machine Translation 16

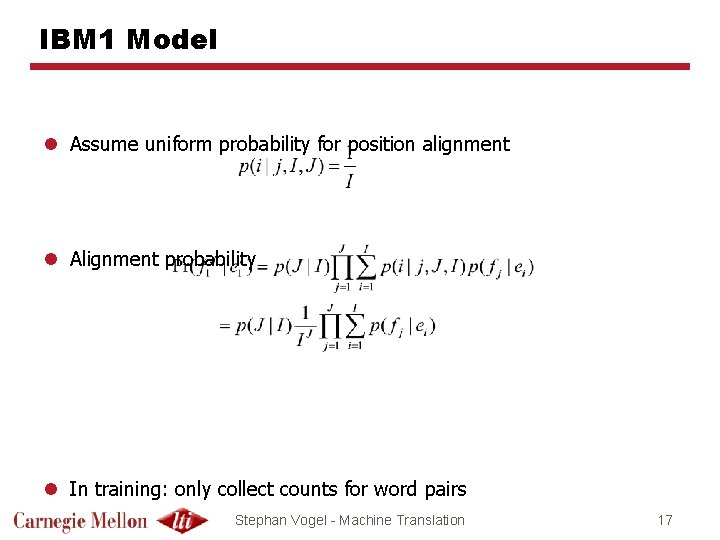

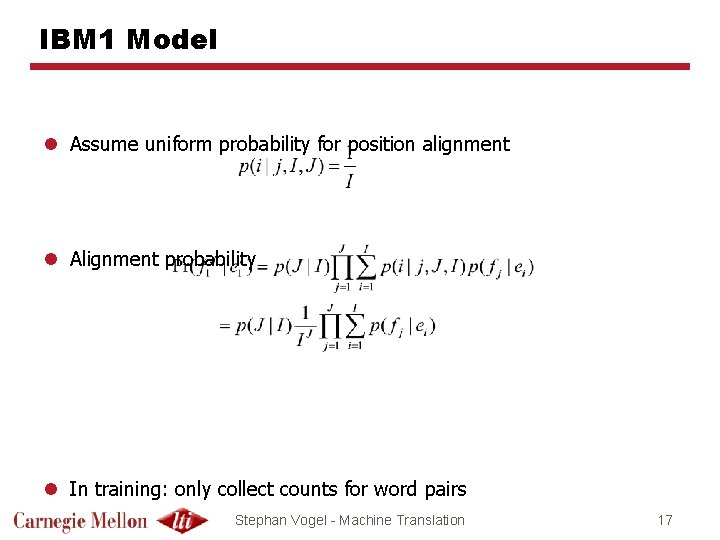

IBM 1 Model l Assume uniform probability for position alignment l Alignment probability l In training: only collect counts for word pairs Stephan Vogel - Machine Translation 17

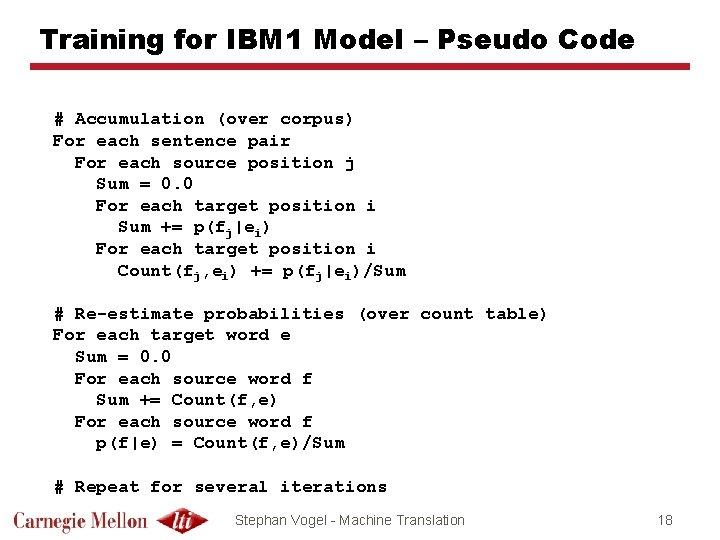

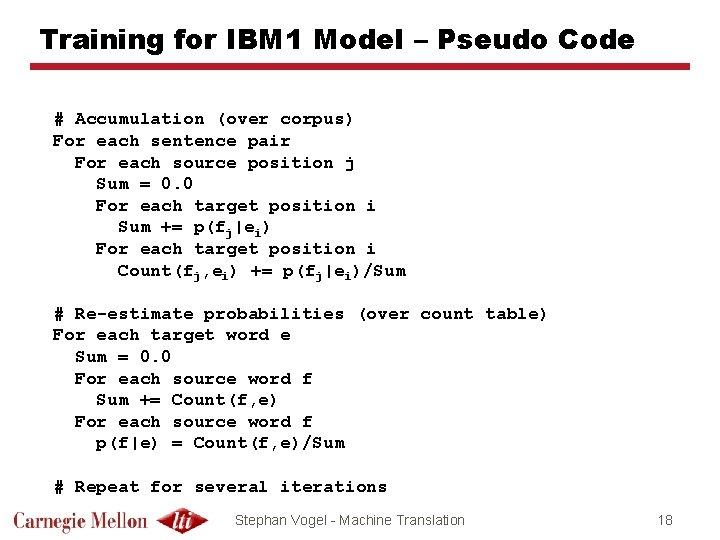

Training for IBM 1 Model – Pseudo Code # Accumulation (over corpus) For each sentence pair For each source position j Sum = 0. 0 For each target position i Sum += p(fj|ei) For each target position i Count(fj, ei) += p(fj|ei)/Sum # Re-estimate probabilities (over count table) For each target word e Sum = 0. 0 For each source word f Sum += Count(f, e) For each source word f p(f|e) = Count(f, e)/Sum # Repeat for several iterations Stephan Vogel - Machine Translation 18

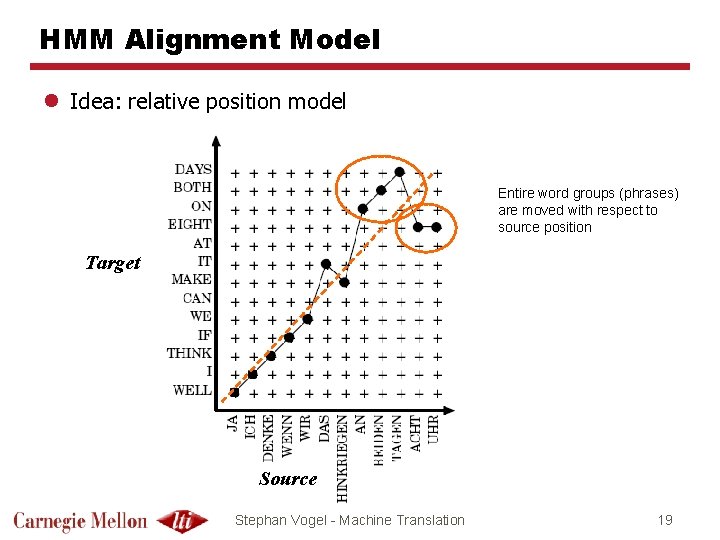

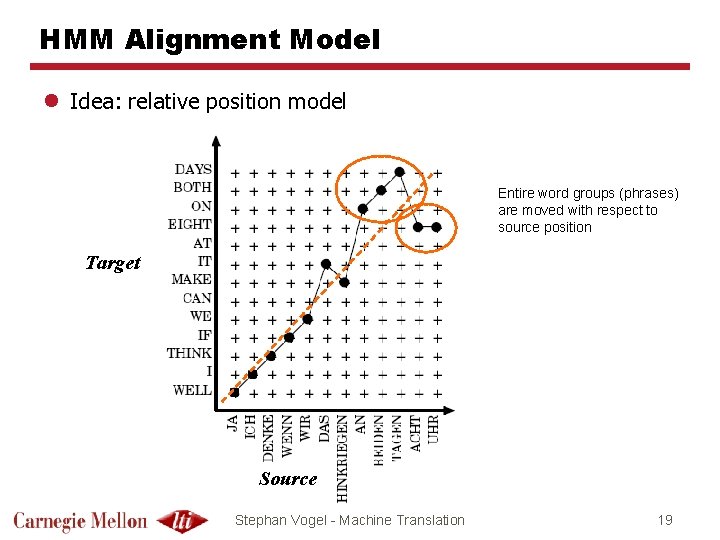

HMM Alignment Model l Idea: relative position model Entire word groups (phrases) are moved with respect to source position Target Source Stephan Vogel - Machine Translation 19

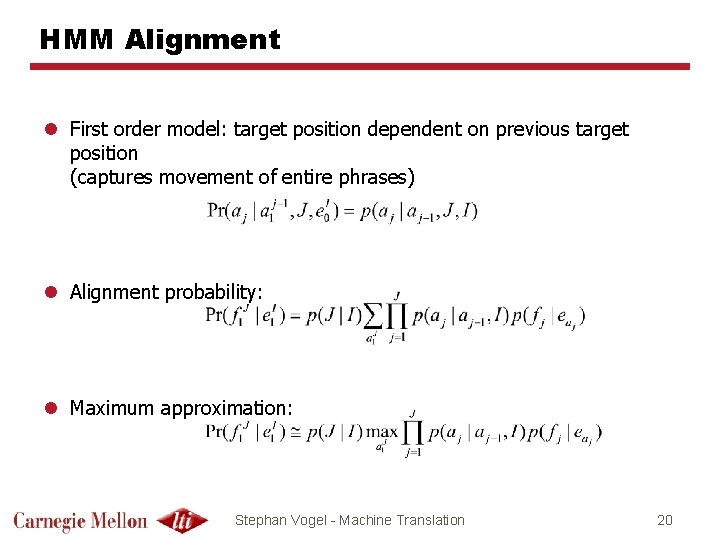

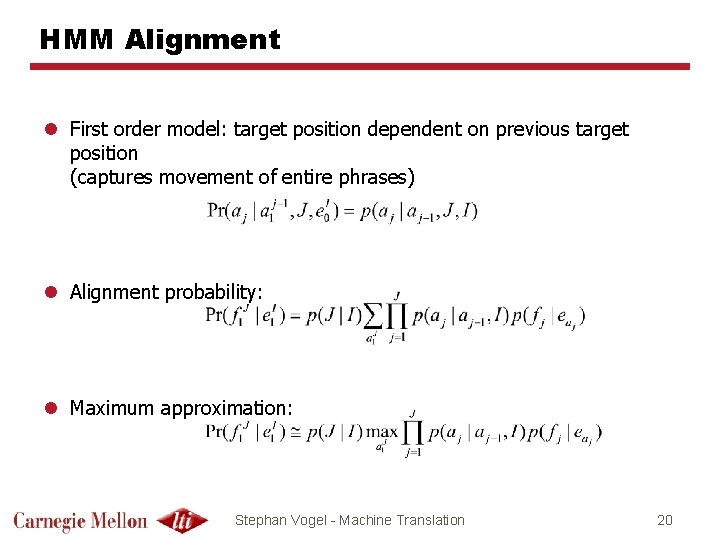

HMM Alignment l First order model: target position dependent on previous target position (captures movement of entire phrases) l Alignment probability: l Maximum approximation: Stephan Vogel - Machine Translation 20

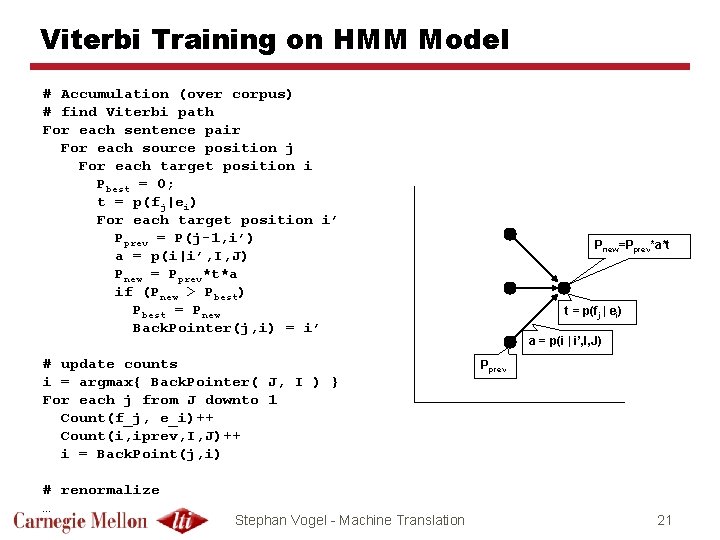

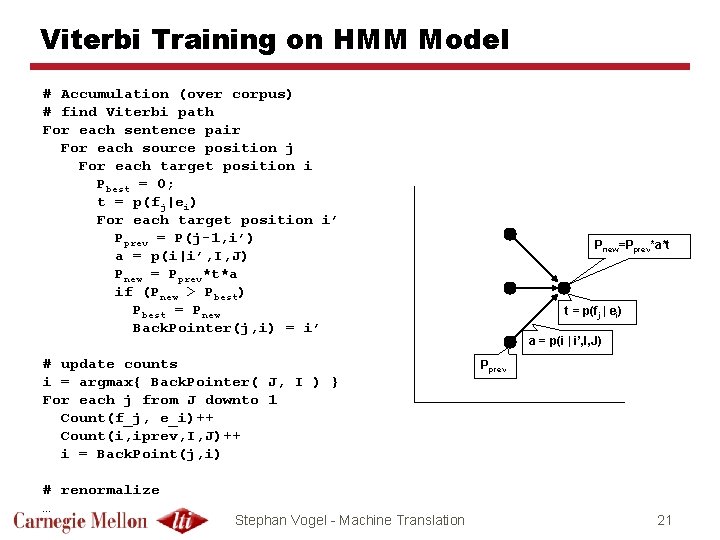

Viterbi Training on HMM Model # Accumulation (over corpus) # find Viterbi path For each sentence pair For each source position j For each target position i Pbest = 0; t = p(fj|ei) For each target position i’ Pprev = P(j-1, i’) a = p(i|i’, I, J) Pnew = Pprev*t*a if (Pnew > Pbest) Pbest = Pnew Back. Pointer(j, i) = i’ # update counts i = argmax{ Back. Pointer( J, I ) } For each j from J downto 1 Count(f_j, e_i)++ Count(i, iprev, I, J)++ i = Back. Point(j, i) # renormalize … Stephan Vogel - Machine Translation Pnew=Pprev*a*t t = p(fj | ei) a = p(i | i’, I, J) Pprev 21

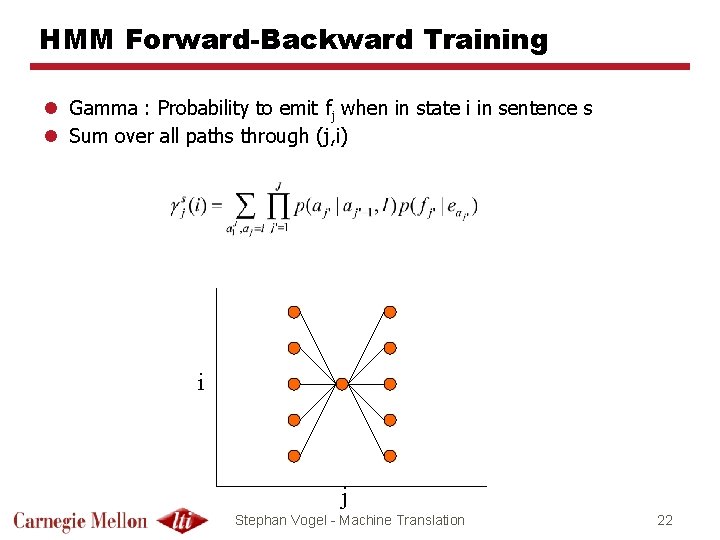

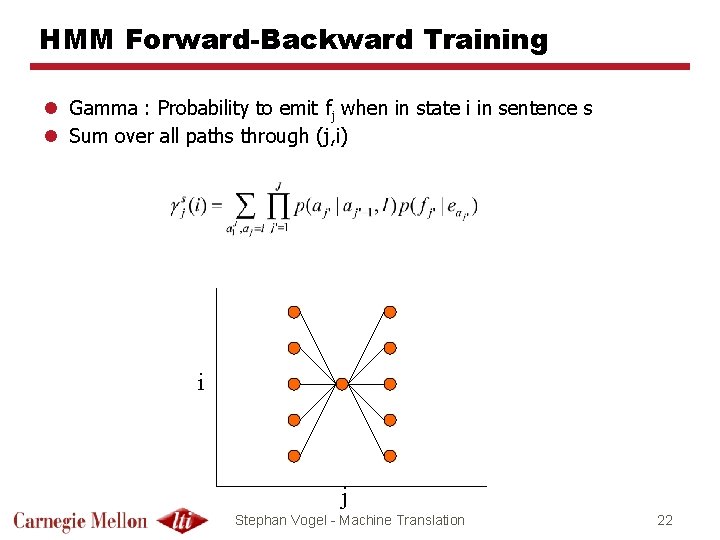

HMM Forward-Backward Training l Gamma : Probability to emit fj when in state i in sentence s l Sum over all paths through (j, i) i j Stephan Vogel - Machine Translation 22

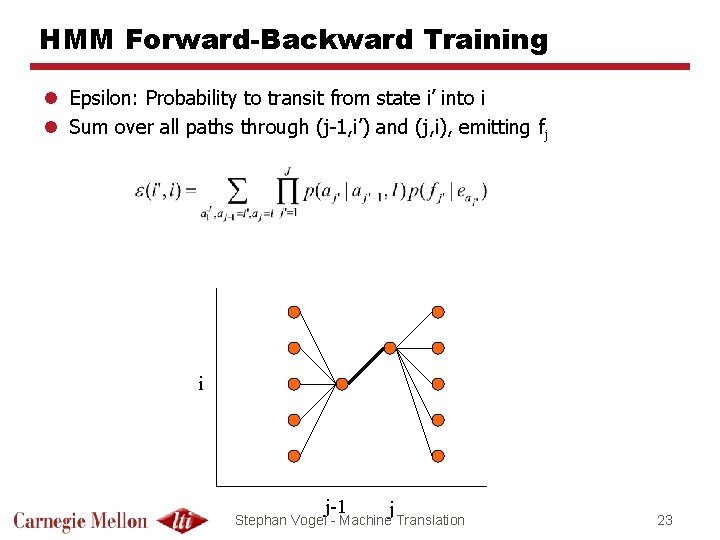

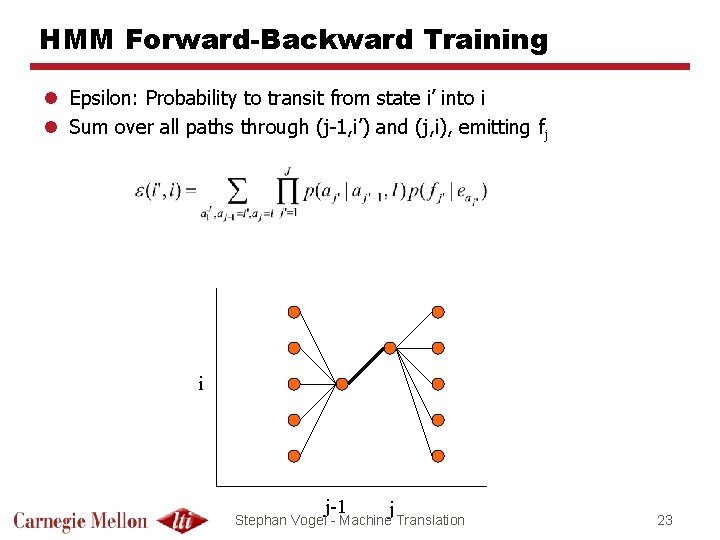

HMM Forward-Backward Training l Epsilon: Probability to transit from state i’ into i l Sum over all paths through (j-1, i’) and (j, i), emitting fj i j-1 j Stephan Vogel - Machine Translation 23

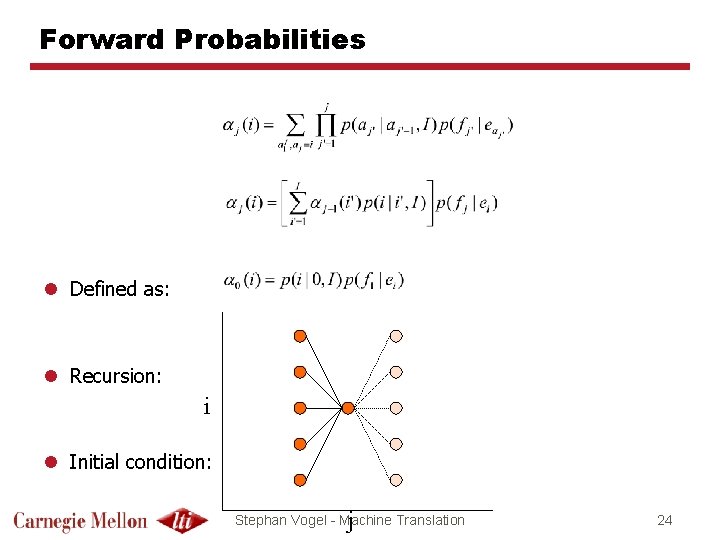

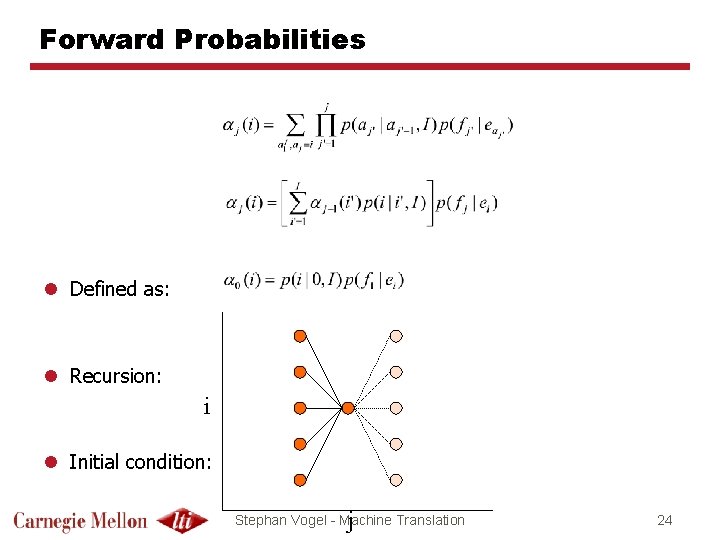

Forward Probabilities l Defined as: l Recursion: i l Initial condition: j Stephan Vogel - Machine Translation 24

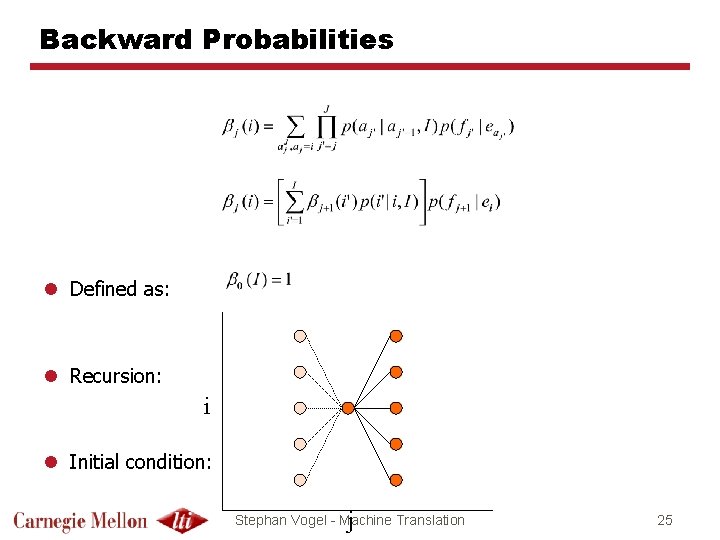

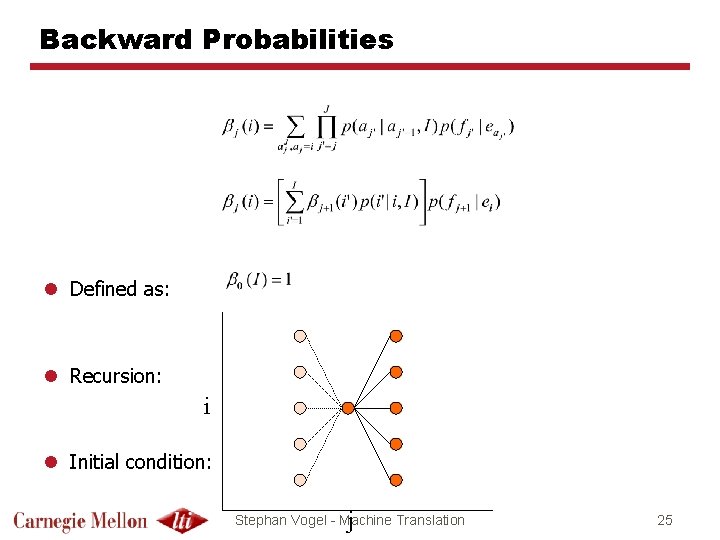

Backward Probabilities l Defined as: l Recursion: i l Initial condition: j Stephan Vogel - Machine Translation 25

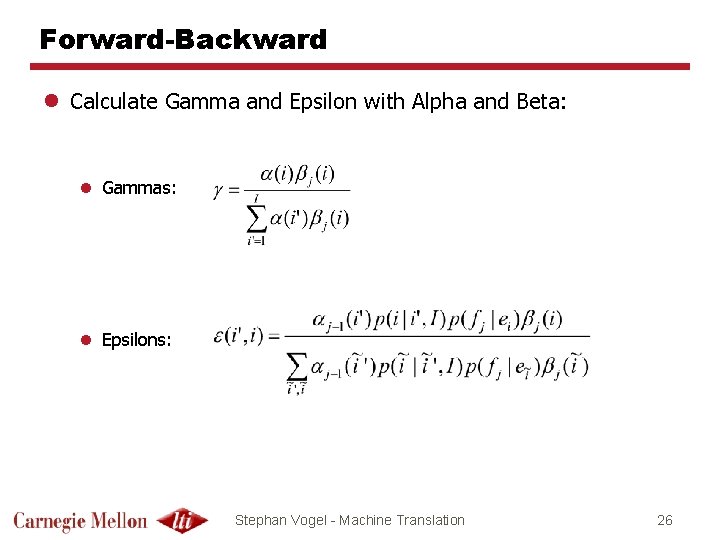

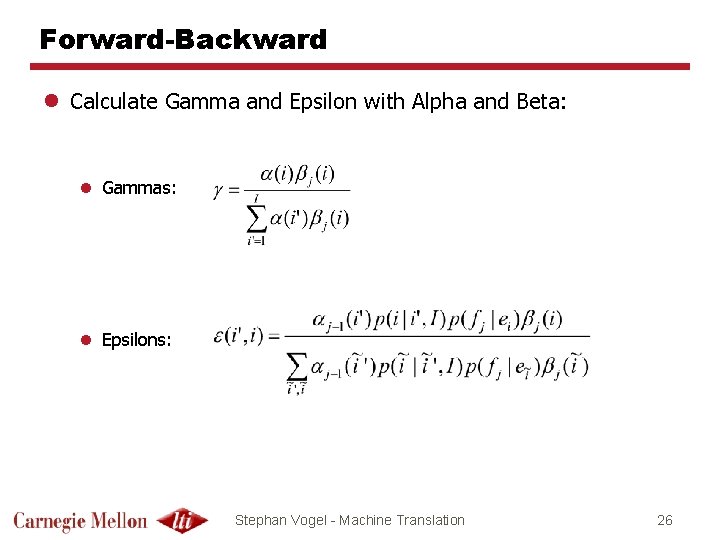

Forward-Backward l Calculate Gamma and Epsilon with Alpha and Beta: l Gammas: l Epsilons: Stephan Vogel - Machine Translation 26

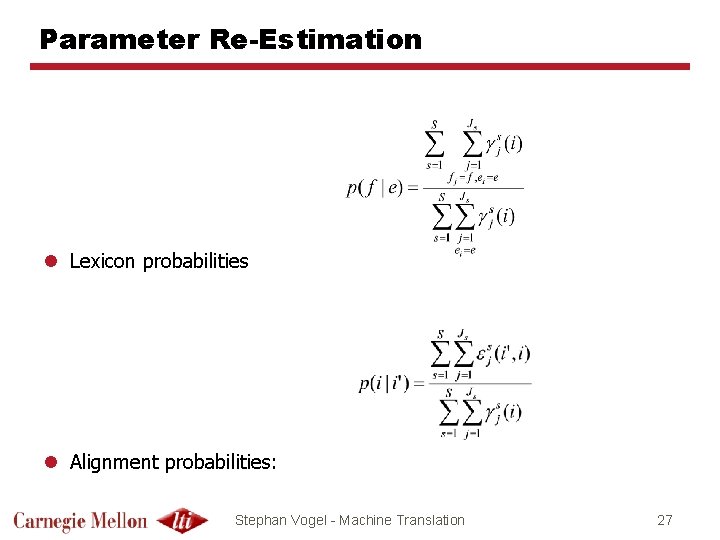

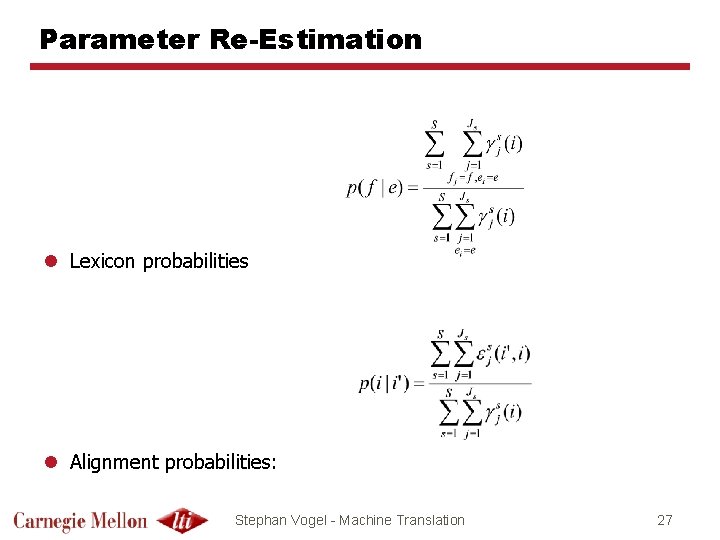

Parameter Re-Estimation l Lexicon probabilities l Alignment probabilities: Stephan Vogel - Machine Translation 27

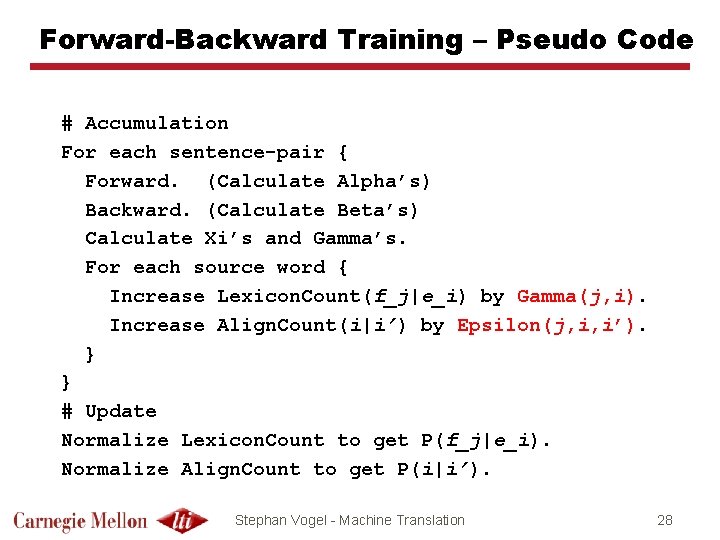

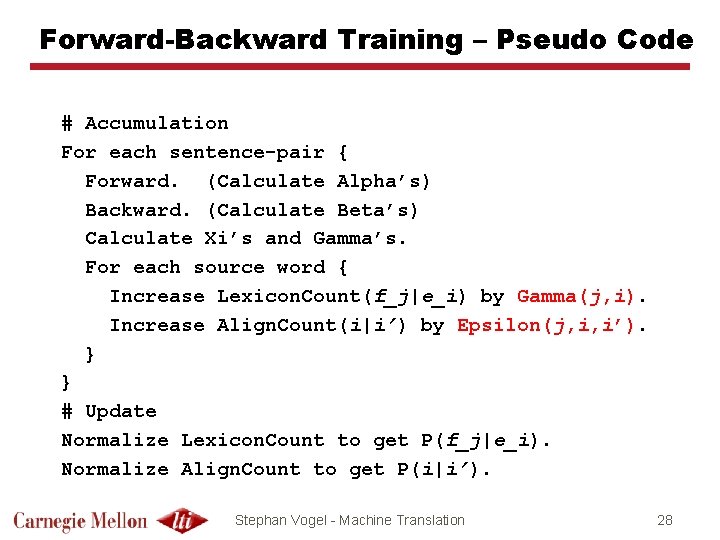

Forward-Backward Training – Pseudo Code # Accumulation For each sentence-pair { Forward. (Calculate Alpha’s) Backward. (Calculate Beta’s) Calculate Xi’s and Gamma’s. For each source word { Increase Lexicon. Count(f_j|e_i) by Gamma(j, i). Increase Align. Count(i|i’) by Epsilon(j, i, i’). } } # Update Normalize Lexicon. Count to get P(f_j|e_i). Normalize Align. Count to get P(i|i’). Stephan Vogel - Machine Translation 28

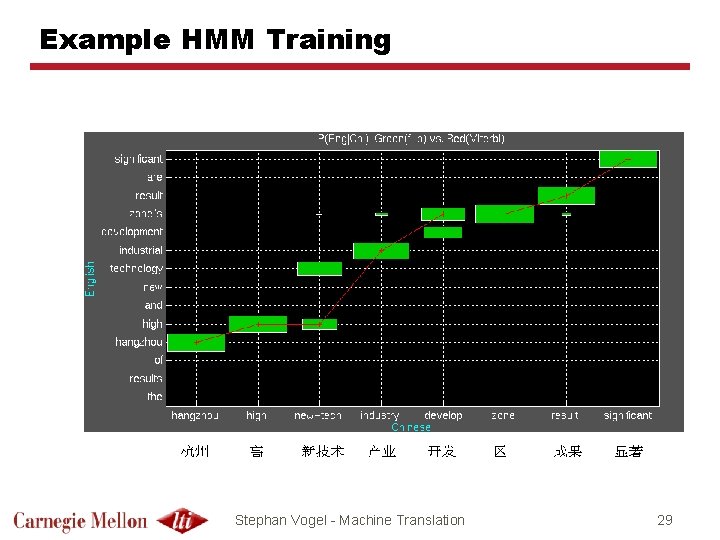

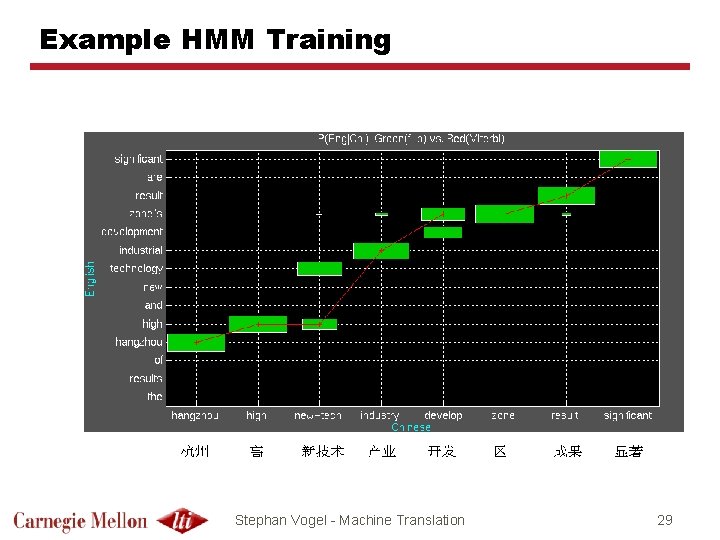

Example HMM Training Stephan Vogel - Machine Translation 29