IS 2620 UMLSec Lecture 10 March 19 2013

- Slides: 61

IS 2620 UMLSec Lecture 10 March 19, 2013 1

Objective l Overview of UMLSec l How UML has been extended with security construct l Some security constructs in UMLSec l Validation of design l Acknowledgement: Courtesy of Jan Jurgens

Quality vs. cost l l Systems on which human life and commercial assets depend need careful development. Systems operating under possible system failure or attack need to be free from weaknesses/flaws Correctness in conflict with cost. Thorough methods of system design not used if too expensive.

Problems l l Many flaws found in designs of securitycritical systems, sometimes years after publication or use. Spectacular Example (1997): l l NSA hacker team breaks into U. S. Department of Defense computers and the U. S. Electric power grid system. Simulates power outages and 911 emergency telephone overloads in Washington, D. C. .

Causes I l l Designing secure systems correctly is difficult. Even experts may fail: – Needham-Schroeder protocol (1978) – attacks found 1981 (Denning, Sacco), 1995 (Lowe) l l Designers often lack background in security. Security as an afterthought.

Causes II l “Blind” use of mechanisms: l l Security often compromised by circumventing (rather than breaking) them. Assumptions on system context, physical environment. “Those who think that their problem can be solved by simply applying cryptography don`t understand cryptography and don`t understand their problem” (Lampson, Needham).

Previous approaches l “Penetrate-and-patch”: unsatisfactory. l insecure l l disruptive l l damage until discovered distributing patches costs money, destroys confidence, annoys customers Traditional formal methods: expensive. l l training people constructing formal specifications.

Holistic view on Security l Saltzer, Schroeder 1975: l l “An expansive view of the problem is most appropriate to help ensure that no gaps appear in the strategy” But “no complete method applicable to the construction of large general-purpose systems exists yet” (since 1975)

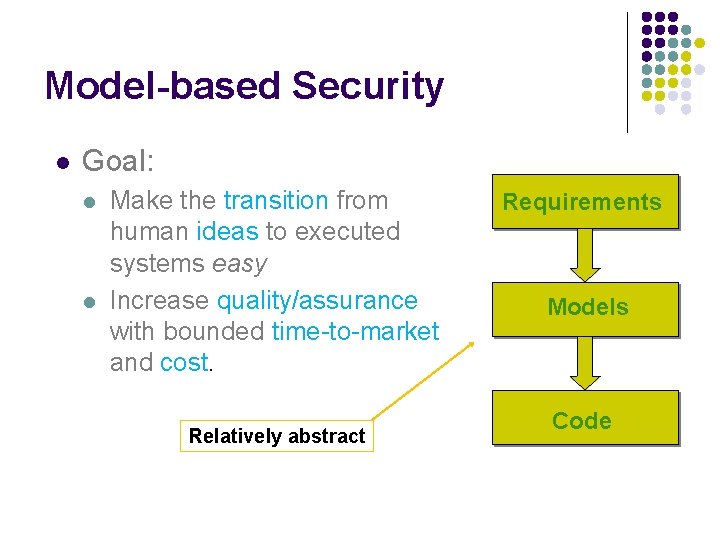

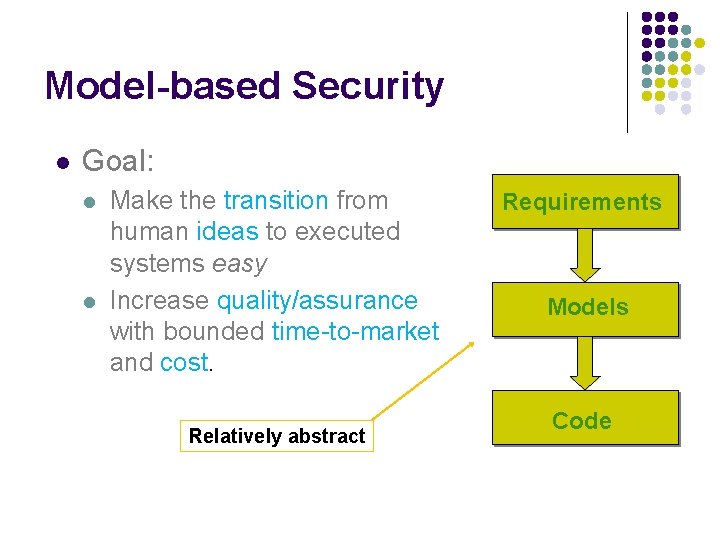

Model-based Security l Goal: l l Make the transition from human ideas to executed systems easy Increase quality/assurance with bounded time-to-market and cost. Relatively abstract Requirements Models Code

Goal: Secure by Design Consider critical properties l from very early stages l within development context l taking an expansive view l seamlessly throughout the development lifecycle. High Assurance/Secure design by model analysis. High Assurance/Secure implementation by test generation.

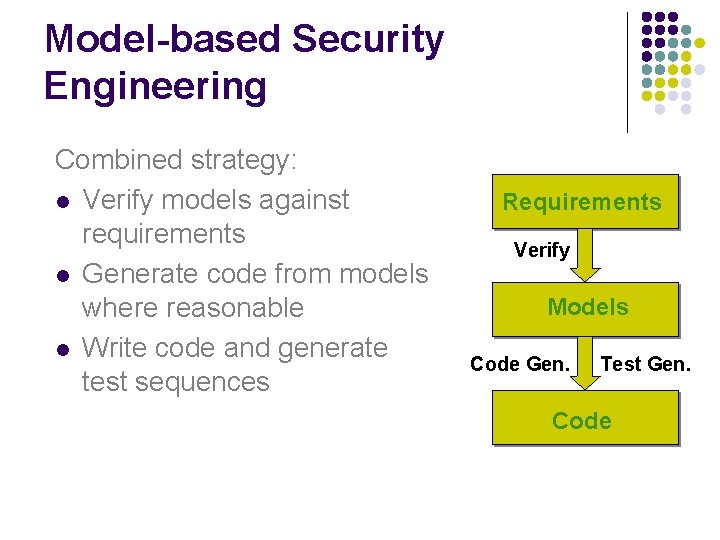

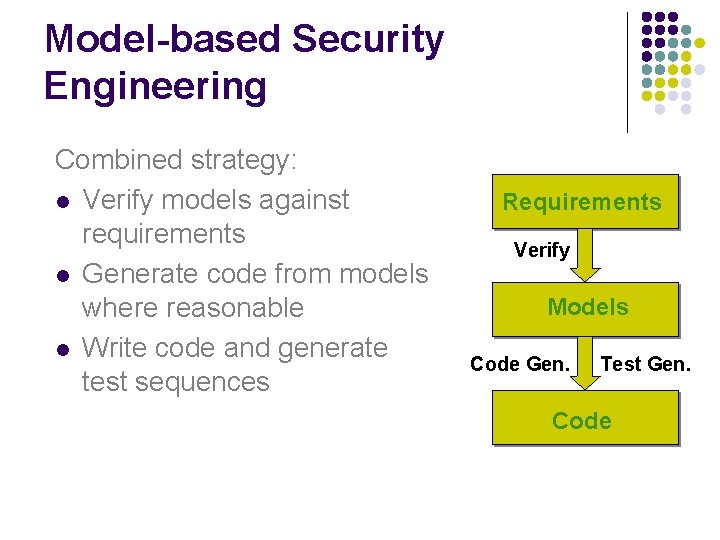

Model-based Security Engineering Combined strategy: l Verify models against requirements l Generate code from models where reasonable l Write code and generate test sequences Requirements Verify Models Code Gen. Test Gen. Code

Secure by design l Establish the system fulfills the security requirements l l l At the design level By analyzing the model Make sure the code is secure l Generate test sequences from the model

Using UML l l l l Provides opportunity for high-quality and cost- and time-efficient high-assurance systems development: De-facto standard in industrial modeling: large number of developers trained in UML. Relatively precisely defined Many tools (specifications, simulation, …).

Challenges l l Adapt UML to critical system application domains. Correct use of UML in the application domains. Conflict between flexibility and unambiguity in the meaning of a notation. Improving tool-support for critical systems development with UML (analysis, …).

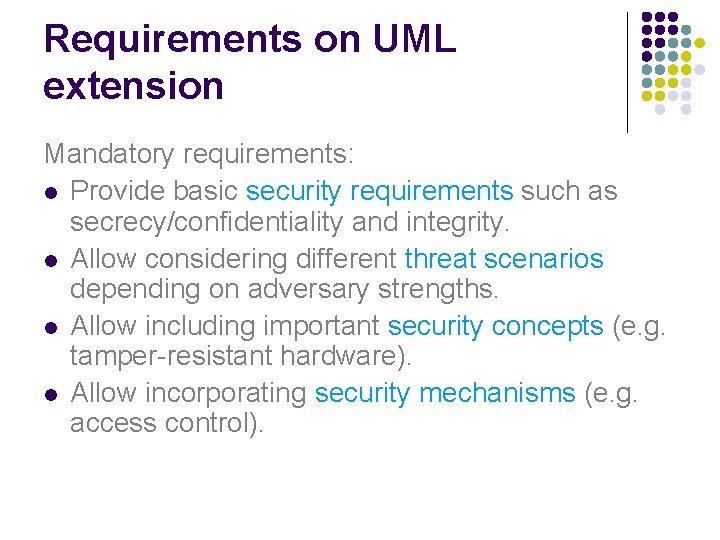

Requirements on UML extension Mandatory requirements: l Provide basic security requirements such as secrecy/confidentiality and integrity. l Allow considering different threat scenarios depending on adversary strengths. l Allow including important security concepts (e. g. tamper-resistant hardware). l Allow incorporating security mechanisms (e. g. access control).

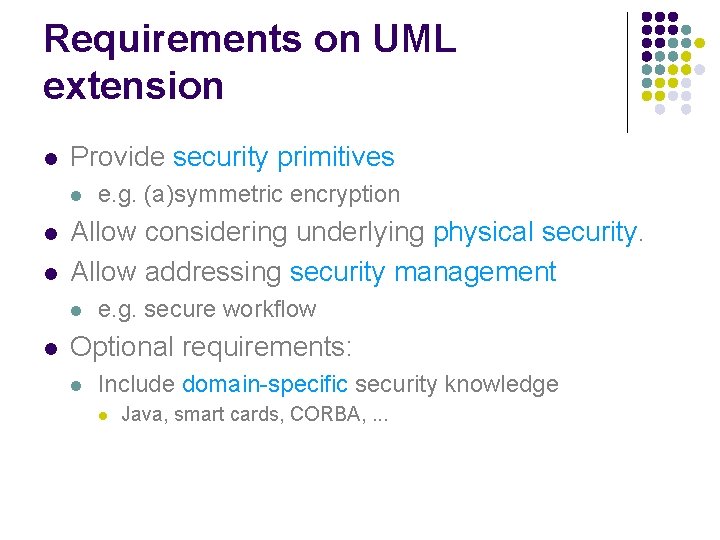

Requirements on UML extension l Provide security primitives l l l Allow considering underlying physical security. Allow addressing security management l l e. g. (a)symmetric encryption e. g. secure workflow Optional requirements: l Include domain-specific security knowledge l Java, smart cards, CORBA, . . .

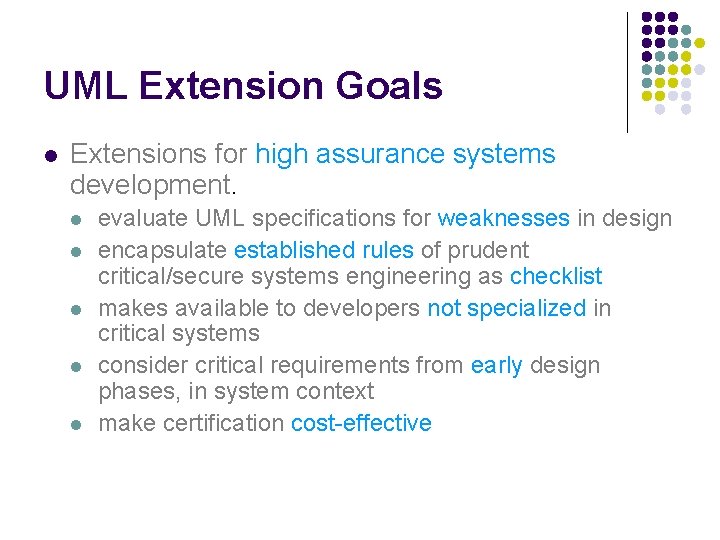

UML Extension Goals l Extensions for high assurance systems development. l l l evaluate UML specifications for weaknesses in design encapsulate established rules of prudent critical/secure systems engineering as checklist makes available to developers not specialized in critical systems consider critical requirements from early design phases, in system context make certification cost-effective

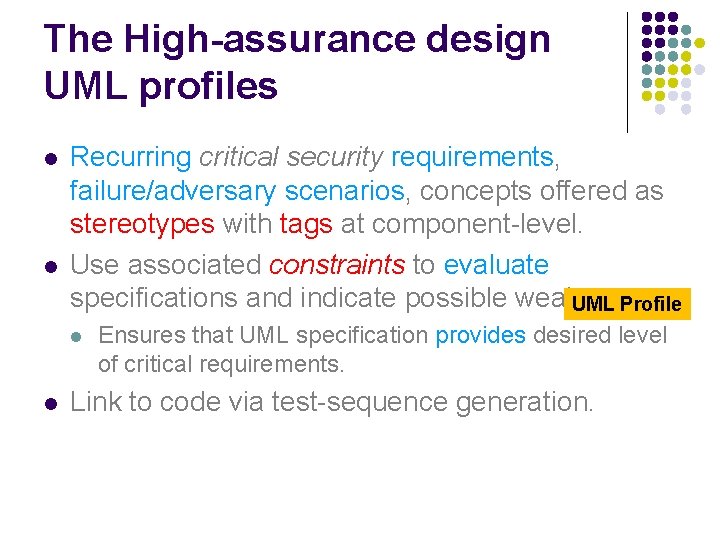

The High-assurance design UML profiles l l Recurring critical security requirements, failure/adversary scenarios, concepts offered as stereotypes with tags at component-level. Use associated constraints to evaluate specifications and indicate possible weaknesses. UML Profile l l Ensures that UML specification provides desired level of critical requirements. Link to code via test-sequence generation.

UML - Review Unified Modeling Language (UML): l visual modeling for OO systems l different views on a system l high degree of abstraction possible l de-facto industry standard (OMG) l standard extension mechanisms

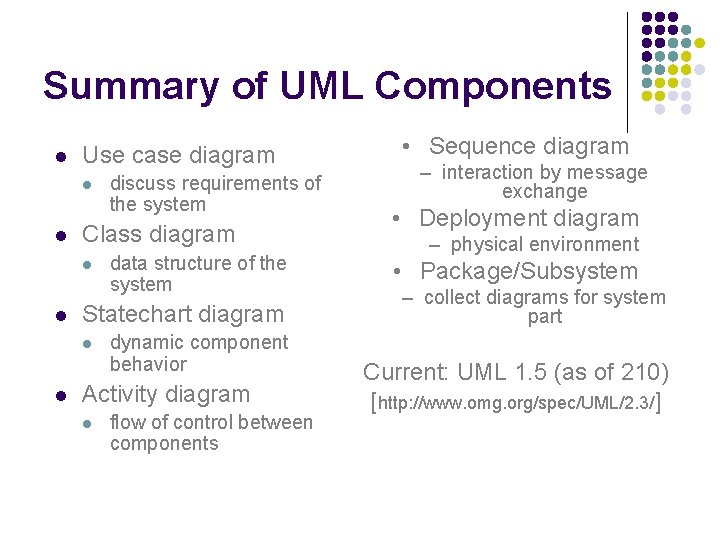

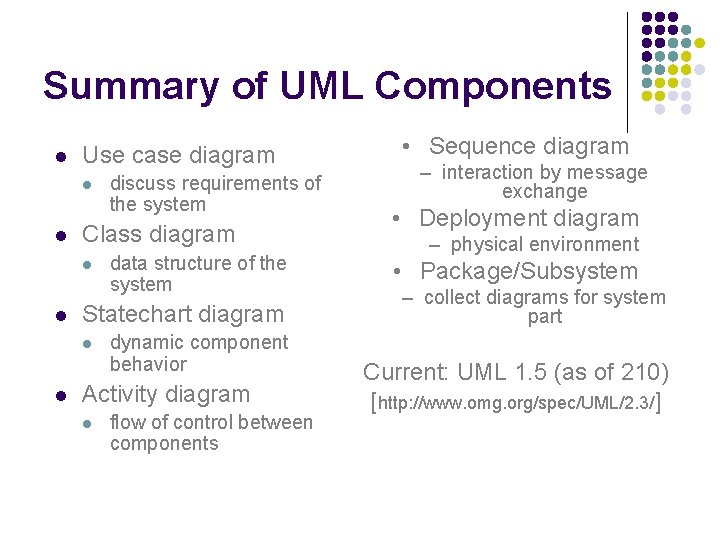

Summary of UML Components l Use case diagram l l Class diagram l l data structure of the system Statechart diagram l l discuss requirements of the system dynamic component behavior Activity diagram l flow of control between components • Sequence diagram – interaction by message exchange • Deployment diagram – physical environment • Package/Subsystem – collect diagrams for system part Current: UML 1. 5 (as of 210) [http: //www. omg. org/spec/UML/2. 3/]

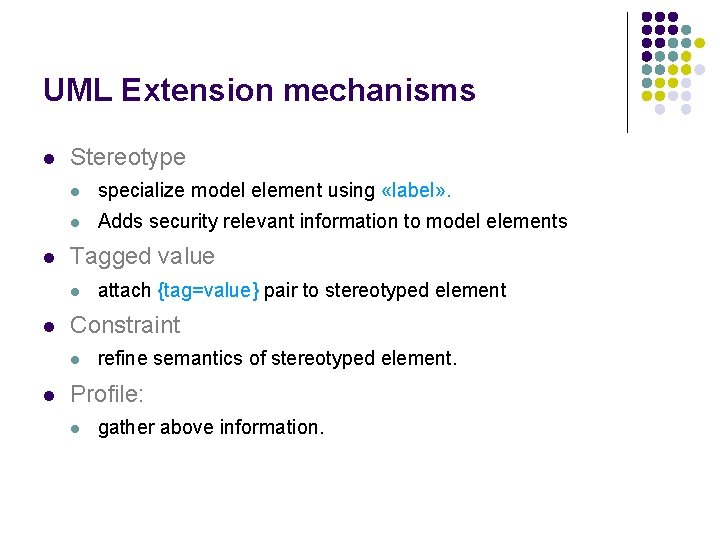

UML Extension mechanisms l l Stereotype l specialize model element using «label» . l Adds security relevant information to model elements Tagged value l l Constraint l l attach {tag=value} pair to stereotyped element refine semantics of stereotyped element. Profile: l gather above information.

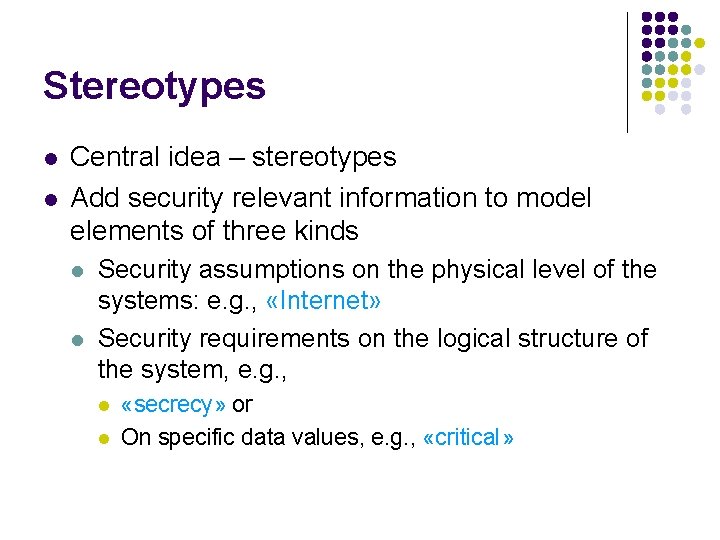

Stereotypes l l Central idea – stereotypes Add security relevant information to model elements of three kinds l l Security assumptions on the physical level of the systems: e. g. , «Internet» Security requirements on the logical structure of the system, e. g. , l l «secrecy» or On specific data values, e. g. , «critical»

Stereotypes l l First two cases l l Security policies that the system parts are supposed to obey; e. g. l «fair exchange» , «secure links» , «data security» , «no down-flow» Simply add some additional information to a model Third one l Constraints are associated that needs to be satisfied by the model

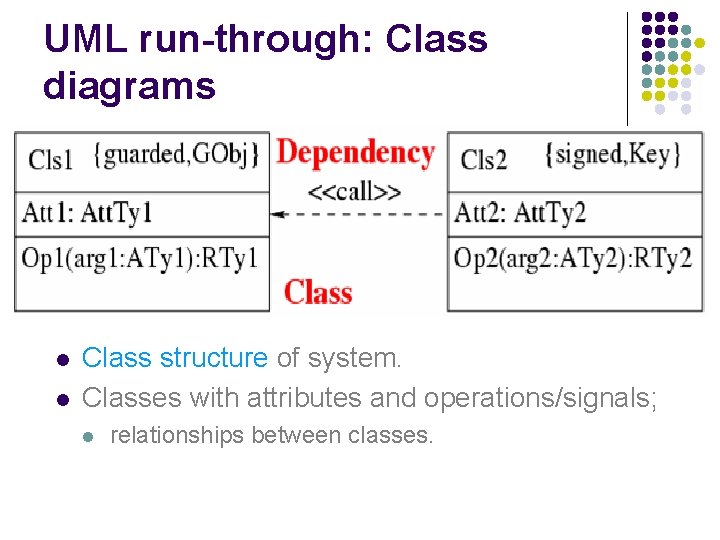

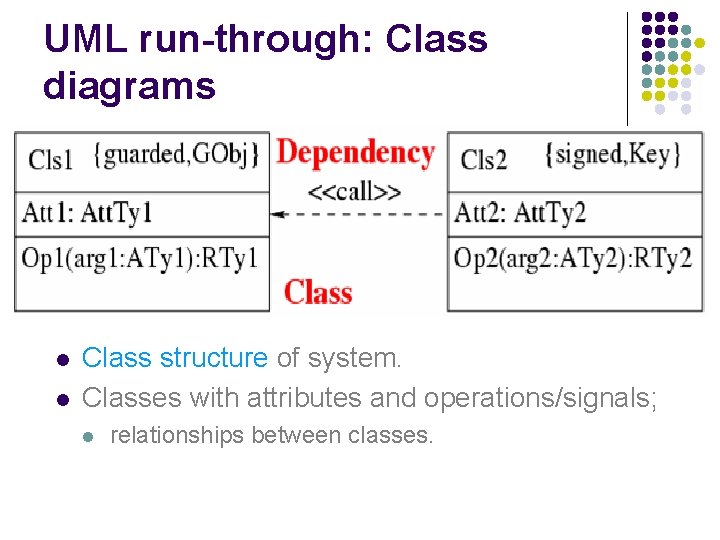

UML run-through: Class diagrams l l Class structure of system. Classes with attributes and operations/signals; l relationships between classes.

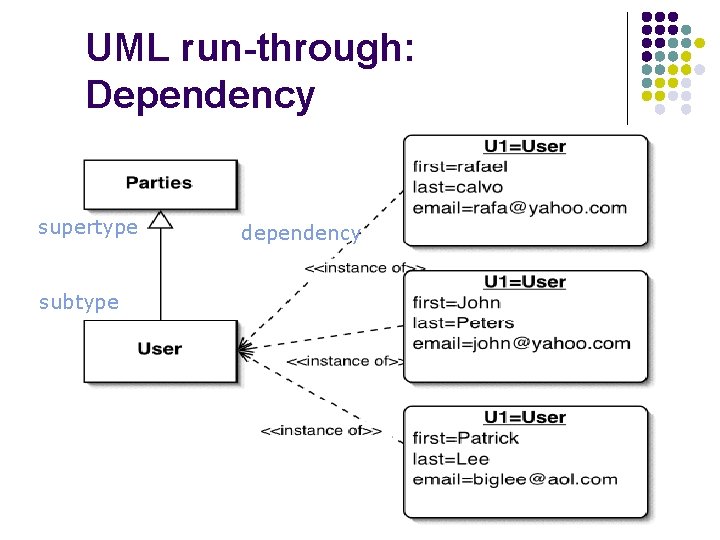

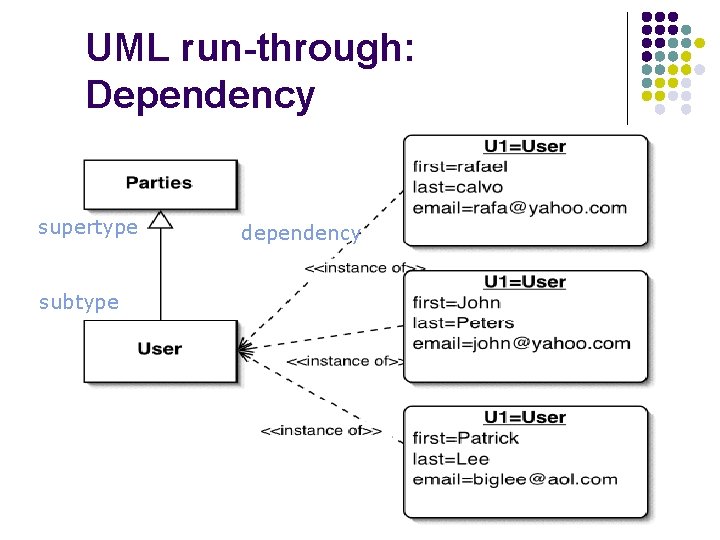

UML run-through: Dependency supertype subtype dependency

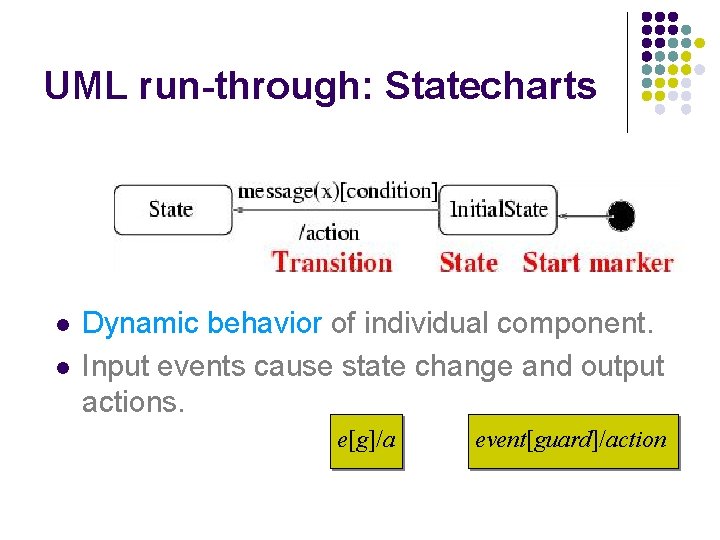

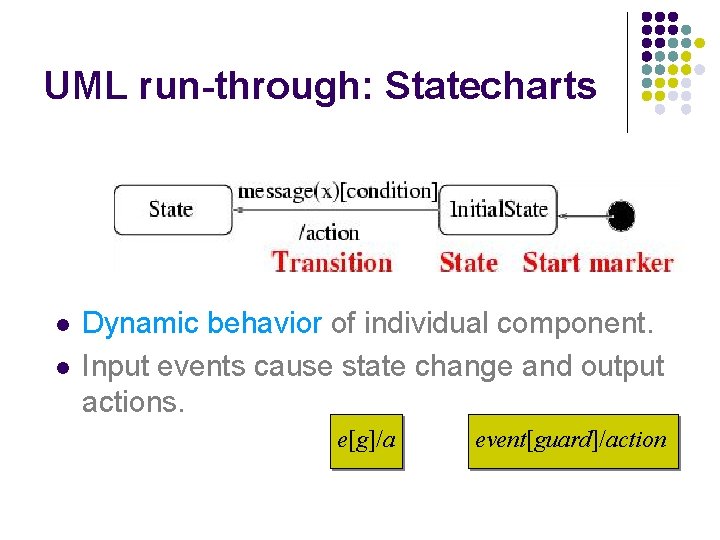

UML run-through: Statecharts l l Dynamic behavior of individual component. Input events cause state change and output actions. e[g]/a event[guard]/action

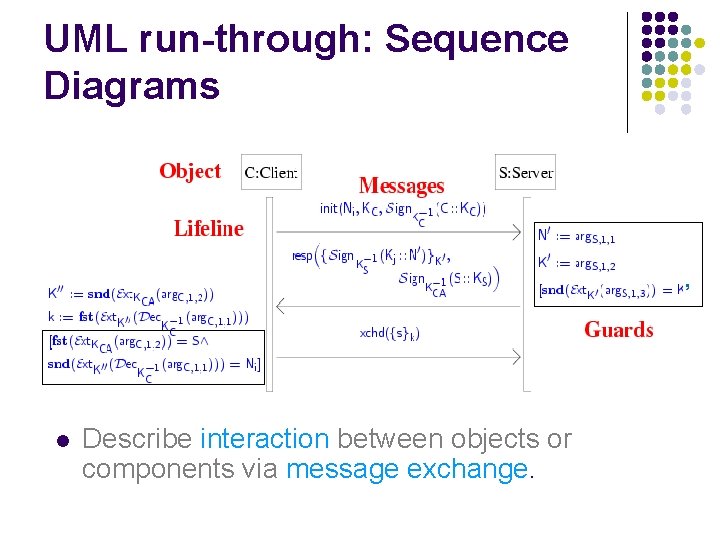

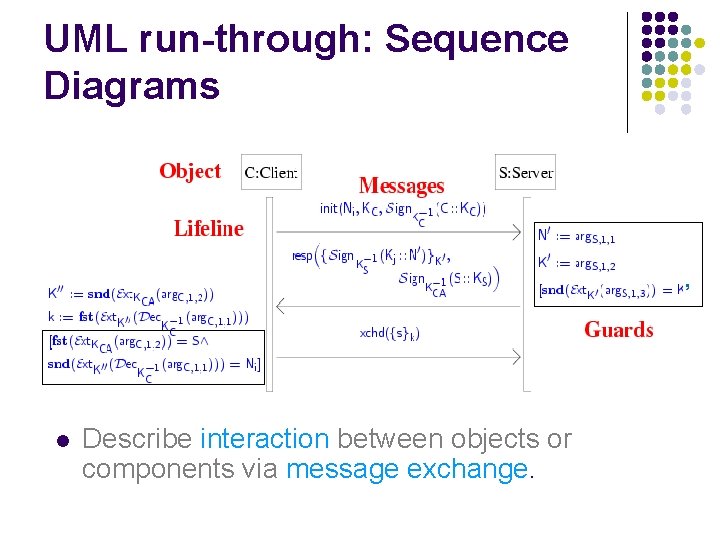

UML run-through: Sequence Diagrams ’ l Describe interaction between objects or components via message exchange.

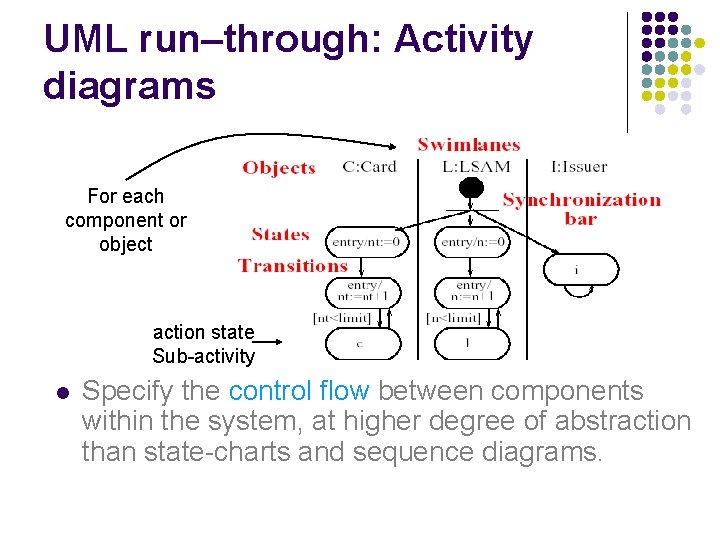

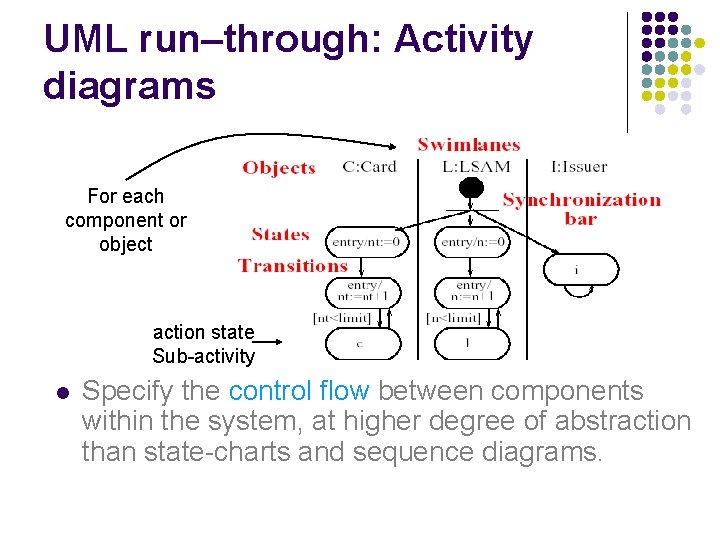

UML run–through: Activity diagrams For each component or object action state Sub-activity l Specify the control flow between components within the system, at higher degree of abstraction than state-charts and sequence diagrams.

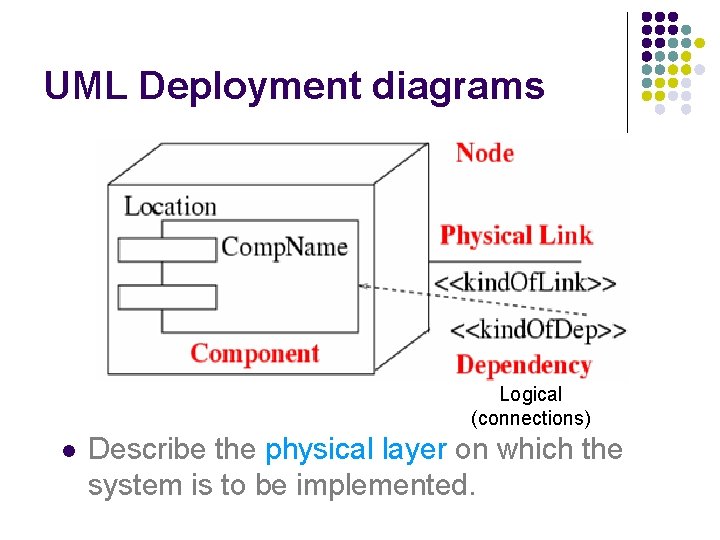

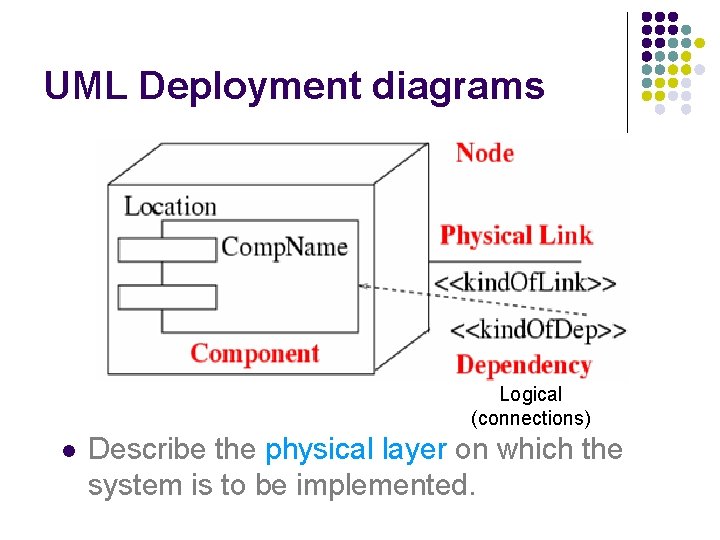

UML Deployment diagrams Logical (connections) l Describe the physical layer on which the system is to be implemented.

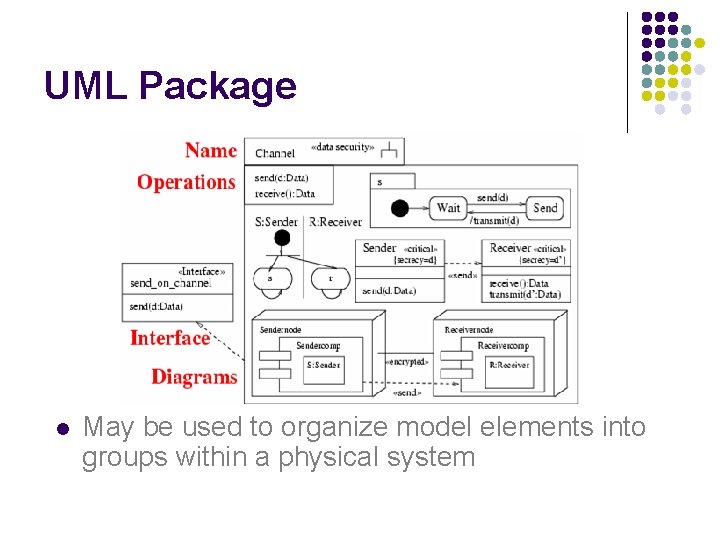

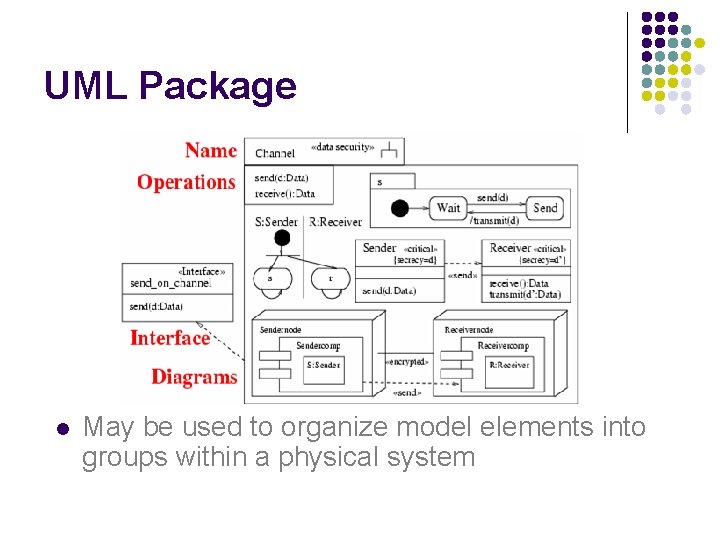

UML Package l May be used to organize model elements into groups within a physical system

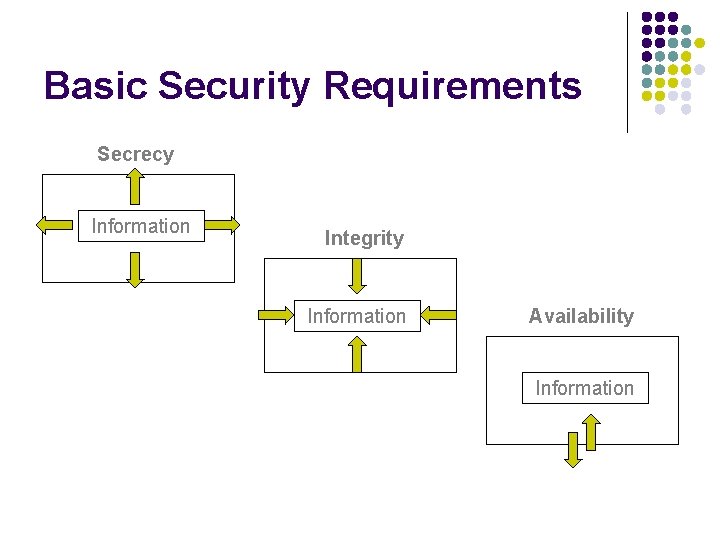

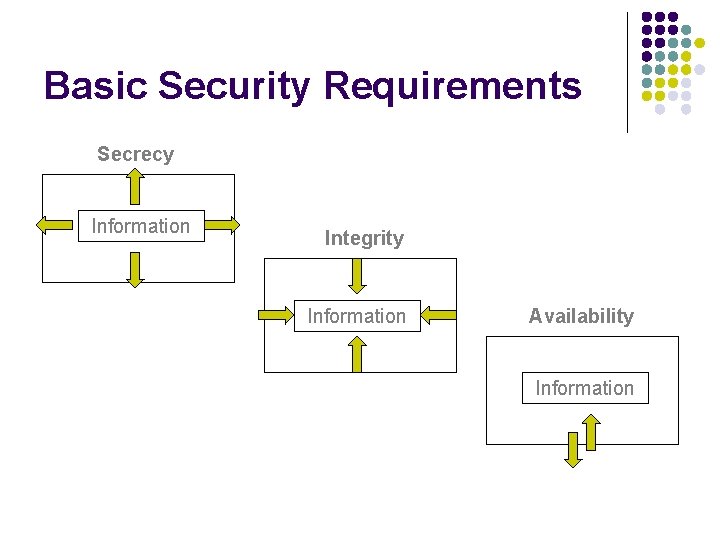

Basic Security Requirements Secrecy Information Integrity Information Availability Information

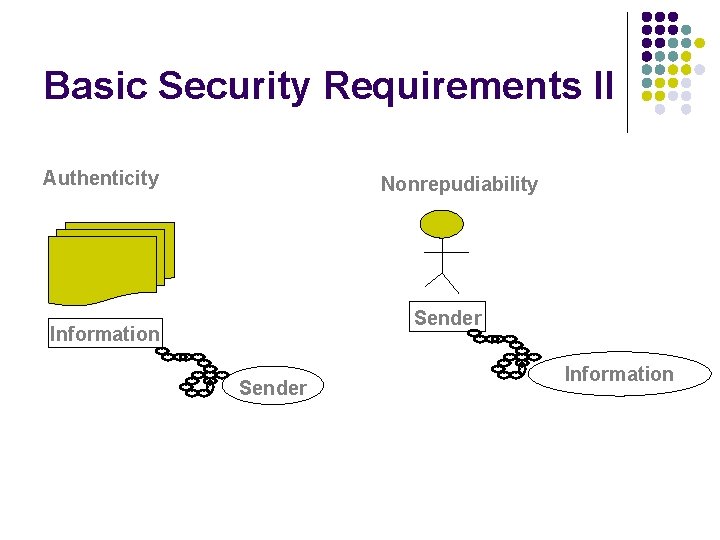

Basic Security Requirements II Authenticity Nonrepudiability Sender Information

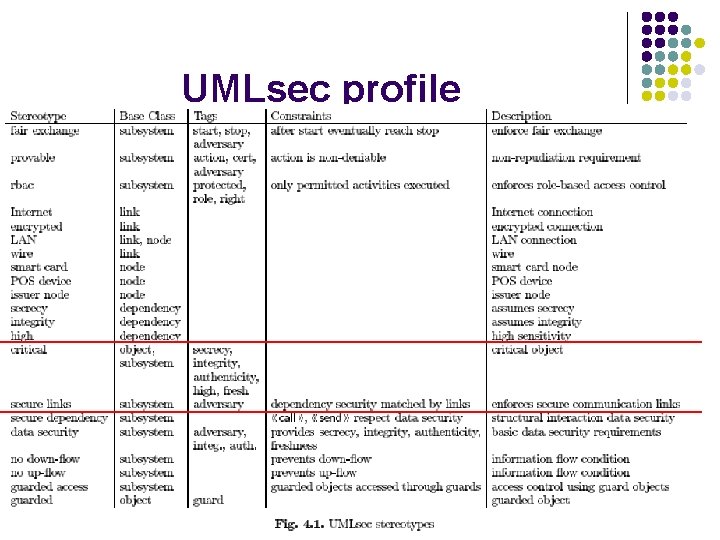

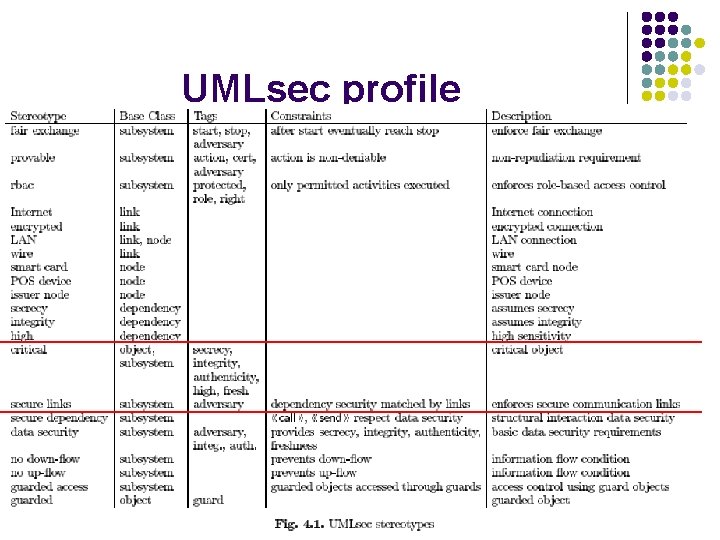

UMLsec profile

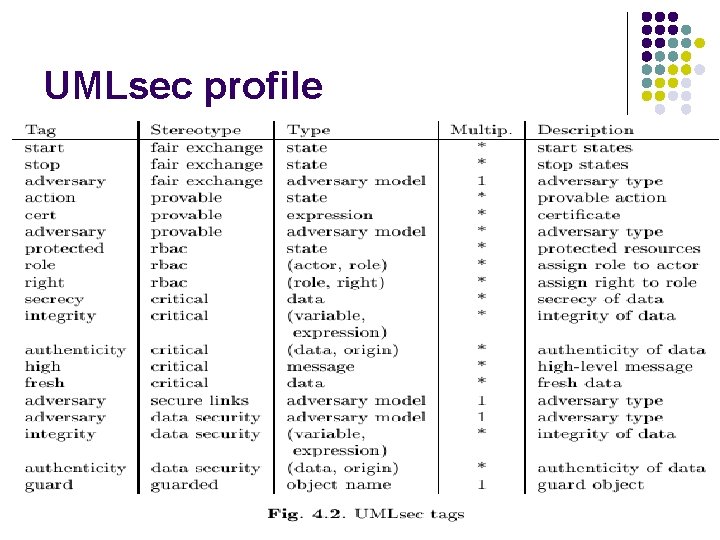

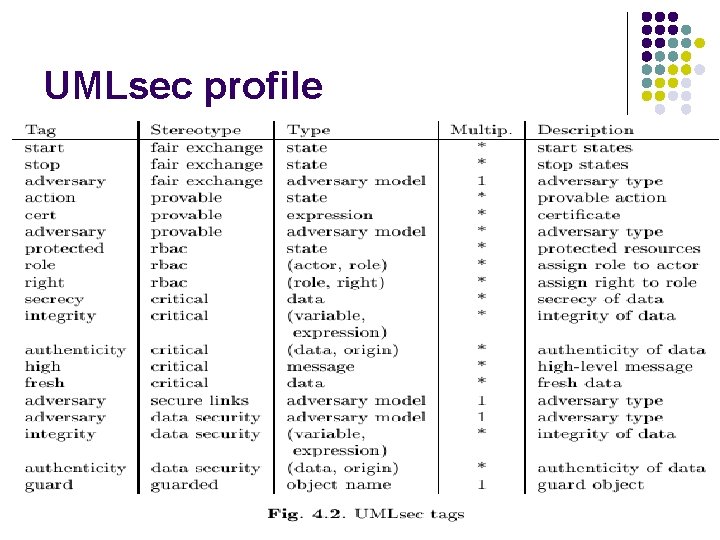

UMLsec profile

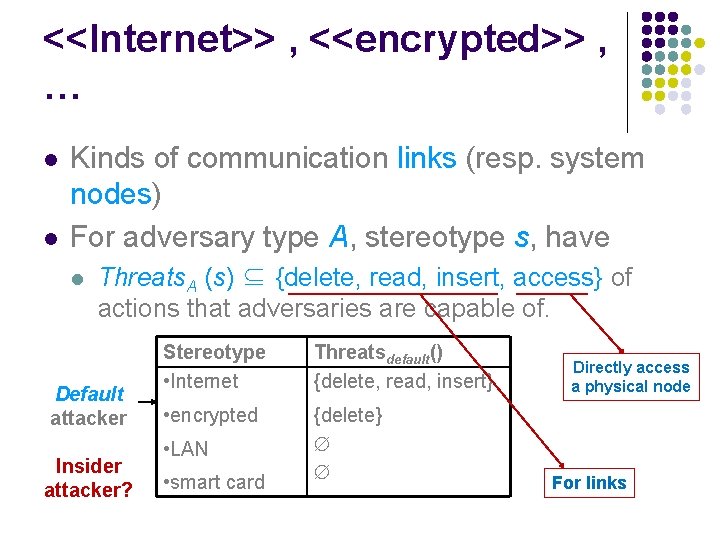

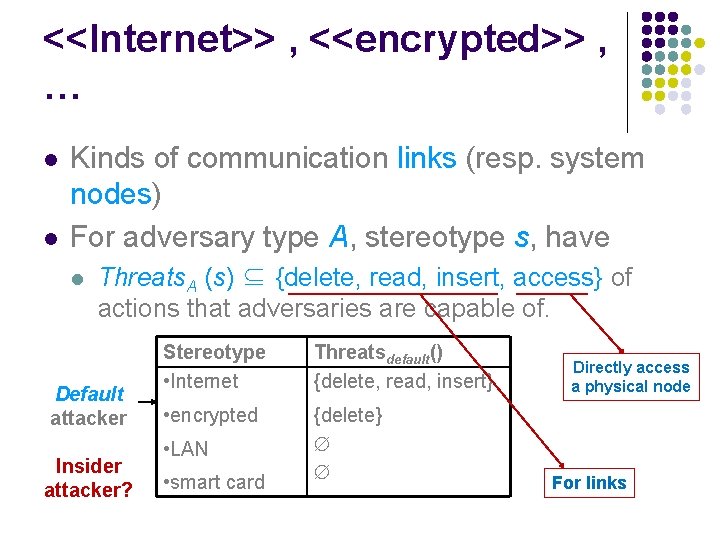

<<Internet>> , <<encrypted>> , … l l Kinds of communication links (resp. system nodes) For adversary type A, stereotype s, have l Threats. A (s) ⊆ {delete, read, insert, access} of actions that adversaries are capable of. Default attacker Insider attacker? Stereotype • Internet Threatsdefault() {delete, read, insert} • encrypted {delete} • LAN • smart card Directly access a physical node For links

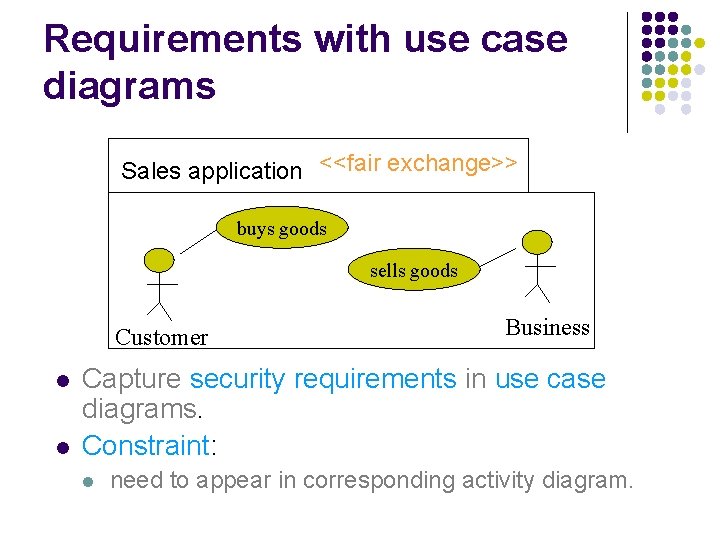

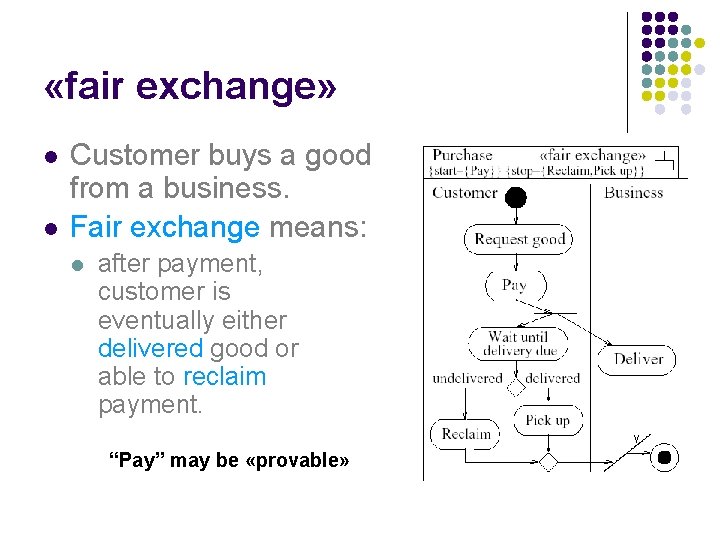

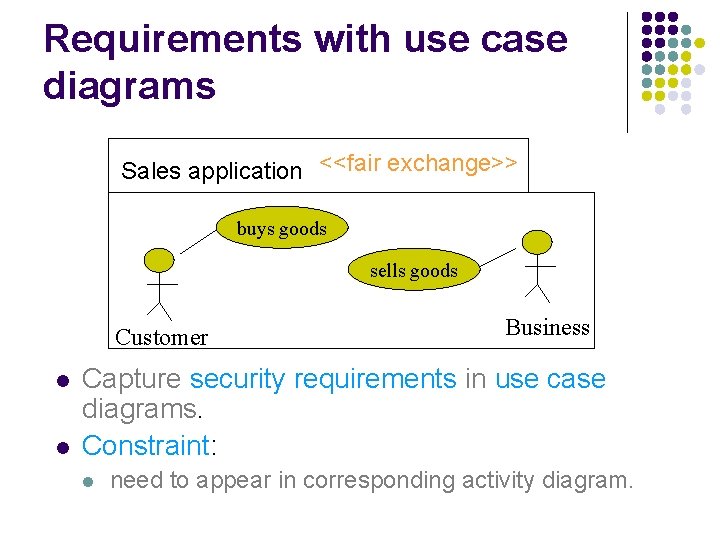

Requirements with use case diagrams Sales application <<fair exchange>> buys goods sells goods Customer l l Business Capture security requirements in use case diagrams. Constraint: l need to appear in corresponding activity diagram.

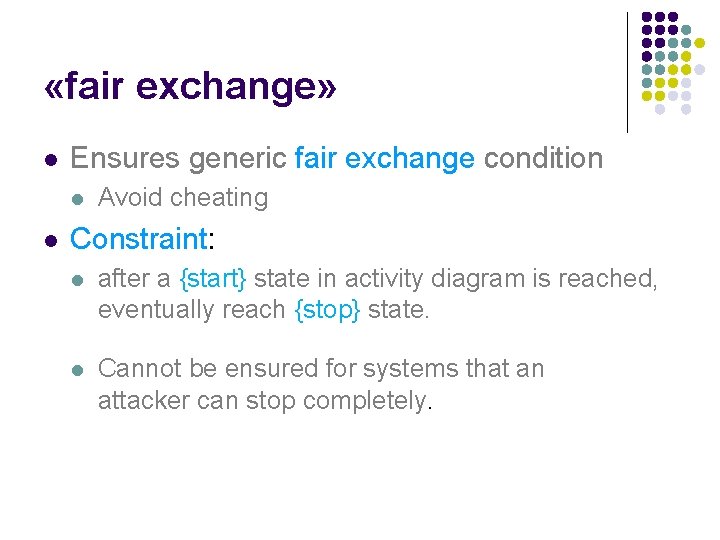

«fair exchange» l Ensures generic fair exchange condition l l Avoid cheating Constraint: l after a {start} state in activity diagram is reached, eventually reach {stop} state. l Cannot be ensured for systems that an attacker can stop completely.

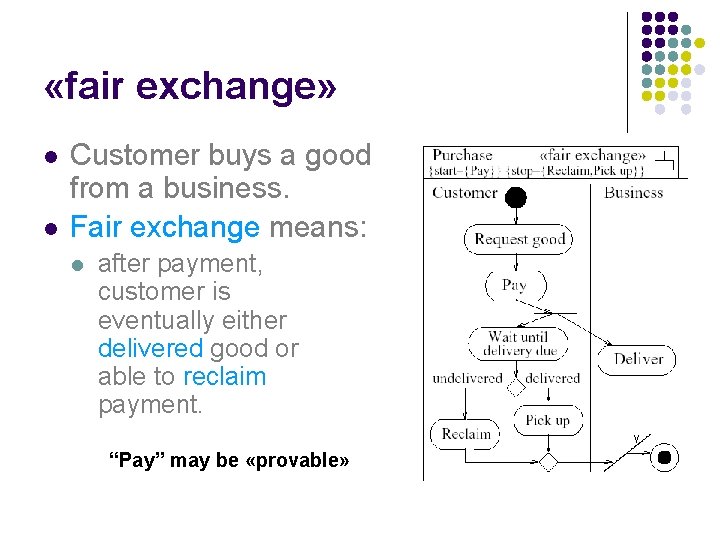

«fair exchange» l l Customer buys a good from a business. Fair exchange means: l after payment, customer is eventually either delivered good or able to reclaim payment. “Pay” may be «provable»

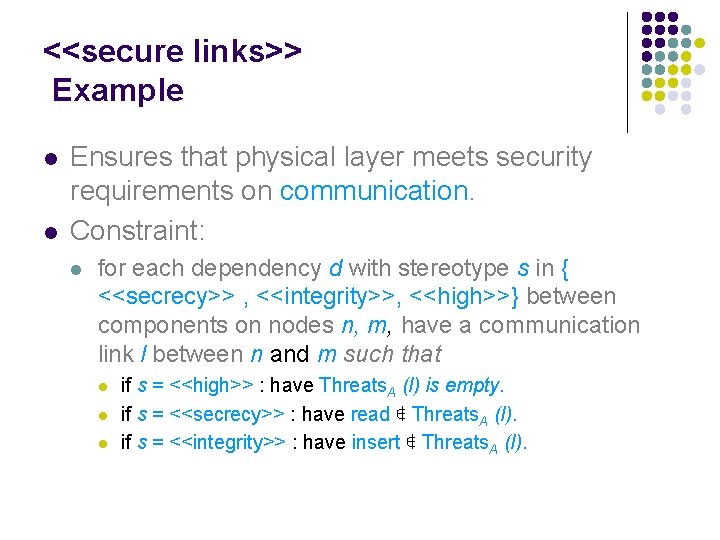

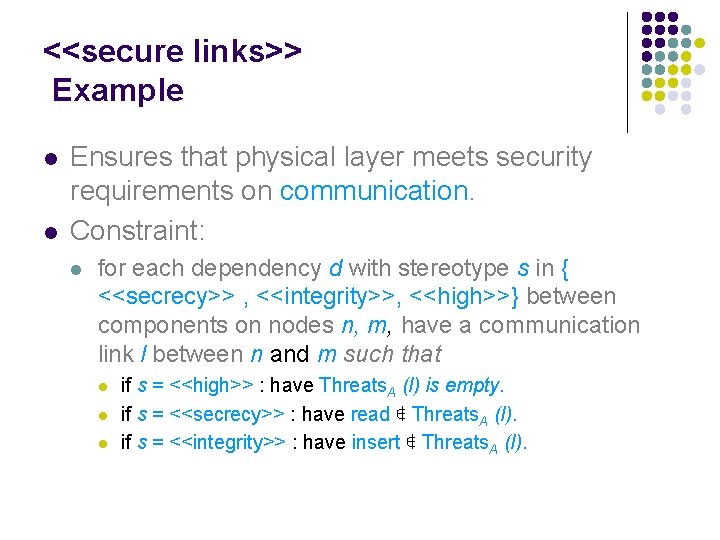

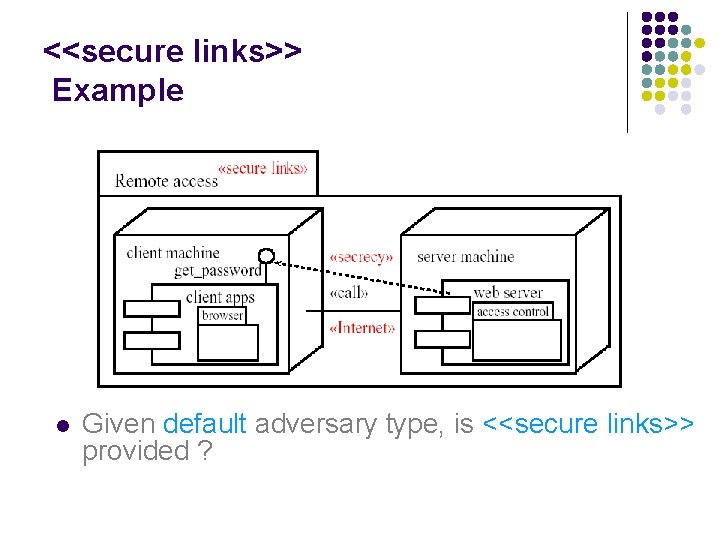

<<secure links>> Example l l Ensures that physical layer meets security requirements on communication. Constraint: l for each dependency d with stereotype s in { <<secrecy>> , <<integrity>>, <<high>>} between components on nodes n, m, have a communication link l between n and m such that l l l if s = <<high>> : have Threats. A (l) is empty. if s = <<secrecy>> : have read ∉ Threats. A (l). if s = <<integrity>> : have insert ∉ Threats. A (l).

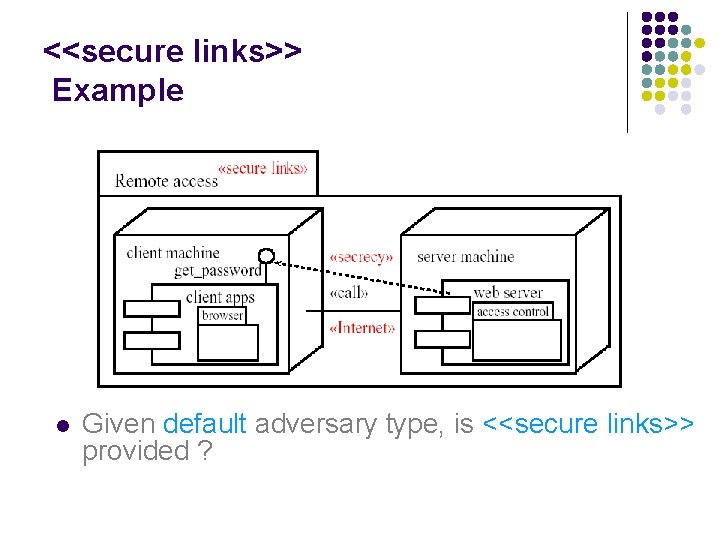

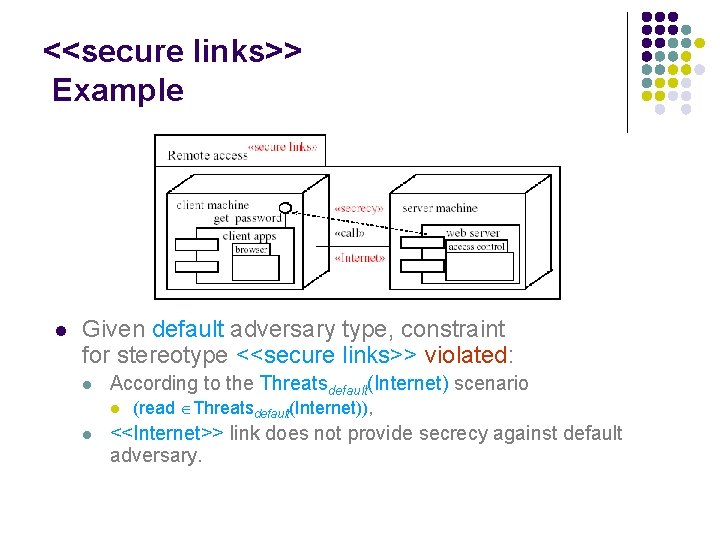

<<secure links>> Example l Given default adversary type, is <<secure links>> provided ?

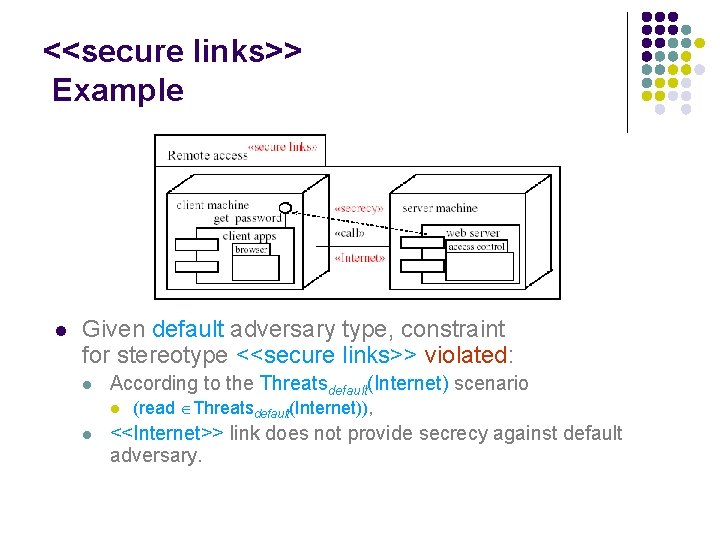

<<secure links>> Example l Given default adversary type, constraint for stereotype <<secure links>> violated: l According to the Threatsdefault(Internet) scenario l l (read Threatsdefault(Internet)), <<Internet>> link does not provide secrecy against default adversary.

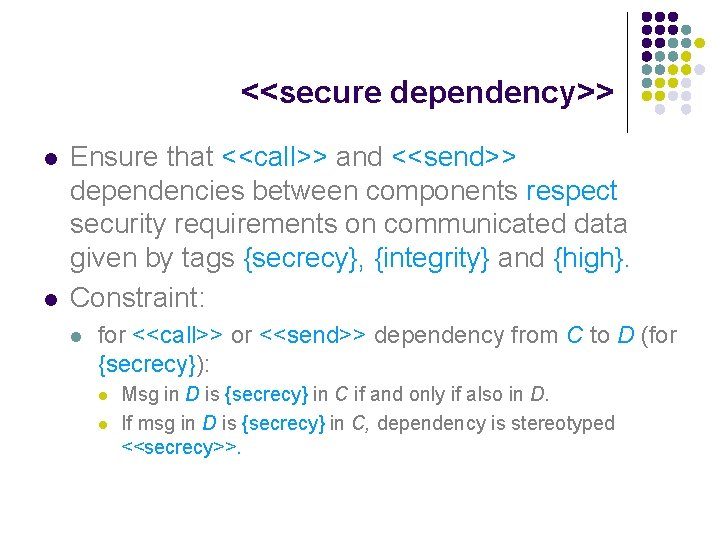

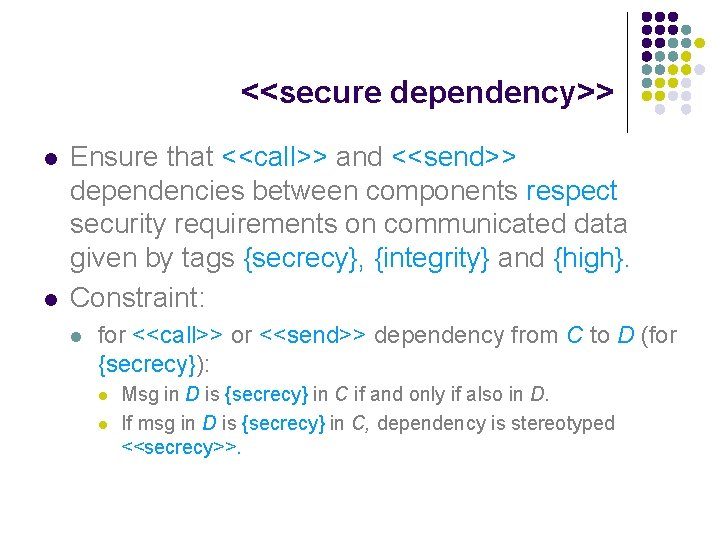

<<secure dependency>> l l Ensure that <<call>> and <<send>> dependencies between components respect security requirements on communicated data given by tags {secrecy}, {integrity} and {high}. Constraint: l for <<call>> or <<send>> dependency from C to D (for {secrecy}): l l Msg in D is {secrecy} in C if and only if also in D. If msg in D is {secrecy} in C, dependency is stereotyped <<secrecy>>.

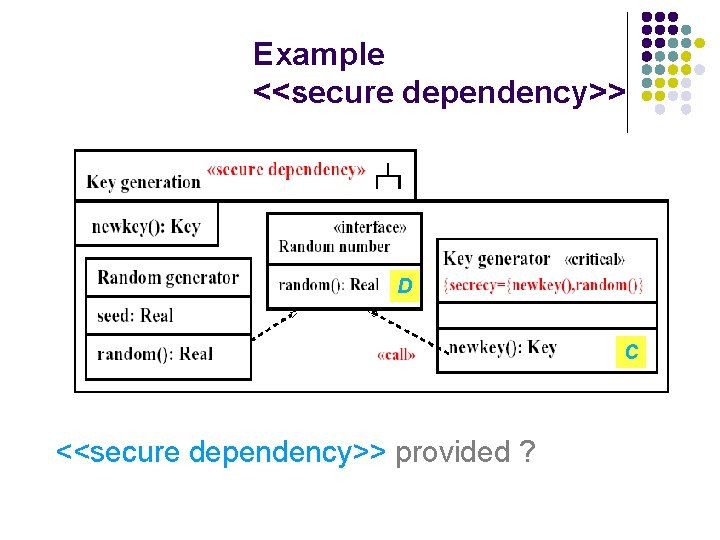

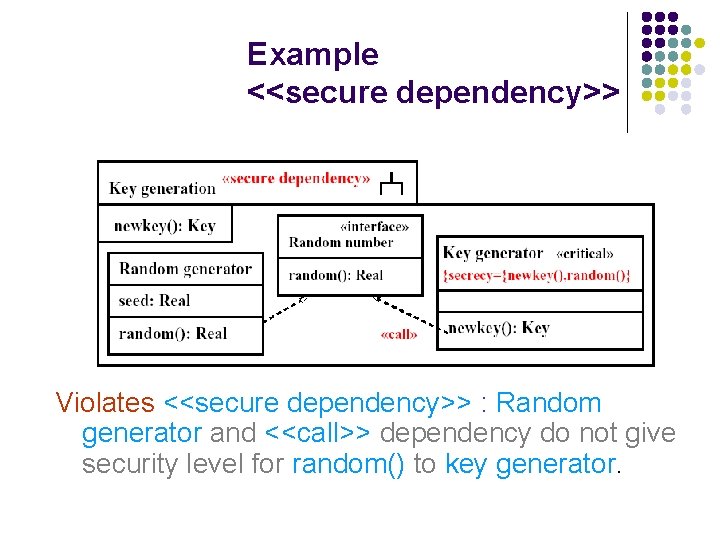

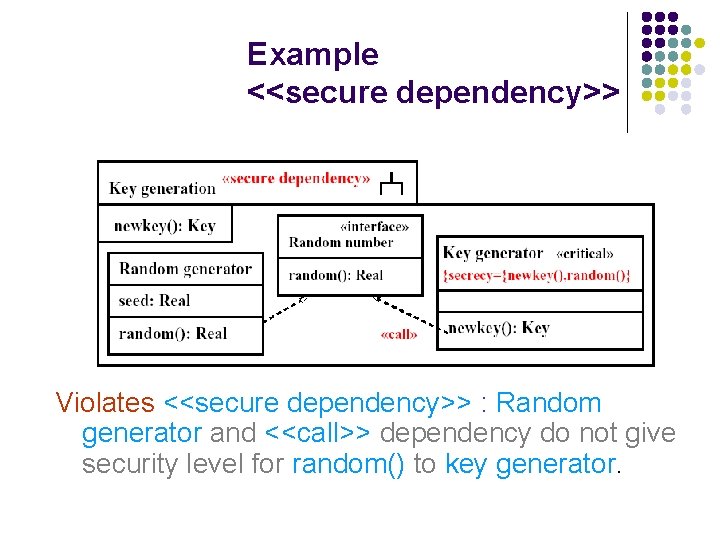

Example <<secure dependency>> D C <<secure dependency>> provided ?

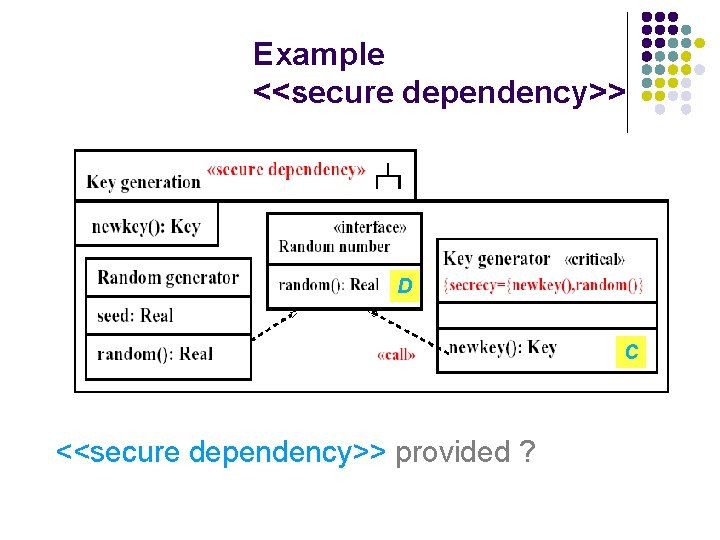

Example <<secure dependency>> Violates <<secure dependency>> : Random generator and <<call>> dependency do not give security level for random() to key generator.

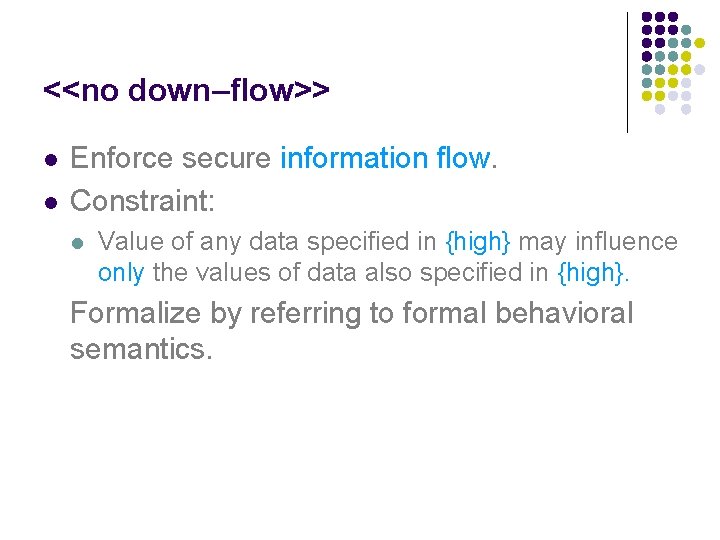

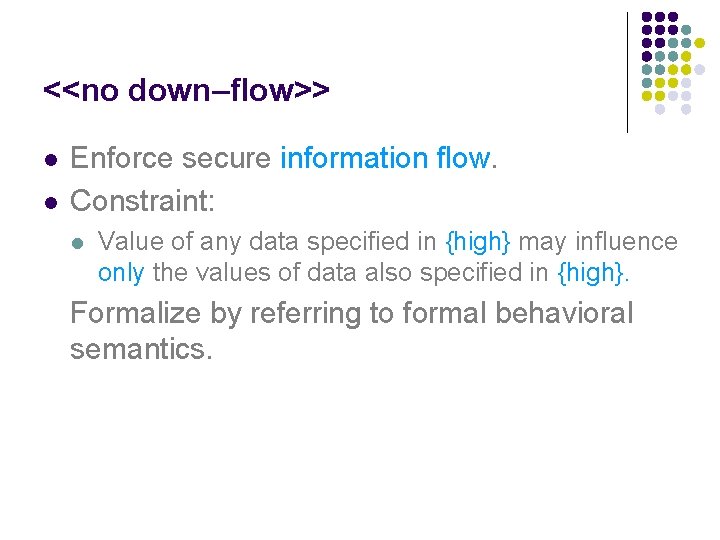

<<no down–flow>> l l Enforce secure information flow. Constraint: l Value of any data specified in {high} may influence only the values of data also specified in {high}. Formalize by referring to formal behavioral semantics.

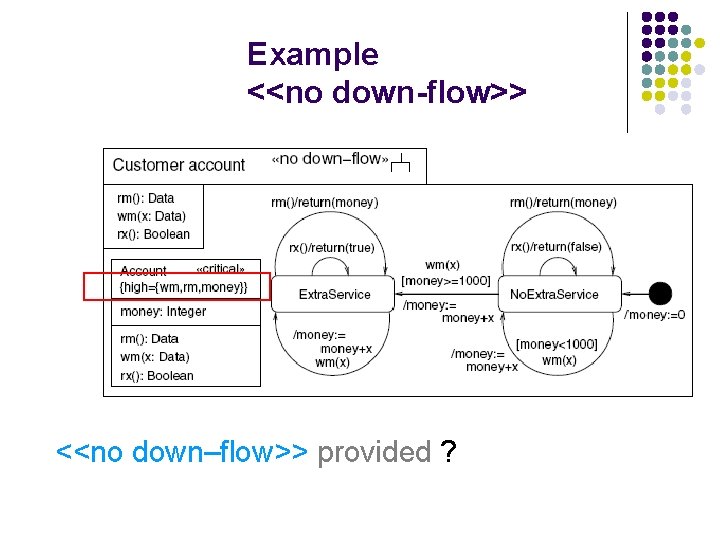

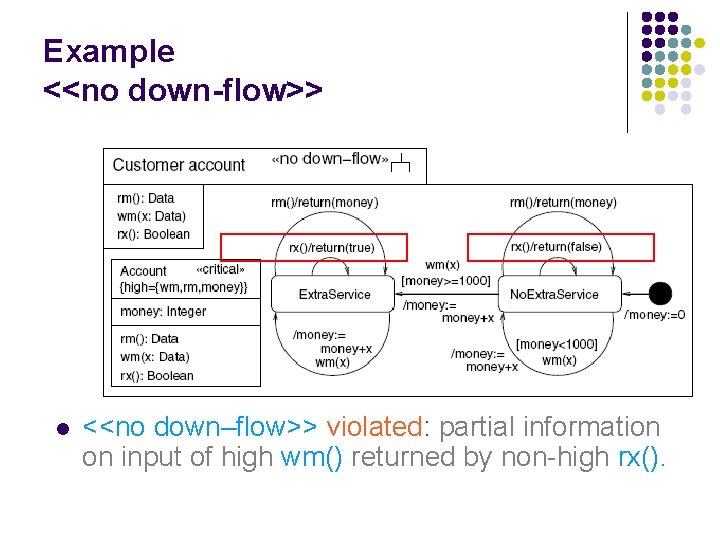

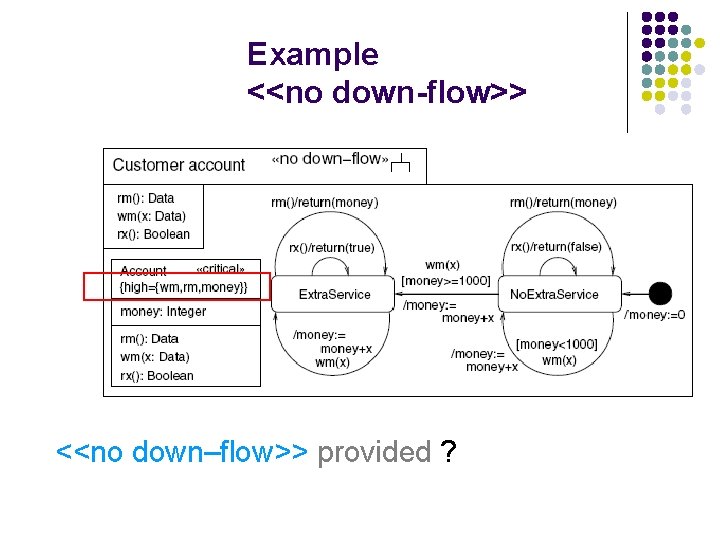

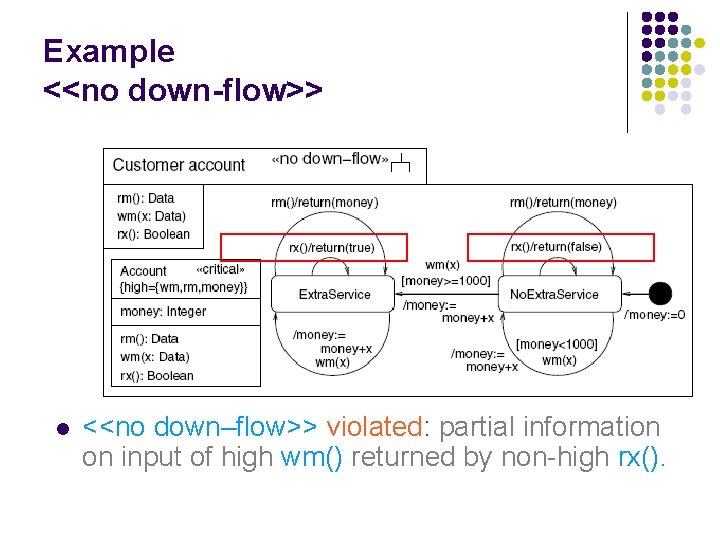

Example <<no down-flow>> <<no down–flow>> provided ?

Example <<no down-flow>> l <<no down–flow>> violated: partial information on input of high wm() returned by non-high rx().

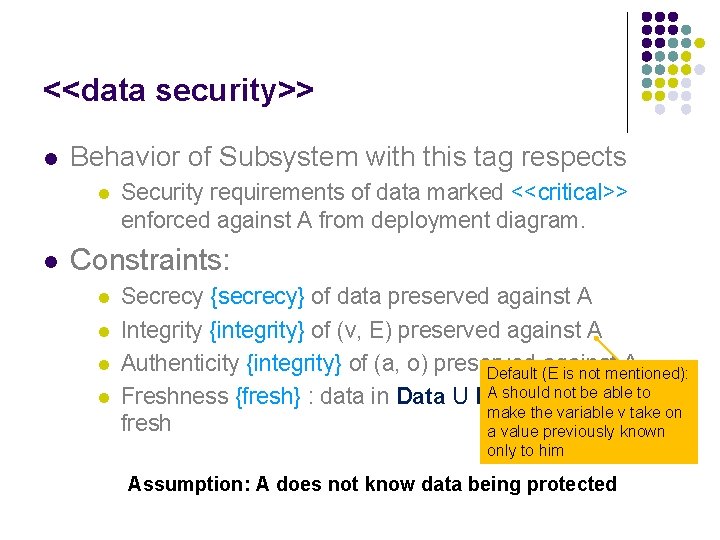

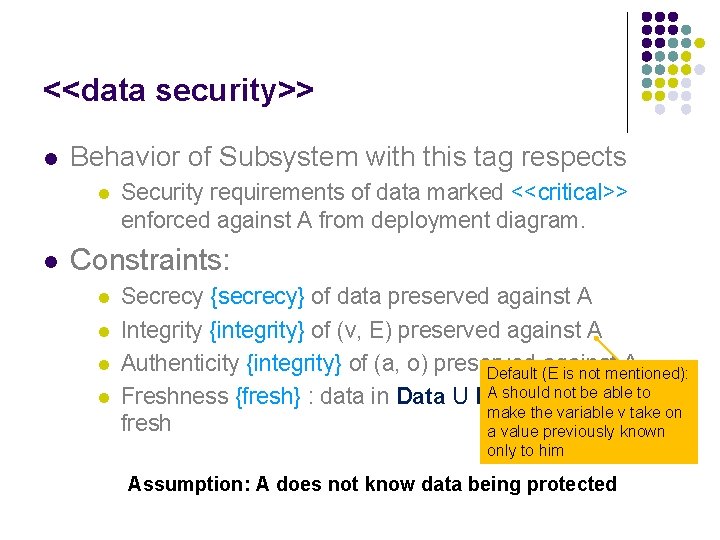

<<data security>> l Behavior of Subsystem with this tag respects l l Security requirements of data marked <<critical>> enforced against A from deployment diagram. Constraints: l l Secrecy {secrecy} of data preserved against A Integrity {integrity} of (v, E) preserved against A Authenticity {integrity} of (a, o) preserved against A Default (E is not mentioned): A should not be able Freshness {fresh} : data in Data U Keys should be to make the variable v take on fresh a value previously known only to him Assumption: A does not know data being protected

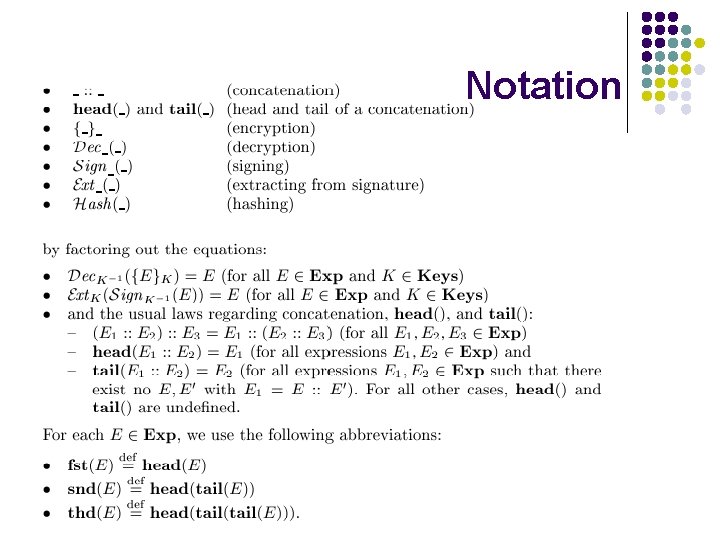

Notation

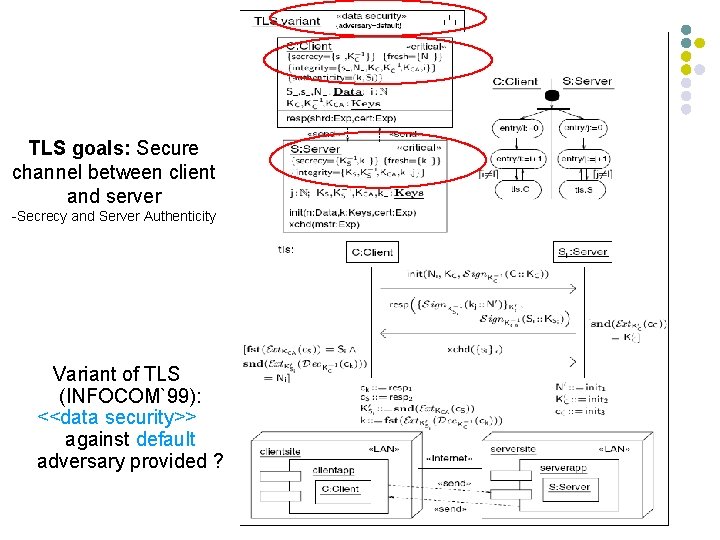

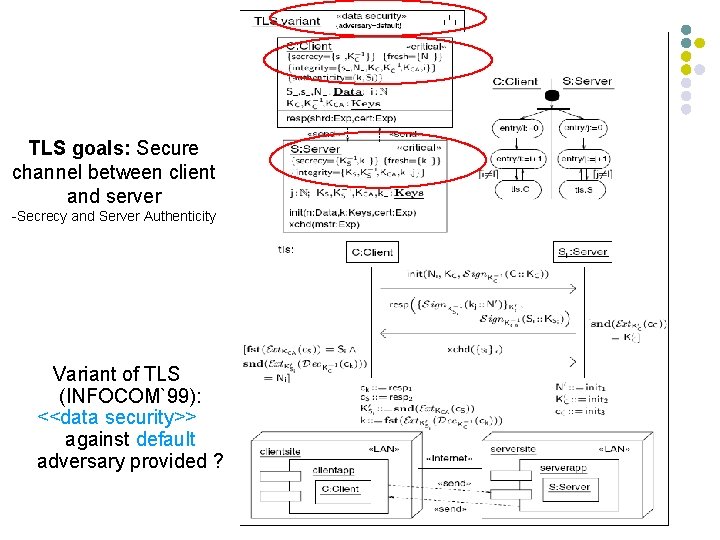

Example <<data security>> TLS goals: Secure channel between client and server -Secrecy and Server Authenticity Variant of TLS (INFOCOM`99): <<data security>> against default adversary provided ?

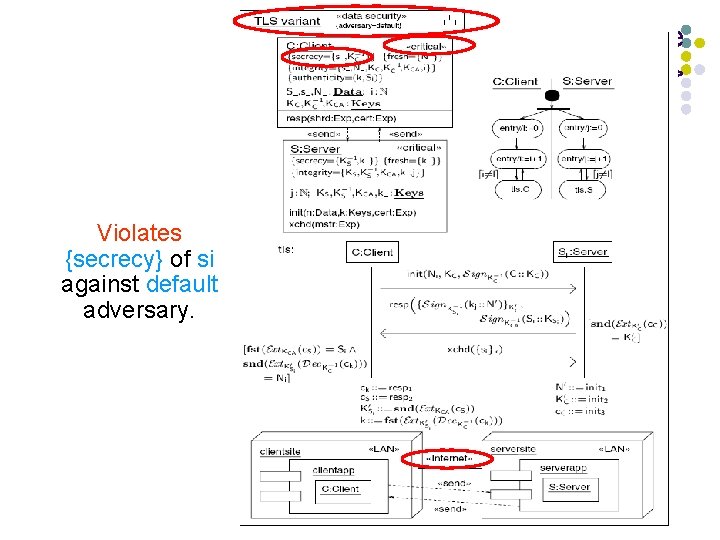

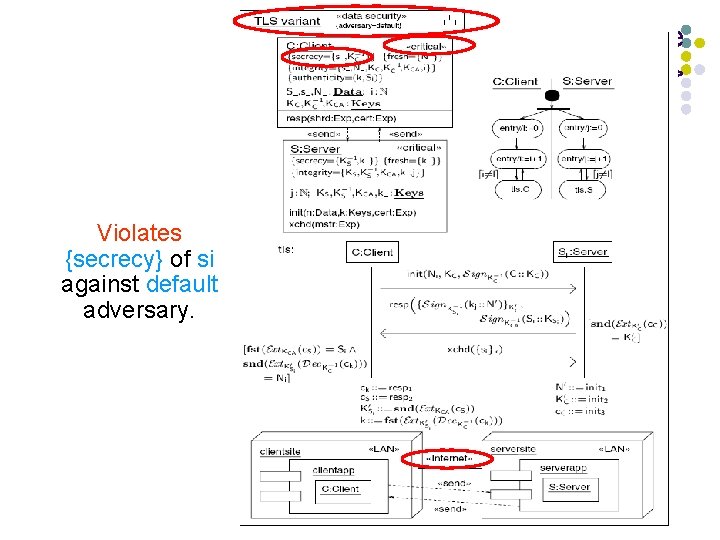

Example <<data security>> Violates {secrecy} of si against default adversary.

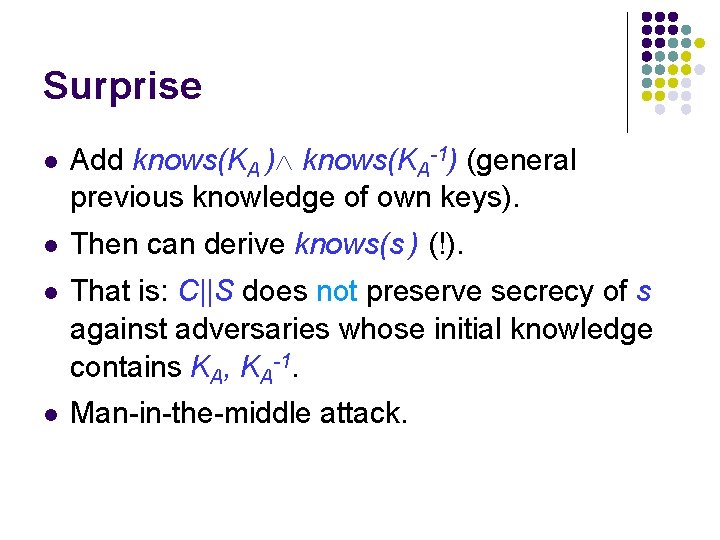

Surprise l Add knows(KA ) knows(KA-1) (general previous knowledge of own keys). l Then can derive knows(s ) (!). l That is: C||S does not preserve secrecy of s against adversaries whose initial knowledge contains KA, KA-1. l Man-in-the-middle attack.

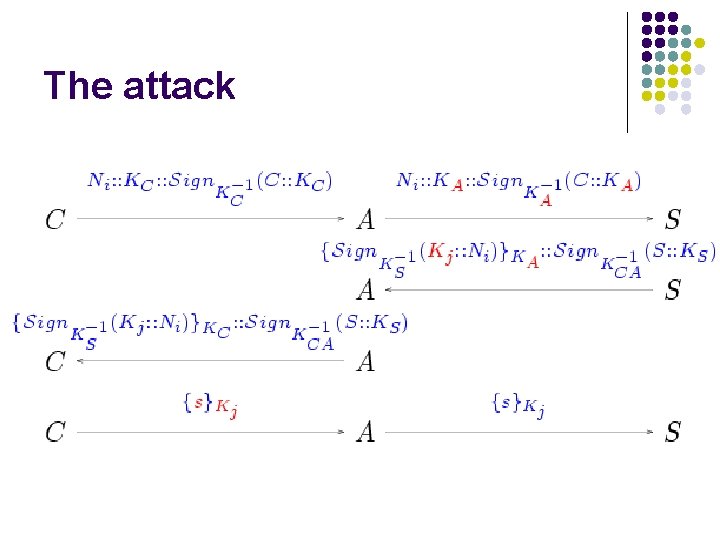

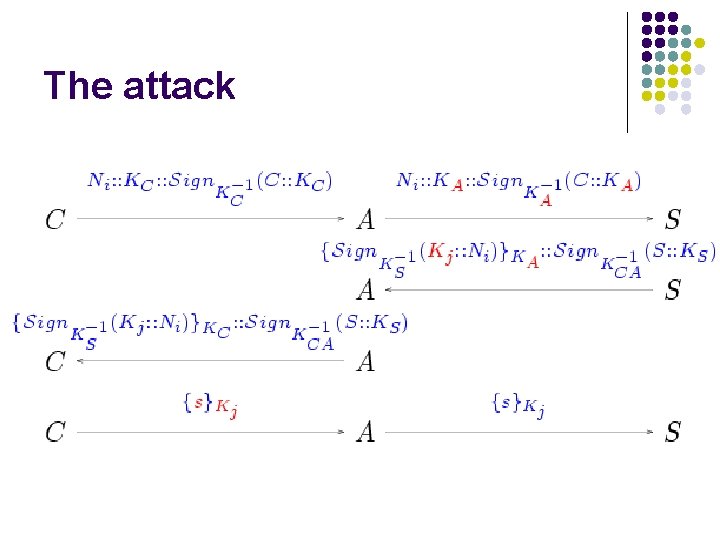

The attack

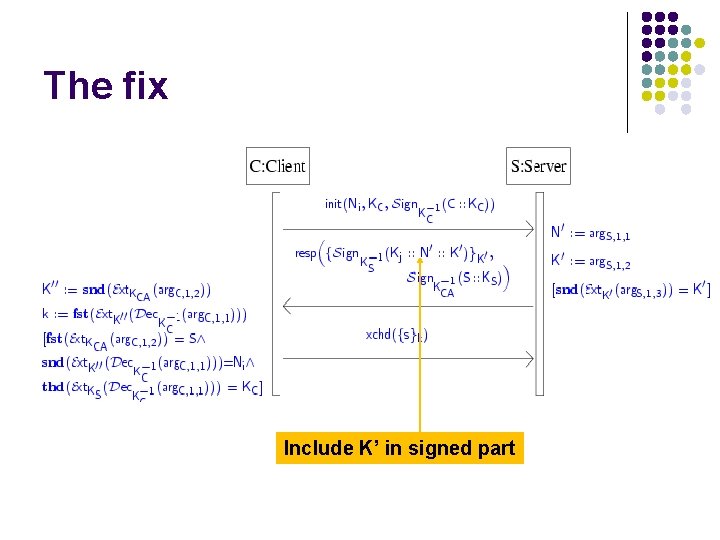

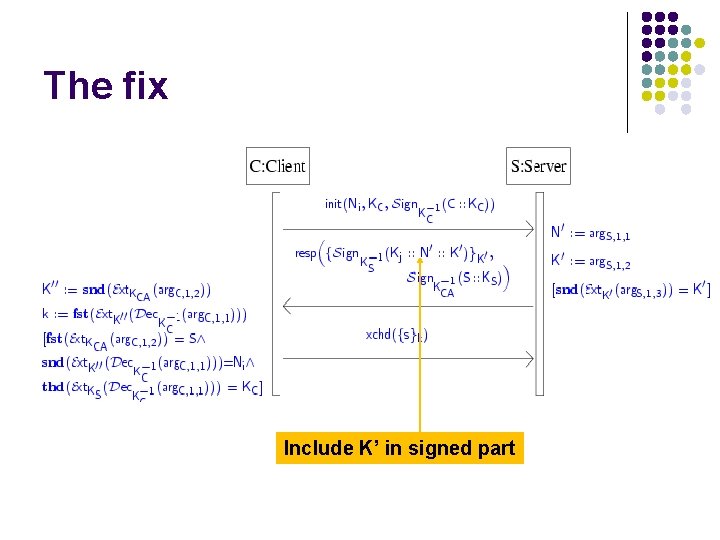

The fix Include K’ in signed part

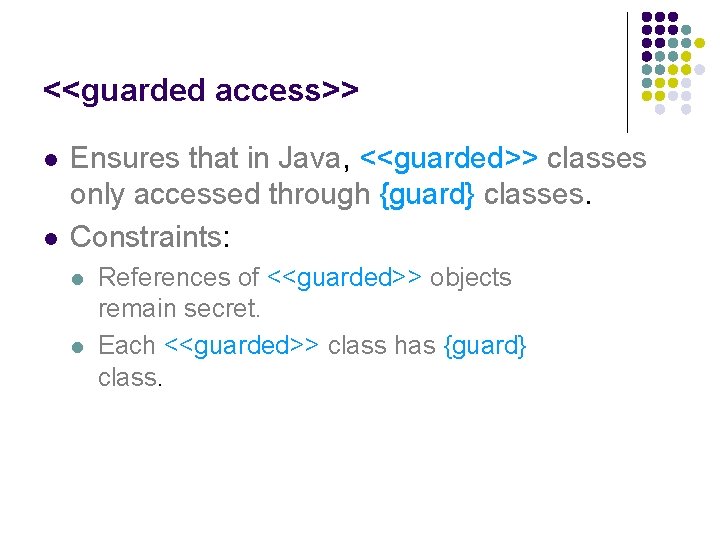

<<guarded access>> l l Ensures that in Java, <<guarded>> classes only accessed through {guard} classes. Constraints: l l References of <<guarded>> objects remain secret. Each <<guarded>> class has {guard} class.

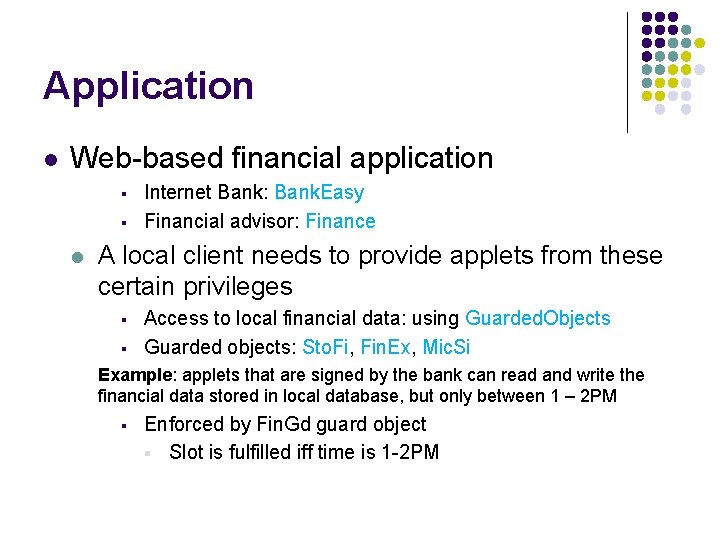

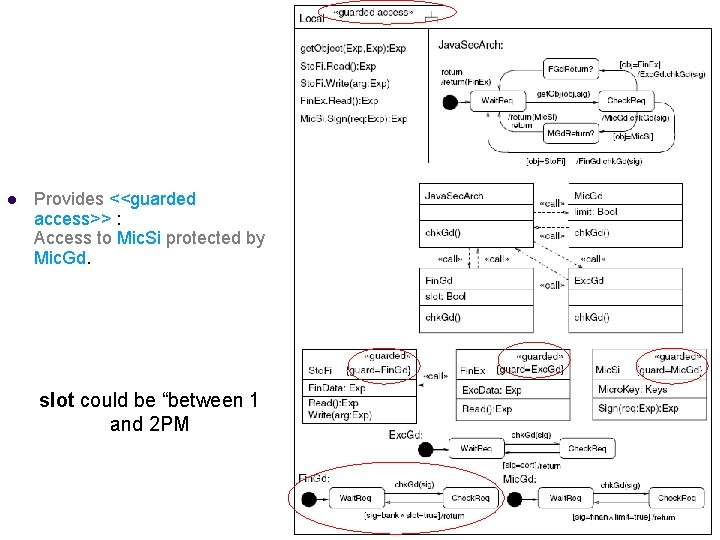

Application l Web-based financial application § § l Internet Bank: Bank. Easy Financial advisor: Finance A local client needs to provide applets from these certain privileges § § Access to local financial data: using Guarded. Objects Guarded objects: Sto. Fi, Fin. Ex, Mic. Si Example: applets that are signed by the bank can read and write the financial data stored in local database, but only between 1 – 2 PM § Enforced by Fin. Gd guard object § Slot is fulfilled iff time is 1 -2 PM

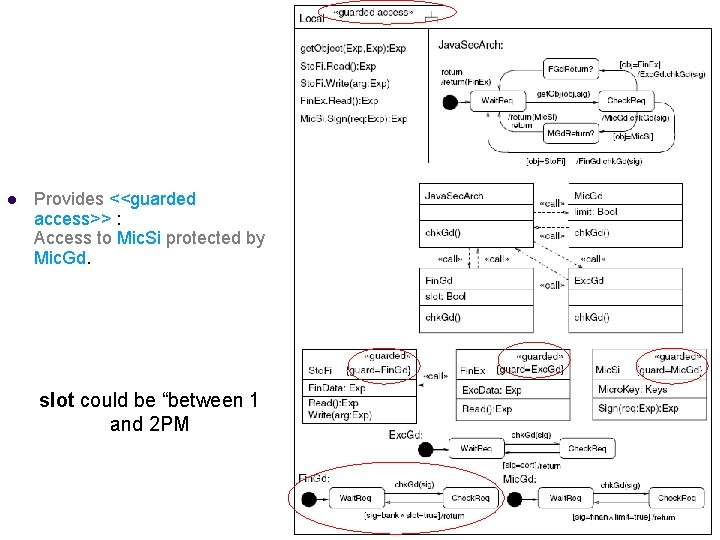

Example <<guarded access>> l Provides <<guarded access>> : Access to Mic. Si protected by Mic. Gd. slot could be “between 1 and 2 PM

Does UMLsec meet requirements? l l l l Security requirements: <<secrecy>> , … Threat scenarios: Use Threatsadv(ster). Security concepts: e. g. <<smart card>>. Security mechanisms: e. g. <<guarded access>>. Security primitives: Encryption built in. Physical security: Given in deployment diagrams. Security management: Use activity diagrams. Technology specific: Java, CORBA security.

Design Principles l How principles are enforced l Economy of mechanism l l Fails-safe defaults l l Check on relevant invariants – e. g. , when interrupted Complete mediation l l Guidance on employment of sec mechanisms to developers – use simple mechanism where appropriate E. g. , guarded access Open design l Approach does not use secrecy of design

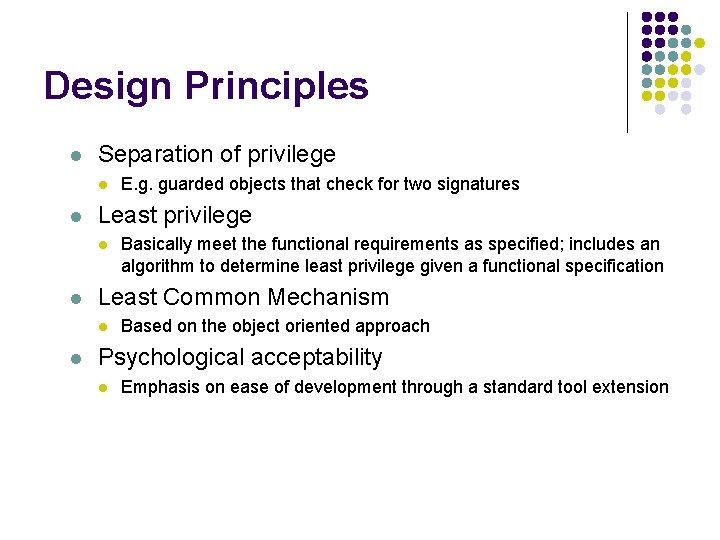

Design Principles l Separation of privilege l l Least privilege l l Basically meet the functional requirements as specified; includes an algorithm to determine least privilege given a functional specification Least Common Mechanism l l E. g. guarded objects that check for two signatures Based on the object oriented approach Psychological acceptability l Emphasis on ease of development through a standard tool extension

Umlsec

Umlsec Umlsec

Umlsec Umlsec

Umlsec Anthem of poland

Anthem of poland March 2013

March 2013 March 27, 2013

March 27, 2013 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Geogrphic

Geogrphic March 30 1981

March 30 1981 Slice march

Slice march Macro march

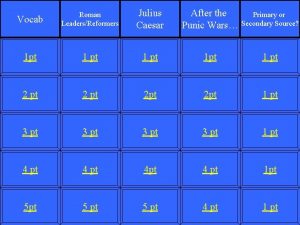

Macro march Ides of march punic wars

Ides of march punic wars 23 march 1996

23 march 1996 March 1, 1803

March 1, 1803 Texas revolution battles map

Texas revolution battles map Glasgow 5th march 1971

Glasgow 5th march 1971 Path of sherman's march to the sea

Path of sherman's march to the sea Inurl:bug bounty intext:token of appreciation

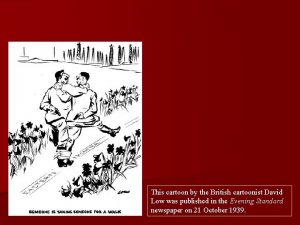

Inurl:bug bounty intext:token of appreciation Cartoon by david low 1933

Cartoon by david low 1933 Glasgow 5th march 1971

Glasgow 5th march 1971 Entertainment identifier registry

Entertainment identifier registry London 1890 rizal

London 1890 rizal March 2 success.com

March 2 success.com Poem for 8 march

Poem for 8 march Cscape envisionrv

Cscape envisionrv Jan feb mar

Jan feb mar March algorithm

March algorithm March 5 2021

March 5 2021 March 1917 revolution

March 1917 revolution How to find trade discount rate

How to find trade discount rate March 25 2011

March 25 2011 Counter column march

Counter column march Salt march

Salt march Talasemi minor kan tablosu

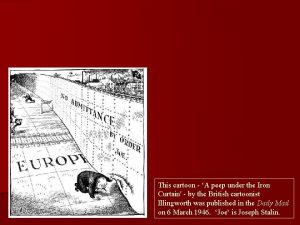

Talasemi minor kan tablosu Political cartoon iron curtain

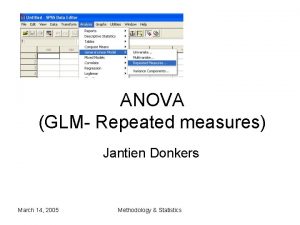

Political cartoon iron curtain Glm repeated measures

Glm repeated measures Nine line medevac

Nine line medevac Sousa march mania

Sousa march mania Why is the long march important

Why is the long march important March joint powers authority

March joint powers authority Sigma gamma rho march of dimes

Sigma gamma rho march of dimes Path of sherman's march to the sea

Path of sherman's march to the sea What is my zodiac sign chinese

What is my zodiac sign chinese Royal march banner

Royal march banner Death march edward yourdon

Death march edward yourdon Sansculottes

Sansculottes Grihalakshmi magazine march 2019

Grihalakshmi magazine march 2019 Kaggle march madness 2019

Kaggle march madness 2019 January february maruary

January february maruary Offset printing march

Offset printing march Joyful mystery pictures

Joyful mystery pictures March of progress view

March of progress view March on the pentagon 1967

March on the pentagon 1967 May is summer?

May is summer? March newsletter kindergarten

March newsletter kindergarten Sherman's march to the sea map

Sherman's march to the sea map Letter to senator robert wagner, march 7, 1934.

Letter to senator robert wagner, march 7, 1934. Kilusang nasyonalismo sa china

Kilusang nasyonalismo sa china 25 march 2008

25 march 2008 20 mile march

20 mile march Ausias march poema xiii

Ausias march poema xiii Aleida march de la torre

Aleida march de la torre