Introduction to Machine Learning and Knowledge Representation Florian

- Slides: 43

Introduction to Machine Learning and Knowledge Representation Florian Gyarfas COMP 790 -072 (Robotics)

Outline Introduction, definitions Common knowledge representations Types of learning Deductive Learning (rules of inference) Explanation-based learning Inductive Learning – some approaches Concept Learning Decision-Tree Learning Clustering Summary, references

Introduction What is Knowledge Representation? Formalisms that represent knowledge (facts about the worlds) and mechanisms to manipulate such knowledge (for example, derive new facts from existing knowledge) What is Machine Learning? Mitchell: “A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E. ” • Example: T: playing checkers P: percent of games won against opponents E: playing practice games against itself

Common Knowledge Representations Logic propositional predicate other Structured Knowledge Representations: Frames Semantic nets …

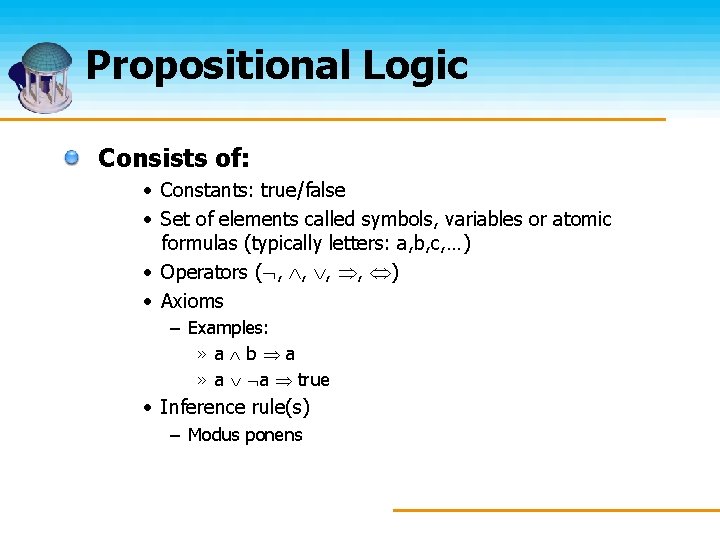

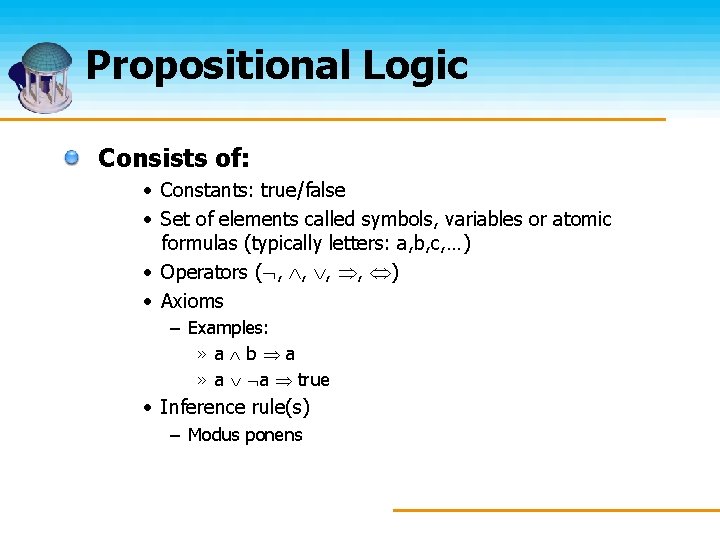

Propositional Logic Consists of: • Constants: true/false • Set of elements called symbols, variables or atomic formulas (typically letters: a, b, c, …) • Operators ( , , ) • Axioms – Examples: » a b a » a a true • Inference rule(s) – Modus ponens

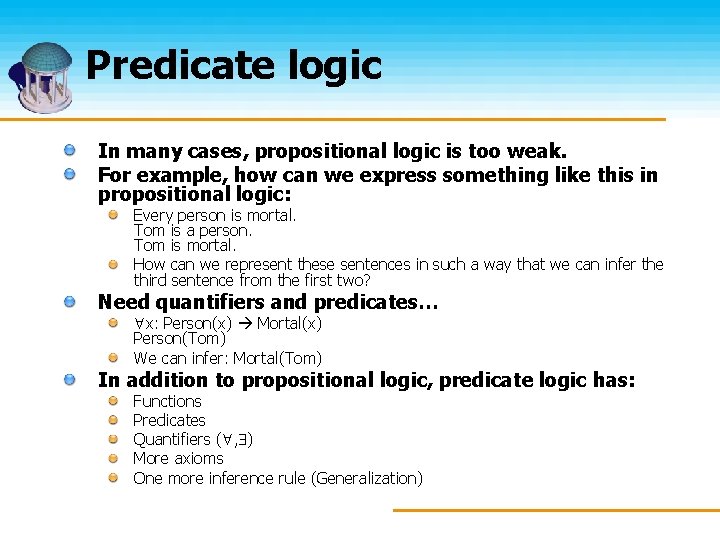

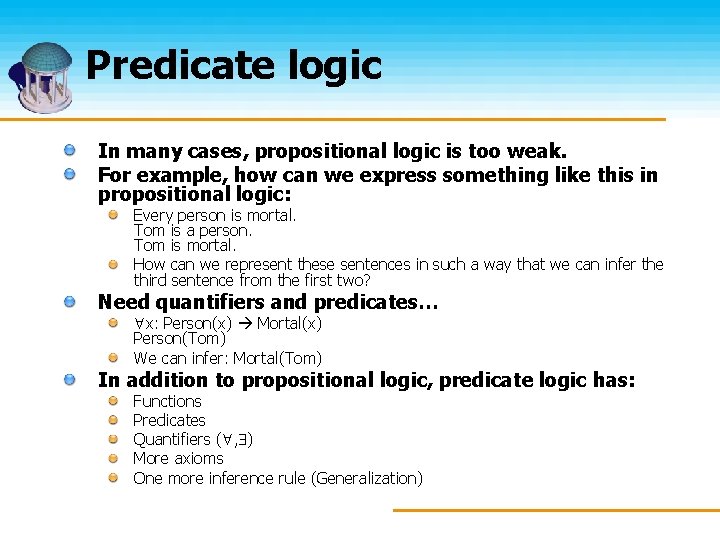

Predicate logic In many cases, propositional logic is too weak. For example, how can we express something like this in propositional logic: Every person is mortal. Tom is a person. Tom is mortal. How can we represent these sentences in such a way that we can infer the third sentence from the first two? Need quantifiers and predicates… x: Person(x) Mortal(x) Person(Tom) We can infer: Mortal(Tom) In addition to propositional logic, predicate logic has: Functions Predicates Quantifiers ( , ) More axioms One more inference rule (Generalization)

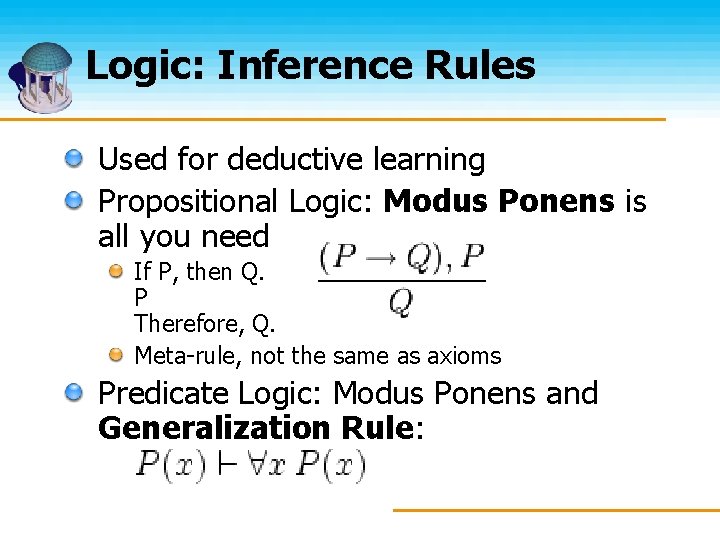

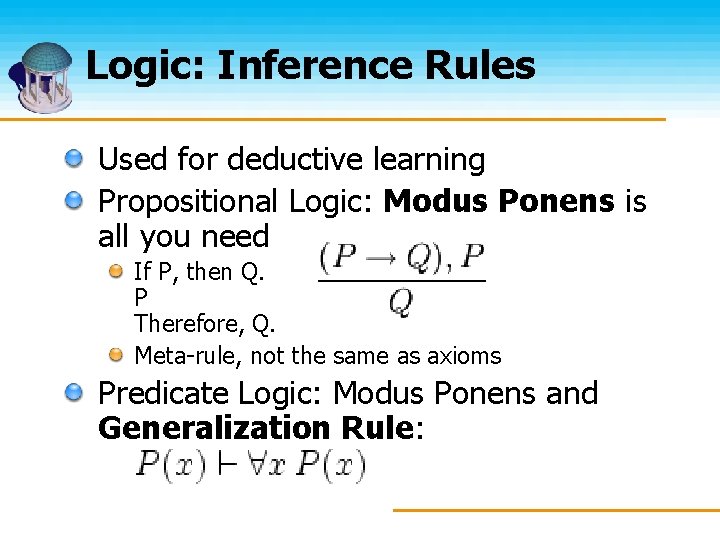

Logic: Inference Rules Used for deductive learning Propositional Logic: Modus Ponens is all you need If P, then Q. P Therefore, Q. Meta-rule, not the same as axioms Predicate Logic: Modus Ponens and Generalization Rule:

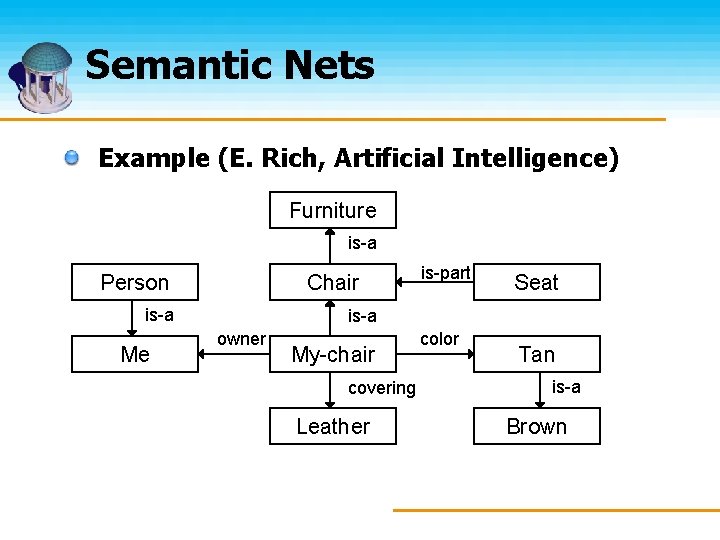

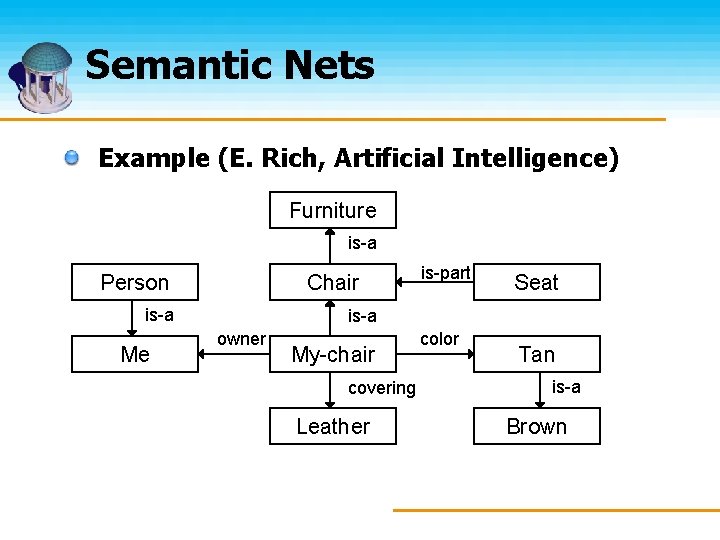

Structured Knowledge Representations Semantic nets Really just graphs that represent knowledge Nodes represent concepts Arcs represent binary relationships between concepts Frames Extension of semantic nets Entities have attributes Class/subclass hierarchy that supports inheritance; classes inherit attributes from superclasses

Semantic Nets Example (E. Rich, Artificial Intelligence) Furniture is-a Person Chair is-a Me is-part Seat is-a owner My-chair covering Leather color Tan is-a Brown

Types of Learning Deductive – Inductive Supervised – Unsupervised Symbolic – Non-symbolic

Deductive vs. inductive Deductive learning Knowledge is deduced from existing knowledge by means of truth-preserving transformations (this is nothing more than reformulation of existing knowledge). If the premises are true, the conclusion must be true! Example: propositional/predicate logic with rules of inference

Deductive vs. inductive Inductive learning Generalization from examples Example: “All observed crows are black” “All crows are black” process of reasoning in which the premises of an argument are believed to support the conclusion but do not ensure it

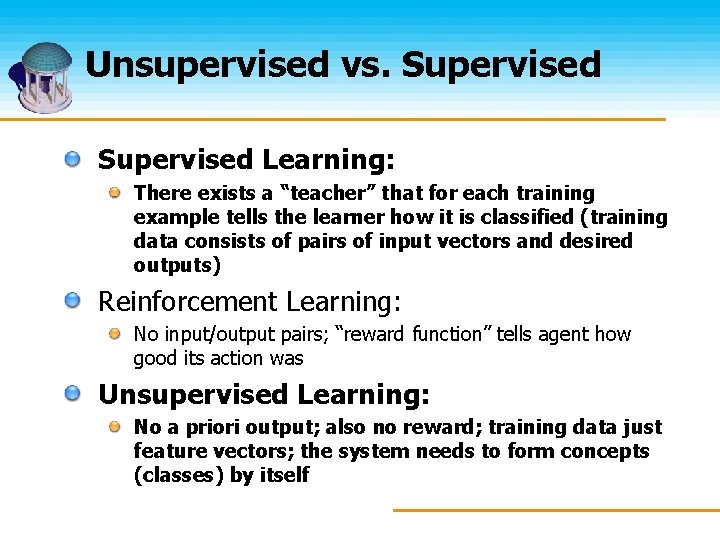

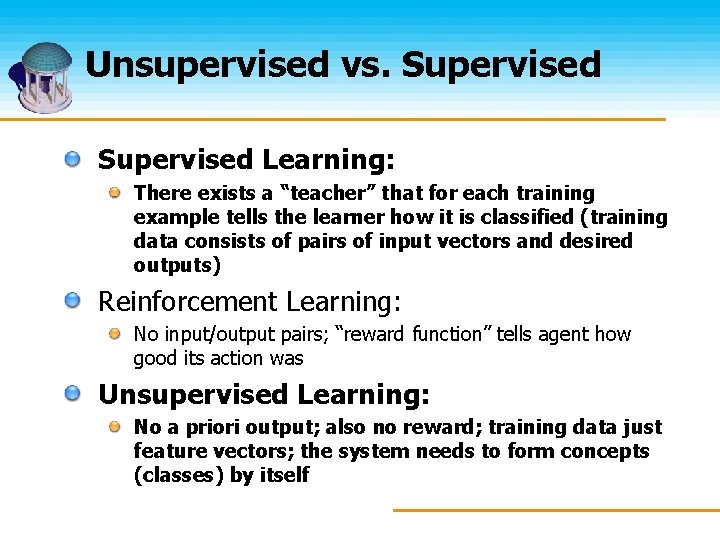

Unsupervised vs. Supervised Learning: There exists a “teacher” that for each training example tells the learner how it is classified (training data consists of pairs of input vectors and desired outputs) Reinforcement Learning: No input/output pairs; “reward function” tells agent how good its action was Unsupervised Learning: No a priori output; also no reward; training data just feature vectors; the system needs to form concepts (classes) by itself

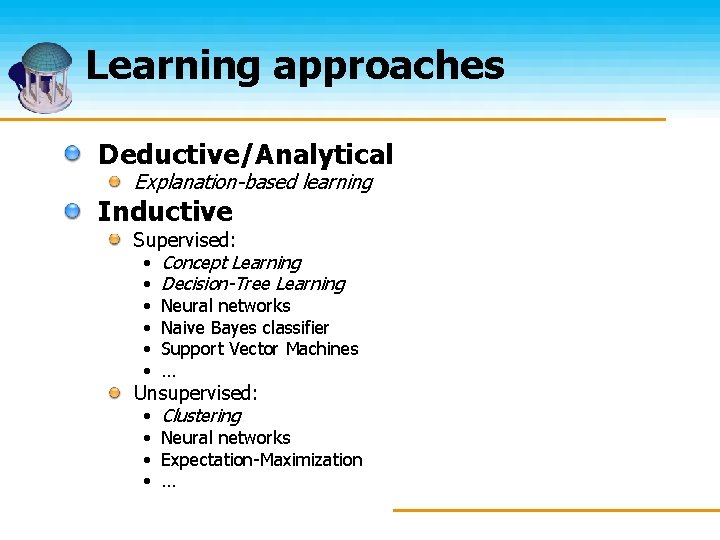

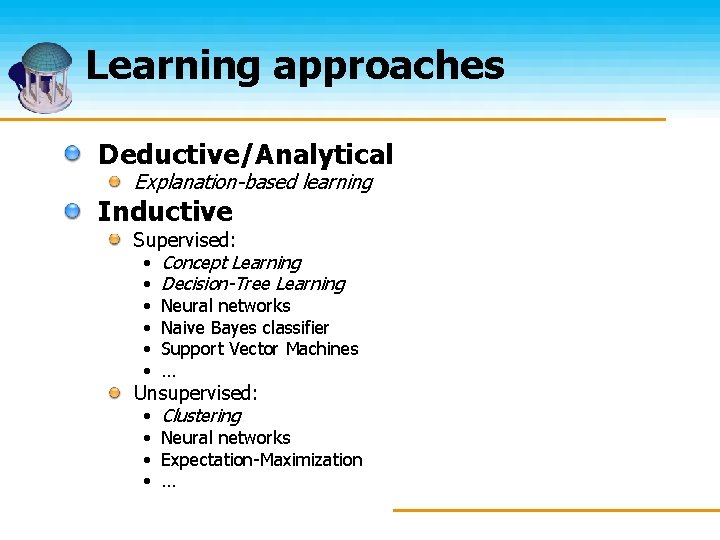

Learning approaches Deductive/Analytical Explanation-based learning Inductive Supervised: • Concept Learning • Decision-Tree Learning • • Neural networks Naive Bayes classifier Support Vector Machines … Unsupervised: • Clustering • Neural networks • Expectation-Maximization • …

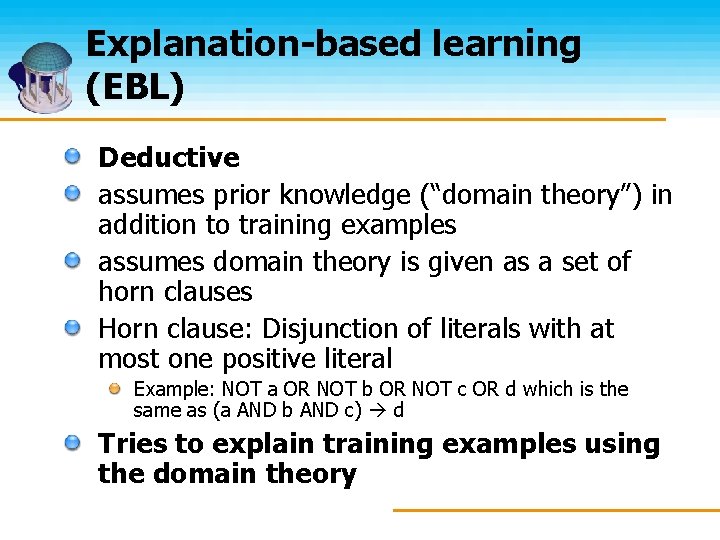

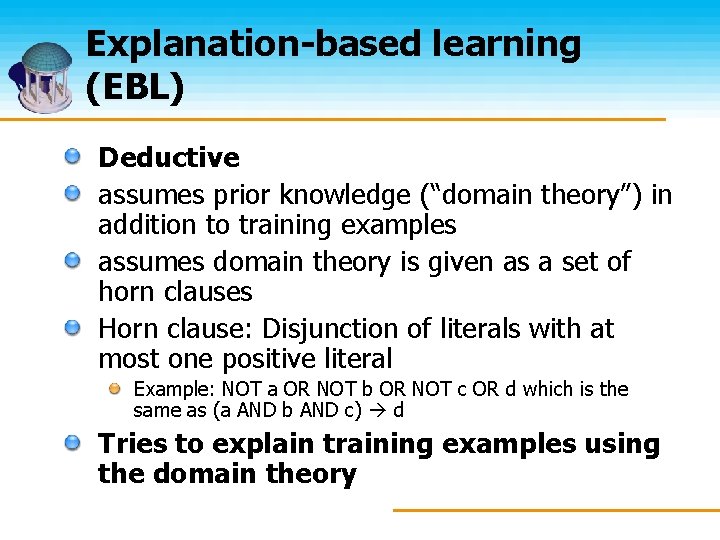

Explanation-based learning (EBL) Deductive assumes prior knowledge (“domain theory”) in addition to training examples assumes domain theory is given as a set of horn clauses Horn clause: Disjunction of literals with at most one positive literal Example: NOT a OR NOT b OR NOT c OR d which is the same as (a AND b AND c) d Tries to explain training examples using the domain theory

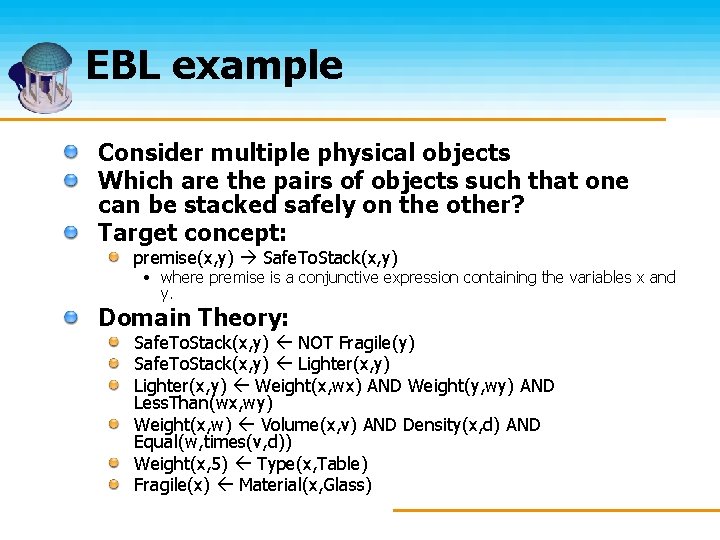

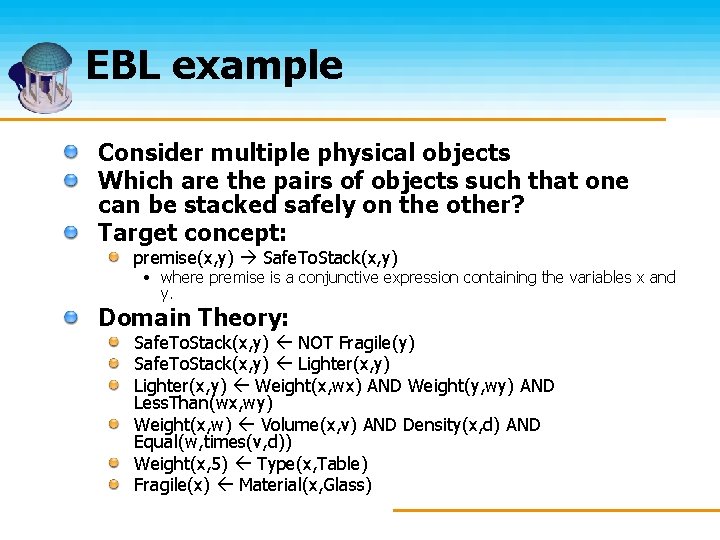

EBL example Consider multiple physical objects Which are the pairs of objects such that one can be stacked safely on the other? Target concept: premise(x, y) Safe. To. Stack(x, y) • where premise is a conjunctive expression containing the variables x and y. Domain Theory: Safe. To. Stack(x, y) NOT Fragile(y) Safe. To. Stack(x, y) Lighter(x, y) Weight(x, wx) AND Weight(y, wy) AND Less. Than(wx, wy) Weight(x, w) Volume(x, v) AND Density(x, d) AND Equal(w, times(v, d)) Weight(x, 5) Type(x, Table) Fragile(x) Material(x, Glass)

EBL example (2) Training example: On(Obj 1, Obj 2) Type(Obj 1, Box) Type(Obj 2, Table) Color(Obj 1, Red) Color(Obj 2, Blue) Volume(Obj 1, 2) Density(Obj 1, 0. 3) Material(Obj 1, Cardboard) Material(Obj 2, Wood) Safe. To. Stack(Obj 1, Obj 2)

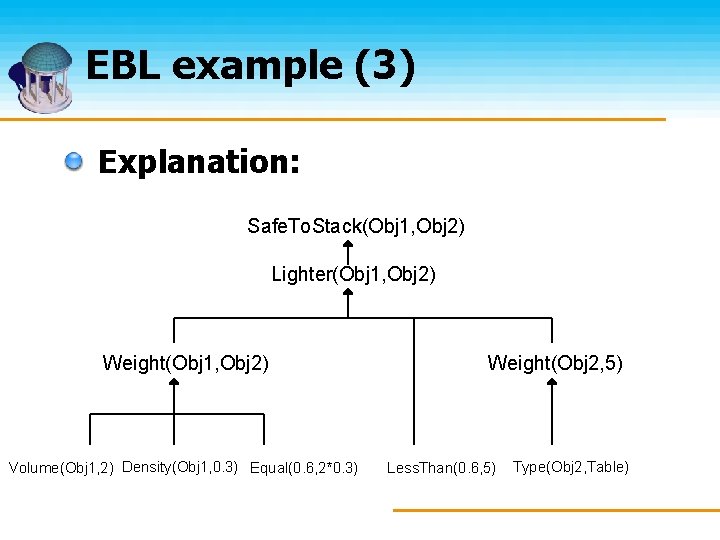

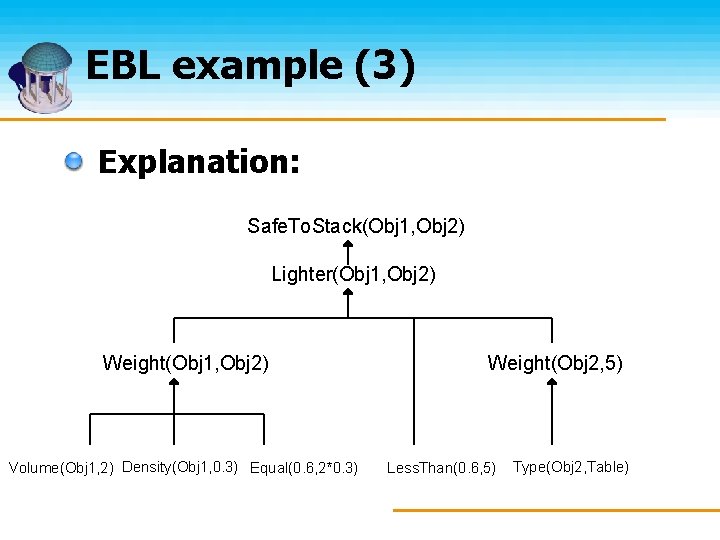

EBL example (3) Explanation: Safe. To. Stack(Obj 1, Obj 2) Lighter(Obj 1, Obj 2) Weight(Obj 1, Obj 2) Volume(Obj 1, 2) Density(Obj 1, 0. 3) Equal(0. 6, 2*0. 3) Weight(Obj 2, 5) Less. Than(0. 6, 5) Type(Obj 2, Table)

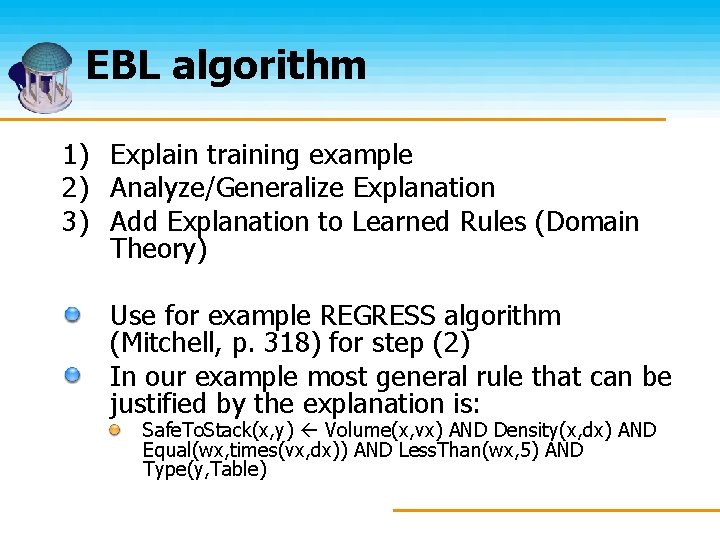

EBL algorithm 1) Explain training example 2) Analyze/Generalize Explanation 3) Add Explanation to Learned Rules (Domain Theory) Use for example REGRESS algorithm (Mitchell, p. 318) for step (2) In our example most general rule that can be justified by the explanation is: Safe. To. Stack(x, y) Volume(x, vx) AND Density(x, dx) AND Equal(wx, times(vx, dx)) AND Less. Than(wx, 5) AND Type(y, Table)

EBL - Remarks Knowledge Reformulation: EBL just restates what the learner already knows You don’t really gain new knowledge! Why do we need it then? In principle, we can compute everything we need using just the domain theory In practice, however, this might not work. Consider chess: Does knowing all the rules make you a perfect player? So EBL reformulates existing knowledge into a more operational form which might be much more effective especially under certain constraints

Concept Learning Inductive learn general concept definition from specific training examples search through predefined space of potential hypotheses for target concept pick the one that best fits training examples

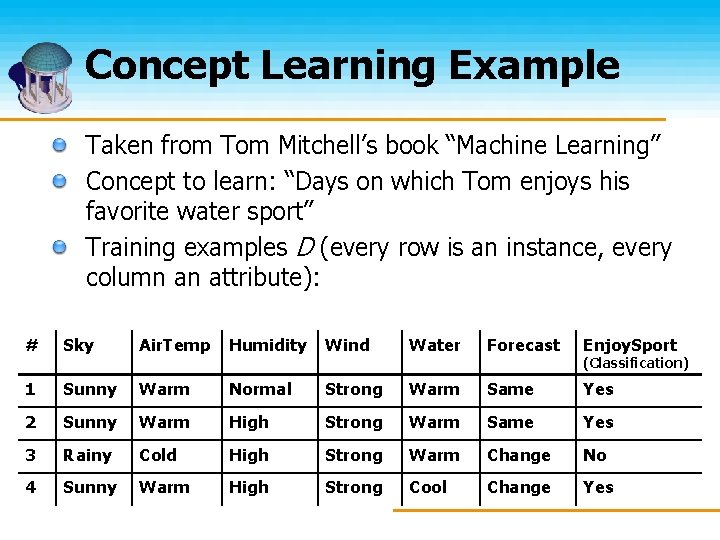

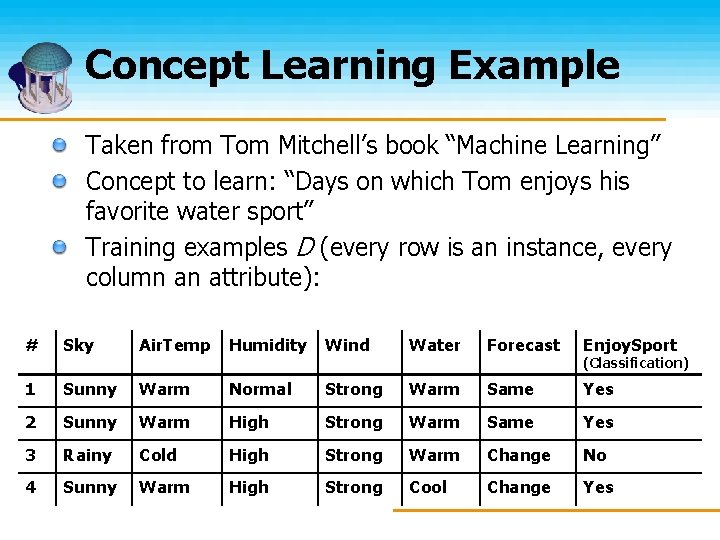

Concept Learning Example Taken from Tom Mitchell’s book “Machine Learning” Concept to learn: “Days on which Tom enjoys his favorite water sport” Training examples D (every row is an instance, every column an attribute): # Sky Air. Temp Humidity Wind Water Forecast Enjoy. Sport 1 Sunny Warm Normal Strong Warm Same Yes 2 Sunny Warm High Strong Warm Same Yes 3 Rainy Cold High Strong Warm Change No 4 Sunny Warm High Strong Cool Change Yes (Classification)

Concept Learning X = set of all instances (instance = combination of attributes), D = set of all training examples (D X) The “target concept” is a function (target function) c(x) that for a given instance x is either 0 or 1 (in our example 0 if Enjoy. Sport = No, 1 if Enjoy. Sport = Yes) We do not know the target concept, but we can come up with hypotheses. We would like to find a hypothesis h(x) such that h(x) = c(x) at least for all x in D (D = training examples) Hypotheses representation: Let us assume that the target concept is expressed as a conjunction of constraints on the instance attributes. Then we can write a hypothesis like this: Air. Temp = Cold Humidity = High. Or, in short: <? , Cold, High, ? , ? > where “? ” means any value is acceptable for this attribute. We use “ ” to indicate that no value is acceptable for an attribute.

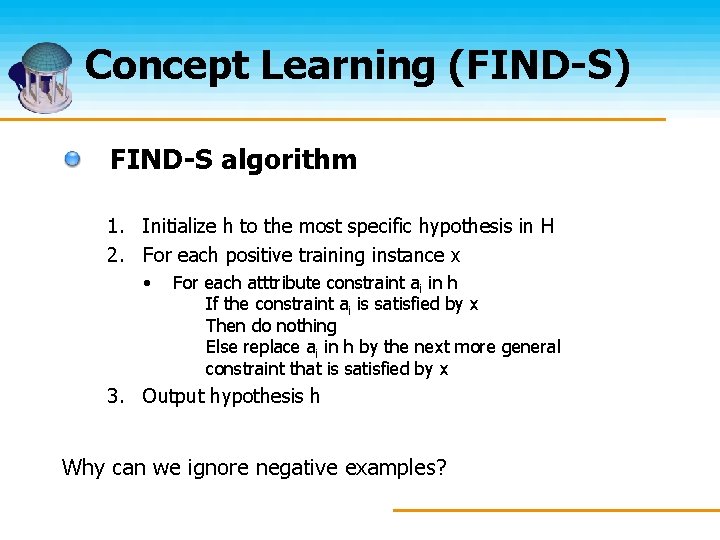

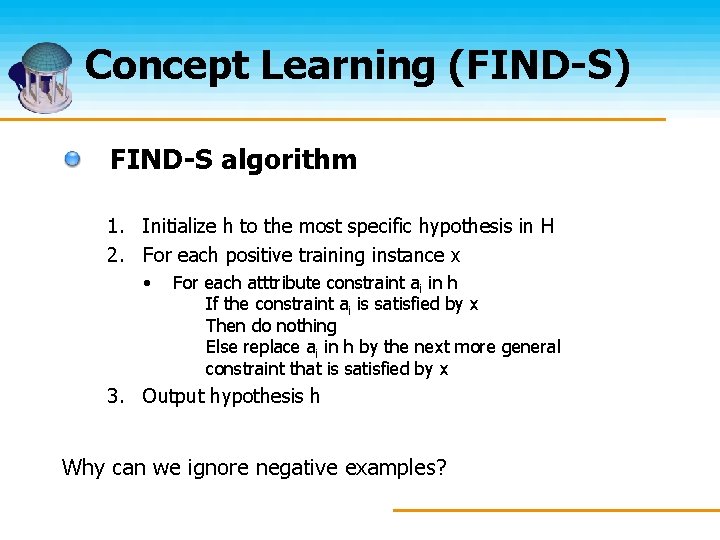

Concept Learning Most general hypothesis: <? , ? , ? , ? > Most specific hypothesis: < , , , > General-to-specific ordering of hypotheses: ≥g (more general than or equal to) defines partial order over hypothesis space H FIND-S algorithm finds the most specific hypothesis • For our example : <Sunny, Warm, ? , Strong, ? >

Concept Learning (FIND-S) FIND-S algorithm 1. Initialize h to the most specific hypothesis in H 2. For each positive training instance x • For each atttribute constraint ai in h If the constraint ai is satisfied by x Then do nothing Else replace ai in h by the next more general constraint that is satisfied by x 3. Output hypothesis h Why can we ignore negative examples?

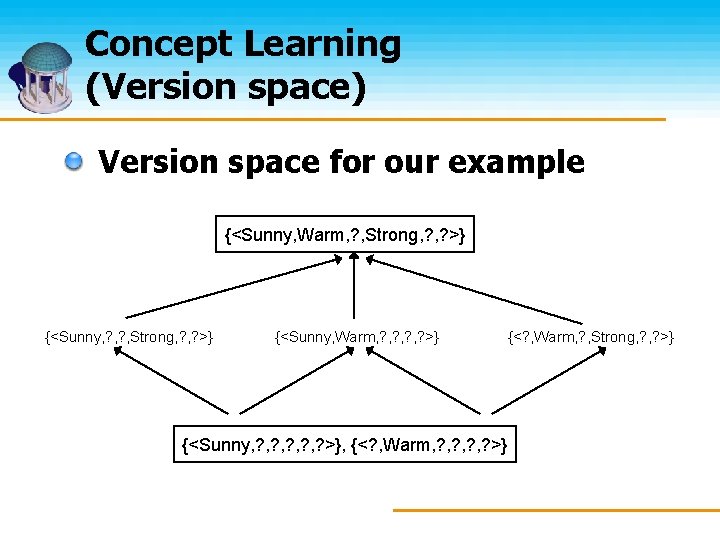

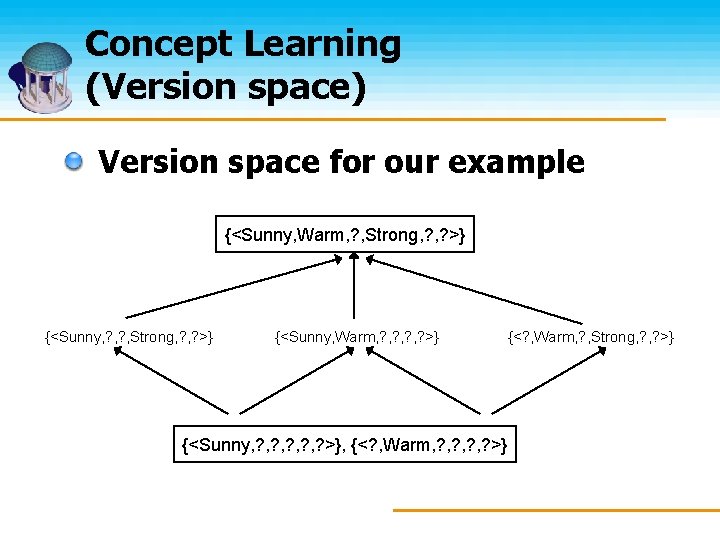

Concept Learning FIND-S only computes the most specific hypothesis Another approach to concept learning: CANDIDATE-ELIMINATION finds all hypotheses in the version space The version space, denoted VSH, D, with respect to hypothesis space H and training examples D, is a subset of hypotheses from H consistent with the training examples in D. VSH, D = {h H|Consistent(h, D)}

Concept Learning (Version space) Version space for our example {<Sunny, Warm, ? , Strong, ? >} {<Sunny, Warm, ? , ? >} {<Sunny, ? , ? , ? >}, {<? , Warm, ? , ? >} {<? , Warm, ? , Strong, ? >}

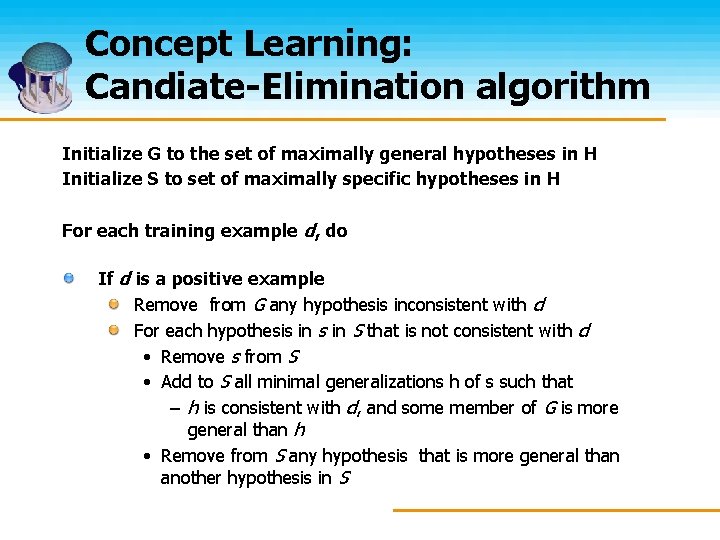

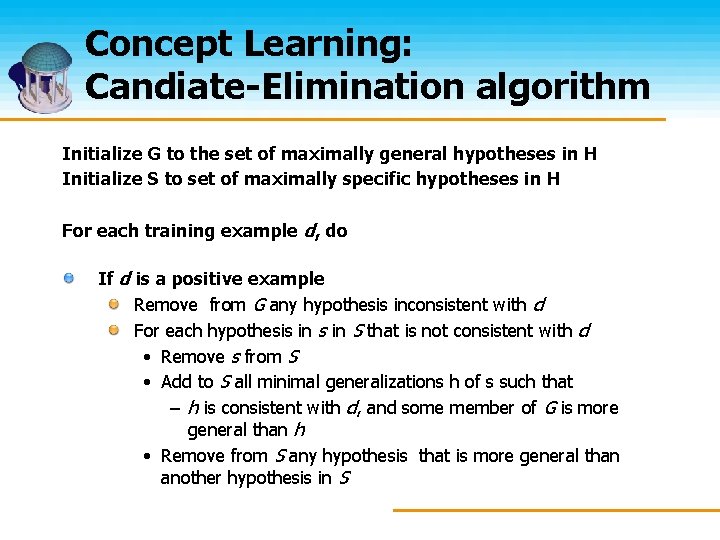

Concept Learning: Candiate-Elimination algorithm Initialize G to the set of maximally general hypotheses in H Initialize S to set of maximally specific hypotheses in H For each training example d, do If d is a positive example Remove from G any hypothesis inconsistent with d For each hypothesis in S that is not consistent with d • Remove s from S • Add to S all minimal generalizations h of s such that – h is consistent with d, and some member of G is more general than h • Remove from S any hypothesis that is more general than another hypothesis in S

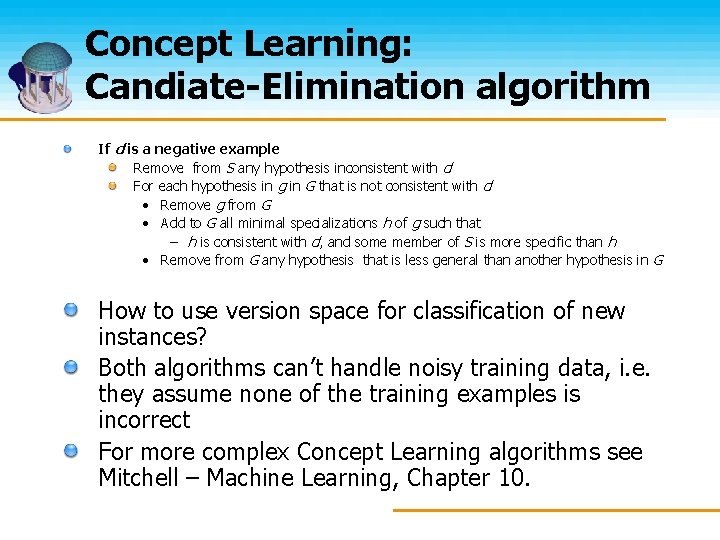

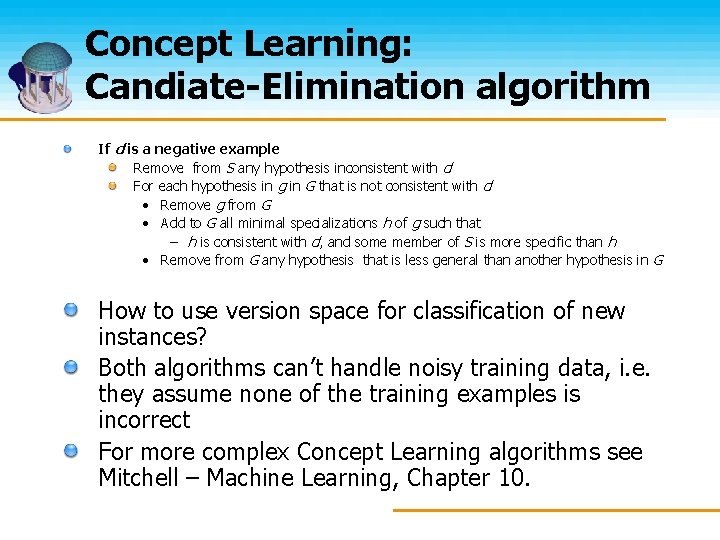

Concept Learning: Candiate-Elimination algorithm If d is a negative example Remove from S any hypothesis inconsistent with d For each hypothesis in g in G that is not consistent with d • Remove g from G • Add to G all minimal specializations h of g such that – h is consistent with d, and some member of S is more specific than h • Remove from G any hypothesis that is less general than another hypothesis in G How to use version space for classification of new instances? Both algorithms can’t handle noisy training data, i. e. they assume none of the training examples is incorrect For more complex Concept Learning algorithms see Mitchell – Machine Learning, Chapter 10.

Inductive bias For both algorithms, we assumed that the target concept was contained in the hypothesis space Our hypothesis space was the set of all hypotheses than can be expressed as a conjunction of attributes Such an assumption is called an inductive bias What if target concept not a conjunction of constraints? Why not consider all possible hypotheses?

Decision Tree Learning Another inductive, supervised learning method for approximating discretevalued target functions Learned function represented by a tree Leaf nodes provide classification Each node specifies a test of some attribute of the instance

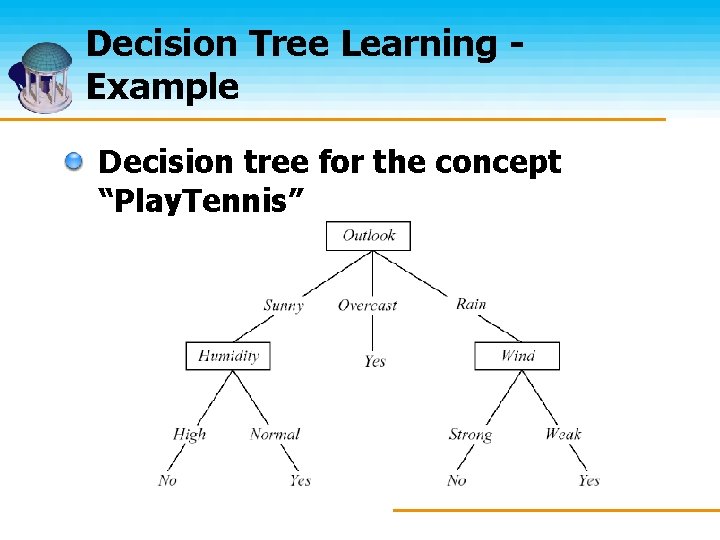

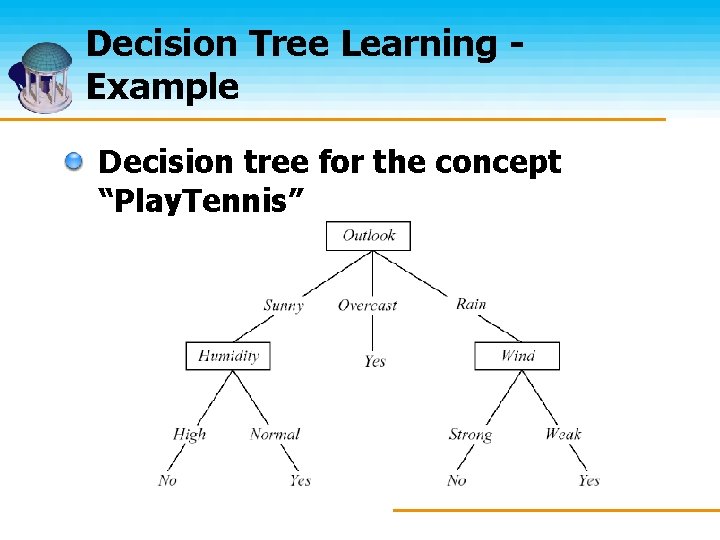

Decision Tree Learning Example Decision tree for the concept “Play. Tennis”

Decision Tree Learning Classification starts at the root node Example tree corresponds to the expression: (Outlook = Sunny AND Humidity = Normal) OR (Outlook = Overcast) OR (Outlook = Rain AND Wind = Weak) Using the tree to classify new instances is easy, but how do we construct a decision tree from training examples?

Decision Tree Learning: ID 3 Algorithm Constructs tree top-down Order of attributes? Evaluate each attribute using a statistical test (see next slide) to determine how well it alone classifies the training examples Use the attribute that best classifies the training example attribute at the root node. Create descendants for each possible value of that attribute. Repeat process for each descendant…

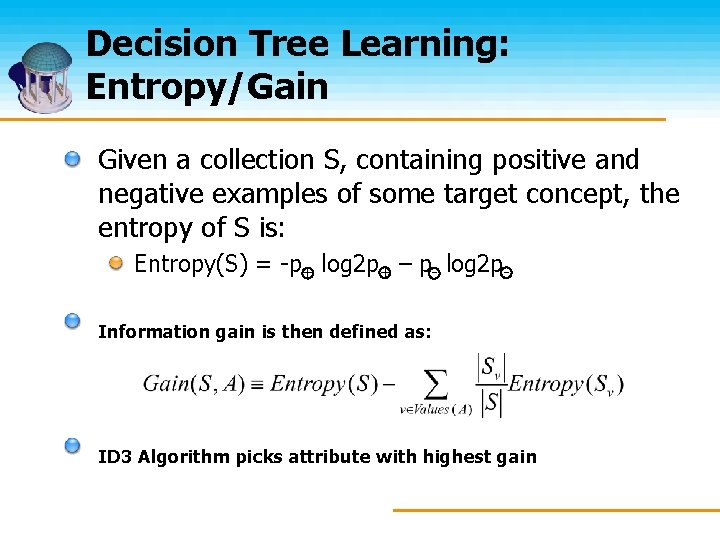

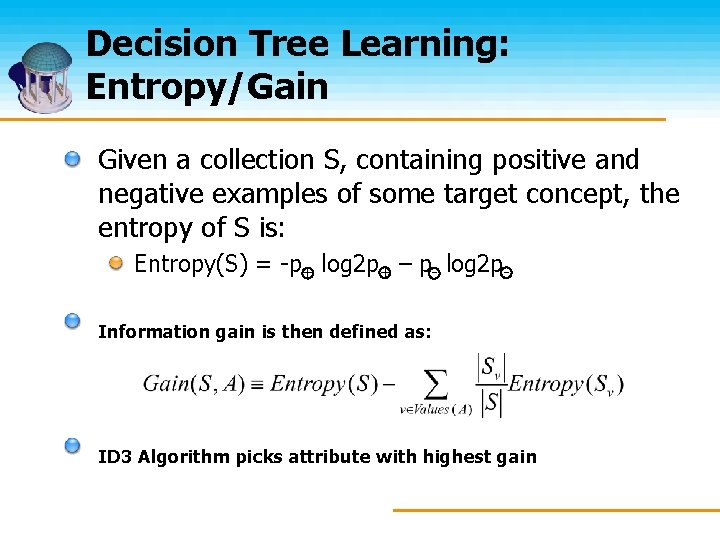

Decision Tree Learning: Entropy/Gain Given a collection S, containing positive and negative examples of some target concept, the entropy of S is: Entropy(S) = -p+ log 2 p+ – p- log 2 p. Information gain is then defined as: ID 3 Algorithm picks attribute with highest gain

Decision Trees - Remarks Tree represents hypothesis; thus, ID 3 determines only a single hypothesis, unlike Candidate-Elimination Unlike both Concept Learning approaches, ID 3 makes no assumptions regarding the hypothesis space; every possible hypothesis can be represented by some tree Inductive Bias: Shorter trees are preferred over larger trees

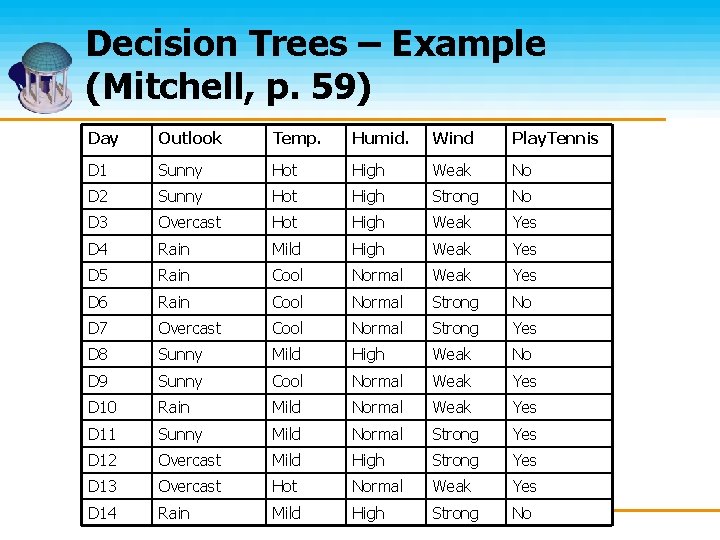

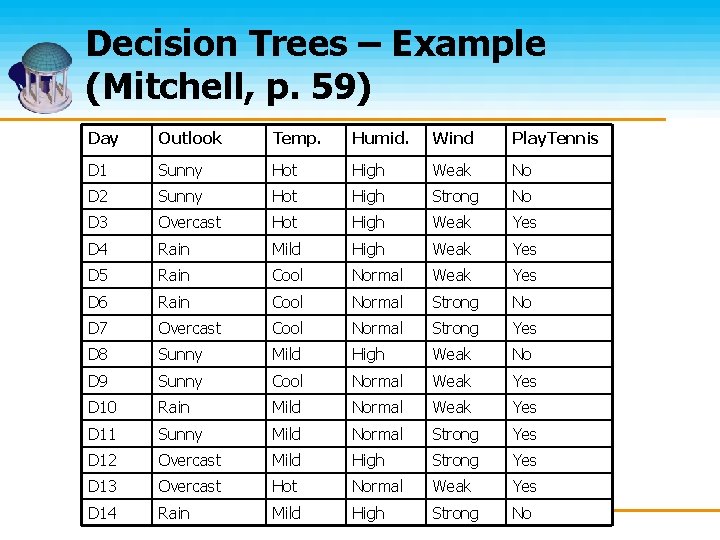

Decision Trees – Example (Mitchell, p. 59) Day Outlook Temp. Humid. Wind Play. Tennis D 1 Sunny Hot High Weak No D 2 Sunny Hot High Strong No D 3 Overcast Hot High Weak Yes D 4 Rain Mild High Weak Yes D 5 Rain Cool Normal Weak Yes D 6 Rain Cool Normal Strong No D 7 Overcast Cool Normal Strong Yes D 8 Sunny Mild High Weak No D 9 Sunny Cool Normal Weak Yes D 10 Rain Mild Normal Weak Yes D 11 Sunny Mild Normal Strong Yes D 12 Overcast Mild High Strong Yes D 13 Overcast Hot Normal Weak Yes D 14 Rain Mild High Strong No

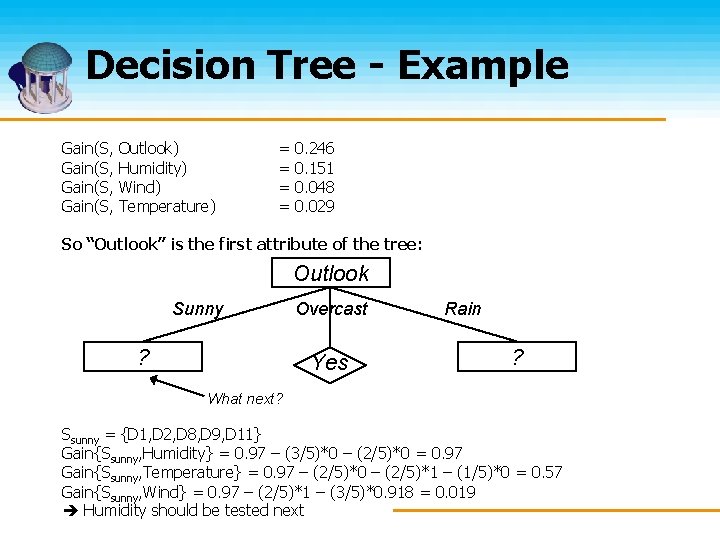

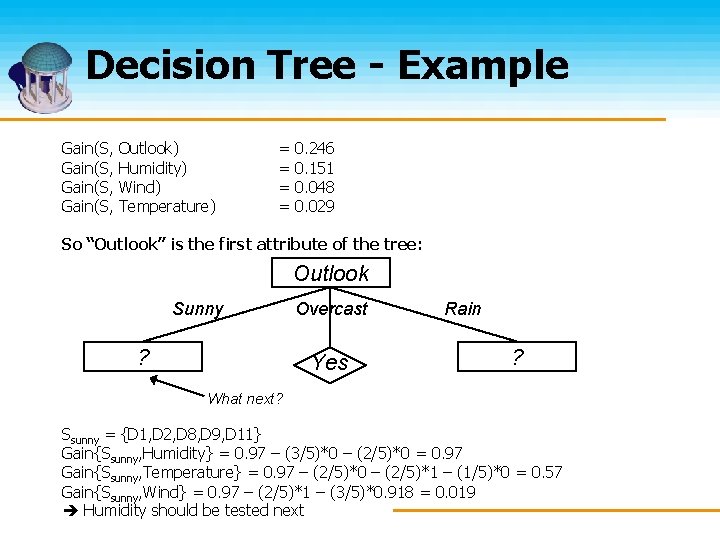

Decision Tree - Example Gain(S, Outlook) Humidity) Wind) Temperature) = = 0. 246 0. 151 0. 048 0. 029 So “Outlook” is the first attribute of the tree: Outlook Sunny ? Overcast Yes Rain ? What next? Ssunny = {D 1, D 2, D 8, D 9, D 11} Gain{Ssunny, Humidity} = 0. 97 – (3/5)*0 – (2/5)*0 = 0. 97 Gain{Ssunny, Temperature} = 0. 97 – (2/5)*0 – (2/5)*1 – (1/5)*0 = 0. 57 Gain{Ssunny, Wind} = 0. 97 – (2/5)*1 – (3/5)*0. 918 = 0. 019 Humidity should be tested next

Learning algorithms in robotics EBL has been used for Planning Algorithms (example: PRODIGY system (Carbonell et al. , 1990)) Could use EBL, concept learning, decision tree learning for things like object recognition (CL and DTL can easily be extended to more than 2 categories) However, while symbolic learning algorithms are simple and easy to understand, they are not very flexible and powerful In many applications, robots need to learn to classify something they perceive (using cameras, sensors etc. ) Sensor inputs etc. are normally numeric Non-symbolic approaches such as NN, Reinforcement Learning or HMM are better suited and thus more commonly used in practice Combined approaches exist, for example EBNN (Explanationbased Neural Networks) (See for example paper “Explanation Based Learning for Mobile Robot Perception” by J. O’Sullivan, T. Mitchell and S. Thrun)

Unsupervised Learning Training data only consists of feature vectors, does not include classifications Unsupervised Learning algorithms try to find patterns in the data Classic examples: Clustering Fitting Gaussian Density functions to data Dimensionality reduction

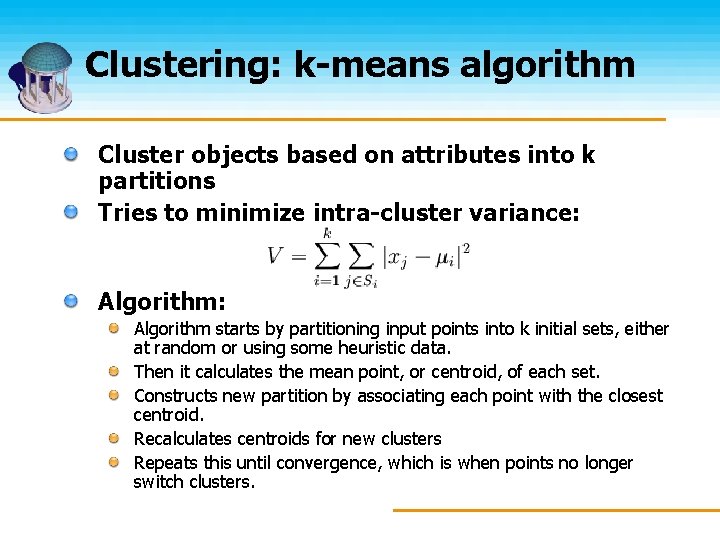

Clustering: k-means algorithm Cluster objects based on attributes into k partitions Tries to minimize intra-cluster variance: Algorithm: Algorithm starts by partitioning input points into k initial sets, either at random or using some heuristic data. Then it calculates the mean point, or centroid, of each set. Constructs new partition by associating each point with the closest centroid. Recalculates centroids for new clusters Repeats this until convergence, which is when points no longer switch clusters.

Summary This presentation mainly covered symbol -based learning algorithms Deductive • EBL Inductive • Concept Learning • Decision-Tree Learning • Unsupervised – Clustering (not necessarily symbol-based), COBWEB Non symbol-based learning algorithms such as neural networks part of the next lecture?

References (Books) Tom Mitchell: Machine Learning. Mc. Graw-Hill, 1997. Stuart Russell, Peter Norvig: Artificial Intelligence – a modern approach. Prentice Hall, 2003. George Luger: Artificial Intelligence. Addison-Wesley, 2002. Elaine Rich: Artificial Intelligence. Mc. Graw-Hill, 1983.