Introduction to Convolution Neural Network CNN Xingang Li

- Slides: 26

Introduction to Convolution Neural Network (CNN) Xingang Li Mechanical Engineering University of Arkansas

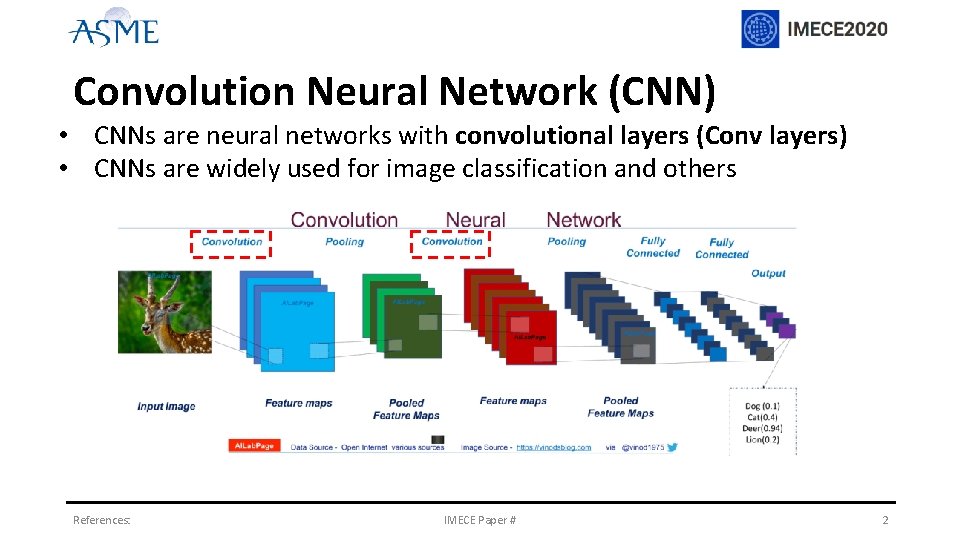

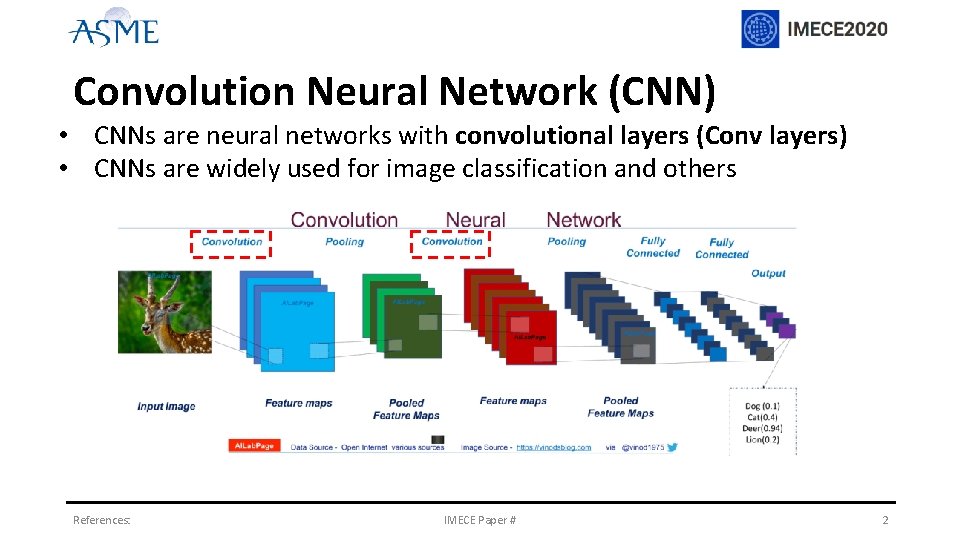

Convolution Neural Network (CNN) • CNNs are neural networks with convolutional layers (Conv layers) • CNNs are widely used for image classification and others References: IMECE Paper # 2

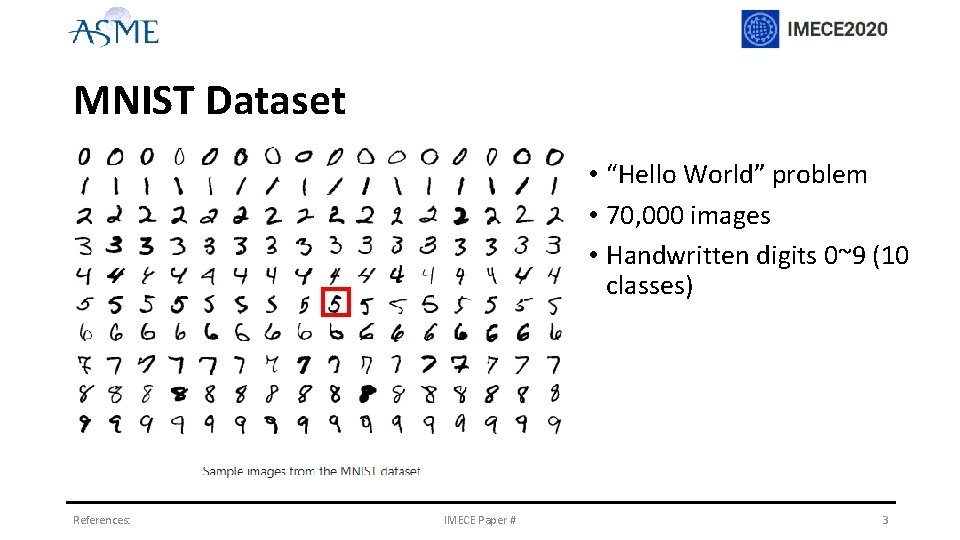

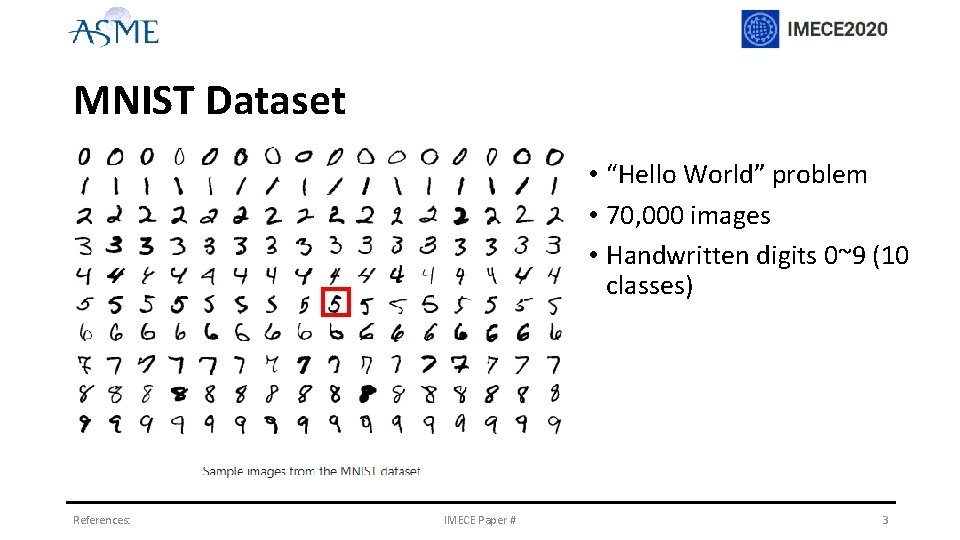

MNIST Dataset • “Hello World” problem • 70, 000 images • Handwritten digits 0~9 (10 classes) References: IMECE Paper # 3

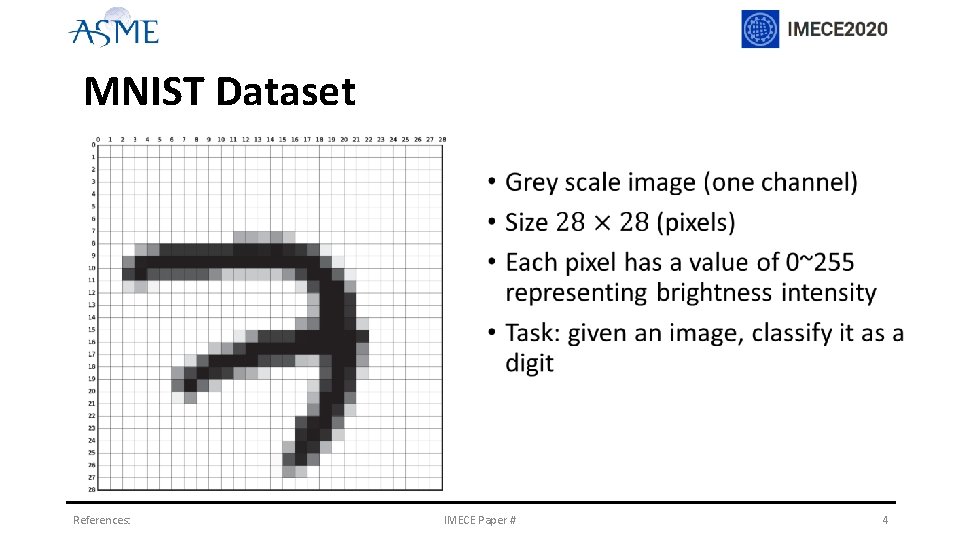

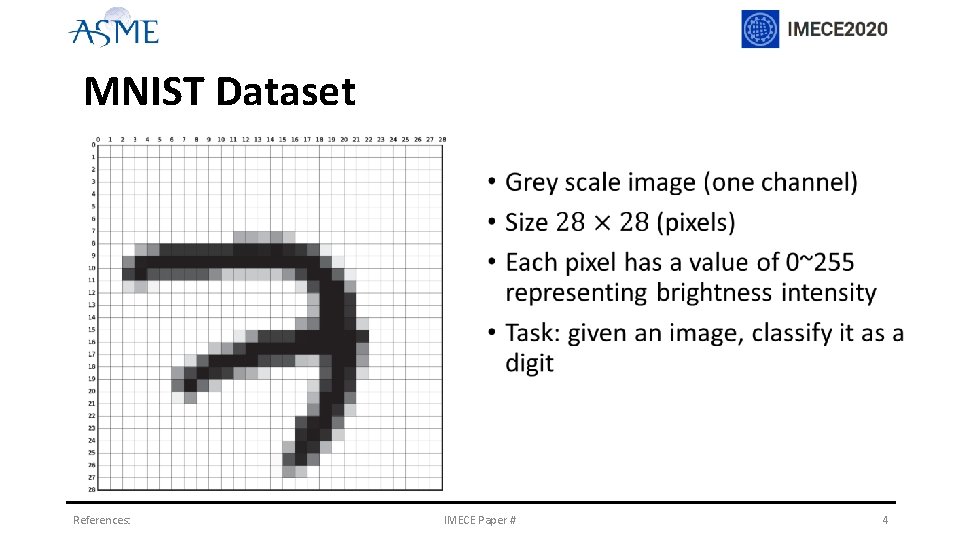

MNIST Dataset References: IMECE Paper # 4

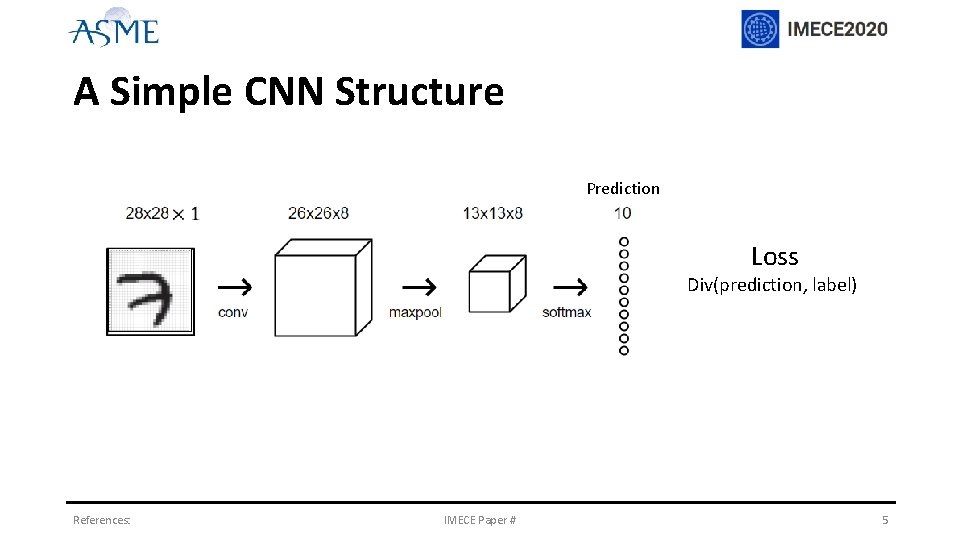

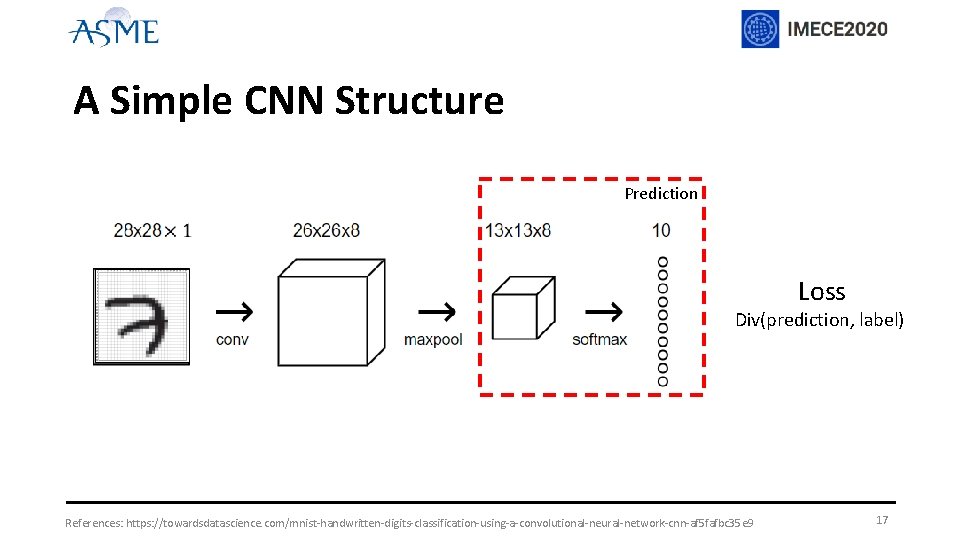

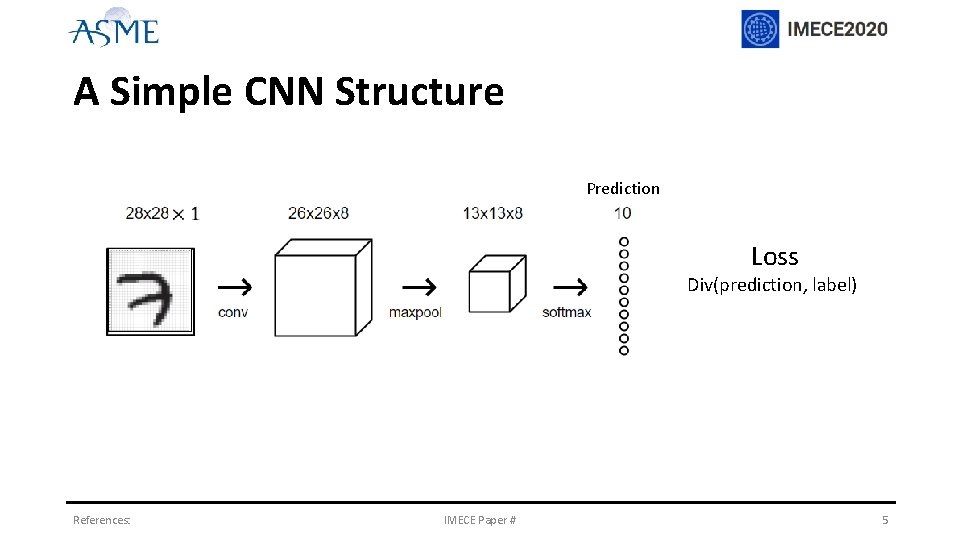

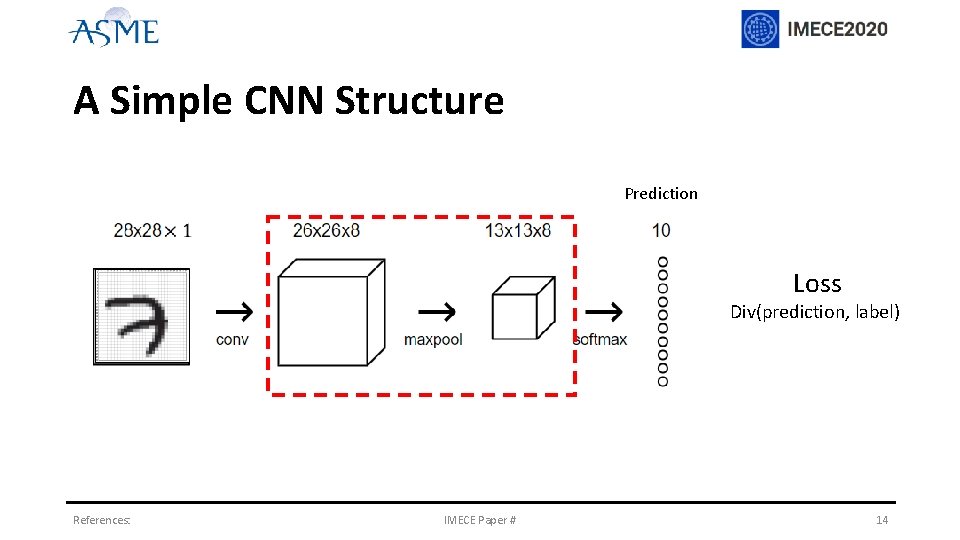

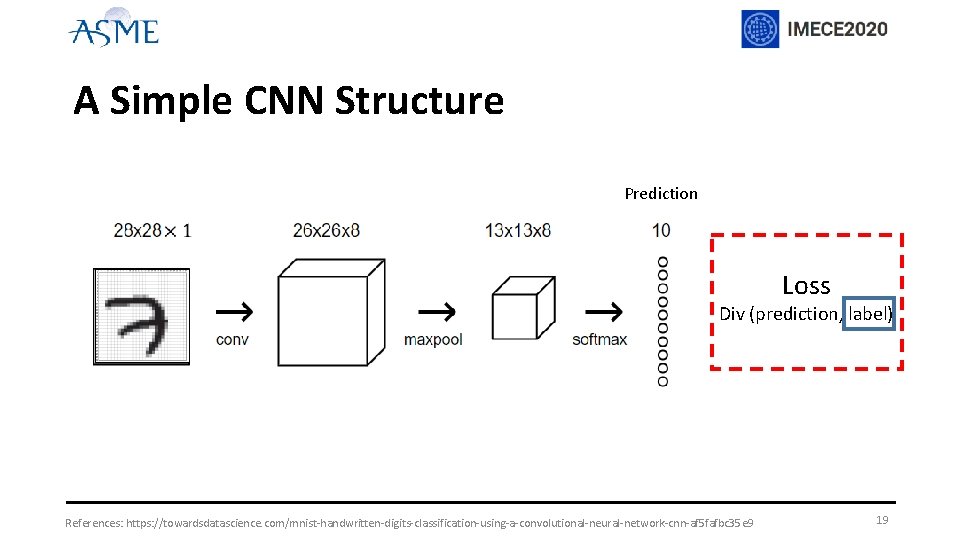

A Simple CNN Structure Prediction Loss Div(prediction, label) References: IMECE Paper # 5

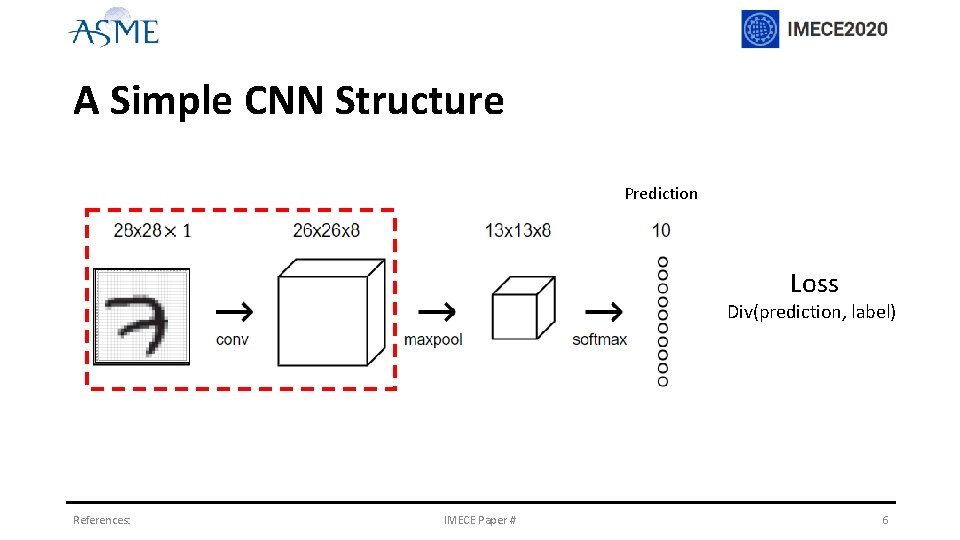

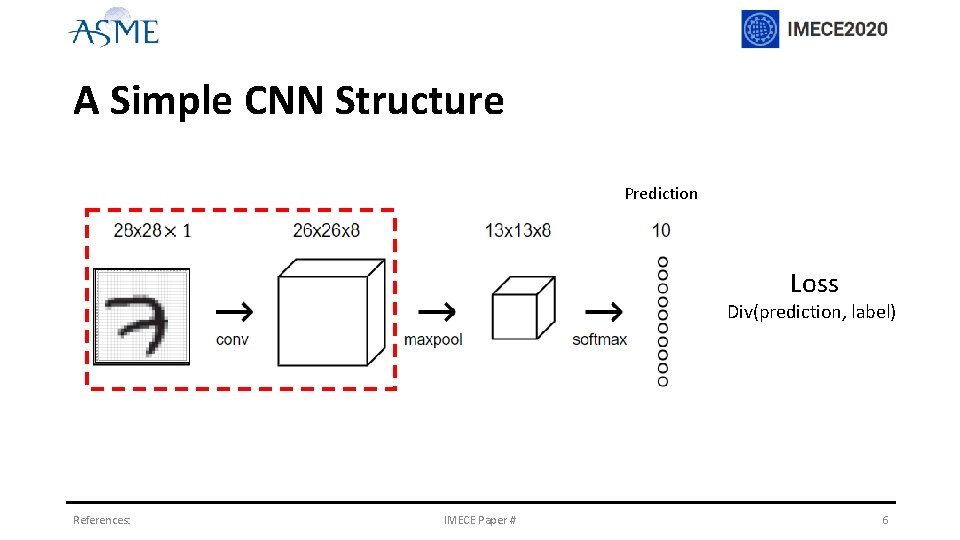

A Simple CNN Structure Prediction Loss Div(prediction, label) References: IMECE Paper # 6

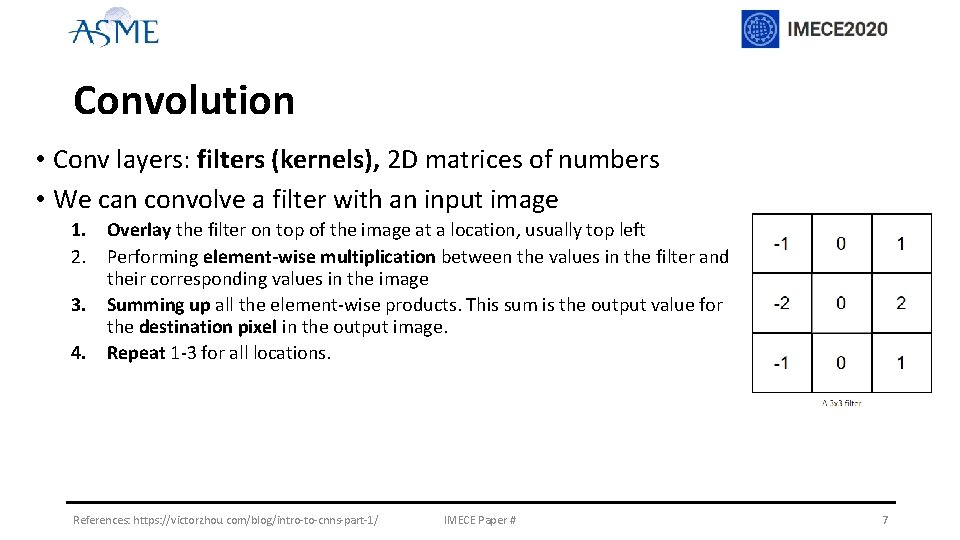

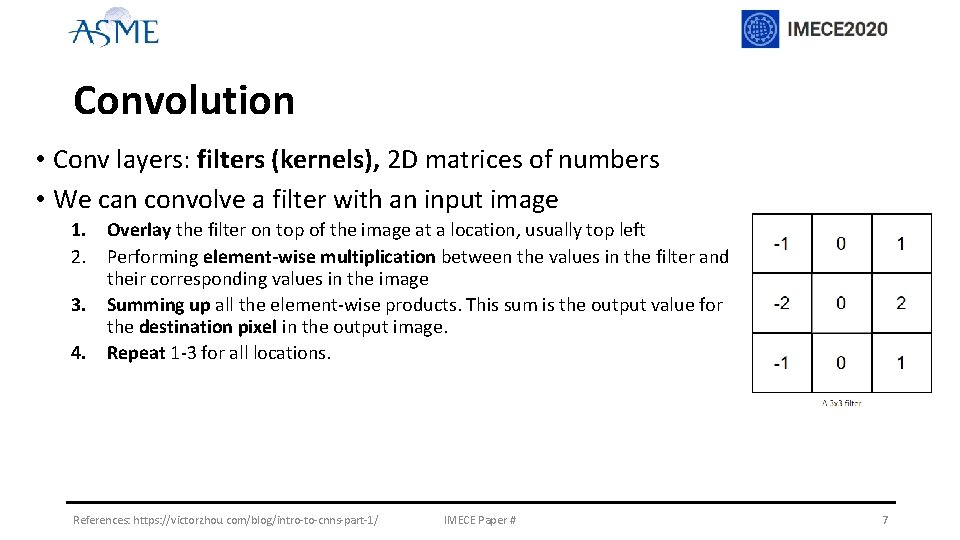

Convolution • Conv layers: filters (kernels), 2 D matrices of numbers • We can convolve a filter with an input image 1. Overlay the filter on top of the image at a location, usually top left 2. Performing element-wise multiplication between the values in the filter and their corresponding values in the image 3. Summing up all the element-wise products. This sum is the output value for the destination pixel in the output image. 4. Repeat 1 -3 for all locations. References: https: //victorzhou. com/blog/intro-to-cnns-part-1/ IMECE Paper # 7

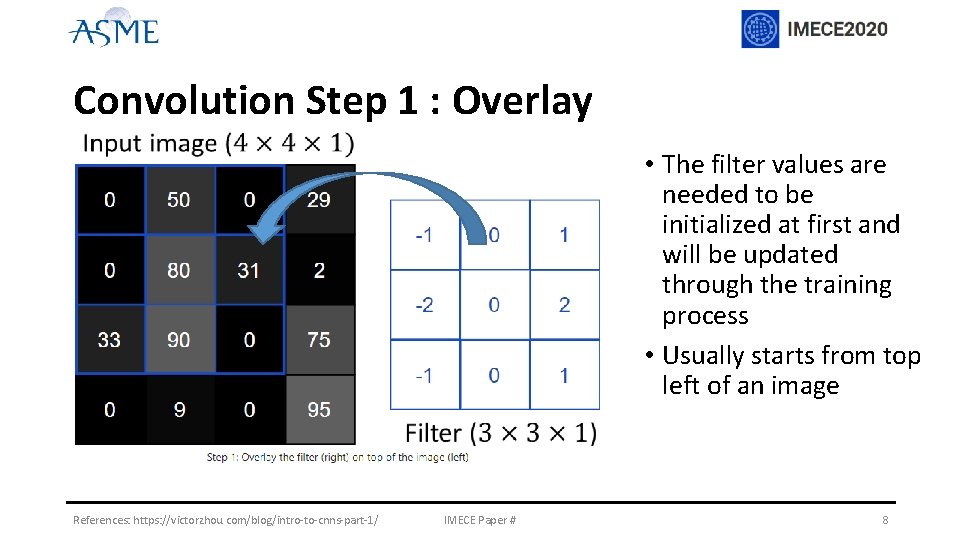

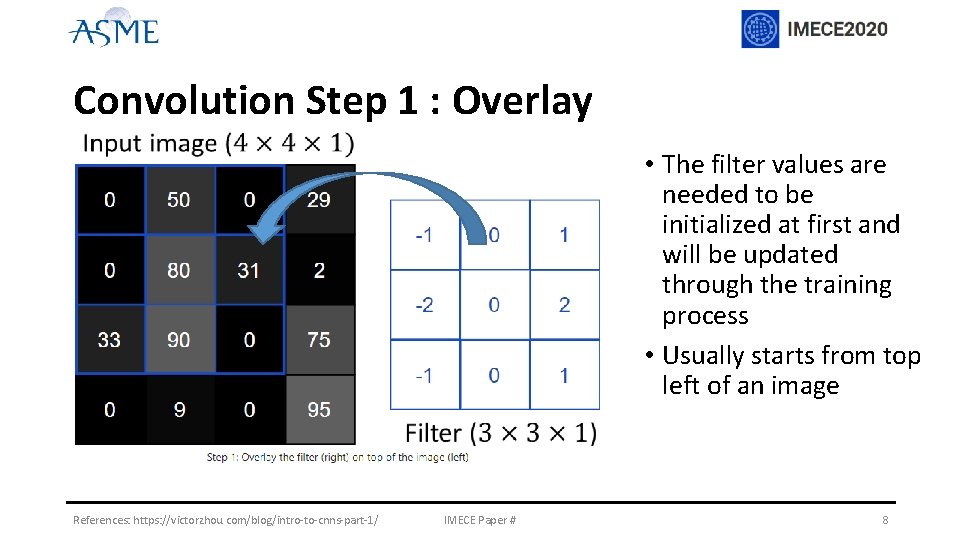

Convolution Step 1 : Overlay • The filter values are needed to be initialized at first and will be updated through the training process • Usually starts from top left of an image References: https: //victorzhou. com/blog/intro-to-cnns-part-1/ IMECE Paper # 8

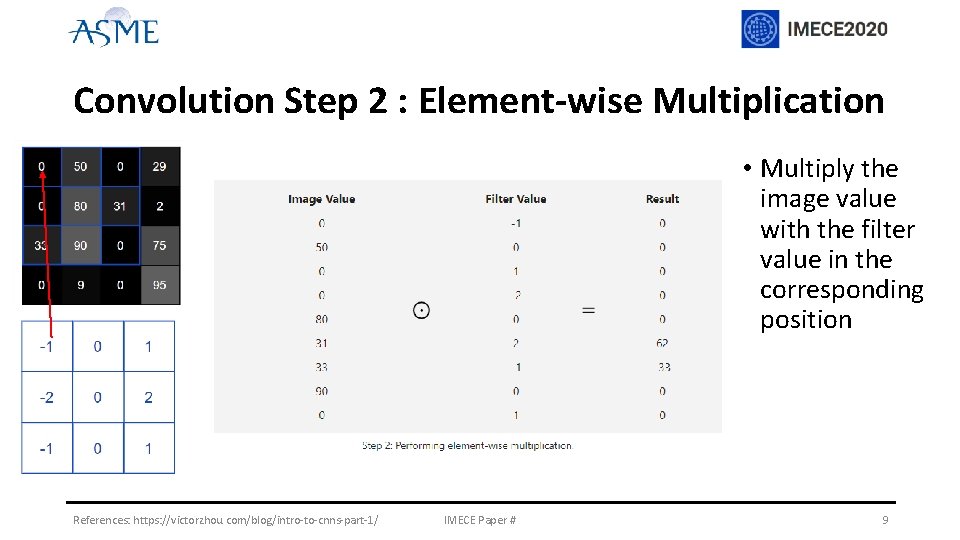

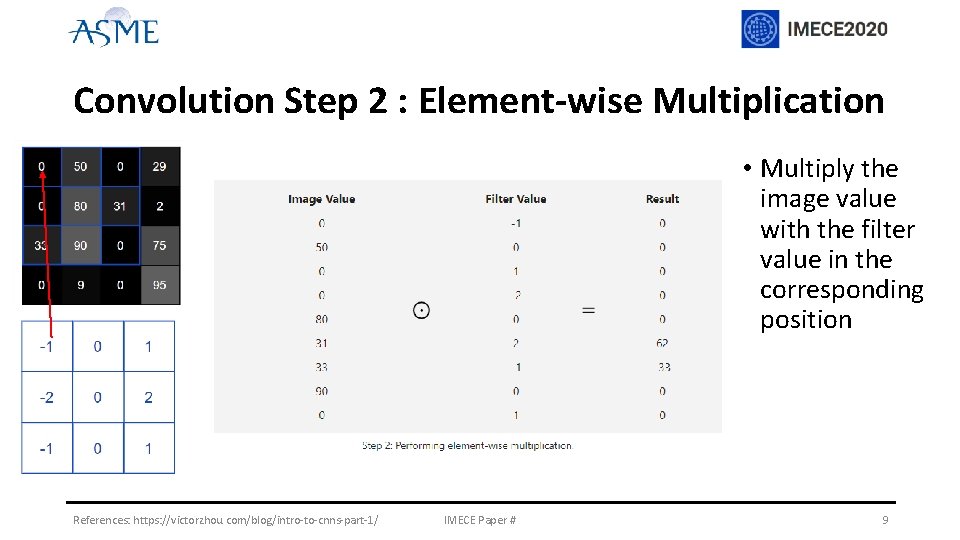

Convolution Step 2 : Element-wise Multiplication References: https: //victorzhou. com/blog/intro-to-cnns-part-1/ IMECE Paper # • Multiply the image value with the filter value in the corresponding position 9

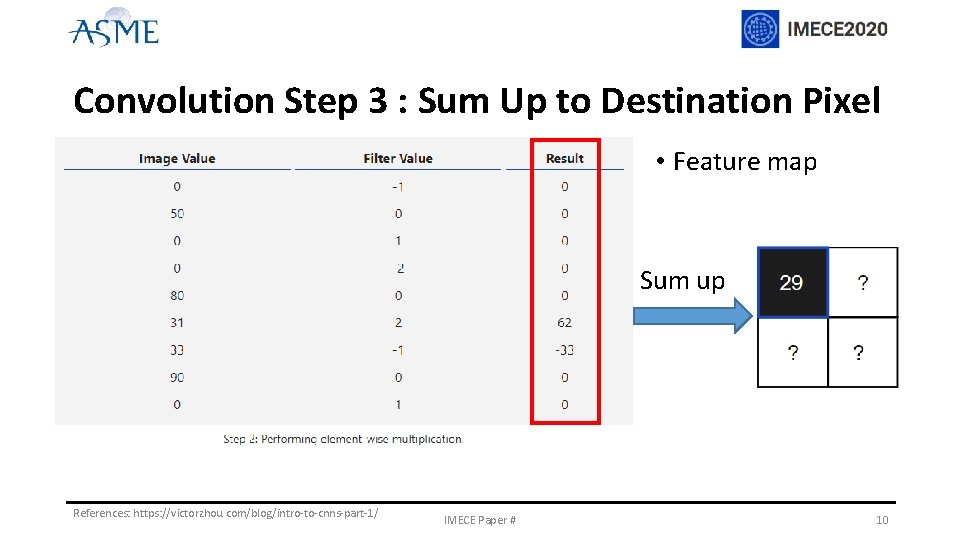

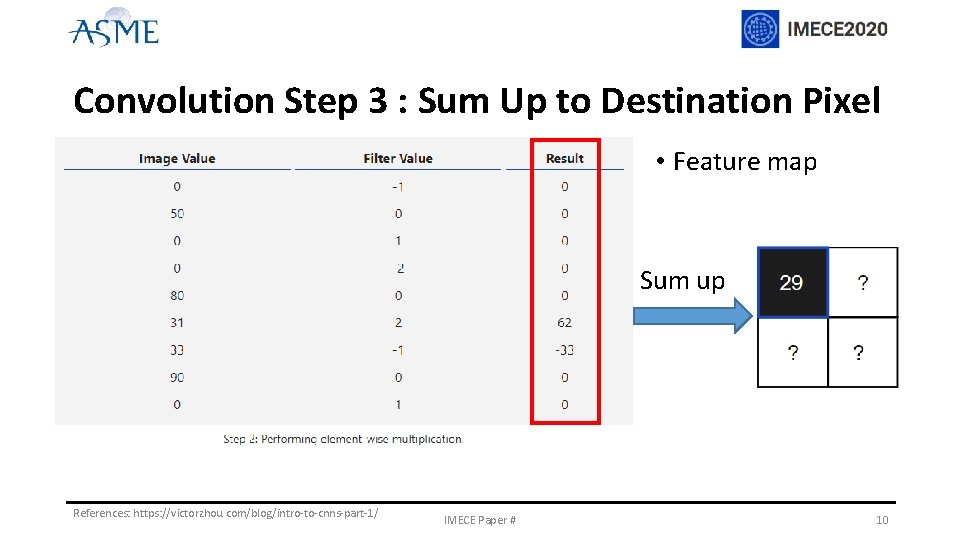

Convolution Step 3 : Sum Up to Destination Pixel • Feature map Sum up References: https: //victorzhou. com/blog/intro-to-cnns-part-1/ IMECE Paper # 10

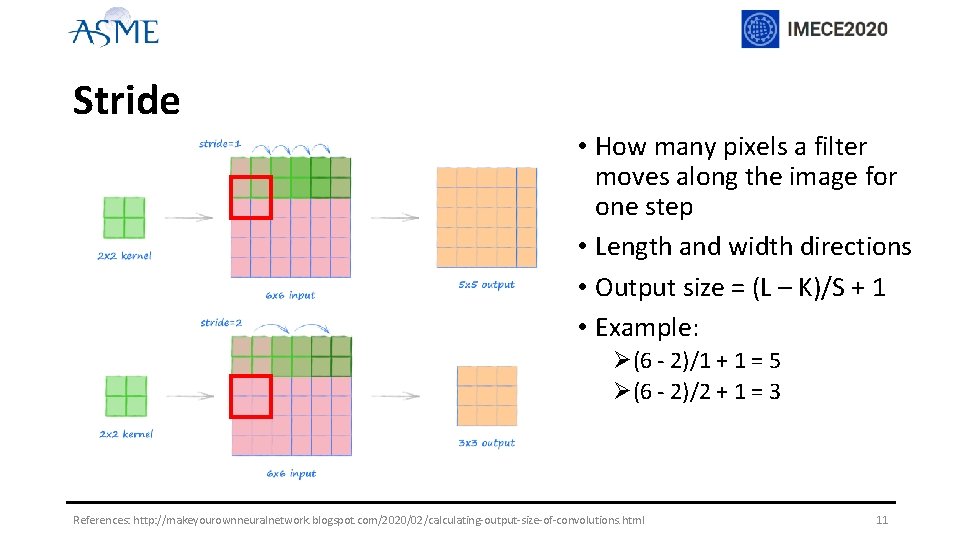

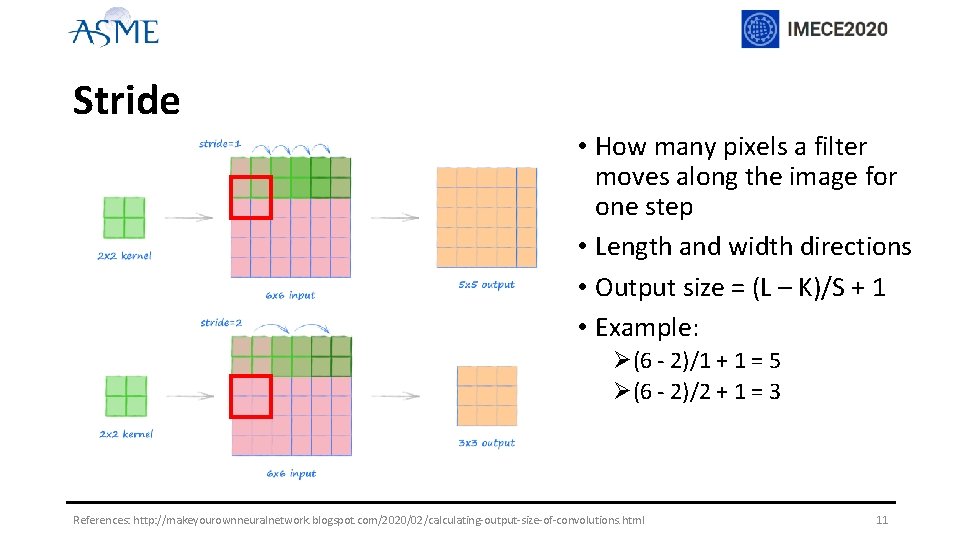

Stride • How many pixels a filter moves along the image for one step • Length and width directions • Output size = (L – K)/S + 1 • Example: Ø(6 - 2)/1 + 1 = 5 Ø(6 - 2)/2 + 1 = 3 References: http: //makeyourownneuralnetwork. blogspot. com/2020/02/calculating-output-size-of-convolutions. html 11

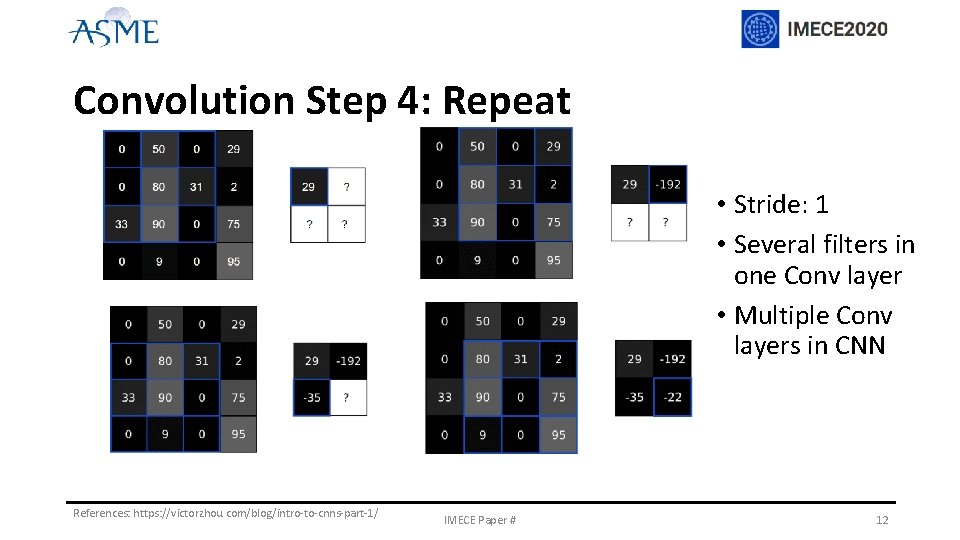

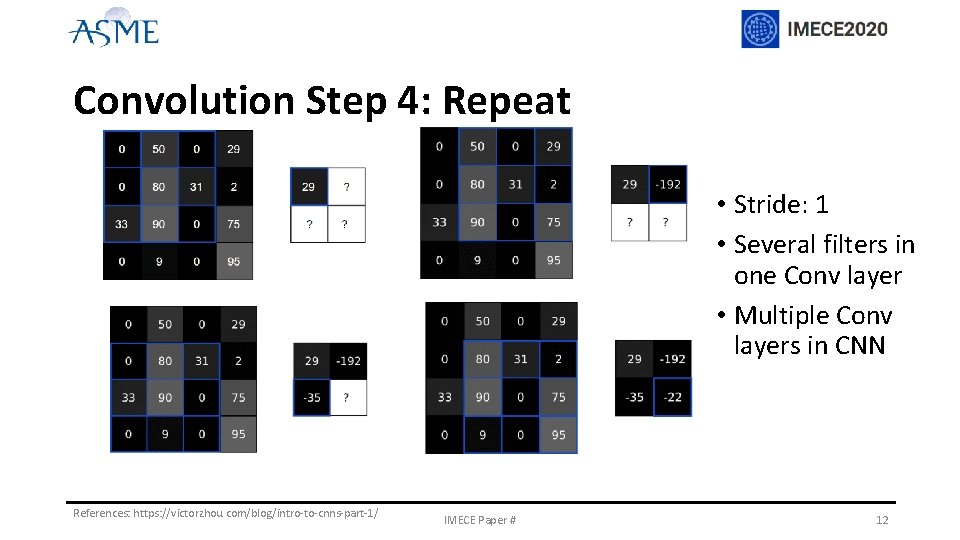

Convolution Step 4: Repeat • Stride: 1 • Several filters in one Conv layer • Multiple Conv layers in CNN References: https: //victorzhou. com/blog/intro-to-cnns-part-1/ IMECE Paper # 12

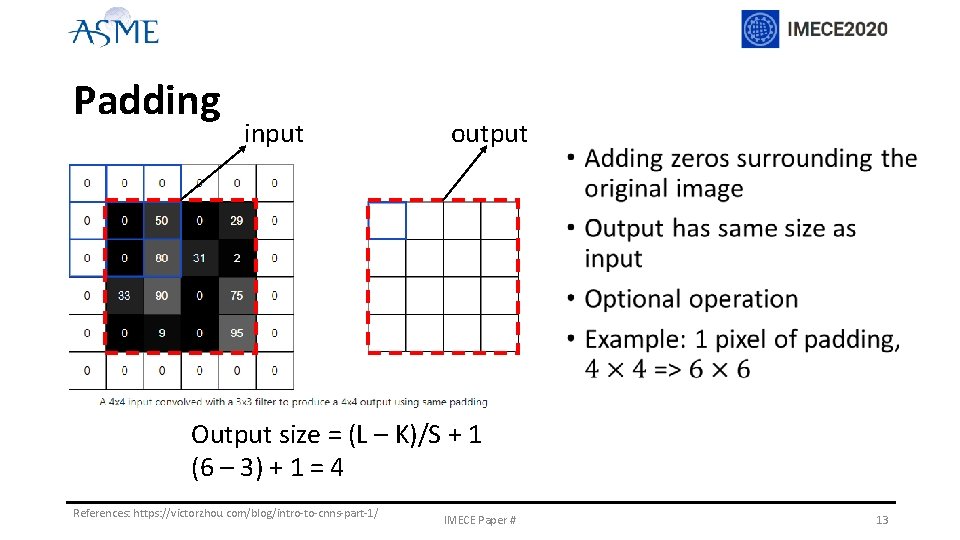

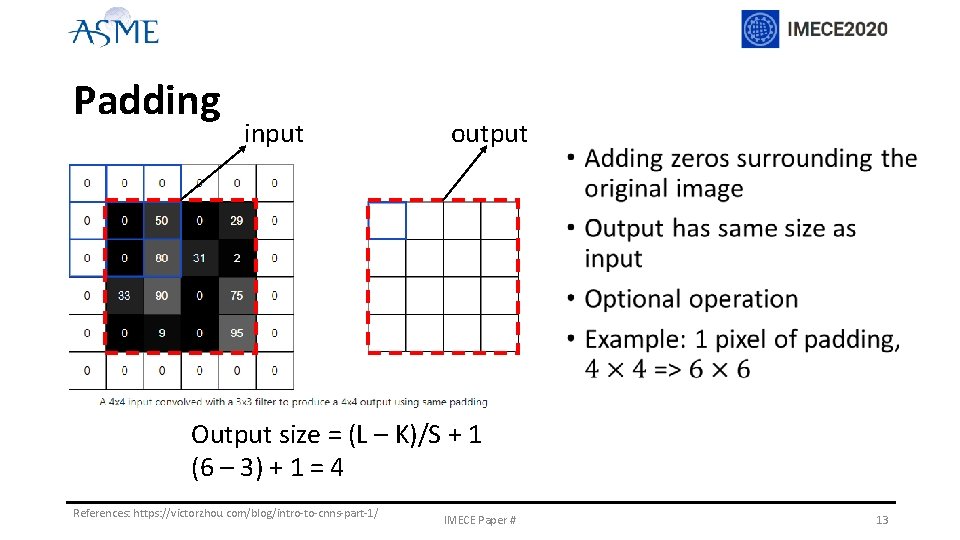

Padding input output Output size = (L – K)/S + 1 (6 – 3) + 1 = 4 References: https: //victorzhou. com/blog/intro-to-cnns-part-1/ IMECE Paper # 13

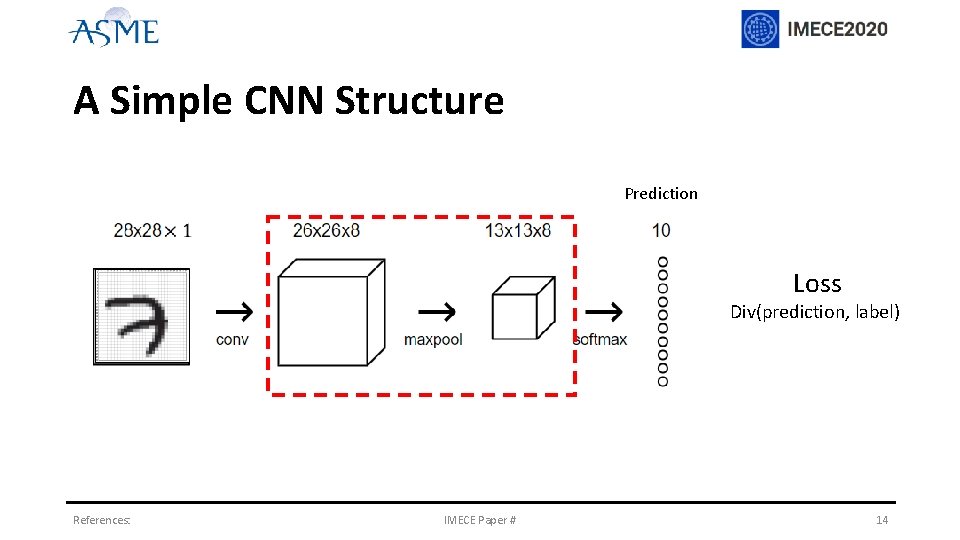

A Simple CNN Structure Prediction Loss Div(prediction, label) References: IMECE Paper # 14

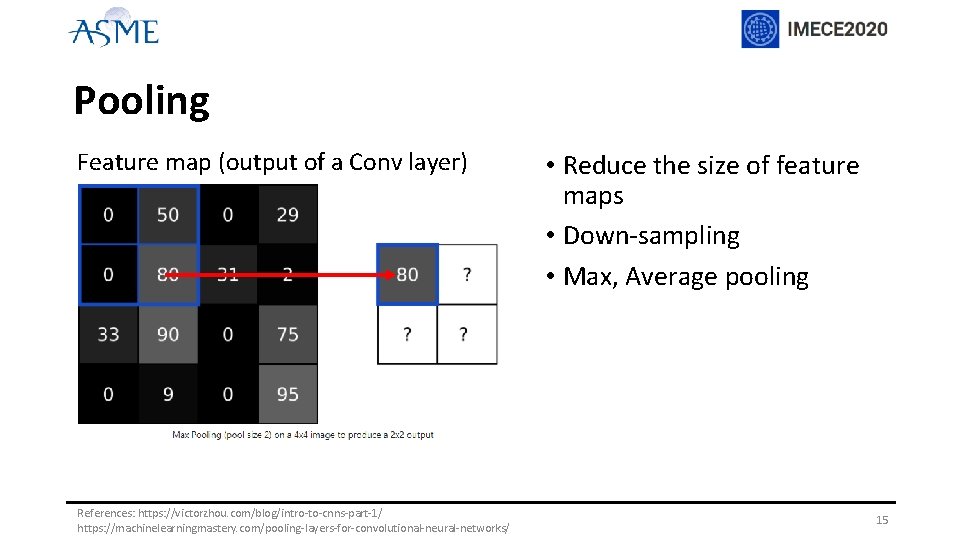

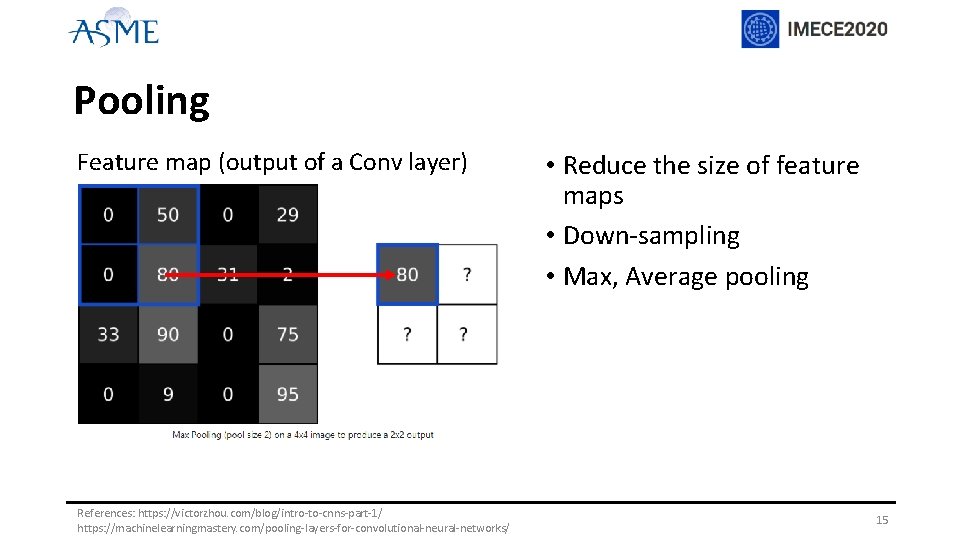

Pooling Feature map (output of a Conv layer) References: https: //victorzhou. com/blog/intro-to-cnns-part-1/ https: //machinelearningmastery. com/pooling-layers-for-convolutional-neural-networks/ • Reduce the size of feature maps • Down-sampling • Max, Average pooling 15

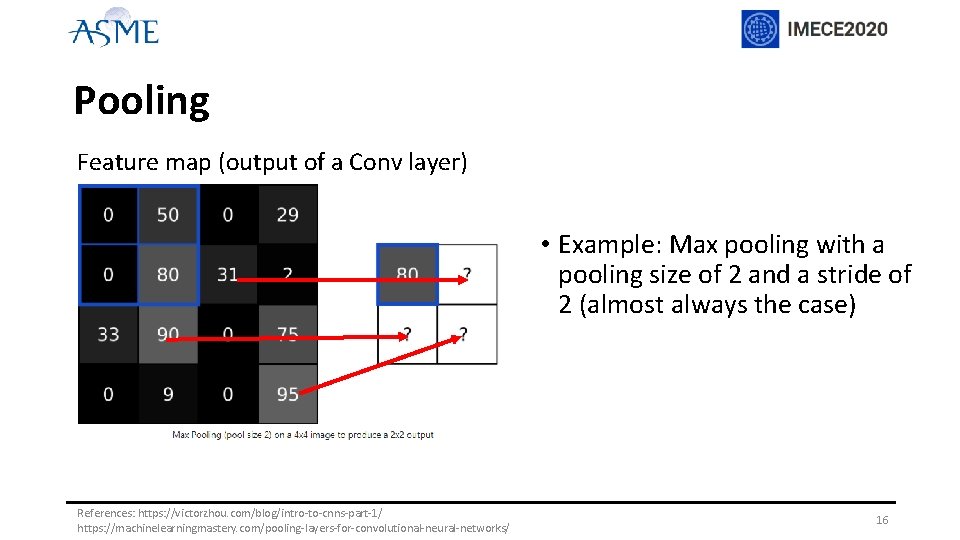

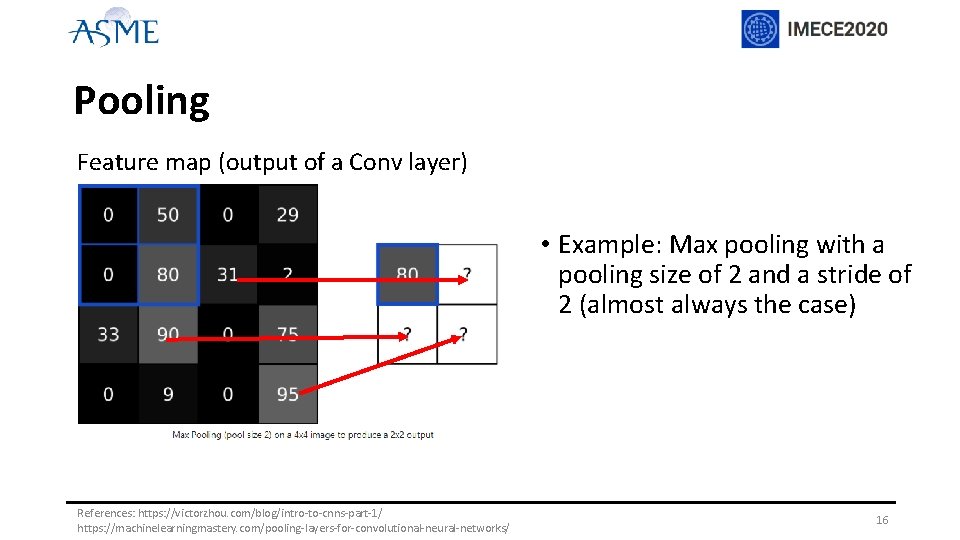

Pooling Feature map (output of a Conv layer) • Example: Max pooling with a pooling size of 2 and a stride of 2 (almost always the case) References: https: //victorzhou. com/blog/intro-to-cnns-part-1/ https: //machinelearningmastery. com/pooling-layers-for-convolutional-neural-networks/ 16

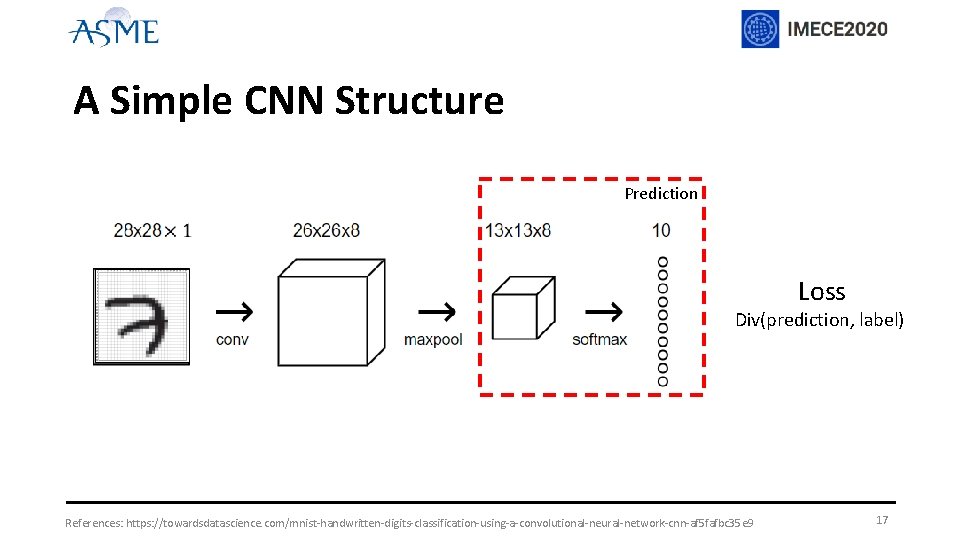

A Simple CNN Structure Prediction Loss Div(prediction, label) References: https: //towardsdatascience. com/mnist-handwritten-digits-classification-using-a-convolutional-neural-network-cnn-af 5 fafbc 35 e 9 17

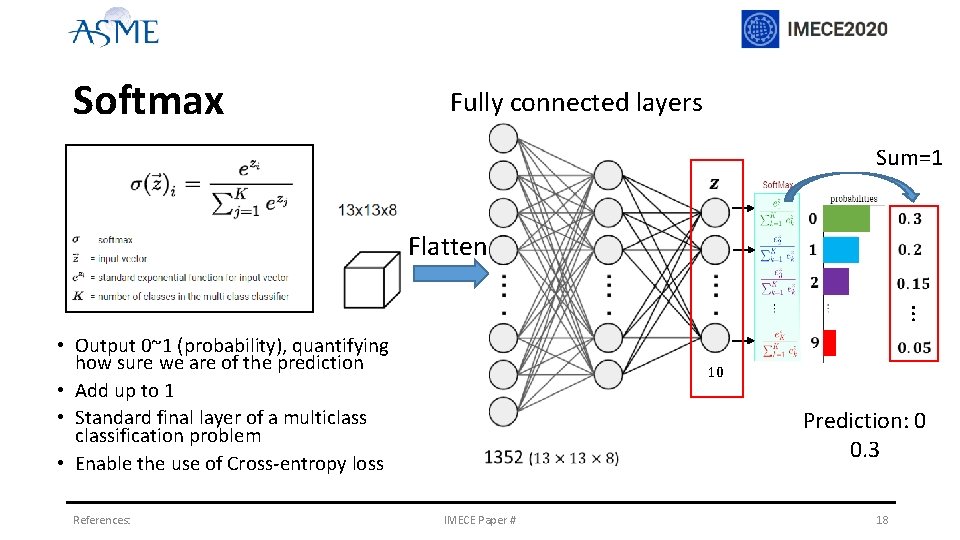

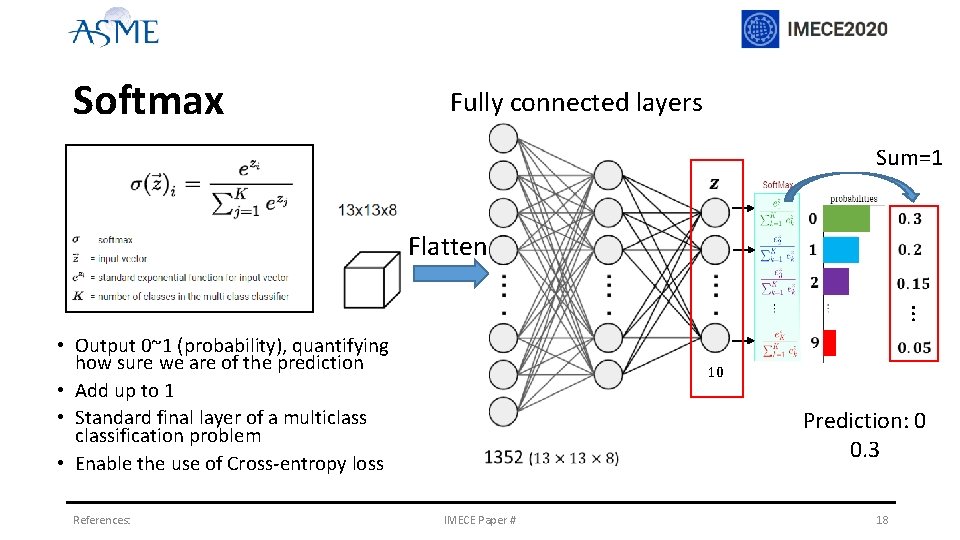

Softmax Fully connected layers Sum=1 Flatten … • Output 0~1 (probability), quantifying how sure we are of the prediction • Add up to 1 • Standard final layer of a multiclassification problem • Enable the use of Cross-entropy loss References: 10 Prediction: 0 0. 3 IMECE Paper # 18

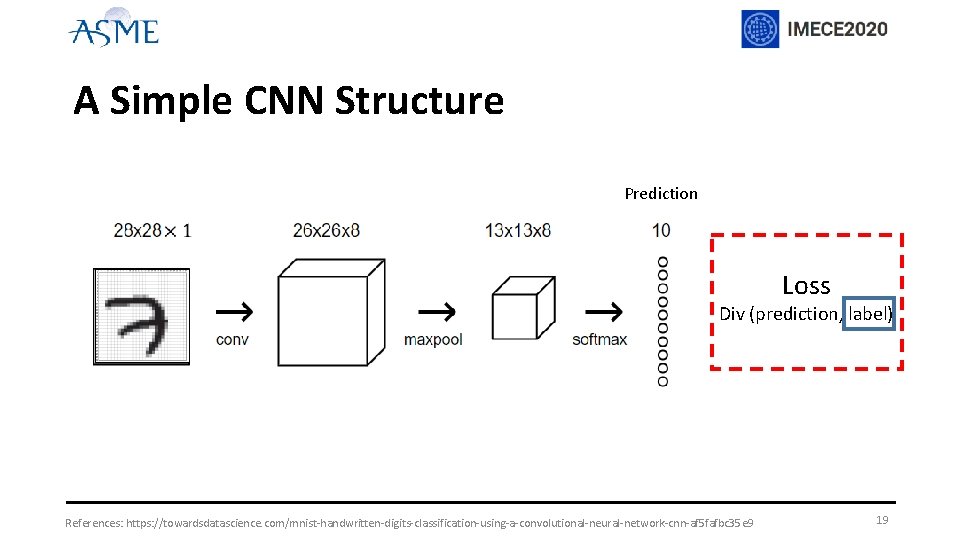

A Simple CNN Structure Prediction Loss Div (prediction, label) References: https: //towardsdatascience. com/mnist-handwritten-digits-classification-using-a-convolutional-neural-network-cnn-af 5 fafbc 35 e 9 19

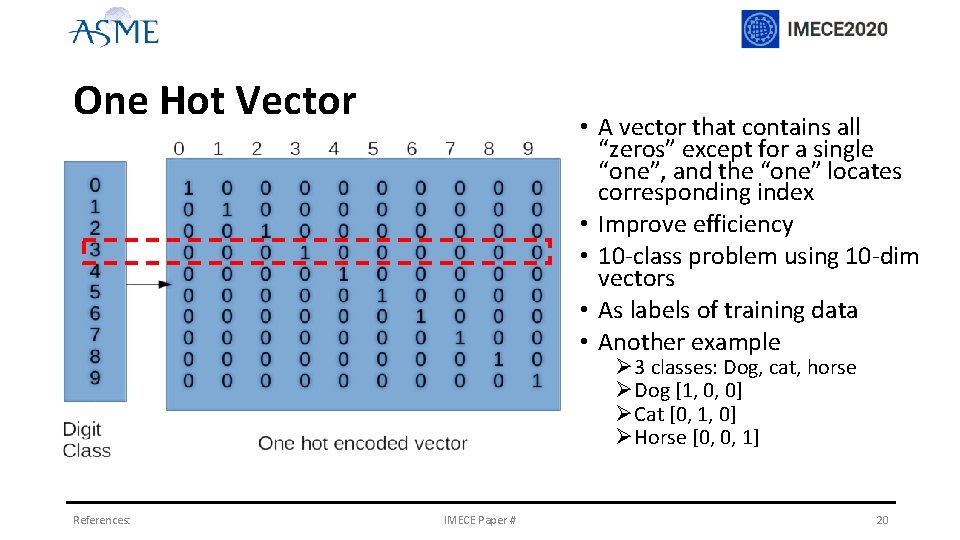

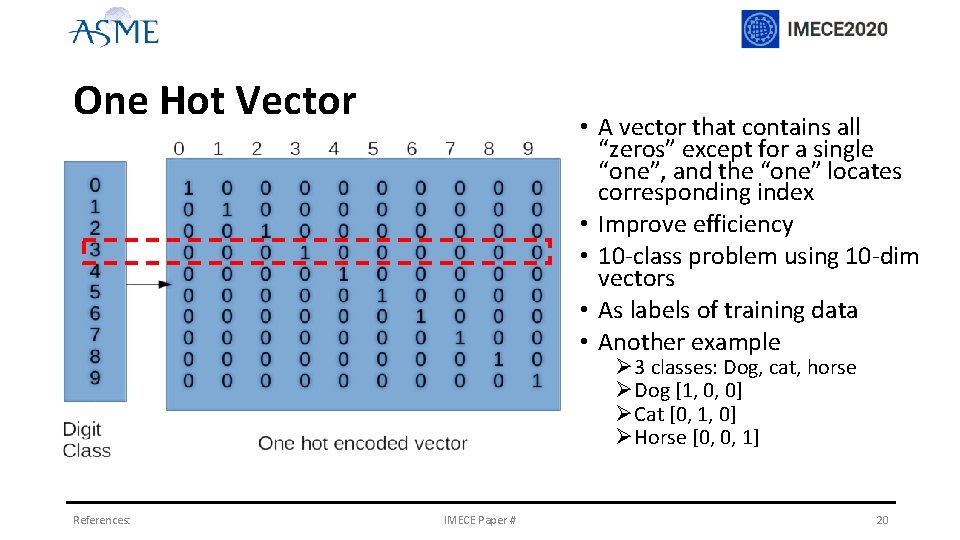

One Hot Vector • A vector that contains all “zeros” except for a single “one”, and the “one” locates corresponding index • Improve efficiency • 10 -class problem using 10 -dim vectors • As labels of training data • Another example Ø 3 classes: Dog, cat, horse ØDog [1, 0, 0] ØCat [0, 1, 0] ØHorse [0, 0, 1] References: IMECE Paper # 20

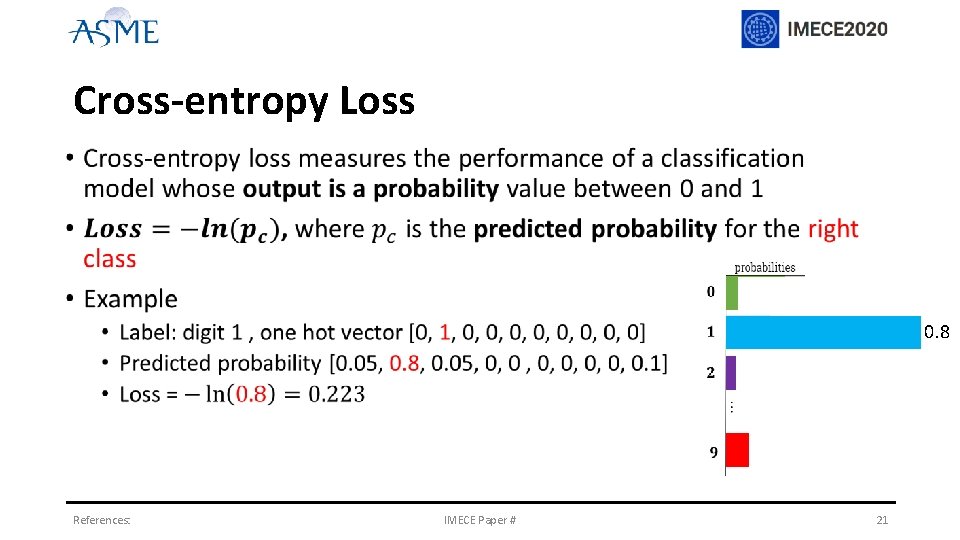

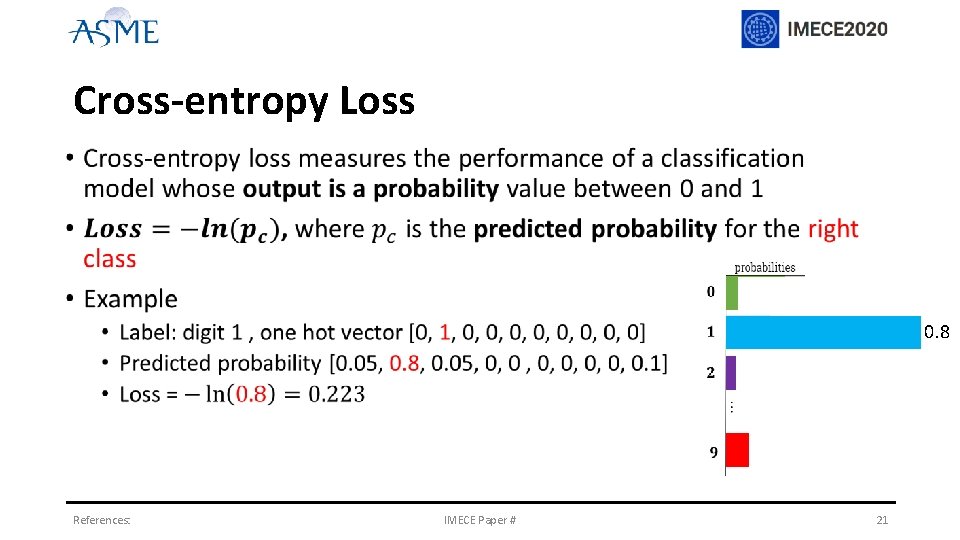

Cross-entropy Loss • 0. 8 References: IMECE Paper # 21

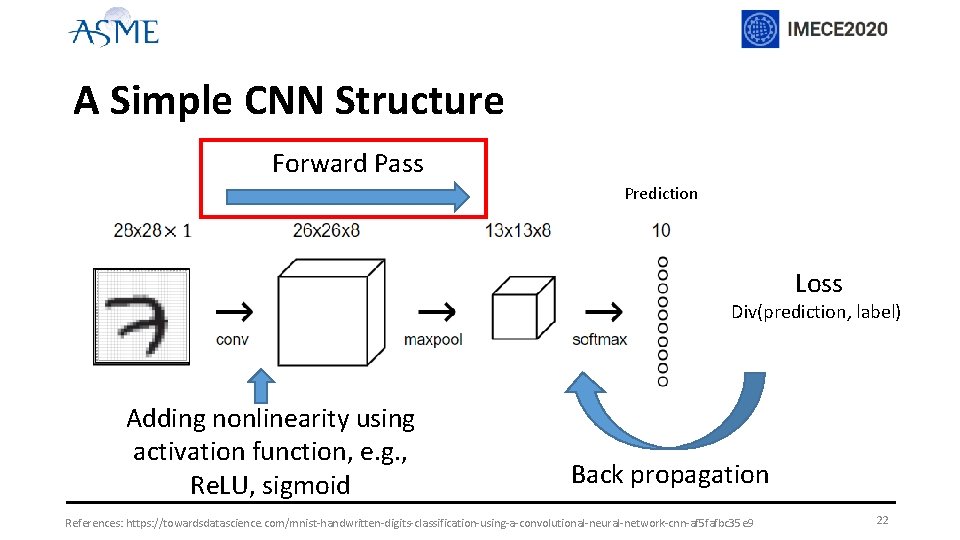

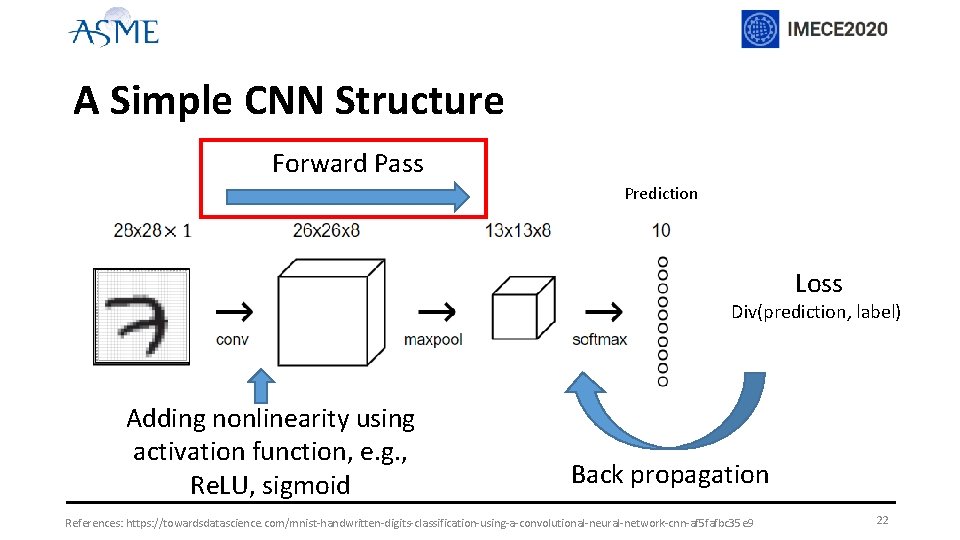

A Simple CNN Structure Forward Pass Prediction Loss Div(prediction, label) Adding nonlinearity using activation function, e. g. , Re. LU, sigmoid Back propagation References: https: //towardsdatascience. com/mnist-handwritten-digits-classification-using-a-convolutional-neural-network-cnn-af 5 fafbc 35 e 9 22

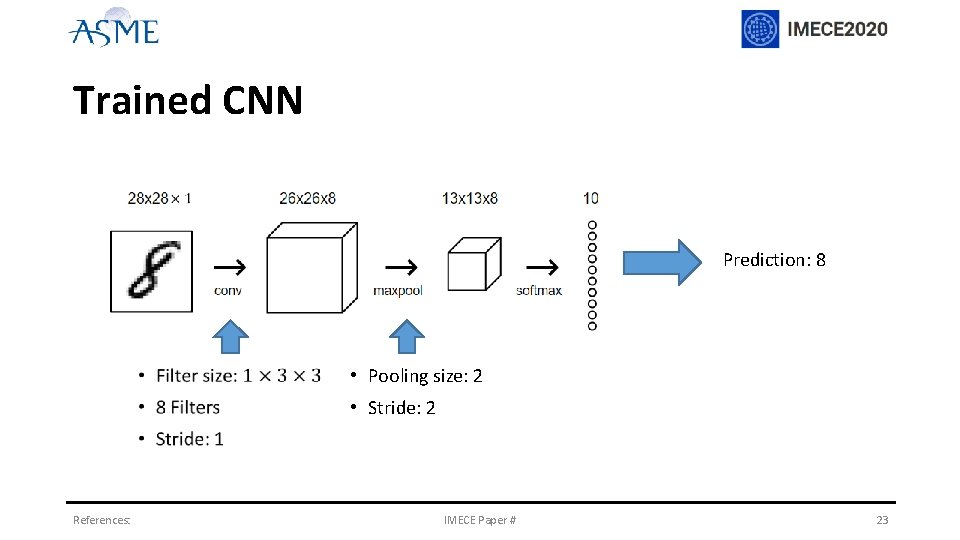

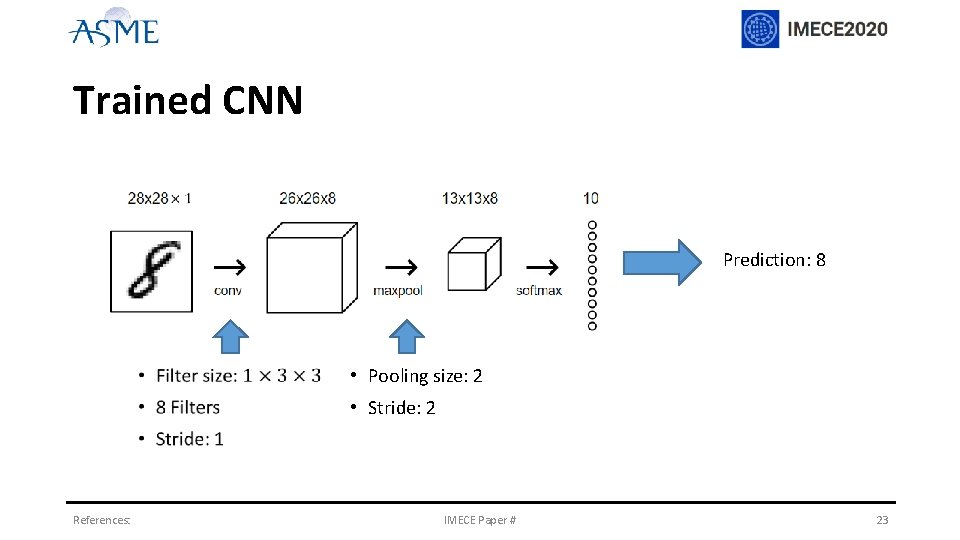

Trained CNN Prediction: 8 • Pooling size: 2 • Stride: 2 References: IMECE Paper # 23

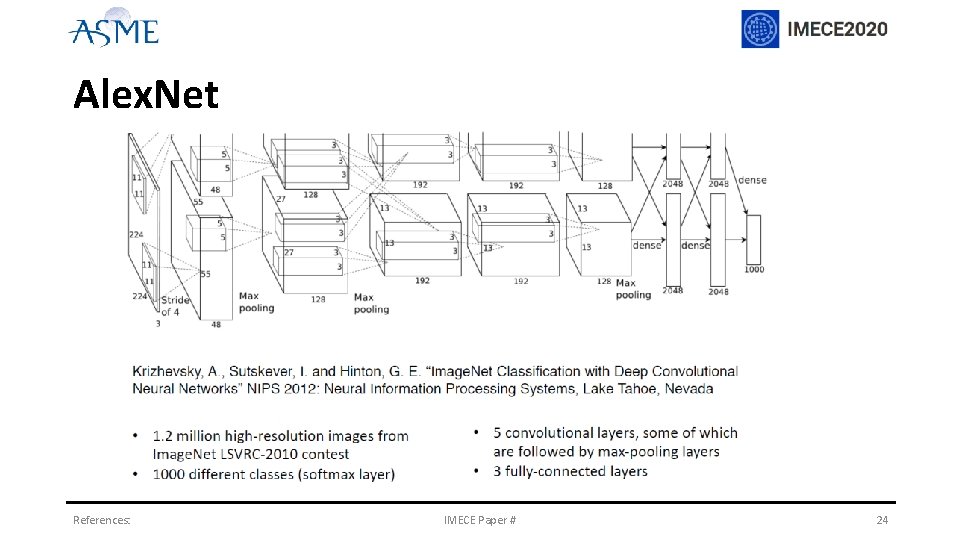

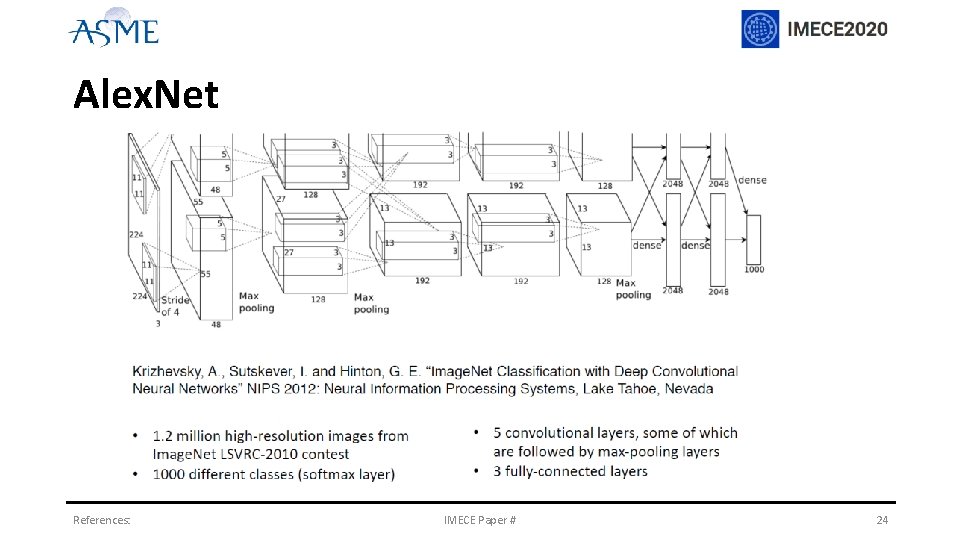

Alex. Net References: IMECE Paper # 24

Conclusion • Forward pass of CNN • Leave backward propagation for your own explorations • Learning materials Øhttps: //victorzhou. com/blog/intro-to-cnns-part-1/ Øhttps: //victorzhou. com/blog/intro-to-cnns-part-2/ Øhttps: //towardsdatascience. com/a-comprehensive-guide-to-convolutional-neuralnetworks-the-eli 5 -way-3 bd 2 b 1164 a 53 References: IMECE Paper # 25

Thank you! Questions? xl 038@uark. edu Xingang Li References: IMECE Paper # 26