Convolutional Neural Network CNN Munif CNN The CNN

- Slides: 22

Convolutional Neural Network (CNN) Munif

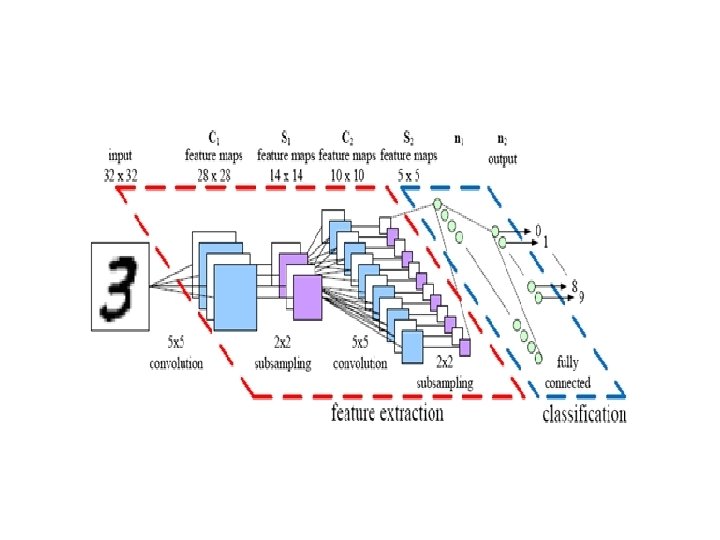

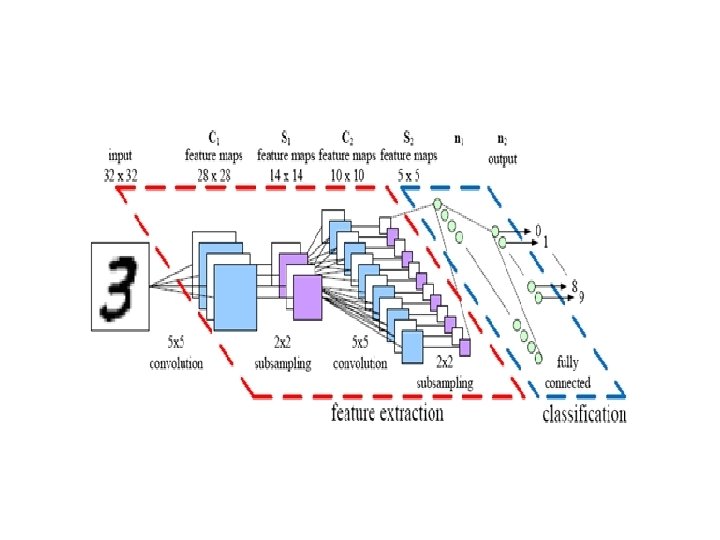

CNN • The (CNN) consists of: – Convolutional layers – Subsampling Layers – Fully connected layers • Has achieved state-of-the-art result for the recognition of handwritten digits

Neural Network And CNN • CNN has fewer connections – Replicating Weights • Easier to train – reduce the training time. • A large-scale datasets • input are images ( 2 D ) • CNN tricky to train

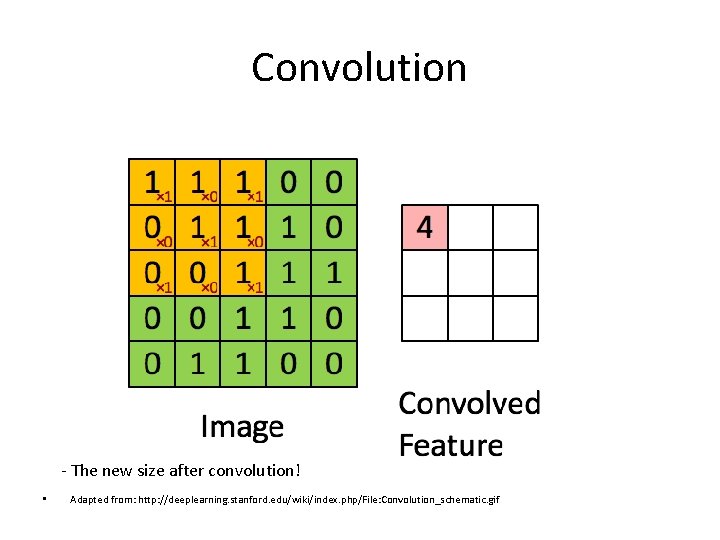

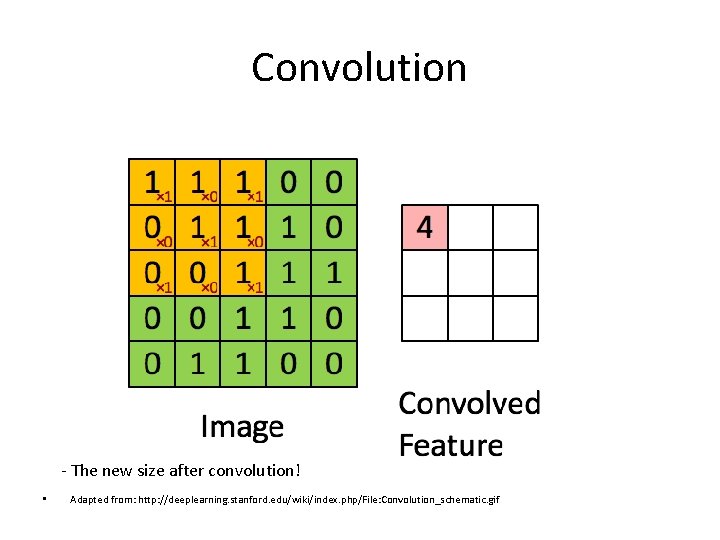

Convolution - The new size after convolution! • Adapted from: http: //deeplearning. stanford. edu/wiki/index. php/File: Convolution_schematic. gif

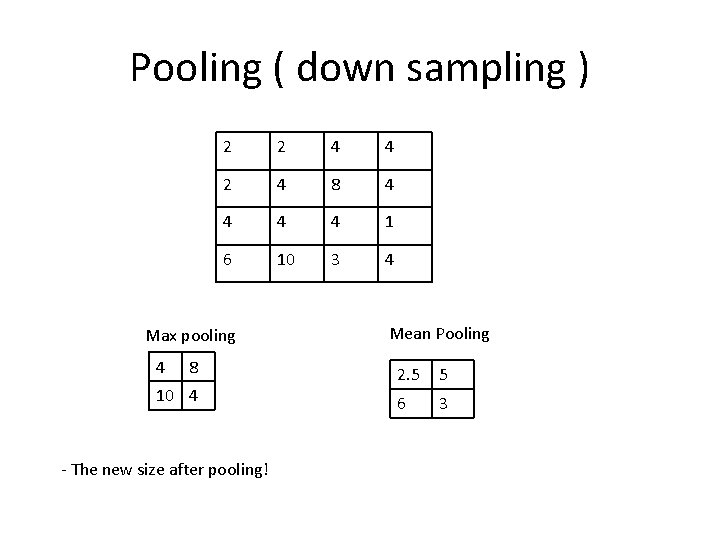

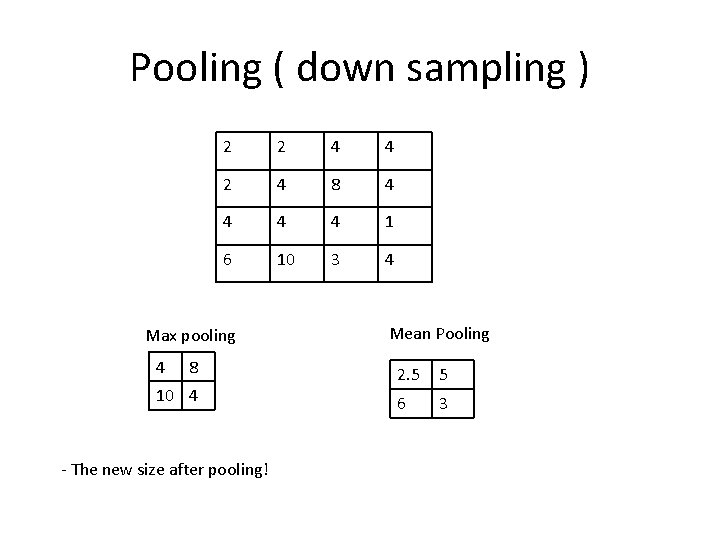

Pooling ( down sampling ) 2 2 4 4 2 4 8 4 4 1 6 10 3 4 Max pooling 4 8 10 4 - The new size after pooling! Mean Pooling 2. 5 5 6 3

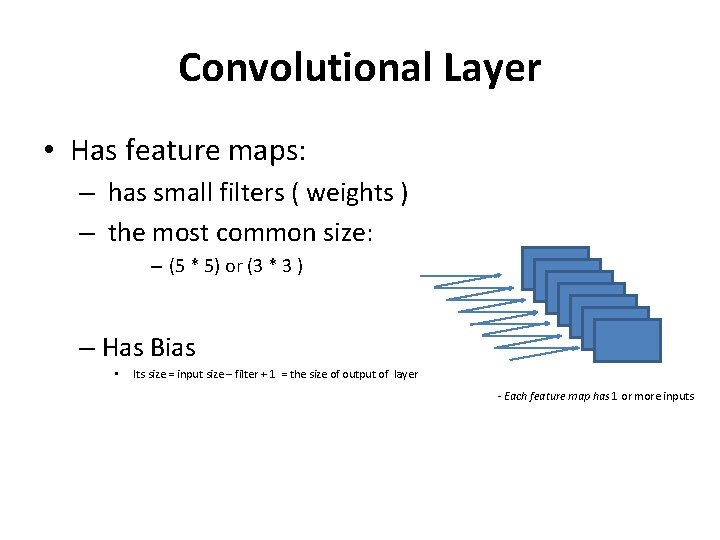

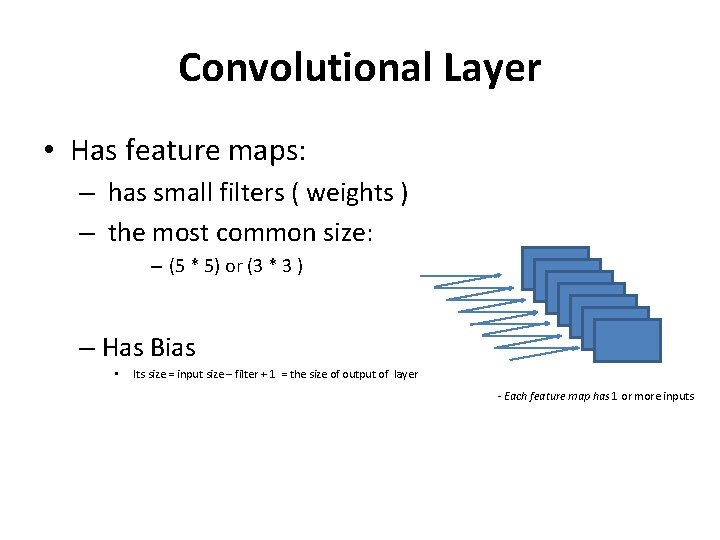

Convolutional Layer • Has feature maps: – has small filters ( weights ) – the most common size: – (5 * 5) or (3 * 3 ) – Has Bias • Its size = input size – filter + 1 = the size of output of layer - Each feature map has 1 or more inputs

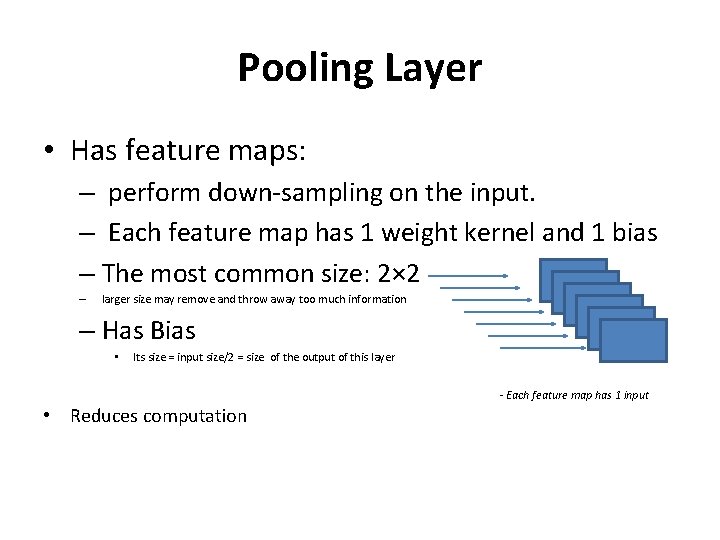

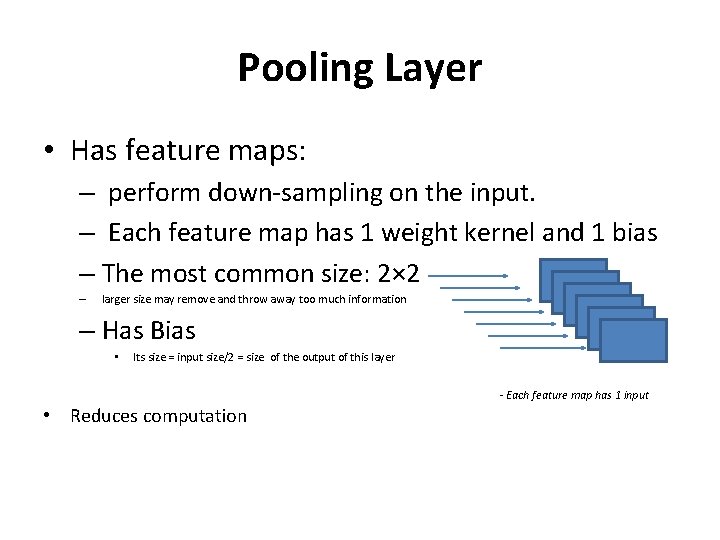

Pooling Layer • Has feature maps: – perform down-sampling on the input. – Each feature map has 1 weight kernel and 1 bias – The most common size: 2× 2 – larger size may remove and throw away too much information – Has Bias • Its size = input size/2 = size of the output of this layer - Each feature map has 1 input • Reduces computation

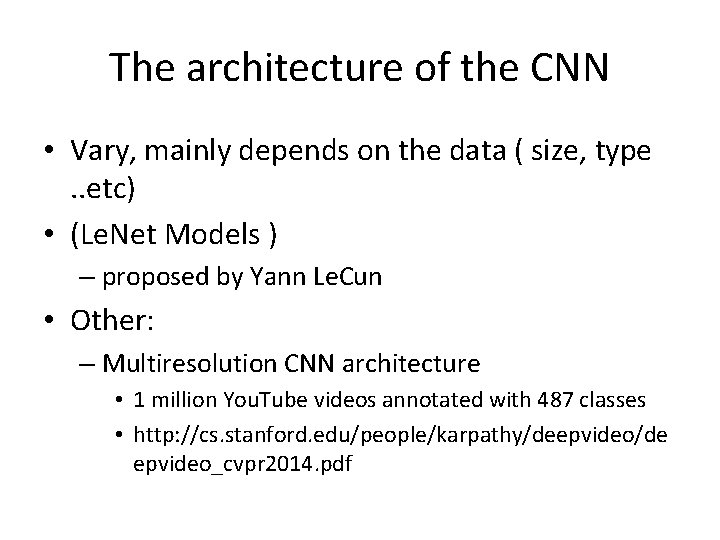

The architecture of the CNN • Vary, mainly depends on the data ( size, type. . etc) • (Le. Net Models ) – proposed by Yann Le. Cun • Other: – Multiresolution CNN architecture • 1 million You. Tube videos annotated with 487 classes • http: //cs. stanford. edu/people/karpathy/deepvideo/de epvideo_cvpr 2014. pdf

The Connections Between Layers • These connections are also referred as receptive fields (RF) – Custom table connections – Random connections – Fully connected layers

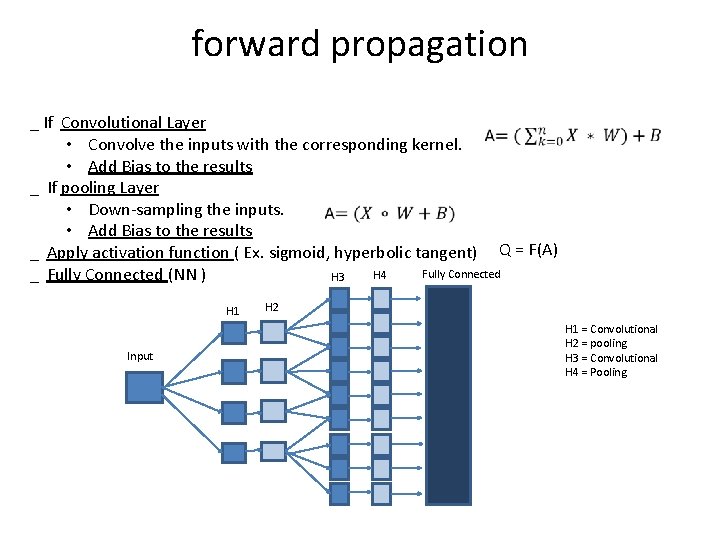

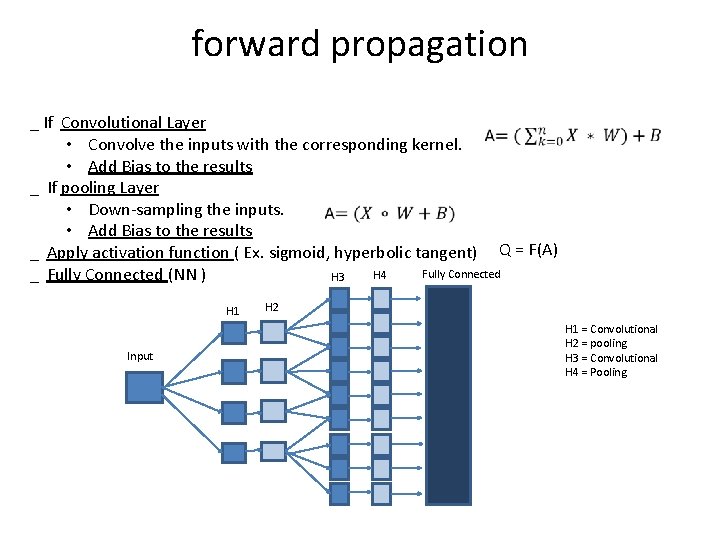

forward propagation _ If Convolutional Layer • Convolve the inputs with the corresponding kernel. • Add Bias to the results _ If pooling Layer • Down-sampling the inputs. • Add Bias to the results _ Apply activation function ( Ex. sigmoid, hyperbolic tangent) Q = F(A) Fully Connected H 4 _ Fully Connected (NN ) H 3 H 1 Input H 2 H 1 = Convolutional H 2 = pooling H 3 = Convolutional H 4 = Pooling

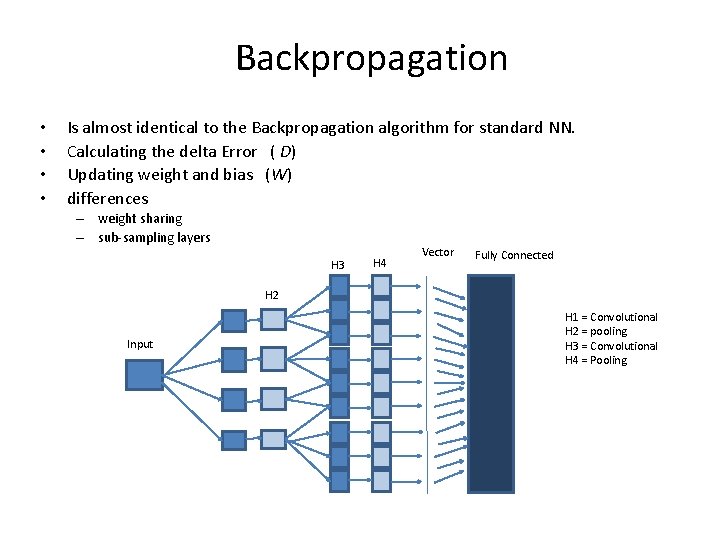

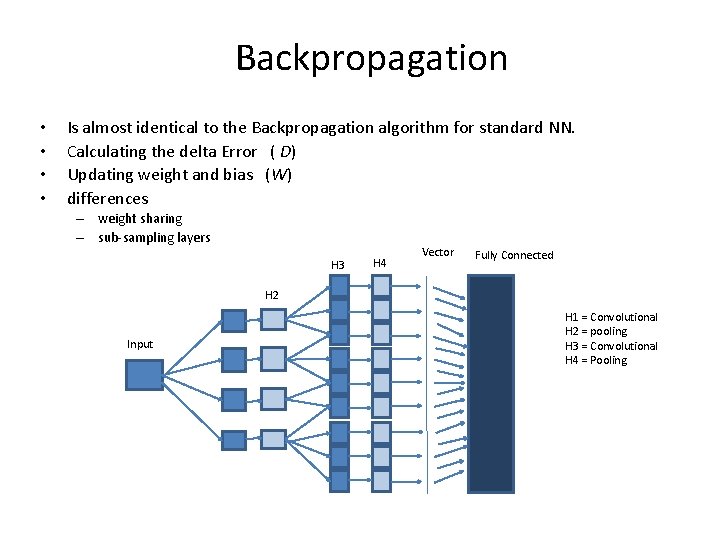

Backpropagation • • Is almost identical to the Backpropagation algorithm for standard NN. Calculating the delta Error ( D) Updating weight and bias (W) differences – weight sharing – sub-sampling layers H 3 H 4 Vector Fully Connected H 2 Input H 1 = Convolutional H 2 = pooling H 3 = Convolutional H 4 = Pooling

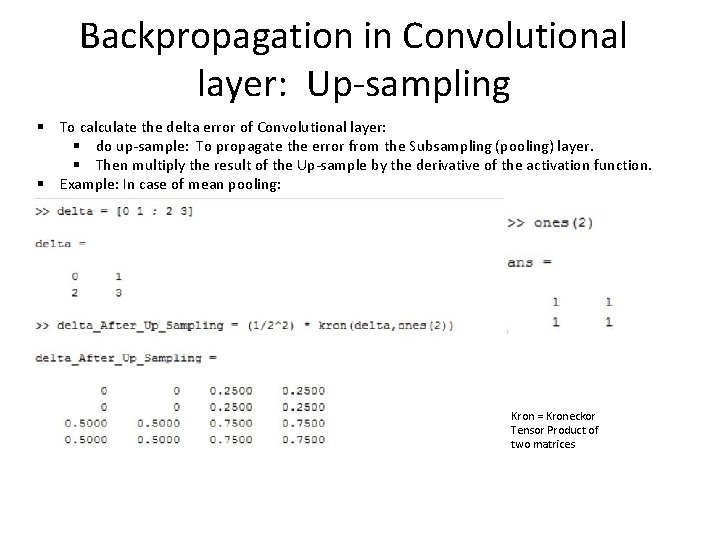

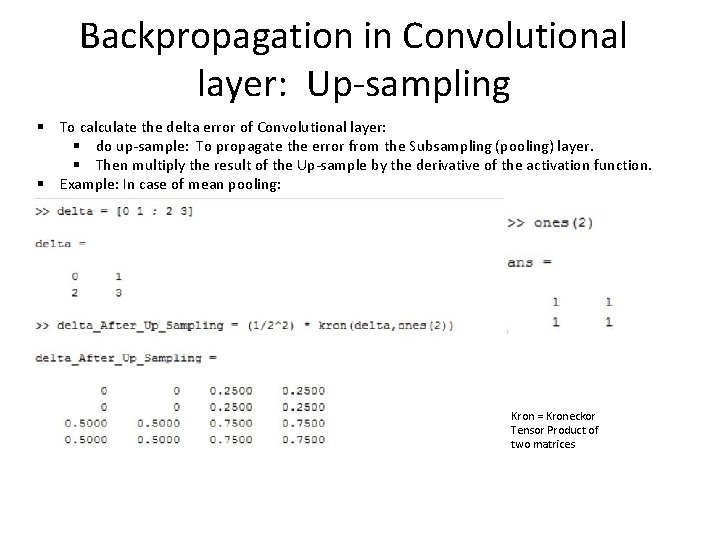

Backpropagation in Convolutional layer: Up-sampling § To calculate the delta error of Convolutional layer: § do up-sample: To propagate the error from the Subsampling (pooling) layer. § Then multiply the result of the Up-sample by the derivative of the activation function. § Example: In case of mean pooling: Kron = Kroneckor Tensor Product of two matrices

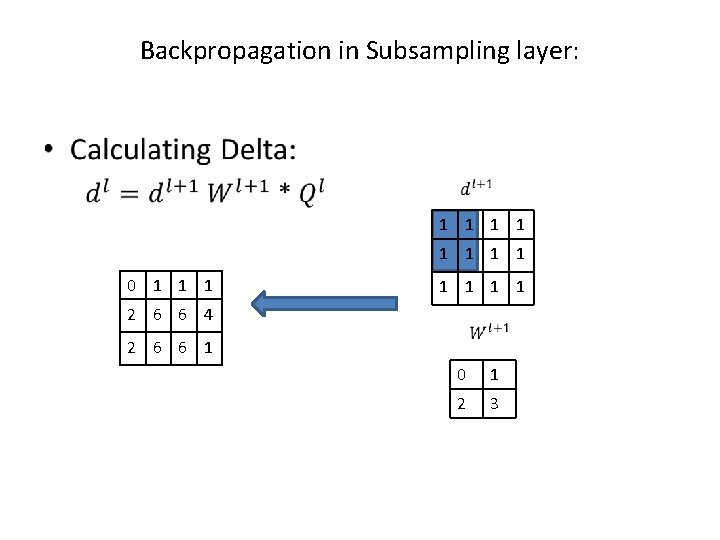

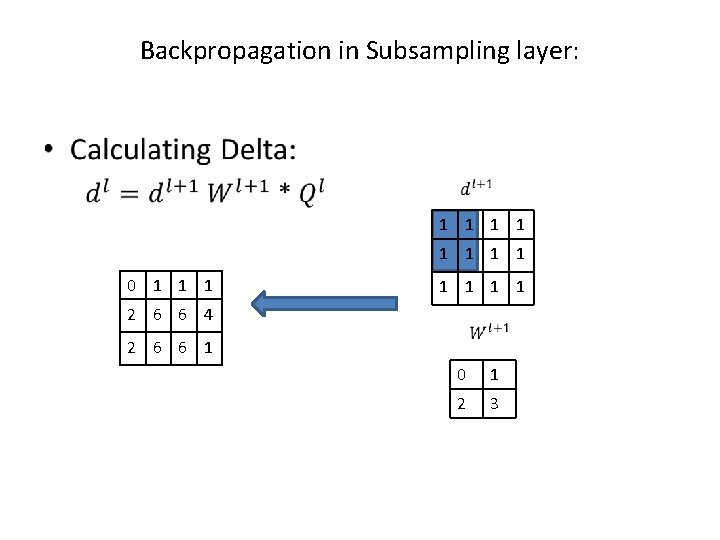

Backpropagation in Subsampling layer: • 1 1 1 1 0 1 1 1 1 2 6 6 4 2 6 6 1 0 1 2 3

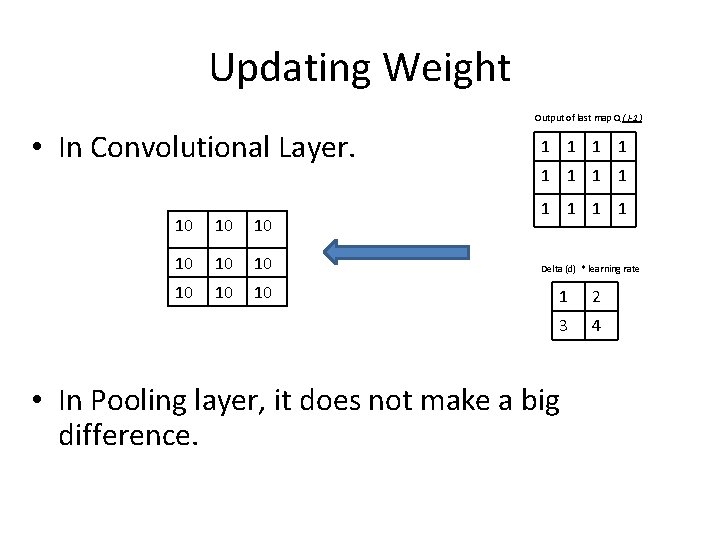

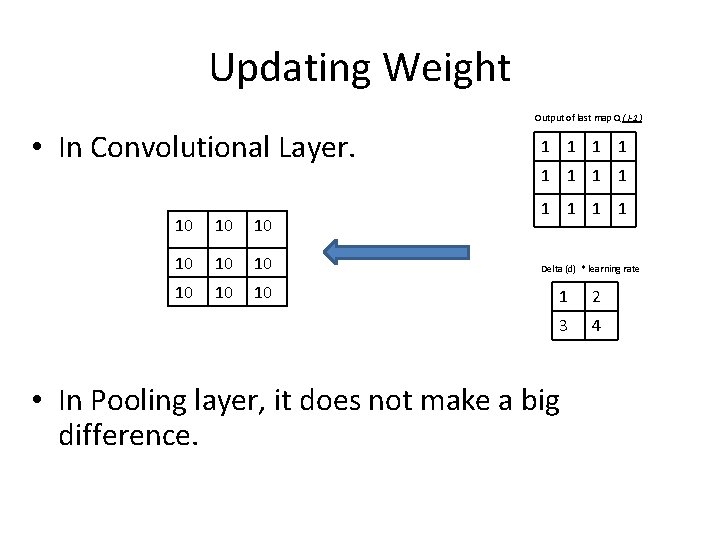

Updating Weight Output of last map Q ( l-1) • In Convolutional Layer. 10 10 10 1 1 1 Delta (d) * learning rate 1 2 3 4 • In Pooling layer, it does not make a big difference.

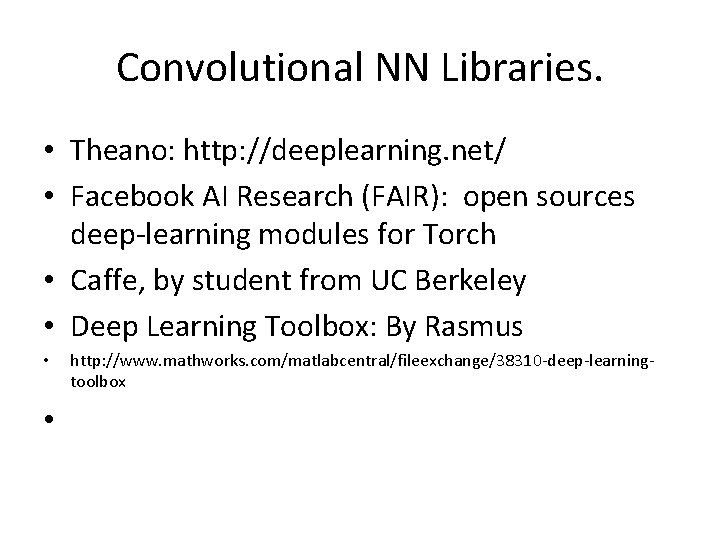

Convolutional NN Libraries. • Theano: http: //deeplearning. net/ • Facebook AI Research (FAIR): open sources deep-learning modules for Torch • Caffe, by student from UC Berkeley • Deep Learning Toolbox: By Rasmus • • http: //www. mathworks. com/matlabcentral/fileexchange/38310 -deep-learningtoolbox

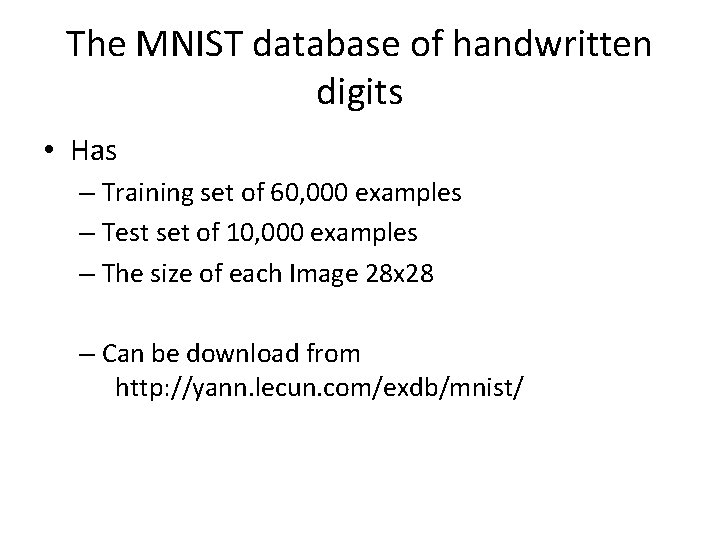

The MNIST database of handwritten digits • Has – Training set of 60, 000 examples – Test set of 10, 000 examples – The size of each Image 28 x 28 – Can be download from http: //yann. lecun. com/exdb/mnist/

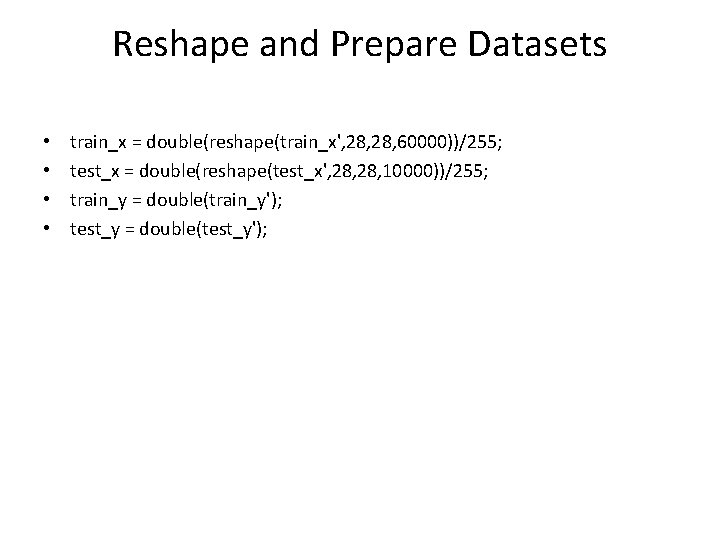

Reshape and Prepare Datasets • • train_x = double(reshape(train_x', 28, 60000))/255; test_x = double(reshape(test_x', 28, 10000))/255; train_y = double(train_y'); test_y = double(test_y');

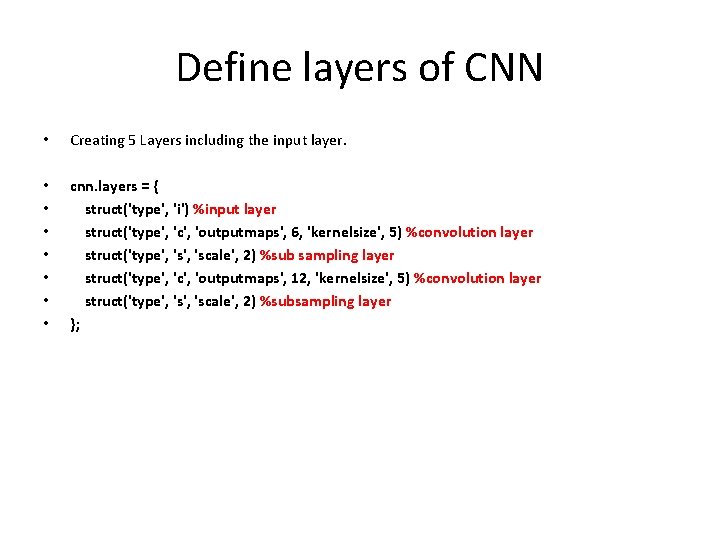

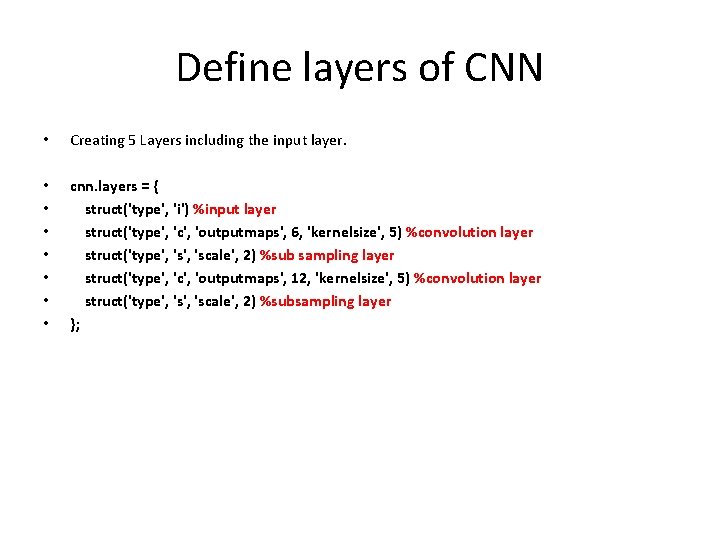

Define layers of CNN • Creating 5 Layers including the input layer. • • cnn. layers = { struct('type', 'i') %input layer struct('type', 'c', 'outputmaps', 6, 'kernelsize', 5) %convolution layer struct('type', 'scale', 2) %sub sampling layer struct('type', 'c', 'outputmaps', 12, 'kernelsize', 5) %convolution layer struct('type', 'scale', 2) %subsampling layer };

Define the Parameters • opts. alpha = 0. 1; • opts. batchsize = 2; • opts. numepochs = 50;

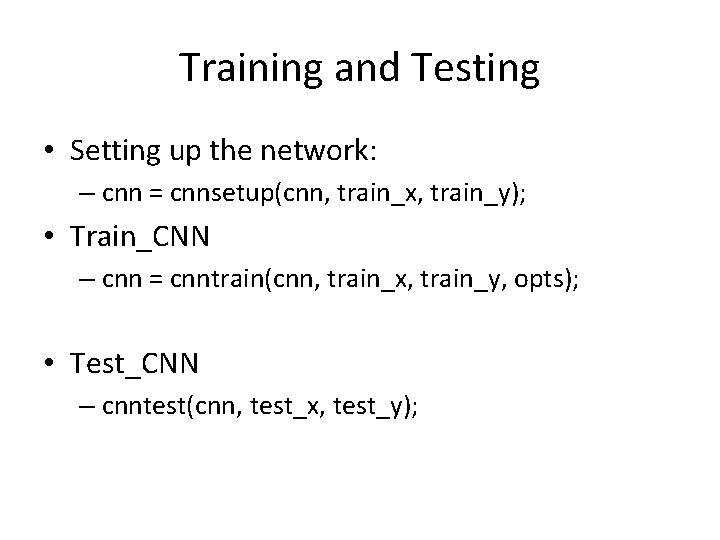

Training and Testing • Setting up the network: – cnn = cnnsetup(cnn, train_x, train_y); • Train_CNN – cnn = cnntrain(cnn, train_x, train_y, opts); • Test_CNN – cnntest(cnn, test_x, test_y);

Thank You