InterNetwork Protocols Theory and Practice Lecture 5 Dr

- Slides: 52

(Inter)Network Protocols: Theory and Practice Lecture 5 Dr. Michael Schapira

Quick Recap: Intradomain Traffic Engineering

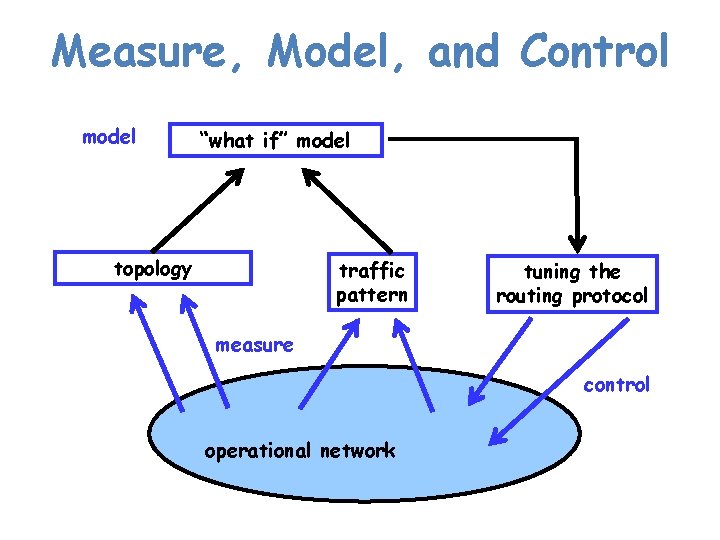

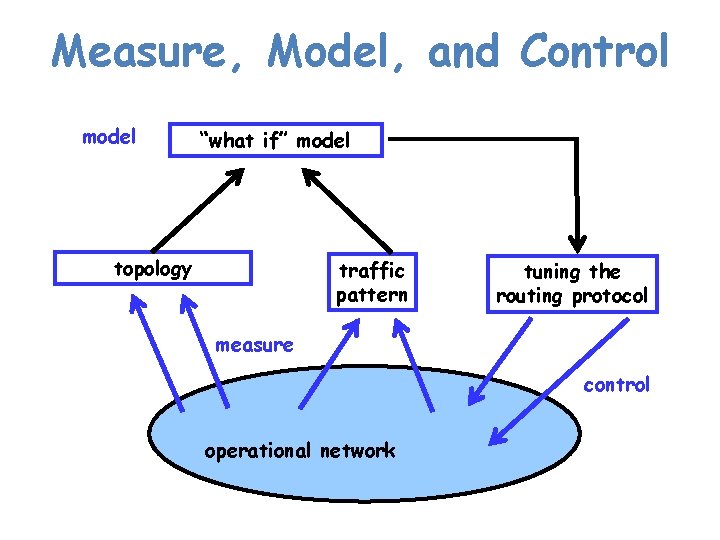

Measure, Model, and Control model “what if” model topology traffic pattern tuning the routing protocol measure control operational network

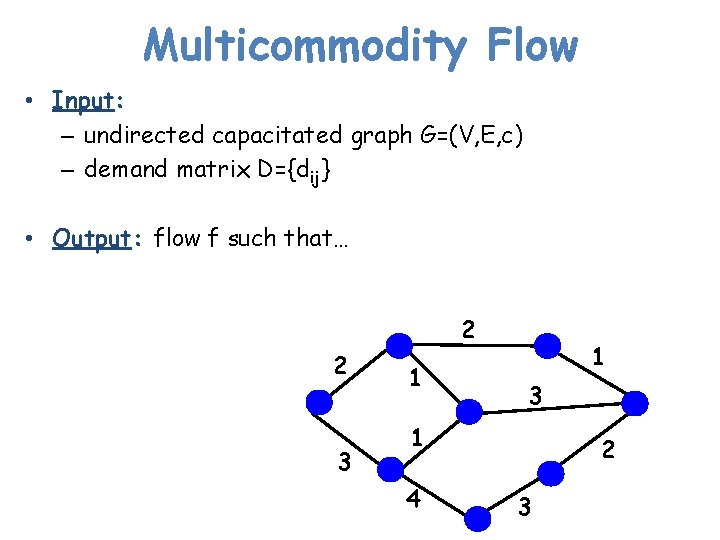

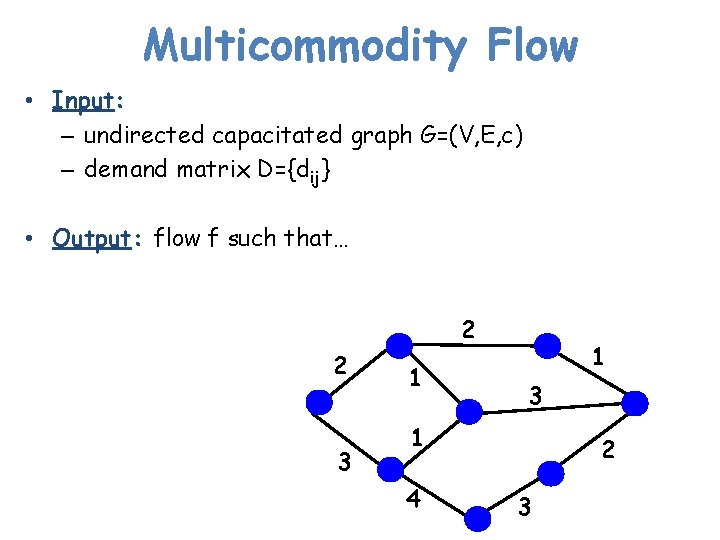

Multicommodity Flow • Input: – undirected capacitated graph G=(V, E, c) – demand matrix D={dij} • Output: flow f such that… 2 2 3 1 1 3 1 4 2 3

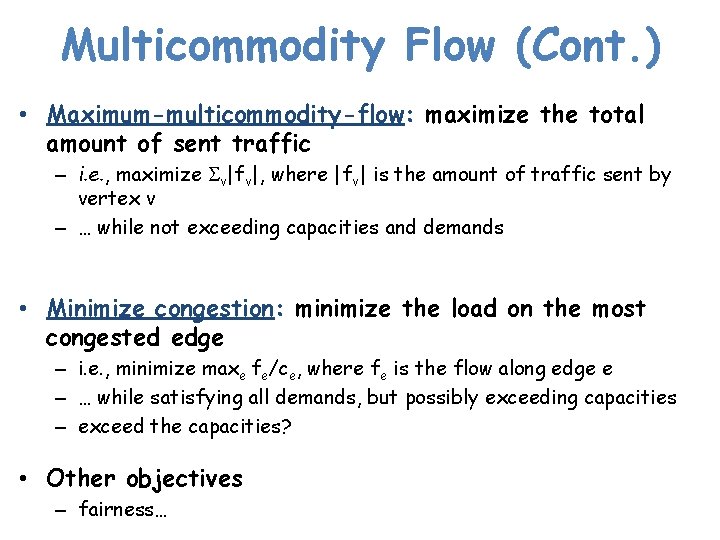

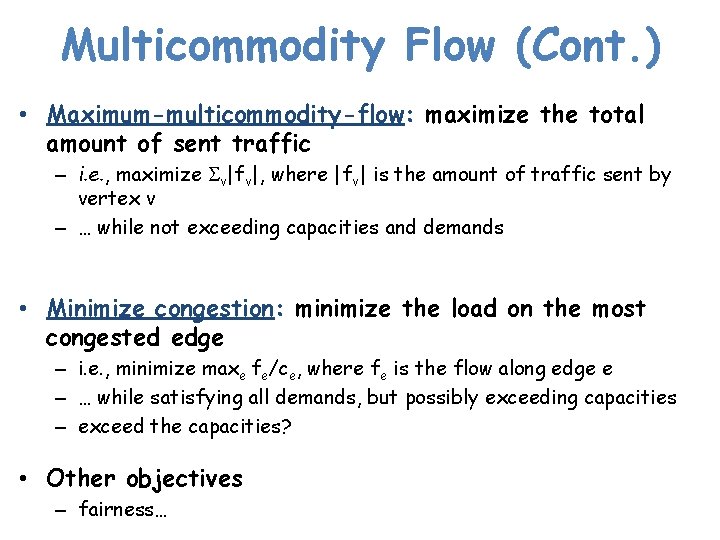

Multicommodity Flow (Cont. ) • Maximum-multicommodity-flow: maximize the total amount of sent traffic – i. e. , maximize Sv|fv|, where |fv| is the amount of traffic sent by vertex v – … while not exceeding capacities and demands • Minimize congestion: minimize the load on the most congested edge – i. e. , minimize maxe fe/ce, where fe is the flow along edge e – … while satisfying all demands, but possibly exceeding capacities – exceed the capacities? • Other objectives – fairness…

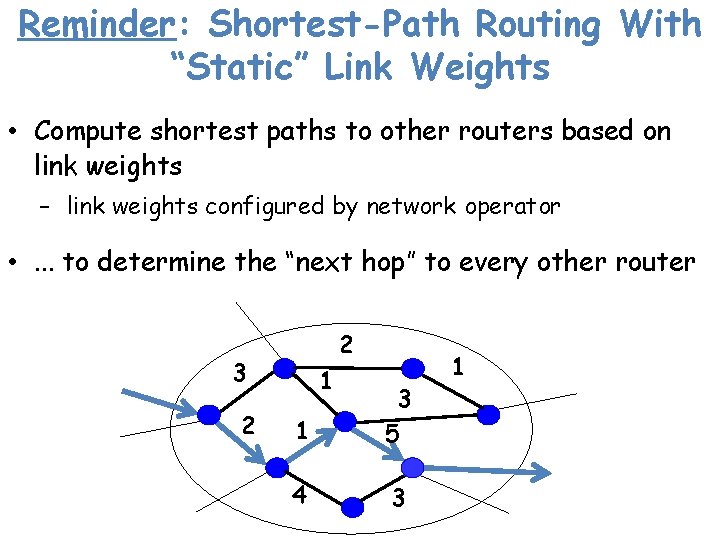

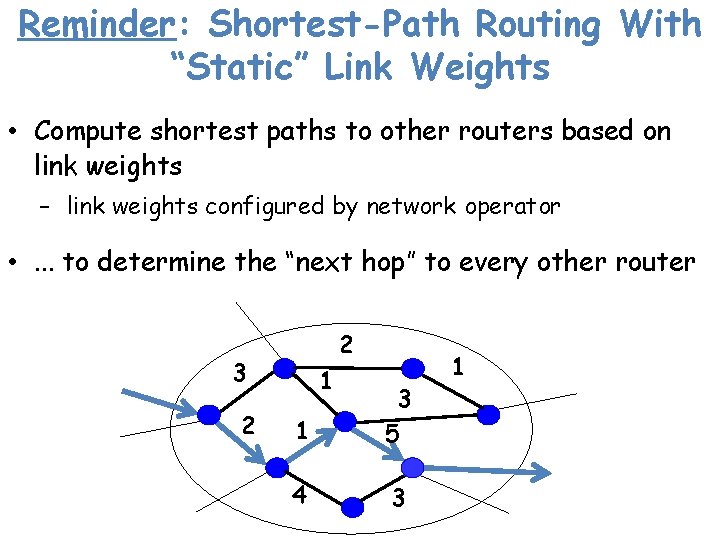

Reminder: Shortest-Path Routing With “Static” Link Weights • Compute shortest paths to other routers based on link weights – link weights configured by network operator • . . . to determine the “next hop” to every other router 2 3 2 1 1 3 5 4 3 1

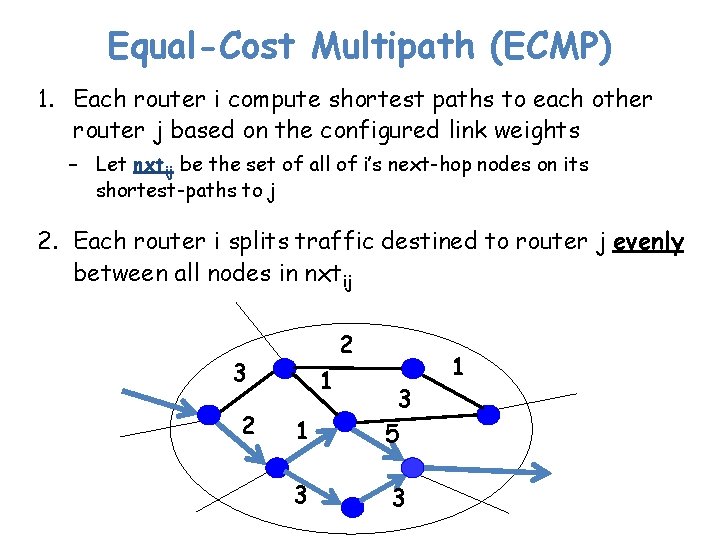

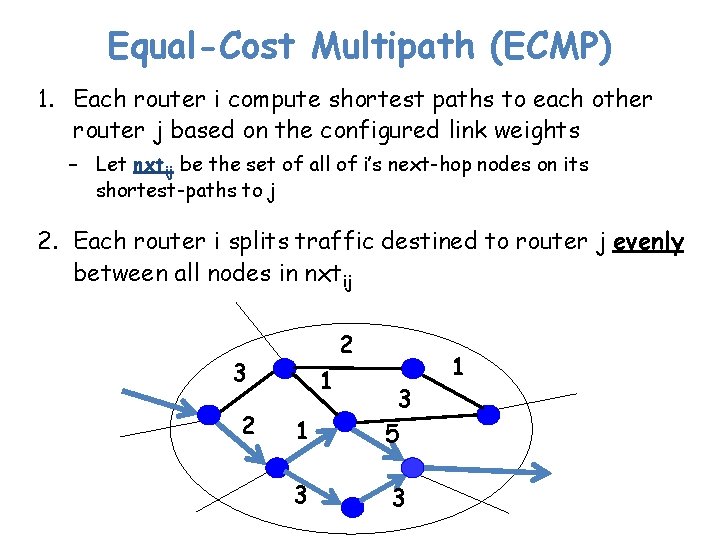

Equal-Cost Multipath (ECMP) 1. Each router i compute shortest paths to each other router j based on the configured link weights – Let nxtij be the set of all of i’s next-hop nodes on its shortest-paths to j 2. Each router i splits traffic destined to router j evenly between all nodes in nxtij 2 3 2 1 1 3 5 3 3 1

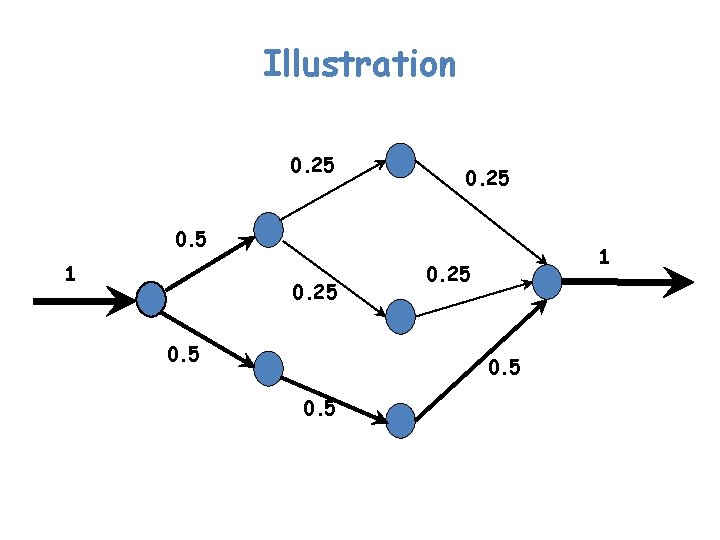

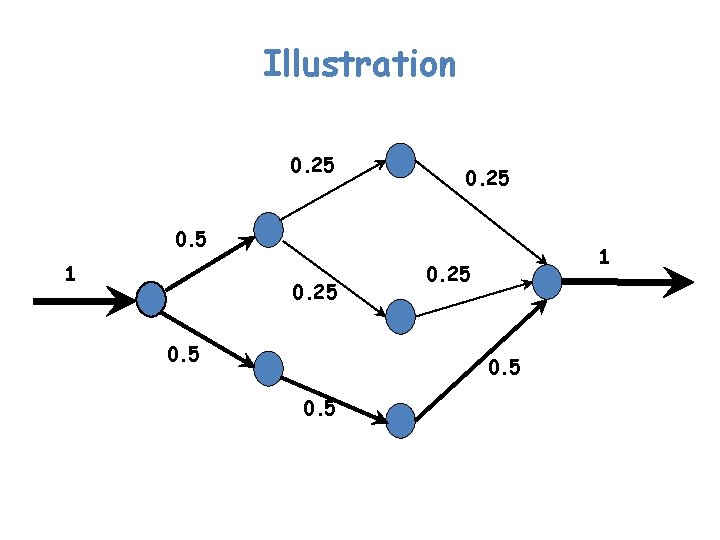

Illustration 0. 25 0. 5 1 0. 25 0. 5

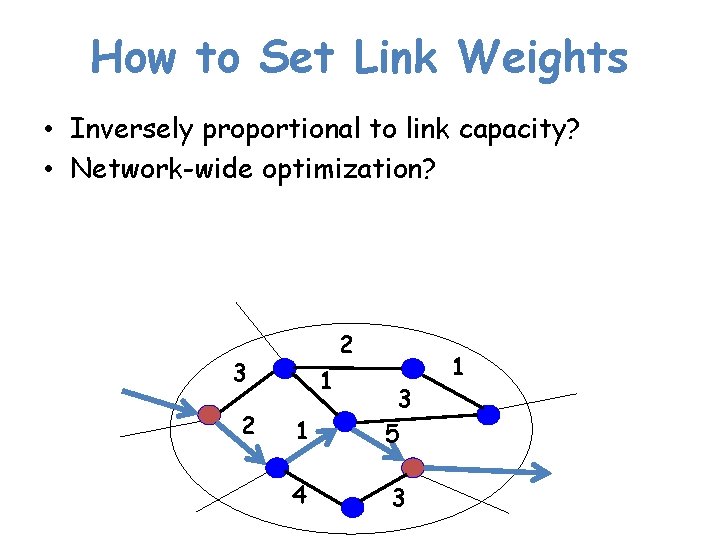

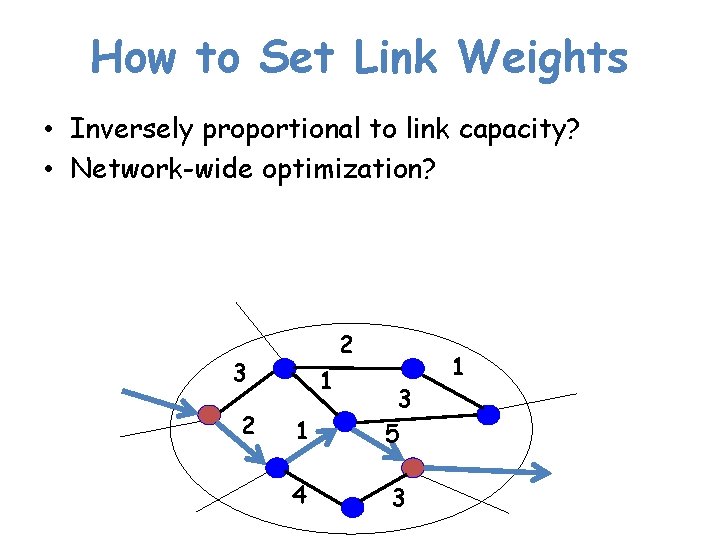

How to Set Link Weights • Inversely proportional to link capacity? • Network-wide optimization? 2 3 2 1 1 3 5 4 3 1

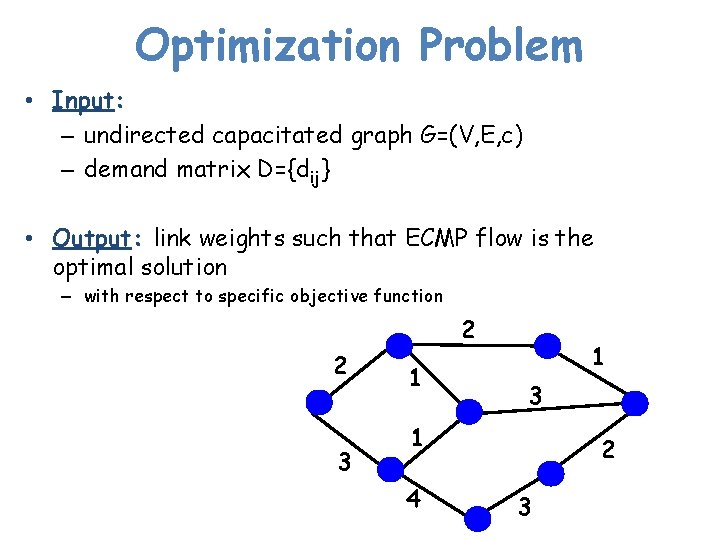

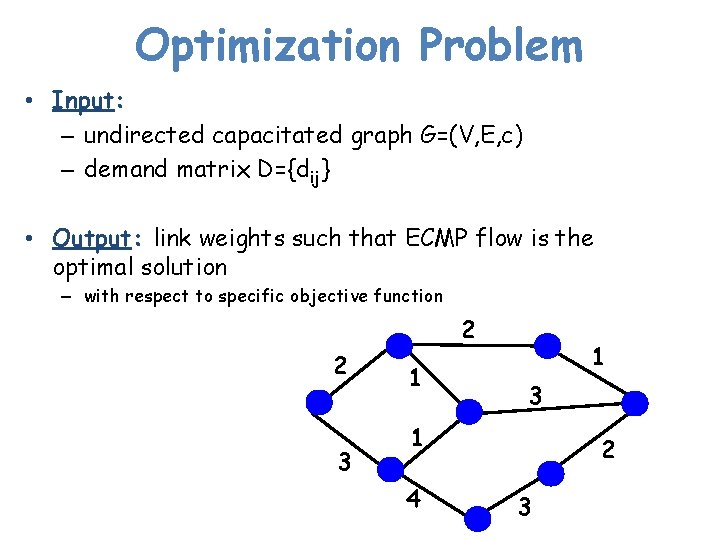

Optimization Problem • Input: – undirected capacitated graph G=(V, E, c) – demand matrix D={dij} • Output: link weights such that ECMP flow is the optimal solution – with respect to specific objective function 2 2 3 1 1 3 1 4 2 3

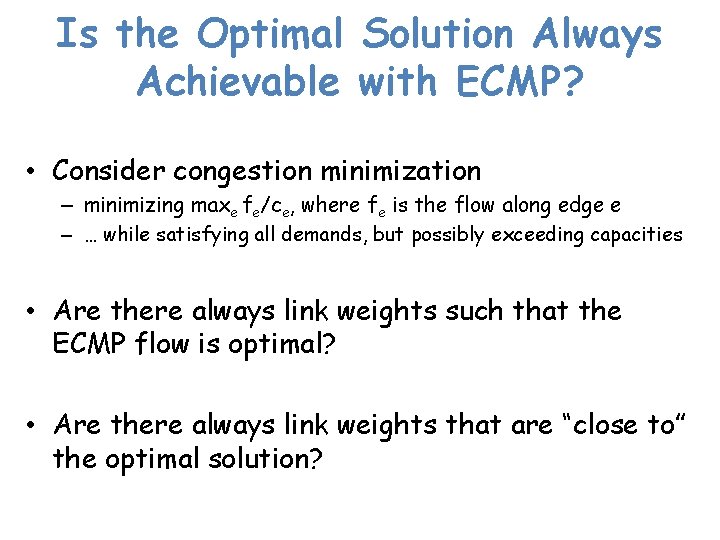

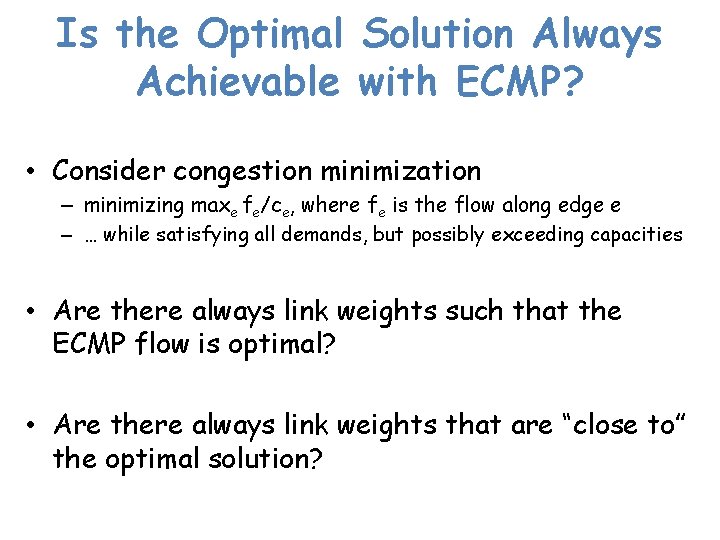

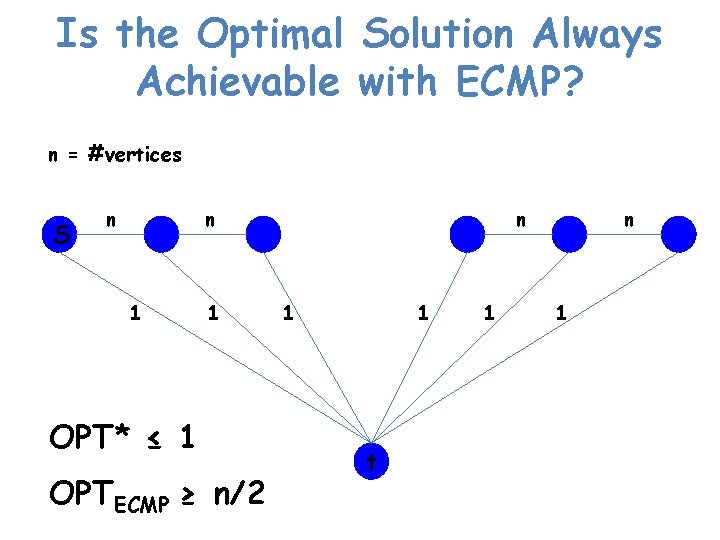

Is the Optimal Solution Always Achievable with ECMP? • Consider congestion minimization – minimizing maxe fe/ce, where fe is the flow along edge e – … while satisfying all demands, but possibly exceeding capacities • Are there always link weights such that the ECMP flow is optimal? • Are there always link weights that are “close to” the optimal solution?

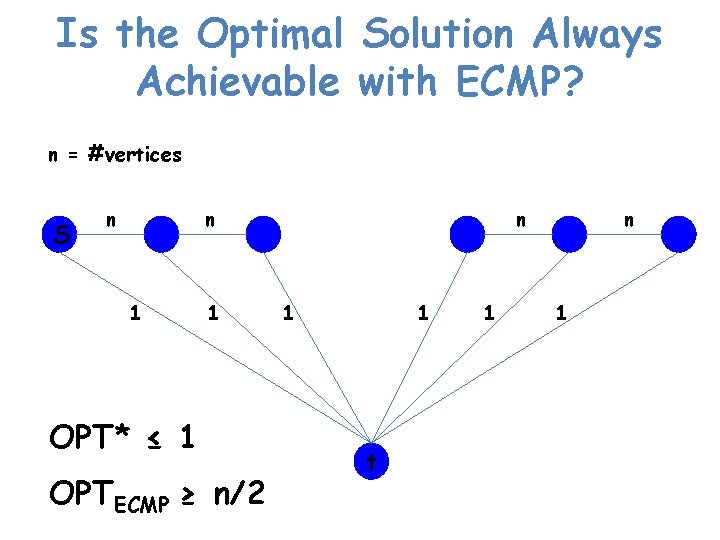

Is the Optimal Solution Always Achievable with ECMP? n = #vertices S n n 1 1 OPT* ≤ 1 OPTECMP ≥ n/2 n 1 1 t 1 n 1

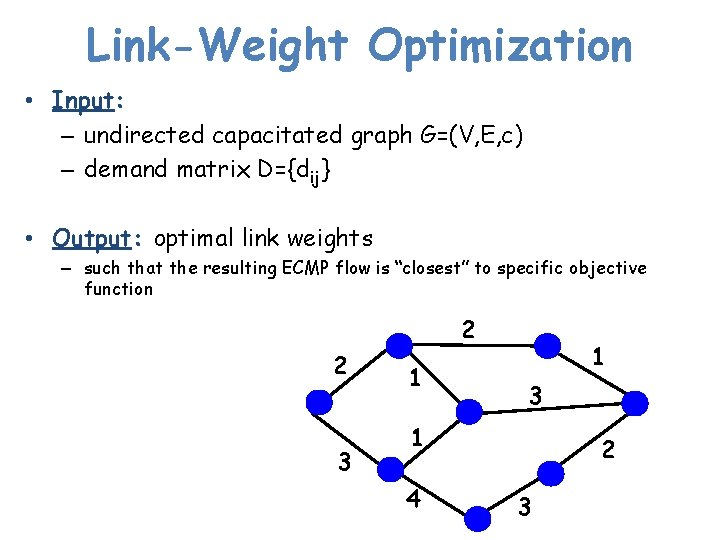

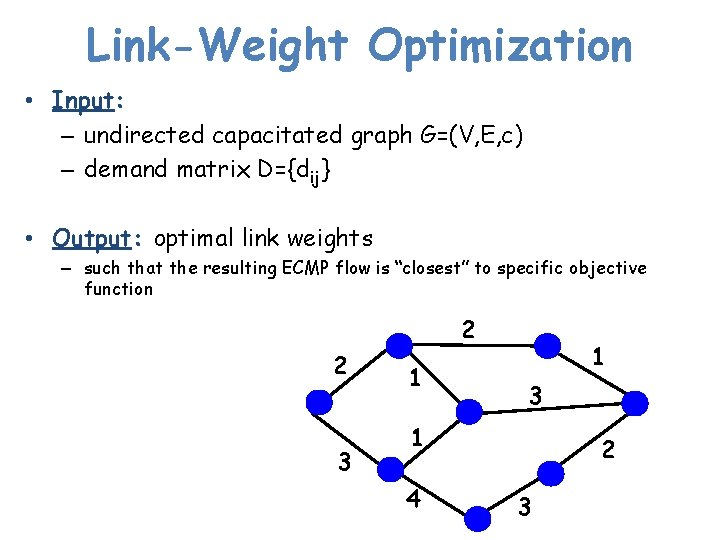

Link-Weight Optimization • Input: – undirected capacitated graph G=(V, E, c) – demand matrix D={dij} • Output: optimal link weights – such that the resulting ECMP flow is “closest” to specific objective function 2 2 3 1 1 3 1 4 2 3

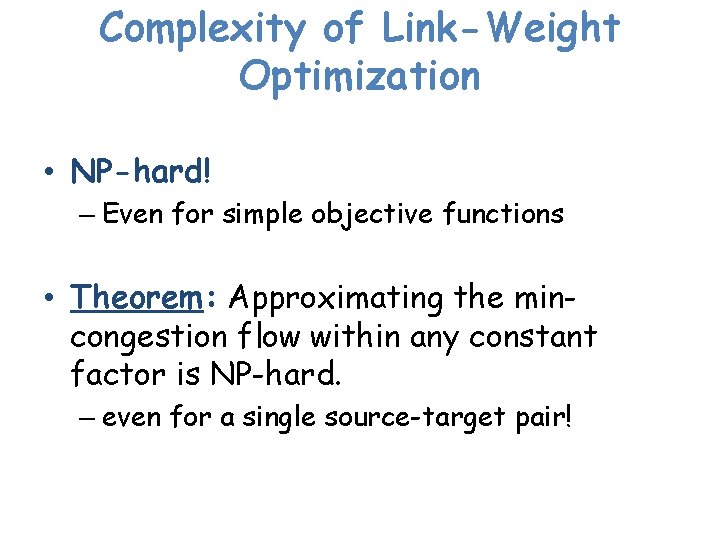

Complexity of Link-Weight Optimization • NP-hard! – Even for simple objective functions • Theorem: Approximating the mincongestion flow within any constant factor is NP-hard. – even for a single source-target pair!

Proof Idea • Theorem: Approximating the mincongestion flow within factor a is NPhard for some constant a. – even for a single source-target pair! • Now, amplify the gap! – recursive construction…

So, What Do Network Operators Do? • Heuristics: searching through weight settings • Clearly suboptimal, but shown to effective in some real-life environments – fast computation of the link weights – good performance, compared to “optimal” solution – resilience to failures

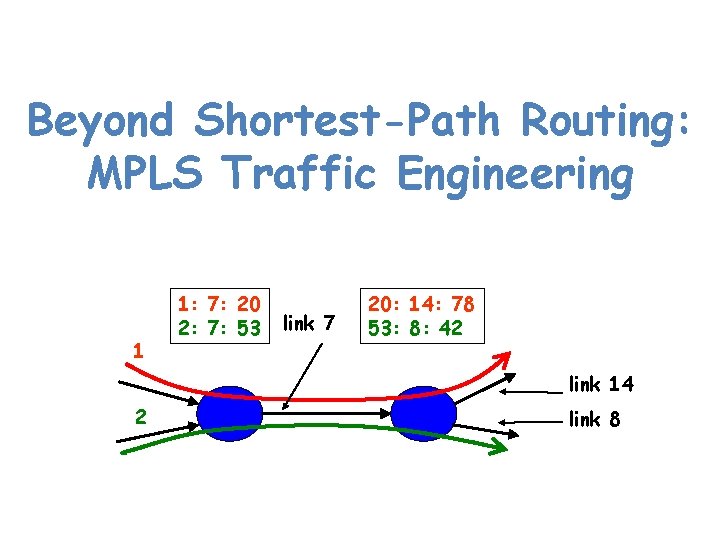

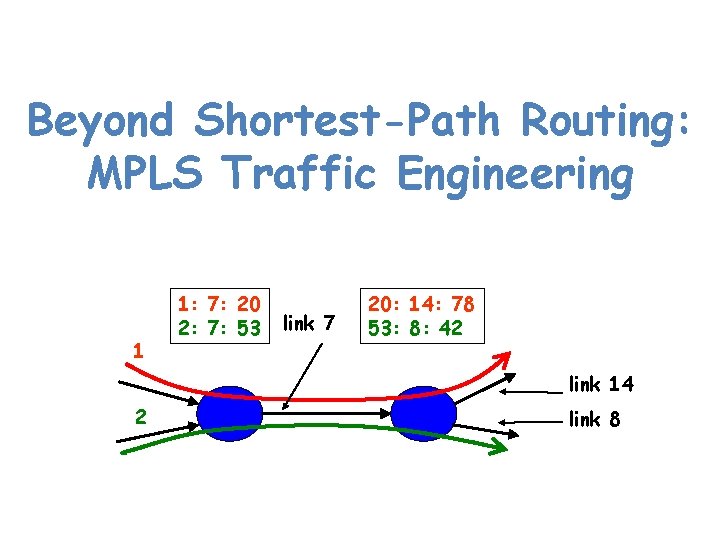

Beyond Shortest-Path Routing: MPLS Traffic Engineering 1 1: 7: 20 2: 7: 53 link 7 20: 14: 78 53: 8: 42 link 14 2 link 8

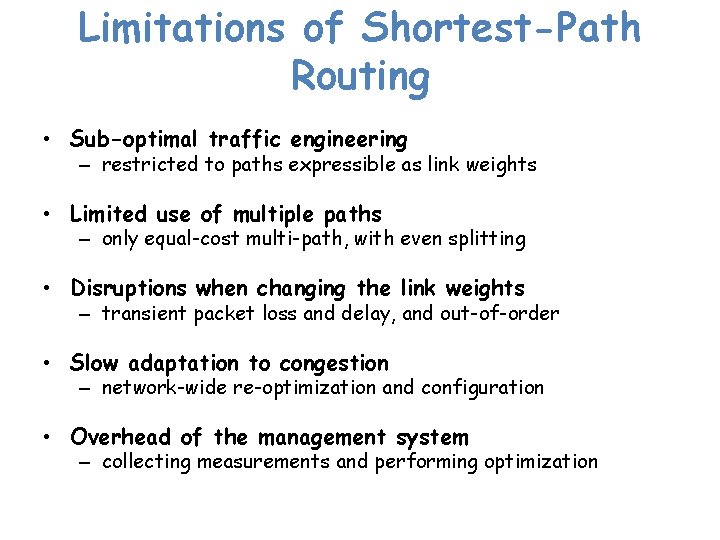

Limitations of Shortest-Path Routing • Sub-optimal traffic engineering – restricted to paths expressible as link weights • Limited use of multiple paths – only equal-cost multi-path, with even splitting • Disruptions when changing the link weights – transient packet loss and delay, and out-of-order • Slow adaptation to congestion – network-wide re-optimization and configuration • Overhead of the management system – collecting measurements and performing optimization

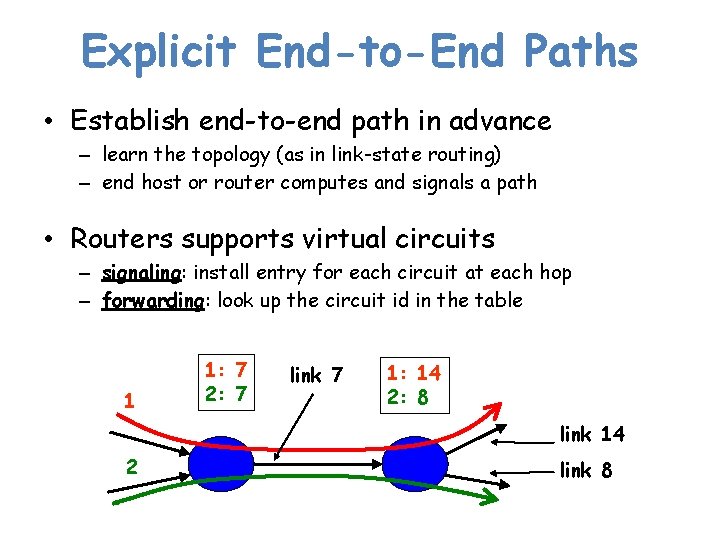

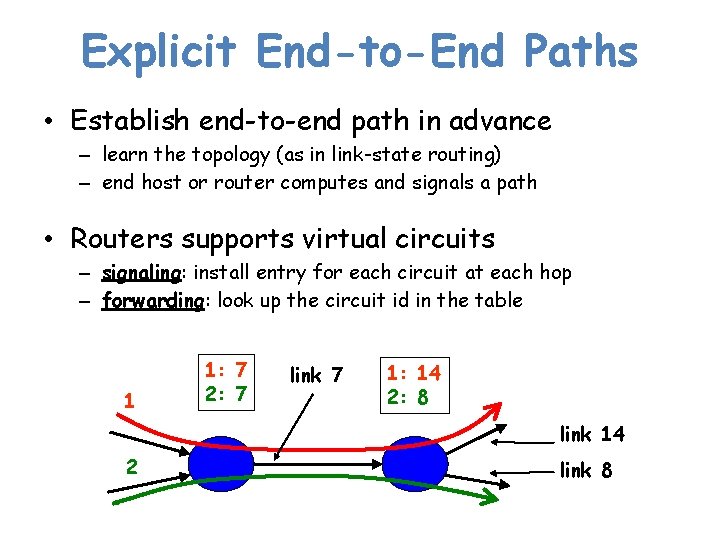

Explicit End-to-End Paths • Establish end-to-end path in advance – learn the topology (as in link-state routing) – end host or router computes and signals a path • Routers supports virtual circuits – signaling: install entry for each circuit at each hop – forwarding: look up the circuit id in the table 1 1: 7 2: 7 link 7 1: 14 2: 8 link 14 2 link 8

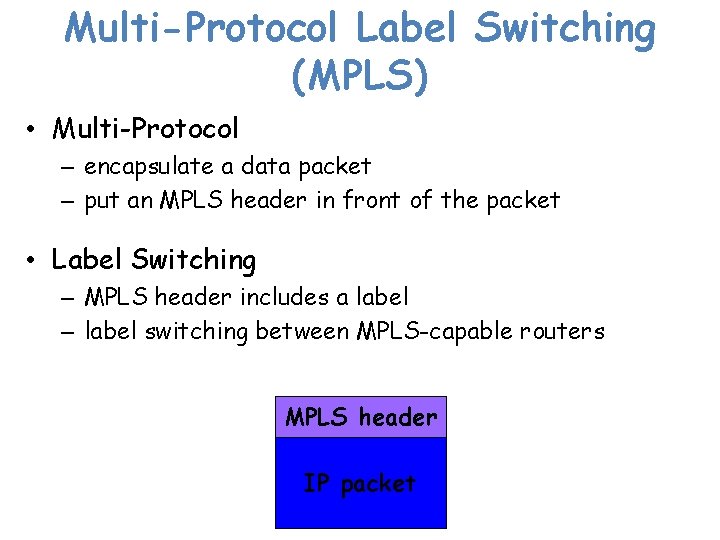

Multi-Protocol Label Switching (MPLS) • Multi-Protocol – encapsulate a data packet – put an MPLS header in front of the packet • Label Switching – MPLS header includes a label – label switching between MPLS-capable routers MPLS header IP packet

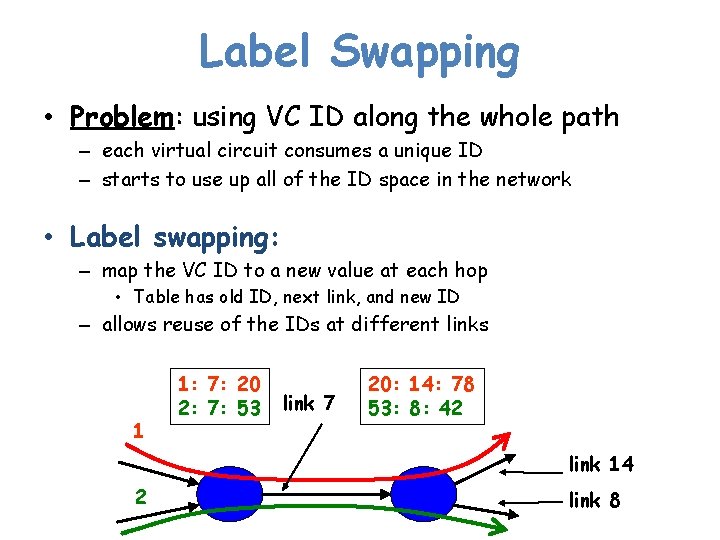

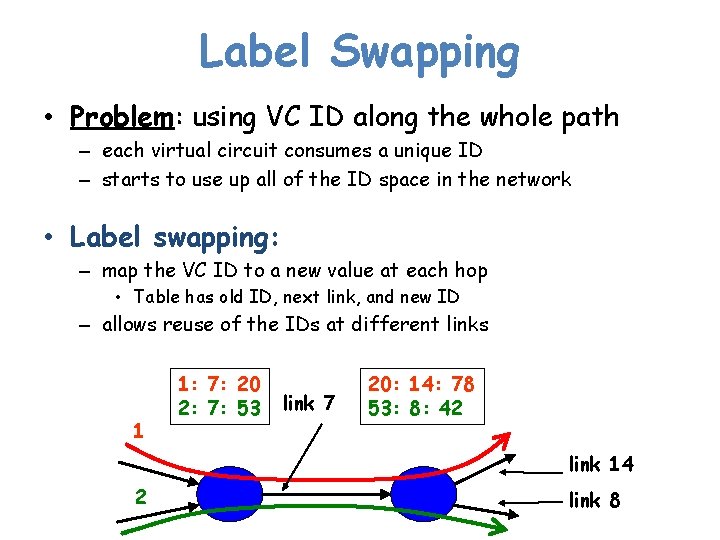

Label Swapping • Problem: using VC ID along the whole path – each virtual circuit consumes a unique ID – starts to use up all of the ID space in the network • Label swapping: – map the VC ID to a new value at each hop • Table has old ID, next link, and new ID – allows reuse of the IDs at different links 1 1: 7: 20 2: 7: 53 link 7 20: 14: 78 53: 8: 42 link 14 2 link 8

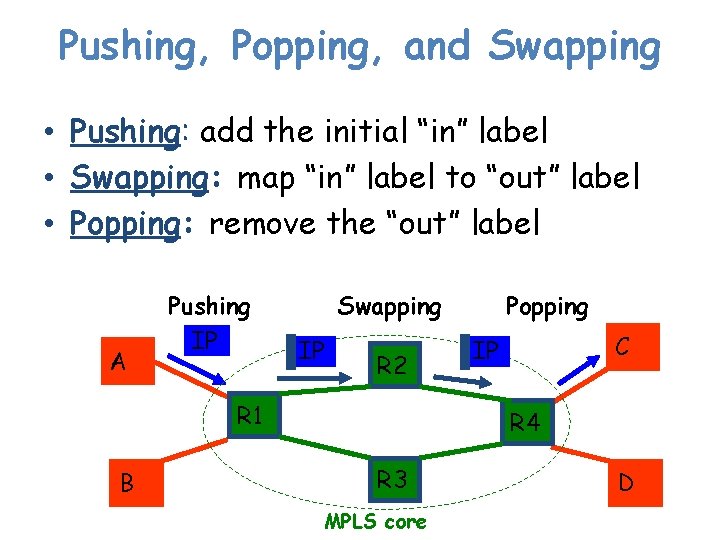

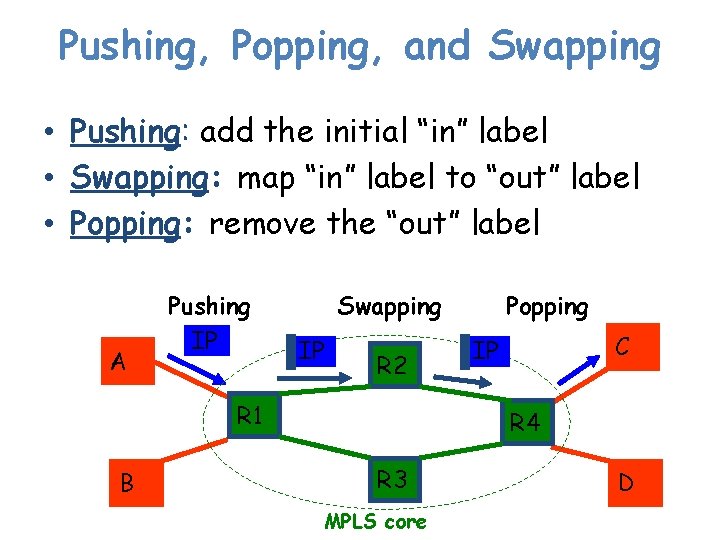

Pushing, Popping, and Swapping • Pushing: add the initial “in” label • Swapping: map “in” label to “out” label • Popping: remove the “out” label A Pushing IP Swapping IP R 2 R 1 B Popping C IP R 4 R 3 MPLS core D

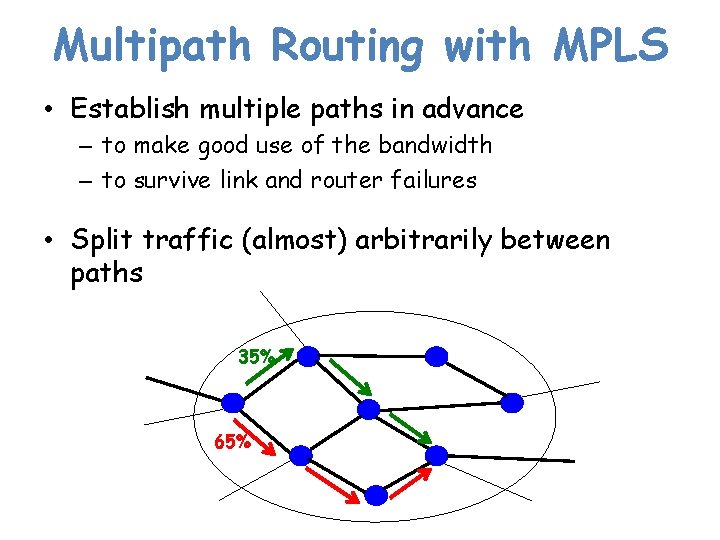

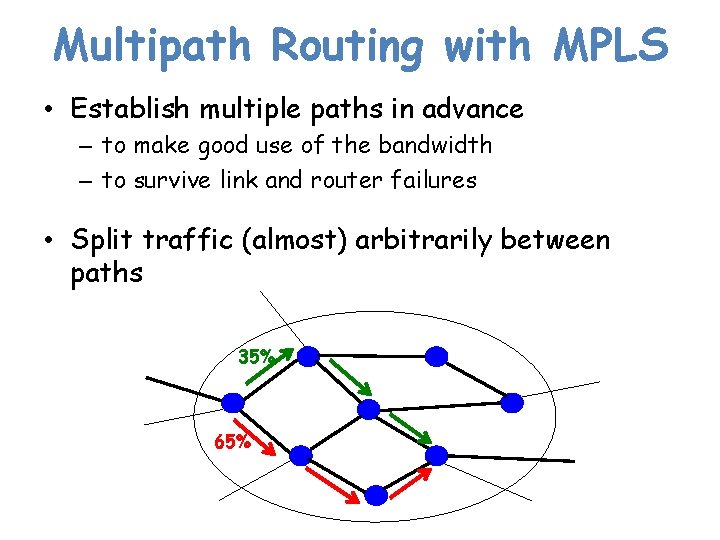

Multipath Routing with MPLS • Establish multiple paths in advance – to make good use of the bandwidth – to survive link and router failures • Split traffic (almost) arbitrarily between paths 35% 65%

Traffic Engineering with MPLS • Can do (almost) anything – even optimize multicommodity flow! • But… at a cost – multiple label-switching paths

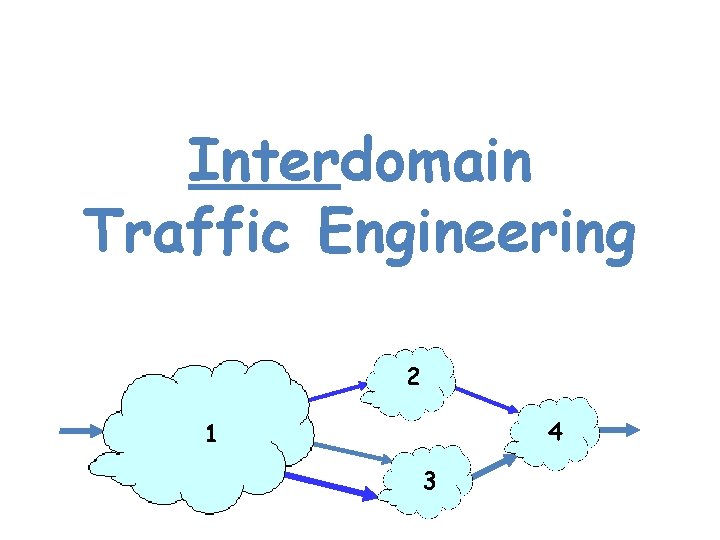

Interdomain Traffic Engineering 2 4 1 3

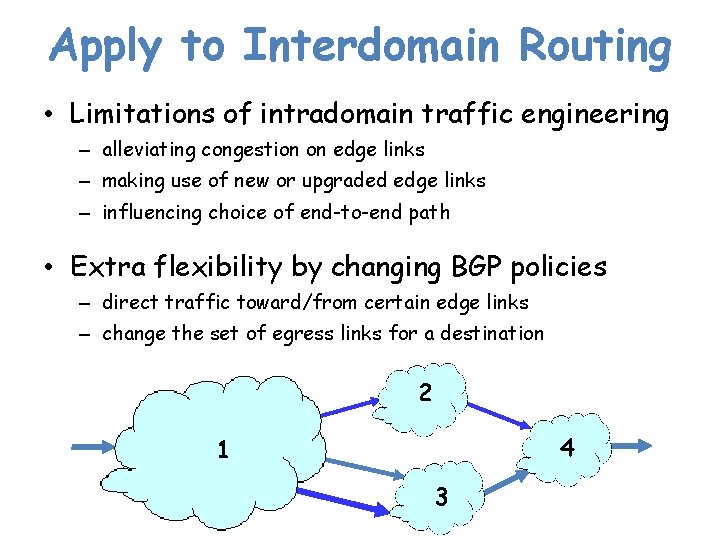

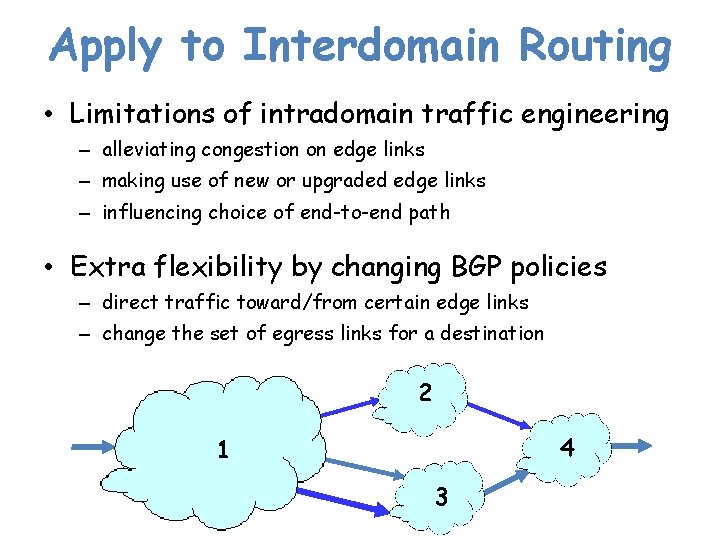

Apply to Interdomain Routing • Limitations of intradomain traffic engineering – alleviating congestion on edge links – making use of new or upgraded edge links – influencing choice of end-to-end path • Extra flexibility by changing BGP policies – direct traffic toward/from certain edge links – change the set of egress links for a destination 2 4 1 3

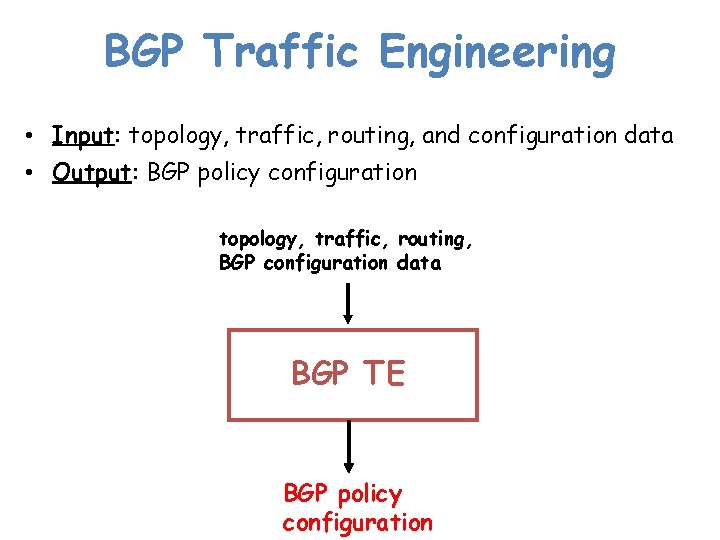

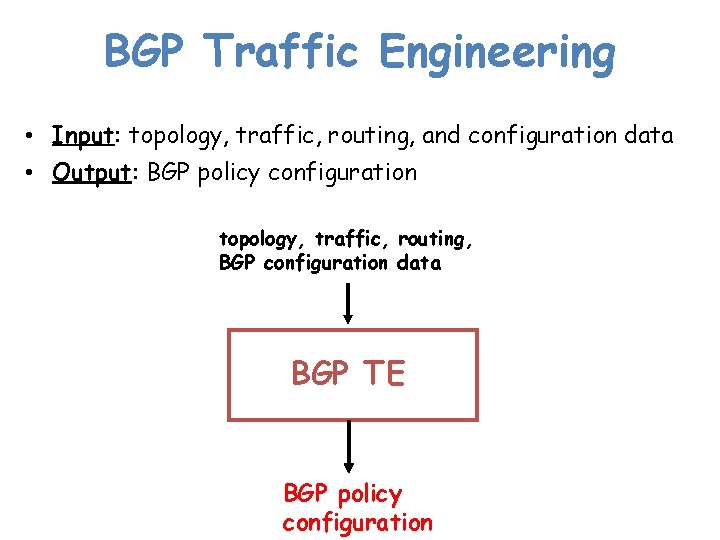

BGP Traffic Engineering • Input: topology, traffic, routing, and configuration data • Output: BGP policy configuration topology, traffic, routing, BGP configuration data BGP TE BGP policy configuration

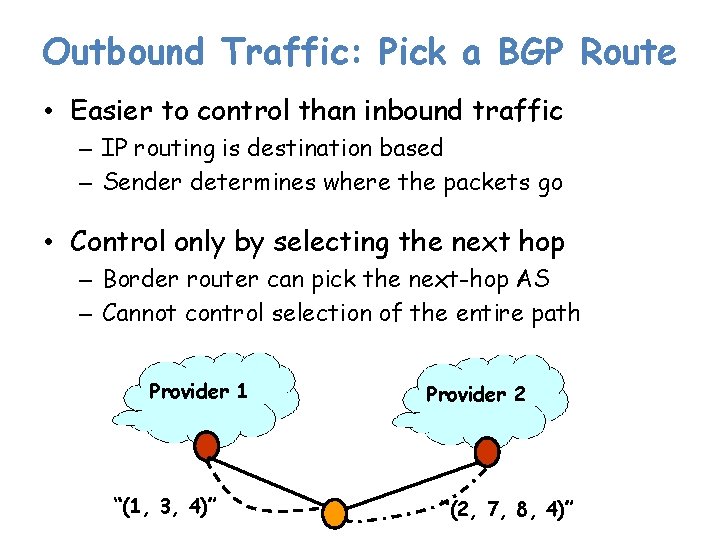

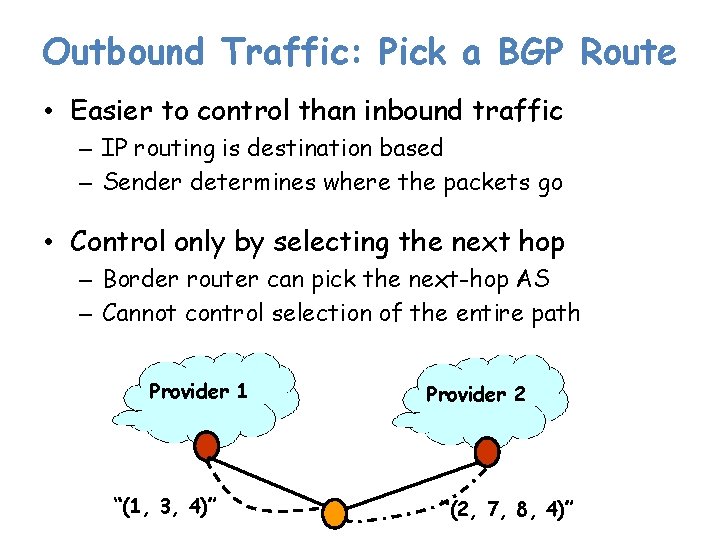

Outbound Traffic: Pick a BGP Route • Easier to control than inbound traffic – IP routing is destination based – Sender determines where the packets go • Control only by selecting the next hop – Border router can pick the next-hop AS – Cannot control selection of the entire path Provider 1 “(1, 3, 4)” Provider 2 “(2, 7, 8, 4)”

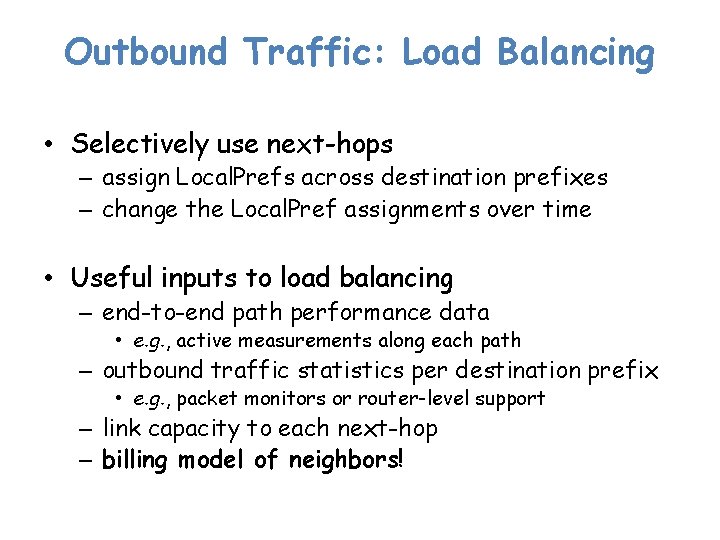

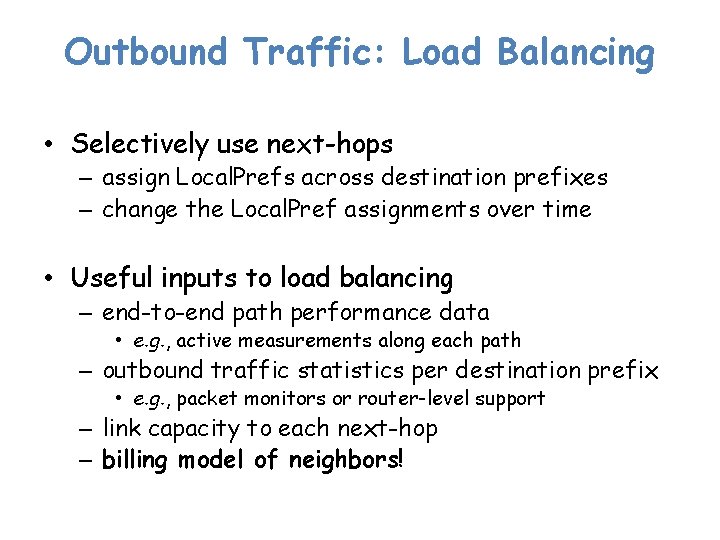

Outbound Traffic: Load Balancing • Selectively use next-hops – assign Local. Prefs across destination prefixes – change the Local. Pref assignments over time • Useful inputs to load balancing – end-to-end path performance data • e. g. , active measurements along each path – outbound traffic statistics per destination prefix • e. g. , packet monitors or router-level support – link capacity to each next-hop – billing model of neighbors!

Outbound Traffic: How to Probe? • Lots of options – – – HTTP transfer UDP traffic TCP traffic Traceroute Ping • Pros and cons for each – – accuracy overhead dropped by routers? sets off intrusion detection systems? • How to monitor the “paths not taken”?

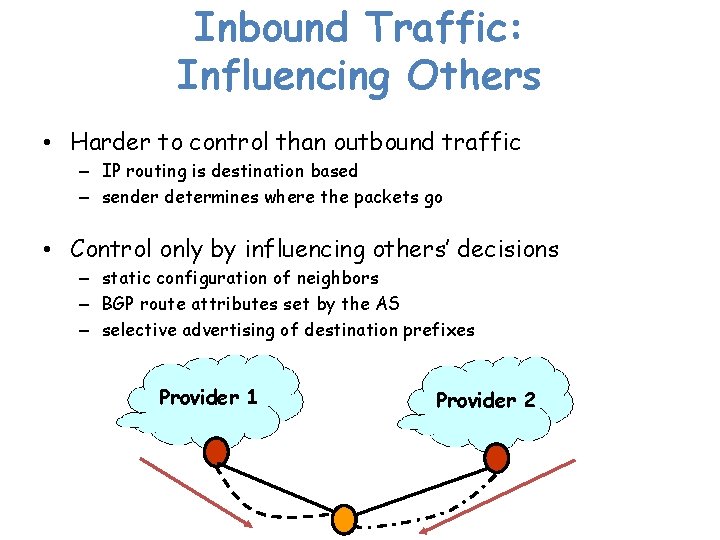

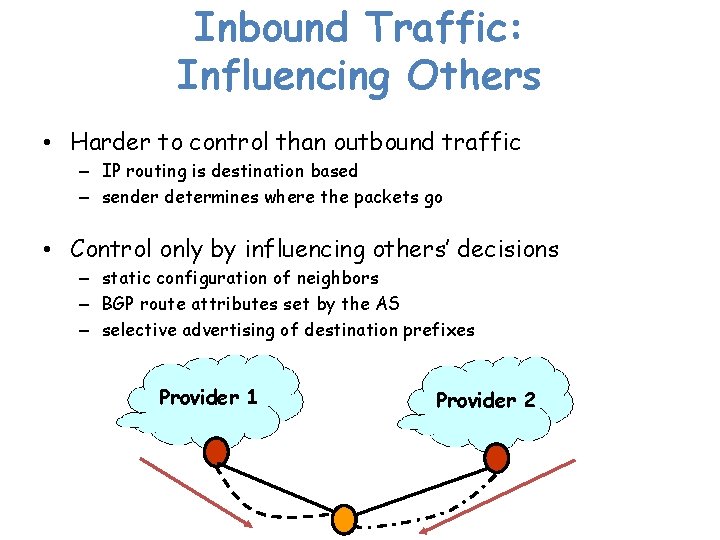

Inbound Traffic: Influencing Others • Harder to control than outbound traffic – IP routing is destination based – sender determines where the packets go • Control only by influencing others’ decisions – static configuration of neighbors – BGP route attributes set by the AS – selective advertising of destination prefixes Provider 1 Provider 2

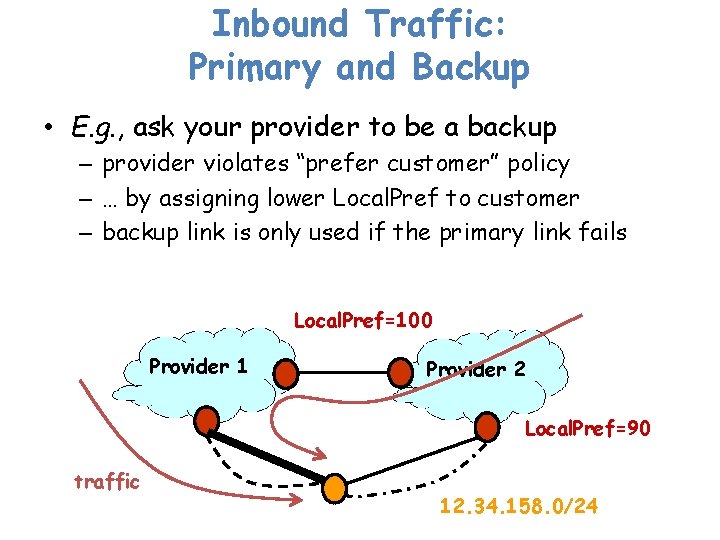

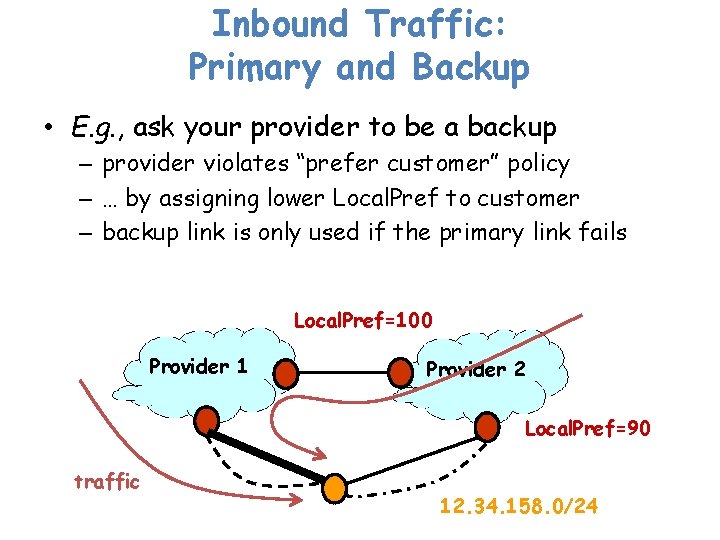

Inbound Traffic: Primary and Backup • E. g. , ask your provider to be a backup – provider violates “prefer customer” policy – … by assigning lower Local. Pref to customer – backup link is only used if the primary link fails Local. Pref=100 Provider 1 Provider 2 Local. Pref=90 traffic 12. 34. 158. 0/24

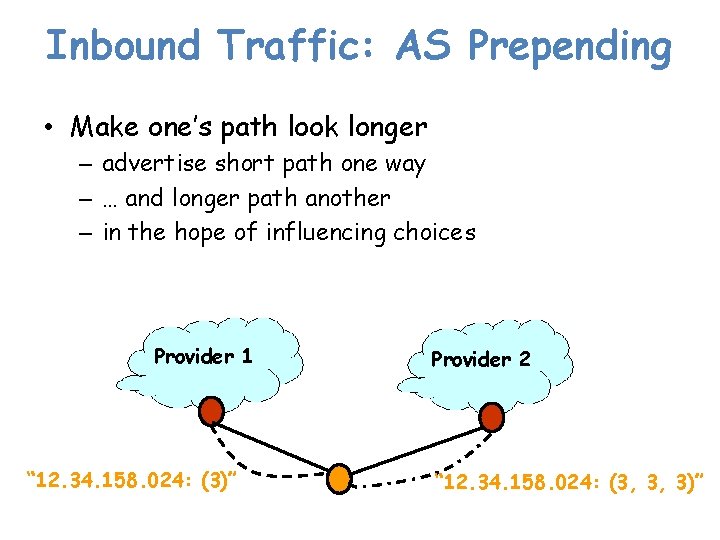

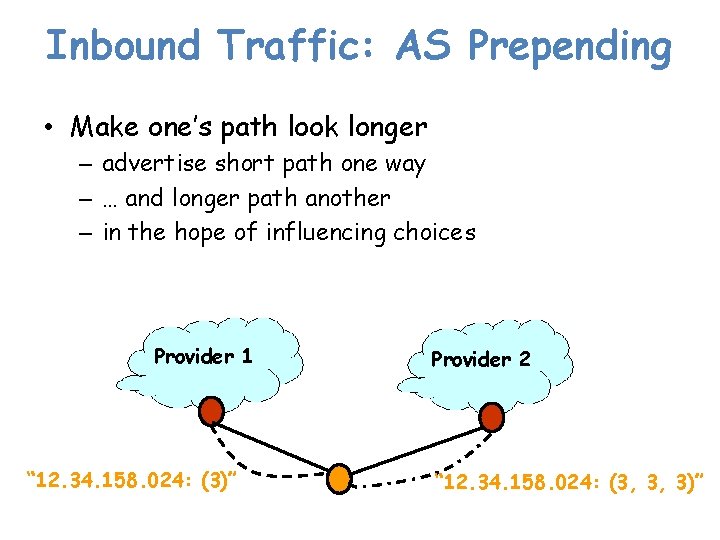

Inbound Traffic: AS Prepending • Make one’s path look longer – advertise short path one way – … and longer path another – in the hope of influencing choices Provider 1 “ 12. 34. 158. 024: (3)” Provider 2 “ 12. 34. 158. 024: (3, 3, 3)”

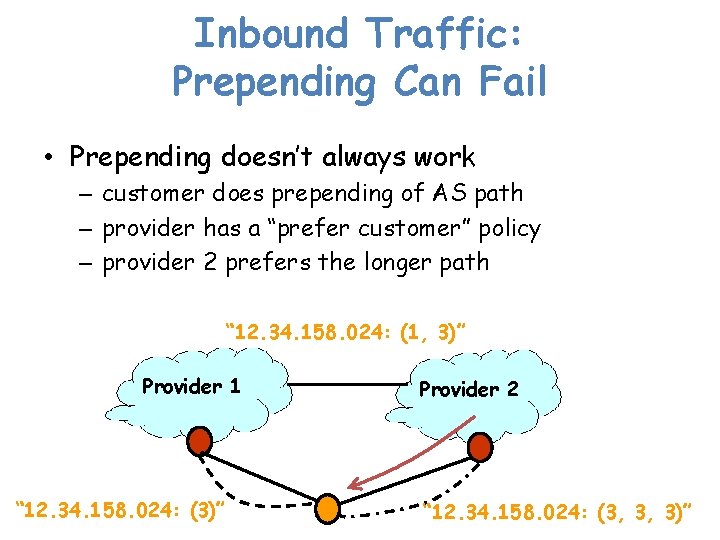

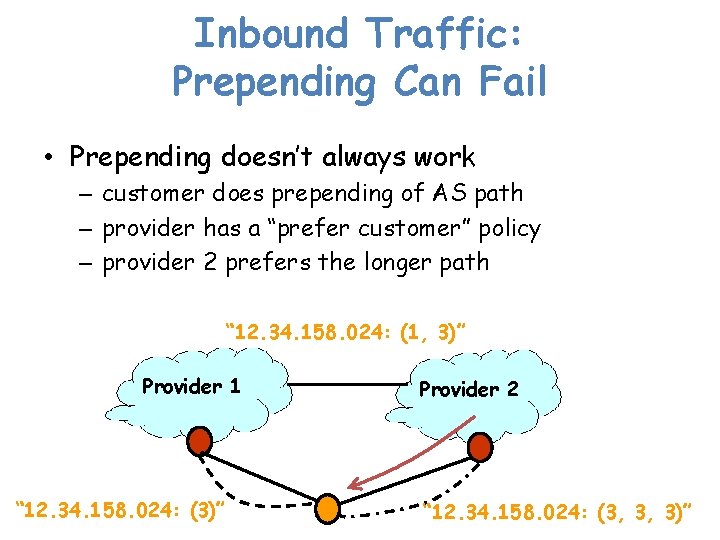

Inbound Traffic: Prepending Can Fail • Prepending doesn’t always work – customer does prepending of AS path – provider has a “prefer customer” policy – provider 2 prefers the longer path “ 12. 34. 158. 024: (1, 3)” Provider 1 “ 12. 34. 158. 024: (3)” Provider 2 “ 12. 34. 158. 024: (3, 3, 3)”

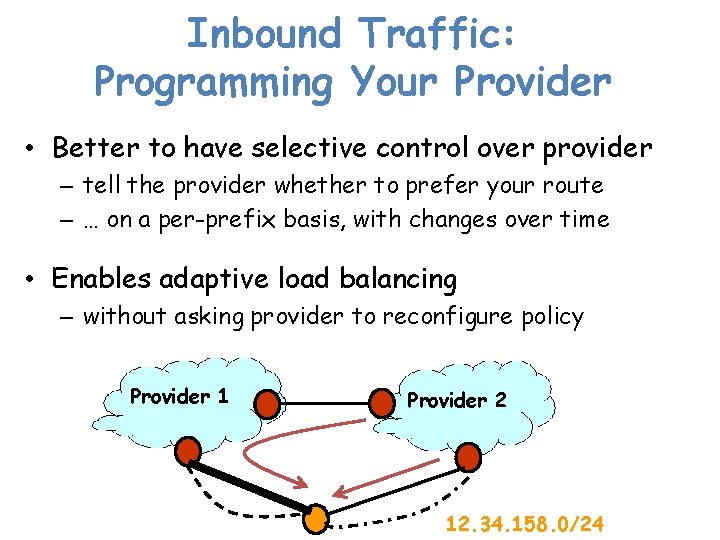

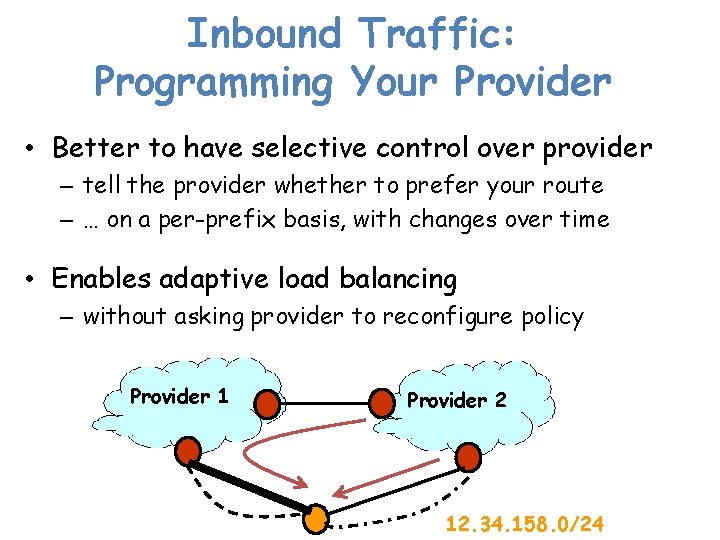

Inbound Traffic: Programming Your Provider • Better to have selective control over provider – tell the provider whether to prefer your route – … on a per-prefix basis, with changes over time • Enables adaptive load balancing – without asking provider to reconfigure policy Provider 1 Provider 2 12. 34. 158. 0/24

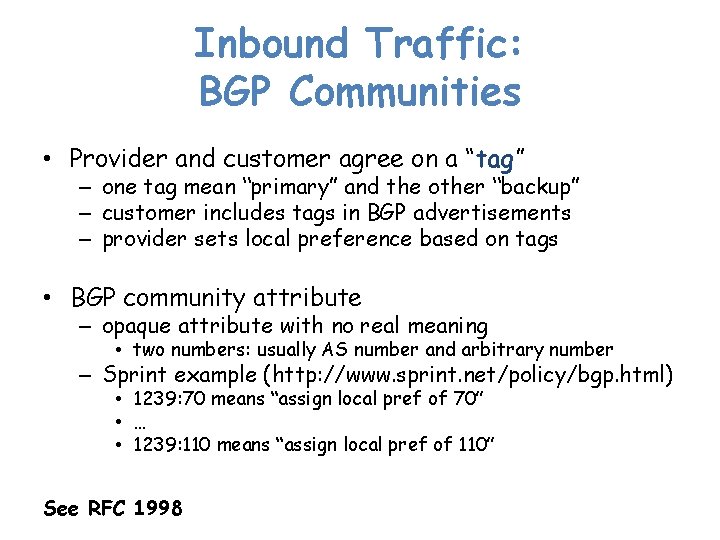

Inbound Traffic: BGP Communities • Provider and customer agree on a “tag” – one tag mean “primary” and the other “backup” – customer includes tags in BGP advertisements – provider sets local preference based on tags • BGP community attribute – opaque attribute with no real meaning • two numbers: usually AS number and arbitrary number – Sprint example (http: //www. sprint. net/policy/bgp. html) • 1239: 70 means “assign local pref of 70” • … • 1239: 110 means “assign local pref of 110” See RFC 1998

Data Centers

Cloud Computing • Computing as a service! • Elastic resources – expand contract resources – pay-per-use – infrastructure on demand

Cloud Service Models • Software as a Service – provider licenses applications to users as a service – e. g. , customer relationship management, e-mail, . . – avoid costs of installation, maintenance, patches, … • Platform as a Service – provider offers software platform for building applications – e. g. , Google’s App-Engine – avoid worrying about scalability of platform • Infrastructure as a Service – provider offers raw computing, storage, and network – e. g. , Amazon’s Elastic Computing Cloud (EC 2) – avoid buying servers and estimating resource needs

Cloud Computing: Challenges • Multi-tenancy – multiple independent users – security and resource isolation – amortize the cost of the (shared) infrastructure • Reliable and efficient service – resiliency: isolate failure of servers and storage – workload movement: move work to other locations

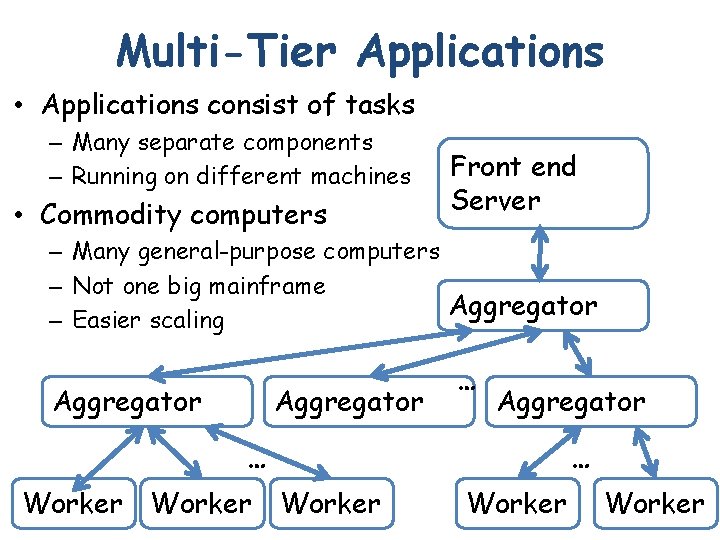

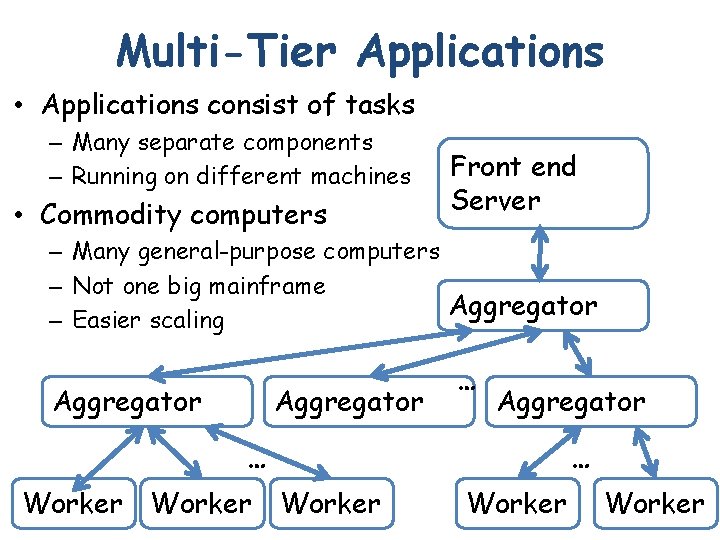

Multi-Tier Applications • Applications consist of tasks – Many separate components – Running on different machines • Commodity computers Front end Server – Many general-purpose computers – Not one big mainframe Aggregator – Easier scaling Aggregator … … Aggregator … Worker 41 … Worker

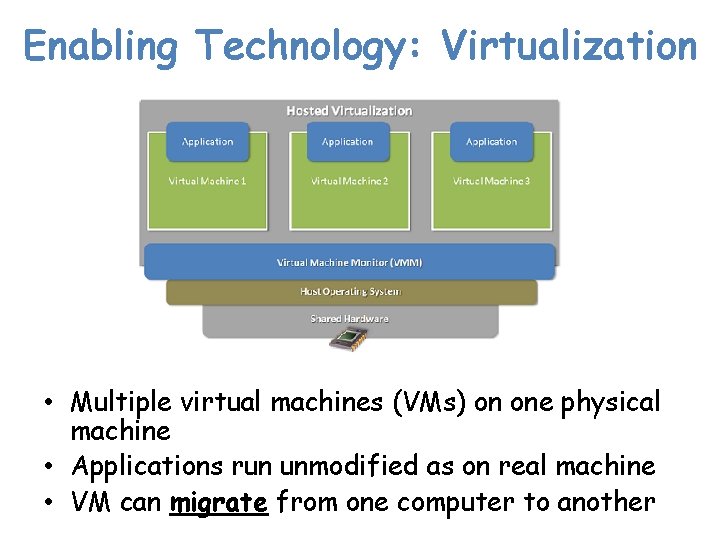

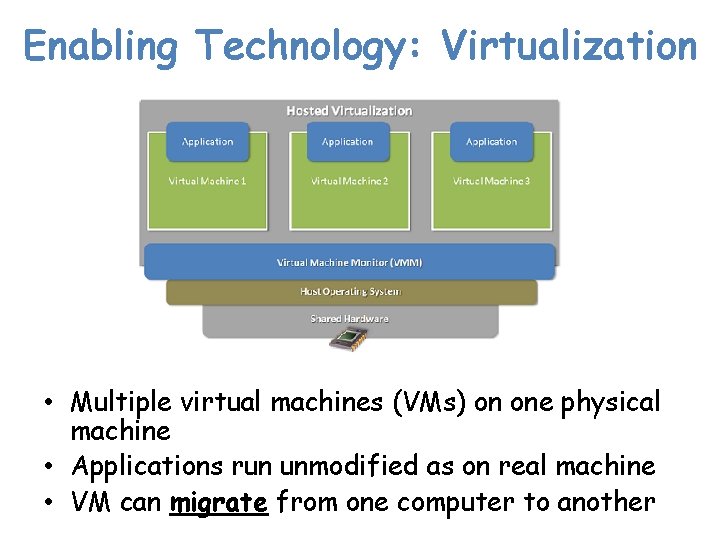

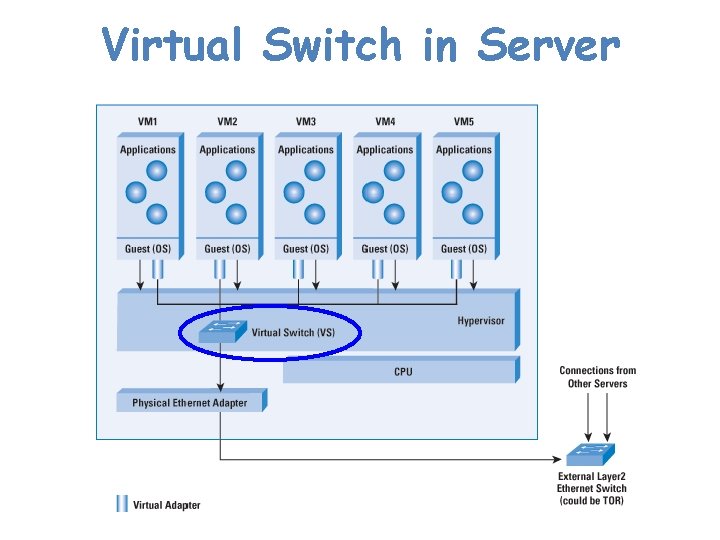

Enabling Technology: Virtualization • Multiple virtual machines (VMs) on one physical machine • Applications run unmodified as on real machine • VM can migrate from one computer to another

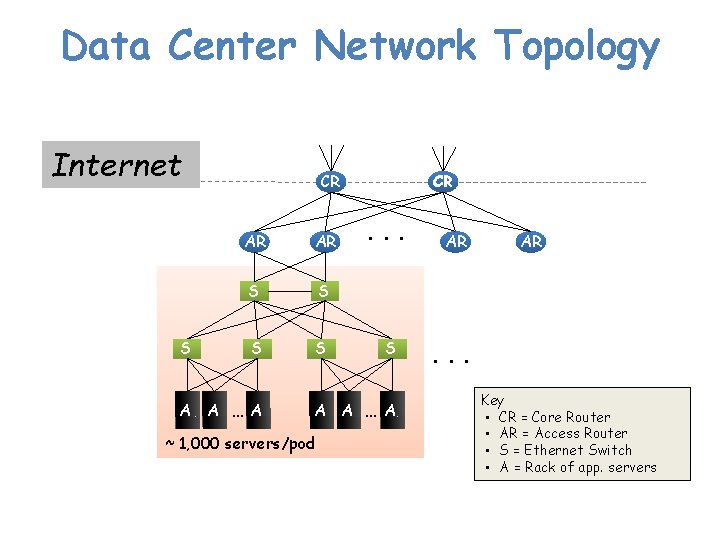

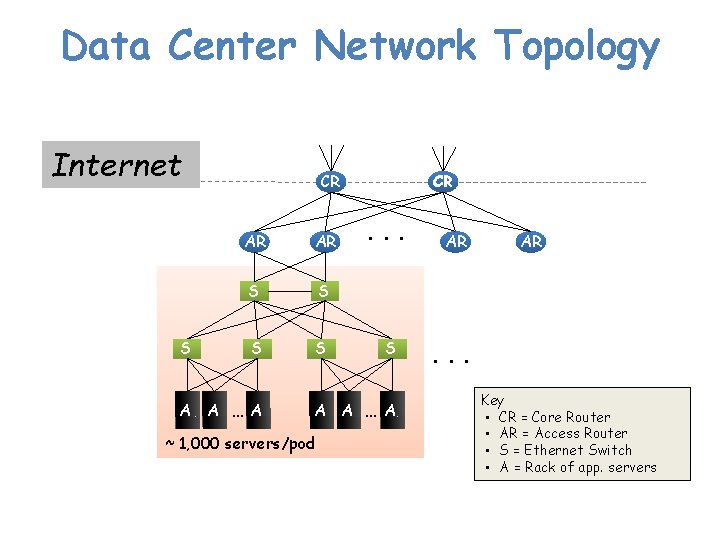

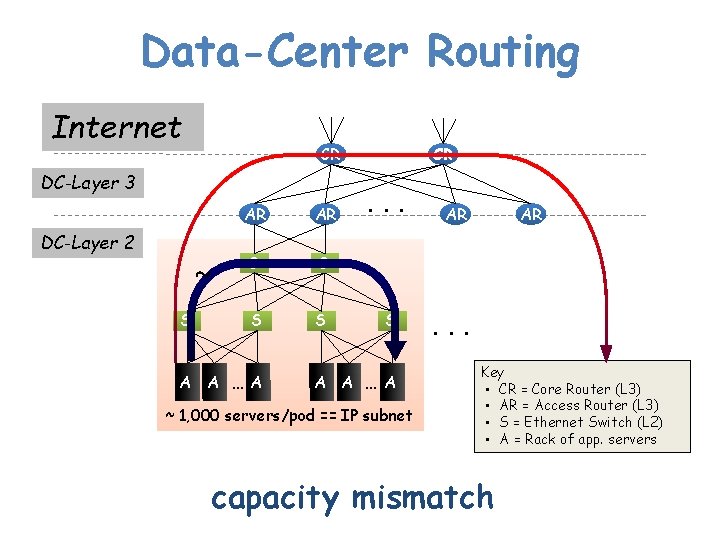

Data Center Network Topology Internet S CR AR AR S S A A … A CR . . . S A A … A ~ 1, 000 servers/pod AR AR . . . Key • CR = Core Router • AR = Access Router • S = Ethernet Switch • A = Rack of app. servers

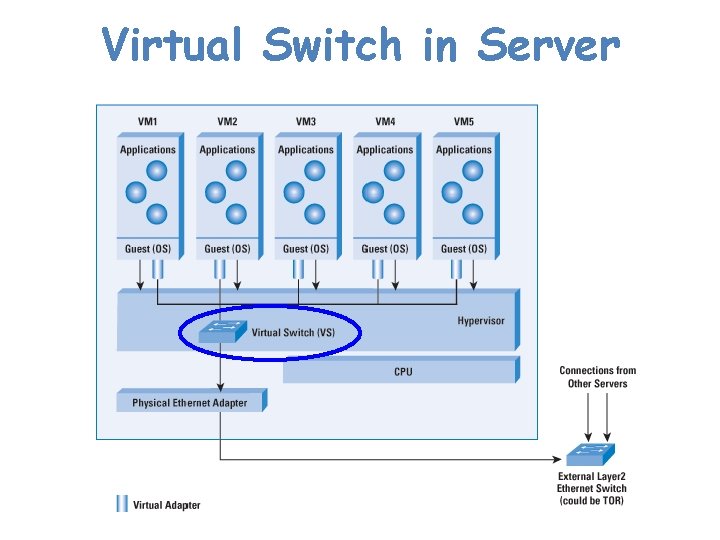

Virtual Switch in Server

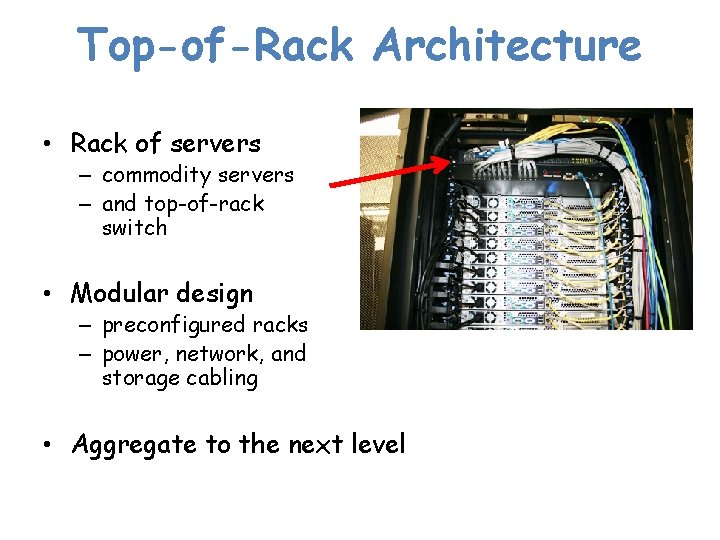

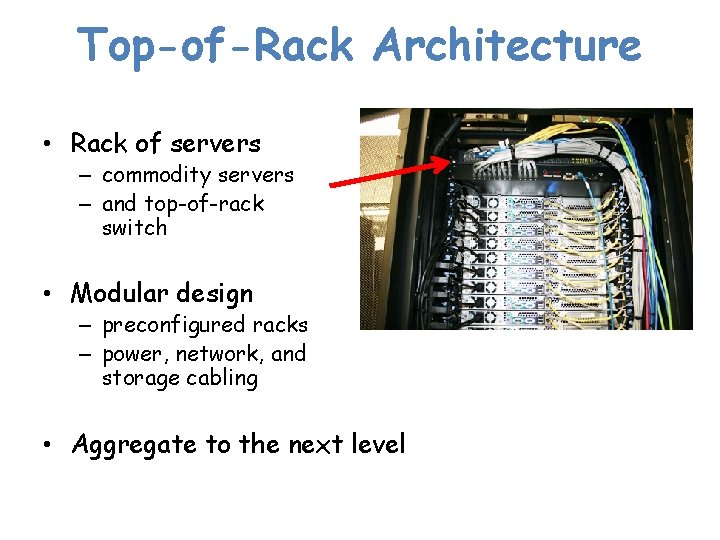

Top-of-Rack Architecture • Rack of servers – commodity servers – and top-of-rack switch • Modular design – preconfigured racks – power, network, and storage cabling • Aggregate to the next level

Modularity, Modularity

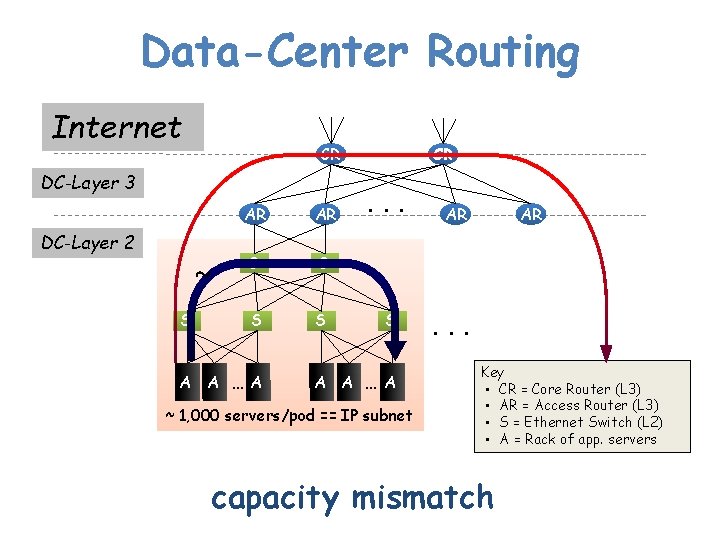

Data-Center Routing Internet CR DC-Layer 3 DC-Layer 2 ~ S AR AR S S A A … A CR . . . S A A … A ~ 1, 000 servers/pod == IP subnet AR AR . . . Key • CR = Core Router (L 3) • AR = Access Router (L 3) • S = Ethernet Switch (L 2) • A = Rack of app. servers capacity mismatch

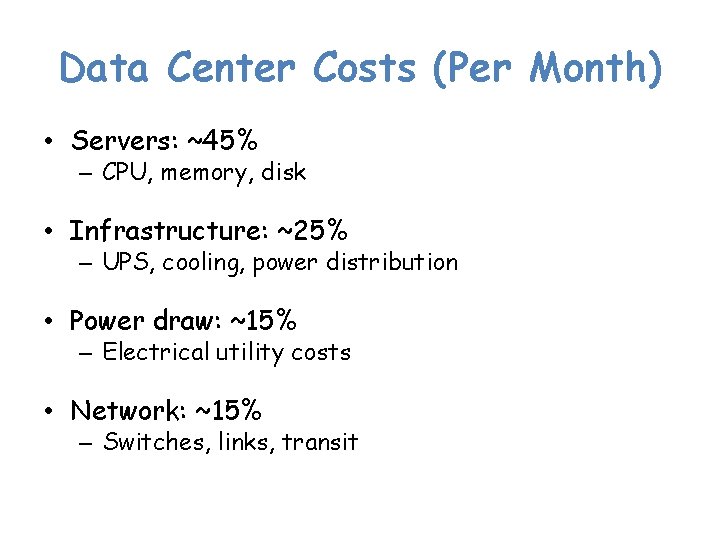

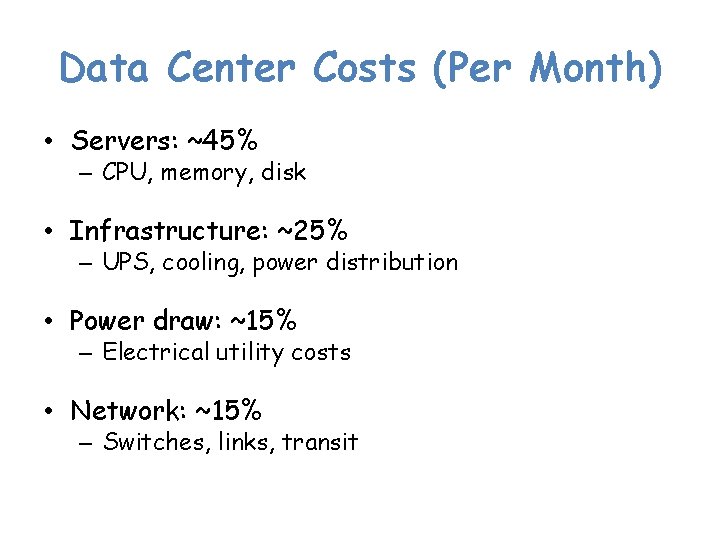

Data Center Costs (Per Month) • Servers: ~45% – CPU, memory, disk • Infrastructure: ~25% – UPS, cooling, power distribution • Power draw: ~15% – Electrical utility costs • Network: ~15% – Switches, links, transit

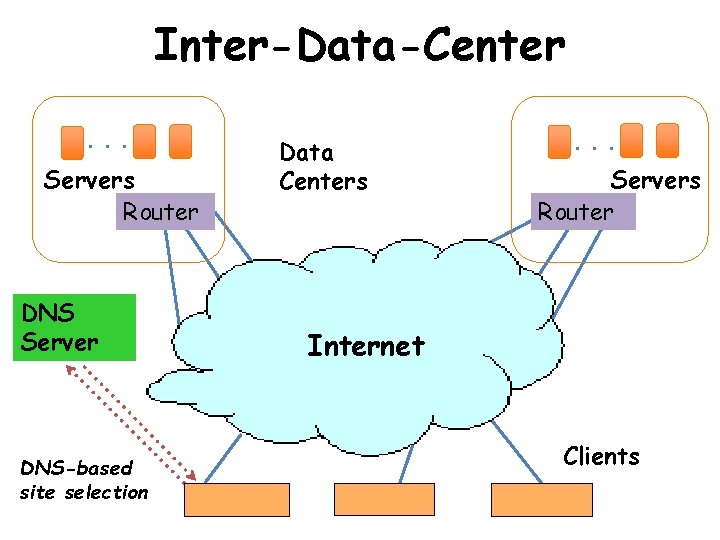

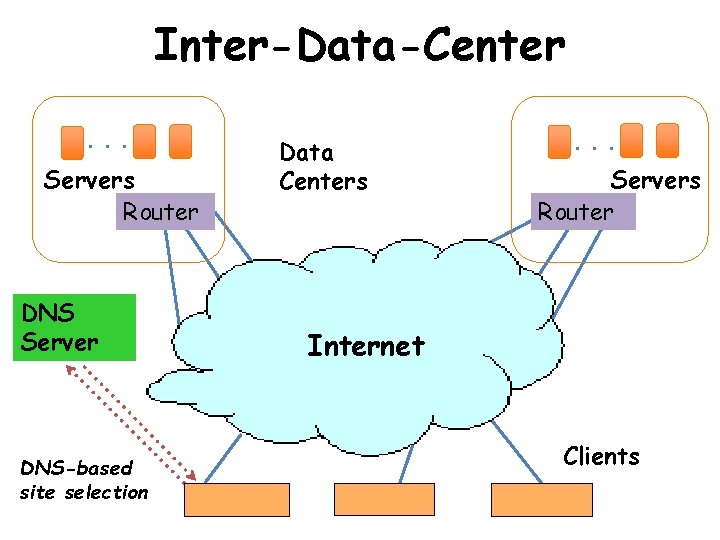

Inter-Data-Center. . . Servers Router DNS Server DNS-based site selection Data Centers . . . Servers Router Internet Clients

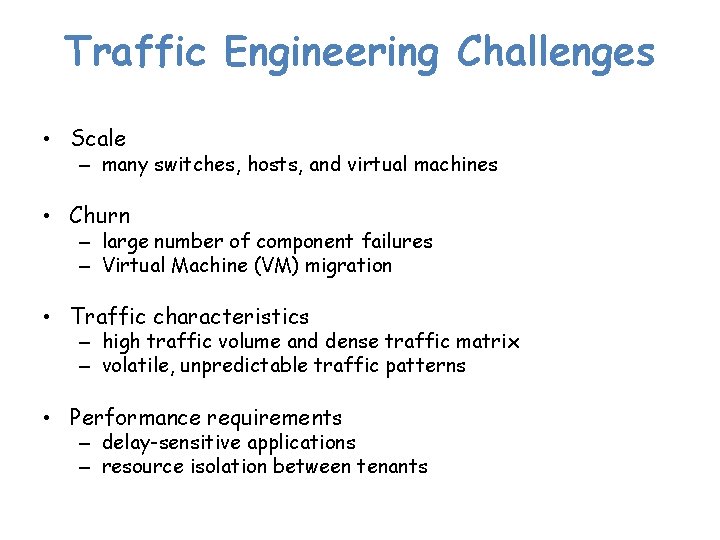

Traffic Engineering Challenges • Scale – many switches, hosts, and virtual machines • Churn – large number of component failures – Virtual Machine (VM) migration • Traffic characteristics – high traffic volume and dense traffic matrix – volatile, unpredictable traffic patterns • Performance requirements – delay-sensitive applications – resource isolation between tenants

Traffic Engineering Opportunities • Efficient network – low propagation delay and high capacity • Specialized topology – Fat tree, Clos network, etc. – opportunities for hierarchical addressing • Control over both network and hosts – joint optimization of routing and server placement – can move network functionality into the end host • Flexible movement of workload – services replicated at multiple servers and data centers – Virtual Machine (VM) migration

Much Work Along These Lines • Draws inspiration from research on interconnection networks (telephone networks, parallel computation, network on chip, …) • Many proposed architectures: – topologies – routing schemes – flow control –…