Lecture 19 Coherence Protocols Topics coherence protocols for

- Slides: 22

Lecture 19: Coherence Protocols • Topics: coherence protocols for symmetric and distributed shared-memory multiprocessors (Sections 6. 3 -6. 5) 1

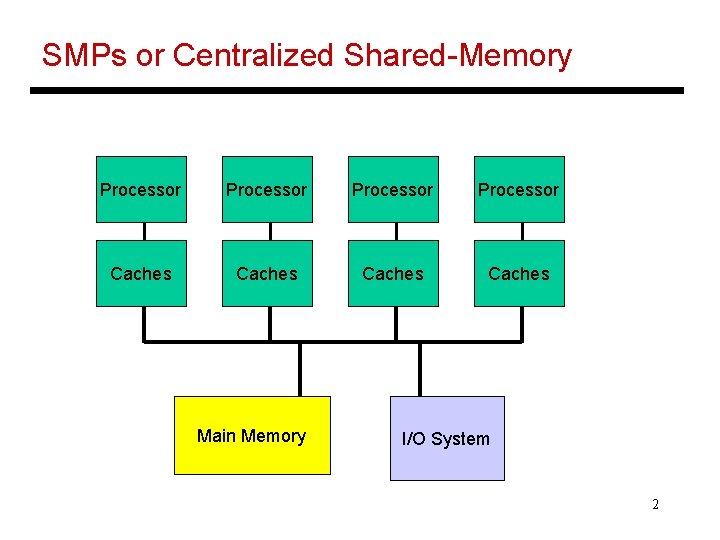

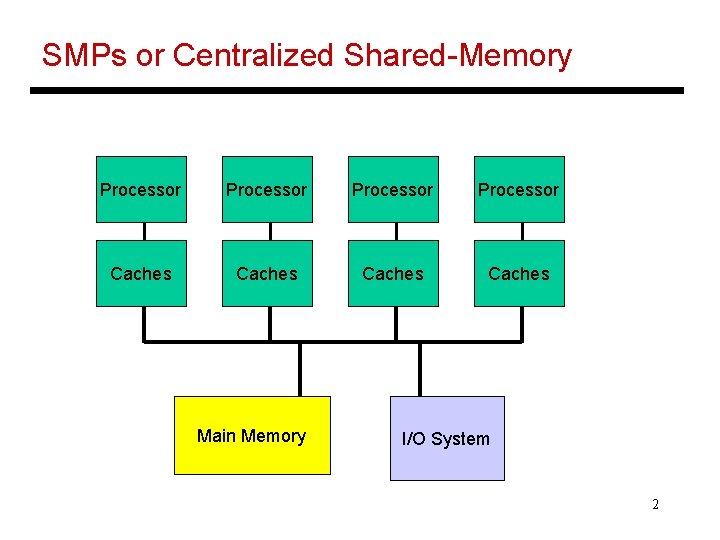

SMPs or Centralized Shared-Memory Processor Caches Main Memory I/O System 2

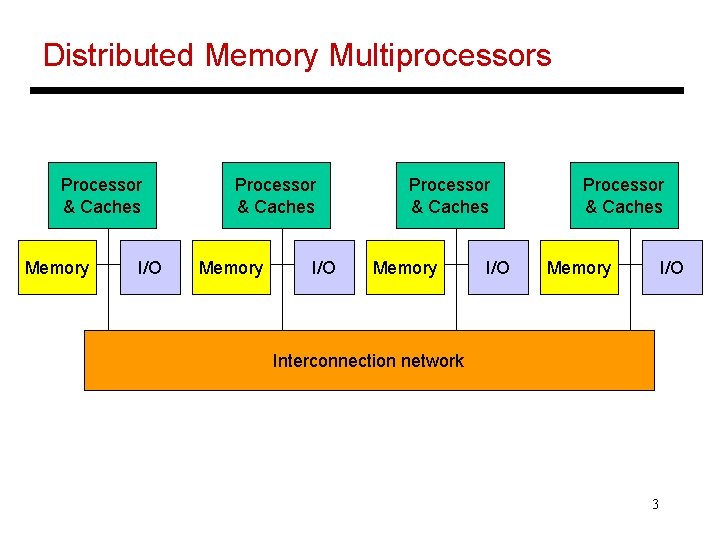

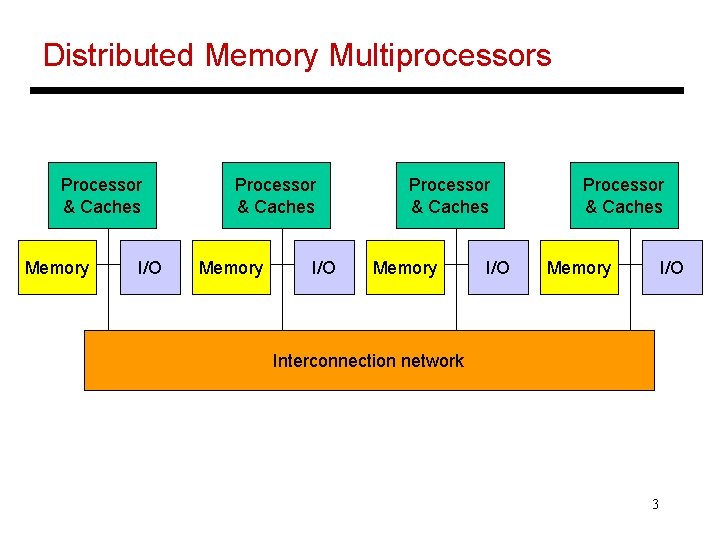

Distributed Memory Multiprocessors Processor & Caches Memory I/O Interconnection network 3

Shared-Memory Vs. Message-Passing Shared-memory: • Well-understood programming model • Communication is implicit and hardware handles protection • Hardware-controlled caching Message-passing: • No cache coherence simpler hardware • Explicit communication easier for the programmer to restructure code • Sender can initiate data transfer 4

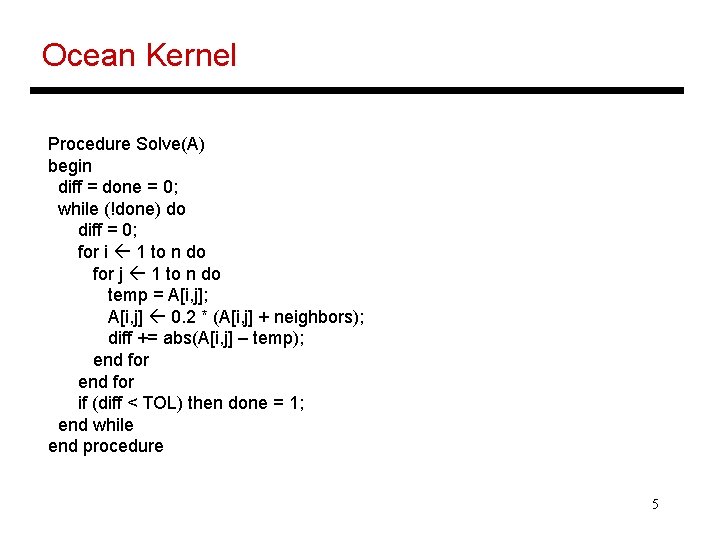

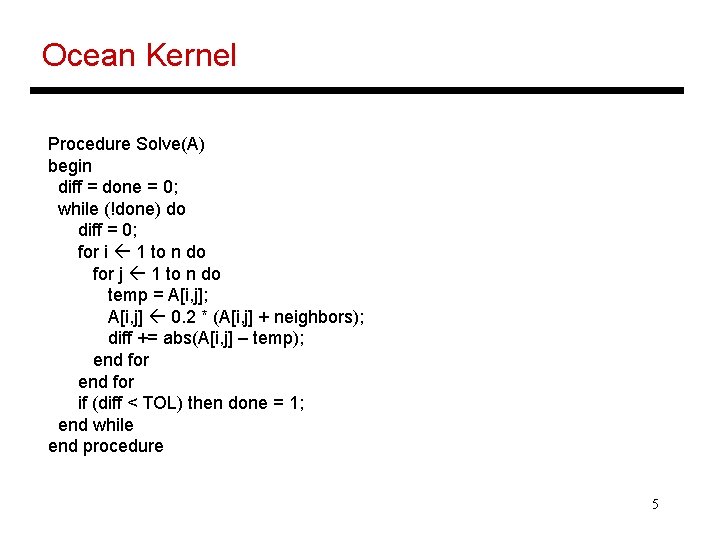

Ocean Kernel Procedure Solve(A) begin diff = done = 0; while (!done) do diff = 0; for i 1 to n do for j 1 to n do temp = A[i, j]; A[i, j] 0. 2 * (A[i, j] + neighbors); diff += abs(A[i, j] – temp); end for if (diff < TOL) then done = 1; end while end procedure 5

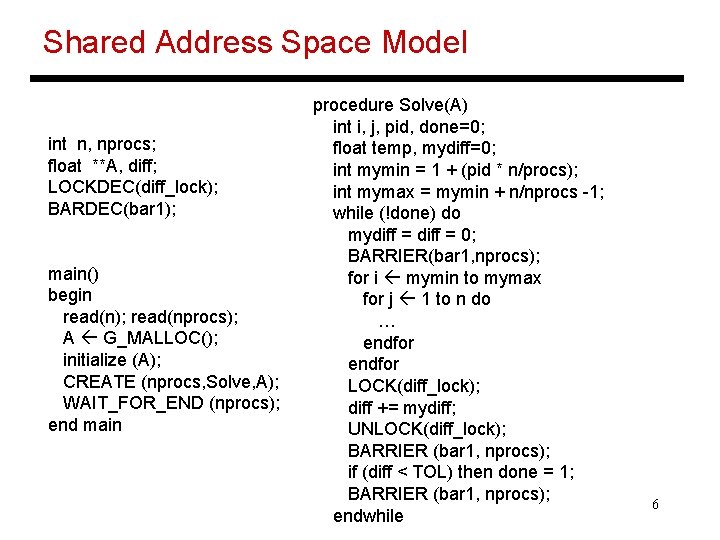

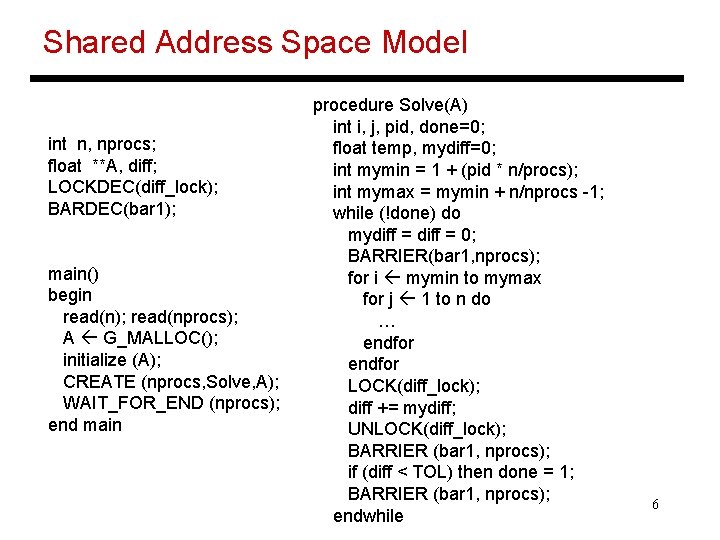

Shared Address Space Model int n, nprocs; float **A, diff; LOCKDEC(diff_lock); BARDEC(bar 1); main() begin read(n); read(nprocs); A G_MALLOC(); initialize (A); CREATE (nprocs, Solve, A); WAIT_FOR_END (nprocs); end main procedure Solve(A) int i, j, pid, done=0; float temp, mydiff=0; int mymin = 1 + (pid * n/procs); int mymax = mymin + n/nprocs -1; while (!done) do mydiff = 0; BARRIER(bar 1, nprocs); for i mymin to mymax for j 1 to n do … endfor LOCK(diff_lock); diff += mydiff; UNLOCK(diff_lock); BARRIER (bar 1, nprocs); if (diff < TOL) then done = 1; BARRIER (bar 1, nprocs); endwhile 6

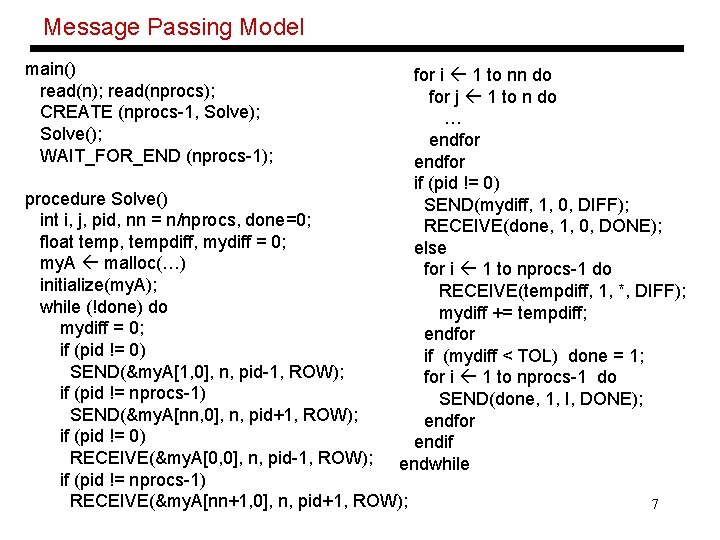

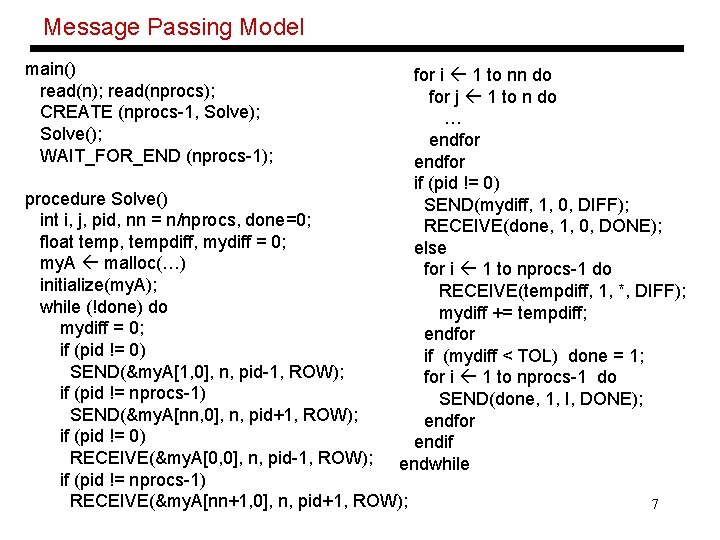

Message Passing Model main() read(n); read(nprocs); CREATE (nprocs-1, Solve); Solve(); WAIT_FOR_END (nprocs-1); for i 1 to nn do for j 1 to n do … endfor if (pid != 0) SEND(mydiff, 1, 0, DIFF); RECEIVE(done, 1, 0, DONE); else for i 1 to nprocs-1 do RECEIVE(tempdiff, 1, *, DIFF); mydiff += tempdiff; endfor if (mydiff < TOL) done = 1; for i 1 to nprocs-1 do SEND(done, 1, I, DONE); endfor endif endwhile procedure Solve() int i, j, pid, nn = n/nprocs, done=0; float temp, tempdiff, mydiff = 0; my. A malloc(…) initialize(my. A); while (!done) do mydiff = 0; if (pid != 0) SEND(&my. A[1, 0], n, pid-1, ROW); if (pid != nprocs-1) SEND(&my. A[nn, 0], n, pid+1, ROW); if (pid != 0) RECEIVE(&my. A[0, 0], n, pid-1, ROW); if (pid != nprocs-1) RECEIVE(&my. A[nn+1, 0], n, pid+1, ROW); 7

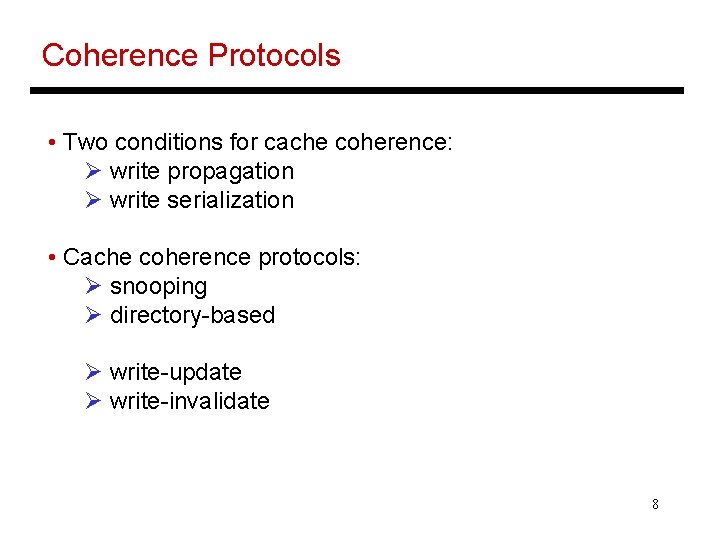

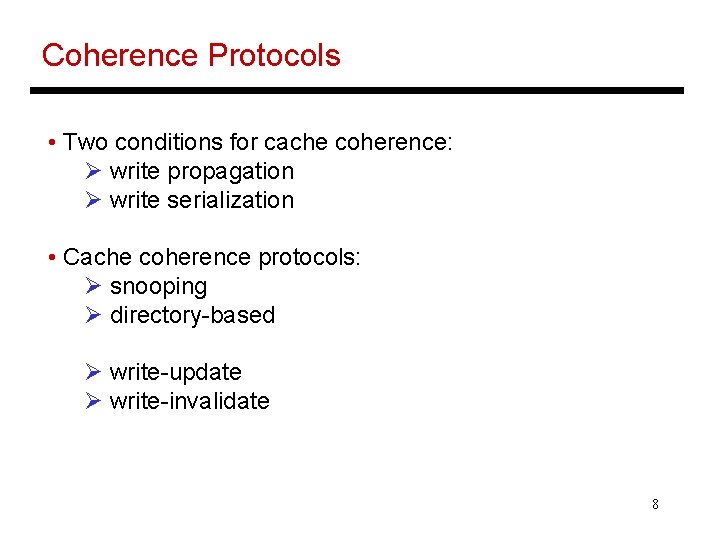

Coherence Protocols • Two conditions for cache coherence: Ø write propagation Ø write serialization • Cache coherence protocols: Ø snooping Ø directory-based Ø write-update Ø write-invalidate 8

SMP Example Processor A Processor B Processor C Processor D Caches Main Memory I/O System A: Rd B: Rd C: Rd A: Wr C: Wr B: Rd A: Rd B: Wr X X X X Y X Y 9

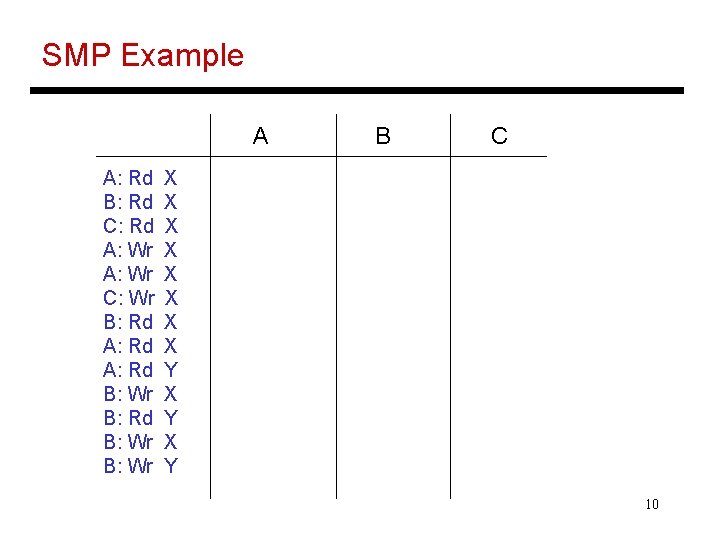

SMP Example A A: Rd B: Rd C: Rd A: Wr C: Wr B: Rd A: Rd B: Wr B C X X X X Y X Y 10

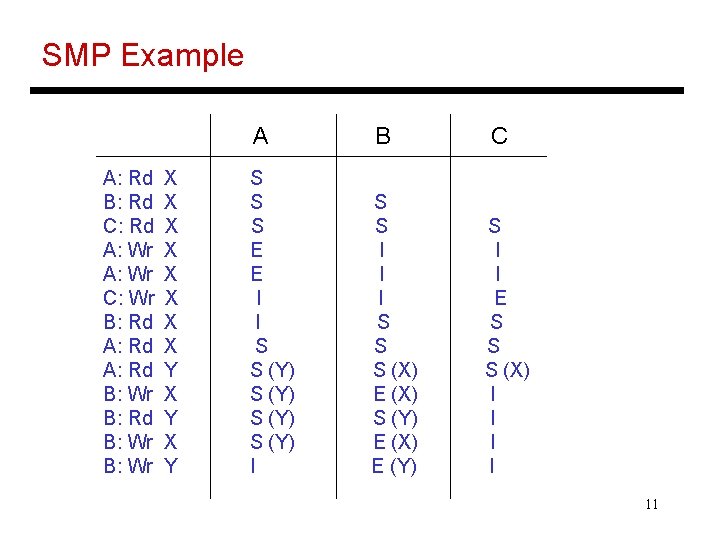

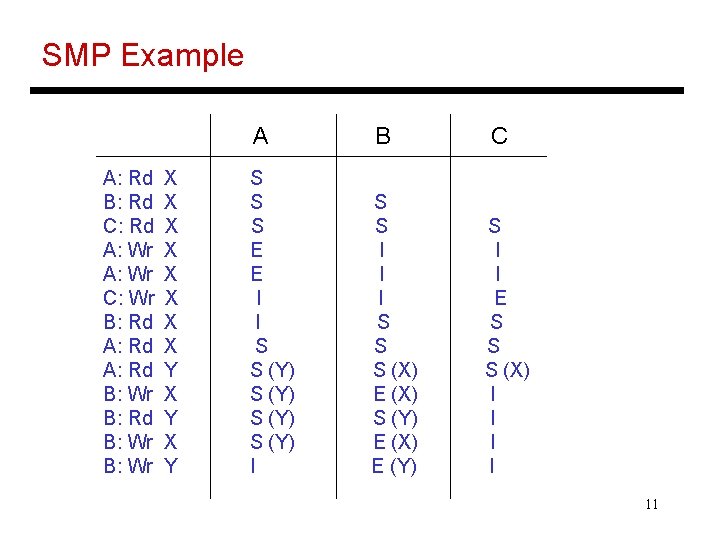

SMP Example A: Rd B: Rd C: Rd A: Wr C: Wr B: Rd A: Rd B: Wr X X X X Y X Y A B S S S E E I I S S (Y) I S S I I I S S S (X) E (X) S (Y) E (X) E (Y) C S I I E S S S (X) I I 11

Example Protocol Request Source Block state Action Read hit Proc Shared/excl Read data in cache Read miss Proc Invalid Place read miss on bus Read miss Proc Shared Conflict miss: place read miss on bus Read miss Proc Exclusive Conflict miss: write back block, place read miss on bus Write hit Proc Exclusive Write data in cache Write hit Proc Shared Place write miss on bus Write miss Proc Invalid Place write miss on bus Write miss Proc Shared Conflict miss: place write miss on bus Write miss Proc Exclusive Conflict miss: write back, place write miss on bus Read miss Bus Shared No action; allow memory to respond Read miss Bus Exclusive Place block on bus; change to shared Write miss Bus Shared Invalidate block Write miss Bus Exclusive 12 Write back block; change to invalid

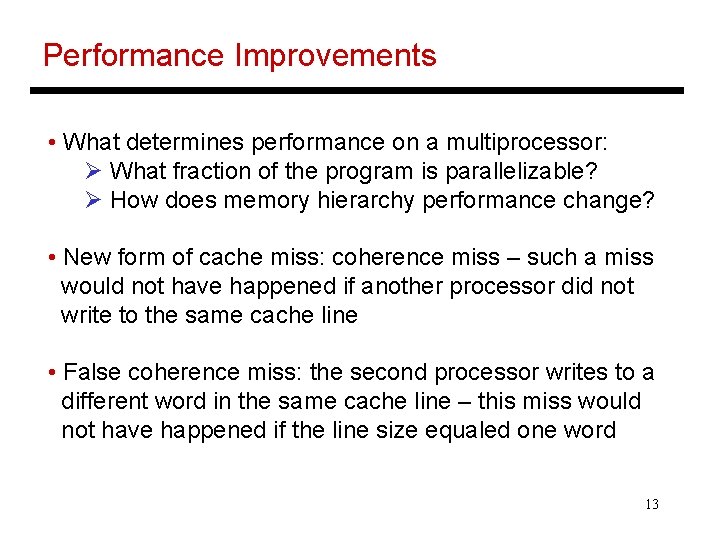

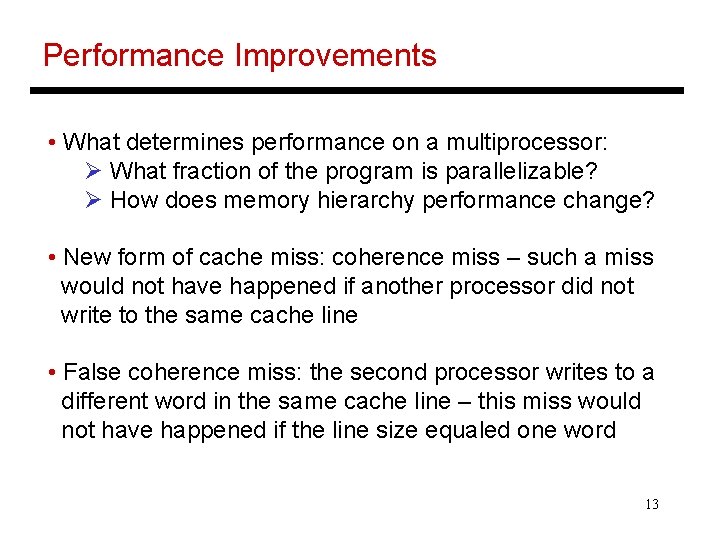

Performance Improvements • What determines performance on a multiprocessor: Ø What fraction of the program is parallelizable? Ø How does memory hierarchy performance change? • New form of cache miss: coherence miss – such a miss would not have happened if another processor did not write to the same cache line • False coherence miss: the second processor writes to a different word in the same cache line – this miss would not have happened if the line size equaled one word 13

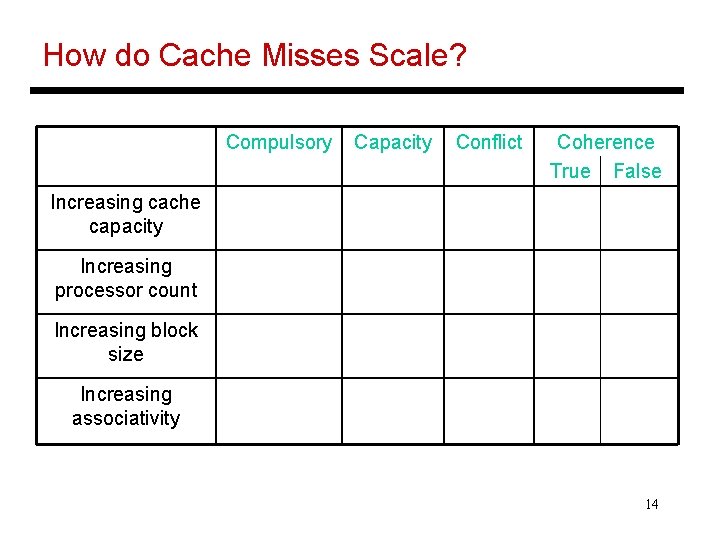

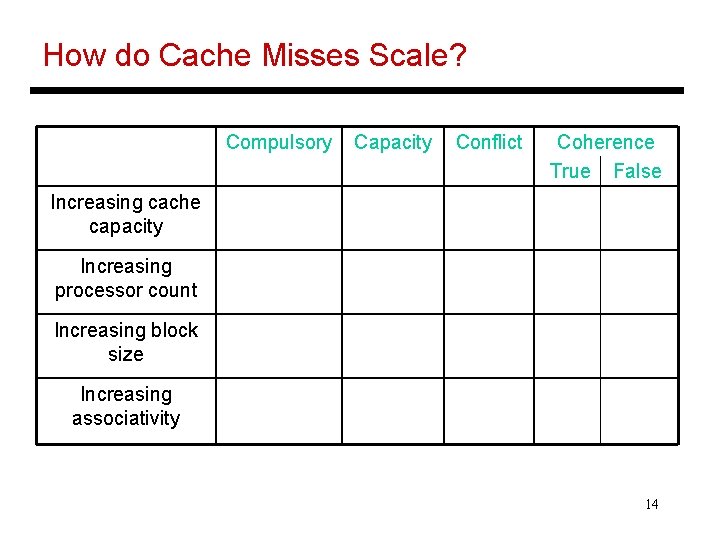

How do Cache Misses Scale? Compulsory Capacity Conflict Coherence True False Increasing cache capacity Increasing processor count Increasing block size Increasing associativity 14

Simplifying Assumptions • All transactions on a read or write are atomic – on a write miss, the miss is sent on the bus, a block is fetched from memory/remote cache, and the block is marked exclusive • Potential problem if the actions are non-atomic: P 1 sends a write miss on the bus, P 2 sends a write miss on the bus: since the block is still invalid in P 1, P 2 does not realize that it should write after receiving the block from P 1 – instead, it receives the block from memory • Most problems are fixable by keeping track of more state: for example, don’t acquire the bus unless all outstanding transactions for the block have completed 15

Coherence in Distributed Memory Multiprocs • Distributed memory systems are typically larger bus-based snooping may not work well • Option 1: software-based mechanisms – message-passing systems or software-controlled cache coherence • Option 2: hardware-based mechanisms – directory-based cache coherence 16

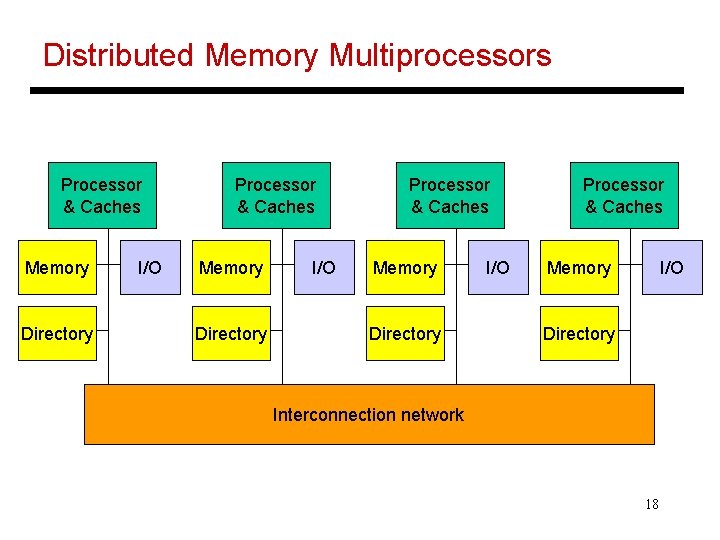

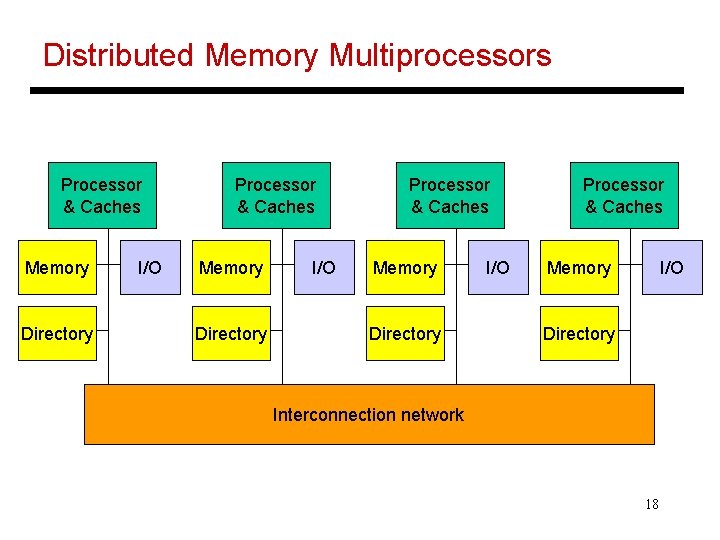

Directory-Based Cache Coherence • The physical memory is distributed among all processors • The directory is also distributed along with the corresponding memory • The physical address is enough to determine the location of memory • The (many) processing nodes are connected with a scalable interconnect (not a bus) – hence, messages are no longer broadcast, but routed from sender to receiver – since the processing nodes can no longer snoop, the directory keeps track of sharing state 17

Distributed Memory Multiprocessors Processor & Caches Memory Directory I/O Processor & Caches Memory I/O Directory Interconnection network 18

Cache Block States • What are the different states a block of memory can have within the directory? • Note that we need information for each cache so that invalidate messages can be sent • The block state is also stored in the cache for efficiency • The directory now serves as the arbitrator: if multiple write attempts happen simultaneously, the directory determines the ordering 19

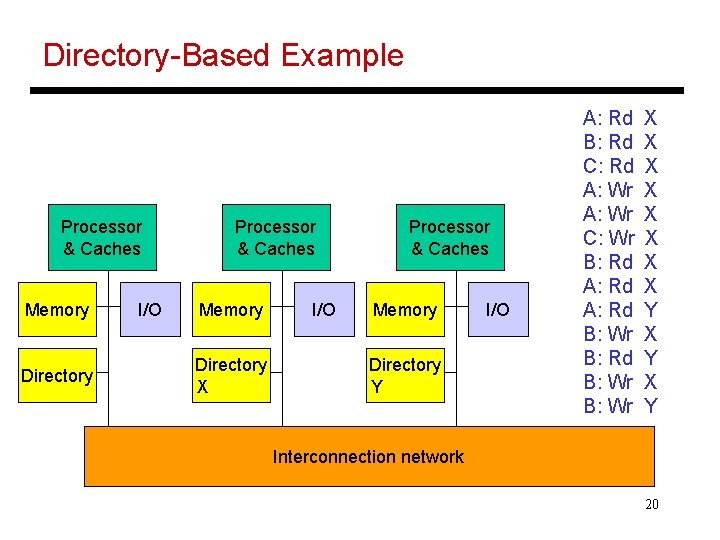

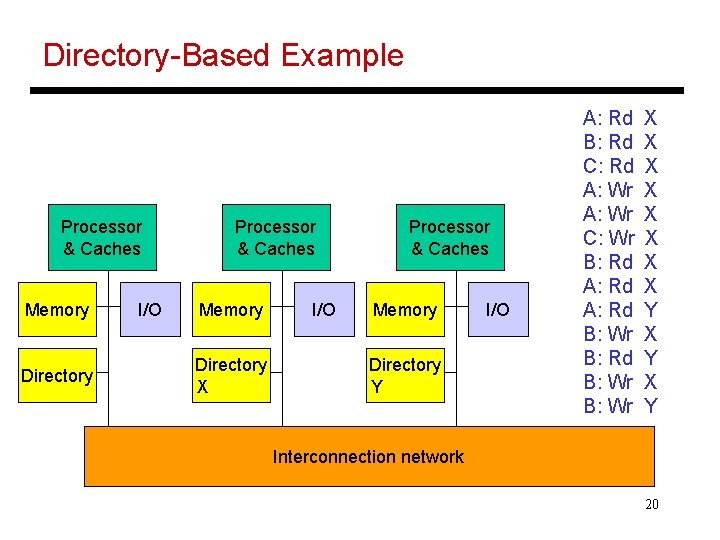

Directory-Based Example Processor & Caches Memory Directory I/O Processor & Caches Memory Directory X I/O Processor & Caches Memory Directory Y I/O A: Rd B: Rd C: Rd A: Wr C: Wr B: Rd A: Rd B: Wr X X X X Y X Y Interconnection network 20

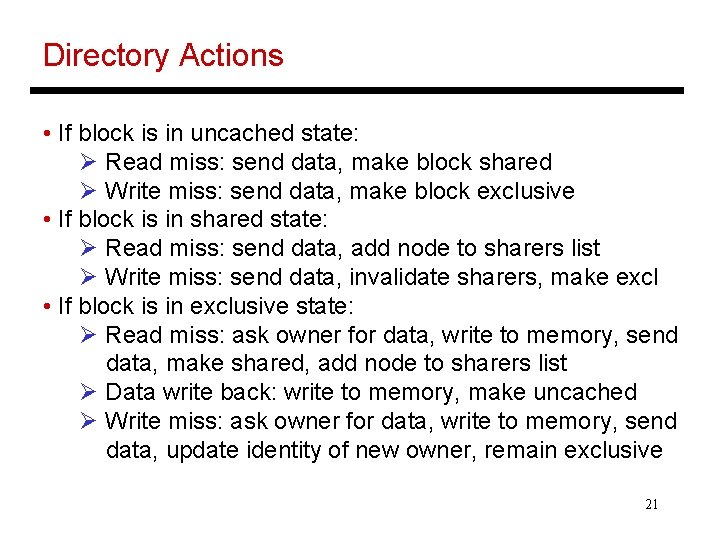

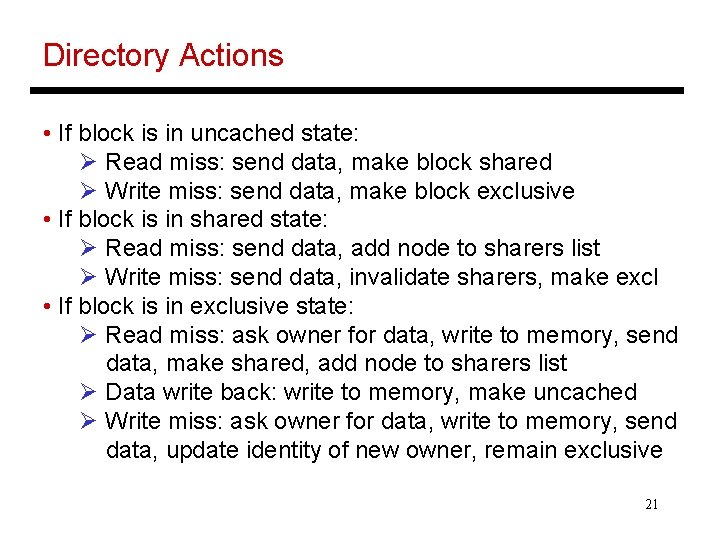

Directory Actions • If block is in uncached state: Ø Read miss: send data, make block shared Ø Write miss: send data, make block exclusive • If block is in shared state: Ø Read miss: send data, add node to sharers list Ø Write miss: send data, invalidate sharers, make excl • If block is in exclusive state: Ø Read miss: ask owner for data, write to memory, send data, make shared, add node to sharers list Ø Data write back: write to memory, make uncached Ø Write miss: ask owner for data, write to memory, send data, update identity of new owner, remain exclusive 21

Title • Bullet 22