Human Competitive Results of Evolutionary Computation Presenter Mati

![Rush Hour GP-Rush [Hauptman et al, 2009] Bronze Humies award 33 Rush Hour GP-Rush [Hauptman et al, 2009] Bronze Humies award 33](https://slidetodoc.com/presentation_image_h/da6b6c992c3ec027d71e1dd36110e172/image-32.jpg)

- Slides: 49

Human Competitive Results of Evolutionary Computation Presenter: Mati Bot Course: Advance Seminar in Algorithms (Prof. Yefim Dinitz) 1

Human Competitive Results of Evolutionary Computation Outline Human-Competitiveness Definition Evolving Hyper-Heuristics using Genetic Programming • Rush-Hour (bronze Humies prize in 2009 by Ami • Hauptman) Freecell(gold Humies prize in 2011 by Achiya Elyasaf) Other Examples 2

What is Human competitive? John Koza defined “Human-Competitiveness” in his book: Genetic Algorithms IV (2003). There are 8 criteria by which a result can be considered Human-Competitive. (will be explained in next slide) Our mission: Creation of Human-Competitive innovative solutions by means of Evolution. 3

The 8 Criteria of Koza for Human Competitivenes. (A) result is a Patent from the past, improvement of a patent. A New patent. 4

The 8 Criteria of Koza for Human Competitivenes. (B) result is equal to or better than another result that was published in a journal. 5

The 8 Criteria of Koza for Human Competitivenes. (C) result is equal to or better than a result in a known DB of results. 6

The 8 Criteria of Koza for Human Competitivenes. (D) publishable in its own right as a new scientific result. • independent of the fact that the result was mechanically created. 7

The 8 Criteria of Koza for Human Competitivenes. (E) The result is equal to or better than the best human-created solution. 8

The 8 Criteria of Koza for Human Competitivenes. (F) equal to or better than an human achievement in its field at the time it was first discovered. 9

The 8 Criteria of Koza for Human Competitivenes. (G) The result solves a problem of indisputable difficulty in its field. 10

The 8 Criteria of Koza for Human Competitivenes. (H) The result holds its own or wins a regulated competition involving human contestants (in the form of either live human players or human-written computer programs). 11

Humies Competition “Humies annual Competition” gives $$$ for the best HC results. (in GECCO conference) Awarding a gold, silver and bronze prizes to the best entries. (money $$$) BGU won 1 gold, 1 silver and 6 bronze prizes since 2005. I counted more than 75 Human-Competitive results on the Humies competition site. http: //www. sigevo. org/gecco-2012/ http: //www. genetic-programming. org/hc 2011/combined. html 12

Evolving Hyper-Heuristics using Genetic Programming Ami Hauptman and Achiya Elyasaf

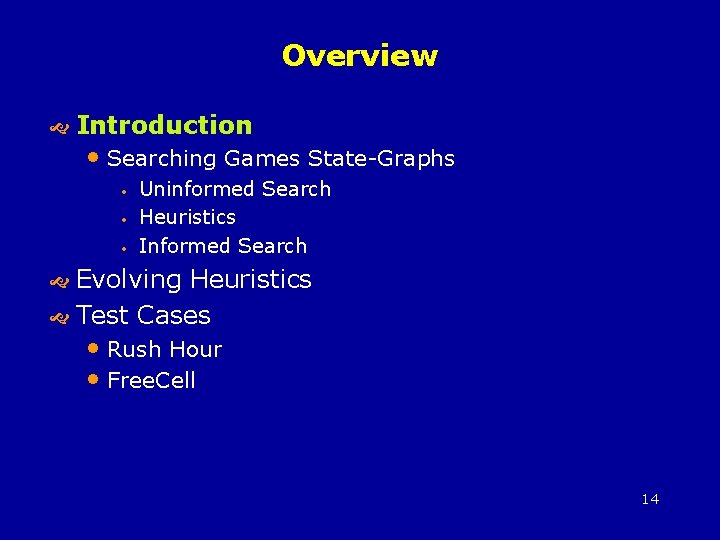

Overview Introduction • Searching Games State-Graphs • • • Uninformed Search Heuristics Informed Search Evolving Heuristics Test Cases • Rush Hour • Free. Cell 14

Representing Games as State-Graphs Every puzzle/game can be represented as a state graph: • In puzzles, board games etc. , every piece move can be counted as an edge/transition between states • In computer war games etc. – the place of the player / the enemy, all the parameters (health, shield…) define a state 15

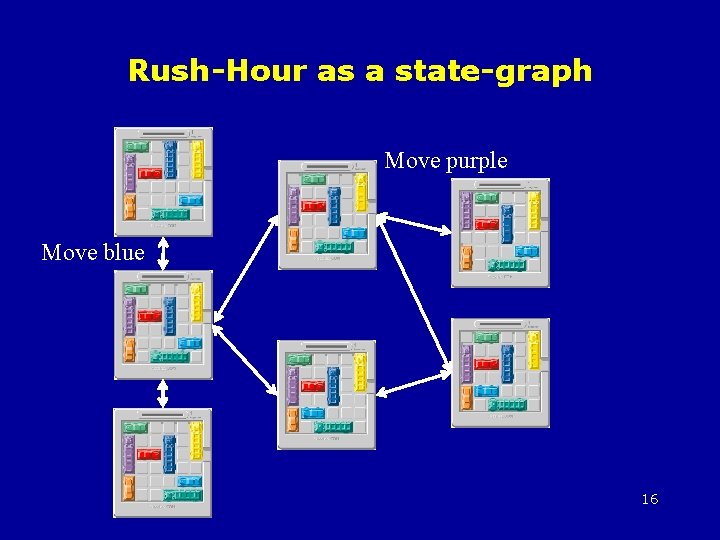

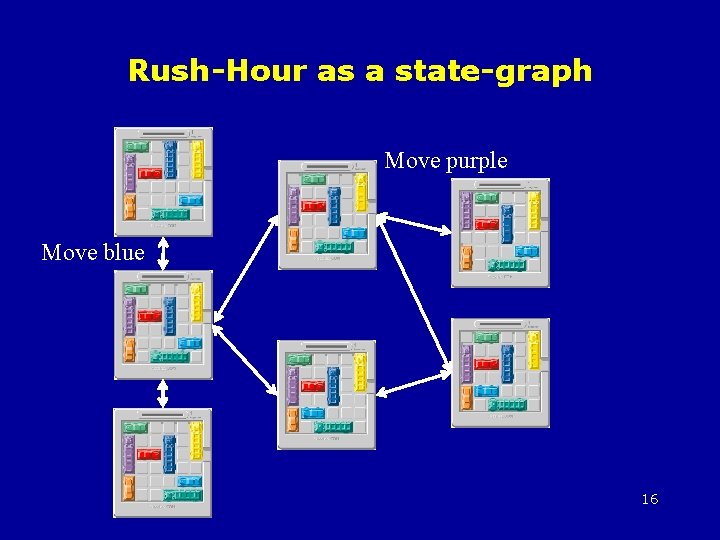

Rush-Hour as a state-graph Move purple Move blue 16

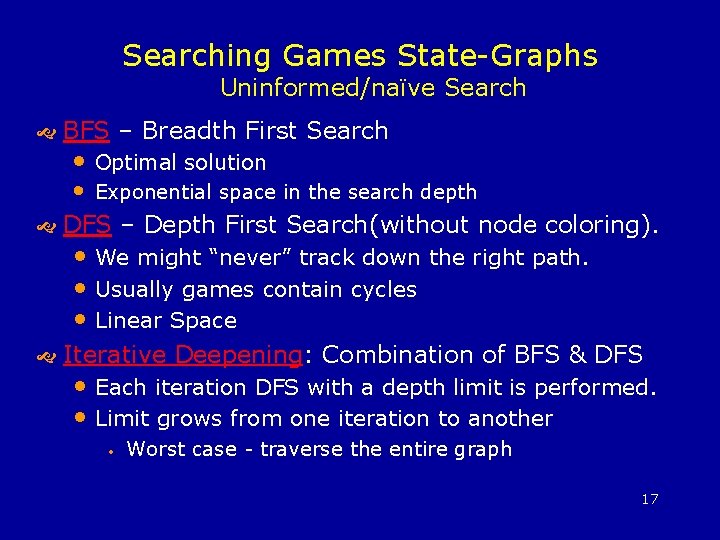

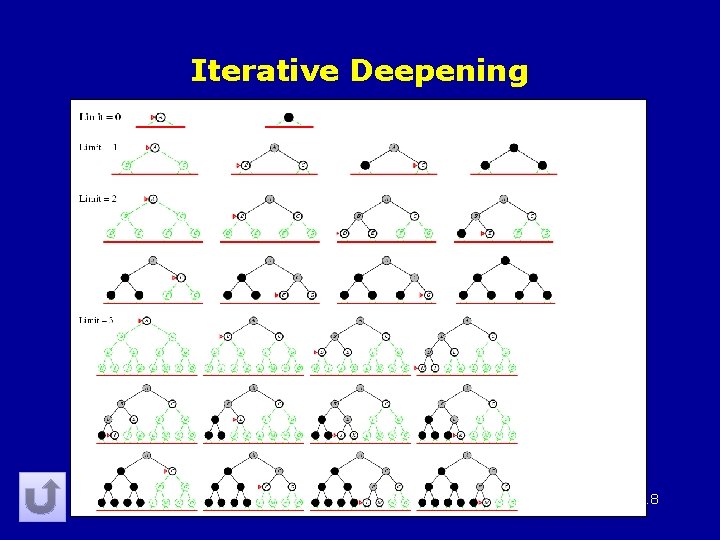

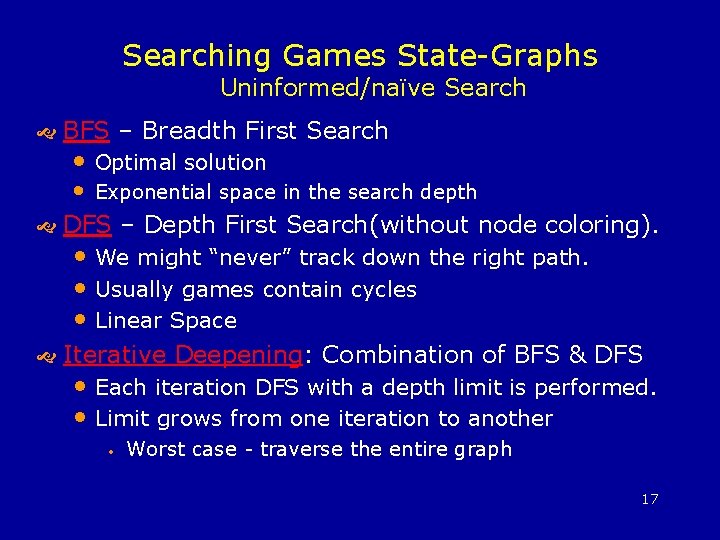

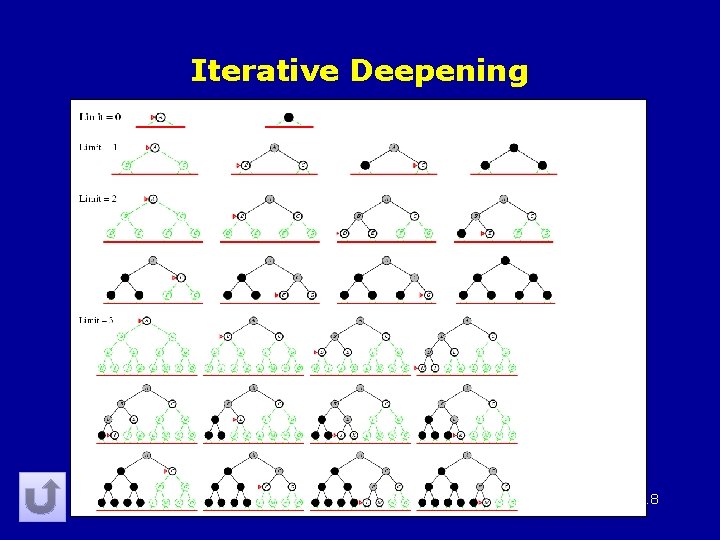

Searching Games State-Graphs Uninformed/naïve Search BFS – Breadth First Search DFS – Depth First Search(without node coloring). Iterative Deepening: Combination of BFS & DFS • Optimal solution • Exponential space in the search depth • We might “never” track down the right path. • Usually games contain cycles • Linear Space • Each iteration DFS with a depth limit is performed. • Limit grows from one iteration to another • Worst case - traverse the entire graph 17

Iterative Deepening 18

Searching Games State-Graphs Uninformed Search Most of the game domains are PSPACE- Complete! Worst case - traverse the entire graph We need an informed-search! (or an intelligent approach to traversing the graph) 19

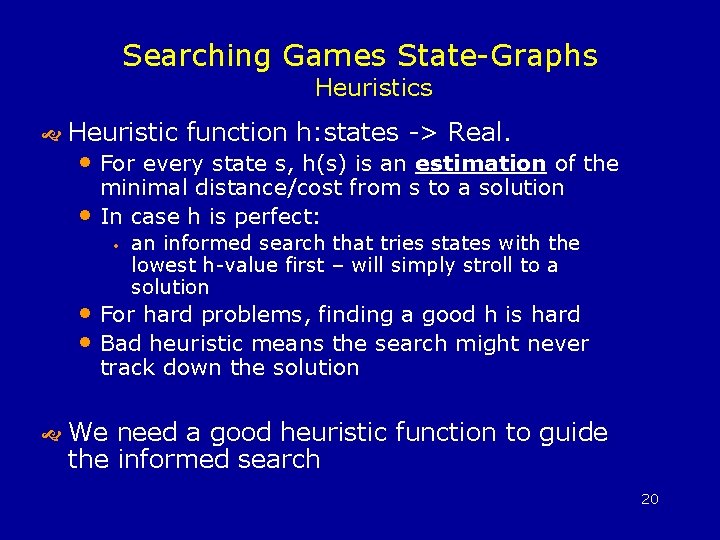

Searching Games State-Graphs Heuristic function h: states -> Real. • For every state s, h(s) is an estimation of the • minimal distance/cost from s to a solution In case h is perfect: • an informed search that tries states with the lowest h-value first – will simply stroll to a solution • For hard problems, finding a good h is hard • Bad heuristic means the search might never track down the solution We need a good heuristic function to guide the informed search 20

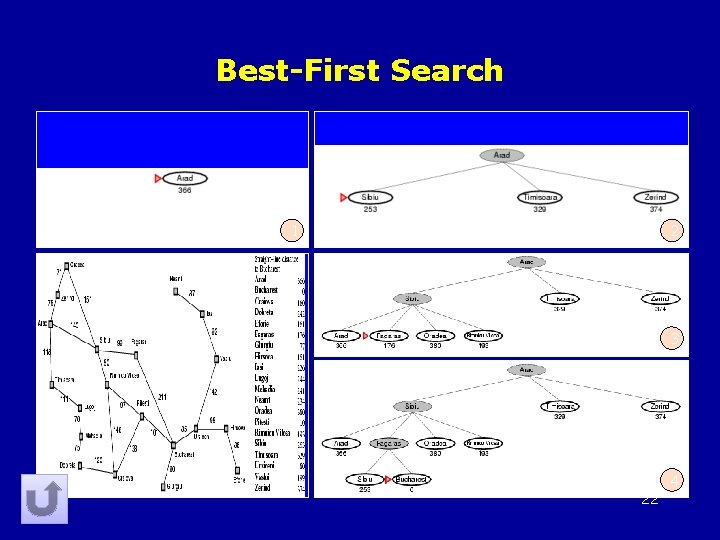

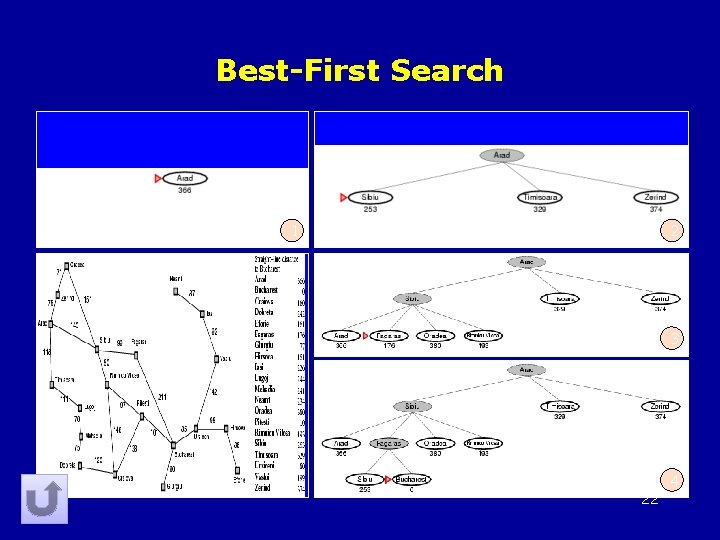

Searching Games State-Graphs Informed Search Best-First search: Like DFS but select nodes with higher heuristic value first • Not necessarily optimal 21

Best-First Search 1 2 3 4 22

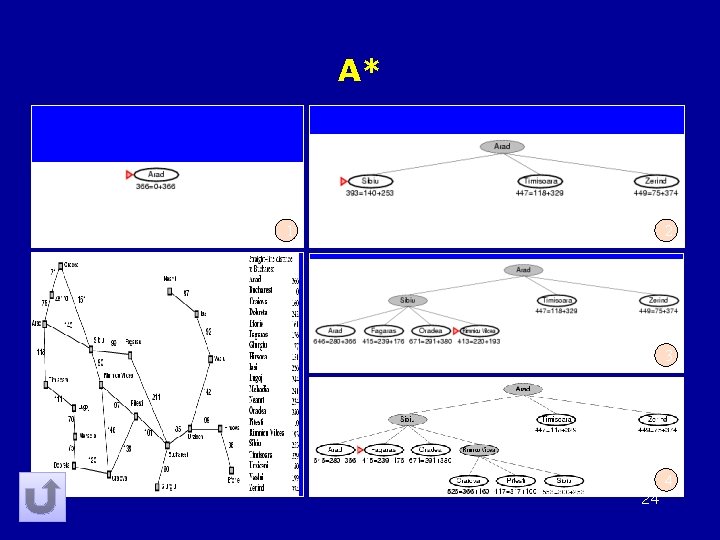

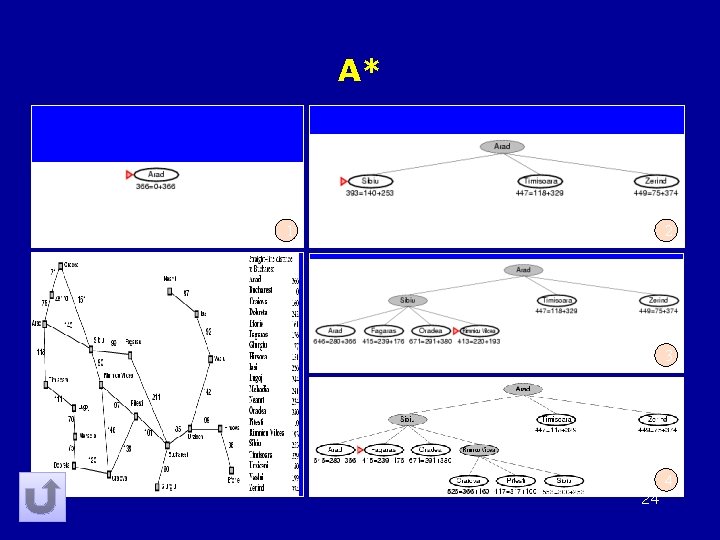

Searching Games State-Graphs Informed Search A*: • G(s)=cost from root till s • H(s)=Heuristic estimation • F(s)=G(s)+H(s) • Holds closed and sorted open lists(the list of • states needs to be checked out). Best (=lowest F(s)) node of all open nodes is selected. 23

A* 1 2 3 4 24

Overview Introduction • Searching Games State-Graphs • • • Uninformed Search Heuristics Informed Search Evolving Heuristics Previous Work • Rush Hour • Free. Cell 26

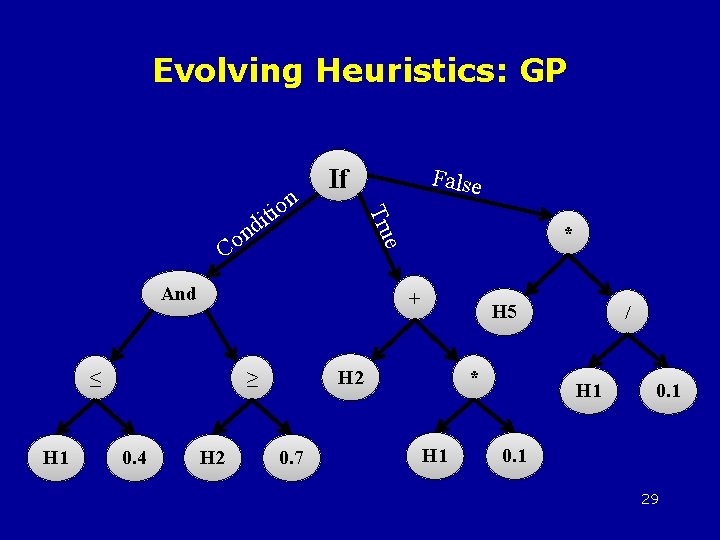

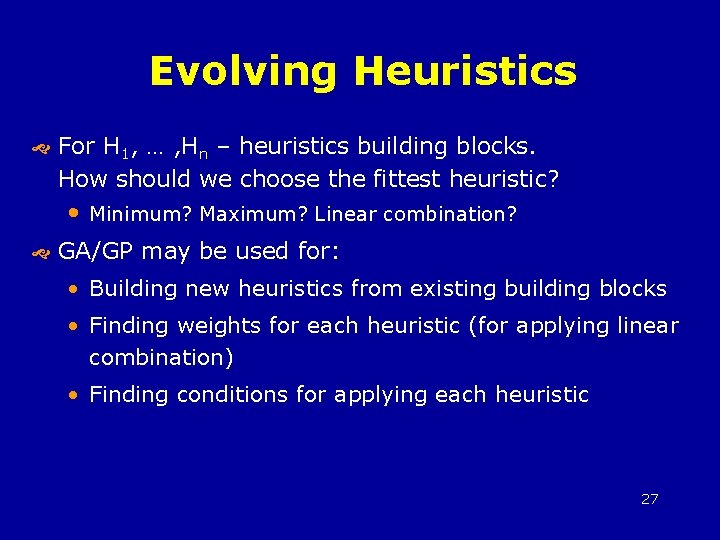

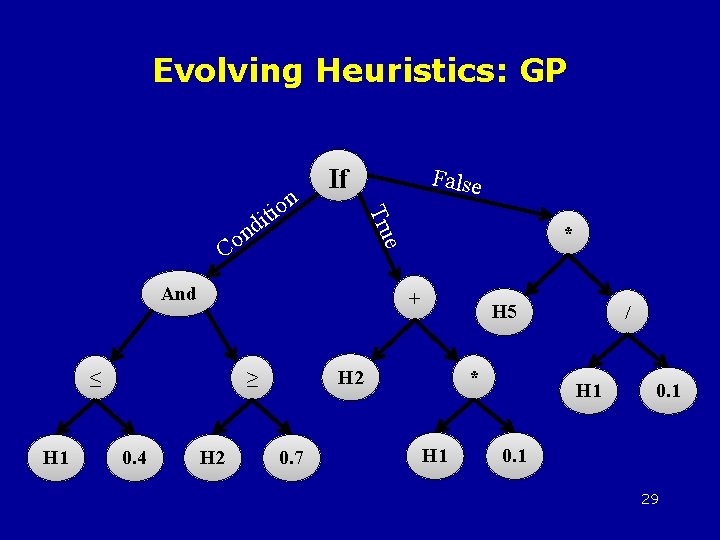

Evolving Heuristics For H 1, … , Hn – heuristics building blocks. How should we choose the fittest heuristic? • Minimum? Maximum? Linear combination? GA/GP may be used for: • Building new heuristics from existing building blocks • Finding weights for each heuristic (for applying linear combination) • Finding conditions for applying each heuristic 27

Evolving Heuristics: GA W 1=0. 3 W 2=0. 01 W 3=0. 2 … Wn=0. 1 28

Evolving Heuristics: GP And + ≤ H 1 H 2 H 5 H 2 ≥ 0. 4 * e n o C False Tru n o i dit If 0. 7 * H 1 / H 1 0. 1 29

Evolving Heuristics: Policies Condition Result Condition 1 Hyper Heuristics 1 Condition 2 Hyper Heuristics 2 Condition n Hyper Heuristics n Default Hyper Heuristics 30

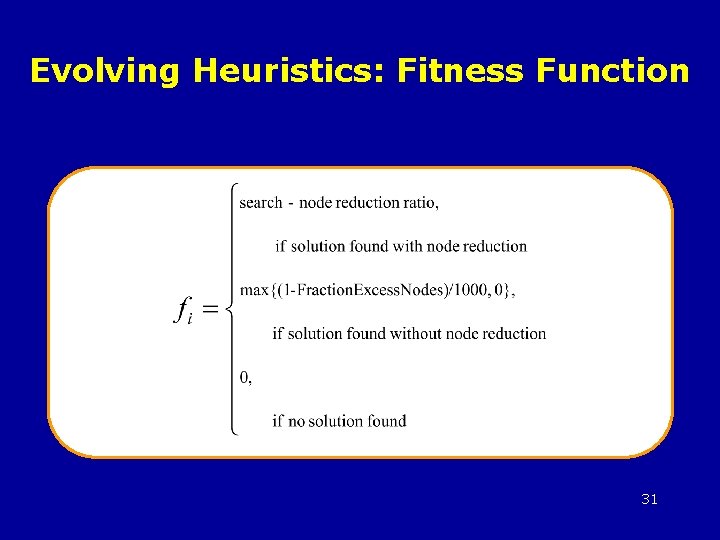

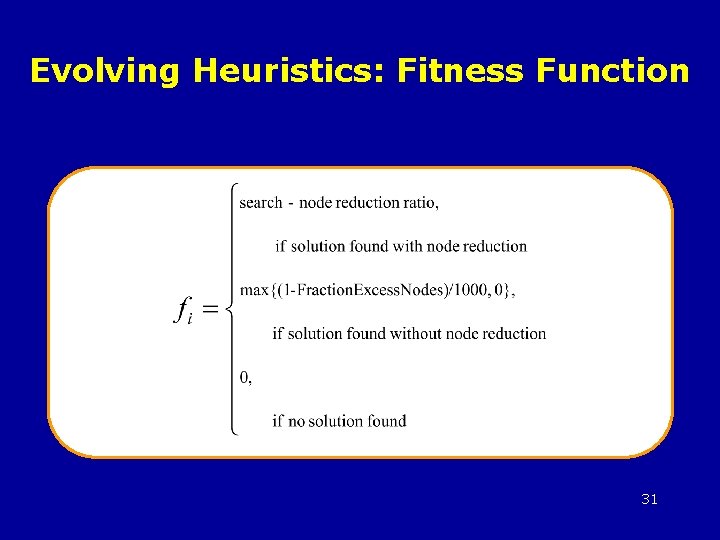

Evolving Heuristics: Fitness Function 31

Overview Introduction • Searching Games State-Graphs • • • Uninformed Search Heuristics Informed Search Evolving Heuristics Test cases • Rush Hour • Free. Cell 32

![Rush Hour GPRush Hauptman et al 2009 Bronze Humies award 33 Rush Hour GP-Rush [Hauptman et al, 2009] Bronze Humies award 33](https://slidetodoc.com/presentation_image_h/da6b6c992c3ec027d71e1dd36110e172/image-32.jpg)

Rush Hour GP-Rush [Hauptman et al, 2009] Bronze Humies award 33

Domain-Specific Heuristics Hand-Crafted Heuristics / Guides: • Blocker estimation – lower bound (admissible) • Goal distance – Manhattan distance • Hybrid blockers distance – combine the above two • Is Move To Secluded – did the car enter a secluded area? (last move blocks all other cars) • Is a Releasing Move – if the last move increased the number of free cars. 34

Blockers Estimation Lower bound for number of steps to goal By: Counting moves to free blocking cars Example: O is blocking RED Need at least: • • Move O Move C Move B Move A H = 4 35

Goal Distance Deduce goal Use “Manhattan Distance” from goal as h measure 16 36

Hybrid “Manhattan Distance” + Blockers Estimation 16+8=24 37

Policy “Ingredients” Functions & Terminals: Terminals Sets Conditions Results Is. Move. To. Secluded, is. Releasing. Move, g, Phase. By. Distance, Phase. By. Blockers, Number. Of. Siblings, Difficulty. Level, Blockers. Lower. Bound, Goal. Distance, Hybrid, 0, 0. 1, … , 0. 9 , 1 Blockers. Lower. Bound, Goal. Distance, Hybrid, 0, 0. 1, … , 0. 9 , 1 If, AND , OR , ≤ , ≥ + , * 38

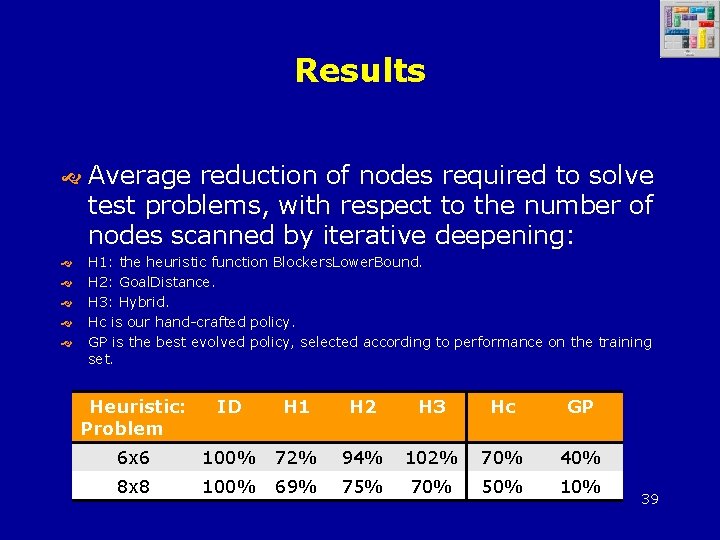

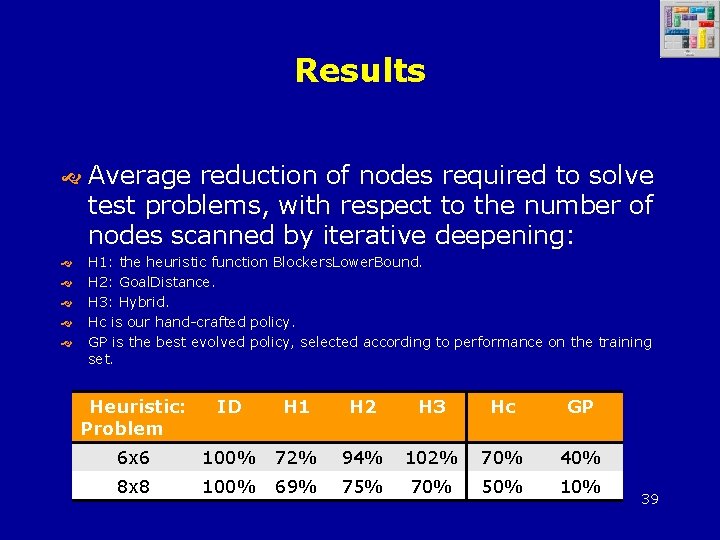

Results Average reduction of nodes required to solve test problems, with respect to the number of nodes scanned by iterative deepening: H 1: the heuristic function Blockers. Lower. Bound. H 2: Goal. Distance. H 3: Hybrid. Hc is our hand-crafted policy. GP is the best evolved policy, selected according to performance on the training set. Heuristic: Problem ID H 1 H 2 H 3 Hc GP 6 x 6 100% 72% 94% 102% 70% 40% 8 x 8 100% 69% 75% 70% 50% 10% 39

Results (cont’d) Time (in seconds) required to solve problems JAM 01. . . JAM 40: ID – iterative deepening, Hi – average of our three hand-crafted heuristics, Hc – our hand-crafted policy. GP – our best evolved policy. human players (average of top 5). 40

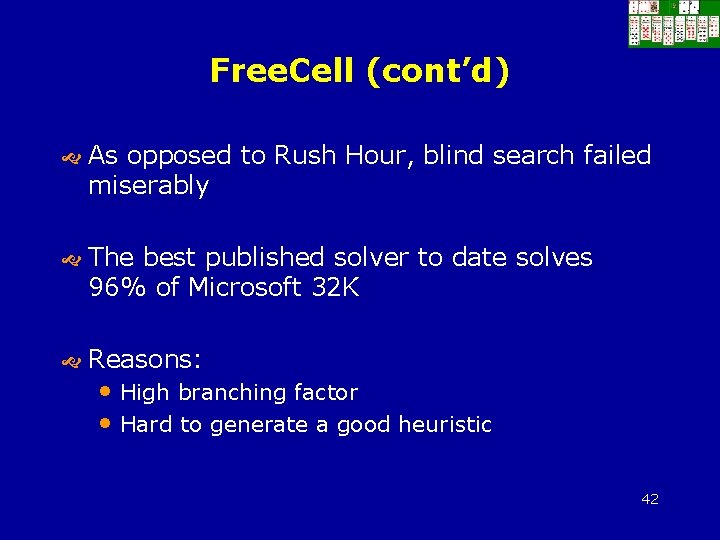

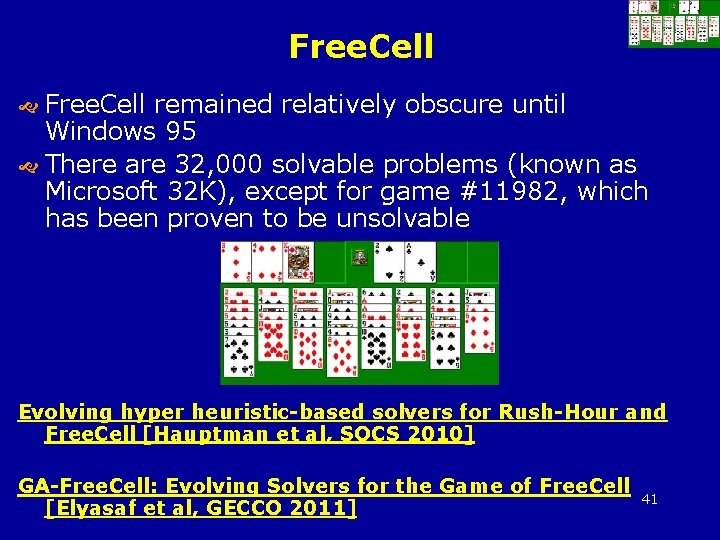

Free. Cell remained relatively obscure until Windows 95 There are 32, 000 solvable problems (known as Microsoft 32 K), except for game #11982, which has been proven to be unsolvable Evolving hyper heuristic-based solvers for Rush-Hour and Free. Cell [Hauptman et al, SOCS 2010] GA-Free. Cell: Evolving Solvers for the Game of Free. Cell [Elyasaf et al, GECCO 2011] 41

Free. Cell (cont’d) As opposed to Rush Hour, blind search failed miserably The best published solver to date solves 96% of Microsoft 32 K Reasons: • High branching factor • Hard to generate a good heuristic 42

Learning Methods: Random Deals Which deals ( קלפים )חלוקות should we use for training? First method tested - random deals • This is what we did in Rush Hour • Here it yielded poor results • Very hard domain 43

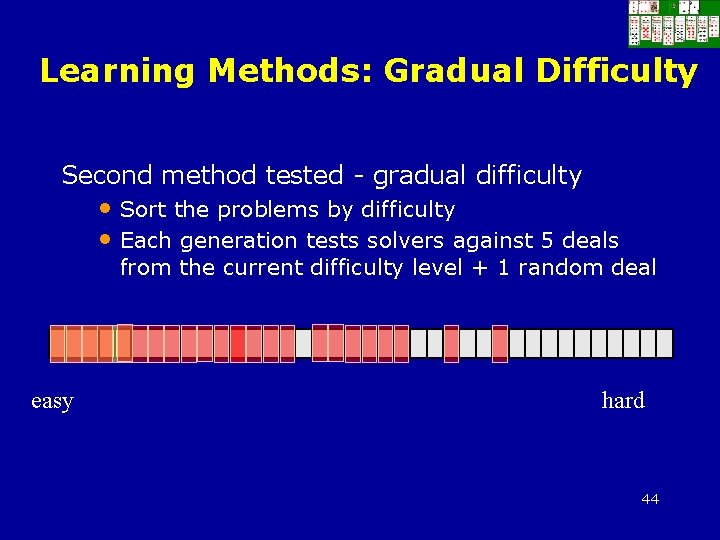

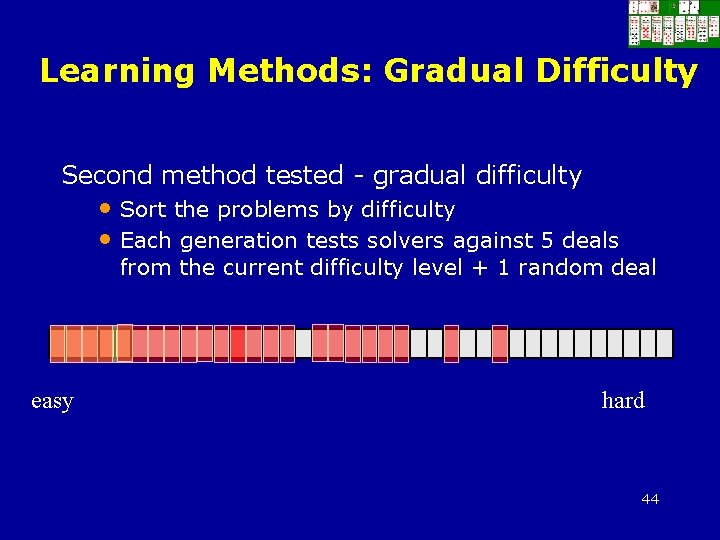

Learning Methods: Gradual Difficulty Second method tested - gradual difficulty • Sort the problems by difficulty • Each generation tests solvers against 5 deals from the current difficulty level + 1 random deal easy hard 44

A few words on Co-evolution Population 1 Population 2 Test for fitness Examples? Solution, Solvers. Examples: • Freecell Solver • Rush Hour Solver • Chess player Problems, adversaries, Examples: • Freecell Deals • Rush Hour Boards • Another Chess Player 45

Learning Methods: Hillis-Style Co-evolution Third method tested - Hillis-style co-evolution using “Hall-of-Fame”: • • A deal population is composed of 40 deals (=40 individuals) + 10 deals that represent a hall-offame Each hyper-heuristic is tested against 4 deal individuals and 2 hall-of-fame deals 46

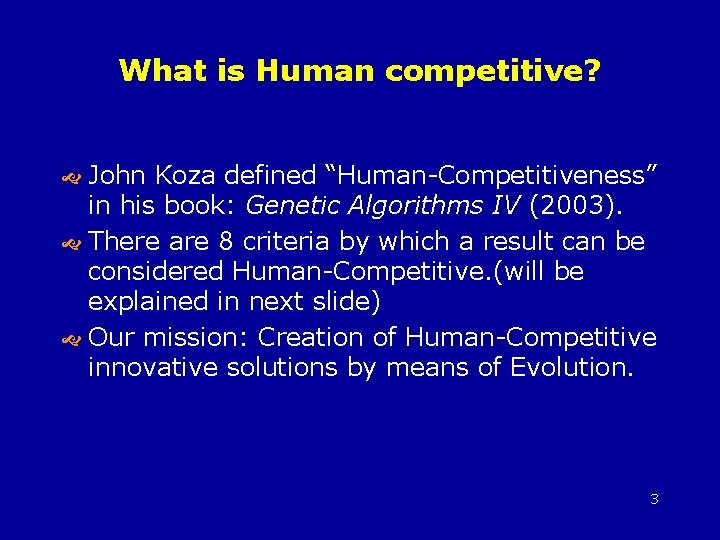

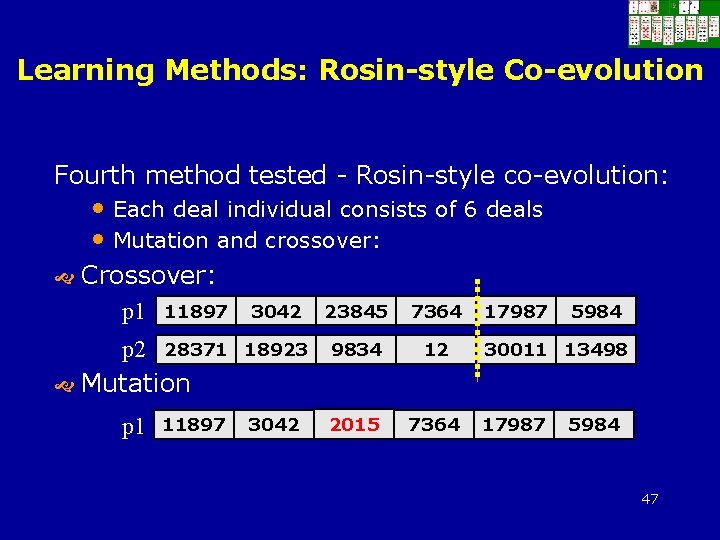

Learning Methods: Rosin-style Co-evolution Fourth method tested - Rosin-style co-evolution: • Each deal individual consists of 6 deals • Mutation and crossover: Crossover: p 1 11897 p 2 28371 Mutation p 1 11897 3042 23845 7364 18923 9834 12 3042 2015 23845 7364 17987 5984 30011 13498 17987 5984 47

Results Learning Method Gradual Difficulty Rosin-style coevolution Run Node Reduction Time Reduction Length Reduction Solved HSD 100% 96% GA-1 23% 31% 1% 71% GA-2 27% 30% 103% 70% GP - - Policy 28% 36% 6% 36% GA 87% 93% 41% 98% Policy 89% 90% 40% 99% 48

Other Human Competitive results Antenna Design for the International Space Station Automatically finding patches using genetic programming Evolvable Malware And many more on Humies site. 49

Thank you for listening any questions? 50

What is the least competitive market structure

What is the least competitive market structure Competitive antagonist

Competitive antagonist Slow-cycle market companies examples

Slow-cycle market companies examples Luis von ahn human computation

Luis von ahn human computation Human resource management gaining a competitive advantage

Human resource management gaining a competitive advantage Human resource management gaining a competitive advantage

Human resource management gaining a competitive advantage Human resource management gaining a competitive advantage

Human resource management gaining a competitive advantage Human resource management: gaining a competitive advantage

Human resource management: gaining a competitive advantage One way linkage hrm

One way linkage hrm Human resource management gaining a competitive advantage

Human resource management gaining a competitive advantage Shqw

Shqw 2. srednja sklanjatev

2. srednja sklanjatev Notasi bukaan jendela

Notasi bukaan jendela Danica mati djuraki

Danica mati djuraki Litanije matere

Litanije matere Kesalahpahaman tentang allah tritunggal

Kesalahpahaman tentang allah tritunggal Mati lepikson

Mati lepikson Kronosov sin

Kronosov sin Kata selalu besinonim dengan

Kata selalu besinonim dengan Ants kaljurand

Ants kaljurand Telegonos

Telegonos Mati tekla

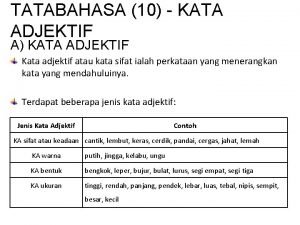

Mati tekla Adjektif

Adjektif Vanesa mati

Vanesa mati Saya sarapan bersama keluarga yang disediakan pembantu saya

Saya sarapan bersama keluarga yang disediakan pembantu saya Bantu membantu kata ganda

Bantu membantu kata ganda Idghom mitsli

Idghom mitsli Hauen mäti käsittely

Hauen mäti käsittely Umpama

Umpama Peribahasa untuk ponteng sekolah

Peribahasa untuk ponteng sekolah Eksodermis

Eksodermis Nafs

Nafs Jaringan pengangkut

Jaringan pengangkut Mati ombler

Mati ombler Väldete määramine

Väldete määramine Tabel mati

Tabel mati Sebuah dadu yang homogen bermata enam dilempar satu kali

Sebuah dadu yang homogen bermata enam dilempar satu kali Mati žalostna je stala besedilo

Mati žalostna je stala besedilo Mati cohen

Mati cohen Mati ombler

Mati ombler Zgodbe o prešernu obnova

Zgodbe o prešernu obnova Mati murru

Mati murru Mate mati

Mate mati Kurt marti happy end text

Kurt marti happy end text Maksud nyawa nyawa ikan

Maksud nyawa nyawa ikan Withholding tax on compensation computation

Withholding tax on compensation computation Expanded withholding tax computation

Expanded withholding tax computation Expanded withholding tax computation

Expanded withholding tax computation Job order tax

Job order tax Fertilizer computation examples

Fertilizer computation examples