Federating cloud computing resources for scientific computing Marian

- Slides: 49

Federating cloud computing resources for scientific computing Marian Bubak Department of Computer Science and ACC Cyfronet AGH University of Science and Technology Krakow, Poland http: //dice. cyfronet. pl Informatics Institute, System and Network Engineering University of Amsterdam www. science. uva. nl SKG Conference, Beijing, China, September 15 -17, 2016

Coauthors • • • Maciej Malawski Bartosz Balis Dariusz Krol Bartosz Kryza Jacek Kitowski Renata Slota dice. cyfronet. pl • Adam Belloum • Reggie Cushing • Michael Gerhards www. science. uva. nl

Outline • • Motivation and research objectives Cloud platform Paa. Sage Workflows on cloud resources (Hyper. Flow) Scheduling workflows on cloud resources Parameter study on clouds with Scalarm In-network data processing for Io. T Addressing reproducibility Conclusions

Motivation • Many different cloud platforms – heterogeneous – API and architecture are not standardized – interdependence between the client application and the cloud platform – porting an existing application to one of the Cloud platform is still a challenging task – lacking support/tools for analysing and porting existing applications • “Developing once and deploying on many Cloud” is not the reality today – missing support/tools for deployment and execution (vendor lock-in) • ICT businesses (and also ALL businesses) need – an appropriate methodology – a flexible development and deployment platform for a technology-neutral approach while targeting best performance and abstracting technical specific details 4

Challenges • Accessing cloud resources in a seamless and efficient way • Analysing and managing architectural changes • Integration with other applications in and outside the cloud or in different clouds • Specifying Key Performance Indicators for cloud applications in a solution-independent way and monitoring these • Understanding resource and cost models of clouds and mapping their needs to these models • Increased complexity of legacy applications

Major objective of the Paa. Sage project To deliver an open and integrated platform to support both design and deployment of Cloud applications, together with an accompanying methodology that allows model-based development, configuration, optimisation, and deployment of existing and new applications independently of the existing underlying Cloud infrastructures.

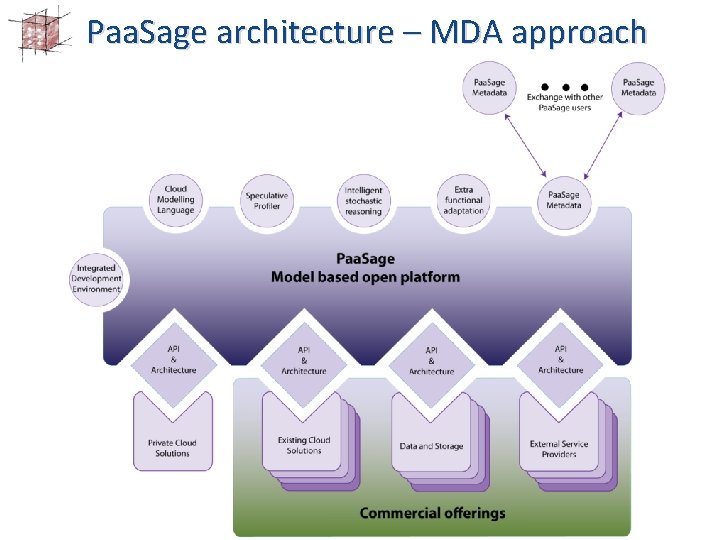

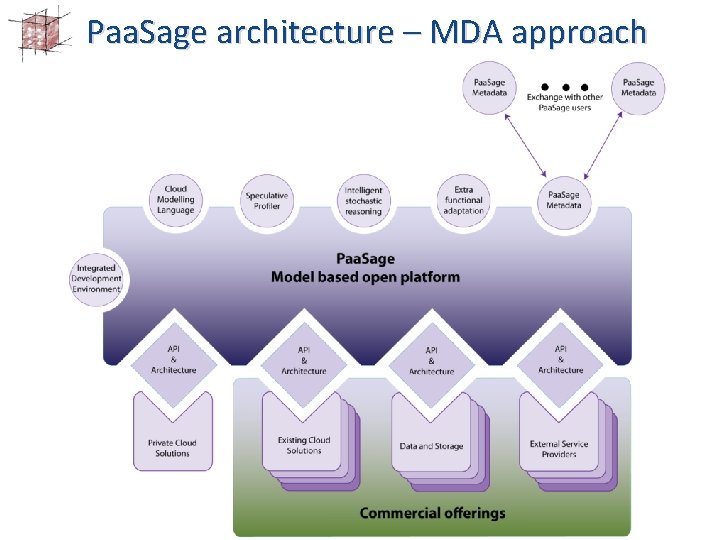

Paa. Sage architecture – MDA approach

Model-based development and deployment of cloud applications Paa. Sage cloud platform: • CAMEL: Cloud Application Modeling and Execution Language – Deployment model: components, connections – Requirements model – Scalability model • Multi-cloud application deployment • Autoscaling, adaptation Integration with workflow systems: • CAMEL app model is generated from a workflow description • Workflow monitoring information triggers workflow autoscaling 8

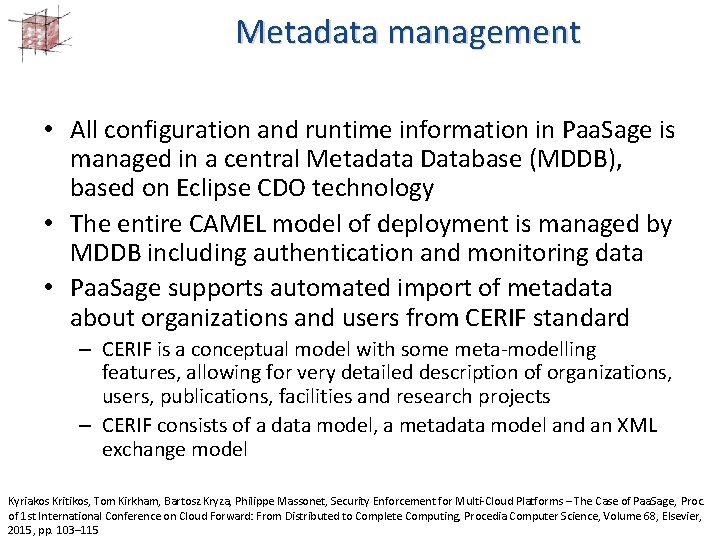

Metadata management • All configuration and runtime information in Paa. Sage is managed in a central Metadata Database (MDDB), based on Eclipse CDO technology • The entire CAMEL model of deployment is managed by MDDB including authentication and monitoring data • Paa. Sage supports automated import of metadata about organizations and users from CERIF standard – CERIF is a conceptual model with some meta-modelling features, allowing for very detailed description of organizations, users, publications, facilities and research projects – CERIF consists of a data model, a metadata model and an XML exchange model Kyriakos Kritikos, Tom Kirkham, Bartosz Kryza, Philippe Massonet, Security Enforcement for Multi-Cloud Platforms – The Case of Paa. Sage, Proc. of 1 st International Conference on Cloud Forward: From Distributed to Complete Computing, Procedia Computer Science, Volume 68, Elsevier, 2015, pp. 103– 115

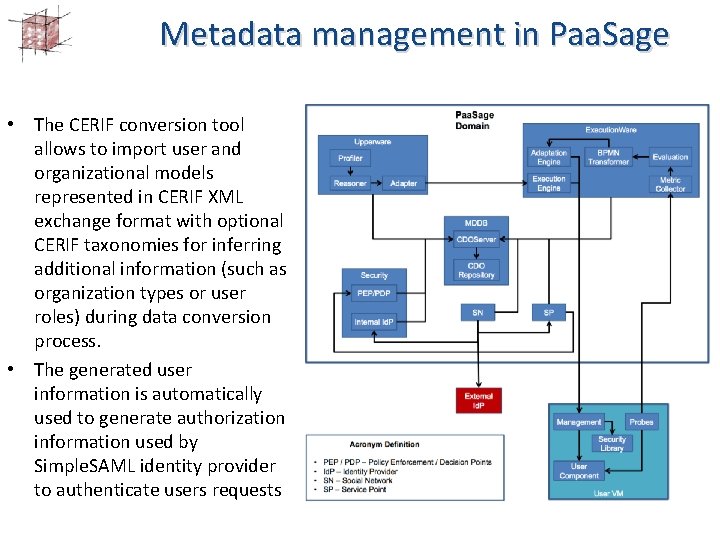

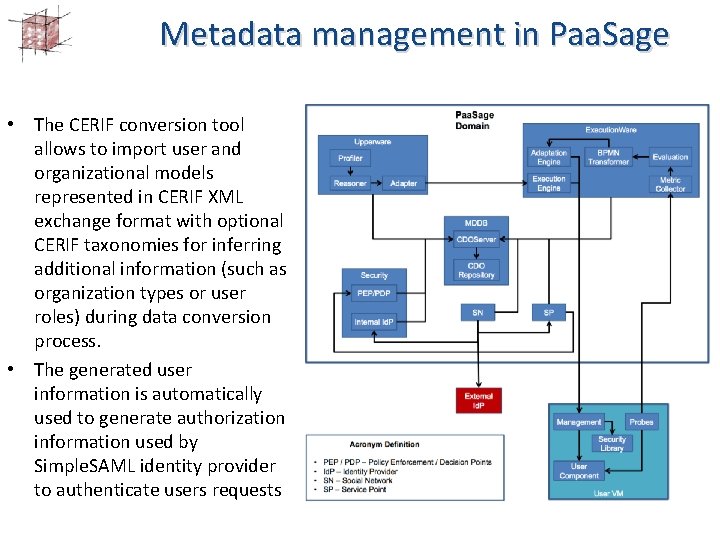

Metadata management in Paa. Sage • The CERIF conversion tool allows to import user and organizational models represented in CERIF XML exchange format with optional CERIF taxonomies for inferring additional information (such as organization types or user roles) during data conversion process. • The generated user information is automatically used to generate authorization information used by Simple. SAML identity provider to authenticate users requests

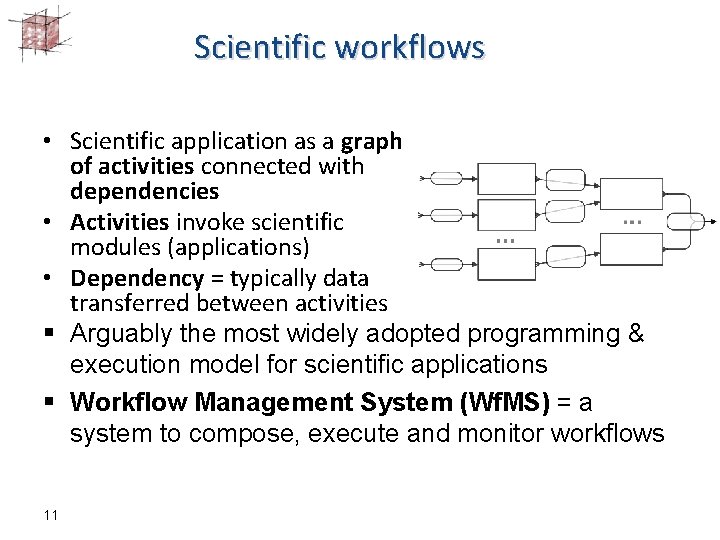

Scientific workflows • Scientific application as a graph of activities connected with dependencies • Activities invoke scientific modules (applications) • Dependency = typically data transferred between activities § Arguably the most widely adopted programming & execution model for scientific applications § Workflow Management System (Wf. MS) = a system to compose, execute and monitor workflows 11

Workflows on cloud resources • Scientific cloud = a complex ecosystem – Users, advanced middleware services, security requirements, resource usage quotas, etc. • Challenge: need a solution for deployment of scientific workflows on cloud infrastructures which is – – – adaptable to diverse cloud infrastructures transparent to the user lightweight: minimizes user’s effort (setup, configuration) maintainable: easy to integrate and fast to update leverage cloud elasticity for autoscaling of scientific workflows loosely coupled integration with cloud management platforms (e. g. Paa. Sage) Maciej Malawski, Bartosz Balis, Kamil Figiela, Maciej Pawlik, Marian Bubak: Support for Scientific Workflows in a Model-Based Cloud Platform. UCC 2015: 412 -413

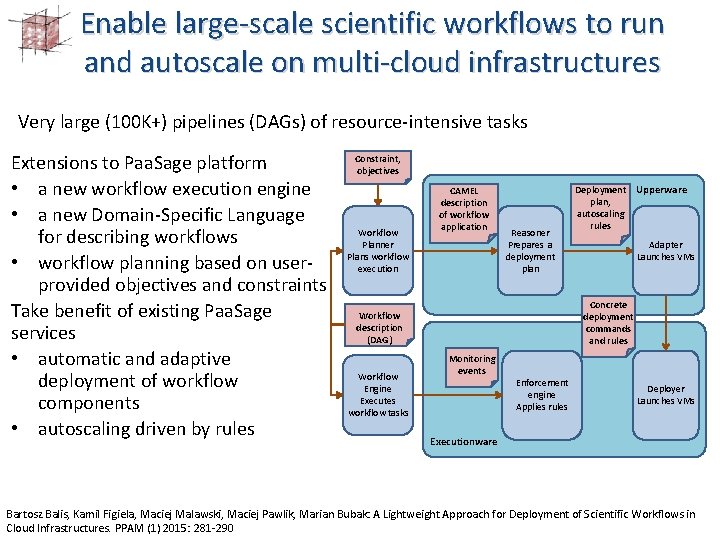

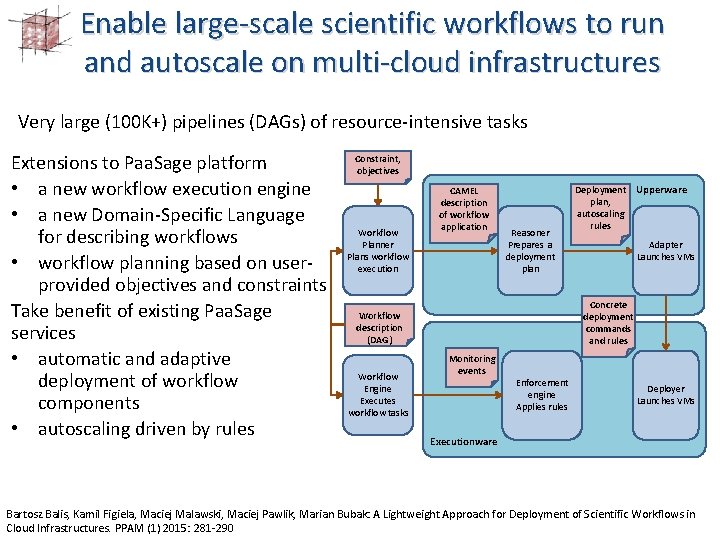

Enable large-scale scientific workflows to run and autoscale on multi-cloud infrastructures Very large (100 K+) pipelines (DAGs) of resource-intensive tasks Extensions to Paa. Sage platform • a new workflow execution engine • a new Domain-Specific Language for describing workflows • workflow planning based on userprovided objectives and constraints Take benefit of existing Paa. Sage services • automatic and adaptive deployment of workflow components • autoscaling driven by rules Constraint, objectives Workflow Planner Plans workflow execution CAMEL description of workflow application Reasoner Prepares a deployment plan Adapter Launches VMs Concrete deployment commands and rules Workflow description (DAG) Workflow Engine Executes workflow tasks Deployment Upperware plan, autoscaling rules Monitoring events Enforcement engine Applies rules Deployer Launches VMs Executionware Bartosz Balis, Kamil Figiela, Maciej Malawski, Maciej Pawlik, Marian Bubak: A Lightweight Approach for Deployment of Scientific Workflows in Cloud Infrastructures. PPAM (1) 2015: 281 -290

e. Science workflows on the Paa. Sage platform • e. Science workflows – PL-Grid community: bioinformatics (genomics, proteomics) – metals engineering (complex metallurgical processes) – Virtual Physiological Human (Taverna and Data. Fluo workflows) – multiscale applications: fusion (Kepler workflows) – military mission planning support (EDA) – astronomy (Pegasus workflows) • Results – Hyper. Flow: workflow execution engine based on REST paradigm – Scalarm: massively self-scalable platform for data farming 14

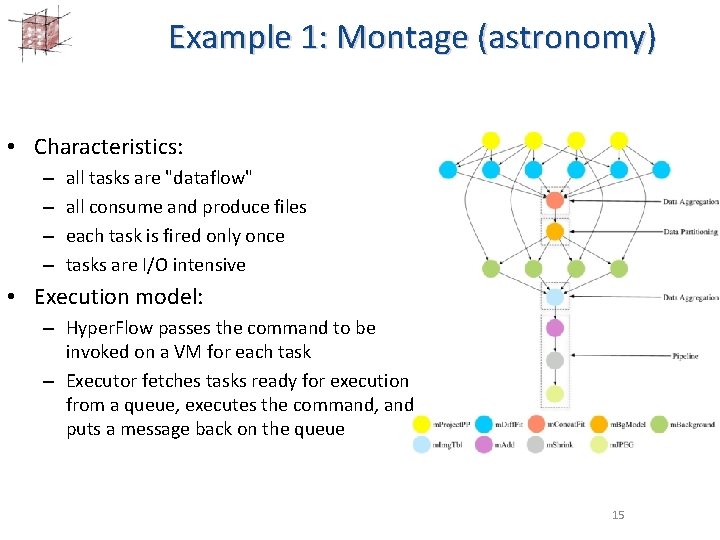

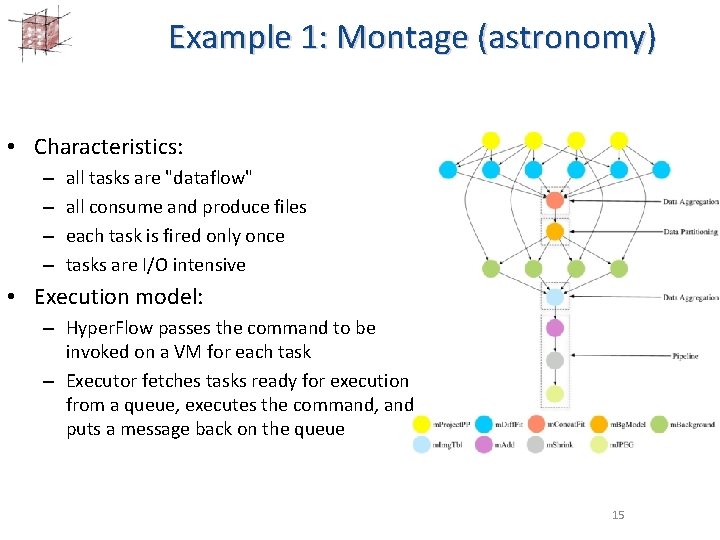

Example 1: Montage (astronomy) • Characteristics: – – all tasks are "dataflow" all consume and produce files each task is fired only once tasks are I/O intensive • Execution model: – Hyper. Flow passes the command to be invoked on a VM for each task – Executor fetches tasks ready for execution from a queue, executes the command, and puts a message back on the queue 15

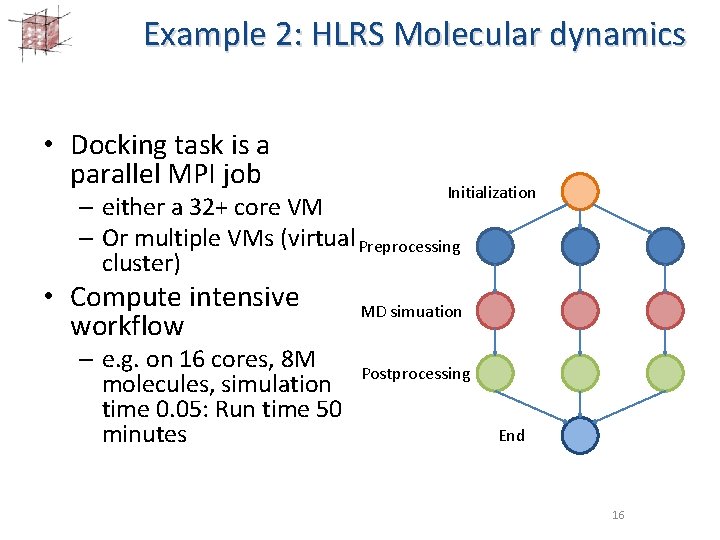

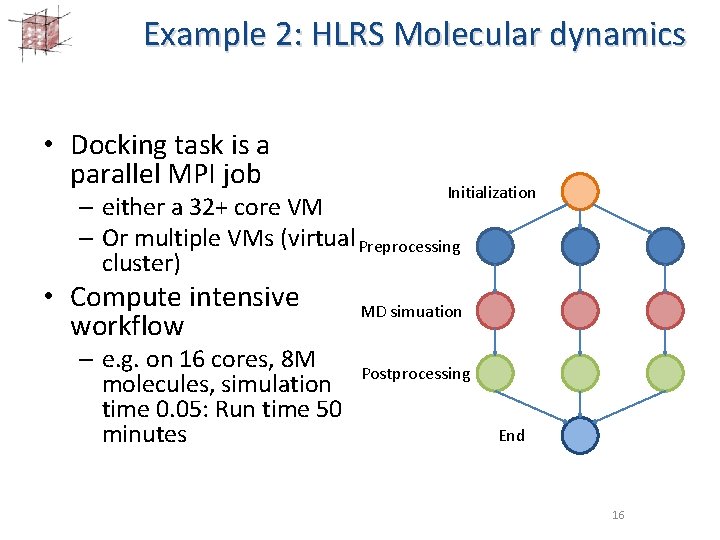

Example 2: HLRS Molecular dynamics • Docking task is a parallel MPI job Initialization – either a 32+ core VM – Or multiple VMs (virtual Preprocessing cluster) • Compute intensive workflow MD simuation – e. g. on 16 cores, 8 M Postprocessing molecules, simulation time 0. 05: Run time 50 minutes End 16

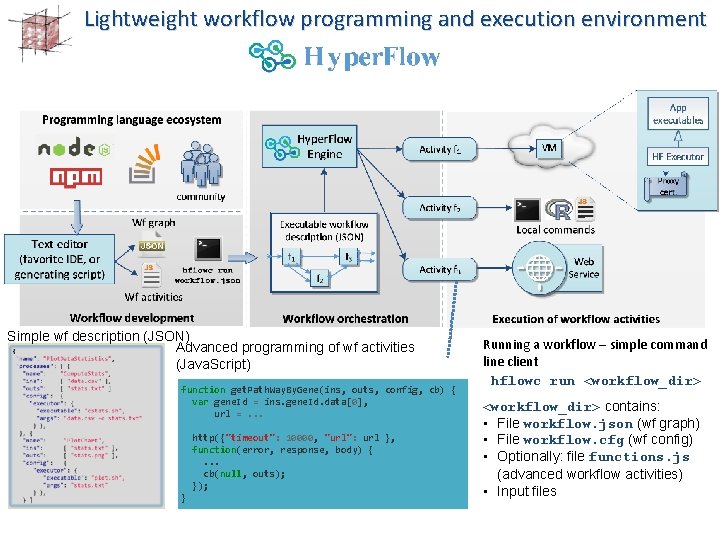

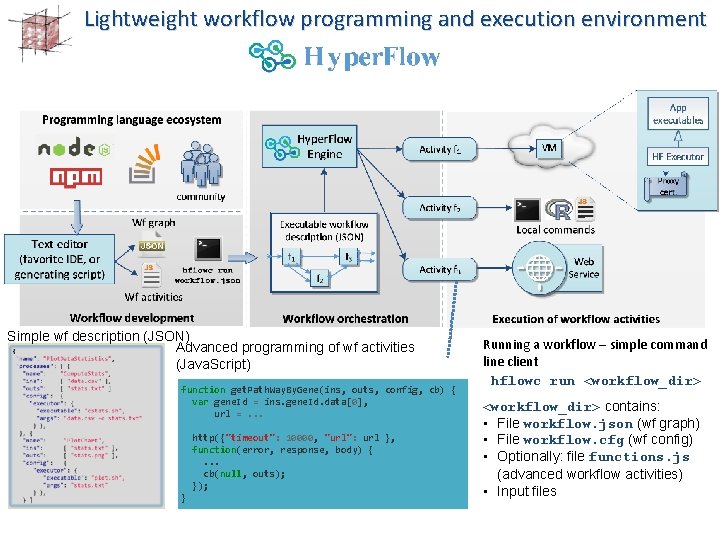

Lightweight workflow programming and execution environment Simple wf description (JSON) Advanced programming of wf activities (Java. Script) function get. Path. Way. By. Gene(ins, outs, config, cb) { var gene. Id = ins. gene. Id. data[0], url =. . . http({"timeout": 10000, "url": url }, function(error, response, body) {. . . cb(null, outs); } Running a workflow – simple command line client hflowc run <workflow_dir> contains: • File workflow. json (wf graph) • File workflow. cfg (wf config) • Optionally: file functions. js (advanced workflow activities) • Input files

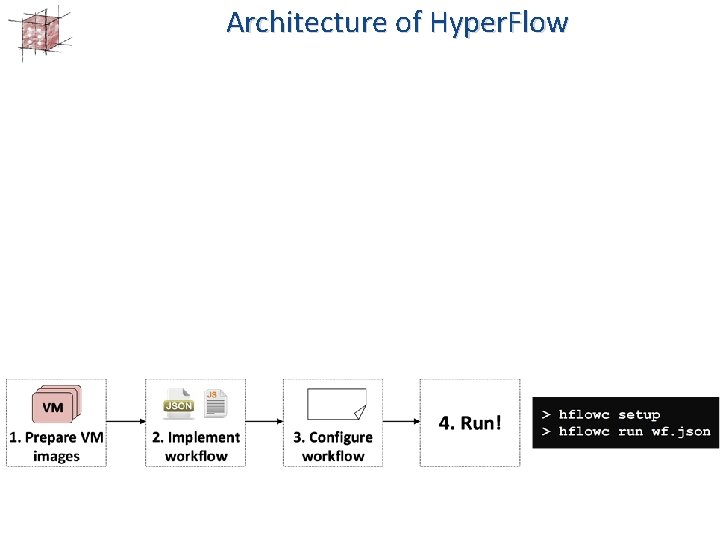

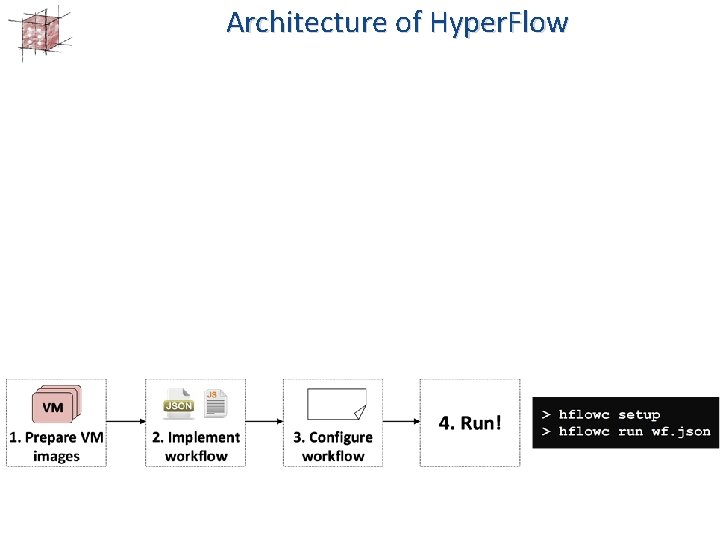

Architecture of Hyper. Flow

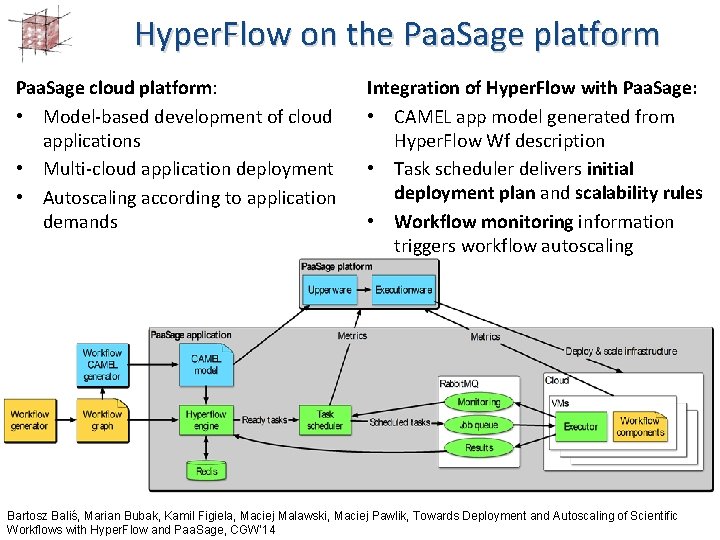

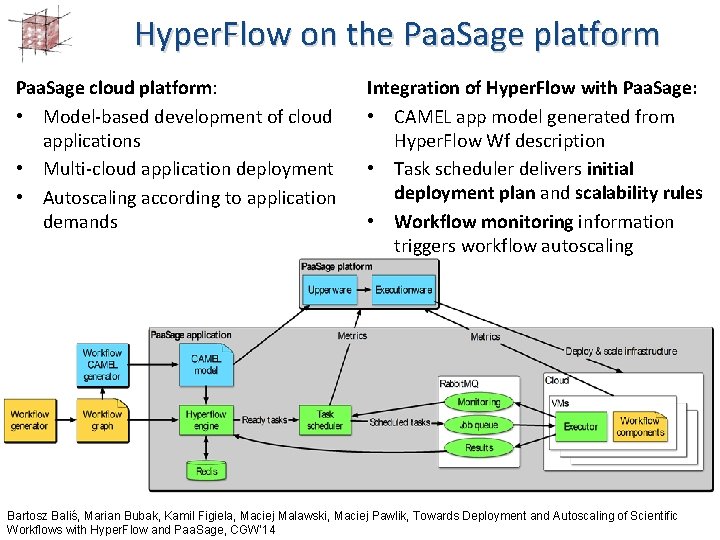

Hyper. Flow on the Paa. Sage platform Paa. Sage cloud platform: • Model-based development of cloud applications • Multi-cloud application deployment • Autoscaling according to application demands Integration of Hyper. Flow with Paa. Sage: • CAMEL app model generated from Hyper. Flow Wf description • Task scheduler delivers initial deployment plan and scalability rules • Workflow monitoring information triggers workflow autoscaling Bartosz Baliś, Marian Bubak, Kamil Figiela, Maciej Malawski, Maciej Pawlik, Towards Deployment and Autoscaling of Scientific Workflows with Hyper. Flow and Paa. Sage, CGW’ 14

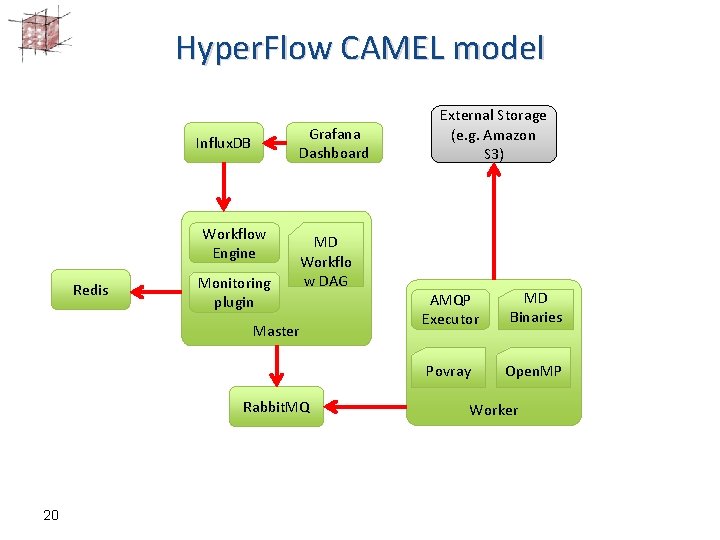

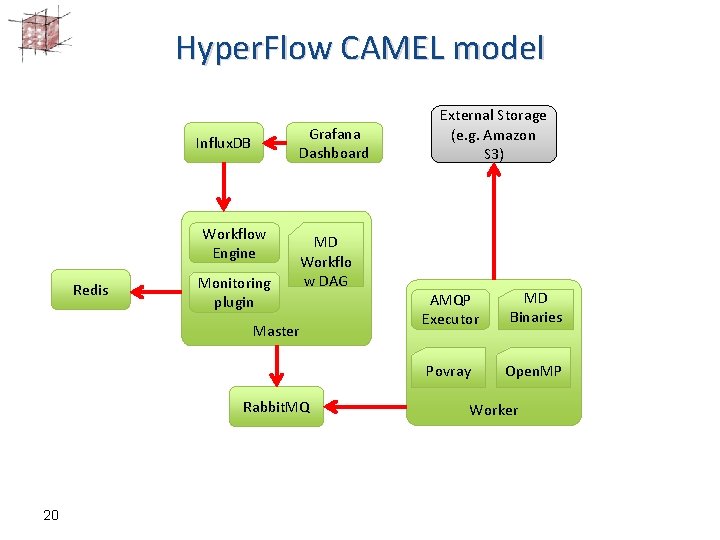

Hyper. Flow CAMEL model Grafana Dashboard Influx. DB Workflow Engine Redis Monitoring plugin MD Workflo w DAG Master Rabbit. MQ 20 External Storage (e. g. Amazon S 3) AMQP Executor MD Binaries Povray Open. MP Worker

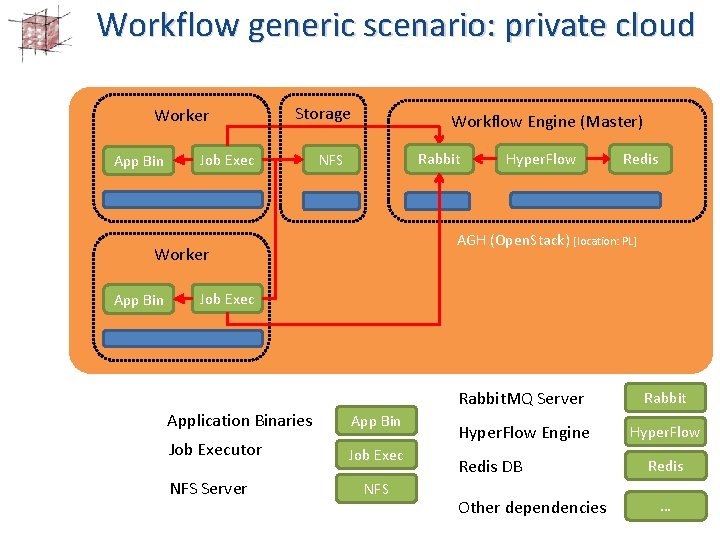

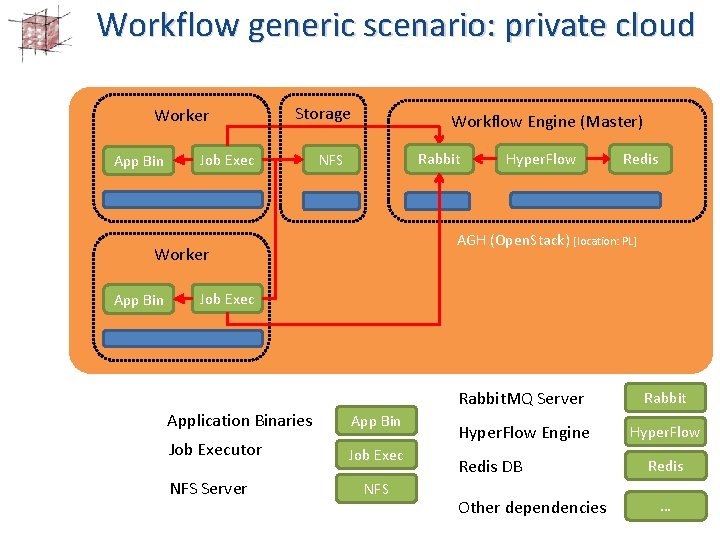

Workflow generic scenario: private cloud Worker App Bin Storage Job Exec Workflow Engine (Master) Rabbit NFS Redis AGH (Open. Stack) [location: PL] Worker App Bin Hyper. Flow Job Exec Application Binaries App Bin Job Executor Job Exec NFS Server NFS Rabbit. MQ Server Rabbit Hyper. Flow Engine Hyper. Flow Redis DB Other dependencies Redis … 21

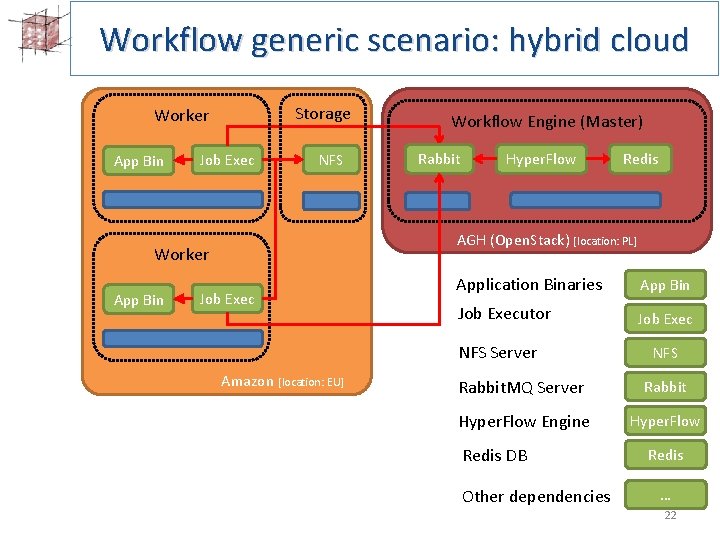

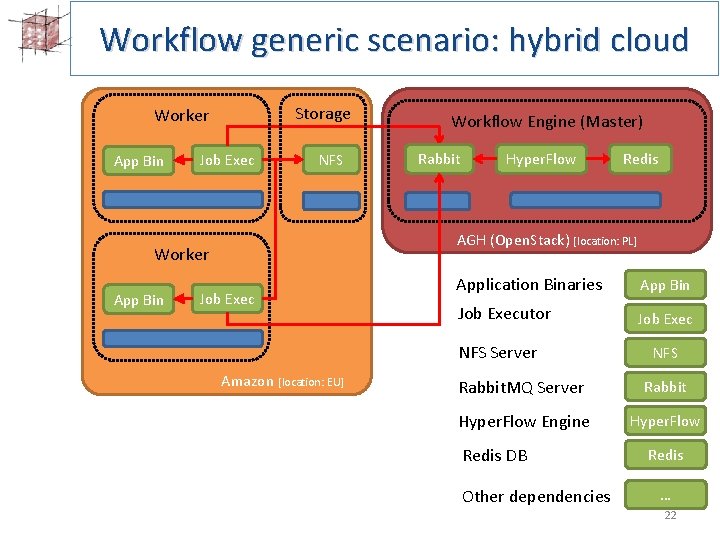

Workflow generic scenario: hybrid cloud Storage Worker App Bin Job Exec NFS Rabbit Hyper. Flow Redis AGH (Open. Stack) [location: PL] Worker App Bin Workflow Engine (Master) Job Exec Application Binaries App Bin Job Executor Job Exec NFS Server Amazon [location: EU] NFS Rabbit. MQ Server Rabbit Hyper. Flow Engine Hyper. Flow Redis DB Other dependencies Redis … 22

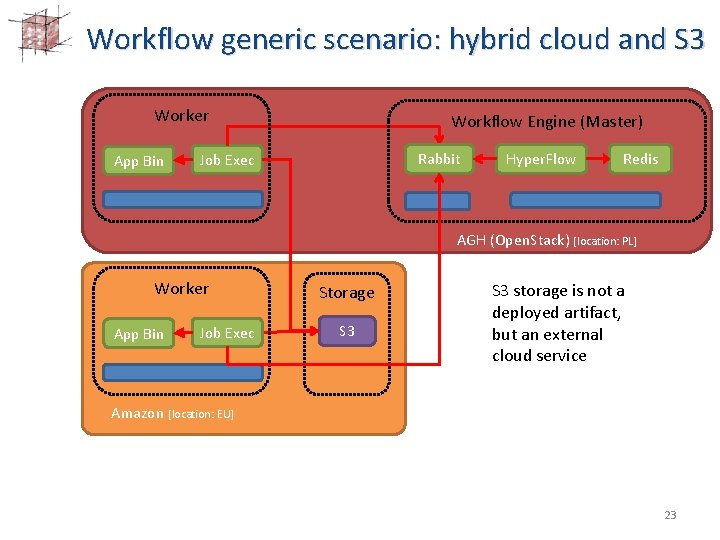

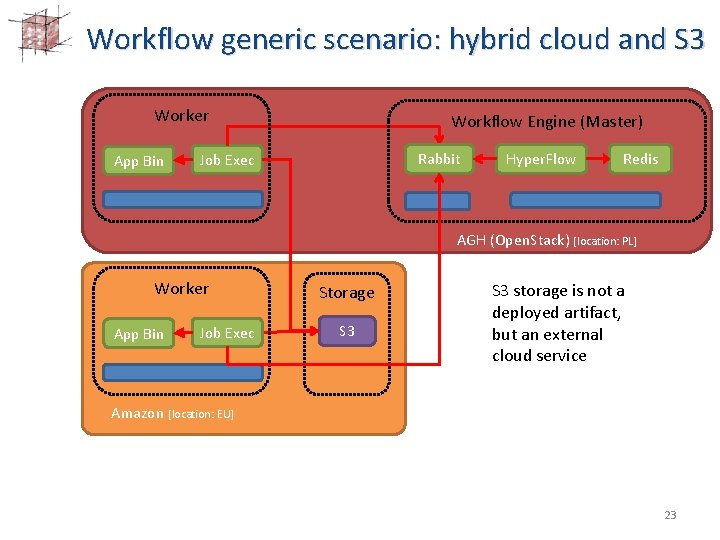

Workflow generic scenario: hybrid cloud and S 3 Worker App Bin Workflow Engine (Master) Rabbit Job Exec Hyper. Flow Redis AGH (Open. Stack) [location: PL] Worker App Bin Job Exec Storage S 3 storage is not a deployed artifact, but an external cloud service Amazon [location: EU] 23

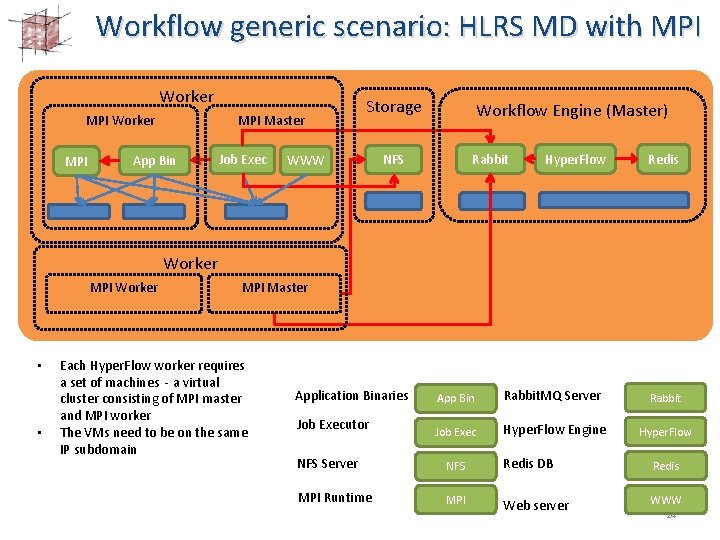

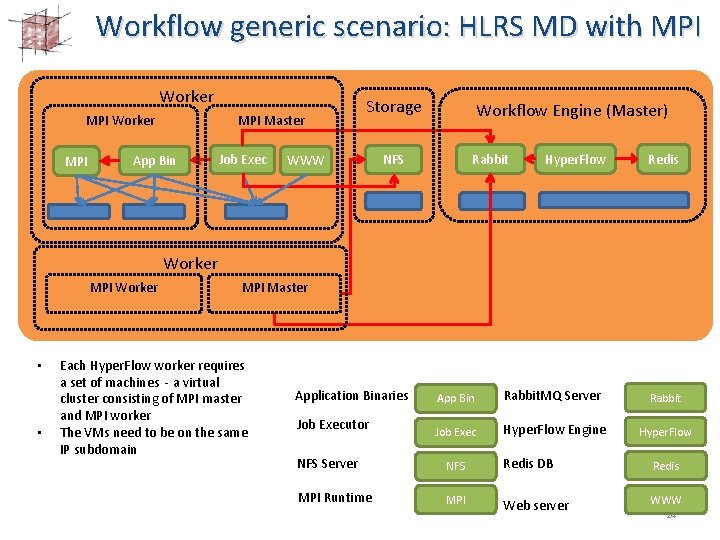

Workflow generic scenario: HLRS MD with MPI Worker MPI Master App Bin Job Exec Storage WWW Workflow Engine (Master) Rabbit NFS Hyper. Flow Redis Worker MPI Worker • • MPI Master Each Hyper. Flow worker requires a set of machines - a virtual cluster consisting of MPI master and MPI worker The VMs need to be on the same IP subdomain Flexiant [location: UK] Application Binaries Job Executor App Bin Rabbit. MQ Server Rabbit Job Exec Hyper. Flow Engine Hyper. Flow NFS Server NFS Redis DB Redis MPI Runtime MPI Web server WWW 24

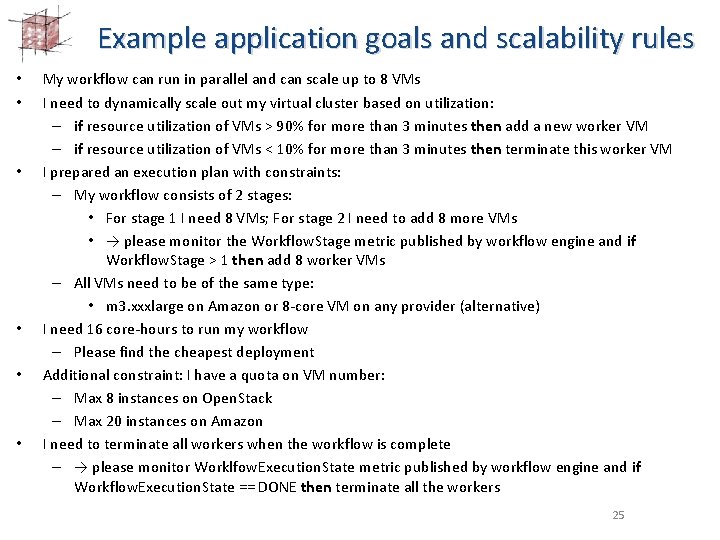

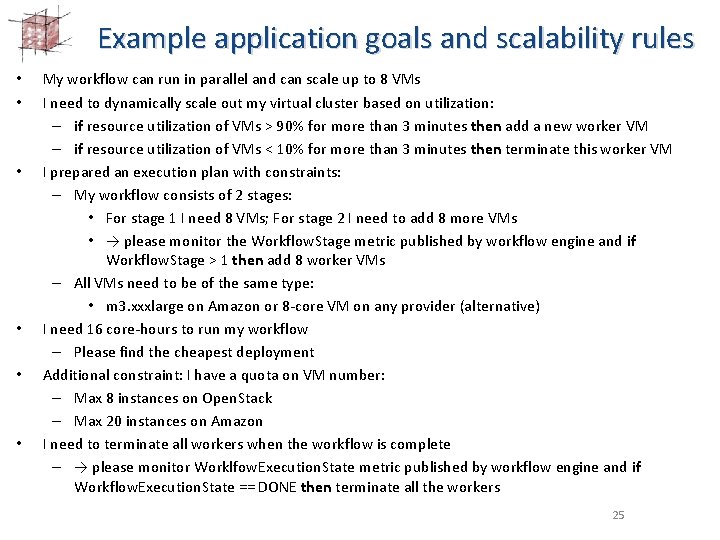

Example application goals and scalability rules • • • My workflow can run in parallel and can scale up to 8 VMs I need to dynamically scale out my virtual cluster based on utilization: – if resource utilization of VMs > 90% for more than 3 minutes then add a new worker VM – if resource utilization of VMs < 10% for more than 3 minutes then terminate this worker VM I prepared an execution plan with constraints: – My workflow consists of 2 stages: • For stage 1 I need 8 VMs; For stage 2 I need to add 8 more VMs • → please monitor the Workflow. Stage metric published by workflow engine and if Workflow. Stage > 1 then add 8 worker VMs – All VMs need to be of the same type: • m 3. xxxlarge on Amazon or 8 -core VM on any provider (alternative) I need 16 core-hours to run my workflow – Please find the cheapest deployment Additional constraint: I have a quota on VM number: – Max 8 instances on Open. Stack – Max 20 instances on Amazon I need to terminate all workers when the workflow is complete – → please monitor Worklfow. Execution. State metric published by workflow engine and if Workflow. Execution. State == DONE then terminate all the workers 25

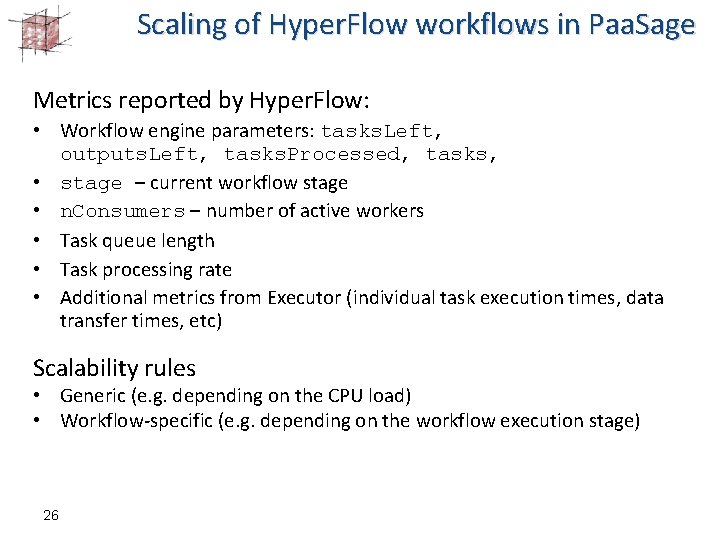

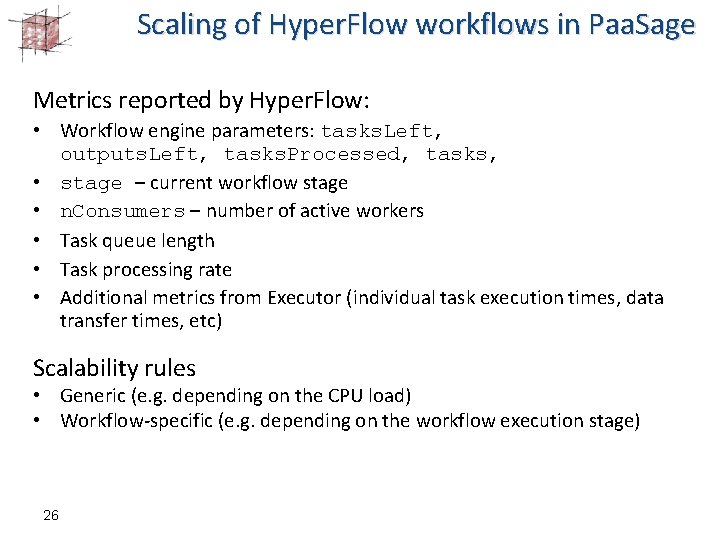

Scaling of Hyper. Flow workflows in Paa. Sage Metrics reported by Hyper. Flow: • Workflow engine parameters: tasks. Left, outputs. Left, tasks. Processed, tasks, • stage – current workflow stage • n. Consumers – number of active workers • Task queue length • Task processing rate • Additional metrics from Executor (individual task execution times, data transfer times, etc) Scalability rules • Generic (e. g. depending on the CPU load) • Workflow-specific (e. g. depending on the workflow execution stage) 26

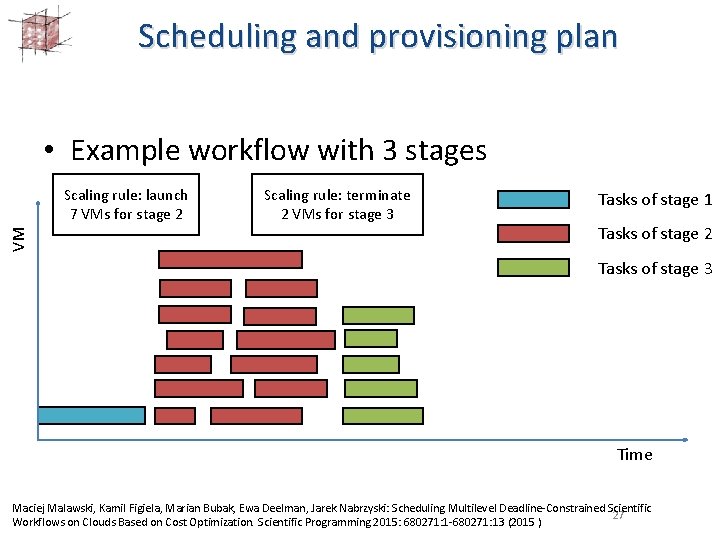

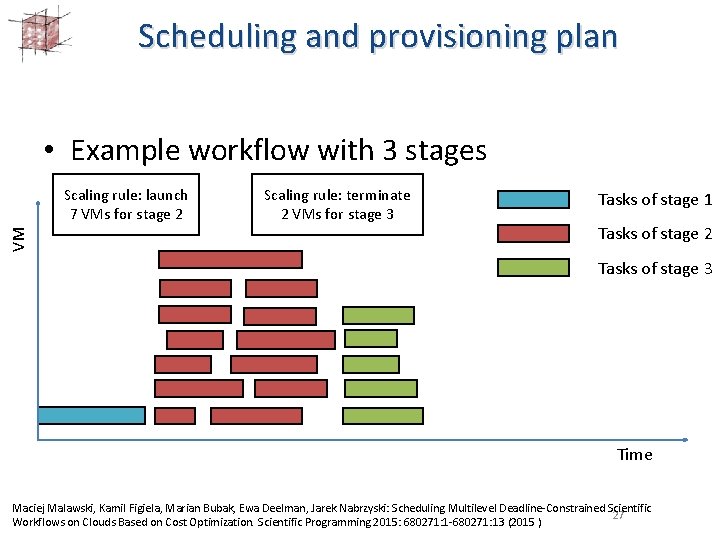

Scheduling and provisioning plan • Example workflow with 3 stages VM Scaling rule: launch 7 VMs for stage 2 Scaling rule: terminate 2 VMs for stage 3 Tasks of stage 1 Tasks of stage 2 Tasks of stage 3 Time Maciej Malawski, Kamil Figiela, Marian Bubak, Ewa Deelman, Jarek Nabrzyski: Scheduling Multilevel Deadline-Constrained Scientific 27 Workflows on Clouds Based on Cost Optimization. Scientific Programming 2015: 680271: 1 -680271: 13 (2015 )

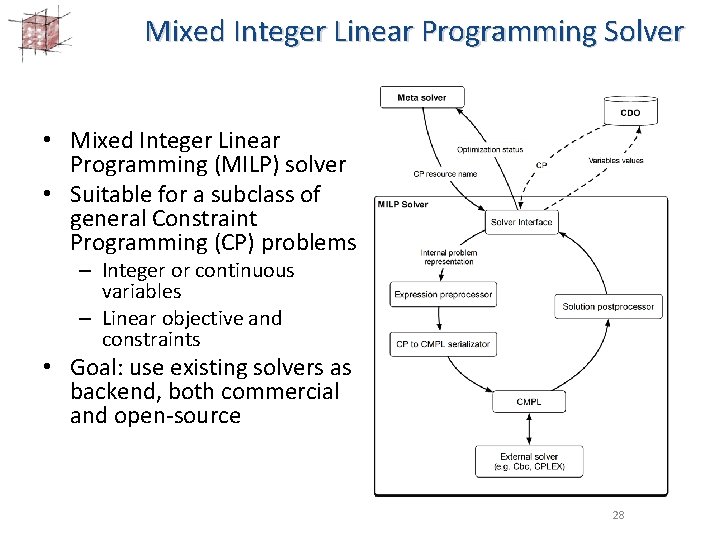

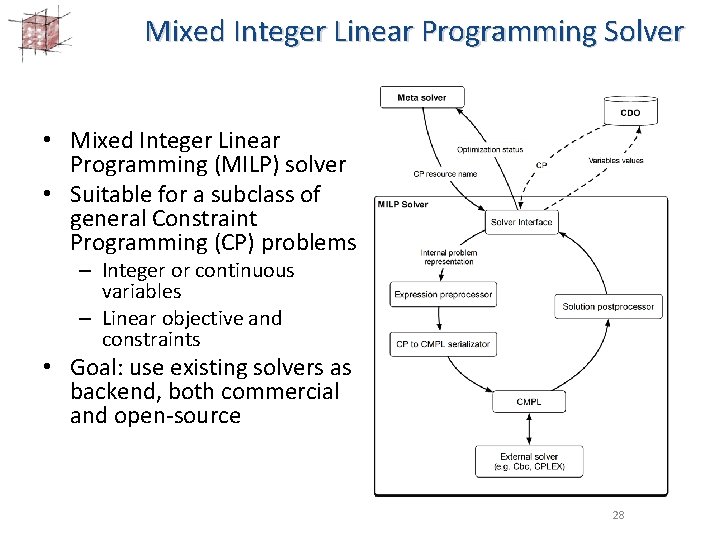

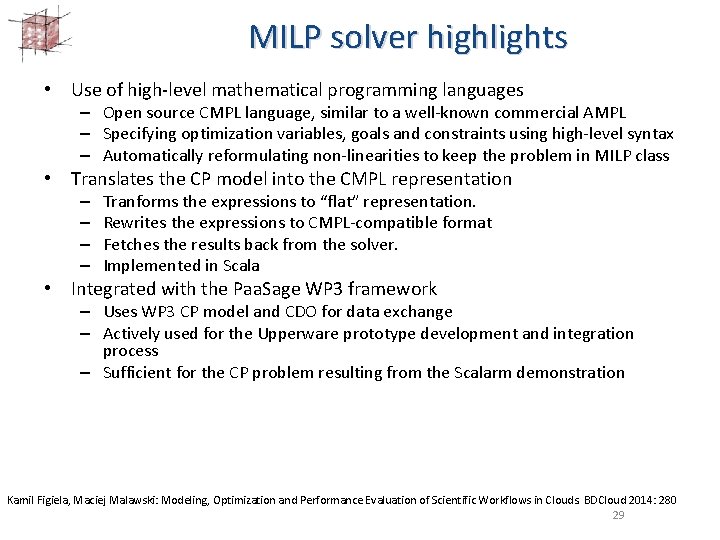

Mixed Integer Linear Programming Solver • Mixed Integer Linear Programming (MILP) solver • Suitable for a subclass of general Constraint Programming (CP) problems – Integer or continuous variables – Linear objective and constraints • Goal: use existing solvers as backend, both commercial and open-source 28

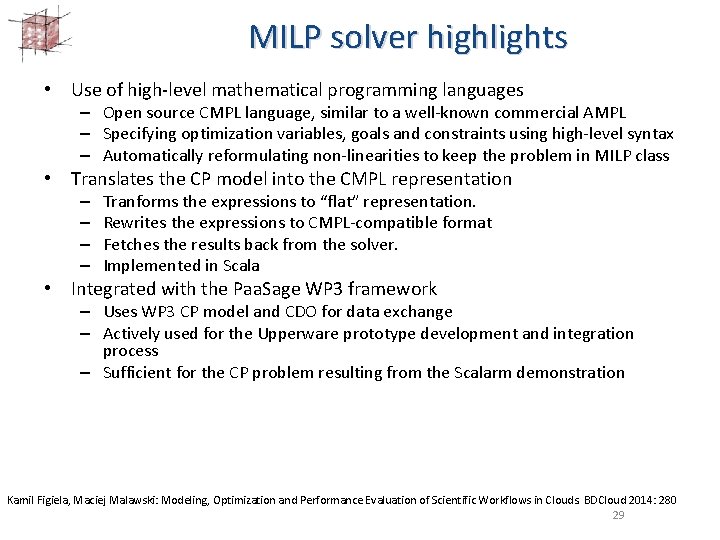

MILP solver highlights • Use of high-level mathematical programming languages – Open source CMPL language, similar to a well-known commercial AMPL – Specifying optimization variables, goals and constraints using high-level syntax – Automatically reformulating non-linearities to keep the problem in MILP class • Translates the CP model into the CMPL representation – – Tranforms the expressions to “flat” representation. Rewrites the expressions to CMPL-compatible format Fetches the results back from the solver. Implemented in Scala • Integrated with the Paa. Sage WP 3 framework – Uses WP 3 CP model and CDO for data exchange – Actively used for the Upperware prototype development and integration process – Sufficient for the CP problem resulting from the Scalarm demonstration Kamil Figiela, Maciej Malawski: Modeling, Optimization and Performance Evaluation of Scientific Workflows in Clouds. BDCloud 2014: 280 29

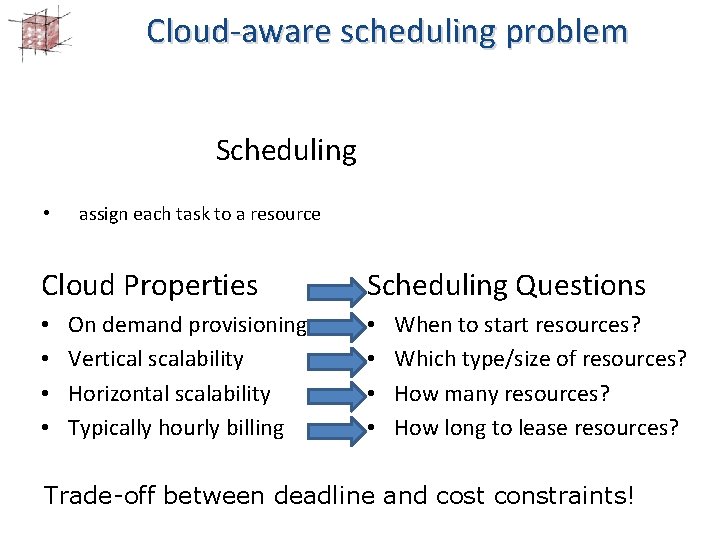

Cloud-aware scheduling problem Cloud-aware Scheduling • • selecting a cost optimized set of resources assign each task to a resource Cloud Properties • • On demand provisioning Vertical scalability Horizontal scalability Typically hourly billing Scheduling Questions • • When to start resources? Which type/size of resources? How many resources? How long to lease resources? Trade-off between deadline and cost constraints!

Resource allocation plans for workflows resource 1 is executing the bottleneck task resource 2 is payed while idling (hourly billiing model)

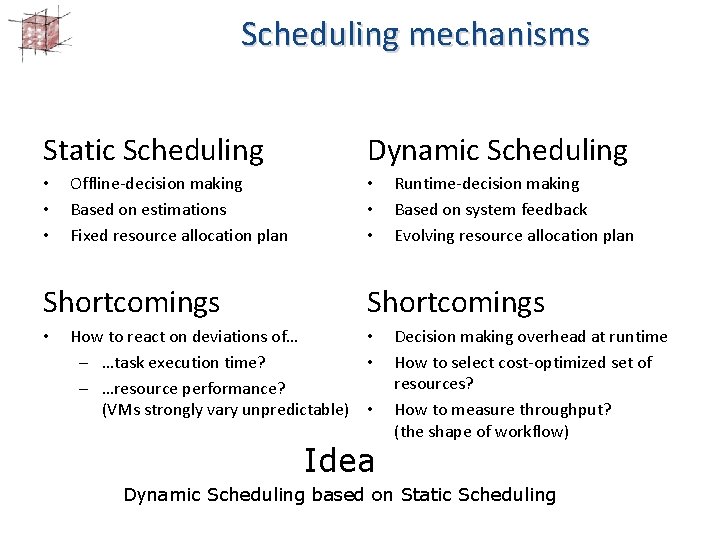

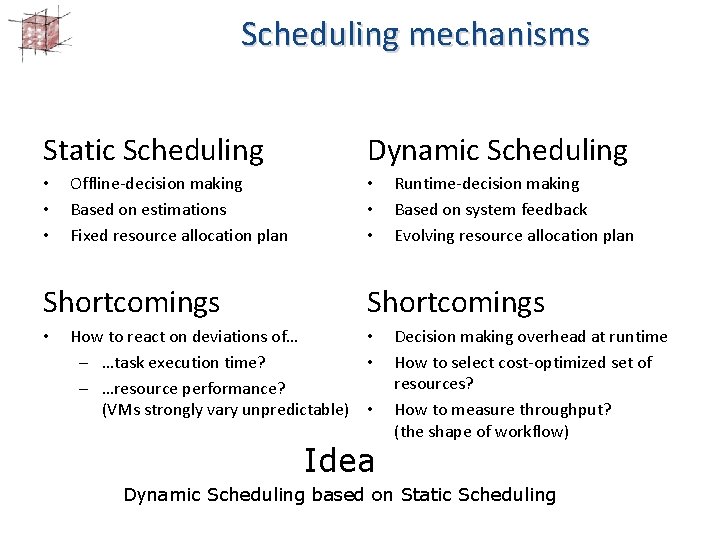

Scheduling mechanisms Static Scheduling • • • Offline-decision making Based on estimations Fixed resource allocation plan Shortcomings • Dynamic Scheduling • • • Runtime-decision making Based on system feedback Evolving resource allocation plan Shortcomings How to react on deviations of… • - …task execution time? • - …resource performance? (VMs strongly vary unpredictable) • Idea Decision making overhead at runtime How to select cost-optimized set of resources? How to measure throughput? (the shape of workflow) Dynamic Scheduling based on Static Scheduling

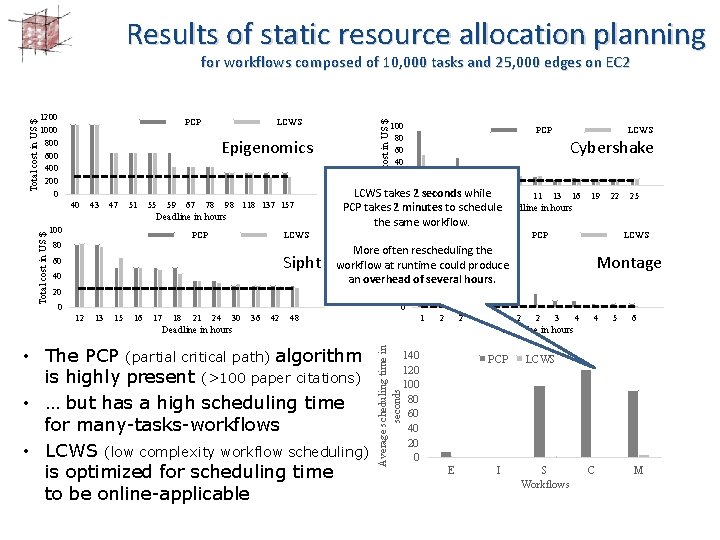

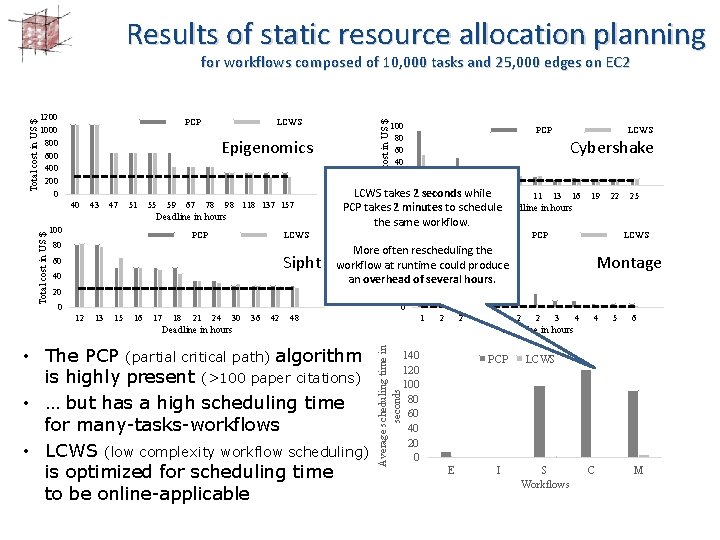

Results of static resource allocation planning PCP LCWS Epigenomics 40 43 47 51 55 59 67 78 98 118 137 157 Deadline in hours Total cost in US $ 1200 1000 800 600 400 200 0 100 PCP 80 LCWS Sipht 60 40 20 0 12 13 15 16 17 18 21 24 30 36 42 48 100 80 60 40 20 0 PCP LCWS Cybershake LCWS takes 2 seconds while 6 7 8 8 9 9 11 13 16 PCP takes 2 minutes to schedule Deadline in hours the same workflow. 120 100 More often rescheduling the 80 workflow at runtime could produce 60 an overhead of several hours. 40 20 0 1 2 2 2 Total cost in US $ for workflows composed of 10, 000 tasks and 25, 000 edges on EC 2 • The PCP (partial critical path) algorithm is highly present (>100 paper citations) • … but has a high scheduling time for many-tasks-workflows • LCWS (low complexity workflow scheduling) is optimized for scheduling time to be online-applicable 22 PCP 25 LCWS Montage 2 3 4 4 5 6 Deadline in hours Average scheduling time in seconds Deadline in hours 19 140 120 100 80 60 40 20 0 PCP E I LCWS S Workflows C M

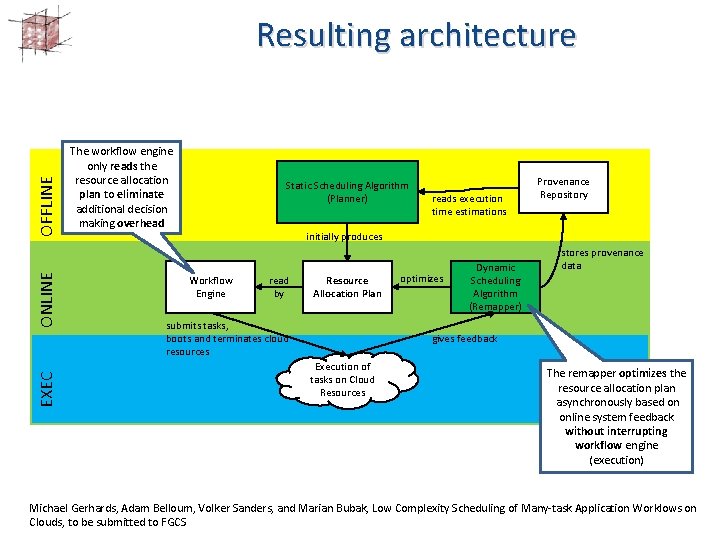

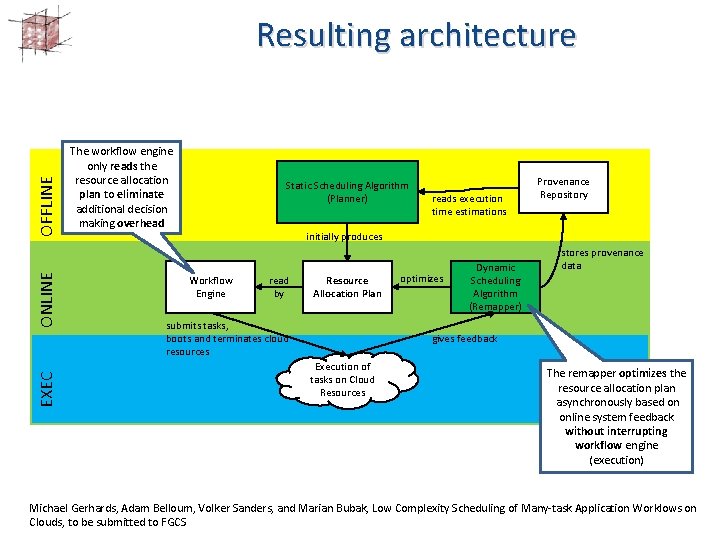

EXEC ONLINE OFFLINE Resulting architecture The workflow engine only reads the resource allocation plan to eliminate additional decision making overhead Static Scheduling Algorithm (Planner) reads execution time estimations Provenance Repository initially produces Workflow Engine read by Resource Allocation Plan submits tasks, boots and terminates cloud resources optimizes Dynamic Scheduling Algorithm (Remapper) stores provenance data gives feedback Execution of tasks on Cloud Resources The remapper optimizes the resource allocation plan asynchronously based on online system feedback without interrupting workflow engine (execution) Michael Gerhards, Adam Belloum, Volker Sanders, and Marian Bubak, Low Complexity Scheduling of Many-task Application Worklows on Clouds, to be submitted to FGCS

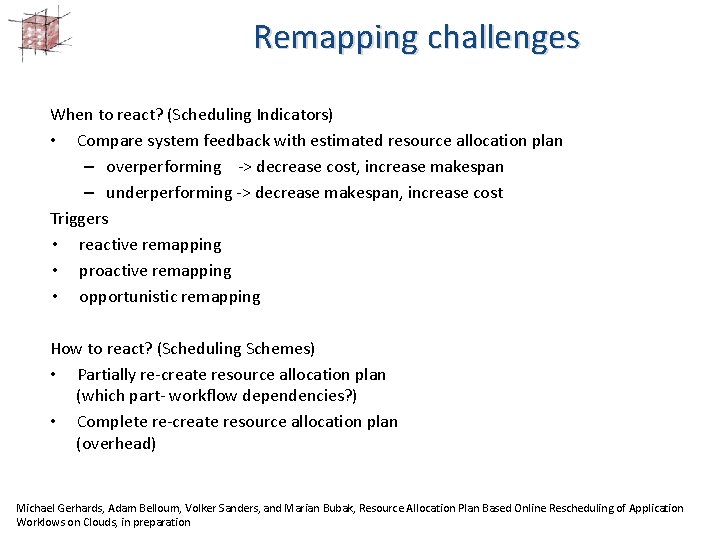

Remapping challenges When to react? (Scheduling Indicators) • Compare system feedback with estimated resource allocation plan – overperforming -> decrease cost, increase makespan – underperforming -> decrease makespan, increase cost Triggers • reactive remapping • proactive remapping • opportunistic remapping How to react? (Scheduling Schemes) • Partially re-create resource allocation plan (which part- workflow dependencies? ) • Complete re-create resource allocation plan (overhead) Michael Gerhards, Adam Belloum, Volker Sanders, and Marian Bubak, Resource Allocation Plan Based Online Rescheduling of Application Worklows on Clouds, in preparation

Parameter studies with Scalarm and Paa. Sage Parameter studies – an approach to scientific computing where the same application is executed multiple times with different input parameter values Data farming – a methodology of studying complex systems with computer simulations oriented on data generation and analysis with parameter studies

Problem statement How to conduct parameter studies and data farming experiment in cross-cloud environments in an automated cost- and performance-effective way ? Challenges: • deployment description in a cloud independent way • automated deployment in cross-cloud environments • cost- and performance-based deployment optimization • scaling out/in based on application-specific metrics

Proposed solution • Use an existing platform – Scalarm – to orchestrate parameter studies and data farming experiments • Use the Paa. Sage framework to manage Scalarm deployment in cross-cloud environment • Model Scalarm (services, deployment and scaling) with the CAMEL language in a cloud provider independent way • Publish the solution in Paa. Sage social network for nonexperience users D. Król, M. Orzechowski, J. Liput, R. Słota, and J. Kitowski. Model-based execution of scientific applications on cloud infrastructures: Scalarm case study, in: Proceedings of Cracow Grid Workshop, pp. 77 -78, 2014. D. Król, R. Da Silva, E. Deelman and V. Lynch, Workflow Performance Profiles: Development and Analysis, accepted: The International Workshop on Algorithms, Models and Tools for Parallel Computing on Heterogeneous Platforms (Hetero. Par'2016), Euro-Par 2016.

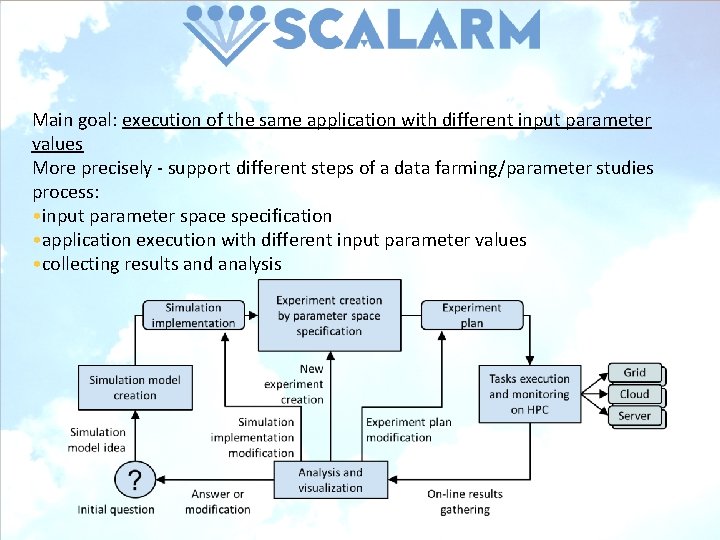

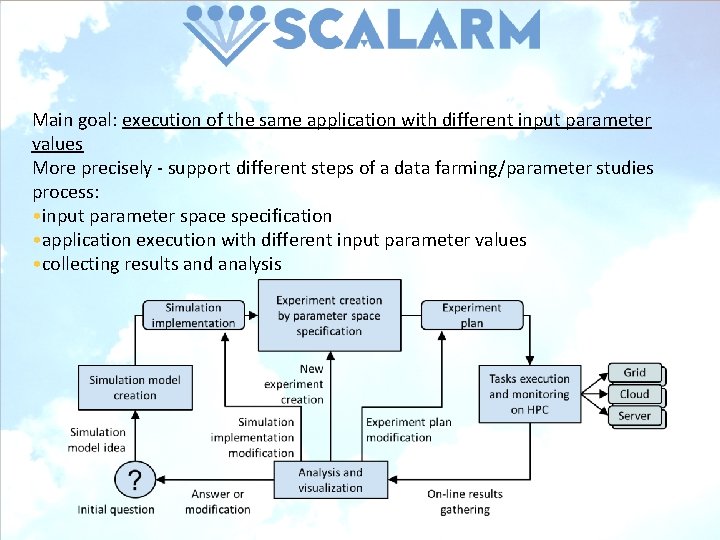

Main goal: execution of the same application with different input parameter values More precisely - support different steps of a data farming/parameter studies process: • input parameter space specification • application execution with different input parameter values • collecting results and analysis

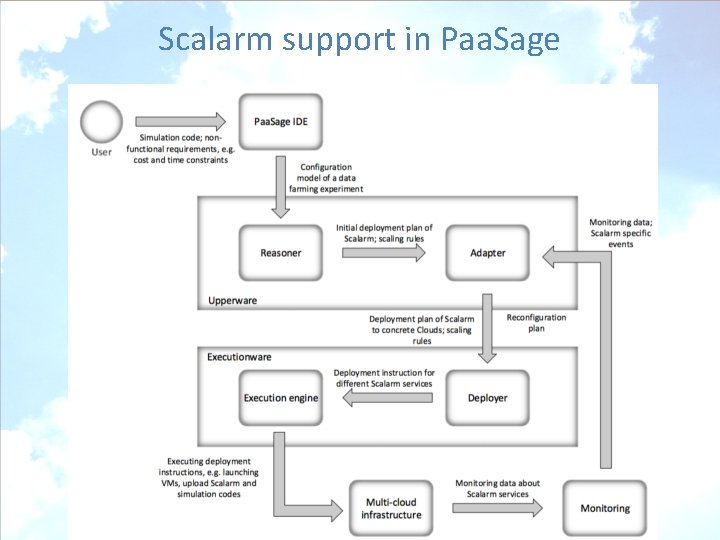

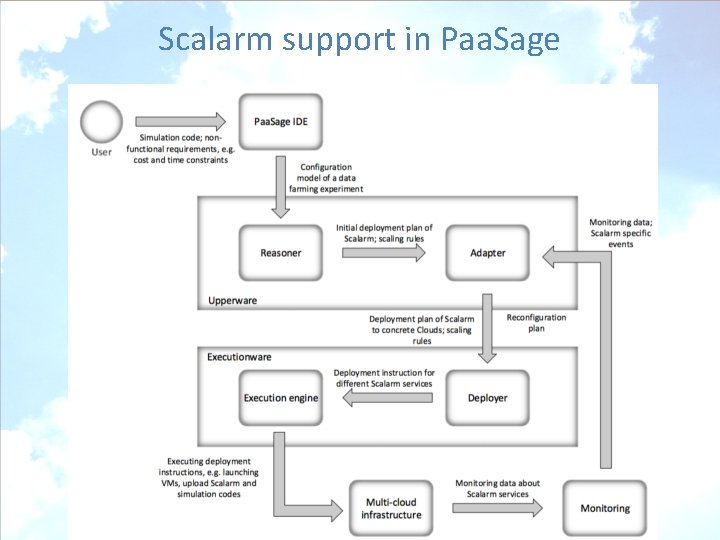

Scalarm support in Paa. Sage

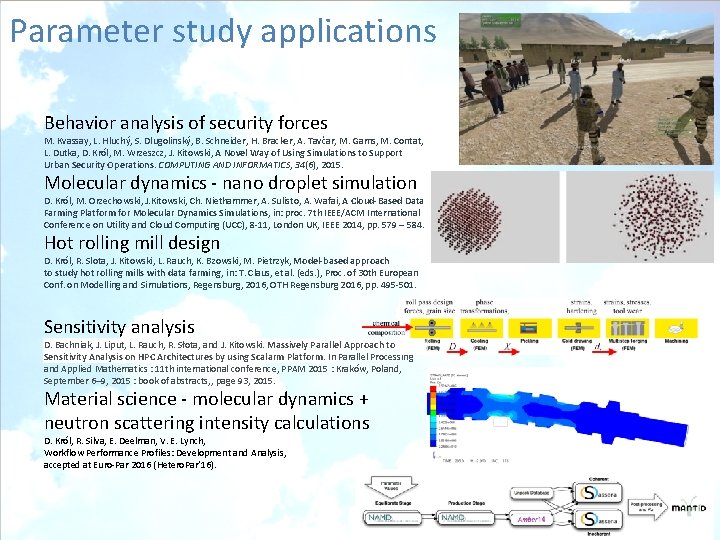

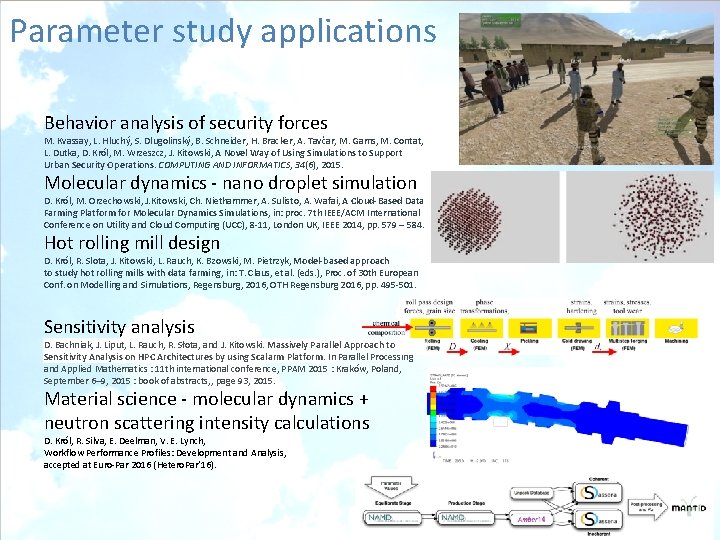

Parameter study applications Behavior analysis of security forces M. Kvassay, L. Hluchý, S. Dlugolinský, B. Schneider, H. Bracker, A. Tavčar, M. Gams, M. Contat, L. Dutka, D. Król, M. Wrzeszcz, J. Kitowski, A Novel Way of Using Simulations to Support Urban Security Operations. COMPUTING AND INFORMATICS, 34(6), 2015. Molecular dynamics - nano droplet simulation D. Król, M. Orzechowski, J. Kitowski, Ch. Niethammer, A. Sulisto, A. Wafai, A Cloud-Based Data Farming Platform for Molecular Dynamics Simulations, in: proc. 7 th IEEE/ACM International Conference on Utility and Cloud Computing (UCC), 8 -11, London UK, IEEE 2014, pp. 579 – 584. Hot rolling mill design D. Król, R. Slota, J. Kitowski, L. Rauch, K. Bzowski, M. Pietrzyk, Model-based approach to study hot rolling mills with data farming, in: T. Claus, et al. (eds. ), Proc. of 30 th European Conf. on Modelling and Simulations, Regensburg, 2016, OTH Regensburg 2016, pp. 495 -501. Sensitivity analysis D. Bachniak, J. Liput, L. Rauch, R. Słota, and J. Kitowski. Massively Parallel Approach to Sensitivity Analysis on HPC Architectures by using Scalarm Platform. In Parallel Processing and Applied Mathematics : 11 th international conference, PPAM 2015 : Kraków, Poland, September 6– 9, 2015 : book of abstracts, , page 93, 2015. Material science - molecular dynamics + neutron scattering intensity calculations D. Król, R. Silva, E. Deelman, V. E. Lynch, Workflow Performance Profiles: Development and Analysis, accepted at Euro-Par 2016 (Hetero. Par’ 16).

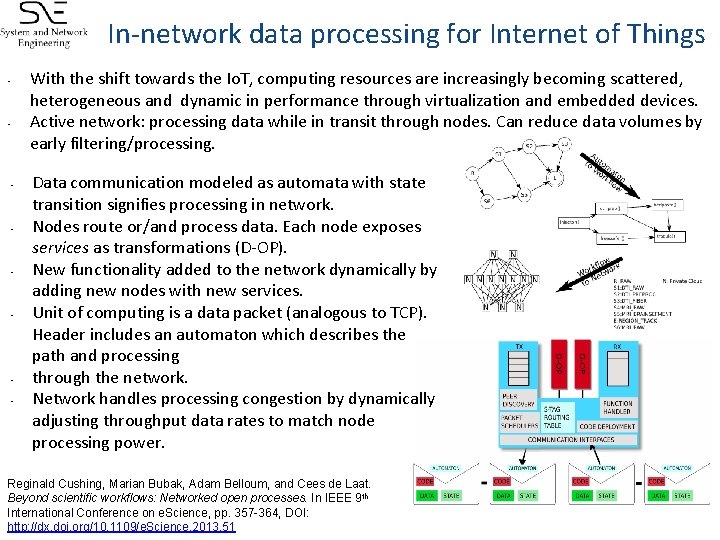

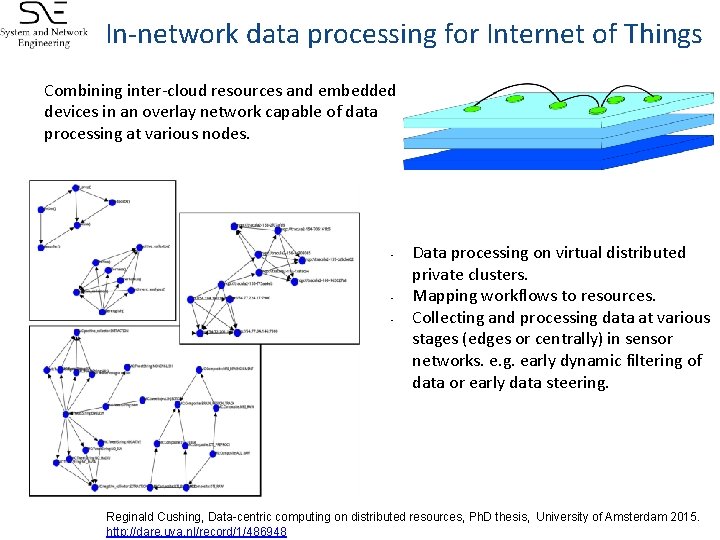

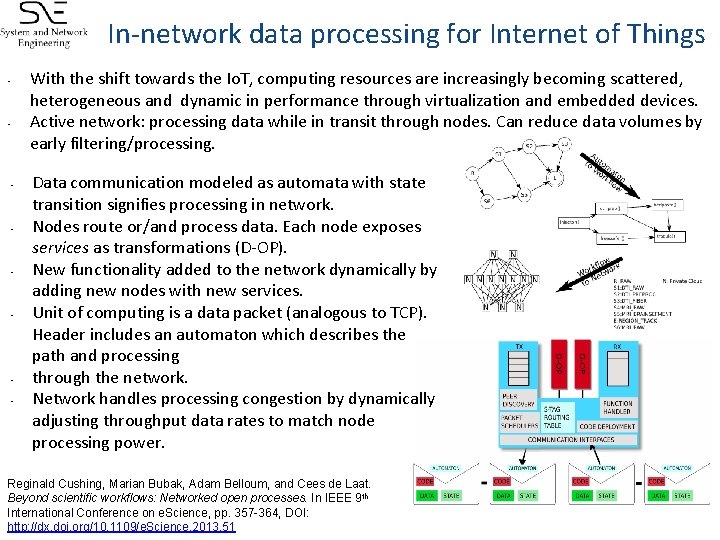

In-network data processing for Internet of Things • • With the shift towards the Io. T, computing resources are increasingly becoming scattered, heterogeneous and dynamic in performance through virtualization and embedded devices. Active network: processing data while in transit through nodes. Can reduce data volumes by early filtering/processing. Data communication modeled as automata with state transition signifies processing in network. Nodes route or/and process data. Each node exposes services as transformations (D-OP). New functionality added to the network dynamically by adding new nodes with new services. Unit of computing is a data packet (analogous to TCP). Header includes an automaton which describes the path and processing through the network. Network handles processing congestion by dynamically adjusting throughput data rates to match node processing power. Reginald Cushing, Marian Bubak, Adam Belloum, and Cees de Laat. Beyond scientific workflows: Networked open processes. In IEEE 9 th International Conference on e. Science, pp. 357 -364, DOI: http: //dx. doi. org/10. 1109/e. Science. 2013. 51

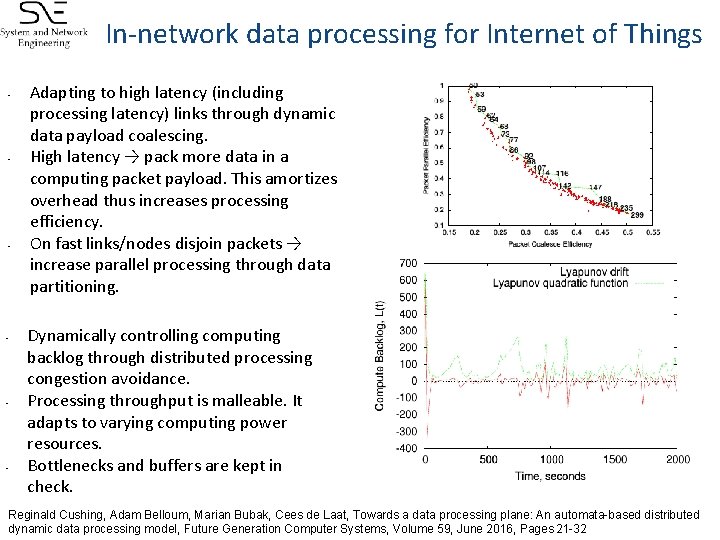

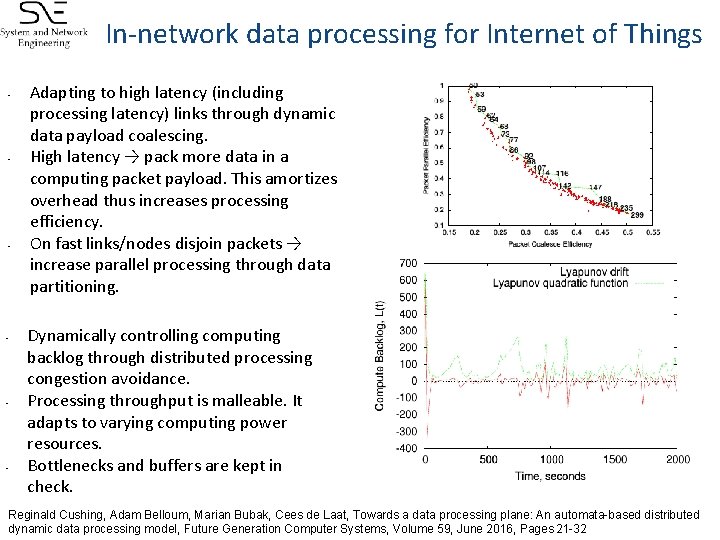

In-network data processing for Internet of Things • • • Adapting to high latency (including processing latency) links through dynamic data payload coalescing. High latency → pack more data in a computing packet payload. This amortizes overhead thus increases processing efficiency. On fast links/nodes disjoin packets → increase parallel processing through data partitioning. Dynamically controlling computing backlog through distributed processing congestion avoidance. Processing throughput is malleable. It adapts to varying computing power resources. Bottlenecks and buffers are kept in check. Reginald Cushing, Adam Belloum, Marian Bubak, Cees de Laat, Towards a data processing plane: An automata-based distributed dynamic data processing model, Future Generation Computer Systems, Volume 59, June 2016, Pages 21 -32

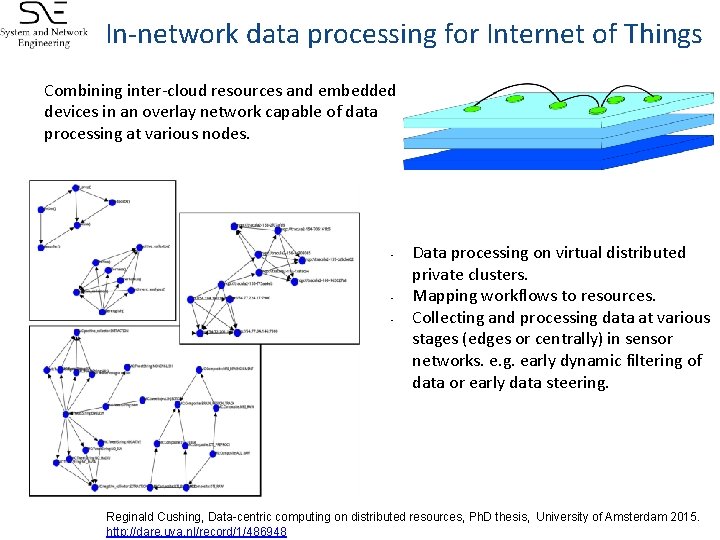

In-network data processing for Internet of Things Combining inter-cloud resources and embedded devices in an overlay network capable of data processing at various nodes. • • • Data processing on virtual distributed private clusters. Mapping workflows to resources. Collecting and processing data at various stages (edges or centrally) in sensor networks. e. g. early dynamic filtering of data or early data steering. Reginald Cushing, Data-centric computing on distributed resources, Ph. D thesis, University of Amsterdam 2015. http: //dare. uva. nl/record/1/486948

Towards reproducible research • The core of science is reproducibility: – Can you reproduce an experiment reported in the research paper? – Can you reproduce the results of your Ph. D student? • Reproducibility is a problem in computer/computational science – How to preserve the data, software, configuration, etc. ? – One approach is to enforce cleanness of the environment: use standards, models, version control systems, etc. – Another is to “preserve the mess” – e. g. freeze the snapshots of images used for actual run 45

Reproducibility with Paa. Sage • Hyper. Flow supports execution of workflows • Paa. Sage support deployment on multiple clouds • Hyper. Flow + Paa. Sage can help solve reproducibility problems: – CAMEL: complete description of infrastructure – Hyper. Flow JSON: complete description of application 46

Conclusions • On-demand deployment of the workflow runtime environment as part of the workflow application • Workflow engine as another app component driving the execution of other components • Avoidance of tight coupling to a particular cloud infrastructure and middleware 47

More at http: //www. paasage. eu https: //github. com/dice-cyfronet/hyperflow http: //scalarm. com http: //dice. cyfronet. pl bubak@agh. edu. pl

Acknowledgements This research was supported by EU FP-7 ICT Project Paa. Sage – 317715 Polish Grant 3033/7 PR/2014/2