Federating Grid and Cloud Storage in EUDAT International

- Slides: 23

Federating Grid and Cloud Storage in EUDAT International Symposium on Grids and Clouds 2014, 23 -28 March 2014 Shaun de Witt, STFC Maciej Brzeźniak, PSNC Martin Hellmich, CERN

Agenda • Introduction • … • … • Test results • Future work 3 rd EUDAT Technical meeting in Bologna 7 th February 2013

Introduction • We present and analyze the results of Grid and Cloud Storage integration • In EUDAT we used: – i. RODS as Grid Storage federation mechanism – Open. Stack Swift as scalable object storage solution • Scope: – Proof of concept – Pilot Open. Stack Swift installation in PSNC – Production i. RODS servers in PSNC (Poznan) and EPCC (Edinburgh) 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013

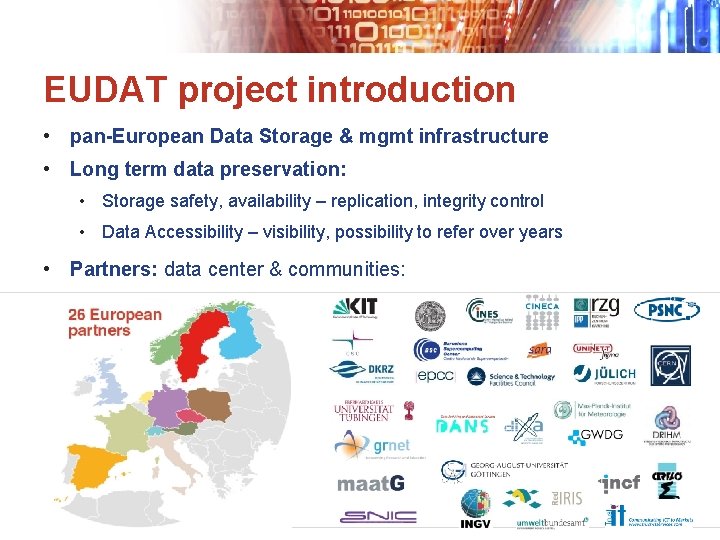

EUDAT project introduction • pan-European Data Storage & mgmt infrastructure • Long term data preservation: • Storage safety, availability – replication, integrity control • Data Accessibility – visibility, possibility to refer over years • Partners: data center & communities: 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013

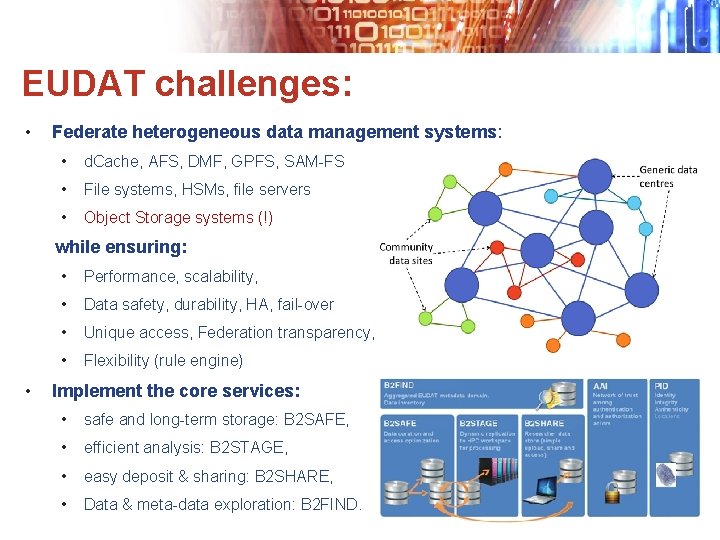

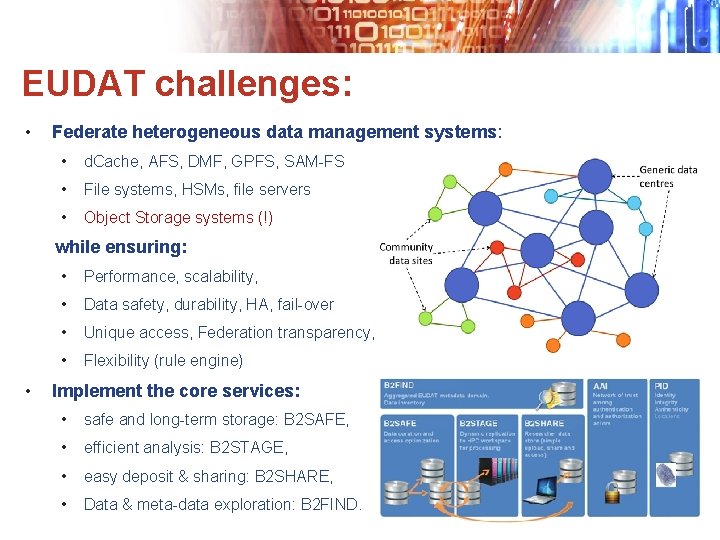

EUDAT challenges: • Federate heterogeneous data management systems: • d. Cache, AFS, DMF, GPFS, SAM-FS • File systems, HSMs, file servers • Object Storage systems (!) while ensuring: • • Performance, scalability, • Data safety, durability, HA, fail-over • Unique access, Federation transparency, • Flexibility (rule engine) Picture showing various storage systems federated under i. RODS Implement the core services: • safe and long-term storage: B 2 SAFE, • efficient analysis: B 2 STAGE, • easy deposit & sharing: B 2 SHARE, • Data & meta-data exploration: B 2 FIND. 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013

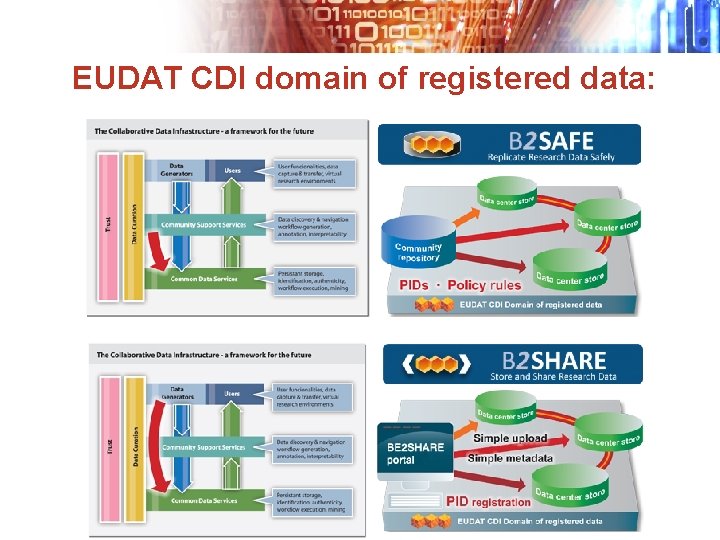

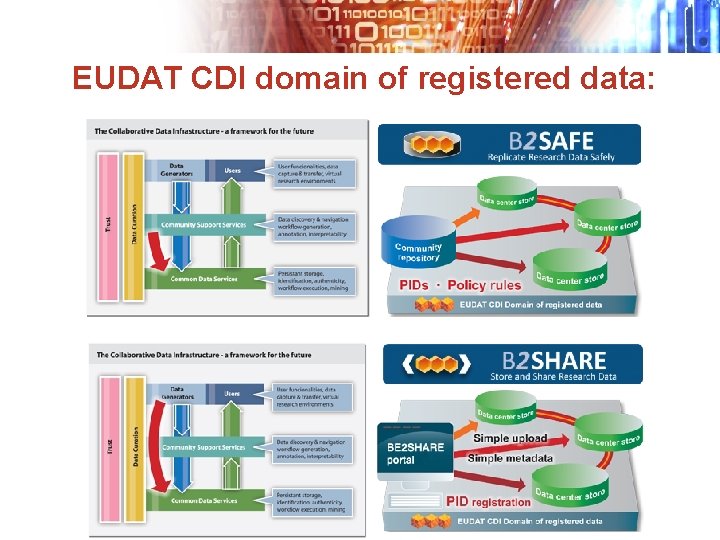

EUDAT CDI domain of registered data:

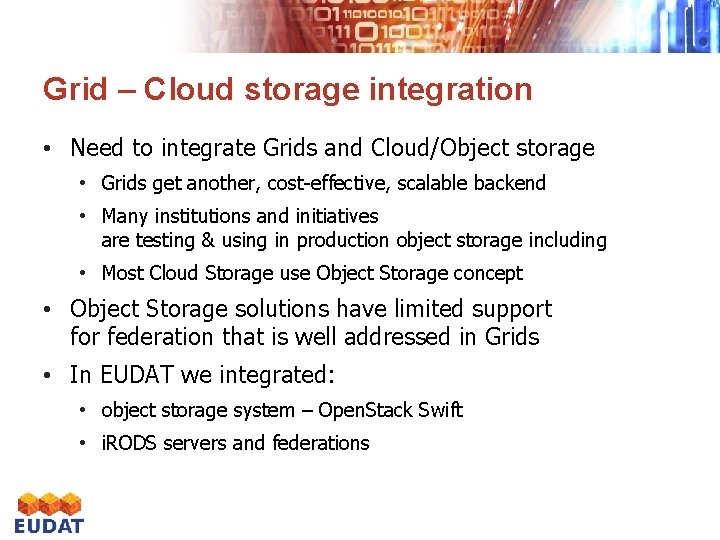

Grid – Cloud storage integration • Need to integrate Grids and Cloud/Object storage • Grids get another, cost-effective, scalable backend • Many institutions and initiatives are testing & using in production object storage including • Most Cloud Storage use Object Storage concept • Object Storage solutions have limited support for federation that is well addressed in Grids • In EUDAT we integrated: • object storage system – Open. Stack Swift • i. RODS servers and federations 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013

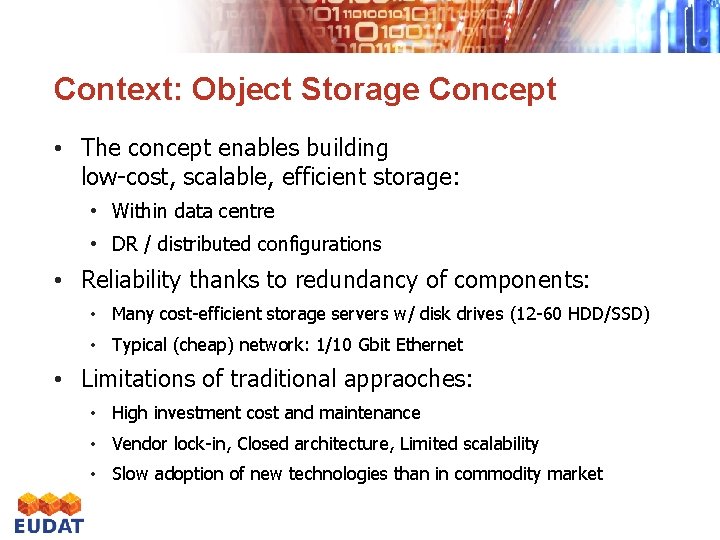

Context: Object Storage Concept • The concept enables building low-cost, scalable, efficient storage: • Within data centre • DR / distributed configurations • Reliability thanks to redundancy of components: • Many cost-efficient storage servers w/ disk drives (12 -60 HDD/SSD) • Typical (cheap) network: 1/10 Gbit Ethernet • Limitations of traditional appraoches: • High investment cost and maintenance • Vendor lock-in, Closed architecture, Limited scalability • Slow adoption of new technologies than in commodity market

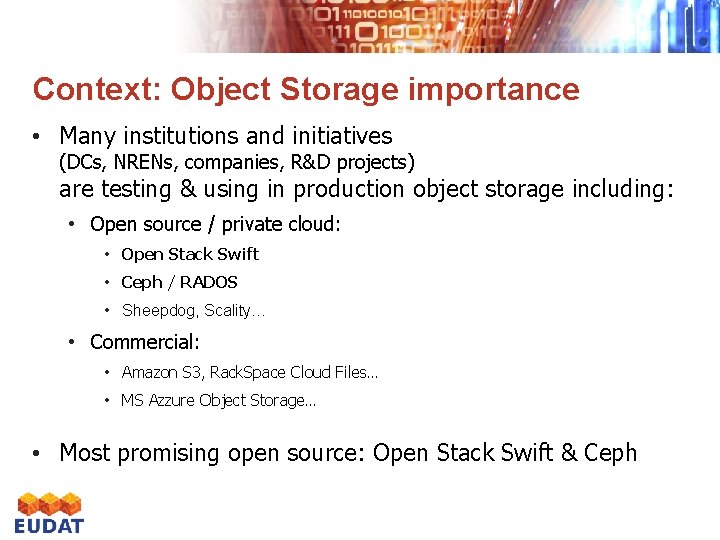

Context: Object Storage importance • Many institutions and initiatives (DCs, NRENs, companies, R&D projects) are testing & using in production object storage including: • Open source / private cloud: • Open Stack Swift • Ceph / RADOS • Sheepdog, Scality… • Commercial: • Amazon S 3, Rack. Space Cloud Files… • MS Azzure Object Storage… • Most promising open source: Open Stack Swift & Ceph

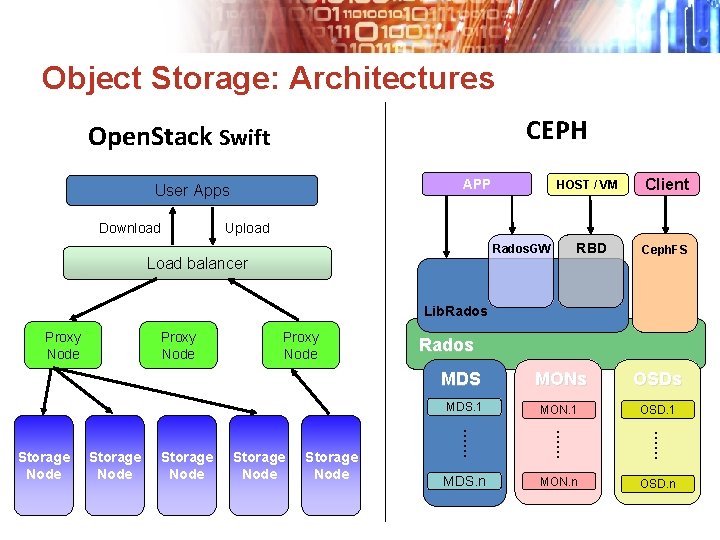

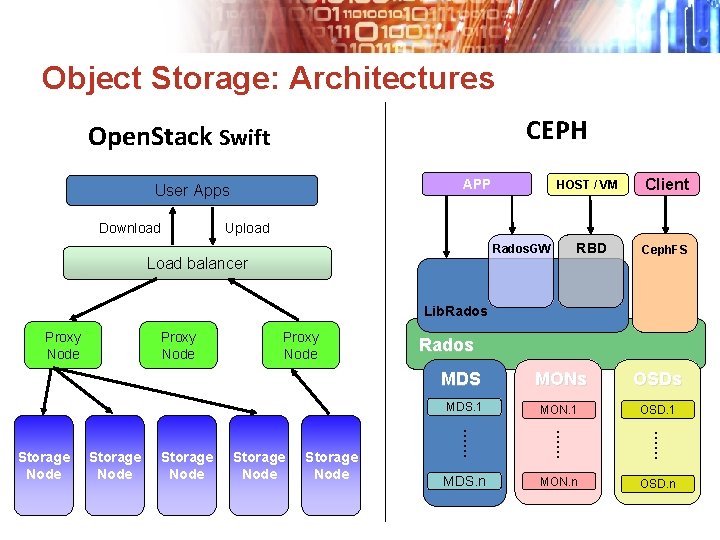

Object Storage: Architectures CEPH Open. Stack Swift APP User Apps Download HOST / VM Client Upload RBD Rados. GW Load balancer Ceph. FS Lib. Rados Proxy Node Storage Node MDS MONs OSDs MDS. 1 MON. 1 OSD. 1 MDS. n MON. n . . . Storage Node Rados . . . Storage Node Proxy Node OSD. n

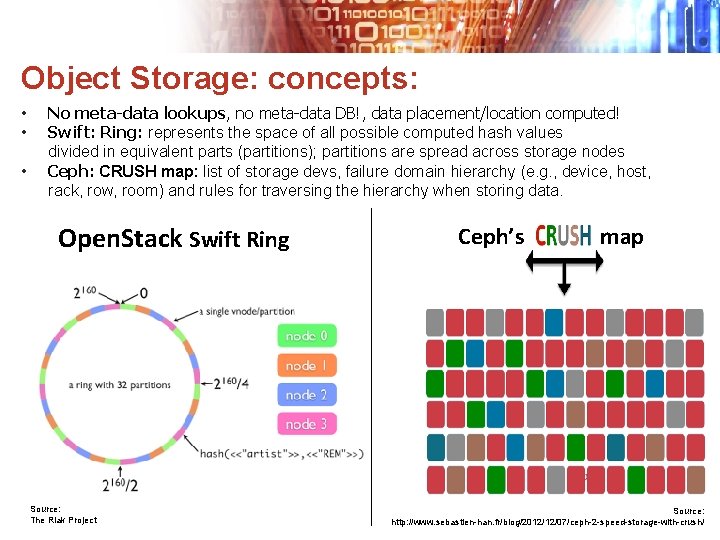

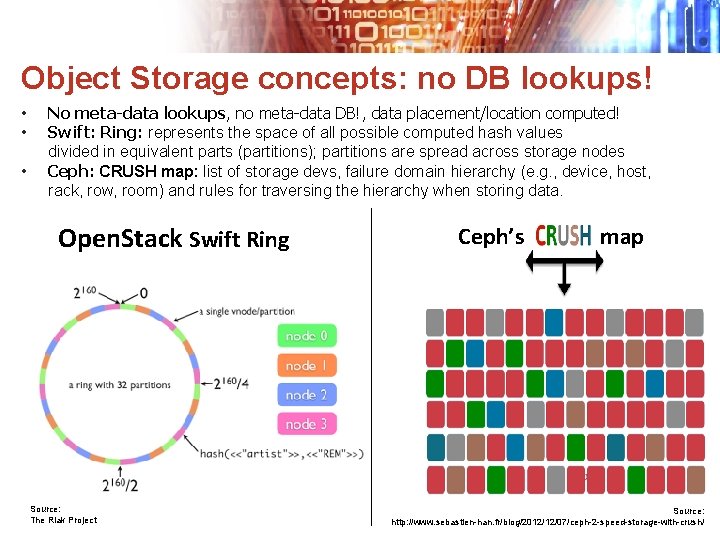

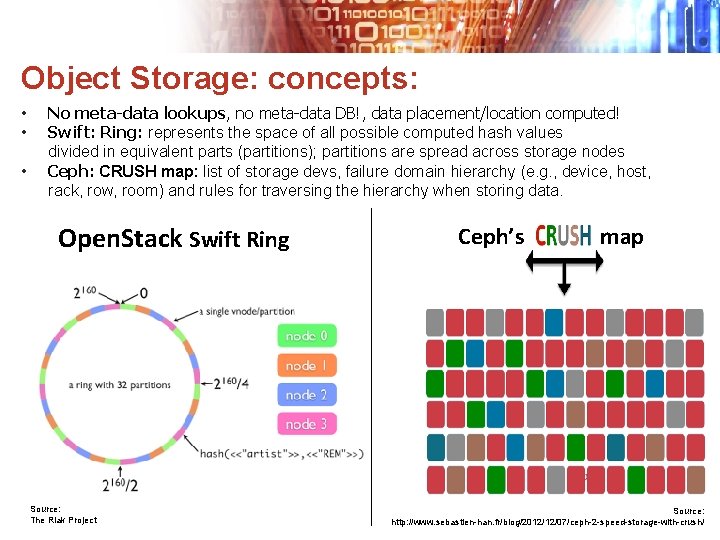

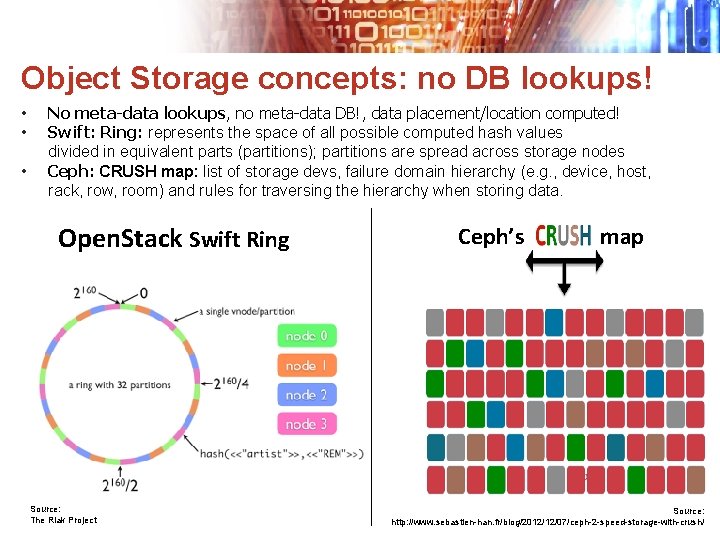

Object Storage: concepts: • • • No meta-data lookups, no meta-data DB!, data placement/location computed! Swift: Ring: represents the space of all possible computed hash values divided in equivalent parts (partitions); partitions are spread across storage nodes Ceph: CRUSH map: list of storage devs, failure domain hierarchy (e. g. , device, host, rack, row, room) and rules for traversing the hierarchy when storing data. Open. Stack Swift Ring Source: The Riak Project Ceph’s map Source: http: //www. sebastien-han. fr/blog/2012/12/07/ceph-2 -speed-storage-with-crush/

Object Storage concepts: no DB lookups! • • • No meta-data lookups, no meta-data DB!, data placement/location computed! Swift: Ring: represents the space of all possible computed hash values divided in equivalent parts (partitions); partitions are spread across storage nodes Ceph: CRUSH map: list of storage devs, failure domain hierarchy (e. g. , device, host, rack, row, room) and rules for traversing the hierarchy when storing data. Open. Stack Swift Ring Source: The Riak Project Ceph’s map Source: http: //www. sebastien-han. fr/blog/2012/12/07/ceph-2 -speed-storage-with-crush/

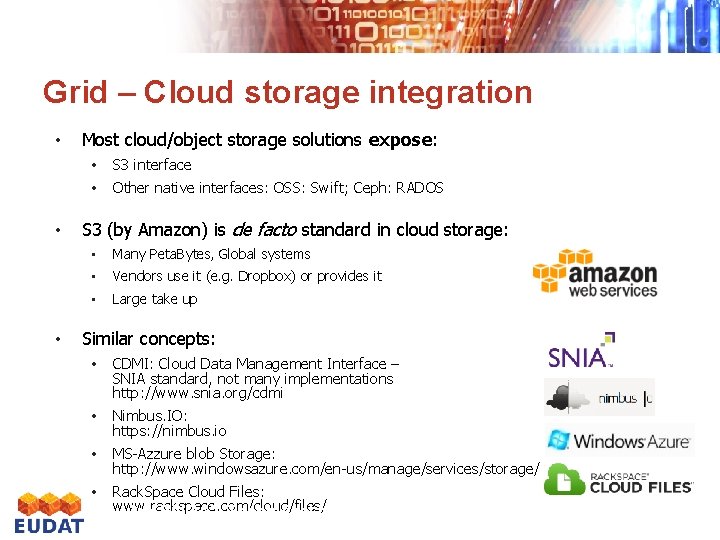

Grid – Cloud storage integration • • • Most cloud/object storage solutions expose: • S 3 interface • Other native interfaces: OSS: Swift; Ceph: RADOS S 3 (by Amazon) is de facto standard in cloud storage: • Many Peta. Bytes, Global systems • Vendors use it (e. g. Dropbox) or provides it • Large take up Similar concepts: • CDMI: Cloud Data Management Interface – SNIA standard, not many implementations http: //www. snia. org/cdmi • Nimbus. IO: https: //nimbus. io • MS-Azzure blob Storage: http: //www. windowsazure. com/en-us/manage/services/storage/ • Rack. Space Cloud Files: www. rackspace. com/cloud/files/ 3 rd EUDAT Technuical meeting 7 th February 2013 in Bologna

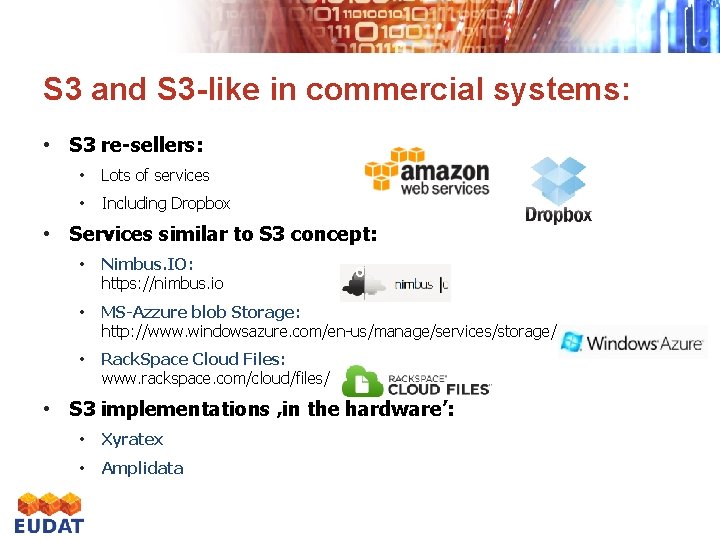

S 3 and S 3 -like in commercial systems: • S 3 re-sellers: • Lots of services • Including Dropbox • Services similar to S 3 concept: • Nimbus. IO: https: //nimbus. io • MS-Azzure blob Storage: http: //www. windowsazure. com/en-us/manage/services/storage/ • Rack. Space Cloud Files: www. rackspace. com/cloud/files/ o • S 3 implementations ‚in the hardware’: • Xyratex • Amplidata 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013

Why build PRIVATE S 3 -like storage? • Features/ benefits: • Reliable storage on top of commodity hardware • User still able to control the data • Easy scalability, possible to grow the system • Adding resources and redistributing data possible in non-disruptive way • Open source software solutions and standards available: • e. g. Open. Stack Swift: Open Stack Native API and S 3 API • Other S 3 -enabled storage: e. g. RADOS • CDMI: Cloud Data Management Interface 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013

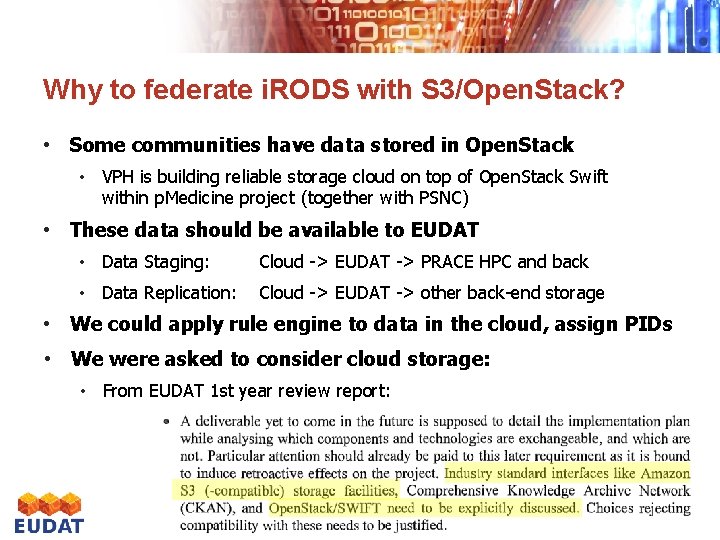

Why to federate i. RODS with S 3/Open. Stack? • Some communities have data stored in Open. Stack • VPH is building reliable storage cloud on top of Open. Stack Swift within p. Medicine project (together with PSNC) • These data should be available to EUDAT • Data Staging: Cloud -> EUDAT -> PRACE HPC and back • Data Replication: Cloud -> EUDAT -> other back-end storage • We could apply rule engine to data in the cloud, assign PIDs • We were asked to consider cloud storage: • From EUDAT 1 st year review report: 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013

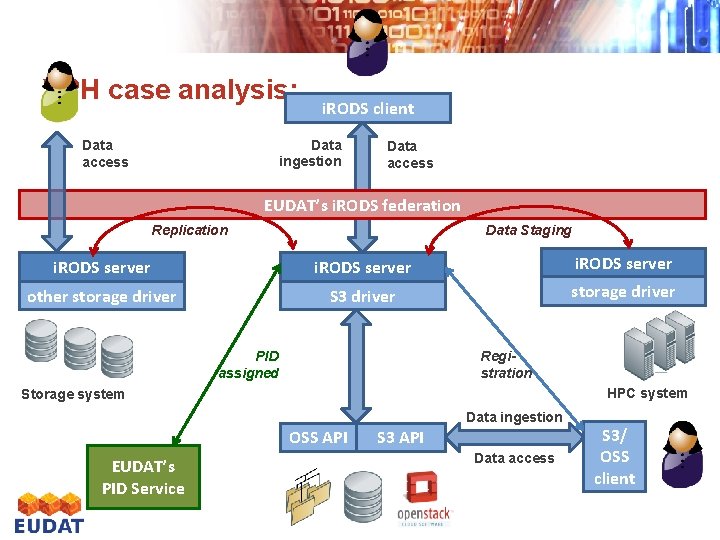

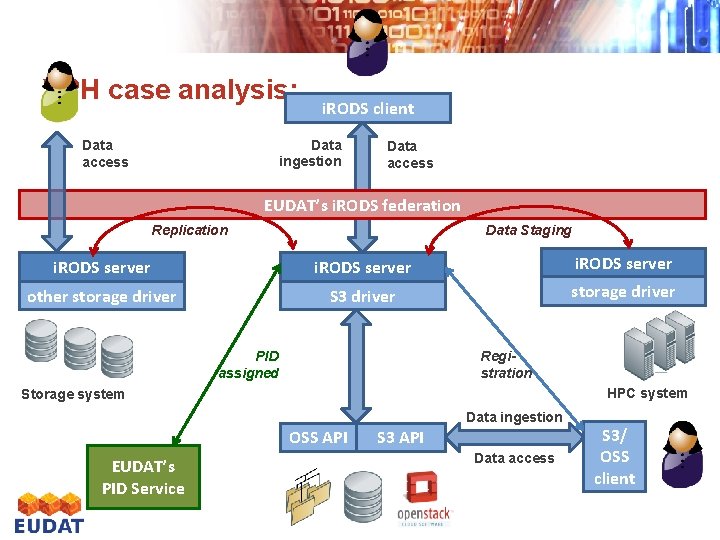

VPH case analysis: i. RODS client Data ingestion Data access EUDAT’s i. RODS federation Replication Data Staging i. RODS server other storage driver S 3 driver storage driver PID assigned Registration HPC system Storage system Data ingestion OSS API EUDAT’s PID Service S 3 API Data access S 3/ OSS client

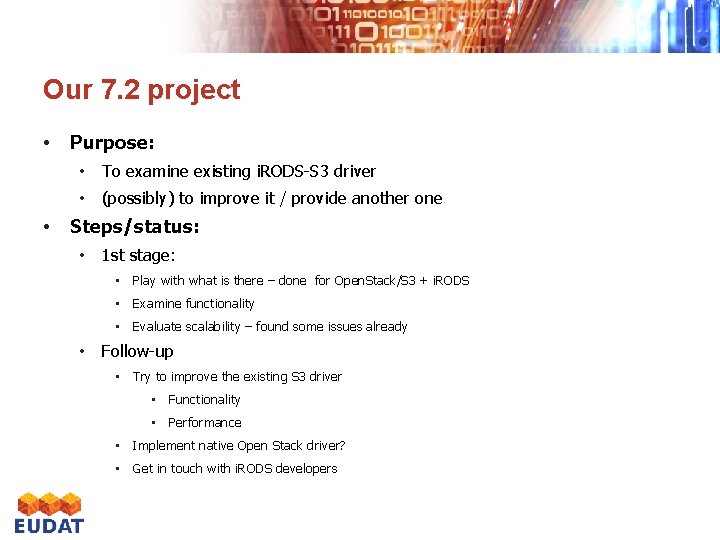

Our 7. 2 project • Purpose: • To examine existing i. RODS-S 3 driver • (possibly) to improve it / provide another one • Steps/status: • 1 st stage: • Play with what is there – done for Open. Stack/S 3 + i. RODS • Examine functionality • Evaluate scalability – found some issues already • Follow-up • Try to improve the existing S 3 driver • Functionality • Performance • Implement native Open Stack driver? • Get in touch with i. RODS developers 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013

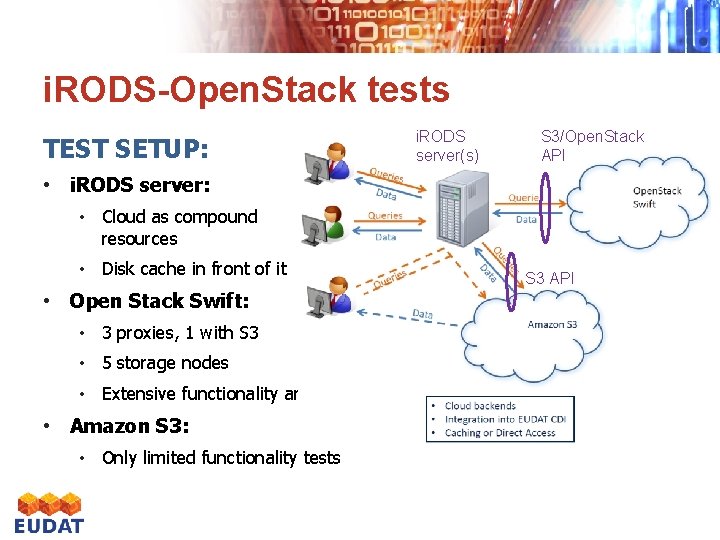

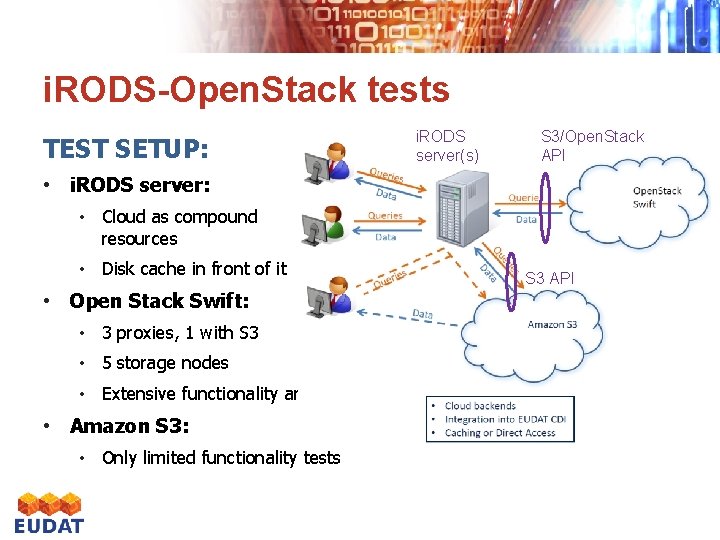

i. RODS-Open. Stack tests TEST SETUP: i. RODS server(s) S 3/Open. Stack API • i. RODS server: • Cloud as compound resources • Disk cache in front of it • Open Stack Swift: • 3 proxies, 1 with S 3 • 5 storage nodes • Extensive functionality and perf. tests • Amazon S 3: • Only limited functionality tests 3 rd EUDAT Technuical meeting in Bologna 7 th February 2013 S 3 API

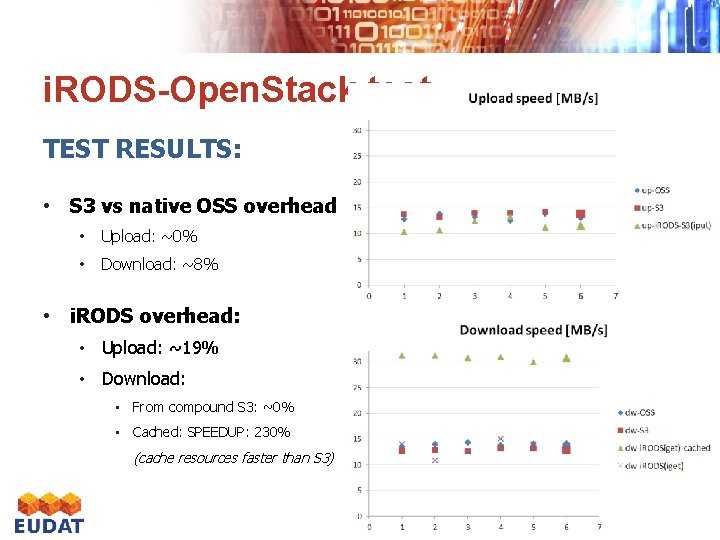

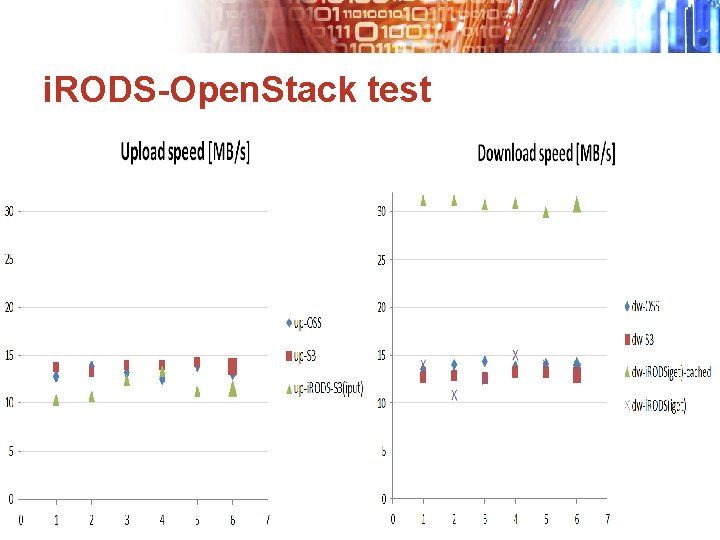

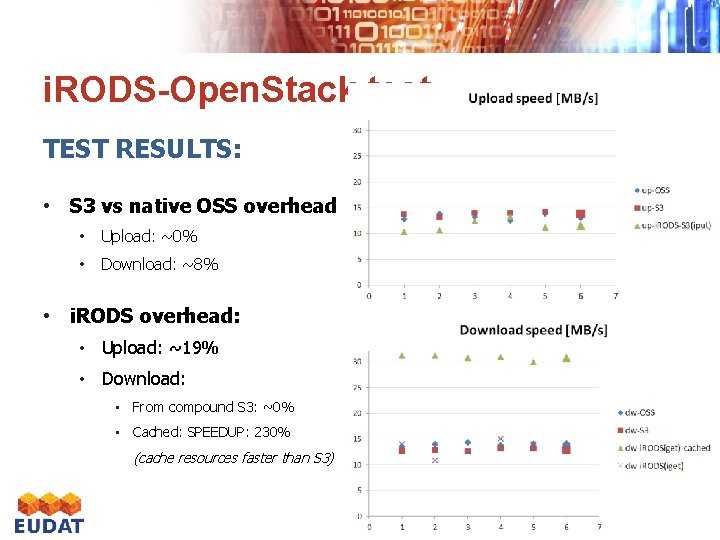

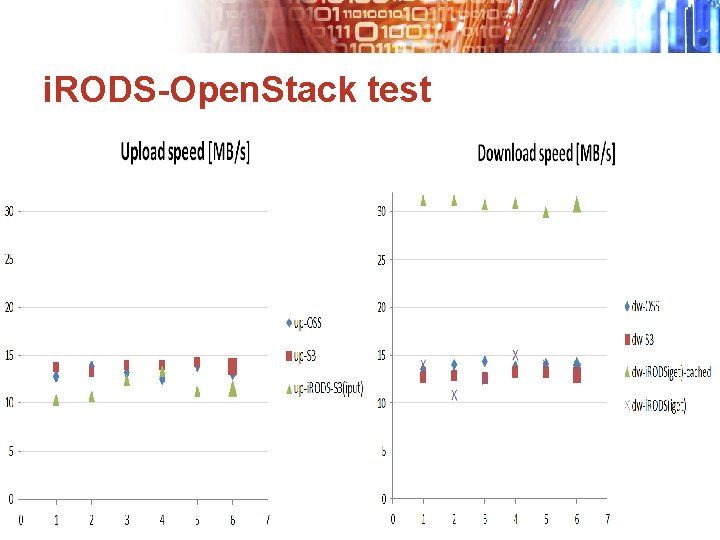

i. RODS-Open. Stack test TEST RESULTS: • S 3 vs native OSS overhead • Upload: ~0% • Download: ~8% • i. RODS overhead: • Upload: ~19% • Download: • From compound S 3: ~0% • Cached: SPEEDUP: 230% (cache resources faster than S 3)

i. RODS-Open. Stack test

Conclusions and future plans: • Conclusions • Performance-wise i. RODS does not bring much overhead – files <2 GB • Problems arise for files >2 GB – no support for multipart upload in i. RODS-S 3 driver – this prevents i. RODS from storing files >2 GB in clouds • Some functional limits (e. g. imv problem) • Using i. RODS to federate S 3 clouds in large scale would require improving the existing or developing a new driver • Future plans: • Test the integration with VPH’s cloud using existing driver • Ask SAF for supporting the driver development • Get in touch with i. RODS developers to assure the sustainability of our work

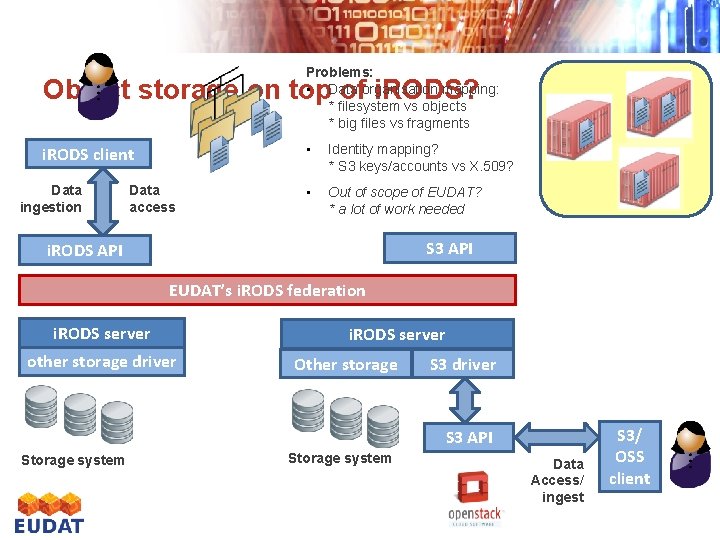

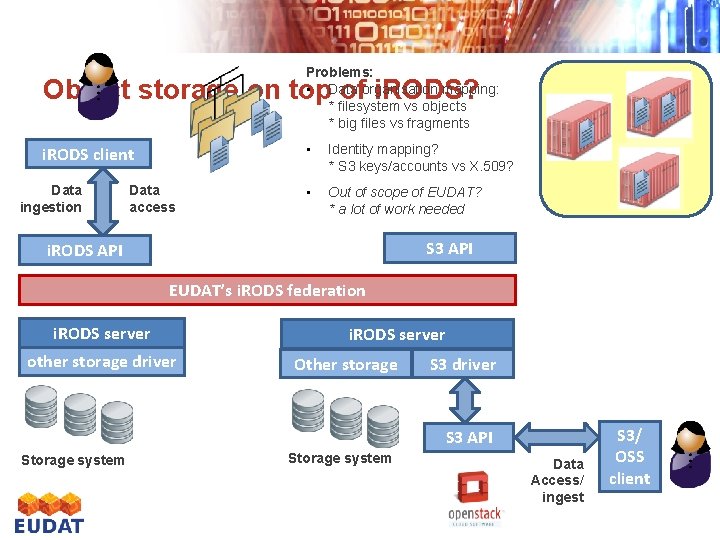

Problems: • Data organisation mapping: * filesystem vs objects * big files vs fragments Object storage on top of i. RODS? i. RODS client Data ingestion Data access • Identity mapping? * S 3 keys/accounts vs X. 509? • Out of scope of EUDAT? * a lot of work needed S 3 API i. RODS API EUDAT’s i. RODS federation i. RODS server other storage driver i. RODS server Other storage S 3 driver S 3 API Storage system Data Access/ ingest S 3/ OSS client