Extensions to the Kmeans Algorithm for Clustering Large

![Notation l l [A 1, A 2, …. . Am] means attribute numbers , Notation l l [A 1, A 2, …. . Am] means attribute numbers ,](https://slidetodoc.com/presentation_image_h/0775fd218aa8dff53ea1abb2278369a2/image-6.jpg)

- Slides: 27

Extensions to the K-means Algorithm for Clustering Large Data Sets with Categorical Values Author: Zhexue Huang Advisor: Dr. Hsu Graduate: Yu-Wei Su 2001/11/06 The Lab of Intelligent Database System, IDS

Outline l l l l l Motivation Objective Research Review Notation K-means Algorithm K-mode Algorithm K-prototype Algorithm Experiment Conclusion Personal opinion 2001/11/06 The Lab of Intelligent Database System, IDS

Motivation l l l K-means methods are efficient for processing large data sets K-means is limited to numeric data Numeric and categorical data are mixed with million objects in real world 2001/11/06 The Lab of Intelligent Database System, IDS

Objective l Extending K-means to categorical domains and domains with mixed numeric and categorical values 2001/11/06 The Lab of Intelligent Database System, IDS

Research review l Partition methods l l Partitioning algorithm organizes the objects into K partition(K<N) K-means[ Mac. Queen, 1967] K-medoids[ Kaufman and Rousseeuw, 1990] CLARANS[ Ng and Han, 1994] 2001/11/06 The Lab of Intelligent Database System, IDS

![Notation l l A 1 A 2 Am means attribute numbers Notation l l [A 1, A 2, …. . Am] means attribute numbers ,](https://slidetodoc.com/presentation_image_h/0775fd218aa8dff53ea1abb2278369a2/image-6.jpg)

Notation l l [A 1, A 2, …. . Am] means attribute numbers , each Ai describes a domains of values, denoted by DOM(Ai) X={X 1, X 2, …. . , Xn} be a set of n objects, object Xi is represented as [Xi, 1, Xi, 2, …. . , Xi, m} Xi=Xk if Xi, j =Xk, j for 1<=j<=m [ ], the first p elements are numeric values, the rest are categorical values 2001/11/06 The Lab of Intelligent Database System, IDS

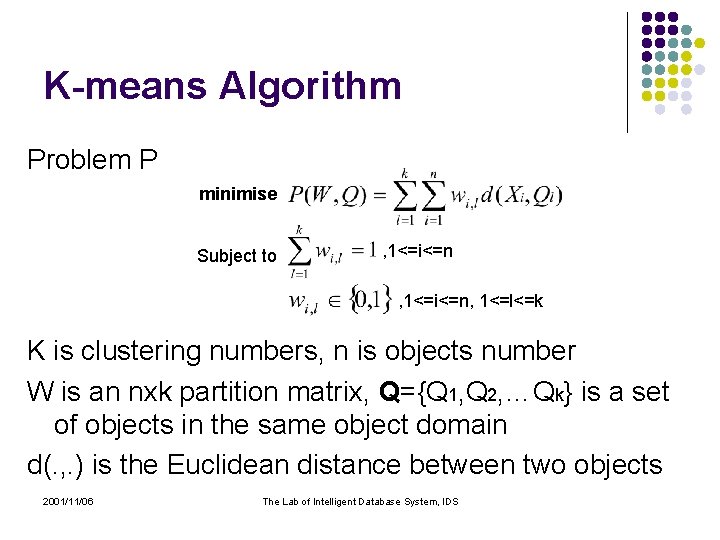

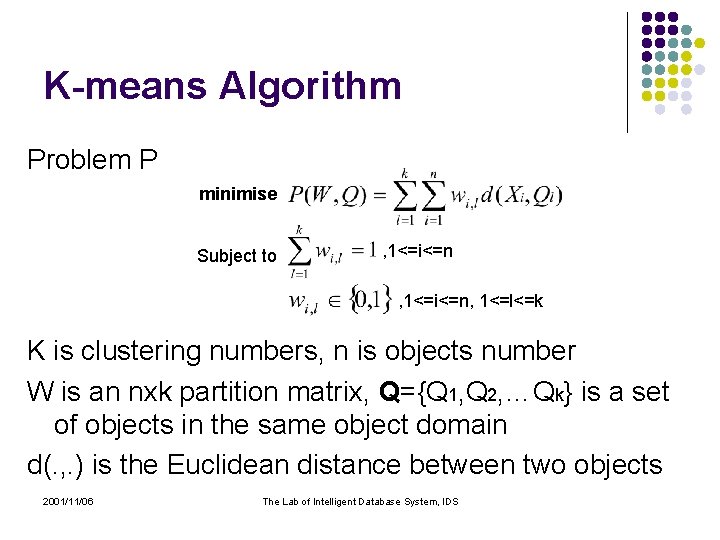

K-means Algorithm Problem P minimise Subject to , 1<=i<=n, 1<=l<=k K is clustering numbers, n is objects number W is an nxk partition matrix, Q={Q 1, Q 2, …Qk} is a set of objects in the same object domain d(. , . ) is the Euclidean distance between two objects 2001/11/06 The Lab of Intelligent Database System, IDS

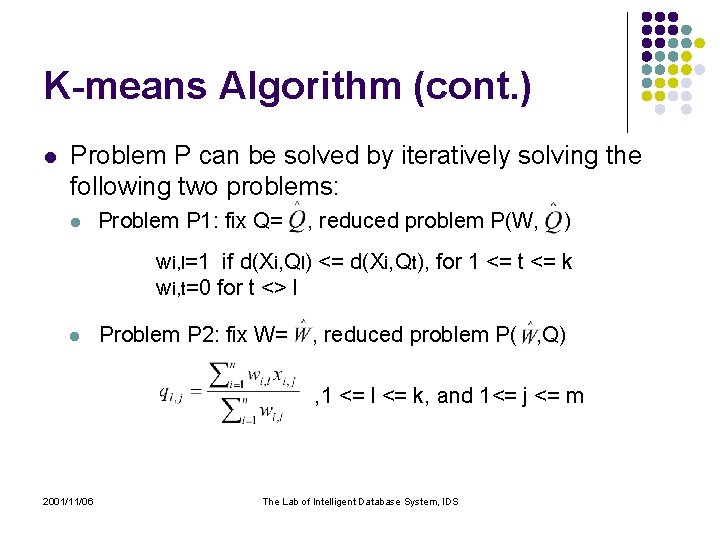

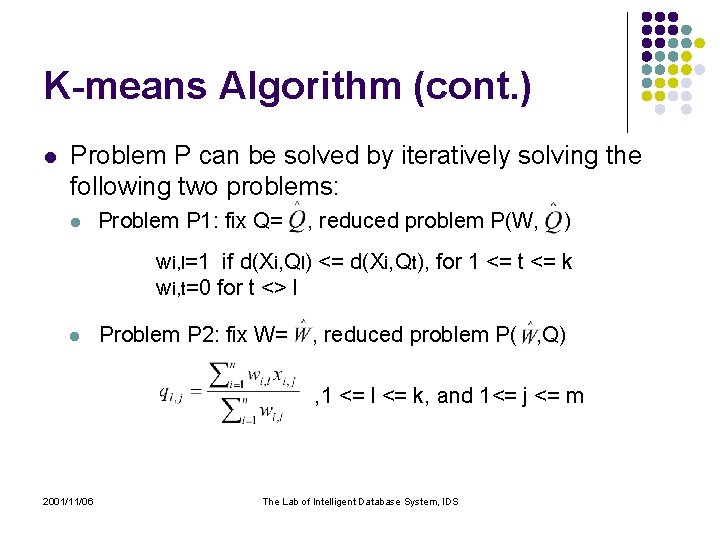

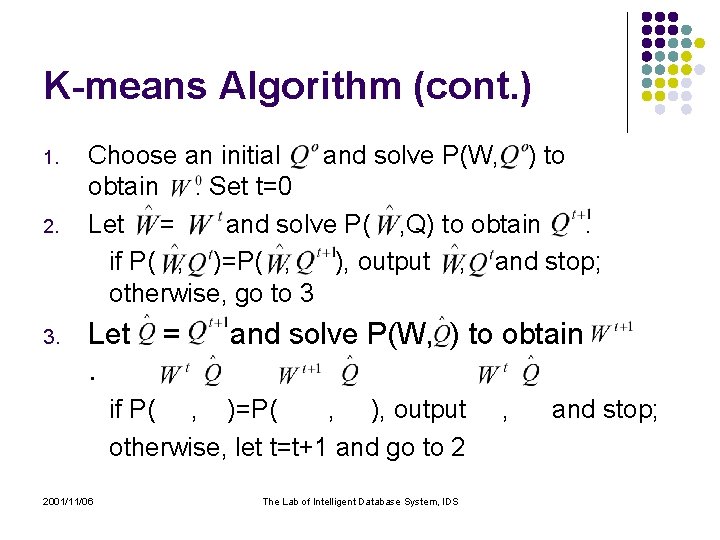

K-means Algorithm (cont. ) l Problem P can be solved by iteratively solving the following two problems: l Problem P 1: fix Q= , reduced problem P(W, ) wi, l=1 if d(Xi, Ql) <= d(Xi, Qt), for 1 <= t <= k wi, t=0 for t <> l l Problem P 2: fix W= , reduced problem P( , Q) , 1 <= l <= k, and 1<= j <= m 2001/11/06 The Lab of Intelligent Database System, IDS

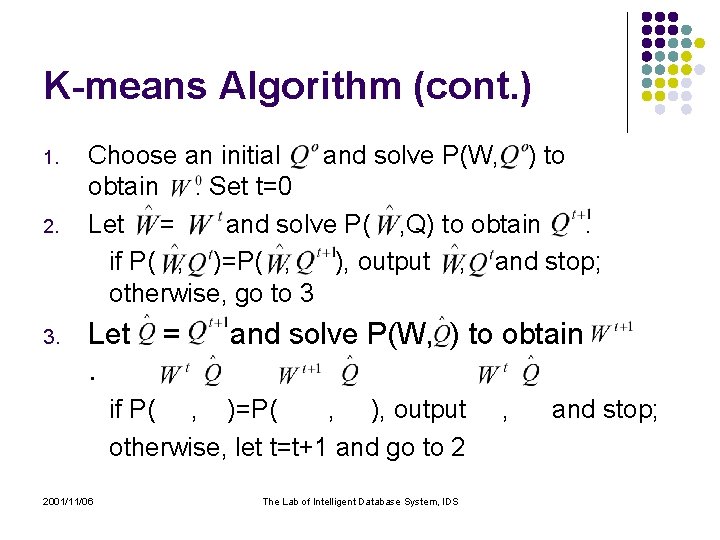

K-means Algorithm (cont. ) 1. 2. 3. Choose an initial and solve P(W, ) to obtain. Set t=0 Let = and solve P( , Q) to obtain. if P( , )=P( , ), output , and stop; otherwise, go to 3 Let. = and solve P(W, ) to obtain if P( , )=P( , ), output otherwise, let t=t+1 and go to 2 2001/11/06 The Lab of Intelligent Database System, IDS , and stop;

K-mode Algorithm l l l Using a simple matching dissimilarity measure for categorical objects Replacing means of clusters by modes Using a frequency-based method to find the modes 2001/11/06 The Lab of Intelligent Database System, IDS

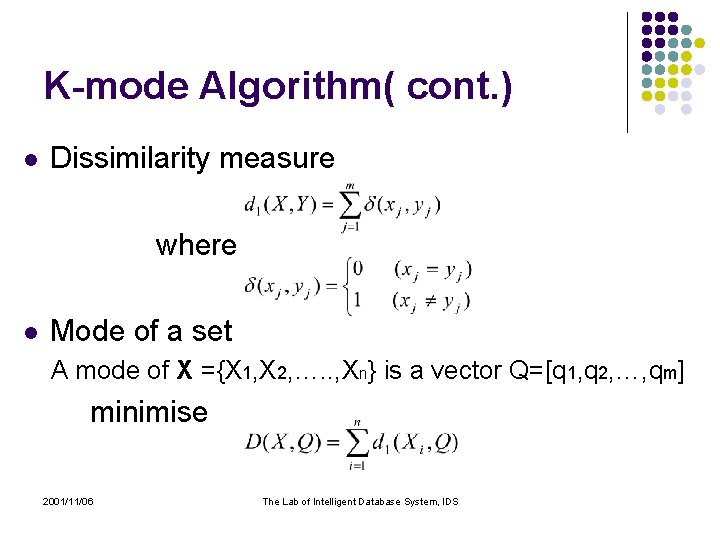

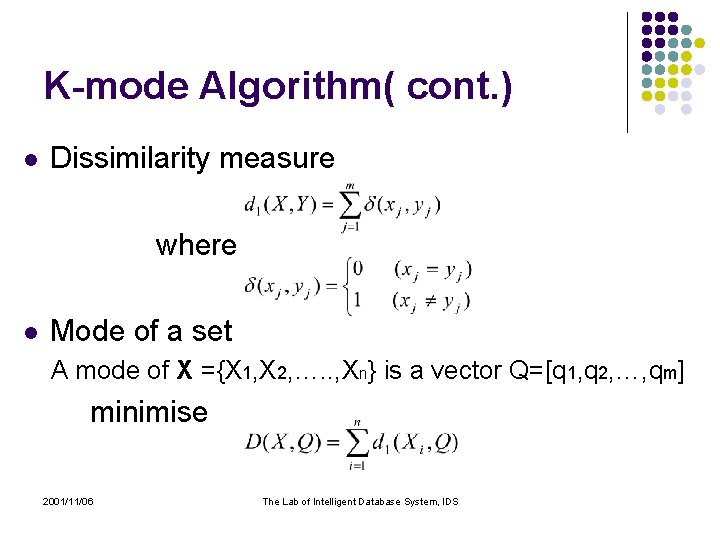

K-mode Algorithm( cont. ) l Dissimilarity measure where l Mode of a set A mode of X ={X 1, X 2, …. . , Xn} is a vector Q=[q 1, q 2, …, qm] minimise 2001/11/06 The Lab of Intelligent Database System, IDS

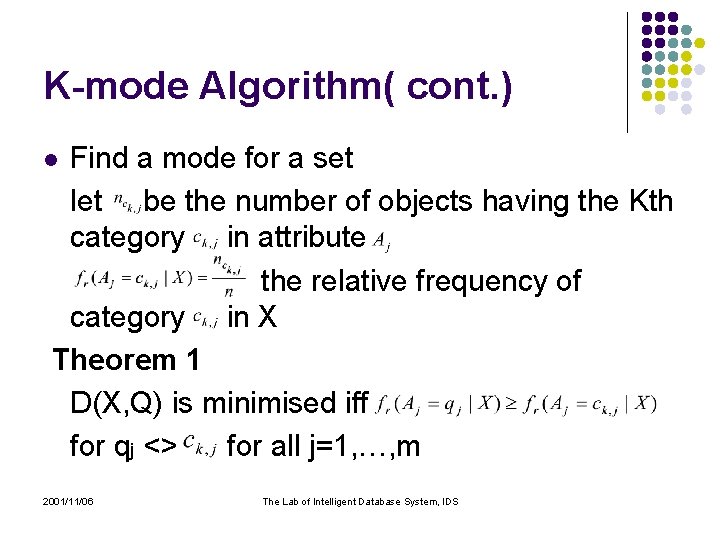

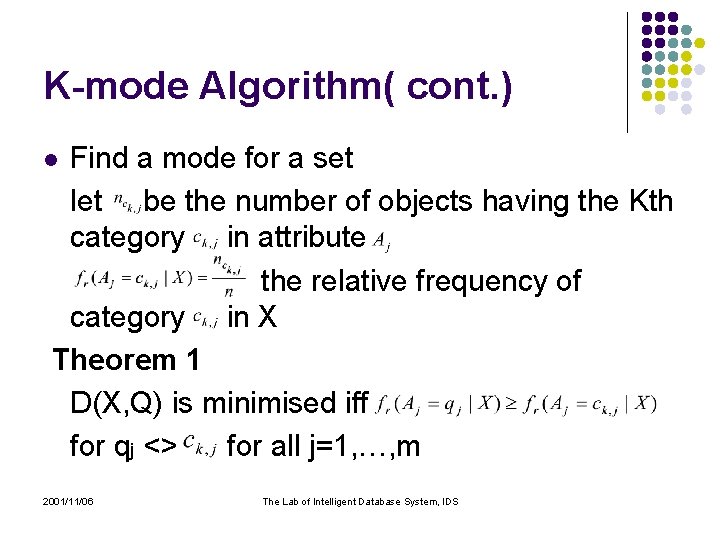

K-mode Algorithm( cont. ) Find a mode for a set let be the number of objects having the Kth category in attribute the relative frequency of category in X Theorem 1 D(X, Q) is minimised iff for qj <> for all j=1, …, m l 2001/11/06 The Lab of Intelligent Database System, IDS

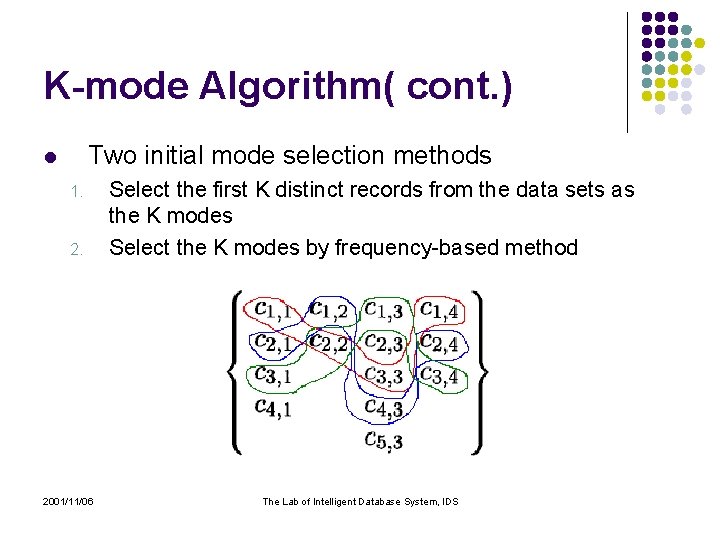

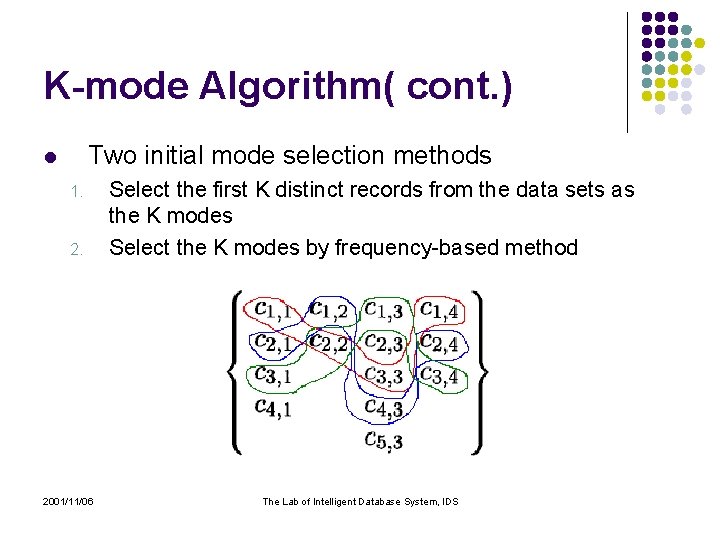

K-mode Algorithm( cont. ) Two initial mode selection methods l 1. 2001/11/06 Select the first K distinct records from the data sets as the K modes Select the K modes by frequency-based method The Lab of Intelligent Database System, IDS

K-mode Algorithm( cont. ) where l and To calculate the total cost P against the whole data set each time when a new Q or W is obtained 2001/11/06 The Lab of Intelligent Database System, IDS

K-mode Algorithm( cont. ) 1. 2. Select K initial modes, one for each cluster Allocate an object to the cluster whose mode is the nearest to it. Update the mode of the cluster after each allocation according to theorem 1 2001/11/06 The Lab of Intelligent Database System, IDS

K-mode Algorithm( cont. ) 3. 4. After all objects have been allocated to clusters, retest the dissimilarity of objects against the current modes if an object is found its nearest mode belongs to another cluster, reallocate the object to that cluster and update the modes of both clusters Repeat 3 until no objects has changed clusters 2001/11/06 The Lab of Intelligent Database System, IDS

K-prototypes Algorithm l l To integrate the k-means and k-modes algorithms and to cluster the mixed-type objects , m is the attribute numbers the first p means numeric data, the rest means categorical data 2001/11/06 The Lab of Intelligent Database System, IDS

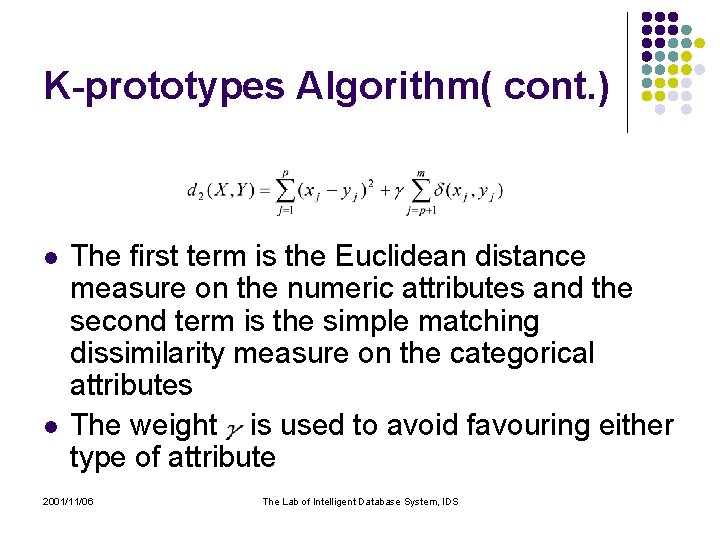

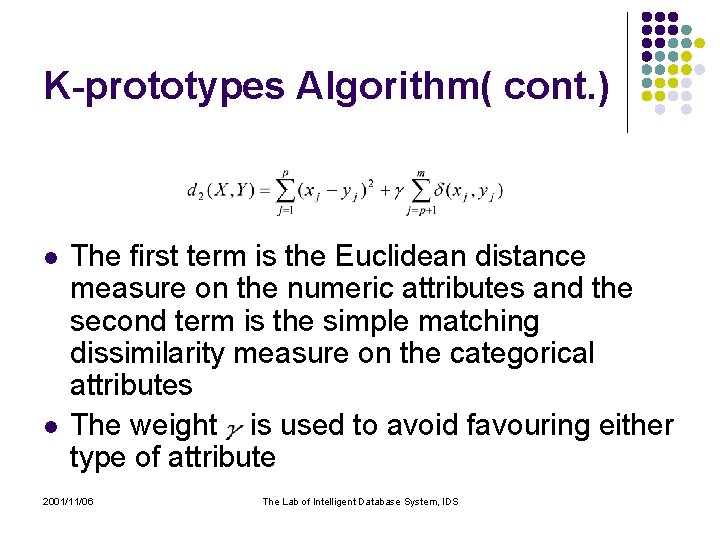

K-prototypes Algorithm( cont. ) l l The first term is the Euclidean distance measure on the numeric attributes and the second term is the simple matching dissimilarity measure on the categorical attributes The weight is used to avoid favouring either type of attribute 2001/11/06 The Lab of Intelligent Database System, IDS

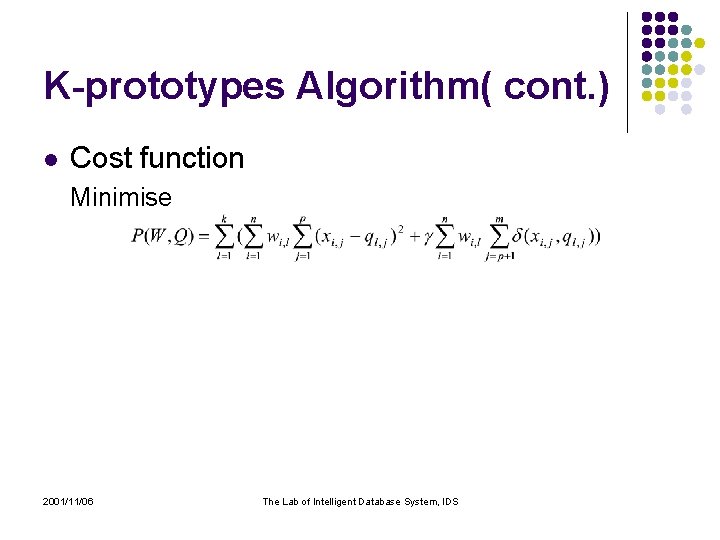

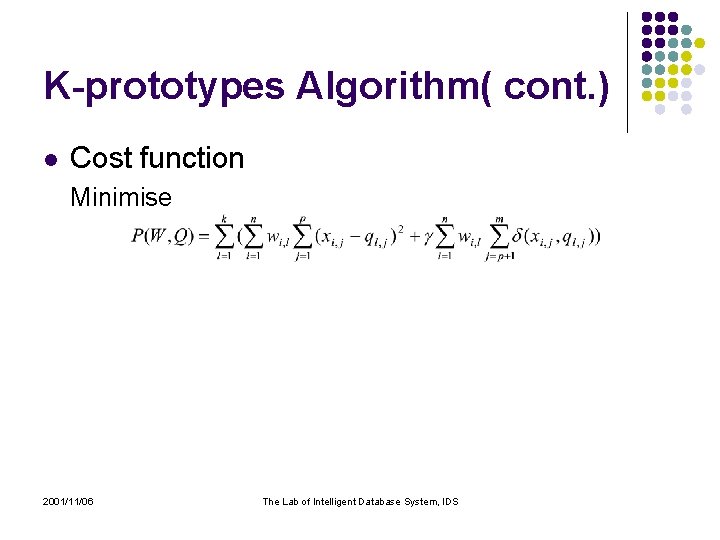

K-prototypes Algorithm( cont. ) l Cost function Minimise 2001/11/06 The Lab of Intelligent Database System, IDS

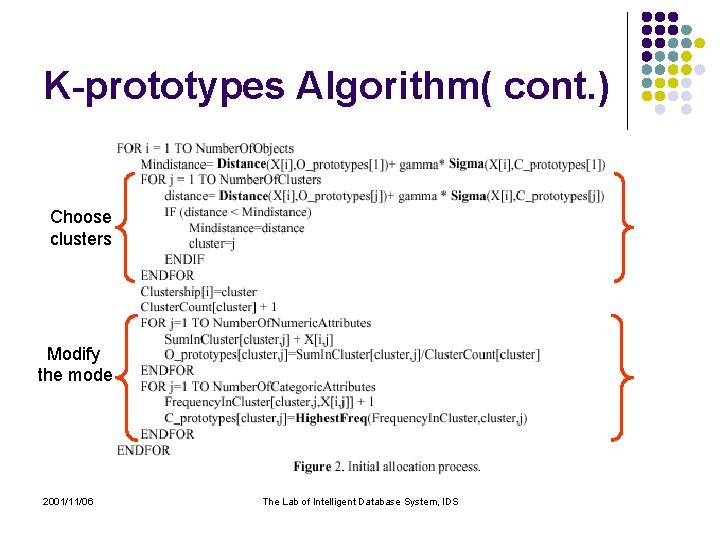

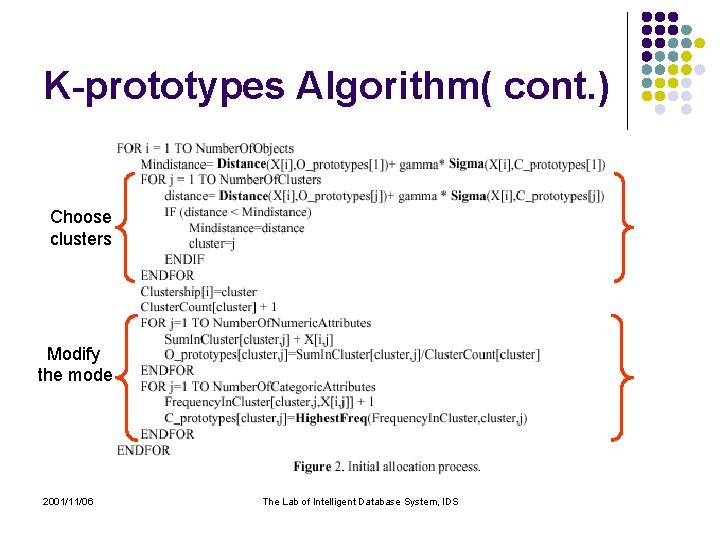

K-prototypes Algorithm( cont. ) Choose clusters Modify the mode 2001/11/06 The Lab of Intelligent Database System, IDS

K-prototypes Algorithm( cont. ) Modify the mode 2001/11/06 The Lab of Intelligent Database System, IDS

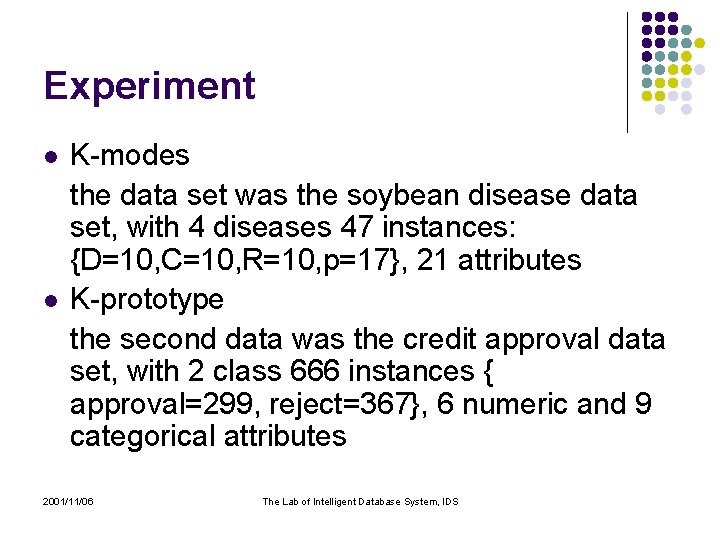

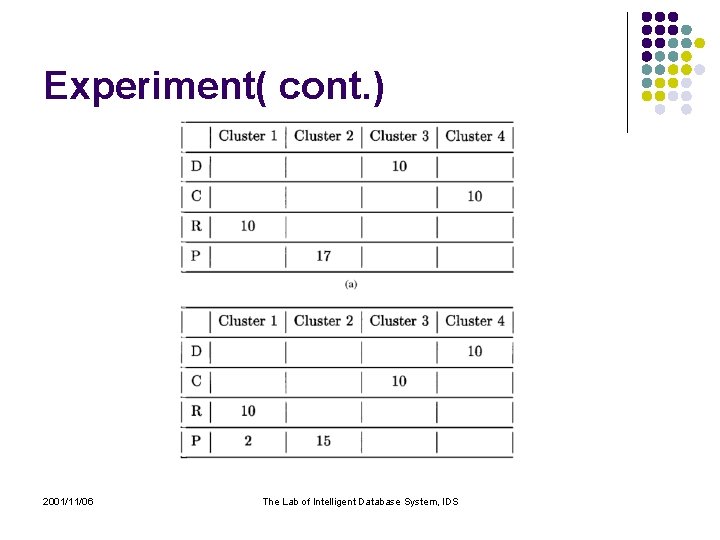

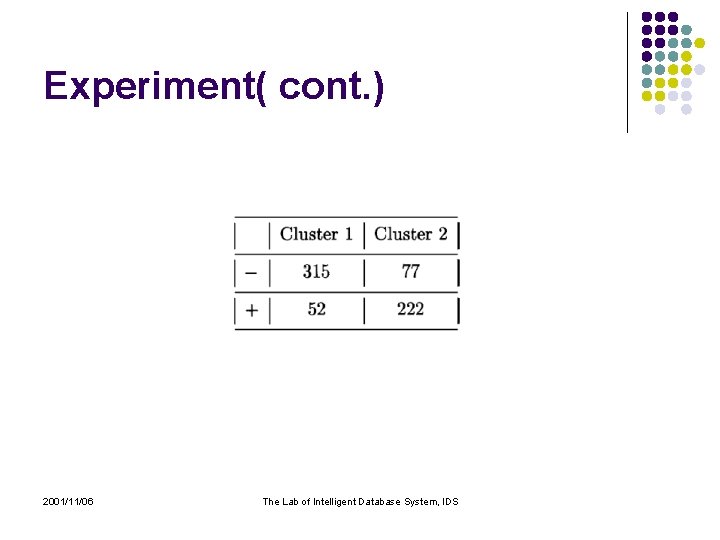

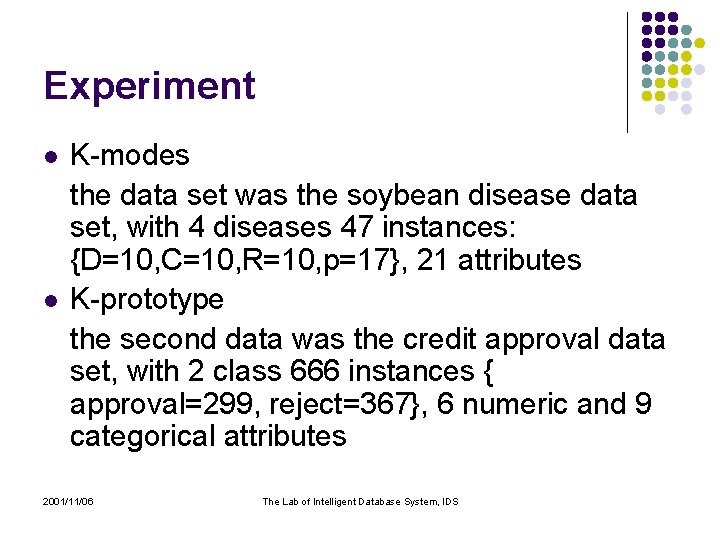

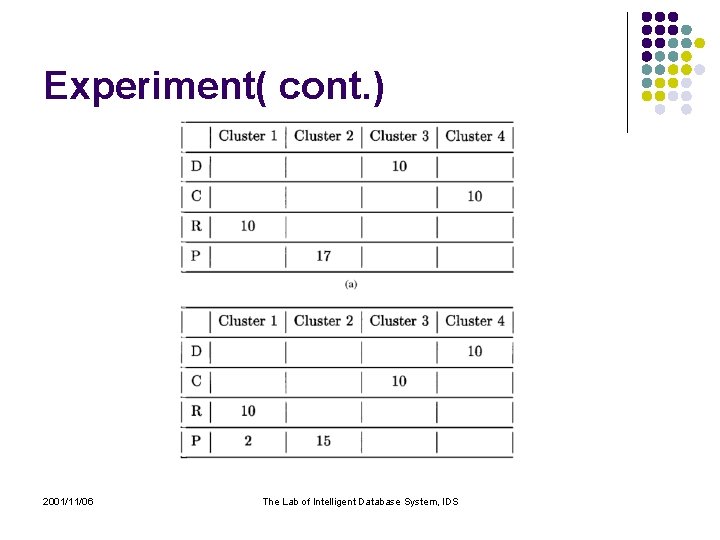

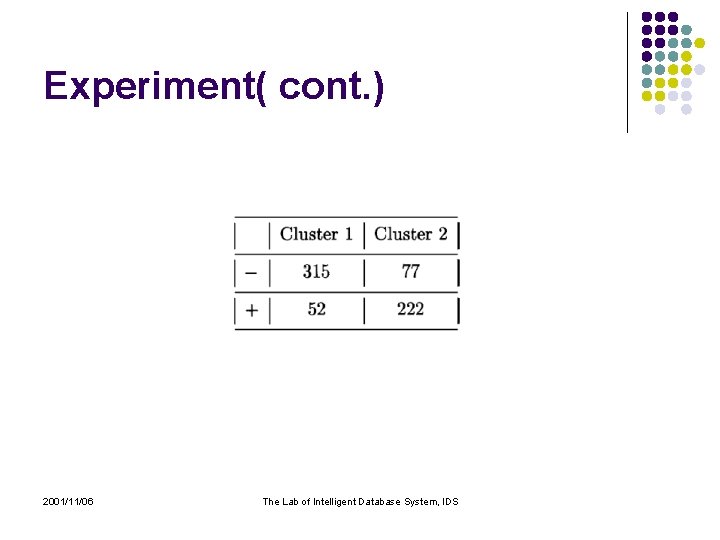

Experiment l l K-modes the data set was the soybean disease data set, with 4 diseases 47 instances: {D=10, C=10, R=10, p=17}, 21 attributes K-prototype the second data was the credit approval data set, with 2 class 666 instances { approval=299, reject=367}, 6 numeric and 9 categorical attributes 2001/11/06 The Lab of Intelligent Database System, IDS

Experiment( cont. ) 2001/11/06 The Lab of Intelligent Database System, IDS

Experiment( cont. ) 2001/11/06 The Lab of Intelligent Database System, IDS

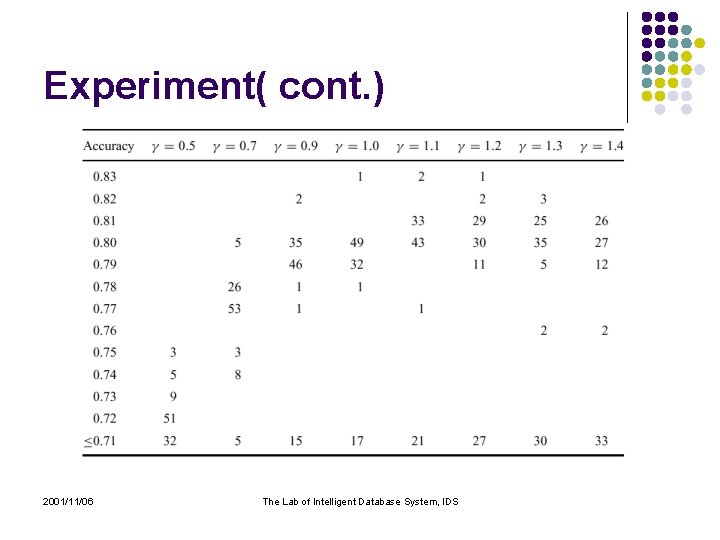

Experiment( cont. ) 2001/11/06 The Lab of Intelligent Database System, IDS

Conclusion l l l The k-modes algorithm is faster than the kmeans and k-prototypes algorithm because it needs less iterations to converge How many clusters are in the data? The weight adds an additional problem 2001/11/06 The Lab of Intelligent Database System, IDS

Personal opinion l l l Conceptual inclusion relationships Outlier problem Massive data sets cause efficient problem 2001/11/06 The Lab of Intelligent Database System, IDS