Large Margin Classification Using the Perceptron Algorithm COSC

![SVM and Large Margin Classification • • • Contributions of SVM: o [Guarantee Effectiveness]: SVM and Large Margin Classification • • • Contributions of SVM: o [Guarantee Effectiveness]:](https://slidetodoc.com/presentation_image_h2/721aa1903b4feb59c7e380bea40fbbce/image-4.jpg)

- Slides: 23

Large Margin Classification Using the Perceptron Algorithm COSC 878 Doctoral Seminar Georgetown University Presenters: Sicong Zhang, Henry Tan. March 16, 2015 Freund, Yoav, and Robert E. Schapire. "Large margin classification using the perceptron algorithm. " Machine learning 37. 3 (1999): 277 -296

Two Key Points to this work: • What is Large Margin Classification? SVM? • What is Perceptron? Perceptron Algorithm? 2

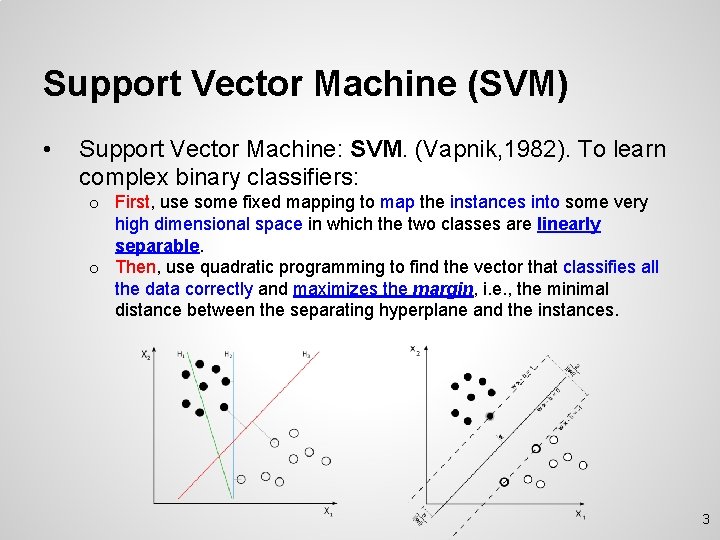

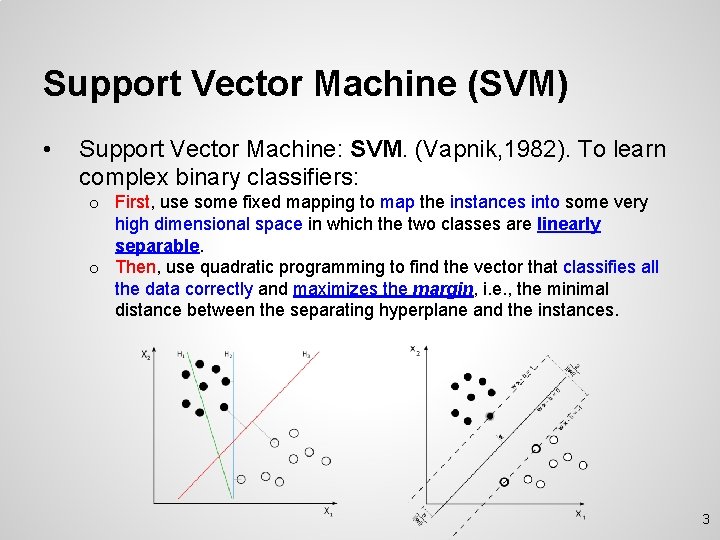

Support Vector Machine (SVM) • Support Vector Machine: SVM. (Vapnik, 1982). To learn complex binary classifiers: o First, use some fixed mapping to map the instances into some very high dimensional space in which the two classes are linearly separable. o Then, use quadratic programming to find the vector that classifies all the data correctly and maximizes the margin, i. e. , the minimal distance between the separating hyperplane and the instances. 3

![SVM and Large Margin Classification Contributions of SVM o Guarantee Effectiveness SVM and Large Margin Classification • • • Contributions of SVM: o [Guarantee Effectiveness]:](https://slidetodoc.com/presentation_image_h2/721aa1903b4feb59c7e380bea40fbbce/image-4.jpg)

SVM and Large Margin Classification • • • Contributions of SVM: o [Guarantee Effectiveness]: A proof of a new bound on the difference between the training error and the test error of a linear classifier that maximizes the margin. The significance of this bound is that it depends only on the size of the margin (or the number of support vectors) and not on the dimension. o [Guarantee Efficiency]: A method for computing the maximal-margin classifier efficiently for some specific high dimensional mappings. Based on the idea of kernel functions. The main part of algorithms for finding the maximal-margin classifier is a computation of a solution for a large quadratic program. In this paper, they introduced a new and simpler algorithm for linear classification which takes advantage of data that are linearly separable with large margins: Voted-perceptron. o Based on perceptron algorithm (Rosenblatt. 1958, 1962) and a transformation of online learning algorithms to batch learning algorithms developed by Helmbold and Warmuth (1995). 4

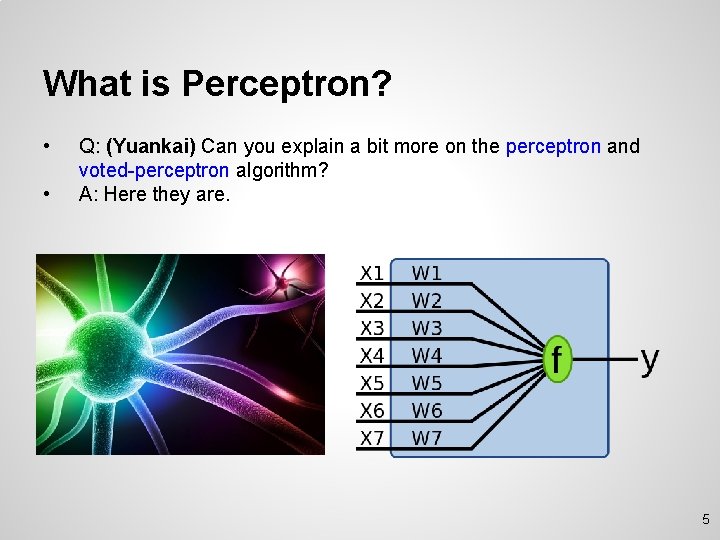

What is Perceptron? • • Q: (Yuankai) Can you explain a bit more on the perceptron and voted-perceptron algorithm? A: Here they are. 5

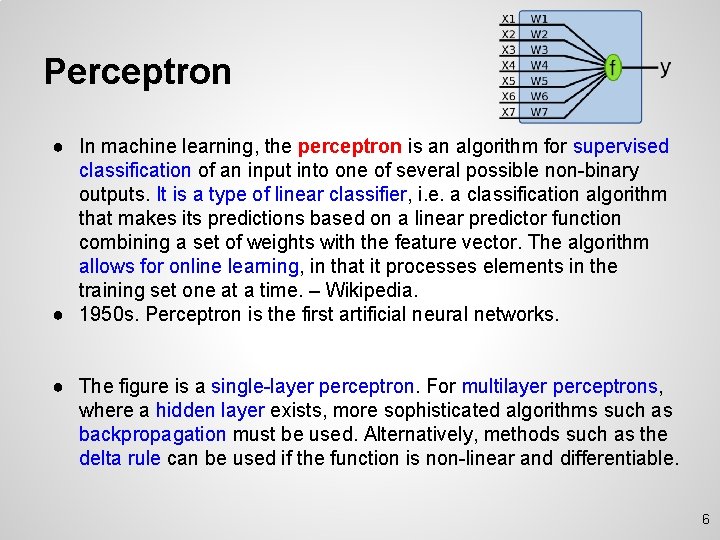

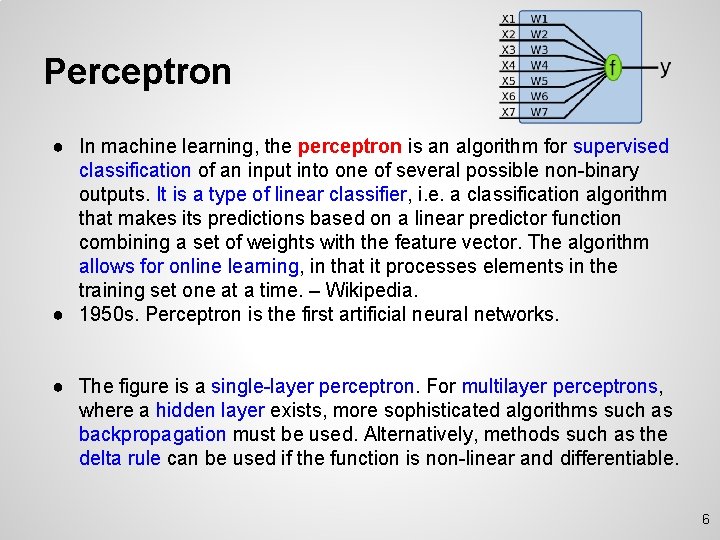

Perceptron ● In machine learning, the perceptron is an algorithm for supervised classification of an input into one of several possible non-binary outputs. It is a type of linear classifier, i. e. a classification algorithm that makes its predictions based on a linear predictor function combining a set of weights with the feature vector. The algorithm allows for online learning, in that it processes elements in the training set one at a time. – Wikipedia. ● 1950 s. Perceptron is the first artificial neural networks. ● The figure is a single-layer perceptron. For multilayer perceptrons, where a hidden layer exists, more sophisticated algorithms such as backpropagation must be used. Alternatively, methods such as the delta rule can be used if the function is non-linear and differentiable. 6

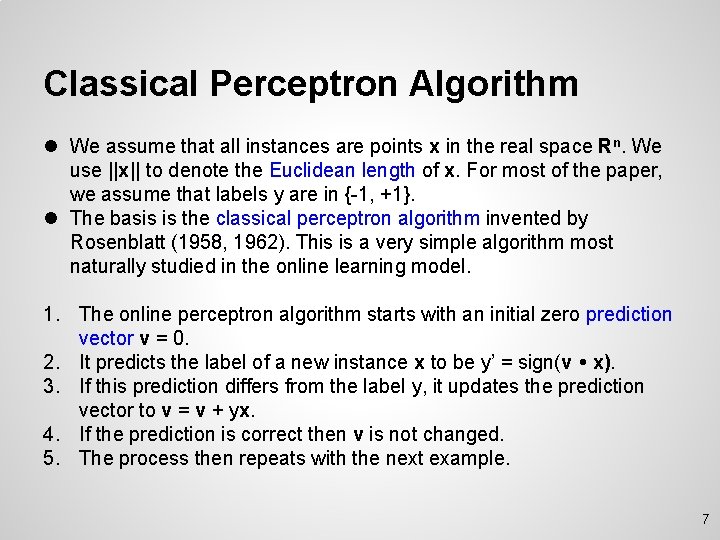

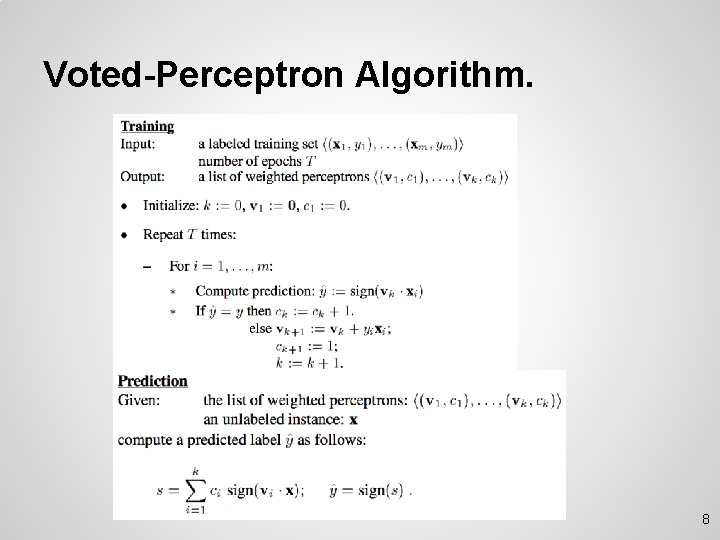

Classical Perceptron Algorithm l We assume that all instances are points x in the real space Rn. We use ||x|| to denote the Euclidean length of x. For most of the paper, we assume that labels y are in {-1, +1}. l The basis is the classical perceptron algorithm invented by Rosenblatt (1958, 1962). This is a very simple algorithm most naturally studied in the online learning model. 1. The online perceptron algorithm starts with an initial zero prediction vector v = 0. 2. It predicts the label of a new instance x to be y’ = sign(v x). 3. If this prediction differs from the label y, it updates the prediction vector to v = v + yx. 4. If the prediction is correct then v is not changed. 5. The process then repeats with the next example. 7

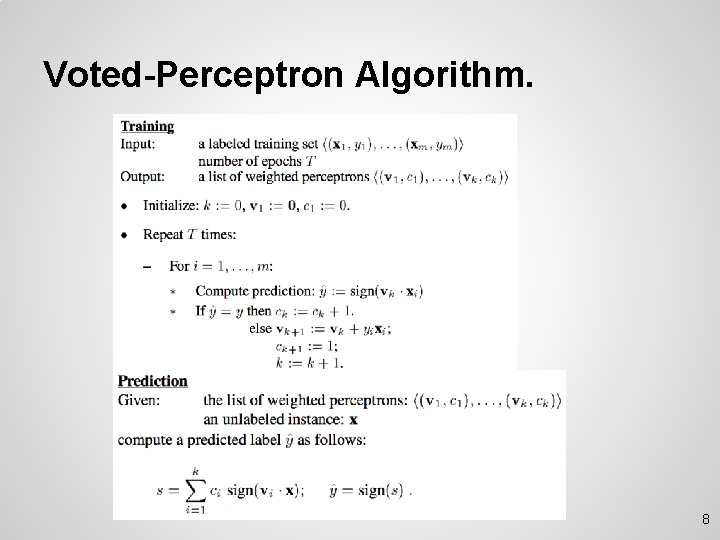

Voted-Perceptron Algorithm. 8

Voted-Perceptron Algorithm. (Cont. ) l If the data are linearly separable, then the perceptron algorithm will eventually converge on some consistent hypothesis (i. e. , a prediction vector that is correct on all of the training examples). l Thus, for linearly separable data, when T -> ∞, the votedperceptron algorithm converges to the regular use of the perceptron algorithm, which is to predict using the final prediction vector. 9

Questions l Q: (Yifang) The algorithm described in Figure 1 is a batch learning algorithm or online learning algorithm? l Q: (Grace) Could you please show the connections between today's paper and online learning? l A: Batch Learning. It is an example to convert an online learning algorithm to a batch learning algorithm. A batch learning algorithm has both the training phase and the prediction phase, while an online learning algorithm only has one phase. l A: I think this paper consist with the online learning paper’s idea that “online learning can perform no worse than batch learning, if processing same amount of training instances”. In the experiment part, Henry will give more discussion. 10

Questions l Q: (Brad) Is my understanding correct that the order in which the algorithm works through the data will affect the weights of the prediction vectors? l A: Yes. And it add another <v, c> pair (vector and weight) every time the prediction vector been updated. l Q: (Brad) If so, is the reason why increasing T improves results? l A: Probably. The author pointed out that “As we shall see in the experiments, the algorithm actually continues to improve performance after. We have no theoretical explanation for this improvement. ” 11

Questions • Q: (Yifang) As shown in Figure 1, the final prediction vector relies heavily on the order of training points been visited? c_{1}, always has a greater chance than c_{n} of been updated? • A: Since the prediction part takes the votes (c_i) as weights, and not depending only on the final vector v, hence the training order of the instances becomes not that important. • Q: (Jiyun) Will a prediction vector be chosen first, discarded and chosen again? What will its survival time be? • A: If that is the case, the precision of the final prediction vector, along with the survival time between the vector updates, may be highly depending on the order of the training instances, which is not good for a machine learning algorithm. I may suggest not to do it this way. 12

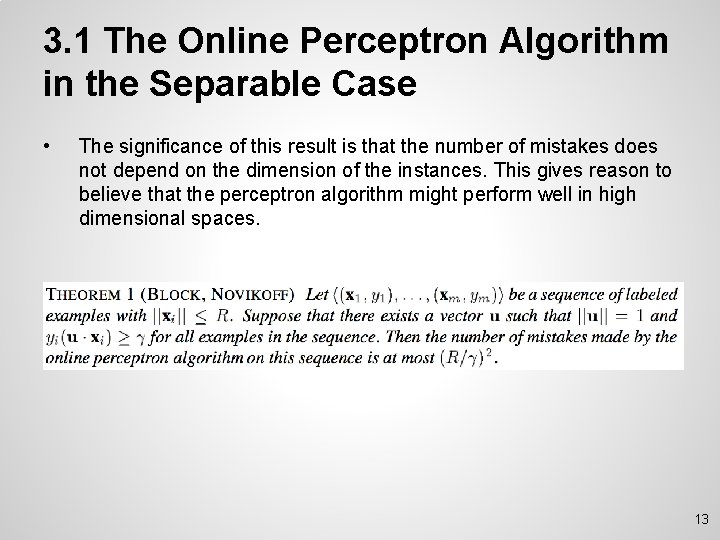

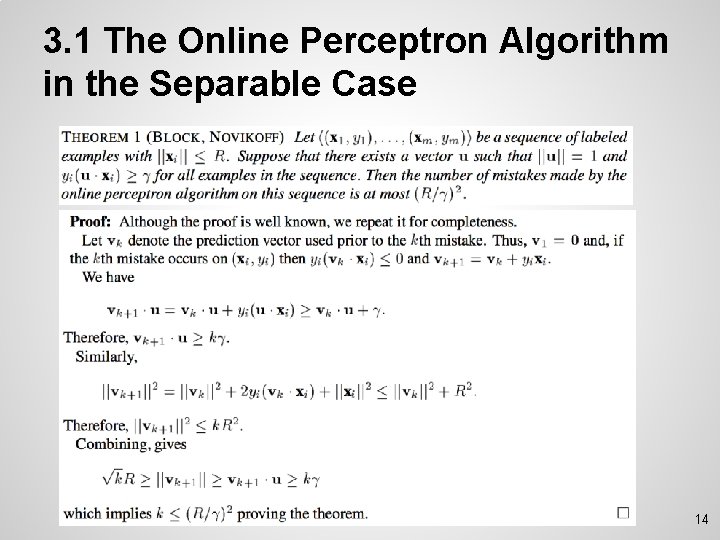

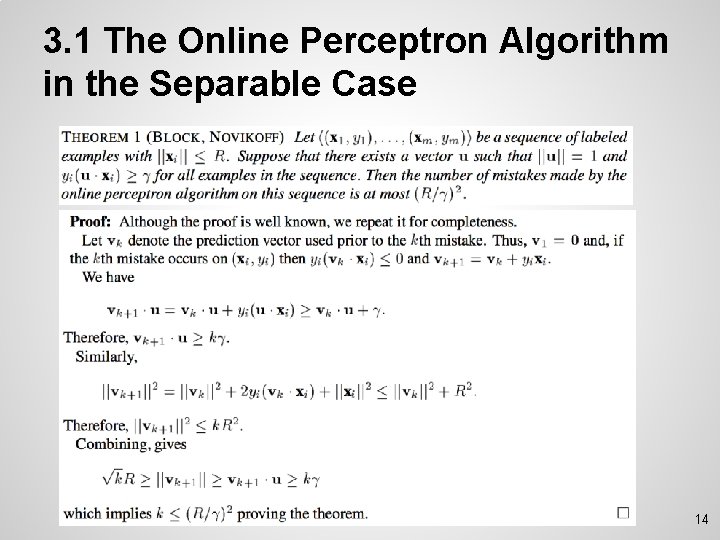

3. 1 The Online Perceptron Algorithm in the Separable Case • The significance of this result is that the number of mistakes does not depend on the dimension of the instances. This gives reason to believe that the perceptron algorithm might perform well in high dimensional spaces. 13

3. 1 The Online Perceptron Algorithm in the Separable Case 14

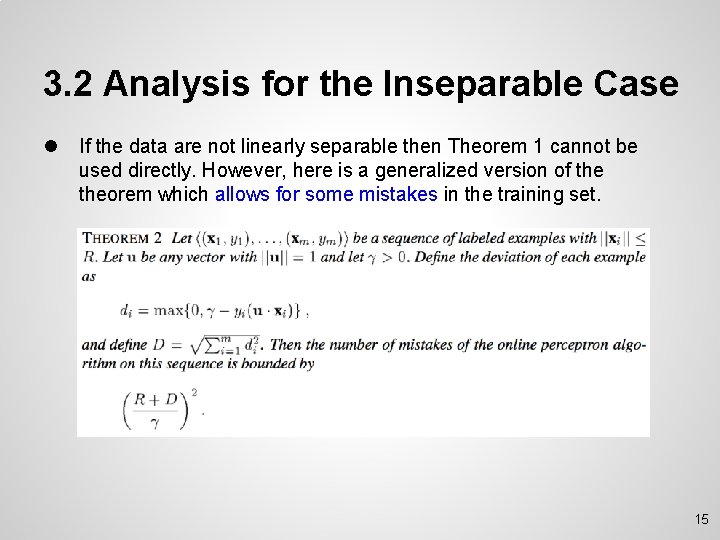

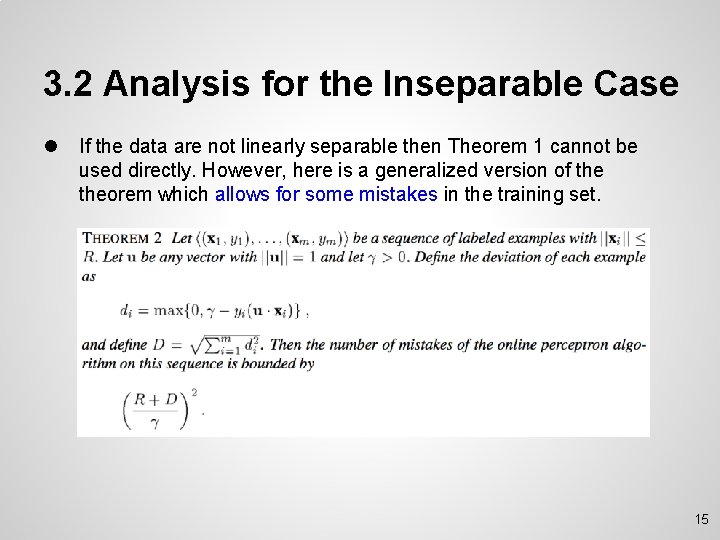

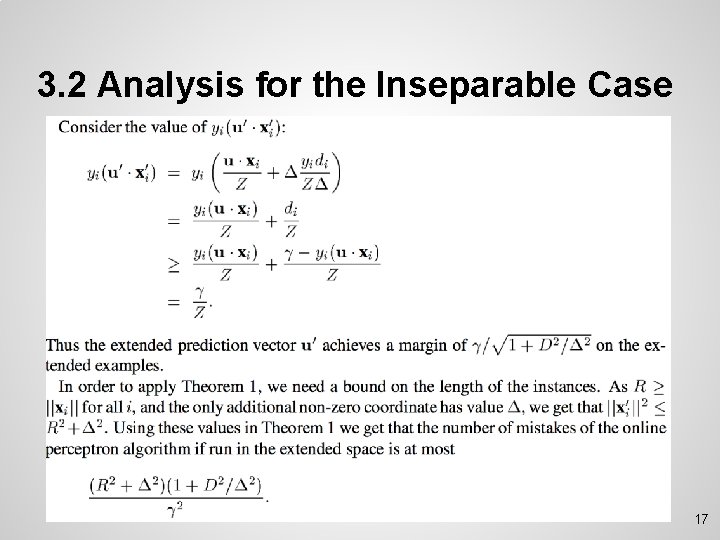

3. 2 Analysis for the Inseparable Case l If the data are not linearly separable then Theorem 1 cannot be used directly. However, here is a generalized version of theorem which allows for some mistakes in the training set. 15

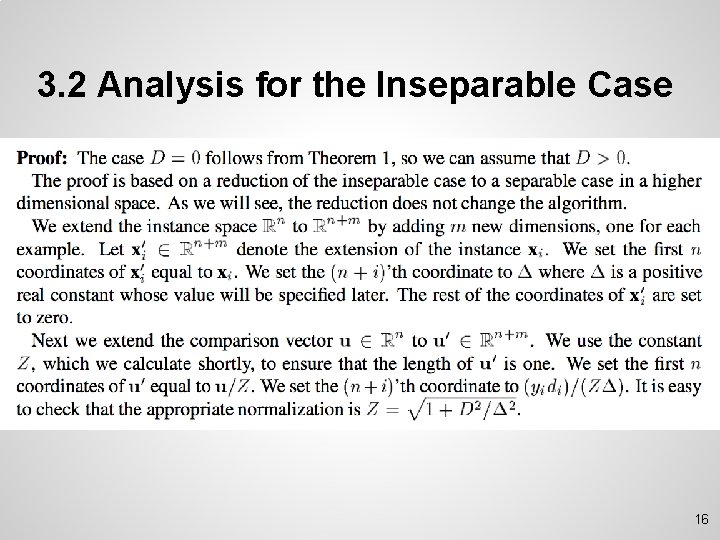

3. 2 Analysis for the Inseparable Case 16

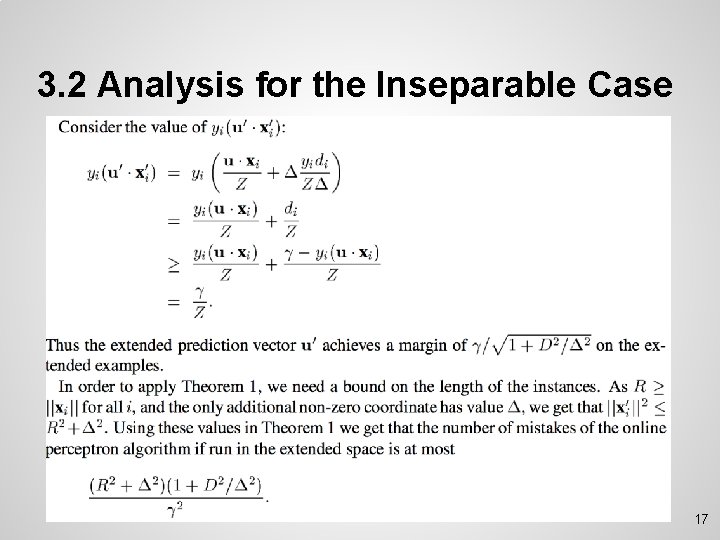

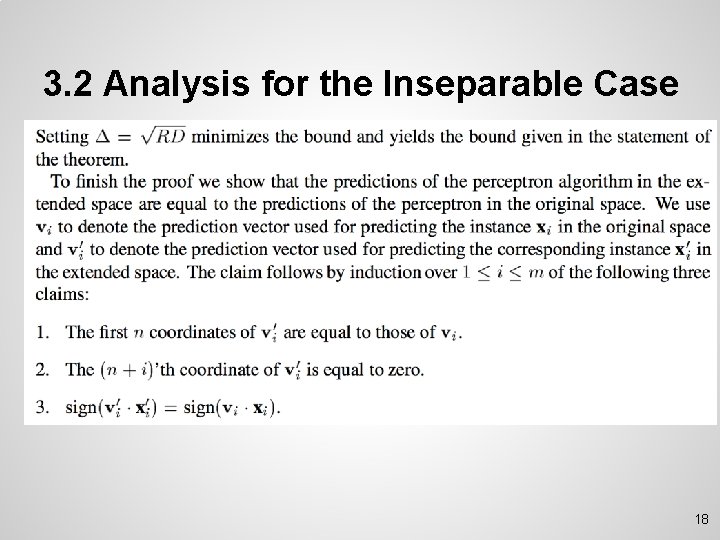

3. 2 Analysis for the Inseparable Case 17

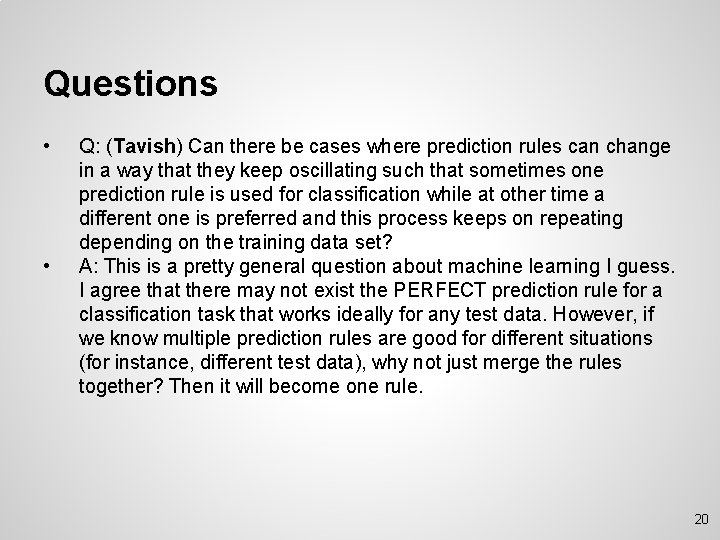

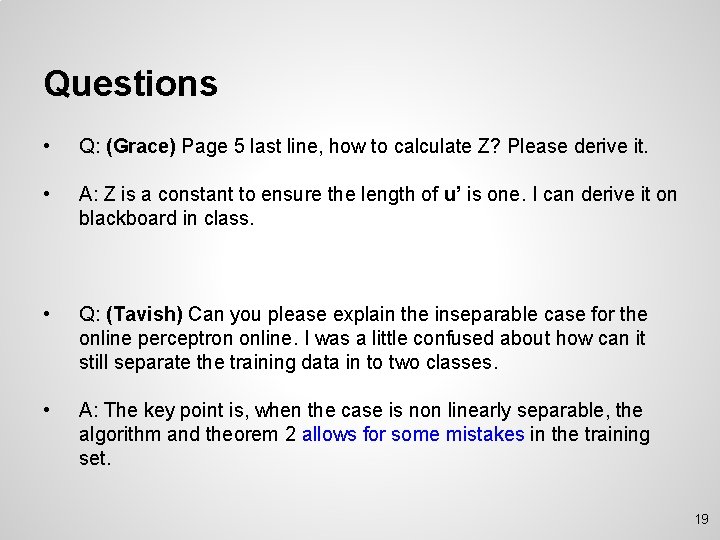

3. 2 Analysis for the Inseparable Case 18

Questions • Q: (Grace) Page 5 last line, how to calculate Z? Please derive it. • A: Z is a constant to ensure the length of u’ is one. I can derive it on blackboard in class. • Q: (Tavish) Can you please explain the inseparable case for the online perceptron online. I was a little confused about how can it still separate the training data in to two classes. • A: The key point is, when the case is non linearly separable, the algorithm and theorem 2 allows for some mistakes in the training set. 19

Questions • • Q: (Tavish) Can there be cases where prediction rules can change in a way that they keep oscillating such that sometimes one prediction rule is used for classification while at other time a different one is preferred and this process keeps on repeating depending on the training data set? A: This is a pretty general question about machine learning I guess. I agree that there may not exist the PERFECT prediction rule for a classification task that works ideally for any test data. However, if we know multiple prediction rules are good for different situations (for instance, different test data), why not just merge the rules together? Then it will become one rule. 20

Questions • • Q: (Brendan) What's the significance of R and gamma and how would you choose gamma in the inseparable case to minimize the bound since it also effects the value of D? A: R is a property of the input data (a bound) and gamma is just a number used in the proof that relates to characteristics in the input but isn't actually chosen for the algorithm. 21

Converting Online to Batch • • • “Leave-one-out” method of converting an online learning algorithm into a batch learning algorithm. Given a training set <(x 1, y 1), …, (xm, ym)> and an unlabeled instance x, we select a number in uniformly at random. We then take the first examples in the training sequence and append the unlabeled in- stance to the end of this subsequence. We run the online algorithm on this sequence of length , and use the prediction of the online algorithm on the last unlabeled instance. For deterministic leave-one-out conversion, we modify the randomized leave-one-out conversion to make it deterministic in the obvious way by choosing the most likely prediction. Q: (Jiyun) Can you explain the “leave-one-out conversion”? Will it use instance <xr+1, yr+1> … <xm, ym>? If not, why throw them away? what is the benefit of doing so? A: No, but they are each time difference. We are not throwing them away. 22

Thank You. and let’s welcome Henry! 23