EECS 252 Graduate Computer Architecture Lecture 4 Review

- Slides: 50

EECS 252 Graduate Computer Architecture Lecture 4 Review Continued: Caching & VM Exceptions January 30 th, 2012 John Kubiatowicz Electrical Engineering and Computer Sciences University of California, Berkeley http: //www. eecs. berkeley. edu/~kubitron/cs 252

Review: Pipelining • Just overlap tasks; easy if tasks are independent • Speed Up Pipeline Depth; if ideal CPI is 1, then: • Hazards limit performance on computers: – Structural: need more HW resources – Data (RAW, WAR, WAW): need forwarding, compiler scheduling – Control: delayed branch, prediction • Exceptions, Interrupts add complexity 1/30/2012 cs 252 -S 12, Lecture 04 2

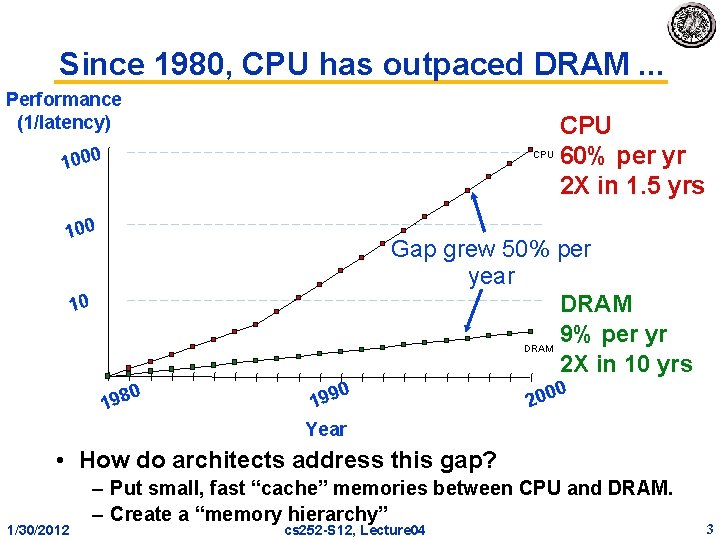

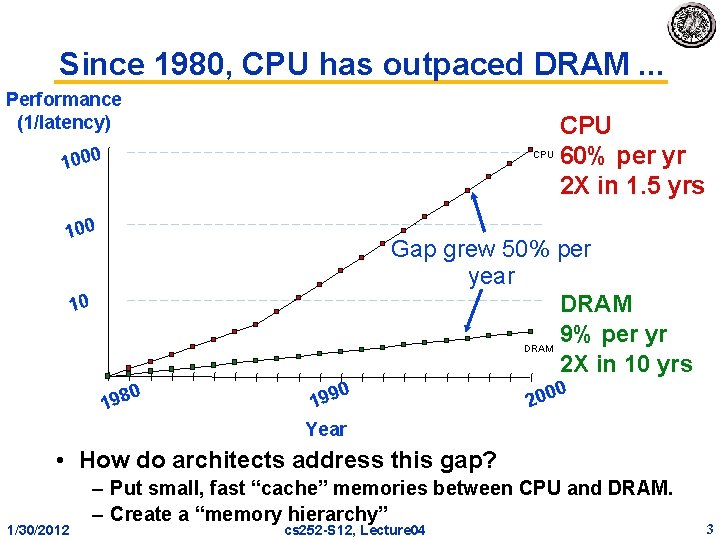

Since 1980, CPU has outpaced DRAM. . . Performance (1/latency) 1000 CPU 100 CPU 60% per yr 2 X in 1. 5 yrs Gap grew 50% per year DRAM 9% per yr DRAM 2 X in 10 yrs 10 0 198 0 199 0 200 Year • How do architects address this gap? 1/30/2012 – Put small, fast “cache” memories between CPU and DRAM. – Create a “memory hierarchy” cs 252 -S 12, Lecture 04 3

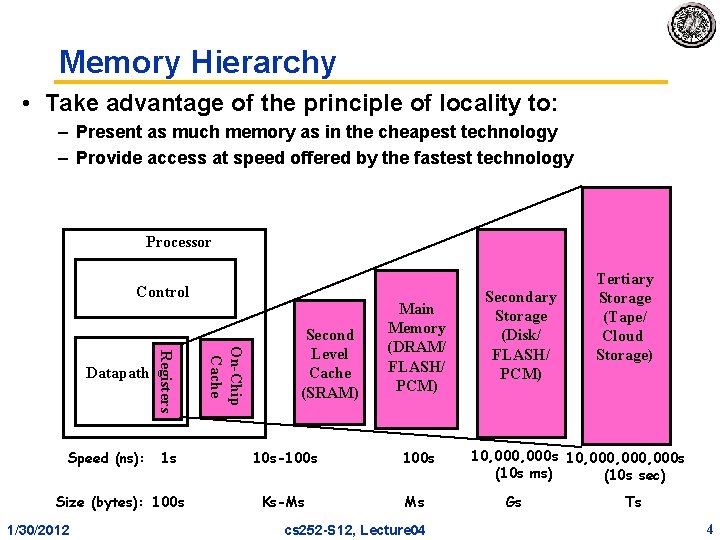

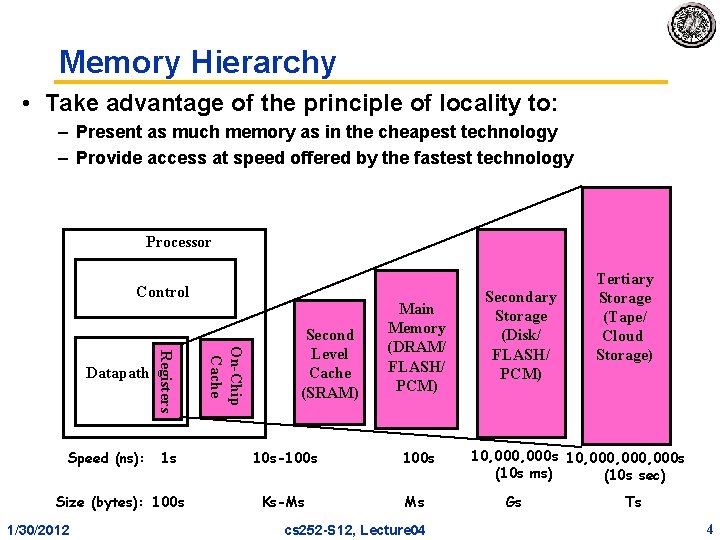

Memory Hierarchy • Take advantage of the principle of locality to: – Present as much memory as in the cheapest technology – Provide access at speed offered by the fastest technology Processor Control 1 s Size (bytes): 100 s 1/30/2012 On-Chip Cache Speed (ns): Registers Datapath Second Level Cache (SRAM) Main Memory (DRAM/ FLASH/ PCM) 10 s-100 s Ks-Ms Ms cs 252 -S 12, Lecture 04 Secondary Storage (Disk/ FLASH/ PCM) Tertiary Storage (Tape/ Cloud Storage) 10, 000 s 10, 000, 000 s (10 s ms) (10 s sec) Gs Ts 4

The Principle of Locality • The Principle of Locality: – Program access a relatively small portion of the address space at any instant of time. • Two Different Types of Locality: – Temporal Locality (Locality in Time): If an item is referenced, it will tend to be referenced again soon (e. g. , loops, reuse) – Spatial Locality (Locality in Space): If an item is referenced, items whose addresses are close by tend to be referenced soon (e. g. , straightline code, array access) • Last 15 years, HW relied on locality for speed 1/30/2012 cs 252 -S 12, Lecture 04 5

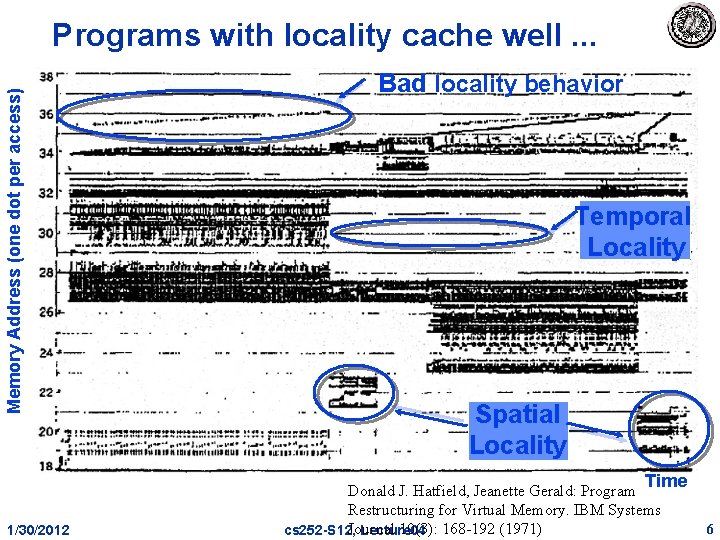

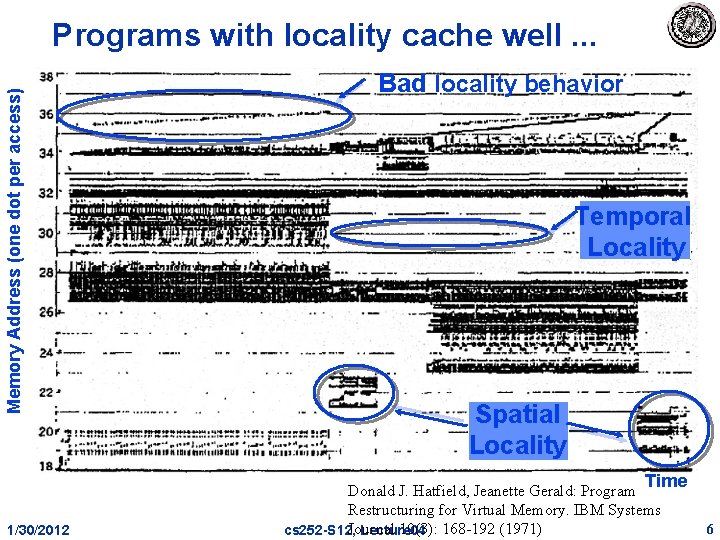

Memory Address (one dot per access) Programs with locality cache well. . . Bad locality behavior Temporal Locality Spatial Locality Time 1/30/2012 Donald J. Hatfield, Jeanette Gerald: Program Restructuring for Virtual Memory. IBM Systems Journal 10(3): 168 -192 (1971) cs 252 -S 12, Lecture 04 6

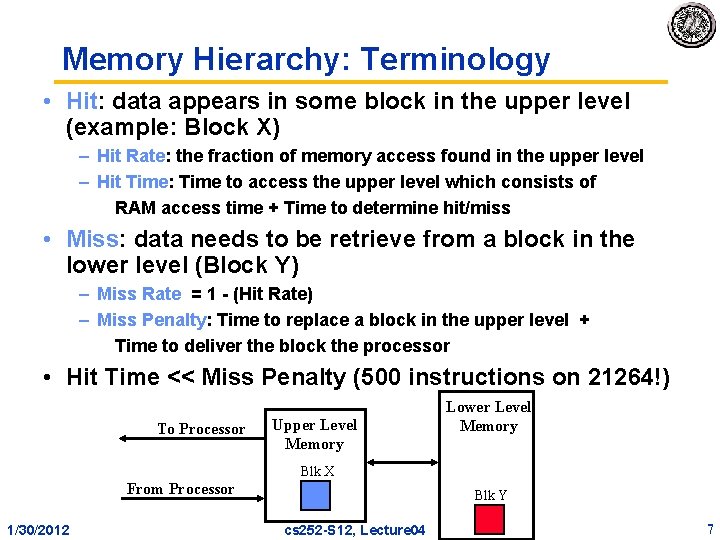

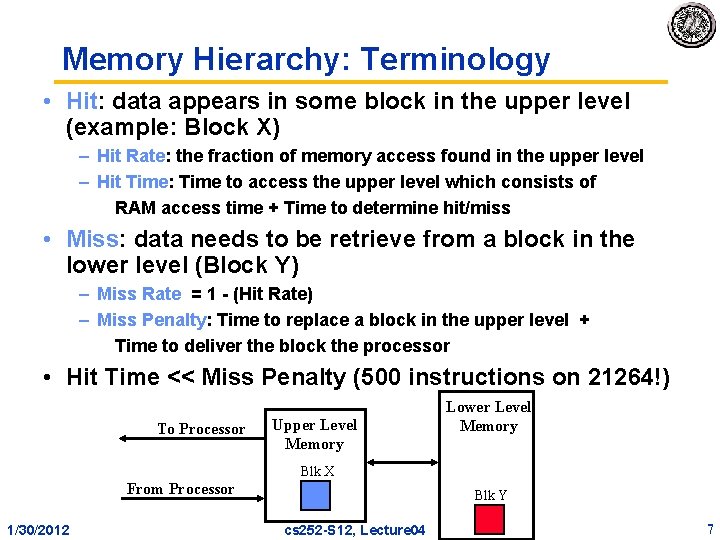

Memory Hierarchy: Terminology • Hit: data appears in some block in the upper level (example: Block X) – Hit Rate: the fraction of memory access found in the upper level – Hit Time: Time to access the upper level which consists of RAM access time + Time to determine hit/miss • Miss: data needs to be retrieve from a block in the lower level (Block Y) – Miss Rate = 1 - (Hit Rate) – Miss Penalty: Time to replace a block in the upper level + Time to deliver the block the processor • Hit Time << Miss Penalty (500 instructions on 21264!) To Processor Upper Level Memory Lower Level Memory Blk X From Processor 1/30/2012 Blk Y cs 252 -S 12, Lecture 04 7

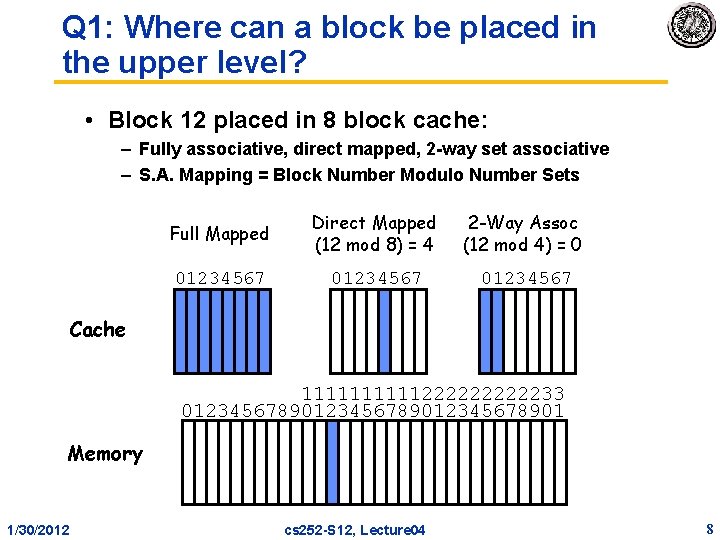

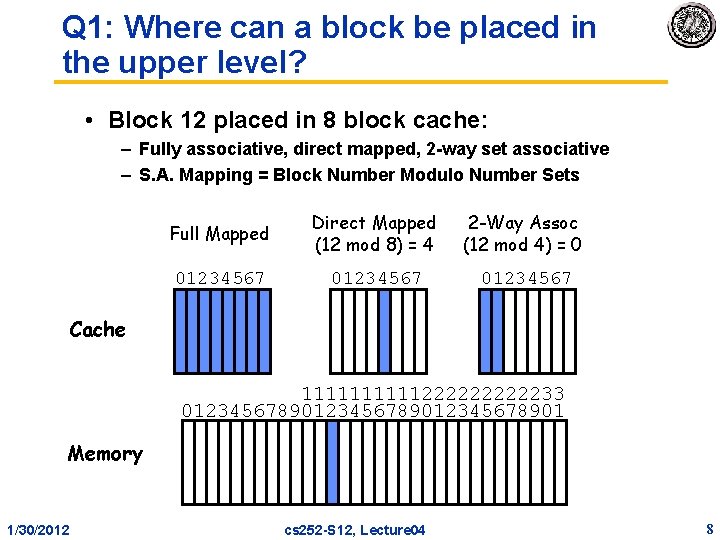

Q 1: Where can a block be placed in the upper level? • Block 12 placed in 8 block cache: – Fully associative, direct mapped, 2 -way set associative – S. A. Mapping = Block Number Modulo Number Sets Full Mapped Direct Mapped (12 mod 8) = 4 2 -Way Assoc (12 mod 4) = 0 01234567 Cache 111112222233 0123456789012345678901 Memory 1/30/2012 cs 252 -S 12, Lecture 04 8

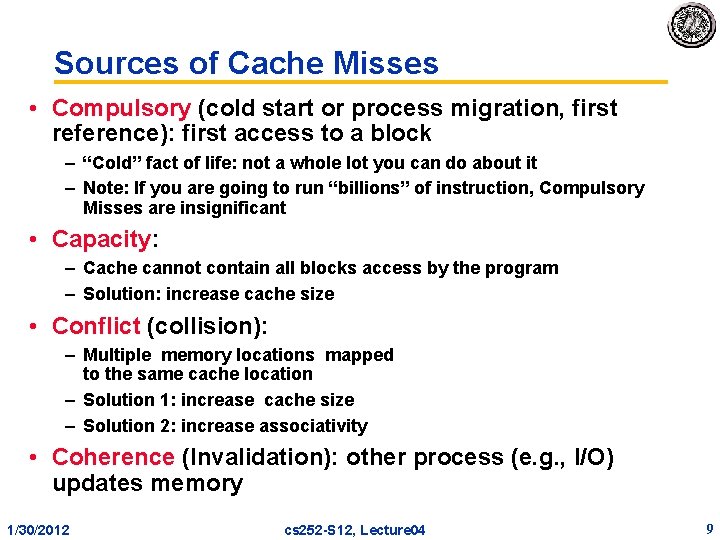

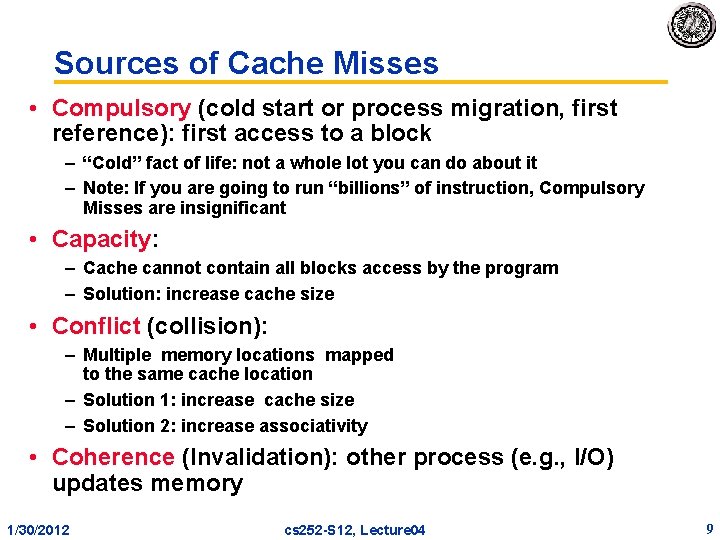

Sources of Cache Misses • Compulsory (cold start or process migration, first reference): first access to a block – “Cold” fact of life: not a whole lot you can do about it – Note: If you are going to run “billions” of instruction, Compulsory Misses are insignificant • Capacity: – Cache cannot contain all blocks access by the program – Solution: increase cache size • Conflict (collision): – Multiple memory locations mapped to the same cache location – Solution 1: increase cache size – Solution 2: increase associativity • Coherence (Invalidation): other process (e. g. , I/O) updates memory 1/30/2012 cs 252 -S 12, Lecture 04 9

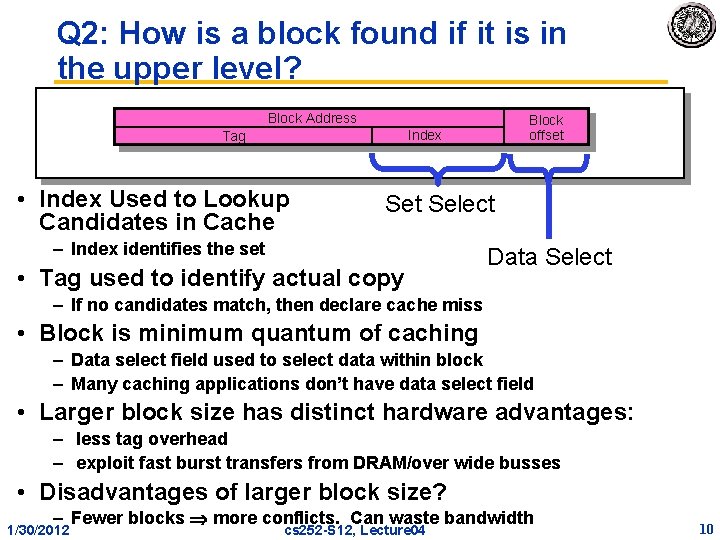

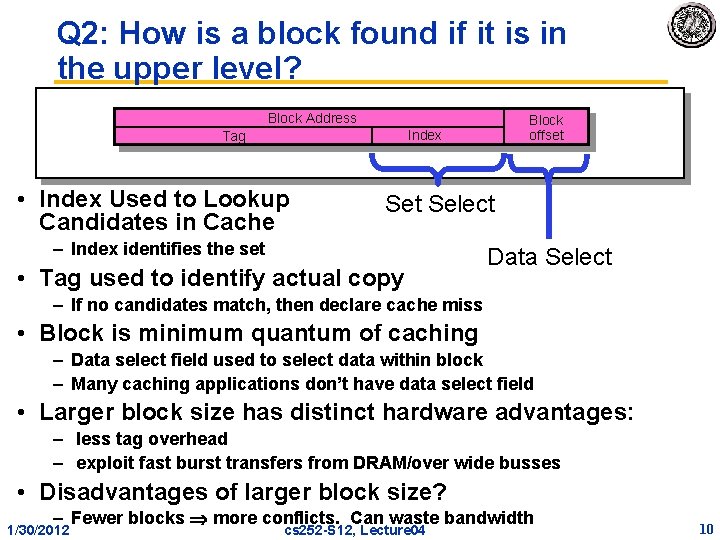

Q 2: How is a block found if it is in the upper level? Block Address Block offset Index Tag • Index Used to Lookup Candidates in Cache Set Select – Index identifies the set • Tag used to identify actual copy Data Select – If no candidates match, then declare cache miss • Block is minimum quantum of caching – Data select field used to select data within block – Many caching applications don’t have data select field • Larger block size has distinct hardware advantages: – less tag overhead – exploit fast burst transfers from DRAM/over wide busses • Disadvantages of larger block size? – Fewer blocks more conflicts. Can waste bandwidth 1/30/2012 cs 252 -S 12, Lecture 04 10

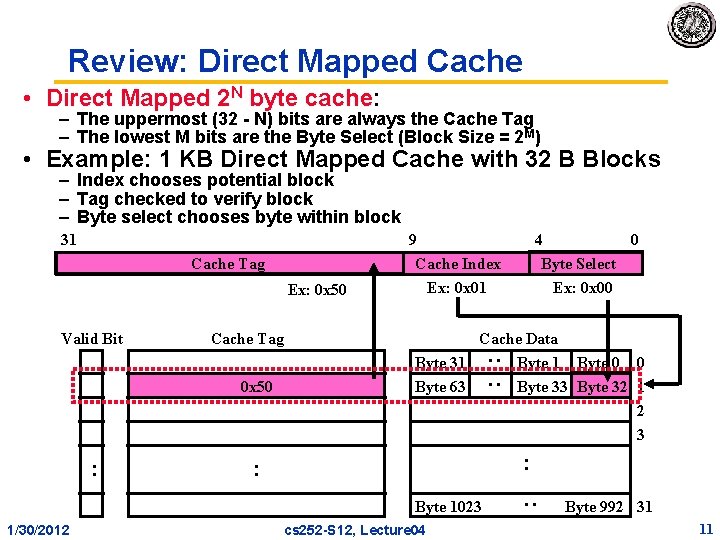

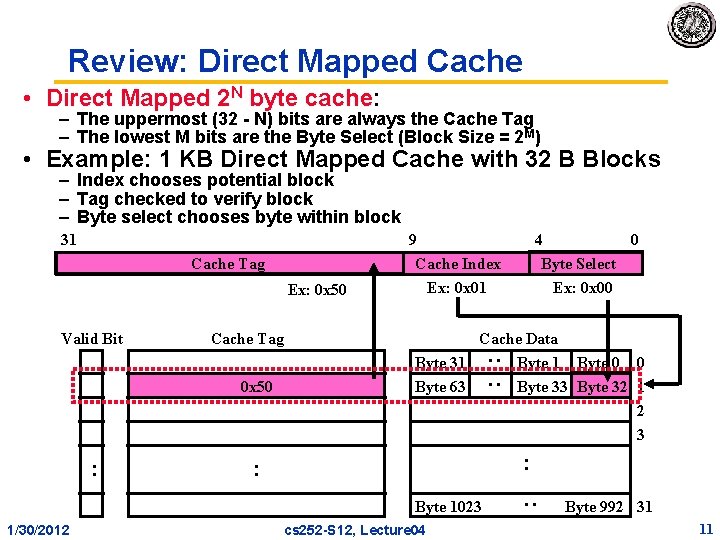

Review: Direct Mapped Cache • Direct Mapped 2 N byte cache: – The uppermost (32 - N) bits are always the Cache Tag – The lowest M bits are the Byte Select (Block Size = 2 M) • Example: 1 KB Direct Mapped Cache with 32 B Blocks – Index chooses potential block – Tag checked to verify block – Byte select chooses byte within block Cache Tag Ex: 0 x 50 Valid Bit 9 Cache Index Ex: 0 x 01 Cache Tag 0 x 50 4 0 Byte Select Ex: 0 x 00 Cache Data Byte 31 Byte 63 : : 31 Byte 0 0 Byte 33 Byte 32 1 2 3 : : Byte 1023 1/30/2012 cs 252 -S 12, Lecture 04 : : Byte 992 31 11

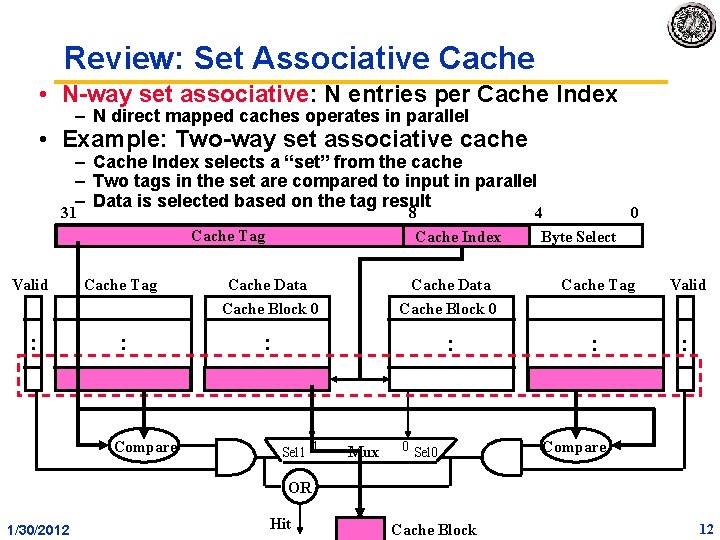

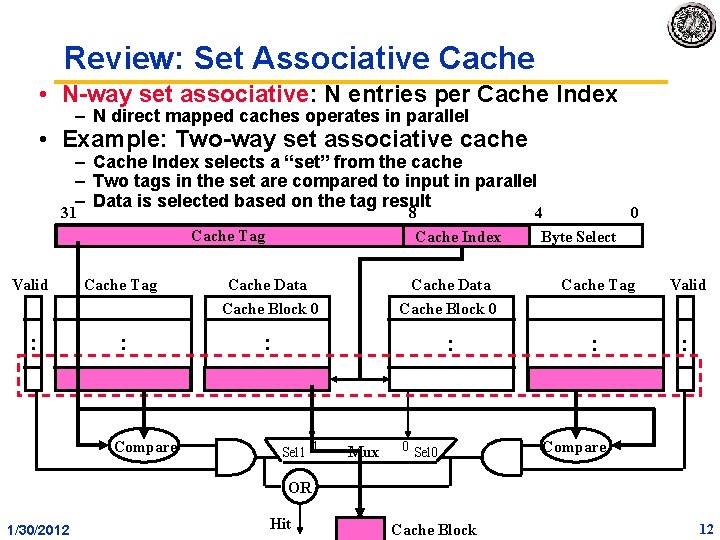

Review: Set Associative Cache • N-way set associative: N entries per Cache Index – N direct mapped caches operates in parallel • Example: Two-way set associative cache – Cache Index selects a “set” from the cache – Two tags in the set are compared to input in parallel – Data is selected based on the tag result 31 8 Cache Index Cache Tag Valid : Cache Tag : Compare Cache Data Cache Block 0 : : Sel 1 1 Cache Data Mux 0 Sel 0 4 0 Byte Select Cache Tag Valid : : Compare OR 1/30/2012 Hitcs 252 -S 12, Lecture 04 Cache Block CS 252 -S 10, Lecture 03 12

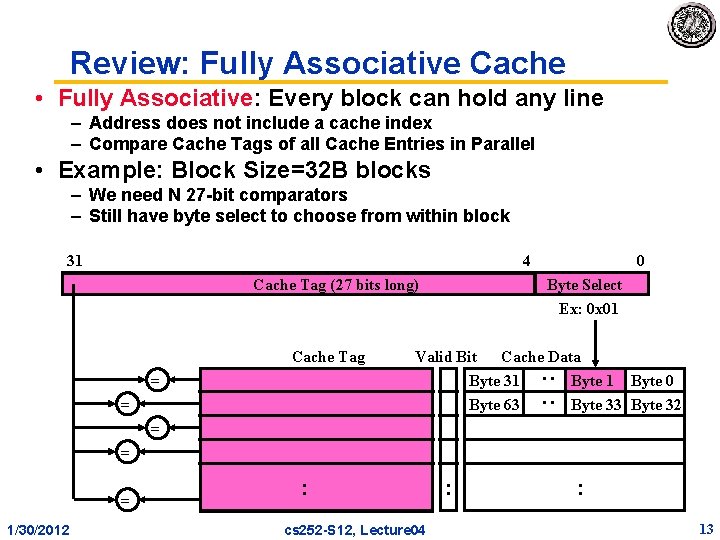

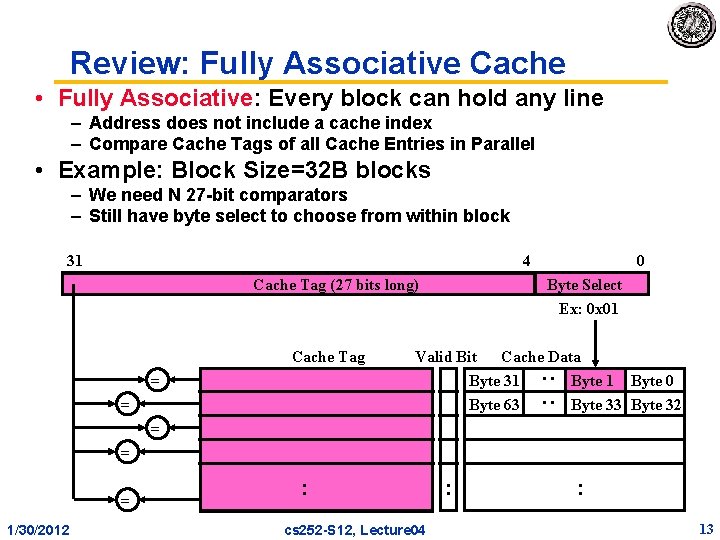

Review: Fully Associative Cache • Fully Associative: Every block can hold any line – Address does not include a cache index – Compare Cache Tags of all Cache Entries in Parallel • Example: Block Size=32 B blocks – We need N 27 -bit comparators – Still have byte select to choose from within block 31 4 Cache Tag (27 bits long) Cache Tag Byte Select Ex: 0 x 01 Valid Bit Cache Data Byte 31 Byte 0 Byte 63 Byte 32 : : = 0 = = 1/30/2012 : cs 252 -S 12, Lecture 04 : : 13

Q 3: Which block should be replaced on a miss? • Easy for Direct Mapped • Set Associative or Fully Associative: – LRU (Least Recently Used): Appealing, but hard to implement for high associativity – Random: Easy, but – how well does it work? Assoc: 1/30/2012 2 -way 4 -way 8 -way Size LRU Ran 16 K 5. 2% 5. 7% 4. 7% 5. 3% 4. 4% 5. 0% 64 K 1. 9% 2. 0% 1. 5% 1. 7% 1. 4% 1. 5% 256 K 1. 15% 1. 17% 1. 13% 1. 12% cs 252 -S 12, Lecture 04 14

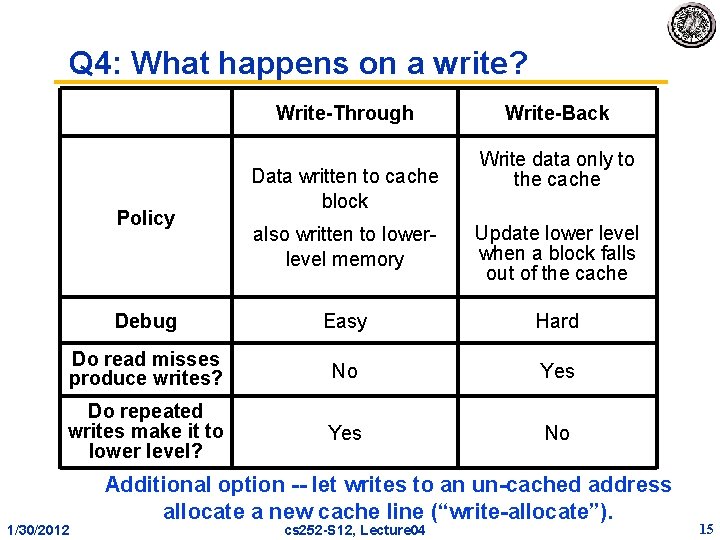

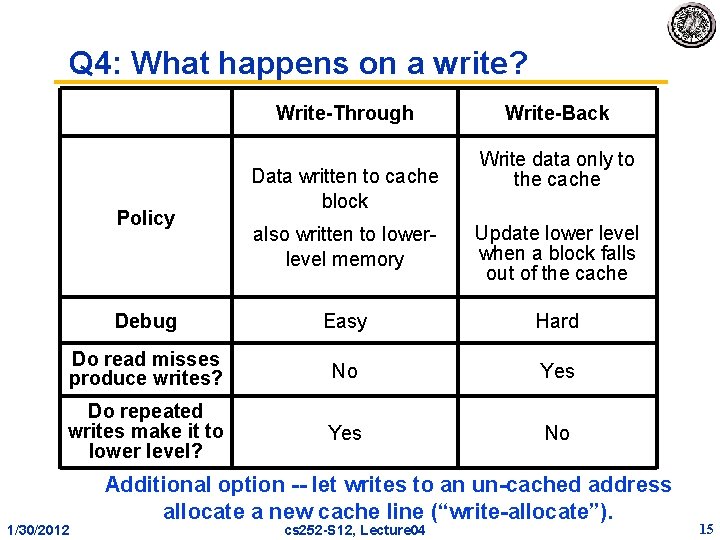

Q 4: What happens on a write? Write-Through Policy Data written to cache block Write-Back Write data only to the cache also written to lowerlevel memory Update lower level when a block falls out of the cache Debug Easy Hard Do read misses produce writes? No Yes Do repeated writes make it to lower level? Yes No 1/30/2012 Additional option -- let writes to an un-cached address allocate a new cache line (“write-allocate”). cs 252 -S 12, Lecture 04 15

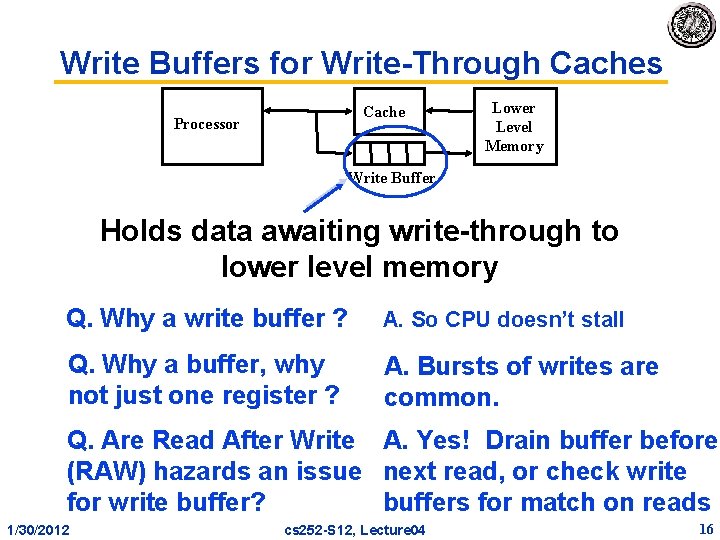

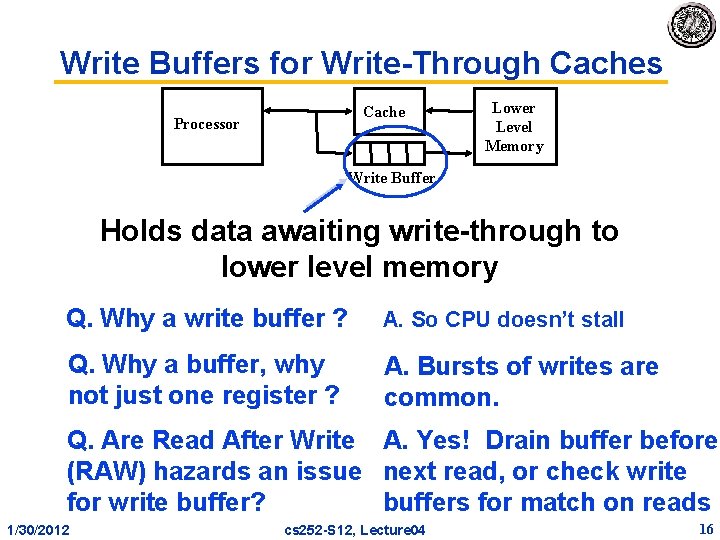

Write Buffers for Write-Through Caches Cache Processor Lower Level Memory Write Buffer Holds data awaiting write-through to lower level memory Q. Why a write buffer ? A. So CPU doesn’t stall Q. Why a buffer, why not just one register ? A. Bursts of writes are common. Q. Are Read After Write A. Yes! Drain buffer before (RAW) hazards an issue next read, or check write for write buffer? buffers for match on reads 1/30/2012 cs 252 -S 12, Lecture 04 16

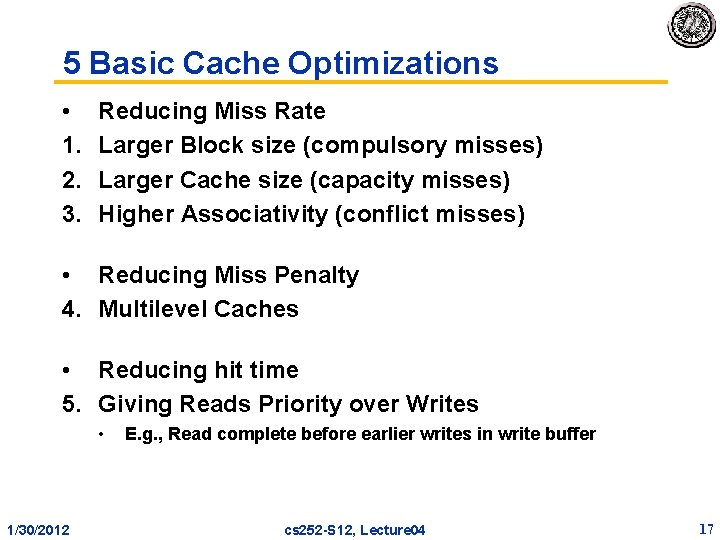

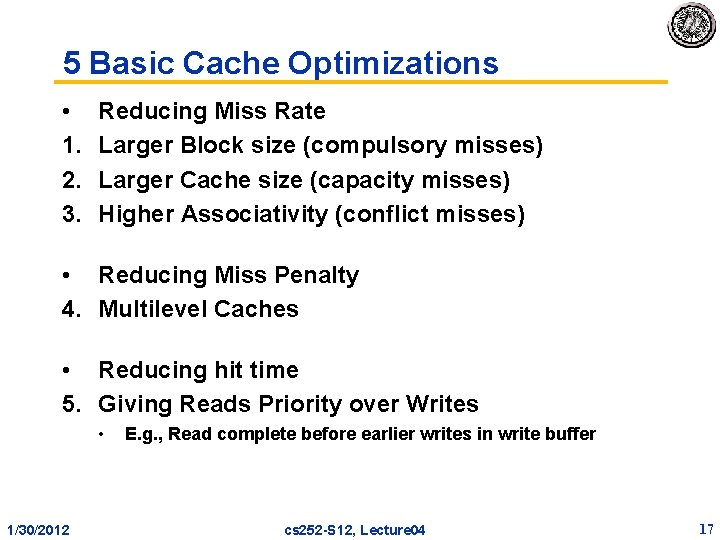

5 Basic Cache Optimizations • 1. 2. 3. Reducing Miss Rate Larger Block size (compulsory misses) Larger Cache size (capacity misses) Higher Associativity (conflict misses) • Reducing Miss Penalty 4. Multilevel Caches • Reducing hit time 5. Giving Reads Priority over Writes • 1/30/2012 E. g. , Read complete before earlier writes in write buffer cs 252 -S 12, Lecture 04 17

CS 252 Administrivia • Textbook Reading for next few lectures: – Computer Architecture: A Quantitative Approach, Chapter 2 • Don’t forget to try to do the prerequisite exams (look at handouts page): – See if you have good enough understanding of prerequisite material • Reading assignment for this week are up. Will work to get much further ahead. – Should be using web form to submit your summaries – Remember: I’ve given you 5 slip days on paper summaries – Technically, these paper summaries are due before class (1: 00) • Go to handouts page: – All readings put up so far are there • Start thinking about projects! – Find a partner, start thinking of topics 1/30/2012 cs 252 -S 12, Lecture 04 18

Precise Exceptions: Ability to Undo! • Readings for today: – James Smith and Andrew Pleszkun, "Implementation of Precise Interrupts in Pipelined Processors – Gurindar Sohi and Sriram Vajapeyam, "Instruction Issue Logic for High-Performance, Interruptable Pipelined Processors“ • Basic ideas: – Prevent out of order commit – Either delay execution or delay commit • Options: – Reorder Buffer with/without bypassing – Future File • We will see an explicit use of both reorder buffer and future file in next couple of lectures 1/30/2012 cs 252 -S 12, Lecture 04 19

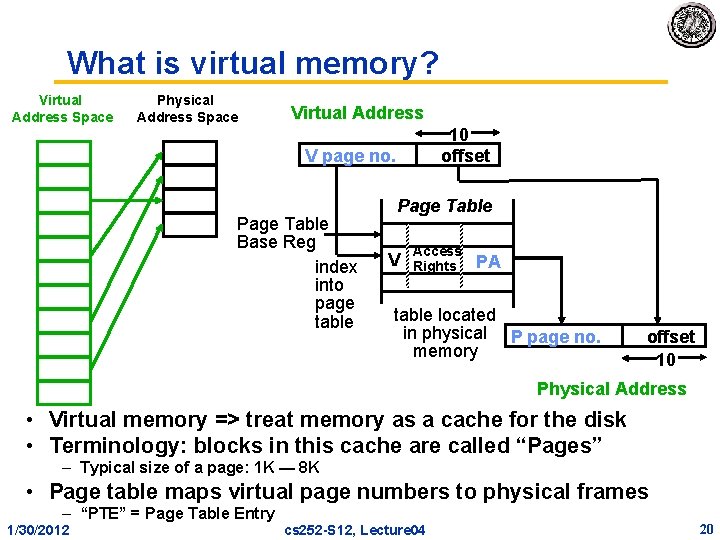

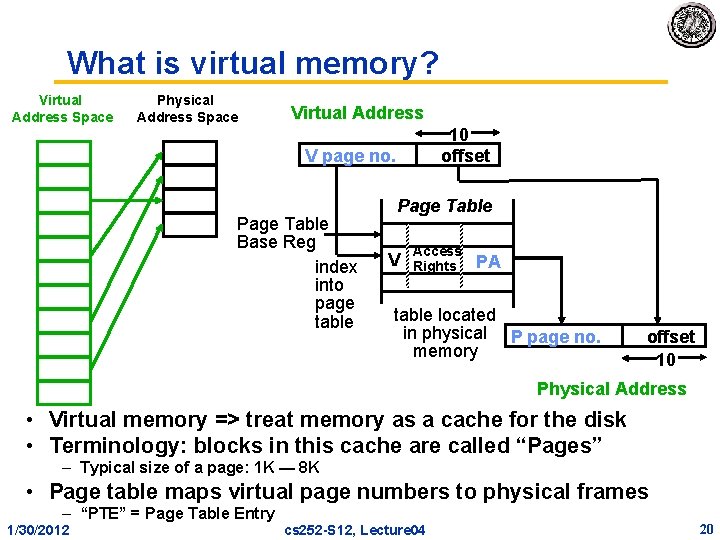

What is virtual memory? Virtual Address Space Physical Address Space Virtual Address 10 offset V page no. Page Table Base Reg index into page table Page Table V Access Rights PA table located in physical P page no. memory offset 10 Physical Address • Virtual memory => treat memory as a cache for the disk • Terminology: blocks in this cache are called “Pages” – Typical size of a page: 1 K — 8 K • Page table maps virtual page numbers to physical frames – “PTE” = Page Table Entry 1/30/2012 cs 252 -S 12, Lecture 04 20

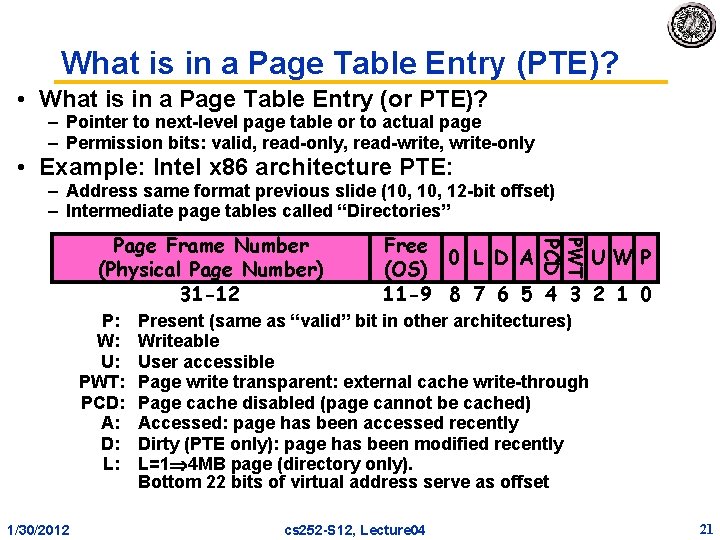

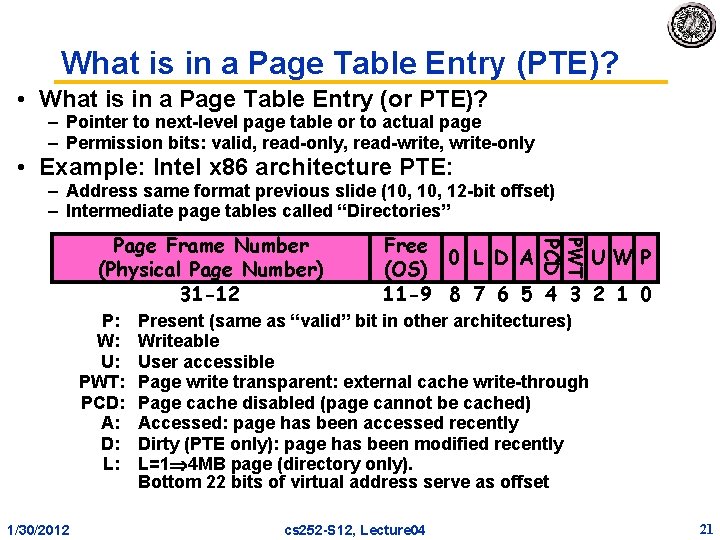

What is in a Page Table Entry (PTE)? • What is in a Page Table Entry (or PTE)? – Pointer to next-level page table or to actual page – Permission bits: valid, read-only, read-write, write-only • Example: Intel x 86 architecture PTE: – Address same format previous slide (10, 12 -bit offset) – Intermediate page tables called “Directories” 1/30/2012 PWT P: W: U: PWT: PCD: A: D: L: Free 0 L D A UW P (OS) 11 -9 8 7 6 5 4 3 2 1 0 PCD Page Frame Number (Physical Page Number) 31 -12 Present (same as “valid” bit in other architectures) Writeable User accessible Page write transparent: external cache write-through Page cache disabled (page cannot be cached) Accessed: page has been accessed recently Dirty (PTE only): page has been modified recently L=1 4 MB page (directory only). Bottom 22 bits of virtual address serve as offset cs 252 -S 12, Lecture 04 21

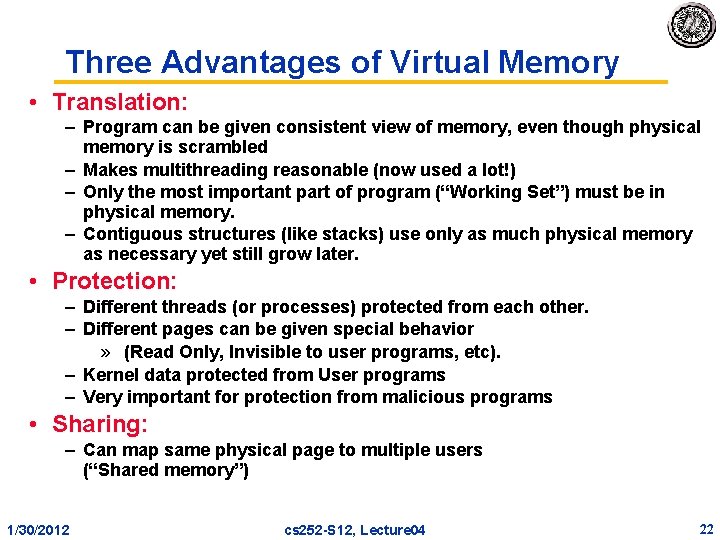

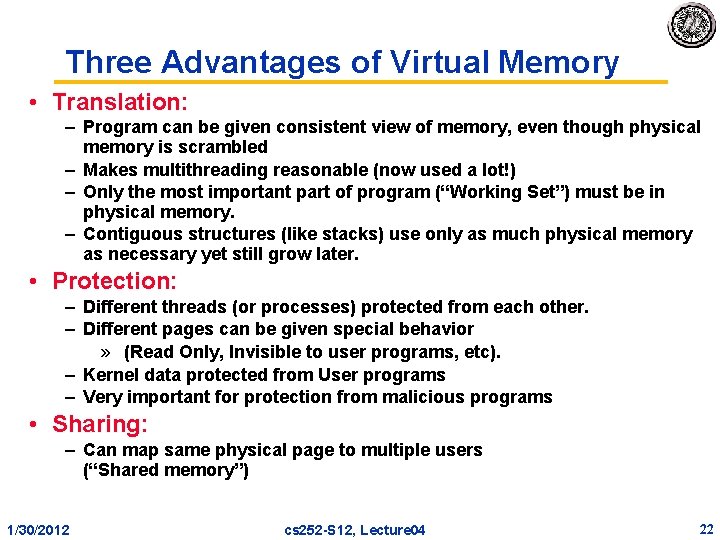

Three Advantages of Virtual Memory • Translation: – Program can be given consistent view of memory, even though physical memory is scrambled – Makes multithreading reasonable (now used a lot!) – Only the most important part of program (“Working Set”) must be in physical memory. – Contiguous structures (like stacks) use only as much physical memory as necessary yet still grow later. • Protection: – Different threads (or processes) protected from each other. – Different pages can be given special behavior » (Read Only, Invisible to user programs, etc). – Kernel data protected from User programs – Very important for protection from malicious programs • Sharing: – Can map same physical page to multiple users (“Shared memory”) 1/30/2012 cs 252 -S 12, Lecture 04 22

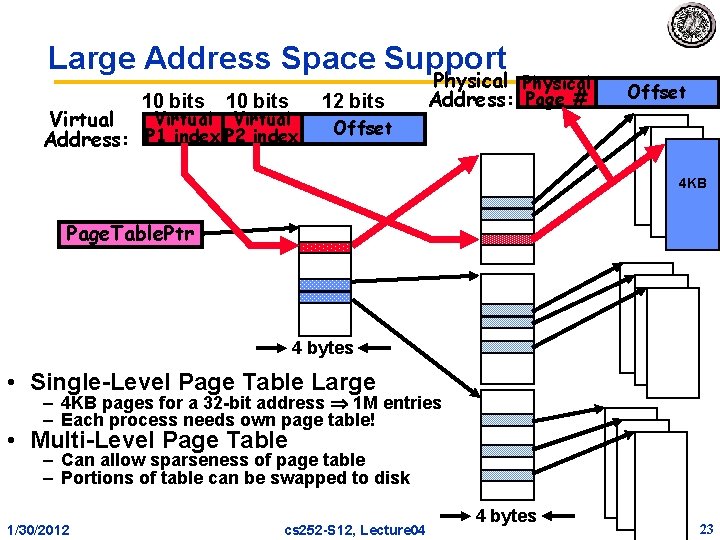

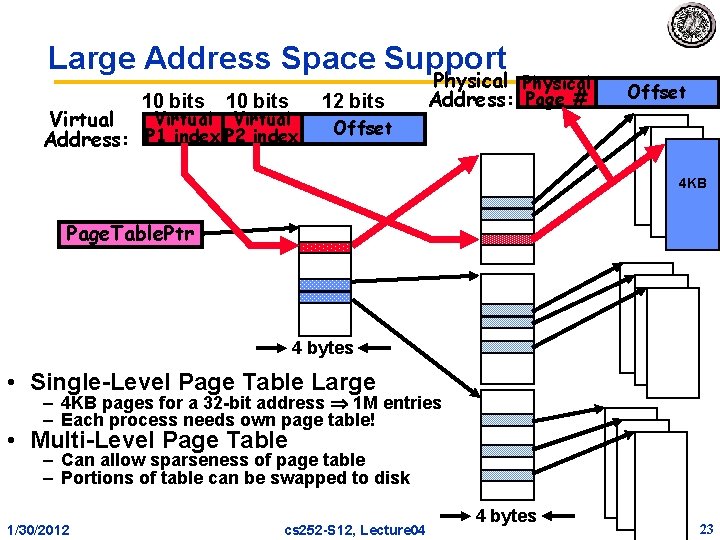

Large Address Space Support 10 bits Virtual Address: P 1 index P 2 index 12 bits Physical Address: Page # Offset 4 KB Page. Table. Ptr 4 bytes • Single-Level Page Table Large – 4 KB pages for a 32 -bit address 1 M entries – Each process needs own page table! • Multi-Level Page Table – Can allow sparseness of page table – Portions of table can be swapped to disk 1/30/2012 cs 252 -S 12, Lecture 04 4 bytes 23

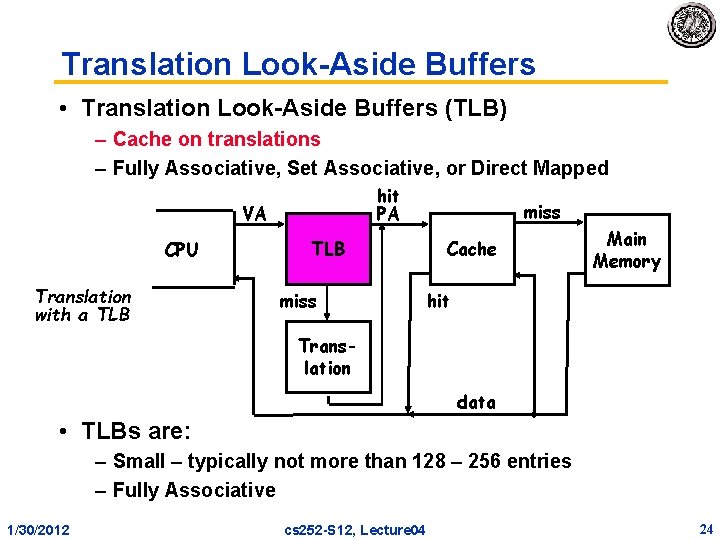

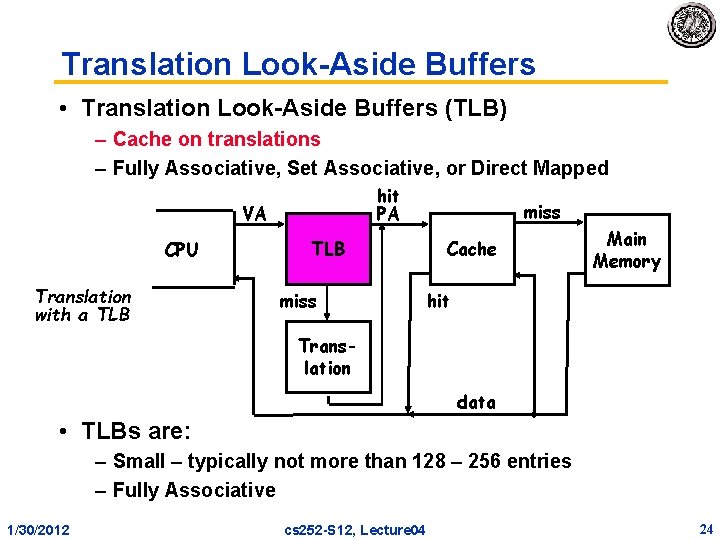

Translation Look-Aside Buffers • Translation Look-Aside Buffers (TLB) – Cache on translations – Fully Associative, Set Associative, or Direct Mapped hit PA VA CPU Translation with a TLB miss Cache Main Memory hit Translation data • TLBs are: – Small – typically not more than 128 – 256 entries – Fully Associative 1/30/2012 cs 252 -S 12, Lecture 04 24

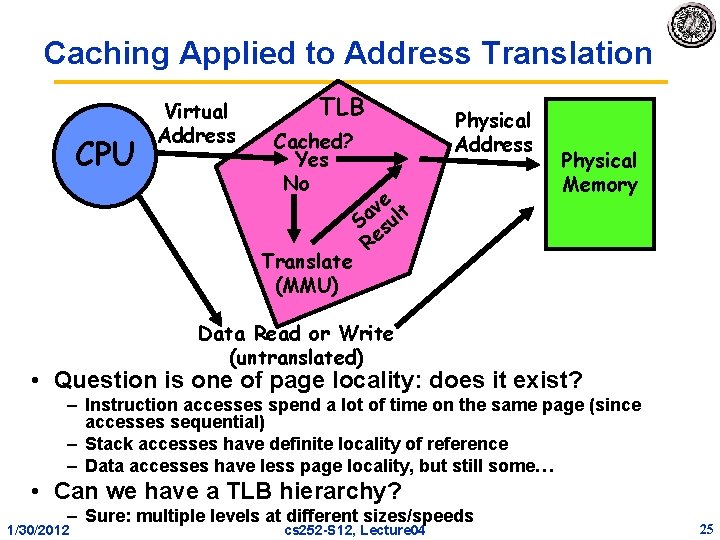

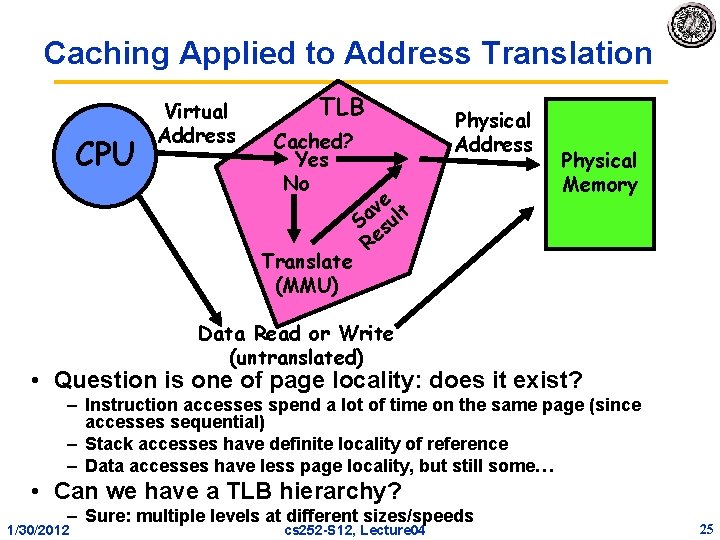

Caching Applied to Address Translation CPU Virtual Address TLB Cached? Yes No Translate (MMU) Physical Address e v t Sa sul Re Physical Memory Data Read or Write (untranslated) • Question is one of page locality: does it exist? – Instruction accesses spend a lot of time on the same page (since accesses sequential) – Stack accesses have definite locality of reference – Data accesses have less page locality, but still some… • Can we have a TLB hierarchy? – Sure: multiple levels at different sizes/speeds 1/30/2012 cs 252 -S 12, Lecture 04 25

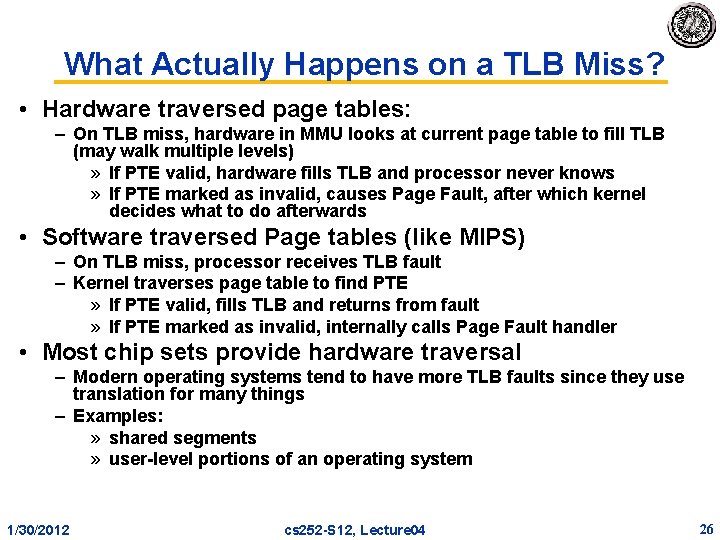

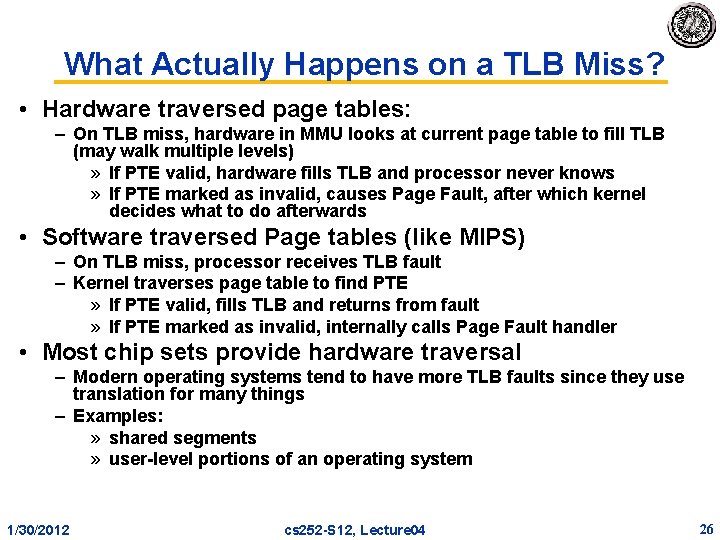

What Actually Happens on a TLB Miss? • Hardware traversed page tables: – On TLB miss, hardware in MMU looks at current page table to fill TLB (may walk multiple levels) » If PTE valid, hardware fills TLB and processor never knows » If PTE marked as invalid, causes Page Fault, after which kernel decides what to do afterwards • Software traversed Page tables (like MIPS) – On TLB miss, processor receives TLB fault – Kernel traverses page table to find PTE » If PTE valid, fills TLB and returns from fault » If PTE marked as invalid, internally calls Page Fault handler • Most chip sets provide hardware traversal – Modern operating systems tend to have more TLB faults since they use translation for many things – Examples: » shared segments » user-level portions of an operating system 1/30/2012 cs 252 -S 12, Lecture 04 26

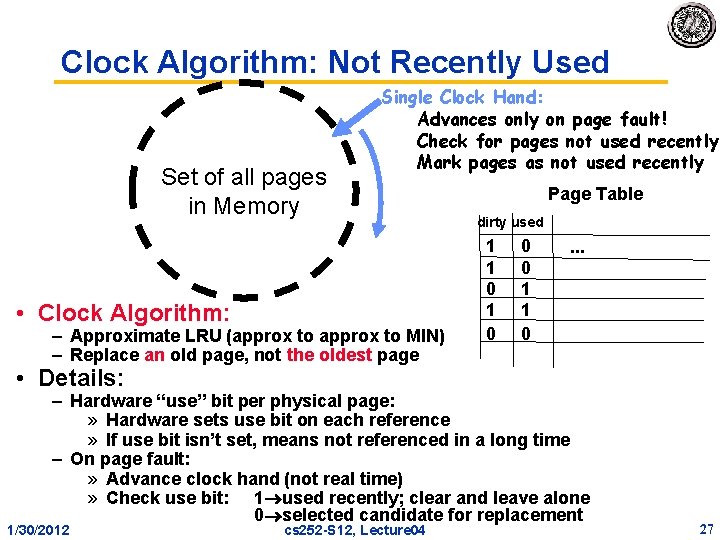

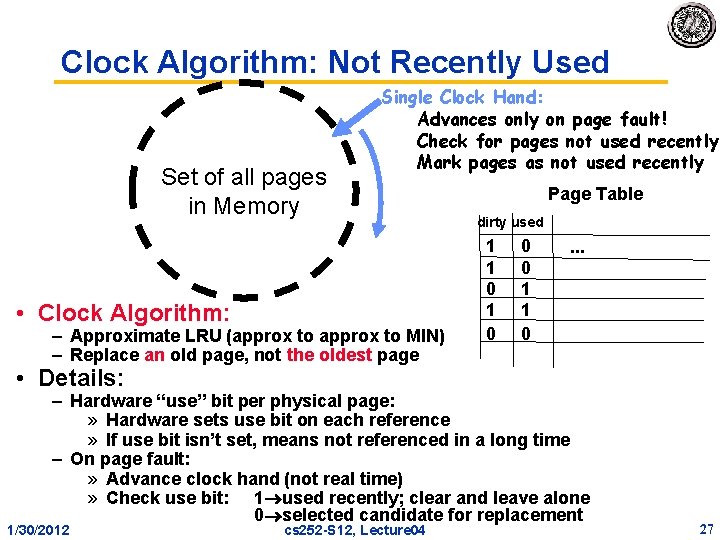

Clock Algorithm: Not Recently Used Set of all pages in Memory Single Clock Hand: Advances only on page fault! Check for pages not used recently Mark pages as not used recently • Clock Algorithm: – Approximate LRU (approx to MIN) – Replace an old page, not the oldest page Page Table dirty used 1 1 0 0 0 1 1 0 . . . • Details: – Hardware “use” bit per physical page: » Hardware sets use bit on each reference » If use bit isn’t set, means not referenced in a long time – On page fault: » Advance clock hand (not real time) » Check use bit: 1 used recently; clear and leave alone 0 selected candidate for replacement 1/30/2012 cs 252 -S 12, Lecture 04 27

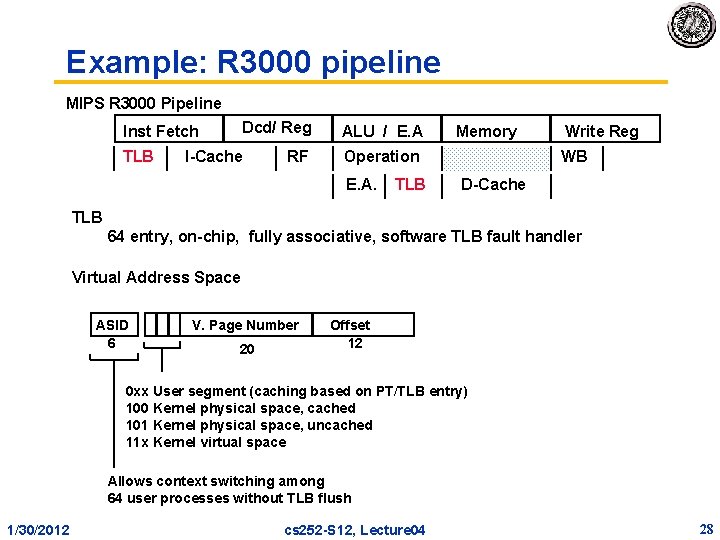

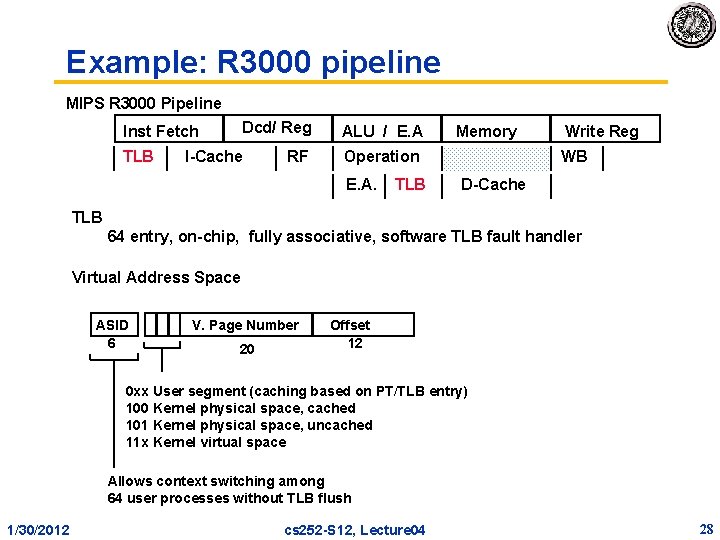

Example: R 3000 pipeline MIPS R 3000 Pipeline Dcd/ Reg Inst Fetch TLB I-Cache RF ALU / E. A Memory Operation E. A. TLB Write Reg WB D-Cache TLB 64 entry, on-chip, fully associative, software TLB fault handler Virtual Address Space ASID 6 V. Page Number 20 Offset 12 0 xx User segment (caching based on PT/TLB entry) 100 Kernel physical space, cached 101 Kernel physical space, uncached 11 x Kernel virtual space Allows context switching among 64 user processes without TLB flush 1/30/2012 cs 252 -S 12, Lecture 04 28

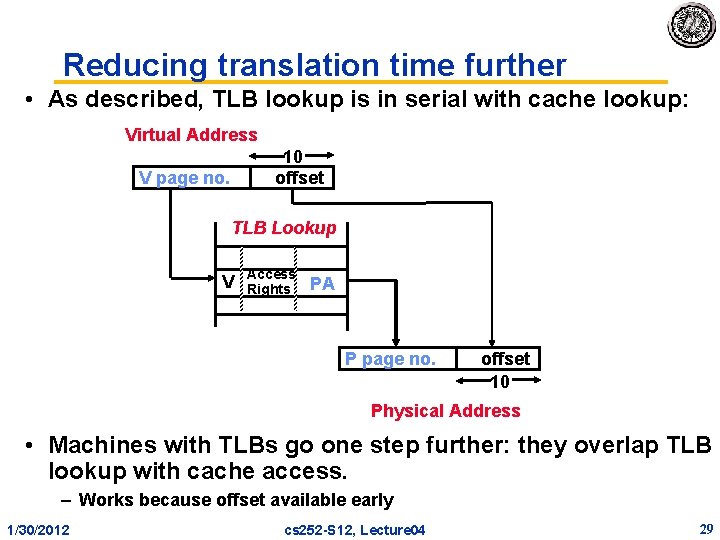

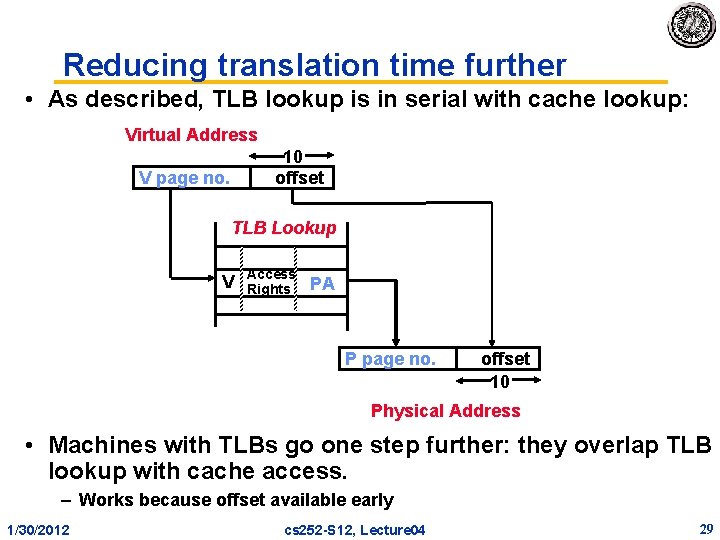

Reducing translation time further • As described, TLB lookup is in serial with cache lookup: Virtual Address 10 offset V page no. TLB Lookup V Access Rights PA P page no. offset 10 Physical Address • Machines with TLBs go one step further: they overlap TLB lookup with cache access. – Works because offset available early 1/30/2012 cs 252 -S 12, Lecture 04 29

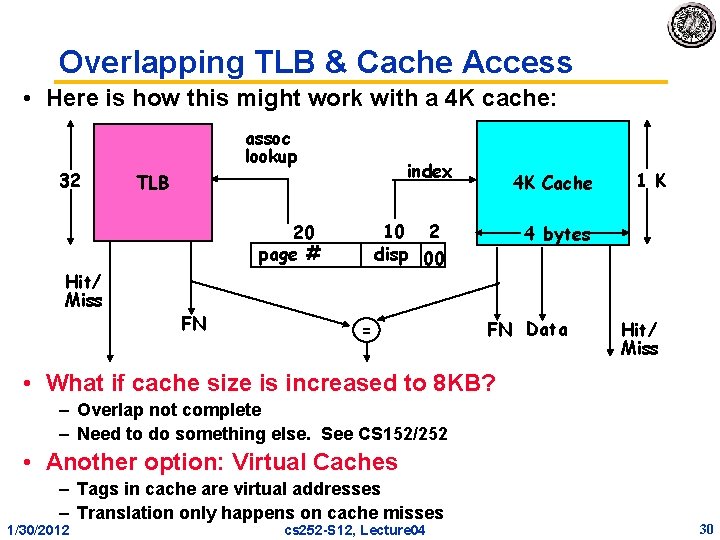

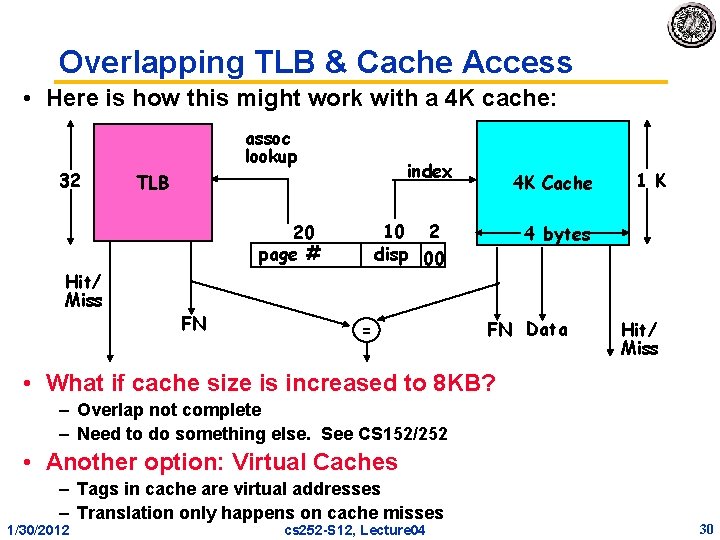

Overlapping TLB & Cache Access • Here is how this might work with a 4 K cache: 32 assoc lookup index TLB 10 2 disp 00 20 page # Hit/ Miss FN 4 K Cache = 1 K 4 bytes FN Data Hit/ Miss • What if cache size is increased to 8 KB? – Overlap not complete – Need to do something else. See CS 152/252 • Another option: Virtual Caches – Tags in cache are virtual addresses – Translation only happens on cache misses 1/30/2012 cs 252 -S 12, Lecture 04 30

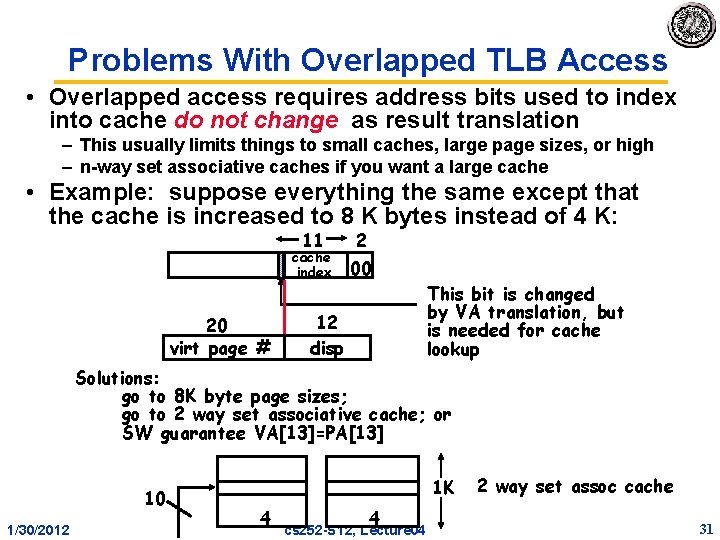

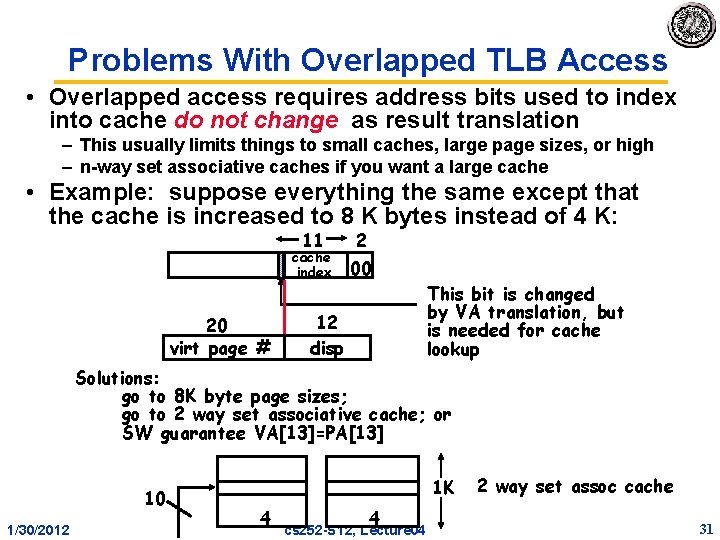

Problems With Overlapped TLB Access • Overlapped access requires address bits used to index into cache do not change as result translation – This usually limits things to small caches, large page sizes, or high – n-way set associative caches if you want a large cache • Example: suppose everything the same except that the cache is increased to 8 K bytes instead of 4 K: 11 cache index 20 virt page # 2 00 12 disp This bit is changed by VA translation, but is needed for cache lookup Solutions: go to 8 K byte page sizes; go to 2 way set associative cache; or SW guarantee VA[13]=PA[13] 10 1/30/2012 1 K 4 4 cs 252 -S 12, Lecture 04 2 way set assoc cache 31

Exceptions: Traps and Interrupts (Hardware) 1/30/2012 cs 252 -S 12, Lecture 04 32

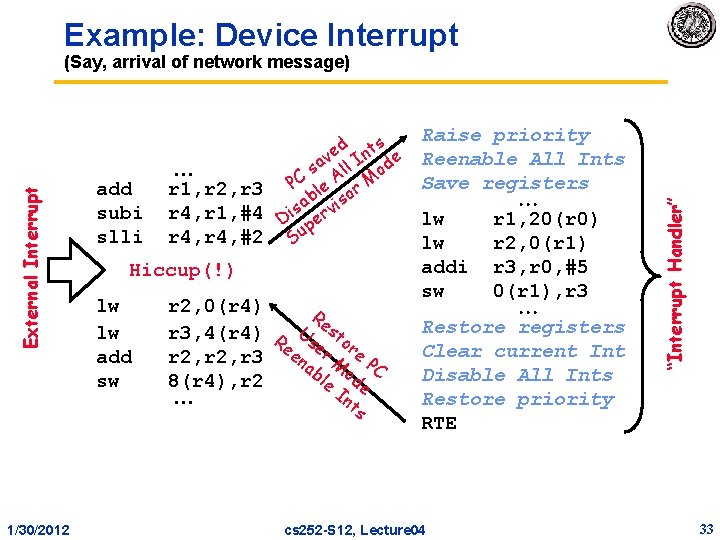

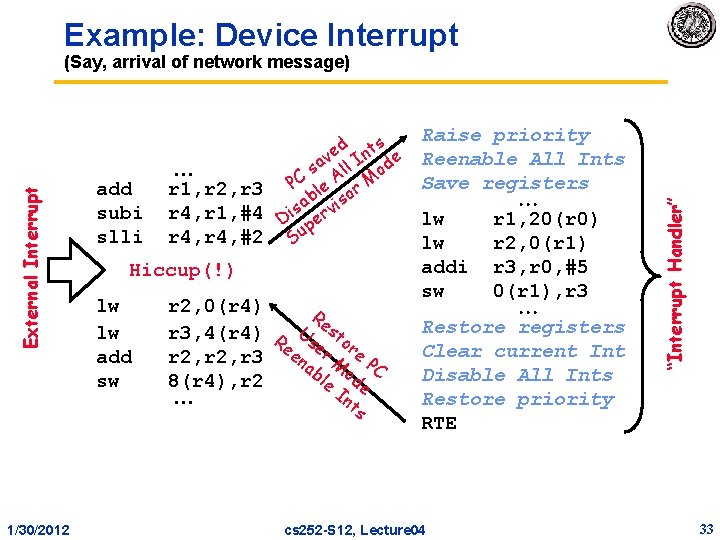

Example: Device Interrupt 1/30/2012 add subi slli r 1, r 2, r 3 r 4, r 1, #4 r 4, #2 ed Ints e v sa ll od PC le A r M b iso a s v Di per Su Hiccup(!) lw lw add sw r 2, 0(r 4) Re r 3, 4(r 4) R Us sto e e r r 2, r 3 ena r M e PC bl od e 8(r 4), r 2 In e ts Raise priority Reenable All Ints Save registers lw r 1, 20(r 0) lw r 2, 0(r 1) addi r 3, r 0, #5 sw 0(r 1), r 3 Restore registers Clear current Int Disable All Ints Restore priority RTE cs 252 -S 12, Lecture 04 “Interrupt Handler” External Interrupt (Say, arrival of network message) 33

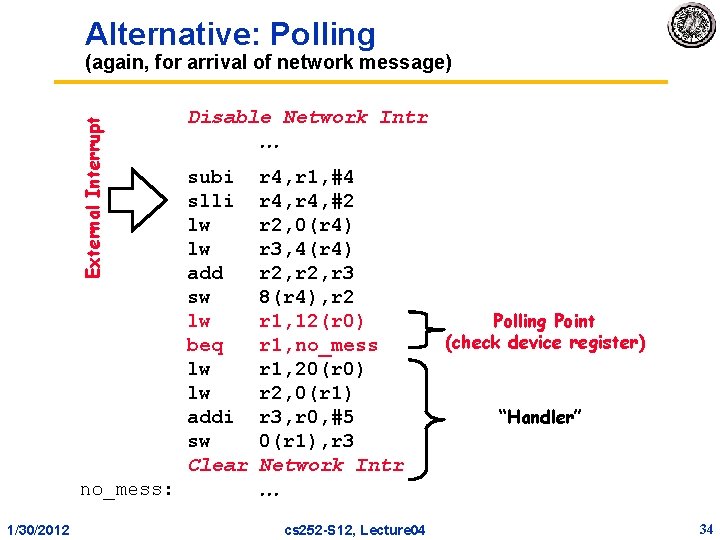

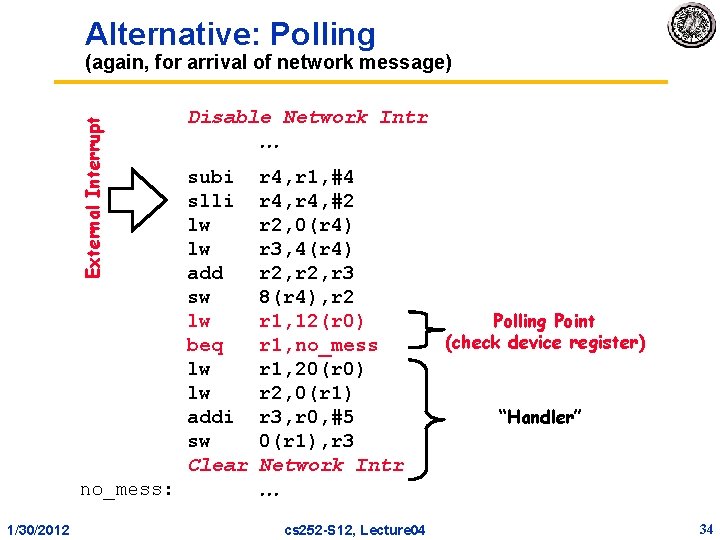

Alternative: Polling External Interrupt (again, for arrival of network message) no_mess: 1/30/2012 Disable Network Intr subi slli lw lw add sw lw beq lw lw addi sw Clear r 4, r 1, #4 r 4, #2 r 2, 0(r 4) r 3, 4(r 4) r 2, r 3 8(r 4), r 2 r 1, 12(r 0) r 1, no_mess r 1, 20(r 0) r 2, 0(r 1) r 3, r 0, #5 0(r 1), r 3 Network Intr cs 252 -S 12, Lecture 04 Polling Point (check device register) “Handler” 34

Polling is faster/slower than Interrupts. • Polling is faster than interrupts because – Compiler knows which registers in use at polling point. Hence, do not need to save and restore registers (or not as many). – Other interrupt overhead avoided (pipeline flush, trap priorities, etc). • Polling is slower than interrupts because – Overhead of polling instructions is incurred regardless of whether or not handler is run. This could add to inner-loop delay. – Device may have to wait for service for a long time. • When to use one or the other? – Multi-axis tradeoff » Frequent/regular events good for polling, as long as device can be controlled at user level. » Interrupts good for infrequent/irregular events » Interrupts good for ensuring regular/predictable service of events. 1/30/2012 cs 252 -S 12, Lecture 04 35

Trap/Interrupt classifications • Traps: relevant to the current process – Faults, arithmetic traps, and synchronous traps – Invoke software on behalf of the currently executing process • Interrupts: caused by asynchronous, outside events – I/O devices requiring service (DISK, network) – Clock interrupts (real time scheduling) • Machine Checks: caused by serious hardware failure – Not always restartable – Indicate that bad things have happened. » Non-recoverable ECC error » Machine room fire » Power outage 1/30/2012 cs 252 -S 12, Lecture 04 36

A related classification: Synchronous vs. Asynchronous • Synchronous: means related to the instruction stream, i. e. during the execution of an instruction – – Must stop an instruction that is currently executing Page fault on load or store instruction Arithmetic exception Software Trap Instructions • Asynchronous: means unrelated to the instruction stream, i. e. caused by an outside event. – Does not have to disrupt instructions that are already executing – Interrupts are asynchronous – Machine checks are asynchronous • Semi. Synchronous (or high-availability interrupts): – Caused by external event but may have to disrupt current instructions in order to guarantee service 1/30/2012 cs 252 -S 12, Lecture 04 37

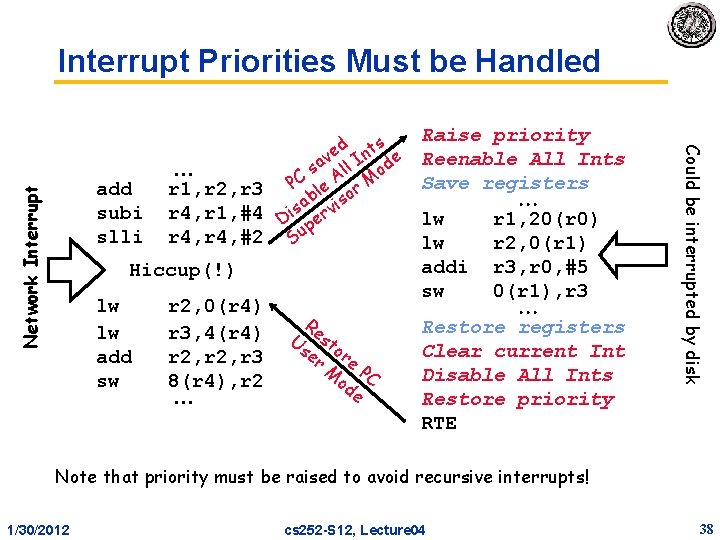

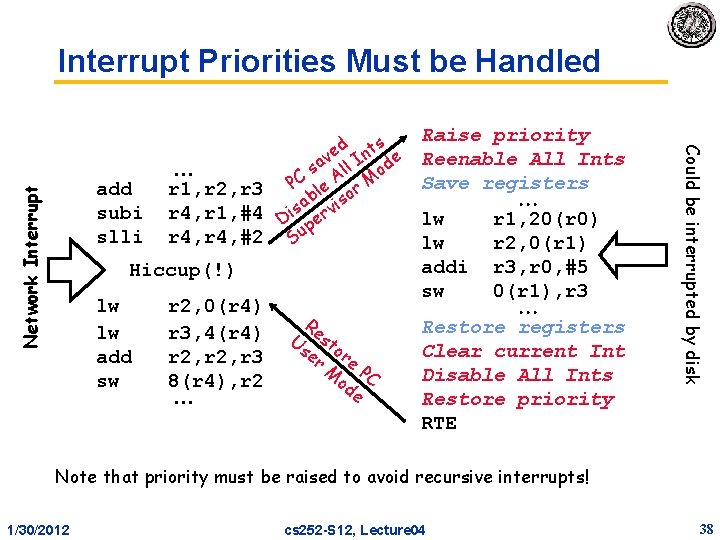

Interrupt Priorities Must be Handled Network Interrupt Hiccup(!) lw lw add sw r 2, 0(r 4) r 3, 4(r 4) r 2, r 3 8(r 4), r 2 Re Us sto er re M PC od e Raise priority Reenable All Ints Save registers lw r 1, 20(r 0) lw r 2, 0(r 1) addi r 3, r 0, #5 sw 0(r 1), r 3 Restore registers Clear current Int Disable All Ints Restore priority RTE Could be interrupted by disk add subi slli r 1, r 2, r 3 r 4, r 1, #4 r 4, #2 ed Ints e v sa ll od PC le A r M b iso a s v Di per Su Note that priority must be raised to avoid recursive interrupts! 1/30/2012 cs 252 -S 12, Lecture 04 38

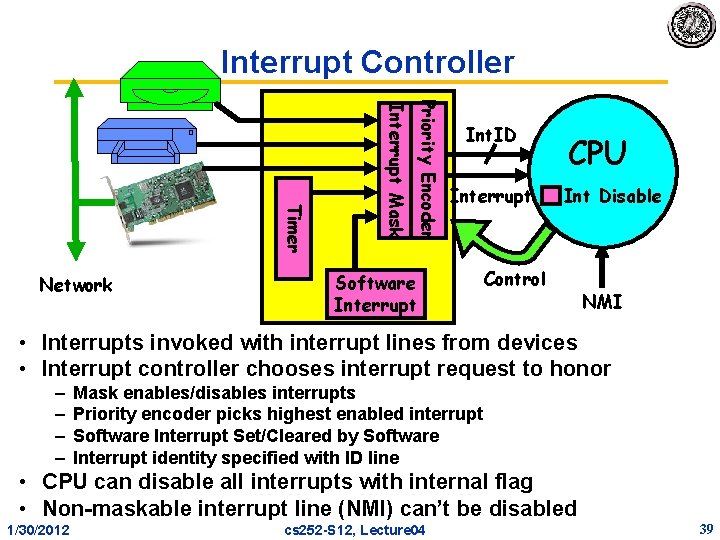

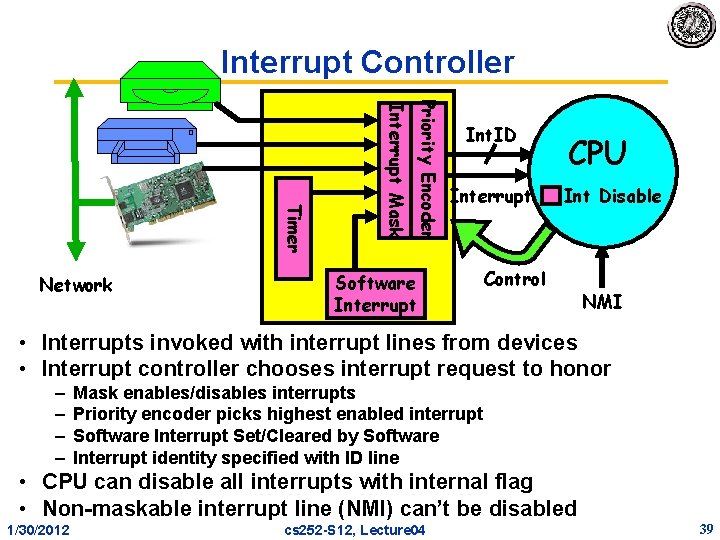

Interrupt Controller Priority Encoder Interrupt Mask Timer Network Int. ID Interrupt Software Interrupt CPU Int Disable Control NMI • Interrupts invoked with interrupt lines from devices • Interrupt controller chooses interrupt request to honor – – Mask enables/disables interrupts Priority encoder picks highest enabled interrupt Software Interrupt Set/Cleared by Software Interrupt identity specified with ID line • CPU can disable all interrupts with internal flag • Non-maskable interrupt line (NMI) can’t be disabled 1/30/2012 cs 252 -S 12, Lecture 04 39

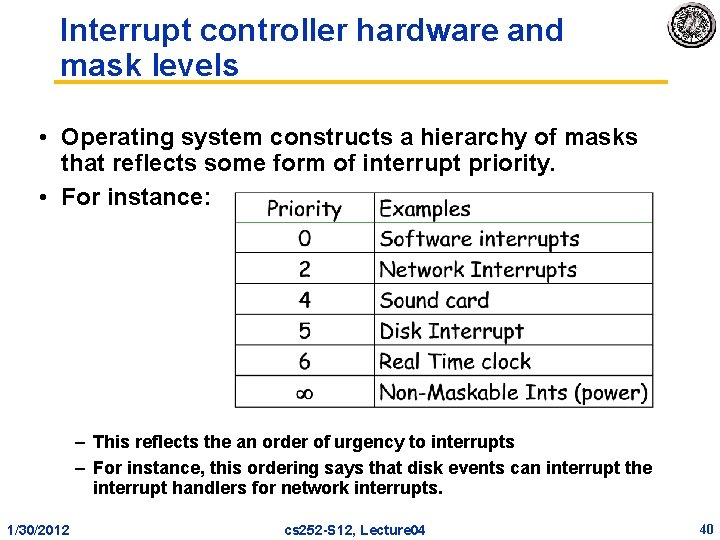

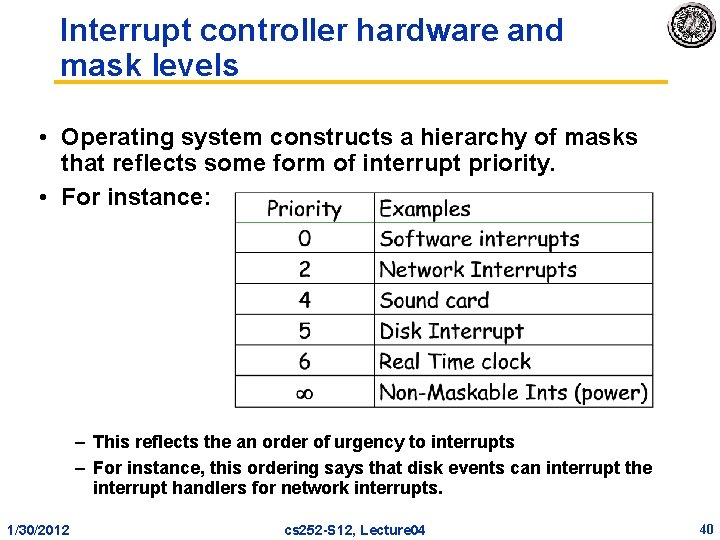

Interrupt controller hardware and mask levels • Operating system constructs a hierarchy of masks that reflects some form of interrupt priority. • For instance: – This reflects the an order of urgency to interrupts – For instance, this ordering says that disk events can interrupt the interrupt handlers for network interrupts. 1/30/2012 cs 252 -S 12, Lecture 04 40

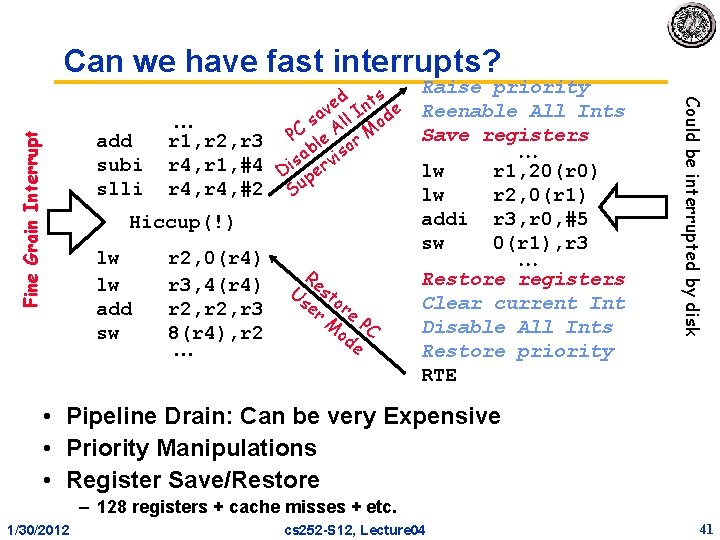

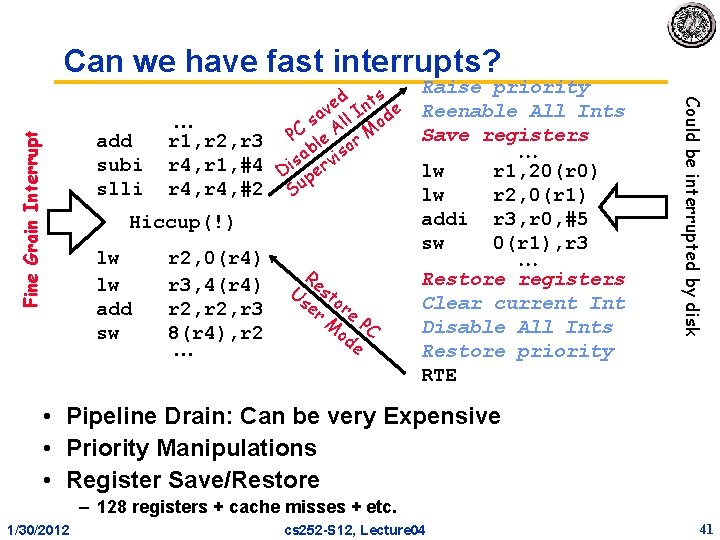

add subi slli r 1, r 2, r 3 r 4, r 1, #4 r 4, #2 ed Ints e v sa ll od PC le A r M b iso a s v Di per Su Hiccup(!) lw lw add sw r 2, 0(r 4) r 3, 4(r 4) r 2, r 3 8(r 4), r 2 Re Us sto er re M PC od e Raise priority Reenable All Ints Save registers lw r 1, 20(r 0) lw r 2, 0(r 1) addi r 3, r 0, #5 sw 0(r 1), r 3 Restore registers Clear current Int Disable All Ints Restore priority RTE Could be interrupted by disk Fine Grain Interrupt Can we have fast interrupts? • Pipeline Drain: Can be very Expensive • Priority Manipulations • Register Save/Restore – 128 registers + cache misses + etc. 1/30/2012 cs 252 -S 12, Lecture 04 41

SPARC (and RISC I) had register windows • On interrupt or procedure call, simply switch to a different set of registers • Really saves on interrupt overhead – Interrupts can happen at any point in the execution, so compiler cannot help with knowledge of live registers. – Conservative handlers must save all registers – Short handlers might be able to save only a few, but this analysis is compilcated • Not as big a deal with procedure calls – Original statement by Patterson was that Berkeley didn’t have a compiler team, so they used a hardware solution – Good compilers can allocate registers across procedure boundaries – Good compilers know what registers are live at any one time • However, register windows have returned! – IA 64 has them – Many other processors have shadow registers for interrupts 1/30/2012 cs 252 -S 12, Lecture 04 42

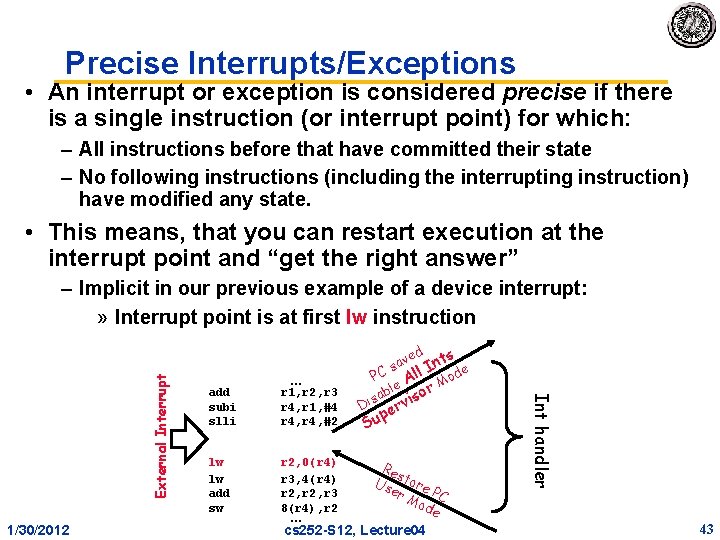

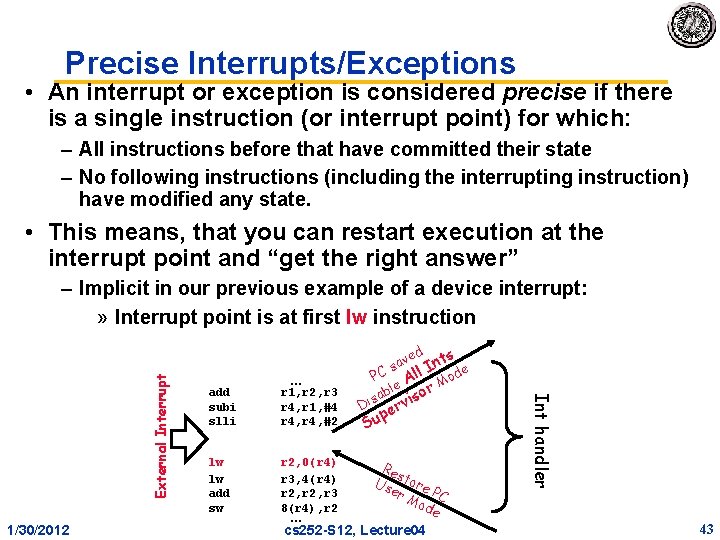

Precise Interrupts/Exceptions • An interrupt or exception is considered precise if there is a single instruction (or interrupt point) for which: – All instructions before that have committed their state – No following instructions (including the interrupting instruction) have modified any state. • This means, that you can restart execution at the interrupt point and “get the right answer” 1/30/2012 add subi slli lw lw add sw r 1, r 2, r 3 r 4, r 1, #4 r 4, #2 r 2, 0(r 4) r 3, 4(r 4) r 2, r 3 8(r 4), r 2 d s ave Int e s PC All Mod ble sor a s i i D e up S rv Re s Us tore er Mo PC de cs 252 -S 12, Lecture 04 Int handler External Interrupt – Implicit in our previous example of a device interrupt: » Interrupt point is at first lw instruction 43

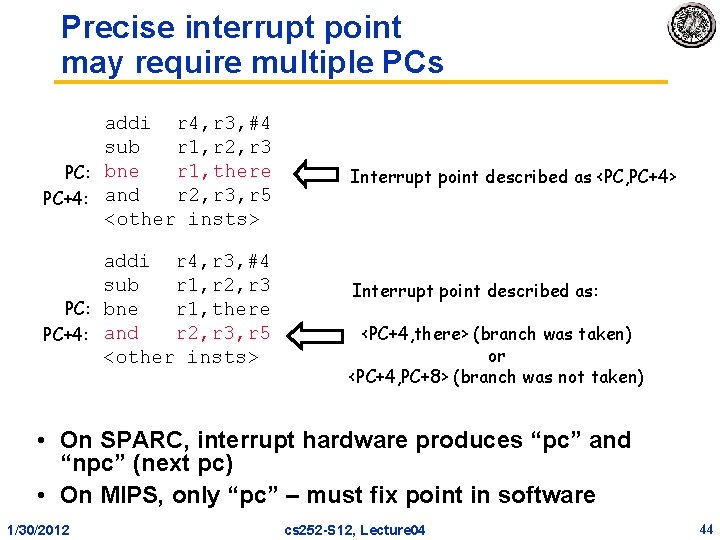

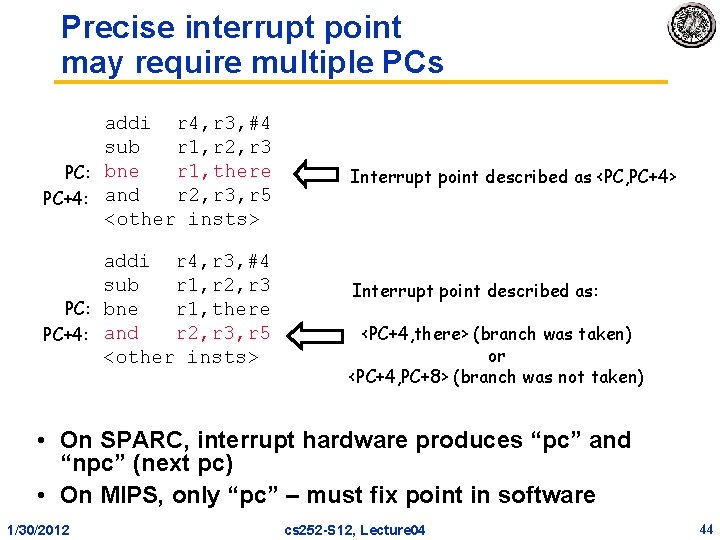

Precise interrupt point may require multiple PCs addi r 4, r 3, #4 sub r 1, r 2, r 3 r 1, there PC: bne r 2, r 3, r 5 PC+4: and <other insts> addi r 4, r 3, #4 sub r 1, r 2, r 3 PC: bne r 1, there r 2, r 3, r 5 PC+4: and <other insts> Interrupt point described as <PC, PC+4> Interrupt point described as: <PC+4, there> (branch was taken) or <PC+4, PC+8> (branch was not taken) • On SPARC, interrupt hardware produces “pc” and “npc” (next pc) • On MIPS, only “pc” – must fix point in software 1/30/2012 cs 252 -S 12, Lecture 04 44

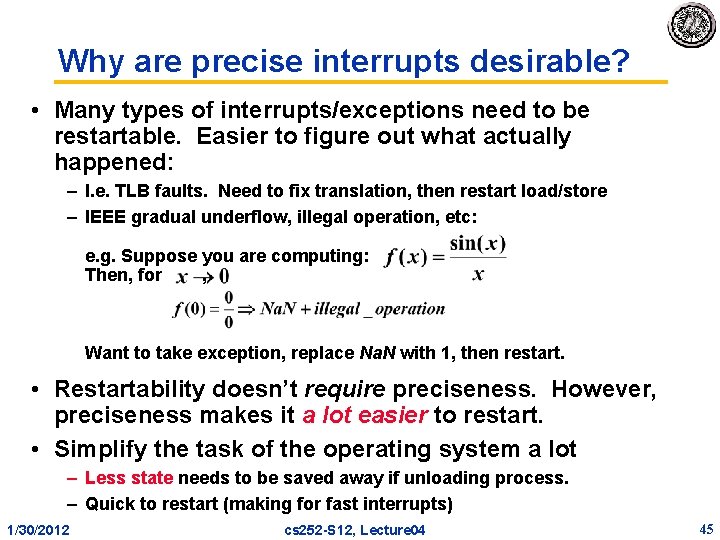

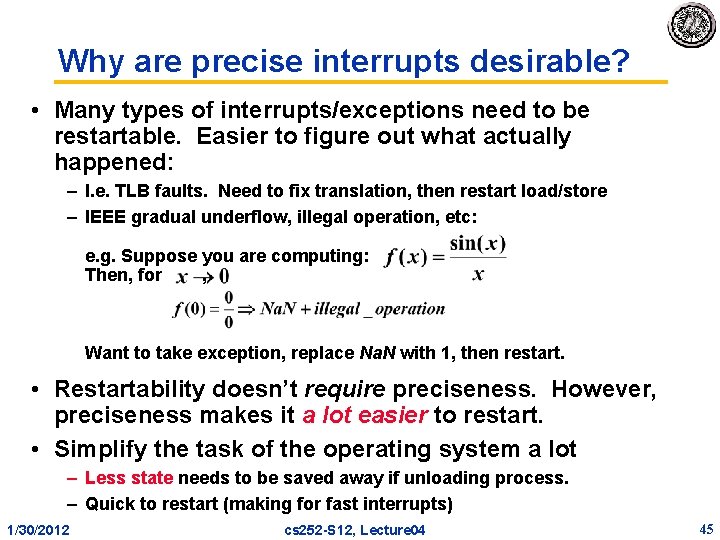

Why are precise interrupts desirable? • Many types of interrupts/exceptions need to be restartable. Easier to figure out what actually happened: – I. e. TLB faults. Need to fix translation, then restart load/store – IEEE gradual underflow, illegal operation, etc: e. g. Suppose you are computing: Then, for , Want to take exception, replace Na. N with 1, then restart. • Restartability doesn’t require preciseness. However, preciseness makes it a lot easier to restart. • Simplify the task of the operating system a lot – Less state needs to be saved away if unloading process. – Quick to restart (making for fast interrupts) 1/30/2012 cs 252 -S 12, Lecture 04 45

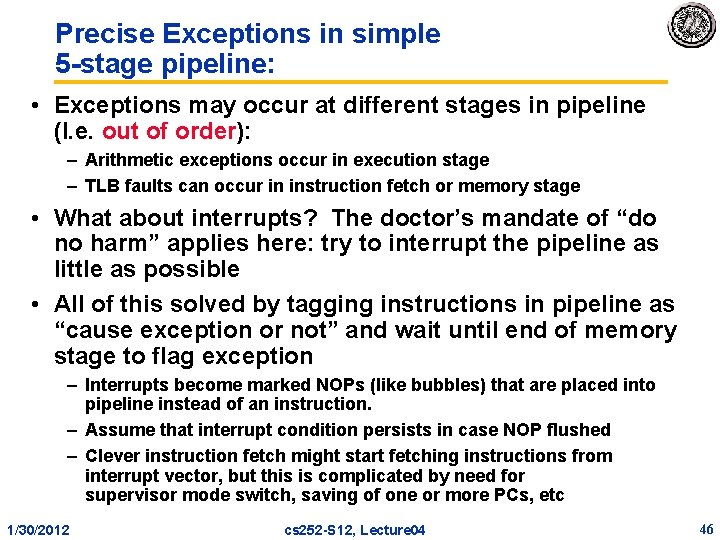

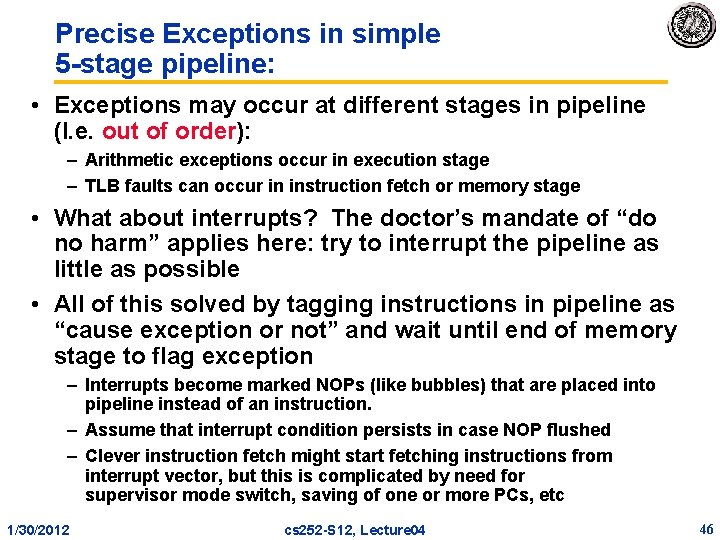

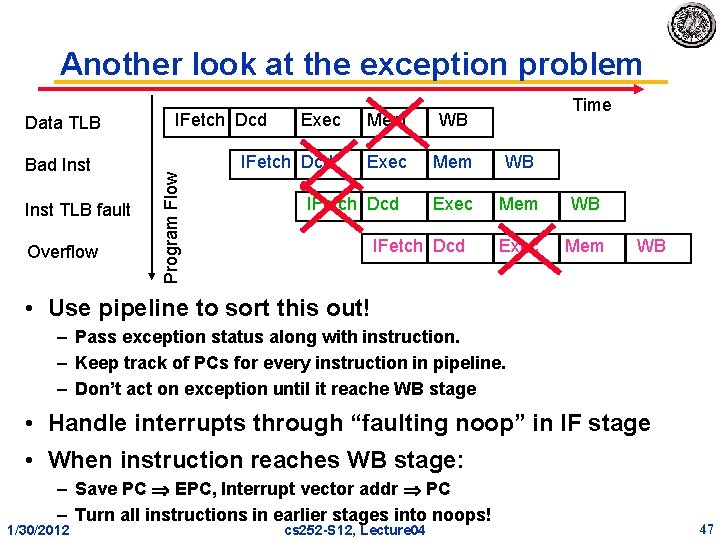

Precise Exceptions in simple 5 -stage pipeline: • Exceptions may occur at different stages in pipeline (I. e. out of order): – Arithmetic exceptions occur in execution stage – TLB faults can occur in instruction fetch or memory stage • What about interrupts? The doctor’s mandate of “do no harm” applies here: try to interrupt the pipeline as little as possible • All of this solved by tagging instructions in pipeline as “cause exception or not” and wait until end of memory stage to flag exception – Interrupts become marked NOPs (like bubbles) that are placed into pipeline instead of an instruction. – Assume that interrupt condition persists in case NOP flushed – Clever instruction fetch might start fetching instructions from interrupt vector, but this is complicated by need for supervisor mode switch, saving of one or more PCs, etc 1/30/2012 cs 252 -S 12, Lecture 04 46

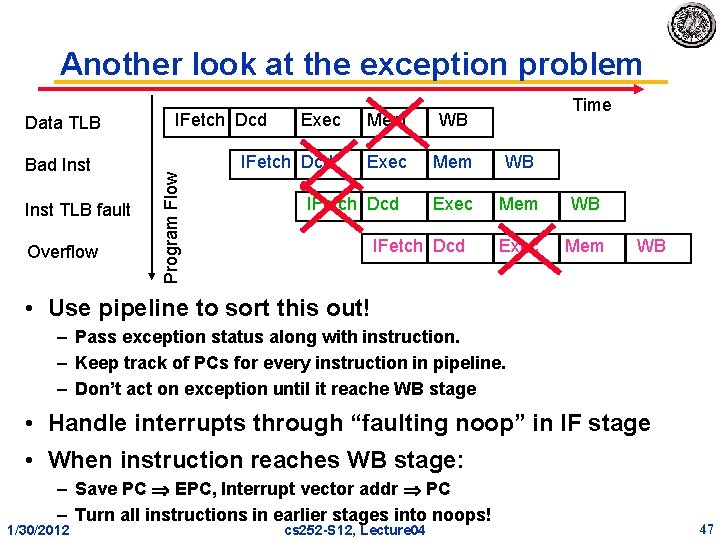

Another look at the exception problem Bad Inst TLB fault Overflow IFetch Dcd Exec IFetch Dcd Program Flow Data TLB Time Mem WB Exec Mem IFetch Dcd WB • Use pipeline to sort this out! – Pass exception status along with instruction. – Keep track of PCs for every instruction in pipeline. – Don’t act on exception until it reache WB stage • Handle interrupts through “faulting noop” in IF stage • When instruction reaches WB stage: – Save PC EPC, Interrupt vector addr PC – Turn all instructions in earlier stages into noops! 1/30/2012 cs 252 -S 12, Lecture 04 47

Summary: Caches • The Principle of Locality: – Program access a relatively small portion of the address space at any instant of time. » Temporal Locality: Locality in Time » Spatial Locality: Locality in Space • Three Major Categories of Cache Misses: – Compulsory Misses: sad facts of life. Example: cold start misses. – Capacity Misses: increase cache size – Conflict Misses: increase cache size and/or associativity. Nightmare Scenario: ping pong effect! • Write Policy: Write Through vs. Write Back • Today CPU time is a function of (ops, cache misses) vs. just f(ops): affects Compilers, Data structures, and Algorithms 1/30/2012 cs 252 -S 12, Lecture 04 48

Summary: TLB, Virtual Memory • Page tables map virtual address to physical address • TLBs are important for fast translation – TLB misses are significant in processor performance – funny times, as most systems can’t access all of 2 nd level cache without TLB misses! • Caches, TLBs, Virtual Memory all understood by examining how they deal with 4 questions: 1) Where can block be placed? 2) How is block found? 3) What block is replaced on miss? 4) How are writes handled? • Today VM allows many processes to share single memory without having to swap all processes to disk; 1/30/2012 cs 252 -S 12, Lecture 04 49

Summary: Interrupts • Interrupts and Exceptions either interrupt the current instruction or happen between instructions – Possibly large quantities of state must be saved before interrupting • Machines with precise exceptions provide one single point in the program to restart execution – All instructions before that point have completed – No instructions after or including that point have completed 1/30/2012 cs 252 -S 12, Lecture 04 50