CMPE 252 A Computer Networks An Introduction to

- Slides: 63

CMPE 252 A : Computer Networks An Introduction to Software Defined Networking (SDN) & Open. Flow Huazhe Wang huazhe. wang@ucsc. edu Some slides are borrowed from http: //www. cs. princeton. edu/courses/archive/spr 12/cos 461/ http: //www-net. cs. umass. edu/kurose-ross-ppt-6 e/

The Internet: A Remarkable Story q Tremendous success v From research experiment to global infrastructure q Brilliance of under-specifying v Network: best-effort packet delivery v Hosts: arbitrary applications q Enables innovation in applications v Web, P 2 P, Vo. IP, social networks, virtual worlds q But, change is easy only at the edge… 1 -2

Inside the ‘Net: A Different Story… q Closed equipment v Software bundled with hardware v Vendor-specific interfaces q Over specified v Slow protocol standardization q Few people can innovate v Equipment vendors write the code v Long delays to introduce new features Impacts performance, security, reliability, cost… 1 -3

Networks are Hard to Manage q Operating a network is expensive v More than half the cost of a network v Yet, operator error causes most outages q Buggy software in the equipment v Routers with 20+ million lines of code v Cascading failures, vulnerabilities, etc. q The network is “in the way” v Especially a problem in data centers v … and home networks 1 -4

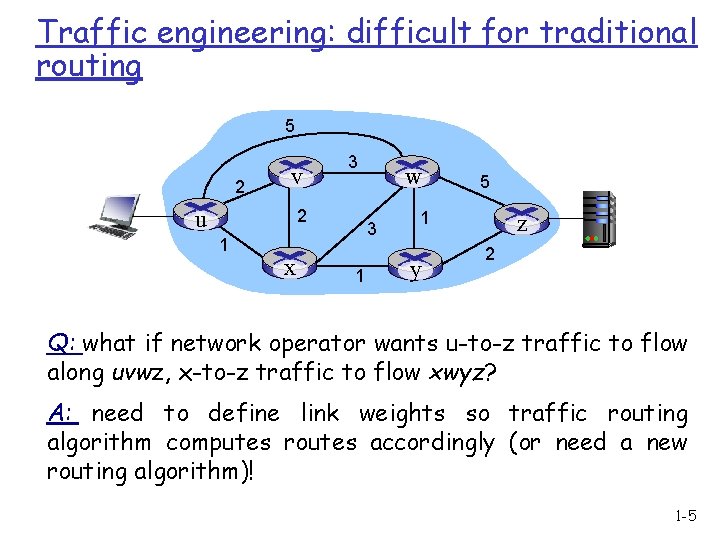

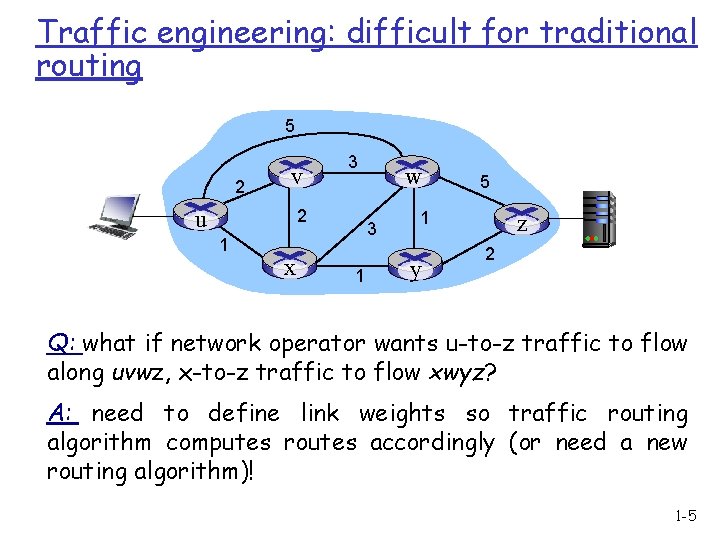

Traffic engineering: difficult for traditional routing 5 2 v u 3 2 1 x w 3 1 5 1 y z 2 Q: what if network operator wants u-to-z traffic to flow along uvwz, x-to-z traffic to flow xwyz? A: need to define link weights so traffic routing algorithm computes routes accordingly (or need a new routing algorithm)! 1 -5

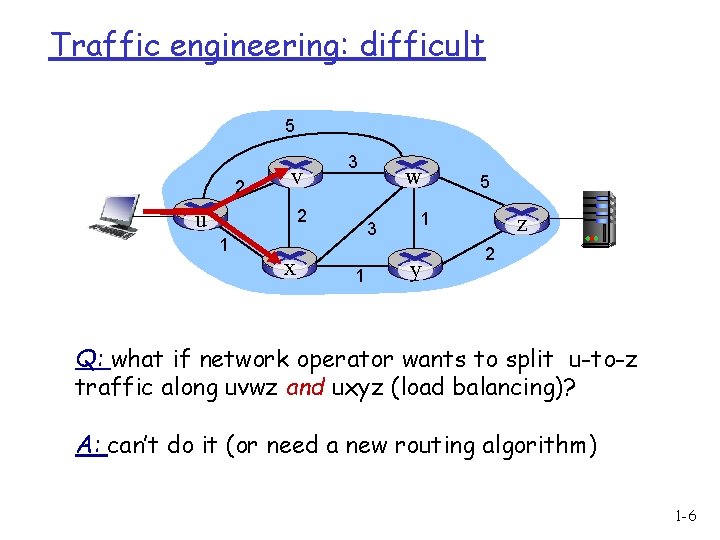

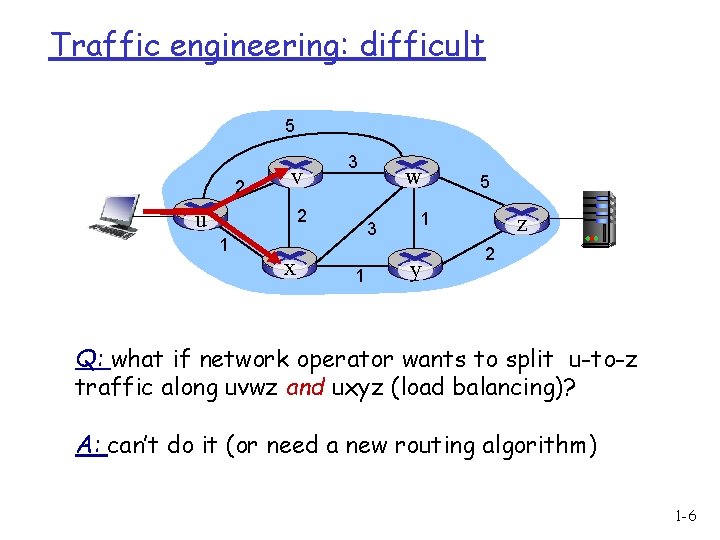

Traffic engineering: difficult 5 2 v u 3 2 1 x w 3 1 5 1 y z 2 Q: what if network operator wants to split u-to-z traffic along uvwz and uxyz (load balancing)? A: can’t do it (or need a new routing algorithm) 1 -6

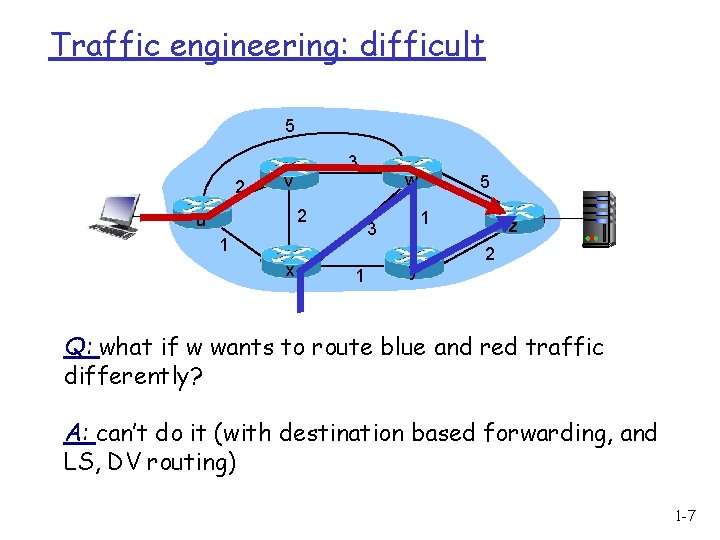

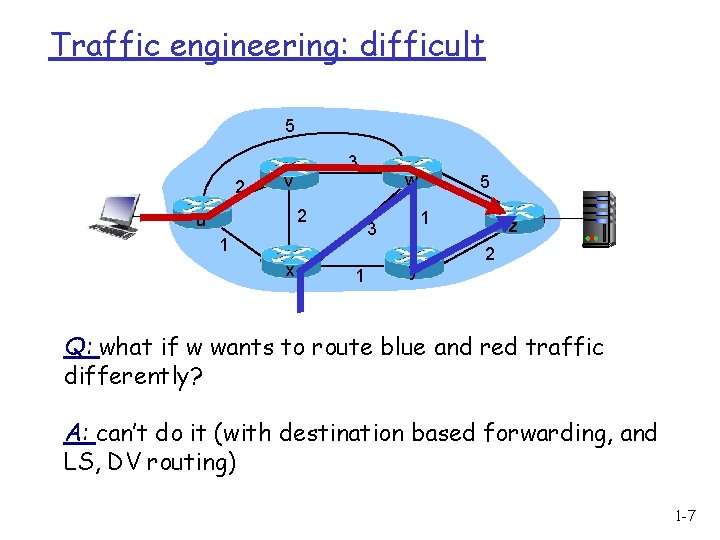

Traffic engineering: difficult 5 2 3 v v 2 u 1 xx w w zz 1 3 1 5 yy 2 Q: what if w wants to route blue and red traffic differently? A: can’t do it (with destination based forwarding, and LS, DV routing) 1 -7

Rethinking the “Division of Labor”

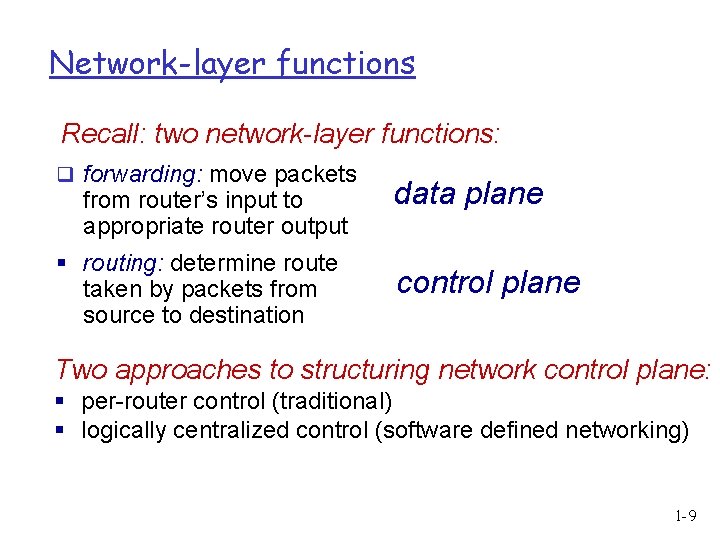

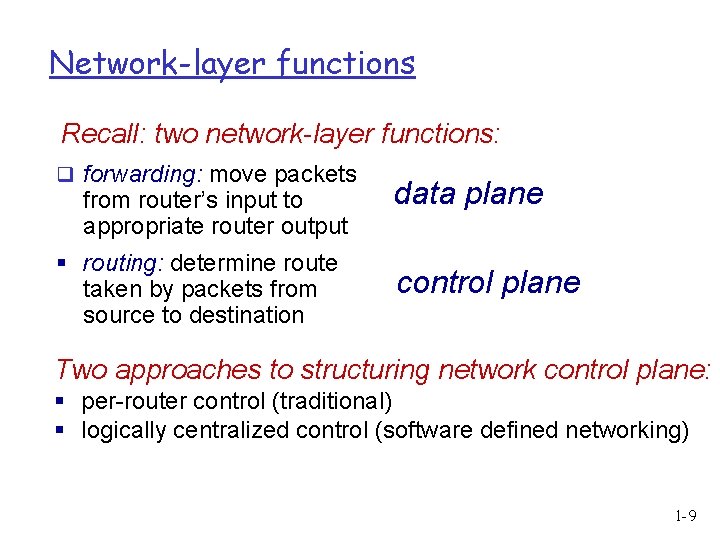

Network-layer functions Recall: two network-layer functions: q forwarding: move packets from router’s input to appropriate router output § routing: determine route taken by packets from source to destination data plane control plane Two approaches to structuring network control plane: § per-router control (traditional) § logically centralized control (software defined networking) 1 -9

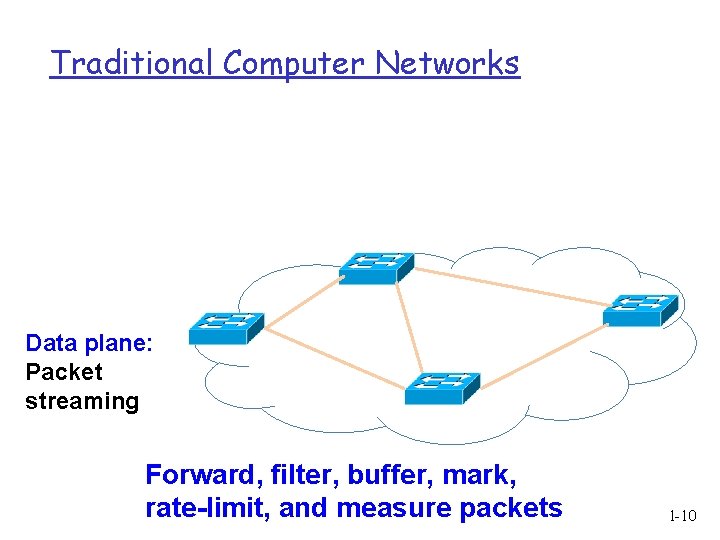

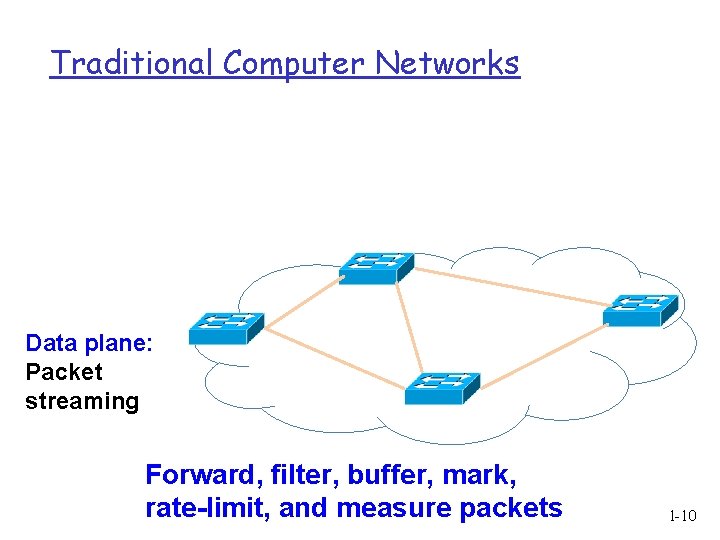

Traditional Computer Networks Data plane: Packet streaming Forward, filter, buffer, mark, rate-limit, and measure packets 1 -10

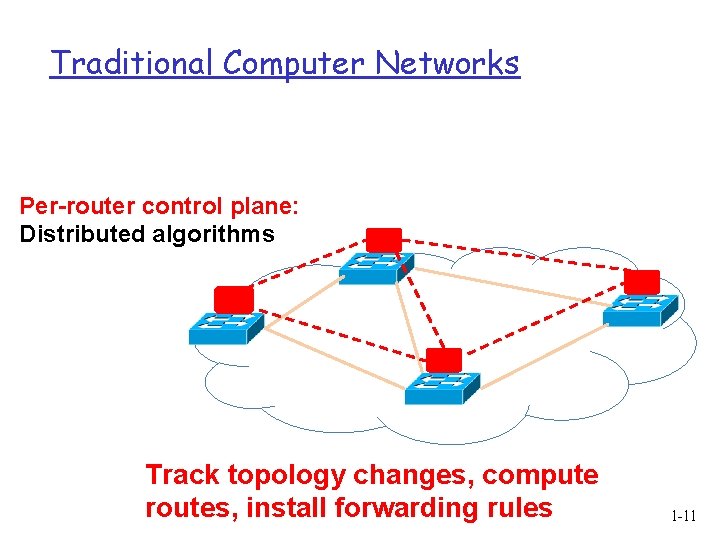

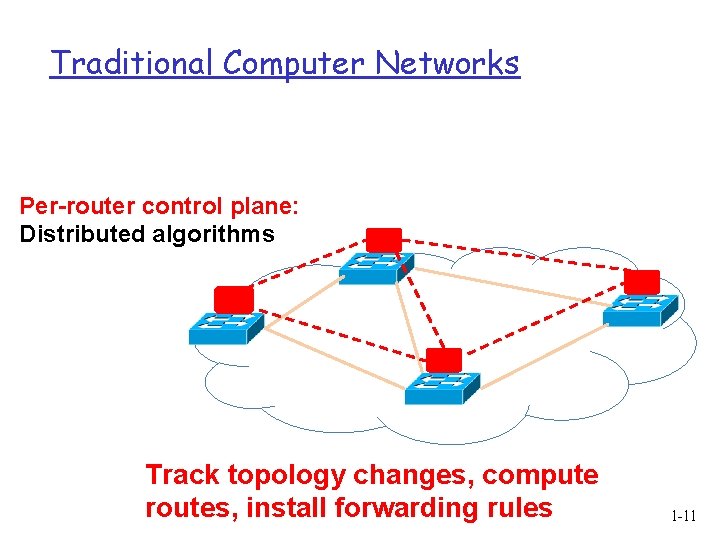

Traditional Computer Networks Per-router control plane: Distributed algorithms Track topology changes, compute routes, install forwarding rules 1 -11

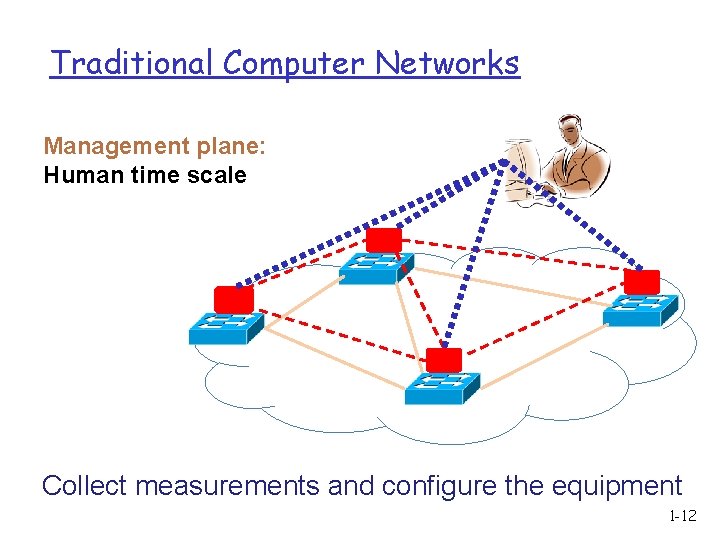

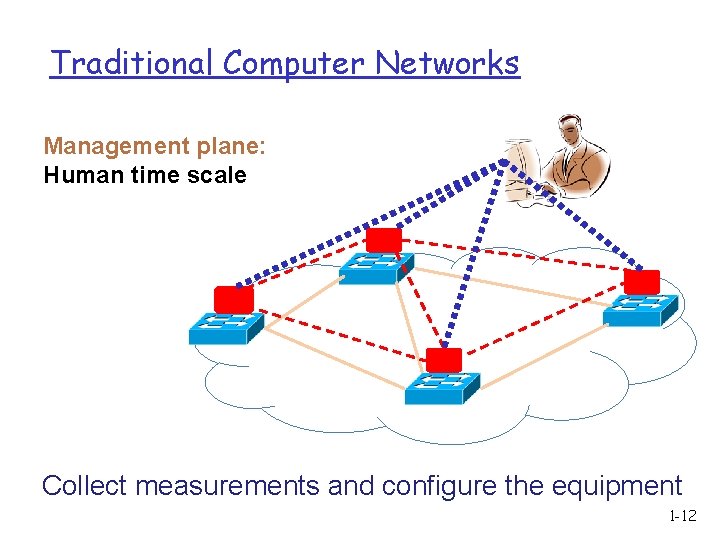

Traditional Computer Networks Management plane: Human time scale Collect measurements and configure the equipment 1 -12

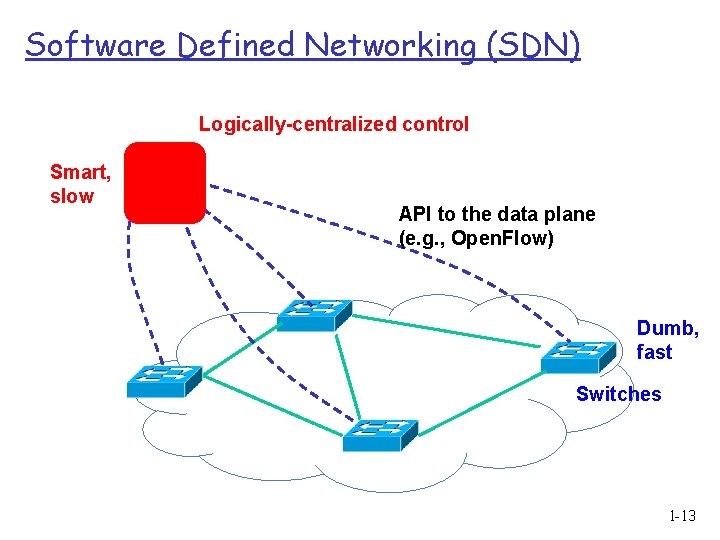

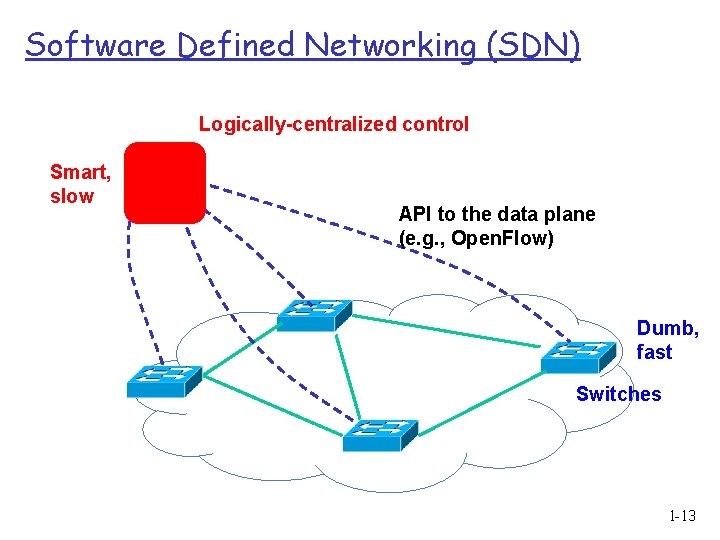

Software Defined Networking (SDN) Logically-centralized control Smart, slow API to the data plane (e. g. , Open. Flow) Dumb, fast Switches 1 -13

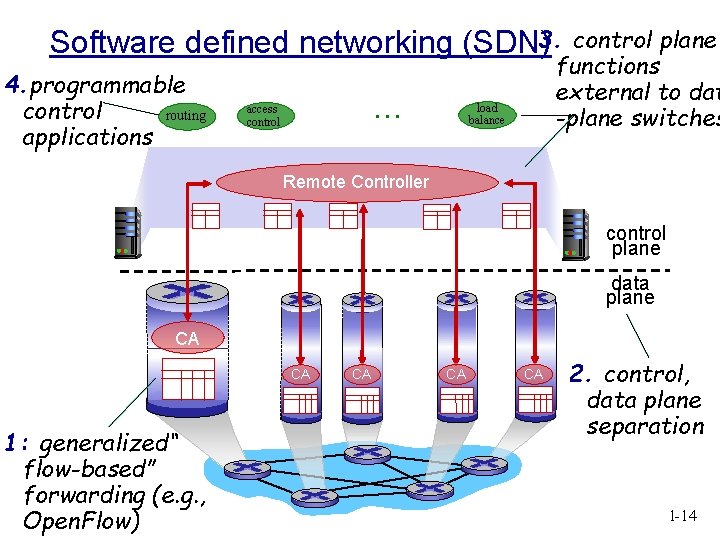

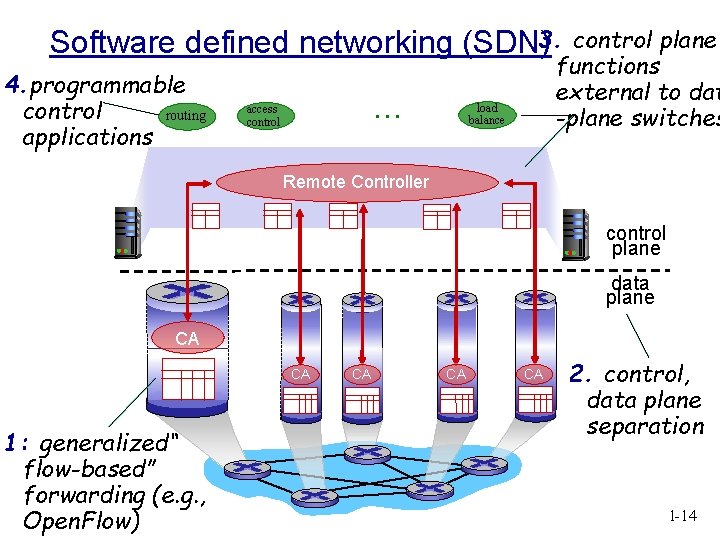

Software defined networking (SDN)3. 4. programmable control routing applications … access control plane functions external to dat -plane switches load balance Remote Controller control plane data plane CA CA 1: generalized“ flow-based” forwarding (e. g. , Open. Flow) CA CA CA 2. control, data plane separation 1 -14

Software defined networking (SDN) Why a logically centralized control plane? q easier network management: avoid router misconfigurations, greater flexibility of traffic flows q table-based forwarding allows “programming” routers v v centralized “programming” easier: compute tables centrally and distributed “programming: more difficult: compute tables as result of distributed algorithm (protocol) implemented in each and every router q open (non-proprietary) implementation of control plane 1 -15

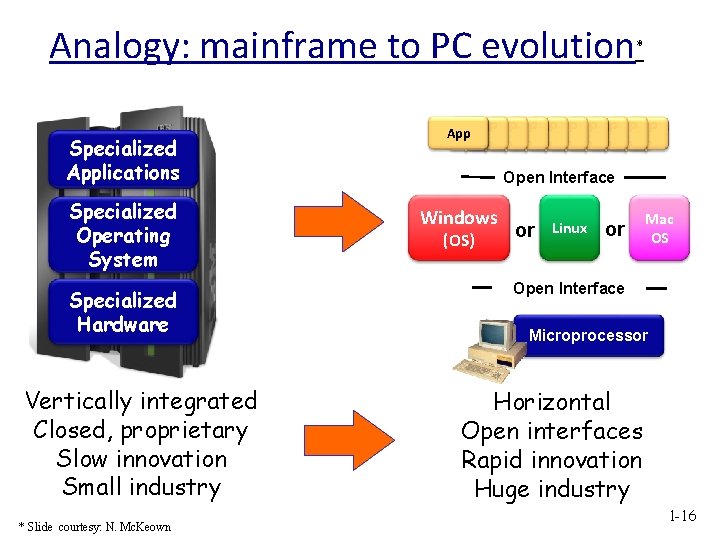

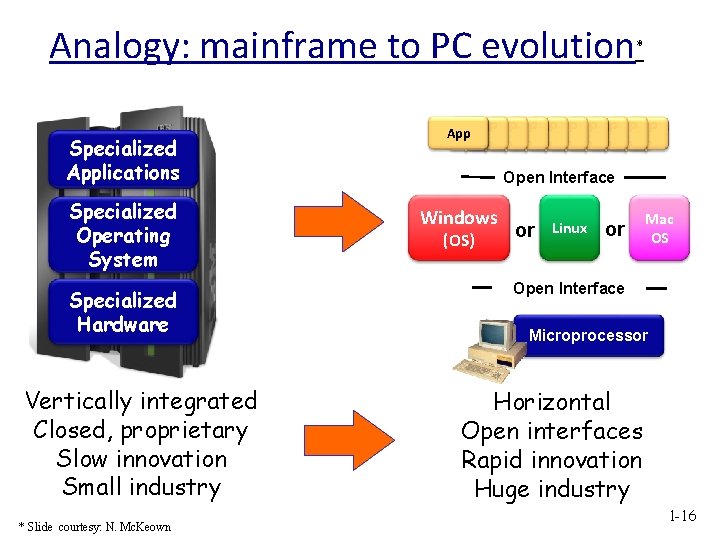

Analogy: mainframe to PC evolution Specialized Applications Specialized Operating System Specialized Hardware Vertically integrated Closed, proprietary Slow innovation Small industry * Slide courtesy: N. Mc. Keown * Ap Ap Ap p p App Open Interface Windows (OS) or Linux or Mac OS Open Interface Microprocessor Horizontal Open interfaces Rapid innovation Huge industry 1 -16

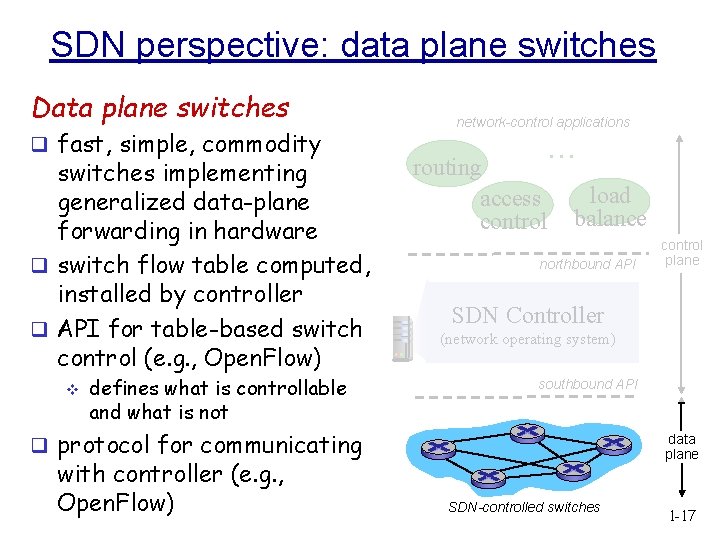

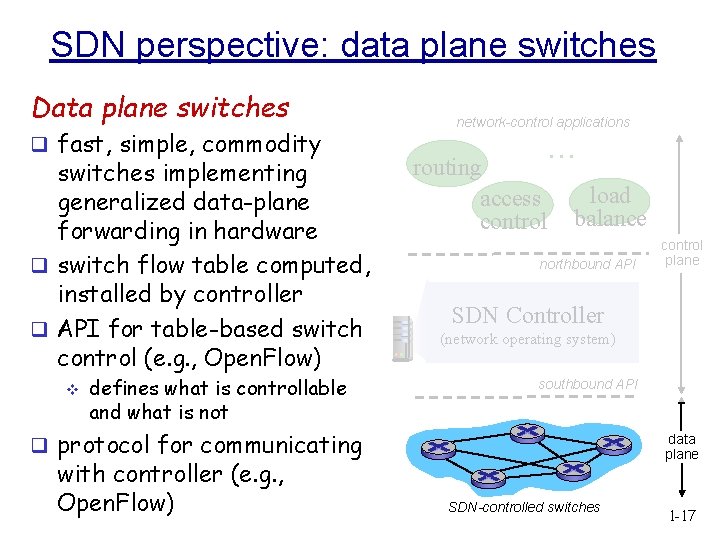

SDN perspective: data plane switches Data plane switches q fast, simple, commodity switches implementing generalized data-plane forwarding in hardware q switch flow table computed, installed by controller q API for table-based switch control (e. g. , Open. Flow) v defines what is controllable and what is not network-control applications … routing access control load balance northbound API SDN Controller (network operating system) southbound API q protocol for communicating with controller (e. g. , Open. Flow) control plane data plane SDN-controlled switches 1 -17

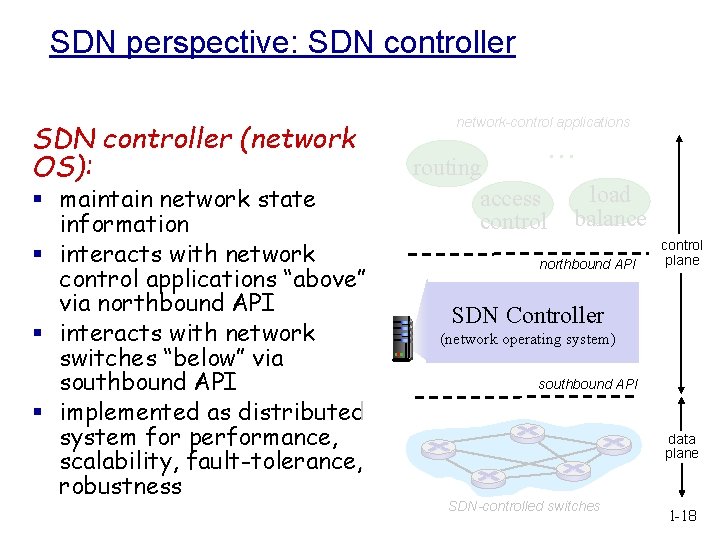

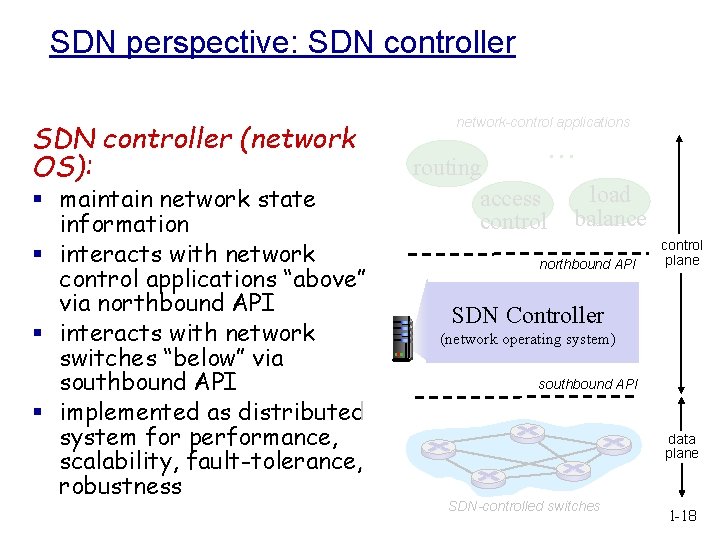

SDN perspective: SDN controller (network OS): § maintain network state information § interacts with network control applications “above” via northbound API § interacts with network switches “below” via southbound API § implemented as distributed system for performance, scalability, fault-tolerance, robustness network-control applications … routing access control load balance northbound API control plane SDN Controller (network operating system) southbound API data plane SDN-controlled switches 1 -18

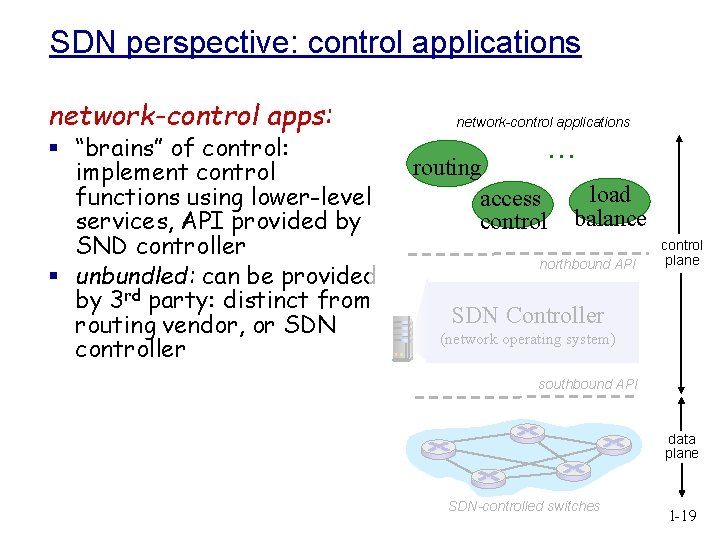

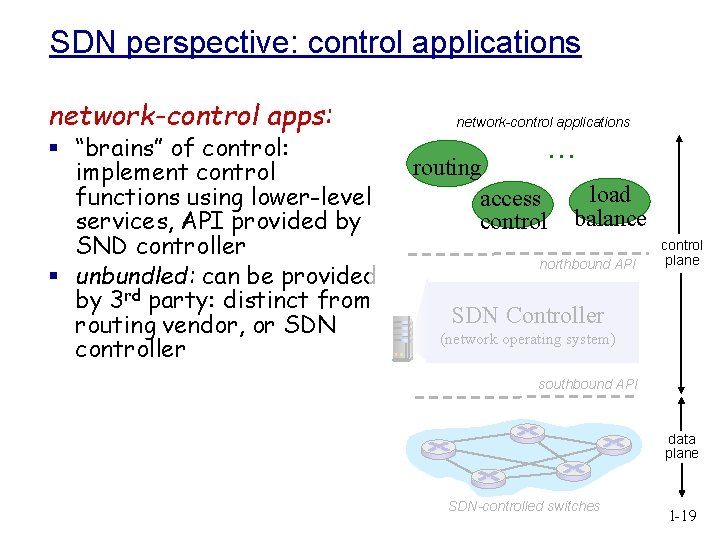

SDN perspective: control applications network-control apps: § “brains” of control: implement control functions using lower-level services, API provided by SND controller § unbundled: can be provided by 3 rd party: distinct from routing vendor, or SDN controller network-control applications … routing access control load balance northbound API control plane SDN Controller (network operating system) southbound API data plane SDN-controlled switches 1 -19

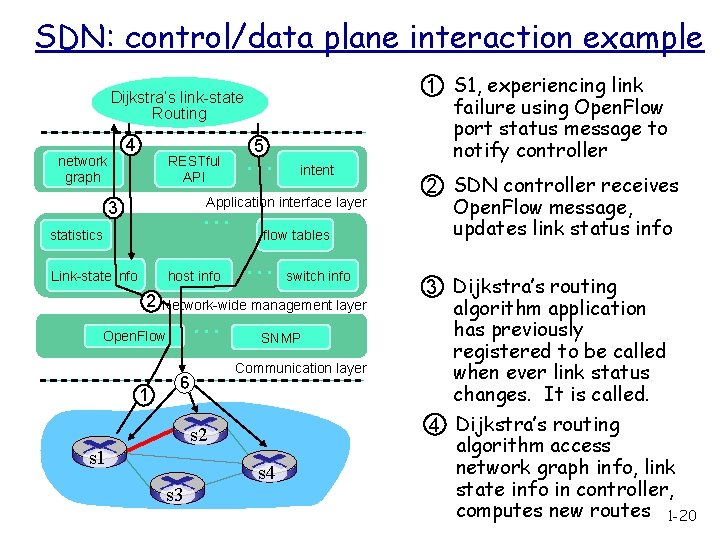

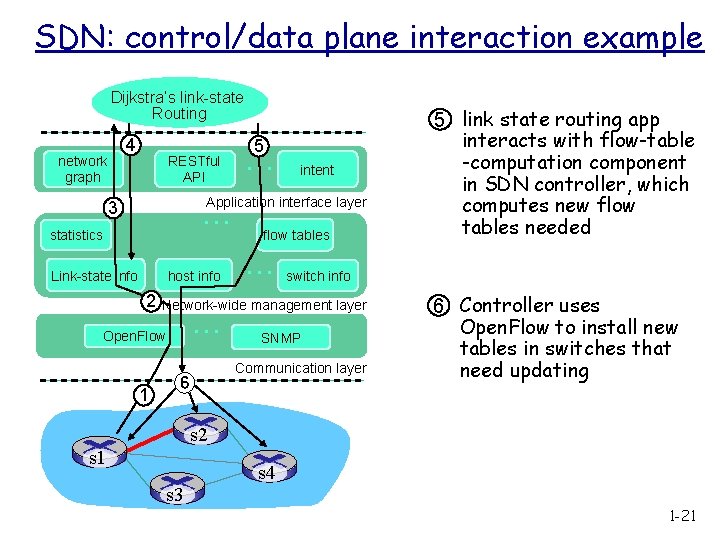

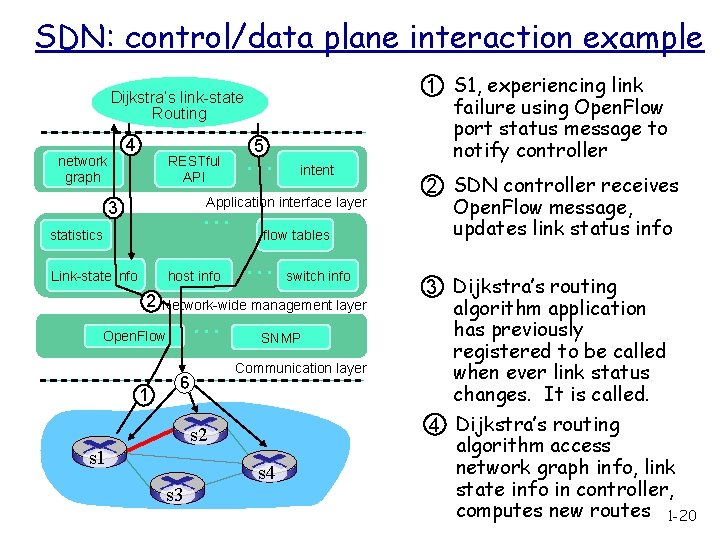

SDN: control/data plane interaction example Dijkstra’s link-state Routing 4 network graph RESTful API 5 … intent Application interface layer … 3 statistics Link-state info host info 2 … switch info Network-wide management layer … Open. Flow 1 flow tables 6 SNMP Communication layer s 2 s 1 s 3 s 4 1 S 1, experiencing link failure using Open. Flow port status message to notify controller 2 SDN controller receives Open. Flow message, updates link status info 3 Dijkstra’s routing algorithm application has previously registered to be called when ever link status changes. It is called. 4 Dijkstra’s routing algorithm access network graph info, link state info in controller, computes new routes 1 -20

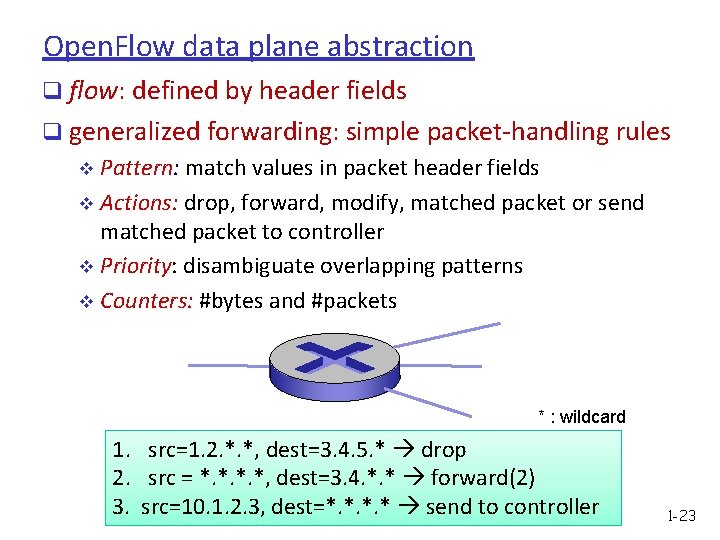

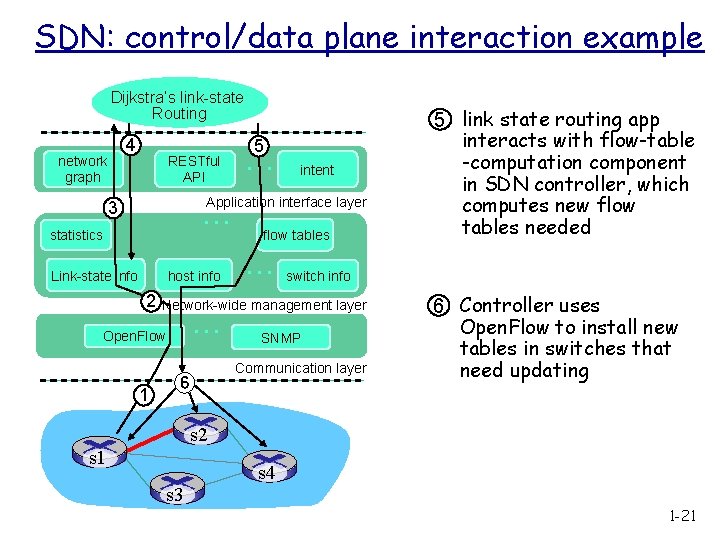

SDN: control/data plane interaction example Dijkstra’s link-state Routing 4 network graph RESTful API 5 … intent Application interface layer … 3 statistics Link-state info host info 2 … switch info Network-wide management layer … Open. Flow 1 flow tables 6 5 link state routing app interacts with flow-table -computation component in SDN controller, which computes new flow tables needed SNMP Communication layer 6 Controller uses Open. Flow to install new tables in switches that need updating s 2 s 1 s 3 s 4 1 -21

Open. Flow (OF) defines the communication protocol that enables the SDN Controller to directly interact with the forwarding plane of network devices.

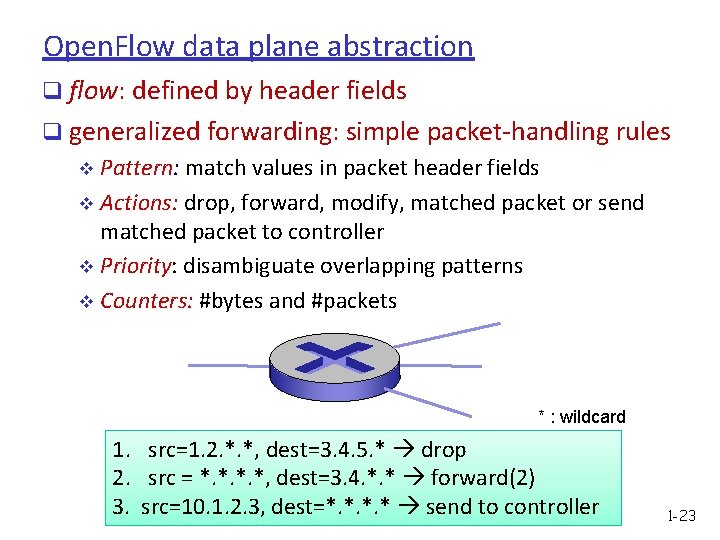

Open. Flow data plane abstraction q flow: defined by header fields q generalized forwarding: simple packet-handling rules v Pattern: match values in packet header fields v Actions: drop, forward, modify, matched packet or send matched packet to controller v Priority: disambiguate overlapping patterns v Counters: #bytes and #packets * : wildcard 1. src=1. 2. *. *, dest=3. 4. 5. * drop 2. src = *. *, dest=3. 4. *. * forward(2) 3. src=10. 1. 2. 3, dest=*. * send to controller 1 -23

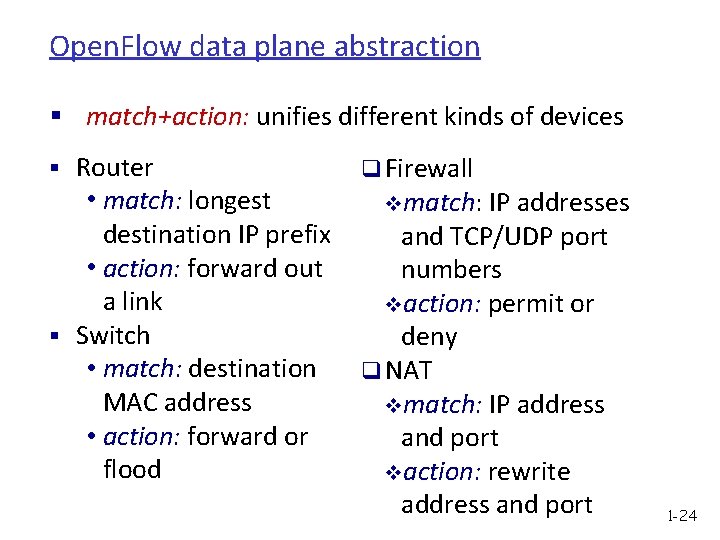

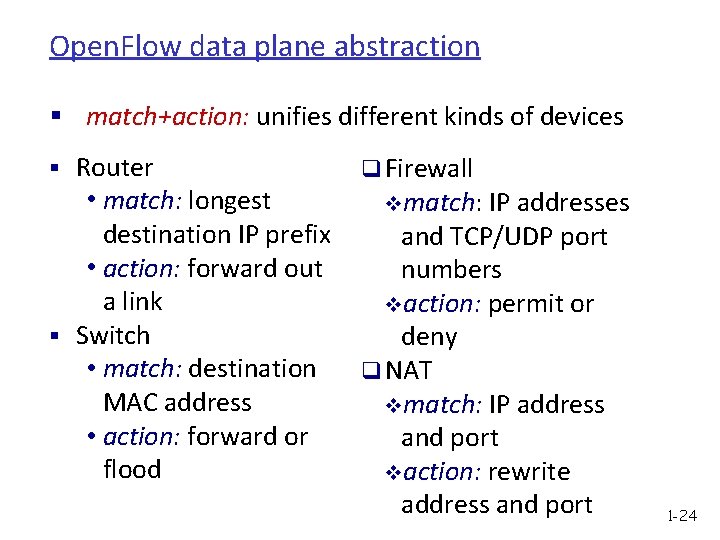

Open. Flow data plane abstraction § match+action: unifies different kinds of devices § Router • match: longest destination IP prefix • action: forward out a link § Switch • match: destination MAC address • action: forward or flood q Firewall vmatch: IP addresses and TCP/UDP port numbers vaction: permit or deny q NAT vmatch: IP address and port vaction: rewrite address and port 1 -24

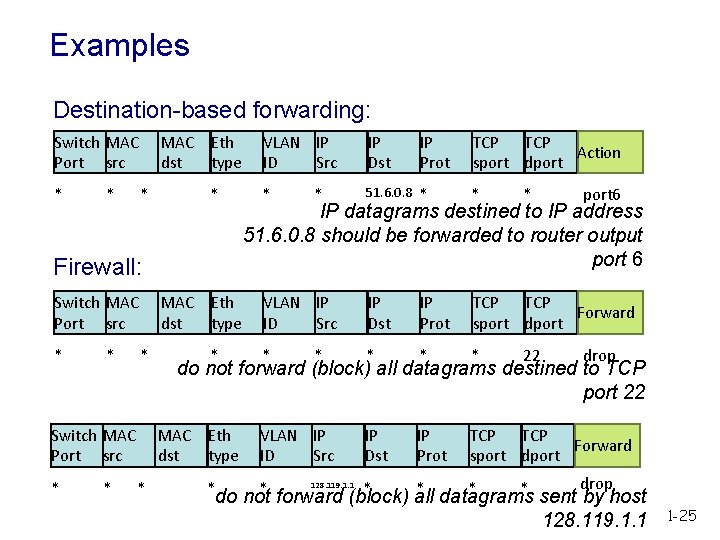

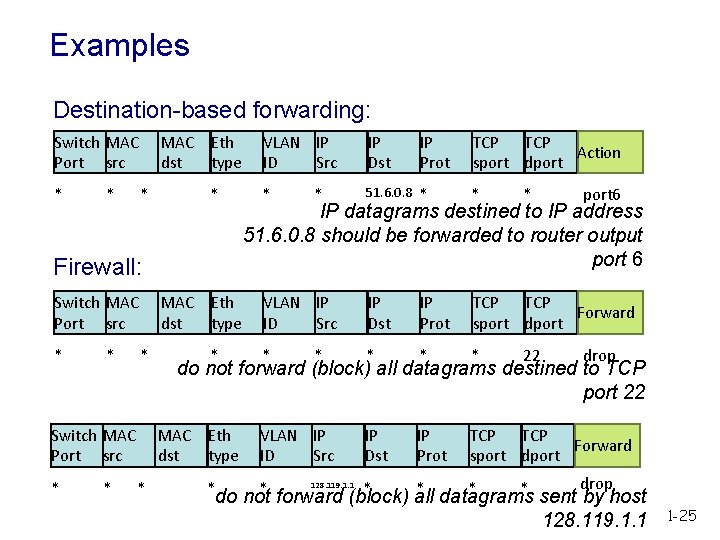

Examples Destination-based forwarding: Switch MAC Port src * * MAC Eth dst type * * Firewall: Switch MAC Port src * * MAC Eth dst type * Switch MAC Port src * * * IP Dst IP Prot TCP Action sport dport * 51. 6. 0. 8 * * VLAN IP ID Src IP Dst IP Prot TCP Forward sport dport * * port 6 IP datagrams destined to IP address 51. 6. 0. 8 should be forwarded to router output port 6 * 22 drop do not forward (block) all datagrams destined to TCP port 22 MAC Eth dst type * VLAN IP ID Src * drop * * do not forward (block) all datagrams sent by host 128. 119. 1. 1 1 -25

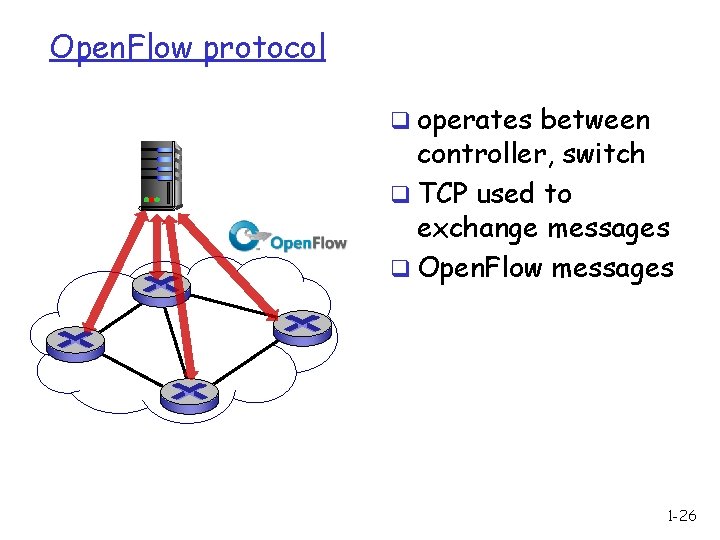

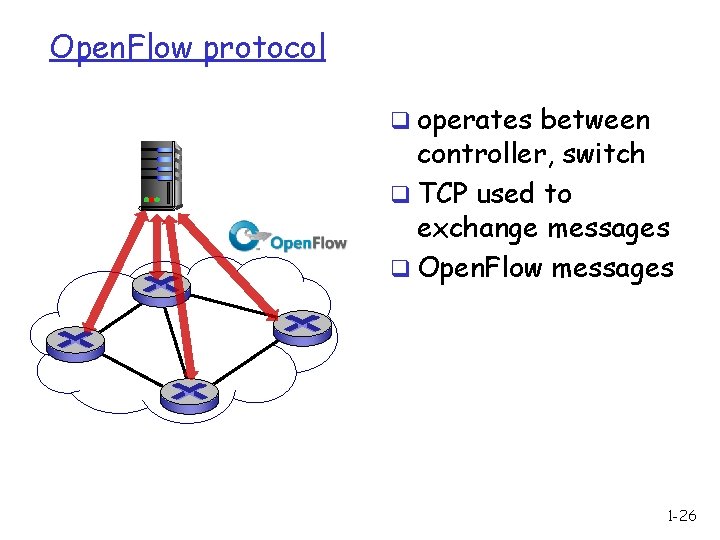

Open. Flow protocol q operates between controller, switch q TCP used to exchange messages q Open. Flow messages 1 -26

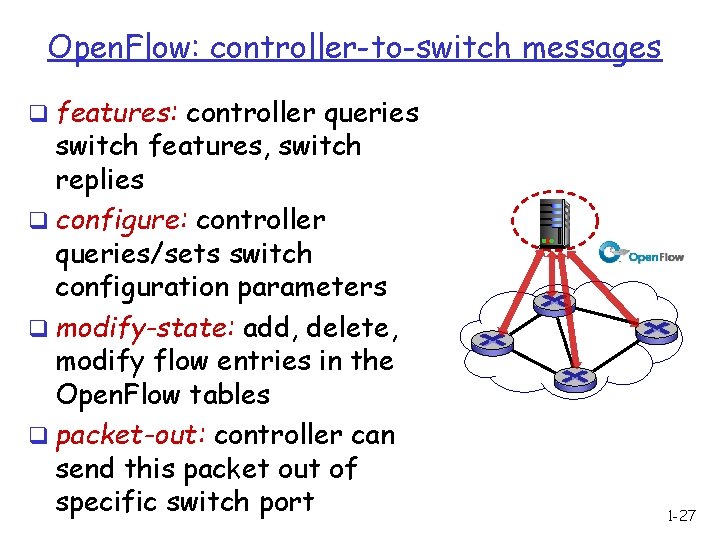

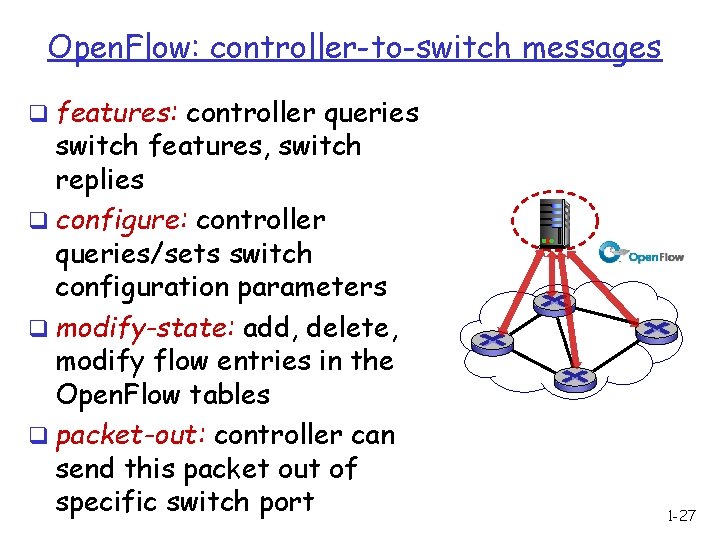

Open. Flow: controller-to-switch messages q features: controller queries switch features, switch replies q configure: controller queries/sets switch configuration parameters q modify-state: add, delete, modify flow entries in the Open. Flow tables q packet-out: controller can send this packet out of specific switch port 1 -27

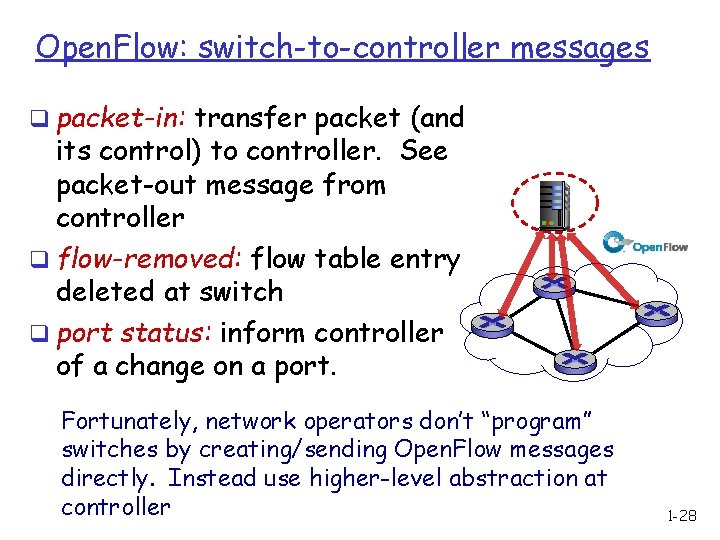

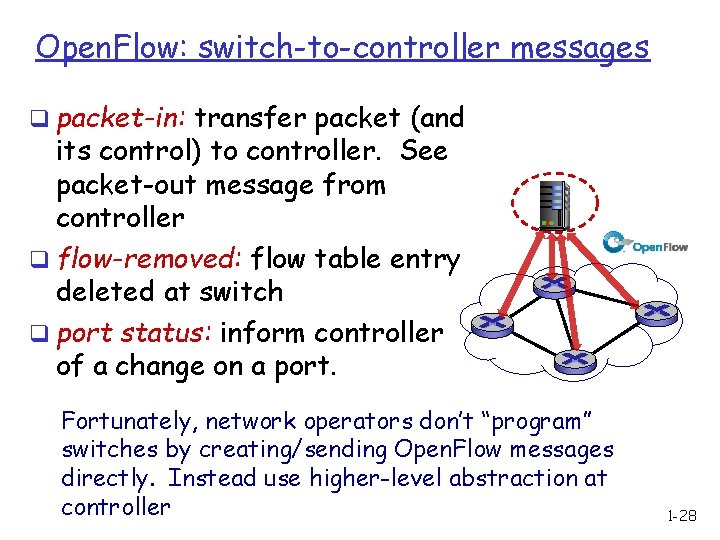

Open. Flow: switch-to-controller messages q packet-in: transfer packet (and its control) to controller. See packet-out message from controller q flow-removed: flow table entry deleted at switch q port status: inform controller of a change on a port. Fortunately, network operators don’t “program” switches by creating/sending Open. Flow messages directly. Instead use higher-level abstraction at controller 1 -28

Open. Flow in the Wild q Open Networking Foundation v Google, Facebook, Microsoft, Yahoo, Verizon, Deutsche Telekom, and many other companies q Commercial Open. Flow switches v HP, NEC, Quanta, Dell, IBM, Juniper, … q Network operating systems v NOX, Beacon, Floodlight, Open. Daylight, POX, Pyretic q Network deployments v Eight campuses, and two research backbone networks v Commercial deployments (e. g. , Google backbone) 1 -29

Challenges

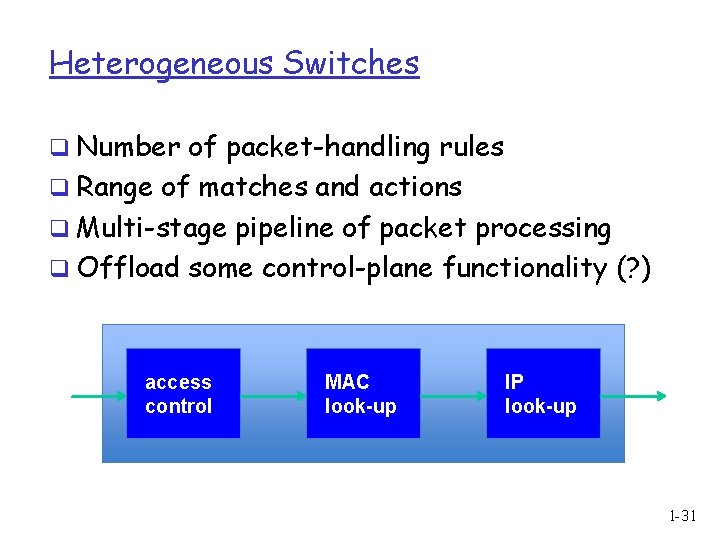

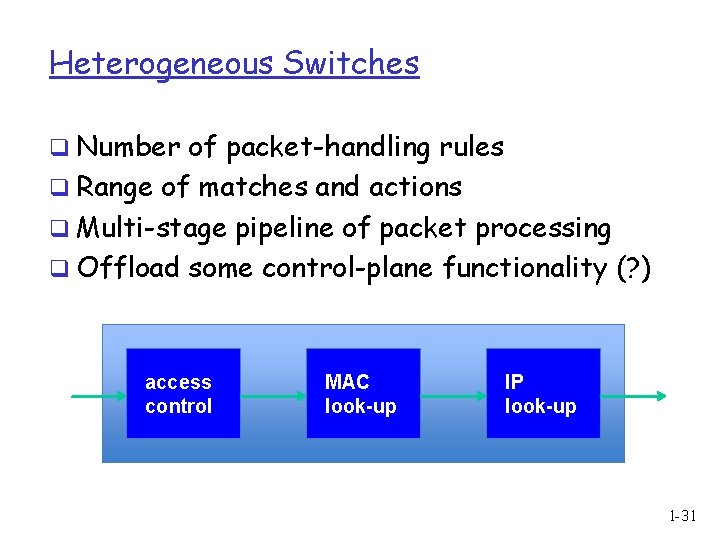

Heterogeneous Switches q Number of packet-handling rules q Range of matches and actions q Multi-stage pipeline of packet processing q Offload some control-plane functionality (? ) access control MAC look-up IP look-up 1 -31

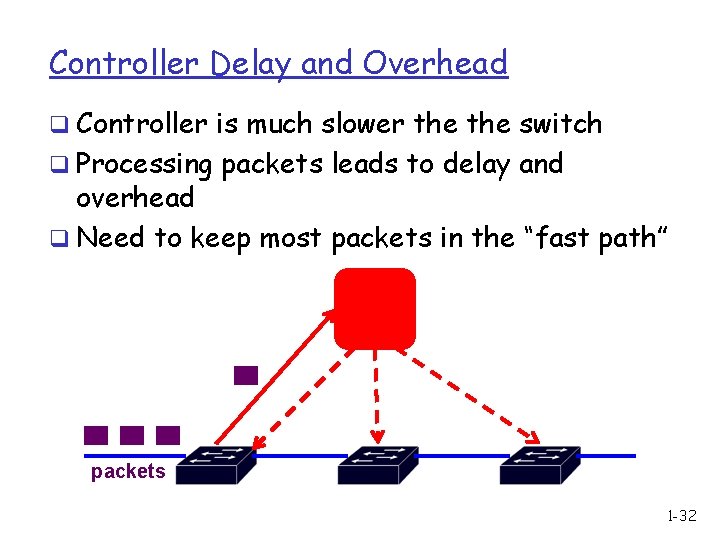

Controller Delay and Overhead q Controller is much slower the switch q Processing packets leads to delay and overhead q Need to keep most packets in the “fast path” packets 1 -32

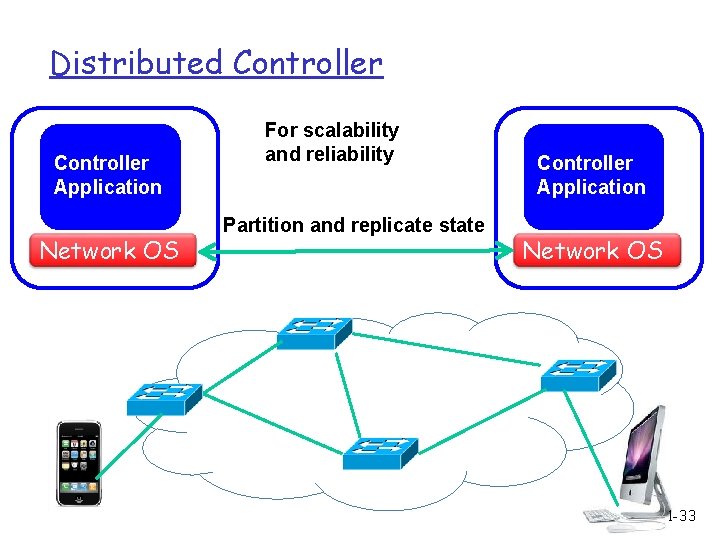

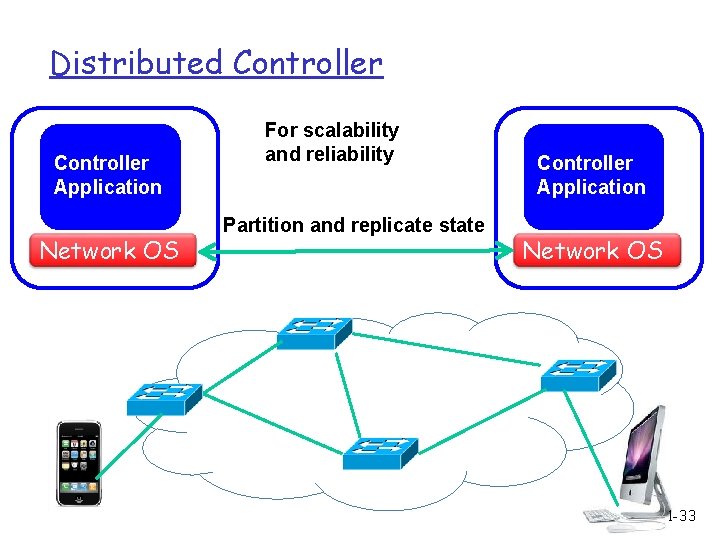

Distributed Controller Application Network OS For scalability and reliability Partition and replicate state Controller Application Network OS 1 -33

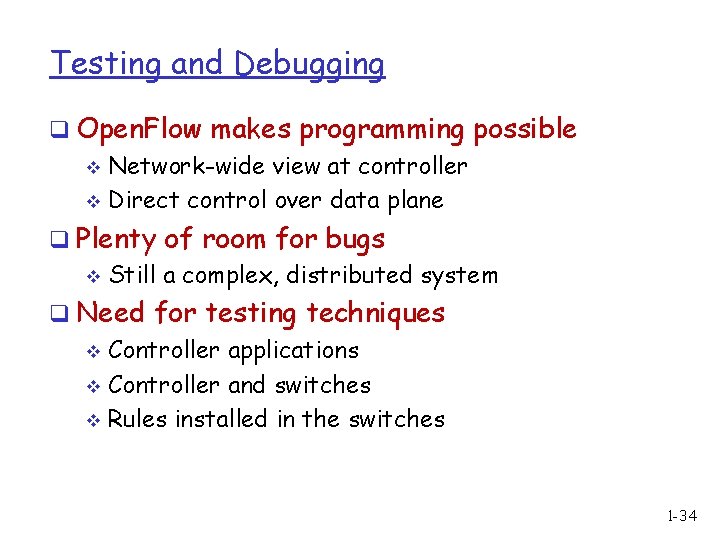

Testing and Debugging q Open. Flow makes programming possible v Network-wide view at controller v Direct control over data plane q Plenty of room for bugs v Still a complex, distributed system q Need for testing techniques v Controller applications v Controller and switches v Rules installed in the switches 1 -34

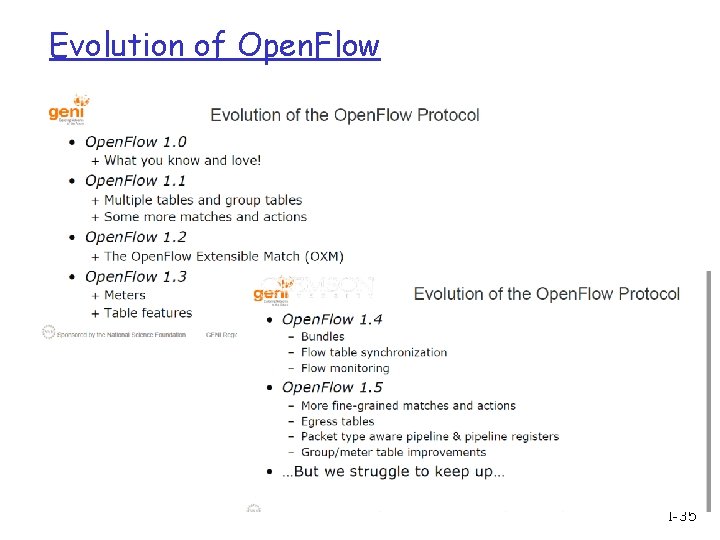

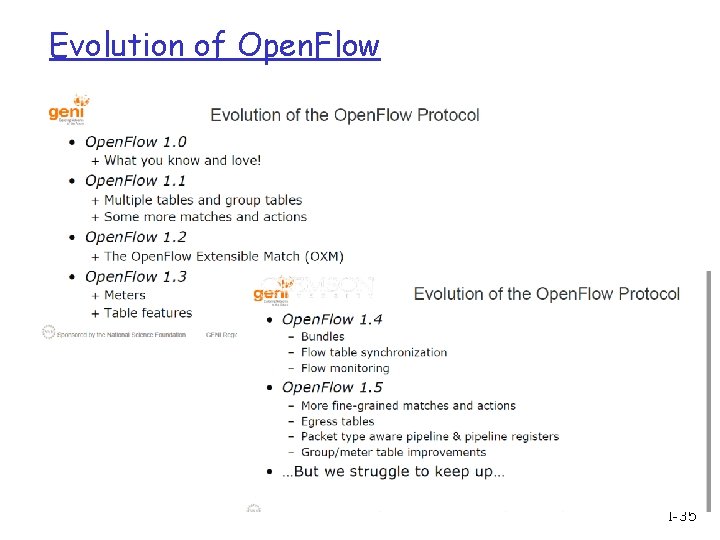

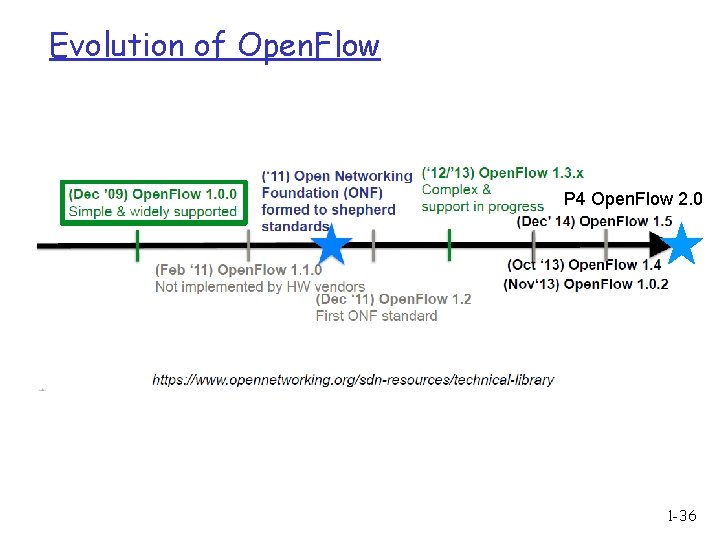

Evolution of Open. Flow 1 -35

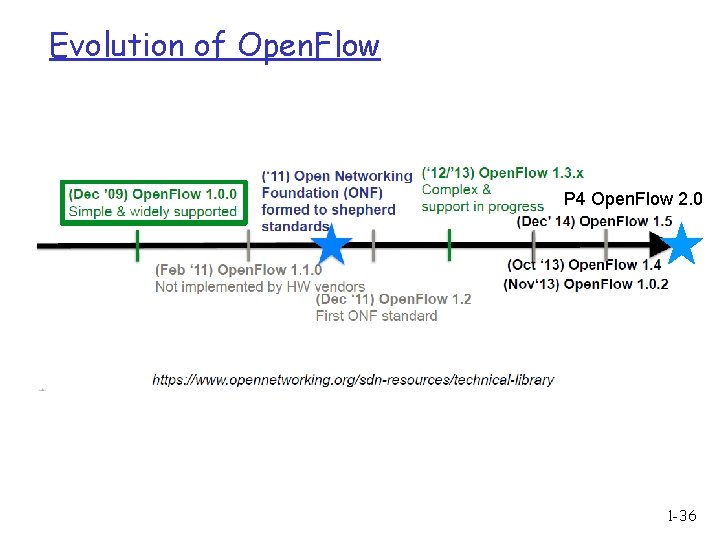

Evolution of Open. Flow P 4 Open. Flow 2. 0 1 -36

Run Open. Flow Experiments q Mininet q Open v. Switch (OVS) 1 -37

Hedera: Dynamic Flow Scheduling for Data Center Networks Mohammad Al-Fares Barath Raghavan* UC San Diego Sivasankar Radhakrishnan Nelson Huang Amin Vahdat *Williams College Slides modified from https: //people. eecs. berkeley. edu/~istoica/classes/cs 294/. . . /21 -Hedera-TD. pptx

Problem q Map. Reduce/Hadoop style workloads have substantial BW requirements q ECMP-based multi-paths load balancing often leads oversubscription --> jobs bottlenecked by network Key insight/idea q Identify large flows and periodically rearrange them to balance the load across core switches Challenges q Estimate bandwidth demand of flows q Find optimal allocation network paths for flows

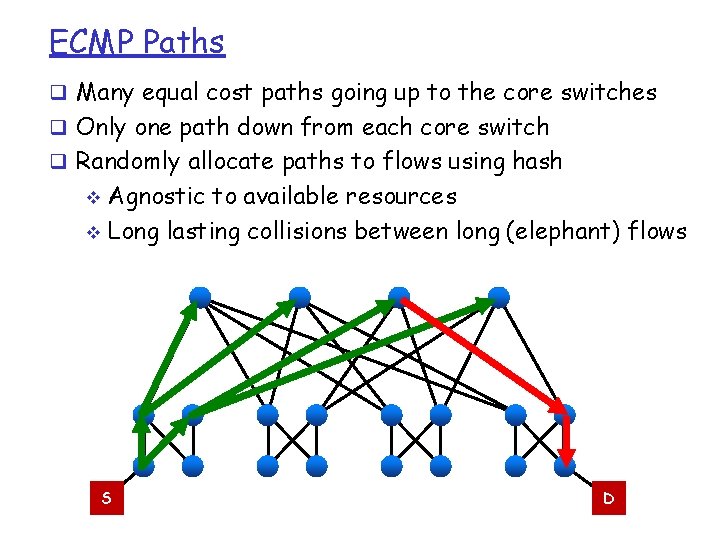

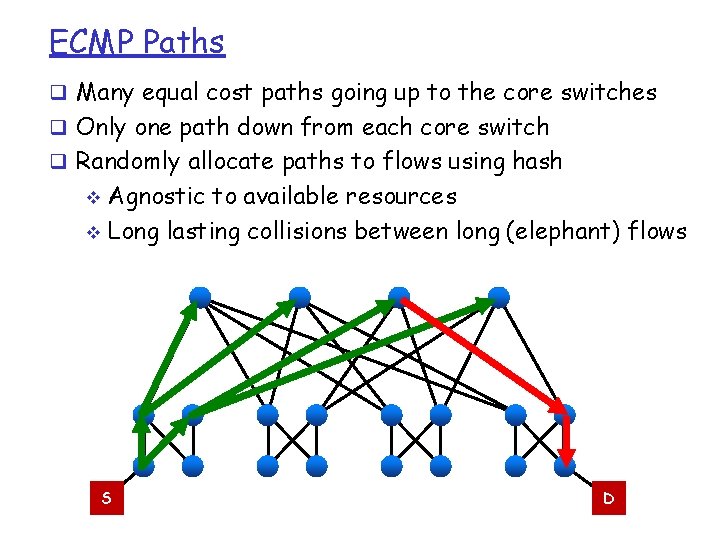

ECMP Paths q Many equal cost paths going up to the core switches q Only one path down from each core switch q Randomly allocate paths to flows using hash Agnostic to available resources v Long lasting collisions between long (elephant) flows v S D

Collisions of elephant flows q Collisions possible in two different ways Upward path v Downward path v S 1 S 2 S 3 D 2 S 4 D 1 D 4 D 3

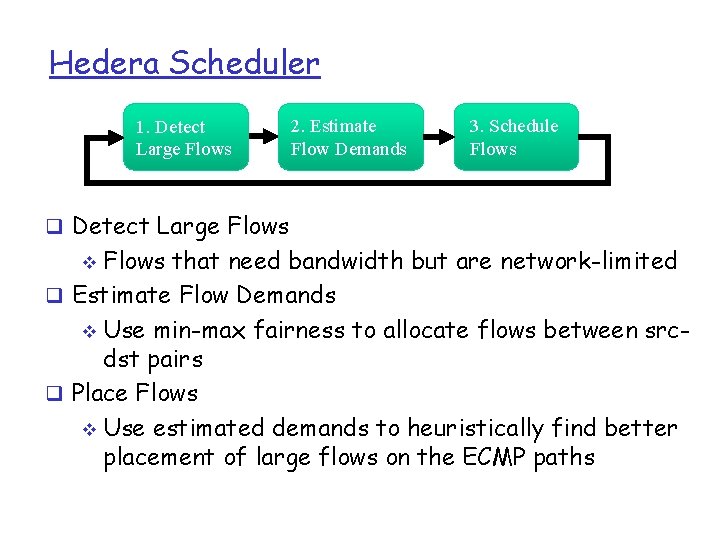

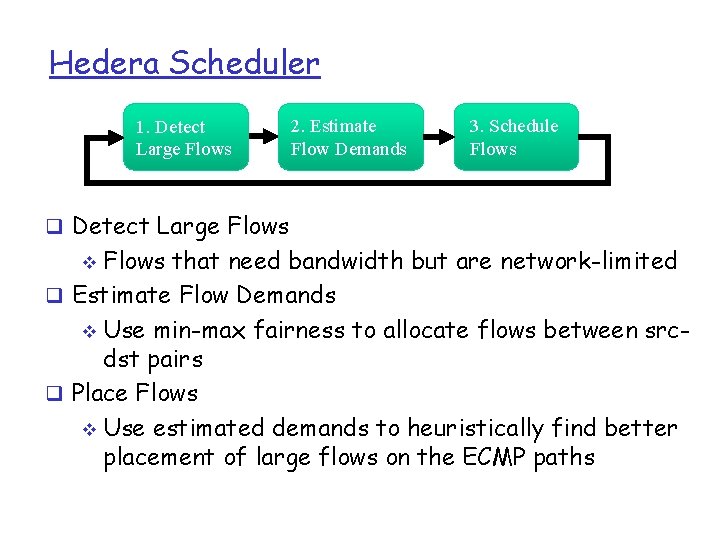

Hedera Scheduler 1. Detect Large Flows 2. Estimate Flow Demands 3. Schedule Flows q Detect Large Flows that need bandwidth but are network-limited q Estimate Flow Demands v Use min-max fairness to allocate flows between srcdst pairs q Place Flows v Use estimated demands to heuristically find better placement of large flows on the ECMP paths v

Elephant Detection q Scheduler continually polls edge switches for flow byte-counts v Flows exceeding B/s threshold are “large” • > %10 of hosts’ link capacity (i. e. > 100 Mbps) q What if only mice on host? v Default ECMP load-balancing efficient for small flows

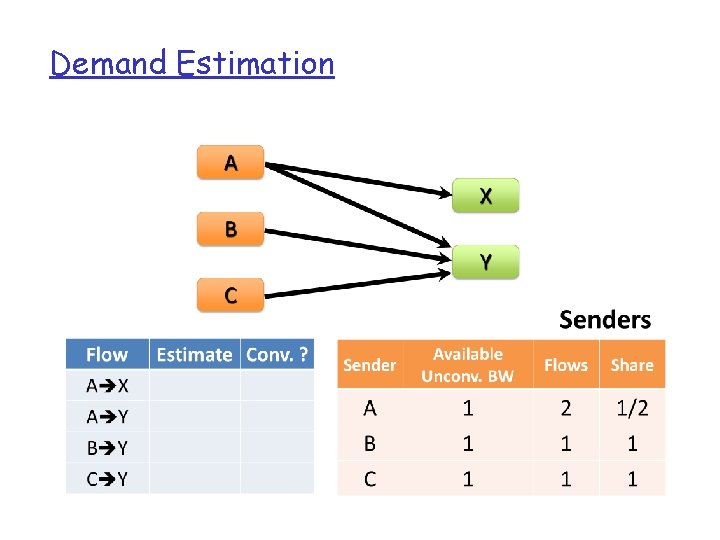

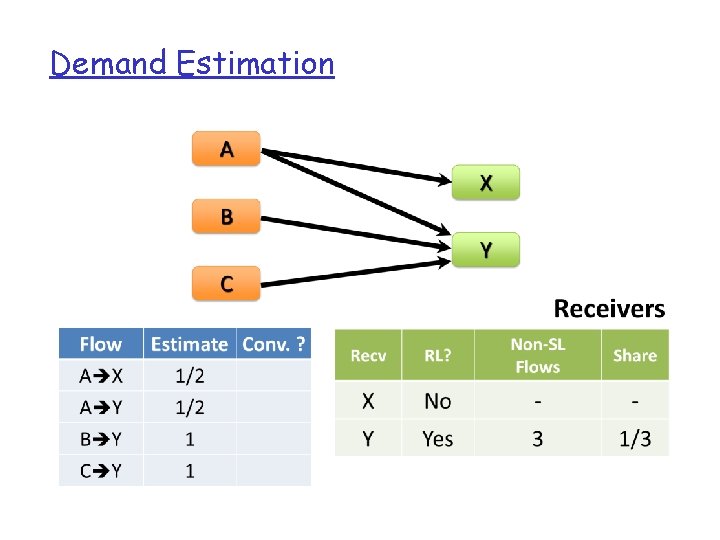

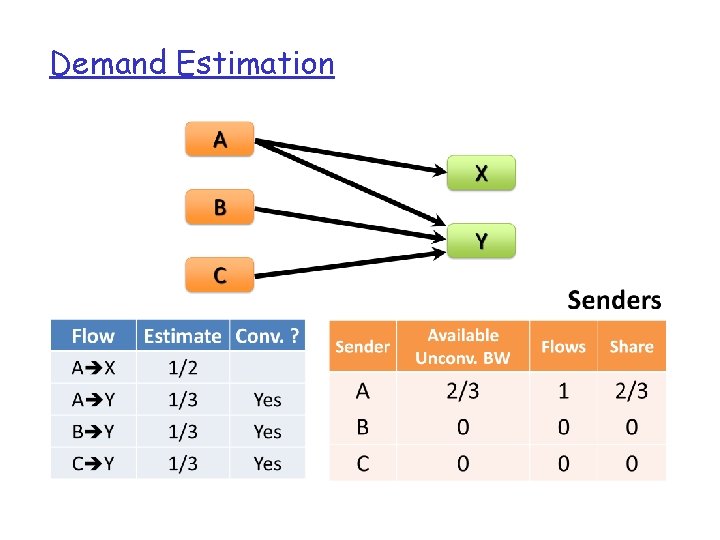

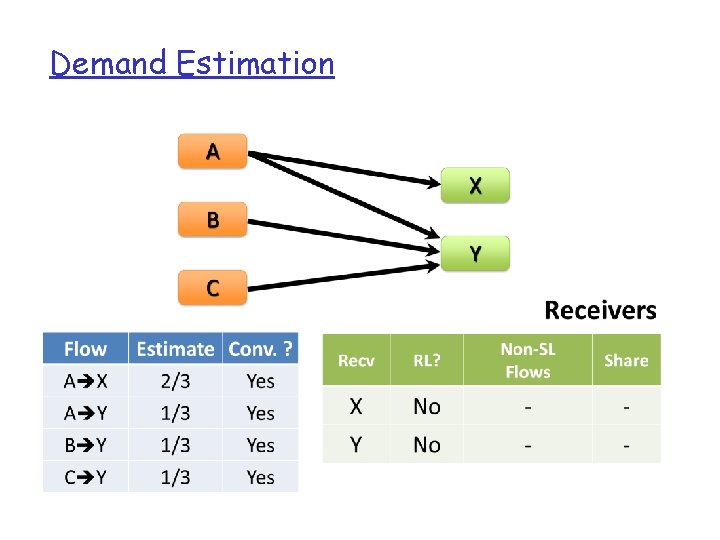

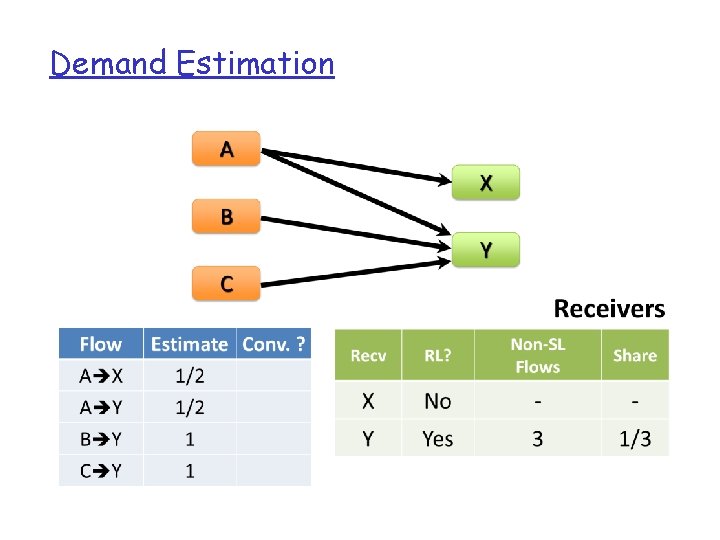

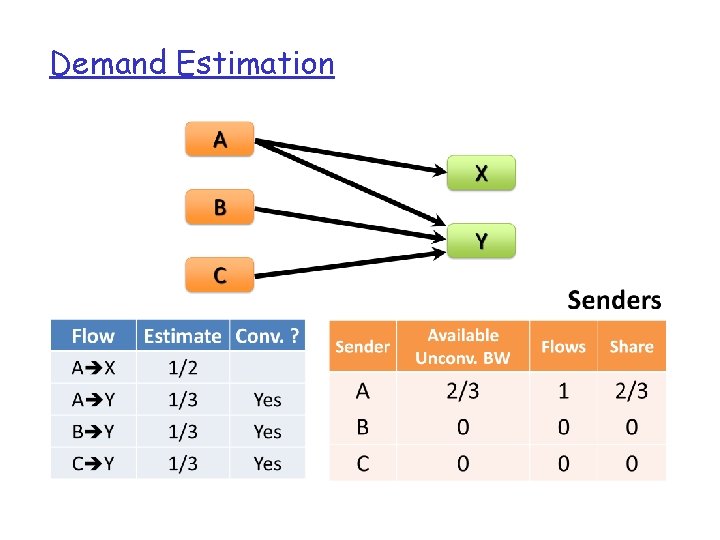

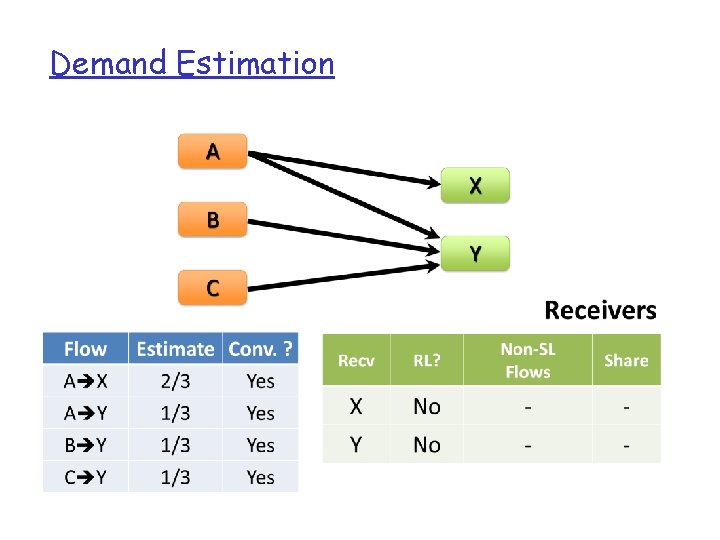

Demand Estimation q Measured flow rate is misleading q Need to find a flow’s “natural” bandwidth requirement when not limited by the network q find max-min fair bandwidth allocation

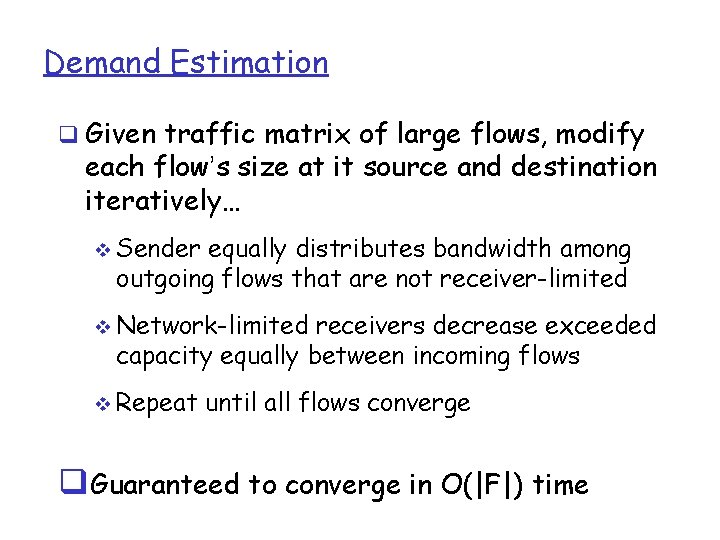

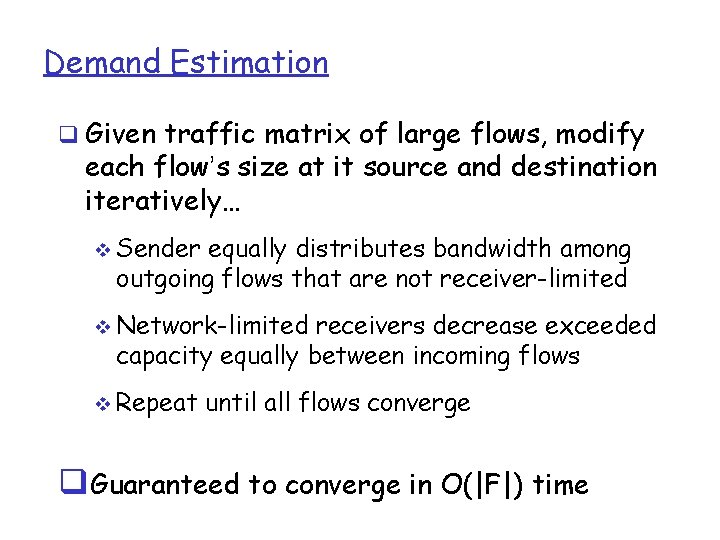

Demand Estimation q Given traffic matrix of large flows, modify each flow’s size at it source and destination iteratively… v Sender equally distributes bandwidth among outgoing flows that are not receiver-limited v Network-limited receivers decrease exceeded capacity equally between incoming flows v Repeat until all flows converge q. Guaranteed to converge in O(|F|) time

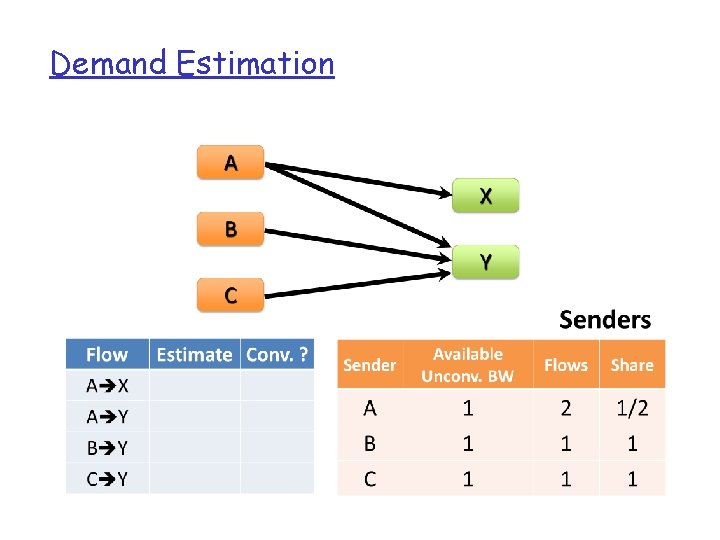

Demand Estimation

Demand Estimation

Demand Estimation

Demand Estimation

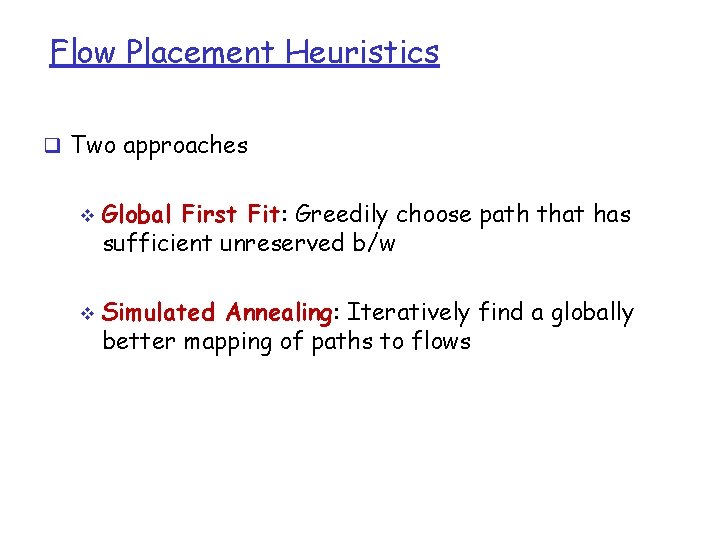

Flow Placement Heuristics q Two approaches v v Global First Fit: Greedily choose path that has sufficient unreserved b/w Simulated Annealing: Iteratively find a globally better mapping of paths to flows

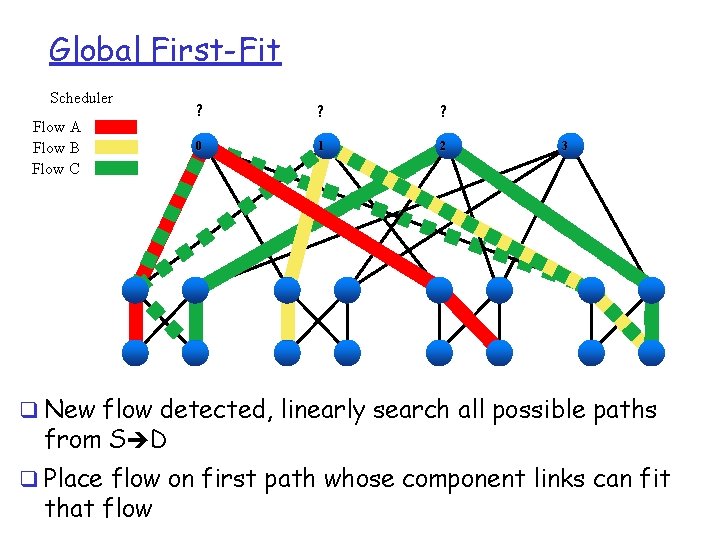

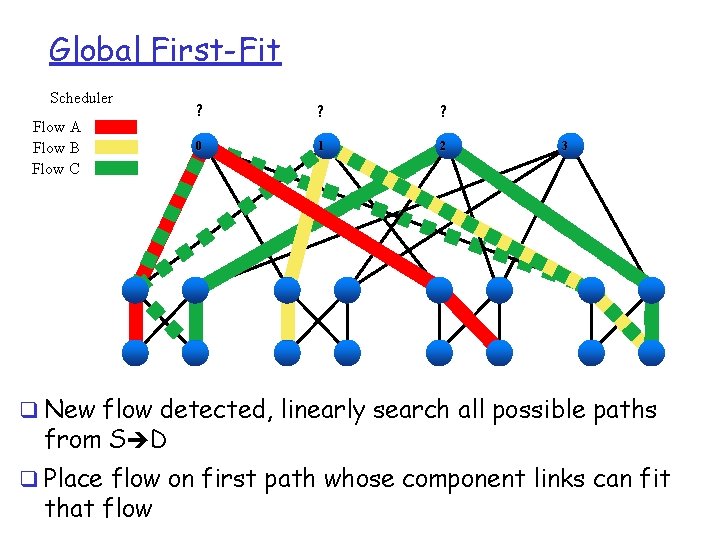

Global First-Fit Scheduler Flow A Flow B Flow C ? ? ? 0 1 2 3 q New flow detected, linearly search all possible paths from S D q Place flow on first path whose component links can fit that flow

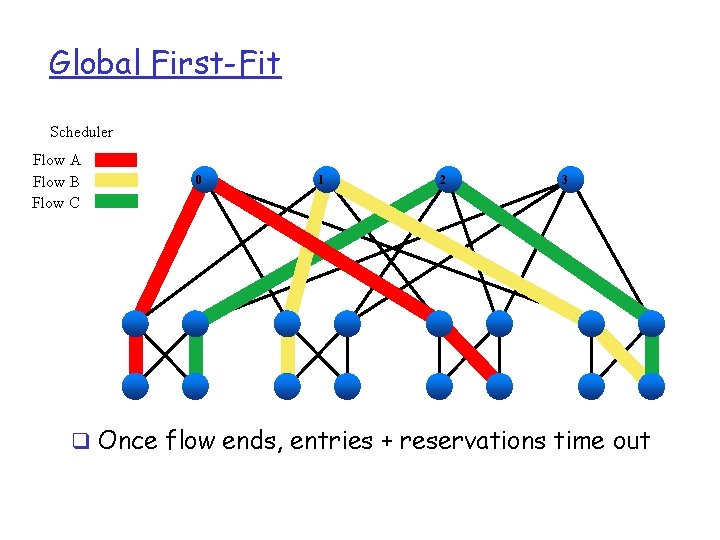

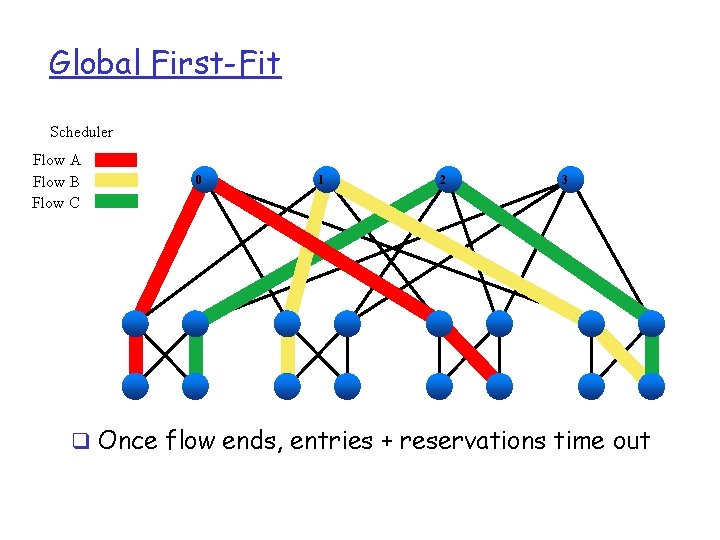

Global First-Fit Scheduler Flow A Flow B Flow C 0 1 2 3 q Once flow ends, entries + reservations time out

Simulated Annealing q Simulated Annealing: v v a numerical optimization technique for searching for a solution in a space otherwise too large for ordinary search methods to yield results Probabilistic search for good flow-to-core mappings

Simulated Annealing q State: A set of mappings from destination hosts to core switches. q Neighbor State: Swap core switches between 2 hosts Within same pod, v Within same edge, v etc v

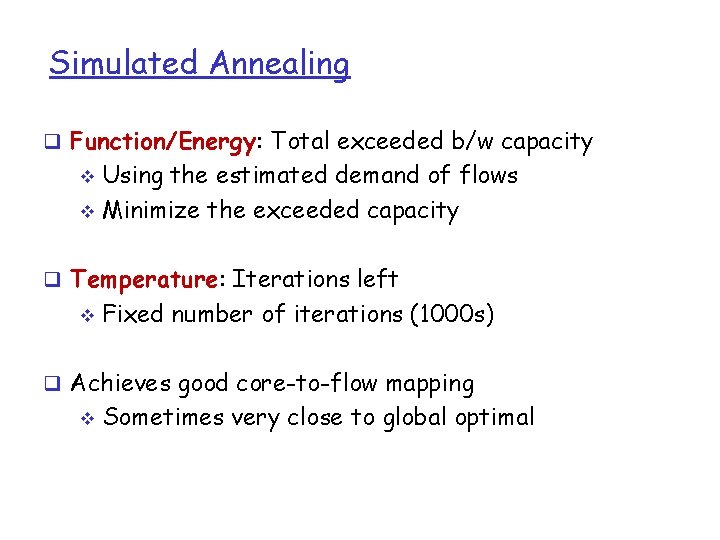

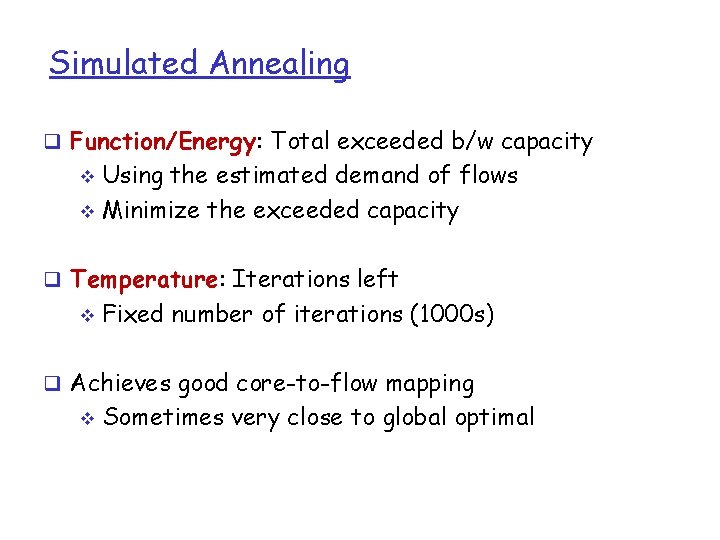

Simulated Annealing q Function/Energy: Total exceeded b/w capacity Using the estimated demand of flows v Minimize the exceeded capacity v q Temperature: Iterations left v Fixed number of iterations (1000 s) q Achieves good core-to-flow mapping v Sometimes very close to global optimal

Simulated Annealing Scheduler Flow A Flow B Flow C v Core 2 10 032 ? ? 0 1 2 3 Example run: 3 flows, 3 iterations

Simulated Annealing Scheduler Flow A Flow B Flow C Core 2 0 3 ? ? 0 1 2 3 q Final state is published to the switches and used as the initial state for next round

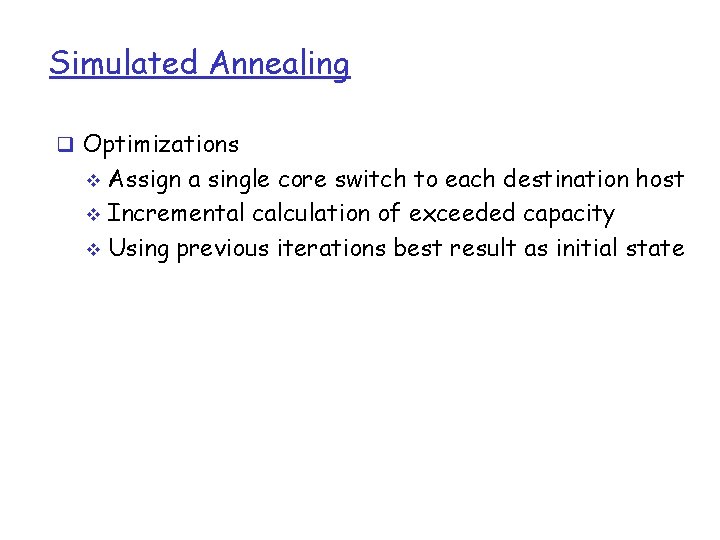

Simulated Annealing q Optimizations Assign a single core switch to each destination host v Incremental calculation of exceeded capacity v Using previous iterations best result as initial state v

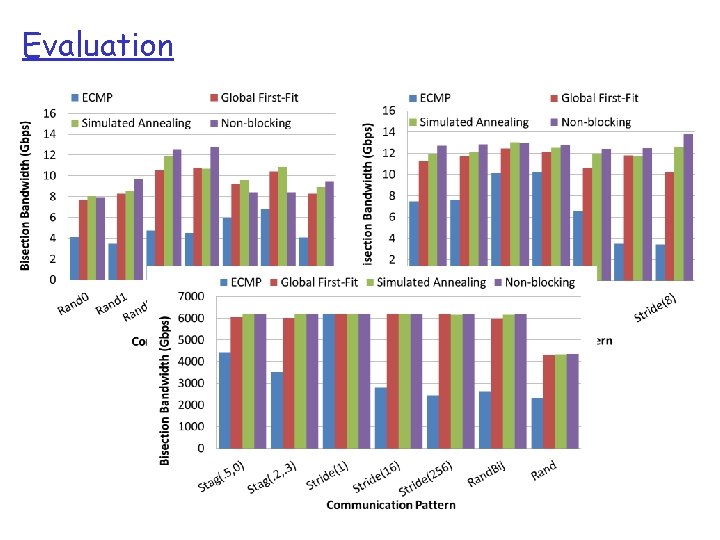

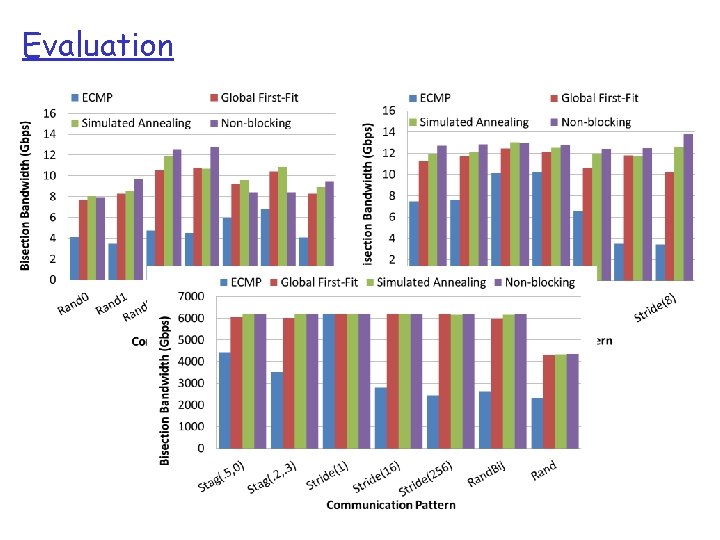

Evaluation

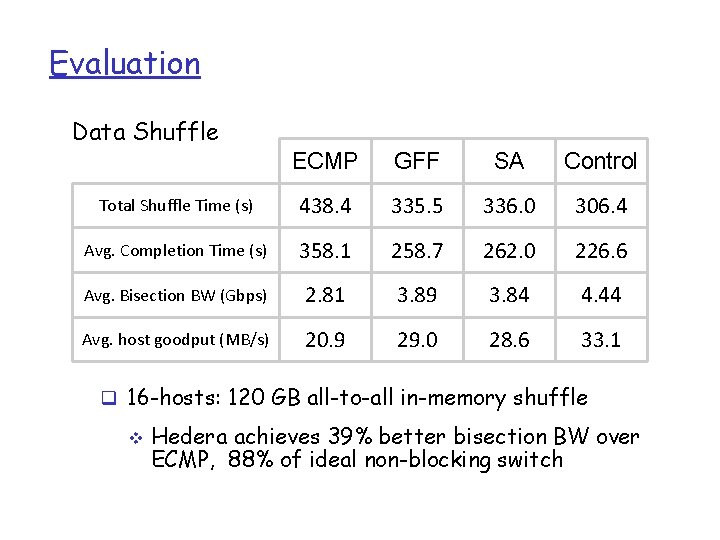

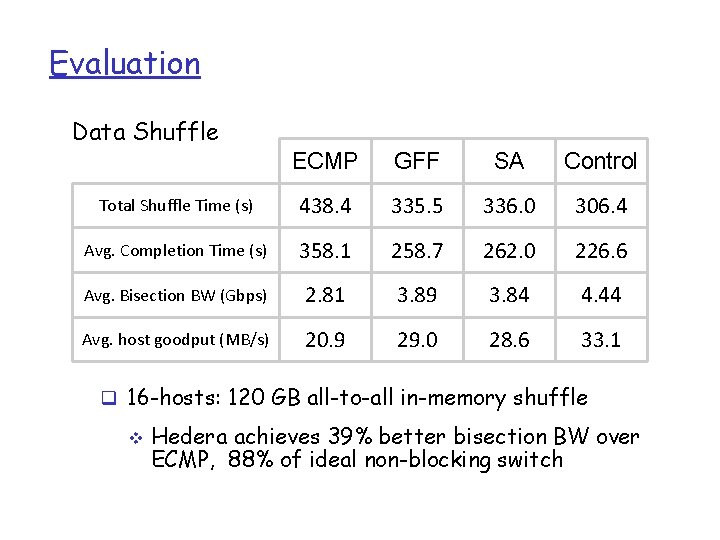

Evaluation Data Shuffle ECMP GFF SA Control Total Shuffle Time (s) 438. 4 335. 5 336. 0 306. 4 Avg. Completion Time (s) 358. 1 258. 7 262. 0 226. 6 Avg. Bisection BW (Gbps) 2. 81 3. 89 3. 84 4. 44 Avg. host goodput (MB/s) 20. 9 29. 0 28. 6 33. 1 q 16 -hosts: 120 GB all-to-all in-memory shuffle v Hedera achieves 39% better bisection BW over ECMP, 88% of ideal non-blocking switch

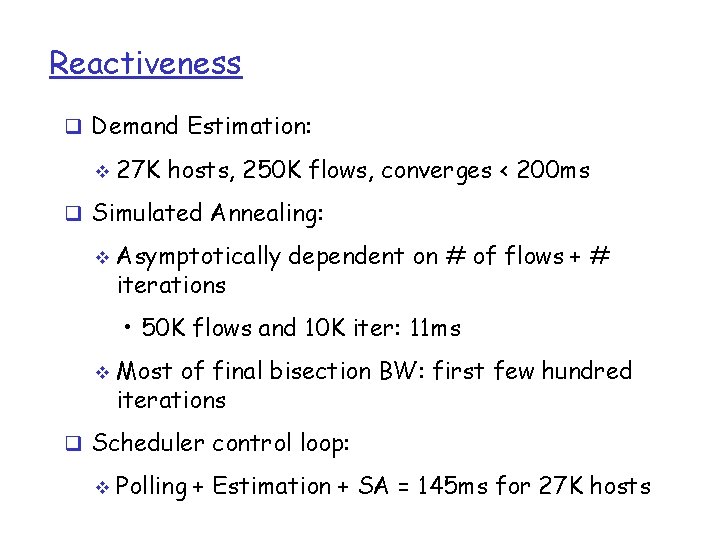

Reactiveness q Demand Estimation: v 27 K hosts, 250 K flows, converges < 200 ms q Simulated Annealing: v Asymptotically dependent on # of flows + # iterations • 50 K flows and 10 K iter: 11 ms v Most of final bisection BW: first few hundred iterations q Scheduler control loop: v Polling + Estimation + SA = 145 ms for 27 K hosts

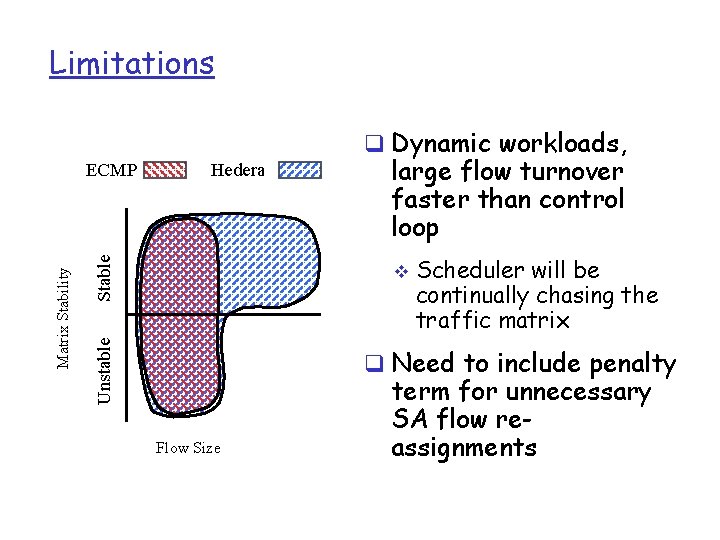

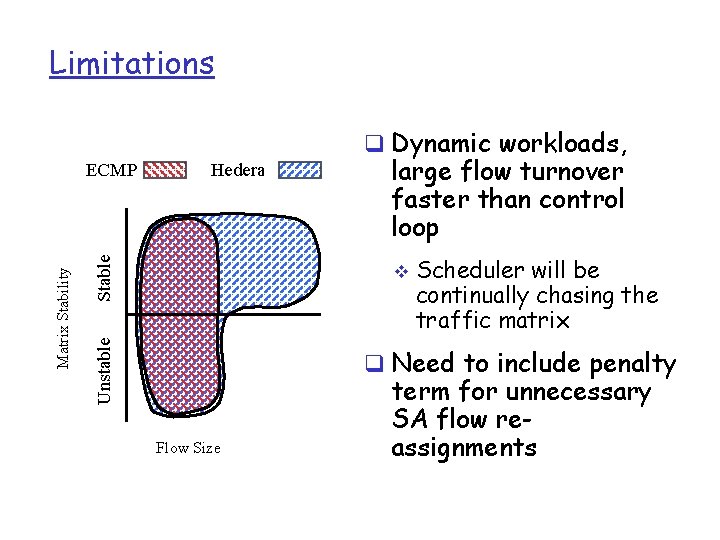

Limitations q Dynamic workloads, Stable Hedera large flow turnover faster than control loop v Unstable Matrix Stability ECMP Scheduler will be continually chasing the traffic matrix q Need to include penalty Flow Size term for unnecessary SA flow reassignments

Conclusion q Rethinking networking v Open interfaces to the data plane v Separation of control and data v Leveraging techniques from distributed systems q Significant momentum v In both research and industry 1 -64