Design Tradeoffs for SSD Performance Nitin Agrawal Vijayan

![Simulation framework • Trace-driven simulator – Modified version of Disk. Sim [Ganger 93] • Simulation framework • Trace-driven simulator – Modified version of Disk. Sim [Ganger 93] •](https://slidetodoc.com/presentation_image_h2/67685ed2da3a05423387d264cd023bf1/image-11.jpg)

- Slides: 57

Design Tradeoffs for SSD Performance Nitin Agrawal*, Vijayan Prabhakaran, Ted Wobber, John D. Davis, Mark Manasse, Rina Panigrahy *University of Wisconsin-Madison Microsoft Research, Silicon Valley

Different? Can we understand performance characteristics of SSDs? 2

SSDs have huge potential • Performance – Excellent random read performance • Lower cost in terms of $/IOPS/GB –Benefits Replace multiple hard disks withfor singlefree SSD do not come • Low power consumption compared to disks – No spin-up/spin-down cost • Better reliability in absence of mechanical parts • Form factor, noise, … 3

Challenges in achieving potential 1. Idiosyncrasies of writing to NAND flash – Cannot overwrite data in place – Block erasure required before page re-write Need to first overcome several 2. Limited serial bandwidth to flash chips constraints of over NAND-flash media 3. Finite erase cycles media lifetime 4

Goals of this work • Understand the constraints of NAND technology • Identify the challenges in SSD design – Little published knowledge on flash disks • Propose architectures to address the challenges • Analyze performance tradeoffs – What happens for I/O intensive workloads? 5

What we did • Studied flash chip specifications • Devised SSD algorithms and data structures • Gathered I/O workload traces from large-scale running systems – TPCC and MS Exchange • Analyzed algorithms under trace-based simulator 6

Key findings • Tradeoffs in almost all aspects of design • Performance and lifetime workload sensitive • Significant hardware and software interplay – Example: parallelism and interconnect density • High-level systems issues appear in firmware – Example: load-balancing, free-block management • Careful design choices help sustain workloads with high I/O rates & increase device lifetime 7

Outline • • • Introduction NAND flash and SSD primer Simulator framework Challenges for SSD design Conclusion 8

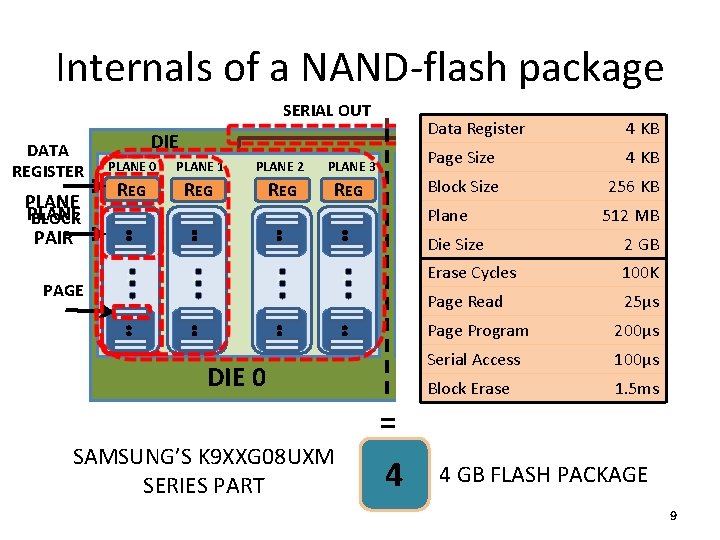

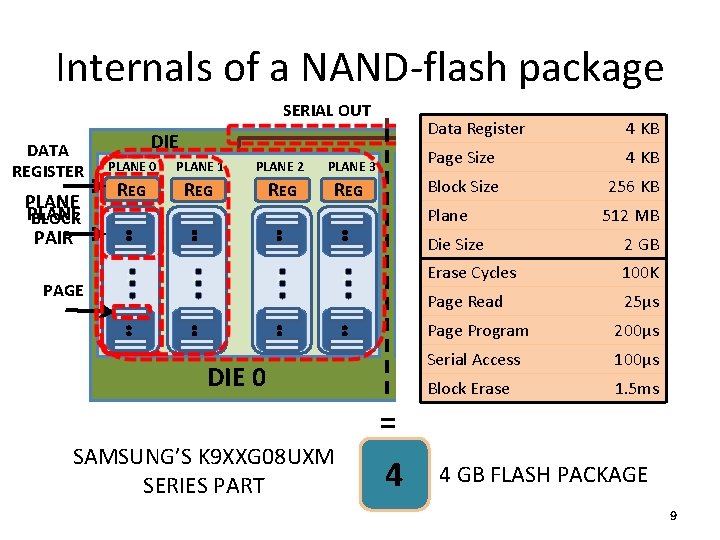

Internals of a NAND-flash package SERIAL OUT DATA REGISTER PLANE BLOCK PAIR Data Register DIE Page Size PLANE 0 PLANE 1 PLANE 2 PLANE 3 PLANE 0 PLANE 1 REG REG Block Size REG 4 KB PLANE 2 KB REG 256 REG Plane PAGE DIE 0 SAMSUNG’S K 9 XXG 08 UXM SERIES PART 512 MB Die Size 2 GB Erase Cycles 100 K Page Read 25μs Page Program 200μs Serial Access 100μs Block Erase. DIE = 4 4 KB PLANE 3 1 1. 5 ms 4 GB FLASH PACKAGE 9

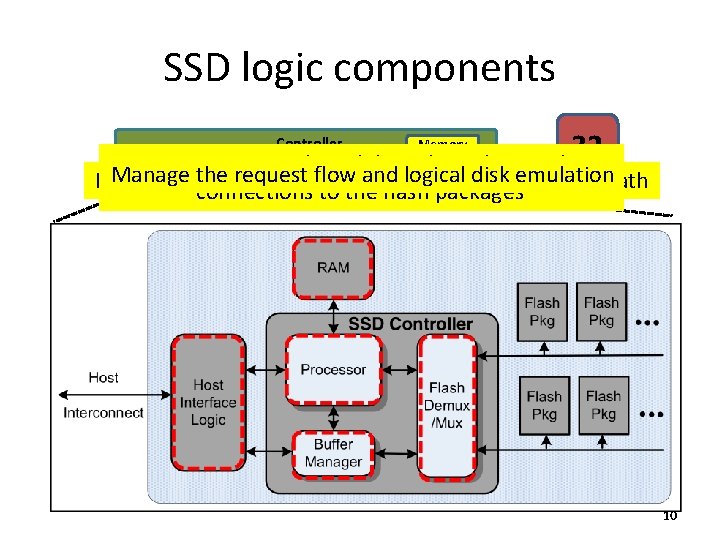

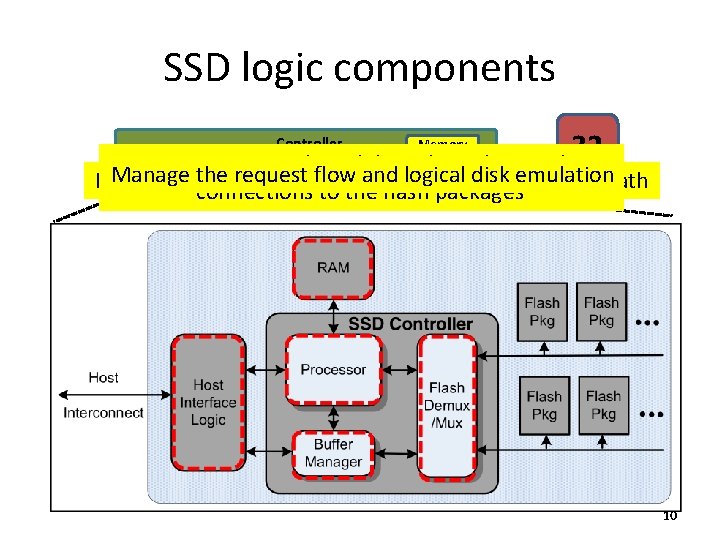

SSD logic components Controller Memory 32 = Emits Support commands some form and data of host along connection the serial Manage Maintain the 4 request mappings flow and logical data structures disk emulation Holds pending and satisfied requests along the. SATA data path 4 such 4 asconnections 4 4 4 32 GB SSD USB, fiber channel, to the flash PCI packages express or 10

![Simulation framework Tracedriven simulator Modified version of Disk Sim Ganger 93 Simulation framework • Trace-driven simulator – Modified version of Disk. Sim [Ganger 93] •](https://slidetodoc.com/presentation_image_h2/67685ed2da3a05423387d264cd023bf1/image-11.jpg)

Simulation framework • Trace-driven simulator – Modified version of Disk. Sim [Ganger 93] • SSD-specific module based on SAMSUNG specs – Support for multiple request streams – Additional data structures for SSD state – Configuration parameters for proposed SSD designs • Workloads – TPCC, MS Exchange, IOZone, Postmark 11

Outline • • • Introduction NAND flash and SSD primer Simulator framework Challenges for SSD design Conclusion 12

Challenge 1 Idiosyncrasies of writing to flash Cannot overwrite data in place Block erasure required before page re-write 13

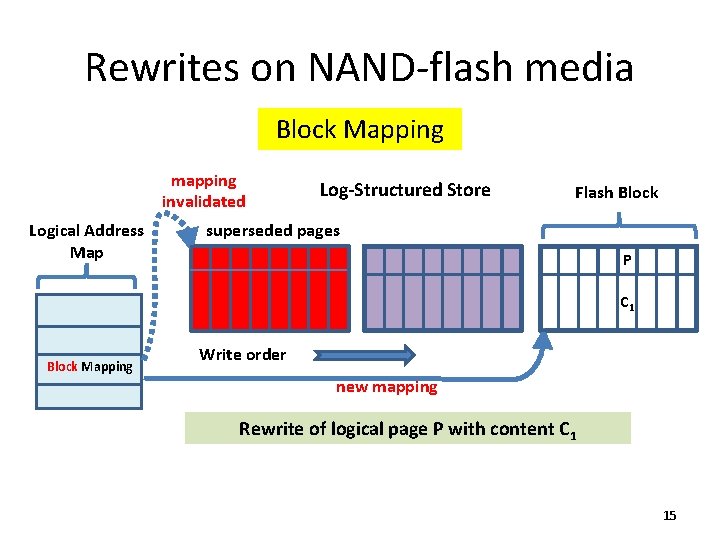

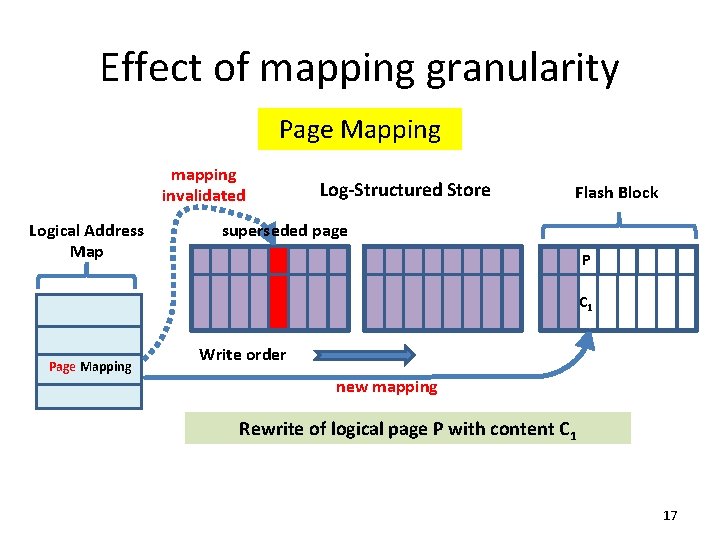

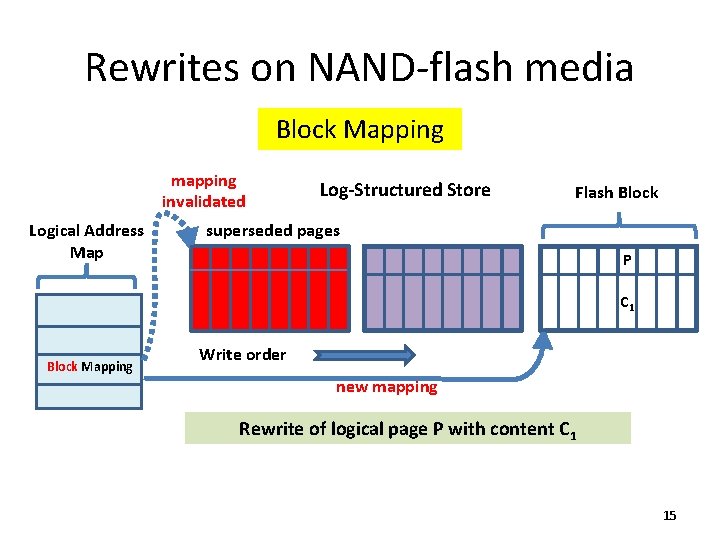

Page write mechanics • How writes work on flash media: – – Initially all bits set to 1 During page write, bits of page are set to 0 Writes in ascending page order within block Erase block before page rewrite to reset bits to 1 • Rewrites must be written to new location – Old page superseded and available for cleaning • Pages grouped into blocks for erasure efficiency 14

Rewrites on NAND-flash media Block Mapping mapping invalidated Logical Address Map Block Mapping Log-Structured Store Flash Block superseded pages P P C 0 C 1 Write order new mapping Rewrite of logical page P with content C 1 15

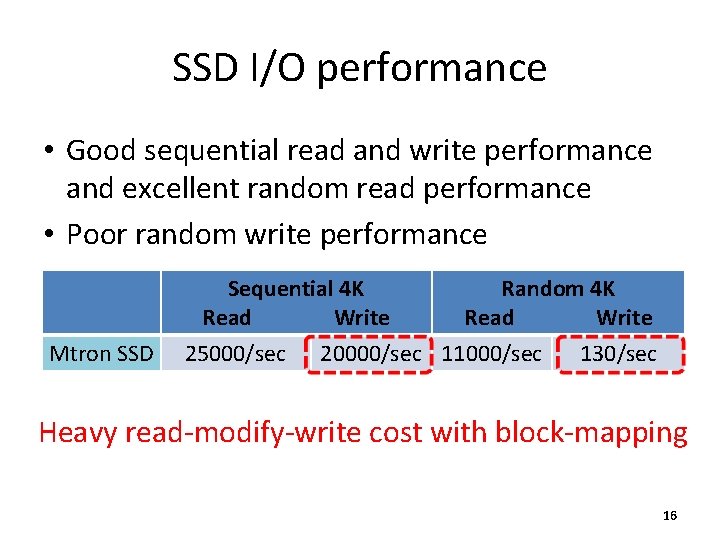

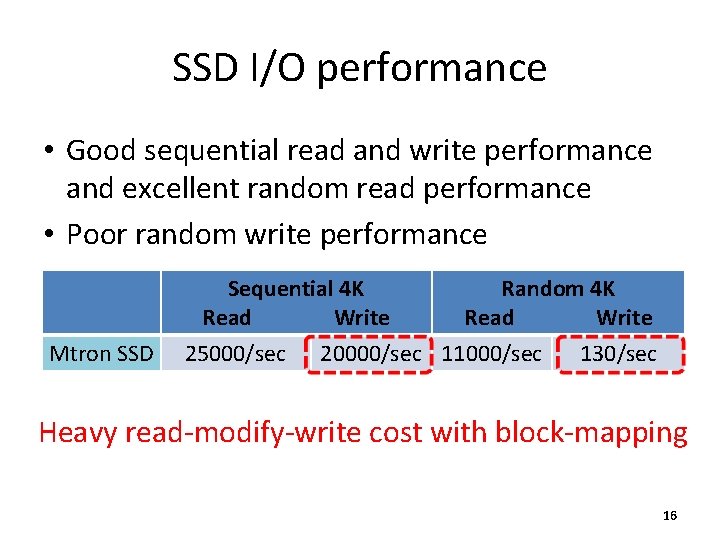

SSD I/O performance • Good sequential read and write performance and excellent random read performance • Poor random write performance Sequential 4 K Read Write Mtron SSD 25000/sec Random 4 K Read Write 20000/sec 11000/sec 130/sec Heavy read-modify-write cost with block-mapping 16

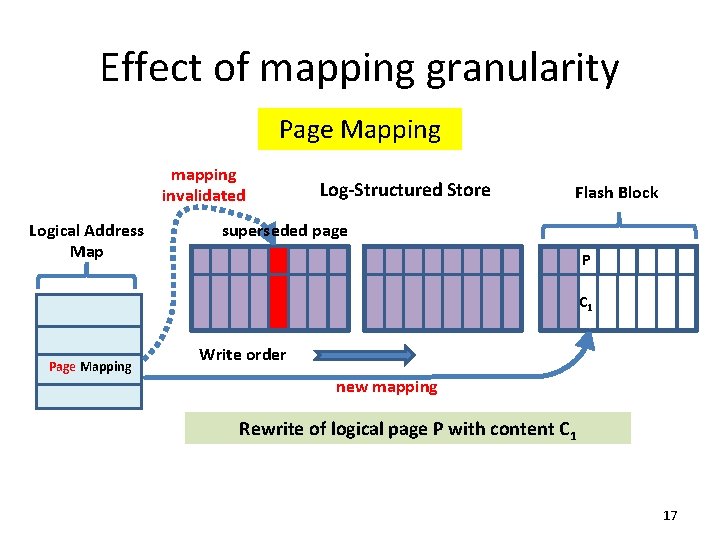

Effect of mapping granularity Page Mapping mapping invalidated Logical Address Map Page Mapping Log-Structured Store Flash Block superseded page P P C 0 C 1 Write order new mapping Rewrite of logical page P with content C 1 17

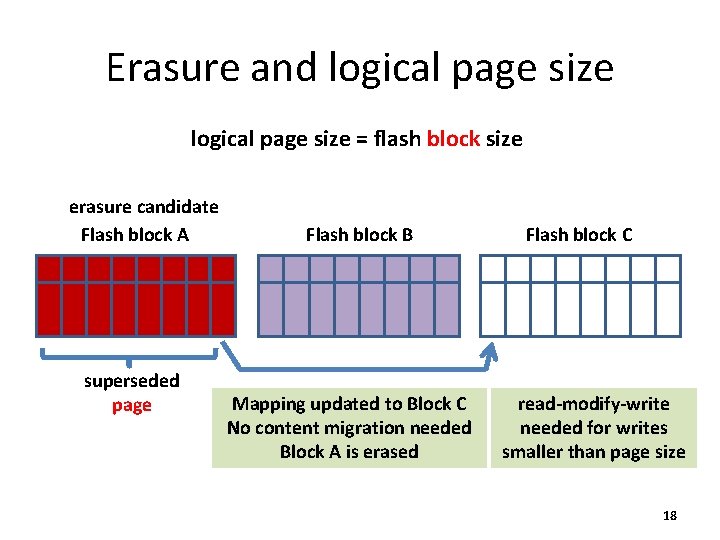

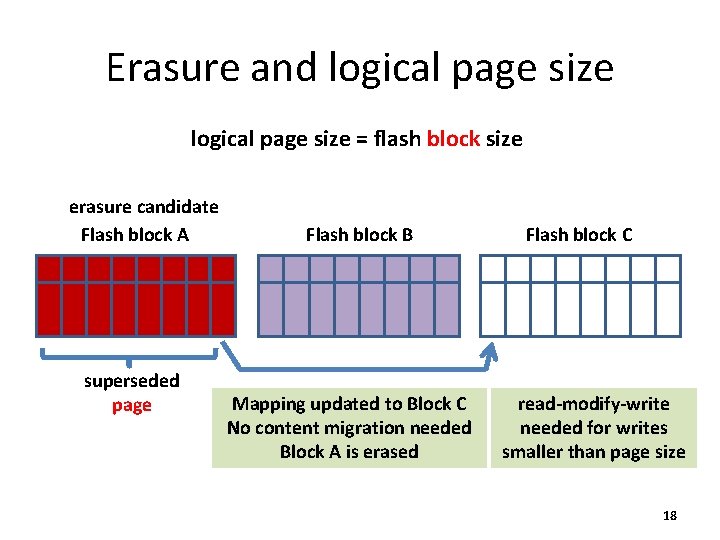

Erasure and logical page size = flash block size erasure candidate Flash block A superseded page Flash block B Mapping updated to Block C No content migration needed Block A is erased Flash block C read-modify-write needed for writes smaller than page size 18

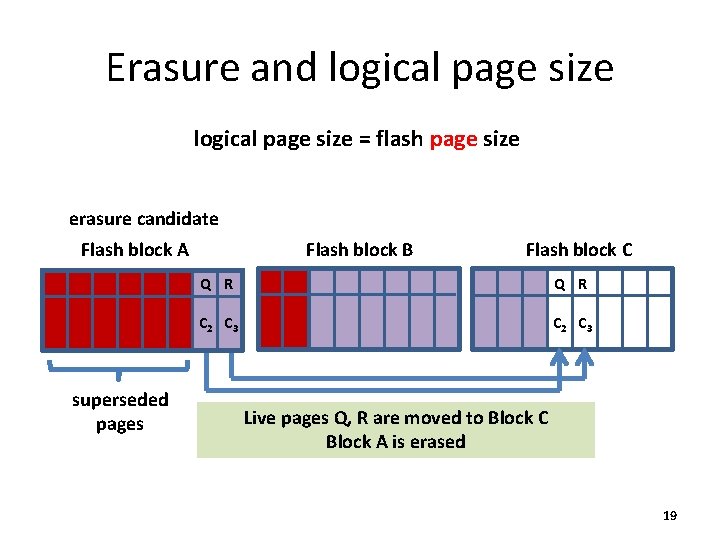

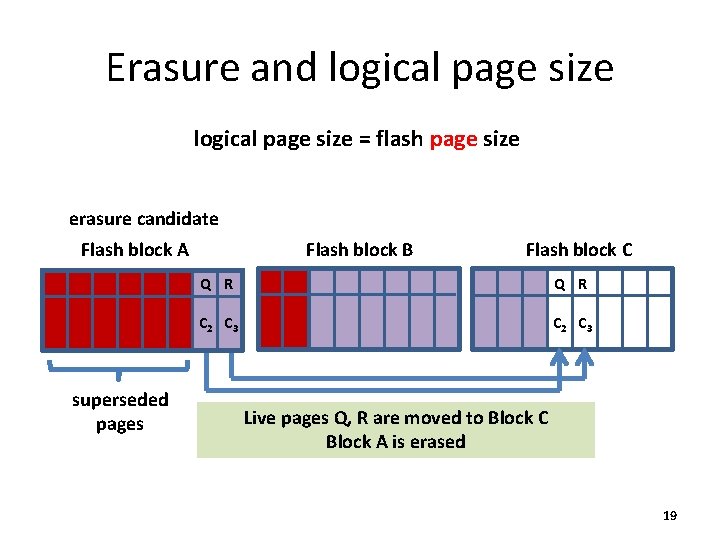

Erasure and logical page size = flash page size erasure candidate Flash block A superseded pages Flash block B Flash block C Q R C 2 C 3 Live pages Q, R are moved to Block C Block A is erased 19

Page-mapping: summary • Fundamental problem with NAND-flash – Cannot overwrite data in place • Design consequences – Log structured store for improved performance – Maintain logical to physical address mapping – Cleaning to reclaim superseded pages 20

Logical page size tradeoffs • Flash blocks need to be erased before reuse – Cleaning of valid pages needed before erasure – Greedy cleaning picks block with most superseded pages • Small logical page size – Fine-grained control over placement – More work to move valid pages from erasure candidates • Logical page size equal to flash block size – Erasure simplified; costly read-modify-write for small writes • SSDs overprovisioned with spare capacity – Reduce foreground cleaning load at cost of available capacity 21

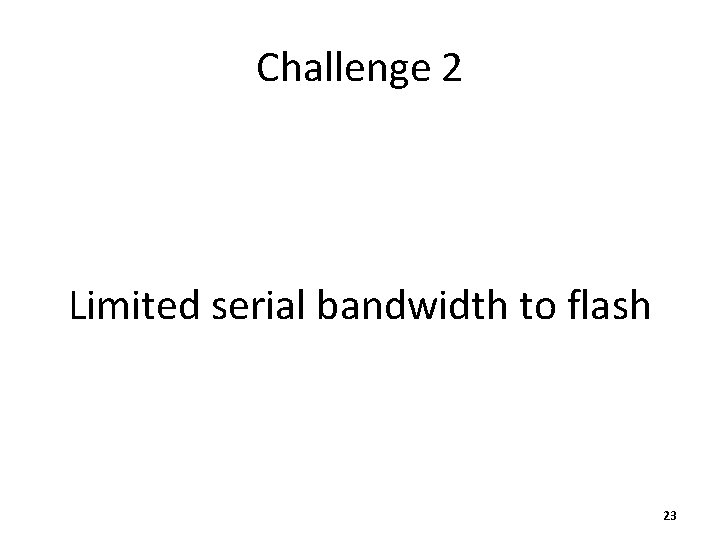

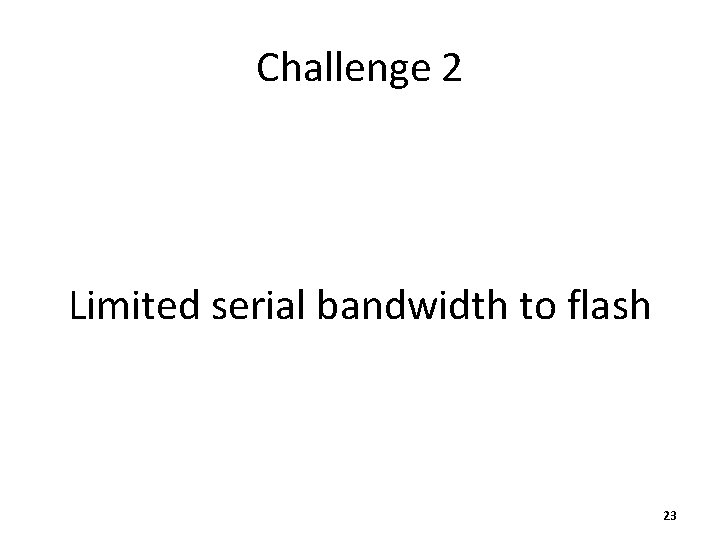

Logical page size vs. I/O latency • Page size equals flash block (256 KB) – Average I/O latency for TPC-C is 20 ms • Page size equals flash page (4 KB) – Average I/O latency for TPC-C is 0. 2 ms 100 X reduction in I/O latency 22

Challenge 2 Limited serial bandwidth to flash 23

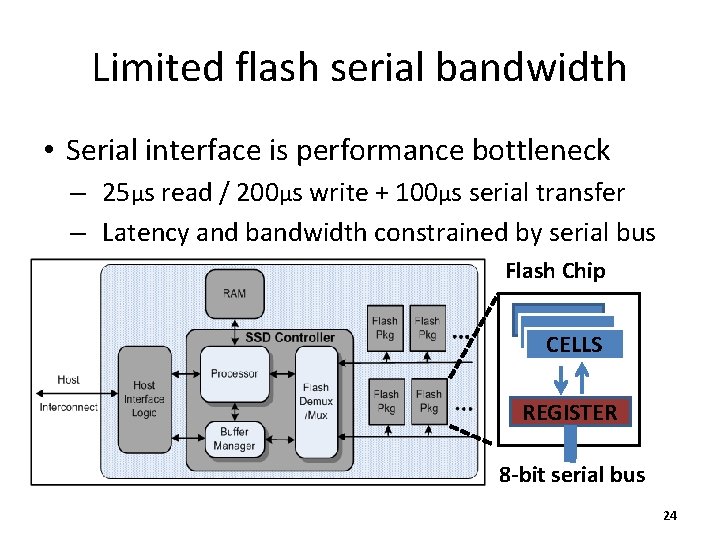

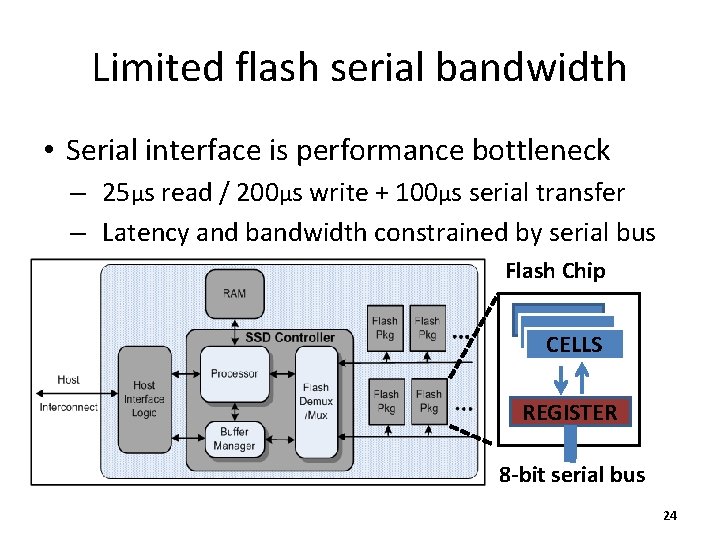

Limited flash serial bandwidth • Serial interface is performance bottleneck – 25μs read / 200μs write + 100μs serial transfer – Latency and bandwidth constrained by serial bus Flash Chip CELLS REGISTER 8 -bit serial bus 24

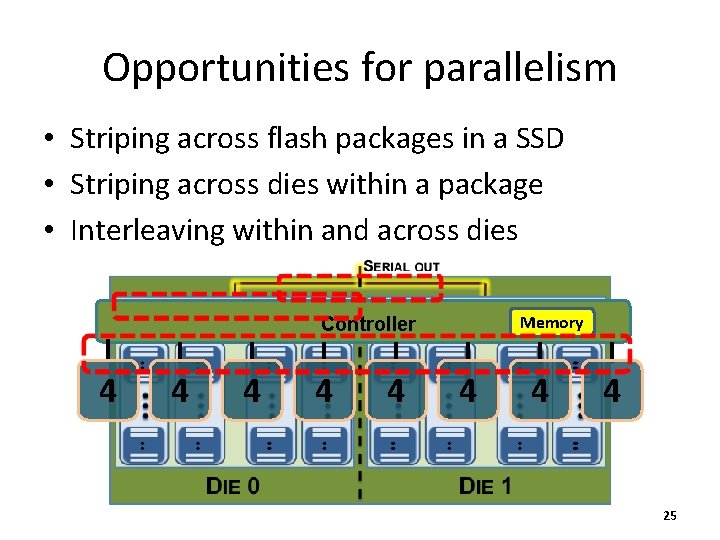

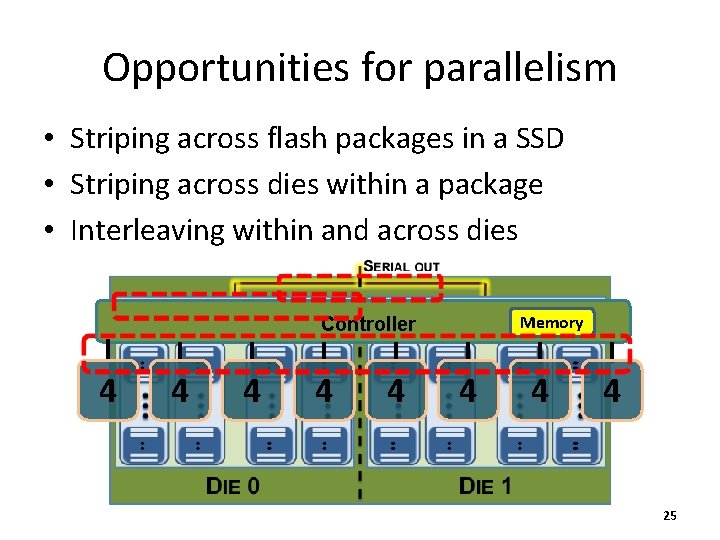

Opportunities for parallelism • Striping across flash packages in a SSD • Striping across dies within a package • Interleaving within and across dies Memory Controller 4 4 4 4 25

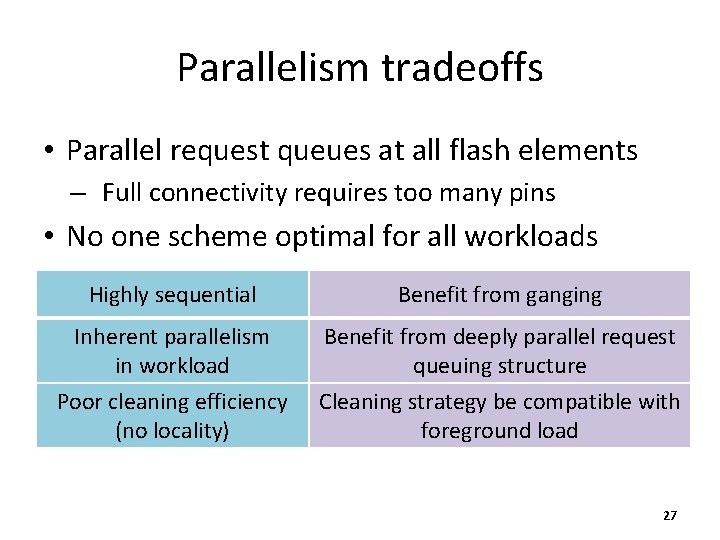

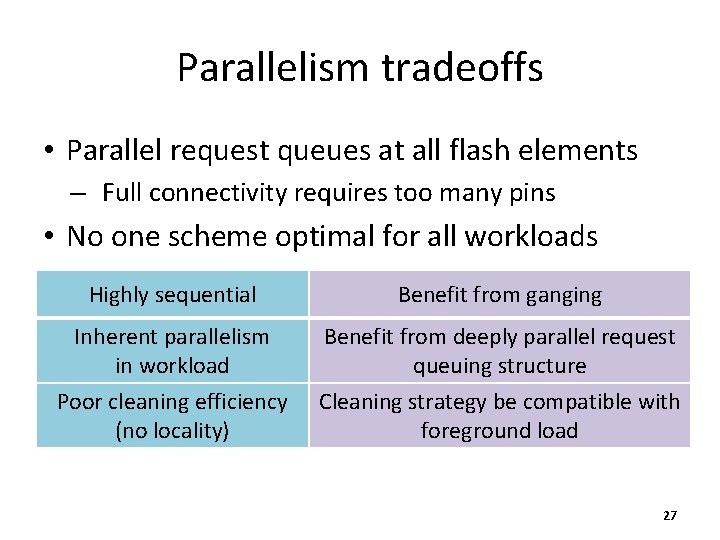

Exploiting parallelism • Gangs of packages operating in synchrony – Practical parallelism by reducing required pin count • (1)Interleaving Shared bus gang • Increases addressability – Improve bandwidth, hides latency of costly ops • Reduces bandwidth – Long running erase on one chip, read/write to other – Interleaved ops on same plane-pair disallowed Vendors provide options for multiple in-flight ops (2)– Shared control gang • • Allows Background cleaning multi-chip ops on idle components • Ops must becopy-back in lock step – Internal operation avoids slow serial pins 26

Parallelism tradeoffs • Parallel request queues at all flash elements – Full connectivity requires too many pins • No one scheme optimal for all workloads Highly sequential Benefit from ganging Inherent parallelism in workload Benefit from deeply parallel request queuing structure Poor cleaning efficiency (no locality) Cleaning strategy be compatible with foreground load 27

Impact of interleaving TPC-C and Exchange • No queuing, no benefit Iozone and Postmark • Sequential I/O component results in queuing • Increased throughput 28

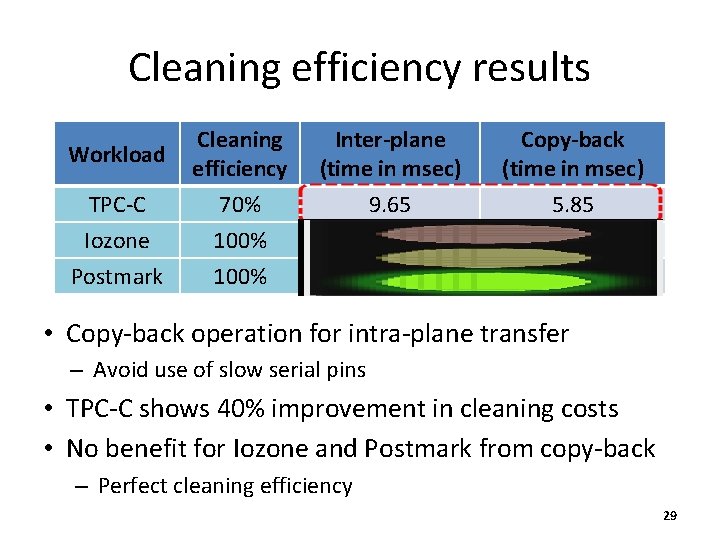

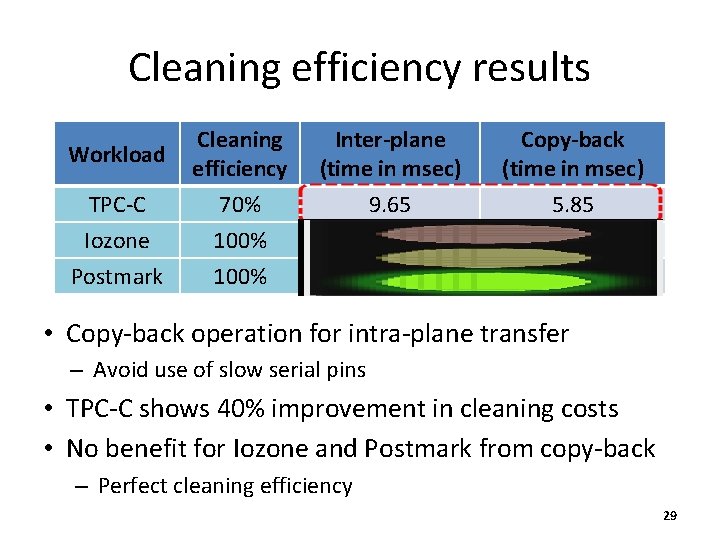

Cleaning efficiency results TPC-C Cleaning efficiency 70% Inter-plane (time in msec) 9. 65 Copy-back (time in msec) 5. 85 Iozone 100% 1. 5 Postmark 100% 1. 5 Workload • Copy-back operation for intra-plane transfer – Avoid use of slow serial pins • TPC-C shows 40% improvement in cleaning costs • No benefit for Iozone and Postmark from copy-back – Perfect cleaning efficiency 29

Challenge 3 Finite number of block erase cycles 30

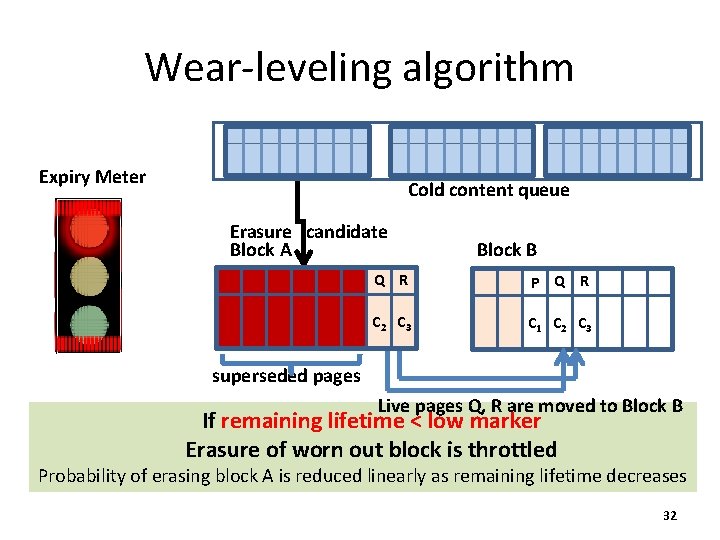

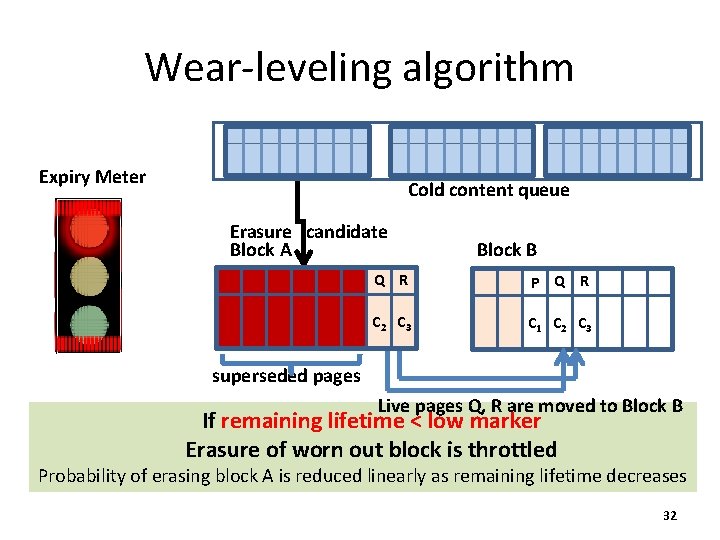

Wear-leveling • Flash blocks erasable only finite times (~100 K) – Need to ensure all blocks wear out evenly • Greedy cleaning implies poor wear-leveling – Repeated cleaning of high-cleaning-efficiency blocks • Modified algorithm using block remaining lifetime – Maintain high & low markers for avg. remaining lifetime – Maintain queue of target blocks with cold data 31

Wear-leveling algorithm Expiry Meter Cold content queue Erasure candidate Block A Block B Q R P Q R C 2 C 3 C 1 C 2 C 3 superseded pages Live pages Q, R are moved to Block B If remaining lifetime < low marker If. Initially remaining lifetime high marker are block far< from expiry Erasure ofblocks worn out is throttled in addition, migrate cold data into after erasure Greedy cleaning picks block with highest cleaning efficiency Probability of erasing block A is reduced linearly as block remaining lifetime decreases 32

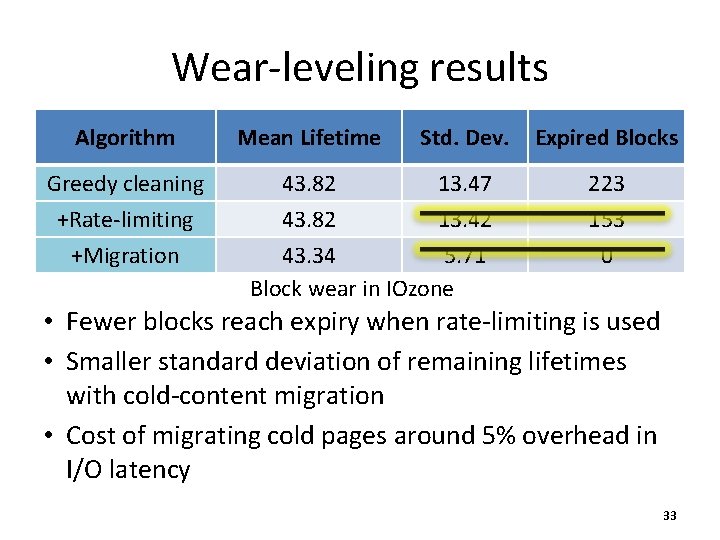

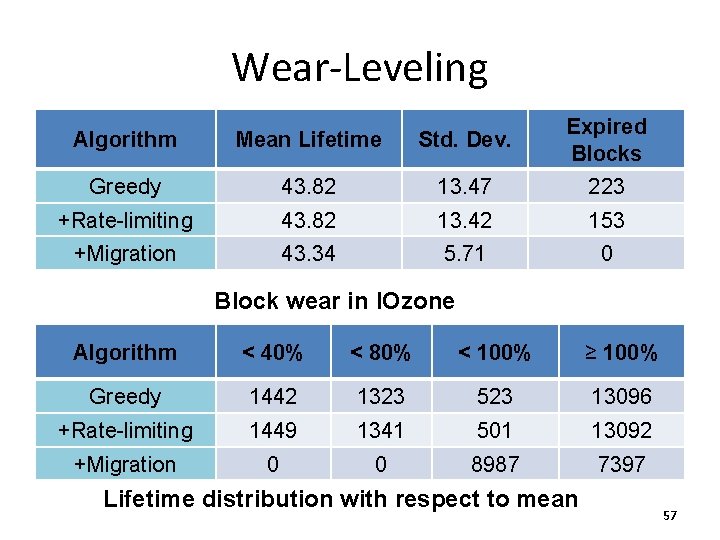

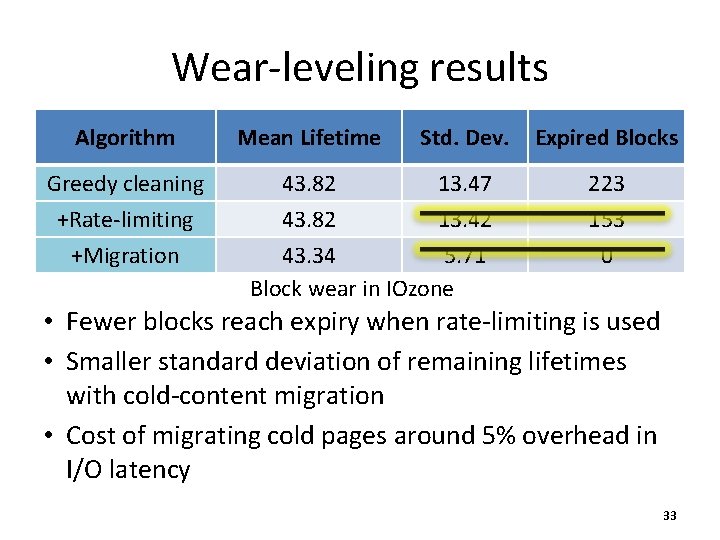

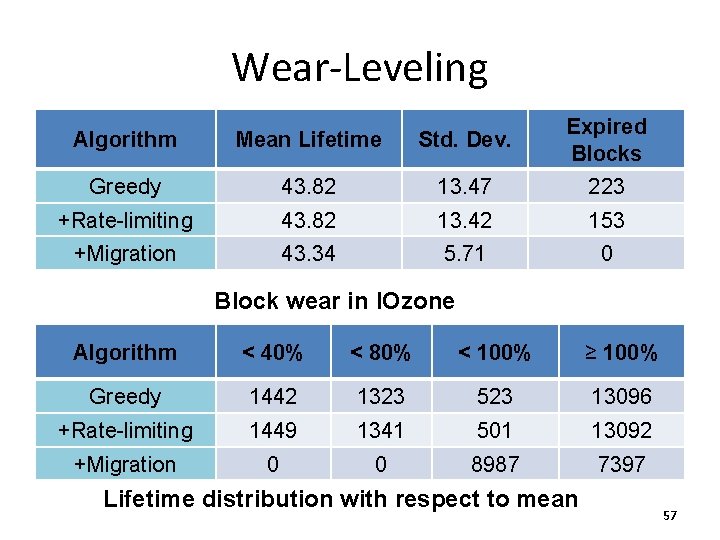

Wear-leveling results Algorithm Mean Lifetime Std. Dev. Expired Blocks Greedy cleaning +Rate-limiting 43. 82 13. 47 13. 42 223 153 +Migration 43. 34 5. 71 Block wear in IOzone 0 • Fewer blocks reach expiry when rate-limiting is used • Smaller standard deviation of remaining lifetimes with cold-content migration • Cost of migrating cold pages around 5% overhead in I/O latency 33

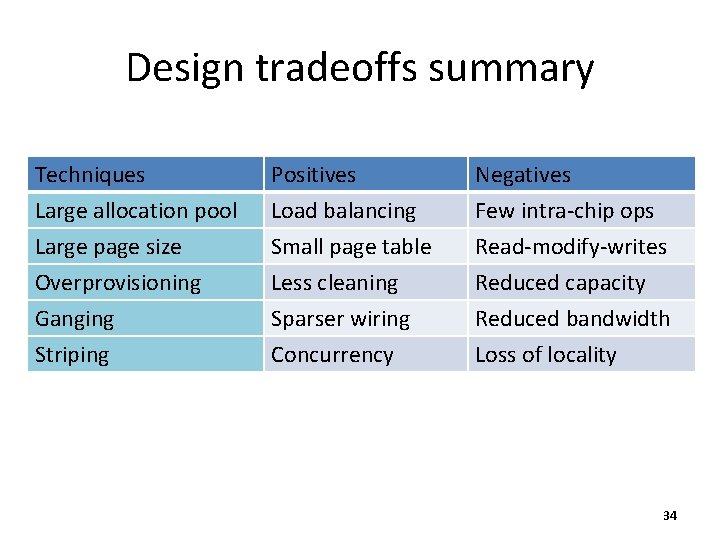

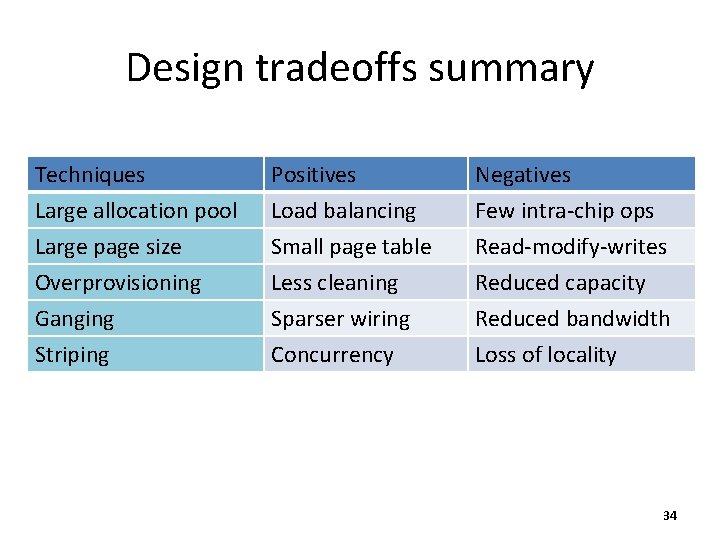

Design tradeoffs summary Techniques Large allocation pool Large page size Overprovisioning Positives Load balancing Small page table Less cleaning Negatives Few intra-chip ops Read-modify-writes Reduced capacity Ganging Striping Sparser wiring Concurrency Reduced bandwidth Loss of locality 34

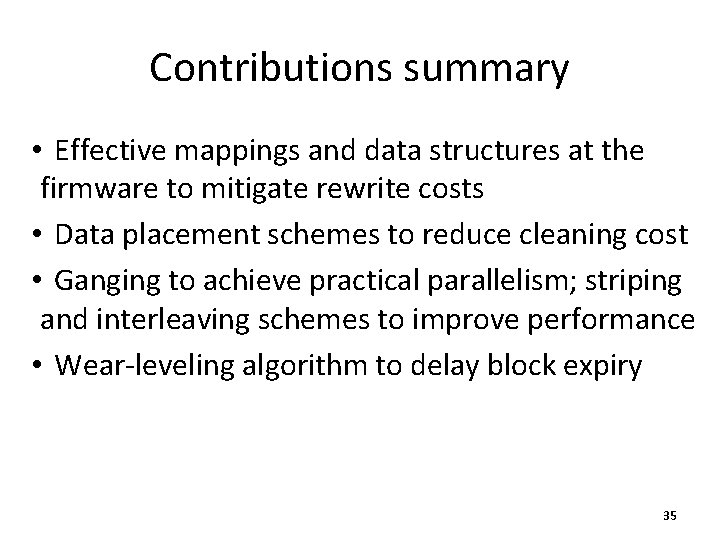

Contributions summary • Effective mappings and data structures at the firmware to mitigate rewrite costs • Data placement schemes to reduce cleaning cost • Ganging to achieve practical parallelism; striping and interleaving schemes to improve performance • Wear-leveling algorithm to delay block expiry 35

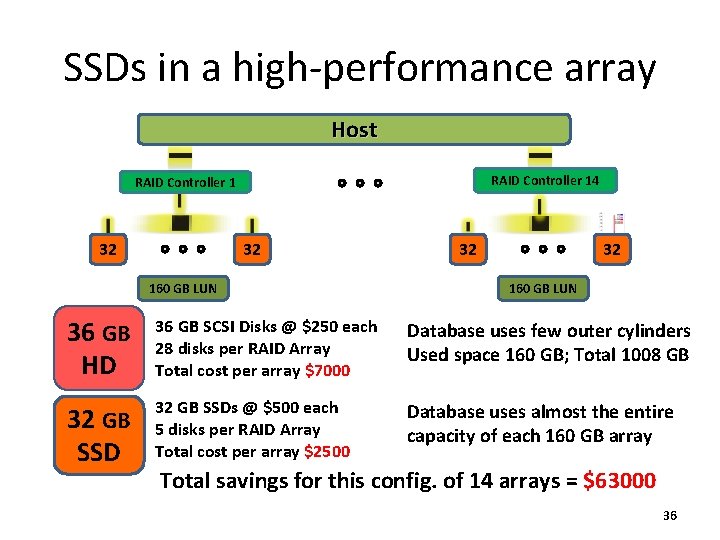

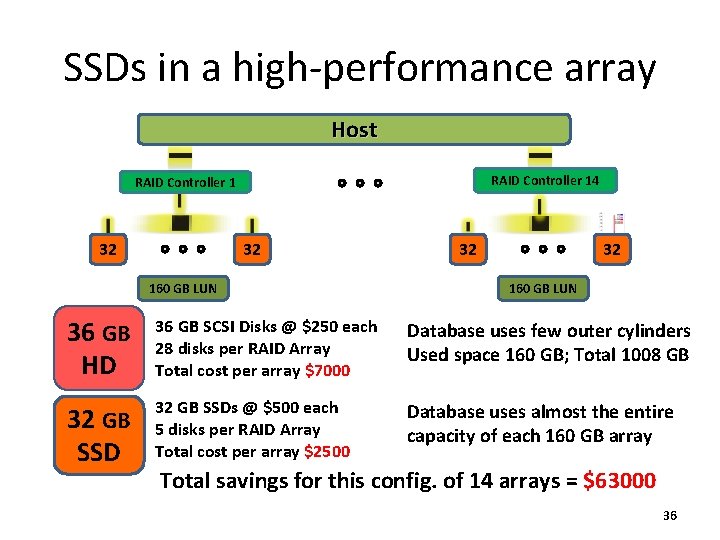

SSDs in a high-performance array Host RAID Controller 14 RAID Controller 1 36 32 1008 GB GB LUN LUN 160 36 GB HD 36 GB SCSI Disks @ $250 each 28 disks per RAID Array Total cost per array $7000 Database uses few outer cylinders Used space 160 GB; Total 1008 GB 32 GB SSDs @ $500 each 5 disks per RAID Array Total cost per array $2500 Database uses almost the entire capacity of each 160 GB array Total savings for this config. of 14 arrays = $63000 36

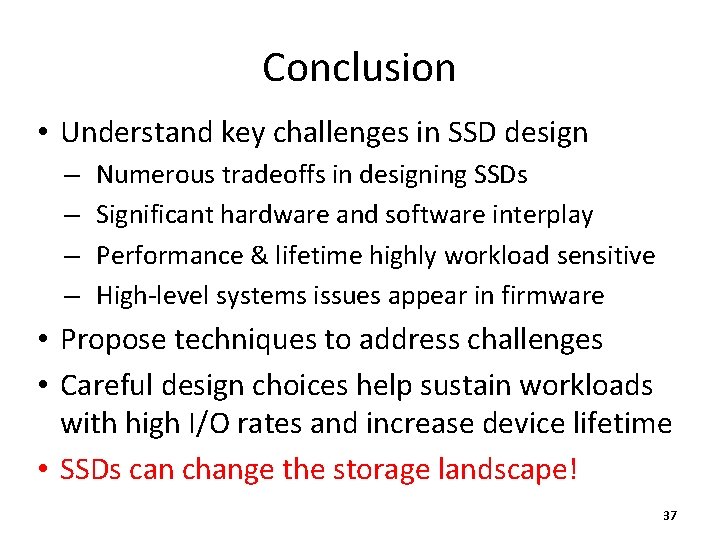

Conclusion • Understand key challenges in SSD design – – Numerous tradeoffs in designing SSDs Significant hardware and software interplay Performance & lifetime highly workload sensitive High-level systems issues appear in firmware • Propose techniques to address challenges • Careful design choices help sustain workloads with high I/O rates and increase device lifetime • SSDs can change the storage landscape! 37

Thanks! Nitin Agrawal*, Vijayan Prabhakaran, Ted Wobber, John D. Davis, Mark Manasse, Rina Panigrahy *University of Wisconsin-Madison Microsoft Research, Silicon Valley 38

Backup Slides 39

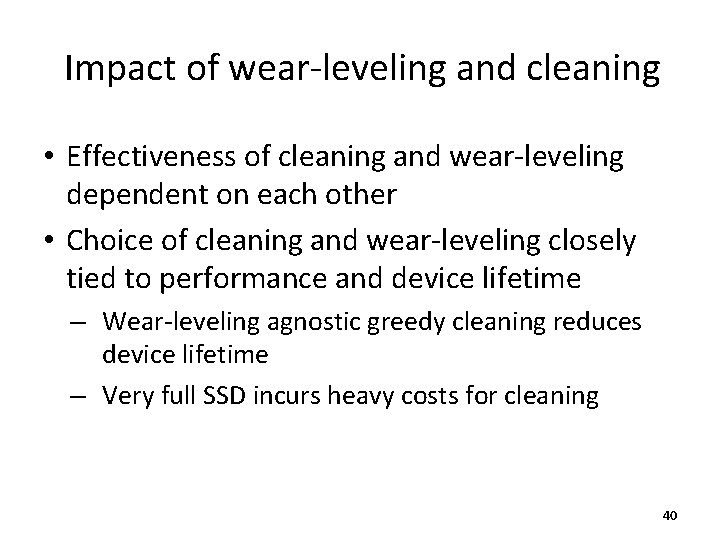

Impact of wear-leveling and cleaning • Effectiveness of cleaning and wear-leveling dependent on each other • Choice of cleaning and wear-leveling closely tied to performance and device lifetime – Wear-leveling agnostic greedy cleaning reduces device lifetime – Very full SSD incurs heavy costs for cleaning 40

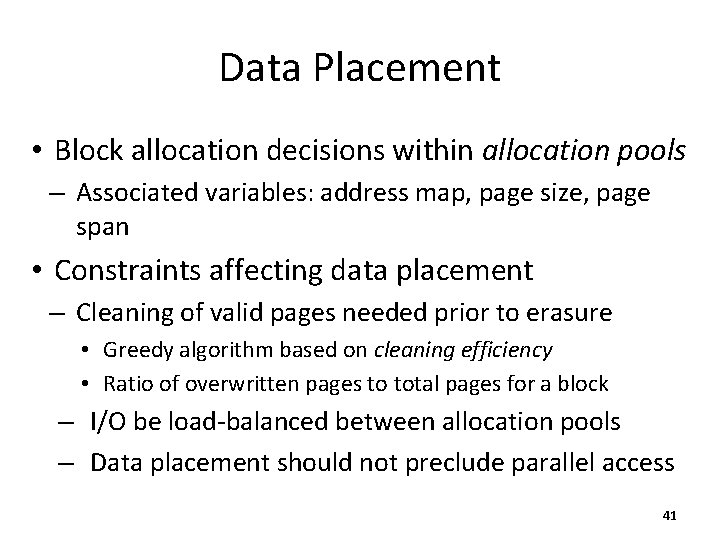

Data Placement • Block allocation decisions within allocation pools – Associated variables: address map, page size, page span • Constraints affecting data placement – Cleaning of valid pages needed prior to erasure • Greedy algorithm based on cleaning efficiency • Ratio of overwritten pages to total pages for a block – I/O be load-balanced between allocation pools – Data placement should not preclude parallel access 41

Example Mapping Tradeoffs • Static mapping of LBAs – Simple, but little scope for load-balancing • Contiguous LBAs mapped to same die – Performance of sequential access in large chunks suffers 42

Data structures at the SSD controller • Use the flash disk as a log [Birrell 07] – Writes performed sequentially whenever possible • Maintain logical to physical address mappings – Per-page granularity to optimize block reuse • Avoid high read-modify-write costs • Reserve pool of erased blocks – Sustain large sequential transfers/bad blocks • Last page of block used as persistent store – Rapidly reconstructed on power up 43

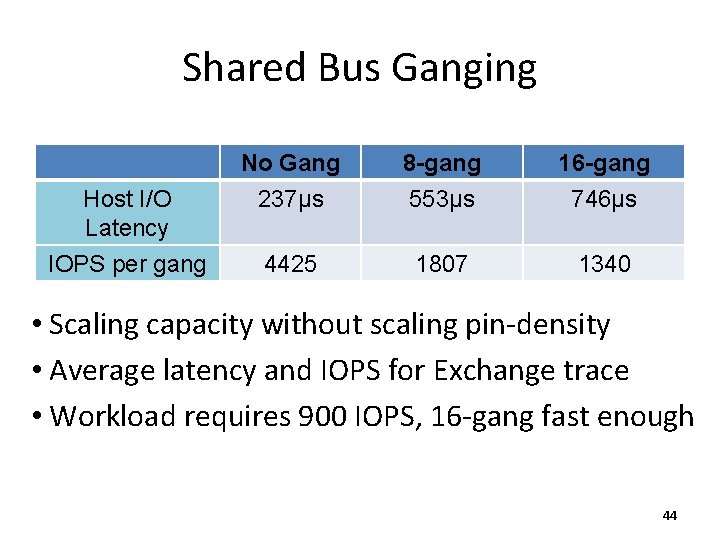

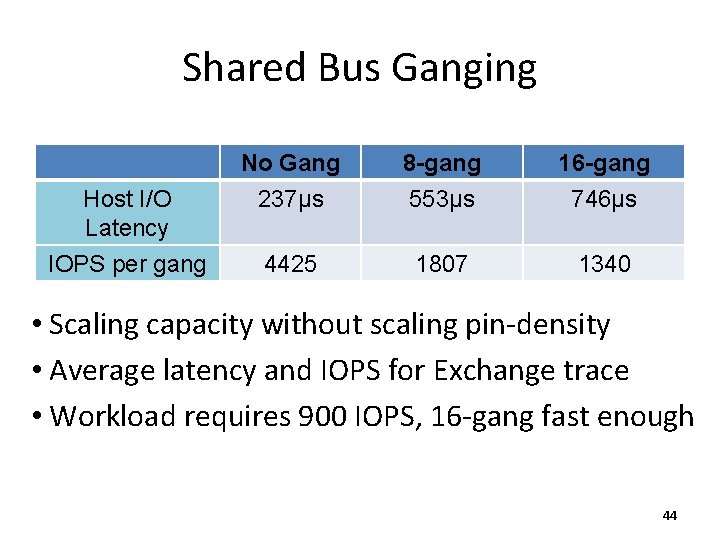

Shared Bus Ganging Host I/O Latency IOPS per gang No Gang 237μs 8 -gang 553μs 16 -gang 746μs 4425 1807 1340 • Scaling capacity without scaling pin-density • Average latency and IOPS for Exchange trace • Workload requires 900 IOPS, 16 -gang fast enough 44

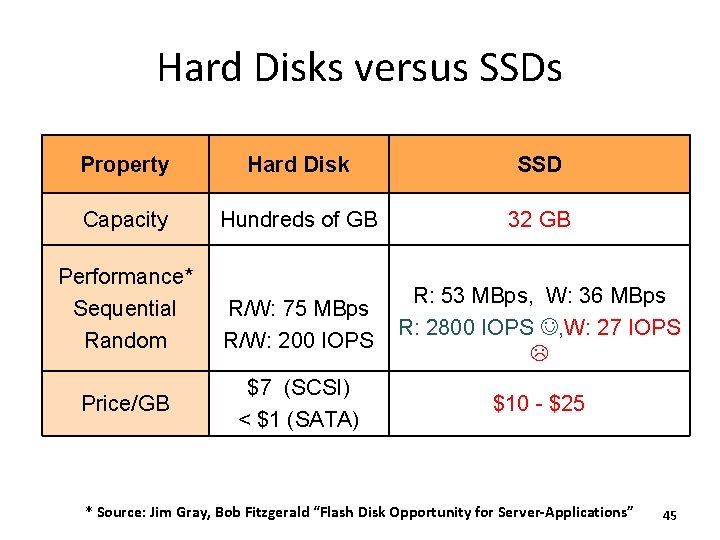

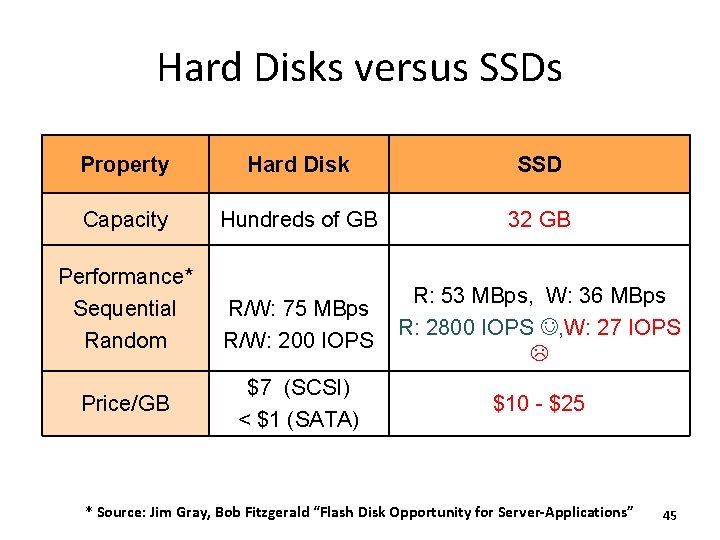

Hard Disks versus SSDs Property Hard Disk SSD Capacity Hundreds of GB 32 GB R/W: 75 MBps R/W: 200 IOPS R: 53 MBps, W: 36 MBps R: 2800 IOPS , W: 27 IOPS $7 (SCSI) < $1 (SATA) $10 - $25 Performance* Sequential Random Price/GB * Source: Jim Gray, Bob Fitzgerald “Flash Disk Opportunity for Server-Applications” 45

Workloads • TPC-C – – – 30 -min trace from configuration of 16000 warehouses 14 RAID fibre-channel controllers each with 28 36 GB disks 6. 8 million events: requests for multiples of 8 KB blocks 2: 1 read-to-write ratio Benchmark uses only 160 GB per controller out of 1 TB Trace misalignment (LBA mod 8=7) fixed by post-processing • Microsoft Exchange Server – 15 -min trace from server running Microsoft Exchange – Specialized database workload with 3: 2 read-to-write ratio – 65 K events from one controller, requests to 250 GB capacity 46

Shared Control Ganging 47

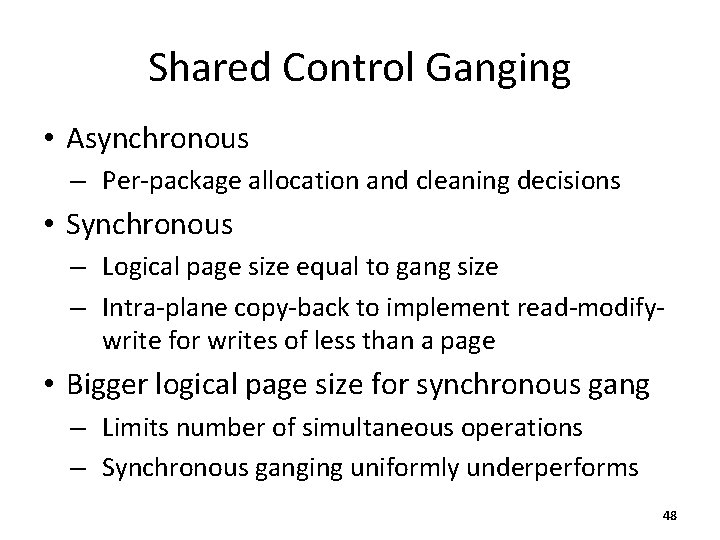

Shared Control Ganging • Asynchronous – Per-package allocation and cleaning decisions • Synchronous – Logical page size equal to gang size – Intra-plane copy-back to implement read-modifywrite for writes of less than a page • Bigger logical page size for synchronous gang – Limits number of simultaneous operations – Synchronous ganging uniformly underperforms 48

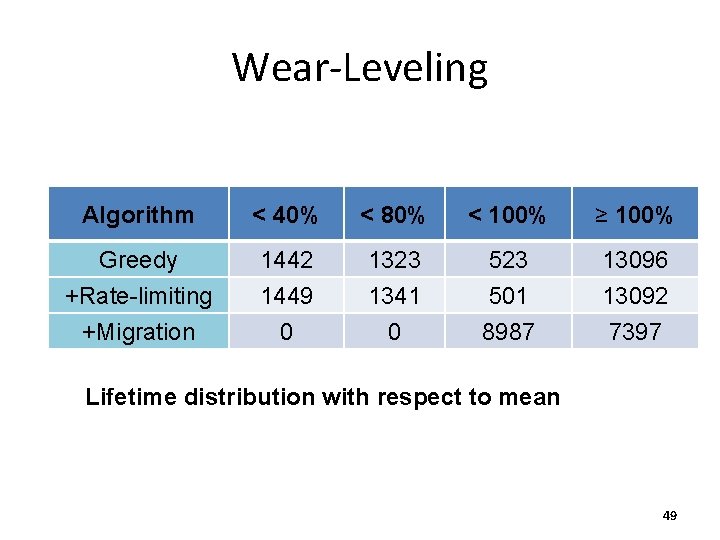

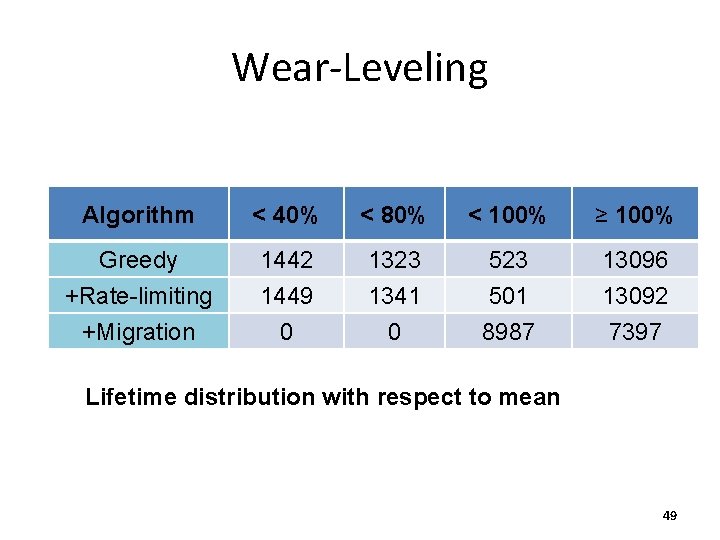

Wear-Leveling Algorithm < 40% < 80% < 100% ≥ 100% Greedy +Rate-limiting 1442 1449 1323 1341 523 501 13096 13092 +Migration 0 0 8987 7397 Lifetime distribution with respect to mean 49

Summary of Findings • This slide will contain specific summary of findings and results • Significant hardware and software interplay – – – Must cooperate to meet performance goals of workload Design and evaluate several data-placement schemes Algorithms for cleaning and wear-leveling • Hardware – Interface and package organization dictate theoretical performance bounds • Software – – – Properties of allocation pool, load balancing, data placement, block management (wear-leveling and cleaning) Workload characteristics All designs benefit from plane interleaving and overprovisioning 50

Example Parallelism Tradeoffs • SSD capacity scaling – Full connectivity between controller and flash packages difficult • No one choice optimal for all workloads – Highly sequential • Benefit from ganging – Inherent parallelism in workload • Benefit from deeply parallel request queuing structure – Poor cleaning efficiency (no locality) • Cleaning strategy be compatible with foreground load 51

I/O Workload TPCC traces obtained from Server Performance group System Configuration • 14 array controllers – 28 15 K RPM 36 GB disks in RAID-0 LUN, outermost cylinders used for the database • TPC-C configuration – 16, 000 warehouses, 300 users, 1. 5 TB total space Properties • Use few tracks per disk to get high performance – most space goes to waste • Reads and writes are uniformly spread across and within LUNs • Read/Write Ratio: 4. 1 M reads and 2. 4 M writes per LUN during half-hour • In total about 57 M reads and 34 M writes across all LUNs: 91 M I/Os 52

Design: Controller Data Structures Details in the OSR paper: Birrell Et. Al. , April 2007 Use the flash disk as a log • Maintain logical to physical address mappings at the page granularity to optimize block reuse • Reserve pool of erased blocks to sustain large sequential transfers/bad blocks • Last page of each block used as persistent store for volatile data structures • Data structures can be rapidly reconstructed on power up 53

Impact of Cleaning • At 2. 4 M writes per LUN, the simple block readmodify-write strategy gives – – 2. 4 M / (5*16) = 30 K writes/die = 3. 66 writes/block Around 7. 3 writes/block-hr = 64 K writes/block-year This gives little over year’s lifetime as chips are rated ~100 K writes/block Choice of cleaning algorithm can improve this by 3 -5 times • A very full array will incur heavy costs for cleaning – – Since a disk block is never freed, a volume is always full If an array is 90% full, 90% of writes will be for cleaning for a truly random workload, if we do simple read-modify-writes 54

Design: Controller Data Structures • Maintain logical-physical mapping at page granularity – Avoid high RMW costs • Reserve pool of erased blocks – Sustain large sequential transfers/bad blocks • Maintain current active page (pointer for next write) • Last page of each block used as persistent store for volatile data structures • Power up sequence to reconstruct data structures • Write chunk size coincides with twice of the page size 55

Interleaving is Great • Serial interface is primary bottleneck – Transfering page from on-chip register to off-chip controller 4 X slower than reading page from NAND cell (100μs v/s 25μs) – 32 MB/sec for a single flash package – 40 MB/sec with interleaving within die • Writes at 13 MB/sec without, and 26 MB/sec with interleaving • Greater degree of interleaving possible – Two die operations and one serial transfer can all proceed in parallel • Considerable speedups when operation latency greater than serial access latency – Example, erase operation • Use choreographed interleaving using the serial pins, or if available, use the intra-plane copy back operation 56

Wear-Leveling Algorithm Mean Lifetime Std. Dev. Expired Blocks Greedy 43. 82 13. 47 223 +Rate-limiting 43. 82 13. 42 153 +Migration 43. 34 5. 71 0 Block wear in IOzone Algorithm < 40% < 80% < 100% ≥ 100% Greedy 1442 1323 523 13096 +Rate-limiting 1449 1341 501 13092 +Migration 0 0 8987 7397 Lifetime distribution with respect to mean 57