DEEP REINFORCEMENT LEARNING ON THE ROAD TO SKYNET

- Slides: 24

DEEP REINFORCEMENT LEARNING ON THE ROAD TO SKYNET! UW CSE Deep Learning – Felix Leeb

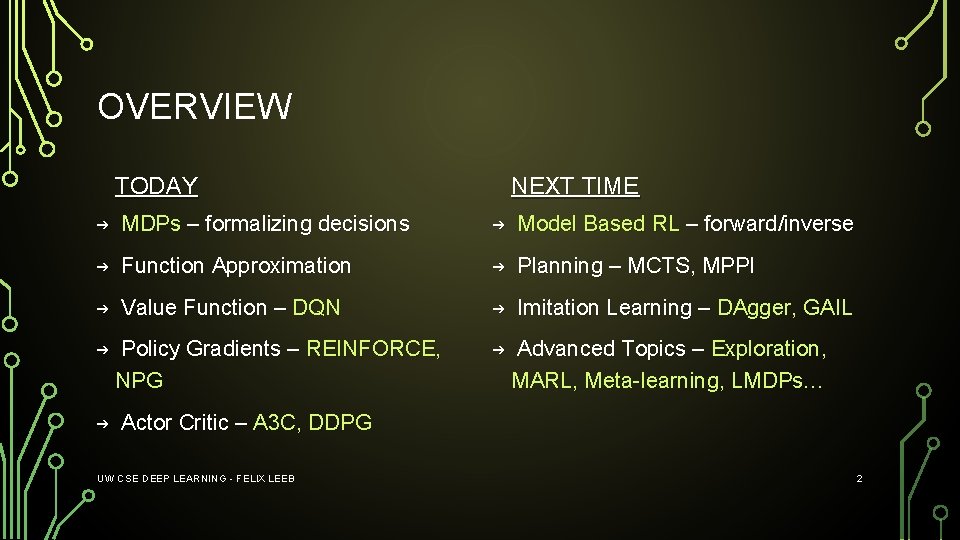

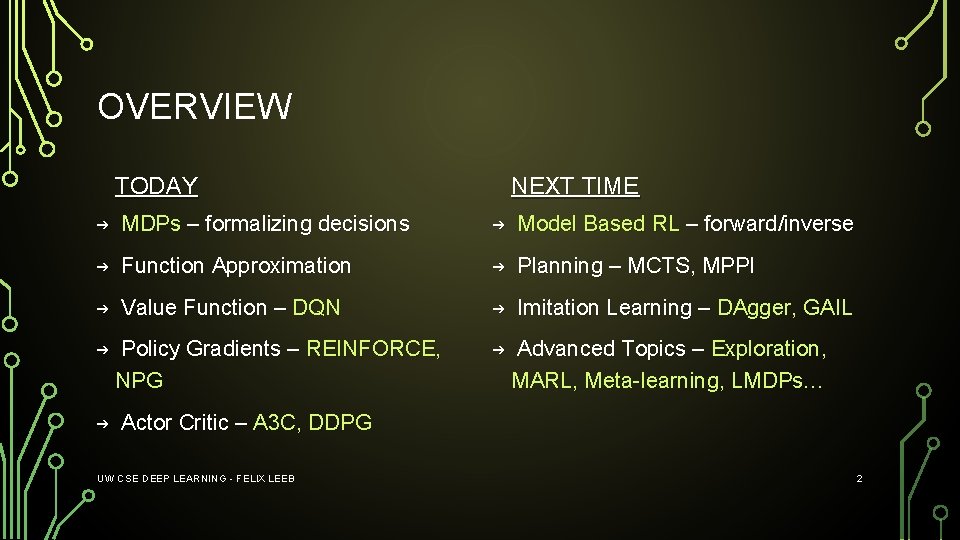

OVERVIEW TODAY NEXT TIME → MDPs – formalizing decisions → Model Based RL – forward/inverse → Function Approximation → Planning – MCTS, MPPI → Value Function – DQN → Imitation Learning – DAgger, GAIL → Policy Gradients – REINFORCE, NPG → Advanced Topics – Exploration, MARL, Meta-learning, LMDPs… → Actor Critic – A 3 C, DDPG UW CSE DEEP LEARNING - FELIX LEEB 2

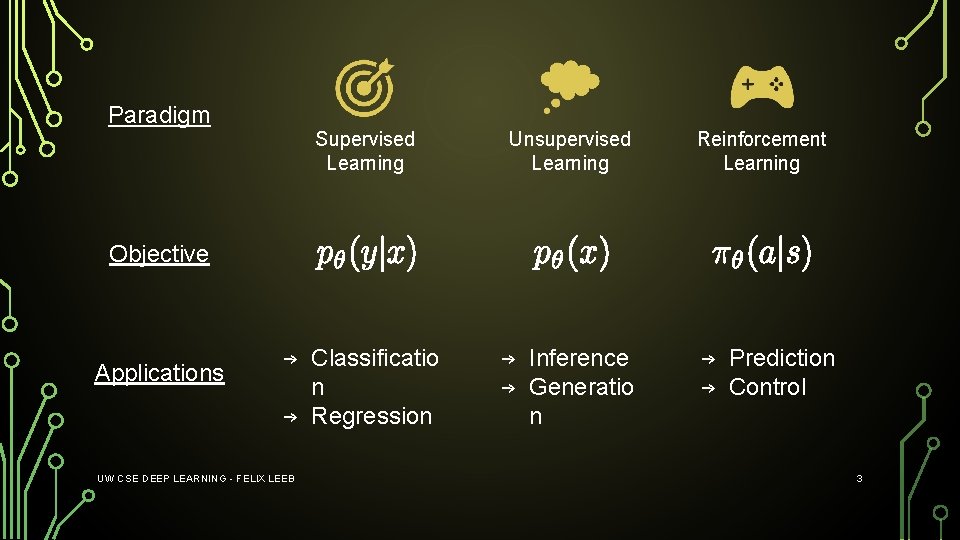

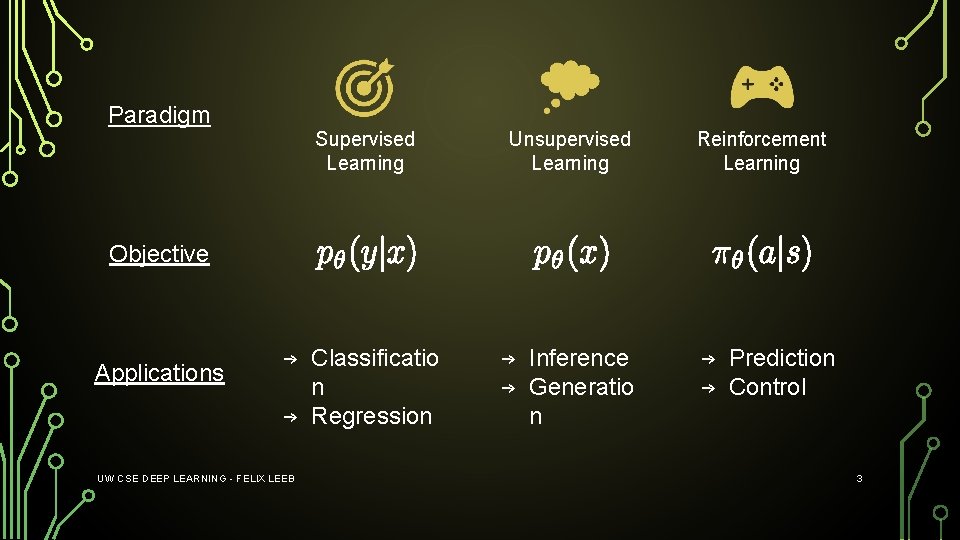

Paradigm Supervised Learning Unsupervised Learning Reinforcement Learning → Inference → Generatio n → Prediction → Control Objective Applications → Classificatio n → Regression UW CSE DEEP LEARNING - FELIX LEEB 3

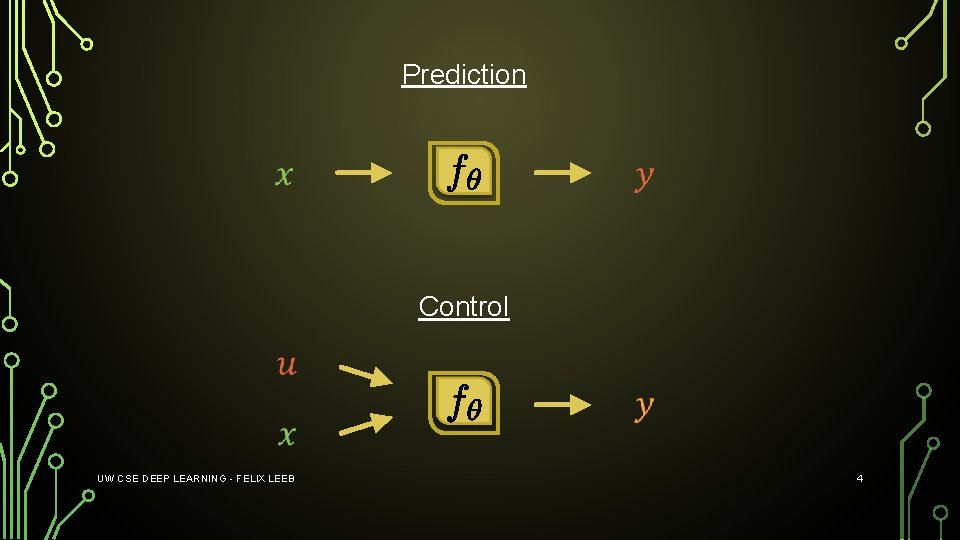

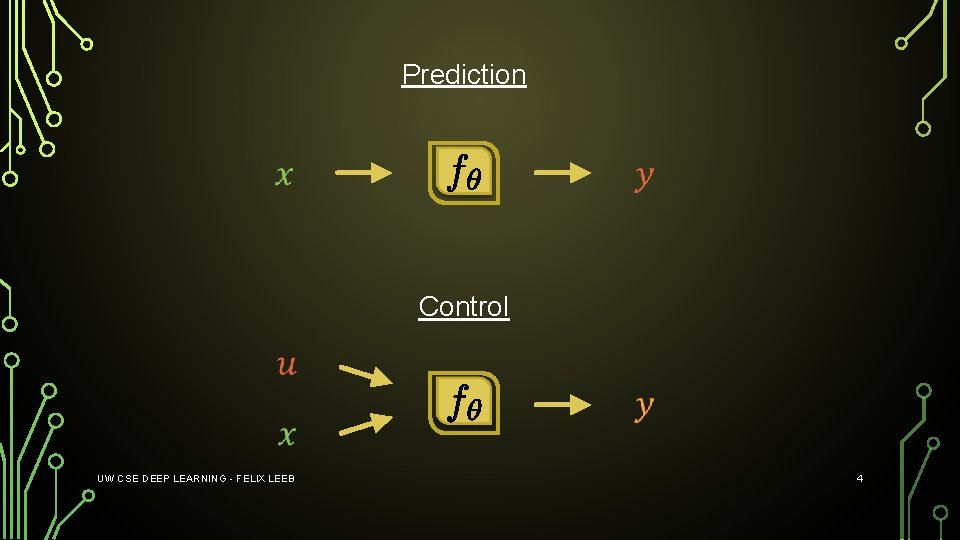

Prediction Control UW CSE DEEP LEARNING - FELIX LEEB 4

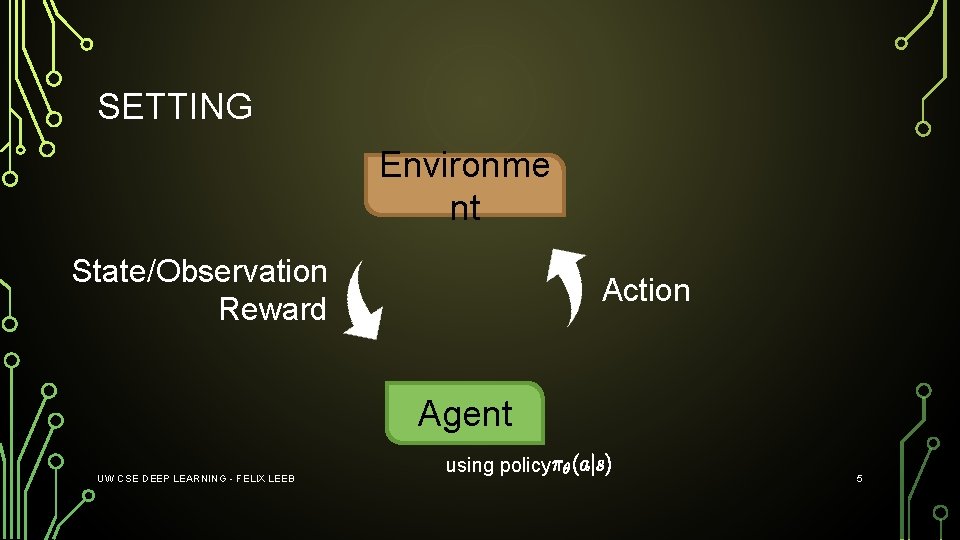

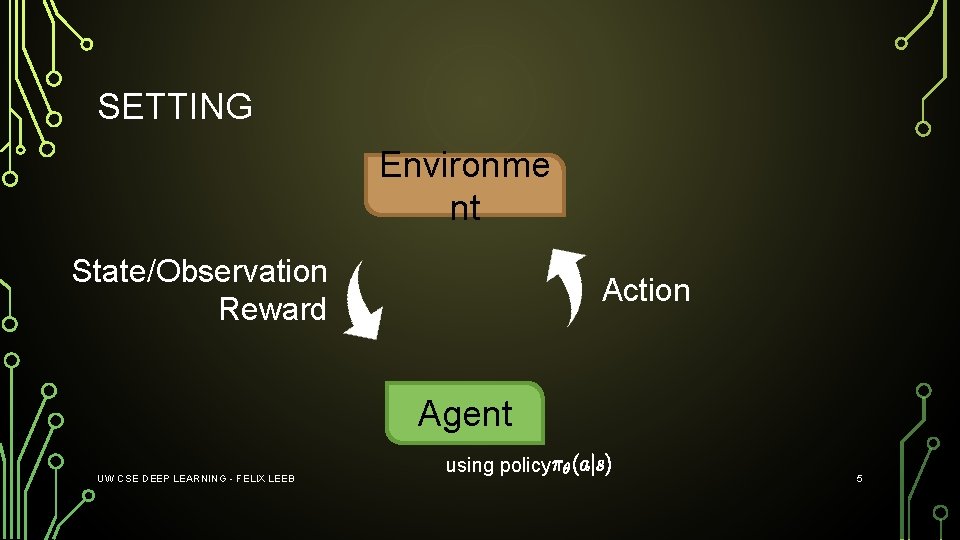

SETTING Environme nt State/Observation Reward Action Agent UW CSE DEEP LEARNING - FELIX LEEB using policy 5

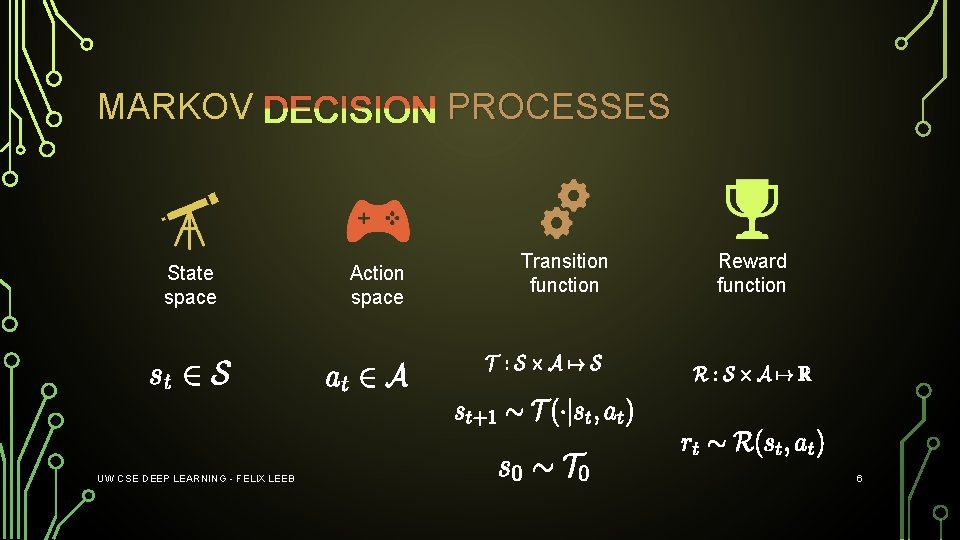

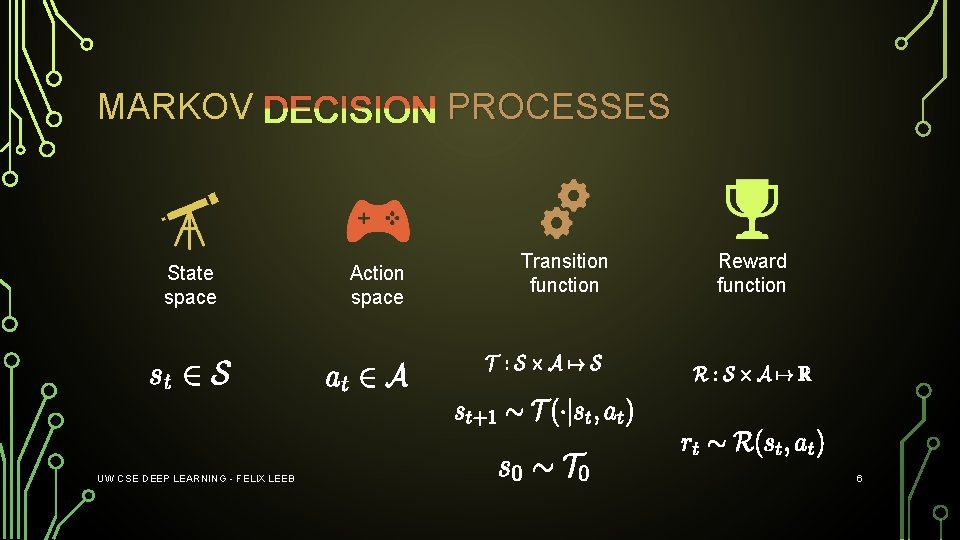

MARKOV DECISION PROCESSES State space UW CSE DEEP LEARNING - FELIX LEEB Action space Transition function Reward function 6

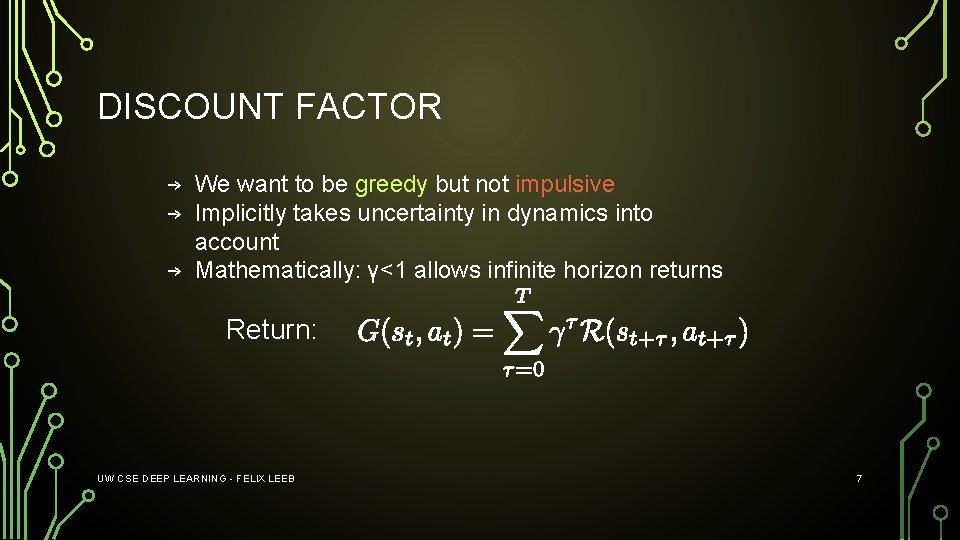

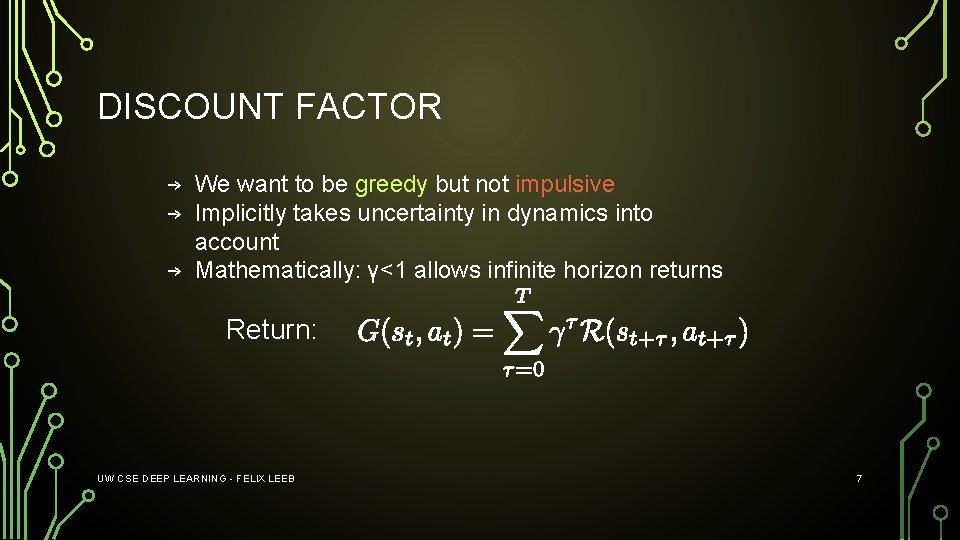

DISCOUNT FACTOR → We want to be greedy but not impulsive → Implicitly takes uncertainty in dynamics into account → Mathematically: γ<1 allows infinite horizon returns Return: UW CSE DEEP LEARNING - FELIX LEEB 7

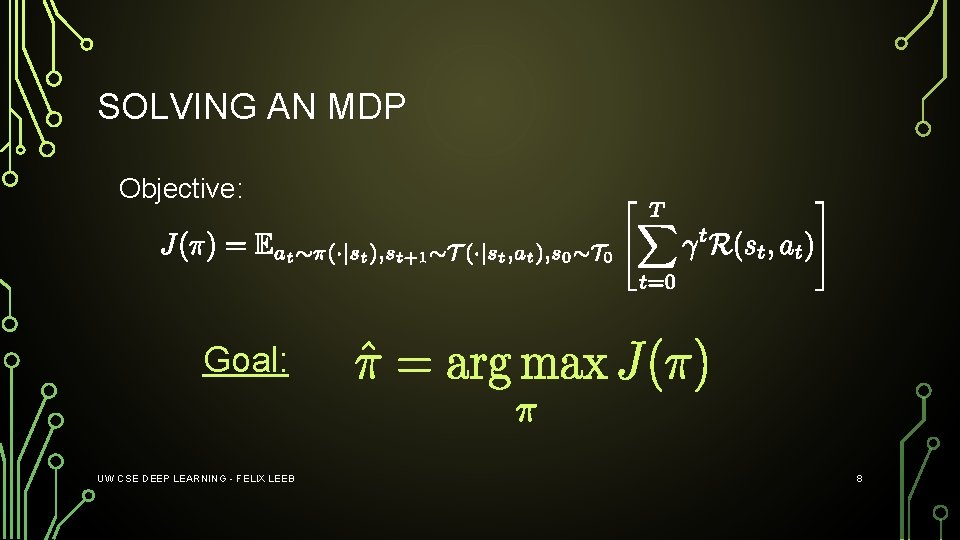

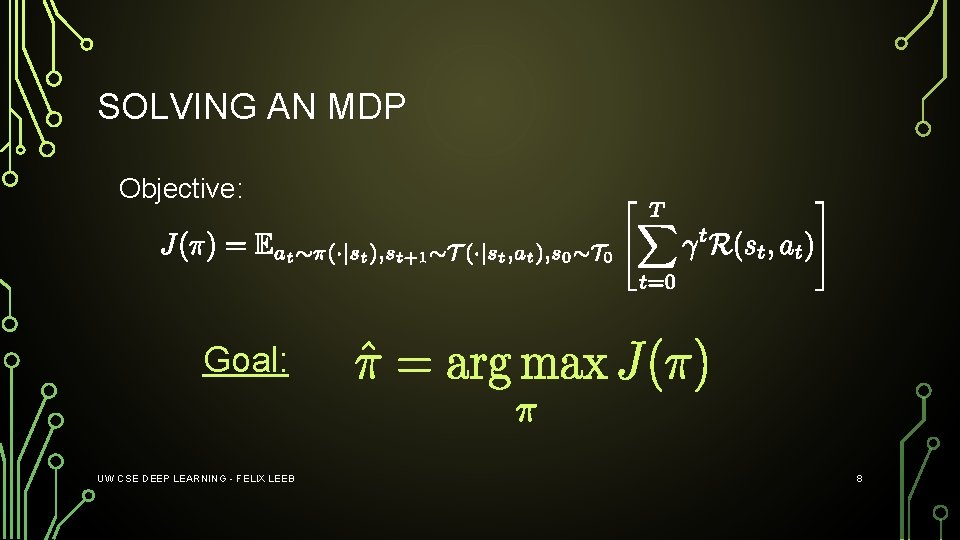

SOLVING AN MDP Objective: Goal: UW CSE DEEP LEARNING - FELIX LEEB 8

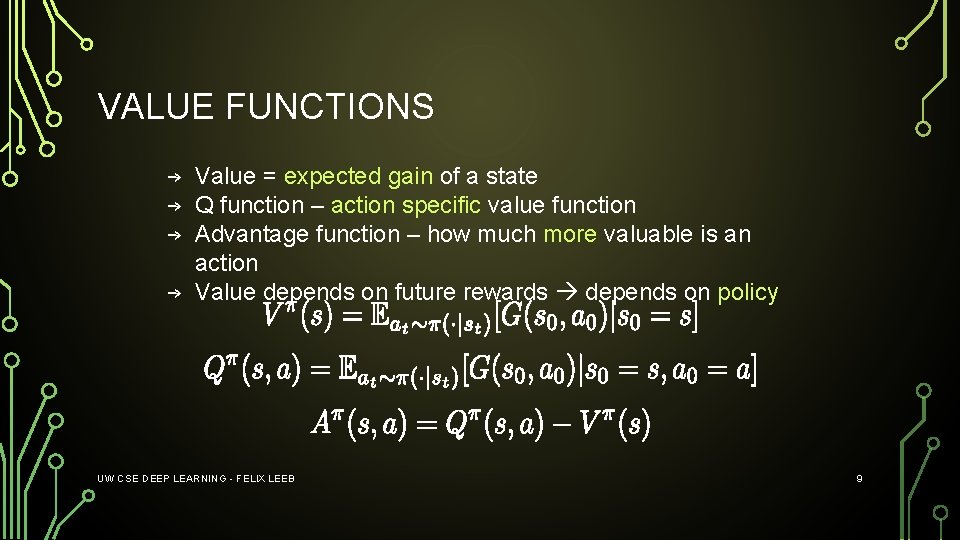

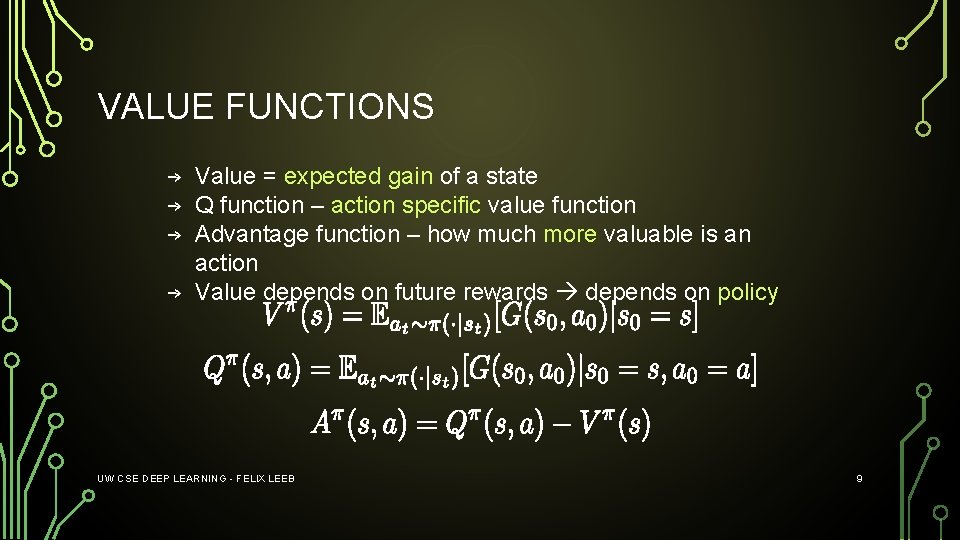

VALUE FUNCTIONS → Value = expected gain of a state → Q function – action specific value function → Advantage function – how much more valuable is an action → Value depends on future rewards depends on policy UW CSE DEEP LEARNING - FELIX LEEB 9

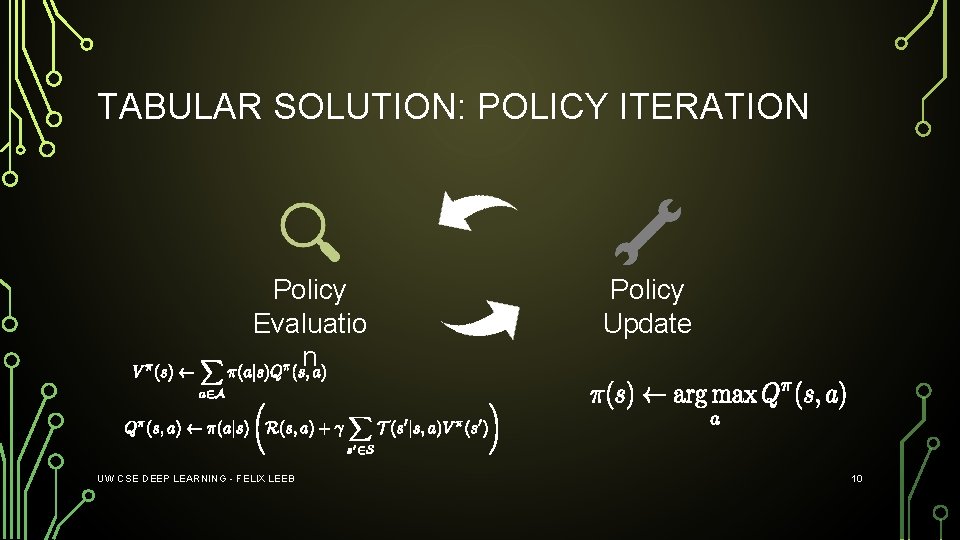

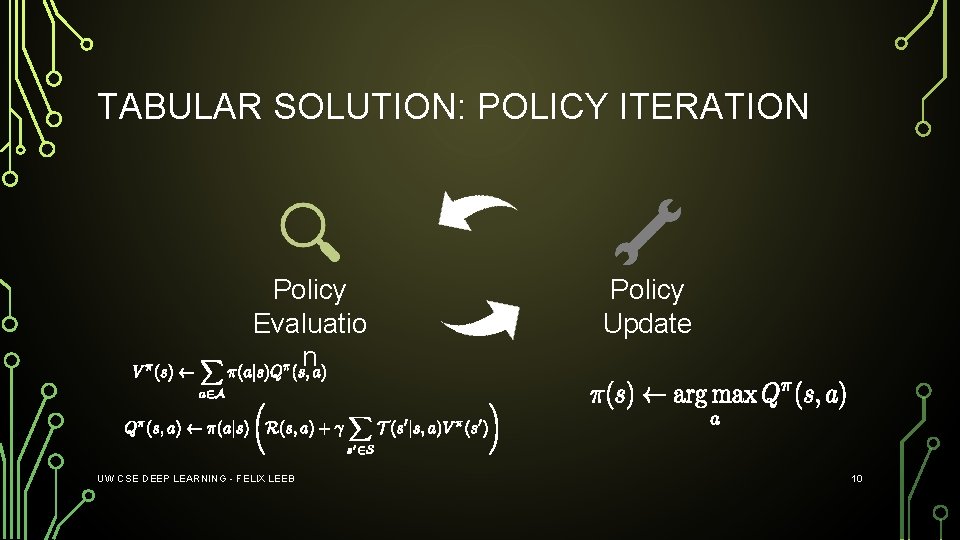

TABULAR SOLUTION: POLICY ITERATION Policy Evaluatio n UW CSE DEEP LEARNING - FELIX LEEB Policy Update 10

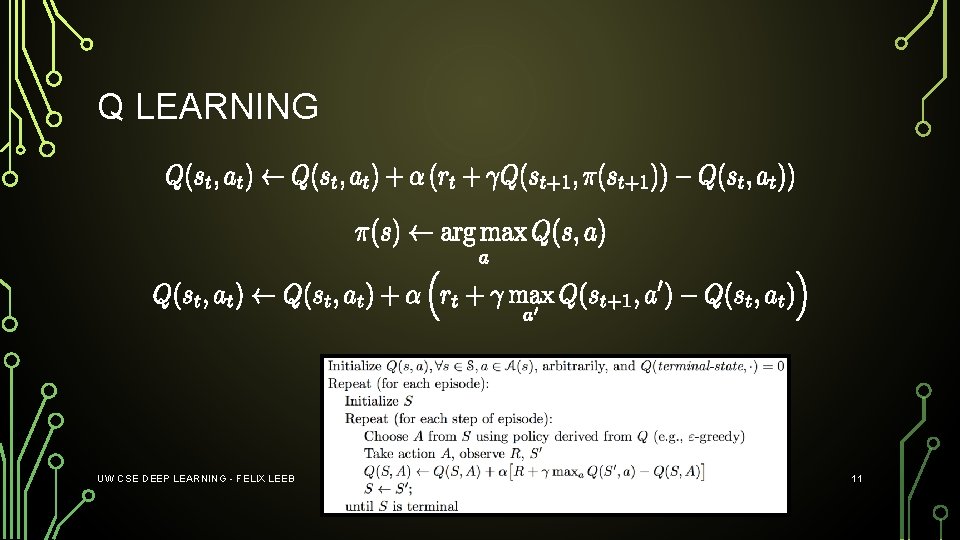

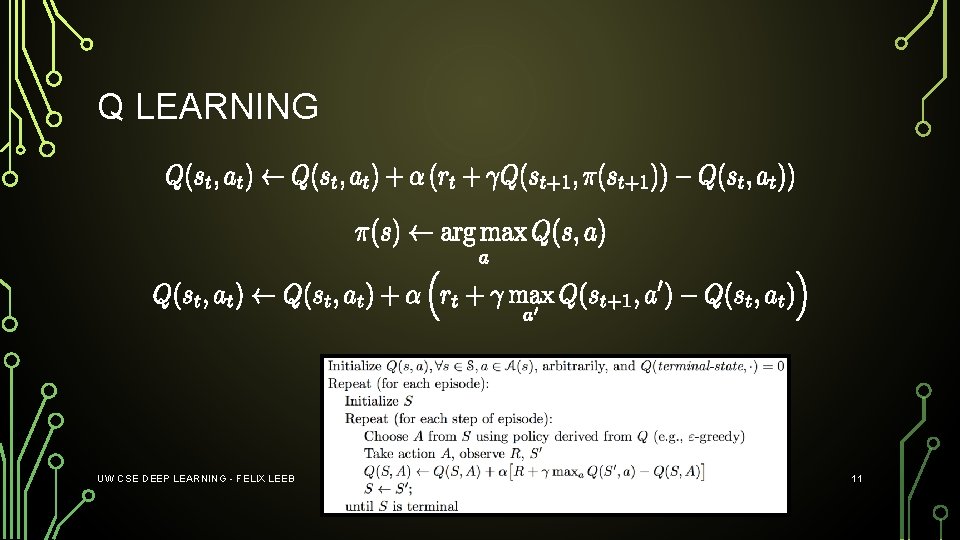

Q LEARNING UW CSE DEEP LEARNING - FELIX LEEB 11

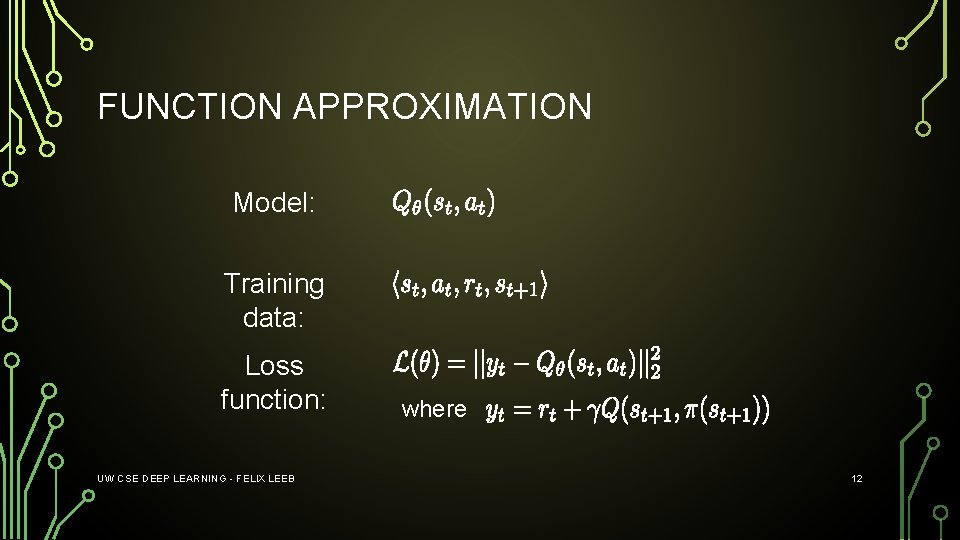

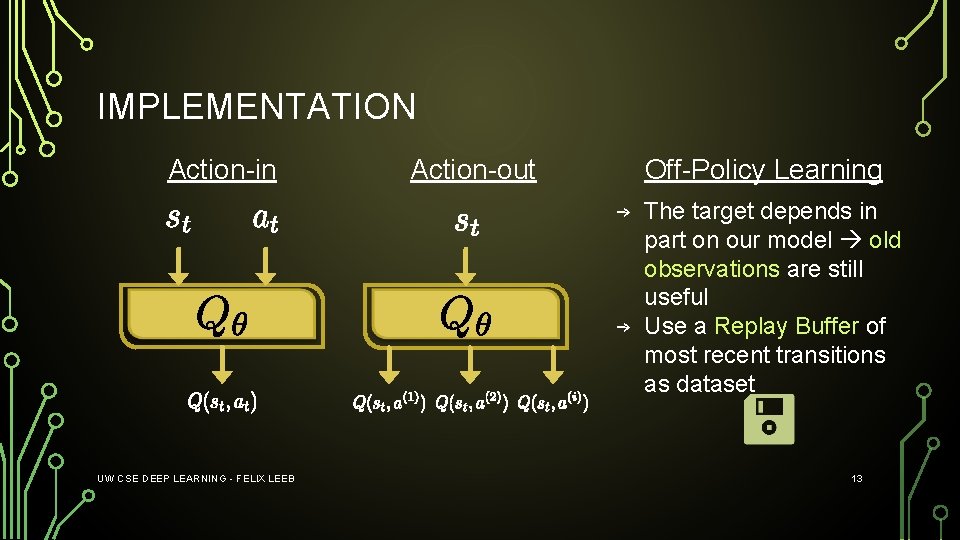

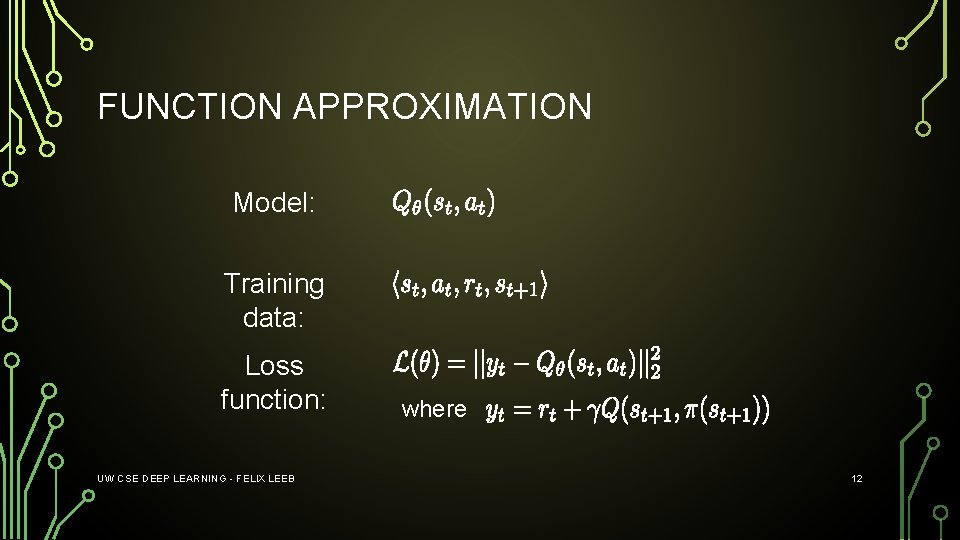

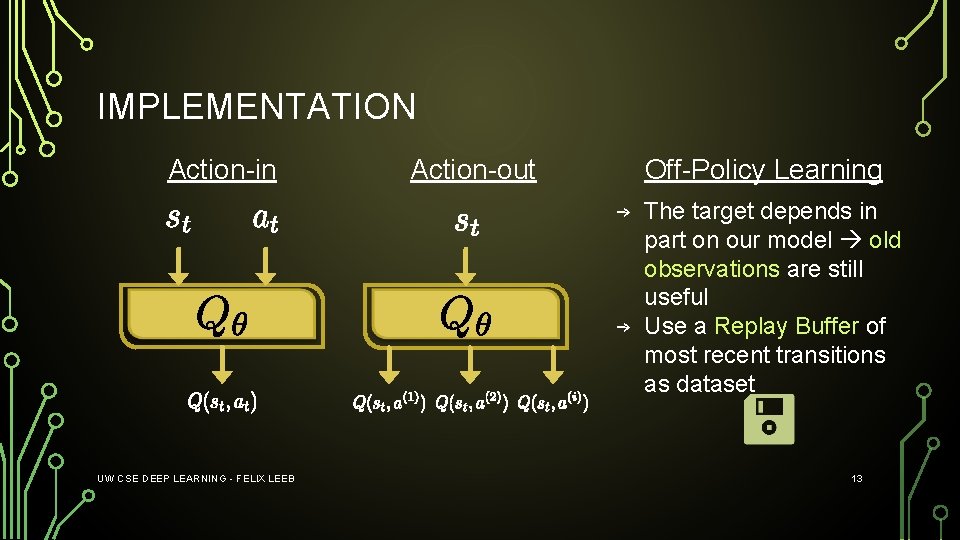

FUNCTION APPROXIMATION Model: Training data: Loss function: UW CSE DEEP LEARNING - FELIX LEEB where 12

IMPLEMENTATION Action-in UW CSE DEEP LEARNING - FELIX LEEB Action-out Off-Policy Learning → The target depends in part on our model old observations are still useful → Use a Replay Buffer of most recent transitions as dataset 13

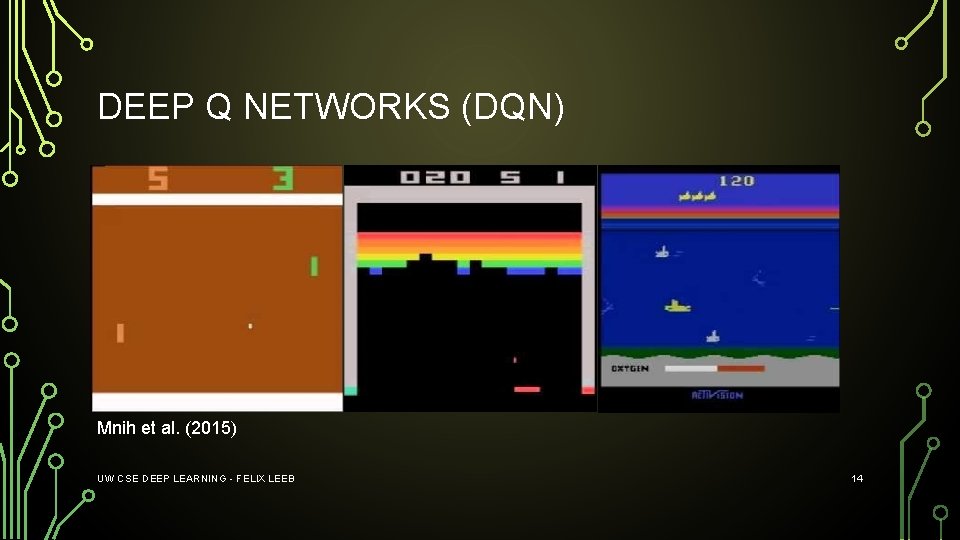

DEEP Q NETWORKS (DQN) Mnih et al. (2015) UW CSE DEEP LEARNING - FELIX LEEB 14

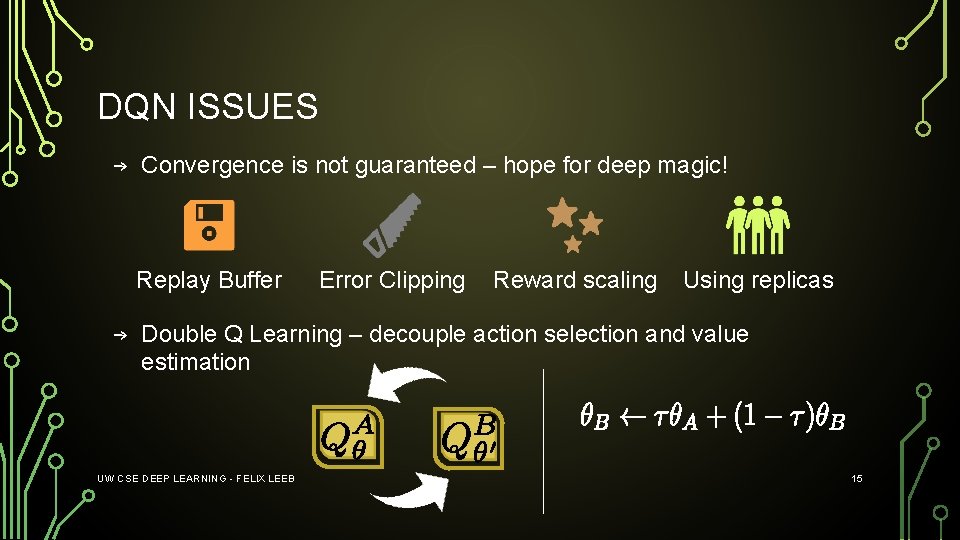

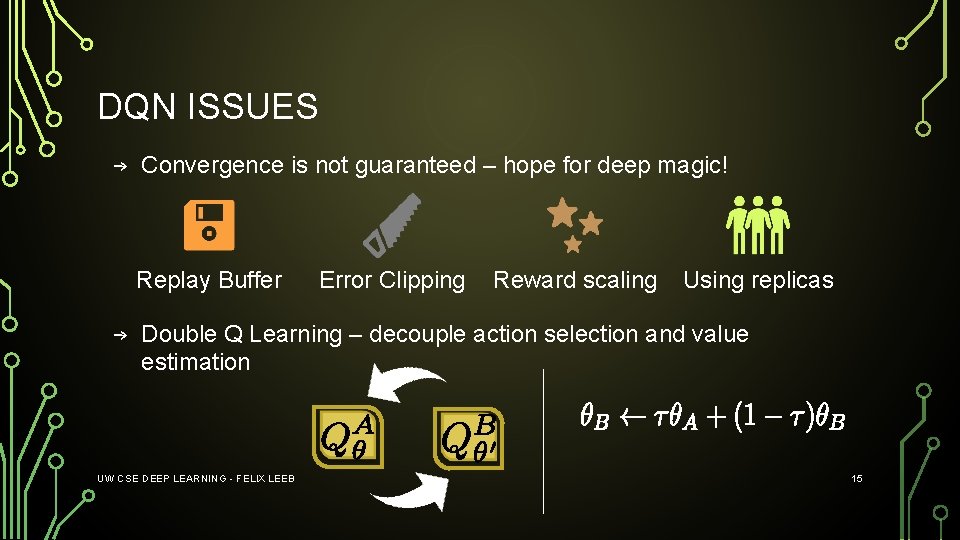

DQN ISSUES → Convergence is not guaranteed – hope for deep magic! Replay Buffer Error Clipping Reward scaling Using replicas → Double Q Learning – decouple action selection and value estimation UW CSE DEEP LEARNING - FELIX LEEB 15

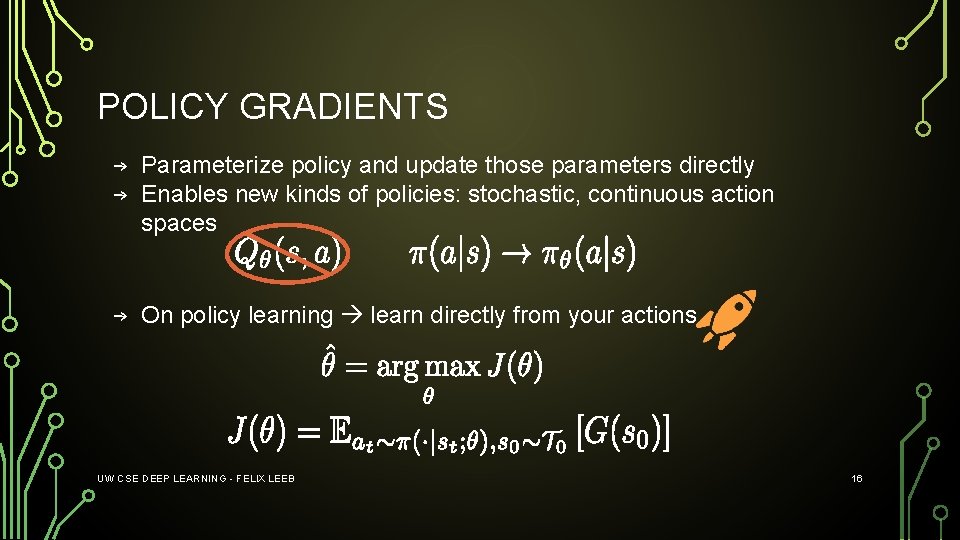

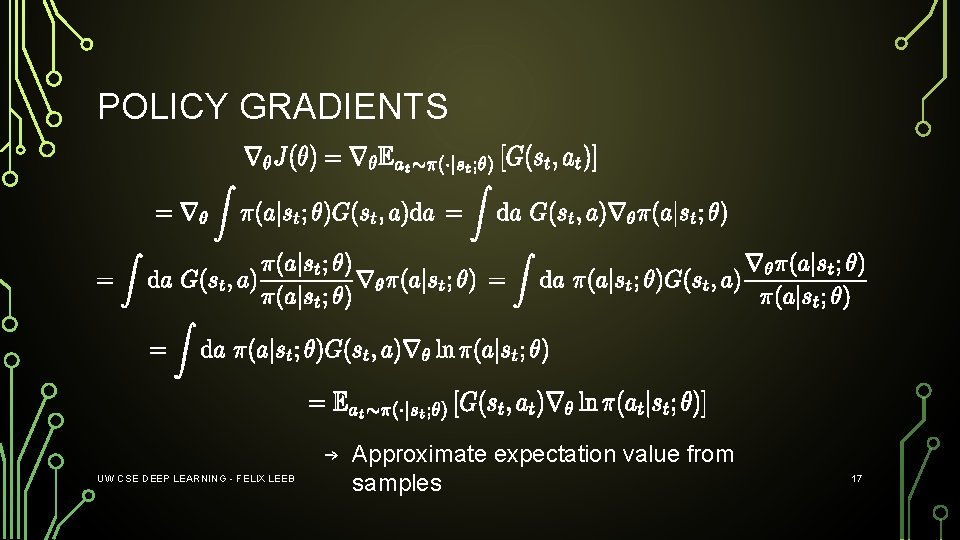

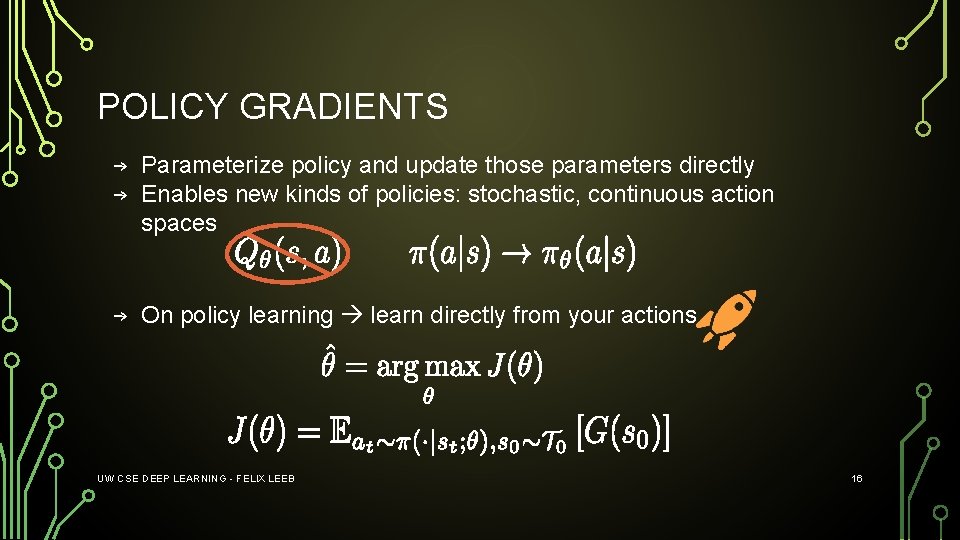

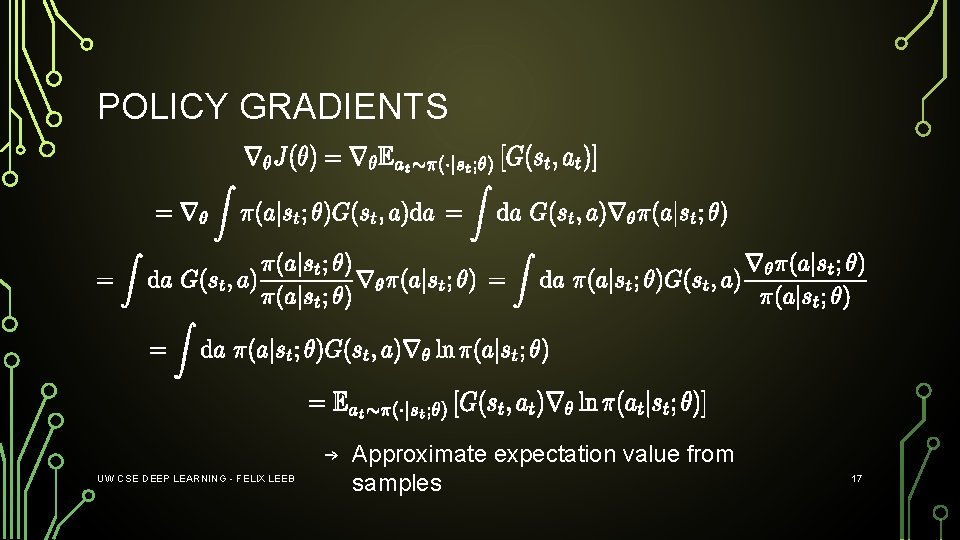

POLICY GRADIENTS → Parameterize policy and update those parameters directly → Enables new kinds of policies: stochastic, continuous action spaces → On policy learning learn directly from your actions UW CSE DEEP LEARNING - FELIX LEEB 16

POLICY GRADIENTS UW CSE DEEP LEARNING - FELIX LEEB → Approximate expectation value from samples 17

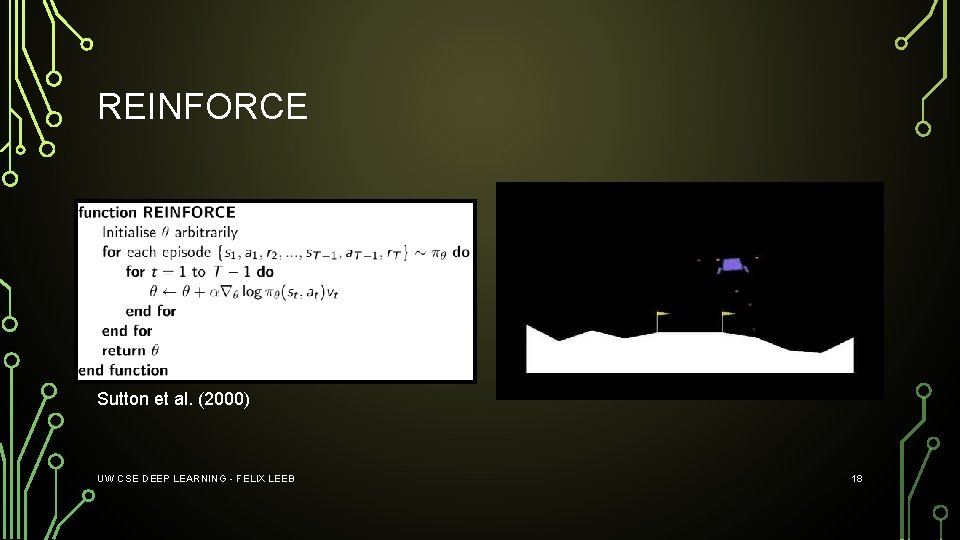

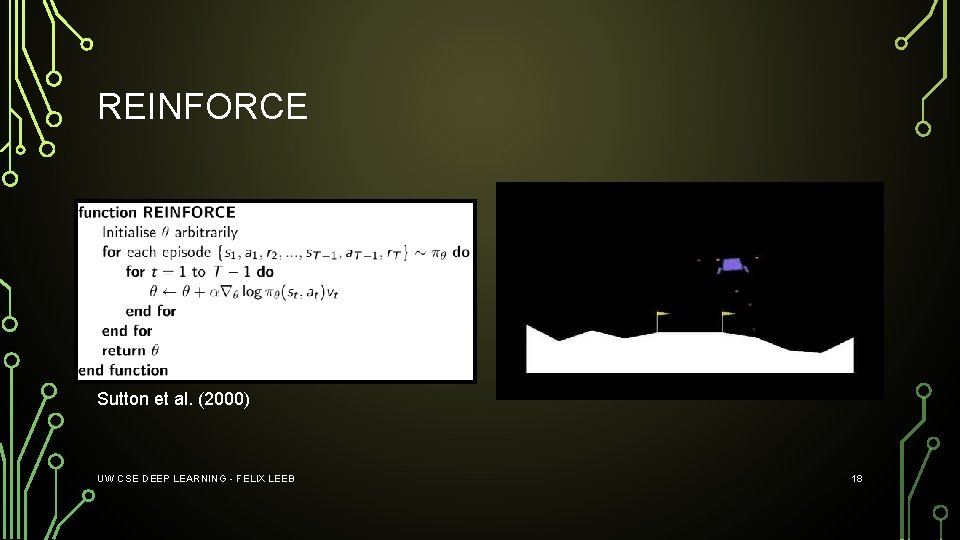

REINFORCE Sutton et al. (2000) UW CSE DEEP LEARNING - FELIX LEEB 18

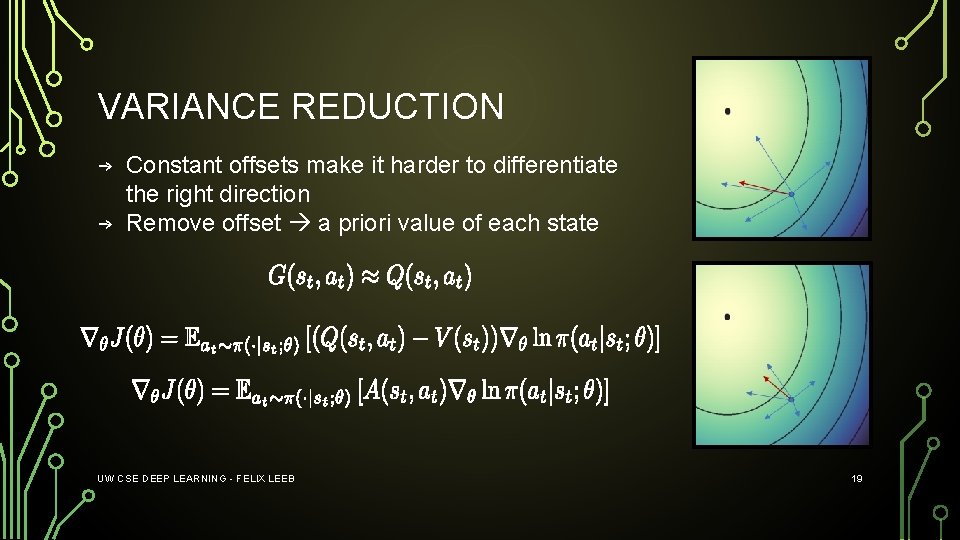

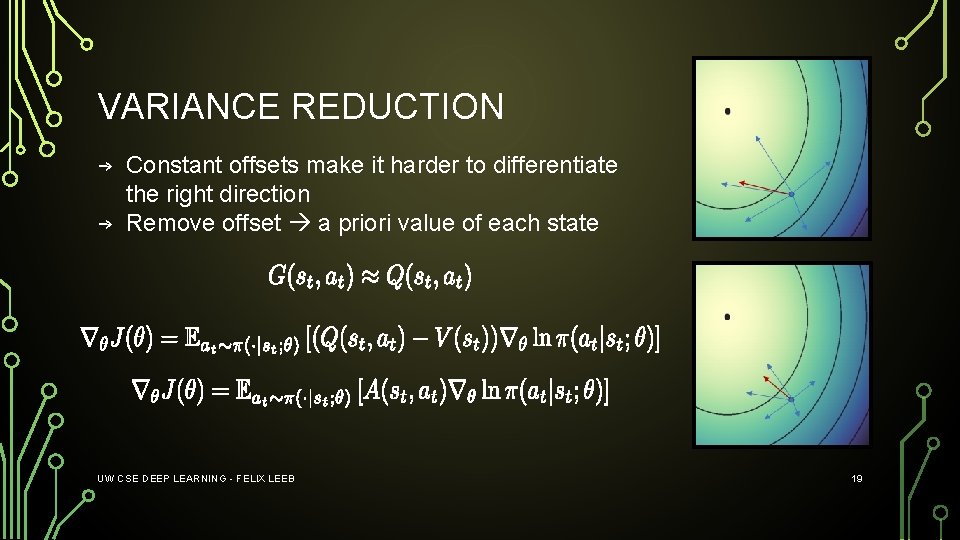

VARIANCE REDUCTION → Constant offsets make it harder to differentiate the right direction → Remove offset a priori value of each state UW CSE DEEP LEARNING - FELIX LEEB 19

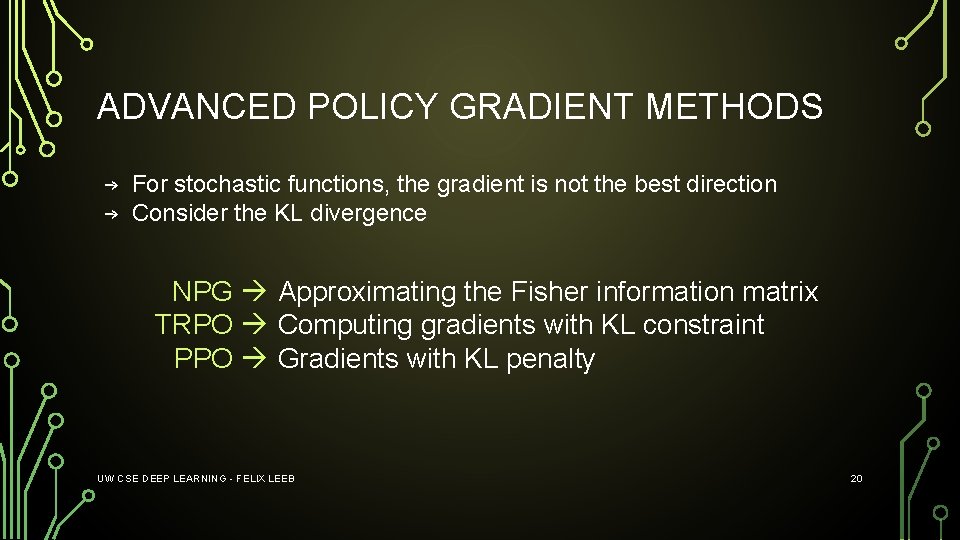

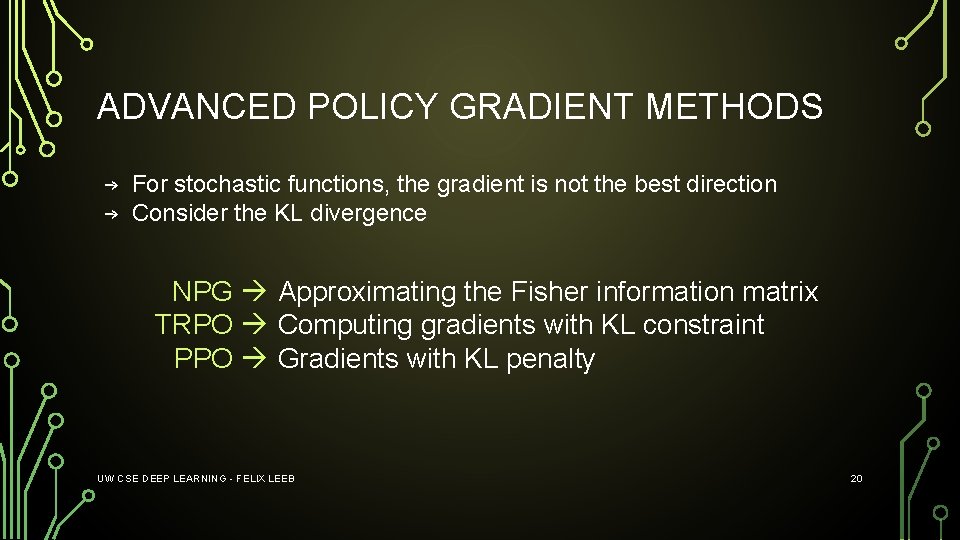

ADVANCED POLICY GRADIENT METHODS → For stochastic functions, the gradient is not the best direction → Consider the KL divergence NPG Approximating the Fisher information matrix TRPO Computing gradients with KL constraint PPO Gradients with KL penalty UW CSE DEEP LEARNING - FELIX LEEB 20

ADVANCED POLICY GRADIENT METHODS Rajeswaran et al. (2017) UW CSE DEEP LEARNING - FELIX LEEB Heess et al. (2017) 21

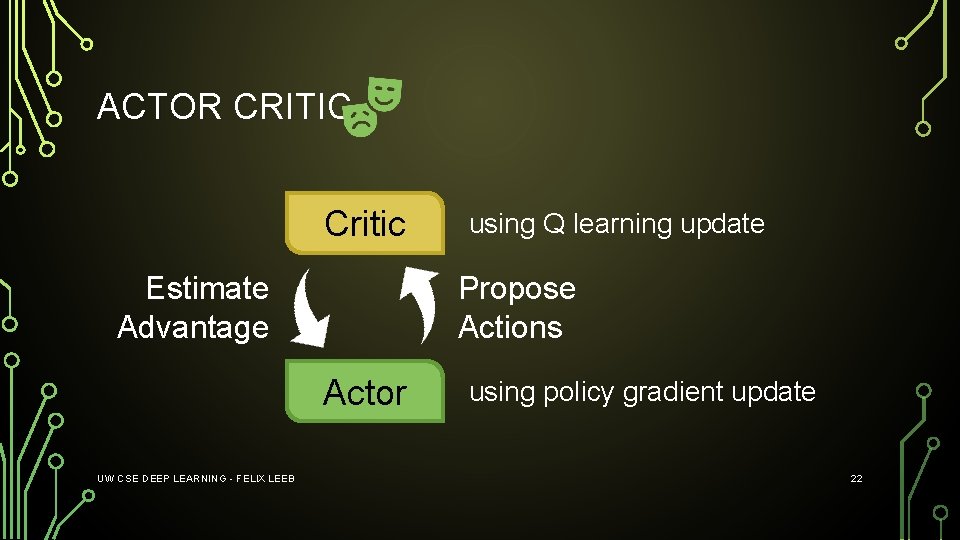

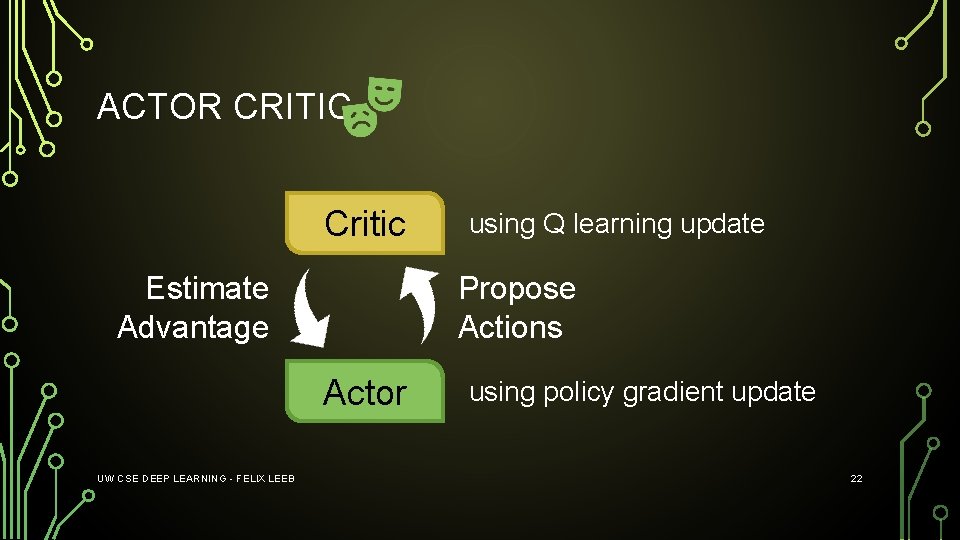

ACTOR CRITIC Critic Estimate Advantage Propose Actions Actor UW CSE DEEP LEARNING - FELIX LEEB using Q learning update using policy gradient update 22

ASYNC ADVANTAGE ACTOR-CRITIC (A 3 C) Mnih et al. (2016) UW CSE DEEP LEARNING - FELIX LEEB 23

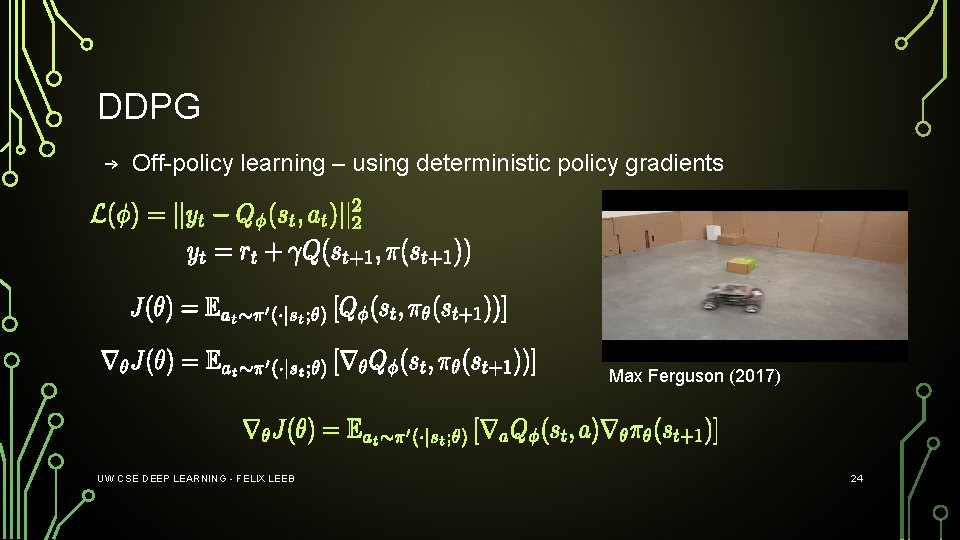

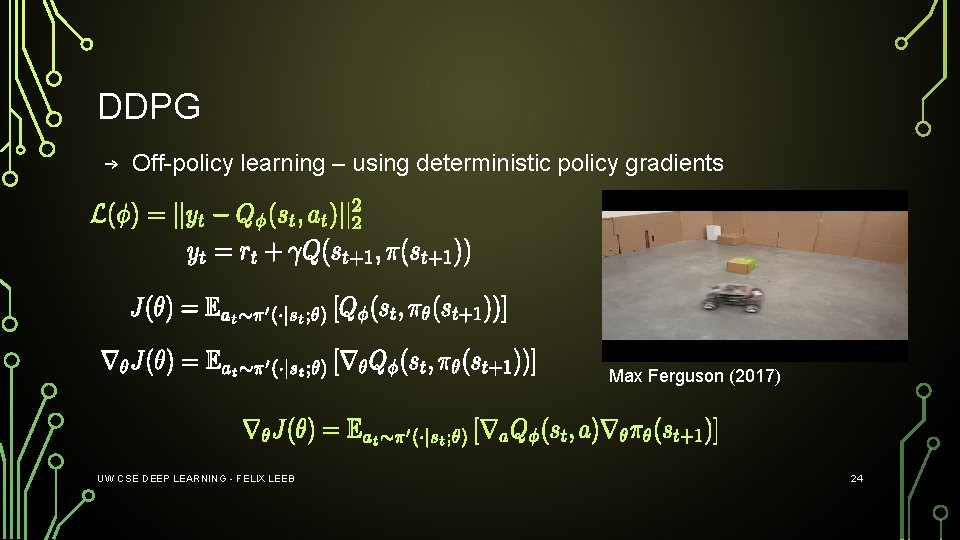

DDPG → Off-policy learning – using deterministic policy gradients Max Ferguson (2017) UW CSE DEEP LEARNING - FELIX LEEB 24