Deep Reinforcement Learning in Navigation Anwica Kashfeen Reinforcement

- Slides: 32

Deep Reinforcement Learning in Navigation Anwica Kashfeen

Reinforcement Learning Involves an agent interacts with environment, which provides numerous rewards Goal: learn to take actions that maximize reward

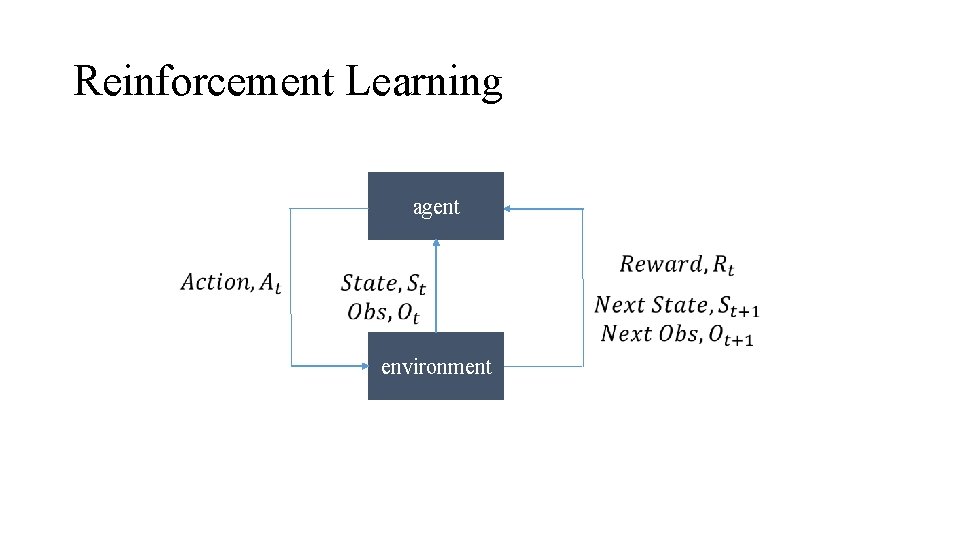

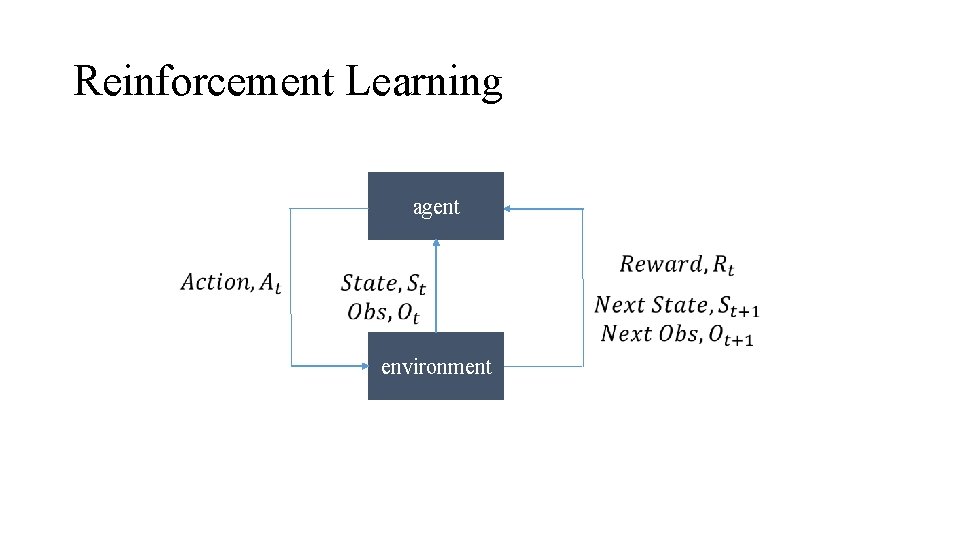

Reinforcement Learning agent environment

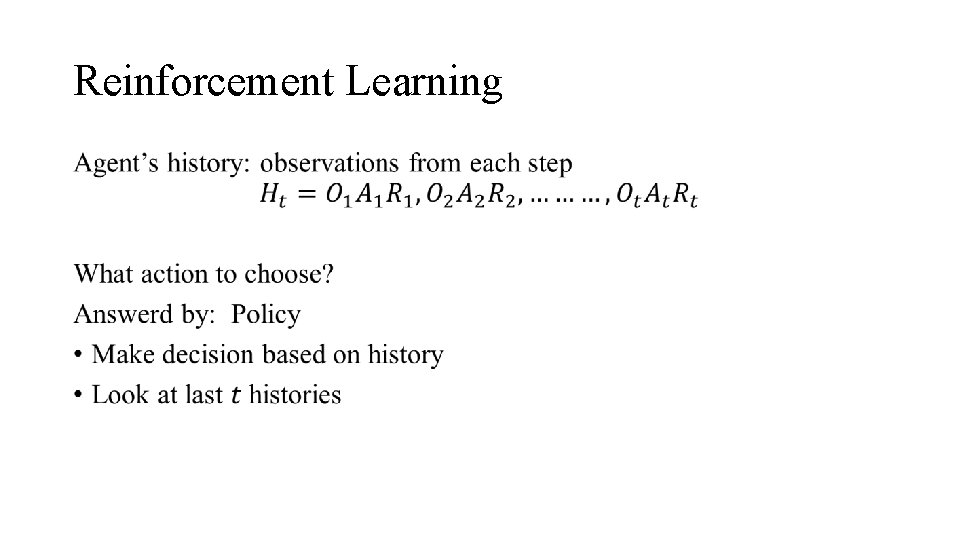

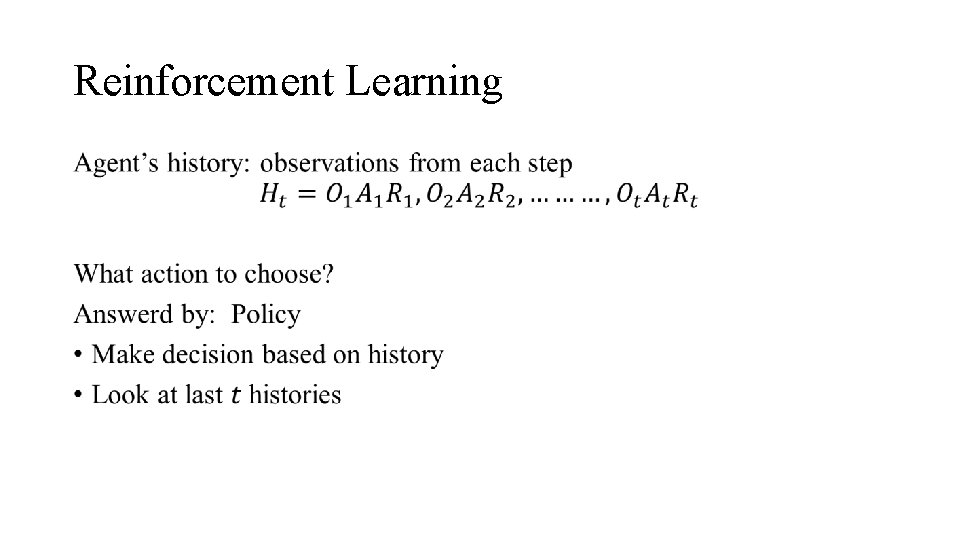

Reinforcement Learning •

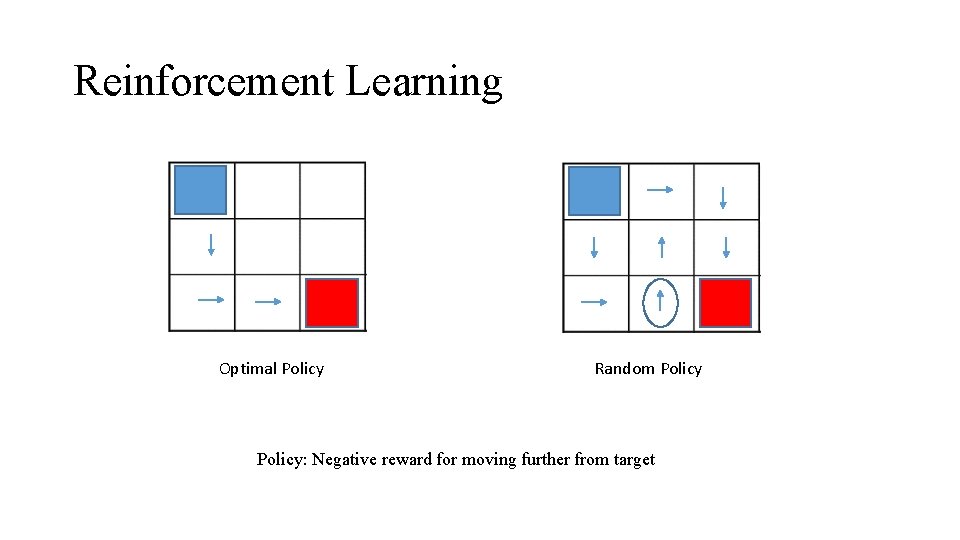

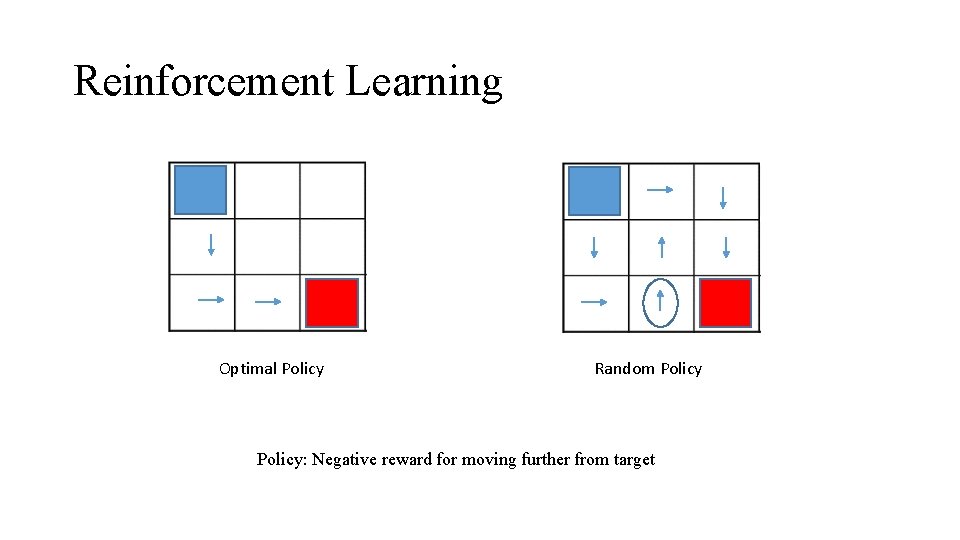

Reinforcement Learning Optimal Policy Random Policy: Negative reward for moving further from target

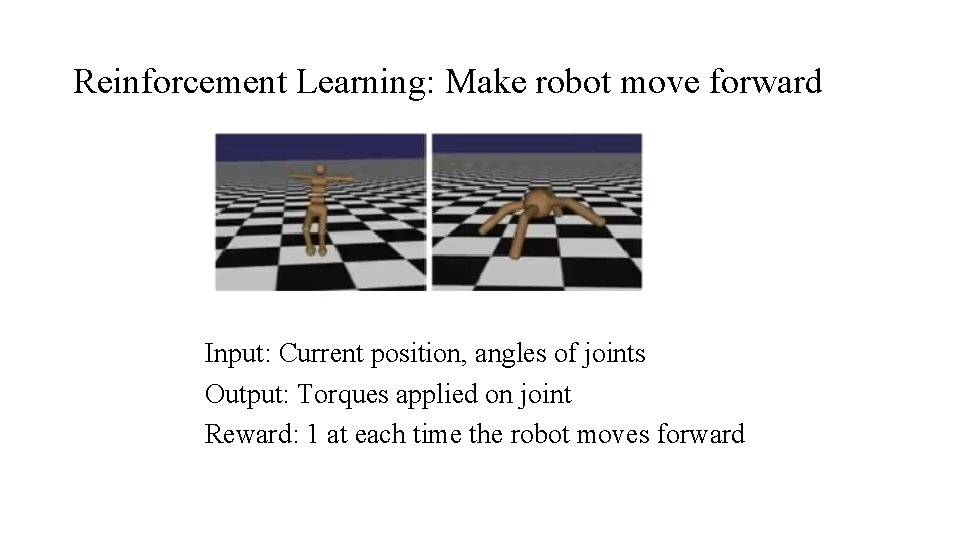

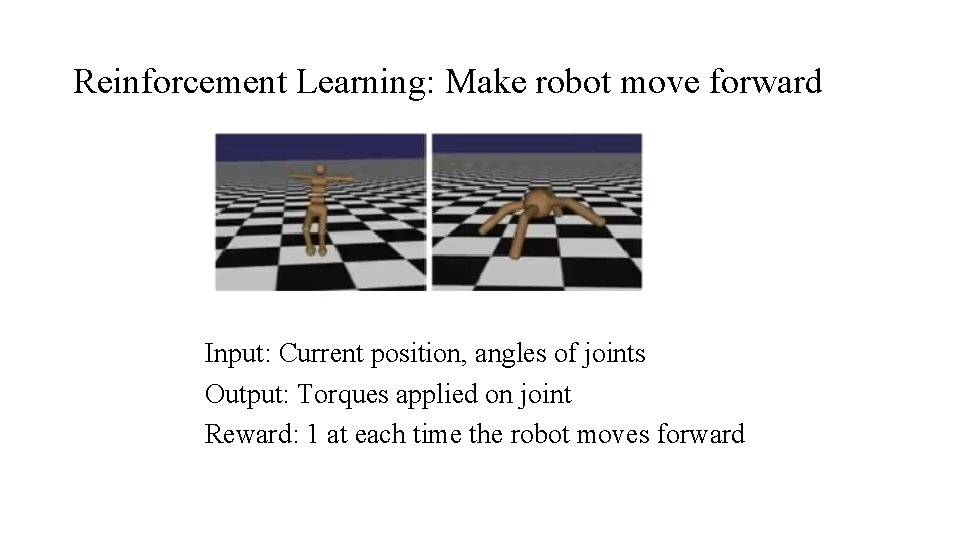

Reinforcement Learning: Make robot move forward Input: Current position, angles of joints Output: Torques applied on joint Reward: 1 at each time the robot moves forward

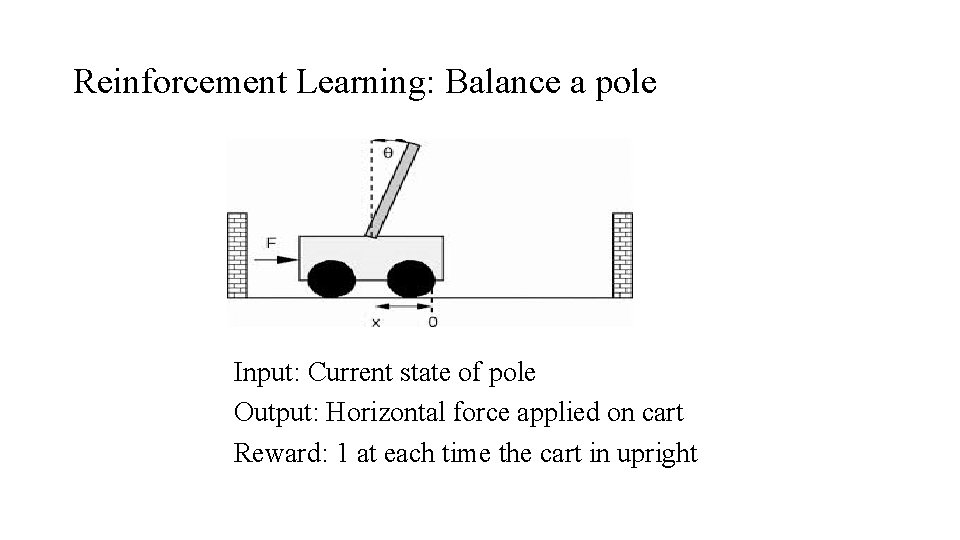

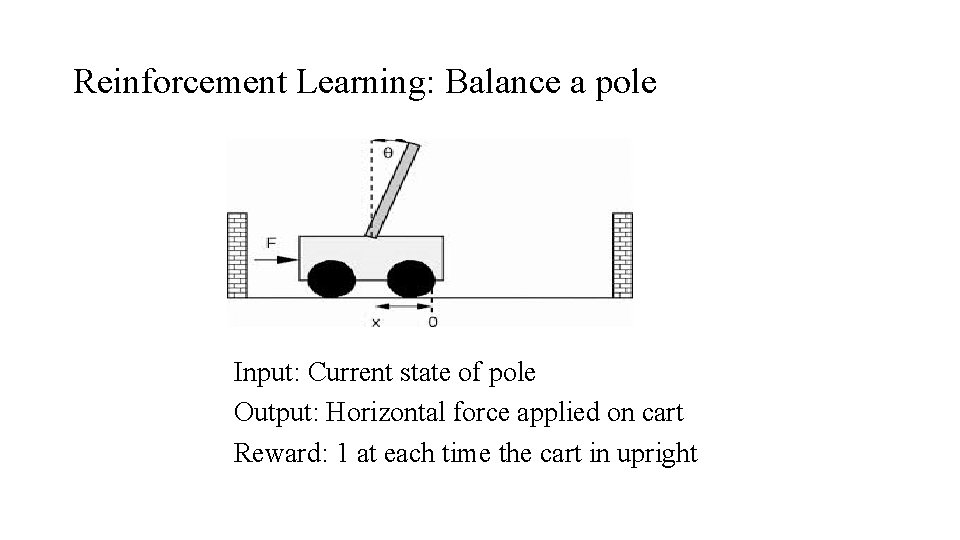

Reinforcement Learning: Balance a pole Input: Current state of pole Output: Horizontal force applied on cart Reward: 1 at each time the cart in upright

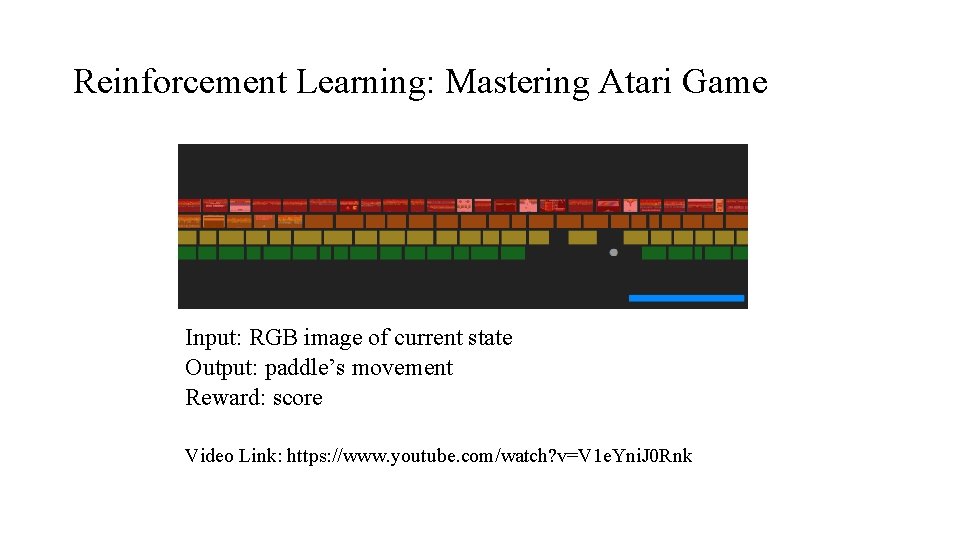

Reinforcement Learning: Mastering Atari Game Input: RGB image of current state Output: paddle’s movement Reward: score Video Link: https: //www. youtube. com/watch? v=V 1 e. Yni. J 0 Rnk

Challenges • Complicated input signals • No supervisor • No instantaneous feedback • Agent’s action effect environment Model Design Criteria: Use environment’s criticism on agents’ action

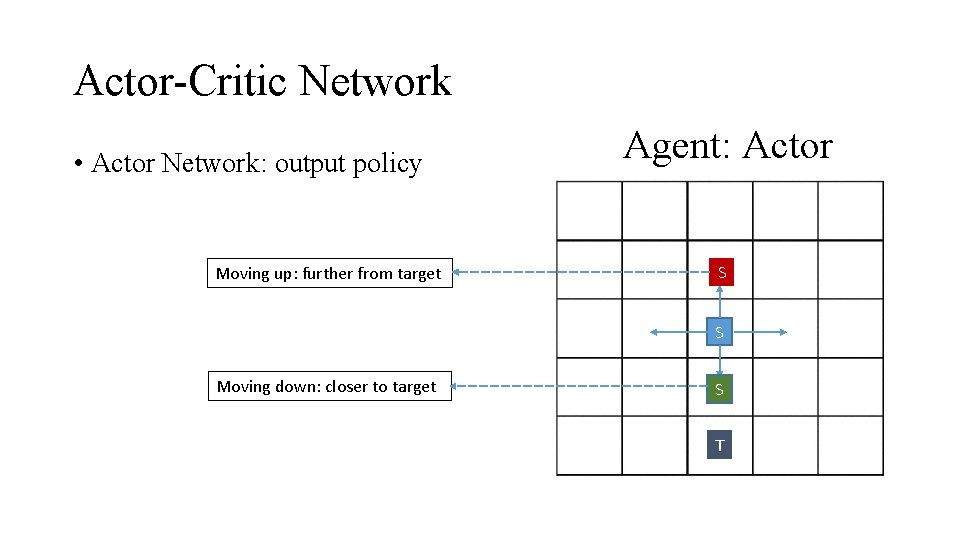

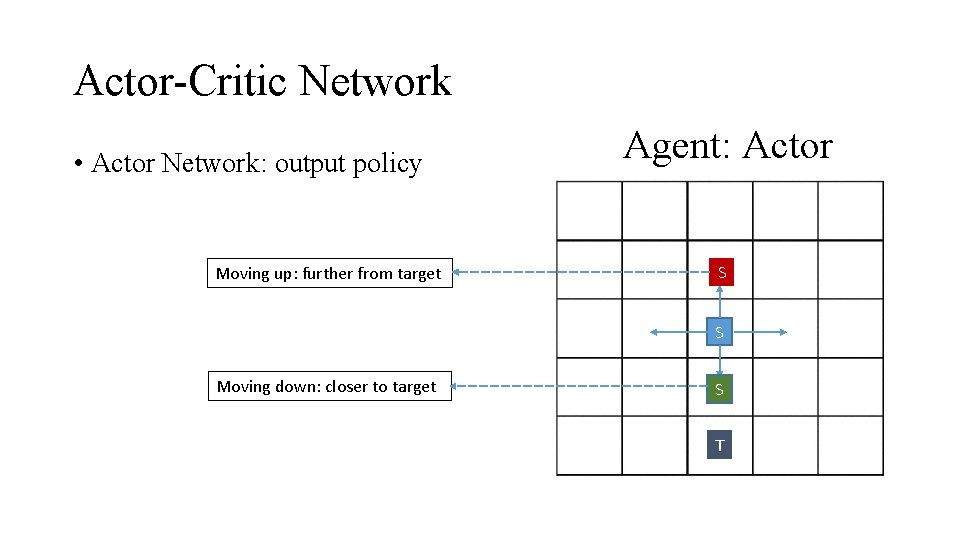

Actor-Critic Network • Actor Network: output policy Moving up: further from target Agent: Actor S S Moving down: closer to target S T

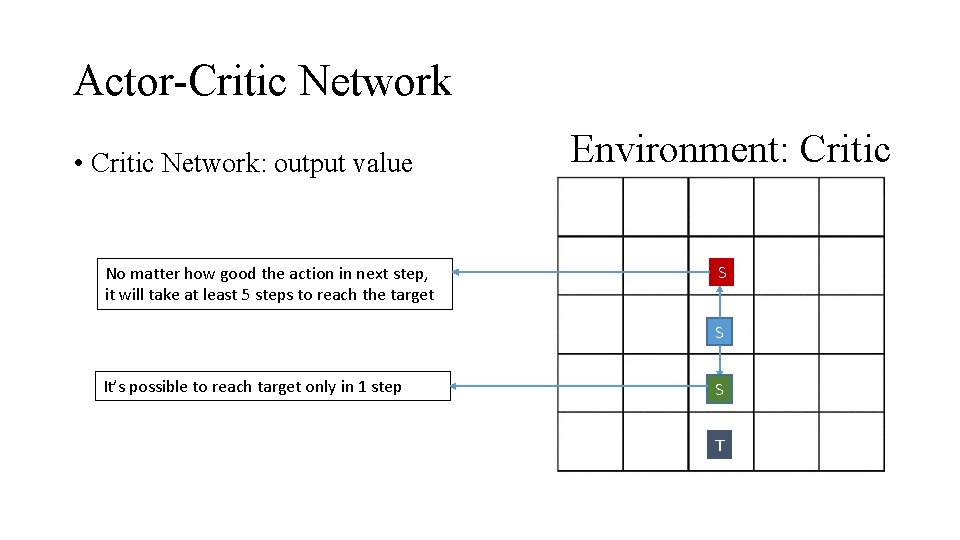

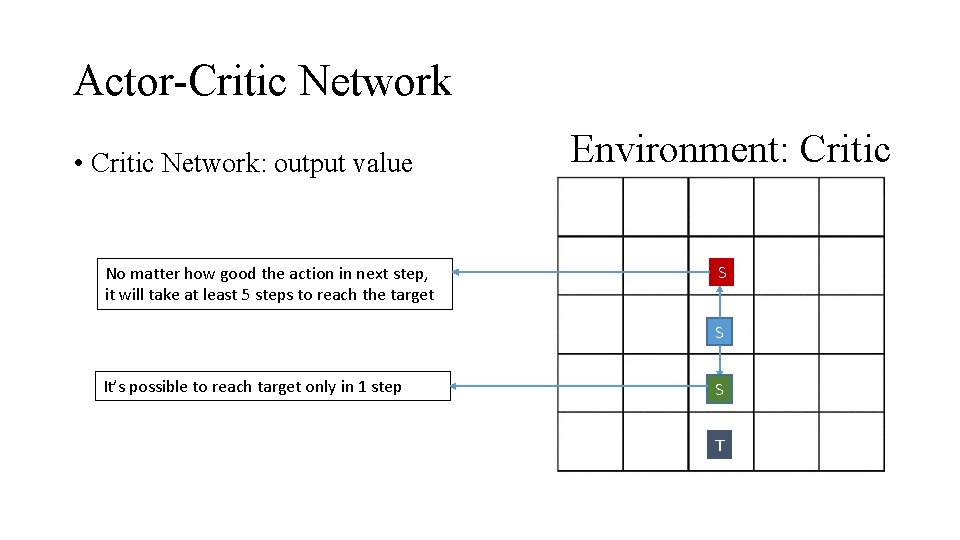

Actor-Critic Network • Critic Network: output value No matter how good the action in next step, it will take at least 5 steps to reach the target Environment: Critic S S It’s possible to reach target only in 1 step S T

Actor-Critic Network • One single network for both actor and critic • Shares network parameters • Two different networks • Do not share network parameters • Actor needs to know the advantage of being in the current state • Choose network model depending on the task

Reinforcement Learning • Target-Driven Navigation • Collision Avoidance

Target-Dirven Navigation Objective • Avoid collision with static objects in environment • Find optimal path from source to target

Target-Driven Navigation • Global Planning • Requires a map • Hard to deal with dynamic objects • Local Planning • Requires perfect sensing of environment

Target-Driven Navigation • Local Planning • Input: RGB image of current & target state • Output • Policy: decides agent’s next step • Value: Value of new state • Reward: • +10 for reaching goal • +1 for small step

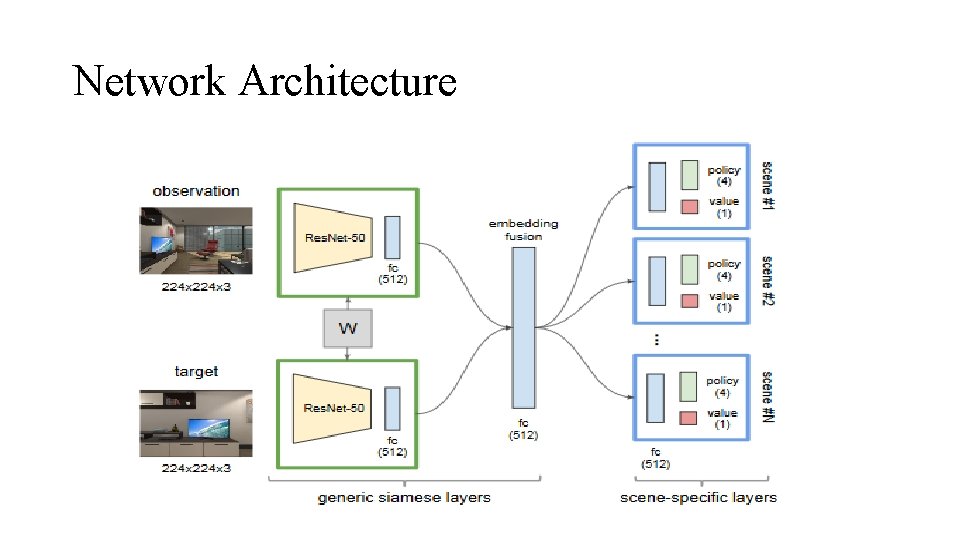

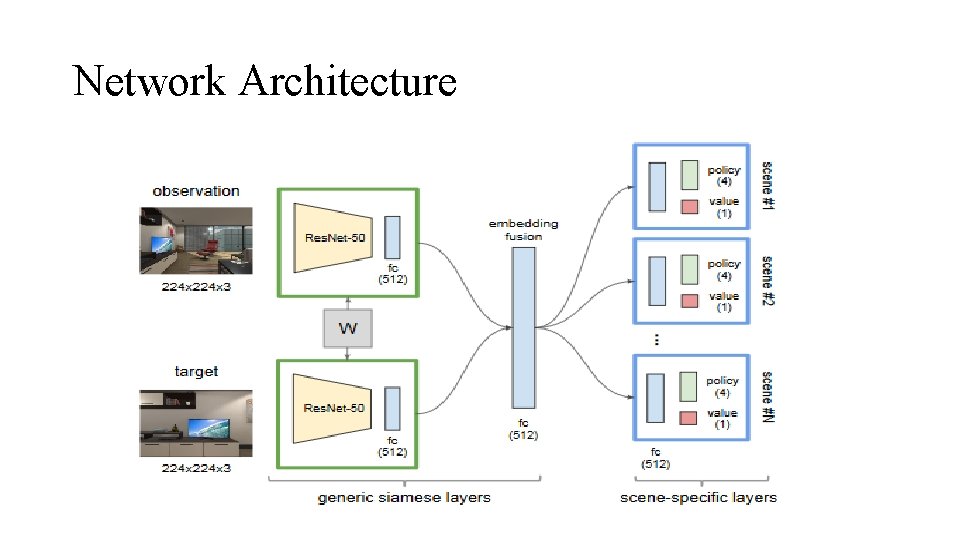

Network Architecture

Network Architecture • One network • Optimize policy and value concurrently • Jointly embeds target and current state Video link: https: //www. youtube. com/watch? v=Sm. Bx. MDi. Orvs

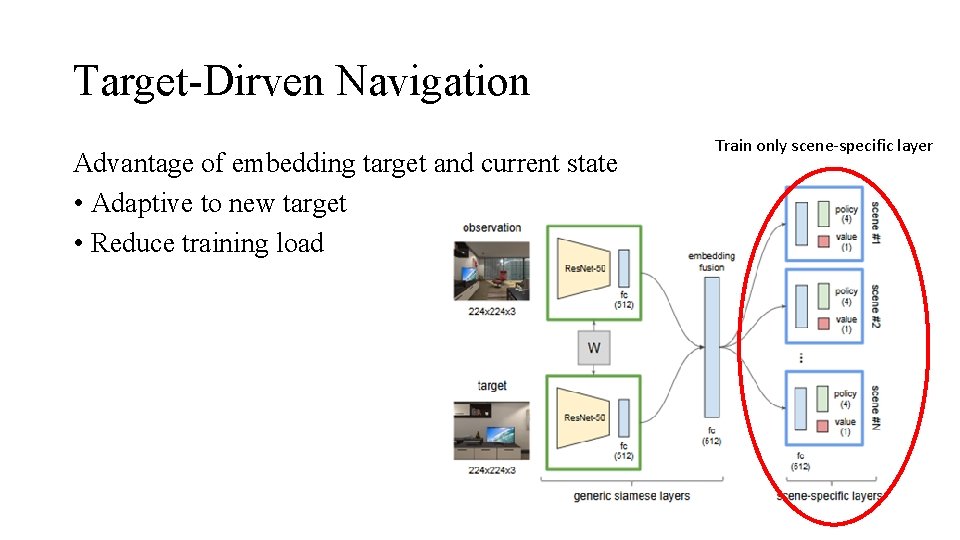

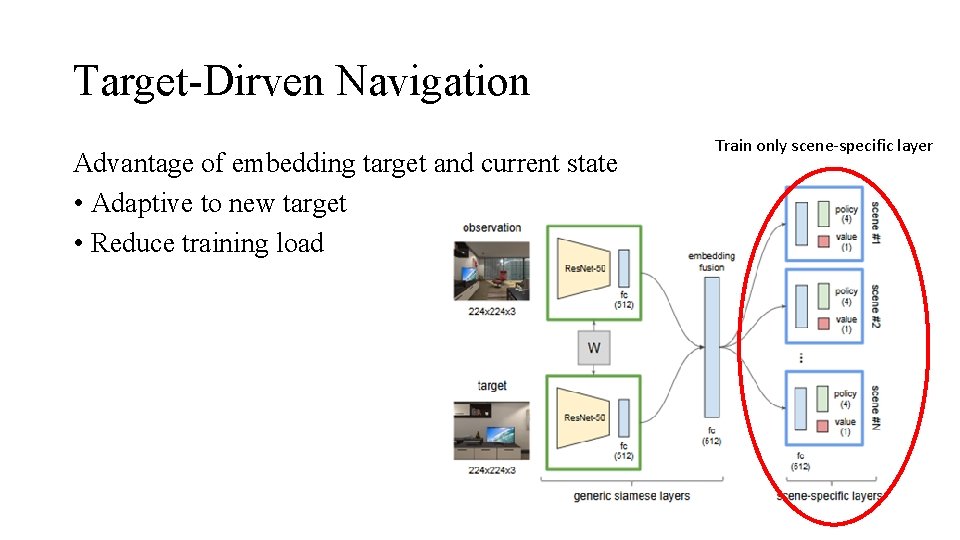

Target-Dirven Navigation Advantage of embedding target and current state • Adaptive to new target • Reduce training load Train only scene-specific layer

Collision Avoidance Objective • Avoid collision with static objects in environment • Avoid collision with other agents

Collision Avoidance • Centralized method: • Each agent is aware of other agents’ position and velocity • Needs perfect communication between each agent and server. • Decentralized method: • Each agent is aware of only its neighbor agents’ position and velocity • Needs perfect sensing capability to obtain neighbor agent’s information

Collision Avoidance • Social Force: • Each agent is considered to be mass particle • Agent keeps a certain distance from other agents and borders • RVO: • Each agent acts independently • Select a velocity outside the RVO • Same policy for all agents • ORCA • Identify collision • Find alternate collision free velocity

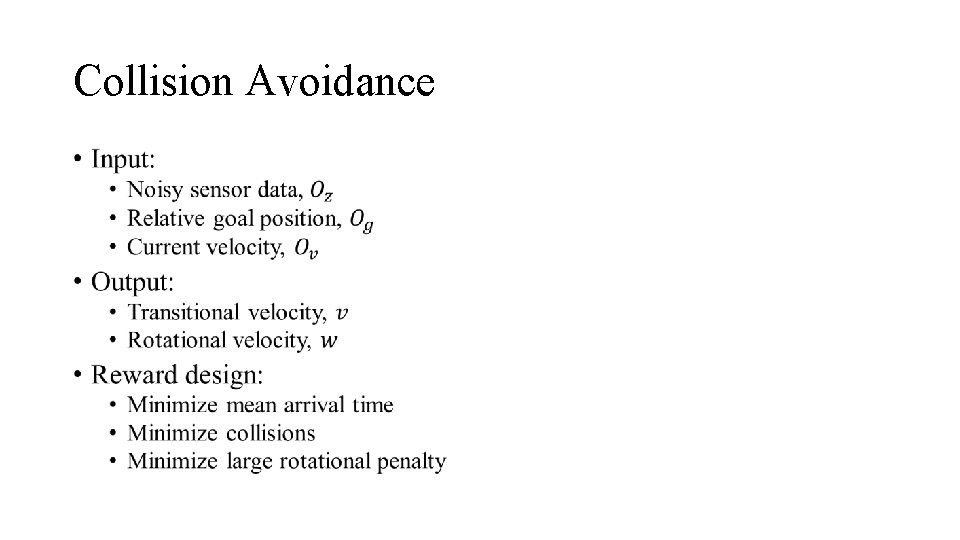

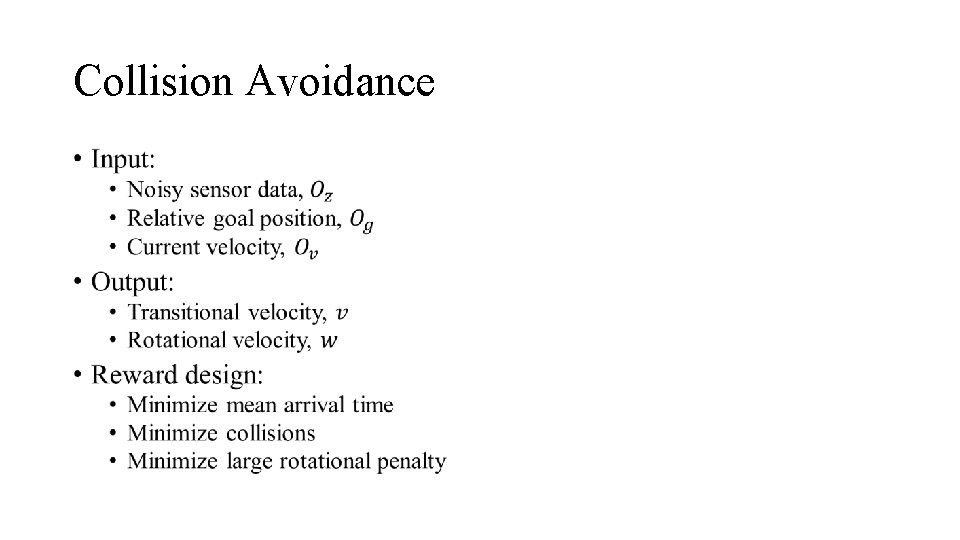

Collision Avoidance •

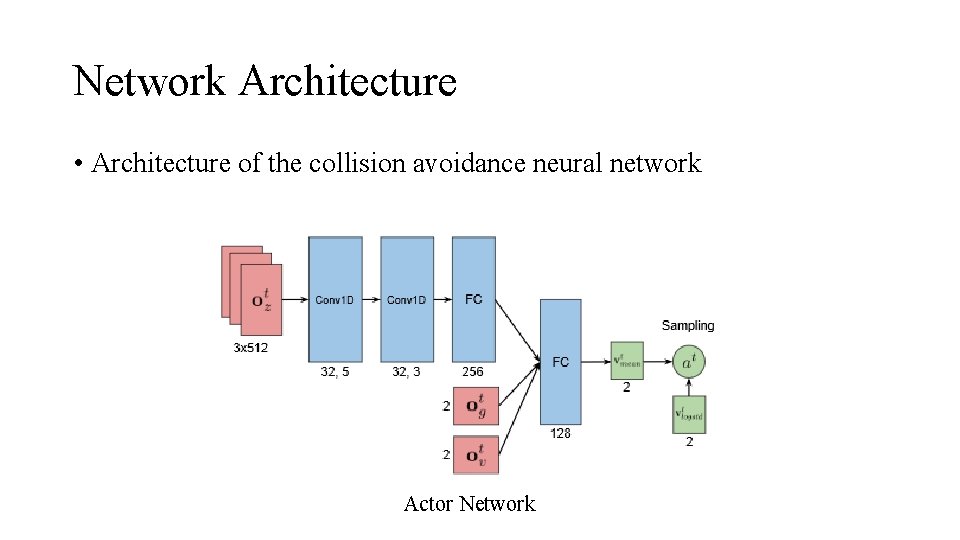

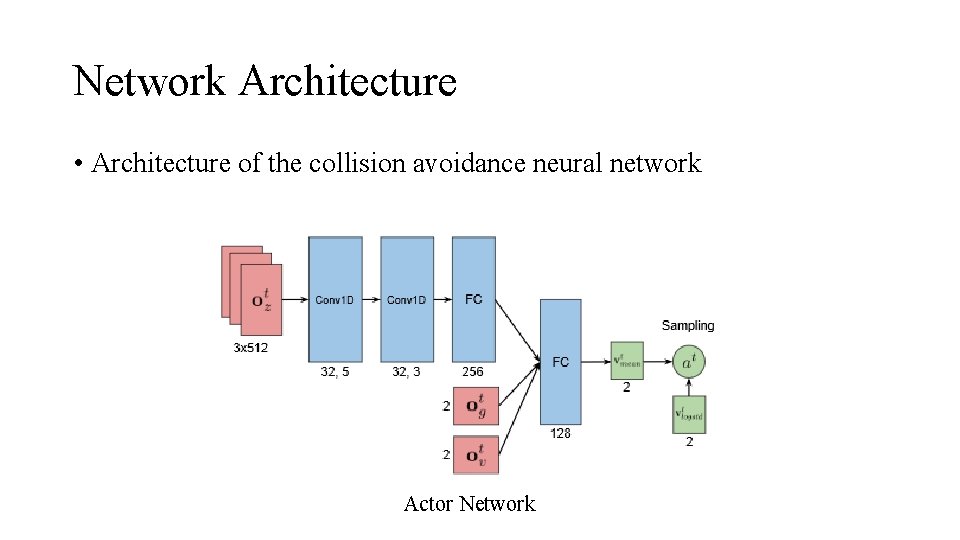

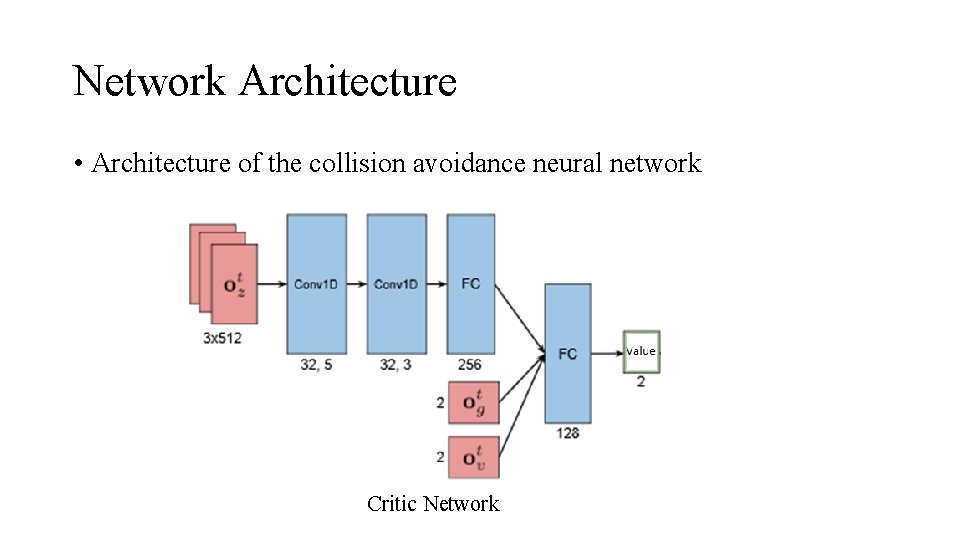

Network Architecture • Architecture of the collision avoidance neural network Actor Network

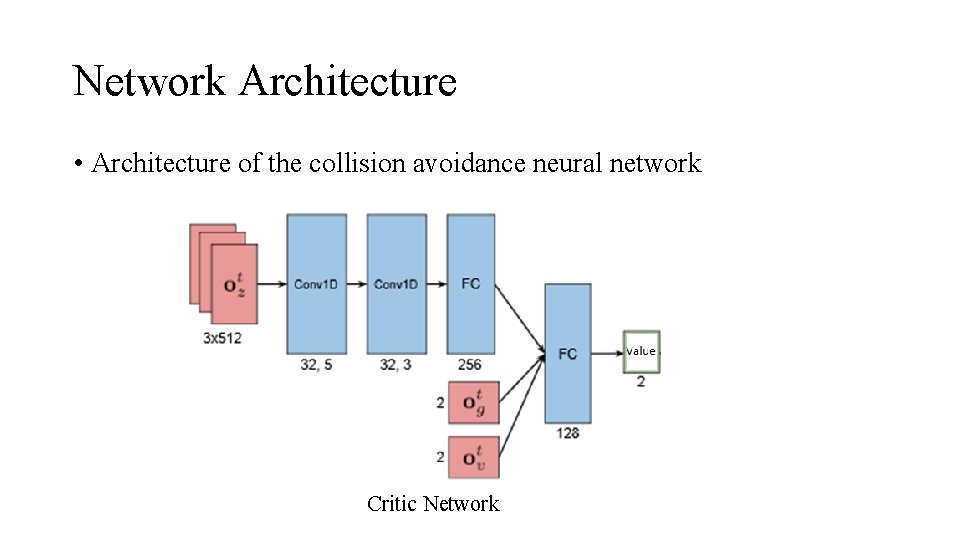

Network Architecture • Architecture of the collision avoidance neural network Critic Network

Network Architecture • Two networks: • Actor: Policy network • Critic: Value network • Update parameter of two networks independently • Critic’s value in incorporated in policy network

Collision Avoidance • Generalize well to avoid dynamic obstacle • Generalize for heterogeneous group of agents Video link: https: //www. youtube. com/watch? v=Uj 1 y. Aml. L 5 lk

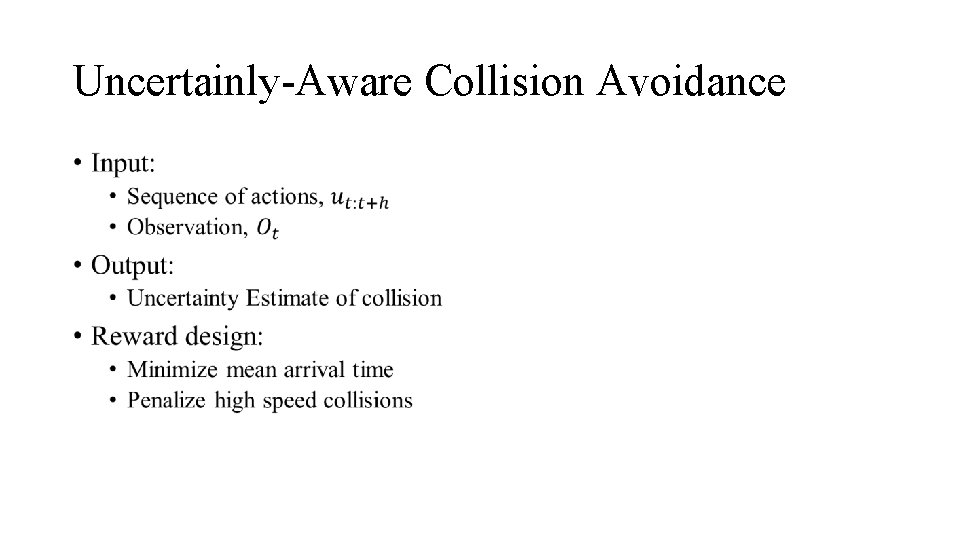

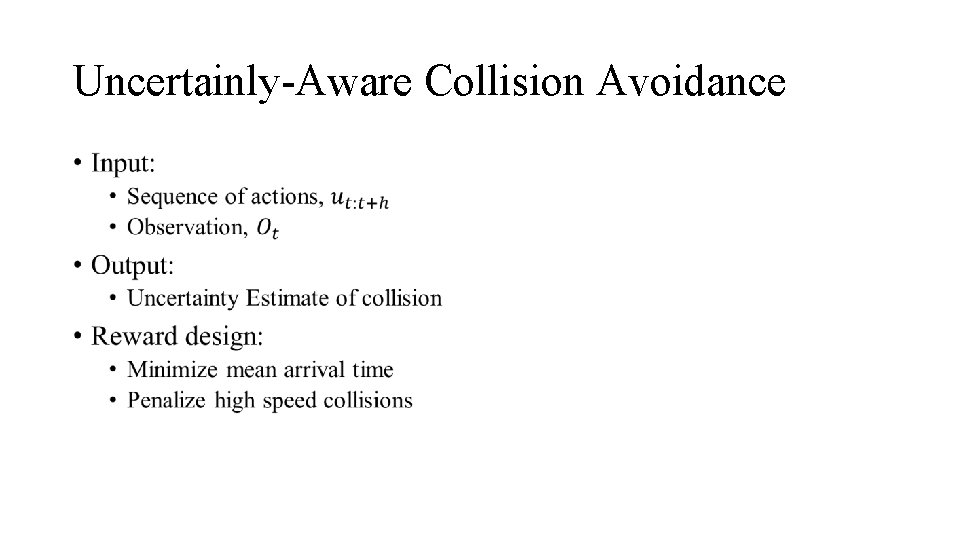

Uncertainly-Aware Collision Avoidance Objective • Avoid collision with static objects in environment • Move cautiously in an unknown environment

Uncertainly-Aware Collision Avoidance •

Uncertainly-Aware Collision Avoidance • Output of NN • Uncertainty • No action! • Cost function • Favors slow movement

Conclusion • Using Reinforcement Learning in three different ways • Target-Dirven Navigation • Use traditional actor-critic model, • one single network for both • Decentralized Multi-Robot Collision Avoidance • Use traditional actor-critic model, • seperate network for actor and critic • Uncertainty-Aware Reinforcement Learning for collision Avoidance • Do not use traditional actor-critic model, • Cost function favors desired action

References • Uncertainty-Aware Reinforcement Learning for Collision Avoidance Gregory Kahn, Adam Villaflor, Vitchyr Pong, Pieter Abbeel, Sergey Levine, Berkeley AI Research (BAIR), University of California, Berkeley, Open. AI • Towards Optimally Decentralized Multi-Robot Collision Avoidance via Deep Reinforcement Learning Pinxin Long, Tingxiang Fan, Xinyi Liao, Wenxi Liu, Hao Zhang, Jia Pan • Target-driven Visual Navigation in Indoor Scenes using Deep Reinforcement Learning Yuke Zhu, Roozbeh Mottaghi, Eric Kolve, Joseph J. Lim, Abhinav Gupta, Li Fei-Fei, Ali Farhadi