CS 33 Introduction to Computer Organization Week 8

- Slides: 29

CS 33: Introduction to Computer Organization Week 8 – Discussion Section Atefeh Sohrabizadeh atefehsz@cs. ucla. edu 11/22/19 1

Agenda ♦ ♦ Virtual Memory Threading and Basic Synchronization 2

Virtual Memory ♦ ♦ As demand on the CPU increases, processes slow down But, if processes need too much memory, some of them may not be able to run § When a program is out of space, it can’t run ♦ Solution: Virtual Memory (VM) § There is only one DRAM unit in machine § Provide each process with a large, uniform, and private address space so that they think they have access to entire DRAM § Main memory (DRAM) will be treated as a cache for an address space on disk § Memory management is simpler • Since each process has a uniform address space § Isolates address spaces • The address space of each process is protected from corruption by other 3

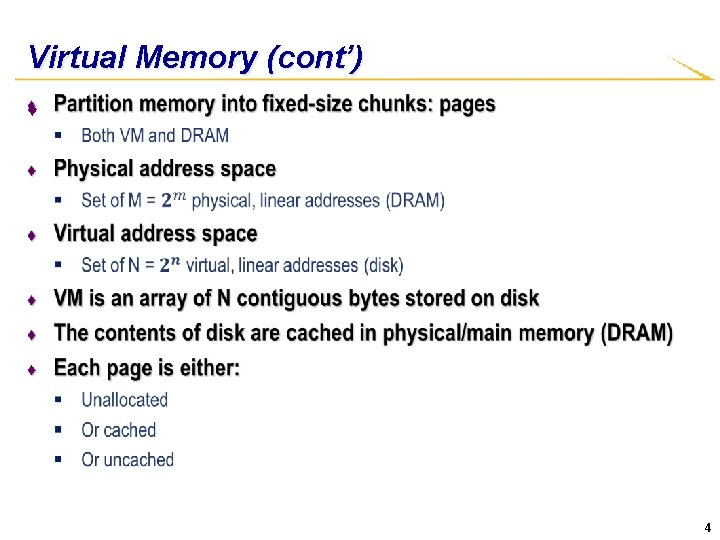

Virtual Memory (cont’) ♦ 4

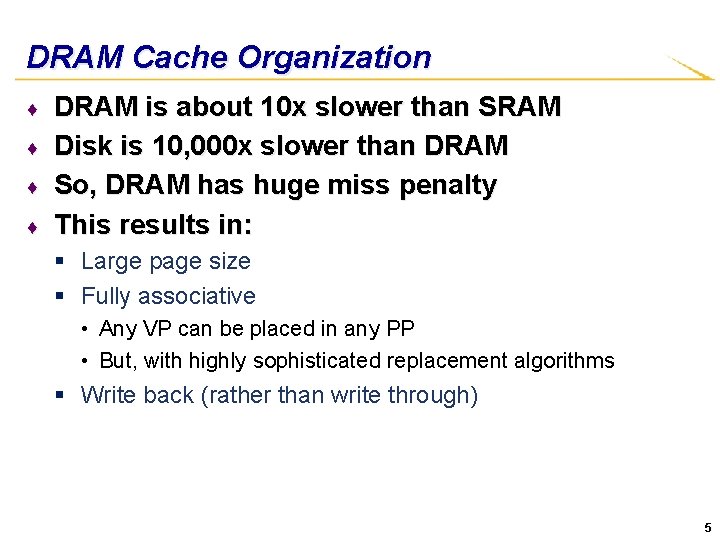

DRAM Cache Organization ♦ ♦ DRAM is about 10 x slower than SRAM Disk is 10, 000 x slower than DRAM So, DRAM has huge miss penalty This results in: § Large page size § Fully associative • Any VP can be placed in any PP • But, with highly sophisticated replacement algorithms § Write back (rather than write through) 5

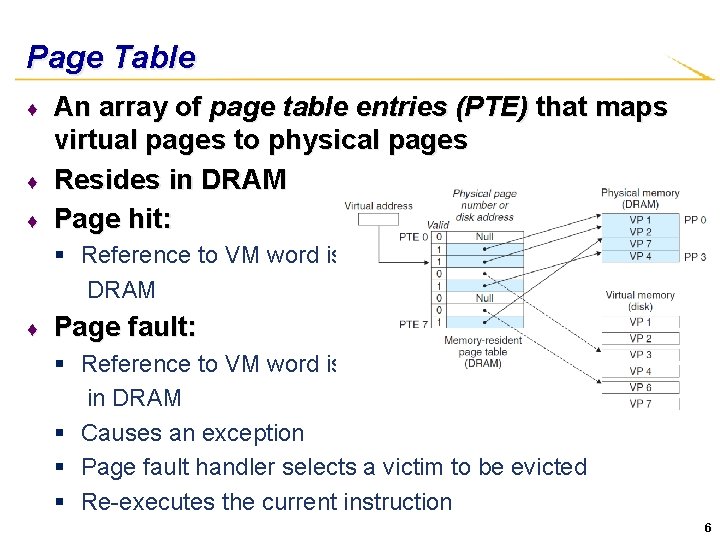

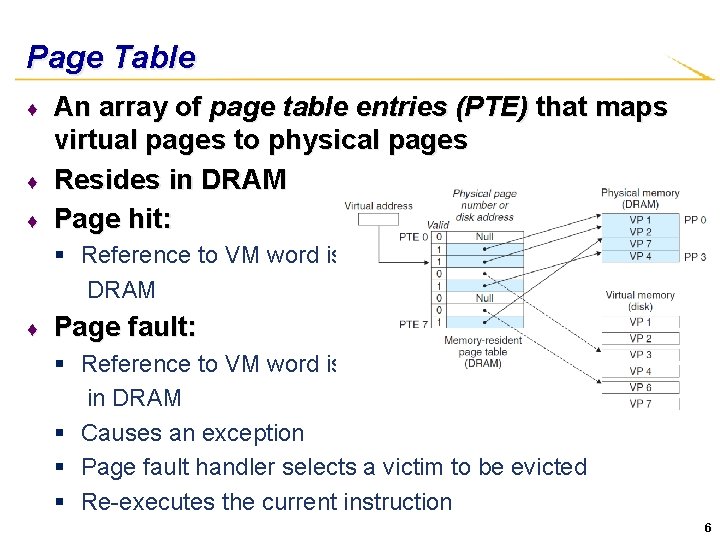

Page Table ♦ ♦ ♦ An array of page table entries (PTE) that maps virtual pages to physical pages Resides in DRAM Page hit: § Reference to VM word is in DRAM ♦ Page fault: § Reference to VM word is not in DRAM § Causes an exception § Page fault handler selects a victim to be evicted § Re-executes the current instruction 6

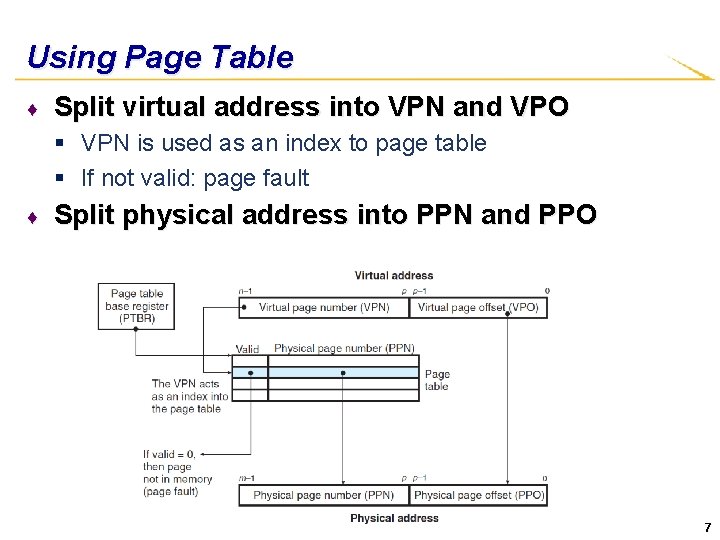

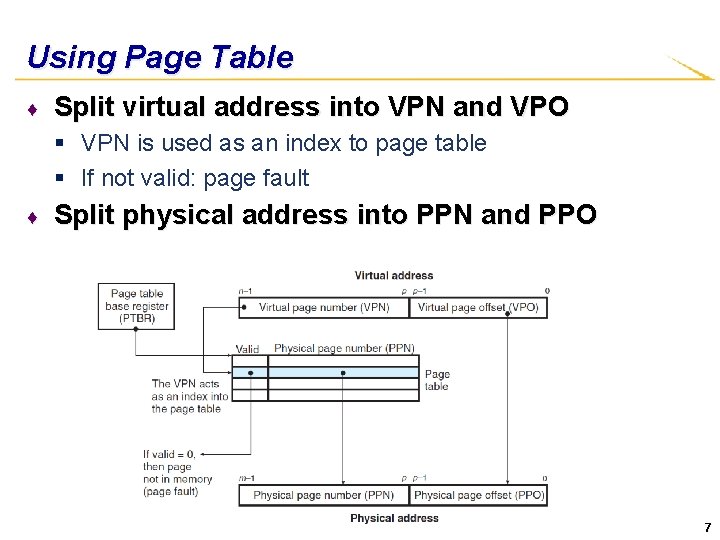

Using Page Table ♦ Split virtual address into VPN and VPO § VPN is used as an index to page table § If not valid: page fault ♦ Split physical address into PPN and PPO 7

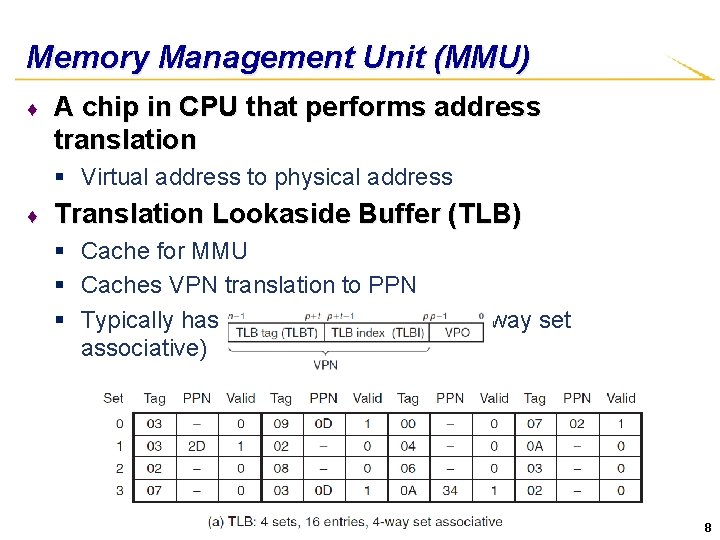

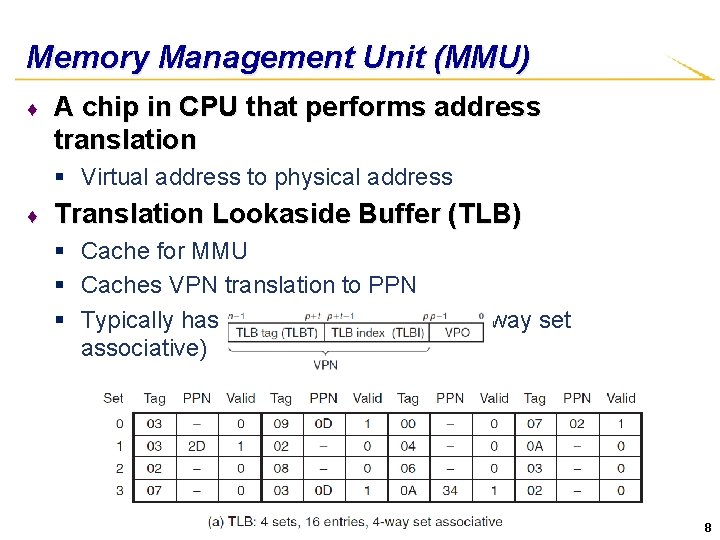

Memory Management Unit (MMU) ♦ A chip in CPU that performs address translation § Virtual address to physical address ♦ Translation Lookaside Buffer (TLB) § Cache for MMU § Caches VPN translation to PPN § Typically has high associativity (e. g. 4 -way set associative) 8

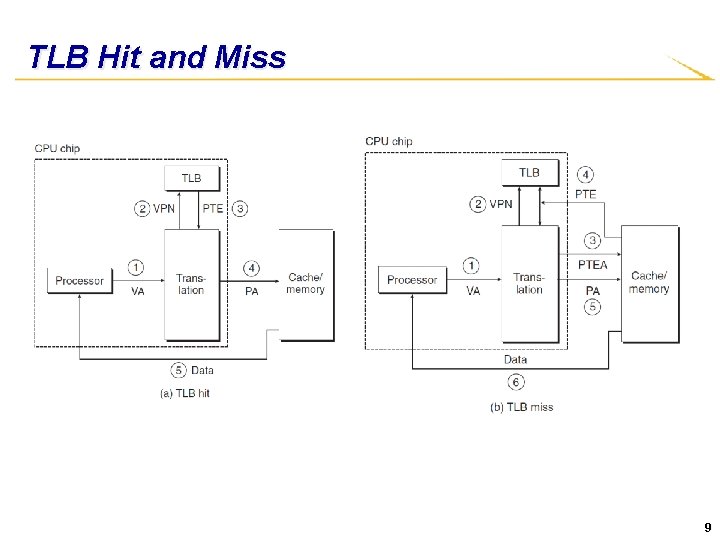

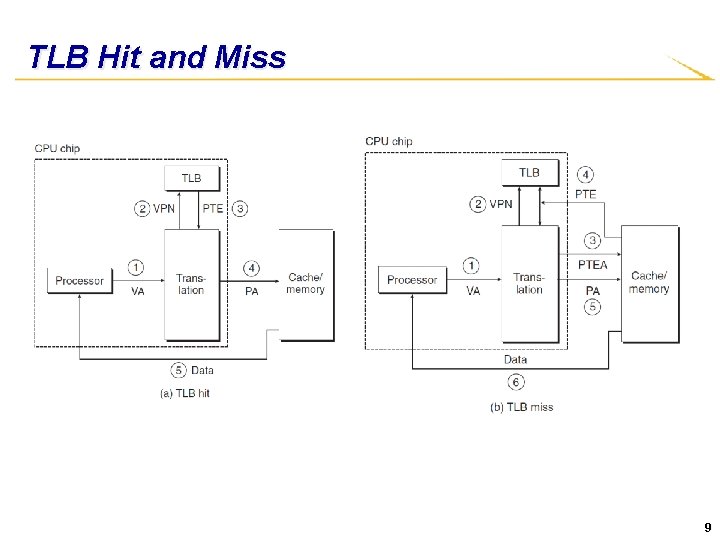

TLB Hit and Miss 9

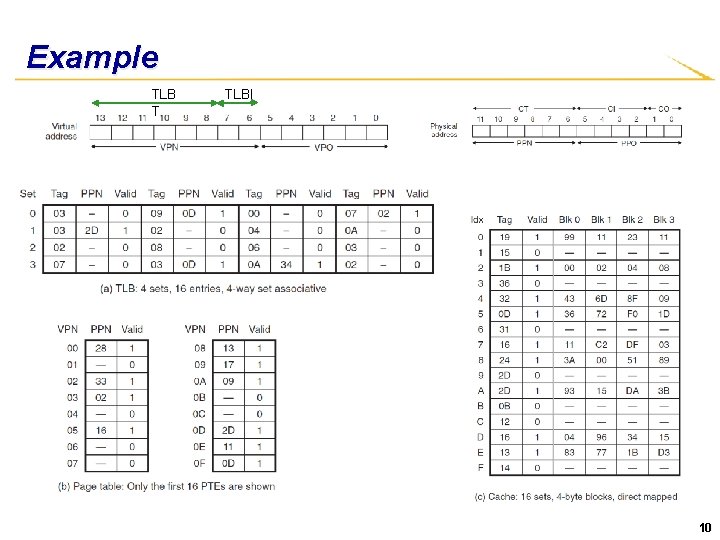

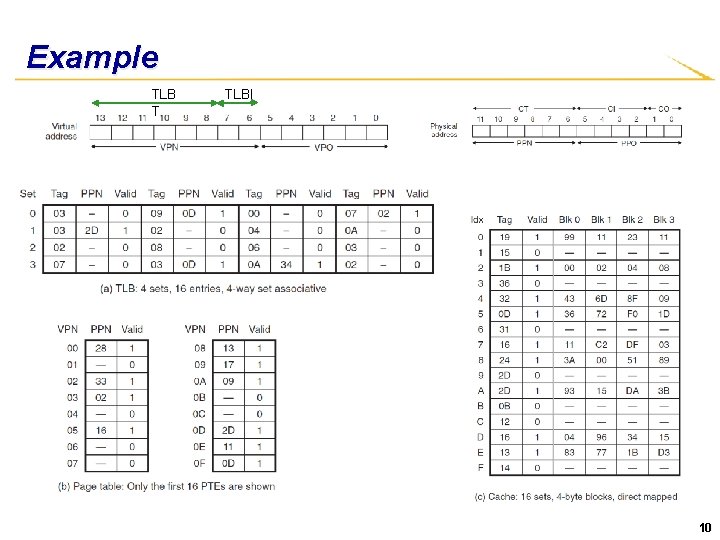

Example TLB T TLBI 10

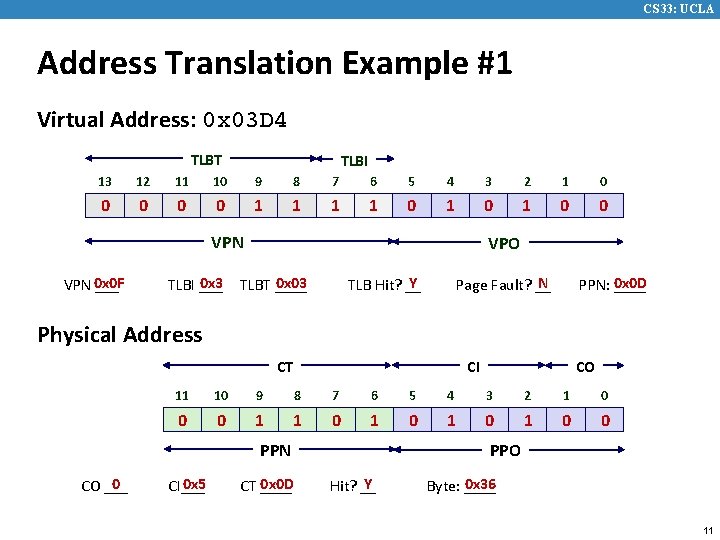

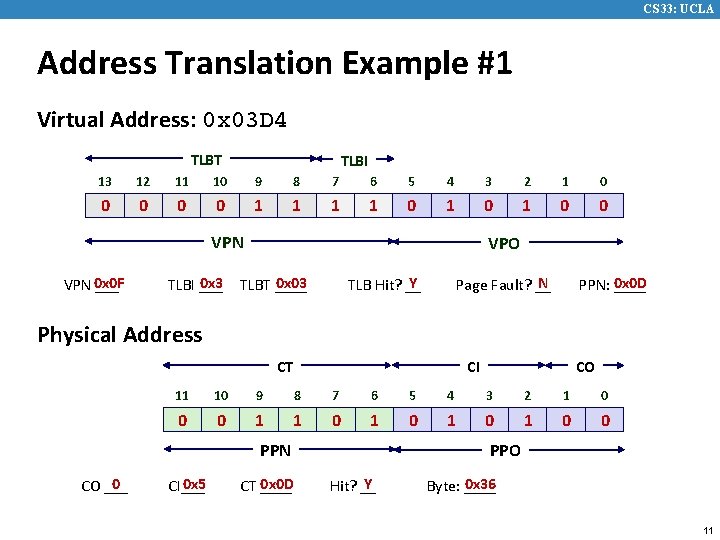

CS 33: UCLA Address Translation Example #1 Virtual Address: 0 x 03 D 4 TLBT TLBI 13 12 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 VPN 0 x 0 F ___ 0 x 3 TLBI ___ VPO Y TLB Hit? __ 0 x 03 TLBT ____ N Page Fault? __ PPN: 0 x 0 D ____ Physical Address CI CT 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 PPN 0 CO ___ CO 0 x 5 CI___ 0 x 0 D CT ____ PPO Y Hit? __ 0 x 36 Byte: ____ 11

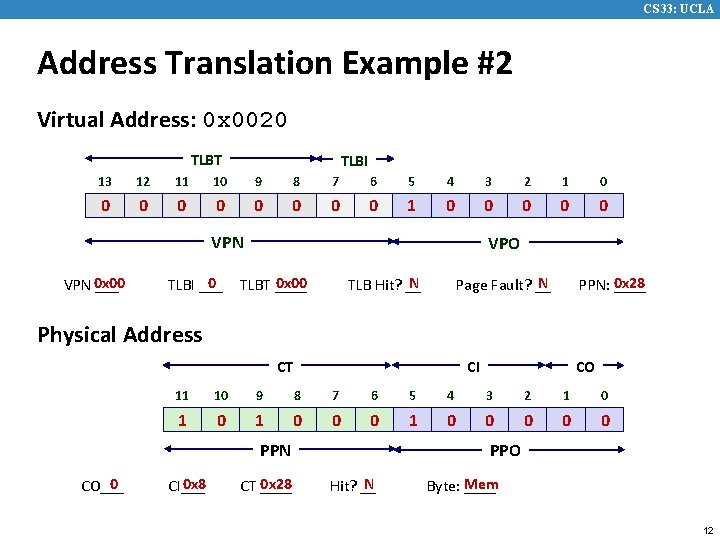

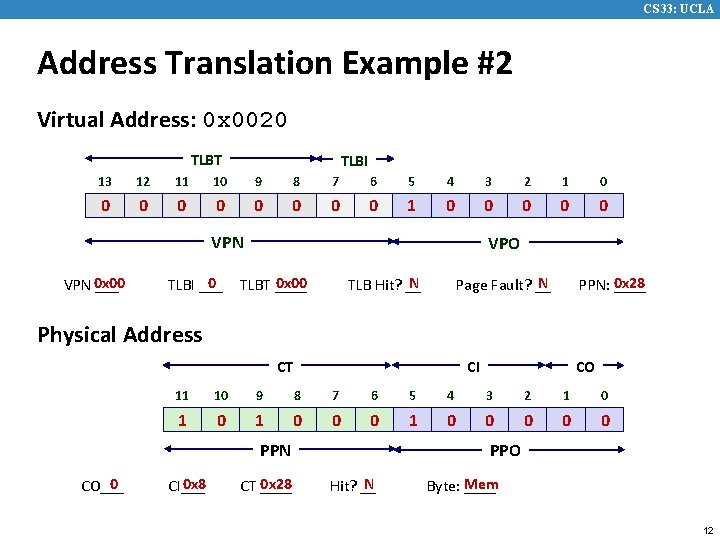

CS 33: UCLA Address Translation Example #2 Virtual Address: 0 x 0020 TLBT TLBI 13 12 11 10 9 8 7 6 5 4 3 2 1 0 0 0 0 0 1 0 0 0 VPN 0 x 00 ___ 0 TLBI ___ VPO N TLB Hit? __ 0 x 00 TLBT ____ N Page Fault? __ PPN: 0 x 28 ____ Physical Address CI CT 11 10 9 8 7 6 5 4 3 2 1 0 1 0 0 0 0 0 PPN 0 CO___ CO 0 x 8 CI___ 0 x 28 CT ____ PPO N Hit? __ Mem Byte: ____ 12

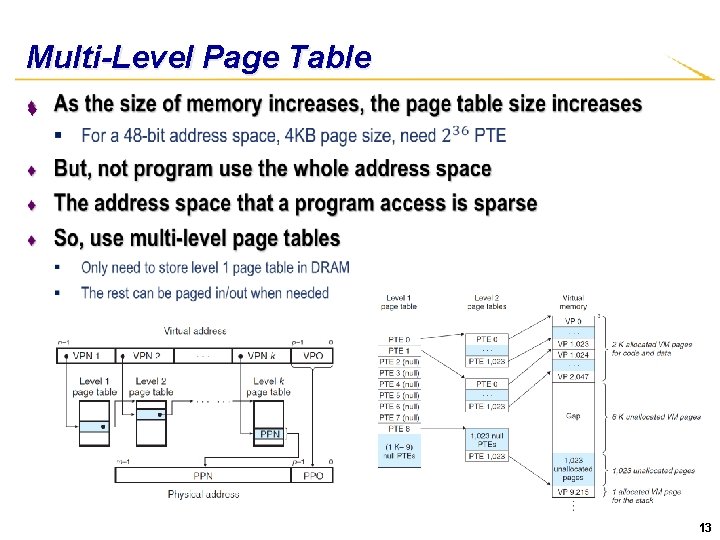

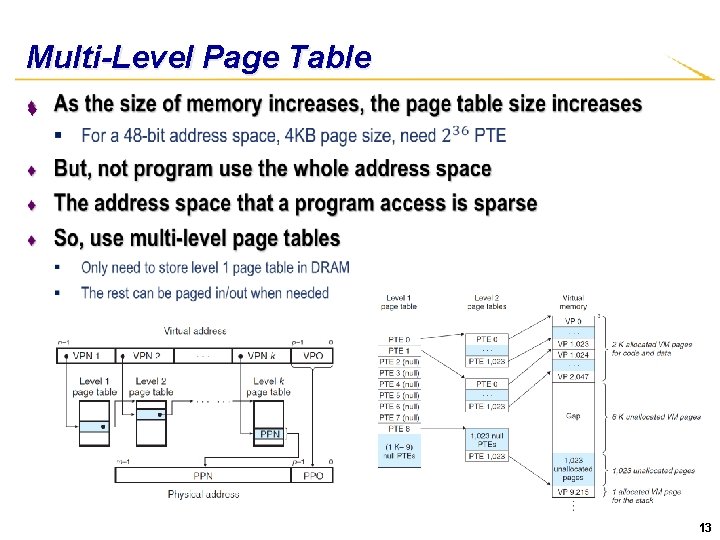

Multi-Level Page Table ♦ 13

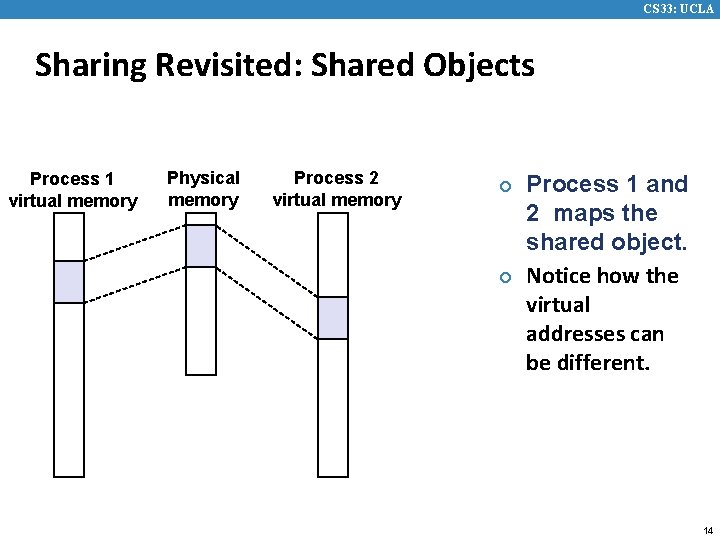

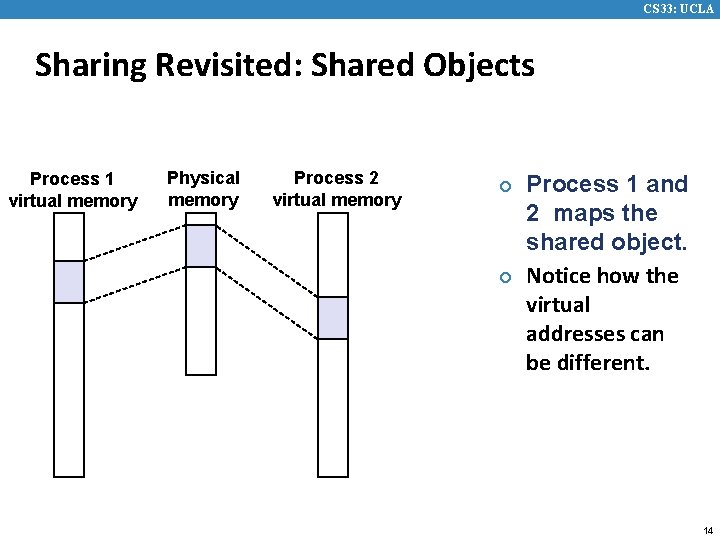

CS 33: UCLA Sharing Revisited: Shared Objects Process 1 virtual memory Physical memory Process 2 virtual memory ¢ ¢ Process 1 and 2 maps the shared object. Notice how the virtual addresses can be different. 14

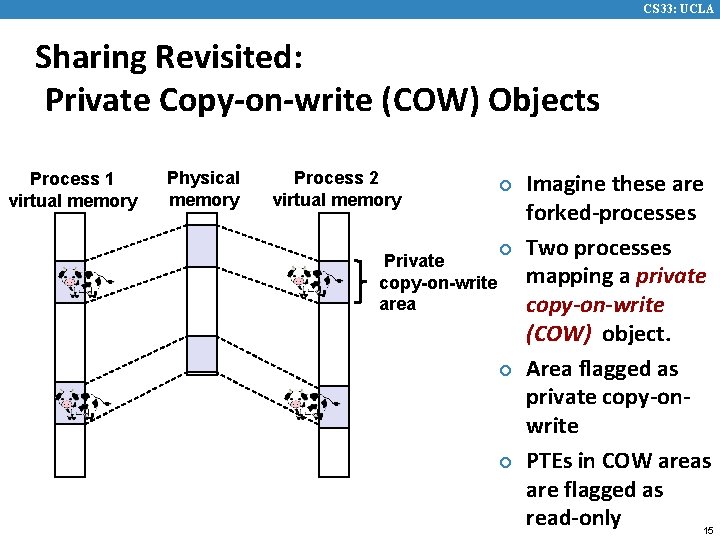

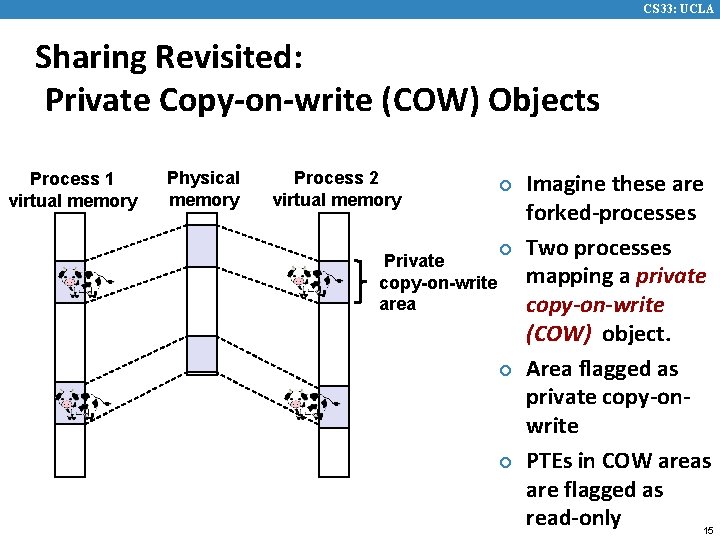

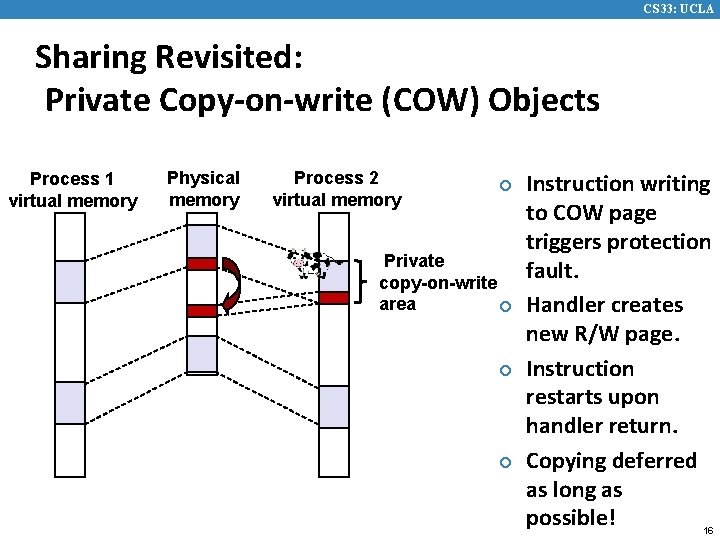

CS 33: UCLA Sharing Revisited: Private Copy-on-write (COW) Objects Process 1 virtual memory Physical memory Process 2 virtual memory Private copy-on-write area ¢ ¢ Imagine these are forked-processes Two processes mapping a private copy-on-write (COW) object. Area flagged as private copy-onwrite PTEs in COW areas are flagged as read-only 15

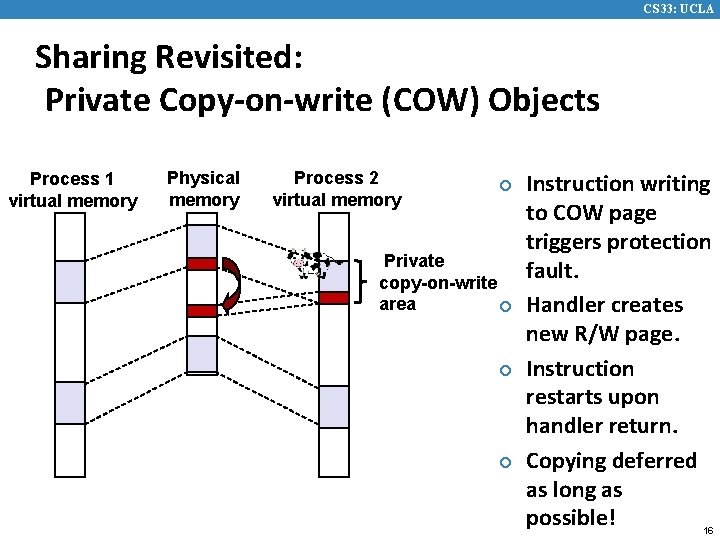

CS 33: UCLA Sharing Revisited: Private Copy-on-write (COW) Objects Process 1 virtual memory Physical memory Process 2 virtual memory ¢ Private copy-on-write area ¢ ¢ ¢ Instruction writing to COW page triggers protection fault. Handler creates new R/W page. Instruction restarts upon handler return. Copying deferred as long as possible! 16

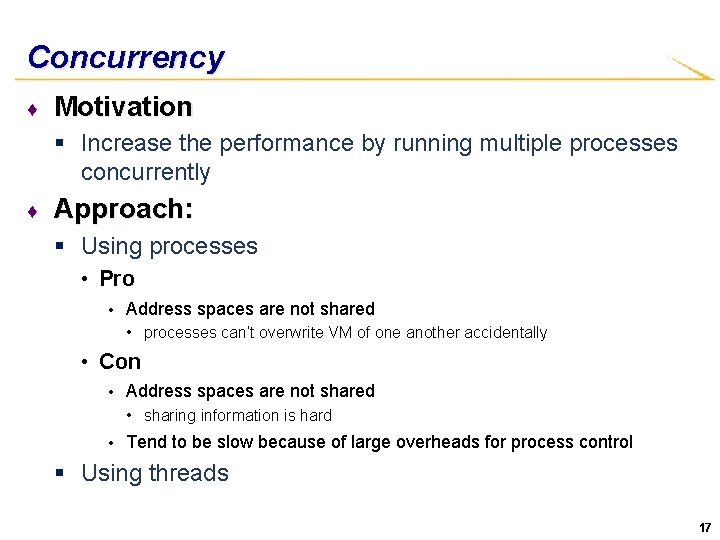

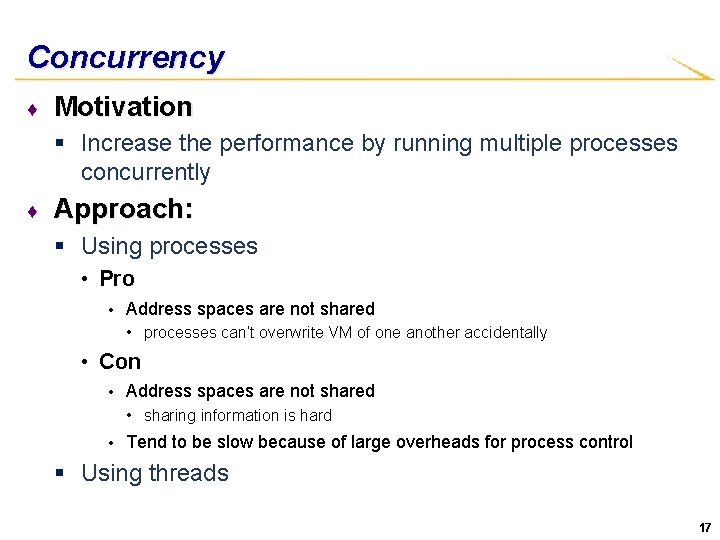

Concurrency ♦ Motivation § Increase the performance by running multiple processes concurrently ♦ Approach: § Using processes • Pro • Address spaces are not shared • processes can’t overwrite VM of one another accidentally • Con • Address spaces are not shared • sharing information is hard • Tend to be slow because of large overheads for process control § Using threads 17

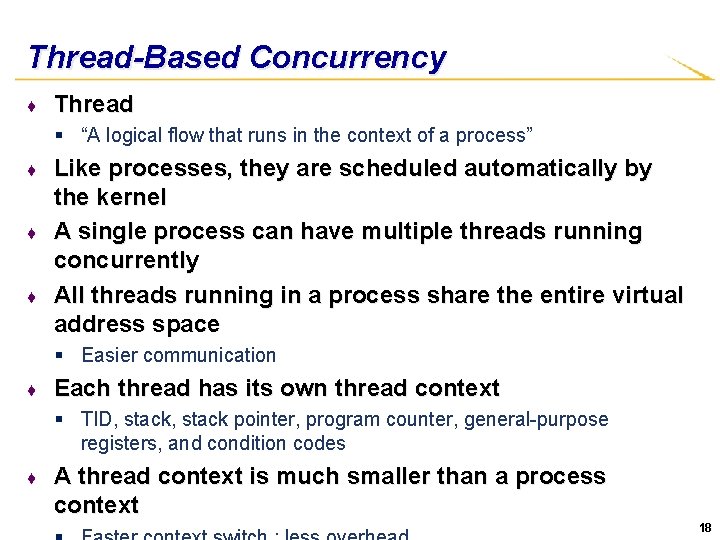

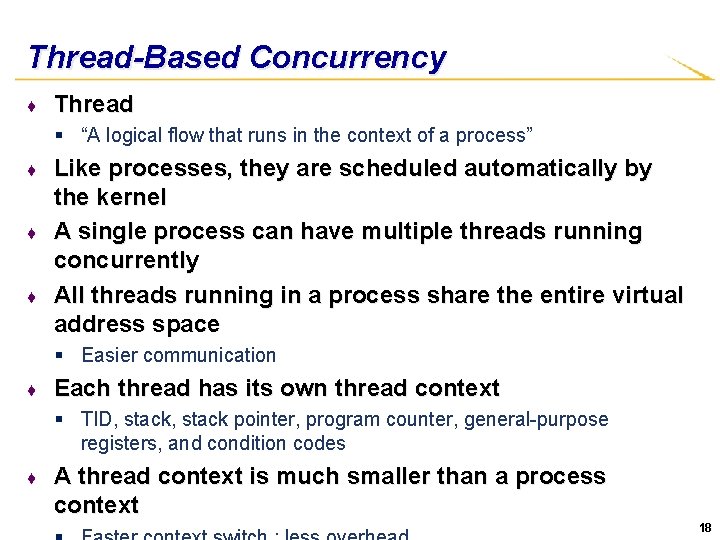

Thread-Based Concurrency ♦ Thread § “A logical flow that runs in the context of a process” ♦ ♦ ♦ Like processes, they are scheduled automatically by the kernel A single process can have multiple threads running concurrently All threads running in a process share the entire virtual address space § Easier communication ♦ Each thread has its own thread context § TID, stack pointer, program counter, general-purpose registers, and condition codes ♦ A thread context is much smaller than a process context 18

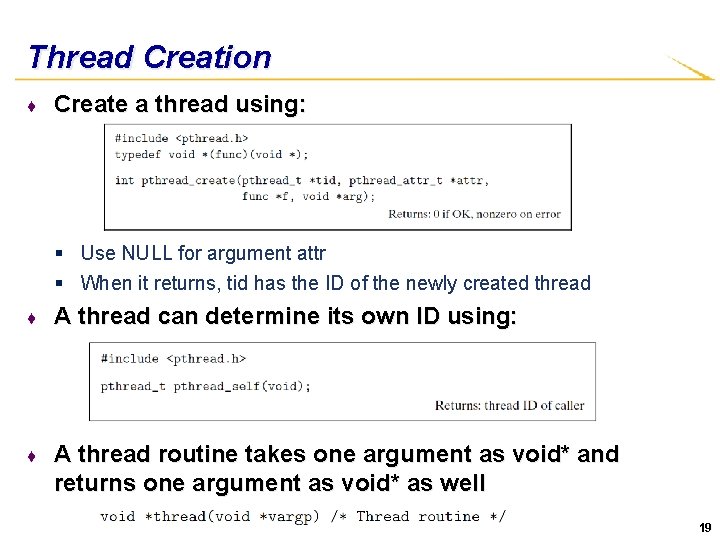

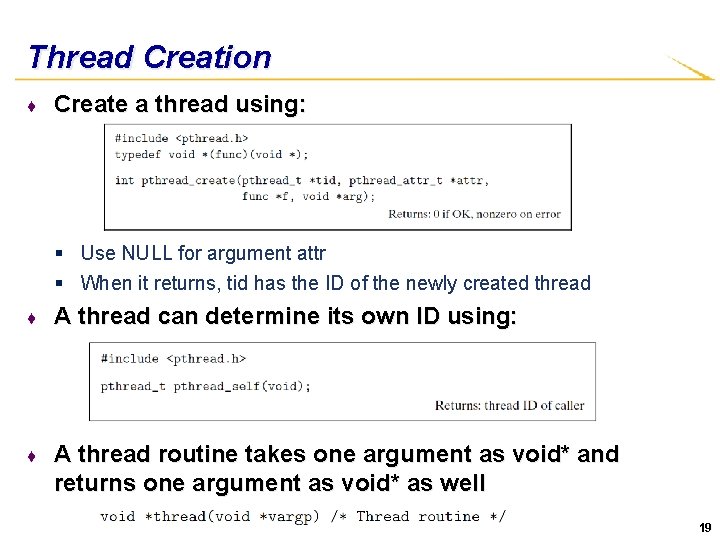

Thread Creation ♦ Create a thread using: § Use NULL for argument attr § When it returns, tid has the ID of the newly created thread ♦ A thread can determine its own ID using: ♦ A thread routine takes one argument as void* and returns one argument as void* as well 19

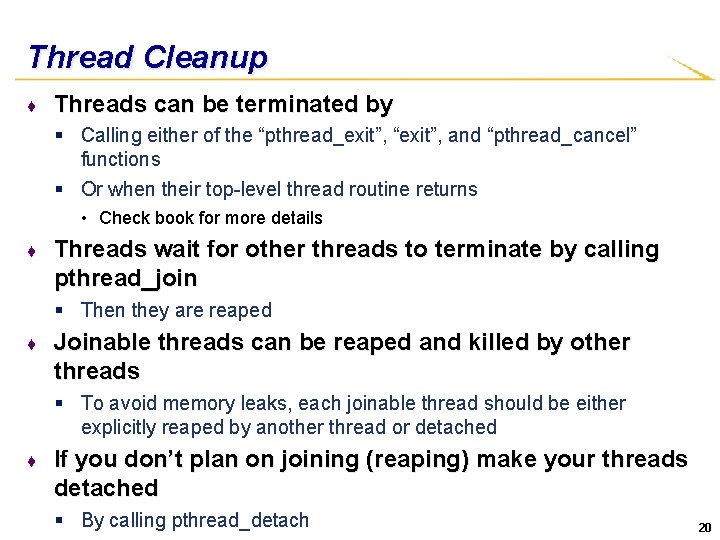

Thread Cleanup ♦ Threads can be terminated by § Calling either of the “pthread_exit”, “exit”, and “pthread_cancel” functions § Or when their top-level thread routine returns • Check book for more details ♦ Threads wait for other threads to terminate by calling pthread_join § Then they are reaped ♦ Joinable threads can be reaped and killed by other threads § To avoid memory leaks, each joinable thread should be either explicitly reaped by another thread or detached ♦ If you don’t plan on joining (reaping) make your threads detached § By calling pthread_detach 20

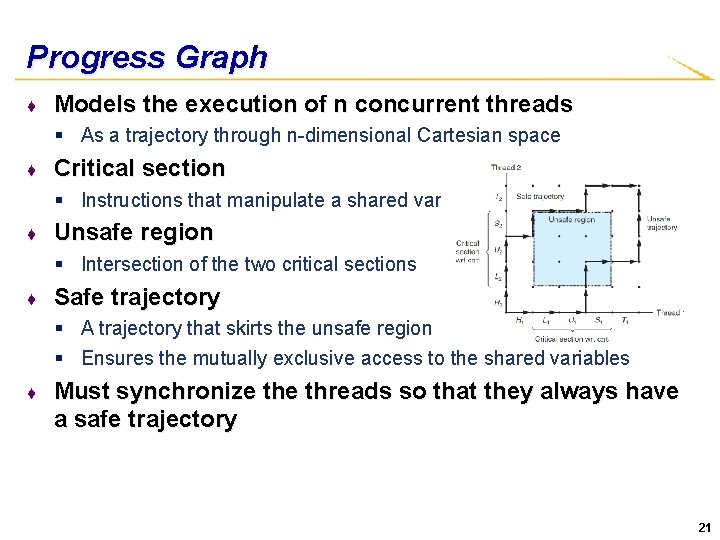

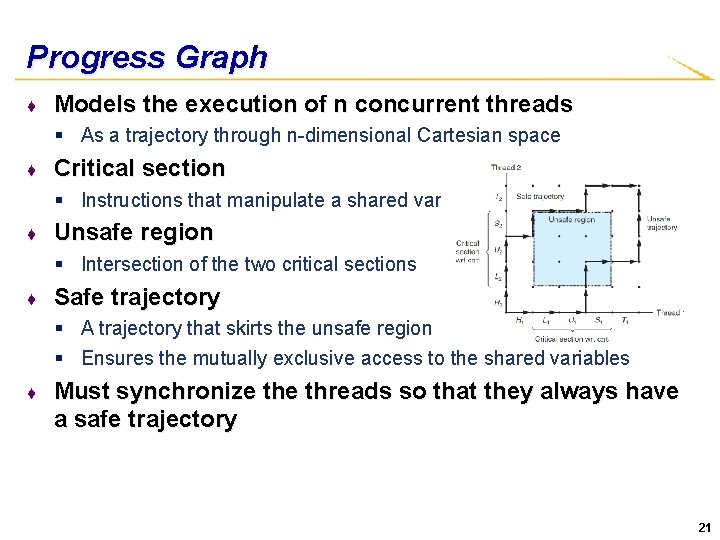

Progress Graph ♦ Models the execution of n concurrent threads § As a trajectory through n-dimensional Cartesian space ♦ Critical section § Instructions that manipulate a shared variable ♦ Unsafe region § Intersection of the two critical sections ♦ Safe trajectory § A trajectory that skirts the unsafe region § Ensures the mutually exclusive access to the shared variables ♦ Must synchronize threads so that they always have a safe trajectory 21

Semaphore ♦ All the process context excluding the thread context is shared § E. g. global and static local variables ♦ ♦ Shared variables may cause synchronization errors (One) Solution: § Use semaphores • A global variable with a nonnegative integer value • A counter that can get N values • A mutex is a binary semaphore (its value is always 0 or 1) • Associate a semaphore with each shared variable 22

Semaphore Functions ♦ int sem_init(sem_t *sem, 0, unsigned int value); § Initializes semaphore “sem” to value ♦ void P(sem_t *s); § “I want access” § Waits until s becomes nonzero § If s is nonzero, decrements s by one and returns immediately • Grabs the lock (locking the mutex) ♦ void V(sem_t *s); § “I’m done” § Increments s by one • Unlocks the mutex § If there any threads waiting at a P operation, wakes up one of them 23

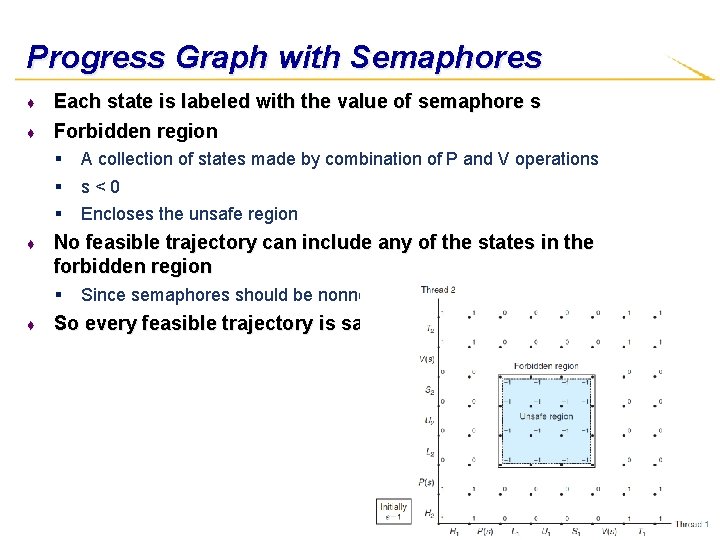

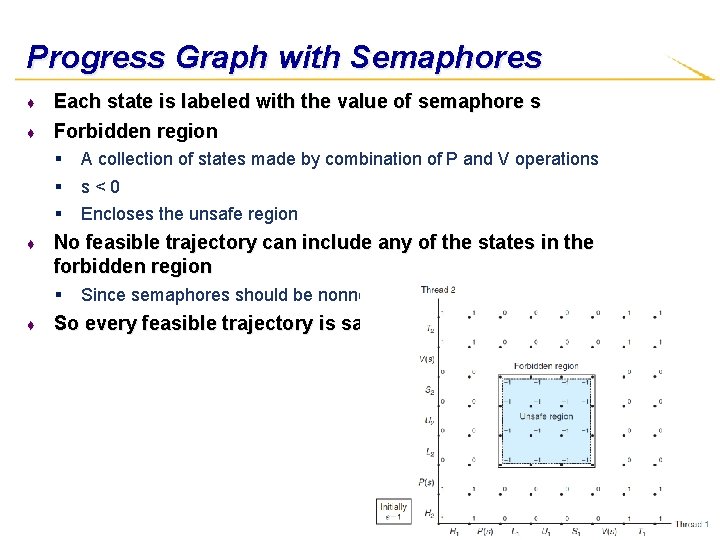

Progress Graph with Semaphores ♦ ♦ Each state is labeled with the value of semaphore s Forbidden region § § § ♦ s<0 Encloses the unsafe region No feasible trajectory can include any of the states in the forbidden region § ♦ A collection of states made by combination of P and V operations Since semaphores should be nonnegative So every feasible trajectory is safe 24

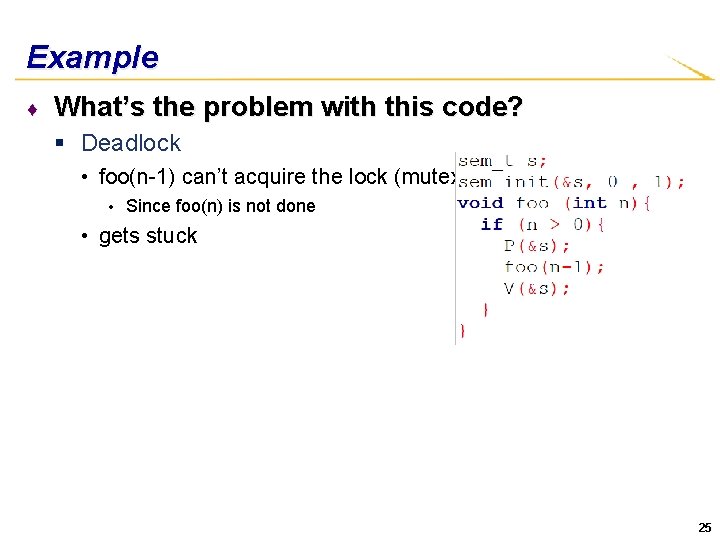

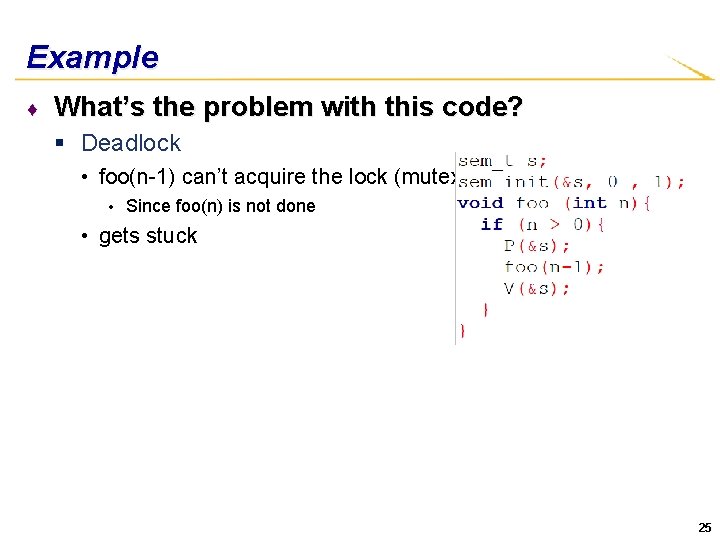

Example ♦ What’s the problem with this code? § Deadlock • foo(n-1) can’t acquire the lock (mutex) • Since foo(n) is not done • gets stuck 25

Concurrency Issues ♦ Thread safety § A function is thread-safe iff always functions correctly when called repeatedly from multiple concurrent threads ♦ ♦ Carefully handle shared variables and use proper synchronization to achieve thread-safe function Reentrant functions § Special form of thread-safe functions § Does not use any shared data ♦ Deadlock § Where a collection of threads are blocked waiting for a condition that will never be true 26

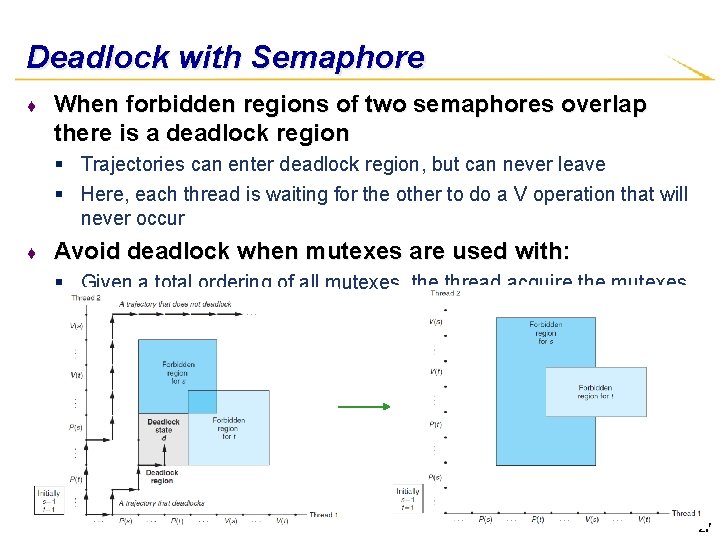

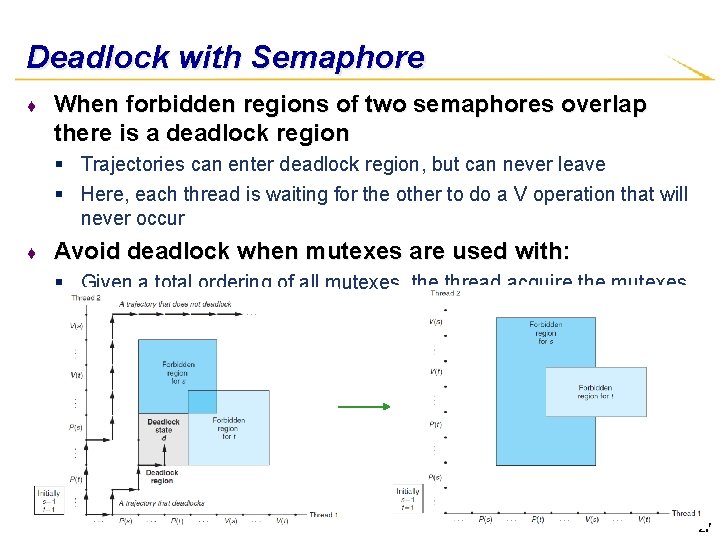

Deadlock with Semaphore ♦ When forbidden regions of two semaphores overlap there is a deadlock region § Trajectories can enter deadlock region, but can never leave § Here, each thread is waiting for the other to do a V operation that will never occur ♦ Avoid deadlock when mutexes are used with: § Given a total ordering of all mutexes, the thread acquire the mutexes in order 27

Class Attendance ♦ Use this link: § https: //onlinepoll. ucla. edu/polls/3734 ♦ Open till 3: 50 PM 28

Acknowledgment ♦ The contents and figures are taken from § “Computer Systems: A Programmer's Perspective”, Ed. 3, Bryant and O’Hallaron § lecture slides 29