CS 3214 Computer Systems Automatic Memory ManagementGC Some

- Slides: 31

CS 3214 Computer Systems Automatic Memory Management/GC

Some of the following slides are taken with permission from Complete Powerpoint Lecture Notes for Computer Systems: A Programmer's Perspective (CS: APP) Randal E. Bryant and David R. O'Hallaron http: //csapp. cs. cmu. edu/public/lectures. html Part 2 MEMORY MANAGEMENT CS 3214 Spring 2015

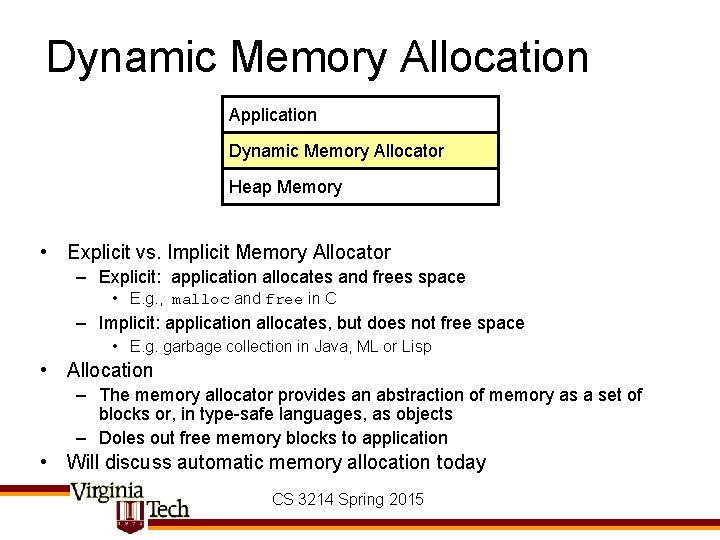

Dynamic Memory Allocation Application Dynamic Memory Allocator Heap Memory • Explicit vs. Implicit Memory Allocator – Explicit: application allocates and frees space • E. g. , malloc and free in C – Implicit: application allocates, but does not free space • E. g. garbage collection in Java, ML or Lisp • Allocation – The memory allocator provides an abstraction of memory as a set of blocks or, in type-safe languages, as objects – Doles out free memory blocks to application • Will discuss automatic memory allocation today CS 3214 Spring 2015

Implicit Memory Management • Motivation: manually (or explicitly) reclaiming memory is difficult: – Too early: risk access-after-free errors – Too late: memory leaks • Requires principled design – Programmer must reason about ownership of objects • Difficult & error prone, especially in the presence of object sharing • Complicates design of APIs CS 3214 Spring 2015

Manual Reference Counting • Idea: keep track of how many references there are to each object in a reference counter stored with each object – Copy a reference to an object globalvar = q • increment count: “addref” – Remove a reference p = NULL • decrement count: “release” • Uses set of rules programmers must follow – E. g. , must ‘release’ reference obtained from OUT parameter in function call – Must ‘addref’ when storing into global – May not have to use addref/release for references copied within one function • Programmer must use addref/release correctly – Still somewhat error prone, but rules are such that correctness of the code can be established locally without consulting the API documentation of any functions being called; parameter annotations (IN, INOUT, return value) imply reference counting rules • Used in Microsoft COM & Netscape XPCOM CS 3214 Spring 2015

Automatic Reference Counting • Idea: force automatic reference count updates when pointers are assigned/copied • Most common variant: – C++ “smart pointers” – C++ allows programmer to interpose on assignments and copies via operator overloading/special purpose constructors. • Disadvantage of all reference counting schemes is their inability to handle cycles – But great advantage is immediate reclamation: no “drag” between last access & reclamation CS 3214 Spring 2015

Garbage Collection • Determine which objects may be accessed in the future – Don’t know which one’s will, but can determine those who can’t be accessed because there are no pointers to them – Requires that all pointers are identifiable (e. g. , no pointer/int conversion) • Invented 1960 by Mc. Carthy for LISP CS 3214 Spring 2015

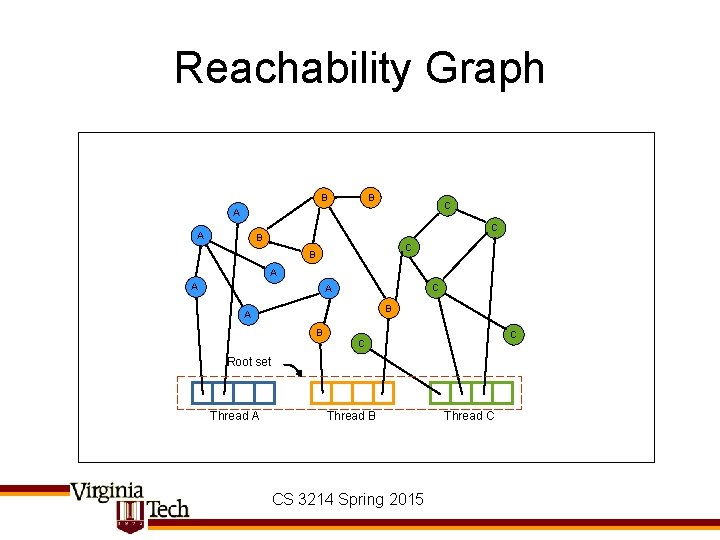

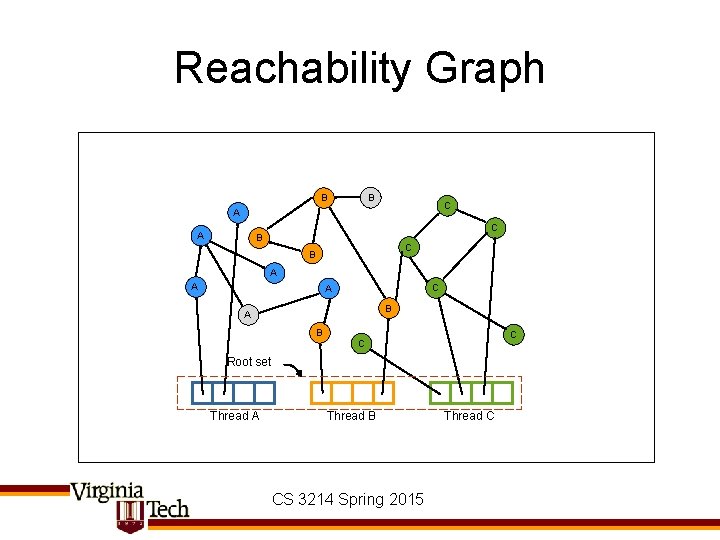

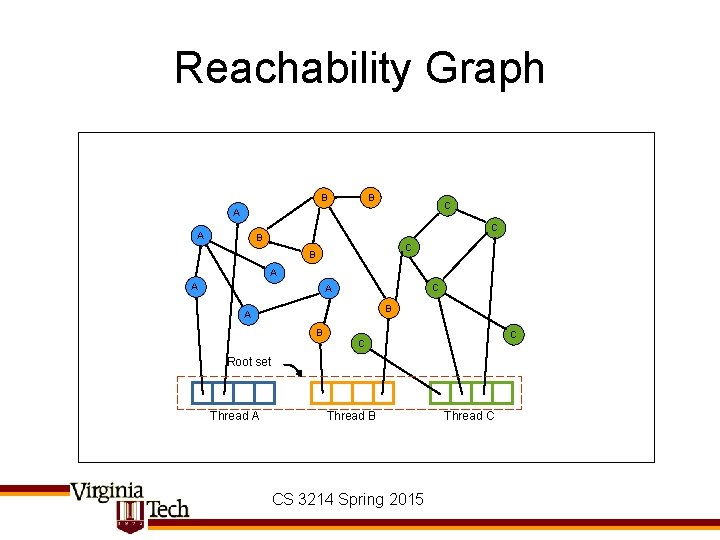

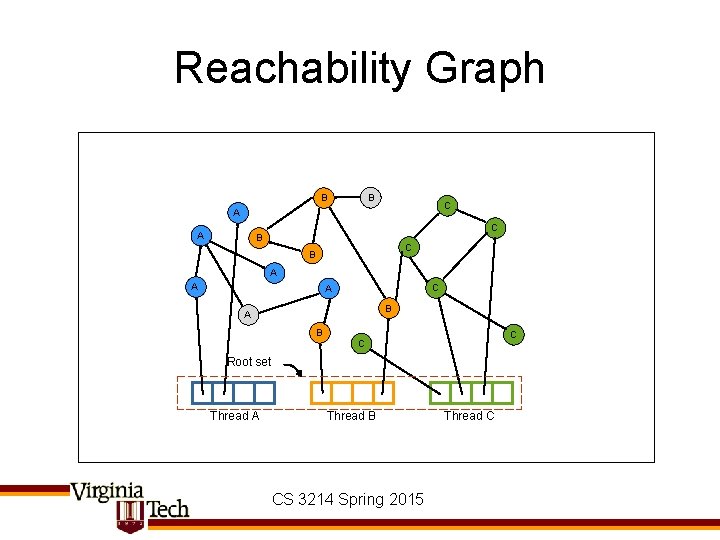

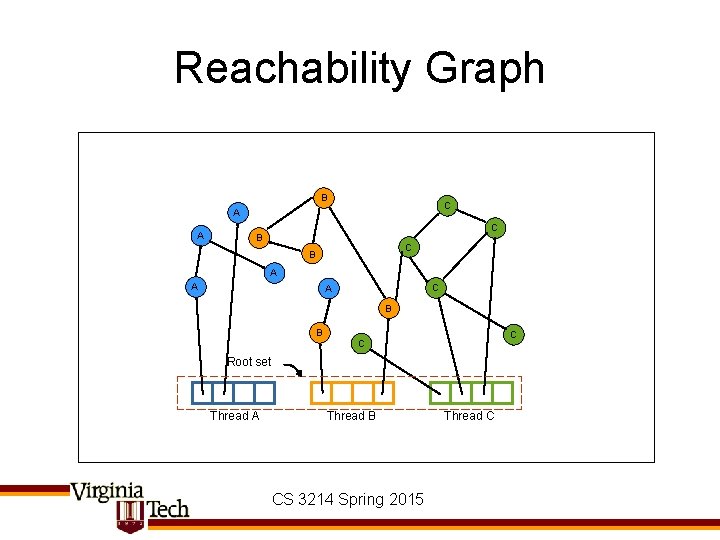

Reachability Graph B B C A A C B A A C A B C C Root set Thread A Thread B CS 3214 Spring 2015 Thread C

Reachability Graph B B C A A C B A A C A B C C Root set Thread A Thread B CS 3214 Spring 2015 Thread C

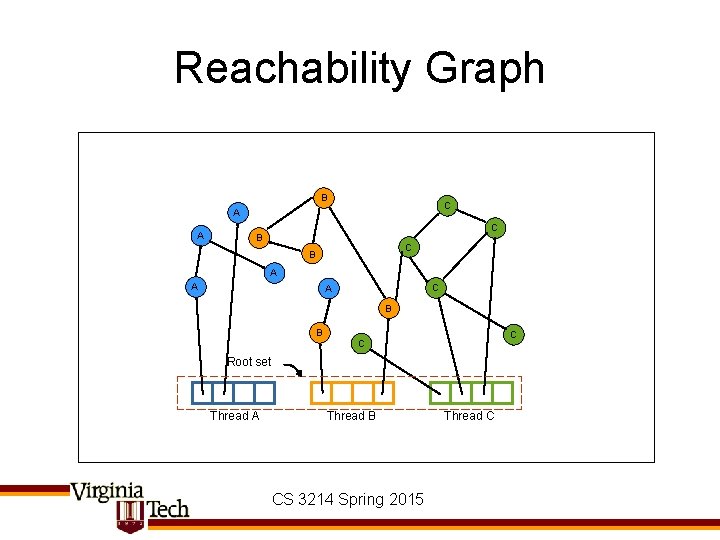

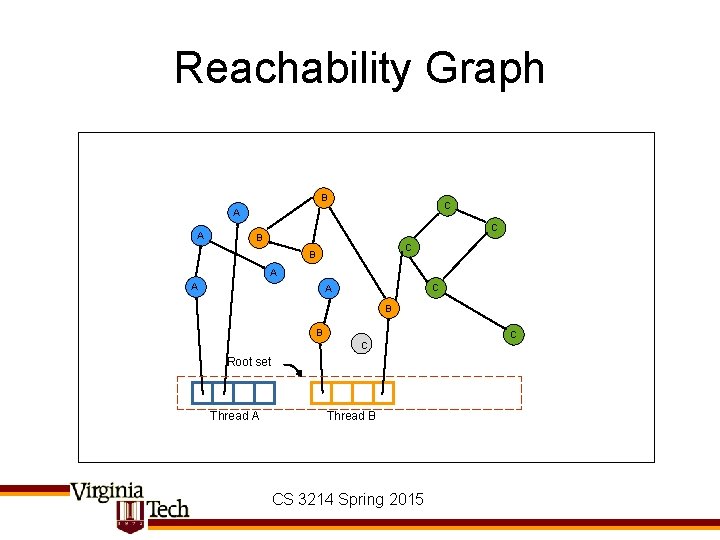

Reachability Graph B C A A C B A A C A B B C C Root set Thread A Thread B CS 3214 Spring 2015 Thread C

Reachability Graph B C A A C B A A C A B B C Root set Thread A Thread B CS 3214 Spring 2015 C

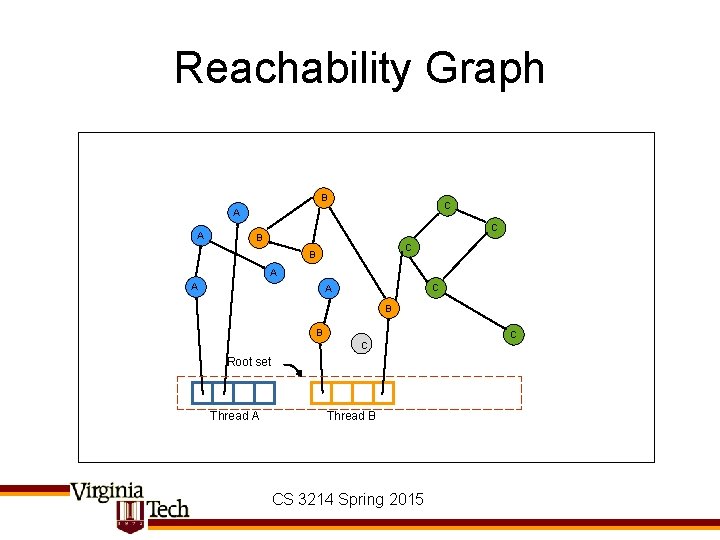

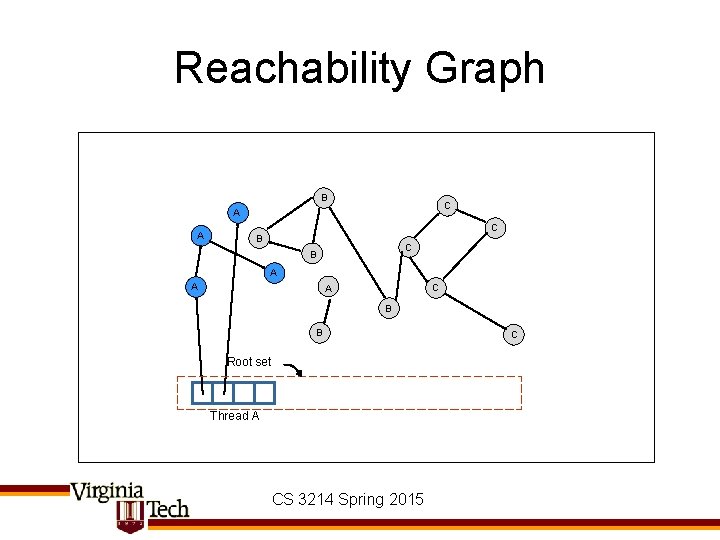

Reachability Graph B C A A C B A A C A B B Root set Thread A CS 3214 Spring 2015 C

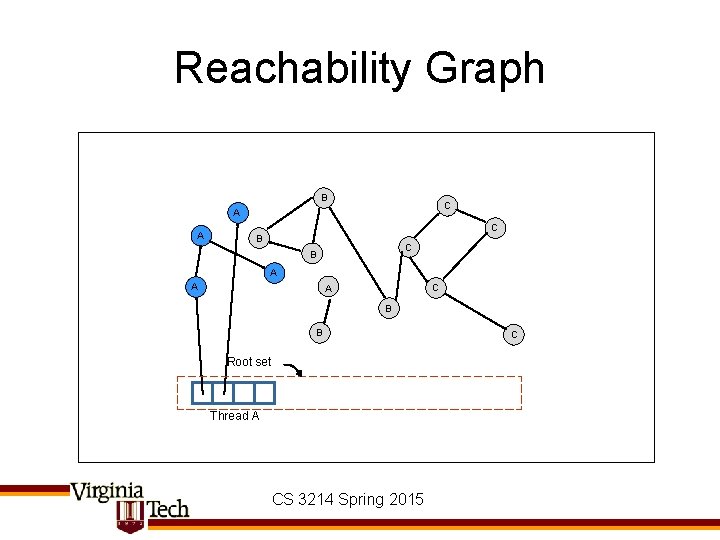

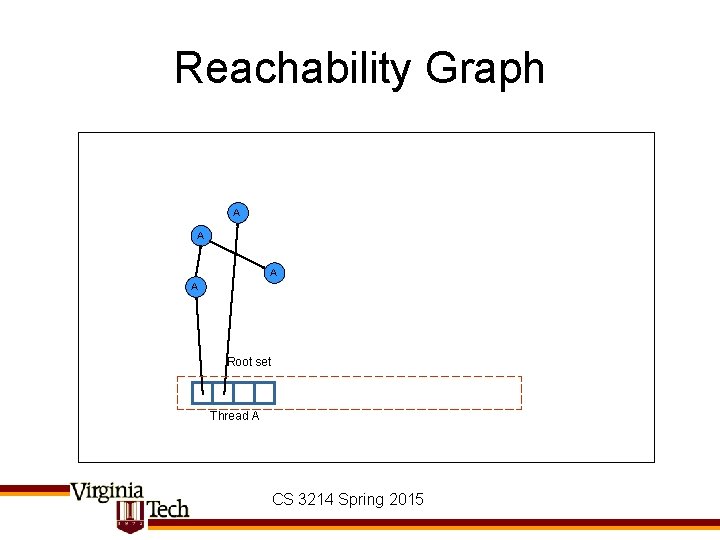

Reachability Graph A A Root set Thread A CS 3214 Spring 2015

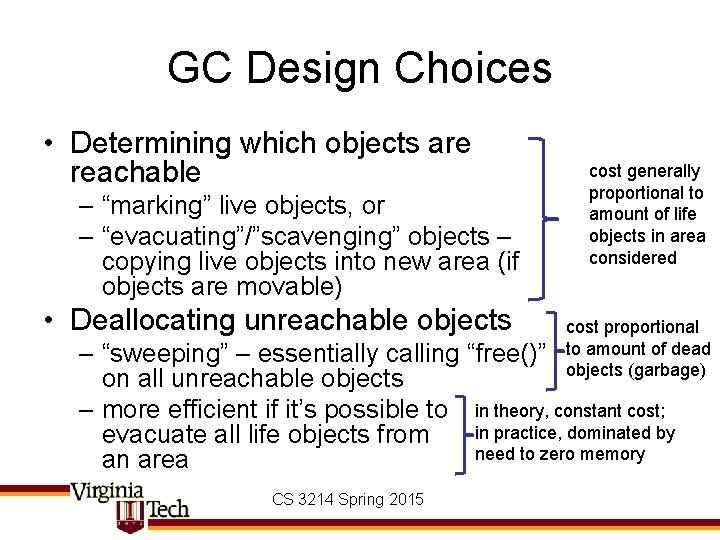

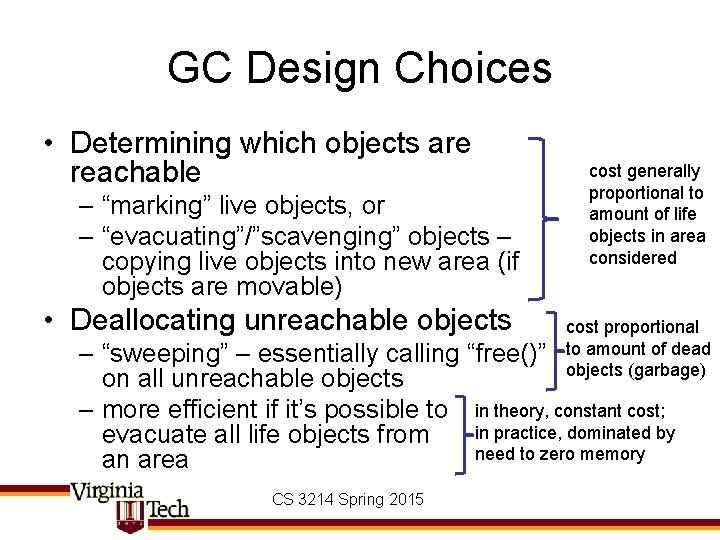

GC Design Choices • Determining which objects are reachable – “marking” live objects, or – “evacuating”/”scavenging” objects – copying live objects into new area (if objects are movable) • Deallocating unreachable objects cost generally proportional to amount of life objects in area considered cost proportional to amount of dead objects (garbage) – “sweeping” – essentially calling “free()” on all unreachable objects – more efficient if it’s possible to in theory, constant cost; in practice, dominated by evacuate all life objects from need to zero memory an area CS 3214 Spring 2015

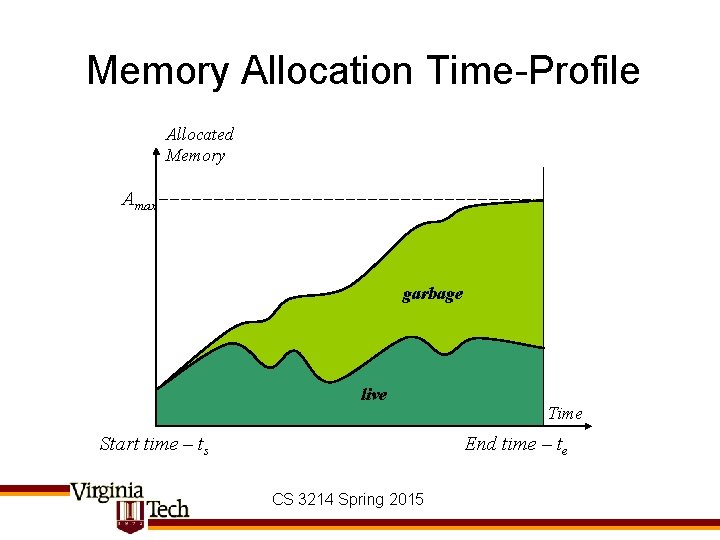

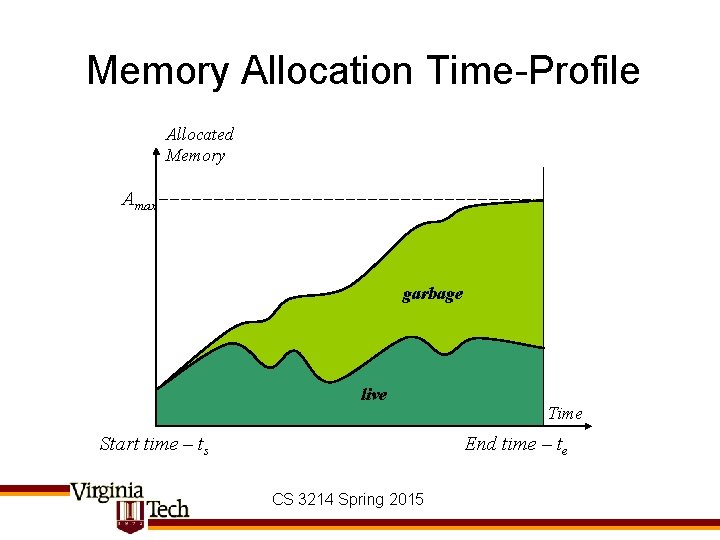

Memory Allocation Time-Profile Allocated Memory Amax garbage live Start time – ts Time End time – te CS 3214 Spring 2015

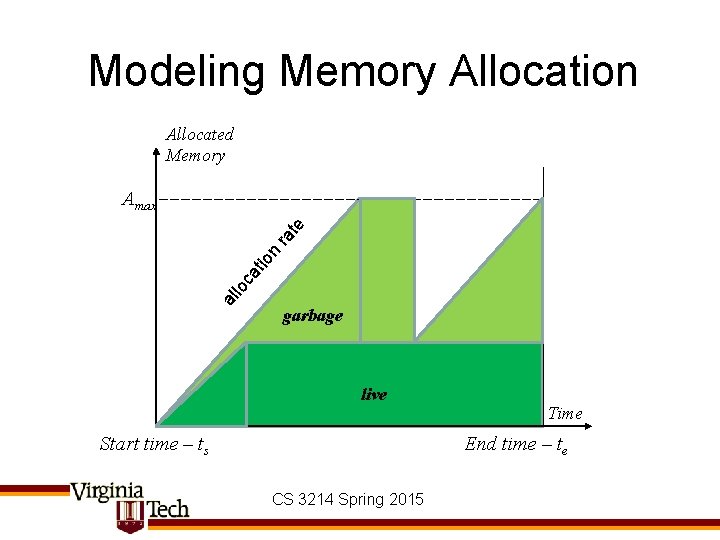

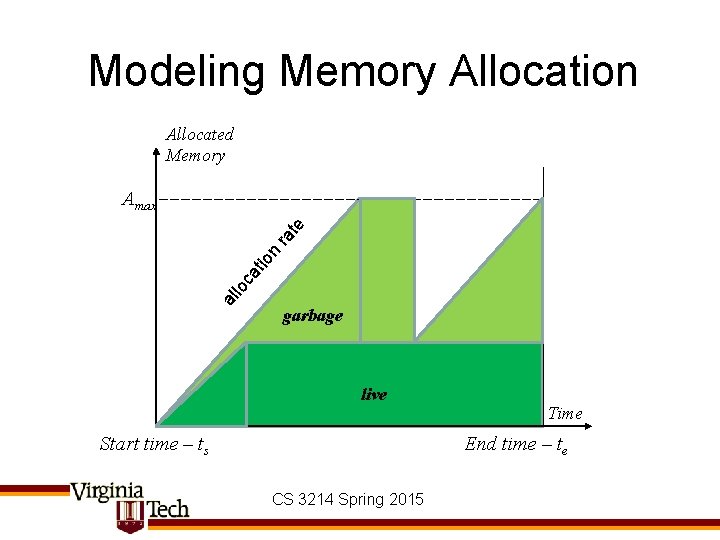

Modeling Memory Allocation Allocated Memory al lo ca tio n ra te Amax garbage live Start time – ts Time End time – te CS 3214 Spring 2015

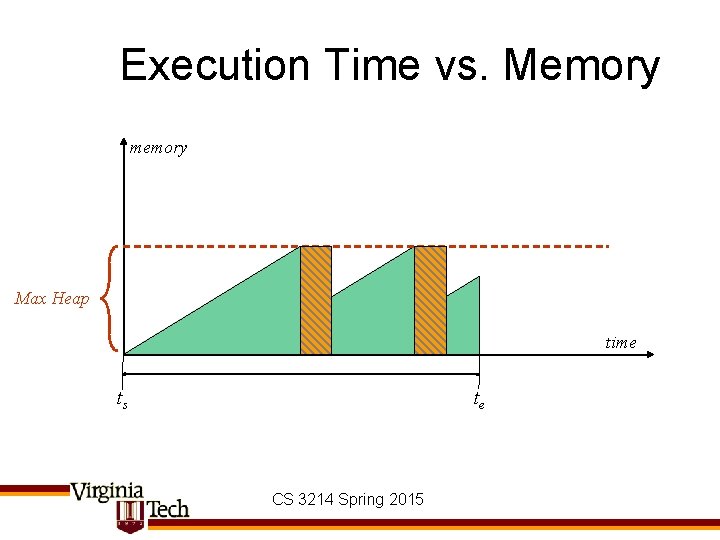

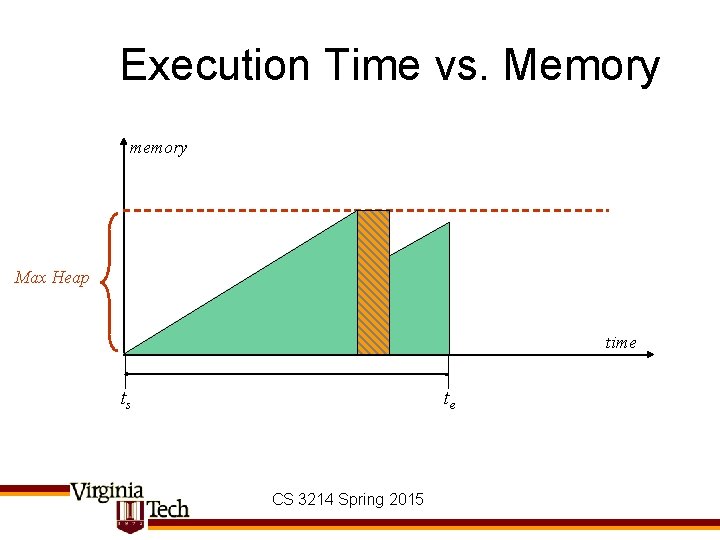

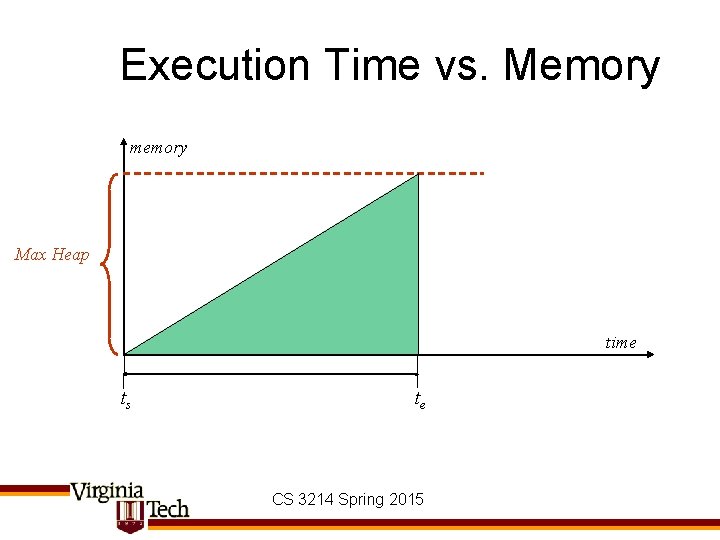

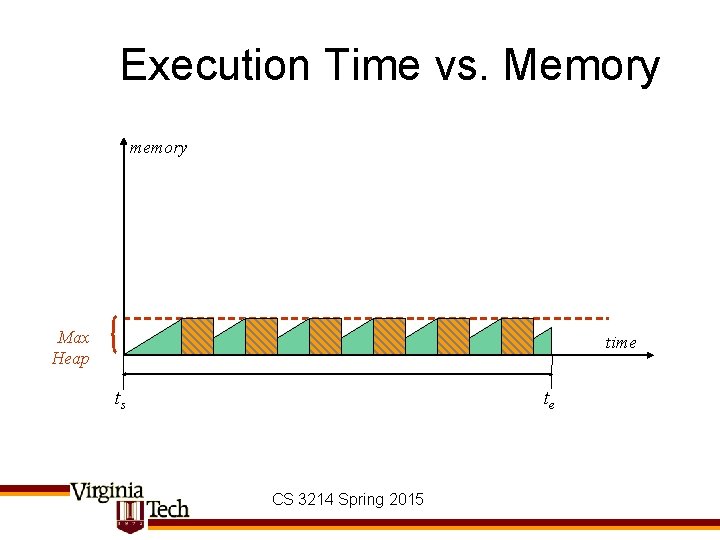

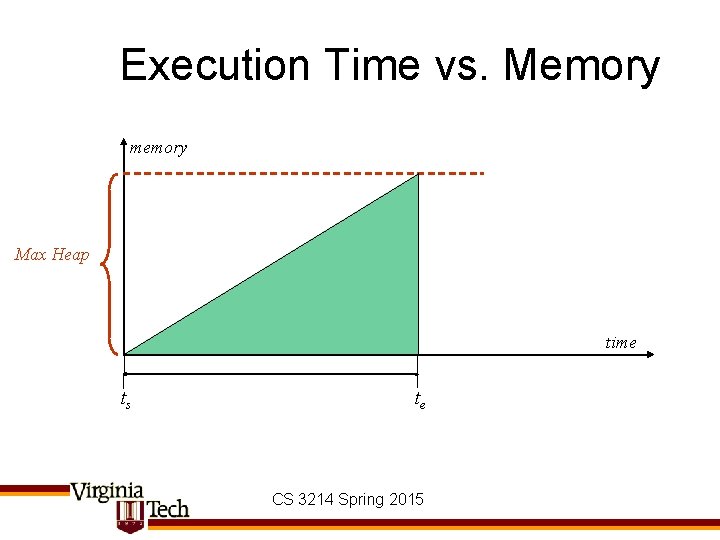

Execution Time vs. Memory memory Max Heap time ts te CS 3214 Spring 2015

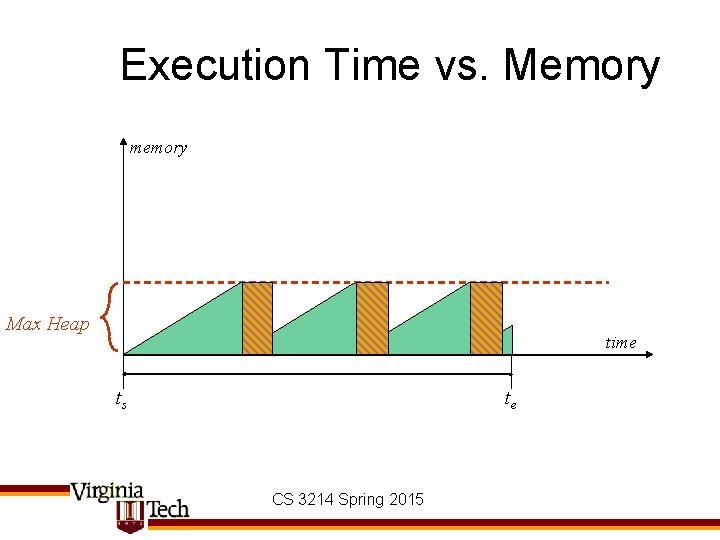

Execution Time vs. Memory memory Max Heap time ts te CS 3214 Spring 2015

Execution Time vs. Memory memory Max Heap time ts te CS 3214 Spring 2015

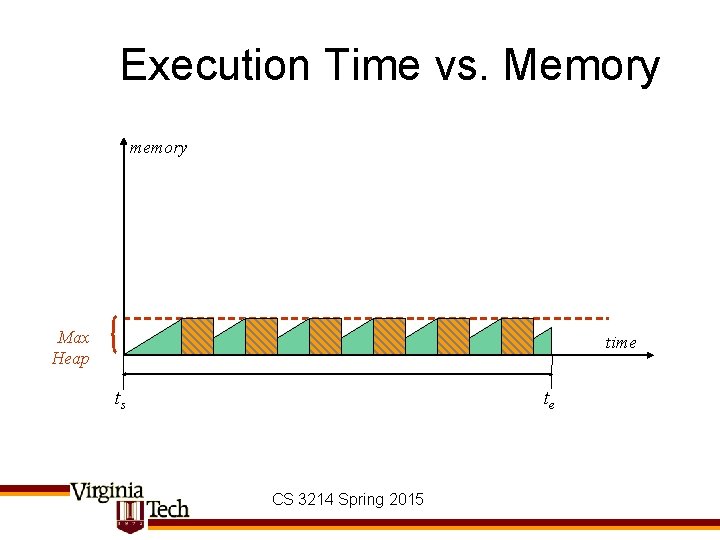

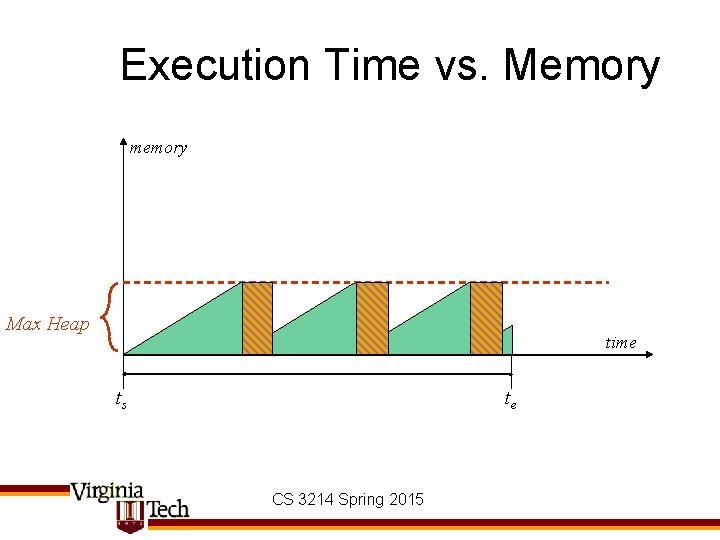

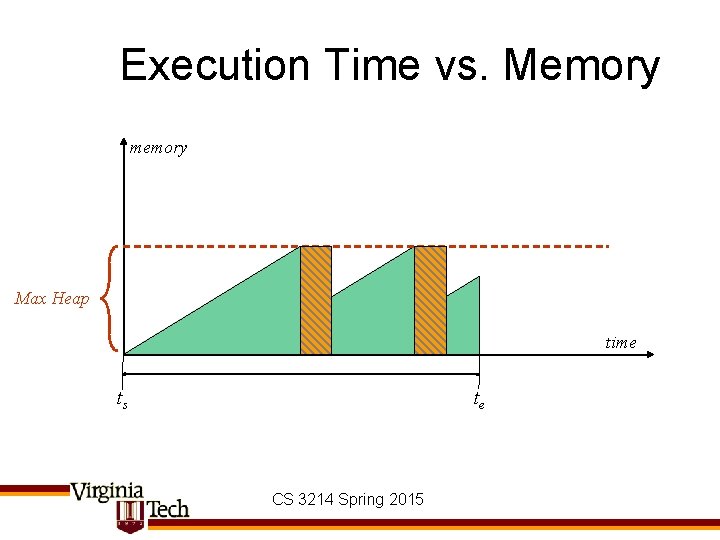

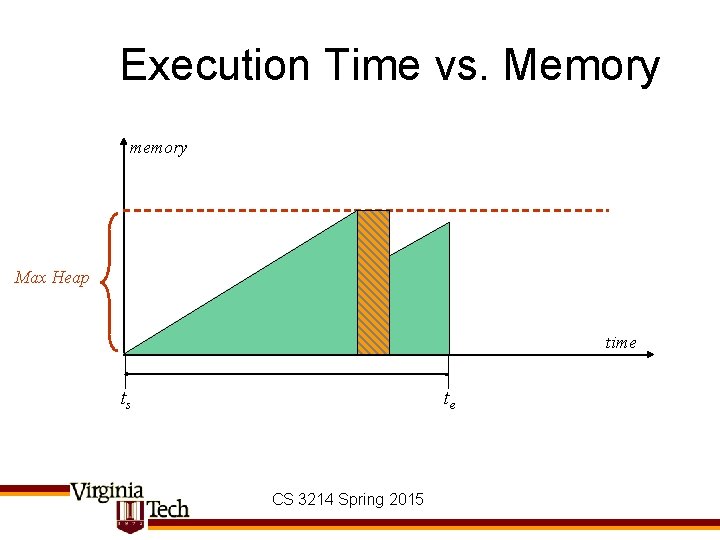

Execution Time vs. Memory memory Max Heap time ts te CS 3214 Spring 2015

Execution Time vs. Memory memory Max Heap time ts te CS 3214 Spring 2015

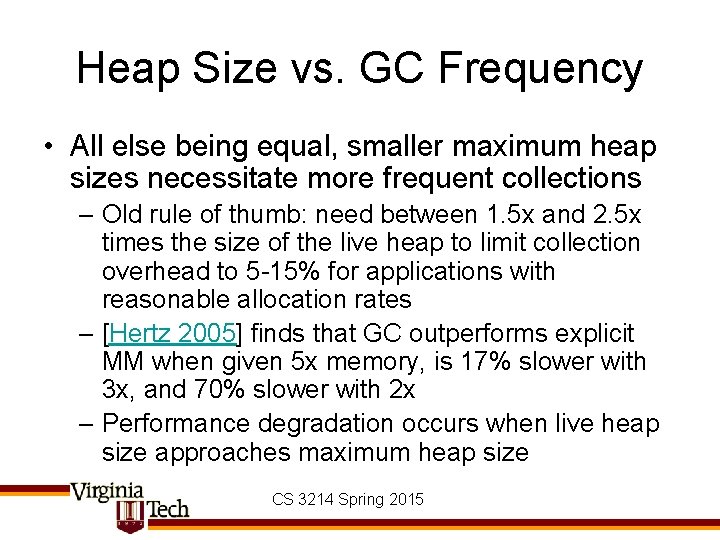

Heap Size vs. GC Frequency • All else being equal, smaller maximum heap sizes necessitate more frequent collections – Old rule of thumb: need between 1. 5 x and 2. 5 x times the size of the live heap to limit collection overhead to 5 -15% for applications with reasonable allocation rates – [Hertz 2005] finds that GC outperforms explicit MM when given 5 x memory, is 17% slower with 3 x, and 70% slower with 2 x – Performance degradation occurs when live heap size approaches maximum heap size CS 3214 Spring 2015

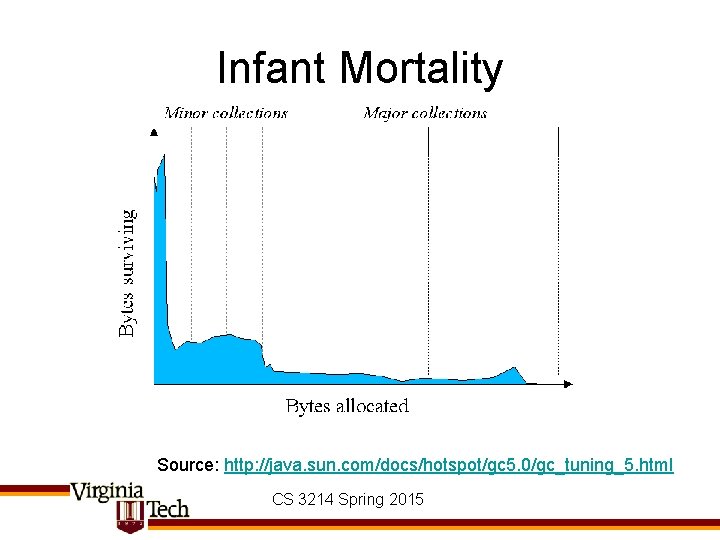

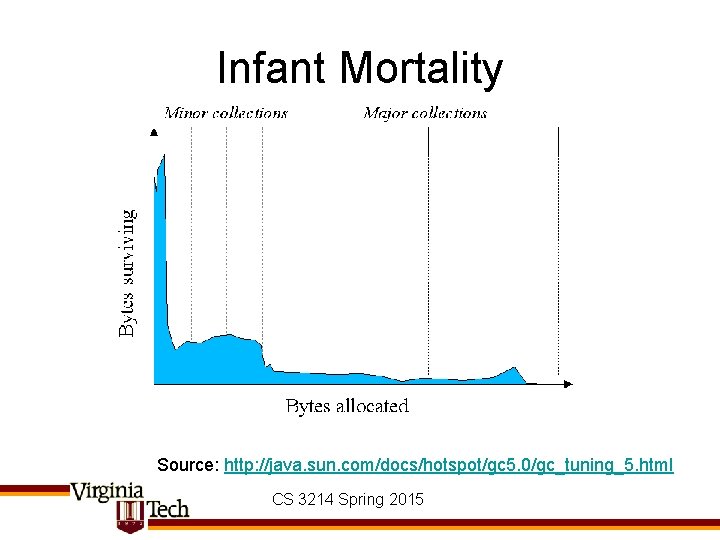

Infant Mortality Source: http: //java. sun. com/docs/hotspot/gc 5. 0/gc_tuning_5. html CS 3214 Spring 2015

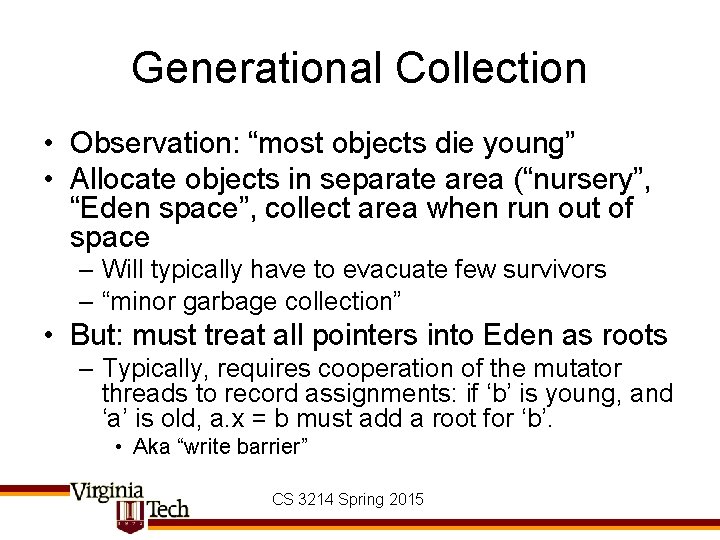

Generational Collection • Observation: “most objects die young” • Allocate objects in separate area (“nursery”, “Eden space”, collect area when run out of space – Will typically have to evacuate few survivors – “minor garbage collection” • But: must treat all pointers into Eden as roots – Typically, requires cooperation of the mutator threads to record assignments: if ‘b’ is young, and ‘a’ is old, a. x = b must add a root for ‘b’. • Aka “write barrier” CS 3214 Spring 2015

When to collect • “Stop-the-world” – All mutators stop while collection is ongoing • Incremental – Mutators perform small chunks of marking during each allocation • Concurrent/Parallel – Garbage collection happens in concurrently running thread – requires some kind of synchronization between mutator & collector CS 3214 Spring 2015

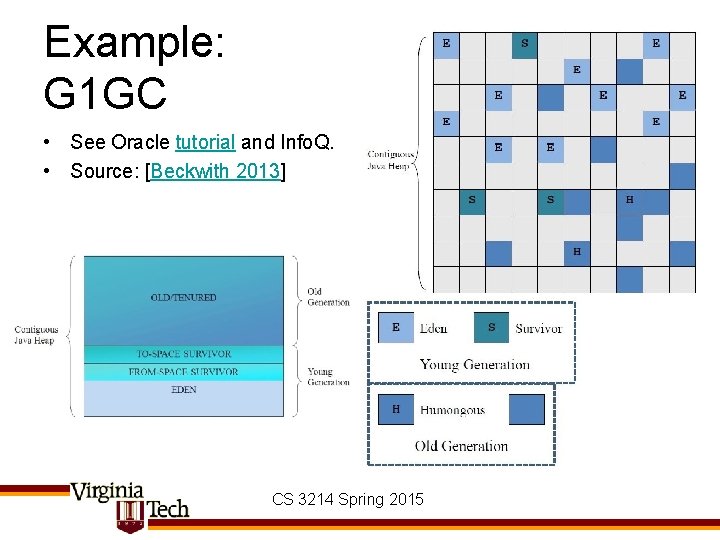

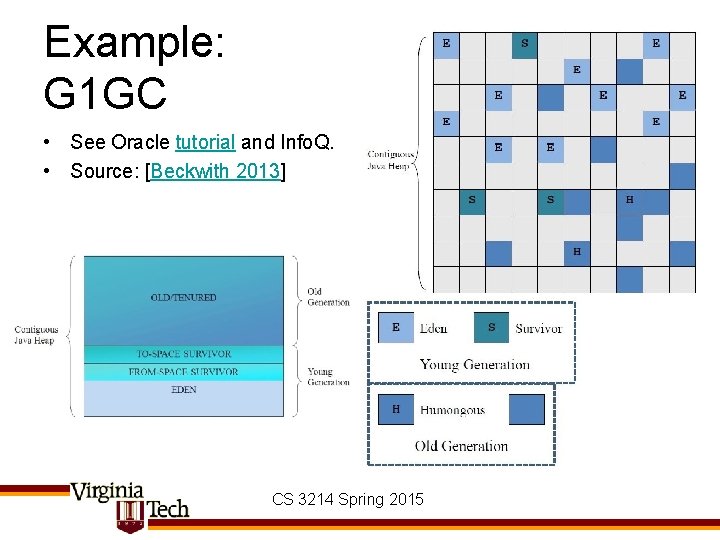

Example: G 1 GC • See Oracle tutorial and Info. Q. • Source: [Beckwith 2013] CS 3214 Spring 2015

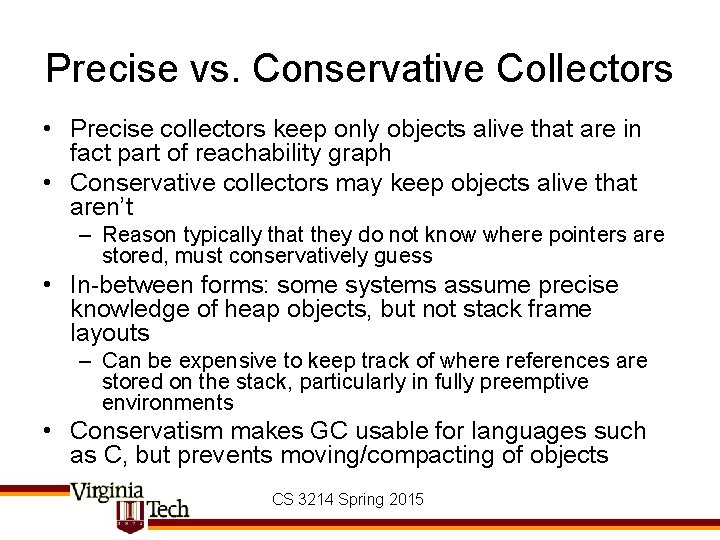

Precise vs. Conservative Collectors • Precise collectors keep only objects alive that are in fact part of reachability graph • Conservative collectors may keep objects alive that aren’t – Reason typically that they do not know where pointers are stored, must conservatively guess • In-between forms: some systems assume precise knowledge of heap objects, but not stack frame layouts – Can be expensive to keep track of where references are stored on the stack, particularly in fully preemptive environments • Conservatism makes GC usable for languages such as C, but prevents moving/compacting of objects CS 3214 Spring 2015

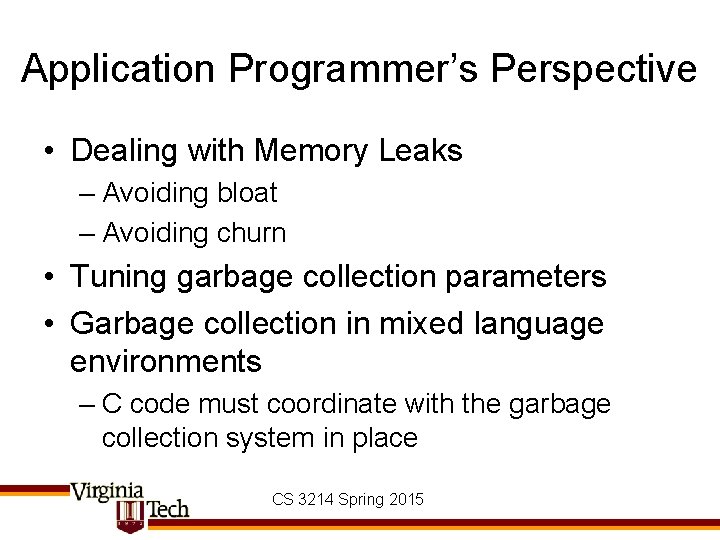

Application Programmer’s Perspective • Dealing with Memory Leaks – Avoiding bloat – Avoiding churn • Tuning garbage collection parameters • Garbage collection in mixed language environments – C code must coordinate with the garbage collection system in place CS 3214 Spring 2015

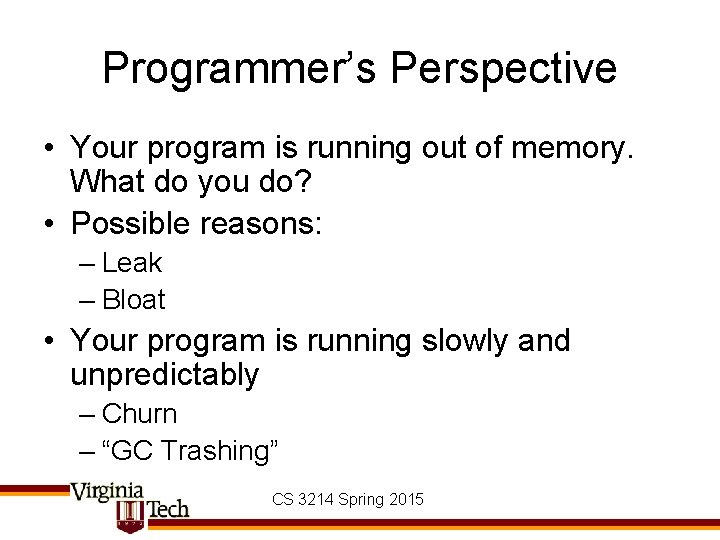

Programmer’s Perspective • Your program is running out of memory. What do you do? • Possible reasons: – Leak – Bloat • Your program is running slowly and unpredictably – Churn – “GC Trashing” CS 3214 Spring 2015

Memory Leaks • Objects that remain reachable, but will not be accessed in the future – Due to application semantics • Will ultimately lead to out-of-memory condition – But will degrade performance before that • Common problem, particularly in multi-layer frameworks – Containers are a frequent culprit – Heap profilers can help CS 3214 Spring 2015

Bloat and Churn • Bloat: use of inefficient, pointer-intensive data structures increases overall memory consumption [Chis et al 2011] – E. g. Hash. Maps for small objects • Churn: frequent and avoidable allocation of objects that turn to garbage quickly • Caches: – How to implement and size caches CS 3214 Spring 2015