ComputerArchitecture Guidance Keio University AMANO Hideharu hungaamicskeioacjp Contents

- Slides: 52

Computer Architecture Guidance Keio University AMANO, Hideharu hunga@am.ics.keio.ac.jp

Contents Techniques on Parallel Processing q q n Parallel Architectures Parallel Programming → On real machines Advanced uni-processor architecture → Special Course of Microprocessors (by Prof. Yamasaki, Fall term)

Class n Lecture using Powerpoint n The ppt file is uploaded on the web site http: //www. am. ics. keio. ac. jp, and you can down load/print before the lecture. q Please check it on every Friday morning. Homework: mail to: hunga@am. ics. keio. ac. jp q

Evaluation n Exercise on Parallel Programming using GPU (50%) q q n Caution! If the program does not run, the unit cannot be given even if you finish all other exercises. This year a new GPU P 100 is now under preparation. Homework: after every lecture (50%)

Computer Architecture 1 Introduction to Parallel Architectures Keio University AMANO, Hideharu hunga@am.ics.keio.ac.jp

Parallel Architecture A parallel architecture consists of multiple processing units which work simultaneously. → Thread level parallelism n n Purposes Classifications Terms Trends

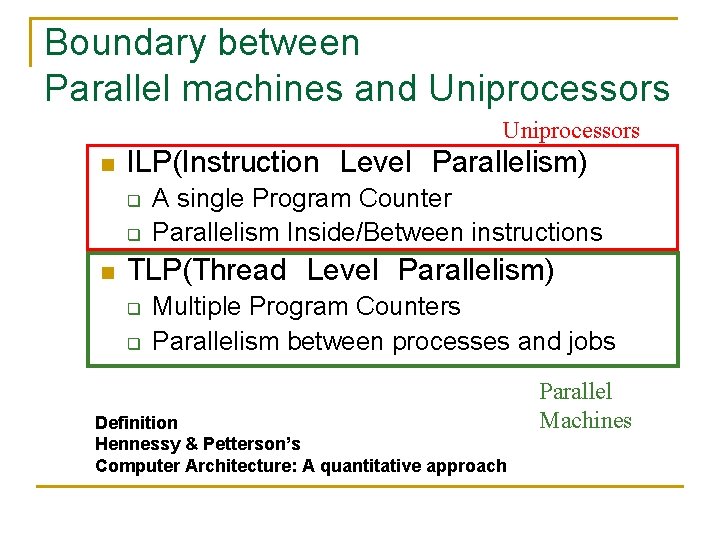

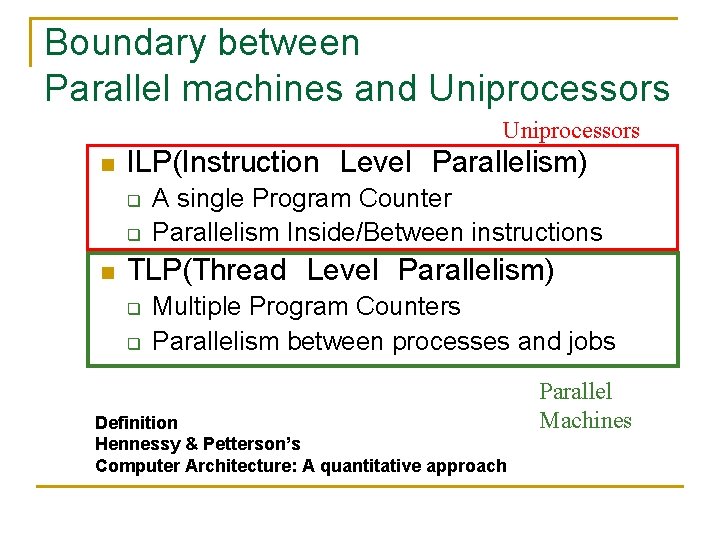

Boundary between Parallel machines and Uniprocessors n ILP(Instruction Level Parallelism) q q n A single Program Counter Parallelism Inside/Between instructions TLP(Thread Level Parallelism) q q Multiple Program Counters Parallelism between processes and jobs Definition Hennessy & Petterson’s Computer Architecture: A quantitative approach Parallel Machines

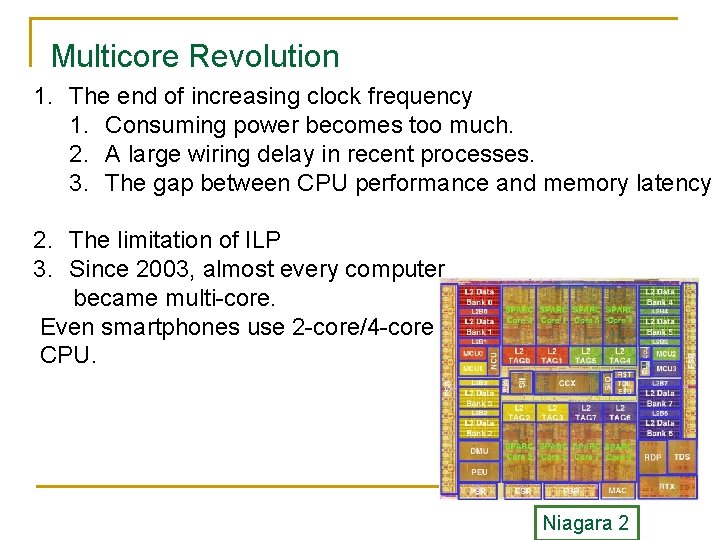

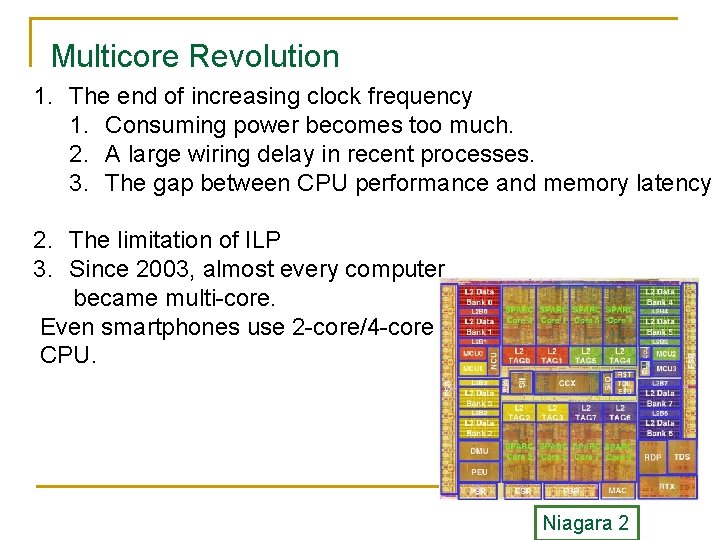

Multicore Revolution 1. The end of increasing clock frequency 1. Consuming power becomes too much. 2. A large wiring delay in recent processes. 3. The gap between CPU performance and memory latency 2. The limitation of ILP 3. Since 2003, almost every computer became multi-core. Even smartphones use 2 -core/4 -core CPU. Niagara 2

End of Moore’s Law in computer to increase performance other than performance No way. Increasing the number of cores 1. 2/year 1. 5/year=Moore’s Law 1. 25/year

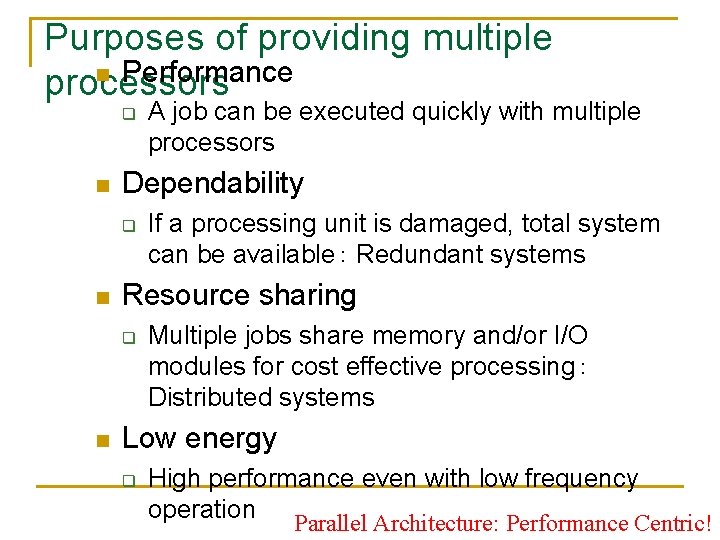

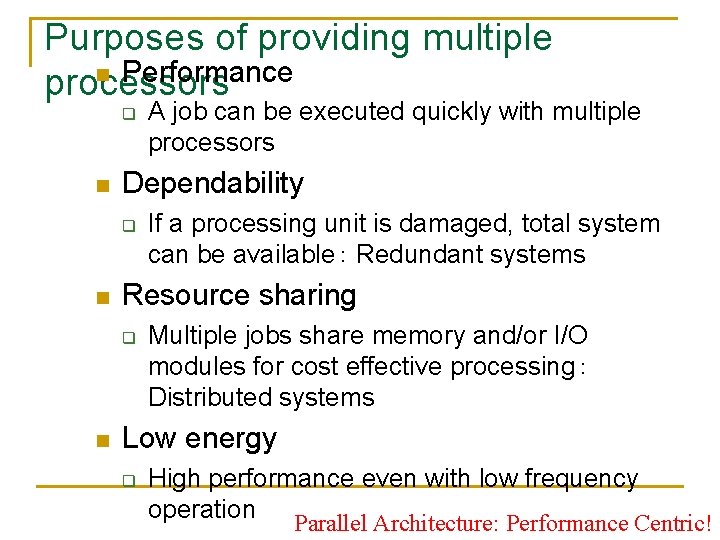

Purposes of providing multiple n Performance processors q n Dependability q n If a processing unit is damaged, total system can be available: Redundant systems Resource sharing q n A job can be executed quickly with multiple processors Multiple jobs share memory and/or I/O modules for cost effective processing: Distributed systems Low energy q High performance even with low frequency operation Parallel Architecture: Performance Centric!

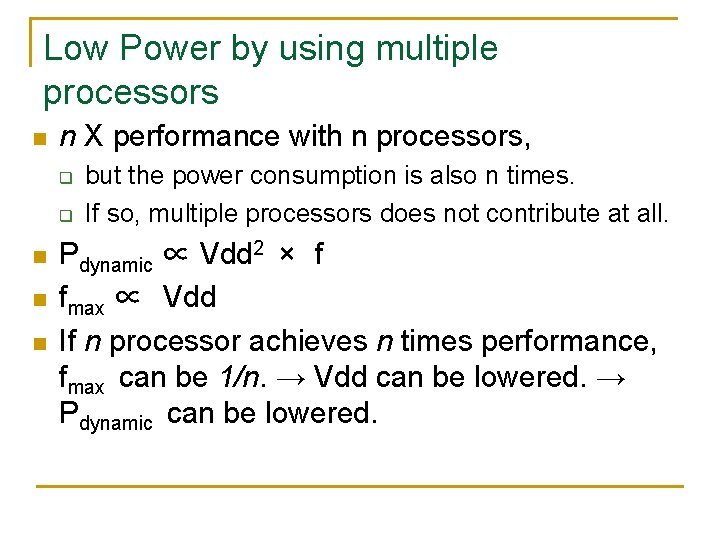

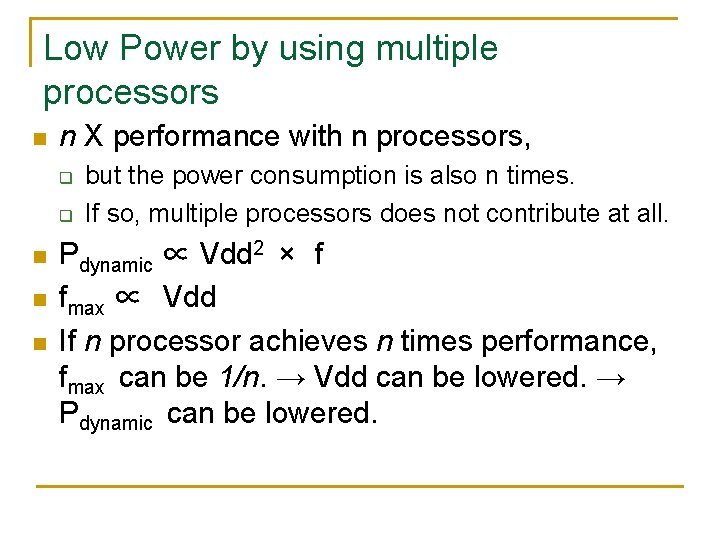

Low Power by using multiple processors n n X performance with n processors, q q n n n but the power consumption is also n times. If so, multiple processors does not contribute at all. Pdynamic ∝ Vdd 2 × f fmax ∝ Vdd If n processor achieves n times performance, fmax can be 1/n. → Vdd can be lowered. → Pdynamic can be lowered.

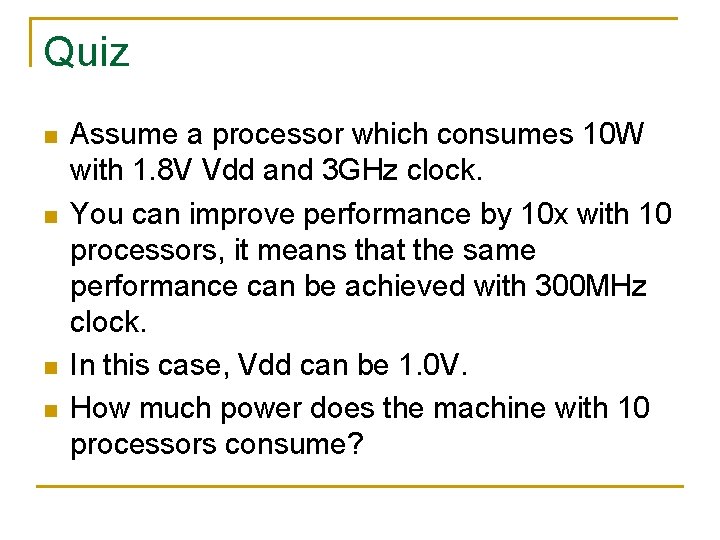

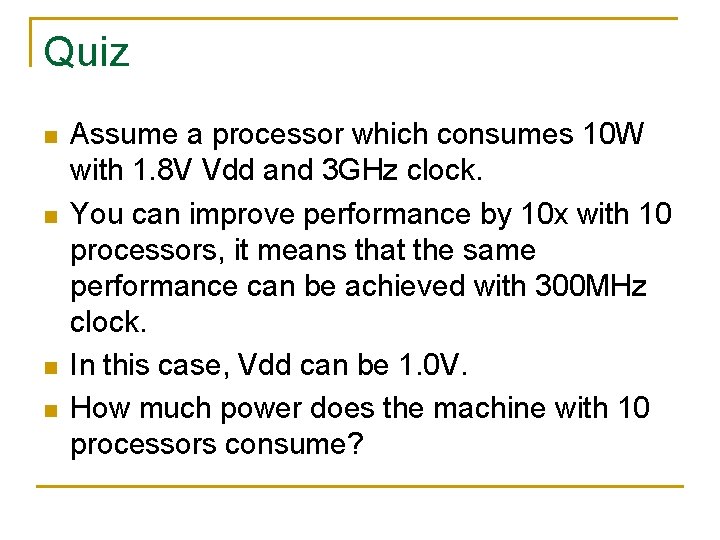

Quiz n n Assume a processor which consumes 10 W with 1. 8 V Vdd and 3 GHz clock. You can improve performance by 10 x with 10 processors, it means that the same performance can be achieved with 300 MHz clock. In this case, Vdd can be 1. 0 V. How much power does the machine with 10 processors consume?

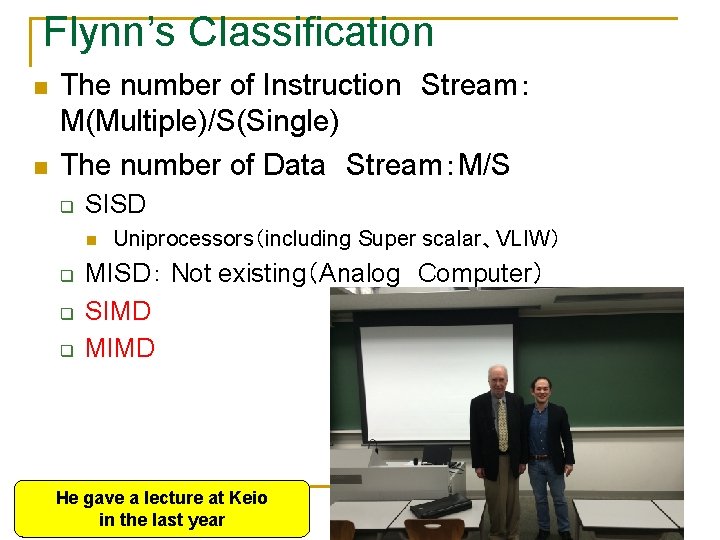

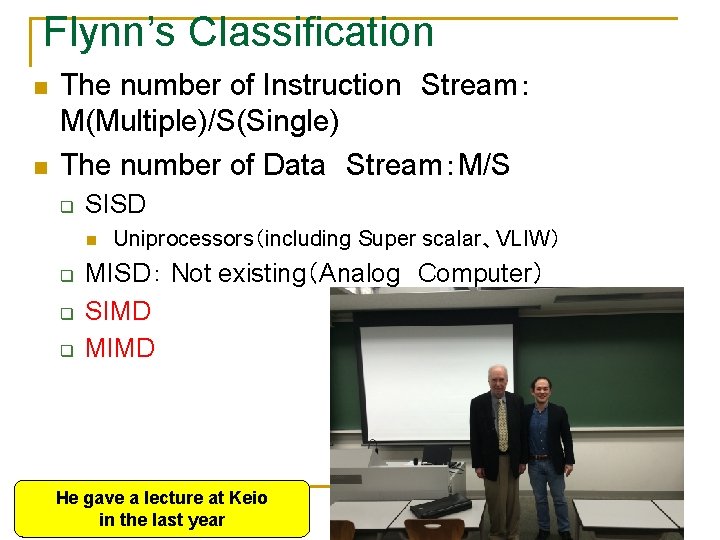

Flynn’s Classification n n The number of Instruction Stream: M(Multiple)/S(Single) The number of Data Stream:M/S q SISD n q q q Uniprocessors(including Super scalar、VLIW) MISD: Not existing(Analog Computer) SIMD MIMD He gave a lecture at Keio in the last year

SIMD (Single Instruction Stream Multiple Data Streams • All Processing Units executes Instruction the same instruction Memory • Low degree of flexibility • Illiac-IV/MMX instructions/Clear. Speed/IMAP /GP-GPU(coarse grain) Instruction • CM-2, (fine grain) Processing Unit Data memory

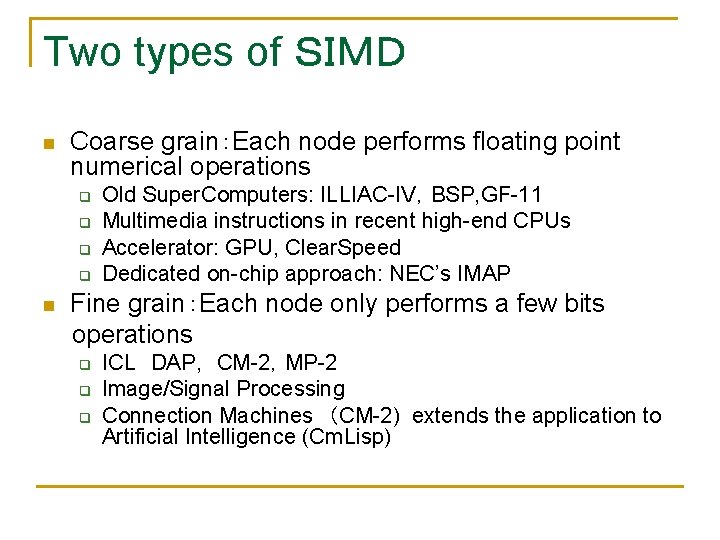

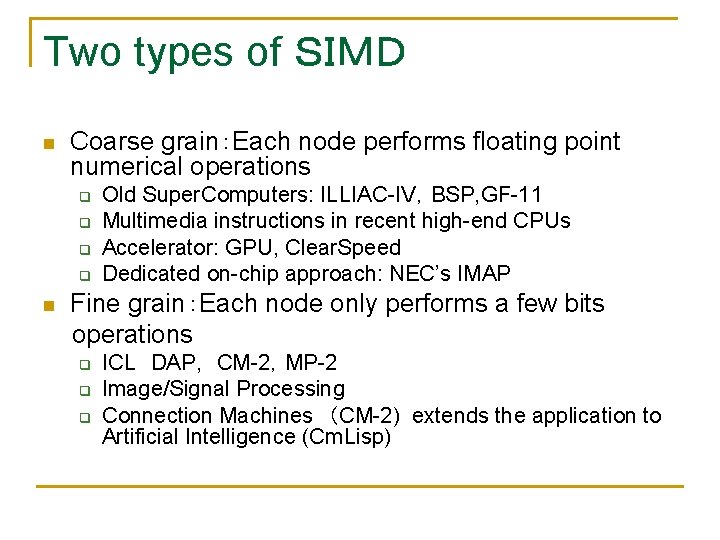

Two types of SIMD n Coarse grain:Each node performs floating point numerical operations q q n Old Super. Computers: ILLIAC-IV,BSP, GF-11 Multimedia instructions in recent high-end CPUs Accelerator: GPU, Clear. Speed Dedicated on-chip approach: NEC’s IMAP Fine grain:Each node only performs a few bits operations q q q ICL DAP, CM-2,MP-2 Image/Signal Processing Connection Machines (CM-2) extends the application to Artificial Intelligence (Cm. Lisp)

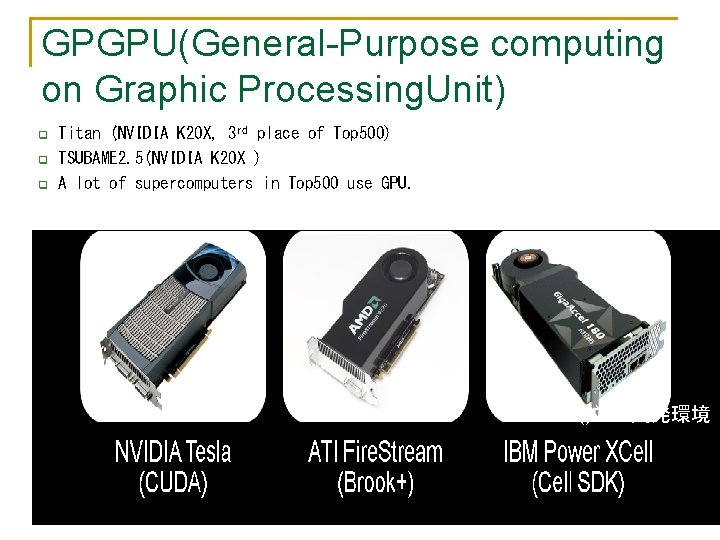

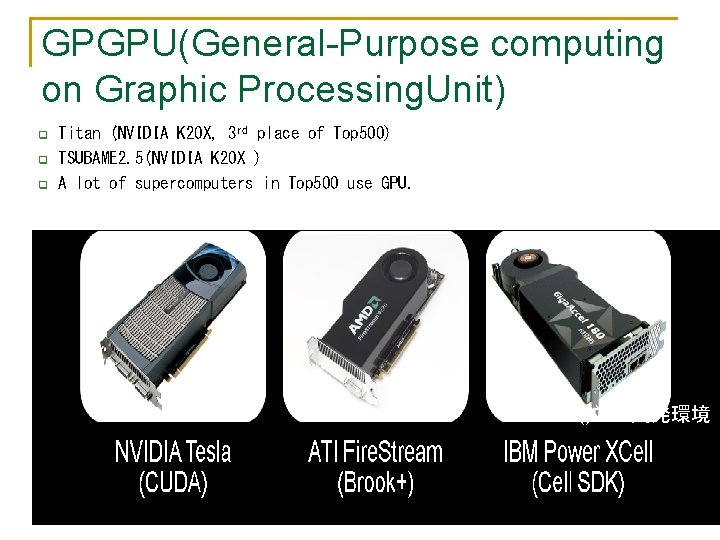

GPGPU(General-Purpose computing on Graphic Processing. Unit) q q q Titan (NVIDIA K 20 X, 3 rd place of Top 500) TSUBAME 2. 5(NVIDIA K 20 X ) A lot of supercomputers in Top 500 use GPU. ※()内は開発環境

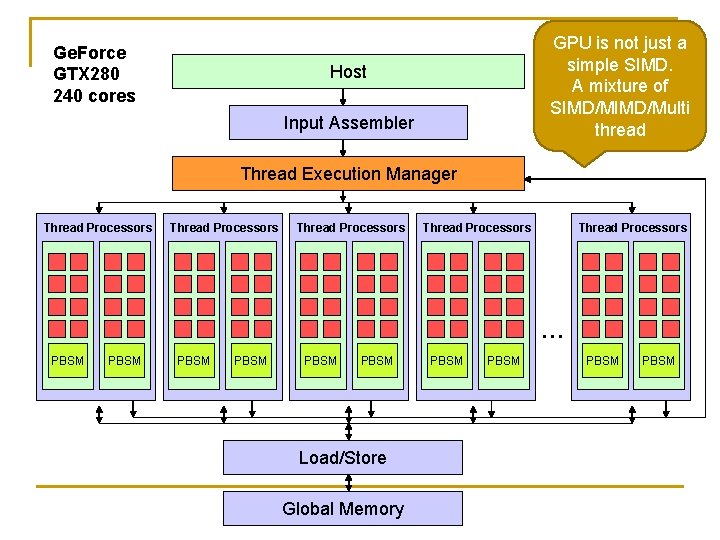

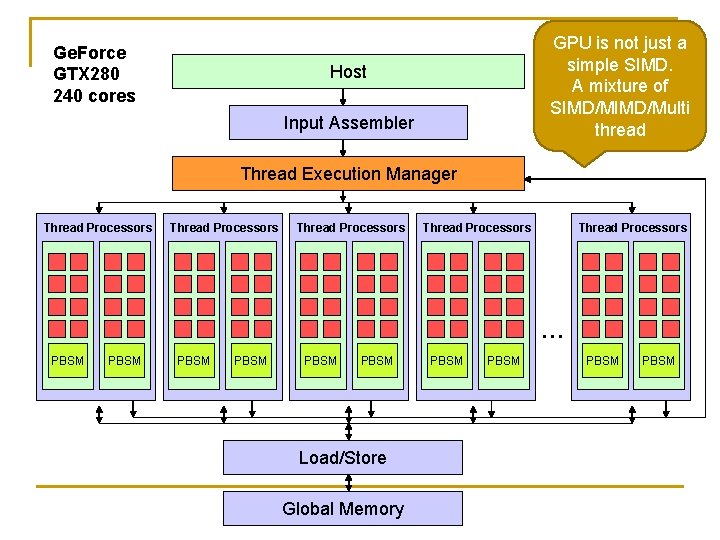

Ge. Force GTX 280 240 cores GPU is not just a simple SIMD. A mixture of SIMD/Multi thread Host Input Assembler Thread Execution Manager Thread Processors Thread Processors … PBSM PBSM Load/Store Global Memory PBSM

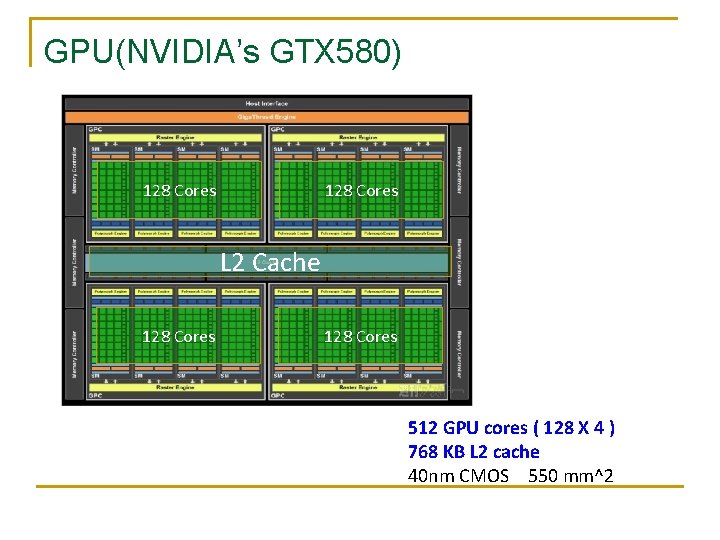

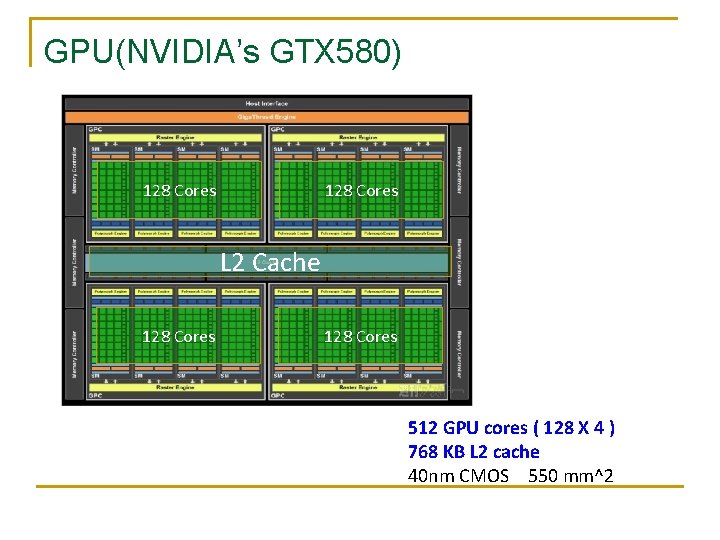

GPU(NVIDIA’s GTX 580) 128 Cores L 2 Cache 128 Cores 512 GPU cores ( 128 X 4 ) 768 KB L 2 cache 40 nm CMOS 550 mm^2

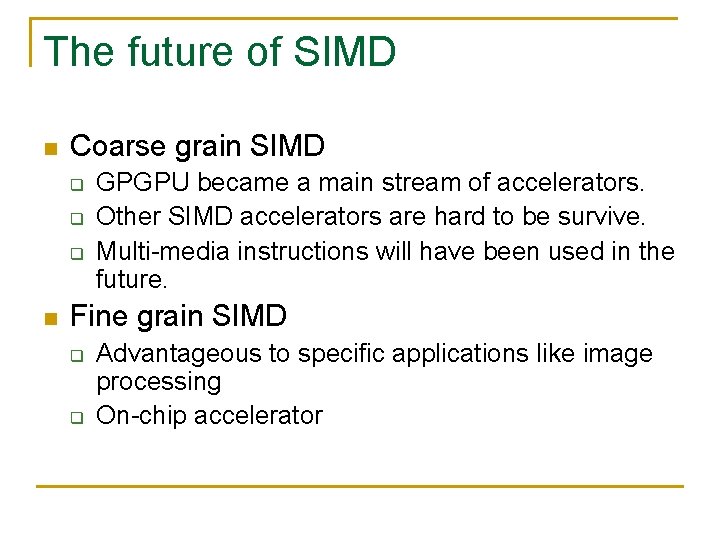

The future of SIMD n Coarse grain SIMD q q q n GPGPU became a main stream of accelerators. Other SIMD accelerators are hard to be survive. Multi-media instructions will have been used in the future. Fine grain SIMD q q Advantageous to specific applications like image processing On-chip accelerator

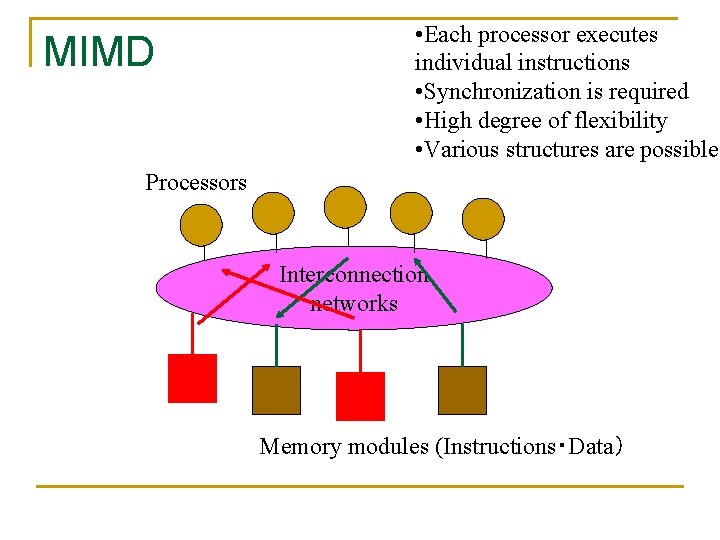

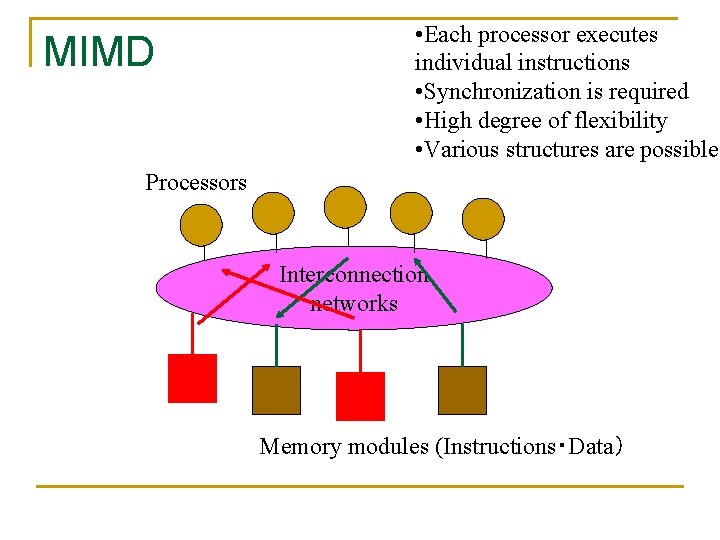

MIMD • Each processor executes individual instructions • Synchronization is required • High degree of flexibility • Various structures are possible Processors Interconnection networks Memory modules (Instructions・Data)

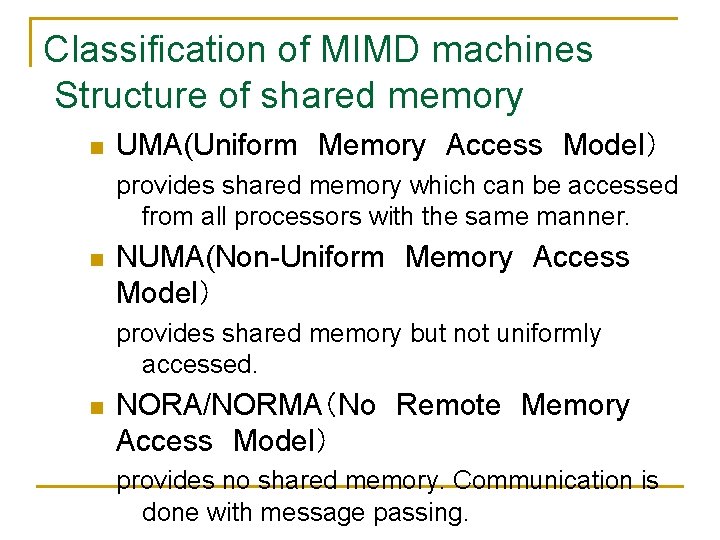

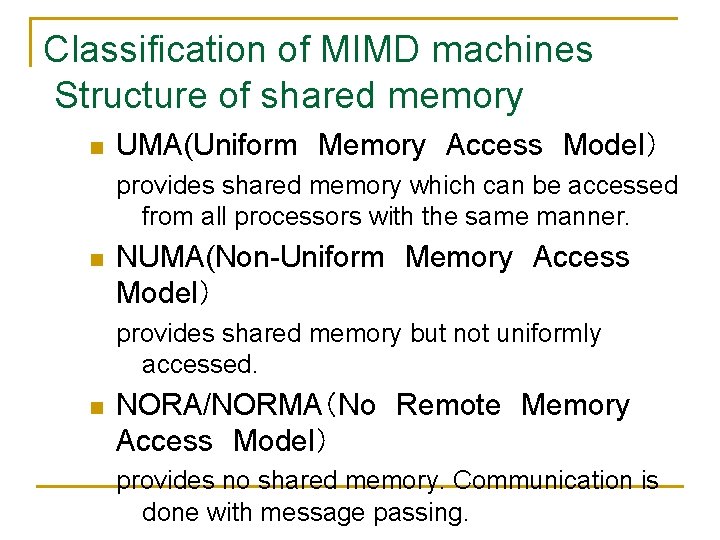

Classification of MIMD machines Structure of shared memory n UMA(Uniform Memory Access Model) provides shared memory which can be accessed from all processors with the same manner. n NUMA(Non-Uniform Memory Access Model) provides shared memory but not uniformly accessed. n NORA/NORMA(No Remote Memory Access Model) provides no shared memory. Communication is done with message passing.

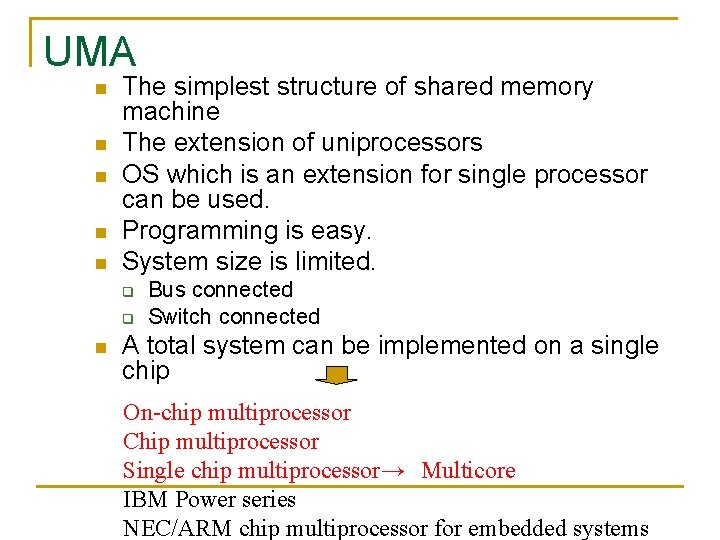

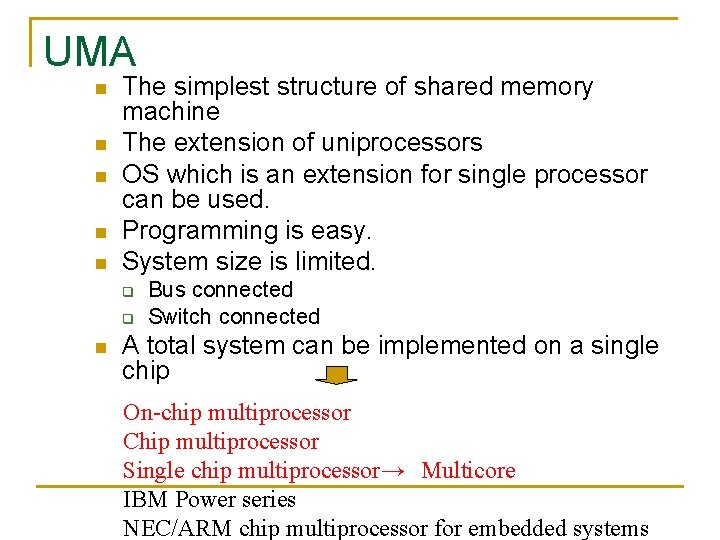

UMA n n n The simplest structure of shared memory machine The extension of uniprocessors OS which is an extension for single processor can be used. Programming is easy. System size is limited. q q n Bus connected Switch connected A total system can be implemented on a single chip On-chip multiprocessor Chip multiprocessor Single chip multiprocessor→ Multicore IBM Power series NEC/ARM chip multiprocessor for embedded systems

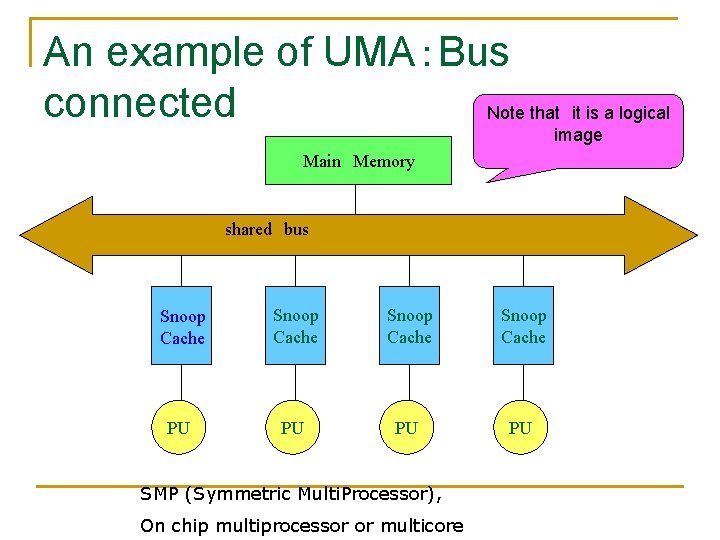

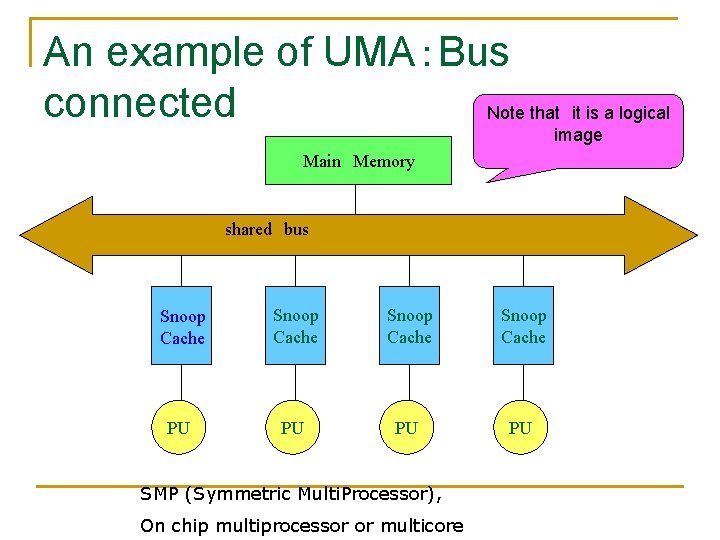

An example of UMA:Bus connected Note that it is a logical image Main Memory shared bus Snoop Cache PU PU SMP (Symmetric Multi. Processor), On chip multiprocessor or multicore

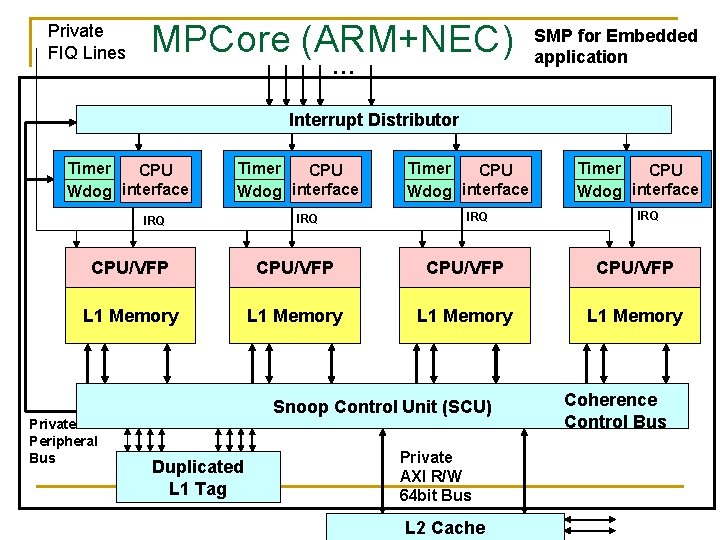

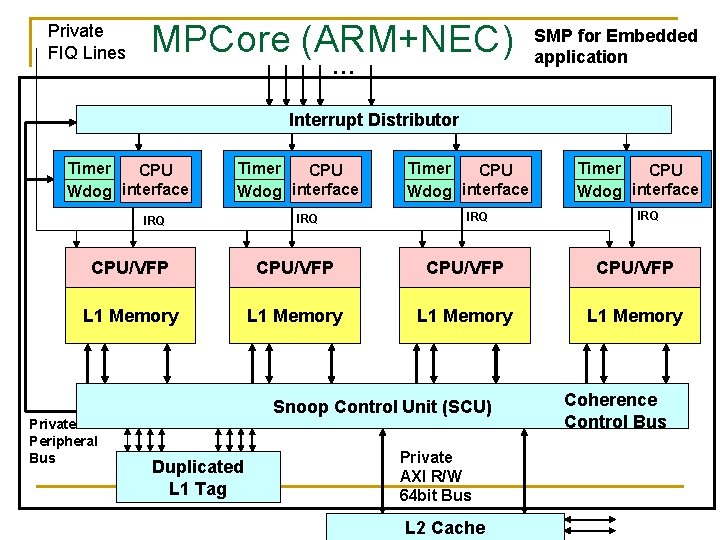

Private FIQ Lines MPCore (ARM+NEC) … SMP for Embedded application Interrupt Distributor Timer CPU Wdog interface IRQ CPU/VFP L 1 Memory Private Peripheral Bus Snoop Control Unit (SCU) Duplicated L 1 Tag Private AXI R/W 64 bit Bus L 2 Cache Coherence Control Bus

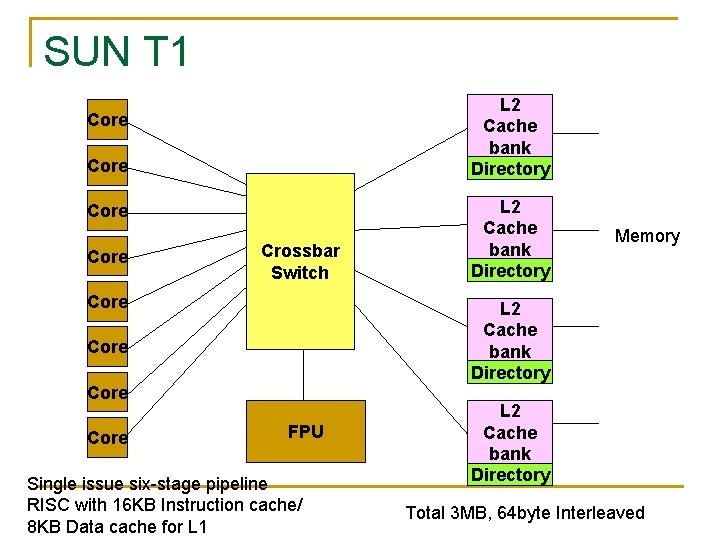

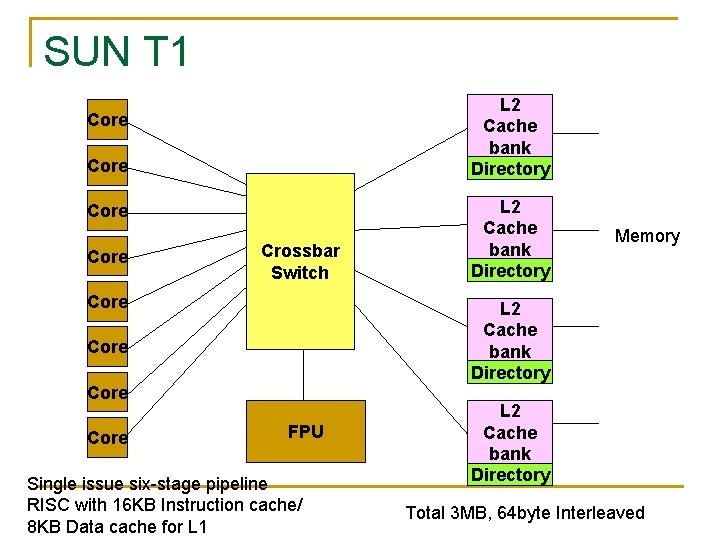

SUN T 1 L 2 Cache bank Directory Core Crossbar Switch Core Memory L 2 Cache bank Directory Core L 2 Cache bank Directory FPU Single issue six-stage pipeline RISC with 16 KB Instruction cache/ 8 KB Data cache for L 1 L 2 Cache bank Directory Total 3 MB, 64 byte Interleaved

Multi-Core (Intel’s Nehalem-EX) CPU CPU L 3 Cache CPU CPU 8 CPU cores 24 MB L 3 cache 45 nm CMOS 600 mm^2

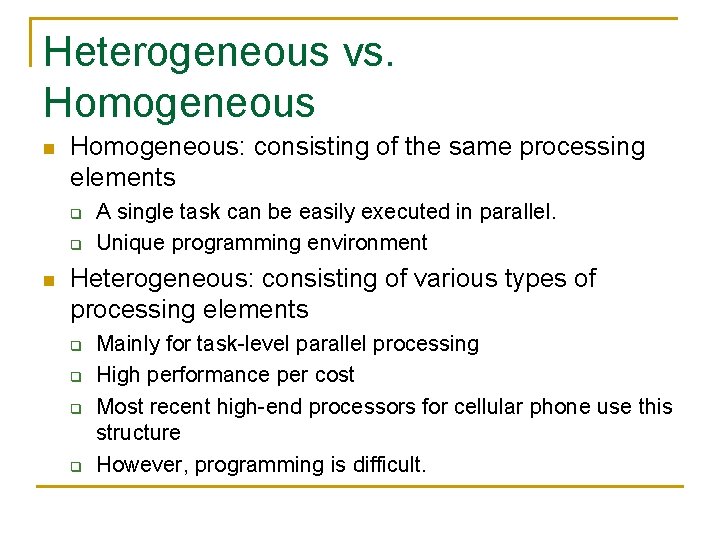

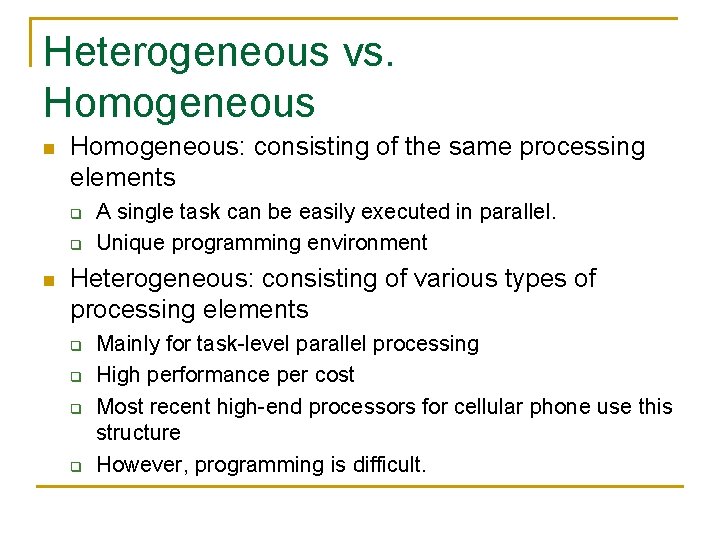

Heterogeneous vs. Homogeneous n Homogeneous: consisting of the same processing elements q q n A single task can be easily executed in parallel. Unique programming environment Heterogeneous: consisting of various types of processing elements q q Mainly for task-level parallel processing High performance per cost Most recent high-end processors for cellular phone use this structure However, programming is difficult.

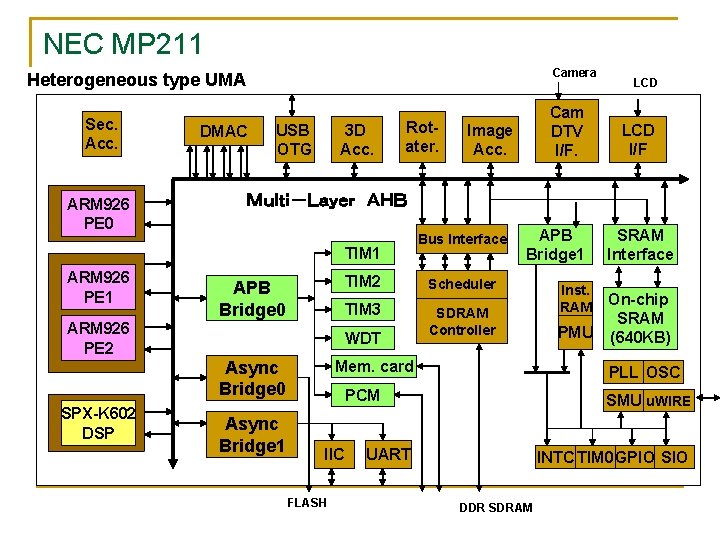

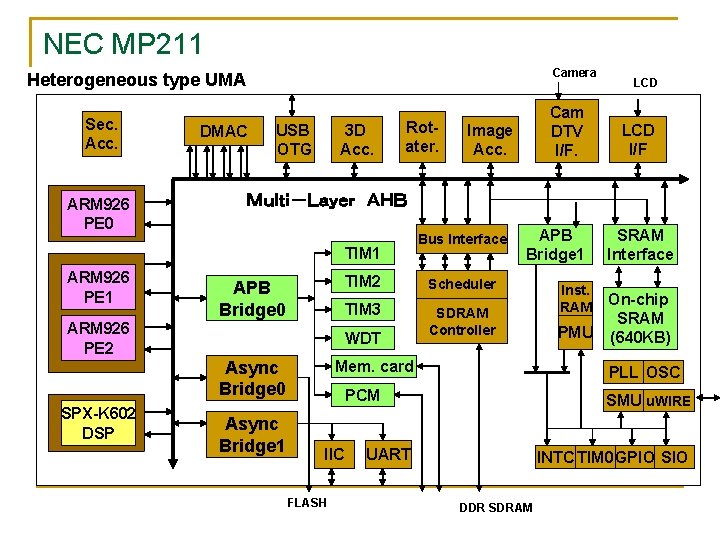

NEC MP 211 Camera Heterogeneous type UMA Sec. Acc. ARM 926 PE 0 DMAC USB OTG 3 D Acc. Rotater. APB Bridge 0 ARM 926 PE 2 Async Bridge 1 Bus Interface TIM 2 Scheduler TIM 3 SDRAM Controller WDT Async Bridge 0 SPX-K 602 DSP Image Acc. LCD I/F Multi-Layer AHB TIM 1 ARM 926 PE 1 Cam DTV I/F. LCD APB Bridge 1 Mem. card FLASH Inst. RAM On-chip SRAM PMU (640 KB) PLL OSC PCM IIC SRAM Interface SMU u. WIRE UART INTC TIM 0 GPIO SIO DDR SDRAM

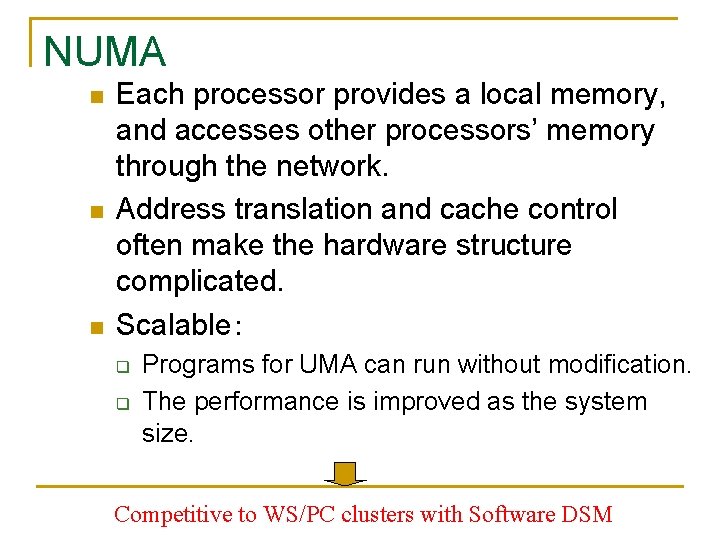

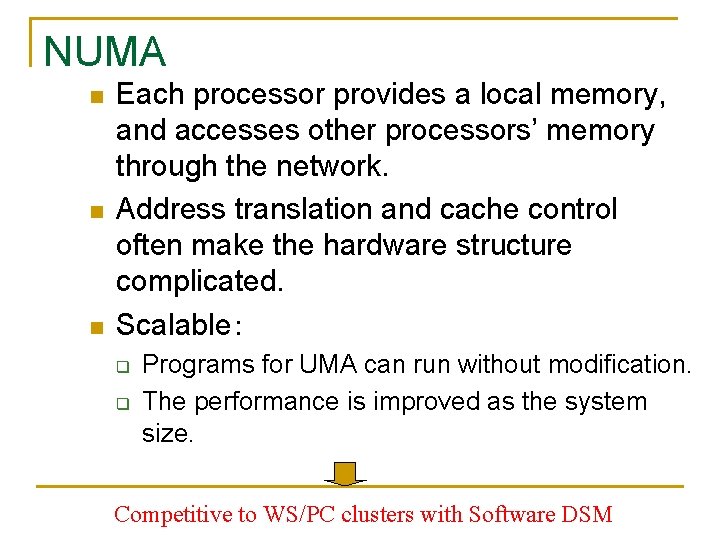

NUMA n n n Each processor provides a local memory, and accesses other processors’ memory through the network. Address translation and cache control often make the hardware structure complicated. Scalable: q q Programs for UMA can run without modification. The performance is improved as the system size. Competitive to WS/PC clusters with Software DSM

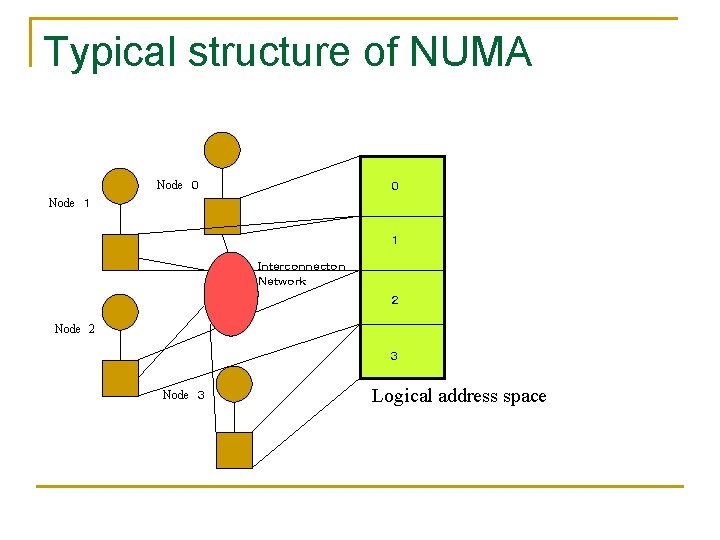

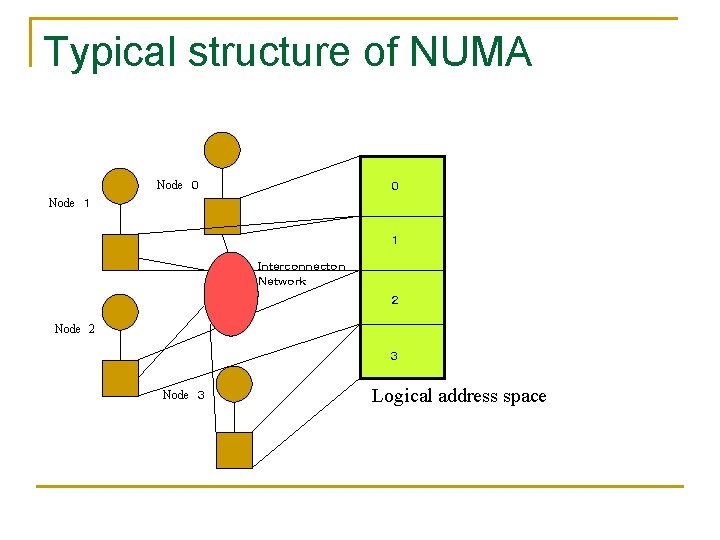

Typical structure of NUMA Node 0 0 Node 1 1 Interconnecton Network 2 Node 2 3 Node 3 Logical address space

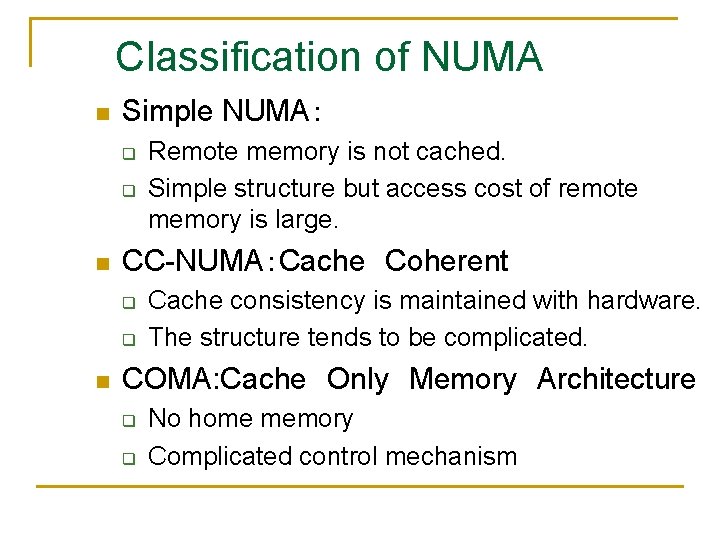

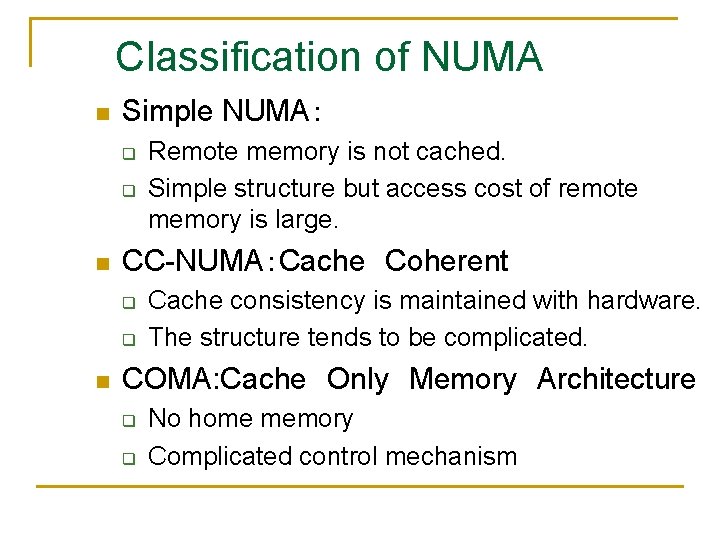

Classification of NUMA n Simple NUMA: q q n CC-NUMA:Cache Coherent q q n Remote memory is not cached. Simple structure but access cost of remote memory is large. Cache consistency is maintained with hardware. The structure tends to be complicated. COMA: Cache Only Memory Architecture q q No home memory Complicated control mechanism

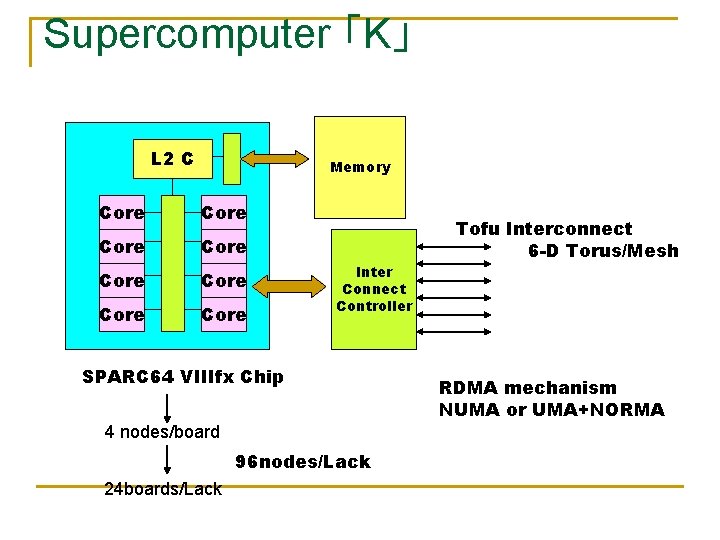

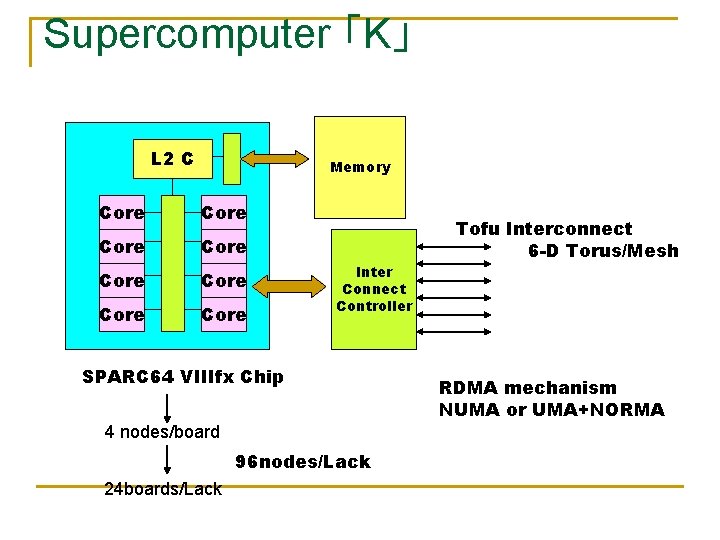

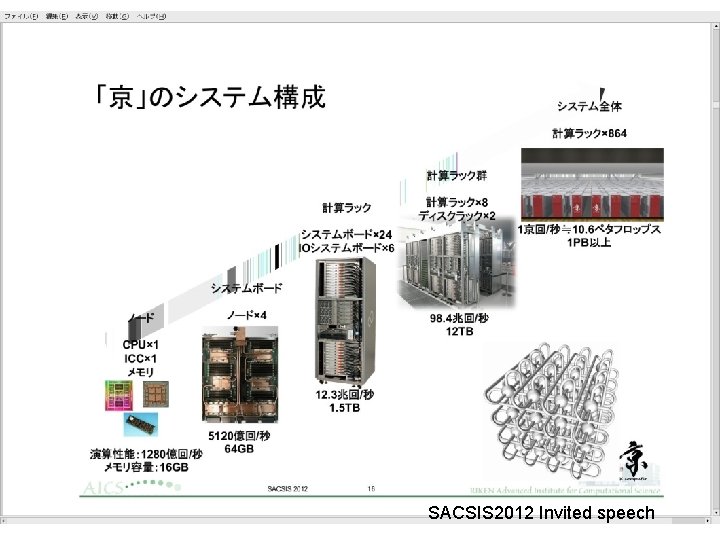

Supercomputer 「K」 L 2 C Memory Core Core Inter Connect Controller SPARC 64 VIIIfx Chip 4 nodes/board 96 nodes/Lack 24 boards/Lack Tofu Interconnect 6 -D Torus/Mesh RDMA mechanism NUMA or UMA+NORMA

SACSIS 2012 Invited speech

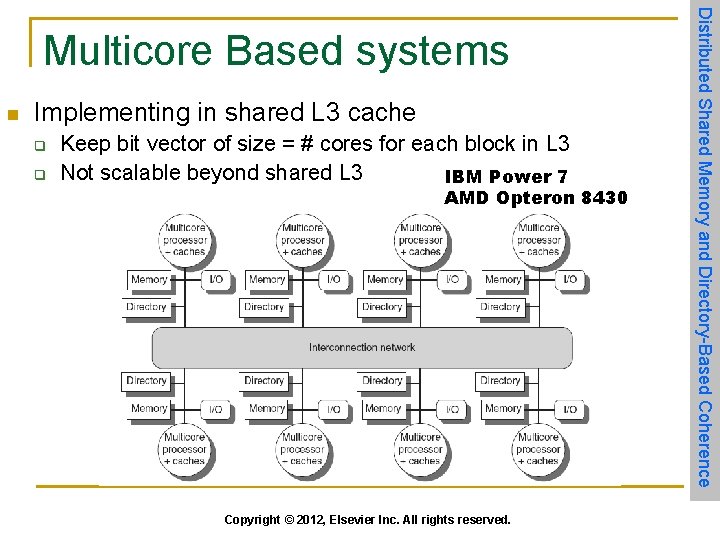

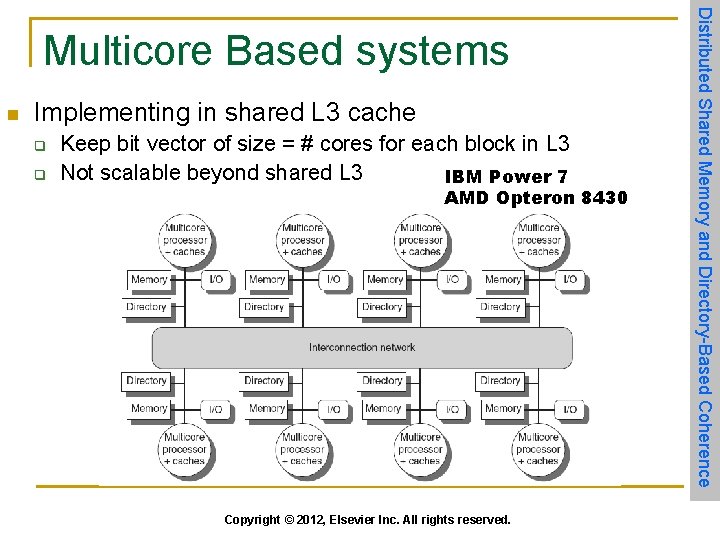

n Implementing in shared L 3 cache q q Keep bit vector of size = # cores for each block in L 3 Not scalable beyond shared L 3 IBM Power 7 AMD Opteron 8430 Copyright © 2012, Elsevier Inc. All rights reserved. Distributed Shared Memory and Directory-Based Coherence Multicore Based systems

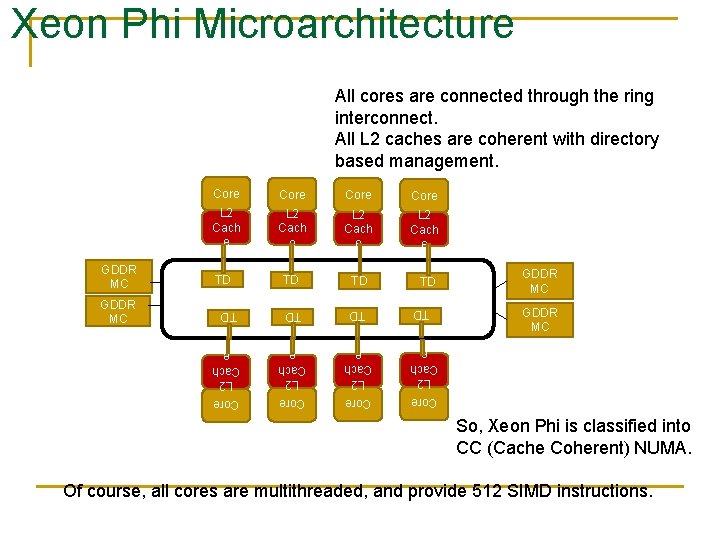

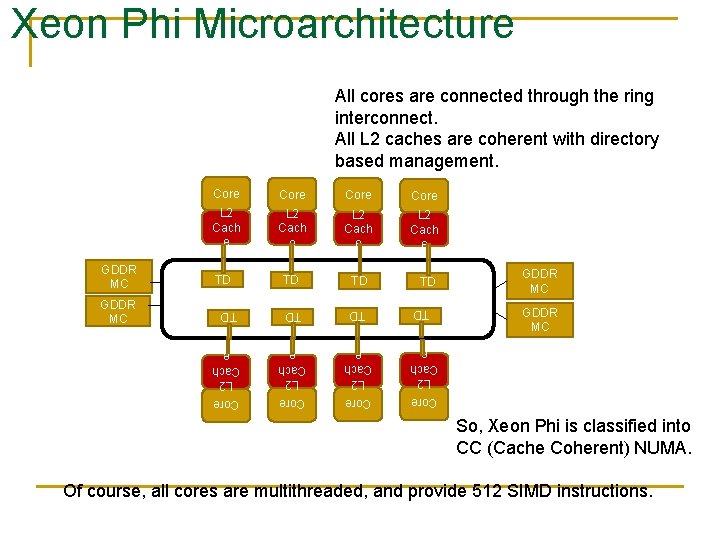

Xeon Phi Microarchitecture All cores are connected through the ring interconnect. All L 2 caches are coherent with directory based management. Core L 2 Cach e TD TD TD L 2 Cach e Core TD GDDR MC Core GDDR MC L 2 Cach e Core So, Xeon Phi is classified into CC (Cache Coherent) NUMA. Of course, all cores are multithreaded, and provide 512 SIMD instructions.

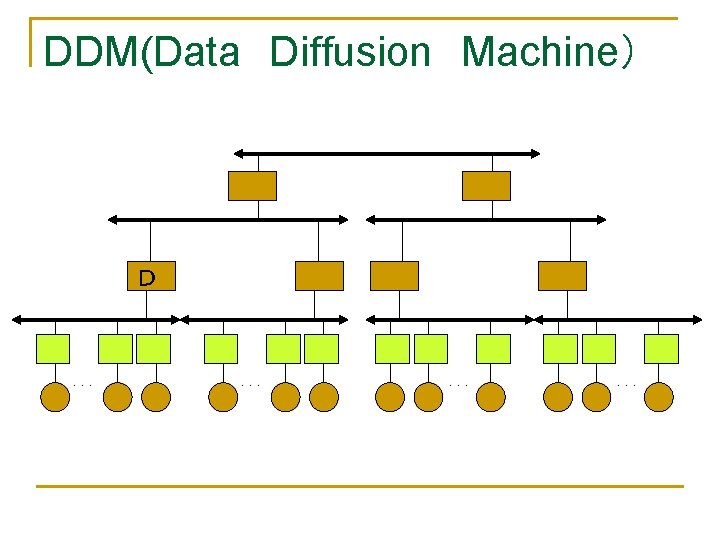

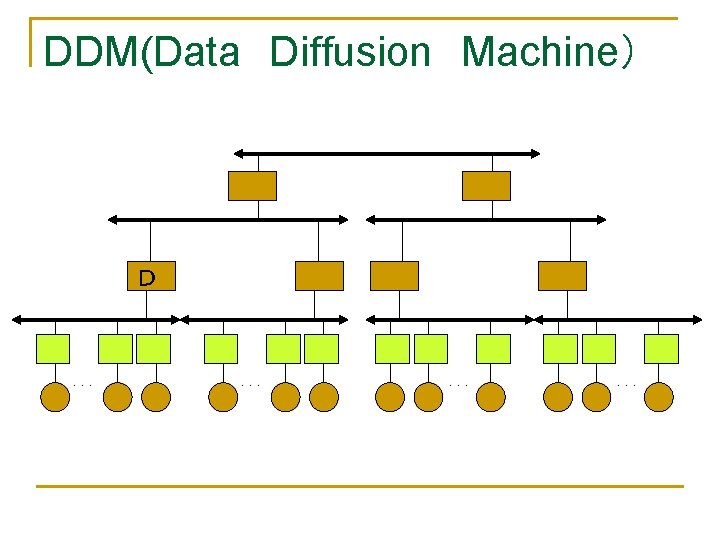

DDM(Data Diffusion Machine) D ... ...

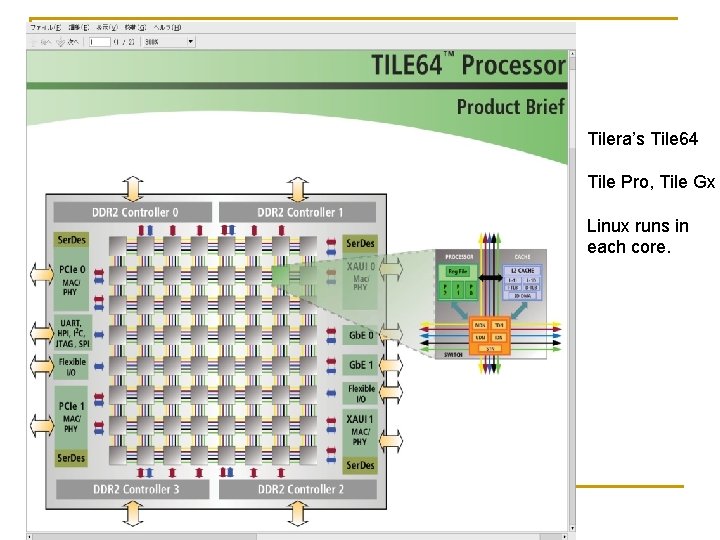

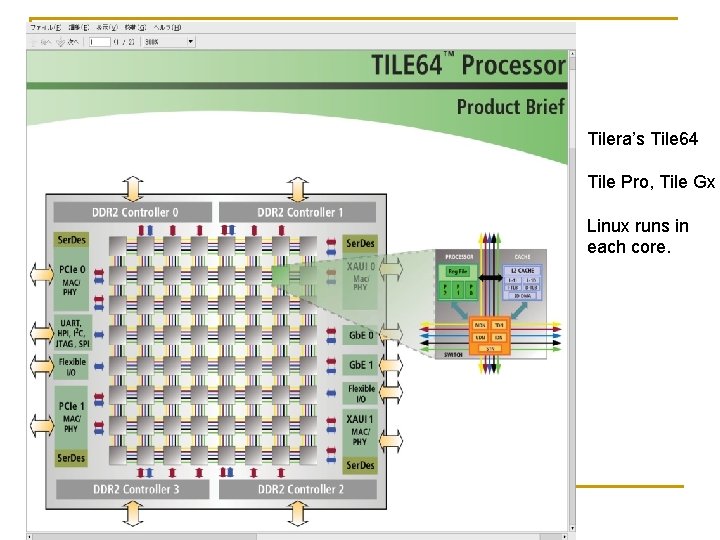

NORA/NORMA n n n No shared memory Communication is done with message passing Simple structure but high peak performance Cost effective solution. Hard for programming Inter-PU communications Cluster computing Tile Processors: On-chip NORMA for embedded applications

Early Hypercube machine n. CUBE 2

Fujitsu’s NORA AP 1000(1990) n n Mesh connection SPARC

Intel’s Paragon XP/S(1991) n n Mesh connection i 860

PC Cluster n Beowulf Cluster (NASA’s Beowulf Projects 1994, by Sterling) q q q n Commodity components TCP/IP Free software Others q q q Commodity components High performance networks like Myrinet / Infiniband Dedicated software

RHi. NET-2 cluster

Tilera’s Tile 64 Tile Pro, Tile Gx Linux runs in each core.

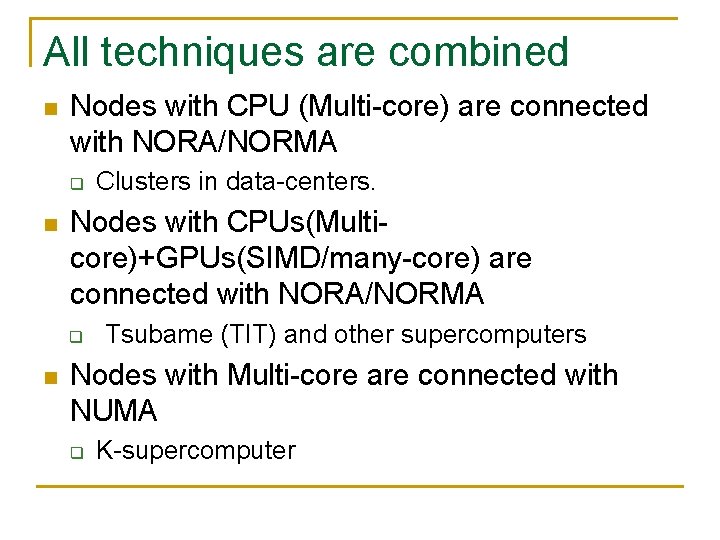

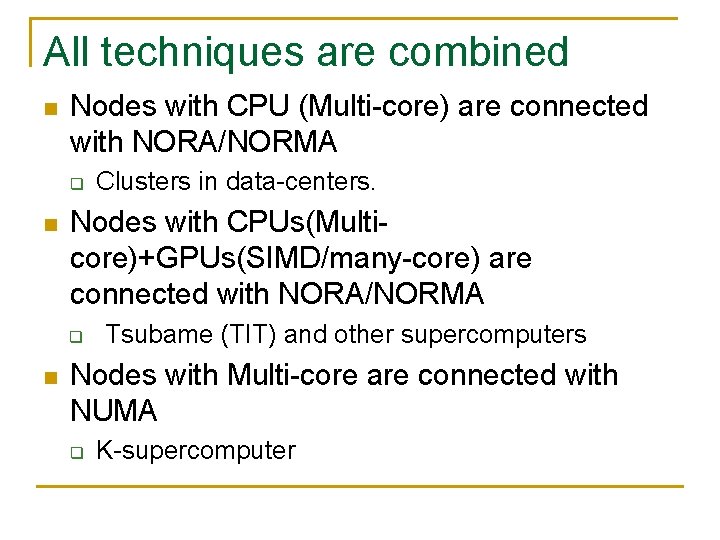

All techniques are combined n Nodes with CPU (Multi-core) are connected with NORA/NORMA q n Nodes with CPUs(Multicore)+GPUs(SIMD/many-core) are connected with NORA/NORMA q n Clusters in data-centers. Tsubame (TIT) and other supercomputers Nodes with Multi-core are connected with NUMA q K-supercomputer

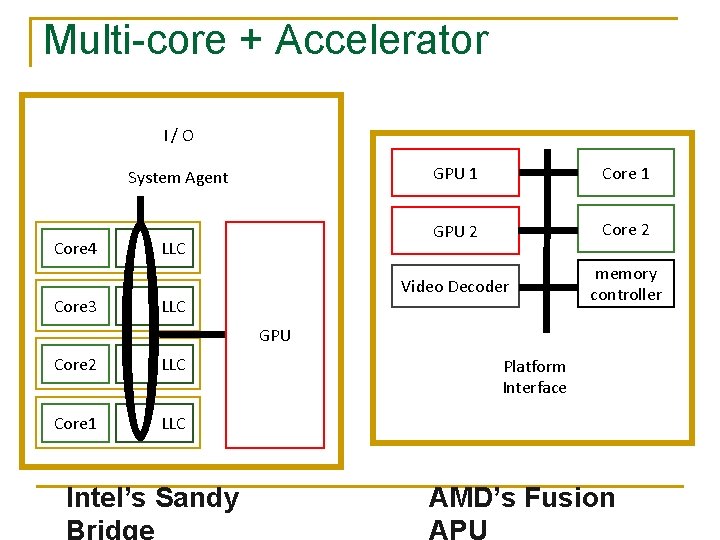

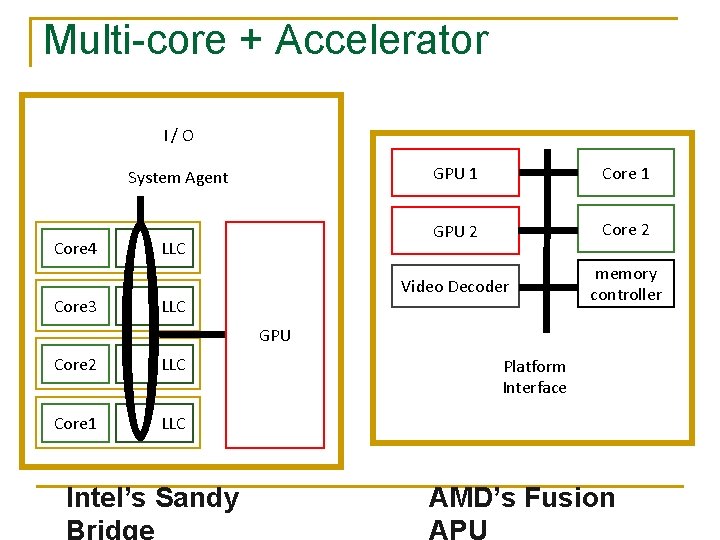

Multi-core + Accelerator I/O System Agent Core 4 Core 3 LLC GPU 1 Core 1 GPU 2 Core 2 Video Decoder memory controller GPU Core 2 LLC Core 1 LLC Intel’s Sandy Platform Interface AMD’s Fusion

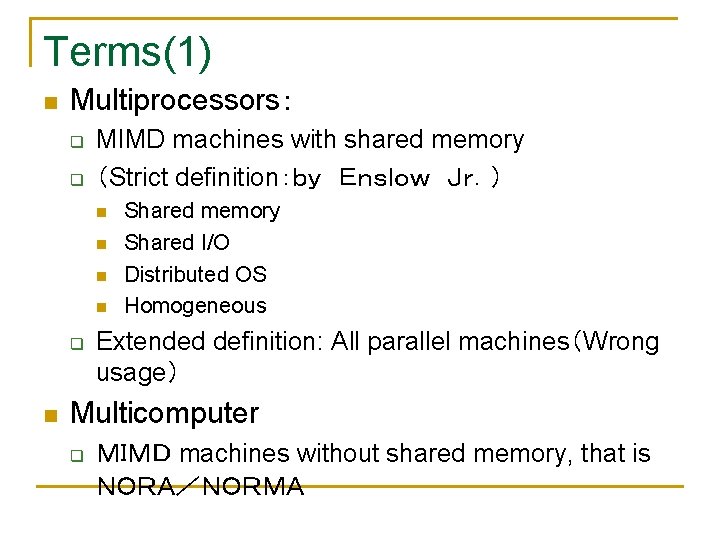

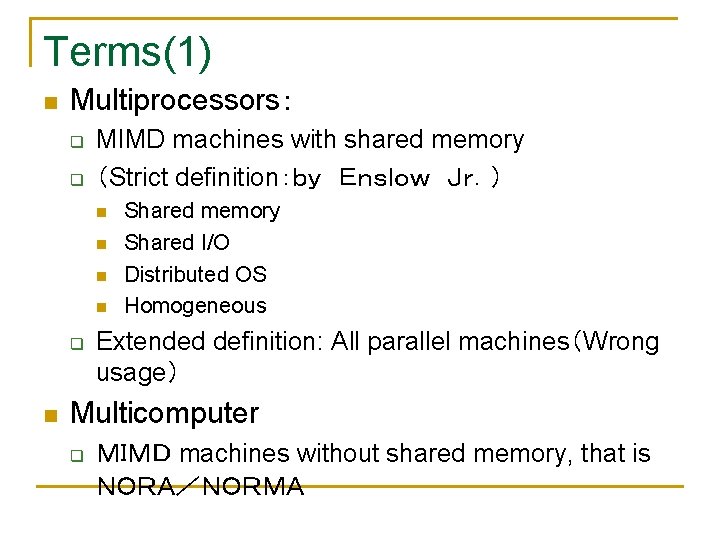

Terms(1) n Multiprocessors: q q MIMD machines with shared memory (Strict definition:by Enslow Jr.) n n q n Shared memory Shared I/O Distributed OS Homogeneous Extended definition: All parallel machines(Wrong usage) Multicomputer q MIMD machines without shared memory, that is NORA/NORMA

Term(2) n Multicore q q q On-chip multiprocessor. Mostly UMA. Symmetric Multi-Processor SMP n n Historically, SMP is used for multi-chip multiprocessor Manycore q q On-chip multiprocessor with a lot of cores GPUs are also referred as “Manycore”.

Classification Fine grain SIMD Coarse grain Multiprocessors Bus connected UMA Switch connected UMA Stored programming based MIMD Simple NUMA CC-NUMA COMA NORA Multicomputers Others Systolic architecture Data flow architecture Mixed control Demand driven architecture

Exercise 1 n n n Pezy’s supercomputer Gyoukou got the 4 th place at TOP 500 in 2017 November. But, the president of Pezy was arrested for crime of fraud later. It uses Pezy SC 2 as an accelerator. Which type is Pezy SC 2 classified into ? If you take this class, send the answer with your name and student number to hunga@am. ics. keio. ac. jp You can use either Japanese or English.

Keio university architecture

Keio university architecture Peta

Peta Bruce hayes

Bruce hayes Keio sdm

Keio sdm Sdm project management

Sdm project management Keio sdm

Keio sdm Direct guidance examples

Direct guidance examples Cuspal angulation

Cuspal angulation 沈榮麟

沈榮麟 Lord of the flies table of contents

Lord of the flies table of contents Air pollution contents

Air pollution contents Dr rami shaarawy

Dr rami shaarawy Table of contents background

Table of contents background Blue ocean strategy table of contents

Blue ocean strategy table of contents Sas contents

Sas contents Table of contents science

Table of contents science Company profile table of contents

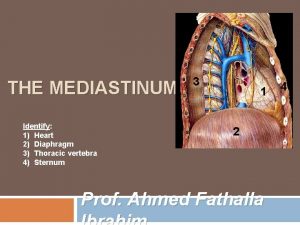

Company profile table of contents Anterior mediastinum contents

Anterior mediastinum contents Table of contents error

Table of contents error Perineum

Perineum Pericardium and mediastinum

Pericardium and mediastinum Spermatic cord contents rule of 3's

Spermatic cord contents rule of 3's Deep perineal pouch contents

Deep perineal pouch contents Contents

Contents Persepolis table

Persepolis table Planetary systems

Planetary systems Table of contents scrapbook

Table of contents scrapbook Table of contents annex

Table of contents annex Mla table of contents

Mla table of contents Table of contents slide

Table of contents slide Clovis unified special education

Clovis unified special education Posterior mediastinum

Posterior mediastinum Science table of contents

Science table of contents Boundaries of anterior triangle

Boundaries of anterior triangle Dlginit

Dlginit Contents page epq

Contents page epq Contents of curriculum vitae

Contents of curriculum vitae Electronic table of contents

Electronic table of contents Civics interactive notebook

Civics interactive notebook Ip contents

Ip contents Contents of a dead man's pocket conflict

Contents of a dead man's pocket conflict Adductor hiatus

Adductor hiatus Contents of a dead man's pocket conflict

Contents of a dead man's pocket conflict Processus vaginalis anatomy

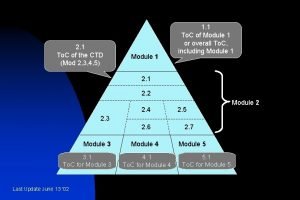

Processus vaginalis anatomy Module 3 ctd table contents

Module 3 ctd table contents Suprasternal space of burns

Suprasternal space of burns Cell contents assignment to a non-cell array object.

Cell contents assignment to a non-cell array object. I-need-a-few-pointers-98hpou5

I-need-a-few-pointers-98hpou5 Writing appendix in a report

Writing appendix in a report Golden lampstand tabernacle

Golden lampstand tabernacle Transverse humeral ligament attachments

Transverse humeral ligament attachments Contents of spermatic cord mnemonic

Contents of spermatic cord mnemonic Ctd module 5 table of contents

Ctd module 5 table of contents