CMPT 371 Data Communications and Networking Chapter 2

![Chord Join r Assume a hash space [0. . 7] r Node n 1 Chord Join r Assume a hash space [0. . 7] r Node n 1](https://slidetodoc.com/presentation_image/da552b68ef0dc6889f2f5c05256ba3e2/image-51.jpg)

- Slides: 59

CMPT 371 Data Communications and Networking Chapter 2 Application Layer - 2 2: Application Layer 1

Chapter 2 outline r 2. 1 Principles of app layer protocols r 2. 2 Web and HTTP r 2. 3 FTP r 2. 4 Electronic Mail m SMTP, POP 3, IMAP r 2. 5 DNS r 2. 6 Content distribution m Network Web caching m Content distribution networks m P 2 P file sharing r 2. 7 Cloud 2: Application Layer 2

Content Distribution r Problem of a single server m Bottleneck, single point of failure, … r Content Distribution m Distribute (Replicate) contents at different place m Direct requests to appropriate places 2: Application Layer 3

Client-side Caching 2: Application Layer 4

Limit of Client-side Caching Not shared ! 2: Application Layer 5

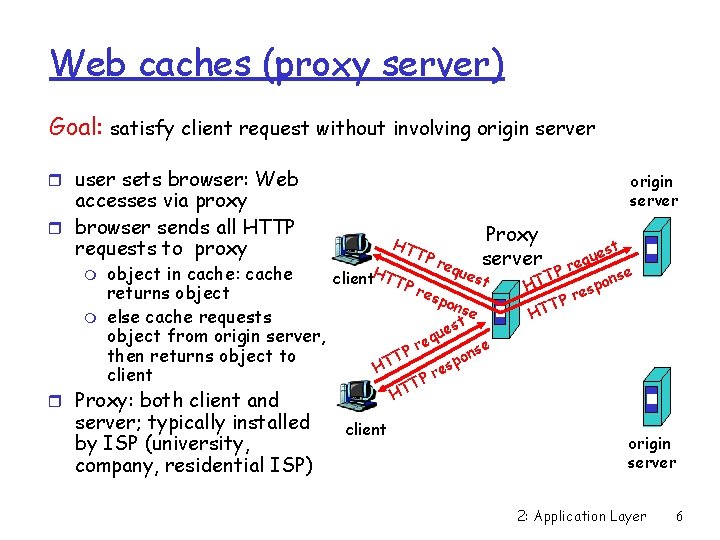

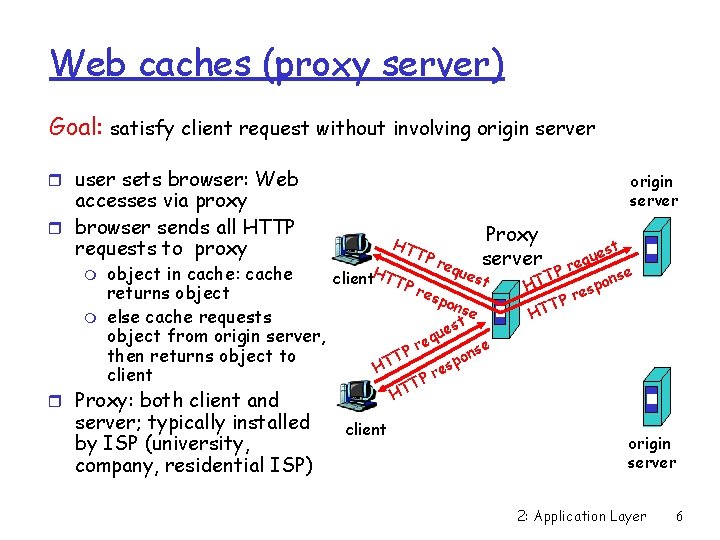

Web caches (proxy server) Goal: satisfy client request without involving origin server r user sets browser: Web origin server accesses via proxy r browser sends all HTTP requests to proxy m m HT TP req Proxy server ues object in cache: cache client. HTTP t res returns object pon se else cache requests t s ue object from origin server, q re P nse then returns object to o T T sp H e r client P T HT r Proxy: both client and server; typically installed by ISP (university, company, residential ISP) est u q e Pr T nse o p HT res P T HT client origin server 2: Application Layer 6

More about Web caching Why Web caching? r Reduce response time for client request. r Reduce traffic on an institution’s access link. r Internet dense with caches enables “poor” content providers to effectively deliver content 2: Application Layer 7

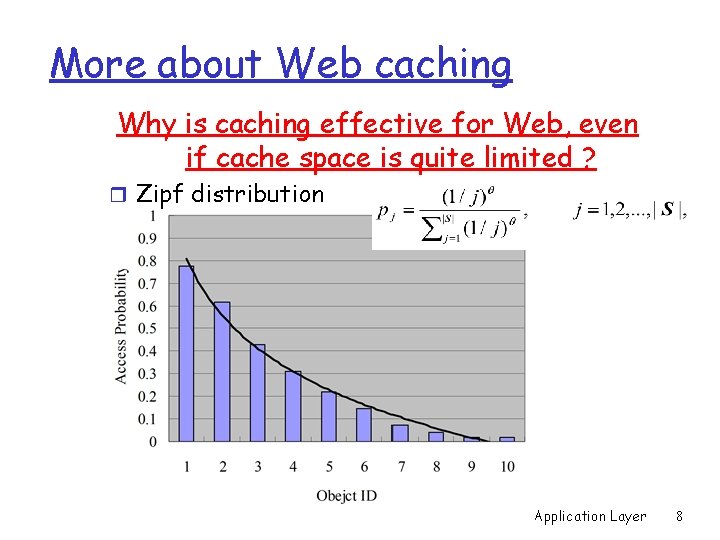

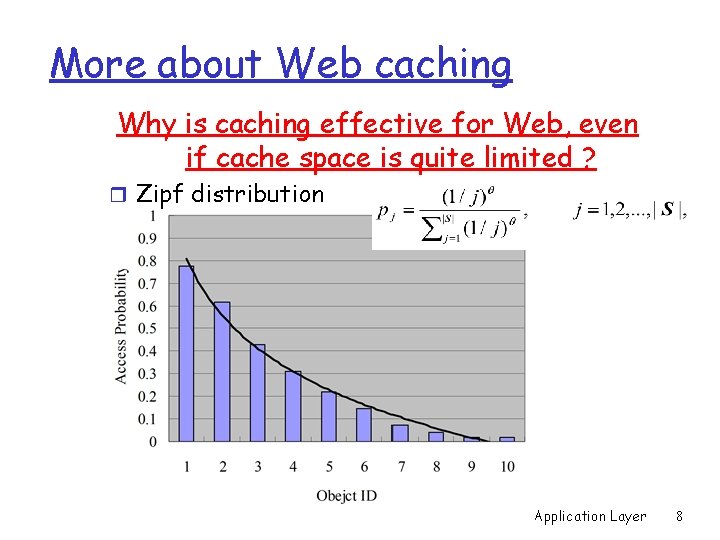

More about Web caching Why is caching effective for Web, even if cache space is quite limited ? r Zipf distribution 2: Application Layer 8

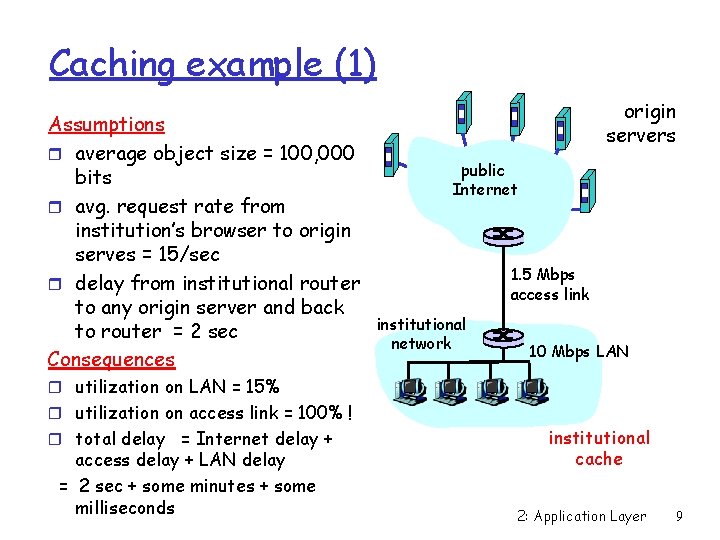

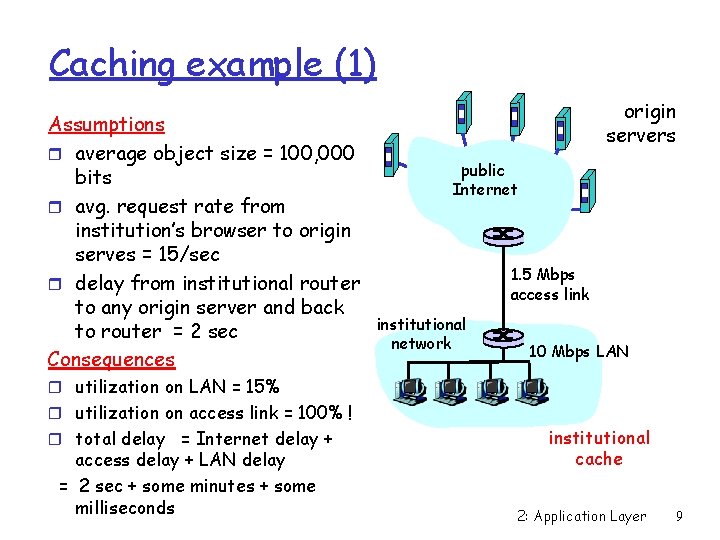

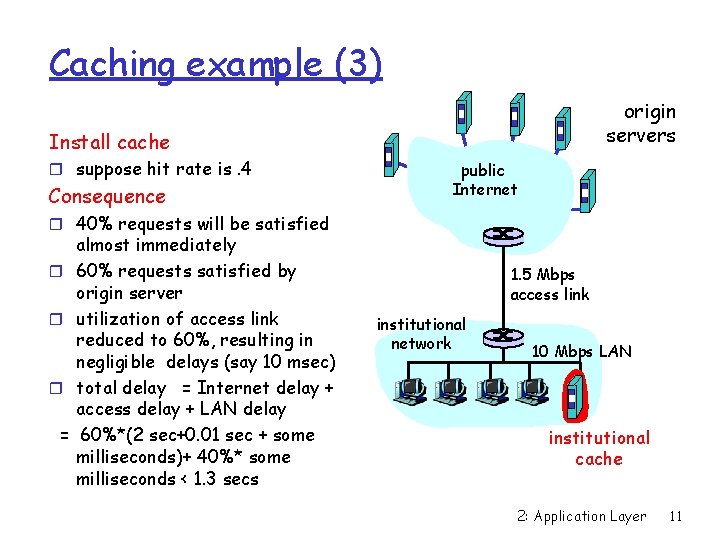

Caching example (1) Assumptions r average object size = 100, 000 bits r avg. request rate from institution’s browser to origin serves = 15/sec r delay from institutional router to any origin server and back to router = 2 sec Consequences origin servers public Internet 1. 5 Mbps access link institutional network 10 Mbps LAN r utilization on LAN = 15% r utilization on access link = 100% ! r total delay = Internet delay + access delay + LAN delay = 2 sec + some minutes + some milliseconds institutional cache 2: Application Layer 9

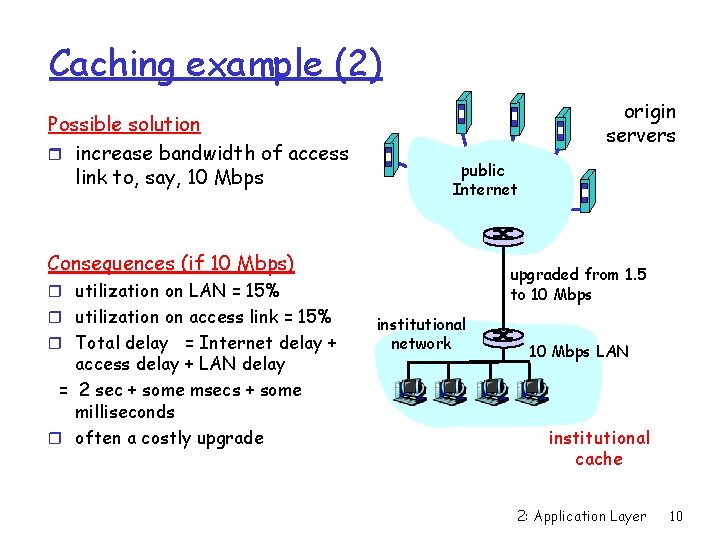

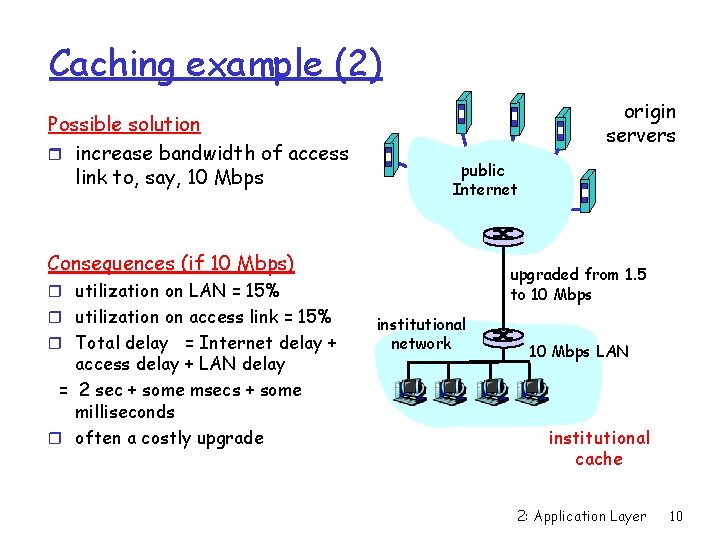

Caching example (2) Possible solution r increase bandwidth of access link to, say, 10 Mbps origin servers public Internet Consequences (if 10 Mbps) upgraded from 1. 5 to 10 Mbps r utilization on LAN = 15% r utilization on access link = 15% r Total delay = Internet delay + access delay + LAN delay = 2 sec + some msecs + some milliseconds r often a costly upgrade institutional network 10 Mbps LAN institutional cache 2: Application Layer 10

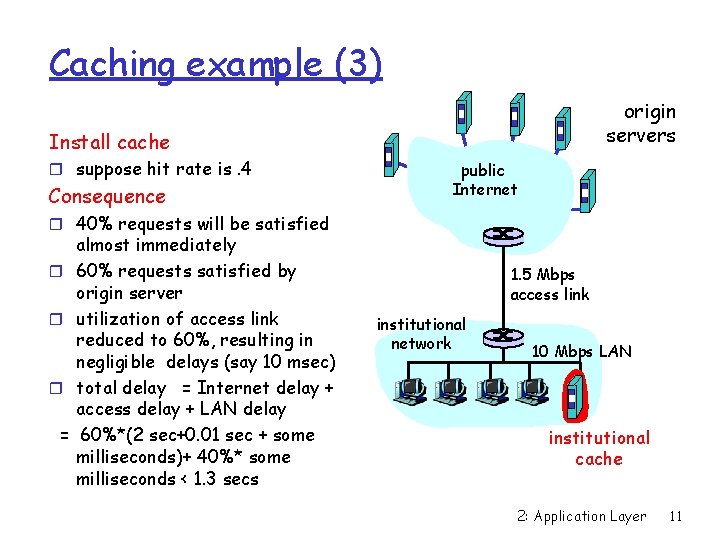

Caching example (3) origin servers Install cache r suppose hit rate is. 4 Consequence public Internet r 40% requests will be satisfied r r r = almost immediately 60% requests satisfied by origin server utilization of access link reduced to 60%, resulting in negligible delays (say 10 msec) total delay = Internet delay + access delay + LAN delay 60%*(2 sec+0. 01 sec + some milliseconds)+ 40%* some milliseconds < 1. 3 secs 1. 5 Mbps access link institutional network 10 Mbps LAN institutional cache 2: Application Layer 11

More about Web caching r Problem of Web caching m Extra space/machine (proxy) m Inconsistency (out-of-date objects…) 2: Application Layer 12

Consistency of Cached Objects r Solution 1: no caching 2: Application Layer 13

Consistency of Cached Objects r Solution 2: Manually update 2: Application Layer 14

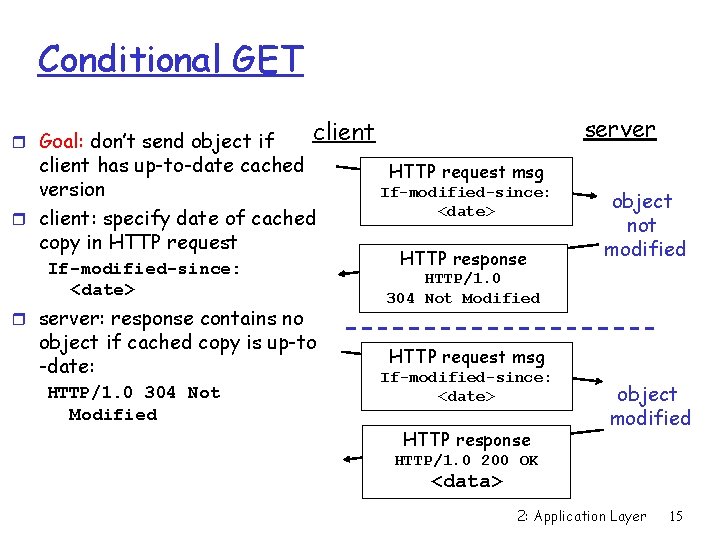

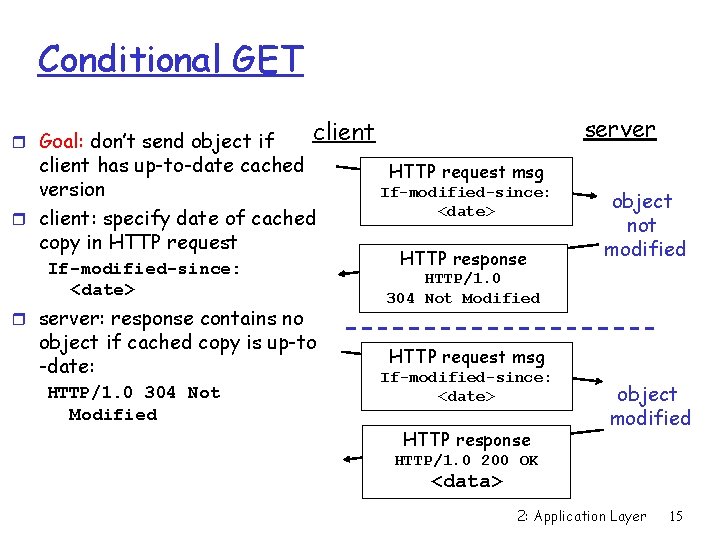

Conditional GET r Goal: don’t send object if client has up-to-date cached version r client: specify date of cached copy in HTTP request If-modified-since: <date> r server: response contains no object if cached copy is up-to -date: HTTP/1. 0 304 Not Modified server client HTTP request msg If-modified-since: <date> HTTP response object not modified HTTP/1. 0 304 Not Modified HTTP request msg If-modified-since: <date> HTTP response object modified HTTP/1. 0 200 OK <data> 2: Application Layer 15

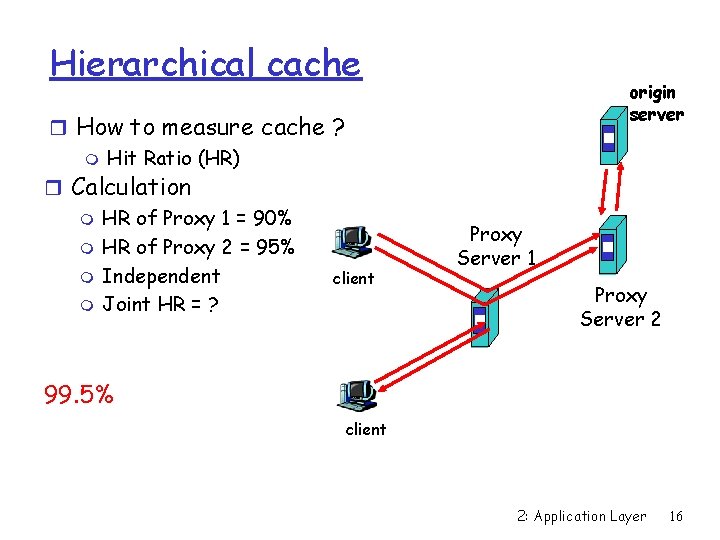

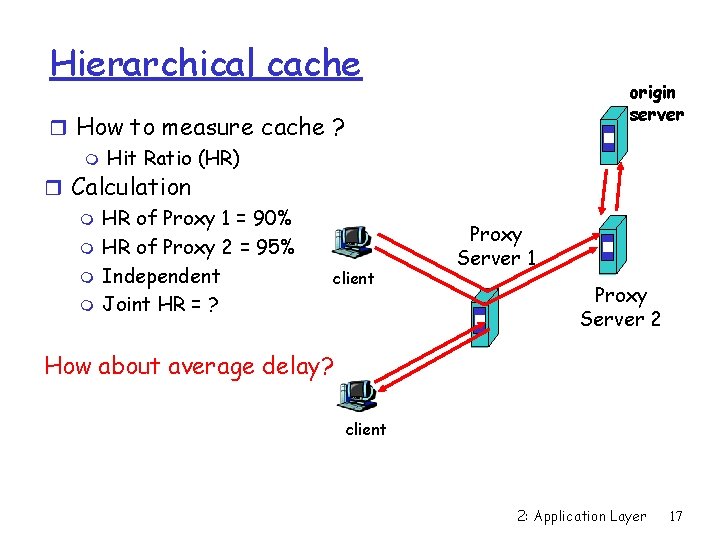

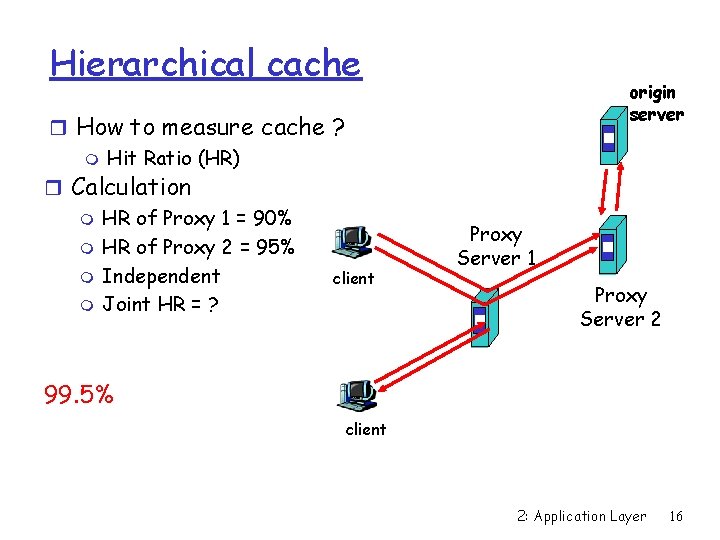

Hierarchical cache r How to measure cache ? m Hit Ratio (HR) r Calculation m HR of Proxy 1 = 90% m HR of Proxy 2 = 95% m Independent client m Joint HR = ? origin server Proxy Server 1 Proxy Server 2 99. 5% client 2: Application Layer 16

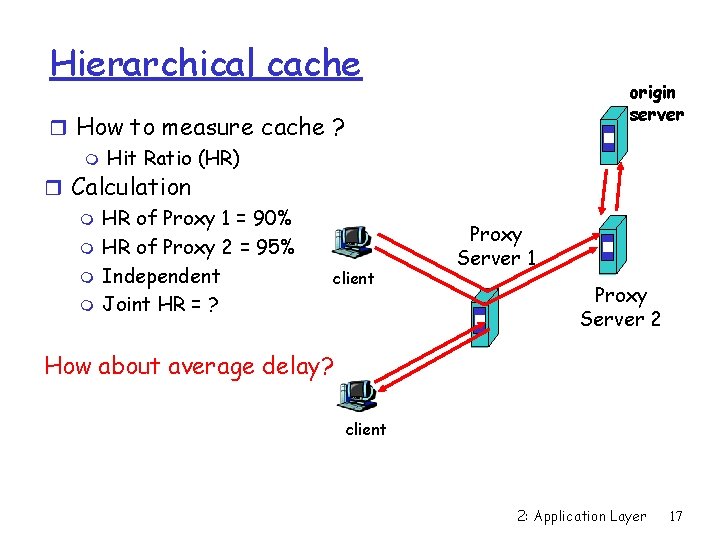

Hierarchical cache r How to measure cache ? m Hit Ratio (HR) r Calculation m HR of Proxy 1 = 90% m HR of Proxy 2 = 95% m Independent client m Joint HR = ? origin server Proxy Server 1 Proxy Server 2 How about average delay? client 2: Application Layer 17

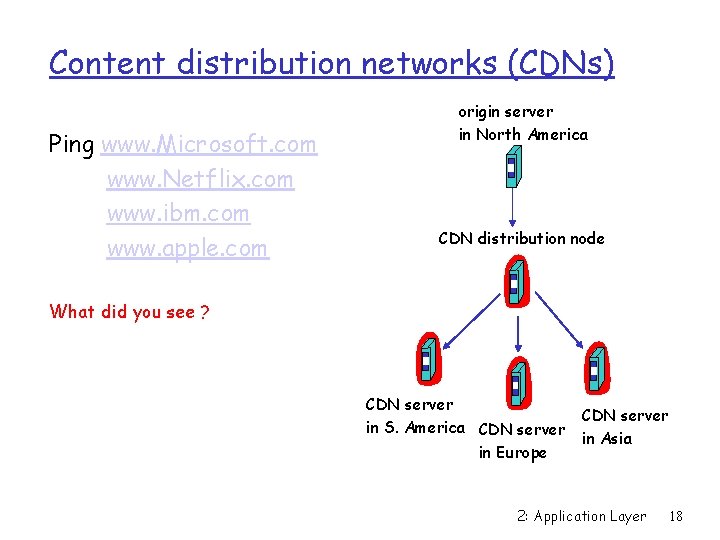

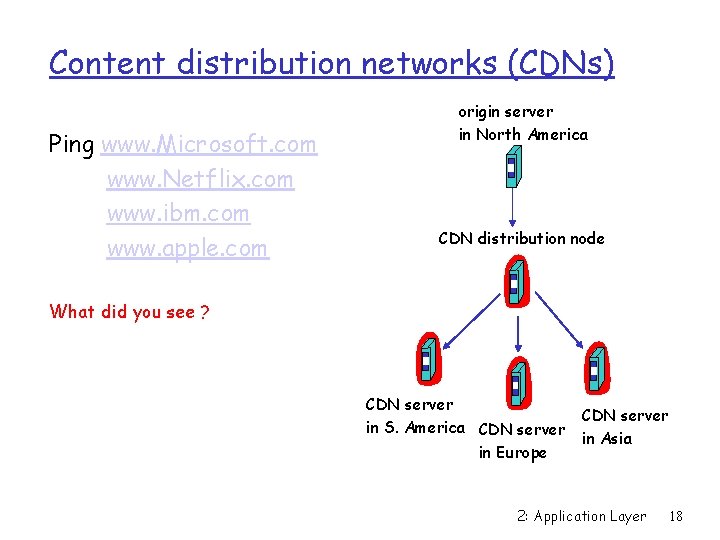

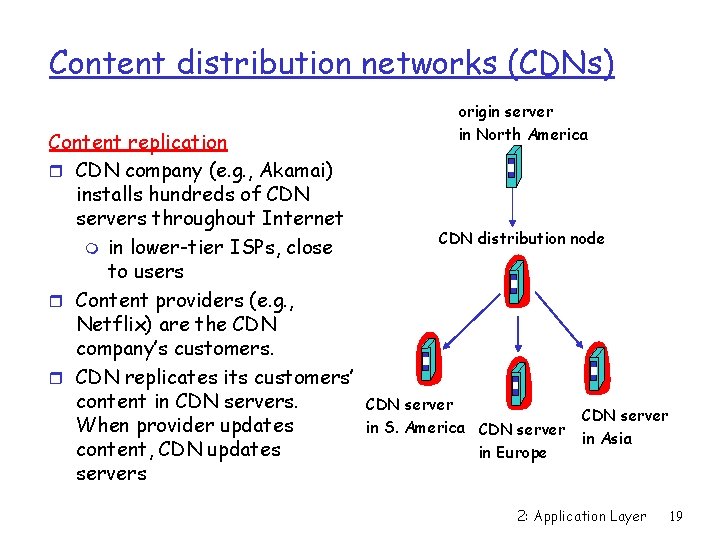

Content distribution networks (CDNs) Ping www. Microsoft. com www. Netflix. com www. ibm. com www. apple. com origin server in North America CDN distribution node What did you see ? CDN server in S. America CDN server in Europe CDN server in Asia 2: Application Layer 18

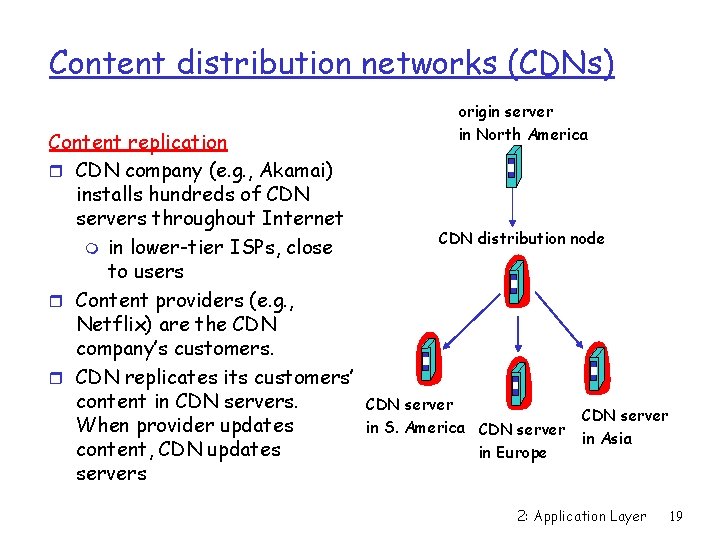

Content distribution networks (CDNs) Content replication r CDN company (e. g. , Akamai) installs hundreds of CDN servers throughout Internet m in lower-tier ISPs, close to users r Content providers (e. g. , Netflix) are the CDN company’s customers. r CDN replicates its customers’ content in CDN servers. When provider updates content, CDN updates servers origin server in North America CDN distribution node CDN server in S. America CDN server in Europe CDN server in Asia 2: Application Layer 19

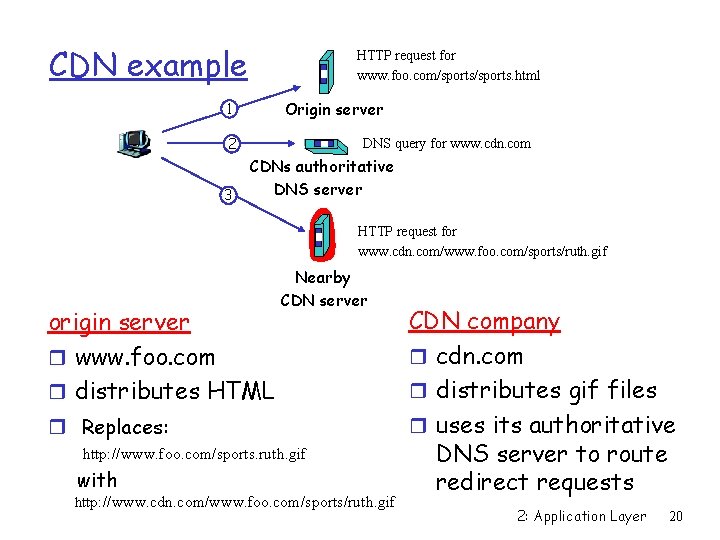

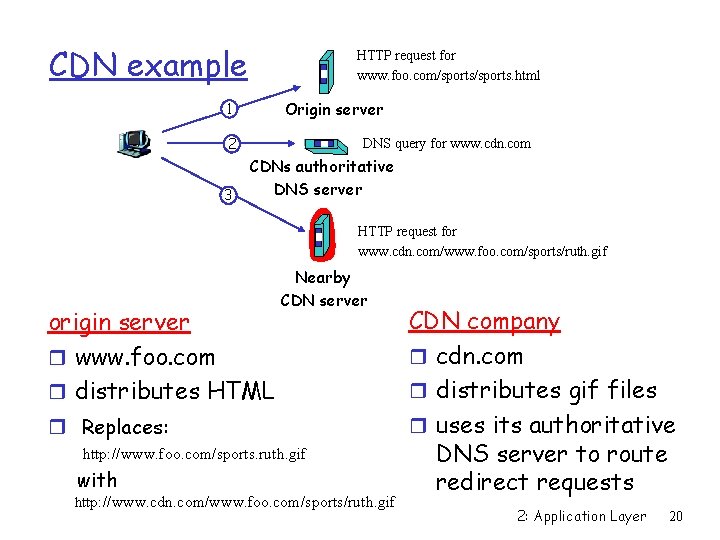

CDN example HTTP request for www. foo. com/sports. html Origin server 1 2 3 DNS query for www. cdn. com CDNs authoritative DNS server HTTP request for www. cdn. com/www. foo. com/sports/ruth. gif origin server r www. foo. com r distributes HTML Nearby CDN server r Replaces: http: //www. foo. com/sports. ruth. gif with http: //www. cdn. com/www. foo. com/sports/ruth. gif CDN company r cdn. com r distributes gif files r uses its authoritative DNS server to route redirect requests 2: Application Layer 20

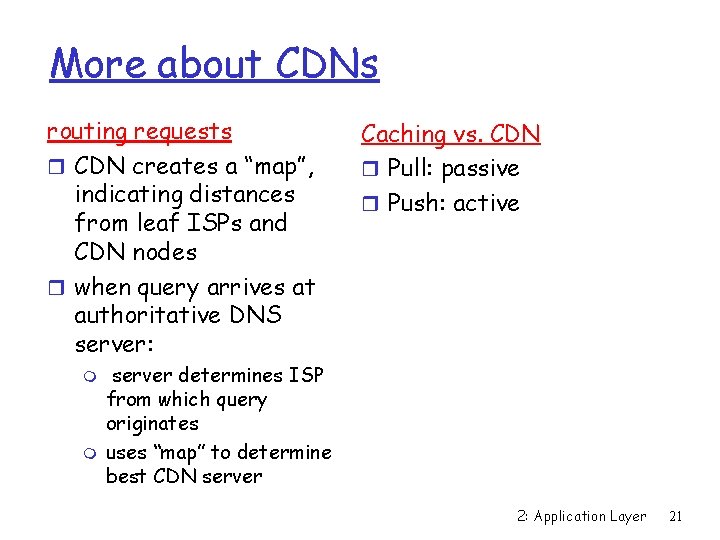

More about CDNs routing requests r CDN creates a “map”, indicating distances from leaf ISPs and CDN nodes r when query arrives at authoritative DNS server: m m Caching vs. CDN r Pull: passive r Push: active server determines ISP from which query originates uses “map” to determine best CDN server 2: Application Layer 21

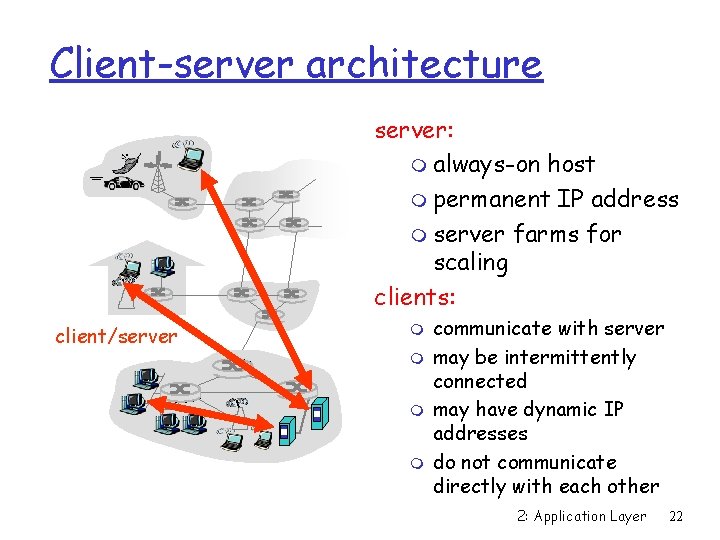

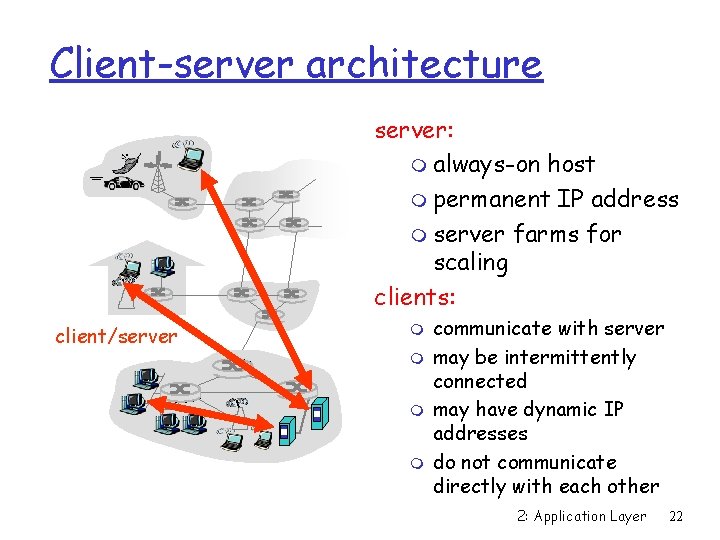

Client-server architecture server: m always-on host m permanent IP address m server farms for scaling clients: client/server m m communicate with server may be intermittently connected may have dynamic IP addresses do not communicate directly with each other 2: Application Layer 22

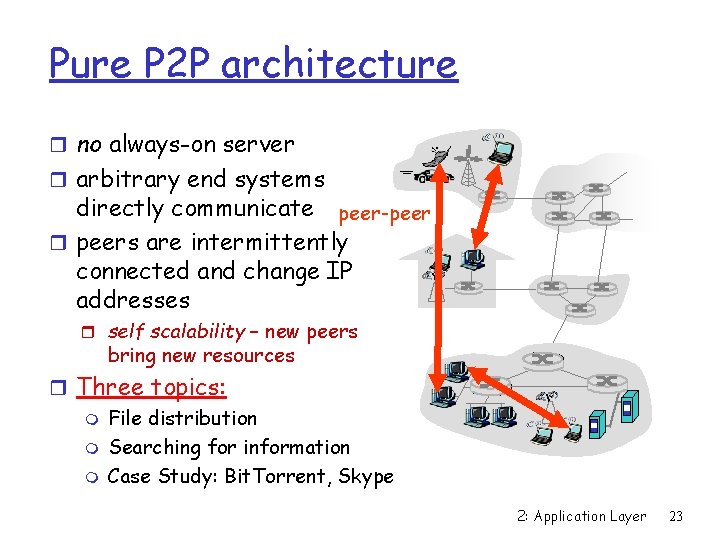

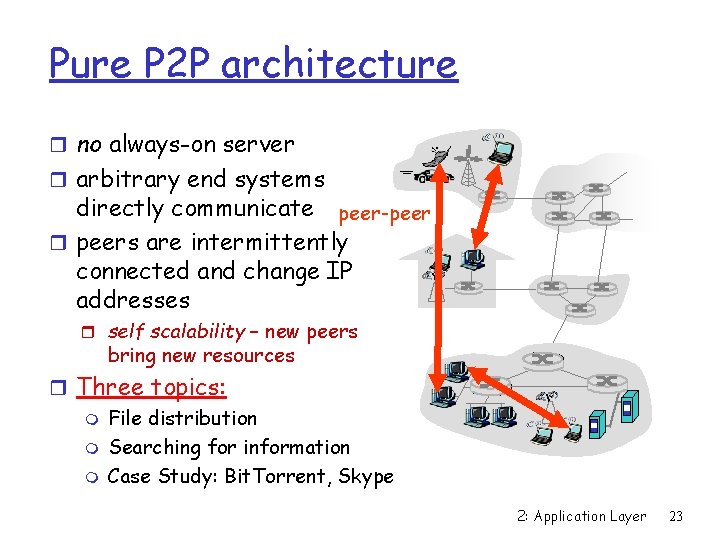

Pure P 2 P architecture r no always-on server r arbitrary end systems directly communicate peer-peer r peers are intermittently connected and change IP addresses r self scalability – new peers bring new resources r Three topics: m File distribution m Searching for information m Case Study: Bit. Torrent, Skype 2: Application Layer 23

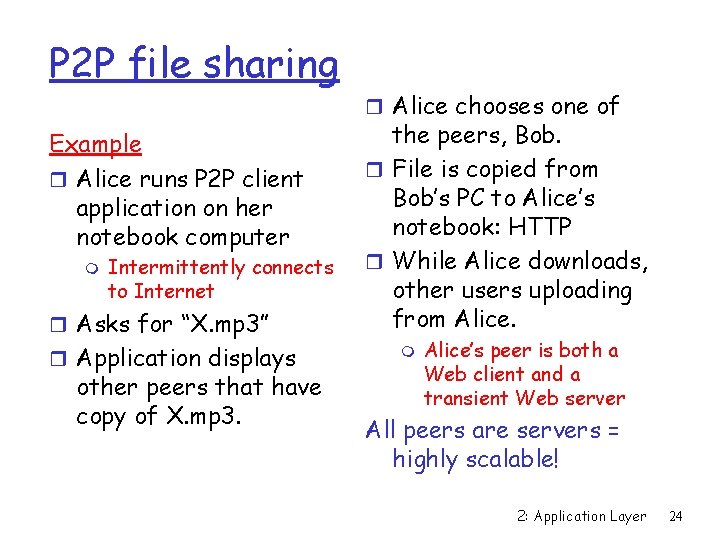

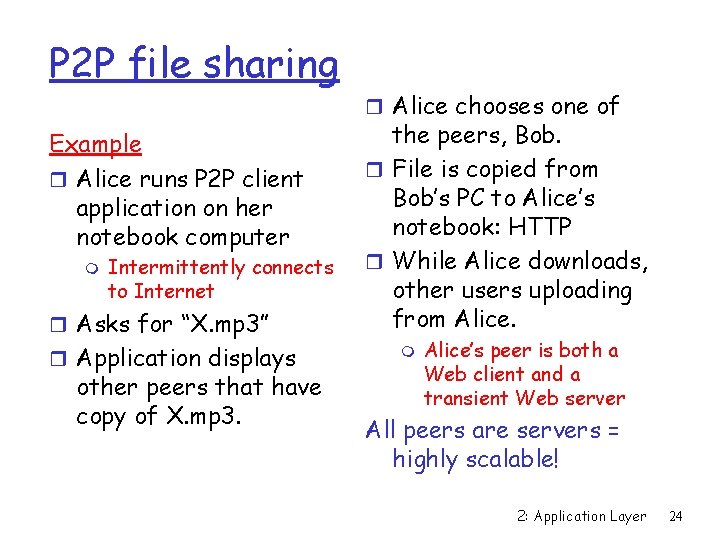

P 2 P file sharing Example r Alice runs P 2 P client application on her notebook computer m Intermittently connects to Internet r Asks for “X. mp 3” r Application displays other peers that have copy of X. mp 3. r Alice chooses one of the peers, Bob. r File is copied from Bob’s PC to Alice’s notebook: HTTP r While Alice downloads, other users uploading from Alice’s peer is both a Web client and a transient Web server All peers are servers = highly scalable! 2: Application Layer 24

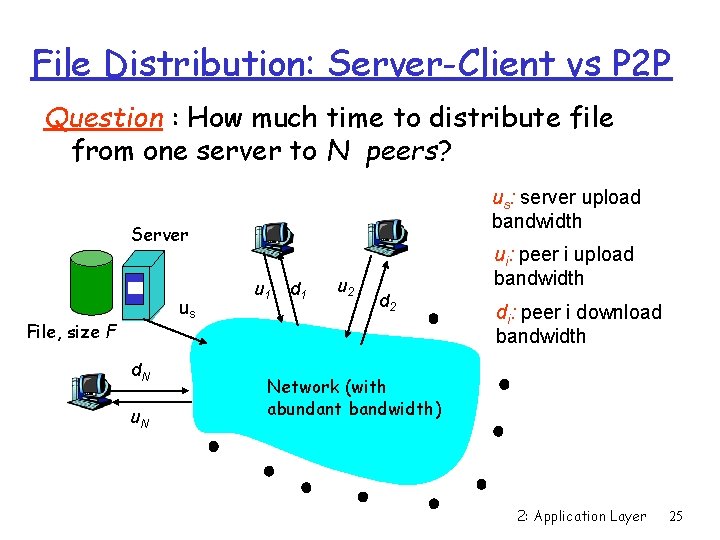

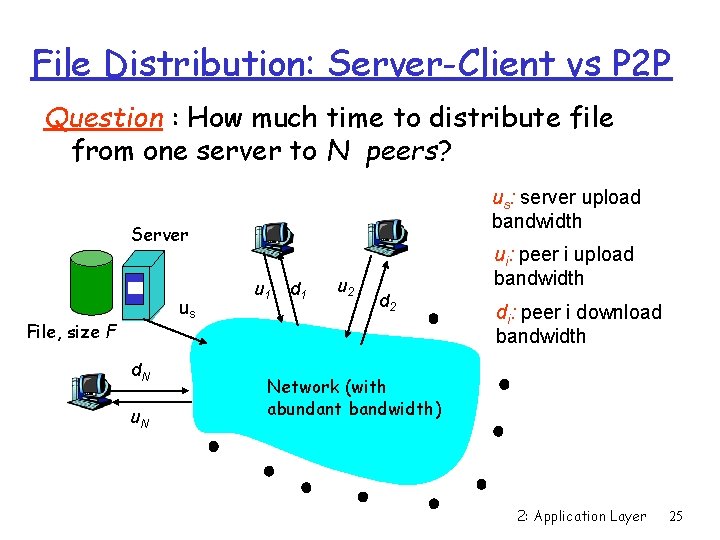

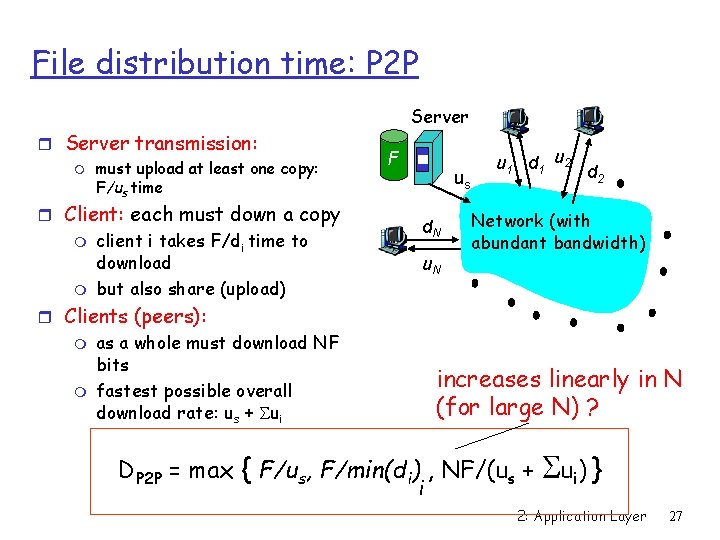

File Distribution: Server-Client vs P 2 P Question : How much time to distribute file from one server to N peers? us: server upload bandwidth Server us File, size F d. N u 1 d 1 u 2 ui: peer i upload bandwidth d 2 di: peer i download bandwidth Network (with abundant bandwidth) 2: Application Layer 25

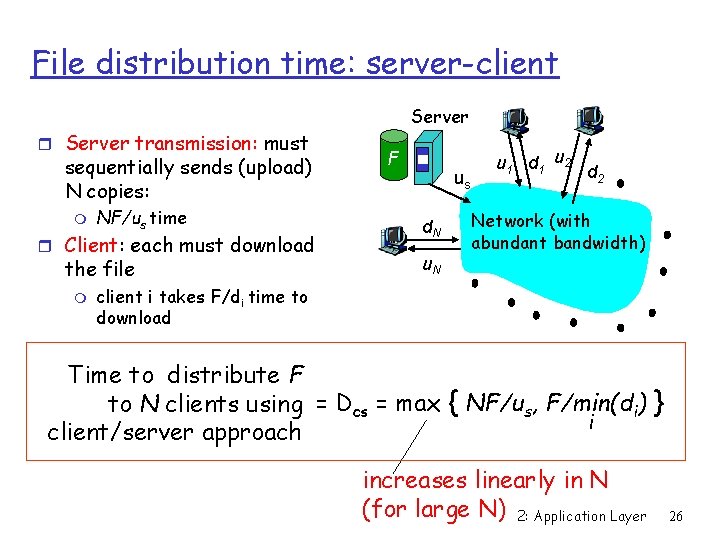

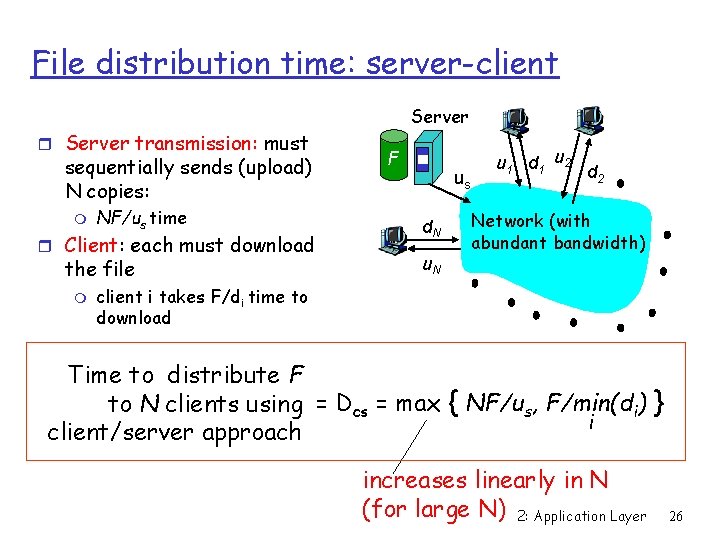

File distribution time: server-client r Server transmission: must sequentially sends (upload) N copies: m NF/us time r Client: each must download the file m Server F us d. N u 1 d 1 u 2 d 2 Network (with abundant bandwidth) client i takes F/di time to download Time to distribute F to N clients using = Dcs = max { NF/us, F/min(di) } i client/server approach increases linearly in N (for large N) 2: Application Layer 26

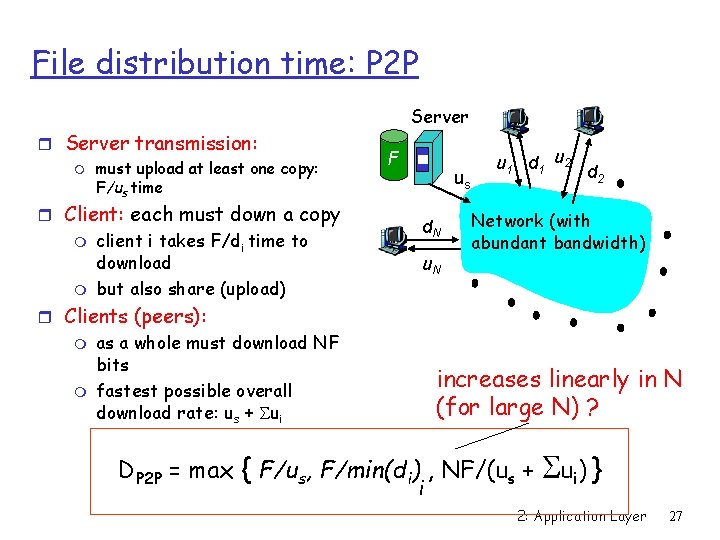

File distribution time: P 2 P r Server transmission: m must upload at least one copy: F/us time r Client: each must down a copy m m client i takes F/di time to download but also share (upload) Server F us d. N u 1 d 1 u 2 d 2 Network (with abundant bandwidth) r Clients (peers): m m as a whole must download NF bits fastest possible overall download rate: us + Sui increases linearly in N (for large N) ? DP 2 P = max { F/us, F/min(di) , NF/(us + i S ui ) } 2: Application Layer 27

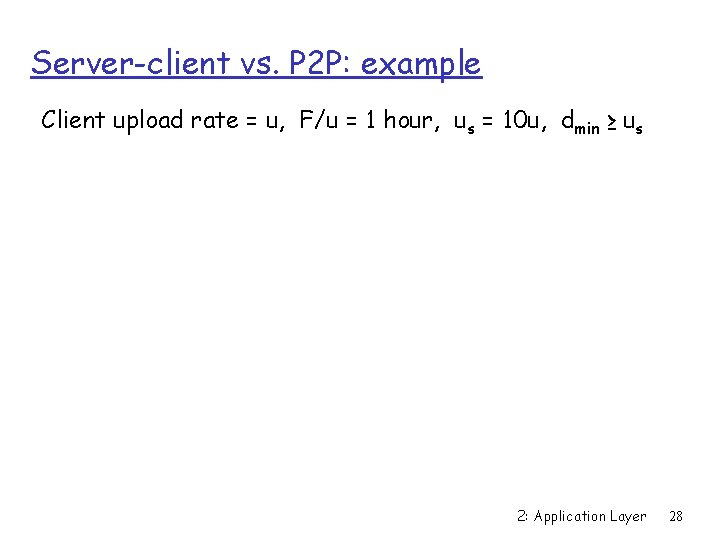

Server-client vs. P 2 P: example Client upload rate = u, F/u = 1 hour, us = 10 u, dmin ≥ us 2: Application Layer 28

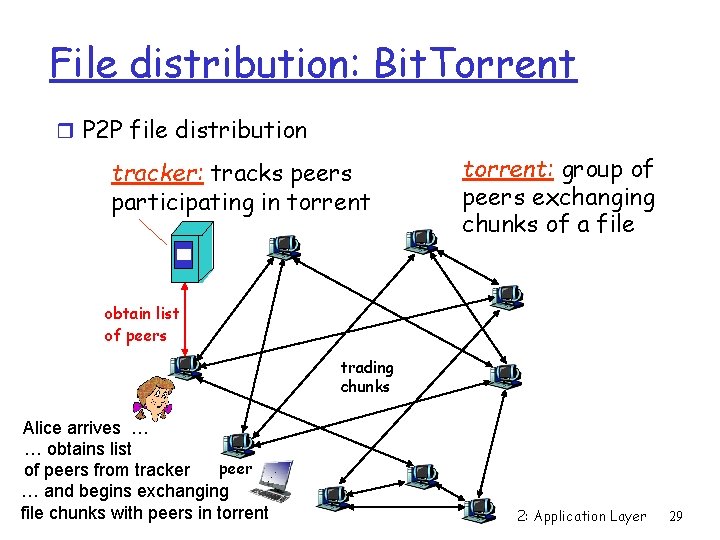

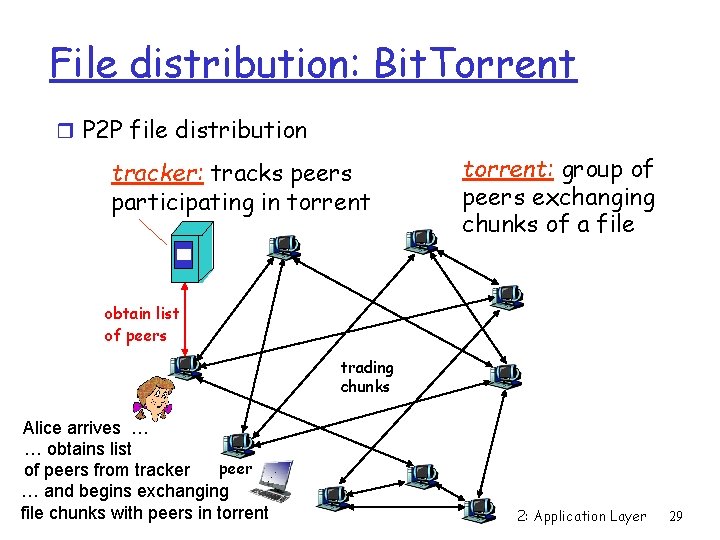

File distribution: Bit. Torrent r P 2 P file distribution tracker: tracks peers participating in torrent: group of peers exchanging chunks of a file obtain list of peers trading chunks Alice arrives … … obtains list peer of peers from tracker … and begins exchanging file chunks with peers in torrent 2: Application Layer 29

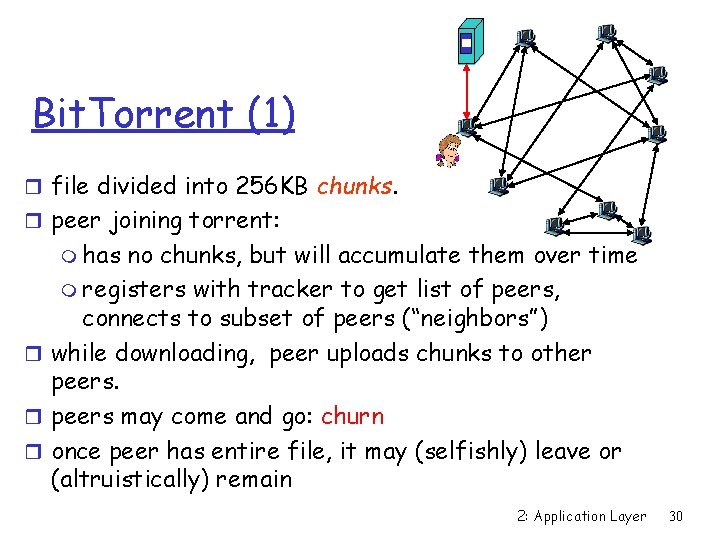

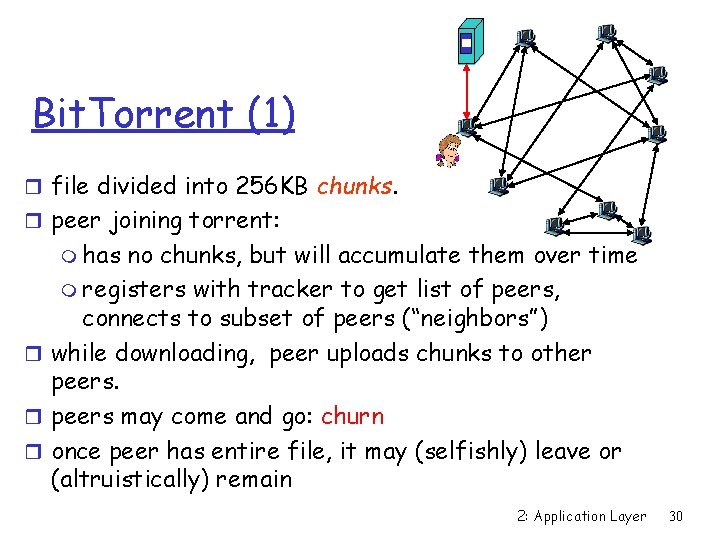

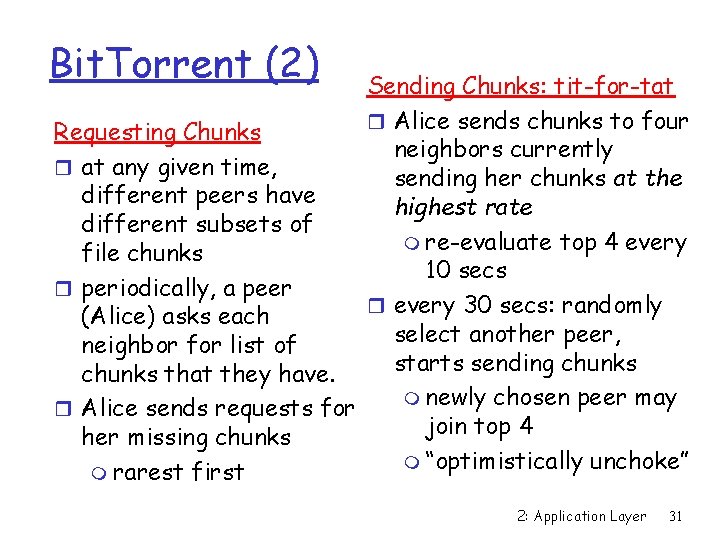

Bit. Torrent (1) r file divided into 256 KB chunks. r peer joining torrent: m has no chunks, but will accumulate them over time m registers with tracker to get list of peers, connects to subset of peers (“neighbors”) r while downloading, peer uploads chunks to other peers may come and go: churn r once peer has entire file, it may (selfishly) leave or (altruistically) remain 2: Application Layer 30

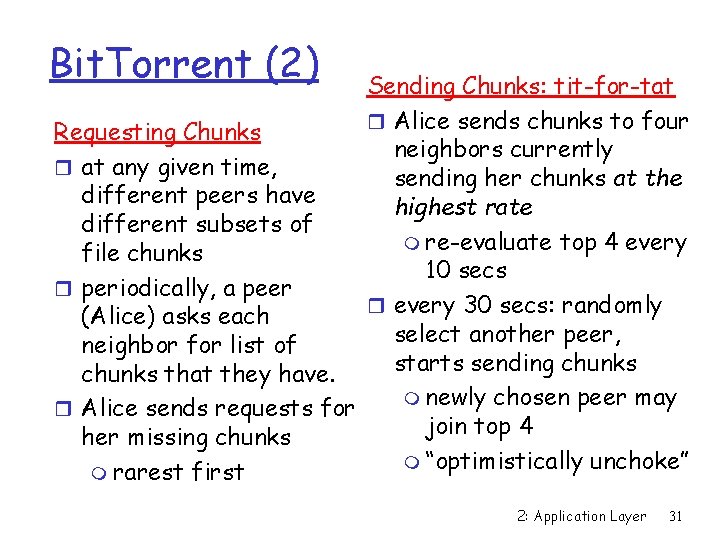

Bit. Torrent (2) Sending Chunks: tit-for-tat r Alice sends chunks to four Requesting Chunks neighbors currently r at any given time, sending her chunks at the different peers have highest rate different subsets of m re-evaluate top 4 every file chunks 10 secs r periodically, a peer r every 30 secs: randomly (Alice) asks each select another peer, neighbor for list of starts sending chunks that they have. m newly chosen peer may r Alice sends requests for join top 4 her missing chunks m “optimistically unchoke” m rarest first 2: Application Layer 31

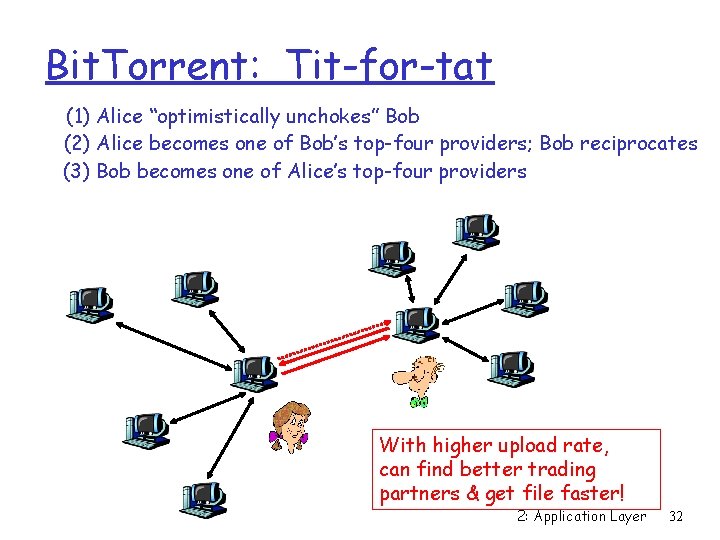

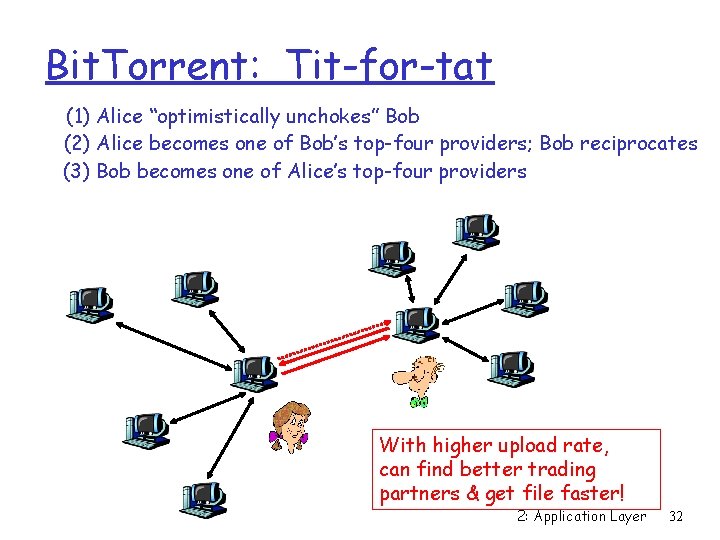

Bit. Torrent: Tit-for-tat (1) Alice “optimistically unchokes” Bob (2) Alice becomes one of Bob’s top-four providers; Bob reciprocates (3) Bob becomes one of Alice’s top-four providers With higher upload rate, can find better trading partners & get file faster! 2: Application Layer 32

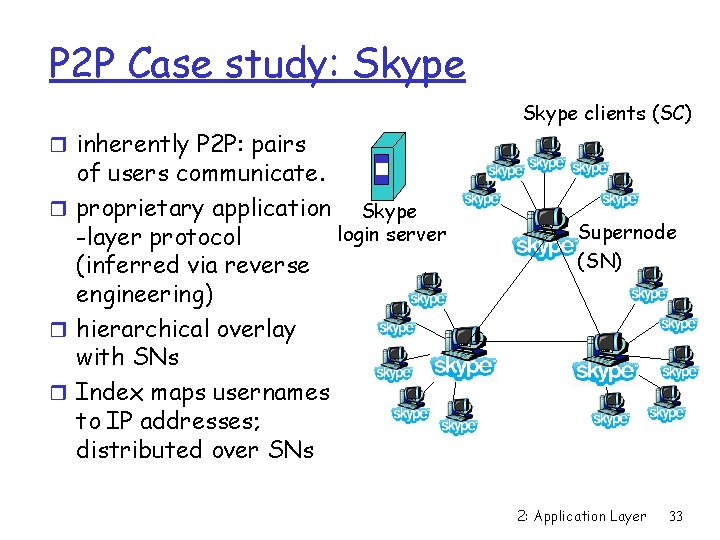

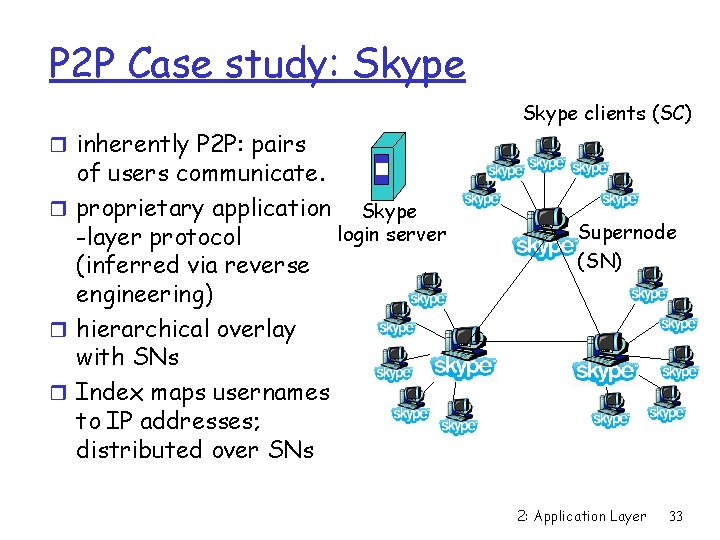

P 2 P Case study: Skype clients (SC) r inherently P 2 P: pairs of users communicate. r proprietary application Skype login server -layer protocol (inferred via reverse engineering) r hierarchical overlay with SNs r Index maps usernames to IP addresses; distributed over SNs Supernode (SN) 2: Application Layer 33

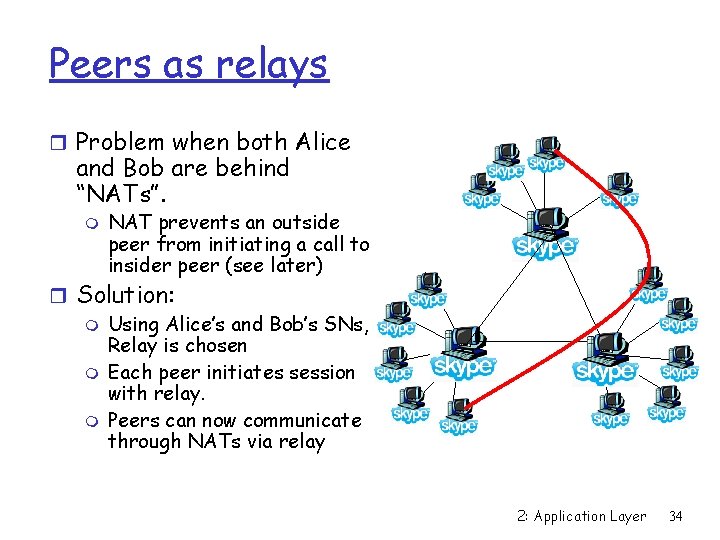

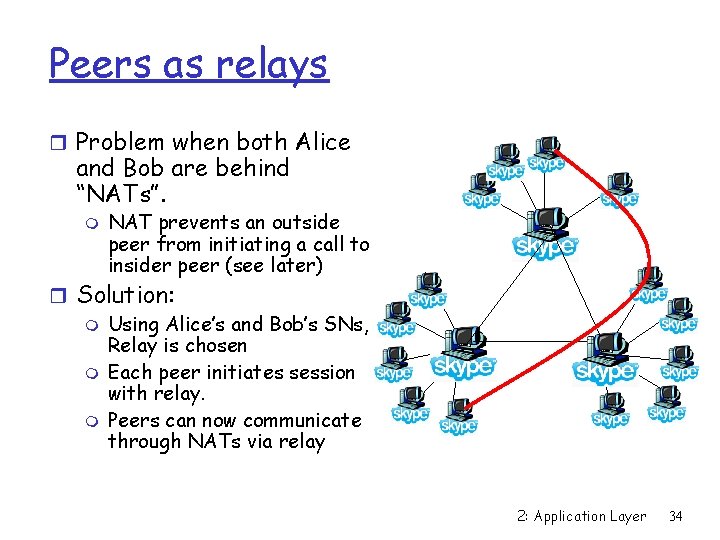

Peers as relays r Problem when both Alice and Bob are behind “NATs”. m NAT prevents an outside peer from initiating a call to insider peer (see later) r Solution: m Using Alice’s and Bob’s SNs, Relay is chosen m Each peer initiates session with relay. m Peers can now communicate through NATs via relay 2: Application Layer 34

P 2 P: searching for information r So many files r But, where are they ? Index in P 2 P system: maps information to peer location (location = IP address & port number) 2: Application Layer 35

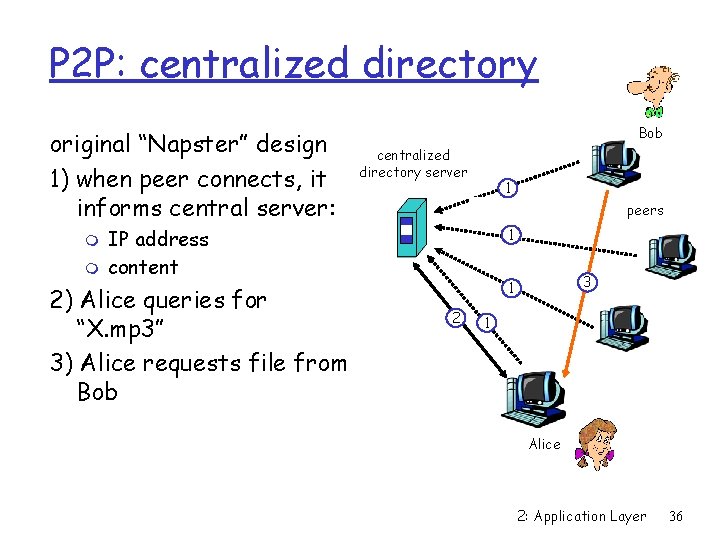

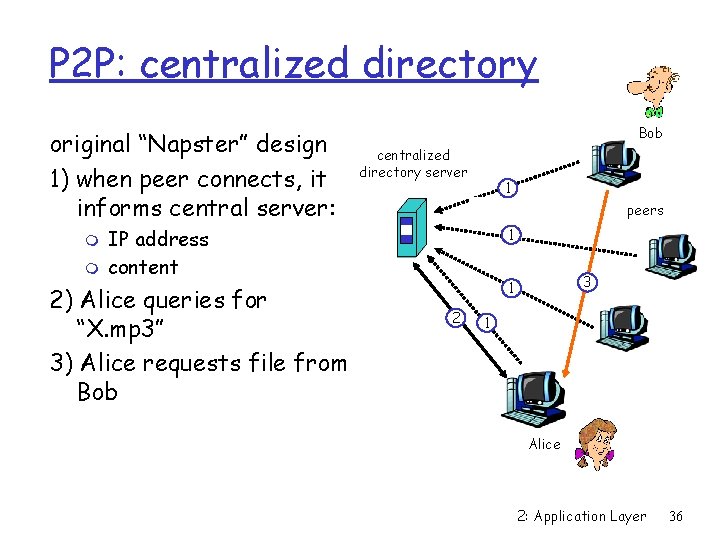

P 2 P: centralized directory original “Napster” design 1) when peer connects, it informs central server: m m Bob centralized directory server 1 peers IP address content 2) Alice queries for “X. mp 3” 3) Alice requests file from Bob 1 3 1 2 1 Alice 2: Application Layer 36

P 2 P: problems with centralized directory r Single point of failure r Performance bottleneck r Copyright infringement file transfer is decentralized, but locating content is highly centralized 2: Application Layer 37

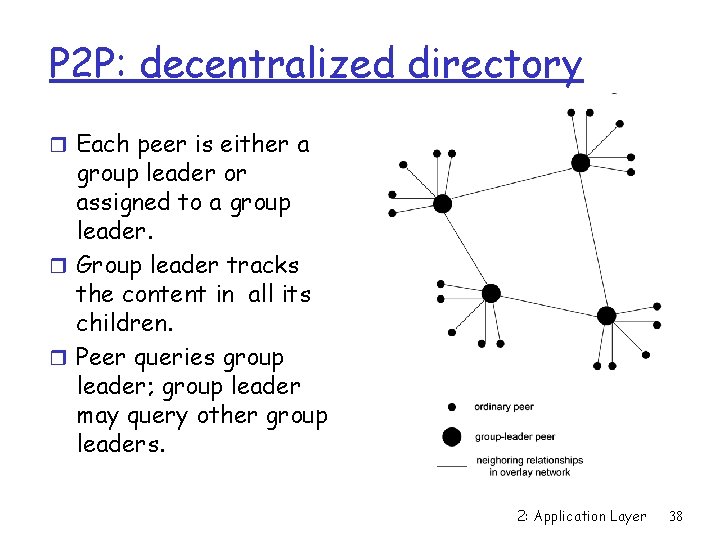

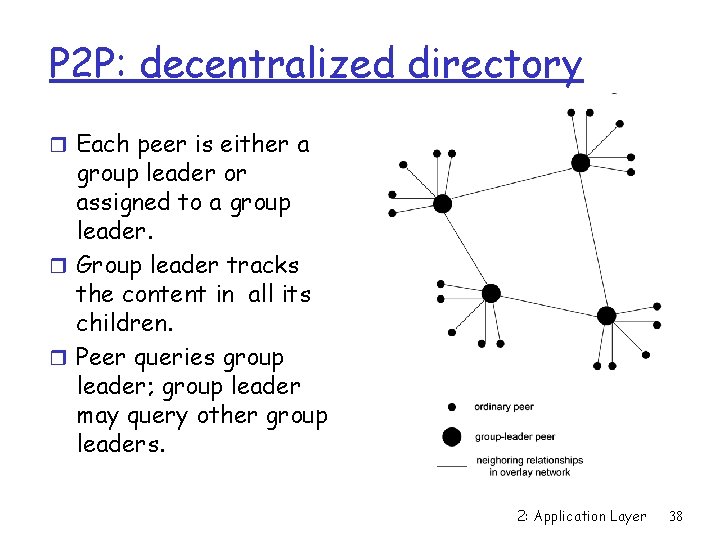

P 2 P: decentralized directory r Each peer is either a group leader or assigned to a group leader. r Group leader tracks the content in all its children. r Peer queries group leader; group leader may query other group leaders. 2: Application Layer 38

More about decentralized directory advantages of approach r no centralized directory server m m location service distributed over peers more difficult to shut down disadvantages of approach r bootstrap node needed r group leaders can get overloaded 2: Application Layer 39

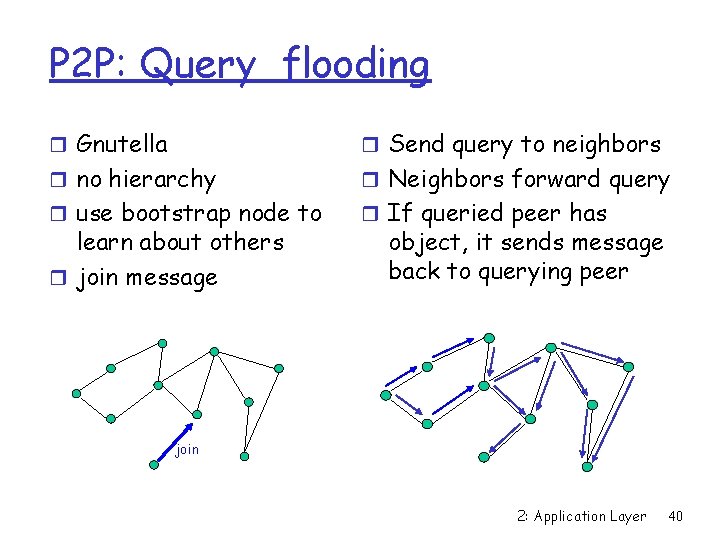

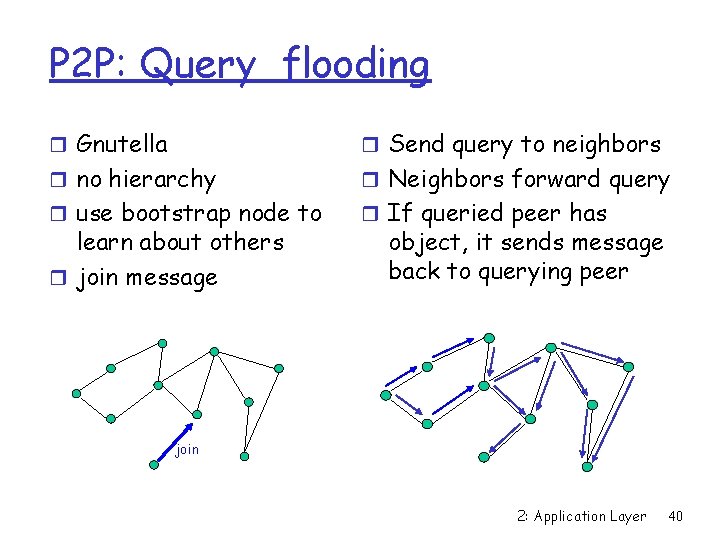

P 2 P: Query flooding r Gnutella r Send query to neighbors r no hierarchy r Neighbors forward query r use bootstrap node to r If queried peer has learn about others r join message object, it sends message back to querying peer join 2: Application Layer 40

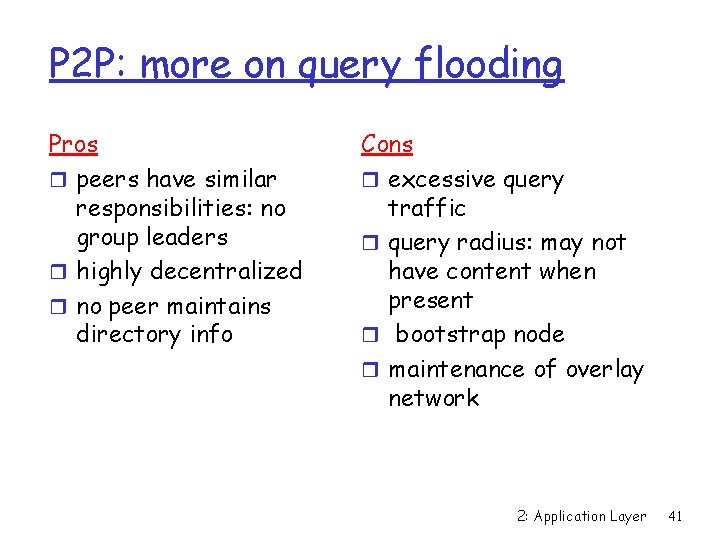

P 2 P: more on query flooding Pros r peers have similar responsibilities: no group leaders r highly decentralized r no peer maintains directory info Cons r excessive query traffic r query radius: may not have content when present r bootstrap node r maintenance of overlay network 2: Application Layer 41

DHT: A New Story… r Motivation: m Frustrated by popularity of all these “half-baked” P 2 P apps m We can do better! m Guaranteed lookup success for files in system m Provable bounds on search time m Provable scalability to millions of node 2: Application Layer 42

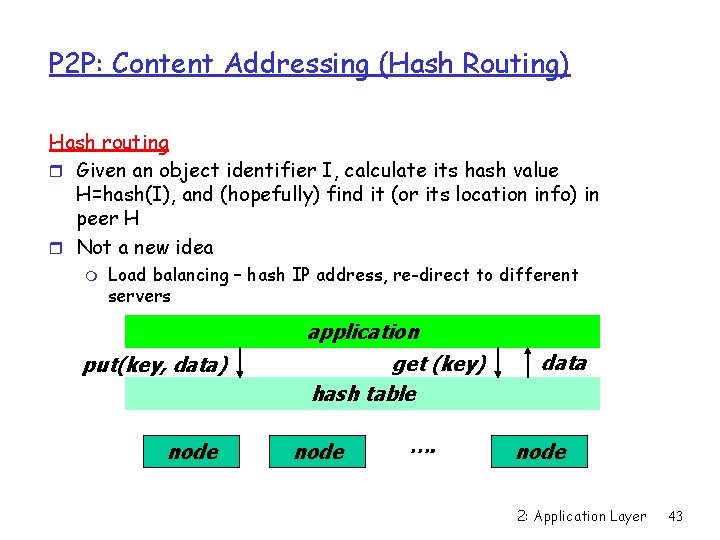

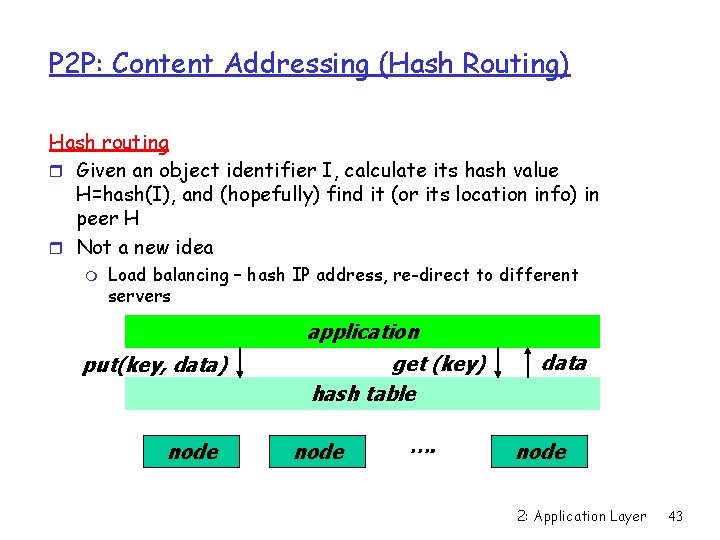

P 2 P: Content Addressing (Hash Routing) Hash routing r Given an object identifier I, calculate its hash value H=hash(I), and (hopefully) find it (or its location info) in peer H r Not a new idea m Load balancing – hash IP address, re-direct to different servers application put(key, data) node get (key) hash table node …. data node 2: Application Layer 43

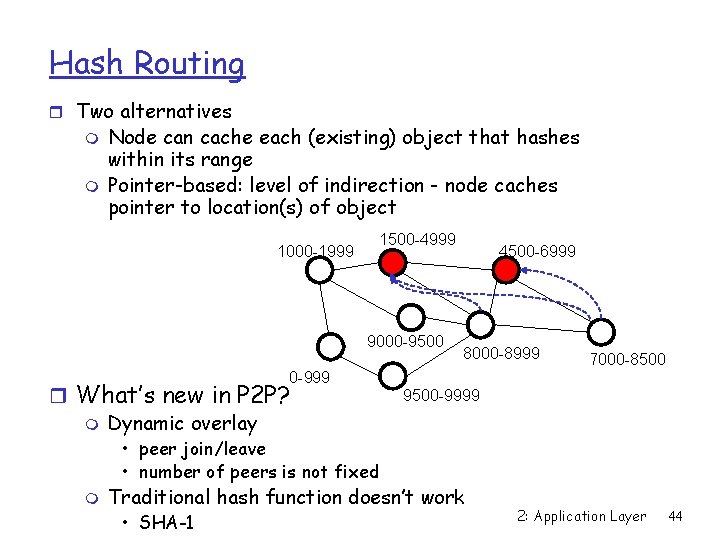

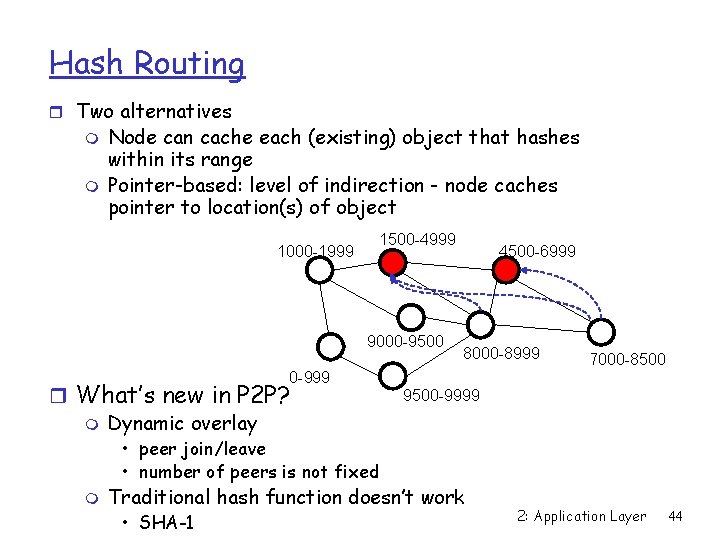

Hash Routing r Two alternatives m m Node can cache each (existing) object that hashes within its range Pointer-based: level of indirection - node caches pointer to location(s) of object 1000 -1999 1500 -4999 9000 -9500 4500 -6999 8000 -8999 7000 -8500 0 -999 r What’s new in P 2 P? m Dynamic overlay 9500 -9999 • peer join/leave • number of peers is not fixed m Traditional hash function doesn’t work • SHA-1 2: Application Layer 44

Distributed Hash Table (DHT) Challenges r For each object, node(s) whose range(s) cover that object must be reachable via a “short” path r # neighbors for each node should scale well (e. g. , should not be O(N)) r Fully distributed (no centralized bottleneck/single point of failure) r DHT mechanism should gracefully handle nodes joining/leaving m m m need to repartition the range space over existing nodes need to reorganize neighbor set need bootstrap mechanism to connect new nodes into the existing DHT infrastructure 2: Application Layer 45

Case Studies r Structure overlay (p 2 p) systems – Consistent Hashing m Chord m CAN (Content Addressable Network) r Key Questions m Q 1: How is hash space divided “evenly” among existing nodes? m Q 2: How is routing implemented that connects an arbitrary node to the node responsible for a given object? m Q 3: How is the hash space repartitioned when nodes join/leave? r Let N be the number of nodes in the overlay r Let H be the size of the range of the hash function (when applicable) 2: Application Layer 46

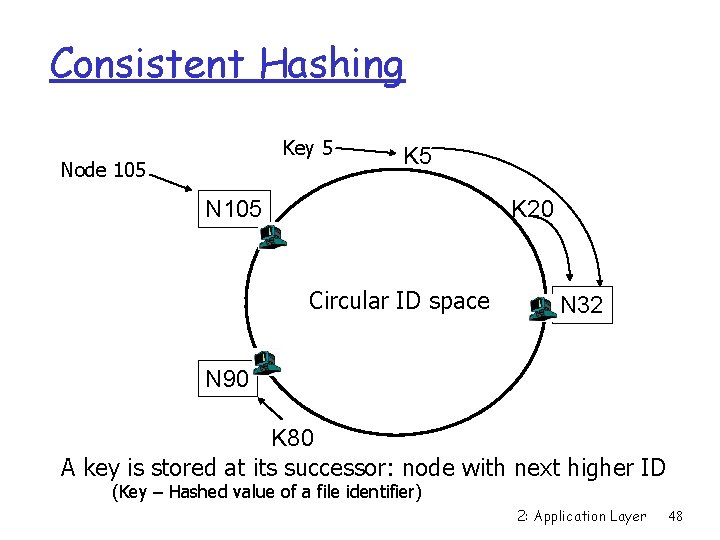

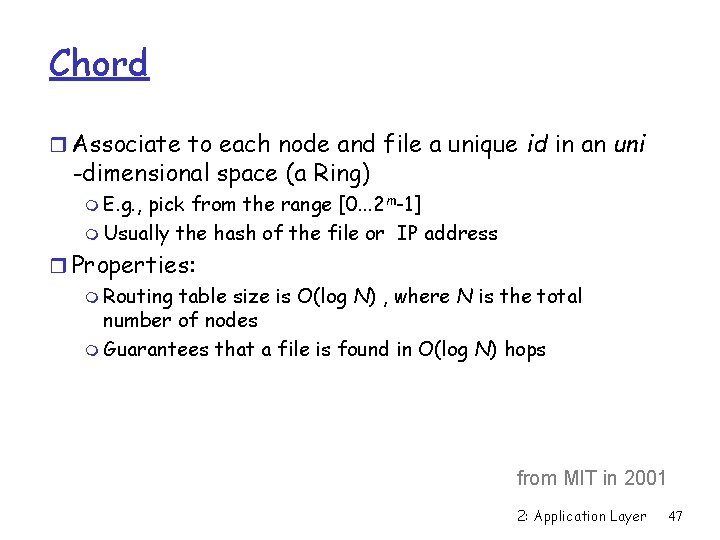

Chord r Associate to each node and file a unique id in an uni -dimensional space (a Ring) m E. g. , pick from the range [0. . . 2 m-1] m Usually the hash of the file or IP address r Properties: m Routing table size is O(log N) , where N is the total number of nodes m Guarantees that a file is found in O(log N) hops from MIT in 2001 2: Application Layer 47

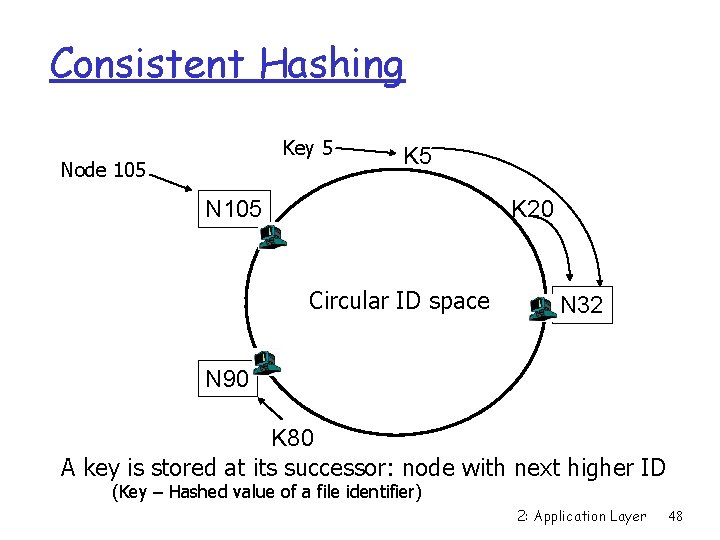

Consistent Hashing Key 5 Node 105 K 5 N 105 K 20 Circular ID space N 32 N 90 K 80 A key is stored at its successor: node with next higher ID (Key – Hashed value of a file identifier) 2: Application Layer 48

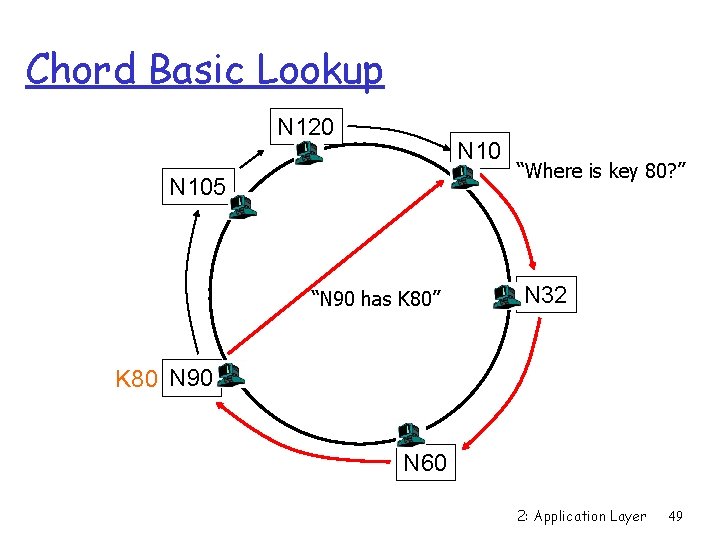

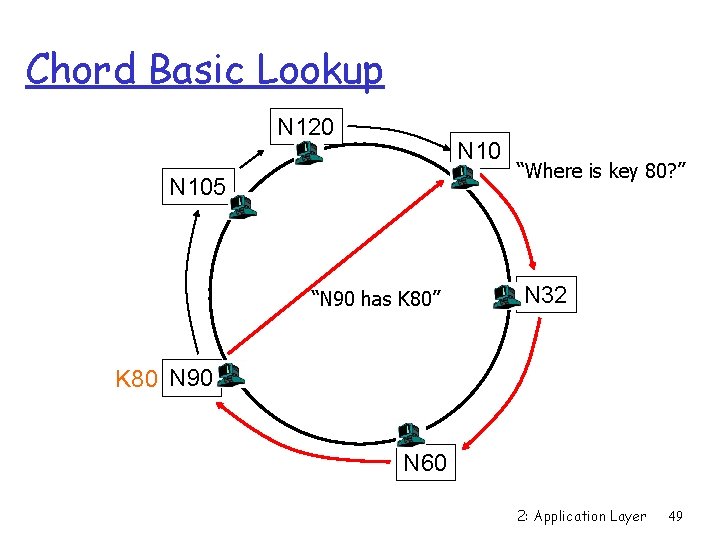

Chord Basic Lookup N 120 N 105 “N 90 has K 80” “Where is key 80? ” N 32 K 80 N 90 N 60 2: Application Layer 49

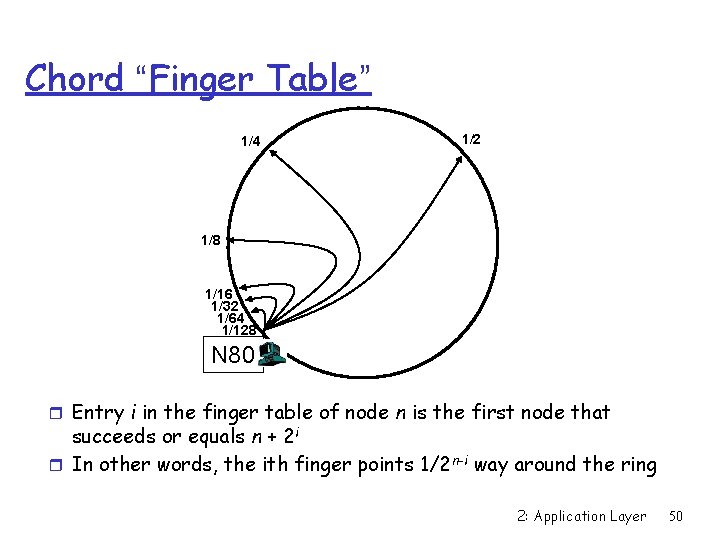

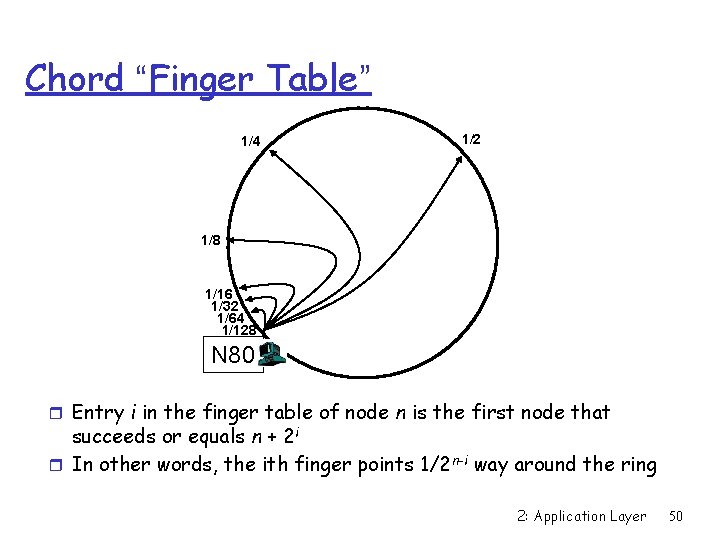

Chord “Finger Table” 1/4 1/2 1/8 1/16 1/32 1/64 1/128 N 80 r Entry i in the finger table of node n is the first node that succeeds or equals n + 2 i r In other words, the ith finger points 1/2 n-i way around the ring 2: Application Layer 50

![Chord Join r Assume a hash space 0 7 r Node n 1 Chord Join r Assume a hash space [0. . 7] r Node n 1](https://slidetodoc.com/presentation_image/da552b68ef0dc6889f2f5c05256ba3e2/image-51.jpg)

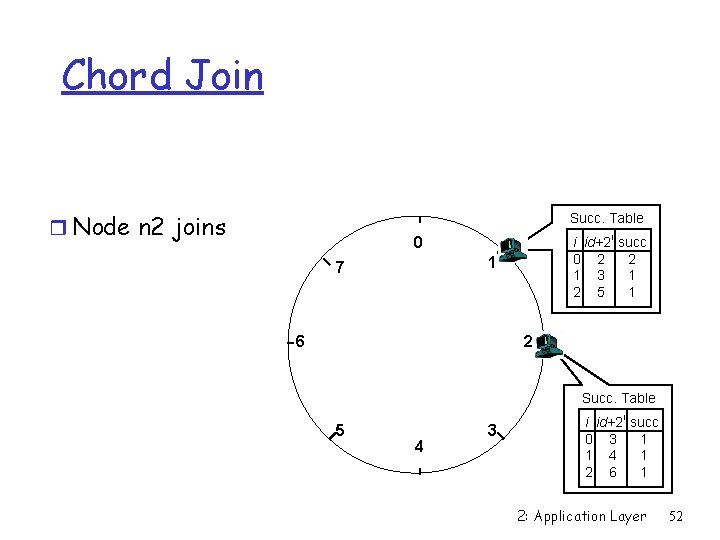

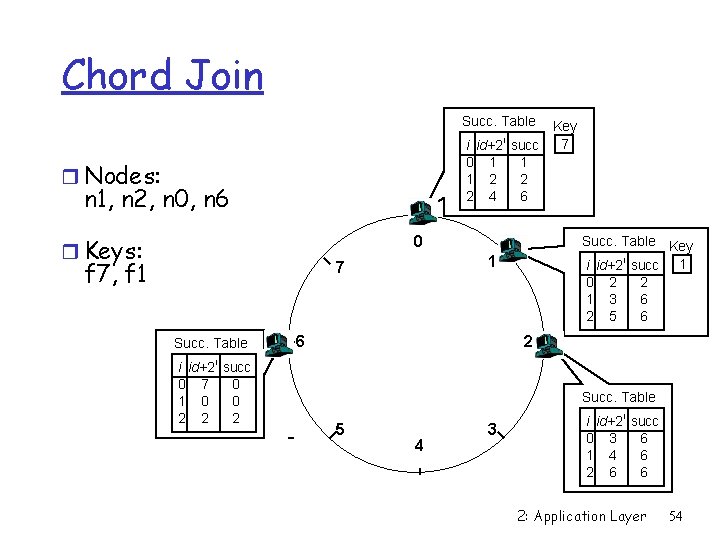

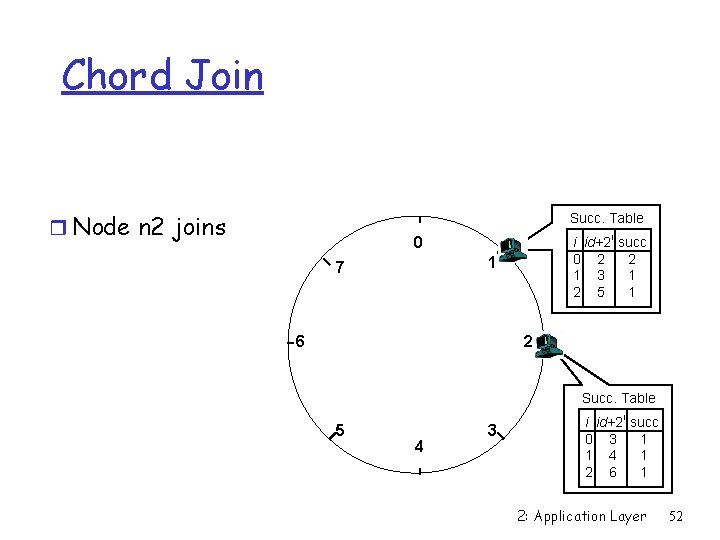

Chord Join r Assume a hash space [0. . 7] r Node n 1 joins Succ. Table i id+2 i succ 0 2 1 1 3 1 2 5 1 0 1 7 6 2 5 4 3 2: Application Layer 51

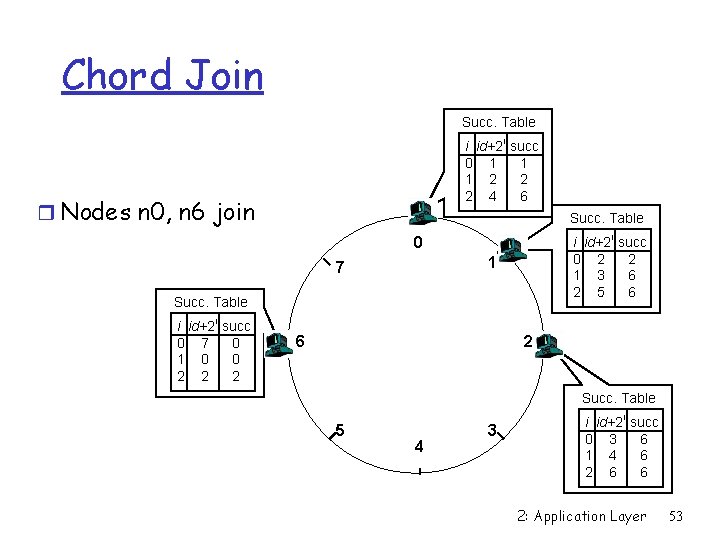

Chord Join r Node n 2 joins Succ. Table i id+2 i succ 0 2 2 1 3 1 2 5 1 0 1 7 6 2 Succ. Table 5 4 3 i id+2 i succ 0 3 1 1 4 1 2 6 1 2: Application Layer 52

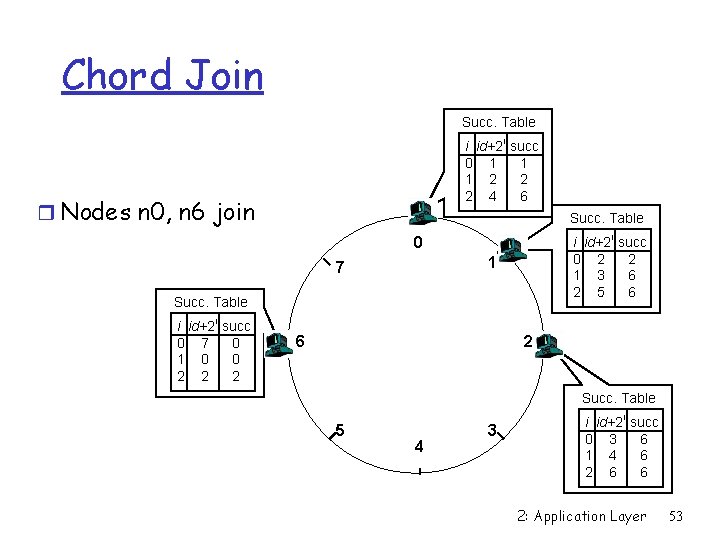

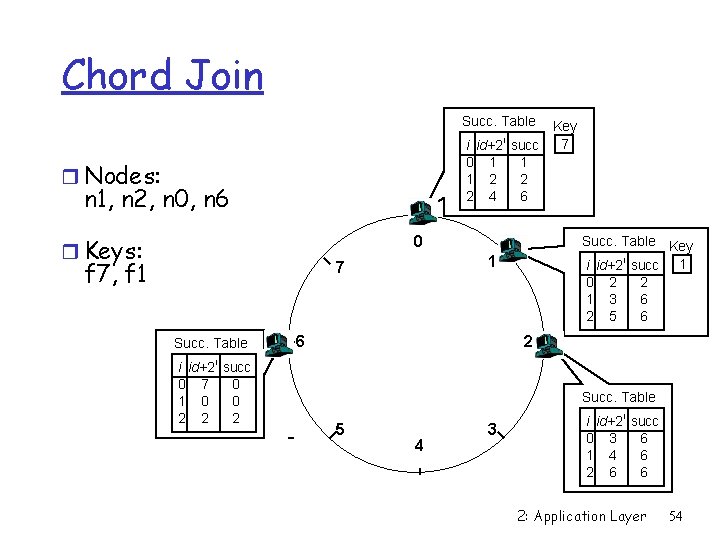

Chord Join Succ. Table i id+2 i succ 0 1 1 1 2 2 2 4 6 r Nodes n 0, n 6 join Succ. Table i id+2 i succ 0 2 2 1 3 6 2 5 6 0 1 7 Succ. Table i id+2 i succ 0 7 0 1 0 0 2 2 2 6 2 Succ. Table 5 4 3 i id+2 i succ 0 3 6 1 4 6 2 6 6 2: Application Layer 53

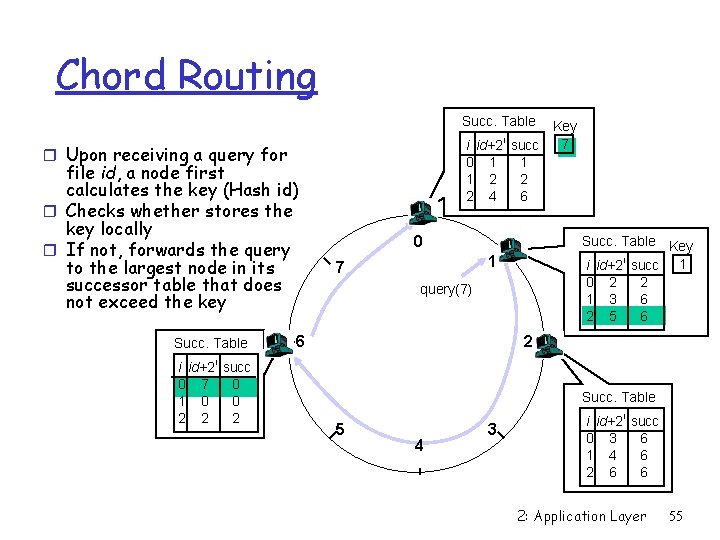

Chord Join Succ. Table i id+2 0 1 1 2 2 4 r Nodes: n 1, n 2, n 0, n 6 r Keys: i Key 7 succ 1 2 6 0 f 7, f 1 1 7 Succ. Table i id+2 i succ 0 7 0 1 0 0 2 2 2 Succ. Table Key i id+2 i succ 1 0 2 2 1 3 6 2 5 6 6 2 Succ. Table 5 4 3 i id+2 i succ 0 3 6 1 4 6 2 6 6 2: Application Layer 54

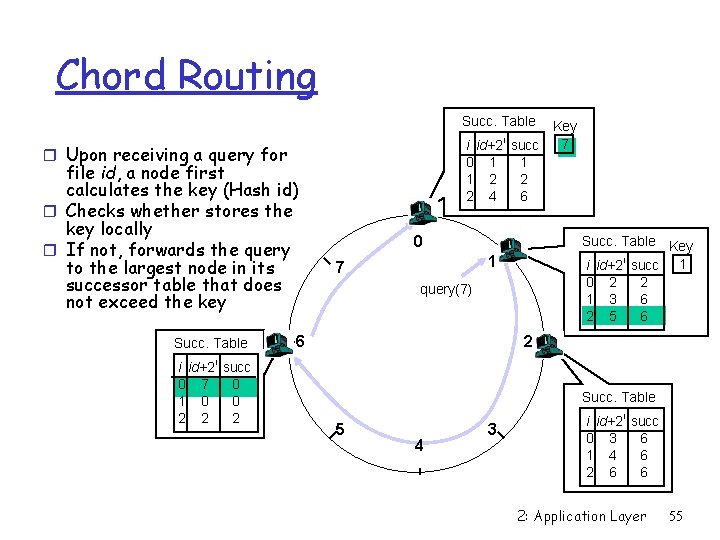

Chord Routing Succ. Table i id+2 0 1 1 2 2 4 r Upon receiving a query for file id, a node first calculates the key (Hash id) r Checks whether stores the key locally r If not, forwards the query to the largest node in its successor table that does not exceed the key Succ. Table i id+2 i succ 0 7 0 1 0 0 2 2 2 i Key 7 succ 1 2 6 0 Succ. Table Key i id+2 i succ 1 0 2 2 1 3 6 2 5 6 1 7 query(7) 6 2 Succ. Table 5 4 3 i id+2 i succ 0 3 6 1 4 6 2 6 6 2: Application Layer 55

Chord Summary r Routing table size? m. Log N fingers r Routing time? m. Each hop expects to 1/2 the distance to the desired key => expect O(log N) hops. Note: so far only the basic Chord; many practical issues remain (not covered in this course though …) 2: Application Layer 56

A few words about Bit. Coin (and other digital/virtual currency) r Two key issues for a currency m Generation (where does it come from ? ) m Distribution (how to use it, i. e. , buy/sell transactions? ) r Bit. Coin – open source p 2 p currency m Mining (hashing) m Verification (blockchain) 2: Application Layer 57

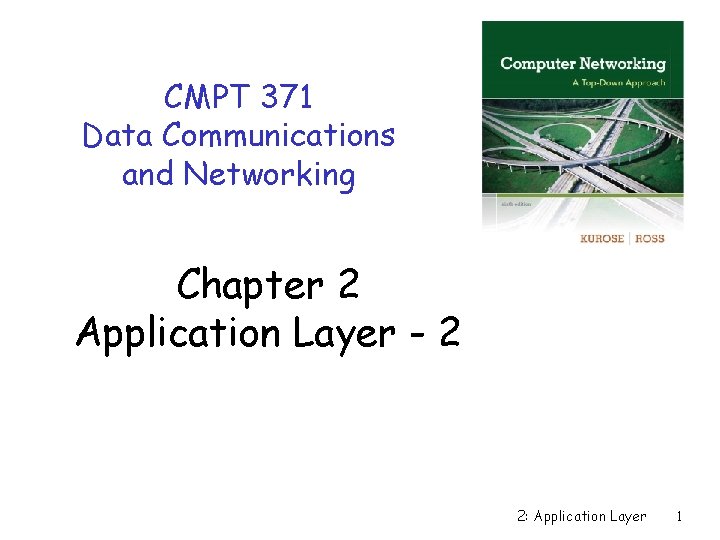

Chapter 2: Summary Our study of network apps now complete! r application service requirements: m reliability, bandwidth, delay r client-server paradigm r Internet transport service model m m connection-oriented, reliable: TCP unreliable, datagrams: UDP r specific protocols: m HTTP m FTP m SMTP, POP, IMAP m DNS r content distribution m caches, CDNs m P 2 P r Cloud 2: Application Layer 58

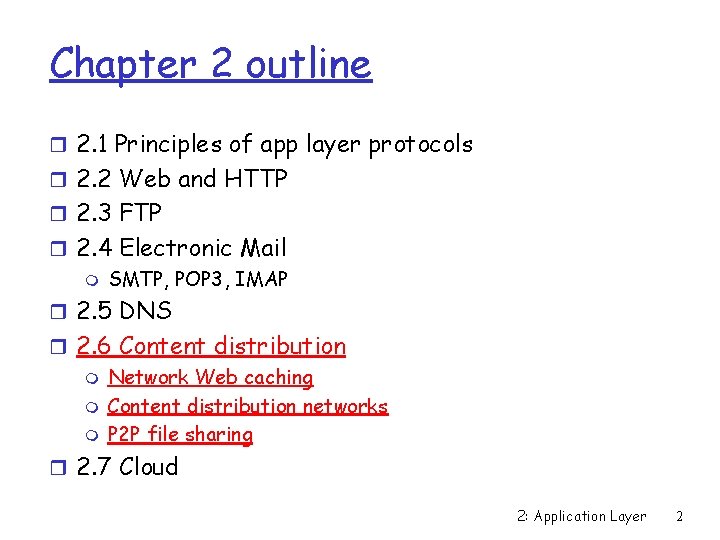

Chapter 2: Summary More importantly: learned about protocols r typical request/reply message exchange: m m client requests info or service server responds with data, status code r message formats: m headers: fields giving info about data m data: info being communicated r control vs. data msgs in-band, out-of-band centralized vs. decentralized stateless vs. stateful reliable vs. unreliable msg transfer “complexity at network edge” – many protocols security: authentication m r r r 2: Application Layer 59