CENG 334 Introduction to Operating Systems Synchronization Topics

![Peterson’s Algorithm (for two threads) int flag[2]; int turn; void init() { flag[0] = Peterson’s Algorithm (for two threads) int flag[2]; int turn; void init() { flag[0] =](https://slidetodoc.com/presentation_image_h2/c425a64ff8f804b60f358e4b9ceed8a3/image-29.jpg)

- Slides: 70

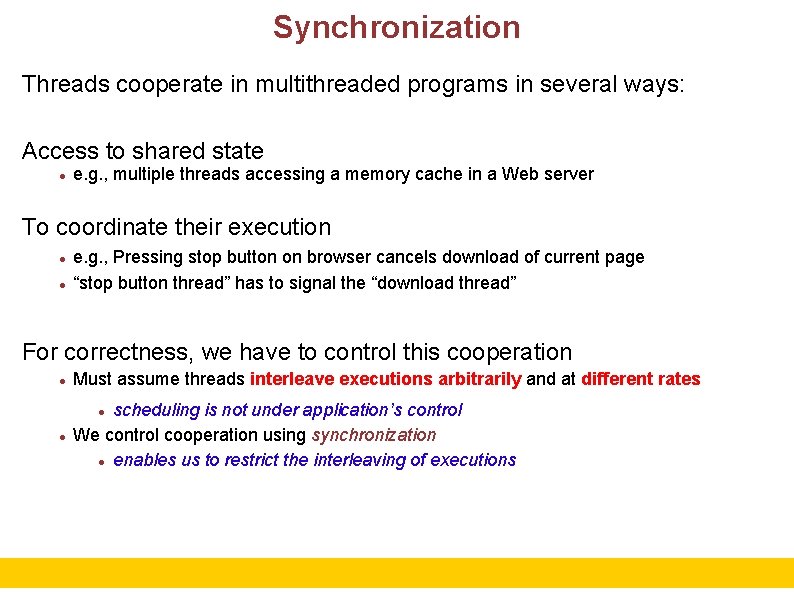

CENG 334 Introduction to Operating Systems Synchronization Topics: • Synchronization problem • Race conditions and Critical Sections • Mutual exclusion • Locks • Spinlocks • Mutexes Erol Sahin Dept of Computer Eng. Middle East Technical University Ankara, TURKEY URL: http: //kovan. ceng. metu. edu. tr/~erol/Courses/CENG 334 Some of the following slides are adapted from Matt Welsh, Harvard Univ. 1

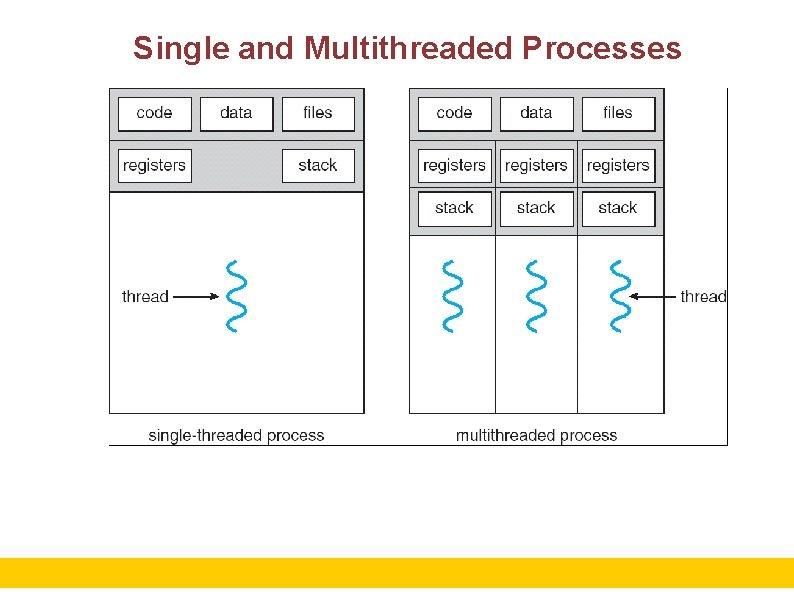

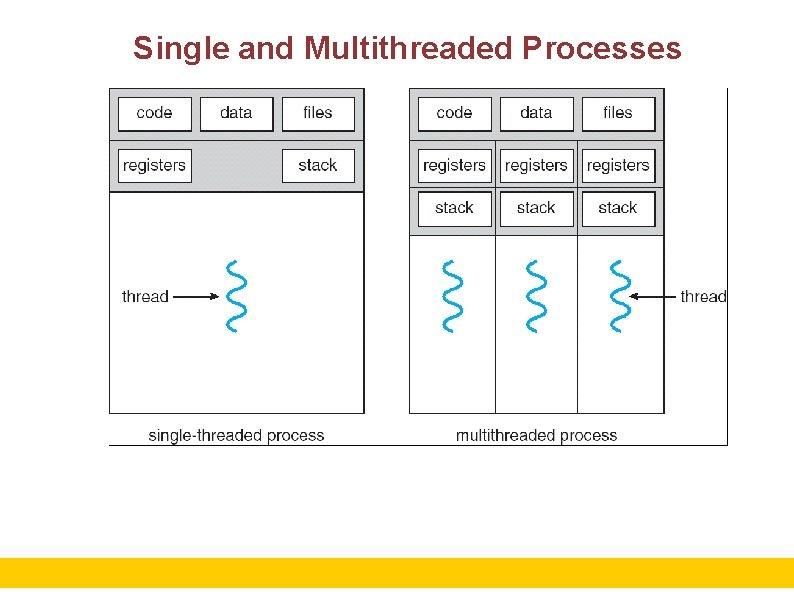

Single and Multithreaded Processes 2

Synchronization Threads cooperate in multithreaded programs in several ways: Access to shared state e. g. , multiple threads accessing a memory cache in a Web server To coordinate their execution e. g. , Pressing stop button on browser cancels download of current page “stop button thread” has to signal the “download thread” For correctness, we have to control this cooperation Must assume threads interleave executions arbitrarily and at different rates scheduling is not under application’s control We control cooperation using synchronization enables us to restrict the interleaving of executions 3

Shared Resources We’ll focus on coordinating access to shared resources Basic problem: Two concurrent threads are accessing a shared variable If the variable is read/modified/written by both threads, then access to the variable must be controlled Otherwise, unexpected results may occur We’ll look at: Mechanisms to control access to shared resources Low-level mechanisms: locks Higher level mechanisms: mutexes, semaphores, monitors, and condition variables Patterns for coordinating access to shared resources bounded buffer, producer-consumer, … This stuff is complicated and rife with pitfalls Details are important for completing assignments Expect questions on the midterm/final! 4

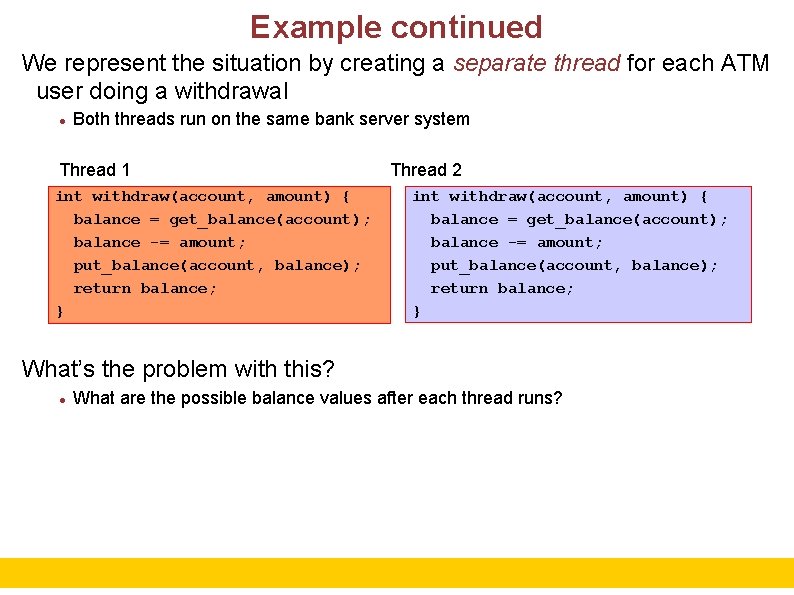

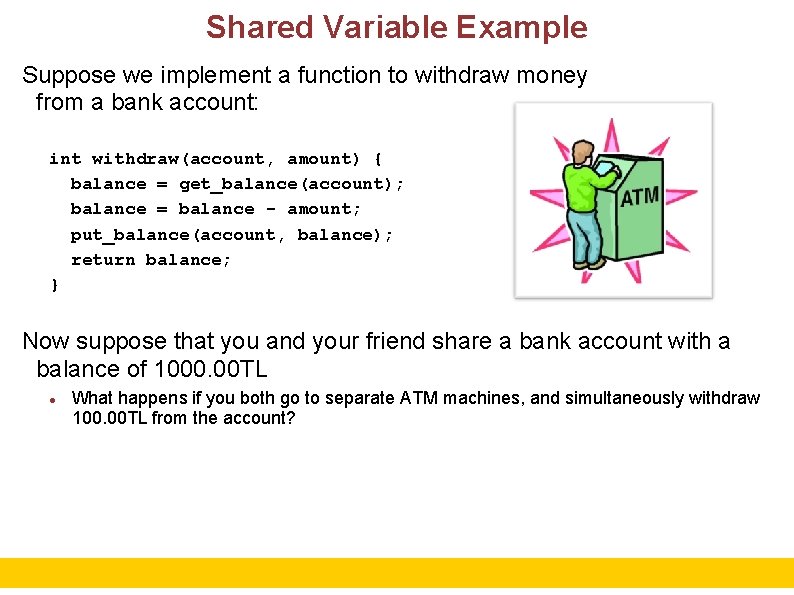

Shared Variable Example Suppose we implement a function to withdraw money from a bank account: int withdraw(account, amount) { balance = get_balance(account); balance = balance - amount; put_balance(account, balance); return balance; } Now suppose that you and your friend share a bank account with a balance of 1000. 00 TL What happens if you both go to separate ATM machines, and simultaneously withdraw 100. 00 TL from the account? 5

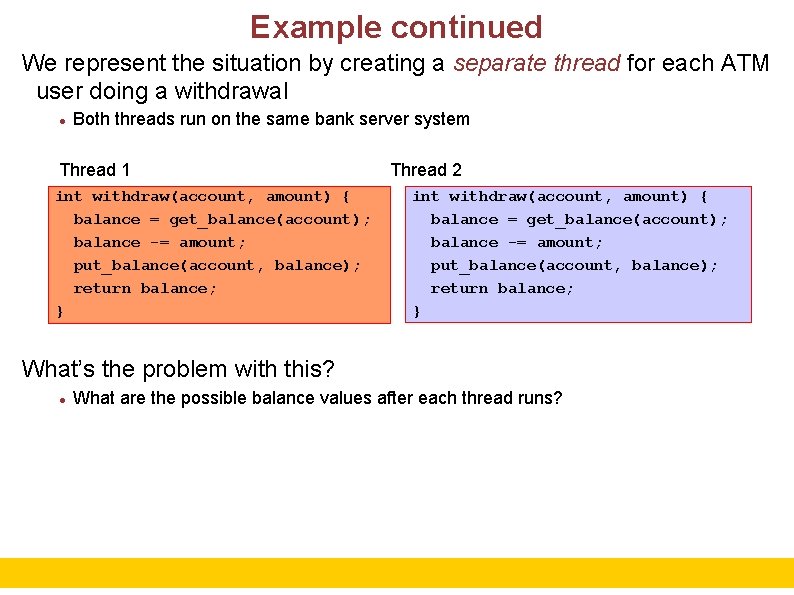

Example continued We represent the situation by creating a separate thread for each ATM user doing a withdrawal Both threads run on the same bank server system Thread 1 int withdraw(account, amount) { balance = get_balance(account); balance -= amount; put_balance(account, balance); return balance; } Thread 2 int withdraw(account, amount) { balance = get_balance(account); balance -= amount; put_balance(account, balance); return balance; } What’s the problem with this? What are the possible balance values after each thread runs? 6

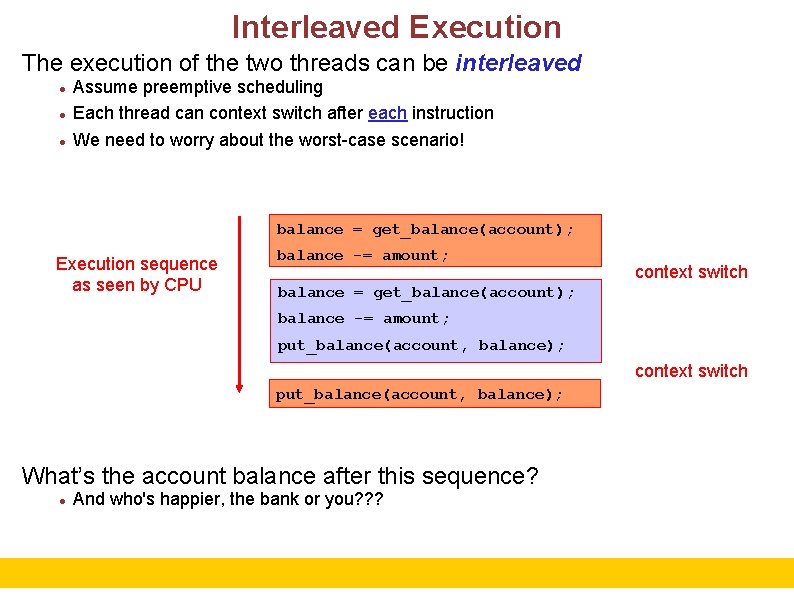

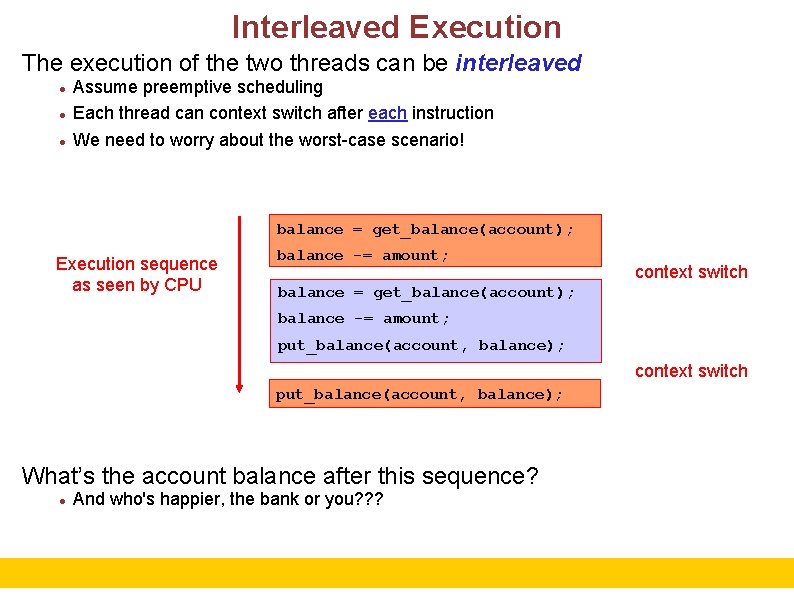

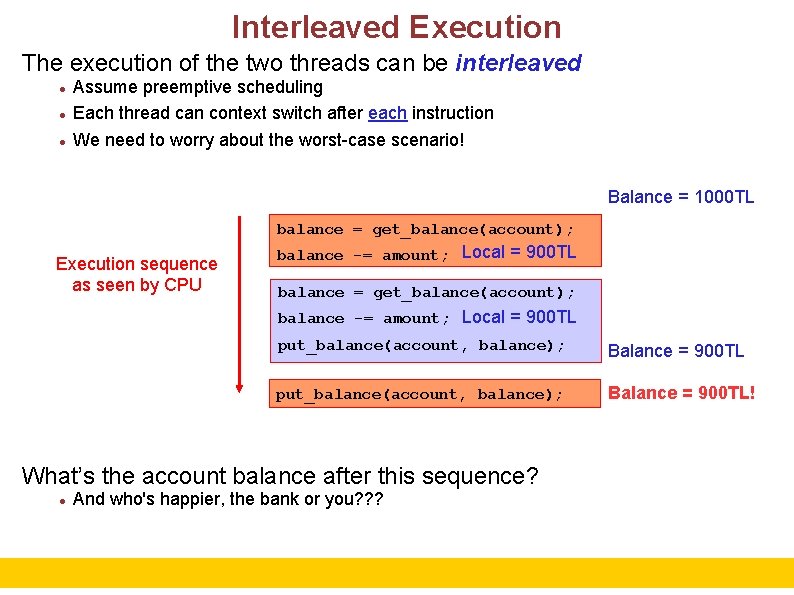

Interleaved Execution The execution of the two threads can be interleaved Assume preemptive scheduling Each thread can context switch after each instruction We need to worry about the worst-case scenario! balance = get_balance(account); Execution sequence as seen by CPU balance -= amount; context switch balance = get_balance(account); balance -= amount; put_balance(account, balance); context switch put_balance(account, balance); What’s the account balance after this sequence? And who's happier, the bank or you? ? ? 7

Interleaved Execution The execution of the two threads can be interleaved Assume preemptive scheduling Each thread can context switch after each instruction We need to worry about the worst-case scenario! Balance = 1000 TL balance = get_balance(account); Execution sequence as seen by CPU balance -= amount; Local = 900 TL balance = get_balance(account); balance -= amount; Local = 900 TL put_balance(account, balance); Balance = 900 TL! What’s the account balance after this sequence? And who's happier, the bank or you? ? ? 8

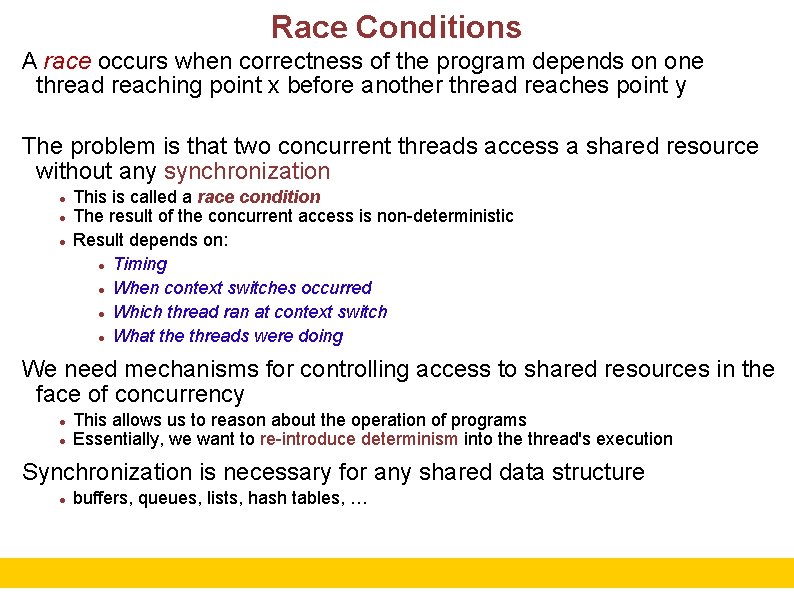

Race Conditions A race occurs when correctness of the program depends on one thread reaching point x before another thread reaches point y The problem is that two concurrent threads access a shared resource without any synchronization This is called a race condition The result of the concurrent access is non-deterministic Result depends on: Timing When context switches occurred Which thread ran at context switch What the threads were doing We need mechanisms for controlling access to shared resources in the face of concurrency This allows us to reason about the operation of programs Essentially, we want to re-introduce determinism into the thread's execution Synchronization is necessary for any shared data structure buffers, queues, lists, hash tables, … 9

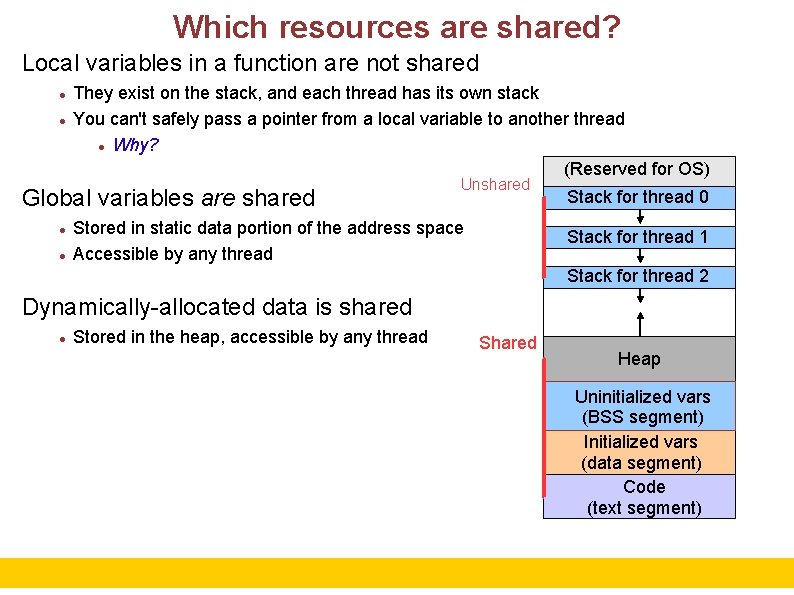

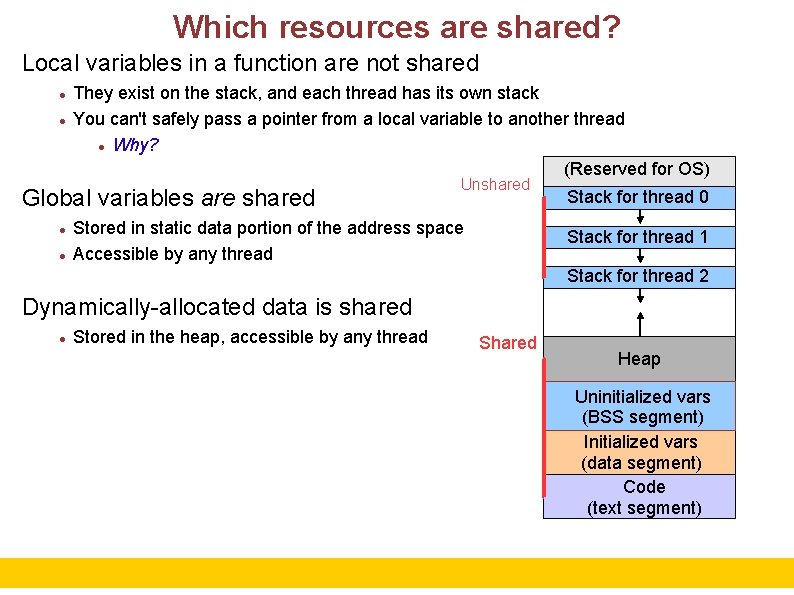

Which resources are shared? Local variables in a function are not shared They exist on the stack, and each thread has its own stack You can't safely pass a pointer from a local variable to another thread Why? (Reserved for OS) Global variables are shared Unshared Stored in static data portion of the address space Accessible by any thread Stack for thread 0 Stack for thread 1 Stack for thread 2 Dynamically-allocated data is shared Stored in the heap, accessible by any thread Shared Heap Uninitialized vars (BSS segment) Initialized vars (data segment) Code (text segment) 10

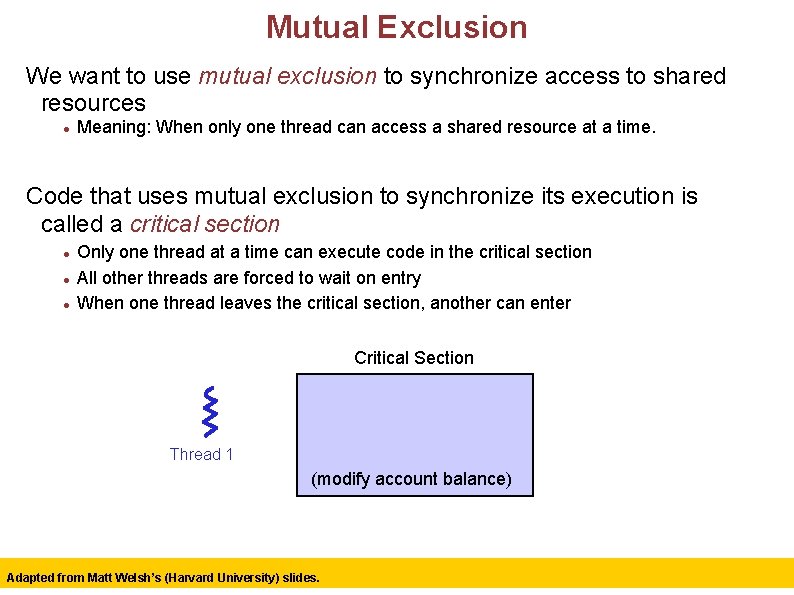

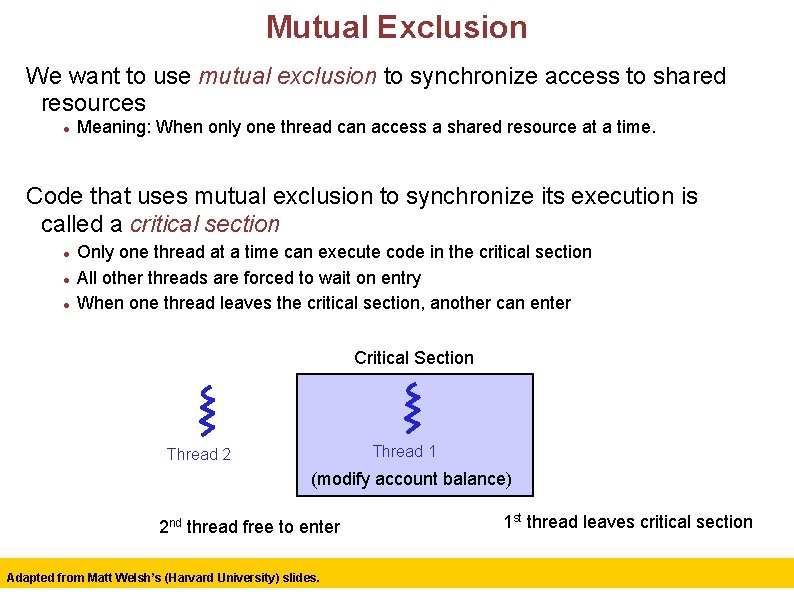

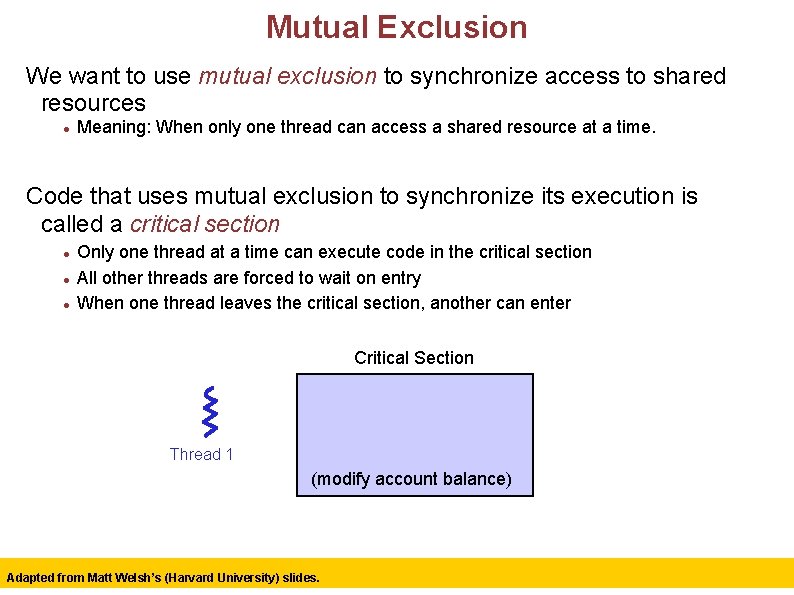

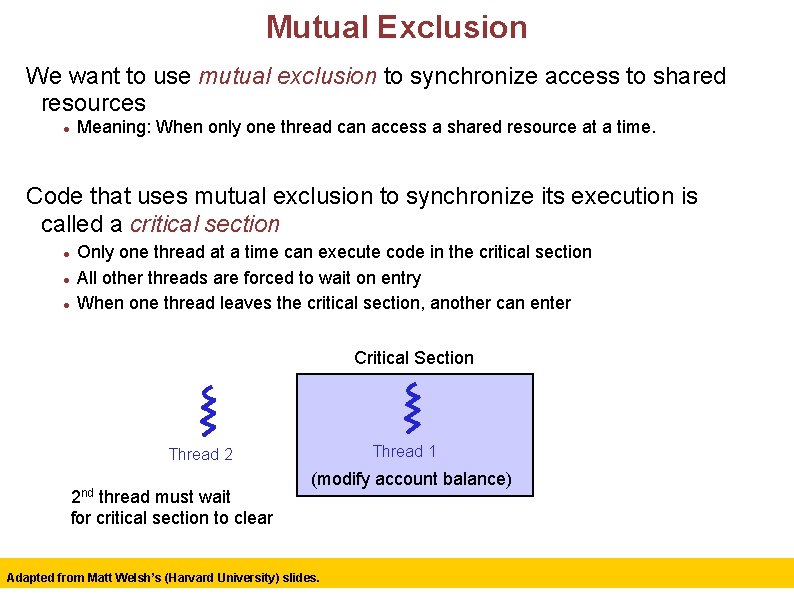

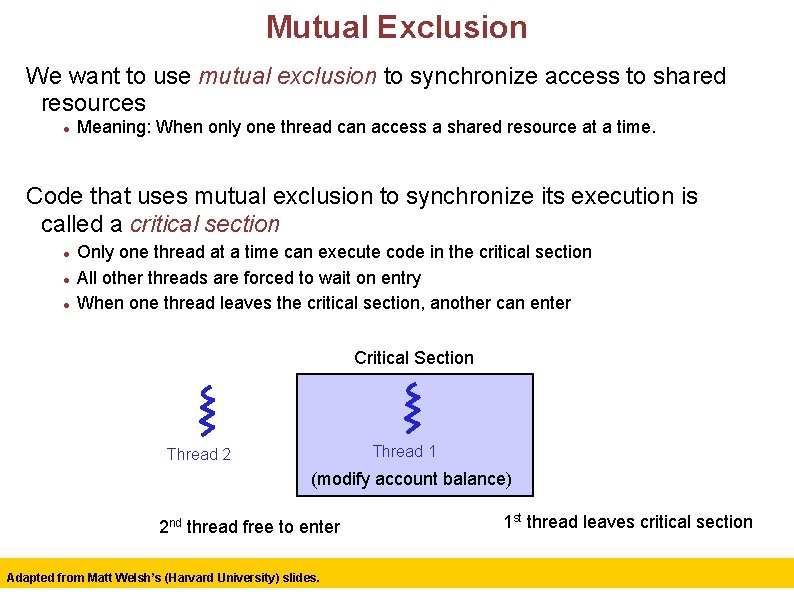

Mutual Exclusion We want to use mutual exclusion to synchronize access to shared resources Meaning: When only one thread can access a shared resource at a time. Code that uses mutual exclusion to synchronize its execution is called a critical section Only one thread at a time can execute code in the critical section All other threads are forced to wait on entry When one thread leaves the critical section, another can enter Critical Section Thread 1 (modify account balance) Adapted from Matt Welsh’s (Harvard University) slides. 11

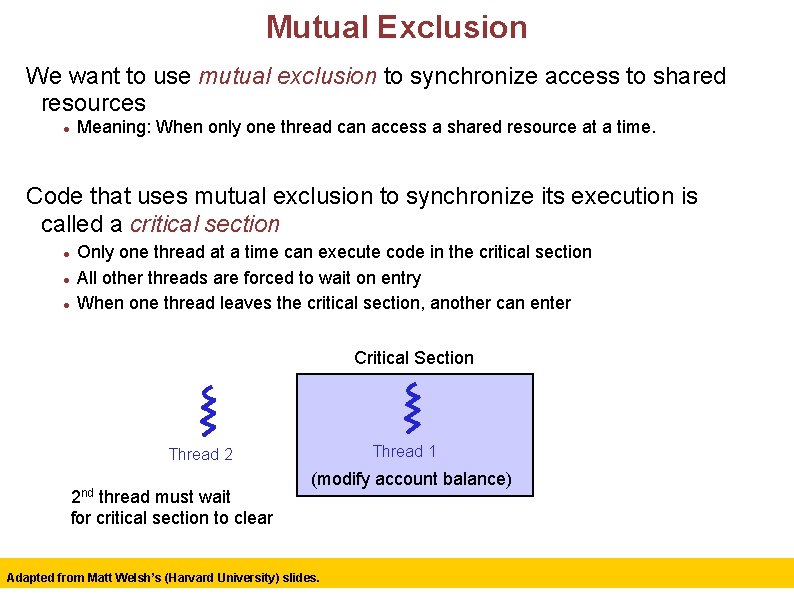

Mutual Exclusion We want to use mutual exclusion to synchronize access to shared resources Meaning: When only one thread can access a shared resource at a time. Code that uses mutual exclusion to synchronize its execution is called a critical section Only one thread at a time can execute code in the critical section All other threads are forced to wait on entry When one thread leaves the critical section, another can enter Critical Section Thread 1 Thread 2 2 nd thread must wait for critical section to clear (modify account balance) Adapted from Matt Welsh’s (Harvard University) slides. 12

Mutual Exclusion We want to use mutual exclusion to synchronize access to shared resources Meaning: When only one thread can access a shared resource at a time. Code that uses mutual exclusion to synchronize its execution is called a critical section Only one thread at a time can execute code in the critical section All other threads are forced to wait on entry When one thread leaves the critical section, another can enter Critical Section Thread 1 Thread 2 (modify account balance) 2 nd thread free to enter Adapted from Matt Welsh’s (Harvard University) slides. 1 st thread leaves critical section 13

Critical Section Requirements Mutual exclusion At most one thread is currently executing in the critical section Progress If thread T 1 is outside the critical section, then T 1 cannot prevent T 2 from entering the critical section Bounded waiting (no starvation) If thread T 1 is waiting on the critical section, then T 1 will eventually enter the critical section Assumes threads eventually leave critical sections Performance The overhead of entering and exiting the critical section is small with respect to the work being done within it Adapted from Matt Welsh’s (Harvard University) slides. 14

Locks A lock is a object (in memory) that provides the following two operations: – acquire( ): a thread calls this before entering a critical section May require waiting to enter the critical section – release( ): a thread calls this after leaving a critical section Allows another thread to enter the critical section A call to acquire( ) must have a corresponding call to release( ) Between acquire( ) and release( ), the thread holds the lock acquire( ) does not return until the caller holds the lock At most one thread can hold a lock at a time (usually!) We'll talk about the exceptions later. . . What can happen if acquire( ) and release( ) calls are not paired? Adapted from Matt Welsh’s (Harvard University) slides. 15

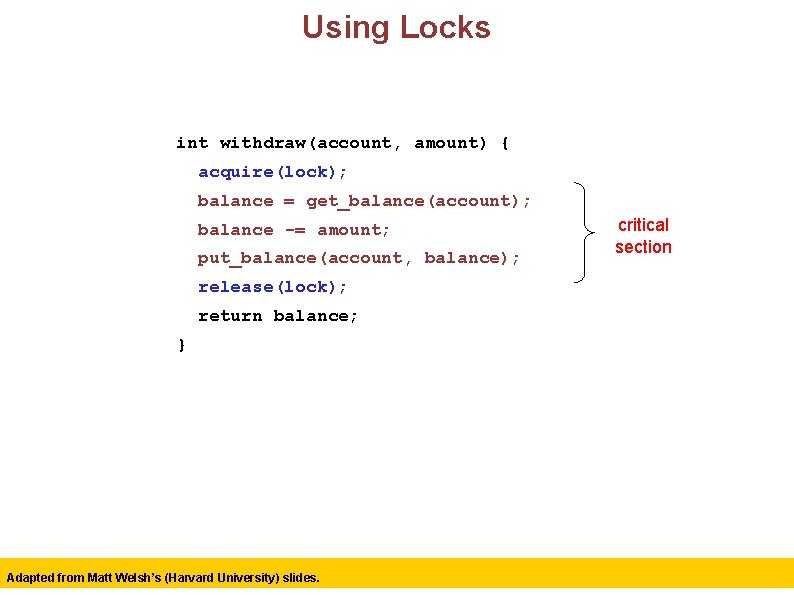

Using Locks int withdraw(account, amount) { acquire(lock); balance = get_balance(account); balance -= amount; put_balance(account, balance); critical section release(lock); return balance; } Adapted from Matt Welsh’s (Harvard University) slides. 16

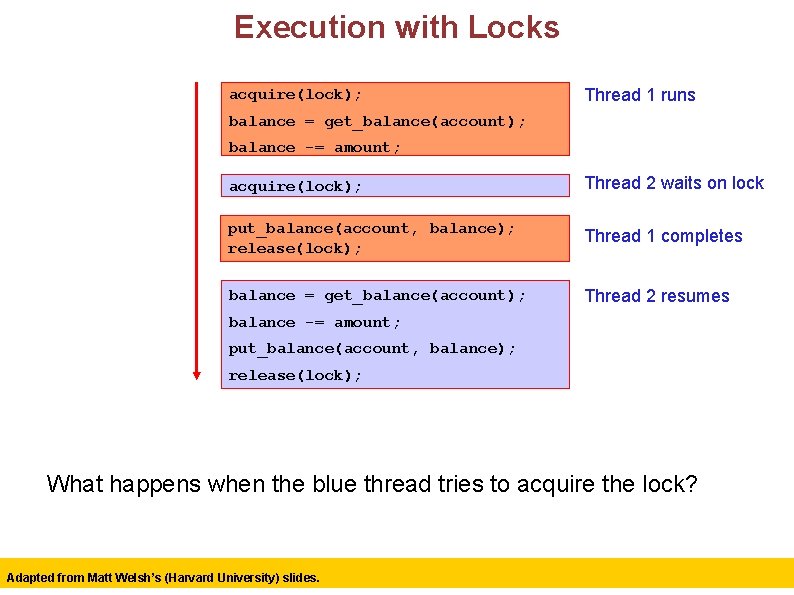

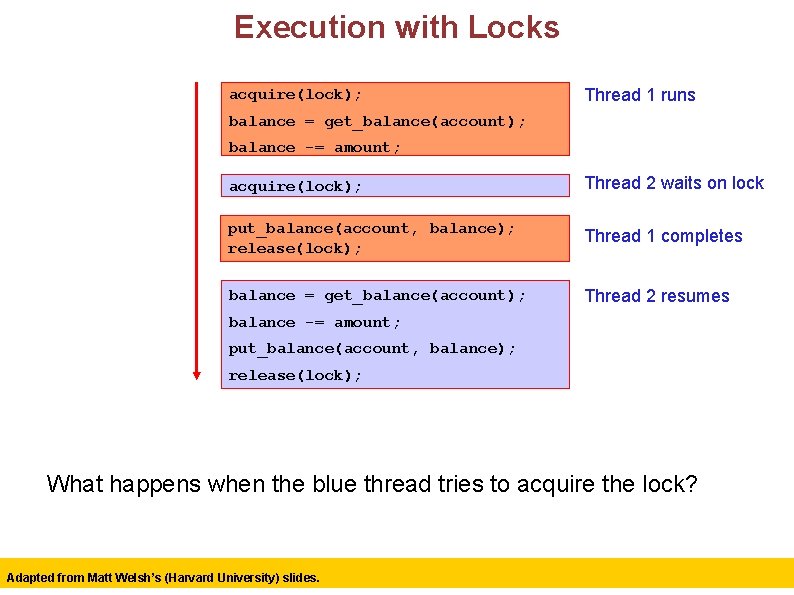

Execution with Locks acquire(lock); Thread 1 runs balance = get_balance(account); balance -= amount; acquire(lock); Thread 2 waits on lock put_balance(account, balance); release(lock); Thread 1 completes balance = get_balance(account); Thread 2 resumes balance -= amount; put_balance(account, balance); release(lock); What happens when the blue thread tries to acquire the lock? Adapted from Matt Welsh’s (Harvard University) slides. 17

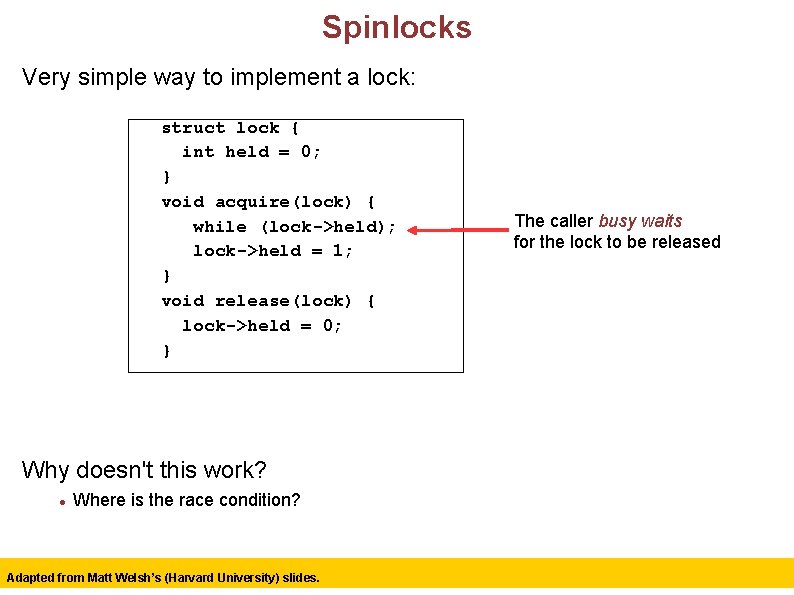

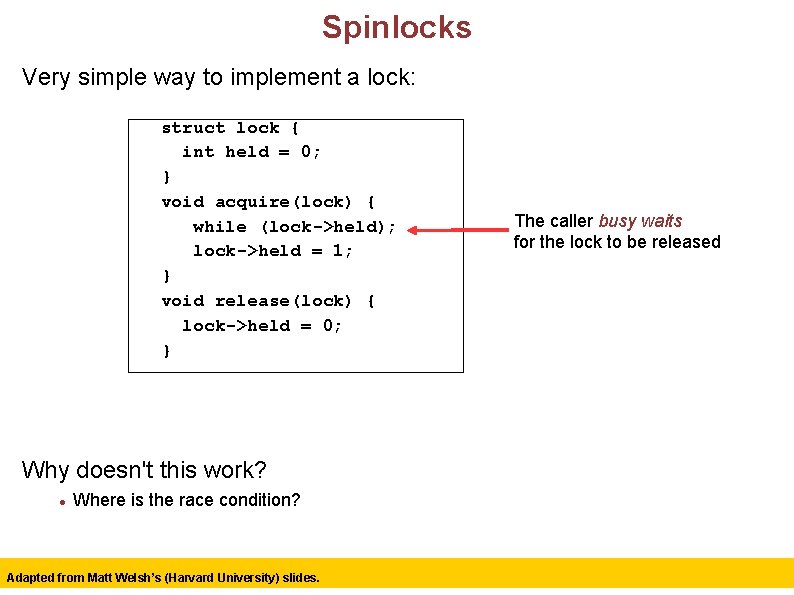

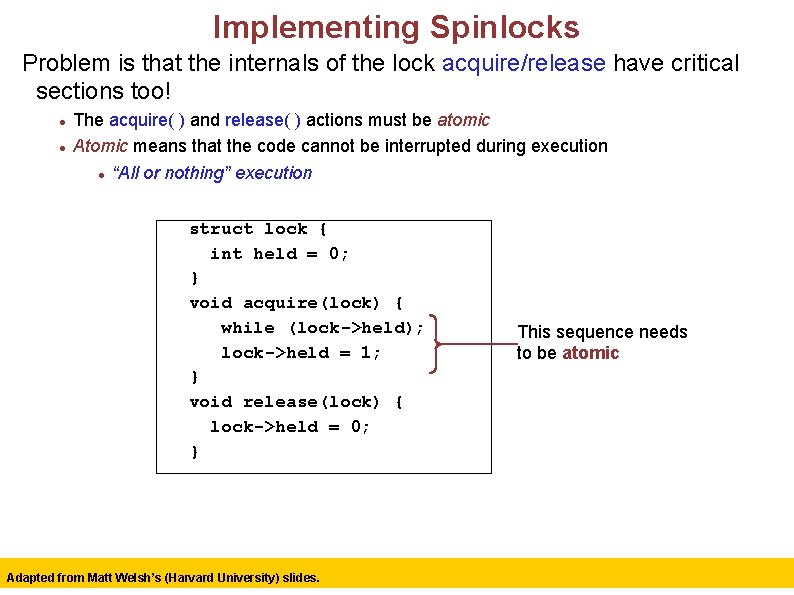

Spinlocks Very simple way to implement a lock: struct lock { int held = 0; } void acquire(lock) { while (lock->held); lock->held = 1; } void release(lock) { lock->held = 0; } The caller busy waits for the lock to be released Why doesn't this work? Where is the race condition? Adapted from Matt Welsh’s (Harvard University) slides. 18

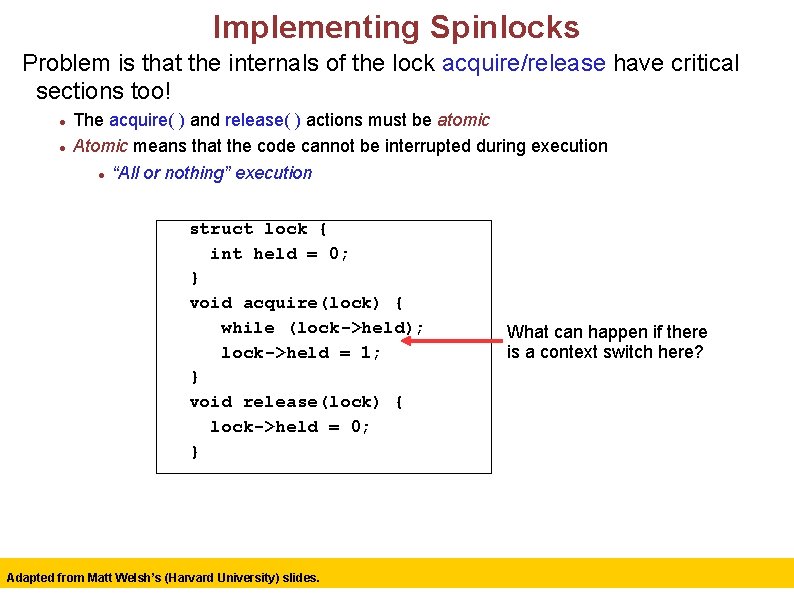

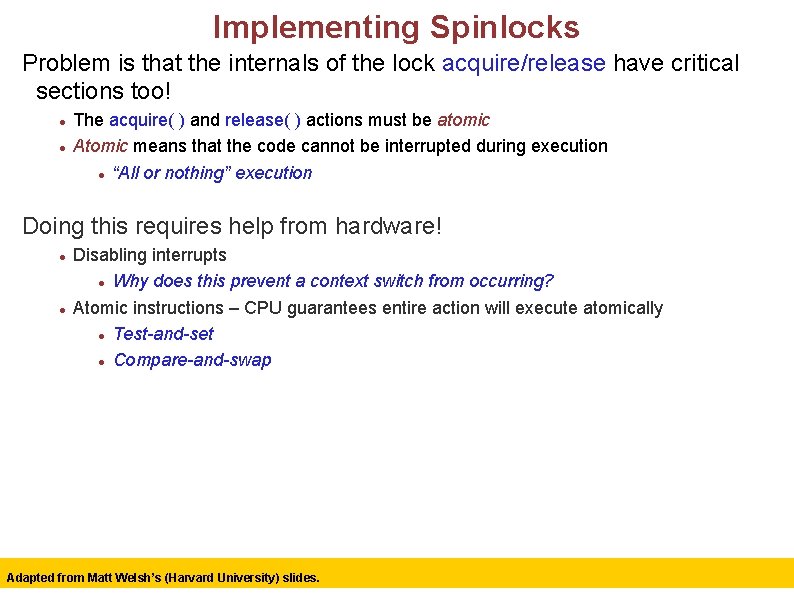

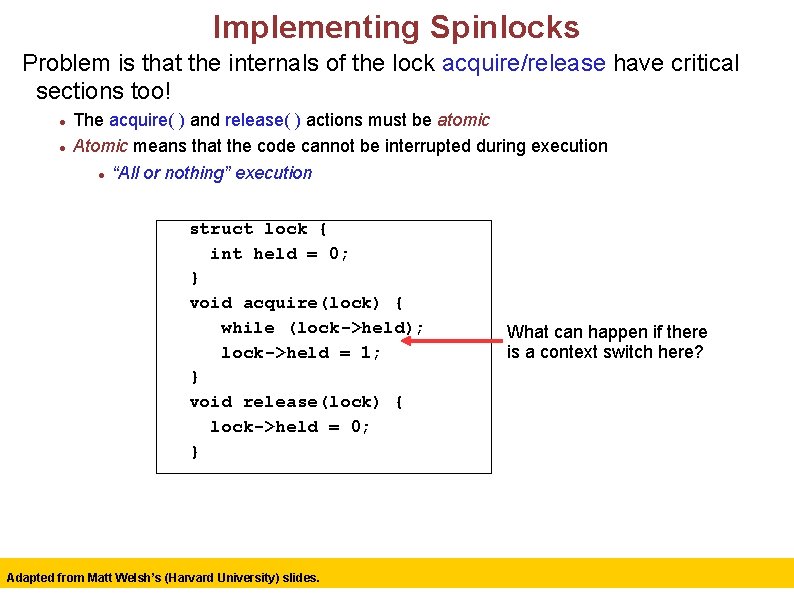

Implementing Spinlocks Problem is that the internals of the lock acquire/release have critical sections too! The acquire( ) and release( ) actions must be atomic Atomic means that the code cannot be interrupted during execution “All or nothing” execution struct lock { int held = 0; } void acquire(lock) { while (lock->held); lock->held = 1; } void release(lock) { lock->held = 0; } Adapted from Matt Welsh’s (Harvard University) slides. What can happen if there is a context switch here? 19

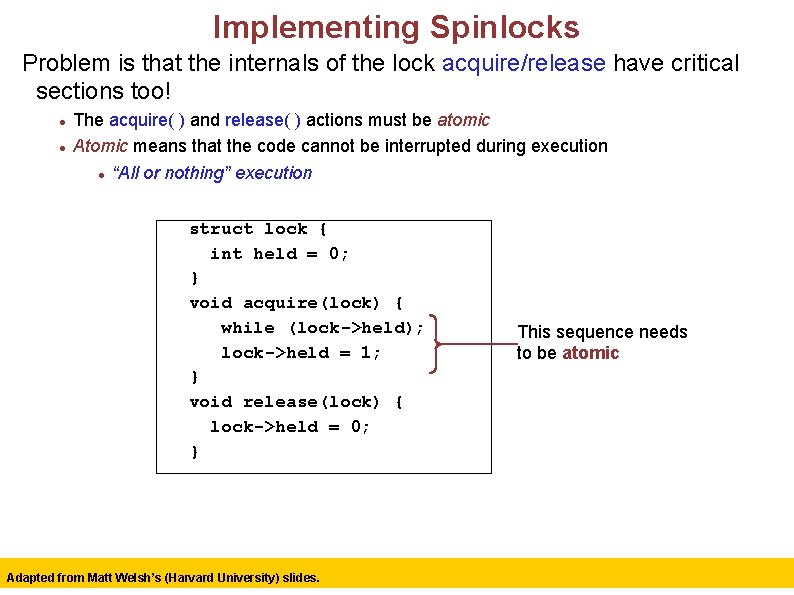

Implementing Spinlocks Problem is that the internals of the lock acquire/release have critical sections too! The acquire( ) and release( ) actions must be atomic Atomic means that the code cannot be interrupted during execution “All or nothing” execution struct lock { int held = 0; } void acquire(lock) { while (lock->held); lock->held = 1; } void release(lock) { lock->held = 0; } Adapted from Matt Welsh’s (Harvard University) slides. This sequence needs to be atomic 20

Implementing Spinlocks Problem is that the internals of the lock acquire/release have critical sections too! The acquire( ) and release( ) actions must be atomic Atomic means that the code cannot be interrupted during execution “All or nothing” execution Doing this requires help from hardware! Disabling interrupts Why does this prevent a context switch from occurring? Atomic instructions – CPU guarantees entire action will execute atomically Test-and-set Compare-and-swap Adapted from Matt Welsh’s (Harvard University) slides. 21

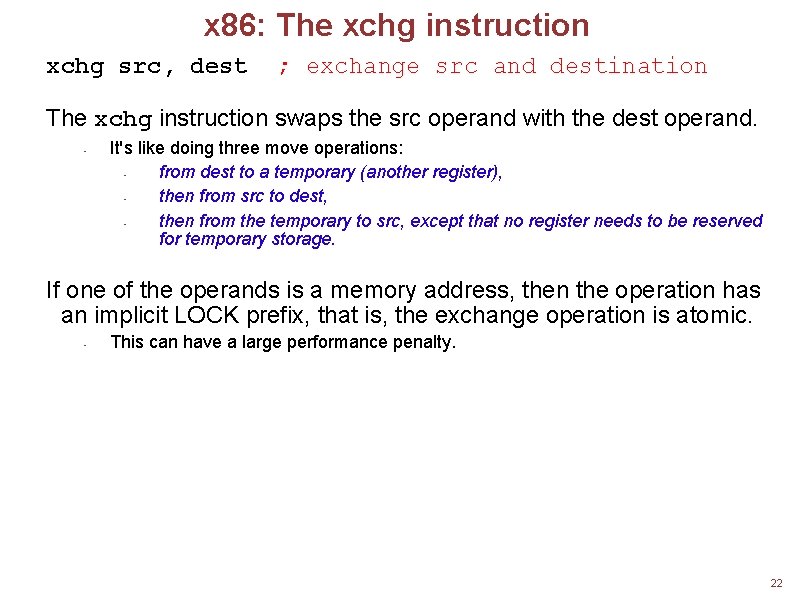

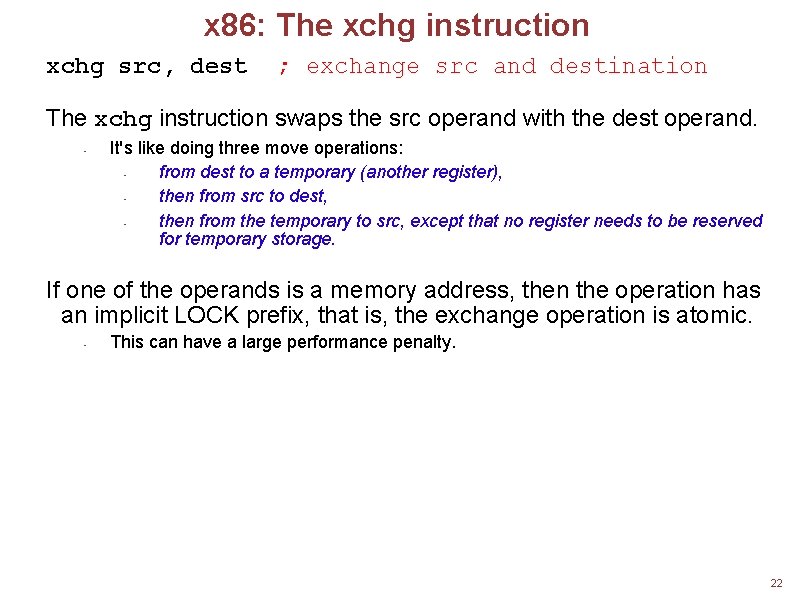

x 86: The xchg instruction xchg src, dest ; exchange src and destination The xchg instruction swaps the src operand with the dest operand. • It's like doing three move operations: • from dest to a temporary (another register), • then from src to dest, • then from the temporary to src, except that no register needs to be reserved for temporary storage. If one of the operands is a memory address, then the operation has an implicit LOCK prefix, that is, the exchange operation is atomic. • This can have a large performance penalty. 22

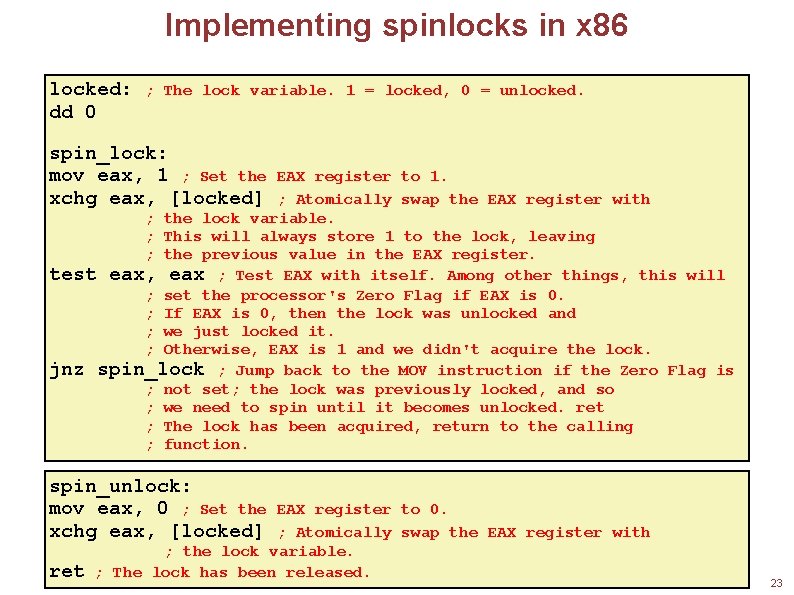

Implementing spinlocks in x 86 locked: dd 0 ; The lock variable. 1 = locked, 0 = unlocked. spin_lock: mov eax, 1 ; Set the xchg eax, [locked] EAX register to 1. ; Atomically swap the EAX register with ; the lock variable. ; This will always store 1 to the lock, leaving ; the previous value in the EAX register. test eax, eax ; Test EAX with itself. Among other things, this will ; set the processor's Zero Flag if EAX is 0. ; If EAX is 0, then the lock was unlocked and ; we just locked it. ; Otherwise, EAX is 1 and we didn't acquire the lock. jnz spin_lock ; Jump back to the MOV instruction if the Zero Flag is ; not set; the lock was previously locked, and so ; we need to spin until it becomes unlocked. ret ; The lock has been acquired, return to the calling ; function. spin_unlock: mov eax, 0 ; Set the xchg eax, [locked] ret EAX register to 0. ; Atomically swap the EAX register with ; the lock variable. ; The lock has been released. 23

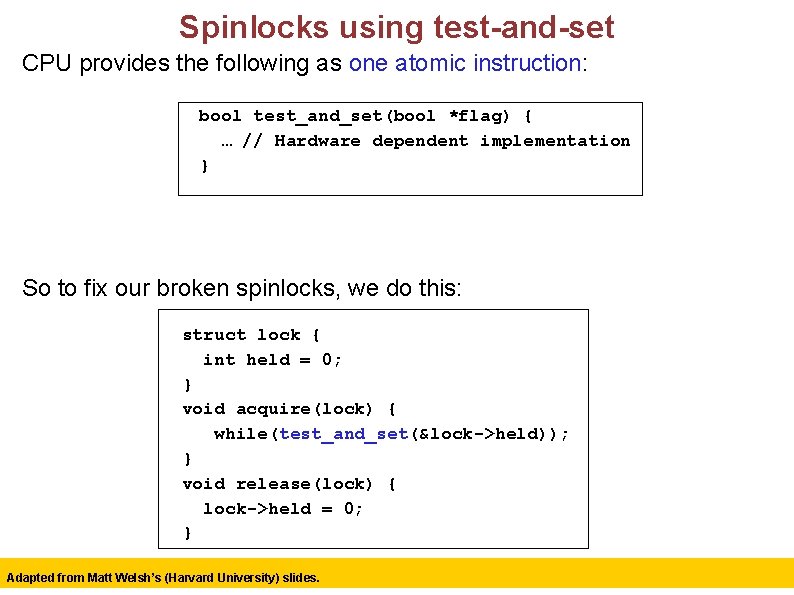

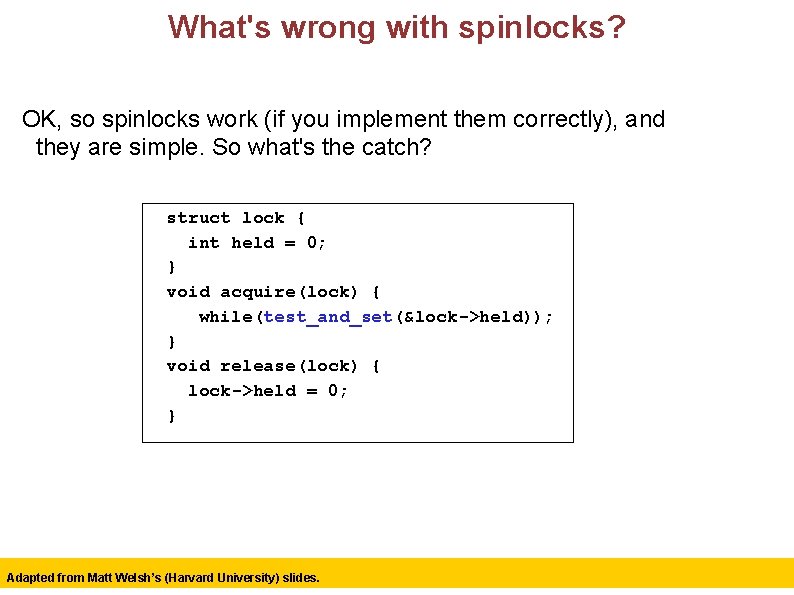

Spinlocks using test-and-set CPU provides the following as one atomic instruction: bool test_and_set(bool *flag) { … // Hardware dependent implementation } So to fix our broken spinlocks, we do this: struct lock { int held = 0; } void acquire(lock) { while(test_and_set(&lock->held)); } void release(lock) { lock->held = 0; } Adapted from Matt Welsh’s (Harvard University) slides. 24

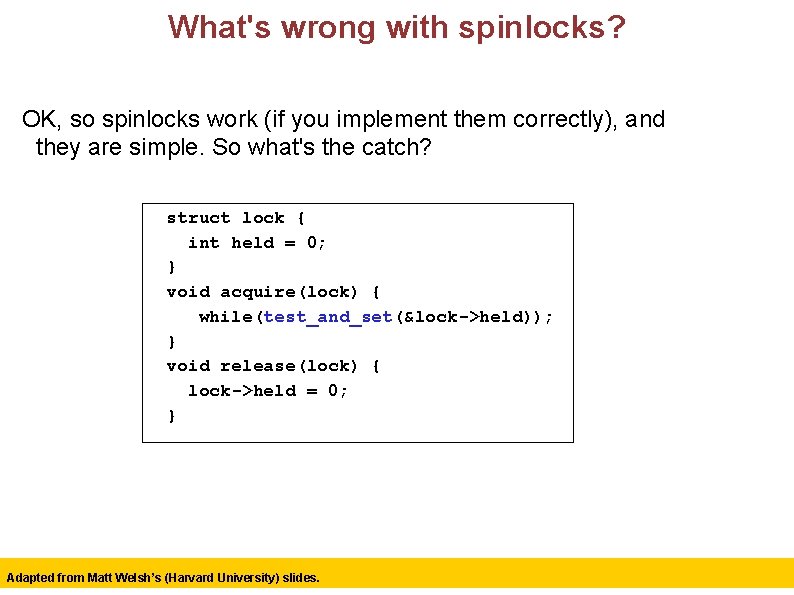

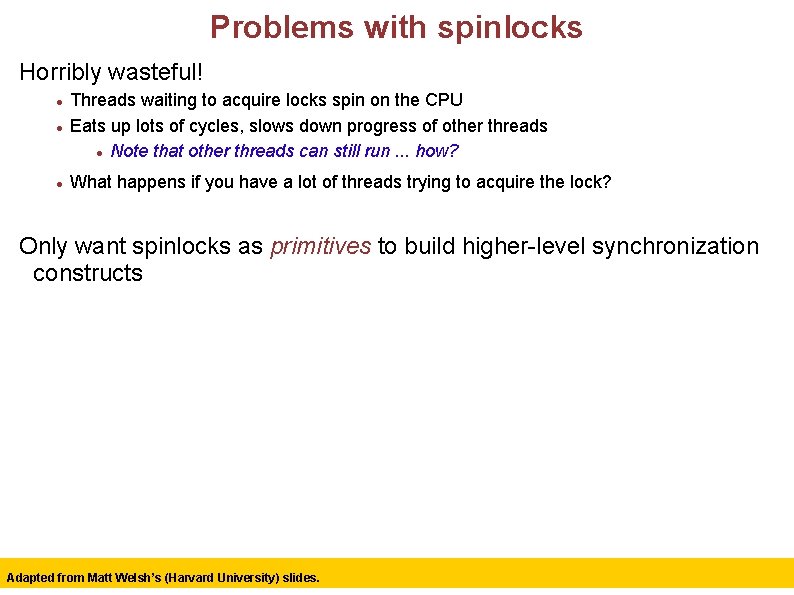

What's wrong with spinlocks? OK, so spinlocks work (if you implement them correctly), and they are simple. So what's the catch? struct lock { int held = 0; } void acquire(lock) { while(test_and_set(&lock->held)); } void release(lock) { lock->held = 0; } Adapted from Matt Welsh’s (Harvard University) slides. 25

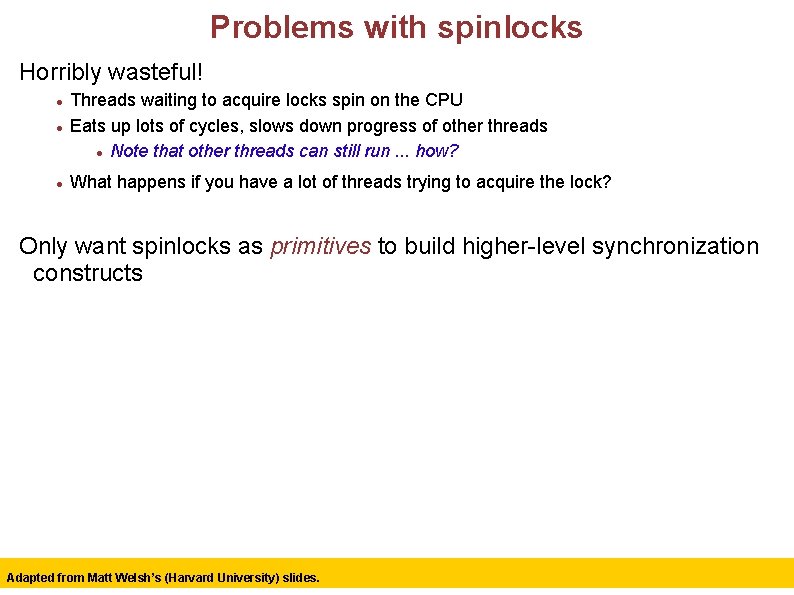

Problems with spinlocks Horribly wasteful! Threads waiting to acquire locks spin on the CPU Eats up lots of cycles, slows down progress of other threads Note that other threads can still run. . . how? What happens if you have a lot of threads trying to acquire the lock? Only want spinlocks as primitives to build higher-level synchronization constructs Adapted from Matt Welsh’s (Harvard University) slides. 26

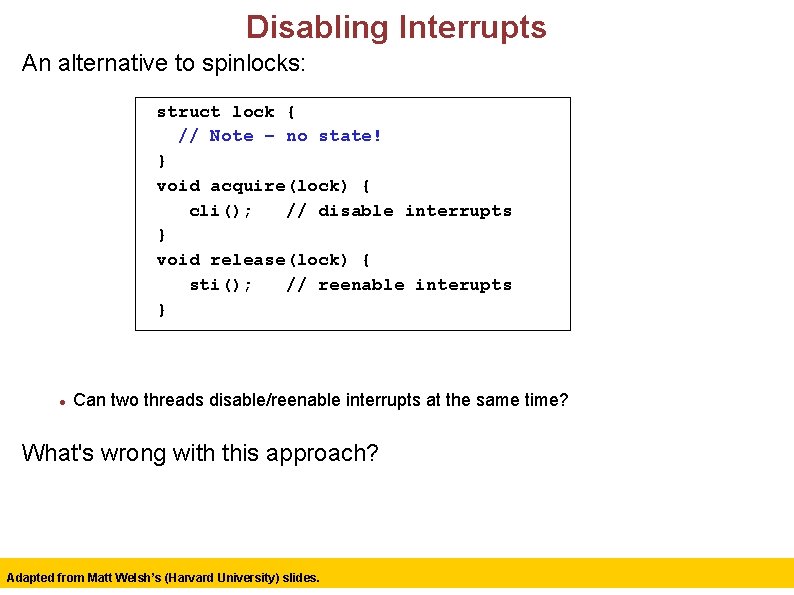

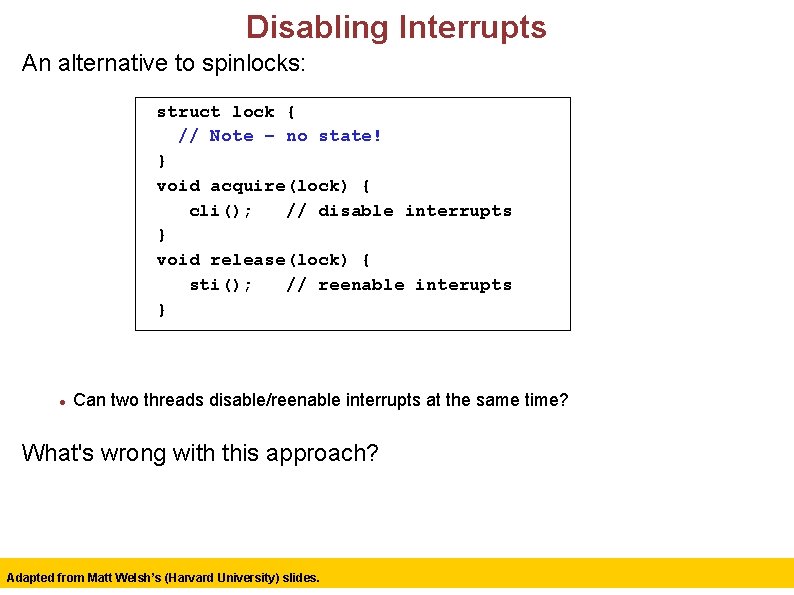

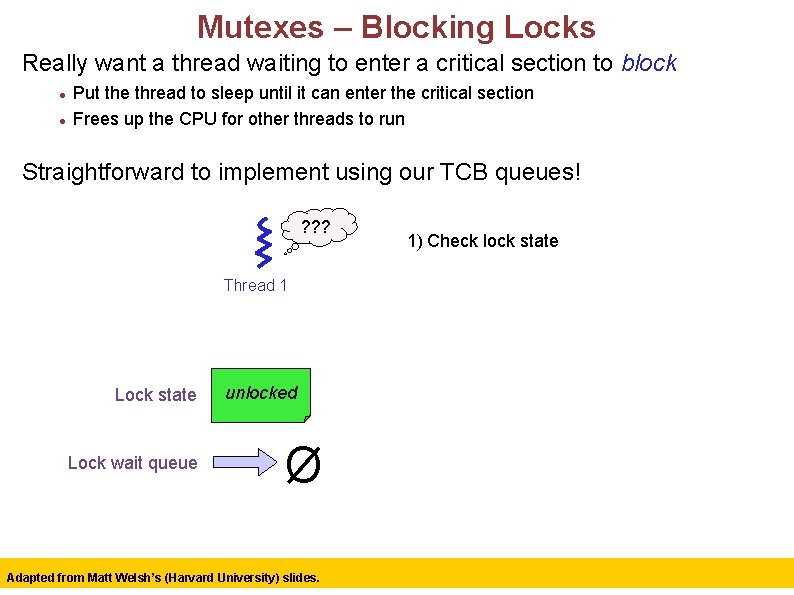

Disabling Interrupts An alternative to spinlocks: struct lock { // Note – no state! } void acquire(lock) { cli(); // disable interrupts } void release(lock) { sti(); // reenable interupts } Can two threads disable/reenable interrupts at the same time? What's wrong with this approach? Adapted from Matt Welsh’s (Harvard University) slides. 27

Disabling Interrupts An alternative to spinlocks: struct lock { // Note – no state! } void acquire(lock) { cli(); // disable interrupts } void release(lock) { sti(); // reenable interupts } Can two threads disable/reenable interrupts at the same time? What's wrong with this approach? Can only be implemented at kernel level (why? ) Inefficient on a multiprocessor system (why? ) All locks in the system are mutually exclusive No separation between different locks for different bank accounts Adapted from Matt Welsh’s (Harvard University) slides. 28

![Petersons Algorithm for two threads int flag2 int turn void init flag0 Peterson’s Algorithm (for two threads) int flag[2]; int turn; void init() { flag[0] =](https://slidetodoc.com/presentation_image_h2/c425a64ff8f804b60f358e4b9ceed8a3/image-29.jpg)

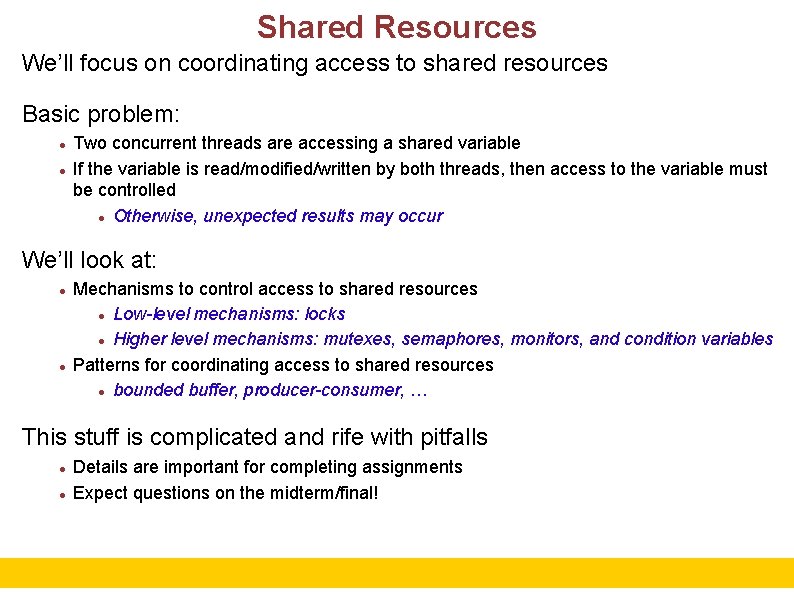

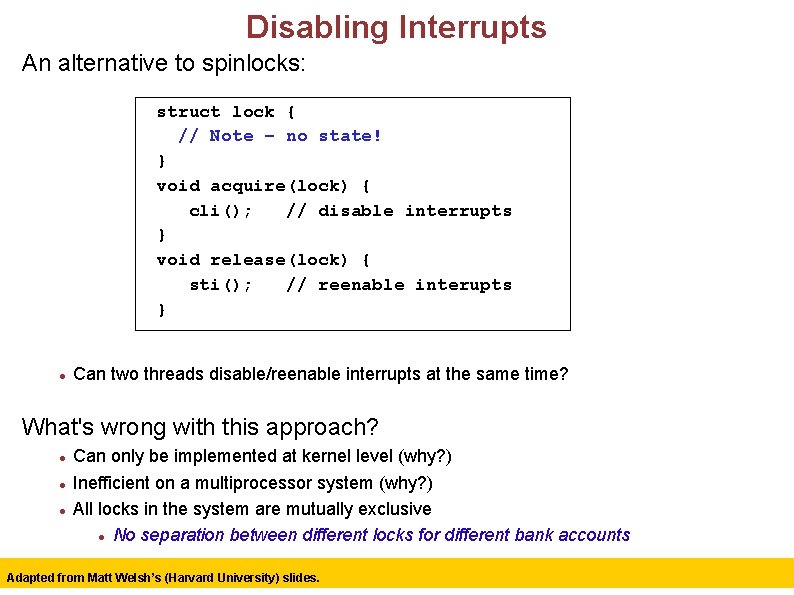

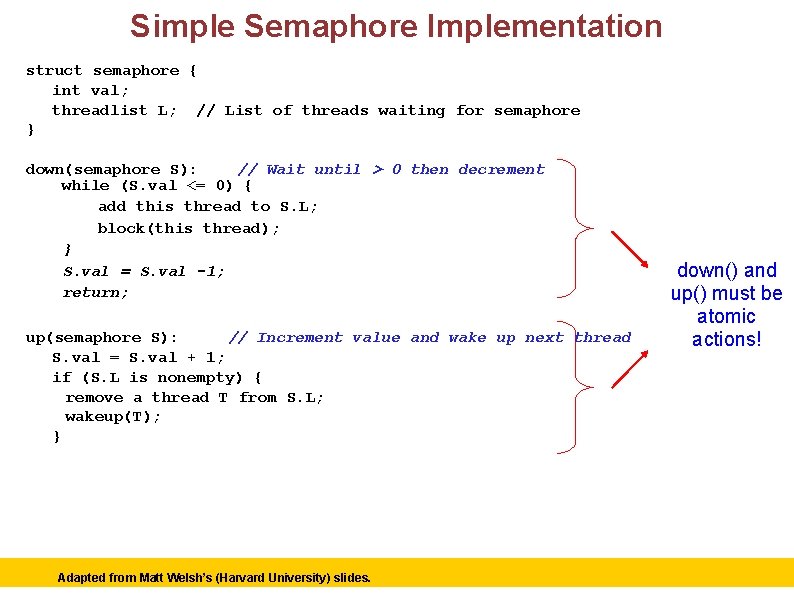

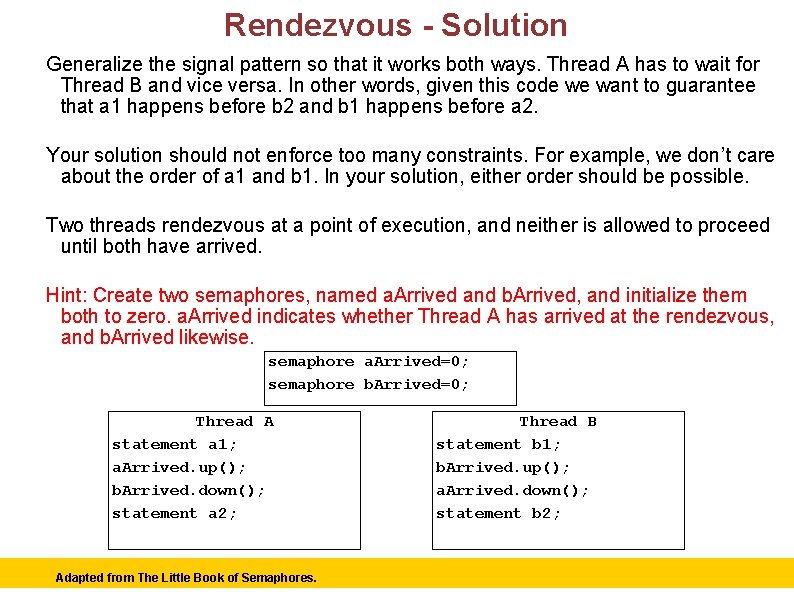

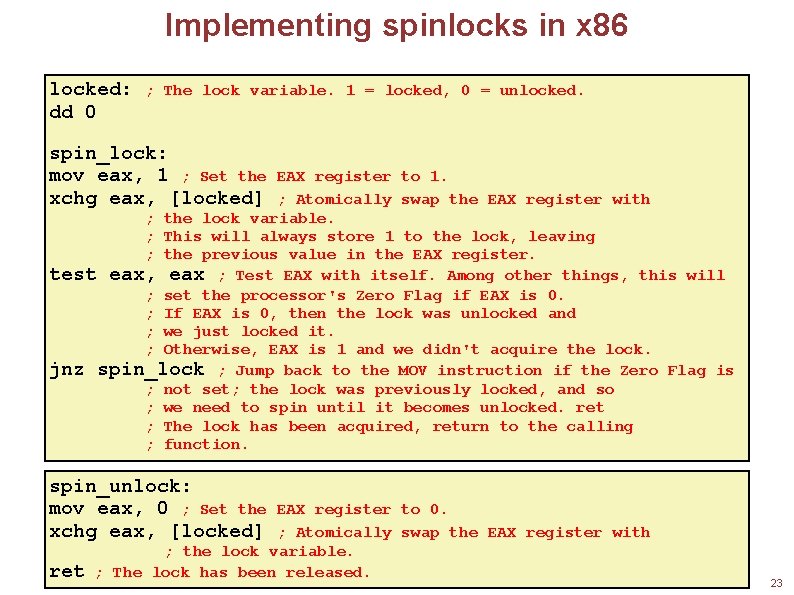

Peterson’s Algorithm (for two threads) int flag[2]; int turn; void init() { flag[0] = flag[1] = 0; // 1 ->thread wants to grab lock turn = 0; // whose turn? (thread 0 or 1? ) } void lock() { int other; flag[self] = 1; // self: thread ID of caller 0 or 1 other = 1 -self; turn = other; // make it other thread’s turn while ((flag[other] == 1) && (turn == other)) ; // spin-wait } void unlock() { flag[self] = 0; // simply undo your intent } The algorithm uses two variables, flag and turn. A flag value of 1 indicates that the thread intends to enter the critical section. The variable turn holds the ID of the thread whose turn it is. Entrance to the critical section is granted for thread T 0 if T 1 does not want to enter its critical section or if T 1 has given priority to T 0 by setting turn to 0. Adapted from Matt Welsh’s (Harvard University) slides. 29

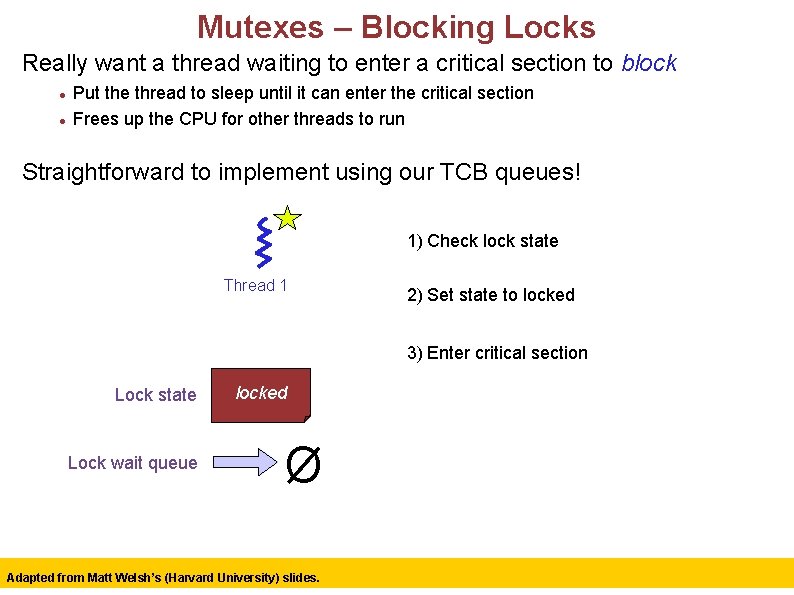

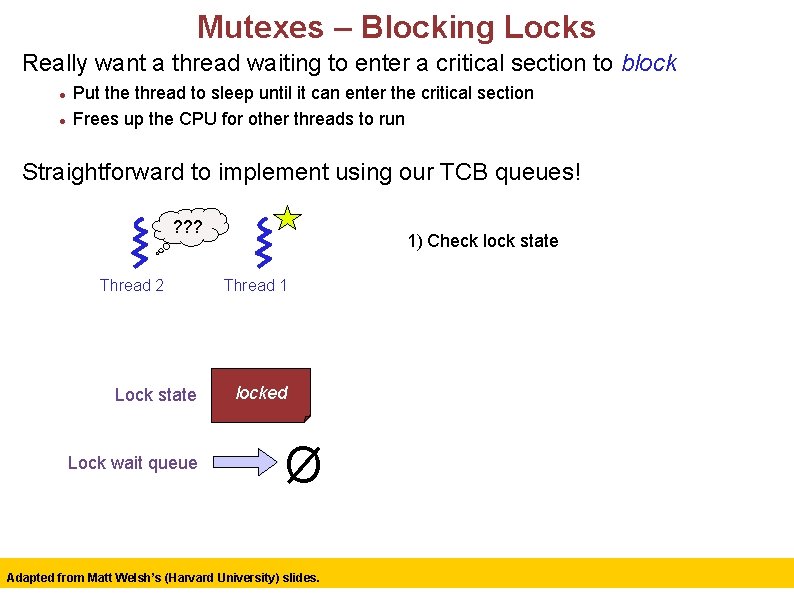

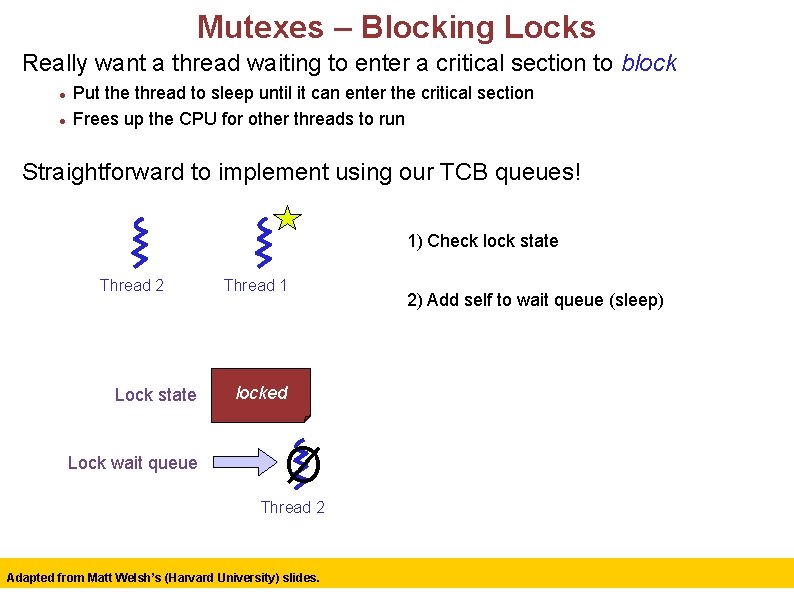

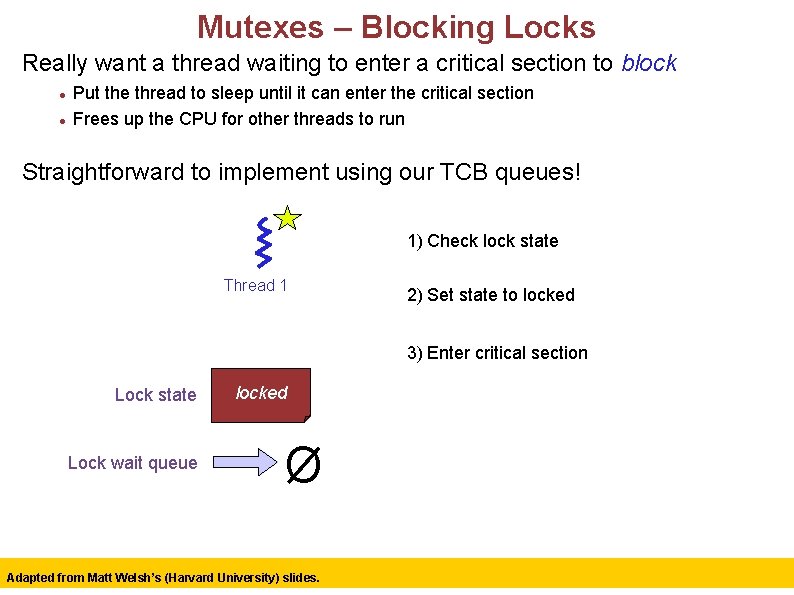

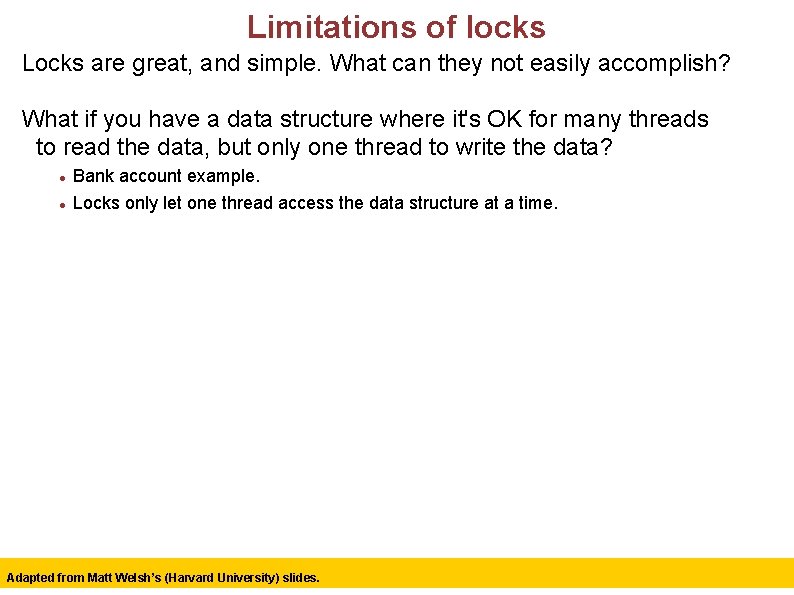

Mutexes – Blocking Locks Really want a thread waiting to enter a critical section to block Put the thread to sleep until it can enter the critical section Frees up the CPU for other threads to run Straightforward to implement using our TCB queues! ? ? ? 1) Check lock state Thread 1 Lock state Lock wait queue unlocked Ø Adapted from Matt Welsh’s (Harvard University) slides. 31

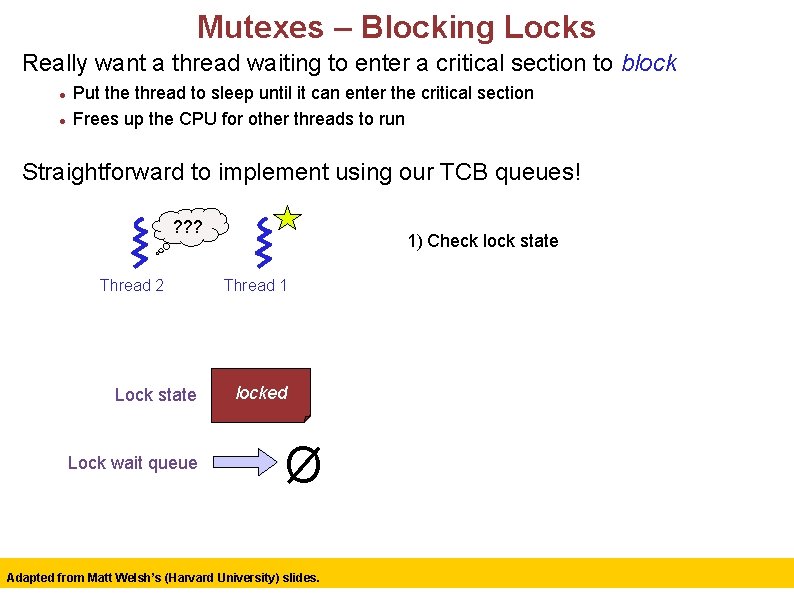

Mutexes – Blocking Locks Really want a thread waiting to enter a critical section to block Put the thread to sleep until it can enter the critical section Frees up the CPU for other threads to run Straightforward to implement using our TCB queues! 1) Check lock state Thread 1 2) Set state to locked 3) Enter critical section Lock state Lock wait queue locked Ø Adapted from Matt Welsh’s (Harvard University) slides. 32

Mutexes – Blocking Locks Really want a thread waiting to enter a critical section to block Put the thread to sleep until it can enter the critical section Frees up the CPU for other threads to run Straightforward to implement using our TCB queues! ? ? ? Thread 2 Lock state Lock wait queue 1) Check lock state Thread 1 locked Ø Adapted from Matt Welsh’s (Harvard University) slides. 33

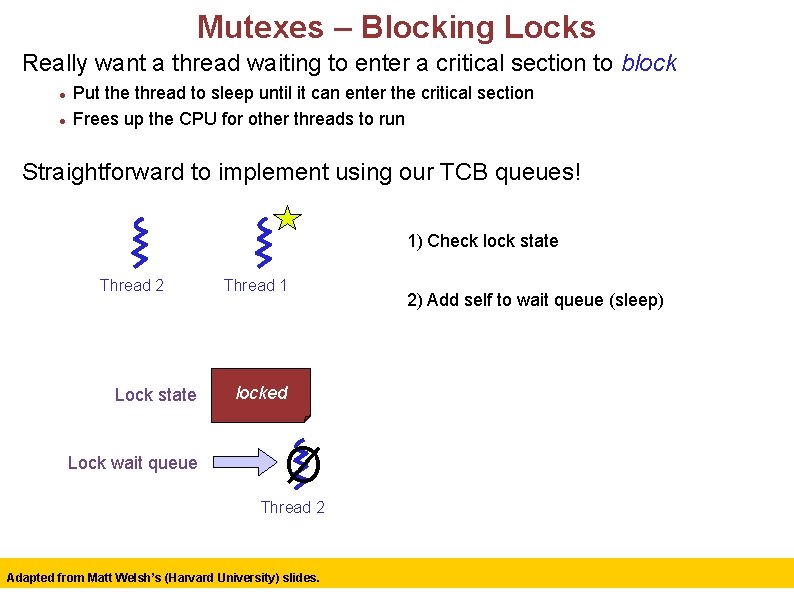

Mutexes – Blocking Locks Really want a thread waiting to enter a critical section to block Put the thread to sleep until it can enter the critical section Frees up the CPU for other threads to run Straightforward to implement using our TCB queues! 1) Check lock state Thread 2 Lock state Lock wait queue Thread 1 2) Add self to wait queue (sleep) locked Ø Thread 2 Adapted from Matt Welsh’s (Harvard University) slides. 34

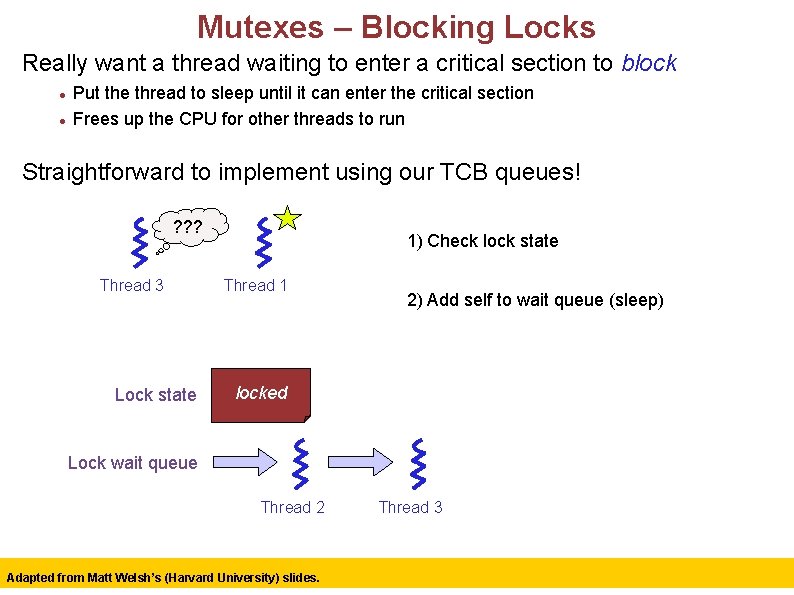

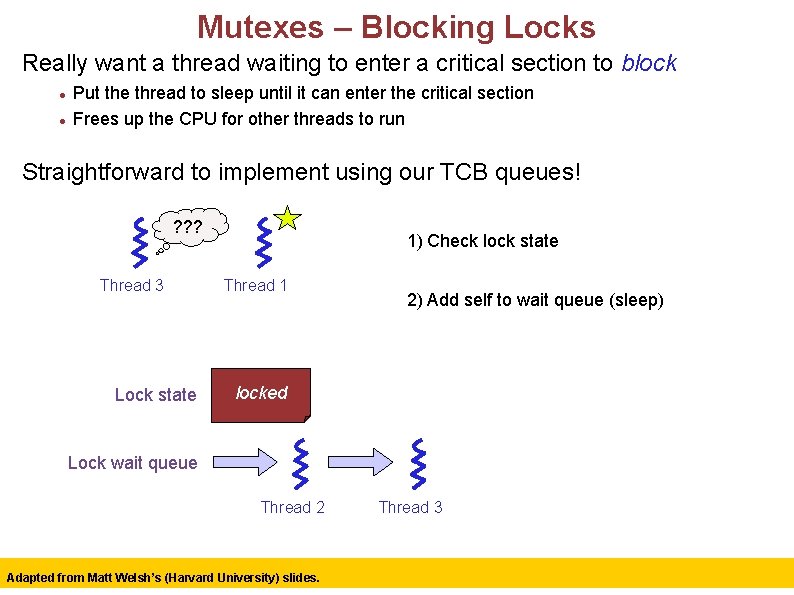

Mutexes – Blocking Locks Really want a thread waiting to enter a critical section to block Put the thread to sleep until it can enter the critical section Frees up the CPU for other threads to run Straightforward to implement using our TCB queues! ? ? ? Thread 3 Lock state 1) Check lock state Thread 1 2) Add self to wait queue (sleep) locked Lock wait queue Thread 2 Adapted from Matt Welsh’s (Harvard University) slides. Thread 3 35

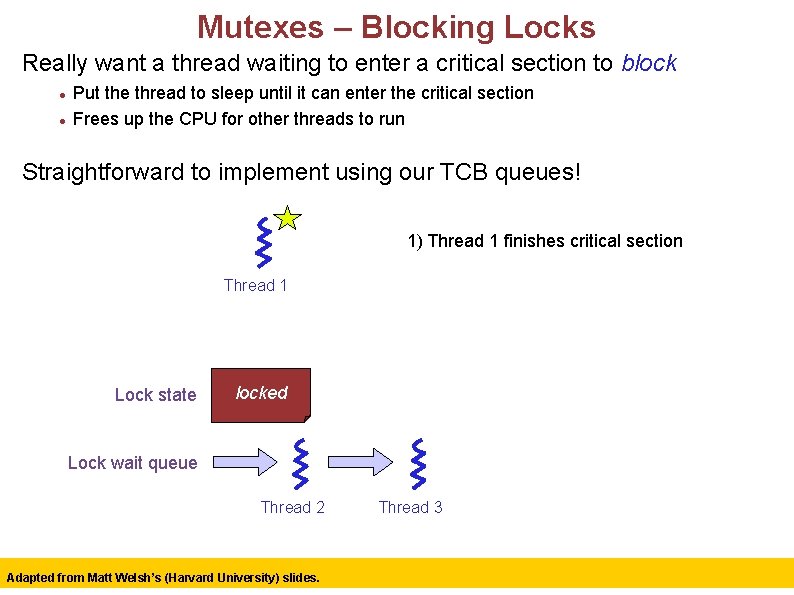

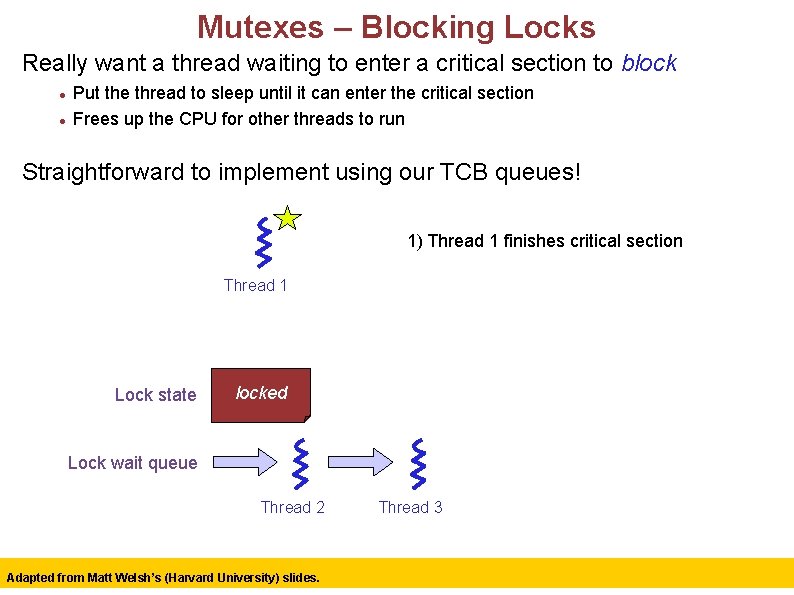

Mutexes – Blocking Locks Really want a thread waiting to enter a critical section to block Put the thread to sleep until it can enter the critical section Frees up the CPU for other threads to run Straightforward to implement using our TCB queues! 1) Thread 1 finishes critical section Thread 1 Lock state locked Lock wait queue Thread 2 Adapted from Matt Welsh’s (Harvard University) slides. Thread 3 36

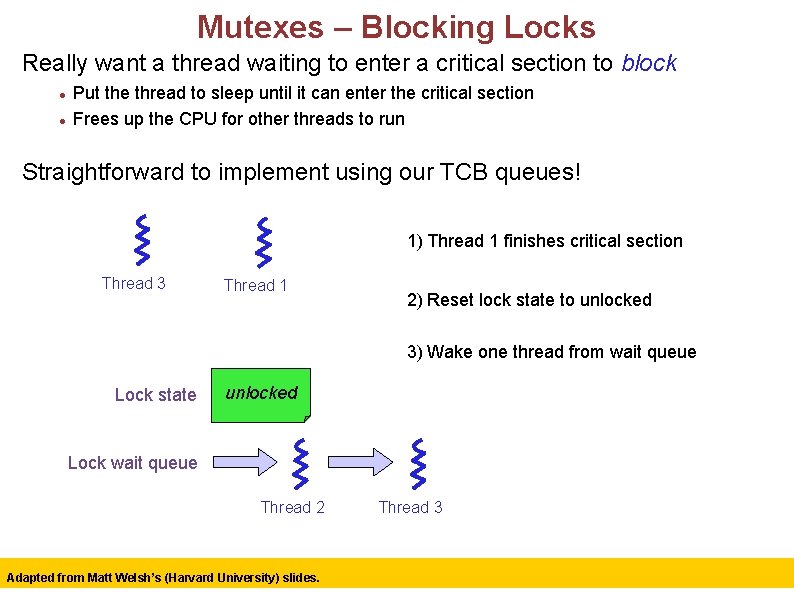

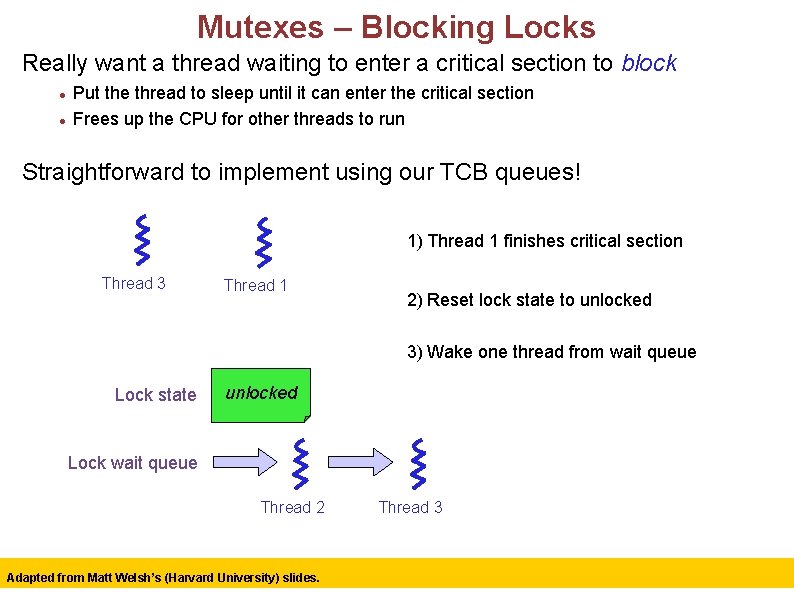

Mutexes – Blocking Locks Really want a thread waiting to enter a critical section to block Put the thread to sleep until it can enter the critical section Frees up the CPU for other threads to run Straightforward to implement using our TCB queues! 1) Thread 1 finishes critical section Thread 3 Thread 1 2) Reset lock state to unlocked 3) Wake one thread from wait queue Lock state unlocked Lock wait queue Thread 2 Adapted from Matt Welsh’s (Harvard University) slides. Thread 3 37

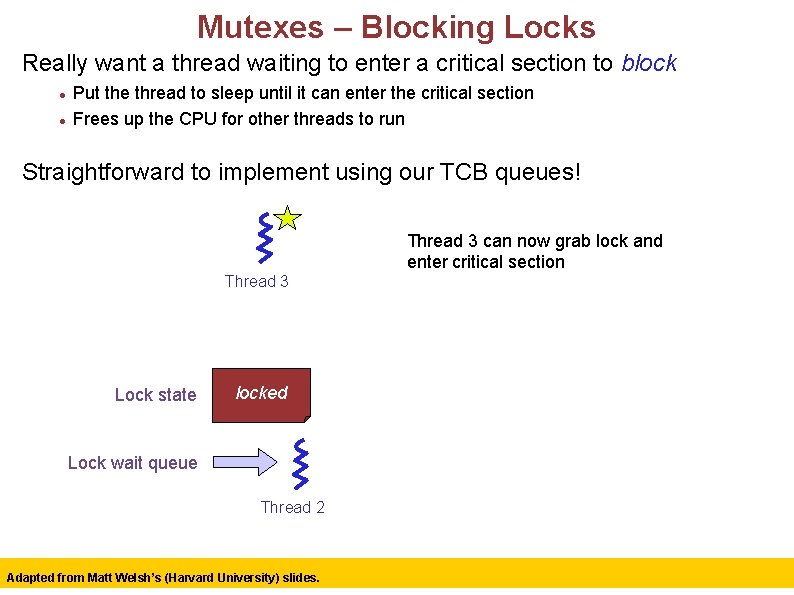

Mutexes – Blocking Locks Really want a thread waiting to enter a critical section to block Put the thread to sleep until it can enter the critical section Frees up the CPU for other threads to run Straightforward to implement using our TCB queues! Thread 3 can now grab lock and enter critical section Thread 3 Lock state locked Lock wait queue Thread 2 Adapted from Matt Welsh’s (Harvard University) slides. 38

Limitations of locks Locks are great, and simple. What can they not easily accomplish? What if you have a data structure where it's OK for many threads to read the data, but only one thread to write the data? Bank account example. Locks only let one thread access the data structure at a time. Adapted from Matt Welsh’s (Harvard University) slides. 39

Limitations of locks Locks are great, and simple. What can they not easily accomplish? What if you have a data structure where it's OK for many threads to read the data, but only one thread to write the data? Bank account example. Locks only let one thread access the data structure at a time. What if you want to protect access to two (or more) data structures at a time? e. g. , Transferring money from one bank account to another. Simple approach: Use a separate lock for each. What happens if you have transfer from account A -> account B, at the same time as transfer from account B -> account A? Hmmmmm. . . tricky. We will get into this next time. Adapted from Matt Welsh’s (Harvard University) slides. 40

Now. . Higher level synchronization primitives: How do to fancier stuff than just locks Semaphores, monitors, and condition variables Implemented using basic locks as a primitive Allow applications to perform more complicated coordination schemes Adapted from Matt Welsh’s (Harvard University) slides. 41

CENG 334 Introduction to Operating Systems Semaphores Topics: • Need for higher-level synchronization primitives • Semaphores and their implementation • The Producer/Consumer problem and its solution with semaphores • The Reader/Writer problem and its solution with semaphores Erol Sahin Dept of Computer Eng. Middle East Technical University Ankara, TURKEY URL: http: //kovan. ceng. metu. edu. tr/~erol/Courses/CENG 334 Some of the following slides are adapted from Matt Welsh, Harvard Univ. 42 42

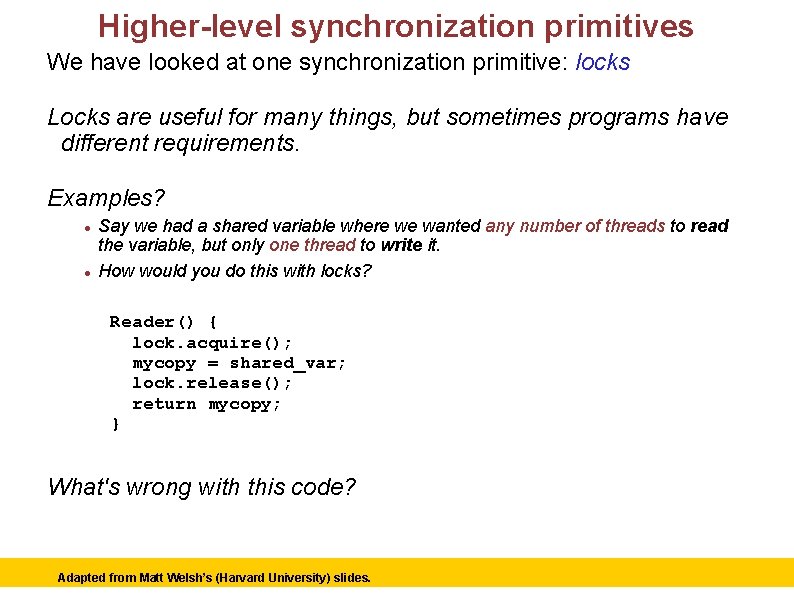

Higher-level synchronization primitives We have looked at one synchronization primitive: locks Locks are useful for many things, but sometimes programs have different requirements. Examples? Say we had a shared variable where we wanted any number of threads to read the variable, but only one thread to write it. How would you do this with locks? Reader() { lock. acquire(); mycopy = shared_var; lock. release(); return mycopy; } Writer() { lock. acquire(); shared_var = NEW_VALUE; lock. release(); } What's wrong with this code? Adapted from Matt Welsh’s (Harvard University) slides. 43

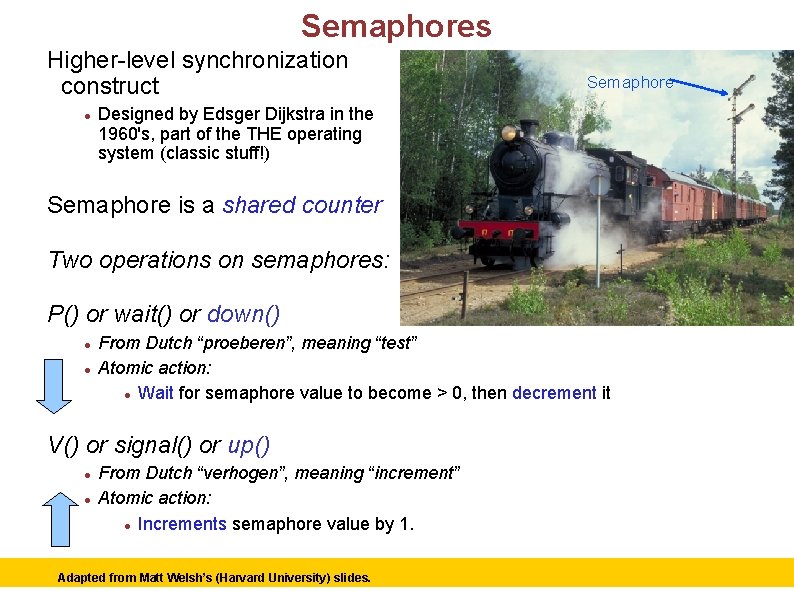

Semaphores Higher-level synchronization construct Semaphore Designed by Edsger Dijkstra in the 1960's, part of the THE operating system (classic stuff!) Semaphore is a shared counter Two operations on semaphores: P() or wait() or down() From Dutch “proeberen”, meaning “test” Atomic action: Wait for semaphore value to become > 0, then decrement it V() or signal() or up() From Dutch “verhogen”, meaning “increment” Atomic action: Increments semaphore value by 1. Adapted from Matt Welsh’s (Harvard University) slides. 44

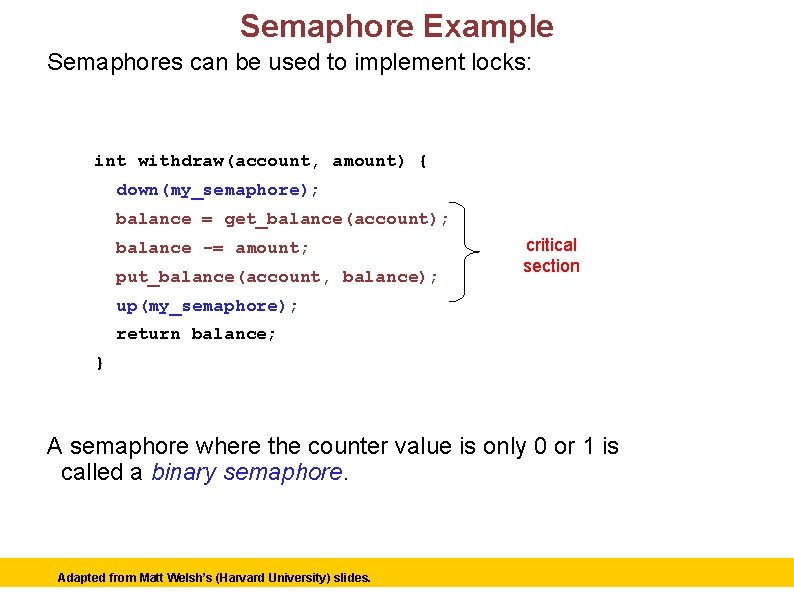

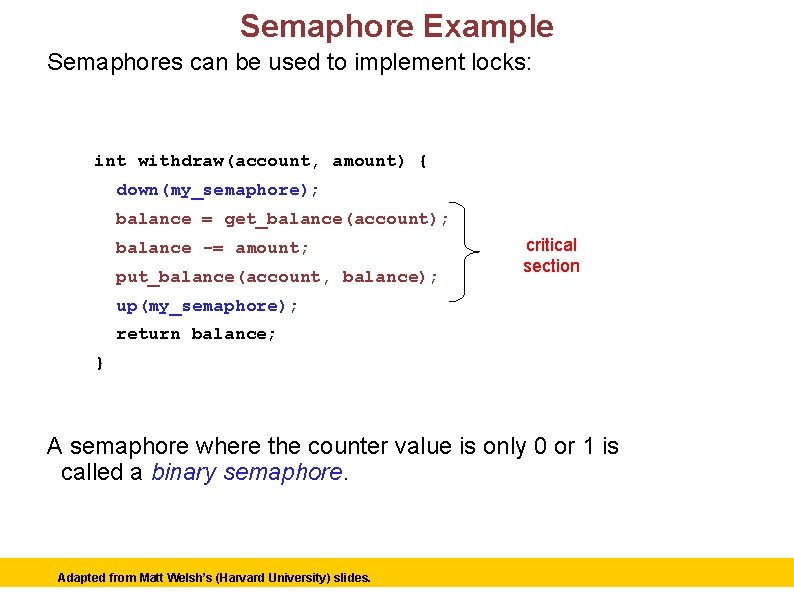

Semaphore Example Semaphores can be used to implement locks: Semaphore my_semaphore = 1; // Initialize to nonzero int withdraw(account, amount) { down(my_semaphore); balance = get_balance(account); balance -= amount; put_balance(account, balance); critical section up(my_semaphore); return balance; } A semaphore where the counter value is only 0 or 1 is called a binary semaphore. Adapted from Matt Welsh’s (Harvard University) slides. 45

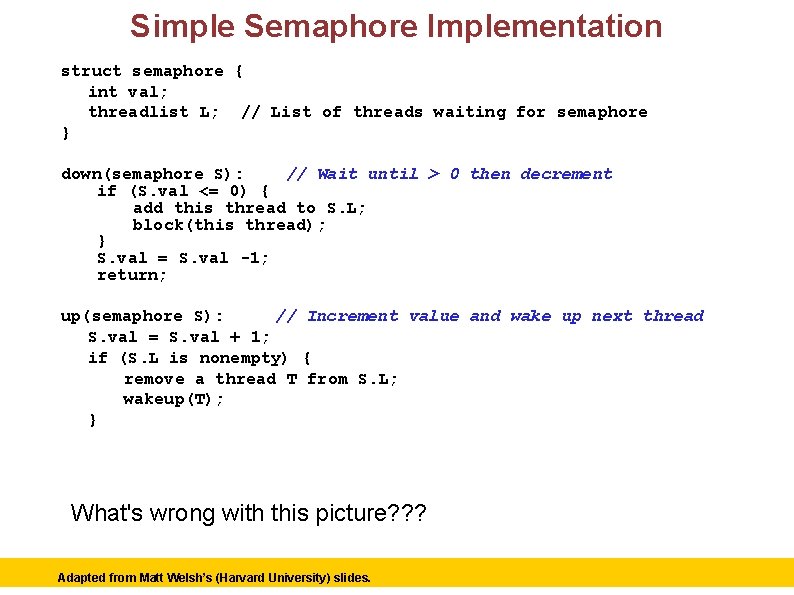

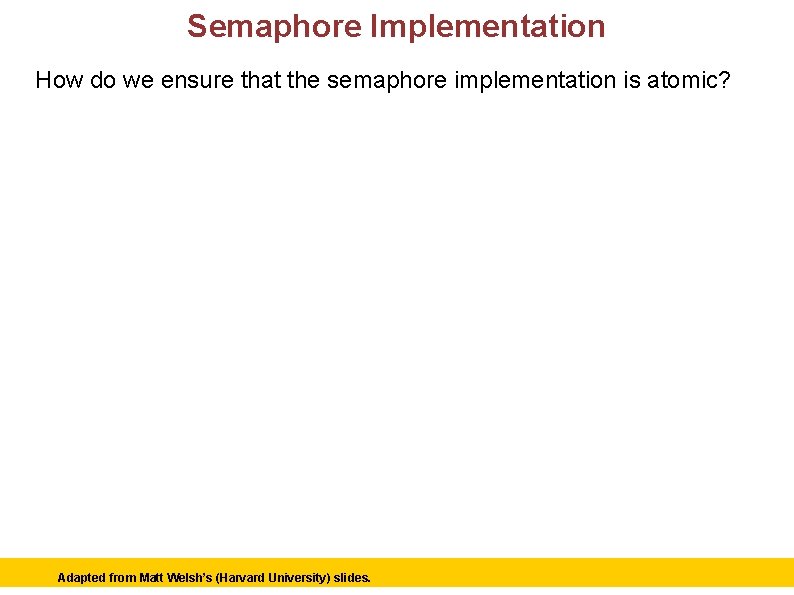

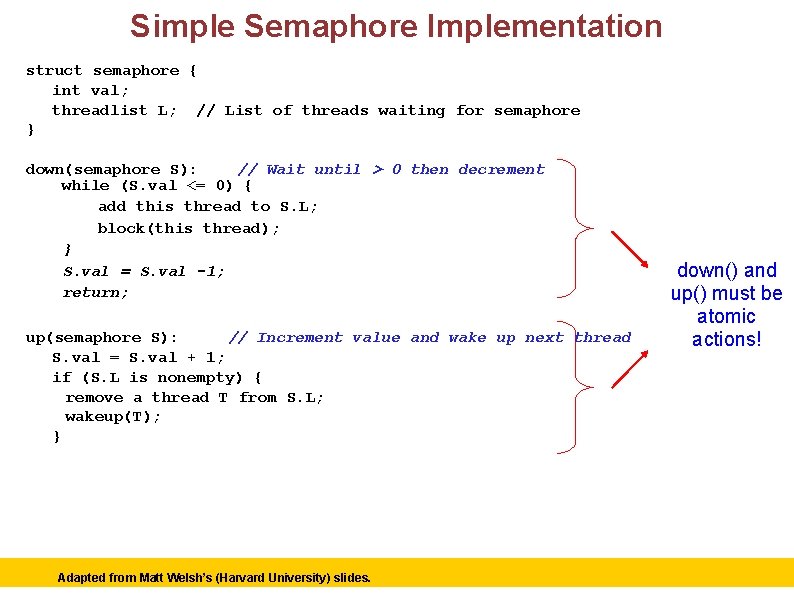

Simple Semaphore Implementation struct semaphore { int val; threadlist L; // List of threads waiting for semaphore } down(semaphore S): // Wait until > 0 then decrement if (S. val <= 0) { add this thread to S. L; block(this thread); } S. val = S. val -1; return; up(semaphore S): // Increment value and wake up next thread S. val = S. val + 1; if (S. L is nonempty) { remove a thread T from S. L; wakeup(T); } What's wrong with this picture? ? ? Adapted from Matt Welsh’s (Harvard University) slides. 46

Simple Semaphore Implementation struct semaphore { int val; threadlist L; // List of threads waiting for semaphore } down(semaphore S): // Wait until > 0 then decrement while (S. val <= 0) { add this thread to S. L; block(this thread); } S. val = S. val -1; return; up(semaphore S): // Increment value and wake up next thread S. val = S. val + 1; if (S. L is nonempty) { remove a thread T from S. L; wakeup(T); } Adapted from Matt Welsh’s (Harvard University) slides. down() and up() must be atomic actions! 47

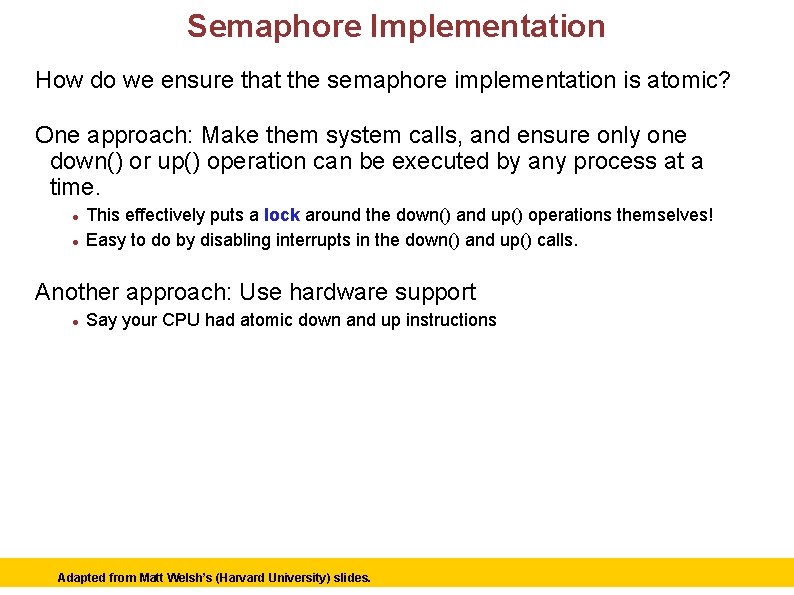

Semaphore Implementation How do we ensure that the semaphore implementation is atomic? Adapted from Matt Welsh’s (Harvard University) slides. 48

Semaphore Implementation How do we ensure that the semaphore implementation is atomic? One approach: Make them system calls, and ensure only one down() or up() operation can be executed by any process at a time. This effectively puts a lock around the down() and up() operations themselves! Easy to do by disabling interrupts in the down() and up() calls. Another approach: Use hardware support Say your CPU had atomic down and up instructions Adapted from Matt Welsh’s (Harvard University) slides. 49

OK, but why are semaphores useful? A binary semaphore (counter is always 0 or 1) is basically a lock. The real value of semaphores becomes apparent when the counter can be initialized to a value other than 0 or 1. Say we initialize a semaphore's counter to 50. What does this mean about down() and up() operations? Adapted from Matt Welsh’s (Harvard University) slides. 50

The Producer/Consumer Problem Also called the Bounded Buffer problem. Producer Mmmm. . . donuts Consumer Producer pushes items into the buffer. Consumer pulls items from the buffer. Producer needs to wait when buffer is full. Consumer needs to wait when the buffer is empty. Adapted from Matt Welsh’s (Harvard University) slides. 51

The Producer/Consumer Problem Also called the Bounded Buffer problem. Producer zzzzz. . Consumer Producer pushes items into the buffer. Consumer pulls items from the buffer. Producer needs to wait when buffer is full. Consumer needs to wait when the buffer is empty. Adapted from Matt Welsh’s (Harvard University) slides. 52

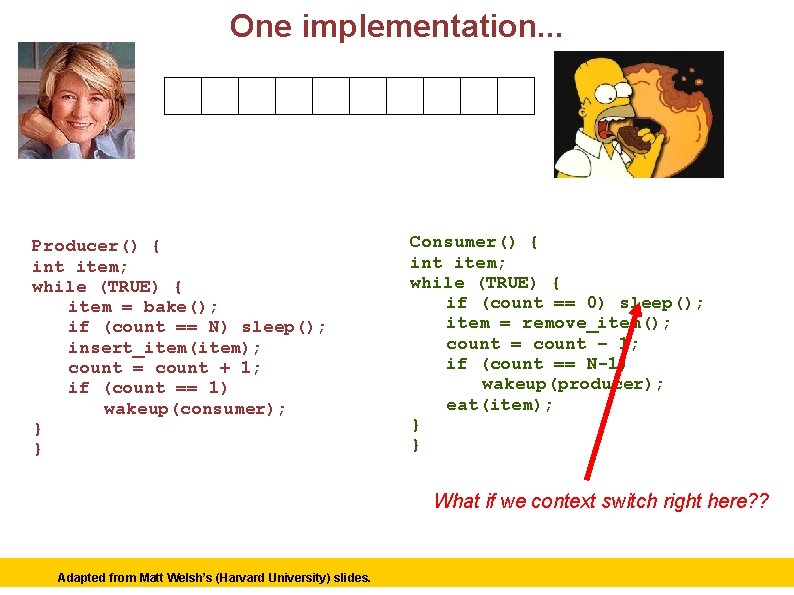

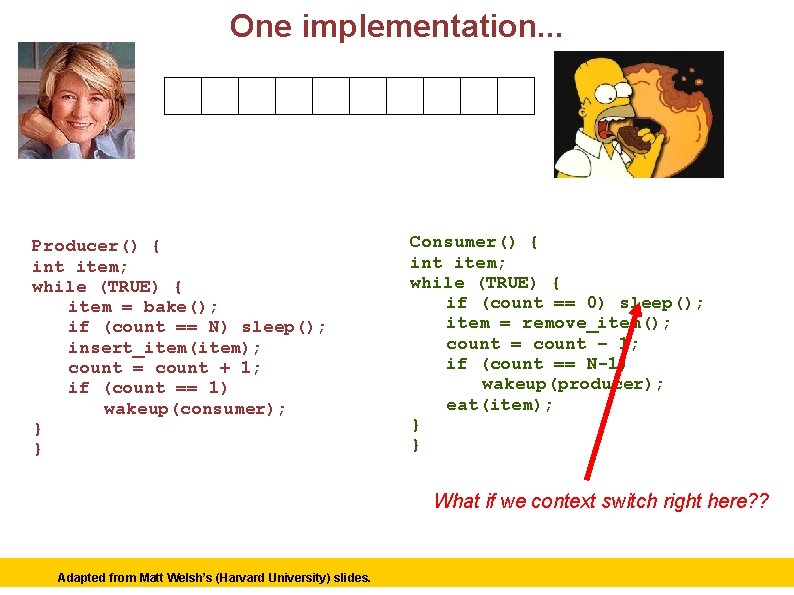

One implementation. . . Producer Consumer int count = 0; Producer() { int item; while (TRUE) { item = bake(); if (count == N) sleep(); insert_item(item); count = count + 1; if (count == 1) wakeup(consumer); } } What's wrong with this code? Adapted from Matt Welsh’s (Harvard University) slides. Consumer() { int item; while (TRUE) { if (count == 0) sleep(); item = remove_item(); count = count – 1; if (count == N-1) wakeup(producer); eat(item); } } What if we context switch right here? ? 53

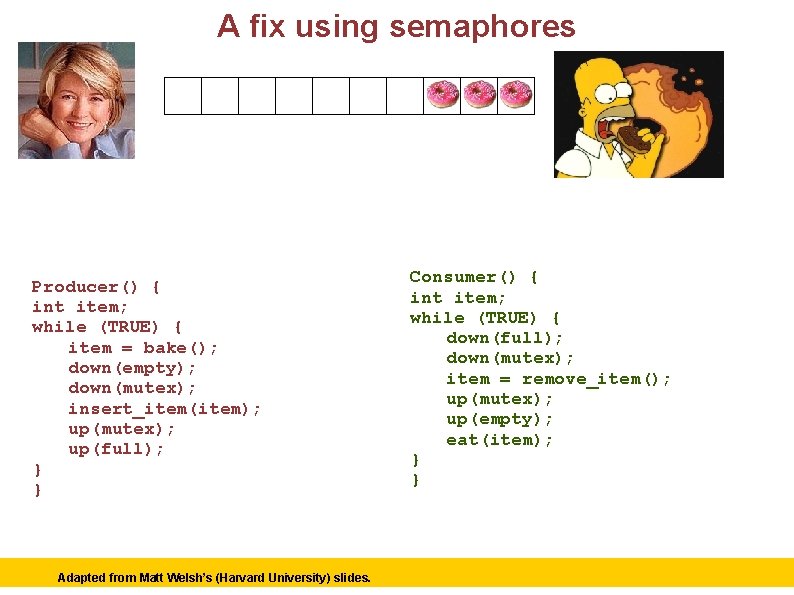

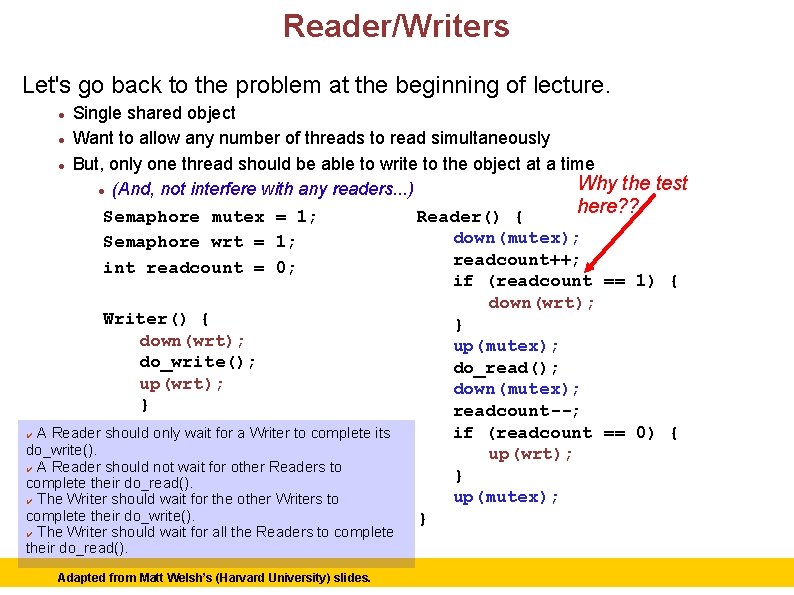

A fix using semaphores Producer Consumer Semaphore mutex = 1; Semaphore empty = N; Semaphore full = 0; Producer() { int item; while (TRUE) { item = bake(); down(empty); down(mutex); insert_item(item); up(mutex); up(full); } } Adapted from Matt Welsh’s (Harvard University) slides. Consumer() { int item; while (TRUE) { down(full); down(mutex); item = remove_item(); up(mutex); up(empty); eat(item); } } 54

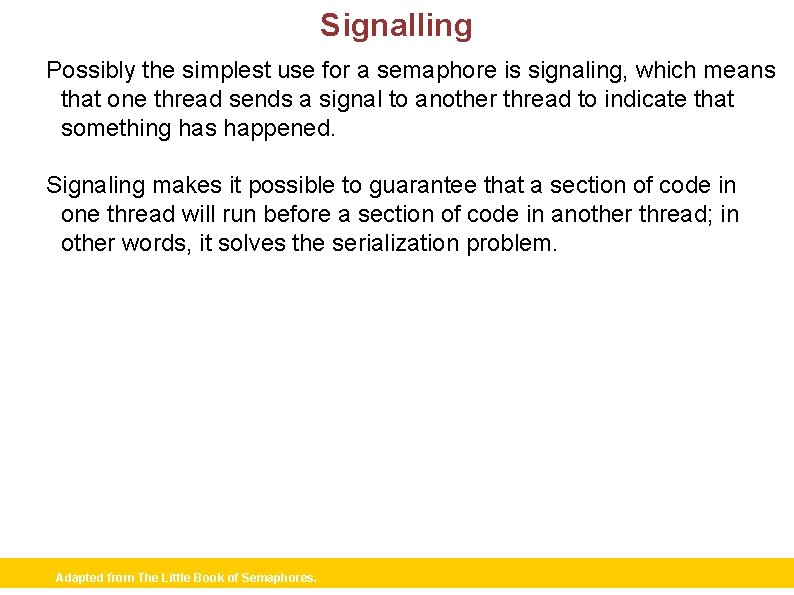

Reader/Writers Let's go back to the problem at the beginning of lecture. Single shared object Want to allow any number of threads to read simultaneously But, only one thread should be able to write to the object at a time Why the test (And, not interfere with any readers. . . ) here? ? Semaphore mutex = 1; Reader() { down(mutex); Semaphore wrt = 1; readcount++; int readcount = 0; if (readcount == 1) { down(wrt); Writer() { } down(wrt); up(mutex); do_write(); do_read(); up(wrt); down(mutex); } readcount--; if (readcount == 0) { ✔ A Reader should only wait for a Writer to complete its do_write(). up(wrt); ✔ A Reader should not wait for other Readers to } complete their do_read(). up(mutex); ✔ The Writer should wait for the other Writers to complete their do_write(). } The Writer should wait for all the Readers to complete their do_read(). ✔ Adapted from Matt Welsh’s (Harvard University) slides. 55

Issues with Semaphores Much of the power of semaphores derives from calls to down() and up() that are unmatched See previous example! Unlike locks, acquire() and release() are not always paired. This means it is a lot easier to get into trouble with semaphores. “More rope” Would be nice if we had some clean, well-defined language support for synchronization. . . Java does! Adapted from Matt Welsh’s (Harvard University) slides. 56

CENG 334 Introduction to Operating Systems Synchronization patterns Topics • Signalling • Rendezvous • Barrier Erol Sahin Dept of Computer Eng. Middle East Technical University Ankara, TURKEY URL: http: //kovan. ceng. metu. edu. tr/ceng 334 57

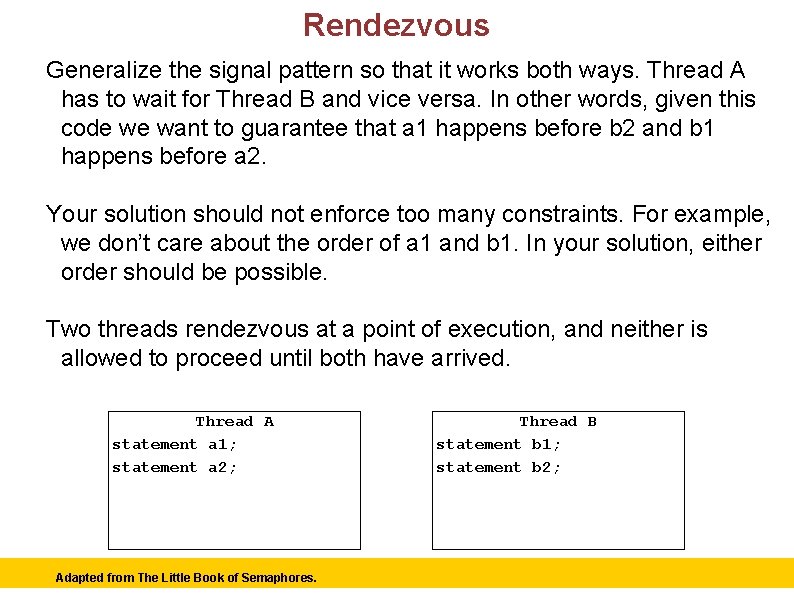

Signalling Possibly the simplest use for a semaphore is signaling, which means that one thread sends a signal to another thread to indicate that something has happened. Signaling makes it possible to guarantee that a section of code in one thread will run before a section of code in another thread; in other words, it solves the serialization problem. Adapted from The Little Book of Semaphores. 58

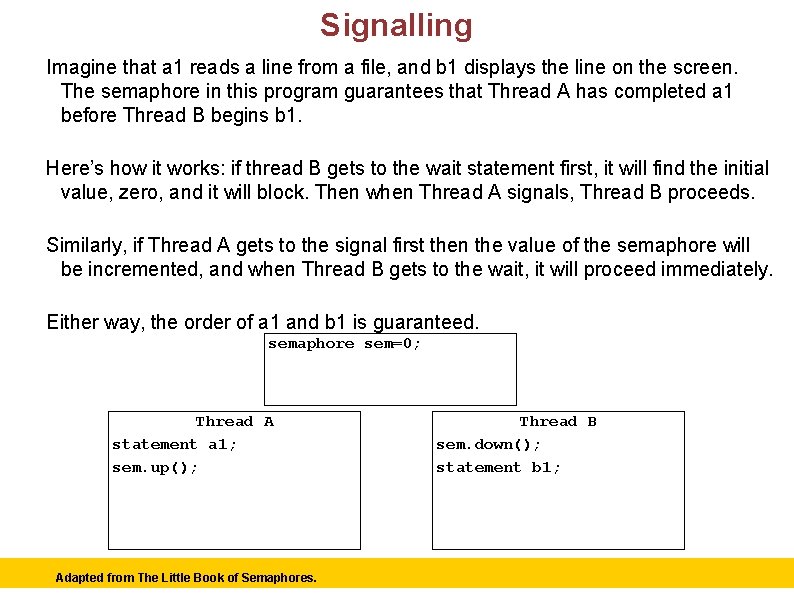

Signalling Imagine that a 1 reads a line from a file, and b 1 displays the line on the screen. The semaphore in this program guarantees that Thread A has completed a 1 before Thread B begins b 1. Here’s how it works: if thread B gets to the wait statement first, it will find the initial value, zero, and it will block. Then when Thread A signals, Thread B proceeds. Similarly, if Thread A gets to the signal first then the value of the semaphore will be incremented, and when Thread B gets to the wait, it will proceed immediately. Either way, the order of a 1 and b 1 is guaranteed. Thread A statement a 1; Thread B statement b 1; Adapted from The Little Book of Semaphores. 59

Signalling Imagine that a 1 reads a line from a file, and b 1 displays the line on the screen. The semaphore in this program guarantees that Thread A has completed a 1 before Thread B begins b 1. Here’s how it works: if thread B gets to the wait statement first, it will find the initial value, zero, and it will block. Then when Thread A signals, Thread B proceeds. Similarly, if Thread A gets to the signal first then the value of the semaphore will be incremented, and when Thread B gets to the wait, it will proceed immediately. Either way, the order of a 1 and b 1 is guaranteed. semaphore sem=0; Thread A statement a 1; sem. up(); Adapted from The Little Book of Semaphores. Thread B sem. down(); statement b 1; 60

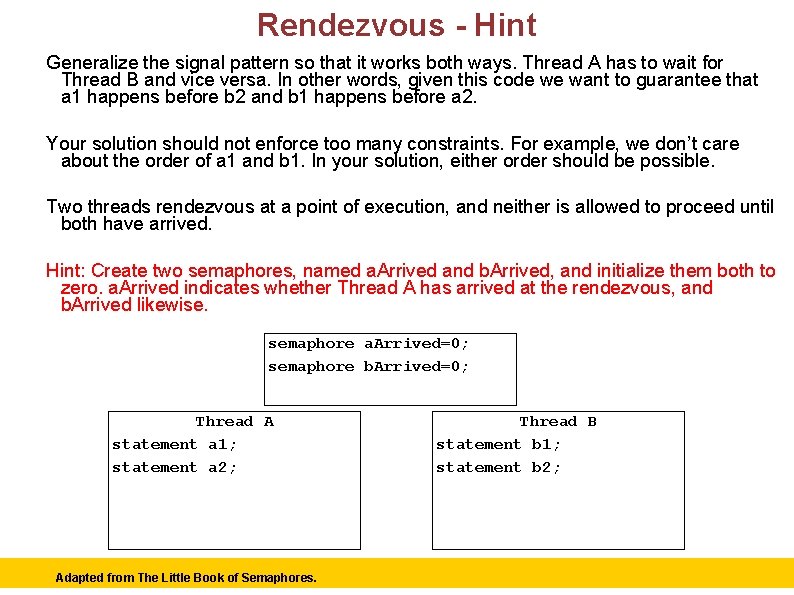

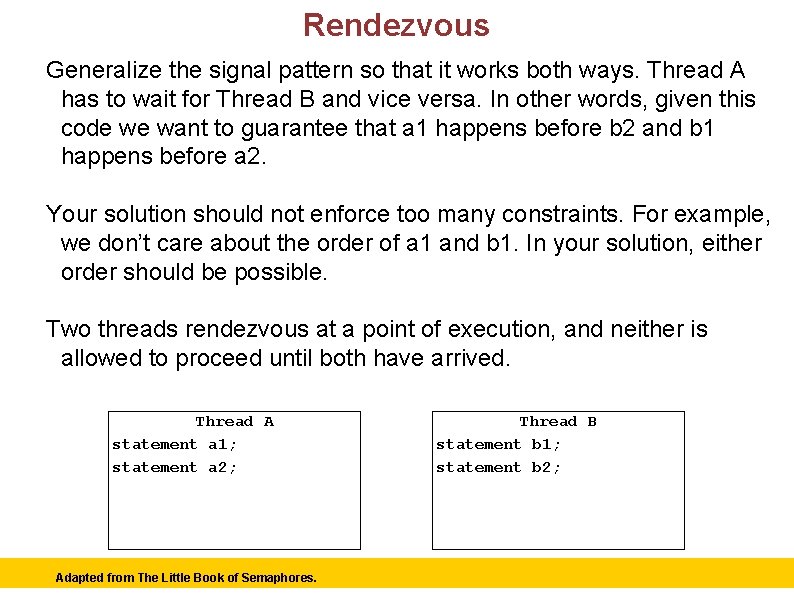

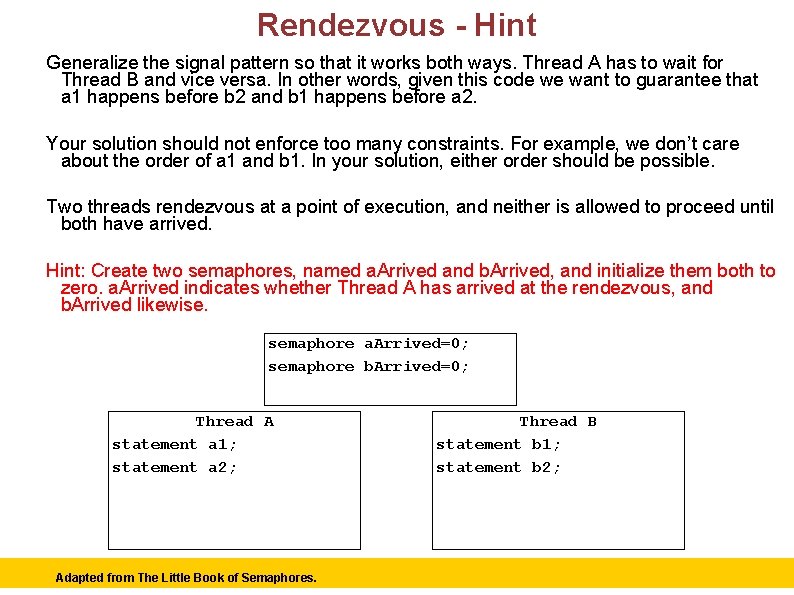

Rendezvous Generalize the signal pattern so that it works both ways. Thread A has to wait for Thread B and vice versa. In other words, given this code we want to guarantee that a 1 happens before b 2 and b 1 happens before a 2. Your solution should not enforce too many constraints. For example, we don’t care about the order of a 1 and b 1. In your solution, either order should be possible. Two threads rendezvous at a point of execution, and neither is allowed to proceed until both have arrived. Thread A statement a 1; statement a 2; Adapted from The Little Book of Semaphores. Thread B statement b 1; statement b 2; 61

Rendezvous - Hint Generalize the signal pattern so that it works both ways. Thread A has to wait for Thread B and vice versa. In other words, given this code we want to guarantee that a 1 happens before b 2 and b 1 happens before a 2. Your solution should not enforce too many constraints. For example, we don’t care about the order of a 1 and b 1. In your solution, either order should be possible. Two threads rendezvous at a point of execution, and neither is allowed to proceed until both have arrived. Hint: Create two semaphores, named a. Arrived and b. Arrived, and initialize them both to zero. a. Arrived indicates whether Thread A has arrived at the rendezvous, and b. Arrived likewise. semaphore a. Arrived=0; semaphore b. Arrived=0; Thread A statement a 1; statement a 2; Adapted from The Little Book of Semaphores. Thread B statement b 1; statement b 2; 62

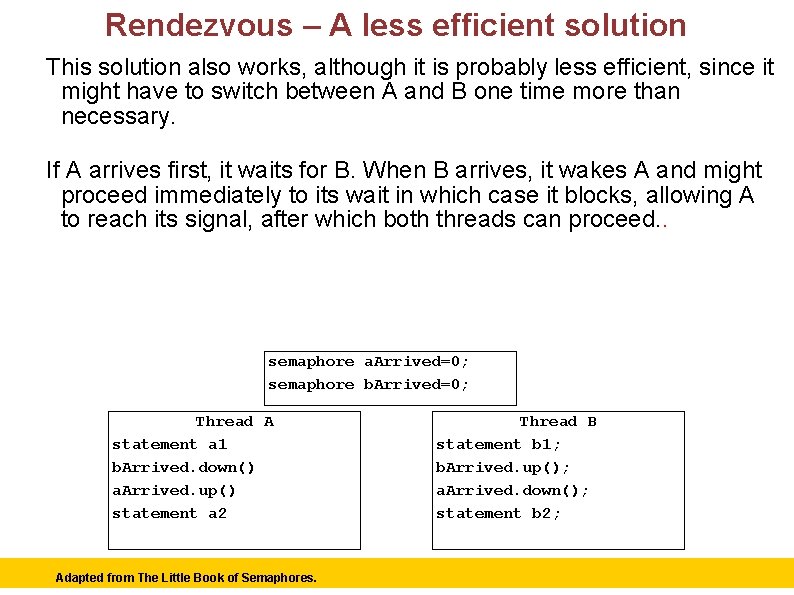

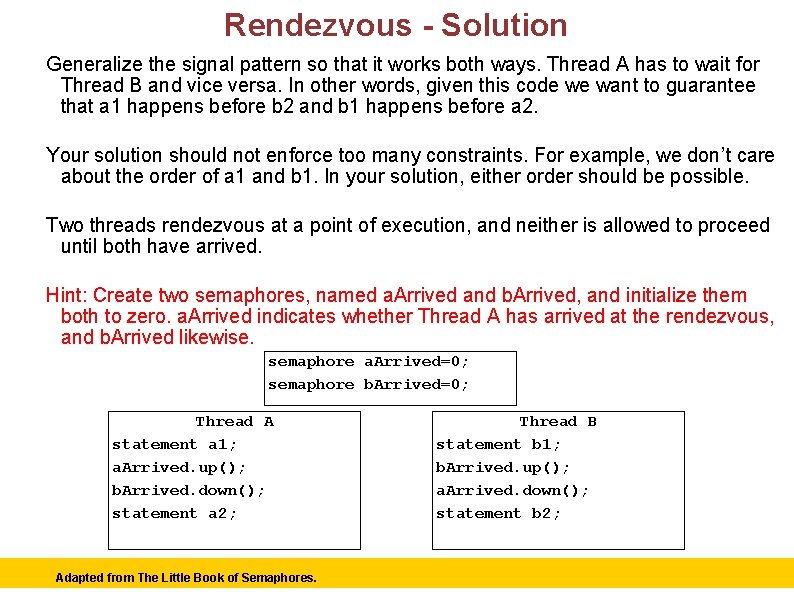

Rendezvous - Solution Generalize the signal pattern so that it works both ways. Thread A has to wait for Thread B and vice versa. In other words, given this code we want to guarantee that a 1 happens before b 2 and b 1 happens before a 2. Your solution should not enforce too many constraints. For example, we don’t care about the order of a 1 and b 1. In your solution, either order should be possible. Two threads rendezvous at a point of execution, and neither is allowed to proceed until both have arrived. Hint: Create two semaphores, named a. Arrived and b. Arrived, and initialize them both to zero. a. Arrived indicates whether Thread A has arrived at the rendezvous, and b. Arrived likewise. semaphore a. Arrived=0; semaphore b. Arrived=0; Thread A statement a 1; a. Arrived. up(); b. Arrived. down(); statement a 2; Adapted from The Little Book of Semaphores. Thread B statement b 1; b. Arrived. up(); a. Arrived. down(); statement b 2; 63

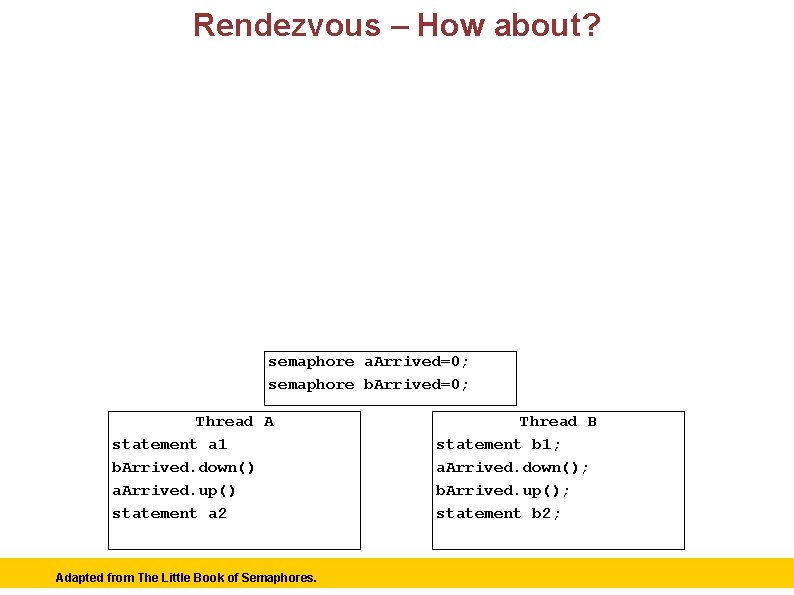

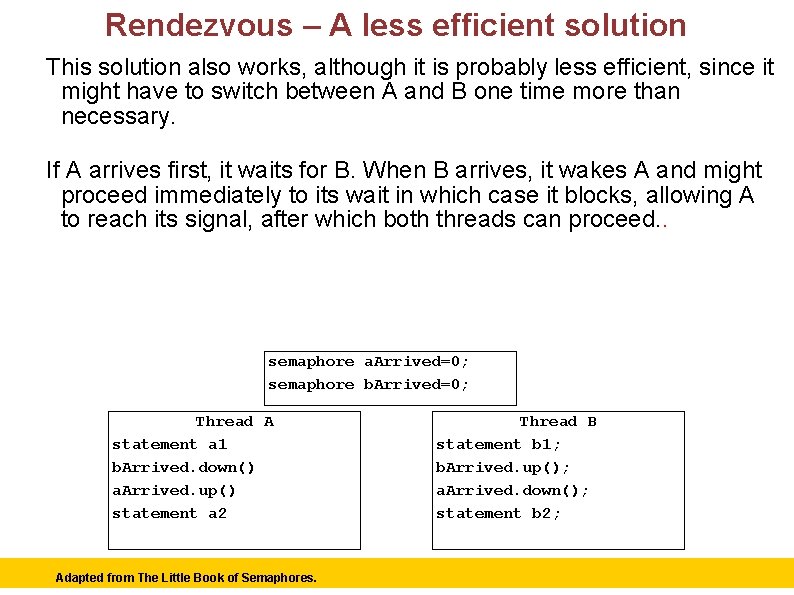

Rendezvous – A less efficient solution This solution also works, although it is probably less efficient, since it might have to switch between A and B one time more than necessary. If A arrives first, it waits for B. When B arrives, it wakes A and might proceed immediately to its wait in which case it blocks, allowing A to reach its signal, after which both threads can proceed. . semaphore a. Arrived=0; semaphore b. Arrived=0; Thread A statement a 1 b. Arrived. down() a. Arrived. up() statement a 2 Adapted from The Little Book of Semaphores. Thread B statement b 1; b. Arrived. up(); a. Arrived. down(); statement b 2; 64

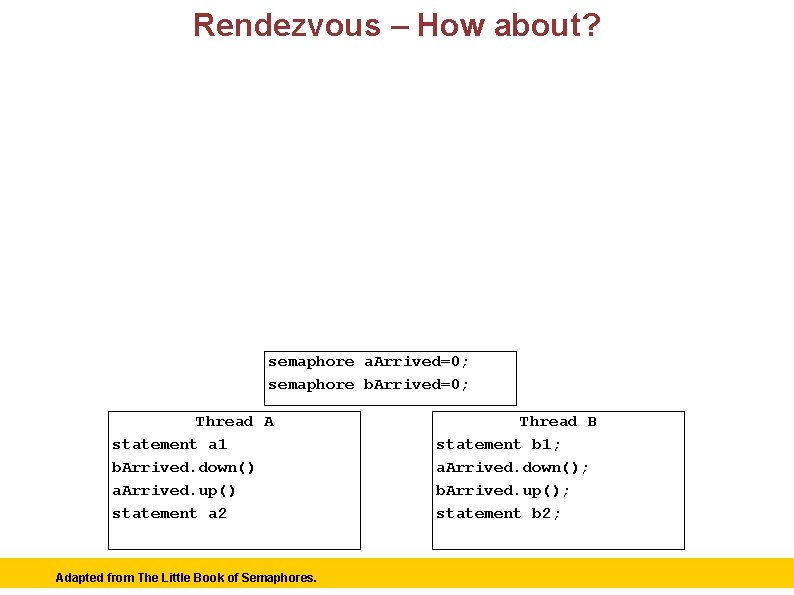

Rendezvous – How about? semaphore a. Arrived=0; semaphore b. Arrived=0; Thread A statement a 1 b. Arrived. down() a. Arrived. up() statement a 2 Adapted from The Little Book of Semaphores. Thread B statement b 1; a. Arrived. down(); b. Arrived. up(); statement b 2; 65

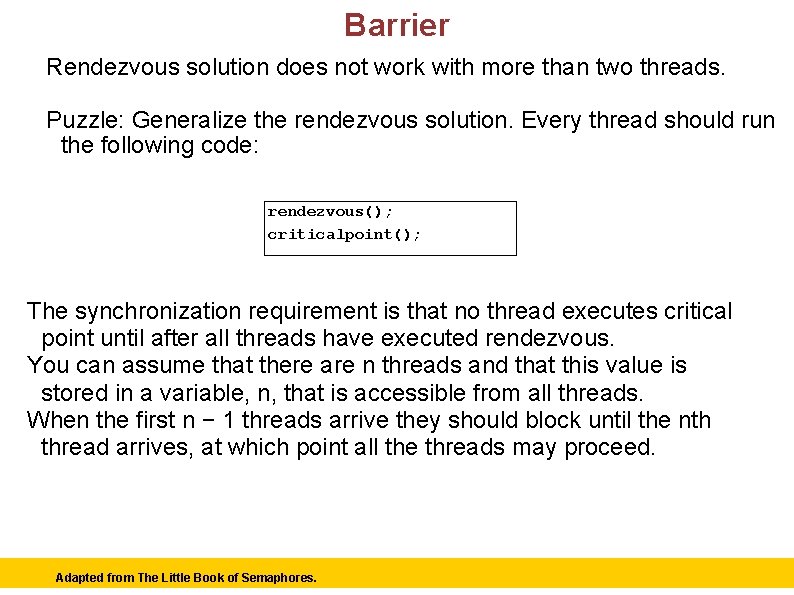

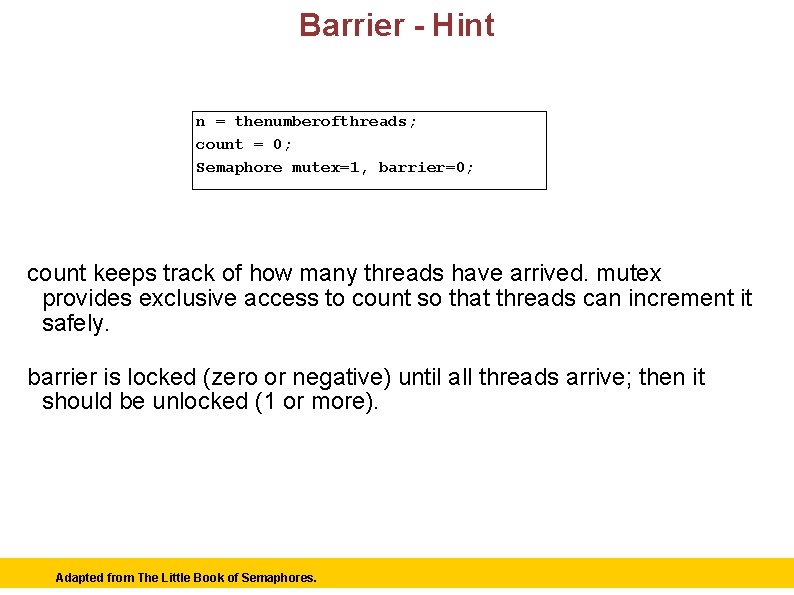

Barrier Rendezvous solution does not work with more than two threads. Puzzle: Generalize the rendezvous solution. Every thread should run the following code: rendezvous(); criticalpoint(); The synchronization requirement is that no thread executes critical point until after all threads have executed rendezvous. You can assume that there are n threads and that this value is stored in a variable, n, that is accessible from all threads. When the first n − 1 threads arrive they should block until the nth thread arrives, at which point all the threads may proceed. Adapted from The Little Book of Semaphores. 66

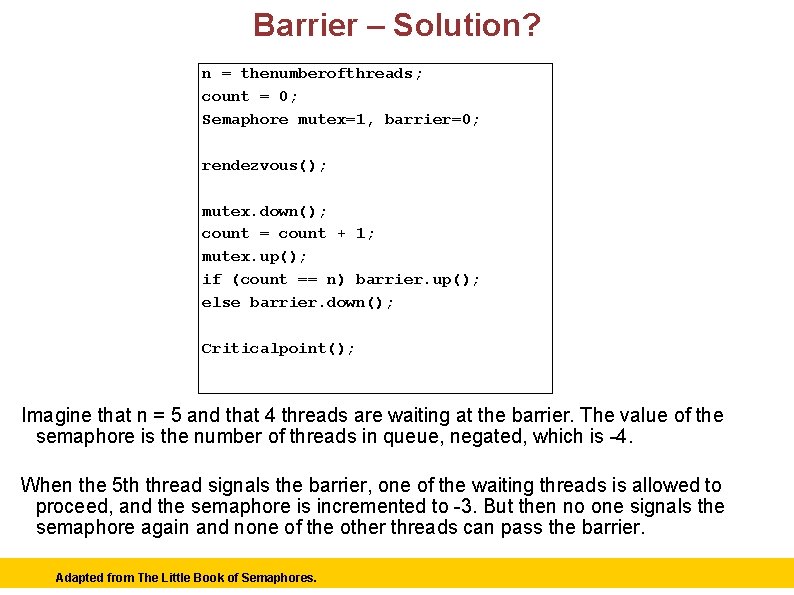

Barrier - Hint n = thenumberofthreads; count = 0; Semaphore mutex=1, barrier=0; count keeps track of how many threads have arrived. mutex provides exclusive access to count so that threads can increment it safely. barrier is locked (zero or negative) until all threads arrive; then it should be unlocked (1 or more). Adapted from The Little Book of Semaphores. 67

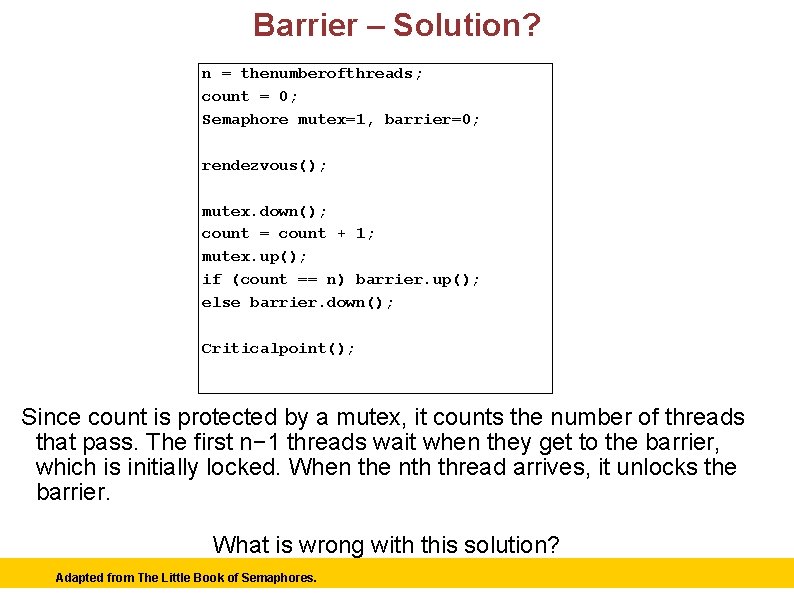

Barrier – Solution? n = thenumberofthreads; count = 0; Semaphore mutex=1, barrier=0; rendezvous(); mutex. down(); count = count + 1; mutex. up(); if (count == n) barrier. up(); else barrier. down(); Criticalpoint(); Since count is protected by a mutex, it counts the number of threads that pass. The first n− 1 threads wait when they get to the barrier, which is initially locked. When the nth thread arrives, it unlocks the barrier. What is wrong with this solution? Adapted from The Little Book of Semaphores. 68

Barrier – Solution? n = thenumberofthreads; count = 0; Semaphore mutex=1, barrier=0; rendezvous(); mutex. down(); count = count + 1; mutex. up(); if (count == n) barrier. up(); else barrier. down(); Criticalpoint(); Imagine that n = 5 and that 4 threads are waiting at the barrier. The value of the semaphore is the number of threads in queue, negated, which is -4. When the 5 th thread signals the barrier, one of the waiting threads is allowed to proceed, and the semaphore is incremented to -3. But then no one signals the semaphore again and none of the other threads can pass the barrier. Adapted from The Little Book of Semaphores. 69

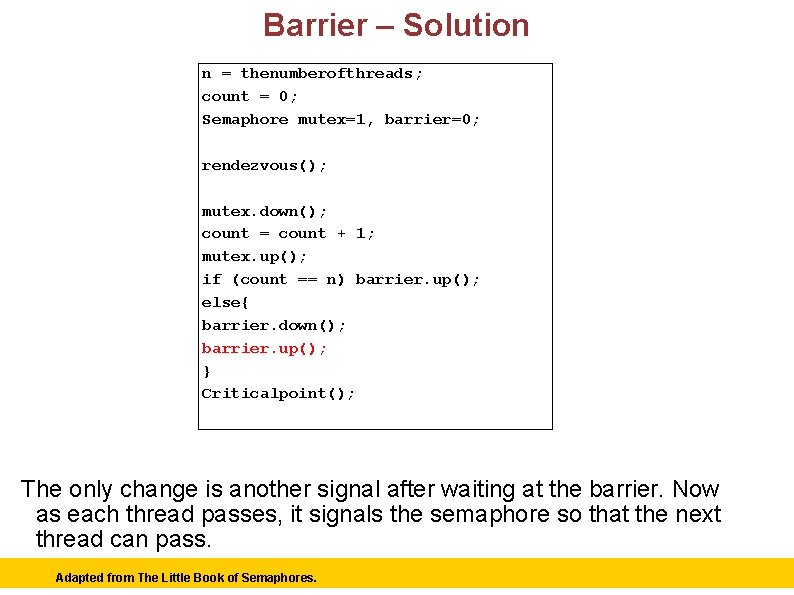

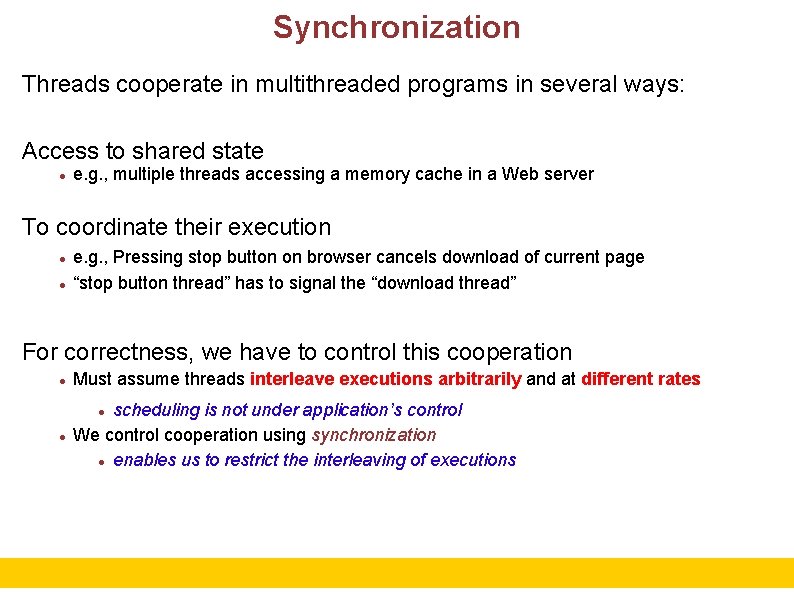

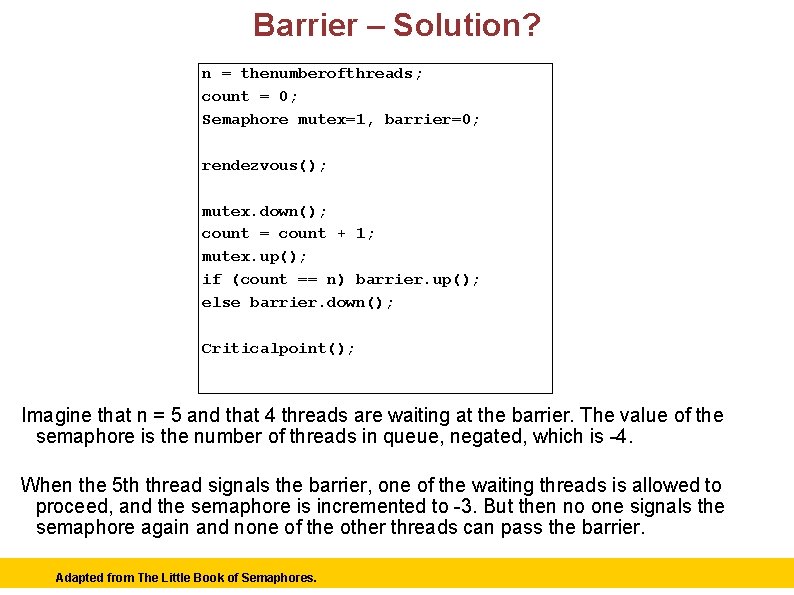

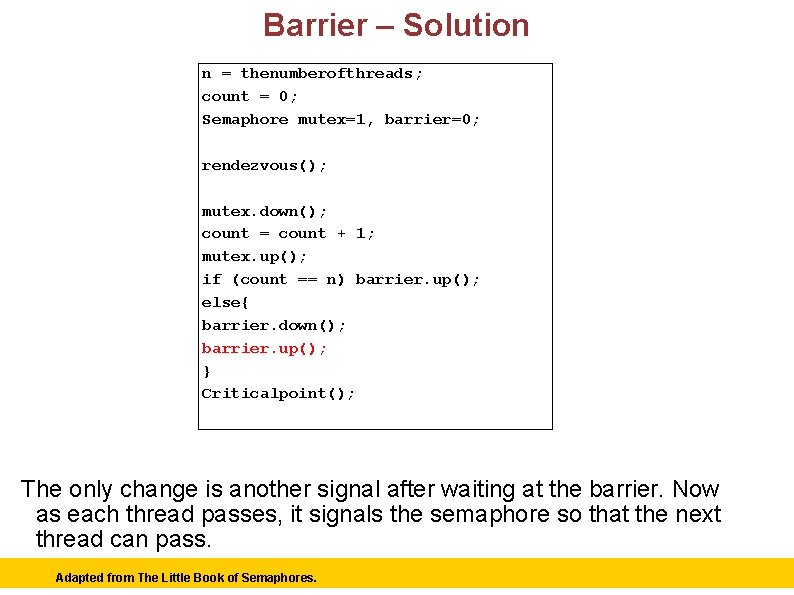

Barrier – Solution n = thenumberofthreads; count = 0; Semaphore mutex=1, barrier=0; rendezvous(); mutex. down(); count = count + 1; mutex. up(); if (count == n) barrier. up(); else{ barrier. down(); barrier. up(); } Criticalpoint(); The only change is another signal after waiting at the barrier. Now as each thread passes, it signals the semaphore so that the next thread can pass. Adapted from The Little Book of Semaphores. 70

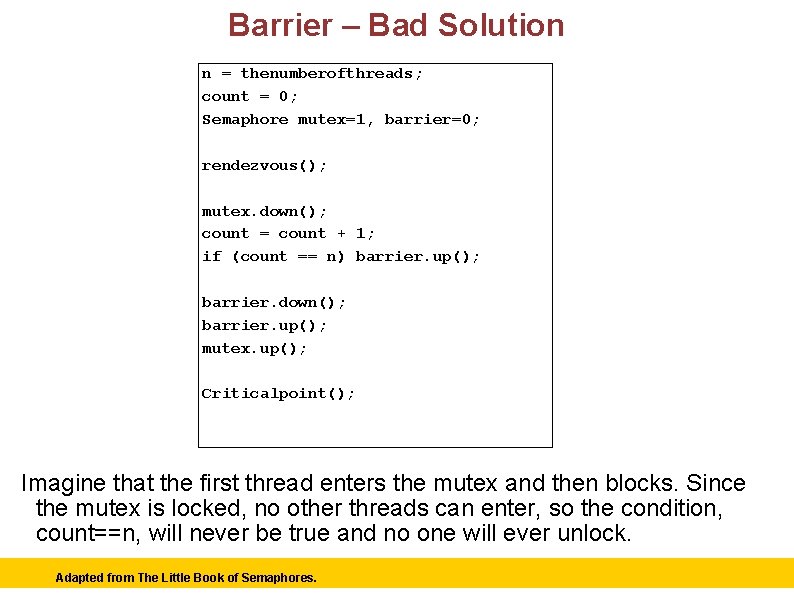

Barrier – Bad Solution n = thenumberofthreads; count = 0; Semaphore mutex=1, barrier=0; rendezvous(); mutex. down(); count = count + 1; if (count == n) barrier. up(); barrier. down(); barrier. up(); mutex. up(); Criticalpoint(); Imagine that the first thread enters the mutex and then blocks. Since the mutex is locked, no other threads can enter, so the condition, count==n, will never be true and no one will ever unlock. Adapted from The Little Book of Semaphores. 71