CENG 334 Operating Systems 03 Threads Synchronization Asst

- Slides: 142

CENG 334 – Operating Systems 03 - Threads & Synchronization Asst. Prof. Yusuf Sahillioğlu Computer Eng. Dept, , Turkey

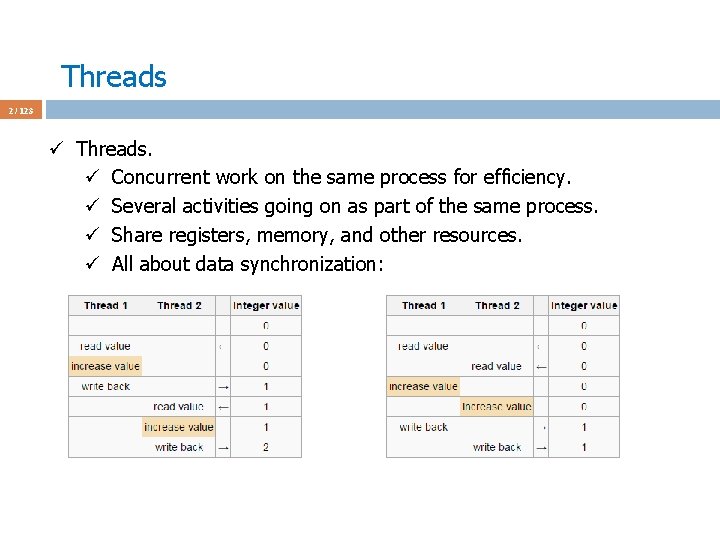

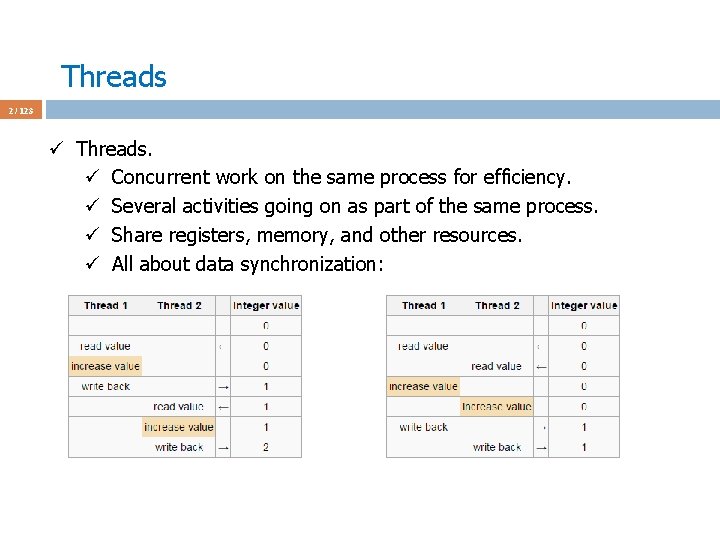

Threads 2 / 123 ü Threads. ü Concurrent work on the same process for efficiency. ü Several activities going on as part of the same process. ü Share registers, memory, and other resources. ü All about data synchronization:

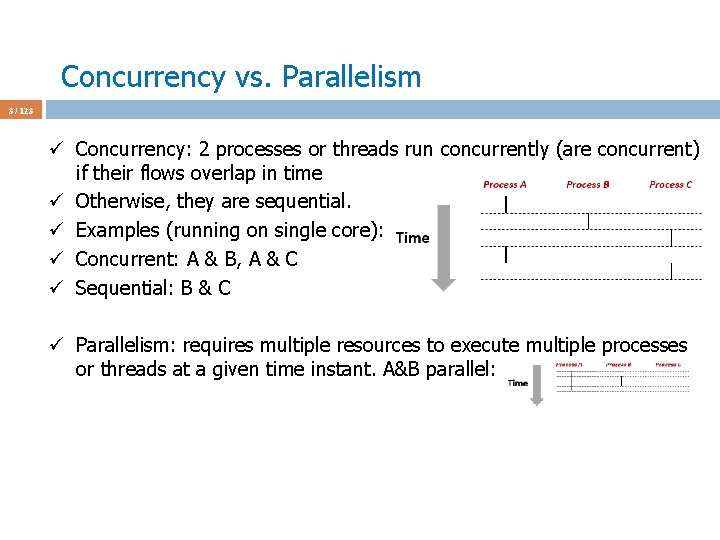

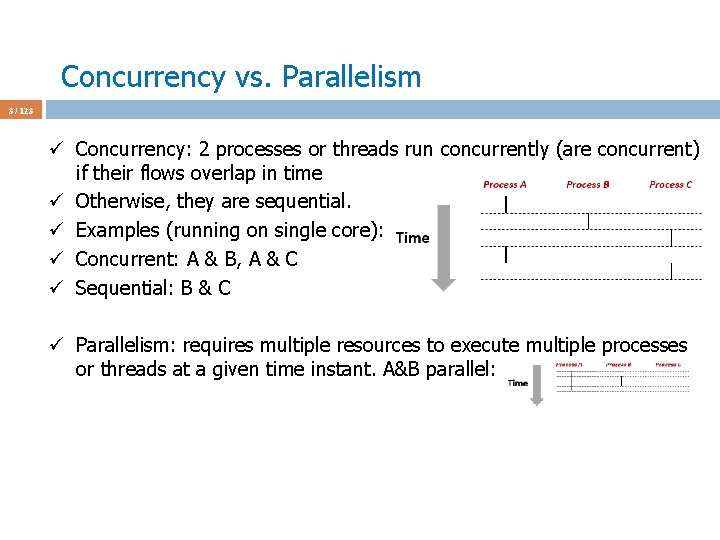

Concurrency vs. Parallelism 3 / 123 ü Concurrency: 2 processes or threads run concurrently (are concurrent) if their flows overlap in time ü Otherwise, they are sequential. ü Examples (running on single core): ü Concurrent: A & B, A & C ü Sequential: B & C ü Parallelism: requires multiple resources to execute multiple processes or threads at a given time instant. A&B parallel:

Concurrent Programming 4 / 123 ü Many programs want to do many things “at once” ü Web browser: ü Download web pages, read cache files, accept user input, . . . ü Web server: ü Handle incoming connections from multiple clients at once ü Scientific programs: ü Process different parts of a data set on different CPUs ü In each case, would like to share memory across these activities ü Web browser: Share buffer for HTML page and inlined images ü Web server: Share memory cache of recently-accessed pages ü Scientific programs: Share memory of data set being processes ü Can't we simply do this with multiple processes?

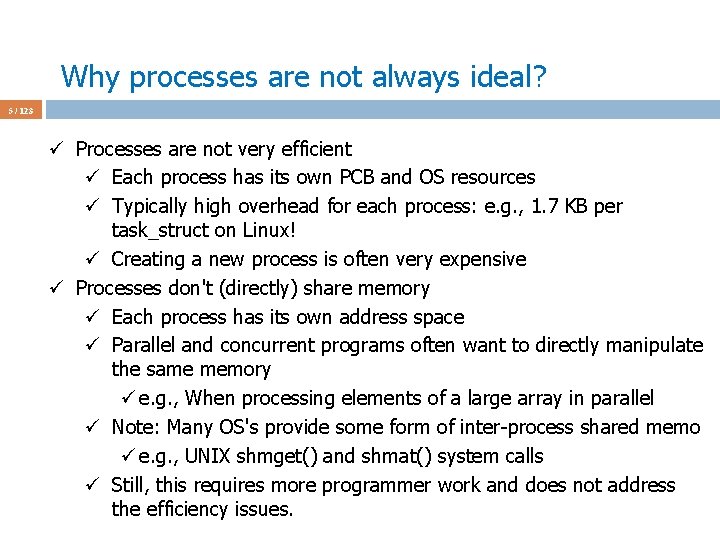

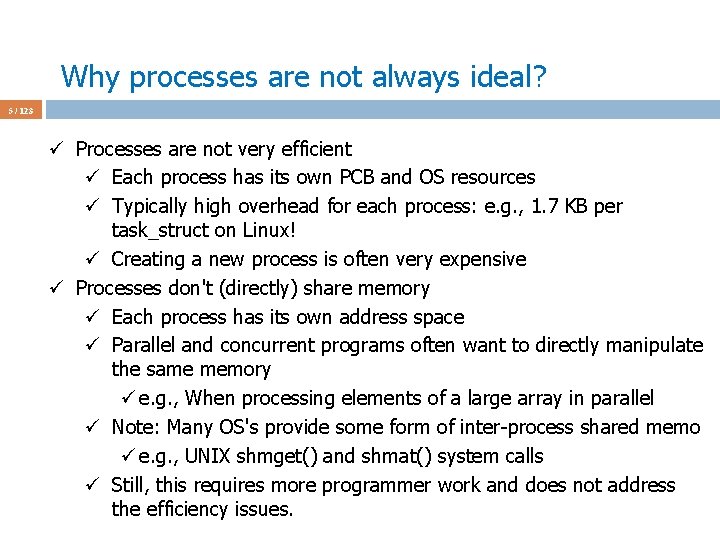

Why processes are not always ideal? 5 / 123 ü Processes are not very efficient ü Each process has its own PCB and OS resources ü Typically high overhead for each process: e. g. , 1. 7 KB per task_struct on Linux! ü Creating a new process is often very expensive ü Processes don't (directly) share memory ü Each process has its own address space ü Parallel and concurrent programs often want to directly manipulate the same memory ü e. g. , When processing elements of a large array in parallel ü Note: Many OS's provide some form of inter-process shared memo ü e. g. , UNIX shmget() and shmat() system calls ü Still, this requires more programmer work and does not address the efficiency issues.

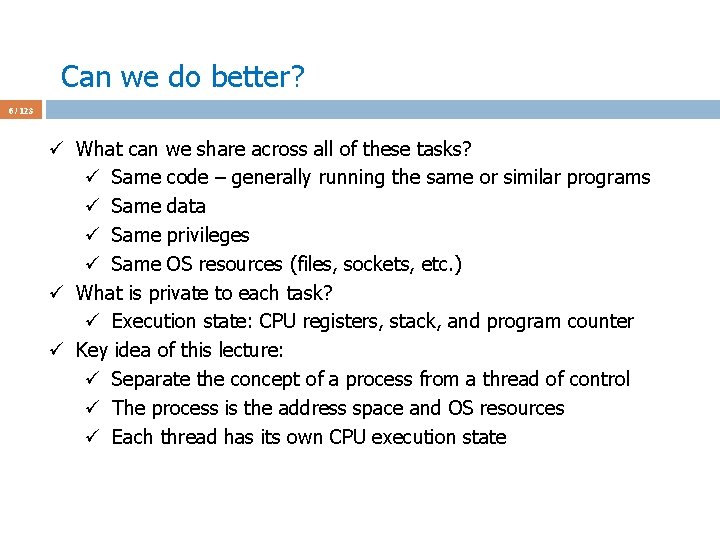

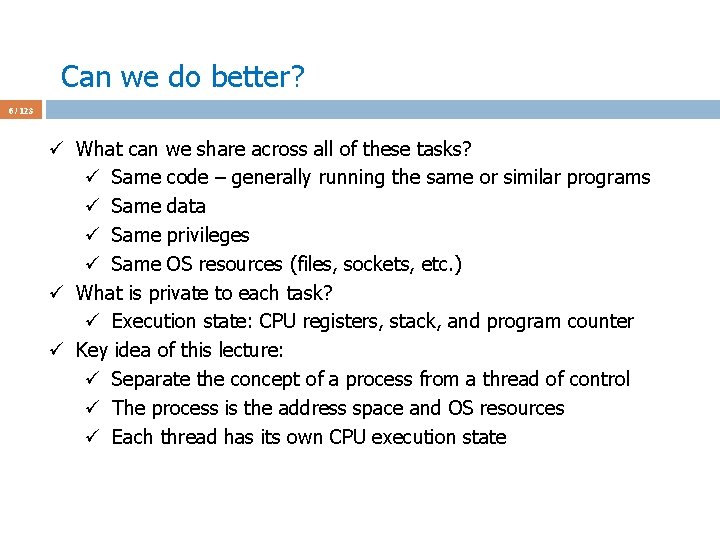

Can we do better? 6 / 123 ü What can we share across all of these tasks? ü Same code – generally running the same or similar programs ü Same data ü Same privileges ü Same OS resources (files, sockets, etc. ) ü What is private to each task? ü Execution state: CPU registers, stack, and program counter ü Key idea of this lecture: ü Separate the concept of a process from a thread of control ü The process is the address space and OS resources ü Each thread has its own CPU execution state

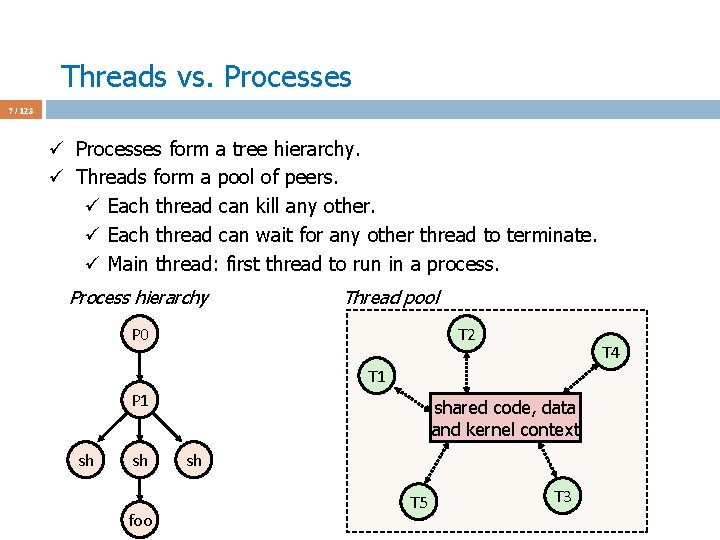

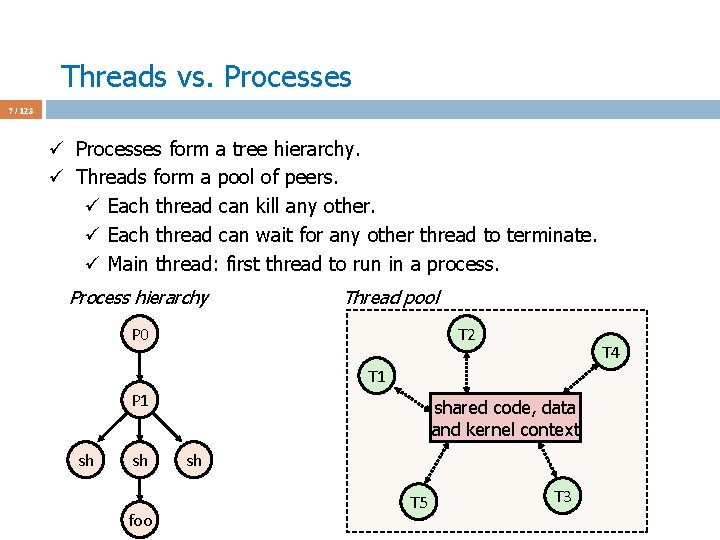

Threads vs. Processes 7 / 123 ü Processes form a tree hierarchy. ü Threads form a pool of peers. ü Each thread can kill any other. ü Each thread can wait for any other thread to terminate. ü Main thread: first thread to run in a process. Process hierarchy Thread pool P 0 T 2 T 4 T 1 P 1 sh sh foo shared code, data and kernel context sh T 5 T 3

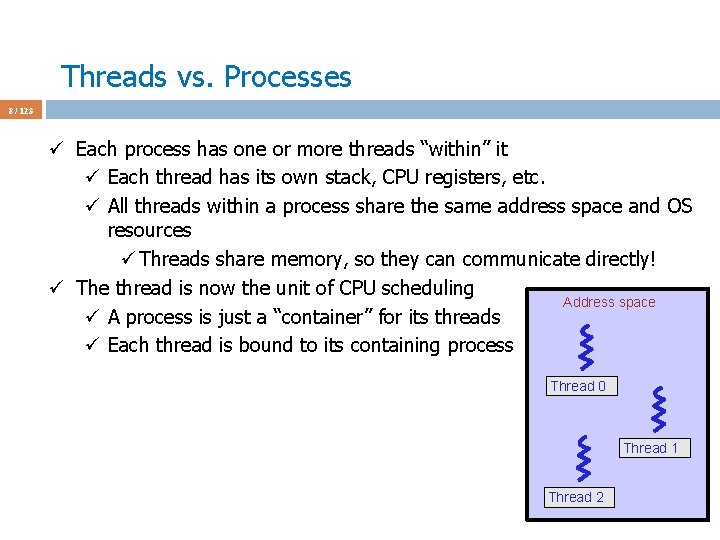

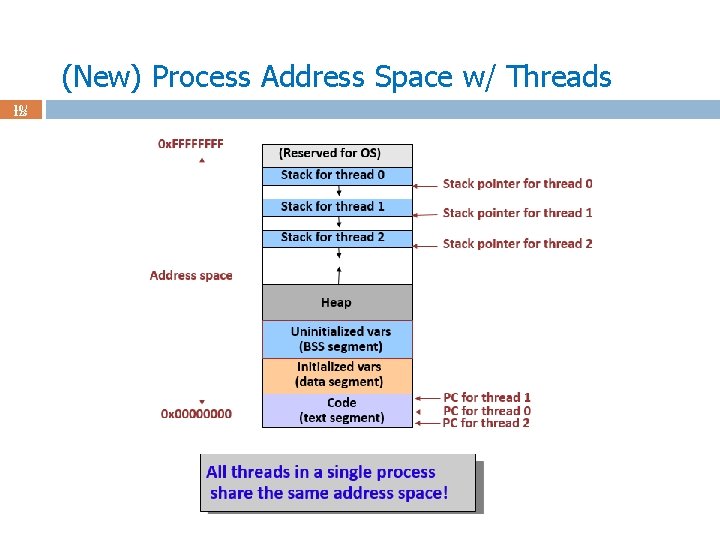

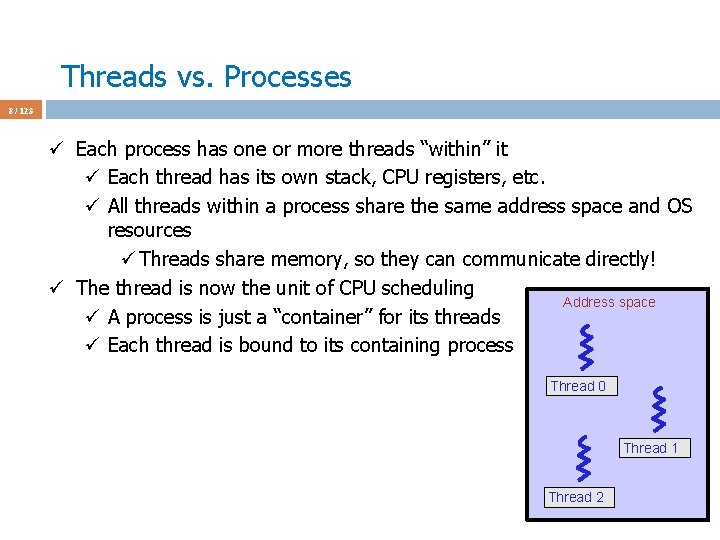

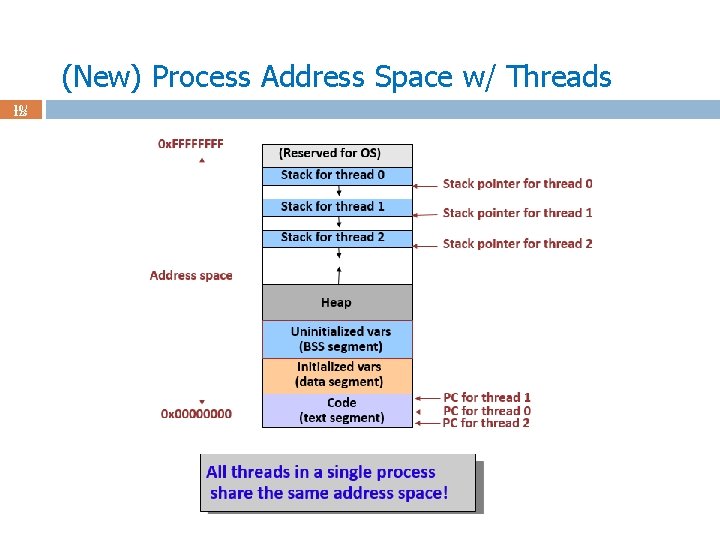

Threads vs. Processes 8 / 123 ü Each process has one or more threads “within” it ü Each thread has its own stack, CPU registers, etc. ü All threads within a process share the same address space and OS resources ü Threads share memory, so they can communicate directly! ü The thread is now the unit of CPU scheduling Address space ü A process is just a “container” for its threads ü Each thread is bound to its containing process Thread 0 Thread 1 Thread 2

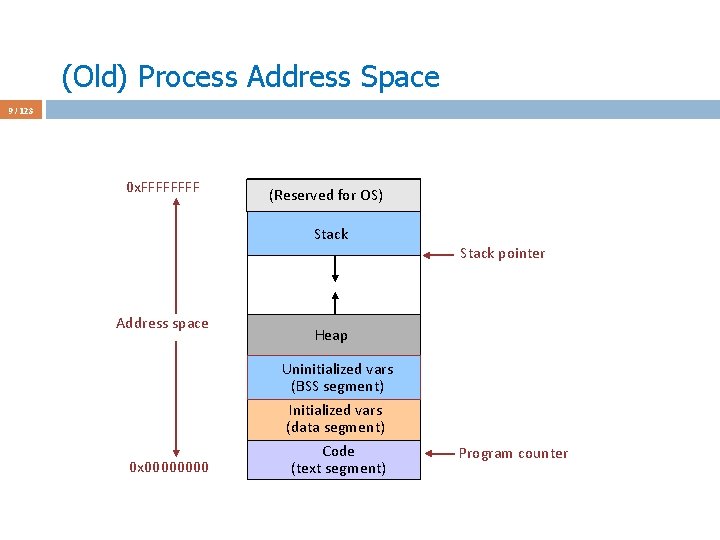

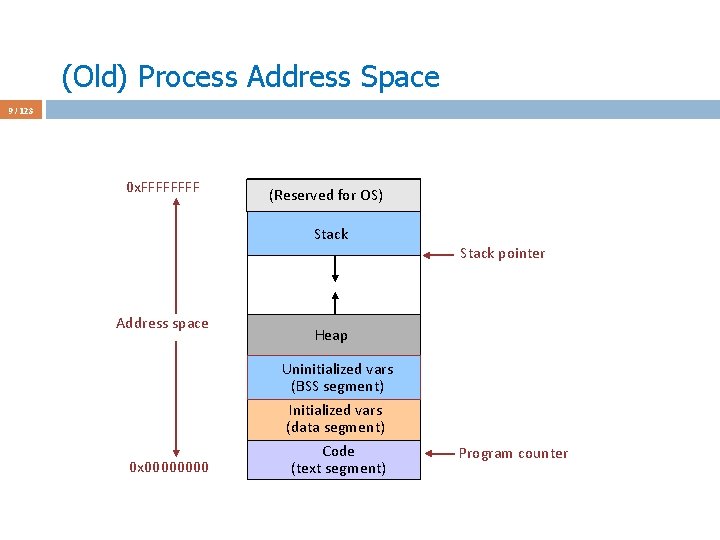

(Old) Process Address Space 9 / 123 0 x. FFFF (Reserved for OS) Stack Address space 0 x 0000 Stack pointer Heap Uninitialized vars (BSS segment) Initialized vars (data segment) Code (text segment) Program counter

(New) Process Address Space w/ Threads 10 / 123

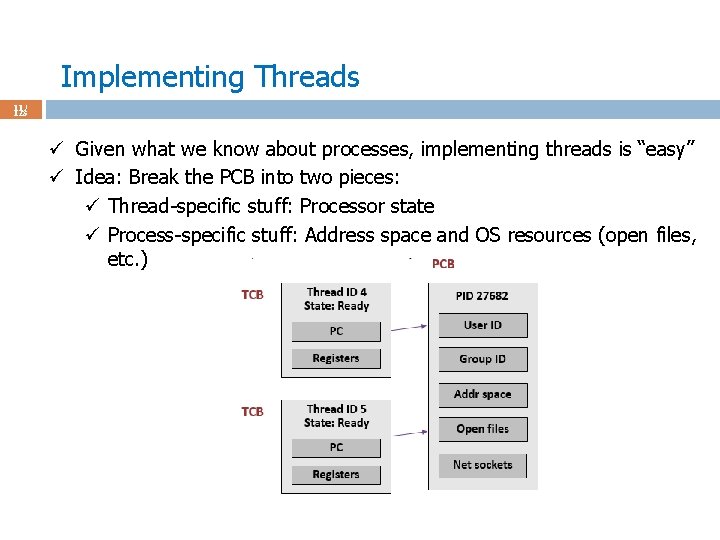

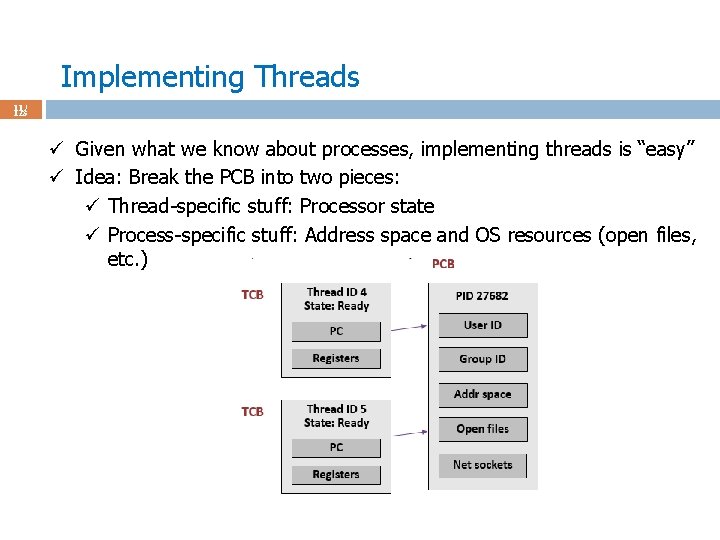

Implementing Threads 11 / 123 ü Given what we know about processes, implementing threads is “easy” ü Idea: Break the PCB into two pieces: ü Thread-specific stuff: Processor state ü Process-specific stuff: Address space and OS resources (open files, etc. )

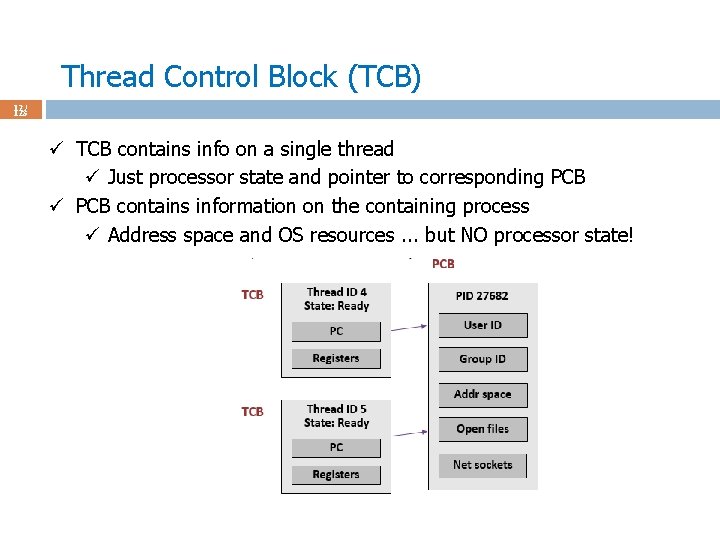

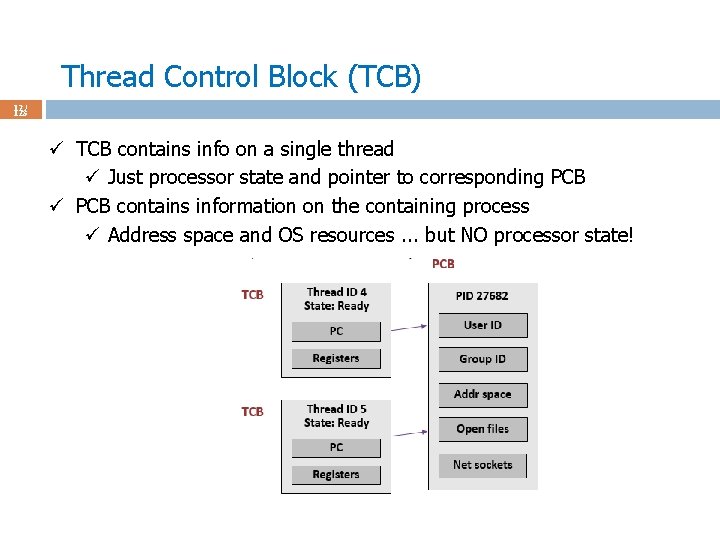

Thread Control Block (TCB) 12 / 123 ü TCB contains info on a single thread ü Just processor state and pointer to corresponding PCB ü PCB contains information on the containing process ü Address space and OS resources. . . but NO processor state!

Thread Control Block (TCB) 13 / 123 ü TCB's are smaller and cheaper than processes ü Linux TCB (thread_struct) has 24 fields ü Linux PCB (task_struct) has 106 fields ü Hence context switching threads is cheaper than context switching processes.

Context Switching 14 / 123 ü TCB is now the unit of a context switch ü Ready queue, wait queues, etc. now contain pointers to TCB's ü Context switch causes CPU state to be copied to/from the TCB ü Context switch between two threads in the same process: ü No need to change address space ü Context switch between two threads in different processes: ü Must change address space, sometimes invalidating cache ü This will become relevant when we talk about virtual memory.

Thread State 15 / 123 ü State shared by all threads in process: ü Memory content (global variables, heap, code, etc). ü I/O (files, network connections, etc). ü A change in the global variable will be seen by all other threads (unlike processes). ü State private to each thread: ü Kept in TCB (Thread Control Block). ü CPU registers, program counter. ü Stack (what functions it is calling, parameters, local variables, return addresses). ü Pointer to enclosing process (PCB).

Thread Behavior 16 / 123 ü Some useful applications with threads: ü ü One thread listens to connections; others handle page requests. One thread handles GUI; other computations. One thread paints the left part, other the right part. . .

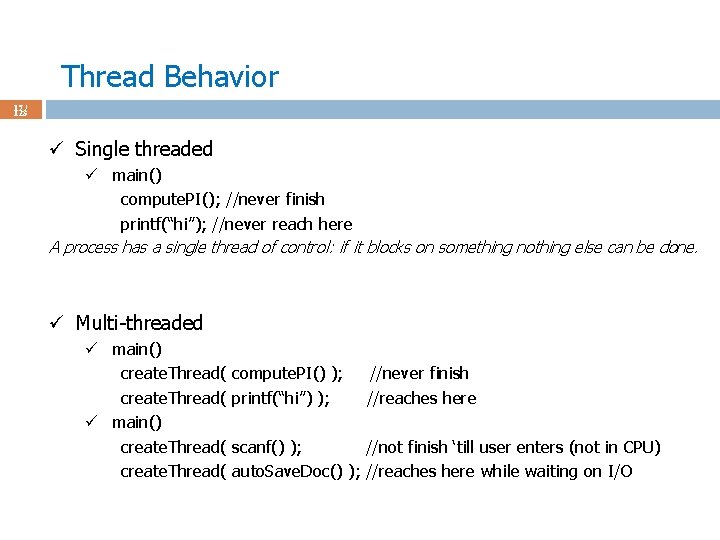

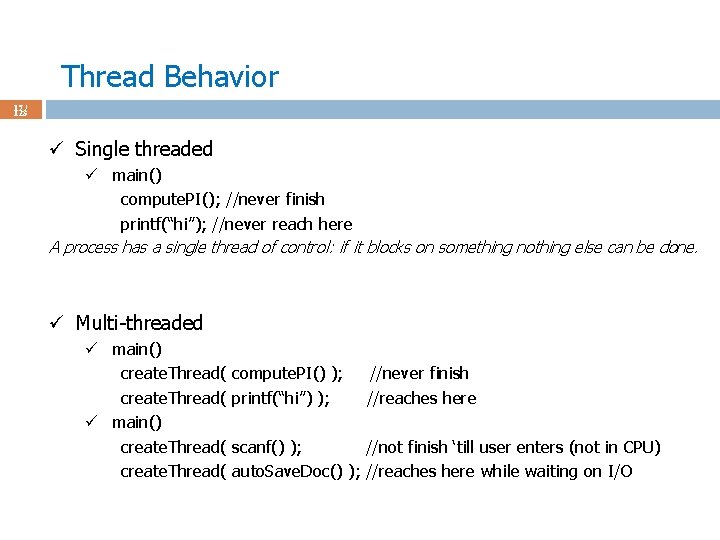

Thread Behavior 17 / 123 ü Single threaded ü main() compute. PI(); //never finish printf(“hi”); //never reach here A process has a single thread of control: if it blocks on something nothing else can be done. ü Multi-threaded ü main() create. Thread( compute. PI() ); printf(“hi”) ); //never finish //reaches here scanf() ); //not finish ‘till user enters (not in CPU) auto. Save. Doc() ); //reaches here while waiting on I/O

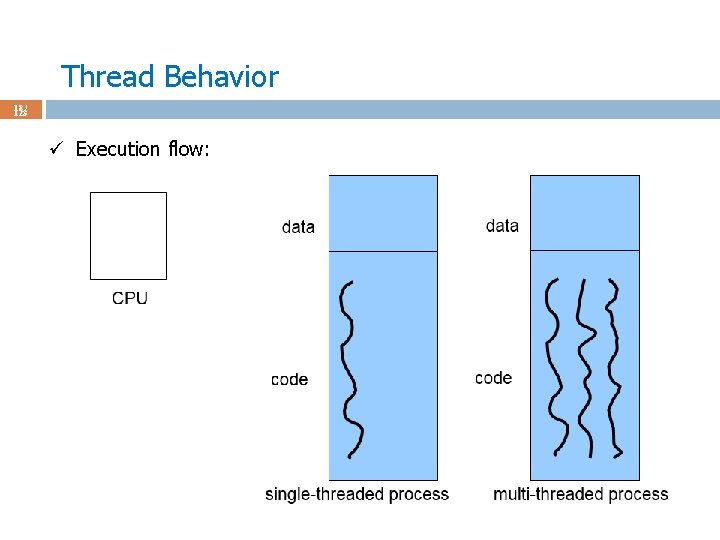

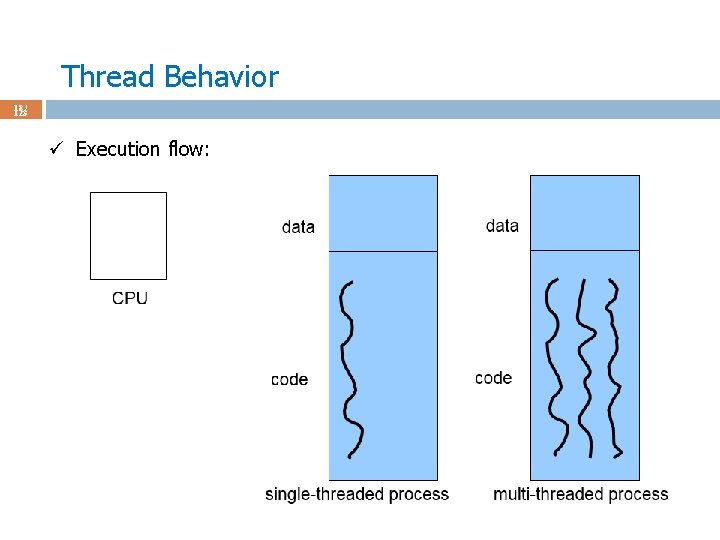

Thread Behavior 18 / 123 ü Execution flow:

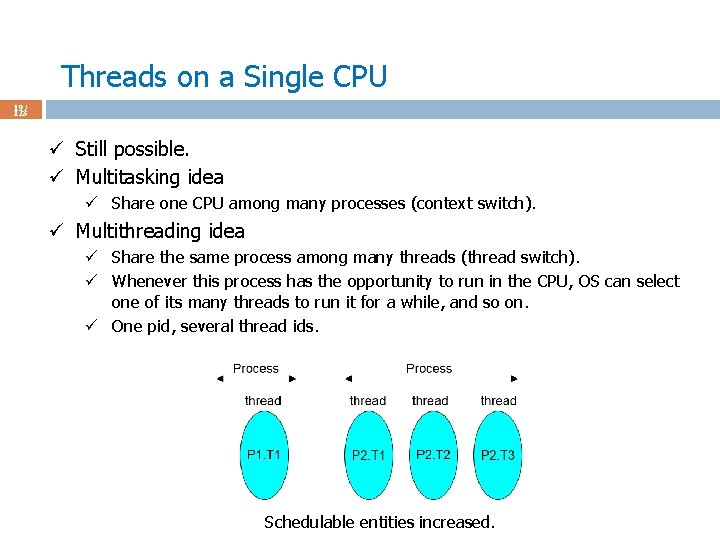

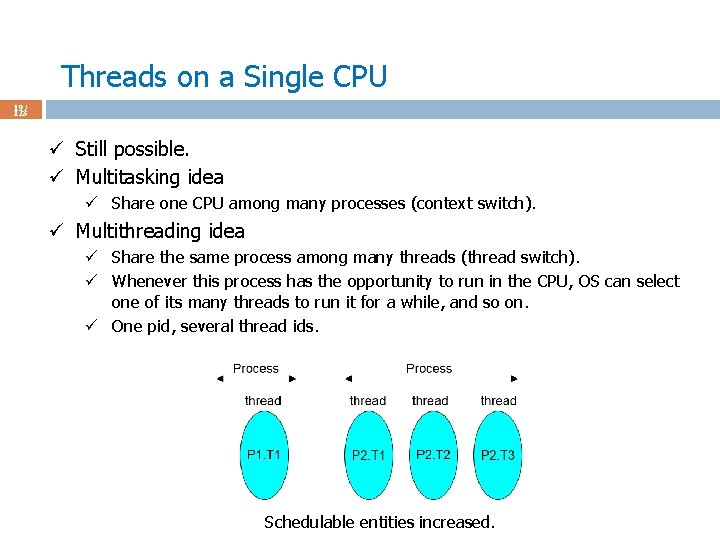

Threads on a Single CPU 19 / 123 ü Still possible. ü Multitasking idea ü Share one CPU among many processes (context switch). ü Multithreading idea ü Share the same process among many threads (thread switch). ü Whenever this process has the opportunity to run in the CPU, OS can select one of its many threads to run it for a while, and so on. ü One pid, several thread ids. Schedulable entities increased.

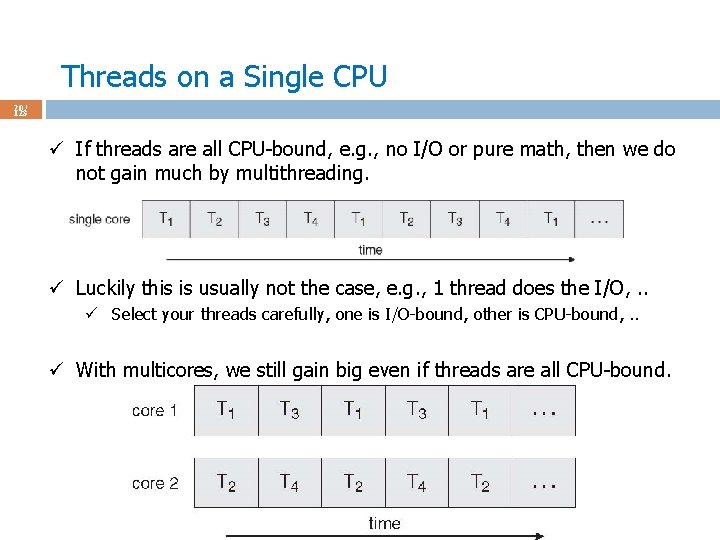

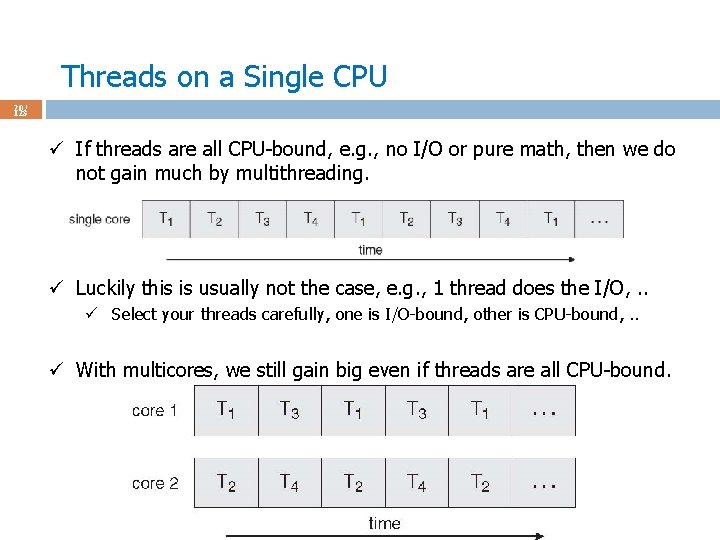

Threads on a Single CPU 20 / 123 ü If threads are all CPU-bound, e. g. , no I/O or pure math, then we do not gain much by multithreading. ü Luckily this is usually not the case, e. g. , 1 thread does the I/O, . . ü Select your threads carefully, one is I/O-bound, other is CPU-bound, . . ü With multicores, we still gain big even if threads are all CPU-bound.

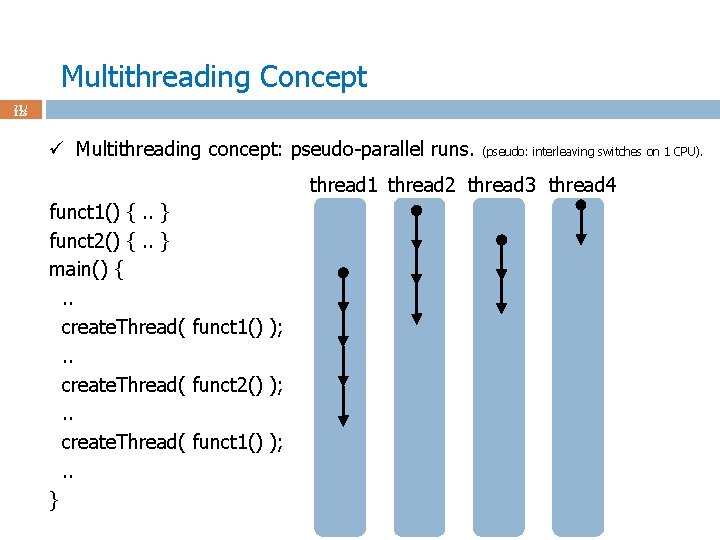

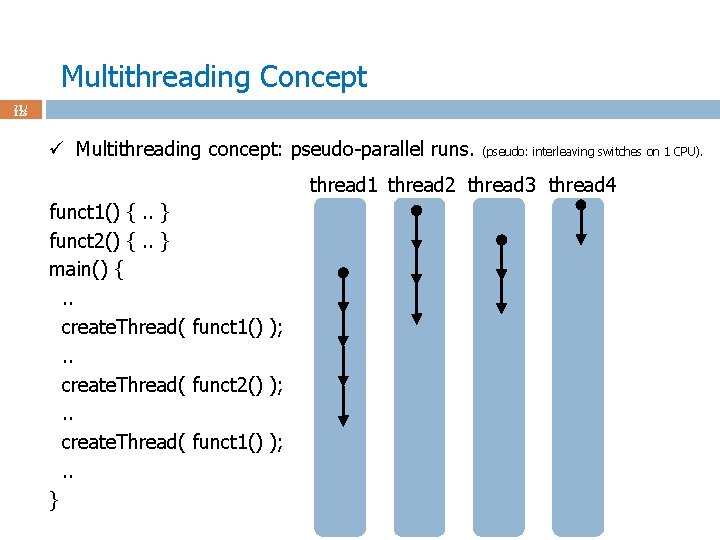

Multithreading Concept 21 / 123 ü Multithreading concept: pseudo-parallel runs. (pseudo: interleaving switches on 1 CPU). thread 1 thread 2 thread 3 thread 4 funct 1() {. . } funct 2() {. . } main() {. . create. Thread( funct 1() ); . . create. Thread( funct 2() ); . . create. Thread( funct 1() ); . . }

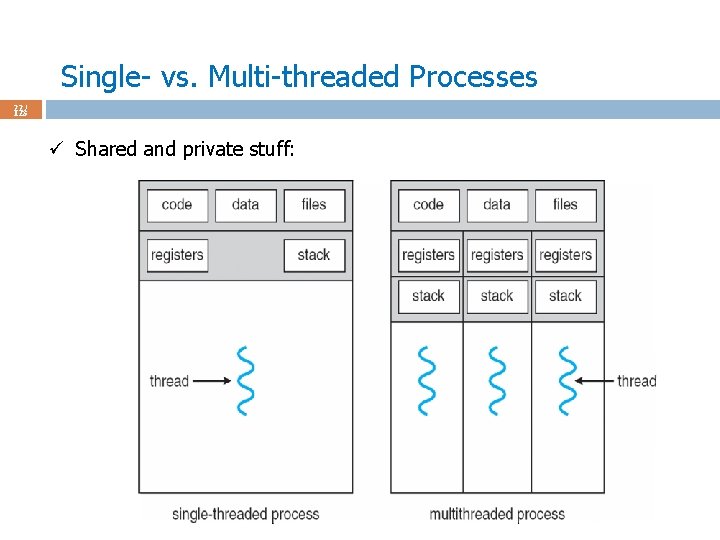

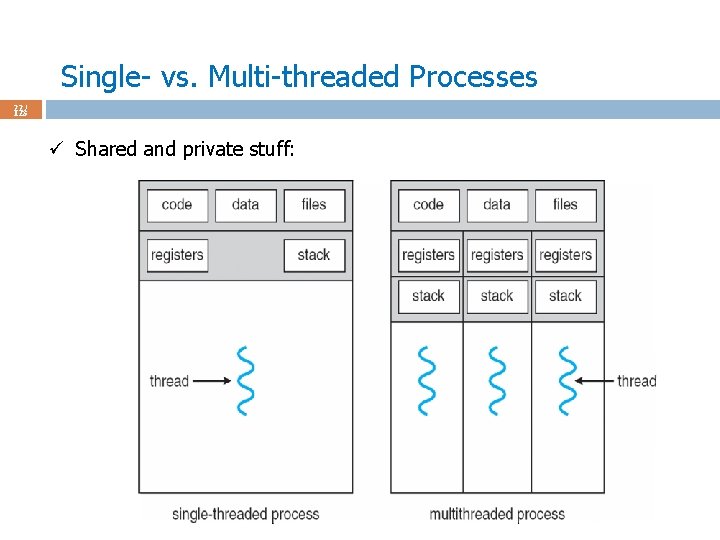

Single- vs. Multi-threaded Processes 22 / 123 ü Shared and private stuff:

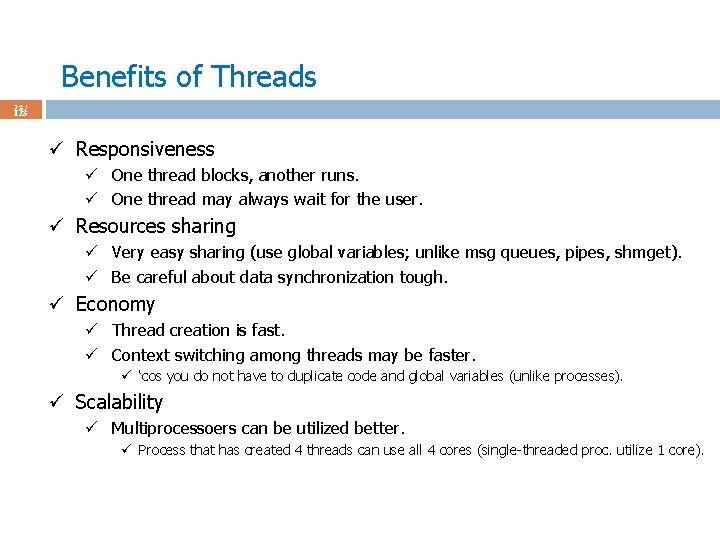

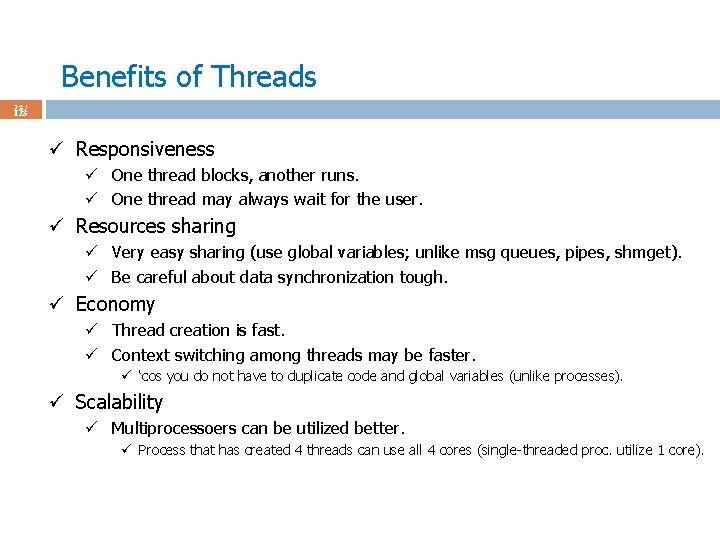

Benefits of Threads 23 / 123 ü Responsiveness ü One thread blocks, another runs. ü One thread may always wait for the user. ü Resources sharing ü Very easy sharing (use global variables; unlike msg queues, pipes, shmget). ü Be careful about data synchronization tough. ü Economy ü Thread creation is fast. ü Context switching among threads may be faster. ü ‘cos you do not have to duplicate code and global variables (unlike processes). ü Scalability ü Multiprocessoers can be utilized better. ü Process that has created 4 threads can use all 4 cores (single-threaded proc. utilize 1 core).

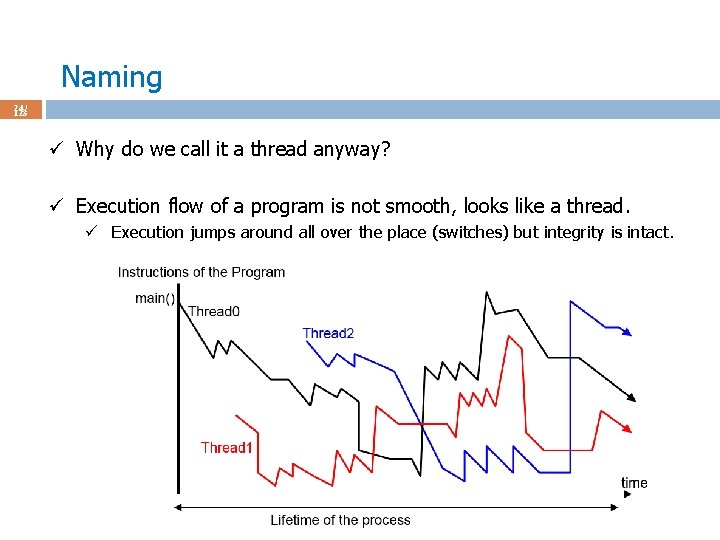

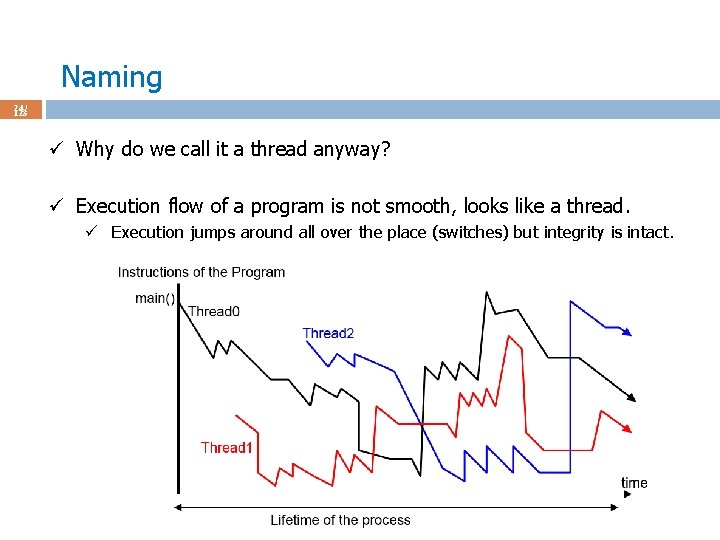

Naming 24 / 123 ü Why do we call it a thread anyway? ü Execution flow of a program is not smooth, looks like a thread. ü Execution jumps around all over the place (switches) but integrity is intact.

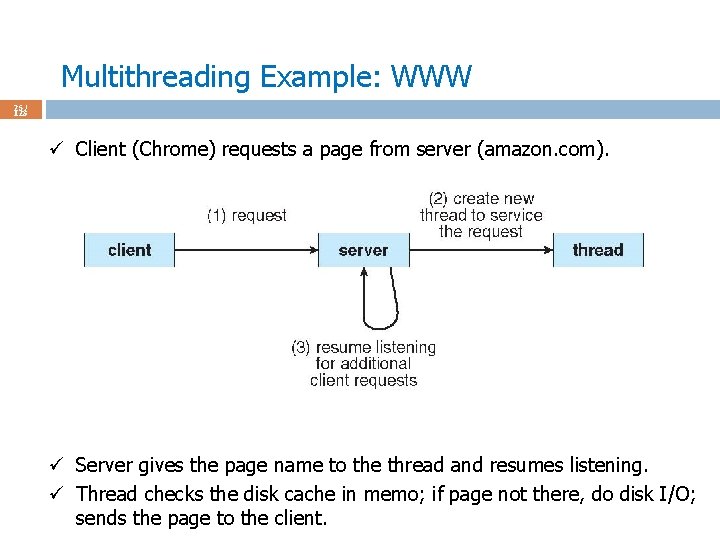

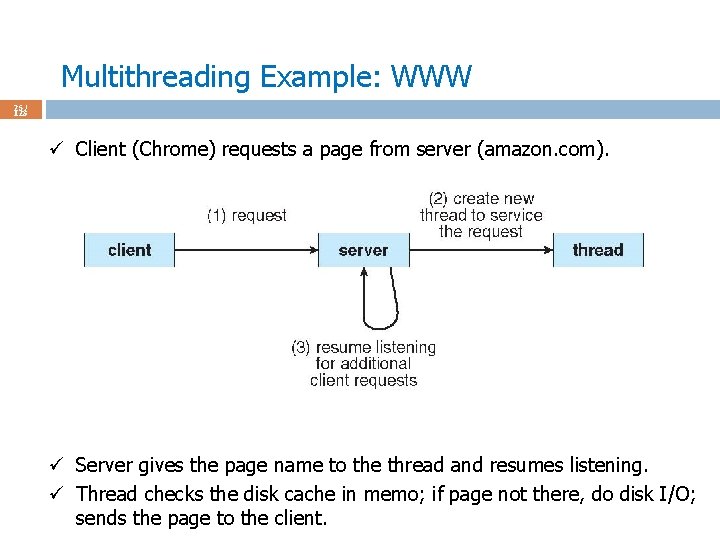

Multithreading Example: WWW 25 / 123 ü Client (Chrome) requests a page from server (amazon. com). ü Server gives the page name to the thread and resumes listening. ü Thread checks the disk cache in memo; if page not there, do disk I/O; sends the page to the client.

Threading Support 26 / 123 ü User-level threads: are threads that the OS is not aware of. They exist entirely within a process, and are scheduled to run within that process's time slices. ü Kernel-level threads: The OS is aware of kernel-level threads. Kernel threads are scheduled by the OS's scheduling algorithm, and require a "lightweight" context switch to switch between (that is, registers, PC, and SP must be changed, but the memory context remains the same among kernel threads in the same process).

Threading Support 27 / 123 ü User-level threads are much faster to switch between, as there is no context switch; further, a problem-domain-dependent algorithm can be used to schedule among them. CPU-bound tasks with interdependent computations, or a task that will switch among threads often, might best be handled by user-level threads.

Threading Support 28 / 123 ü Kernel-level threads are scheduled by the OS, and each thread can be granted its own time slices by the scheduling algorithm. The kernel scheduler can thus make intelligent decisions among threads, and avoid scheduling processes which consist of entirely idle threads (or I/O bound threads). A task that has multiple threads that are I/O bound, or that has many threads (and thus will benefit from the additional time slices that kernel threads will receive) might best be handled by kernel threads. ü Kernel-level threads require a system call for the switch to occur; userlevel threads do not.

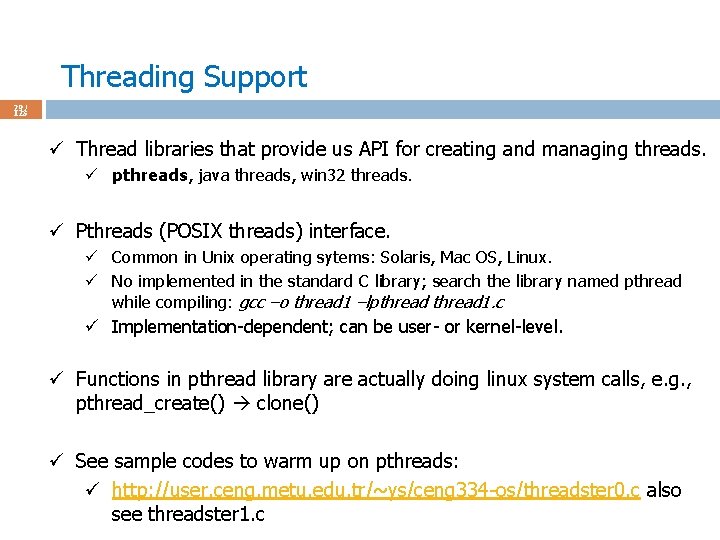

Threading Support 29 / 123 ü Thread libraries that provide us API for creating and managing threads. ü pthreads, java threads, win 32 threads. ü Pthreads (POSIX threads) interface. ü Common in Unix operating sytems: Solaris, Mac OS, Linux. ü No implemented in the standard C library; search the library named pthread while compiling: gcc –o thread 1 –lpthread 1. c ü Implementation-dependent; can be user- or kernel-level. ü Functions in pthread library are actually doing linux system calls, e. g. , pthread_create() clone() ü See sample codes to warm up on pthreads: ü http: //user. ceng. metu. edu. tr/~ys/ceng 334 -os/threadster 0. c also see threadster 1. c

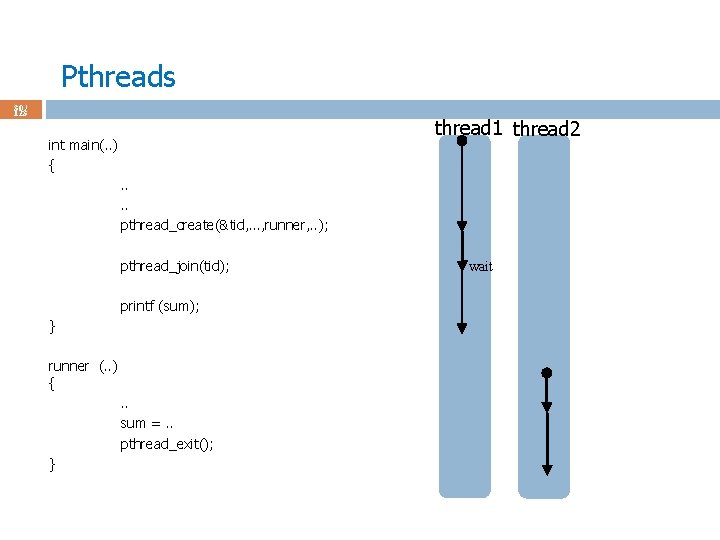

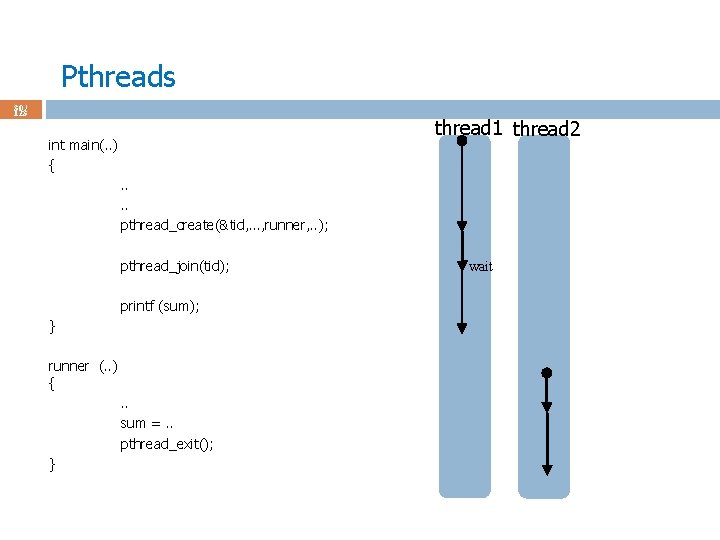

Pthreads 30 / 123 thread 1 thread 2 int main(. . ) {. . pthread_create(&tid, …, runner, . . ); pthread_join(tid); printf (sum); } runner (. . ) {. . sum =. . pthread_exit(); } wait

Single- to Multi-thread Conversion 31 / 123 ü In a simple world ü Identify functions as parallel activities. ü Run them as separate threads. ü In real world ü Single-threaded programs use global variables, library functions (malloc). ü Be careful with them. ü Global variables are good for easy-communication but need special care.

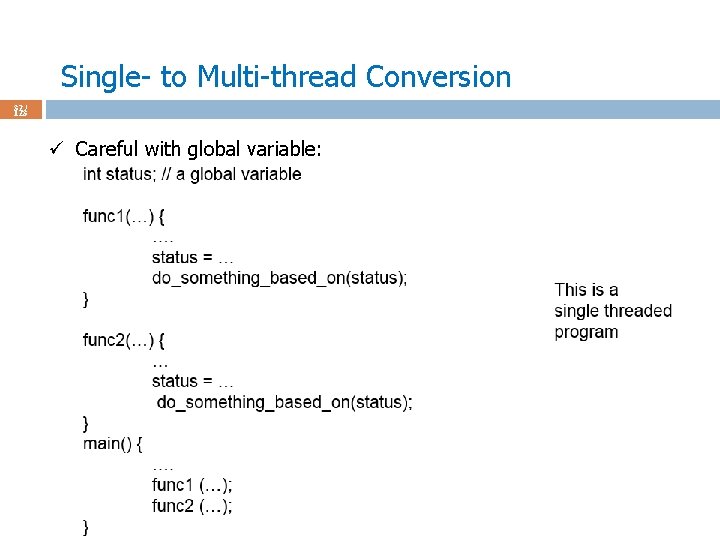

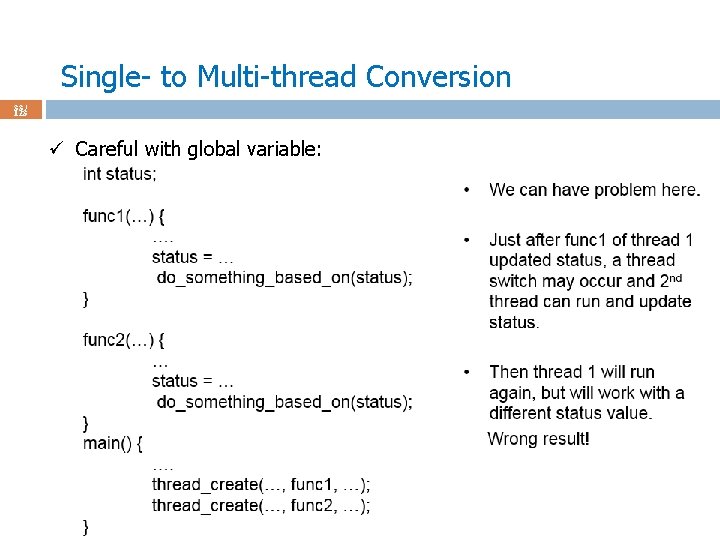

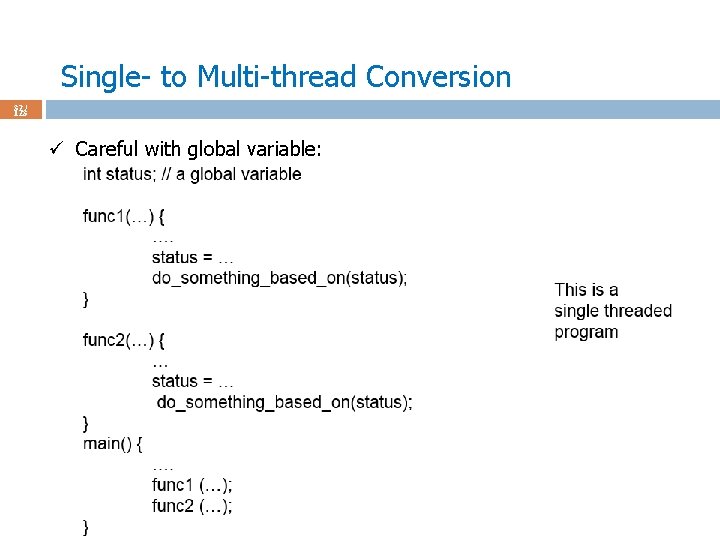

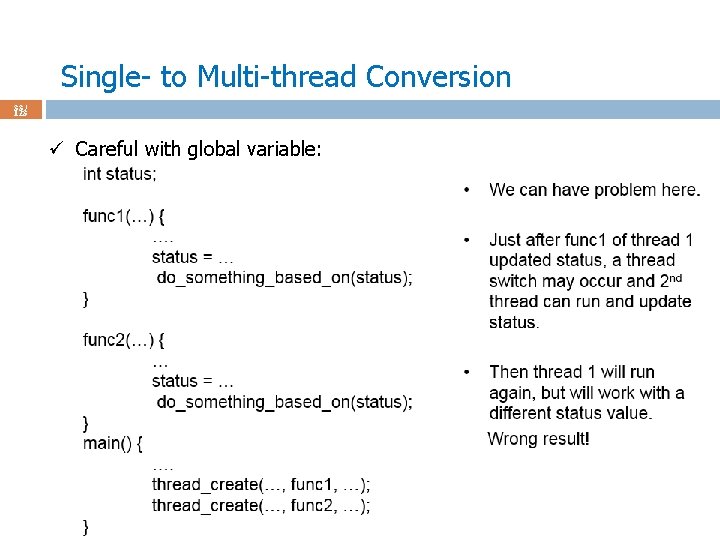

Single- to Multi-thread Conversion 32 / 123 ü Careful with global variable:

Single- to Multi-thread Conversion 33 / 123 ü Careful with global variable:

Single- to Multi-thread Conversion 34 / 123 ü Global, local, and thread-specific variables. ü thread-specific: global inside thread, but not for the whole process, i. e. , other threads cannot access it, but all the functions of the thread can (no problem ‘cos fnctns within a thread executed sequentially). ü No language support for this variable type; C cannot do this. ü Thread API has special functions to create such variables.

Single- to Multi-thread Conversion 35 / 123 ü Use thread-safe (reentrant, reenterable) library routines. ü Multiple malloc()s are executed sequentially in a single-threaded code. ü Say one thread is suspended on malloc(); another process calls malloc() and re-enters it while the 1 st one has not finished. ü Library functions should be designed to be reentrant = designed to have a second call to itself from the same process before it’s finished. ü To do so, do not use global variables.

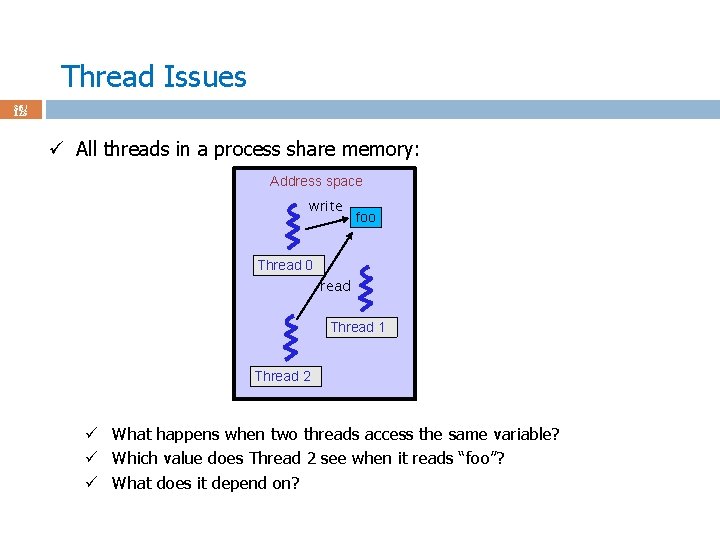

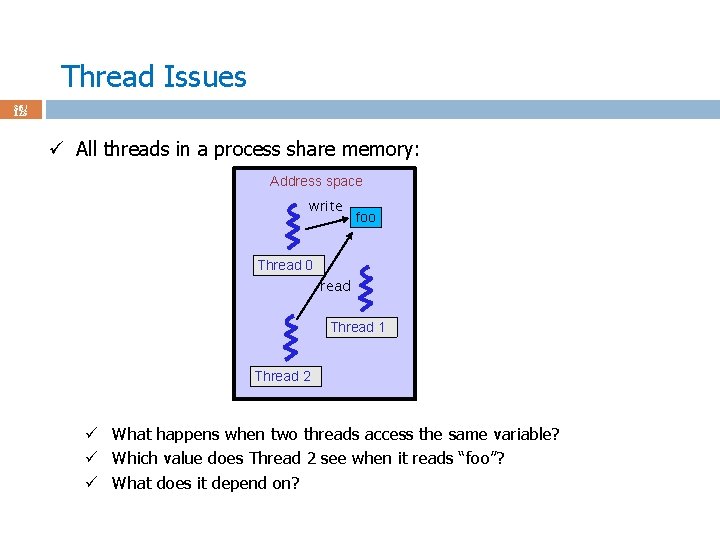

Thread Issues 36 / 123 ü All threads in a process share memory: Address space write foo Thread 0 read Thread 1 Thread 2 ü What happens when two threads access the same variable? ü Which value does Thread 2 see when it reads “foo”? ü What does it depend on?

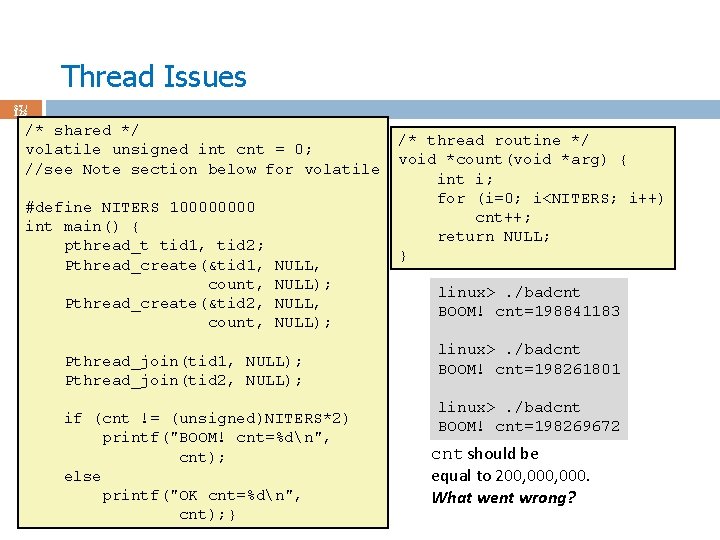

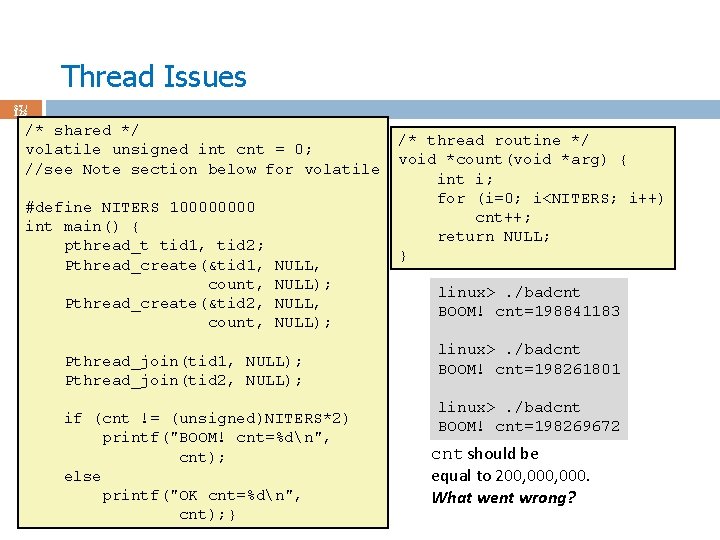

Thread Issues 37 / 123 /* shared */ volatile ü asd unsigned int cnt = 0; //see Note section below for volatile #define NITERS 10000 int main() { pthread_t tid 1, tid 2; Pthread_create(&tid 1, count, Pthread_create(&tid 2, count, NULL, NULL); Pthread_join(tid 1, NULL); Pthread_join(tid 2, NULL); if (cnt != (unsigned)NITERS*2) printf("BOOM! cnt=%dn", cnt); else printf("OK cnt=%dn", cnt); } /* thread routine */ void *count(void *arg) { int i; for (i=0; i<NITERS; i++) cnt++; return NULL; } linux>. /badcnt BOOM! cnt=198841183 linux>. /badcnt BOOM! cnt=198261801 linux>. /badcnt BOOM! cnt=198269672 cnt should be equal to 200, 000. What went wrong?

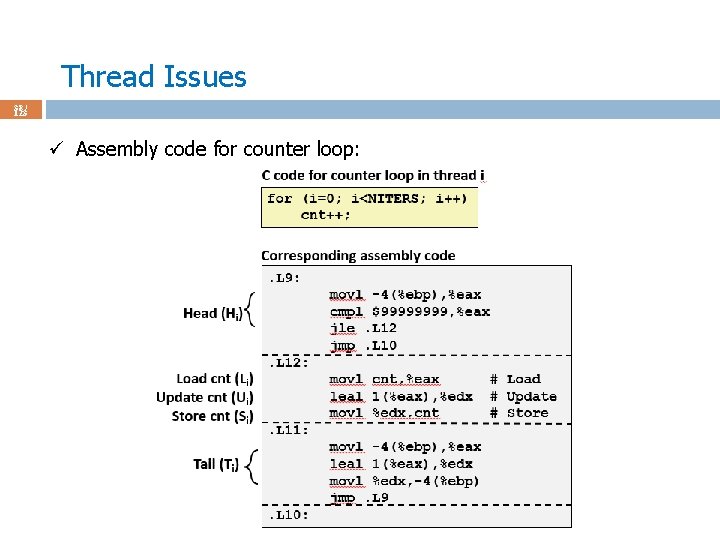

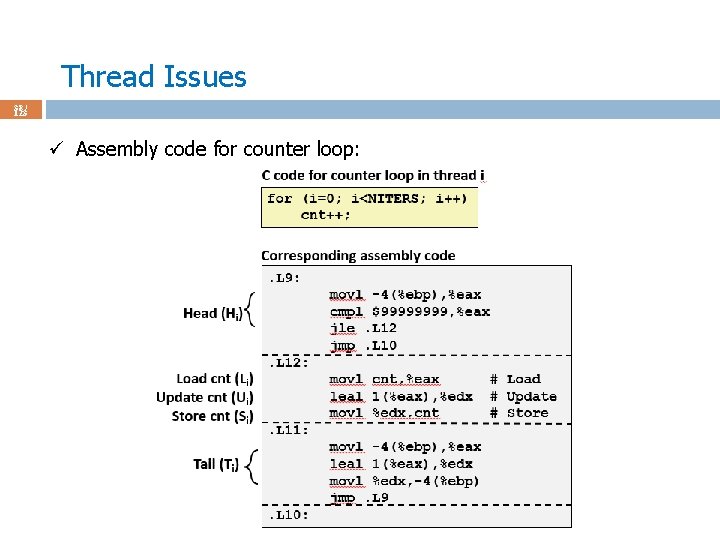

Thread Issues 38 / 123 ü Assembly code for counter loop:

Thread Issues 39 / 123 ü Assembly code for counter loop. ü Unpredictable switches of threads by scheduler will create inconsistencies on the shared data, e. g. , global variable cnt. ü Handling this is arguably the most important topic of this class: Synchronization.

Synchronization 40 / 123 ü Synchronize threads/coordinate their activities so that when you access the shared data (e. g. , global variables) you are not having a trouble. ü Multiple processes sharing a file or shared memory segment also require synchronization (= critical section handling).

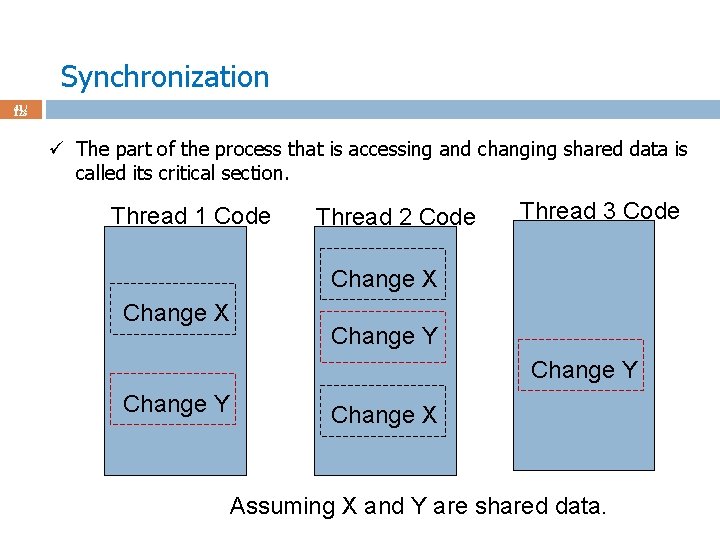

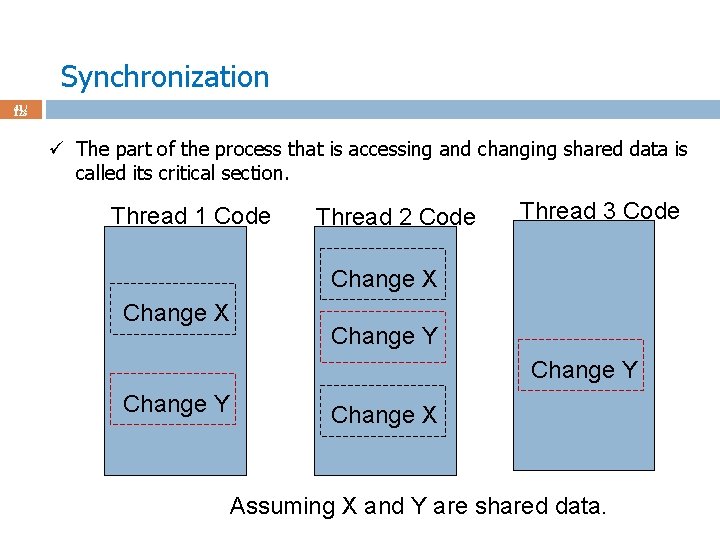

Synchronization 41 / 123 ü The part of the process that is accessing and changing shared data is called its critical section. Thread 1 Code Thread 2 Code Thread 3 Code Change X Change Y Change X Assuming X and Y are shared data.

Synchronization 42 / 123 ü Solution: No 2 processes/threads are in their critical section at the same time, aka Mutual Exclusion (mutex). ü Must assume processes/threads interleave executions arbitrarily (preemptive scheduling) and at different rates. ü Scheduling is not under application’s control. ü We control coordination using data synchronization. ü We restrict interleaving of executions to ensure consistency. ü Low-level mechanism to do this: locks, ü High-level mechanisms: mutexes, semaphores, monitors, condition variables.

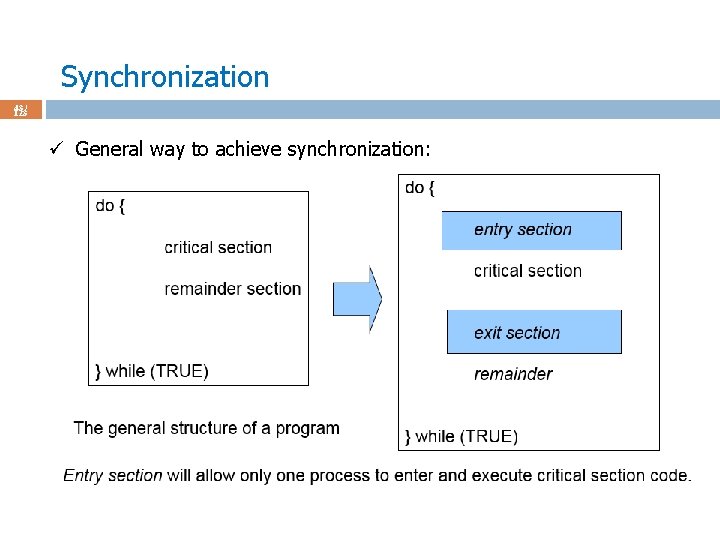

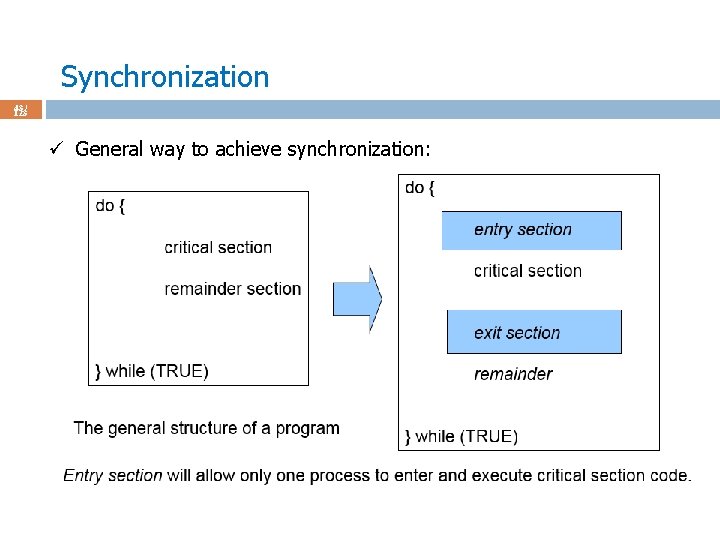

Synchronization 43 / 123 ü General way to achieve synchronization:

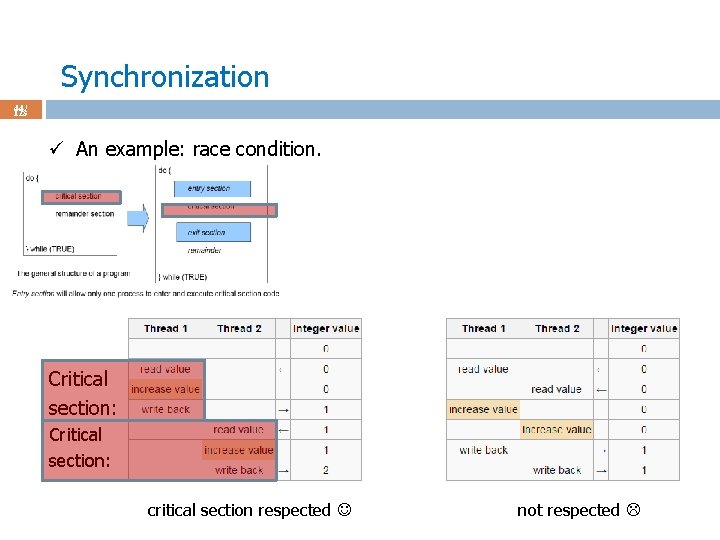

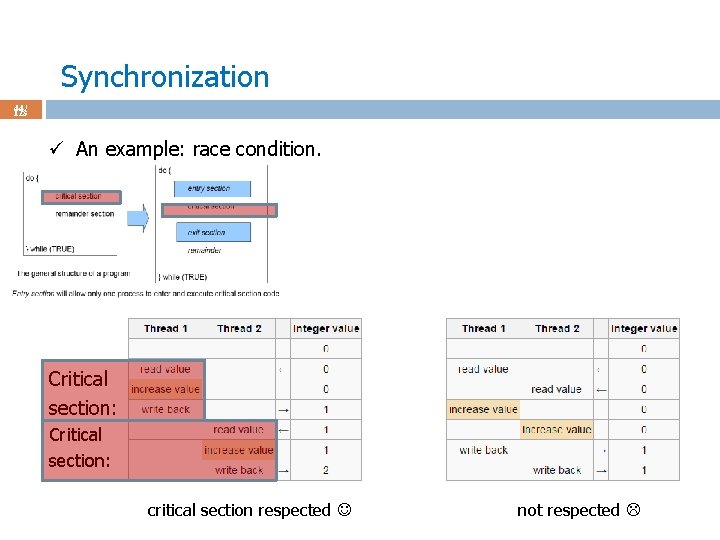

Synchronization 44 / 123 ü An example: race condition. Critical section: critical section respected not respected

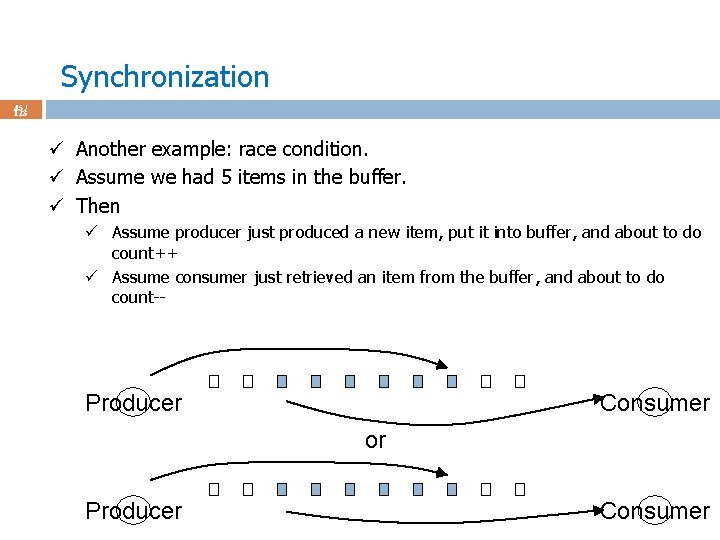

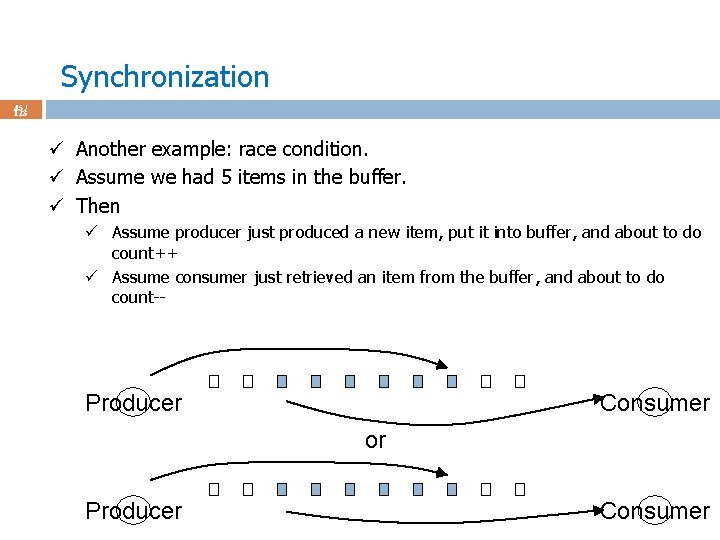

Synchronization 45 / 123 ü Another example: race condition. ü Assume we had 5 items in the buffer. ü Then ü Assume producer just produced a new item, put it into buffer, and about to do count++ ü Assume consumer just retrieved an item from the buffer, and about to do count-- Producer Consumer or Producer Consumer

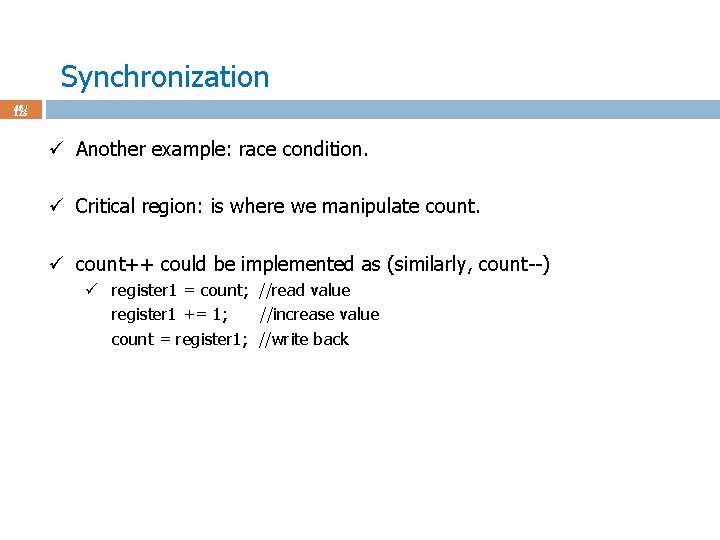

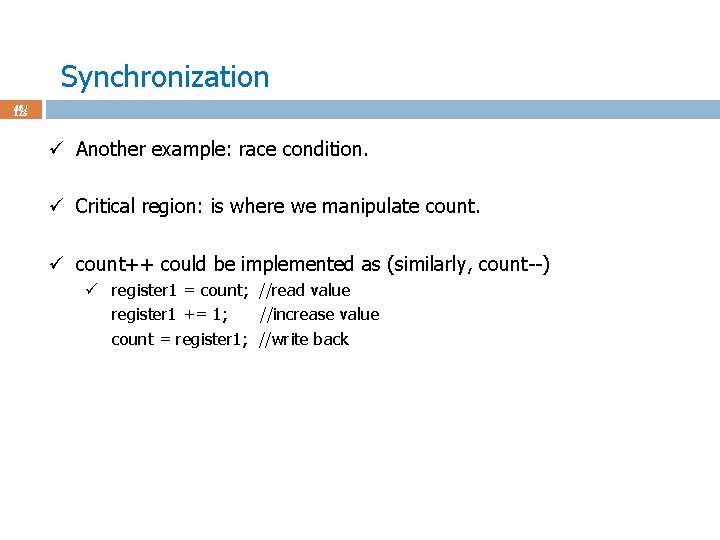

Synchronization 46 / 123 ü Another example: race condition. ü Critical region: is where we manipulate count. ü count++ could be implemented as (similarly, count--) ü register 1 = count; //read value register 1 += 1; //increase value count = register 1; //write back

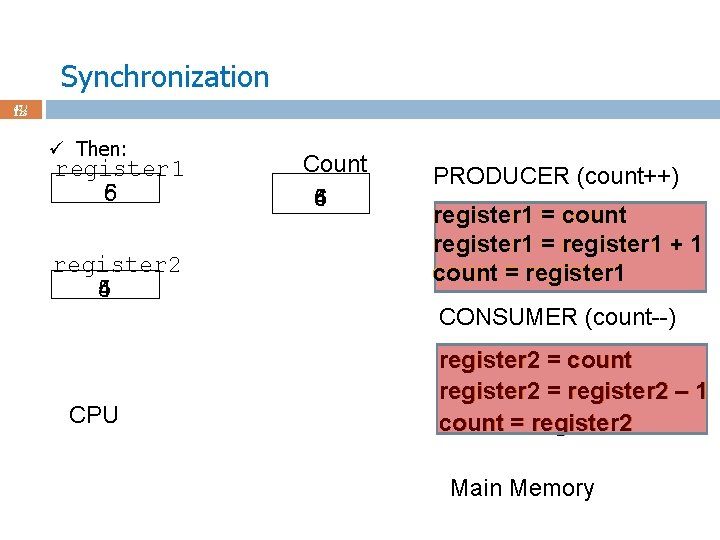

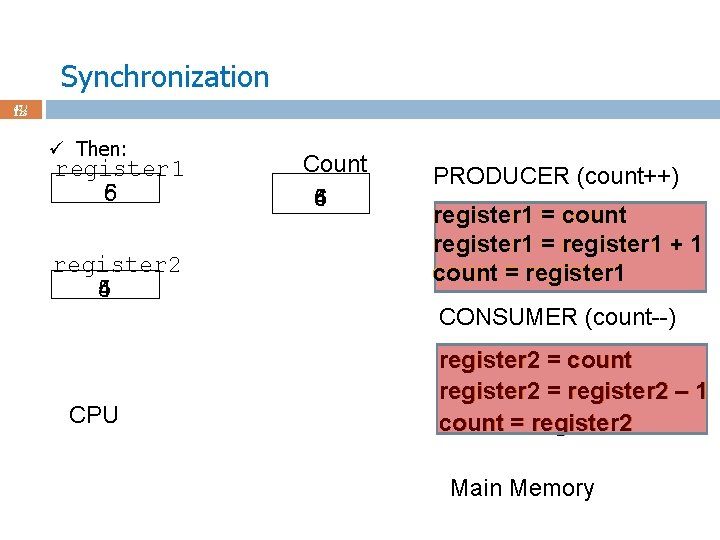

Synchronization 47 / 123 ü Then: register 1 6 5 register 2 4 5 Count 5 4 6 PRODUCER (count++) register 1 = count register 1 = register 1 + 1 count = register 1 CONSUMER (count--) CPU register 2 = count register 2 = register 2 – 1 count = register 2 Main Memory

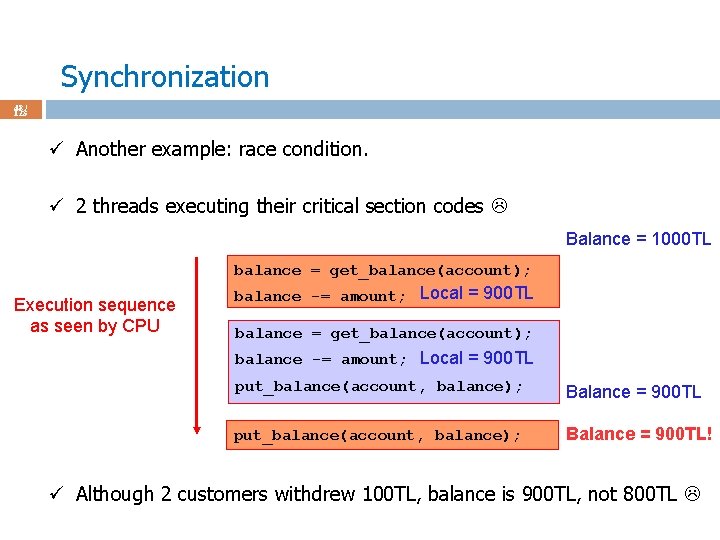

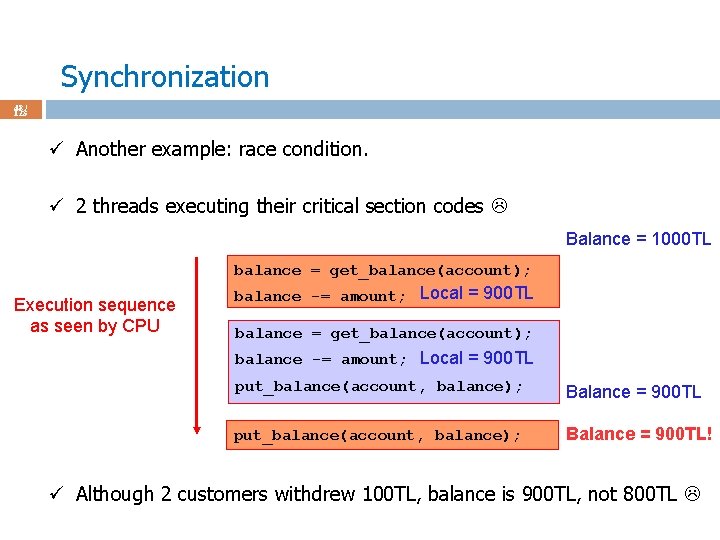

Synchronization 48 / 123 ü Another example: race condition. ü 2 threads executing their critical section codes Balance = 1000 TL balance = get_balance(account); Execution sequence as seen by CPU balance -= amount; Local = 900 TL balance = get_balance(account); balance -= amount; Local = 900 TL put_balance(account, balance); Balance = 900 TL! ü Although 2 customers withdrew 100 TL, balance is 900 TL, not 800 TL

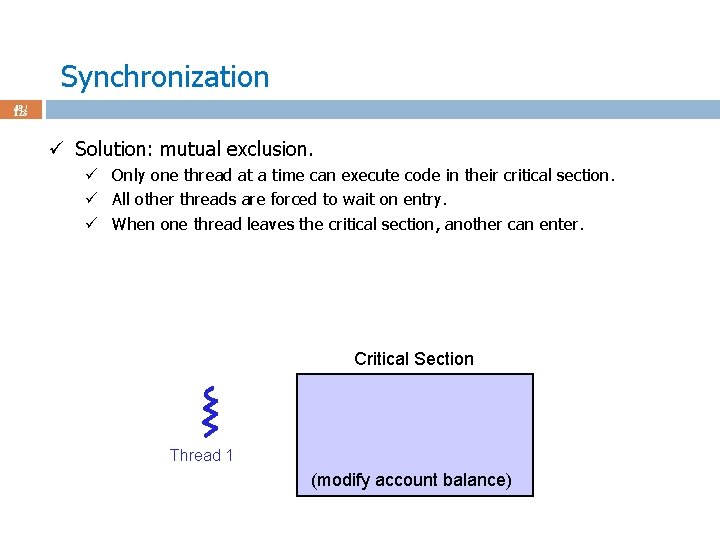

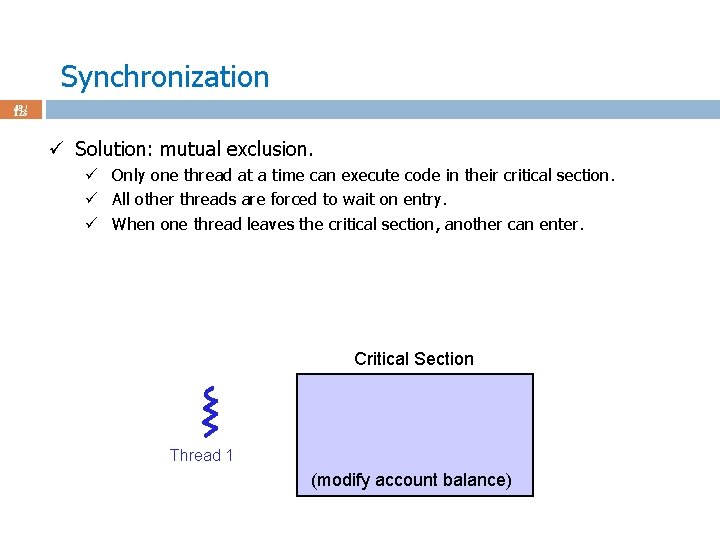

Synchronization 49 / 123 ü Solution: mutual exclusion. ü Only one thread at a time can execute code in their critical section. ü All other threads are forced to wait on entry. ü When one thread leaves the critical section, another can enter. Critical Section Thread 1 (modify account balance)

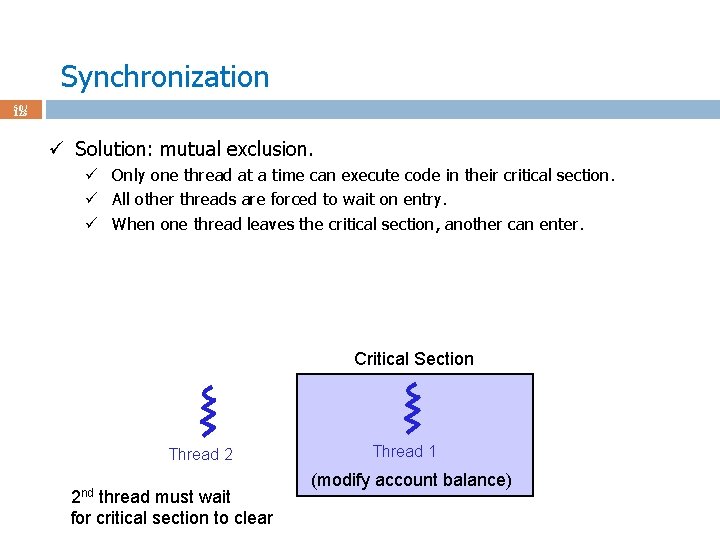

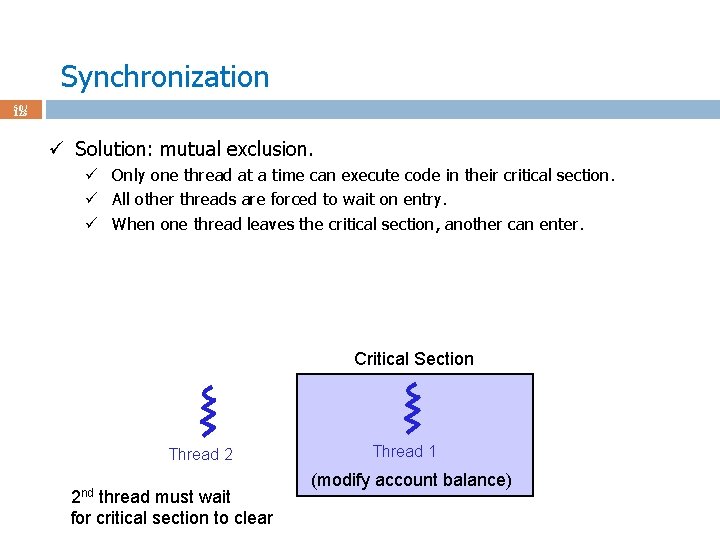

Synchronization 50 / 123 ü Solution: mutual exclusion. ü Only one thread at a time can execute code in their critical section. ü All other threads are forced to wait on entry. ü When one thread leaves the critical section, another can enter. Critical Section Thread 2 2 nd thread must wait for critical section to clear Thread 1 (modify account balance)

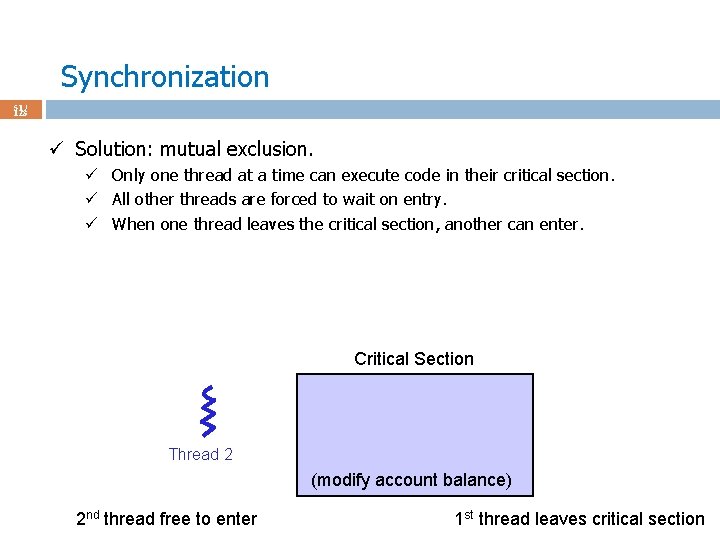

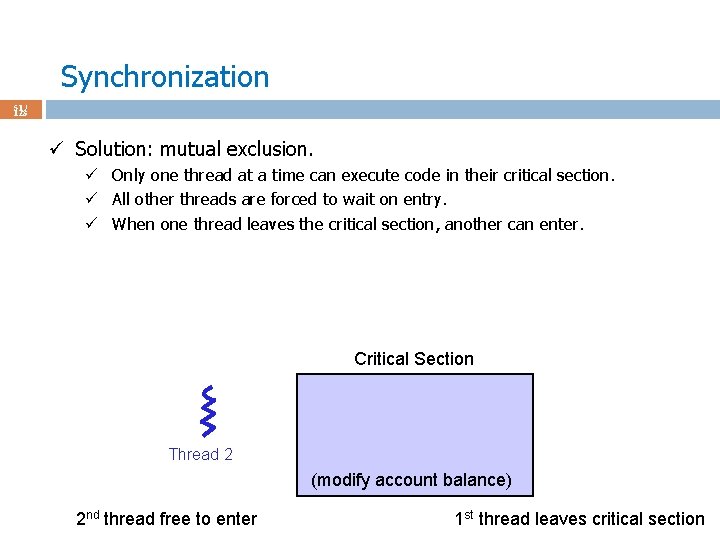

Synchronization 51 / 123 ü Solution: mutual exclusion. ü Only one thread at a time can execute code in their critical section. ü All other threads are forced to wait on entry. ü When one thread leaves the critical section, another can enter. Critical Section Thread 2 (modify account balance) 2 nd thread free to enter 1 st thread leaves critical section

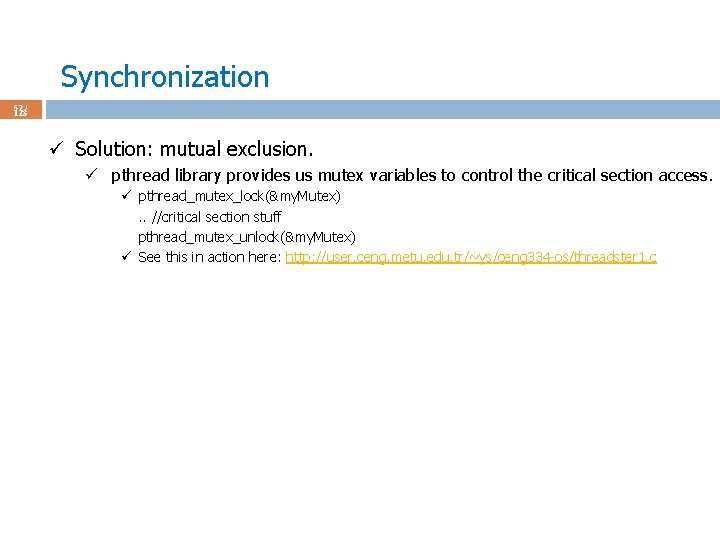

Synchronization 52 / 123 ü Solution: mutual exclusion. ü pthread library provides us mutex variables to control the critical section access. ü pthread_mutex_lock(&my. Mutex). . //critical section stuff pthread_mutex_unlock(&my. Mutex) ü See this in action here: http: //user. ceng. metu. edu. tr/~ys/ceng 334 -os/threadster 1. c

Synchronization 53 / 123 ü Critical section requirements. ü Mutual exclusion: at most 1 thread is currently executing in the critical section. ü Progress: if thread T 1 is outside the critical section, then T 1 cannot prevent T 2 from entering the critical section. ü No starvation: if T 1 is waiting for the critical section, it’ll eventually enter. ü Assuming threads eventually leave critical sections. ü Performance: the overhead of entering/exiting critical section is small w. r. t. the work being done within it.

Synchronization 54 / 123 ü Solution: Peterson’s solution to mutual exclusion. ü Programming at the application (sw solution; no hw or kernel support). ü Peterson. enter //similar to pthread_mutex_lock(&my. Mutex). . //critical section stuff Peterson. exit //similar to pthread_mutex_unlock(&my. Mutex) ü Works for 2 threads/processes (not more). ü Is this solution OK? ü Set global variable lock = 1. ü A thread that wants to enter critical section checks lock == 1. ü If true, enter. Do lock--. ü if false, another thread decremented it so not enter.

Synchronization 55 / 123 ü Solution: Peterson’s solution to mutual exclusion. ü Programming at the application (sw solution; no hw or kernel support). ü Peterson. enter //similar to pthread_mutex_lock(&my. Mutex). . //critical section stuff Peterson. exit //similar to pthread_mutex_unlock(&my. Mutex) ü Works for 2 threads/processes (not more). ü Is this solution OK? ü Set global variable lock = 1. ü A thread that wants to enter critical section checks lock == 1. ü If true, enter. Do lock--. ü if false, another thread decremented it so not enter. ü This solution sucks ‘cos lock itself is a shared global variable. ü Just using a single variable without any other protection is not enough. ü Back to Peterson’s algo. .

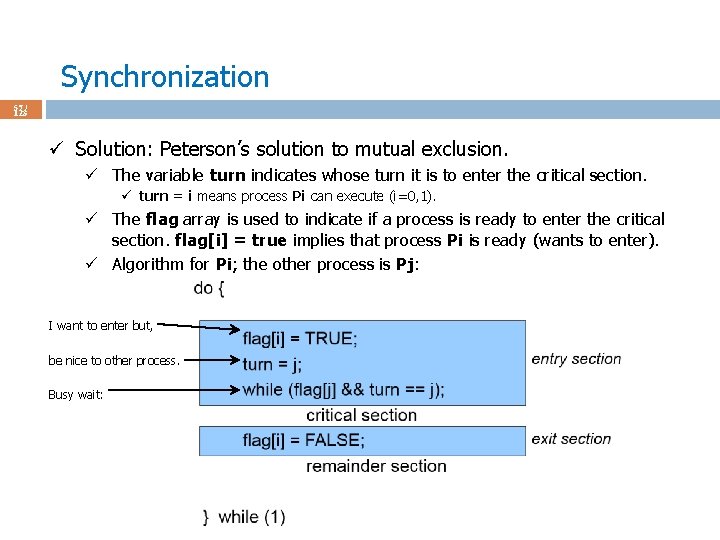

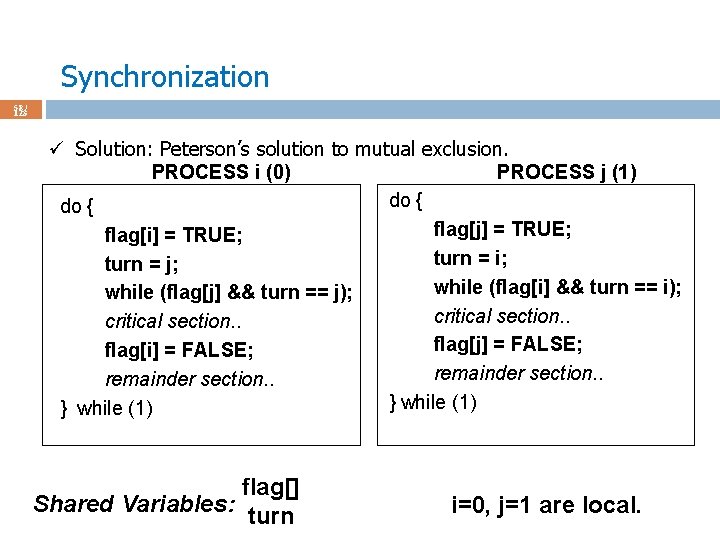

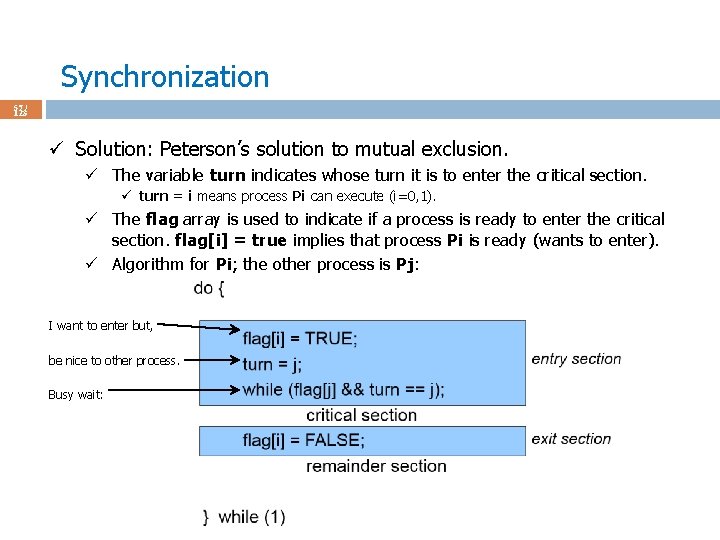

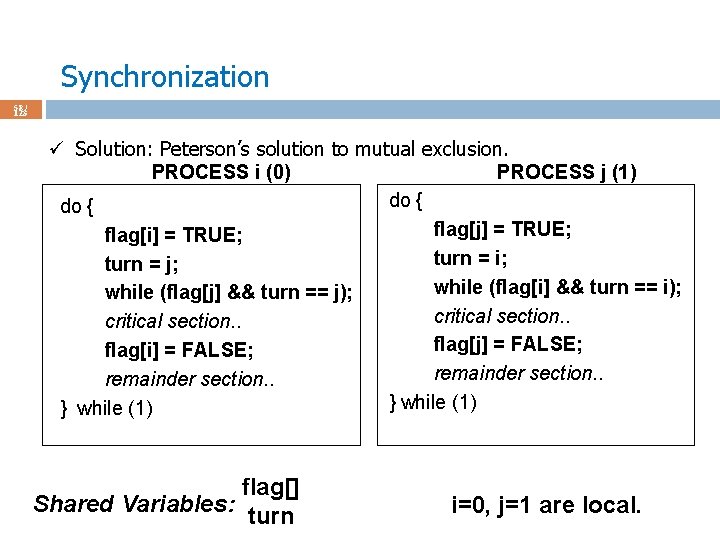

Synchronization 56 / 123 ü Solution: Peterson’s solution to mutual exclusion. ü Programming at the application (sw solution; no hw or kernel support). ü Peterson. enter //similar to pthread_mutex_lock(&my. Mutex). . //critical section stuff Peterson. exit //similar to pthread_mutex_unlock(&my. Mutex) ü Works for 2 threads/processes (not more). ü Assume that the LOAD and STORE machine instructions are atomic; that is, cannot be interrupted. ü The two processes share two variables: ü int turn; ü boolean flag[2]; ü The variable turn indicates whose turn it is to enter the critical section. ü turn = i means process Pi can execute (i=0, 1). ü The flag array is used to indicate if a process is ready to enter the critical section. flag[i] = true implies that process Pi is ready (wants to enter).

Synchronization 57 / 123 ü Solution: Peterson’s solution to mutual exclusion. ü The variable turn indicates whose turn it is to enter the critical section. ü turn = i means process Pi can execute (i=0, 1). ü The flag array is used to indicate if a process is ready to enter the critical section. flag[i] = true implies that process Pi is ready (wants to enter). ü Algorithm for Pi; the other process is Pj: I want to enter but, be nice to other process. Busy wait:

Synchronization 58 / 123 ü Solution: Peterson’s solution to mutual exclusion. PROCESS i (0) PROCESS j (1) do { flag[j] = TRUE; flag[i] = TRUE; turn = i; turn = j; while (flag[i] && turn == i); while (flag[j] && turn == j); critical section. . flag[j] = FALSE; flag[i] = FALSE; remainder section. . } while (1) flag[] Shared Variables: turn i=0, j=1 are local.

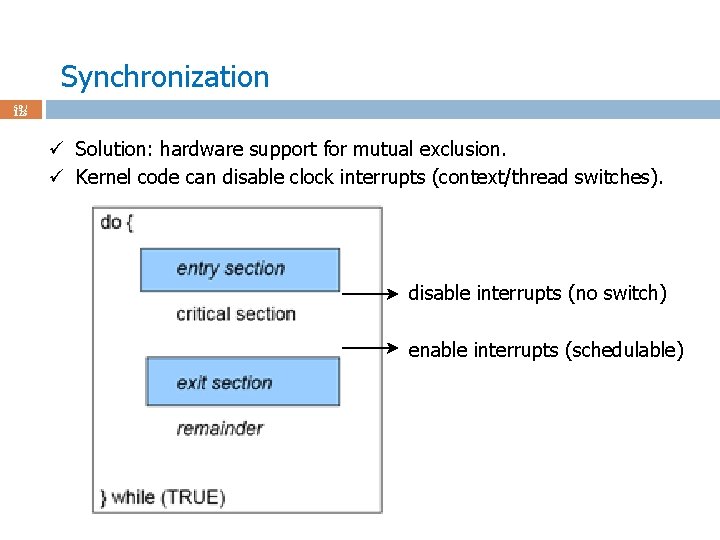

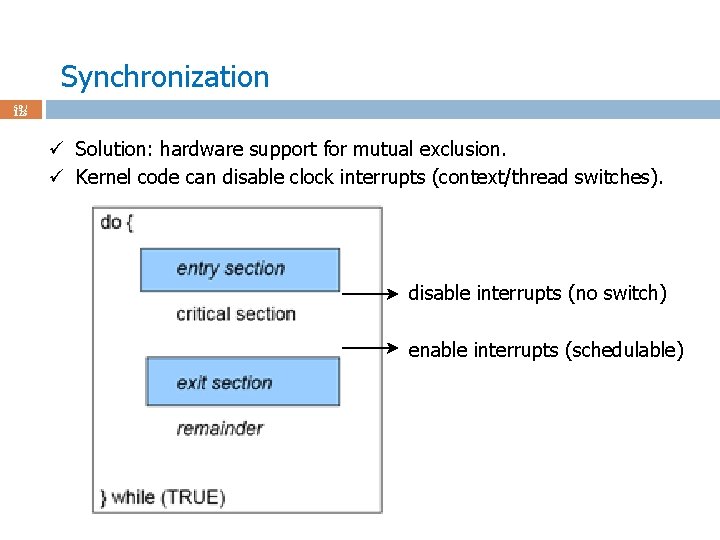

Synchronization 59 / 123 ü Solution: hardware support for mutual exclusion. ü Kernel code can disable clock interrupts (context/thread switches). disable interrupts (no switch) enable interrupts (schedulable)

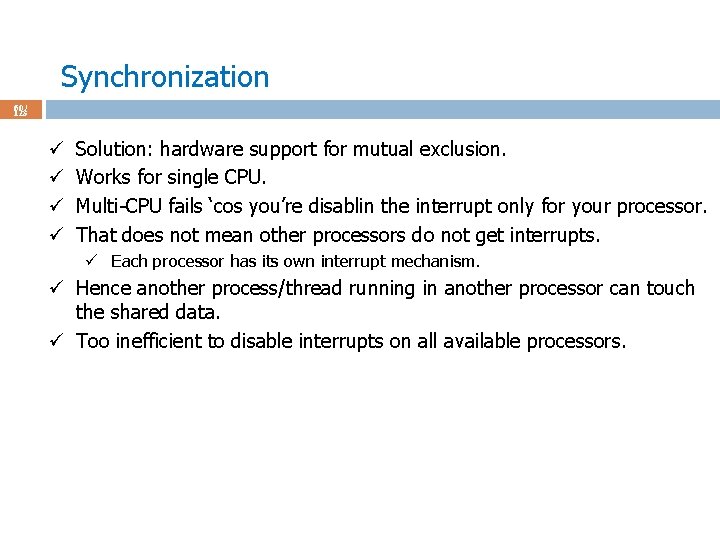

Synchronization 60 / 123 ü ü Solution: hardware support for mutual exclusion. Works for single CPU. Multi-CPU fails ‘cos you’re disablin the interrupt only for your processor. That does not mean other processors do not get interrupts. ü Each processor has its own interrupt mechanism. ü Hence another process/thread running in another processor can touch the shared data. ü Too inefficient to disable interrupts on all available processors.

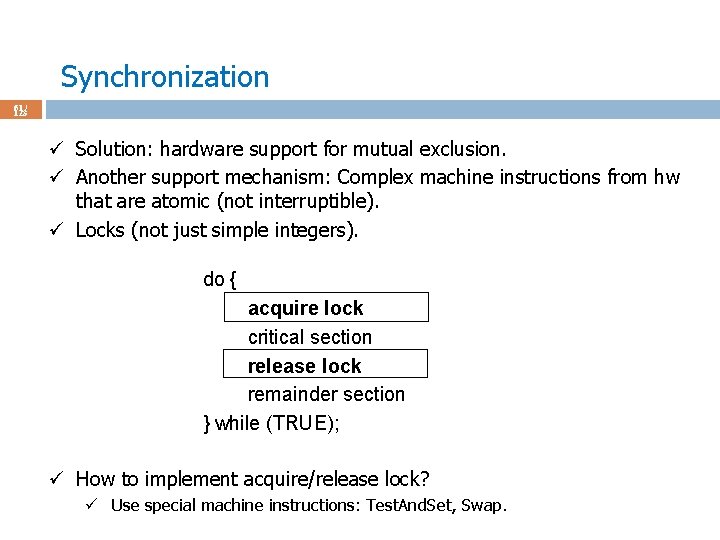

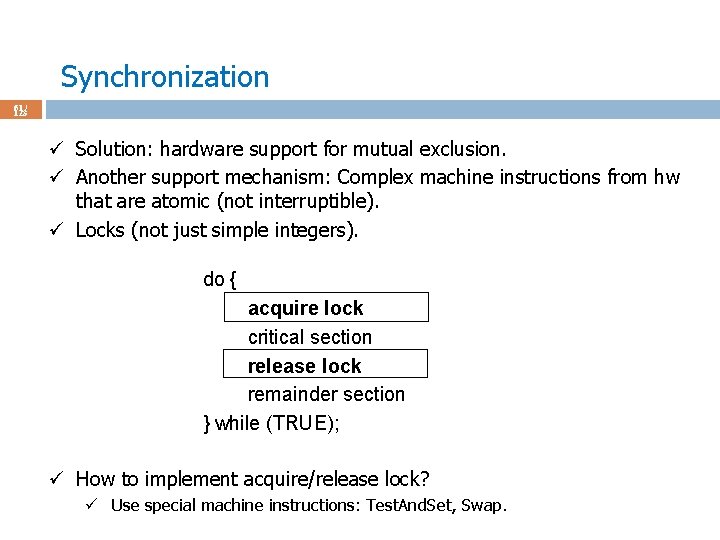

Synchronization 61 / 123 ü Solution: hardware support for mutual exclusion. ü Another support mechanism: Complex machine instructions from hw that are atomic (not interruptible). ü Locks (not just simple integers). do { acquire lock critical section release lock remainder section } while (TRUE); ü How to implement acquire/release lock? ü Use special machine instructions: Test. And. Set, Swap.

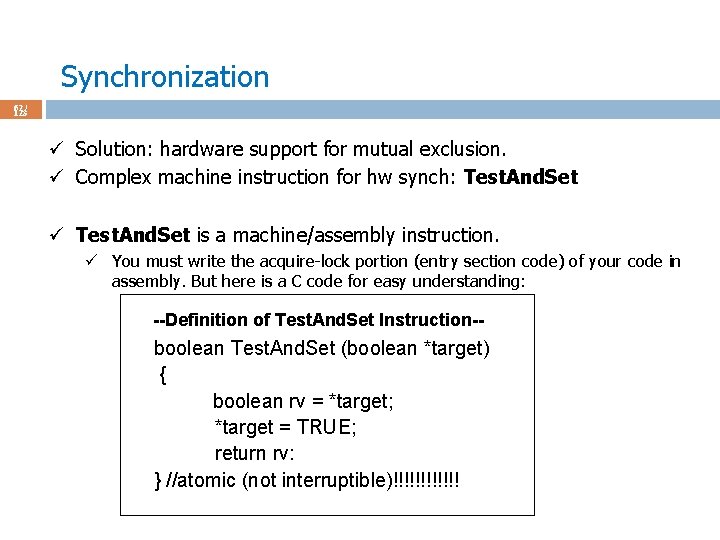

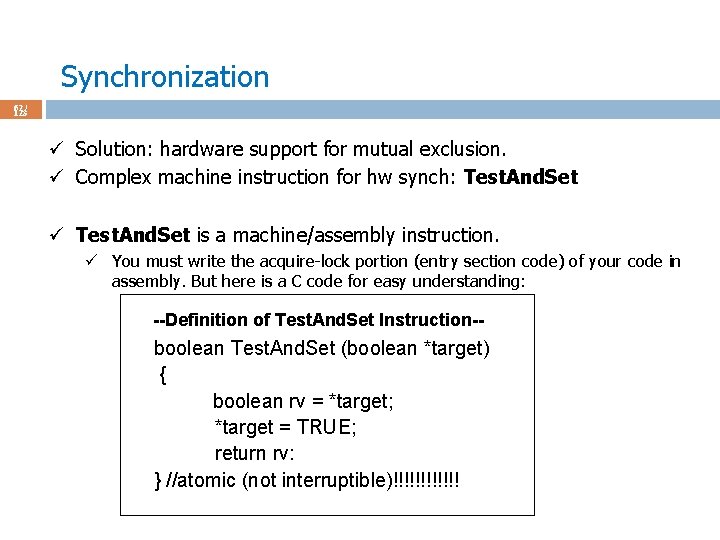

Synchronization 62 / 123 ü Solution: hardware support for mutual exclusion. ü Complex machine instruction for hw synch: Test. And. Set ü Test. And. Set is a machine/assembly instruction. ü You must write the acquire-lock portion (entry section code) of your code in assembly. But here is a C code for easy understanding: --Definition of Test. And. Set Instruction-- boolean Test. And. Set (boolean *target) { boolean rv = *target; *target = TRUE; return rv: } //atomic (not interruptible)!!!!!!

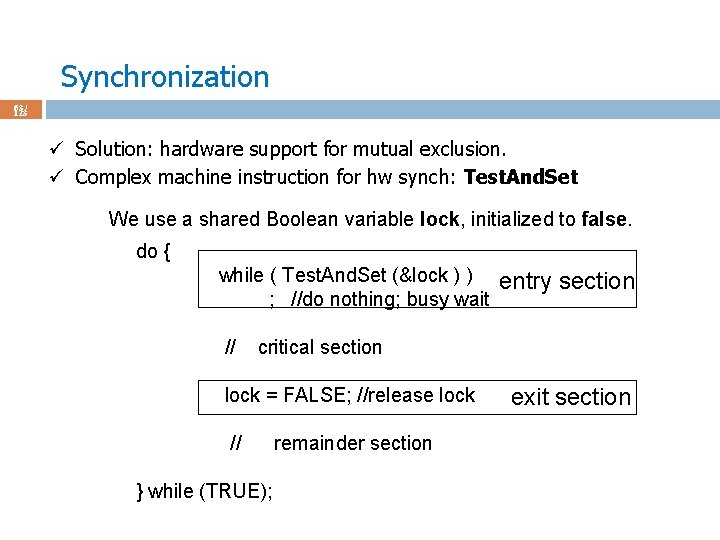

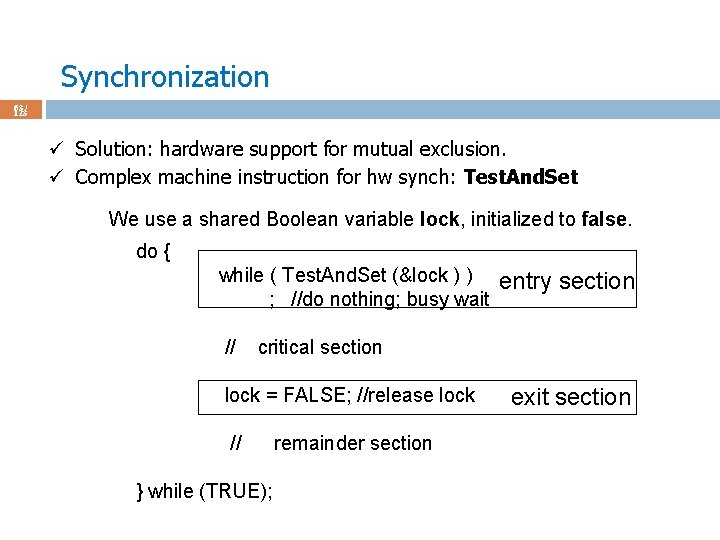

Synchronization 63 / 123 ü Solution: hardware support for mutual exclusion. ü Complex machine instruction for hw synch: Test. And. Set We use a shared Boolean variable lock, initialized to false. do { while ( Test. And. Set (&lock ) ) ; //do nothing; busy wait // entry section critical section lock = FALSE; //release lock // } while (TRUE); remainder section exit section

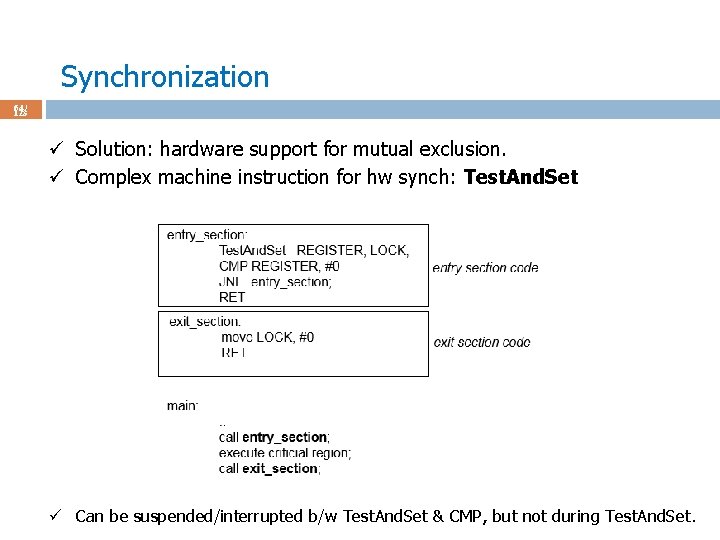

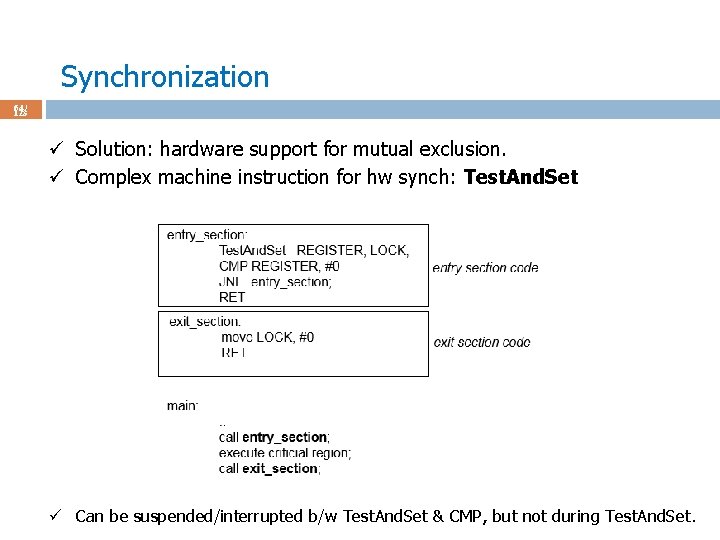

Synchronization 64 / 123 ü Solution: hardware support for mutual exclusion. ü Complex machine instruction for hw synch: Test. And. Set ü Can be suspended/interrupted b/w Test. And. Set & CMP, but not during Test. And. Set.

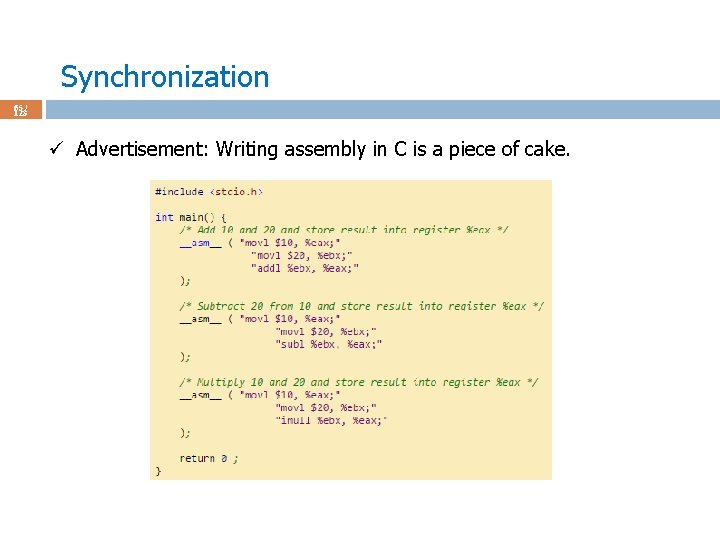

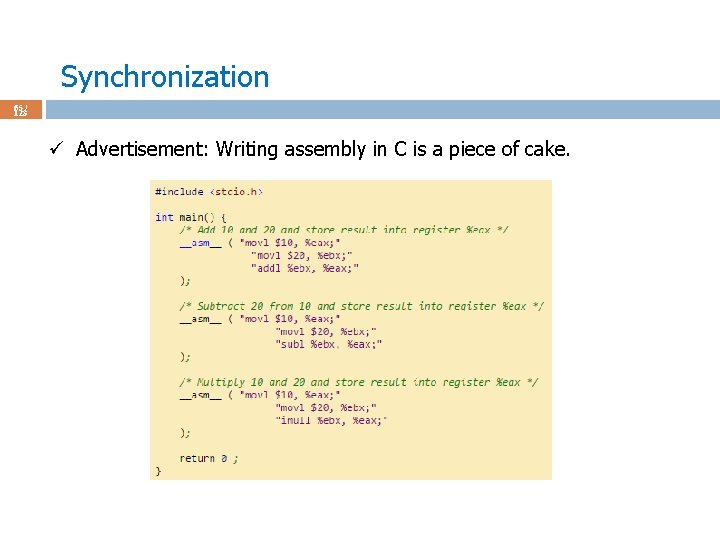

Synchronization 65 / 123 ü Advertisement: Writing assembly in C is a piece of cake.

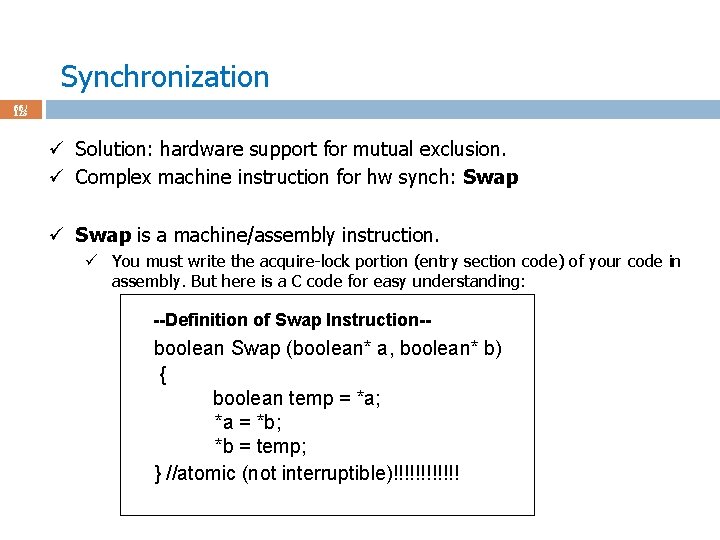

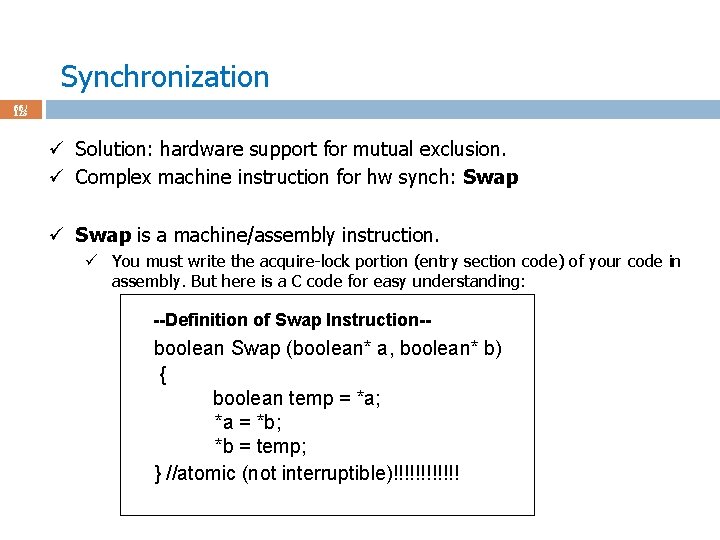

Synchronization 66 / 123 ü Solution: hardware support for mutual exclusion. ü Complex machine instruction for hw synch: Swap ü Swap is a machine/assembly instruction. ü You must write the acquire-lock portion (entry section code) of your code in assembly. But here is a C code for easy understanding: --Definition of Swap Instruction-- boolean Swap (boolean* a, boolean* b) { boolean temp = *a; *a = *b; *b = temp; } //atomic (not interruptible)!!!!!!

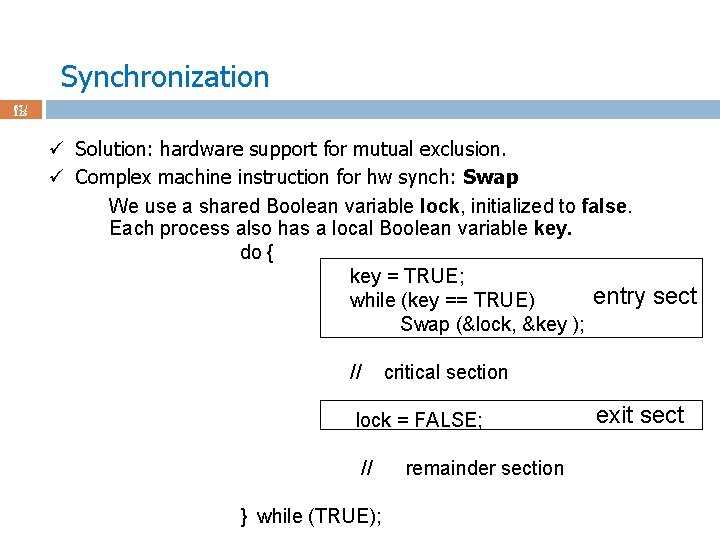

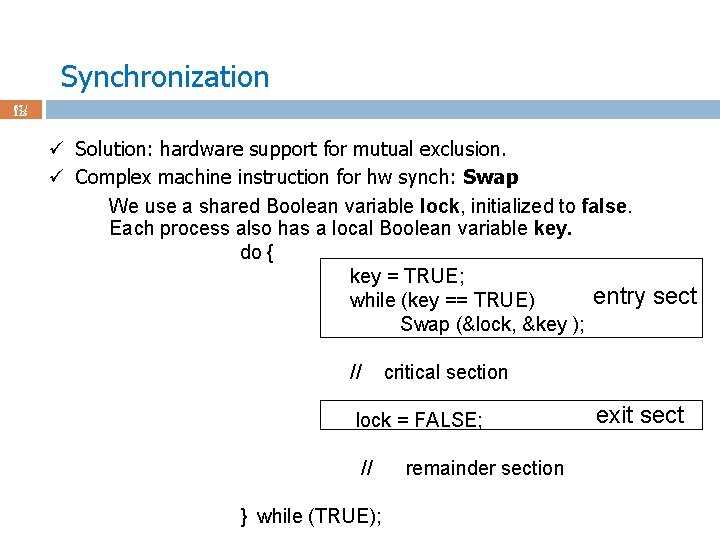

Synchronization 67 / 123 ü Solution: hardware support for mutual exclusion. ü Complex machine instruction for hw synch: Swap We use a shared Boolean variable lock, initialized to false. Each process also has a local Boolean variable key. do { key = TRUE; entry sect while (key == TRUE) Swap (&lock, &key ); // critical section lock = FALSE; // } while (TRUE); remainder section exit sect

Synchronization 68 / 123 ü Solution: hardware support for mutual exclusion. ü A comment on Test. And. Swap & Swap. ü Although they both guarantee mutual exclusion, they make one process (X) wait a lot: ü A process X may be waiting, but we can have the other process Y going into the critical region repeatedly. ü One toy/bad solution: keep the remainder section code so long that scheduler kicks Y out of the CPU before it reaches back to the entry section.

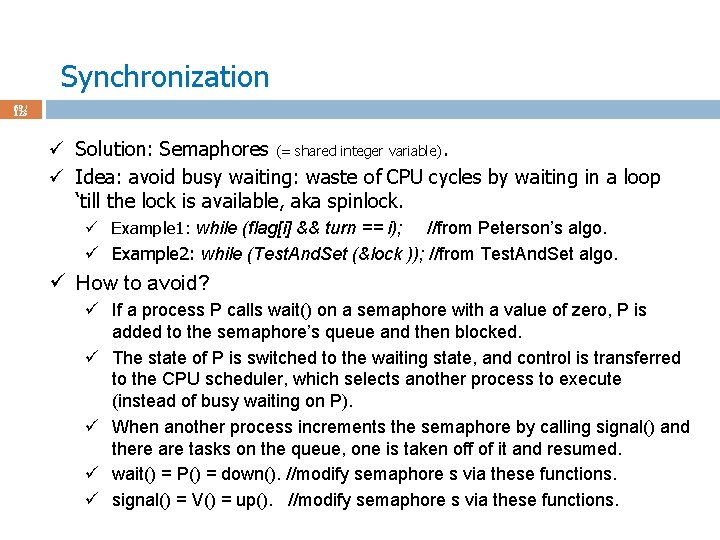

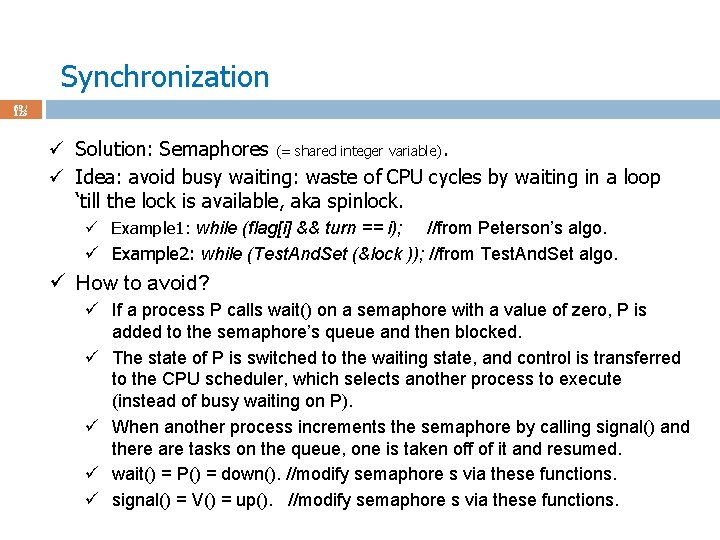

Synchronization 69 / 123 ü Solution: Semaphores (= shared integer variable). ü Idea: avoid busy waiting: waste of CPU cycles by waiting in a loop ‘till the lock is available, aka spinlock. ü Example 1: while (flag[i] && turn == i); //from Peterson’s algo. ü Example 2: while (Test. And. Set (&lock )); //from Test. And. Set algo. ü How to avoid? ü If a process P calls wait() on a semaphore with a value of zero, P is added to the semaphore’s queue and then blocked. ü The state of P is switched to the waiting state, and control is transferred to the CPU scheduler, which selects another process to execute (instead of busy waiting on P). ü When another process increments the semaphore by calling signal() and there are tasks on the queue, one is taken off of it and resumed. ü wait() = P() = down(). //modify semaphore s via these functions. ü signal() = V() = up(). //modify semaphore s via these functions.

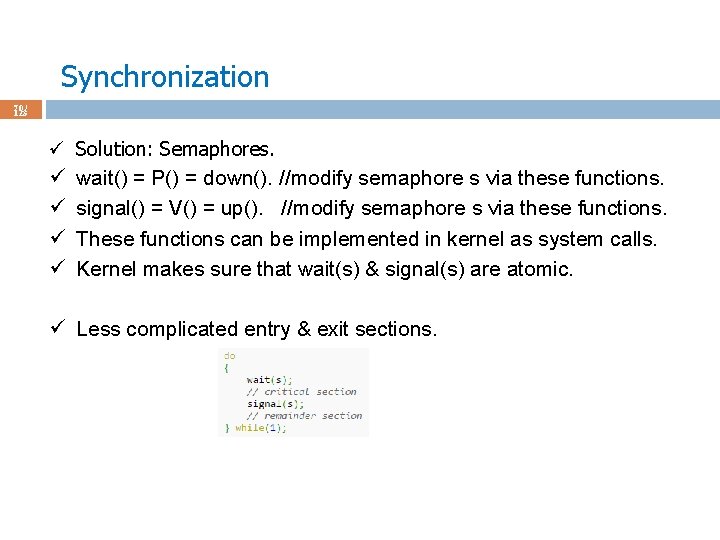

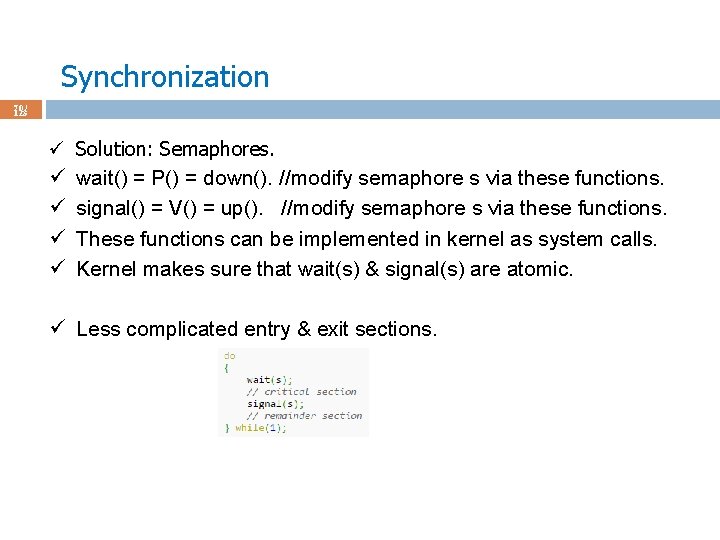

Synchronization 70 / 123 ü ü ü Solution: Semaphores. wait() = P() = down(). //modify semaphore s via these functions. signal() = V() = up(). //modify semaphore s via these functions. These functions can be implemented in kernel as system calls. Kernel makes sure that wait(s) & signal(s) are atomic. ü Less complicated entry & exit sections.

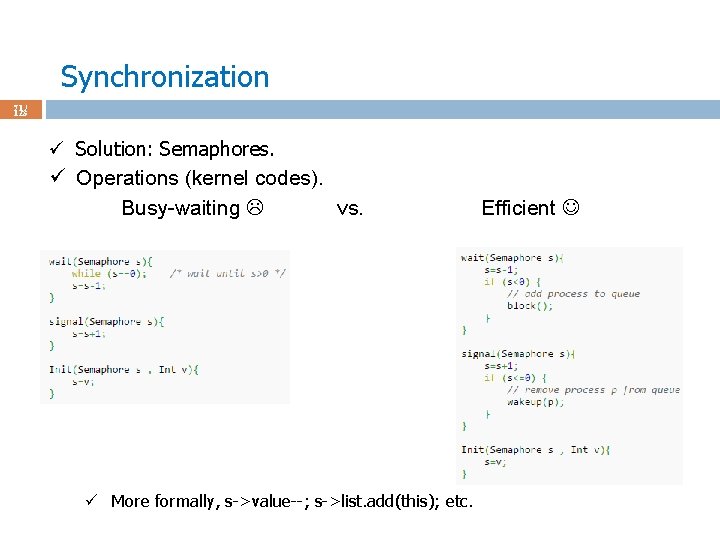

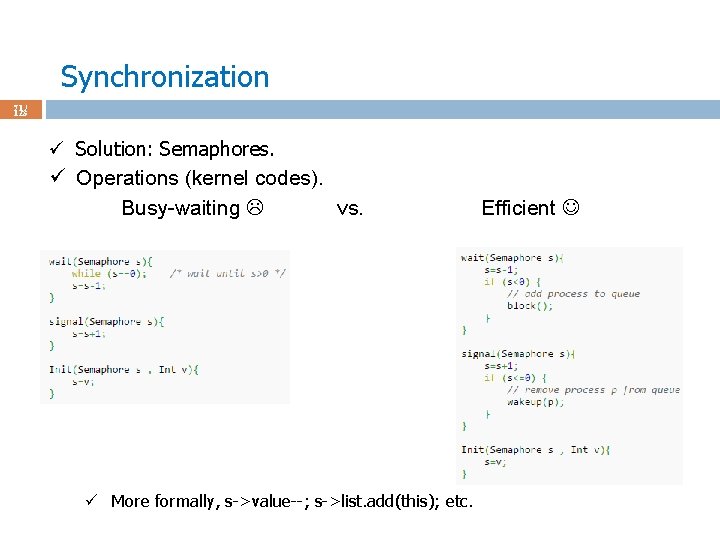

Synchronization 71 / 123 ü Solution: Semaphores. ü Operations (kernel codes). Busy-waiting vs. ü More formally, s->value--; s->list. add(this); etc. Efficient

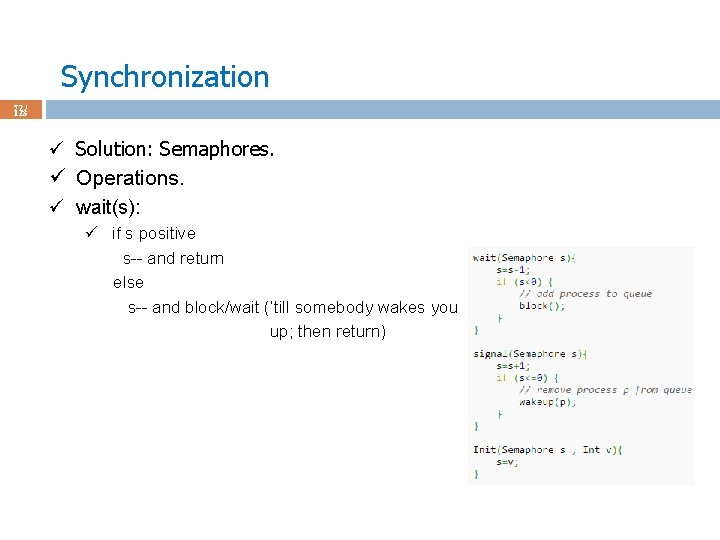

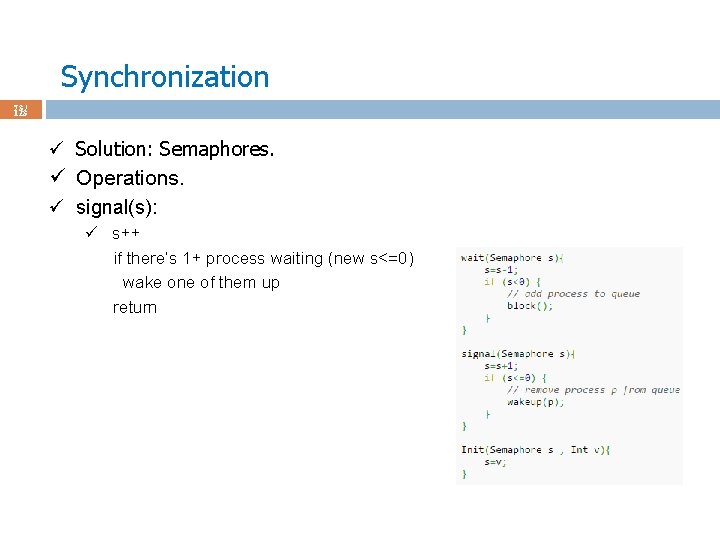

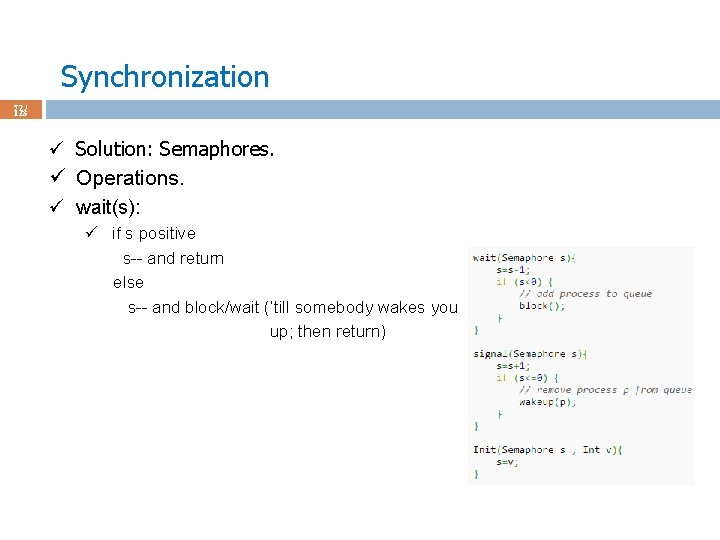

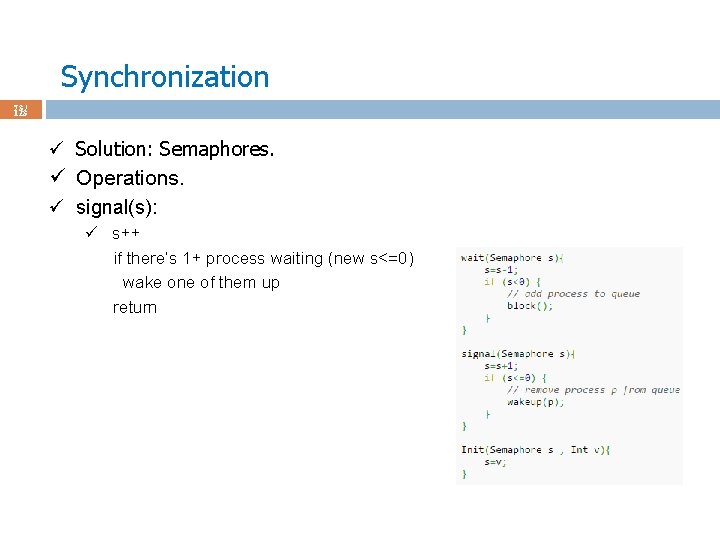

Synchronization 72 / 123 ü Solution: Semaphores. ü Operations. ü wait(s): ü if s positive s-- and return else s-- and block/wait (‘till somebody wakes you up; then return)

Synchronization 73 / 123 ü Solution: Semaphores. ü Operations. ü signal(s): ü s++ if there’s 1+ process waiting (new s<=0) wake one of them up return

Synchronization 74 / 123 ü Solution: Semaphores. ü Types. ü Binary semaphore ü Integer value can range only between 0 and 1; can be simpler to implement; aka mutex locks. ü Provides mutual exclusion; can be used for the critical section problem. ü Counting semaphore ü Integer value can range over an unrestricted domain. ü Can be used for other synchronization problems; for example for resource allocation. ü Example: you have 10 instances of a resource. Init semaphore s to 10 in this case.

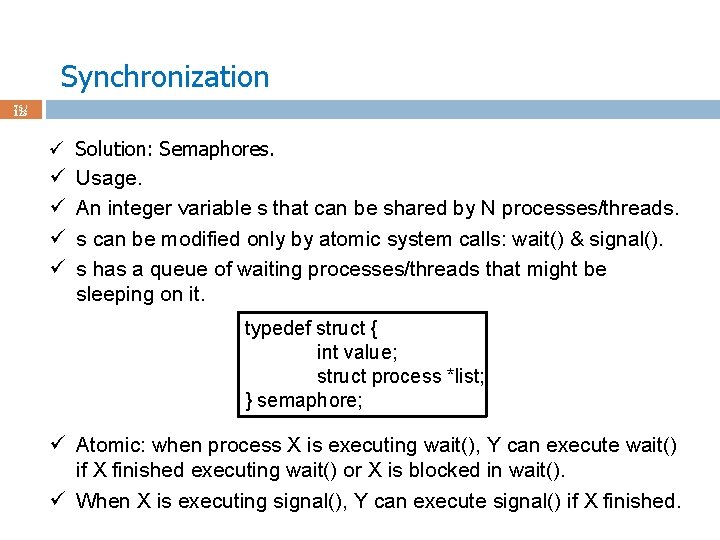

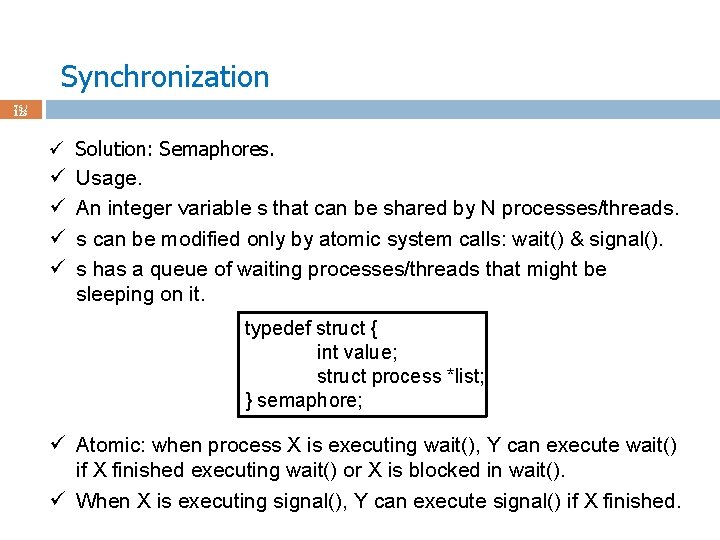

Synchronization 75 / 123 ü ü ü Solution: Semaphores. Usage. An integer variable s that can be shared by N processes/threads. s can be modified only by atomic system calls: wait() & signal(). s has a queue of waiting processes/threads that might be sleeping on it. typedef struct { int value; struct process *list; } semaphore; ü Atomic: when process X is executing wait(), Y can execute wait() if X finished executing wait() or X is blocked in wait(). ü When X is executing signal(), Y can execute signal() if X finished.

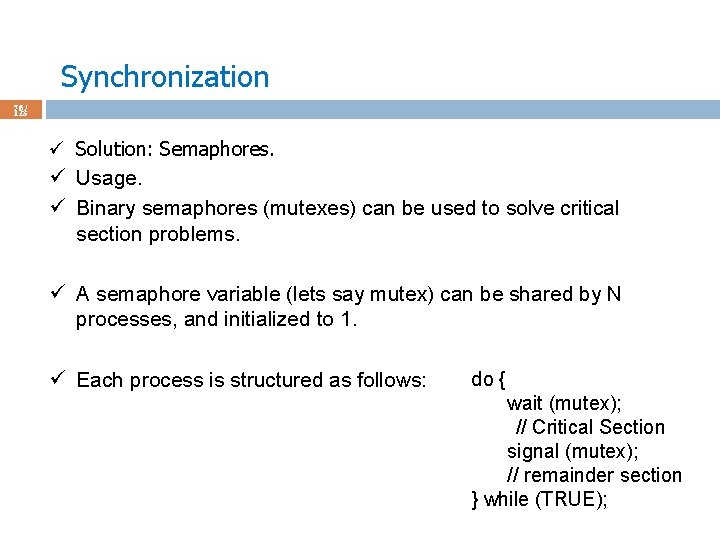

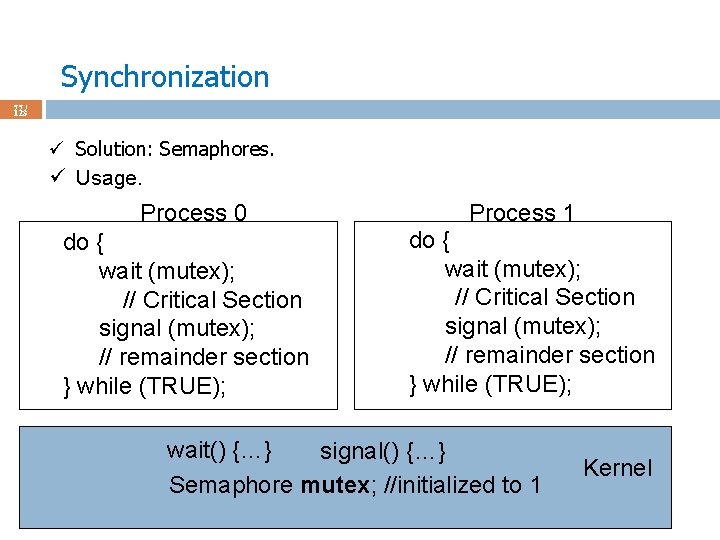

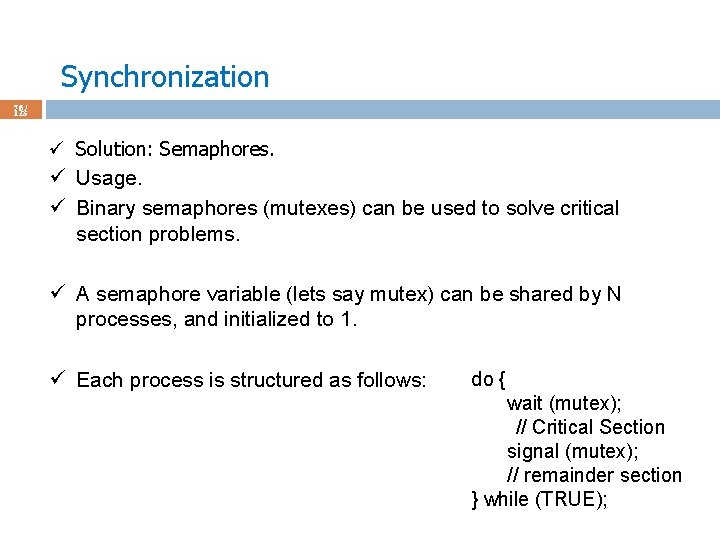

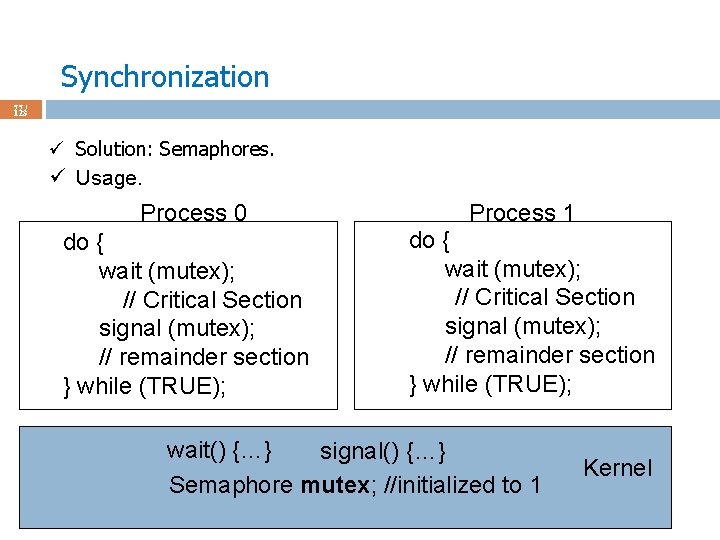

Synchronization 76 / 123 ü Solution: Semaphores. ü Usage. ü Binary semaphores (mutexes) can be used to solve critical section problems. ü A semaphore variable (lets say mutex) can be shared by N processes, and initialized to 1. ü Each process is structured as follows: do { wait (mutex); // Critical Section signal (mutex); // remainder section } while (TRUE);

Synchronization 77 / 123 ü Solution: Semaphores. ü Usage. Process 0 do { wait (mutex); // Critical Section signal (mutex); // remainder section } while (TRUE); Process 1 do { wait (mutex); // Critical Section signal (mutex); // remainder section } while (TRUE); wait() {…} signal() {…} Semaphore mutex; //initialized to 1 Kernel

Synchronization 78 / 123 ü Solution: Semaphores. ü Usage. ü Kernel puts processes/threads waiting on s in a FIFO queue. Why FIFO?

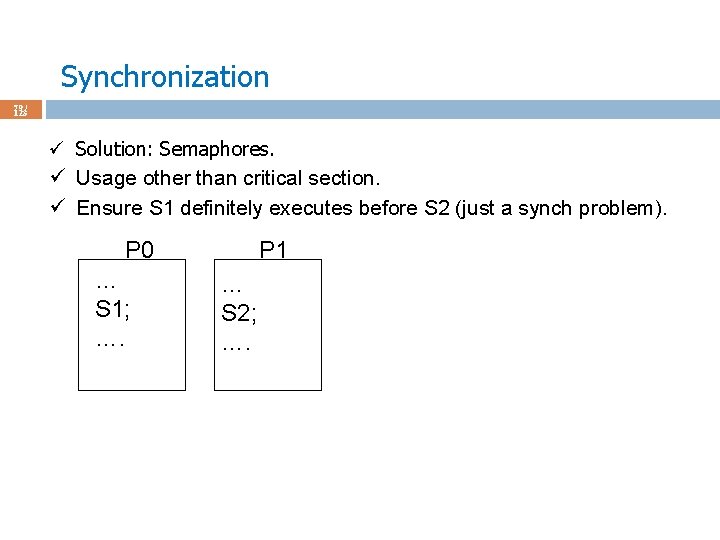

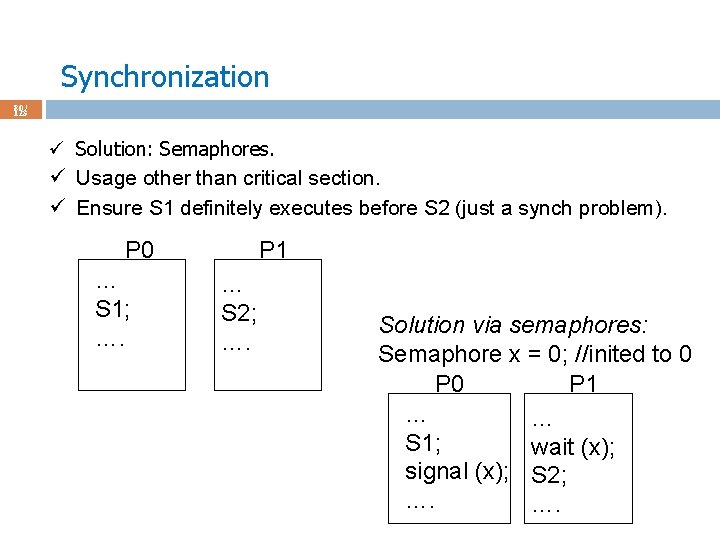

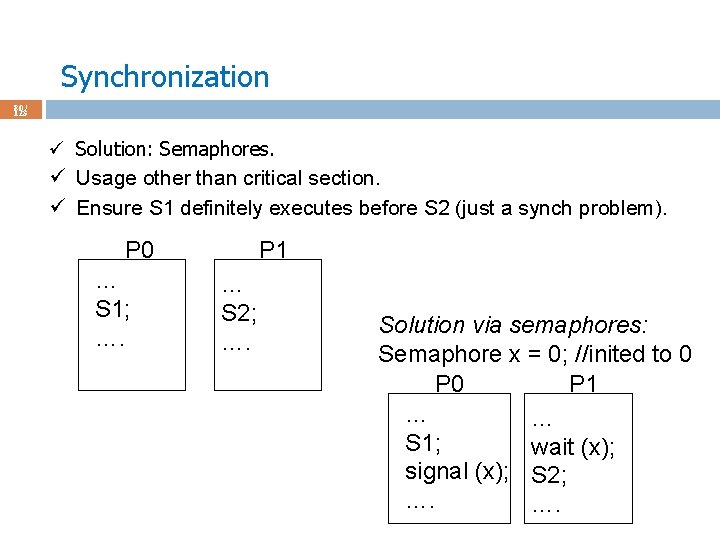

Synchronization 79 / 123 ü Solution: Semaphores. ü Usage other than critical section. ü Ensure S 1 definitely executes before S 2 (just a synch problem). P 0 … S 1; …. P 1 … S 2; ….

Synchronization 80 / 123 ü Solution: Semaphores. ü Usage other than critical section. ü Ensure S 1 definitely executes before S 2 (just a synch problem). P 0 … S 1; …. P 1 … S 2; …. Solution via semaphores: Semaphore x = 0; //inited to 0 P 1 … … S 1; wait (x); signal (x); S 2; …. ….

Synchronization 81 / 123 ü ü ü Solution: Semaphores. Usage other than critical section. Resource allocation (just another synch problem). We have N processes that want a resource that has 5 instances. Solution:

Synchronization 82 / 123 ü ü ü Solution: Semaphores. Usage other than critical section. Resource allocation (just another synch problem). We’ve N processes that want a resource R that has 5 instances. Solution: ü Semaphore rs = 5; ü Every process that wants to use R will do wait(rs); ü If some instance is available, that means rs will be nonnegative no blocking. ü If all 5 instances are used, that means rs will be negative block ‘till rs nonneg. ü Every process that finishes with R will do signal(rs); ü A blocked processes will change state from waiting to ready.

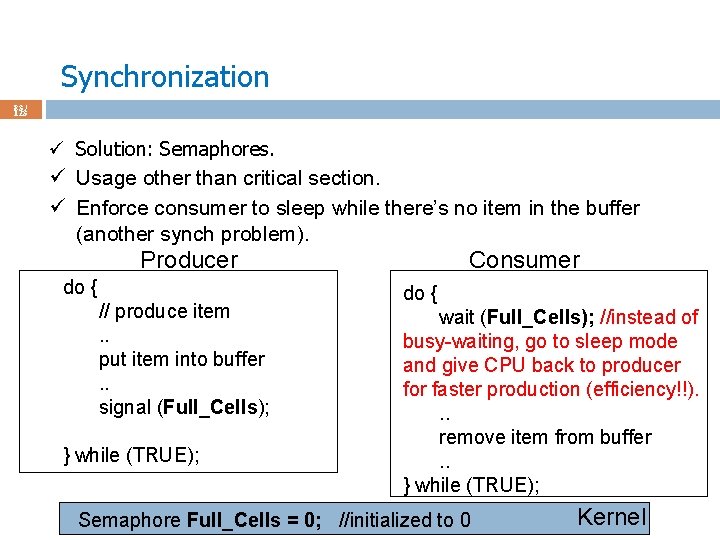

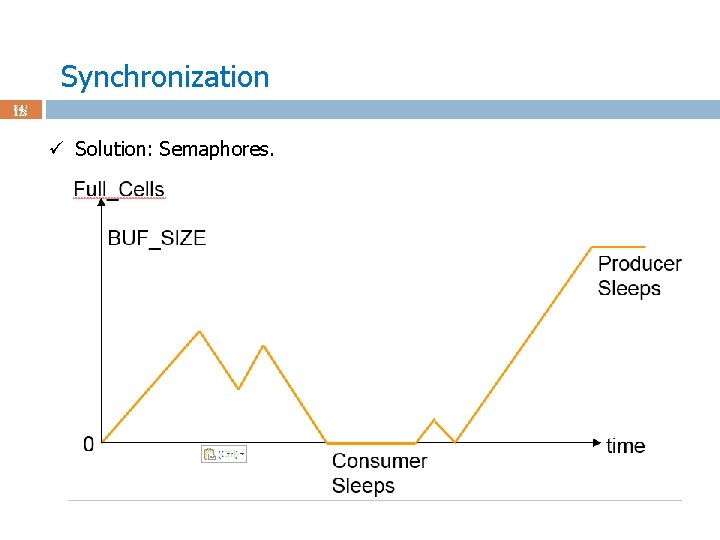

Synchronization 83 / 123 ü Solution: Semaphores. ü Usage other than critical section. ü Enforce consumer to sleep while there’s no item in the buffer (another synch problem). Producer do { // produce item. . put item into buffer. . signal (Full_Cells); } while (TRUE); Consumer do { wait (Full_Cells); //instead of busy-waiting, go to sleep mode and give CPU back to producer for faster production (efficiency!!). . . remove item from buffer. . } while (TRUE); Semaphore Full_Cells = 0; //initialized to 0 Kernel

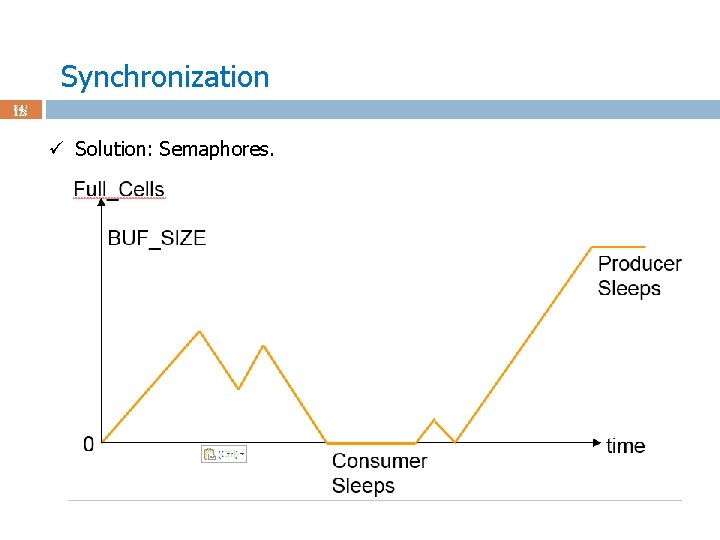

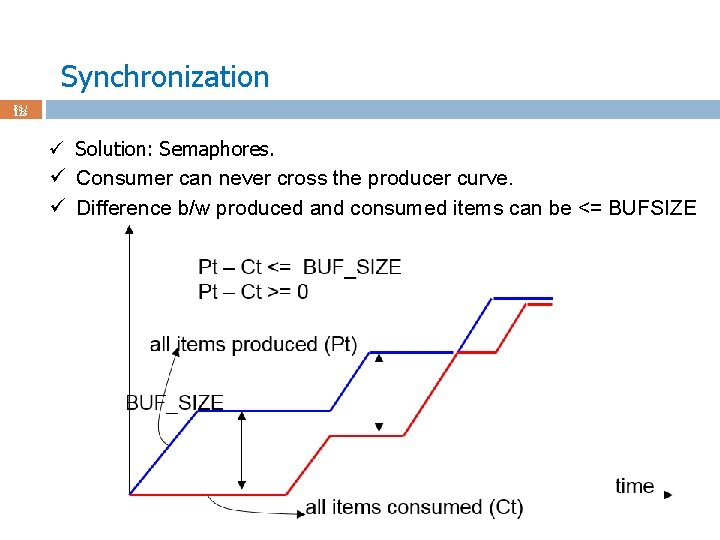

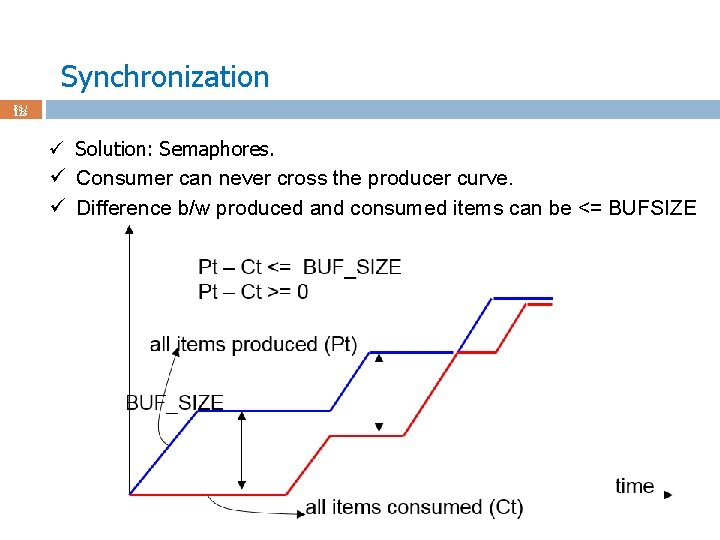

Synchronization 84 / 123 ü Solution: Semaphores.

Synchronization 85 / 123 ü Solution: Semaphores. ü Consumer can never cross the producer curve. ü Difference b/w produced and consumed items can be <= BUFSIZE

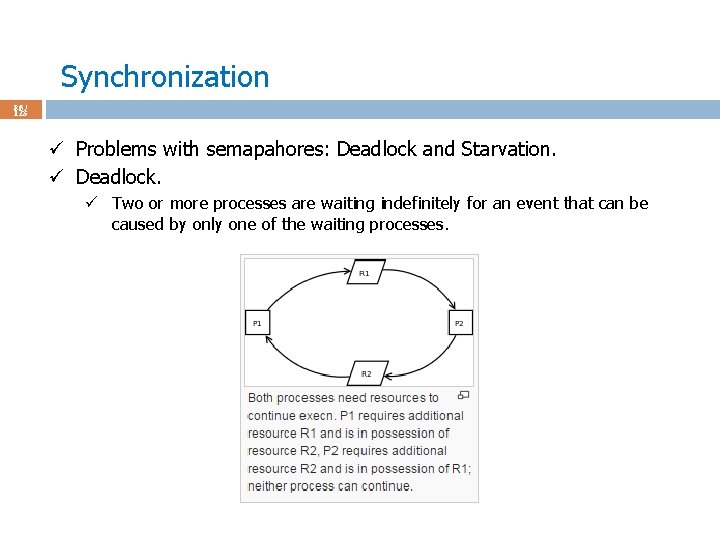

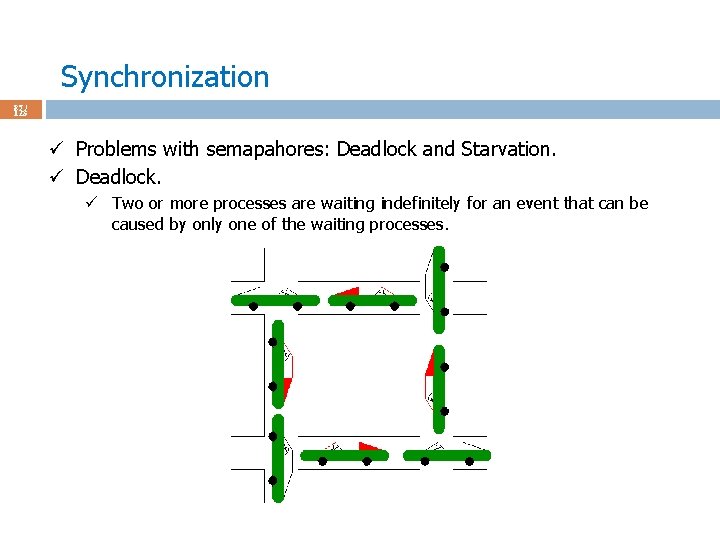

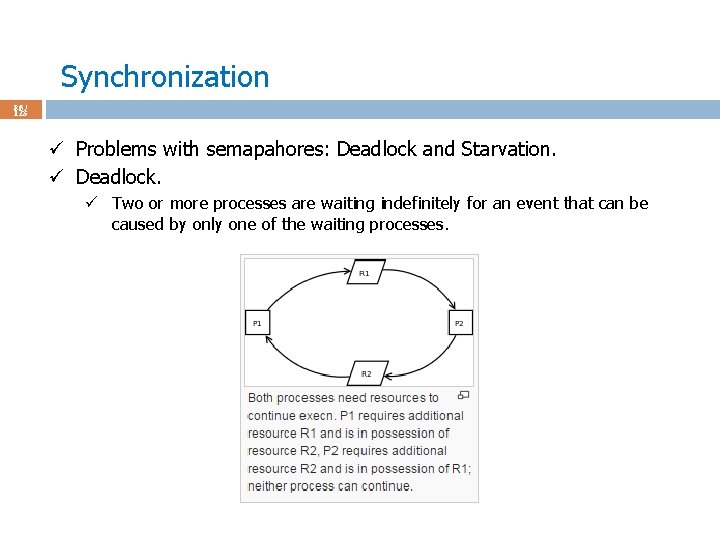

Synchronization 86 / 123 ü Problems with semapahores: Deadlock and Starvation. ü Deadlock. ü Two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes.

Synchronization 87 / 123 ü Problems with semapahores: Deadlock and Starvation. ü Deadlock. ü Two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes.

Synchronization 88 / 123 ü Problems with semapahores: Deadlock and Starvation. ü Deadlock. ü Two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes.

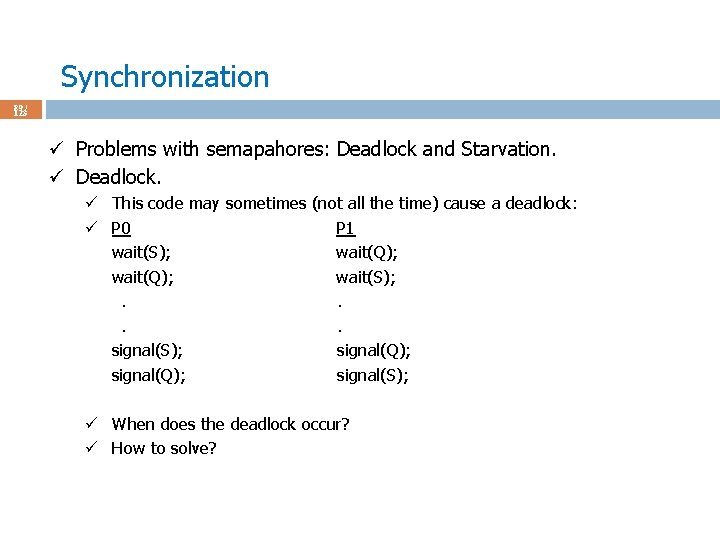

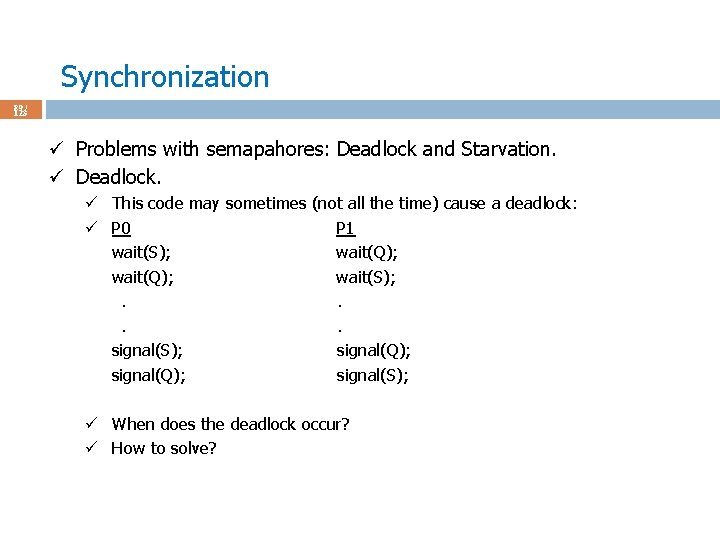

Synchronization 89 / 123 ü Problems with semapahores: Deadlock and Starvation. ü Deadlock. ü This code may sometimes (not all the time) cause a deadlock: ü P 0 P 1 wait(S); wait(Q); wait(S); . . signal(S); signal(Q); signal(S); ü When does the deadlock occur? ü How to solve?

Synchronization 90 / 123 ü Problems with semapahores: Deadlock and Starvation. ü Indefinite blocking: a process may never be removed from the semaphore queue in which it is susupended; it’ll always be sleeping; no service. ü When does it occur? ü How to solve? ü Another problem: ü Low-priority process may cause high-priority process to wait.

Synchronization 91 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü ü ü Bounded-buffer problem. Readers-Writers problem. Dining philosophers problem. Rendezvous problem. Barrier problem.

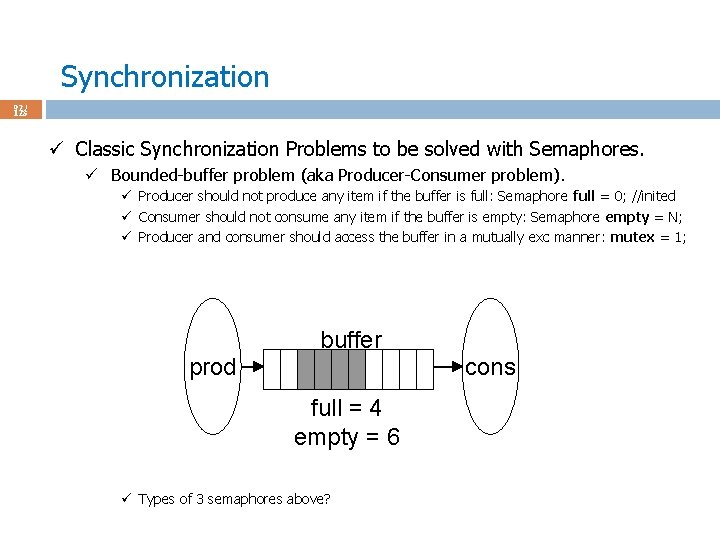

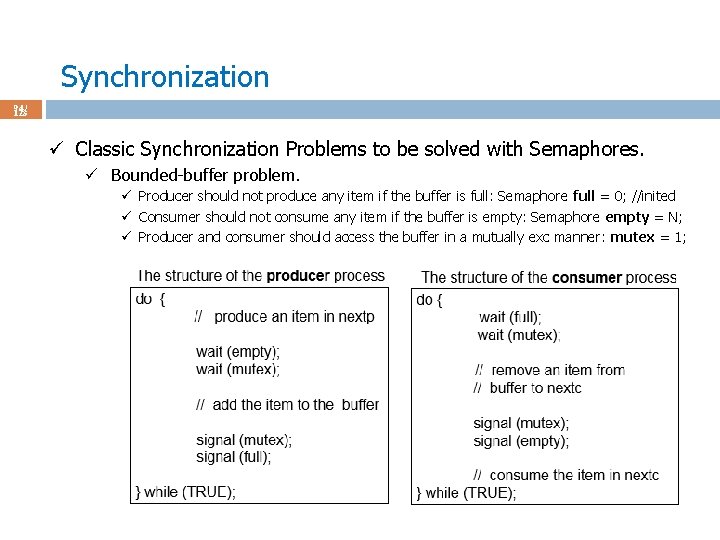

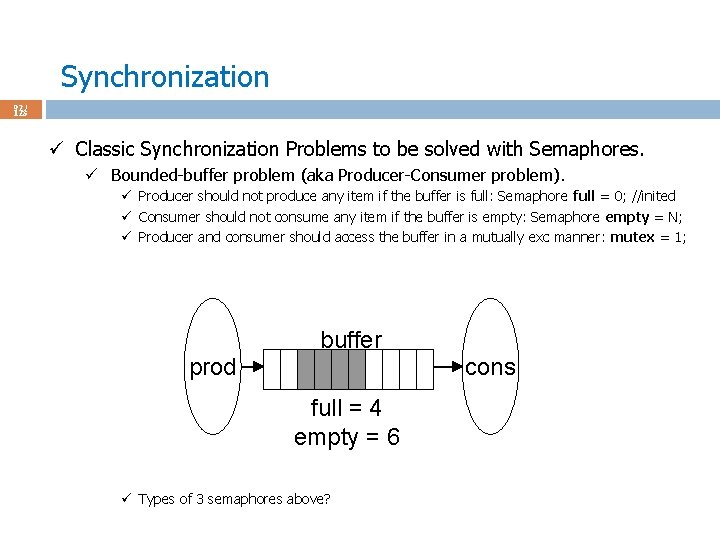

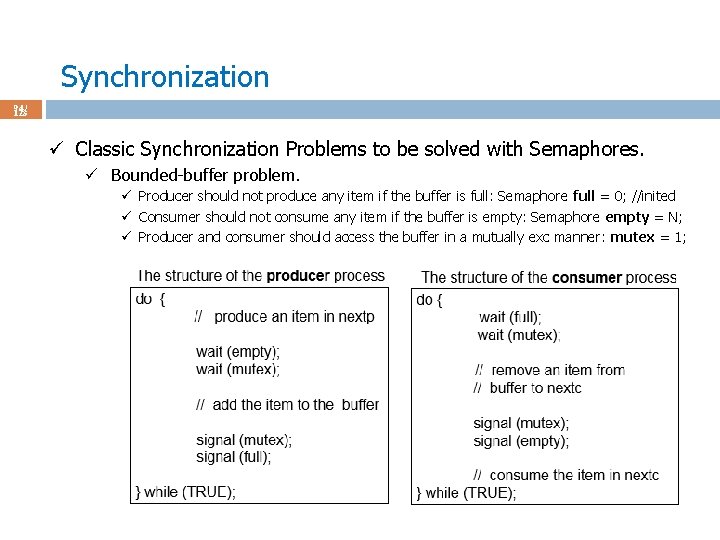

Synchronization 92 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Bounded-buffer problem (aka Producer-Consumer problem). ü Producer should not produce any item if the buffer is full: Semaphore full = 0; //inited ü Consumer should not consume any item if the buffer is empty: Semaphore empty = N; ü Producer and consumer should access the buffer in a mutually exc manner: mutex = 1; buffer prod cons full = 4 empty = 6 ü Types of 3 semaphores above?

Synchronization 93 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Bounded-buffer problem. ü Producer should not produce any item if the buffer is full: Semaphore full = 0; //inited ü Consumer should not consume any item if the buffer is empty: Semaphore empty = N; ü Producer and consumer should access the buffer in a mutually exc manner: mutex = 1; ü Think about the code of this?

Synchronization 94 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Bounded-buffer problem. ü Producer should not produce any item if the buffer is full: Semaphore full = 0; //inited ü Consumer should not consume any item if the buffer is empty: Semaphore empty = N; ü Producer and consumer should access the buffer in a mutually exc manner: mutex = 1;

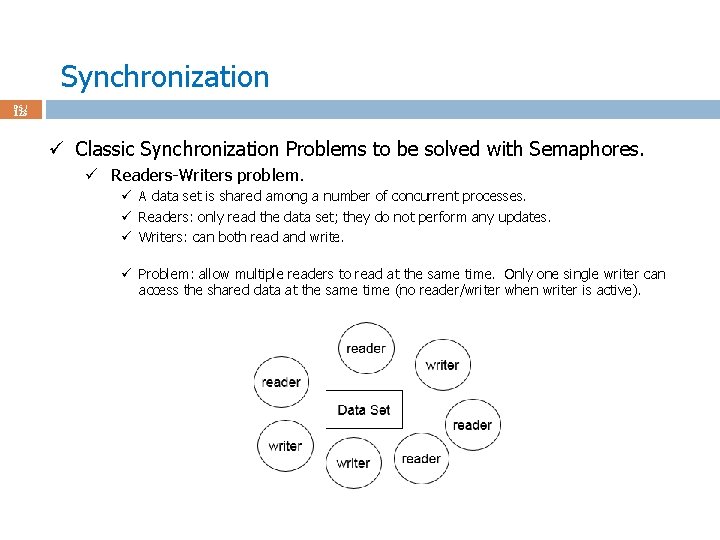

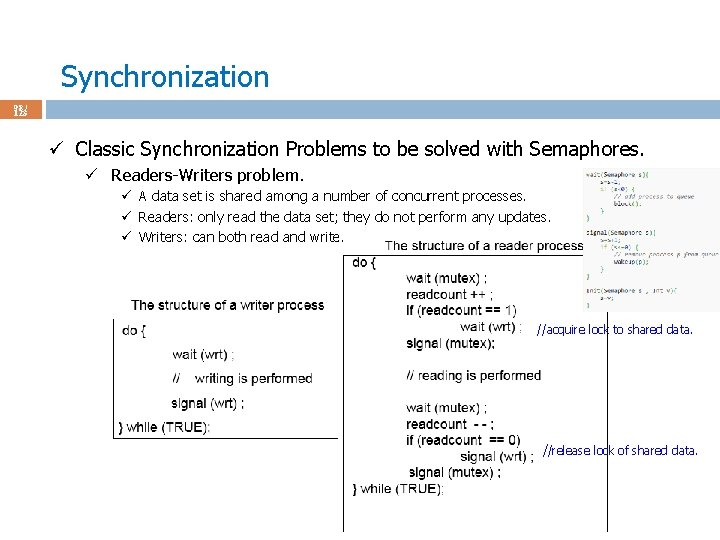

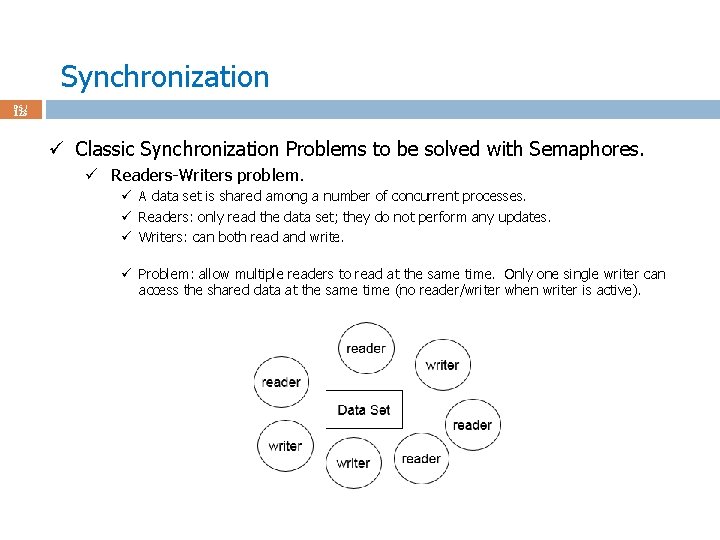

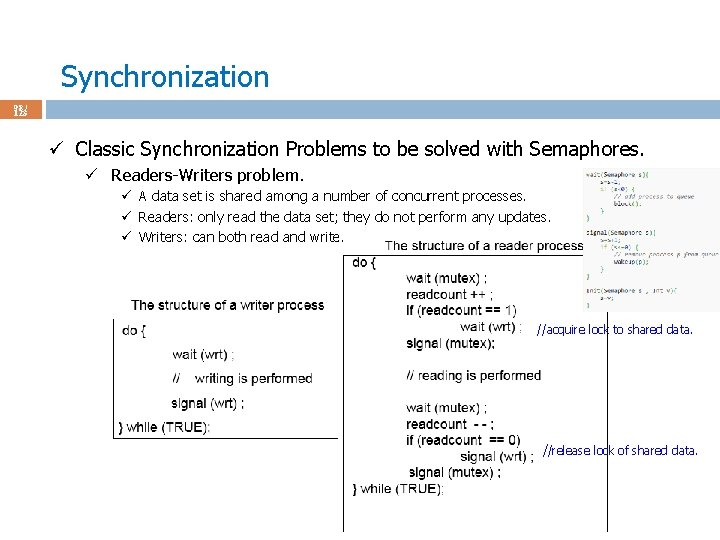

Synchronization 95 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Readers-Writers problem. ü A data set is shared among a number of concurrent processes. ü Readers: only read the data set; they do not perform any updates. ü Writers: can both read and write. ü Problem: allow multiple readers to read at the same time. Only one single writer can access the shared data at the same time (no reader/writer when writer is active).

Synchronization 96 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Readers-Writers problem. ü A data set is shared among a number of concurrent processes. ü Readers: only read the data set; they do not perform any updates. ü Writers: can both read and write. ü Problem: allow multiple readers to read at the same time. Only one single writer can access the shared data at the same time (no reader/writer when writer is active). ü Integer readcount initialized to 0. ü Number of readers reading the data at the moment. ü Semaphore mutex initialized to 1. ü Protects the readcount variable (multiple readers may try to modify it). ü Semaphore wrt initialized to 1. ü Protects the shared data (either writer or reader(s) should access data at a time).

Synchronization 97 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Readers-Writers problem. ü A data set is shared among a number of concurrent processes. ü Readers: only read the data set; they do not perform any updates. ü Writers: can both read and write. ü Problem: allow multiple readers to read at the same time. Only one single writer can access the shared data at the same time (no reader/writer when writer is active). ü Think about the code of this? ü Reader and writer processes running in (pseudo) parallel. ü Hint: first and last reader should do something special.

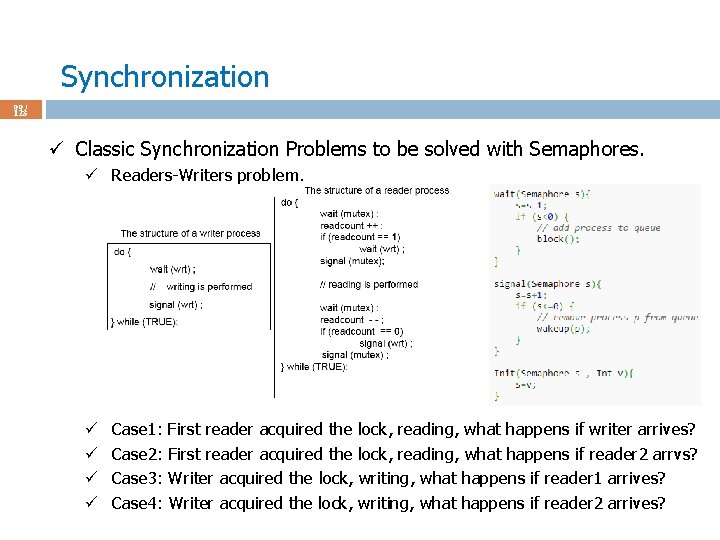

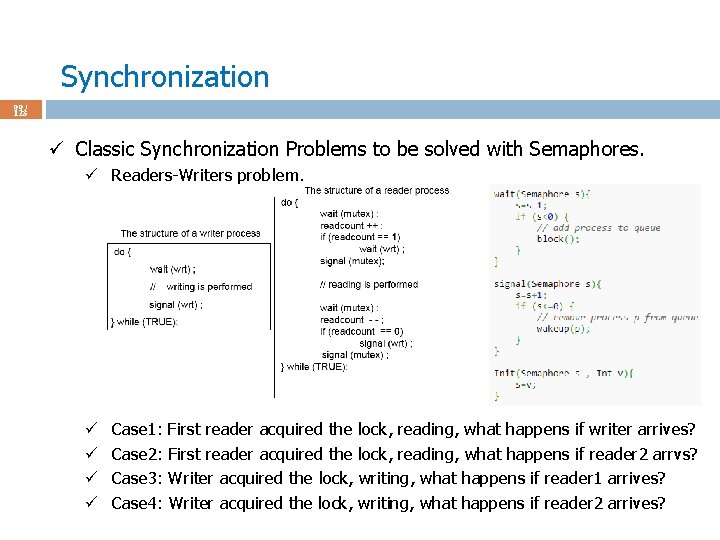

Synchronization 98 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Readers-Writers problem. ü A data set is shared among a number of concurrent processes. ü Readers: only read the data set; they do not perform any updates. ü Writers: can both read and write. //acquire lock to shared data. //release lock of shared data.

Synchronization 99 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Readers-Writers problem. ü ü Case 1: Case 2: Case 3: Case 4: First reader acquired the lock, reading, what happens if writer arrives? First reader acquired the lock, reading, what happens if reader 2 arrvs? Writer acquired the lock, writing, what happens if reader 1 arrives? Writer acquired the lock, writing, what happens if reader 2 arrives?

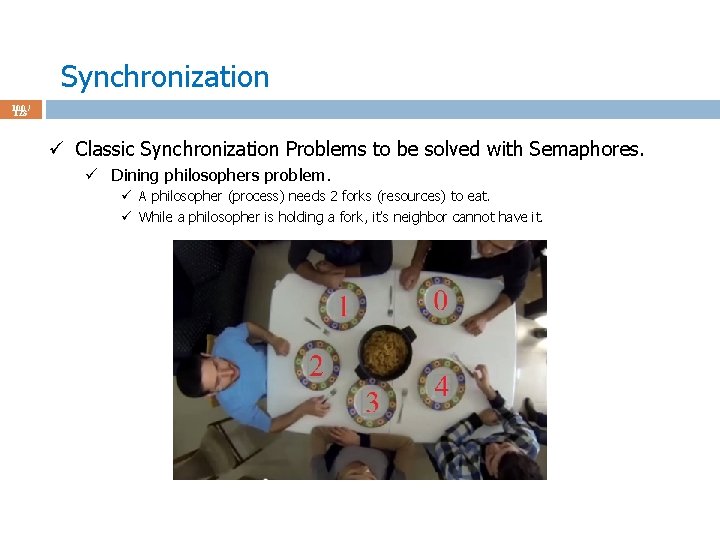

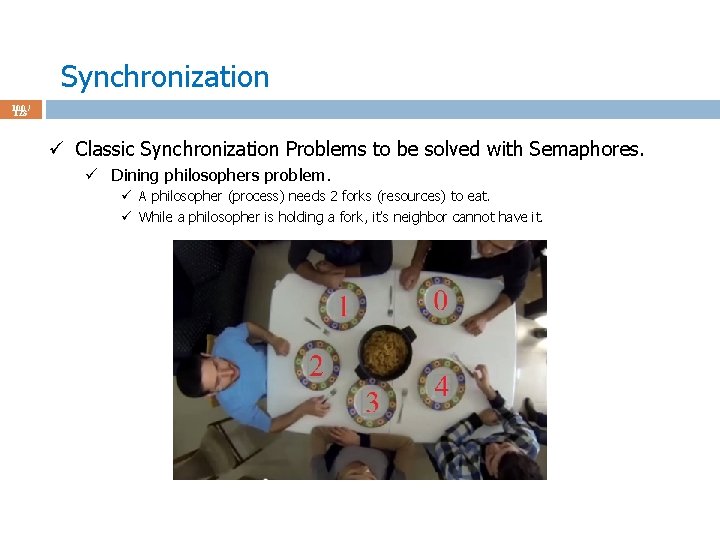

Synchronization 100 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbor cannot have it.

Synchronization 101 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbor cannot have it.

Synchronization 102 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbor cannot have it.

Synchronization 103 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbor cannot have it.

Synchronization 104 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbors cannot have it. ü Not gay , just going for a fork.

Synchronization 105 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbors cannot have it. ü Philosopher in 2 states: eating (needs forks) and thinking (not need forks). ü We want parallelism, e. g. , 4 or 5 (not 1 or 3) can be eating while 2 is eating. ü We don’t want deadlock: waiting for each other indefinitely. ü We don’t want starvation: no philosopher waits forever (starves to death).

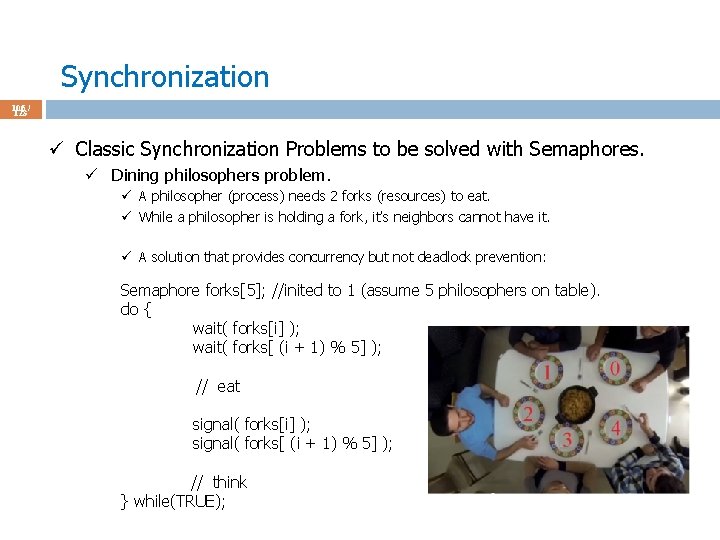

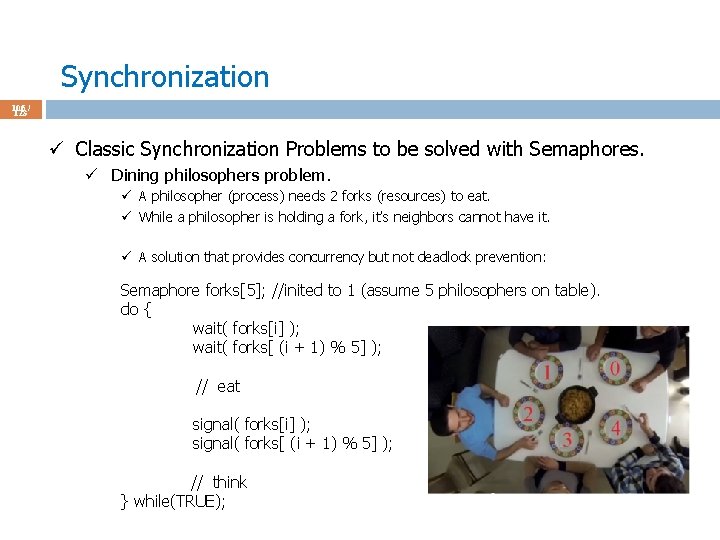

Synchronization 106 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbors cannot have it. ü A solution that provides concurrency but not deadlock prevention: Semaphore forks[5]; //inited to 1 (assume 5 philosophers on table). do { wait( forks[i] ); wait( forks[ (i + 1) % 5] ); // eat signal( forks[i] ); signal( forks[ (i + 1) % 5] ); // think } while(TRUE);

Synchronization 107 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbors cannot have it. ü A solution that provides concurrency but not deadlock prevention: ü How is deadlock possible?

Synchronization 108 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Dining philosophers problem. ü A philosopher (process) needs 2 forks (resources) to eat. ü While a philosopher is holding a fork, it’s neighbors cannot have it. ü A solution that provides concurrency but not deadlock prevention: ü How is deadlock possible? ü Deadlock in a circular fashion: 4 gets the left fork, context switch (cs), 3 gets the left fork, cs, . . , 0 gets the left fork, cs, 4 now wants the right fork which is held by 0 forever. Unlucky sequence of cs’s not likely but possible. ü A perfect solution w/o deadlock danger is possible with again semaphores. ü Solution #1: put the left back if you cannot grab right. ü Solution #2: grab both forks at once (atomic).

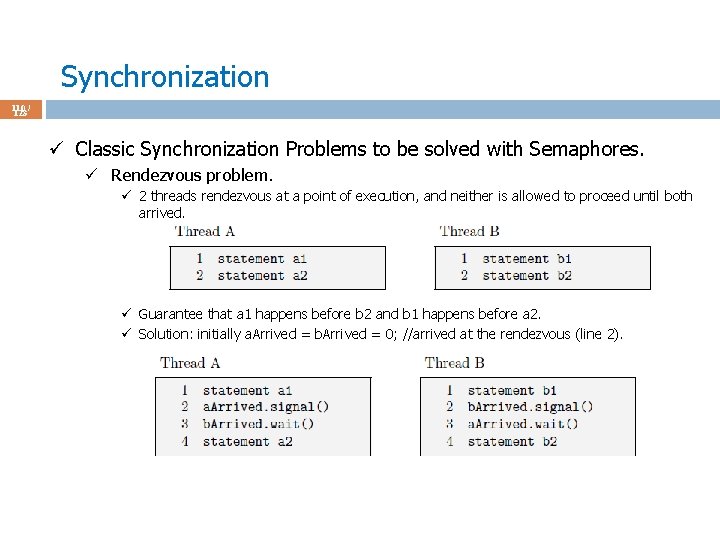

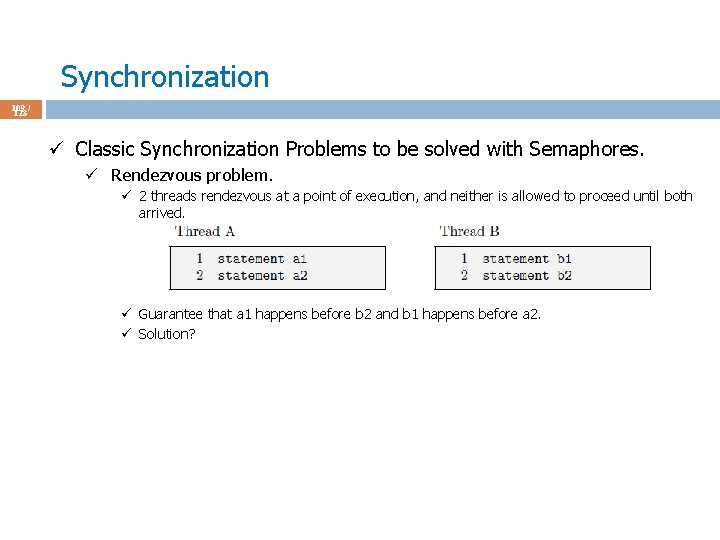

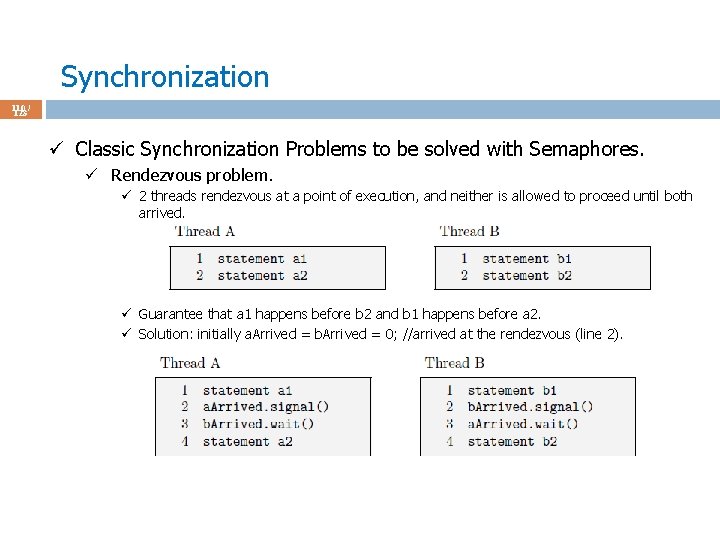

Synchronization 109 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Rendezvous problem. ü 2 threads rendezvous at a point of execution, and neither is allowed to proceed until both arrived. ü Guarantee that a 1 happens before b 2 and b 1 happens before a 2. ü Solution?

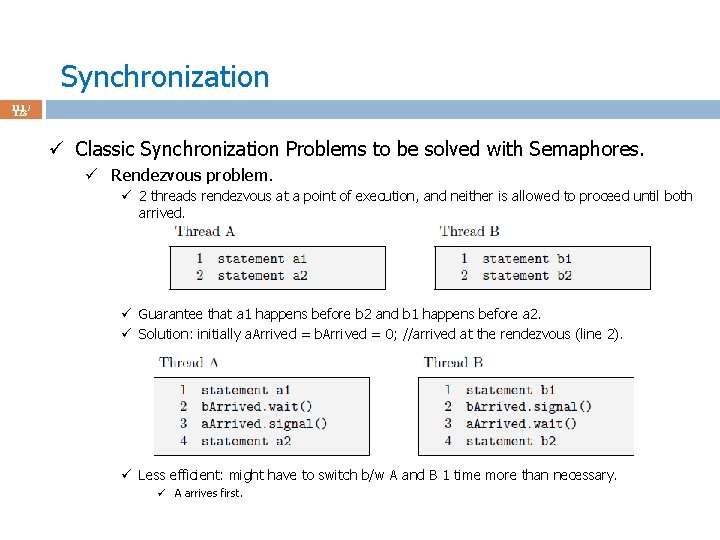

Synchronization 110 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Rendezvous problem. ü 2 threads rendezvous at a point of execution, and neither is allowed to proceed until both arrived. ü Guarantee that a 1 happens before b 2 and b 1 happens before a 2. ü Solution: initially a. Arrived = b. Arrived = 0; //arrived at the rendezvous (line 2).

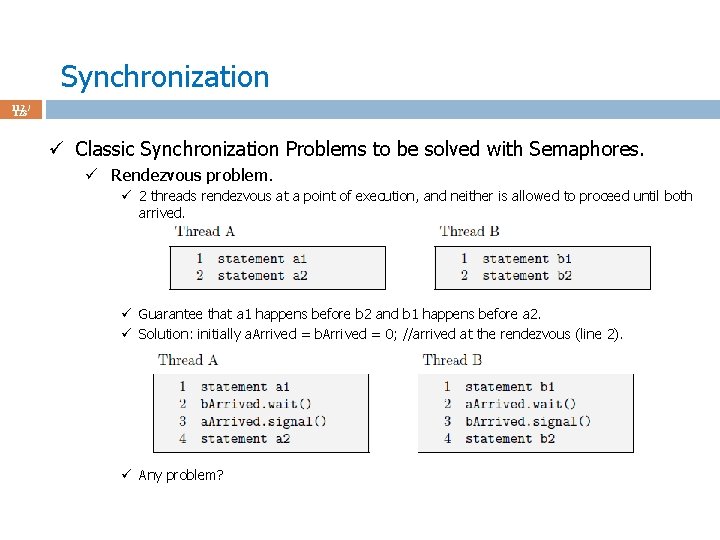

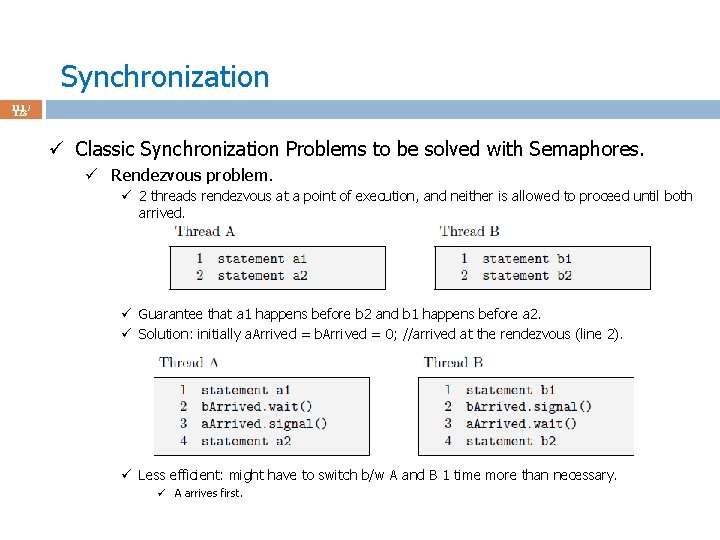

Synchronization 111 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Rendezvous problem. ü 2 threads rendezvous at a point of execution, and neither is allowed to proceed until both arrived. ü Guarantee that a 1 happens before b 2 and b 1 happens before a 2. ü Solution: initially a. Arrived = b. Arrived = 0; //arrived at the rendezvous (line 2). ü Less efficient: might have to switch b/w A and B 1 time more than necessary. ü A arrives first.

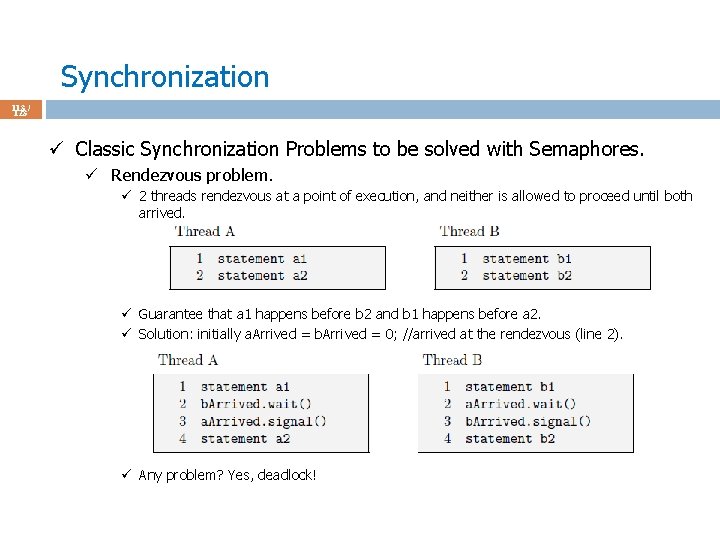

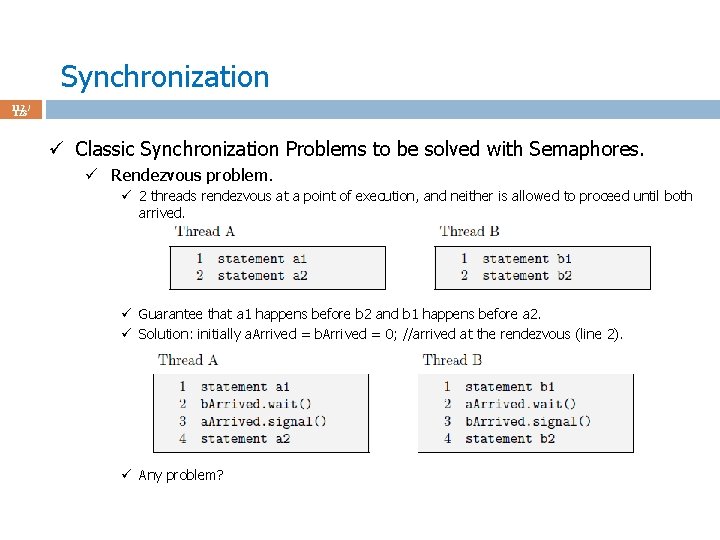

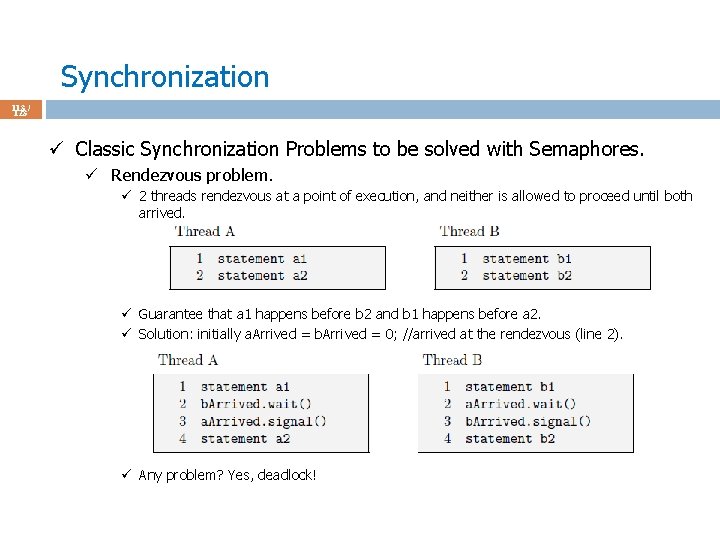

Synchronization 112 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Rendezvous problem. ü 2 threads rendezvous at a point of execution, and neither is allowed to proceed until both arrived. ü Guarantee that a 1 happens before b 2 and b 1 happens before a 2. ü Solution: initially a. Arrived = b. Arrived = 0; //arrived at the rendezvous (line 2). ü Any problem?

Synchronization 113 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Rendezvous problem. ü 2 threads rendezvous at a point of execution, and neither is allowed to proceed until both arrived. ü Guarantee that a 1 happens before b 2 and b 1 happens before a 2. ü Solution: initially a. Arrived = b. Arrived = 0; //arrived at the rendezvous (line 2). ü Any problem? Yes, deadlock!

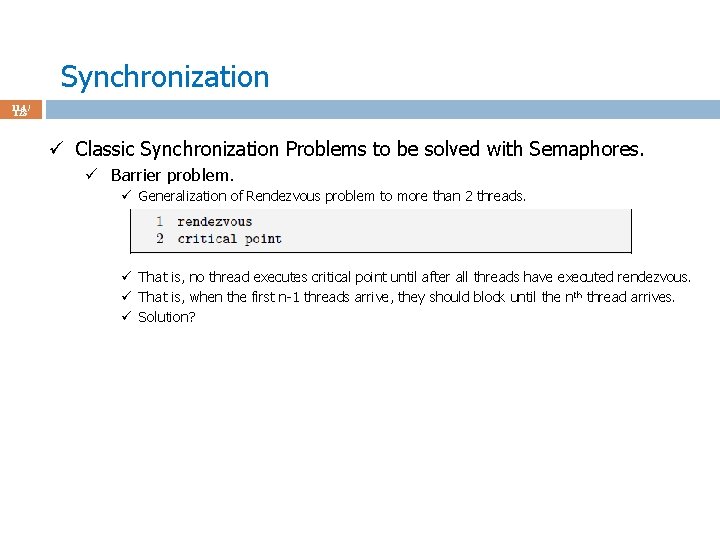

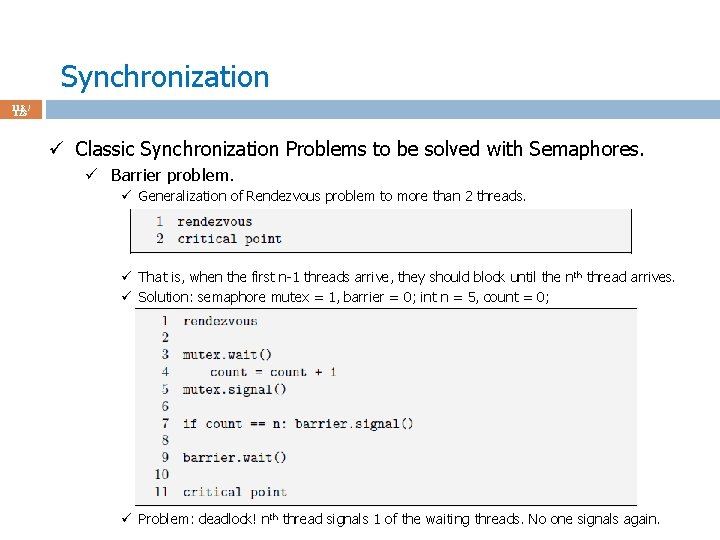

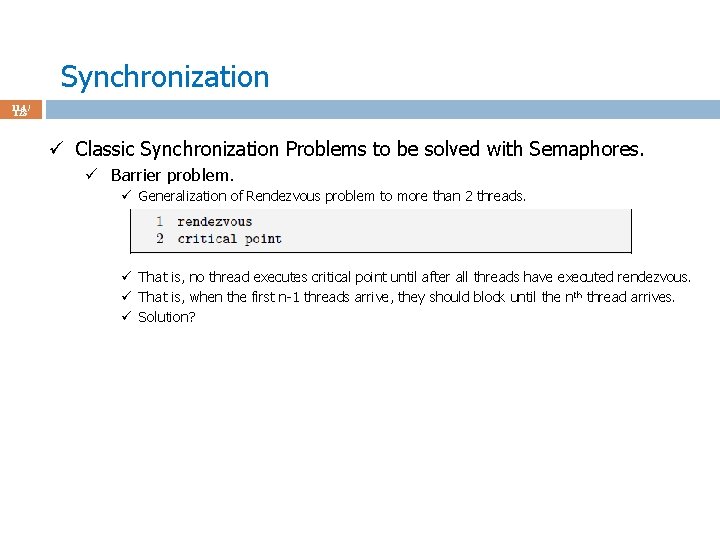

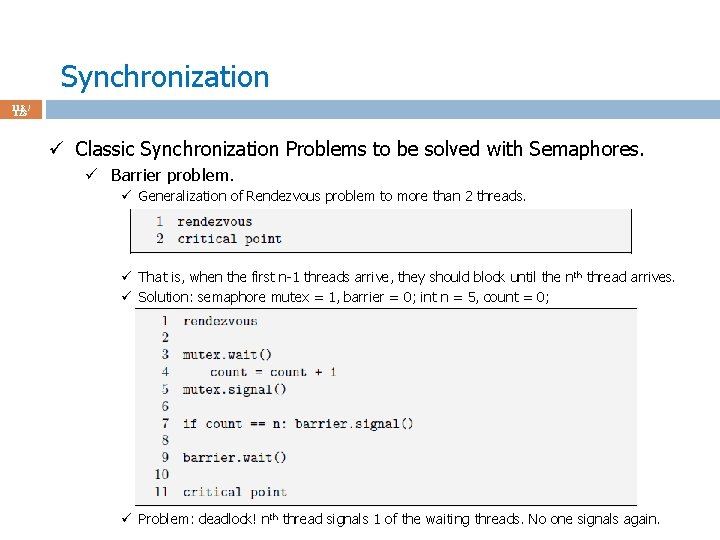

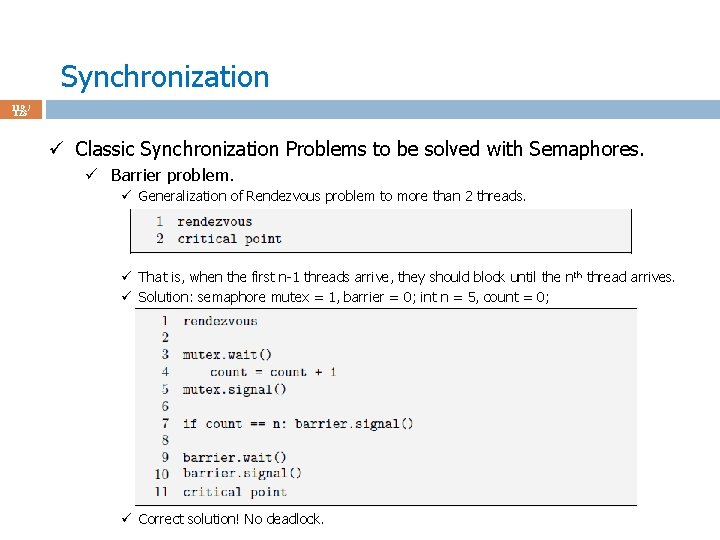

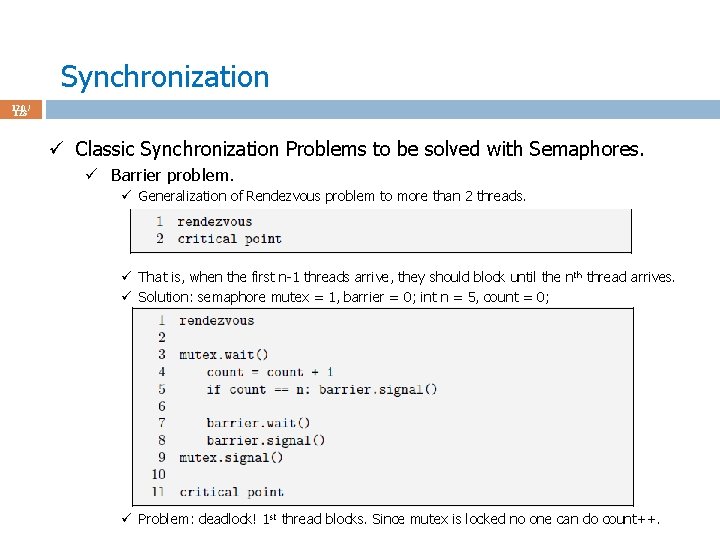

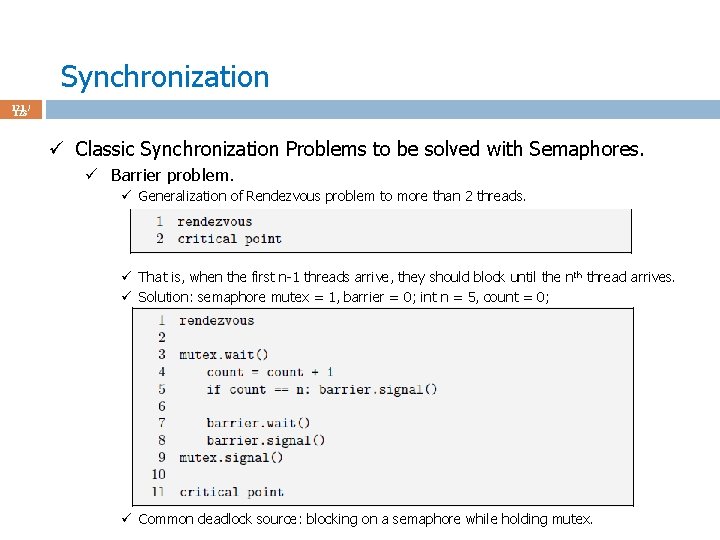

Synchronization 114 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Barrier problem. ü Generalization of Rendezvous problem to more than 2 threads. ü That is, no thread executes critical point until after all threads have executed rendezvous. ü That is, when the first n-1 threads arrive, they should block until the n th thread arrives. ü Solution?

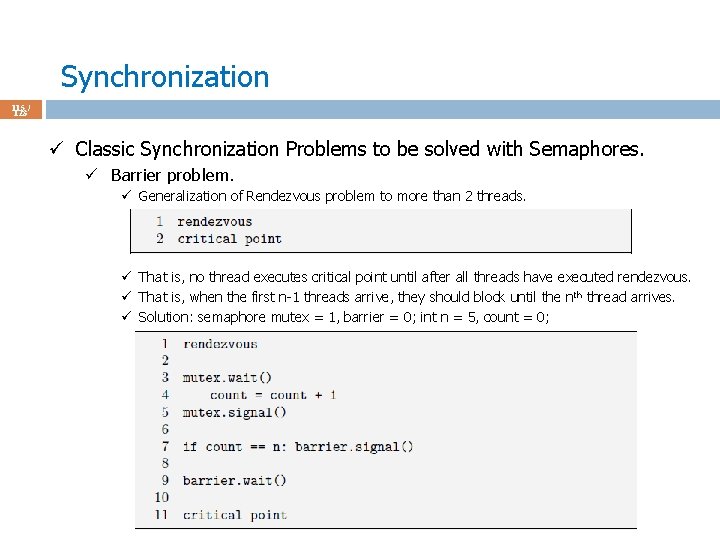

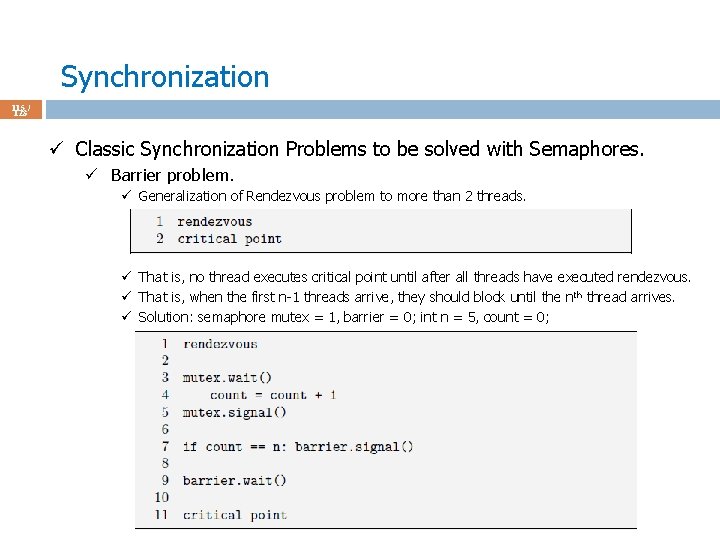

Synchronization 115 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Barrier problem. ü Generalization of Rendezvous problem to more than 2 threads. ü That is, no thread executes critical point until after all threads have executed rendezvous. ü That is, when the first n-1 threads arrive, they should block until the n th thread arrives. ü Solution: semaphore mutex = 1, barrier = 0; int n = 5, count = 0;

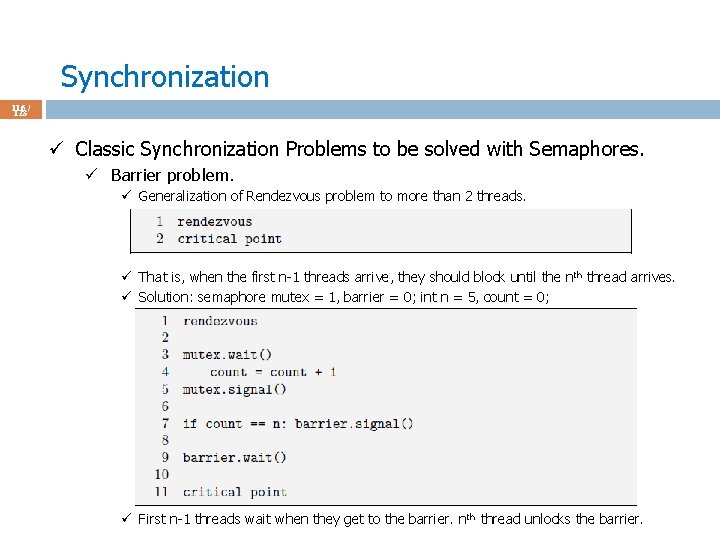

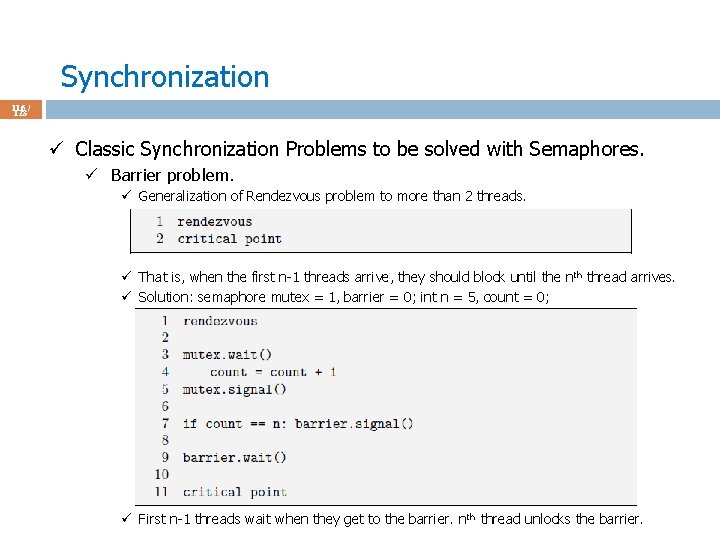

Synchronization 116 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Barrier problem. ü Generalization of Rendezvous problem to more than 2 threads. ü That is, when the first n-1 threads arrive, they should block until the n th thread arrives. ü Solution: semaphore mutex = 1, barrier = 0; int n = 5, count = 0; ü First n-1 threads wait when they get to the barrier. nth thread unlocks the barrier.

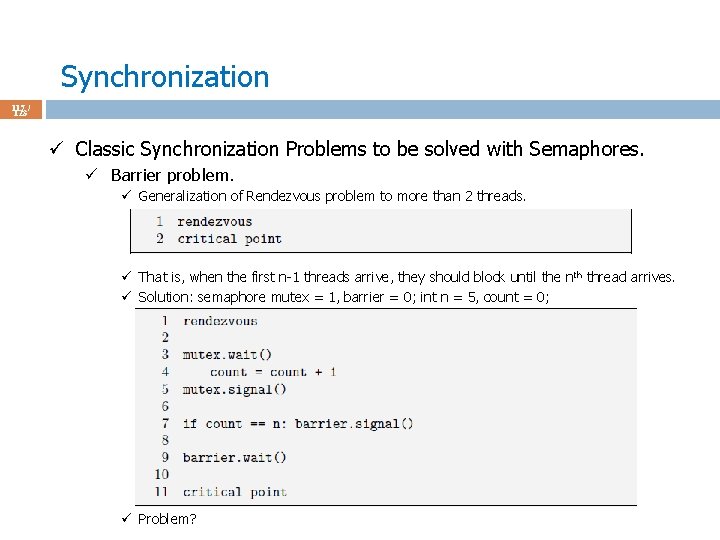

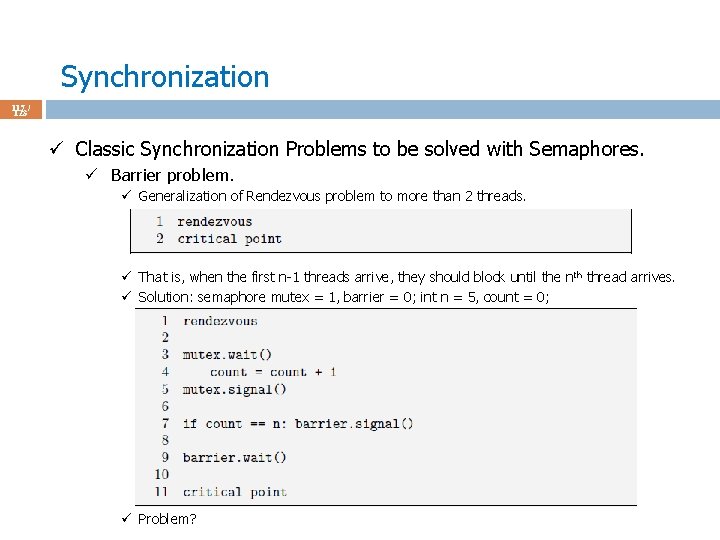

Synchronization 117 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Barrier problem. ü Generalization of Rendezvous problem to more than 2 threads. ü That is, when the first n-1 threads arrive, they should block until the n th thread arrives. ü Solution: semaphore mutex = 1, barrier = 0; int n = 5, count = 0; ü Problem?

Synchronization 118 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Barrier problem. ü Generalization of Rendezvous problem to more than 2 threads. ü That is, when the first n-1 threads arrive, they should block until the n th thread arrives. ü Solution: semaphore mutex = 1, barrier = 0; int n = 5, count = 0; ü Problem: deadlock! nth thread signals 1 of the waiting threads. No one signals again.

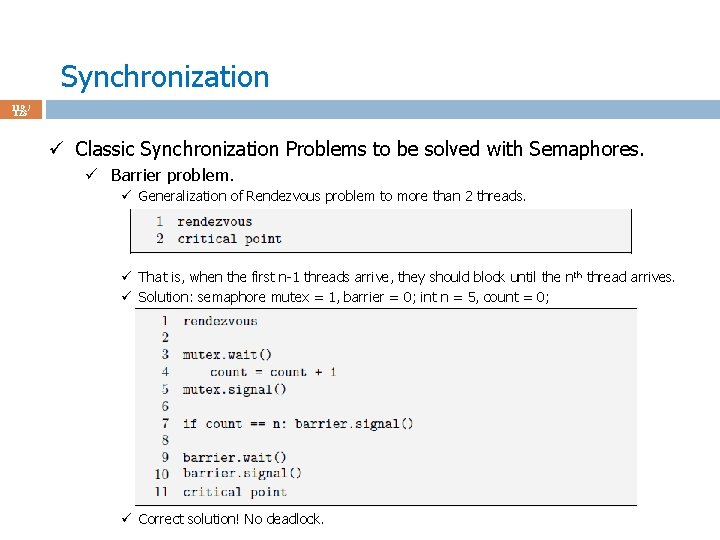

Synchronization 119 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Barrier problem. ü Generalization of Rendezvous problem to more than 2 threads. ü That is, when the first n-1 threads arrive, they should block until the n th thread arrives. ü Solution: semaphore mutex = 1, barrier = 0; int n = 5, count = 0; ü Correct solution! No deadlock.

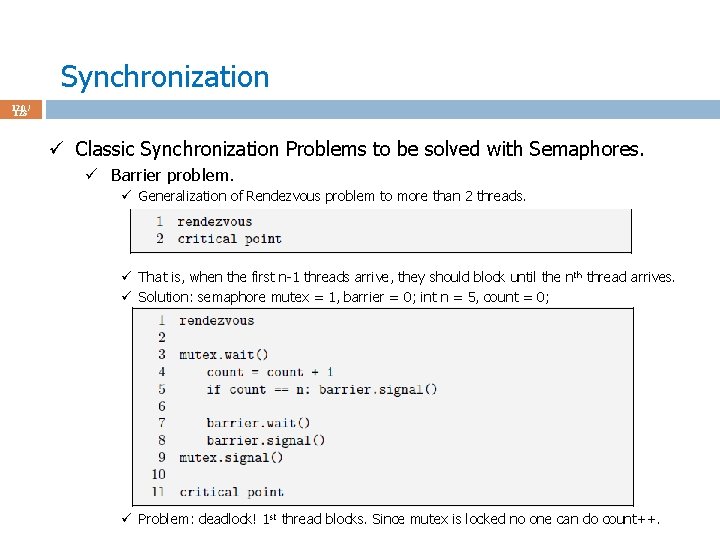

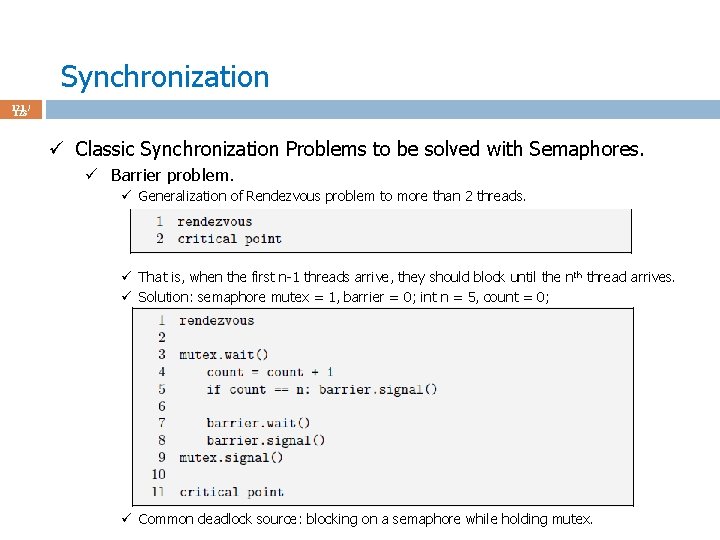

Synchronization 120 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Barrier problem. ü Generalization of Rendezvous problem to more than 2 threads. ü That is, when the first n-1 threads arrive, they should block until the n th thread arrives. ü Solution: semaphore mutex = 1, barrier = 0; int n = 5, count = 0; ü Problem: deadlock! 1 st thread blocks. Since mutex is locked no one can do count++.

Synchronization 121 / 123 ü Classic Synchronization Problems to be solved with Semaphores. ü Barrier problem. ü Generalization of Rendezvous problem to more than 2 threads. ü That is, when the first n-1 threads arrive, they should block until the n th thread arrives. ü Solution: semaphore mutex = 1, barrier = 0; int n = 5, count = 0; ü Common deadlock source: blocking on a semaphore while holding mutex.

Synchronization 122 / 123 ü Problems with semaphores. ü Careless programmer may do ü signal(mutex); . . wait(mutex); //2+ threads in critical region (unprotected). ü wait(mutex); . . wait(mutex); //deadlock (indefinite waiting). ü Forgetting corresponding wait(mutex) or signal(mutex); //unprotect & deadlck ü Need something else, something better, something easier to use: ü Monitors.

Synchronization 123 / 123 ü ü ü Solution: Monitors. Idea: get help not from the OS but from the programming language. High-level abstraction for process/thread synchronization. C does not provide monitors (use semaphores) but Java does. Compiler ensures that the critical regions of your code are protected. ü You just identify the critical section of the code, put them into a monitor, and compiler puts the protection code. ü Monitor implementation using semaphores. ü Compiler writer/language developer has to worry about this stuff, not the casual application programmer.

Synchronization 124 / 123 ü Solution: Monitors. ü Monitor is a construct in the language, like class construct: monitor-name { // shared variable declarations procedure P 1 (. . ) {. . }. . procedure Pn (. . ) {. . } Initialization code (. . ) {. . } ü monitor construct guarantees that only one process may be active within the monitor at a time.

Synchronization 125 / 123 ü Solution: Monitors. ü monitor construct guarantees that only one process may be active within the monitor at a time. ü This means that, if a process is running inside the monitor (= running a procedure, say P 1()), then no other process can be active inside the monitor (= can run P 1() or any other procedure of the monitor) at the same time. ü Compiler is putting some locks/semaphores to the beginning/ending of these critical regions (procedures, shared variables, etc. ). ü So it is not the programmer’s job anymore to insert these locks/semaphores.

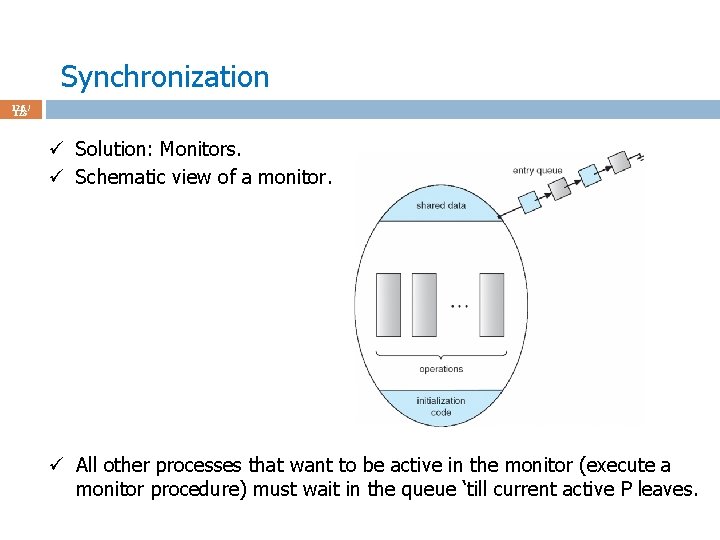

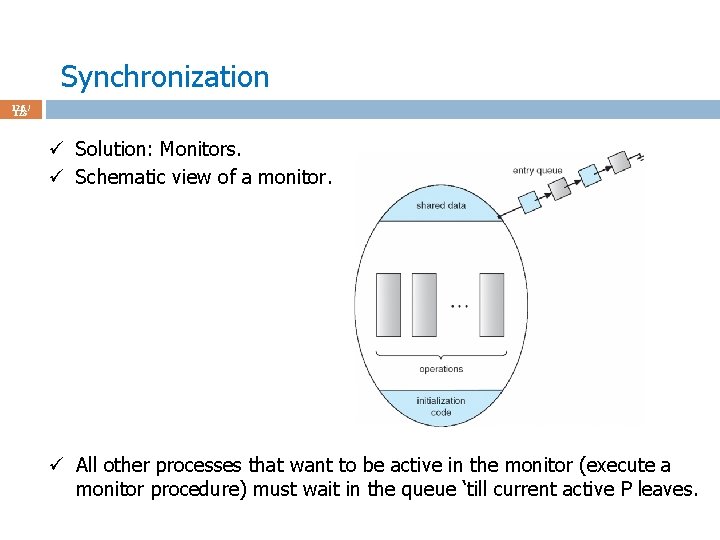

Synchronization 126 / 123 ü Solution: Monitors. ü Schematic view of a monitor. ü All other processes that want to be active in the monitor (execute a monitor procedure) must wait in the queue ‘till current active P leaves.

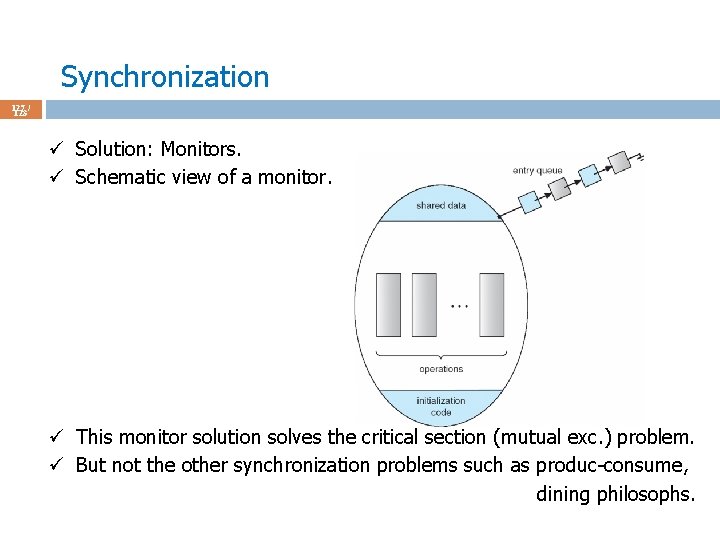

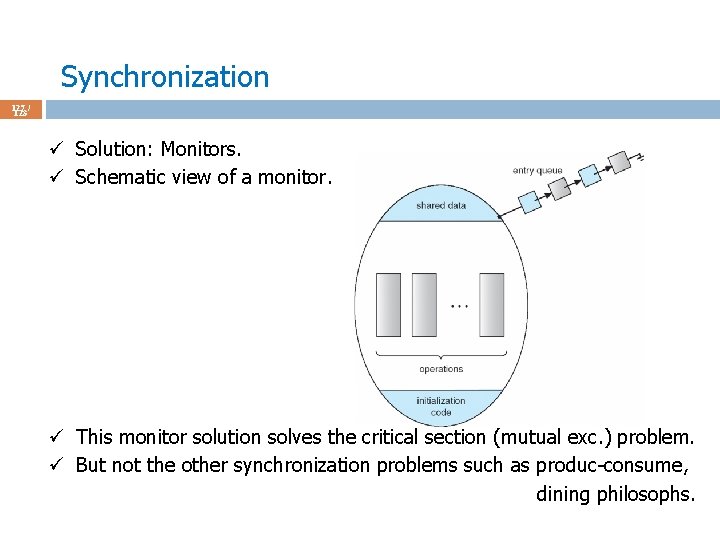

Synchronization 127 / 123 ü Solution: Monitors. ü Schematic view of a monitor. ü This monitor solution solves the critical section (mutual exc. ) problem. ü But not the other synchronization problems such as produc-consume, dining philosophs.

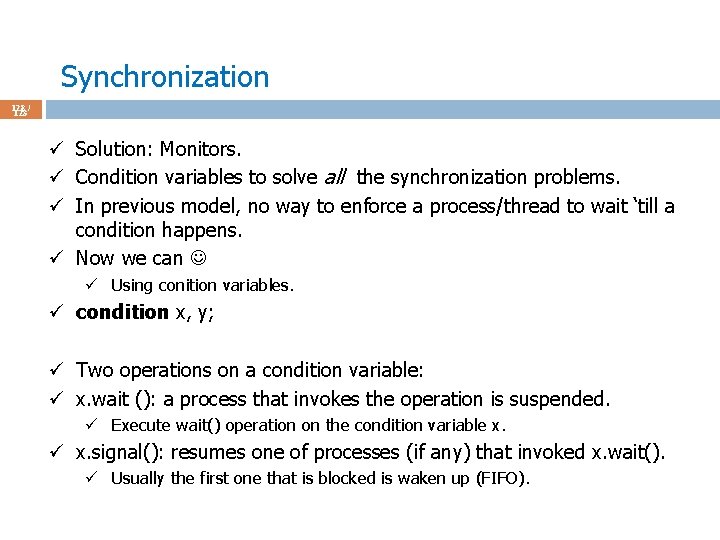

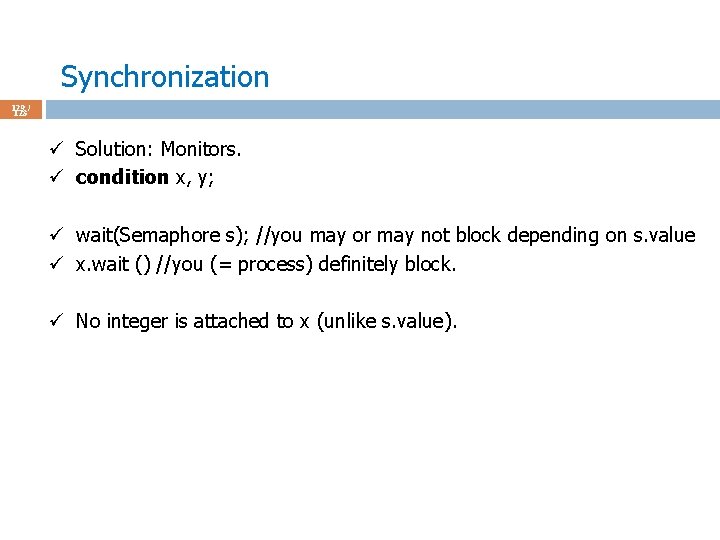

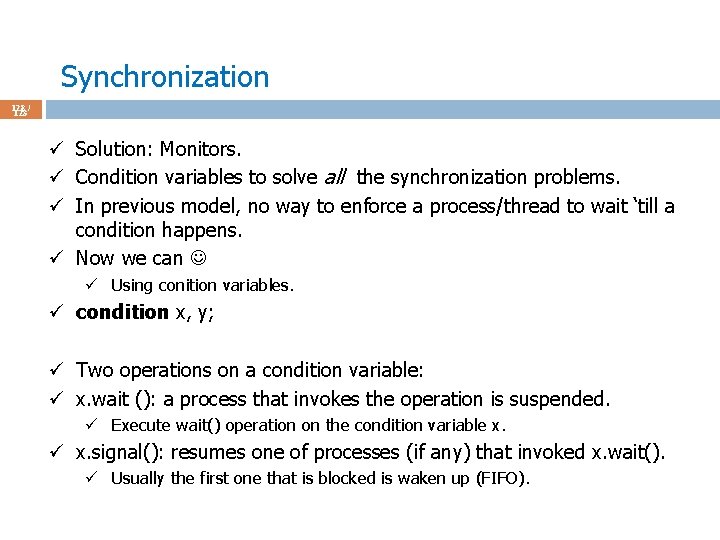

Synchronization 128 / 123 ü Solution: Monitors. ü Condition variables to solve all the synchronization problems. ü In previous model, no way to enforce a process/thread to wait ‘till a condition happens. ü Now we can ü Using conition variables. ü condition x, y; ü Two operations on a condition variable: ü x. wait (): a process that invokes the operation is suspended. ü Execute wait() operation on the condition variable x. ü x. signal(): resumes one of processes (if any) that invoked x. wait(). ü Usually the first one that is blocked is waken up (FIFO).

Synchronization 129 / 123 ü Solution: Monitors. ü condition x, y; ü wait(Semaphore s); //you may or may not block depending on s. value ü x. wait () //you (= process) definitely block. ü No integer is attached to x (unlike s. value).

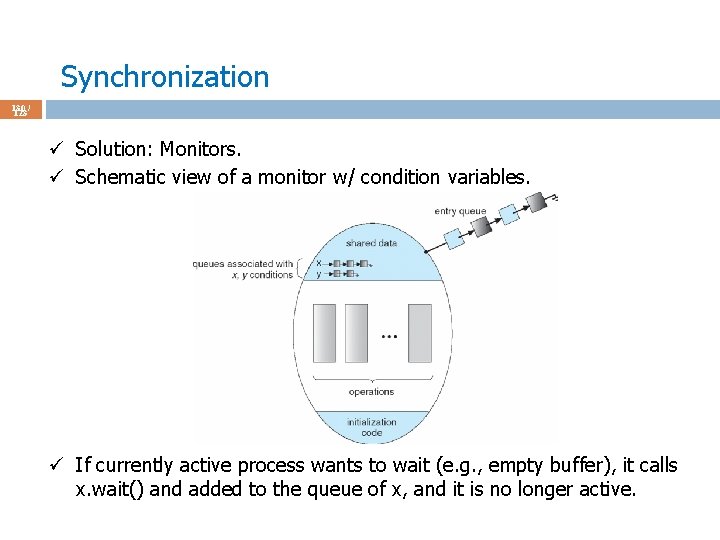

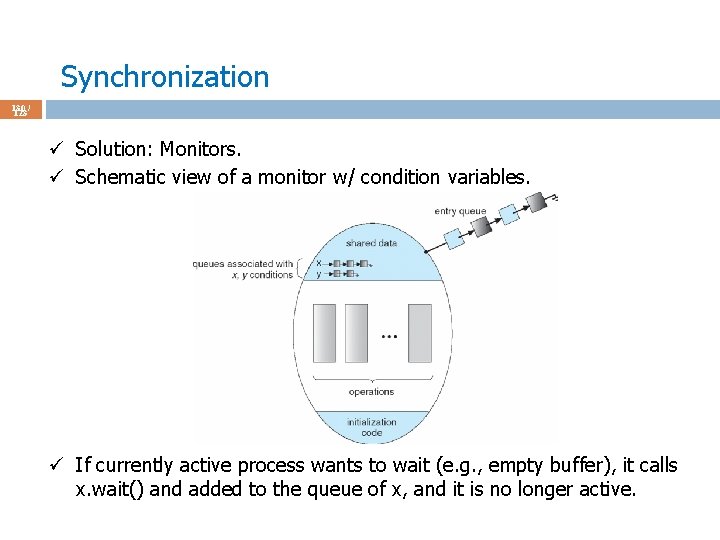

Synchronization 130 / 123 ü Solution: Monitors. ü Schematic view of a monitor w/ condition variables. ü If currently active process wants to wait (e. g. , empty buffer), it calls x. wait() and added to the queue of x, and it is no longer active.

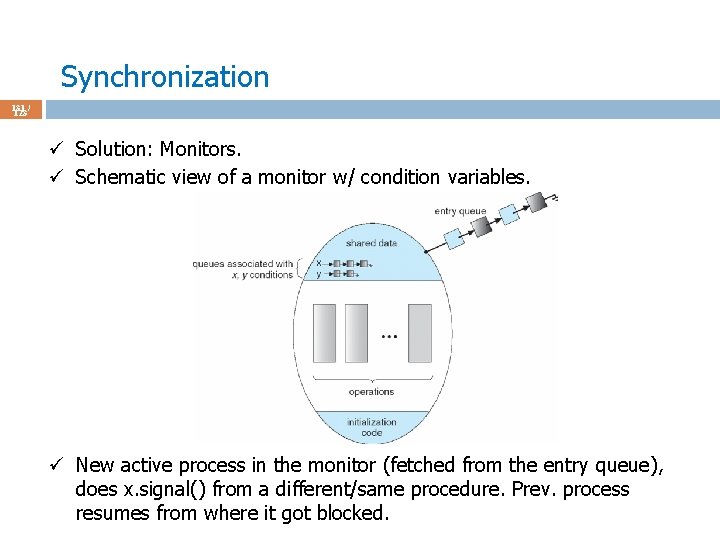

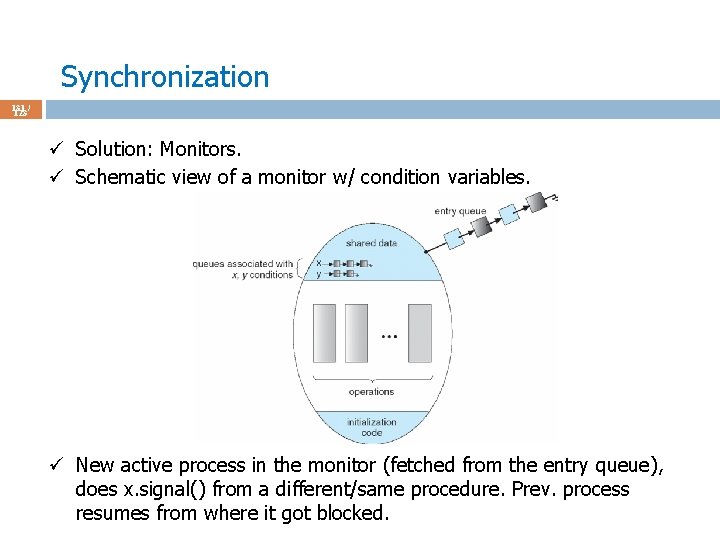

Synchronization 131 / 123 ü Solution: Monitors. ü Schematic view of a monitor w/ condition variables. ü New active process in the monitor (fetched from the entry queue), does x. signal() from a different/same procedure. Prev. process resumes from where it got blocked.

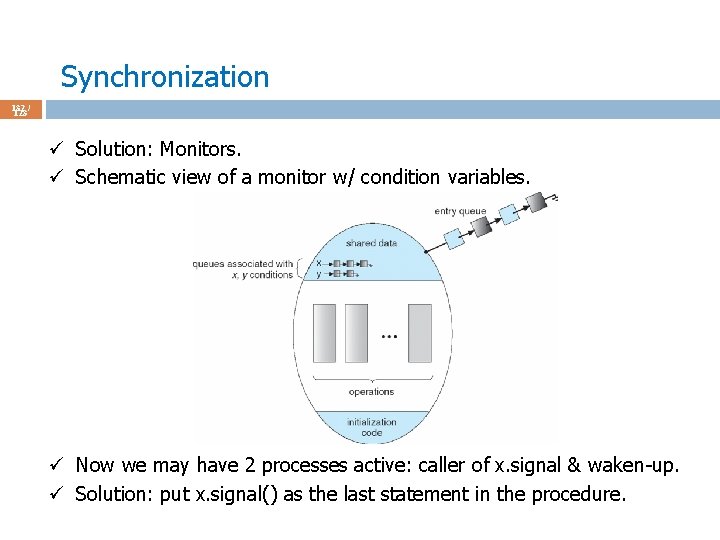

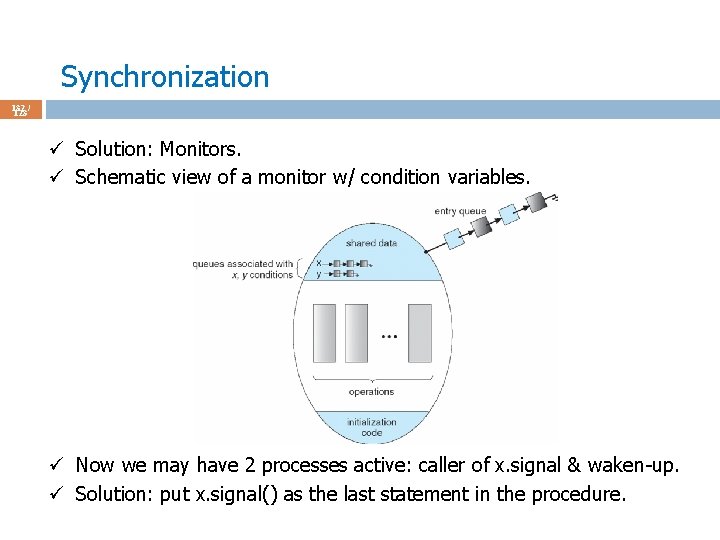

Synchronization 132 / 123 ü Solution: Monitors. ü Schematic view of a monitor w/ condition variables. ü Now we may have 2 processes active: caller of x. signal & waken-up. ü Solution: put x. signal() as the last statement in the procedure.

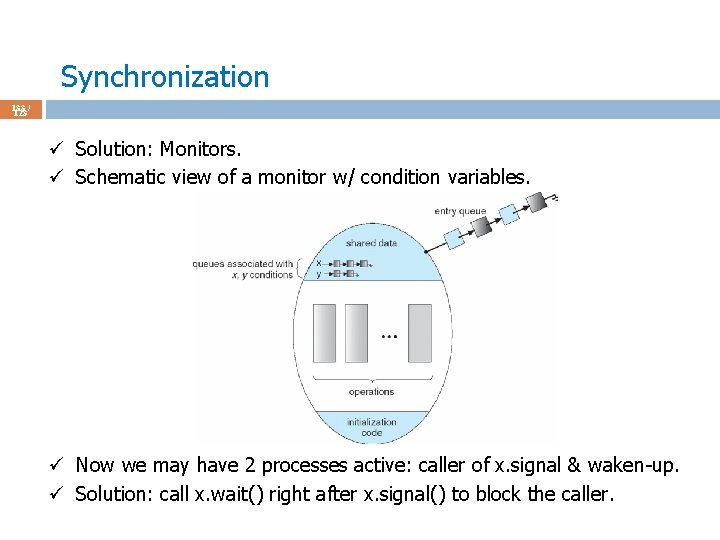

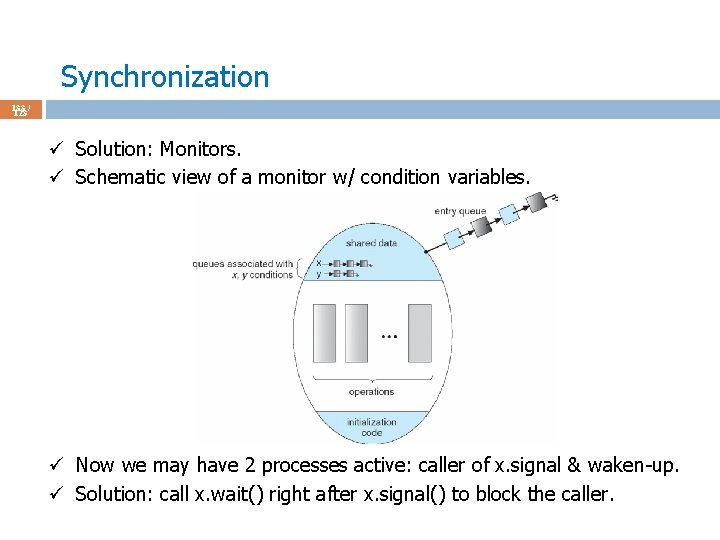

Synchronization 133 / 123 ü Solution: Monitors. ü Schematic view of a monitor w/ condition variables. ü Now we may have 2 processes active: caller of x. signal & waken-up. ü Solution: call x. wait() right after x. signal() to block the caller.

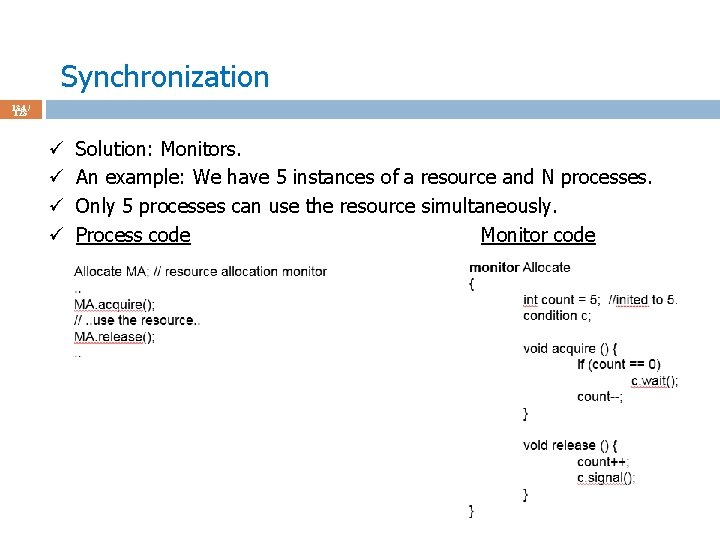

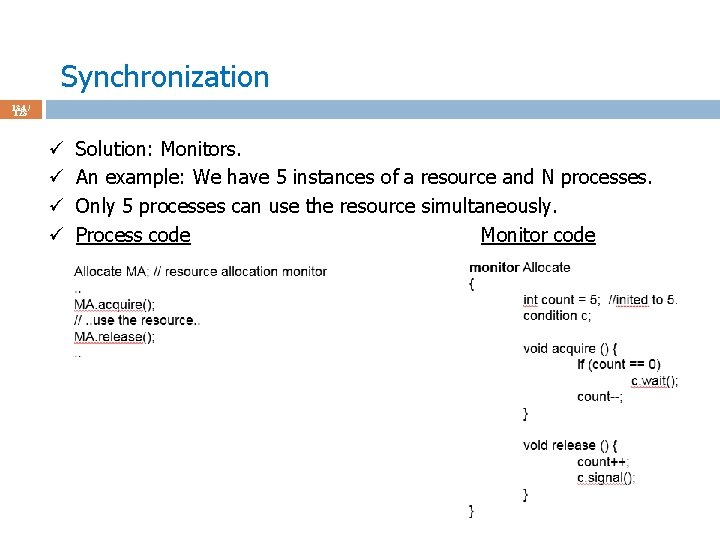

Synchronization 134 / 123 ü ü Solution: Monitors. An example: We have 5 instances of a resource and N processes. Only 5 processes can use the resource simultaneously. Process code Monitor code

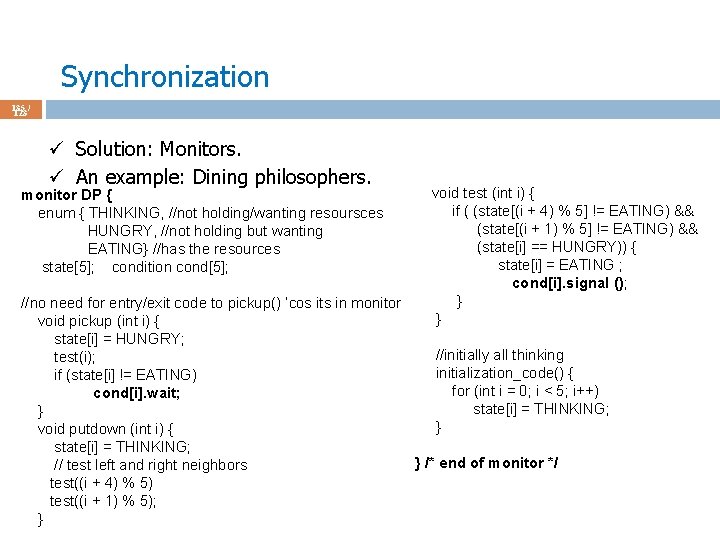

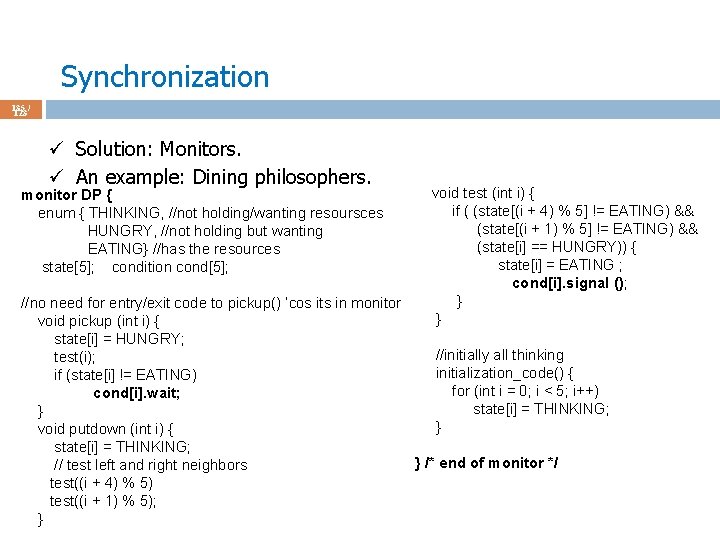

Synchronization 135 / 123 ü Solution: Monitors. ü An example: Dining philosophers. monitor DP { enum { THINKING, //not holding/wanting resoursces HUNGRY, //not holding but wanting EATING} //has the resources state[5]; condition cond[5]; void test (int i) { if ( (state[(i + 4) % 5] != EATING) && (state[(i + 1) % 5] != EATING) && (state[i] == HUNGRY)) { state[i] = EATING ; cond[i]. signal (); } } //no need for entry/exit code to pickup() ‘cos its in monitor void pickup (int i) { state[i] = HUNGRY; //initially all thinking test(i); initialization_code() { if (state[i] != EATING) for (int i = 0; i < 5; i++) cond[i]. wait; state[i] = THINKING; } } void putdown (int i) { state[i] = THINKING; } /* end of monitor */ // test left and right neighbors test((i + 4) % 5) test((i + 1) % 5); }

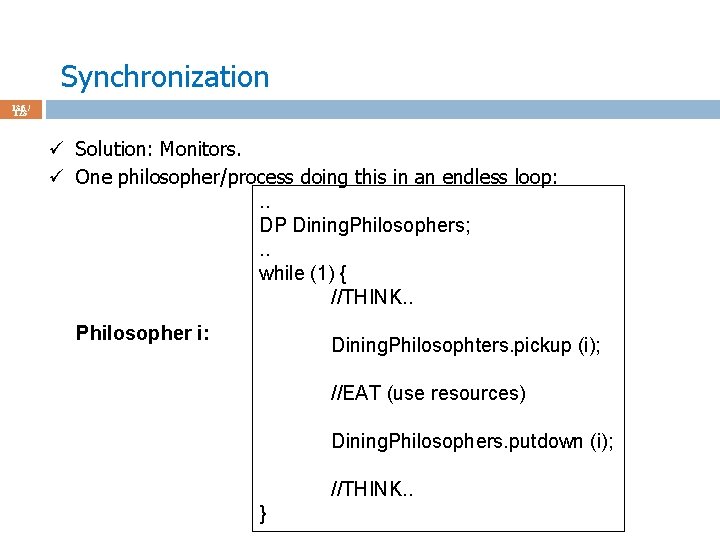

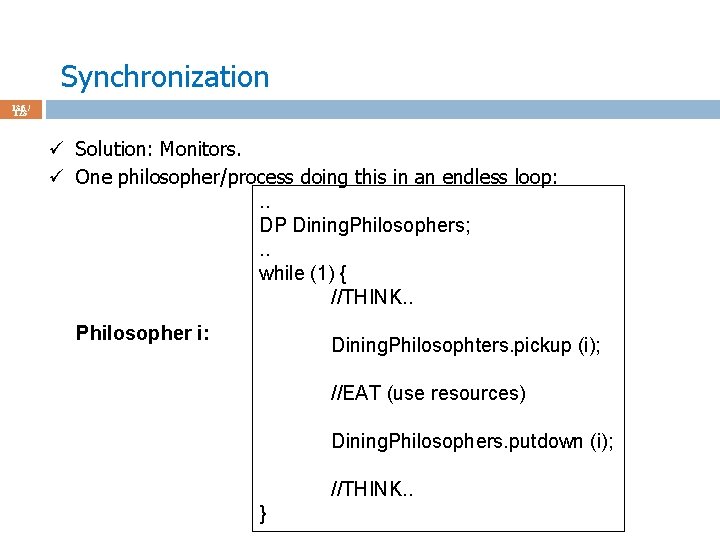

Synchronization 136 / 123 ü Solution: Monitors. ü One philosopher/process doing this in an endless loop: . . DP Dining. Philosophers; . . while (1) { //THINK. . Philosopher i: Dining. Philosophters. pickup (i); //EAT (use resources) Dining. Philosophers. putdown (i); //THINK. . }

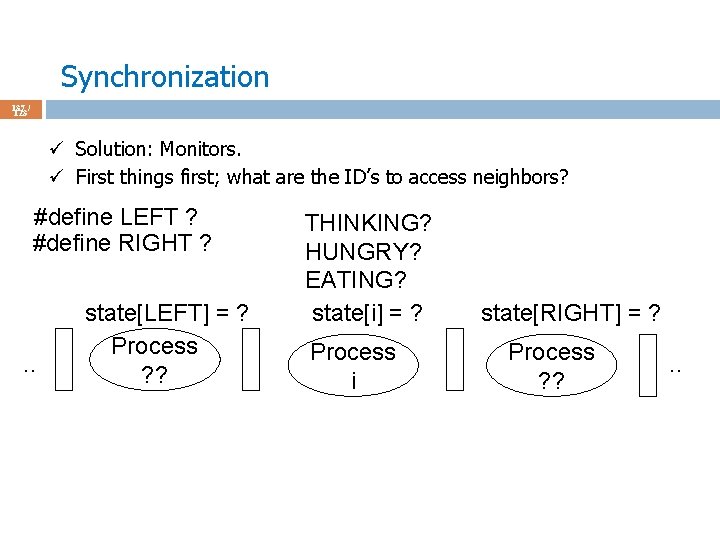

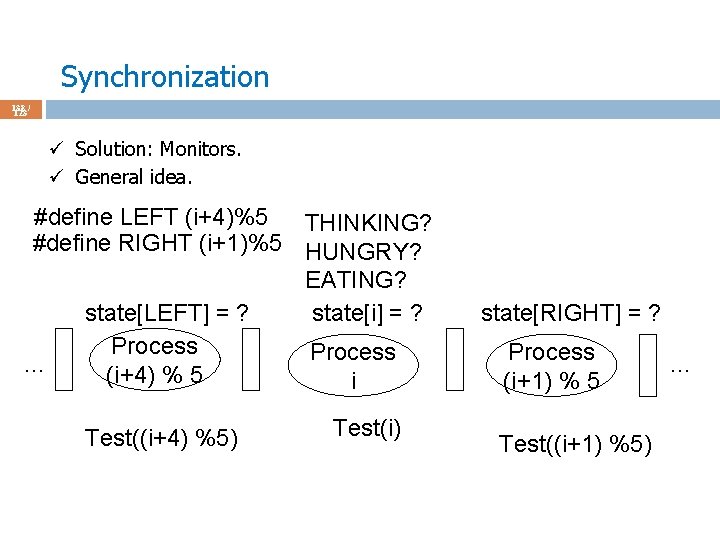

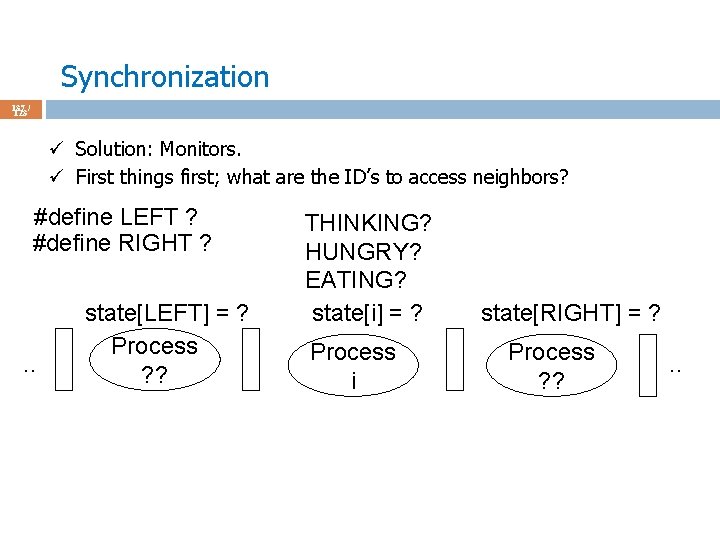

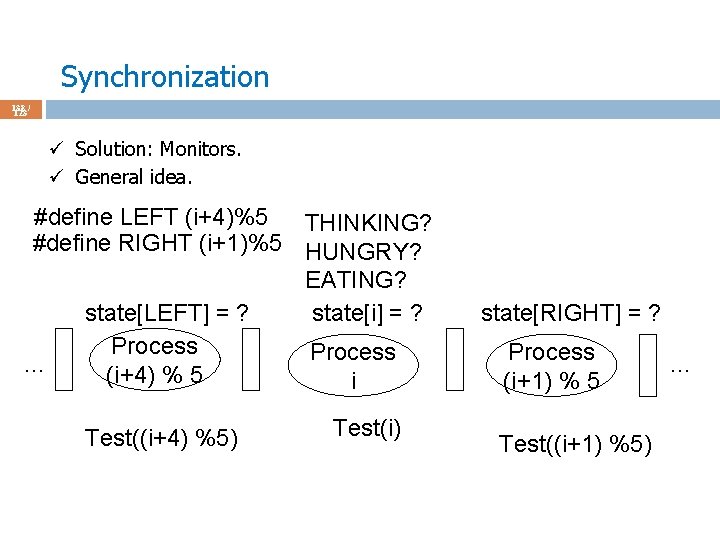

Synchronization 137 / 123 ü Solution: Monitors. ü First things first; what are the ID’s to access neighbors? #define LEFT ? #define RIGHT ? . . state[LEFT] = ? Process ? ? THINKING? HUNGRY? EATING? state[i] = ? Process i state[RIGHT] = ? Process ? ? . .

Synchronization 138 / 123 ü Solution: Monitors. ü General idea. #define LEFT (i+4)%5 THINKING? #define RIGHT (i+1)%5 HUNGRY? EATING? state[LEFT] = ? state[i] = ? Process … (i+4) % 5 i Test((i+4) %5) Test(i) state[RIGHT] = ? Process (i+1) % 5 Test((i+1) %5) …

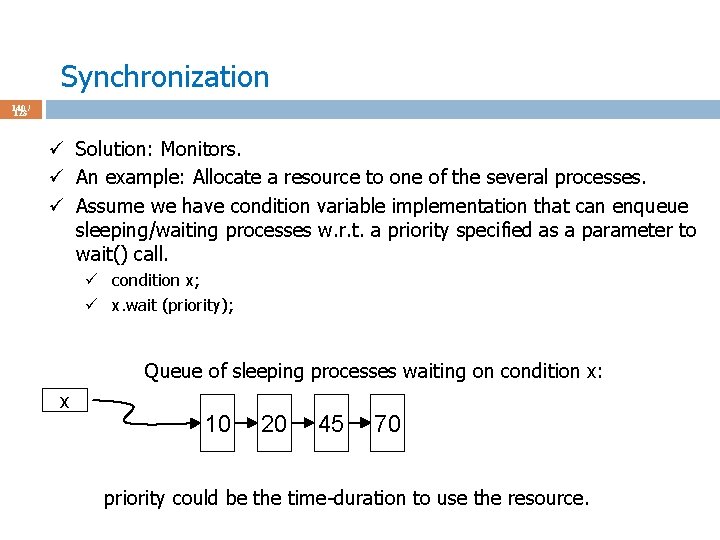

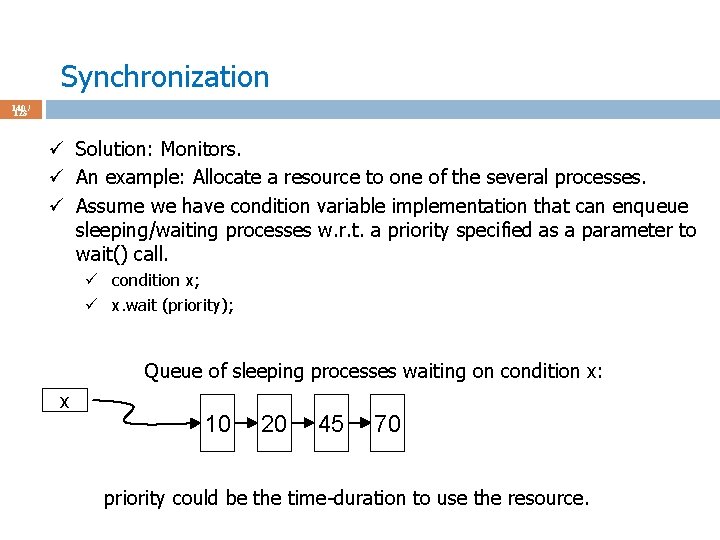

Synchronization 139 / 123 ü Solution: Monitors. ü An example: Allocate a resource to one of the several processes. ü Priority-based: The process that will use the resource for the shortest amount of time (known) will get the resource first if there are other processes that want the resource. . . Resource Processes or Threads that want to use the resource

Synchronization 140 / 123 ü Solution: Monitors. ü An example: Allocate a resource to one of the several processes. ü Assume we have condition variable implementation that can enqueue sleeping/waiting processes w. r. t. a priority specified as a parameter to wait() call. ü condition x; ü x. wait (priority); Queue of sleeping processes waiting on condition x: x 10 20 45 70 priority could be the time-duration to use the resource.

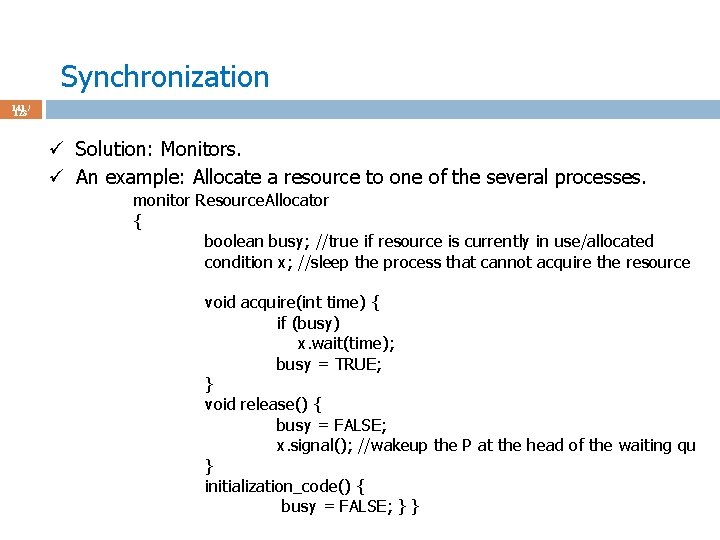

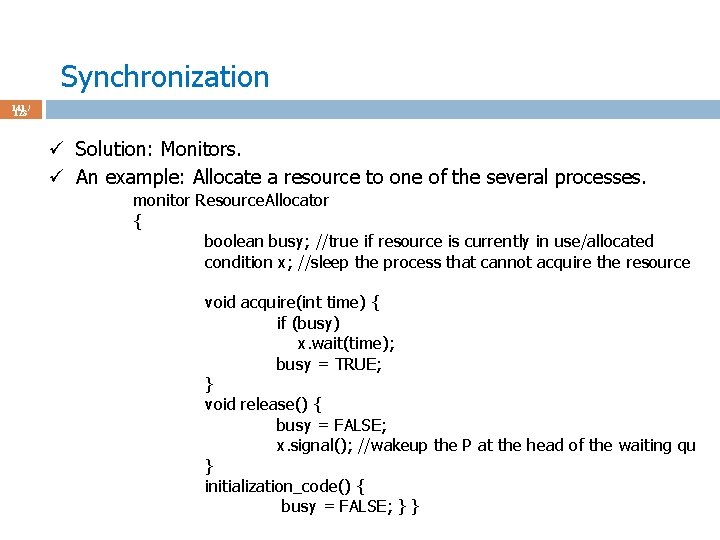

Synchronization 141 / 123 ü Solution: Monitors. ü An example: Allocate a resource to one of the several processes. monitor Resource. Allocator { boolean busy; //true if resource is currently in use/allocated condition x; //sleep the process that cannot acquire the resource void acquire(int time) { if (busy) x. wait(time); busy = TRUE; } void release() { busy = FALSE; x. signal(); //wakeup the P at the head of the waiting qu } initialization_code() { busy = FALSE; } }

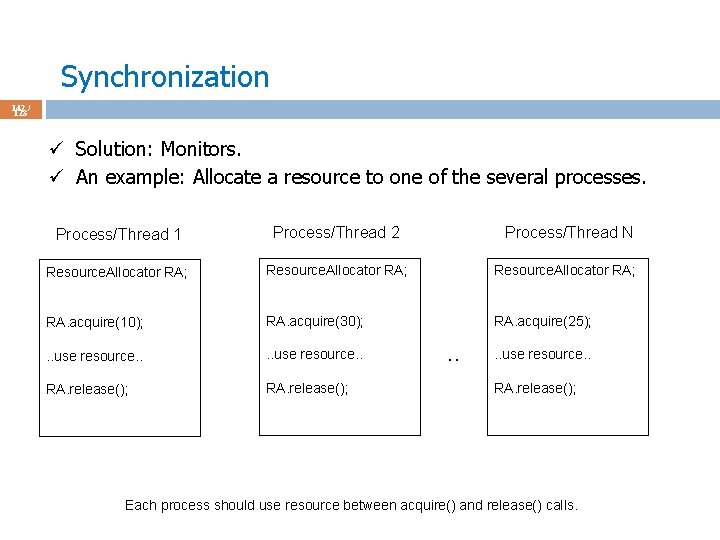

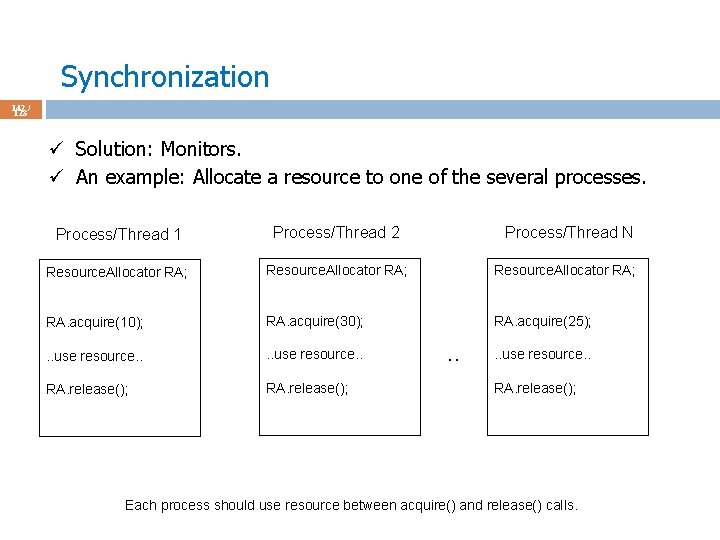

Synchronization 142 / 123 ü Solution: Monitors. ü An example: Allocate a resource to one of the several processes. Process/Thread 1 Process/Thread 2 Process/Thread N Resource. Allocator RA; RA. acquire(10); RA. acquire(30); RA. acquire(25); . . use resource. . RA. release(); . . use resource. . RA. release(); Each process should use resource between acquire() and release() calls.