CENG 334 Introduction to Operating Systems Filesystems Topics

- Slides: 55

CENG 334 Introduction to Operating Systems Filesystems Topics: Erol Sahin Dept of Computer Eng. Middle East Technical University Ankara, TURKEY URL: http: //kovan. ceng. metu. edu. tr/ceng 334 © 2006 Matt Welsh – Harvard University 1

File System Caching Most filesystems cache significant amounts of disk in memory e. g. , Linux tries to use all “free” physical memory as a giant cache Avoids huge overhead for going to disk for every I/O Issues: When do you commit a write to the disk? What happens if you write only to the memory cache and then the system crashes? How do you keep the memory and disk views of a file consistent? What if the file metadata (inodes, etc. ) is modified before the data blocks? Read-ahead Read a few extra blocks into memory when you do one read operation Amortize the cost of the seek Useful if the blocks of a file are laid out in contiguous blocks Take advantage of sequential access patterns on the file © 2006 Matt Welsh – Harvard University 2

Berkeley FFS Motivated by performance problems with older UNIX filesystems: Small blocks (512 bytes) Free list was unordered; no notion of allocating chunks of space at a time Inodes and data blocks may be located far from each other (long seek time) Related files (in same directory) might be very far apart No symbolic links, file locking, limited filenames (14 chars), no quotas Main goal of FFS was to improve performance: Use a larger block size – why does this help? ? Allocate blocks of a file (and files in same directory) near each other on the disk Entire filesystem described by a superblock Contains free block bitmap, location of root directory inode, etc. Copies of superblock stored at different locations on disk (for safety) © 2006 Matt Welsh – Harvard University 3

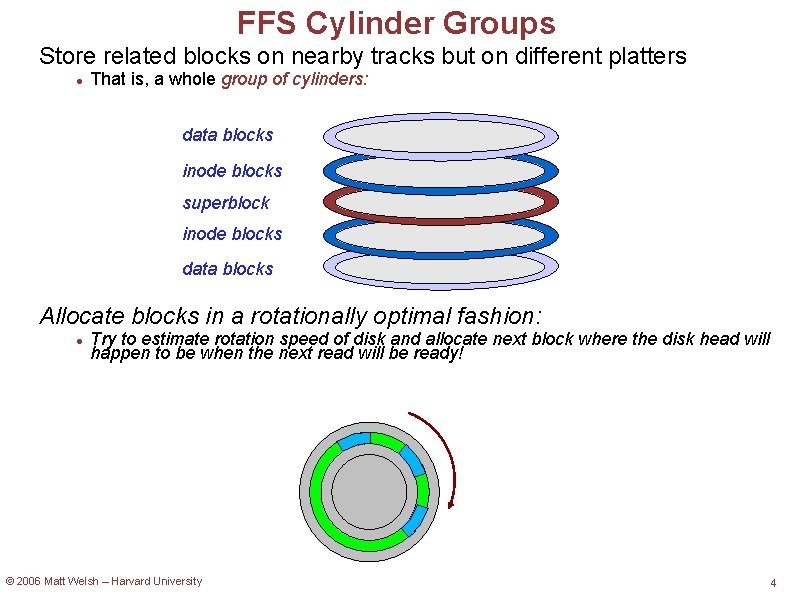

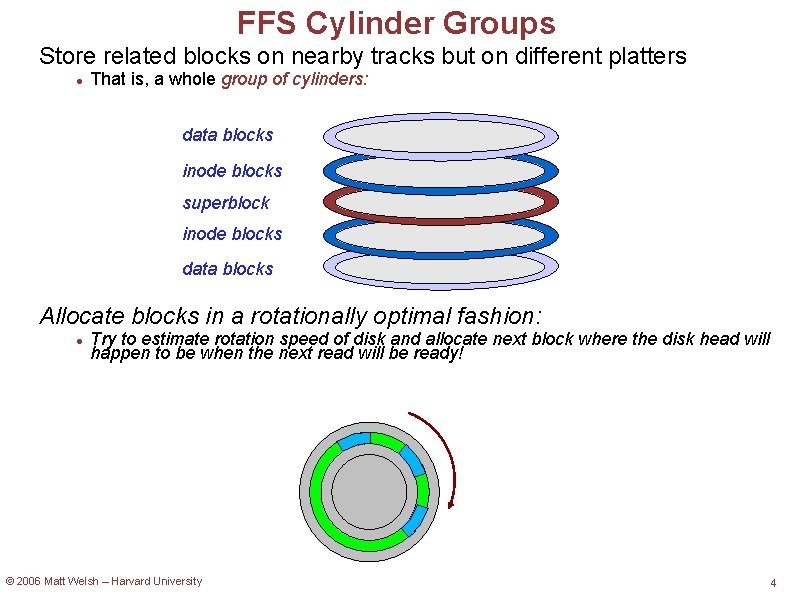

FFS Cylinder Groups Store related blocks on nearby tracks but on different platters That is, a whole group of cylinders: data blocks inode blocks superblock inode blocks data blocks Allocate blocks in a rotationally optimal fashion: Try to estimate rotation speed of disk and allocate next block where the disk head will happen to be when the next read will be ready! © 2006 Matt Welsh – Harvard University 4

Does this stuff matter anymore? Modern disks have a lot of internal buffering and logic Batch multiple write requests into a single write Internally reorder multiple outstanding requests Internal remapping of bad blocks to different places on the physical disk OS has little information on physical disk geometry anyway! Blocks with similar block #'s are usually close to each other, but that's about it. . . So, how useful are this fancy OS-driven block layout techniques? Clearly used to have significant impact on disk performance These days, not clear that they are so useful Still, lots of debate in the FS community about this Modern filesystems still use notion of block and cylinder grouping Argument that OS can know more about the workload, multiple users, different request priorities, and tradeoffs in terms of bandwidth vs. latency © 2006 Matt Welsh – Harvard University 5

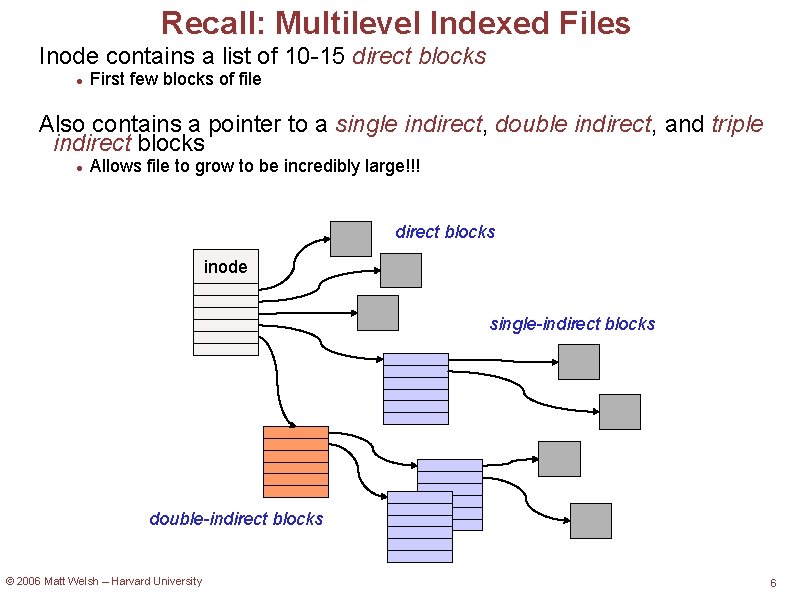

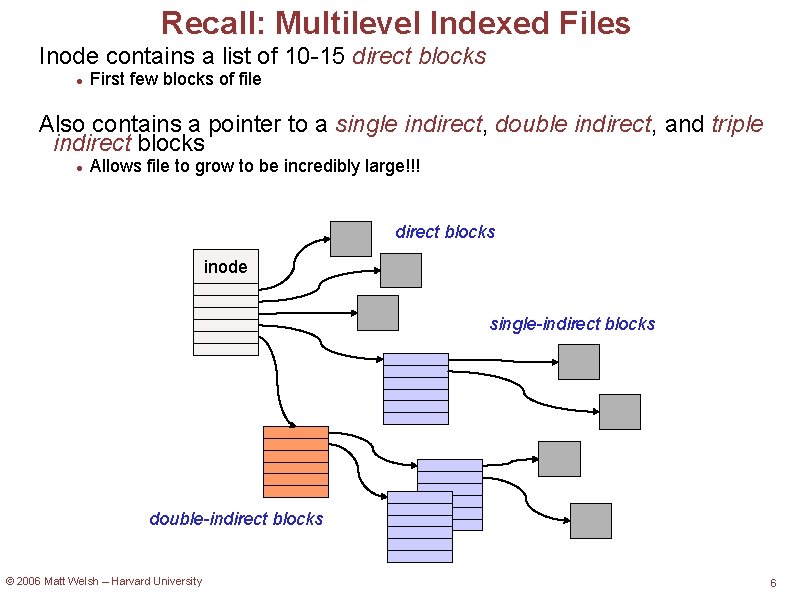

Recall: Multilevel Indexed Files Inode contains a list of 10 -15 direct blocks First few blocks of file Also contains a pointer to a single indirect, double indirect, and triple indirect blocks Allows file to grow to be incredibly large!!! direct blocks inode single-indirect blocks double-indirect blocks © 2006 Matt Welsh – Harvard University 6

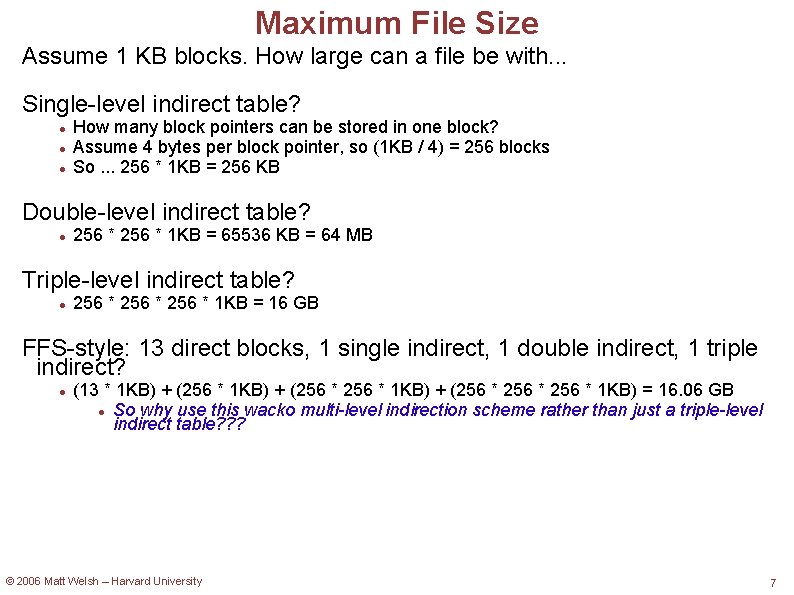

Maximum File Size Assume 1 KB blocks. How large can a file be with. . . Single-level indirect table? How many block pointers can be stored in one block? Assume 4 bytes per block pointer, so (1 KB / 4) = 256 blocks So. . . 256 * 1 KB = 256 KB Double-level indirect table? 256 * 1 KB = 65536 KB = 64 MB Triple-level indirect table? 256 * 1 KB = 16 GB FFS-style: 13 direct blocks, 1 single indirect, 1 double indirect, 1 triple indirect? (13 * 1 KB) + (256 * 256 * 1 KB) = 16. 06 GB So why use this wacko multi-level indirection scheme rather than just a triple-level indirect table? ? ? © 2006 Matt Welsh – Harvard University 7

FFS Block Sizes Older UNIX filesystems used small blocks (512 B or 1 KB) Low disk bandwidth utilization Maximum file size is limited (how many blocks can an inode keep track of) FFS introduced larger block sizes (4 KB) Allows multiple sectors to be read/written at once Introduces internal fragmentation: a whole block may not be used Fix: Block “fragments” (1 KB) The last block in a file may consist of 1, 2, or 3 fragments Fragments from different files stored on the same block © 2006 Matt Welsh – Harvard University 8

Log-structured Fileystems (LFS) Around '91, two trends in disk technology were emerging: Disk bandwidth was increasing rapidly (over 40% a year) Seek latency not improving much at all Machines had increasingly large main memories Large buffer caches absorb a large fraction of read I/Os Can use for writes as well! Coalesce several small writes into one larger write Some lingering problems with FFS. . . Writing to file metadata (inodes) was required to be synchronous Couldn't buffer metadata writes in memory Lots of small writes to file metadata means lots of seeks! LFS takes advantage of both to increase FS performance Mendel Rosenblum and John Ousterhout Mendel is now a prof at Stanford Also lots of contributions by our own Margo Seltzer © 2006 Matt Welsh – Harvard University 9

LFS: Basic Idea Treat the entire disk as one big append-only log for writes! Don't try to lay out blocks on disk in some predetermined order Whenever a file write occurs, append it to the end of the log Whenever file metadata changes, append it to the end of the log Collect pending writes in memory and stream out in one big write Maximizes disk bandwidth No “extra” seeks required (only those to move the end of the log) When do writes to the actual disk happen? © 2006 Matt Welsh – Harvard University 10

LFS: Basic Idea Treat the entire disk as one big append-only log for writes! Don't try to lay out blocks on disk in some predetermined order Whenever a file write occurs, append it to the end of the log Whenever file metadata changes, append it to the end of the log Collect pending writes in memory and stream out in one big write Maximizes disk bandwidth No “extra” seeks required (only those to move the end of the log) When do writes to the actual disk happen? When a user calls sync() -- synchronize data on disk for whole filesystem When a user calls fsync() -- synchronize data on disk for one file When OS needs to reclaim dirty buffer cache pages Note that this can often be avoided, eg. , by preferring clean pages Sounds simple. . . But lots of hairy details to deal with! © 2006 Matt Welsh – Harvard University 11

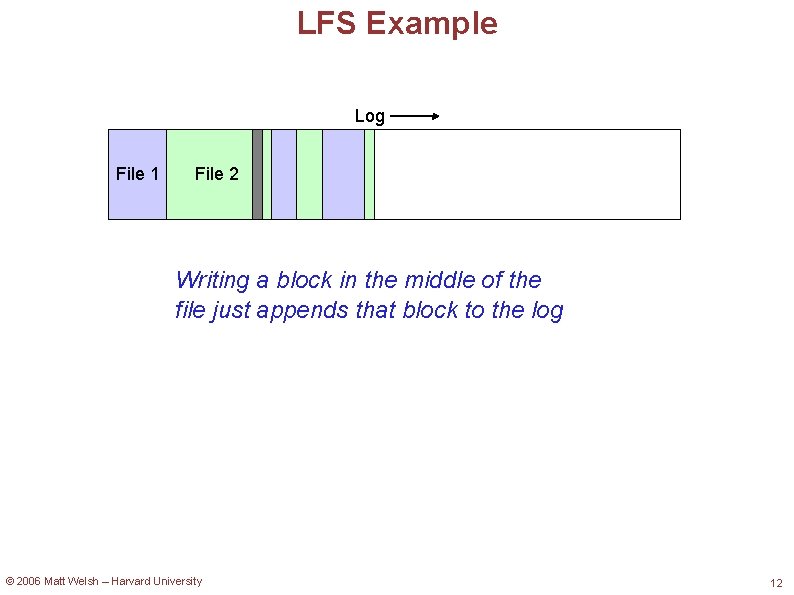

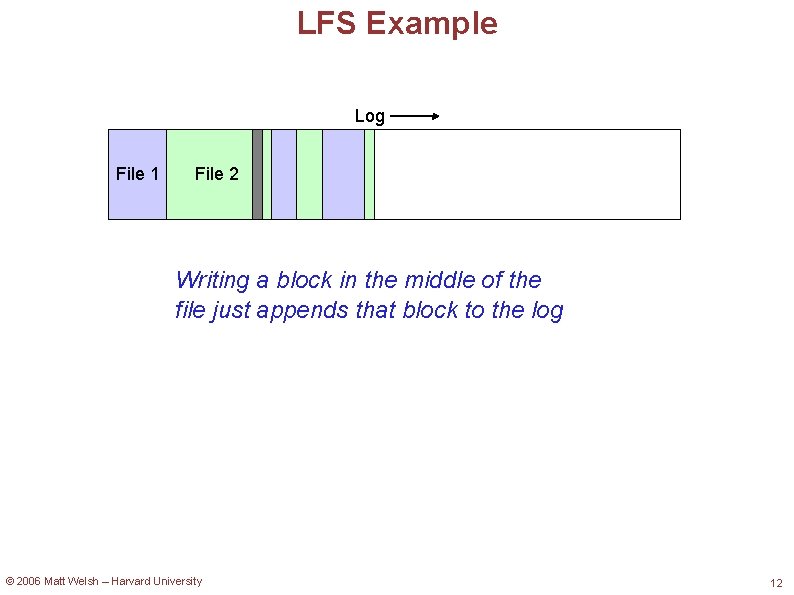

LFS Example Log File 1 File 2 Writing a block in the middle of the file just appends that block to the log © 2006 Matt Welsh – Harvard University 12

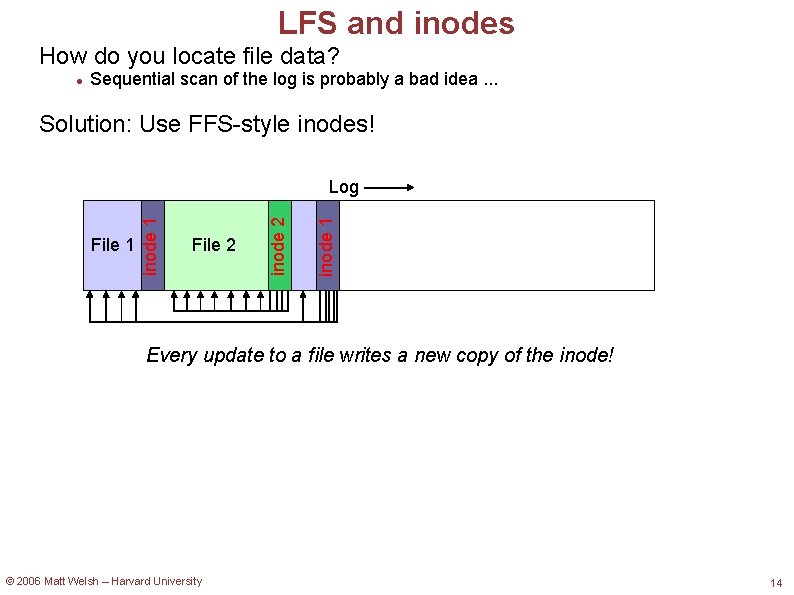

LFS and inodes How do you locate file data? Sequential scan of the log is probably a bad idea. . . Solution: Use FFS-style inodes! File 2 © 2006 Matt Welsh – Harvard University inode 2 File 1 inode 1 Log 13

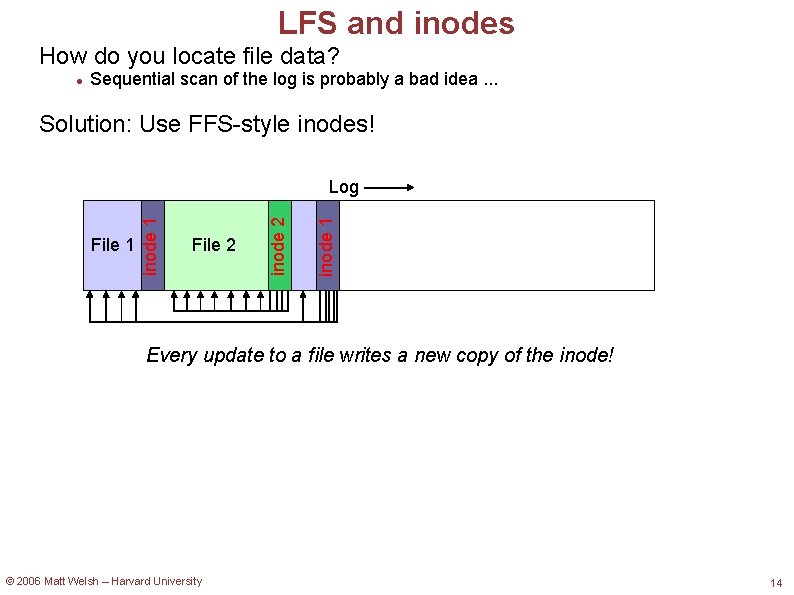

LFS and inodes How do you locate file data? Sequential scan of the log is probably a bad idea. . . Solution: Use FFS-style inodes! inode 1 File 2 inode 2 File 1 inode 1 Log Every update to a file writes a new copy of the inode! © 2006 Matt Welsh – Harvard University 14

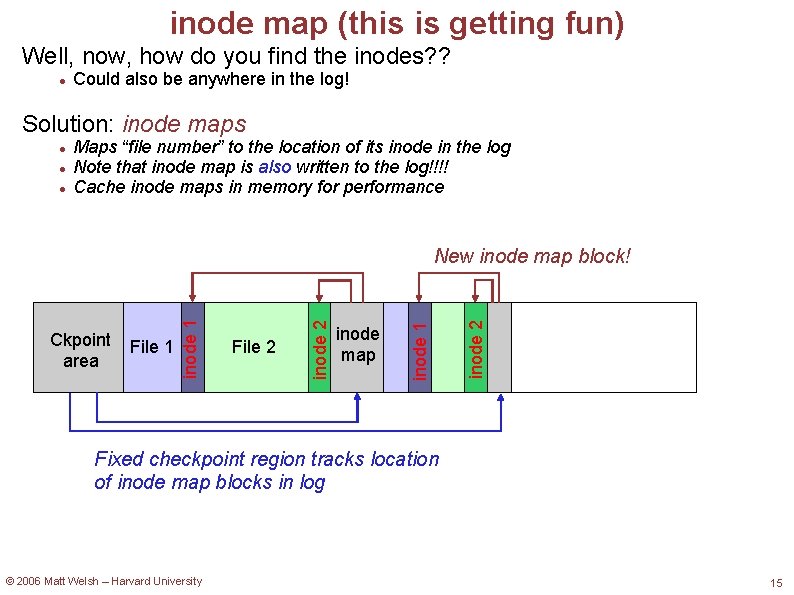

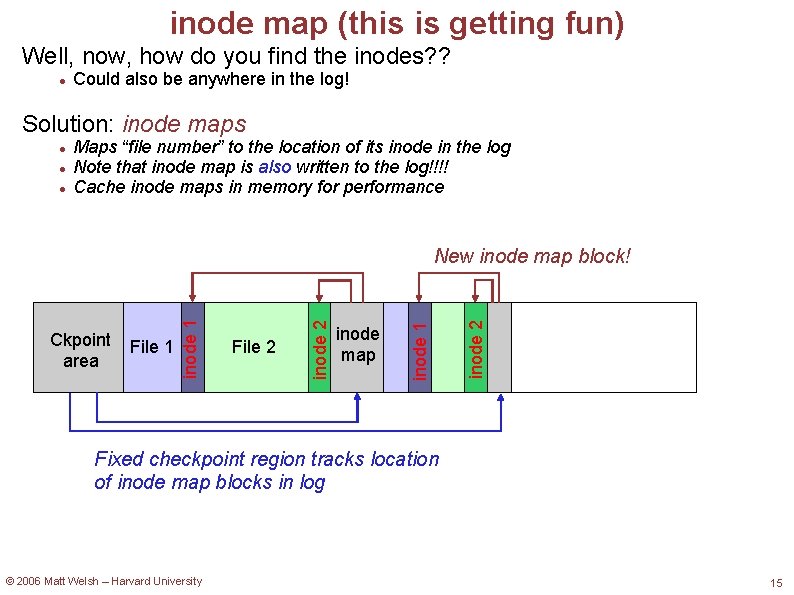

inode map (this is getting fun) Well, now, how do you find the inodes? ? Could also be anywhere in the log! Solution: inode maps Maps “file number” to the location of its inode in the log Note that inode map is also written to the log!!!! Cache inode maps in memory for performance inode map inode 2 File 2 inode 1 File 1 inode 2 Ckpoint area inode 1 New inode map block! Fixed checkpoint region tracks location of inode map blocks in log © 2006 Matt Welsh – Harvard University 15

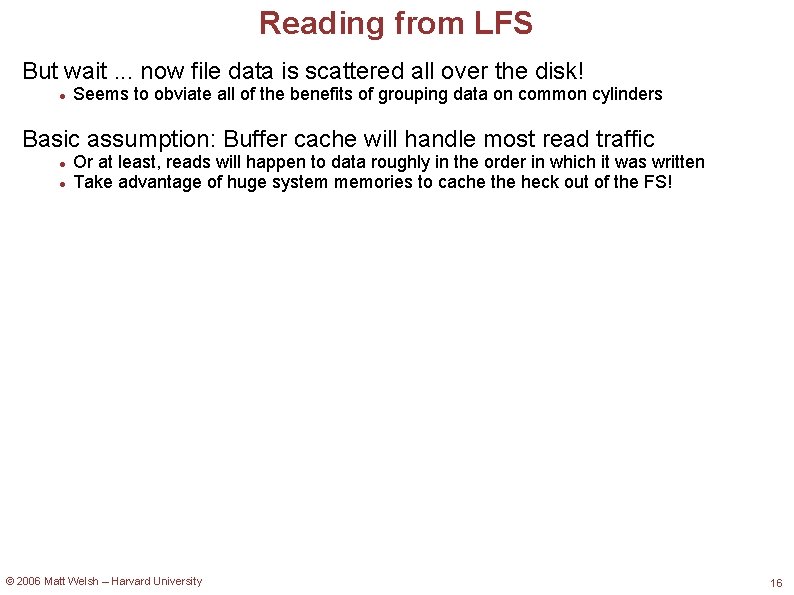

Reading from LFS But wait. . . now file data is scattered all over the disk! Seems to obviate all of the benefits of grouping data on common cylinders Basic assumption: Buffer cache will handle most read traffic Or at least, reads will happen to data roughly in the order in which it was written Take advantage of huge system memories to cache the heck out of the FS! © 2006 Matt Welsh – Harvard University 16

Log Cleaner With LFS, eventually the disk will fill up! Need some way to reclaim “dead space” What constitutes “dead space? ” Deleted files File blocks that have been “overwritten” Solution: Periodic “log cleaning” Scan the log and look for deleted or overwritten blocks Effectively, clear out stale log entries Copy live data to the end of the log The rest of the log (at the beginning) can now be reused! © 2006 Matt Welsh – Harvard University 17

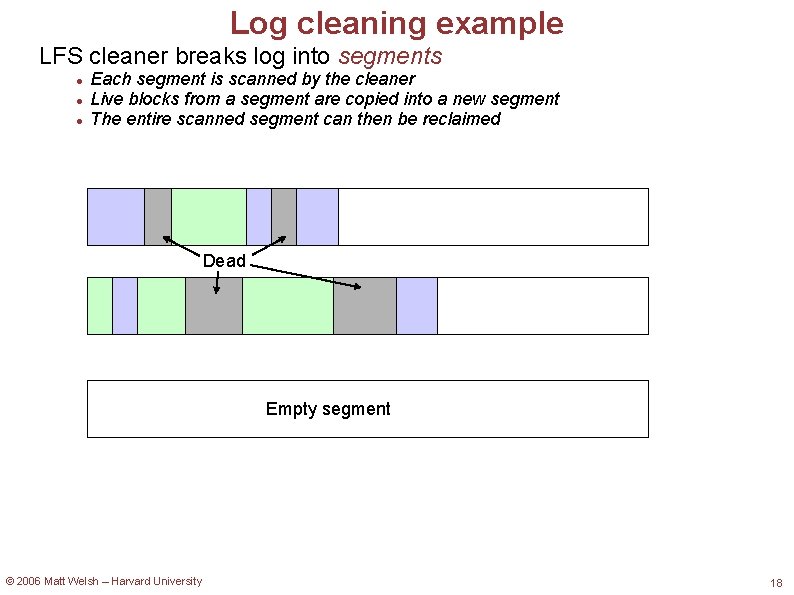

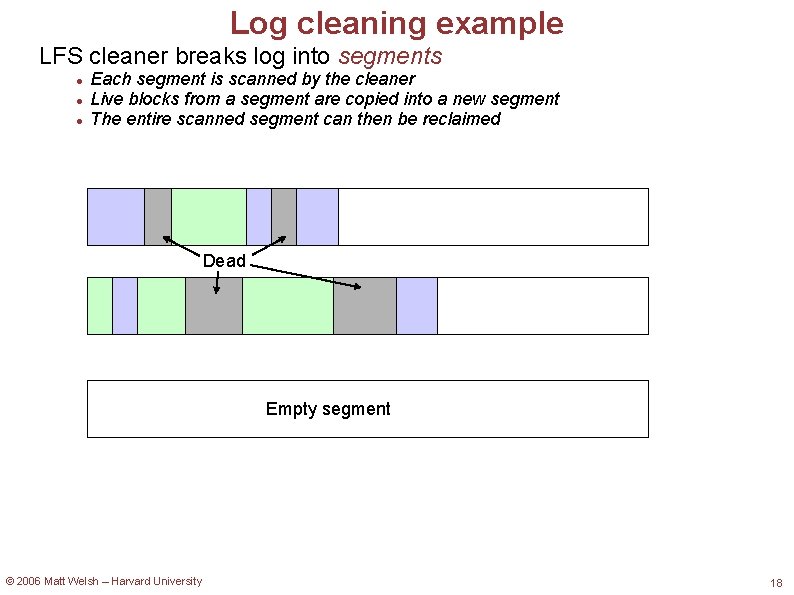

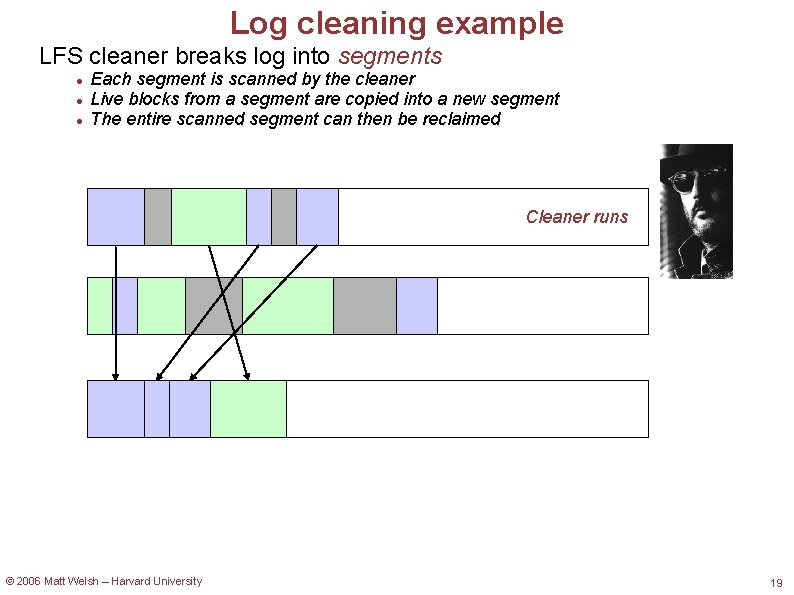

Log cleaning example LFS cleaner breaks log into segments Each segment is scanned by the cleaner Live blocks from a segment are copied into a new segment The entire scanned segment can then be reclaimed Dead Empty segment © 2006 Matt Welsh – Harvard University 18

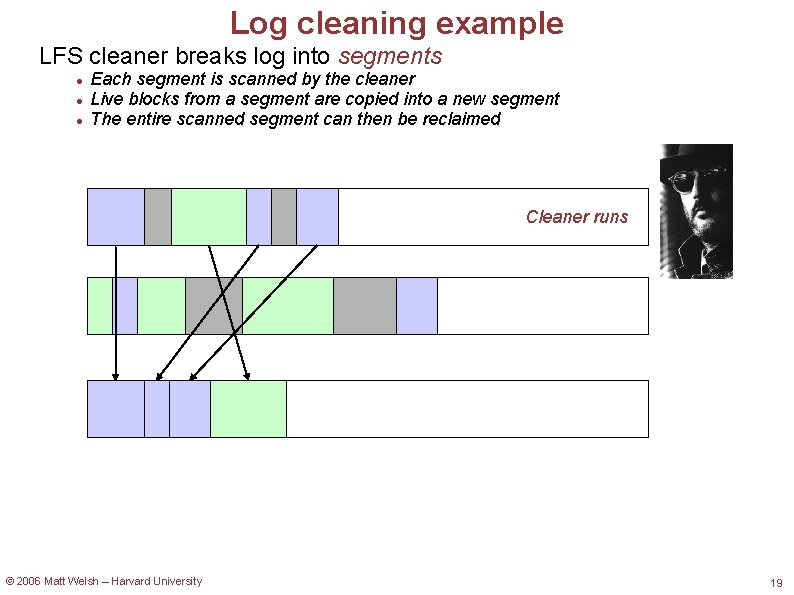

Log cleaning example LFS cleaner breaks log into segments Each segment is scanned by the cleaner Live blocks from a segment are copied into a new segment The entire scanned segment can then be reclaimed Cleaner runs © 2006 Matt Welsh – Harvard University 19

Log cleaning example LFS cleaner breaks log into segments Each segment is scanned by the cleaner Live blocks from a segment are copied into a new segment The entire scanned segment can then be reclaimed Cleaner runs © 2006 Matt Welsh – Harvard University 20

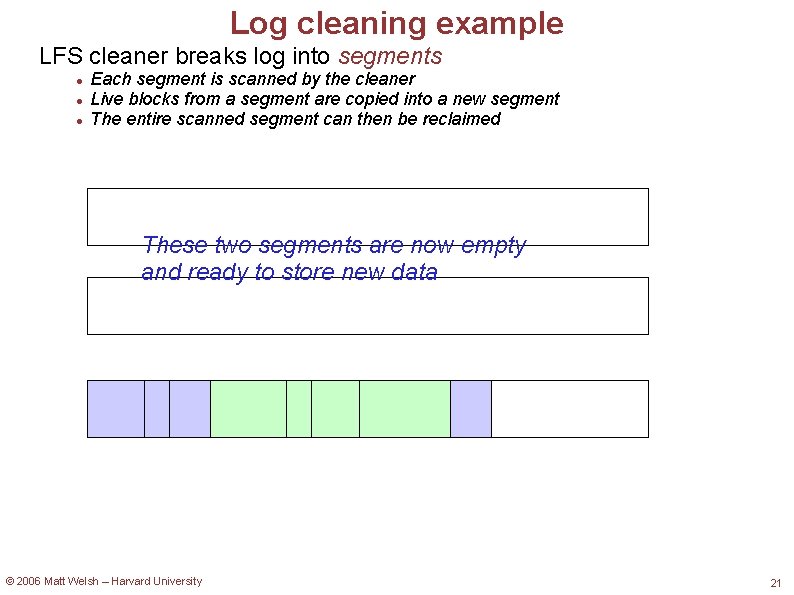

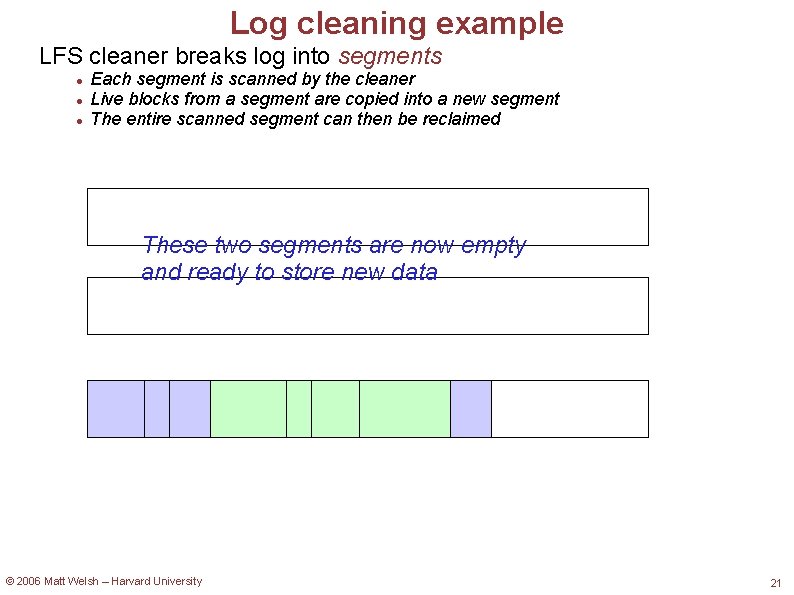

Log cleaning example LFS cleaner breaks log into segments Each segment is scanned by the cleaner Live blocks from a segment are copied into a new segment The entire scanned segment can then be reclaimed These two segments are now empty and ready to store new data © 2006 Matt Welsh – Harvard University 21

Cleaning Issues When does the cleaner run? Generally when the system (or at least the disk) is otherwise idle Can cause problems on a busy system with little idle time Cleaning a segment requires reading the whole thing! Can reduce this cost if the data to be written is already in cache How does segment size affect performance? © 2006 Matt Welsh – Harvard University 22

Cleaning Issues When does the cleaner run? Generally when the system (or at least the disk) is otherwise idle Can cause problems on a busy system with little idle time Cleaning a segment requires reading the whole thing! Can reduce this cost if the data to be written is already in cache How does segment size affect performance? Large segments amortize cost of access/seek time to read/write entire segment during cleaning Small segments introduce more variance in segment utilizations More segments will contain only dead blocks, making cleaning trivial Could imagine dynamically chaging segment sizes based on observed overhead for cleaning © 2006 Matt Welsh – Harvard University 23

LFS Debate First LFS paper by Rosenblum and Ousterhout in '91 1992 port of LFS to BSD by Margo Seltzer and others. . . Seltzer et al. Publish paper in USENIX'93 pointing out some flaws Ousterhout publishes critique of '93 LFS paper Seltzer publishes revised paper in '95 Ousterhout publishes critique of '95 paper Seltzer publishes response to critique Ousterhout publishes response to critique. . . “Lies, damn lies, and benchmarks” It is very difficult to come up with definitive benchmarks proving that one system is better than another Can always find a scenario where one system design outperforms another Difficult to extrapolate based on benchmark tests © 2006 Matt Welsh – Harvard University 24

Filesystem corruption What happens when you are making changes to a filesystem and the system crashes? Example: Modifying block 5 of a large directory, adding lots of new file entries System crashes while the block is being written The new files are “lost!” System runs fsck program on reboot Scans through the entire filesystem and locates corrupted inodes and directories Can typically find the bad directory, but may not be able to repair it! The directory could have been left in any state during the write fsck can take a very long time on large filesystems And, no guarantees that it fixes the problems anyway © 2006 Matt Welsh – Harvard University 25

Example: removing a file requires 1. Remove the file from its directory 2. Release the i-node to the pool of free i-nodes 3. Return all the disk blocks to the pool of free disk blocks In the absence of crashes the order these steps taken do not matter. In the presence of crashes, however, it does! © 2006 Matt Welsh – Harvard University 26

Example: removing a file requires 1. Remove the file from its directory 2. Release the i-node to the pool of free i-nodes 3. Return all the disk blocks to the pool of free disk blocks The inodes and file blocks will not be accessible from any file yet they will not be available for reassignment. © 2006 Matt Welsh – Harvard University 27

Example: removing a file requires 1. Remove the file from its directory 2. Release the i-node to the pool of free i-nodes 3. Return all the disk blocks to the pool of free disk blocks The directory node will point to an invalid inode or (if the inode is reassigned) point to a different file. The blocks of the file will not be available for reassignment. © 2006 Matt Welsh – Harvard University 28

Example: removing a file requires 1. Remove the file from its directory 2. Release the i-node to the pool of free i-nodes 3. Return all the disk blocks to the pool of free disk blocks The file will point to empty blocks, or (after reassignment) it will share the blocks of other files to which these were reassigned. . © 2006 Matt Welsh – Harvard University 29

Journaling Filesystems Ensure that changes to the filesystem are made atomically That is, a group of changes are made all together, or not at all In the directory modification example, this means that after the system reboots: The directory either looks exactly as it did before the block was modified Or the directory looks exactly as it did after the block was modified Cannot leave an FS entity (data block, inode, directory, etc. ) in an intermediate state! Idea: Maintain a log of all changes to the filesystem Log contains entries that indicate what was done e. g. , “Directory 2841 had inodes 404, 407, and 408 added to it” To make a filesystem change: 1. Write an intent-to-commit record to the log 2. Write the appropriate changes to the log Do not modify the filesystem data directly!!! 3. Write a commit record to the log This is essentially the same as the notion of database transactions © 2006 Matt Welsh – Harvard University 30

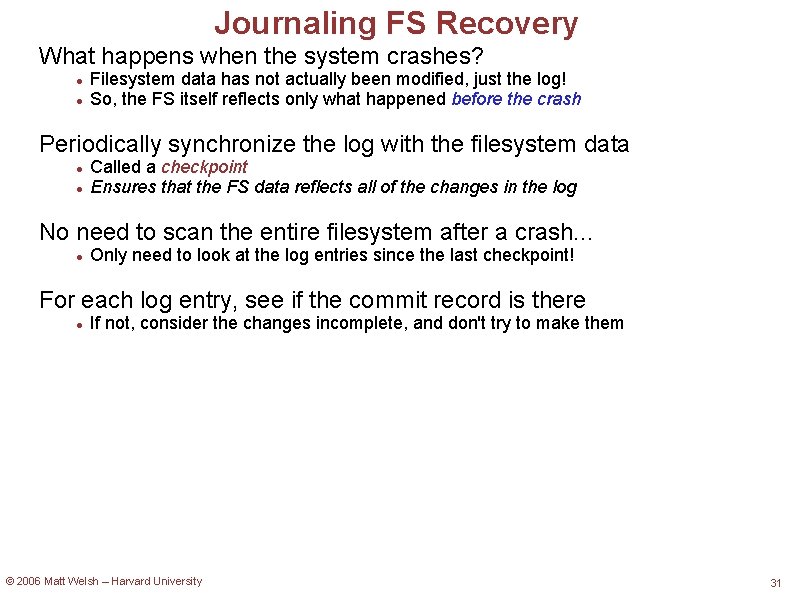

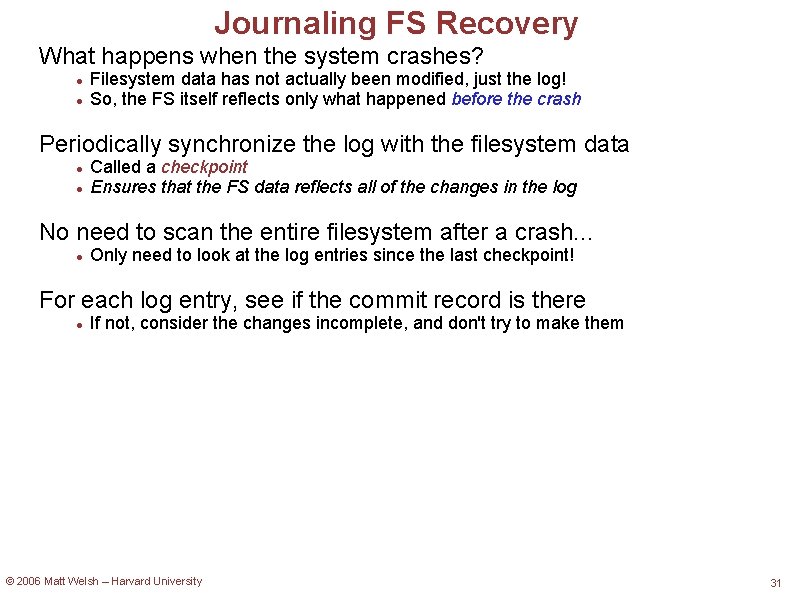

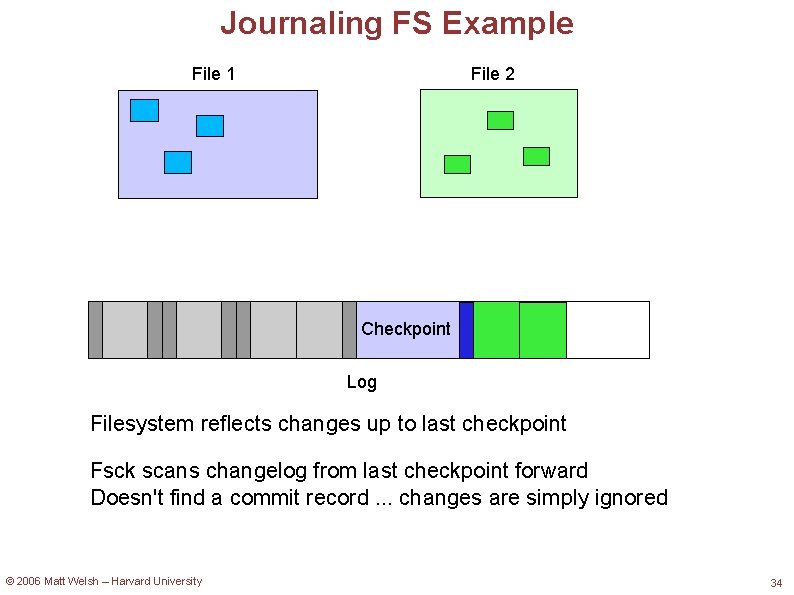

Journaling FS Recovery What happens when the system crashes? Filesystem data has not actually been modified, just the log! So, the FS itself reflects only what happened before the crash Periodically synchronize the log with the filesystem data Called a checkpoint Ensures that the FS data reflects all of the changes in the log No need to scan the entire filesystem after a crash. . . Only need to look at the log entries since the last checkpoint! For each log entry, see if the commit record is there If not, consider the changes incomplete, and don't try to make them © 2006 Matt Welsh – Harvard University 31

Journaling FS Example File 1 File 2 Checkpoint Log © 2006 Matt Welsh – Harvard University 32

Journaling FS Example File 1 File 2 Checkpoint Log © 2006 Matt Welsh – Harvard University 33

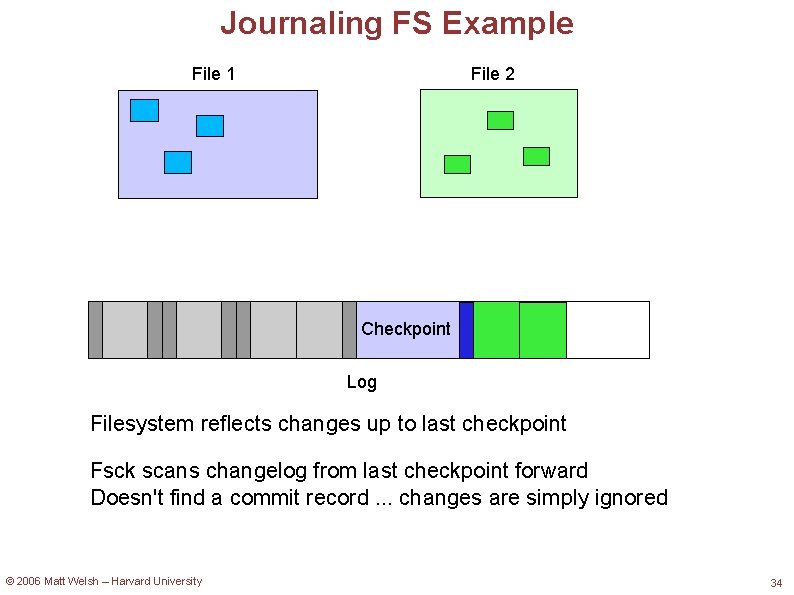

Journaling FS Example File 1 File 2 Checkpoint Log Filesystem reflects changes up to last checkpoint Fsck scans changelog from last checkpoint forward Doesn't find a commit record. . . changes are simply ignored © 2006 Matt Welsh – Harvard University 34

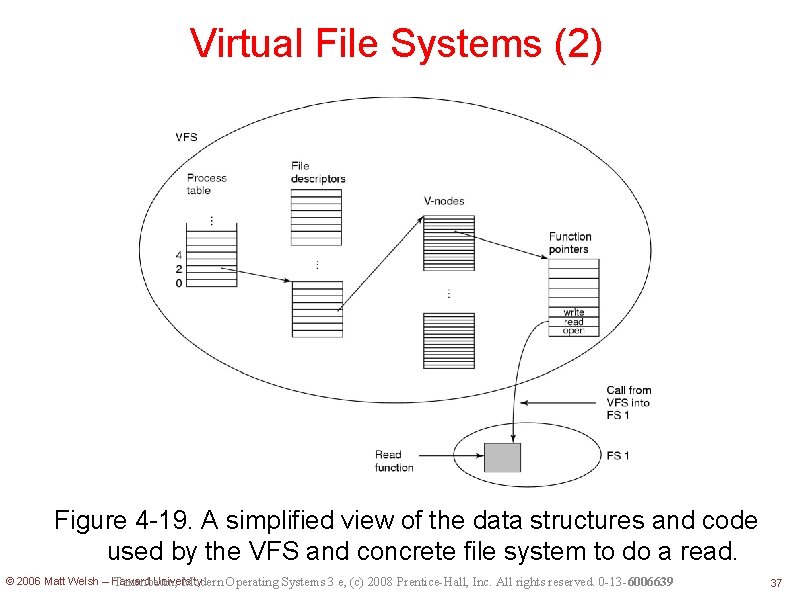

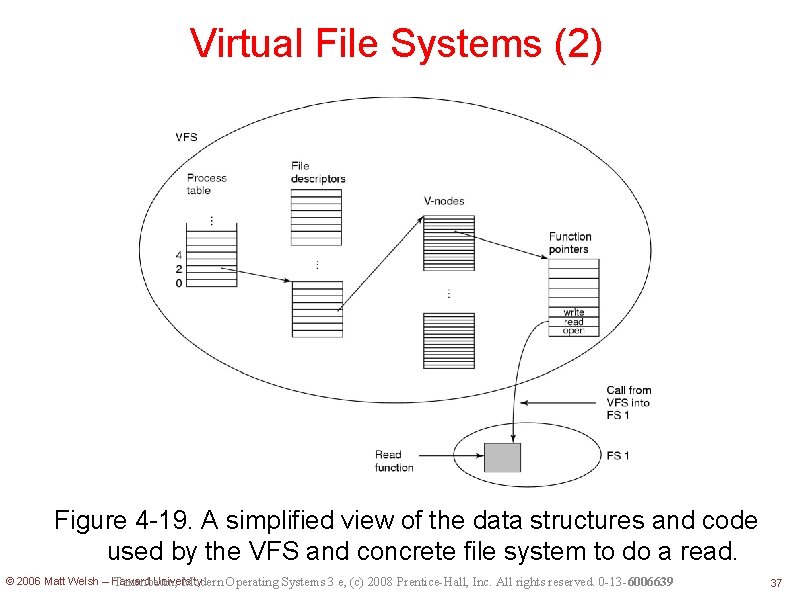

Virtual File Systems (1) VFS: another layer of abstraction Upper interface: for processes implementing POSIX interface Lower interface: for concrete file systems VFS translates the POSIX calls to the calls of the filesystems under it. Developed by Sun to support NFS (Network File System) protocol. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 35

At boot time, the root filesystem is registered with VFS. When other filesystems are mounted, they must also register with VFS. When a filesystem registers, it provides the list of addresses of the functions that the VFS demands, such as reading a block. After regitratin, when one opens a file: open(“/usr/include/unistd. h”, O_RDONLY) VFS creates a v-node and makes a call to the concrete filesystem to return all the information needed. The created v-node also contains pointers to the table of functions for the concrete filesystem that the file resides. © 2006 Matt Welsh – Harvard University 36

Virtual File Systems (2) Figure 4 -19. A simplified view of the data structures and code used by the VFS and concrete file system to do a read. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 37

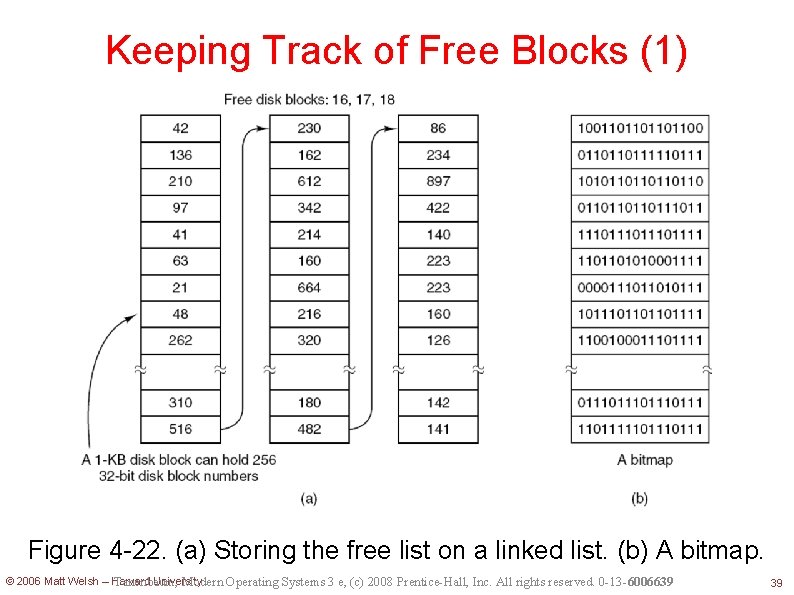

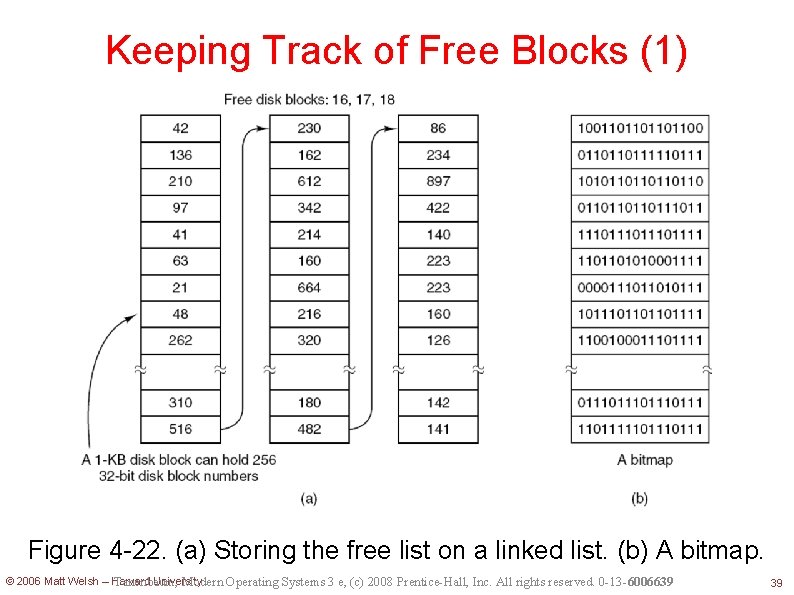

Keeping track of Free Blocks Two methods: Linked list of free blocks Bitmap structure © 2006 Matt Welsh – Harvard University 38

Keeping Track of Free Blocks (1) Figure 4 -22. (a) Storing the free list on a linked list. (b) A bitmap. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 39

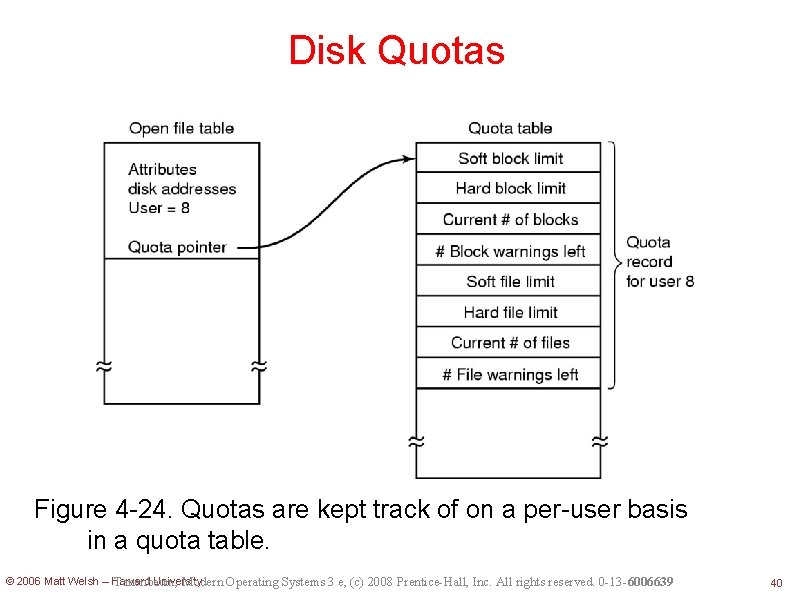

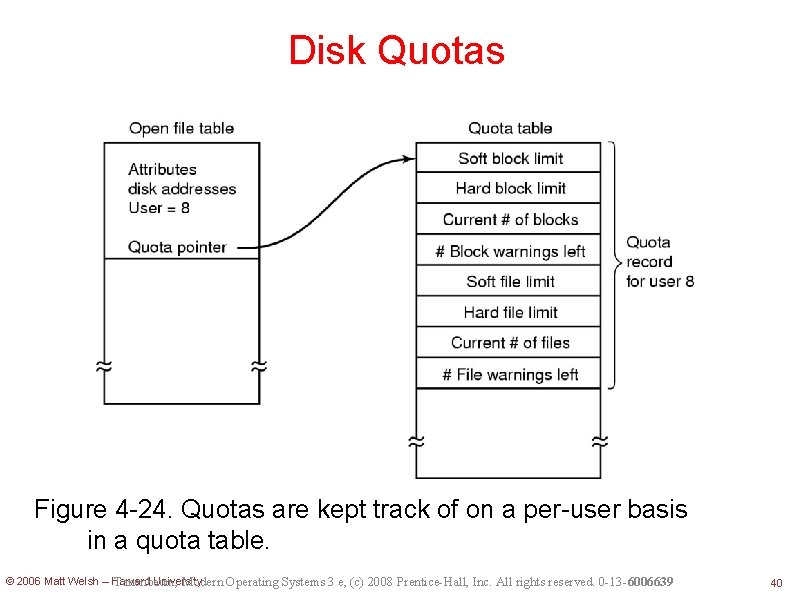

Disk Quotas Figure 4 -24. Quotas are kept track of on a per-user basis in a quota table. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 40

File System Backups (1) Backups to tape are generally made to handle one of two potential problems: • • Recover from disaster. Recover from stupidity. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 41

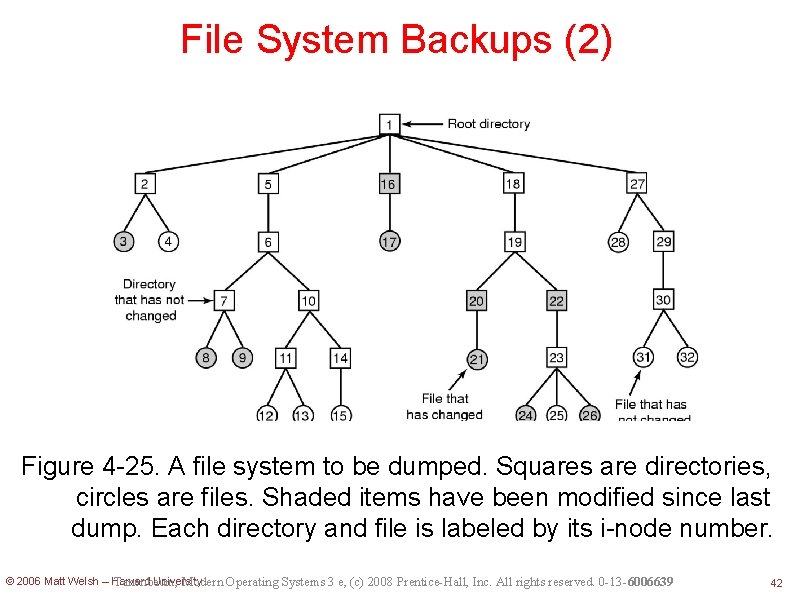

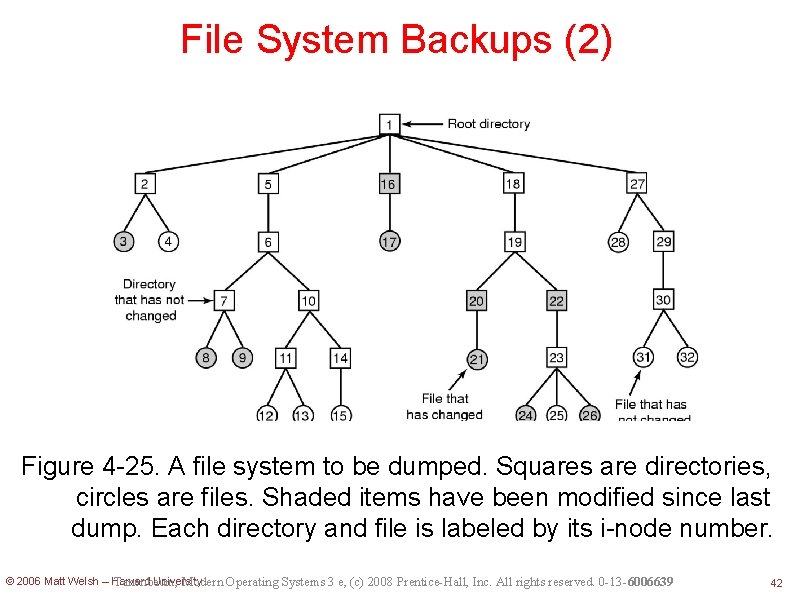

File System Backups (2) Figure 4 -25. A file system to be dumped. Squares are directories, circles are files. Shaded items have been modified since last dump. Each directory and file is labeled by its i-node number. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 42

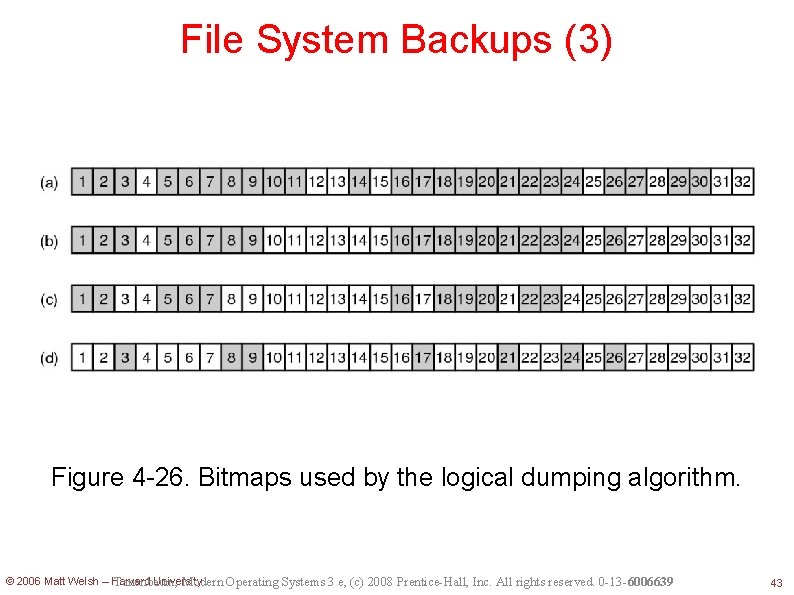

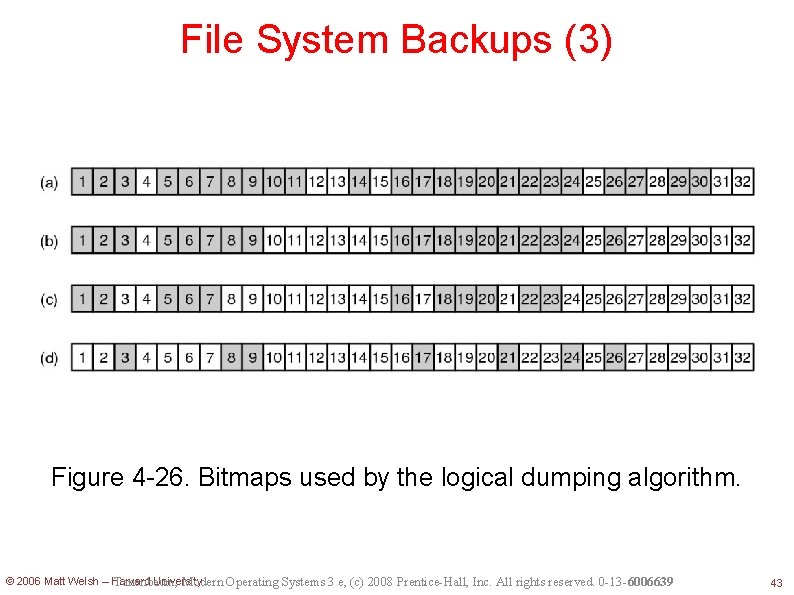

File System Backups (3) Figure 4 -26. Bitmaps used by the logical dumping algorithm. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 43

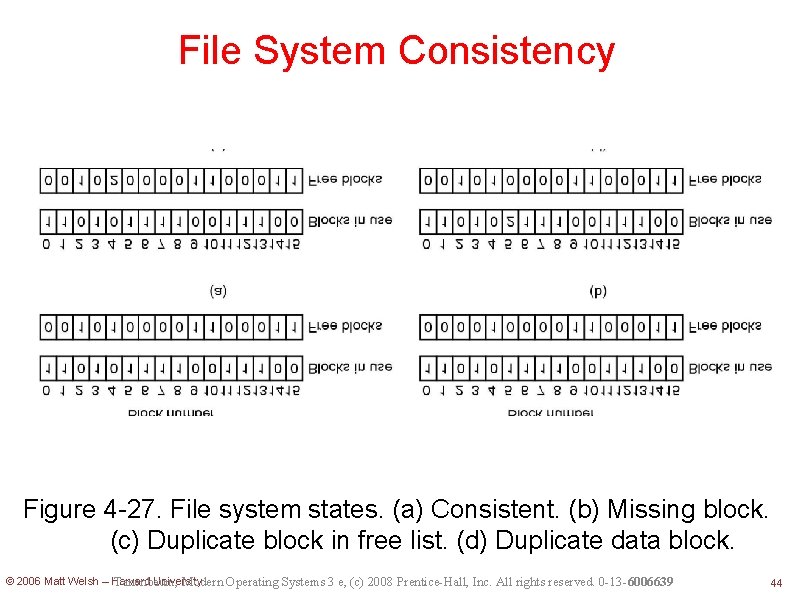

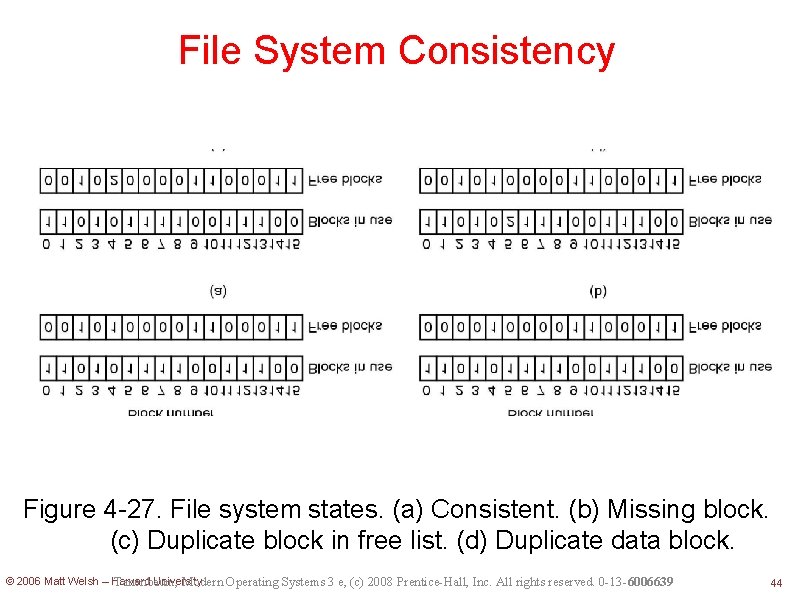

File System Consistency Figure 4 -27. File system states. (a) Consistent. (b) Missing block. (c) Duplicate block in free list. (d) Duplicate data block. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 44

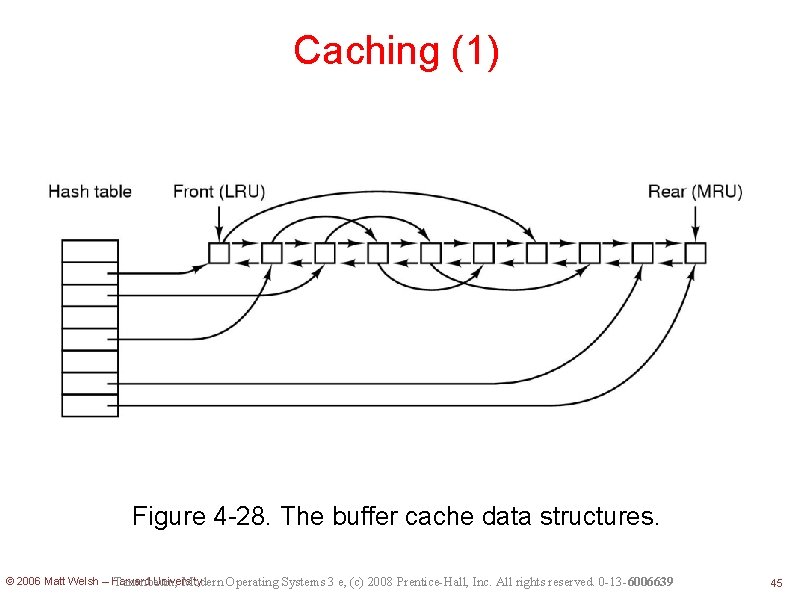

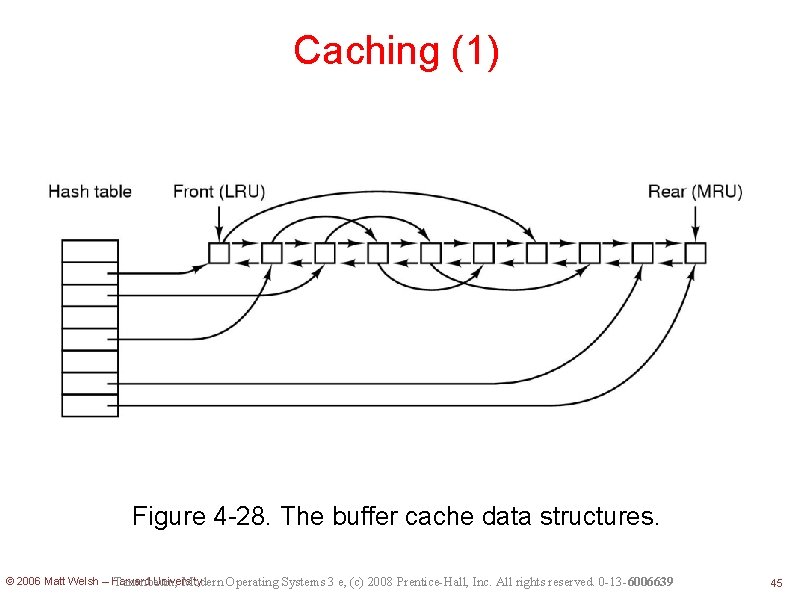

Caching (1) Figure 4 -28. The buffer cache data structures. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 45

Caching (2) • Some blocks, such as i-node blocks, are rarely referenced two times within a short interval. • Consider a modified LRU scheme, taking two factors into account: • Is the block likely to be needed again soon? • Is the block essential to the consistency of the file system? © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 46

Reducing Disk Arm Motion Figure 4 -29. (a) I-nodes placed at the start of the disk. (b) Disk divided into cylinder groups, each with its own blocks and i-nodes. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 47

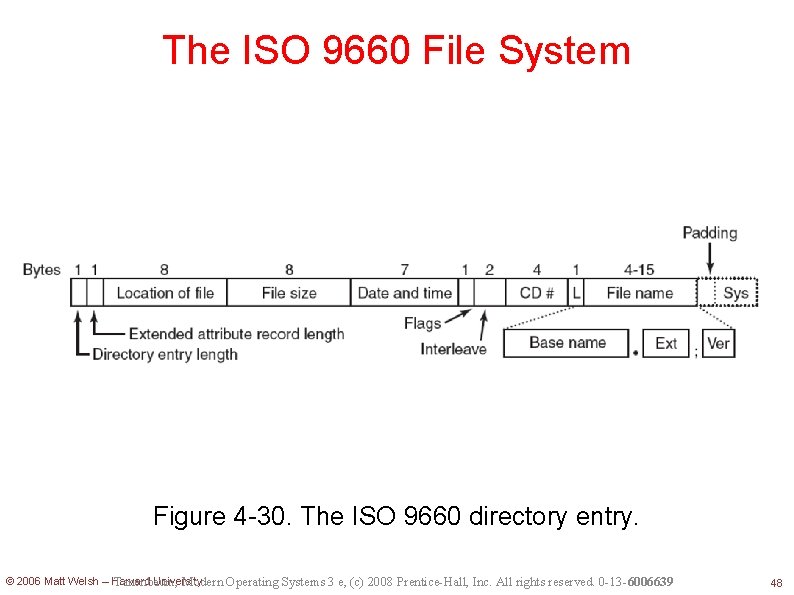

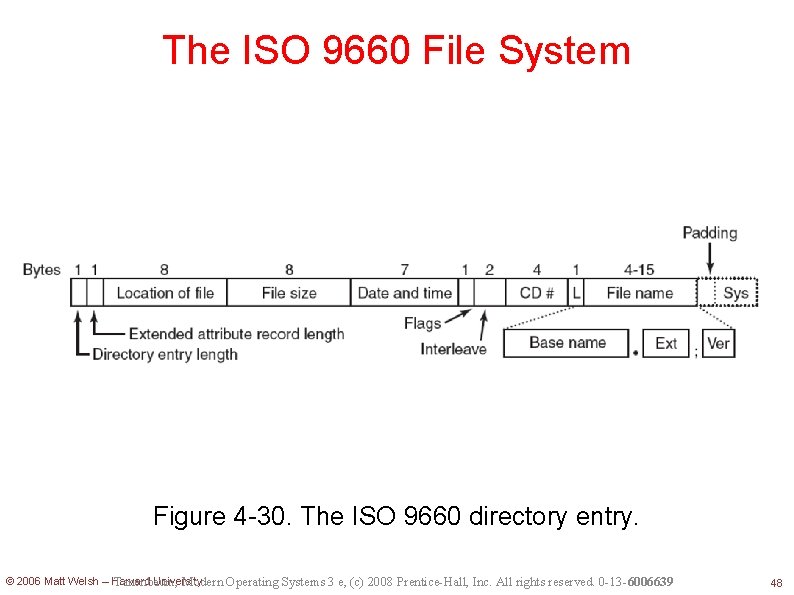

The ISO 9660 File System Figure 4 -30. The ISO 9660 directory entry. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 48

Rock Ridge Extensions Rock Ridge extension fields: • • PX - POSIX attributes. PN - Major and minor device numbers. SL - Symbolic link. NM - Alternative name. CL - Child location. PL - Parent location. RE - Relocation. TF - Time stamps. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 49

Joliet Extensions Joliet extension fields: • • Long file names. Unicode character set. Directory nesting deeper than eight levels. Directory names with extensions © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 50

The MS-DOS File System (1) Figure 4 -31. The MS-DOS directory entry. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 51

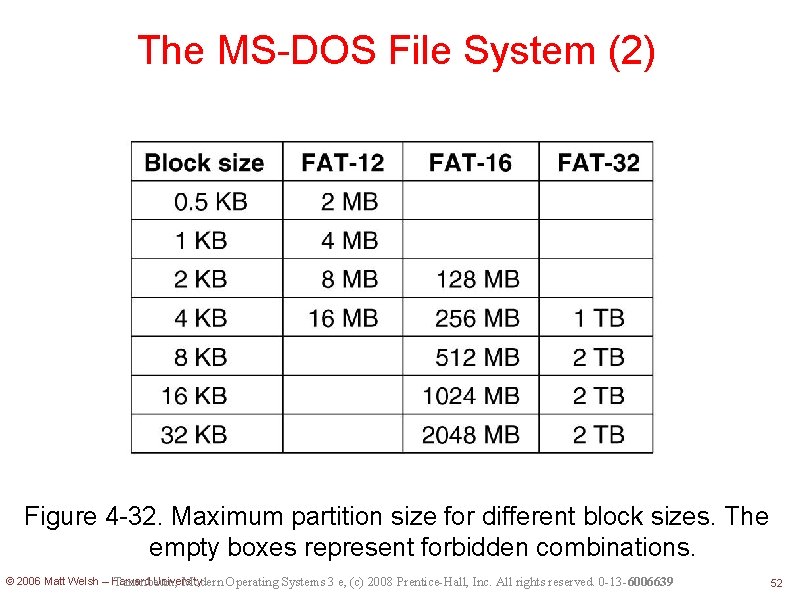

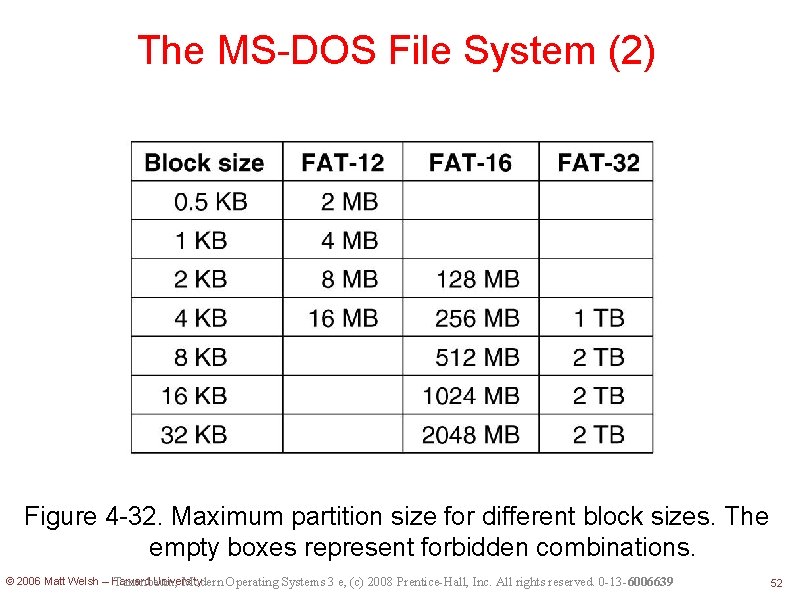

The MS-DOS File System (2) Figure 4 -32. Maximum partition size for different block sizes. The empty boxes represent forbidden combinations. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 52

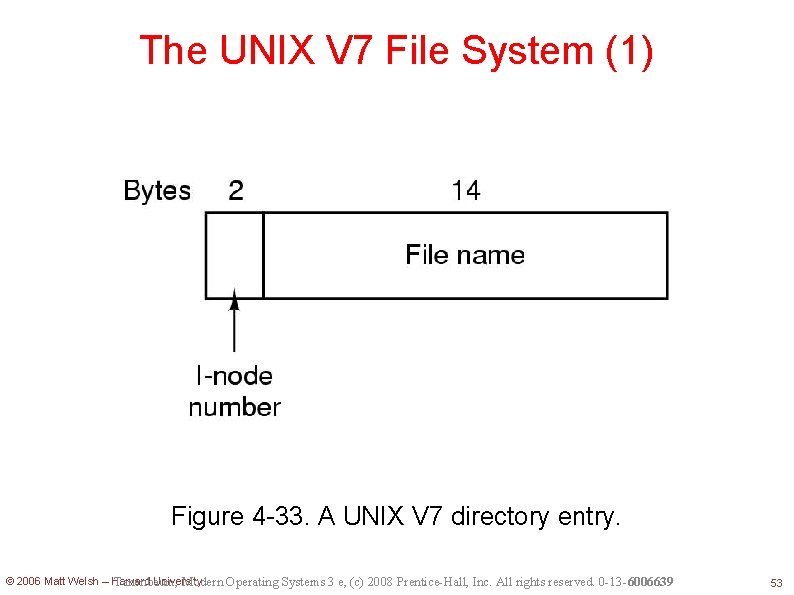

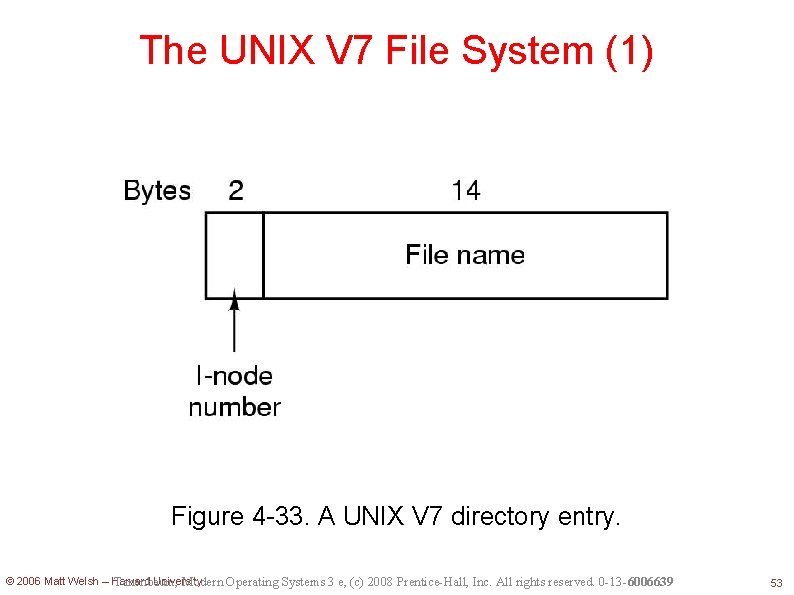

The UNIX V 7 File System (1) Figure 4 -33. A UNIX V 7 directory entry. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 53

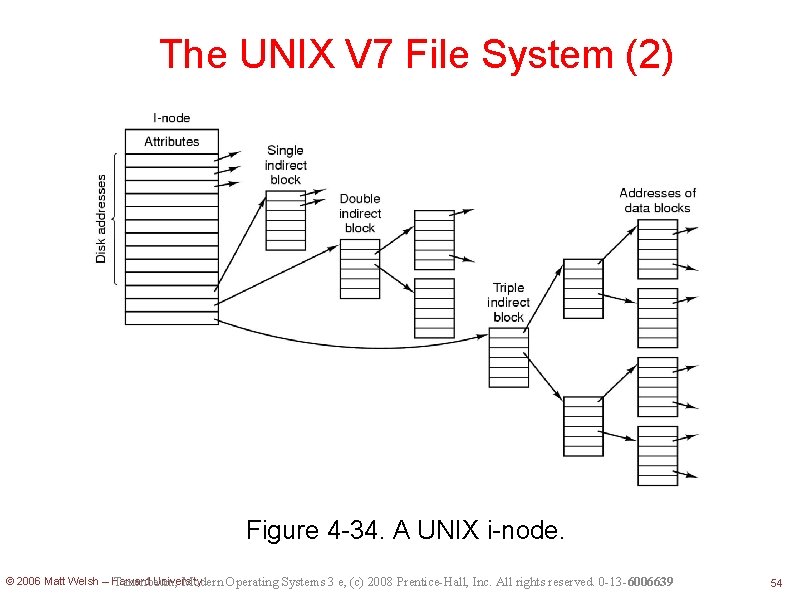

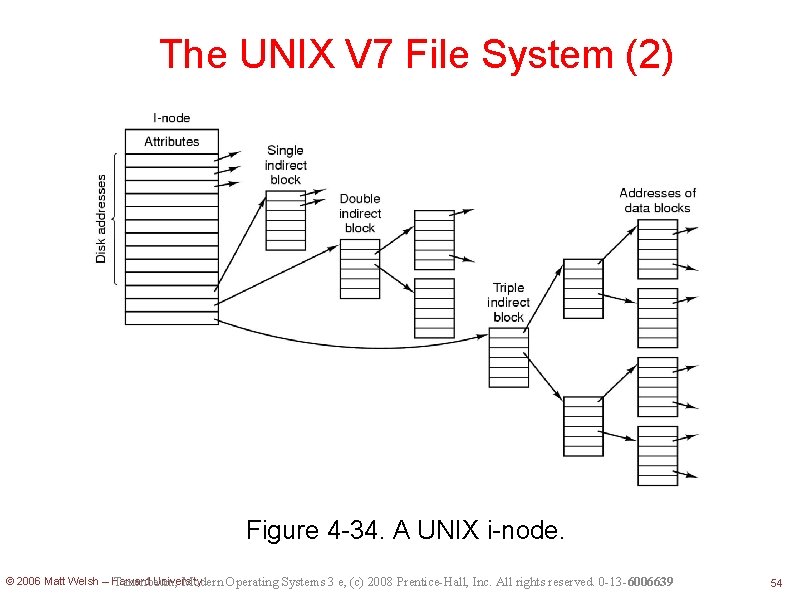

The UNIX V 7 File System (2) Figure 4 -34. A UNIX i-node. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 54

The UNIX V 7 File System (3) Figure 4 -35. The steps in looking up /usr/ast/mbox. © 2006 Matt Welsh – Harvard University Tanenbaum, Modern Operating Systems 3 e, (c) 2008 Prentice-Hall, Inc. All rights reserved. 0 -13 -6006639 55