Perception in 3 D Computer Graphics CENG 505

![Top-down Component �Eye movements on Repin’s picture [Yarbus 67] 16/72 Top-down Component �Eye movements on Repin’s picture [Yarbus 67] 16/72](https://slidetodoc.com/presentation_image_h/9aed9cbf57880a0927e955352ffdf94e/image-17.jpg)

- Slides: 73

Perception in 3 D Computer Graphics CENG 505: Advanced Computer Graphics

Motivation �Çok rendering yavaş olur! (told by? ) �Take perception into account �Don’t waste resources �Render less

Subtopics �Visual Attention �Top-down, bottom-up perception �Stereo Vision �Fusion �Depth Perception �Depth cues �Color perception

Introduction �Utilize perceptual principles in CG �It is more important �what is perceived than what is drawn into the screen �Do not waste resources for not perceived details �Determine visually important regions �Visual attention point of view �Where do we attend? �How to utilize this information in CG? 3/72

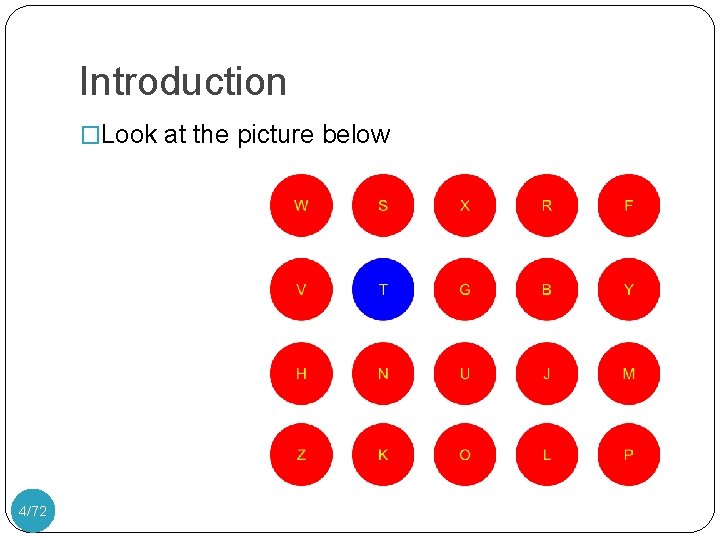

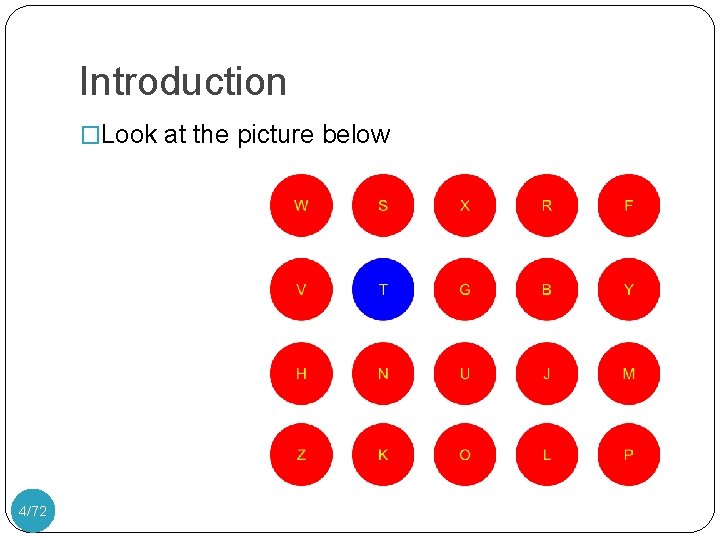

Introduction �Look at the picture below 4/72

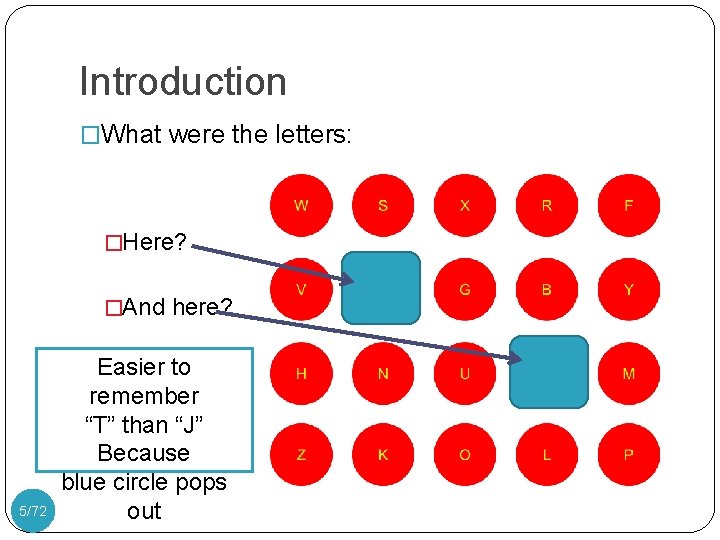

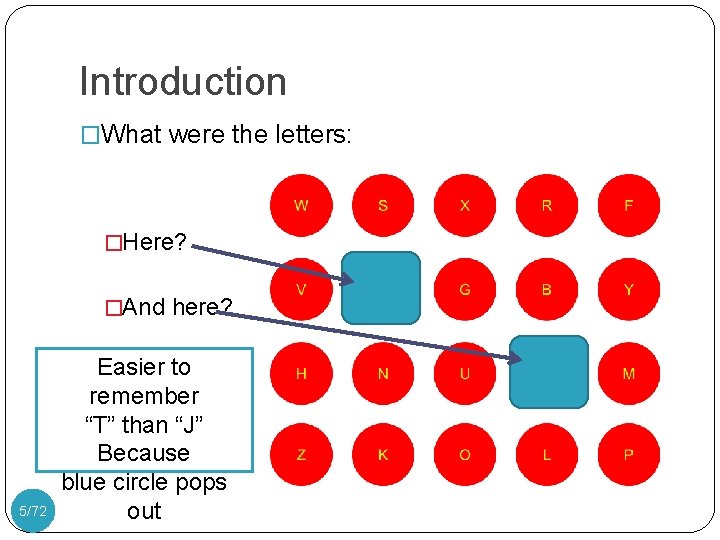

Introduction �What were the letters: �Here? �And here? 5/72 Easier to remember “T” than “J” Because blue circle pops out

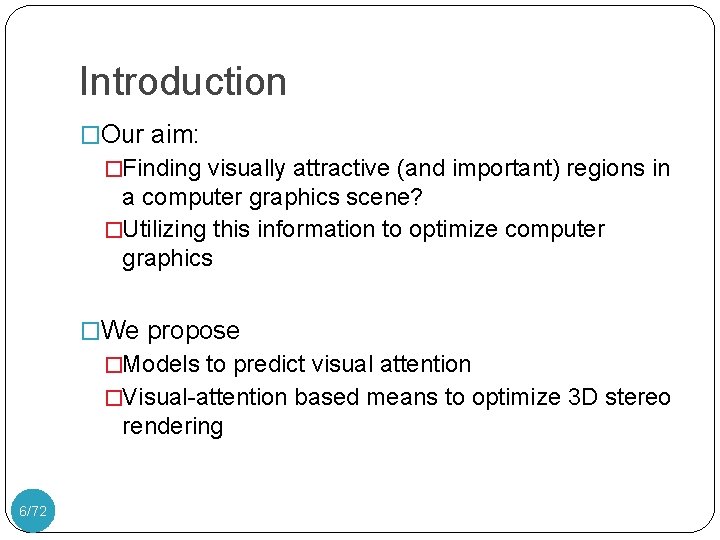

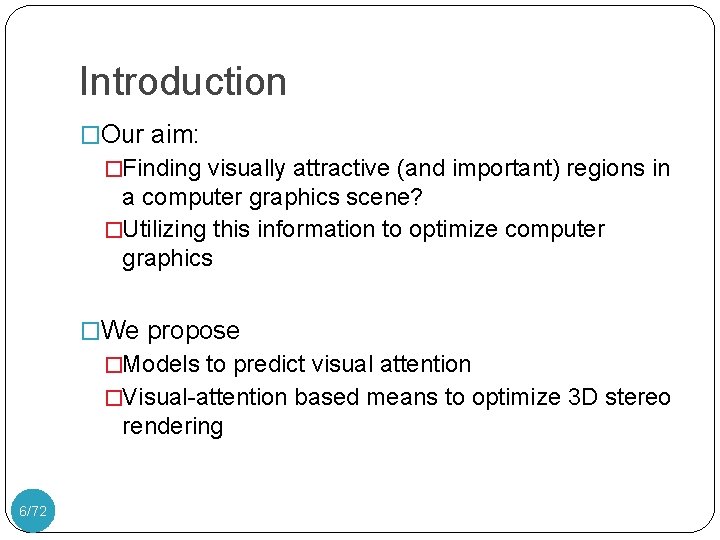

Introduction �Our aim: �Finding visually attractive (and important) regions in a computer graphics scene? �Utilizing this information to optimize computer graphics �We propose �Models to predict visual attention �Visual-attention based means to optimize 3 D stereo rendering 6/72

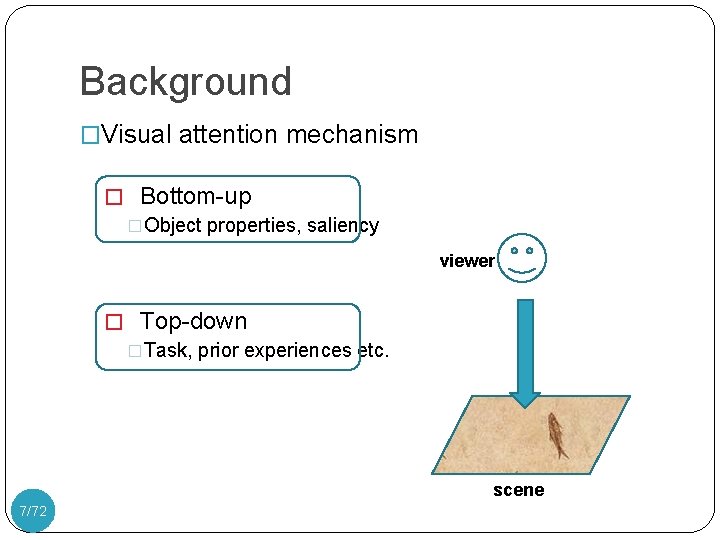

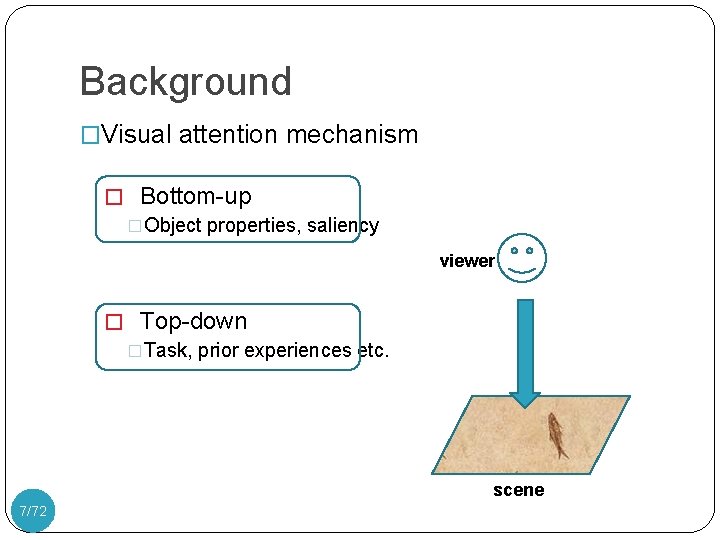

Background �Visual attention mechanism � Bottom-up �Object properties, saliency viewer � Top-down �Task, prior experiences etc. scene 7/72

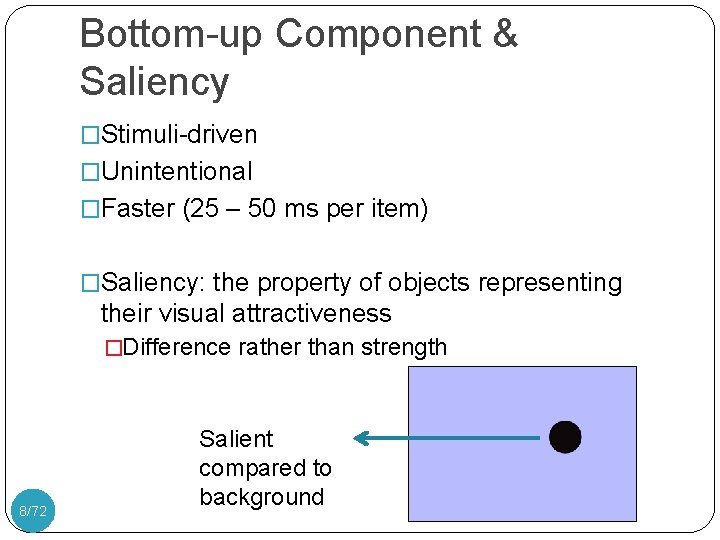

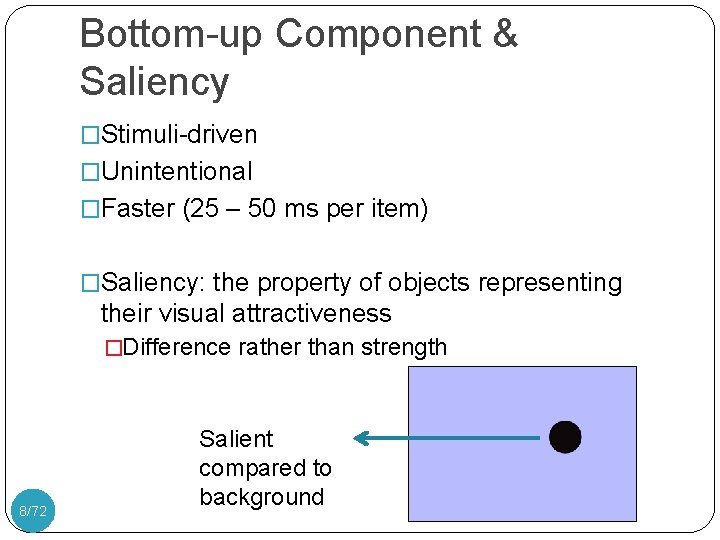

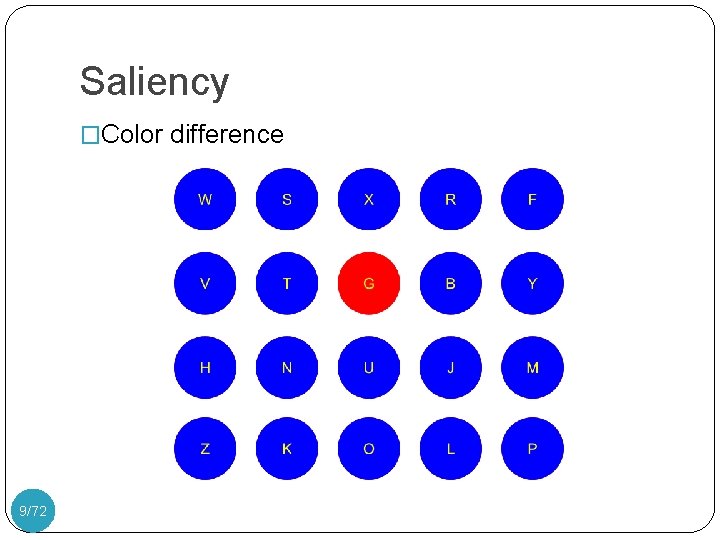

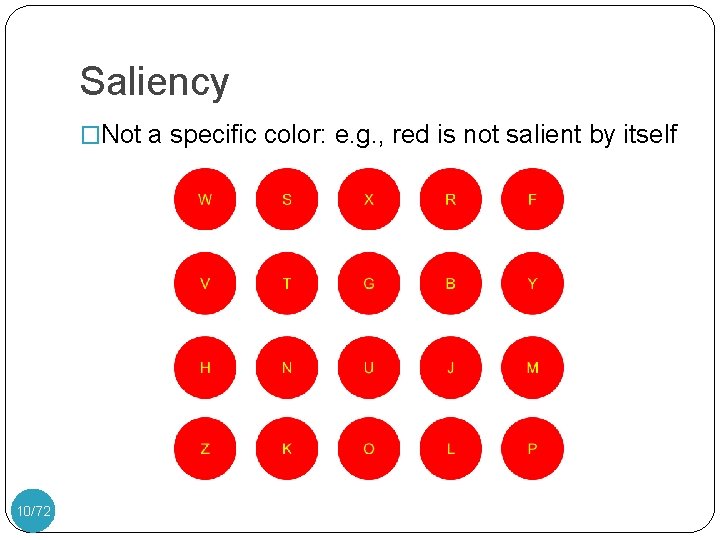

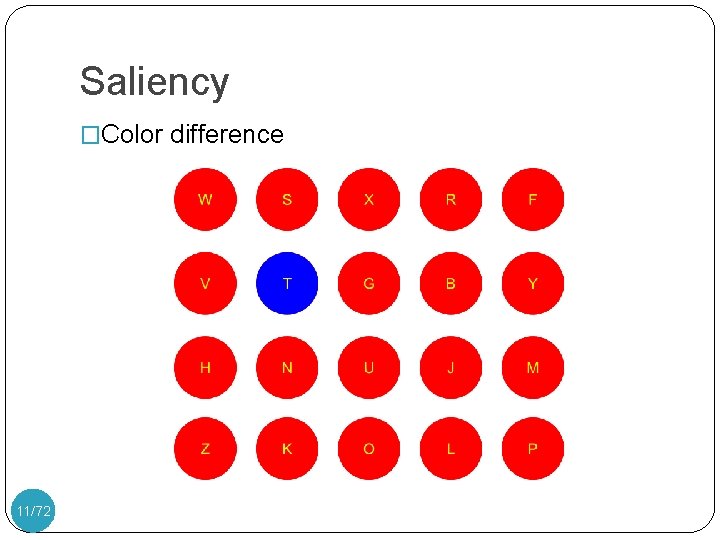

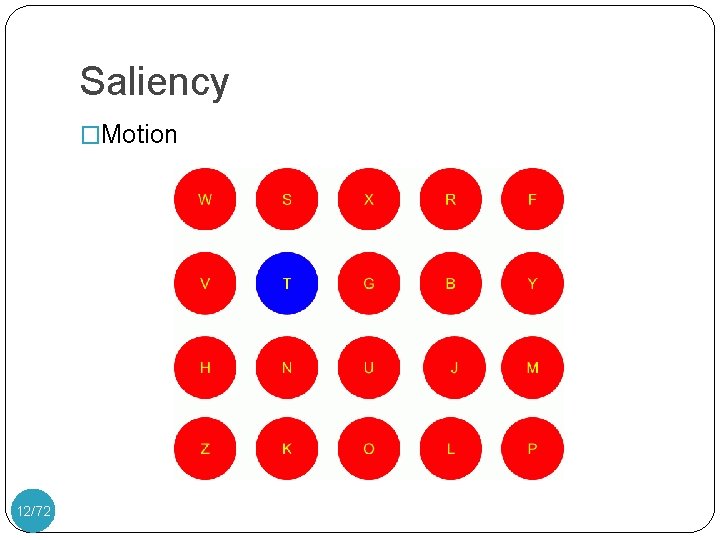

Bottom-up Component & Saliency �Stimuli-driven �Unintentional �Faster (25 – 50 ms per item) �Saliency: the property of objects representing their visual attractiveness �Difference rather than strength 8/72 Salient compared to background

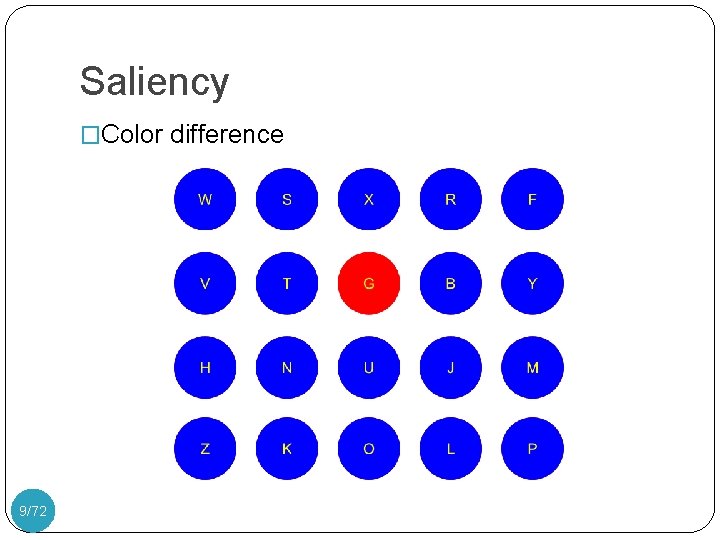

Saliency �Color difference 9/72

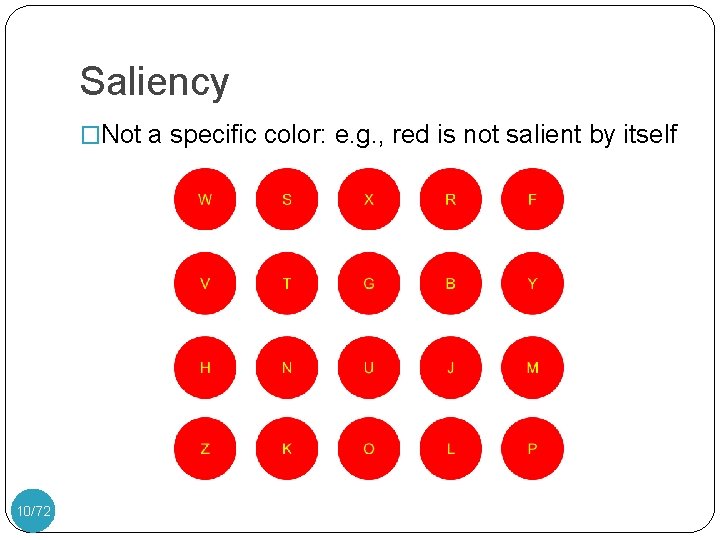

Saliency �Not a specific color: e. g. , red is not salient by itself 10/72

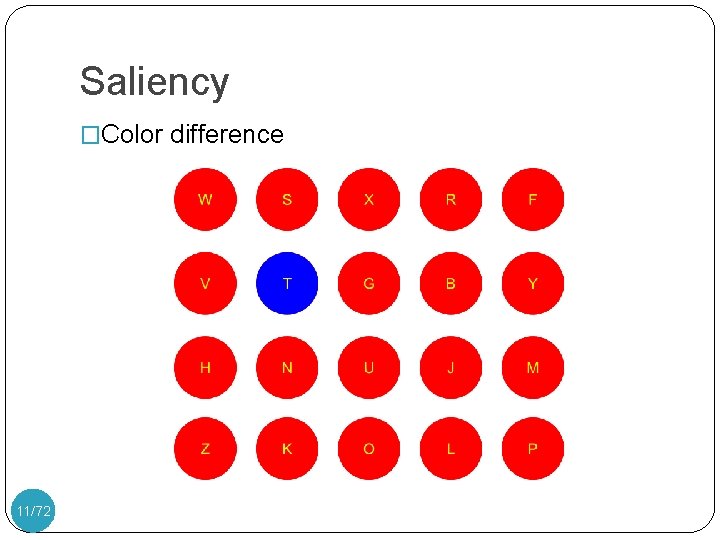

Saliency �Color difference 11/72

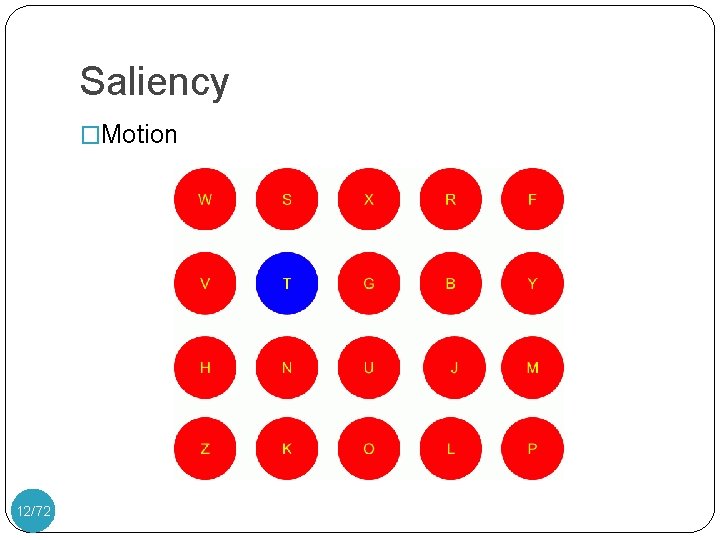

Saliency �Motion 12/72

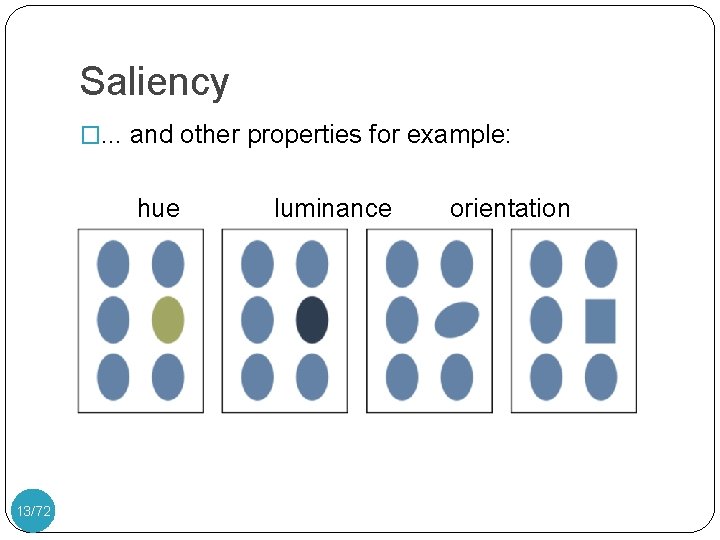

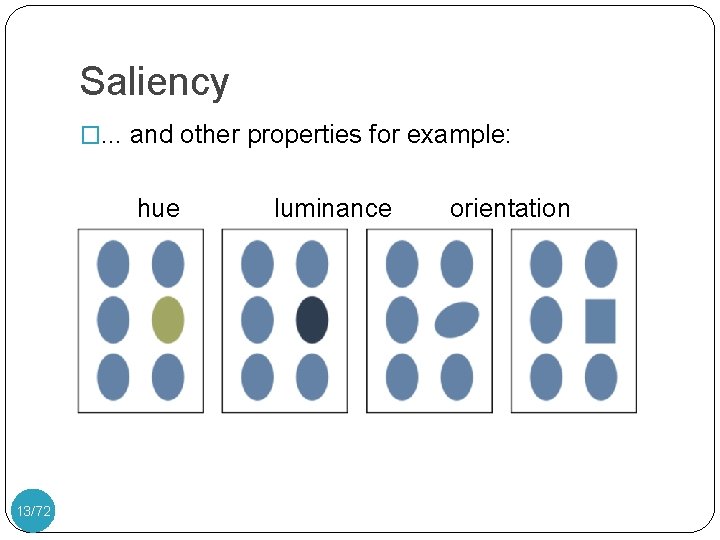

Saliency �. . . and other properties for example: hue shape 13/72 luminance orientation

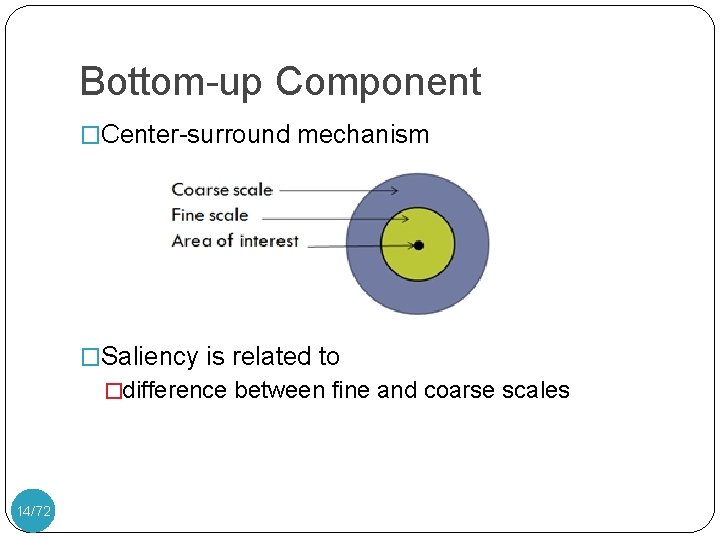

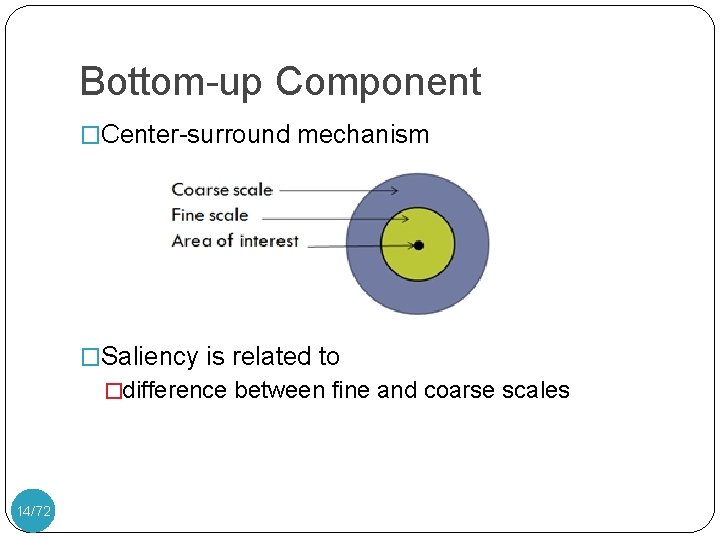

Bottom-up Component �Center-surround mechanism �Saliency is related to �difference between fine and coarse scales 14/72

Top-down Component �Task-driven �Prior experiences �Intentional �Slower (>200 ms) 15/72

![Topdown Component Eye movements on Repins picture Yarbus 67 1672 Top-down Component �Eye movements on Repin’s picture [Yarbus 67] 16/72](https://slidetodoc.com/presentation_image_h/9aed9cbf57880a0927e955352ffdf94e/image-17.jpg)

Top-down Component �Eye movements on Repin’s picture [Yarbus 67] 16/72

Top-down Component �Without a task 17/72

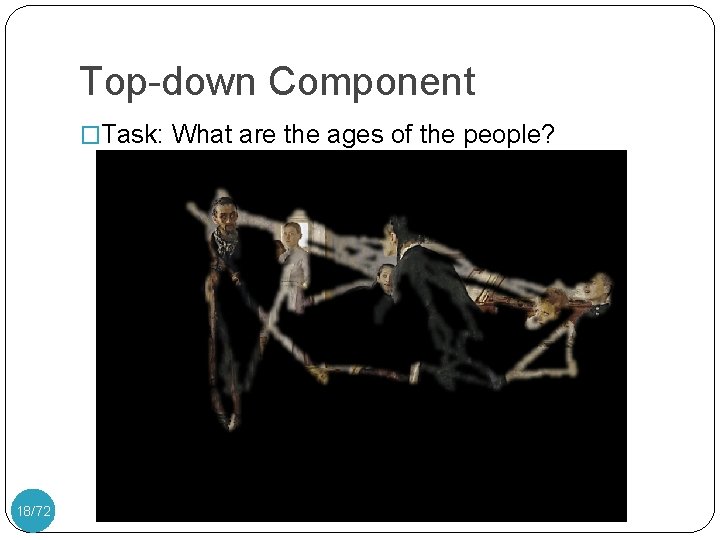

Top-down Component �Task: What are the ages of the people? 18/72

Top-down Component �Task: What were they doing before? 19/72

Bottom-up vs. Top-down �Both components are important �Top-down component: �Difficult to know tasks �Highly related to personalities, prior experiences etc. �May require semantic knowledge - object recognition too �Our primary interest is the Bottom-up component �Purely based on scene properties 20/72

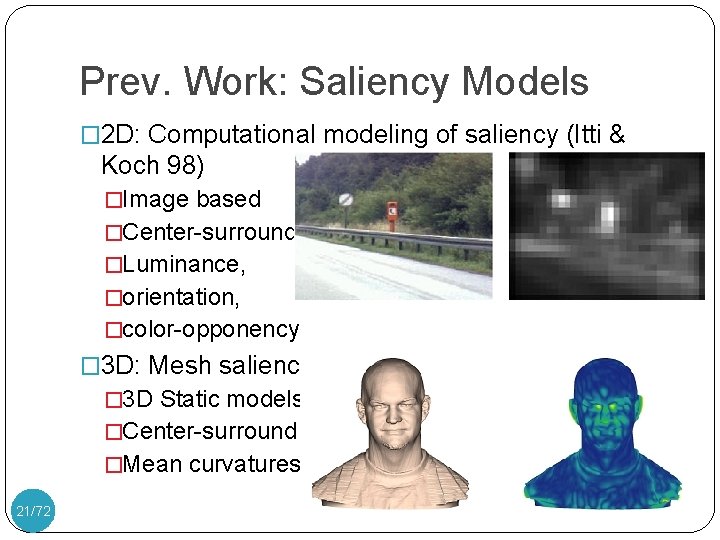

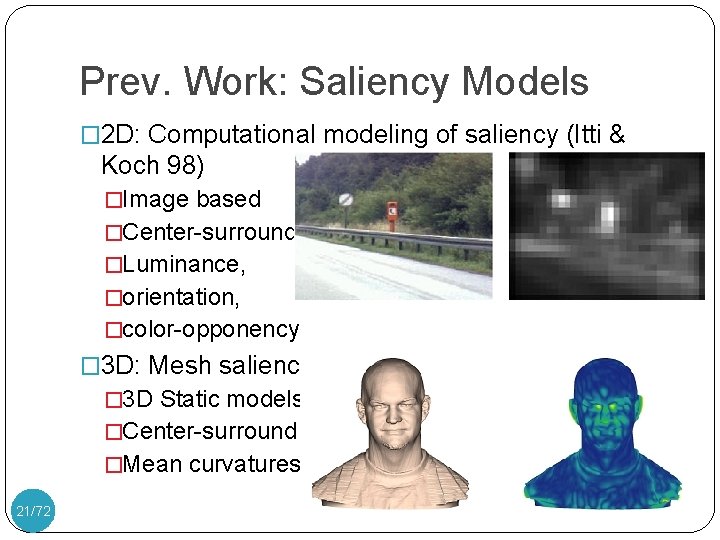

Prev. Work: Saliency Models � 2 D: Computational modeling of saliency (Itti & Koch 98) �Image based �Center-surround �Luminance, �orientation, �color-opponency � 3 D: Mesh saliency (Lee et al. 05) � 3 D Static models �Center-surround �Mean curvatures 21/72

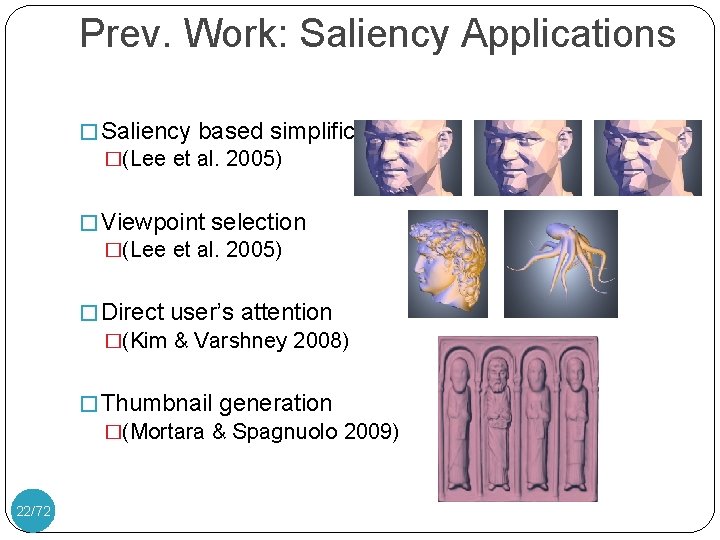

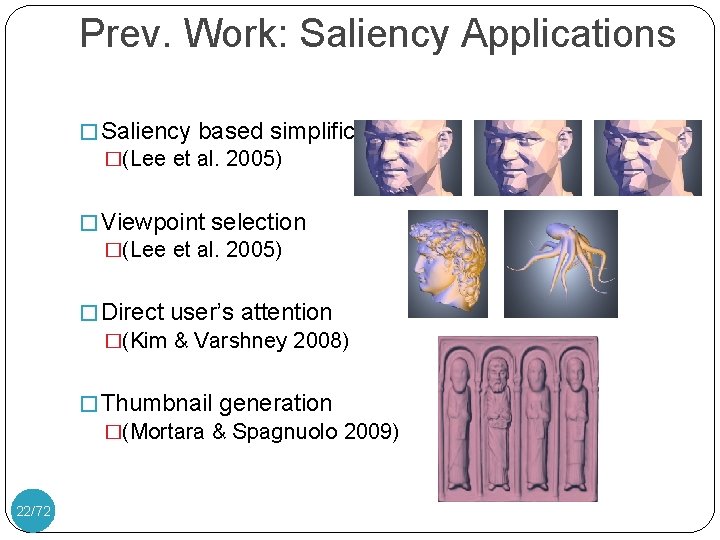

Prev. Work: Saliency Applications � Saliency based simplification �(Lee et al. 2005) � Viewpoint selection �(Lee et al. 2005) � Direct user’s attention �(Kim & Varshney 2008) � Thumbnail generation �(Mortara & Spagnuolo 2009) 22/72

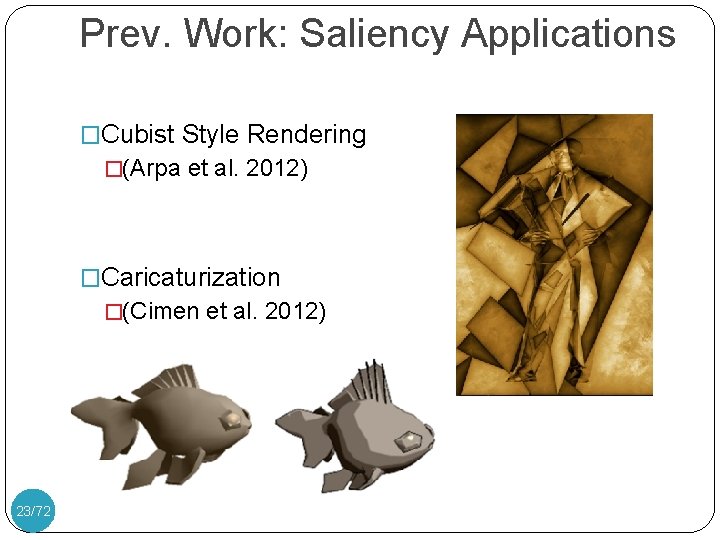

Prev. Work: Saliency Applications �Cubist Style Rendering �(Arpa et al. 2012) �Caricaturization �(Cimen et al. 2012) 23/72

Several studies 24/72

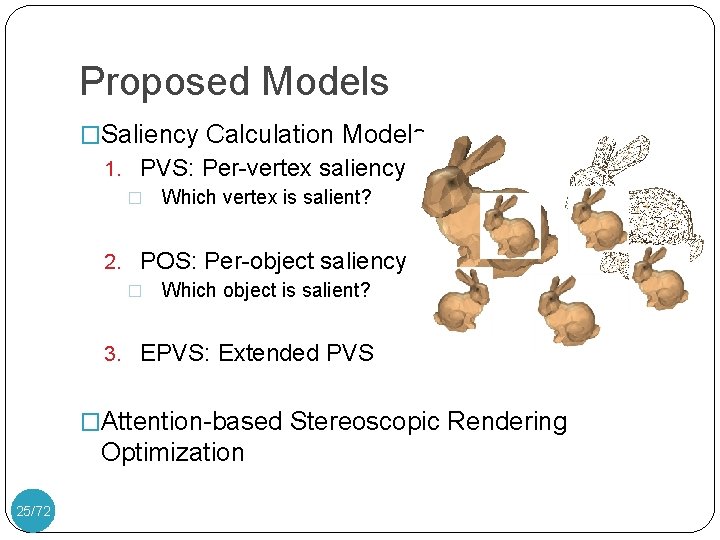

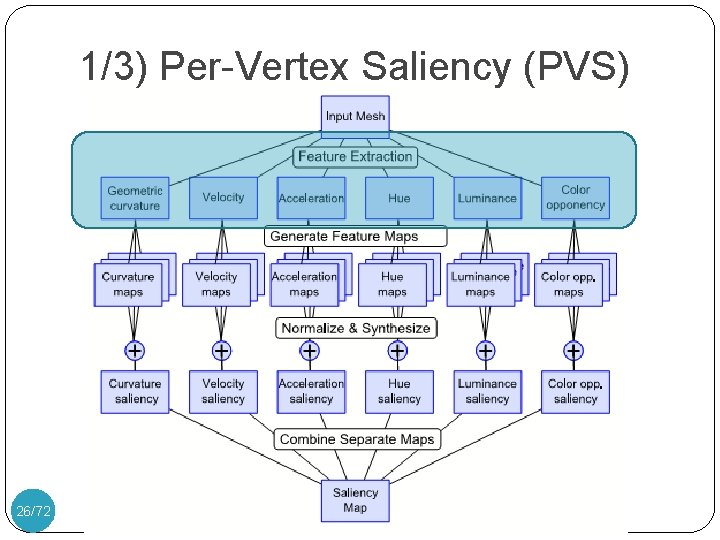

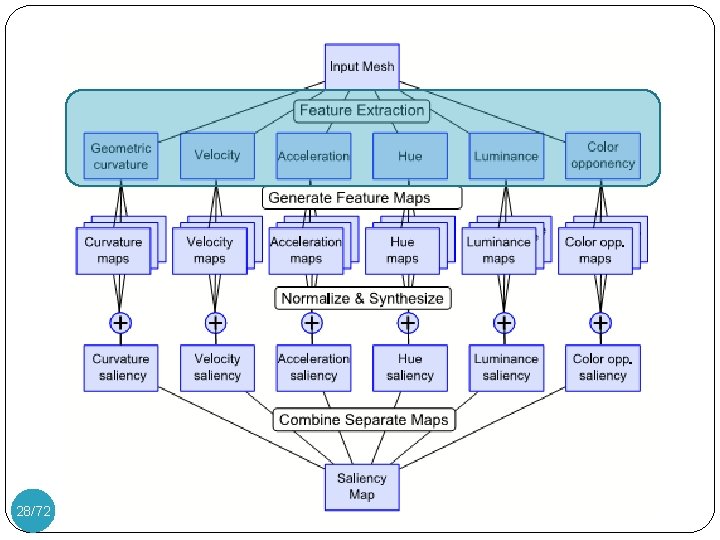

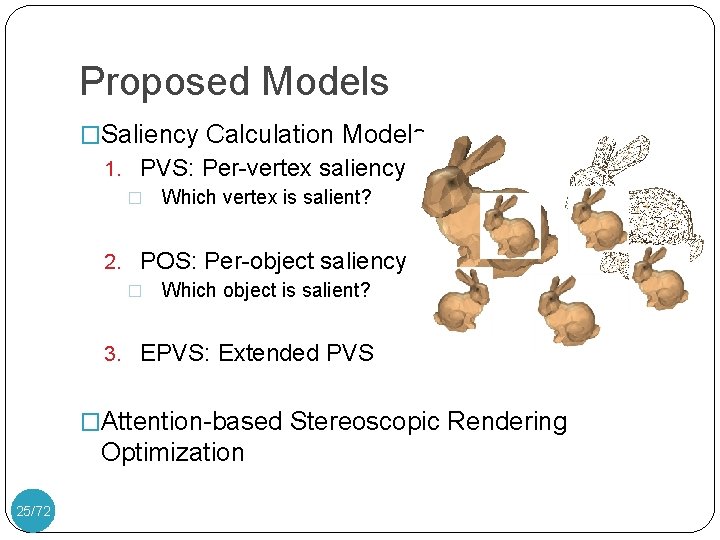

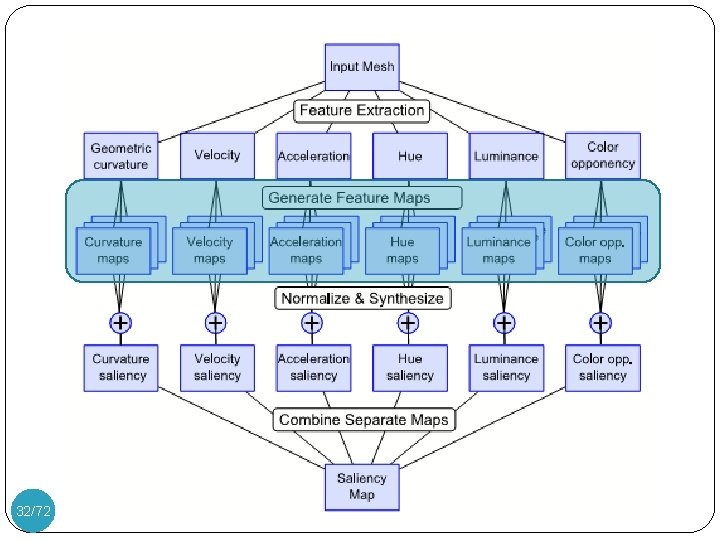

Proposed Models �Saliency Calculation Models 1. PVS: Per-vertex saliency model � Which vertex is salient? 2. POS: Per-object saliency model � Which object is salient? 3. EPVS: Extended PVS �Attention-based Stereoscopic Rendering Optimization 25/72

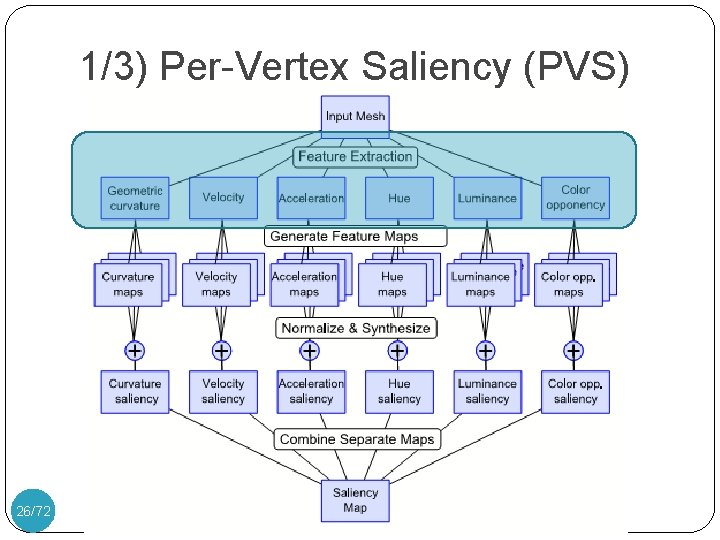

1/3) Per-Vertex Saliency (PVS) 26/72

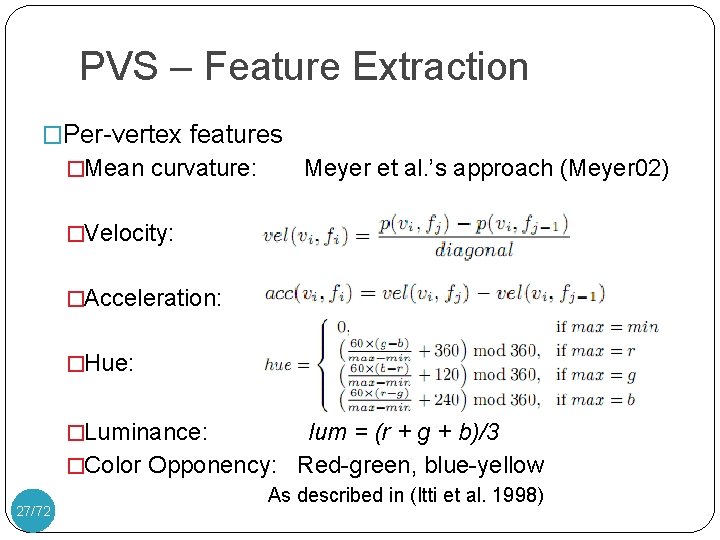

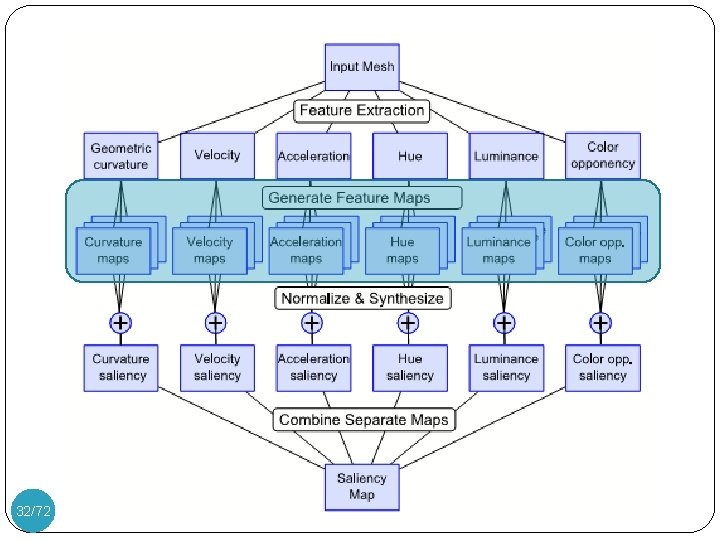

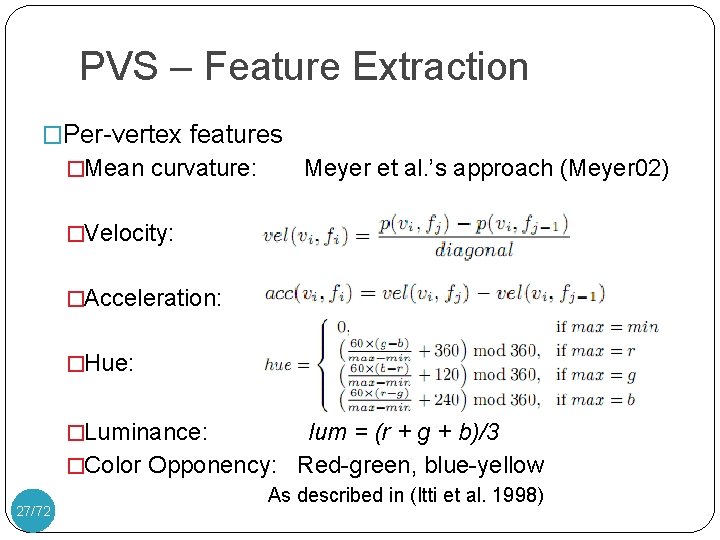

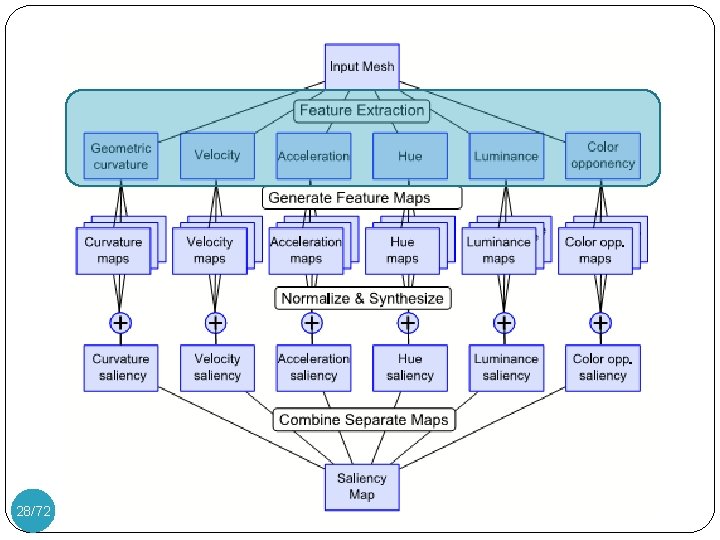

PVS – Feature Extraction �Per-vertex features �Mean curvature: Meyer et al. ’s approach (Meyer 02) �Velocity: �Acceleration: �Hue: �Luminance: lum = (r + g + b)/3 �Color Opponency: Red-green, blue-yellow 27/72 As described in (Itti et al. 1998)

28/72

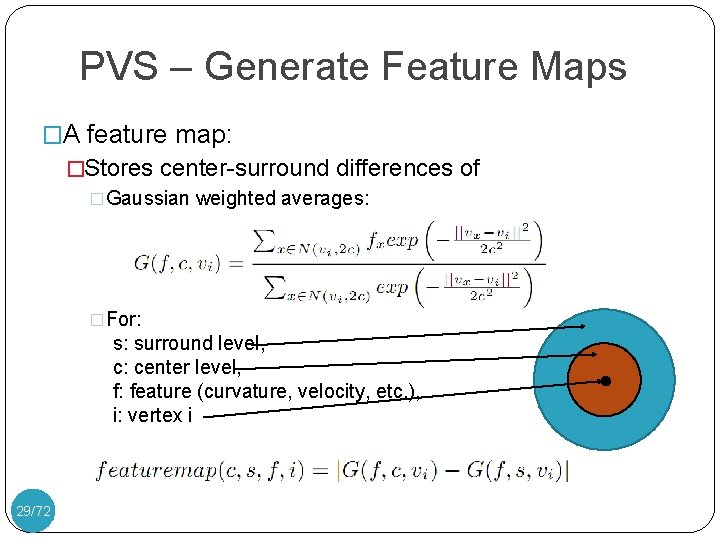

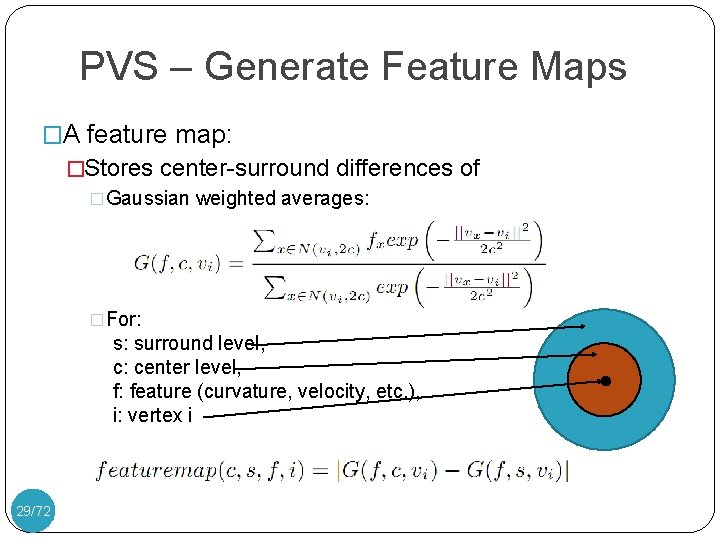

PVS – Generate Feature Maps �A feature map: �Stores center-surround differences of �Gaussian weighted averages: �For: s: surround level, c: center level, f: feature (curvature, velocity, etc. ), i: vertex i 29/72

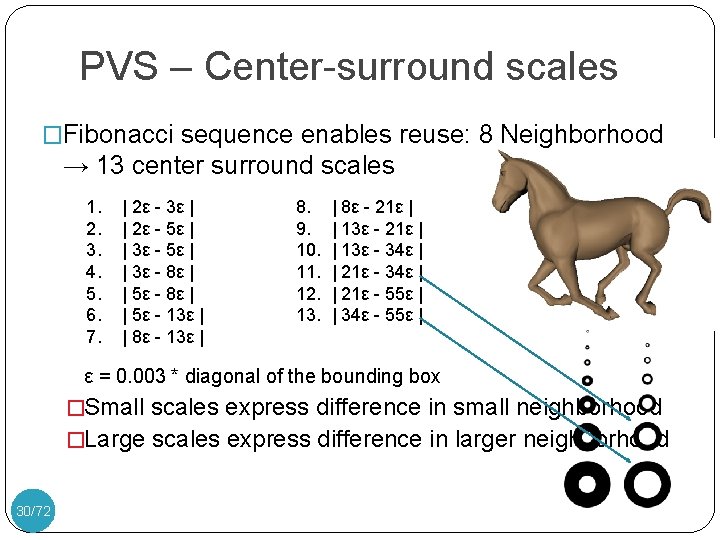

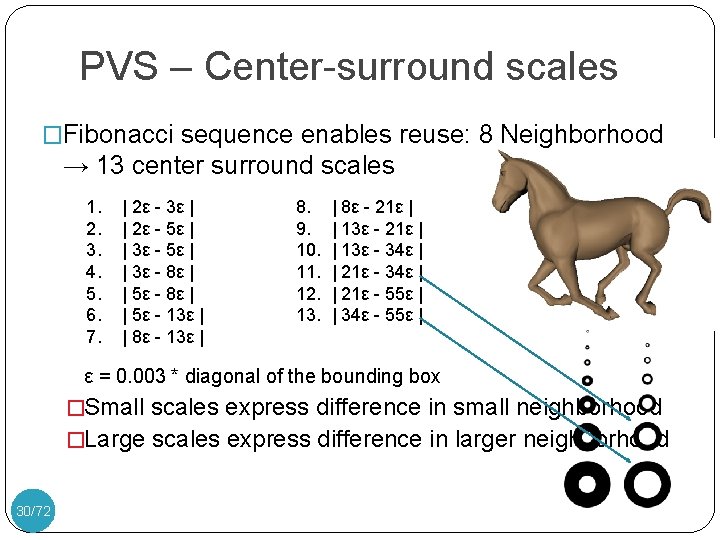

PVS – Center-surround scales �Fibonacci sequence enables reuse: 8 Neighborhood → 13 center surround scales 1. 2. 3. 4. 5. 6. 7. | 2ε - 3ε | | 2ε - 5ε | | 3ε - 8ε | | 5ε - 13ε | | 8ε - 13ε | 8. 9. 10. 11. 12. 13. | 8ε - 21ε | | 13ε - 34ε | | 21ε - 55ε | | 34ε - 55ε | ε = 0. 003 * diagonal of the bounding box �Small scales express difference in small neighborhood �Large scales express difference in larger neighborhood 30/72

32/72

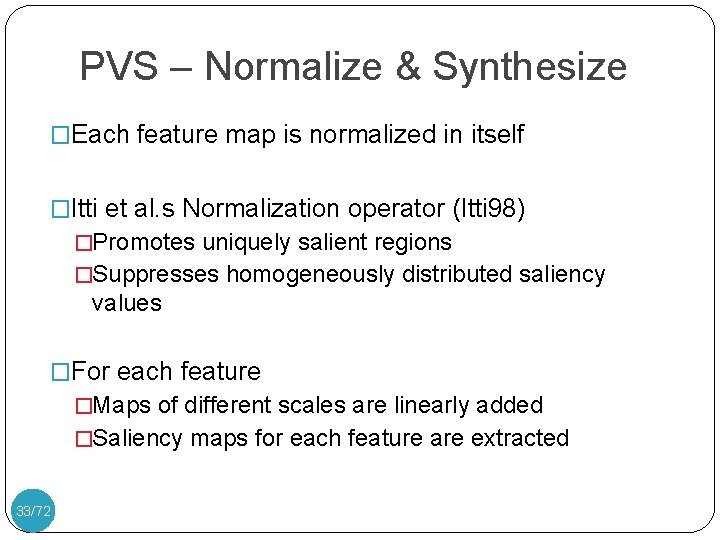

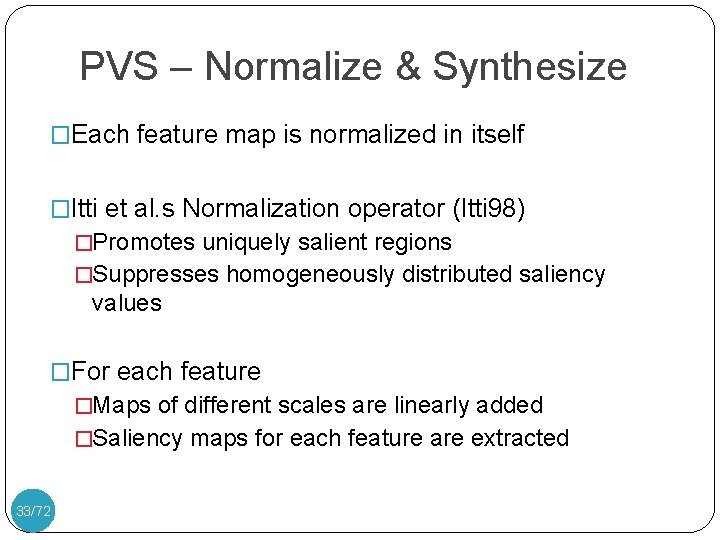

PVS – Normalize & Synthesize �Each feature map is normalized in itself �Itti et al. s Normalization operator (Itti 98) �Promotes uniquely salient regions �Suppresses homogeneously distributed saliency values �For each feature �Maps of different scales are linearly added �Saliency maps for each feature are extracted 33/72

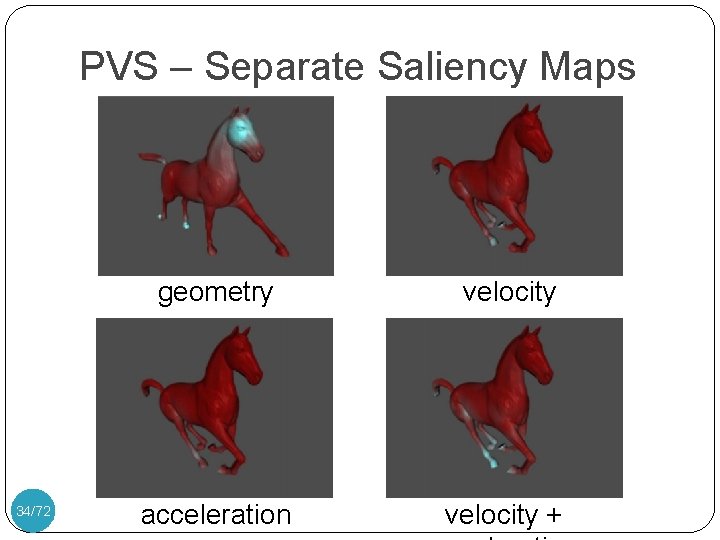

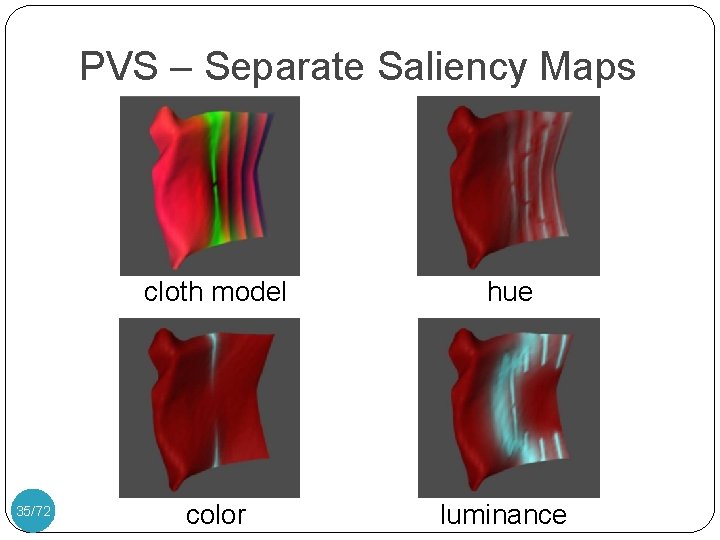

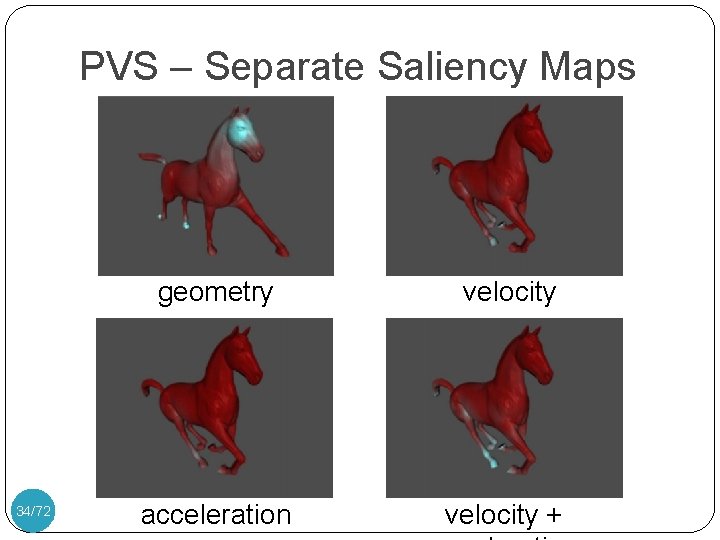

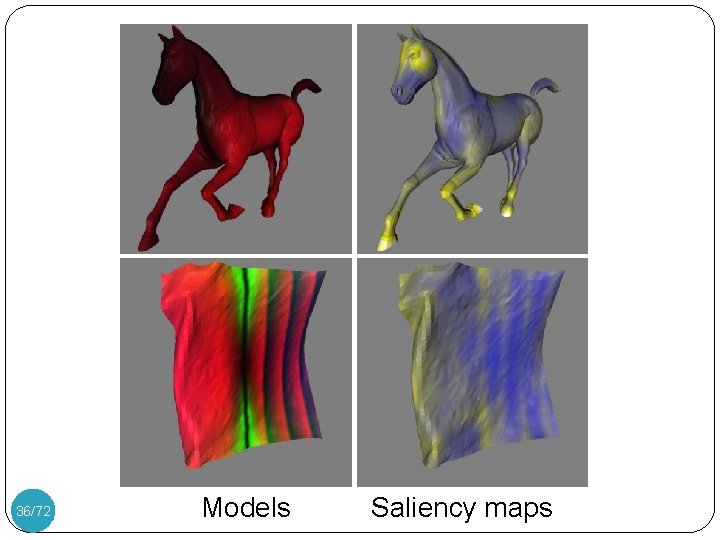

PVS – Separate Saliency Maps 34/72 geometry velocity acceleration velocity +

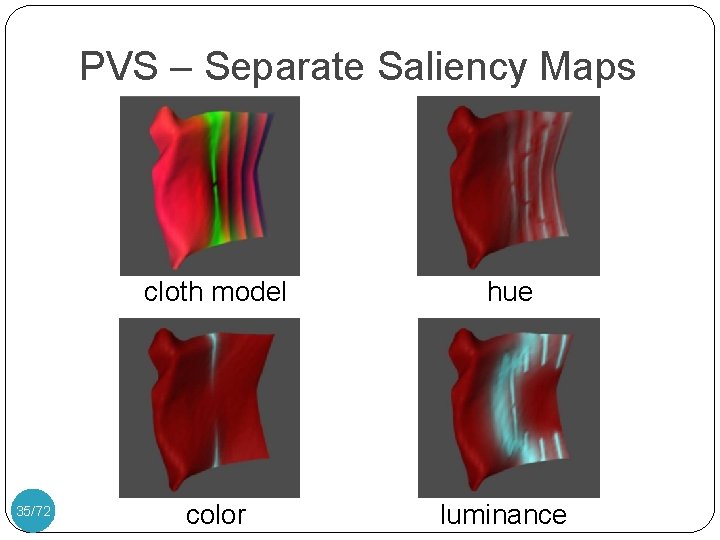

PVS – Separate Saliency Maps 35/72 cloth model hue color luminance

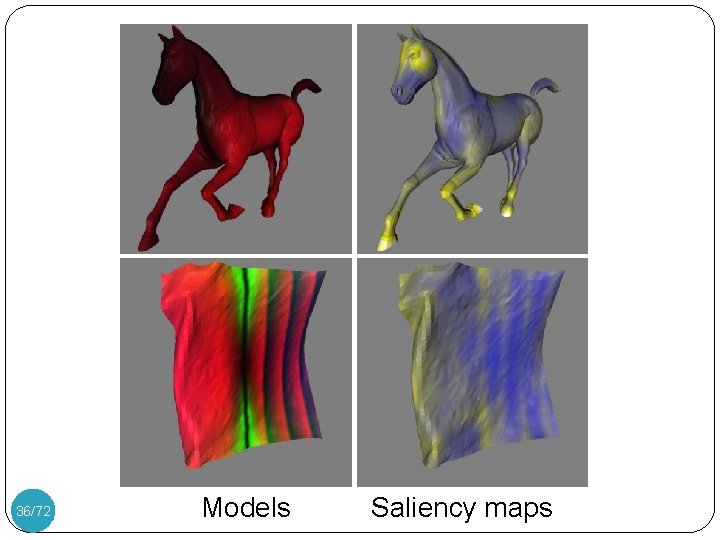

36/72 Models Saliency maps

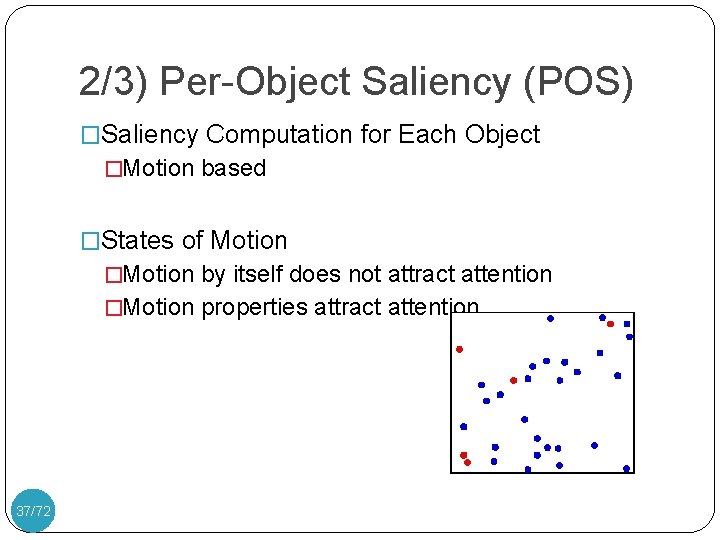

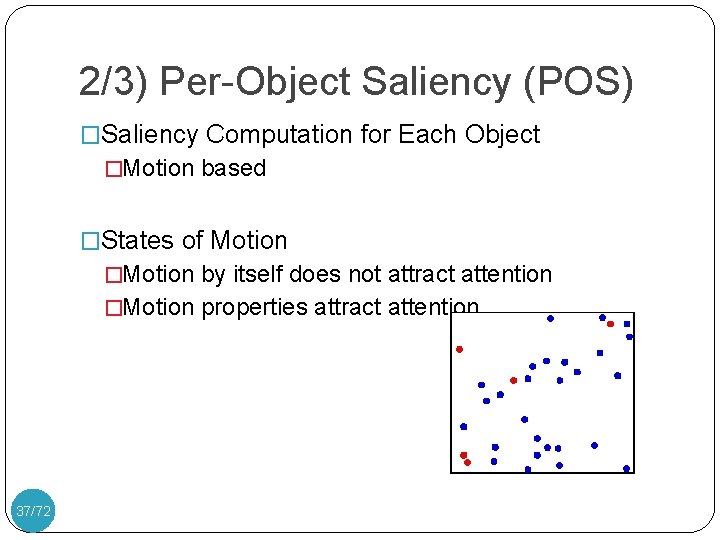

2/3) Per-Object Saliency (POS) �Saliency Computation for Each Object �Motion based �States of Motion �Motion by itself does not attract attention �Motion properties attract attention 37/72

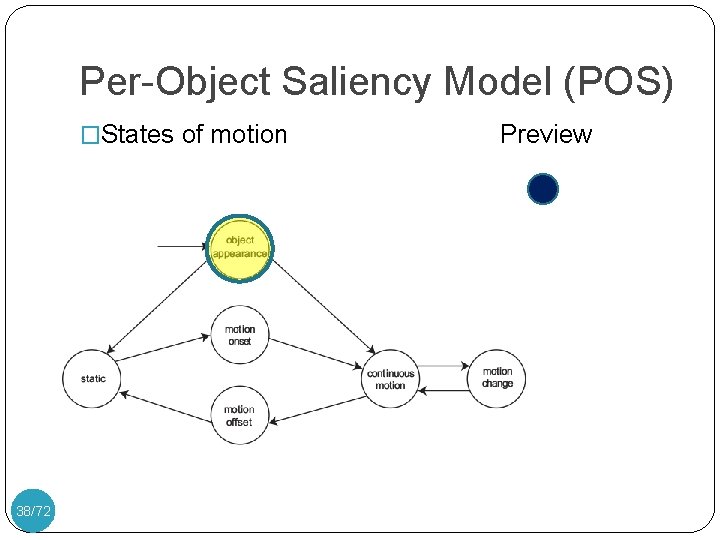

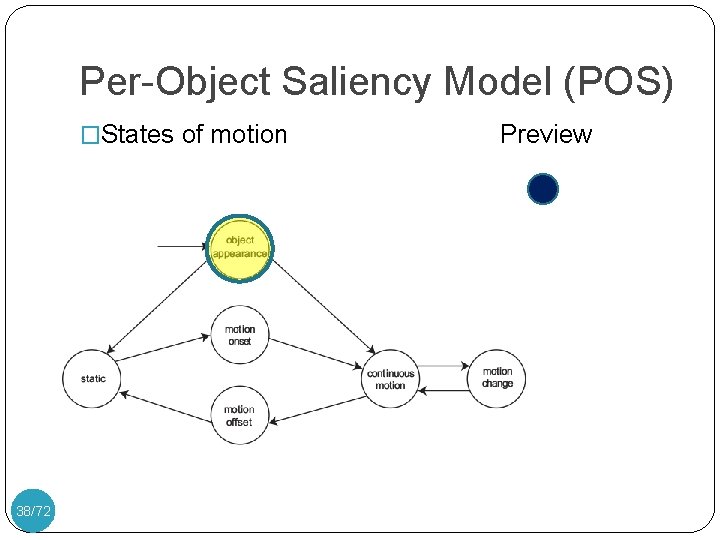

Per-Object Saliency Model (POS) �States of motion 38/72 Preview

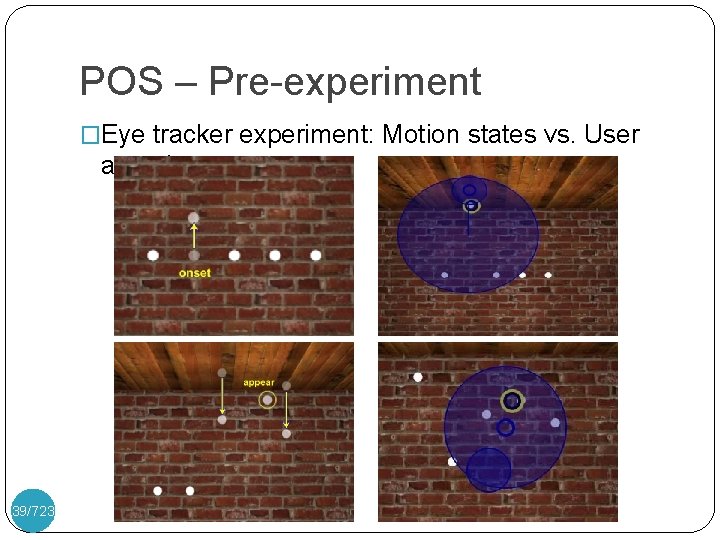

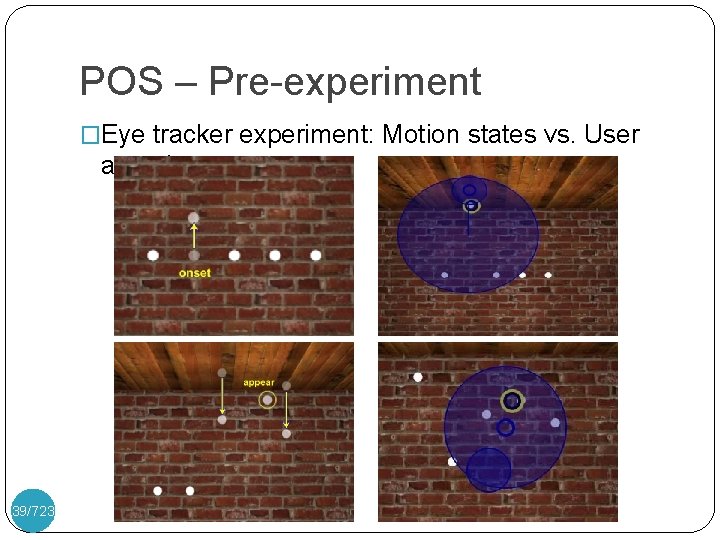

POS – Pre-experiment �Eye tracker experiment: Motion states vs. User attentions 39/723

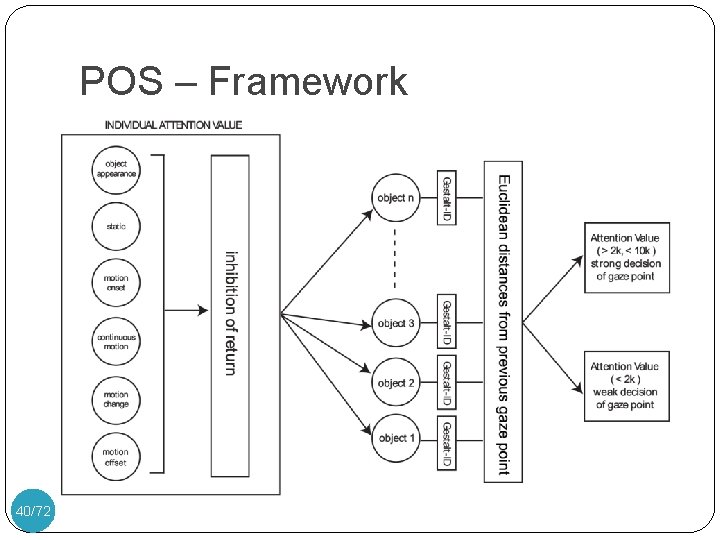

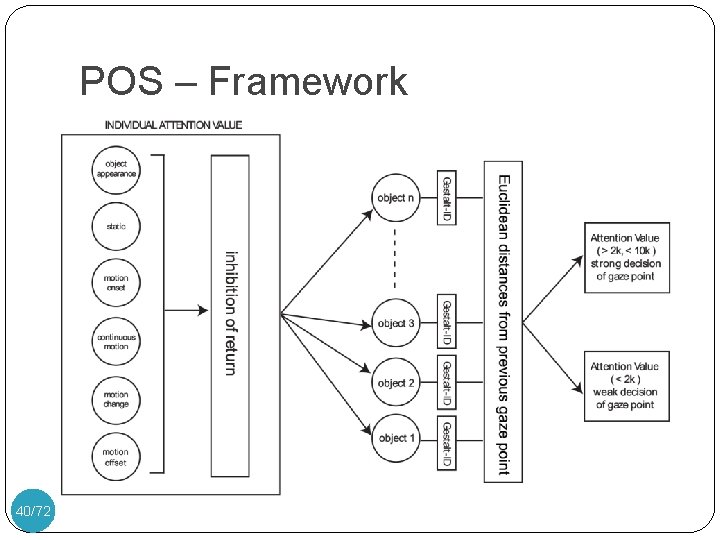

POS – Framework 40/72

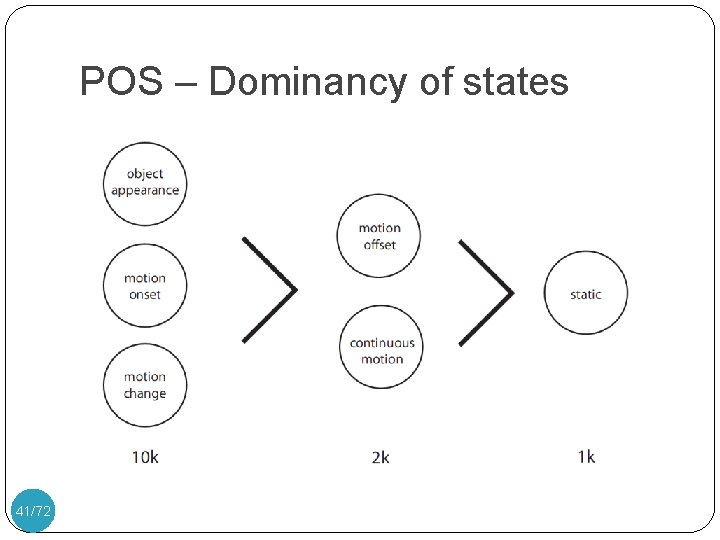

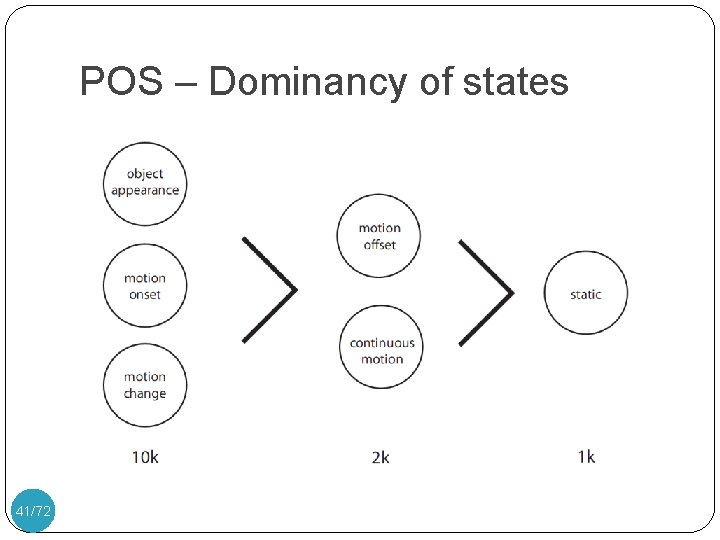

POS – Dominancy of states 41/72

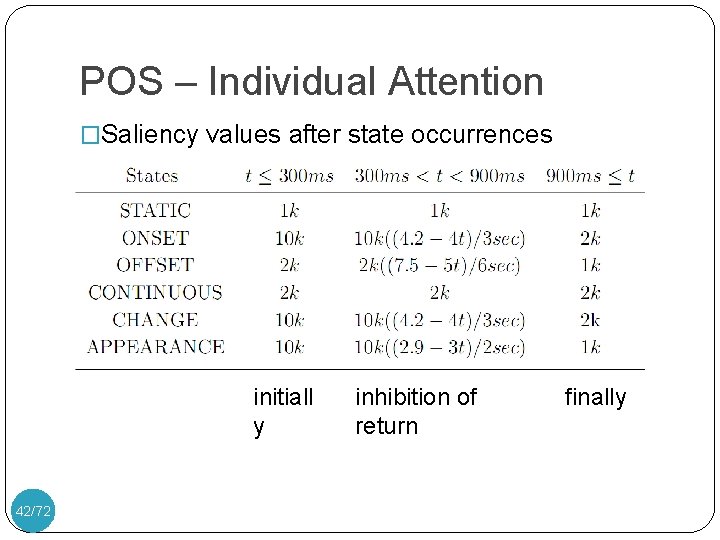

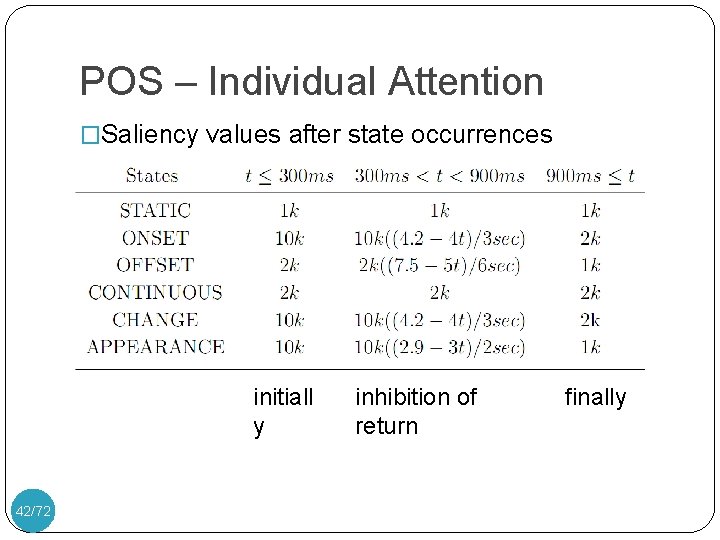

POS – Individual Attention �Saliency values after state occurrences initiall y 42/72 inhibition of return finally

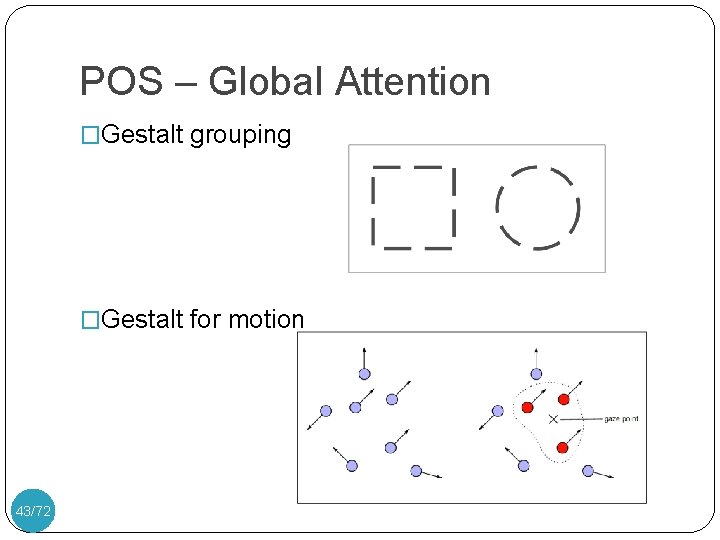

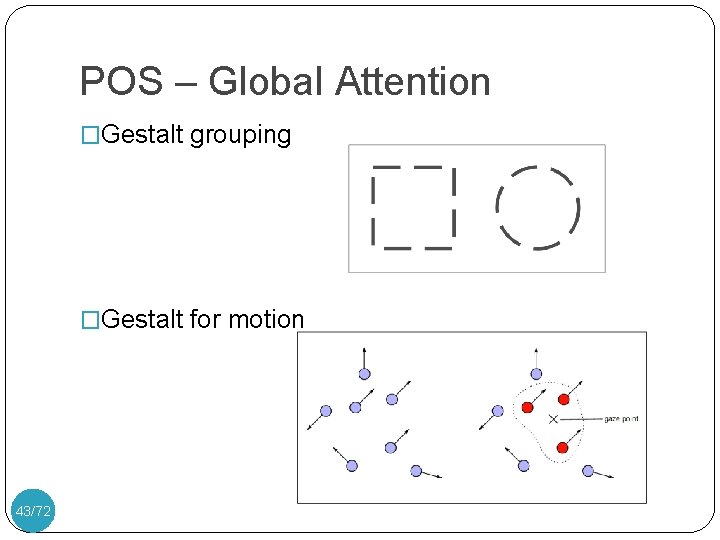

POS – Global Attention �Gestalt grouping �Gestalt for motion 43/72

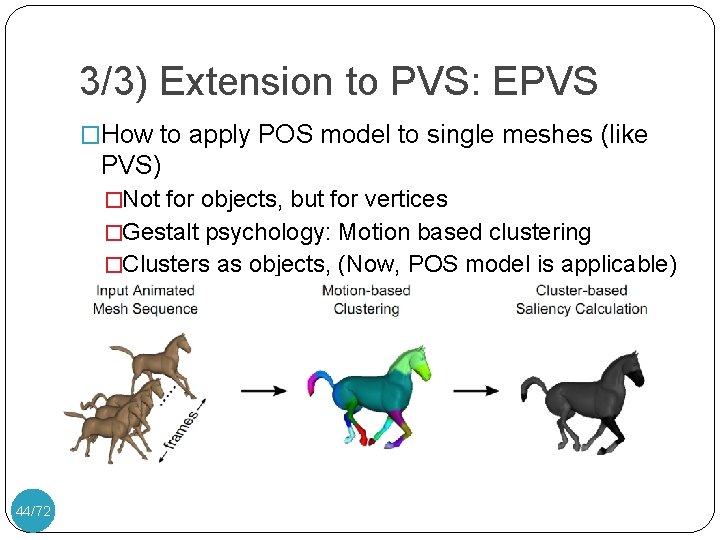

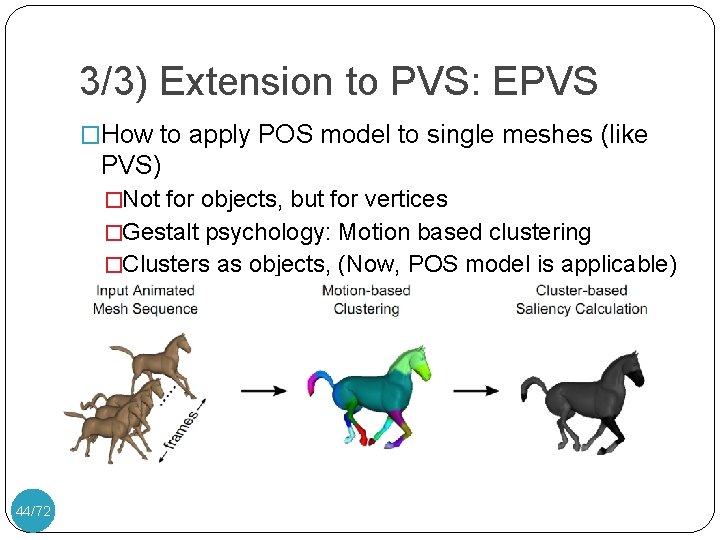

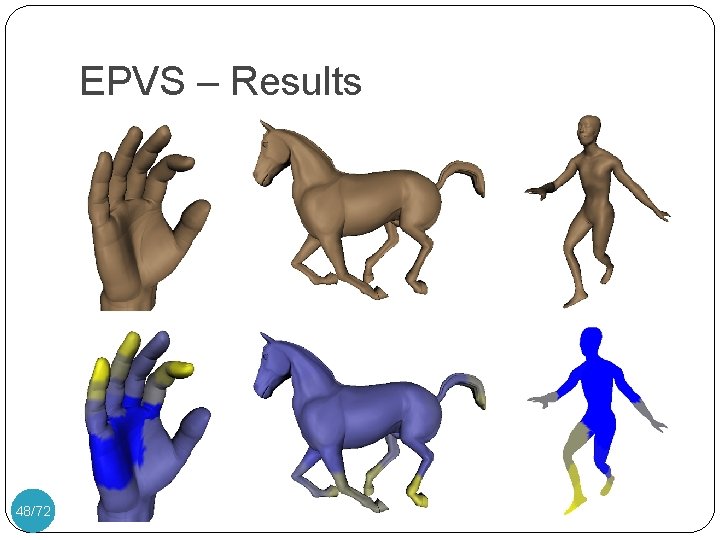

3/3) Extension to PVS: EPVS �How to apply POS model to single meshes (like PVS) �Not for objects, but for vertices �Gestalt psychology: Motion based clustering �Clusters as objects, (Now, POS model is applicable) 44/72

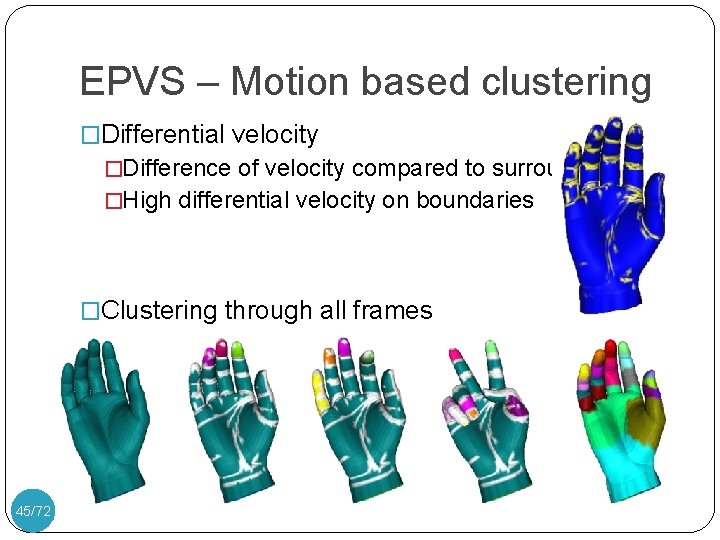

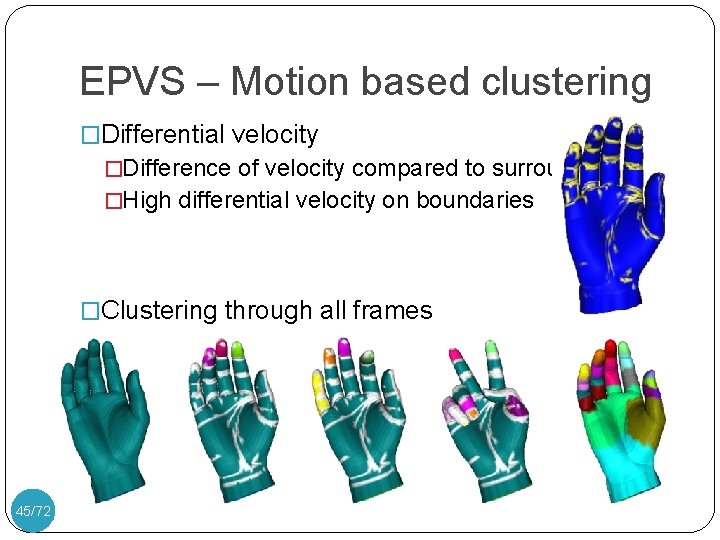

EPVS – Motion based clustering �Differential velocity �Difference of velocity compared to surroundings �High differential velocity on boundaries �Clustering through all frames 45/72

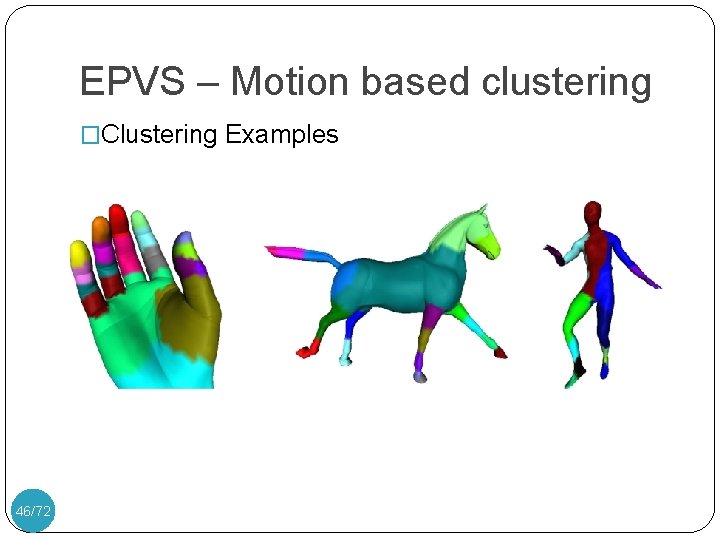

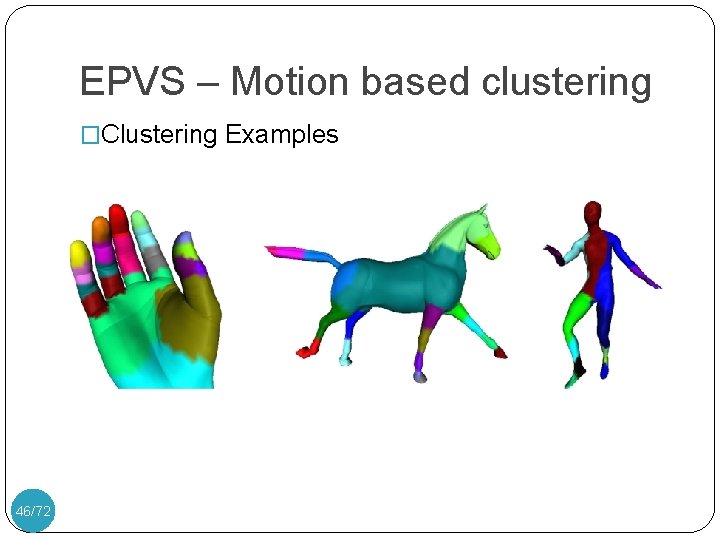

EPVS – Motion based clustering �Clustering Examples 46/72

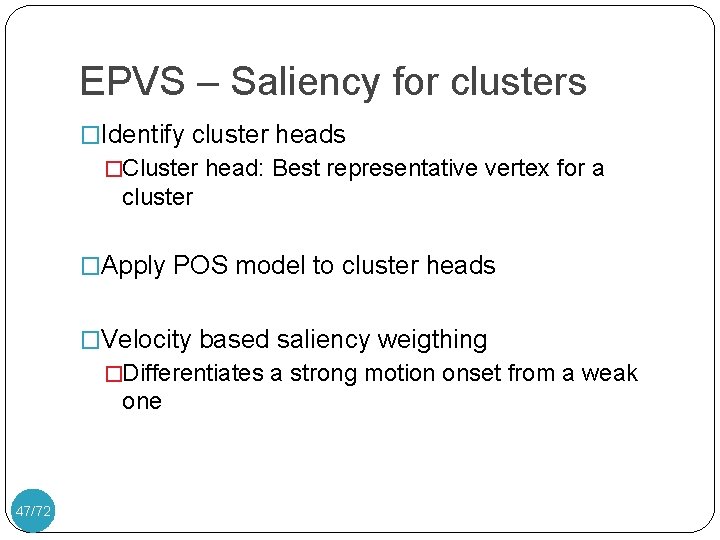

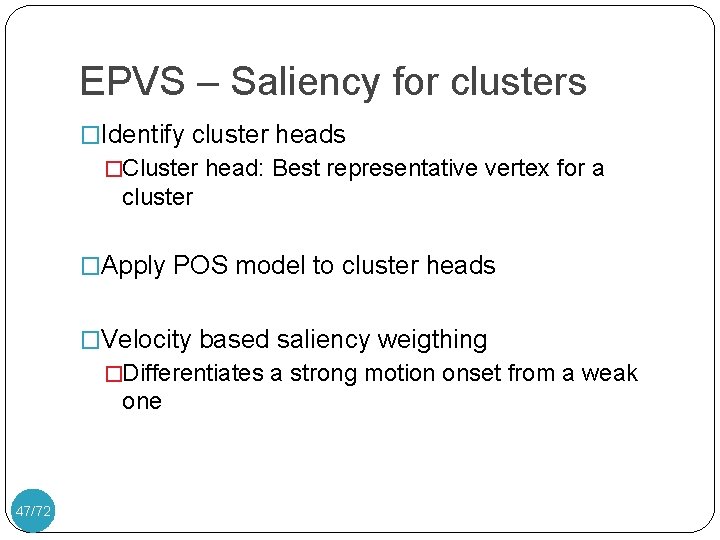

EPVS – Saliency for clusters �Identify cluster heads �Cluster head: Best representative vertex for a cluster �Apply POS model to cluster heads �Velocity based saliency weigthing �Differentiates a strong motion onset from a weak one 47/72

EPVS – Results 48/72

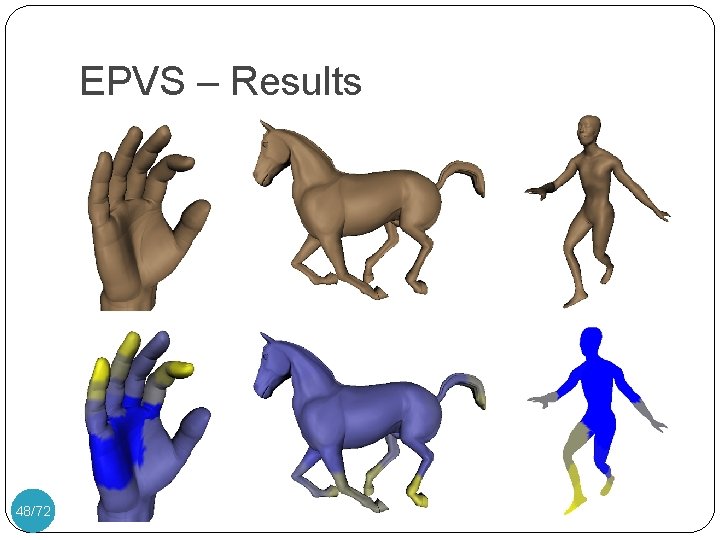

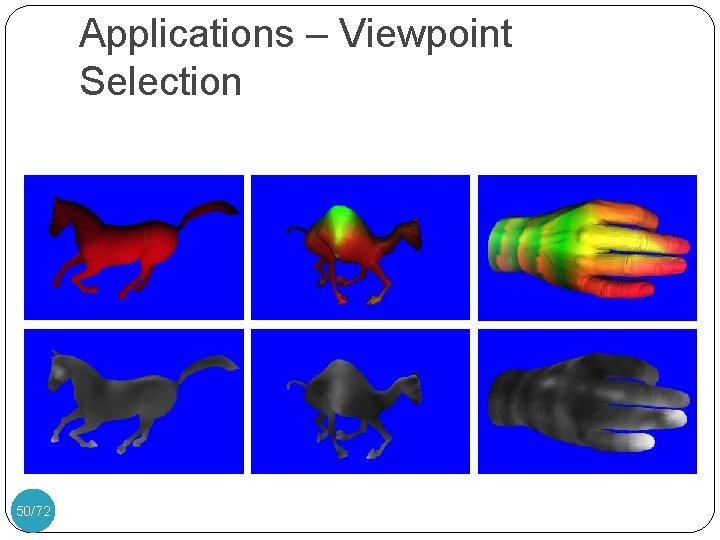

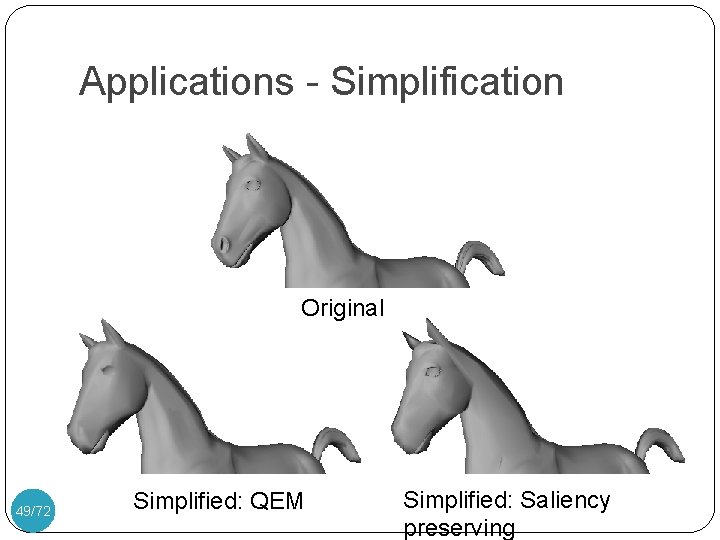

Applications - Simplification Original 49/72 Simplified: QEM Simplified: Saliency preserving

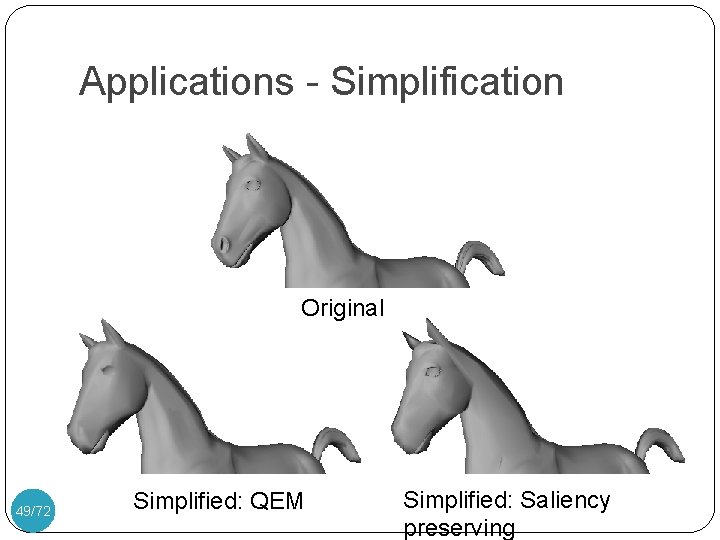

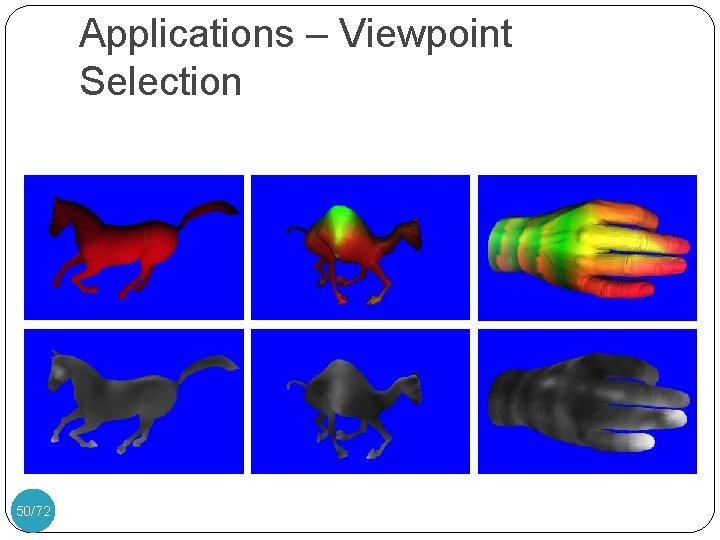

Applications – Viewpoint Selection 50/72

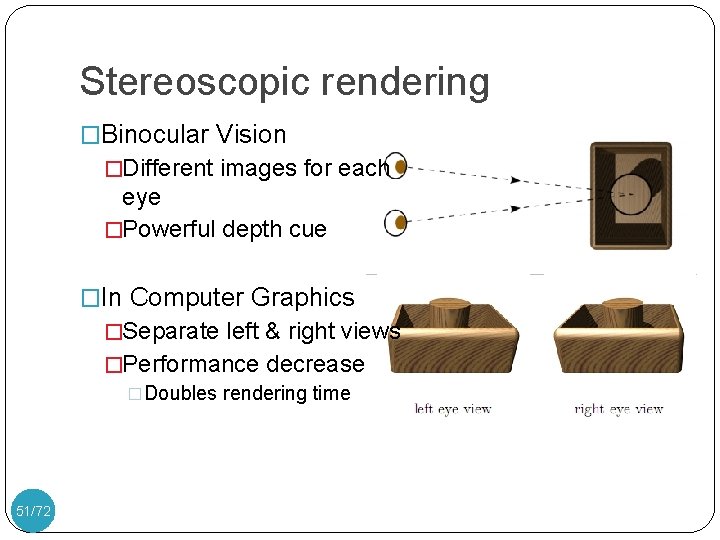

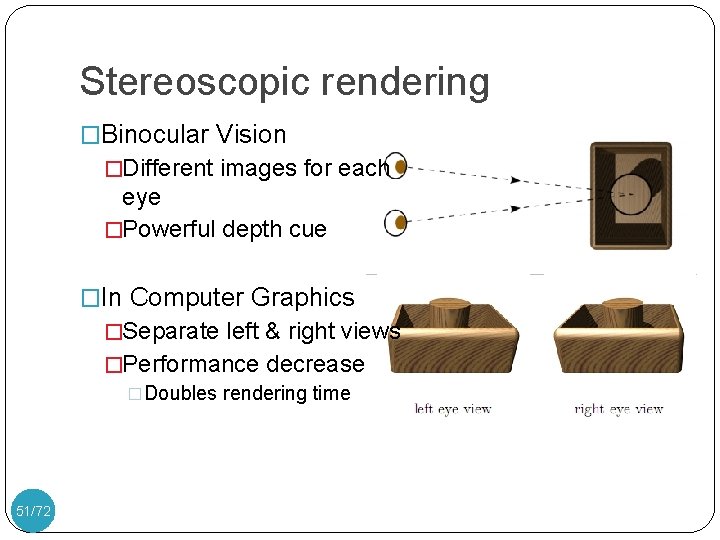

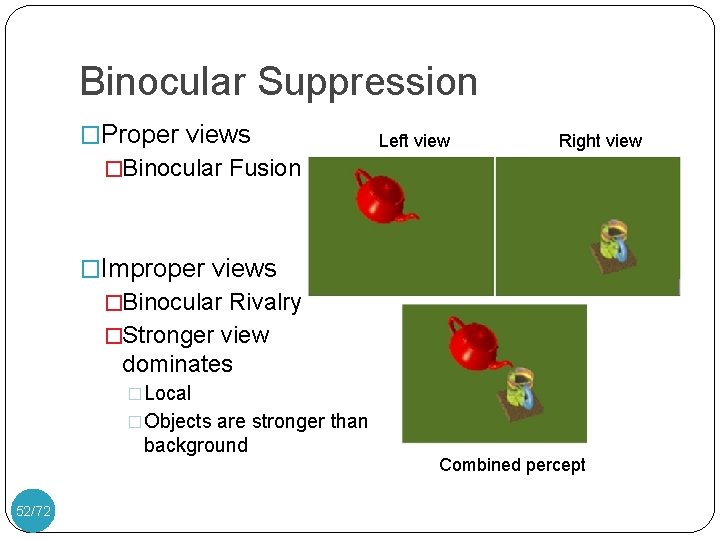

Stereoscopic rendering �Binocular Vision �Different images for each eye �Powerful depth cue �In Computer Graphics �Separate left & right views �Performance decrease �Doubles rendering time 51/72

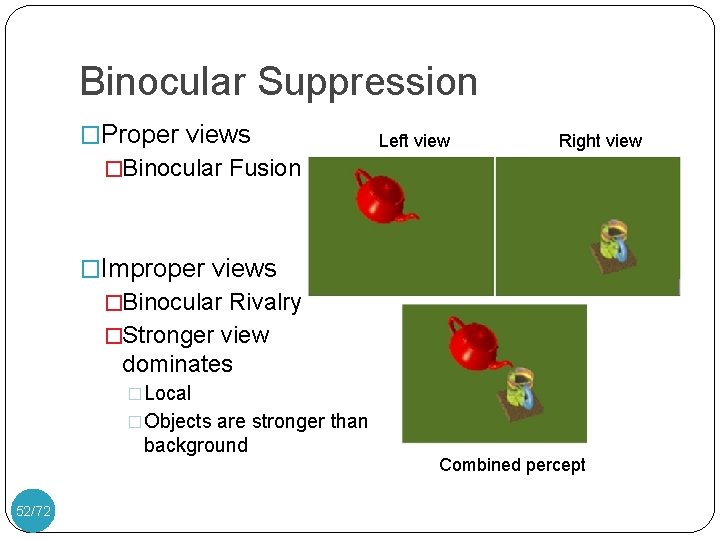

Binocular Suppression �Proper views Left view Right view �Binocular Fusion �Improper views �Binocular Rivalry �Stronger view dominates �Local �Objects are stronger than background 52/72 Combined percept

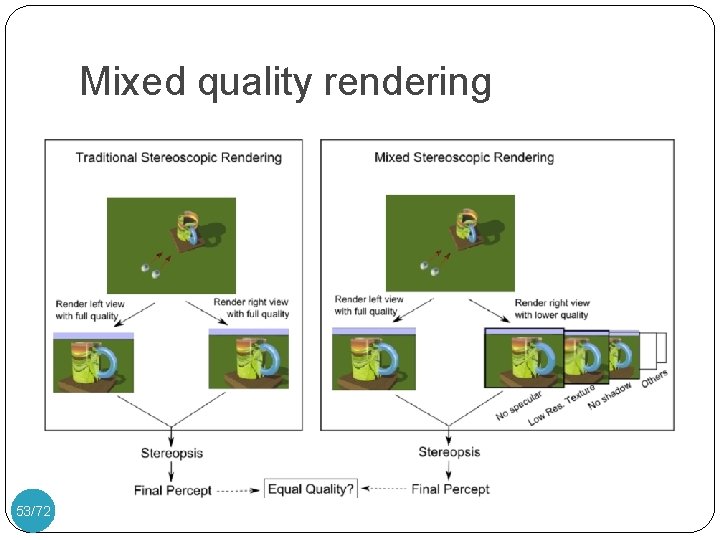

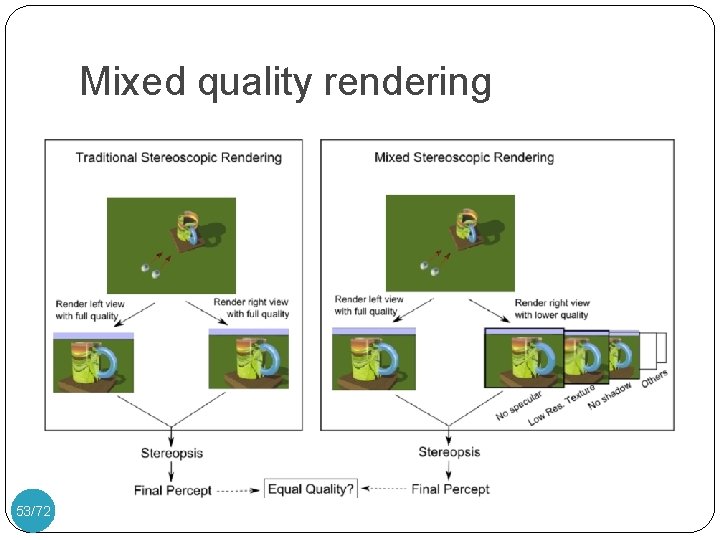

Mixed quality rendering 53/72

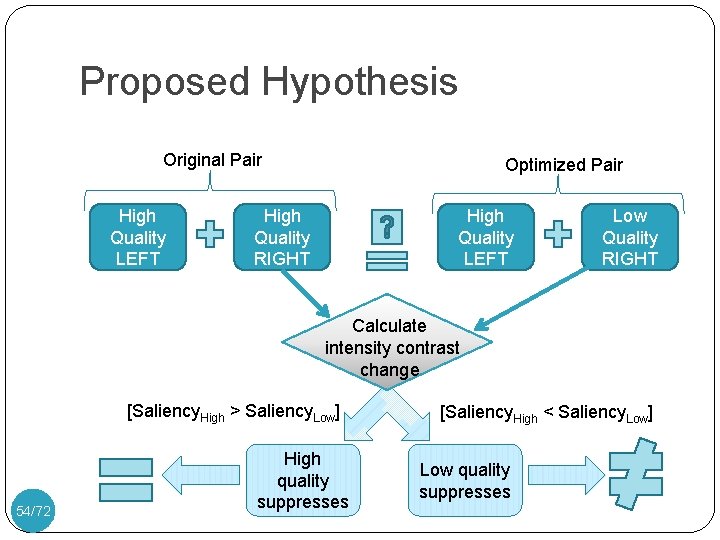

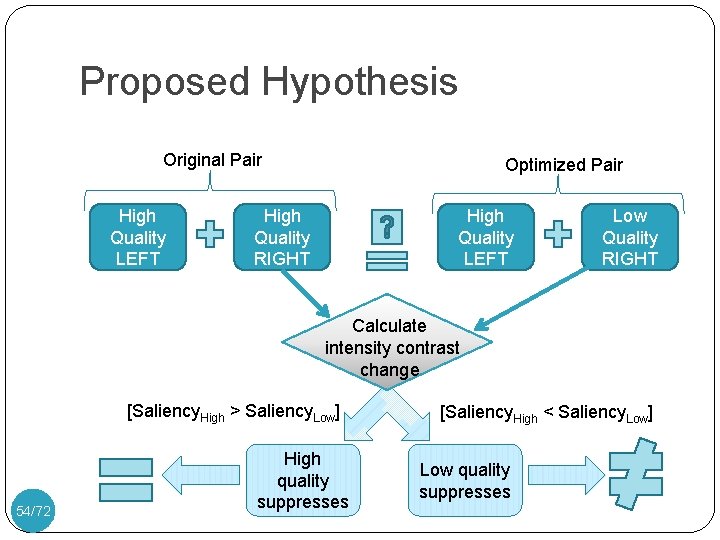

Proposed Hypothesis Original Pair High Quality LEFT Optimized Pair High Quality RIGHT High Quality LEFT Low Quality RIGHT Calculate intensity contrast change [Saliency. High > Saliency. Low] 54/72 High quality suppresses [Saliency. High < Saliency. Low] Low quality suppresses

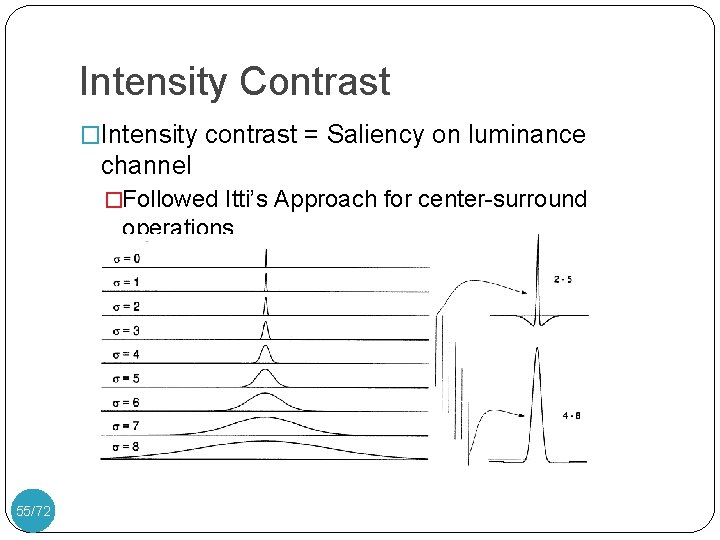

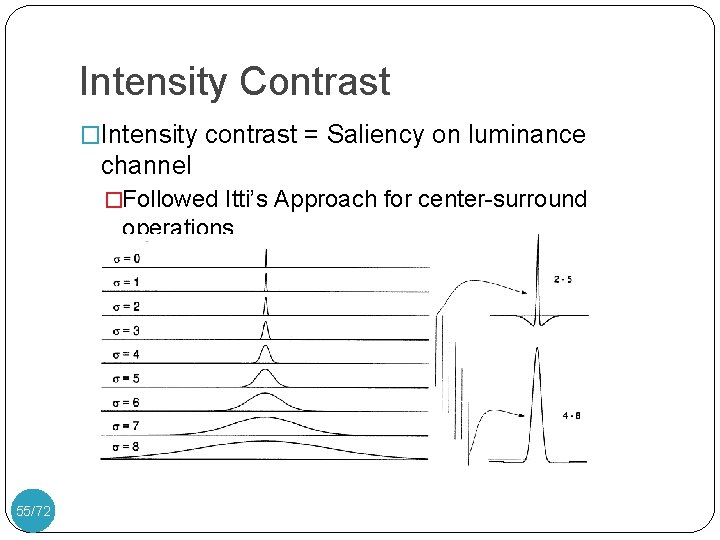

Intensity Contrast �Intensity contrast = Saliency on luminance channel �Followed Itti’s Approach for center-surround operations � 6 Do. G maps (2 -5, 2 -6, 3 -7, 4 -8) 55/72

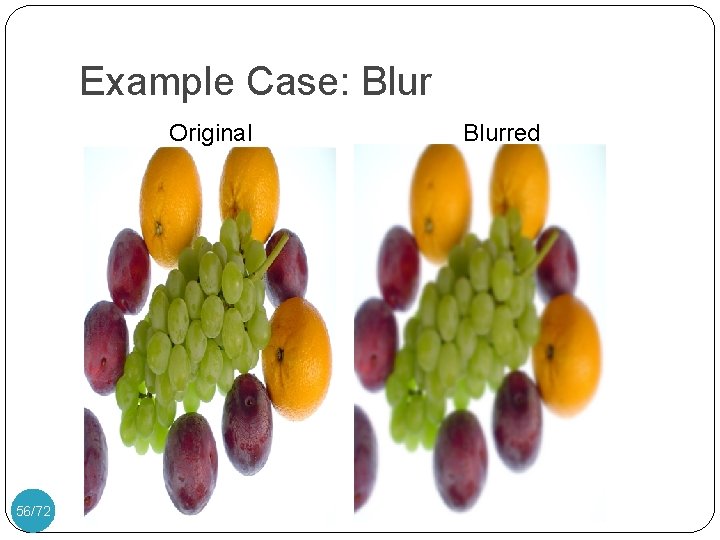

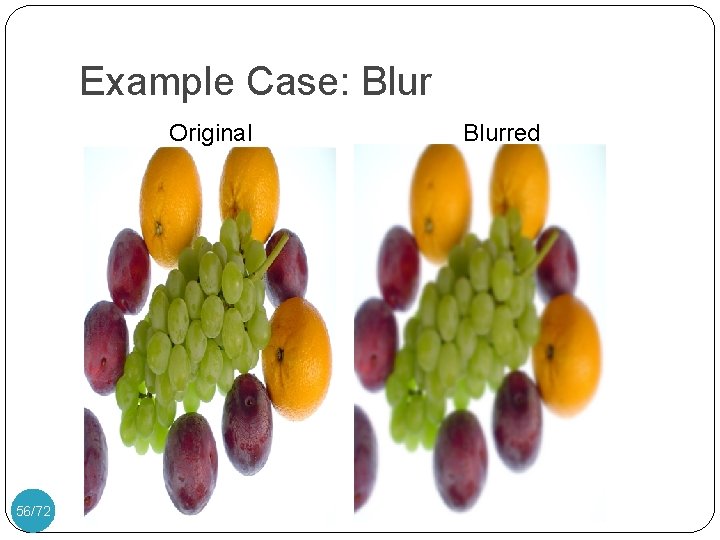

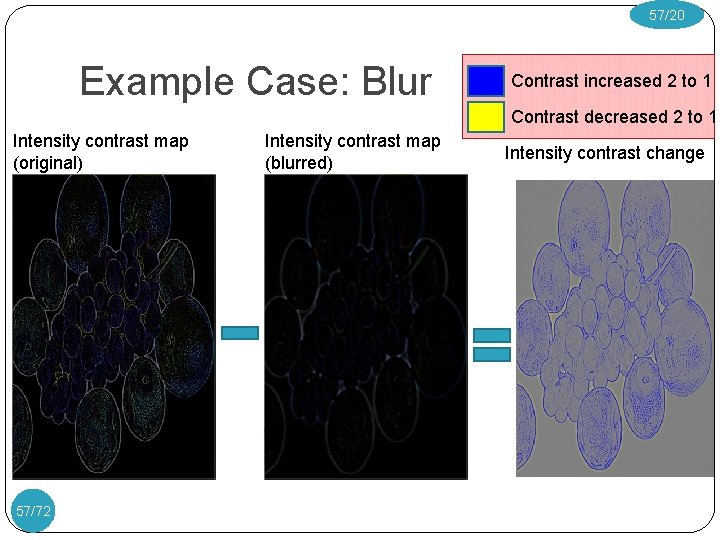

Example Case: Blur Original 56/72 Blurred

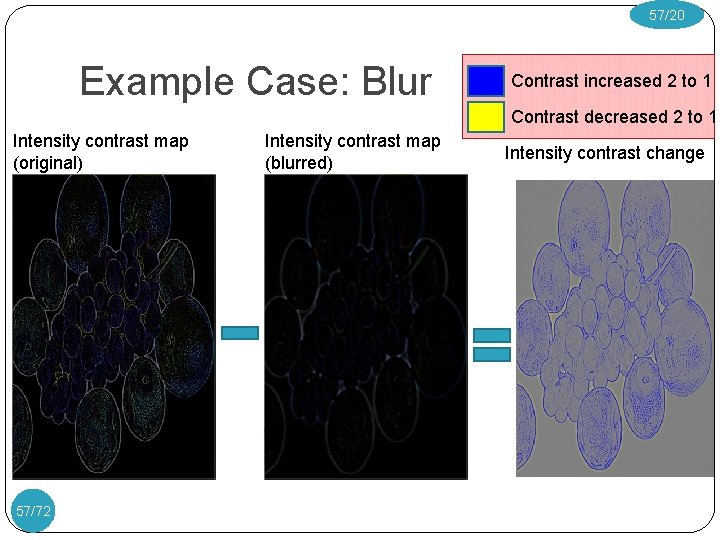

57/20 Example Case: Blur Contrast increased 2 to 1 Contrast decreased 2 to 1 Intensity contrast map (original) 57/72 Intensity contrast map (blurred) Intensity contrast change

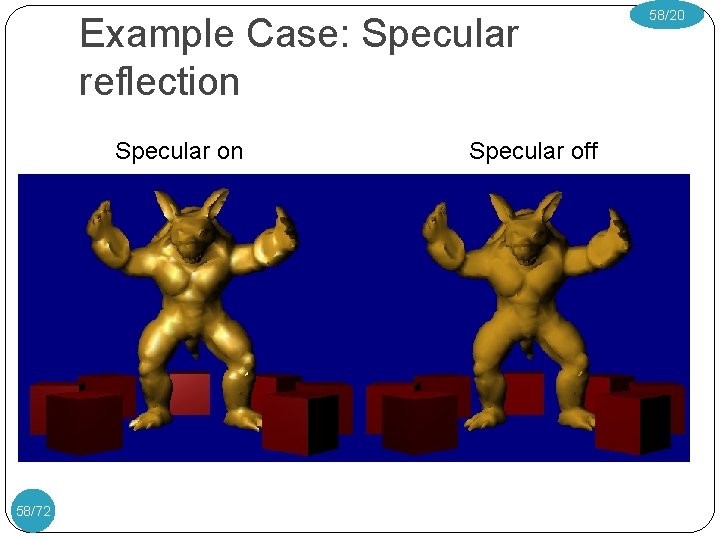

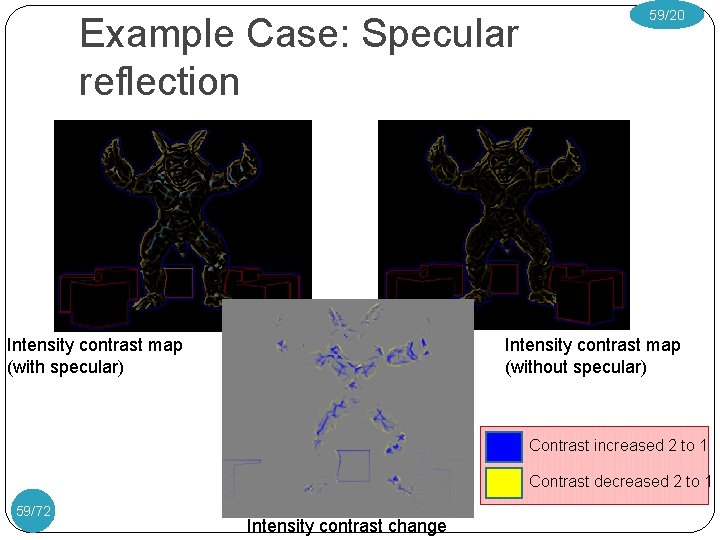

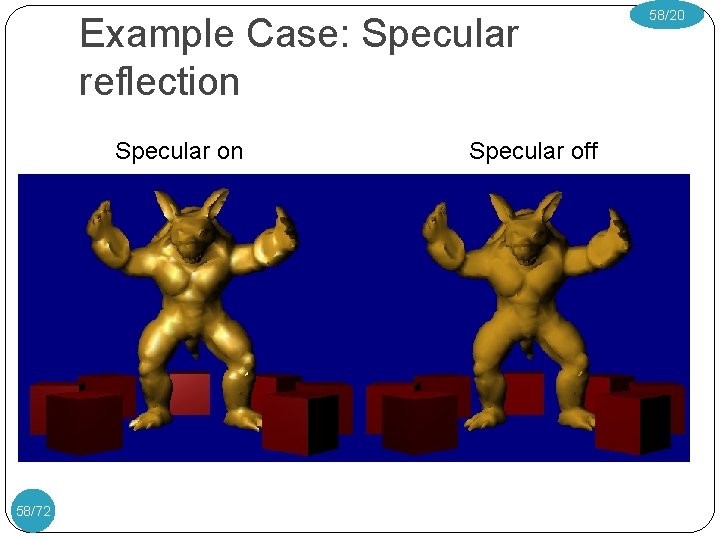

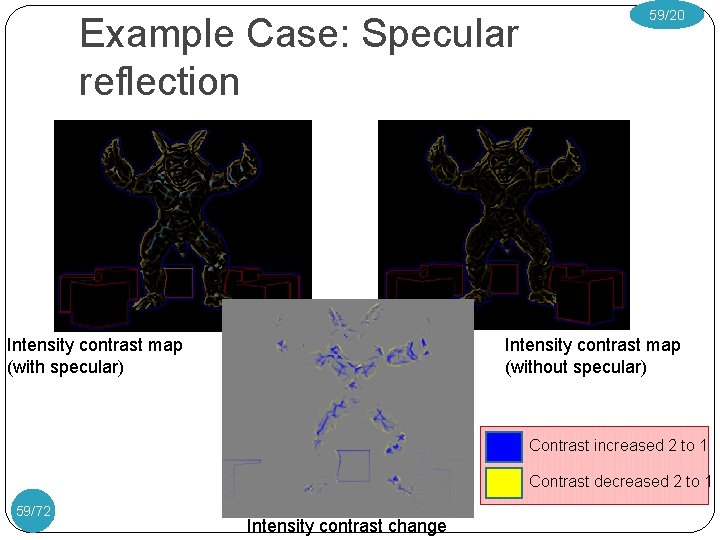

Example Case: Specular reflection Specular on 58/72 Specular off 58/20

Example Case: Specular reflection Intensity contrast map (with specular) 59/20 Intensity contrast map (without specular) Contrast increased 2 to 1 Contrast decreased 2 to 1 59/72 Intensity contrast change

Evaluations 1. 2. 3. 4. 60/72 PVS Model POS Model EPVS Model Stereo Rendering Optimization

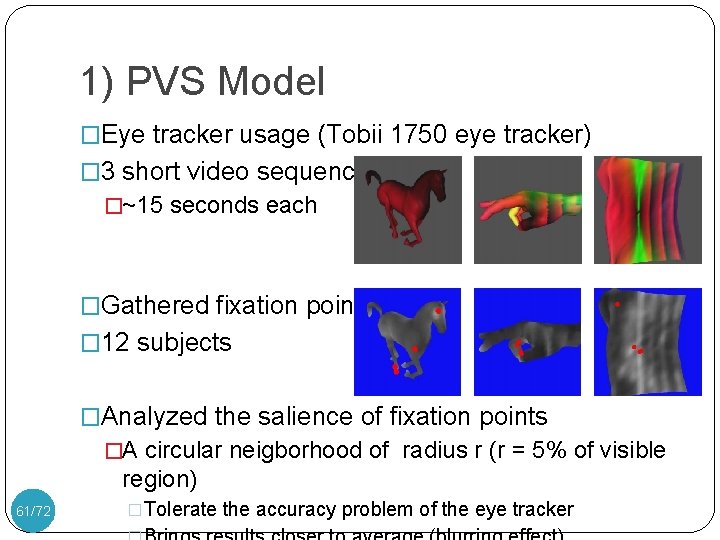

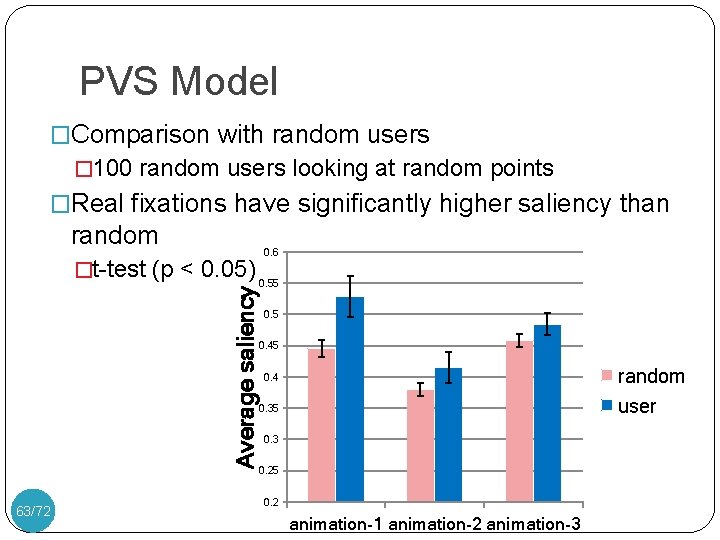

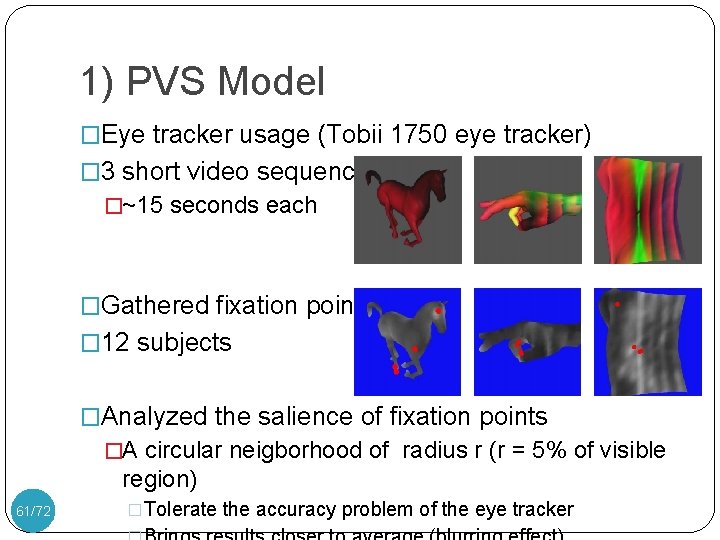

1) PVS Model �Eye tracker usage (Tobii 1750 eye tracker) � 3 short video sequences: �~15 seconds each �Gathered fixation points: � 12 subjects �Analyzed the salience of fixation points �A circular neigborhood of radius r (r = 5% of visible region) 61/72 �Tolerate the accuracy problem of the eye tracker

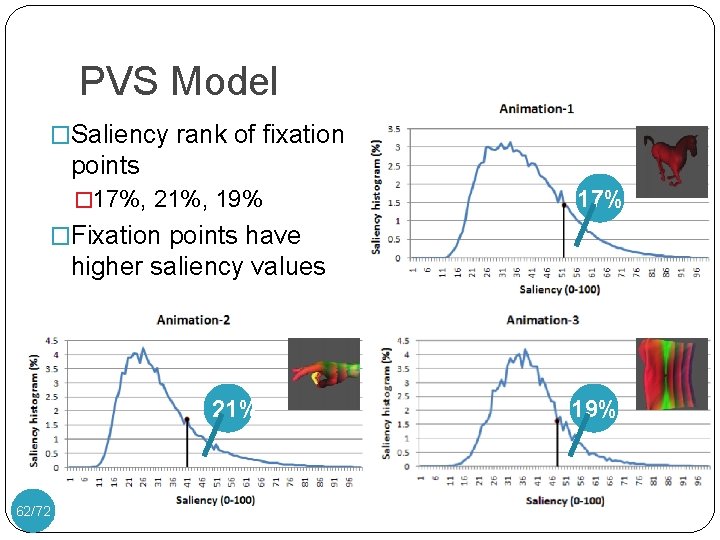

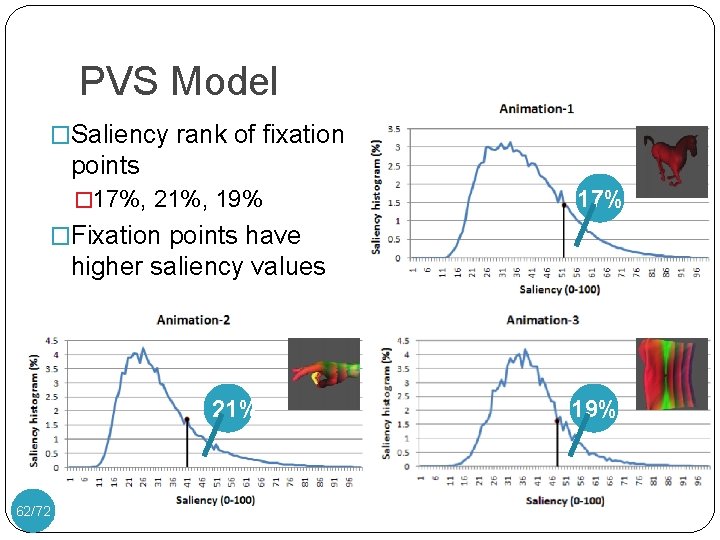

PVS Model �Saliency rank of fixation points � 17%, 21%, 19% 17% �Fixation points have higher saliency values 21% 62/72 19%

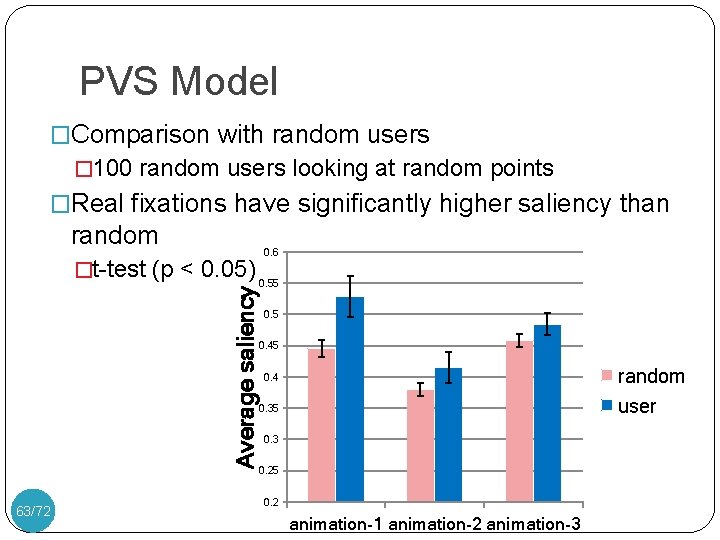

PVS Model �Comparison with random users � 100 random users looking at random points �Real fixations have significantly higher saliency than random Average saliency �t-test (p < 0. 05) 63/72 0. 6 0. 55 0. 45 random user 0. 4 0. 35 0. 3 0. 25 0. 2 animation-1 animation-2 animation-3

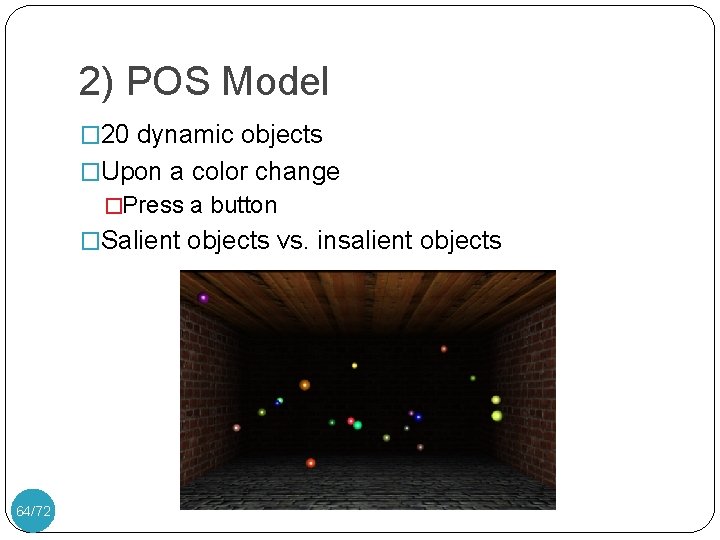

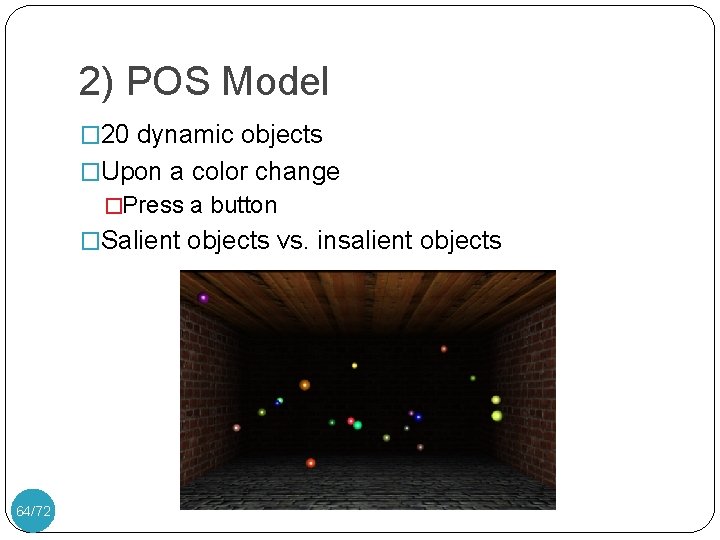

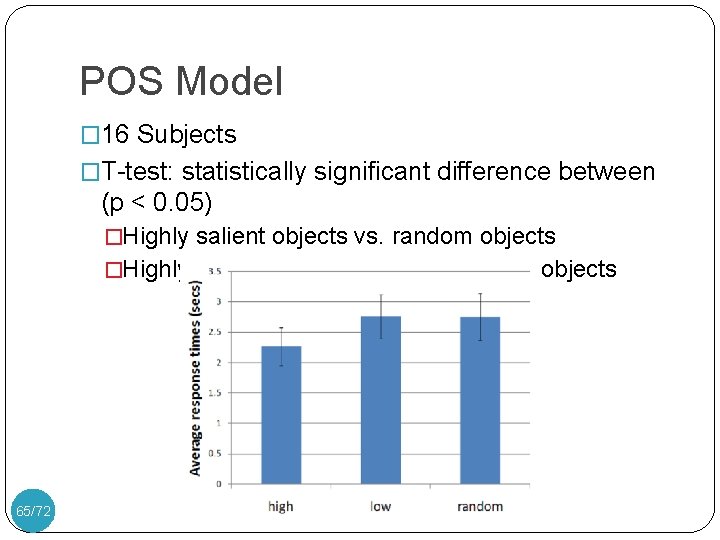

2) POS Model � 20 dynamic objects �Upon a color change �Press a button �Salient objects vs. insalient objects 64/72

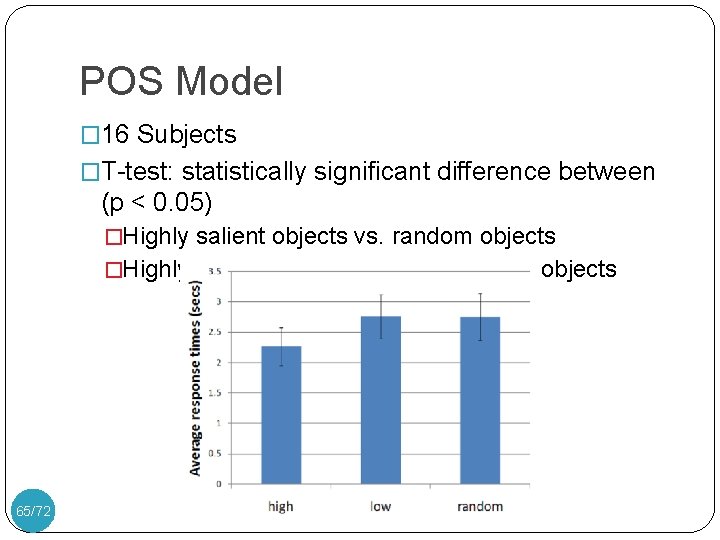

POS Model � 16 Subjects �T-test: statistically significant difference between (p < 0. 05) �Highly salient objects vs. random objects �Highly salieny objects vs. Lowly salient objects 65/72

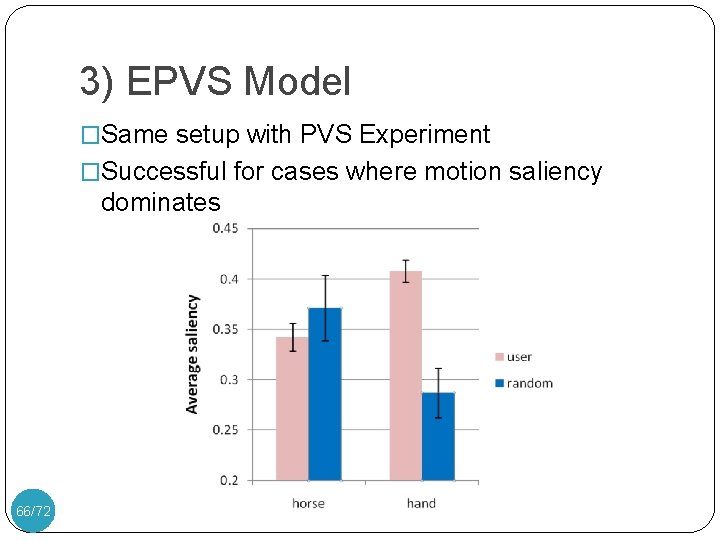

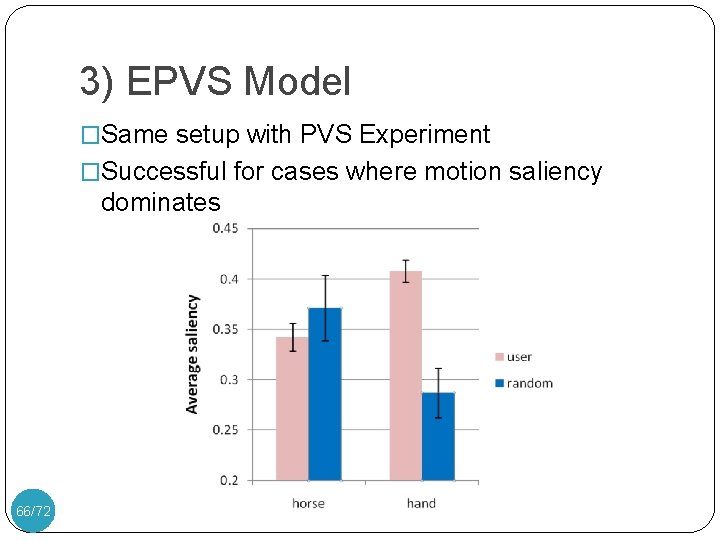

3) EPVS Model �Same setup with PVS Experiment �Successful for cases where motion saliency dominates 66/72

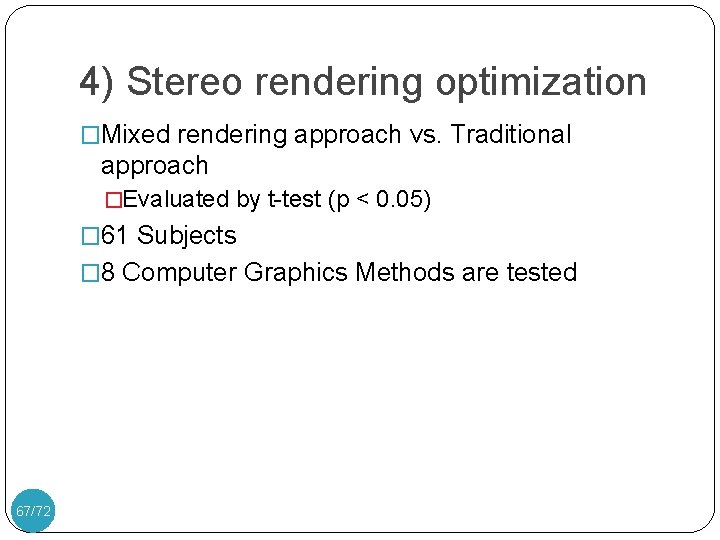

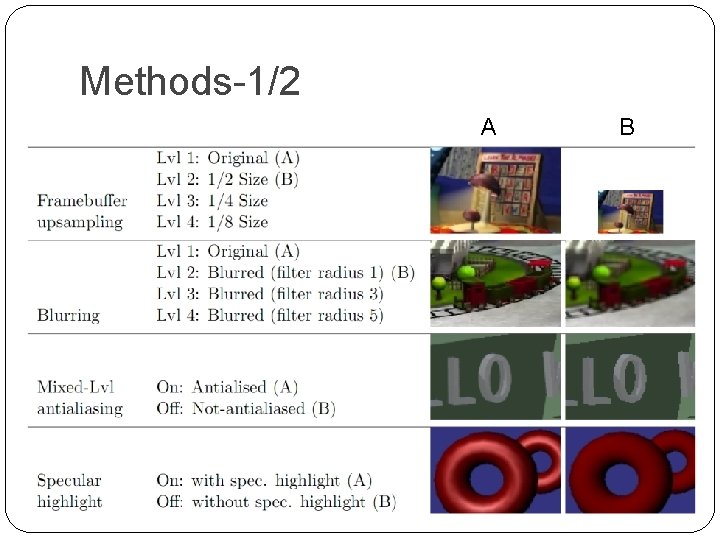

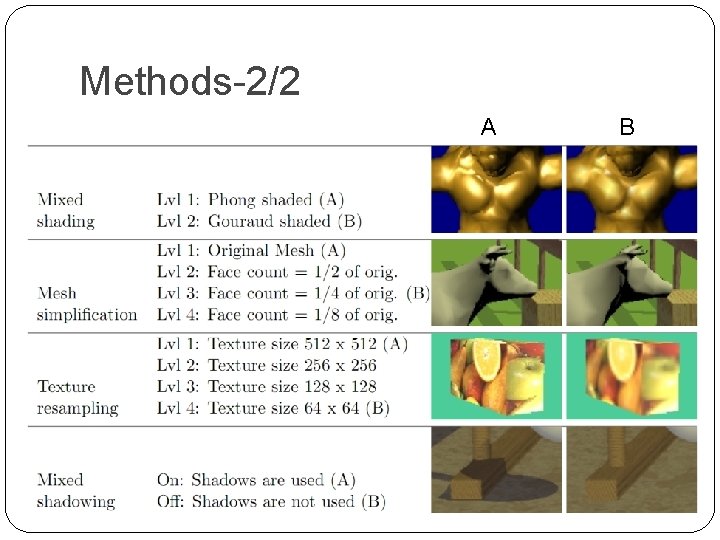

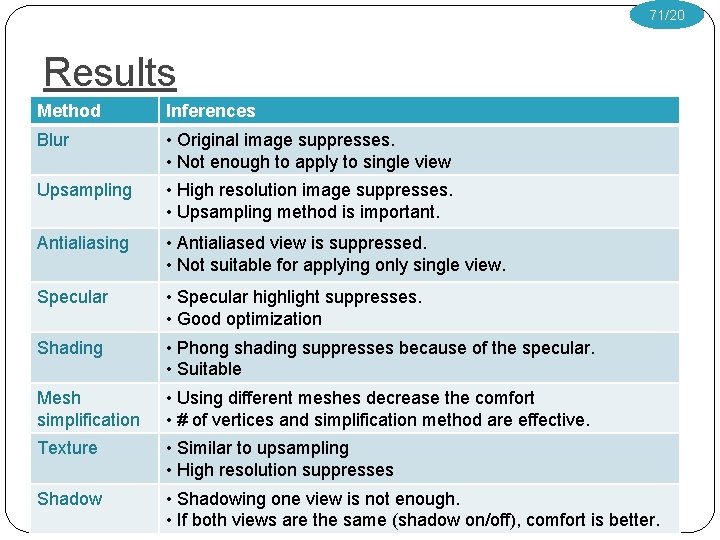

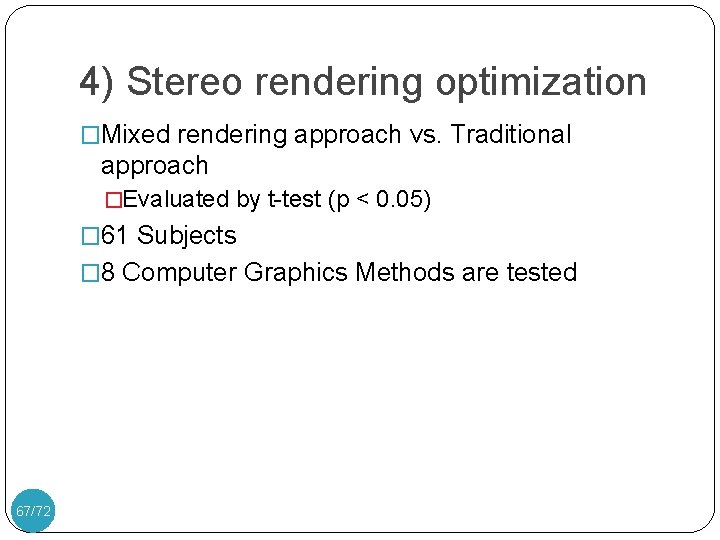

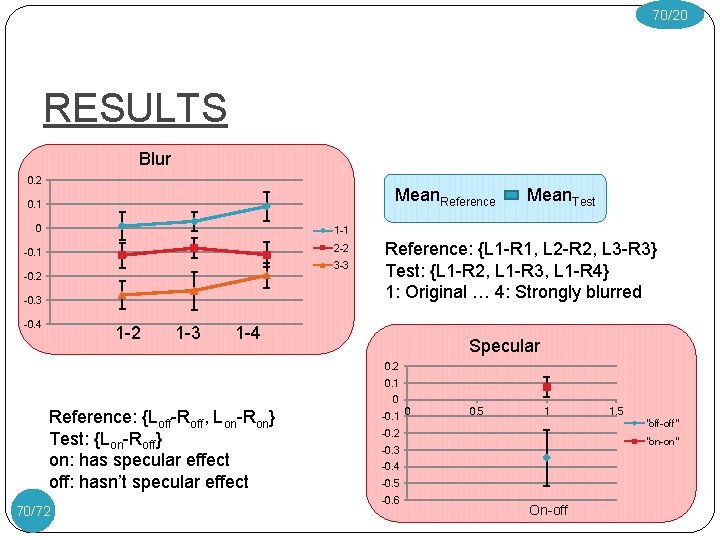

4) Stereo rendering optimization �Mixed rendering approach vs. Traditional approach �Evaluated by t-test (p < 0. 05) � 61 Subjects � 8 Computer Graphics Methods are tested 67/72

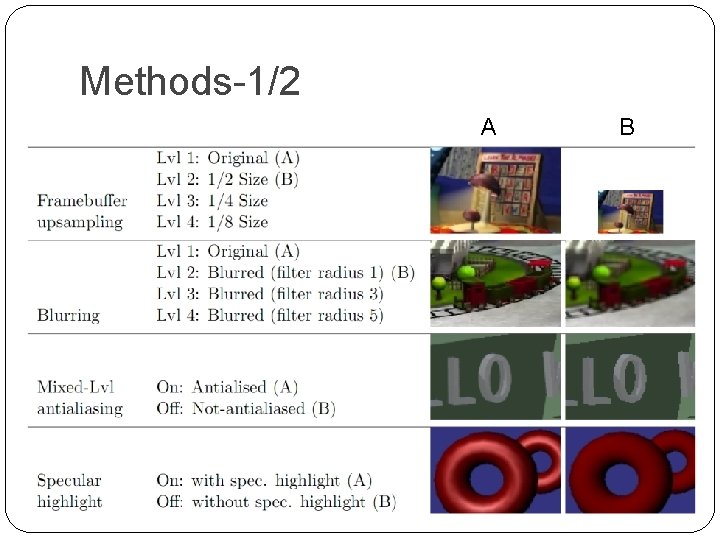

Methods-1/2 A B

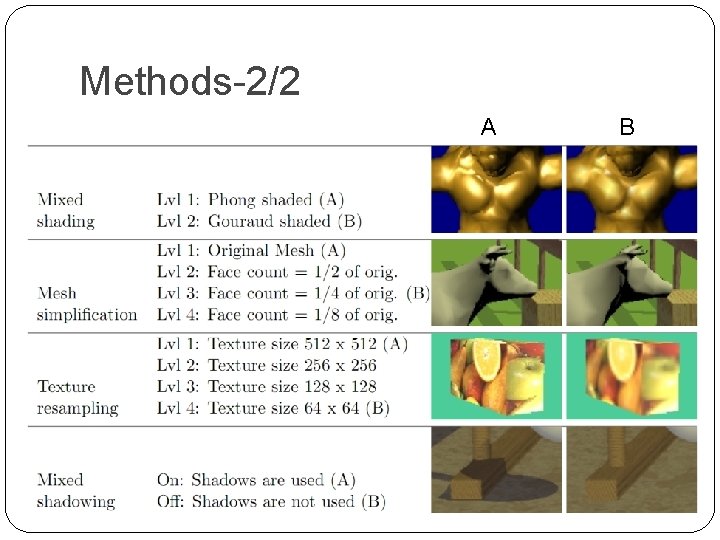

Methods-2/2 A B

70/20 RESULTS Blur 0. 2 Mean. Reference 0. 1 0 1 -1 -0. 1 2 -2 3 -3 -0. 2 -0. 3 -0. 4 1 -2 1 -3 Mean. Test Reference: {L 1 -R 1, L 2 -R 2, L 3 -R 3} Test: {L 1 -R 2, L 1 -R 3, L 1 -R 4} 1: Original … 4: Strongly blurred 1 -4 Specular 0. 2 0. 1 0 Reference: {Loff-Roff, Lon-Ron} Test: {Lon-Roff} on: has specular effect off: hasn’t specular effect 70/72 -0. 1 0 0. 5 1 "off-off" -0. 2 "on-on" -0. 3 -0. 4 -0. 5 -0. 6 1. 5 On-off

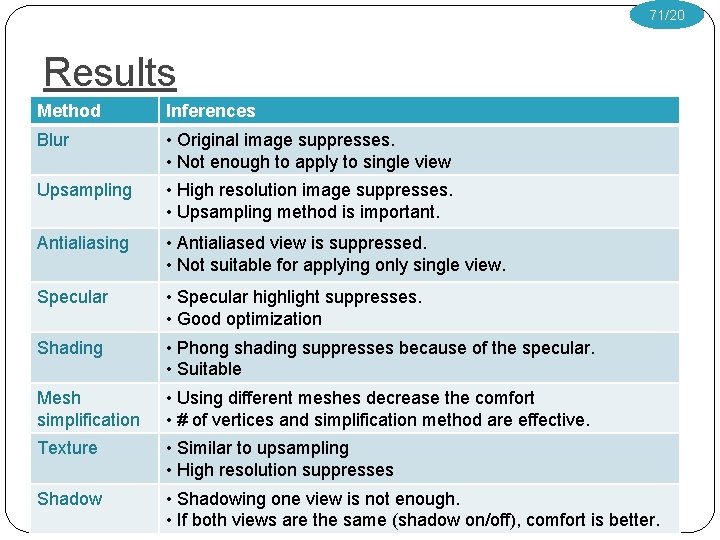

71/20 Results Method Inferences Blur • Original image suppresses. • Not enough to apply to single view Upsampling • High resolution image suppresses. • Upsampling method is important. Antialiasing • Antialiased view is suppressed. • Not suitable for applying only single view. Specular • Specular highlight suppresses. • Good optimization Shading • Phong shading suppresses because of the specular. • Suitable Mesh simplification • Using different meshes decrease the comfort • # of vertices and simplification method are effective. Texture • Similar to upsampling • High resolution suppresses Shadow • Shadowing one view is not enough. • If both views are the same (shadow on/off), comfort is better.

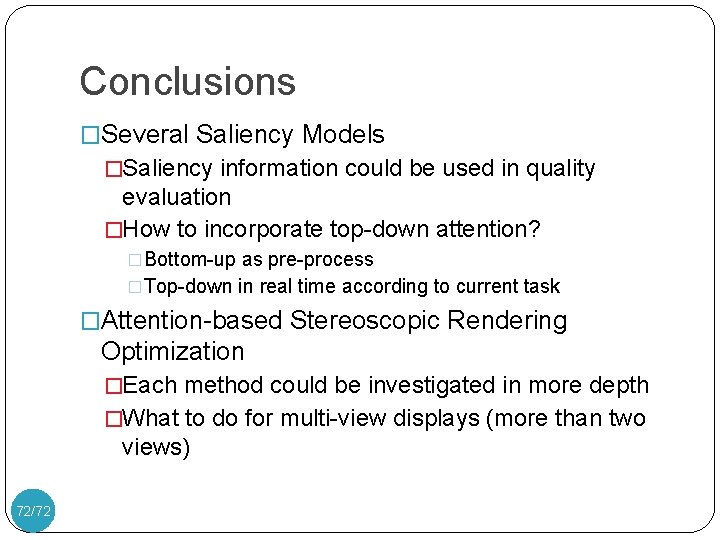

Conclusions �Several Saliency Models �Saliency information could be used in quality evaluation �How to incorporate top-down attention? �Bottom-up as pre-process �Top-down in real time according to current task �Attention-based Stereoscopic Rendering Optimization �Each method could be investigated in more depth �What to do for multi-view displays (more than two views) 72/72

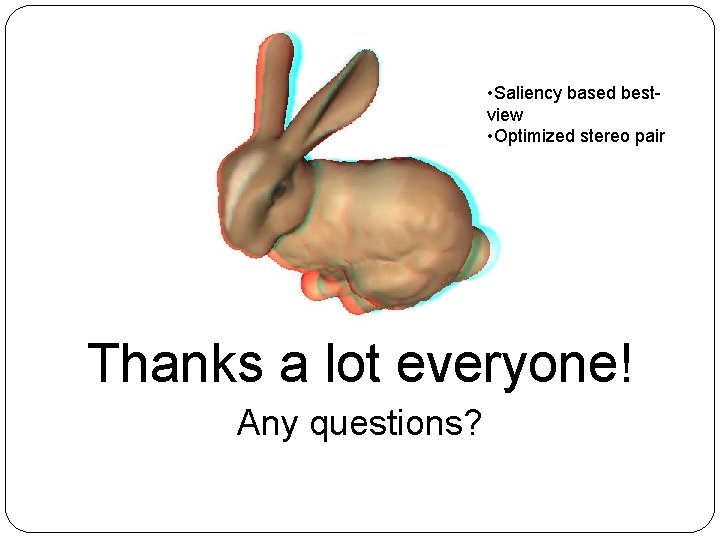

• Saliency based bestview • Optimized stereo pair Thanks a lot everyone! Any questions?