Anomaly Detection Lecture Notes for Chapter 9 Introduction

- Slides: 47

Anomaly Detection Lecture Notes for Chapter 9 Introduction to Data Mining, 2 nd Edition by Tan, Steinbach, Karpatne, Kumar 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 1

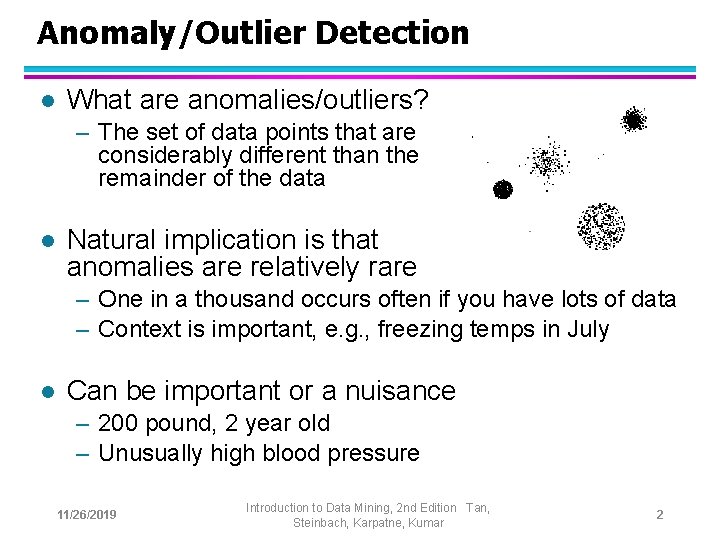

Anomaly/Outlier Detection l What are anomalies/outliers? – The set of data points that are considerably different than the remainder of the data l Natural implication is that anomalies are relatively rare – One in a thousand occurs often if you have lots of data – Context is important, e. g. , freezing temps in July l Can be important or a nuisance – 200 pound, 2 year old – Unusually high blood pressure 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 2

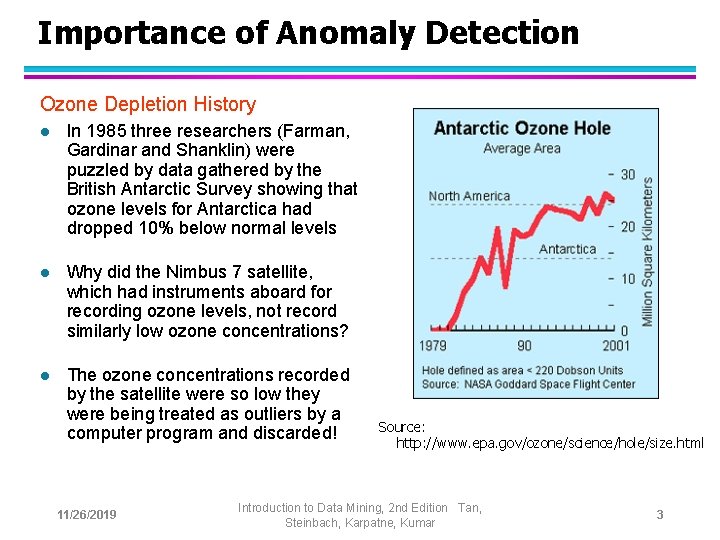

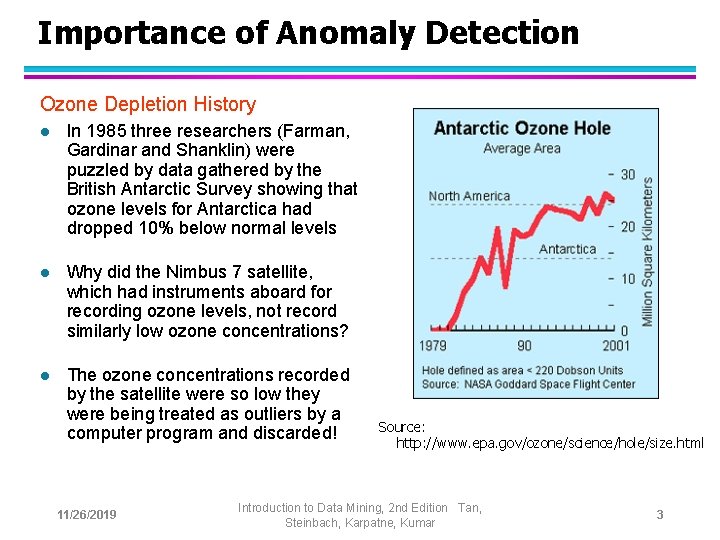

Importance of Anomaly Detection Ozone Depletion History l In 1985 three researchers (Farman, Gardinar and Shanklin) were puzzled by data gathered by the British Antarctic Survey showing that ozone levels for Antarctica had dropped 10% below normal levels l Why did the Nimbus 7 satellite, which had instruments aboard for recording ozone levels, not record similarly low ozone concentrations? l The ozone concentrations recorded by the satellite were so low they were being treated as outliers by a computer program and discarded! 11/26/2019 Source: http: //www. epa. gov/ozone/science/hole/size. html Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 3

Causes of Anomalies l Data from different classes – Measuring the weights of oranges, but a few grapefruit are mixed in l Natural variation – Unusually tall people l Data errors – 200 pound 2 year old 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 4

Distinction Between Noise and Anomalies l Noise doesn’t necessarily produce unusual values or objects l Noise is not interesting l Noise and anomalies are related but distinct concepts 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 5

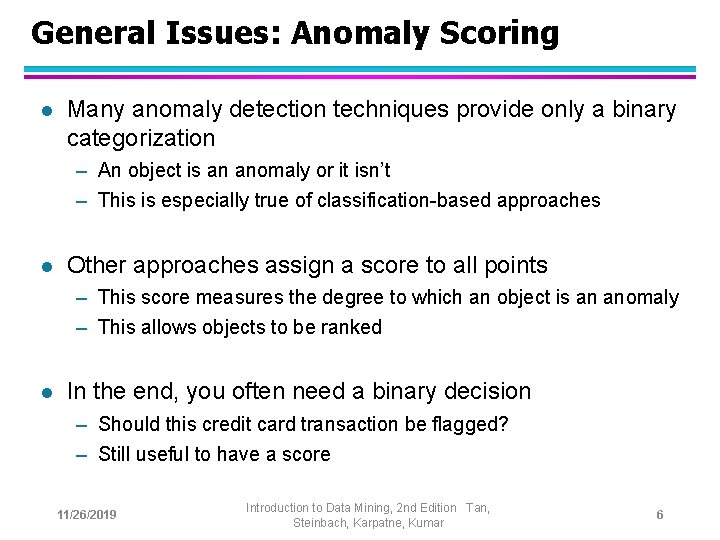

General Issues: Anomaly Scoring l Many anomaly detection techniques provide only a binary categorization – An object is an anomaly or it isn’t – This is especially true of classification-based approaches l Other approaches assign a score to all points – This score measures the degree to which an object is an anomaly – This allows objects to be ranked l In the end, you often need a binary decision – Should this credit card transaction be flagged? – Still useful to have a score 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 6

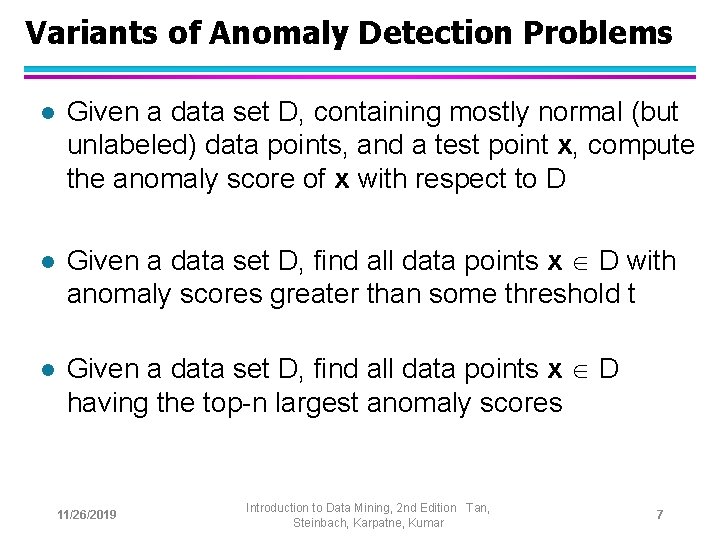

Variants of Anomaly Detection Problems l Given a data set D, containing mostly normal (but unlabeled) data points, and a test point x, compute the anomaly score of x with respect to D l Given a data set D, find all data points x D with anomaly scores greater than some threshold t l Given a data set D, find all data points x D having the top-n largest anomaly scores 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 7

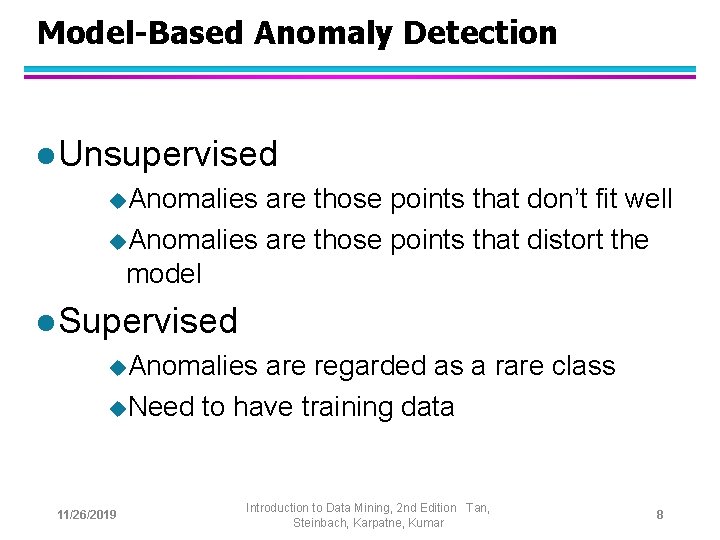

Model-Based Anomaly Detection l Unsupervised u. Anomalies are those points that don’t fit well u. Anomalies are those points that distort the model l Supervised u. Anomalies are regarded as a rare class u. Need to have training data 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 8

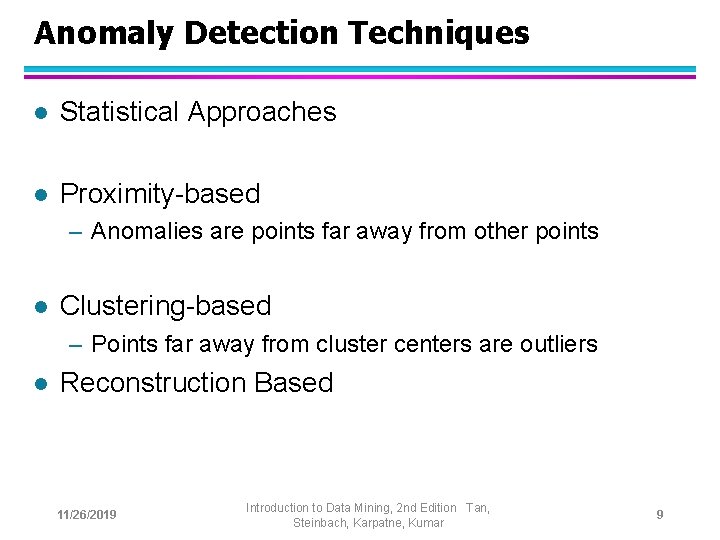

Anomaly Detection Techniques l Statistical Approaches l Proximity-based – Anomalies are points far away from other points l Clustering-based – Points far away from cluster centers are outliers l Reconstruction Based 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 9

Statistical Approaches Probabilistic definition of an outlier: An outlier is an object that has a low probability with respect to a probability distribution model of the data. l Usually assume a parametric model describing the distribution of the data (e. g. , normal distribution) l Apply a statistical test that depends on – Data distribution – Parameters of distribution (e. g. , mean, variance) – Number of expected outliers (confidence limit) l Issues – Identifying the distribution of a data set u Heavy tailed distribution – Number of attributes – Is the data a mixture of distributions? 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 10

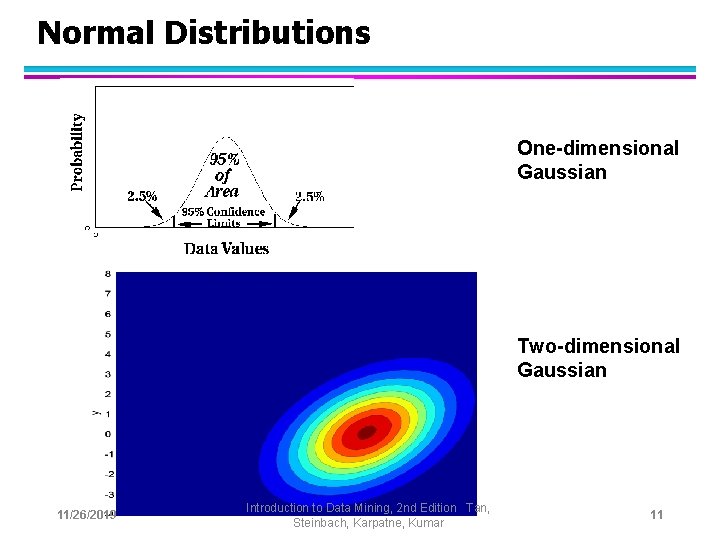

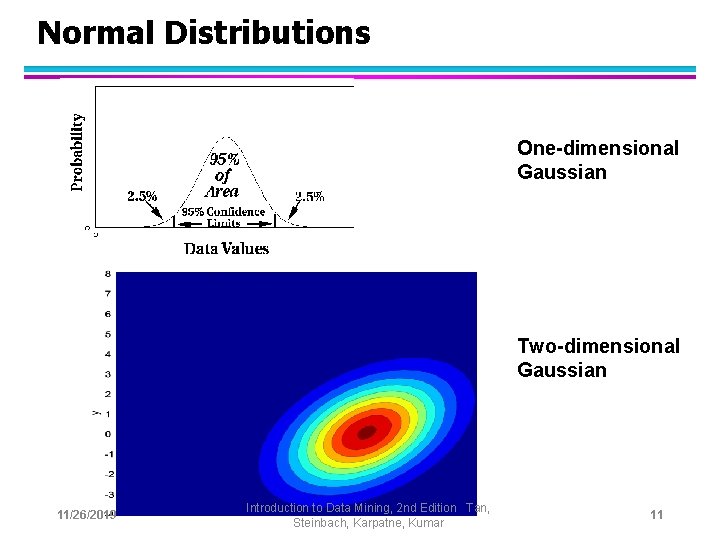

Normal Distributions One-dimensional Gaussian Two-dimensional Gaussian 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 11

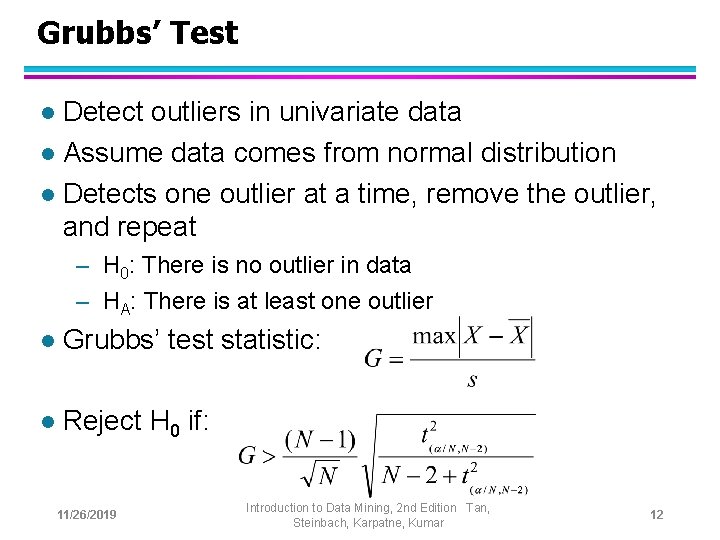

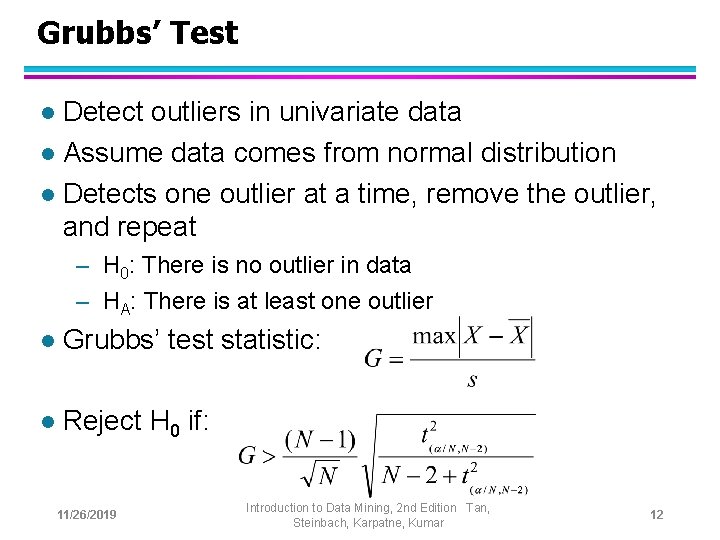

Grubbs’ Test Detect outliers in univariate data l Assume data comes from normal distribution l Detects one outlier at a time, remove the outlier, and repeat l – H 0: There is no outlier in data – HA: There is at least one outlier l Grubbs’ test statistic: l Reject H 0 if: 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 12

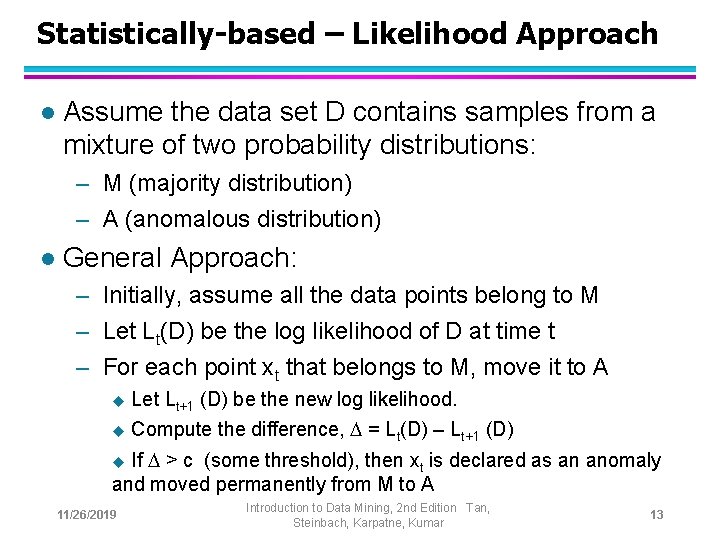

Statistically-based – Likelihood Approach l Assume the data set D contains samples from a mixture of two probability distributions: – M (majority distribution) – A (anomalous distribution) l General Approach: – Initially, assume all the data points belong to M – Let Lt(D) be the log likelihood of D at time t – For each point xt that belongs to M, move it to A u Let Lt+1 (D) be the new log likelihood. u Compute the difference, = Lt(D) – Lt+1 (D) If > c (some threshold), then xt is declared as an anomaly and moved permanently from M to A u 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 13

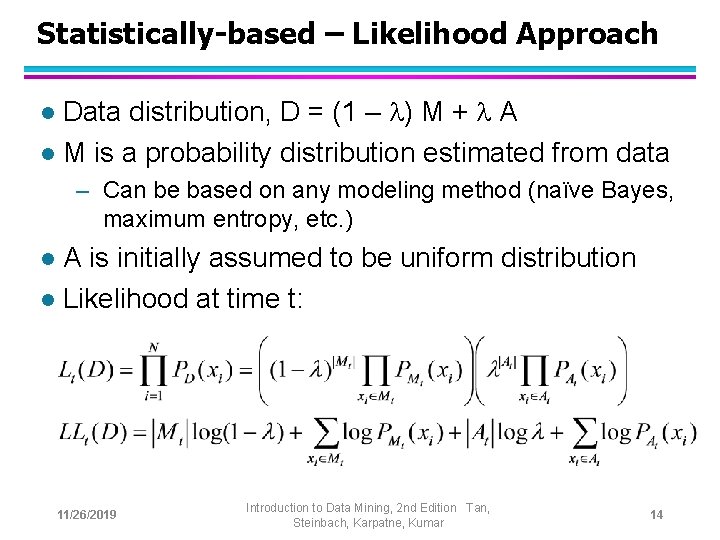

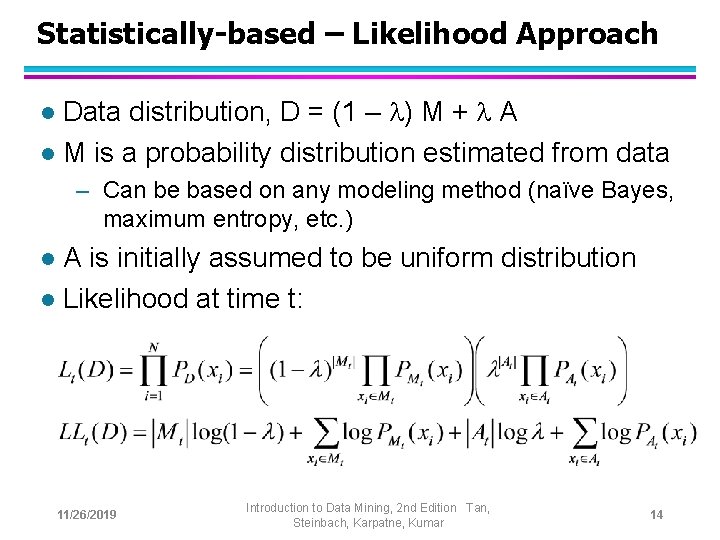

Statistically-based – Likelihood Approach Data distribution, D = (1 – ) M + A l M is a probability distribution estimated from data l – Can be based on any modeling method (naïve Bayes, maximum entropy, etc. ) A is initially assumed to be uniform distribution l Likelihood at time t: l 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 14

Strengths/Weaknesses of Statistical Approaches l Firm mathematical foundation l Can be very efficient l Good results if distribution is known l In many cases, data distribution may not be known l For high dimensional data, it may be difficult to estimate the true distribution l Anomalies can distort the parameters of the distribution 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 15

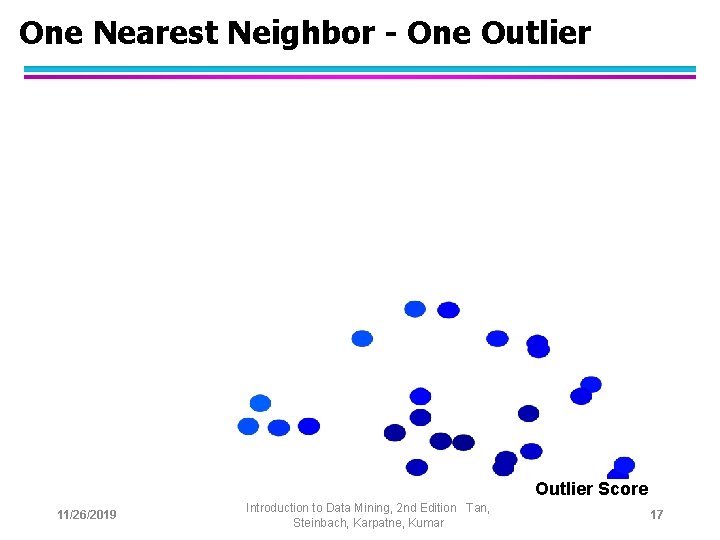

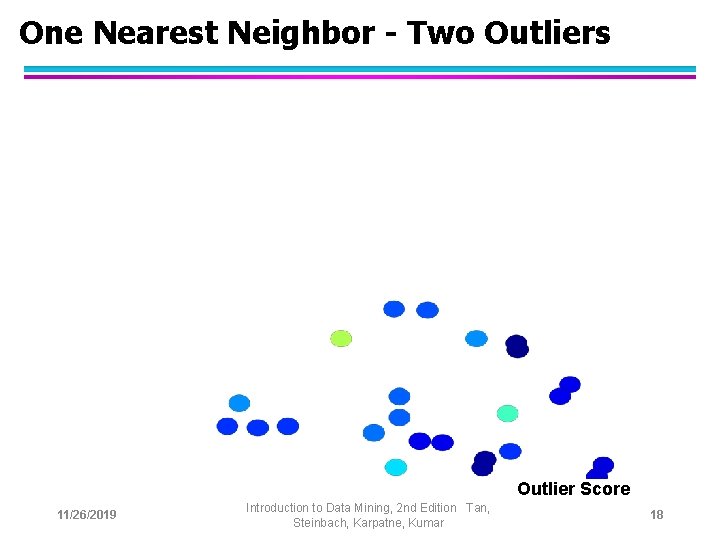

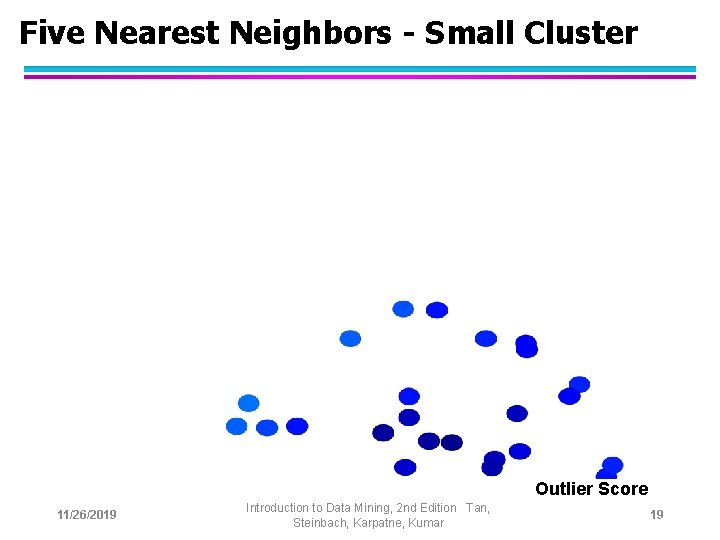

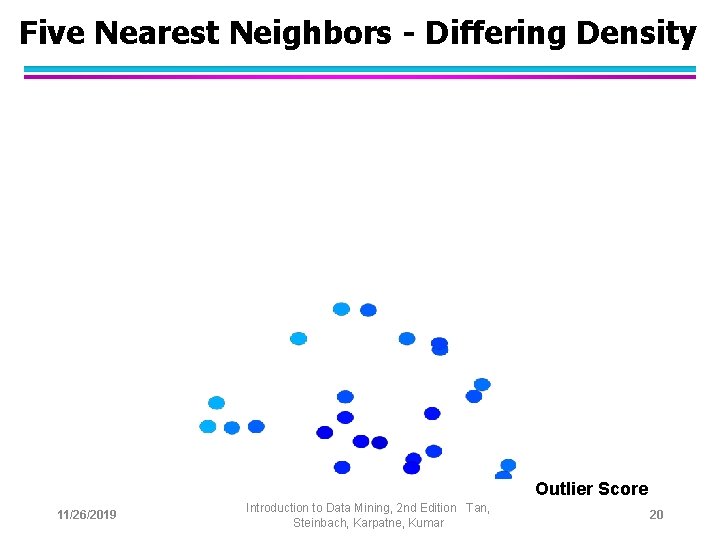

Distance-Based Approaches l The outlier score of an object is the distance to its kth nearest neighbor 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 16

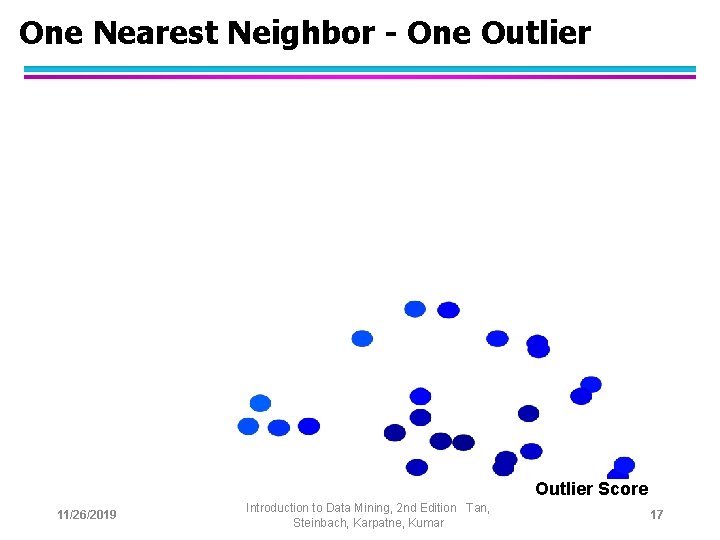

One Nearest Neighbor - One Outlier Score 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 17

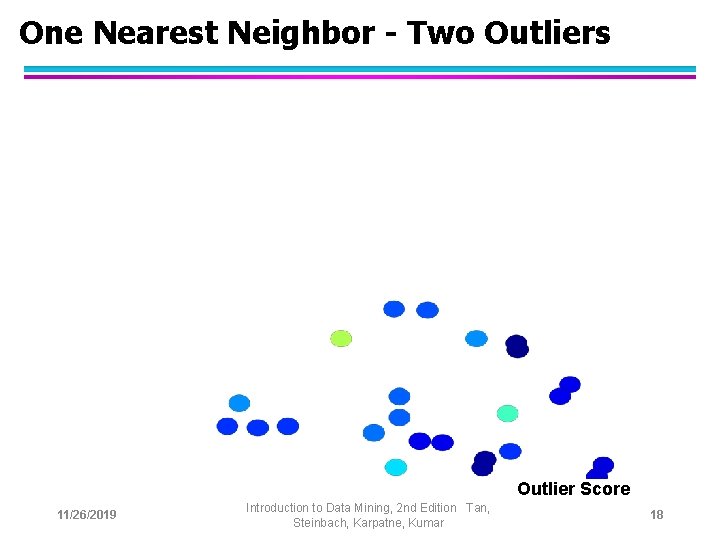

One Nearest Neighbor - Two Outliers Outlier Score 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 18

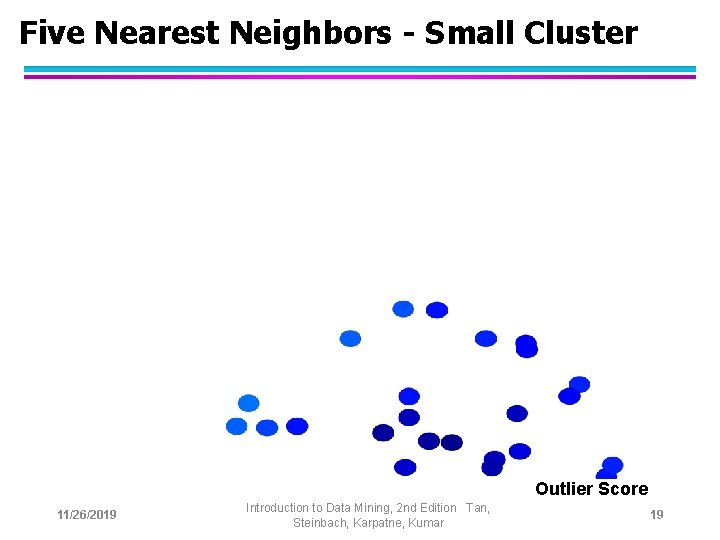

Five Nearest Neighbors - Small Cluster Outlier Score 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 19

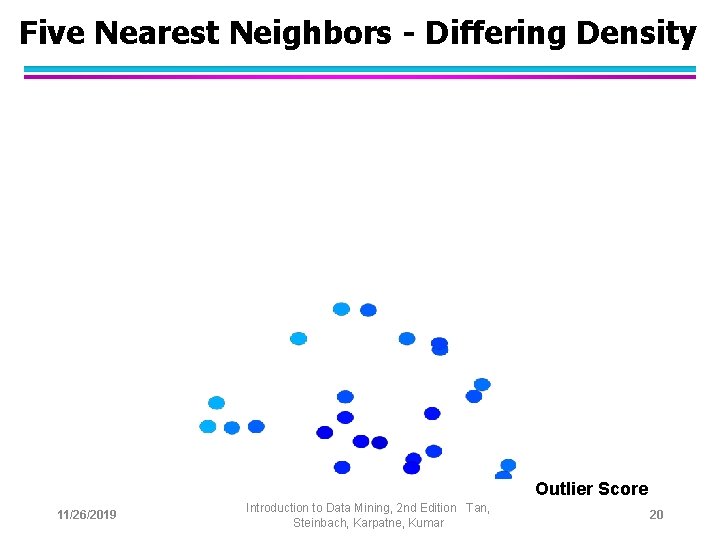

Five Nearest Neighbors - Differing Density Outlier Score 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 20

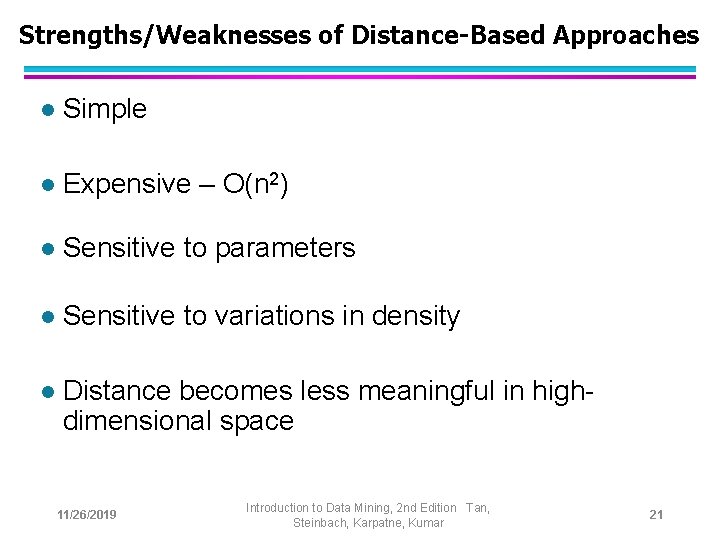

Strengths/Weaknesses of Distance-Based Approaches l Simple l Expensive – O(n 2) l Sensitive to parameters l Sensitive to variations in density l Distance becomes less meaningful in highdimensional space 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 21

Density-Based Approaches l Density-based Outlier: The outlier score of an object is the inverse of the density around the object. – Can be defined in terms of the k nearest neighbors – One definition: Inverse of distance to kth neighbor – Another definition: Inverse of the average distance to k neighbors – DBSCAN definition l If there are regions of different density, this approach can have problems 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 22

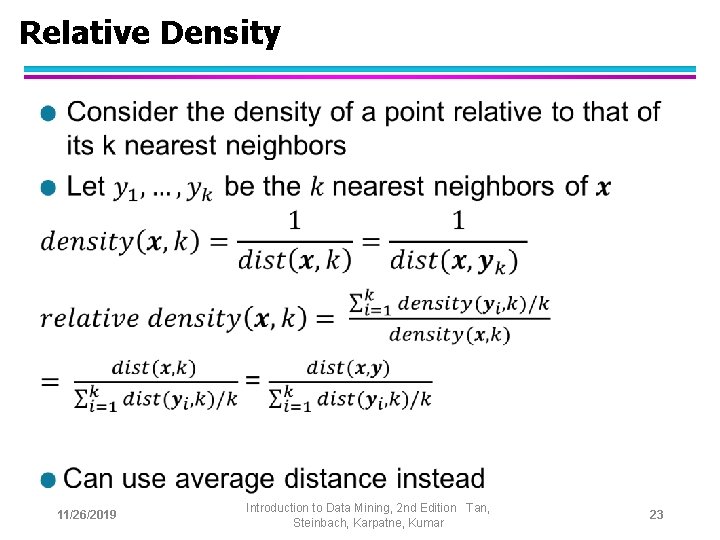

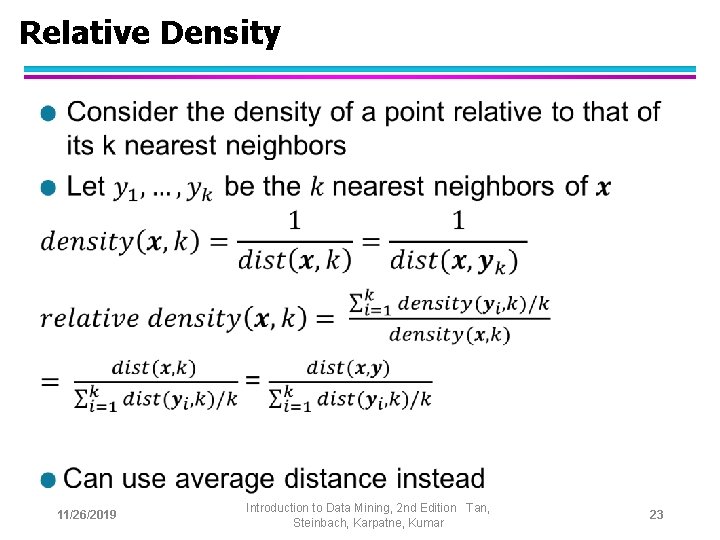

Relative Density l 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 23

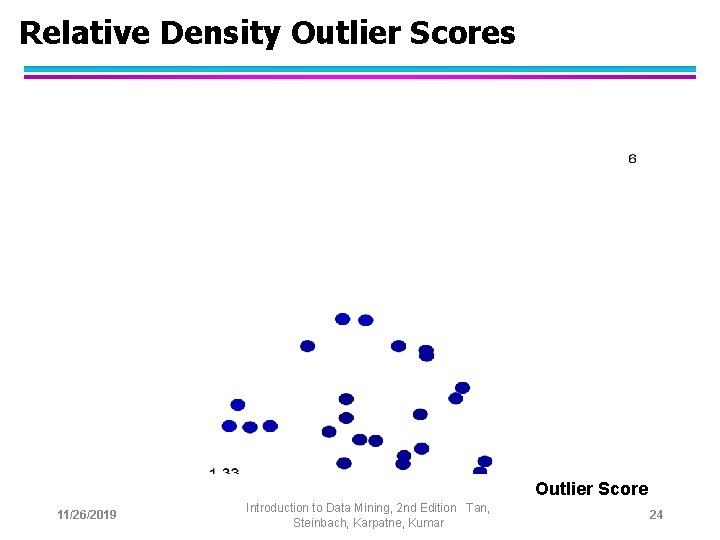

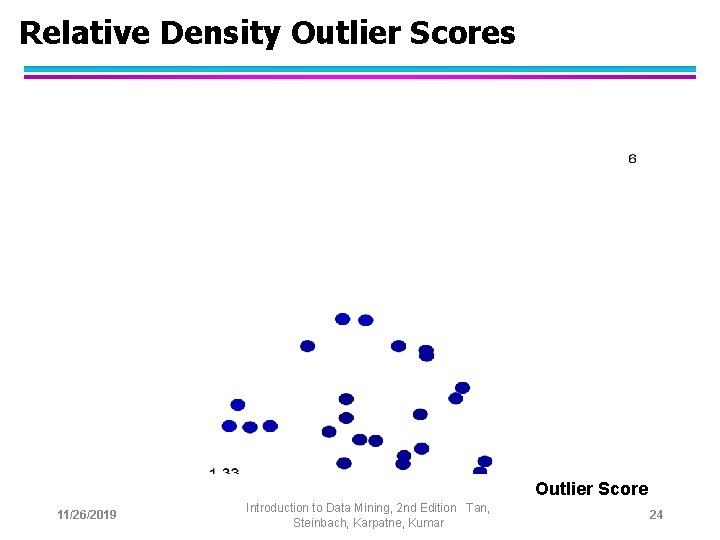

Relative Density Outlier Scores Outlier Score 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 24

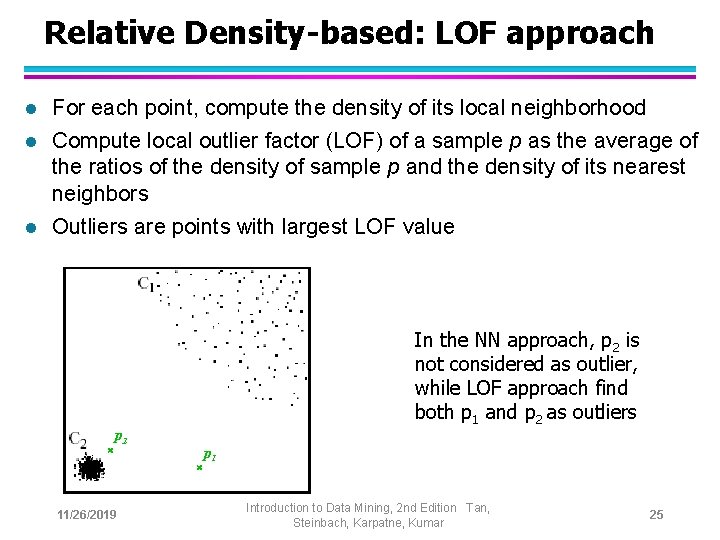

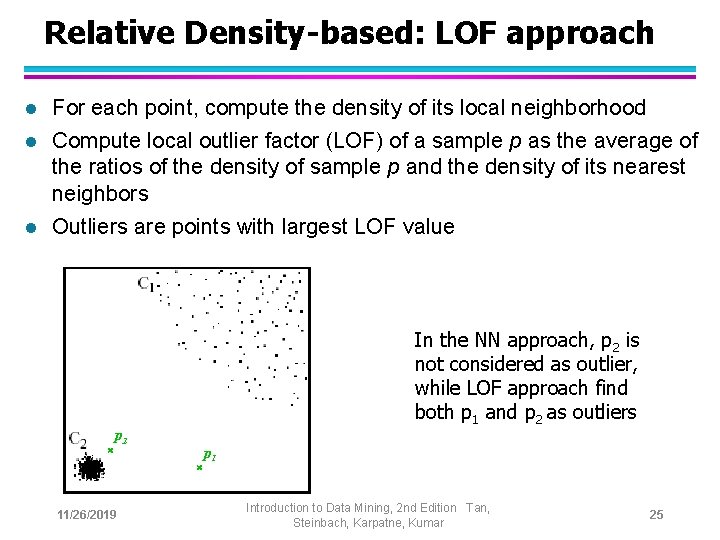

Relative Density-based: LOF approach l For each point, compute the density of its local neighborhood l Compute local outlier factor (LOF) of a sample p as the average of the ratios of the density of sample p and the density of its nearest neighbors l Outliers are points with largest LOF value In the NN approach, p 2 is not considered as outlier, while LOF approach find both p 1 and p 2 as outliers p 2 11/26/2019 p 1 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 25

Strengths/Weaknesses of Density-Based Approaches l Simple l Expensive – O(n 2) l Sensitive to parameters l Density becomes less meaningful in highdimensional space 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 26

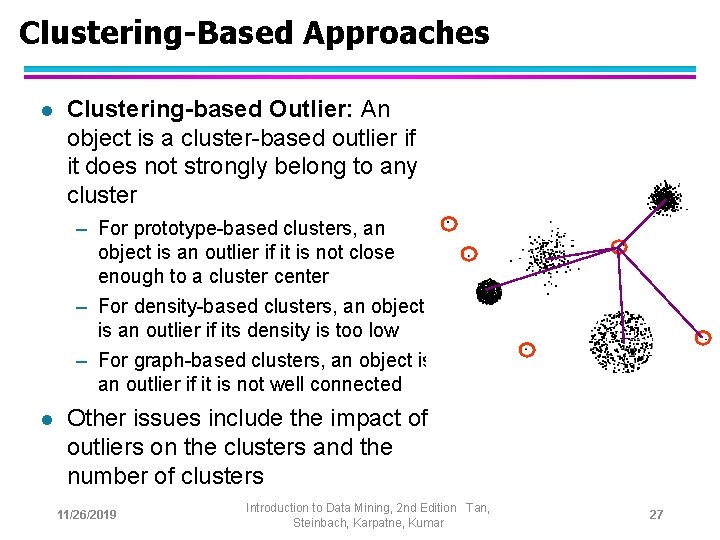

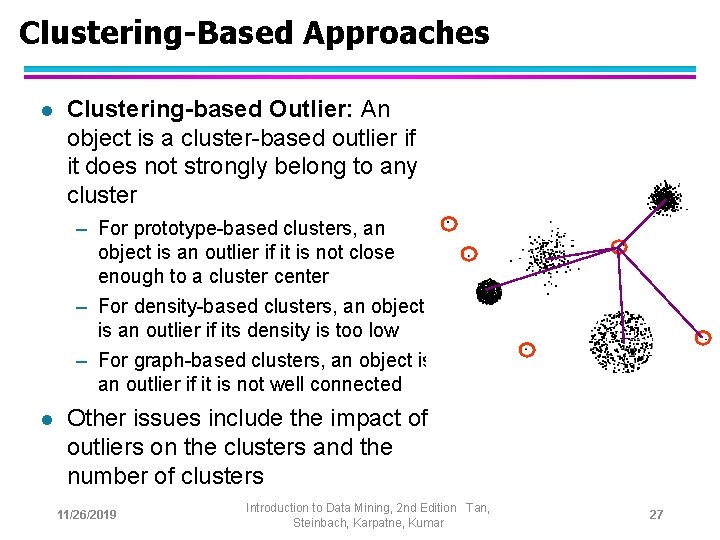

Clustering-Based Approaches l Clustering-based Outlier: An object is a cluster-based outlier if it does not strongly belong to any cluster – For prototype-based clusters, an object is an outlier if it is not close enough to a cluster center – For density-based clusters, an object is an outlier if its density is too low – For graph-based clusters, an object is an outlier if it is not well connected l Other issues include the impact of outliers on the clusters and the number of clusters 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 27

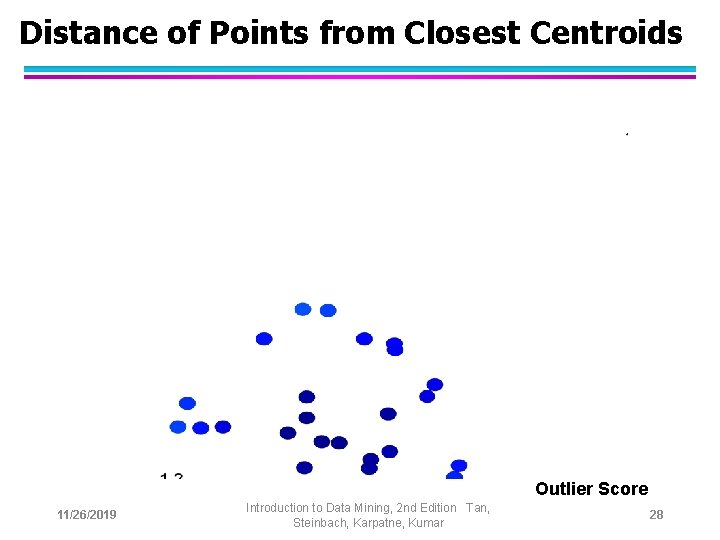

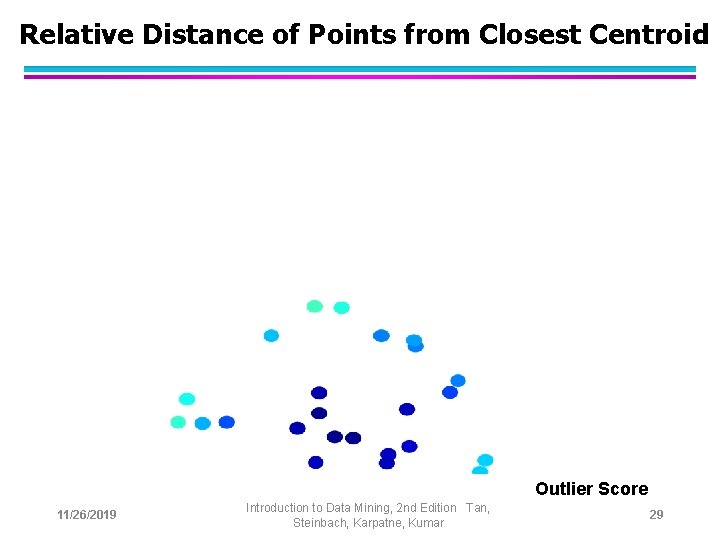

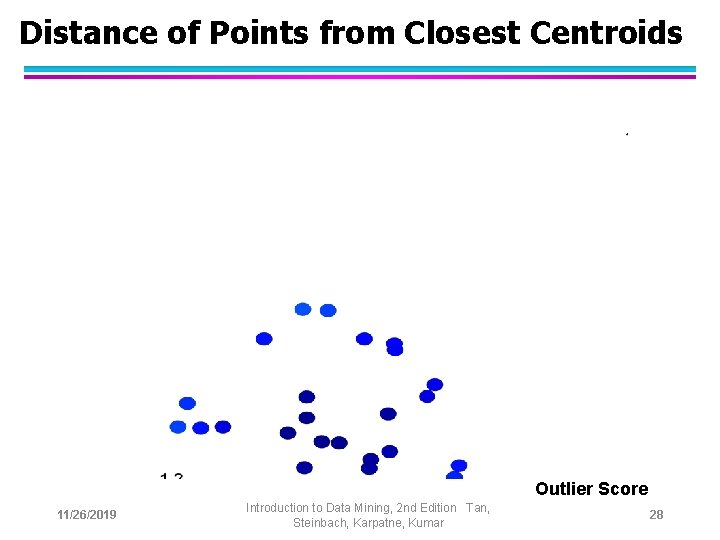

Distance of Points from Closest Centroids Outlier Score 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 28

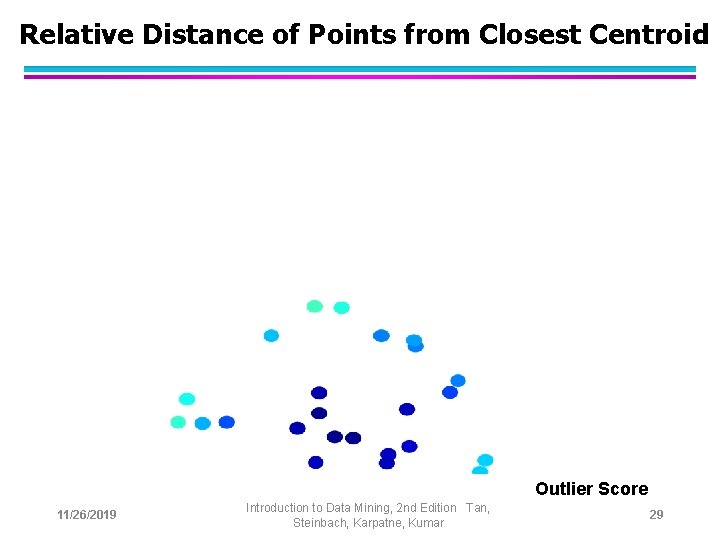

Relative Distance of Points from Closest Centroid Outlier Score 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 29

Strengths/Weaknesses of Clustering-Based Approaches l Simple l Many clustering techniques can be used l Can be difficult to decide on a clustering technique l Can be difficult to decide on number of clusters l Outliers can distort the clusters 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 30

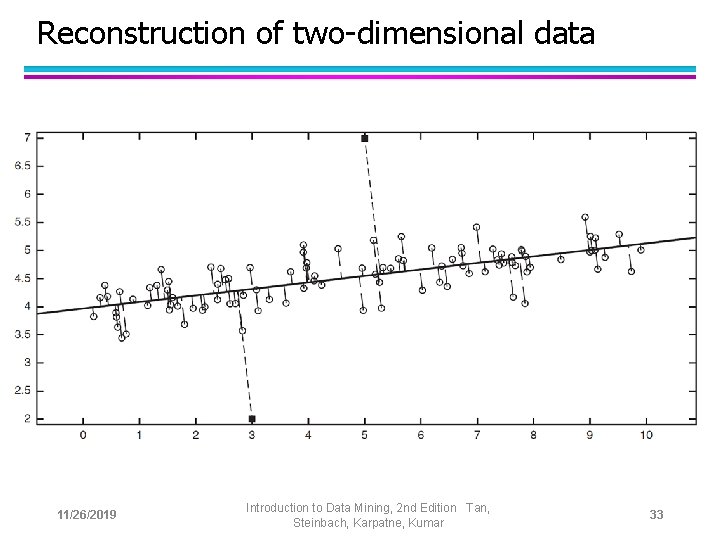

Reconstruction-Based Approaches Based on assumptions there are patterns in the distribution of the normal class that can be captured using lower-dimensional representations l Reduce data to lower dimensional data l – Can use Principal Components Analysis (PCA) or other dimensionality reduction techniques – Can also use neural networks l Measure the reconstruction error for each object – The difference between original and reduced dimensionality version 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 31

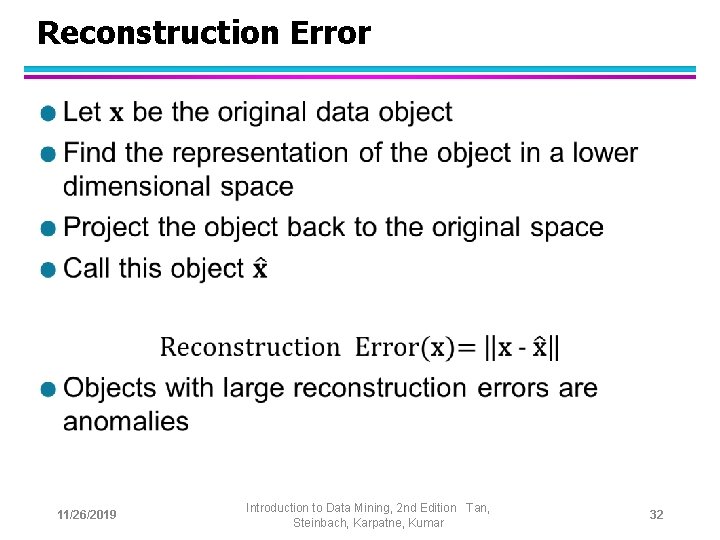

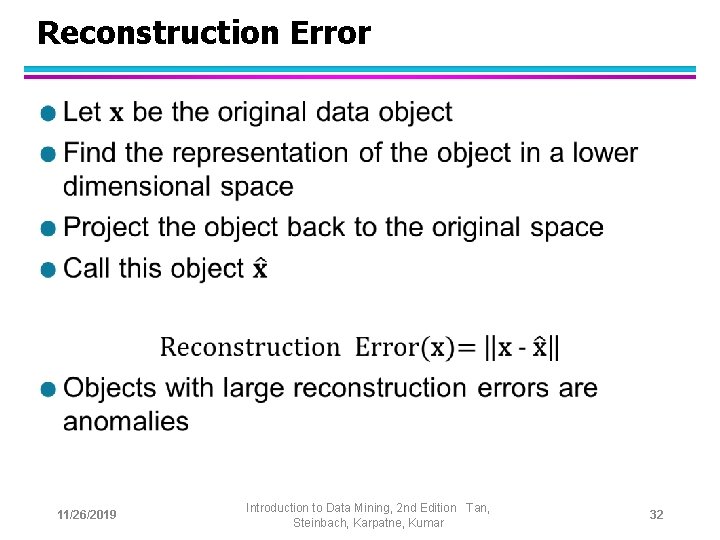

Reconstruction Error l 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 32

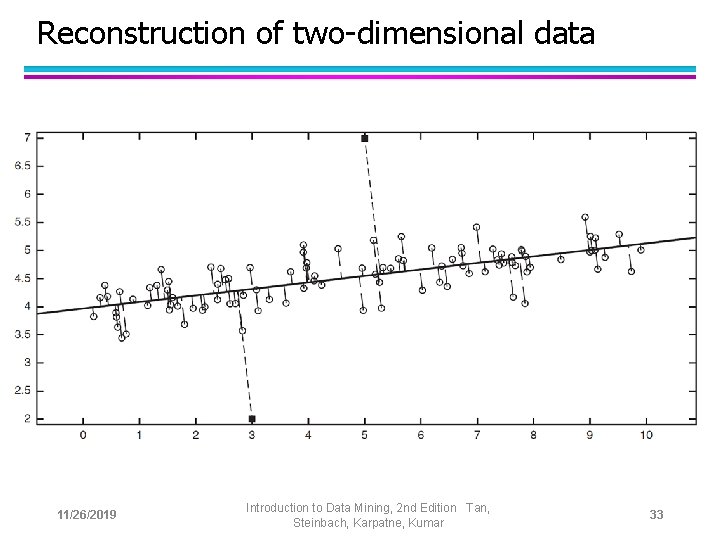

Reconstruction of two-dimensional data 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 33

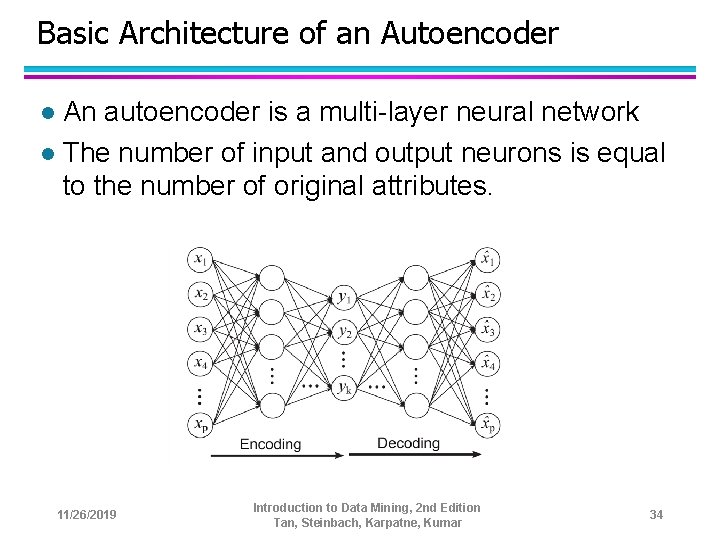

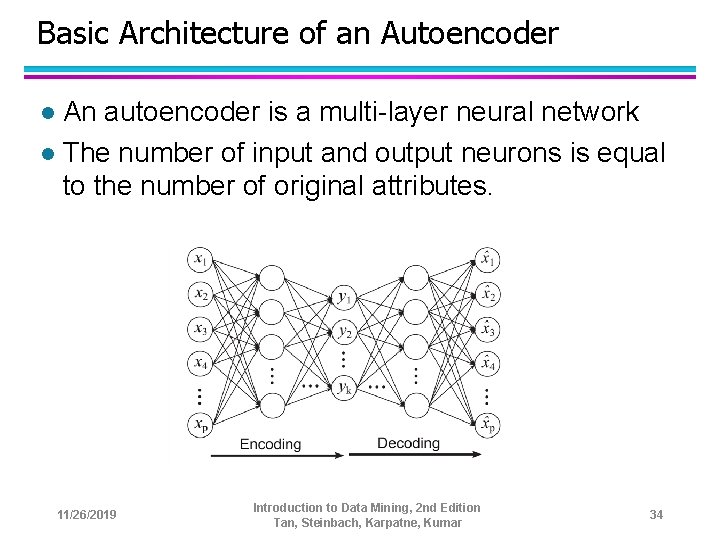

Basic Architecture of an Autoencoder An autoencoder is a multi-layer neural network l The number of input and output neurons is equal to the number of original attributes. l 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 34

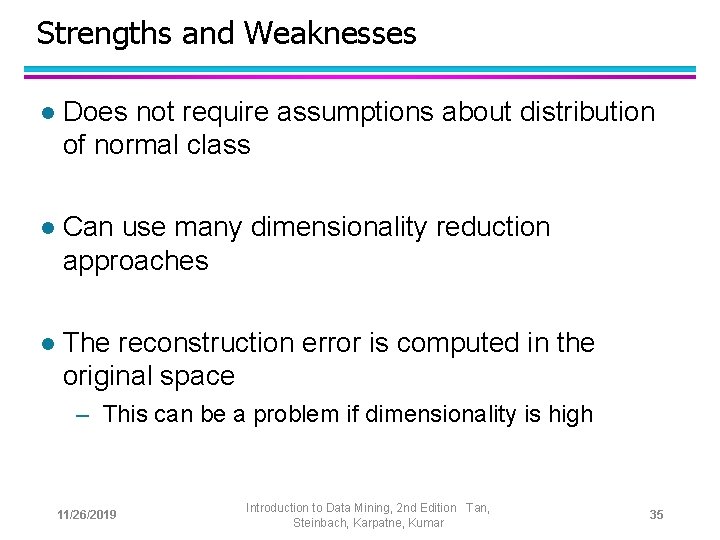

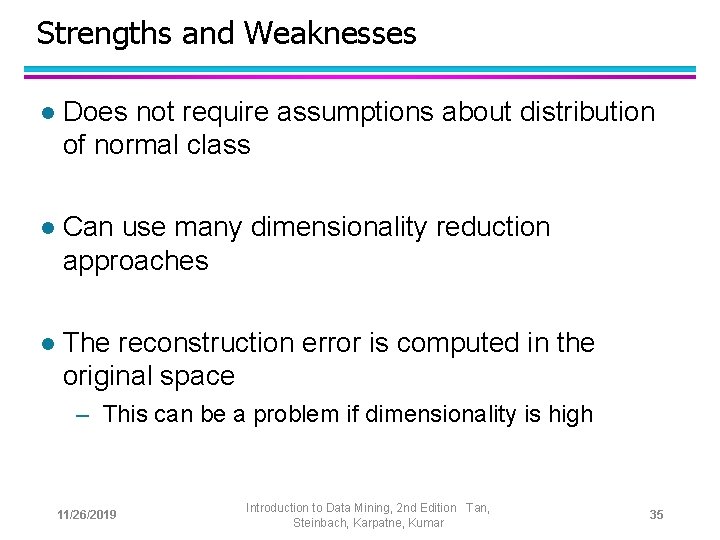

Strengths and Weaknesses l Does not require assumptions about distribution of normal class l Can use many dimensionality reduction approaches l The reconstruction error is computed in the original space – This can be a problem if dimensionality is high 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 35

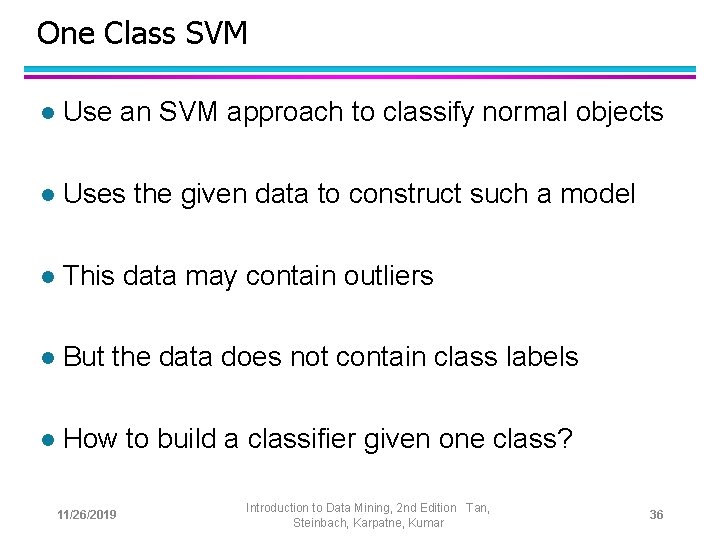

One Class SVM l Use an SVM approach to classify normal objects l Uses the given data to construct such a model l This data may contain outliers l But the data does not contain class labels l How to build a classifier given one class? 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 36

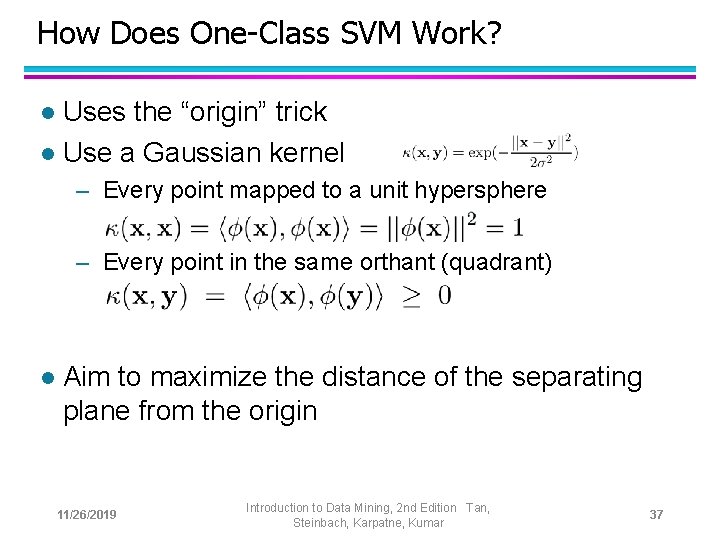

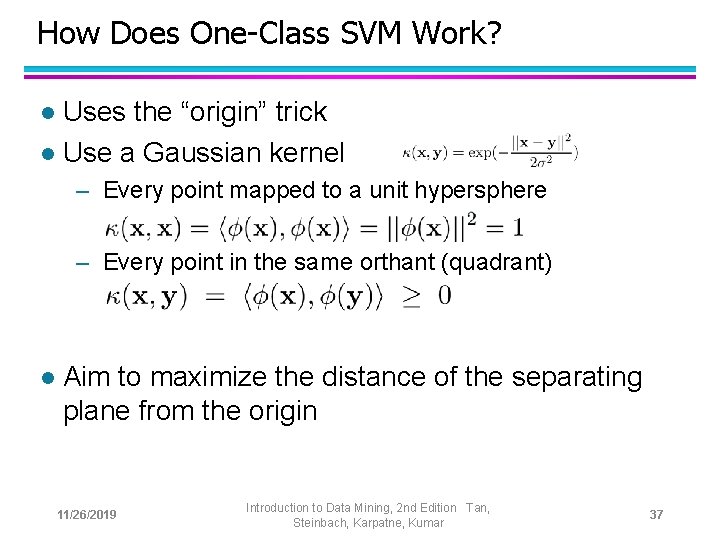

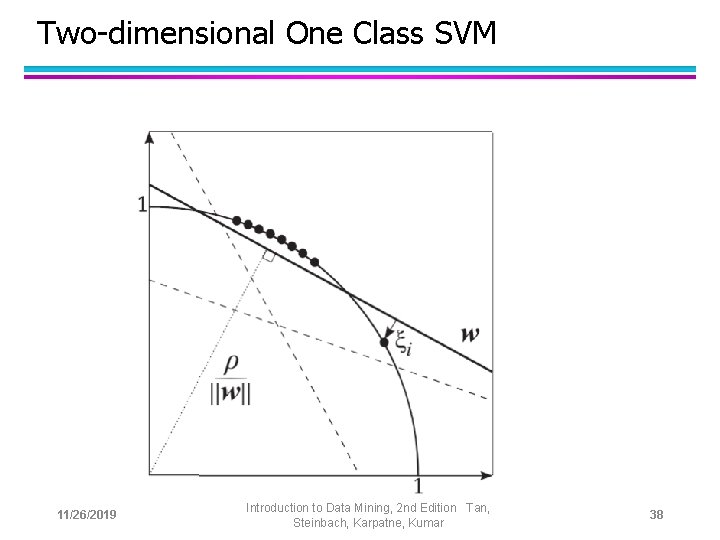

How Does One-Class SVM Work? Uses the “origin” trick l Use a Gaussian kernel l – Every point mapped to a unit hypersphere – Every point in the same orthant (quadrant) l Aim to maximize the distance of the separating plane from the origin 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 37

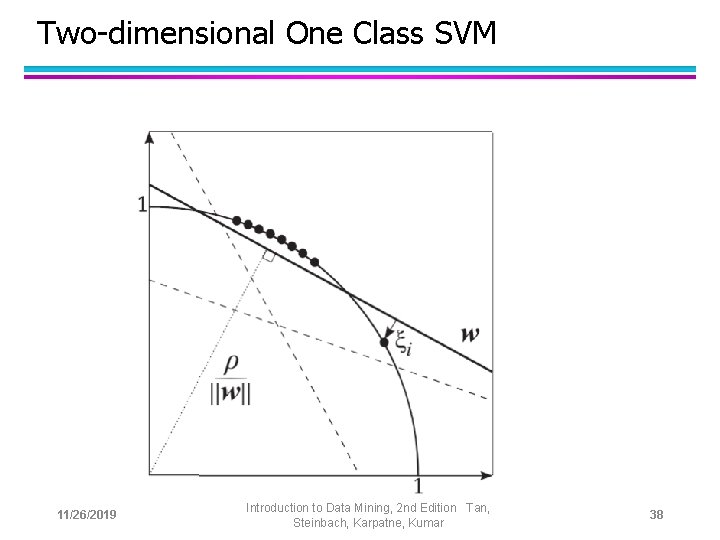

Two-dimensional One Class SVM 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 38

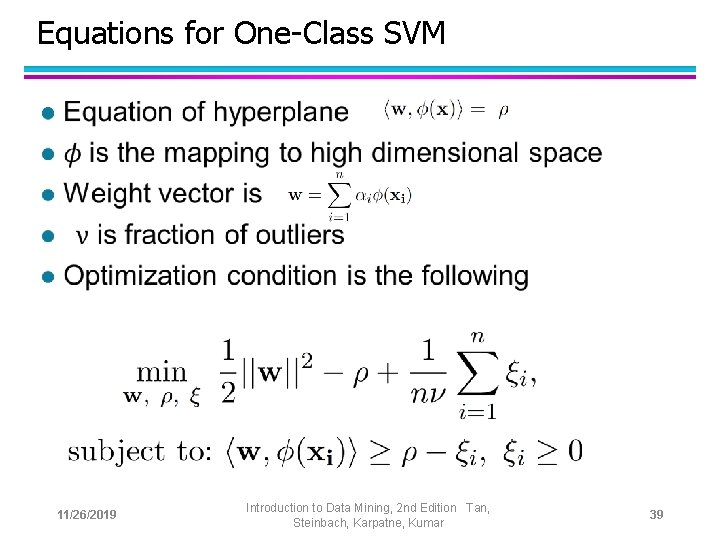

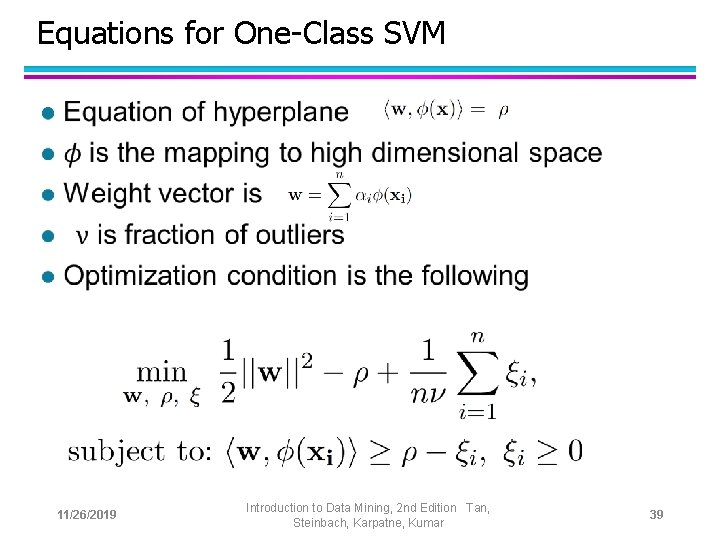

Equations for One-Class SVM l 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 39

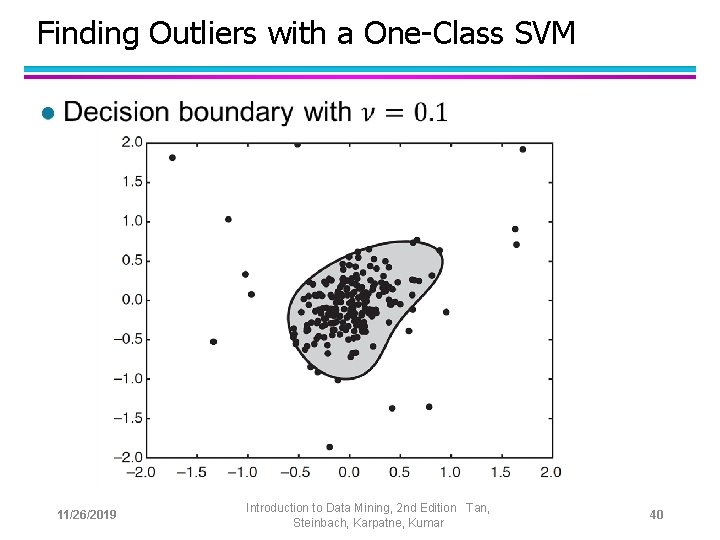

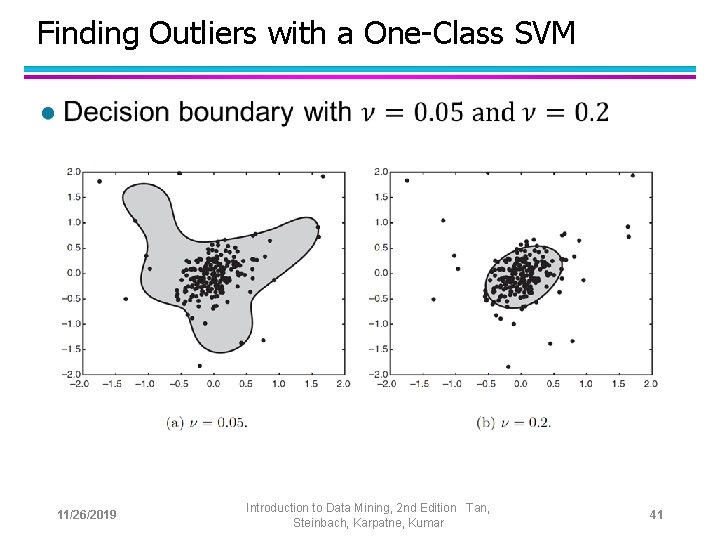

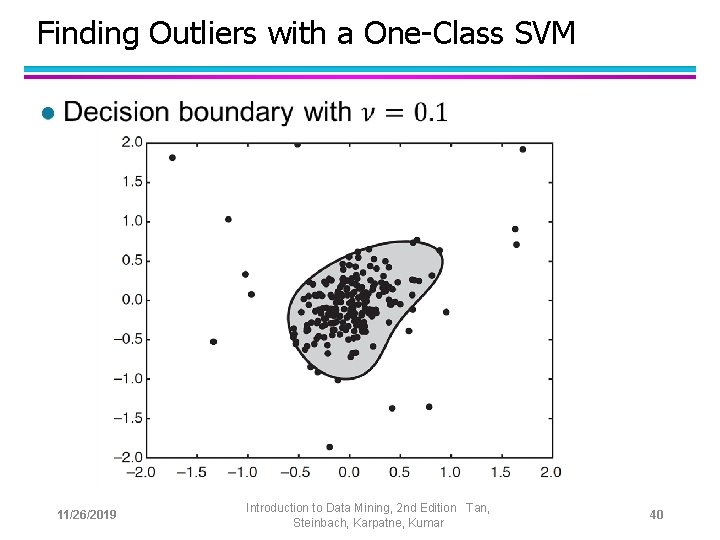

Finding Outliers with a One-Class SVM l 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 40

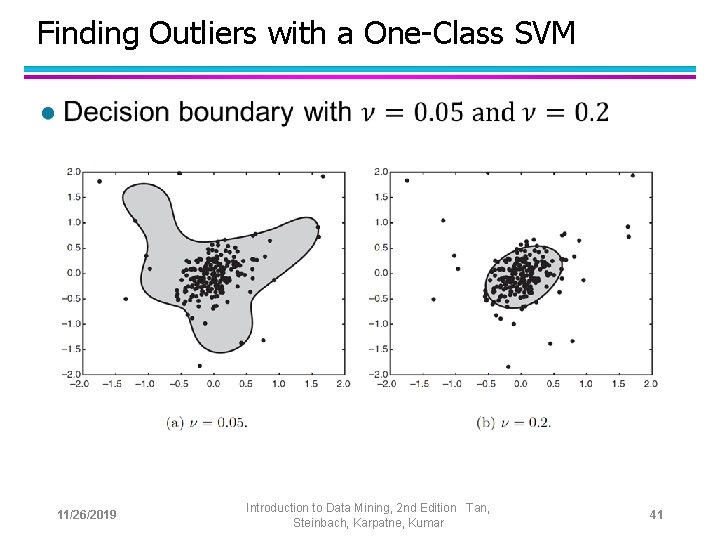

Finding Outliers with a One-Class SVM l 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 41

Strengths and Weaknesses l 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 42

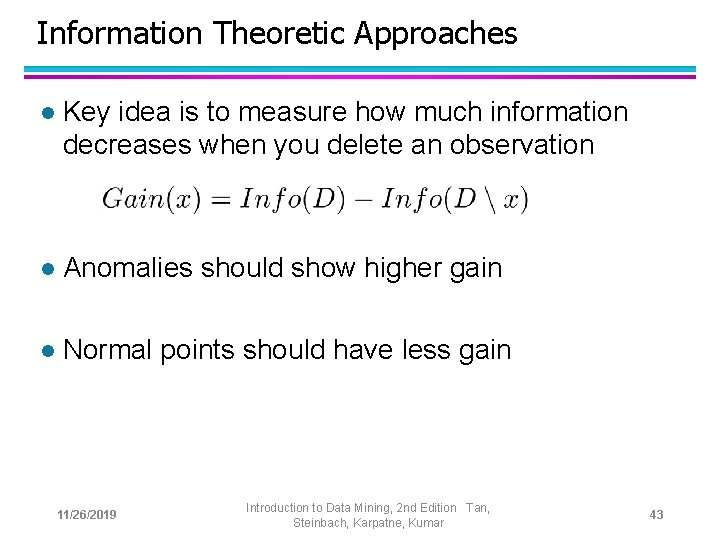

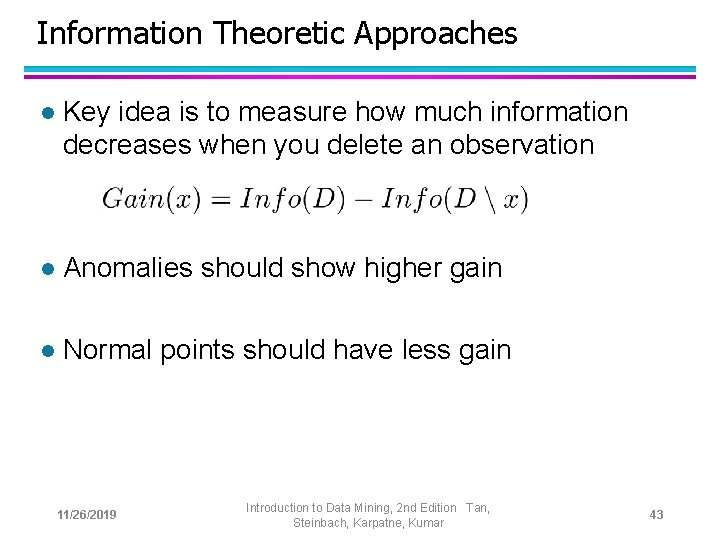

Information Theoretic Approaches l Key idea is to measure how much information decreases when you delete an observation l Anomalies should show higher gain l Normal points should have less gain 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 43

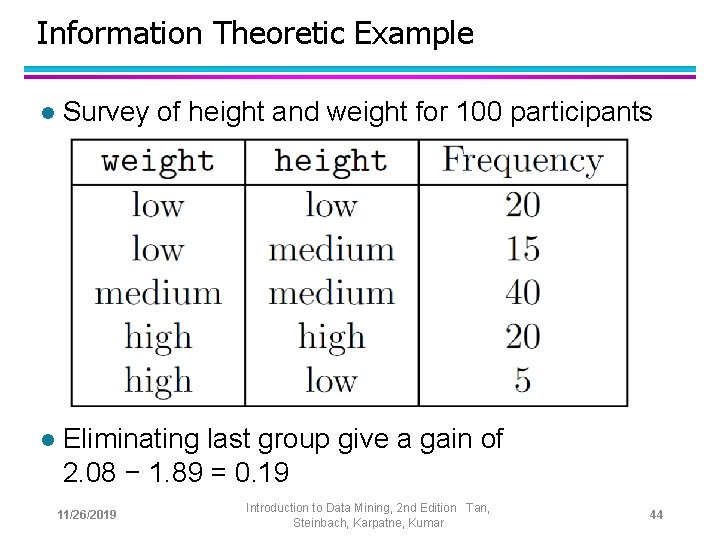

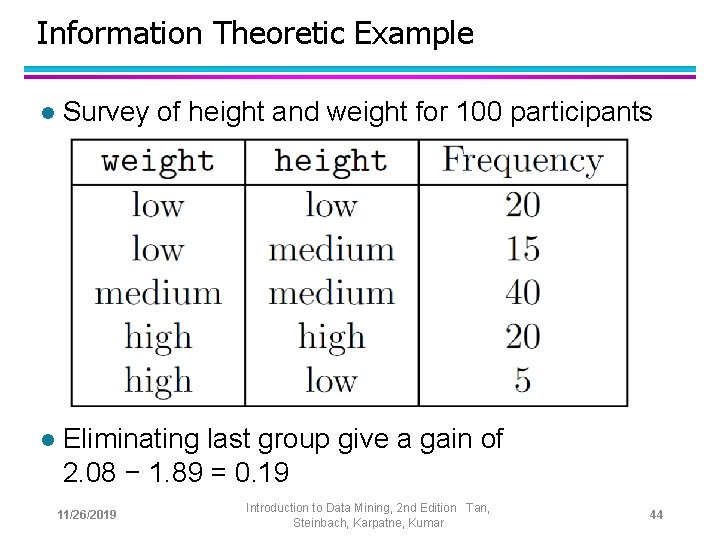

Information Theoretic Example l Survey of height and weight for 100 participants l Eliminating last group give a gain of 2. 08 − 1. 89 = 0. 19 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 44

Strengths and Weaknesses l Solid theoretical foundation l Theoretically applicable to all kinds of data l Difficult and computationally expensive to implement in practice 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 45

Evaluation of Anomaly Detection l If class labels are present, then use standard evaluation approaches for rare class such as precision, recall, or false positive rate – FPR is also know as false alarm rate l For unsupervised anomaly detection use measures provided by the anomaly method – Reconstruction error or gain l Can also look at histograms of anomaly scores. 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 46

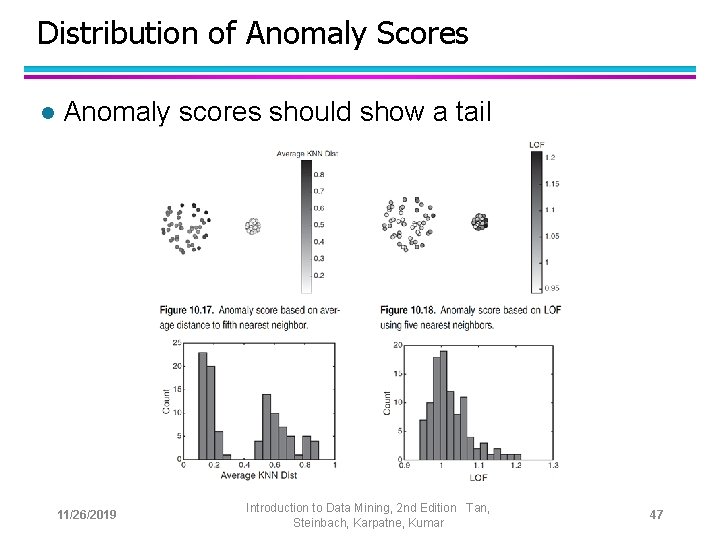

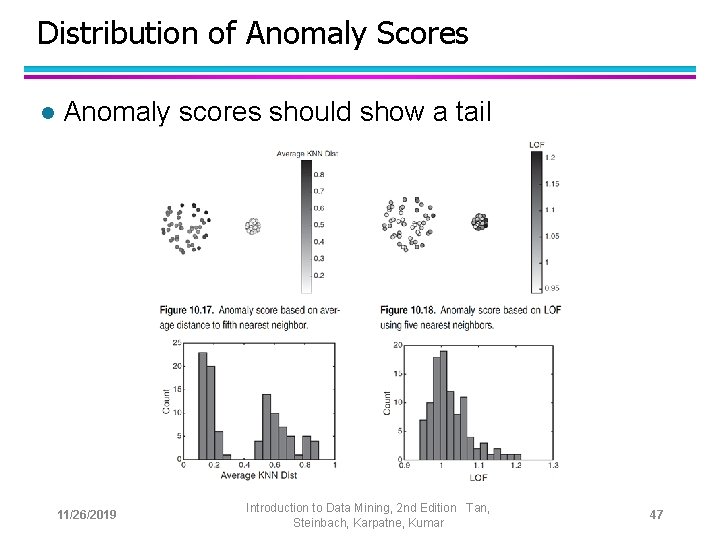

Distribution of Anomaly Scores l Anomaly scores should show a tail 11/26/2019 Introduction to Data Mining, 2 nd Edition Tan, Steinbach, Karpatne, Kumar 47