ADVANCED COMPUTER ARCHITECTURE CS 43306501 ProcessinginMemory Samira Khan

- Slides: 53

ADVANCED COMPUTER ARCHITECTURE CS 4330/6501 Processing-in-Memory Samira Khan University of Virginia Apr 22, 2019 The content and concept of this course are adapted from CMU ECE 740

LOGISTICS • Apr 24: Student Presentation 5 Towards Federated Learning at Scale: System Design, Ar. Xiv 2019 Tensor. Flow: A System for Large-Scale Machine Learning, OSDI 2016 • May 2: Final presentations • 2. 00 pm – 5. 00 pm • Will serve food and drinks

Three Key Systems Trends 1. Data access is a major bottleneck • Applications are increasingly data hungry 2. Energy consumption is a key limiter 3. Data movement energy dominates compute • Especially true for off-chip to on-chip movement 3

Challenge and Opportunity for Future High Performance, Energy Efficient, Sustainable 4

The Problem Data access is the major performance and energy bottleneck Our current design principles cause great energy waste (and great performance loss) 5

The Problem Processing of data is performed far away from the data 6

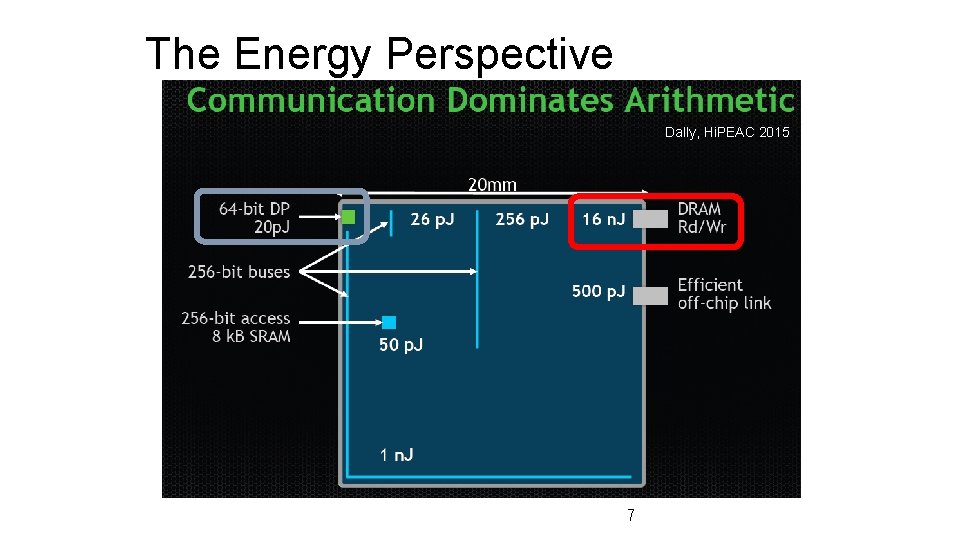

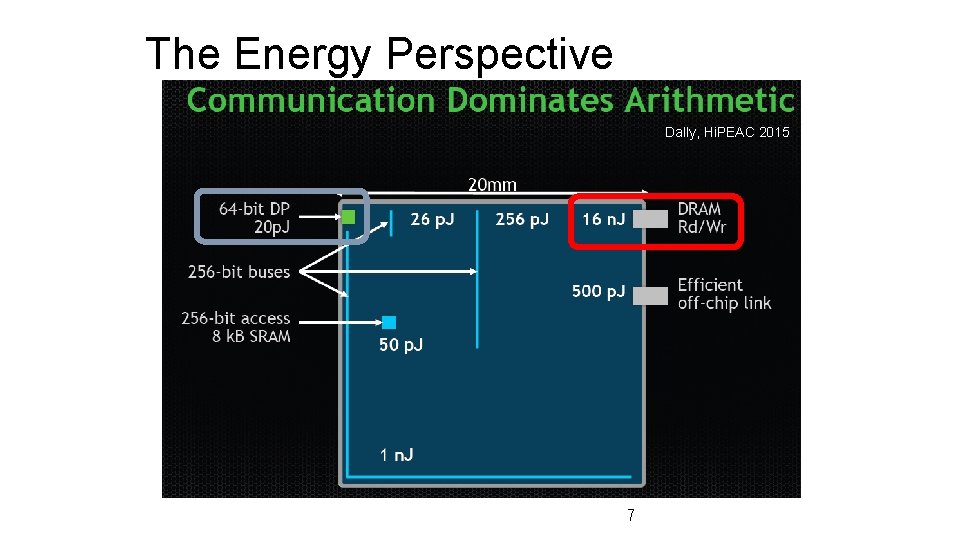

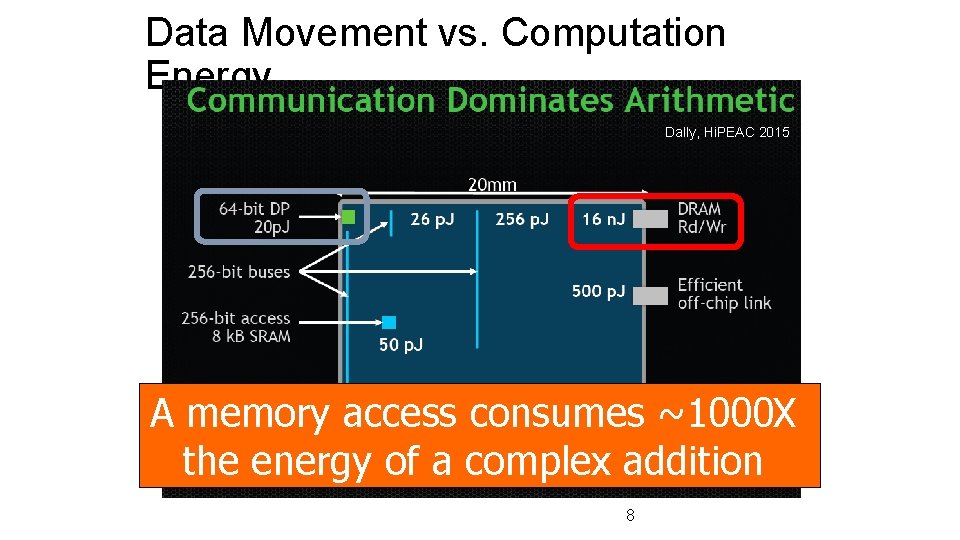

The Energy Perspective Dally, Hi. PEAC 2015 7

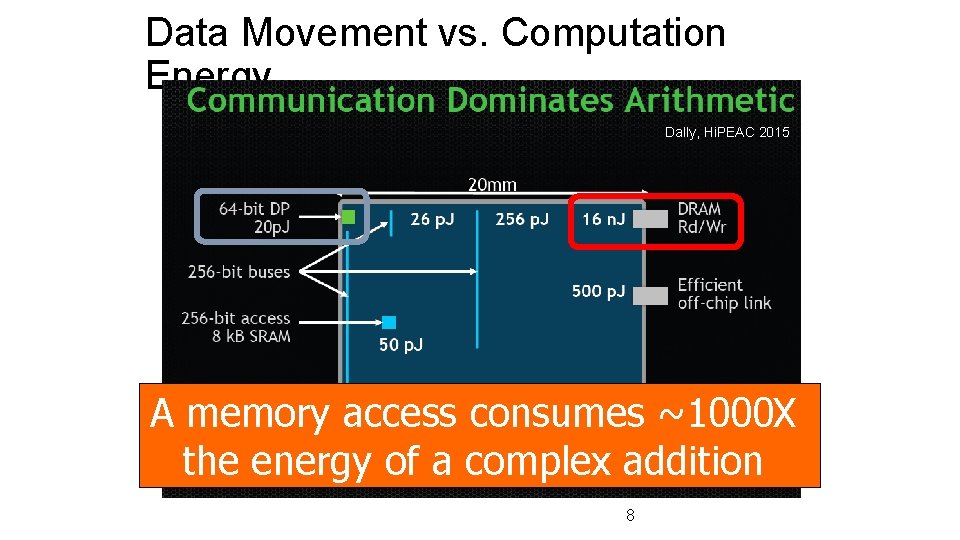

Data Movement vs. Computation Energy Dally, Hi. PEAC 2015 A memory access consumes ~1000 X the energy of a complex addition 8

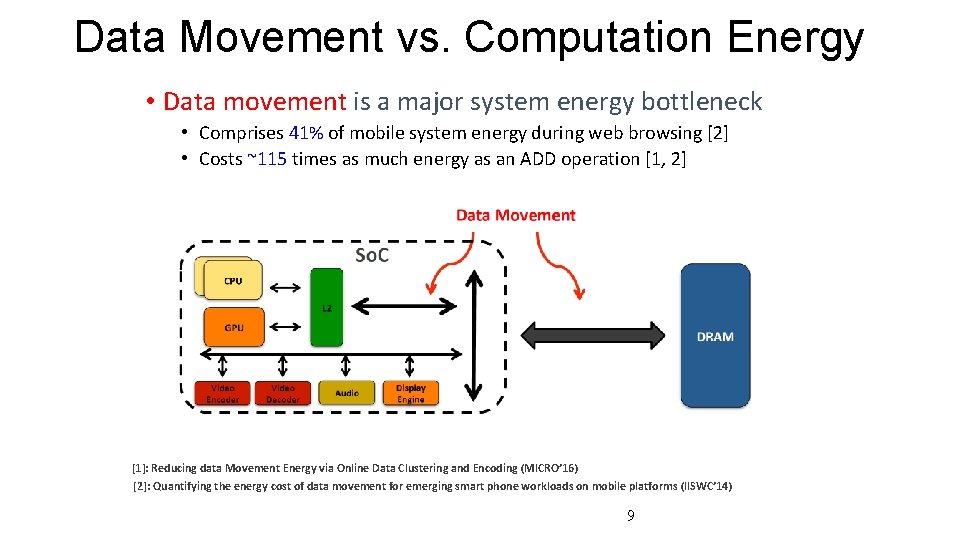

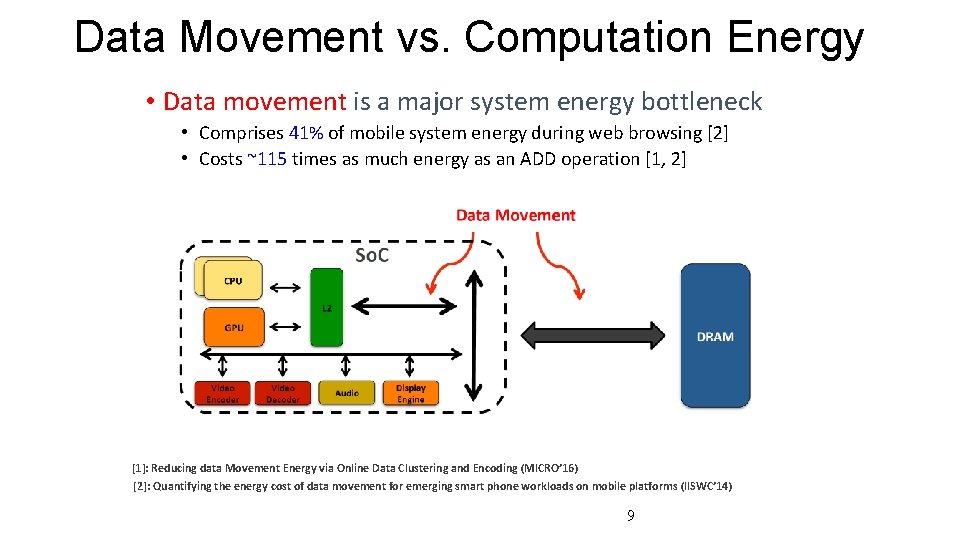

Data Movement vs. Computation Energy • Data movement is a major system energy bottleneck • Comprises 41% of mobile system energy during web browsing [2] • Costs ~115 times as much energy as an ADD operation [1, 2] [1]: Reducing data Movement Energy via Online Data Clustering and Encoding (MICRO’ 16) [2]: Quantifying the energy cost of data movement for emerging smart phone workloads on mobile platforms (IISWC’ 14) 9

We Need A Paradigm Shift To … • Enable computation with minimal data movement • Compute where it makes sense (where data resides) • Make computing architectures more data-centric 10

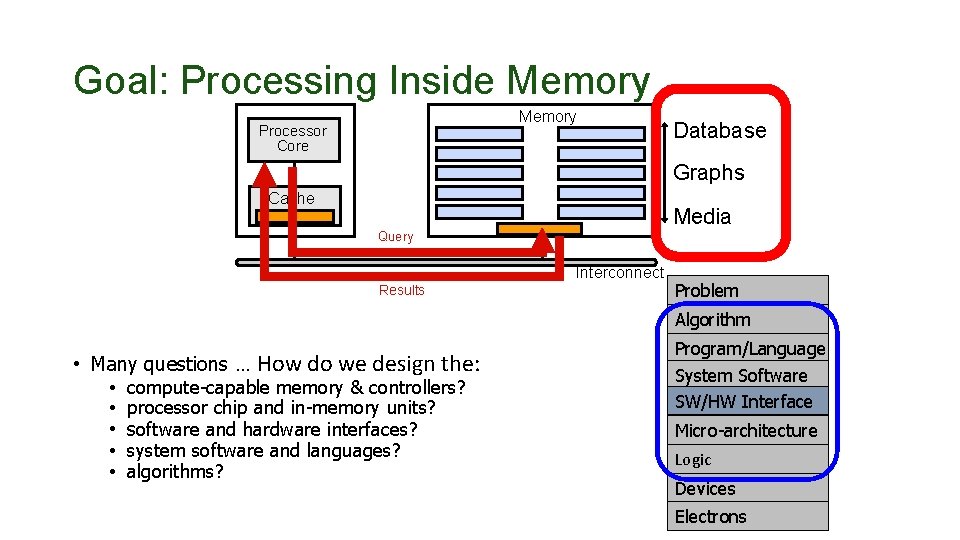

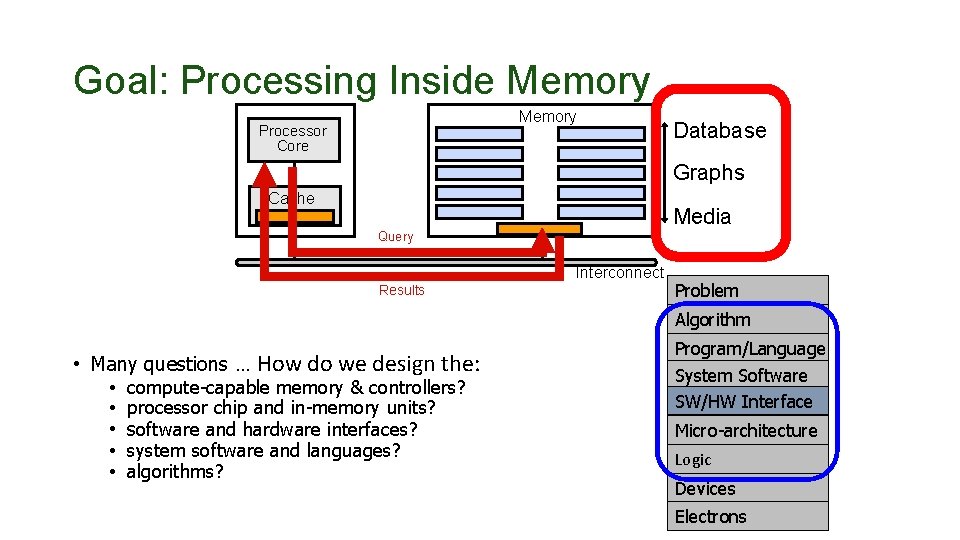

Goal: Processing Inside Memory Processor Core Database Graphs Cache Media Query Interconnect Results Problem Algorithm • Many questions … How do we design the: • compute-capable memory & controllers? • processor chip and in-memory units? • software and hardware interfaces? • system software and languages? • algorithms? Program/Language System Software SW/HW Interface Micro-architecture Logic Devices Electrons

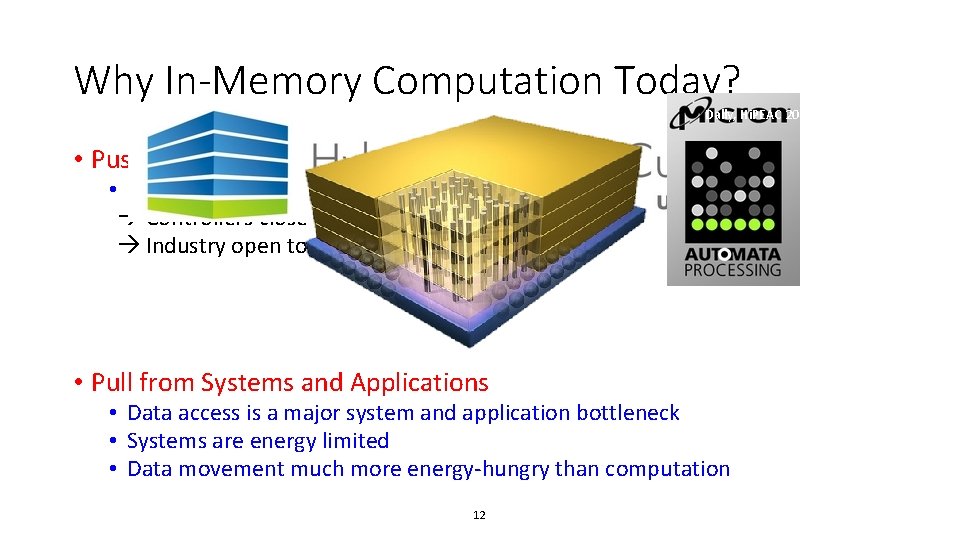

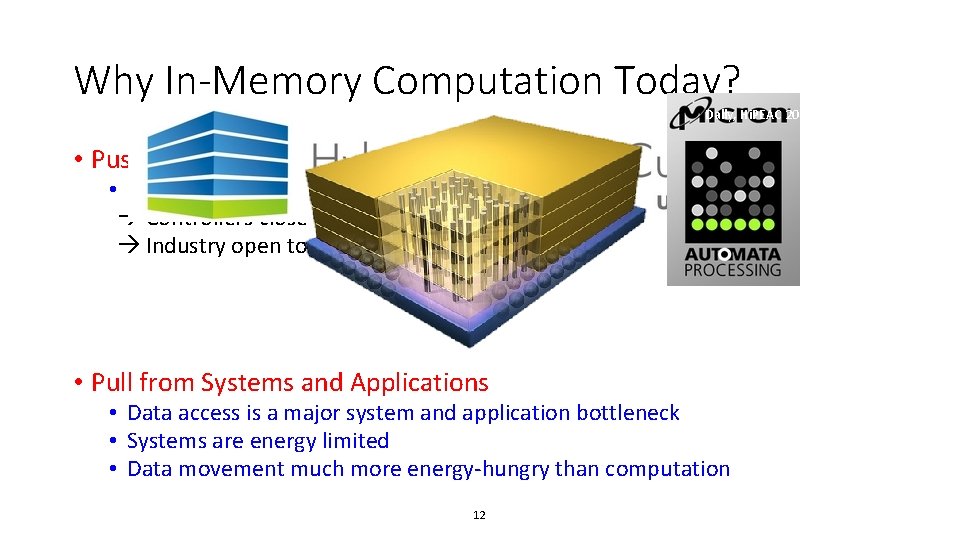

Why In-Memory Computation Today? Dally, Hi. PEAC 2015 • Push from Technology • DRAM Scaling at jeopardy Controllers close to DRAM Industry open to new memory architectures • Pull from Systems and Applications • Data access is a major system and application bottleneck • Systems are energy limited • Data movement much more energy-hungry than computation 12

Agenda • Major Trends Affecting Main Memory • The Need for Intelligent Memory Controllers • Bottom Up: Push from Circuits and Devices • Top Down: Pull from Systems and Applications • Processing in Memory: Two Directions • Minimally Changing Memory Chips • Exploiting 3 D-Stacked Memory • How to Enable Adoption of Processing in Memory • Conclusion 13

Processing in Memory: Two Approaches 1. Minimally changing memory chips 2. Exploiting 3 D-stacked memory 14

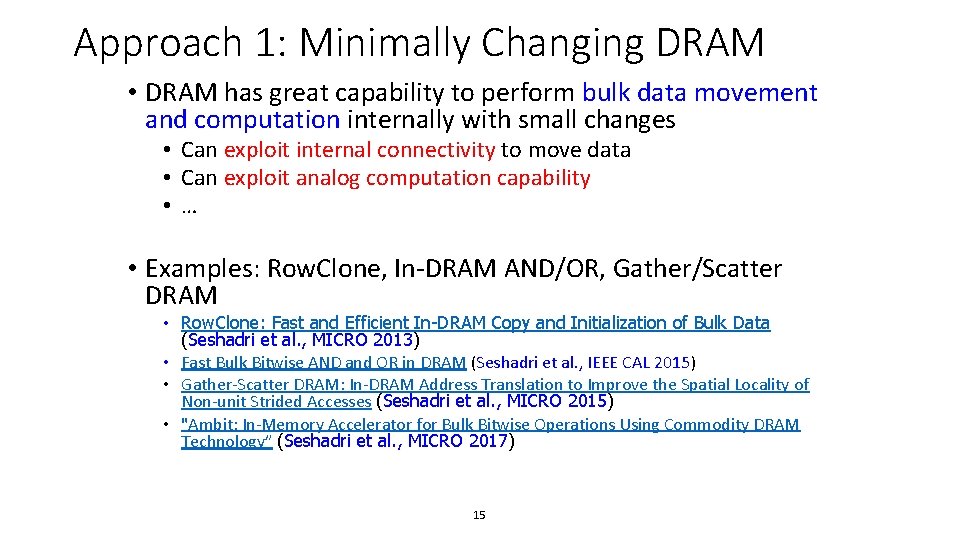

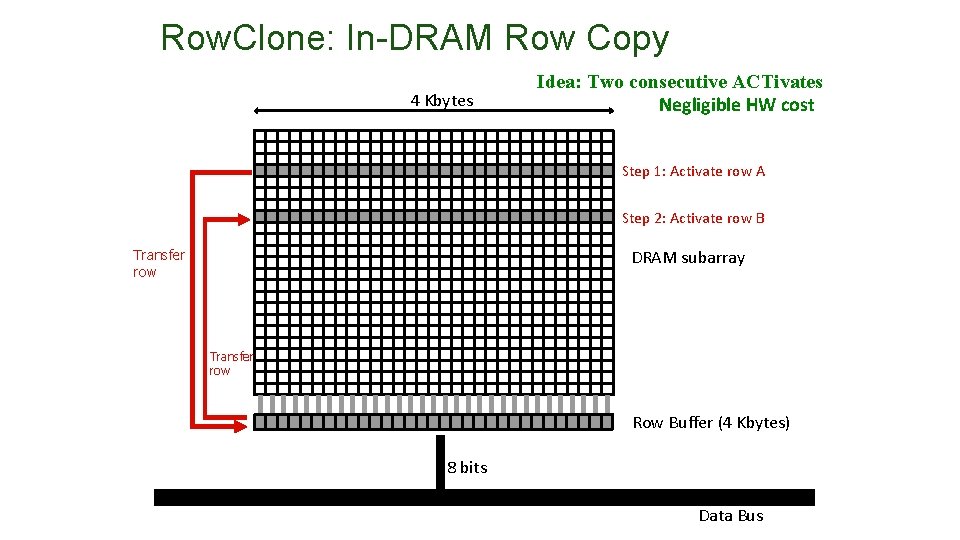

Approach 1: Minimally Changing DRAM • DRAM has great capability to perform bulk data movement and computation internally with small changes • Can exploit internal connectivity to move data • Can exploit analog computation capability • … • Examples: Row. Clone, In-DRAM AND/OR, Gather/Scatter DRAM • Row. Clone: Fast and Efficient In-DRAM Copy and Initialization of Bulk Data (Seshadri et al. , MICRO 2013) • Fast Bulk Bitwise AND and OR in DRAM (Seshadri et al. , IEEE CAL 2015) • Gather-Scatter DRAM: In-DRAM Address Translation to Improve the Spatial Locality of Non-unit Strided Accesses (Seshadri et al. , MICRO 2015) • "Ambit: In-Memory Accelerator for Bulk Bitwise Operations Using Commodity DRAM Technology” (Seshadri et al. , MICRO 2017) 15

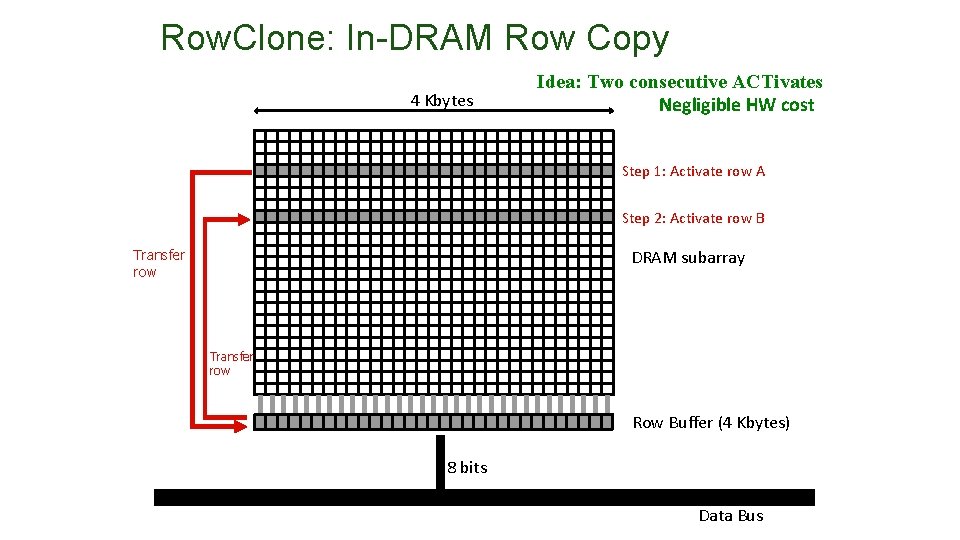

Row. Clone: In-DRAM Row Copy 4 Kbytes Idea: Two consecutive ACTivates Negligible HW cost Step 1: Activate row A Step 2: Activate row B DRAM subarray Transfer row Row Buffer (4 Kbytes) 8 bits Data Bus

Agenda • Major Trends Affecting Main Memory • The Need for Intelligent Memory Controllers • Bottom Up: Push from Circuits and Devices • Top Down: Pull from Systems and Applications • Processing in Memory: Two Directions • Minimally Changing Memory Chips • Exploiting 3 D-Stacked Memory • How to Enable Adoption of Processing in Memory • Conclusion 17

Processing in Memory: Two Approaches 1. Minimally changing memory chips 2. Exploiting 3 D-stacked memory 18

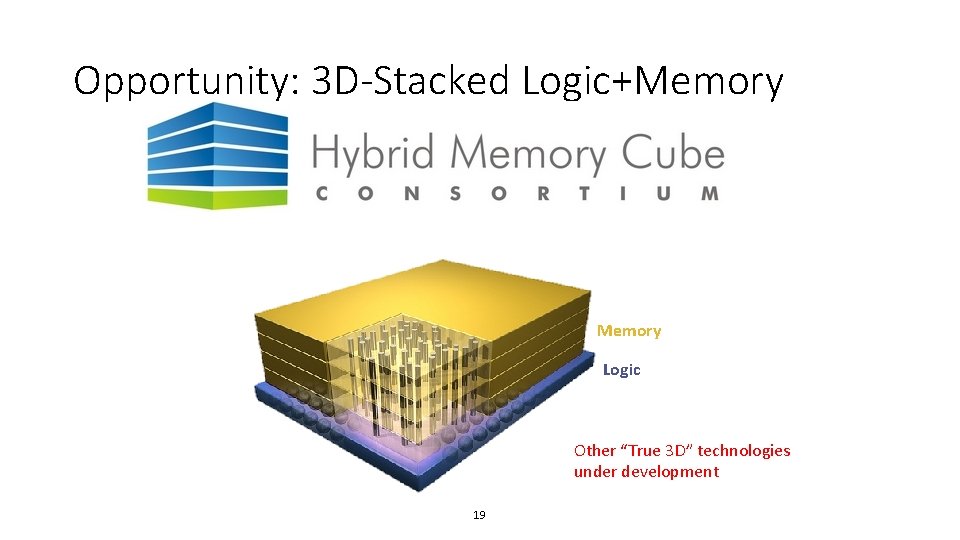

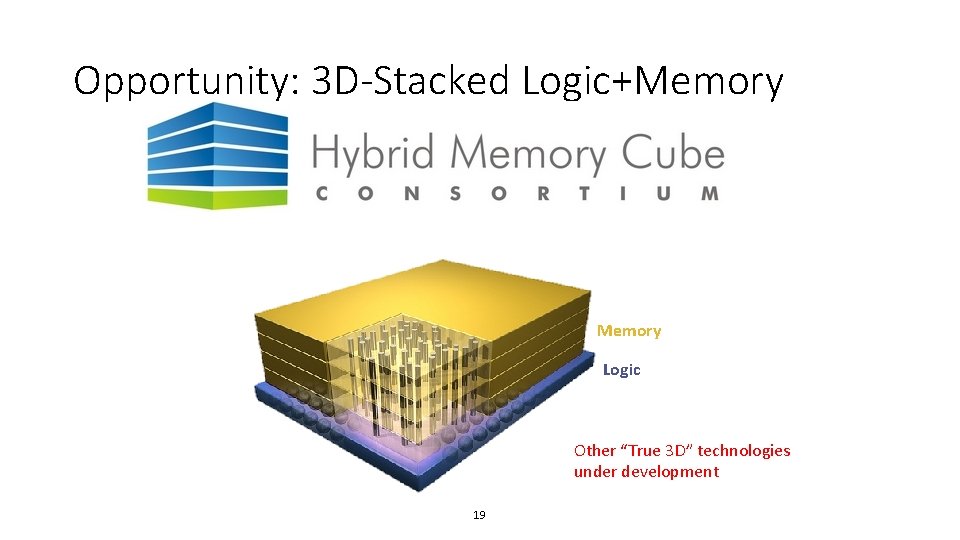

Opportunity: 3 D-Stacked Logic+Memory Logic Other “True 3 D” technologies under development 19

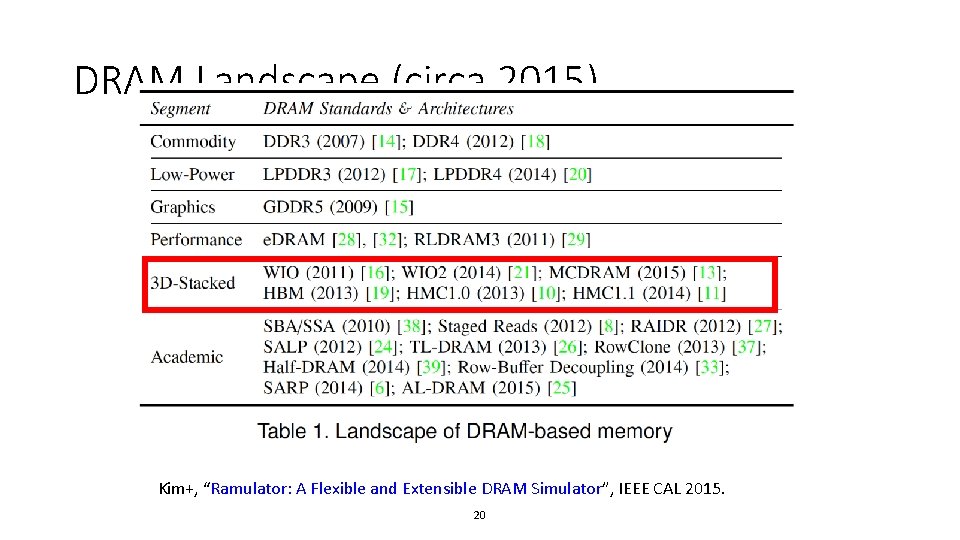

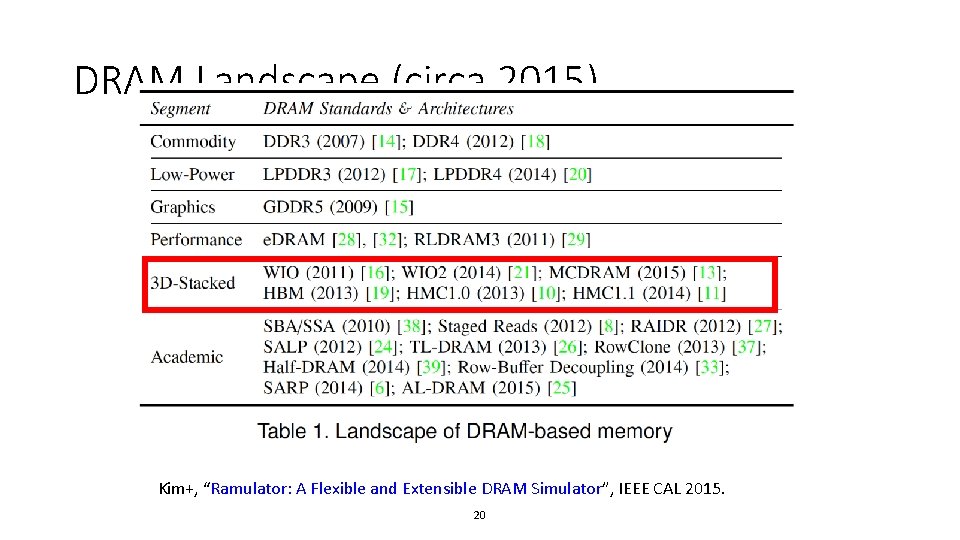

DRAM Landscape (circa 2015) Kim+, “Ramulator: A Flexible and Extensible DRAM Simulator”, IEEE CAL 2015. 20

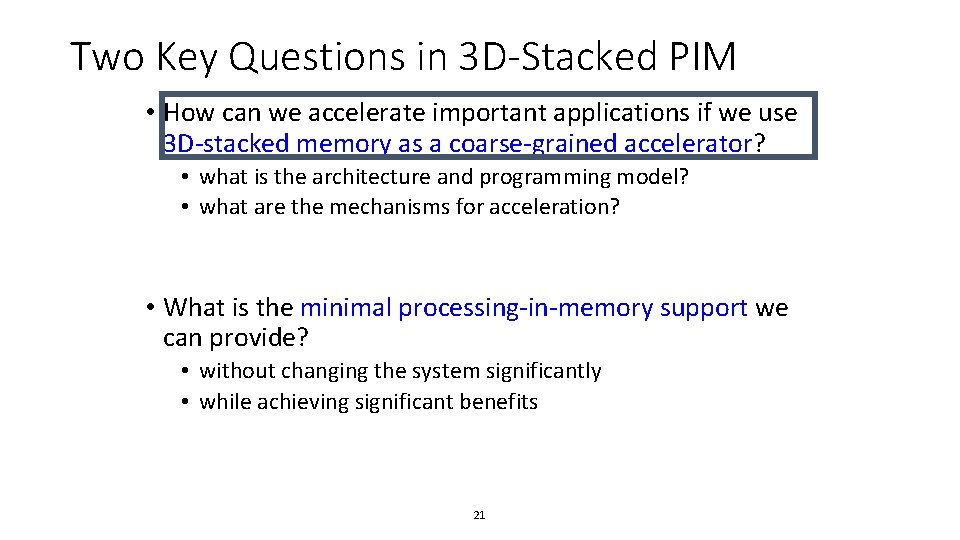

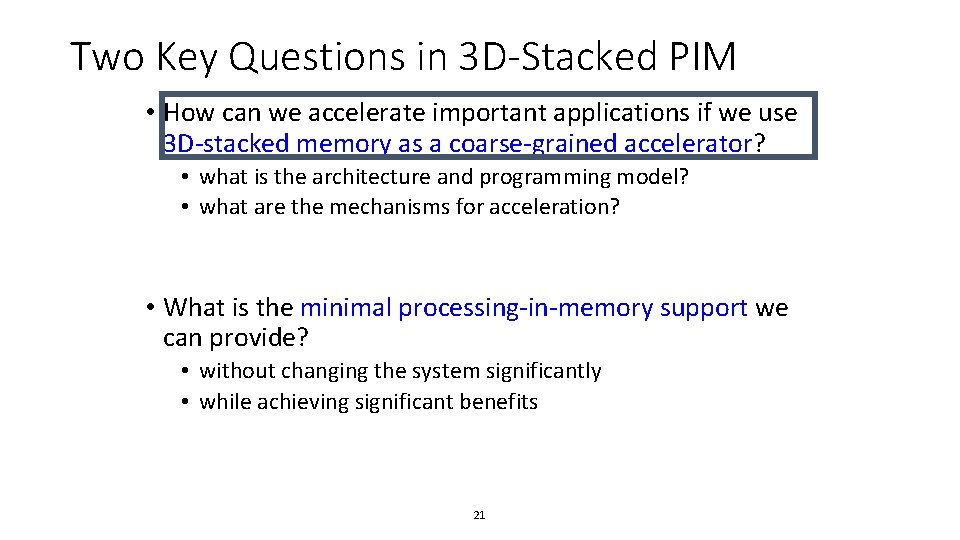

Two Key Questions in 3 D-Stacked PIM • How can we accelerate important applications if we use 3 D-stacked memory as a coarse-grained accelerator? • what is the architecture and programming model? • what are the mechanisms for acceleration? • What is the minimal processing-in-memory support we can provide? • without changing the system significantly • while achieving significant benefits 21

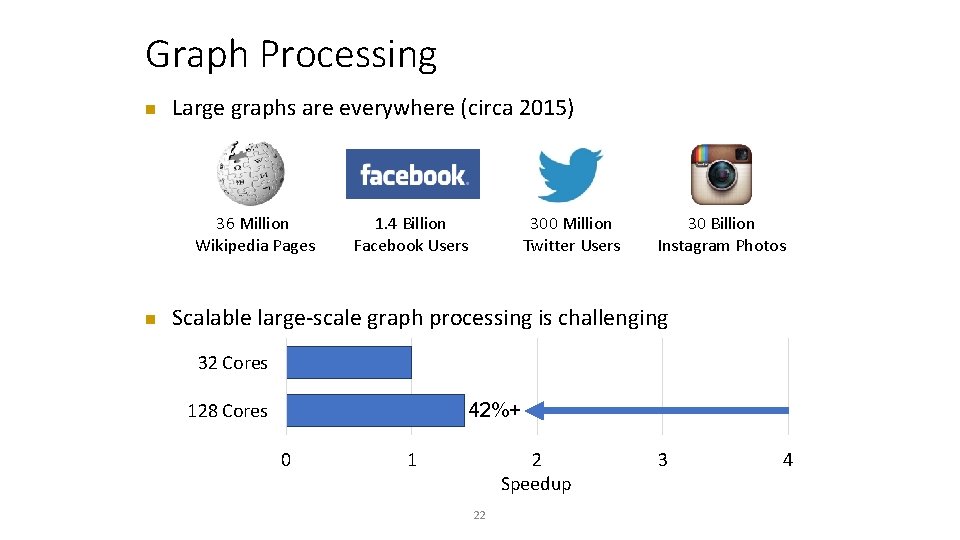

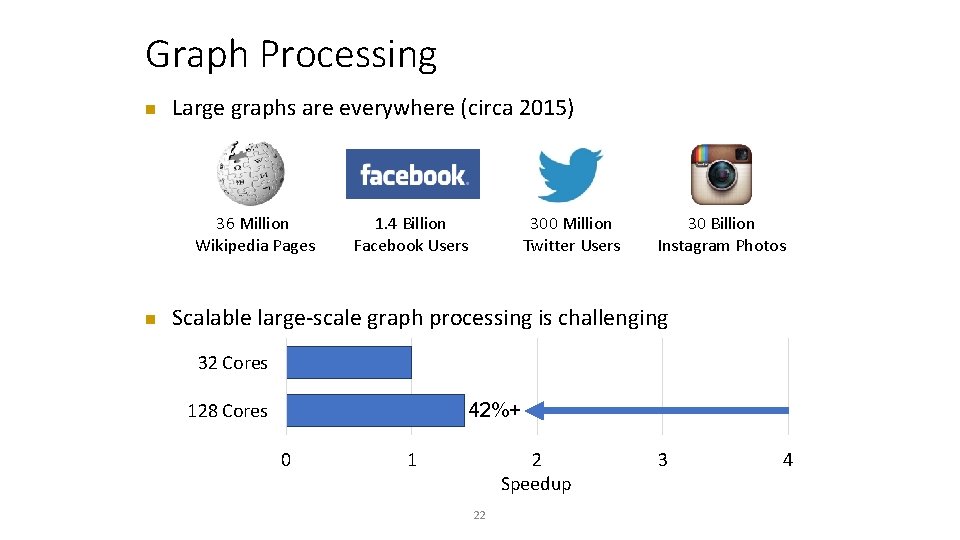

Graph Processing n Large graphs are everywhere (circa 2015) 36 Million Wikipedia Pages n 1. 4 Billion Facebook Users 300 Million Twitter Users 30 Billion Instagram Photos Scalable large-scale graph processing is challenging 32 Cores 42%+ 128 Cores 0 1 2 Speedup 22 3 4

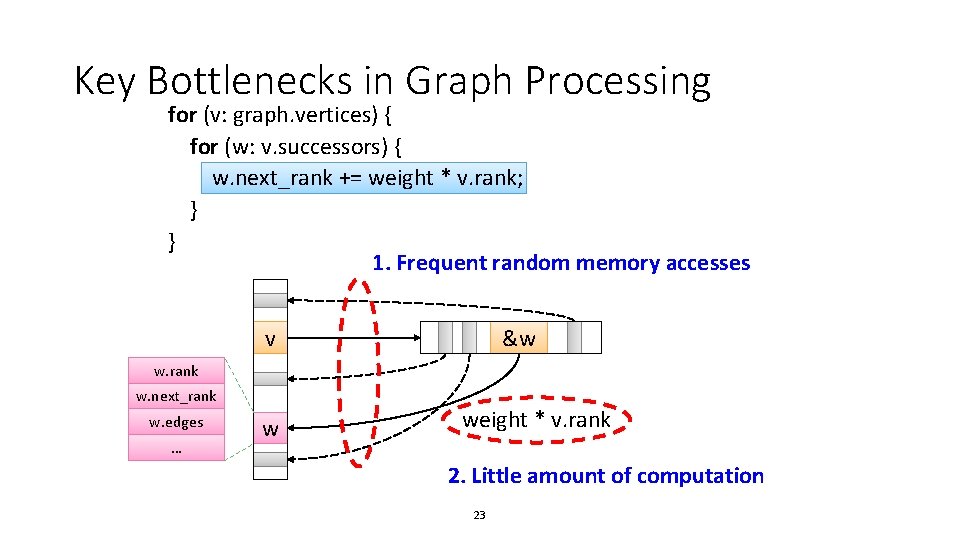

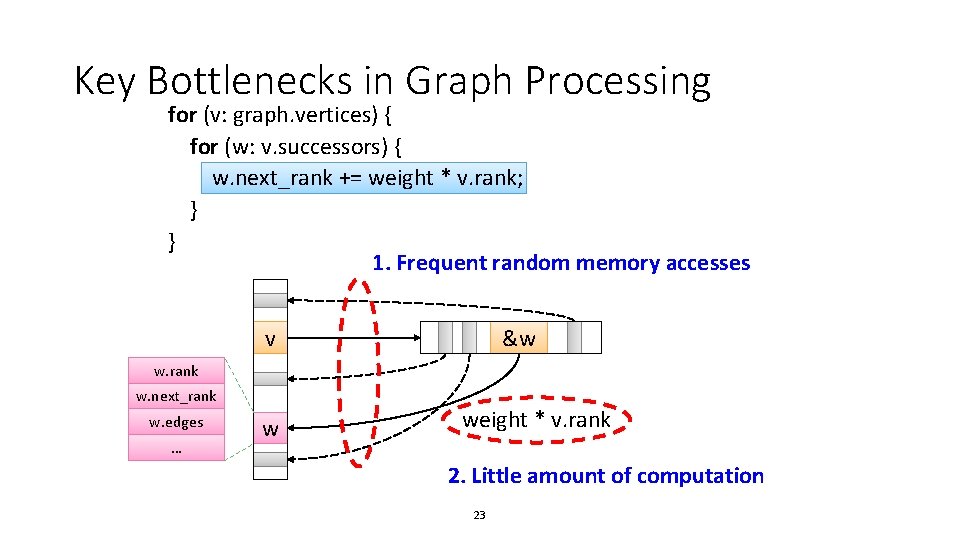

Key Bottlenecks in Graph Processing for (v: graph. vertices) { for (w: v. successors) { w. next_rank += weight * v. rank; } } 1. Frequent random memory accesses &w v w. rank w. next_rank w. edges … w weight * v. rank 2. Little amount of computation 23

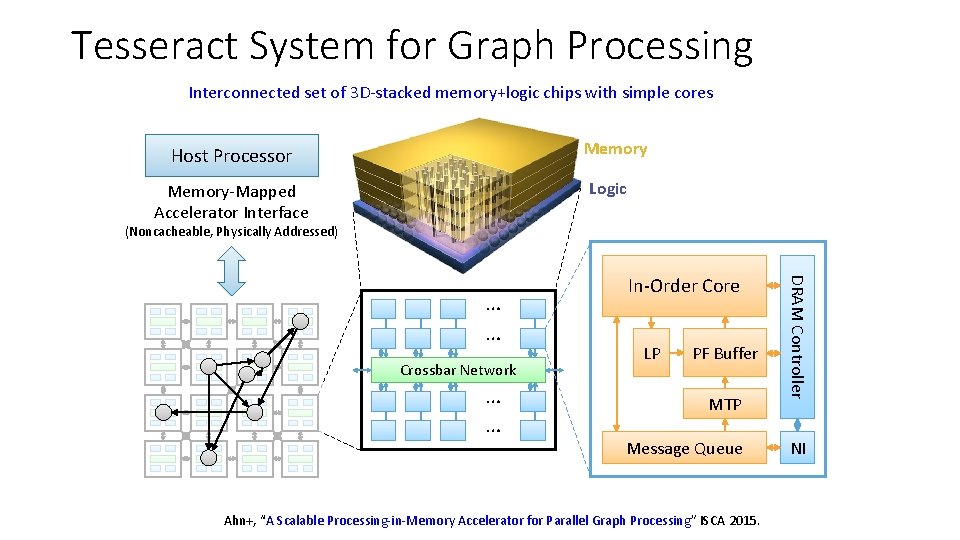

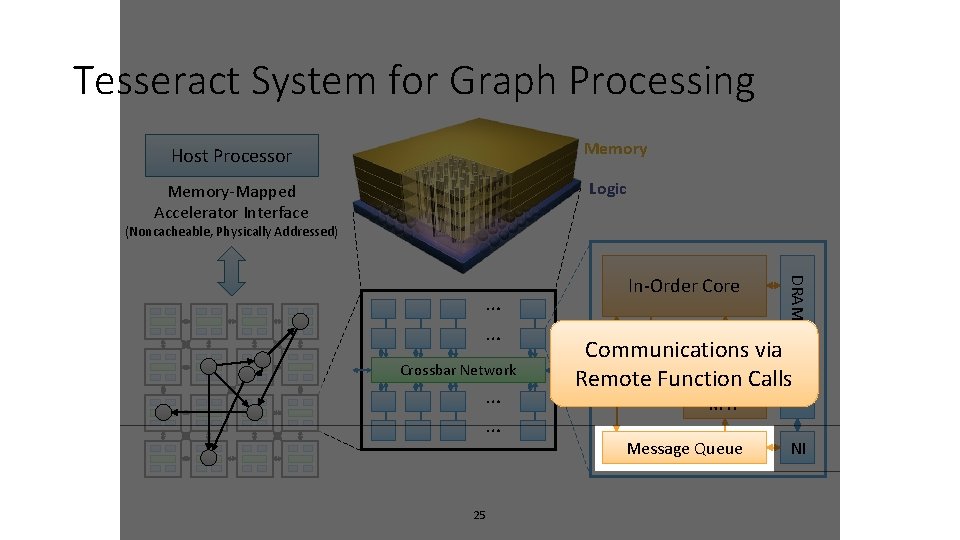

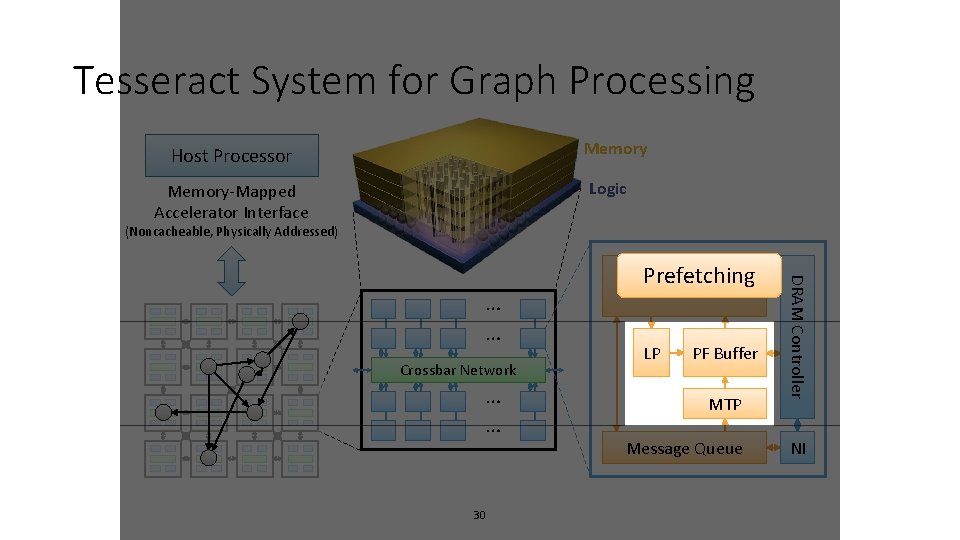

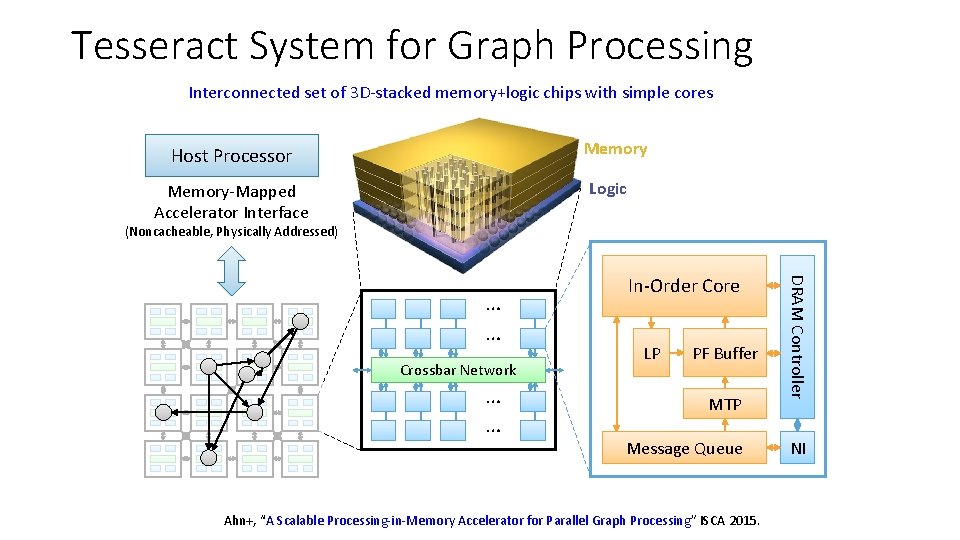

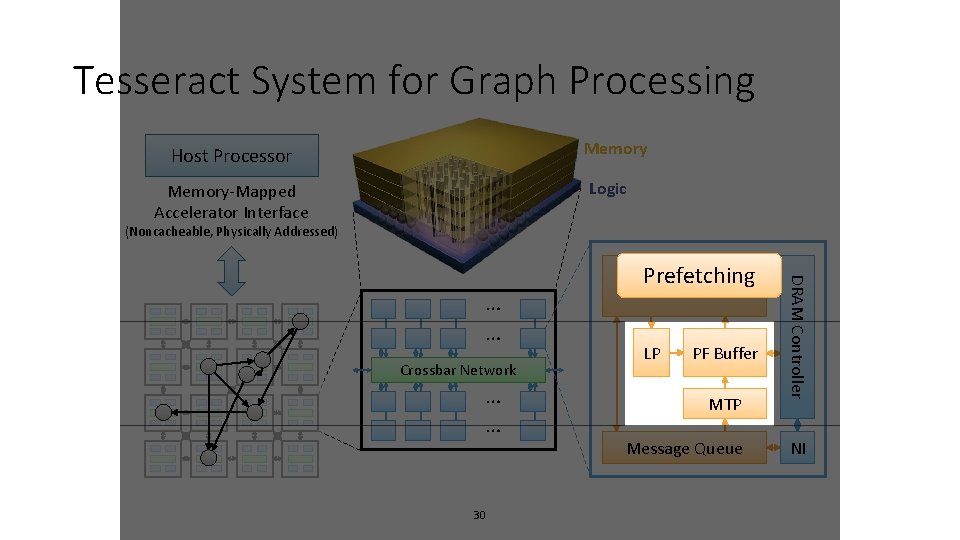

Tesseract System for Graph Processing Interconnected set of 3 D-stacked memory+logic chips with simple cores Memory Host Processor Logic Memory-Mapped Accelerator Interface (Noncacheable, Physically Addressed) Crossbar Network … … LP PF Buffer MTP Message Queue Ahn+, “A Scalable Processing-in-Memory Accelerator for Parallel Graph Processing” ISCA 2015. DRAM Controller … … In-Order Core NI

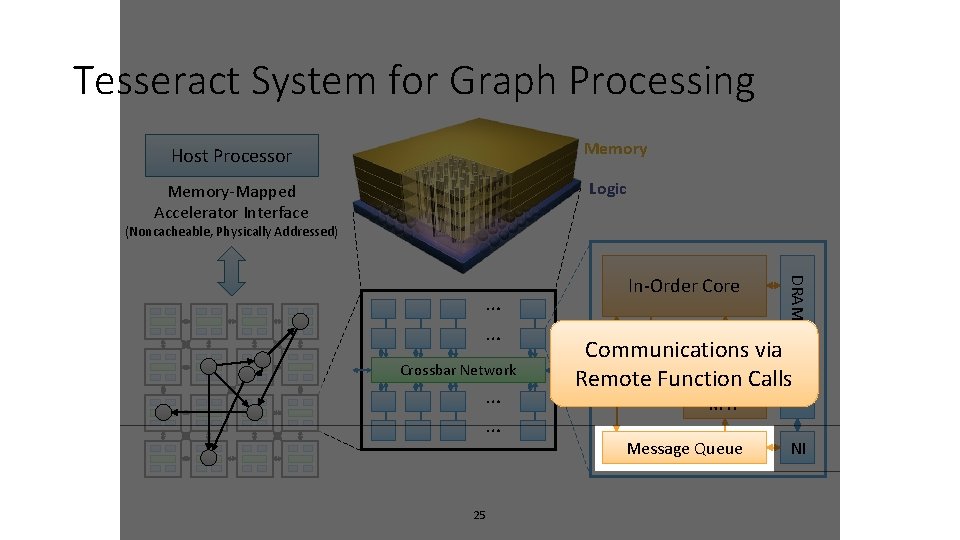

Tesseract System for Graph Processing Memory Host Processor Logic Memory-Mapped Accelerator Interface (Noncacheable, Physically Addressed) Crossbar Network … … 25 DRAM Controller … … In-Order Core Communications PF Buffervia LP Remote Function Calls MTP Message Queue NI

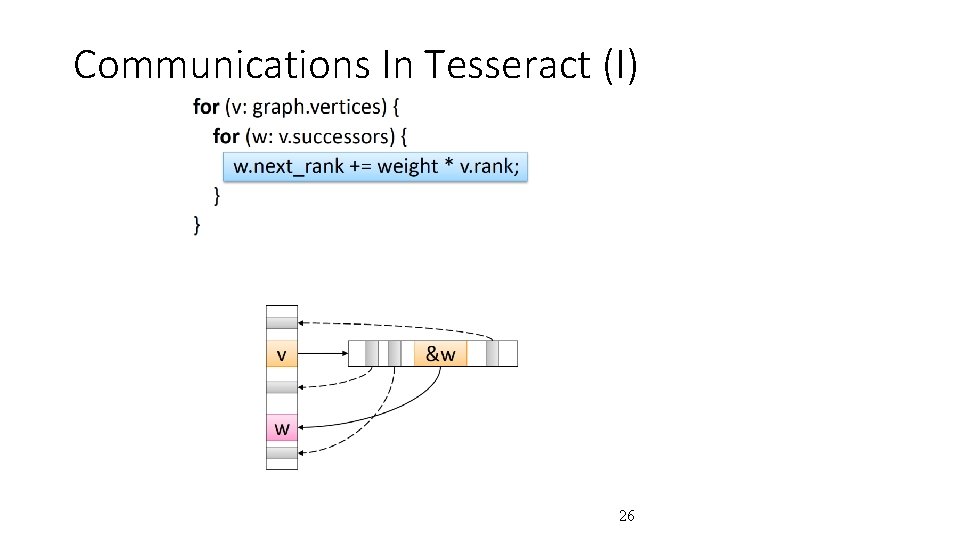

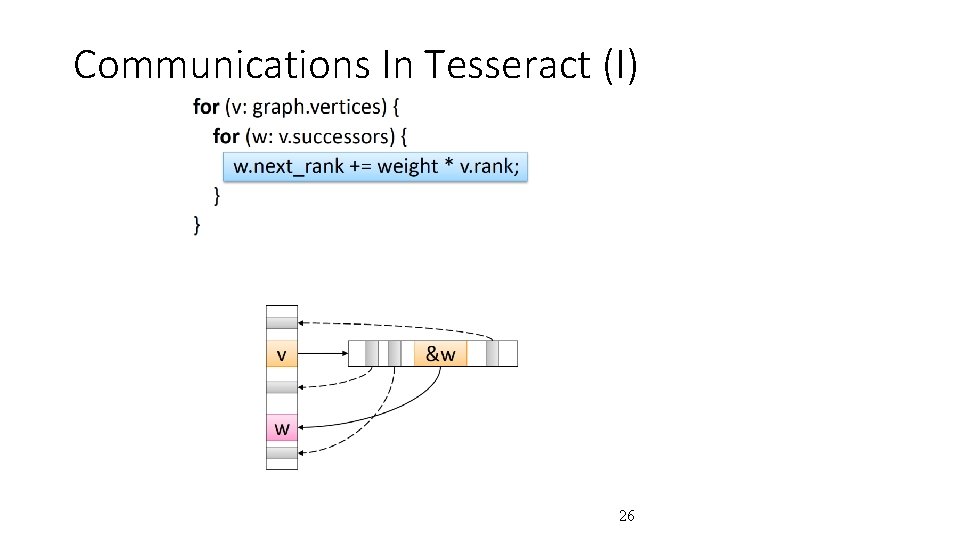

Communications In Tesseract (I) 26

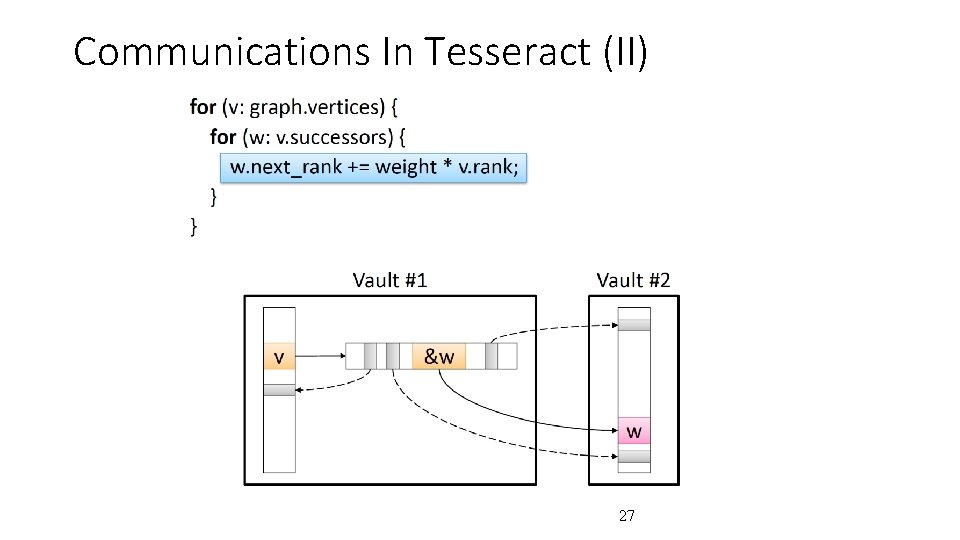

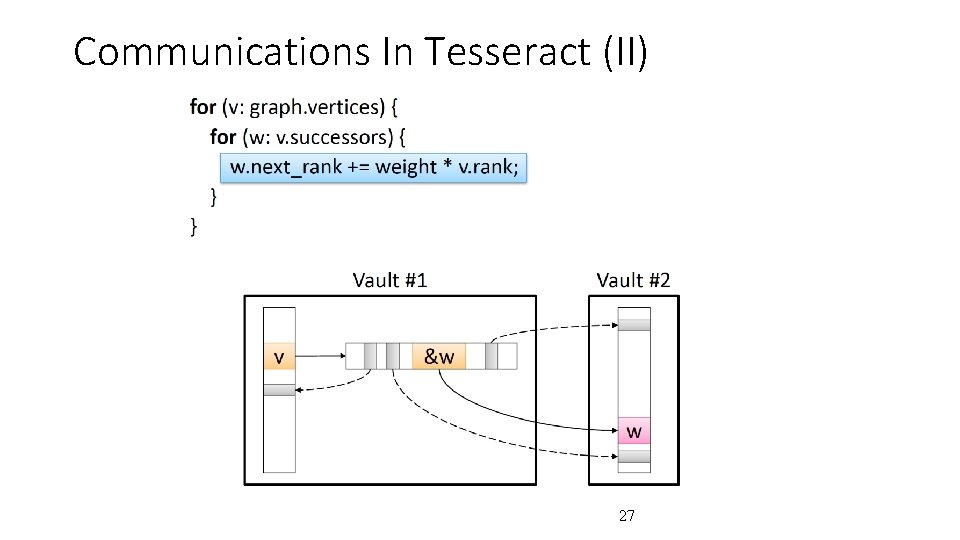

Communications In Tesseract (II) 27

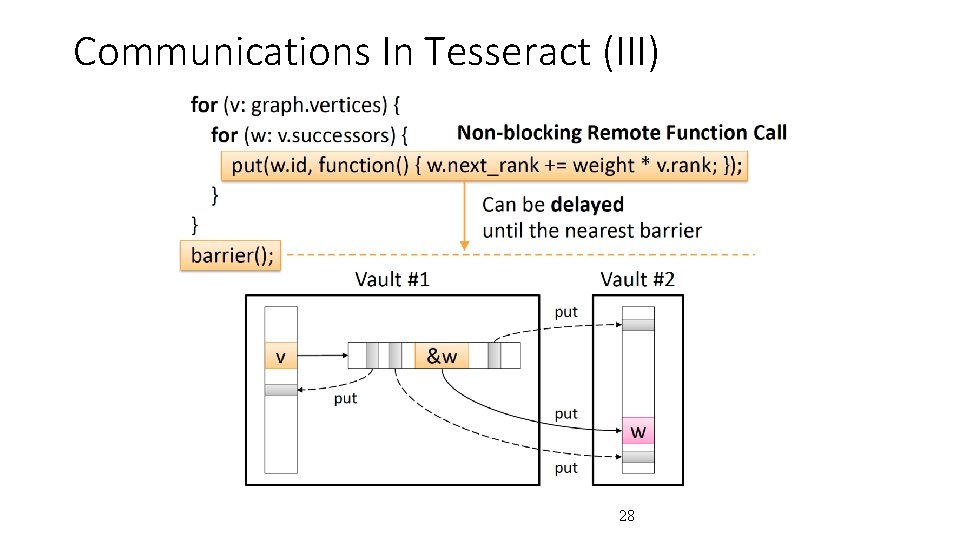

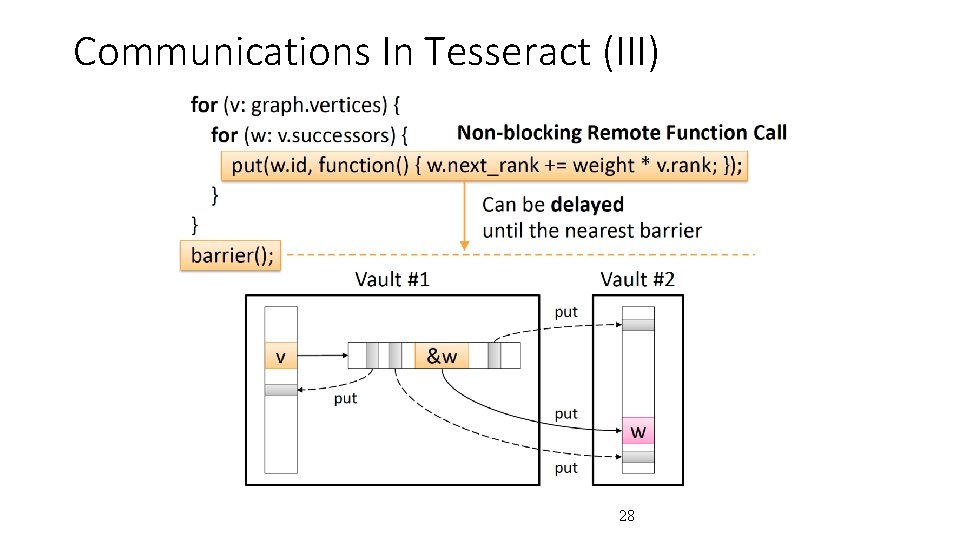

Communications In Tesseract (III) 28

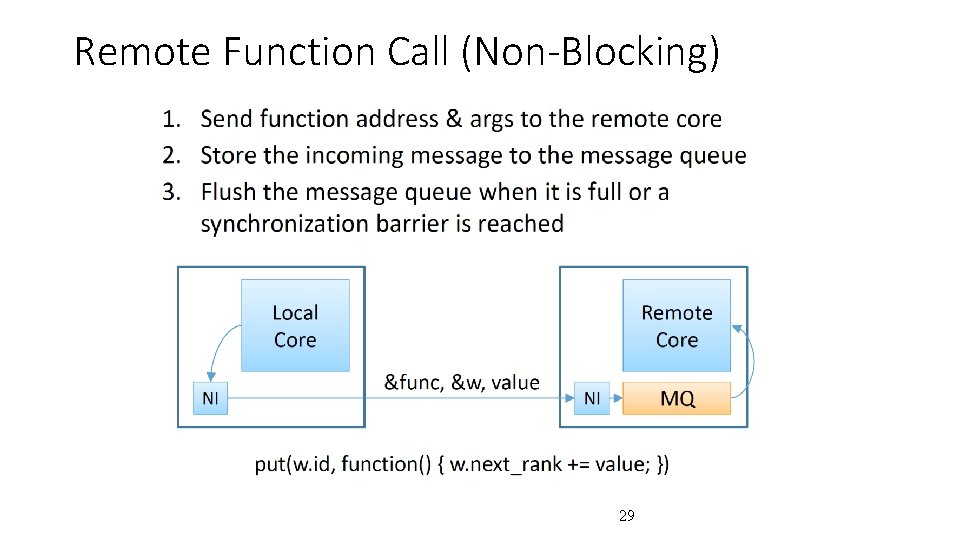

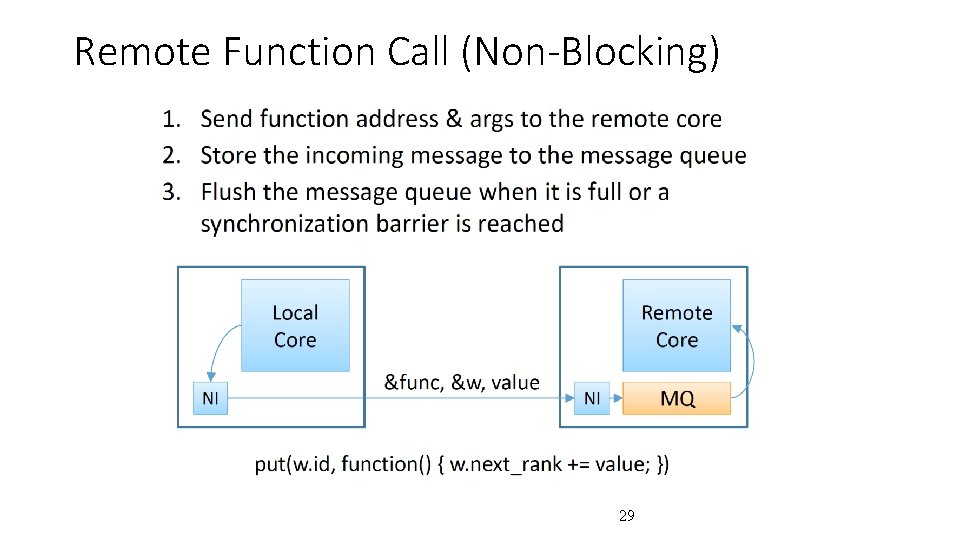

Remote Function Call (Non-Blocking) 29

Tesseract System for Graph Processing Memory Host Processor Logic Memory-Mapped Accelerator Interface (Noncacheable, Physically Addressed) Crossbar Network … … 30 LP PF Buffer MTP Message Queue DRAM Controller … … Prefetching In-Order Core NI

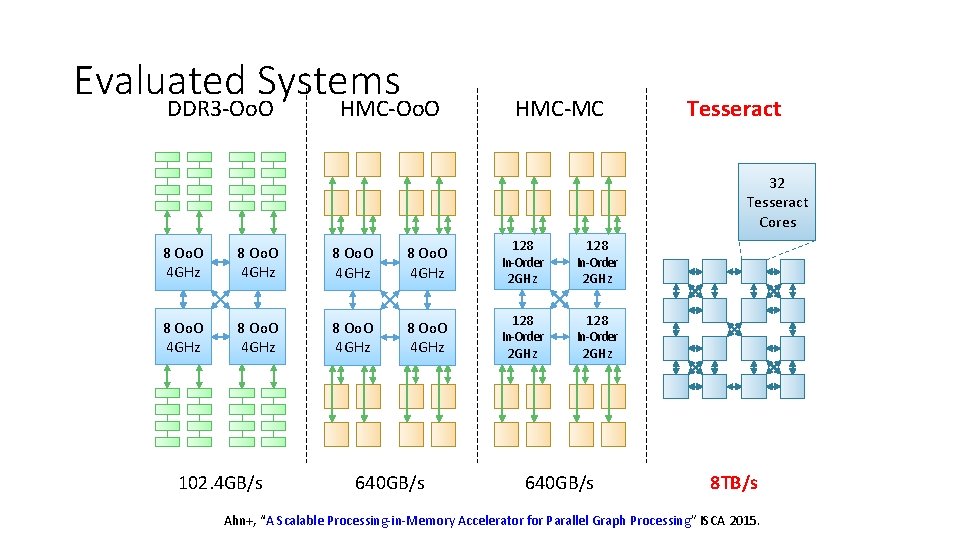

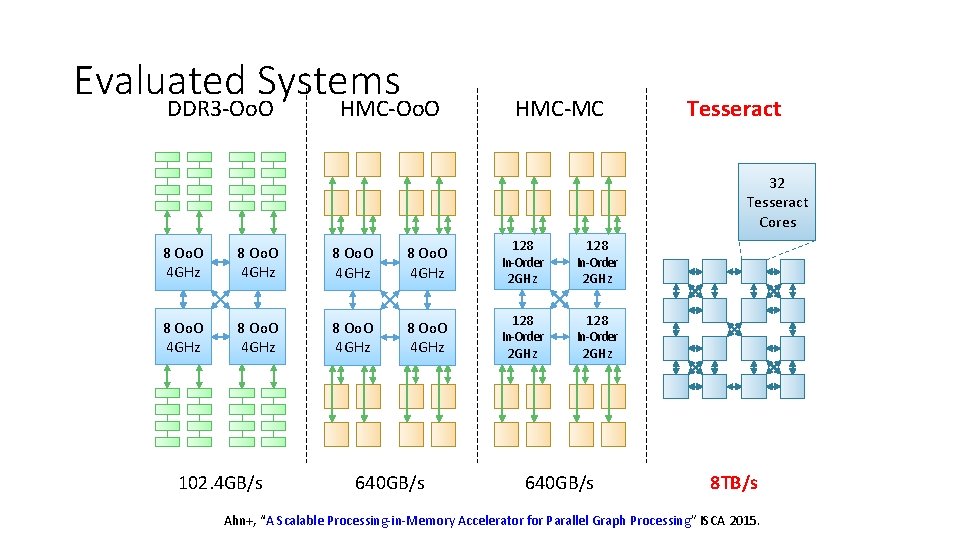

Evaluated Systems DDR 3 -Oo. O HMC-MC Tesseract 32 Tesseract Cores 8 Oo. O 4 GHz 8 Oo. O 4 GHz 128 In-Order 2 GHz 102. 4 GB/s 640 GB/s 8 TB/s Ahn+, “A Scalable Processing-in-Memory Accelerator for Parallel Graph Processing” ISCA 2015.

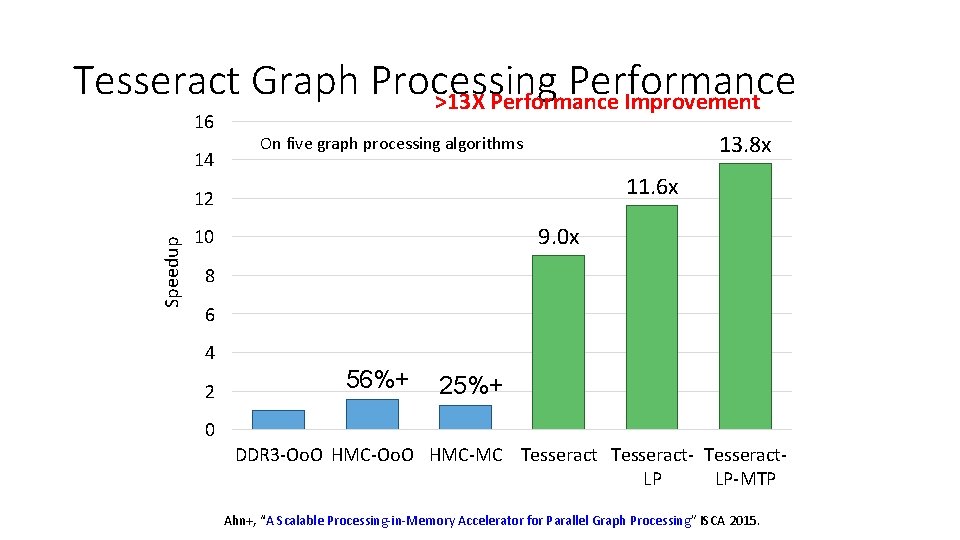

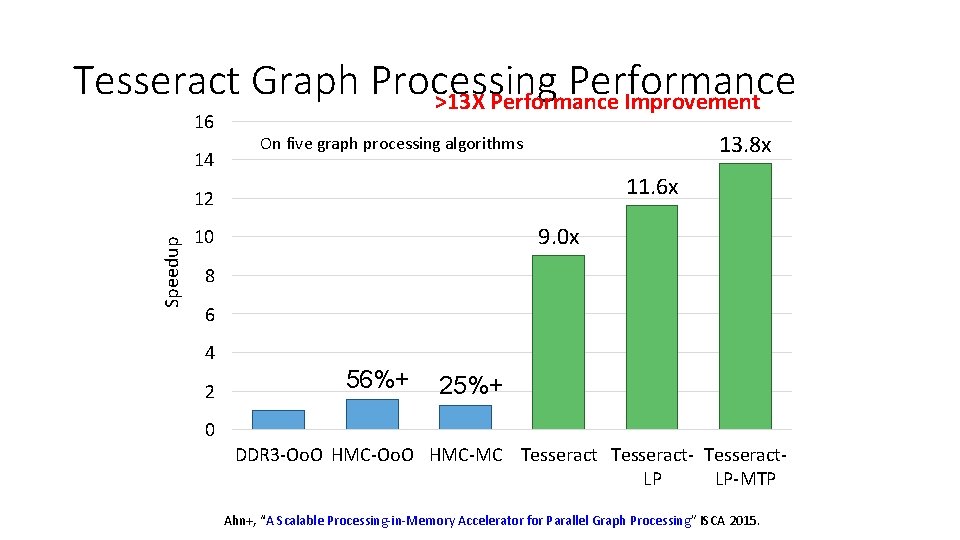

Tesseract Graph Processing Performance >13 X Performance Improvement 16 14 13. 8 x On five graph processing algorithms 11. 6 x Speedup 12 9. 0 x 10 8 6 4 2 56%+ 25%+ 0 DDR 3 -Oo. O HMC-MC Tesseract- Tesseract. LP LP-MTP Ahn+, “A Scalable Processing-in-Memory Accelerator for Parallel Graph Processing” ISCA 2015.

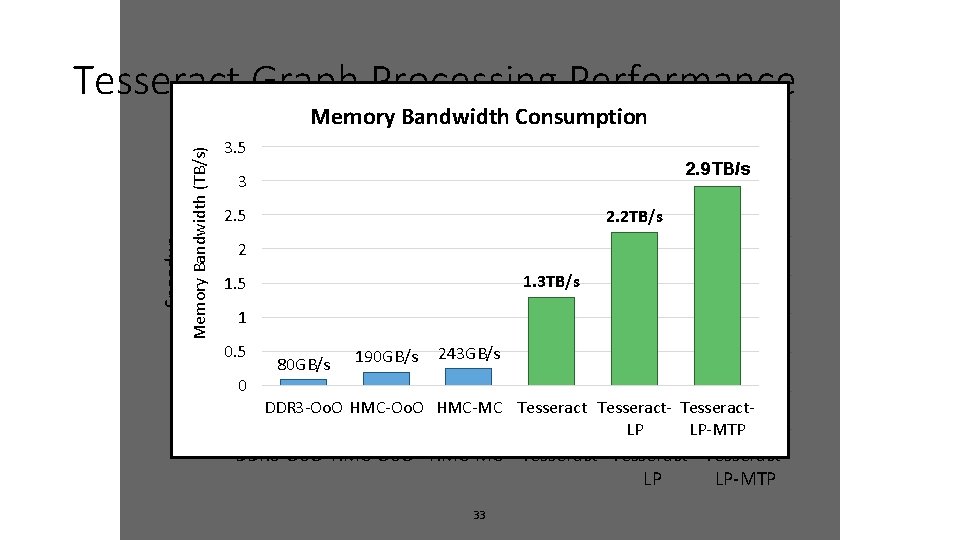

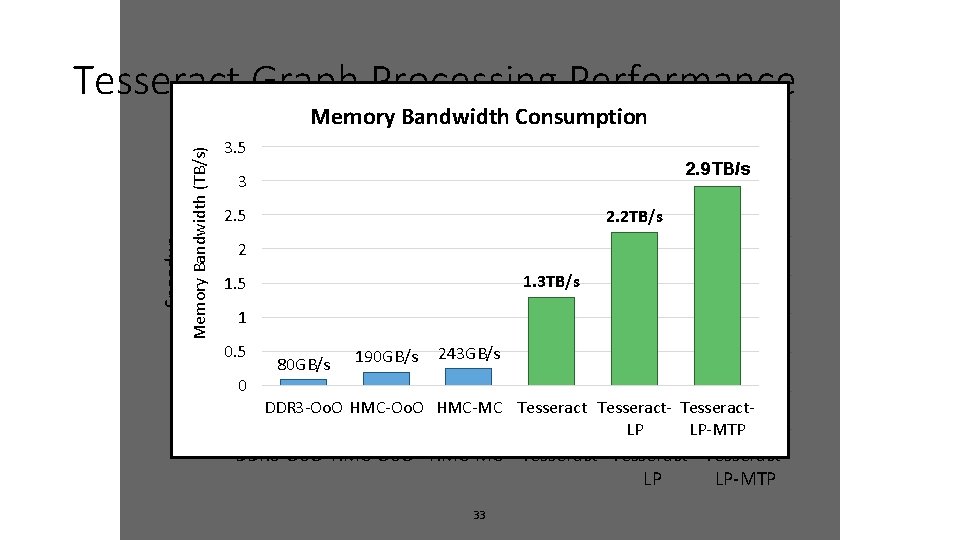

Tesseract Graph Processing Performance Memory Bandwidth Consumption Memory Bandwidth (TB/s) 16 14 Speedup 12 10 3. 5 3 11. 6 x 2. 5 9. 0 x 2 8 1. 5 6 0 2. 9 TB/s 2. 2 TB/s 1. 3 TB/s 1 4 0. 5 2 13. 8 x 0 80 GB/s 190 GB/s 56%+ 243 GB/s 25%+ DDR 3 -Oo. O HMC-Oo. O HMC-MC Tesseract- Tesseract. LP LP-MTP 33

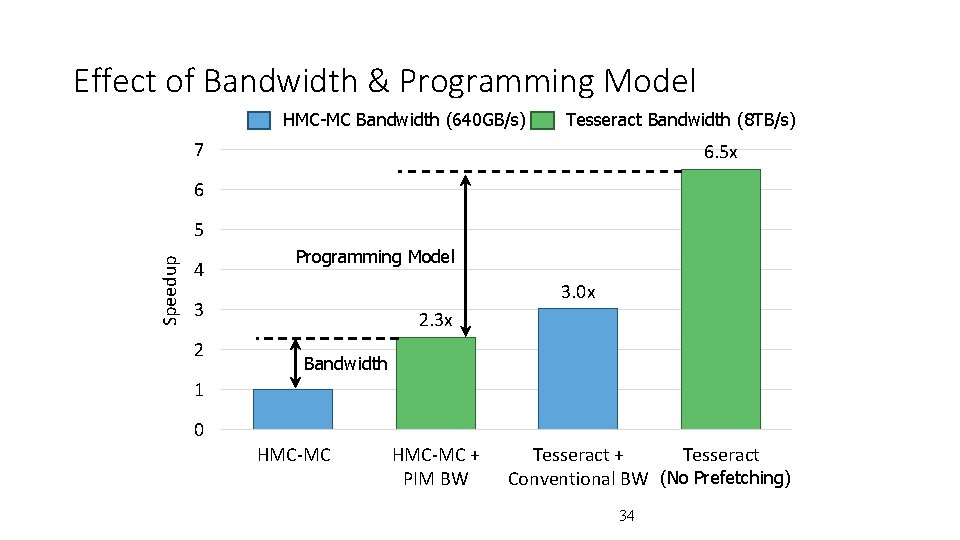

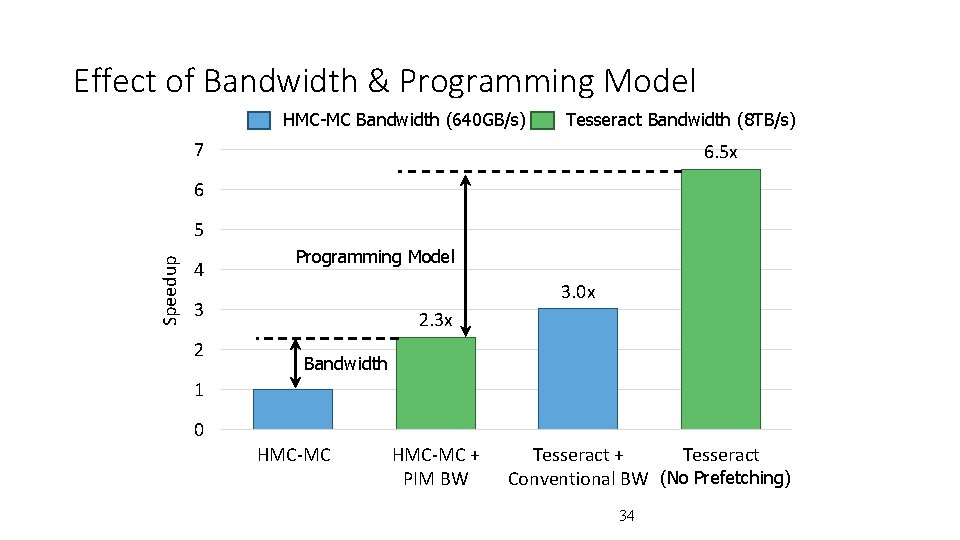

Effect of Bandwidth & Programming Model HMC-MC Bandwidth (640 GB/s) Tesseract Bandwidth (8 TB/s) 7 6. 5 x 6 Speedup 5 4 Programming Model 3. 0 x 3 2 2. 3 x Bandwidth 1 0 HMC-MC + PIM BW Tesseract + Tesseract Conventional BW (No Prefetching) 34

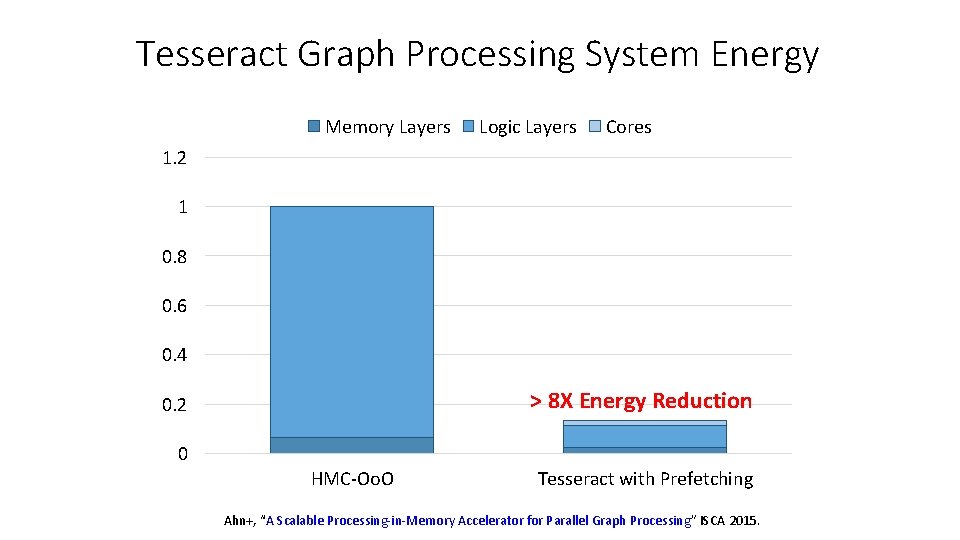

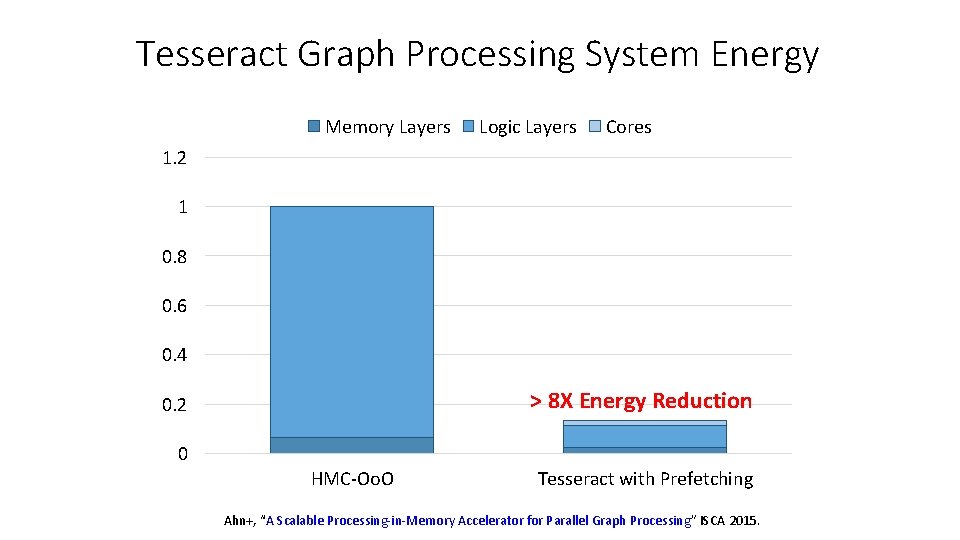

Tesseract Graph Processing System Energy Memory Layers Logic Layers Cores 1. 2 1 0. 8 0. 6 0. 4 > 8 X Energy Reduction 0. 2 0 HMC-Oo. O Tesseract with Prefetching Ahn+, “A Scalable Processing-in-Memory Accelerator for Parallel Graph Processing” ISCA 2015.

Tesseract: Advantages & Disadvantages • Advantages + Specialized graph processing accelerator using PIM + Large system performance and energy benefits + Takes advantage of 3 D stacking for an important workload • Disadvantages - Changes a lot in the system - New programming model - Specialized Tesseract cores for graph processing - Cost - Scalability limited by off-chip links or graph partitioning 36

Eliminating the Adoption Barriers How to Enable Adoption of Processing in Memory 37

Barriers to Adoption of PIM 1. Functionality of and applications for PIM 2. Ease of programming (interfaces and compiler/HW support) 3. System support: coherence & virtual memory 4. Runtime systems for adaptive scheduling, data mapping, access/sharing control 5. Infrastructures to assess benefits and feasibility 38

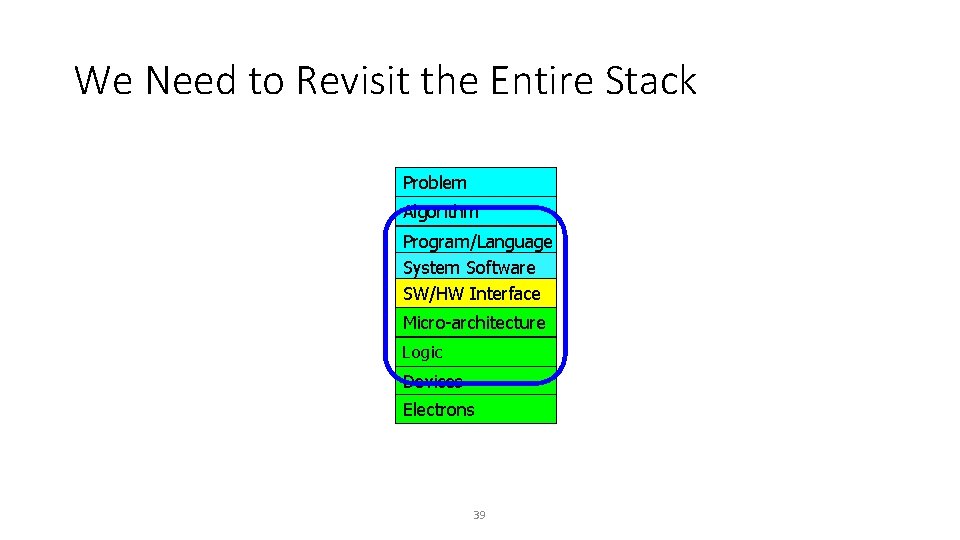

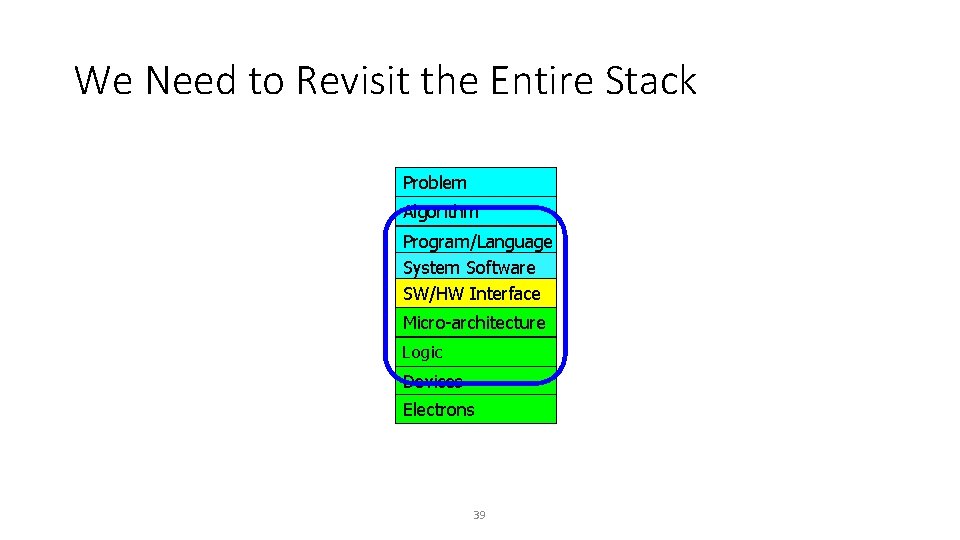

We Need to Revisit the Entire Stack Problem Algorithm Program/Language System Software SW/HW Interface Micro-architecture Logic Devices Electrons 39

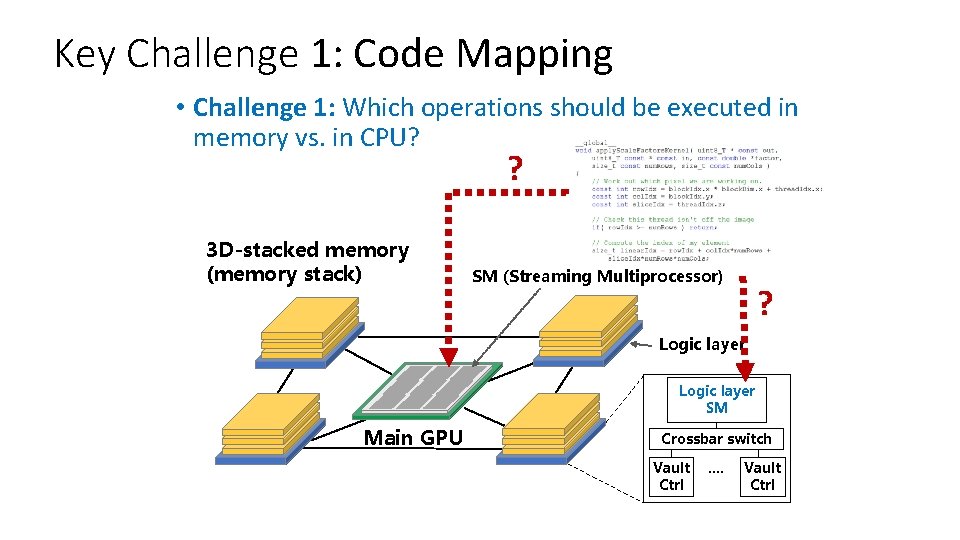

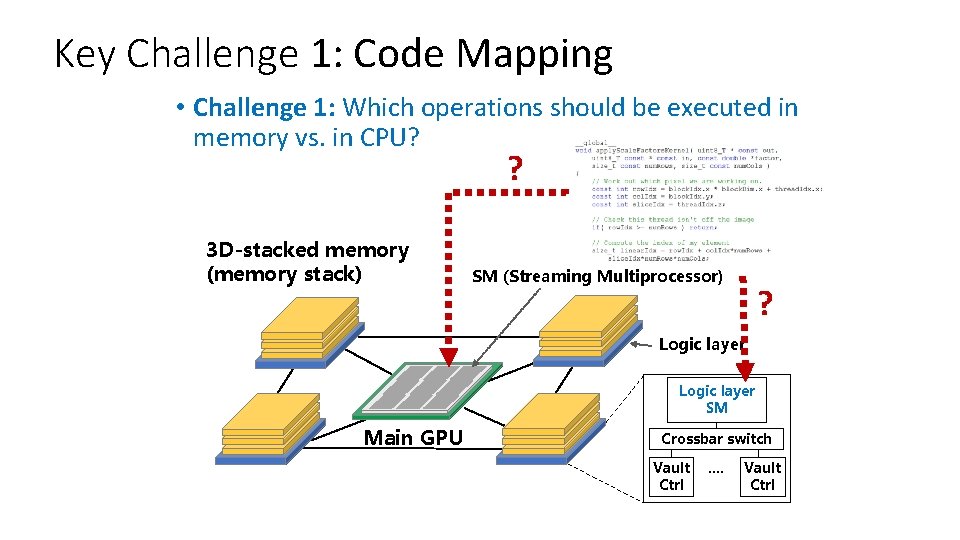

Key Challenge 1: Code Mapping • Challenge 1: Which operations should be executed in memory vs. in CPU? ? 3 D-stacked memory (memory stack) SM (Streaming Multiprocessor) ? Logic layer SM Main GPU Crossbar switch Vault Ctrl …. Vault Ctrl

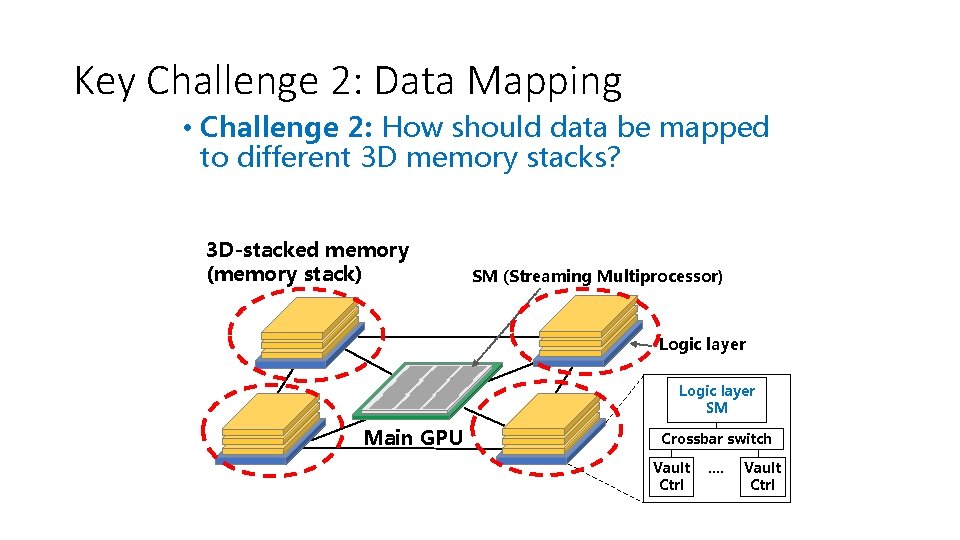

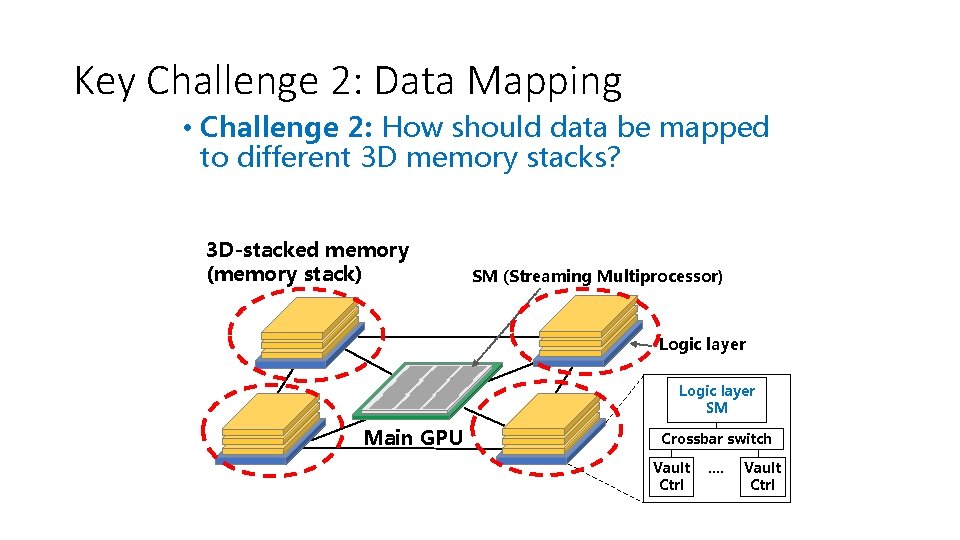

Key Challenge 2: Data Mapping • Challenge 2: How should data be mapped to different 3 D memory stacks? 3 D-stacked memory (memory stack) SM (Streaming Multiprocessor) Logic layer SM Main GPU Crossbar switch Vault Ctrl …. Vault Ctrl

Enabling the Paradigm Shift

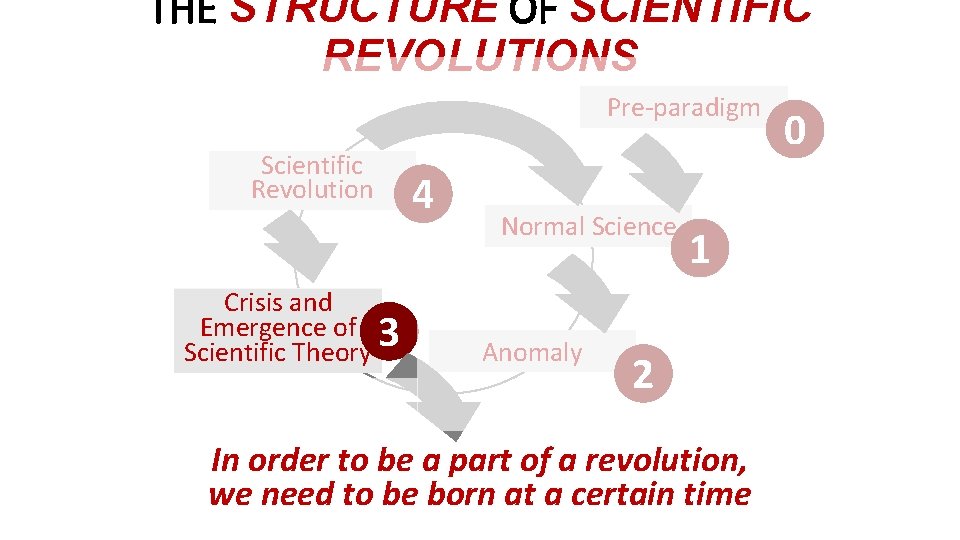

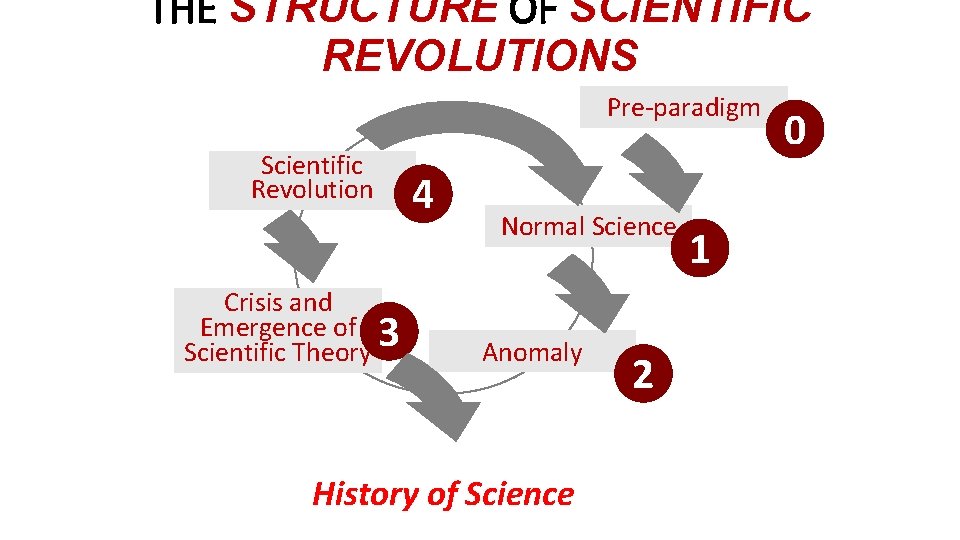

Computer Architecture Today • You can revolutionize the way computers are built, if you understand both the hardware and the software (and change each accordingly) • You can invent new paradigms for computation, communication, and storage • Recommended book: Thomas Kuhn, “The Structure of Scientific Revolutions” (1962) • Pre-paradigm science: no clear consensus in the field • Normal science: dominant theory used to explain/improve things (business as usual); exceptions considered anomalies • Revolutionary science: underlying assumptions re-examined 43

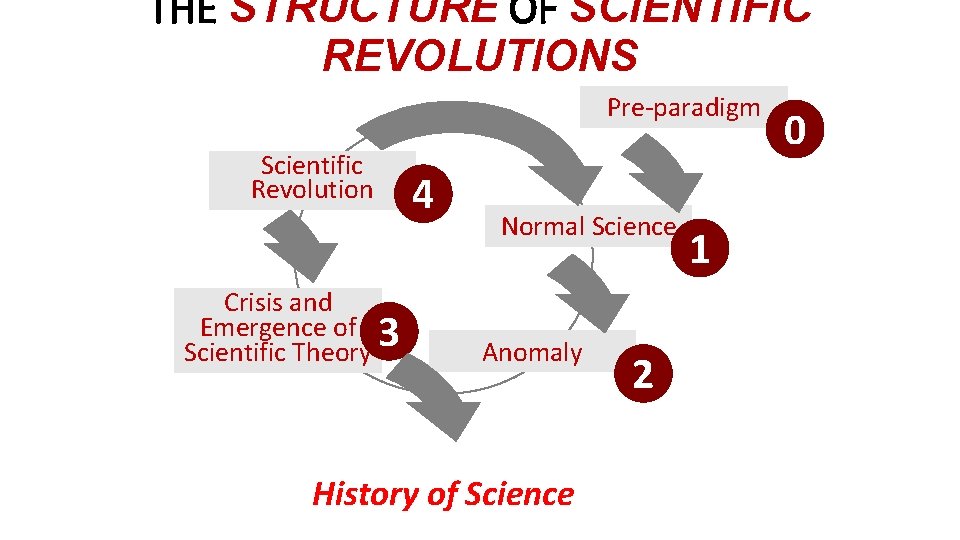

THE STRUCTURE OF SCIENTIFIC REVOLUTIONS Pre-paradigm Scientific Revolution Crisis and Emergence of 3 Scientific Theory 4 Normal Science Anomaly History of Science 2 1 0

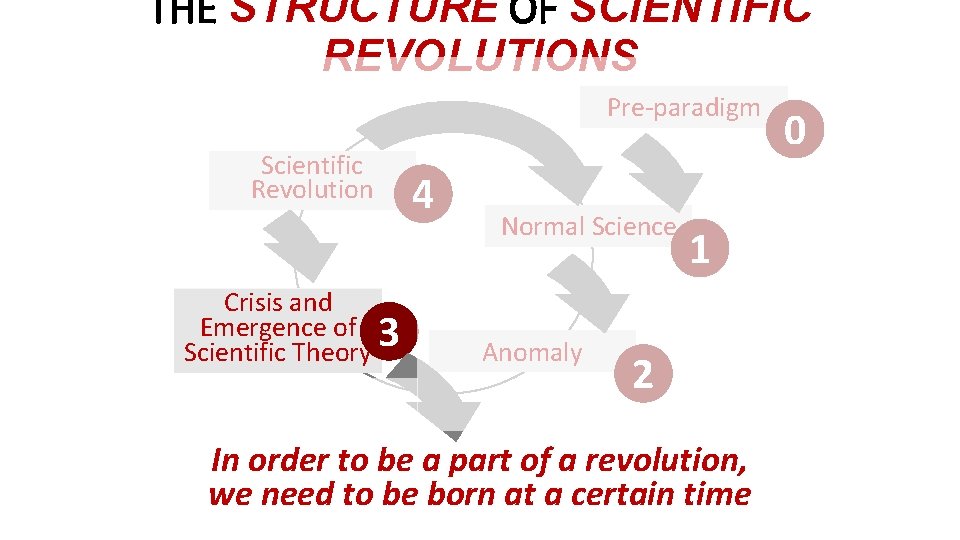

THE STRUCTURE OF SCIENTIFIC REVOLUTIONS Pre-paradigm Scientific Revolution Crisis and Emergence of 3 Scientific Theory 4 Normal Science Anomaly 1 2 In order to be a part of a revolution, we need to be born at a certain time 0

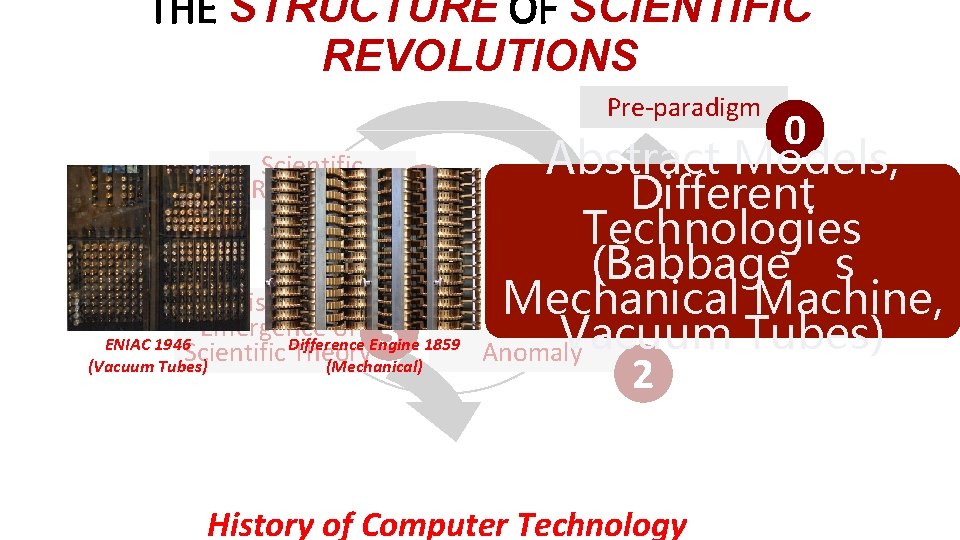

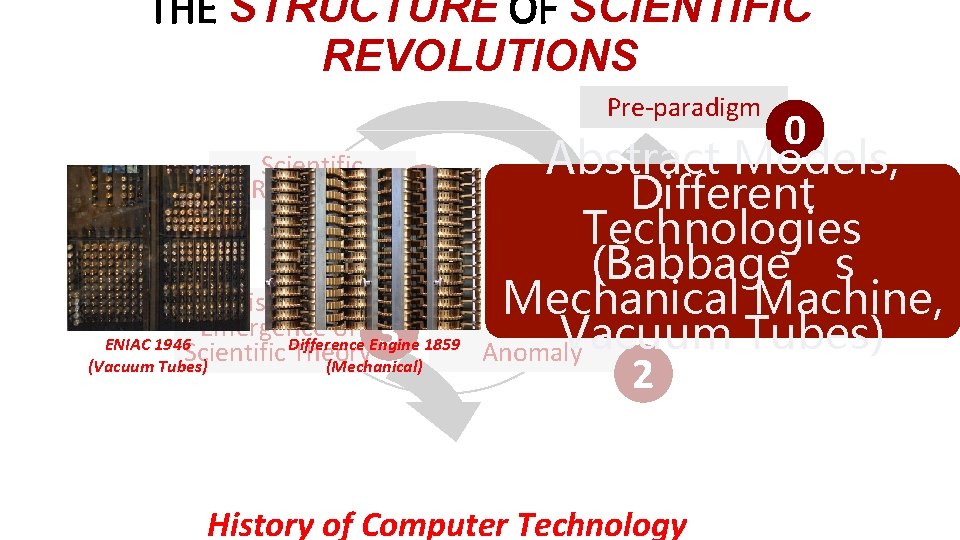

THE STRUCTURE OF SCIENTIFIC REVOLUTIONS Pre-paradigm Scientific Revolution 4 Crisis and Emergence of 3 1859 Difference Engine ENIAC 1946 Scientific Theory (Mechanical) (Vacuum Tubes) 0 Abstract Models, Different Normal. Technologies Science 1 (Babbage’s Mechanical Machine, Vacuum Tubes) Anomaly 2 History of Computer Technology

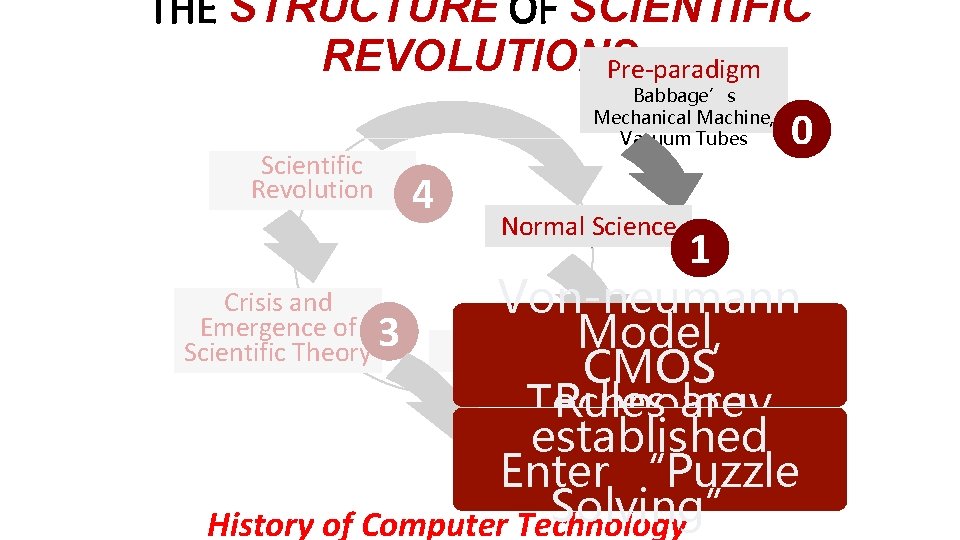

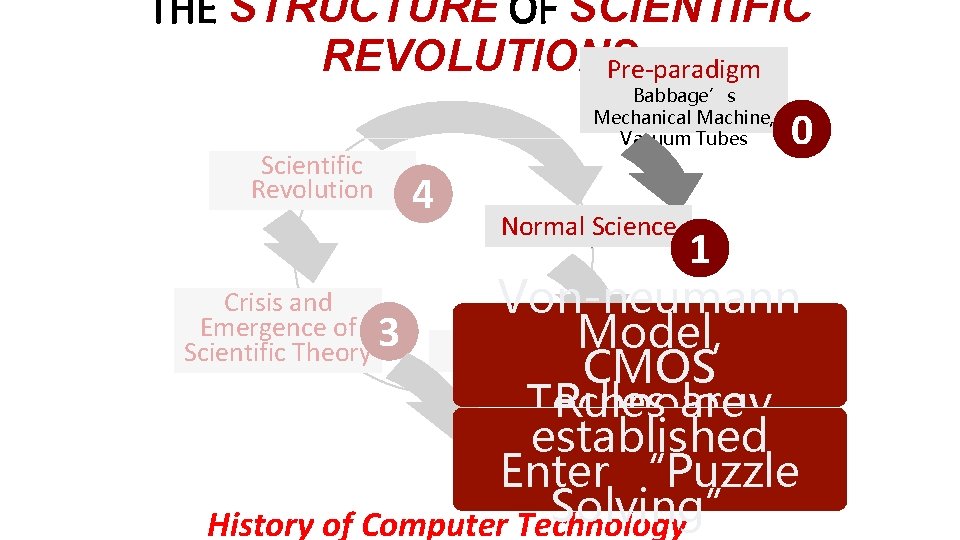

THE STRUCTURE OF SCIENTIFIC REVOLUTIONS Pre-paradigm Scientific Revolution Babbage’s Mechanical Machine, Vacuum Tubes 4 Normal Science 0 1 Crisis and Von-neumann Emergence of 3 Anomaly. Model, Scientific Theory CMOS 2 Rules are Technology established Enter “Puzzle Solving” History of Computer Technology

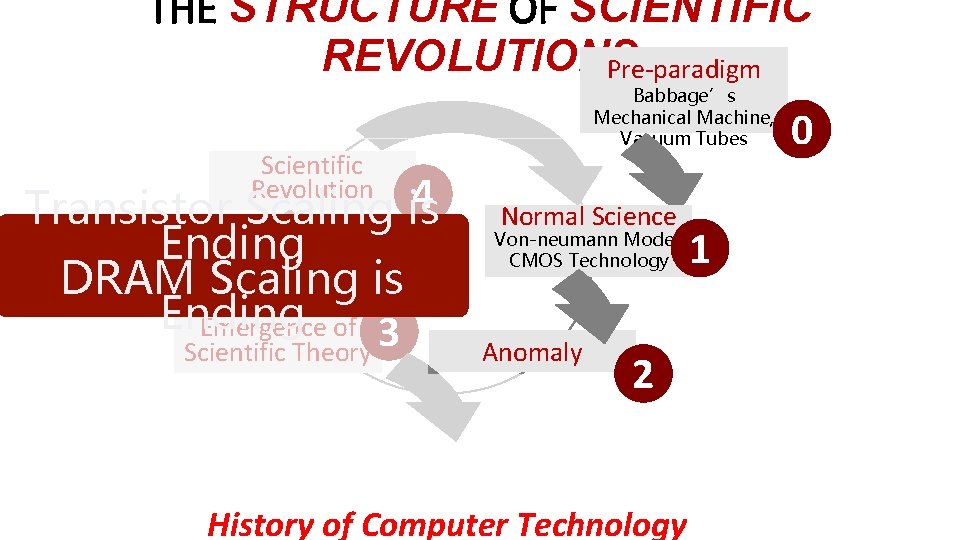

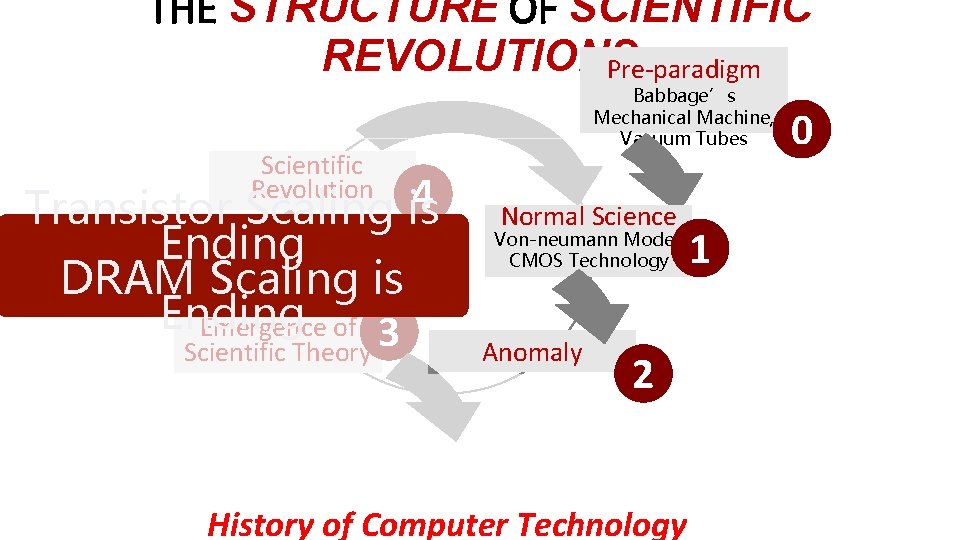

THE STRUCTURE OF SCIENTIFIC REVOLUTIONS Pre-paradigm Scientific Revolution 4 Transistor Scaling is Ending DRAM Scaling is Crisis and Ending Emergence of 3 Scientific Theory Babbage’s Mechanical Machine, Vacuum Tubes Normal Science Von-neumann Model, CMOS Technology Anomaly 2 History of Computer Technology 1 0

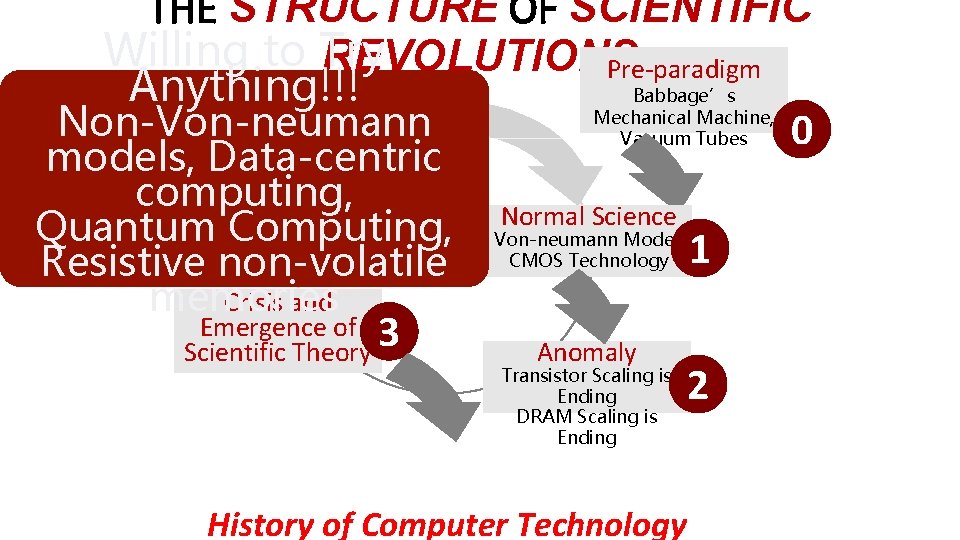

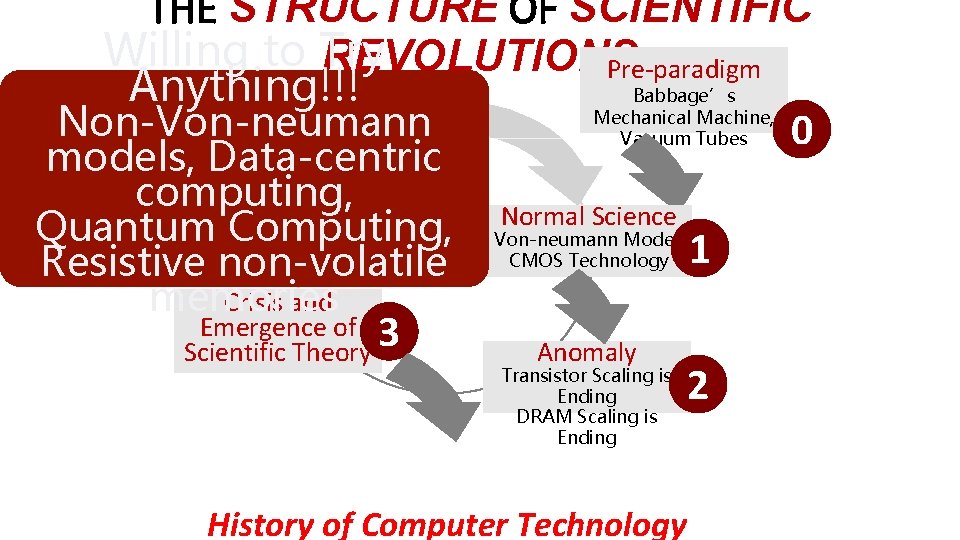

THE STRUCTURE OF SCIENTIFIC Willing to Try REVOLUTIONS Pre-paradigm Anything!!! Babbage’s Mechanical Machine, Non-Von-neumann 0 Vacuum Tubes models, Data-centric Scientific Revolution 4 computing, Normal Science Quantum Computing, Von-neumann Model, CMOS Technology 1 Resistive non-volatile memories Crisis and Emergence of 3 Scientific Theory Anomaly Transistor Scaling is Ending DRAM Scaling is Ending History of Computer Technology 2

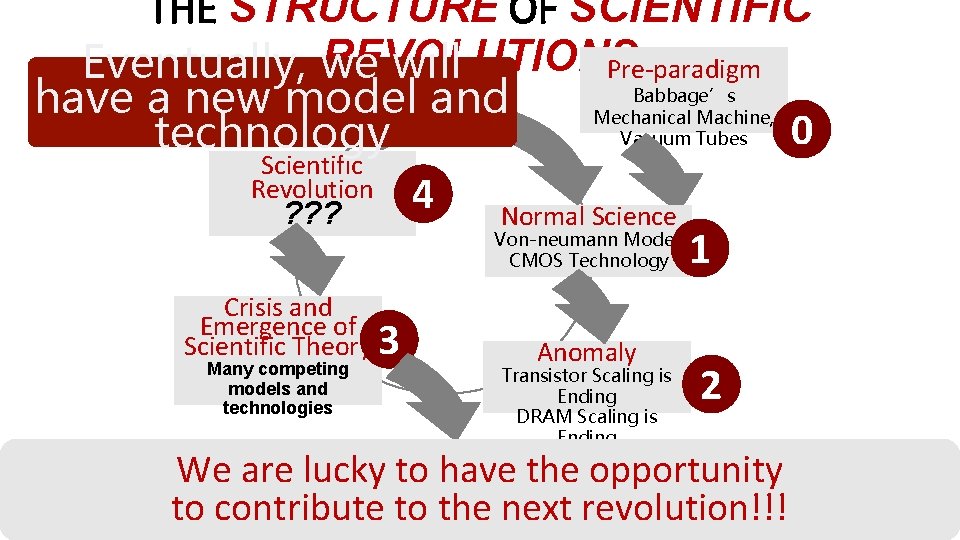

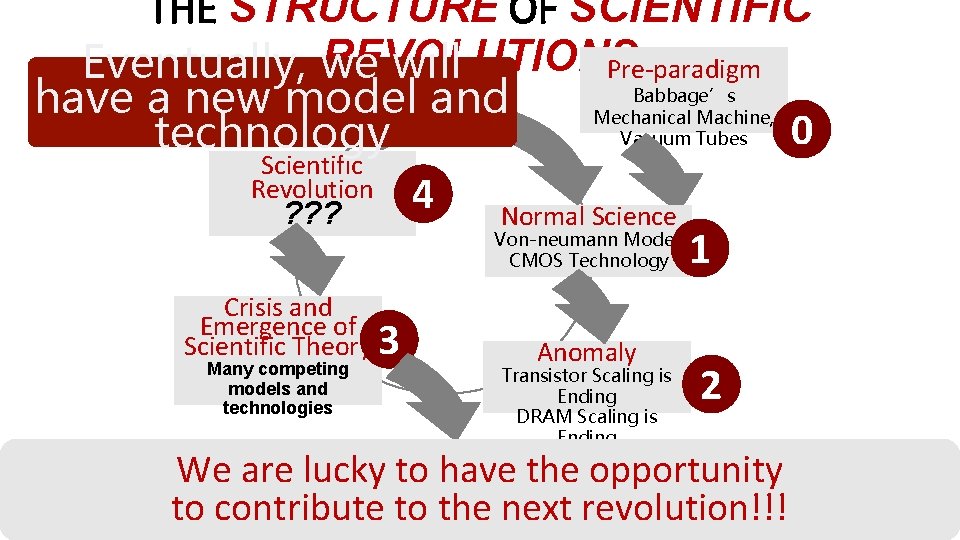

THE STRUCTURE OF SCIENTIFIC REVOLUTIONS Pre-paradigm Eventually, we will Babbage’s have a new model and Mechanical Machine, 0 Vacuum Tubes technology Scientific Revolution 4 ? ? ? Normal Science Von-neumann Model, CMOS Technology 1 Crisis and Emergence of Scientific Theory 3 Many competing models and technologies Anomaly Transistor Scaling is Ending DRAM Scaling is Ending 2 We are lucky to have the opportunity to History contribute to the next revolution!!! of Computer Technology

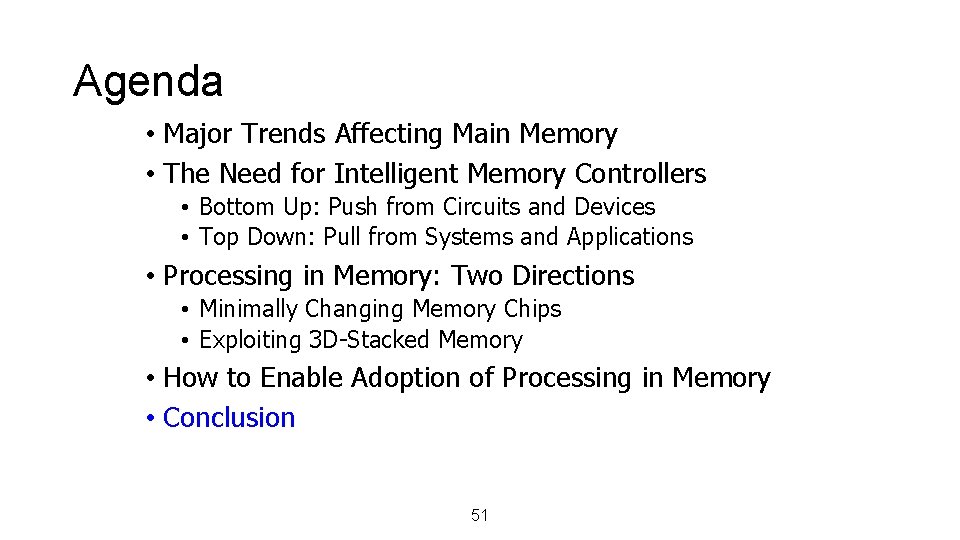

Agenda • Major Trends Affecting Main Memory • The Need for Intelligent Memory Controllers • Bottom Up: Push from Circuits and Devices • Top Down: Pull from Systems and Applications • Processing in Memory: Two Directions • Minimally Changing Memory Chips • Exploiting 3 D-Stacked Memory • How to Enable Adoption of Processing in Memory • Conclusion 51

Challenge and Opportunity for Future Fundamentally Energy-Efficient (Data-Centric) Computing Architectures 52

ADVANCED COMPUTER ARCHITECTURE CS 4330/6501 Processing-in-Memory Samira Khan University of Virginia Apr 22, 2019 The content and concept of this course are adapted from CMU ECE 740