COMPUTER ARCHITECTURE CS 6354 Asymmetric MultiCores Samira Khan

- Slides: 35

COMPUTER ARCHITECTURE CS 6354 Asymmetric Multi-Cores Samira Khan University of Virginia Sep 13, 2017 The content and concept of this course are adapted from CMU ECE 740

AGENDA • Logistics • Review from last lecture – Accelerating critical sections • Asymmetric Multi-Core 2

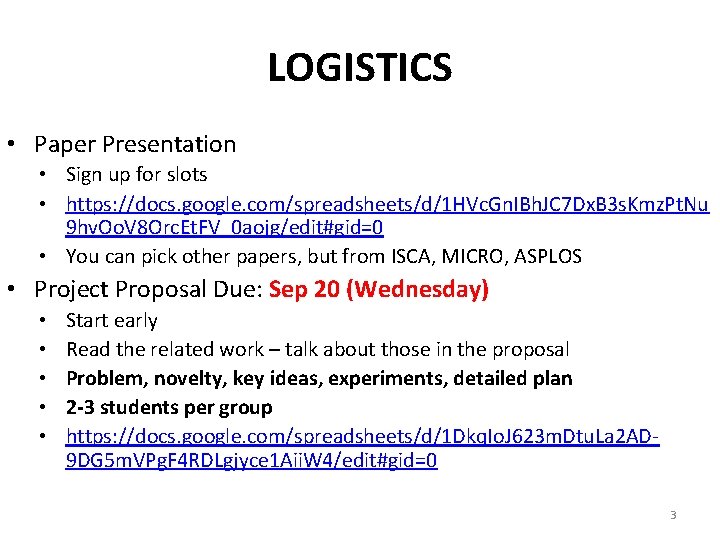

LOGISTICS • Paper Presentation • Sign up for slots • https: //docs. google. com/spreadsheets/d/1 HVc. Gn. IBh. JC 7 Dx. B 3 s. Kmz. Pt. Nu 9 hv. Oo. V 8 Orc. Et. FV_0 aojg/edit#gid=0 • You can pick other papers, but from ISCA, MICRO, ASPLOS • Project Proposal Due: Sep 20 (Wednesday) • • • Start early Read the related work – talk about those in the proposal Problem, novelty, key ideas, experiments, detailed plan 2 -3 students per group https: //docs. google. com/spreadsheets/d/1 Dkq. Io. J 623 m. Dtu. La 2 AD 9 DG 5 m. VPg. F 4 RDLgjyce 1 Aii. W 4/edit#gid=0 3

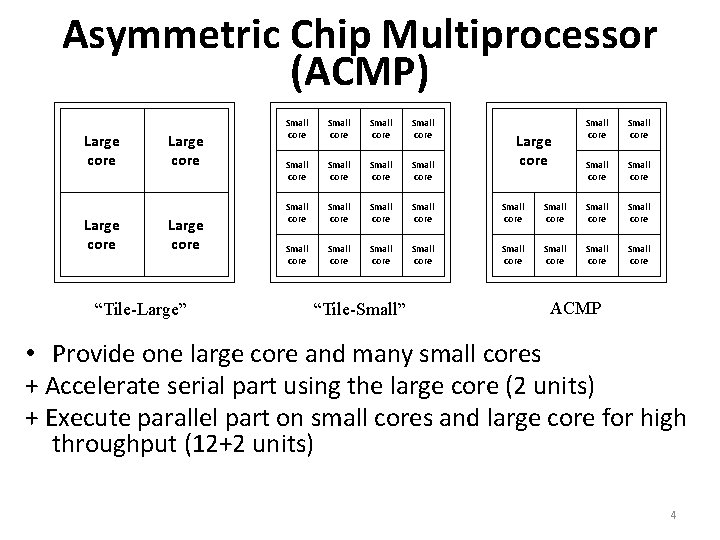

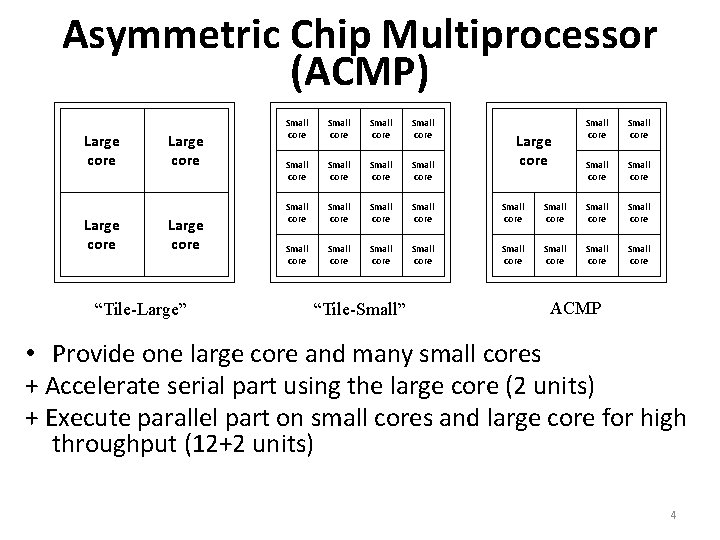

Asymmetric Chip Multiprocessor (ACMP) Large core “Tile-Large” Small core Small core Small core Small core Small core “Tile-Small” Small core Small core Small core Large core ACMP • Provide one large core and many small cores + Accelerate serial part using the large core (2 units) + Execute parallel part on small cores and large core for high throughput (12+2 units) 4

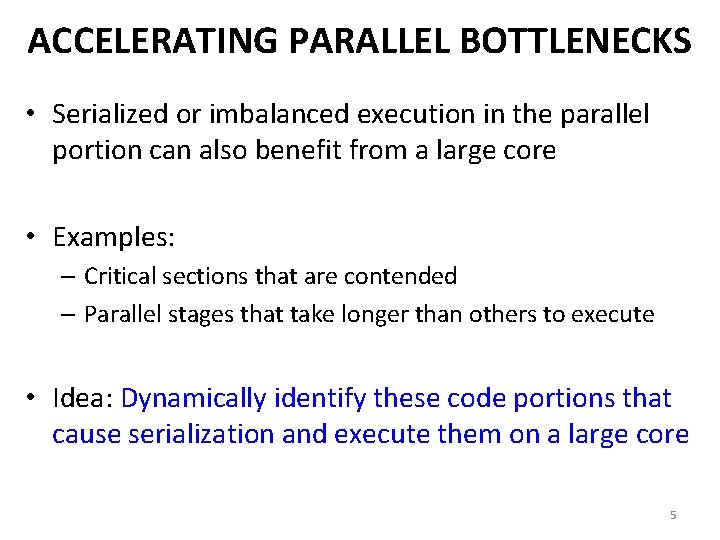

ACCELERATING PARALLEL BOTTLENECKS • Serialized or imbalanced execution in the parallel portion can also benefit from a large core • Examples: – Critical sections that are contended – Parallel stages that take longer than others to execute • Idea: Dynamically identify these code portions that cause serialization and execute them on a large core 5

ACCELERATED CRITICAL SECTIONS (ACS) Small Core A = compute() PUSH A CSCALL X, Target PC … LOCK X result = CS(A) UNLOCK X print result … … … Large Core CSCALL Request Send X, TPC, STACK_PTR, CORE_ID … Waiting in Critical Section Request … Buffer (CSRB) … TPC: Acquire X POP A result = CS(A) PUSH result Release X CSRET X CSDONE Response POP result print result Suleman et al. , “Accelerating Critical Section Execution with Asymmetric Multi. Core Architectures, ” ASPLOS 2009. 6

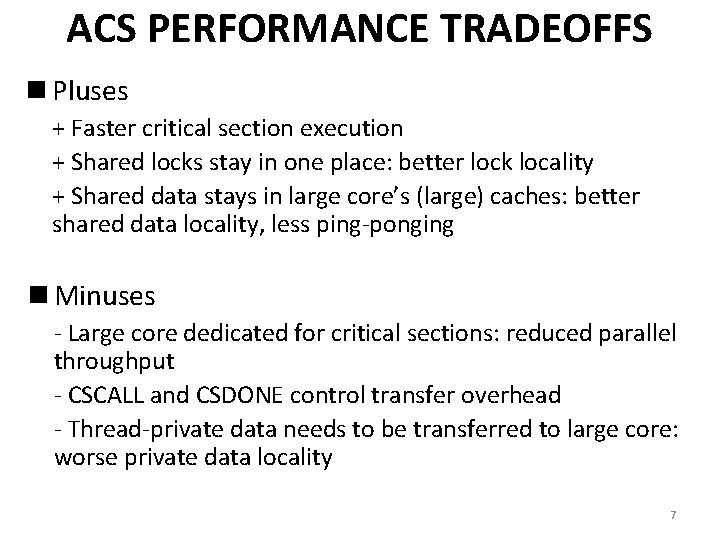

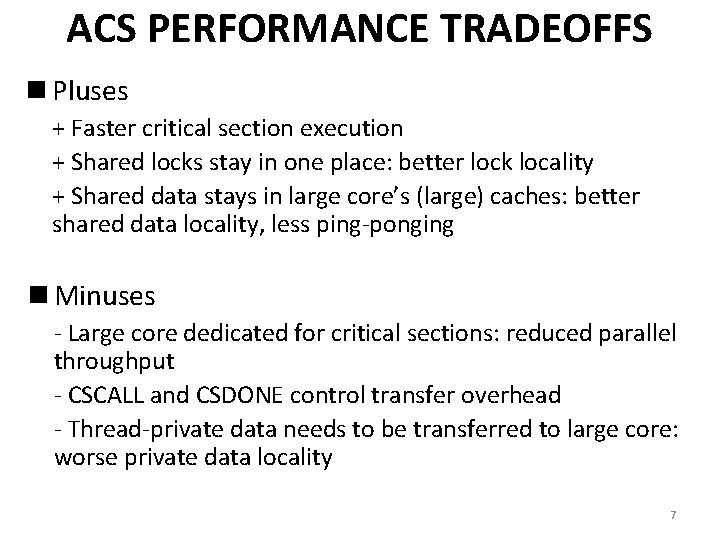

ACS PERFORMANCE TRADEOFFS n Pluses + Faster critical section execution + Shared locks stay in one place: better lock locality + Shared data stays in large core’s (large) caches: better shared data locality, less ping-ponging n Minuses - Large core dedicated for critical sections: reduced parallel throughput - CSCALL and CSDONE control transfer overhead - Thread-private data needs to be transferred to large core: worse private data locality 7

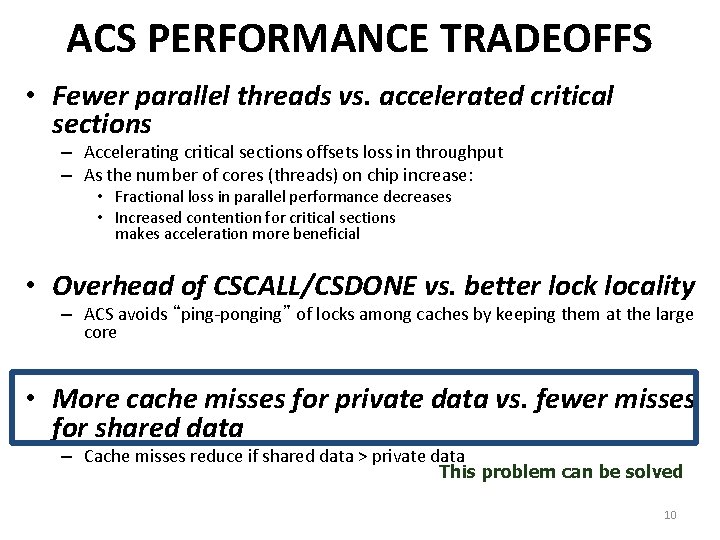

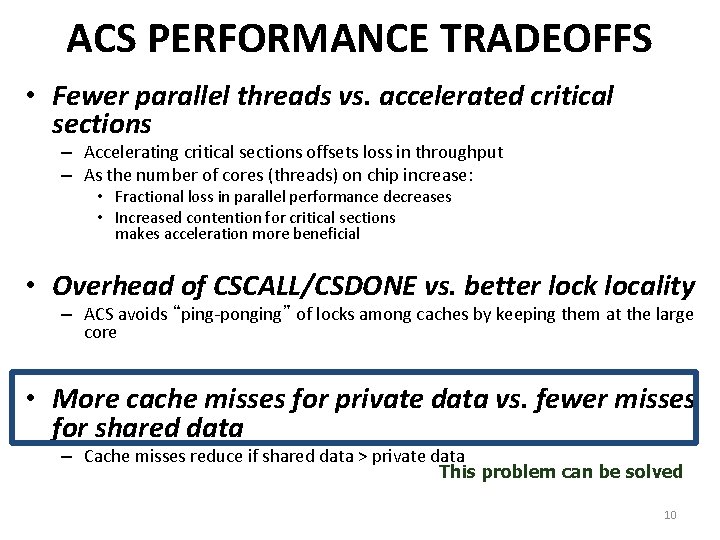

ACS PERFORMANCE TRADEOFFS • Fewer parallel threads vs. accelerated critical sections – Accelerating critical sections offsets loss in throughput – As the number of cores (threads) on chip increase: • Fractional loss in parallel performance decreases • Increased contention for critical sections makes acceleration more beneficial • Overhead of CSCALL/CSDONE vs. better lock locality – ACS avoids “ping-ponging” of locks among caches by keeping them at the large core • More cache misses for private data vs. fewer misses for shared data 8

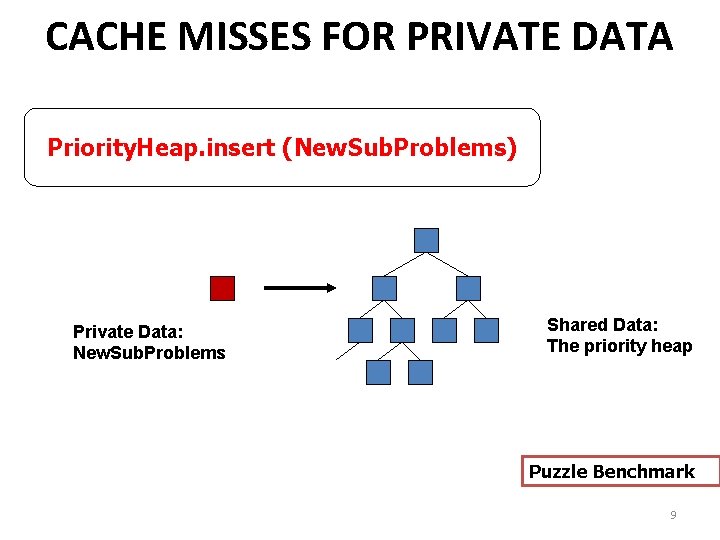

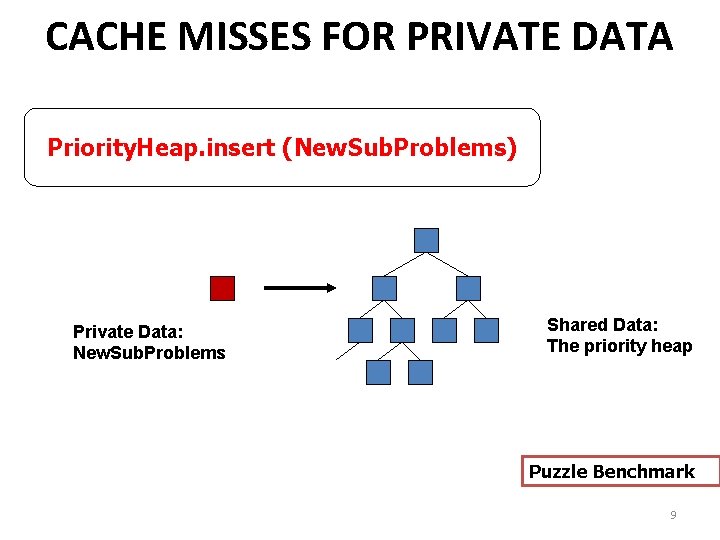

CACHE MISSES FOR PRIVATE DATA Priority. Heap. insert (New. Sub. Problems) Private Data: New. Sub. Problems Shared Data: The priority heap Puzzle Benchmark 9

ACS PERFORMANCE TRADEOFFS • Fewer parallel threads vs. accelerated critical sections – Accelerating critical sections offsets loss in throughput – As the number of cores (threads) on chip increase: • Fractional loss in parallel performance decreases • Increased contention for critical sections makes acceleration more beneficial • Overhead of CSCALL/CSDONE vs. better lock locality – ACS avoids “ping-ponging” of locks among caches by keeping them at the large core • More cache misses for private data vs. fewer misses for shared data – Cache misses reduce if shared data > private data This problem can be solved 10

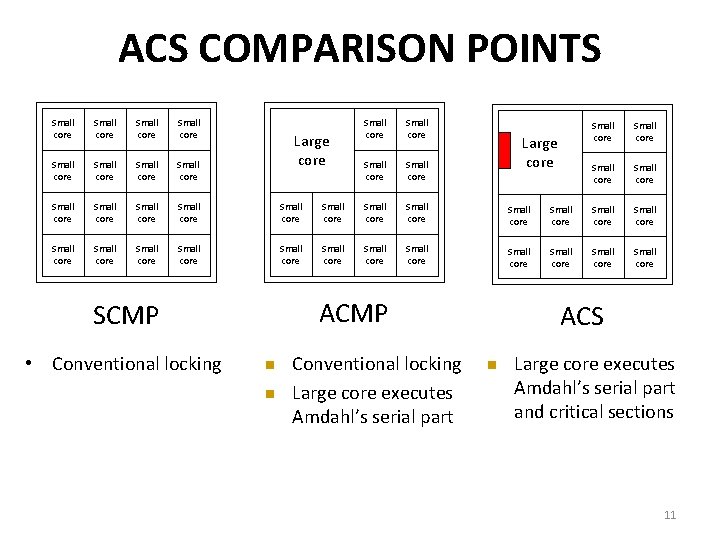

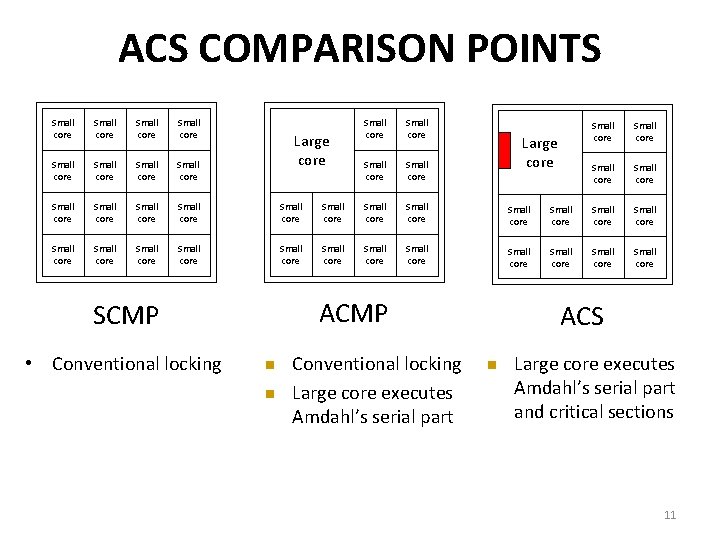

ACS COMPARISON POINTS Small core Small core Small core Small core Small core Small core Small core Small core Large core n n Conventional locking Large core executes Amdahl’s serial part Small core Small core Small core Large core ACMP SCMP • Conventional locking Small core ACS n Large core executes Amdahl’s serial part and critical sections 11

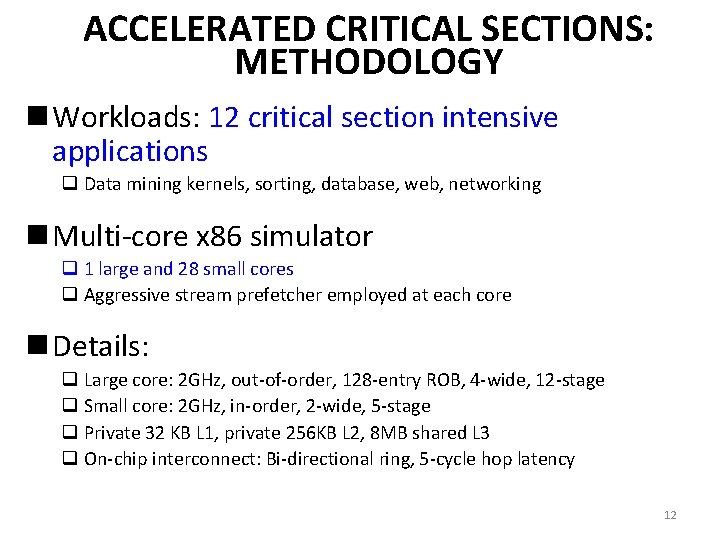

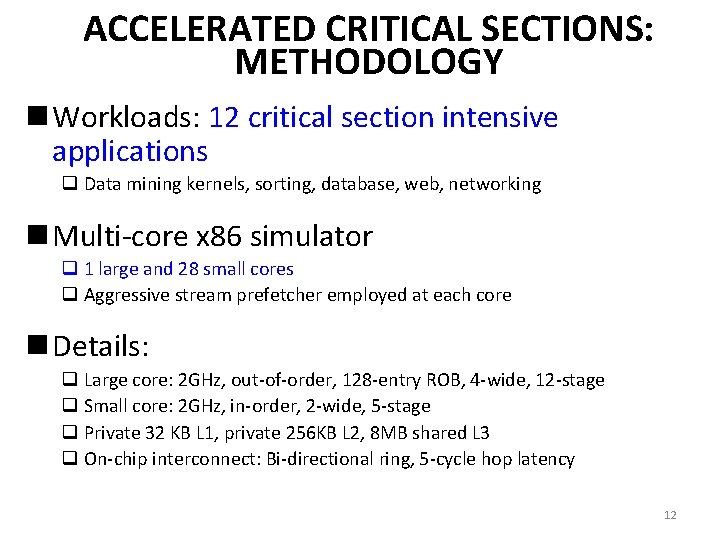

ACCELERATED CRITICAL SECTIONS: METHODOLOGY n Workloads: 12 critical section intensive applications q Data mining kernels, sorting, database, web, networking n Multi-core x 86 simulator q 1 large and 28 small cores q Aggressive stream prefetcher employed at each core n Details: q Large core: 2 GHz, out-of-order, 128 -entry ROB, 4 -wide, 12 -stage q Small core: 2 GHz, in-order, 2 -wide, 5 -stage q Private 32 KB L 1, private 256 KB L 2, 8 MB shared L 3 q On-chip interconnect: Bi-directional ring, 5 -cycle hop latency 12

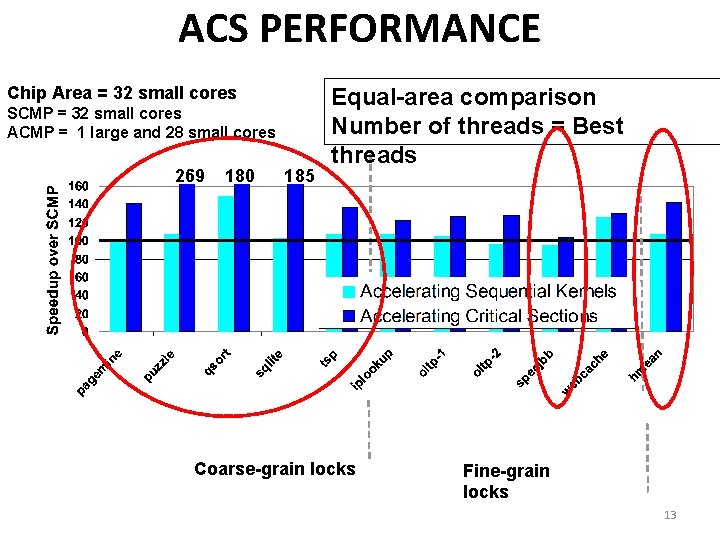

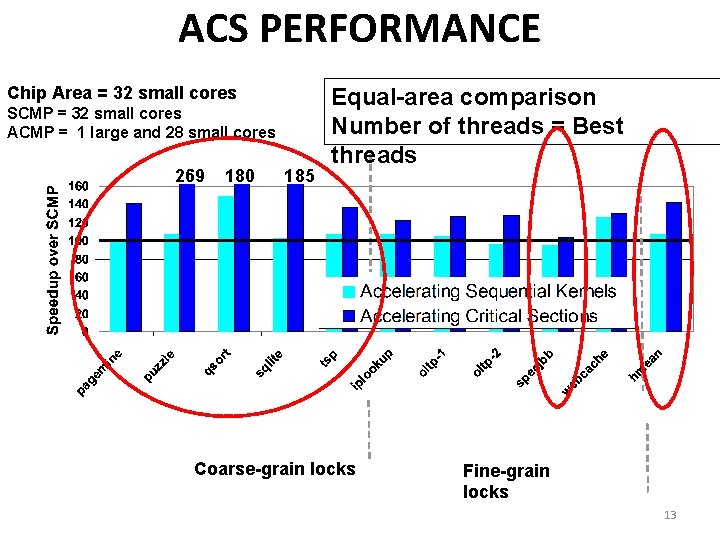

ACS PERFORMANCE Chip Area = 32 small cores SCMP = 32 small cores ACMP = 1 large and 28 small cores 269 180 185 Equal-area comparison Number of threads = Best threads Coarse-grain locks Fine-grain locks 13

EQUAL-AREA COMPARISONS ------ SCMP ------ ACS Number of threads = No. of cores Speedup over a small core 3, 5 3 2, 5 2 1, 5 1 0, 5 0 3 5 2, 5 4 2 3 1, 5 2 1 0, 5 1 0 0 0 8 16 24 32 (a) ep (b) is 6 10 5 8 4 2 1 2 0 0 0 8 16 24 32 (c) pagemine (d) puzzle 6 4 4 2 0 0 8 16 24 32 (g) sqlite (h) iplookup 3, 5 3 2, 5 2 1, 5 1 0, 5 0 0 8 16 24 32 8 6 3 7 6 5 4 3 2 1 0 0 8 16 24 32 (i) oltp-1 14 12 10 8 6 4 2 0 0 8 16 24 32 (e) qsort (f) tsp 12 3 12 10 2, 5 10 8 2 8 6 1, 5 6 4 1 4 2 0, 5 2 0 0 8 16 24 32 (i) oltp-2 0 8 16 24 32 (k) specjbb 0 8 16 24 32 (l) webcache Chip Area (small cores) 14

ACS SUMMARY • Critical sections reduce performance and limit scalability • Accelerate critical sections by executing them on a powerful core • ACS reduces average execution time by: – 34% compared to an equal-area SCMP – 23% compared to an equal-area ACMP • ACS improves scalability of 7 of the 12 workloads • Generalizing the idea: Accelerate all bottlenecks (“critical paths”) by executing them on a powerful core 15

USES OF ASYMMETRY • So far: – Improvement in serial performance (sequential bottleneck) • What else can we do with asymmetry? – Energy reduction? – Energy/performance tradeoff? – Improvement in parallel portion? 16

USES OF CMPs • Can you think about using these ideas to improve singlethreaded performance? • Implicit parallelization: thread level speculation – Slipstream processors – Leader-follower architectures • Helper threading – Prefetching – Branch prediction • Exception handling • Redundant execution to tolerate soft (and hard? ) errors 17

SLIPSTREAM PROCESSORS • Goal: use multiple hardware contexts to speed up single thread execution (implicitly parallelize the program) • Idea: Divide program execution into two threads: – Advanced thread executes a reduced instruction stream, speculatively – Redundant thread uses results, prefetches, predictions generated by advanced thread and ensures correctness • Benefit: Execution time of the overall program reduces • Core idea is similar to many thread-level speculation approaches, except with a reduced instruction stream • Sundaramoorthy et al. , “Slipstream Processors: Improving both Performance and Fault Tolerance, ” ASPLOS 2000. 18

SLIPSTREAMING • “At speeds in excess of 190 m. p. h. , high air pressure forms at the front of a race car and a partial vacuum forms behind it. This creates drag and limits the car’s top speed. • A second car can position itself close behind the first (a process called slipstreaming or drafting). This fills the vacuum behind the lead car, reducing its drag. And the trailing car now has less wind resistance in front (and by some accounts, the vacuum behind the lead car actually helps pull the trailing car). • As a result, both cars speed up by several m. p. h. : the two combined go faster than either can alone. ” 19

SLIPSTREAM PROCESSORS • Detect and remove ineffectual instructions; run a shortened “effectual” version of the program (Advanced or A-stream) in one thread context • Ensure correctness by running a complete version of the program (Redundant or R-stream) in another thread context • Shortened A-stream runs fast; R-stream consumes near-perfect control and data flow outcomes from A-stream and finishes close behind • Two streams together lead to faster execution (by helping each other) than a single one alone 20

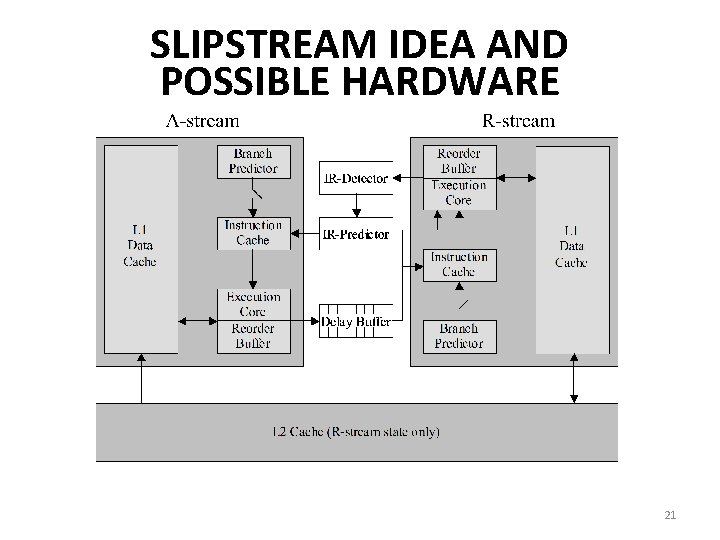

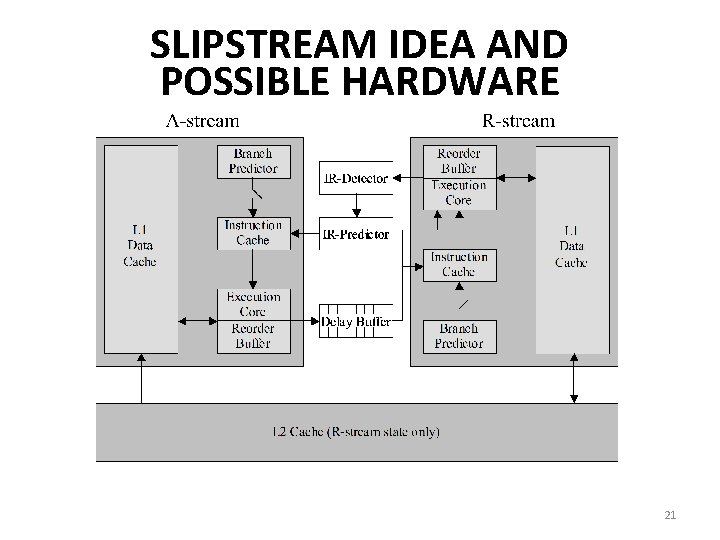

SLIPSTREAM IDEA AND POSSIBLE HARDWARE 21

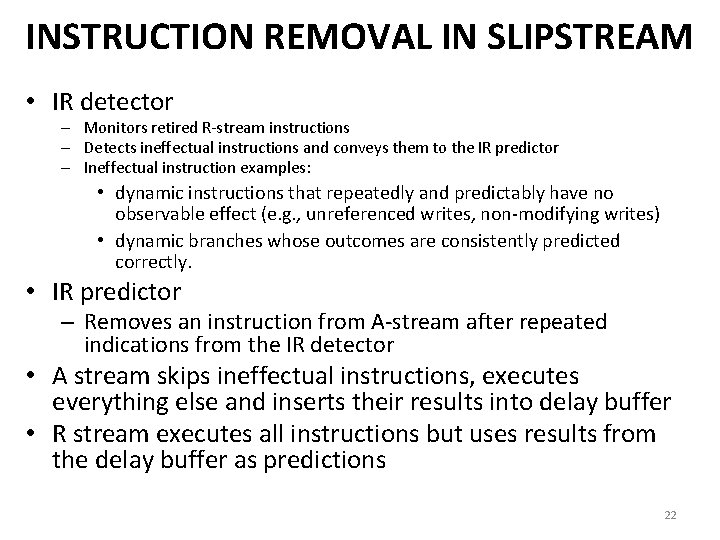

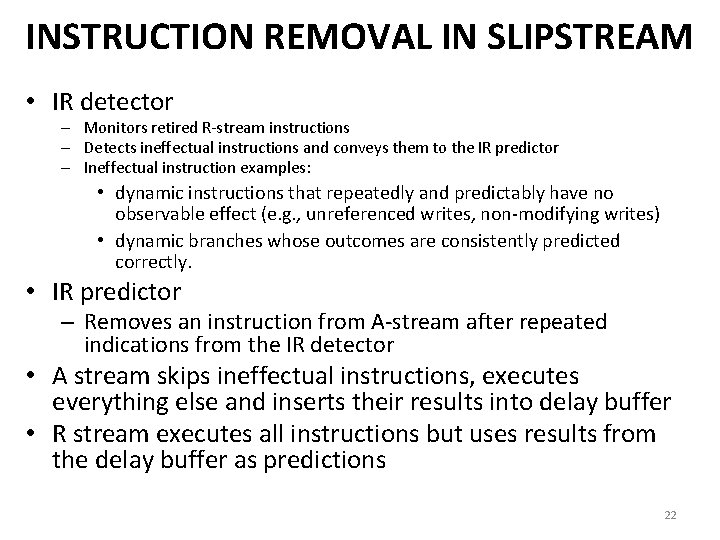

INSTRUCTION REMOVAL IN SLIPSTREAM • IR detector – Monitors retired R-stream instructions – Detects ineffectual instructions and conveys them to the IR predictor – Ineffectual instruction examples: • dynamic instructions that repeatedly and predictably have no observable effect (e. g. , unreferenced writes, non-modifying writes) • dynamic branches whose outcomes are consistently predicted correctly. • IR predictor – Removes an instruction from A-stream after repeated indications from the IR detector • A stream skips ineffectual instructions, executes everything else and inserts their results into delay buffer • R stream executes all instructions but uses results from the delay buffer as predictions 22

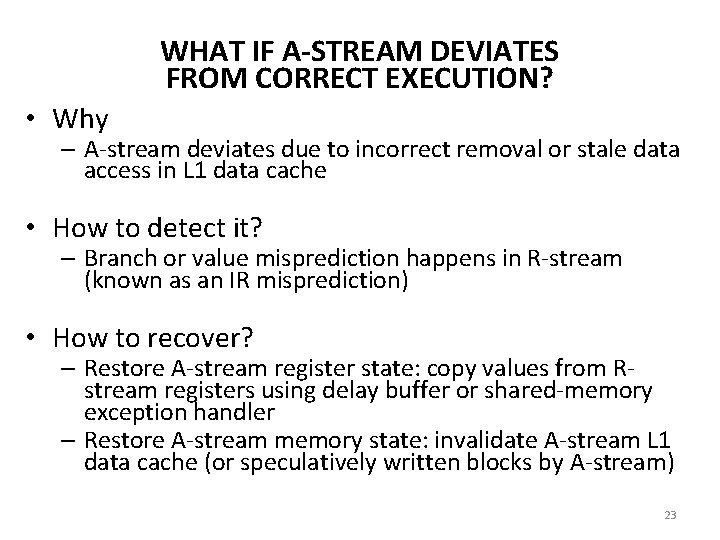

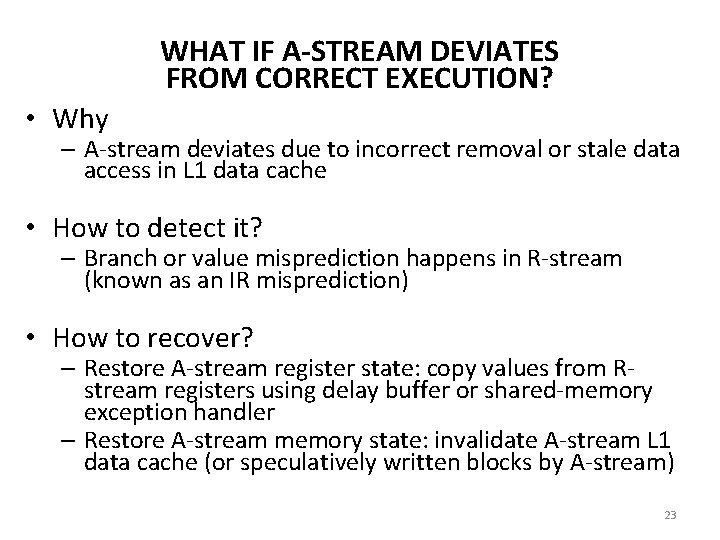

WHAT IF A-STREAM DEVIATES FROM CORRECT EXECUTION? • Why – A-stream deviates due to incorrect removal or stale data access in L 1 data cache • How to detect it? – Branch or value misprediction happens in R-stream (known as an IR misprediction) • How to recover? – Restore A-stream register state: copy values from Rstream registers using delay buffer or shared-memory exception handler – Restore A-stream memory state: invalidate A-stream L 1 data cache (or speculatively written blocks by A-stream) 23

Slipstream Questions • How to construct the advanced thread – Original proposal: • Dynamically eliminate redundant instructions (silent stores, dynamically dead instructions) • Dynamically eliminate easy-to-predict branches – Other ways: • Dynamically ignore long-latency stalls • Static based on profiling • How to speed up the redundant thread – Original proposal: Reuse instruction results (control and data flow outcomes from the A-stream) – Other ways: Only use branch results and prefetched data as predictions 24

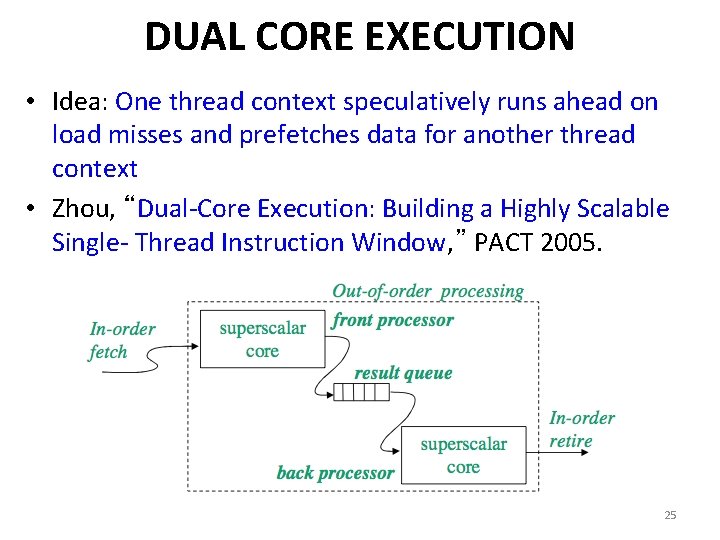

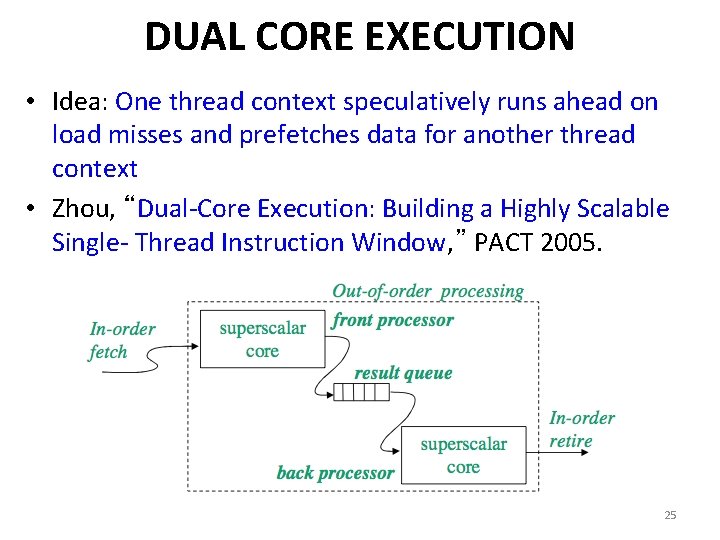

DUAL CORE EXECUTION • Idea: One thread context speculatively runs ahead on load misses and prefetches data for another thread context • Zhou, “Dual-Core Execution: Building a Highly Scalable Single- Thread Instruction Window, ” PACT 2005. 25

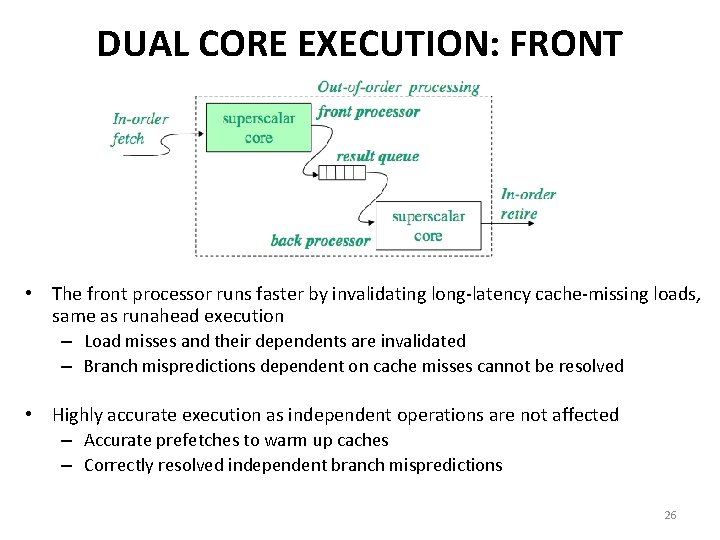

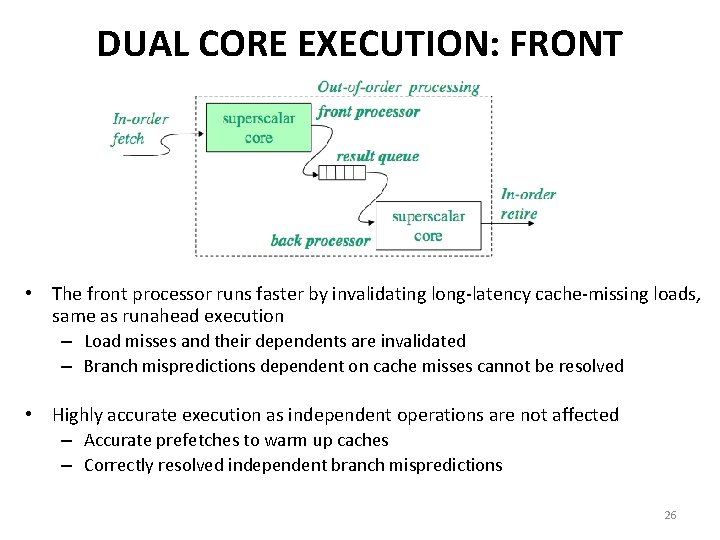

DUAL CORE EXECUTION: FRONT PROCESSOR • The front processor runs faster by invalidating long-latency cache-missing loads, same as runahead execution – Load misses and their dependents are invalidated – Branch mispredictions dependent on cache misses cannot be resolved • Highly accurate execution as independent operations are not affected – Accurate prefetches to warm up caches – Correctly resolved independent branch mispredictions 26

DUAL CORE EXECUTION: BACK PROCESSOR • Re-execution ensures correctness and provides precise program state – Resolve branch mispredictions dependent on long-latency cache misses • Back processor makes faster progress with help from the front processor – Highly accurate instruction stream – Warmed up data caches 27

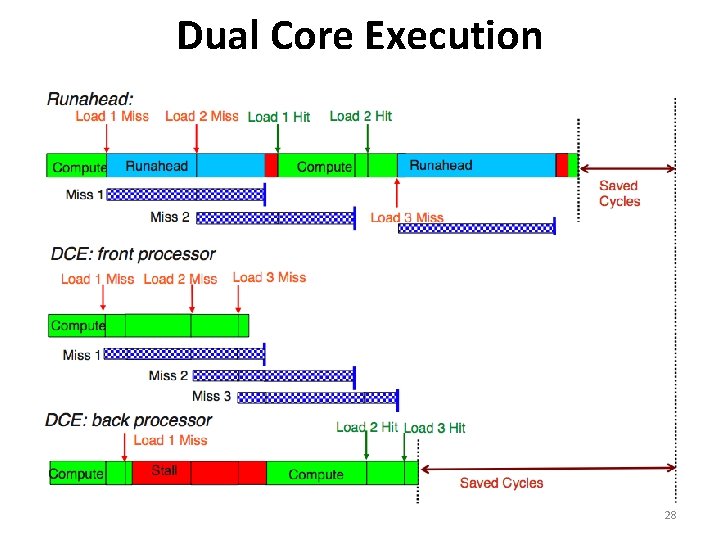

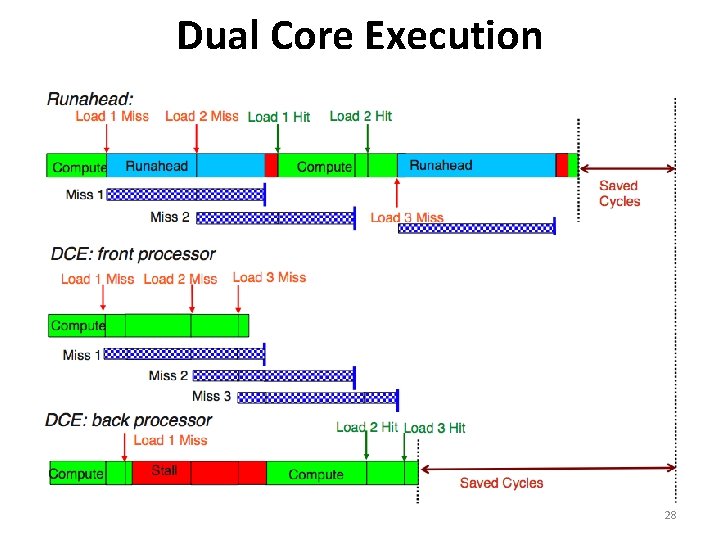

Dual Core Execution 28

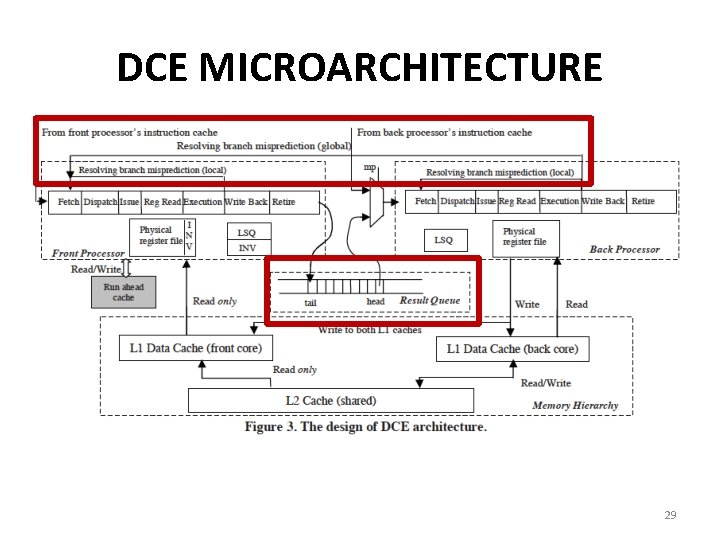

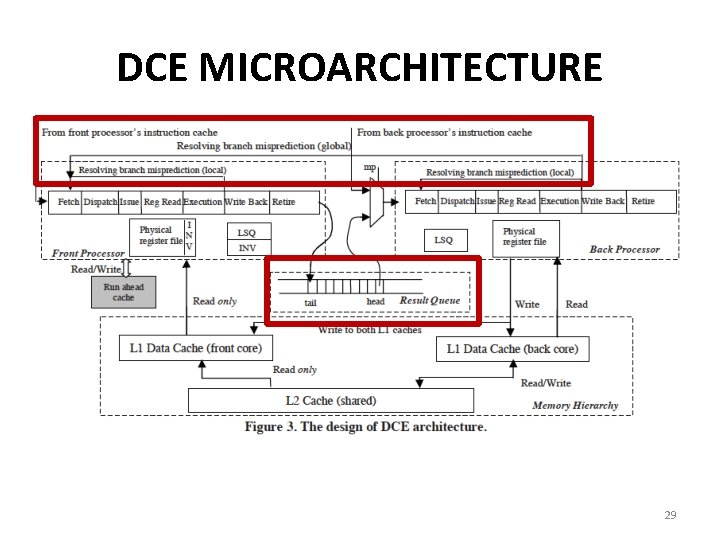

DCE MICROARCHITECTURE 29

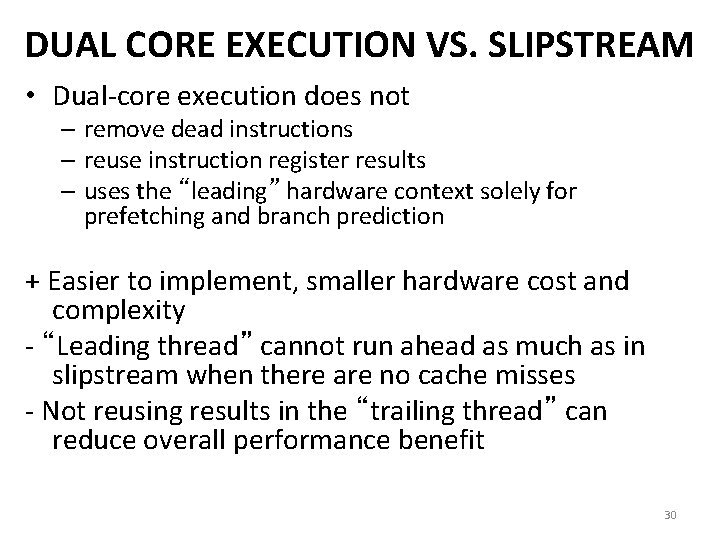

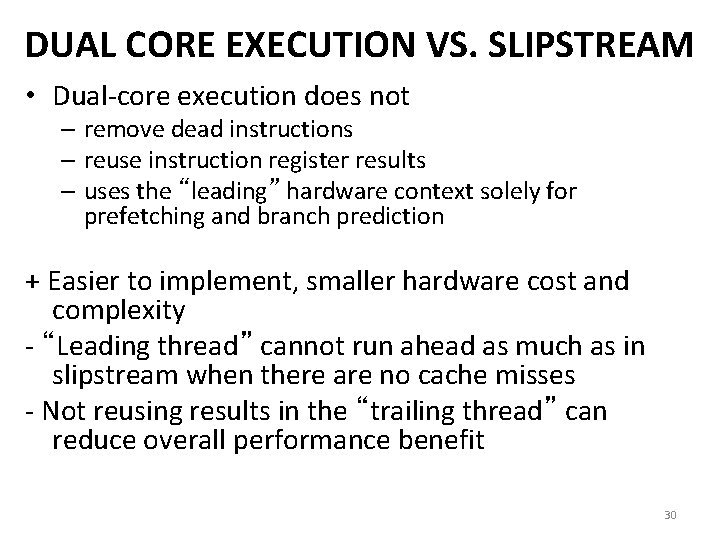

DUAL CORE EXECUTION VS. SLIPSTREAM • Dual-core execution does not – remove dead instructions – reuse instruction register results – uses the “leading” hardware context solely for prefetching and branch prediction + Easier to implement, smaller hardware cost and complexity - “Leading thread” cannot run ahead as much as in slipstream when there are no cache misses - Not reusing results in the “trailing thread” can reduce overall performance benefit 30

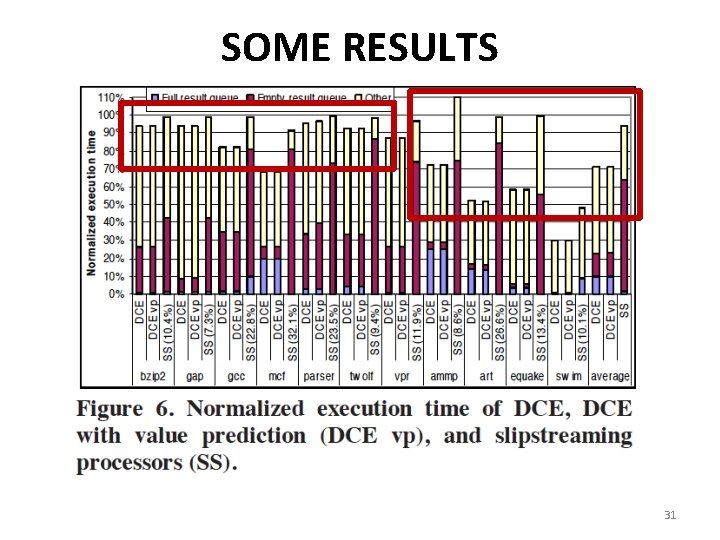

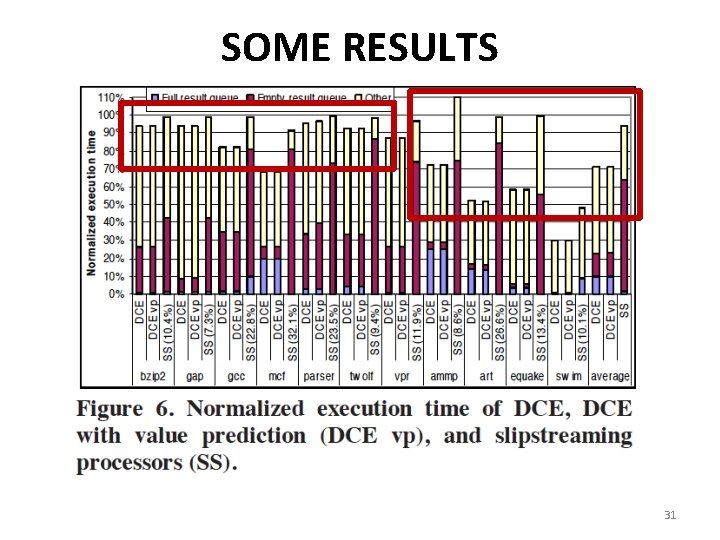

SOME RESULTS 31

HETEROGENEITY (ASYMMETRY) SPECIALIZATION • Heterogeneity and asymmetry have the same meaning – Contrast with homogeneity and symmetry • Heterogeneity is a very general system design concept (and life concept, as well) • Idea: Instead of having multiple instances of the same “resource” to be the same (i. e. , homogeneous or symmetric), design some instances to be different (i. e. , heterogeneous or asymmetric) • Different instances can be optimized to be more efficient in executing different types of workloads or satisfying different requirements/goals – Heterogeneity enables specialization/customization 32

WHY ASYMMETRY IN DESIGN? (I) • Different workloads executing in a system can have different behavior – Different applications can have different behavior – Different execution phases of an application can have different behavior – The same application executing at different times can have different behavior (due to input set changes and dynamic events) – E. g. , locality, predictability of branches, instruction-level parallelism, data dependencies, serial fraction, bottlenecks in parallel portion, interference characteristics, … • Systems are designed to satisfy different metrics at the same time – There is almost never a single goal in design, depending on design point – E. g. , Performance, energy efficiency, fairness, predictability, reliability, availability, cost, memory capacity, latency, bandwidth, … 33

WHY ASYMMETRY IN DESIGN? (II) • Problem: Symmetric design is one-size-fits-all • It tries to fit a single-size design to all workloads and metrics • It is very difficult to come up with a single design – that satisfies all workloads even for a single metric – that satisfies all design metrics at the same time • This holds true for different system components, or resources – Cores, caches, memory, controllers, interconnect, disks, servers, … – Algorithms, policies, … 34

COMPUTER ARCHITECTURE CS 6354 Asymmetric Multi-Cores Samira Khan University of Virginia Sep 13, 2017 The content and concept of this course are adapted from CMU ECE 740

Computer architecture

Computer architecture Samira khan uva

Samira khan uva Dr samira khan

Dr samira khan Artemis

Artemis Samira zegrari

Samira zegrari Samira kazan

Samira kazan Fatemeh soltani

Fatemeh soltani Samira kazan origin

Samira kazan origin Samira block

Samira block How to counter samira

How to counter samira Bus architecture in computer organization

Bus architecture in computer organization Difference computer organization and architecture

Difference computer organization and architecture Design of a basic computer

Design of a basic computer Advantages of asymmetric encryption

Advantages of asymmetric encryption Symmetric and asymmetric matrix

Symmetric and asymmetric matrix Introduction to corporate communication

Introduction to corporate communication Symmetrical iugr

Symmetrical iugr Game theory asymmetric information

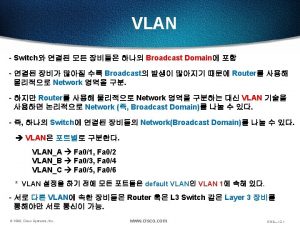

Game theory asymmetric information Asymmetric vlan

Asymmetric vlan Asymmetric information diagram

Asymmetric information diagram Asymmetric information diagram

Asymmetric information diagram Asymmetric communication

Asymmetric communication Vlan 1-4094

Vlan 1-4094 Define ihrm

Define ihrm Asymmetric encryption 歴史

Asymmetric encryption 歴史 Asymmetric vlan

Asymmetric vlan Asymmetric vlan

Asymmetric vlan Key distribution

Key distribution Asymmetric encryption java

Asymmetric encryption java Two-way asymmetric model

Two-way asymmetric model Projection bias

Projection bias Asymmetric dimethylarginine

Asymmetric dimethylarginine X=vxt

X=vxt Cryptography computer science

Cryptography computer science Asymmetric synthesis example

Asymmetric synthesis example Asymmetric dominance effect

Asymmetric dominance effect