COMPUTER ARCHITECTURE CS 6354 Pipelining Samira Khan University

- Slides: 35

COMPUTER ARCHITECTURE CS 6354 Pipelining Samira Khan University of Virginia Feb 4, 2016 The content and concept of this course are adapted from CMU ECE 740

AGENDA • Review from last lecture – ISA Tradeoffs • Pipelining 2

REVIEW OF LAST LECTURE: ISA TRADEOFFS • • Complex vs. simple instructions: concept of semantic gap Use of translation to change the tradeoffs Fixed vs. variable length, uniform vs. non-uniform decode Number of registers • What is the benefit of translating complex instructions to “simple instructions” before executing them? – In hardware (Intel, AMD)? – In software (Transmeta)? • Which ISA is easier to extend: fixed length or variable length? • How can you have a variable length, uniform decode ISA? 3

PROGRAMMER VS. (MICRO)ARCHITECT • Many ISA features designed to aid programmers • But, complicate the hardware designer’s job • Virtual memory – vs. overlay programming – Should the programmer be concerned about the size of code blocks? • Unaligned memory access – Compile/programmer needs to align data • Transactional memory? • VLIW vs. SIMD? Superscalar execution vs. SIMD? 4

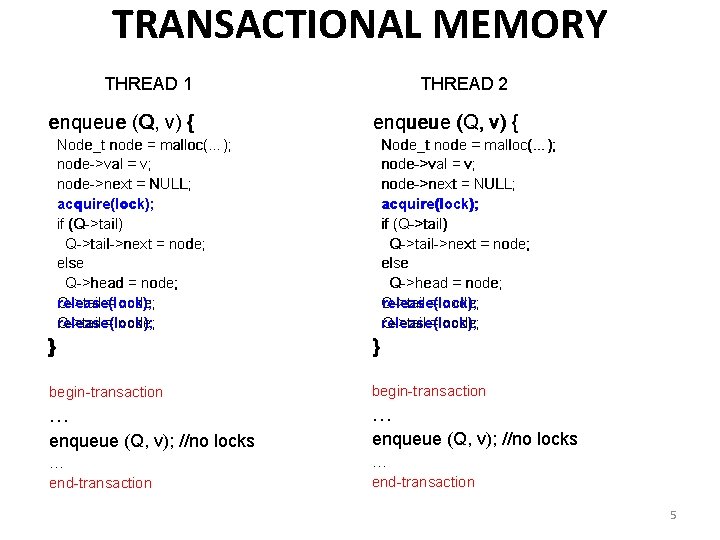

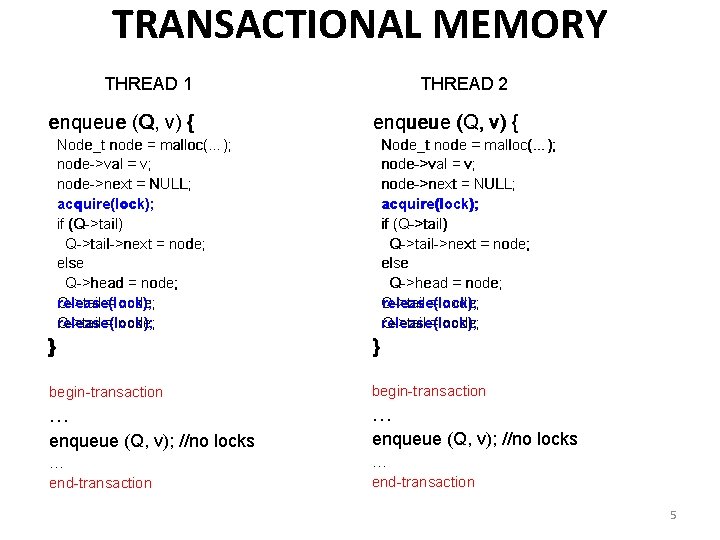

TRANSACTIONAL MEMORY THREAD 1 enqueue (Q, v) { THREAD 2 enqueue (Q, v) { Node_t node = malloc(…); node->val = v; node->next = NULL; acquire(lock); if (Q->tail) Q->tail->next = node; else Q->head = node; release(lock); Q->tail = node; Q->tail release(lock); = node; Node_t node = malloc(…); node->val = v; node->next = NULL; acquire(lock); if (Q->tail) Q->tail->next = node; else Q->head = node; Q->tail release(lock); = node; release(lock); Q->tail = node; } } begin-transaction … … enqueue (Q, v); //no locks … … end-transaction 5

TRANSACTIONAL MEMORY • A transaction is executed atomically: ALL or NONE • If there is a data conflict between two transactions, only one of them completes; the other is rolled back – Both write to the same location – One reads from the location another writes 6

ISA-LEVEL TRADEOFF: SUPPORTING TM • Still under research • Pros: – Could make programming with threads easier – Could improve parallel program performance vs. locks. Why? • Cons: – What if it does not pan out? – All future microarchitectures might have to support the new instructions (for backward compatibility reasons) – Complexity? • How does the architect decide whether or not to support TM in the ISA? (How to evaluate the whole stack) 7

AGENDA • Review from last lecture – ISA Tradeoffs • Pipelining 8

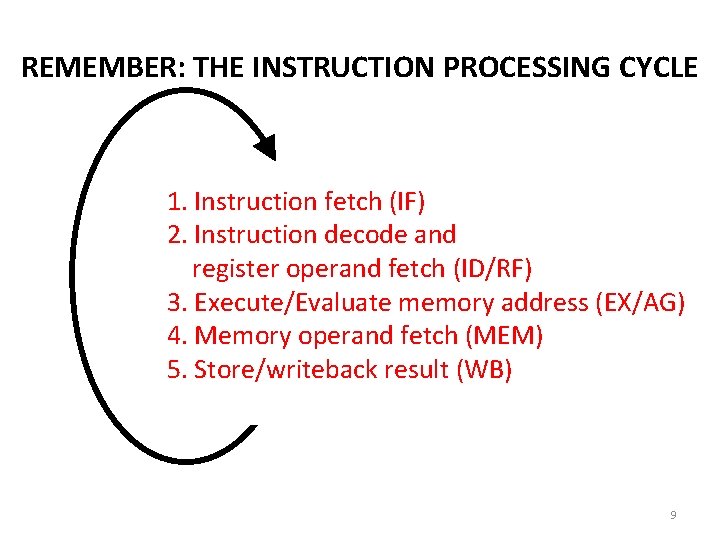

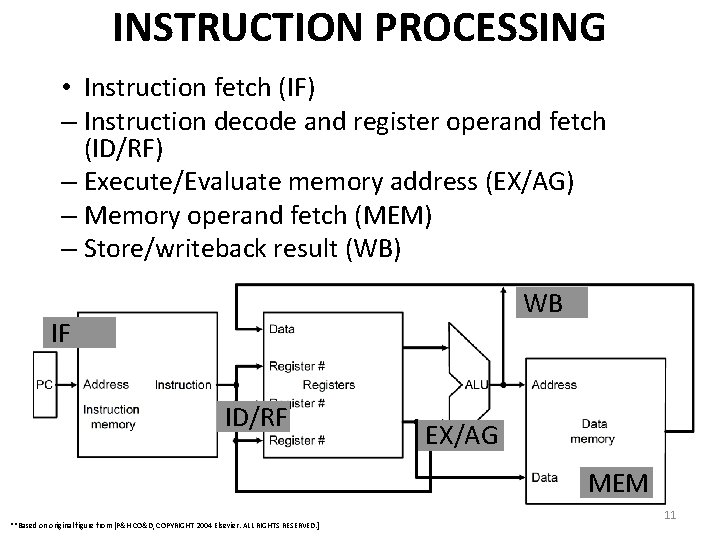

REMEMBER: THE INSTRUCTION PROCESSING CYCLE – Fetch 1. Instruction fetch (IF) – Decode 2. Instruction decode and – Evaluate register operand Address fetch (ID/RF) 3. Execute/Evaluate memory address (EX/AG) – Fetch Operands 4. Memory operand fetch (MEM) – Execute 5. Store/writeback result (WB) – Store Result 9

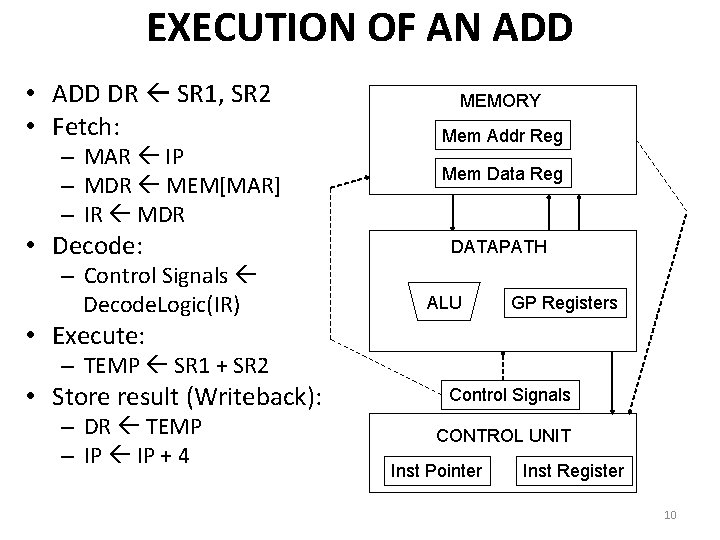

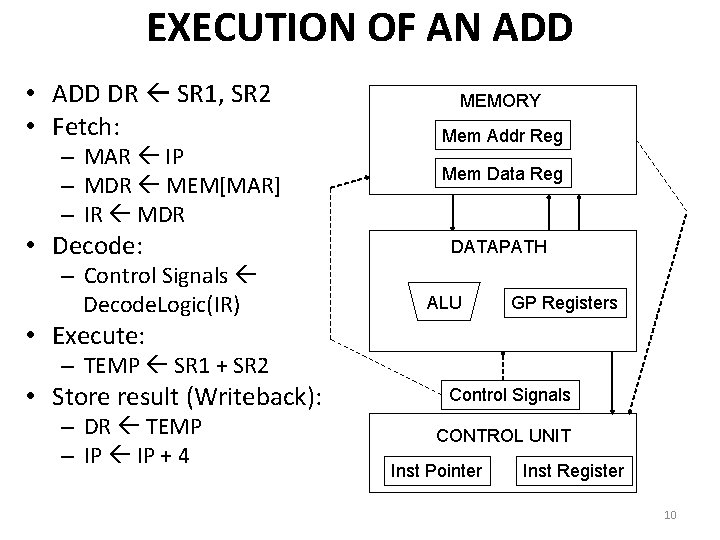

EXECUTION OF AN ADD • ADD DR SR 1, SR 2 • Fetch: – MAR IP – MDR MEM[MAR] – IR MDR • Decode: – Control Signals Decode. Logic(IR) MEMORY Mem Addr Reg Mem Data Reg DATAPATH ALU GP Registers • Execute: – TEMP SR 1 + SR 2 • Store result (Writeback): – DR TEMP – IP + 4 Control Signals CONTROL UNIT Inst Pointer Inst Register 10

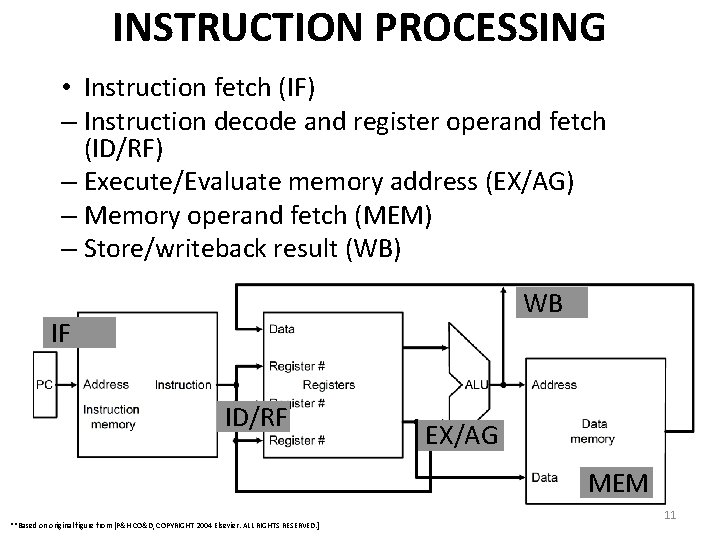

INSTRUCTION PROCESSING • Instruction fetch (IF) – Instruction decode and register operand fetch (ID/RF) – Execute/Evaluate memory address (EX/AG) – Memory operand fetch (MEM) – Store/writeback result (WB) WB IF ID/RF EX/AG MEM **Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 11

INTRO TO PIPELINING (II) • In the microcoded machine, some resources are idle in different stages of instruction processing – Fetch logic is idle when ADD is being decoded or executed • Pipelined machines – Use idle resources to process other instructions – Each stage processes a different instruction – When decoding the ADD, fetch the next instruction – Think “assembly line” 12

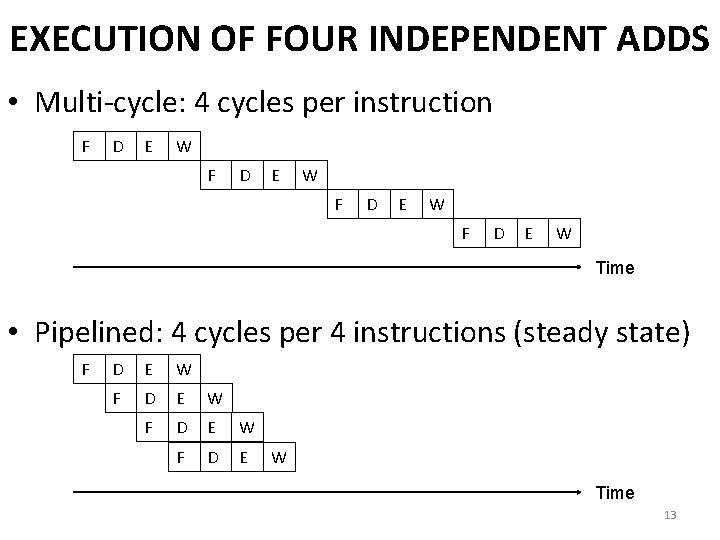

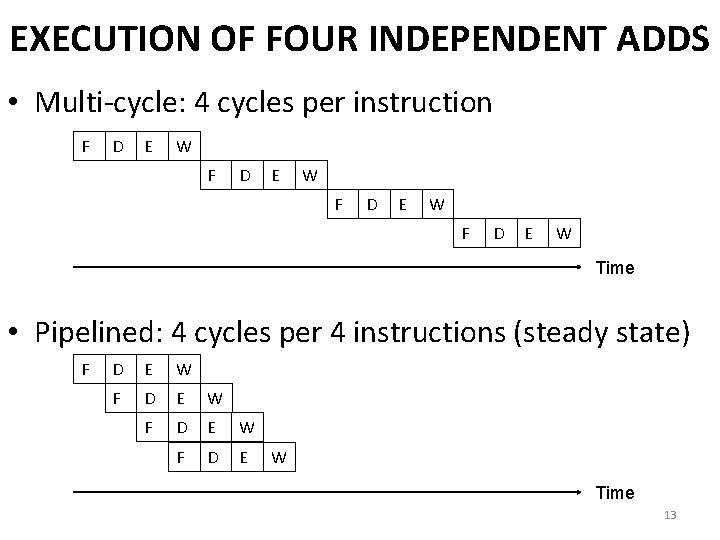

EXECUTION OF FOUR INDEPENDENT ADDS • Multi-cycle: 4 cycles per instruction F D E W Time • Pipelined: 4 cycles per 4 instructions (steady state) F D E W Time 13

PIPELINING TRADEOFFFS? • Pipelined vs. multi-cycle machines – Advantage: Improves instruction throughput (reduces CPI) – Disadvantage: Requires more logic, higher power consumption 14

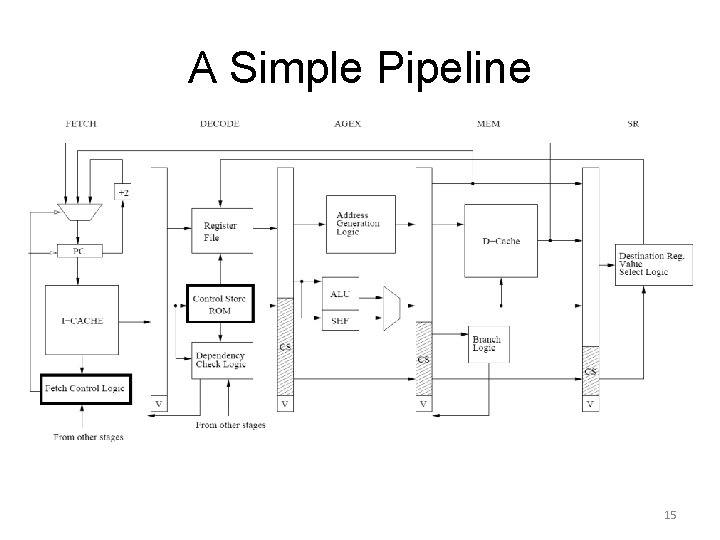

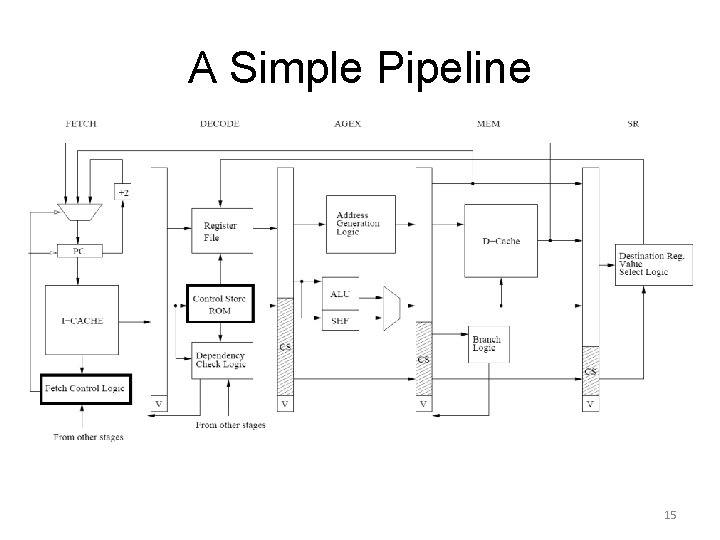

A Simple Pipeline 15

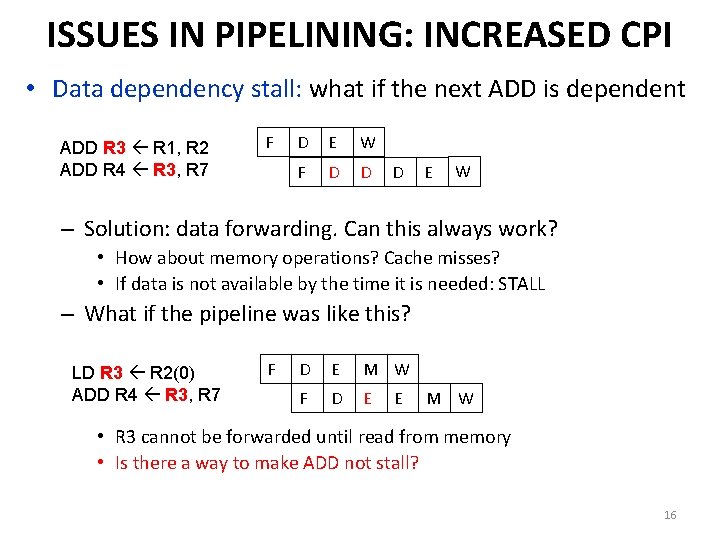

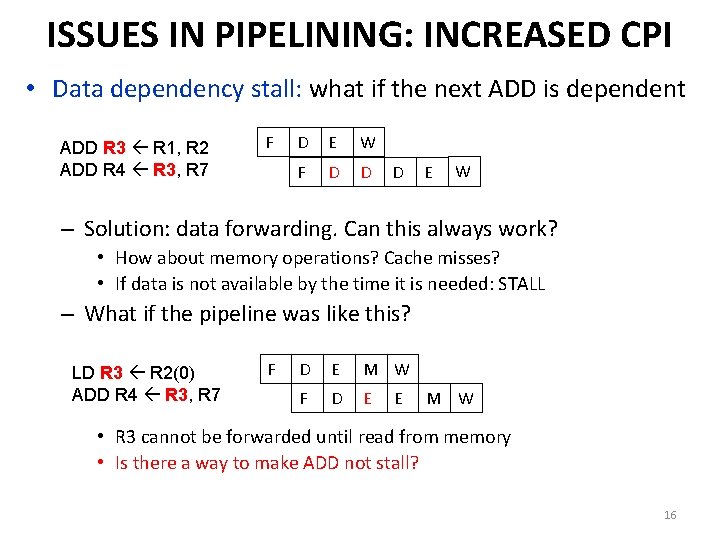

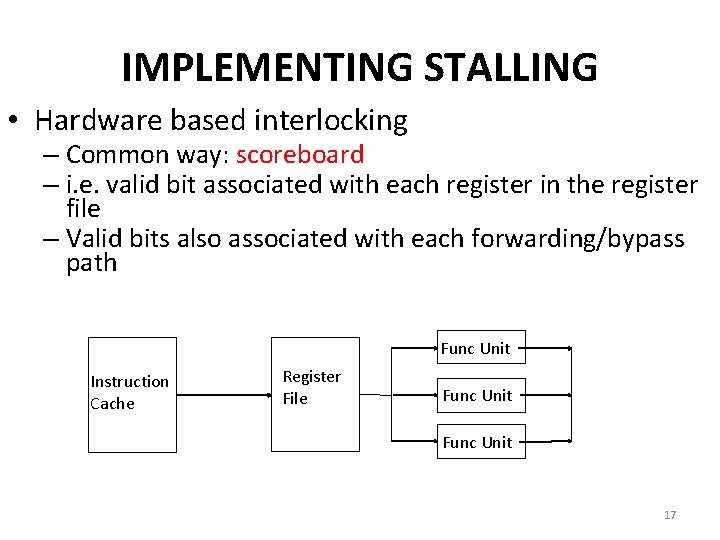

ISSUES IN PIPELINING: INCREASED CPI • Data dependency stall: what if the next ADD is dependent ADD R 3 R 1, R 2 ADD R 4 R 3, R 7 F D E W F D D D E W – Solution: data forwarding. Can this always work? • How about memory operations? Cache misses? • If data is not available by the time it is needed: STALL – What if the pipeline was like this? LD R 3 R 2(0) ADD R 4 R 3, R 7 F D E M W F D E E M W • R 3 cannot be forwarded until read from memory • Is there a way to make ADD not stall? 16

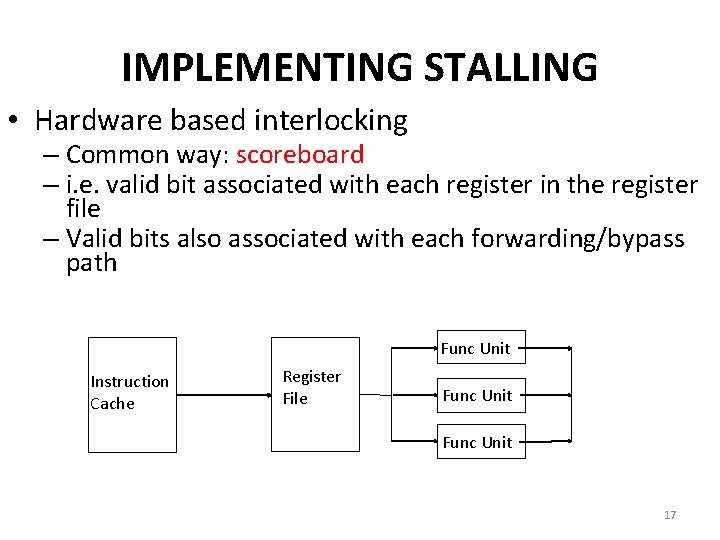

IMPLEMENTING STALLING • Hardware based interlocking – Common way: scoreboard – i. e. valid bit associated with each register in the register file – Valid bits also associated with each forwarding/bypass path Func Unit Instruction Cache Register File Func Unit 17

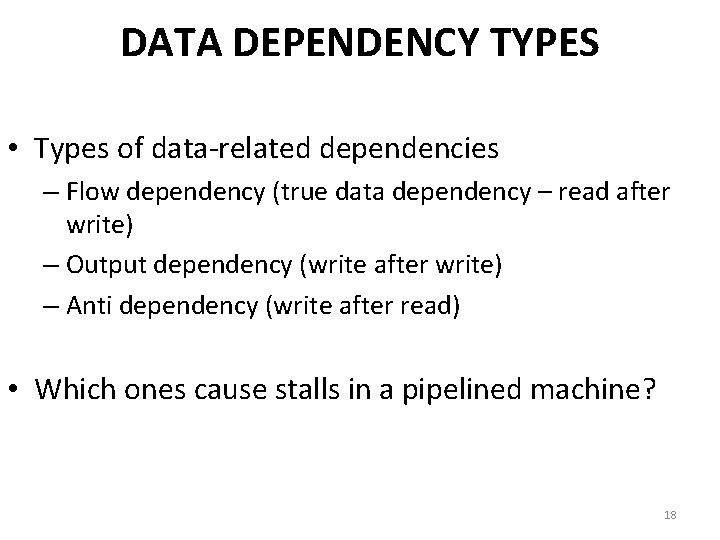

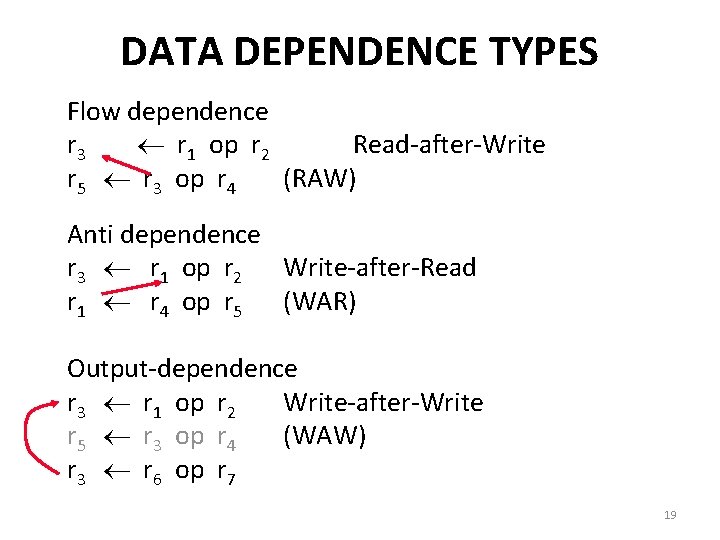

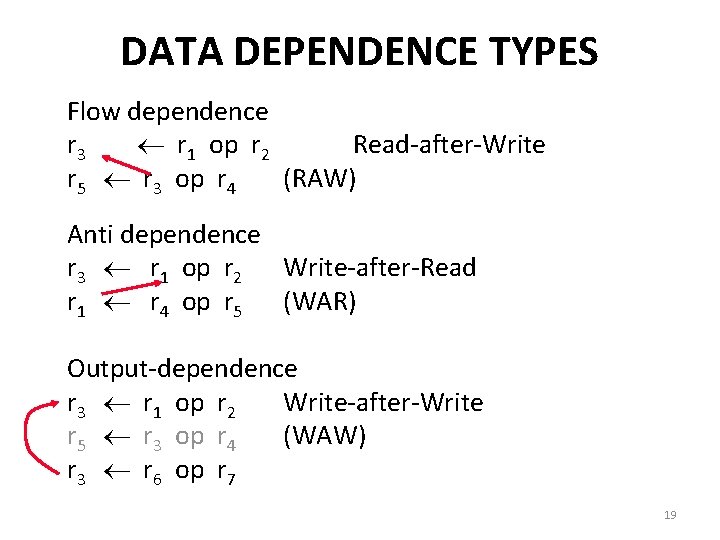

DATA DEPENDENCY TYPES • Types of data-related dependencies – Flow dependency (true data dependency – read after write) – Output dependency (write after write) – Anti dependency (write after read) • Which ones cause stalls in a pipelined machine? 18

DATA DEPENDENCE TYPES Flow dependence r 3 r 1 op r 2 Read-after-Write r 5 r 3 op r 4 (RAW) Anti dependence r 3 r 1 op r 2 Write-after-Read r 1 r 4 op r 5 (WAR) Output-dependence r 3 r 1 op r 2 Write-after-Write r 5 r 3 op r 4 (WAW) r 3 r 6 op r 7 19

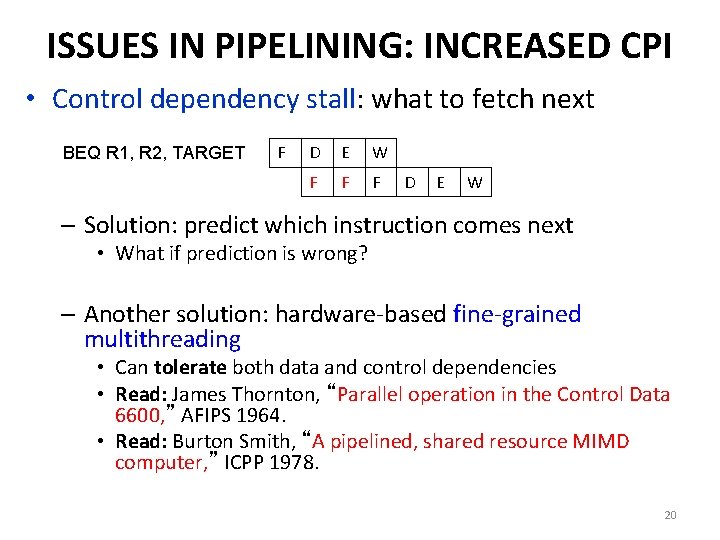

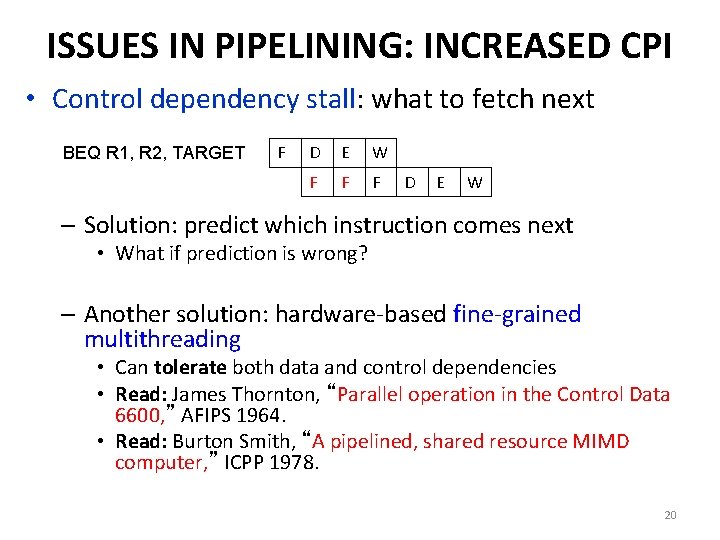

ISSUES IN PIPELINING: INCREASED CPI • Control dependency stall: what to fetch next BEQ R 1, R 2, TARGET F D E W F F F D E W – Solution: predict which instruction comes next • What if prediction is wrong? – Another solution: hardware-based fine-grained multithreading • Can tolerate both data and control dependencies • Read: James Thornton, “Parallel operation in the Control Data 6600, ” AFIPS 1964. • Read: Burton Smith, “A pipelined, shared resource MIMD computer, ” ICPP 1978. 20

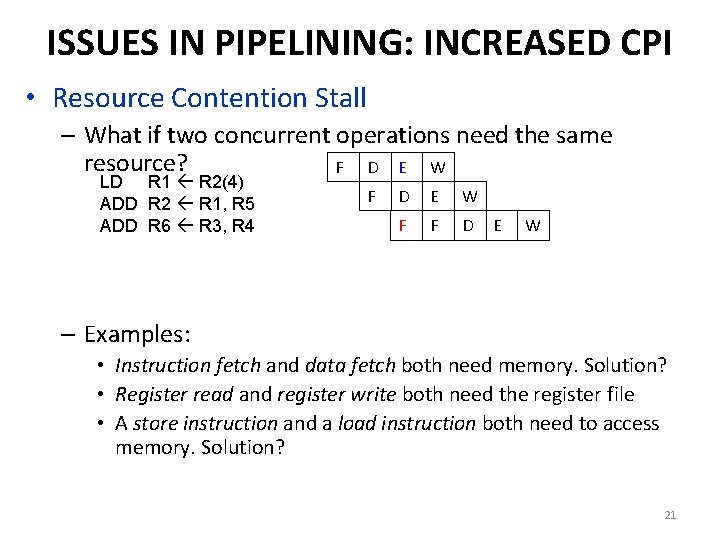

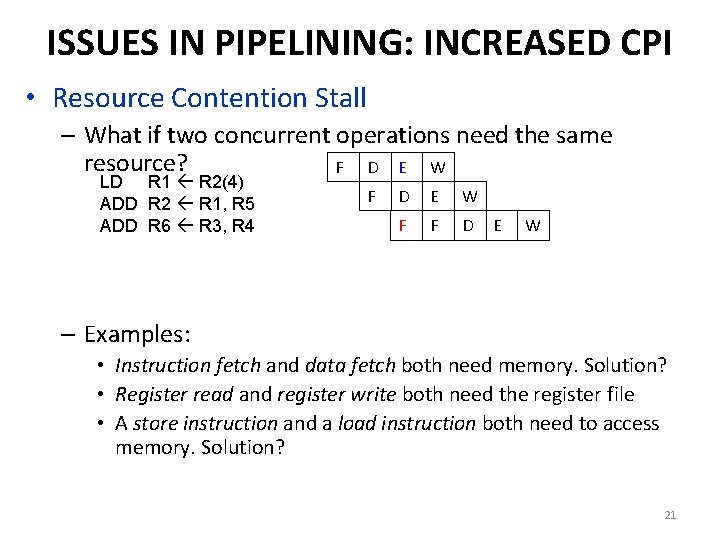

ISSUES IN PIPELINING: INCREASED CPI • Resource Contention Stall – What if two concurrent operations need the same resource? F D E W LD R 1 R 2(4) ADD R 2 R 1, R 5 ADD R 6 R 3, R 4 F D E W F F D E W – Examples: • Instruction fetch and data fetch both need memory. Solution? • Register read and register write both need the register file • A store instruction and a load instruction both need to access memory. Solution? 21

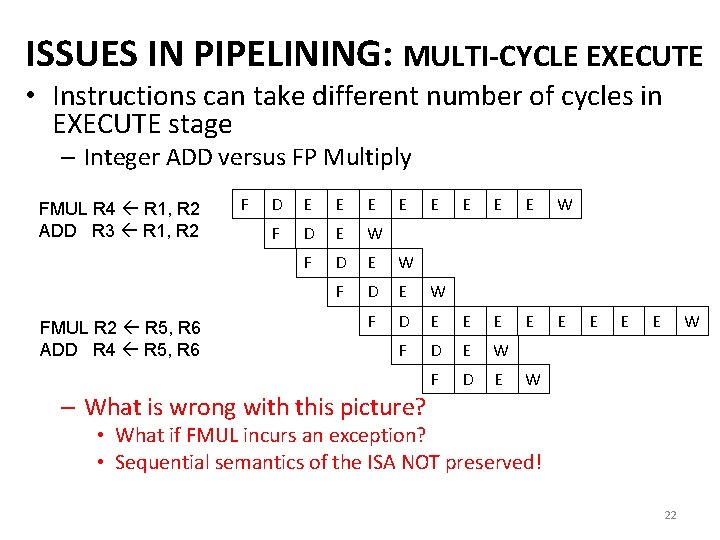

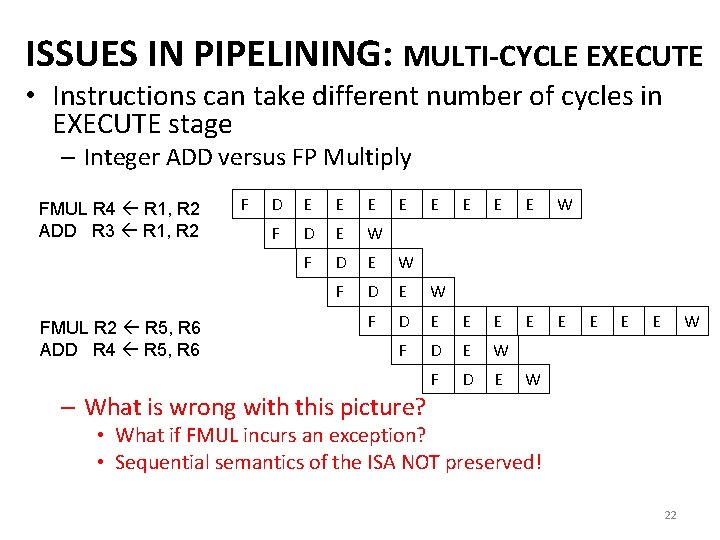

ISSUES IN PIPELINING: MULTI-CYCLE EXECUTE • Instructions can take different number of cycles in EXECUTE stage – Integer ADD versus FP Multiply FMUL R 4 R 1, R 2 ADD R 3 R 1, R 2 FMUL R 2 R 5, R 6 ADD R 4 R 5, R 6 F D E E E E W F D E E E F D E W F D E E W W – What is wrong with this picture? • What if FMUL incurs an exception? • Sequential semantics of the ISA NOT preserved! 22

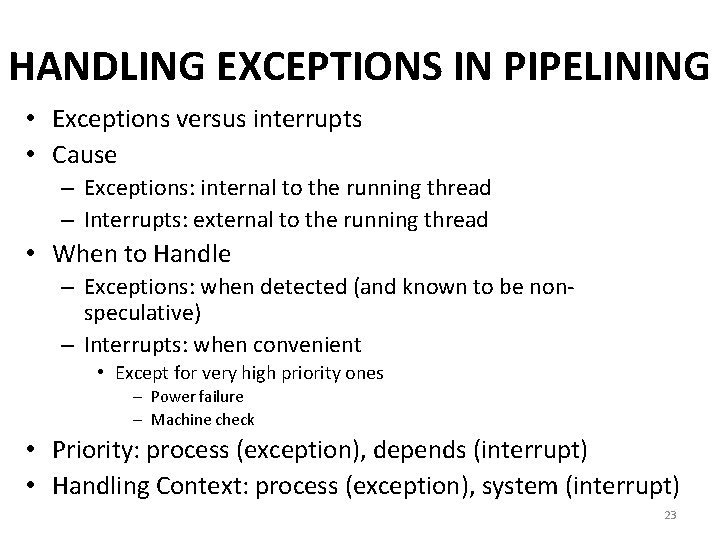

HANDLING EXCEPTIONS IN PIPELINING • Exceptions versus interrupts • Cause – Exceptions: internal to the running thread – Interrupts: external to the running thread • When to Handle – Exceptions: when detected (and known to be nonspeculative) – Interrupts: when convenient • Except for very high priority ones – Power failure – Machine check • Priority: process (exception), depends (interrupt) • Handling Context: process (exception), system (interrupt) 23

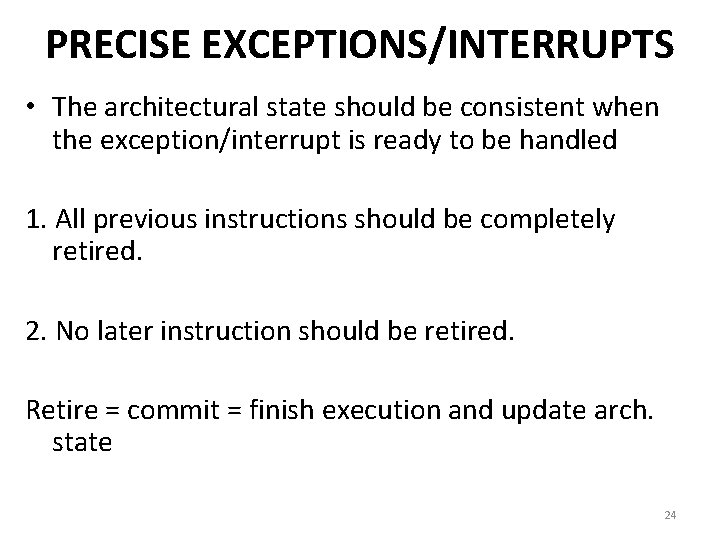

PRECISE EXCEPTIONS/INTERRUPTS • The architectural state should be consistent when the exception/interrupt is ready to be handled 1. All previous instructions should be completely retired. 2. No later instruction should be retired. Retire = commit = finish execution and update arch. state 24

WHY DO WE WANT PRECISE EXCEPTIONS? • Aid software debugging • Enable (easy) recovery from exceptions, e. g. page faults • Enable (easily) restartable processes • Enable traps into software (e. g. , software implemented opcodes) 25

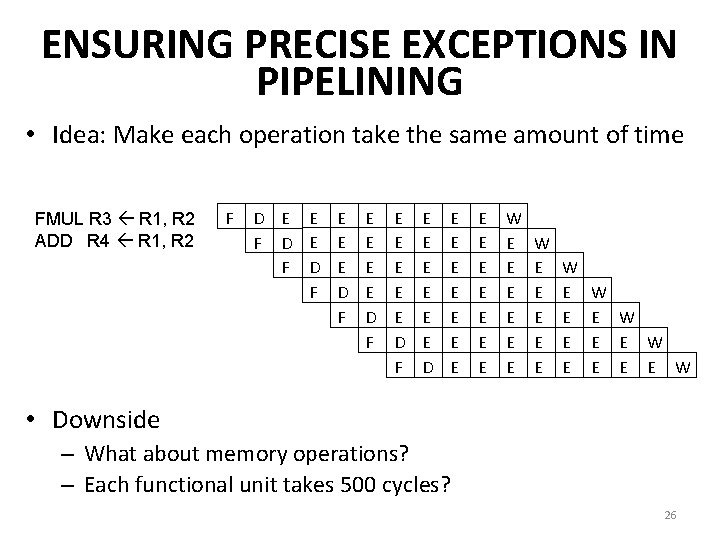

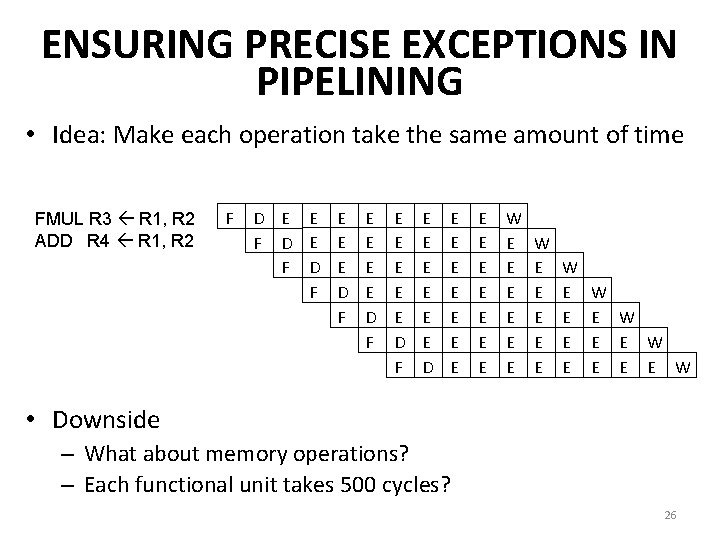

ENSURING PRECISE EXCEPTIONS IN PIPELINING • Idea: Make each operation take the same amount of time FMUL R 3 R 1, R 2 ADD R 4 R 1, R 2 F D E E F D F E E E D F E E E D E E E E W E E E W E E W E E E W • Downside – What about memory operations? – Each functional unit takes 500 cycles? 26

• Reorder buffer SOLUTIONS • History buffer • Future register file • Checkpointing • Reading – Smith and Plezskun, “Implementing Precise Interrupts in Pipelined Processors” IEEE Trans on Computers 1988 and ISCA 1985. – Hwu and Patt, “Checkpoint Repair for Out-of-order Execution Machines, ” ISCA 1987. 27

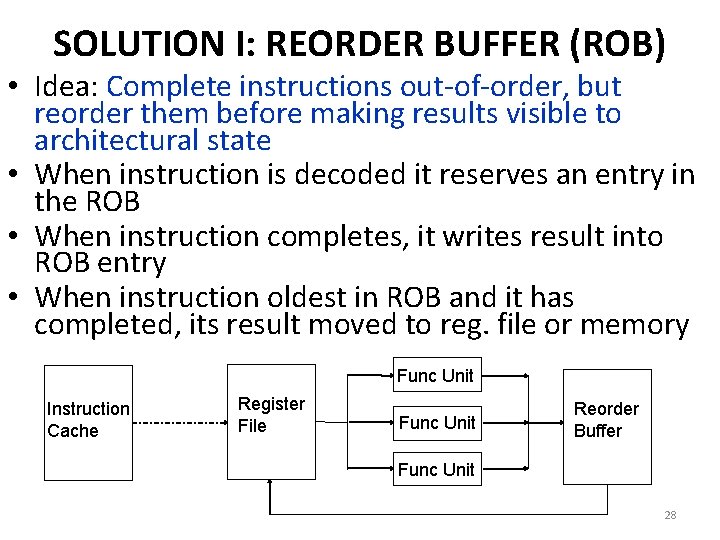

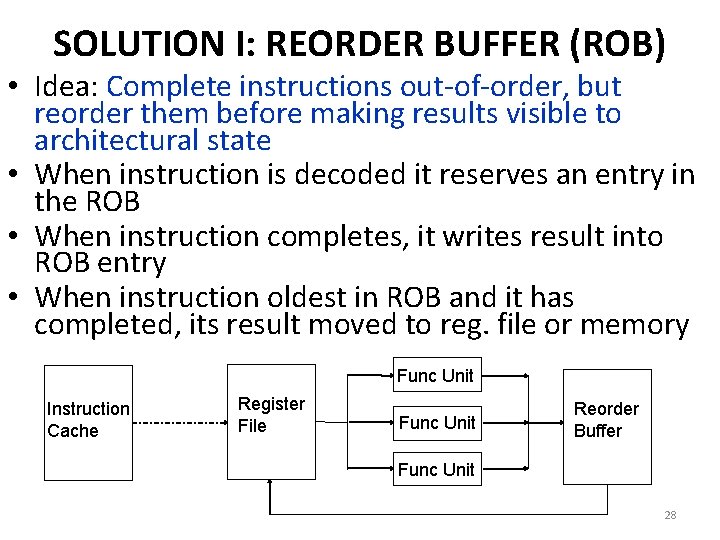

SOLUTION I: REORDER BUFFER (ROB) • Idea: Complete instructions out-of-order, but reorder them before making results visible to architectural state • When instruction is decoded it reserves an entry in the ROB • When instruction completes, it writes result into ROB entry • When instruction oldest in ROB and it has completed, its result moved to reg. file or memory Func Unit Instruction Cache Register File Func Unit Reorder Buffer Func Unit 28

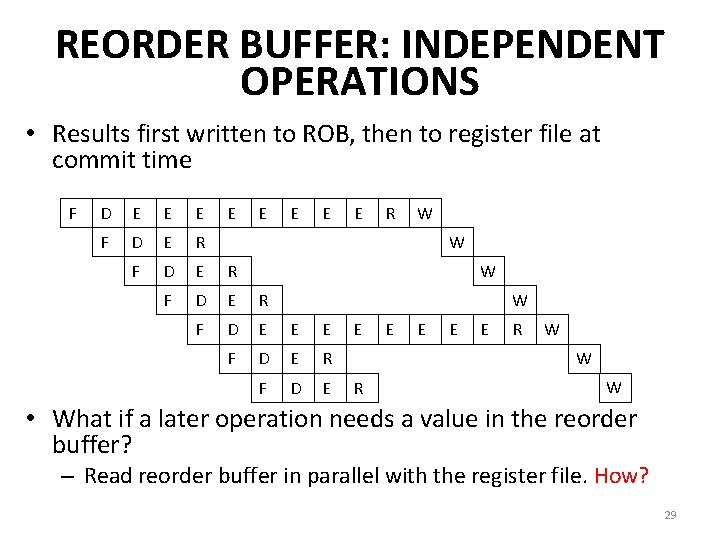

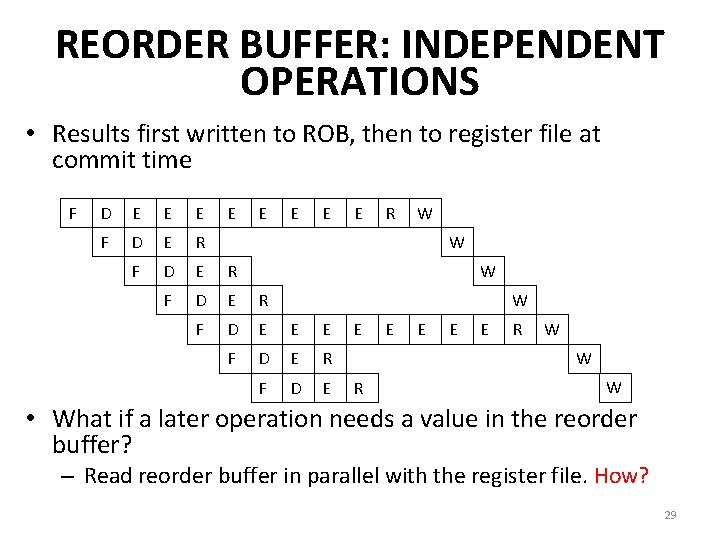

REORDER BUFFER: INDEPENDENT OPERATIONS • Results first written to ROB, then to register file at commit time F D E E E E F D E R F D E E E F D E R F D E E R W W E E E R W W R W • What if a later operation needs a value in the reorder buffer? – Read reorder buffer in parallel with the register file. How? 29

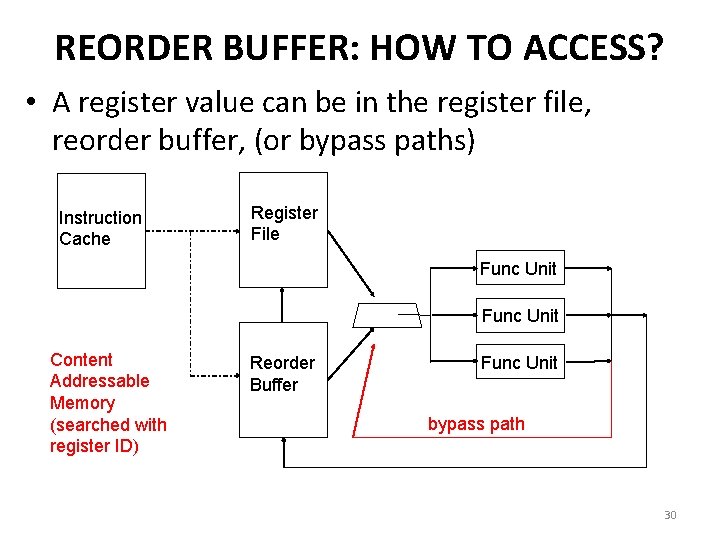

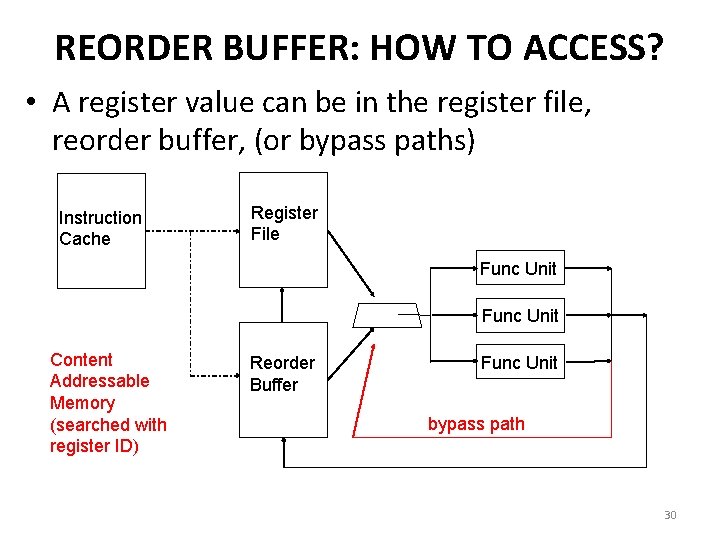

REORDER BUFFER: HOW TO ACCESS? • A register value can be in the register file, reorder buffer, (or bypass paths) Instruction Cache Register File Func Unit Content Addressable Memory (searched with register ID) Reorder Buffer Func Unit bypass path 30

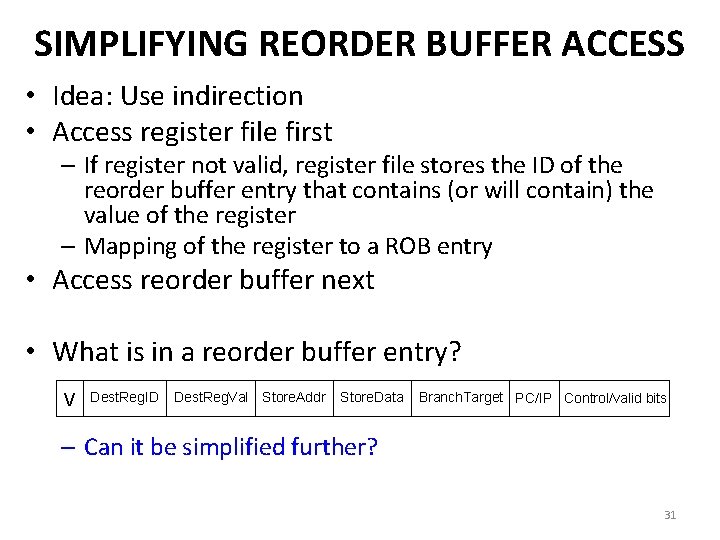

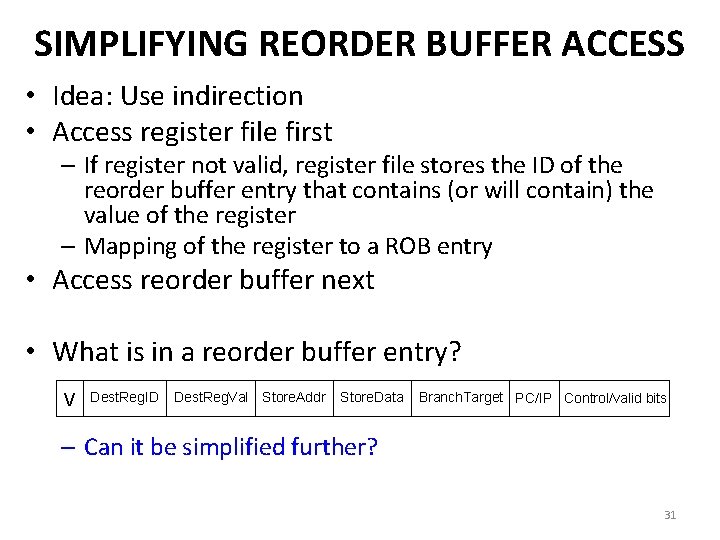

SIMPLIFYING REORDER BUFFER ACCESS • Idea: Use indirection • Access register file first – If register not valid, register file stores the ID of the reorder buffer entry that contains (or will contain) the value of the register – Mapping of the register to a ROB entry • Access reorder buffer next • What is in a reorder buffer entry? V Dest. Reg. ID Dest. Reg. Val Store. Addr Store. Data Branch. Target PC/IP Control/valid bits – Can it be simplified further? 31

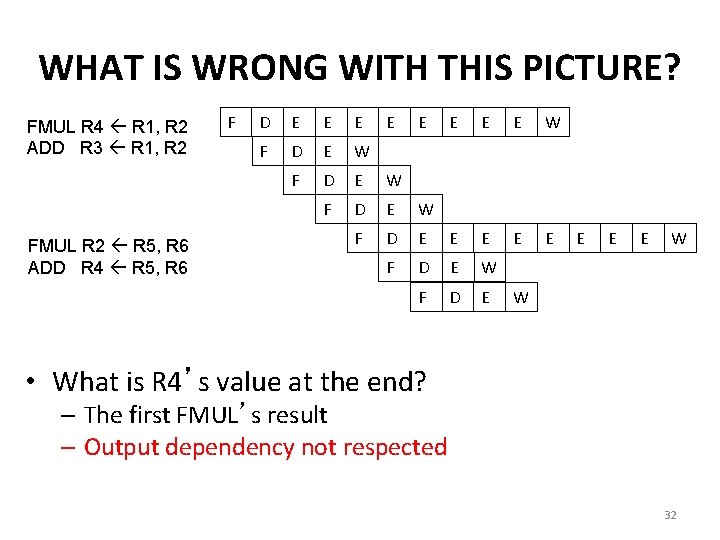

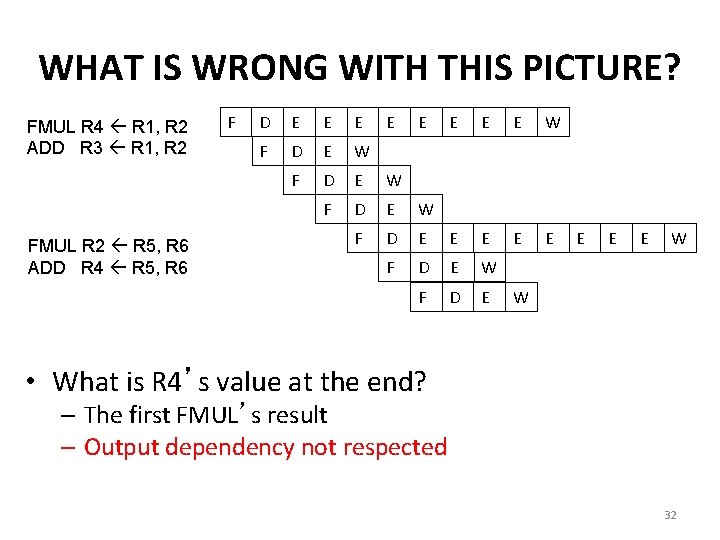

WHAT IS WRONG WITH THIS PICTURE? FMUL R 4 R 1, R 2 ADD R 3 R 1, R 2 FMUL R 2 R 5, R 6 ADD R 4 R 5, R 6 F D E E E E W F D E E E F D E W F D E E W W • What is R 4’s value at the end? – The first FMUL’s result – Output dependency not respected 32

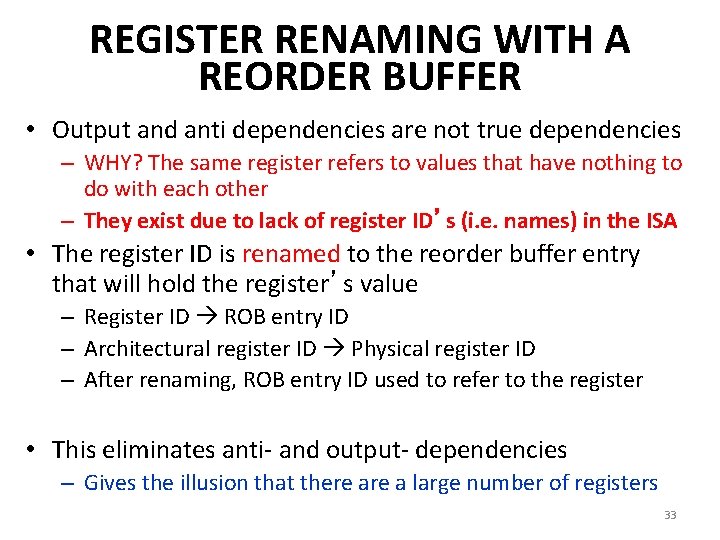

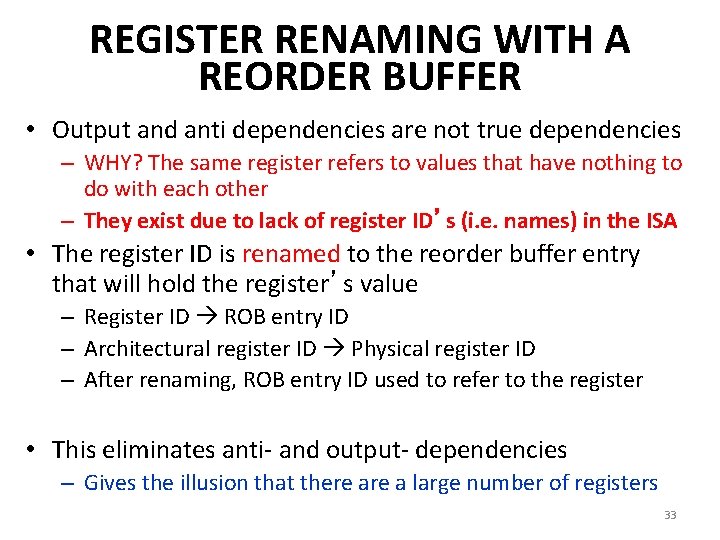

REGISTER RENAMING WITH A REORDER BUFFER • Output and anti dependencies are not true dependencies – WHY? The same register refers to values that have nothing to do with each other – They exist due to lack of register ID’s (i. e. names) in the ISA • The register ID is renamed to the reorder buffer entry that will hold the register’s value – Register ID ROB entry ID – Architectural register ID Physical register ID – After renaming, ROB entry ID used to refer to the register • This eliminates anti- and output- dependencies – Gives the illusion that there a large number of registers 33

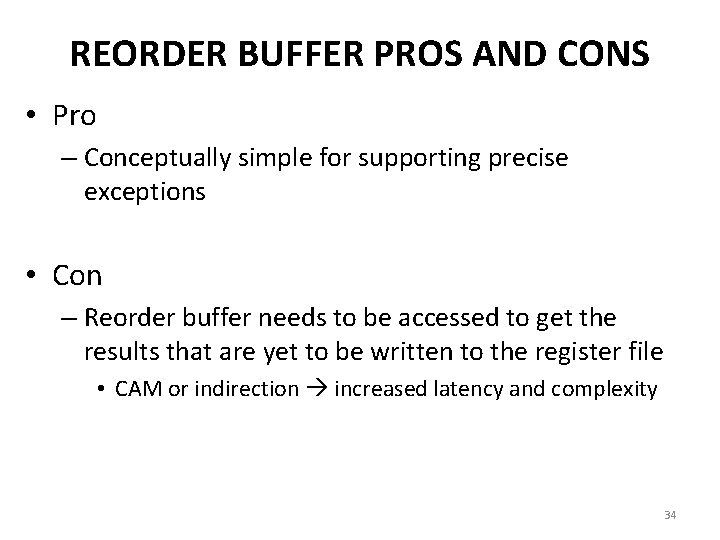

REORDER BUFFER PROS AND CONS • Pro – Conceptually simple for supporting precise exceptions • Con – Reorder buffer needs to be accessed to get the results that are yet to be written to the register file • CAM or indirection increased latency and complexity 34

COMPUTER ARCHITECTURE CS 6354 Pipelining Samira Khan University of Virginia Feb 4, 2016 The content and concept of this course are adapted from CMU ECE 740