Advanced Cache Design Topics El Ayat 915 cache

- Slides: 34

Advanced Cache Design Topics El Ayat 9/15 cache. 1

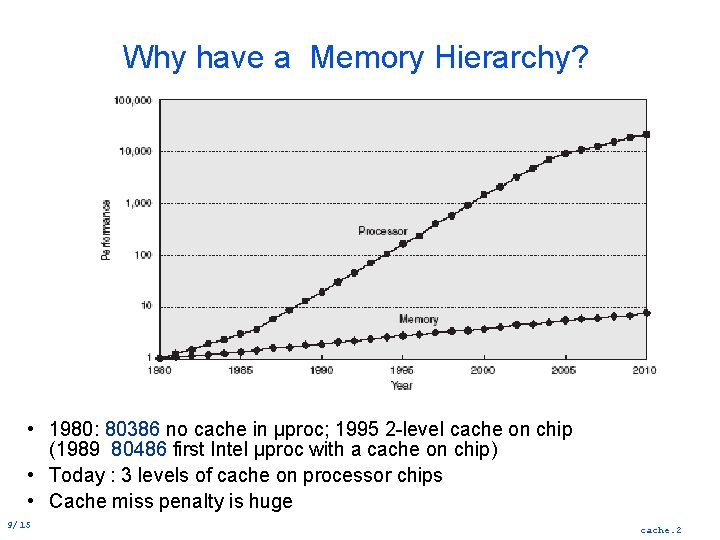

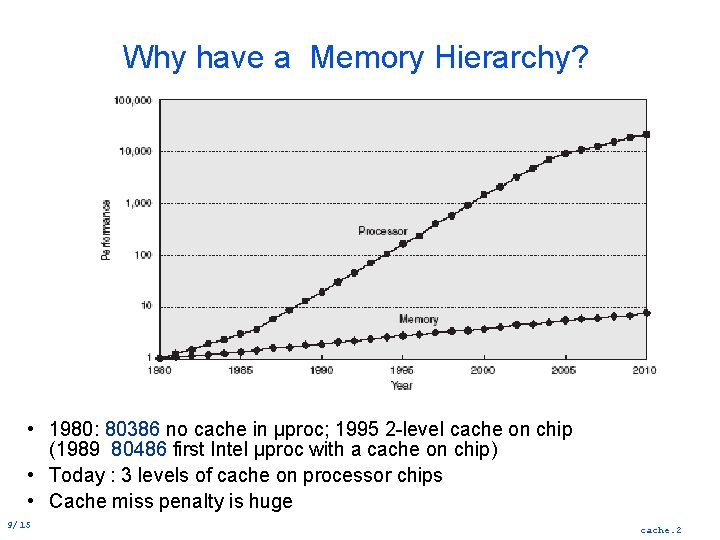

Why have a Memory Hierarchy? CPU DRAM Gap • 1980: 80386 no cache in µproc; 1995 2 level cache on chip (1989 80486 first Intel µproc with a cache on chip) • Today : 3 levels of cache on processor chips • Cache miss penalty is huge 9/15 cache. 2

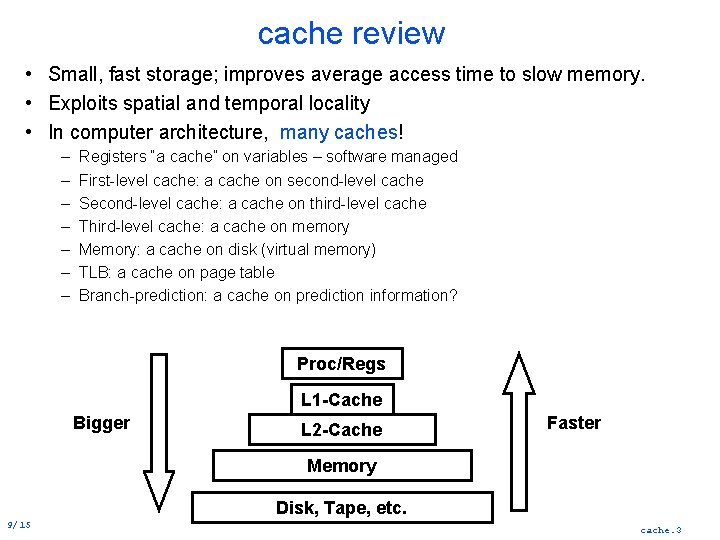

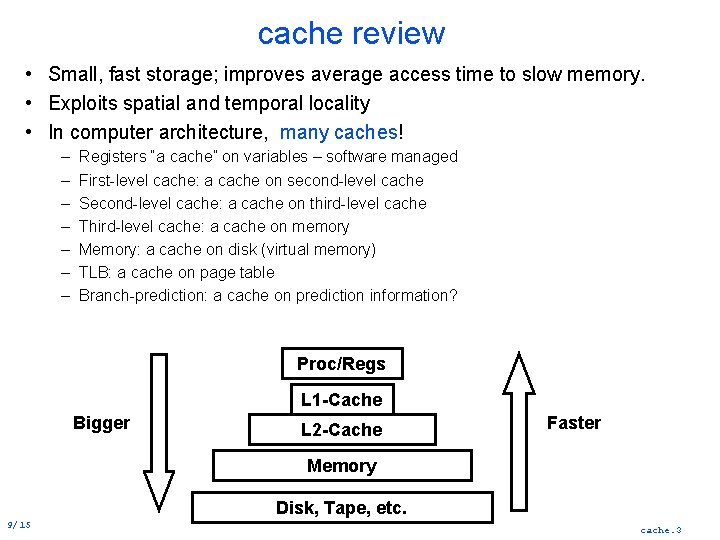

cache review • Small, fast storage; improves average access time to slow memory. • Exploits spatial and temporal locality • In computer architecture, many caches! – – – – Registers “a cache” on variables – software managed First level cache: a cache on second level cache Second level cache: a cache on third level cache Third level cache: a cache on memory Memory: a cache on disk (virtual memory) TLB: a cache on page table Branch prediction: a cache on prediction information? Proc/Regs L 1 -Cache Bigger L 2 -Cache Faster Memory Disk, Tape, etc. 9/15 cache. 3

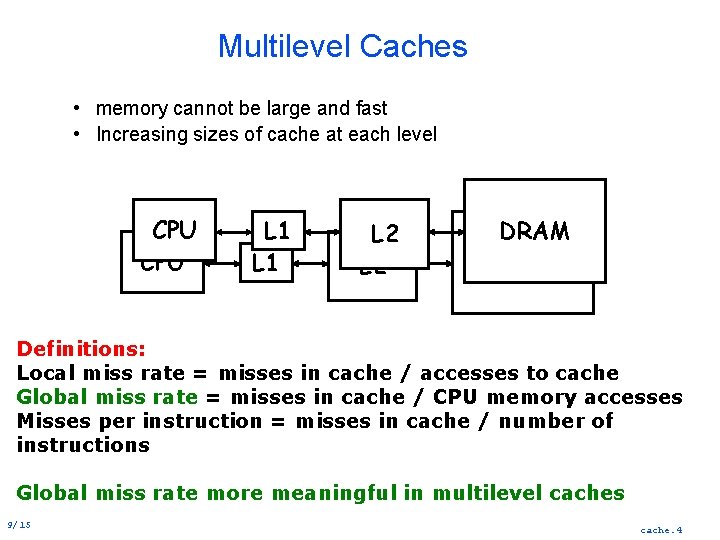

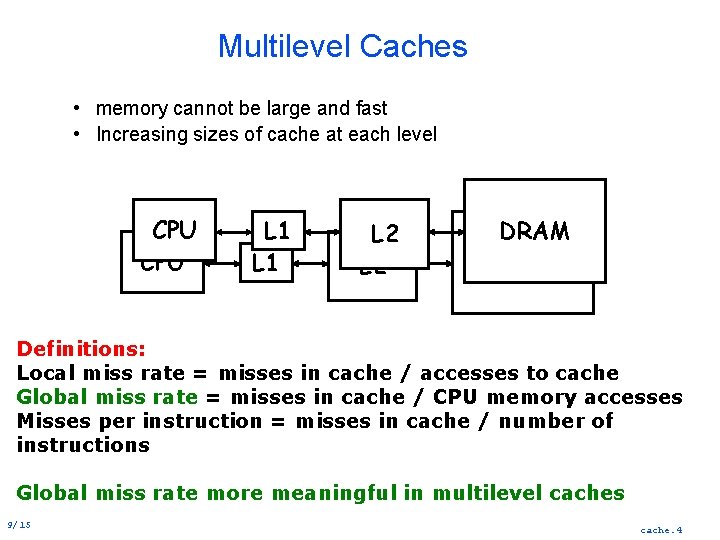

Multilevel Caches • memory cannot be large and fast • Increasing sizes of cache at each level CPU L 1 L 2 DRAM Definitions: Local miss rate = misses in cache / accesses to cache Global miss rate = misses in cache / CPU memory accesses Misses per instruction = misses in cache / number of instructions Global miss rate more meaningful in multilevel caches 9/15 cache. 4

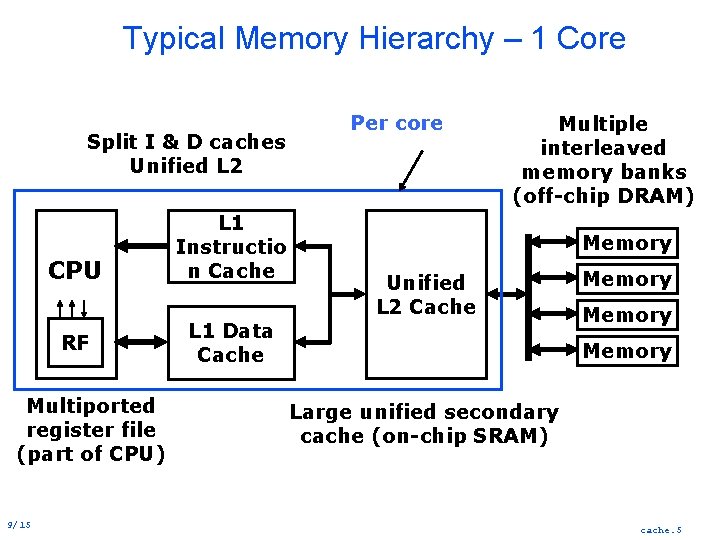

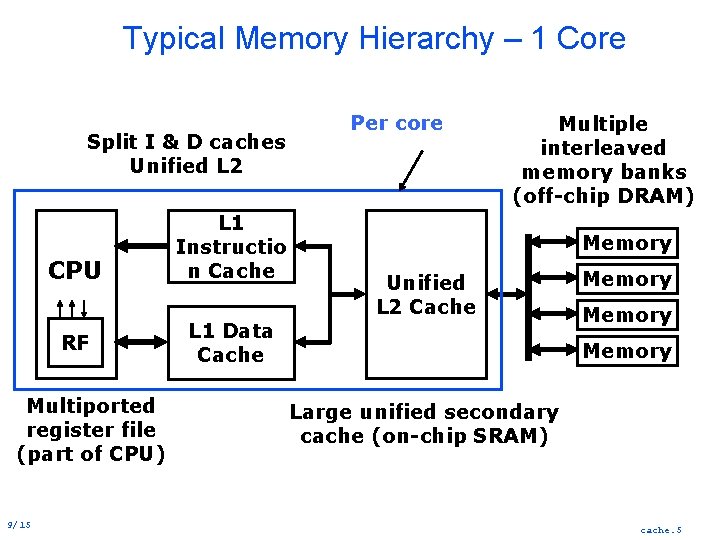

Typical Memory Hierarchy – 1 Core Split I & D caches Unified L 2 CPU RF Multiported register file (part of CPU) 9/15 L 1 Instructio n Cache Per core Multiple interleaved memory banks (off-chip DRAM) Memory Unified L 2 Cache L 1 Data Cache Memory Large unified secondary cache (on-chip SRAM) cache. 5

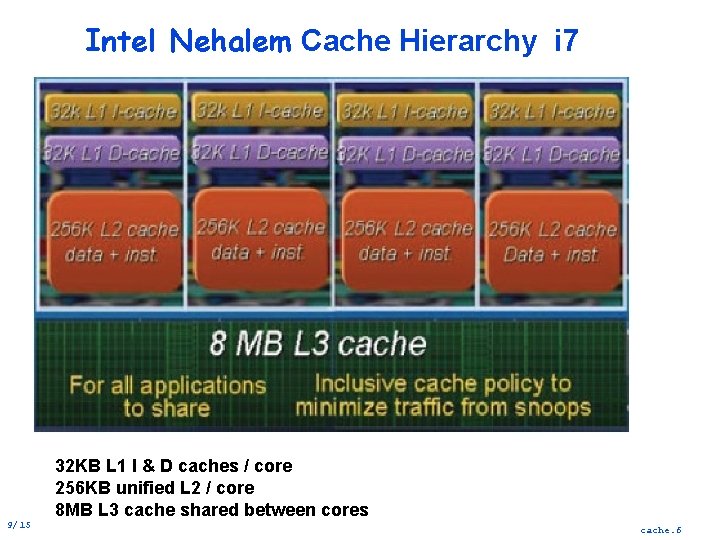

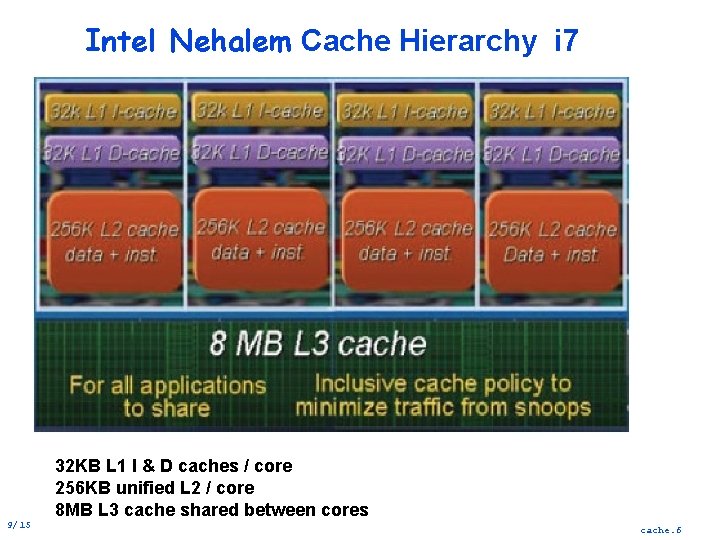

Intel Nehalem Cache Hierarchy i 7 9/15 32 KB L 1 I & D caches / core 256 KB unified L 2 / core 8 MB L 3 cache shared between cores cache. 6

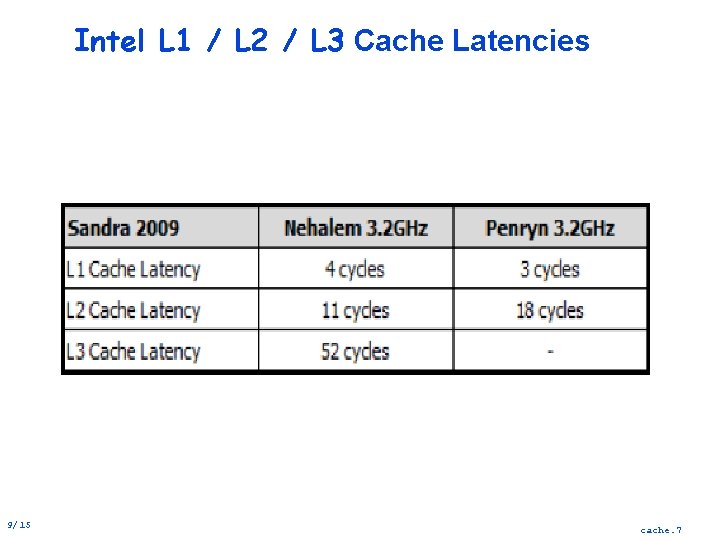

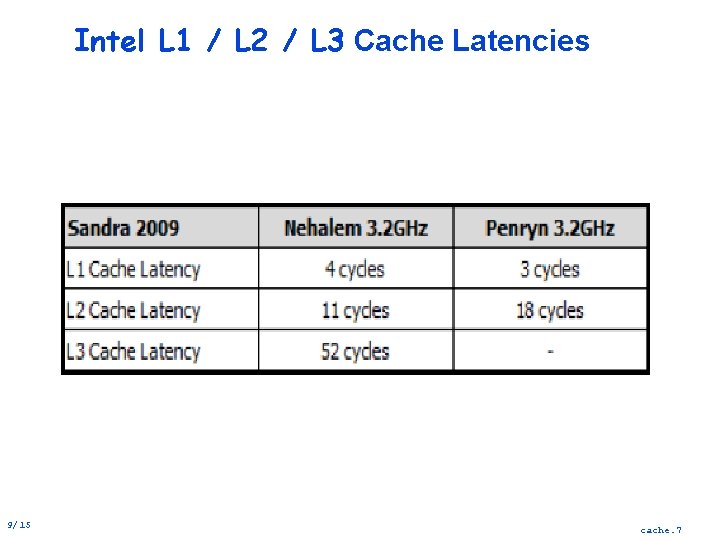

Intel L 1 / L 2 / L 3 Cache Latencies 9/15 cache. 7

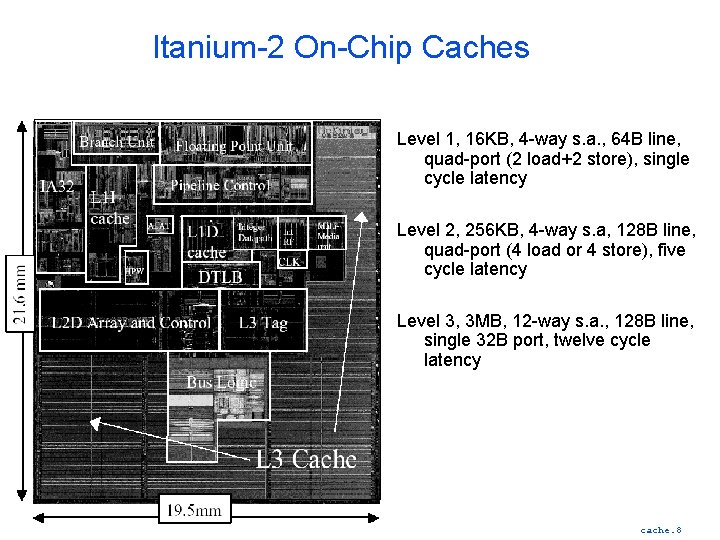

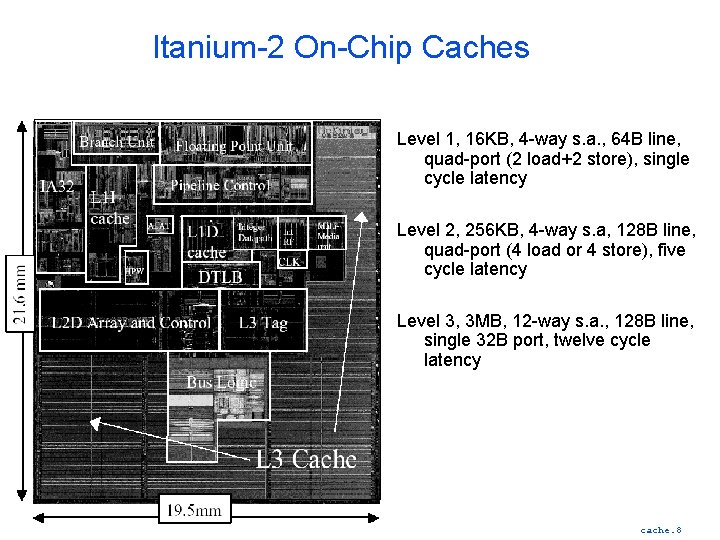

Itanium 2 On Chip Caches Level 1, 16 KB, 4 way s. a. , 64 B line, quad port (2 load+2 store), single cycle latency Level 2, 256 KB, 4 way s. a, 128 B line, quad port (4 load or 4 store), five cycle latency Level 3, 3 MB, 12 way s. a. , 128 B line, single 32 B port, twelve cycle latency 9/15 9/25/2007 cache. 8

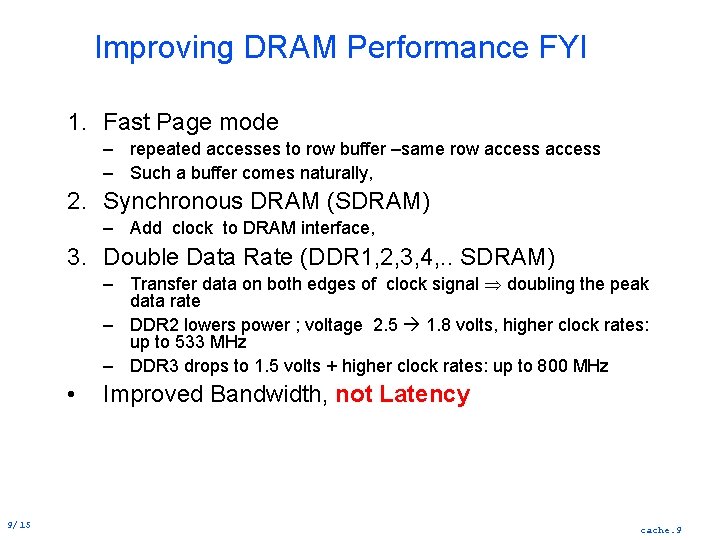

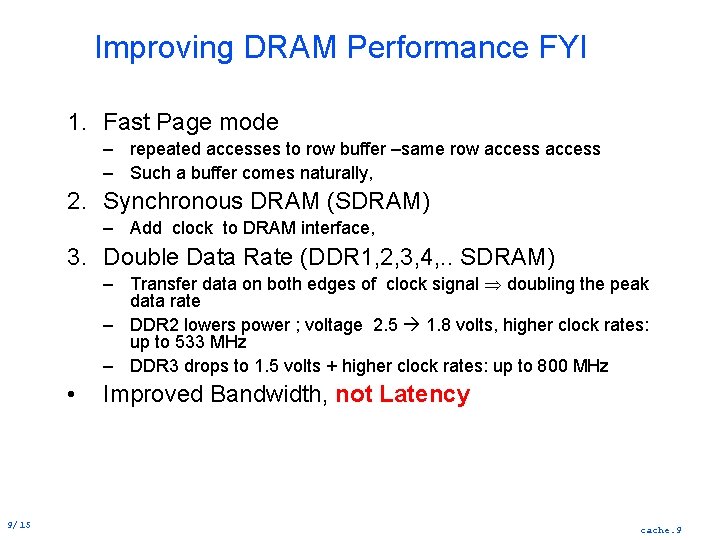

Improving DRAM Performance FYI 1. Fast Page mode – repeated accesses to row buffer –same row access – Such a buffer comes naturally, 2. Synchronous DRAM (SDRAM) – Add clock to DRAM interface, 3. Double Data Rate (DDR 1, 2, 3, 4, . . SDRAM) – Transfer data on both edges of clock signal doubling the peak data rate – DDR 2 lowers power ; voltage 2. 5 1. 8 volts, higher clock rates: up to 533 MHz – DDR 3 drops to 1. 5 volts + higher clock rates: up to 800 MHz • 9/15 Improved Bandwidth, not Latency cache. 9

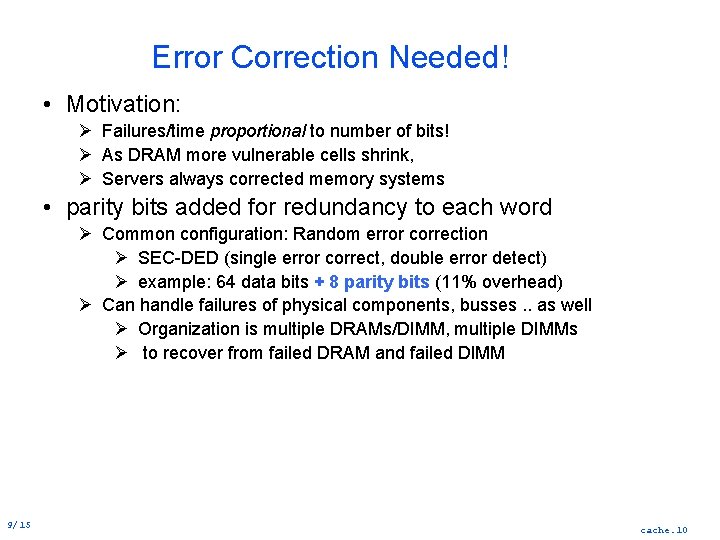

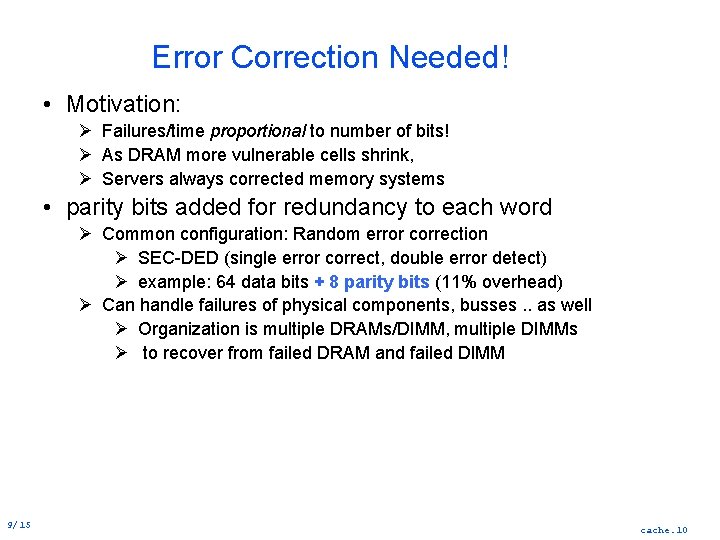

Error Correction Needed! • Motivation: Ø Failures/time proportional to number of bits! Ø As DRAM more vulnerable cells shrink, Ø Servers always corrected memory systems • parity bits added for redundancy to each word Ø Common configuration: Random error correction Ø SEC DED (single error correct, double error detect) Ø example: 64 data bits + 8 parity bits (11% overhead) Ø Can handle failures of physical components, busses. . as well Ø Organization is multiple DRAMs/DIMM, multiple DIMMs Ø to recover from failed DRAM and failed DIMM 9/15 cache. 10

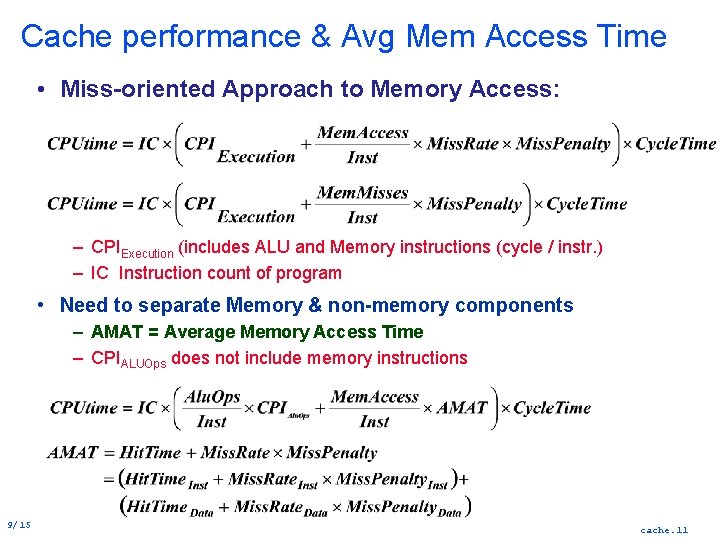

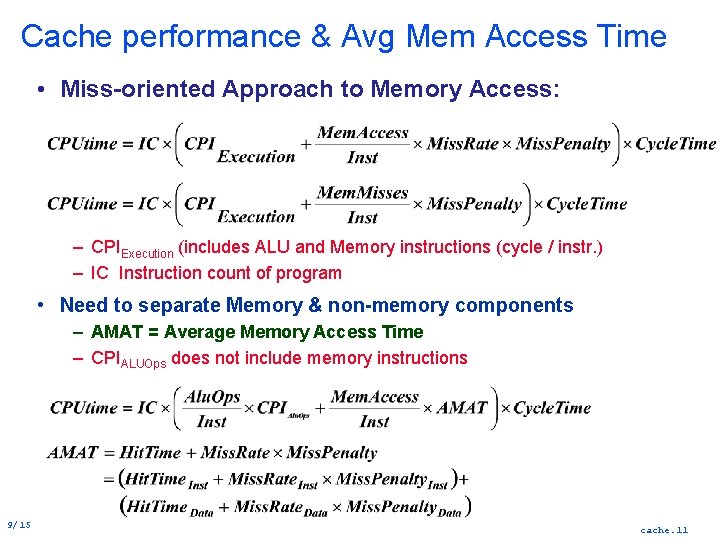

Cache performance & Avg Mem Access Time • Miss-oriented Approach to Memory Access: – CPIExecution (includes ALU and Memory instructions (cycle / instr. ) – IC Instruction count of program • Need to separate Memory & non-memory components – AMAT = Average Memory Access Time – CPIALUOps does not include memory instructions 9/15 cache. 11

Improving Cache Performance 1. Reduce the miss rate, 2. Reduce the miss penalty 3. Reduce the cache hit time. 9/15 cache. 12

Miss Rate Reduction 1. Large cache 2. Large Block Size 3. Higher Associativity 4. HW Prefetching Instr, Data 5. SW Prefetching Data 6. Compiler Optimizations 9/15 cache. 13

Cache miss classification 3 Cs – Compulsory—The first access to a block is not in the cache. Block must be brought into cache. [cold start misses first reference misses] (Misses even if Infinite Cache) – Capacity—Cache cannot contain all blocks needed. [capacity misses]. Blocks discarded / retrieved. (Misses in Fully Associative Size X Cache) – Conflict—If set associative or direct mapped. Block discarded / retrieved – same index collision misses or interference misses. (Misses in N-way Associative, Size X Cache) • 4 th “C”: – Coherence Misses caused by cache coherence. 9/15 cache. 14

3 Cs Absolute Miss Rate (SPEC 92) your cache simulation HW Miss rate Conflict 9/15 cache. 15

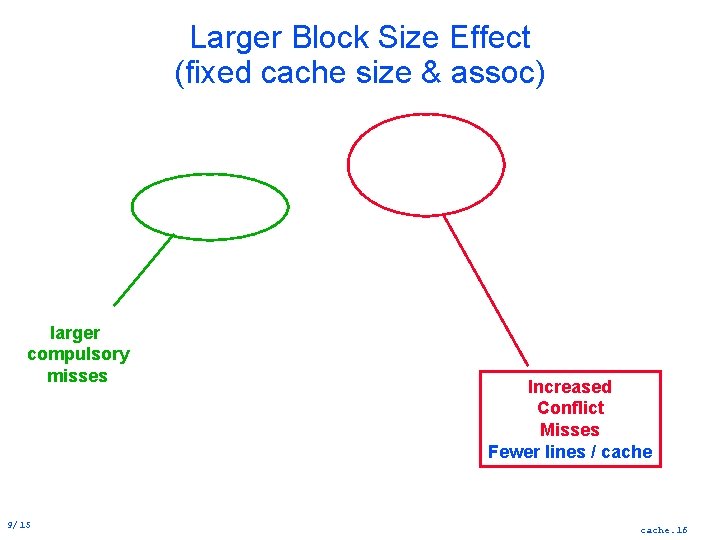

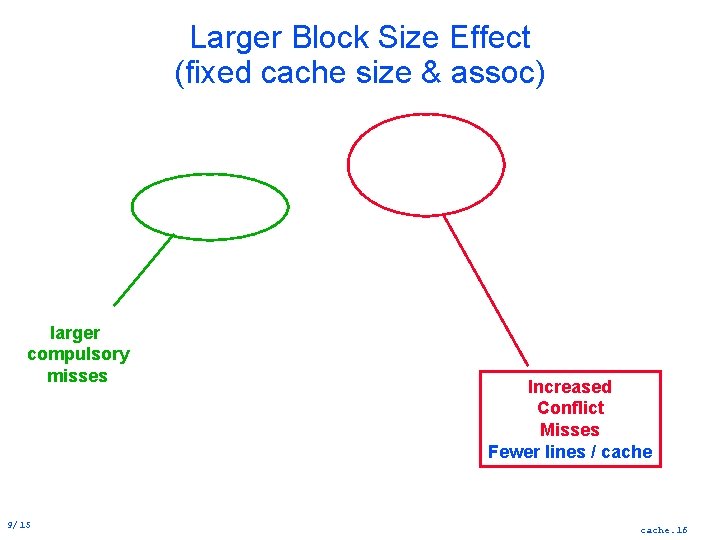

Larger Block Size Effect (fixed cache size & assoc) larger compulsory misses 9/15 Increased Conflict Misses Fewer lines / cache. 16

Associativity Conflict 9/15 cache. 17

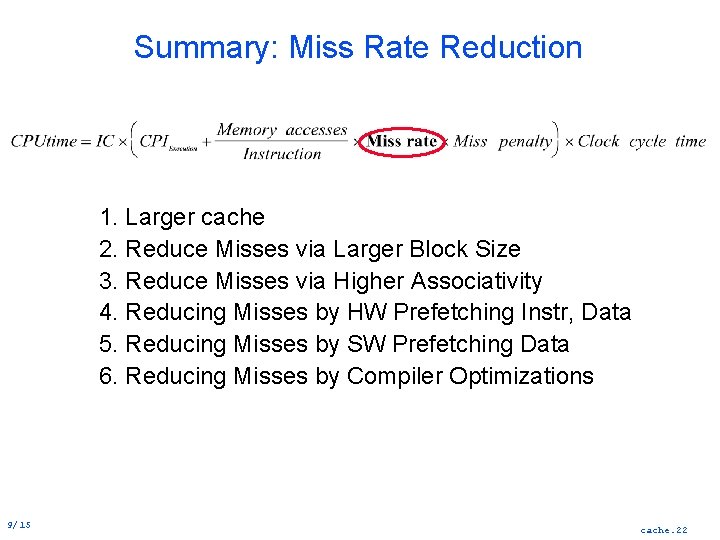

Miss Rate Reduction Reduce Misses by: 1. Larger cache 2. Larger Block Size 3. Higher Associativity 4. HW Prefetching Instr, Data 5. SW Prefetching Data 6. Compiler Optimizations 9/15 cache. 18

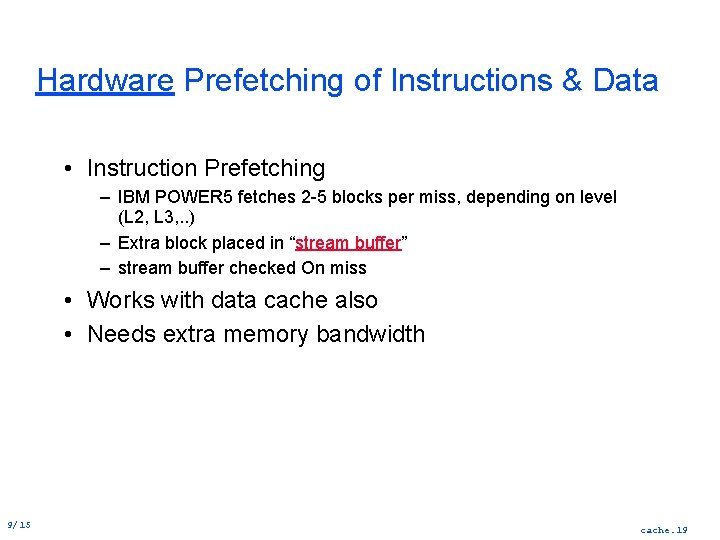

Hardware Prefetching of Instructions & Data • Instruction Prefetching – IBM POWER 5 fetches 2 5 blocks per miss, depending on level (L 2, L 3, . . ) – Extra block placed in “stream buffer” – stream buffer checked On miss • Works with data cache also • Needs extra memory bandwidth 9/15 cache. 19

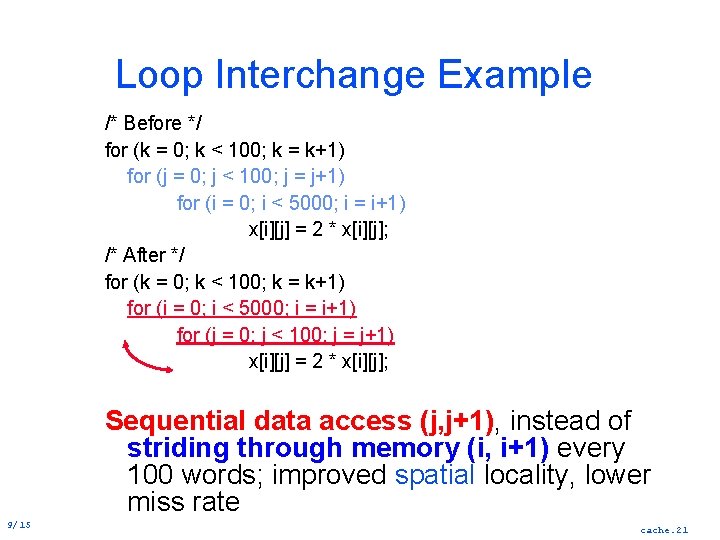

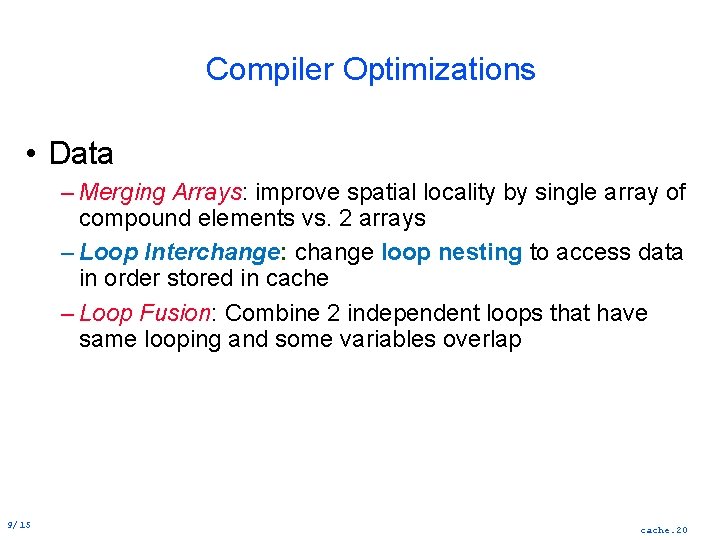

Compiler Optimizations • Data – Merging Arrays: improve spatial locality by single array of compound elements vs. 2 arrays – Loop Interchange: change loop nesting to access data in order stored in cache – Loop Fusion: Combine 2 independent loops that have same looping and some variables overlap 9/15 cache. 20

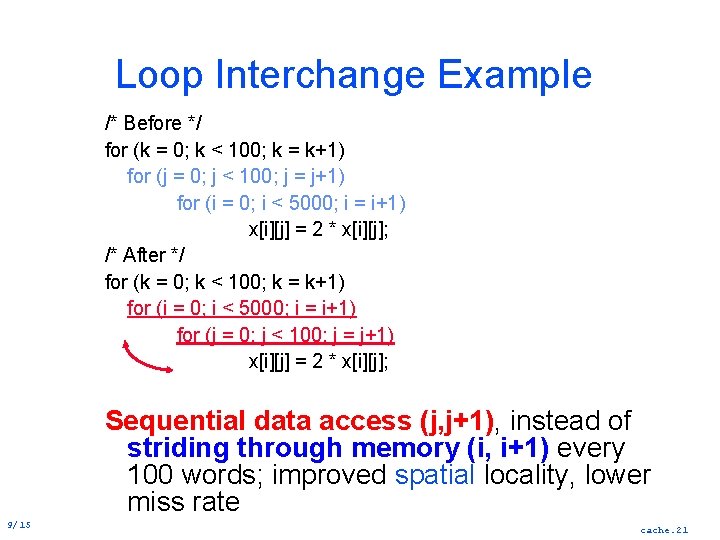

Loop Interchange Example /* Before */ for (k = 0; k < 100; k = k+1) for (j = 0; j < 100; j = j+1) for (i = 0; i < 5000; i = i+1) x[i][j] = 2 * x[i][j]; /* After */ for (k = 0; k < 100; k = k+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < 100; j = j+1) x[i][j] = 2 * x[i][j]; Sequential data access (j, j+1), instead of striding through memory (i, i+1) every 100 words; improved spatial locality, lower miss rate 9/15 cache. 21

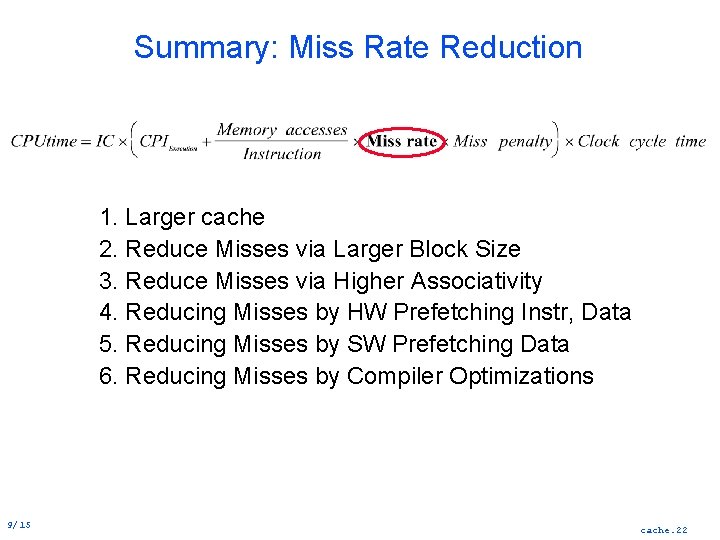

Summary: Miss Rate Reduction 1. Larger cache 2. Reduce Misses via Larger Block Size 3. Reduce Misses via Higher Associativity 4. Reducing Misses by HW Prefetching Instr, Data 5. Reducing Misses by SW Prefetching Data 6. Reducing Misses by Compiler Optimizations 9/15 cache. 22

Improving Cache Performance 1. Reduce the miss rate 2. Reduce the miss penalty 3. Reduce Cache hit time 9/15 cache. 23

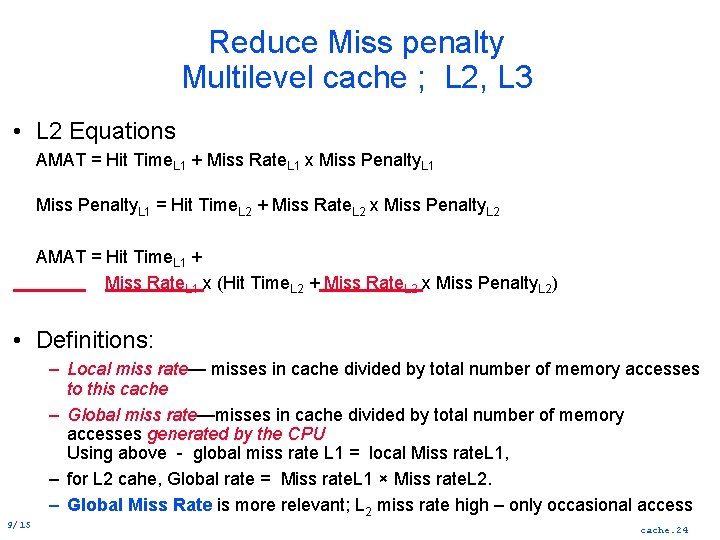

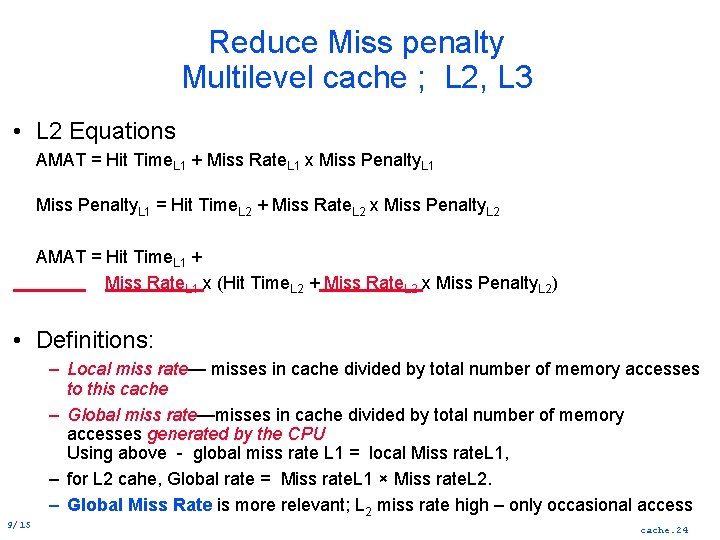

Reduce Miss penalty Multilevel cache ; L 2, L 3 • L 2 Equations AMAT = Hit Time. L 1 + Miss Rate. L 1 x Miss Penalty. L 1 = Hit Time. L 2 + Miss Rate. L 2 x Miss Penalty. L 2 AMAT = Hit Time. L 1 + Miss Rate. L 1 x (Hit Time. L 2 + Miss Rate. L 2 x Miss Penalty. L 2) • Definitions: – Local miss rate— misses in cache divided by total number of memory accesses to this cache – Global miss rate—misses in cache divided by total number of memory accesses generated by the CPU Using above global miss rate L 1 = local Miss rate. L 1, – for L 2 cahe, Global rate = Miss rate. L 1 × Miss rate. L 2. – Global Miss Rate is more relevant; L 2 miss rate high – only occasional access 9/15 cache. 24

Reducing Miss Penalty: Read Priority over Write on Miss • Write through w/ write buffers => RAW conflicts with main memory reads on cache misses – Wait for write buffer to empty, might increase read miss penalty – Check write buffer contents before read; if no conflicts, let the memory access continue • Write back buffer to hold displaced blocks – – 9/15 Read miss replacing dirty block Normal: Write dirty block to memory, then read block OR copy dirty block to write buffer, read, and then write CPU stall less since restarts as soon as read cache. 25

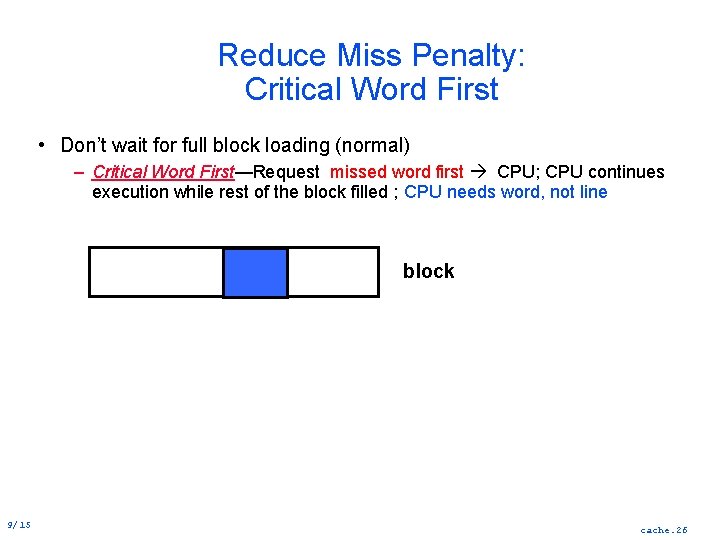

Reduce Miss Penalty: Critical Word First • Don’t wait for full block loading (normal) – Critical Word First—Request missed word first CPU; CPU continues execution while rest of the block filled ; CPU needs word, not line block 9/15 cache. 26

Reduce Miss Penalty: Non blocking Caches reduce stalls on misses • Non-blocking cache data cache supplies cache hits during a miss – requires out-of-order execution – requires multi-bank memories • “hit under multiple miss” overlapping multiple misses – Significantly increases cache controller complexity multiple outstanding memory accesses – Requires multiple memory banks – 4 outstanding memory misses supported 9/15 cache. 27

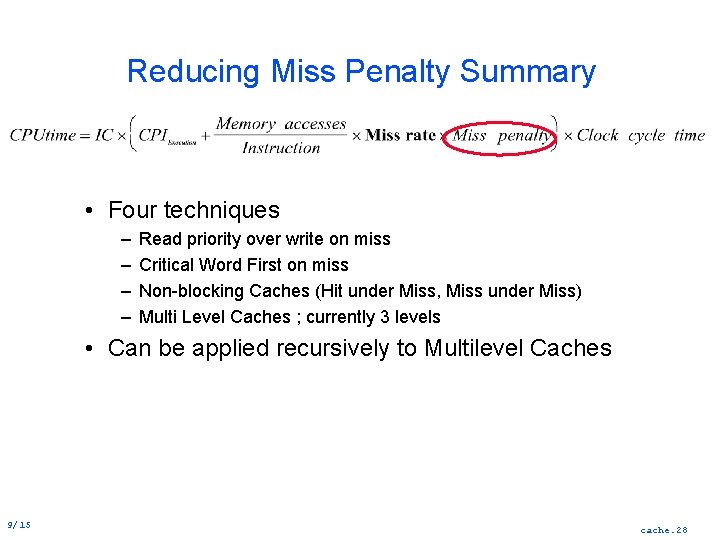

Reducing Miss Penalty Summary • Four techniques – – Read priority over write on miss Critical Word First on miss Non blocking Caches (Hit under Miss, Miss under Miss) Multi Level Caches ; currently 3 levels • Can be applied recursively to Multilevel Caches 9/15 cache. 28

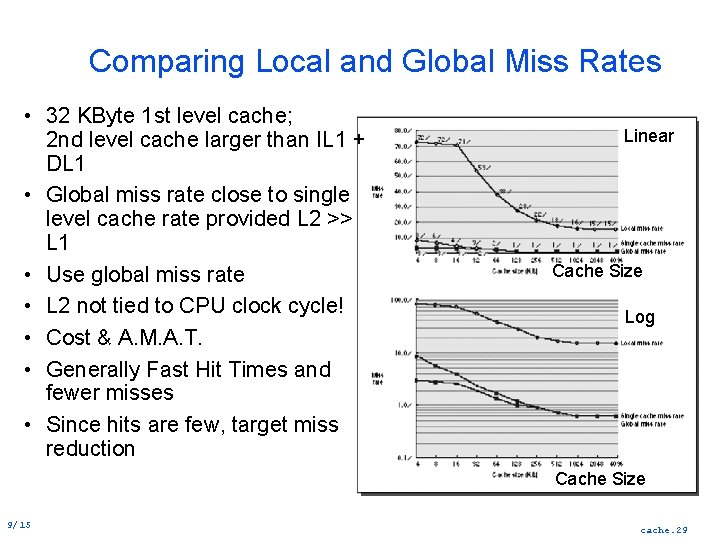

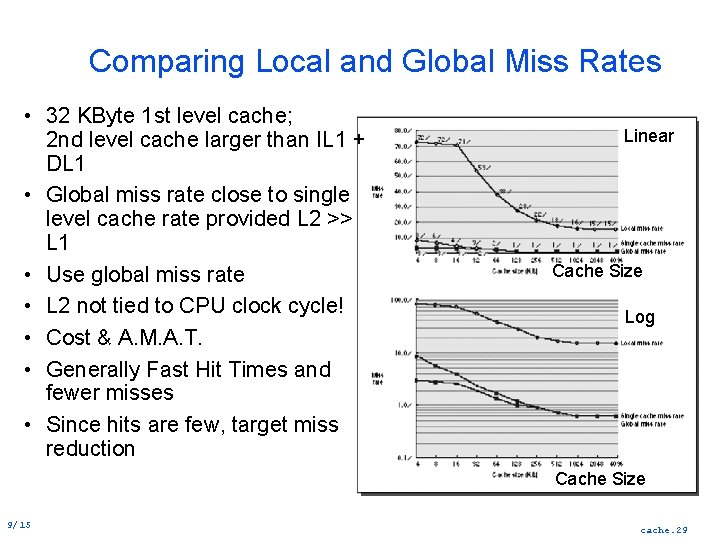

Comparing Local and Global Miss Rates • 32 KByte 1 st level cache; 2 nd level cache larger than IL 1 + DL 1 • Global miss rate close to single level cache rate provided L 2 >> L 1 • Use global miss rate • L 2 not tied to CPU clock cycle! • Cost & A. M. A. T. • Generally Fast Hit Times and fewer misses • Since hits are few, target miss reduction Linear Cache Size Log Cache Size 9/15 cache. 29

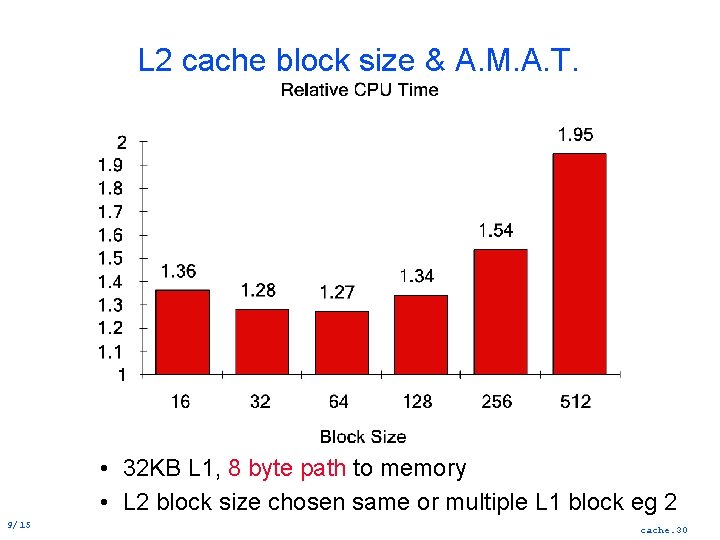

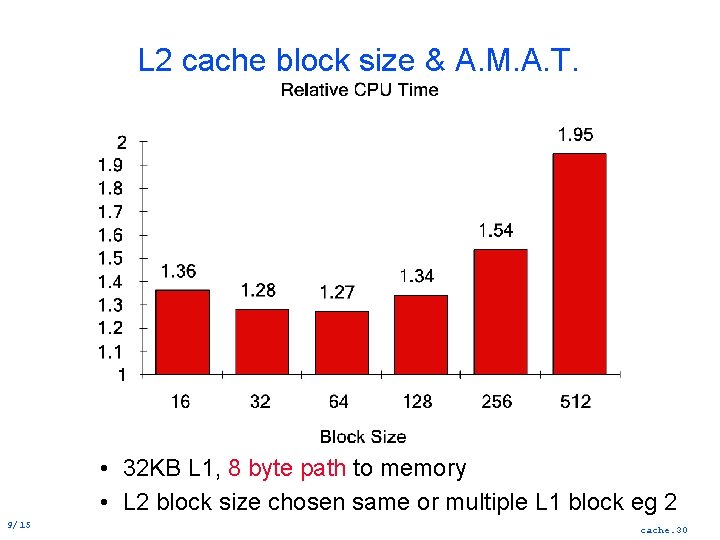

L 2 cache block size & A. M. A. T. • 32 KB L 1, 8 byte path to memory • L 2 block size chosen same or multiple L 1 block eg 2 9/15 cache. 30

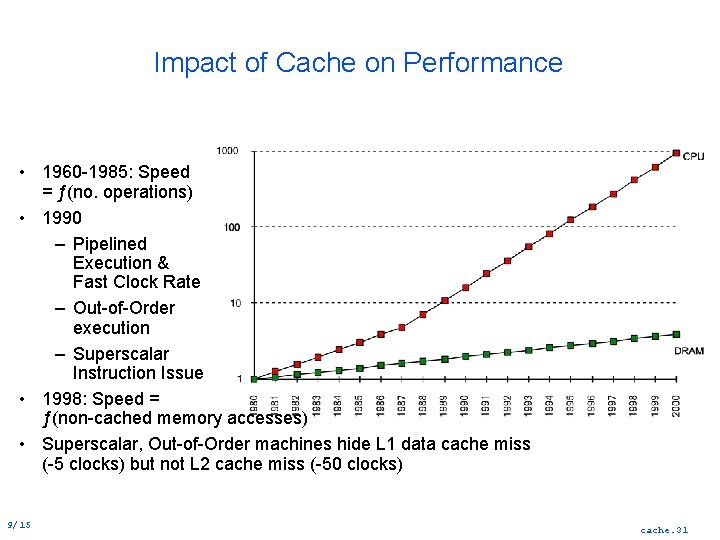

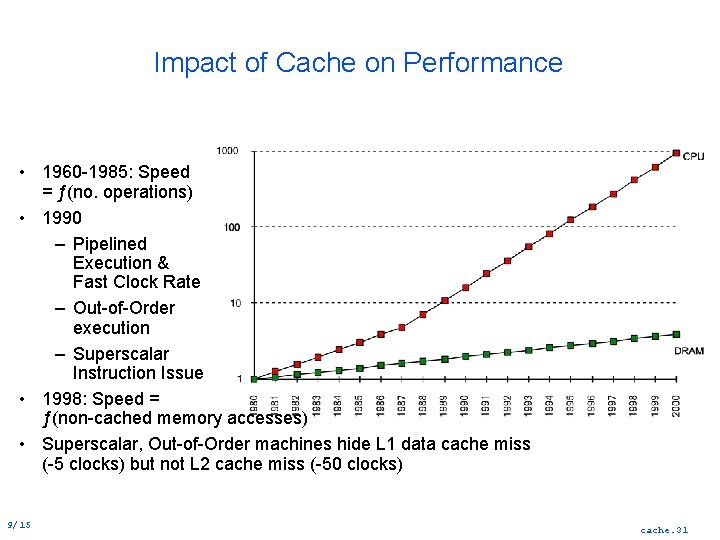

Impact of Cache on Performance • 1960 1985: Speed = ƒ(no. operations) • 1990 – Pipelined Execution & Fast Clock Rate – Out of Order execution – Superscalar Instruction Issue • 1998: Speed = ƒ(non cached memory accesses) • Superscalar, Out of Order machines hide L 1 data cache miss ( 5 clocks) but not L 2 cache miss ( 50 clocks) 9/15 cache. 31

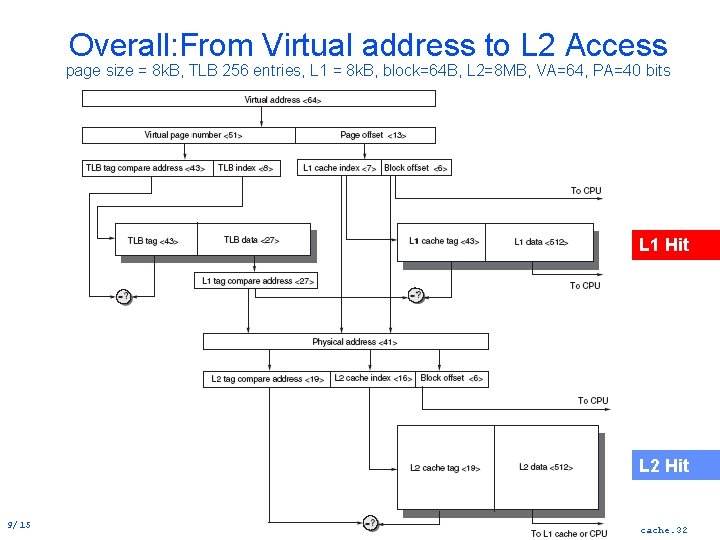

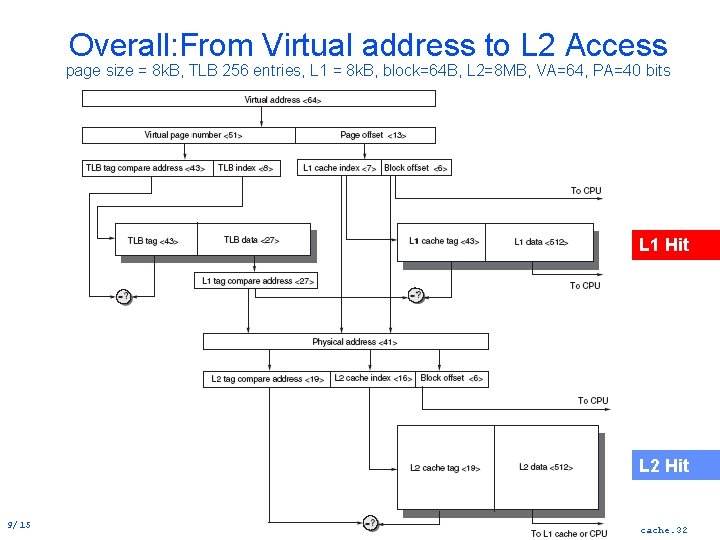

Overall: From Virtual address to L 2 Access page size = 8 k. B, TLB 256 entries, L 1 = 8 k. B, block=64 B, L 2=8 MB, VA=64, PA=40 bits L 1 Hit L 2 Hit 9/15 cache. 32

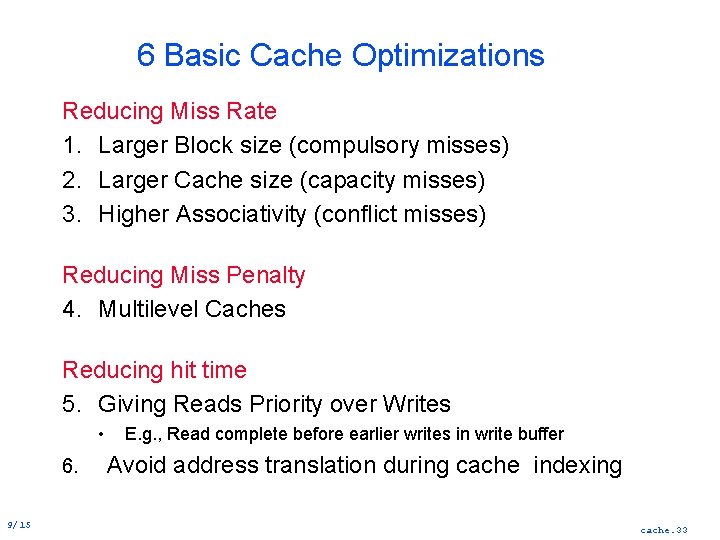

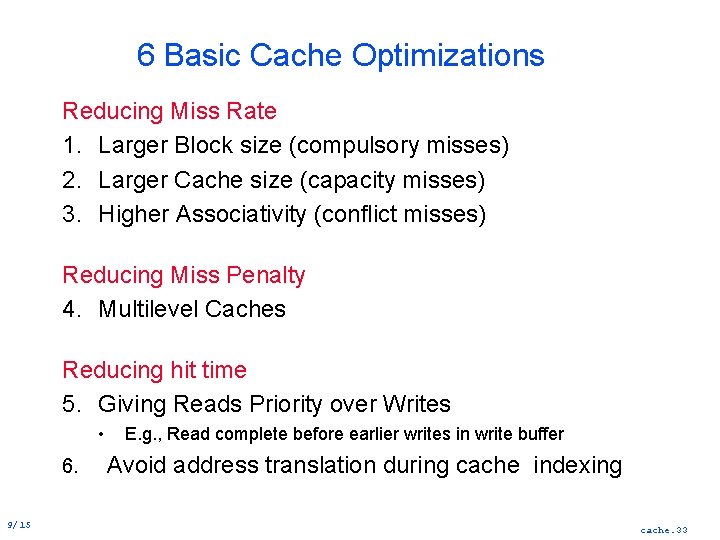

6 Basic Cache Optimizations Reducing Miss Rate 1. Larger Block size (compulsory misses) 2. Larger Cache size (capacity misses) 3. Higher Associativity (conflict misses) Reducing Miss Penalty 4. Multilevel Caches Reducing hit time 5. Giving Reads Priority over Writes • 6. 9/15 E. g. , Read complete before earlier writes in write buffer Avoid address translation during cache indexing cache. 33

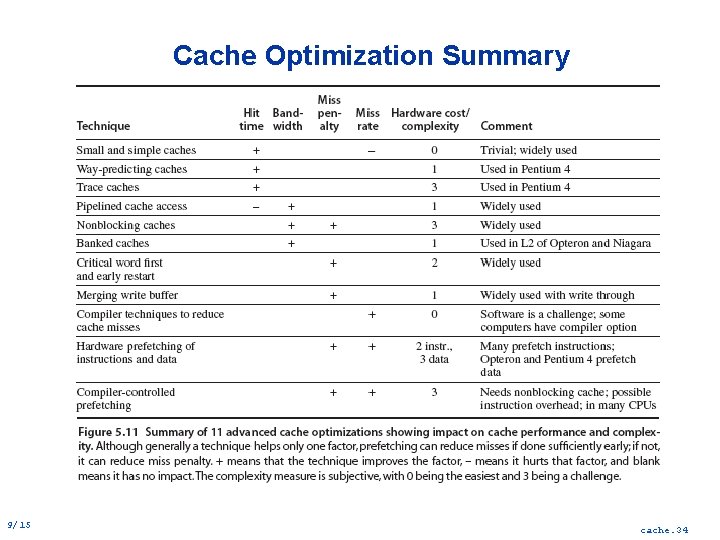

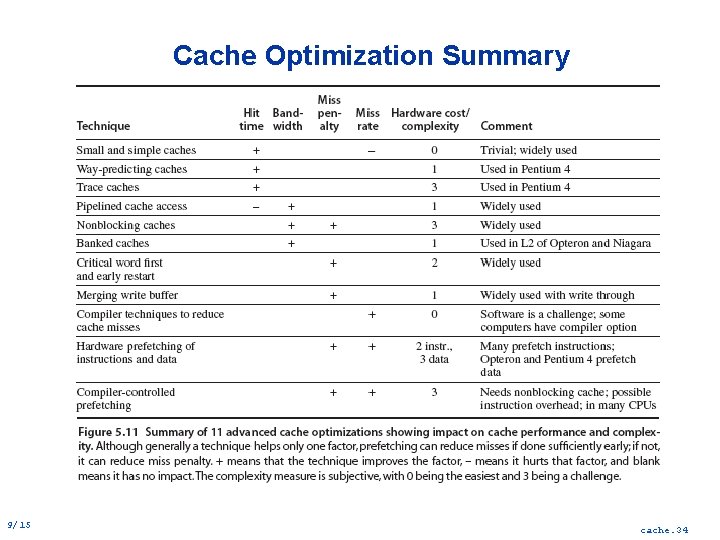

Cache Optimization Summary 9/15 cache. 34