A SYSTEMATIC APPROACH TO PLANTWIDE CONTROL 1985 2010

- Slides: 40

A SYSTEMATIC APPROACH TO PLANTWIDE CONTROL (1985 -2010 -2025 ) Sigurd Skogestad Department of Chemical Engineering Norwegian University of Science and Tecnology (NTNU) Trondheim, Norway DTU March 2011 1

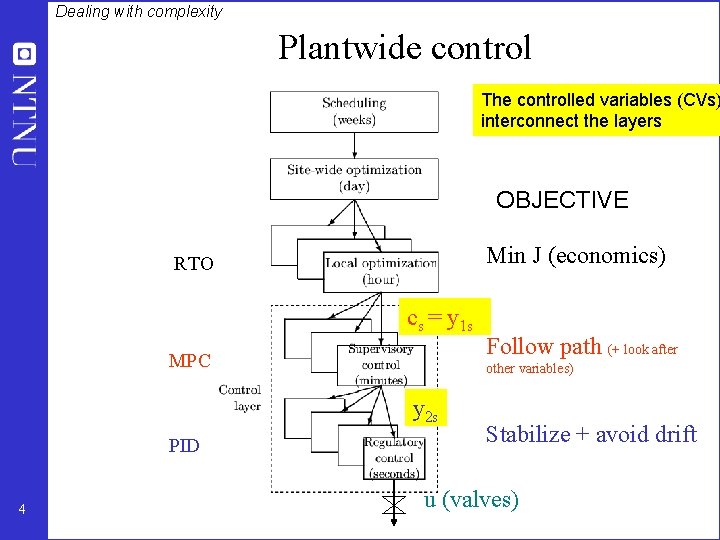

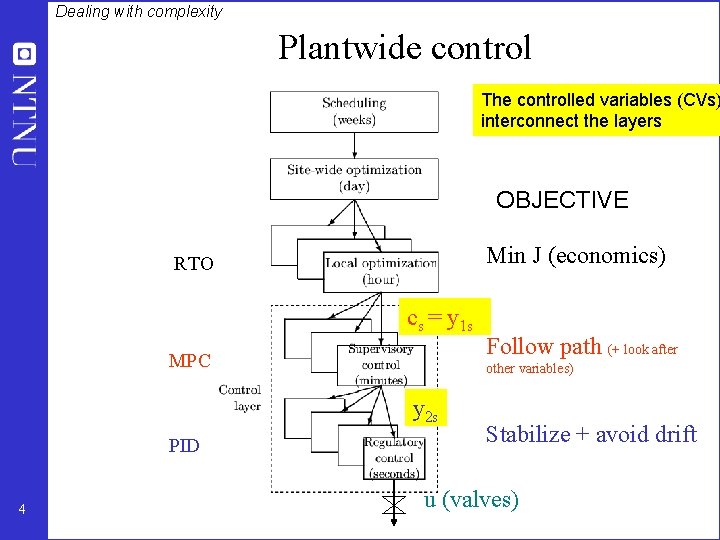

Dealing with complexity Plantwide control The controlled variables (CVs) interconnect the layers OBJECTIVE Min J (economics) RTO cs = y 1 s MPC other variables) y 2 s PID 4 Follow path (+ look after Stabilize + avoid drift u (valves)

How we design a control system for a complete chemical plant? • • 5 Where do we start? What should we control? and why? etc.

• Alan Foss (“Critique of chemical process control theory”, AICh. E Journal, 1973): The central issue to be resolved. . . is the determination of control system structure. Which variables should be measured, which inputs should be manipulated and which links should be made between the two sets? There is more than a suspicion that the work of a genius is needed here, for without it the control configuration problem will likely remain in a primitive, hazily stated and wholly unmanageable form. The gap is present indeed, but contrary to the views of many, it is theoretician who must close it. Previous work on plantwide control: 6 • Page Buckley (1964) - Chapter on “Overall process control” (still industrial practice) • Greg Shinskey (1967) – process control systems • Alan Foss (1973) - control system structure • Bill Luyben et al. (1975 - ) – case studies ; “snowball effect” • George Stephanopoulos and Manfred Morari (1980) – synthesis of control structures for chemical processes • Ruel Shinnar (1981 - ) - “dominant variables” • Jim Downs (1991) - Tennessee Eastman challenge problem • Larsson and Skogestad (2000): Review of plantwide control

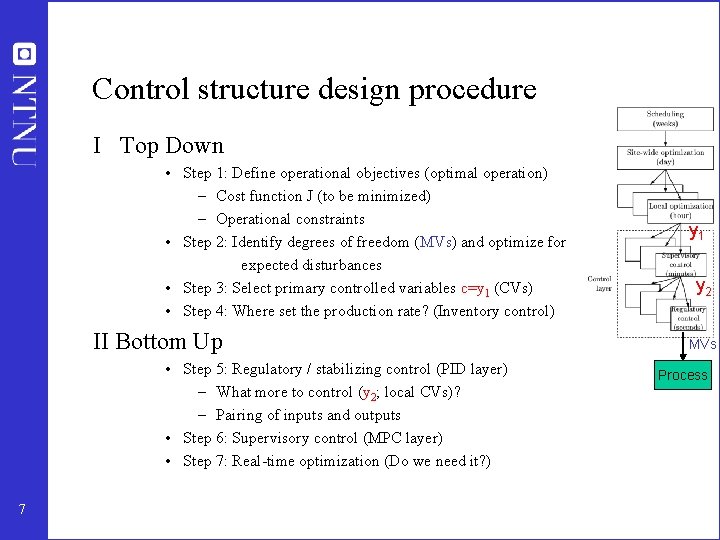

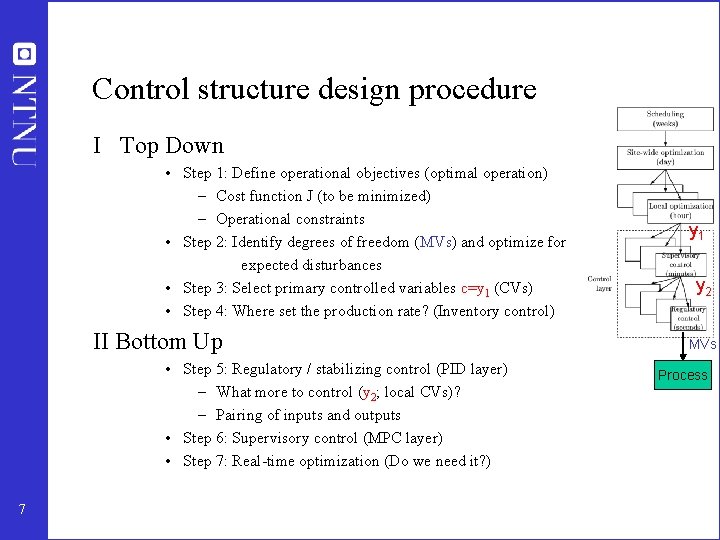

Control structure design procedure I Top Down • Step 1: Define operational objectives (optimal operation) – Cost function J (to be minimized) – Operational constraints • Step 2: Identify degrees of freedom (MVs) and optimize for expected disturbances • Step 3: Select primary controlled variables c=y 1 (CVs) • Step 4: Where set the production rate? (Inventory control) II Bottom Up • Step 5: Regulatory / stabilizing control (PID layer) – What more to control (y 2; local CVs)? – Pairing of inputs and outputs • Step 6: Supervisory control (MPC layer) • Step 7: Real-time optimization (Do we need it? ) 7 y 1 y 2 MVs Process

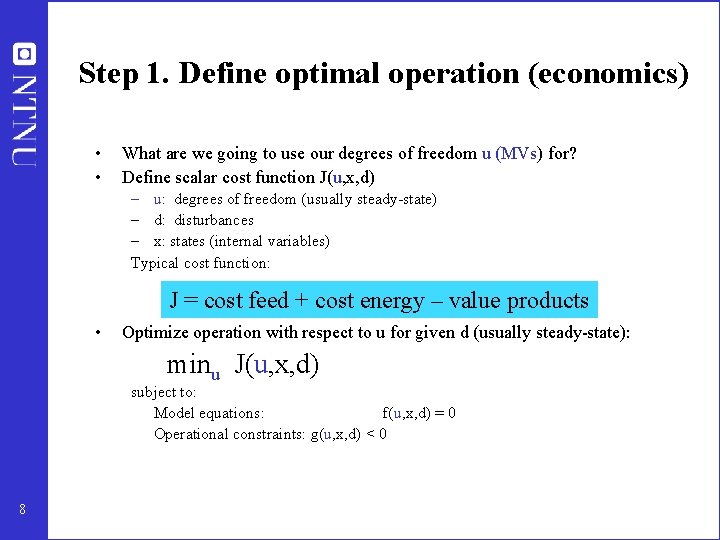

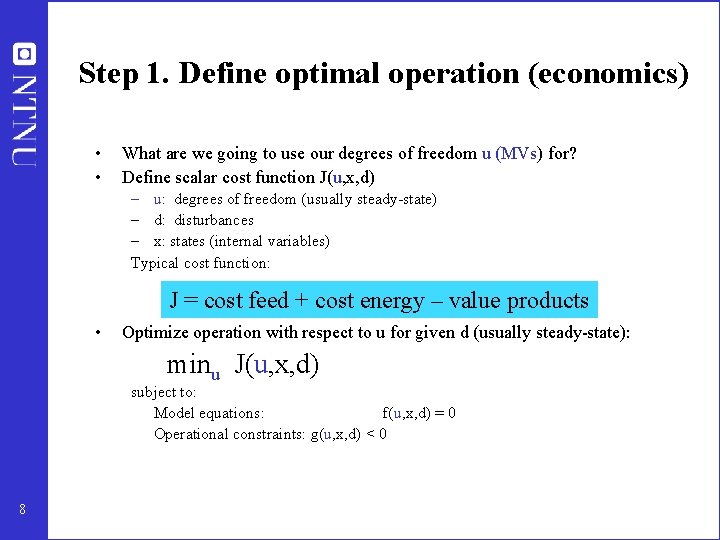

Step 1. Define optimal operation (economics) • • What are we going to use our degrees of freedom u (MVs) for? Define scalar cost function J(u, x, d) – u: degrees of freedom (usually steady-state) – d: disturbances – x: states (internal variables) Typical cost function: J = cost feed + cost energy – value products • Optimize operation with respect to u for given d (usually steady-state): minu J(u, x, d) subject to: Model equations: f(u, x, d) = 0 Operational constraints: g(u, x, d) < 0 8

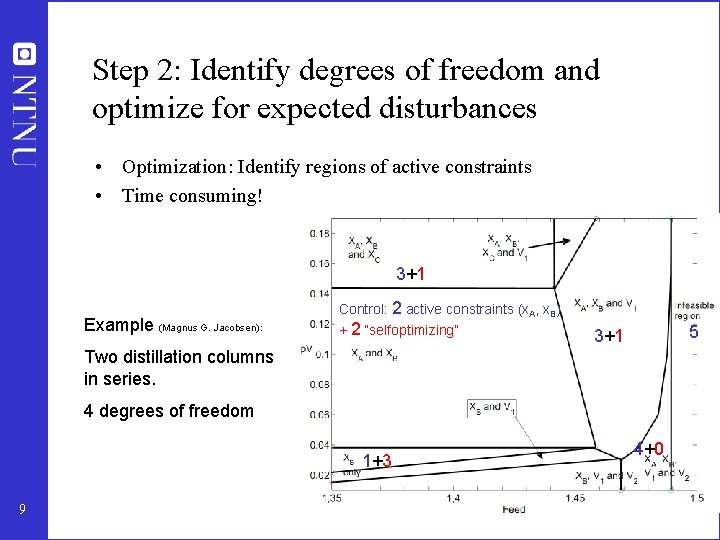

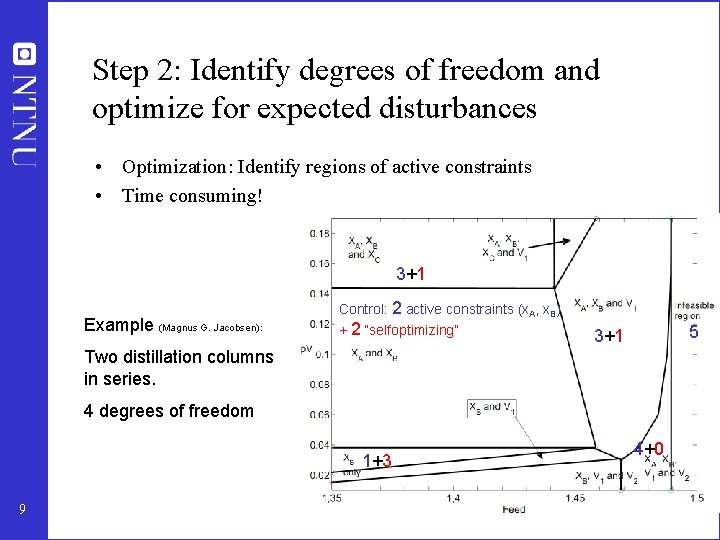

Step 2: Identify degrees of freedom and optimize for expected disturbances • Optimization: Identify regions of active constraints • Time consuming! 3+1 Example (Magnus G. Jacobsen): Control: 2 active constraints (x. A, x. B) + 2 “selfoptimizing” 5 3+1 Two distillation columns in series. 4 degrees of freedom 1+3 9 4+0

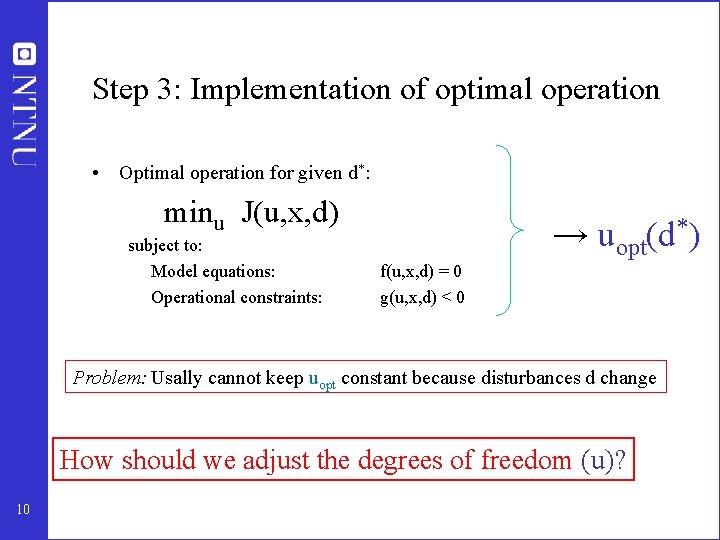

Step 3: Implementation of optimal operation • Optimal operation for given d*: minu J(u, x, d) subject to: Model equations: Operational constraints: → uopt(d*) f(u, x, d) = 0 g(u, x, d) < 0 Problem: Usally cannot keep uopt constant because disturbances d change How should we adjust the degrees of freedom (u)? 10

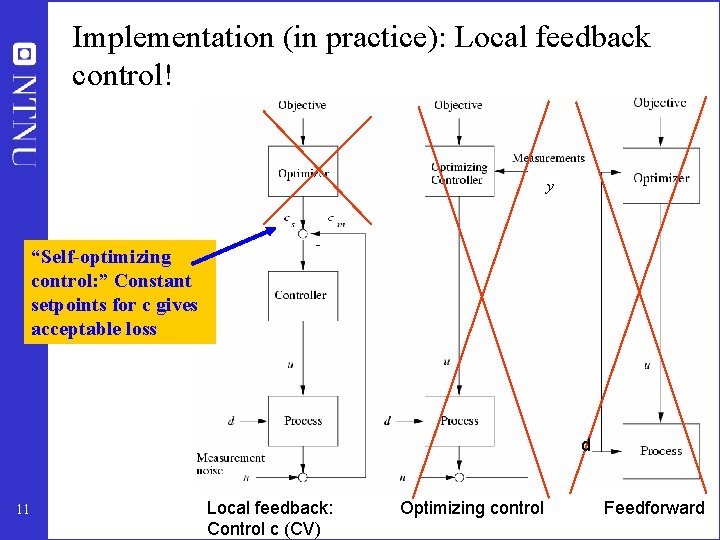

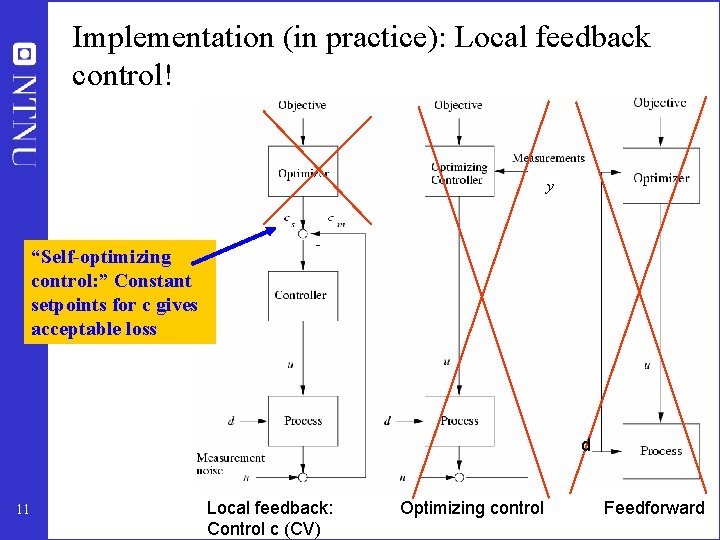

Implementation (in practice): Local feedback control! y “Self-optimizing control: ” Constant setpoints for c gives acceptable loss d 11 Local feedback: Control c (CV) Optimizing control Feedforward

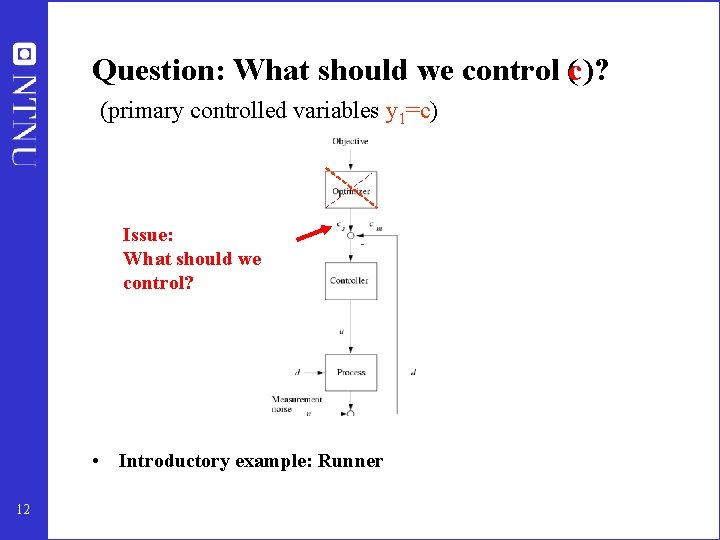

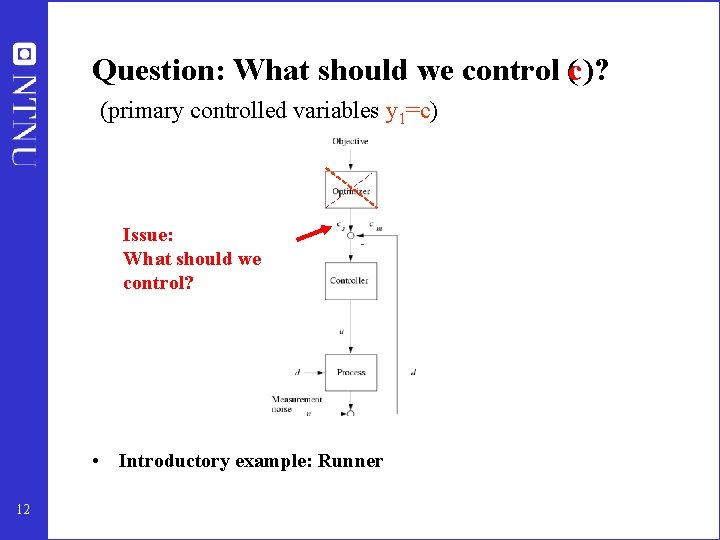

Question: What should we control (c)? (primary controlled variables y 1=c) Issue: What should we control? • Introductory example: Runner 12

Optimal operation - Runner Optimal operation of runner – Cost to be minimized, J=T – One degree of freedom (u=power) – What should we control? 13

Optimal operation - Runner Sprinter (100 m) • 1. Optimal operation of Sprinter, J=T – Active constraint control: • Maximum speed (”no thinking required”) 14

Optimal operation - Runner Marathon (40 km) • 2. Optimal operation of Marathon runner, J=T • Unconstrained optimum! • Any ”self-optimizing” variable c (to control at constant setpoint)? • • 15 c 1 = distance to leader of race c 2 = speed c 3 = heart rate c 4 = level of lactate in muscles

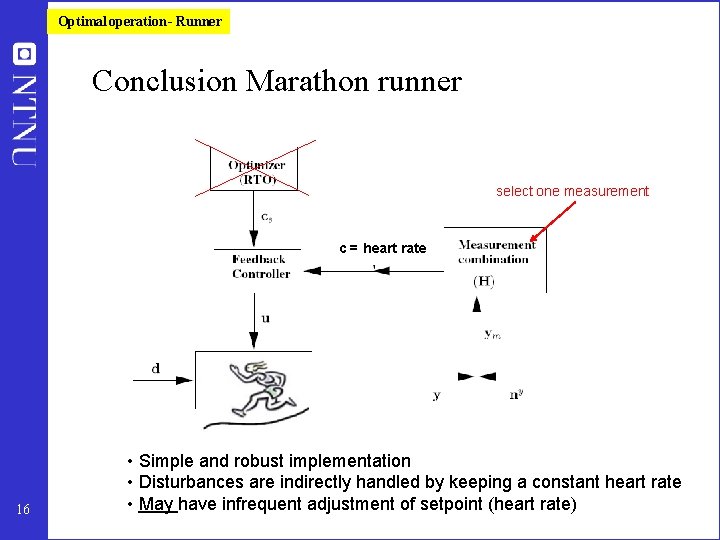

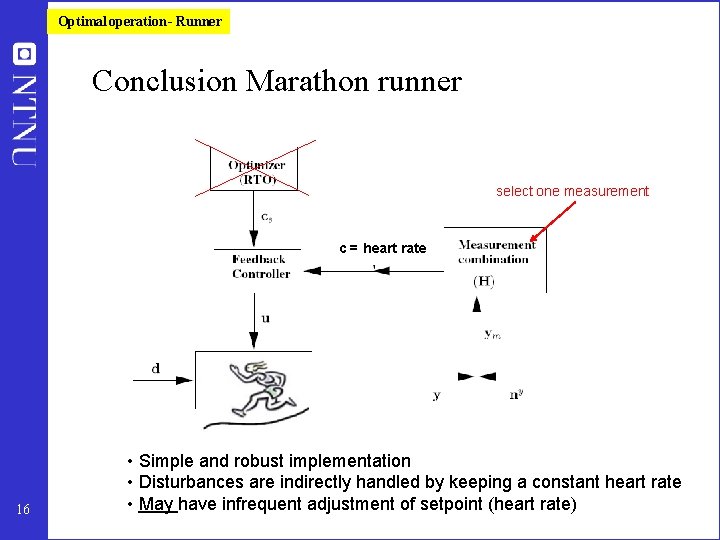

Optimal operation - Runner Conclusion Marathon runner select one measurement c = heart rate 16 • Simple and robust implementation • Disturbances are indirectly handled by keeping a constant heart rate • May have infrequent adjustment of setpoint (heart rate)

Step 3. What should we control (c)? (primary controlled variables y 1=c) Selection of controlled variables c 1. Control active constraints! 2. Unconstrained variables: Control self-optimizing variables! 17

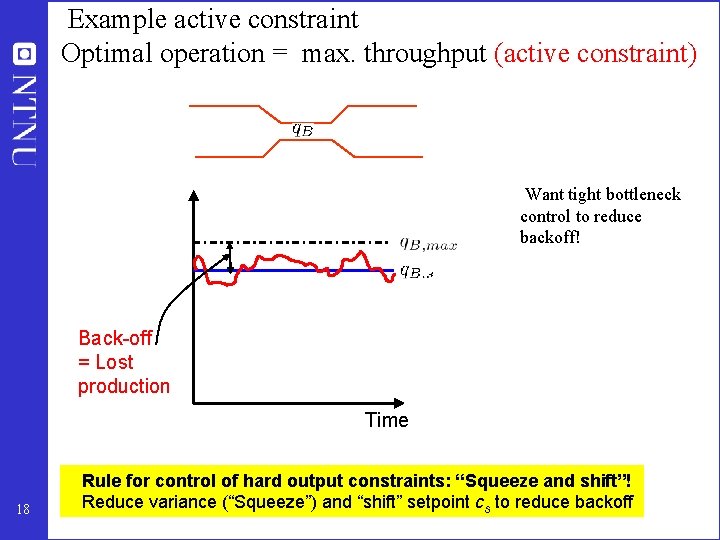

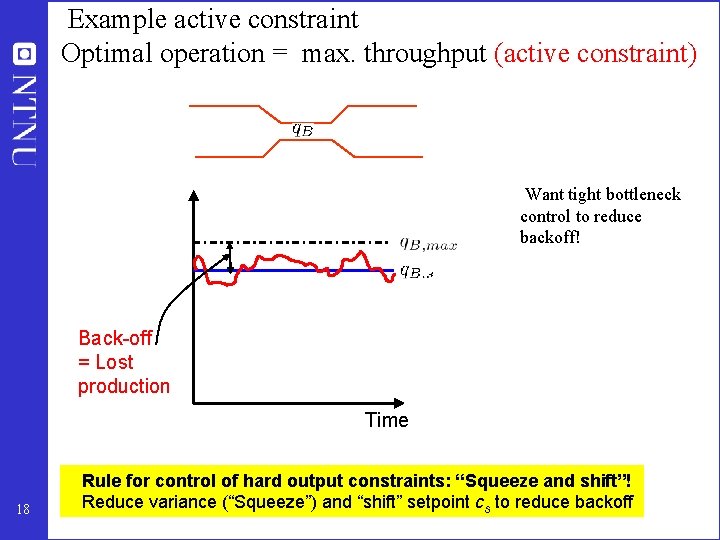

Example active constraint Optimal operation = max. throughput (active constraint) Want tight bottleneck control to reduce backoff! Back-off = Lost production Time 18 Rule for control of hard output constraints: “Squeeze and shift”! Reduce variance (“Squeeze”) and “shift” setpoint cs to reduce backoff

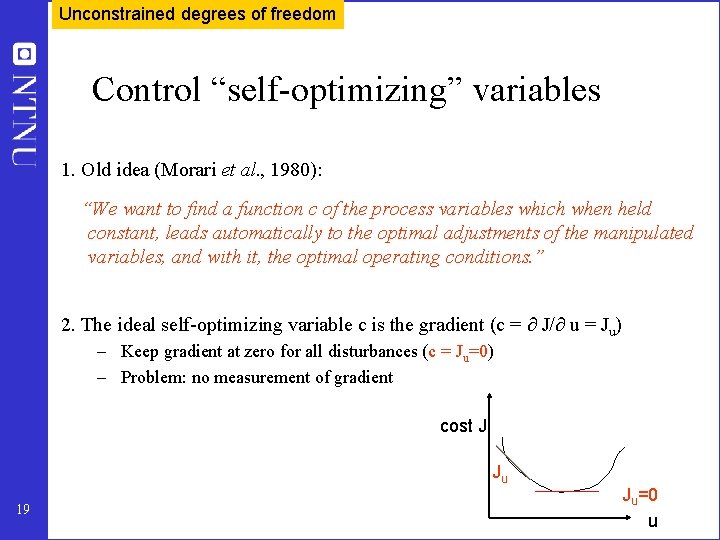

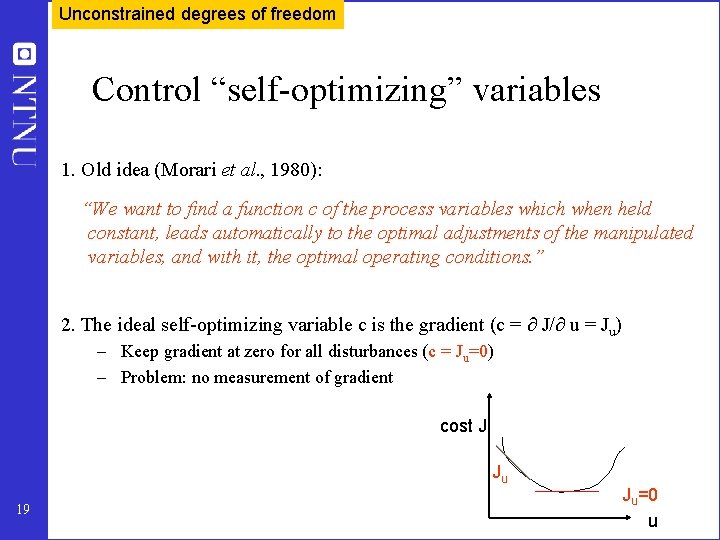

Unconstrained degrees of freedom Control “self-optimizing” variables 1. Old idea (Morari et al. , 1980): “We want to find a function c of the process variables which when held constant, leads automatically to the optimal adjustments of the manipulated variables, and with it, the optimal operating conditions. ” 2. The ideal self-optimizing variable c is the gradient (c = J/ u = Ju) – Keep gradient at zero for all disturbances (c = Ju=0) – Problem: no measurement of gradient cost J Ju 19 Ju=0 u

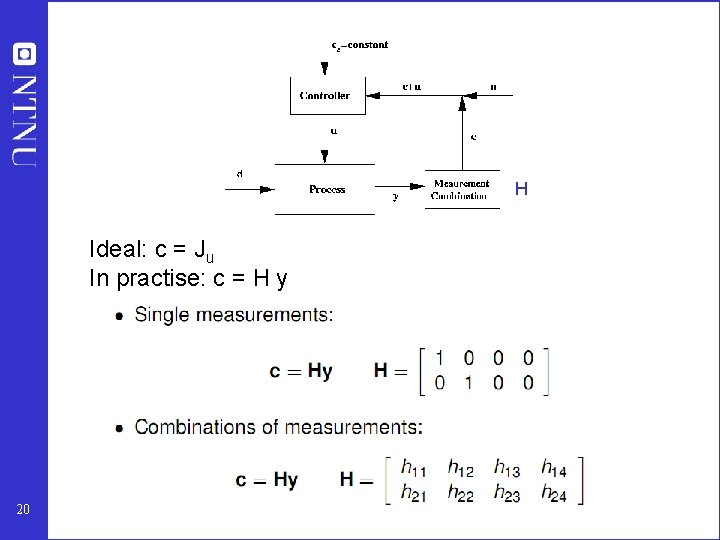

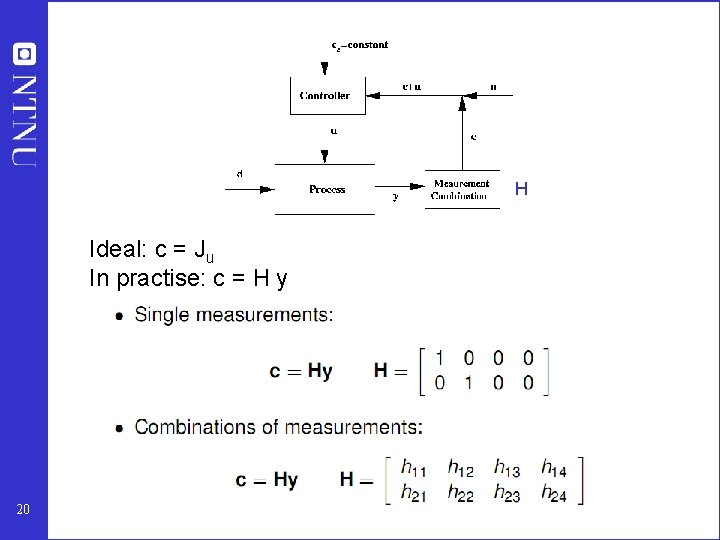

H Ideal: c = Ju In practise: c = H y 20

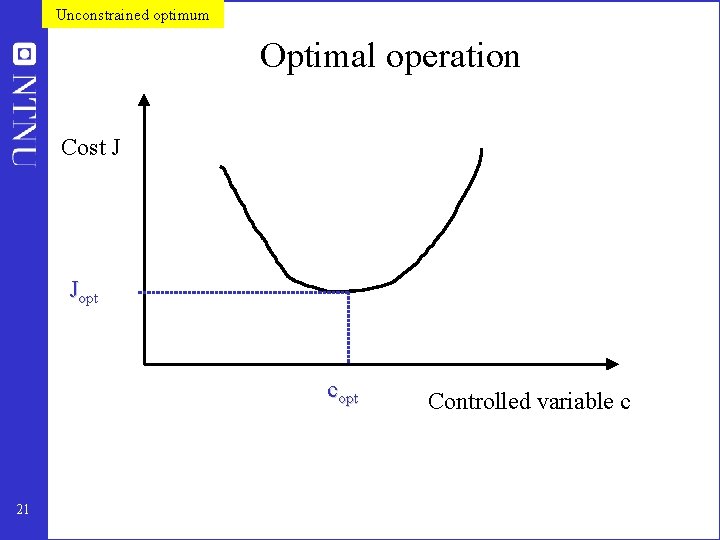

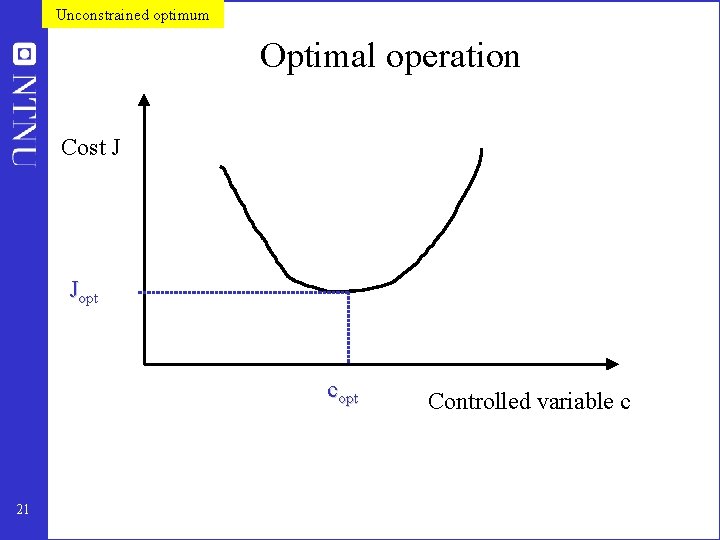

Unconstrained optimum Optimal operation Cost J Jopt copt 21 Controlled variable c

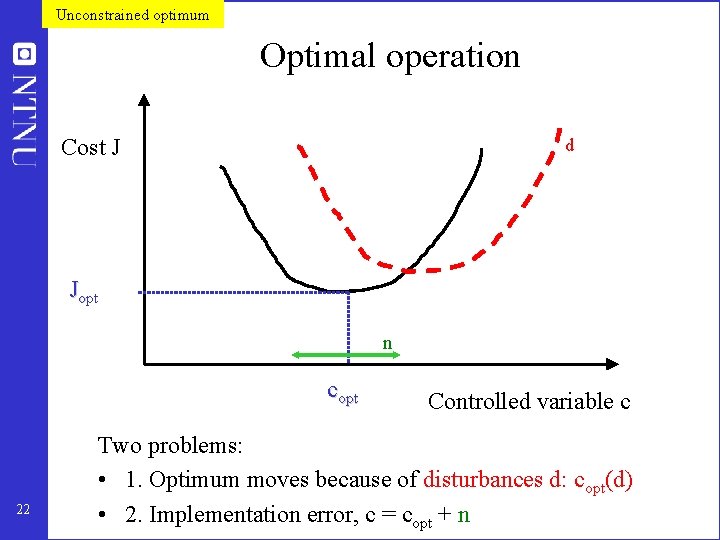

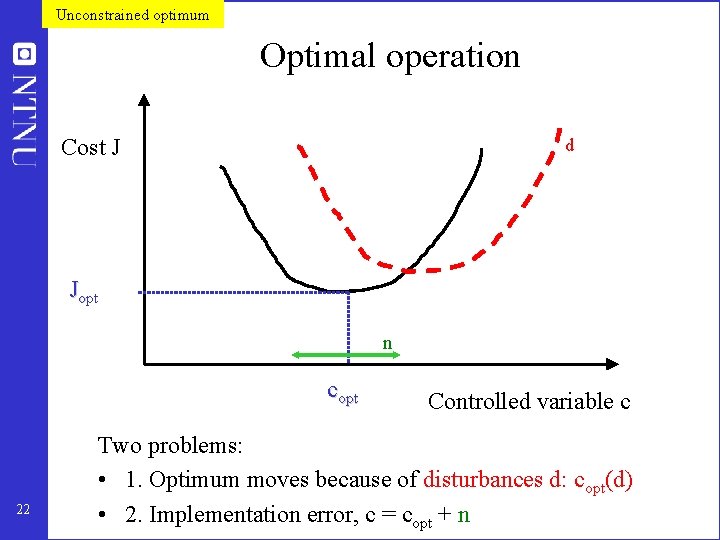

Unconstrained optimum Optimal operation Cost J d Jopt n copt 22 Controlled variable c Two problems: • 1. Optimum moves because of disturbances d: copt(d) • 2. Implementation error, c = copt + n

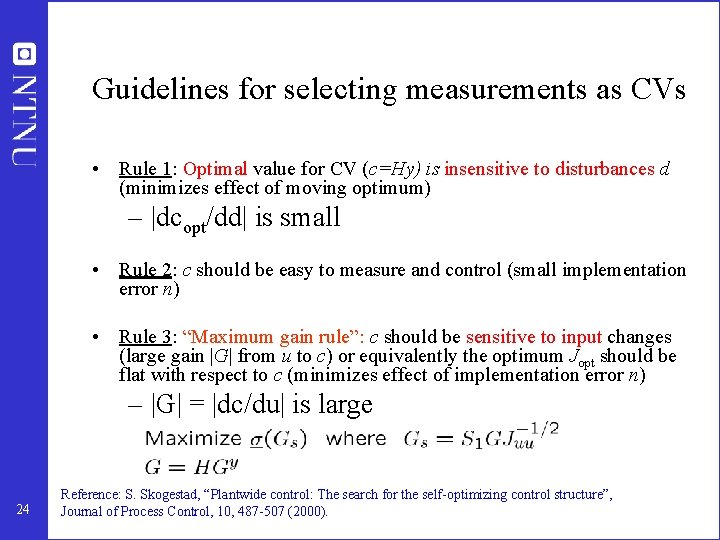

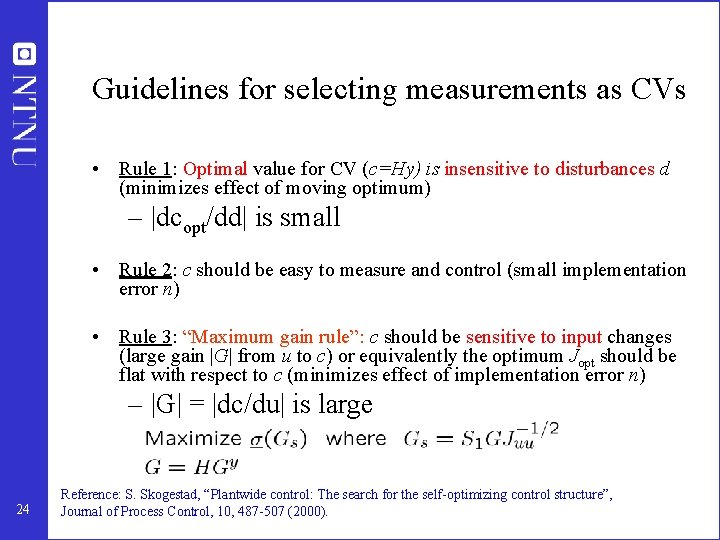

Guidelines for selecting measurements as CVs • Rule 1: Optimal value for CV (c=Hy) is insensitive to disturbances d (minimizes effect of moving optimum) – |dcopt/dd| is small • Rule 2: c should be easy to measure and control (small implementation error n) • Rule 3: “Maximum gain rule”: c should be sensitive to input changes (large gain |G| from u to c) or equivalently the optimum Jopt should be flat with respect to c (minimizes effect of implementation error n) – |G| = |dc/du| is large 24 Reference: S. Skogestad, “Plantwide control: The search for the self-optimizing control structure”, Journal of Process Control, 10, 487 -507 (2000).

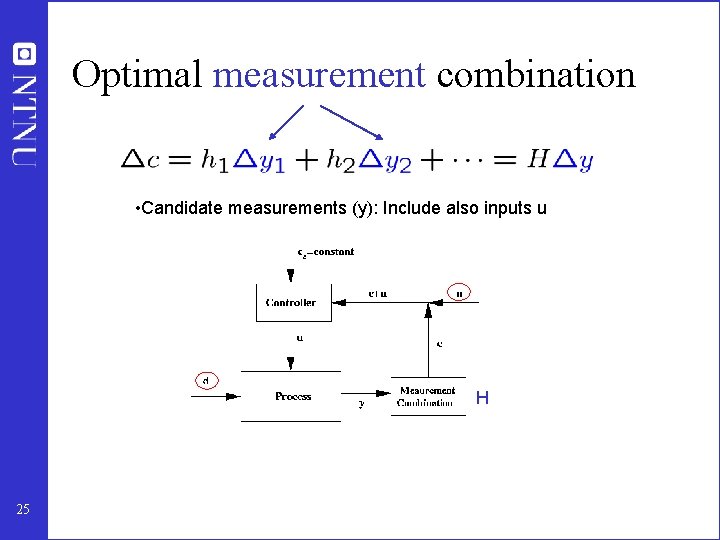

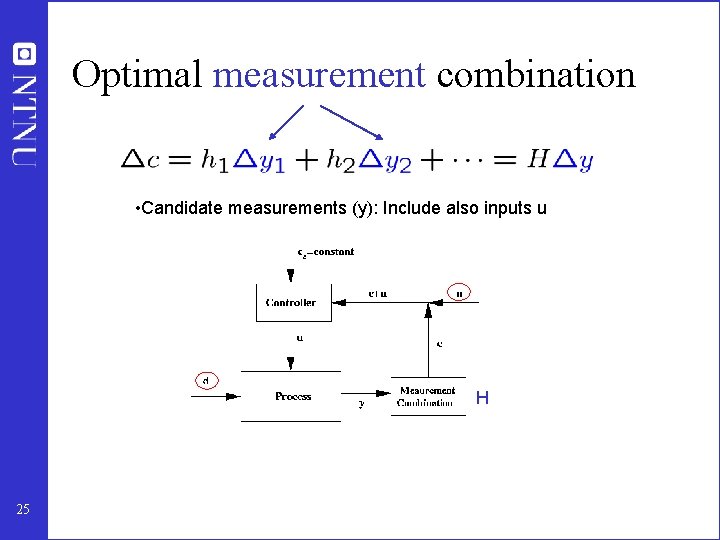

Optimal measurement combination • Candidate measurements (y): Include also inputs u H 25

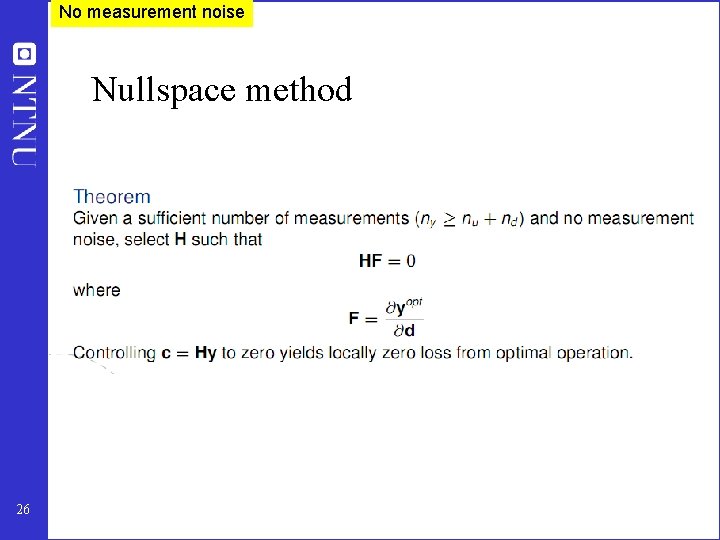

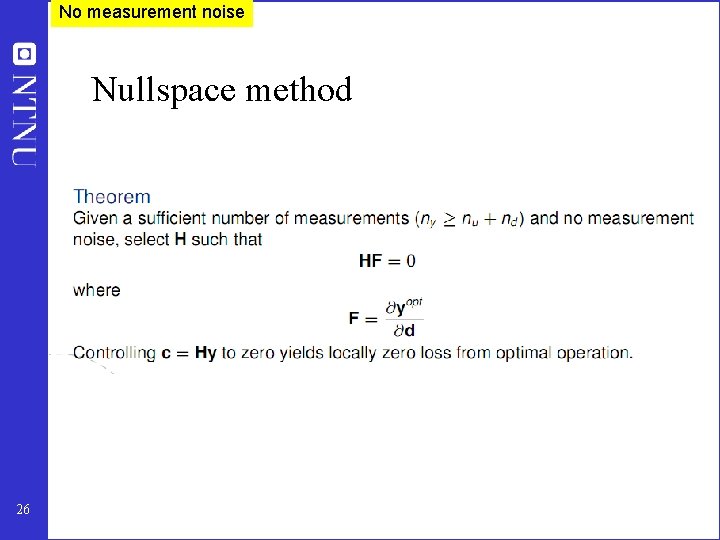

No measurement noise Nullspace method 26

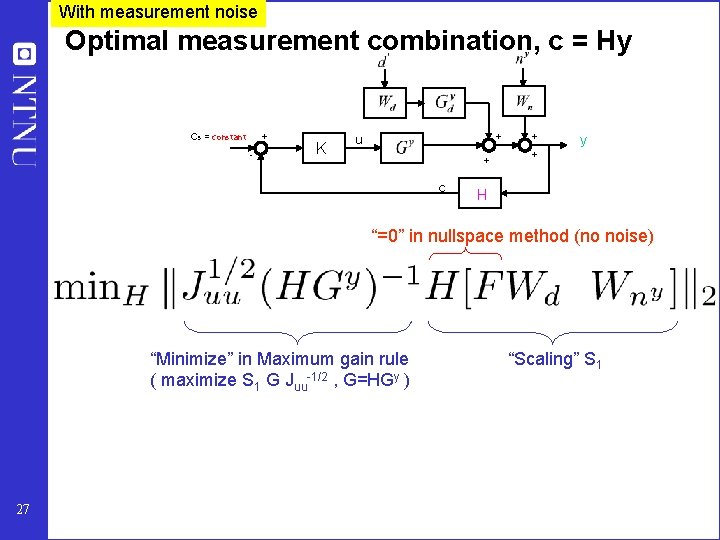

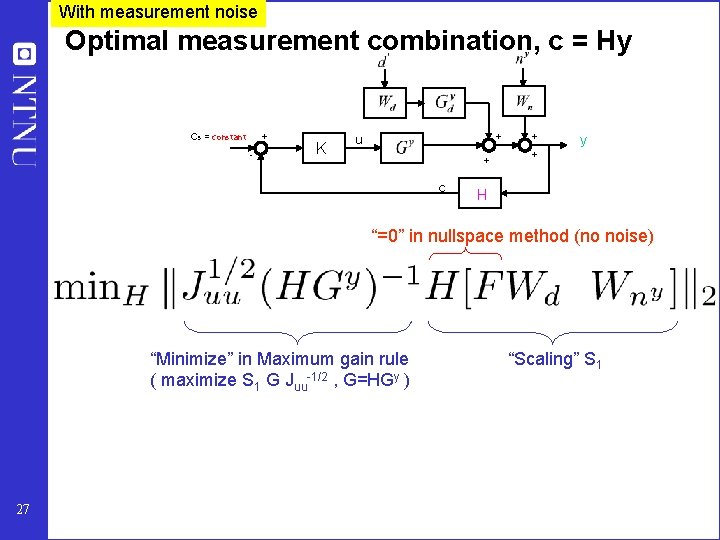

With measurement noise Optimal measurement combination, c = Hy cs = constant + - K u + + c + y + H “=0” in nullspace method (no noise) “Minimize” in Maximum gain rule ( maximize S 1 G Juu-1/2 , G=HGy ) 27 “Scaling” S 1

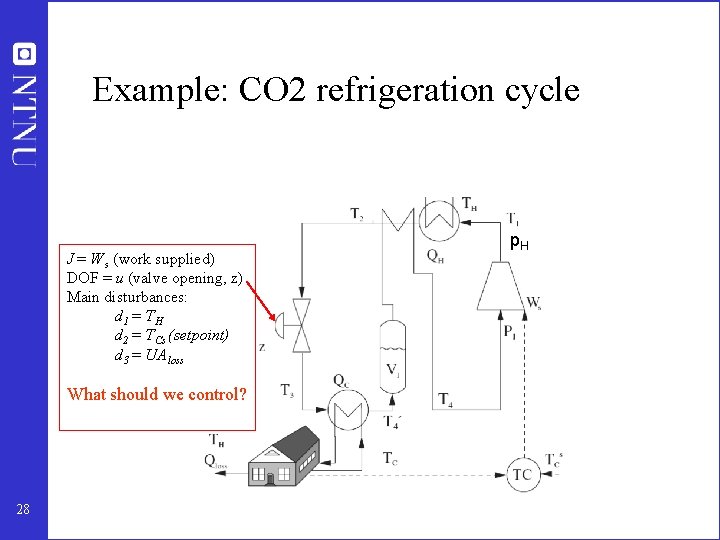

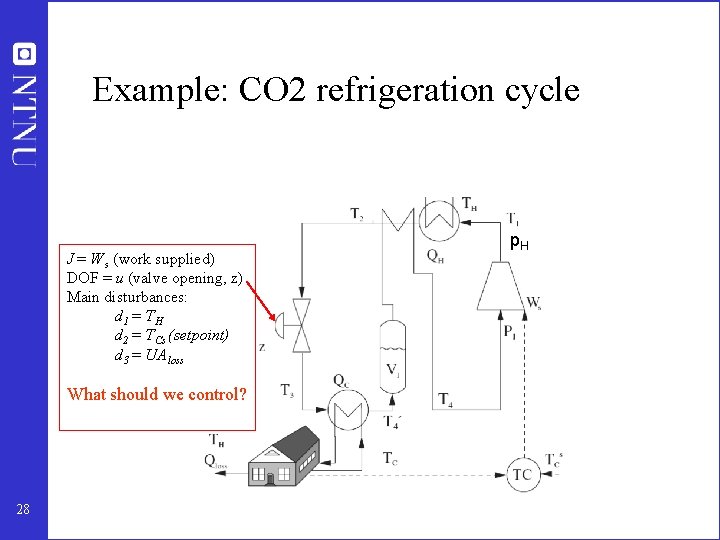

Example: CO 2 refrigeration cycle J = W s (work supplied) DOF = u (valve opening, z) Main disturbances: d 1 = T H d 2 = T Cs (setpoint) d 3 = UA loss What should we control? 28 p. H

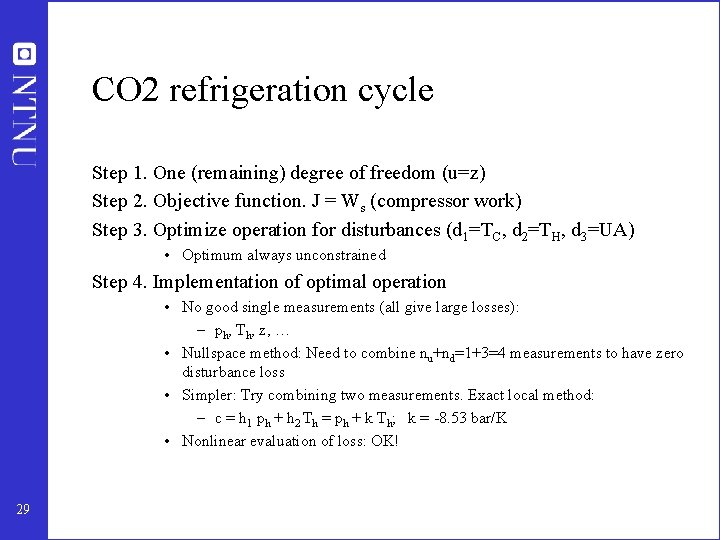

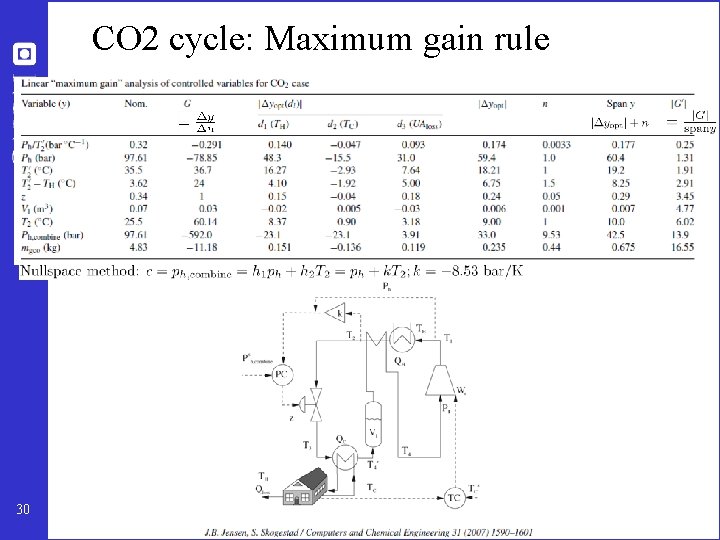

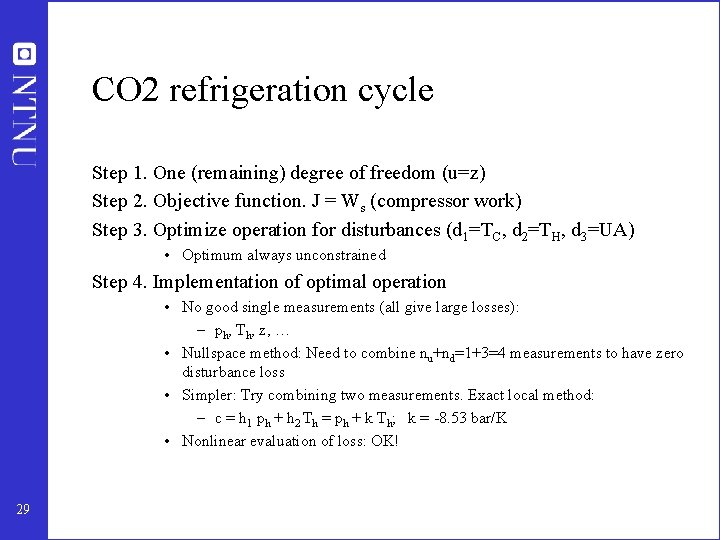

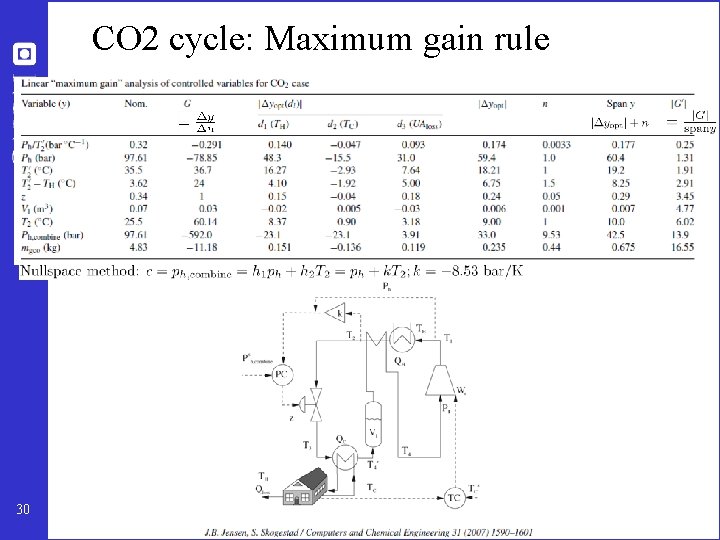

CO 2 refrigeration cycle Step 1. One (remaining) degree of freedom (u=z) Step 2. Objective function. J = Ws (compressor work) Step 3. Optimize operation for disturbances (d 1=TC, d 2=TH, d 3=UA) • Optimum always unconstrained Step 4. Implementation of optimal operation • No good single measurements (all give large losses): – ph, Th, z, … • Nullspace method: Need to combine nu+nd=1+3=4 measurements to have zero disturbance loss • Simpler: Try combining two measurements. Exact local method: – c = h 1 ph + h 2 Th = ph + k Th; k = -8. 53 bar/K • Nonlinear evaluation of loss: OK! 29

CO 2 cycle: Maximum gain rule 30

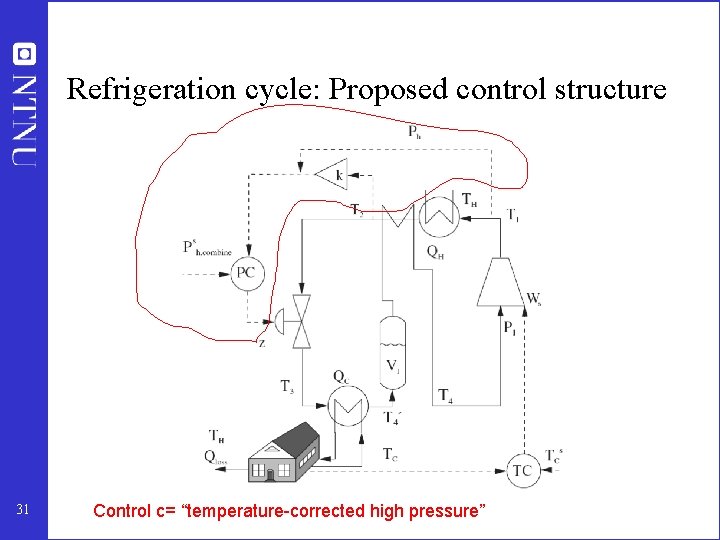

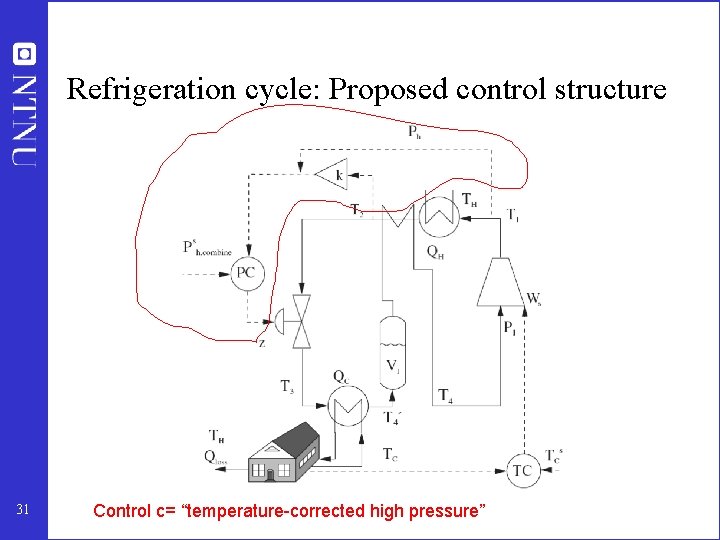

Refrigeration cycle: Proposed control structure 31 Control c= “temperature-corrected high pressure”

Step 4. Where set production rate? • • • 32 Where locale the TPM (throughput manipulator)? Very important! Determines structure of remaining inventory (level) control system Set production rate at (dynamic) bottleneck Link between Top-down and Bottom-up parts

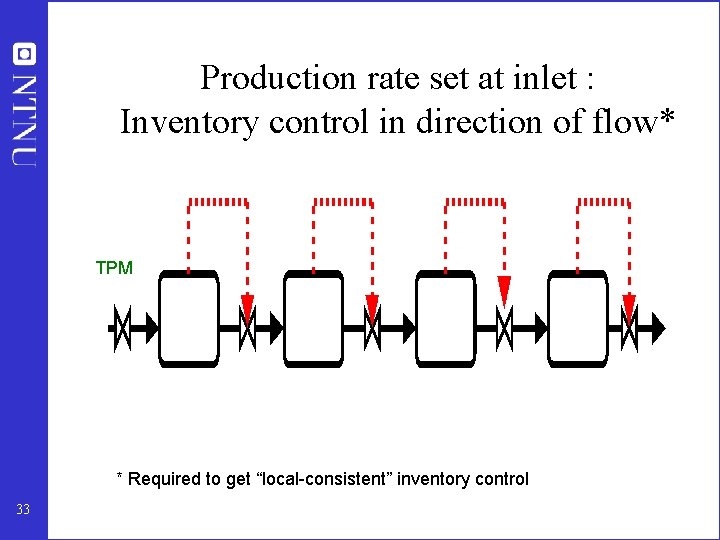

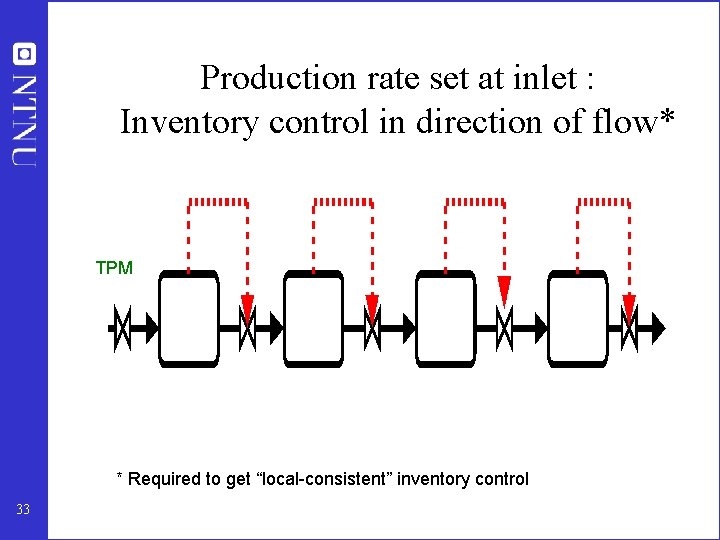

Production rate set at inlet : Inventory control in direction of flow* TPM * Required to get “local-consistent” inventory control 33

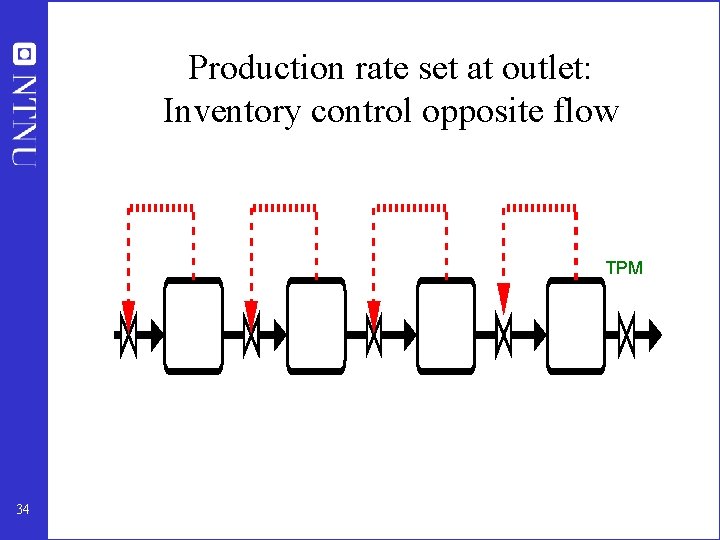

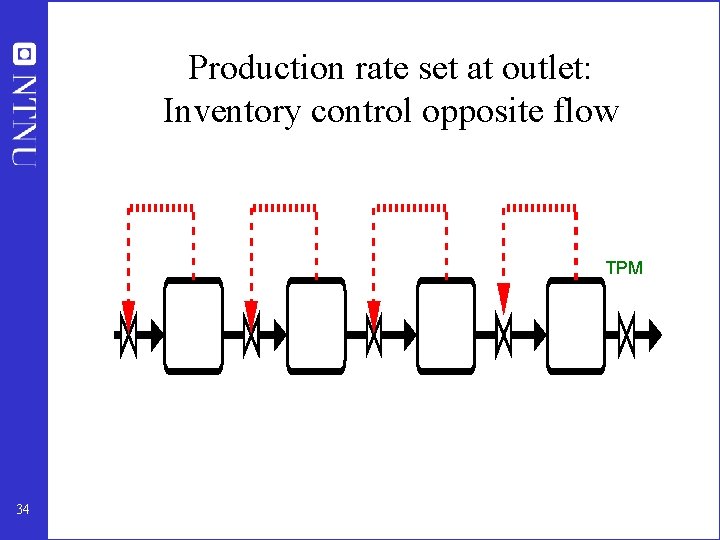

Production rate set at outlet: Inventory control opposite flow TPM 34

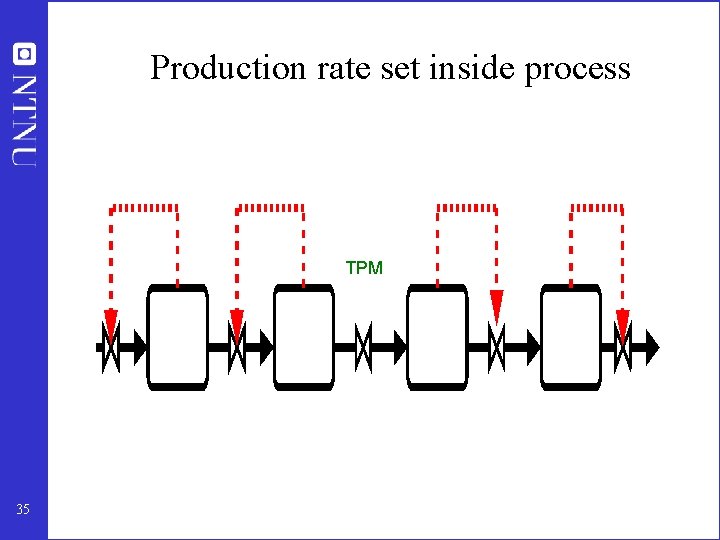

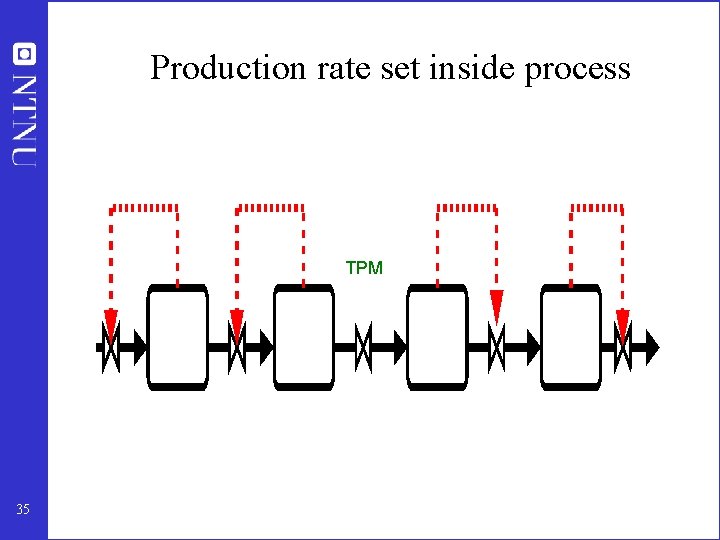

Production rate set inside process TPM 35

LOCATE TPM? • Default choice: place the TPM at the feed • Consider moving if there is an important active constraint that could otherwise not be well controlled. 36

Step 5: Regulatory control layer Step 5. Choose structure of regulatory (stabilizing) layer (a) Identify “stabilizing” CV 2 s (levels, pressures, reactortemperature, one temperature in each column, etc. ). In addition, active constraints (CV 1) that require tight control (small backoff) may be assigned to the regulatory layer. (Comment: usually not necessary with tight control of unconstrained CVs because optimum is usually relatively flat) (b) Identify pairings (MVs to be used to control CV 2), taking into account – Want “local consistency” for the inventory control – Want tight control of important active constraints – Avoid MVs that may saturate in the regulatory layer, because this would require either • reassigning the regulatory loop (complication penalty), or • requiring back-off for the MV variable (economic penalty) Preferably, the same regulatory layer should be used for all operating regions without the need for reassigning inputs or outputs. 38

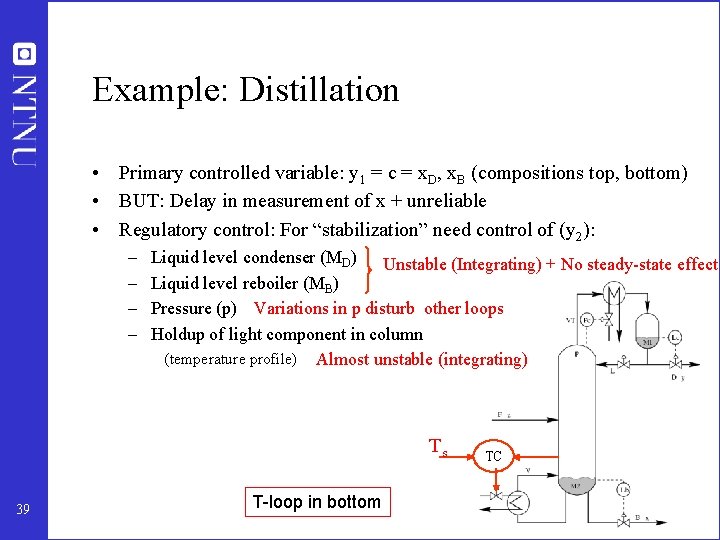

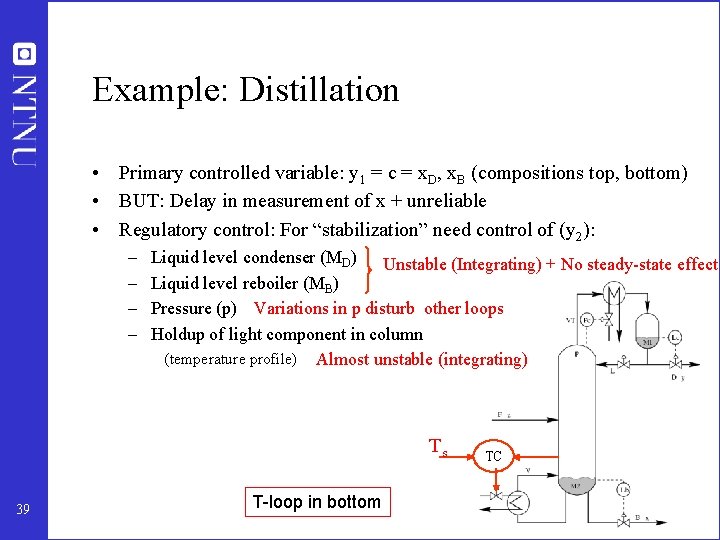

Example: Distillation • Primary controlled variable: y 1 = c = x. D, x. B (compositions top, bottom) • BUT: Delay in measurement of x + unreliable • Regulatory control: For “stabilization” need control of (y 2): – – Liquid level condenser (MD) Unstable (Integrating) + No steady-state effect Liquid level reboiler (MB) Pressure (p) Variations in p disturb other loops Holdup of light component in column (temperature profile) Almost unstable (integrating) Ts 39 T-loop in bottom TC

Why simplified configurations? Why control layers? Why not one “big” multivariable controller? • Fundamental: Save on modelling effort • Other: – – – 40 easy to understand easy to tune and retune insensitive to model uncertainty possible to design for failure tolerance fewer links reduced computation load

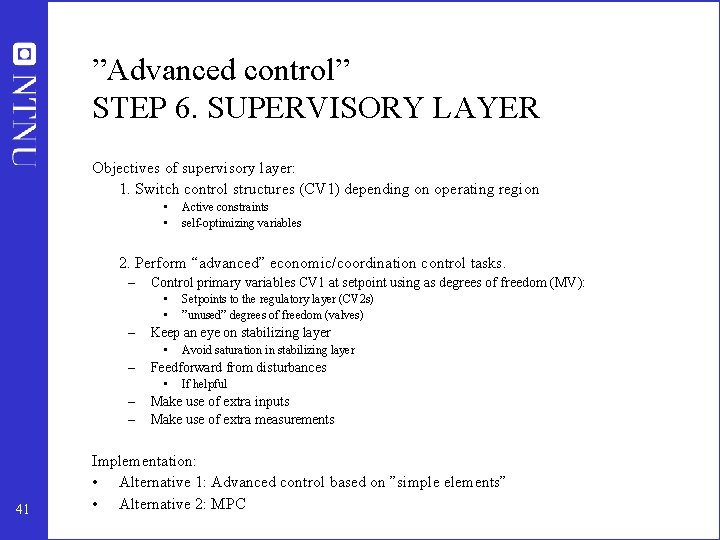

”Advanced control” STEP 6. SUPERVISORY LAYER Objectives of supervisory layer: 1. Switch control structures (CV 1) depending on operating region • • Active constraints self-optimizing variables 2. Perform “advanced” economic/coordination control tasks. – Control primary variables CV 1 at setpoint using as degrees of freedom (MV): • • – Keep an eye on stabilizing layer • – 41 Avoid saturation in stabilizing layer Feedforward from disturbances • – – Setpoints to the regulatory layer (CV 2 s) ”unused” degrees of freedom (valves) If helpful Make use of extra inputs Make use of extra measurements Implementation: • Alternative 1: Advanced control based on ”simple elements” • Alternative 2: MPC

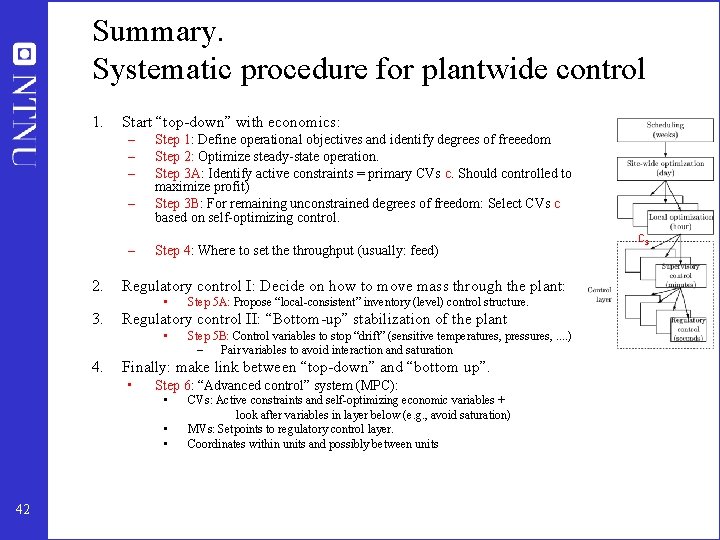

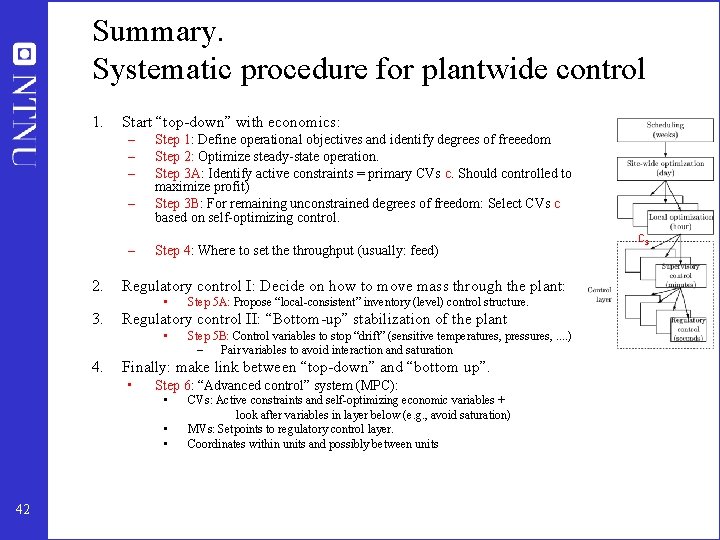

Summary. Systematic procedure for plantwide control 1. Start “top-down” with economics: – – – 2. Step 1: Define operational objectives and identify degrees of freeedom Step 2: Optimize steady-state operation. Step 3 A: Identify active constraints = primary CVs c. Should controlled to maximize profit) Step 3 B: For remaining unconstrained degrees of freedom: Select CVs c based on self-optimizing control. Step 4: Where to set the throughput (usually: feed) Regulatory control I: Decide on how to move mass through the plant: • 3. Regulatory control II: “Bottom-up” stabilization of the plant • 4. Step 5 B: Control variables to stop “drift” (sensitive temperatures, pressures, . . ) – Pair variables to avoid interaction and saturation Finally: make link between “top-down” and “bottom up”. • Step 6: “Advanced control” system (MPC): • • • 42 Step 5 A: Propose “local-consistent” inventory (level) control structure. CVs: Active constraints and self-optimizing economic variables + look after variables in layer below (e. g. , avoid saturation) MVs: Setpoints to regulatory control layer. Coordinates within units and possibly between units cs

Summary and references • The following paper summarizes the procedure: – S. Skogestad, ``Control structure design for complete chemical plants'', Computers and Chemical Engineering, 28 (1 -2), 219 -234 (2004). • There are many approaches to plantwide control as discussed in the following review paper: – T. Larsson and S. Skogestad, ``Plantwide control: A review and a new design procedure'' Modeling, Identification and Control, 21, 209 -240 (2000). 43

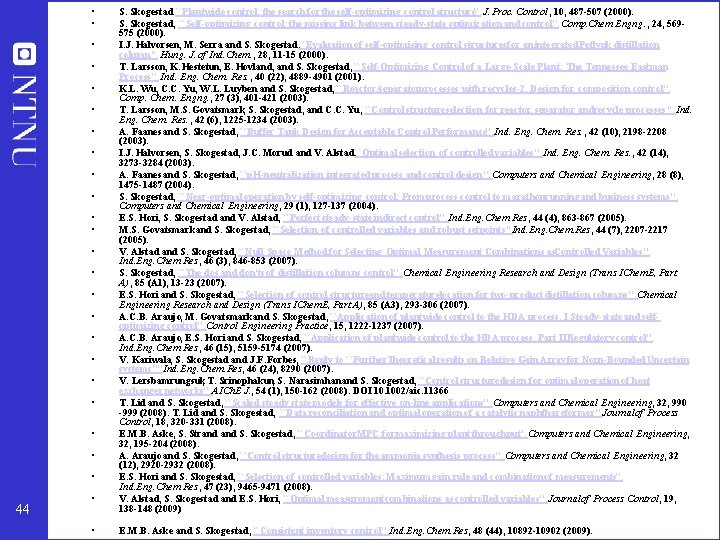

• • • • • • 44 • • S. Skogestad ``Plantwide control: the search for the self-optimizing control structure'', J. Proc. Control, 10, 487 -507 (2000). S. Skogestad, ``Self-optimizing control: the missing link between steady-state optimization and control'', Comp. Chem. Engng. , 24, 569575 (2000). I. J. Halvorsen, M. Serra and S. Skogestad, ``Evaluation of self-optimising control structuresfor an integrated Petlyuk distillation column'', Hung. J. of Ind. Chem. , 28, 11 -15 (2000). T. Larsson, K. Hestetun, E. Hovland, and S. Skogestad, ``Self-Optimizing Control of a Large-Scale Plant: The Tennessee Eastman Process'', Ind. Eng. Chem. Res. , 40 (22), 4889 -4901 (2001). K. L. Wu, C. C. Yu, W. L. Luyben and S. Skogestad, ``Reactor/separatorprocesses with recycles-2. Design for composition control'', Comp. Chem. Engng. , 27 (3), 401 -421 (2003). T. Larsson, M. S. Govatsmark, S. Skogestad, and C. C. Yu, ``Control structureselection for reactor, separator andrecycle processes'', Ind. Eng. Chem. Res. , 42 (6), 1225 -1234 (2003). A. Faanes and S. Skogestad, ``Buffer Tank Design for Acceptable Control Performance'', Ind. Eng. Chem. Res. , 42 (10), 2198 -2208 (2003). I. J. Halvorsen, S. Skogestad, J. C. Morud and V. Alstad, ``Optimal selection of controlled variables'', Ind. Eng. Chem. Res. , 42 (14), 3273 -3284 (2003). A. Faanes and S. Skogestad, ``p. H-neutralization: integrated process and control design'', Computers and Chemical Engineering, 28 (8), 1475 -1487 (2004). S. Skogestad, ``Near-optimal operation by self-optimizing control: From process control to marathonrunning and business systems'', Computers and Chemical Engineering, 29 (1), 127 -137 (2004). E. S. Hori, S. Skogestad and V. Alstad, ``Perfect steady-state indirect control'', Ind. Eng. Chem. Res, 44 (4), 863 -867 (2005). M. S. Govatsmarkand S. Skogestad, ``Selection of controlled variables and robust setpoints'', Ind. Eng. Chem. Res, 44 (7), 2207 -2217 (2005). V. Alstad and S. Skogestad, ``Null Space Method for Selecting Optimal Measurement Combinations as. Controlled Variables'', Ind. Eng. Chem. Res, 46 (3), 846 -853 (2007). S. Skogestad, ``The dos and don'ts of distillation columns control'', Chemical Engineering Research and Design (Trans IChem. E, Part A), 85 (A 1), 13 -23 (2007). E. S. Hori and S. Skogestad, ``Selection of control structureand temperaturelocation for two-product distillation columns'', Chemical Engineering Research and Design (Trans IChem. E, Part A), 85 (A 3), 293 -306 (2007). A. C. B. Araujo, M. Govatsmarkand S. Skogestad, ``Application of plantwide control to the HDA process. I Steady-state and selfoptimizing control'', Control Engineering Practice, 15, 1222 -1237 (2007). A. C. B. Araujo, E. S. Hori and S. Skogestad, ``Application of plantwide control to the HDA process. Part IIRegulatory control'', Ind. Eng. Chem. Res, 46 (15), 5159 -5174 (2007). V. Kariwala, S. Skogestad and J. F. Forbes, ``Reply to ``Further. Theoretical results on Relative Gain Array for Norn-Bounded Uncertain systems''''Ind. Eng. Chem. Res, 46 (24), 8290 (2007). V. Lersbamrungsuk, T. Srinophakun, S. Narasimhanand S. Skogestad, ``Control structuredesign for optimal operation of heat exchanger networks'', AICh. E J. , 54 (1), 150 -162 (2008). DOI 10. 1002/aic. 11366 T. Lid and S. Skogestad, ``Scaled steady state models for effective on-line applications'', Computers and Chemical Engineering, 32, 990 -999 (2008). T. Lid and S. Skogestad, ``Data reconciliation and optimal operation of a catalytic naphthareformer'', Journal of Process Control, 18, 320 -331 (2008). E. M. B. Aske, S. Strand S. Skogestad, ``Coordinator. MPC for maximizing plant throughput'', Computers and Chemical Engineering, 32, 195 -204 (2008). A. Araujo and S. Skogestad, ``Control structuredesign for the ammonia synthesis process'', Computers and Chemical Engineering, 32 (12), 2920 -2932 (2008). E. S. Hori and S. Skogestad, ``Selection of controlled variables: Maximum gain rule and combination of measurements'', Ind. Eng. Chem. Res, 47 (23), 9465 -9471 (2008). V. Alstad, S. Skogestad and E. S. Hori, ``Optimal measurement combinations as controlled variables'', Journal of Process Control, 19, 138 -148 (2009) E. M. B. Aske and S. Skogestad, ``Consistent inventory control'', Ind. Eng. Chem. Res, 48 (44), 10892 -10902 (2009).