1 Eyad Alshareef Chapter 8 Data Compression EYAD

- Slides: 39

1 Eyad Alshareef Chapter 8: - Data Compression EYAD ALSHAREEF

Topics: 2 Basic Coding Schema Huffman Coding Eyad Alshareef Introduction

What is Data Compression? compression is the representation of an information source (e. g. a data file, a speech signal, an image, or a video signal) as accurately as possible using the fewest number of bits. Compressed data can only be understood if the decoding method is known by the receiver. Eyad Alshareef Data 3

Data Compression reduces the size of a file: To save space when storing it. To save time when transmitting it. Most Who files have lots of redundancy. needs compression? Text, Basic images, sound, video, … concepts ancient (1950 s), best technology recently developed. Eyad Alshareef Compression 4

Applications 5 Eyad Alshareef Multimedia. Images: Sound: Video: GIF, JPEG. MP 3. MPEG, Div. X™, HDTV. Databases. Google.

Why Data Compression? Data storage and transmission cost money. This cost can be reduced by processing the data so that it takes less memory and less transmission time. Disadvantage of Data compression: Compressed data must be decompressed to be viewed (or heard), thus extra processing is required. The design of data compression schemes therefore involve trade-offs between various factors, including the degree of compression, the amount of distortion introduced (if using a lossy compression scheme), the computational resources required to compress and uncompressed the data. Eyad Alshareef 6

How is data compression possible? is possible because information usually contains redundancies, or information that is often repeated. Examples File include reoccurring letters, numbers or pixels compression programs remove this redundancy. Eyad Alshareef Compression 7

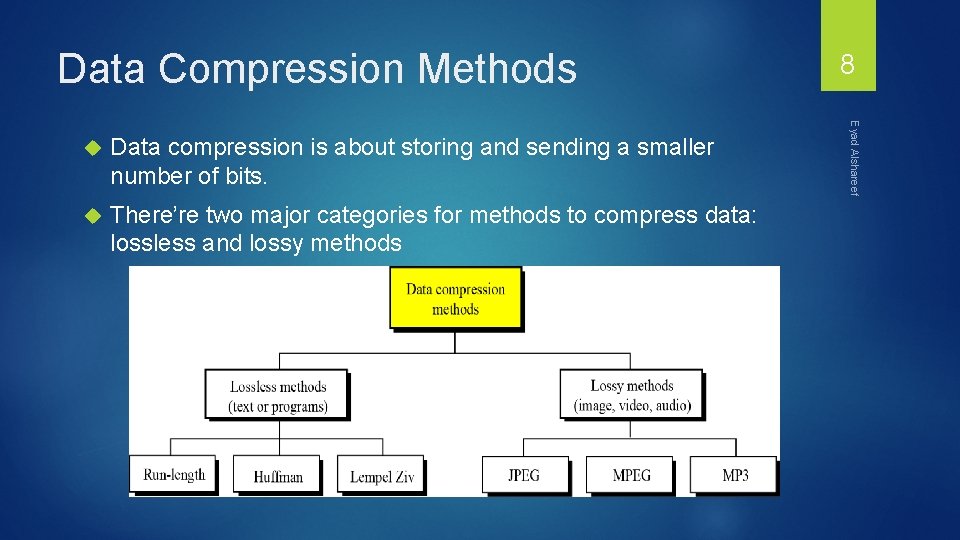

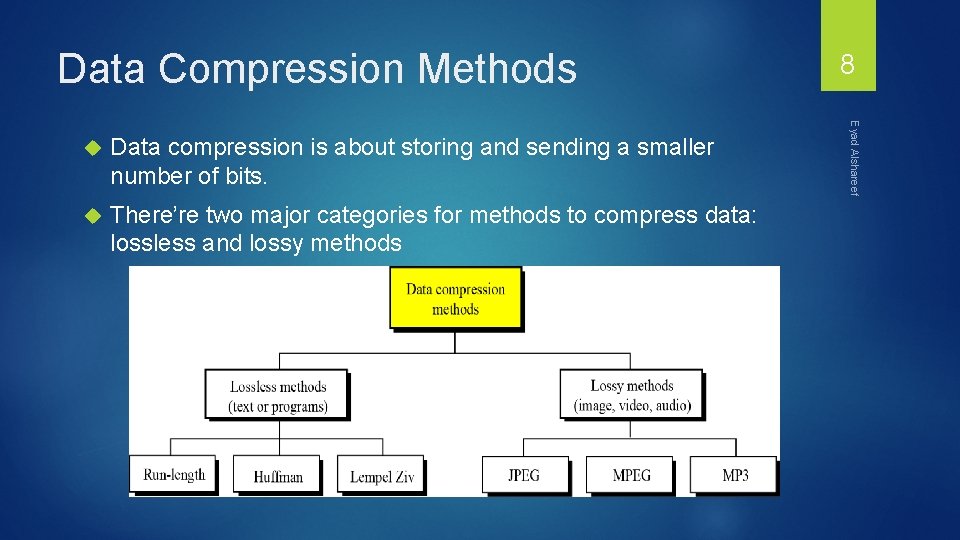

Data Compression Methods Data compression is about storing and sending a smaller number of bits. There’re two major categories for methods to compress data: lossless and lossy methods Eyad Alshareef 8

Data Compression Methods Lossless techniques enable exact reconstruction of the original document from the compressed information. Exploit redundancy in data Applied to general data Examples: Run-length, Huffman, LZ 77, LZ 78, and LZW Lossy compression - reduces a file by permanently eliminating certain redundant information Exploit redundancy and human perception Applied to audio, image, and video Examples: JPEG and MPEG Lossy techniques usually achieve higher compression rates than lossless ones. Eyad Alshareef 9

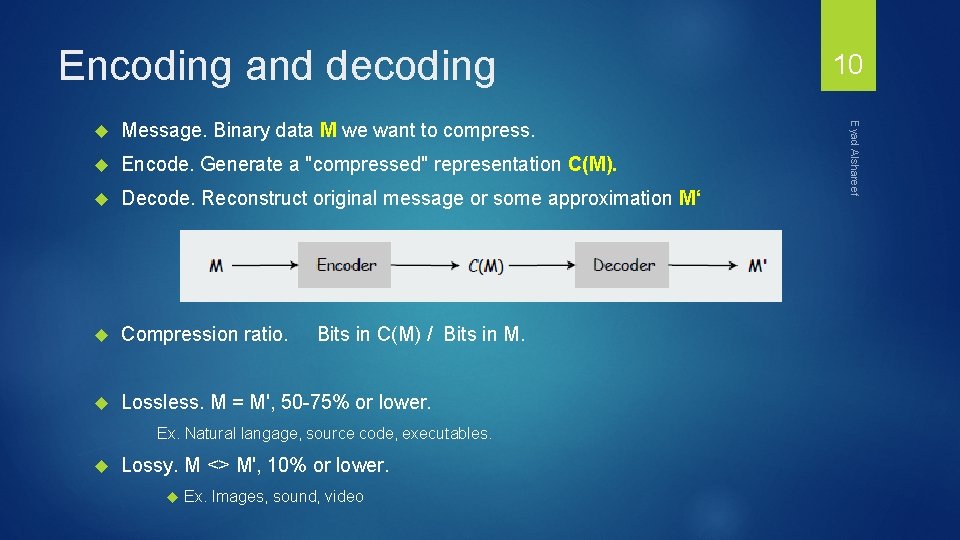

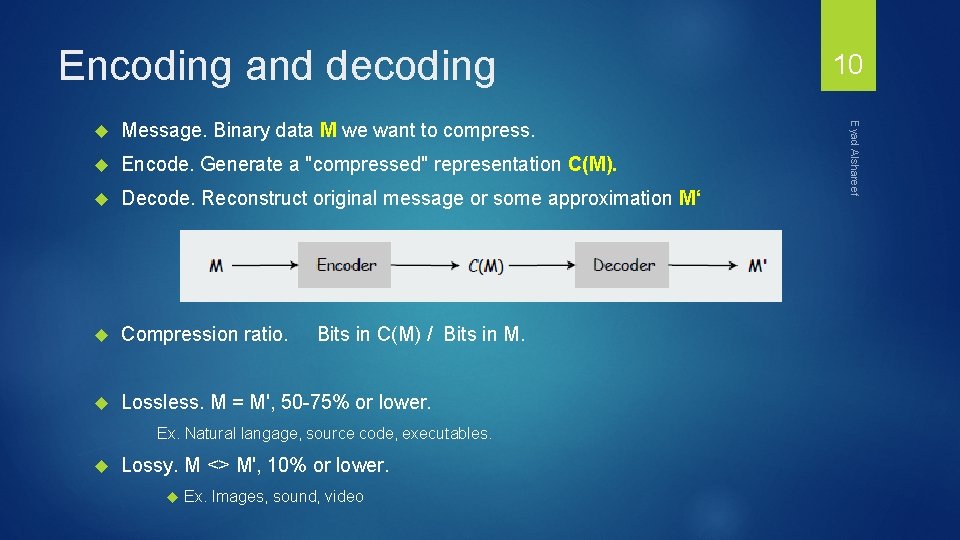

Encoding and decoding Message. Binary data M we want to compress. Encode. Generate a "compressed" representation C(M). Decode. Reconstruct original message or some approximation M‘ Compression ratio. Lossless. M = M', 50 -75% or lower. Bits in C(M) / Bits in M. Ex. Natural langage, source code, executables. Lossy. M <> M', 10% or lower. Ex. Images, sound, video Eyad Alshareef 10

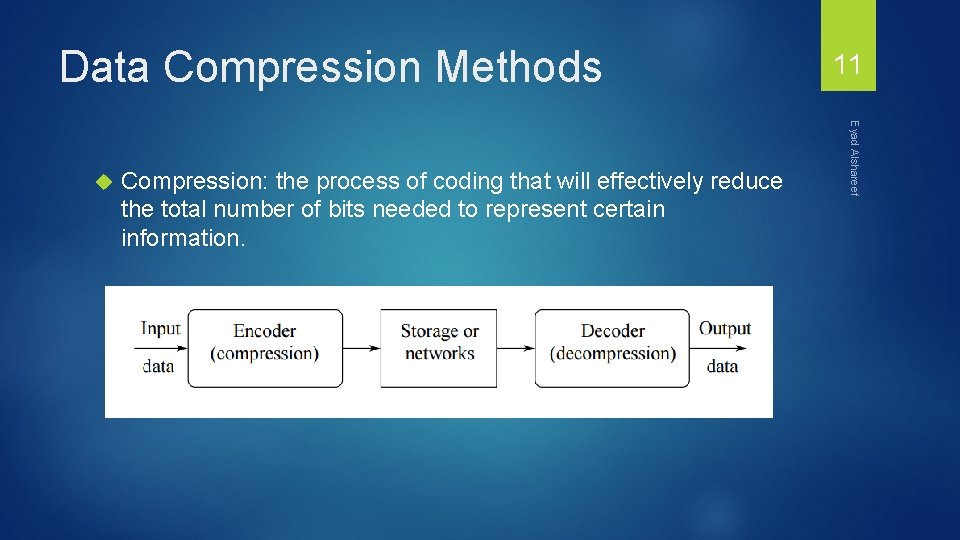

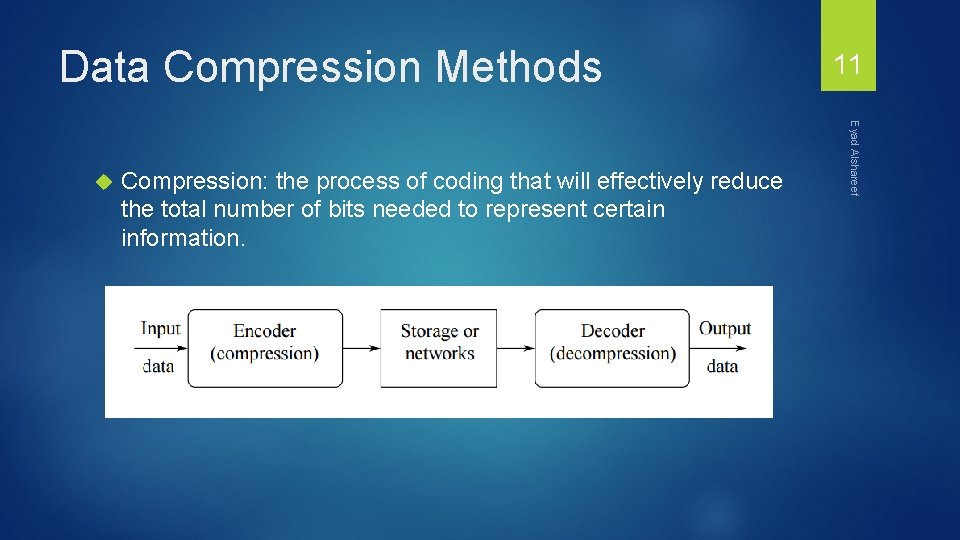

Data Compression Methods Compression: the process of coding that will effectively reduce the total number of bits needed to represent certain information. Eyad Alshareef 11

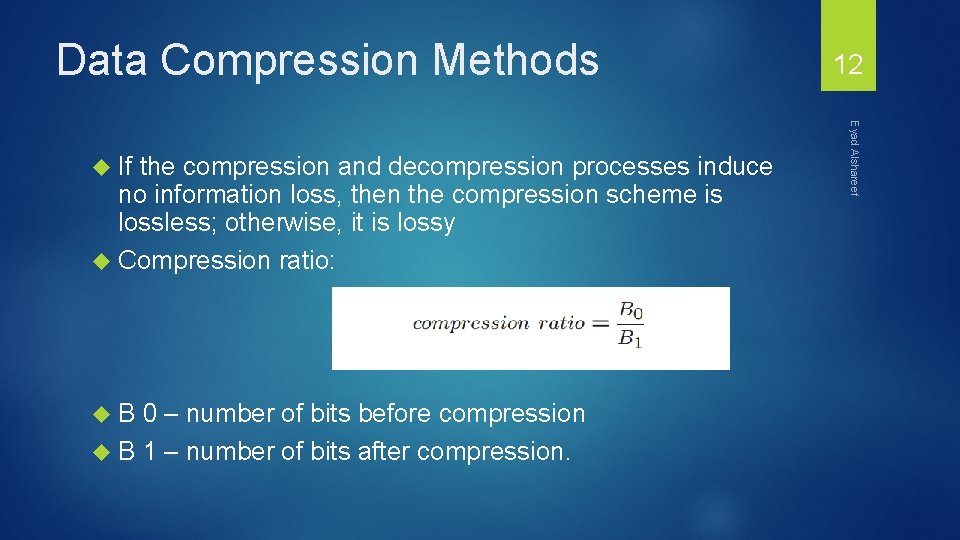

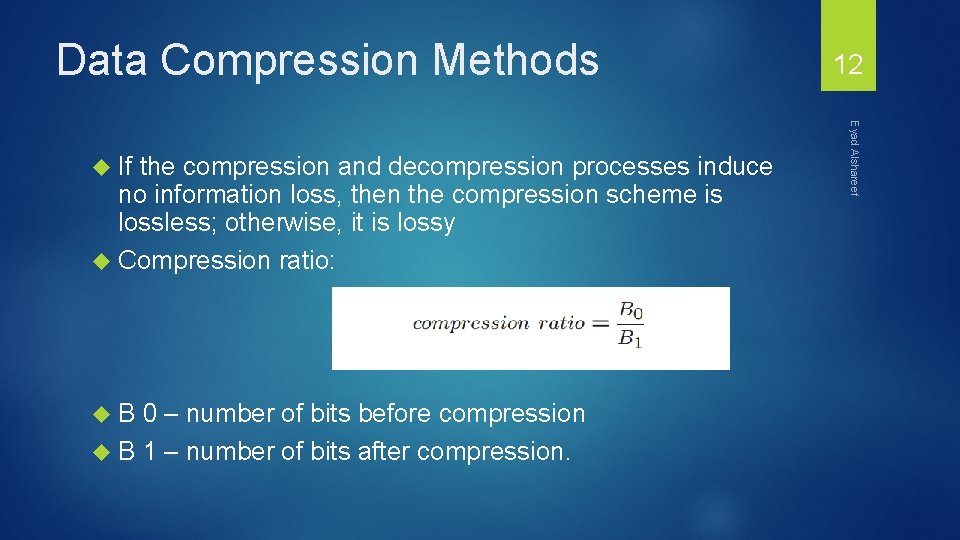

Data Compression Methods the compression and decompression processes induce no information loss, then the compression scheme is lossless; otherwise, it is lossy Compression ratio: B 0 – number of bits before compression B 1 – number of bits after compression. Eyad Alshareef If 12

Lossless Compression Methods lossless methods, original data and the data after compression and decompression are exactly the same. Redundant data is removed in compression and added during decompression. Lossless methods are used when we can’t afford to lose any data: legal and medical documents, computer programs. Eyad Alshareef In 13

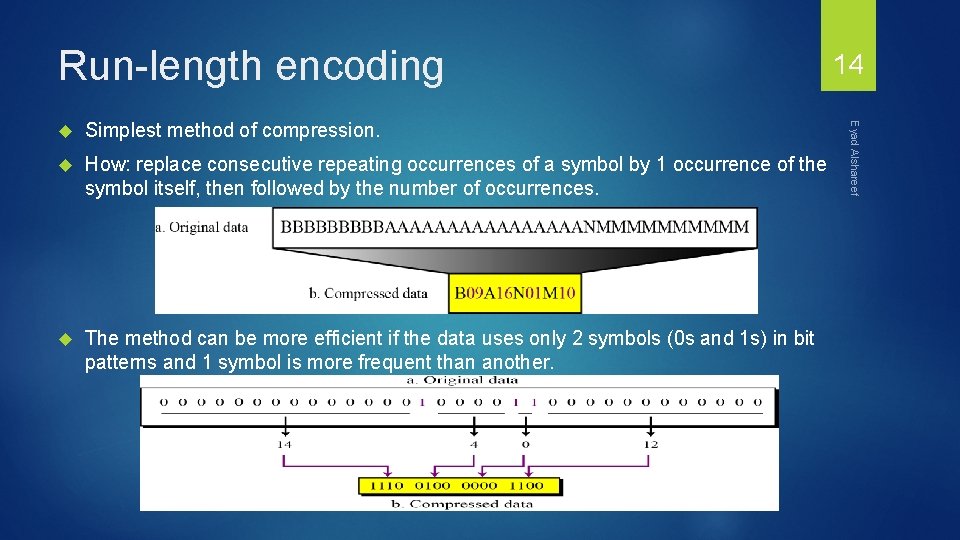

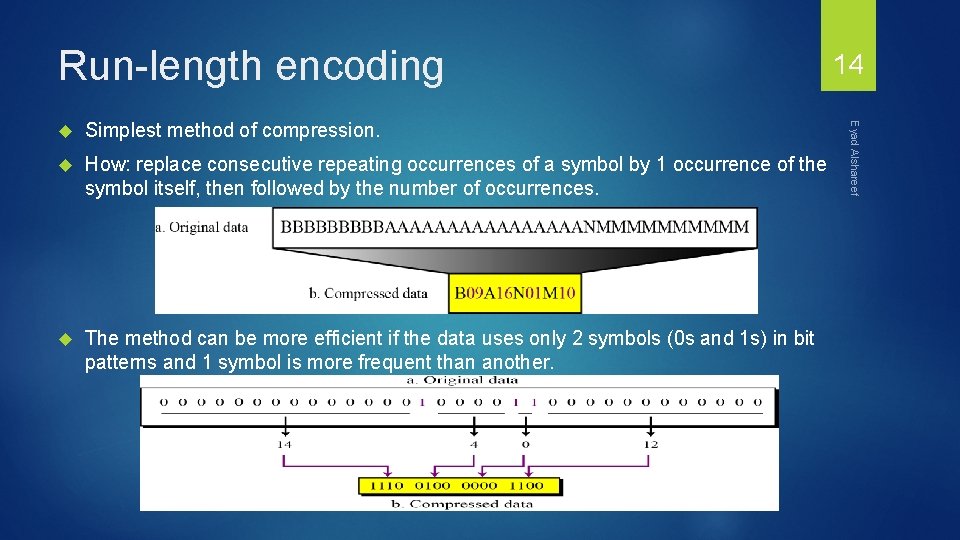

Run-length encoding Simplest method of compression. How: replace consecutive repeating occurrences of a symbol by 1 occurrence of the symbol itself, then followed by the number of occurrences. The method can be more efficient if the data uses only 2 symbols (0 s and 1 s) in bit patterns and 1 symbol is more frequent than another. Eyad Alshareef 14

Variable length-encoding Some characters occur more frequently than others. It’s possible to represent frequently occurring characters with a smaller number of bits during transmission. This may be accomplished by a variable length code, as opposed to a fixed length code like ASCII. An example of a simple variable length code is Morse Code. “E” occurs more frequently than “Z” so we represent “E” with a shorter length code: . =E -=T --. . =Z --. -=Q

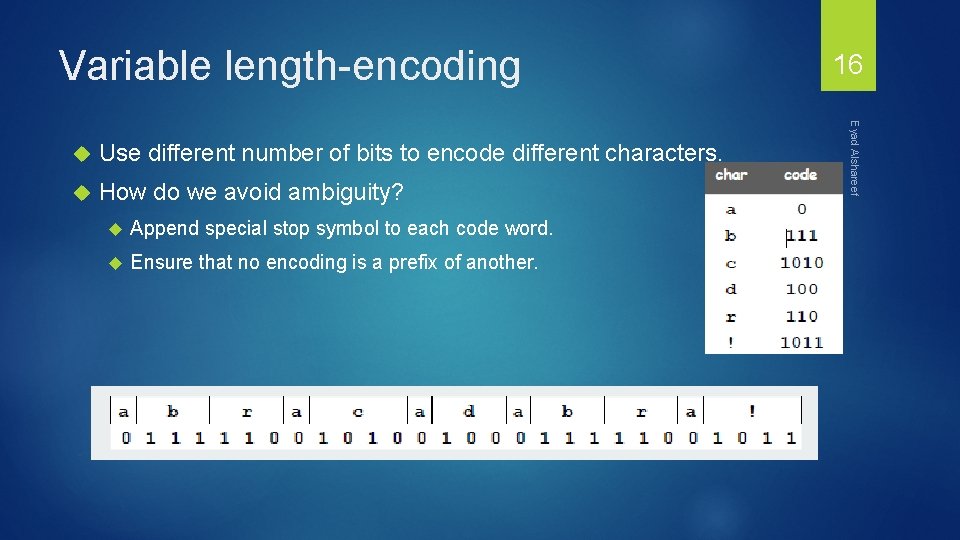

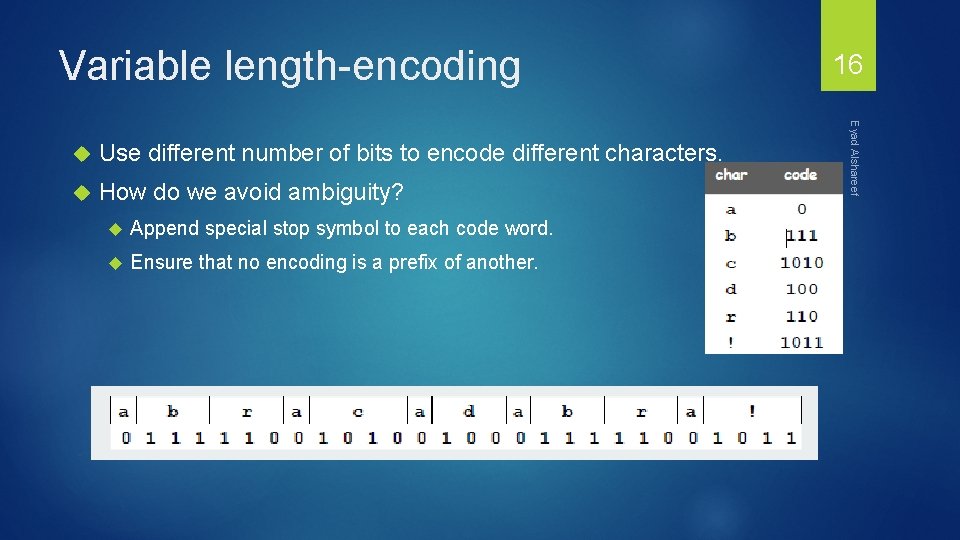

Variable length-encoding Use different number of bits to encode different characters. How do we avoid ambiguity? Append special stop symbol to each code word. Ensure that no encoding is a prefix of another. Eyad Alshareef 16

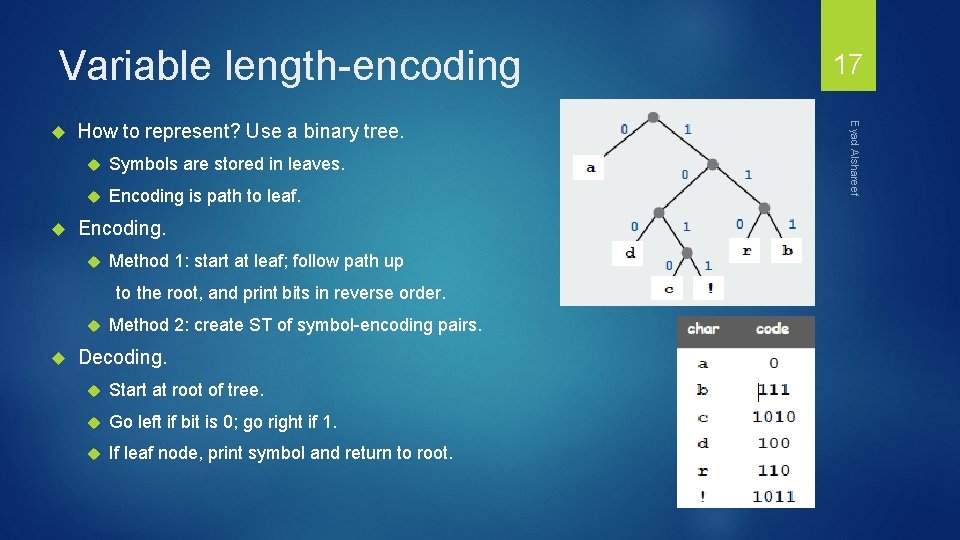

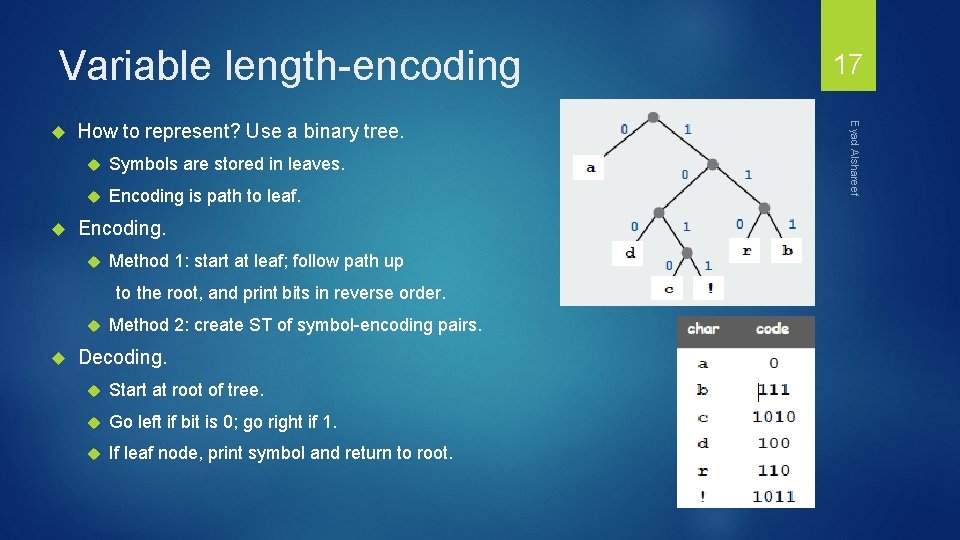

Variable length-encoding How to represent? Use a binary tree. Symbols are stored in leaves. Encoding is path to leaf. Encoding. Method 1: start at leaf; follow path up to the root, and print bits in reverse order. Method 2: create ST of symbol-encoding pairs. Decoding. Start at root of tree. Go left if bit is 0; go right if 1. If leaf node, print symbol and return to root. Eyad Alshareef 17

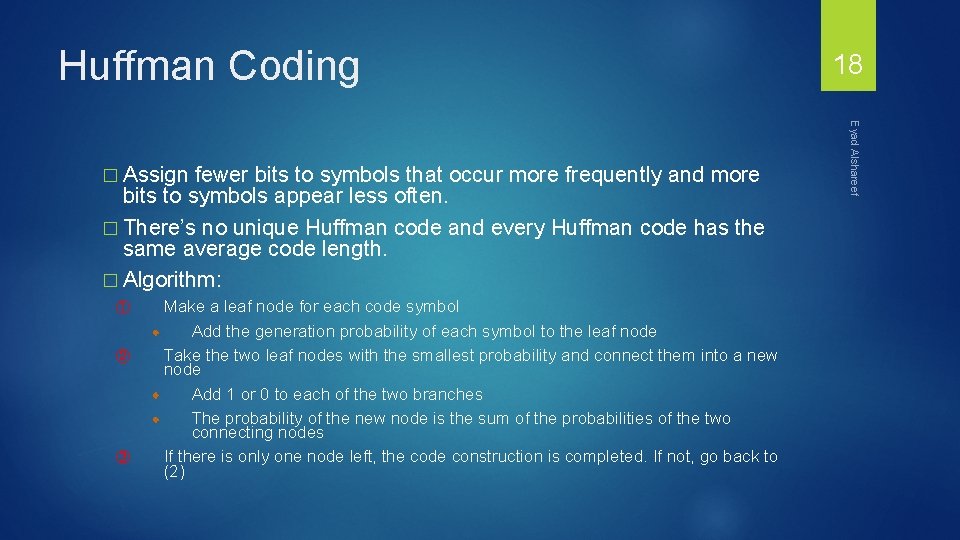

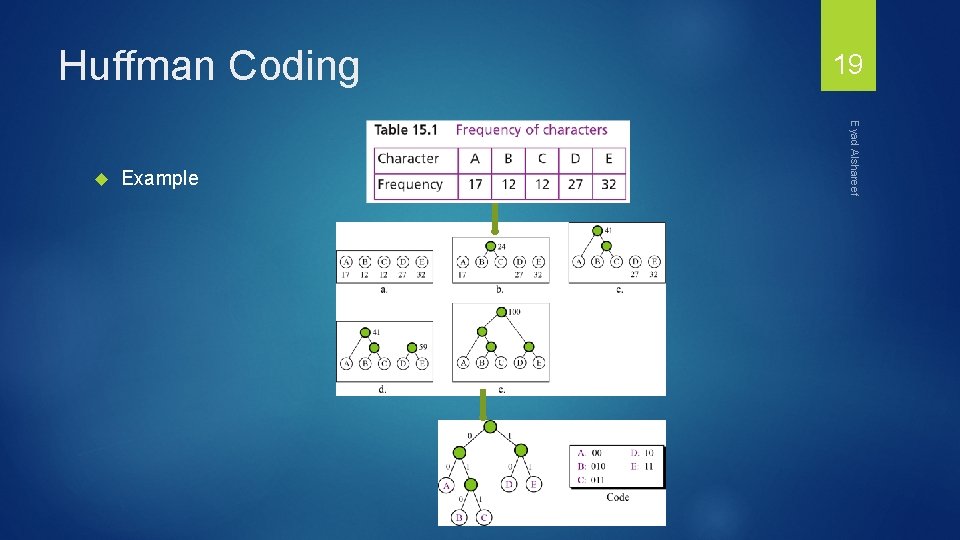

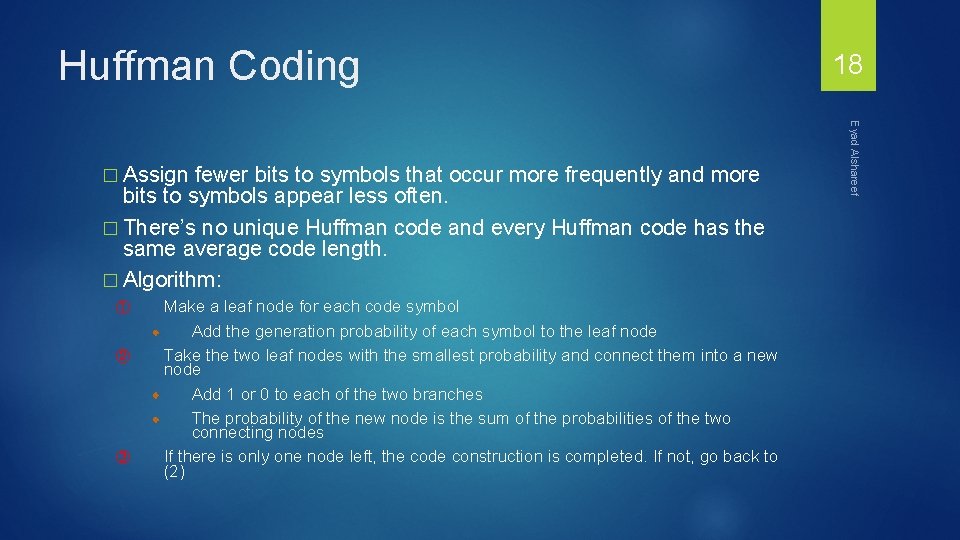

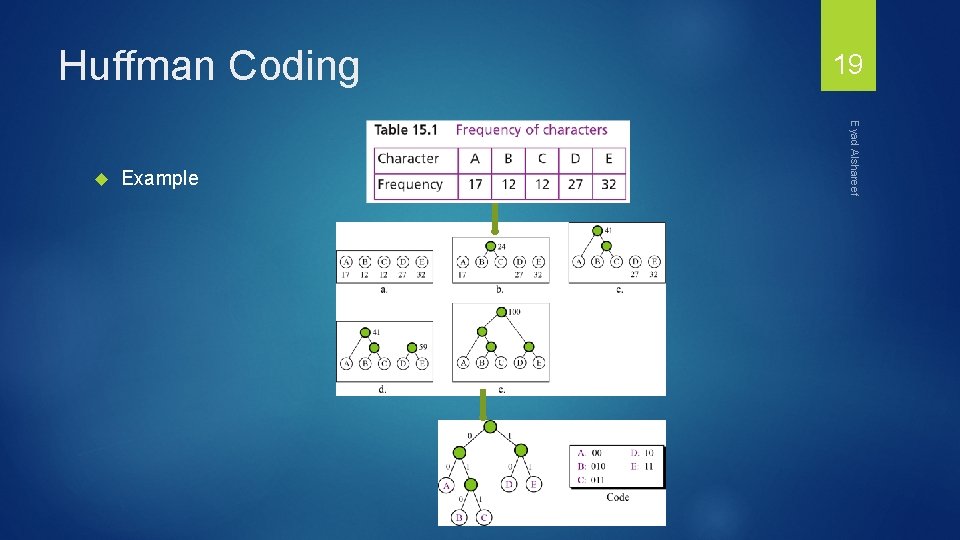

Huffman Coding fewer bits to symbols that occur more frequently and more bits to symbols appear less often. � There’s no unique Huffman code and every Huffman code has the same average code length. � Algorithm: ① ② ③ Make a leaf node for each code symbol Add the generation probability of each symbol to the leaf node Take the two leaf nodes with the smallest probability and connect them into a new node Add 1 or 0 to each of the two branches The probability of the new node is the sum of the probabilities of the two connecting nodes If there is only one node left, the code construction is completed. If not, go back to (2) Eyad Alshareef � Assign 18

Huffman Coding Example Eyad Alshareef 19

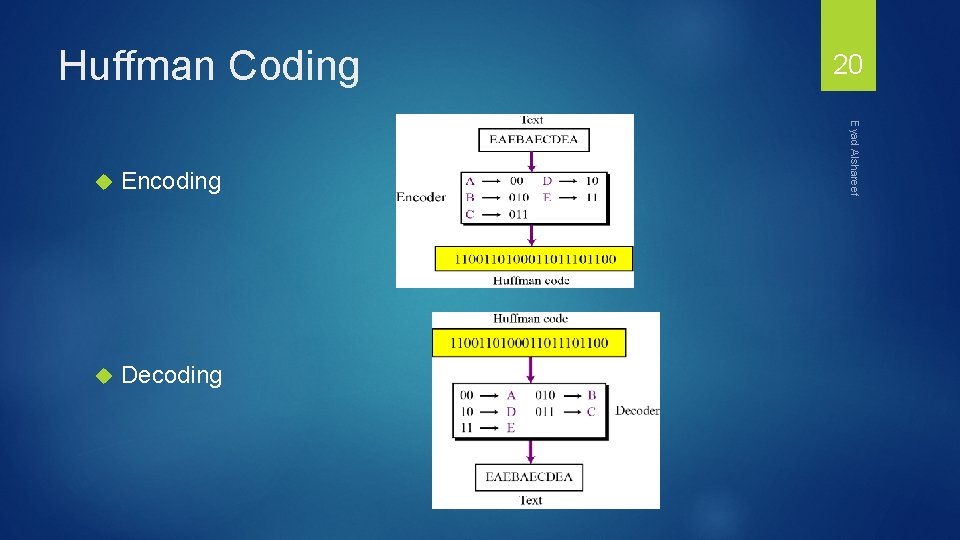

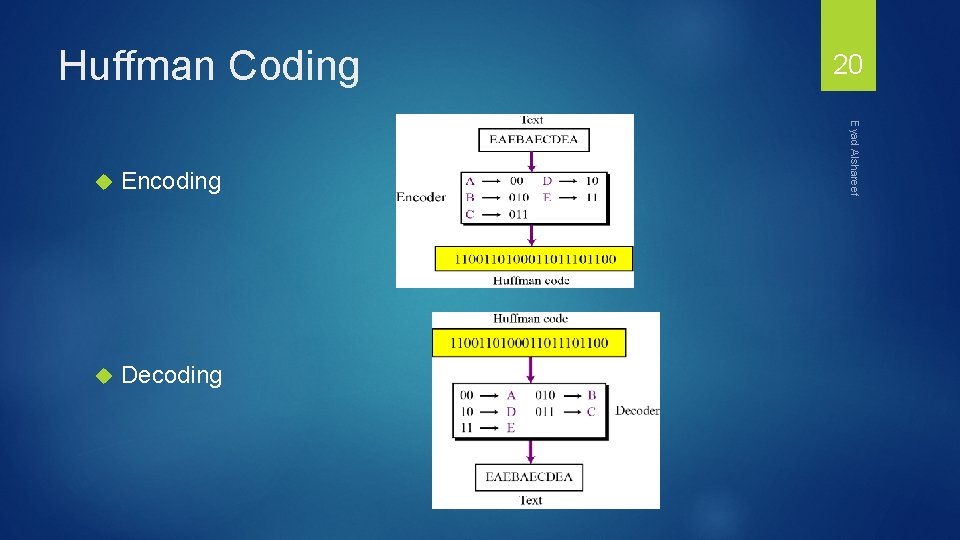

Huffman Coding Encoding Decoding Eyad Alshareef 20

21 Eyad Alshareef Huffman Coding

Huffman Coding Huffman codes can be used to compress information Like Win. Zip – although Win. Zip doesn’t use the Huffman algorithm JPEGs do use Huffman as part of their compression process The basic idea is that instead of storing each character in a file as an 8 -bit ASCII value, we will instead store the more frequently occurring characters using fewer bits and less frequently occurring characters using more bits On average this should decrease the filesize (usually ½) Eyad Alshareef 22

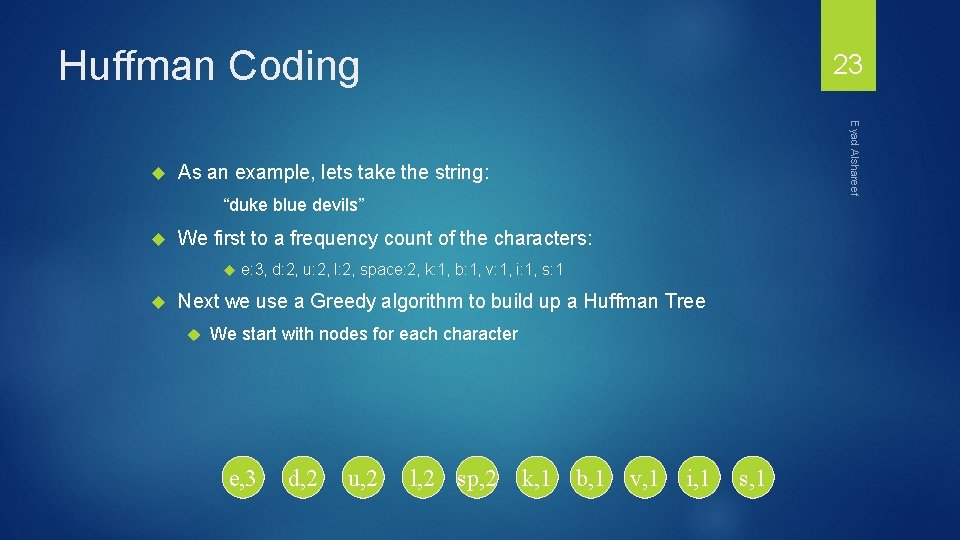

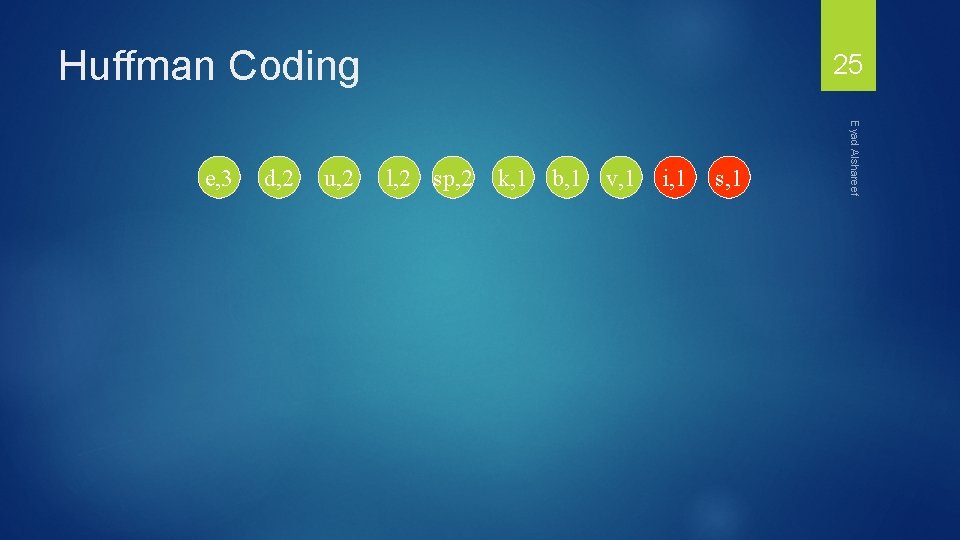

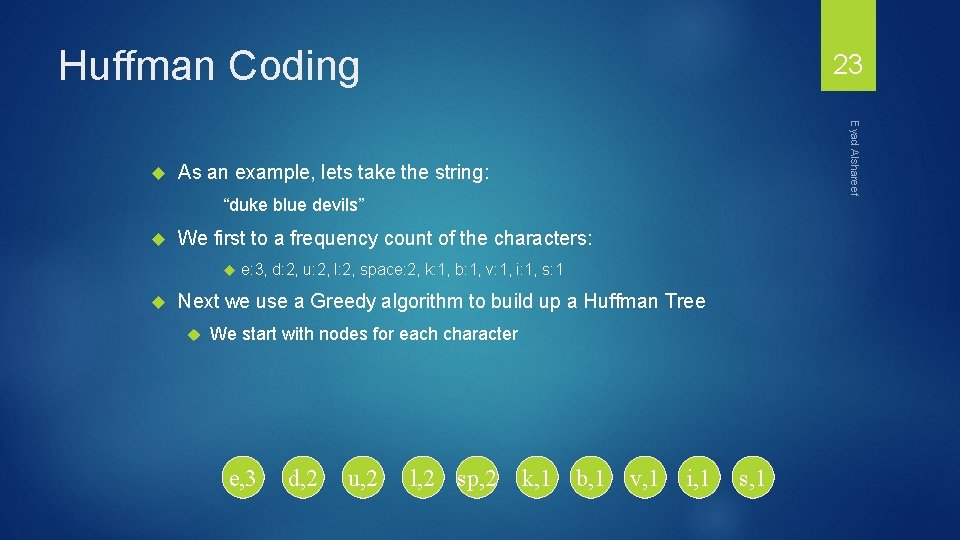

Huffman Coding Eyad Alshareef 23 As an example, lets take the string: “duke blue devils” We first to a frequency count of the characters: e: 3, d: 2, u: 2, l: 2, space: 2, k: 1, b: 1, v: 1, i: 1, s: 1 Next we use a Greedy algorithm to build up a Huffman Tree We start with nodes for each character e, 3 d, 2 u, 2 l, 2 sp, 2 k, 1 b, 1 v, 1 i, 1 s, 1

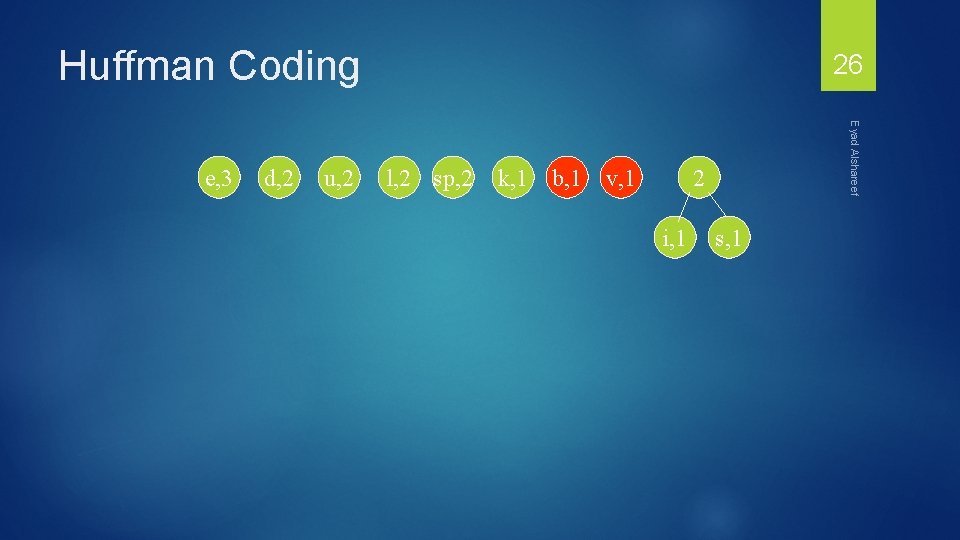

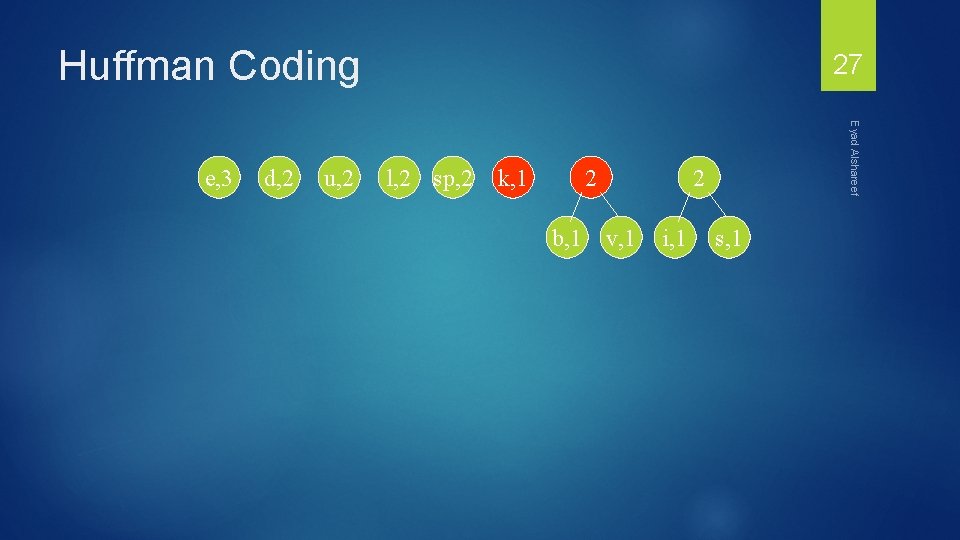

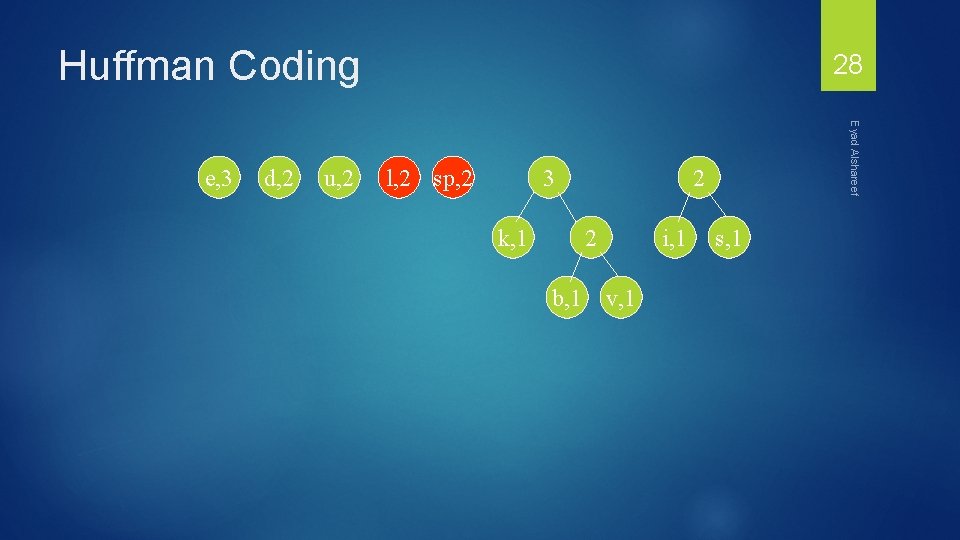

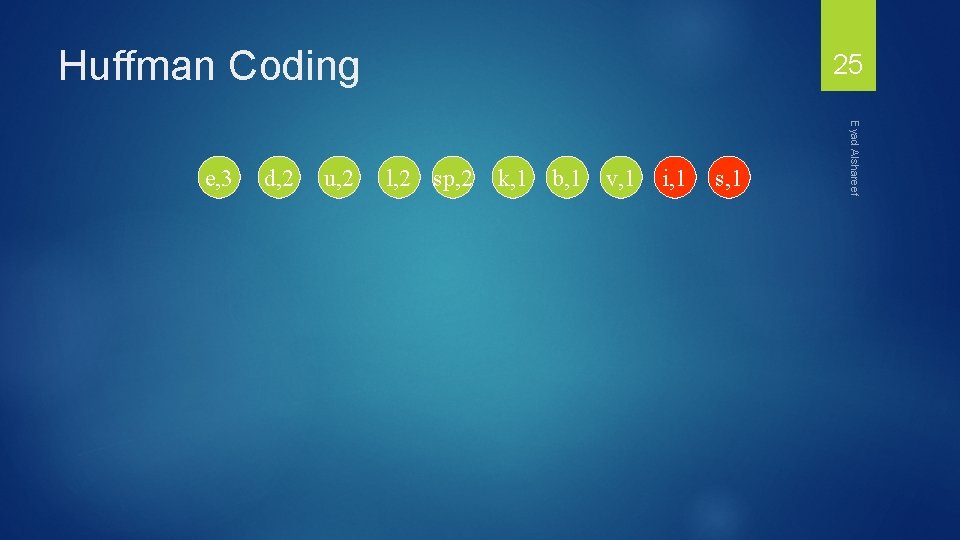

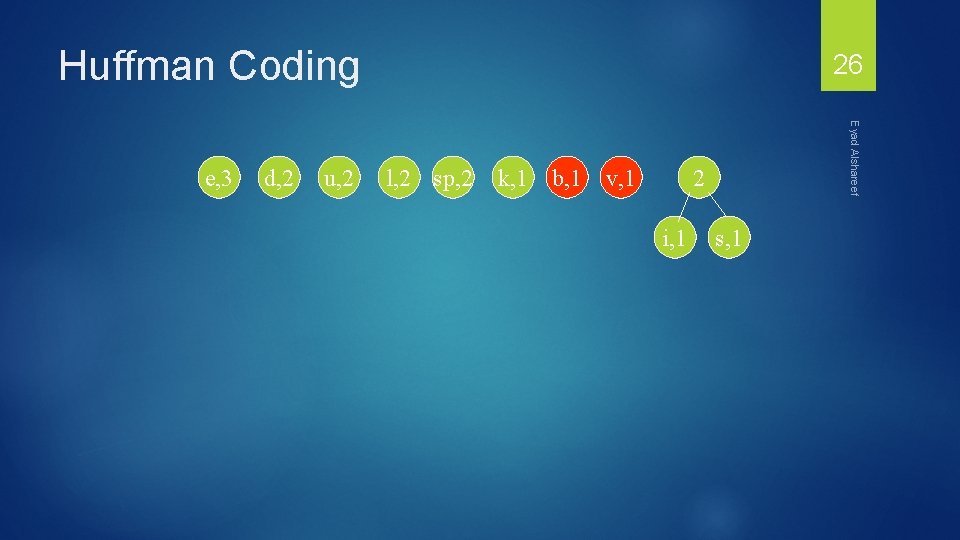

Huffman Coding We then pick the nodes with the smallest frequency and combine them together to form a new node The selection of these nodes is the Greedy part The two selected nodes are removed from the set, but replace by the combined node This continues until we have only 1 node left in the set Eyad Alshareef 24

Huffman Coding d, 2 u, 2 l, 2 sp, 2 k, 1 b, 1 v, 1 i, 1 s, 1 Eyad Alshareef e, 3 25

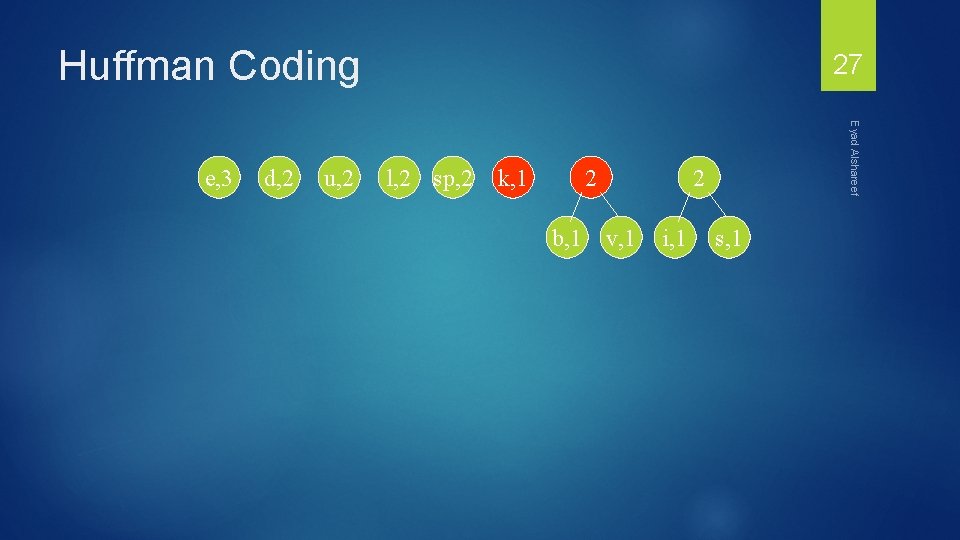

Huffman Coding d, 2 u, 2 l, 2 sp, 2 k, 1 b, 1 v, 1 Eyad Alshareef e, 3 26 2 i, 1 s, 1

Huffman Coding d, 2 u, 2 l, 2 sp, 2 k, 1 2 b, 1 Eyad Alshareef e, 3 27 2 v, 1 i, 1 s, 1

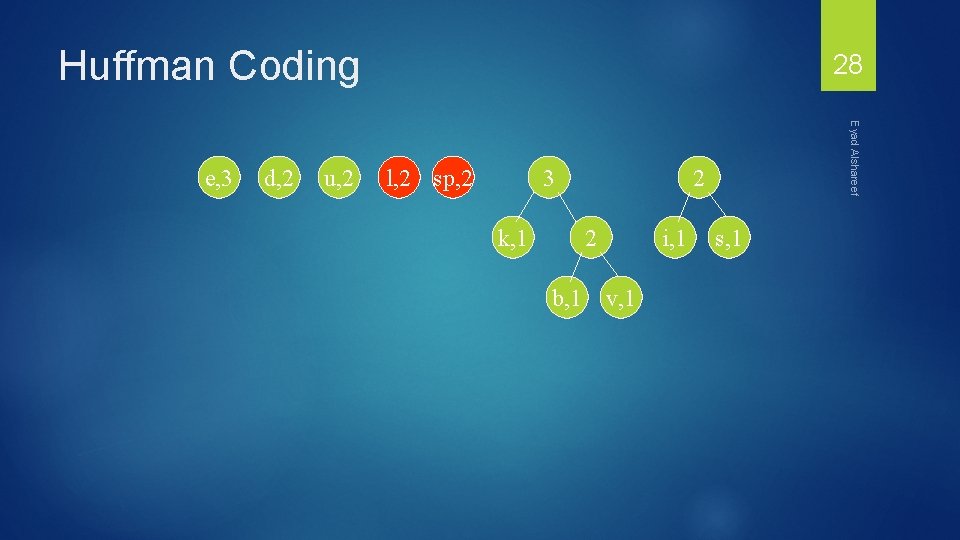

Huffman Coding d, 2 u, 2 l, 2 sp, 2 3 k, 1 2 2 b, 1 Eyad Alshareef e, 3 28 i, 1 v, 1 s, 1

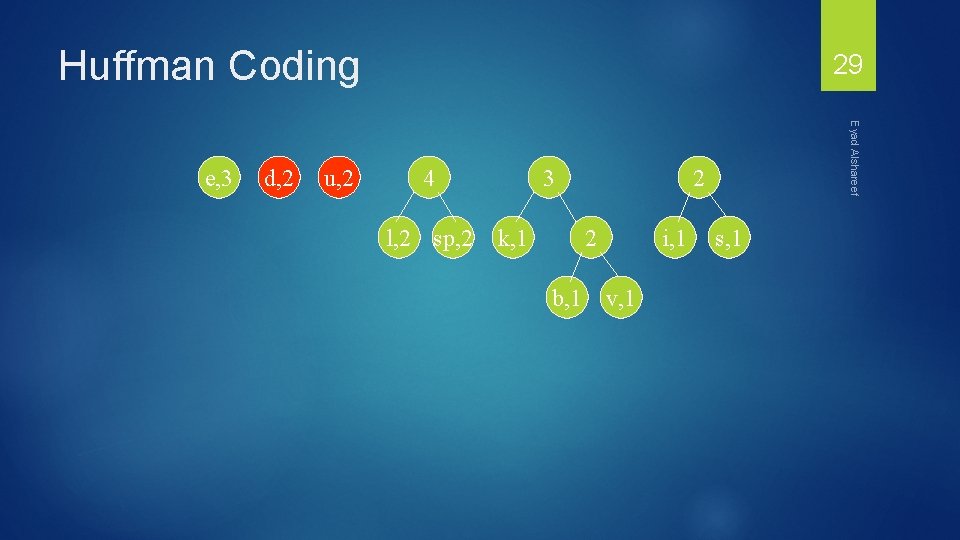

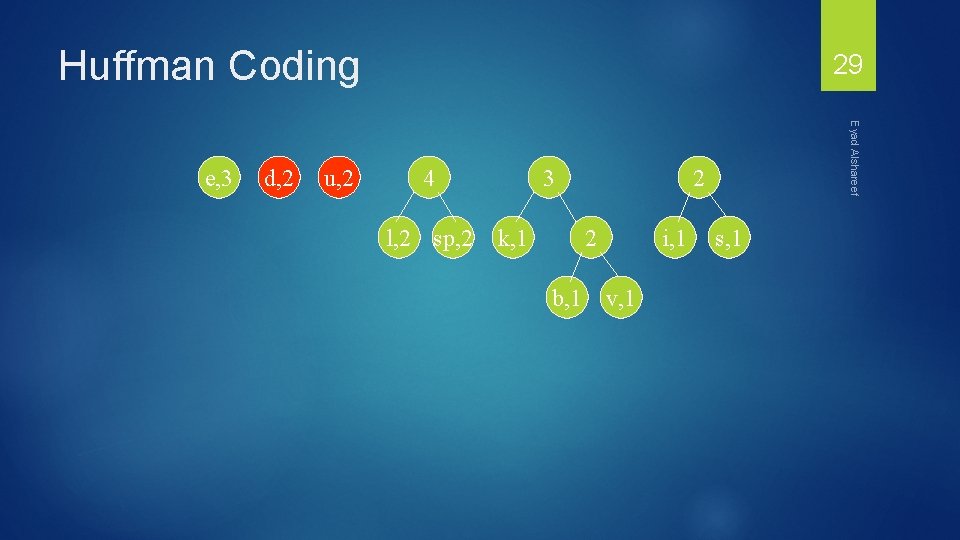

Huffman Coding d, 2 u, 2 4 l, 2 sp, 2 3 k, 1 2 2 b, 1 Eyad Alshareef e, 3 29 i, 1 v, 1 s, 1

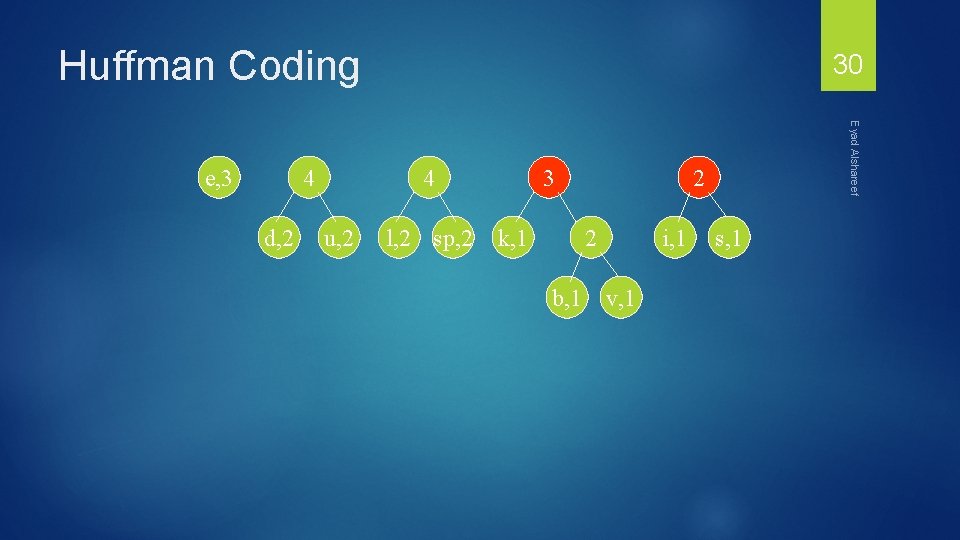

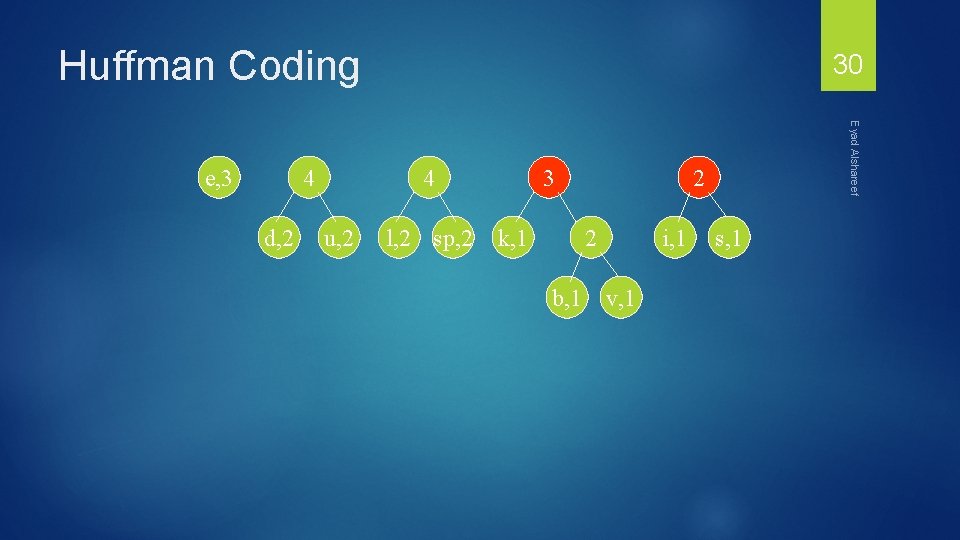

Huffman Coding 4 d, 2 4 u, 2 l, 2 sp, 2 3 k, 1 2 2 b, 1 Eyad Alshareef e, 3 30 i, 1 v, 1 s, 1

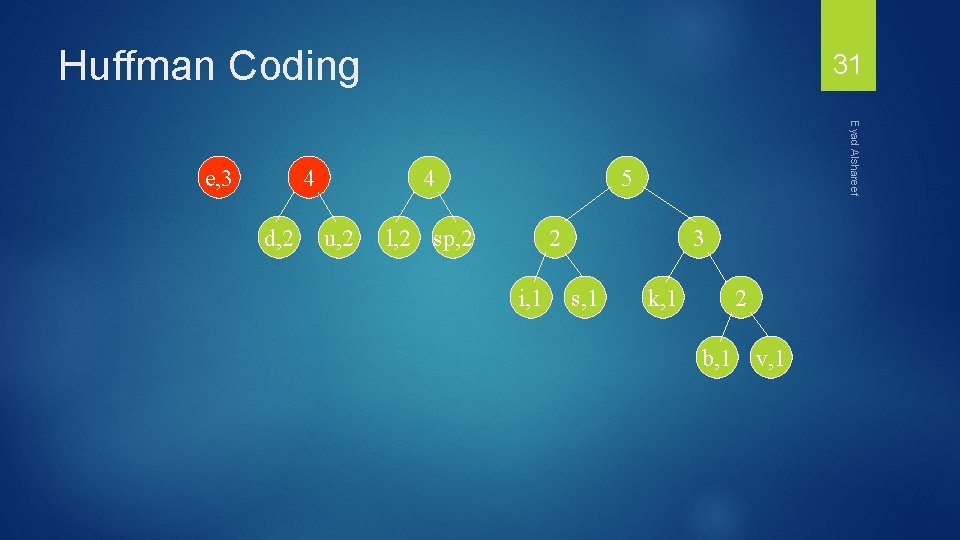

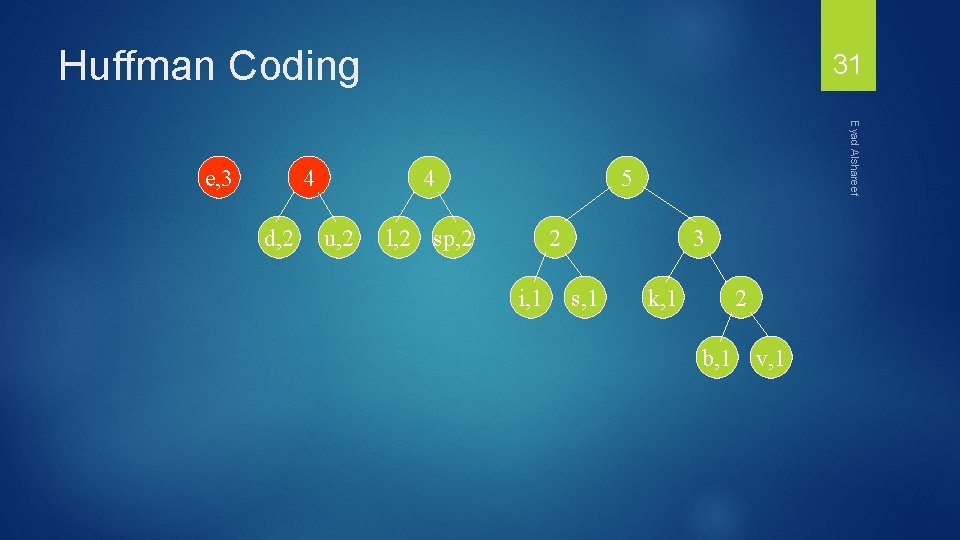

Huffman Coding 4 d, 2 4 u, 2 Eyad Alshareef e, 3 31 5 l, 2 sp, 2 2 i, 1 3 s, 1 k, 1 2 b, 1 v, 1

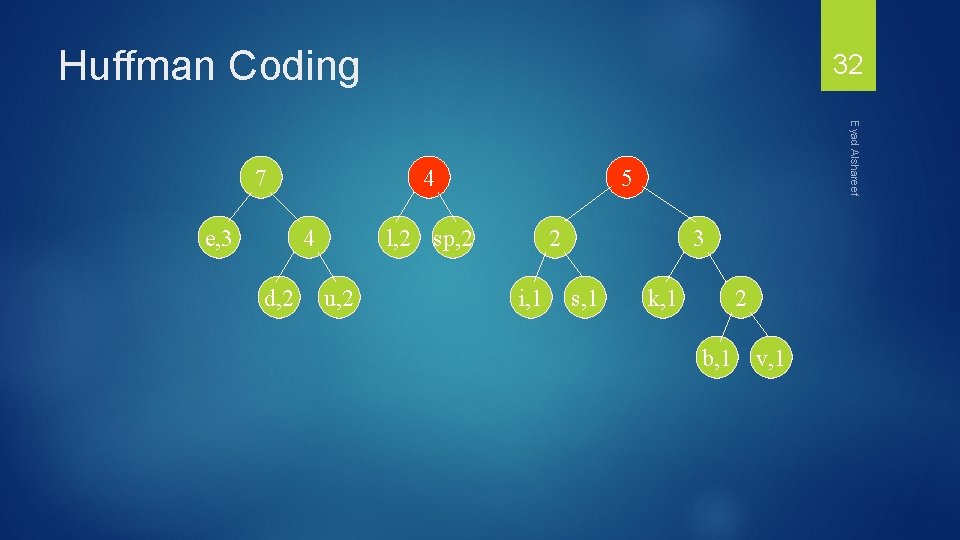

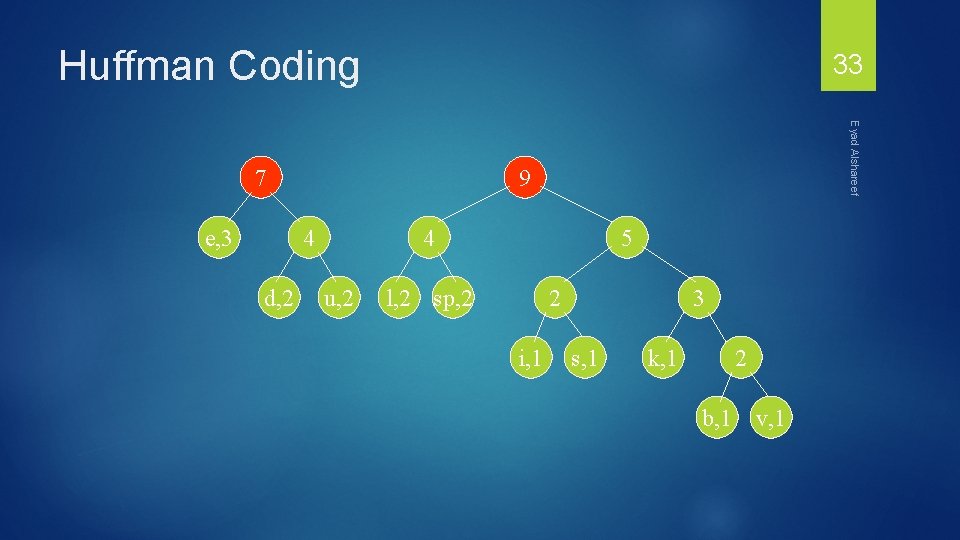

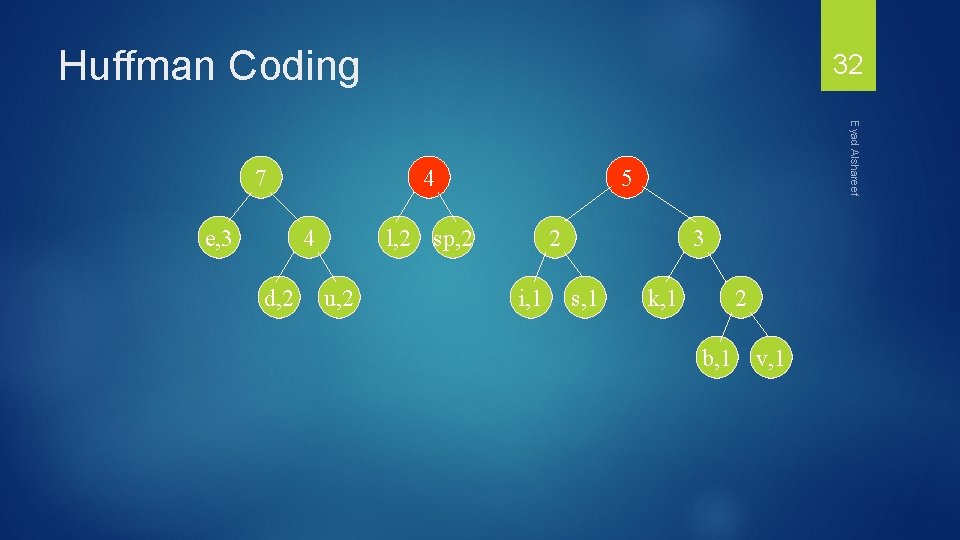

Huffman Coding e, 3 4 4 d, 2 5 l, 2 sp, 2 u, 2 Eyad Alshareef 7 32 2 i, 1 3 s, 1 k, 1 2 b, 1 v, 1

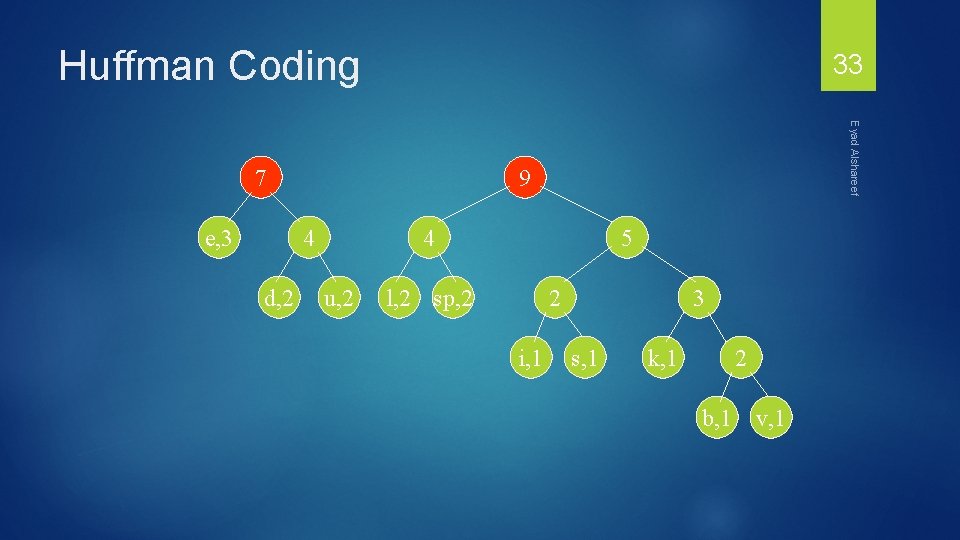

Huffman Coding 33 e, 3 9 4 d, 2 Eyad Alshareef 7 4 u, 2 5 l, 2 sp, 2 2 i, 1 3 s, 1 k, 1 2 b, 1 v, 1

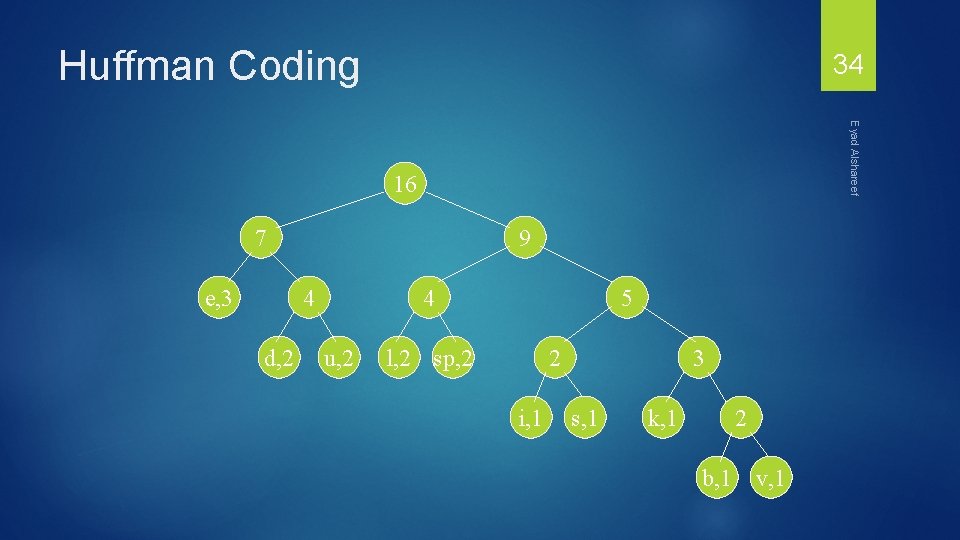

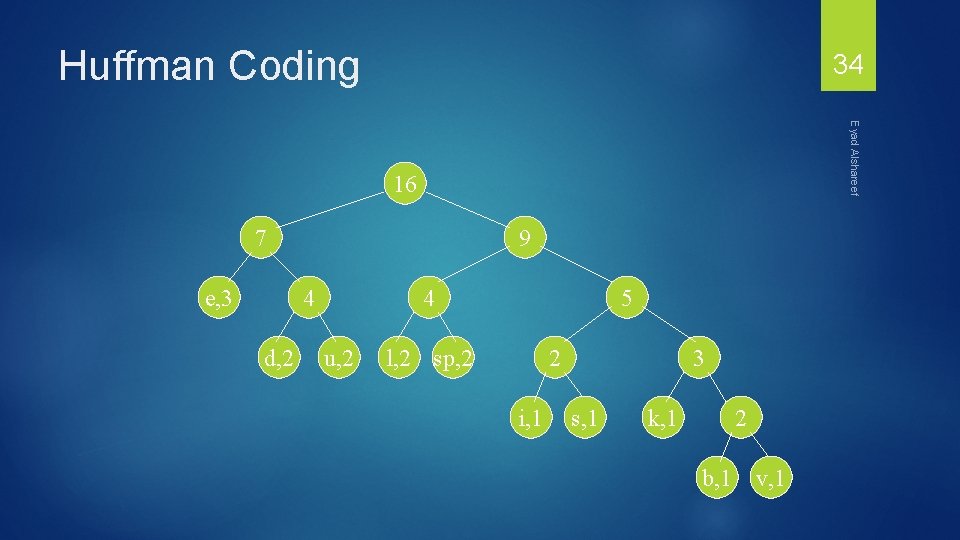

Huffman Coding 34 Eyad Alshareef 16 7 e, 3 9 4 d, 2 4 u, 2 5 l, 2 sp, 2 2 i, 1 3 s, 1 k, 1 2 b, 1 v, 1

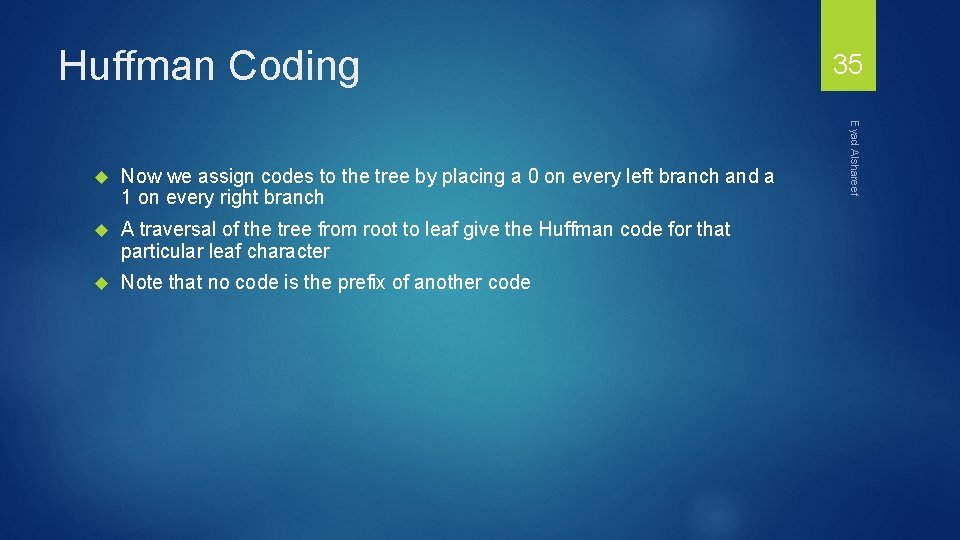

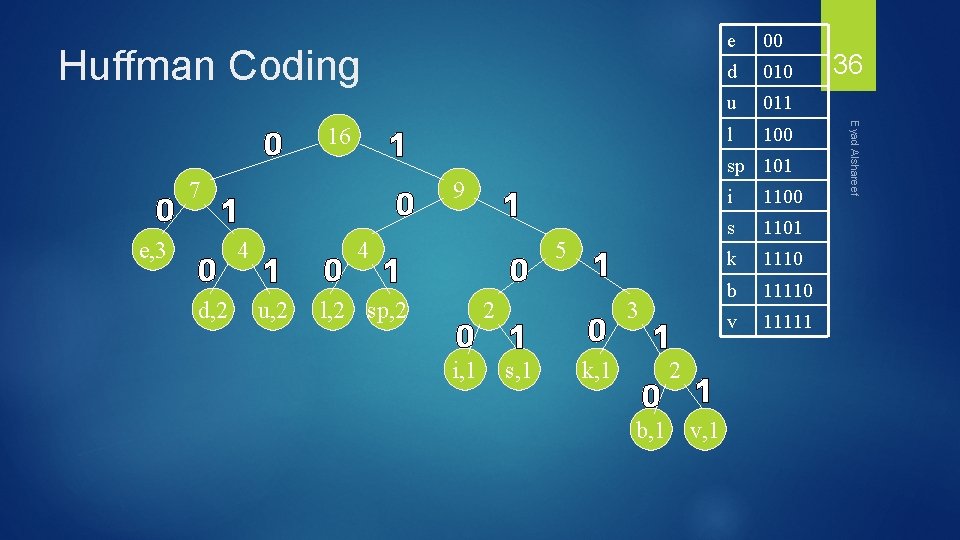

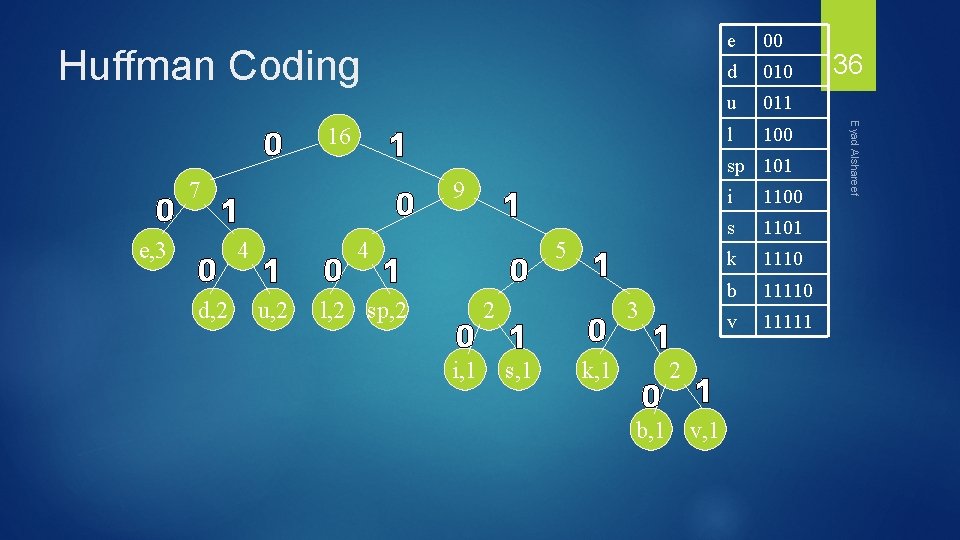

Huffman Coding Now we assign codes to the tree by placing a 0 on every left branch and a 1 on every right branch A traversal of the tree from root to leaf give the Huffman code for that particular leaf character Note that no code is the prefix of another code Eyad Alshareef 35

Huffman Coding 00 d 010 u 011 l 100 sp 101 7 e, 3 9 4 d, 2 4 u, 2 5 l, 2 sp, 2 2 i, 1 3 s, 1 k, 1 2 b, 1 v, 1 i 1100 s 1101 k 1110 b 11110 v 11111 36 Eyad Alshareef 16 e

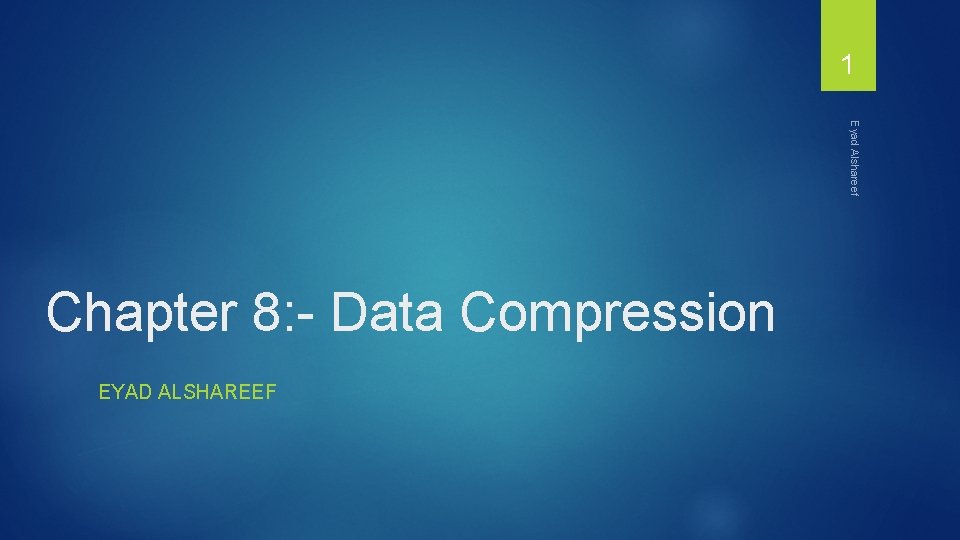

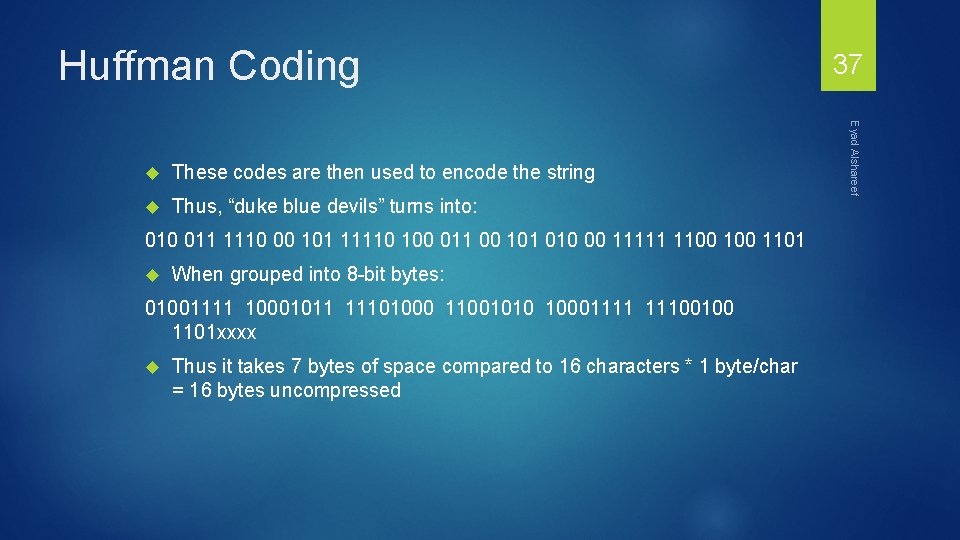

Huffman Coding These codes are then used to encode the string Thus, “duke blue devils” turns into: 010 011 1110 00 101 11110 100 011 00 101 010 00 11111 1100 1101 When grouped into 8 -bit bytes: 01001111 10001011 11101000 11001010 10001111 11100100 1101 xxxx Thus it takes 7 bytes of space compared to 16 characters * 1 byte/char = 16 bytes uncompressed Eyad Alshareef 37

Huffman Coding Uncompressing works by reading in the file bit by bit Start at the root of the tree If a 0 is read, head left If a 1 is read, head right When a leaf is reached decode that character and start over again at the root of the tree Thus, we need to save Huffman table information as a header in the compressed file Doesn’t add a significant amount of size to the file for large files (which are the ones you want to compress anyway) Or we could use a fixed universal set of codes/freqencies Eyad Alshareef 38

39 Eyad Alshareef