The CMS Grid Analysis Environment GAE The CAIGEE

- Slides: 27

The CMS Grid Analysis Environment GAE (The CAIGEE Architecture) CMS Analysis – an Interactive Grid Enabled Environment See http: //ultralight. caltech. edu/gaeweb The CMS Collaboration

Outline • The Current Grid System used by CMS • The CMS Grid Analysis Environment being developed at Caltech, Florida and FNAL • Summary The CMS Collaboration 2

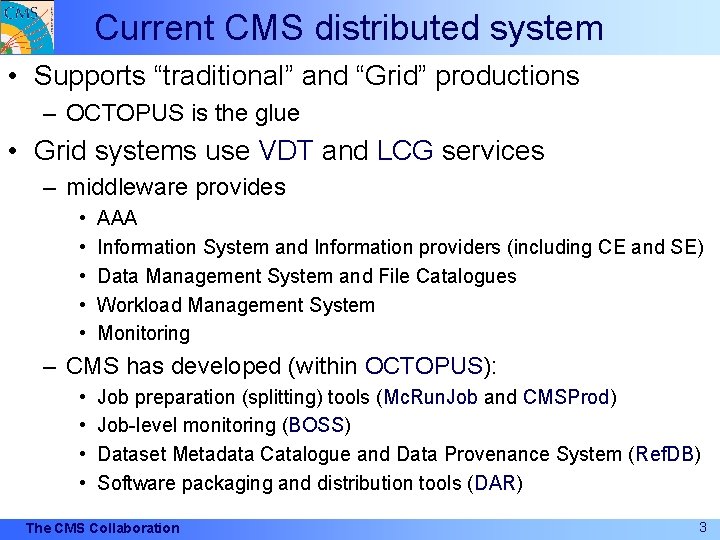

Current CMS distributed system • Supports “traditional” and “Grid” productions – OCTOPUS is the glue • Grid systems use VDT and LCG services – middleware provides • • • AAA Information System and Information providers (including CE and SE) Data Management System and File Catalogues Workload Management System Monitoring – CMS has developed (within OCTOPUS): • • Job preparation (splitting) tools (Mc. Run. Job and CMSProd) Job-level monitoring (BOSS) Dataset Metadata Catalogue and Data Provenance System (Ref. DB) Software packaging and distribution tools (DAR) The CMS Collaboration 3

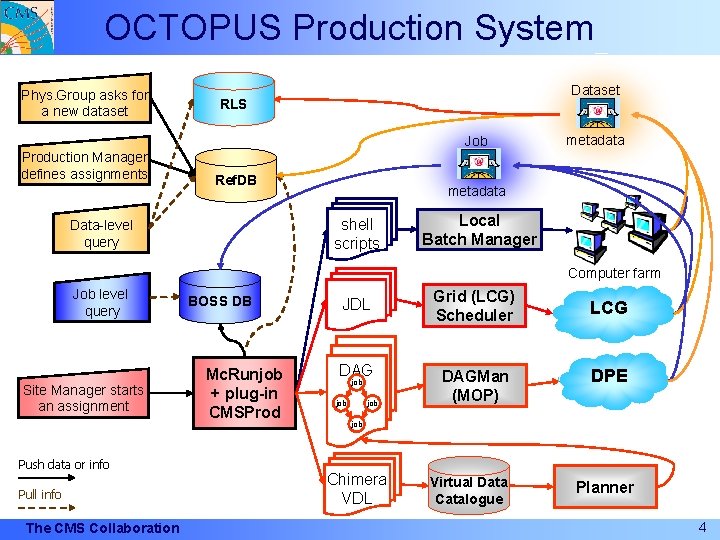

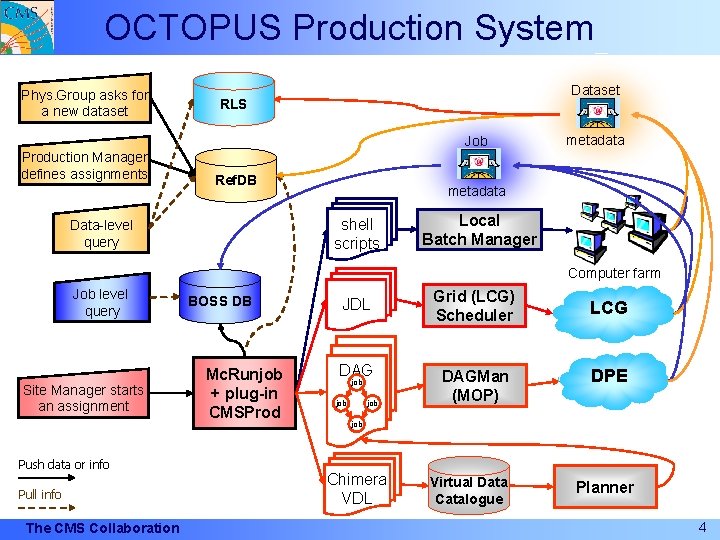

OCTOPUS Production System Phys. Group asks for a new dataset Dataset RLS Job Production Manager defines assignments Ref. DB metadata shell scripts Data-level query metadata Local Batch Manager Computer farm Job level query Site Manager starts an assignment Push data or info Pull info The CMS Collaboration BOSS DB Mc. Runjob + plug-in CMSProd JDL Grid (LCG) Scheduler DAGMan (MOP) job job LCG DPE job Chimera VDL Virtual Data Catalogue Planner 4

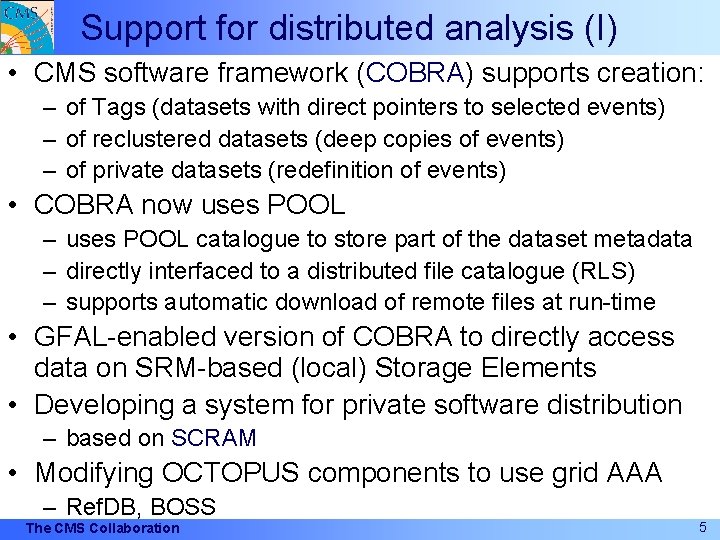

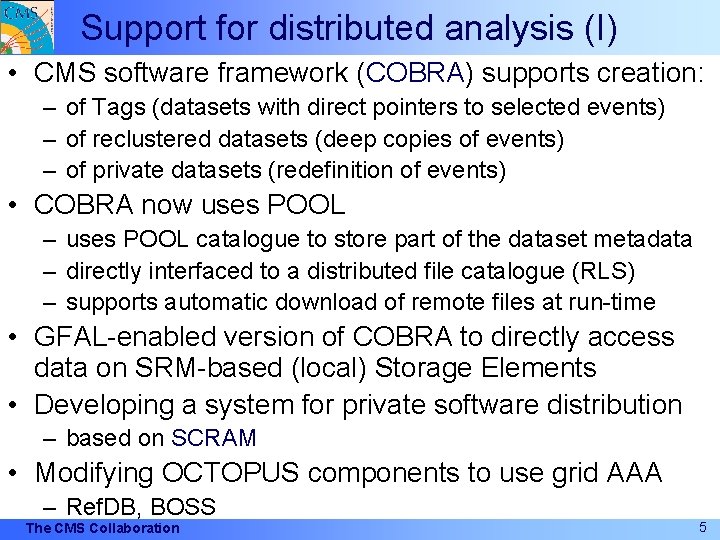

Support for distributed analysis (I) • CMS software framework (COBRA) supports creation: – of Tags (datasets with direct pointers to selected events) – of reclustered datasets (deep copies of events) – of private datasets (redefinition of events) • COBRA now uses POOL – uses POOL catalogue to store part of the dataset metadata – directly interfaced to a distributed file catalogue (RLS) – supports automatic download of remote files at run-time • GFAL-enabled version of COBRA to directly access data on SRM-based (local) Storage Elements • Developing a system for private software distribution – based on SCRAM • Modifying OCTOPUS components to use grid AAA – Ref. DB, BOSS The CMS Collaboration 5

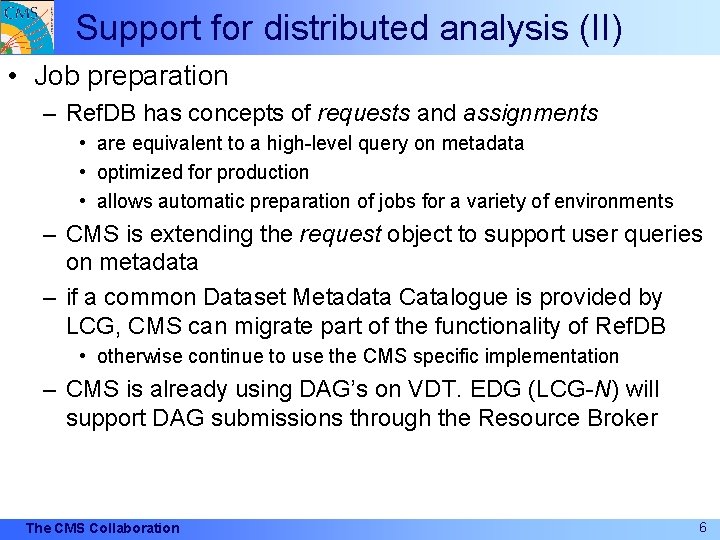

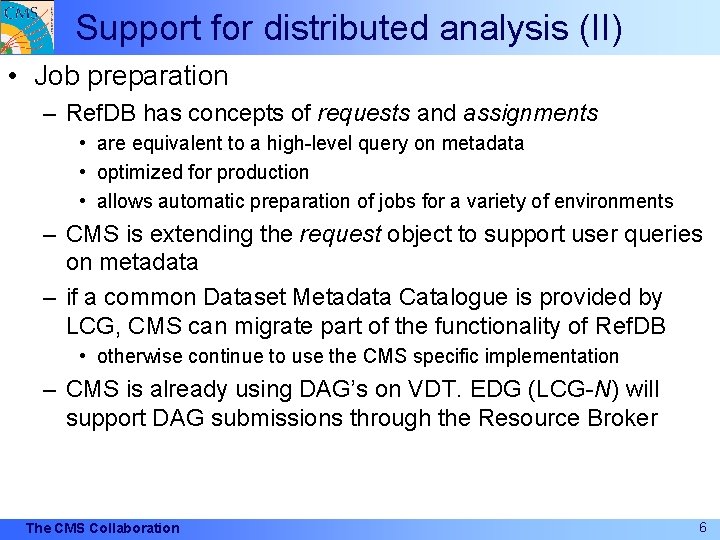

Support for distributed analysis (II) • Job preparation – Ref. DB has concepts of requests and assignments • are equivalent to a high-level query on metadata • optimized for production • allows automatic preparation of jobs for a variety of environments – CMS is extending the request object to support user queries on metadata – if a common Dataset Metadata Catalogue is provided by LCG, CMS can migrate part of the functionality of Ref. DB • otherwise continue to use the CMS specific implementation – CMS is already using DAG’s on VDT. EDG (LCG-N) will support DAG submissions through the Resource Broker The CMS Collaboration 6

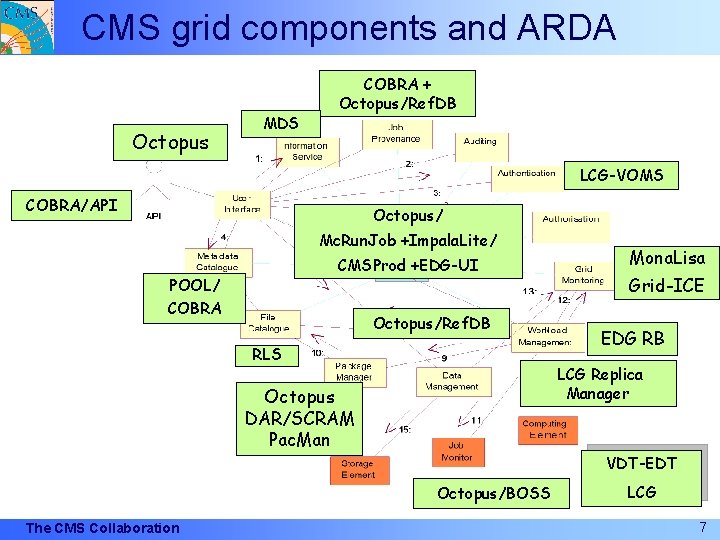

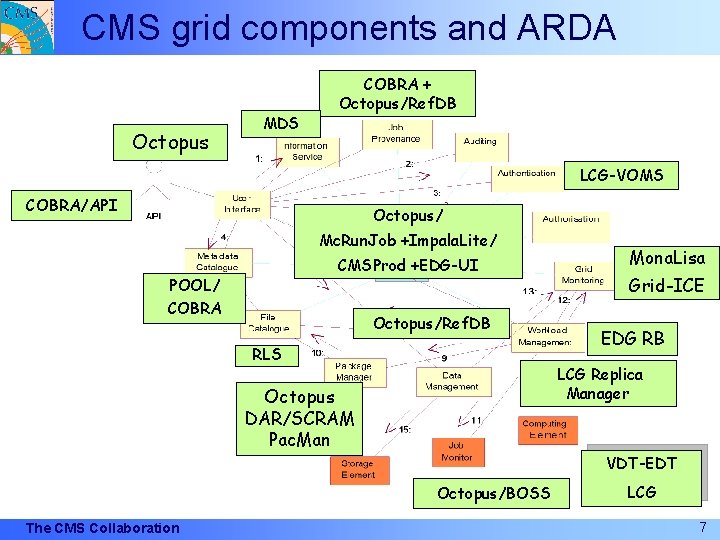

CMS grid components and ARDA Octopus MDS COBRA + Octopus/Ref. DB LCG-VOMS COBRA/API Octopus/ Mc. Run. Job +Impala. Lite/ CMSProd +EDG-UI POOL/ COBRA Octopus/Ref. DB RLS Mona. Lisa Grid-ICE EDG RB LCG Replica Manager Octopus DAR/SCRAM Pac. Man VDT-EDT Octopus/BOSS The CMS Collaboration LCG 7

The Grid Analysis Environment (GAE) The CMS Collaboration 8

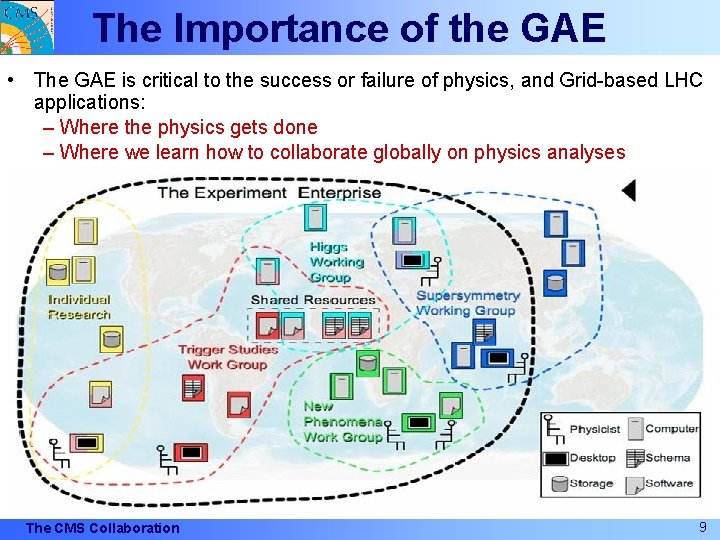

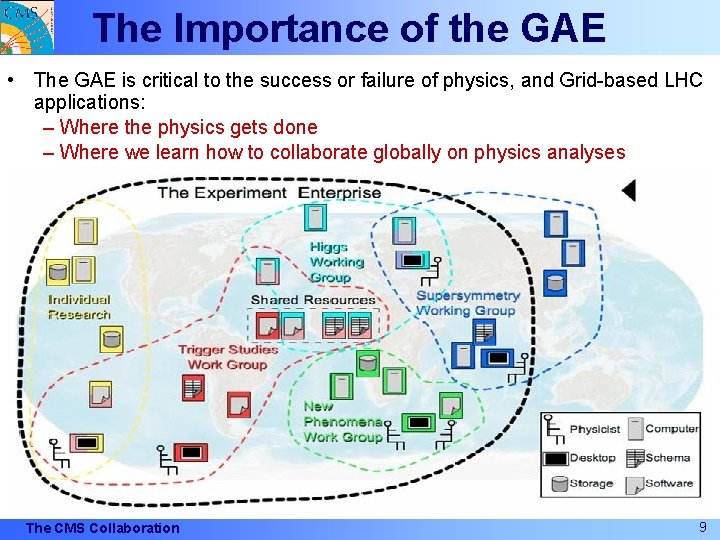

The Importance of the GAE • The GAE is critical to the success or failure of physics, and Grid-based LHC applications: – Where the physics gets done – Where we learn how to collaborate globally on physics analyses The CMS Collaboration 9

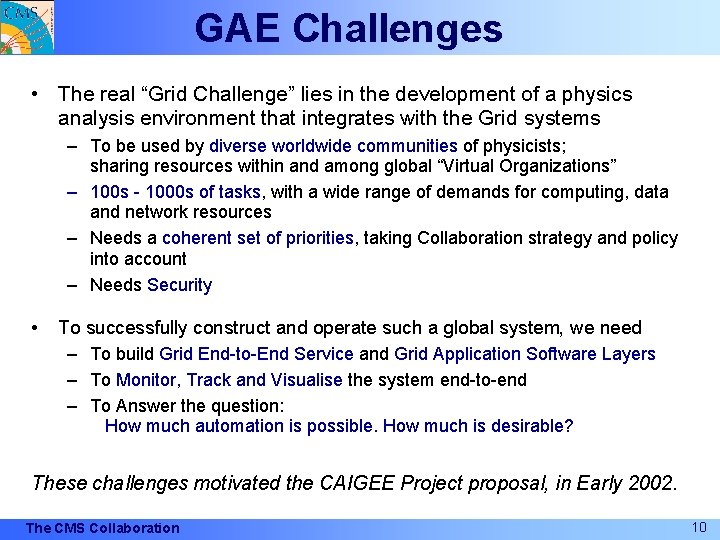

GAE Challenges • The real “Grid Challenge” lies in the development of a physics analysis environment that integrates with the Grid systems – To be used by diverse worldwide communities of physicists; sharing resources within and among global “Virtual Organizations” – 100 s - 1000 s of tasks, with a wide range of demands for computing, data and network resources – Needs a coherent set of priorities, taking Collaboration strategy and policy into account – Needs Security • To successfully construct and operate such a global system, we need – To build Grid End-to-End Service and Grid Application Software Layers – To Monitor, Track and Visualise the system end-to-end – To Answer the question: How much automation is possible. How much is desirable? These challenges motivated the CAIGEE Project proposal, in Early 2002. The CMS Collaboration 10

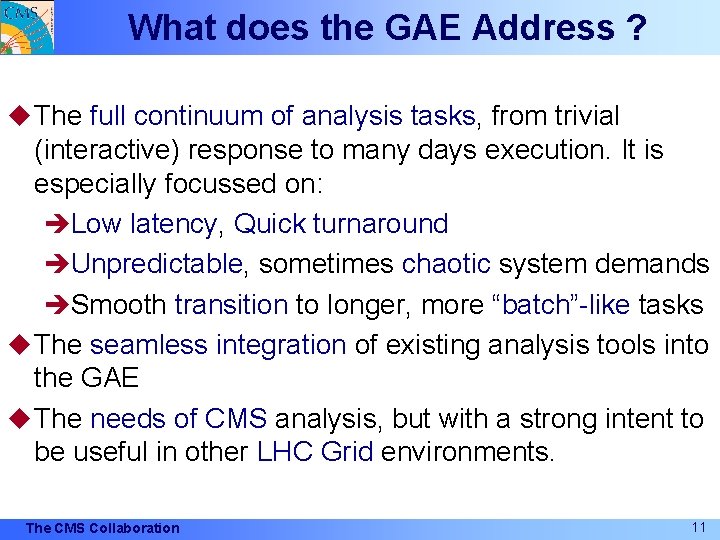

What does the GAE Address ? u The full continuum of analysis tasks, from trivial (interactive) response to many days execution. It is especially focussed on: èLow latency, Quick turnaround èUnpredictable, sometimes chaotic system demands èSmooth transition to longer, more “batch”-like tasks u The seamless integration of existing analysis tools into the GAE u The needs of CMS analysis, but with a strong intent to be useful in other LHC Grid environments. The CMS Collaboration 11

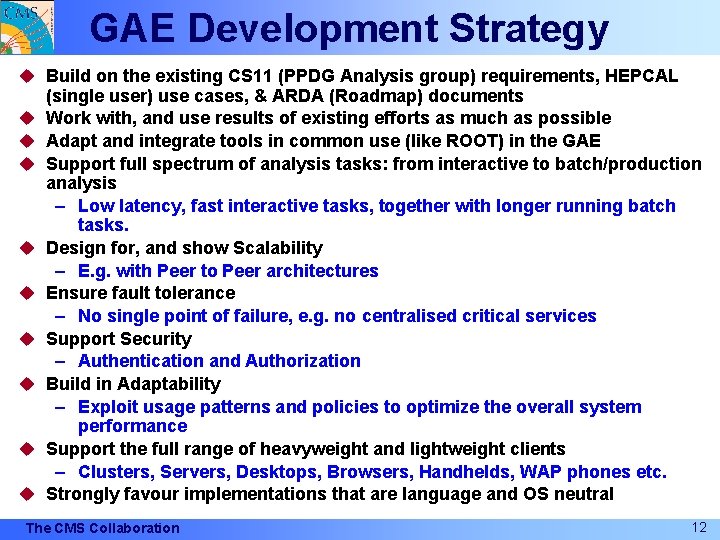

GAE Development Strategy u Build on the existing CS 11 (PPDG Analysis group) requirements, HEPCAL u u u u u (single user) use cases, & ARDA (Roadmap) documents Work with, and use results of existing efforts as much as possible Adapt and integrate tools in common use (like ROOT) in the GAE Support full spectrum of analysis tasks: from interactive to batch/production analysis – Low latency, fast interactive tasks, together with longer running batch tasks. Design for, and show Scalability – E. g. with Peer to Peer architectures Ensure fault tolerance – No single point of failure, e. g. no centralised critical services Support Security – Authentication and Authorization Build in Adaptability – Exploit usage patterns and policies to optimize the overall system performance Support the full range of heavyweight and lightweight clients – Clusters, Servers, Desktops, Browsers, Handhelds, WAP phones etc. Strongly favour implementations that are language and OS neutral The CMS Collaboration 12

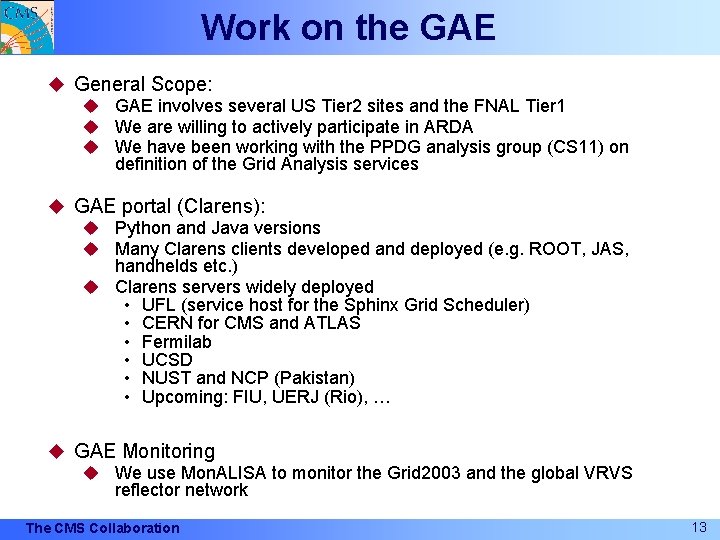

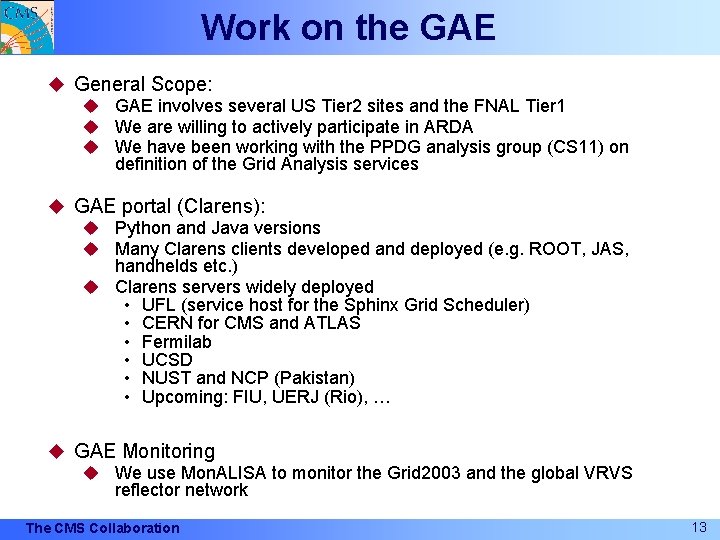

Work on the GAE u General Scope: u GAE involves several US Tier 2 sites and the FNAL Tier 1 u We are willing to actively participate in ARDA u We have been working with the PPDG analysis group (CS 11) on definition of the Grid Analysis services u GAE portal (Clarens): u Python and Java versions u Many Clarens clients developed and deployed (e. g. ROOT, JAS, handhelds etc. ) u Clarens servers widely deployed • UFL (service host for the Sphinx Grid Scheduler) • CERN for CMS and ATLAS • Fermilab • UCSD • NUST and NCP (Pakistan) • Upcoming: FIU, UERJ (Rio), … u GAE Monitoring u We use Mon. ALISA to monitor the Grid 2003 and the global VRVS reflector network The CMS Collaboration 13

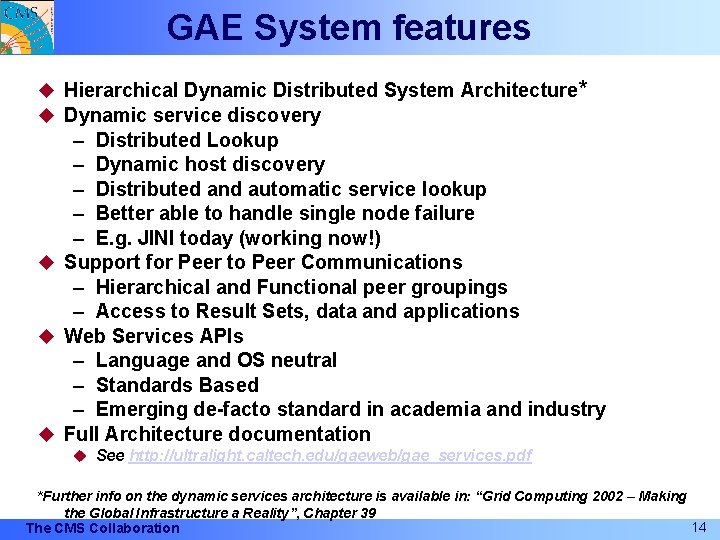

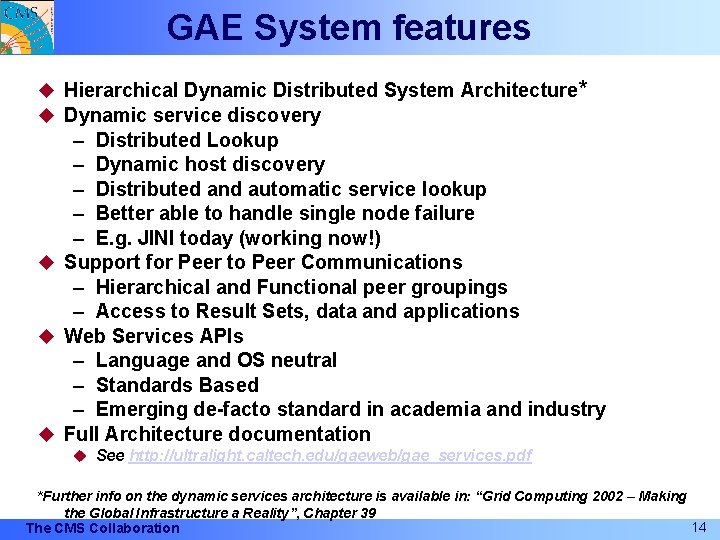

GAE System features u Hierarchical Dynamic Distributed System Architecture* u Dynamic service discovery – Distributed Lookup – Dynamic host discovery – Distributed and automatic service lookup – Better able to handle single node failure – E. g. JINI today (working now!) u Support for Peer to Peer Communications – Hierarchical and Functional peer groupings – Access to Result Sets, data and applications u Web Services APIs – Language and OS neutral – Standards Based – Emerging de-facto standard in academia and industry u Full Architecture documentation u See http: //ultralight. caltech. edu/gaeweb/gae_services. pdf *Further info on the dynamic services architecture is available in: “Grid Computing 2002 – Making the Global Infrastructure a Reality”, Chapter 39 14 The CMS Collaboration

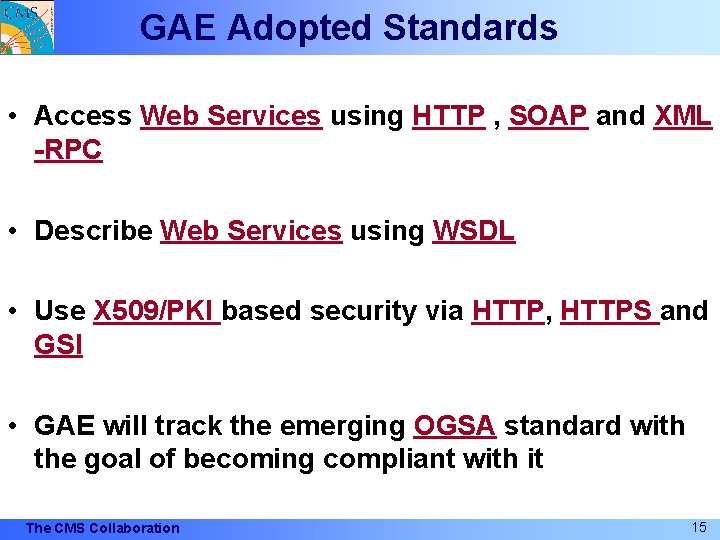

GAE Adopted Standards • Access Web Services using HTTP , SOAP and XML -RPC • Describe Web Services using WSDL • Use X 509/PKI based security via HTTP, HTTPS and GSI • GAE will track the emerging OGSA standard with the goal of becoming compliant with it The CMS Collaboration 15

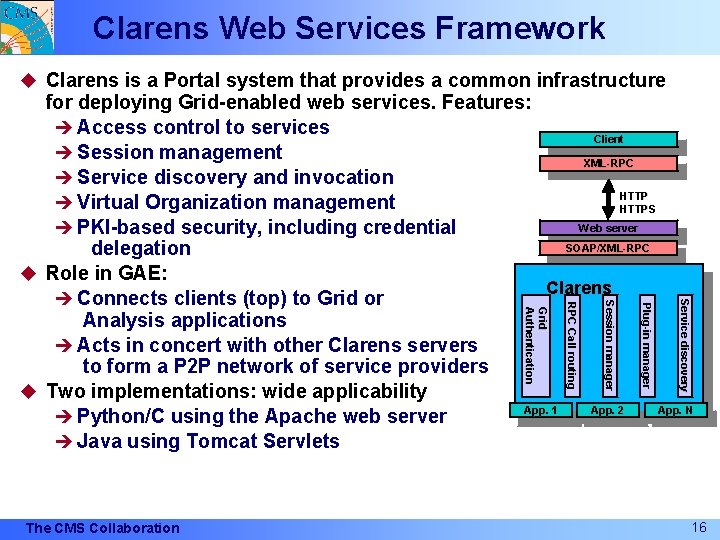

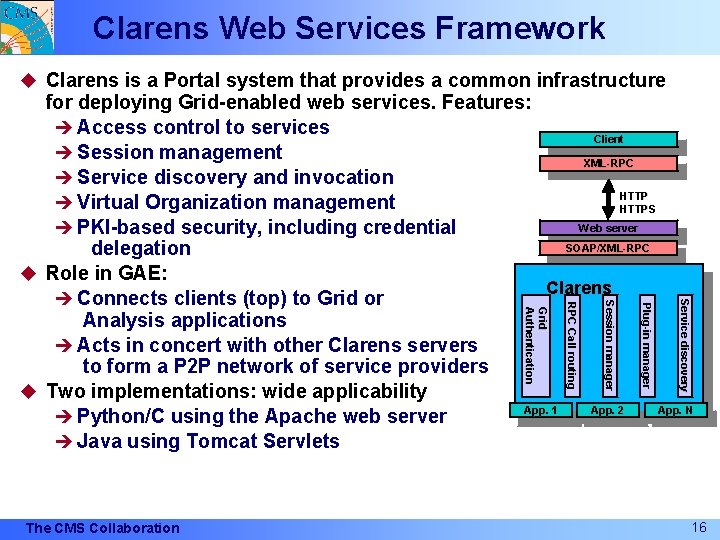

Clarens Web Services Framework u Clarens is a Portal system that provides a common infrastructure Service discovery Plug-in manager Session manager The CMS Collaboration RPC Call routing Grid Authentication for deploying Grid-enabled web services. Features: è Access control to services Client è Session management XML-RPC è Service discovery and invocation HTTP è Virtual Organization management HTTPS Web server è PKI-based security, including credential SOAP/XML-RPC delegation u Role in GAE: Clarens è Connects clients (top) to Grid or Analysis applications è Acts in concert with other Clarens servers to form a P 2 P network of service providers u Two implementations: wide applicability App. 1 App. 2 App. N è Python/C using the Apache web server è Java using Tomcat Servlets 16

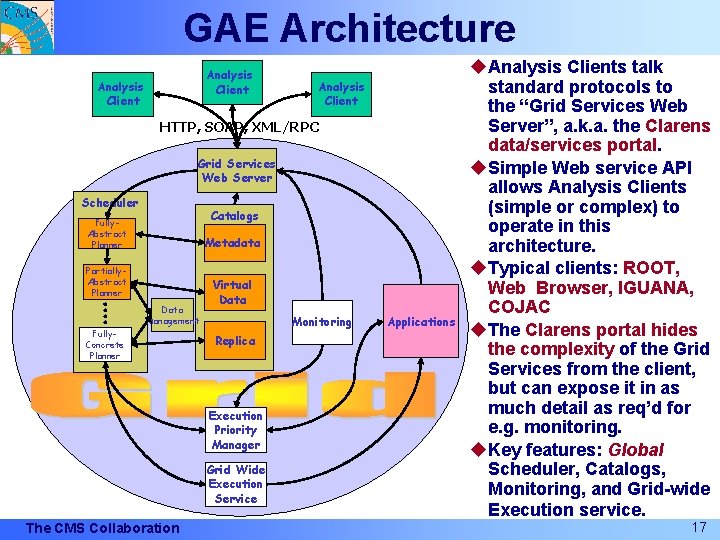

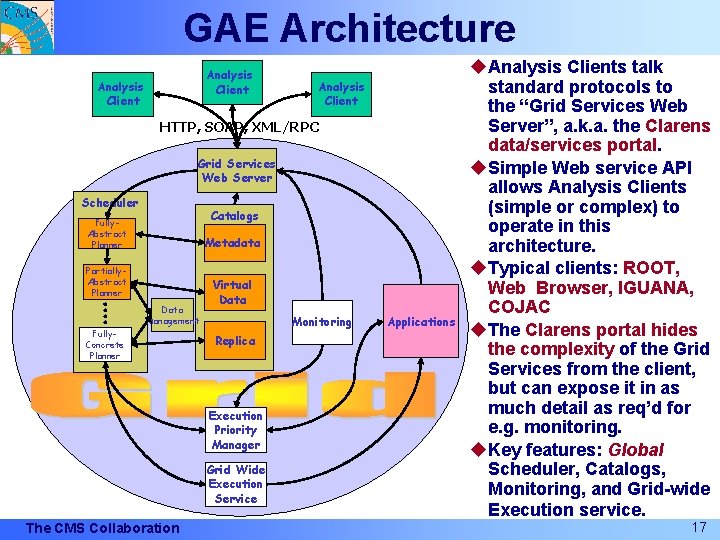

GAE Architecture Analysis Client HTTP, SOAP, XML/RPC Grid Services Web Server Scheduler Catalogs Fully. Abstract Planner Metadata Partially. Abstract Planner Data Management Fully. Concrete Planner Virtual Data Monitoring Replica Execution Priority Manager Grid Wide Execution Service The CMS Collaboration Applications u. Analysis Clients talk standard protocols to the “Grid Services Web Server”, a. k. a. the Clarens data/services portal. u. Simple Web service API allows Analysis Clients (simple or complex) to operate in this architecture. u. Typical clients: ROOT, Web Browser, IGUANA, COJAC u. The Clarens portal hides the complexity of the Grid Services from the client, but can expose it in as much detail as req’d for e. g. monitoring. u. Key features: Global Scheduler, Catalogs, Monitoring, and Grid-wide Execution service. 17

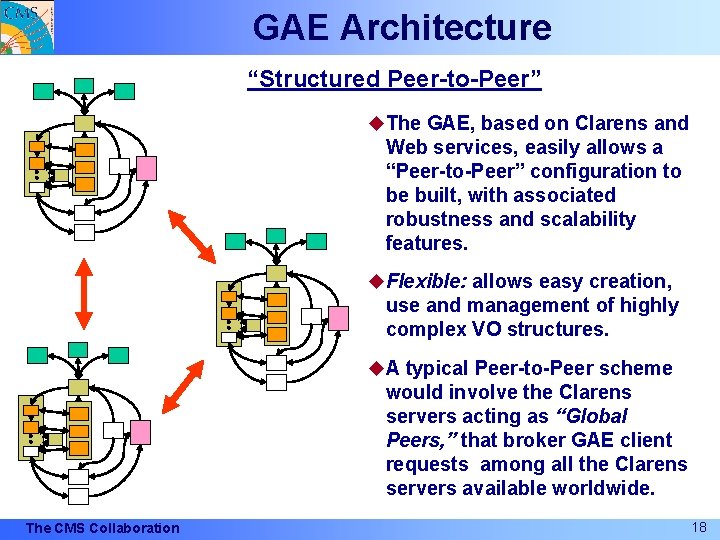

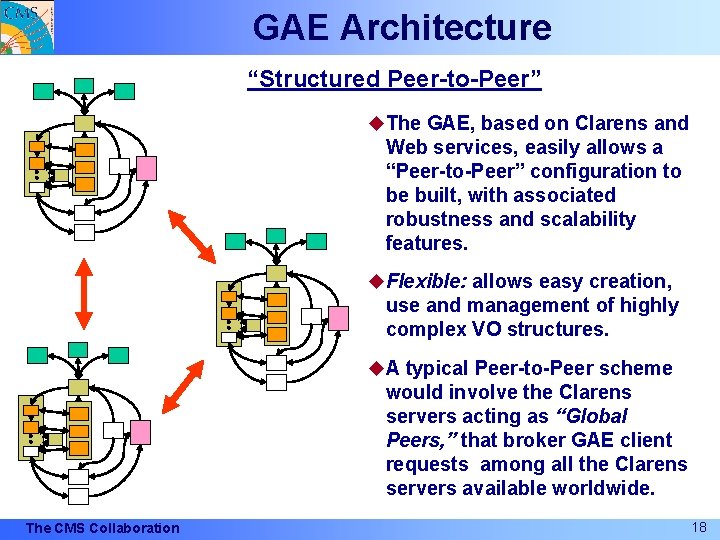

GAE Architecture “Structured Peer-to-Peer” u. The GAE, based on Clarens and Web services, easily allows a “Peer-to-Peer” configuration to be built, with associated robustness and scalability features. u. Flexible: allows easy creation, use and management of highly complex VO structures. u. A typical Peer-to-Peer scheme would involve the Clarens servers acting as “Global Peers, ” that broker GAE client requests among all the Clarens servers available worldwide. The CMS Collaboration 18

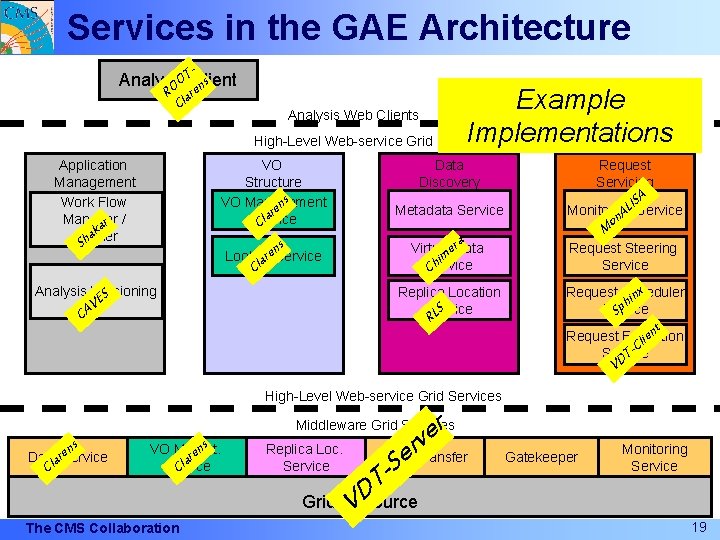

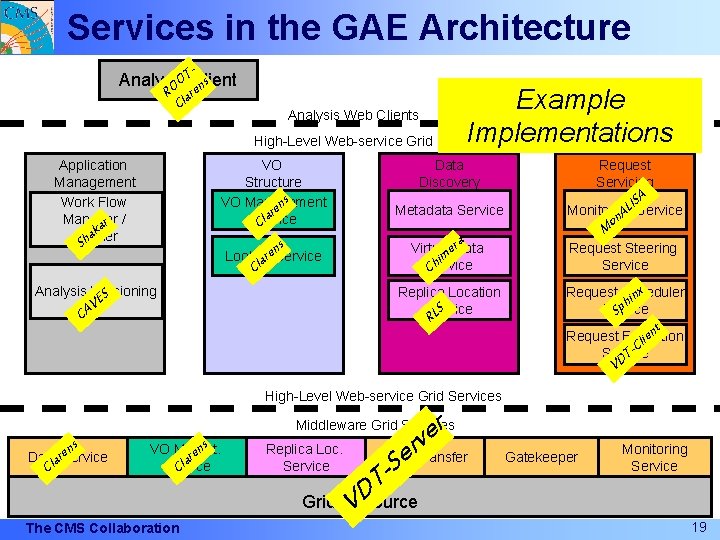

Services in the GAE Architecture - TClient Analysis O O ens R lar C Application Management Work Flow Manager ar / k a Builder h Example Implementations High-Level Web-service Grid Services Analysis Web Clients VO Structure s VO Management en r a Service Cl S s en. Service Lookup r a Cl Analysis Versioning S VE CA Data Discovery Request Servicing Metadata Service Monitoring AL Service ISA on M ra Virtuale. Data im Service Ch Request Steering Service Replica Location Service LS Request Scheduler inx h Service Sp R t n Request Execution lie C Service TVD High-Level Web-service Grid Services r e rv Middleware Grid Services ns e Datalar. Service C s VO Mngmt. en r a Service Cl The CMS Collaboration Replica Loc. Service e S - Data Transfer T D Grid Resource V Gatekeeper Monitoring Service 19

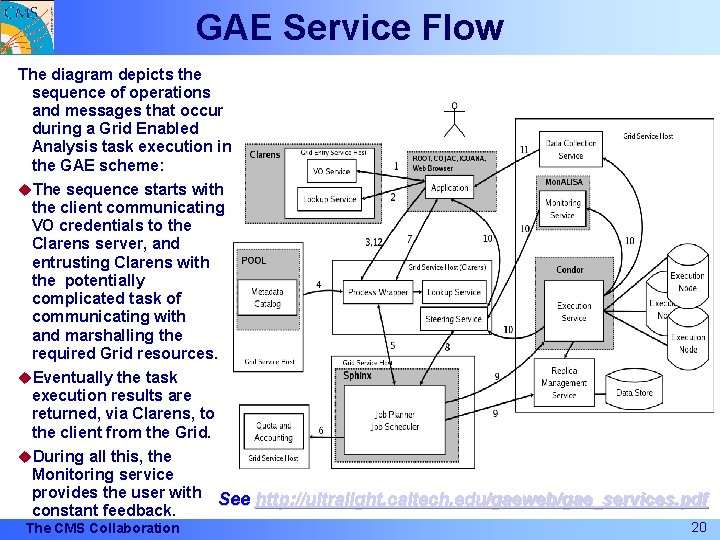

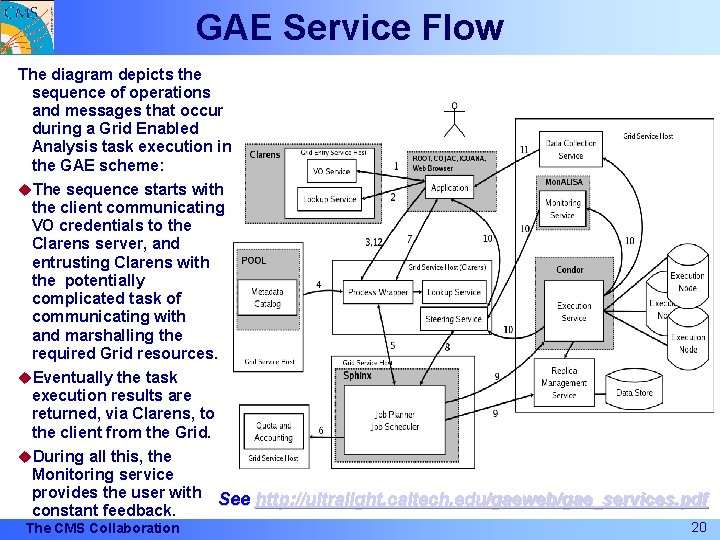

GAE Service Flow The diagram depicts the sequence of operations and messages that occur during a Grid Enabled Analysis task execution in the GAE scheme: u. The sequence starts with the client communicating VO credentials to the Clarens server, and entrusting Clarens with the potentially complicated task of communicating with and marshalling the required Grid resources. u. Eventually the task execution results are returned, via Clarens, to the client from the Grid. u. During all this, the Monitoring service provides the user with constant feedback. The CMS Collaboration See http: //ultralight. caltech. edu/gaeweb/gae_services. pdf 20

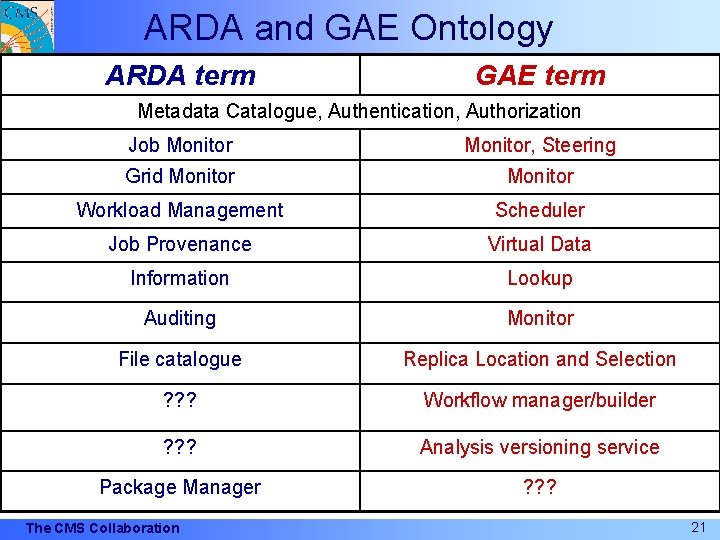

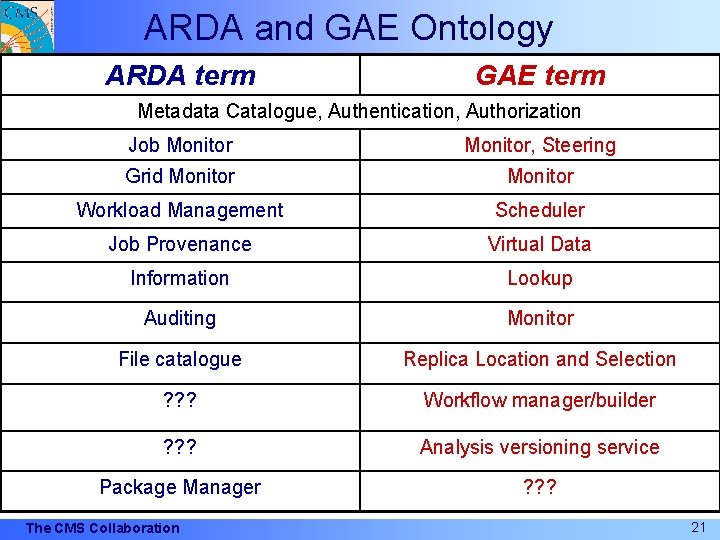

ARDA and GAE Ontology ARDA term GAE term Metadata Catalogue, Authentication, Authorization Job Monitor, Steering Grid Monitor Workload Management Scheduler Job Provenance Virtual Data Information Lookup Auditing Monitor File catalogue Replica Location and Selection ? ? ? Workflow manager/builder ? ? ? Analysis versioning service Package Manager ? ? ? The CMS Collaboration 21

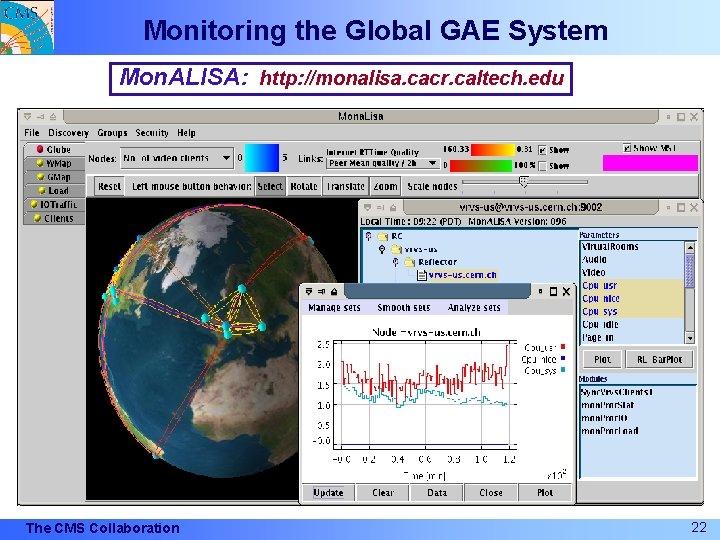

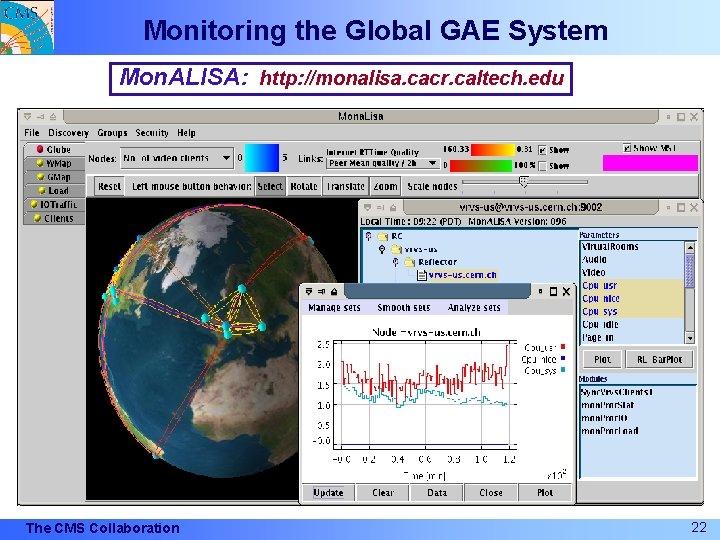

Monitoring the Global GAE System Mon. ALISA: http: //monalisa. cacr. caltech. edu The CMS Collaboration 22

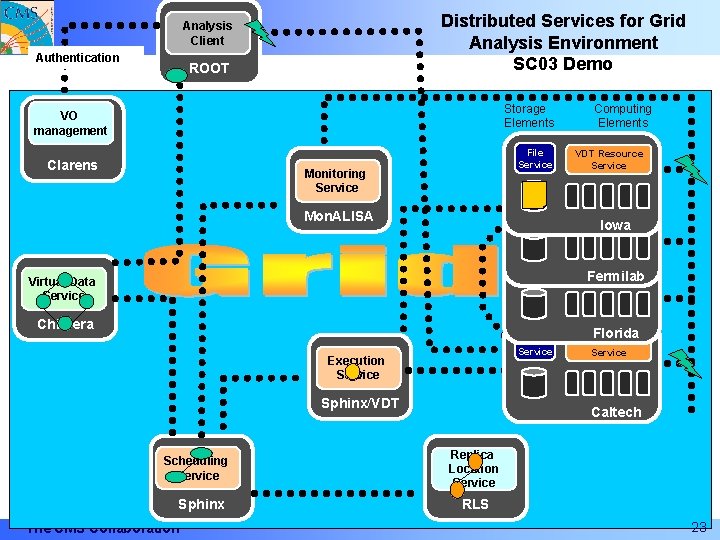

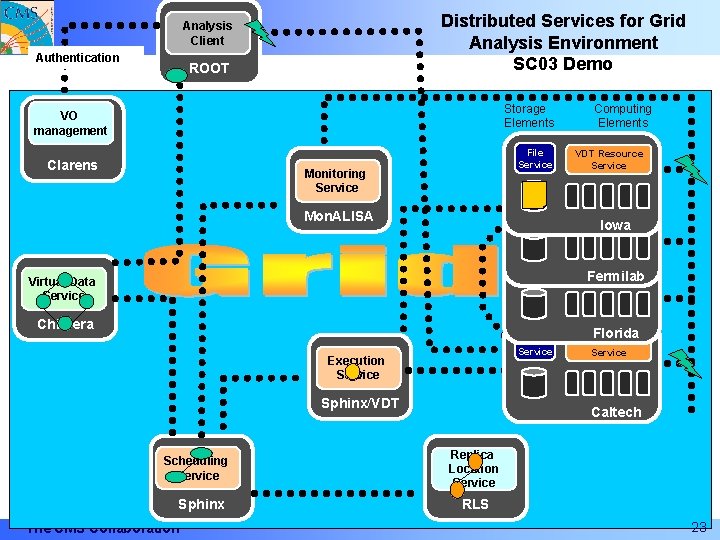

Distributed Services for Grid Analysis Environment SC 03 Demo Analysis Client Authentication ROOT Storage Elements VO management Clarens Monitoring Service Mon. ALISA Virtual Data Service File Service VDT Resource Service Fermilab File Service VDT Resource Service Chimera Execution Service Sphinx/VDT Scheduling Service Sphinx The CMS Collaboration Computing Elements Iowa Florida Caltech Replica Location Service RLS 23

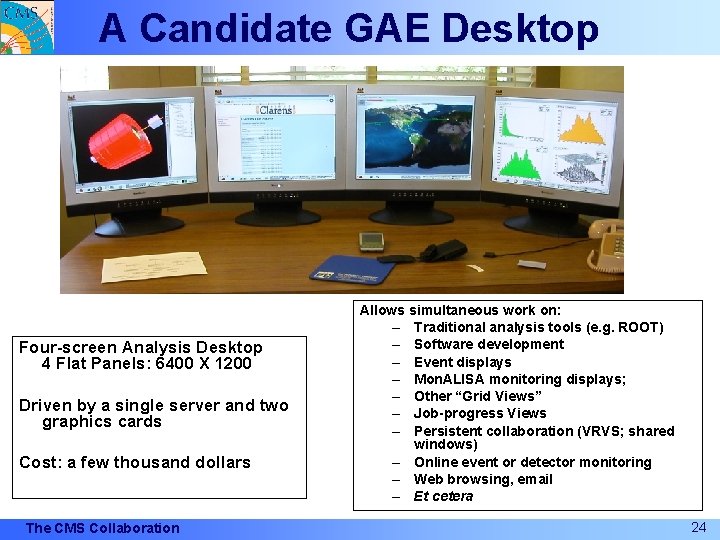

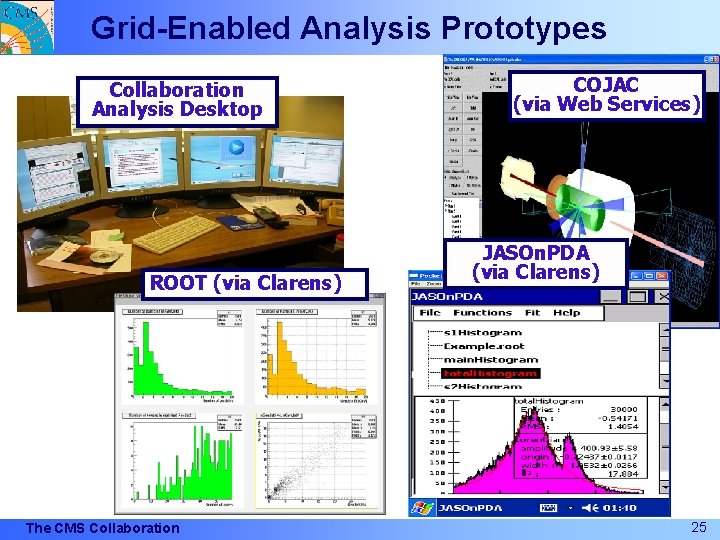

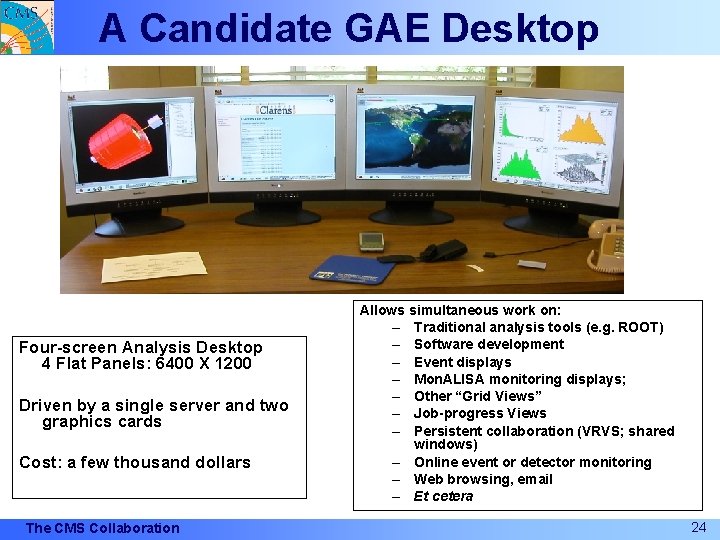

A Candidate GAE Desktop Four-screen Analysis Desktop 4 Flat Panels: 6400 X 1200 Driven by a single server and two graphics cards Cost: a few thousand dollars The CMS Collaboration Allows simultaneous work on: – Traditional analysis tools (e. g. ROOT) – Software development – Event displays – Mon. ALISA monitoring displays; – Other “Grid Views” – Job-progress Views – Persistent collaboration (VRVS; shared windows) – Online event or detector monitoring – Web browsing, email – Et cetera 24

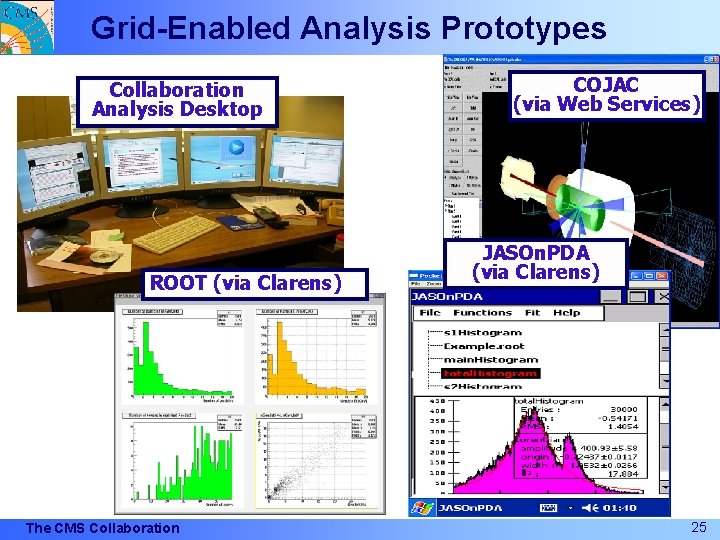

Grid-Enabled Analysis Prototypes Collaboration Analysis Desktop ROOT (via Clarens) The CMS Collaboration COJAC (via Web Services) JASOn. PDA (via Clarens) 25

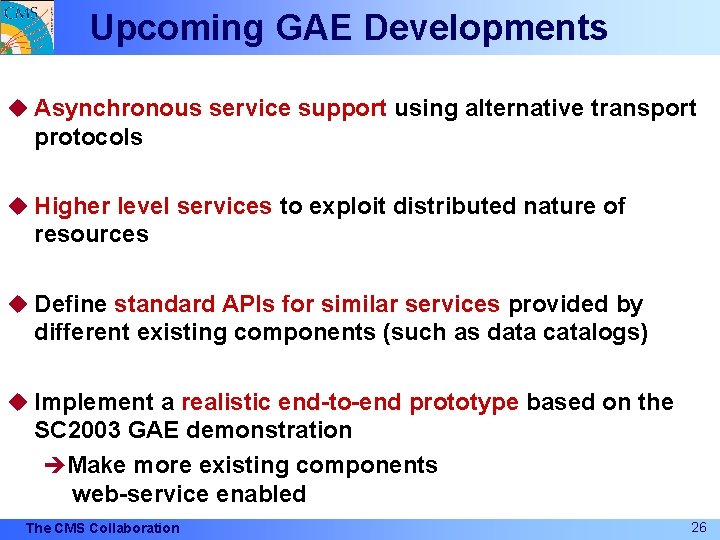

Upcoming GAE Developments u Asynchronous service support using alternative transport protocols u Higher level services to exploit distributed nature of resources u Define standard APIs for similar services provided by different existing components (such as data catalogs) u Implement a realistic end-to-end prototype based on the SC 2003 GAE demonstration èMake more existing components web-service enabled The CMS Collaboration 26

Summary • Distributed Production – CMS is already using successfully LCG and VDT grid middleware to build its distributed production system – A distributed batch-analysis system is being developed on top of the same LCG and VDT software – CMS suggests that in the short term ( 6 months) ARDA extends the functionality of the LCG middleware to meet the architecture described in the ARDA document • Grid Analysis Environment – CMS asks that the GAE be adopted as the basis for the ARDA work on a system that supports interactive and batch analysis. Support for Interactive Analysis is the crucial goal in this context. The CMS Collaboration 27