The Center for Astrophysical Thermonuclear Flashes The Flash

- Slides: 28

The Center for Astrophysical Thermonuclear Flashes The Flash Code Bruce Fryxell Leader, Code Group Year 3 Site Review Argonne National Laboratory Oct. 30, 2000 An Accelerated Strategic Computing Initiative (ASCI) Academic Strategic Alliances Program (ASAP) Center at The University of Chicago

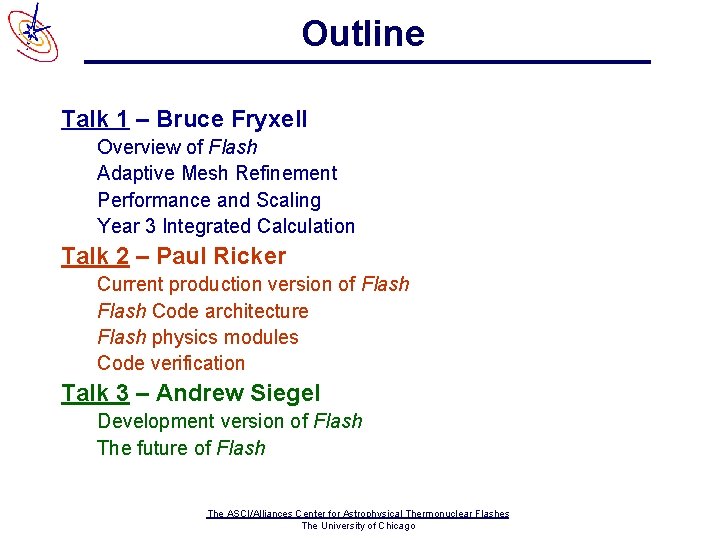

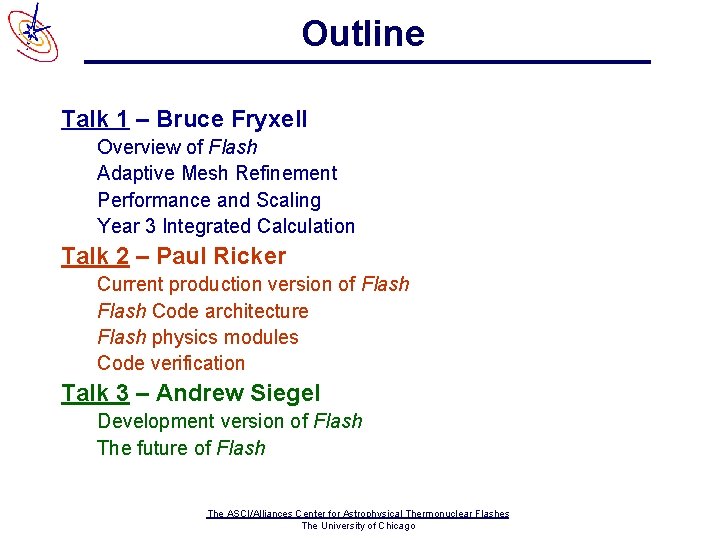

Outline Talk 1 – Bruce Fryxell Overview of Flash Adaptive Mesh Refinement Performance and Scaling Year 3 Integrated Calculation Talk 2 – Paul Ricker Current production version of Flash Code architecture Flash physics modules Code verification Talk 3 – Andrew Siegel Development version of Flash The future of Flash The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

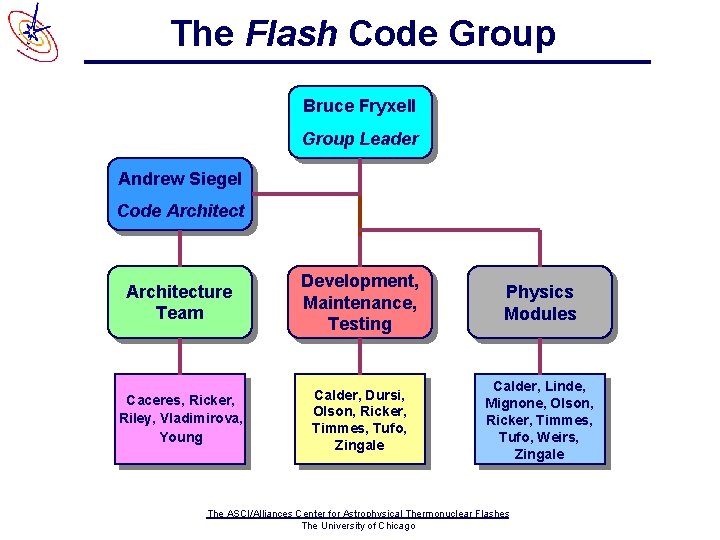

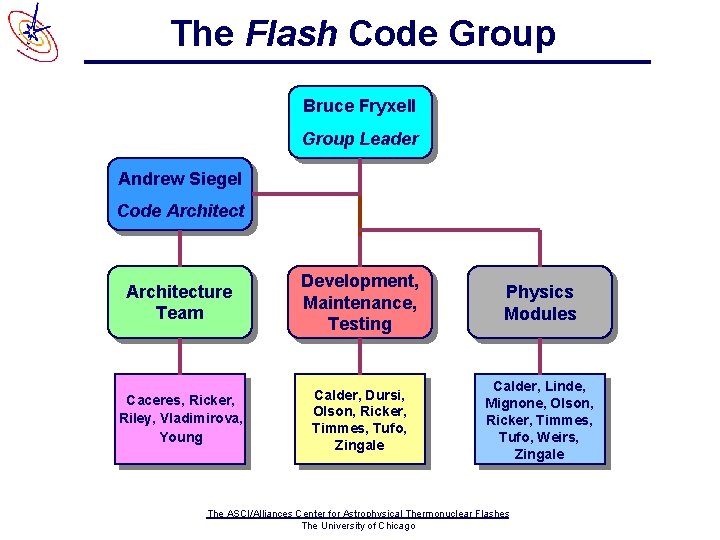

The Flash Code Group Bruce Fryxell Group Leader Andrew Siegel Code Architecture Team Development, Maintenance, Testing Physics Modules Caceres, Ricker, Riley, Vladimirova, Young Calder, Dursi, Olson, Ricker, Timmes, Tufo, Zingale Calder, Linde, Mignone, Olson, Ricker, Timmes, Tufo, Weirs, Zingale The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

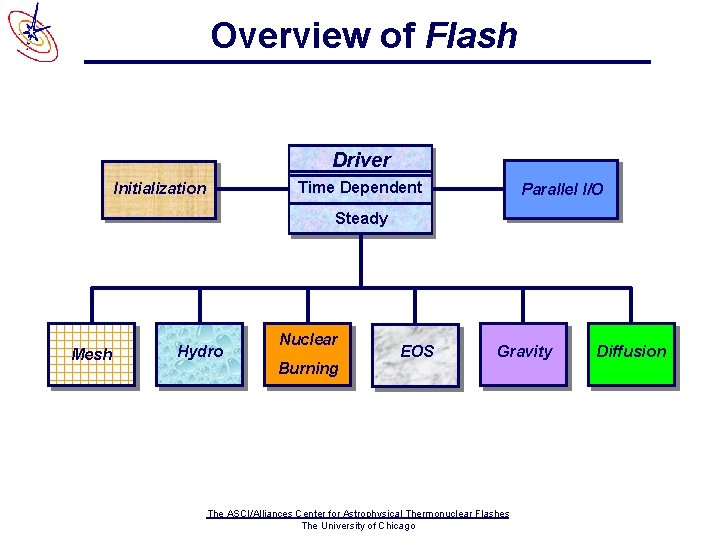

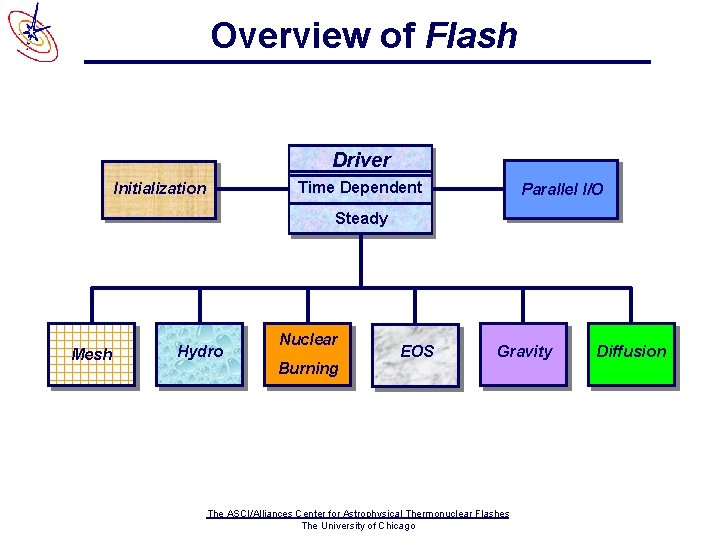

Overview of Flash Driver Time Dependent Initialization Parallel I/O Steady Mesh Hydro Nuclear Burning EOS Gravity The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago Diffusion

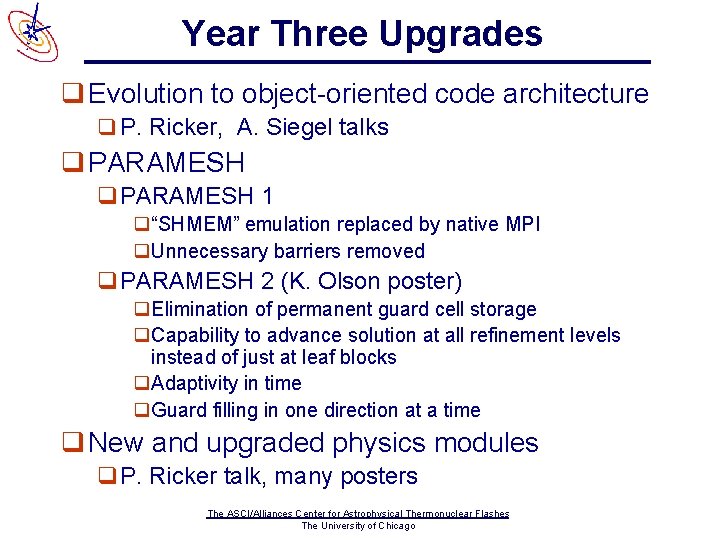

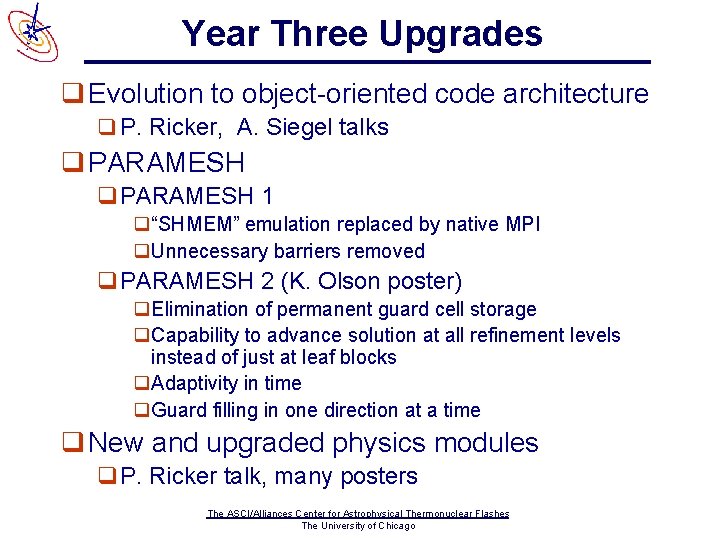

Year Three Upgrades q Evolution to object-oriented code architecture q P. Ricker, A. Siegel talks q PARAMESH q. PARAMESH 1 q“SHMEM” emulation replaced by native MPI q. Unnecessary barriers removed q. PARAMESH 2 (K. Olson poster) q. Elimination of permanent guard cell storage q. Capability to advance solution at all refinement levels instead of just at leaf blocks q. Adaptivity in time q. Guard filling in one direction at a time q New and upgraded physics modules q. P. Ricker talk, many posters The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Other Accomplishments q Parallel I/O q. HDF 5 q 10 x improvement in I/O throughput q Documentation q. Comprehensive user manual qhttp: //flash. uchicago. edu/flashcode/doc q. The physics and algorithms used in Flash qhttp: //flash. uchicago. edu/flashcode/pubs q Code release q. Friendly users – May 2000 q. Astrophysics Community – Oct. 2000 The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

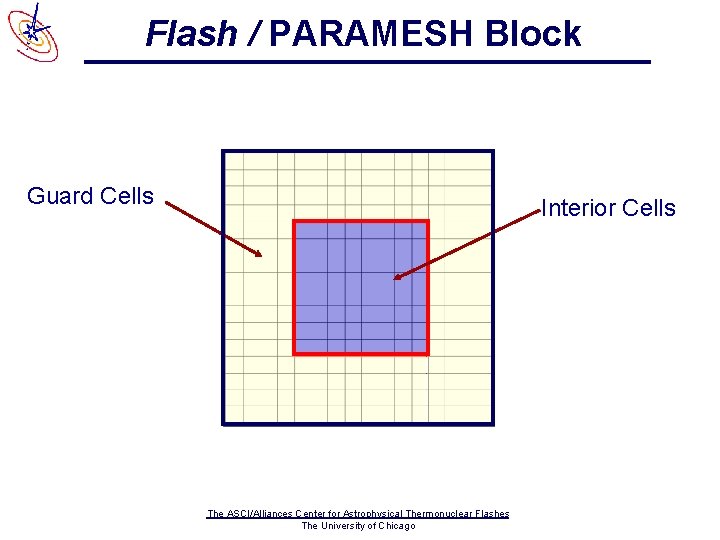

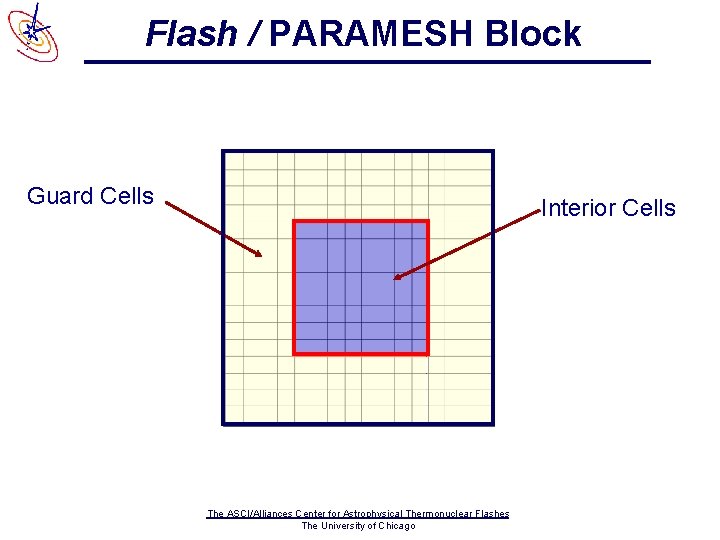

Adaptive Mesh Refinement q Reduces time to solution and improves accuracy by concentrating grid points in regions which require high resolution q PARAMESH (NASA / GSFC) q Block structured refinement (8 x 8 blocks) q User-defined refinement criterion – currently using second derivatives of density and pressure The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Flash / PARAMESH Block Guard Cells Interior Cells The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

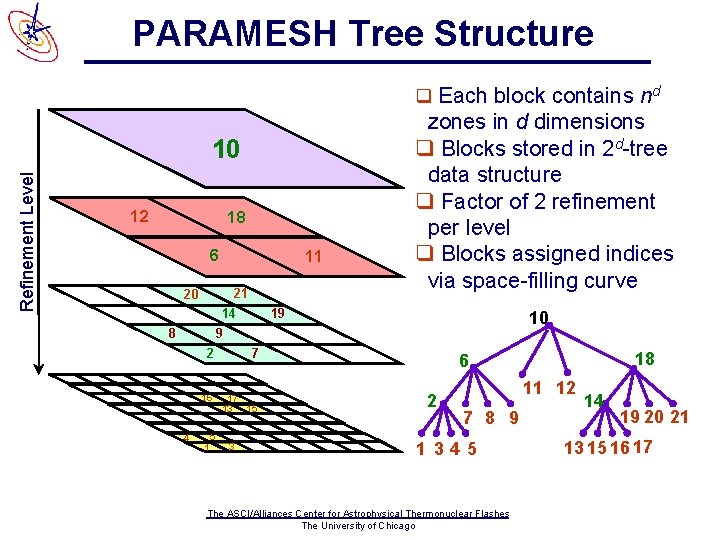

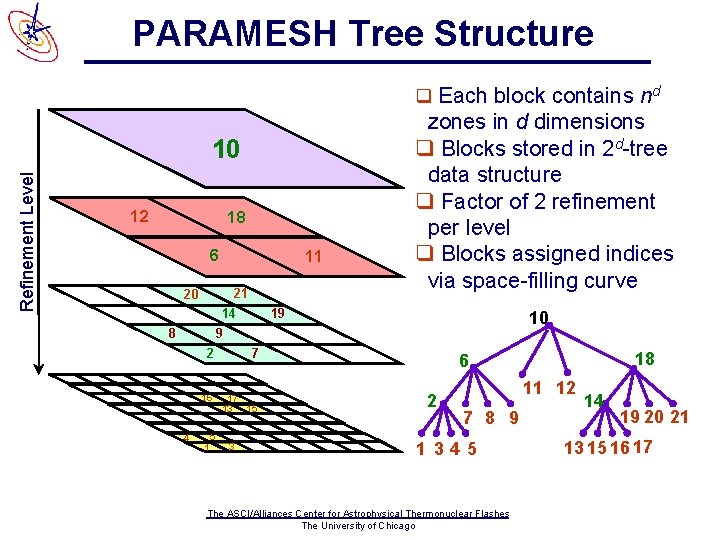

PARAMESH Tree Structure q Each block contains nd Refinement Level 10 12 18 6 11 21 20 14 9 8 4 19 2 7 16 17 13 15 1 5 3 zones in d dimensions q Blocks stored in 2 d-tree data structure q Factor of 2 refinement per level q Blocks assigned indices via space-filling curve 10 18 6 2 11 12 7 8 9 1 345 The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago 14 19 20 21 13 15 16 17

Load Balancing q Work weighted Morton space filling curve q Performance insensitive to choice of space filling curve q Refinement and redistribution of blocks every four time steps The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

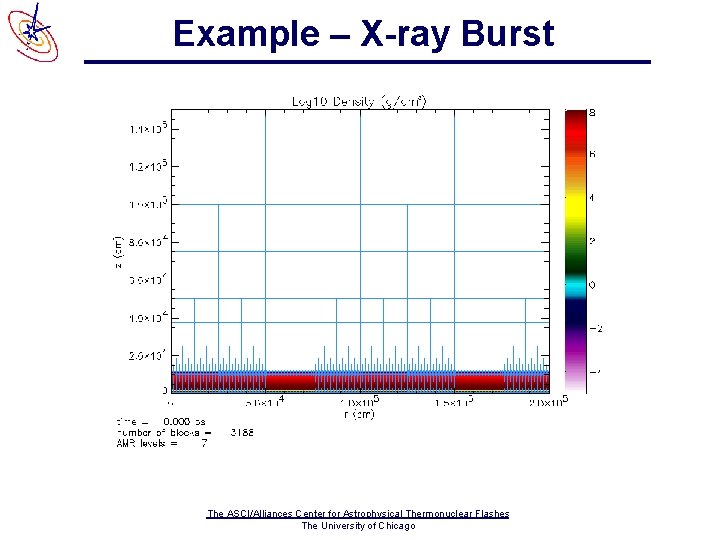

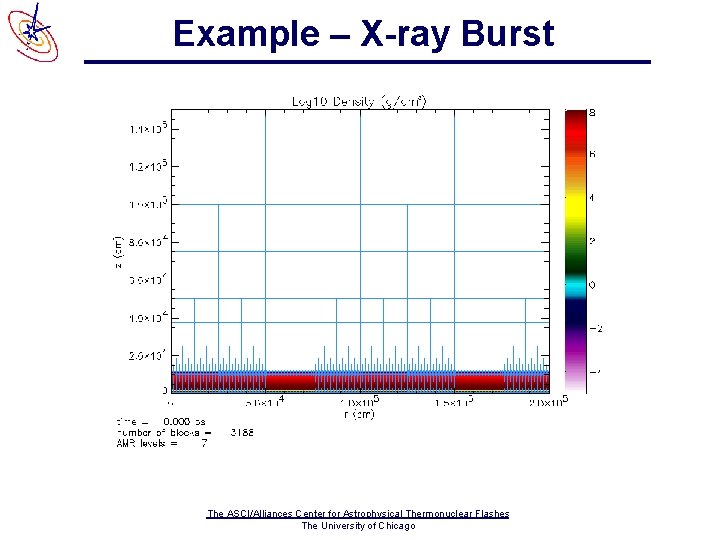

Example – X-ray Burst The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

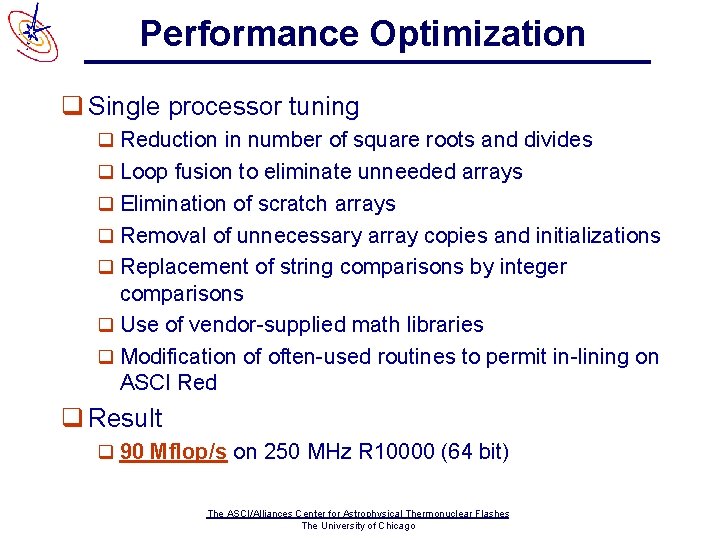

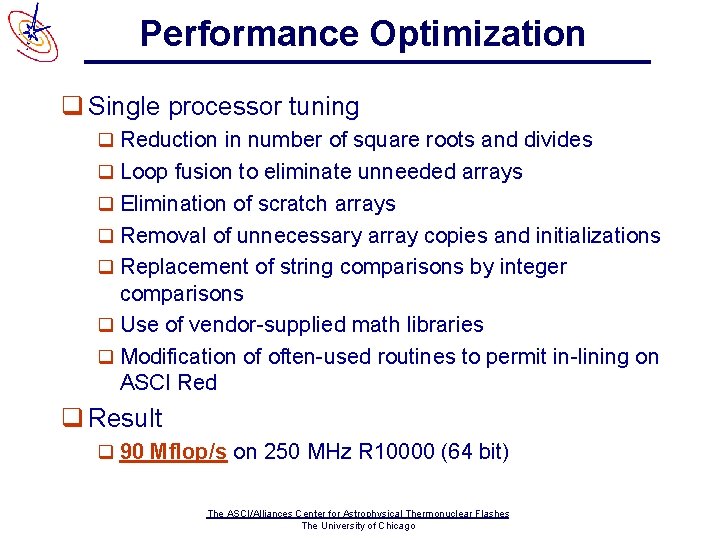

Performance Optimization q Single processor tuning q Reduction in number of square roots and divides q Loop fusion to eliminate unneeded arrays q Elimination of scratch arrays q Removal of unnecessary array copies and initializations q Replacement of string comparisons by integer comparisons q Use of vendor-supplied math libraries q Modification of often-used routines to permit in-lining on ASCI Red q Result q 90 Mflop/s on 250 MHz R 10000 (64 bit) The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

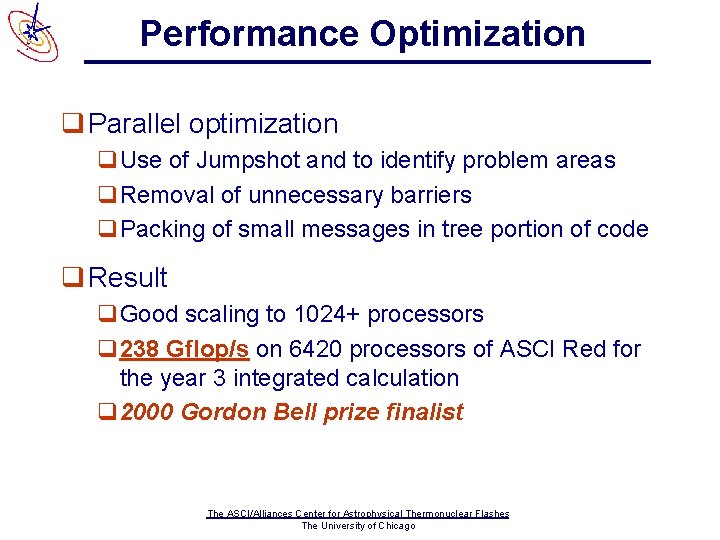

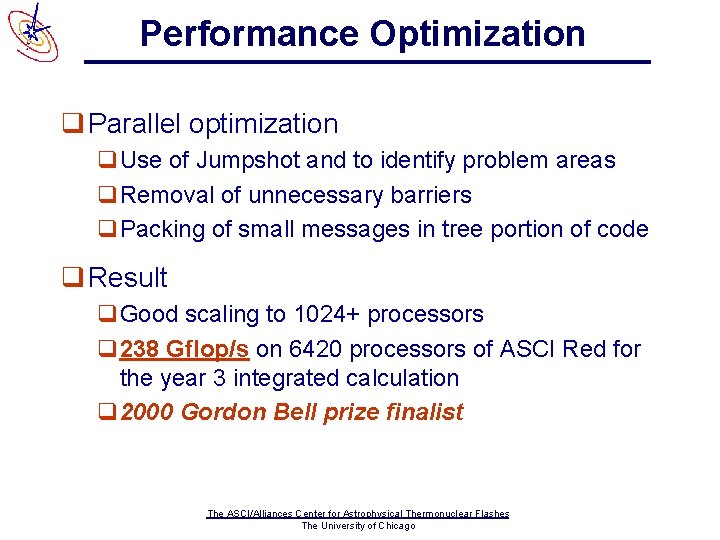

Performance Optimization q Parallel optimization q. Use of Jumpshot and to identify problem areas q. Removal of unnecessary barriers q. Packing of small messages in tree portion of code q Result q. Good scaling to 1024+ processors q 238 Gflop/s on 6420 processors of ASCI Red for the year 3 integrated calculation q 2000 Gordon Bell prize finalist The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

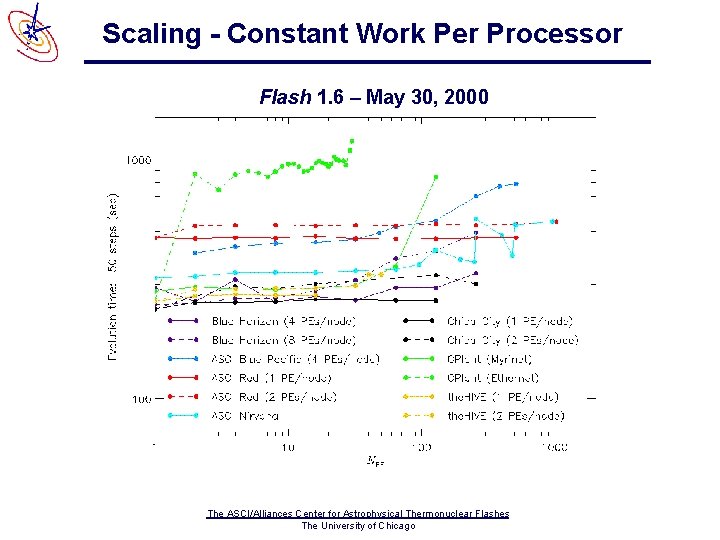

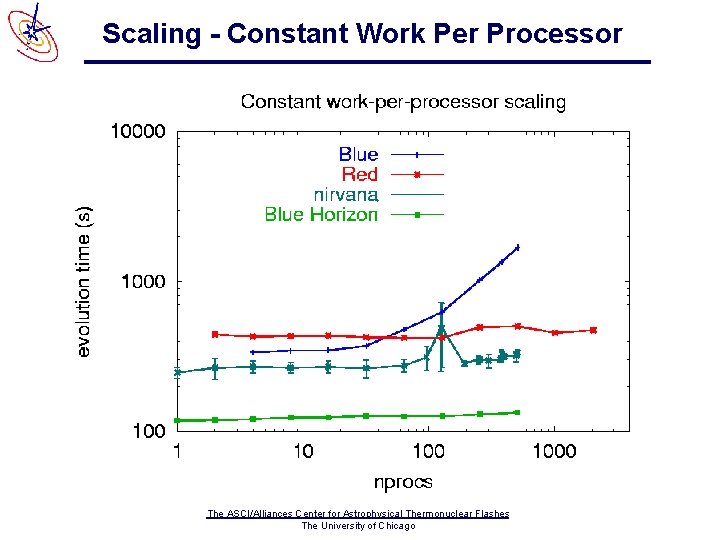

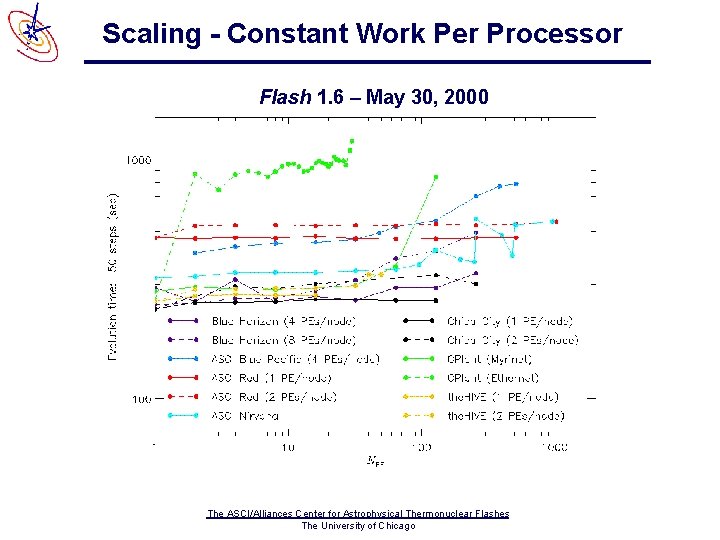

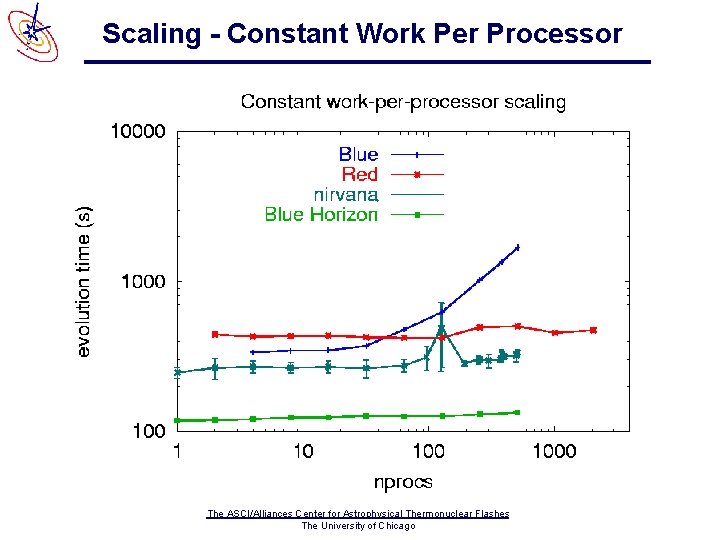

Scaling q Constant work per processor scaling q Shock tube simulation q Two-dimensional q Hydrodynamics, Adaptive Mesh Refinement, gamma-law equation of state q Relatively high communication to computation cost The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Scaling - Constant Work Per Processor Flash 1. 6 – May 30, 2000 The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Scaling - Constant Work Per Processor The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Scaling - Constant Work Per Processor The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

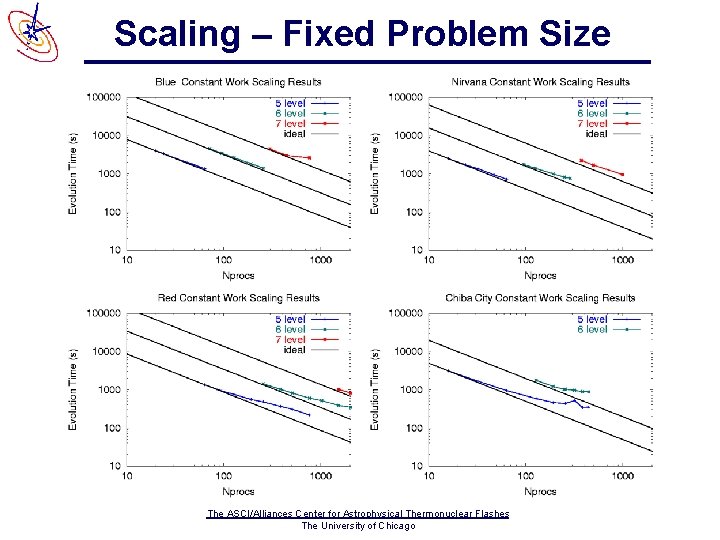

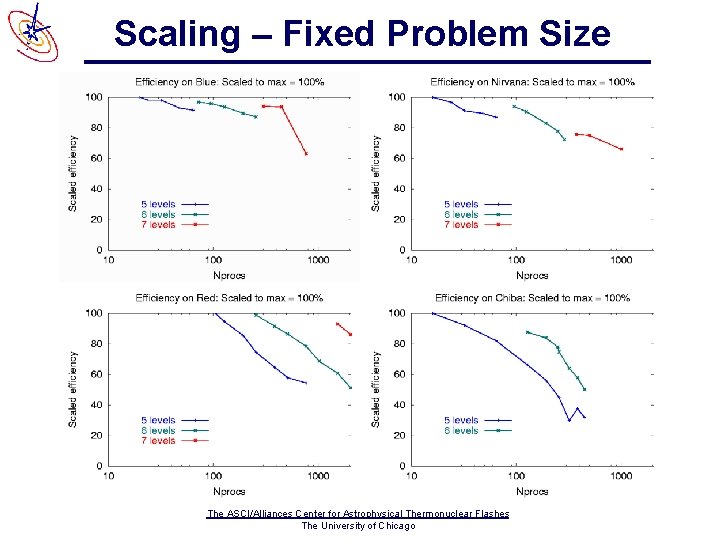

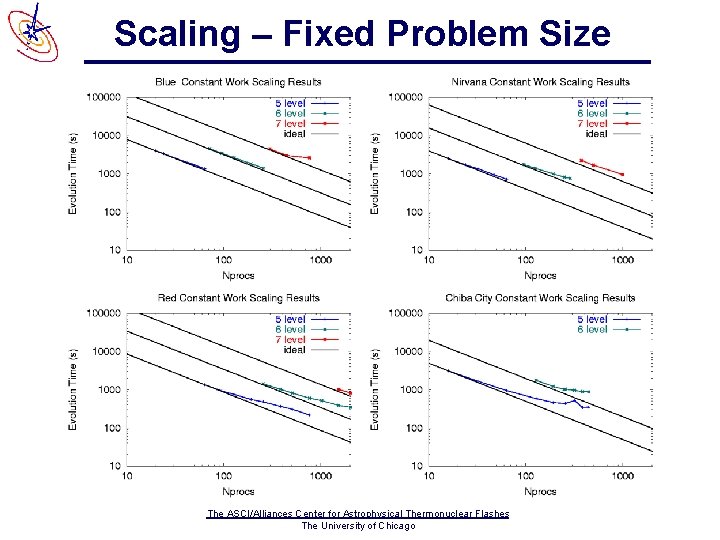

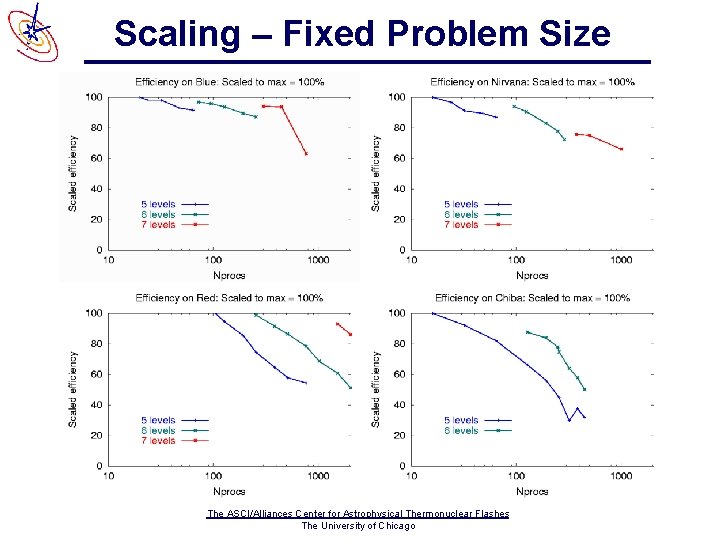

Scaling q Fixed problem size scaling q Cellular detonation q Three-dimensional q Uses most of the major physics modules in the code q Relatively low communication to computation cost The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Scaling – Fixed Problem Size The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Scaling – Fixed Problem Size The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Summary of Scaling q As number of blocks per processor decreases, a larger fraction of the blocks must get their guard cell information from off processor q This causes deviation from ideal scaling when the number of blocks per processor drops too low q Of the three ASCI machines, this effect is most noticeable on Red, due to its relatively small memory per processor q Significant variation in timings on Nirvana between identical simulations The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Summary of Scaling q Significant improvement in cross-box scaling on Nirvana can be achieved by tuning MPI environment variables q Scalability on Blue Pacific is highly dependent on operating system revisions q Parallel efficiency for memory bound jobs q > 90% on Blue Pacific and Red q > 75% on Nirvana q Typical performance – 10 -15% of peak on 1024 processors The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

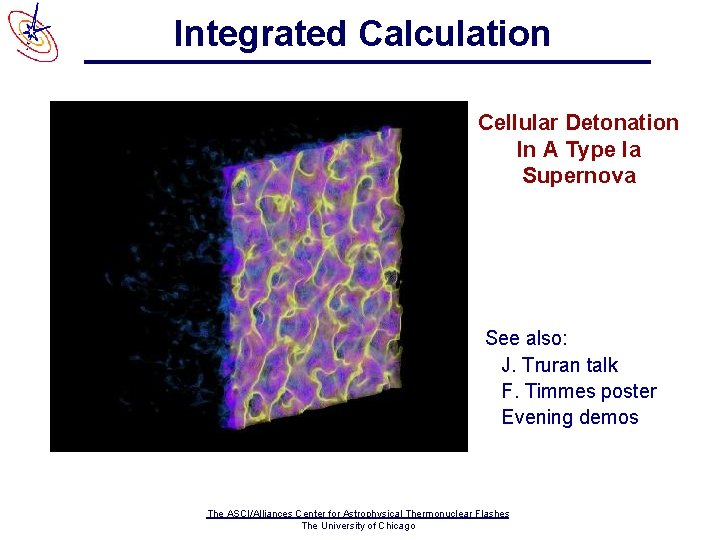

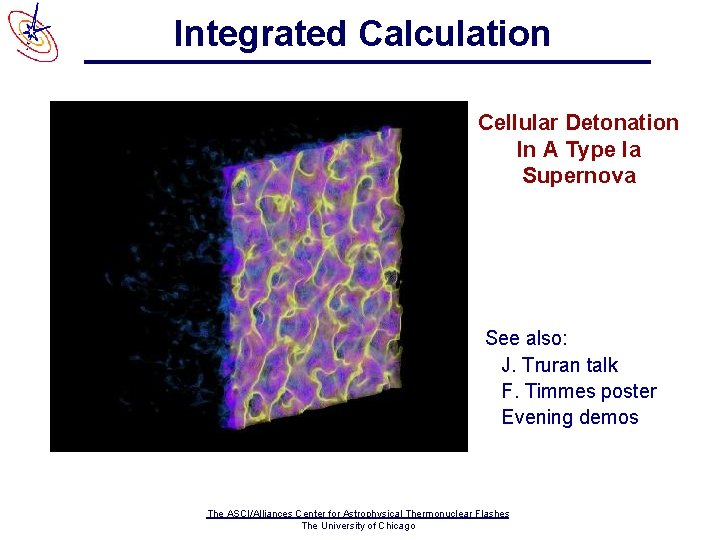

Integrated Calculation Cellular Detonation In A Type Ia Supernova See also: J. Truran talk F. Timmes poster Evening demos The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Why a Cellular Detonation? q Two of our target astrophysics calculations (X-ray bursts and Type Ia Supernovae) involve detonations q We can not resolve the structure of the detonation front in a calculation which contains the entire star q Want to do a study of a small portion of the detonation front to see if a subgrid model is necessary to compute q The detonation speed q The nucleosynthesis q This problem exercises most of the major modules in the code and thus serves as a good test of the overall code performance The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

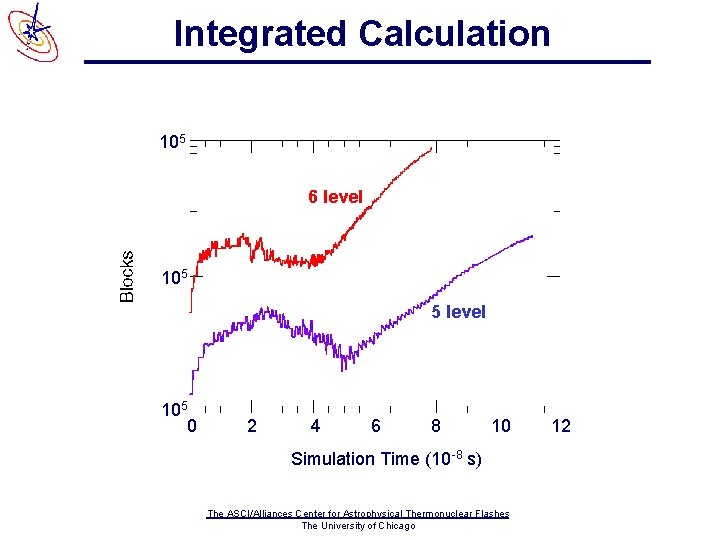

Integrated Calculation q 1000 processors on ASCI Blue Pacific q Effective grid size (if fully refined) q 256 x 5120 = 335 million grid points q Actual grid size q 6 million points at beginning of calculation q 45 million points at end of calculation q Savings from using AMR q 40 -50 x for first half of calculation q 7 x at end of calculation q Total wall clock time ~ 70 hours The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Integrated Calculation q Generated 1. 2 Tbyte of data q Half of wall clock time required for I/O q 0. 2 Tbyte transferred to ANL by network for visualization q. Used Grid. FTP to transfer files q 7 parallel streams to 7 separate disks q. Throughput ~ 4 Mbytes/s q. Total transfer time < 1 day The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

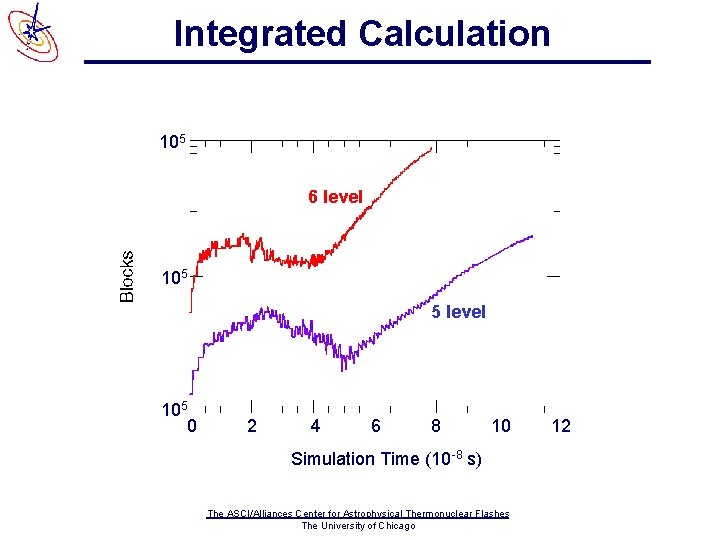

Integrated Calculation 105 6 level 105 5 level 105 0 2 4 6 8 10 Simulation Time (10 -8 s) The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago 12

Summary 1. Substantial progress made in Year 3 in improving and extending Flash 2. Flash is now being used to address many of our target astrophysics problems and is producing important scientific results 3. Flash achieves good performance on all three ASCI computers and scales to thousands of processors 4. Large 3 D integrated calculation completed on ASCI Blue Pacific and data successfully transferred back to Chicago for analysis and visualization The ASCI/Alliances Center for Astrophysical Thermonuclear Flashes The University of Chicago

Arcetri astrophysical observatory

Arcetri astrophysical observatory Astrophysical journal keywords

Astrophysical journal keywords Digital photography with flash and no-flash image pairs

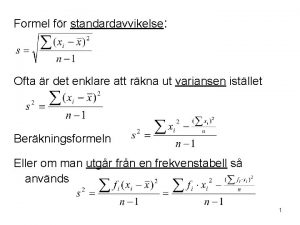

Digital photography with flash and no-flash image pairs Stickprovsvariansen

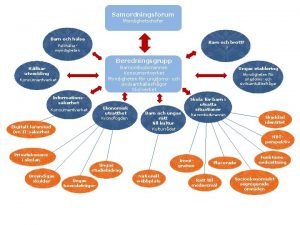

Stickprovsvariansen Informationskartläggning

Informationskartläggning Datorkunskap för nybörjare

Datorkunskap för nybörjare Tack för att ni har lyssnat

Tack för att ni har lyssnat Klassificeringsstruktur för kommunala verksamheter

Klassificeringsstruktur för kommunala verksamheter Vad står k.r.å.k.a.n för

Vad står k.r.å.k.a.n för Läkarutlåtande för livränta

Läkarutlåtande för livränta Påbyggnader för flakfordon

Påbyggnader för flakfordon Tack för att ni lyssnade

Tack för att ni lyssnade Egg för emanuel

Egg för emanuel Tack för att ni har lyssnat

Tack för att ni har lyssnat Rutin för avvikelsehantering

Rutin för avvikelsehantering Vanlig celldelning

Vanlig celldelning Programskede byggprocessen

Programskede byggprocessen Tidbok för yrkesförare

Tidbok för yrkesförare Iso 22301 utbildning

Iso 22301 utbildning Myndigheten för delaktighet

Myndigheten för delaktighet Presentera för publik crossboss

Presentera för publik crossboss Mall debattartikel

Mall debattartikel Kung dog 1611

Kung dog 1611 Tobinskatten för och nackdelar

Tobinskatten för och nackdelar Tack för att ni har lyssnat

Tack för att ni har lyssnat Boverket ka

Boverket ka Referatmarkeringar

Referatmarkeringar Mjälthilus

Mjälthilus Varför kallas perioden 1918-1939 för mellankrigstiden

Varför kallas perioden 1918-1939 för mellankrigstiden